├── .gitignore

├── resources

├── readme

│ ├── qq.JPG

│ ├── qq2.jpg

│ ├── overview.png

│ └── result_allinone_small.png

├── test

│ └── test.jpg

├── frontend

│ ├── Roboto-Light.ttf

│ ├── index.js

│ ├── canvas.html

│ ├── tmp.html

│ └── canvas_double.html

└── workflow

│ ├── nobel_workflow.json

│ └── nobel_workflow_for_install.json

├── requirements.txt

├── package.json

├── README_model.md

├── main_without_openai.py

├── main.py

├── src

├── slogan_agent.py

├── html_modify.py

└── server.py

├── README.md

└── LICENSE

/.gitignore:

--------------------------------------------------------------------------------

1 | input

2 | output

3 | node_modules

4 | **/__pycache__

5 |

6 | .env

7 | package-lock.json

--------------------------------------------------------------------------------

/resources/readme/qq.JPG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/16131zzzzzzzz/EveryoneNobel/HEAD/resources/readme/qq.JPG

--------------------------------------------------------------------------------

/resources/readme/qq2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/16131zzzzzzzz/EveryoneNobel/HEAD/resources/readme/qq2.jpg

--------------------------------------------------------------------------------

/resources/test/test.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/16131zzzzzzzz/EveryoneNobel/HEAD/resources/test/test.jpg

--------------------------------------------------------------------------------

/resources/readme/overview.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/16131zzzzzzzz/EveryoneNobel/HEAD/resources/readme/overview.png

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | pypinyin==0.53.0

2 | openai==1.40.3

3 | beautifulsoup4==4.12.3

4 | websocket-client==0.58.0

5 | python-dotenv

--------------------------------------------------------------------------------

/resources/frontend/Roboto-Light.ttf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/16131zzzzzzzz/EveryoneNobel/HEAD/resources/frontend/Roboto-Light.ttf

--------------------------------------------------------------------------------

/resources/readme/result_allinone_small.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/16131zzzzzzzz/EveryoneNobel/HEAD/resources/readme/result_allinone_small.png

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "convert-test",

3 | "version": "1.0.0",

4 | "description": "",

5 | "main": "resources/frontend/index.js",

6 | "scripts": {

7 | "dev": "node resources/frontend/index.js"

8 | },

9 | "keywords": [],

10 | "author": "",

11 | "license": "ISC",

12 | "dependencies": {

13 | "puppeteer": "^23.5.1"

14 | }

15 | }

16 |

--------------------------------------------------------------------------------

/resources/frontend/index.js:

--------------------------------------------------------------------------------

1 | const puppeteer = require('puppeteer');

2 | const path = require('path');

3 |

4 | // 从命令行参数获取 HTML 文件路径和输出文件名

5 | const htmlFileName = process.argv[2]; // 第一个参数为 HTML 文件名

6 | const outputFileName = process.argv[3]; // 第二个参数为输出文件名

7 |

8 | (async () => {

9 | const browser = await puppeteer.launch({

10 | headless: true, // 强制无头模式

11 | args: ['--no-sandbox', '--disable-setuid-sandbox'] // 适用于某些环境

12 | });

13 | const page = await browser.newPage();

14 |

15 | // 使用命令行参数指定的 HTML 文件

16 | const filePath = htmlFileName; // 用哪个 HTML 就改成哪个

17 |

18 | await page.goto(`file://${filePath}`, { waitUntil: 'networkidle0' });

19 |

20 | const element = await page.$("#canvas");

21 | await element.screenshot({ path: outputFileName }); // 使用命令行参数指定的输出文件名

22 |

23 | await browser.close();

24 | })();

25 |

--------------------------------------------------------------------------------

/README_model.md:

--------------------------------------------------------------------------------

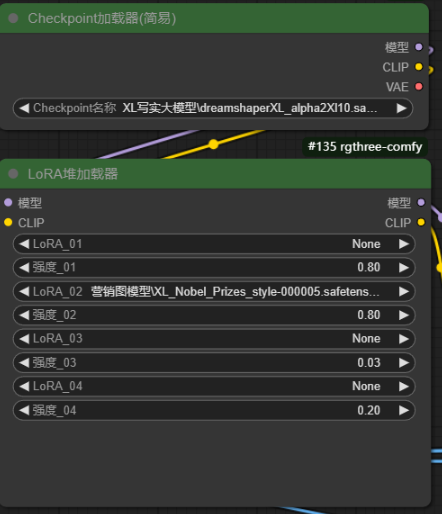

1 | # ComfyUI Models

2 | ## 1. Download Models

3 | Download all models below and put it into ComfyUI's `models` folder.

4 | ### SD model

5 | link: https://civitai.com/models/112902/dreamshaper-xl

6 |

7 | path: `models/checkpoints`

8 |

9 | ### Lora model

10 | link: https://civitai.com/models/875184?modelVersionId=979771

11 |

12 | path: `models/loras`

13 |

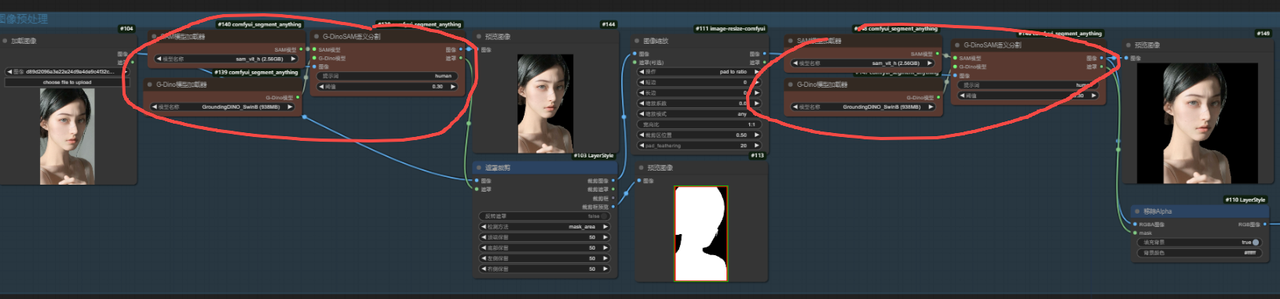

14 | ### Sam model

15 | link:https://pan.quark.cn/s/f6422ed31f96

16 |

17 | path: `models/sams`

18 |

19 | ### Ground-Dino model

20 | link:https://pan.quark.cn/s/269e85ea0785

21 |

22 | path: `models/grounding-dino`

23 |

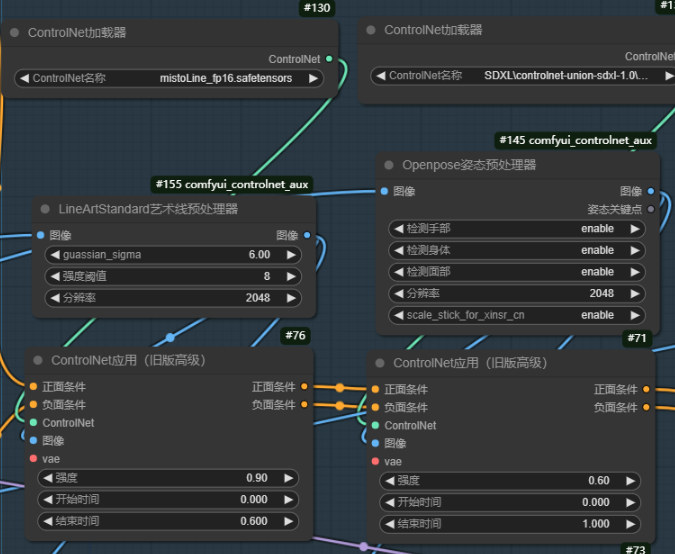

24 | ### ControlNet model 1 (Left in the workflow for lineart)

25 | link:https://pan.quark.cn/s/bab5376acd72

26 |

27 | path: `models/controlnet`

28 |

29 | ### ControlNet model 2 (Right in the workflow for pose)

30 | link:https://pan.quark.cn/s/3accad712c54

31 |

32 | path: `models/controlnet`

33 |

34 | ### VAE model

35 | link:https://pan.quark.cn/s/da810e21331e

36 |

37 | path: `models/vae`

38 |

39 | ## 2. Adapt the model paths

40 | Adapt the model paths in the workflow nodes below to the your own path.

41 |

42 | [](https://www.picgo.net/image/1280X1280-%281%29.oqa7JG)

43 |

44 | [](https://www.picgo.net/image/7fc490b0-5923-4dbc-a650-a3d31374050e.oqahXk)

45 |

46 | [](https://www.picgo.net/image/7787472d-c1b0-4947-896b-928146f3aabb.oqaOlw)

47 |

48 | [](https://www.picgo.net/image/15113787-baab-4a02-99ee-eccdd0011c11.oqaF5l)

49 |

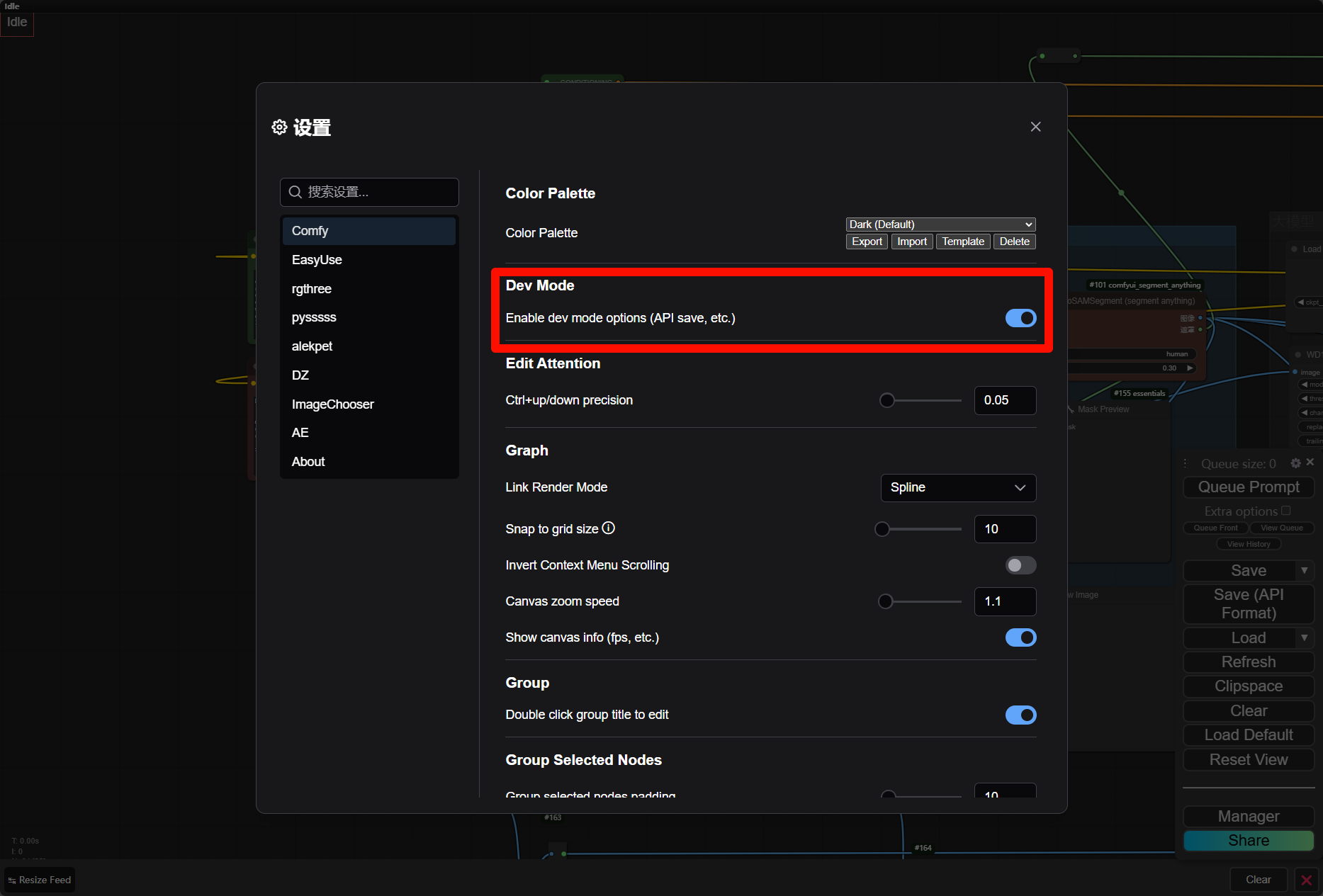

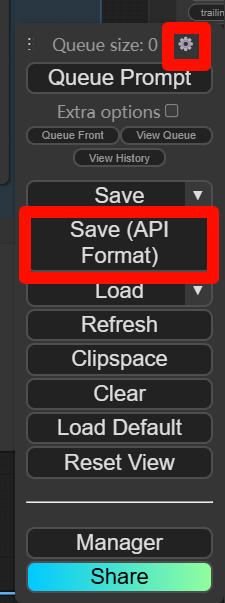

50 | ## 3. Save as API format

51 | Run ComfyUI locally in your browser. !!!Delete all preview image node except the final output preview image!!!. Once it’s running, go to the settings and enable the "Dev Mode Options". You can export the workflow in API format as a JSON file. Use this exported JSON to replace the original resources/workflow/nobel_workflow.json (don't change the json name).

52 |

53 | [](https://www.picgo.net/image/PixPin-2024-10-27-13-48-00.oqmTsi)

54 |

55 | [](https://www.picgo.net/image/PixPin-2024-10-27-13-48-22.oqmKLf)

56 |

--------------------------------------------------------------------------------

/main_without_openai.py:

--------------------------------------------------------------------------------

1 | import os

2 | import threading

3 | import json

4 | import argparse

5 |

6 | from src.html_modify import modifier_html

7 | from src.server import prompt_image_to_image

8 |

9 | def main(name, subject, content, image_path, comfy_server_address):

10 | proj_dir = os.path.dirname(os.path.abspath(__file__))

11 |

12 | name_safe = name.replace(" ", "_")

13 | output_path = os.path.join(proj_dir, "output", name_safe)

14 | os.makedirs(output_path, exist_ok=True)

15 | html_template_path = "resources/frontend/canvas.html"

16 |

17 | output_pic_path = f"nobel_{name_safe}_{(image_path.split('/')[-1]).split('.')[0]}.jpg"

18 | output_pic_path = os.path.join(output_path, output_pic_path)

19 | output_html_path = os.path.join(proj_dir, "resources/frontend/tmp.html")

20 |

21 | subject_content = subject

22 | contribution_content = content

23 |

24 | # save config

25 | info = {

26 | "name": name,

27 | "content": subject_content,

28 | "image_path": image_path,

29 | "contribution": contribution_content,

30 | "output_image_path": output_pic_path,

31 | }

32 | print(info)

33 | json_file_path = os.path.join(

34 | output_path, f"info_{name}_{(image_path.split('/')[-1]).split('.')[0]}.json"

35 | )

36 | with open(json_file_path, "w", encoding="utf-8") as json_file:

37 | json.dump(info, json_file, ensure_ascii=False, indent=4)

38 |

39 | # comfy

40 | print("start comfy")

41 | output_filename = prompt_image_to_image(

42 | "resources/workflow/nobel_workflow.json",

43 | image_path,

44 | comfy_server_address,

45 | output_path=output_path,

46 | save_previews=True,

47 | )

48 | print("comfy image written into: ", output_filename)

49 |

50 | # template

51 | html_modifier = modifier_html(

52 | html_template_path, subject_content, name, contribution_content, output_filename

53 | )

54 |

55 | # save html

56 | html_modifier.save_changes(output_html_path)

57 | print("end generate image html")

58 |

59 | # start npm run dev thread

60 | def start_npm_dev(input_html_path, output_pic_path):

61 | os.system(f"npm run dev -- {input_html_path} {output_pic_path}")

62 |

63 | threading.Thread(target=start_npm_dev, args=(output_html_path, output_pic_path)).start()

64 |

65 | if __name__ == "__main__":

66 | parser = argparse.ArgumentParser(description='Generate Nobel Prize Slogan and Image.')

67 | parser.add_argument('--name', required=True, help='Name of the individual')

68 | parser.add_argument('--subject', required=True, help='Subject of the prize')

69 | parser.add_argument('--content', required=True, help='Contribution description')

70 | parser.add_argument('--image_path', required=True, help='Path to the input image')

71 | parser.add_argument('--comfy_server_address', default="127.0.0.1:6006", help='Address of the Comfy server')

72 |

73 | args = parser.parse_args()

74 |

75 | main(args.name, args.subject, args.content, args.image_path, args.comfy_server_address)

76 |

--------------------------------------------------------------------------------

/main.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import os

3 | import threading

4 | import json

5 | import argparse

6 | from dotenv import load_dotenv

7 |

8 | from src.slogan_agent import get_slogan, prompt_dict

9 | from src.html_modify import modifier_html

10 | from src.server import prompt_image_to_image

11 |

12 | def main(name, subject, content, image_path, comfy_server_address):

13 | load_dotenv()

14 | api_key = os.getenv("API_KEY")

15 | proj_dir = os.path.dirname(os.path.abspath(__file__))

16 |

17 | name_safe = name.replace(" ", "_")

18 | output_path = os.path.join(proj_dir, "output", name_safe)

19 | os.makedirs(output_path, exist_ok=True)

20 | html_template_path = "resources/frontend/canvas.html"

21 |

22 | output_pic_path = f"nobel_{name_safe}_{(image_path.split('/')[-1]).split('.')[0]}.jpg"

23 | output_pic_path = os.path.join(output_path, output_pic_path)

24 | output_html_path = os.path.join(proj_dir, "resources/frontend/tmp.html")

25 |

26 | # get slogan

27 | print("start get slogan")

28 | subject_content, name, contribution_content = get_slogan(

29 | content, name, prompt_dict, api_key

30 | )

31 |

32 | # save config

33 | info = {

34 | "name": name,

35 | "content": subject_content,

36 | "image_path": image_path,

37 | "contribution": contribution_content,

38 | "output_image_path": output_pic_path,

39 | }

40 | print(info)

41 | json_file_path = os.path.join(

42 | output_path, f"info_{name}_{(image_path.split('/')[-1]).split('.')[0]}.json"

43 | )

44 | with open(json_file_path, "w", encoding="utf-8") as json_file:

45 | json.dump(info, json_file, ensure_ascii=False, indent=4)

46 |

47 | # comfy

48 | print("start comfy")

49 | output_filename = prompt_image_to_image(

50 | "resources/workflow/nobel_workflow.json",

51 | image_path,

52 | comfy_server_address,

53 | output_path=output_path,

54 | save_previews=True,

55 | )

56 | print("comfy image written into: ", output_filename)

57 |

58 | # template

59 | html_modifier = modifier_html(

60 | html_template_path, subject_content, name, contribution_content, output_filename

61 | )

62 |

63 | # save html

64 | html_modifier.save_changes(output_html_path)

65 | print("end generate image html")

66 |

67 | # start npm run dev thread

68 | def start_npm_dev(input_html_path, output_pic_path):

69 | os.system(f"npm run dev -- {input_html_path} {output_pic_path}")

70 |

71 | threading.Thread(target=start_npm_dev, args=(output_html_path, output_pic_path)).start()

72 |

73 | if __name__ == "__main__":

74 | parser = argparse.ArgumentParser(description='Generate Nobel Prize Slogan and Image.')

75 | parser.add_argument('--name', required=True, help='Name of the individual')

76 | parser.add_argument('--subject', required=True, help='Subject of the prize')

77 | parser.add_argument('--content', required=True, help='Contribution description')

78 | parser.add_argument('--image_path', required=True, help='Path to the input image')

79 | parser.add_argument('--comfy_server_address', default="127.0.0.1:6006", help='Address of the Comfy server')

80 |

81 | args = parser.parse_args()

82 |

83 | main(args.name, args.subject, args.content, args.image_path, args.comfy_server_address)

84 |

--------------------------------------------------------------------------------

/src/slogan_agent.py:

--------------------------------------------------------------------------------

1 | from openai import OpenAI

2 | import re

3 | from pypinyin import pinyin, Style

4 |

5 |

6 | def call_llm(api_key: str, prompt: str, sys_prompt: str) -> dict:

7 | client = OpenAI(

8 | api_key=api_key,

9 | base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

10 | )

11 | completion = client.chat.completions.create(

12 | model="qwen-plus",

13 | messages=[

14 | {

15 | "role": "system",

16 | "content": sys_prompt,

17 | },

18 | {"role": "user", "content": prompt},

19 | ],

20 | temperature=0.8,

21 | )

22 | response = completion.choices[0].message.content

23 | return response

24 |

25 |

26 | prompt_dict = {

27 | "subject_prompt": {

28 | "sys_prompt": "Only one English word or phrase is returned",

29 | "prompt": "Use the above text to sort the person's description by topic and return just one English word or phrase:",

30 | },

31 | "contribution_prompt": {

32 | "sys_prompt": "你是评审人员,擅长评审他人的工作",

33 | "prompt": " 结合以上人物介绍,不要出现人名,不要主语,最后不要句号。用夸张且诙谐的一句中文不超过20个词不带主语的话总结他的主要贡献,可以非常主观: ",

34 | },

35 | }

36 |

37 | prompt_dict_en = {

38 | "subject_prompt": {

39 | "sys_prompt": "Only one English word or phrase is returned",

40 | "prompt": "Use the above text to sort the person's description by topic and return just one English word or phrase:",

41 | },

42 | "contribution_prompt": {

43 | "sys_prompt": "You're a reviewer, and you're good at reviewing other people's work. Only return English",

44 | "prompt": "Combined with the above character introduction, summarize his main contributions in a one-sentence short play without subject or punctuation. Exaggerate to emphasize the great contribution. Only return English And no more than 20 words: ",

45 | },

46 | }

47 |

48 |

49 | def is_chinese(char):

50 | """判断一个字符是否为中文"""

51 | return "\u4e00" <= char <= "\u9fff"

52 |

53 |

54 | def standardize_name(name):

55 | """将输入的名字转化为学术英语的标准格式"""

56 | if any(is_chinese(char) for char in name):

57 | # 使用pypinyin库将中文名转为拼音

58 | parts = pinyin(name, style=Style.NORMAL)

59 |

60 | parts = [part[0] for part in parts] # 不进行大写转换,直接连成字符串

61 | # 假设最后一个部分是姓,其他是名

62 | first_name = "".join(parts[1:]).capitalize() # 连接并首字母大写

63 | last_name = parts[0].capitalize() # 姓的首字母大写

64 | # 转换为首字母大写的格式,并调整顺序

65 | return f"{first_name} {last_name}"

66 | else:

67 | # 如果名字不含中文,直接返回

68 | return name

69 |

70 |

71 | def get_slogan(content, name, prompt_dict, api_key):

72 |

73 | name = standardize_name(name)

74 |

75 | subject_prompt = prompt_dict["subject_prompt"]

76 | sys_prompt = subject_prompt["sys_prompt"]

77 | prompt = content + subject_prompt["prompt"]

78 | subject = call_llm(api_key, prompt, sys_prompt)

79 | subject_content = f"THE NOBEL PRIZE IN {subject.upper()} 2024"

80 |

81 | contribution_prompt = prompt_dict["contribution_prompt"]

82 | sys_prompt = contribution_prompt["sys_prompt"]

83 | prompt = content + contribution_prompt["prompt"] + content

84 | contribution_content = "”" + call_llm(api_key, prompt, sys_prompt).strip() + "“"

85 |

86 | return subject_content, name, contribution_content

87 |

88 |

89 | if __name__ == "__main__":

90 | api_key = ""

91 | name = "somebody"

92 | content = "Do nothing"

93 | prompt_dict = prompt_dict

94 | subject_content, name, contribution_content = get_slogan(

95 | content, name, prompt_dict, api_key

96 | )

97 | print(subject_content)

98 | print(name)

99 | print(contribution_content)

100 |

--------------------------------------------------------------------------------

/resources/frontend/canvas.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Canvas with Colored Bottom and Text Overlay

8 |

75 |

76 |

77 |

78 |

79 |

80 |

81 |

82 |

THE NOBEL PRIZE IN PHYSICS 2024

83 |

Iron Man

84 |

”哥们发现了新元素,你呢?“

85 |

86 |

78 |

79 |

80 |

81 |

82 |

THE NOBEL PRIZE IN DO NOTHING 2024

83 |

somebody

84 |

”无所事事却能评出最有趣的作品,真是懒人界的奇迹“

85 |

86 |

86 |

87 |

88 |

89 |

90 |

91 |

THE NOBEL PRIZE

IN MAGA 2024

92 |

Donald Trump

93 |

Elon Musk

94 |

”MAKE AMERICAN GREAT AGAIN“

95 |

96 |

3 |

4 |

🏆 EveryoneNobel

5 |

6 |

9 |

10 | | **[Overview](#overview)** | **[News](#news)** | **[Requirements](#requirements)** | **[Quick Start](#quick-start)** | **[Contributors](#contributors)** |

11 |

12 |

13 | ## 💡 Overview

14 |

15 | EveryoneNobel aims to generate **Nobel Prize images for everyone**. We utilizes ComfyUI for image generation and HTML templates to display text on the images. This project serves not only as a process for generating nobel images but also as **a potential universal framework**. This framework transforms the ComfyUI-generated visuals into final products, offering a structured approach for further applications and customization.

16 |

17 | We share how we build the entire app and sell the product in 30 hours in this blog [here](https://mp.weixin.qq.com/s/t3v-h1MzpFKuh0RCMRmjEg).

18 |

19 | You could generate the picture without text [here](https://civitai.com/models/875184?modelVersionId=979771).

20 |

21 |

22 |

23 |

33 |

34 |

97 |

98 |  |

99 |  |

100 |  |

101 |  |

102 |  |

103 |

104 |

105 |

106 | ## 🏄 Star History

107 |

108 | [](https://star-history.com/#16131zzzzzzzz/EveryoneNobel&Date)

109 |

--------------------------------------------------------------------------------

/src/html_modify.py:

--------------------------------------------------------------------------------

1 | from bs4 import BeautifulSoup

2 |

3 |

4 | class HtmlModifier:

5 | def __init__(self, file_path):

6 | self.file_path = file_path

7 | self.load_html()

8 |

9 | def load_html(self):

10 | """加载HTML文件并解析"""

11 | with open(self.file_path, "r", encoding="utf-8") as file:

12 | self.html_content = file.read()

13 | self.soup = BeautifulSoup(self.html_content, "html.parser")

14 |

15 | def update_subject(self, new_text):

16 | """更新主题文本"""

17 | subject = self.soup.find(class_="subject")

18 | if subject:

19 | subject.string = new_text

20 |

21 | def update_name(self, new_text):

22 | """更新名字文本"""

23 | name = self.soup.find(class_="name")

24 | if name:

25 | name.string = new_text

26 |

27 | def update_contribution(self, new_text):

28 | """更新贡献文本"""

29 | contribution = self.soup.find(class_="contribution")

30 | if contribution:

31 | contribution.string = new_text

32 |

33 | def update_footer(self, new_text):

34 | """更新底部文本"""

35 | footer = self.soup.find(class_="footer")

36 | if footer:

37 | footer.string = new_text

38 |

39 | def update_image_source(self, new_src):

40 | """更新图片源"""

41 | img = self.soup.find("img", id="img1")

42 | if img:

43 | img["src"] = new_src

44 |

45 | def update_style(self, class_name, property_name, value):

46 | """更新指定类的CSS样式"""

47 | element = self.soup.find(class_=class_name)

48 | if element and "style" in element.attrs:

49 | # 修改现有的style属性

50 | styles = element["style"].split(";")

51 | new_styles = []

52 | for style in styles:

53 | if style.strip() and property_name in style:

54 | # 修改指定的样式属性

55 | new_styles.append(f"{property_name}: {value}")

56 | else:

57 | new_styles.append(style)

58 | element["style"] = "; ".join(new_styles) + ";" # 更新style属性

59 | elif element:

60 | # 如果没有style属性,则创建一个

61 | element["style"] = f"{property_name}: {value};"

62 |

63 | def save_changes(self, new_path):

64 | """保存修改后的HTML内容"""

65 | with open(new_path, "w", encoding="utf-8") as file:

66 | file.write(str(self.soup))

67 |

68 |

69 | class HtmlModifierDouble(HtmlModifier):

70 | def __init__(self, file_path):

71 | self.file_path = file_path

72 | self.load_html()

73 |

74 | def update_image_source2(self, new_src):

75 | """更新图片源"""

76 | img = self.soup.find("img", id="img2")

77 | if img:

78 | img["src"] = new_src

79 |

80 | def update_name2(self, new_text):

81 | """更新名字文本"""

82 | name = self.soup.find(class_="name1")

83 | if name:

84 | name.string = new_text

85 |

86 |

87 | def modifier_html_double(

88 | html_template_path,

89 | subject_content,

90 | name,

91 | name2,

92 | contribution_content,

93 | img_path,

94 | img_path2,

95 | ):

96 | # 使用示例

97 | html_modifier = HtmlModifierDouble(html_template_path)

98 |

99 | subject_content_sencond_row = subject_content.split("

")[-1]

100 | subject_content_font = int(30 * min(60 / len(subject_content), 1))

101 |

102 | contribution_content_font = int(20 * min(1, 120 / len(contribution_content)))

103 | # 修改内容

104 | html_modifier.update_subject(subject_content)

105 | html_modifier.update_name(name)

106 | html_modifier.update_contribution(contribution_content)

107 |

108 | html_modifier.update_image_source(img_path)

109 | html_modifier.update_image_source2(img_path2)

110 | html_modifier.update_name2(name2)

111 | html_modifier.update_style(

112 | "subject", "font-size", f"{subject_content_font}px"

113 | ) # 更新名字字体大小

114 | html_modifier.update_style(

115 | "contribution", "font-size", f"{contribution_content_font}px"

116 | ) # 更新名字字体大小

117 | return html_modifier

118 |

119 |

120 | def modifier_html(

121 | html_template_path, subject_content, name, contribution_content, img_path

122 | ):

123 | # 使用示例

124 | html_modifier = HtmlModifier(html_template_path)

125 |

126 | subject_content_sencond_row = subject_content.split("

")[-1]

127 | subject_content_font = int(30 * min(60 / len(subject_content), 1))

128 |

129 | contribution_content_font = int(20 * min(1, 120 / len(contribution_content)))

130 | # 修改内容

131 | html_modifier.update_subject(subject_content)

132 | html_modifier.update_name(name)

133 | html_modifier.update_contribution(contribution_content)

134 |

135 | html_modifier.update_image_source(img_path)

136 | html_modifier.update_style(

137 | "subject", "font-size", f"{subject_content_font}px"

138 | ) # 更新名字字体大小

139 | html_modifier.update_style(

140 | "contribution", "font-size", f"{contribution_content_font}px"

141 | ) # 更新名字字体大小

142 | return html_modifier

143 |

144 |

145 | if __name__ == "__main__":

146 | html_template_path = "canvas.html"

147 | new_path = "1.html"

148 | subject_content = "THE NOBEL PRIZE IN COMPUTER SCIENCE 2024"

149 | name = "Zhihong Zhu"

150 | contribution_content = "”玩原神玩的“"

151 | img_path = "zhu.png"

152 | img_path2 = "zhu.png"

153 | name2 = "原神"

154 | html_modifier = modifier_html(

155 | html_template_path, subject_content, name, contribution_content, img_path

156 | )

157 | # html_modifier = modifier_html_double(html_template_path,subject_content,name,name2,contribution_content,img_path,img_path2)

158 | html_modifier.save_changes(new_path)

159 |

--------------------------------------------------------------------------------

/src/server.py:

--------------------------------------------------------------------------------

1 | import json

2 | import websocket # NOTE: websocket-client (https://github.com/websocket-client/websocket-client)

3 | import uuid

4 | import urllib.request

5 | from requests_toolbelt import MultipartEncoder

6 | import urllib.parse

7 | import random

8 | import os

9 | import io

10 | from PIL import Image

11 |

12 |

13 | def open_websocket_connection(comfy_server_address):

14 | server_address = comfy_server_address

15 | client_id = str(uuid.uuid4())

16 | ws = websocket.WebSocket()

17 | ws.connect("ws://{}/ws?clientId={}".format(server_address, client_id))

18 | return ws, server_address, client_id

19 |

20 |

21 | def queue_prompt(prompt, client_id, server_address):

22 | p = {"prompt": prompt, "client_id": client_id}

23 | headers = {"Content-Type": "application/json"}

24 | data = json.dumps(p).encode("utf-8")

25 | req = urllib.request.Request(

26 | "http://{}/prompt".format(server_address), data=data, headers=headers

27 | )

28 | return json.loads(urllib.request.urlopen(req).read())

29 |

30 |

31 | def get_history(prompt_id, server_address):

32 | with urllib.request.urlopen(

33 | "http://{}/history/{}".format(server_address, prompt_id)

34 | ) as response:

35 | return json.loads(response.read())

36 |

37 |

38 | def get_image(filename, subfolder, folder_type, server_address):

39 | data = {"filename": filename, "subfolder": subfolder, "type": folder_type}

40 | url_values = urllib.parse.urlencode(data)

41 | with urllib.request.urlopen(

42 | "http://{}/view?{}".format(server_address, url_values)

43 | ) as response:

44 | return response.read()

45 |

46 |

47 | def upload_image(input_path, name, server_address, image_type="input", overwrite=True):

48 | with open(input_path, "rb") as file:

49 | multipart_data = MultipartEncoder(

50 | fields={

51 | "image": (name, file, "image/png"),

52 | "type": image_type,

53 | "overwrite": str(overwrite).lower(),

54 | }

55 | )

56 |

57 | data = multipart_data

58 | headers = {"Content-Type": multipart_data.content_type}

59 | request = urllib.request.Request(

60 | "http://{}/upload/image".format(server_address), data=data, headers=headers

61 | )

62 | with urllib.request.urlopen(request) as response:

63 | return response.read()

64 |

65 |

66 | def load_workflow(workflow_path):

67 | try:

68 | with open(workflow_path, "r") as file:

69 | workflow = json.load(file)

70 | return json.dumps(workflow)

71 | except FileNotFoundError:

72 | print(f"The file {workflow_path} was not found.")

73 | return None

74 | except json.JSONDecodeError:

75 | print(f"The file {workflow_path} contains invalid JSON.")

76 | return None

77 |

78 |

79 | def prompt_image_to_image(

80 | workflow_path, input_path, comfy_server_address, output_path="./output/", save_previews=False

81 | ):

82 | with open(workflow_path, "r", encoding="utf-8") as workflow_api_txt2gif_file:

83 | prompt = json.load(workflow_api_txt2gif_file)

84 | filename = input_path.split("/")[-1]

85 | prompt.get("104")["inputs"]["image"] = filename

86 | file_name = generate_image_by_prompt_and_image(

87 | prompt, output_path, input_path, filename, comfy_server_address, save_previews

88 | )

89 | return file_name

90 |

91 |

92 | def generate_image_by_prompt_and_image(

93 | prompt, output_path, input_path, filename, comfy_server_address, save_previews=False

94 | ):

95 | try:

96 | ws, server_address, client_id = open_websocket_connection(comfy_server_address)

97 | upload_image(input_path, filename, server_address)

98 | prompt_id = queue_prompt(prompt, client_id, server_address)["prompt_id"]

99 | track_progress(prompt, ws, prompt_id)

100 | images = get_images(prompt_id, server_address, save_previews)

101 | save_image(images, output_path, save_previews)

102 | finally:

103 | ws.close()

104 | return os.path.join(output_path, images[-1]["file_name"])

105 |

106 |

107 | def track_progress(prompt, ws, prompt_id):

108 | node_ids = list(prompt.keys())

109 | finished_nodes = []

110 |

111 | while True:

112 | out = ws.recv()

113 | if isinstance(out, str):

114 | message = json.loads(out)

115 | if message["type"] == "progress":

116 | data = message["data"]

117 | current_step = data["value"]

118 | print("In K-Sampler -> Step: ", current_step, " of: ", data["max"])

119 | if message["type"] == "execution_cached":

120 | data = message["data"]

121 | for itm in data["nodes"]:

122 | if itm not in finished_nodes:

123 | finished_nodes.append(itm)

124 | print(

125 | "Progess: ",

126 | len(finished_nodes),

127 | "/",

128 | len(node_ids),

129 | " Tasks done",

130 | )

131 | if message["type"] == "executing":

132 | data = message["data"]

133 | if data["node"] not in finished_nodes:

134 | finished_nodes.append(data["node"])

135 | print(

136 | "Progess: ",

137 | len(finished_nodes),

138 | "/",

139 | len(node_ids),

140 | " Tasks done",

141 | )

142 |

143 | if data["node"] is None and data["prompt_id"] == prompt_id:

144 | break # Execution is done

145 | else:

146 | continue

147 | return

148 |

149 |

150 | # 从history通过prompt_id找到图片

151 | # TODO: (warning!!!) prompt_id要保证每次都不一样

152 | # TODO: (warning!!!) 不知道是否大规模运行,history多了后会导致卡顿,可能需要清理机制

153 | # TODO: 固定模板应该可以不用遍历 节省时间

154 | def get_images(prompt_id, server_address, allow_preview=False):

155 | output_images = []

156 |

157 | history = get_history(prompt_id, server_address)[prompt_id]

158 | for node_id in history["outputs"]:

159 | node_output = history["outputs"][node_id]

160 | if "images" in node_output:

161 | output_data = {}

162 | for image in node_output["images"]:

163 | if allow_preview and image["type"] == "temp":

164 | preview_data = get_image(

165 | image["filename"],

166 | image["subfolder"],

167 | image["type"],

168 | server_address,

169 | )

170 | output_data["image_data"] = preview_data

171 | if image["type"] == "output":

172 | image_data = get_image(

173 | image["filename"],

174 | image["subfolder"],

175 | image["type"],

176 | server_address,

177 | )

178 | output_data["image_data"] = image_data

179 | output_data["file_name"] = image["filename"]

180 | output_data["type"] = image["type"]

181 | output_images.append(output_data)

182 |

183 | return output_images

184 |

185 |

186 | def save_image(images, output_path, save_previews):

187 | # for itm in images:

188 | # directory = os.path.join(output_path, 'temp/') if itm['type'] == 'temp' and save_previews else output_path

189 | # os.makedirs(directory, exist_ok=True)

190 | # try:

191 | # image = Image.open(io.BytesIO(itm['image_data']))

192 | # image.save(os.path.join(directory, itm['file_name']))

193 | # except Exception as e:

194 | # print(f"Failed to save image {itm['file_name']}: {e}")

195 | # TODO: 这个逻辑不怎么好看 但应该不会造成隐患

196 | itm = images[-1]

197 | os.makedirs(output_path, exist_ok=True)

198 | try:

199 | image = Image.open(io.BytesIO(itm["image_data"]))

200 | image.save(os.path.join(output_path, itm["file_name"]))

201 | except Exception as e:

202 | print(f"Failed to save image {itm['file_name']}: {e}")

203 |

204 |

205 | if __name__ == "__main__":

206 | IMAGE_PATH = "/root/autodl-tmp/comfy_api/image_tmp/images (2).jpeg"

207 | OUTPUT_PATH = "./output/"

208 | output_filename = prompt_image_to_image(

209 | "nobel_slight_workflow_api.json",

210 | IMAGE_PATH,

211 | output_path=OUTPUT_PATH,

212 | save_previews=True,

213 | )

214 | print(output_filename)

215 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/resources/workflow/nobel_workflow.json:

--------------------------------------------------------------------------------

1 | {

2 | "8": {

3 | "inputs": {

4 | "samples": [

5 | "137",

6 | 0

7 | ],

8 | "vae": [

9 | "35",

10 | 0

11 | ]

12 | },

13 | "class_type": "VAEDecode",

14 | "_meta": {

15 | "title": "VAE Decode"

16 | }

17 | },

18 | "28": {

19 | "inputs": {

20 | "upscale_method": "nearest-exact",

21 | "width": 1024,

22 | "height": 1024,

23 | "crop": "center",

24 | "image": [

25 | "110",

26 | 0

27 | ]

28 | },

29 | "class_type": "ImageScale",

30 | "_meta": {

31 | "title": "Upscale Image"

32 | }

33 | },

34 | "35": {

35 | "inputs": {

36 | "vae_name": "sdxlVAE_sdxlVAE.safetensors"

37 | },

38 | "class_type": "VAELoader",

39 | "_meta": {

40 | "title": "Load VAE"

41 | }

42 | },

43 | "71": {

44 | "inputs": {

45 | "strength": 0.6,

46 | "start_percent": 0,

47 | "end_percent": 1,

48 | "positive": [

49 | "76",

50 | 0

51 | ],

52 | "negative": [

53 | "76",

54 | 1

55 | ],

56 | "control_net": [

57 | "131",

58 | 0

59 | ],

60 | "image": [

61 | "145",

62 | 0

63 | ]

64 | },

65 | "class_type": "ControlNetApplyAdvanced",

66 | "_meta": {

67 | "title": "Apply ControlNet (Advanced)"

68 | }

69 | },

70 | "76": {

71 | "inputs": {

72 | "strength": 0.9,

73 | "start_percent": 0,

74 | "end_percent": 0.8,

75 | "positive": [

76 | "128",

77 | 0

78 | ],

79 | "negative": [

80 | "129",

81 | 0

82 | ],

83 | "control_net": [

84 | "130",

85 | 0

86 | ],

87 | "image": [

88 | "155",

89 | 0

90 | ]

91 | },

92 | "class_type": "ControlNetApplyAdvanced",

93 | "_meta": {

94 | "title": "Apply ControlNet (Advanced)"

95 | }

96 | },

97 | "88": {

98 | "inputs": {

99 | "pixels": [

100 | "28",

101 | 0

102 | ],

103 | "vae": [

104 | "35",

105 | 0

106 | ]

107 | },

108 | "class_type": "VAEEncode",

109 | "_meta": {

110 | "title": "VAE Encode"

111 | }

112 | },

113 | "103": {

114 | "inputs": {

115 | "invert_mask": false,

116 | "detect": "mask_area",

117 | "top_reserve": 50,

118 | "bottom_reserve": 50,

119 | "left_reserve": 50,

120 | "right_reserve": 50,

121 | "image": [

122 | "104",

123 | 0

124 | ],

125 | "mask_for_crop": [

126 | "138",

127 | 1

128 | ]

129 | },

130 | "class_type": "LayerUtility: CropByMask",

131 | "_meta": {

132 | "title": "LayerUtility: CropByMask"

133 | }

134 | },

135 | "104": {

136 | "inputs": {

137 | "image": "858825fafb0fad48442506f3329cef28.jpg",

138 | "upload": "image"

139 | },

140 | "class_type": "LoadImage",

141 | "_meta": {

142 | "title": "Load Image"

143 | }

144 | },

145 | "110": {

146 | "inputs": {

147 | "fill_background": true,

148 | "background_color": "#ffffff",

149 | "RGBA_image": [

150 | "146",

151 | 0

152 | ],

153 | "mask": [

154 | "146",

155 | 1

156 | ]

157 | },

158 | "class_type": "LayerUtility: ImageRemoveAlpha",

159 | "_meta": {

160 | "title": "LayerUtility: ImageRemoveAlpha"

161 | }

162 | },

163 | "111": {

164 | "inputs": {

165 | "action": "pad to ratio",

166 | "smaller_side": 0,

167 | "larger_side": 0,

168 | "scale_factor": 0,

169 | "resize_mode": "any",

170 | "side_ratio": "1:1",

171 | "crop_pad_position": 0.5,

172 | "pad_feathering": 20,

173 | "pixels": [

174 | "103",

175 | 0

176 | ]

177 | },

178 | "class_type": "ImageResize",

179 | "_meta": {

180 | "title": "Image Resize"

181 | }

182 | },

183 | "128": {

184 | "inputs": {

185 | "text": "masterpiece,High quality,Nobel_Prizes_style,monochrome,bold black lines,2d retro illustration, watercolor, pencil crayon,Portrait illustration, this illustration is outlined with bold black lines, highlighted colors include shades of brown, black, and the background is a simple light color. The portrait shows the half.(young man:1.8)",

186 | "parser": "A1111",

187 | "mean_normalization": true,

188 | "multi_conditioning": false,

189 | "use_old_emphasis_implementation": false,

190 | "with_SDXL": false,

191 | "ascore": 6,

192 | "width": 1024,

193 | "height": 1024,

194 | "crop_w": 0,

195 | "crop_h": 0,

196 | "target_width": 1024,

197 | "target_height": 1024,

198 | "text_g": "",

199 | "text_l": "",

200 | "smZ_steps": 1,

201 | "clip": [

202 | "133",

203 | 0

204 | ]

205 | },

206 | "class_type": "smZ CLIPTextEncode",

207 | "_meta": {

208 | "title": "CLIP Text Encode++"

209 | }

210 | },

211 | "129": {

212 | "inputs": {

213 | "text": "yellow face,nsfw, lowres, (bad), text, error, fewer, extra, missing,\n\nworst quality, jpeg artifacts, low quality, watermark,\n\nunfinished, displeasing, oldest, early, chromatic aberration,\n\nsignature, extra digits, artistic error, username, scan, [abstract],\n\n(Shape is not compact, loose, weak volume, flat, thin, weak texture, no volume :1.3),\n\nWeak color, no color space, low level contrast,\n",

214 | "parser": "A1111",

215 | "mean_normalization": true,

216 | "multi_conditioning": false,

217 | "use_old_emphasis_implementation": false,

218 | "with_SDXL": false,

219 | "ascore": 6,

220 | "width": 1024,

221 | "height": 1024,

222 | "crop_w": 0,

223 | "crop_h": 0,

224 | "target_width": 1024,

225 | "target_height": 1024,

226 | "text_g": "",

227 | "text_l": "",

228 | "smZ_steps": 1,

229 | "clip": [

230 | "133",

231 | 0

232 | ]

233 | },

234 | "class_type": "smZ CLIPTextEncode",

235 | "_meta": {

236 | "title": "CLIP Text Encode++"

237 | }

238 | },

239 | "130": {

240 | "inputs": {

241 | "control_net_name": "mistoLine_fp16.safetensors"

242 | },

243 | "class_type": "ControlNetLoader",

244 | "_meta": {

245 | "title": "Load ControlNet Model"

246 | }

247 | },

248 | "131": {

249 | "inputs": {

250 | "control_net_name": "iffusion_pytorch_model_promax.safetensors"

251 | },

252 | "class_type": "ControlNetLoader",

253 | "_meta": {

254 | "title": "Load ControlNet Model"

255 | }

256 | },

257 | "132": {

258 | "inputs": {

259 | "ckpt_name": "dreamshaperXL_alpha2Xl10.safetensors"

260 | },

261 | "class_type": "CheckpointLoaderSimple",

262 | "_meta": {

263 | "title": "Load Checkpoint"

264 | }

265 | },

266 | "133": {

267 | "inputs": {

268 | "stop_at_clip_layer": -6,

269 | "clip": [

270 | "135",

271 | 1

272 | ]

273 | },

274 | "class_type": "CLIPSetLastLayer",

275 | "_meta": {

276 | "title": "CLIP Set Last Layer"

277 | }

278 | },

279 | "135": {

280 | "inputs": {

281 | "lora_01": "None",

282 | "strength_01": 0.8,

283 | "lora_02": "XL_Nobel_Prizes_style-000005.safetensors",

284 | "strength_02": 0.8,

285 | "lora_03": "None",

286 | "strength_03": 0.03,

287 | "lora_04": "None",

288 | "strength_04": 0.2,

289 | "model": [

290 | "132",

291 | 0

292 | ],

293 | "clip": [

294 | "132",

295 | 1

296 | ]

297 | },

298 | "class_type": "Lora Loader Stack (rgthree)",

299 | "_meta": {

300 | "title": "Lora Loader Stack (rgthree)"

301 | }

302 | },

303 | "137": {

304 | "inputs": {

305 | "seed": 414577579665607,

306 | "steps": 30,

307 | "cfg": 10,

308 | "sampler_name": "euler_ancestral",

309 | "scheduler": "normal",

310 | "denoise": 1,

311 | "model": [

312 | "135",

313 | 0

314 | ],

315 | "positive": [

316 | "71",

317 | 0

318 | ],

319 | "negative": [

320 | "71",

321 | 1

322 | ],

323 | "latent_image": [

324 | "153",

325 | 0

326 | ]

327 | },

328 | "class_type": "KSampler",

329 | "_meta": {

330 | "title": "KSampler"

331 | }

332 | },

333 | "138": {

334 | "inputs": {

335 | "prompt": "human",

336 | "threshold": 0.3,

337 | "sam_model": [

338 | "140",

339 | 0

340 | ],

341 | "grounding_dino_model": [

342 | "139",

343 | 0

344 | ],

345 | "image": [

346 | "104",

347 | 0

348 | ]

349 | },

350 | "class_type": "GroundingDinoSAMSegment (segment anything)",

351 | "_meta": {

352 | "title": "GroundingDinoSAMSegment (segment anything)"

353 | }

354 | },

355 | "139": {

356 | "inputs": {

357 | "model_name": "GroundingDINO_SwinB (938MB)"

358 | },

359 | "class_type": "GroundingDinoModelLoader (segment anything)",

360 | "_meta": {

361 | "title": "GroundingDinoModelLoader (segment anything)"

362 | }

363 | },

364 | "140": {

365 | "inputs": {

366 | "model_name": "sam_vit_h (2.56GB)"

367 | },

368 | "class_type": "SAMModelLoader (segment anything)",

369 | "_meta": {

370 | "title": "SAMModelLoader (segment anything)"

371 | }

372 | },

373 | "145": {

374 | "inputs": {

375 | "detect_hand": "enable",

376 | "detect_body": "enable",

377 | "detect_face": "enable",

378 | "resolution": 2048,

379 | "scale_stick_for_xinsr_cn": "enable",

380 | "image": [

381 | "110",

382 | 0

383 | ]

384 | },

385 | "class_type": "OpenposePreprocessor",

386 | "_meta": {

387 | "title": "OpenPose Pose"

388 | }

389 | },

390 | "146": {

391 | "inputs": {

392 | "prompt": "human",

393 | "threshold": 0.3,

394 | "sam_model": [

395 | "148",

396 | 0

397 | ],

398 | "grounding_dino_model": [

399 | "147",

400 | 0

401 | ],

402 | "image": [

403 | "111",

404 | 0

405 | ]

406 | },

407 | "class_type": "GroundingDinoSAMSegment (segment anything)",

408 | "_meta": {

409 | "title": "GroundingDinoSAMSegment (segment anything)"

410 | }

411 | },

412 | "147": {

413 | "inputs": {

414 | "model_name": "GroundingDINO_SwinB (938MB)"

415 | },

416 | "class_type": "GroundingDinoModelLoader (segment anything)",

417 | "_meta": {

418 | "title": "GroundingDinoModelLoader (segment anything)"

419 | }

420 | },

421 | "148": {

422 | "inputs": {

423 | "model_name": "sam_vit_h (2.56GB)"

424 | },

425 | "class_type": "SAMModelLoader (segment anything)",

426 | "_meta": {

427 | "title": "SAMModelLoader (segment anything)"

428 | }

429 | },

430 | "153": {

431 | "inputs": {

432 | "width": [

433 | "154",

434 | 0

435 | ],

436 | "height": [

437 | "154",

438 | 1

439 | ],

440 | "batch_size": 1

441 | },

442 | "class_type": "EmptyLatentImage",

443 | "_meta": {

444 | "title": "Empty Latent Image"

445 | }

446 | },

447 | "154": {

448 | "inputs": {

449 | "image": [

450 | "28",

451 | 0

452 | ]

453 | },

454 | "class_type": "GetImageSize+",

455 | "_meta": {

456 | "title": "🔧 Get Image Size"

457 | }

458 | },

459 | "155": {

460 | "inputs": {

461 | "guassian_sigma": 6,

462 | "intensity_threshold": 8,

463 | "resolution": 2048,

464 | "image": [

465 | "110",

466 | 0

467 | ]

468 | },

469 | "class_type": "LineartStandardPreprocessor",

470 | "_meta": {

471 | "title": "Standard Lineart"

472 | }

473 | },

474 | "161": {

475 | "inputs": {

476 | "brightness": 1.1,

477 | "contrast": 1.35,

478 | "saturation": 1.2,

479 | "image": [

480 | "8",

481 | 0

482 | ]

483 | },

484 | "class_type": "LayerColor: Brightness & Contrast",

485 | "_meta": {

486 | "title": "LayerColor: Brightness & Contrast"

487 | }

488 | },

489 | "162": {

490 | "inputs": {

491 | "amount": 0.8,

492 | "image": [

493 | "161",

494 | 0

495 | ]

496 | },

497 | "class_type": "ImageCASharpening+",

498 | "_meta": {

499 | "title": "🔧 Image Contrast Adaptive Sharpening"

500 | }

501 | },

502 | "164": {

503 | "inputs": {

504 | "filename_prefix": "ComfyUI",

505 | "images": [

506 | "162",

507 | 0

508 | ]

509 | },

510 | "class_type": "SaveImage",

511 | "_meta": {

512 | "title": "Save Image"

513 | }

514 | }

515 | }

--------------------------------------------------------------------------------

/resources/workflow/nobel_workflow_for_install.json:

--------------------------------------------------------------------------------

1 | {

2 | "last_node_id": 157,

3 | "last_link_id": 163,

4 | "nodes": [

5 | {

6 | "id": 147,

7 | "type": "GroundingDinoModelLoader (segment anything)",

8 | "pos": {

9 | "0": -700,

10 | "1": 70

11 | },

12 | "size": {

13 | "0": 361.20001220703125,

14 | "1": 58

15 | },

16 | "flags": {},

17 | "order": 0,

18 | "mode": 0,

19 | "inputs": [],

20 | "outputs": [

21 | {

22 | "name": "GROUNDING_DINO_MODEL",

23 | "type": "GROUNDING_DINO_MODEL",

24 | "links": [

25 | 136

26 | ],

27 | "shape": 3,

28 | "label": "G-Dino模型"

29 | }

30 | ],

31 | "properties": {

32 | "Node name for S&R": "GroundingDinoModelLoader (segment anything)"

33 | },

34 | "widgets_values": [

35 | "GroundingDINO_SwinB (938MB)"

36 | ],

37 | "color": "#332922",

38 | "bgcolor": "#593930"

39 | },

40 | {

41 | "id": 148,

42 | "type": "SAMModelLoader (segment anything)",

43 | "pos": {

44 | "0": -700,

45 | "1": -30

46 | },

47 | "size": {

48 | "0": 358.7974548339844,

49 | "1": 58

50 | },

51 | "flags": {},

52 | "order": 1,

53 | "mode": 0,

54 | "inputs": [],

55 | "outputs": [

56 | {

57 | "name": "SAM_MODEL",

58 | "type": "SAM_MODEL",

59 | "links": [

60 | 135

61 | ],

62 | "shape": 3,

63 | "label": "SAM模型"

64 | }

65 | ],

66 | "properties": {

67 | "Node name for S&R": "SAMModelLoader (segment anything)"

68 | },

69 | "widgets_values": [

70 | "sam_vit_h (2.56GB)"

71 | ],

72 | "color": "#332922",

73 | "bgcolor": "#593930"

74 | },

75 | {

76 | "id": 145,

77 | "type": "OpenposePreprocessor",

78 | "pos": {

79 | "0": 2450,

80 | "1": -220

81 | },

82 | "size": {

83 | "0": 315,

84 | "1": 174

85 | },

86 | "flags": {},

87 | "order": 25,

88 | "mode": 0,

89 | "inputs": [

90 | {

91 | "name": "image",

92 | "type": "IMAGE",

93 | "link": 132,

94 | "label": "图像"

95 | }

96 | ],

97 | "outputs": [

98 | {

99 | "name": "IMAGE",

100 | "type": "IMAGE",

101 | "links": [

102 | 133,

103 | 134

104 | ],

105 | "slot_index": 0,

106 | "shape": 3,

107 | "label": "图像"

108 | },

109 | {

110 | "name": "POSE_KEYPOINT",

111 | "type": "POSE_KEYPOINT",

112 | "links": null,

113 | "shape": 3,

114 | "label": "姿态关键点"

115 | }

116 | ],

117 | "properties": {

118 | "Node name for S&R": "OpenposePreprocessor"

119 | },

120 | "widgets_values": [

121 | "enable",

122 | "enable",

123 | "enable",

124 | 2048,

125 | "enable"

126 | ]

127 | },

128 | {

129 | "id": 155,

130 | "type": "LineartStandardPreprocessor",

131 | "pos": {

132 | "0": 2068.07373046875,

133 | "1": -171.20970153808594

134 | },

135 | "size": {

136 | "0": 315,

137 | "1": 106

138 | },

139 | "flags": {},

140 | "order": 26,

141 | "mode": 0,

142 | "inputs": [

143 | {

144 | "name": "image",

145 | "type": "IMAGE",

146 | "link": 149,

147 | "label": "图像"

148 | }

149 | ],

150 | "outputs": [

151 | {

152 | "name": "IMAGE",

153 | "type": "IMAGE",

154 | "links": [

155 | 150,

156 | 151

157 | ],

158 | "slot_index": 0,

159 | "shape": 3,

160 | "label": "图像"

161 | }

162 | ],

163 | "properties": {

164 | "Node name for S&R": "LineartStandardPreprocessor"

165 | },

166 | "widgets_values": [

167 | 6,

168 | 8,

169 | 2048

170 | ]

171 | },

172 | {

173 | "id": 130,

174 | "type": "ControlNetLoader",

175 | "pos": {

176 | "0": 2034.07373046875,

177 | "1": -367.20947265625

178 | },

179 | "size": {

180 | "0": 373.6316223144531,

181 | "1": 58

182 | },

183 | "flags": {},

184 | "order": 2,

185 | "mode": 0,

186 | "inputs": [],

187 | "outputs": [

188 | {

189 | "name": "CONTROL_NET",

190 | "type": "CONTROL_NET",

191 | "links": [

192 | 99

193 | ],

194 | "slot_index": 0,

195 | "shape": 3,

196 | "label": "ControlNet"

197 | }

198 | ],

199 | "properties": {

200 | "Node name for S&R": "ControlNetLoader"

201 | },

202 | "widgets_values": [

203 | "mistoLine_fp16.safetensors"

204 | ]

205 | },

206 | {

207 | "id": 76,

208 | "type": "ControlNetApplyAdvanced",

209 | "pos": {

210 | "0": 2060.07373046875,

211 | "1": -3.209773063659668

212 | },

213 | "size": {

214 | "0": 320,

215 | "1": 186

216 | },

217 | "flags": {},

218 | "order": 30,

219 | "mode": 0,

220 | "inputs": [

221 | {

222 | "name": "positive",

223 | "type": "CONDITIONING",

224 | "link": 95,

225 | "label": "正面条件"

226 | },

227 | {

228 | "name": "negative",

229 | "type": "CONDITIONING",

230 | "link": 97,

231 | "label": "负面条件"

232 | },

233 | {

234 | "name": "control_net",

235 | "type": "CONTROL_NET",

236 | "link": 99,

237 | "label": "ControlNet"

238 | },

239 | {

240 | "name": "image",

241 | "type": "IMAGE",

242 | "link": 150,

243 | "label": "图像"

244 | },

245 | {

246 | "name": "vae",

247 | "type": "VAE",

248 | "link": null

249 | }

250 | ],

251 | "outputs": [

252 | {

253 | "name": "positive",

254 | "type": "CONDITIONING",

255 | "links": [

256 | 24

257 | ],

258 | "shape": 3,

259 | "label": "正面条件"

260 | },

261 | {

262 | "name": "negative",

263 | "type": "CONDITIONING",

264 | "links": [

265 | 25

266 | ],

267 | "shape": 3,

268 | "label": "负面条件"

269 | }

270 | ],

271 | "properties": {

272 | "Node name for S&R": "ControlNetApplyAdvanced"

273 | },

274 | "widgets_values": [

275 | 0.9,

276 | 0,

277 | 0.6

278 | ]

279 | },

280 | {

281 | "id": 71,

282 | "type": "ControlNetApplyAdvanced",

283 | "pos": {

284 | "0": 2440,

285 | "1": 0

286 | },

287 | "size": {

288 | "0": 320,

289 | "1": 186

290 | },

291 | "flags": {},

292 | "order": 33,

293 | "mode": 0,

294 | "inputs": [

295 | {

296 | "name": "positive",

297 | "type": "CONDITIONING",

298 | "link": 24,

299 | "label": "正面条件"

300 | },

301 | {

302 | "name": "negative",

303 | "type": "CONDITIONING",

304 | "link": 25,

305 | "label": "负面条件"

306 | },

307 | {

308 | "name": "control_net",

309 | "type": "CONTROL_NET",

310 | "link": 100,

311 | "label": "ControlNet"

312 | },

313 | {

314 | "name": "image",

315 | "type": "IMAGE",

316 | "link": 133,

317 | "label": "图像"

318 | },

319 | {

320 | "name": "vae",

321 | "type": "VAE",

322 | "link": null

323 | }

324 | ],

325 | "outputs": [

326 | {

327 | "name": "positive",

328 | "type": "CONDITIONING",

329 | "links": [

330 | 114

331 | ],

332 | "shape": 3,

333 | "label": "正面条件"

334 | },

335 | {

336 | "name": "negative",

337 | "type": "CONDITIONING",

338 | "links": [

339 | 115

340 | ],

341 | "shape": 3,

342 | "label": "负面条件"

343 | }

344 | ],

345 | "properties": {

346 | "Node name for S&R": "ControlNetApplyAdvanced"

347 | },

348 | "widgets_values": [

349 | 0.6,

350 | 0,

351 | 1

352 | ]

353 | },

354 | {

355 | "id": 79,

356 | "type": "PreviewImage",

357 | "pos": {

358 | "0": 2072.07373046875,

359 | "1": 250.79006958007812

360 | },

361 | "size": {

362 | "0": 309.0509033203125,

363 | "1": 264.9362487792969

364 | },

365 | "flags": {},

366 | "order": 31,

367 | "mode": 0,

368 | "inputs": [

369 | {

370 | "name": "images",

371 | "type": "IMAGE",

372 | "link": 151,

373 | "label": "图像"

374 | }

375 | ],

376 | "outputs": [],

377 | "properties": {

378 | "Node name for S&R": "PreviewImage"

379 | },

380 | "widgets_values": []

381 | },

382 | {

383 | "id": 104,

384 | "type": "LoadImage",

385 | "pos": {

386 | "0": -2490,

387 | "1": -30

388 | },

389 | "size": {

390 | "0": 315,

391 | "1": 314

392 | },

393 | "flags": {},

394 | "order": 3,

395 | "mode": 0,

396 | "inputs": [],

397 | "outputs": [

398 | {

399 | "name": "IMAGE",

400 | "type": "IMAGE",

401 | "links": [

402 | 52,

403 | 129

404 | ],

405 | "slot_index": 0,

406 | "shape": 3,

407 | "label": "图像"

408 | },

409 | {

410 | "name": "MASK",

411 | "type": "MASK",

412 | "links": null,

413 | "shape": 3,

414 | "label": "遮罩"

415 | }

416 | ],

417 | "properties": {

418 | "Node name for S&R": "LoadImage"

419 | },

420 | "widgets_values": [

421 | "d89d2096a3e22e24d9a4de9c4f32ca53.jpg",

422 | "image"

423 | ]

424 | },

425 | {

426 | "id": 138,

427 | "type": "GroundingDinoSAMSegment (segment anything)",

428 | "pos": {

429 | "0": -1780,

430 | "1": -40

431 | },

432 | "size": {

433 | "0": 352.79998779296875,

434 | "1": 122

435 | },

436 | "flags": {},

437 | "order": 10,

438 | "mode": 0,

439 | "inputs": [

440 | {

441 | "name": "sam_model",

442 | "type": "SAM_MODEL",

443 | "link": 118,

444 | "slot_index": 0,

445 | "label": "SAM模型"

446 | },

447 | {

448 | "name": "grounding_dino_model",

449 | "type": "GROUNDING_DINO_MODEL",

450 | "link": 119,

451 | "slot_index": 1,

452 | "label": "G-Dino模型"

453 | },

454 | {

455 | "name": "image",

456 | "type": "IMAGE",

457 | "link": 129,

458 | "label": "图像"

459 | }

460 | ],

461 | "outputs": [

462 | {

463 | "name": "IMAGE",

464 | "type": "IMAGE",

465 | "links": [

466 | 131

467 | ],

468 | "slot_index": 0,

469 | "shape": 3,

470 | "label": " 图像"

471 | },

472 | {

473 | "name": "MASK",

474 | "type": "MASK",

475 | "links": [

476 | 130

477 | ],

478 | "slot_index": 1,

479 | "shape": 3,

480 | "label": "遮罩"

481 | }

482 | ],

483 | "properties": {

484 | "Node name for S&R": "GroundingDinoSAMSegment (segment anything)"

485 | },

486 | "widgets_values": [

487 | "human",

488 | 0.3

489 | ],

490 | "color": "#332922",

491 | "bgcolor": "#593930"

492 | },

493 | {

494 | "id": 139,

495 | "type": "GroundingDinoModelLoader (segment anything)",

496 | "pos": {

497 | "0": -2160,

498 | "1": 70

499 | },

500 | "size": {

501 | "0": 361.20001220703125,

502 | "1": 58

503 | },

504 | "flags": {},

505 | "order": 4,

506 | "mode": 0,

507 | "inputs": [],

508 | "outputs": [

509 | {

510 | "name": "GROUNDING_DINO_MODEL",

511 | "type": "GROUNDING_DINO_MODEL",

512 | "links": [

513 | 119

514 | ],

515 | "shape": 3,

516 | "label": "G-Dino模型"

517 | }

518 | ],

519 | "properties": {

520 | "Node name for S&R": "GroundingDinoModelLoader (segment anything)"

521 | },

522 | "widgets_values": [

523 | "GroundingDINO_SwinB (938MB)"

524 | ],

525 | "color": "#332922",

526 | "bgcolor": "#593930"

527 | },

528 | {

529 | "id": 103,

530 | "type": "LayerUtility: CropByMask",

531 | "pos": {

532 | "0": -1410,

533 | "1": 260

534 | },

535 | "size": {

536 | "0": 330,

537 | "1": 238

538 | },

539 | "flags": {},

540 | "order": 14,

541 | "mode": 0,

542 | "inputs": [

543 | {

544 | "name": "image",

545 | "type": "IMAGE",

546 | "link": 52,

547 | "slot_index": 0,

548 | "label": "图像"

549 | },

550 | {

551 | "name": "mask_for_crop",

552 | "type": "MASK",

553 | "link": 130,

554 | "label": "遮罩"

555 | }

556 | ],

557 | "outputs": [

558 | {

559 | "name": "croped_image",

560 | "type": "IMAGE",

561 | "links": [

562 | 61

563 | ],

564 | "slot_index": 0,

565 | "shape": 3,

566 | "label": "裁剪图像"

567 | },

568 | {

569 | "name": "croped_mask",

570 | "type": "MASK",

571 | "links": [],

572 | "slot_index": 1,

573 | "shape": 3,

574 | "label": "裁剪遮罩"

575 | },

576 | {

577 | "name": "crop_box",

578 | "type": "BOX",

579 | "links": [],

580 | "shape": 3,

581 | "label": "裁剪框"

582 | },

583 | {

584 | "name": "box_preview",

585 | "type": "IMAGE",

586 | "links": [

587 | 63

588 | ],

589 | "slot_index": 3,

590 | "shape": 3,

591 | "label": "裁剪框预览"

592 | }

593 | ],

594 | "properties": {

595 | "Node name for S&R": "LayerUtility: CropByMask"

596 | },

597 | "widgets_values": [

598 | false,

599 | "mask_area",

600 | 50,

601 | 50,

602 | 50,

603 | 50

604 | ],

605 | "color": "rgba(38, 73, 116, 0.7)"

606 | },

607 | {

608 | "id": 144,

609 | "type": "PreviewImage",

610 | "pos": {

611 | "0": -1410,

612 | "1": -40

613 | },

614 | "size": {

615 | "0": 333.3568420410156,

616 | "1": 251.85440063476562

617 | },

618 | "flags": {},

619 | "order": 13,

620 | "mode": 0,

621 | "inputs": [

622 | {

623 | "name": "images",

624 | "type": "IMAGE",

625 | "link": 131,

626 | "label": "图像"

627 | }

628 | ],

629 | "outputs": [],

630 | "properties": {

631 | "Node name for S&R": "PreviewImage"

632 | },

633 | "widgets_values": []

634 | },

635 | {

636 | "id": 111,

637 | "type": "ImageResize",

638 | "pos": {

639 | "0": -1050,

640 | "1": -30

641 | },

642 | "size": {

643 | "0": 315,

644 | "1": 246

645 | },

646 | "flags": {},

647 | "order": 16,

648 | "mode": 0,

649 | "inputs": [

650 | {

651 | "name": "pixels",

652 | "type": "IMAGE",

653 | "link": 61,

654 | "label": "图像"

655 | },

656 | {

657 | "name": "mask_optional",

658 | "type": "MASK",

659 | "link": null,

660 | "label": "遮罩(可选)"

661 | }

662 | ],

663 | "outputs": [

664 | {

665 | "name": "IMAGE",

666 | "type": "IMAGE",

667 | "links": [

668 | 137