├── .gitattributes

├── .gitignore

├── 2voctxt.py

├── LICENSE

├── README.md

├── bbox-regression.py

├── data

├── MYSELF.py

├── VOCdevkit

│ └── VOC2007

│ │ └── ImageSets

│ │ └── Main

│ │ ├── test.txt

│ │ ├── train.txt

│ │ ├── trainval.txt

│ │ └── val.txt

├── VOCdevkitVOC2007

│ ├── annotations_cache

│ │ └── annots.pkl

│ └── results

│ │ ├── det_test_None.txt

│ │ ├── det_test_ship.txt

│ │ ├── det_train_None.txt

│ │ ├── det_train_ship.txt

│ │ ├── det_trainval_None.txt

│ │ ├── det_trainval_ship.txt

│ │ ├── det_val_None.txt

│ │ └── det_val_ship.txt

├── __init__.py

├── config.py

├── example.jpg

├── scripts

│ ├── COCO2014.sh

│ ├── VOC2007.sh

│ └── VOC2012.sh

└── voc0712.py

├── demo

├── __init__.py

├── demo.ipynb

└── live.py

├── doc

├── SSD.jpg

├── detection_example.png

├── detection_example2.png

├── detection_examples.png

└── ssd.png

├── eval.py

├── focal_loss.py

├── layers

├── __init__.py

├── box_utils.py

├── functions

│ ├── __init__.py

│ ├── detection.py

│ └── prior_box.py

└── modules

│ ├── __init__.py

│ ├── l2norm.py

│ └── multibox_loss.py

├── loc-txt.ipynb

├── ssd.py

├── test.py

├── train.py

├── utils

├── __init__.py

└── augmentations.py

├── xml2regresstxt.py

├── 代码详解blog.txt

├── 保存权重

├── train.py

└── 代码详解blog.txt

├── 显示检测结果code.py

└── 训练步骤.txt

/.gitattributes:

--------------------------------------------------------------------------------

1 | *.ipynb linguist-language=Python

2 | .ipynb_checkpoints/* linguist-documentation

3 | dev.ipynb linguist-documentation

4 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | *.egg-info/

24 | .installed.cfg

25 | *.egg

26 |

27 | # PyInstaller

28 | # Usually these files are written by a python script from a template

29 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

30 | *.manifest

31 | *.spec

32 |

33 | # Installer logs

34 | pip-log.txt

35 | pip-delete-this-directory.txt

36 |

37 | # Unit test / coverage reports

38 | htmlcov/

39 | .tox/

40 | .coverage

41 | .coverage.*

42 | .cache

43 | nosetests.xml

44 | coverage.xml

45 | *,cover

46 | .hypothesis/

47 |

48 | # Translations

49 | *.mo

50 | *.pot

51 |

52 | # Django stuff:

53 | *.log

54 | local_settings.py

55 |

56 | # Flask stuff:

57 | instance/

58 | .webassets-cache

59 |

60 | # Scrapy stuff:

61 | .scrapy

62 |

63 | # Sphinx documentation

64 | docs/_build/

65 |

66 | # PyBuilder

67 | target/

68 |

69 | # IPython Notebook

70 | .ipynb_checkpoints

71 |

72 | # pyenv

73 | .python-version

74 |

75 | # celery beat schedule file

76 | celerybeat-schedule

77 |

78 | # dotenv

79 | .env

80 |

81 | # virtualenv

82 | venv/

83 | ENV/

84 |

85 | # Spyder project settings

86 | .spyderproject

87 |

88 | # Rope project settings

89 | .ropeproject

90 |

91 | # atom remote-sync package

92 | .remote-sync.json

93 |

94 | # weights

95 | weights/

96 |

97 | #DS_Store

98 | .DS_Store

99 |

100 | # dev stuff

101 | eval/

102 | eval.ipynb

103 | dev.ipynb

104 | .vscode/

105 |

106 | # not ready

107 | videos/

108 | templates/

109 | data/ssd_dataloader.py

110 | data/datasets/

111 | doc/visualize.py

112 | read_results.py

113 | ssd300_120000/

114 | demos/live

115 | webdemo.py

116 | test_data_aug.py

117 |

118 | # attributes

119 |

120 | # pycharm

121 | .idea/

122 |

123 | # temp checkout soln

124 | data/datasets/

125 | data/ssd_dataloader.py

126 |

127 | # pylint

128 | .pylintrc

--------------------------------------------------------------------------------

/2voctxt.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- encoding: utf-8 -*-

3 | '''

4 | @File : 2voctxt.py

5 | @Version : 1.0

6 | @Author : 2014Vee

7 | @Contact : 1976535998@qq.com

8 | @License : (C)Copyright 2014Vee From UESTC

9 | @Modify Time : 2020/4/17 15:37

10 | @Desciption : None

11 | '''

12 | import os

13 | import random

14 |

15 | # https://blog.csdn.net/duanyajun987/article/details/81507656

16 | #*这里小心一下,里面的训练集和数据集文件的比例如下

17 | #这里由于数据集NWPU里已经有测试集所以不需要在把数据留一部分测试

18 | trainval_percent = 0.2

19 | train_percent = 0.8

20 | xmlfilepath = './data/VOCdevkit/VOC2007/Annotations'

21 | txtsavepath = './data/VOCdevkit/VOC2007/ImageSets/Main'

22 | total_xml = os.listdir(xmlfilepath)

23 |

24 | num = len(total_xml)

25 | list = range(num)

26 | tv = int(num * trainval_percent)

27 | tr = int(tv * train_percent)

28 | trainval = random.sample(list, tv)

29 | train = random.sample(trainval, tr)

30 |

31 | ftrainval = open(txtsavepath + '/trainval.txt', 'w')

32 | ftest = open(txtsavepath + '/test.txt', 'w')

33 | ftrain = open(txtsavepath + '/train.txt', 'w')

34 | fval = open(txtsavepath + '/val.txt', 'w')

35 |

36 | for i in list:

37 | name = total_xml[i][:-4] + '\n'

38 | if i in trainval:

39 | ftrainval.write(name)

40 | if i in train:

41 | ftest.write(name)

42 | else:

43 | fval.write(name)

44 | else:

45 | ftrain.write(name)

46 |

47 | ftrainval.close()

48 | ftrain.close()

49 | fval.close()

50 | ftest.close()

51 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Max deGroot, Ellis Brown

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # SSD: Single Shot MultiBox Object Detector, in PyTorch

2 | A [PyTorch](http://pytorch.org/) implementation of [Single Shot MultiBox Detector](http://arxiv.org/abs/1512.02325) from the 2016 paper by Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang, and Alexander C. Berg. The official and original Caffe code can be found [here](https://github.com/weiliu89/caffe/tree/ssd).

3 |

4 |

5 |  6 |

7 | ### Table of Contents

8 | - Installation

9 | - Datasets

10 | - Train

11 | - Evaluate

12 | - Performance

13 | - Demos

14 | - Future Work

15 | - Reference

16 |

17 |

18 |

19 |

20 |

21 |

22 | ## Installation

23 | - Install [PyTorch](http://pytorch.org/) by selecting your environment on the website and running the appropriate command.

24 | - Clone this repository.

25 | * Note: We currently only support Python 3+.

26 | - Then download the dataset by following the [instructions](#datasets) below.

27 | - We now support [Visdom](https://github.com/facebookresearch/visdom) for real-time loss visualization during training!

28 | * To use Visdom in the browser:

29 | ```Shell

30 | # First install Python server and client

31 | pip install visdom

32 | # Start the server (probably in a screen or tmux)

33 | python -m visdom.server

34 | ```

35 | * Then (during training) navigate to http://localhost:8097/ (see the Train section below for training details).

36 | - Note: For training, we currently support [VOC](http://host.robots.ox.ac.uk/pascal/VOC/) and [COCO](http://mscoco.org/), and aim to add [ImageNet](http://www.image-net.org/) support soon.

37 |

38 | ## Datasets

39 | To make things easy, we provide bash scripts to handle the dataset downloads and setup for you. We also provide simple dataset loaders that inherit `torch.utils.data.Dataset`, making them fully compatible with the `torchvision.datasets` [API](http://pytorch.org/docs/torchvision/datasets.html).

40 |

41 |

42 | ### COCO

43 | Microsoft COCO: Common Objects in Context

44 |

45 | ##### Download COCO 2014

46 | ```Shell

47 | # specify a directory for dataset to be downloaded into, else default is ~/data/

48 | sh data/scripts/COCO2014.sh

49 | ```

50 |

51 | ### VOC Dataset

52 | PASCAL VOC: Visual Object Classes

53 |

54 | ##### Download VOC2007 trainval & test

55 | ```Shell

56 | # specify a directory for dataset to be downloaded into, else default is ~/data/

57 | sh data/scripts/VOC2007.sh #

58 | ```

59 |

60 | ##### Download VOC2012 trainval

61 | ```Shell

62 | # specify a directory for dataset to be downloaded into, else default is ~/data/

63 | sh data/scripts/VOC2012.sh #

64 | ```

65 |

66 | ## Training SSD

67 | - First download the fc-reduced [VGG-16](https://arxiv.org/abs/1409.1556) PyTorch base network weights at: https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

68 | - By default, we assume you have downloaded the file in the `ssd.pytorch/weights` dir:

69 |

70 | ```Shell

71 | mkdir weights

72 | cd weights

73 | wget https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

74 | ```

75 |

76 | - To train SSD using the train script simply specify the parameters listed in `train.py` as a flag or manually change them.

77 |

78 | ```Shell

79 | python train.py

80 | ```

81 |

82 | - Note:

83 | * For training, an NVIDIA GPU is strongly recommended for speed.

84 | * For instructions on Visdom usage/installation, see the Installation section.

85 | * You can pick-up training from a checkpoint by specifying the path as one of the training parameters (again, see `train.py` for options)

86 |

87 | ## Evaluation

88 | To evaluate a trained network:

89 |

90 | ```Shell

91 | python eval.py

92 | ```

93 |

94 | You can specify the parameters listed in the `eval.py` file by flagging them or manually changing them.

95 |

96 |

97 |

6 |

7 | ### Table of Contents

8 | - Installation

9 | - Datasets

10 | - Train

11 | - Evaluate

12 | - Performance

13 | - Demos

14 | - Future Work

15 | - Reference

16 |

17 |

18 |

19 |

20 |

21 |

22 | ## Installation

23 | - Install [PyTorch](http://pytorch.org/) by selecting your environment on the website and running the appropriate command.

24 | - Clone this repository.

25 | * Note: We currently only support Python 3+.

26 | - Then download the dataset by following the [instructions](#datasets) below.

27 | - We now support [Visdom](https://github.com/facebookresearch/visdom) for real-time loss visualization during training!

28 | * To use Visdom in the browser:

29 | ```Shell

30 | # First install Python server and client

31 | pip install visdom

32 | # Start the server (probably in a screen or tmux)

33 | python -m visdom.server

34 | ```

35 | * Then (during training) navigate to http://localhost:8097/ (see the Train section below for training details).

36 | - Note: For training, we currently support [VOC](http://host.robots.ox.ac.uk/pascal/VOC/) and [COCO](http://mscoco.org/), and aim to add [ImageNet](http://www.image-net.org/) support soon.

37 |

38 | ## Datasets

39 | To make things easy, we provide bash scripts to handle the dataset downloads and setup for you. We also provide simple dataset loaders that inherit `torch.utils.data.Dataset`, making them fully compatible with the `torchvision.datasets` [API](http://pytorch.org/docs/torchvision/datasets.html).

40 |

41 |

42 | ### COCO

43 | Microsoft COCO: Common Objects in Context

44 |

45 | ##### Download COCO 2014

46 | ```Shell

47 | # specify a directory for dataset to be downloaded into, else default is ~/data/

48 | sh data/scripts/COCO2014.sh

49 | ```

50 |

51 | ### VOC Dataset

52 | PASCAL VOC: Visual Object Classes

53 |

54 | ##### Download VOC2007 trainval & test

55 | ```Shell

56 | # specify a directory for dataset to be downloaded into, else default is ~/data/

57 | sh data/scripts/VOC2007.sh #

58 | ```

59 |

60 | ##### Download VOC2012 trainval

61 | ```Shell

62 | # specify a directory for dataset to be downloaded into, else default is ~/data/

63 | sh data/scripts/VOC2012.sh #

64 | ```

65 |

66 | ## Training SSD

67 | - First download the fc-reduced [VGG-16](https://arxiv.org/abs/1409.1556) PyTorch base network weights at: https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

68 | - By default, we assume you have downloaded the file in the `ssd.pytorch/weights` dir:

69 |

70 | ```Shell

71 | mkdir weights

72 | cd weights

73 | wget https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

74 | ```

75 |

76 | - To train SSD using the train script simply specify the parameters listed in `train.py` as a flag or manually change them.

77 |

78 | ```Shell

79 | python train.py

80 | ```

81 |

82 | - Note:

83 | * For training, an NVIDIA GPU is strongly recommended for speed.

84 | * For instructions on Visdom usage/installation, see the Installation section.

85 | * You can pick-up training from a checkpoint by specifying the path as one of the training parameters (again, see `train.py` for options)

86 |

87 | ## Evaluation

88 | To evaluate a trained network:

89 |

90 | ```Shell

91 | python eval.py

92 | ```

93 |

94 | You can specify the parameters listed in the `eval.py` file by flagging them or manually changing them.

95 |

96 |

97 |  98 |

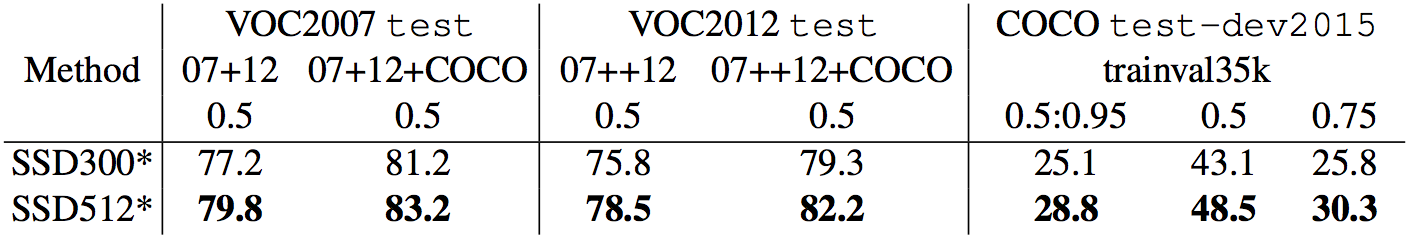

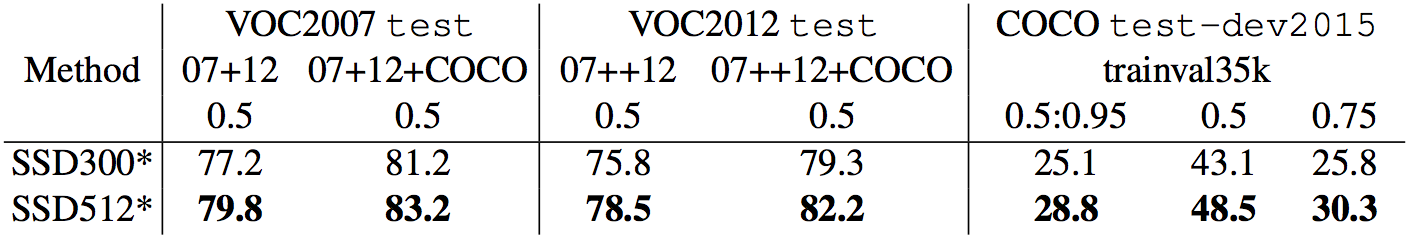

99 | ## Performance

100 |

101 | #### VOC2007 Test

102 |

103 | ##### mAP

104 |

105 | | Original | Converted weiliu89 weights | From scratch w/o data aug | From scratch w/ data aug |

106 | |:-:|:-:|:-:|:-:|

107 | | 77.2 % | 77.26 % | 58.12% | 77.43 % |

108 |

109 | ##### FPS

110 | **GTX 1060:** ~45.45 FPS

111 |

112 | ## Demos

113 |

114 | ### Use a pre-trained SSD network for detection

115 |

116 | #### Download a pre-trained network

117 | - We are trying to provide PyTorch `state_dicts` (dict of weight tensors) of the latest SSD model definitions trained on different datasets.

118 | - Currently, we provide the following PyTorch models:

119 | * SSD300 trained on VOC0712 (newest PyTorch weights)

120 | - https://s3.amazonaws.com/amdegroot-models/ssd300_mAP_77.43_v2.pth

121 | * SSD300 trained on VOC0712 (original Caffe weights)

122 | - https://s3.amazonaws.com/amdegroot-models/ssd_300_VOC0712.pth

123 | - Our goal is to reproduce this table from the [original paper](http://arxiv.org/abs/1512.02325)

124 |

98 |

99 | ## Performance

100 |

101 | #### VOC2007 Test

102 |

103 | ##### mAP

104 |

105 | | Original | Converted weiliu89 weights | From scratch w/o data aug | From scratch w/ data aug |

106 | |:-:|:-:|:-:|:-:|

107 | | 77.2 % | 77.26 % | 58.12% | 77.43 % |

108 |

109 | ##### FPS

110 | **GTX 1060:** ~45.45 FPS

111 |

112 | ## Demos

113 |

114 | ### Use a pre-trained SSD network for detection

115 |

116 | #### Download a pre-trained network

117 | - We are trying to provide PyTorch `state_dicts` (dict of weight tensors) of the latest SSD model definitions trained on different datasets.

118 | - Currently, we provide the following PyTorch models:

119 | * SSD300 trained on VOC0712 (newest PyTorch weights)

120 | - https://s3.amazonaws.com/amdegroot-models/ssd300_mAP_77.43_v2.pth

121 | * SSD300 trained on VOC0712 (original Caffe weights)

122 | - https://s3.amazonaws.com/amdegroot-models/ssd_300_VOC0712.pth

123 | - Our goal is to reproduce this table from the [original paper](http://arxiv.org/abs/1512.02325)

124 |

125 |

126 |

127 | ### Try the demo notebook

128 | - Make sure you have [jupyter notebook](http://jupyter.readthedocs.io/en/latest/install.html) installed.

129 | - Two alternatives for installing jupyter notebook:

130 | 1. If you installed PyTorch with [conda](https://www.continuum.io/downloads) (recommended), then you should already have it. (Just navigate to the ssd.pytorch cloned repo and run):

131 | `jupyter notebook`

132 |

133 | 2. If using [pip](https://pypi.python.org/pypi/pip):

134 |

135 | ```Shell

136 | # make sure pip is upgraded

137 | pip3 install --upgrade pip

138 | # install jupyter notebook

139 | pip install jupyter

140 | # Run this inside ssd.pytorch

141 | jupyter notebook

142 | ```

143 |

144 | - Now navigate to `demo/demo.ipynb` at http://localhost:8888 (by default) and have at it!

145 |

146 | ### Try the webcam demo

147 | - Works on CPU (may have to tweak `cv2.waitkey` for optimal fps) or on an NVIDIA GPU

148 | - This demo currently requires opencv2+ w/ python bindings and an onboard webcam

149 | * You can change the default webcam in `demo/live.py`

150 | - Install the [imutils](https://github.com/jrosebr1/imutils) package to leverage multi-threading on CPU:

151 | * `pip install imutils`

152 | - Running `python -m demo.live` opens the webcam and begins detecting!

153 |

154 | ## TODO

155 | We have accumulated the following to-do list, which we hope to complete in the near future

156 | - Still to come:

157 | * [x] Support for the MS COCO dataset

158 | * [ ] Support for SSD512 training and testing

159 | * [ ] Support for training on custom datasets

160 |

161 | ## Authors

162 |

163 | * [**Max deGroot**](https://github.com/amdegroot)

164 | * [**Ellis Brown**](http://github.com/ellisbrown)

165 |

166 | ***Note:*** Unfortunately, this is just a hobby of ours and not a full-time job, so we'll do our best to keep things up to date, but no guarantees. That being said, thanks to everyone for your continued help and feedback as it is really appreciated. We will try to address everything as soon as possible.

167 |

168 | ## References

169 | - Wei Liu, et al. "SSD: Single Shot MultiBox Detector." [ECCV2016]((http://arxiv.org/abs/1512.02325)).

170 | - [Original Implementation (CAFFE)](https://github.com/weiliu89/caffe/tree/ssd)

171 | - A huge thank you to [Alex Koltun](https://github.com/alexkoltun) and his team at [Webyclip](http://www.webyclip.com) for their help in finishing the data augmentation portion.

172 | - A list of other great SSD ports that were sources of inspiration (especially the Chainer repo):

173 | * [Chainer](https://github.com/Hakuyume/chainer-ssd), [Keras](https://github.com/rykov8/ssd_keras), [MXNet](https://github.com/zhreshold/mxnet-ssd), [Tensorflow](https://github.com/balancap/SSD-Tensorflow)

174 |

--------------------------------------------------------------------------------

/bbox-regression.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- encoding: utf-8 -*-

3 | '''

4 | @File : bbox-regression.py

5 | @Version : 1.0

6 | @Author : 2014Vee

7 | @Contact : 1976535998@qq.com

8 | @License : (C)Copyright 2014Vee From UESTC

9 | @Modify Time : 2020/4/14 10:37

10 | @Desciption : None

11 | '''

12 |

13 | import cv2

14 | import numpy as np

15 | import xml.dom.minidom

16 | import tensorflow as tf

17 | import os

18 | import time

19 | from tensorflow.python.framework import graph_util

20 |

21 | slim = tf.contrib.slim

22 |

23 | #读取txt文件

24 | train_txt = open('/data/lp/project/ssd.pytorch/txtsave/train.txt')

25 | val_txt = open('/data/lp/project/ssd.pytorch/txtsave/val.txt')

26 | train_content = train_txt.readlines() #保存的train.txt中的内容

27 | val_content = val_txt.readlines() #保存的val.txt中的内容

28 | # for linetr in train_content:

29 | # print ("train_content",linetr.rstrip('\n'))

30 | # for lineva in val_content:

31 | # print ("val_content",lineva.rstrip('\n'))

32 |

33 | #根据txt文件读取图像数据,并归一化图像,并保存缩放比例

34 | train_imgs=[]#缩放后的图像尺寸

35 | train_imgs_ratio=[] #width 缩放比,height缩放比

36 | val_imgs=[]

37 | val_imgs_ratio=[]

38 |

39 |

40 | h=48

41 | w=192 #归一化的尺寸

42 | c=3 #通道

43 |

44 |

45 | for linetr in train_content:

46 | img_path='/data/lp/project/ssd.pytorch/oripic/'+linetr.rstrip('\n')+'.jpg'

47 | img = cv2.imread(img_path) #读取原图

48 | # print("image_name", str(linetr.rstrip('\n')))

49 | # print("imgshape", img.shape)

50 | imgresize= cv2.resize(img,(w,h)) #图像归一化

51 | ratio = np.array([imgresize.shape[0]/img.shape[0], imgresize.shape[1]/img.shape[1]],np.float32) #height缩放比 ,width 缩放比,

52 | train_imgs_ratio.append(ratio)

53 | train_imgs.append(imgresize)

54 | train_img_arr = np.asarray(train_imgs,np.float32) #保存训练图像数据的列表 h w c

55 | print(len(train_img_arr),len(train_imgs_ratio))

56 |

57 | for lineva in val_content:

58 | img_path='/data/lp/project/ssd.pytorch/oripic/'+lineva.rstrip('\n')+'.jpg'

59 | img = cv2.imread(img_path) # h w c

60 | imgresize= cv2.resize(img,(w,h)) #h w c

61 | ratio = np.array([imgresize.shape[0]/img.shape[0], imgresize.shape[1]/img.shape[1]],np.float32) #height缩放比, width 缩放比,

62 | val_imgs_ratio.append(ratio)

63 | val_imgs.append(imgresize)

64 | # print(imgresize.shape[0], imgresize.shape[1], imgresize.shape[2])

65 | val_img_arr = np.asarray(val_imgs,np.float32) #保存验证图像的数据的列表 h w c

66 |

67 | # print(len(val_img_arr),len(val_imgs_ratio))

68 |

69 | # 根据txt文件读取xml,并获取xml中的坐标(xmin,ymin,xmax,ymax)(x表示width,y表示height),并获取经过缩放后的坐标

70 | train_xml = [] # 保存标记的边框坐标

71 | train_xml_resize = [] # 保存标记的边框坐标经过缩放后的坐标,缩放比与图像归一化的缩放比

72 | val_xml = []

73 | val_xml_resize = []

74 | for linetr in train_content:

75 | xml_path = '/data/lp/project/ssd.pytorch/xml_zc_fz/' + linetr.rstrip(

76 | '\n') + '.xml'

77 | print(xml_path)

78 | xml_DomTree = xml.dom.minidom.parse(xml_path)

79 | xml_annotation = xml_DomTree.documentElement

80 | xml_object = xml_annotation.getElementsByTagName('object')

81 | xml_bndbox = xml_object[0].getElementsByTagName('bndbox')

82 | xmin_list = xml_bndbox[0].getElementsByTagName('xmin')

83 | xmin = int(xmin_list[0].childNodes[0].data)

84 | ymin_list = xml_bndbox[0].getElementsByTagName('ymin')

85 | ymin = int(ymin_list[0].childNodes[0].data)

86 | xmax_list = xml_bndbox[0].getElementsByTagName('xmax')

87 | xmax = int(xmax_list[0].childNodes[0].data)

88 | ymax_list = xml_bndbox[0].getElementsByTagName('ymax')

89 | ymax = int(ymax_list[0].childNodes[0].data)

90 | coordinate = np.array([ymin, xmin, ymax, xmax], np.int) # h w h w

91 | train_xml.append(coordinate) # 保存训练图像的xml的坐标

92 | # print("bbox:", coordinate)

93 | # print(len(train_xml))

94 |

95 | for lineva in val_content:

96 | xml_path = '/data/lp/project/ssd.pytorch/xml_zc_fz/' + lineva.rstrip(

97 | '\n') + '.xml'

98 | print(xml_path)

99 | xml_DomTree = xml.dom.minidom.parse(xml_path)

100 | xml_annotation = xml_DomTree.documentElement

101 | xml_object = xml_annotation.getElementsByTagName('object')

102 | xml_bndbox = xml_object[0].getElementsByTagName('bndbox')

103 | xmin_list = xml_bndbox[0].getElementsByTagName('xmin')

104 | xmin = int(xmin_list[0].childNodes[0].data)

105 | ymin_list = xml_bndbox[0].getElementsByTagName('ymin')

106 | ymin = int(ymin_list[0].childNodes[0].data)

107 | xmax_list = xml_bndbox[0].getElementsByTagName('xmax')

108 | xmax = int(xmax_list[0].childNodes[0].data)

109 | ymax_list = xml_bndbox[0].getElementsByTagName('ymax')

110 | ymax = int(ymax_list[0].childNodes[0].data)

111 | coordinate = np.array([ymin, xmin, ymax, xmax], np.int)

112 | val_xml.append(coordinate) # 保存验证图像的xml的坐标

113 | # print(len(val_xml))

114 |

115 | for i in range(0, len(train_imgs_ratio)):

116 | ymin_ratio = train_xml[i][0] * train_imgs_ratio[i][0]

117 | xmin_ratio = train_xml[i][1] * train_imgs_ratio[i][1]

118 | ymax_ratio = train_xml[i][2] * train_imgs_ratio[i][0]

119 | xmax_ratio = train_xml[i][3] * train_imgs_ratio[i][1]

120 | coordinate_ratio = np.array([ymin_ratio, xmin_ratio, ymax_ratio, xmax_ratio], np.float32)

121 | train_xml_resize.append(coordinate_ratio) # 保存训练图像的标记的xml的缩放后的坐标

122 |

123 | for i in range(0, len(val_imgs_ratio)):

124 | ymin_ratio = val_xml[i][0] * val_imgs_ratio[i][0]

125 | xmin_ratio = val_xml[i][1] * val_imgs_ratio[i][1]

126 | ymax_ratio = val_xml[i][2] * val_imgs_ratio[i][0]

127 | xmax_ratio = val_xml[i][3] * val_imgs_ratio[i][1]

128 | coordinate_ratio = np.array([ymin_ratio, xmin_ratio, ymax_ratio, xmax_ratio], np.float32)

129 | val_xml_resize.append(coordinate_ratio) # 保存训练验证图像的标记的xml的缩放后的坐标

130 |

131 |

132 | # 按批次取数据,获取batchsize数据

133 | # inputs 图像数据 归一化后的数据

134 | # targets xml坐标数据 归一化后的数据

135 | def getbatches(inputs=None, targets=None, batch_size=None, shuffle=False):

136 | assert len(inputs) == len(targets)

137 | if shuffle:

138 | indices = np.arange(len(inputs))

139 | np.random.shuffle(indices)

140 | for start_idx in range(0, len(inputs) - batch_size + 1, batch_size):

141 | if shuffle:

142 | excerpt = indices[start_idx:start_idx + batch_size] # 其实就是按照batchsize做切片

143 | else:

144 | excerpt = slice(start_idx, start_idx + batch_size)

145 | yield inputs[excerpt], targets[excerpt] # 这个yield每次都是遇到了就返回类似于关键字return

146 | # 但是下次执行的时候就是从yield后面的代码进行继续,此时这个函数不是普通函数而是一个生成器了

147 |

148 |

149 | #损失函数smoothL1范数

150 | def abs_smooth(x):

151 | """Smoothed absolute function. Useful to compute an L1 smooth error.

152 |

153 | Define as:

154 | x^2 / 2 if abs(x) < 1

155 | abs(x) - 0.5 if abs(x) > 1

156 | We use here a differentiable definition using min(x) and abs(x). Clearly

157 | not optimal, but good enough for our purpose!

158 | """

159 | absx = tf.abs(x)

160 | minx = tf.minimum(absx, 1)

161 | r = 0.5 * ((absx - 1) * minx + absx)#这个地方打开会有平方项

162 | return r

163 |

164 | #构建网络结构

165 |

166 | input_data = tf.placeholder(tf.float32,shape=[None,h,w,c],name='x') #输入的图像数据(归一化后的图像数据)

167 | input_bound = tf.placeholder(tf.float32,shape=[None,None],name='y') #输入的标记的边框坐标数据(缩放后的xml坐标)

168 | prob=tf.placeholder(tf.float32, name='keep_prob')

169 |

170 |

171 | #第一个卷积层(192——>96) (48--》24)

172 | #conv1 = slim.repeat(input_data, 2, slim.conv2d, 32, [3, 3], scope='conv1')

173 | conv1 = slim.conv2d(input_data, 32, [3, 3], scope='conv1')##32是指卷积核的个数,[3, 3]是指卷积核尺寸,默认步长是[1,1]

174 | pool1 = slim.max_pool2d(conv1, [2, 2], scope='pool1')#[2,2]是池化步长

175 |

176 | #第二个卷积层(96-48) (24-》12)

177 | #conv2 = slim.repeat(pool1, 2, slim.conv2d, 64, [3, 3], scope='conv2')

178 | conv2 = slim.conv2d(pool1, 64, [3, 3], scope='conv2')

179 | pool2 = slim.max_pool2d(conv2, [2, 2], scope='pool2')

180 |

181 | #第三个卷积层(48-24) (12-》6)

182 | #conv3 = slim.repeat(pool2, 2, slim.conv2d, 128, [3, 3], scope='conv3')

183 | conv3 = slim.conv2d(pool2, 128, [3, 3], scope='conv3')

184 | pool3 = slim.max_pool2d(conv3, [2, 2], scope='pool3')

185 |

186 | #第四个卷积层(24) (6)

187 | conv4 = slim.conv2d(pool3, 256 ,[3, 3], scope='conv4')

188 | dropout = tf.layers.dropout(conv4, rate=prob, training=True)

189 | #dropout = tf.nn.dropout(conv4,keep_prob)

190 | #pool4 = slim.max_pool2d(conv4, [2, 2], scope='pool4')

191 |

192 | #第五个卷积层(24-12) (6-》3)

193 | #conv5 = slim.repeat(dropout, 2, slim.conv2d, 128, [3, 3], scope='conv5')

194 | conv5 = slim.conv2d(dropout , 128, [3, 3], scope='conv5')

195 | pool5 = slim.max_pool2d(conv5, [2, 2], scope='pool5')

196 |

197 | #第六个卷积层(12-6) (3-》1)

198 | #conv6 = slim.repeat(pool5, 2, slim.conv2d, 64, [3, 3], scope='conv6')

199 | conv6 = slim.conv2d(pool5, 64, [3, 3], scope='conv6')

200 | pool6 = slim.max_pool2d(conv6, [2, 2], scope='pool6')

201 |

202 | reshape = tf.reshape(pool6, [-1, 6 * 1 * 64])

203 | # print(reshape.get_shape())

204 |

205 | fc = slim.fully_connected(reshape, 4, scope='fc')

206 | # print(fc)

207 | # print(input_data)

208 |

209 | '''

210 | #第七个卷积层(6-3) (1-》1)

211 | conv7 = slim.conv2d(pool6, 32, [3, 3], scope='conv7')

212 | pool7 = slim.max_pool2d(conv7, [2, 2], scope='pool7')

213 |

214 | conv8 = slim.conv2d(pool7, 4, [3, 3], padding=None, activation_fn=None,scope='conv8')

215 | '''

216 |

217 |

218 | n_epoch =500

219 | batch_size= 32

220 | print (batch_size)

221 |

222 |

223 | weights = tf.expand_dims(1. * 1., axis=-1)

224 | loss = abs_smooth(fc - input_bound)#fc层和输入标签的差,用平滑L2范数做损失函数

225 | # print(loss)

226 | train_op=tf.train.AdamOptimizer(learning_rate=0.001).minimize(loss)#优化用的adam,学习率0.001

227 |

228 | #correct_prediction = tf.equal(fc, input_bound)

229 | #correct_prediction = tf.equal(tf.cast(fc,tf.int32), tf.cast(input_bound, tf.int32))

230 |

231 | temp_acc = tf.abs(tf.cast(fc,tf.int32) - tf.cast(input_bound, tf.int32)) #fc出来之后的和标签做个差值

232 | compare_np = np.ones((batch_size,4), np.int32) #建立一个和batch_size一样大小,4通道的compare_np

233 | compare_np[:] = 3

234 | print(compare_np)

235 | compare_tf = tf.convert_to_tensor(compare_np) #

236 | # print(compare_tf)

237 | correct_prediction = tf.less(temp_acc,compare_tf) ##temp_acc对应的元素如果比compare_tf对应的小,那么对应位置返回true

238 | # print(correct_prediction)

239 | loss = tf.div(tf.reduce_sum(loss * weights), batch_size, name='value')##求张量沿着某个方向的和,求完后可以降维度

240 | tf.summary.scalar('loss',loss) #可视化观看常量

241 | # print(loss)

242 | accuracy= tf.reduce_mean(tf.cast(correct_prediction, tf.float32))###tf.cast函数转换类型###

243 | #tf.summary.scalar('accuracy',accuracy) #可视化观看常量

244 | # print(accuracy)

245 |

246 |

247 | # print(prob)

248 |

249 | # pb_file_path = '/data/liuan/jupyter/root/project/keras-retinanet-master/bbox_fz_zc_006000/bbox_pb_model/ocr_bboxregress_batch16_epoch10000.pb'

250 | pb_file_path = '/data/lp/project/ssd.pytorch/ocr_bbox_batch16_epoch'

251 |

252 | # 设置可见GPU

253 | gpu_no = '1' # or '1'

254 | os.environ["CUDA_VISIBLE_DEVICES"] = gpu_no

255 | # 定义TensorFlow配置

256 | config = tf.ConfigProto()

257 | # 配置GPU内存分配方式

258 | config.gpu_options.allow_growth = True

259 | config.gpu_options.per_process_gpu_memory_fraction = 0.6

260 | # config.gpu_options.per_process_gpu_memory_fraction = 0.8

261 |

262 |

263 | sess = tf.InteractiveSession(config=config)

264 |

265 | # ////////////////////////////////

266 | # ckpt = tf.train.get_checkpoint_state('/home/data/wangchongjin/ad_image/model_save/')

267 | # saver = tf.train.import_meta_graph(ckpt.model_checkpoint_path +'.meta') # 载入图结构,保存在.meta文件中

268 | # saver.restore(sess,ckpt.model_checkpoint_path)

269 | # //////////////////////////////////

270 | sess.run(tf.global_variables_initializer())

271 |

272 | merged = tf.summary.merge_all()

273 | writer = tf.summary.FileWriter(

274 | "/data/lp/project/ssd.pytorch/ocr_bbox_batch16_epoch/record_graph", sess.graph_def)

275 |

276 | # saver = tf.train.Saver() # 声明tf.train.Saver类用于保存模型

277 |

278 |

279 | for epoch in range(n_epoch):

280 | start_time = time.time()

281 |

282 | # training

283 | train_loss, train_acc, n_batch = 0, 0, 0

284 | for x_train_a, y_train_a in getbatches(train_img_arr, train_xml_resize, batch_size, shuffle=False):

285 | _, err, acc = sess.run([train_op, loss, accuracy],

286 | feed_dict={input_data: x_train_a, input_bound: y_train_a, prob: 0.5})

287 | train_loss += err

288 | train_acc += acc

289 | n_batch += 1

290 |

291 | # print(epoch)

292 | # print(" train loss: %f" % (train_loss/ n_batch))

293 | # print(" train acc: %f" % (train_acc/ n_batch))

294 |

295 | # validation

296 | val_loss, val_acc, n_batch = 0, 0, 0

297 | for x_val_a, y_val_a in getbatches(val_img_arr, val_xml_resize, batch_size, shuffle=False):

298 | err, acc = sess.run([loss, accuracy], feed_dict={input_data: x_val_a, input_bound: y_val_a, prob: 0})

299 | # print(err)

300 | val_loss += err

301 | val_acc += acc

302 | n_batch += 1

303 |

304 | rs = sess.run([merged], feed_dict={input_data: x_val_a, input_bound: y_val_a, prob: 0})

305 | if n_batch is batch_size:

306 | writer.add_summary(rs[0], epoch)

307 |

308 | # print(" validation loss: %f" % (val_loss/ n_batch))

309 | # print(" validation acc: %f" % (val_acc/ n_batch))

310 |

311 | # saver.save(sess, "/home/data/wangchongjin/ad_image/model_save_new/ad.ckpt")

312 | constant_graph = graph_util.convert_variables_to_constants(sess, sess.graph_def, ['fc/Relu'])

313 |

314 | with tf.gfile.FastGFile(pb_file_path + '_' + str(epoch) + '.pb', mode='wb') as f:

315 | f.write(constant_graph.SerializeToString())

316 |

317 | writer.close()

318 | sess.close()

--------------------------------------------------------------------------------

/data/MYSELF.py:

--------------------------------------------------------------------------------

1 | # # import os.path as osp

2 | # # import sys

3 | # # import torch

4 | # # import torch.utils.data as data

5 | # # import cv2

6 | # # import numpy as np

7 | # # if sys.version_info[0] == 2:

8 | # # import xml.etree.cElementTree as ET

9 | # # else:

10 | # # import xml.etree.ElementTree as ET

11 | # # image_sets=['2007', 'trainval'],#,('2012', 'trainval') 要选用的数据集

12 | # # root="D:/Deep_learning/ssd.pytorch-master/data/VOCdevkit/"

13 | # # ids = list()

14 | # # for (year, name) in image_sets:

15 | # # rootpath = osp.join(root, 'VOC' + year)

16 | # # for line in open(osp.join(rootpath, 'ImageSets', 'Main', name + '.txt')):

17 | # # ids.append((rootpath, line.strip()))

18 | # # print(ids[0])

19 | # #

20 | # # img_id = ids[927] #('D:/Deep_learning/ssd.pytorch-master/data/VOCdevkit/VOC2007', '000001')

21 | # # anno = osp.join('%s', 'Annotations', '%s.xml')

22 | # # img = osp.join('%s', 'JPEGImages', '%s.jpg')

23 | # # target = ET.parse(anno % img_id).getroot() #读取xml文件

24 | # # img = cv2.imread(img % img_id)#获取图像

25 | # # cv2.imshow('pwn',img)

26 | # # height, width, channels = img.shape

27 | # # print(height)

28 | # # print(width)

29 | # # print(channels)

30 | # # cv2.waitKey (0)

31 | # #

32 | # # VOC_CLASSES1 = ( # always index 0

33 | # # 'aeroplane', 'bicycle', 'bird', 'boat',

34 | # # 'bottle', 'bus', 'car', 'cat', 'chair',

35 | # # 'cow', 'diningtable', 'dog', 'horse',

36 | # # 'motorbike', 'person', 'pottedplant',

37 | # # 'sheep', 'sofa', 'train', 'tvmonitor')

38 | # # VOC_CLASSES2=('ship','pwn')

39 | # #

40 | # # what=dict(zip(VOC_CLASSES1, range(len(VOC_CLASSES1))))

41 | # # what2=dict(zip(VOC_CLASSES2, range(len(VOC_CLASSES2))))

42 | # # print(what)

43 | # # print(what2)

44 | # #######################################################################################################################

45 | # # from __future__ import division

46 | # # from math import sqrt as sqrt

47 | # # from itertools import product as product

48 | # # import torch

49 | # # mean = []

50 | # # clip=True

51 | # # for i, j in product(range(5), repeat=2): # 生成平面的网格位置坐标 i=[0 0 0 0 0 1 1 1 1 1 2 2 2 2 2 3 3 3 3 3 4 4 4 4 4]

52 | # # f_k = 300 / 64 #37.5 j=[0 1 2 3 4 0 1 2 3 4 0 1 2 3 4 0 1 2 3 4 0 1 2 3 4]

53 | # # cx = (j + 0.5) / f_k #

54 | # # cy = (i + 0.5) / f_k #

55 | # # s_k =162 / 300#0.1

56 | # # mean += [cx, cy, s_k, s_k]

57 | # # # aspect_ratio: 1

58 | # # # rel size: sqrt(s_k * s_(k+1))

59 | # # s_k_prime = sqrt(s_k * (213/300))#0.14

60 | # # mean += [cx, cy, s_k_prime, s_k_prime]

61 | # #

62 | # # # rest of aspect ratios

63 | # # for ar in [2,3]: # 'aspect_ratios': [[2], [2, 3], [2, 3], [2, 3], [2], [2]],

64 | # # mean += [cx, cy, s_k * sqrt(ar), s_k / sqrt(ar)]

65 | # # mean += [cx, cy, s_k / sqrt(ar), s_k * sqrt(ar)]

66 | # #

67 | # # output = torch.Tensor(mean).view(-1, 4)

68 | # # if clip:

69 | # # output.clamp_(max=1, min=0)

70 | # import torch as t

71 | # list1=[t.full([2,2,2],1),t.full([2,2,2],2)]

72 | # list2=[t.full([2,2,2],3),t.full([2,2,2],4)]

73 | # list3=[t.full([2,2,2],5),t.full([2,2,2],6)]

74 | # loc=[]

75 | # conf=[]

76 | # pwn=zip(list1,list2,list3)

77 | # print(pwn)

78 | #

79 | # # for (x,l,c) in zip(list1,list2,list3):

80 | # # loc.append(l(x))

81 | # # conf.append(c(x))

82 | #

83 | # # import torch

84 | # # x = torch.tensor([[1,2,3],[4,5,6]])

85 | # # x.is_contiguous() # True

86 | # # print(x)

87 | # # print(x.transpose(0,1))

88 | # # print(x.transpose(0, 1).is_contiguous()) # False

89 | # # print(x.transpose(0, 1).contiguous().is_contiguous()) # True

90 |

91 | from data import *

92 | from utils.augmentations import SSDAugmentation

93 | from layers.modules import MultiBoxLoss

94 | from ssd import build_ssd

95 | import os

96 | import time

97 | import torch

98 | from torch.autograd import Variable

99 | import torch.nn as nn

100 | import torch.optim as optim

101 | import torch.backends.cudnn as cudnn

102 | import torch.nn.init as init

103 | import torch.utils.data as data

104 | import argparse

105 | import visdom as viz

106 |

107 | list1=torch.arange(0,8)

108 | x = torch.Tensor([[1], [2], [3]])

109 | y = x.expand(3, 4)

110 | print("x.size():", x.size())

111 | print("y.size():", y.size())

112 |

113 | print(x)

114 | print(y)

--------------------------------------------------------------------------------

/data/VOCdevkit/VOC2007/ImageSets/Main/test.txt:

--------------------------------------------------------------------------------

1 | 000009

2 | 000010

3 | 000012

4 | 000013

5 | 000014

6 | 000015

7 | 000023

8 | 000024

9 | 000028

10 | 000029

11 | 000031

12 | 000032

13 | 000034

14 | 000040

15 | 000053

16 | 000059

17 | 000067

18 | 000074

19 | 000075

20 | 000076

21 | 000090

22 | 000101

23 | 000105

24 | 000107

25 | 000116

26 | 000120

27 | 000131

28 | 000133

29 | 000135

30 | 000136

31 | 000137

32 | 000139

33 | 000149

34 | 000157

35 | 000163

36 | 000164

37 | 000181

38 | 000183

39 | 000187

40 | 000192

41 | 000197

42 | 000203

43 | 000219

44 | 000220

45 | 000229

46 | 000230

47 | 000231

48 | 000235

49 | 000237

50 | 000241

51 | 000244

52 | 000248

53 | 000255

54 | 000256

55 | 000262

56 | 000263

57 | 000264

58 | 000266

59 | 000268

60 | 000269

61 | 000274

62 | 000275

63 | 000282

64 | 000290

65 | 000291

66 | 000303

67 | 000306

68 | 000319

69 | 000334

70 | 000340

71 | 000346

72 | 000351

73 | 000352

74 | 000360

75 | 000366

76 | 000369

77 | 000384

78 | 000390

79 | 000392

80 | 000398

81 | 000408

82 | 000409

83 | 000423

84 | 000430

85 | 000431

86 | 000440

87 | 000444

88 | 000450

89 | 000454

90 | 000462

91 | 000470

92 | 000472

93 | 000486

94 | 000487

95 | 000488

96 | 000489

97 | 000499

98 | 000501

99 | 000505

100 | 000506

101 | 000507

102 | 000513

103 | 000530

104 | 000533

105 | 000540

106 | 000542

107 | 000544

108 | 000552

109 | 000561

110 | 000564

111 | 000567

112 | 000568

113 | 000569

114 | 000571

115 | 000574

116 | 000576

117 | 000584

118 | 000590

119 | 000593

120 | 000598

121 | 000602

122 | 000604

123 | 000605

124 | 000619

125 | 000620

126 | 000631

127 | 000635

128 | 000649

129 | 000661

130 | 000672

131 | 000676

132 | 000694

133 | 000697

134 | 000708

135 | 000711

136 | 000712

137 | 000713

138 | 000717

139 | 000727

140 | 000729

141 | 000731

142 | 000732

143 | 000739

144 | 000743

145 | 000746

146 | 000748

147 | 000753

148 | 000754

149 | 000756

150 | 000764

151 | 000769

152 | 000774

153 | 000775

154 | 000786

155 | 000793

156 | 000796

157 | 000802

158 | 000808

159 | 000809

160 | 000814

161 | 000819

162 | 000821

163 | 000823

164 | 000846

165 | 000848

166 | 000858

167 | 000863

168 | 000867

169 | 000869

170 | 000875

171 | 000880

172 | 000883

173 | 000884

174 | 000888

175 | 000893

176 | 000894

177 | 000897

178 | 000898

179 | 000900

180 | 000910

181 | 000912

182 | 000918

183 | 000919

184 | 000920

185 | 000921

186 | 000922

187 | 000926

188 | 000946

189 | 000947

190 | 000954

191 | 000960

192 | 000961

193 | 000967

194 | 000971

195 | 000977

196 | 000978

197 | 000982

198 | 000984

199 | 000986

200 | 000988

201 | 000989

202 | 000996

203 | 000997

204 | 001006

205 | 001007

206 | 001020

207 | 001029

208 | 001036

209 | 001043

210 | 001044

211 | 001047

212 | 001057

213 | 001059

214 | 001062

215 | 001068

216 | 001069

217 | 001075

218 | 001076

219 | 001078

220 | 001080

221 | 001084

222 | 001091

223 | 001096

224 | 001099

225 | 001104

226 | 001114

227 | 001127

228 | 001129

229 | 001130

230 | 001139

231 | 001146

232 | 001147

233 |

--------------------------------------------------------------------------------

/data/VOCdevkit/VOC2007/ImageSets/Main/train.txt:

--------------------------------------------------------------------------------

1 | 000001

2 | 000002

3 | 000003

4 | 000004

5 | 000005

6 | 000006

7 | 000007

8 | 000008

9 | 000011

10 | 000016

11 | 000018

12 | 000019

13 | 000020

14 | 000021

15 | 000025

16 | 000026

17 | 000027

18 | 000030

19 | 000033

20 | 000035

21 | 000036

22 | 000037

23 | 000038

24 | 000039

25 | 000041

26 | 000042

27 | 000043

28 | 000044

29 | 000045

30 | 000046

31 | 000047

32 | 000048

33 | 000049

34 | 000050

35 | 000051

36 | 000052

37 | 000054

38 | 000056

39 | 000057

40 | 000058

41 | 000060

42 | 000061

43 | 000062

44 | 000063

45 | 000064

46 | 000065

47 | 000066

48 | 000069

49 | 000070

50 | 000071

51 | 000072

52 | 000073

53 | 000077

54 | 000078

55 | 000079

56 | 000080

57 | 000081

58 | 000082

59 | 000083

60 | 000084

61 | 000085

62 | 000086

63 | 000087

64 | 000088

65 | 000089

66 | 000091

67 | 000093

68 | 000094

69 | 000095

70 | 000096

71 | 000097

72 | 000099

73 | 000100

74 | 000102

75 | 000103

76 | 000104

77 | 000106

78 | 000108

79 | 000109

80 | 000110

81 | 000111

82 | 000112

83 | 000113

84 | 000114

85 | 000115

86 | 000117

87 | 000121

88 | 000122

89 | 000123

90 | 000125

91 | 000126

92 | 000127

93 | 000128

94 | 000129

95 | 000130

96 | 000132

97 | 000134

98 | 000138

99 | 000140

100 | 000142

101 | 000143

102 | 000144

103 | 000145

104 | 000146

105 | 000147

106 | 000150

107 | 000151

108 | 000152

109 | 000153

110 | 000154

111 | 000156

112 | 000158

113 | 000159

114 | 000160

115 | 000162

116 | 000165

117 | 000166

118 | 000167

119 | 000168

120 | 000169

121 | 000170

122 | 000171

123 | 000172

124 | 000173

125 | 000174

126 | 000175

127 | 000176

128 | 000177

129 | 000178

130 | 000179

131 | 000180

132 | 000182

133 | 000184

134 | 000185

135 | 000186

136 | 000188

137 | 000189

138 | 000190

139 | 000191

140 | 000193

141 | 000194

142 | 000195

143 | 000196

144 | 000198

145 | 000199

146 | 000200

147 | 000201

148 | 000202

149 | 000204

150 | 000205

151 | 000206

152 | 000207

153 | 000208

154 | 000209

155 | 000210

156 | 000213

157 | 000215

158 | 000216

159 | 000217

160 | 000218

161 | 000221

162 | 000223

163 | 000225

164 | 000226

165 | 000227

166 | 000228

167 | 000232

168 | 000233

169 | 000234

170 | 000236

171 | 000238

172 | 000239

173 | 000240

174 | 000242

175 | 000243

176 | 000245

177 | 000246

178 | 000247

179 | 000249

180 | 000250

181 | 000251

182 | 000252

183 | 000253

184 | 000257

185 | 000258

186 | 000260

187 | 000261

188 | 000265

189 | 000267

190 | 000270

191 | 000271

192 | 000272

193 | 000273

194 | 000276

195 | 000277

196 | 000278

197 | 000279

198 | 000280

199 | 000281

200 | 000283

201 | 000284

202 | 000285

203 | 000286

204 | 000287

205 | 000288

206 | 000289

207 | 000292

208 | 000293

209 | 000294

210 | 000295

211 | 000296

212 | 000298

213 | 000299

214 | 000301

215 | 000302

216 | 000309

217 | 000310

218 | 000311

219 | 000312

220 | 000313

221 | 000314

222 | 000316

223 | 000317

224 | 000318

225 | 000320

226 | 000321

227 | 000322

228 | 000323

229 | 000324

230 | 000325

231 | 000326

232 | 000327

233 | 000328

234 | 000329

235 | 000331

236 | 000332

237 | 000335

238 | 000336

239 | 000337

240 | 000338

241 | 000339

242 | 000341

243 | 000342

244 | 000343

245 | 000344

246 | 000345

247 | 000347

248 | 000348

249 | 000349

250 | 000350

251 | 000353

252 | 000354

253 | 000355

254 | 000356

255 | 000357

256 | 000358

257 | 000359

258 | 000361

259 | 000362

260 | 000363

261 | 000364

262 | 000365

263 | 000367

264 | 000368

265 | 000370

266 | 000371

267 | 000372

268 | 000373

269 | 000374

270 | 000376

271 | 000377

272 | 000379

273 | 000380

274 | 000381

275 | 000383

276 | 000385

277 | 000386

278 | 000387

279 | 000388

280 | 000389

281 | 000391

282 | 000393

283 | 000394

284 | 000395

285 | 000396

286 | 000397

287 | 000399

288 | 000400

289 | 000401

290 | 000402

291 | 000403

292 | 000405

293 | 000406

294 | 000407

295 | 000410

296 | 000411

297 | 000412

298 | 000413

299 | 000414

300 | 000415

301 | 000416

302 | 000417

303 | 000418

304 | 000419

305 | 000420

306 | 000421

307 | 000422

308 | 000424

309 | 000426

310 | 000427

311 | 000428

312 | 000429

313 | 000432

314 | 000433

315 | 000434

316 | 000435

317 | 000438

318 | 000439

319 | 000441

320 | 000443

321 | 000445

322 | 000446

323 | 000447

324 | 000448

325 | 000449

326 | 000451

327 | 000452

328 | 000453

329 | 000455

330 | 000456

331 | 000457

332 | 000458

333 | 000459

334 | 000460

335 | 000463

336 | 000464

337 | 000465

338 | 000466

339 | 000467

340 | 000468

341 | 000469

342 | 000471

343 | 000473

344 | 000474

345 | 000475

346 | 000476

347 | 000477

348 | 000478

349 | 000479

350 | 000480

351 | 000482

352 | 000483

353 | 000484

354 | 000485

355 | 000490

356 | 000492

357 | 000493

358 | 000494

359 | 000495

360 | 000496

361 | 000497

362 | 000498

363 | 000500

364 | 000502

365 | 000503

366 | 000509

367 | 000510

368 | 000511

369 | 000512

370 | 000514

371 | 000515

372 | 000516

373 | 000517

374 | 000518

375 | 000520

376 | 000521

377 | 000522

378 | 000523

379 | 000525

380 | 000527

381 | 000528

382 | 000529

383 | 000531

384 | 000532

385 | 000534

386 | 000535

387 | 000536

388 | 000537

389 | 000538

390 | 000539

391 | 000541

392 | 000543

393 | 000545

394 | 000546

395 | 000547

396 | 000548

397 | 000549

398 | 000550

399 | 000551

400 | 000553

401 | 000554

402 | 000555

403 | 000556

404 | 000557

405 | 000558

406 | 000559

407 | 000560

408 | 000562

409 | 000563

410 | 000565

411 | 000566

412 | 000570

413 | 000572

414 | 000573

415 | 000575

416 | 000577

417 | 000578

418 | 000579

419 | 000580

420 | 000582

421 | 000583

422 | 000585

423 | 000586

424 | 000587

425 | 000588

426 | 000589

427 | 000591

428 | 000592

429 | 000594

430 | 000595

431 | 000596

432 | 000597

433 | 000599

434 | 000600

435 | 000601

436 | 000606

437 | 000607

438 | 000608

439 | 000609

440 | 000610

441 | 000611

442 | 000612

443 | 000613

444 | 000614

445 | 000615

446 | 000616

447 | 000617

448 | 000618

449 | 000621

450 | 000622

451 | 000623

452 | 000624

453 | 000625

454 | 000626

455 | 000627

456 | 000628

457 | 000630

458 | 000632

459 | 000633

460 | 000634

461 | 000636

462 | 000637

463 | 000638

464 | 000641

465 | 000642

466 | 000643

467 | 000645

468 | 000647

469 | 000648

470 | 000650

471 | 000651

472 | 000652

473 | 000653

474 | 000654

475 | 000656

476 | 000657

477 | 000658

478 | 000659

479 | 000663

480 | 000664

481 | 000665

482 | 000666

483 | 000667

484 | 000668

485 | 000669

486 | 000670

487 | 000671

488 | 000673

489 | 000674

490 | 000675

491 | 000677

492 | 000678

493 | 000679

494 | 000680

495 | 000681

496 | 000682

497 | 000683

498 | 000684

499 | 000685

500 | 000686

501 | 000687

502 | 000688

503 | 000689

504 | 000690

505 | 000691

506 | 000692

507 | 000693

508 | 000696

509 | 000698

510 | 000699

511 | 000700

512 | 000701

513 | 000702

514 | 000703

515 | 000704

516 | 000705

517 | 000706

518 | 000707

519 | 000709

520 | 000710

521 | 000714

522 | 000715

523 | 000718

524 | 000719

525 | 000720

526 | 000721

527 | 000722

528 | 000723

529 | 000724

530 | 000725

531 | 000726

532 | 000728

533 | 000730

534 | 000733

535 | 000734

536 | 000736

537 | 000738

538 | 000740

539 | 000741

540 | 000742

541 | 000744

542 | 000745

543 | 000747

544 | 000749

545 | 000750

546 | 000751

547 | 000755

548 | 000757

549 | 000758

550 | 000759

551 | 000760

552 | 000761

553 | 000762

554 | 000763

555 | 000765

556 | 000766

557 | 000767

558 | 000768

559 | 000770

560 | 000772

561 | 000776

562 | 000777

563 | 000778

564 | 000779

565 | 000780

566 | 000781

567 | 000782

568 | 000783

569 | 000784

570 | 000785

571 | 000787

572 | 000788

573 | 000789

574 | 000790

575 | 000791

576 | 000792

577 | 000794

578 | 000795

579 | 000797

580 | 000798

581 | 000799

582 | 000800

583 | 000801

584 | 000803

585 | 000804

586 | 000805

587 | 000806

588 | 000811

589 | 000813

590 | 000815

591 | 000816

592 | 000817

593 | 000818

594 | 000822

595 | 000824

596 | 000825

597 | 000826

598 | 000828

599 | 000829

600 | 000830

601 | 000831

602 | 000832

603 | 000833

604 | 000834

605 | 000835

606 | 000836

607 | 000837

608 | 000838

609 | 000839

610 | 000840

611 | 000841

612 | 000842

613 | 000843

614 | 000844

615 | 000845

616 | 000847

617 | 000849

618 | 000850

619 | 000851

620 | 000852

621 | 000853

622 | 000854

623 | 000855

624 | 000856

625 | 000857

626 | 000859

627 | 000860

628 | 000861

629 | 000862

630 | 000864

631 | 000865

632 | 000866

633 | 000868

634 | 000871

635 | 000872

636 | 000873

637 | 000874

638 | 000876

639 | 000877

640 | 000881

641 | 000885

642 | 000886

643 | 000887

644 | 000889

645 | 000890

646 | 000892

647 | 000895

648 | 000896

649 | 000899

650 | 000901

651 | 000902

652 | 000903

653 | 000904

654 | 000905

655 | 000907

656 | 000908

657 | 000913

658 | 000914

659 | 000915

660 | 000916

661 | 000917

662 | 000923

663 | 000924

664 | 000927

665 | 000929

666 | 000930

667 | 000931

668 | 000932

669 | 000933

670 | 000934

671 | 000935

672 | 000936

673 | 000937

674 | 000938

675 | 000939

676 | 000940

677 | 000941

678 | 000942

679 | 000943

680 | 000944

681 | 000945

682 | 000948

683 | 000949

684 | 000950

685 | 000951

686 | 000952

687 | 000953

688 | 000955

689 | 000956

690 | 000957

691 | 000958

692 | 000959

693 | 000962

694 | 000963

695 | 000964

696 | 000966

697 | 000969

698 | 000970

699 | 000972

700 | 000973

701 | 000974

702 | 000975

703 | 000976

704 | 000979

705 | 000980

706 | 000981

707 | 000983

708 | 000985

709 | 000987

710 | 000990

711 | 000992

712 | 000993

713 | 000994

714 | 000995

715 | 000998

716 | 001000

717 | 001001

718 | 001002

719 | 001003

720 | 001004

721 | 001005

722 | 001008

723 | 001009

724 | 001010

725 | 001012

726 | 001013

727 | 001014

728 | 001015

729 | 001016

730 | 001017

731 | 001018

732 | 001019

733 | 001021

734 | 001022

735 | 001024

736 | 001025

737 | 001026

738 | 001027

739 | 001028

740 | 001030

741 | 001032

742 | 001033

743 | 001034

744 | 001035

745 | 001037

746 | 001038

747 | 001039

748 | 001040

749 | 001041

750 | 001042

751 | 001046

752 | 001048

753 | 001049

754 | 001051

755 | 001052

756 | 001053

757 | 001055

758 | 001056

759 | 001058

760 | 001060

761 | 001061

762 | 001063

763 | 001064

764 | 001065

765 | 001066

766 | 001067

767 | 001070

768 | 001071

769 | 001072

770 | 001074

771 | 001077

772 | 001079

773 | 001081

774 | 001082

775 | 001083

776 | 001085

777 | 001086

778 | 001087

779 | 001088

780 | 001089

781 | 001090

782 | 001092

783 | 001093

784 | 001094

785 | 001095

786 | 001097

787 | 001098

788 | 001101

789 | 001102

790 | 001103

791 | 001105

792 | 001107

793 | 001108

794 | 001109

795 | 001110

796 | 001111

797 | 001112

798 | 001113

799 | 001115

800 | 001116

801 | 001117

802 | 001118

803 | 001119

804 | 001120

805 | 001121

806 | 001122

807 | 001123

808 | 001124

809 | 001125

810 | 001126

811 | 001128

812 | 001131

813 | 001132

814 | 001133

815 | 001134

816 | 001135

817 | 001136

818 | 001137

819 | 001138

820 | 001140

821 | 001141

822 | 001142

823 | 001143

824 | 001144

825 | 001145

826 | 001148

827 | 001149

828 | 001150

829 | 001151

830 | 001152

831 | 001154

832 | 001156

833 | 001158

834 | 001159

835 | 001160

836 |

--------------------------------------------------------------------------------

/data/VOCdevkit/VOC2007/ImageSets/Main/trainval.txt:

--------------------------------------------------------------------------------

1 | 000001

2 | 000002

3 | 000003

4 | 000004

5 | 000005

6 | 000006

7 | 000007

8 | 000008

9 | 000011

10 | 000016

11 | 000017

12 | 000018

13 | 000019

14 | 000020

15 | 000021

16 | 000022

17 | 000025

18 | 000026

19 | 000027

20 | 000030

21 | 000033

22 | 000035

23 | 000036

24 | 000037

25 | 000038

26 | 000039

27 | 000041

28 | 000042

29 | 000043

30 | 000044

31 | 000045

32 | 000046

33 | 000047

34 | 000048

35 | 000049

36 | 000050

37 | 000051

38 | 000052

39 | 000054

40 | 000055

41 | 000056

42 | 000057

43 | 000058

44 | 000060

45 | 000061

46 | 000062

47 | 000063

48 | 000064

49 | 000065

50 | 000066

51 | 000068

52 | 000069

53 | 000070

54 | 000071

55 | 000072

56 | 000073

57 | 000077

58 | 000078

59 | 000079

60 | 000080

61 | 000081

62 | 000082

63 | 000083

64 | 000084

65 | 000085

66 | 000086

67 | 000087

68 | 000088

69 | 000089

70 | 000091

71 | 000092

72 | 000093

73 | 000094

74 | 000095

75 | 000096

76 | 000097

77 | 000098

78 | 000099

79 | 000100

80 | 000102

81 | 000103

82 | 000104

83 | 000106

84 | 000108

85 | 000109

86 | 000110

87 | 000111

88 | 000112

89 | 000113

90 | 000114

91 | 000115

92 | 000117

93 | 000118

94 | 000119

95 | 000121

96 | 000122

97 | 000123

98 | 000124

99 | 000125

100 | 000126

101 | 000127

102 | 000128

103 | 000129

104 | 000130

105 | 000132

106 | 000134

107 | 000138

108 | 000140

109 | 000141

110 | 000142

111 | 000143

112 | 000144

113 | 000145

114 | 000146

115 | 000147

116 | 000148

117 | 000150

118 | 000151

119 | 000152

120 | 000153

121 | 000154

122 | 000155

123 | 000156

124 | 000158

125 | 000159

126 | 000160

127 | 000161

128 | 000162

129 | 000165

130 | 000166

131 | 000167

132 | 000168

133 | 000169

134 | 000170

135 | 000171

136 | 000172

137 | 000173

138 | 000174

139 | 000175

140 | 000176

141 | 000177

142 | 000178

143 | 000179

144 | 000180

145 | 000182

146 | 000184

147 | 000185

148 | 000186

149 | 000188

150 | 000189

151 | 000190

152 | 000191

153 | 000193

154 | 000194

155 | 000195

156 | 000196

157 | 000198

158 | 000199

159 | 000200

160 | 000201

161 | 000202

162 | 000204

163 | 000205

164 | 000206

165 | 000207

166 | 000208

167 | 000209

168 | 000210

169 | 000211

170 | 000212

171 | 000213

172 | 000214

173 | 000215

174 | 000216

175 | 000217

176 | 000218

177 | 000221

178 | 000222

179 | 000223

180 | 000224

181 | 000225

182 | 000226

183 | 000227

184 | 000228

185 | 000232

186 | 000233

187 | 000234

188 | 000236

189 | 000238

190 | 000239

191 | 000240

192 | 000242

193 | 000243

194 | 000245

195 | 000246

196 | 000247

197 | 000249

198 | 000250

199 | 000251

200 | 000252

201 | 000253

202 | 000254

203 | 000257

204 | 000258

205 | 000259

206 | 000260

207 | 000261

208 | 000265

209 | 000267

210 | 000270

211 | 000271

212 | 000272

213 | 000273

214 | 000276

215 | 000277

216 | 000278

217 | 000279

218 | 000280

219 | 000281

220 | 000283

221 | 000284

222 | 000285

223 | 000286

224 | 000287

225 | 000288

226 | 000289

227 | 000292

228 | 000293

229 | 000294

230 | 000295

231 | 000296

232 | 000297

233 | 000298

234 | 000299

235 | 000300

236 | 000301

237 | 000302

238 | 000304

239 | 000305

240 | 000307

241 | 000308

242 | 000309

243 | 000310

244 | 000311

245 | 000312

246 | 000313

247 | 000314

248 | 000315

249 | 000316

250 | 000317

251 | 000318

252 | 000320

253 | 000321

254 | 000322

255 | 000323

256 | 000324

257 | 000325

258 | 000326

259 | 000327

260 | 000328

261 | 000329

262 | 000330

263 | 000331

264 | 000332

265 | 000333

266 | 000335

267 | 000336

268 | 000337

269 | 000338

270 | 000339

271 | 000341

272 | 000342

273 | 000343

274 | 000344

275 | 000345

276 | 000347

277 | 000348

278 | 000349

279 | 000350

280 | 000353

281 | 000354

282 | 000355

283 | 000356

284 | 000357

285 | 000358

286 | 000359

287 | 000361

288 | 000362

289 | 000363

290 | 000364

291 | 000365

292 | 000367

293 | 000368

294 | 000370

295 | 000371

296 | 000372

297 | 000373

298 | 000374

299 | 000375

300 | 000376

301 | 000377

302 | 000378

303 | 000379

304 | 000380

305 | 000381

306 | 000382

307 | 000383

308 | 000385

309 | 000386

310 | 000387

311 | 000388

312 | 000389

313 | 000391

314 | 000393

315 | 000394

316 | 000395

317 | 000396

318 | 000397

319 | 000399

320 | 000400

321 | 000401

322 | 000402

323 | 000403

324 | 000404

325 | 000405

326 | 000406

327 | 000407

328 | 000410

329 | 000411

330 | 000412

331 | 000413

332 | 000414

333 | 000415

334 | 000416

335 | 000417

336 | 000418

337 | 000419

338 | 000420

339 | 000421

340 | 000422

341 | 000424

342 | 000425

343 | 000426

344 | 000427

345 | 000428

346 | 000429

347 | 000432

348 | 000433

349 | 000434

350 | 000435

351 | 000436

352 | 000437

353 | 000438

354 | 000439

355 | 000441

356 | 000442

357 | 000443

358 | 000445

359 | 000446

360 | 000447

361 | 000448

362 | 000449

363 | 000451

364 | 000452

365 | 000453

366 | 000455

367 | 000456

368 | 000457

369 | 000458

370 | 000459

371 | 000460

372 | 000461

373 | 000463

374 | 000464

375 | 000465

376 | 000466

377 | 000467

378 | 000468

379 | 000469

380 | 000471

381 | 000473

382 | 000474

383 | 000475

384 | 000476

385 | 000477

386 | 000478

387 | 000479

388 | 000480

389 | 000481

390 | 000482

391 | 000483

392 | 000484

393 | 000485

394 | 000490

395 | 000491

396 | 000492

397 | 000493

398 | 000494

399 | 000495

400 | 000496

401 | 000497

402 | 000498

403 | 000500

404 | 000502

405 | 000503

406 | 000504

407 | 000508

408 | 000509

409 | 000510

410 | 000511

411 | 000512

412 | 000514

413 | 000515

414 | 000516

415 | 000517

416 | 000518

417 | 000519

418 | 000520

419 | 000521

420 | 000522

421 | 000523

422 | 000524

423 | 000525

424 | 000526

425 | 000527

426 | 000528

427 | 000529

428 | 000531

429 | 000532

430 | 000534

431 | 000535

432 | 000536

433 | 000537

434 | 000538

435 | 000539

436 | 000541

437 | 000543

438 | 000545

439 | 000546

440 | 000547

441 | 000548

442 | 000549

443 | 000550

444 | 000551

445 | 000553

446 | 000554

447 | 000555

448 | 000556

449 | 000557

450 | 000558

451 | 000559

452 | 000560

453 | 000562

454 | 000563

455 | 000565

456 | 000566

457 | 000570

458 | 000572

459 | 000573

460 | 000575

461 | 000577

462 | 000578

463 | 000579

464 | 000580

465 | 000581

466 | 000582

467 | 000583

468 | 000585

469 | 000586

470 | 000587

471 | 000588

472 | 000589

473 | 000591

474 | 000592

475 | 000594

476 | 000595

477 | 000596

478 | 000597

479 | 000599

480 | 000600

481 | 000601

482 | 000603

483 | 000606

484 | 000607

485 | 000608

486 | 000609

487 | 000610

488 | 000611

489 | 000612

490 | 000613

491 | 000614

492 | 000615

493 | 000616

494 | 000617

495 | 000618

496 | 000621

497 | 000622

498 | 000623

499 | 000624

500 | 000625

501 | 000626

502 | 000627

503 | 000628

504 | 000629

505 | 000630

506 | 000632

507 | 000633

508 | 000634

509 | 000636

510 | 000637

511 | 000638

512 | 000639

513 | 000640

514 | 000641

515 | 000642

516 | 000643

517 | 000644

518 | 000645

519 | 000646

520 | 000647

521 | 000648

522 | 000650

523 | 000651

524 | 000652

525 | 000653

526 | 000654

527 | 000655

528 | 000656

529 | 000657

530 | 000658

531 | 000659

532 | 000660

533 | 000662

534 | 000663

535 | 000664

536 | 000665

537 | 000666

538 | 000667

539 | 000668

540 | 000669

541 | 000670

542 | 000671

543 | 000673

544 | 000674

545 | 000675

546 | 000677

547 | 000678

548 | 000679

549 | 000680

550 | 000681

551 | 000682

552 | 000683

553 | 000684

554 | 000685

555 | 000686

556 | 000687

557 | 000688

558 | 000689

559 | 000690

560 | 000691

561 | 000692

562 | 000693

563 | 000695

564 | 000696

565 | 000698

566 | 000699

567 | 000700

568 | 000701

569 | 000702

570 | 000703

571 | 000704

572 | 000705

573 | 000706

574 | 000707

575 | 000709

576 | 000710

577 | 000714

578 | 000715

579 | 000716

580 | 000718

581 | 000719

582 | 000720

583 | 000721

584 | 000722

585 | 000723

586 | 000724

587 | 000725

588 | 000726

589 | 000728

590 | 000730

591 | 000733

592 | 000734

593 | 000735

594 | 000736

595 | 000737

596 | 000738

597 | 000740

598 | 000741

599 | 000742

600 | 000744

601 | 000745

602 | 000747

603 | 000749

604 | 000750

605 | 000751

606 | 000752

607 | 000755

608 | 000757

609 | 000758

610 | 000759

611 | 000760

612 | 000761

613 | 000762

614 | 000763

615 | 000765

616 | 000766

617 | 000767

618 | 000768

619 | 000770

620 | 000771

621 | 000772

622 | 000773

623 | 000776

624 | 000777

625 | 000778

626 | 000779

627 | 000780

628 | 000781

629 | 000782

630 | 000783

631 | 000784

632 | 000785

633 | 000787

634 | 000788

635 | 000789

636 | 000790

637 | 000791

638 | 000792

639 | 000794

640 | 000795

641 | 000797

642 | 000798

643 | 000799

644 | 000800

645 | 000801

646 | 000803

647 | 000804

648 | 000805

649 | 000806

650 | 000807

651 | 000810

652 | 000811

653 | 000812

654 | 000813

655 | 000815

656 | 000816

657 | 000817

658 | 000818

659 | 000820

660 | 000822

661 | 000824

662 | 000825

663 | 000826

664 | 000827

665 | 000828

666 | 000829

667 | 000830

668 | 000831

669 | 000832

670 | 000833

671 | 000834

672 | 000835

673 | 000836

674 | 000837

675 | 000838

676 | 000839

677 | 000840

678 | 000841

679 | 000842

680 | 000843

681 | 000844

682 | 000845

683 | 000847

684 | 000849

685 | 000850

686 | 000851

687 | 000852

688 | 000853

689 | 000854

690 | 000855

691 | 000856

692 | 000857

693 | 000859

694 | 000860

695 | 000861

696 | 000862

697 | 000864

698 | 000865

699 | 000866

700 | 000868

701 | 000870

702 | 000871

703 | 000872

704 | 000873

705 | 000874

706 | 000876

707 | 000877

708 | 000878

709 | 000879

710 | 000881

711 | 000882

712 | 000885

713 | 000886

714 | 000887

715 | 000889

716 | 000890

717 | 000891

718 | 000892

719 | 000895

720 | 000896

721 | 000899

722 | 000901

723 | 000902

724 | 000903

725 | 000904

726 | 000905

727 | 000906

728 | 000907

729 | 000908

730 | 000909

731 | 000911

732 | 000913

733 | 000914

734 | 000915

735 | 000916

736 | 000917

737 | 000923

738 | 000924

739 | 000925

740 | 000927

741 | 000928

742 | 000929

743 | 000930

744 | 000931

745 | 000932

746 | 000933

747 | 000934

748 | 000935

749 | 000936

750 | 000937

751 | 000938

752 | 000939

753 | 000940

754 | 000941

755 | 000942

756 | 000943

757 | 000944

758 | 000945

759 | 000948

760 | 000949

761 | 000950

762 | 000951

763 | 000952

764 | 000953

765 | 000955

766 | 000956

767 | 000957

768 | 000958

769 | 000959

770 | 000962

771 | 000963

772 | 000964

773 | 000965

774 | 000966

775 | 000968

776 | 000969

777 | 000970

778 | 000972

779 | 000973

780 | 000974

781 | 000975

782 | 000976

783 | 000979

784 | 000980

785 | 000981

786 | 000983

787 | 000985

788 | 000987

789 | 000990

790 | 000991

791 | 000992

792 | 000993

793 | 000994