├── .gitattributes

├── .gitignore

├── .gitmodules

├── Dockerfile

├── Dockerfile-for-Mainland-China

├── LICENSE

├── README-zh.md

├── README.md

├── assets

├── favicon.ico

├── gitconfig-cn

└── tensorboard-example.png

├── config

├── default.toml

├── lora.toml

├── presets

│ └── example.toml

└── sample_prompts.txt

├── gui.py

├── huggingface

├── accelerate

│ └── default_config.yaml

└── hub

│ └── version.txt

├── install-cn.ps1

├── install.bash

├── install.ps1

├── interrogate.ps1

├── logs

└── .keep

├── mikazuki

├── app

│ ├── __init__.py

│ ├── api.py

│ ├── application.py

│ ├── config.py

│ ├── models.py

│ └── proxy.py

├── global.d.ts

├── hook

│ ├── i18n.json

│ └── sitecustomize.py

├── launch_utils.py

├── log.py

├── process.py

├── schema

│ ├── dreambooth.ts

│ ├── flux-lora.ts

│ ├── lora-basic.ts

│ ├── lora-master.ts

│ ├── lumina2-lora.ts

│ ├── sd3-lora.ts

│ └── shared.ts

├── scripts

│ ├── fix_scripts_python_executable_path.py

│ └── torch_check.py

├── tagger

│ ├── dbimutils.py

│ ├── format.py

│ └── interrogator.py

├── tasks.py

├── tsconfig.json

└── utils

│ ├── devices.py

│ ├── tk_window.py

│ └── train_utils.py

├── output

└── .keep

├── requirements.txt

├── resize.ps1

├── run.ipynb

├── run_gui.ps1

├── run_gui.sh

├── run_gui_cn.sh

├── scripts

├── dev

│ ├── .gitignore

│ ├── COMMIT_ID

│ ├── LICENSE.md

│ ├── README-ja.md

│ ├── README.md

│ ├── XTI_hijack.py

│ ├── _typos.toml

│ ├── fine_tune.py

│ ├── finetune

│ │ ├── blip

│ │ │ ├── blip.py

│ │ │ ├── med.py

│ │ │ ├── med_config.json

│ │ │ └── vit.py

│ │ ├── clean_captions_and_tags.py

│ │ ├── hypernetwork_nai.py

│ │ ├── make_captions.py

│ │ ├── make_captions_by_git.py

│ │ ├── merge_captions_to_metadata.py

│ │ ├── merge_dd_tags_to_metadata.py

│ │ ├── prepare_buckets_latents.py

│ │ └── tag_images_by_wd14_tagger.py

│ ├── flux_minimal_inference.py

│ ├── flux_train.py

│ ├── flux_train_control_net.py

│ ├── flux_train_network.py

│ ├── gen_img.py

│ ├── gen_img_diffusers.py

│ ├── library

│ │ ├── __init__.py

│ │ ├── adafactor_fused.py

│ │ ├── attention_processors.py

│ │ ├── config_util.py

│ │ ├── custom_offloading_utils.py

│ │ ├── custom_train_functions.py

│ │ ├── deepspeed_utils.py

│ │ ├── device_utils.py

│ │ ├── flux_models.py

│ │ ├── flux_train_utils.py

│ │ ├── flux_utils.py

│ │ ├── huggingface_util.py

│ │ ├── hypernetwork.py

│ │ ├── ipex

│ │ │ ├── __init__.py

│ │ │ ├── attention.py

│ │ │ ├── diffusers.py

│ │ │ ├── gradscaler.py

│ │ │ └── hijacks.py

│ │ ├── lpw_stable_diffusion.py

│ │ ├── model_util.py

│ │ ├── original_unet.py

│ │ ├── sai_model_spec.py

│ │ ├── sd3_models.py

│ │ ├── sd3_train_utils.py

│ │ ├── sd3_utils.py

│ │ ├── sdxl_lpw_stable_diffusion.py

│ │ ├── sdxl_model_util.py

│ │ ├── sdxl_original_control_net.py

│ │ ├── sdxl_original_unet.py

│ │ ├── sdxl_train_util.py

│ │ ├── slicing_vae.py

│ │ ├── strategy_base.py

│ │ ├── strategy_flux.py

│ │ ├── strategy_sd.py

│ │ ├── strategy_sd3.py

│ │ ├── strategy_sdxl.py

│ │ ├── train_util.py

│ │ └── utils.py

│ ├── networks

│ │ ├── check_lora_weights.py

│ │ ├── control_net_lllite.py

│ │ ├── control_net_lllite_for_train.py

│ │ ├── convert_flux_lora.py

│ │ ├── dylora.py

│ │ ├── extract_lora_from_dylora.py

│ │ ├── extract_lora_from_models.py

│ │ ├── flux_extract_lora.py

│ │ ├── flux_merge_lora.py

│ │ ├── lora.py

│ │ ├── lora_diffusers.py

│ │ ├── lora_fa.py

│ │ ├── lora_flux.py

│ │ ├── lora_interrogator.py

│ │ ├── lora_sd3.py

│ │ ├── merge_lora.py

│ │ ├── merge_lora_old.py

│ │ ├── oft.py

│ │ ├── oft_flux.py

│ │ ├── resize_lora.py

│ │ ├── sdxl_merge_lora.py

│ │ └── svd_merge_lora.py

│ ├── pytest.ini

│ ├── requirements.txt

│ ├── sd3_minimal_inference.py

│ ├── sd3_train.py

│ ├── sd3_train_network.py

│ ├── sdxl_gen_img.py

│ ├── sdxl_minimal_inference.py

│ ├── sdxl_train.py

│ ├── sdxl_train_control_net.py

│ ├── sdxl_train_control_net_lllite.py

│ ├── sdxl_train_control_net_lllite_old.py

│ ├── sdxl_train_network.py

│ ├── sdxl_train_textual_inversion.py

│ ├── setup.py

│ ├── tools

│ │ ├── cache_latents.py

│ │ ├── cache_text_encoder_outputs.py

│ │ ├── canny.py

│ │ ├── convert_diffusers20_original_sd.py

│ │ ├── convert_diffusers_to_flux.py

│ │ ├── detect_face_rotate.py

│ │ ├── latent_upscaler.py

│ │ ├── merge_models.py

│ │ ├── merge_sd3_safetensors.py

│ │ ├── original_control_net.py

│ │ ├── resize_images_to_resolution.py

│ │ └── show_metadata.py

│ ├── train_control_net.py

│ ├── train_controlnet.py

│ ├── train_db.py

│ ├── train_network.py

│ ├── train_textual_inversion.py

│ └── train_textual_inversion_XTI.py

└── stable

│ ├── .gitignore

│ ├── COMMIT_ID

│ ├── LICENSE.md

│ ├── README-ja.md

│ ├── README.md

│ ├── XTI_hijack.py

│ ├── _typos.toml

│ ├── fine_tune.py

│ ├── finetune

│ ├── blip

│ │ ├── blip.py

│ │ ├── med.py

│ │ ├── med_config.json

│ │ └── vit.py

│ ├── clean_captions_and_tags.py

│ ├── hypernetwork_nai.py

│ ├── make_captions.py

│ ├── make_captions_by_git.py

│ ├── merge_captions_to_metadata.py

│ ├── merge_dd_tags_to_metadata.py

│ ├── prepare_buckets_latents.py

│ └── tag_images_by_wd14_tagger.py

│ ├── gen_img.py

│ ├── gen_img_diffusers.py

│ ├── library

│ ├── __init__.py

│ ├── adafactor_fused.py

│ ├── attention_processors.py

│ ├── config_util.py

│ ├── custom_train_functions.py

│ ├── deepspeed_utils.py

│ ├── device_utils.py

│ ├── huggingface_util.py

│ ├── hypernetwork.py

│ ├── ipex

│ │ ├── __init__.py

│ │ ├── attention.py

│ │ ├── diffusers.py

│ │ ├── gradscaler.py

│ │ └── hijacks.py

│ ├── lpw_stable_diffusion.py

│ ├── model_util.py

│ ├── original_unet.py

│ ├── sai_model_spec.py

│ ├── sdxl_lpw_stable_diffusion.py

│ ├── sdxl_model_util.py

│ ├── sdxl_original_unet.py

│ ├── sdxl_train_util.py

│ ├── slicing_vae.py

│ ├── train_util.py

│ └── utils.py

│ ├── networks

│ ├── check_lora_weights.py

│ ├── control_net_lllite.py

│ ├── control_net_lllite_for_train.py

│ ├── dylora.py

│ ├── extract_lora_from_dylora.py

│ ├── extract_lora_from_models.py

│ ├── lora.py

│ ├── lora_diffusers.py

│ ├── lora_fa.py

│ ├── lora_interrogator.py

│ ├── merge_lora.py

│ ├── merge_lora_old.py

│ ├── oft.py

│ ├── resize_lora.py

│ ├── sdxl_merge_lora.py

│ └── svd_merge_lora.py

│ ├── requirements.txt

│ ├── sdxl_gen_img.py

│ ├── sdxl_minimal_inference.py

│ ├── sdxl_train.py

│ ├── sdxl_train_control_net_lllite.py

│ ├── sdxl_train_control_net_lllite_old.py

│ ├── sdxl_train_network.py

│ ├── sdxl_train_textual_inversion.py

│ ├── setup.py

│ ├── tools

│ ├── cache_latents.py

│ ├── cache_text_encoder_outputs.py

│ ├── canny.py

│ ├── convert_diffusers20_original_sd.py

│ ├── detect_face_rotate.py

│ ├── latent_upscaler.py

│ ├── merge_models.py

│ ├── original_control_net.py

│ ├── resize_images_to_resolution.py

│ └── show_metadata.py

│ ├── train_controlnet.py

│ ├── train_db.py

│ ├── train_network.py

│ ├── train_textual_inversion.py

│ └── train_textual_inversion_XTI.py

├── sd-models

└── put stable diffusion model here.txt

├── svd_merge.ps1

├── tagger.ps1

├── tagger.sh

├── tensorboard.ps1

├── train.ipynb

├── train.ps1

├── train.sh

├── train_by_toml.ps1

└── train_by_toml.sh

/.gitattributes:

--------------------------------------------------------------------------------

1 | *.ps1 text eol=crlf

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .vscode

2 | .idea

3 |

4 | venv

5 | __pycache__

6 |

7 | output/*

8 | !output/.keep

9 |

10 | assets/config.json

11 |

12 | py310

13 | python

14 | git

15 | wd14_tagger_model

16 |

17 | train/*

18 | logs/*

19 | sd-models/*

20 | toml/autosave/*

21 | config/autosave/*

22 | config/presets/test*.toml

23 |

24 | !sd-models/put stable diffusion model here.txt

25 | !logs/.keep

26 |

27 | tests/

28 |

29 | huggingface/hub/models*

30 | huggingface/hub/version_diffusers_cache.txt

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "frontend"]

2 | path = frontend

3 | url = https://github.com/hanamizuki-ai/lora-gui-dist

4 | [submodule "mikazuki/dataset-tag-editor"]

5 | path = mikazuki/dataset-tag-editor

6 | url = https://github.com/Akegarasu/dataset-tag-editor

7 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM nvcr.io/nvidia/pytorch:24.07-py3

2 |

3 | EXPOSE 28000

4 |

5 | ENV TZ=Asia/Shanghai

6 | RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone && apt update && apt install python3-tk -y

7 |

8 | RUN mkdir /app

9 |

10 | WORKDIR /app

11 | RUN git clone --recurse-submodules https://github.com/Akegarasu/lora-scripts

12 |

13 | WORKDIR /app/lora-scripts

14 | RUN pip install xformers==0.0.27.post2 --no-deps && pip install -r requirements.txt

15 |

16 | WORKDIR /app/lora-scripts/scripts

17 | RUN pip install -r requirements.txt

18 |

19 | WORKDIR /app/lora-scripts

20 |

21 | CMD ["python", "gui.py", "--listen"]

--------------------------------------------------------------------------------

/Dockerfile-for-Mainland-China:

--------------------------------------------------------------------------------

1 | FROM nvcr.io/nvidia/pytorch:24.07-py3

2 |

3 | EXPOSE 28000

4 |

5 | ENV TZ=Asia/Shanghai

6 | RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone && apt update && apt install python3-tk -y

7 |

8 | RUN mkdir /app

9 |

10 | WORKDIR /app

11 | RUN git clone --recurse-submodules https://github.com/Akegarasu/lora-scripts

12 |

13 | WORKDIR /app/lora-scripts

14 |

15 | # 设置 Python pip 软件包国内镜像代理

16 | RUN pip config set global.index-url 'https://pypi.tuna.tsinghua.edu.cn/simple' && \

17 | pip config set install.trusted-host 'pypi.tuna.tsinghua.edu.cn'

18 |

19 | # 初次安装依赖

20 | RUN pip install xformers==0.0.27.post2 --no-deps && pip install -r requirements.txt

21 |

22 | # 更新 训练程序 stable 版本依赖

23 | WORKDIR /app/lora-scripts/scripts/stable

24 | RUN pip install -r requirements.txt

25 |

26 | # 更新 训练程序 dev 版本依赖

27 | WORKDIR /app/lora-scripts/scripts/dev

28 | RUN pip install -r requirements.txt

29 |

30 | WORKDIR /app/lora-scripts

31 |

32 | # 修正运行报错以及底包缺失的依赖

33 | # ref

34 | # - https://soulteary.com/2024/01/07/fix-opencv-dependency-errors-opencv-fixer.html

35 | # - https://blog.csdn.net/qq_50195602/article/details/124188467

36 | RUN pip install opencv-fixer==0.2.5 && python -c "from opencv_fixer import AutoFix; AutoFix()" \

37 | pip install opencv-python-headless && apt install ffmpeg libsm6 libxext6 libgl1 -y

38 |

39 | CMD ["python", "gui.py", "--listen"]

--------------------------------------------------------------------------------

/README-zh.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | # SD-Trainer

6 |

7 | _✨ 享受 Stable Diffusion 训练! ✨_

8 |

9 |

12 |

13 |  14 |

15 |

16 |

14 |

15 |

16 |  17 |

18 |

19 |

17 |

18 |

19 |  20 |

21 |

22 |

20 |

21 |

22 |  23 |

24 |

23 |

24 |

25 |

26 |

27 | 下载

28 | ·

29 | 文档

30 | ·

31 | 中文README

32 |

33 |

34 | LoRA-scripts(又名 SD-Trainer)

35 |

36 | LoRA & Dreambooth 训练图形界面 & 脚本预设 & 一键训练环境,用于 [kohya-ss/sd-scripts](https://github.com/kohya-ss/sd-scripts.git)

37 |

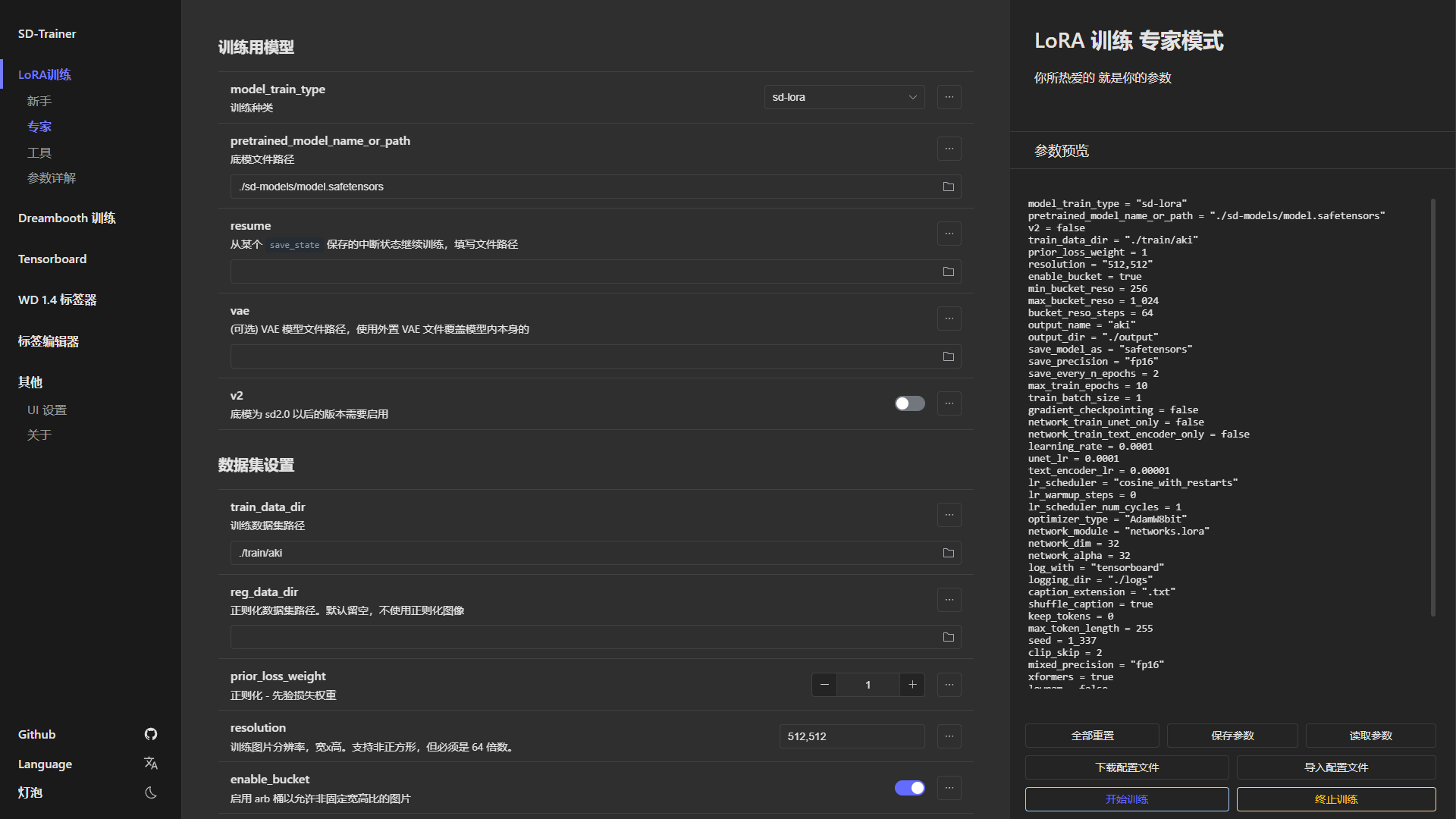

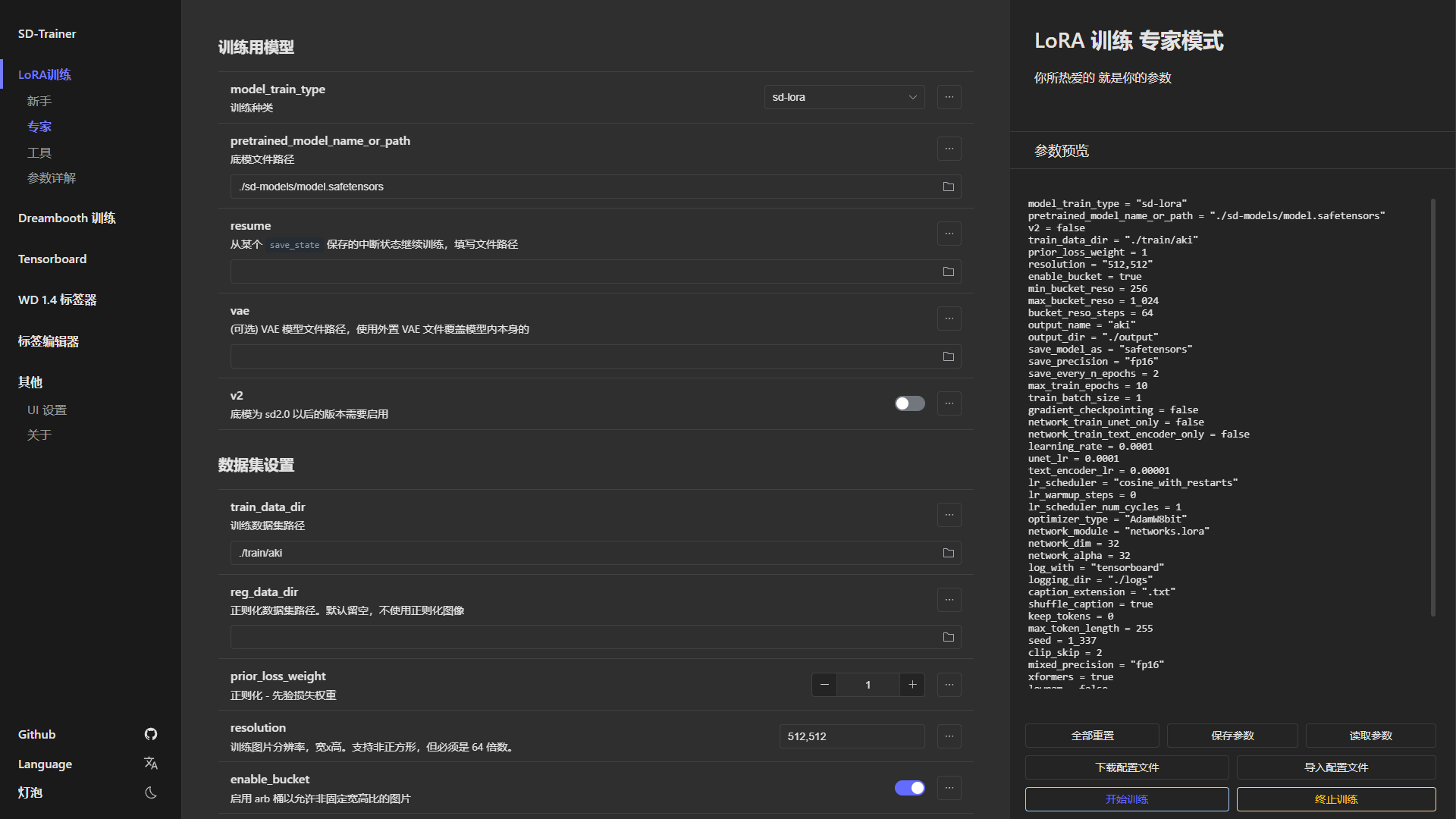

38 | ## ✨新特性: 训练 WebUI

39 |

40 | Stable Diffusion 训练工作台。一切集成于一个 WebUI 中。

41 |

42 | 按照下面的安装指南安装 GUI,然后运行 `run_gui.ps1`(Windows) 或 `run_gui.sh`(Linux) 来启动 GUI。

43 |

44 |

45 |

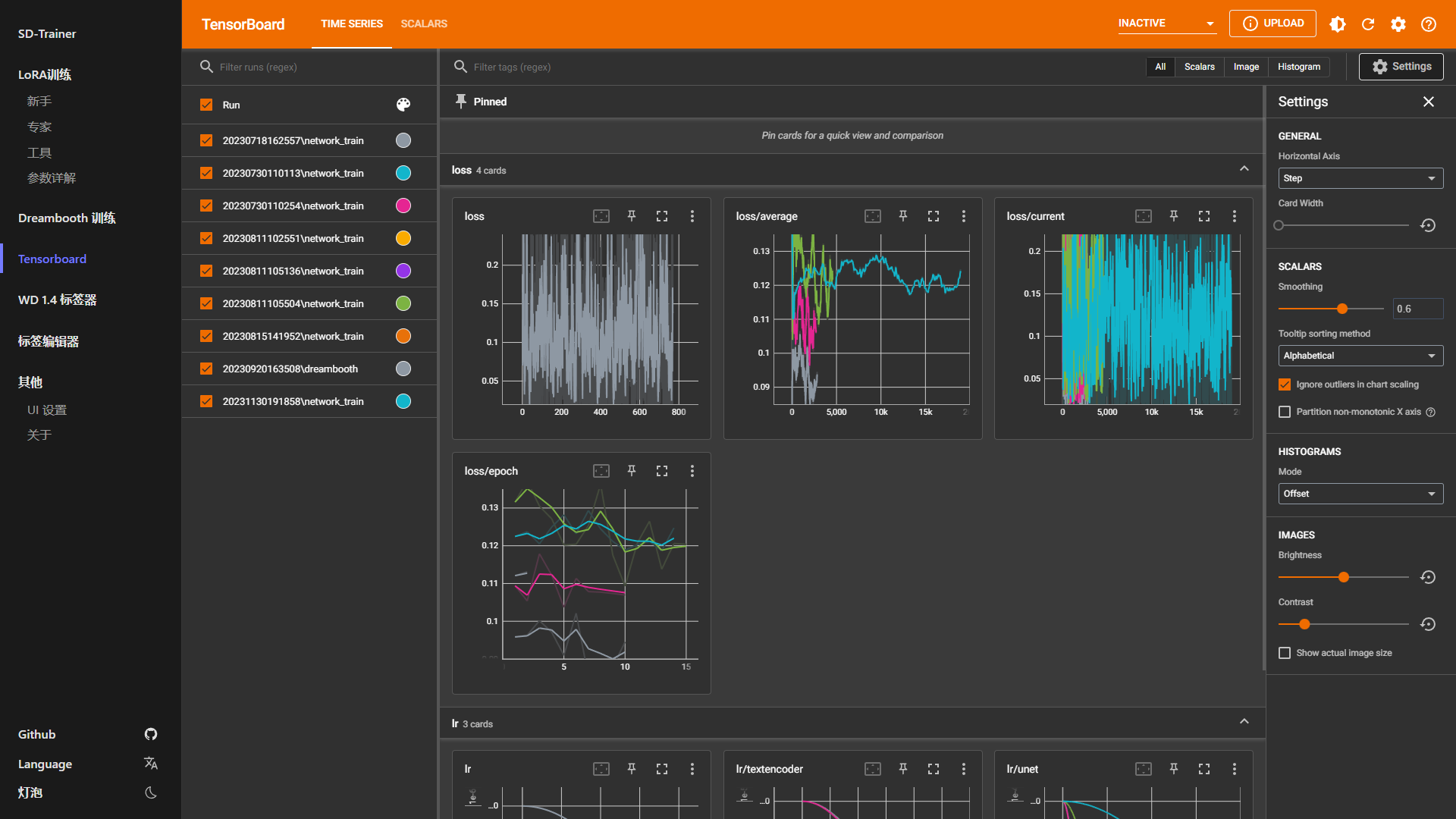

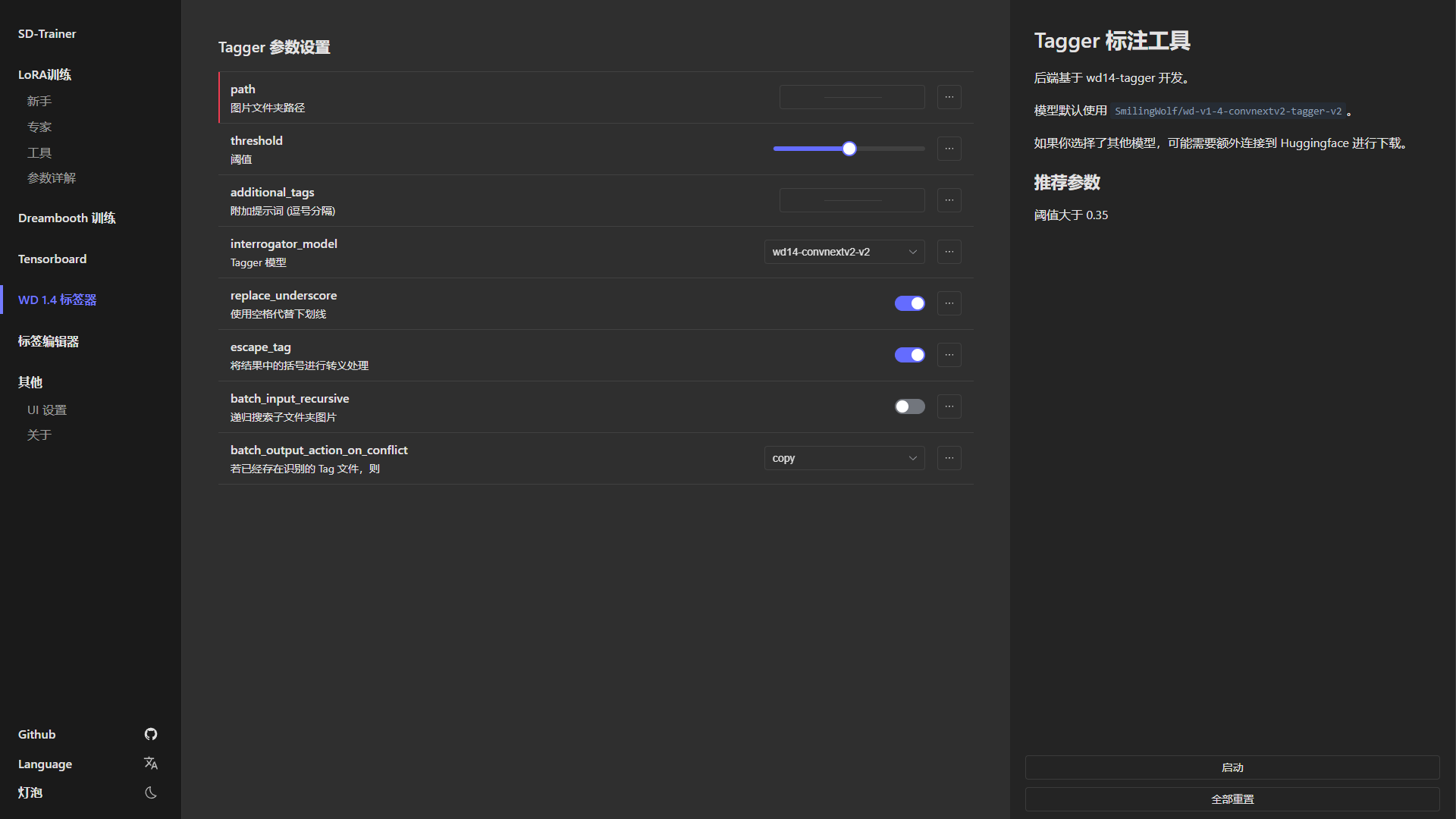

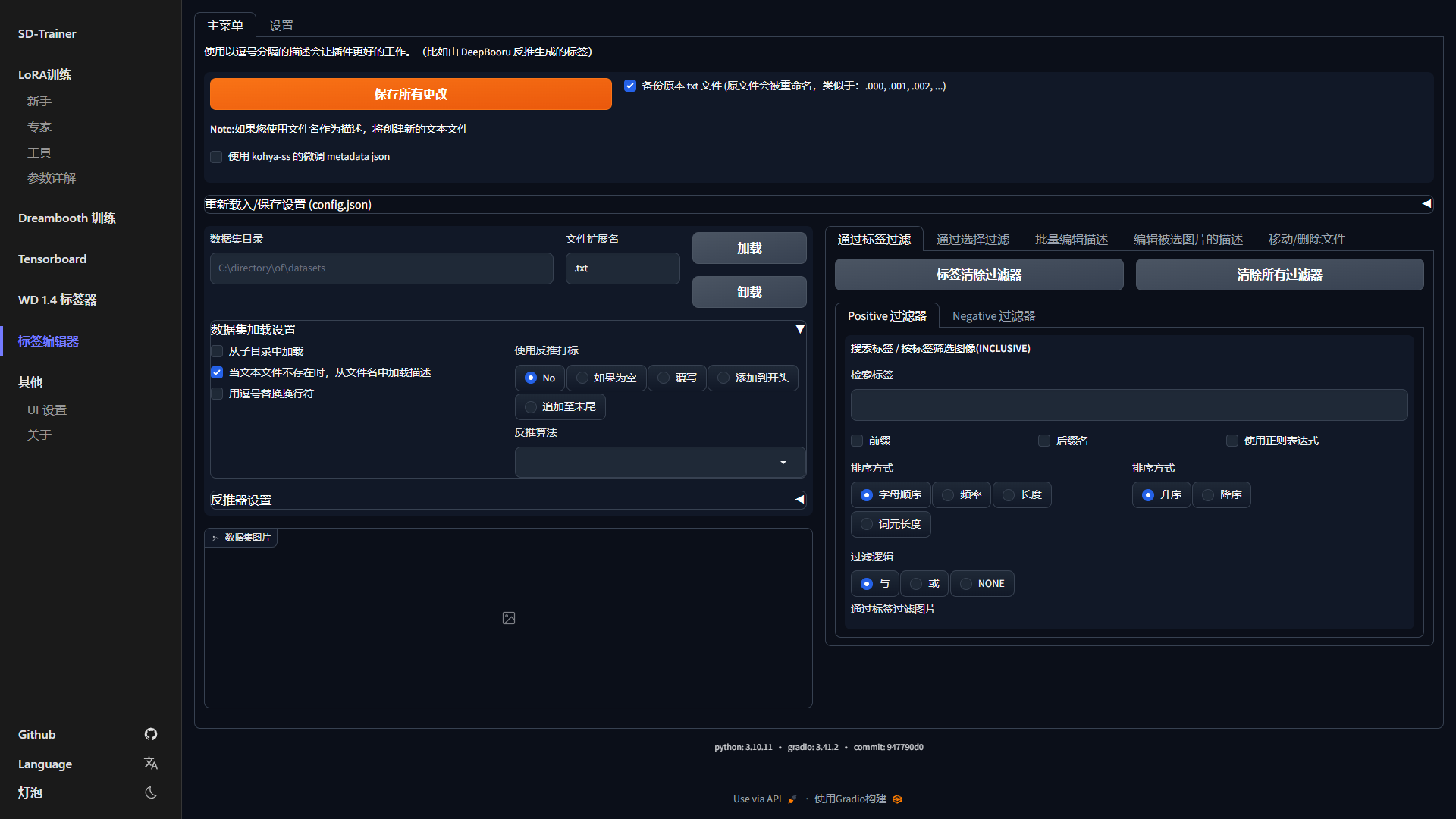

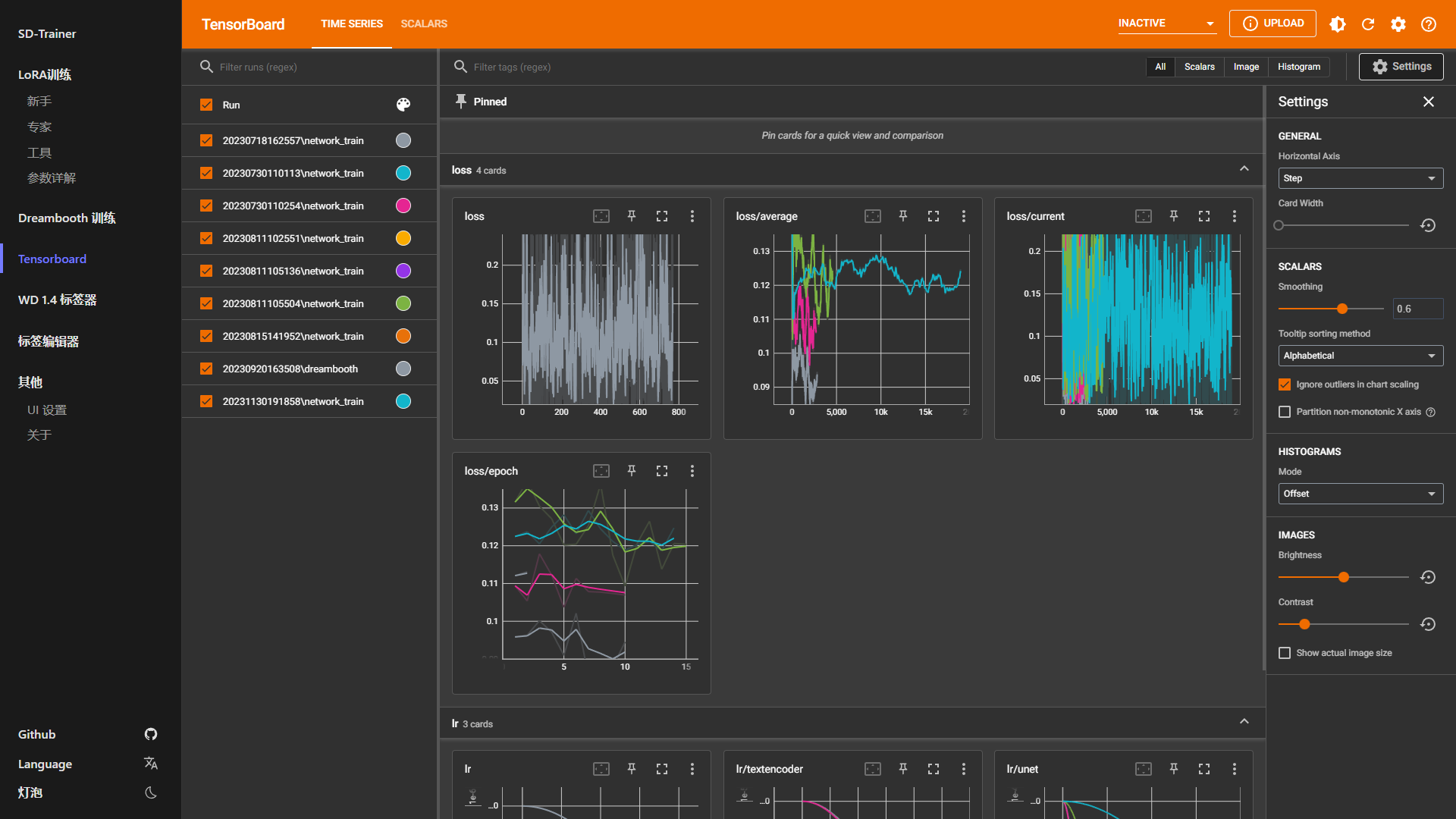

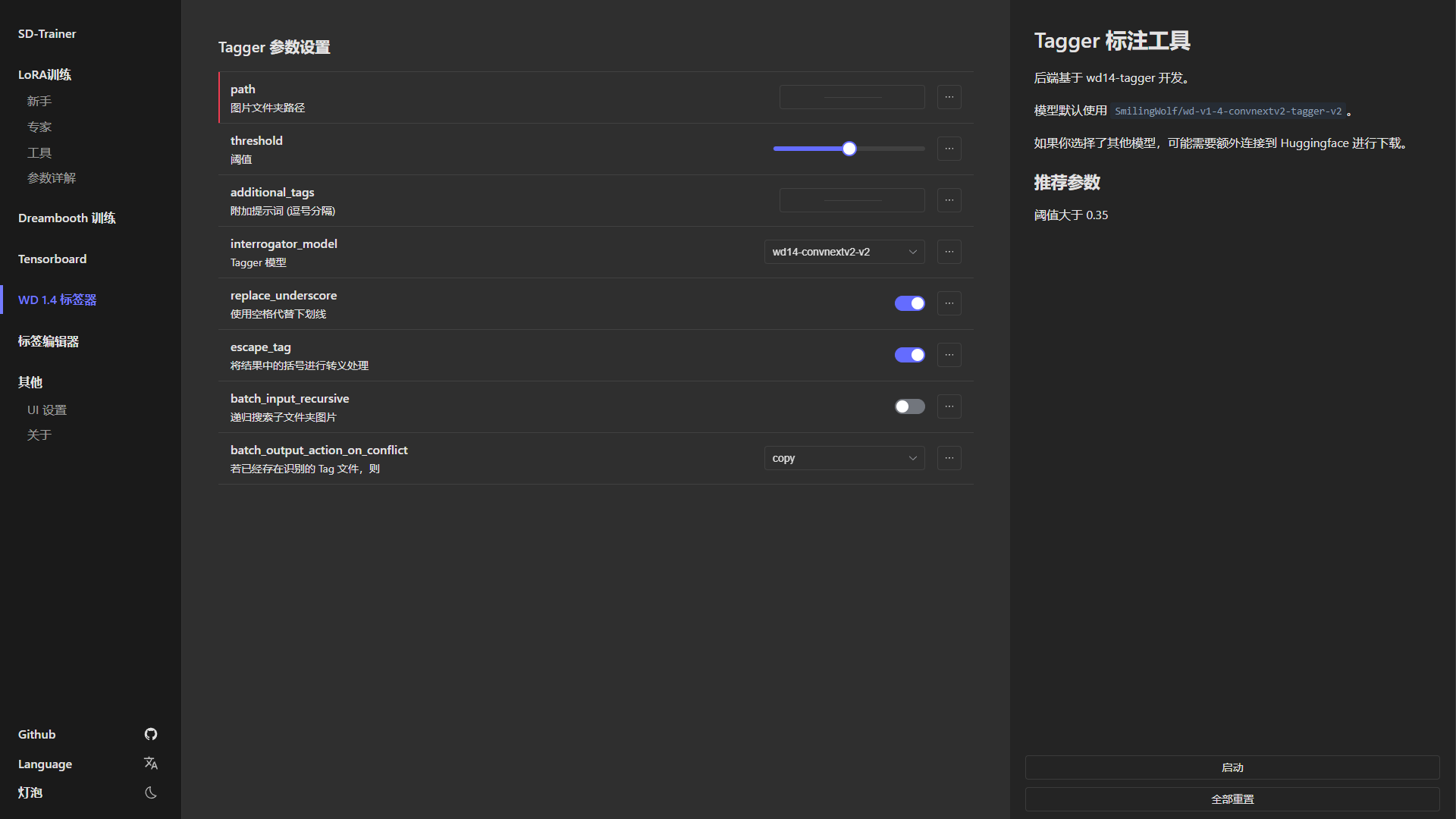

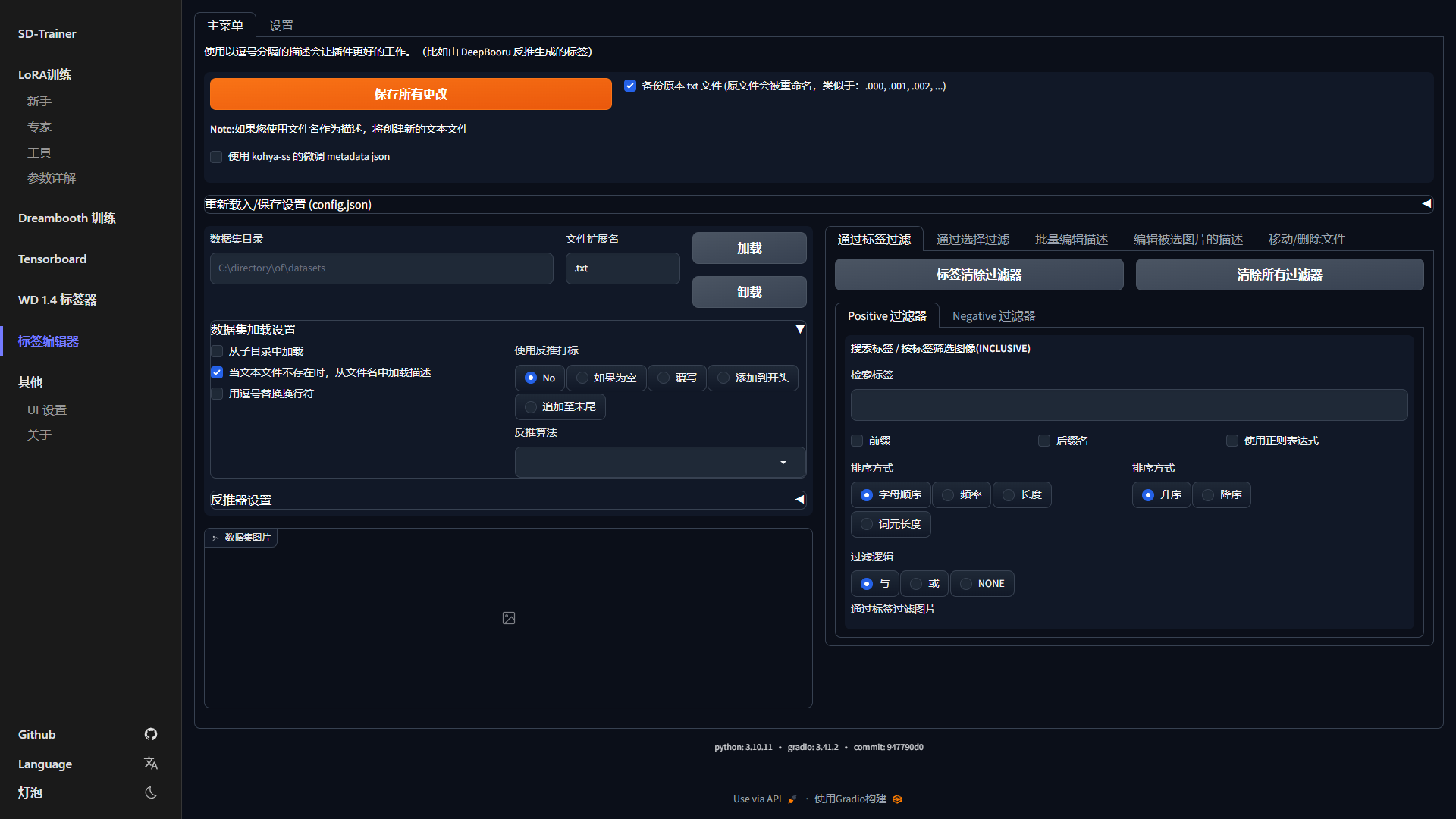

46 | | Tensorboard | WD 1.4 标签器 | 标签编辑器 |

47 | | ------------ | ------------ | ------------ |

48 | |  |  |  |

49 |

50 |

51 | # 使用方法

52 |

53 | ### 必要依赖

54 |

55 | Python 3.10 和 Git

56 |

57 | ### 克隆带子模块的仓库

58 |

59 | ```sh

60 | git clone --recurse-submodules https://github.com/Akegarasu/lora-scripts

61 | ```

62 |

63 | ## ✨ SD-Trainer GUI

64 |

65 | ### Windows

66 |

67 | #### 安装

68 |

69 | 运行 `install-cn.ps1` 将自动为您创建虚拟环境并安装必要的依赖。

70 |

71 | #### 训练

72 |

73 | 运行 `run_gui.ps1`,程序将自动打开 [http://127.0.0.1:28000](http://127.0.0.1:28000)

74 |

75 | ### Linux

76 |

77 | #### 安装

78 |

79 | 运行 `install.bash` 将创建虚拟环境并安装必要的依赖。

80 |

81 | #### 训练

82 |

83 | 运行 `bash run_gui.sh`,程序将自动打开 [http://127.0.0.1:28000](http://127.0.0.1:28000)

84 |

85 | ### Docker

86 |

87 | #### 编译镜像

88 |

89 | ```bash

90 | # 国内镜像优化版本

91 | # 其中 akegarasu_lora-scripts:latest 为镜像及其 tag 名,根据镜像托管服务商实际进行修改

92 | docker build -t akegarasu_lora-scripts:latest -f Dockfile-for-Mainland-China .

93 | docker push akegarasu_lora-scripts:latest

94 | ```

95 |

96 | #### 使用镜像

97 |

98 | > 提供一个本人已打包好并推送到 `aliyuncs` 上的镜像,此镜像压缩归档大小约 `10G` 左右,请耐心等待拉取。

99 |

100 | ```bash

101 | docker run --gpus all -p 28000:28000 -p 6006:6006 registry.cn-hangzhou.aliyuncs.com/go-to-mirror/akegarasu_lora-scripts:latest

102 | ```

103 |

104 | 或者使用 `docker-compose.yaml` 。

105 |

106 | ```yaml

107 | services:

108 | lora-scripts:

109 | container_name: lora-scripts

110 | build:

111 | context: .

112 | dockerfile: Dockerfile-for-Mainland-China

113 | image: "registry.cn-hangzhou.aliyuncs.com/go-to-mirror/akegarasu_lora-scripts:latest"

114 | ports:

115 | - "28000:28000"

116 | - "6006:6006"

117 | # 共享本地文件夹(请根据实际修改)

118 | #volumes:

119 | # - "/data/srv/lora-scripts:/app/lora-scripts"

120 | # 共享 comfyui 大模型

121 | # - "/data/srv/comfyui/models/checkpoints:/app/lora-scripts/sd-models/comfyui"

122 | # 共享 sd-webui 大模型

123 | # - "/data/srv/stable-diffusion-webui/models/Stable-diffusion:/app/lora-scripts/sd-models/sd-webui"

124 | environment:

125 | - HF_HOME=huggingface

126 | - PYTHONUTF8=1

127 | security_opt:

128 | - "label=type:nvidia_container_t"

129 | runtime: nvidia

130 | deploy:

131 | resources:

132 | reservations:

133 | devices:

134 | - driver: nvidia

135 | device_ids: ['0']

136 | capabilities: [gpu]

137 | ```

138 |

139 | 关于容器使用 GPU 相关依赖安装问题,请自行搜索查阅资料解决。

140 |

141 | ## 通过手动运行脚本的传统训练方式

142 |

143 | ### Windows

144 |

145 | #### 安装

146 |

147 | 运行 `install.ps1` 将自动为您创建虚拟环境并安装必要的依赖。

148 |

149 | #### 训练

150 |

151 | 编辑 `train.ps1`,然后运行它。

152 |

153 | ### Linux

154 |

155 | #### 安装

156 |

157 | 运行 `install.bash` 将创建虚拟环境并安装必要的依赖。

158 |

159 | #### 训练

160 |

161 | 训练

162 |

163 | 脚本 `train.sh` **不会** 为您激活虚拟环境。您应该先激活虚拟环境。

164 |

165 | ```sh

166 | source venv/bin/activate

167 | ```

168 |

169 | 编辑 `train.sh`,然后运行它。

170 |

171 | #### TensorBoard

172 |

173 | 运行 `tensorboard.ps1` 将在 http://localhost:6006/ 启动 TensorBoard

174 |

175 | ## 程序参数

176 |

177 | | 参数名称 | 类型 | 默认值 | 描述 |

178 | |------------------------------|-------|--------------|-------------------------------------------------|

179 | | `--host` | str | "127.0.0.1" | 服务器的主机名 |

180 | | `--port` | int | 28000 | 运行服务器的端口 |

181 | | `--listen` | bool | false | 启用服务器的监听模式 |

182 | | `--skip-prepare-environment` | bool | false | 跳过环境准备步骤 |

183 | | `--disable-tensorboard` | bool | false | 禁用 TensorBoard |

184 | | `--disable-tageditor` | bool | false | 禁用标签编辑器 |

185 | | `--tensorboard-host` | str | "127.0.0.1" | 运行 TensorBoard 的主机 |

186 | | `--tensorboard-port` | int | 6006 | 运行 TensorBoard 的端口 |

187 | | `--localization` | str | | 界面的本地化设置 |

188 | | `--dev` | bool | false | 开发者模式,用于禁用某些检查 |

189 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | # SD-Trainer

6 |

7 | _✨ Enjoy Stable Diffusion Train! ✨_

8 |

9 |

12 |

13 |  14 |

15 |

16 |

14 |

15 |

16 |  17 |

18 |

19 |

17 |

18 |

19 |  20 |

21 |

22 |

20 |

21 |

22 |  23 |

24 |

23 |

24 |

25 |

26 |

27 | Download

28 | ·

29 | Documents

30 | ·

31 | 中文README

32 |

33 |

34 | LoRA-scripts (a.k.a SD-Trainer)

35 |

36 | LoRA & Dreambooth training GUI & scripts preset & one key training environment for [kohya-ss/sd-scripts](https://github.com/kohya-ss/sd-scripts.git)

37 |

38 | ## ✨NEW: Train WebUI

39 |

40 | The **REAL** Stable Diffusion Training Studio. Everything in one WebUI.

41 |

42 | Follow the installation guide below to install the GUI, then run `run_gui.ps1`(windows) or `run_gui.sh`(linux) to start the GUI.

43 |

44 |

45 |

46 | | Tensorboard | WD 1.4 Tagger | Tag Editor |

47 | | ------------ | ------------ | ------------ |

48 | |  |  |  |

49 |

50 |

51 | # Usage

52 |

53 | ### Required Dependencies

54 |

55 | Python 3.10 and Git

56 |

57 | ### Clone repo with submodules

58 |

59 | ```sh

60 | git clone --recurse-submodules https://github.com/Akegarasu/lora-scripts

61 | ```

62 |

63 | ## ✨ SD-Trainer GUI

64 |

65 | ### Windows

66 |

67 | #### Installation

68 |

69 | Run `install.ps1` will automatically create a venv for you and install necessary deps.

70 | If you are in China mainland, please use `install-cn.ps1`

71 |

72 | #### Train

73 |

74 | run `run_gui.ps1`, then program will open [http://127.0.0.1:28000](http://127.0.0.1:28000) automanticlly

75 |

76 | ### Linux

77 |

78 | #### Installation

79 |

80 | Run `install.bash` will create a venv and install necessary deps.

81 |

82 | #### Train

83 |

84 | run `bash run_gui.sh`, then program will open [http://127.0.0.1:28000](http://127.0.0.1:28000) automanticlly

85 |

86 | ## Legacy training through run script manually

87 |

88 | ### Windows

89 |

90 | #### Installation

91 |

92 | Run `install.ps1` will automatically create a venv for you and install necessary deps.

93 |

94 | #### Train

95 |

96 | Edit `train.ps1`, and run it.

97 |

98 | ### Linux

99 |

100 | #### Installation

101 |

102 | Run `install.bash` will create a venv and install necessary deps.

103 |

104 | #### Train

105 |

106 | Training script `train.sh` **will not** activate venv for you. You should activate venv first.

107 |

108 | ```sh

109 | source venv/bin/activate

110 | ```

111 |

112 | Edit `train.sh`, and run it.

113 |

114 | #### TensorBoard

115 |

116 | Run `tensorboard.ps1` will start TensorBoard at http://localhost:6006/

117 |

118 | ## Program arguments

119 |

120 | | Parameter Name | Type | Default Value | Description |

121 | |-------------------------------|-------|---------------|--------------------------------------------------|

122 | | `--host` | str | "127.0.0.1" | Hostname for the server |

123 | | `--port` | int | 28000 | Port to run the server |

124 | | `--listen` | bool | false | Enable listening mode for the server |

125 | | `--skip-prepare-environment` | bool | false | Skip the environment preparation step |

126 | | `--disable-tensorboard` | bool | false | Disable TensorBoard |

127 | | `--disable-tageditor` | bool | false | Disable tag editor |

128 | | `--tensorboard-host` | str | "127.0.0.1" | Host to run TensorBoard |

129 | | `--tensorboard-port` | int | 6006 | Port to run TensorBoard |

130 | | `--localization` | str | | Localization settings for the interface |

131 | | `--dev` | bool | false | Developer mode to disale some checks |

132 |

--------------------------------------------------------------------------------

/assets/favicon.ico:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Akegarasu/lora-scripts/e0f5194815203093659d6ec280b9362b9792c070/assets/favicon.ico

--------------------------------------------------------------------------------

/assets/gitconfig-cn:

--------------------------------------------------------------------------------

1 | [url "https://jihulab.com/Akegarasu/lora-scripts"]

2 | insteadOf = https://github.com/Akegarasu/lora-scripts

3 |

4 | [url "https://jihulab.com/Akegarasu/sd-scripts"]

5 | insteadOf = https://github.com/Akegarasu/sd-scripts

6 |

7 | [url "https://jihulab.com/affair3547/sd-scripts"]

8 | insteadOf = https://github.com/kohya-ss/sd-scripts.git

9 |

10 | [url "https://jihulab.com/affair3547/lora-gui-dist"]

11 | insteadOf = https://github.com/hanamizuki-ai/lora-gui-dist

12 |

13 | [url "https://jihulab.com/Akegarasu/dataset-tag-editor"]

14 | insteadOf = https://github.com/Akegarasu/dataset-tag-editor

15 |

--------------------------------------------------------------------------------

/assets/tensorboard-example.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Akegarasu/lora-scripts/e0f5194815203093659d6ec280b9362b9792c070/assets/tensorboard-example.png

--------------------------------------------------------------------------------

/config/default.toml:

--------------------------------------------------------------------------------

1 | [model]

2 | v2 = false

3 | v_parameterization = false

4 | pretrained_model_name_or_path = "./sd-models/model.ckpt"

5 |

6 | [dataset]

7 | train_data_dir = "./train/input"

8 | reg_data_dir = ""

9 | prior_loss_weight = 1

10 | cache_latents = true

11 | shuffle_caption = true

12 | enable_bucket = true

13 |

14 | [additional_network]

15 | network_dim = 32

16 | network_alpha = 16

17 | network_train_unet_only = false

18 | network_train_text_encoder_only = false

19 | network_module = "networks.lora"

20 | network_args = []

21 |

22 | [optimizer]

23 | unet_lr = 1e-4

24 | text_encoder_lr = 1e-5

25 | optimizer_type = "AdamW8bit"

26 | lr_scheduler = "cosine_with_restarts"

27 | lr_warmup_steps = 0

28 | lr_restart_cycles = 1

29 |

30 | [training]

31 | resolution = "512,512"

32 | train_batch_size = 1

33 | max_train_epochs = 10

34 | noise_offset = 0.0

35 | keep_tokens = 0

36 | xformers = true

37 | lowram = false

38 | clip_skip = 2

39 | mixed_precision = "fp16"

40 | save_precision = "fp16"

41 |

42 | [sample_prompt]

43 | sample_sampler = "euler_a"

44 | sample_every_n_epochs = 1

45 |

46 | [saving]

47 | output_name = "output_name"

48 | save_every_n_epochs = 1

49 | save_n_epoch_ratio = 0

50 | save_last_n_epochs = 499

51 | save_state = false

52 | save_model_as = "safetensors"

53 | output_dir = "./output"

54 | logging_dir = "./logs"

55 | log_prefix = "output_name"

56 |

57 | [others]

58 | min_bucket_reso = 256

59 | max_bucket_reso = 1024

60 | caption_extension = ".txt"

61 | max_token_length = 225

62 | seed = 1337

63 |

--------------------------------------------------------------------------------

/config/lora.toml:

--------------------------------------------------------------------------------

1 | [model_arguments]

2 | v2 = false

3 | v_parameterization = false

4 | pretrained_model_name_or_path = "./sd-models/model.ckpt"

5 |

6 | [dataset_arguments]

7 | train_data_dir = "./train/aki"

8 | reg_data_dir = ""

9 | resolution = "512,512"

10 | prior_loss_weight = 1

11 |

12 | [additional_network_arguments]

13 | network_dim = 32

14 | network_alpha = 16

15 | network_train_unet_only = false

16 | network_train_text_encoder_only = false

17 | network_module = "networks.lora"

18 | network_args = []

19 |

20 | [optimizer_arguments]

21 | unet_lr = 1e-4

22 | text_encoder_lr = 1e-5

23 |

24 | optimizer_type = "AdamW8bit"

25 | lr_scheduler = "cosine_with_restarts"

26 | lr_warmup_steps = 0

27 | lr_restart_cycles = 1

28 |

29 | [training_arguments]

30 | train_batch_size = 1

31 | noise_offset = 0.0

32 | keep_tokens = 0

33 | min_bucket_reso = 256

34 | max_bucket_reso = 1024

35 | caption_extension = ".txt"

36 | max_token_length = 225

37 | seed = 1337

38 | xformers = true

39 | lowram = false

40 | max_train_epochs = 10

41 | resolution = "512,512"

42 | clip_skip = 2

43 | mixed_precision = "fp16"

44 |

45 | [sample_prompt_arguments]

46 | sample_sampler = "euler_a"

47 | sample_every_n_epochs = 5

48 |

49 | [saving_arguments]

50 | output_name = "output_name"

51 | save_every_n_epochs = 1

52 | save_state = false

53 | save_model_as = "safetensors"

54 | output_dir = "./output"

55 | logging_dir = "./logs"

56 | log_prefix = ""

57 | save_precision = "fp16"

58 |

59 | [others]

60 | cache_latents = true

61 | shuffle_caption = true

62 | enable_bucket = true

--------------------------------------------------------------------------------

/config/presets/example.toml:

--------------------------------------------------------------------------------

1 | # 模板显示的信息

2 | [metadata]

3 | name = "中分辨率训练" # 模板名称

4 | version = "0.0.1" # 模板版本

5 | author = "秋叶" # 模板作者

6 | # train_type 参数可以设置 lora-basic,lora-master,flux-lora 等内容。用于过滤显示的。不填就全部显示

7 | # train_type = ""

8 | description = "这是一个样例模板,提高训练分辨率。如果你想加入自己的模板,可以按照 config/presets 中的文件,修改后前往 Github 发起 PR"

9 |

10 | # 模板配置内容。请只填写修改了的内容,未修改无需填写。

11 | [data]

12 | resolution = "768,768"

13 | enable_bucket = true

14 | min_bucket_reso = 256

15 | max_bucket_reso = 2048

16 | bucket_no_upscale = true

--------------------------------------------------------------------------------

/config/sample_prompts.txt:

--------------------------------------------------------------------------------

1 | (masterpiece, best quality:1.2), 1girl, solo, --n lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, --w 512 --h 768 --l 7 --s 24 --d 1337

--------------------------------------------------------------------------------

/gui.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import locale

3 | import os

4 | import platform

5 | import subprocess

6 | import sys

7 |

8 | from mikazuki.launch_utils import (base_dir_path, catch_exception, git_tag,

9 | prepare_environment, check_port_avaliable, find_avaliable_ports)

10 | from mikazuki.log import log

11 |

12 | parser = argparse.ArgumentParser(description="GUI for stable diffusion training")

13 | parser.add_argument("--host", type=str, default="127.0.0.1")

14 | parser.add_argument("--port", type=int, default=28000, help="Port to run the server on")

15 | parser.add_argument("--listen", action="store_true")

16 | parser.add_argument("--skip-prepare-environment", action="store_true")

17 | parser.add_argument("--skip-prepare-onnxruntime", action="store_true")

18 | parser.add_argument("--disable-tensorboard", action="store_true")

19 | parser.add_argument("--disable-tageditor", action="store_true")

20 | parser.add_argument("--disable-auto-mirror", action="store_true")

21 | parser.add_argument("--tensorboard-host", type=str, default="127.0.0.1", help="Port to run the tensorboard")

22 | parser.add_argument("--tensorboard-port", type=int, default=6006, help="Port to run the tensorboard")

23 | parser.add_argument("--localization", type=str)

24 | parser.add_argument("--dev", action="store_true")

25 |

26 |

27 | @catch_exception

28 | def run_tensorboard():

29 | log.info("Starting tensorboard...")

30 | subprocess.Popen([sys.executable, "-m", "tensorboard.main", "--logdir", "logs",

31 | "--host", args.tensorboard_host, "--port", str(args.tensorboard_port)])

32 |

33 |

34 | @catch_exception

35 | def run_tag_editor():

36 | log.info("Starting tageditor...")

37 | cmd = [

38 | sys.executable,

39 | base_dir_path() / "mikazuki/dataset-tag-editor/scripts/launch.py",

40 | "--port", "28001",

41 | "--shadow-gradio-output",

42 | "--root-path", "/proxy/tageditor"

43 | ]

44 | if args.localization:

45 | cmd.extend(["--localization", args.localization])

46 | else:

47 | l = locale.getdefaultlocale()[0]

48 | if l and l.startswith("zh"):

49 | cmd.extend(["--localization", "zh-Hans"])

50 | subprocess.Popen(cmd)

51 |

52 |

53 | def launch():

54 | log.info("Starting SD-Trainer Mikazuki GUI...")

55 | log.info(f"Base directory: {base_dir_path()}, Working directory: {os.getcwd()}")

56 | log.info(f"{platform.system()} Python {platform.python_version()} {sys.executable}")

57 |

58 | if not args.skip_prepare_environment:

59 | prepare_environment(disable_auto_mirror=args.disable_auto_mirror)

60 |

61 | if not check_port_avaliable(args.port):

62 | avaliable = find_avaliable_ports(30000, 30000+20)

63 | if avaliable:

64 | args.port = avaliable

65 | else:

66 | log.error("port finding fallback error")

67 |

68 | log.info(f"SD-Trainer Version: {git_tag(base_dir_path())}")

69 |

70 | os.environ["MIKAZUKI_HOST"] = args.host

71 | os.environ["MIKAZUKI_PORT"] = str(args.port)

72 | os.environ["MIKAZUKI_TENSORBOARD_HOST"] = args.tensorboard_host

73 | os.environ["MIKAZUKI_TENSORBOARD_PORT"] = str(args.tensorboard_port)

74 | os.environ["MIKAZUKI_DEV"] = "1" if args.dev else "0"

75 |

76 | if args.listen:

77 | args.host = "0.0.0.0"

78 | args.tensorboard_host = "0.0.0.0"

79 |

80 | if not args.disable_tageditor:

81 | run_tag_editor()

82 |

83 | if not args.disable_tensorboard:

84 | run_tensorboard()

85 |

86 | import uvicorn

87 | log.info(f"Server started at http://{args.host}:{args.port}")

88 | uvicorn.run("mikazuki.app:app", host=args.host, port=args.port, log_level="error", reload=args.dev)

89 |

90 |

91 | if __name__ == "__main__":

92 | args, _ = parser.parse_known_args()

93 | launch()

94 |

--------------------------------------------------------------------------------

/huggingface/accelerate/default_config.yaml:

--------------------------------------------------------------------------------

1 | command_file: null

2 | commands: null

3 | compute_environment: LOCAL_MACHINE

4 | deepspeed_config: {}

5 | distributed_type: 'NO'

6 | downcast_bf16: 'no'

7 | dynamo_backend: 'NO'

8 | fsdp_config: {}

9 | gpu_ids: all

10 | machine_rank: 0

11 | main_process_ip: null

12 | main_process_port: null

13 | main_training_function: main

14 | megatron_lm_config: {}

15 | mixed_precision: fp16

16 | num_machines: 1

17 | num_processes: 1

18 | rdzv_backend: static

19 | same_network: true

20 | tpu_name: null

21 | tpu_zone: null

22 | use_cpu: false

23 |

--------------------------------------------------------------------------------

/huggingface/hub/version.txt:

--------------------------------------------------------------------------------

1 | 1

--------------------------------------------------------------------------------

/install-cn.ps1:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Akegarasu/lora-scripts/e0f5194815203093659d6ec280b9362b9792c070/install-cn.ps1

--------------------------------------------------------------------------------

/install.bash:

--------------------------------------------------------------------------------

1 | #!/usr/bin/bash

2 |

3 | script_dir="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null 2>&1 && pwd )"

4 | create_venv=true

5 |

6 | while [ -n "$1" ]; do

7 | case "$1" in

8 | --disable-venv)

9 | create_venv=false

10 | shift

11 | ;;

12 | *)

13 | shift

14 | ;;

15 | esac

16 | done

17 |

18 | if $create_venv; then

19 | echo "Creating python venv..."

20 | python3 -m venv venv

21 | source "$script_dir/venv/bin/activate"

22 | echo "active venv"

23 | fi

24 |

25 | echo "Installing torch & xformers..."

26 |

27 | cuda_version=$(nvidia-smi | grep -oiP 'CUDA Version: \K[\d\.]+')

28 |

29 | if [ -z "$cuda_version" ]; then

30 | cuda_version=$(nvcc --version | grep -oiP 'release \K[\d\.]+')

31 | fi

32 | cuda_major_version=$(echo "$cuda_version" | awk -F'.' '{print $1}')

33 | cuda_minor_version=$(echo "$cuda_version" | awk -F'.' '{print $2}')

34 |

35 | echo "CUDA Version: $cuda_version"

36 |

37 |

38 | if (( cuda_major_version >= 12 )); then

39 | echo "install torch 2.7.0+cu128"

40 | pip install torch==2.7.0+cu128 torchvision==0.22.0+cu128 --extra-index-url https://download.pytorch.org/whl/cu128

41 | pip install --no-deps xformers==0.0.30 --extra-index-url https://download.pytorch.org/whl/cu128

42 | elif (( cuda_major_version == 11 && cuda_minor_version >= 8 )); then

43 | echo "install torch 2.4.0+cu118"

44 | pip install torch==2.4.0+cu118 torchvision==0.19.0+cu118 --extra-index-url https://download.pytorch.org/whl/cu118

45 | pip install --no-deps xformers==0.0.27.post2+cu118 --extra-index-url https://download.pytorch.org/whl/cu118

46 | elif (( cuda_major_version == 11 && cuda_minor_version >= 6 )); then

47 | echo "install torch 1.12.1+cu116"

48 | pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

49 | # for RTX3090+cu113/cu116 xformers, we need to install this version from source. You can also try xformers==0.0.18

50 | pip install --upgrade git+https://github.com/facebookresearch/xformers.git@0bad001ddd56c080524d37c84ff58d9cd030ebfd

51 | pip install triton==2.0.0.dev20221202

52 | elif (( cuda_major_version == 11 && cuda_minor_version >= 2 )); then

53 | echo "install torch 1.12.1+cu113"

54 | pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu116

55 | pip install --upgrade git+https://github.com/facebookresearch/xformers.git@0bad001ddd56c080524d37c84ff58d9cd030ebfd

56 | pip install triton==2.0.0.dev20221202

57 | else

58 | echo "Unsupported cuda version:$cuda_version"

59 | exit 1

60 | fi

61 |

62 | echo "Installing deps..."

63 |

64 | cd "$script_dir" || exit

65 | pip install --upgrade -r requirements.txt

66 |

67 | echo "Install completed"

68 |

--------------------------------------------------------------------------------

/install.ps1:

--------------------------------------------------------------------------------

1 | $Env:HF_HOME = "huggingface"

2 |

3 | if (!(Test-Path -Path "venv")) {

4 | Write-Output "Creating venv for python..."

5 | python -m venv venv

6 | }

7 | .\venv\Scripts\activate

8 |

9 | Write-Output "Installing deps..."

10 |

11 | pip install torch==2.7.0+cu128 torchvision==0.22.0+cu128 --extra-index-url https://download.pytorch.org/whl/cu128

12 | pip install -U -I --no-deps xformers==0.0.30 --extra-index-url https://download.pytorch.org/whl/cu128

13 | pip install --upgrade -r requirements.txt

14 |

15 | Write-Output "Install completed"

16 | Read-Host | Out-Null ;

17 |

--------------------------------------------------------------------------------

/interrogate.ps1:

--------------------------------------------------------------------------------

1 | # LoRA interrogate script by @bdsqlsz

2 |

3 | $v2 = 0 # load Stable Diffusion v2.x model / Stable Diffusion 2.x模型读取

4 | $sd_model = "./sd-models/sd_model.safetensors" # Stable Diffusion model to load: ckpt or safetensors file | 读取的基础SD模型, 保存格式 cpkt 或 safetensors

5 | $model = "./output/LoRA.safetensors" # LoRA model to interrogate: ckpt or safetensors file | 需要调查关键字的LORA模型, 保存格式 cpkt 或 safetensors

6 | $batch_size = 64 # batch size for processing with Text Encoder | 使用 Text Encoder 处理时的批量大小,默认16,推荐64/128

7 | $clip_skip = 1 # use output of nth layer from back of text encoder (n>=1) | 使用文本编码器倒数第 n 层的输出,n 可以是大于等于 1 的整数

8 |

9 |

10 | # Activate python venv

11 | .\venv\Scripts\activate

12 |

13 | $Env:HF_HOME = "huggingface"

14 | $ext_args = [System.Collections.ArrayList]::new()

15 |

16 | if ($v2) {

17 | [void]$ext_args.Add("--v2")

18 | }

19 |

20 | # run interrogate

21 | accelerate launch --num_cpu_threads_per_process=8 "./scripts/stable/networks/lora_interrogator.py" `

22 | --sd_model=$sd_model `

23 | --model=$model `

24 | --batch_size=$batch_size `

25 | --clip_skip=$clip_skip `

26 | $ext_args

27 |

28 | Write-Output "Interrogate finished"

29 | Read-Host | Out-Null ;

30 |

--------------------------------------------------------------------------------

/logs/.keep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Akegarasu/lora-scripts/e0f5194815203093659d6ec280b9362b9792c070/logs/.keep

--------------------------------------------------------------------------------

/mikazuki/app/__init__.py:

--------------------------------------------------------------------------------

1 | from . import application

2 |

3 | app = application.app

--------------------------------------------------------------------------------

/mikazuki/app/application.py:

--------------------------------------------------------------------------------

1 | import asyncio

2 | import mimetypes

3 | import os

4 | import sys

5 | import webbrowser

6 | from contextlib import asynccontextmanager

7 |

8 | from fastapi import FastAPI

9 | from fastapi.middleware.cors import CORSMiddleware

10 | from fastapi.responses import FileResponse

11 | from fastapi.staticfiles import StaticFiles

12 | from starlette.exceptions import HTTPException

13 |

14 | from mikazuki.app.config import app_config

15 | from mikazuki.app.api import load_schemas, load_presets

16 | from mikazuki.app.api import router as api_router

17 | # from mikazuki.app.ipc import router as ipc_router

18 | from mikazuki.app.proxy import router as proxy_router

19 | from mikazuki.utils.devices import check_torch_gpu

20 |

21 | mimetypes.add_type("application/javascript", ".js")

22 | mimetypes.add_type("text/css", ".css")

23 |

24 |

25 | class SPAStaticFiles(StaticFiles):

26 | async def get_response(self, path: str, scope):

27 | try:

28 | return await super().get_response(path, scope)

29 | except HTTPException as ex:

30 | if ex.status_code == 404:

31 | return await super().get_response("index.html", scope)

32 | else:

33 | raise ex

34 |

35 |

36 | async def app_startup():

37 | app_config.load_config()

38 |

39 | await load_schemas()

40 | await load_presets()

41 | await asyncio.to_thread(check_torch_gpu)

42 |

43 | if sys.platform == "win32" and os.environ.get("MIKAZUKI_DEV", "0") != "1":

44 | webbrowser.open(f'http://{os.environ["MIKAZUKI_HOST"]}:{os.environ["MIKAZUKI_PORT"]}')

45 |

46 |

47 | @asynccontextmanager

48 | async def lifespan(app: FastAPI):

49 | await app_startup()

50 | yield

51 |

52 |

53 | app = FastAPI(lifespan=lifespan)

54 | app.include_router(proxy_router)

55 |

56 |

57 | cors_config = os.environ.get("MIKAZUKI_APP_CORS", "")

58 | if cors_config != "":

59 | if cors_config == "1":

60 | cors_config = ["http://localhost:8004", "*"]

61 | else:

62 | cors_config = cors_config.split(";")

63 | app.add_middleware(

64 | CORSMiddleware,

65 | allow_origins=cors_config,

66 | allow_credentials=True,

67 | allow_methods=["*"],

68 | allow_headers=["*"],

69 | )

70 |

71 |

72 | @app.middleware("http")

73 | async def add_cache_control_header(request, call_next):

74 | response = await call_next(request)

75 | response.headers["Cache-Control"] = "max-age=0"

76 | return response

77 |

78 | app.include_router(api_router, prefix="/api")

79 | # app.include_router(ipc_router, prefix="/ipc")

80 |

81 |

82 | @app.get("/")

83 | async def index():

84 | return FileResponse("./frontend/dist/index.html")

85 |

86 |

87 | @app.get("/favicon.ico", response_class=FileResponse)

88 | async def favicon():

89 | return FileResponse("assets/favicon.ico")

90 |

91 | app.mount("/", SPAStaticFiles(directory="frontend/dist", html=True), name="static")

92 |

--------------------------------------------------------------------------------

/mikazuki/app/config.py:

--------------------------------------------------------------------------------

1 | import os

2 | import json

3 | from pathlib import Path

4 | from mikazuki.log import log

5 |

6 | class Config:

7 |

8 | def __init__(self, path: str):

9 | self.path = path

10 | self._stored = {}

11 | self._default = {

12 | "last_path": "",

13 | "saved_params": {}

14 | }

15 | self.lock = False

16 |

17 | def load_config(self):

18 | log.info(f"Loading config from {self.path}")

19 | if not os.path.exists(self.path):

20 | self._stored = self._default

21 | self.save_config()

22 | return

23 |

24 | try:

25 | with open(self.path, "r", encoding="utf-8") as f:

26 | self._stored = json.load(f)

27 | except Exception as e:

28 | log.error(f"Error loading config: {e}")

29 | self._stored = self._default

30 | return

31 |

32 | def save_config(self):

33 | try:

34 | with open(self.path, "w", encoding="utf-8") as f:

35 | json.dump(self._stored, f, indent=4, ensure_ascii=False)

36 | except Exception as e:

37 | log.error(f"Error saving config: {e}")

38 |

39 | def __getitem__(self, key):

40 |

41 | return self._stored.get(key, None)

42 |

43 | def __setitem__(self, key, value):

44 | self._stored[key] = value

45 |

46 |

47 | app_config = Config(Path(__file__).parents[2].absolute() / "assets" / "config.json")

48 |

--------------------------------------------------------------------------------

/mikazuki/app/models.py:

--------------------------------------------------------------------------------

1 | from pydantic import BaseModel, Field

2 | from typing import List, Optional, Union, Dict, Any

3 |

4 |

5 | class TaggerInterrogateRequest(BaseModel):

6 | path: str

7 | interrogator_model: str = Field(

8 | default="wd14-convnextv2-v2"

9 | )

10 | threshold: float = Field(

11 | default=0.35,

12 | ge=0,

13 | le=1

14 | )

15 | additional_tags: str = ""

16 | exclude_tags: str = ""

17 | escape_tag: bool = True

18 | batch_input_recursive: bool = False

19 | batch_output_action_on_conflict: str = "ignore"

20 | replace_underscore: bool = True

21 | replace_underscore_excludes: str = Field(

22 | default="0_0, (o)_(o), +_+, +_-, ._., _, <|>_<|>, =_=, >_<, 3_3, 6_9, >_o, @_@, ^_^, o_o, u_u, x_x, |_|, ||_||"

23 | )

24 |

25 |

26 | class APIResponse(BaseModel):

27 | status: str

28 | message: Optional[str]

29 | data: Optional[Dict]

30 |

31 |

32 | class APIResponseSuccess(APIResponse):

33 | status: str = "success"

34 |

35 |

36 | class APIResponseFail(APIResponse):

37 | status: str = "fail"

38 |

--------------------------------------------------------------------------------

/mikazuki/app/proxy.py:

--------------------------------------------------------------------------------

1 | import asyncio

2 | import os

3 |

4 | import httpx

5 | import starlette

6 | import websockets

7 | from fastapi import APIRouter, Request, WebSocket

8 | from httpx import ConnectError

9 | from starlette.background import BackgroundTask

10 | from starlette.requests import Request

11 | from starlette.responses import PlainTextResponse, StreamingResponse

12 |

13 | from mikazuki.log import log

14 |

15 | router = APIRouter()

16 |

17 |

18 | def reverse_proxy_maker(url_type: str, full_path: bool = False):

19 | if url_type == "tensorboard":

20 | host = os.environ.get("MIKAZUKI_TENSORBOARD_HOST", "127.0.0.1")

21 | port = os.environ.get("MIKAZUKI_TENSORBOARD_PORT", "6006")

22 | elif url_type == "tageditor":

23 | host = os.environ.get("MIKAZUKI_TAGEDITOR_HOST", "127.0.0.1")

24 | port = os.environ.get("MIKAZUKI_TAGEDITOR_PORT", "28001")

25 |

26 | client = httpx.AsyncClient(base_url=f"http://{host}:{port}/", proxies={}, trust_env=False, timeout=360)

27 |

28 | async def _reverse_proxy(request: Request):

29 | if full_path:

30 | url = httpx.URL(path=request.url.path, query=request.url.query.encode("utf-8"))

31 | else:

32 | url = httpx.URL(

33 | path=request.path_params.get("path", ""),

34 | query=request.url.query.encode("utf-8")

35 | )

36 | rp_req = client.build_request(

37 | request.method, url,

38 | headers=request.headers.raw,

39 | content=request.stream() if request.method != "GET" else None

40 | )

41 | try:

42 | rp_resp = await client.send(rp_req, stream=True)

43 | except ConnectError:

44 | return PlainTextResponse(

45 | content="The requested service not started yet or service started fail. This may cost a while when you first time startup\n请求的服务尚未启动或启动失败。若是第一次启动,可能需要等待一段时间后再刷新网页。",

46 | status_code=502

47 | )

48 | return StreamingResponse(

49 | rp_resp.aiter_raw(),

50 | status_code=rp_resp.status_code,

51 | headers=rp_resp.headers,

52 | background=BackgroundTask(rp_resp.aclose),

53 | )

54 |

55 | return _reverse_proxy

56 |

57 |

58 | async def proxy_ws_forward(ws_a: WebSocket, ws_b: websockets.WebSocketClientProtocol):

59 | while True:

60 | try:

61 | data = await ws_a.receive_text()

62 | await ws_b.send(data)

63 | except starlette.websockets.WebSocketDisconnect as e:

64 | break

65 | except Exception as e:

66 | log.error(f"Error when proxy data client -> backend: {e}")

67 | break

68 |

69 |

70 | async def proxy_ws_reverse(ws_a: WebSocket, ws_b: websockets.WebSocketClientProtocol):

71 | while True:

72 | try:

73 | data = await ws_b.recv()

74 | await ws_a.send_text(data)

75 | except websockets.exceptions.ConnectionClosedOK as e:

76 | break

77 | except Exception as e:

78 | log.error(f"Error when proxy data backend -> client: {e}")

79 | break

80 |

81 |

82 | @router.websocket("/proxy/tageditor/queue/join")

83 | async def websocket_a(ws_a: WebSocket):

84 | # for temp use

85 | ws_b_uri = "ws://127.0.0.1:28001/queue/join"

86 | await ws_a.accept()

87 | async with websockets.connect(ws_b_uri, timeout=360, ping_timeout=None) as ws_b_client:

88 | fwd_task = asyncio.create_task(proxy_ws_forward(ws_a, ws_b_client))

89 | rev_task = asyncio.create_task(proxy_ws_reverse(ws_a, ws_b_client))

90 | await asyncio.gather(fwd_task, rev_task)

91 |

92 | router.add_route("/proxy/tensorboard/{path:path}", reverse_proxy_maker("tensorboard"), ["GET", "POST"])

93 | router.add_route("/font-roboto/{path:path}", reverse_proxy_maker("tensorboard", full_path=True), ["GET", "POST"])

94 | router.add_route("/proxy/tageditor/{path:path}", reverse_proxy_maker("tageditor"), ["GET", "POST"])

95 |

--------------------------------------------------------------------------------

/mikazuki/global.d.ts:

--------------------------------------------------------------------------------

1 | interface Window {

2 | __MIKAZUKI__: any;

3 | }

4 |

5 | type Dict = {

6 | [key in K]: T;

7 | };

8 |

9 | declare const kSchema: unique symbol;

10 |

11 | declare namespace Schemastery {

12 | type From = X extends string | number | boolean ? SchemaI : X extends SchemaI ? X : X extends typeof String ? SchemaI : X extends typeof Number ? SchemaI : X extends typeof Boolean ? SchemaI : X extends typeof Function ? SchemaI any> : X extends Constructor ? SchemaI : never;

13 | type TypeS1 = X extends SchemaI ? S : never;

14 | type Inverse = X extends SchemaI ? (arg: Y) => void : never;

15 | type TypeS = TypeS1>;

16 | type TypeT = ReturnType>;

17 | type Resolve = (data: any, schema: SchemaI, options?: Options, strict?: boolean) => [any, any?];

18 | type IntersectS = From extends SchemaI ? S : never;

19 | type IntersectT = Inverse> extends ((arg: infer T) => void) ? T : never;

20 | type TupleS = X extends readonly [infer L, ...infer R] ? [TypeS?, ...TupleS] : any[];

21 | type TupleT = X extends readonly [infer L, ...infer R] ? [TypeT?, ...TupleT] : any[];

22 | type ObjectS = {

23 | [K in keyof X]?: TypeS | null;

24 | } & Dict;

25 | type ObjectT = {

26 | [K in keyof X]: TypeT;

27 | } & Dict;

28 | type Constructor = new (...args: any[]) => T;

29 | interface Static {

30 | (options: Partial>): SchemaI;

31 | new (options: Partial>): SchemaI;

32 | prototype: SchemaI;

33 | resolve: Resolve;

34 | from(source?: X): From;

35 | extend(type: string, resolve: Resolve): void;

36 | any(): SchemaI;

37 | never(): SchemaI;

38 | const(value: T): SchemaI;

39 | string(): SchemaI;

40 | number(): SchemaI;

41 | natural(): SchemaI;

42 | percent(): SchemaI;

43 | boolean(): SchemaI;

44 | date(): SchemaI;

45 | bitset(bits: Partial>): SchemaI;

46 | function(): SchemaI any>;

47 | is(constructor: Constructor): SchemaI;

48 | array(inner: X): SchemaI[], TypeT[]>;

49 | dict = SchemaI>(inner: X, sKey?: Y): SchemaI, TypeS>, Dict, TypeT>>;

50 | tuple(list: X): SchemaI, TupleT>;

51 | object(dict: X): SchemaI, ObjectT>;

52 | union(list: readonly X[]): SchemaI, TypeT>;

53 | intersect(list: readonly X[]): SchemaI, IntersectT>;

54 | transform(inner: X, callback: (value: TypeS) => T, preserve?: boolean): SchemaI, T>;

55 | }

56 | interface Options {

57 | autofix?: boolean;

58 | }

59 | interface Meta {

60 | default?: T extends {} ? Partial : T;

61 | required?: boolean;

62 | disabled?: boolean;

63 | collapse?: boolean;

64 | badges?: {

65 | text: string;

66 | type: string;

67 | }[];

68 | hidden?: boolean;

69 | loose?: boolean;

70 | role?: string;

71 | extra?: any;

72 | link?: string;

73 | description?: string | Dict;

74 | comment?: string;

75 | pattern?: {

76 | source: string;

77 | flags?: string;

78 | };

79 | max?: number;

80 | min?: number;

81 | step?: number;

82 | }

83 |

84 | interface Schemastery {

85 | (data?: S | null, options?: Schemastery.Options): T;

86 | new(data?: S | null, options?: Schemastery.Options): T;

87 | [kSchema]: true;

88 | uid: number;

89 | meta: Schemastery.Meta;

90 | type: string;

91 | sKey?: SchemaI;

92 | inner?: SchemaI;

93 | list?: SchemaI[];

94 | dict?: Dict;

95 | bits?: Dict;

96 | callback?: Function;

97 | value?: T;

98 | refs?: Dict;

99 | preserve?: boolean;

100 | toString(inline?: boolean): string;

101 | toJSON(): SchemaI;

102 | required(value?: boolean): SchemaI;

103 | hidden(value?: boolean): SchemaI;

104 | loose(value?: boolean): SchemaI;

105 | role(text: string, extra?: any): SchemaI;

106 | link(link: string): SchemaI;

107 | default(value: T): SchemaI;

108 | comment(text: string): SchemaI;

109 | description(text: string): SchemaI;

110 | disabled(value?: boolean): SchemaI;

111 | collapse(value?: boolean): SchemaI;

112 | deprecated(): SchemaI;

113 | experimental(): SchemaI;

114 | pattern(regexp: RegExp): SchemaI;

115 | max(value: number): SchemaI;

116 | min(value: number): SchemaI;

117 | step(value: number): SchemaI;

118 | set(key: string, value: SchemaI): SchemaI;

119 | push(value: SchemaI): SchemaI;

120 | simplify(value?: any): any;

121 | i18n(messages: Dict): SchemaI;

122 | extra(key: K, value: Schemastery.Meta[K]): SchemaI;

123 | }

124 |

125 | }

126 |

127 | type SchemaI = Schemastery.Schemastery;

128 |

129 | declare const Schema: Schemastery.Static

130 |

131 | declare const SHARED_SCHEMAS: Dict

132 |

133 | declare function UpdateSchema(origin: Record, modify?: Record, toDelete?: string[]): Record;

134 |

--------------------------------------------------------------------------------

/mikazuki/hook/i18n.json:

--------------------------------------------------------------------------------

1 | {

2 | "指定エポックまでのステップ数": "指定 epoch 之前的步数",

3 | "学習開始": "训练开始",

4 | "学習画像の数×繰り返し回数": "训练图像数量×重复次数",

5 | "正則化画像の数": "正则化图像数量",

6 | "正則化画像の数×繰り返し回数": "正则化图像数量×重复次数",

7 | "1epochのバッチ数": "每个 epoch 的 batch 数",

8 | "バッチサイズ": "batch 大小",

9 | "学習率": "学习率",

10 | "勾配を合計するステップ数": "梯度累积步数",

11 | "学習率の減衰率": "学习率衰减率",

12 | "学習ステップ数": "训练步数"

13 | }

--------------------------------------------------------------------------------

/mikazuki/hook/sitecustomize.py:

--------------------------------------------------------------------------------

1 |

2 |

3 | _origin_print = print

4 |

5 |

6 | def i18n_print(data, *args, **kwargs):

7 | _origin_print(data, *args, **kwargs)

8 | _origin_print("i18n_print")

9 |

10 |

11 | __builtins__["print"] = i18n_print

12 |

--------------------------------------------------------------------------------

/mikazuki/log.py:

--------------------------------------------------------------------------------

1 | import logging

2 |

3 |

4 | log = logging.getLogger('sd-trainer')

5 | log.setLevel(logging.DEBUG)

6 |

7 | try:

8 | from rich.console import Console

9 | from rich.logging import RichHandler

10 | from rich.pretty import install as pretty_install

11 | from rich.theme import Theme

12 |

13 | console = Console(

14 | log_time=True,

15 | log_time_format='%H:%M:%S-%f',

16 | theme=Theme(

17 | {

18 | 'traceback.border': 'black',

19 | 'traceback.border.syntax_error': 'black',

20 | 'inspect.value.border': 'black',

21 | }

22 | ),

23 | )

24 | pretty_install(console=console)

25 | rh = RichHandler(

26 | show_time=True,

27 | omit_repeated_times=False,

28 | show_level=True,

29 | show_path=False,

30 | markup=False,

31 | rich_tracebacks=True,

32 | log_time_format='%H:%M:%S-%f',

33 | level=logging.INFO,

34 | console=console,

35 | )

36 | rh.set_name(logging.INFO)

37 | while log.hasHandlers() and len(log.handlers) > 0:

38 | log.removeHandler(log.handlers[0])

39 | log.addHandler(rh)

40 |

41 | except ModuleNotFoundError:

42 | pass

43 |

44 |

--------------------------------------------------------------------------------

/mikazuki/process.py:

--------------------------------------------------------------------------------

1 |

2 | import asyncio

3 | import os

4 | import sys

5 | from typing import Optional

6 |

7 | from mikazuki.app.models import APIResponse

8 | from mikazuki.log import log

9 | from mikazuki.tasks import tm

10 | from mikazuki.launch_utils import base_dir_path

11 |

12 |

13 | def run_train(toml_path: str,

14 | trainer_file: str = "./scripts/train_network.py",

15 | gpu_ids: Optional[list] = None,

16 | cpu_threads: Optional[int] = 2):

17 | log.info(f"Training started with config file / 训练开始,使用配置文件: {toml_path}")

18 | args = [

19 | sys.executable, "-m", "accelerate.commands.launch", # use -m to avoid python script executable error

20 | "--num_cpu_threads_per_process", str(cpu_threads), # cpu threads

21 | "--quiet", # silence accelerate error message

22 | trainer_file,

23 | "--config_file", toml_path,

24 | ]

25 |

26 | customize_env = os.environ.copy()

27 | customize_env["ACCELERATE_DISABLE_RICH"] = "1"

28 | customize_env["PYTHONUNBUFFERED"] = "1"

29 | customize_env["PYTHONWARNINGS"] = "ignore::FutureWarning,ignore::UserWarning"

30 |

31 | if gpu_ids:

32 | customize_env["CUDA_VISIBLE_DEVICES"] = ",".join(gpu_ids)

33 | log.info(f"Using GPU(s) / 使用 GPU: {gpu_ids}")

34 |

35 | if len(gpu_ids) > 1:

36 | args[3:3] = ["--multi_gpu", "--num_processes", str(len(gpu_ids))]

37 | if sys.platform == "win32":

38 | customize_env["USE_LIBUV"] = "0"

39 | args[3:3] = ["--rdzv_backend", "c10d"]

40 |

41 | if not (task := tm.create_task(args, customize_env)):

42 | return APIResponse(status="error", message="Failed to create task / 无法创建训练任务")

43 |

44 | def _run():

45 | try:

46 | task.execute()

47 | result = task.communicate()

48 | if result.returncode != 0:

49 | log.error(f"Training failed / 训练失败")

50 | else:

51 | log.info(f"Training finished / 训练完成")

52 | except Exception as e:

53 | log.error(f"An error occurred when training / 训练出现致命错误: {e}")

54 |

55 | coro = asyncio.to_thread(_run)

56 | asyncio.create_task(coro)

57 |

58 | return APIResponse(status="success", message=f"Training started / 训练开始 ID: {task.task_id}")

59 |

--------------------------------------------------------------------------------

/mikazuki/schema/flux-lora.ts:

--------------------------------------------------------------------------------

1 | Schema.intersect([

2 | Schema.object({

3 | model_train_type: Schema.string().default("flux-lora").disabled().description("训练种类"),

4 | pretrained_model_name_or_path: Schema.string().role('filepicker', { type: "model-file" }).default("./sd-models/model.safetensors").description("Flux 模型路径"),

5 | ae: Schema.string().role('filepicker', { type: "model-file" }).description("AE 模型文件路径"),

6 | clip_l: Schema.string().role('filepicker', { type: "model-file" }).description("clip_l 模型文件路径"),

7 | t5xxl: Schema.string().role('filepicker', { type: "model-file" }).description("t5xxl 模型文件路径"),

8 | resume: Schema.string().role('filepicker', { type: "folder" }).description("从某个 `save_state` 保存的中断状态继续训练,填写文件路径"),

9 | }).description("训练用模型"),

10 |

11 | Schema.object({

12 | timestep_sampling: Schema.union(["sigma", "uniform", "sigmoid", "shift"]).default("sigmoid").description("时间步采样"),

13 | sigmoid_scale: Schema.number().step(0.001).default(1.0).description("sigmoid 缩放"),

14 | model_prediction_type: Schema.union(["raw", "additive", "sigma_scaled"]).default("raw").description("模型预测类型"),

15 | discrete_flow_shift: Schema.number().step(0.001).default(1.0).description("Euler 调度器离散流位移"),

16 | loss_type: Schema.union(["l1", "l2", "huber", "smooth_l1"]).default("l2").description("损失函数类型"),

17 | guidance_scale: Schema.number().step(0.01).default(1.0).description("CFG 引导缩放"),

18 | t5xxl_max_token_length: Schema.number().step(1).description("T5XXL 最大 token 长度(不填写使用自动)"),

19 | train_t5xxl: Schema.boolean().default(false).description("训练 T5XXL(不推荐)"),

20 | }).description("Flux 专用参数"),

21 |

22 | Schema.object(

23 | UpdateSchema(SHARED_SCHEMAS.RAW.DATASET_SETTINGS, {

24 | resolution: Schema.string().default("768,768").description("训练图片分辨率,宽x高。支持非正方形,但必须是 64 倍数。"),

25 | enable_bucket: Schema.boolean().default(true).description("启用 arb 桶以允许非固定宽高比的图片"),

26 | min_bucket_reso: Schema.number().default(256).description("arb 桶最小分辨率"),

27 | max_bucket_reso: Schema.number().default(2048).description("arb 桶最大分辨率"),

28 | bucket_reso_steps: Schema.number().default(64).description("arb 桶分辨率划分单位,FLUX 需大于 64"),

29 | })

30 | ).description("数据集设置"),

31 |

32 | // 保存设置

33 | SHARED_SCHEMAS.SAVE_SETTINGS,

34 |

35 | Schema.object({

36 | max_train_epochs: Schema.number().min(1).default(20).description("最大训练 epoch(轮数)"),

37 | train_batch_size: Schema.number().min(1).default(1).description("批量大小, 越高显存占用越高"),

38 | gradient_checkpointing: Schema.boolean().default(true).description("梯度检查点"),

39 | gradient_accumulation_steps: Schema.number().min(1).default(1).description("梯度累加步数"),

40 | network_train_unet_only: Schema.boolean().default(true).description("仅训练 U-Net"),

41 | network_train_text_encoder_only: Schema.boolean().default(false).description("仅训练文本编码器"),

42 | }).description("训练相关参数"),

43 |

44 | // 学习率&优化器设置

45 | SHARED_SCHEMAS.LR_OPTIMIZER,

46 |

47 | Schema.intersect([

48 | Schema.object({

49 | network_module: Schema.union(["networks.lora_flux", "networks.oft_flux", "lycoris.kohya"]).default("networks.lora_flux").description("训练网络模块"),

50 | network_weights: Schema.string().role('filepicker').description("从已有的 LoRA 模型上继续训练,填写路径"),

51 | network_dim: Schema.number().min(1).default(2).description("网络维度,常用 4~128,不是越大越好, 低dim可以降低显存占用"),

52 | network_alpha: Schema.number().min(1).default(16).description("常用值:等于 network_dim 或 network_dim*1/2 或 1。使用较小的 alpha 需要提升学习率"),

53 | network_dropout: Schema.number().step(0.01).default(0).description('dropout 概率 (与 lycoris 不兼容,需要用 lycoris 自带的)'),

54 | scale_weight_norms: Schema.number().step(0.01).min(0).description("最大范数正则化。如果使用,推荐为 1"),

55 | network_args_custom: Schema.array(String).role('table').description('自定义 network_args,一行一个'),

56 | enable_base_weight: Schema.boolean().default(false).description('启用基础权重(差异炼丹)'),

57 | }).description("网络设置"),

58 |

59 | // lycoris 参数

60 | SHARED_SCHEMAS.LYCORIS_MAIN,

61 | SHARED_SCHEMAS.LYCORIS_LOKR,

62 |

63 | SHARED_SCHEMAS.NETWORK_OPTION_BASEWEIGHT,

64 | ]),

65 |

66 | // 预览图设置

67 | SHARED_SCHEMAS.PREVIEW_IMAGE,

68 |

69 | // 日志设置

70 | SHARED_SCHEMAS.LOG_SETTINGS,

71 |

72 | // caption 选项

73 | // FLUX 去除 max_token_length

74 | Schema.object(UpdateSchema(SHARED_SCHEMAS.RAW.CAPTION_SETTINGS, {}, ["max_token_length"])).description("caption(Tag)选项"),

75 |

76 | // 噪声设置

77 | SHARED_SCHEMAS.NOISE_SETTINGS,

78 |

79 | // 数据增强

80 | SHARED_SCHEMAS.DATA_ENCHANCEMENT,

81 |

82 | // 其他选项

83 | SHARED_SCHEMAS.OTHER,

84 |

85 | // 速度优化选项

86 | Schema.object(

87 | UpdateSchema(SHARED_SCHEMAS.RAW.PRECISION_CACHE_BATCH, {

88 | fp8_base: Schema.boolean().default(true).description("对基础模型使用 FP8 精度"),

89 | fp8_base_unet: Schema.boolean().description("仅对 U-Net 使用 FP8 精度(CLIP-L不使用)"),

90 | sdpa: Schema.boolean().default(true).description("启用 sdpa"),

91 | cache_text_encoder_outputs: Schema.boolean().default(true).description("缓存文本编码器的输出,减少显存使用。使用时需要关闭 shuffle_caption"),

92 | cache_text_encoder_outputs_to_disk: Schema.boolean().default(true).description("缓存文本编码器的输出到磁盘"),

93 | }, ["xformers"])

94 | ).description("速度优化选项"),

95 |

96 | // 分布式训练

97 | SHARED_SCHEMAS.DISTRIBUTED_TRAINING

98 | ]);

99 |

--------------------------------------------------------------------------------

/mikazuki/schema/lora-basic.ts:

--------------------------------------------------------------------------------

1 | Schema.intersect([

2 | Schema.object({

3 | pretrained_model_name_or_path: Schema.string().role('filepicker', {type: "model-file"}).default("./sd-models/model.safetensors").description("底模文件路径"),

4 | }).description("训练用模型"),

5 |

6 | Schema.object({

7 | train_data_dir: Schema.string().role('filepicker', { type: "folder", internal: "train-dir" }).default("./train/aki").description("训练数据集路径"),

8 | reg_data_dir: Schema.string().role('filepicker', { type: "folder", internal: "train-dir" }).description("正则化数据集路径。默认留空,不使用正则化图像"),

9 | resolution: Schema.string().default("512,512").description("训练图片分辨率,宽x高。支持非正方形,但必须是 64 倍数。"),

10 | }).description("数据集设置"),

11 |

12 | Schema.object({

13 | output_name: Schema.string().default("aki").description("模型保存名称"),

14 | output_dir: Schema.string().default("./output").role('filepicker', { type: "folder" }).description("模型保存文件夹"),

15 | save_every_n_epochs: Schema.number().default(2).description("每 N epoch(轮)自动保存一次模型"),

16 | }).description("保存设置"),

17 |

18 | Schema.object({

19 | max_train_epochs: Schema.number().min(1).default(10).description("最大训练 epoch(轮数)"),

20 | train_batch_size: Schema.number().min(1).default(1).description("批量大小"),

21 | }).description("训练相关参数"),

22 |

23 | Schema.intersect([

24 | Schema.object({

25 | unet_lr: Schema.string().default("1e-4").description("U-Net 学习率"),

26 | text_encoder_lr: Schema.string().default("1e-5").description("文本编码器学习率"),

27 | lr_scheduler: Schema.union([

28 | "cosine",

29 | "cosine_with_restarts",

30 | "constant",

31 | "constant_with_warmup",

32 | ]).default("cosine_with_restarts").description("学习率调度器设置"),

33 | lr_warmup_steps: Schema.number().default(0).description('学习率预热步数'),

34 | }).description("学习率与优化器设置"),

35 | Schema.union([

36 | Schema.object({

37 | lr_scheduler: Schema.const('cosine_with_restarts'),

38 | lr_scheduler_num_cycles: Schema.number().default(1).description('重启次数'),

39 | }),

40 | Schema.object({}),

41 | ]),

42 | Schema.object({

43 | optimizer_type: Schema.union([

44 | "AdamW8bit",

45 | "Lion",

46 | ]).default("AdamW8bit").description("优化器设置"),

47 | })

48 | ]),

49 |

50 | Schema.intersect([

51 | Schema.object({

52 | enable_preview: Schema.boolean().default(false).description('启用训练预览图'),

53 | }).description('训练预览图设置'),

54 |

55 | Schema.union([

56 | Schema.object({

57 | enable_preview: Schema.const(true).required(),

58 | sample_prompts: Schema.string().role('textarea').default(window.__MIKAZUKI__.SAMPLE_PROMPTS_DEFAULT).description(window.__MIKAZUKI__.SAMPLE_PROMPTS_DESCRIPTION),

59 | sample_sampler: Schema.union(["ddim", "pndm", "lms", "euler", "euler_a", "heun", "dpm_2", "dpm_2_a", "dpmsolver", "dpmsolver++", "dpmsingle", "k_lms", "k_euler", "k_euler_a", "k_dpm_2", "k_dpm_2_a"]).default("euler_a").description("生成预览图所用采样器"),

60 | sample_every_n_epochs: Schema.number().default(2).description("每 N 个 epoch 生成一次预览图"),

61 | }),

62 | Schema.object({}),

63 | ]),

64 | ]),

65 |

66 | Schema.intersect([

67 | Schema.object({

68 | network_weights: Schema.string().role('filepicker', { type: "model-file", internal: "model-saved-file" }).description("从已有的 LoRA 模型上继续训练,填写路径"),

69 | network_dim: Schema.number().min(8).max(256).step(8).default(32).description("网络维度,常用 4~128,不是越大越好, 低dim可以降低显存占用"),

70 | network_alpha: Schema.number().min(1).default(32).description(

71 | "常用值:等于 network_dim 或 network_dim*1/2 或 1。使用较小的 alpha 需要提升学习率。"

72 | ),

73 | }).description("网络设置"),

74 | ]),

75 |

76 | Schema.object({

77 | shuffle_caption: Schema.boolean().default(true).description("训练时随机打乱 tokens"),

78 | keep_tokens: Schema.number().min(0).max(255).step(1).default(0).description("在随机打乱 tokens 时,保留前 N 个不变"),

79 | }).description("caption 选项"),

80 |

81 | Schema.object({

82 | mixed_precision: Schema.union(["no", "fp16", "bf16"]).default("fp16").description("混合精度, RTX30系列以后也可以指定`bf16`"),

83 | no_half_vae: Schema.boolean().description("不使用半精度 VAE,当出现 NaN detected in latents 报错时使用"),

84 | xformers: Schema.boolean().default(true).description("启用 xformers"),

85 | cache_latents: Schema.boolean().default(true).description("缓存图像 latent, 缓存 VAE 输出以减少 VRAM 使用")

86 | }).description("速度优化选项"),

87 | ]);

88 |

--------------------------------------------------------------------------------

/mikazuki/schema/lora-master.ts:

--------------------------------------------------------------------------------

1 | Schema.intersect([

2 | Schema.intersect([

3 | Schema.object({

4 | model_train_type: Schema.union(["sd-lora", "sdxl-lora"]).default("sd-lora").description("训练种类"),

5 | pretrained_model_name_or_path: Schema.string().role('filepicker', { type: "model-file" }).default("./sd-models/model.safetensors").description("底模文件路径"),

6 | resume: Schema.string().role('filepicker', { type: "folder" }).description("从某个 `save_state` 保存的中断状态继续训练,填写文件路径"),

7 | vae: Schema.string().role('filepicker', { type: "model-file" }).description("(可选) VAE 模型文件路径,使用外置 VAE 文件覆盖模型内本身的"),

8 | }).description("训练用模型"),

9 |

10 | Schema.union([

11 | Schema.object({

12 | model_train_type: Schema.const("sd-lora"),

13 | v2: Schema.boolean().default(false).description("底模为 sd2.0 以后的版本需要启用"),

14 | }),

15 | Schema.object({}),

16 | ]),

17 |

18 | Schema.union([

19 | Schema.object({

20 | model_train_type: Schema.const("sd-lora"),

21 | v2: Schema.const(true).required(),

22 | v_parameterization: Schema.boolean().default(false).description("v-parameterization 学习"),

23 | scale_v_pred_loss_like_noise_pred: Schema.boolean().default(false).description("缩放 v-prediction 损失(与v-parameterization配合使用)"),

24 | }),

25 | Schema.object({}),

26 | ]),

27 | ]),

28 |

29 | // 数据集设置

30 | Schema.object(SHARED_SCHEMAS.RAW.DATASET_SETTINGS).description("数据集设置"),

31 |

32 | // 保存设置

33 | SHARED_SCHEMAS.SAVE_SETTINGS,

34 |

35 | Schema.object({

36 | max_train_epochs: Schema.number().min(1).default(10).description("最大训练 epoch(轮数)"),

37 | train_batch_size: Schema.number().min(1).default(1).description("批量大小, 越高显存占用越高"),

38 | gradient_checkpointing: Schema.boolean().default(false).description("梯度检查点"),

39 | gradient_accumulation_steps: Schema.number().min(1).description("梯度累加步数"),

40 | network_train_unet_only: Schema.boolean().default(false).description("仅训练 U-Net 训练SDXL Lora时推荐开启"),

41 | network_train_text_encoder_only: Schema.boolean().default(false).description("仅训练文本编码器"),

42 | }).description("训练相关参数"),

43 |

44 | // 学习率&优化器设置

45 | SHARED_SCHEMAS.LR_OPTIMIZER,

46 |

47 | Schema.intersect([

48 | Schema.object({

49 | network_module: Schema.union(["networks.lora", "networks.dylora", "networks.oft", "lycoris.kohya"]).default("networks.lora").description("训练网络模块"),

50 | network_weights: Schema.string().role('filepicker').description("从已有的 LoRA 模型上继续训练,填写路径"),

51 | network_dim: Schema.number().min(1).default(32).description("网络维度,常用 4~128,不是越大越好, 低dim可以降低显存占用"),

52 | network_alpha: Schema.number().min(1).default(32).description("常用值:等于 network_dim 或 network_dim*1/2 或 1。使用较小的 alpha 需要提升学习率"),

53 | network_dropout: Schema.number().step(0.01).default(0).description('dropout 概率 (与 lycoris 不兼容,需要用 lycoris 自带的)'),

54 | scale_weight_norms: Schema.number().step(0.01).min(0).description("最大范数正则化。如果使用,推荐为 1"),

55 | network_args_custom: Schema.array(String).role('table').description('自定义 network_args,一行一个'),

56 | enable_block_weights: Schema.boolean().default(false).description('启用分层学习率训练(只支持网络模块 networks.lora)'),

57 | enable_base_weight: Schema.boolean().default(false).description('启用基础权重(差异炼丹)'),

58 | }).description("网络设置"),

59 |

60 | // lycoris 参数

61 | SHARED_SCHEMAS.LYCORIS_MAIN,

62 | SHARED_SCHEMAS.LYCORIS_LOKR,

63 |

64 | // dylora 参数

65 | SHARED_SCHEMAS.NETWORK_OPTION_DYLORA,

66 |

67 | // 分层学习率参数

68 | SHARED_SCHEMAS.NETWORK_OPTION_BLOCK_WEIGHTS,

69 |

70 | SHARED_SCHEMAS.NETWORK_OPTION_BASEWEIGHT,

71 | ]),

72 |

73 | // 预览图设置

74 | SHARED_SCHEMAS.PREVIEW_IMAGE,

75 |

76 | // 日志设置

77 | SHARED_SCHEMAS.LOG_SETTINGS,

78 |

79 | // caption 选项

80 | Schema.object(SHARED_SCHEMAS.RAW.CAPTION_SETTINGS).description("caption(Tag)选项"),

81 |

82 | // 噪声设置

83 | SHARED_SCHEMAS.NOISE_SETTINGS,

84 |

85 | // 数据增强

86 | SHARED_SCHEMAS.DATA_ENCHANCEMENT,

87 |

88 | // 其他选项

89 | SHARED_SCHEMAS.OTHER,

90 |

91 | // 速度优化选项

92 | Schema.object(SHARED_SCHEMAS.RAW.PRECISION_CACHE_BATCH).description("速度优化选项"),

93 |

94 | // 分布式训练