Neurons in Neural Network are inspired from biological neurons. This Neural Network would able to do various tasks like classifying images, prediction, and so on. Alexa and Siri use neural network.

5 |

6 | A Perceptron is an algorithm used for supervised learning of binary classifiers. Binary classifiers decide whether an input, usually represented by a series of vectors, belongs to a specific class. In short, a perceptron is a single-layer neural network.

7 |

Working of Perceptron

10 | 11 | Perceptron looks like the following structure: 12 | 13 | 14 |

15 | We have 3 inputs X,Y and Z. When this inputs get into the net input fucntion it firstly get weighted with some weigh value that is w1,w2 and w3 respectively. The net input fucntion(Z) sums up all the value i.e. Z = (Xi * Wi). This Z will determine if a neuron fires or not. Firing of the neuron depends on a function which is called Activation Function. If the sum of the input signals exceeds a certain threshold, it either outputs a signal or does not return an output.

16 |

17 | -

14 |

15 | We have 3 inputs X,Y and Z. When this inputs get into the net input fucntion it firstly get weighted with some weigh value that is w1,w2 and w3 respectively. The net input fucntion(Z) sums up all the value i.e. Z = (Xi * Wi). This Z will determine if a neuron fires or not. Firing of the neuron depends on a function which is called Activation Function. If the sum of the input signals exceeds a certain threshold, it either outputs a signal or does not return an output.

16 |

17 | -

Perceptron Function

18 | 19 | Perceptron is a function that maps its input “x,” which is multiplied with the learned weight coefficient; an output value ”f(x)”is generated. 20 | 21 | ``` If w.x + b > 0, then f(x) = 1 ; else f(x) = 0 ``` 22 | 23 | In the equation given above: 24 | 25 | “w” = vector of real-valued weights 26 | 27 | “b” = bias (an element that adjusts the boundary away from origin without any dependence on the input value) 28 | 29 | “x” = vector of input x values 30 | 31 | ``` Σ (Wi * Xi) ``` 32 | 33 | “m” = number of inputs to the Perceptron 34 | 35 | The output can be represented as “1” or “0.” It can also be represented as “1” or “-1” depending on which activation function is used. 36 | 37 | -

Perceptron Training

38 | Refer:

39 | [Perceptron Training](perceptron_training.py)

--------------------------------------------------------------------------------

/FP-Growth/README.md:

--------------------------------------------------------------------------------

1 | # FP GROWTH ALGORITHM

2 |

3 | ## Introduction

4 |

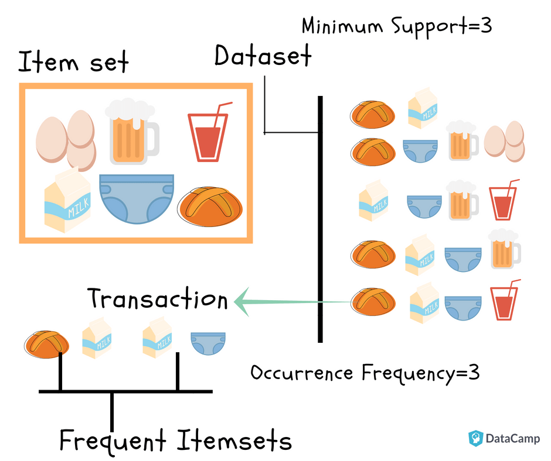

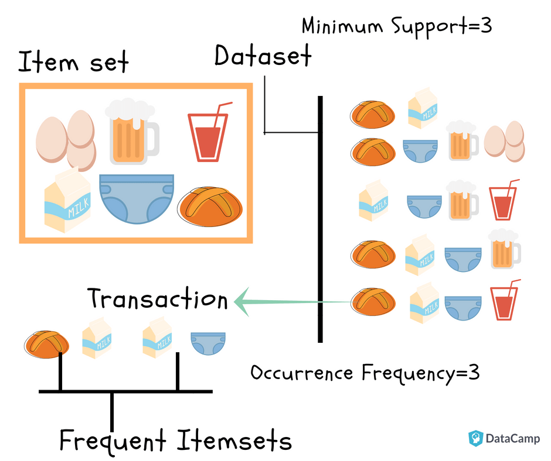

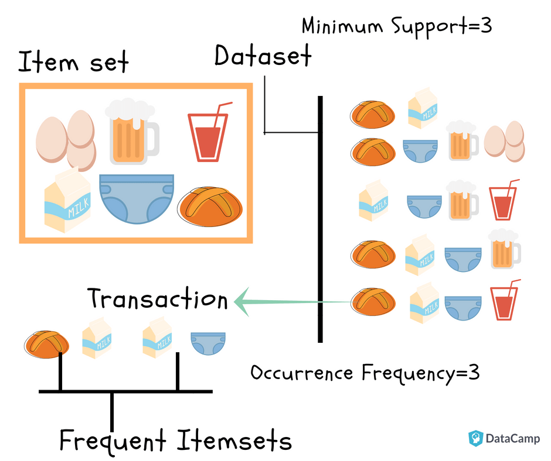

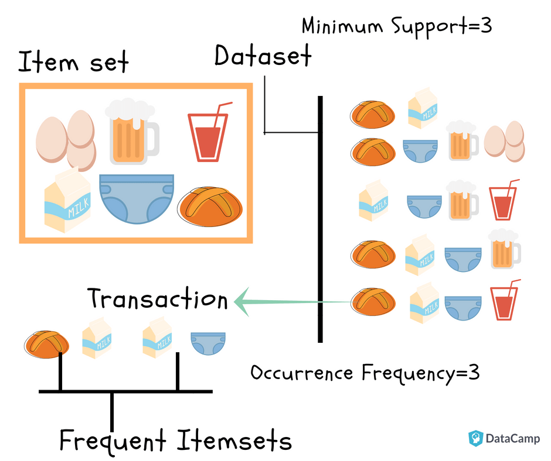

5 | FP growth algorithm or the Frequent Pattern Growth Algorithm is an improvement of apriori algorithm. The two primary drawbacks of the Apriori Algorithm were that at each step, candidate sets had to be rebuilt and to build those, the algorithm had to repeatedly scan the database. These properties in turn made the algorithm slower. FP algorithm overcomes the disadvantages of the Apriori algorithm by storing all the transactions in a Tree Data Structure.

6 |

7 | ## FP Tree

8 |

9 | FP tree is the core concept of the whole FP Growth algorithm. It is the compressed representation of the itemset database. The tree structure not only reserves the itemset in DB but also keeps track of the association between itemsets. The tree is constructed by taking each itemset and mapping it to a path in the tree one at a time. The whole idea behind this construction is that more frequently occurring items will have better chances of sharing items. We then mine the tree recursively to get the frequent pattern. Pattern growth, the name of the algorithm, is achieved by concatenating the frequent pattern generated from the conditional FP trees.

10 |

11 | A FP tree looks something like this:

12 |

13 |

14 |

15 | ## Advantages

16 |

17 | ▪ This algorithm needs to scan the database only twice when compared to Apriori which scans the transactions for each iteration.

18 |

19 | ▪ The pairing of items is not done in this algorithm and this makes it faster.

20 |

21 | ▪ The database is stored in a compact version in memory.

22 |

23 | ▪ It is efficient and scalable for mining both long and short frequent patterns.

24 |

25 | ## Disadvantages

26 |

27 | ▪ FP Tree is more cumbersome and difficult to build than Apriori.

28 |

29 | ▪ It may be expensive.

30 |

31 | ▪ When the database is large, the algorithm may not fit in the shared memory.

32 |

33 | ## References

34 |

35 | ▪ https://www.geeksforgeeks.org/ml-frequent-pattern-growth-algorithm/

36 |

37 | ▪ https://www.softwaretestinghelp.com/fp-growth-algorithm-data-mining/

38 |

39 | ▪ https://towardsdatascience.com/fp-growth-frequent-pattern-generation-in-data-mining-with-python-implementation-244e561ab1c3

40 |

--------------------------------------------------------------------------------

/Lowess Regression/README.md:

--------------------------------------------------------------------------------

1 | # LOWESS REGRESSION

2 |

3 | ## Introduction

4 |

5 | LOWESS are non-parametric regression methods that combine multiple regression models in a k-nearest-neighbor-based meta-model. They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor. LOWESS combines much of the simplicity of linear least squares regression with the flexibility of nonlinear regression. It does this by fitting simple models to localized subsets of the data to build up a function that describes the variation in the data, point by point.

6 |

7 | ## Procedure

8 |

9 | A linear function is fitted only on a local set of points delimited by a region, using weighted least squares. The weights are given by the heights of a kernel function (i.e. weighting function) giving:

10 |

11 | ▪ more weights to points near the target point x0 whose response is being estimated

12 |

13 | ▪ less weight to points further away

14 |

15 | We obtain then a fitted model that retains only the point of the model that are close to the target point (x0). The target point then moves away on the x axis and the procedure repeats for each point.

16 |

17 | ## Advantages

18 |

19 | ▪ Allows us to put less care into selecting the features in order to avoid overfitting.

20 |

21 | ▪ Does not require specification of a function to fit a model to all of the data in the sample.

22 |

23 | ▪ Only a Kernel function and smoothing / bandwidth parameters are required.

24 |

25 | ▪ Very flexible, can model complex processes for which no theoretical model exists.

26 |

27 | ▪ Considered one of the most attractive of the modern regression methods for applications that fit the general framework of least squares regression but which have a complex deterministic structure.

28 |

29 | ## Disadvantages

30 |

31 | ▪ Requires to keep the entire training set in order to make future predictions.

32 |

33 | ▪ The number of parameters grows linearly with the size of the training set.

34 |

35 | ▪ Computationally intensive, as a regression model is computed for each point.

36 |

37 | ▪ Requires fairly large, densely sampled data sets in order to produce good models. This is because LOWESS relies on the local data structure when performing the local fitting.

38 |

39 | ## References

40 |

41 | ▪ https://xavierbourretsicotte.github.io/loess.html

42 |

--------------------------------------------------------------------------------

/Mini Batch K-means Clustering/README.md:

--------------------------------------------------------------------------------

1 | # MINI BATCH K-MEANS CLUSTERING

2 |

3 | ## Introduction

4 |

5 | Mini Batch K-means algorithm‘s main idea is to use small random batches of data of a fixed size, so they can be stored in memory. Each iteration a new random sample from the dataset is obtained and used to update the clusters and this is repeated until convergence. Each mini batch updates the clusters using a convex combination of the values of the prototypes and the data, applying a learning rate that decreases with the number of iterations. This learning rate is the inverse of the number of data assigned to a cluster during the process. As the number of iterations increases, the effect of new data is reduced, so convergence can be detected when no changes in the clusters occur in several consecutive iterations.

6 |

7 | ## Need for Mini-Batch K-Means Clustering Algorithm

8 |

9 | K-means is one of the most popular clustering algorithms, mainly because of its good time performance. With the increasing size of the datasets being analyzed, the computation time of K-means increases because of its constraint of needing the whole dataset in main memory. For this reason, several methods have been proposed to reduce the temporal and spatial cost of the algorithm. A different approach is the Mini batch K-means algorithm.

10 |

11 | The empirical results suggest that it can obtain a substantial saving of computational time at the expense of some loss of cluster quality, but not extensive study of the algorithm has been done to measure how the characteristics of the datasets, such as the number of clusters or its size, affect the partition quality.

12 |

13 |  14 |

15 | ## Advantages

16 |

17 | ▪ If variables are huge, then K-Means most of the times gets computationally faster than hierarchical clustering, if we keep k small.

18 |

19 | ▪ K-Means produce tighter clusters than hierarchical clustering.

20 |

21 | ## Disadvantages

22 |

23 | ▪ Difficult to predict K-Value.

24 |

25 | ▪ It may not work well with clusters (in the original data) of different size and different density.

26 |

27 | ## References

28 |

29 | ▪ https://www.geeksforgeeks.org/ml-mini-batch-k-means-clustering-algorithm/

30 |

31 | ▪ http://playwidtech.blogspot.com/2013/02/k-means-clustering-advantages-and.html

32 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing guidelines

2 |

3 | ## Before contributing

4 |

5 | Welcome to [Algo-Phantoms/Algo-ScriptML](https://github.com/Algo-Phantoms/Algo-ScriptML). Before sending your pull requests, make sure that you **read the whole guidelines**. If you have any doubt on the contributing guide, please feel free to reach out to us.

6 |

7 | ### Contribution

8 |

9 | We appreciate any contribution, from fixing a grammar mistake in a comment to implementing complex algorithms. Please read this section if you are contributing your work.

10 |

11 | #### Coding Style

12 |

13 | We want your work to be readable by others; therefore, we encourage you to note the following:

14 |

15 | - Follow PEP8 guidelines. Read more about it here.

16 | - Please write in Python 3.7+. __print()__ is a function in Python 3 so __print "Hello"__ will _not_ work but __print("Hello")__ will.

17 | - Please focus hard on naming of functions, classes, and variables. Help your reader by using __descriptive names__ that can help you to remove redundant comments.

18 | - Please follow the [Python Naming Conventions](https://pep8.org/#prescriptive-naming-conventions) so variable_names and function_names should be lower_case, CONSTANTS in UPPERCASE, ClassNames should be CamelCase, etc.

19 | - Expand acronyms because __gcf()__ is hard to understand but __greatest_common_factor()__ is not.

20 |

21 | - Avoid importing external libraries for basic algorithms. Only use those libraries for complicated algorithms. **Usage of NumPY is highly recommended.**

22 |

23 |

24 | #### Other points to remember while submitting your work:

25 |

26 | - File extension for code should be `.py`.

27 | - Strictly use snake_case (underscore_separated) in your file_name, as it will be easy to parse in future using scripts.

28 | - Please avoid creating new directories if at all possible. Try to fit your work into the existing directory structure. If you want to. Please contact us before doing so.

29 | - If you have modified/added code work, make sure the code compiles before submitting.

30 | - If you have modified/added documentation work, ensure your language is concise and contains no grammar errors.

31 | - Do not update the [README.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/README.md) and [Contributing_Guidelines.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/CONTRIBUTING.md).

32 |

33 | Happy Coding :)

34 |

35 |

--------------------------------------------------------------------------------

/stochastic gradient descent/README.md:

--------------------------------------------------------------------------------

1 | # Stochastic gradient descent(SGD):

2 | * stochastic gradient descent(SGD) is used for regression problems with **very large dataset(in millions).**

3 | * SGD is same as gradient discent algorithm but have difference in optimization function.

4 | * SGD is inspired by Robbins–Monro algorithm of the 1950s.

5 |

6 | ## Basic idea behind SGD:

7 | * SGD works as an iterative algorithm.

8 | * It starts from a random point in dataset

9 | * after that it tries to fit the training sets one by one.

10 |

11 | ## Working of SGD:

12 |

13 | * first it initialize the theta(weights) to some random values.

14 | * than it takes a dataset(a row) from training set and tries to fit perfectly and returns modefied theta(weights).

15 | * that returned theta(weights) are applied over next dataset and tries to fit it perfectly and returns the theta.

16 | * this loop runs untill last dataset.

17 |

18 | ## Intution behind SGD:

19 | * first the algo shuffles the data, so that there should be no pattern can be seen firstly.

20 | * than algo tries to fit nex data more accurately thn the previously.

21 |

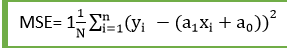

22 | ## SGD function:

23 |

24 |

25 |

26 | ## Difference between SGD and Gradient descent(Batch descent):

27 |

28 |

29 | * gradient descent goes from 1 to m(no of datasets) in every iterations while SGD iterates one time over one dataset.

30 |

31 | ## Main advantages of SGD:

32 | * computational efficient.

33 | * model with large dataset can be trained eaisly.

34 |

35 | ## Main disadvantages of SGD:

36 |

37 | * not effective over small datasets.

38 |

39 | ## Documentation:

40 |

41 | ```python

42 | Stochastic_gradient_descent(learning_rate=0.1)

43 | ```

44 | it takes the learning rate only, if you will not give it will be initialized to 0.1

45 | ```python

46 | object.fit(X,y)

47 | ```

48 | after making object of SGD type we have to call .fit() method in order to train model.

49 |

50 | * X: feature dataset(shuffeled)

51 | * y:label set(target set)(shuffled)

52 |

53 | ```python

54 | object.predict(X_pred)

55 | ```

56 | X_pred: features for which prediction is to be made.

57 |

58 | ## Example:

59 |

60 | ```python

61 |

62 | algo=Stochastic_gradient_descent(learning_rate=0.03)

63 | model=algo.fit(X,y)

64 | predicted_value=model.predict(X_pred)

65 | ```

66 |

--------------------------------------------------------------------------------

/Apriori/README.md:

--------------------------------------------------------------------------------

1 | # APRIORI ALGORITHM

2 |

3 | ## Introduction

4 |

5 | Apriori algorithm was given by R. Agrawal and R. Srikant in 1994 for finding frequent itemsets in a dataset for boolean association rule. The algorithm is called so because it uses prior knowledge of frequent itemset properties. With the help of the association rule, it determines how strongly or how weakly two objects are connected. This algorithm uses a breadth-first search and Hash Tree to calculate the itemset associations efficiently. It is the iterative process for finding the frequent itemsets from the large dataset.

6 |

7 | To improve the efficiency of level-wise generation of frequent itemsets, **Apriori Property** is used. It helps in reducing the search space.

8 |

9 | Frequent itemsets: Frequent itemsets are those items whose support is greater than the threshold value or user-specified minimum support. It means if A & B are the frequent itemsets together, then individually A and B should also be the frequent itemset. Suppose there are the two transactions: A= {1,2,3,4,5}, and B= {2,3,7}, in these two transactions, 2 and 3 are the frequent itemsets.

10 |

11 |

12 |

13 | ## Apriori Property

14 |

15 | According to this property, all subsets of a frequent itemset must also be frequent.

16 |

17 | ## Steps for Apriori Algorithm

18 |

19 | Step 1: Determine the support of itemsets in the transactional database, and select the minimum support and confidence.

20 |

21 | Step 2: Take all supports in the transaction with higher support value than the minimum or selected support value.

22 |

23 | Step 3: Find all the rules of these subsets that have higher confidence value than the threshold or minimum confidence.

24 |

25 | Step 4: Sort the rules as the decreasing order of lift.

26 |

27 | ## Advantages of Apriori Algorithm

28 |

29 | ▪ This is an easy to understand algorithm.

30 |

31 | ▪ The join and prune steps of the algorithm can be easily implemented on large datasets.

32 |

33 | ## Disadvantages of Apriori Algorithm

34 |

35 | ▪ The apriori algorithm works slowly as compared to other algorithms.

36 |

37 | ▪ The overall performance can be reduced as it scans the database for multiple times.

38 |

39 | ▪ The time complexity and space complexity of the apriori algorithm is O(2D), which is very high. Here D represents the horizontal width present in the database.

40 |

41 | ## References

42 |

43 | ▪ https://www.geeksforgeeks.org/apriori-algorithm/

44 |

45 | ▪ https://www.javatpoint.com/apriori-algorithm-in-machine-learning

46 |

--------------------------------------------------------------------------------

/K Nearest Neighbors/README.md:

--------------------------------------------------------------------------------

1 | # K Nearest Neighbors Algorithm

2 | # Introduction

3 | The K-nearest neighbors is a simple and easy-to-implement supervised machine learning algorithm. It can be used to solve both classification as well as regression problems. This algorithm assumes that similar data points are close to each other in the scatter plot.

4 |

5 | # Choosing the right value for K

6 | The best way to decide this is by trying out several values of K (number of nearest neighbors) before settling on one. Low values of K (like K = 1 or K = 2) can be noisy and subject to outliers. If we take large values of K, a category with only a few values in it will always be voted out by other categories. Choose the value of K that reduces the number of errors. We usually make K an odd number to have a tiebreaker.

7 |

8 | # Algorithm

9 | 1. Start with a dataset with known categories.

14 |

15 | ## Advantages

16 |

17 | ▪ If variables are huge, then K-Means most of the times gets computationally faster than hierarchical clustering, if we keep k small.

18 |

19 | ▪ K-Means produce tighter clusters than hierarchical clustering.

20 |

21 | ## Disadvantages

22 |

23 | ▪ Difficult to predict K-Value.

24 |

25 | ▪ It may not work well with clusters (in the original data) of different size and different density.

26 |

27 | ## References

28 |

29 | ▪ https://www.geeksforgeeks.org/ml-mini-batch-k-means-clustering-algorithm/

30 |

31 | ▪ http://playwidtech.blogspot.com/2013/02/k-means-clustering-advantages-and.html

32 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing guidelines

2 |

3 | ## Before contributing

4 |

5 | Welcome to [Algo-Phantoms/Algo-ScriptML](https://github.com/Algo-Phantoms/Algo-ScriptML). Before sending your pull requests, make sure that you **read the whole guidelines**. If you have any doubt on the contributing guide, please feel free to reach out to us.

6 |

7 | ### Contribution

8 |

9 | We appreciate any contribution, from fixing a grammar mistake in a comment to implementing complex algorithms. Please read this section if you are contributing your work.

10 |

11 | #### Coding Style

12 |

13 | We want your work to be readable by others; therefore, we encourage you to note the following:

14 |

15 | - Follow PEP8 guidelines. Read more about it here.

16 | - Please write in Python 3.7+. __print()__ is a function in Python 3 so __print "Hello"__ will _not_ work but __print("Hello")__ will.

17 | - Please focus hard on naming of functions, classes, and variables. Help your reader by using __descriptive names__ that can help you to remove redundant comments.

18 | - Please follow the [Python Naming Conventions](https://pep8.org/#prescriptive-naming-conventions) so variable_names and function_names should be lower_case, CONSTANTS in UPPERCASE, ClassNames should be CamelCase, etc.

19 | - Expand acronyms because __gcf()__ is hard to understand but __greatest_common_factor()__ is not.

20 |

21 | - Avoid importing external libraries for basic algorithms. Only use those libraries for complicated algorithms. **Usage of NumPY is highly recommended.**

22 |

23 |

24 | #### Other points to remember while submitting your work:

25 |

26 | - File extension for code should be `.py`.

27 | - Strictly use snake_case (underscore_separated) in your file_name, as it will be easy to parse in future using scripts.

28 | - Please avoid creating new directories if at all possible. Try to fit your work into the existing directory structure. If you want to. Please contact us before doing so.

29 | - If you have modified/added code work, make sure the code compiles before submitting.

30 | - If you have modified/added documentation work, ensure your language is concise and contains no grammar errors.

31 | - Do not update the [README.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/README.md) and [Contributing_Guidelines.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/CONTRIBUTING.md).

32 |

33 | Happy Coding :)

34 |

35 |

--------------------------------------------------------------------------------

/stochastic gradient descent/README.md:

--------------------------------------------------------------------------------

1 | # Stochastic gradient descent(SGD):

2 | * stochastic gradient descent(SGD) is used for regression problems with **very large dataset(in millions).**

3 | * SGD is same as gradient discent algorithm but have difference in optimization function.

4 | * SGD is inspired by Robbins–Monro algorithm of the 1950s.

5 |

6 | ## Basic idea behind SGD:

7 | * SGD works as an iterative algorithm.

8 | * It starts from a random point in dataset

9 | * after that it tries to fit the training sets one by one.

10 |

11 | ## Working of SGD:

12 |

13 | * first it initialize the theta(weights) to some random values.

14 | * than it takes a dataset(a row) from training set and tries to fit perfectly and returns modefied theta(weights).

15 | * that returned theta(weights) are applied over next dataset and tries to fit it perfectly and returns the theta.

16 | * this loop runs untill last dataset.

17 |

18 | ## Intution behind SGD:

19 | * first the algo shuffles the data, so that there should be no pattern can be seen firstly.

20 | * than algo tries to fit nex data more accurately thn the previously.

21 |

22 | ## SGD function:

23 |

24 |

25 |

26 | ## Difference between SGD and Gradient descent(Batch descent):

27 |

28 |

29 | * gradient descent goes from 1 to m(no of datasets) in every iterations while SGD iterates one time over one dataset.

30 |

31 | ## Main advantages of SGD:

32 | * computational efficient.

33 | * model with large dataset can be trained eaisly.

34 |

35 | ## Main disadvantages of SGD:

36 |

37 | * not effective over small datasets.

38 |

39 | ## Documentation:

40 |

41 | ```python

42 | Stochastic_gradient_descent(learning_rate=0.1)

43 | ```

44 | it takes the learning rate only, if you will not give it will be initialized to 0.1

45 | ```python

46 | object.fit(X,y)

47 | ```

48 | after making object of SGD type we have to call .fit() method in order to train model.

49 |

50 | * X: feature dataset(shuffeled)

51 | * y:label set(target set)(shuffled)

52 |

53 | ```python

54 | object.predict(X_pred)

55 | ```

56 | X_pred: features for which prediction is to be made.

57 |

58 | ## Example:

59 |

60 | ```python

61 |

62 | algo=Stochastic_gradient_descent(learning_rate=0.03)

63 | model=algo.fit(X,y)

64 | predicted_value=model.predict(X_pred)

65 | ```

66 |

--------------------------------------------------------------------------------

/Apriori/README.md:

--------------------------------------------------------------------------------

1 | # APRIORI ALGORITHM

2 |

3 | ## Introduction

4 |

5 | Apriori algorithm was given by R. Agrawal and R. Srikant in 1994 for finding frequent itemsets in a dataset for boolean association rule. The algorithm is called so because it uses prior knowledge of frequent itemset properties. With the help of the association rule, it determines how strongly or how weakly two objects are connected. This algorithm uses a breadth-first search and Hash Tree to calculate the itemset associations efficiently. It is the iterative process for finding the frequent itemsets from the large dataset.

6 |

7 | To improve the efficiency of level-wise generation of frequent itemsets, **Apriori Property** is used. It helps in reducing the search space.

8 |

9 | Frequent itemsets: Frequent itemsets are those items whose support is greater than the threshold value or user-specified minimum support. It means if A & B are the frequent itemsets together, then individually A and B should also be the frequent itemset. Suppose there are the two transactions: A= {1,2,3,4,5}, and B= {2,3,7}, in these two transactions, 2 and 3 are the frequent itemsets.

10 |

11 |

12 |

13 | ## Apriori Property

14 |

15 | According to this property, all subsets of a frequent itemset must also be frequent.

16 |

17 | ## Steps for Apriori Algorithm

18 |

19 | Step 1: Determine the support of itemsets in the transactional database, and select the minimum support and confidence.

20 |

21 | Step 2: Take all supports in the transaction with higher support value than the minimum or selected support value.

22 |

23 | Step 3: Find all the rules of these subsets that have higher confidence value than the threshold or minimum confidence.

24 |

25 | Step 4: Sort the rules as the decreasing order of lift.

26 |

27 | ## Advantages of Apriori Algorithm

28 |

29 | ▪ This is an easy to understand algorithm.

30 |

31 | ▪ The join and prune steps of the algorithm can be easily implemented on large datasets.

32 |

33 | ## Disadvantages of Apriori Algorithm

34 |

35 | ▪ The apriori algorithm works slowly as compared to other algorithms.

36 |

37 | ▪ The overall performance can be reduced as it scans the database for multiple times.

38 |

39 | ▪ The time complexity and space complexity of the apriori algorithm is O(2D), which is very high. Here D represents the horizontal width present in the database.

40 |

41 | ## References

42 |

43 | ▪ https://www.geeksforgeeks.org/apriori-algorithm/

44 |

45 | ▪ https://www.javatpoint.com/apriori-algorithm-in-machine-learning

46 |

--------------------------------------------------------------------------------

/K Nearest Neighbors/README.md:

--------------------------------------------------------------------------------

1 | # K Nearest Neighbors Algorithm

2 | # Introduction

3 | The K-nearest neighbors is a simple and easy-to-implement supervised machine learning algorithm. It can be used to solve both classification as well as regression problems. This algorithm assumes that similar data points are close to each other in the scatter plot.

4 |

5 | # Choosing the right value for K

6 | The best way to decide this is by trying out several values of K (number of nearest neighbors) before settling on one. Low values of K (like K = 1 or K = 2) can be noisy and subject to outliers. If we take large values of K, a category with only a few values in it will always be voted out by other categories. Choose the value of K that reduces the number of errors. We usually make K an odd number to have a tiebreaker.

7 |

8 | # Algorithm

9 | 1. Start with a dataset with known categories.

10 | 2. Initialize K(number of nearest neighbours).

11 | 3. For each data point in the training data:

12 | 3.1 calculate the distance between the data point and the current example from the data.

13 | 3.2 add the distance and the index of the example to an ordered collection.

14 | 4. Sort the ordered collection of distances and indices from smallest to largest (in ascending order) by the distances

15 | 5. Pick the first K entries from the sorted collection.

16 | 6. Get the labels of the selected K entries.

17 | 7. If regression, return the mean of the K labels. (return average of K labels)

18 | 8. If classification, return the mode of the K labels. (return mode of K labels)

19 |

20 |

14 |

15 | ## Advantages

16 |

17 | ▪ If variables are huge, then K-Means most of the times gets computationally faster than hierarchical clustering, if we keep k small.

18 |

19 | ▪ K-Means produce tighter clusters than hierarchical clustering.

20 |

21 | ## Disadvantages

22 |

23 | ▪ Difficult to predict K-Value.

24 |

25 | ▪ It may not work well with clusters (in the original data) of different size and different density.

26 |

27 | ## References

28 |

29 | ▪ https://www.geeksforgeeks.org/ml-mini-batch-k-means-clustering-algorithm/

30 |

31 | ▪ http://playwidtech.blogspot.com/2013/02/k-means-clustering-advantages-and.html

32 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing guidelines

2 |

3 | ## Before contributing

4 |

5 | Welcome to [Algo-Phantoms/Algo-ScriptML](https://github.com/Algo-Phantoms/Algo-ScriptML). Before sending your pull requests, make sure that you **read the whole guidelines**. If you have any doubt on the contributing guide, please feel free to reach out to us.

6 |

7 | ### Contribution

8 |

9 | We appreciate any contribution, from fixing a grammar mistake in a comment to implementing complex algorithms. Please read this section if you are contributing your work.

10 |

11 | #### Coding Style

12 |

13 | We want your work to be readable by others; therefore, we encourage you to note the following:

14 |

15 | - Follow PEP8 guidelines. Read more about it here.

16 | - Please write in Python 3.7+. __print()__ is a function in Python 3 so __print "Hello"__ will _not_ work but __print("Hello")__ will.

17 | - Please focus hard on naming of functions, classes, and variables. Help your reader by using __descriptive names__ that can help you to remove redundant comments.

18 | - Please follow the [Python Naming Conventions](https://pep8.org/#prescriptive-naming-conventions) so variable_names and function_names should be lower_case, CONSTANTS in UPPERCASE, ClassNames should be CamelCase, etc.

19 | - Expand acronyms because __gcf()__ is hard to understand but __greatest_common_factor()__ is not.

20 |

21 | - Avoid importing external libraries for basic algorithms. Only use those libraries for complicated algorithms. **Usage of NumPY is highly recommended.**

22 |

23 |

24 | #### Other points to remember while submitting your work:

25 |

26 | - File extension for code should be `.py`.

27 | - Strictly use snake_case (underscore_separated) in your file_name, as it will be easy to parse in future using scripts.

28 | - Please avoid creating new directories if at all possible. Try to fit your work into the existing directory structure. If you want to. Please contact us before doing so.

29 | - If you have modified/added code work, make sure the code compiles before submitting.

30 | - If you have modified/added documentation work, ensure your language is concise and contains no grammar errors.

31 | - Do not update the [README.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/README.md) and [Contributing_Guidelines.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/CONTRIBUTING.md).

32 |

33 | Happy Coding :)

34 |

35 |

--------------------------------------------------------------------------------

/stochastic gradient descent/README.md:

--------------------------------------------------------------------------------

1 | # Stochastic gradient descent(SGD):

2 | * stochastic gradient descent(SGD) is used for regression problems with **very large dataset(in millions).**

3 | * SGD is same as gradient discent algorithm but have difference in optimization function.

4 | * SGD is inspired by Robbins–Monro algorithm of the 1950s.

5 |

6 | ## Basic idea behind SGD:

7 | * SGD works as an iterative algorithm.

8 | * It starts from a random point in dataset

9 | * after that it tries to fit the training sets one by one.

10 |

11 | ## Working of SGD:

12 |

13 | * first it initialize the theta(weights) to some random values.

14 | * than it takes a dataset(a row) from training set and tries to fit perfectly and returns modefied theta(weights).

15 | * that returned theta(weights) are applied over next dataset and tries to fit it perfectly and returns the theta.

16 | * this loop runs untill last dataset.

17 |

18 | ## Intution behind SGD:

19 | * first the algo shuffles the data, so that there should be no pattern can be seen firstly.

20 | * than algo tries to fit nex data more accurately thn the previously.

21 |

22 | ## SGD function:

23 |

24 |

25 |

26 | ## Difference between SGD and Gradient descent(Batch descent):

27 |

28 |

29 | * gradient descent goes from 1 to m(no of datasets) in every iterations while SGD iterates one time over one dataset.

30 |

31 | ## Main advantages of SGD:

32 | * computational efficient.

33 | * model with large dataset can be trained eaisly.

34 |

35 | ## Main disadvantages of SGD:

36 |

37 | * not effective over small datasets.

38 |

39 | ## Documentation:

40 |

41 | ```python

42 | Stochastic_gradient_descent(learning_rate=0.1)

43 | ```

44 | it takes the learning rate only, if you will not give it will be initialized to 0.1

45 | ```python

46 | object.fit(X,y)

47 | ```

48 | after making object of SGD type we have to call .fit() method in order to train model.

49 |

50 | * X: feature dataset(shuffeled)

51 | * y:label set(target set)(shuffled)

52 |

53 | ```python

54 | object.predict(X_pred)

55 | ```

56 | X_pred: features for which prediction is to be made.

57 |

58 | ## Example:

59 |

60 | ```python

61 |

62 | algo=Stochastic_gradient_descent(learning_rate=0.03)

63 | model=algo.fit(X,y)

64 | predicted_value=model.predict(X_pred)

65 | ```

66 |

--------------------------------------------------------------------------------

/Apriori/README.md:

--------------------------------------------------------------------------------

1 | # APRIORI ALGORITHM

2 |

3 | ## Introduction

4 |

5 | Apriori algorithm was given by R. Agrawal and R. Srikant in 1994 for finding frequent itemsets in a dataset for boolean association rule. The algorithm is called so because it uses prior knowledge of frequent itemset properties. With the help of the association rule, it determines how strongly or how weakly two objects are connected. This algorithm uses a breadth-first search and Hash Tree to calculate the itemset associations efficiently. It is the iterative process for finding the frequent itemsets from the large dataset.

6 |

7 | To improve the efficiency of level-wise generation of frequent itemsets, **Apriori Property** is used. It helps in reducing the search space.

8 |

9 | Frequent itemsets: Frequent itemsets are those items whose support is greater than the threshold value or user-specified minimum support. It means if A & B are the frequent itemsets together, then individually A and B should also be the frequent itemset. Suppose there are the two transactions: A= {1,2,3,4,5}, and B= {2,3,7}, in these two transactions, 2 and 3 are the frequent itemsets.

10 |

11 |

12 |

13 | ## Apriori Property

14 |

15 | According to this property, all subsets of a frequent itemset must also be frequent.

16 |

17 | ## Steps for Apriori Algorithm

18 |

19 | Step 1: Determine the support of itemsets in the transactional database, and select the minimum support and confidence.

20 |

21 | Step 2: Take all supports in the transaction with higher support value than the minimum or selected support value.

22 |

23 | Step 3: Find all the rules of these subsets that have higher confidence value than the threshold or minimum confidence.

24 |

25 | Step 4: Sort the rules as the decreasing order of lift.

26 |

27 | ## Advantages of Apriori Algorithm

28 |

29 | ▪ This is an easy to understand algorithm.

30 |

31 | ▪ The join and prune steps of the algorithm can be easily implemented on large datasets.

32 |

33 | ## Disadvantages of Apriori Algorithm

34 |

35 | ▪ The apriori algorithm works slowly as compared to other algorithms.

36 |

37 | ▪ The overall performance can be reduced as it scans the database for multiple times.

38 |

39 | ▪ The time complexity and space complexity of the apriori algorithm is O(2D), which is very high. Here D represents the horizontal width present in the database.

40 |

41 | ## References

42 |

43 | ▪ https://www.geeksforgeeks.org/apriori-algorithm/

44 |

45 | ▪ https://www.javatpoint.com/apriori-algorithm-in-machine-learning

46 |

--------------------------------------------------------------------------------

/K Nearest Neighbors/README.md:

--------------------------------------------------------------------------------

1 | # K Nearest Neighbors Algorithm

2 | # Introduction

3 | The K-nearest neighbors is a simple and easy-to-implement supervised machine learning algorithm. It can be used to solve both classification as well as regression problems. This algorithm assumes that similar data points are close to each other in the scatter plot.

4 |

5 | # Choosing the right value for K

6 | The best way to decide this is by trying out several values of K (number of nearest neighbors) before settling on one. Low values of K (like K = 1 or K = 2) can be noisy and subject to outliers. If we take large values of K, a category with only a few values in it will always be voted out by other categories. Choose the value of K that reduces the number of errors. We usually make K an odd number to have a tiebreaker.

7 |

8 | # Algorithm

9 | 1. Start with a dataset with known categories.

14 |

15 | ## Advantages

16 |

17 | ▪ If variables are huge, then K-Means most of the times gets computationally faster than hierarchical clustering, if we keep k small.

18 |

19 | ▪ K-Means produce tighter clusters than hierarchical clustering.

20 |

21 | ## Disadvantages

22 |

23 | ▪ Difficult to predict K-Value.

24 |

25 | ▪ It may not work well with clusters (in the original data) of different size and different density.

26 |

27 | ## References

28 |

29 | ▪ https://www.geeksforgeeks.org/ml-mini-batch-k-means-clustering-algorithm/

30 |

31 | ▪ http://playwidtech.blogspot.com/2013/02/k-means-clustering-advantages-and.html

32 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing guidelines

2 |

3 | ## Before contributing

4 |

5 | Welcome to [Algo-Phantoms/Algo-ScriptML](https://github.com/Algo-Phantoms/Algo-ScriptML). Before sending your pull requests, make sure that you **read the whole guidelines**. If you have any doubt on the contributing guide, please feel free to reach out to us.

6 |

7 | ### Contribution

8 |

9 | We appreciate any contribution, from fixing a grammar mistake in a comment to implementing complex algorithms. Please read this section if you are contributing your work.

10 |

11 | #### Coding Style

12 |

13 | We want your work to be readable by others; therefore, we encourage you to note the following:

14 |

15 | - Follow PEP8 guidelines. Read more about it here.

16 | - Please write in Python 3.7+. __print()__ is a function in Python 3 so __print "Hello"__ will _not_ work but __print("Hello")__ will.

17 | - Please focus hard on naming of functions, classes, and variables. Help your reader by using __descriptive names__ that can help you to remove redundant comments.

18 | - Please follow the [Python Naming Conventions](https://pep8.org/#prescriptive-naming-conventions) so variable_names and function_names should be lower_case, CONSTANTS in UPPERCASE, ClassNames should be CamelCase, etc.

19 | - Expand acronyms because __gcf()__ is hard to understand but __greatest_common_factor()__ is not.

20 |

21 | - Avoid importing external libraries for basic algorithms. Only use those libraries for complicated algorithms. **Usage of NumPY is highly recommended.**

22 |

23 |

24 | #### Other points to remember while submitting your work:

25 |

26 | - File extension for code should be `.py`.

27 | - Strictly use snake_case (underscore_separated) in your file_name, as it will be easy to parse in future using scripts.

28 | - Please avoid creating new directories if at all possible. Try to fit your work into the existing directory structure. If you want to. Please contact us before doing so.

29 | - If you have modified/added code work, make sure the code compiles before submitting.

30 | - If you have modified/added documentation work, ensure your language is concise and contains no grammar errors.

31 | - Do not update the [README.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/README.md) and [Contributing_Guidelines.md](https://github.com/Algo-Phantoms/Algo-ScriptML/blob/main/CONTRIBUTING.md).

32 |

33 | Happy Coding :)

34 |

35 |

--------------------------------------------------------------------------------

/stochastic gradient descent/README.md:

--------------------------------------------------------------------------------

1 | # Stochastic gradient descent(SGD):

2 | * stochastic gradient descent(SGD) is used for regression problems with **very large dataset(in millions).**

3 | * SGD is same as gradient discent algorithm but have difference in optimization function.

4 | * SGD is inspired by Robbins–Monro algorithm of the 1950s.

5 |

6 | ## Basic idea behind SGD:

7 | * SGD works as an iterative algorithm.

8 | * It starts from a random point in dataset

9 | * after that it tries to fit the training sets one by one.

10 |

11 | ## Working of SGD:

12 |

13 | * first it initialize the theta(weights) to some random values.

14 | * than it takes a dataset(a row) from training set and tries to fit perfectly and returns modefied theta(weights).

15 | * that returned theta(weights) are applied over next dataset and tries to fit it perfectly and returns the theta.

16 | * this loop runs untill last dataset.

17 |

18 | ## Intution behind SGD:

19 | * first the algo shuffles the data, so that there should be no pattern can be seen firstly.

20 | * than algo tries to fit nex data more accurately thn the previously.

21 |

22 | ## SGD function:

23 |

24 |

25 |

26 | ## Difference between SGD and Gradient descent(Batch descent):

27 |

28 |

29 | * gradient descent goes from 1 to m(no of datasets) in every iterations while SGD iterates one time over one dataset.

30 |

31 | ## Main advantages of SGD:

32 | * computational efficient.

33 | * model with large dataset can be trained eaisly.

34 |

35 | ## Main disadvantages of SGD:

36 |

37 | * not effective over small datasets.

38 |

39 | ## Documentation:

40 |

41 | ```python

42 | Stochastic_gradient_descent(learning_rate=0.1)

43 | ```

44 | it takes the learning rate only, if you will not give it will be initialized to 0.1

45 | ```python

46 | object.fit(X,y)

47 | ```

48 | after making object of SGD type we have to call .fit() method in order to train model.

49 |

50 | * X: feature dataset(shuffeled)

51 | * y:label set(target set)(shuffled)

52 |

53 | ```python

54 | object.predict(X_pred)

55 | ```

56 | X_pred: features for which prediction is to be made.

57 |

58 | ## Example:

59 |

60 | ```python

61 |

62 | algo=Stochastic_gradient_descent(learning_rate=0.03)

63 | model=algo.fit(X,y)

64 | predicted_value=model.predict(X_pred)

65 | ```

66 |

--------------------------------------------------------------------------------

/Apriori/README.md:

--------------------------------------------------------------------------------

1 | # APRIORI ALGORITHM

2 |

3 | ## Introduction

4 |

5 | Apriori algorithm was given by R. Agrawal and R. Srikant in 1994 for finding frequent itemsets in a dataset for boolean association rule. The algorithm is called so because it uses prior knowledge of frequent itemset properties. With the help of the association rule, it determines how strongly or how weakly two objects are connected. This algorithm uses a breadth-first search and Hash Tree to calculate the itemset associations efficiently. It is the iterative process for finding the frequent itemsets from the large dataset.

6 |

7 | To improve the efficiency of level-wise generation of frequent itemsets, **Apriori Property** is used. It helps in reducing the search space.

8 |

9 | Frequent itemsets: Frequent itemsets are those items whose support is greater than the threshold value or user-specified minimum support. It means if A & B are the frequent itemsets together, then individually A and B should also be the frequent itemset. Suppose there are the two transactions: A= {1,2,3,4,5}, and B= {2,3,7}, in these two transactions, 2 and 3 are the frequent itemsets.

10 |

11 |

12 |

13 | ## Apriori Property

14 |

15 | According to this property, all subsets of a frequent itemset must also be frequent.

16 |

17 | ## Steps for Apriori Algorithm

18 |

19 | Step 1: Determine the support of itemsets in the transactional database, and select the minimum support and confidence.

20 |

21 | Step 2: Take all supports in the transaction with higher support value than the minimum or selected support value.

22 |

23 | Step 3: Find all the rules of these subsets that have higher confidence value than the threshold or minimum confidence.

24 |

25 | Step 4: Sort the rules as the decreasing order of lift.

26 |

27 | ## Advantages of Apriori Algorithm

28 |

29 | ▪ This is an easy to understand algorithm.

30 |

31 | ▪ The join and prune steps of the algorithm can be easily implemented on large datasets.

32 |

33 | ## Disadvantages of Apriori Algorithm

34 |

35 | ▪ The apriori algorithm works slowly as compared to other algorithms.

36 |

37 | ▪ The overall performance can be reduced as it scans the database for multiple times.

38 |

39 | ▪ The time complexity and space complexity of the apriori algorithm is O(2D), which is very high. Here D represents the horizontal width present in the database.

40 |

41 | ## References

42 |

43 | ▪ https://www.geeksforgeeks.org/apriori-algorithm/

44 |

45 | ▪ https://www.javatpoint.com/apriori-algorithm-in-machine-learning

46 |

--------------------------------------------------------------------------------

/K Nearest Neighbors/README.md:

--------------------------------------------------------------------------------

1 | # K Nearest Neighbors Algorithm

2 | # Introduction

3 | The K-nearest neighbors is a simple and easy-to-implement supervised machine learning algorithm. It can be used to solve both classification as well as regression problems. This algorithm assumes that similar data points are close to each other in the scatter plot.

4 |

5 | # Choosing the right value for K

6 | The best way to decide this is by trying out several values of K (number of nearest neighbors) before settling on one. Low values of K (like K = 1 or K = 2) can be noisy and subject to outliers. If we take large values of K, a category with only a few values in it will always be voted out by other categories. Choose the value of K that reduces the number of errors. We usually make K an odd number to have a tiebreaker.

7 |

8 | # Algorithm

9 | 1. Start with a dataset with known categories.10 | 2. Initialize K(number of nearest neighbours).

11 | 3. For each data point in the training data:

12 | 3.1 calculate the distance between the data point and the current example from the data.

13 | 3.2 add the distance and the index of the example to an ordered collection.

14 | 4. Sort the ordered collection of distances and indices from smallest to largest (in ascending order) by the distances

15 | 5. Pick the first K entries from the sorted collection.

16 | 6. Get the labels of the selected K entries.

17 | 7. If regression, return the mean of the K labels. (return average of K labels)

18 | 8. If classification, return the mode of the K labels. (return mode of K labels)

19 | 20 |

21 |  22 |

22 |

26 | 2. There is no need to build a model, tune parameters, or make additional assumptions.

27 | 3. New data can be added seamlessly which will not impact the accuracy of the algorithm.

28 | # Disadvantages 29 | 1. KNN algorithm does not work well with large datasets. In large datasets, the cost of calculating the distance between the new point and the existing points is huge which degrades the performance of the algorithm.

30 | 2. It is sensitive to noisy data, missing values and outliers.

31 | # References 32 | 1. https://www.youtube.com/watch?v=HVXime0nQeI

33 | 2. https://towardsdatascience.com/machine-learning-basics-with-the-k-nearest-neighbors-algorithm-6a6e71d01761

34 | -------------------------------------------------------------------------------- /K-Means/kmeans.py: -------------------------------------------------------------------------------- 1 | # -*- coding: utf-8 -*- 2 | import random 3 | from sklearn.cluster import KMeans 4 | import seaborn as sns 5 | import numpy as np 6 | import matplotlib.pyplot as plt 7 | from sklearn.datasets import make_blobs 8 | 9 | # Library Implementation of K Means 10 | 11 | 12 | X, y = make_blobs(centers=3, random_state=42) 13 | 14 | 15 | sns.scatterplot(X[:, 0], X[:, 1], hue=y) 16 | 17 | sns.scatterplot(X[:, 0], X[:, 1]) 18 | 19 | 20 | model = KMeans(n_clusters=4) 21 | 22 | model.fit(X) 23 | 24 | y_gen = model.labels_ 25 | 26 | sns.scatterplot(X[:, 0], X[:, 1], hue=y_gen) 27 | 28 | model.cluster_centers_ 29 | 30 | sns.scatterplot(X[:, 0], X[:, 1], hue=y_gen) 31 | 32 | for center in model.cluster_centers_: 33 | plt.scatter(center[0], center[1], s=60) 34 | 35 | sns.scatterplot(X[:, 0], X[:, 1]) 36 | 37 | 38 | # Custom Implementation of K Means 39 | 40 | class Cluster: 41 | 42 | def __init__(self, center): 43 | """Initialization of Clusters for K Means.""" 44 | self.center = center 45 | self.points = [] 46 | 47 | def distance(self, point): 48 | return np.sqrt(np.sum((point - self.center) ** 2)) 49 | 50 | 51 | class CustomKMeans: 52 | 53 | def __init__(self, n_clusters=3, max_iters=20): 54 | """Custom Implementation of K Means.""" 55 | self.n_clusters = n_clusters 56 | self.max_iters = max_iters 57 | 58 | def fit(self, X): 59 | 60 | clusters = [] 61 | for _ in range(self.n_clusters): 62 | cluster = Cluster(center=random.choice(X)) 63 | clusters.append(cluster) 64 | 65 | for _ in range(self.max_iters): 66 | 67 | labels = [] 68 | 69 | # going for each point 70 | for point in X: 71 | 72 | # collecting disctances form every cluster 73 | distances = [] 74 | for cluster in clusters: 75 | distances.append(cluster.distance(point)) 76 | 77 | # finding closest cluster 78 | closest_idx = np.argmin(distances) 79 | closest_cluster = clusters[closest_idx] 80 | closest_cluster.points.append(point) 81 | labels.append(closest_idx) 82 | 83 | for cluster in clusters: 84 | cluster.center = np.mean(cluster.points, axis=0) 85 | 86 | self.labels_ = labels 87 | self.cluster_centers_ = [cluster.center for cluster in clusters] 88 | 89 | 90 | model = CustomKMeans(n_clusters=2) 91 | 92 | model.fit(X) 93 | 94 | sns.scatterplot(X[:, 0], X[:, 1], hue=model.labels_) 95 | 96 | for center in model.cluster_centers_: 97 | plt.scatter(center[0], center[1], s=60) 98 | -------------------------------------------------------------------------------- /Neural Network/neural_network.py: -------------------------------------------------------------------------------- 1 | # -*- coding: utf-8 -*- 2 | """Neural Network.ipynb 3 | 4 | Automatically generated by Colaboratory. 5 | 6 | Original file is located at 7 | https://colab.research.google.com/drive/1HMxwkMbHBiP3DnVkR59bFIwQ7WLo8S51 8 | """ 9 | 10 | import pandas as pd 11 | import numpy as np 12 | class NeuralNetwork: 13 | 14 | def __init__(self,input_size,layers,output_size): 15 | np.random.seed(0) 16 | 17 | model = {} #Dictionary 18 | 19 | #First Layer 20 | model['W1'] = np.random.randn(input_size,layers[0]) 21 | model['b1'] = np.zeros((1,layers[0])) 22 | 23 | #Second Layer 24 | model['W2'] = np.random.randn(layers[0],layers[1]) 25 | model['b2'] = np.zeros((1,layers[1])) 26 | 27 | #Third/Output Layer 28 | model['W3'] = np.random.randn(layers[1],output_size) 29 | model['b3'] = np.zeros((1,output_size)) 30 | 31 | self.model = model 32 | self.activation_outputs = None 33 | 34 | def forward(self,x): 35 | 36 | W1,W2,W3 = self.model['W1'],self.model['W2'],self.model['W3'] 37 | b1, b2, b3 = self.model['b1'],self.model['b2'],self.model['b3'] 38 | 39 | z1 = np.dot(x,W1) + b1 40 | a1 = np.tanh(z1) 41 | 42 | z2 = np.dot(a1,W2) + b2 43 | a2 = np.tanh(z2) 44 | 45 | z3 = np.dot(a2,W3) + b3 46 | y_ = softmax(z3) 47 | 48 | self.activation_outputs = (a1,a2,y_) 49 | return y_ 50 | 51 | def backward(self,x,y,learning_rate=0.001): 52 | W1,W2,W3 = self.model['W1'],self.model['W2'],self.model['W3'] 53 | b1, b2, b3 = self.model['b1'],self.model['b2'],self.model['b3'] 54 | m = x.shape[0] 55 | 56 | a1,a2,y_ = self.activation_outputs 57 | 58 | delta3 = y_ - y 59 | dw3 = np.dot(a2.T,delta3) 60 | db3 = np.sum(delta3,axis=0) 61 | 62 | delta2 = (1-np.square(a2))*np.dot(delta3,W3.T) 63 | dw2 = np.dot(a1.T,delta2) 64 | db2 = np.sum(delta2,axis=0) 65 | 66 | delta1 = (1-np.square(a1))*np.dot(delta2,W2.T) 67 | dw1 = np.dot(X.T,delta1) 68 | db1 = np.sum(delta1,axis=0) 69 | 70 | 71 | #Update the Model Parameters using Gradient Descent 72 | self.model["W1"] -= learning_rate*dw1 73 | self.model['b1'] -= learning_rate*db1 74 | 75 | self.model["W2"] -= learning_rate*dw2 76 | self.model['b2'] -= learning_rate*db2 77 | 78 | self.model["W3"] -= learning_rate*dw3 79 | self.model['b3'] -= learning_rate*db3 80 | -------------------------------------------------------------------------------- /Linear Regression/Linear_Regression.py: -------------------------------------------------------------------------------- 1 | import pandas as pd 2 | import numpy as np 3 | import matplotlib.pyplot as plt 4 | 5 | 6 | class LinearRegression: 7 | 8 | def __init__(self): 9 | """ 10 | Linear regression 11 | Arguments - alpha (by default .001) 12 | epochs(by default 100) 13 | plot : bool - plot the cost vs epoch graph 14 | function - fit : (x, y) - train the data 15 | predict : (x) - predict the new y using previus trained model 16 | this module also normalize data brfore training that increase training efficiency 17 | """ 18 | self.costs = [] 19 | self.b = 0 20 | self.w = [] 21 | 22 | @classmethod 23 | def forward(x, w, b): 24 | y_pred = np.dot(x, w) + b 25 | return y_pred 26 | 27 | @classmethod 28 | def compute_cost(y_pred, y, n): 29 | cost = (1/(2*n)) * np.sum(np.square(y_pred - y)) 30 | return cost 31 | 32 | @classmethod 33 | def backward(y_pred, y, x, n): 34 | # print(y_pred.shape, y.shape, x.shape) 35 | dw = (1/n) * np.dot(x.T, (y_pred-y)) 36 | # print(dw.shape) 37 | db = (1/n) * np.sum((y_pred-y)) 38 | return dw, db 39 | 40 | @classmethod 41 | def update(w, b, dw, db, lr): 42 | w -= lr*dw 43 | b -= lr*db 44 | return w, b 45 | 46 | @classmethod 47 | def normalize(df): 48 | result = df.copy() 49 | for feature_name in df.columns: 50 | mean = np.mean(df[feature_name]) 51 | std = np.std(df[feature_name]) 52 | result[feature_name] = (df[feature_name] - mean) / std 53 | return result 54 | 55 | @classmethod 56 | def initialize(m): 57 | w = np.random.normal(size=(m, 1)) 58 | b = 0 59 | return (w, b) 60 | 61 | def fit(self, X, y, lr=.005, epochs=550): 62 | X = pd.DataFrame(X) 63 | X = self.normalize(X) 64 | y = np.reshape(np.array(y), (len(y), 1)) 65 | n, m = X.shape 66 | w, b = self.initialize(m) 67 | w = w 68 | b = b 69 | self.w, self.b = self.initialize(m) 70 | for _ in range(epochs): 71 | y_pred = self.forward(X, self.w, self.b) 72 | cost = self.compute_cost(y_pred, y, n) 73 | dw, db = self.backward(y_pred, y, X, n) 74 | self.w, self.b = self.update(self.w, self.b, dw, db, lr) 75 | self.costs.append(cost) 76 | plt.plot(self.costs) 77 | 78 | def predict(self, X): 79 | X = pd.DataFrame(X) 80 | X = self.normalize(X) 81 | y_pred = self.forward(X, self.w, self.b) 82 | return y_pred 83 | -------------------------------------------------------------------------------- /Preprocessing/standard_scaler.py: -------------------------------------------------------------------------------- 1 | import numpy as np 2 | np.seterr(divide='ignore', invalid='ignore') 3 | 4 | class StandardScaler: 5 | 6 | def __init__(self, *args): 7 | ''' 8 | >>> scaler = StandardScaler() 9 | >>> data = [[0, 0], [0, 0], [1, 1], [1, 1]] 10 | >>> print(scaler.fit(data)) 11 | StandardScaler() 12 | >>> print(scaler.mean_) 13 | [0.5 0.5] 14 | >>> print(scaler.transform(data)) 15 | [[-1. -1.] 16 | [-1. -1.] 17 | [ 1. 1.] 18 | [ 1. 1.]] 19 | >>> print(scaler.transform([[2, 2]])) 20 | [[3. 3.]] 21 | ''' 22 | self._sample_size = None 23 | self._means = None 24 | self._stds = None 25 | 26 | def fit(self, x, *args): 27 | try: 28 | x = np.array(x, dtype=np.float64) 29 | self._sample_size = x.shape[1] 30 | self._means = np.mean(x, axis=0) 31 | self._stds = np.std(x, axis=0) 32 | return self 33 | except Exception as e: 34 | raise e 35 | 36 | def transform(self, x, *args): 37 | try: 38 | x = np.array(x, dtype=np.float64) 39 | if self._means is None and self._stds is None: 40 | return f'NotFittedError: This StandardScaler instance is not fitted yet. Call \'fit\' with appropriate arguments before using this estimator.' 41 | elif x.shape[1] != self._sample_size: 42 | return f'ValueError: X has {x.shape[1]} features, but StandardScaler is expecting {self._sample_size} features as input' 43 | else: 44 | x = (x - self._means) / self._stds 45 | x = self.__remove_outlier_by_zero(x) 46 | return x 47 | except Exception as e: 48 | raise e 49 | 50 | def fit_transform(self, x, *args): 51 | try: 52 | self.fit(x) 53 | return self.transform(x) 54 | except Exception as e: 55 | raise e 56 | 57 | def inverse_transform(self, x, *args): 58 | try: 59 | x = np.array(x, dtype=np.float64) 60 | if self._means is None and self._stds is None: 61 | return f'NotFittedError: This StandardScaler instance is not fitted yet. Call \'fit\' with appropriate arguments before using this estimator.' 62 | else: 63 | x = (x * self._stds) + self._means 64 | x = self.__remove_outlier_by_zero(x) 65 | return x 66 | except Exception as e: 67 | raise e 68 | 69 | def __remove_outlier_by_zero(self, x): 70 | return np.nan_to_num(x, nan=0.0, posinf=0.0, neginf=0.0) 71 | 72 | @property 73 | def mean_(self): 74 | return self._means 75 | 76 | @property 77 | def scale_(self): 78 | return self._stds 79 | 80 | 81 | 82 | 83 | 84 | 85 | 86 | 87 | -------------------------------------------------------------------------------- /Ridge Regression/README.md: -------------------------------------------------------------------------------- 1 | # Ridge Regression 2 | 3 | ## Introduction 4 | - Ridge regression also known as L2 regularization is a special case of Tikhonov regularization in which all parameters are regularized equally. 5 | - It was first introduced by Hoerl and Kennard in 1970. 6 | - It is a technique which is used for analyzing multiple regression data that suffer from multicollinearity. 7 | 8 | 9 | ## Working of Ridge Regression 10 | - The objective of ridge regresion is to minimize the loss function plus the sum of square of the magnitude of coefficients. 11 | - The Ridge regression puts a constraint on the sum of suqare of values of the model parameters,the sum has to be less than a fixed value (upper bound). 12 | - In order to do so, it applies a shrinking (regularization) process where it penalizes the coefficients of the regression variables. 13 | - The goal of this process is to minimize the prediction error. 14 | 15 | 16 | ## Ridge Regression Cost Function 17 |

18 |  19 |

19 |

23 |  24 |

24 |

10 |  11 |

11 |

33 |  34 |

34 |

19 |  20 |

20 |

24 |  25 |

25 |

array, true values

22 | y_hat --> array, predicted values

23 | Returns:

24 | float, error

25 | '''

26 | error = 0

27 | for i in range(len(y)):

28 | error += (y[i] - y_hat[i]) ** 2

29 | return error / len(y)

30 |

31 | # method for calculating the coefficient of the linear regression model

32 | def fit(self, X, y):

33 | '''

34 | Input parameters:

35 | X --> array, features

36 | y --> array, true values

37 | Returns:

38 | None

39 | '''

40 | # 1. initializing weights and bias to zeros

41 | self.weights = np.zeros(X.shape[1])

42 | self.bias = 0

43 |

44 | # 2. performing gradient descent

45 | for i in range(self.n_iterations):

46 | # line equation

47 | y_hat = np.dot(X, self.weights) + self.bias

48 | loss = self._mean_squared_error(y, y_hat)

49 | self.loss.append(loss)

50 |

51 | # calculating derivatives

52 | partial_w = (1 / X.shape[0]) * (2 * np.dot(X.T, (y_hat - y)))

53 | partial_d = (1 / X.shape[0]) * (2 * np.sum(y_hat - y))

54 |

55 | # updating the coefficients

56 | self.weights -= self.learning_rate * partial_w

57 | self.bias -= self.learning_rate * partial_d

58 |

59 | # method for making predictions using the line equation

60 | def predict(self, X):

61 | '''

62 | Input parameters:

63 | X --> array, features

64 | Returns:

65 | array, predictions

66 | '''

67 | return np.dot(X, self.weights) + self.bias()

68 |

69 | '''

70 |

71 | EXAMPLE:

72 |

73 | # Importing Libraries

74 |

75 | import pandas as pd

76 | from sklearn import preprocessing

77 | from statsmodels.stats.outliers_influence import variance_inflation_factor

78 | from sklearn.model_selection import train_test_split

79 |

80 |

81 | # Getting our Data

82 |

83 | df = pd.read_csv('startups.csv')

84 |

85 |

86 | # Data Preprocessing

87 |

88 | # no null values are present

89 | # but, we need to encode 'State' attribute

90 | label_encoder = preprocessing.LabelEncoder() # encoding data

91 | df['State'] = df['State'].astype('|S')

92 | df['State'] = label_encoder.fit_transform(df['State'])

93 | # checking for null values

94 | df.isnull().any()

95 | # checking vif

96 | variables = df[['R&D Spend', 'Administration', 'Marketing Spend', 'State']]

97 | vif = pd.DataFrame()

98 | vif['VIF'] = [variance_inflation_factor(variables.values, i) for i in range(variables.shape[1])]

99 | vif['Features'] = variables.columns

100 | # as vif for all attributes<10, we need not drop any of them

101 |

102 |

103 | # Splitting Data for Training and Testing

104 |

105 | data = df.values

106 | X,y = data[:,:-1], data[:,-1]

107 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0) # splitting in the ration 80:20

108 |

109 |

110 | # Fitting the Data

111 |

112 | model = LinearRegression(learning_rate=0.01, n_iterations=10000)

113 | model.fit(X_train,y_train)

114 |

115 |

116 | # Making Predictions

117 |

118 | y_pred = model.predict(X_test)

119 |

120 | '''

--------------------------------------------------------------------------------

/Preprocessing/min_max_scaler.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | np.seterr(divide='ignore', invalid='ignore')

3 |

4 | class MinMaxScaler:

5 |

6 | def __init__(self, feature_range=(0,1), *args):

7 | '''

8 | >>> data = [[-1, 2], [-0.5, 6], [0, 10], [1, 18]]

9 | >>> scaler = MinMaxScaler()

10 | >>> print(scaler.fit(data))

11 | MinMaxScaler()

12 | >>> print(scaler.data_max_)

13 | [ 1. 18.]

14 | >>> print(scaler.transform(data))

15 | [[0. 0. ]

16 | [0.25 0.25]

17 | [0.5 0.5 ]

18 | [1. 1. ]]

19 | >>> print(scaler.transform([[2, 2]]))

20 | [[1.5 0. ]]

21 | '''

22 | try:

23 | if (feature_range[0] >= feature_range[1]):

24 | raise ValueError(f'Minimum of desired feature range must be smaller than maximum.')

25 | else:

26 | self._scale_min = feature_range[0]

27 | self._scale_max = feature_range[1]

28 | self._sample_size = None

29 | self._mins = None

30 | self._maxs = None

31 | except Exception as e:

32 | raise e

33 |

34 | def fit(self, x, *args):

35 | try:

36 | x = np.array(x, dtype=np.float64)

37 | self._sample_size = x.shape[1]

38 | self._mins = x.min(axis=0)

39 | self._maxs = x.max(axis=0)

40 | return self

41 | except Exception as e:

42 | raise e

43 |

44 | def transform(self, x, *args):

45 | try:

46 | x = np.array(x, dtype=np.float64)

47 | if self._maxs is None and self._mins is None:

48 | return f'NotFittedError: This MinMaxScaler instance is not fitted yet. Call \'fit\' with appropriate arguments before using this estimator.'

49 | elif x.shape[1] != self._sample_size:

50 | return f'ValueError: X has {x.shape[1]} features, but MinMaxScaler is expecting {self._sample_size} features as input'

51 | else:

52 | x = (x - self._mins) / (self._maxs - self._mins)

53 | x = (x * (self._scale_max - self._scale_min)) + self._scale_min

54 | x = self.__remove_outlier_by_zero(x)

55 | return x

56 | except Exception as e:

57 | raise e

58 |

59 | def fit_transform(self, x, *args):

60 | try:

61 | self.fit(x)

62 | return self.transform(x)

63 | except Exception as e:

64 | raise e

65 |

66 | def inverse_transform(self, x, *args):

67 | try:

68 | x = np.array(x, dtype=np.float64)

69 | if self._maxs is None and self._mins is None:

70 | return f'NotFittedError: This MinMaxScaler instance is not fitted yet. Call \'fit\' with appropriate arguments before using this estimator.'

71 | else:

72 | x = (x - self._scale_min) / (self._scale_max - self._scale_min)

73 | x = (x * (self._maxs - self._mins)) + self._mins

74 | return x

75 | except Exception as e:

76 | raise e

77 |

78 | def __remove_outlier_by_zero(self, x):

79 | return np.nan_to_num(x, nan=0.0, posinf=0.0, neginf=0.0)

80 |

81 | def __remove_outlier_by_one(self, x):

82 | return np.nan_to_num(x, nan=1.0, posinf=1.0, neginf=1.0)

83 |

84 | @property

85 | def min_(self):

86 | _min = self._scale_min - (self._mins * self.scale_)

87 | return _min

88 |

89 | @property

90 | def scale_(self):

91 | _scale = (self._scale_max - self._scale_min) / (self._maxs - self._mins)

92 | _scale = self.__remove_outlier_by_one(_scale)

93 | return _scale

94 |

95 | @property

96 | def data_min_(self):

97 | return self._mins

98 |

99 | @property

100 | def data_max_(self):

101 | return self._maxs

102 |

103 | @property

104 | def data_range_(self):

105 | data_range = self._maxs - self._mins

106 | return data_range

107 |

108 |

109 |

110 |

--------------------------------------------------------------------------------

/Elastic Net/Elastic_Net_Regression.py:

--------------------------------------------------------------------------------

1 | # # ELASTIC NET REGRESSION

2 | # # ''''''''''''''''''''''''''''''''''''''''''''''''''''''''

3 |

4 | # ## Definition

5 | # In statistics and in the fitting of linear or logistic regression models, the elastic net is a regularized regression method that linearly combines the L1 and L2 penalties of the lasso and ridge methods.The elastic net method performs variable selection and regularization simultaneously.

6 | #

7 | # ## Dataset Used

8 | # ### Dataset download link

9 | # https://www.kaggle.com/karthickveerakumar/salary-data-simple-linear-regression

10 | # ### Description

11 | # This dataset consists of company data with 30 employees(30 rows), and 2 columns. The 2 columns are of years of experience and the salary. Thus we aim at finding how years of experience affect salary of employees using elastic-net.

12 | #

13 | # ## Code

14 | # Importing required libraries

15 |

16 | import numpy as np

17 | import matplotlib.pyplot as plt

18 | import pandas as pd

19 | from sklearn.model_selection import train_test_split

20 |

21 | class Elastic_Net_Regression() :

22 | def __init__(self,learning_rate,iterations,l1_penality,l2_penality) :

23 | self.learning_rate=learning_rate

24 | self.iterations=iterations

25 | self.l1_penality=l1_penality

26 | self.l2_penality=l2_penality

27 |

28 | def fit(self,x,y):

29 | self.b=0

30 | self.x=x

31 | self.y=y

32 | self.m=x.shape[0]

33 | self.n=x.shape[1]

34 | self.W=np.zeros(self.n)

35 | self.weight_updation()

36 | return self

37 |

38 | def weight_updation(self):

39 | for i in range(self.iterations):

40 | self.update_weights()

41 |

42 | def update_weights(self):

43 | y_pred=self.predict(self.x)

44 | dW=np.zeros(self.n)

45 | for j in range(self.n):

46 | if self.W[j]<=0:

47 | dW[j] = -(2*(self.x[:,j]).dot(self.y-y_pred))-self.l1_penality+2*self.l2_penality*self.W[j]

48 | dW[j]//=self.m

49 | else :

50 | dW[j]=-(2*(self.x[:,j]).dot(self.y-y_pred))+self.l1_penality+2*self.l2_penality*self.W[j]

51 | dW[j]//=self.m

52 | db=-2*np.sum(self.y-y_pred)

53 | db//=self.m

54 | self.W-=self.learning_rate*dW

55 | self.b-=self.learning_rate*db

56 | return self

57 |

58 | def predict(self,x):

59 | ans=x.dot(self.W)+self.b

60 | return ans

61 |

62 | #UNCOMMENT THE BELOW LINES TO TEST THE ALGORITHM

63 | # def main() :

64 | # df=pd.read_csv("salary_data.csv")

65 | # x=df.iloc[:,:-1].values

66 | # y=df.iloc[:,1].values

67 | # x_train,x_test,y_train,y_test=train_test_split(x,y,test_size=1/3.5,random_state=0)

68 | # model = Elastic_Net_Regression(iterations=3000,

69 | # learning_rate=0.01,

70 | # l1_penality=500,

71 | # l2_penality=1)

72 | # model.fit(x_train,y_train)

73 | # y_pred=model.predict(x_test)

74 | # print("Predicted values of y:",np.round( y_pred[:3], 2))

75 | # print("Test values of y:",y_test[:3])

76 | # print("Trained Weight W:",round(model.W[0],2))

77 | # print("Trained bias b:",round(model.b,2))

78 | # plt.subplot(211)

79 | # plt.title('Salary vs Years of Experience')

80 | # plt.scatter(x_test,y_test,color='blue',label="Test Y")

81 | # plt.scatter(x_test,y_pred,color='red',label="Predicted Y")

82 | # plt.legend(loc=2)

83 | # plt.subplot(212)

84 | # plt.scatter(x_test,y_test,color='green',label="Test Y")

85 | # plt.plot(x_test,y_pred,color='yellow',label="Predicted Y")

86 | # plt.xlabel('Years of Experience')

87 | # plt.ylabel('Salary')

88 | # plt.legend(loc=2)

89 | # plt.show()

90 |

91 |

92 | # if __name__ == "__main__" :

93 | # main()

94 |

95 |

96 | # ### References taken from

97 | # https://corporatefinanceinstitute.com/resources/knowledge/other/elastic-net/

98 | # \

99 | # https://www.geeksforgeeks.org/implementation-of-elastic-net-regression-from-scratch/

100 |

--------------------------------------------------------------------------------

/Linear Regression/README.md:

--------------------------------------------------------------------------------

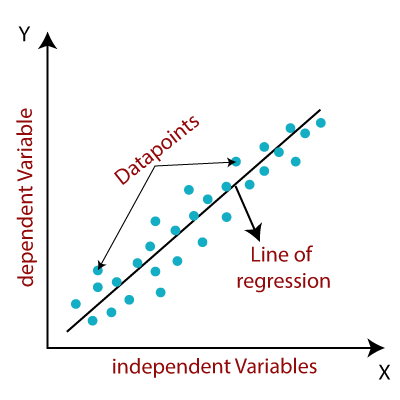

1 |

2 | Using the script

3 |

4 | ## import the module

5 |

6 | >import linearRegression

7 | >

8 | >linearReg_model = LinearRegression()

9 |

10 | ## train the data

11 |

12 | >linearReg_model.fit(x_train, y_train)

13 |

14 | ## predict the model

15 |

16 | >y_pred = linearReg_model.predict(x_test)

17 | ## Regression:

18 |

19 | Firstly let’s see what's regression. Regression is a technique for predicting a goal value using independent predictors. This method is primarily used for forecasting and determining cause and effect relationships among variables. The number of independent variables and the form of relationship between the independent and dependent variables is the key points that cause the differences in regression techniques.

20 |

21 | ## Linear regression

22 |

23 | One of the most fundamental and commonly used Machine Learning algorithms is linear regression. It's a statistical methodology for conducting the predictive analysis. The linear regression algorithm shows a linear relationship between a dependent (y) variable and one or more independent (y) variables, hence the name. Since linear regression reveals a linear relationship, it determines how the value of the dependent variable changes as the value of the independent variable changes.

24 |

25 |

26 |

27 | Linear regression is mathematically represented as:-

28 |

29 | y=a0 +a1.x

30 |

31 | Here,

32 | y= Dependent variable

33 | a0= Intercept of line

34 | a1= Linear regression coefficient

35 | x= Independent variable

36 |

37 | There are two types of linear regression:-

38 |

39 | Simple linear regression- It is a is Linear Regression algorithm that uses a single independent variable to predict the value of a numerical dependent variable.

40 |