├── .DS_Store

├── MultiTrans.py

├── README.md

├── checkpoints

├── GMM_pretrained

│ └── gmm.txt

└── TOM_pretrained

│ └── tom.txt

├── cp_dataset.py

├── data

├── test_pairs.txt

├── test_pairs_same.txt

└── train_pairs.txt

├── grid.png

├── metrics

├── PerceptualSimilarity

│ ├── .gitignore

│ ├── Dockerfile

│ ├── LICENSE

│ ├── README.md

│ ├── data

│ │ ├── __init__.py

│ │ ├── base_data_loader.py

│ │ ├── custom_dataset_data_loader.py

│ │ ├── data_loader.py

│ │ ├── dataset

│ │ │ ├── __init__.py

│ │ │ ├── base_dataset.py

│ │ │ ├── jnd_dataset.py

│ │ │ └── twoafc_dataset.py

│ │ └── image_folder.py

│ ├── example_dists.txt

│ ├── imgs

│ │ ├── ex_dir0

│ │ │ ├── 0.png

│ │ │ └── 1.png

│ │ ├── ex_dir1

│ │ │ ├── 0.png

│ │ │ └── 1.png

│ │ ├── ex_dir_pair

│ │ │ ├── ex_p0.png

│ │ │ ├── ex_p1.png

│ │ │ └── ex_ref.png

│ │ ├── ex_p0.png

│ │ ├── ex_p1.png

│ │ ├── ex_ref.png

│ │ ├── example_dists.txt

│ │ └── fig1.png

│ ├── lpips

│ │ ├── __init__.py

│ │ ├── lpips.py

│ │ ├── pretrained_networks.py

│ │ ├── trainer.py

│ │ └── weights

│ │ │ ├── v0.0

│ │ │ ├── alex.pth

│ │ │ ├── squeeze.pth

│ │ │ └── vgg.pth

│ │ │ └── v0.1

│ │ │ ├── alex.pth

│ │ │ ├── squeeze.pth

│ │ │ └── vgg.pth

│ ├── lpips_1dir_allpairs.py

│ ├── lpips_2dirs.py

│ ├── lpips_2imgs.py

│ ├── lpips_loss.py

│ ├── requirements.txt

│ ├── scripts

│ │ ├── download_dataset.sh

│ │ ├── download_dataset_valonly.sh

│ │ ├── eval_valsets.sh

│ │ ├── train_test_metric.sh

│ │ ├── train_test_metric_scratch.sh

│ │ └── train_test_metric_tune.sh

│ ├── setup.py

│ ├── testLPIPS.sh

│ ├── test_dataset_model.py

│ ├── test_network.py

│ ├── train.py

│ └── util

│ │ ├── __init__.py

│ │ ├── html.py

│ │ ├── util.py

│ │ └── visualizer.py

├── getIS.py

├── getJS.py

├── getSSIM.py

└── inception_score.py

├── modules

├── __pycache__

│ ├── multihead_attention.cpython-36.pyc

│ ├── position_embedding.cpython-36.pyc

│ └── transformer.cpython-36.pyc

├── multihead_attention.py

├── position_embedding.py

└── transformer.py

├── networks.py

├── requirements.txt

├── test.py

├── train.py

└── visualization.py

/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/.DS_Store

--------------------------------------------------------------------------------

/MultiTrans.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch import nn

3 | import torch.nn.functional as F

4 |

5 | from einops import rearrange, repeat

6 | from einops.layers.torch import Rearrange

7 |

8 | from modules.transformer import TransformerEncoder

9 |

10 |

11 | class MULTModel(nn.Module):

12 | def __init__(self, img_H=256, img_W=192, patch_size = 32, dim = 1024):

13 | """

14 | """

15 | super(MULTModel, self).__init__()

16 |

17 | assert img_H % patch_size == 0, 'Image dimensions must be divisible by the patch size H.'

18 | assert img_W % patch_size == 0, 'Image dimensions must be divisible by the patch size W.'

19 |

20 | num_patches = (img_H // patch_size) * (img_W // patch_size) # (256 / 32) * (192 / 32) = 48

21 | patch_dim_22 = 22 * patch_size * patch_size # 22 * 32 * 32 = 22528

22 | patch_dim_3 = 3 * patch_size * patch_size # 3 * 32 * 32 = 3072

23 | patch_dim_1 = 1 * patch_size * patch_size # 1 * 32 * 32 = 1024

24 |

25 |

26 | self.to_patch_embedding_22 = nn.Sequential(

27 | # [B, 22, 256, 192] -> [B, 22, 8 * 32, 6 * 32] -> [B, 8 * 6, 32 * 32 * 22]

28 | Rearrange('b c (h p1) (w p2) -> b (h w) (p1 p2 c)', p1 = patch_size, p2 = patch_size),

29 | # [B, 48, 32*32*22] -> [B, 48, 2048]

30 | nn.Linear(patch_dim_22, 11264),

31 | )

32 | self.to_patch_embedding_3 = nn.Sequential(

33 | # [B, 3, 256, 192] -> [B, 3, 8 * 32, 6 * 32] -> [B, 8 * 6, 32 * 32 * 3]

34 | Rearrange('b c (h p1) (w p2) -> b (h w) (p1 p2 c)', p1 = patch_size, p2 = patch_size),

35 | # [B, 48, 3072] -> [B, 48, 1024]

36 | nn.Linear(patch_dim_3, dim),

37 | )

38 |

39 | self.to_patch_embedding_1 = nn.Sequential(

40 | # [B, 3, 256, 192] -> [B, 3, 8 * 32, 6 * 32] -> [B, 8 * 6, 32 * 32 * 3]

41 | Rearrange('b c (h p1) (w p2) -> b (h w) (p1 p2 c)', p1 = patch_size, p2 = patch_size),

42 | # [B, 48, 3072] -> [B, 48, 1024]

43 | nn.Linear(patch_dim_1, dim),

44 | )

45 | # [B, 48, 32 * 32 * 26]

46 |

47 | self.backRearrange = nn.Sequential(Rearrange('b (h w) (p1 p2 c) -> b c (h p1) (w p2)', p1=patch_size, p2=patch_size, h=8, w=6))

48 |

49 | self.d_l, self.d_a, self.d_v = 1024, 1024, 1024

50 | combined_dim = self.d_l + self.d_a + self.d_v

51 |

52 | output_dim = 32 * 32 * 26

53 |

54 | self.num_heads = 8

55 | self.layers = 3

56 | self.attn_dropout = nn.Dropout(0.1)

57 | self.attn_dropout_a = nn.Dropout(0.0)

58 | self.attn_dropout_v = nn.Dropout(0.0)

59 | self.relu_dropout = nn.Dropout(0.1)

60 | self.embed_dropout = nn.Dropout(0.25)

61 | self.res_dropout = nn.Dropout(0.1)

62 | self.attn_mask = True

63 |

64 | # 2. Crossmodal Attentions

65 | # if self.lonly:

66 | self.trans_l_with_a = self.get_network(self_type='la')

67 | self.trans_l_with_v = self.get_network(self_type='lv')

68 | # if self.aonly:

69 | self.trans_a_with_l = self.get_network(self_type='al')

70 | self.trans_a_with_v = self.get_network(self_type='av')

71 | # if self.vonly:

72 | self.trans_v_with_l = self.get_network(self_type='vl')

73 | self.trans_v_with_a = self.get_network(self_type='va')

74 |

75 | # 3. Self Attentions (Could be replaced by LSTMs, GRUs, etc.)

76 | # [e.g., self.trans_x_mem = nn.LSTM(self.d_x, self.d_x, 1)

77 | self.trans_l_mem = self.get_network(self_type='l_mem', layers=3)

78 | self.trans_a_mem = self.get_network(self_type='a_mem', layers=3)

79 | self.trans_v_mem = self.get_network(self_type='v_mem', layers=3)

80 |

81 | # Projection layers

82 | self.proj1 = nn.Linear(6144, 6144)

83 | self.proj2 = nn.Linear(6144, 6144)

84 | self.out_layer = nn.Linear(6144, output_dim)

85 |

86 | self.projConv1 = nn.Conv1d(11264, 1024, kernel_size=1, padding=0, bias=False)

87 | self.projConv2 = nn.Conv1d(1024, 1024, kernel_size=1, padding=0, bias=False)

88 | self.projConv3 = nn.Conv1d(1024, 1024, kernel_size=1, padding=0, bias=False)

89 |

90 |

91 |

92 |

93 | def get_network(self, self_type='l', layers=-1):

94 | if self_type in ['l', 'al', 'vl']:

95 | embed_dim, attn_dropout = self.d_l, self.attn_dropout

96 | elif self_type in ['a', 'la', 'va']:

97 | embed_dim, attn_dropout = self.d_a, self.attn_dropout_a

98 | elif self_type in ['v', 'lv', 'av']:

99 | embed_dim, attn_dropout = self.d_v, self.attn_dropout_v

100 | elif self_type == 'l_mem':

101 | embed_dim, attn_dropout = self.d_l, self.attn_dropout

102 | elif self_type == 'a_mem':

103 | embed_dim, attn_dropout = self.d_a, self.attn_dropout

104 | elif self_type == 'v_mem':

105 | embed_dim, attn_dropout = self.d_v, self.attn_dropout

106 | else:

107 | raise ValueError("Unknown network type")

108 |

109 | return TransformerEncoder(embed_dim=embed_dim,

110 | num_heads=self.num_heads,

111 | layers=max(self.layers, layers),

112 | attn_dropout=attn_dropout,

113 | relu_dropout=self.relu_dropout,

114 | res_dropout=self.res_dropout,

115 | embed_dropout=self.embed_dropout,

116 | attn_mask=self.attn_mask)

117 |

118 |

119 | def forward(self, x1, x2, x3):

120 | # Input:

121 | # x1: [B, 22, 256, 192]

122 | # x2: [B, 3, 256, 192]

123 | # x3: [B, 1, 256, 192]

124 |

125 | # Step1: patch_embedding

126 | x1 = self.to_patch_embedding_22(x1) # [B, 22, 256, 192] -> [B, 48, 11264]

127 | x2 = self.to_patch_embedding_3(x2) # [B, 3, 256, 192] -> [B, 48, 1024]

128 | x3 = self.to_patch_embedding_1(x3) # [B, 1, 256, 192] -> [B, 48, 1024]

129 |

130 | # Step2: Project & 1D Conv & Permute

131 | # [B, 48, 1024] -> [B, 1024, 48]

132 | x1 = x1.transpose(1, 2) # [B, 11264, 48]

133 | x2 = x2.transpose(1, 2)

134 | x3 = x3.transpose(1, 2)

135 |

136 | # [1024]

137 | proj_x1 = self.projConv1(x1)

138 | proj_x2 = self.projConv2(x2)

139 | proj_x3 = self.projConv3(x3)

140 |

141 | # [48, B, 1024]

142 | proj_x1 = proj_x1.permute(2, 0, 1)

143 | proj_x2 = proj_x2.permute(2, 0, 1)

144 | proj_x3 = proj_x3.permute(2, 0, 1)

145 |

146 | # Self_att first [48, B, 1024]

147 | proj_x1_trans = self.trans_l_mem(proj_x1)

148 | proj_x2_trans = self.trans_a_mem(proj_x2)

149 | proj_x3_trans = self.trans_v_mem(proj_x3)

150 |

151 |

152 | # Step3: Cross Attention

153 | # (x3,x2) --> x1

154 | h_l_with_as = self.trans_l_with_a(proj_x1, proj_x2_trans, proj_x2_trans) # Dimension (L, N, d_l) [48, B, 1024]

155 | h_l_with_vs = self.trans_l_with_v(proj_x1, proj_x3_trans, proj_x3_trans) # Dimension (L, N, d_l) [48, B, 1024]

156 |

157 | cross1 = torch.cat([h_l_with_as, h_l_with_vs], 2) # [2048]

158 |

159 | # (x1, x3) --> x2

160 | h_a_with_ls = self.trans_a_with_l(proj_x2, proj_x1_trans, proj_x1_trans)

161 | h_a_with_vs = self.trans_a_with_v(proj_x2, proj_x3_trans, proj_x3_trans)

162 |

163 | cross2 = torch.cat([h_a_with_ls, h_a_with_vs], 2)

164 |

165 | # (x1,x2) --> x3

166 | h_v_with_ls = self.trans_v_with_l(proj_x3, proj_x1_trans, proj_x1_trans)

167 | h_v_with_as = self.trans_v_with_a(proj_x3, proj_x2_trans, proj_x2_trans)

168 |

169 | cross3 = torch.cat([h_v_with_ls, h_v_with_as], 2)

170 |

171 | # Combine by cat

172 | # 三个[48, B, 2048] -> [48, B, 6144]

173 | # last_hs = torch.cat([last_h_l, last_h_a, last_h_v], dim=1#[N,6144]

174 | last_hs = torch.cat([cross1, cross2, cross3], dim=2) #[48, B, 6144]

175 |

176 | # A residual block

177 | decompo_1 = self.proj1(last_hs)

178 | decompo_1_relu = F.relu(decompo_1)

179 | last_hs_proj = self.proj2(decompo_1_relu)

180 | last_hs_proj += last_hs

181 |

182 | # last_hs_proj = self.proj2(F.dropout(F.relu(self.proj1(last_hs)), p=self.out_dropout, training=self.training))

183 | # last_hs_proj += last_hs

184 |

185 | output = self.out_layer(last_hs_proj) # [48, B, 26624(26 * 32 * 32)]

186 | output = output.permute(1, 0, 2) # [B, 8 * 6, 32 * 32 * 26]

187 | output = self.backRearrange(output)

188 |

189 | return output

190 |

191 |

192 |

193 | if __name__ == '__main__':

194 | encoder = TransformerEncoder(300, 4, 2)

195 | x = torch.tensor(torch.rand(20, 2, 300))

196 | print(encoder(x).shape)

197 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | []((https://github.com/Amazingren/CIT/graphs/commit-activity))

5 |

6 |

7 |

8 | # Cloth Interactive Transformer (CIT)

9 |

10 | [Cloth Interactive Transformer for Virtual Try-On](https://arxiv.org/abs/2104.05519)

11 | [Bin Ren](https://scholar.google.com/citations?user=Md9maLYAAAAJ&hl=en)1, [Hao Tang](http://disi.unitn.it/~hao.tang/)1, Fanyang Meng2, Runwei Ding3, [Ling Shao](https://scholar.google.com/citations?user=z84rLjoAAAAJ&hl=en)4, [Philip H.S. Torr](https://scholar.google.com/citations?user=kPxa2w0AAAAJ&hl=en)5, [Nicu Sebe](https://scholar.google.com/citations?user=stFCYOAAAAAJ&hl=en)16.

12 | 1University of Trento, Italy,

13 | 2Peng Cheng Laboratory, China,

14 | 3Peking University Shenzhen Graduate School, China,

15 | 4Inception Institute of AI, UAE,

16 | 5University of Oxford, UK,

17 | 6Huawei Research Ireland, Ireland.

18 |

19 | The repository offers the official implementation of our paper in PyTorch.

20 | The code and pre-trained models are tested with pytorch 0.4.1, torchvision 0.2.1, opencv-python 4.1, and pillow 5.4 (Python 3.6).

21 |

22 | :t-rex:News!!! We have updated the pre-trained model(June 5th, 2021)!

23 |

24 | In the meantime, check out our recent paper [XingGAN](https://github.com/Ha0Tang/XingGAN) and [XingVTON](https://github.com/Ha0Tang/XingVTON).

25 |

26 | ## Usage

27 | This pipeline is a combination of consecutive training and testing of Cloth Interactive Transformer (CIT) Matching block based GMM and CIT Reasoning block based TOM. GMM generates the warped clothes according to the target human. Then, TOM blends the warped clothes outputs from GMM into the target human properties, to generate the final try-on output.

28 |

29 | 1) Install the requirements

30 | 2) Download/Prepare the dataset

31 | 3) Train the CIT Matching block based GMM network

32 | 4) Get warped clothes for training set with trained GMM network, and copy warped clothes & masks inside `data/train` directory

33 | 5) Train the CIT Reasoning block based TOM network

34 | 6) Test CIT Matching block based GMM for testing set

35 | 7) Get warped clothes for testing set, copy warped clothes & masks inside `data/test` directory

36 | 8) Test CIT Reasoning block based TOM testing set

37 |

38 | ## Installation

39 | This implementation is built and tested in PyTorch 0.4.1.

40 | Pytorch and torchvision are recommended to install with conda: `conda install pytorch=0.4.1 torchvision=0.2.1 -c pytorch`

41 |

42 | For all packages, run `pip install -r requirements.txt`

43 |

44 | ## Data Preparation

45 | For training/testing VITON dataset, our full and processed dataset is available here: https://1drv.ms/u/s!Ai8t8GAHdzVUiQQYX0azYhqIDPP6?e=4cpFTI. After downloading, unzip to your own data directory `./data/`.

46 |

47 | ## Training

48 | Run `python train.py` with your specific usage options for GMM and TOM stage.

49 |

50 | For example, GMM: ```python train.py --name GMM --stage GMM --workers 4 --save_count 5000 --shuffle```.

51 | Then run test.py for GMM network with the training dataset, which will generate the warped clothes and masks in "warp-cloth" and "warp-mask" folders inside the "result/GMM/train/" directory.

52 | Copy the "warp-cloth" and "warp-mask" folders into your data directory, for example inside "data/train" folder.

53 |

54 | Run TOM stage, ```python train.py --name TOM --stage TOM --workers 4 --save_count 5000 --shuffle```

55 |

56 | ## Evaluation

57 | We adopt four evaluation metrics in our work for evaluating the performance of the proposed XingVTON. There are Jaccard score (JS), structral similarity index measure (SSIM), learned perceptual image patch similarity (LPIPS), and Inception score (IS).

58 |

59 | Note that JS is used for the same clothing retry-on cases (with ground truth cases) in the first geometric matching stage, while SSIM and LPIPS are used for the same clothing retry-on cases (with ground truth cases) in the second try-on stage. In addition, IS is used for different clothing try-on (where no ground truth is available).

60 |

61 | ### For JS

62 | - Step1: Run```python test.py --name GMM --stage GMM --workers 4 --datamode test --data_list test_pairs_same.txt --checkpoint checkpoints/GMM_pretrained/gmm_final.pth```

63 | then the parsed segmentation area for current upper clothing is used as the reference image, accompanied with generated warped clothing mask then:

64 | - Step2: Run```python metrics/getJS.py```

65 |

66 | ### For SSIM

67 | After we run test.py for GMM network with the testibng dataset, the warped clothes and masks will be generated in "warp-cloth" and "warp-mask" folders inside the "result/GMM/test/" directory. Copy the "warp-cloth" and "warp-mask" folders into your data directory, for example inside "data/test" folder. Then:

68 | - Step1: Run TOM stage test ```python test.py --name TOM --stage TOM --workers 4 --datamode test --data_list test_pairs_same.txt --checkpoint checkpoints/TOM_pretrained/tom_final.pth```

69 | Then the original target human image is used as the reference image, accompanied with the generated retry-on image then:

70 | - Step2: Run ```python metrics/getSSIM.py```

71 |

72 | ### For LPIPS

73 | - Step1: You need to creat a new virtual enviriment, then install PyTorch 1.0+ and torchvision;

74 | - Step2: Run ```sh metrics/PerceptualSimilarity/testLPIPS.sh```;

75 |

76 | ### For IS

77 | - Step1: Run TOM stage test ```python test.py --name TOM --stage TOM --workers 4 --datamode test --data_list test_pairs.txt --checkpoint checkpoints/TOM_pretrained/tom_final.pth```

78 | - Step2: Run ```python metrics/getIS.py```

79 |

80 | ## Inference

81 | The pre-trained models are provided [here](https://drive.google.com/drive/folders/12SAalfaQ--osAIIEh-qE5TLOP_kJmIP8?usp=sharing). Download the pre-trained models and put them in this project (./checkpoints)

82 | Then just run the same step as Evaluation to test/inference our model.

83 |

84 | ## Acknowledgements

85 | This source code is inspired by [CP-VTON](https://github.com/sergeywong/cp-vton), [CP-VTON+](https://github.com/minar09/cp-vton-plus). We are extremely grateful for their public implementation.

86 |

87 | ## Citation

88 | If you use this code for your research, please consider giving a star :star: and citing our [paper](https://arxiv.org/abs/2104.05519) :t-rex::

89 |

90 | CIT

91 | ```

92 | @article{ren2021cloth,

93 | title={Cloth Interactive Transformer for Virtual Try-On},

94 | author={Ren, Bin and Tang, Hao and Meng, Fanyang and Ding, Runwei and Shao, Ling and Torr, Philip HS and Sebe, Nicu},

95 | journal={arXiv preprint arXiv:2104.05519},

96 | year={2021}

97 | }

98 | ```

99 |

100 |

101 | ## Contributions

102 | If you have any questions/comments/bug reports, feel free to open a github issue or pull a request or e-mail to the author Bin Ren ([bin.ren@unitn.it](bin.ren@unitn.it)).

--------------------------------------------------------------------------------

/checkpoints/GMM_pretrained/gmm.txt:

--------------------------------------------------------------------------------

1 | put the pre-trained gmm model here.

--------------------------------------------------------------------------------

/checkpoints/TOM_pretrained/tom.txt:

--------------------------------------------------------------------------------

1 | put the pre-trained tom model here.

--------------------------------------------------------------------------------

/cp_dataset.py:

--------------------------------------------------------------------------------

1 | # coding=utf-8

2 | import torch

3 | import torch.utils.data as data

4 | import torchvision.transforms as transforms

5 |

6 | from PIL import Image

7 | from PIL import ImageDraw

8 |

9 | import os.path as osp

10 | import numpy as np

11 | import json

12 |

13 |

14 | class CPDataset(data.Dataset):

15 | """Dataset for CP-VTON+.

16 | """

17 |

18 | def __init__(self, opt):

19 | super(CPDataset, self).__init__()

20 | # base setting

21 | self.opt = opt

22 | self.root = opt.dataroot

23 | self.datamode = opt.datamode # train or test or self-defined

24 | self.stage = opt.stage # GMM or TOM

25 | self.data_list = opt.data_list

26 | self.fine_height = opt.fine_height

27 | self.fine_width = opt.fine_width

28 | self.radius = opt.radius

29 | self.data_path = osp.join(opt.dataroot, opt.datamode)

30 | self.transform = transforms.Compose([

31 | transforms.ToTensor(),

32 | transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

33 |

34 | # load data list

35 | im_names = []

36 | c_names = []

37 | with open(osp.join(opt.dataroot, opt.data_list), 'r') as f:

38 | for line in f.readlines():

39 | im_name, c_name = line.strip().split()

40 | im_names.append(im_name)

41 | c_names.append(c_name)

42 |

43 | self.im_names = im_names

44 | self.c_names = c_names

45 |

46 | def name(self):

47 | return "CPDataset"

48 |

49 | def __getitem__(self, index):

50 | c_name = self.c_names[index]

51 | im_name = self.im_names[index]

52 | if self.stage == 'GMM':

53 | c = Image.open(osp.join(self.data_path, 'cloth', c_name))

54 | cm = Image.open(osp.join(self.data_path, 'cloth-mask', c_name)).convert('L')

55 | else:

56 | c = Image.open(osp.join(self.data_path, 'warp-cloth', im_name)) # c_name, if that is used when saved

57 | cm = Image.open(osp.join(self.data_path, 'warp-mask', im_name)).convert('L') # c_name, if that is used when saved

58 |

59 | c = self.transform(c) # [-1,1]

60 | cm_array = np.array(cm)

61 | cm_array = (cm_array >= 128).astype(np.float32)

62 | cm = torch.from_numpy(cm_array) # [0,1]

63 | cm.unsqueeze_(0)

64 |

65 | # person image

66 | im = Image.open(osp.join(self.data_path, 'image', im_name))

67 | im = self.transform(im) # [-1,1]

68 |

69 | """

70 | LIP labels

71 |

72 | [(0, 0, 0), # 0=Background

73 | (128, 0, 0), # 1=Hat

74 | (255, 0, 0), # 2=Hair

75 | (0, 85, 0), # 3=Glove

76 | (170, 0, 51), # 4=SunGlasses

77 | (255, 85, 0), # 5=UpperClothes

78 | (0, 0, 85), # 6=Dress

79 | (0, 119, 221), # 7=Coat

80 | (85, 85, 0), # 8=Socks

81 | (0, 85, 85), # 9=Pants

82 | (85, 51, 0), # 10=Jumpsuits

83 | (52, 86, 128), # 11=Scarf

84 | (0, 128, 0), # 12=Skirt

85 | (0, 0, 255), # 13=Face

86 | (51, 170, 221), # 14=LeftArm

87 | (0, 255, 255), # 15=RightArm

88 | (85, 255, 170), # 16=LeftLeg

89 | (170, 255, 85), # 17=RightLeg

90 | (255, 255, 0), # 18=LeftShoe

91 | (255, 170, 0) # 19=RightShoe

92 | (170, 170, 50) # 20=Skin/Neck/Chest (Newly added after running dataset_neck_skin_correction.py)

93 | ]

94 | """

95 |

96 | # load parsing image

97 | parse_name = im_name.replace('.jpg', '.png')

98 | im_parse = Image.open(

99 | # osp.join(self.data_path, 'image-parse', parse_name)).convert('L')

100 | osp.join(self.data_path, 'image-parse-new', parse_name)).convert('L') # updated new segmentation

101 | parse_array = np.array(im_parse)

102 | im_mask = Image.open(

103 | osp.join(self.data_path, 'image-mask', parse_name)).convert('L')

104 | mask_array = np.array(im_mask)

105 |

106 | # parse_shape = (parse_array > 0).astype(np.float32) # CP-VTON body shape

107 | # Get shape from body mask (CP-VTON+)

108 | parse_shape = (mask_array > 0).astype(np.float32)

109 |

110 | if self.stage == 'GMM':

111 | parse_head = (parse_array == 1).astype(np.float32) + \

112 | (parse_array == 4).astype(np.float32) + \

113 | (parse_array == 13).astype(

114 | np.float32) # CP-VTON+ GMM input (reserved regions)

115 | else:

116 | parse_head = (parse_array == 1).astype(np.float32) + \

117 | (parse_array == 2).astype(np.float32) + \

118 | (parse_array == 4).astype(np.float32) + \

119 | (parse_array == 9).astype(np.float32) + \

120 | (parse_array == 12).astype(np.float32) + \

121 | (parse_array == 13).astype(np.float32) + \

122 | (parse_array == 16).astype(np.float32) + \

123 | (parse_array == 17).astype(

124 | np.float32) # CP-VTON+ TOM input (reserved regions)

125 |

126 | parse_cloth = (parse_array == 5).astype(np.float32) + \

127 | (parse_array == 6).astype(np.float32) + \

128 | (parse_array == 7).astype(np.float32) # upper-clothes labels

129 |

130 | # shape downsample

131 | parse_shape_ori = Image.fromarray((parse_shape*255).astype(np.uint8))

132 | parse_shape = parse_shape_ori.resize(

133 | (self.fine_width//16, self.fine_height//16), Image.BILINEAR)

134 | parse_shape = parse_shape.resize(

135 | (self.fine_width, self.fine_height), Image.BILINEAR)

136 | parse_shape_ori = parse_shape_ori.resize(

137 | (self.fine_width, self.fine_height), Image.BILINEAR)

138 | shape_ori = self.transform(parse_shape_ori) # [-1,1]

139 | shape = self.transform(parse_shape) # [-1,1]

140 | phead = torch.from_numpy(parse_head) # [0,1]

141 | # phand = torch.from_numpy(parse_hand) # [0,1]

142 | pcm = torch.from_numpy(parse_cloth) # [0,1]

143 |

144 | # upper cloth

145 | im_c = im * pcm + (1 - pcm) # [-1,1], fill 1 for other parts

146 | im_h = im * phead - (1 - phead) # [-1,1], fill -1 for other parts

147 |

148 | # load pose points

149 | pose_name = im_name.replace('.jpg', '_keypoints.json')

150 | with open(osp.join(self.data_path, 'pose', pose_name), 'r') as f:

151 | pose_label = json.load(f)

152 | pose_data = pose_label['people'][0]['pose_keypoints']

153 | pose_data = np.array(pose_data)

154 | pose_data = pose_data.reshape((-1, 3))

155 |

156 | point_num = pose_data.shape[0]

157 | pose_map = torch.zeros(point_num, self.fine_height, self.fine_width)

158 | r = self.radius

159 | im_pose = Image.new('L', (self.fine_width, self.fine_height))

160 | pose_draw = ImageDraw.Draw(im_pose)

161 | for i in range(point_num):

162 | one_map = Image.new('L', (self.fine_width, self.fine_height))

163 | draw = ImageDraw.Draw(one_map)

164 | pointx = pose_data[i, 0]

165 | pointy = pose_data[i, 1]

166 | if pointx > 1 and pointy > 1:

167 | draw.rectangle((pointx-r, pointy-r, pointx +

168 | r, pointy+r), 'white', 'white')

169 | pose_draw.rectangle(

170 | (pointx-r, pointy-r, pointx+r, pointy+r), 'white', 'white')

171 | one_map = self.transform(one_map)

172 | pose_map[i] = one_map[0]

173 |

174 | # just for visualization

175 | im_pose = self.transform(im_pose)

176 |

177 | # cloth-agnostic representation

178 | agnostic = torch.cat([shape, im_h, pose_map], 0)

179 |

180 | if self.stage == 'GMM':

181 | im_g = Image.open('grid.png')

182 | im_g = self.transform(im_g)

183 | else:

184 | im_g = ''

185 |

186 | pcm.unsqueeze_(0) # CP-VTON+

187 |

188 | result = {

189 | 'c_name': c_name, # for visualization

190 | 'im_name': im_name, # for visualization or ground truth

191 | 'cloth': c, # for input

192 | 'cloth_mask': cm, # for input

193 | 'image': im, # for visualization

194 | 'agnostic': agnostic, # for input

195 | 'parse_cloth': im_c, # for ground truth

196 | 'shape': shape, # for visualization

197 | 'head': im_h, # for visualization

198 | 'pose_image': im_pose, # for visualization

199 | 'grid_image': im_g, # for visualization

200 | 'parse_cloth_mask': pcm, # for CP-VTON+, TOM input

201 | 'shape_ori': shape_ori, # original body shape without resize

202 | }

203 |

204 | return result

205 |

206 | def __len__(self):

207 | return len(self.im_names)

208 |

209 |

210 | class CPDataLoader(object):

211 | def __init__(self, opt, dataset):

212 | super(CPDataLoader, self).__init__()

213 |

214 | if opt.shuffle:

215 | train_sampler = torch.utils.data.sampler.RandomSampler(dataset)

216 | else:

217 | train_sampler = None

218 |

219 | self.data_loader = torch.utils.data.DataLoader(

220 | dataset, batch_size=opt.batch_size, shuffle=(

221 | train_sampler is None),

222 | num_workers=opt.workers, pin_memory=True, sampler=train_sampler)

223 | self.dataset = dataset

224 | self.data_iter = self.data_loader.__iter__()

225 |

226 | def next_batch(self):

227 | try:

228 | batch = self.data_iter.__next__()

229 | except StopIteration:

230 | self.data_iter = self.data_loader.__iter__()

231 | batch = self.data_iter.__next__()

232 |

233 | return batch

234 |

235 |

236 | if __name__ == "__main__":

237 | print("Check the dataset for geometric matching module!")

238 |

239 | import argparse

240 | parser = argparse.ArgumentParser()

241 | parser.add_argument("--dataroot", default="data")

242 | parser.add_argument("--datamode", default="train")

243 | parser.add_argument("--stage", default="GMM")

244 | parser.add_argument("--data_list", default="train_pairs.txt")

245 | parser.add_argument("--fine_width", type=int, default=192)

246 | parser.add_argument("--fine_height", type=int, default=256)

247 | parser.add_argument("--radius", type=int, default=3)

248 | parser.add_argument("--shuffle", action='store_true',

249 | help='shuffle input data')

250 | parser.add_argument('-b', '--batch-size', type=int, default=4)

251 | parser.add_argument('-j', '--workers', type=int, default=1)

252 |

253 | opt = parser.parse_args()

254 | dataset = CPDataset(opt)

255 | data_loader = CPDataLoader(opt, dataset)

256 |

257 | print('Size of the dataset: %05d, dataloader: %04d'

258 | % (len(dataset), len(data_loader.data_loader)))

259 | first_item = dataset.__getitem__(0)

260 | first_batch = data_loader.next_batch()

261 |

262 | from IPython import embed

263 | embed()

264 |

--------------------------------------------------------------------------------

/grid.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/grid.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 |

3 | checkpoints/*

4 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM nvidia/cuda:9.0-base-ubuntu16.04

2 |

3 | LABEL maintainer="Seyoung Park "

4 |

5 | # This Dockerfile is forked from Tensorflow Dockerfile

6 |

7 | # Pick up some PyTorch gpu dependencies

8 | RUN apt-get update && apt-get install -y --no-install-recommends \

9 | build-essential \

10 | cuda-command-line-tools-9-0 \

11 | cuda-cublas-9-0 \

12 | cuda-cufft-9-0 \

13 | cuda-curand-9-0 \

14 | cuda-cusolver-9-0 \

15 | cuda-cusparse-9-0 \

16 | curl \

17 | libcudnn7=7.1.4.18-1+cuda9.0 \

18 | libfreetype6-dev \

19 | libhdf5-serial-dev \

20 | libpng12-dev \

21 | libzmq3-dev \

22 | pkg-config \

23 | python \

24 | python-dev \

25 | rsync \

26 | software-properties-common \

27 | unzip \

28 | && \

29 | apt-get clean && \

30 | rm -rf /var/lib/apt/lists/*

31 |

32 |

33 | # Install miniconda

34 | RUN apt-get update && apt-get install -y --no-install-recommends \

35 | wget && \

36 | MINICONDA="Miniconda3-latest-Linux-x86_64.sh" && \

37 | wget --quiet https://repo.continuum.io/miniconda/$MINICONDA && \

38 | bash $MINICONDA -b -p /miniconda && \

39 | rm -f $MINICONDA

40 | ENV PATH /miniconda/bin:$PATH

41 |

42 | # Install PyTorch

43 | RUN conda update -n base conda && \

44 | conda install pytorch torchvision cuda90 -c pytorch

45 |

46 | # Install PerceptualSimilarity dependencies

47 | RUN conda install numpy scipy jupyter matplotlib && \

48 | conda install -c conda-forge scikit-image && \

49 | apt-get install -y python-qt4 && \

50 | pip install opencv-python

51 |

52 | # For CUDA profiling, TensorFlow requires CUPTI. Maybe PyTorch needs this too.

53 | ENV LD_LIBRARY_PATH /usr/local/cuda/extras/CUPTI/lib64:$LD_LIBRARY_PATH

54 |

55 | # IPython

56 | EXPOSE 8888

57 |

58 | WORKDIR "/notebooks"

59 |

60 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/LICENSE:

--------------------------------------------------------------------------------

1 | Copyright (c) 2018, Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, Oliver Wang

2 | All rights reserved.

3 |

4 | Redistribution and use in source and binary forms, with or without

5 | modification, are permitted provided that the following conditions are met:

6 |

7 | * Redistributions of source code must retain the above copyright notice, this

8 | list of conditions and the following disclaimer.

9 |

10 | * Redistributions in binary form must reproduce the above copyright notice,

11 | this list of conditions and the following disclaimer in the documentation

12 | and/or other materials provided with the distribution.

13 |

14 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

15 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

16 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

17 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

18 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

19 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

20 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

21 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

22 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

23 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

24 |

25 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/README.md:

--------------------------------------------------------------------------------

1 |

2 | ## Perceptual Similarity Metric and Dataset [[Project Page]](http://richzhang.github.io/PerceptualSimilarity/)

3 |

4 | **The Unreasonable Effectiveness of Deep Features as a Perceptual Metric**

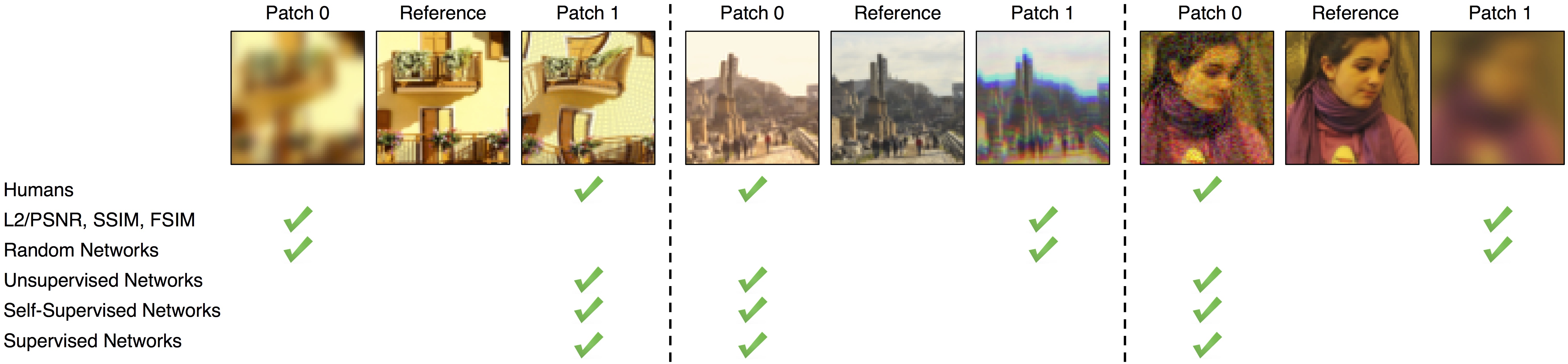

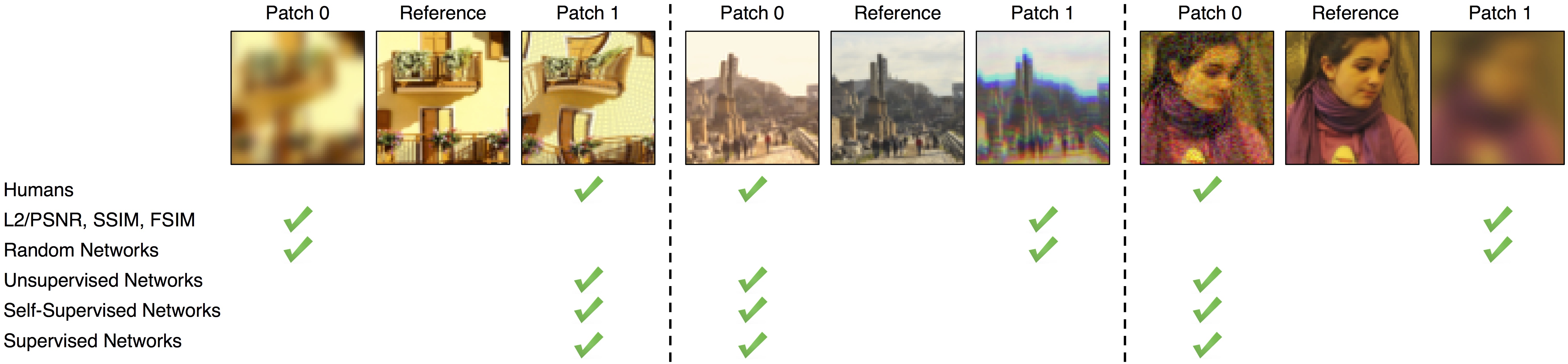

5 | [Richard Zhang](https://richzhang.github.io/), [Phillip Isola](http://web.mit.edu/phillipi/), [Alexei A. Efros](http://www.eecs.berkeley.edu/~efros/), [Eli Shechtman](https://research.adobe.com/person/eli-shechtman/), [Oliver Wang](http://www.oliverwang.info/). In [CVPR](https://arxiv.org/abs/1801.03924), 2018.

6 |

7 |  8 |

9 | ### Quick start

10 |

11 | Run `pip install lpips`. The following Python code is all you need.

12 |

13 | ```python

14 | import lpips

15 | loss_fn_alex = lpips.LPIPS(net='alex') # best forward scores

16 | loss_fn_vgg = lpips.LPIPS(net='vgg') # closer to "traditional" perceptual loss, when used for optimization

17 |

18 | import torch

19 | img0 = torch.zeros(1,3,64,64) # image should be RGB, IMPORTANT: normalized to [-1,1]

20 | img1 = torch.zeros(1,3,64,64)

21 | d = loss_fn_alex(img0, img1)

22 | ```

23 |

24 | More thorough information about variants is below. This repository contains our **perceptual metric (LPIPS)** and **dataset (BAPPS)**. It can also be used as a "perceptual loss". This uses PyTorch; a Tensorflow alternative is [here](https://github.com/alexlee-gk/lpips-tensorflow).

25 |

26 |

27 | **Table of Contents**

8 |

9 | ### Quick start

10 |

11 | Run `pip install lpips`. The following Python code is all you need.

12 |

13 | ```python

14 | import lpips

15 | loss_fn_alex = lpips.LPIPS(net='alex') # best forward scores

16 | loss_fn_vgg = lpips.LPIPS(net='vgg') # closer to "traditional" perceptual loss, when used for optimization

17 |

18 | import torch

19 | img0 = torch.zeros(1,3,64,64) # image should be RGB, IMPORTANT: normalized to [-1,1]

20 | img1 = torch.zeros(1,3,64,64)

21 | d = loss_fn_alex(img0, img1)

22 | ```

23 |

24 | More thorough information about variants is below. This repository contains our **perceptual metric (LPIPS)** and **dataset (BAPPS)**. It can also be used as a "perceptual loss". This uses PyTorch; a Tensorflow alternative is [here](https://github.com/alexlee-gk/lpips-tensorflow).

25 |

26 |

27 | **Table of Contents**

28 | 1. [Learned Perceptual Image Patch Similarity (LPIPS) metric](#1-learned-perceptual-image-patch-similarity-lpips-metric)

29 | a. [Basic Usage](#a-basic-usage) If you just want to run the metric through command line, this is all you need.

30 | b. ["Perceptual Loss" usage](#b-backpropping-through-the-metric)

31 | c. [About the metric](#c-about-the-metric)

32 | 2. [Berkeley-Adobe Perceptual Patch Similarity (BAPPS) dataset](#2-berkeley-adobe-perceptual-patch-similarity-bapps-dataset)

33 | a. [Download](#a-downloading-the-dataset)

34 | b. [Evaluation](#b-evaluating-a-perceptual-similarity-metric-on-a-dataset)

35 | c. [About the dataset](#c-about-the-dataset)

36 | d. [Train the metric using the dataset](#d-using-the-dataset-to-train-the-metric)

37 |

38 | ## (0) Dependencies/Setup

39 |

40 | ### Installation

41 | - Install PyTorch 1.0+ and torchvision fom http://pytorch.org

42 |

43 | ```bash

44 | pip install -r requirements.txt

45 | ```

46 | - Clone this repo:

47 | ```bash

48 | git clone https://github.com/richzhang/PerceptualSimilarity

49 | cd PerceptualSimilarity

50 | ```

51 |

52 | ## (1) Learned Perceptual Image Patch Similarity (LPIPS) metric

53 |

54 | Evaluate the distance between image patches. **Higher means further/more different. Lower means more similar.**

55 |

56 | ### (A) Basic Usage

57 |

58 | #### (A.I) Line commands

59 |

60 | Example scripts to take the distance between 2 specific images, all corresponding pairs of images in 2 directories, or all pairs of images within a directory:

61 |

62 | ```

63 | python lpips_2imgs.py -p0 imgs/ex_ref.png -p1 imgs/ex_p0.png --use_gpu

64 | python lpips_2dirs.py -d0 imgs/ex_dir0 -d1 imgs/ex_dir1 -o imgs/example_dists.txt --use_gpu

65 | python lpips_1dir_allpairs.py -d imgs/ex_dir_pair -o imgs/example_dists_pair.txt --use_gpu

66 | ```

67 |

68 | #### (A.II) Python code

69 |

70 | File [test_network.py](test_network.py) shows example usage. This snippet is all you really need.

71 |

72 | ```python

73 | import lpips

74 | loss_fn = lpips.LPIPS(net='alex')

75 | d = loss_fn.forward(im0,im1)

76 | ```

77 |

78 | Variables ```im0, im1``` is a PyTorch Tensor/Variable with shape ```Nx3xHxW``` (```N``` patches of size ```HxW```, RGB images scaled in `[-1,+1]`). This returns `d`, a length `N` Tensor/Variable.

79 |

80 | Run `python test_network.py` to take the distance between example reference image [`ex_ref.png`](imgs/ex_ref.png) to distorted images [`ex_p0.png`](./imgs/ex_p0.png) and [`ex_p1.png`](imgs/ex_p1.png). Before running it - which do you think *should* be closer?

81 |

82 | **Some Options** By default in `model.initialize`:

83 | - By default, `net='alex'`. Network `alex` is fastest, performs the best (as a forward metric), and is the default. For backpropping, `net='vgg'` loss is closer to the traditional "perceptual loss".

84 | - By default, `lpips=True`. This adds a linear calibration on top of intermediate features in the net. Set this to `lpips=False` to equally weight all the features.

85 |

86 | ### (B) Backpropping through the metric

87 |

88 | File [`lpips_loss.py`](lpips_loss.py) shows how to iteratively optimize using the metric. Run `python lpips_loss.py` for a demo. The code can also be used to implement vanilla VGG loss, without our learned weights.

89 |

90 | ### (C) About the metric

91 |

92 | **Higher means further/more different. Lower means more similar.**

93 |

94 | We found that deep network activations work surprisingly well as a perceptual similarity metric. This was true across network architectures (SqueezeNet [2.8 MB], AlexNet [9.1 MB], and VGG [58.9 MB] provided similar scores) and supervisory signals (unsupervised, self-supervised, and supervised all perform strongly). We slightly improved scores by linearly "calibrating" networks - adding a linear layer on top of off-the-shelf classification networks. We provide 3 variants, using linear layers on top of the SqueezeNet, AlexNet (default), and VGG networks.

95 |

96 | If you use LPIPS in your publication, please specify which version you are using. The current version is 0.1. You can set `version='0.0'` for the initial release.

97 |

98 | ## (2) Berkeley Adobe Perceptual Patch Similarity (BAPPS) dataset

99 |

100 | ### (A) Downloading the dataset

101 |

102 | Run `bash ./scripts/download_dataset.sh` to download and unzip the dataset into directory `./dataset`. It takes [6.6 GB] total. Alternatively, run `bash ./scripts/get_dataset_valonly.sh` to only download the validation set [1.3 GB].

103 | - 2AFC train [5.3 GB]

104 | - 2AFC val [1.1 GB]

105 | - JND val [0.2 GB]

106 |

107 | ### (B) Evaluating a perceptual similarity metric on a dataset

108 |

109 | Script `test_dataset_model.py` evaluates a perceptual model on a subset of the dataset.

110 |

111 | **Dataset flags**

112 | - `--dataset_mode`: `2afc` or `jnd`, which type of perceptual judgment to evaluate

113 | - `--datasets`: list the datasets to evaluate

114 | - if `--dataset_mode 2afc`: choices are [`train/traditional`, `train/cnn`, `val/traditional`, `val/cnn`, `val/superres`, `val/deblur`, `val/color`, `val/frameinterp`]

115 | - if `--dataset_mode jnd`: choices are [`val/traditional`, `val/cnn`]

116 |

117 | **Perceptual similarity model flags**

118 | - `--model`: perceptual similarity model to use

119 | - `lpips` for our LPIPS learned similarity model (linear network on top of internal activations of pretrained network)

120 | - `baseline` for a classification network (uncalibrated with all layers averaged)

121 | - `l2` for Euclidean distance

122 | - `ssim` for Structured Similarity Image Metric

123 | - `--net`: [`squeeze`,`alex`,`vgg`] for the `net-lin` and `net` models; ignored for `l2` and `ssim` models

124 | - `--colorspace`: choices are [`Lab`,`RGB`], used for the `l2` and `ssim` models; ignored for `net-lin` and `net` models

125 |

126 | **Misc flags**

127 | - `--batch_size`: evaluation batch size (will default to 1)

128 | - `--use_gpu`: turn on this flag for GPU usage

129 |

130 | An example usage is as follows: `python ./test_dataset_model.py --dataset_mode 2afc --datasets val/traditional val/cnn --model lpips --net alex --use_gpu --batch_size 50`. This would evaluate our model on the "traditional" and "cnn" validation datasets.

131 |

132 | ### (C) About the dataset

133 |

134 | The dataset contains two types of perceptual judgements: **Two Alternative Forced Choice (2AFC)** and **Just Noticeable Differences (JND)**.

135 |

136 | **(1) 2AFC** Evaluators were given a patch triplet (1 reference + 2 distorted). They were asked to select which of the distorted was "closer" to the reference.

137 |

138 | Training sets contain 2 judgments/triplet.

139 | - `train/traditional` [56.6k triplets]

140 | - `train/cnn` [38.1k triplets]

141 | - `train/mix` [56.6k triplets]

142 |

143 | Validation sets contain 5 judgments/triplet.

144 | - `val/traditional` [4.7k triplets]

145 | - `val/cnn` [4.7k triplets]

146 | - `val/superres` [10.9k triplets]

147 | - `val/deblur` [9.4k triplets]

148 | - `val/color` [4.7k triplets]

149 | - `val/frameinterp` [1.9k triplets]

150 |

151 | Each 2AFC subdirectory contains the following folders:

152 | - `ref`: original reference patches

153 | - `p0,p1`: two distorted patches

154 | - `judge`: human judgments - 0 if all preferred p0, 1 if all humans preferred p1

155 |

156 | **(2) JND** Evaluators were presented with two patches - a reference and a distorted - for a limited time. They were asked if the patches were the same (identically) or different.

157 |

158 | Each set contains 3 human evaluations/example.

159 | - `val/traditional` [4.8k pairs]

160 | - `val/cnn` [4.8k pairs]

161 |

162 | Each JND subdirectory contains the following folders:

163 | - `p0,p1`: two patches

164 | - `same`: human judgments: 0 if all humans thought patches were different, 1 if all humans thought patches were same

165 |

166 | ### (D) Using the dataset to train the metric

167 |

168 | See script `train_test_metric.sh` for an example of training and testing the metric. The script will train a model on the full training set for 10 epochs, and then test the learned metric on all of the validation sets. The numbers should roughly match the **Alex - lin** row in Table 5 in the [paper](https://arxiv.org/abs/1801.03924). The code supports training a linear layer on top of an existing representation. Training will add a subdirectory in the `checkpoints` directory.

169 |

170 | You can also train "scratch" and "tune" versions by running `train_test_metric_scratch.sh` and `train_test_metric_tune.sh`, respectively.

171 |

172 | ## Citation

173 |

174 | If you find this repository useful for your research, please use the following.

175 |

176 | ```

177 | @inproceedings{zhang2018perceptual,

178 | title={The Unreasonable Effectiveness of Deep Features as a Perceptual Metric},

179 | author={Zhang, Richard and Isola, Phillip and Efros, Alexei A and Shechtman, Eli and Wang, Oliver},

180 | booktitle={CVPR},

181 | year={2018}

182 | }

183 | ```

184 |

185 | ## Acknowledgements

186 |

187 | This repository borrows partially from the [pytorch-CycleGAN-and-pix2pix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) repository. The average precision (AP) code is borrowed from the [py-faster-rcnn](https://github.com/rbgirshick/py-faster-rcnn/blob/master/lib/datasets/voc_eval.py) repository. [Angjoo Kanazawa](https://github.com/akanazawa), [Connelly Barnes](http://www.connellybarnes.com/work/), [Gaurav Mittal](https://github.com/g1910), [wilhelmhb](https://github.com/wilhelmhb), [Filippo Mameli](https://github.com/mameli), [SuperShinyEyes](https://github.com/SuperShinyEyes), [Minyoung Huh](http://people.csail.mit.edu/minhuh/) helped to improve the codebase.

188 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/data/__init__.py

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/base_data_loader.py:

--------------------------------------------------------------------------------

1 |

2 | class BaseDataLoader():

3 | def __init__(self):

4 | pass

5 |

6 | def initialize(self):

7 | pass

8 |

9 | def load_data():

10 | return None

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/custom_dataset_data_loader.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data

2 | from data.base_data_loader import BaseDataLoader

3 | import os

4 |

5 | def CreateDataset(dataroots,dataset_mode='2afc',load_size=64,):

6 | dataset = None

7 | if dataset_mode=='2afc': # human judgements

8 | from data.dataset.twoafc_dataset import TwoAFCDataset

9 | dataset = TwoAFCDataset()

10 | elif dataset_mode=='jnd': # human judgements

11 | from data.dataset.jnd_dataset import JNDDataset

12 | dataset = JNDDataset()

13 | else:

14 | raise ValueError("Dataset Mode [%s] not recognized."%self.dataset_mode)

15 |

16 | dataset.initialize(dataroots,load_size=load_size)

17 | return dataset

18 |

19 | class CustomDatasetDataLoader(BaseDataLoader):

20 | def name(self):

21 | return 'CustomDatasetDataLoader'

22 |

23 | def initialize(self, datafolders, dataroot='./dataset',dataset_mode='2afc',load_size=64,batch_size=1,serial_batches=True, nThreads=1):

24 | BaseDataLoader.initialize(self)

25 | if(not isinstance(datafolders,list)):

26 | datafolders = [datafolders,]

27 | data_root_folders = [os.path.join(dataroot,datafolder) for datafolder in datafolders]

28 | self.dataset = CreateDataset(data_root_folders,dataset_mode=dataset_mode,load_size=load_size)

29 | self.dataloader = torch.utils.data.DataLoader(

30 | self.dataset,

31 | batch_size=batch_size,

32 | shuffle=not serial_batches,

33 | num_workers=int(nThreads))

34 |

35 | def load_data(self):

36 | return self.dataloader

37 |

38 | def __len__(self):

39 | return len(self.dataset)

40 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/data_loader.py:

--------------------------------------------------------------------------------

1 | def CreateDataLoader(datafolder,dataroot='./dataset',dataset_mode='2afc',load_size=64,batch_size=1,serial_batches=True,nThreads=4):

2 | from data.custom_dataset_data_loader import CustomDatasetDataLoader

3 | data_loader = CustomDatasetDataLoader()

4 | # print(data_loader.name())

5 | data_loader.initialize(datafolder,dataroot=dataroot+'/'+dataset_mode,dataset_mode=dataset_mode,load_size=load_size,batch_size=batch_size,serial_batches=serial_batches, nThreads=nThreads)

6 | return data_loader

7 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/dataset/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/data/dataset/__init__.py

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/dataset/base_dataset.py:

--------------------------------------------------------------------------------

1 | import torch.utils.data as data

2 |

3 | class BaseDataset(data.Dataset):

4 | def __init__(self):

5 | super(BaseDataset, self).__init__()

6 |

7 | def name(self):

8 | return 'BaseDataset'

9 |

10 | def initialize(self):

11 | pass

12 |

13 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/dataset/jnd_dataset.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | import torchvision.transforms as transforms

3 | from data.dataset.base_dataset import BaseDataset

4 | from data.image_folder import make_dataset

5 | from PIL import Image

6 | import numpy as np

7 | import torch

8 | from IPython import embed

9 |

10 | class JNDDataset(BaseDataset):

11 | def initialize(self, dataroot, load_size=64):

12 | self.root = dataroot

13 | self.load_size = load_size

14 |

15 | self.dir_p0 = os.path.join(self.root, 'p0')

16 | self.p0_paths = make_dataset(self.dir_p0)

17 | self.p0_paths = sorted(self.p0_paths)

18 |

19 | self.dir_p1 = os.path.join(self.root, 'p1')

20 | self.p1_paths = make_dataset(self.dir_p1)

21 | self.p1_paths = sorted(self.p1_paths)

22 |

23 | transform_list = []

24 | transform_list.append(transforms.Scale(load_size))

25 | transform_list += [transforms.ToTensor(),

26 | transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5))]

27 |

28 | self.transform = transforms.Compose(transform_list)

29 |

30 | # judgement directory

31 | self.dir_S = os.path.join(self.root, 'same')

32 | self.same_paths = make_dataset(self.dir_S,mode='np')

33 | self.same_paths = sorted(self.same_paths)

34 |

35 | def __getitem__(self, index):

36 | p0_path = self.p0_paths[index]

37 | p0_img_ = Image.open(p0_path).convert('RGB')

38 | p0_img = self.transform(p0_img_)

39 |

40 | p1_path = self.p1_paths[index]

41 | p1_img_ = Image.open(p1_path).convert('RGB')

42 | p1_img = self.transform(p1_img_)

43 |

44 | same_path = self.same_paths[index]

45 | same_img = np.load(same_path).reshape((1,1,1,)) # [0,1]

46 |

47 | same_img = torch.FloatTensor(same_img)

48 |

49 | return {'p0': p0_img, 'p1': p1_img, 'same': same_img,

50 | 'p0_path': p0_path, 'p1_path': p1_path, 'same_path': same_path}

51 |

52 | def __len__(self):

53 | return len(self.p0_paths)

54 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/dataset/twoafc_dataset.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | import torchvision.transforms as transforms

3 | from data.dataset.base_dataset import BaseDataset

4 | from data.image_folder import make_dataset

5 | from PIL import Image

6 | import numpy as np

7 | import torch

8 | # from IPython import embed

9 |

10 | class TwoAFCDataset(BaseDataset):

11 | def initialize(self, dataroots, load_size=64):

12 | if(not isinstance(dataroots,list)):

13 | dataroots = [dataroots,]

14 | self.roots = dataroots

15 | self.load_size = load_size

16 |

17 | # image directory

18 | self.dir_ref = [os.path.join(root, 'ref') for root in self.roots]

19 | self.ref_paths = make_dataset(self.dir_ref)

20 | self.ref_paths = sorted(self.ref_paths)

21 |

22 | self.dir_p0 = [os.path.join(root, 'p0') for root in self.roots]

23 | self.p0_paths = make_dataset(self.dir_p0)

24 | self.p0_paths = sorted(self.p0_paths)

25 |

26 | self.dir_p1 = [os.path.join(root, 'p1') for root in self.roots]

27 | self.p1_paths = make_dataset(self.dir_p1)

28 | self.p1_paths = sorted(self.p1_paths)

29 |

30 | transform_list = []

31 | transform_list.append(transforms.Scale(load_size))

32 | transform_list += [transforms.ToTensor(),

33 | transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5))]

34 |

35 | self.transform = transforms.Compose(transform_list)

36 |

37 | # judgement directory

38 | self.dir_J = [os.path.join(root, 'judge') for root in self.roots]

39 | self.judge_paths = make_dataset(self.dir_J,mode='np')

40 | self.judge_paths = sorted(self.judge_paths)

41 |

42 | def __getitem__(self, index):

43 | p0_path = self.p0_paths[index]

44 | p0_img_ = Image.open(p0_path).convert('RGB')

45 | p0_img = self.transform(p0_img_)

46 |

47 | p1_path = self.p1_paths[index]

48 | p1_img_ = Image.open(p1_path).convert('RGB')

49 | p1_img = self.transform(p1_img_)

50 |

51 | ref_path = self.ref_paths[index]

52 | ref_img_ = Image.open(ref_path).convert('RGB')

53 | ref_img = self.transform(ref_img_)

54 |

55 | judge_path = self.judge_paths[index]

56 | # judge_img = (np.load(judge_path)*2.-1.).reshape((1,1,1,)) # [-1,1]

57 | judge_img = np.load(judge_path).reshape((1,1,1,)) # [0,1]

58 |

59 | judge_img = torch.FloatTensor(judge_img)

60 |

61 | return {'p0': p0_img, 'p1': p1_img, 'ref': ref_img, 'judge': judge_img,

62 | 'p0_path': p0_path, 'p1_path': p1_path, 'ref_path': ref_path, 'judge_path': judge_path}

63 |

64 | def __len__(self):

65 | return len(self.p0_paths)

66 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/data/image_folder.py:

--------------------------------------------------------------------------------

1 | ################################################################################

2 | # Code from

3 | # https://github.com/pytorch/vision/blob/master/torchvision/datasets/folder.py

4 | # Modified the original code so that it also loads images from the current

5 | # directory as well as the subdirectories

6 | ################################################################################

7 |

8 | import torch.utils.data as data

9 |

10 | from PIL import Image

11 | import os

12 | import os.path

13 |

14 | IMG_EXTENSIONS = [

15 | '.jpg', '.JPG', '.jpeg', '.JPEG',

16 | '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',

17 | ]

18 |

19 | NP_EXTENSIONS = ['.npy',]

20 |

21 | def is_image_file(filename, mode='img'):

22 | if(mode=='img'):

23 | return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

24 | elif(mode=='np'):

25 | return any(filename.endswith(extension) for extension in NP_EXTENSIONS)

26 |

27 | def make_dataset(dirs, mode='img'):

28 | if(not isinstance(dirs,list)):

29 | dirs = [dirs,]

30 |

31 | images = []

32 | for dir in dirs:

33 | assert os.path.isdir(dir), '%s is not a valid directory' % dir

34 | for root, _, fnames in sorted(os.walk(dir)):

35 | for fname in fnames:

36 | if is_image_file(fname, mode=mode):

37 | path = os.path.join(root, fname)

38 | images.append(path)

39 |

40 | # print("Found %i images in %s"%(len(images),root))

41 | return images

42 |

43 | def default_loader(path):

44 | return Image.open(path).convert('RGB')

45 |

46 | class ImageFolder(data.Dataset):

47 | def __init__(self, root, transform=None, return_paths=False,

48 | loader=default_loader):

49 | imgs = make_dataset(root)

50 | if len(imgs) == 0:

51 | raise(RuntimeError("Found 0 images in: " + root + "\n"

52 | "Supported image extensions are: " + ",".join(IMG_EXTENSIONS)))

53 |

54 | self.root = root

55 | self.imgs = imgs

56 | self.transform = transform

57 | self.return_paths = return_paths

58 | self.loader = loader

59 |

60 | def __getitem__(self, index):

61 | path = self.imgs[index]

62 | img = self.loader(path)

63 | if self.transform is not None:

64 | img = self.transform(img)

65 | if self.return_paths:

66 | return img, path

67 | else:

68 | return img

69 |

70 | def __len__(self):

71 | return len(self.imgs)

72 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir0/0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir0/0.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir0/1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir0/1.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir1/0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir1/0.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir1/1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir1/1.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir_pair/ex_p0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir_pair/ex_p0.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir_pair/ex_p1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir_pair/ex_p1.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_dir_pair/ex_ref.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_dir_pair/ex_ref.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_p0.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_p0.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_p1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_p1.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/ex_ref.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/ex_ref.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/example_dists.txt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/example_dists.txt

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/imgs/fig1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Amazingren/CIT/e7613e495cb60433fea28afd80d3cc70bcfa7ff1/metrics/PerceptualSimilarity/imgs/fig1.png

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/lpips/__init__.py:

--------------------------------------------------------------------------------

1 |

2 | from __future__ import absolute_import

3 | from __future__ import division

4 | from __future__ import print_function

5 |

6 | import numpy as np

7 | import torch

8 | # from torch.autograd import Variable

9 |

10 | from lpips.trainer import *

11 | from lpips.lpips import *

12 |

13 | # class PerceptualLoss(torch.nn.Module):

14 | # def __init__(self, model='lpips', net='alex', spatial=False, use_gpu=False, gpu_ids=[0], version='0.1'): # VGG using our perceptually-learned weights (LPIPS metric)

15 | # # def __init__(self, model='net', net='vgg', use_gpu=True): # "default" way of using VGG as a perceptual loss

16 | # super(PerceptualLoss, self).__init__()

17 | # print('Setting up Perceptual loss...')

18 | # self.use_gpu = use_gpu

19 | # self.spatial = spatial

20 | # self.gpu_ids = gpu_ids

21 | # self.model = dist_model.DistModel()

22 | # self.model.initialize(model=model, net=net, use_gpu=use_gpu, spatial=self.spatial, gpu_ids=gpu_ids, version=version)

23 | # print('...[%s] initialized'%self.model.name())

24 | # print('...Done')

25 |

26 | # def forward(self, pred, target, normalize=False):

27 | # """

28 | # Pred and target are Variables.

29 | # If normalize is True, assumes the images are between [0,1] and then scales them between [-1,+1]

30 | # If normalize is False, assumes the images are already between [-1,+1]

31 |

32 | # Inputs pred and target are Nx3xHxW

33 | # Output pytorch Variable N long

34 | # """

35 |

36 | # if normalize:

37 | # target = 2 * target - 1

38 | # pred = 2 * pred - 1

39 |

40 | # return self.model.forward(target, pred)

41 |

42 | def normalize_tensor(in_feat,eps=1e-10):

43 | norm_factor = torch.sqrt(torch.sum(in_feat**2,dim=1,keepdim=True))

44 | return in_feat/(norm_factor+eps)

45 |

46 | def l2(p0, p1, range=255.):

47 | return .5*np.mean((p0 / range - p1 / range)**2)

48 |

49 | def psnr(p0, p1, peak=255.):

50 | return 10*np.log10(peak**2/np.mean((1.*p0-1.*p1)**2))

51 |

52 | def dssim(p0, p1, range=255.):

53 | from skimage.measure import compare_ssim

54 | return (1 - compare_ssim(p0, p1, data_range=range, multichannel=True)) / 2.

55 |

56 | def rgb2lab(in_img,mean_cent=False):

57 | from skimage import color

58 | img_lab = color.rgb2lab(in_img)

59 | if(mean_cent):

60 | img_lab[:,:,0] = img_lab[:,:,0]-50

61 | return img_lab

62 |

63 | def tensor2np(tensor_obj):

64 | # change dimension of a tensor object into a numpy array

65 | return tensor_obj[0].cpu().float().numpy().transpose((1,2,0))

66 |

67 | def np2tensor(np_obj):

68 | # change dimenion of np array into tensor array

69 | return torch.Tensor(np_obj[:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

70 |

71 | def tensor2tensorlab(image_tensor,to_norm=True,mc_only=False):

72 | # image tensor to lab tensor

73 | from skimage import color

74 |

75 | img = tensor2im(image_tensor)

76 | img_lab = color.rgb2lab(img)

77 | if(mc_only):

78 | img_lab[:,:,0] = img_lab[:,:,0]-50

79 | if(to_norm and not mc_only):

80 | img_lab[:,:,0] = img_lab[:,:,0]-50

81 | img_lab = img_lab/100.

82 |

83 | return np2tensor(img_lab)

84 |

85 | def tensorlab2tensor(lab_tensor,return_inbnd=False):

86 | from skimage import color

87 | import warnings

88 | warnings.filterwarnings("ignore")

89 |

90 | lab = tensor2np(lab_tensor)*100.

91 | lab[:,:,0] = lab[:,:,0]+50

92 |

93 | rgb_back = 255.*np.clip(color.lab2rgb(lab.astype('float')),0,1)

94 | if(return_inbnd):

95 | # convert back to lab, see if we match

96 | lab_back = color.rgb2lab(rgb_back.astype('uint8'))

97 | mask = 1.*np.isclose(lab_back,lab,atol=2.)

98 | mask = np2tensor(np.prod(mask,axis=2)[:,:,np.newaxis])

99 | return (im2tensor(rgb_back),mask)

100 | else:

101 | return im2tensor(rgb_back)

102 |

103 | def load_image(path):

104 | if(path[-3:] == 'dng'):

105 | import rawpy

106 | with rawpy.imread(path) as raw:

107 | img = raw.postprocess()

108 | elif(path[-3:]=='bmp' or path[-3:]=='jpg' or path[-3:]=='png'):

109 | import cv2

110 | return cv2.imread(path)[:,:,::-1]

111 | else:

112 | img = (255*plt.imread(path)[:,:,:3]).astype('uint8')

113 |

114 | return img

115 |

116 | def rgb2lab(input):

117 | from skimage import color

118 | return color.rgb2lab(input / 255.)

119 |

120 | def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

121 | image_numpy = image_tensor[0].cpu().float().numpy()

122 | image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

123 | return image_numpy.astype(imtype)

124 |

125 | def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

126 | return torch.Tensor((image / factor - cent)

127 | [:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

128 |

129 | def tensor2vec(vector_tensor):

130 | return vector_tensor.data.cpu().numpy()[:, :, 0, 0]

131 |

132 |

133 | def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

134 | # def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=1.):

135 | image_numpy = image_tensor[0].cpu().float().numpy()

136 | image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

137 | return image_numpy.astype(imtype)

138 |

139 | def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

140 | # def im2tensor(image, imtype=np.uint8, cent=1., factor=1.):

141 | return torch.Tensor((image / factor - cent)

142 | [:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

143 |

144 |

145 |

146 | def voc_ap(rec, prec, use_07_metric=False):

147 | """ ap = voc_ap(rec, prec, [use_07_metric])

148 | Compute VOC AP given precision and recall.

149 | If use_07_metric is true, uses the

150 | VOC 07 11 point method (default:False).

151 | """

152 | if use_07_metric:

153 | # 11 point metric

154 | ap = 0.

155 | for t in np.arange(0., 1.1, 0.1):

156 | if np.sum(rec >= t) == 0:

157 | p = 0

158 | else:

159 | p = np.max(prec[rec >= t])

160 | ap = ap + p / 11.

161 | else:

162 | # correct AP calculation

163 | # first append sentinel values at the end

164 | mrec = np.concatenate(([0.], rec, [1.]))

165 | mpre = np.concatenate(([0.], prec, [0.]))

166 |

167 | # compute the precision envelope

168 | for i in range(mpre.size - 1, 0, -1):

169 | mpre[i - 1] = np.maximum(mpre[i - 1], mpre[i])

170 |

171 | # to calculate area under PR curve, look for points

172 | # where X axis (recall) changes value

173 | i = np.where(mrec[1:] != mrec[:-1])[0]

174 |

175 | # and sum (\Delta recall) * prec

176 | ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

177 | return ap

178 |

179 |

--------------------------------------------------------------------------------

/metrics/PerceptualSimilarity/lpips/lpips.py:

--------------------------------------------------------------------------------

1 |

2 | from __future__ import absolute_import

3 |

4 | import torch

5 | import torch.nn as nn

6 | import torch.nn.init as init

7 | from torch.autograd import Variable

8 | import numpy as np

9 | from . import pretrained_networks as pn

10 | import torch.nn

11 |

12 | import lpips

13 |

14 | def spatial_average(in_tens, keepdim=True):

15 | return in_tens.mean([2,3],keepdim=keepdim)

16 |

17 | def upsample(in_tens, out_HW=(64,64)): # assumes scale factor is same for H and W

18 | in_H, in_W = in_tens.shape[2], in_tens.shape[3]

19 | return nn.Upsample(size=out_HW, mode='bilinear', align_corners=False)(in_tens)

20 |

21 | # Learned perceptual metric

22 | class LPIPS(nn.Module):

23 | def __init__(self, pretrained=True, net='alex', version='0.1', lpips=True, spatial=False,

24 | pnet_rand=False, pnet_tune=False, use_dropout=True, model_path=None, eval_mode=True, verbose=True):

25 | # lpips - [True] means with linear calibration on top of base network

26 | # pretrained - [True] means load linear weights

27 |

28 | super(LPIPS, self).__init__()

29 | if(verbose):

30 | print('Setting up [%s] perceptual loss: trunk [%s], v[%s], spatial [%s]'%

31 | ('LPIPS' if lpips else 'baseline', net, version, 'on' if spatial else 'off'))

32 |

33 | self.pnet_type = net

34 | self.pnet_tune = pnet_tune

35 | self.pnet_rand = pnet_rand

36 | self.spatial = spatial

37 | self.lpips = lpips # false means baseline of just averaging all layers

38 | self.version = version

39 | self.scaling_layer = ScalingLayer()

40 |

41 | if(self.pnet_type in ['vgg','vgg16']):

42 | net_type = pn.vgg16

43 | self.chns = [64,128,256,512,512]

44 | elif(self.pnet_type=='alex'):

45 | net_type = pn.alexnet

46 | self.chns = [64,192,384,256,256]

47 | elif(self.pnet_type=='squeeze'):

48 | net_type = pn.squeezenet

49 | self.chns = [64,128,256,384,384,512,512]

50 | self.L = len(self.chns)

51 |

52 | self.net = net_type(pretrained=not self.pnet_rand, requires_grad=self.pnet_tune)

53 |

54 | if(lpips):

55 | self.lin0 = NetLinLayer(self.chns[0], use_dropout=use_dropout)

56 | self.lin1 = NetLinLayer(self.chns[1], use_dropout=use_dropout)

57 | self.lin2 = NetLinLayer(self.chns[2], use_dropout=use_dropout)

58 | self.lin3 = NetLinLayer(self.chns[3], use_dropout=use_dropout)

59 | self.lin4 = NetLinLayer(self.chns[4], use_dropout=use_dropout)

60 | self.lins = [self.lin0,self.lin1,self.lin2,self.lin3,self.lin4]

61 | if(self.pnet_type=='squeeze'): # 7 layers for squeezenet

62 | self.lin5 = NetLinLayer(self.chns[5], use_dropout=use_dropout)

63 | self.lin6 = NetLinLayer(self.chns[6], use_dropout=use_dropout)

64 | self.lins+=[self.lin5,self.lin6]

65 | self.lins = nn.ModuleList(self.lins)

66 |

67 | if(pretrained):

68 | if(model_path is None):

69 | import inspect

70 | import os

71 | model_path = os.path.abspath(os.path.join(inspect.getfile(self.__init__), '..', 'weights/v%s/%s.pth'%(version,net)))

72 |

73 | if(verbose):

74 | print('Loading model from: %s'%model_path)

75 | self.load_state_dict(torch.load(model_path, map_location='cpu'), strict=False)

76 |

77 | if(eval_mode):

78 | self.eval()

79 |

80 | def forward(self, in0, in1, retPerLayer=False, normalize=False):

81 | if normalize: # turn on this flag if input is [0,1] so it can be adjusted to [-1, +1]

82 | in0 = 2 * in0 - 1

83 | in1 = 2 * in1 - 1

84 |

85 | # v0.0 - original release had a bug, where input was not scaled

86 | in0_input, in1_input = (self.scaling_layer(in0), self.scaling_layer(in1)) if self.version=='0.1' else (in0, in1)

87 | outs0, outs1 = self.net.forward(in0_input), self.net.forward(in1_input)

88 | feats0, feats1, diffs = {}, {}, {}

89 |

90 | for kk in range(self.L):

91 | feats0[kk], feats1[kk] = lpips.normalize_tensor(outs0[kk]), lpips.normalize_tensor(outs1[kk])

92 | diffs[kk] = (feats0[kk]-feats1[kk])**2

93 |

94 | if(self.lpips):

95 | if(self.spatial):

96 | res = [upsample(self.lins[kk](diffs[kk]), out_HW=in0.shape[2:]) for kk in range(self.L)]

97 | else:

98 | res = [spatial_average(self.lins[kk](diffs[kk]), keepdim=True) for kk in range(self.L)]

99 | else:

100 | if(self.spatial):

101 | res = [upsample(diffs[kk].sum(dim=1,keepdim=True), out_HW=in0.shape[2:]) for kk in range(self.L)]

102 | else:

103 | res = [spatial_average(diffs[kk].sum(dim=1,keepdim=True), keepdim=True) for kk in range(self.L)]

104 |

105 | val = res[0]

106 | for l in range(1,self.L):

107 | val += res[l]

108 |

109 | # a = spatial_average(self.lins[kk](diffs[kk]), keepdim=True)