├── #8Weeksqlchallange

├── Case Study # 1 - Danny's Diner

│ ├── Danny's Diner Solution.md

│ ├── Danny's Diner Solution.sql

│ ├── Diner_Schema.sql

│ ├── ERD.png

│ └── README.md

├── Case Study # 2 - Pizza Runner

│ ├── 0. Data Cleaning.md

│ ├── A. Pizza Metrics.md

│ ├── B. Runner and Customer Experience.md

│ ├── C. Ingredient Optimisation.md

│ ├── D. Pricing and Ratings.md

│ ├── Pizza Runner Schema.sql

│ └── Readme.md

├── Case Study # 3 - Foodie Fi

│ └── Readme.md

└── README.md

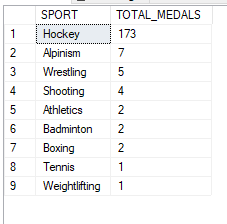

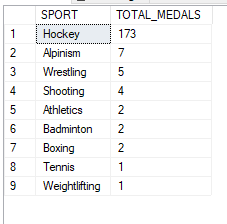

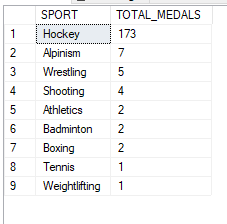

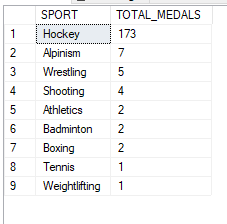

├── Analyze Incredible 120 Years of Olympic Games

├── Olympics_data.zip

└── README.md

├── Credit card spending habits in India

├── Credit Card Image.jpg

├── CreditCard_Sql_EDA_01June.pdf

├── Readme.md

└── SqlCode_Creditcard.sql

├── Cryptocurrency

├── Cryptocurrency Solutions.md

├── PostgresCode.sql

├── README.MD

├── Schema_Postgres.sql

├── crypto-erd.png

├── members.csv

├── prices.csv

└── transactions.csv

├── Indian Bank Case Study

├── IndianBank_Queries.sql

└── README.md

├── Murder Mystery Case Study

├── Crime Scene.csv

├── Drivers license.csv

├── GetFitNow check in.csv

├── GetFitNow members.csv

├── Interviews.csv

├── Person.csv

├── Readme.md

├── SQL Murder Mystery.sql

└── Schema.png

├── OYO business case study

├── Oyo Business Room Sales Analysis_Sql_18June.pdf

├── Oyo_City.xlsx

├── Oyo_Sales.xlsx

├── Readme.md

└── SqlCode_OYO_business.sql

├── README.md

├── School Case Study

├── README.md

└── School_Queries.sql

├── Sql_case_studies(Data In Motion, LLC)

├── 01Sql_Challenge(The Tiny Shop)

│ ├── PostgreSqlcode.sql

│ └── Readme.md

└── 02Sql_Challenge(Human Resources)

│ ├── PostgreSqlCode.sql

│ └── Readme.md

├── Sql_case_studies(Steel data challenge)

├── 01Sql_challenge (Steve's Car Showroom)

│ ├── 01Sql_challenge (Steve's Car Showroom).jpeg

│ └── Schema.sql

├── 02Sql_challenge (Esports Tournament)

│ ├── 02Sql_challenge (Esports Tournament).jpeg

│ └── Schema.sql

├── 03Sql_challenge (Customer Insights)

│ ├── 03Sql_challenge (Customer Insights).jpeg

│ └── Schema.sql

├── 04Sql_challenge (Finance Analysis)

│ ├── 04Sql_challenge (Finance Analysis)Part 1.jpeg

│ ├── 04Sql_challenge (Finance Analysis)Part 2.jpeg

│ ├── Schema.sql

│ └── Sqlcode(Finance analysis).sql

├── 05Sql_Challenge (Marketing Analysis)

│ ├── 06 Sql_Challenge (Marketing Analysis).sql

│ ├── Schema.sql

│ └── Sql Challenge_6_Steeldata.pdf

├── 05Sql_Challenge (Pub chain)

│ ├── Schema.sql

│ └── Sqlcode(PubPricinganalysis).sql

└── 05Sql_Challenge (Pub chain)

│ └── Sql Challenge_5_Steeldata.pdf

└── Supplier Case Study

├── README.md

└── Supplier_Queries.sql

/#8Weeksqlchallange/Case Study # 1 - Danny's Diner/Danny's Diner Solution.md:

--------------------------------------------------------------------------------

1 | # :ramen: :curry: :sushi: Case Study #1: Danny's Diner

2 |

3 | ## Case Study Questions

4 |

5 | 1. What is the total amount each customer spent at the restaurant?

6 | 2. How many days has each customer visited the restaurant?

7 | 3. What was the first item from the menu purchased by each customer?

8 | 4. What is the most purchased item on the menu and how many times was it purchased by all customers?

9 | 5. Which item was the most popular for each customer?

10 | 6. Which item was purchased first by the customer after they became a member?

11 | 7. Which item was purchased just before the customer became a member?

12 | 8. What is the total items and amount spent for each member before they became a member?

13 | 9. If each $1 spent equates to 10 points and sushi has a 2x points multiplier - how many points would each customer have?

14 | 10. In the first week after a customer joins the program (including their join date) they earn 2x points on all items, not just sushi - how many points do customer A and B have at the end of January?

15 | ***

16 |

17 | ### 1. What is the total amount each customer spent at the restaurant?

18 |

19 | Click here for the solution

20 |

21 | ```sql

22 | SELECT s.customer_id AS customer, SUM(m.price) AS spentamount_cust

23 | FROM sales s

24 | JOIN menu m

25 | ON s.product_id = m.product_id

26 | GROUP BY s.customer_id;

27 | ```

28 |

29 |

30 | #### Output:

31 | |customer|spentamount_cust|

32 | |--------|----------------|

33 | |A| 76

34 | |B |74

35 | |C |36

36 |

37 | ***

38 |

39 | ### 2. How many days has each customer visited the restaurant?

40 |

41 | Click here for the solution

42 |

43 | ```sql

44 | SELECT customer_id, COUNT(DISTINCT order_date) AS Visit_frequency

45 | FROM sales

46 | GROUP BY customer_id;

47 | ```

48 |

49 |

50 | #### Output:

51 | |customer_id| Visit_frequency|

52 | |-----------|----------------|

53 | |A| 4

54 | |B |6

55 | |C |2

56 |

57 | ***

58 |

59 | ### 3. What was the first item from the menu purchased by each customer?

60 | -- Asssumption: Since the timestamp is missing, all items bought on the first day is considered as the first item(provided multiple items were purchased on the first day)

61 |

62 | Click here for the solution

63 |

64 | ```sql

65 | SELECT s.customer_id AS customer, m.product_name AS food_item

66 | FROM sales s

67 | JOIN menu m

68 | ON s.product_id = m.product_id

69 | WHERE s.order_date = (SELECT MIN(order_date) FROM sales WHERE customer_id = s.customer_id)

70 | ORDER BY s.product_id;

71 | ```

72 |

73 |

74 | #### Output:

75 | |customer |food_item|

76 | |--------|----------|

77 | |A| sushi

78 | |B |curry

79 | |C |ramen

80 |

81 | ***

82 |

83 | ### 4. What is the most purchased item on the menu and how many times was it purchased by all customers?

84 |

85 | Click here for the solution

86 |

87 | ```sql

88 | SELECT TOP 1 m.product_name, COUNT(s.product_id) AS order_count

89 | FROM sales s

90 | JOIN menu m ON s.product_id=m.product_id

91 | GROUP BY m.product_name

92 | ORDER BY order_count DESC;

93 | ```

94 |

95 |

96 | #### Output:

97 | |product_name| order_count|

98 | |------------|-------------|

99 | |ramen |8|

100 | ***

101 |

102 | ### 5. Which item was the most popular for each customer?

103 | -- Asssumption: Products with the highest purchase counts are all considered to be popular for each customer

104 |

105 | Click here for the solution

106 |

107 | ```sql

108 | with cte_order_count as

109 | (

110 | select s.customer_id,

111 | m.product_name,

112 | count(*) as order_count

113 | from sales as s

114 | inner join menu as m

115 | on s.product_id = m.product_id

116 | group by s.customer_id,m.product_name

117 | ),

118 | cte_popular_rank AS (

119 | SELECT *,

120 | rank() over(partition by customer_id order by order_count desc) as rn

121 | from cte_order_count )

122 |

123 | SELECT customer_id as customer, product_name as food_item,order_count

124 | FROM cte_popular_rank

125 | WHERE rn = 1;

126 | ```

127 |

128 |

129 | #### Output:

130 |

131 | | customer_id | product_name | order_count | rank |

132 | |--------------|---------------|--------------|-------|

133 | | A | ramen | 3 | 1 |

134 | | B | ramen | 2 | 1 |

135 | | B | curry | 2 | 1 |

136 | | B | sushi | 2 | 1 |

137 | | C | ramen | 3 | 1 |

138 |

139 | ***

140 |

141 | ### 6. Which item was purchased first by the customer after they became a member?

142 | -- Before answering question 6, I created a membership_validation table to validate only those customers joining in the membership program:

143 |

144 | Click here for the solution

145 |

146 | ```sql

147 | DROP TABLE #Membership_validation;

148 | CREATE TABLE #Membership_validation

149 | (

150 | customer_id varchar(1),

151 | order_date date,

152 | product_name varchar(5),

153 | price int,

154 | join_date date,

155 | membership varchar(5)

156 | )

157 |

158 | INSERT INTO #Membership_validation

159 | SELECT s.customer_id, s.order_date, m.product_name, m.price, mem.join_date,

160 | CASE WHEN s.order_date >= mem.join_date THEN 'X' ELSE '' END AS membership

161 | FROM sales s

162 | INNER JOIN menu m ON s.product_id = m.product_id

163 | LEFT JOIN members mem ON mem.customer_id = s.customer_id

164 | WHERE join_date IS NOT NULL

165 | ORDER BY customer_id, order_date;

166 |

167 | select * from #Membership_validation;

168 | ```

169 |

170 |

171 | #### Temporary Table {#Membership_validation} Output:

172 |

173 | | customer_id| order_date | product_name | price | join_date |membership |

174 | |:------------:|:---------------:|:--------------:|:-------:|:--------------:|:-----------:|

175 | | A |2021-01-01| sushi| 10| 2021-01-07| |

176 | | A |2021-01-01 |curry | 15 |2021-01-07 | |

177 | | A |2021-01-07 |curry | 15 |2021-01-07 | X |

178 | | A |2021-01-10 |ramen | 12 |2021-01-07 | X

179 | | A |2021-01-11 | ramen | 12 |2021-01-07 | X

180 | | A |2021-01-11 | ramen |12 | 2021-01-07 | X

181 | | B |2021-01-01 | curry | 15 | 2021-01-09 | |

182 | | B | 2021-01-02 | curry | 15 | 2021-01-09 | |

183 | | B |2021-01-04 | sushi |10 | 2021-01-09 | |

184 | | B |2021-01-11 | sushi |10 | 2021-01-09 | X |

185 | | B |2021-01-16 | ramen | 12 | 2021-01-09| X|

186 | | B |2021-02-01 | ramen |12 |2021-01-09 | X|

187 |

188 |

189 | Click here for the solution

190 |

191 | ```sql

192 | WITH cte_first_after_mem AS (

193 | SELECT

194 | customer_id,

195 | product_name,

196 | order_date,

197 | RANK() OVER(PARTITION BY customer_id ORDER BY order_date) AS purchase_order

198 | FROM #Membership_validation

199 | WHERE membership = 'X'

200 | )

201 | SELECT * FROM cte_first_after_mem

202 | WHERE purchase_order = 1;

203 | ```

204 |

205 |

206 | #### Output:

207 | |customer_id| product_name| order_date| purchase_order|

208 | |:---------:|:-----------:|:--------:|:-------------:|

209 | |A| curry| 2021-01-07| 1|

210 | |B |sushi| 2021-01-11 |1|

211 |

212 | ***

213 |

214 | ### 7. Which item was purchased just before the customer became a member?

215 |

216 | Click here for the solution

217 |

218 | ```sql

219 | WITH cte_last_before_mem AS (

220 | SELECT

221 | customer_id,

222 | product_name,

223 | order_date,

224 | RANK() OVER( PARTITION BY customer_id ORDER BY order_date DESC) AS purchase_order

225 | FROM #Membership_validation

226 | WHERE membership = ''

227 | )

228 | SELECT * FROM cte_last_before_mem

229 | WHERE purchase_order = 1;

230 | ```

231 |

232 |

233 | #### Output:

234 | |customer_id |product_name| order_date |purchase_order|

235 | |:---------:|:------------:|:----------:|:-------------:|

236 | |A |sushi| 2021-01-01| 1|

237 | |A |curry| 2021-01-01| 1|

238 | |B| sushi| 2021-01-04| 1|

239 |

240 | ***

241 |

242 | ### 8. What is the total items and amount spent for each member before they became a member?

243 |

244 | Click here for the solution

245 |

246 | ```sql

247 | WITH cte_spent_before_mem AS (

248 | SELECT

249 | customer_id,

250 | product_name,

251 | price

252 | FROM #Membership_validation

253 | WHERE membership = ''

254 | )

255 | SELECT

256 | customer_id,

257 | SUM(price) AS total_spent,

258 | COUNT(*) AS total_items

259 | FROM cte_spent_before_mem

260 | GROUP BY customer_id

261 | ORDER BY customer_id;

262 | ```

263 |

264 |

265 | #### Output:

266 | |customer_id |total_spent |total_items|

267 | |:---------:|:------------:|:---------:|

268 | |A| 25| 2|

269 | |B |40| 3|

270 |

271 | ***

272 |

273 | ### 9. If each $1 spent equates to 10 points and sushi has a 2x points multiplier - how many points would each customer have?

274 |

275 | Click here for the solution

276 |

277 | ```sql

278 | SELECT

279 | customer_id,

280 | SUM( CASE

281 | WHEN product_name = 'sushi' THEN (price * 20) ELSE (price * 10)

282 | END

283 | ) AS total_points

284 | FROM #Membership_validation

285 | WHERE order_date >= join_date

286 | GROUP BY customer_id

287 | ORDER BY customer_id;

288 | ```

289 |

290 |

291 | #### Output:

292 | |customer_id |total_points|

293 | |:----------:|:-----------:|

294 | |A| 510|

295 | |B| 440|

296 |

297 | ### 10. In the first week after a customer joins the program (including their join date) they earn 2x points on all items, not just sushi - how many points do customer A and B have at the end of January

298 | Asssumption: Points is rewarded only after the customer joins in the membership program

299 | #### Steps

300 | 1. Find the program_last_date which is 7 days after a customer joins the program (including their join date)

301 | 2. Determine the customer points for each transaction and for members with a membership

302 | a. During the first week of the membership -> points = price*20 irrespective of the purchase item

303 | b. Product = Sushi -> and order_date is not within a week of membership -> points = price*20

304 | c. Product = Not Sushi -> and order_date is not within a week of membership -> points = price*10

305 | 3. Conditions in WHERE clause

306 | a. order_date <= '2021-01-31' -> Order must be placed before 31st January 2021

307 | b. order_date >= join_date -> Points awarded to only customers with a membership

308 |

309 |

310 | Click here for the solution

311 |

312 | ```sql

313 | with program_last_day_cte as

314 | (

315 | select join_date ,

316 | Dateadd(dd,7,join_date) as program_last_date,

317 | customer_id

318 | from members

319 | )

320 | SELECT s.customer_id,

321 | SUM(CASE

322 | WHEN order_date BETWEEN join_date AND program_last_date THEN price*10*2

323 | WHEN order_date NOT BETWEEN join_date AND program_last_date

324 | AND product_name = 'sushi' THEN price*10*2

325 | WHEN order_date NOT BETWEEN join_date AND program_last_date

326 | AND product_name != 'sushi' THEN price*10

327 | END) AS customer_points

328 | FROM MENU as m

329 | INNER JOIN sales as s on m.product_id = s.product_id

330 | INNER JOIN program_last_day_cte as k on k.customer_id = s.customer_id

331 | AND order_date <='2021-01-31'

332 | AND order_date >=join_date

333 | GROUP BY s.customer_id

334 | ORDER BY s.customer_id;

335 | ```

336 |

337 |

338 | #### Output:

339 | |customer_id |customer_points|

340 | |:----------:|:-------------:|

341 | |A| 1020|

342 | |B| 440|

343 |

344 | ***

345 |

346 |

347 | Click [here](https://github.com/AmitPatel-analyst/SQL-Case-Study/tree/main/%238Weeksqlchallange) to move back to the 8-Week-SQL-Challenge repository!

348 |

349 |

350 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 1 - Danny's Diner/Danny's Diner Solution.sql:

--------------------------------------------------------------------------------

1 | ----------------------------------

2 | -- CASE STUDY #1: DANNY'S DINER --

3 | ----------------------------------

4 |

5 | -- Author: Amit Patel

6 | -- Date: 25/04/2023

7 | -- Tool used: MSSQL Server

8 |

9 | --------------------------

10 | -- CASE STUDY QUESTIONS --

11 | --------------------------

12 |

13 | -- 1. What is the total amount each customer spent at the restaurant?

14 |

15 | SELECT s.customer_id AS customer, SUM(m.price) AS spentamount_cust

16 | FROM sales s

17 | JOIN menu m

18 | ON s.product_id = m.product_id

19 | GROUP BY s.customer_id;

20 |

21 |

22 | -- 2. How many days has each customer visited the restaurant?

23 |

24 | SELECT customer_id, COUNT(DISTINCT order_date) AS Visit_frequency

25 | FROM sales

26 | GROUP BY customer_id;

27 |

28 | -- 3. What was the first item from the menu purchased by each customer?

29 | -- -- Asssumption: Since the timestamp is missing, all items bought on the first day is considered as the first item(provided multiple items were purchased on the first day)

30 |

31 | SELECT s.customer_id AS customer, m.product_name AS food_item

32 | FROM sales s

33 | JOIN menu m

34 | ON s.product_id = m.product_id

35 | WHERE s.order_date = (SELECT MIN(order_date) FROM sales WHERE customer_id = s.customer_id)

36 | ORDER BY s.product_id;

37 |

38 | -- 4. What is the most purchased item on the menu and how many times was it purchased by all customers?

39 |

40 | SELECT TOP 1 m.product_name, COUNT(s.product_id) AS order_count

41 | FROM sales s

42 | JOIN menu m ON s.product_id=m.product_id

43 | GROUP BY m.product_name

44 | ORDER BY order_count DESC;

45 |

46 | -- 5. Which item was the most popular for each customer?

47 | -- Asssumption: Products with the highest purchase counts are all considered to be popular for each customer

48 |

49 | with cte_order_count as

50 | (

51 | select s.customer_id,

52 | m.product_name,

53 | count(*) as order_count

54 | from sales as s

55 | inner join menu as m

56 | on s.product_id = m.product_id

57 | group by s.customer_id,m.product_name

58 | ),

59 | cte_popular_rank AS (

60 | SELECT *,

61 | rank() over(partition by customer_id order by order_count desc) as rn

62 | from cte_order_count )

63 |

64 | SELECT customer_id as customer, product_name as food_item,order_count

65 | FROM cte_popular_rank

66 | WHERE rn = 1;

67 |

68 | -- 6. Which item was purchased first by the customer after they became a member?

69 | -- Before answering question 6, I created a membership_validation table to validate only those customers joining in the membership program:

70 |

71 | DROP TABLE #Membership_validation;

72 | CREATE TABLE #Membership_validation

73 | (

74 | customer_id varchar(1),

75 | order_date date,

76 | product_name varchar(5),

77 | price int,

78 | join_date date,

79 | membership varchar(5)

80 | )

81 |

82 | INSERT INTO #Membership_validation

83 | SELECT s.customer_id, s.order_date, m.product_name, m.price, mem.join_date,

84 | CASE WHEN s.order_date >= mem.join_date THEN 'X' ELSE '' END AS membership

85 | FROM sales s

86 | INNER JOIN menu m ON s.product_id = m.product_id

87 | LEFT JOIN members mem ON mem.customer_id = s.customer_id

88 | WHERE join_date IS NOT NULL

89 | ORDER BY customer_id, order_date;

90 |

91 | select * from #Membership_validation;

92 | Soln No. 6

93 | WITH cte_first_after_mem AS (

94 | SELECT

95 | customer_id,

96 | product_name,

97 | order_date,

98 | RANK() OVER(PARTITION BY customer_id ORDER BY order_date) AS purchase_order

99 | FROM #Membership_validation

100 | WHERE membership = 'X'

101 | )

102 | SELECT * FROM cte_first_after_mem

103 | WHERE purchase_order = 1;

104 |

105 | -- 7. Which item was purchased just before the customer became a member?

106 |

107 | WITH cte_last_before_mem AS (

108 | SELECT

109 | customer_id,

110 | product_name,

111 | order_date,

112 | RANK() OVER( PARTITION BY customer_id ORDER BY order_date DESC) AS purchase_order

113 | FROM #Membership_validation

114 | WHERE membership = ''

115 | )

116 | SELECT * FROM cte_last_before_mem

117 | WHERE purchase_order = 1;

118 |

119 | -- 8. What is the total items and amount spent for each member before they became a member?

120 | WITH cte_spent_before_mem AS (

121 | SELECT

122 | customer_id,

123 | product_name,

124 | price

125 | FROM #Membership_validation

126 | WHERE membership = ''

127 | )

128 | SELECT

129 | customer_id,

130 | SUM(price) AS total_spent,

131 | COUNT(*) AS total_items

132 | FROM cte_spent_before_mem

133 | GROUP BY customer_id

134 | ORDER BY customer_id;

135 |

136 | -- 9. If each $1 spent equates to 10 points and sushi has a 2x points multiplier - how many points would each customer have?

137 |

138 | SELECT

139 | customer_id,

140 | SUM( CASE

141 | WHEN product_name = 'sushi' THEN (price * 20) ELSE (price * 10)

142 | END

143 | ) AS total_points

144 | FROM #Membership_validation

145 | WHERE order_date >= join_date

146 | GROUP BY customer_id

147 | ORDER BY customer_id;

148 |

149 | -- 10. In the first week after a customer joins the program (including their join date) they earn 2x points on all items, not just sushi - how many points do customer A and B have at the end of January

150 | Asssumption: Points is rewarded only after the customer joins in the membership program

151 |

152 | Steps

153 | Find the program_last_date which is 7 days after a customer joins the program (including their join date)

154 | Determine the customer points for each transaction and for members with a membership a. During the first week of the membership -> points = price20 irrespective of the purchase item b. Product = Sushi -> and order_date is not within a week of membership -> points = price20 c. Product = Not Sushi -> and order_date is not within a week of membership -> points = price*10

155 | Conditions in WHERE clause a. order_date <= '2021-01-31' -> Order must be placed before 31st January 2021 b. order_date >= join_date -> Points awarded to only customers with a membership

156 |

157 | with program_last_day_cte as

158 | (

159 | select join_date ,

160 | Dateadd(dd,7,join_date) as program_last_date,

161 | customer_id

162 | from members

163 | )

164 | SELECT s.customer_id,

165 | SUM(CASE

166 | WHEN order_date BETWEEN join_date AND program_last_date THEN price*10*2

167 | WHEN order_date NOT BETWEEN join_date AND program_last_date

168 | AND product_name = 'sushi' THEN price*10*2

169 | WHEN order_date NOT BETWEEN join_date AND program_last_date

170 | AND product_name != 'sushi' THEN price*10

171 | END) AS customer_points

172 | FROM MENU as m

173 | INNER JOIN sales as s on m.product_id = s.product_id

174 | INNER JOIN program_last_day_cte as k on k.customer_id = s.customer_id

175 | AND order_date <='2021-01-31'

176 | AND order_date >=join_date

177 | GROUP BY s.customer_id

178 | ORDER BY s.customer_id;

179 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 1 - Danny's Diner/Diner_Schema.sql:

--------------------------------------------------------------------------------

1 | ----------------------------------

2 | -- CASE STUDY #1: DANNY'S DINER --

3 | ----------------------------------

4 |

5 | -- Author: Amit Patel

6 | -- Date: 25/04/2023

7 | -- Tool used: MSSQL Server

8 |

9 |

10 |

11 | CREATE SCHEMA dannys_diner;

12 | use dannys_diner;

13 |

14 | CREATE TABLE sales (

15 | customer_id VARCHAR(1),

16 | order_date DATE,

17 | product_id INTEGER

18 | );

19 |

20 | INSERT INTO sales (

21 | customer_id, order_date, product_id

22 | )

23 | VALUES

24 | ('A', '2021-01-01', '1'),

25 | ('A', '2021-01-01', '2'),

26 | ('A', '2021-01-07', '2'),

27 | ('A', '2021-01-10', '3'),

28 | ('A', '2021-01-11', '3'),

29 | ('A', '2021-01-11', '3'),

30 | ('B', '2021-01-01', '2'),

31 | ('B', '2021-01-02', '2'),

32 | ('B', '2021-01-04', '1'),

33 | ('B', '2021-01-11', '1'),

34 | ('B', '2021-01-16', '3'),

35 | ('B', '2021-02-01', '3'),

36 | ('C', '2021-01-01', '3'),

37 | ('C', '2021-01-01', '3'),

38 | ('C', '2021-01-07', '3');

39 |

40 | CREATE TABLE menu (

41 | product_id INTEGER,

42 | product_name VARCHAR(5),

43 | price INTEGER

44 | );

45 |

46 | INSERT INTO menu (product_id, product_name, price)

47 | VALUES

48 | (1, 'sushi', 10),

49 | (2, 'curry', 15),

50 | (3, 'ramen', 12);

51 |

52 |

53 | CREATE TABLE members (

54 | customer_id VARCHAR(1),

55 | join_date DATE

56 | );

57 |

58 | INSERT INTO members (customer_id, join_date)

59 | VALUES

60 | ('A', '2021-01-07'),

61 | ('B', '2021-01-09');

62 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 1 - Danny's Diner/ERD.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AmitPatel-analyst/SQL-Case-Study/4dae8f080f537149fb3f2a7233519d9d17903659/#8Weeksqlchallange/Case Study # 1 - Danny's Diner/ERD.png

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 1 - Danny's Diner/README.md:

--------------------------------------------------------------------------------

1 | # :ramen: :curry: :sushi: Case Study #1: Danny's Diner

2 |

3 |  4 |

5 | You can find the complete challenge here: https://8weeksqlchallenge.com/case-study-1/

6 |

7 | ## Table Of Contents

8 | - [Introduction](#introduction)

9 | - [Problem Statement](#problem-statement)

10 | - [Datasets used](#datasets-used)

11 | - [Entity Relationship Diagram](#entity-relationship-diagram)

12 | - [Case Study Questions](#case-study-questions)

13 |

14 | ## Introduction

15 | Danny seriously loves Japanese food so in the beginning of 2021, he decides to embark upon a risky venture and opens up a cute little restaurant that sells his 3 favourite foods: sushi, curry and ramen.

16 |

17 | Danny’s Diner is in need of your assistance to help the restaurant stay afloat - the restaurant has captured some very basic data from their few months of operation but have no idea how to use their data to help them run the business.

18 |

19 | ## Problem Statement

20 | Danny wants to use the data to answer a few simple questions about his customers, especially about their visiting patterns, how much money they’ve spent and also which menu items are their favourite. Having this deeper connection with his customers will help him deliver a better and more personalised experience for his loyal customers.

21 | He plans on using these insights to help him decide whether he should expand the existing customer loyalty program.

22 |

23 | ## Datasets used

24 | Three key datasets for this case study

25 | - sales: The sales table captures all customer_id level purchases with an corresponding order_date and product_id information for when and what menu items were ordered.

26 | - menu: The menu table maps the product_id to the actual product_name and price of each menu item.

27 | - members: The members table captures the join_date when a customer_id joined the beta version of the Danny’s Diner loyalty program.

28 |

29 | ## Entity Relationship Diagram

30 |

31 |

32 |

33 | ## Case Study Questions

34 | 1. What is the total amount each customer spent at the restaurant?

35 | 2. How many days has each customer visited the restaurant?

36 | 3. What was the first item from the menu purchased by each customer?

37 | 4. What is the most purchased item on the menu and how many times was it purchased by all customers?

38 | 5. Which item was the most popular for each customer?

39 | 6. Which item was purchased first by the customer after they became a member?

40 | 7. Which item was purchased just before the customer became a member?

41 | 10. What is the total items and amount spent for each member before they became a member?

42 | 11. If each $1 spent equates to 10 points and sushi has a 2x points multiplier - how many points would each customer have?

43 | 12. In the first week after a customer joins the program (including their join date) they earn 2x points on all items, not just sushi - how many points do customer A and B have at the end of January?

44 |

45 | ## Insights

46 | ◼Out of the three Customers A, B and C, 2 customers A & B have joined the membership and there are 3 products offered at Danny’s Diner: sushi, curry and ramen.

47 | ◼Customer A has spent the highest amount of $76 at Danny’s Diner, followed by B and C

48 | ◼Frequently visited Customers are A and B with 6 number of times and the Customer C has done the lowest no of visits.

49 | ◼The First item opted by most of the customers to taste from Danny’s Diner is curry and then it was ramen.

50 | ◼The most popular item in Danny’s diner is found as Ramen, followed by curry and sushi.

51 | ◼The first item bought By Customers A and B after taking membership are Ramen and sushi respectively.

52 | ◼Before taking membership the last item chosen by Customer A were sushi and curry and for B it was sushi

53 | ◼Before taking the membership, Customer A has bought 2 items with a Total amount of $25 and B has bought 3 items with a total amount of $40

54 | ◼Customers A, B and C have earned Total points of 860, 940 and 360 based on their purchases without their membership.

55 | ◼As Customers A and B took membership and can earn 20 points for every $1 spent irrespective of the items, they earned total Points of 1010 and 820 by the end of January 2021.

56 |

57 | Click [here](https://github.com/AmitPatel-analyst/SQL-Case-Study/blob/main/%238Weeksqlchallange/Case%20Study%20%23%201%20-%20Danny's%20Diner/Danny's%20Diner%20Solution.md) to view the solution of the case study!

58 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/0. Data Cleaning.md:

--------------------------------------------------------------------------------

1 |

2 | # Data Cleaning

3 |

4 | ## customer_orders table

5 | - The exclusions and extras columns in customer_orders table will need to be cleaned up before using them in the queries

6 | - In the exclusions and extras columns, there are blank spaces and null values.

7 |

8 | ```sql

9 | SELECT

10 | [order_id],

11 | [customer_id],

12 | [pizza_id],

13 | CASE

14 | WHEN exclusions IS NULL OR

15 | exclusions LIKE 'null' THEN ' '

16 | ELSE exclusions

17 | END AS exclusions,

18 | CASE

19 | WHEN extras IS NULL OR

20 | extras LIKE 'null' THEN ' '

21 | ELSE extras

22 | END AS extras,

23 | [order_time] INTO Updated_customer_orders

24 | FROM [pizza_runner].[customer_orders];

25 |

26 | select * from Updated_customer_orders;

27 | ```

28 | #### Result set:

29 |

30 |

31 | ***

32 |

33 | ## runner_orders table

34 | - The pickup_time, distance, duration and cancellation columns in runner_orders table will need to be cleaned up before using them in the queries

35 | - In the pickup_time column, there are null values.

36 | - In the distance column, there are null values. It contains unit - km. The 'km' must also be stripped

37 | - In the duration column, there are null values. The 'minutes', 'mins' 'minute' must be stripped

38 | - In the cancellation column, there are blank spaces and null values.

39 |

40 | ```sql

41 | create table updated_runner_orders

42 | (

43 | [order_id] int,

44 | [runner_id] int,

45 | [pickup_time] datetime,

46 | [distance] float,

47 | [duration] int,

48 | [cancellation] varchar(23)

49 | );

50 |

51 | insert into updated_runner_orders

52 | select

53 | order_id,

54 | runner_id,

55 | case when pickup_time LIKE 'null' then null else pickup_time end as pickup_time,

56 | case when distance LIKE 'null' then null else trim(

57 | REPLACE(distance, 'km', '')

58 | ) end as distance,

59 | case when duration LIKE 'null' then null else trim(

60 | REPLACE(

61 | duration,

62 | substring(duration, 3, 10),

63 | ''

64 | )

65 | ) end as duration,

66 | CASE WHEN cancellation IN ('null', 'NaN', '') THEN null ELSE cancellation END AS cancellation

67 | from

68 | [pizza_runner].[runner_orders];

69 | ```

70 | #### Result set:

71 |

72 |

73 |

74 | ***

75 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/A. Pizza Metrics.md:

--------------------------------------------------------------------------------

1 | # :pizza: Case Study #2: Pizza runner - Pizza Metrics

2 |

3 | ## Case Study Questions

4 |

5 | 1. How many pizzas were ordered?

6 | 2. How many unique customer orders were made?

7 | 3. How many successful orders were delivered by each runner?

8 | 4. How many of each type of pizza was delivered?

9 | 5. How many Vegetarian and Meatlovers were ordered by each customer?

10 | 6. What was the maximum number of pizzas delivered in a single order?

11 | 7. For each customer, how many delivered pizzas had at least 1 change and how many had no changes?

12 | 8. How many pizzas were delivered that had both exclusions and extras?

13 | 9. What was the total volume of pizzas ordered for each hour of the day?

14 | 10. What was the volume of orders for each day of the week?

15 |

16 | ***

17 | Total Tables -

18 | SELECT * FROM updated_runner_orders;

19 | SELECT * FROM Updated_customer_orders;

20 | SELECT * FROM pizza_runner.pizza_toppings;

21 | SELECT * FROM pizza_runner.pizza_recipes;

22 | SELECT * FROM pizza_runner.pizza_names;

23 | SELECT * FROM pizza_runner.runners;

24 |

25 | ### 1. How many pizzas were ordered?

26 |

4 |

5 | You can find the complete challenge here: https://8weeksqlchallenge.com/case-study-1/

6 |

7 | ## Table Of Contents

8 | - [Introduction](#introduction)

9 | - [Problem Statement](#problem-statement)

10 | - [Datasets used](#datasets-used)

11 | - [Entity Relationship Diagram](#entity-relationship-diagram)

12 | - [Case Study Questions](#case-study-questions)

13 |

14 | ## Introduction

15 | Danny seriously loves Japanese food so in the beginning of 2021, he decides to embark upon a risky venture and opens up a cute little restaurant that sells his 3 favourite foods: sushi, curry and ramen.

16 |

17 | Danny’s Diner is in need of your assistance to help the restaurant stay afloat - the restaurant has captured some very basic data from their few months of operation but have no idea how to use their data to help them run the business.

18 |

19 | ## Problem Statement

20 | Danny wants to use the data to answer a few simple questions about his customers, especially about their visiting patterns, how much money they’ve spent and also which menu items are their favourite. Having this deeper connection with his customers will help him deliver a better and more personalised experience for his loyal customers.

21 | He plans on using these insights to help him decide whether he should expand the existing customer loyalty program.

22 |

23 | ## Datasets used

24 | Three key datasets for this case study

25 | - sales: The sales table captures all customer_id level purchases with an corresponding order_date and product_id information for when and what menu items were ordered.

26 | - menu: The menu table maps the product_id to the actual product_name and price of each menu item.

27 | - members: The members table captures the join_date when a customer_id joined the beta version of the Danny’s Diner loyalty program.

28 |

29 | ## Entity Relationship Diagram

30 |

31 |

32 |

33 | ## Case Study Questions

34 | 1. What is the total amount each customer spent at the restaurant?

35 | 2. How many days has each customer visited the restaurant?

36 | 3. What was the first item from the menu purchased by each customer?

37 | 4. What is the most purchased item on the menu and how many times was it purchased by all customers?

38 | 5. Which item was the most popular for each customer?

39 | 6. Which item was purchased first by the customer after they became a member?

40 | 7. Which item was purchased just before the customer became a member?

41 | 10. What is the total items and amount spent for each member before they became a member?

42 | 11. If each $1 spent equates to 10 points and sushi has a 2x points multiplier - how many points would each customer have?

43 | 12. In the first week after a customer joins the program (including their join date) they earn 2x points on all items, not just sushi - how many points do customer A and B have at the end of January?

44 |

45 | ## Insights

46 | ◼Out of the three Customers A, B and C, 2 customers A & B have joined the membership and there are 3 products offered at Danny’s Diner: sushi, curry and ramen.

47 | ◼Customer A has spent the highest amount of $76 at Danny’s Diner, followed by B and C

48 | ◼Frequently visited Customers are A and B with 6 number of times and the Customer C has done the lowest no of visits.

49 | ◼The First item opted by most of the customers to taste from Danny’s Diner is curry and then it was ramen.

50 | ◼The most popular item in Danny’s diner is found as Ramen, followed by curry and sushi.

51 | ◼The first item bought By Customers A and B after taking membership are Ramen and sushi respectively.

52 | ◼Before taking membership the last item chosen by Customer A were sushi and curry and for B it was sushi

53 | ◼Before taking the membership, Customer A has bought 2 items with a Total amount of $25 and B has bought 3 items with a total amount of $40

54 | ◼Customers A, B and C have earned Total points of 860, 940 and 360 based on their purchases without their membership.

55 | ◼As Customers A and B took membership and can earn 20 points for every $1 spent irrespective of the items, they earned total Points of 1010 and 820 by the end of January 2021.

56 |

57 | Click [here](https://github.com/AmitPatel-analyst/SQL-Case-Study/blob/main/%238Weeksqlchallange/Case%20Study%20%23%201%20-%20Danny's%20Diner/Danny's%20Diner%20Solution.md) to view the solution of the case study!

58 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/0. Data Cleaning.md:

--------------------------------------------------------------------------------

1 |

2 | # Data Cleaning

3 |

4 | ## customer_orders table

5 | - The exclusions and extras columns in customer_orders table will need to be cleaned up before using them in the queries

6 | - In the exclusions and extras columns, there are blank spaces and null values.

7 |

8 | ```sql

9 | SELECT

10 | [order_id],

11 | [customer_id],

12 | [pizza_id],

13 | CASE

14 | WHEN exclusions IS NULL OR

15 | exclusions LIKE 'null' THEN ' '

16 | ELSE exclusions

17 | END AS exclusions,

18 | CASE

19 | WHEN extras IS NULL OR

20 | extras LIKE 'null' THEN ' '

21 | ELSE extras

22 | END AS extras,

23 | [order_time] INTO Updated_customer_orders

24 | FROM [pizza_runner].[customer_orders];

25 |

26 | select * from Updated_customer_orders;

27 | ```

28 | #### Result set:

29 |

30 |

31 | ***

32 |

33 | ## runner_orders table

34 | - The pickup_time, distance, duration and cancellation columns in runner_orders table will need to be cleaned up before using them in the queries

35 | - In the pickup_time column, there are null values.

36 | - In the distance column, there are null values. It contains unit - km. The 'km' must also be stripped

37 | - In the duration column, there are null values. The 'minutes', 'mins' 'minute' must be stripped

38 | - In the cancellation column, there are blank spaces and null values.

39 |

40 | ```sql

41 | create table updated_runner_orders

42 | (

43 | [order_id] int,

44 | [runner_id] int,

45 | [pickup_time] datetime,

46 | [distance] float,

47 | [duration] int,

48 | [cancellation] varchar(23)

49 | );

50 |

51 | insert into updated_runner_orders

52 | select

53 | order_id,

54 | runner_id,

55 | case when pickup_time LIKE 'null' then null else pickup_time end as pickup_time,

56 | case when distance LIKE 'null' then null else trim(

57 | REPLACE(distance, 'km', '')

58 | ) end as distance,

59 | case when duration LIKE 'null' then null else trim(

60 | REPLACE(

61 | duration,

62 | substring(duration, 3, 10),

63 | ''

64 | )

65 | ) end as duration,

66 | CASE WHEN cancellation IN ('null', 'NaN', '') THEN null ELSE cancellation END AS cancellation

67 | from

68 | [pizza_runner].[runner_orders];

69 | ```

70 | #### Result set:

71 |

72 |

73 |

74 | ***

75 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/A. Pizza Metrics.md:

--------------------------------------------------------------------------------

1 | # :pizza: Case Study #2: Pizza runner - Pizza Metrics

2 |

3 | ## Case Study Questions

4 |

5 | 1. How many pizzas were ordered?

6 | 2. How many unique customer orders were made?

7 | 3. How many successful orders were delivered by each runner?

8 | 4. How many of each type of pizza was delivered?

9 | 5. How many Vegetarian and Meatlovers were ordered by each customer?

10 | 6. What was the maximum number of pizzas delivered in a single order?

11 | 7. For each customer, how many delivered pizzas had at least 1 change and how many had no changes?

12 | 8. How many pizzas were delivered that had both exclusions and extras?

13 | 9. What was the total volume of pizzas ordered for each hour of the day?

14 | 10. What was the volume of orders for each day of the week?

15 |

16 | ***

17 | Total Tables -

18 | SELECT * FROM updated_runner_orders;

19 | SELECT * FROM Updated_customer_orders;

20 | SELECT * FROM pizza_runner.pizza_toppings;

21 | SELECT * FROM pizza_runner.pizza_recipes;

22 | SELECT * FROM pizza_runner.pizza_names;

23 | SELECT * FROM pizza_runner.runners;

24 |

25 | ### 1. How many pizzas were ordered?

26 |

27 | Click here for solution

28 |

29 | ```sql

30 | select count(pizza_id) as pizza_count from Updated_customer_orders;

31 | ```

32 |

33 |

34 | #### Output:

35 |

36 |

37 | ### 2. How many unique customer orders were made?

38 |

39 | Click here for solution

40 |

41 | ```sql

42 | select count(distinct order_id) as order_count from Updated_customer_orders;

43 | ```

44 |

45 |

46 | #### Output:

47 |

48 |

49 | ### 3. How many successful orders were delivered by each runner?

50 |

51 | Click here for solution

52 |

53 | ```sql

54 | SELECT

55 | runner_id,

56 | COUNT(order_id) [Successful Order]

57 | FROM updated_runner_orders

58 | WHERE cancellation IS NULL

59 | OR cancellation NOT IN ('Restaurant Cancellation', 'Customer Cancellation')

60 | GROUP BY runner_id

61 | ORDER BY 2 DESC;

62 | ```

63 |

64 |

65 | #### Output:

66 |

67 |

68 | #### 4. How many of each type of pizza was delivered?

69 |

70 | Click here for solution

71 |

72 | ```sql

73 | SELECT

74 | p.pizza_name,

75 | Pizza_count

76 | FROM (SELECT

77 | c.pizza_id,

78 | COUNT(r.order_id) AS Pizza_count

79 | FROM updated_runner_orders r

80 | JOIN Updated_customer_orders c

81 | ON r.order_id = c.order_id

82 | WHERE cancellation IS NULL

83 | OR cancellation NOT IN ('Restaurant Cancellation', 'Customer Cancellation')

84 | GROUP BY c.pizza_id) k

85 | INNER JOIN pizza_runner.[pizza_names] p

86 | ON k.pizza_id = p.pizza_id;

87 | ```

88 |

89 |

90 | #### Output:

91 |

92 |

93 | #### 5. How many Vegetarian and Meatlovers were ordered by each customer?

94 |

95 | Click here for solution

96 |

97 | ```sql

98 | SELECT

99 | customer_id,

100 | SUM(CASE

101 | WHEN pizza_id = 1 THEN 1

102 | ELSE 0

103 | END) AS Orderby_Meatlovers,

104 | SUM(CASE

105 | WHEN pizza_id = 2 THEN 1

106 | ELSE 0

107 | END) AS Orderby_Vegetarian

108 | FROM Updated_customer_orders

109 | GROUP BY customer_id;

110 | ```

111 |

112 |

113 | #### Output:

114 |

115 |

116 | #### 6. What was the maximum number of pizzas delivered in a single order?

117 |

118 | Click here for solution

119 |

120 | ```sql

121 | SELECT

122 | order_id, pizza_count AS max_count_delivered_pizza

123 | FROM (SELECT top 1

124 | r.order_id,

125 | COUNT(c.pizza_id) AS pizza_count

126 | FROM updated_runner_orders r

127 | JOIN Updated_customer_orders c

128 | ON r.order_id = c.order_id

129 | WHERE cancellation IS NULL

130 | OR cancellation NOT IN ('Restaurant Cancellation', 'Customer Cancellation')

131 | GROUP BY r.order_id

132 | ORDER BY pizza_count desc) k;

133 | ```

134 |

135 |

136 | #### Output:

137 |

138 |

139 | #### 7. For each customer, how many delivered pizzas had at least 1 change and how many had no changes?

140 | - at least 1 change -> either exclusion or extras

141 | - no changes -> exclusion and extras are NULL

142 |

143 | Click here for solution

144 |

145 | ```sql

146 | SELECT

147 | c.customer_id,

148 | SUM(CASE WHEN c.exclusions <> ' ' OR

149 | c.extras <> ' ' THEN 1 ELSE 0 END) AS Changes,

150 | SUM(CASE WHEN c.exclusions = ' ' AND

151 | c.extras = ' ' THEN 1 ELSE 0 END) AS No_changes

152 | FROM updated_runner_orders r

153 | INNER JOIN Updated_customer_orders c

154 | ON r.order_id = c.order_id

155 | WHERE r.cancellation IS NULL

156 | OR r.cancellation NOT IN ('Restaurant Cancellation', 'Customer Cancellation')

157 | GROUP BY c.customer_id

158 | ORDER BY c.customer_id;

159 | ```

160 |

161 |

162 | #### Output:

163 |

164 |

165 |

166 | #### 8. How many pizzas were delivered that had both exclusions and extras?

167 |

168 | Click here for solution

169 |

170 | ```sql

171 | SELECT

172 | count(r.order_id) as Order_had_bothexclusions_and_extras

173 | FROM updated_runner_orders r

174 | inner JOIN Updated_customer_orders c

175 | ON r.order_id = c.order_id

176 | WHERE r.cancellation IS NULL and c.exclusions <> ' ' AND

177 | c.extras <> ' ';

178 | ```

179 |

180 |

181 | #### Output:

182 |

183 |

184 |

185 | #### 9. What was the total volume of pizzas ORDERED for each hour of the day?

186 |

187 | Click here for solution

188 |

189 | ```sql

190 |

191 | SELECT

192 | DATEPART(HOUR, order_time) AS Hour,

193 | COUNT(1) AS Pizza_Ordered_Count

194 | FROM Updated_customer_orders

195 | WHERE order_time IS NOT NULL

196 | GROUP BY DATEPART(HOUR, order_time)

197 | ORDER BY 1;

198 | ```

199 |

200 |

201 | #### Output:

202 |

203 |

204 |

205 | #### 10. What was the volume of orders for each day of the week?

206 |

207 | Click here for solution

208 |

209 | ```sql

210 | SELECT

211 | DATENAME(dw, order_time) AS Day_of_Week,

212 | COUNT(1) AS Pizza_Ordered_Count

213 | FROM Updated_customer_orders

214 | GROUP BY DATENAME(dw, order_time)

215 | ORDER BY 2 DESC;

216 |

217 | ```

218 |

219 |

220 | #### Output:

221 |

222 |

223 | ## Insights

224 |

225 | ◼ Total Unique orders customers made were 10 and total 14 pizzas were ordered.

226 | ◼ Runner 1 had Delivered highest number of pizzas , whereas Runner 3 had delivered Least.

227 | ◼ Meatlovers pizza was delivered 9 times , whereas Vegetarian pizza was delivered 3 times only.

228 | ◼ Customer 101,102,103,105 had ordered Vegetarian pizza only 1 time, whereas Customer 104 had ordered Meatlovers pizza 3 times.

229 | ◼ Customer 101,102 liked his/her pizza as per the original recipe, whereas customer 103,104,105 had their own

230 | preference for pizza toppings and requested atleast 1 change on their pizza.

231 | ◼ Only One customer had ordered 1 pizza having both exclusions and extra toppings.

232 | ◼ Highest number of pizza ordered were at 1:00 pm , 6:00 pm , 9:00 pm & 11:00 pm, whereas Least number of pizza were ordered at 11:00 am & 7:00 pm.

233 | ◼ On Saturday and Wednesday highest number of pizza were ordered , whereas on Friday least number of pizza were ordered

234 |

235 |

236 | ***

237 | Click [here](https://github.com/AmitPatel-analyst/SQL-Case-Study/blob/main/%238Weeksqlchallange/Case%20Study%20%23%202%20-%20Pizza%20Runner/B.%20Runner%20and%20Customer%20Experience.md) to view the solution of B. Runner and Customer Experience

238 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/B. Runner and Customer Experience.md:

--------------------------------------------------------------------------------

1 | # :pizza: Case Study #2: Pizza runner - Runner and Customer Experience

2 |

3 | ## Case Study Questions

4 |

5 | 1. How many runners signed up for each 1 week period? (i.e. week starts 2021-01-01)

6 | 2. What was the average time in minutes it took for each runner to arrive at the Pizza Runner HQ to pickup the order?

7 | 3. Is there any relationship between the number of pizzas and how long the order takes to prepare?

8 | 4. What was the average distance travelled for each customer?

9 | 5. What was the difference between the longest and shortest delivery times for all orders?

10 | 6. What was the average speed for each runner for each delivery and do you notice any trend for these values?

11 | 7. What is the successful delivery percentage for each runner?

12 |

13 | ***

14 | Total Tables are following:

15 | select * from updated_runner_orders;

16 | select * from Updated_customer_orders;

17 | select * from pizza_runner.runners;

18 |

19 | ### 1. How many runners signed up for each 1 week period? (i.e. week starts 2021-01-01)

20 |

21 | Click here for solution

22 |

23 | ```sql

24 | SELECT

25 | CASE

26 | WHEN registration_date BETWEEN '2021-01-01' AND '2021-01-07' THEN '2021-01-01'

27 | WHEN registration_date BETWEEN '2021-01-08' AND '2021-01-14' THEN '2021-01-08'

28 | WHEN registration_date BETWEEN '2021-01-15' AND '2021-01-21' THEN '2021-01-15'

29 | END AS [Week Start_Period],count(runner_id) as cnt

30 | from pizza_runner.runners

31 | group by CASE

32 | WHEN registration_date BETWEEN '2021-01-01' AND '2021-01-07' THEN '2021-01-01'

33 | WHEN registration_date BETWEEN '2021-01-08' AND '2021-01-14' THEN '2021-01-08'

34 | WHEN registration_date BETWEEN '2021-01-15' AND '2021-01-21' THEN '2021-01-15'

35 | END ;

36 | ```

37 |

38 |

39 | #### Output:

40 |

41 |

42 | ### 2.What was the AVERAGE TIME IN MINUTES it took for EACH RUNNER to arrive at the Pizza Runner HQ to PICKUP the order?

43 |

44 | Click here for solution

45 |

46 | ```sql

47 | SELECT r.runner_id

48 | ,Avg_Arrival_minutes = avg(datepart(minute, (pickup_time - order_time)))

49 | FROM updated_runner_orders r

50 | INNER JOIN Updated_customer_orders c ON r.order_id = c.order_id

51 | WHERE r.cancellation IS NULL

52 | OR r.cancellation NOT IN (

53 | 'Restaurant Cancellation'

54 | ,'Customer Cancellation'

55 | )

56 | GROUP BY r.runner_id;

57 | ```

58 |

59 |

60 | #### Output:

61 |

62 |

63 | ### 3. Is there any relationship between the number of pizzas and how long the order takes to prepare?

64 |

65 | Click here for solution

66 |

67 | ```sql

68 | with order_count as

69 | (

70 | select order_id,order_time,count(pizza_id) as pizza_order_count

71 | from Updated_customer_orders

72 | group by order_id,order_time

73 | ),

74 | prepare_time as

75 | (

76 | select c.*,r.pickup_time

77 | ,datepart(minute,(r.pickup_time-c.order_time)) as prepare_time

78 | from updated_runner_orders r

79 | join order_count c

80 | on r.order_id=c.order_id

81 | where r.pickup_time is not null

82 | )

83 | select pizza_order_count,avg(prepare_time) as avg_prepare_time from prepare_time

84 | group by pizza_order_count

85 | order by pizza_order_count;

86 | ```

87 |

88 |

89 | #### Output:

90 |

91 |

92 |

93 | ### 4. What was the AVERAGE DISTANCE travelled for EACH RUNNER?

94 |

95 | Click here for solution

96 |

97 | ```sql

98 | select runner_id,

99 | Avg_distance_travel = round(avg(distance),2)

100 | from updated_runner_orders

101 | where cancellation is null

102 | or cancellation not in ('Restaurant Cancellation','Customer Cancellation')

103 | group by runner_id

104 | order by runner_id;

105 | ```

106 |

107 |

108 | #### Output:

109 |

110 |

111 | ### 5. What was the difference between the longest and shortest delivery times for all orders?

112 |

113 | Click here for solution

114 |

115 | ```sql

116 | SELECT

117 | MAX(duration) - MIN(duration) AS Time_span

118 | FROM updated_runner_orders;

119 | ```

120 |

121 |

122 | #### Output:

123 |

124 |

125 | ### 6.What was the average speed for each runner for each delivery and do you notice any trend for these values?

126 |

127 | Click here for solution

128 |

129 | ```sql

130 | with order_count as

131 | (

132 | select order_id,order_time,count(pizza_id) as pizza_order_count

133 | from Updated_customer_orders

134 | group by order_id,order_time

135 | ), speed as

136 | ( select

137 | c.order_id

138 | ,r.runner_id

139 | ,c.pizza_order_count

140 | ,round((60* r.distance/ r.duration),2) as speed_kmph

141 | from updated_runner_orders r

142 | join order_count c

143 | on r.order_id = c.order_id

144 | where cancellation is null

145 |

146 | )

147 | select runner_id,pizza_order_count,speed_kmph,avg(speed_kmph) over(partition by runner_id) as speed_avg

148 | from speed

149 | order by runner_id;

150 | ```

151 |

152 |

153 | #### Output:

154 |

155 |

156 | ### 7. What is the successful delivery percentage for each runner?

157 |

158 | Click here for solution

159 |

160 | ```sql

161 | select runner_id,

162 | count(pickup_time) as delivered_orders,

163 | count(order_id) as total_orders,

164 | round(100*count(pickup_time)/count(order_id),0) as delivery_pct

165 | from updated_runner_orders

166 | group by runner_id;

167 | ```

168 |

169 |

170 | #### Output:

171 |

172 |

173 | ## Insights

174 | 1. On average , An order with single pizza took 12 minutes to prepare. whereas an order of 2 pizzas took total 18 minutes ,so 9 minutes per pizza is an ultimate efficiency rate.

175 | 2. On average , A runner_id 3 had least distance travelled, whereas A runner_id 2 had highest distance travelled.

176 | 3. The difference between longest (40 minutes) and shortest ( 10 minutes) delivery time for all orders is 30 minutes.

177 | 4. While delivering pizza , an speed for runner_id 1 was varied from 37.5 kmph to 60 kmph.

178 | 5. An speed for runner_id 2 has varied from 35.1 km h to 93.6 mph which is abnormal , so danny has to lookat the matter seriously on runner_id 2.

179 | 6. An speed for runner_id 3 is 40 kmph.

180 |

181 |

182 | Click [here](https://github.com/AmitPatel-analyst/SQL-Case-Study/blob/main/%238Weeksqlchallange/Case%20Study%20%23%202%20-%20Pizza%20Runner/C.%20Ingredient%20Optimisation.md) to view the solution of C. Ingredient Optimisation

183 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/C. Ingredient Optimisation.md:

--------------------------------------------------------------------------------

1 | # :pizza: Case Study #2: Pizza runner - Ingredient Optimisation

2 |

3 | ## Case Study Questions

4 |

5 | 1. What are the standard ingredients for each pizza?

6 | 2. What was the most commonly added extra?

7 | 3. What was the most common exclusion?

8 | 4. Generate an order item for each record in the customers_orders table in the format of one of the following:

9 | ◼ Meat Lovers

10 | ◼ Meat Lovers - Exclude Beef

11 | ◼ Meat Lovers - Extra Bacon

12 | ◼ Meat Lovers - Exclude Cheese, Bacon - Extra Mushroom, Peppers

13 |

14 | ***

15 | Total Tables are following:

16 | select * from updated_runner_orders;

17 | select * from Updated_customer_orders;

18 | SELECT * FROM pizza_runner.[pizza_toppings];

19 | SELECT * FROM pizza_runner.[pizza_recipes];

20 | SELECT * FROM pizza_runner.[pizza_names];

21 | select * from pizza_runner.runners

22 | select * from customer_orders_new;

23 |

24 |

25 | ### 1. What are the standard ingredients for each pizza?

26 |

27 | Click here for solution

28 |

29 | ```sql

30 | with cte as

31 | ( select pizza_id,value as topping_id

32 | from pizza_runner.[pizza_recipes]

33 | cross apply string_split (toppings,',')

34 | ),

35 | final as

36 | (

37 | SELECT pizza_id,topping_name

38 | FROM pizza_runner.[pizza_toppings] as t

39 | inner join cte

40 | on cte.topping_id=t.topping_id

41 | )

42 | select c.pizza_name,STRING_AGG(topping_name,',') as Ingredients

43 | from final inner join pizza_runner.[pizza_names] as c on c.pizza_id=final.pizza_id

44 | group by c.pizza_name;

45 | ```

46 |

47 |

48 | #### Output:

49 |

50 |

51 | ### 2. What was the most commonly added extra?

52 |

53 | Click here for solution

54 |

55 | ```sql

56 | with Get_toppingid as

57 | (select value as topping_id

58 | from Updated_customer_orders

59 | cross apply string_split (extras,',')

60 | )

61 | select t2.topping_name ,count(1) as added_extras_count

62 | from Get_toppingid as t1 inner join pizza_runner.[pizza_toppings] as t2

63 | on t1.topping_id=t2.topping_id

64 | where t2.topping_name is not null

65 | group by t2.topping_name

66 | order by 2 desc;

67 | ```

68 |

69 |

70 | #### Output:

71 |

72 |

73 | ### 3. What was the most common exclusion?

74 |

75 | Click here for solution

76 |

77 | ```sql

78 | with Get_toppingid as

79 | (select value as topping_id

80 | from Updated_customer_orders

81 | cross apply string_split (exclusions,',')

82 | )

83 | select t2.topping_name ,count(1) as added_exclusions_count

84 | from Get_toppingid as t1 inner join pizza_runner.[pizza_toppings] as t2

85 | on t1.topping_id=t2.topping_id

86 | where t2.topping_name is not null

87 | group by t2.topping_name

88 | order by 2 desc;

89 | ```

90 |

91 |

92 | #### Output:

93 |

94 |

95 | ### 4. Generate an order item for each record in the customers_orders table

96 |

97 | Click here for solution

98 |

99 | ```sql

100 | with cte as

101 | (

102 | select c.order_id , p.pizza_name , c.exclusions , c.extras

103 | ,topp1.topping_name as exclude , topp2.topping_name as extra

104 | from customer_orders_new as c

105 | inner join pizza_runner.[pizza_names] as p

106 | on c.pizza_id = p.pizza_id

107 | left join pizza_runner.[pizza_toppings] as topp1

108 | on topp1.topping_id = c.exclusions

109 | left join pizza_runner.[pizza_toppings] as topp2

110 | on topp2.topping_id = c.extras

111 | )

112 |

113 | select order_id , pizza_name,

114 | case when pizza_name is not null and exclude is null and extra is null then pizza_name

115 | when pizza_name is not null and exclude is not null and extra is not null then concat(pizza_name,' - ','Exclude ',exclude,' - ','Extra ',extra)

116 | when pizza_name is not null and exclude is null and extra is not null then concat(pizza_name,' - ','Extra ',extra)

117 | when pizza_name is not null and exclude is not null and extra is null then concat(pizza_name,' - ','Exclude ',exclude)

118 | end as order_item

119 | from cte

120 | order by order_id;

121 | ```

122 |

123 |

124 | #### Output:

125 |

126 |

127 | ### Insights Gathered

128 | 1. The most commonly added extra was "Bacon".

129 | 2. The most commonly added exclusion was "Cheese".

130 |

131 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/D. Pricing and Ratings.md:

--------------------------------------------------------------------------------

1 | # :pizza: Case Study #2: Pizza runner - Pricing and Ratings

2 |

3 | ## Case Study Questions

4 | 1. If a Meat Lovers pizza costs $12 and Vegetarian costs $10 and there were no charges for changes - how much money has Pizza Runner made so far if there are no delivery fees?

5 | 2. What if there was an additional $1 charge for any pizza extras?

6 | - Add cheese is $1 extra

7 | 3. The Pizza Runner team now wants to add an additional ratings system that allows customers to rate their runner, how would you design an additional table for this new dataset - generate a schema for this new table and insert your own data for ratings for each successful customer order between 1 to 5.

8 | 4. Using your newly generated table - can you join all of the information together to form a table which has the following information for successful deliveries?

9 | ◼ customer_id

10 | ◼ order_id

11 | ◼ runner_id

12 | ◼ rating

13 | ◼ order_time

14 | ◼ pickup_time

15 | ◼ Time between order and pickup

16 | ◼ Delivery duration

17 | ◼ Average speed

18 | ◼ Total number of pizzas

19 | 5. If a Meat Lovers pizza was $12 and Vegetarian $10 fixed prices with no cost for extras and each runner is paid $0.30 per kilometre traveled - how much money does Pizza Runner have left over after these deliveries?

20 | ***

21 | Total Tables are following:

22 | select * from updated_runner_orders;

23 | select * from Updated_customer_orders;

24 | SELECT * FROM pizza_runner.[pizza_toppings];

25 | SELECT * FROM pizza_runner.[pizza_recipes];

26 | SELECT * FROM pizza_runner.[pizza_names];

27 | select * from pizza_runner.runners

28 | select * from customer_orders_new;

29 |

30 |

31 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/Pizza Runner Schema.sql:

--------------------------------------------------------------------------------

1 | /********************************************************************************************

2 | #CASE STUDY - 2 'PIZZA RUNNER'

3 | *******************************************************************************************/

4 |

5 | CREATE SCHEMA pizza_runner

6 | go

7 |

8 | CREATE TABLE pizza_runner.runners (

9 | "runner_id" INTEGER,

10 | "registration_date" DATE

11 | );

12 | INSERT INTO pizza_runner.runners

13 | ("runner_id", "registration_date")

14 | VALUES

15 | (1, '2021-01-01'),

16 | (2, '2021-01-03'),

17 | (3, '2021-01-08'),

18 | (4, '2021-01-15');

19 |

20 | CREATE TABLE pizza_runner.customer_orders (

21 | "order_id" INTEGER,

22 | "customer_id" INTEGER,

23 | "pizza_id" INTEGER,

24 | "exclusions" VARCHAR(4),

25 | "extras" VARCHAR(4),

26 | "order_time" datetime

27 | );

28 | INSERT INTO pizza_runner.customer_orders

29 | ("order_id", "customer_id", "pizza_id", "exclusions", "extras", "order_time")

30 | VALUES

31 | ('1', '101', '1', '', '', '2020-01-01 18:05:02'),

32 | ('2', '101', '1', '', '', '2020-01-01 19:00:52'),

33 | ('3', '102', '1', '', '', '2020-01-02 23:51:23'),

34 | ('3', '102', '2', '', NULL, '2020-01-02 23:51:23'),

35 | ('4', '103', '1', '4', '', '2020-01-04 13:23:46'),

36 | ('4', '103', '1', '4', '', '2020-01-04 13:23:46'),

37 | ('4', '103', '2', '4', '', '2020-01-04 13:23:46'),

38 | ('5', '104', '1', 'null', '1', '2020-01-08 21:00:29'),

39 | ('6', '101', '2', 'null', 'null', '2020-01-08 21:03:13'),

40 | ('7', '105', '2', 'null', '1', '2020-01-08 21:20:29'),

41 | ('8', '102', '1', 'null', 'null', '2020-01-09 23:54:33'),

42 | ('9', '103', '1', '4', '1, 5', '2020-01-10 11:22:59'),

43 | ('10', '104', '1', 'null', 'null', '2020-01-11 18:34:49'),

44 | ('10', '104', '1', '2, 6', '1, 4', '2020-01-11 18:34:49');

45 |

46 |

47 |

48 | CREATE TABLE pizza_runner.runner_orders (

49 | "order_id" INTEGER,

50 | "runner_id" INTEGER,

51 | "pickup_time" VARCHAR(19),

52 | "distance" VARCHAR(7),

53 | "duration" VARCHAR(10),

54 | "cancellation" VARCHAR(23)

55 | );

56 |

57 | INSERT INTO pizza_runner.runner_orders

58 | ("order_id", "runner_id", "pickup_time", "distance", "duration", "cancellation")

59 | VALUES

60 | ('1', '1', '2020-01-01 18:15:34', '20km', '32 minutes', ''),

61 | ('2', '1', '2020-01-01 19:10:54', '20km', '27 minutes', ''),

62 | ('3', '1', '2020-01-03 00:12:37', '13.4km', '20 mins', NULL),

63 | ('4', '2', '2020-01-04 13:53:03', '23.4', '40', NULL),

64 | ('5', '3', '2020-01-08 21:10:57', '10', '15', NULL),

65 | ('6', '3', 'null', 'null', 'null', 'Restaurant Cancellation'),

66 | ('7', '2', '2020-01-08 21:30:45', '25km', '25mins', 'null'),

67 | ('8', '2', '2020-01-10 00:15:02', '23.4 km', '15 minute', 'null'),

68 | ('9', '2', 'null', 'null', 'null', 'Customer Cancellation'),

69 | ('10', '1', '2020-01-11 18:50:20', '10km', '10minutes', 'null');

70 |

71 | CREATE TABLE pizza_runner.pizza_names (

72 | "pizza_id" INTEGER,

73 | "pizza_name" varchar(50)

74 | );

75 | INSERT INTO pizza_runner.pizza_names

76 | ("pizza_id", "pizza_name")

77 | VALUES

78 | (1, 'Meatlovers'),

79 | (2, 'Vegetarian');

80 |

81 | CREATE TABLE pizza_runner.pizza_recipes (

82 | "pizza_id" INTEGER,

83 | "toppings" varchar(50)

84 | );

85 | INSERT INTO pizza_runner.pizza_recipes

86 | ("pizza_id", "toppings")

87 | VALUES

88 | (1, '1, 2, 3, 4, 5, 6, 8, 10'),

89 | (2, '4, 6, 7, 9, 11, 12');

90 |

91 | CREATE TABLE pizza_runner.pizza_toppings (

92 | "topping_id" INTEGER,

93 | "topping_name" varchar(50)

94 | );

95 | INSERT INTO pizza_runner.pizza_toppings

96 | ("topping_id", "topping_name")

97 | VALUES

98 | (1, 'Bacon'),

99 | (2, 'BBQ Sauce'),

100 | (3, 'Beef'),

101 | (4, 'Cheese'),

102 | (5, 'Chicken'),

103 | (6, 'Mushrooms'),

104 | (7, 'Onions'),

105 | (8, 'Pepperoni'),

106 | (9, 'Peppers'),

107 | (10, 'Salami'),

108 | (11, 'Tomatoes'),

109 | (12, 'Tomato Sauce');

110 |

111 | select * from pizza_runner.runners;

112 |

113 | select * from pizza_runner.customer_orders;

114 |

115 | select * from pizza_runner.runner_orders;

116 |

117 | SELECT * FROM pizza_runner.[pizza_toppings];

118 | SELECT * FROM pizza_runner.[pizza_recipes];

119 | SELECT * FROM pizza_runner.[pizza_names];

120 |

--------------------------------------------------------------------------------

/#8Weeksqlchallange/Case Study # 2 - Pizza Runner/Readme.md:

--------------------------------------------------------------------------------

1 | # :pizza: :runner: Case Study #2: Pizza Runner

2 |

3 |  4 |

5 | You can find the complete challenge here:https://8weeksqlchallenge.com/case-study-2/

6 | ## Table Of Contents

7 | - [Introduction](#introduction)

8 | - [Datasets used](#datasets-used)

9 | - [Entity Relationship Diagram](#entity-relationship-diagram)

10 | - [Data Cleaning](#data-cleaning)

11 | - [Case Study Solutions](#case-study-solutions)

12 |

13 | ## Introduction

14 | Danny was scrolling through his Instagram feed when something really caught his eye - “80s Retro Styling and Pizza Is The Future!”

15 | Danny was sold on the idea, but he knew that pizza alone was not going to help him get seed funding to expand his new Pizza Empire - so he had one more genius idea to combine with it - he was going to Uberize it - and so Pizza Runner was launched!

16 | Danny started by recruiting “runners” to deliver fresh pizza from Pizza Runner Headquarters (otherwise known as Danny’s house) and also maxed out his credit card to pay freelance developers to build a mobile app to accept orders from customers.

17 |

18 |

19 | ## Datasets used

20 | Key datasets for this case study

21 | - **runners** : The table shows the registration_date for each new runner

22 | - **customer_orders** : Customer pizza orders are captured in the customer_orders table with 1 row for each individual pizza that is part of the order. The pizza_id relates to the type of pizza which was ordered whilst the exclusions are the ingredient_id values which should be removed from the pizza and the extras are the ingredient_id values which need to be added to the pizza.

23 | - **runner_orders** : After each orders are received through the system - they are assigned to a runner - however not all orders are fully completed and can be cancelled by the restaurant or the customer. The pickup_time is the timestamp at which the runner arrives at the Pizza Runner headquarters to pick up the freshly cooked pizzas. The distance and duration fields are related to how far and long the runner had to travel to deliver the order to the respective customer.

24 | - **pizza_names** : Pizza Runner only has 2 pizzas available the Meat Lovers or Vegetarian!

25 | - **pizza_recipes** : Each pizza_id has a standard set of toppings which are used as part of the pizza recipe.

26 | - **pizza_toppings** : The table contains all of the topping_name values with their corresponding topping_id value

27 |

28 | ## Entity Relationship Diagram

29 |

4 |

5 | You can find the complete challenge here:https://8weeksqlchallenge.com/case-study-2/

6 | ## Table Of Contents

7 | - [Introduction](#introduction)

8 | - [Datasets used](#datasets-used)

9 | - [Entity Relationship Diagram](#entity-relationship-diagram)

10 | - [Data Cleaning](#data-cleaning)

11 | - [Case Study Solutions](#case-study-solutions)

12 |

13 | ## Introduction

14 | Danny was scrolling through his Instagram feed when something really caught his eye - “80s Retro Styling and Pizza Is The Future!”

15 | Danny was sold on the idea, but he knew that pizza alone was not going to help him get seed funding to expand his new Pizza Empire - so he had one more genius idea to combine with it - he was going to Uberize it - and so Pizza Runner was launched!

16 | Danny started by recruiting “runners” to deliver fresh pizza from Pizza Runner Headquarters (otherwise known as Danny’s house) and also maxed out his credit card to pay freelance developers to build a mobile app to accept orders from customers.

17 |

18 |

19 | ## Datasets used

20 | Key datasets for this case study

21 | - **runners** : The table shows the registration_date for each new runner

22 | - **customer_orders** : Customer pizza orders are captured in the customer_orders table with 1 row for each individual pizza that is part of the order. The pizza_id relates to the type of pizza which was ordered whilst the exclusions are the ingredient_id values which should be removed from the pizza and the extras are the ingredient_id values which need to be added to the pizza.

23 | - **runner_orders** : After each orders are received through the system - they are assigned to a runner - however not all orders are fully completed and can be cancelled by the restaurant or the customer. The pickup_time is the timestamp at which the runner arrives at the Pizza Runner headquarters to pick up the freshly cooked pizzas. The distance and duration fields are related to how far and long the runner had to travel to deliver the order to the respective customer.

24 | - **pizza_names** : Pizza Runner only has 2 pizzas available the Meat Lovers or Vegetarian!

25 | - **pizza_recipes** : Each pizza_id has a standard set of toppings which are used as part of the pizza recipe.

26 | - **pizza_toppings** : The table contains all of the topping_name values with their corresponding topping_id value

27 |

28 | ## Entity Relationship Diagram

29 |

30 |  31 |

32 | ## Case Study Question:

33 | A. Pizza Metrics

34 | 1. How many pizzas were ordered?

35 | 2. How many unique customer orders were made?

36 | 3. How many successful orders were delivered by each runner?

37 | 4. How many of each type of pizza was delivered?

38 | 5. How many Vegetarian and Meatlovers were ordered by each customer?

39 | 6. What was the maximum number of pizzas delivered in a single order?

40 | 7. For each customer, how many delivered pizzas had at least 1 change and how many had no changes?

41 | 8. How many pizzas were delivered that had both exclusions and extras?

42 | 9. What was the total volume of pizzas ordered for each hour of the day?

43 | 10. What was the volume of orders for each day of the week?

44 |

45 | B. Runner and Customer Experience

46 | 1. How many runners signed up for each 1 week period? (i.e. week starts 2021-01-01)

47 | 2. What was the average time in minutes it took for each runner to arrive at the Pizza Runner HQ to pickup the order?

48 | 3. Is there any relationship between the number of pizzas and how long the order takes to prepare?

49 | 4. What was the average distance travelled for each customer?