├── .circleci

└── config.yml

├── .gitignore

├── .gitmodules

├── Changelog.md

├── LICENSE

├── MANIFEST.in

├── Makefile

├── README.md

├── binder

├── apt.txt

├── index.ipynb

├── postBuild

└── requirements.txt

├── conx

├── __init__.py

├── _version.py

├── activations.py

├── dataset.py

├── datasets

│ ├── __init__.py

│ ├── _cifar10.py

│ ├── _cifar100.py

│ ├── _colors.py

│ ├── _fingers.py

│ ├── _gridfonts.py

│ ├── _mnist.py

│ └── cmu_faces.py

├── layers.py

├── network.py

├── networks

│ ├── __init__.py

│ └── _keras.py

├── tests

│ └── test_network.py

├── utils.py

└── widgets.py

├── data

├── cmu_faces_full_size.npz

├── cmu_faces_half_size.npz

├── cmu_faces_quarter_size.npz

├── colors.csv

├── figure_ground_a.dat

├── figure_ground_a.npy

├── fingers.npz

├── grid.png

├── gridfonts.dat

├── gridfonts.npy

├── gridfonts.py

├── mnist.h5

├── mnist.py

└── mnist_images.png

├── docker

├── Dockerfile

├── Makefile

└── README.md

├── docs

├── Makefile

├── requirements.txt

└── source

│ ├── _static

│ └── css

│ │ └── custom.css

│ ├── conf.py

│ ├── conx.rst

│ ├── examples.rst

│ ├── img

│ └── logo.gif

│ ├── index.rst

│ └── modules.rst

├── readthedocs.yaml

├── requirements.txt

├── setup.cfg

└── setup.py

/.circleci/config.yml:

--------------------------------------------------------------------------------

1 | # Python CircleCI 2.0 configuration file

2 | #

3 | # Check https://circleci.com/docs/2.0/language-python/ for more details

4 | #

5 | version: 2

6 | jobs:

7 | build:

8 | docker:

9 | # specify the version you desire here

10 | # use `-browsers` prefix for selenium tests, e.g. `3.6.1-browsers`

11 | - image: circleci/python:3.6.1

12 |

13 | # Specify service dependencies here if necessary

14 | # CircleCI maintains a library of pre-built images

15 | # documented at https://circleci.com/docs/2.0/circleci-images/

16 | # - image: circleci/postgres:9.4

17 |

18 | working_directory: ~/conx

19 |

20 | steps:

21 | - checkout

22 |

23 | # Download and cache dependencies

24 | - restore_cache:

25 | keys:

26 | - v1-dependencies-{{ checksum "requirements.txt" }}

27 | # fallback to using the latest cache if no exact match is found

28 | - v1-dependencies-

29 |

30 | - run:

31 | name: install dependencies

32 | command: |

33 | python3 -m venv venv

34 | . venv/bin/activate

35 | pip install -r requirements.txt

36 | pip install nose

37 | pip install tensorflow

38 | pip install codecov

39 |

40 | - save_cache:

41 | paths:

42 | - ./venv

43 | key: v1-dependencies-{{ checksum "requirements.txt" }}

44 |

45 | # run tests!

46 | - run:

47 | name: run tests

48 | command: |

49 | . venv/bin/activate

50 | nosetests --with-coverage --nologcapture --with-doc conx

51 | codecov

52 |

53 | - store_artifacts:

54 | path: test-reports

55 | destination: test-reports

56 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | *.egg-info/

24 | .installed.cfg

25 | *.egg

26 |

27 | # PyInstaller

28 | # Usually these files are written by a python script from a template

29 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

30 | *.manifest

31 | *.spec

32 |

33 | # Installer logs

34 | pip-log.txt

35 | pip-delete-this-directory.txt

36 |

37 | # Unit test / coverage reports

38 | htmlcov/

39 | .tox/

40 | .coverage

41 | .coverage.*

42 | .cache

43 | nosetests.xml

44 | coverage.xml

45 | *,cover

46 | .hypothesis/

47 |

48 | # Translations

49 | *.mo

50 | *.pot

51 |

52 | # Django stuff:

53 | *.log

54 | local_settings.py

55 |

56 | # Flask stuff:

57 | instance/

58 | .webassets-cache

59 |

60 | # Scrapy stuff:

61 | .scrapy

62 |

63 | # Sphinx documentation

64 | docs/_build/

65 |

66 | # PyBuilder

67 | target/

68 |

69 | # IPython Notebook

70 | .ipynb_checkpoints

71 |

72 | # pyenv

73 | .python-version

74 |

75 | # celery beat schedule file

76 | celerybeat-schedule

77 |

78 | # dotenv

79 | .env

80 |

81 | # virtualenv

82 | venv/

83 | ENV/

84 |

85 | # Spyder project settings

86 | .spyderproject

87 |

88 | # Rope project settings

89 | .ropeproject

90 |

91 | ## conx specific:

92 |

93 | docs/source/*.ipynb

94 | docs/source/*.jpg

95 | docs/source/*.gif

96 | docs/source/*.png

97 | docs/source/*.md

98 | docs/source/_static/*.mp4

99 | tmp/

100 | Makefile

101 | notebooks/*.conx/*

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "notebooks"]

2 | path = notebooks

3 | url = https://github.com/calysto/conx-notebooks

4 |

--------------------------------------------------------------------------------

/Changelog.md:

--------------------------------------------------------------------------------

1 | # Changelog

2 |

3 | ## 3.7.5

4 |

5 | Released Wed September 12, 2018

6 |

7 | * Re-wrote reset_weights; just recompiles model

8 | * Fixed error in gridfont loader

9 | * All widgets/pictures are JupyterLab compatible

10 | * Added support for dynamic_pictures on/off; default is off

11 | * SVG arrows are now curves

12 | * New algorithm for bank layout in SVG

13 | * moved dataset.get() to Dataset.get() and net.get_dataset()

14 | * new virtual datasets API, including vmnist, H5Dataset (remote and local)

15 | * better cache in virtual datasets

16 | * Allow dropout to operate on 0, 1, 2, or 3 whole dims

17 | * Added cx.Layer(bidirectional=mode)

18 | * Show network banks as red until compiled

19 | * Rewrote and renamed net.test() to net.evaluate() and net.evaluate_and_label()

20 | * net.evaluate() for showing results

21 | * net.evaluate_and_label() for use in plots

22 |

23 | ## 3.7.4

24 |

25 | Released Sun August 19, 2018

26 |

27 | * net.pp() gives standard formatting for ints and floats

28 | * Allow negative position in virtual dataset vectors

29 | * Fixed error in colors dataset that truncated the target integer to 8 bits

30 | * Add internal error function to net.compile(error={...})

31 | * New spelling: ConX

32 | * cx.image_to_array() removes alpha

33 | * vshape can be three dimensions (for color images)

34 | * some new image functions: image_resize(), image_remove_alpha()

35 | * renamed "sequence" to "raw" in utils

36 | * Added cx.shape(summary=False), cx.get_ranges(array, form), and get_dim(array, DIMS)

37 | * Use kverbose in train() for all keras activity

38 |

39 | ## 3.7.3

40 |

41 | Released Mon August 13, 2017

42 |

43 | * Allow bool values with onehot

44 | * Unfix fixed crossentropy warning

45 | * Allow datasets to be composed of bools

46 | * added temperature to choice()

47 | * Added net.dataset.inputs.test(tolerance=0.2, index=True)

48 |

49 | ## 3.7.1

50 |

51 | Released Fri August 10, 2018

52 |

53 | * Separate build/compile --- compile() no longer resets weights;

54 | * added net.add_loss()

55 | * Remove additional_output_banks

56 | * refactor build/compile

57 | * add LambdaLayer with size

58 | * add prop_from_dict[(input, output)] = model

59 |

60 | ## 3.7.0

61 |

62 | Released Tue Aug 7, 2018

63 |

64 | * Allow additional output layers for network

65 | * Fix: crossentropy check

66 | * added indentity layer for propagating to input layers

67 | * Include LICENSE.txt file in wheels

68 |

69 | ## 3.6.10

70 |

71 | Released Thu May 17, 2018

72 |

73 | * delete layers, IncepetionV3, combine networks

74 | * ability to delete layers

75 | * ability to connect two networks together

76 | * rewrote SVG embedded images to use standard cairosvg

77 | * added inceptionv3 network

78 | * cx.download has new verbose flag

79 | * fixes for minimum and maximum

80 | * import_keras_model now forms proper connections

81 | * Warn when displaying network if not compiled then activations won't be visible

82 | * array_to_image(colormap=) now returns RGBA image

83 |

84 | ## 3.6.9

85 |

86 | Released Fri May 4, 2018

87 |

88 | * propagate_to_features() scales layer[0]

89 | * added cx.array

90 | * fixed (somewhat) array_to_image(colormap=)

91 | * added VGG16 and ImageNet notebook

92 | * New Network.info()

93 | * Updated Network.propagate_to_features(), util.image()

94 | * Network.info() describes predefined networks

95 | * new utility image(filename)

96 | * rewrote Network.propagate_to_features() to be faster

97 | * added VGG preprocessing and postprocessing

98 | * Picture autoscales inputs by default

99 | * Add net.picture(minmax=)

100 | * Rebuild models on import_keras

101 | * Added VGG19

102 | * Added vgg16 and idea of applications as Network.networks

103 | * Bug in building intermediate hidden -> output models

104 |

105 | ## 3.6.7

106 |

107 | Released Tue April 17, 2018

108 |

109 | * Fixed bug in building hidden -> output intermediate models

110 |

111 | ## 3.6.6

112 |

113 | Released Fri April 13, 2018

114 |

115 | * Added cx.view_image_list(pivot) - rotates list and layout

116 | * Added colors dataset

117 | * Added Dataset.delete_bank(), Dataset.append_bank()

118 | * Added Dataset.ITEM[V] = value

119 |

120 | ## 3.6.5

121 |

122 | Released Fri April 6, 2018

123 |

124 | * Removed examples; use notebooks or help instead

125 | * cx.view_image_list() can have layout=None, (int, None), or (None, int)

126 | * Added cx.scale(vector, range, dtype, truncate)

127 | * Added cx.scatter_images(images, xys) - creates scatter plot of images

128 | * Fixed pca.translate(scale=SCALE) bug

129 | * downgrade tensorflow on readthedocs because memory hog kills build

130 |

131 | ## 3.6.4

132 |

133 | Released Thur April 5, 2018

134 |

135 | * changed "not allowed" warning on multi-dim outputs to

136 | "are you sure?"

137 | * fix colormap on array_to_image; added tests

138 | * fix cx.view(array)

139 | * Allow dataset to load generators, zips, etc.

140 |

141 | ## 3.6.3

142 |

143 | Released Tue April 3, 2018

144 |

145 | * Two fixes for array_to_image: div by float; move cmap conversion to end

146 | * Protection for list/array for range and shape

147 | * from kmader: Adding jyro to binder requirements

148 |

149 | ## 3.6.2

150 |

151 | Released Tue March 6, 2018

152 |

153 | * added raw=False to conx.utilities image_to_array(), frange(), and reshape()

154 |

155 | ## 3.6.1

156 |

157 | Released Mon March 5, 2018

158 |

159 | * SVG Network enhancements

160 | * vertical and horizontal space

161 | * fixed network drawing connection paths

162 | * save keras functions

163 | * don't crash in attempting to build propagate_from pathways

164 | * added binary_to_int

165 | * download() can rename file

166 | * fixed mislabeled MNIST image

167 | * better memory management when load cifar

168 | * Network.train(verbose=0) returns proper values

169 | * labels for finger dataset are now strings

170 | * added labels for cx.view_image_list()

171 | * fixed bug in len(dataset.labels)

172 | * added layout to net.plot_layer_weights()

173 | * added ImageLayer(keep_aspect_ratio)

174 | * fixed bugs in datavector.shape

175 | * added dataset.load(generator, count)

176 | * fixed bugs in net.get_weights()/set_weights()

177 | * added network.propagate_to_image(feature=NUM)

178 |

179 | ## 3.6.0

180 |

181 | Released Mon Feb 12, 2018. Initial released version recommended for daily use.

182 |

183 | * fixed blurry activation network pictures

184 | * show "[layer(s) not shown]" when Layer(visible=False)

185 | * added fingers dataset

186 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | COPYRIGHT

2 |

3 | All contributions by Calysto Conx Project members:

4 | Copyright (c) 2017, Calysto Conx Project members.

5 | All rights reserved.

6 |

7 | All contributions by François Chollet:

8 | Copyright (c) 2015, François Chollet.

9 | All rights reserved.

10 |

11 | All contributions by Google:

12 | Copyright (c) 2015, Google, Inc.

13 | All rights reserved.

14 |

15 | All contributions by Microsoft:

16 | Copyright (c) 2017, Microsoft, Inc.

17 | All rights reserved.

18 |

19 | All other contributions:

20 | Copyright (c) 2015 - 2017, the respective contributors.

21 | All rights reserved.

22 |

23 | Each contributor holds copyright over their respective contributions.

24 | The project versioning (Git) records all such contribution source information.

25 |

26 | LICENSE

27 |

28 | The MIT License (MIT)

29 |

30 | Permission is hereby granted, free of charge, to any person obtaining a copy

31 | of this software and associated documentation files (the "Software"), to deal

32 | in the Software without restriction, including without limitation the rights

33 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

34 | copies of the Software, and to permit persons to whom the Software is

35 | furnished to do so, subject to the following conditions:

36 |

37 | The above copyright notice and this permission notice shall be included in all

38 | copies or substantial portions of the Software.

39 |

40 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

41 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

42 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

43 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

44 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

45 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

46 | SOFTWARE.

47 |

48 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include *.md

2 | include LICENSE

3 | prune .git

4 | prune dist

5 | prune build

6 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | export VERSION=`python3 setup.py --version 2>/dev/null`

2 |

3 | release:

4 | pip3 install wheel twine setuptools --user

5 | rm -rf dist

6 | python3 setup.py register

7 | python3 setup.py bdist_wheel --universal

8 | python3 setup.py sdist

9 | git commit -a -m "Release $(VERSION)"; true

10 | git tag v$(VERSION)

11 | git push origin --all

12 | git push origin --tags

13 | twine upload dist/*

14 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # ConX Neural Networks

2 |

3 | ## The On-Ramp to Deep Learning

4 |

5 | Built in Python 3 on Keras 2.

6 |

7 | [](https://mybinder.org/v2/gh/Calysto/conx/master?filepath=binder%2Findex.ipynb)

8 | [](https://circleci.com/gh/Calysto/conx/tree/master)

9 | [](https://codecov.io/gh/Calysto/conx)

10 | [](http://conx.readthedocs.io/en/latest/?badge=latest)

11 | [](https://badge.fury.io/py/conx)

12 | [](https://pypistats.org/packages/conx)

13 |

14 | Read the documentation at [conx.readthedocs.io](http://conx.readthedocs.io/)

15 |

16 | Ask questions on the mailing list: [conx-users](https://groups.google.com/forum/#!forum/conx-users)

17 |

18 | Implements Deep Learning neural network algorithms using a simple interface with easy visualizations and useful analytics. Built on top of Keras, which can use either [TensorFlow](https://www.tensorflow.org/), [Theano](http://www.deeplearning.net/software/theano/), or [CNTK](https://www.cntk.ai/pythondocs/).

19 |

20 | A network can be specified to the constructor by providing sizes. For example, Network("XOR", 2, 5, 1) specifies a network named "XOR" with a 2-node input layer, 5-unit hidden layer, and a 1-unit output layer. However, any complex network can be constructed using the `net.connect()` method.

21 |

22 | Computing XOR via a target function:

23 |

24 | ```python

25 | import conx as cx

26 |

27 | dataset = [[[0, 0], [0]],

28 | [[0, 1], [1]],

29 | [[1, 0], [1]],

30 | [[1, 1], [0]]]

31 |

32 | net = cx.Network("XOR", 2, 5, 1, activation="sigmoid")

33 | net.dataset.load(dataset)

34 | net.compile(error='mean_squared_error',

35 | optimizer="sgd", lr=0.3, momentum=0.9)

36 | net.train(2000, report_rate=10, accuracy=1.0)

37 | net.test(show=True)

38 | ```

39 |

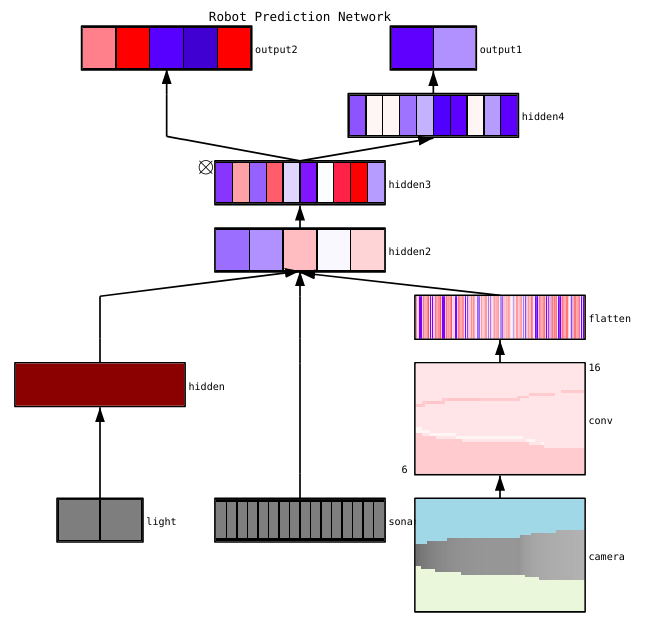

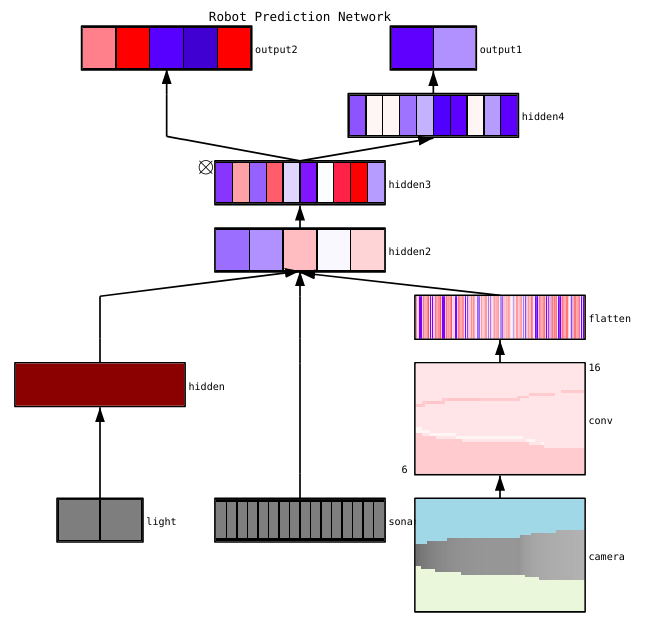

40 | Creates dynamic, rendered visualizations like this:

41 |

42 |  43 |

44 | ## Examples

45 |

46 | See [conx-notebooks](https://github.com/Calysto/conx-notebooks/blob/master/00_Index.ipynb) and the [documentation](http://conx.readthedocs.io/en/latest/) for additional examples.

47 |

48 | ## Installation

49 |

50 | See [How To Run Conx](https://github.com/Calysto/conx-notebooks/tree/master/HowToRun#how-to-run-conx)

51 | to see options on running virtual machines, in the cloud, and personal

52 | installation.

53 |

--------------------------------------------------------------------------------

/binder/apt.txt:

--------------------------------------------------------------------------------

1 | libffi-dev

2 | libffi6

3 | ffmpeg

4 | texlive-latex-base

5 | texlive-latex-recommended

6 | texlive-science

7 | texlive-latex-extra

8 | texlive-fonts-recommended

9 | dvipng

10 | ghostscript

11 | graphviz

12 |

--------------------------------------------------------------------------------

/binder/index.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Welcome to Deep Learning with conx!\n",

8 | "\n",

9 | "This is a live Jupyter notebook running in the cloud via mybinder.\n",

10 | "\n",

11 | "To test Conx, and get your own copy of the conx-notebooks:"

12 | ]

13 | },

14 | {

15 | "cell_type": "code",

16 | "execution_count": 1,

17 | "metadata": {},

18 | "outputs": [

19 | {

20 | "name": "stderr",

21 | "output_type": "stream",

22 | "text": [

23 | "Using Theano backend.\n",

24 | "Conx, version 3.6.0\n"

25 | ]

26 | }

27 | ],

28 | "source": [

29 | "import conx as cx"

30 | ]

31 | },

32 | {

33 | "cell_type": "code",

34 | "execution_count": null,

35 | "metadata": {},

36 | "outputs": [],

37 | "source": [

38 | "cx.download(\"https://github.com/Calysto/conx-notebooks/archive/master.zip\")"

39 | ]

40 | },

41 | {

42 | "cell_type": "markdown",

43 | "metadata": {},

44 | "source": [

45 | "To \"trust\" these notebooks:"

46 | ]

47 | },

48 | {

49 | "cell_type": "code",

50 | "execution_count": null,

51 | "metadata": {},

52 | "outputs": [],

53 | "source": [

54 | "! jupyter trust conx-notebooks-master/*.ipynb"

55 | ]

56 | },

57 | {

58 | "cell_type": "markdown",

59 | "metadata": {},

60 | "source": [

61 | "You can then open \n",

62 | "\n",

63 | "* [conx-notebooks-master/00_Index.ipynb](conx-notebooks-master/00_Index.ipynb) \n",

64 | "* [conx-notebooks-master/collections/sigcse-2018/](conx-notebooks-master/collections/sigcse-2018/) \n",

65 | "\n",

66 | "and explore the notebooks.\n",

67 | "\n",

68 | "Welcome to Conx!\n",

69 | "\n",

70 | "For more help or information see:\n",

71 | "\n",

72 | "* http://conx.readthedocs.io/en/latest/ - online help\n",

73 | "* https://groups.google.com/forum/#!forum/conx-users - conx-users mailing list"

74 | ]

75 | }

76 | ],

77 | "metadata": {

78 | "kernelspec": {

79 | "display_name": "Python 3",

80 | "language": "python",

81 | "name": "python3"

82 | },

83 | "language_info": {

84 | "codemirror_mode": {

85 | "name": "ipython",

86 | "version": 3

87 | },

88 | "file_extension": ".py",

89 | "mimetype": "text/x-python",

90 | "name": "python",

91 | "nbconvert_exporter": "python",

92 | "pygments_lexer": "ipython3",

93 | "version": "3.6.3"

94 | },

95 | "widgets": {

96 | "application/vnd.jupyter.widget-state+json": {

97 | "state": {

98 | "0c01554263f740678e052dddc7baa232": {

99 | "model_module": "@jupyter-widgets/controls",

100 | "model_module_version": "1.1.0",

101 | "model_name": "SelectModel",

102 | "state": {

103 | "_options_labels": [

104 | "Test",

105 | "Train"

106 | ],

107 | "description": "Dataset:",

108 | "index": 1,

109 | "layout": "IPY_MODEL_b6d05976b5cb40ff8a4ddee3c5a861d9",

110 | "rows": 1,

111 | "style": "IPY_MODEL_51461b41bbd4492583f7fbd5d631835d"

112 | }

113 | },

114 | "136345188d7d4eeb97ad63b4e39206c0": {

115 | "model_module": "@jupyter-widgets/base",

116 | "model_module_version": "1.0.0",

117 | "model_name": "LayoutModel",

118 | "state": {}

119 | },

120 | "137cc55f71044b329686552e4d5ed5fe": {

121 | "model_module": "@jupyter-widgets/controls",

122 | "model_module_version": "1.1.0",

123 | "model_name": "HBoxModel",

124 | "state": {

125 | "children": [

126 | "IPY_MODEL_9bfc7d1321df402ebac286b2c8b7a47d",

127 | "IPY_MODEL_f3801cae672644048cd4eb49c89f6fb5",

128 | "IPY_MODEL_dd4b1f529c7941569816da9769084b90",

129 | "IPY_MODEL_5ef72263c5e247cbbb9916bffccf7485",

130 | "IPY_MODEL_c491fff0bf9f47339ab116e4a2b728c9",

131 | "IPY_MODEL_4cb4b63e98944567b0959241151670e8",

132 | "IPY_MODEL_44d99e7e5001431abc7161a689dd6ea5"

133 | ],

134 | "layout": "IPY_MODEL_f6b3e8fc467742ac88c9cf3bf03cfa03"

135 | }

136 | },

137 | "15dbc9bb00744ca8a4613fd572a49fc0": {

138 | "model_module": "@jupyter-widgets/controls",

139 | "model_module_version": "1.1.0",

140 | "model_name": "ButtonStyleModel",

141 | "state": {}

142 | },

143 | "15efb7a6a85b429c857fdc8e18c154c7": {

144 | "model_module": "@jupyter-widgets/output",

145 | "model_module_version": "1.0.0",

146 | "model_name": "OutputModel",

147 | "state": {

148 | "layout": "IPY_MODEL_e4910a4128764aad9d412609da7c9a53"

149 | }

150 | },

151 | "16832b10a05e46b19183f1726ed80faf": {

152 | "model_module": "@jupyter-widgets/base",

153 | "model_module_version": "1.0.0",

154 | "model_name": "LayoutModel",

155 | "state": {

156 | "justify_content": "center",

157 | "overflow_x": "auto",

158 | "overflow_y": "auto",

159 | "width": "95%"

160 | }

161 | },

162 | "17b44abf16704be4971f53e8160fce47": {

163 | "model_module": "@jupyter-widgets/base",

164 | "model_module_version": "1.0.0",

165 | "model_name": "LayoutModel",

166 | "state": {}

167 | },

168 | "18294f98de474bd794eb2b003add21f8": {

169 | "model_module": "@jupyter-widgets/controls",

170 | "model_module_version": "1.1.0",

171 | "model_name": "FloatTextModel",

172 | "state": {

173 | "description": "Leftmost color maps to:",

174 | "layout": "IPY_MODEL_17b44abf16704be4971f53e8160fce47",

175 | "step": null,

176 | "style": "IPY_MODEL_733f45e0a1c44fe1aa0aca4ee87c5217",

177 | "value": -1

178 | }

179 | },

180 | "21191f7705374908a284ccf55829951c": {

181 | "model_module": "@jupyter-widgets/base",

182 | "model_module_version": "1.0.0",

183 | "model_name": "LayoutModel",

184 | "state": {}

185 | },

186 | "24381fd59a954b2980c8347250859849": {

187 | "model_module": "@jupyter-widgets/controls",

188 | "model_module_version": "1.1.0",

189 | "model_name": "DescriptionStyleModel",

190 | "state": {

191 | "description_width": "initial"

192 | }

193 | },

194 | "26908fd67f194c21a968e035de73aa1a": {

195 | "model_module": "@jupyter-widgets/controls",

196 | "model_module_version": "1.1.0",

197 | "model_name": "SelectModel",

198 | "state": {

199 | "_options_labels": [

200 | "input",

201 | "hidden",

202 | "output"

203 | ],

204 | "description": "Layer:",

205 | "index": 2,

206 | "layout": "IPY_MODEL_da640a5ac3bc4a59ab8a82bc8fcbf746",

207 | "rows": 1,

208 | "style": "IPY_MODEL_5348b0f185bd4fa5a642e5121eb0feed"

209 | }

210 | },

211 | "2982cc6bc30940ff96f2f9131a4a6241": {

212 | "model_module": "@jupyter-widgets/base",

213 | "model_module_version": "1.0.0",

214 | "model_name": "LayoutModel",

215 | "state": {}

216 | },

217 | "29eb7d46cc034ca5abe9c2d759997fb5": {

218 | "model_module": "@jupyter-widgets/controls",

219 | "model_module_version": "1.1.0",

220 | "model_name": "FloatSliderModel",

221 | "state": {

222 | "continuous_update": false,

223 | "description": "Zoom",

224 | "layout": "IPY_MODEL_55ec8f78c85a4eb8af0a7bbd80cf4ce3",

225 | "max": 3,

226 | "min": 0.5,

227 | "step": 0.1,

228 | "style": "IPY_MODEL_bea9ec4c51724a9797030debbd44b595",

229 | "value": 1

230 | }

231 | },

232 | "2aa5894453424c4aacf351dd8c8ab922": {

233 | "model_module": "@jupyter-widgets/base",

234 | "model_module_version": "1.0.0",

235 | "model_name": "LayoutModel",

236 | "state": {}

237 | },

238 | "3156b5f6d28642bd9633e8e5518c9097": {

239 | "model_module": "@jupyter-widgets/controls",

240 | "model_module_version": "1.1.0",

241 | "model_name": "VBoxModel",

242 | "state": {

243 | "children": [

244 | "IPY_MODEL_0c01554263f740678e052dddc7baa232",

245 | "IPY_MODEL_29eb7d46cc034ca5abe9c2d759997fb5",

246 | "IPY_MODEL_dd45f7f9734f48fba70aaf8ae65dbb97",

247 | "IPY_MODEL_421ac3c41d5b46e6af897887dae323e3",

248 | "IPY_MODEL_b9be5483405141b88cf18e7d4f4963de",

249 | "IPY_MODEL_6c4dcc259efa4621aac46f4703595e4d",

250 | "IPY_MODEL_c712050936334e1d859ad0bdbba33a50",

251 | "IPY_MODEL_5a5d74e4200043f6ab928822b4346aa4"

252 | ],

253 | "layout": "IPY_MODEL_34cf92591c7545d3b47483fc9b50c798"

254 | }

255 | },

256 | "326c868bba2744109476ec1a641314b3": {

257 | "model_module": "@jupyter-widgets/controls",

258 | "model_module_version": "1.1.0",

259 | "model_name": "VBoxModel",

260 | "state": {

261 | "children": [

262 | "IPY_MODEL_26908fd67f194c21a968e035de73aa1a",

263 | "IPY_MODEL_7db6e0d0198d45f98e8c5a6f08d0791b",

264 | "IPY_MODEL_8193e107e49f49dda781c456011d9a78",

265 | "IPY_MODEL_b0b819e6de95419a9eeb8a0002353ee1",

266 | "IPY_MODEL_18294f98de474bd794eb2b003add21f8",

267 | "IPY_MODEL_a836973f20574c71b33aa3cef64e7f1e",

268 | "IPY_MODEL_71588c1ad11e42df844dff42b5ab041d"

269 | ],

270 | "layout": "IPY_MODEL_4b5e4ed95f04466e8685437a1f5593e7"

271 | }

272 | },

273 | "328d40ca9797496b91ee0685f8f59d05": {

274 | "model_module": "@jupyter-widgets/base",

275 | "model_module_version": "1.0.0",

276 | "model_name": "LayoutModel",

277 | "state": {

278 | "width": "100%"

279 | }

280 | },

281 | "32eb1f056b8a4311a1448f7fd4b7459e": {

282 | "model_module": "@jupyter-widgets/controls",

283 | "model_module_version": "1.1.0",

284 | "model_name": "ButtonStyleModel",

285 | "state": {}

286 | },

287 | "34cf92591c7545d3b47483fc9b50c798": {

288 | "model_module": "@jupyter-widgets/base",

289 | "model_module_version": "1.0.0",

290 | "model_name": "LayoutModel",

291 | "state": {

292 | "width": "100%"

293 | }

294 | },

295 | "3501c6717de1410aba4274dec484dde0": {

296 | "model_module": "@jupyter-widgets/controls",

297 | "model_module_version": "1.1.0",

298 | "model_name": "DescriptionStyleModel",

299 | "state": {

300 | "description_width": "initial"

301 | }

302 | },

303 | "364a4cc16b974d61b20102f7cbd4e9fe": {

304 | "model_module": "@jupyter-widgets/controls",

305 | "model_module_version": "1.1.0",

306 | "model_name": "DescriptionStyleModel",

307 | "state": {

308 | "description_width": "initial"

309 | }

310 | },

311 | "379c8a4fc2c04ec1aea72f9a38509632": {

312 | "model_module": "@jupyter-widgets/controls",

313 | "model_module_version": "1.1.0",

314 | "model_name": "DescriptionStyleModel",

315 | "state": {

316 | "description_width": "initial"

317 | }

318 | },

319 | "3b7e0bdd891743bda0dd4a9d15dd0a42": {

320 | "model_module": "@jupyter-widgets/controls",

321 | "model_module_version": "1.1.0",

322 | "model_name": "DescriptionStyleModel",

323 | "state": {

324 | "description_width": ""

325 | }

326 | },

327 | "402fa77b803048b2990b273187598d95": {

328 | "model_module": "@jupyter-widgets/controls",

329 | "model_module_version": "1.1.0",

330 | "model_name": "SliderStyleModel",

331 | "state": {

332 | "description_width": ""

333 | }

334 | },

335 | "421ac3c41d5b46e6af897887dae323e3": {

336 | "model_module": "@jupyter-widgets/controls",

337 | "model_module_version": "1.1.0",

338 | "model_name": "IntTextModel",

339 | "state": {

340 | "description": "Vertical space between layers:",

341 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

342 | "step": 1,

343 | "style": "IPY_MODEL_379c8a4fc2c04ec1aea72f9a38509632",

344 | "value": 30

345 | }

346 | },

347 | "4479c1d8a9d74da1800e20711176fe1a": {

348 | "model_module": "@jupyter-widgets/base",

349 | "model_module_version": "1.0.0",

350 | "model_name": "LayoutModel",

351 | "state": {}

352 | },

353 | "44d99e7e5001431abc7161a689dd6ea5": {

354 | "model_module": "@jupyter-widgets/controls",

355 | "model_module_version": "1.1.0",

356 | "model_name": "ButtonModel",

357 | "state": {

358 | "icon": "refresh",

359 | "layout": "IPY_MODEL_861d8540f11944d8995a9f5c1385c829",

360 | "style": "IPY_MODEL_eba95634dd3241f2911fc17342ddf924"

361 | }

362 | },

363 | "486e1a6b578b44b397b02f1dbafc907b": {

364 | "model_module": "@jupyter-widgets/controls",

365 | "model_module_version": "1.1.0",

366 | "model_name": "DescriptionStyleModel",

367 | "state": {

368 | "description_width": ""

369 | }

370 | },

371 | "4b5e4ed95f04466e8685437a1f5593e7": {

372 | "model_module": "@jupyter-widgets/base",

373 | "model_module_version": "1.0.0",

374 | "model_name": "LayoutModel",

375 | "state": {

376 | "width": "100%"

377 | }

378 | },

379 | "4cb4b63e98944567b0959241151670e8": {

380 | "model_module": "@jupyter-widgets/controls",

381 | "model_module_version": "1.1.0",

382 | "model_name": "ButtonModel",

383 | "state": {

384 | "description": "Play",

385 | "icon": "play",

386 | "layout": "IPY_MODEL_328d40ca9797496b91ee0685f8f59d05",

387 | "style": "IPY_MODEL_79f332bd05a2497eb63b8f6cc6acf8a3"

388 | }

389 | },

390 | "4e5807bdbf3d4dc2b611e26fa56b6101": {

391 | "model_module": "@jupyter-widgets/base",

392 | "model_module_version": "1.0.0",

393 | "model_name": "LayoutModel",

394 | "state": {

395 | "width": "100px"

396 | }

397 | },

398 | "51461b41bbd4492583f7fbd5d631835d": {

399 | "model_module": "@jupyter-widgets/controls",

400 | "model_module_version": "1.1.0",

401 | "model_name": "DescriptionStyleModel",

402 | "state": {

403 | "description_width": ""

404 | }

405 | },

406 | "5348b0f185bd4fa5a642e5121eb0feed": {

407 | "model_module": "@jupyter-widgets/controls",

408 | "model_module_version": "1.1.0",

409 | "model_name": "DescriptionStyleModel",

410 | "state": {

411 | "description_width": ""

412 | }

413 | },

414 | "55ec8f78c85a4eb8af0a7bbd80cf4ce3": {

415 | "model_module": "@jupyter-widgets/base",

416 | "model_module_version": "1.0.0",

417 | "model_name": "LayoutModel",

418 | "state": {}

419 | },

420 | "5a5d74e4200043f6ab928822b4346aa4": {

421 | "model_module": "@jupyter-widgets/controls",

422 | "model_module_version": "1.1.0",

423 | "model_name": "FloatTextModel",

424 | "state": {

425 | "description": "Feature scale:",

426 | "layout": "IPY_MODEL_6ee890b9a11c4ce0868fc8da9b510720",

427 | "step": null,

428 | "style": "IPY_MODEL_24381fd59a954b2980c8347250859849",

429 | "value": 2

430 | }

431 | },

432 | "5ef72263c5e247cbbb9916bffccf7485": {

433 | "model_module": "@jupyter-widgets/controls",

434 | "model_module_version": "1.1.0",

435 | "model_name": "ButtonModel",

436 | "state": {

437 | "icon": "forward",

438 | "layout": "IPY_MODEL_f5e9b479caa3491496610a1bca70f6f6",

439 | "style": "IPY_MODEL_32eb1f056b8a4311a1448f7fd4b7459e"

440 | }

441 | },

442 | "63880f7d52ea4c7da360d2269be5cbd2": {

443 | "model_module": "@jupyter-widgets/base",

444 | "model_module_version": "1.0.0",

445 | "model_name": "LayoutModel",

446 | "state": {

447 | "width": "100%"

448 | }

449 | },

450 | "66e29c1eb7fd494babefa05037841259": {

451 | "model_module": "@jupyter-widgets/controls",

452 | "model_module_version": "1.1.0",

453 | "model_name": "CheckboxModel",

454 | "state": {

455 | "description": "Errors",

456 | "disabled": false,

457 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

458 | "style": "IPY_MODEL_3501c6717de1410aba4274dec484dde0",

459 | "value": false

460 | }

461 | },

462 | "6b1a08b14f2647c3aace0739e77581de": {

463 | "model_module": "@jupyter-widgets/base",

464 | "model_module_version": "1.0.0",

465 | "model_name": "LayoutModel",

466 | "state": {}

467 | },

468 | "6c4dcc259efa4621aac46f4703595e4d": {

469 | "model_module": "@jupyter-widgets/controls",

470 | "model_module_version": "1.1.0",

471 | "model_name": "SelectModel",

472 | "state": {

473 | "_options_labels": [

474 | ""

475 | ],

476 | "description": "Features:",

477 | "index": 0,

478 | "layout": "IPY_MODEL_2982cc6bc30940ff96f2f9131a4a6241",

479 | "rows": 1,

480 | "style": "IPY_MODEL_c0a2f4ee45914dcaa1a8bdacac5046ed"

481 | }

482 | },

483 | "6ee890b9a11c4ce0868fc8da9b510720": {

484 | "model_module": "@jupyter-widgets/base",

485 | "model_module_version": "1.0.0",

486 | "model_name": "LayoutModel",

487 | "state": {}

488 | },

489 | "6f384b6b080b4a72bfaae3920f9b7163": {

490 | "model_module": "@jupyter-widgets/controls",

491 | "model_module_version": "1.1.0",

492 | "model_name": "HBoxModel",

493 | "state": {

494 | "children": [

495 | "IPY_MODEL_88d0c8c574214d258d52e6cf10c90587",

496 | "IPY_MODEL_8c242c24558644d68a3fa12cc2d805ba"

497 | ],

498 | "layout": "IPY_MODEL_a05f399be93e4461a73ff1e852749db5"

499 | }

500 | },

501 | "71588c1ad11e42df844dff42b5ab041d": {

502 | "model_module": "@jupyter-widgets/controls",

503 | "model_module_version": "1.1.0",

504 | "model_name": "IntTextModel",

505 | "state": {

506 | "description": "Feature to show:",

507 | "layout": "IPY_MODEL_136345188d7d4eeb97ad63b4e39206c0",

508 | "step": 1,

509 | "style": "IPY_MODEL_364a4cc16b974d61b20102f7cbd4e9fe"

510 | }

511 | },

512 | "733f45e0a1c44fe1aa0aca4ee87c5217": {

513 | "model_module": "@jupyter-widgets/controls",

514 | "model_module_version": "1.1.0",

515 | "model_name": "DescriptionStyleModel",

516 | "state": {

517 | "description_width": "initial"

518 | }

519 | },

520 | "788f81e5b21a4638b040e17ac78b8ce6": {

521 | "model_module": "@jupyter-widgets/controls",

522 | "model_module_version": "1.1.0",

523 | "model_name": "DescriptionStyleModel",

524 | "state": {

525 | "description_width": "initial"

526 | }

527 | },

528 | "79f332bd05a2497eb63b8f6cc6acf8a3": {

529 | "model_module": "@jupyter-widgets/controls",

530 | "model_module_version": "1.1.0",

531 | "model_name": "ButtonStyleModel",

532 | "state": {}

533 | },

534 | "7db6e0d0198d45f98e8c5a6f08d0791b": {

535 | "model_module": "@jupyter-widgets/controls",

536 | "model_module_version": "1.1.0",

537 | "model_name": "CheckboxModel",

538 | "state": {

539 | "description": "Visible",

540 | "disabled": false,

541 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

542 | "style": "IPY_MODEL_df5e3f91eea7415a888271f8fc68f9a5",

543 | "value": true

544 | }

545 | },

546 | "8193e107e49f49dda781c456011d9a78": {

547 | "model_module": "@jupyter-widgets/controls",

548 | "model_module_version": "1.1.0",

549 | "model_name": "SelectModel",

550 | "state": {

551 | "_options_labels": [

552 | "",

553 | "Accent",

554 | "Accent_r",

555 | "Blues",

556 | "Blues_r",

557 | "BrBG",

558 | "BrBG_r",

559 | "BuGn",

560 | "BuGn_r",

561 | "BuPu",

562 | "BuPu_r",

563 | "CMRmap",

564 | "CMRmap_r",

565 | "Dark2",

566 | "Dark2_r",

567 | "GnBu",

568 | "GnBu_r",

569 | "Greens",

570 | "Greens_r",

571 | "Greys",

572 | "Greys_r",

573 | "OrRd",

574 | "OrRd_r",

575 | "Oranges",

576 | "Oranges_r",

577 | "PRGn",

578 | "PRGn_r",

579 | "Paired",

580 | "Paired_r",

581 | "Pastel1",

582 | "Pastel1_r",

583 | "Pastel2",

584 | "Pastel2_r",

585 | "PiYG",

586 | "PiYG_r",

587 | "PuBu",

588 | "PuBuGn",

589 | "PuBuGn_r",

590 | "PuBu_r",

591 | "PuOr",

592 | "PuOr_r",

593 | "PuRd",

594 | "PuRd_r",

595 | "Purples",

596 | "Purples_r",

597 | "RdBu",

598 | "RdBu_r",

599 | "RdGy",

600 | "RdGy_r",

601 | "RdPu",

602 | "RdPu_r",

603 | "RdYlBu",

604 | "RdYlBu_r",

605 | "RdYlGn",

606 | "RdYlGn_r",

607 | "Reds",

608 | "Reds_r",

609 | "Set1",

610 | "Set1_r",

611 | "Set2",

612 | "Set2_r",

613 | "Set3",

614 | "Set3_r",

615 | "Spectral",

616 | "Spectral_r",

617 | "Vega10",

618 | "Vega10_r",

619 | "Vega20",

620 | "Vega20_r",

621 | "Vega20b",

622 | "Vega20b_r",

623 | "Vega20c",

624 | "Vega20c_r",

625 | "Wistia",

626 | "Wistia_r",

627 | "YlGn",

628 | "YlGnBu",

629 | "YlGnBu_r",

630 | "YlGn_r",

631 | "YlOrBr",

632 | "YlOrBr_r",

633 | "YlOrRd",

634 | "YlOrRd_r",

635 | "afmhot",

636 | "afmhot_r",

637 | "autumn",

638 | "autumn_r",

639 | "binary",

640 | "binary_r",

641 | "bone",

642 | "bone_r",

643 | "brg",

644 | "brg_r",

645 | "bwr",

646 | "bwr_r",

647 | "cool",

648 | "cool_r",

649 | "coolwarm",

650 | "coolwarm_r",

651 | "copper",

652 | "copper_r",

653 | "cubehelix",

654 | "cubehelix_r",

655 | "flag",

656 | "flag_r",

657 | "gist_earth",

658 | "gist_earth_r",

659 | "gist_gray",

660 | "gist_gray_r",

661 | "gist_heat",

662 | "gist_heat_r",

663 | "gist_ncar",

664 | "gist_ncar_r",

665 | "gist_rainbow",

666 | "gist_rainbow_r",

667 | "gist_stern",

668 | "gist_stern_r",

669 | "gist_yarg",

670 | "gist_yarg_r",

671 | "gnuplot",

672 | "gnuplot2",

673 | "gnuplot2_r",

674 | "gnuplot_r",

675 | "gray",

676 | "gray_r",

677 | "hot",

678 | "hot_r",

679 | "hsv",

680 | "hsv_r",

681 | "inferno",

682 | "inferno_r",

683 | "jet",

684 | "jet_r",

685 | "magma",

686 | "magma_r",

687 | "nipy_spectral",

688 | "nipy_spectral_r",

689 | "ocean",

690 | "ocean_r",

691 | "pink",

692 | "pink_r",

693 | "plasma",

694 | "plasma_r",

695 | "prism",

696 | "prism_r",

697 | "rainbow",

698 | "rainbow_r",

699 | "seismic",

700 | "seismic_r",

701 | "spectral",

702 | "spectral_r",

703 | "spring",

704 | "spring_r",

705 | "summer",

706 | "summer_r",

707 | "tab10",

708 | "tab10_r",

709 | "tab20",

710 | "tab20_r",

711 | "tab20b",

712 | "tab20b_r",

713 | "tab20c",

714 | "tab20c_r",

715 | "terrain",

716 | "terrain_r",

717 | "viridis",

718 | "viridis_r",

719 | "winter",

720 | "winter_r"

721 | ],

722 | "description": "Colormap:",

723 | "index": 0,

724 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

725 | "rows": 1,

726 | "style": "IPY_MODEL_a19b7c9088874ddaaf2efd2bce456ef7"

727 | }

728 | },

729 | "838f5f2263084d2eafad4d9a12fc3e7f": {

730 | "model_module": "@jupyter-widgets/controls",

731 | "model_module_version": "1.1.0",

732 | "model_name": "DescriptionStyleModel",

733 | "state": {

734 | "description_width": "initial"

735 | }

736 | },

737 | "861d8540f11944d8995a9f5c1385c829": {

738 | "model_module": "@jupyter-widgets/base",

739 | "model_module_version": "1.0.0",

740 | "model_name": "LayoutModel",

741 | "state": {

742 | "width": "25%"

743 | }

744 | },

745 | "86f142406e04427da6205fa66bac9620": {

746 | "model_module": "@jupyter-widgets/base",

747 | "model_module_version": "1.0.0",

748 | "model_name": "LayoutModel",

749 | "state": {

750 | "width": "100%"

751 | }

752 | },

753 | "88d0c8c574214d258d52e6cf10c90587": {

754 | "model_module": "@jupyter-widgets/controls",

755 | "model_module_version": "1.1.0",

756 | "model_name": "IntSliderModel",

757 | "state": {

758 | "continuous_update": false,

759 | "description": "Dataset index",

760 | "layout": "IPY_MODEL_b14873fb0b8347d2a1083d59fba8ad54",

761 | "max": 3,

762 | "style": "IPY_MODEL_402fa77b803048b2990b273187598d95",

763 | "value": 3

764 | }

765 | },

766 | "89b2034ca3124ff6848b20fb84a1a342": {

767 | "model_module": "@jupyter-widgets/controls",

768 | "model_module_version": "1.1.0",

769 | "model_name": "DescriptionStyleModel",

770 | "state": {

771 | "description_width": ""

772 | }

773 | },

774 | "8bdd74f89a3043e792eabd6a6226a6ab": {

775 | "model_module": "@jupyter-widgets/base",

776 | "model_module_version": "1.0.0",

777 | "model_name": "LayoutModel",

778 | "state": {

779 | "width": "100%"

780 | }

781 | },

782 | "8c242c24558644d68a3fa12cc2d805ba": {

783 | "model_module": "@jupyter-widgets/controls",

784 | "model_module_version": "1.1.0",

785 | "model_name": "LabelModel",

786 | "state": {

787 | "layout": "IPY_MODEL_4e5807bdbf3d4dc2b611e26fa56b6101",

788 | "style": "IPY_MODEL_486e1a6b578b44b397b02f1dbafc907b",

789 | "value": "of 4"

790 | }

791 | },

792 | "9b5de2cdaeba4cc2be73572dc68dd1e9": {

793 | "model_module": "@jupyter-widgets/controls",

794 | "model_module_version": "1.1.0",

795 | "model_name": "VBoxModel",

796 | "state": {

797 | "children": [

798 | "IPY_MODEL_b985873afa7a4297b6a3f47c5d6cdb89",

799 | "IPY_MODEL_d5b406961ccc42458002f37052e3d0a9",

800 | "IPY_MODEL_dea0a485bad246ce9e6f5273e581c7cf",

801 | "IPY_MODEL_15efb7a6a85b429c857fdc8e18c154c7"

802 | ],

803 | "layout": "IPY_MODEL_21191f7705374908a284ccf55829951c"

804 | }

805 | },

806 | "9bfc7d1321df402ebac286b2c8b7a47d": {

807 | "model_module": "@jupyter-widgets/controls",

808 | "model_module_version": "1.1.0",

809 | "model_name": "ButtonModel",

810 | "state": {

811 | "icon": "fast-backward",

812 | "layout": "IPY_MODEL_cc692e9fd5a3487ba19794423c815e29",

813 | "style": "IPY_MODEL_e7e084ac53694252b14094ad1bc1affd"

814 | }

815 | },

816 | "9fca8922d6a84010a73ddb46f2713c40": {

817 | "model_module": "@jupyter-widgets/base",

818 | "model_module_version": "1.0.0",

819 | "model_name": "LayoutModel",

820 | "state": {}

821 | },

822 | "a05f399be93e4461a73ff1e852749db5": {

823 | "model_module": "@jupyter-widgets/base",

824 | "model_module_version": "1.0.0",

825 | "model_name": "LayoutModel",

826 | "state": {

827 | "height": "40px"

828 | }

829 | },

830 | "a19b7c9088874ddaaf2efd2bce456ef7": {

831 | "model_module": "@jupyter-widgets/controls",

832 | "model_module_version": "1.1.0",

833 | "model_name": "DescriptionStyleModel",

834 | "state": {

835 | "description_width": ""

836 | }

837 | },

838 | "a836973f20574c71b33aa3cef64e7f1e": {

839 | "model_module": "@jupyter-widgets/controls",

840 | "model_module_version": "1.1.0",

841 | "model_name": "FloatTextModel",

842 | "state": {

843 | "description": "Rightmost color maps to:",

844 | "layout": "IPY_MODEL_d32a5abd74134a4686d75e191a1533cb",

845 | "step": null,

846 | "style": "IPY_MODEL_ac548405c8af4ee7a8ab8c38abae38c9",

847 | "value": 1

848 | }

849 | },

850 | "aa04171bbaa9441db93e9a7e35d43065": {

851 | "model_module": "@jupyter-widgets/controls",

852 | "model_module_version": "1.1.0",

853 | "model_name": "CheckboxModel",

854 | "state": {

855 | "description": "Show Targets",

856 | "disabled": false,

857 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

858 | "style": "IPY_MODEL_838f5f2263084d2eafad4d9a12fc3e7f",

859 | "value": false

860 | }

861 | },

862 | "ac548405c8af4ee7a8ab8c38abae38c9": {

863 | "model_module": "@jupyter-widgets/controls",

864 | "model_module_version": "1.1.0",

865 | "model_name": "DescriptionStyleModel",

866 | "state": {

867 | "description_width": "initial"

868 | }

869 | },

870 | "b0b819e6de95419a9eeb8a0002353ee1": {

871 | "model_module": "@jupyter-widgets/controls",

872 | "model_module_version": "1.1.0",

873 | "model_name": "HTMLModel",

874 | "state": {

875 | "layout": "IPY_MODEL_cc030e35783e49339e05ba715ee14e62",

876 | "style": "IPY_MODEL_de9d6e453fa14e79b965a4501ff69845",

877 | "value": "

43 |

44 | ## Examples

45 |

46 | See [conx-notebooks](https://github.com/Calysto/conx-notebooks/blob/master/00_Index.ipynb) and the [documentation](http://conx.readthedocs.io/en/latest/) for additional examples.

47 |

48 | ## Installation

49 |

50 | See [How To Run Conx](https://github.com/Calysto/conx-notebooks/tree/master/HowToRun#how-to-run-conx)

51 | to see options on running virtual machines, in the cloud, and personal

52 | installation.

53 |

--------------------------------------------------------------------------------

/binder/apt.txt:

--------------------------------------------------------------------------------

1 | libffi-dev

2 | libffi6

3 | ffmpeg

4 | texlive-latex-base

5 | texlive-latex-recommended

6 | texlive-science

7 | texlive-latex-extra

8 | texlive-fonts-recommended

9 | dvipng

10 | ghostscript

11 | graphviz

12 |

--------------------------------------------------------------------------------

/binder/index.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Welcome to Deep Learning with conx!\n",

8 | "\n",

9 | "This is a live Jupyter notebook running in the cloud via mybinder.\n",

10 | "\n",

11 | "To test Conx, and get your own copy of the conx-notebooks:"

12 | ]

13 | },

14 | {

15 | "cell_type": "code",

16 | "execution_count": 1,

17 | "metadata": {},

18 | "outputs": [

19 | {

20 | "name": "stderr",

21 | "output_type": "stream",

22 | "text": [

23 | "Using Theano backend.\n",

24 | "Conx, version 3.6.0\n"

25 | ]

26 | }

27 | ],

28 | "source": [

29 | "import conx as cx"

30 | ]

31 | },

32 | {

33 | "cell_type": "code",

34 | "execution_count": null,

35 | "metadata": {},

36 | "outputs": [],

37 | "source": [

38 | "cx.download(\"https://github.com/Calysto/conx-notebooks/archive/master.zip\")"

39 | ]

40 | },

41 | {

42 | "cell_type": "markdown",

43 | "metadata": {},

44 | "source": [

45 | "To \"trust\" these notebooks:"

46 | ]

47 | },

48 | {

49 | "cell_type": "code",

50 | "execution_count": null,

51 | "metadata": {},

52 | "outputs": [],

53 | "source": [

54 | "! jupyter trust conx-notebooks-master/*.ipynb"

55 | ]

56 | },

57 | {

58 | "cell_type": "markdown",

59 | "metadata": {},

60 | "source": [

61 | "You can then open \n",

62 | "\n",

63 | "* [conx-notebooks-master/00_Index.ipynb](conx-notebooks-master/00_Index.ipynb) \n",

64 | "* [conx-notebooks-master/collections/sigcse-2018/](conx-notebooks-master/collections/sigcse-2018/) \n",

65 | "\n",

66 | "and explore the notebooks.\n",

67 | "\n",

68 | "Welcome to Conx!\n",

69 | "\n",

70 | "For more help or information see:\n",

71 | "\n",

72 | "* http://conx.readthedocs.io/en/latest/ - online help\n",

73 | "* https://groups.google.com/forum/#!forum/conx-users - conx-users mailing list"

74 | ]

75 | }

76 | ],

77 | "metadata": {

78 | "kernelspec": {

79 | "display_name": "Python 3",

80 | "language": "python",

81 | "name": "python3"

82 | },

83 | "language_info": {

84 | "codemirror_mode": {

85 | "name": "ipython",

86 | "version": 3

87 | },

88 | "file_extension": ".py",

89 | "mimetype": "text/x-python",

90 | "name": "python",

91 | "nbconvert_exporter": "python",

92 | "pygments_lexer": "ipython3",

93 | "version": "3.6.3"

94 | },

95 | "widgets": {

96 | "application/vnd.jupyter.widget-state+json": {

97 | "state": {

98 | "0c01554263f740678e052dddc7baa232": {

99 | "model_module": "@jupyter-widgets/controls",

100 | "model_module_version": "1.1.0",

101 | "model_name": "SelectModel",

102 | "state": {

103 | "_options_labels": [

104 | "Test",

105 | "Train"

106 | ],

107 | "description": "Dataset:",

108 | "index": 1,

109 | "layout": "IPY_MODEL_b6d05976b5cb40ff8a4ddee3c5a861d9",

110 | "rows": 1,

111 | "style": "IPY_MODEL_51461b41bbd4492583f7fbd5d631835d"

112 | }

113 | },

114 | "136345188d7d4eeb97ad63b4e39206c0": {

115 | "model_module": "@jupyter-widgets/base",

116 | "model_module_version": "1.0.0",

117 | "model_name": "LayoutModel",

118 | "state": {}

119 | },

120 | "137cc55f71044b329686552e4d5ed5fe": {

121 | "model_module": "@jupyter-widgets/controls",

122 | "model_module_version": "1.1.0",

123 | "model_name": "HBoxModel",

124 | "state": {

125 | "children": [

126 | "IPY_MODEL_9bfc7d1321df402ebac286b2c8b7a47d",

127 | "IPY_MODEL_f3801cae672644048cd4eb49c89f6fb5",

128 | "IPY_MODEL_dd4b1f529c7941569816da9769084b90",

129 | "IPY_MODEL_5ef72263c5e247cbbb9916bffccf7485",

130 | "IPY_MODEL_c491fff0bf9f47339ab116e4a2b728c9",

131 | "IPY_MODEL_4cb4b63e98944567b0959241151670e8",

132 | "IPY_MODEL_44d99e7e5001431abc7161a689dd6ea5"

133 | ],

134 | "layout": "IPY_MODEL_f6b3e8fc467742ac88c9cf3bf03cfa03"

135 | }

136 | },

137 | "15dbc9bb00744ca8a4613fd572a49fc0": {

138 | "model_module": "@jupyter-widgets/controls",

139 | "model_module_version": "1.1.0",

140 | "model_name": "ButtonStyleModel",

141 | "state": {}

142 | },

143 | "15efb7a6a85b429c857fdc8e18c154c7": {

144 | "model_module": "@jupyter-widgets/output",

145 | "model_module_version": "1.0.0",

146 | "model_name": "OutputModel",

147 | "state": {

148 | "layout": "IPY_MODEL_e4910a4128764aad9d412609da7c9a53"

149 | }

150 | },

151 | "16832b10a05e46b19183f1726ed80faf": {

152 | "model_module": "@jupyter-widgets/base",

153 | "model_module_version": "1.0.0",

154 | "model_name": "LayoutModel",

155 | "state": {

156 | "justify_content": "center",

157 | "overflow_x": "auto",

158 | "overflow_y": "auto",

159 | "width": "95%"

160 | }

161 | },

162 | "17b44abf16704be4971f53e8160fce47": {

163 | "model_module": "@jupyter-widgets/base",

164 | "model_module_version": "1.0.0",

165 | "model_name": "LayoutModel",

166 | "state": {}

167 | },

168 | "18294f98de474bd794eb2b003add21f8": {

169 | "model_module": "@jupyter-widgets/controls",

170 | "model_module_version": "1.1.0",

171 | "model_name": "FloatTextModel",

172 | "state": {

173 | "description": "Leftmost color maps to:",

174 | "layout": "IPY_MODEL_17b44abf16704be4971f53e8160fce47",

175 | "step": null,

176 | "style": "IPY_MODEL_733f45e0a1c44fe1aa0aca4ee87c5217",

177 | "value": -1

178 | }

179 | },

180 | "21191f7705374908a284ccf55829951c": {

181 | "model_module": "@jupyter-widgets/base",

182 | "model_module_version": "1.0.0",

183 | "model_name": "LayoutModel",

184 | "state": {}

185 | },

186 | "24381fd59a954b2980c8347250859849": {

187 | "model_module": "@jupyter-widgets/controls",

188 | "model_module_version": "1.1.0",

189 | "model_name": "DescriptionStyleModel",

190 | "state": {

191 | "description_width": "initial"

192 | }

193 | },

194 | "26908fd67f194c21a968e035de73aa1a": {

195 | "model_module": "@jupyter-widgets/controls",

196 | "model_module_version": "1.1.0",

197 | "model_name": "SelectModel",

198 | "state": {

199 | "_options_labels": [

200 | "input",

201 | "hidden",

202 | "output"

203 | ],

204 | "description": "Layer:",

205 | "index": 2,

206 | "layout": "IPY_MODEL_da640a5ac3bc4a59ab8a82bc8fcbf746",

207 | "rows": 1,

208 | "style": "IPY_MODEL_5348b0f185bd4fa5a642e5121eb0feed"

209 | }

210 | },

211 | "2982cc6bc30940ff96f2f9131a4a6241": {

212 | "model_module": "@jupyter-widgets/base",

213 | "model_module_version": "1.0.0",

214 | "model_name": "LayoutModel",

215 | "state": {}

216 | },

217 | "29eb7d46cc034ca5abe9c2d759997fb5": {

218 | "model_module": "@jupyter-widgets/controls",

219 | "model_module_version": "1.1.0",

220 | "model_name": "FloatSliderModel",

221 | "state": {

222 | "continuous_update": false,

223 | "description": "Zoom",

224 | "layout": "IPY_MODEL_55ec8f78c85a4eb8af0a7bbd80cf4ce3",

225 | "max": 3,

226 | "min": 0.5,

227 | "step": 0.1,

228 | "style": "IPY_MODEL_bea9ec4c51724a9797030debbd44b595",

229 | "value": 1

230 | }

231 | },

232 | "2aa5894453424c4aacf351dd8c8ab922": {

233 | "model_module": "@jupyter-widgets/base",

234 | "model_module_version": "1.0.0",

235 | "model_name": "LayoutModel",

236 | "state": {}

237 | },

238 | "3156b5f6d28642bd9633e8e5518c9097": {

239 | "model_module": "@jupyter-widgets/controls",

240 | "model_module_version": "1.1.0",

241 | "model_name": "VBoxModel",

242 | "state": {

243 | "children": [

244 | "IPY_MODEL_0c01554263f740678e052dddc7baa232",

245 | "IPY_MODEL_29eb7d46cc034ca5abe9c2d759997fb5",

246 | "IPY_MODEL_dd45f7f9734f48fba70aaf8ae65dbb97",

247 | "IPY_MODEL_421ac3c41d5b46e6af897887dae323e3",

248 | "IPY_MODEL_b9be5483405141b88cf18e7d4f4963de",

249 | "IPY_MODEL_6c4dcc259efa4621aac46f4703595e4d",

250 | "IPY_MODEL_c712050936334e1d859ad0bdbba33a50",

251 | "IPY_MODEL_5a5d74e4200043f6ab928822b4346aa4"

252 | ],

253 | "layout": "IPY_MODEL_34cf92591c7545d3b47483fc9b50c798"

254 | }

255 | },

256 | "326c868bba2744109476ec1a641314b3": {

257 | "model_module": "@jupyter-widgets/controls",

258 | "model_module_version": "1.1.0",

259 | "model_name": "VBoxModel",

260 | "state": {

261 | "children": [

262 | "IPY_MODEL_26908fd67f194c21a968e035de73aa1a",

263 | "IPY_MODEL_7db6e0d0198d45f98e8c5a6f08d0791b",

264 | "IPY_MODEL_8193e107e49f49dda781c456011d9a78",

265 | "IPY_MODEL_b0b819e6de95419a9eeb8a0002353ee1",

266 | "IPY_MODEL_18294f98de474bd794eb2b003add21f8",

267 | "IPY_MODEL_a836973f20574c71b33aa3cef64e7f1e",

268 | "IPY_MODEL_71588c1ad11e42df844dff42b5ab041d"

269 | ],

270 | "layout": "IPY_MODEL_4b5e4ed95f04466e8685437a1f5593e7"

271 | }

272 | },

273 | "328d40ca9797496b91ee0685f8f59d05": {

274 | "model_module": "@jupyter-widgets/base",

275 | "model_module_version": "1.0.0",

276 | "model_name": "LayoutModel",

277 | "state": {

278 | "width": "100%"

279 | }

280 | },

281 | "32eb1f056b8a4311a1448f7fd4b7459e": {

282 | "model_module": "@jupyter-widgets/controls",

283 | "model_module_version": "1.1.0",

284 | "model_name": "ButtonStyleModel",

285 | "state": {}

286 | },

287 | "34cf92591c7545d3b47483fc9b50c798": {

288 | "model_module": "@jupyter-widgets/base",

289 | "model_module_version": "1.0.0",

290 | "model_name": "LayoutModel",

291 | "state": {

292 | "width": "100%"

293 | }

294 | },

295 | "3501c6717de1410aba4274dec484dde0": {

296 | "model_module": "@jupyter-widgets/controls",

297 | "model_module_version": "1.1.0",

298 | "model_name": "DescriptionStyleModel",

299 | "state": {

300 | "description_width": "initial"

301 | }

302 | },

303 | "364a4cc16b974d61b20102f7cbd4e9fe": {

304 | "model_module": "@jupyter-widgets/controls",

305 | "model_module_version": "1.1.0",

306 | "model_name": "DescriptionStyleModel",

307 | "state": {

308 | "description_width": "initial"

309 | }

310 | },

311 | "379c8a4fc2c04ec1aea72f9a38509632": {

312 | "model_module": "@jupyter-widgets/controls",

313 | "model_module_version": "1.1.0",

314 | "model_name": "DescriptionStyleModel",

315 | "state": {

316 | "description_width": "initial"

317 | }

318 | },

319 | "3b7e0bdd891743bda0dd4a9d15dd0a42": {

320 | "model_module": "@jupyter-widgets/controls",

321 | "model_module_version": "1.1.0",

322 | "model_name": "DescriptionStyleModel",

323 | "state": {

324 | "description_width": ""

325 | }

326 | },

327 | "402fa77b803048b2990b273187598d95": {

328 | "model_module": "@jupyter-widgets/controls",

329 | "model_module_version": "1.1.0",

330 | "model_name": "SliderStyleModel",

331 | "state": {

332 | "description_width": ""

333 | }

334 | },

335 | "421ac3c41d5b46e6af897887dae323e3": {

336 | "model_module": "@jupyter-widgets/controls",

337 | "model_module_version": "1.1.0",

338 | "model_name": "IntTextModel",

339 | "state": {

340 | "description": "Vertical space between layers:",

341 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

342 | "step": 1,

343 | "style": "IPY_MODEL_379c8a4fc2c04ec1aea72f9a38509632",

344 | "value": 30

345 | }

346 | },

347 | "4479c1d8a9d74da1800e20711176fe1a": {

348 | "model_module": "@jupyter-widgets/base",

349 | "model_module_version": "1.0.0",

350 | "model_name": "LayoutModel",

351 | "state": {}

352 | },

353 | "44d99e7e5001431abc7161a689dd6ea5": {

354 | "model_module": "@jupyter-widgets/controls",

355 | "model_module_version": "1.1.0",

356 | "model_name": "ButtonModel",

357 | "state": {

358 | "icon": "refresh",

359 | "layout": "IPY_MODEL_861d8540f11944d8995a9f5c1385c829",

360 | "style": "IPY_MODEL_eba95634dd3241f2911fc17342ddf924"

361 | }

362 | },

363 | "486e1a6b578b44b397b02f1dbafc907b": {

364 | "model_module": "@jupyter-widgets/controls",

365 | "model_module_version": "1.1.0",

366 | "model_name": "DescriptionStyleModel",

367 | "state": {

368 | "description_width": ""

369 | }

370 | },

371 | "4b5e4ed95f04466e8685437a1f5593e7": {

372 | "model_module": "@jupyter-widgets/base",

373 | "model_module_version": "1.0.0",

374 | "model_name": "LayoutModel",

375 | "state": {

376 | "width": "100%"

377 | }

378 | },

379 | "4cb4b63e98944567b0959241151670e8": {

380 | "model_module": "@jupyter-widgets/controls",

381 | "model_module_version": "1.1.0",

382 | "model_name": "ButtonModel",

383 | "state": {

384 | "description": "Play",

385 | "icon": "play",

386 | "layout": "IPY_MODEL_328d40ca9797496b91ee0685f8f59d05",

387 | "style": "IPY_MODEL_79f332bd05a2497eb63b8f6cc6acf8a3"

388 | }

389 | },

390 | "4e5807bdbf3d4dc2b611e26fa56b6101": {

391 | "model_module": "@jupyter-widgets/base",

392 | "model_module_version": "1.0.0",

393 | "model_name": "LayoutModel",

394 | "state": {

395 | "width": "100px"

396 | }

397 | },

398 | "51461b41bbd4492583f7fbd5d631835d": {

399 | "model_module": "@jupyter-widgets/controls",

400 | "model_module_version": "1.1.0",

401 | "model_name": "DescriptionStyleModel",

402 | "state": {

403 | "description_width": ""

404 | }

405 | },

406 | "5348b0f185bd4fa5a642e5121eb0feed": {

407 | "model_module": "@jupyter-widgets/controls",

408 | "model_module_version": "1.1.0",

409 | "model_name": "DescriptionStyleModel",

410 | "state": {

411 | "description_width": ""

412 | }

413 | },

414 | "55ec8f78c85a4eb8af0a7bbd80cf4ce3": {

415 | "model_module": "@jupyter-widgets/base",

416 | "model_module_version": "1.0.0",

417 | "model_name": "LayoutModel",

418 | "state": {}

419 | },

420 | "5a5d74e4200043f6ab928822b4346aa4": {

421 | "model_module": "@jupyter-widgets/controls",

422 | "model_module_version": "1.1.0",

423 | "model_name": "FloatTextModel",

424 | "state": {

425 | "description": "Feature scale:",

426 | "layout": "IPY_MODEL_6ee890b9a11c4ce0868fc8da9b510720",

427 | "step": null,

428 | "style": "IPY_MODEL_24381fd59a954b2980c8347250859849",

429 | "value": 2

430 | }

431 | },

432 | "5ef72263c5e247cbbb9916bffccf7485": {

433 | "model_module": "@jupyter-widgets/controls",

434 | "model_module_version": "1.1.0",

435 | "model_name": "ButtonModel",

436 | "state": {

437 | "icon": "forward",

438 | "layout": "IPY_MODEL_f5e9b479caa3491496610a1bca70f6f6",

439 | "style": "IPY_MODEL_32eb1f056b8a4311a1448f7fd4b7459e"

440 | }

441 | },

442 | "63880f7d52ea4c7da360d2269be5cbd2": {

443 | "model_module": "@jupyter-widgets/base",

444 | "model_module_version": "1.0.0",

445 | "model_name": "LayoutModel",

446 | "state": {

447 | "width": "100%"

448 | }

449 | },

450 | "66e29c1eb7fd494babefa05037841259": {

451 | "model_module": "@jupyter-widgets/controls",

452 | "model_module_version": "1.1.0",

453 | "model_name": "CheckboxModel",

454 | "state": {

455 | "description": "Errors",

456 | "disabled": false,

457 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

458 | "style": "IPY_MODEL_3501c6717de1410aba4274dec484dde0",

459 | "value": false

460 | }

461 | },

462 | "6b1a08b14f2647c3aace0739e77581de": {

463 | "model_module": "@jupyter-widgets/base",

464 | "model_module_version": "1.0.0",

465 | "model_name": "LayoutModel",

466 | "state": {}

467 | },

468 | "6c4dcc259efa4621aac46f4703595e4d": {

469 | "model_module": "@jupyter-widgets/controls",

470 | "model_module_version": "1.1.0",

471 | "model_name": "SelectModel",

472 | "state": {

473 | "_options_labels": [

474 | ""

475 | ],

476 | "description": "Features:",

477 | "index": 0,

478 | "layout": "IPY_MODEL_2982cc6bc30940ff96f2f9131a4a6241",

479 | "rows": 1,

480 | "style": "IPY_MODEL_c0a2f4ee45914dcaa1a8bdacac5046ed"

481 | }

482 | },

483 | "6ee890b9a11c4ce0868fc8da9b510720": {

484 | "model_module": "@jupyter-widgets/base",

485 | "model_module_version": "1.0.0",

486 | "model_name": "LayoutModel",

487 | "state": {}

488 | },

489 | "6f384b6b080b4a72bfaae3920f9b7163": {

490 | "model_module": "@jupyter-widgets/controls",

491 | "model_module_version": "1.1.0",

492 | "model_name": "HBoxModel",

493 | "state": {

494 | "children": [

495 | "IPY_MODEL_88d0c8c574214d258d52e6cf10c90587",

496 | "IPY_MODEL_8c242c24558644d68a3fa12cc2d805ba"

497 | ],

498 | "layout": "IPY_MODEL_a05f399be93e4461a73ff1e852749db5"

499 | }

500 | },

501 | "71588c1ad11e42df844dff42b5ab041d": {

502 | "model_module": "@jupyter-widgets/controls",

503 | "model_module_version": "1.1.0",

504 | "model_name": "IntTextModel",

505 | "state": {

506 | "description": "Feature to show:",

507 | "layout": "IPY_MODEL_136345188d7d4eeb97ad63b4e39206c0",

508 | "step": 1,

509 | "style": "IPY_MODEL_364a4cc16b974d61b20102f7cbd4e9fe"

510 | }

511 | },

512 | "733f45e0a1c44fe1aa0aca4ee87c5217": {

513 | "model_module": "@jupyter-widgets/controls",

514 | "model_module_version": "1.1.0",

515 | "model_name": "DescriptionStyleModel",

516 | "state": {

517 | "description_width": "initial"

518 | }

519 | },

520 | "788f81e5b21a4638b040e17ac78b8ce6": {

521 | "model_module": "@jupyter-widgets/controls",

522 | "model_module_version": "1.1.0",

523 | "model_name": "DescriptionStyleModel",

524 | "state": {

525 | "description_width": "initial"

526 | }

527 | },

528 | "79f332bd05a2497eb63b8f6cc6acf8a3": {

529 | "model_module": "@jupyter-widgets/controls",

530 | "model_module_version": "1.1.0",

531 | "model_name": "ButtonStyleModel",

532 | "state": {}

533 | },

534 | "7db6e0d0198d45f98e8c5a6f08d0791b": {

535 | "model_module": "@jupyter-widgets/controls",

536 | "model_module_version": "1.1.0",

537 | "model_name": "CheckboxModel",

538 | "state": {

539 | "description": "Visible",

540 | "disabled": false,

541 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

542 | "style": "IPY_MODEL_df5e3f91eea7415a888271f8fc68f9a5",

543 | "value": true

544 | }

545 | },

546 | "8193e107e49f49dda781c456011d9a78": {

547 | "model_module": "@jupyter-widgets/controls",

548 | "model_module_version": "1.1.0",

549 | "model_name": "SelectModel",

550 | "state": {

551 | "_options_labels": [

552 | "",

553 | "Accent",

554 | "Accent_r",

555 | "Blues",

556 | "Blues_r",

557 | "BrBG",

558 | "BrBG_r",

559 | "BuGn",

560 | "BuGn_r",

561 | "BuPu",

562 | "BuPu_r",

563 | "CMRmap",

564 | "CMRmap_r",

565 | "Dark2",

566 | "Dark2_r",

567 | "GnBu",

568 | "GnBu_r",

569 | "Greens",

570 | "Greens_r",

571 | "Greys",

572 | "Greys_r",

573 | "OrRd",

574 | "OrRd_r",

575 | "Oranges",

576 | "Oranges_r",

577 | "PRGn",

578 | "PRGn_r",

579 | "Paired",

580 | "Paired_r",

581 | "Pastel1",

582 | "Pastel1_r",

583 | "Pastel2",

584 | "Pastel2_r",

585 | "PiYG",

586 | "PiYG_r",

587 | "PuBu",

588 | "PuBuGn",

589 | "PuBuGn_r",

590 | "PuBu_r",

591 | "PuOr",

592 | "PuOr_r",

593 | "PuRd",

594 | "PuRd_r",

595 | "Purples",

596 | "Purples_r",

597 | "RdBu",

598 | "RdBu_r",

599 | "RdGy",

600 | "RdGy_r",

601 | "RdPu",

602 | "RdPu_r",

603 | "RdYlBu",

604 | "RdYlBu_r",

605 | "RdYlGn",

606 | "RdYlGn_r",

607 | "Reds",

608 | "Reds_r",

609 | "Set1",

610 | "Set1_r",

611 | "Set2",

612 | "Set2_r",

613 | "Set3",

614 | "Set3_r",

615 | "Spectral",

616 | "Spectral_r",

617 | "Vega10",

618 | "Vega10_r",

619 | "Vega20",

620 | "Vega20_r",

621 | "Vega20b",

622 | "Vega20b_r",

623 | "Vega20c",

624 | "Vega20c_r",

625 | "Wistia",

626 | "Wistia_r",

627 | "YlGn",

628 | "YlGnBu",

629 | "YlGnBu_r",

630 | "YlGn_r",

631 | "YlOrBr",

632 | "YlOrBr_r",

633 | "YlOrRd",

634 | "YlOrRd_r",

635 | "afmhot",

636 | "afmhot_r",

637 | "autumn",

638 | "autumn_r",

639 | "binary",

640 | "binary_r",

641 | "bone",

642 | "bone_r",

643 | "brg",

644 | "brg_r",

645 | "bwr",

646 | "bwr_r",

647 | "cool",

648 | "cool_r",

649 | "coolwarm",

650 | "coolwarm_r",

651 | "copper",

652 | "copper_r",

653 | "cubehelix",

654 | "cubehelix_r",

655 | "flag",

656 | "flag_r",

657 | "gist_earth",

658 | "gist_earth_r",

659 | "gist_gray",

660 | "gist_gray_r",

661 | "gist_heat",

662 | "gist_heat_r",

663 | "gist_ncar",

664 | "gist_ncar_r",

665 | "gist_rainbow",

666 | "gist_rainbow_r",

667 | "gist_stern",

668 | "gist_stern_r",

669 | "gist_yarg",

670 | "gist_yarg_r",

671 | "gnuplot",

672 | "gnuplot2",

673 | "gnuplot2_r",

674 | "gnuplot_r",

675 | "gray",

676 | "gray_r",

677 | "hot",

678 | "hot_r",

679 | "hsv",

680 | "hsv_r",

681 | "inferno",

682 | "inferno_r",

683 | "jet",

684 | "jet_r",

685 | "magma",

686 | "magma_r",

687 | "nipy_spectral",

688 | "nipy_spectral_r",

689 | "ocean",

690 | "ocean_r",

691 | "pink",

692 | "pink_r",

693 | "plasma",

694 | "plasma_r",

695 | "prism",

696 | "prism_r",

697 | "rainbow",

698 | "rainbow_r",

699 | "seismic",

700 | "seismic_r",

701 | "spectral",

702 | "spectral_r",

703 | "spring",

704 | "spring_r",

705 | "summer",

706 | "summer_r",

707 | "tab10",

708 | "tab10_r",

709 | "tab20",

710 | "tab20_r",

711 | "tab20b",

712 | "tab20b_r",

713 | "tab20c",

714 | "tab20c_r",

715 | "terrain",

716 | "terrain_r",

717 | "viridis",

718 | "viridis_r",

719 | "winter",

720 | "winter_r"

721 | ],

722 | "description": "Colormap:",

723 | "index": 0,

724 | "layout": "IPY_MODEL_9fca8922d6a84010a73ddb46f2713c40",

725 | "rows": 1,

726 | "style": "IPY_MODEL_a19b7c9088874ddaaf2efd2bce456ef7"

727 | }

728 | },

729 | "838f5f2263084d2eafad4d9a12fc3e7f": {

730 | "model_module": "@jupyter-widgets/controls",

731 | "model_module_version": "1.1.0",

732 | "model_name": "DescriptionStyleModel",

733 | "state": {

734 | "description_width": "initial"

735 | }

736 | },

737 | "861d8540f11944d8995a9f5c1385c829": {

738 | "model_module": "@jupyter-widgets/base",

739 | "model_module_version": "1.0.0",

740 | "model_name": "LayoutModel",

741 | "state": {

742 | "width": "25%"

743 | }

744 | },

745 | "86f142406e04427da6205fa66bac9620": {

746 | "model_module": "@jupyter-widgets/base",

747 | "model_module_version": "1.0.0",

748 | "model_name": "LayoutModel",

749 | "state": {

750 | "width": "100%"

751 | }

752 | },

753 | "88d0c8c574214d258d52e6cf10c90587": {

754 | "model_module": "@jupyter-widgets/controls",

755 | "model_module_version": "1.1.0",

756 | "model_name": "IntSliderModel",

757 | "state": {

758 | "continuous_update": false,

759 | "description": "Dataset index",

760 | "layout": "IPY_MODEL_b14873fb0b8347d2a1083d59fba8ad54",

761 | "max": 3,

762 | "style": "IPY_MODEL_402fa77b803048b2990b273187598d95",

763 | "value": 3

764 | }

765 | },

766 | "89b2034ca3124ff6848b20fb84a1a342": {

767 | "model_module": "@jupyter-widgets/controls",

768 | "model_module_version": "1.1.0",

769 | "model_name": "DescriptionStyleModel",

770 | "state": {

771 | "description_width": ""

772 | }

773 | },

774 | "8bdd74f89a3043e792eabd6a6226a6ab": {

775 | "model_module": "@jupyter-widgets/base",

776 | "model_module_version": "1.0.0",

777 | "model_name": "LayoutModel",

778 | "state": {

779 | "width": "100%"

780 | }

781 | },

782 | "8c242c24558644d68a3fa12cc2d805ba": {

783 | "model_module": "@jupyter-widgets/controls",

784 | "model_module_version": "1.1.0",

785 | "model_name": "LabelModel",

786 | "state": {