├── panda

├── rl

│ ├── policies

│ │ ├── __init__.py

│ │ ├── utils.py

│ │ ├── distributions.py

│ │ ├── mlp_actor_critic.py

│ │ └── actor_critic.py

│ ├── base_agent.py

│ ├── main.py

│ ├── dataset.py

│ ├── rollouts.py

│ ├── normalizer.py

│ ├── ppo_agent.py

│ ├── sac_agent.py

│ └── trainer.py

├── models

│ ├── robot

│ │ ├── __init__.py

│ │ ├── robot.py

│ │ └── panda_robot.py

│ ├── task

│ │ ├── __init__.py

│ │ ├── task.py

│ │ └── grasping_task.py

│ ├── arena

│ │ ├── __init__.py

│ │ ├── arena.py

│ │ └── table_arena.py

│ ├── gripper

│ │ ├── __init__.py

│ │ ├── panda_gripper.py

│ │ └── gripper.py

│ ├── __init__.py

│ ├── assets

│ │ ├── textures

│ │ │ ├── metal.png

│ │ │ ├── dark-wood.png

│ │ │ └── light-wood.png

│ │ ├── objects

│ │ │ ├── meshes

│ │ │ │ └── base.stl

│ │ │ ├── cube.xml

│ │ │ ├── cyl.xml

│ │ │ ├── cyl2.xml

│ │ │ └── basepart.xml

│ │ ├── robot

│ │ │ └── panda

│ │ │ │ ├── meshes

│ │ │ │ ├── hand.stl

│ │ │ │ ├── finger.stl

│ │ │ │ ├── link0.stl

│ │ │ │ ├── link1.stl

│ │ │ │ ├── link2.stl

│ │ │ │ ├── link3.stl

│ │ │ │ ├── link4.stl

│ │ │ │ ├── link5.stl

│ │ │ │ ├── link6.stl

│ │ │ │ ├── link7.stl

│ │ │ │ ├── hand_vis.stl

│ │ │ │ ├── link0_vis.stl

│ │ │ │ ├── link1_vis.stl

│ │ │ │ ├── link2_vis.stl

│ │ │ │ ├── link3_vis.stl

│ │ │ │ ├── link4_vis.stl

│ │ │ │ ├── link5_vis.stl

│ │ │ │ ├── link6_vis.stl

│ │ │ │ ├── link7_vis.stl

│ │ │ │ ├── pedestal.stl

│ │ │ │ └── finger_vis.stl

│ │ │ │ └── robot_torque.xml

│ │ ├── gripper

│ │ │ ├── meshes

│ │ │ │ └── panda_gripper

│ │ │ │ │ ├── hand.stl

│ │ │ │ │ ├── finger.stl

│ │ │ │ │ ├── hand_vis.stl

│ │ │ │ │ ├── finger_vis.stl

│ │ │ │ │ ├── finger_longer.stl

│ │ │ │ │ ├── finger_longer_a.stl

│ │ │ │ │ ├── finger_longer_b.stl

│ │ │ │ │ ├── finger_longer_c.stl

│ │ │ │ │ └── finger_longer_a_old.stl

│ │ │ └── panda_gripper.xml

│ │ ├── base.xml

│ │ └── arena

│ │ │ └── table_arena.xml

│ ├── objects

│ │ ├── __init__.py

│ │ ├── xml_objects.py

│ │ └── objects.py

│ ├── world.py

│ └── base.py

├── controller

│ ├── __init__.py

│ ├── controller.py

│ └── arm_controller.py

├── log

│ └── rl.111.

│ │ ├── ckpt_09000000.pt

│ │ ├── ckpt_09360000.pt

│ │ ├── replay_09000000.pkl

│ │ └── replay_09360000.pkl

├── environments

│ ├── __init__.py

│ ├── action_space.py

│ ├── base.py

│ ├── panda_grasping.py

│ └── panda.py

├── config

│ ├── controller_config.hjson

│ ├── grasping.py

│ └── __init__.py

├── utils

│ ├── __init__.py

│ ├── logger.py

│ ├── mpi.py

│ ├── mujoco_py_renderer.py

│ ├── mjcf_utils.py

│ ├── pytorch.py

│ └── transform_utils.py

├── README.md

└── environment.yml

├── base_policy.gif

├── exp_image.png

├── adapted_policy.gif

└── README.md

/panda/rl/policies/__init__.py:

--------------------------------------------------------------------------------

1 | from .mlp_actor_critic import MlpActor, MlpCritic

2 |

3 |

4 |

--------------------------------------------------------------------------------

/panda/models/robot/__init__.py:

--------------------------------------------------------------------------------

1 | from .robot import Robot

2 | from .panda_robot import Panda

3 |

--------------------------------------------------------------------------------

/base_policy.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/base_policy.gif

--------------------------------------------------------------------------------

/exp_image.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/exp_image.png

--------------------------------------------------------------------------------

/panda/controller/__init__.py:

--------------------------------------------------------------------------------

1 | from .controller import Controller

2 | from .arm_controller import *

3 |

--------------------------------------------------------------------------------

/panda/models/task/__init__.py:

--------------------------------------------------------------------------------

1 | from .task import Task

2 |

3 | from .grasping_task import GraspingTask

4 |

--------------------------------------------------------------------------------

/adapted_policy.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/adapted_policy.gif

--------------------------------------------------------------------------------

/panda/models/arena/__init__.py:

--------------------------------------------------------------------------------

1 | from .arena import Arena

2 | from .table_arena import TableArena

3 |

4 |

5 |

--------------------------------------------------------------------------------

/panda/models/gripper/__init__.py:

--------------------------------------------------------------------------------

1 | from .gripper import Gripper

2 | from .panda_gripper import PandaGripper

3 |

4 |

--------------------------------------------------------------------------------

/panda/log/rl.111./ckpt_09000000.pt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/log/rl.111./ckpt_09000000.pt

--------------------------------------------------------------------------------

/panda/log/rl.111./ckpt_09360000.pt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/log/rl.111./ckpt_09360000.pt

--------------------------------------------------------------------------------

/panda/log/rl.111./replay_09000000.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/log/rl.111./replay_09000000.pkl

--------------------------------------------------------------------------------

/panda/log/rl.111./replay_09360000.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/log/rl.111./replay_09360000.pkl

--------------------------------------------------------------------------------

/panda/models/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 | from .world import MujocoWorldBase

3 |

4 | assets_root = os.path.join(os.path.dirname(__file__), "assets")

5 |

--------------------------------------------------------------------------------

/panda/models/assets/textures/metal.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/textures/metal.png

--------------------------------------------------------------------------------

/panda/models/assets/textures/dark-wood.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/textures/dark-wood.png

--------------------------------------------------------------------------------

/panda/models/assets/objects/meshes/base.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/objects/meshes/base.stl

--------------------------------------------------------------------------------

/panda/models/assets/textures/light-wood.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/textures/light-wood.png

--------------------------------------------------------------------------------

/panda/environments/__init__.py:

--------------------------------------------------------------------------------

1 | from .base import MujocoEnv

2 | from .base import make

3 | from .panda import PandaEnv

4 | from .panda_grasping import PandaGrasp

5 |

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/hand.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/hand.stl

--------------------------------------------------------------------------------

/panda/models/objects/__init__.py:

--------------------------------------------------------------------------------

1 | from .objects import MujocoObject, MujocoXMLObject

2 | from .xml_objects import CubeObject, BasePartObject, CylObject, Cyl2Object

3 |

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/finger.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/finger.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link0.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link0.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link1.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link1.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link2.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link2.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link3.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link3.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link4.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link4.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link5.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link5.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link6.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link6.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link7.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link7.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/hand_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/hand_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link0_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link0_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link1_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link1_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link2_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link2_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link3_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link3_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link4_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link4_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link5_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link5_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link6_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link6_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/link7_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/link7_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/pedestal.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/pedestal.stl

--------------------------------------------------------------------------------

/panda/models/assets/robot/panda/meshes/finger_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/robot/panda/meshes/finger_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/hand.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/hand.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/hand_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/hand_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger_vis.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger_vis.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger_longer.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger_longer.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_a.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_a.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_b.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_b.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_c.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_c.stl

--------------------------------------------------------------------------------

/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_a_old.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Asad-Shahid/Intelligent-Task-Learning/HEAD/panda/models/assets/gripper/meshes/panda_gripper/finger_longer_a_old.stl

--------------------------------------------------------------------------------

/panda/models/world.py:

--------------------------------------------------------------------------------

1 | from models.base import MujocoXML

2 | from utils.mjcf_utils import xml_path_completion

3 |

4 |

5 | class MujocoWorldBase(MujocoXML):

6 | """Base class to inherit all mujoco worlds from."""

7 |

8 | def __init__(self):

9 | super().__init__(xml_path_completion("base.xml"))

10 |

--------------------------------------------------------------------------------

/panda/config/controller_config.hjson:

--------------------------------------------------------------------------------

1 | /* Default values for controller config parameters */

2 | {

3 | // Joint Velocity controller

4 | "joint_velocity":

5 | {

6 | "control_range": [1, 1, 1, 1, 1, 1, 1],

7 | "kv": [8.0, 7.0, 6.0, 4.0, 2.0, 0.5, 0.1],

8 | "interpolation": "linear"

9 | }

10 | }

11 |

--------------------------------------------------------------------------------

/panda/utils/__init__.py:

--------------------------------------------------------------------------------

1 | from .mujoco_py_renderer import MujocoPyRenderer

2 |

3 | def str2bool(v):

4 | return v.lower() == 'true'

5 |

6 |

7 | def str2intlist(value):

8 | if not value:

9 | return value

10 | else:

11 | return [int(num) for num in value.split(',')]

12 |

13 |

14 | def str2list(value):

15 | if not value:

16 | return value

17 | else:

18 | return [num for num in value.split(',')]

19 |

--------------------------------------------------------------------------------

/panda/models/assets/base.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/panda/models/arena/arena.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from models.base import MujocoXML

3 | from utils.mjcf_utils import array_to_string, string_to_array

4 |

5 |

6 | class Arena(MujocoXML):

7 | """Base arena class."""

8 |

9 | def set_origin(self, offset):

10 | """Applies a constant offset to all objects."""

11 | offset = np.array(offset)

12 | for node in self.worldbody.findall("./*[@pos]"):

13 | cur_pos = string_to_array(node.get("pos"))

14 | new_pos = cur_pos + offset

15 | node.set("pos", array_to_string(new_pos))

16 |

--------------------------------------------------------------------------------

/panda/models/assets/objects/cube.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/panda/models/assets/objects/cyl.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/panda/models/assets/objects/cyl2.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/panda/models/assets/objects/basepart.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/panda/utils/logger.py:

--------------------------------------------------------------------------------

1 | import time

2 | import logging

3 |

4 | import numpy as np

5 | import colorlog

6 |

7 |

8 | formatter = colorlog.ColoredFormatter(

9 | "%(log_color)s[%(asctime)s] %(message)s",

10 | datefmt=None,

11 | reset=True,

12 | log_colors={'DEBUG': 'cyan', 'INFO': 'white', 'WARNING': 'yellow', 'ERROR': 'red,bold', 'CRITICAL': 'red,bg_white'},

13 | secondary_log_colors={},

14 | style='%')

15 |

16 | logger = colorlog.getLogger('gear_assembly')

17 | logger.setLevel(logging.DEBUG)

18 |

19 | #fh = logging.FileHandler('log')

20 | #fh.setLevel(logging.DEBUG)

21 | #fh.setFormatter(formatter)

22 | #logger.addHandler(fh)

23 |

24 | ch = colorlog.StreamHandler()

25 | ch.setLevel(logging.DEBUG)

26 | ch.setFormatter(formatter)

27 | logger.addHandler(ch)

28 |

--------------------------------------------------------------------------------

/panda/models/task/task.py:

--------------------------------------------------------------------------------

1 | from models.world import MujocoWorldBase

2 |

3 |

4 | class Task(MujocoWorldBase):

5 | """

6 | Base class for creating MJCF model of a task.

7 |

8 | A task typically involves a robot interacting with objects in an arena

9 | (workshpace). The purpose of a task class is to generate a MJCF model

10 | of the task by combining the MJCF models of each component together and

11 | place them to the right positions.

12 | """

13 |

14 | def merge_robot(self, mujoco_robot):

15 | """Adds robot model to the MJCF model."""

16 | pass

17 |

18 | def merge_arena(self, mujoco_arena):

19 | """Adds arena model to the MJCF model."""

20 | pass

21 |

22 | def merge_objects(self, mujoco_objects):

23 | """Adds physical objects to the MJCF model."""

24 | pass

25 |

--------------------------------------------------------------------------------

/panda/utils/mpi.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from mpi4py import MPI

3 |

4 |

5 | def _mpi_average(x):

6 | buf = np.zeros_like(x)

7 | MPI.COMM_WORLD.Allreduce(x, buf, op=MPI.SUM)

8 | buf /= MPI.COMM_WORLD.Get_size()

9 | return buf

10 |

11 |

12 | # Average across the cpu's data

13 | def mpi_average(x):

14 | if isinstance(x, dict):

15 | keys = sorted(x.keys())

16 | return {k: _mpi_average(np.array(x[k])) for k in keys}

17 | else:

18 | return _mpi_average(np.array(x))

19 |

20 |

21 | def _mpi_sum(x):

22 | buf = np.zeros_like(x)

23 | MPI.COMM_WORLD.Allreduce(x, buf, op=MPI.SUM)

24 | return buf

25 |

26 |

27 | # Sum over the cpu's data

28 | def mpi_sum(x):

29 | if isinstance(x, dict):

30 | keys = sorted(x.keys())

31 | return {k: _mpi_sum(np.array(x[k])) for k in keys}

32 | else:

33 | return _mpi_sum(np.array(x))

34 |

--------------------------------------------------------------------------------

/panda/models/objects/xml_objects.py:

--------------------------------------------------------------------------------

1 | from models.objects import MujocoXMLObject

2 | from utils.mjcf_utils import xml_path_completion

3 |

4 |

5 | class CubeObject(MujocoXMLObject):

6 | """

7 | Round Gear

8 | """

9 |

10 | def __init__(self):

11 | super().__init__(xml_path_completion("objects/cube.xml"))

12 |

13 |

14 | class BasePartObject(MujocoXMLObject):

15 | """

16 | Base of the assembly

17 | """

18 |

19 | def __init__(self):

20 | super().__init__(xml_path_completion("objects/basepart.xml"))

21 |

22 | class CylObject(MujocoXMLObject):

23 | """

24 | Clutter

25 | """

26 |

27 | def __init__(self):

28 | super().__init__(xml_path_completion("objects/cyl.xml"))

29 |

30 | class Cyl2Object(MujocoXMLObject):

31 | """

32 | Clutter

33 | """

34 |

35 | def __init__(self):

36 | super().__init__(xml_path_completion("objects/cyl2.xml"))

37 |

--------------------------------------------------------------------------------

/panda/controller/controller.py:

--------------------------------------------------------------------------------

1 | import abc # for abstract base class definitions

2 |

3 |

4 | class Controller(metaclass=abc.ABCMeta):

5 | """

6 | Base class for all robot controllers.

7 | Defines basic interface for all controllers to adhere to.

8 | """

9 |

10 | def __init__(self, bullet_data_path, robot_jpos_getter):

11 | """

12 | Args:

13 | bullet_data_path (str): base path to bullet data.

14 |

15 | robot_jpos_getter (function): function that returns the position of the joints

16 | as a numpy array of the right dimension.

17 | """

18 | raise NotImplementedError

19 |

20 | @abc.abstractmethod

21 | def get_control(self, *args, **kwargs):

22 | """

23 | Retrieve a control input from the controller.

24 | """

25 | raise NotImplementedError

26 |

27 | @abc.abstractmethod

28 | def sync_state(self):

29 | """

30 | This function does internal bookkeeping to maintain

31 | consistency between the robot being controlled and

32 | the controller state.

33 | """

34 | raise NotImplementedError

35 |

--------------------------------------------------------------------------------

/panda/rl/policies/utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | import torch.nn as nn

4 | import torch.nn.functional as F

5 |

6 | def fanin_init(tensor): # Initialization

7 | size = tensor.size()

8 | if len(size) == 2:

9 | fan_in = size[0]

10 | elif len(size) > 2:

11 | fan_in = np.prod(size[1:])

12 | else:

13 | raise Exception("Shape must be have dimension at least 2.")

14 | bound = 1. / np.sqrt(fan_in)

15 | return tensor.data.uniform_(-bound, bound) # fills a tensor with unifrom distribution between bounds

16 |

17 | class MLP(nn.Module):

18 | def __init__(self, config, input_dim, output_dim, hid_dims=[]):

19 | super().__init__()

20 | #activation_fn = getattr(F, config.activation)

21 | activation_fn = nn.ReLU()

22 |

23 | fc = []

24 | prev_dim = input_dim

25 | for d in hid_dims:

26 | fc.append(nn.Linear(prev_dim, d))

27 | fanin_init(fc[-1].weight)

28 | fc[-1].bias.data.fill_(0.1)

29 | fc.append(activation_fn)

30 | prev_dim = d

31 | fc.append(nn.Linear(prev_dim, output_dim))

32 | fc[-1].weight.data.uniform_(-1e-3, 1e-3)

33 | fc[-1].bias.data.uniform_(-1e-3, 1e-3)

34 | self.fc = nn.Sequential(*fc)

35 |

36 | def forward(self, ob):

37 | return self.fc(ob)

38 |

--------------------------------------------------------------------------------

/panda/README.md:

--------------------------------------------------------------------------------

1 | ## Config

2 | Config contains configuration details about environment, controller and learning algorithms.

3 |

4 | ## Controller

5 | Controller contains the joint velocity controller class, actions coming from learning algorithms are interprested as joint velocities but real robot is equipped with torque controller. Controller class converts the joint velocities to joint torques and also performs interpolation between each successive action coming from policy if needed.

6 |

7 | ## Envionments

8 | Envionments include base enviornment and environments for the Panda Robot and the Grasping task. Panda Robot defines everything robot related. Grasping task defines everything task related including a reward function. Environments also contain an action space class that defines the size and limits of robot's action space to be used in learning

9 |

10 | ## Models

11 | Models is where everything is actually defined for simulation. Arena, Gripper, Objects and Robot are the classes that use xmls respectively from Assets. Task merges all pieces and instantiates a scene model for MuJoCo to perform the simulation. Task is used later on in the environment.

12 |

13 | ## RL

14 | RL is where actual learning happens. PPO and SAC are the implemented algorithms. Neural network models are present in policies.

15 |

16 |

17 | ## Utils

18 | Utils contain auxiliaries both for environment defintion and learning part.

19 |

20 | Note: all the files have been commented heavily (with possible output) to have a faster understanding of what's happening.

21 |

--------------------------------------------------------------------------------

/panda/models/robot/robot.py:

--------------------------------------------------------------------------------

1 | from collections import OrderedDict

2 | from models.base import MujocoXML

3 |

4 |

5 | class Robot(MujocoXML):

6 | """Base class for all robot models."""

7 |

8 | def __init__(self, fname):

9 | """Initializes from file @fname."""

10 | super().__init__(fname)

11 | # key: gripper name and value: gripper model

12 | self.grippers = OrderedDict()

13 |

14 | def add_gripper(self, arm_name, gripper):

15 | """

16 | Mounts gripper to arm.

17 |

18 | Throws error if robot already has a gripper or gripper type is incorrect.

19 |

20 | Args:

21 | arm_name (str): name of arm mount

22 | gripper (MujocoGripper instance): gripper MJCF model

23 | """

24 | if arm_name in self.grippers:

25 | raise ValueError("Attempts to add multiple grippers to one body")

26 |

27 | arm_subtree = self.worldbody.find(".//body[@name='{}']".format(arm_name))

28 |

29 | for body in gripper.worldbody:

30 | arm_subtree.append(body)

31 |

32 | self.merge(gripper, merge_body=False)

33 | self.grippers[arm_name] = gripper

34 |

35 | @property

36 | def dof(self):

37 | """Returns the number of DOF of the robot, not including gripper."""

38 | raise NotImplementedError

39 |

40 | @property

41 | def joints(self):

42 | """Returns a list of joint names of the robot."""

43 | raise NotImplementedError

44 |

45 | @property

46 | def init_qpos(self):

47 | """Returns default qpos."""

48 | raise NotImplementedError

49 |

--------------------------------------------------------------------------------

/panda/models/gripper/panda_gripper.py:

--------------------------------------------------------------------------------

1 | """

2 | Gripper for Franka's Panda (has two fingers).

3 | """

4 | import numpy as np

5 | from utils.mjcf_utils import xml_path_completion

6 | from models.gripper.gripper import Gripper

7 |

8 |

9 | class PandaGripperBase(Gripper):

10 | """

11 | Gripper for Franka's Panda (has two fingers).

12 | """

13 |

14 | def __init__(self):

15 | super().__init__(xml_path_completion("gripper/panda_gripper.xml"))

16 |

17 | def format_action(self, action):

18 | return action

19 |

20 | @property

21 | def init_qpos(self):

22 | return np.array([0.020833, -0.020833])

23 |

24 | @property

25 | def joints(self):

26 | return ["finger_joint1", "finger_joint2"]

27 |

28 | @property

29 | def dof(self):

30 | return 2

31 |

32 | @property

33 | def visualization_sites(self):

34 | return ["grip_site"]

35 |

36 | def contact_geoms(self):

37 | return ["hand_collision", "finger1_collision", "finger2_collision", "finger1_tip_collision", "finger2_tip_collision"]

38 |

39 | @property

40 | def left_finger_geoms(self):

41 | return ["finger1_tip_collision"]

42 |

43 | @property

44 | def right_finger_geoms(self):

45 | return ["finger2_tip_collision"]

46 |

47 |

48 | class PandaGripper(PandaGripperBase):

49 | """

50 | Modifies PandaGripperBase to only take one action.

51 | """

52 |

53 | def format_action(self, action):

54 | """

55 | 1 => closed, -1 => open

56 | """

57 | assert len(action) == 1

58 | return np.array([-1 * action[0], 1 * action[0]])

59 |

60 | @property

61 | def dof(self):

62 | return 1

63 |

--------------------------------------------------------------------------------

/panda/models/arena/table_arena.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from models.arena import Arena

3 | from utils.mjcf_utils import xml_path_completion

4 | from utils.mjcf_utils import array_to_string, string_to_array

5 |

6 |

7 | class TableArena(Arena):

8 | """Workspace that contains a tabletop."""

9 |

10 | def __init__(self, table_full_size=(0.35, 0.6, 0.02), table_friction=(1, 0.005, 0.0001)):

11 | """

12 | Args:

13 | table_full_size: full dimensions of the table

14 | table_friction: friction parameters of the table

15 | """

16 | super().__init__(xml_path_completion("arena/table_arena.xml"))

17 |

18 | self.table_full_size = np.array(table_full_size)

19 | self.table_half_size = self.table_full_size / 2

20 | self.table_friction = table_friction

21 |

22 | self.floor = self.worldbody.find("./geom[@name='floor']")

23 | self.table_body = self.worldbody.find("./body[@name='table']")

24 | self.table_collision = self.table_body.find("./geom[@name='table_collision']")

25 | self.table_view = self.table_body.find("./geom[@name='table_view']")

26 | self.configure_location()

27 |

28 | def configure_location(self):

29 | self.bottom_pos = np.array([0, 0, 0])

30 | self.floor.set("pos", array_to_string(self.bottom_pos))

31 | self.table_collision.set("size", array_to_string(self.table_full_size))

32 | self.table_view.set("size", array_to_string(self.table_full_size))

33 |

34 | @property

35 | def table_top_abs(self):

36 | """

37 | Returns the absolute position of table top.

38 | """

39 | return string_to_array(self.table_body.get("pos"))

40 |

--------------------------------------------------------------------------------

/panda/rl/policies/distributions.py:

--------------------------------------------------------------------------------

1 | from collections import OrderedDict

2 |

3 | import numpy as np

4 | import torch

5 | import torch.nn as nn

6 | import torch.distributions

7 |

8 |

9 | # Normal

10 | FixedNormal = torch.distributions.Normal

11 |

12 | normal_init = FixedNormal.__init__

13 | FixedNormal.__init__ = lambda self, mean, std: normal_init(self, mean.double(), std.double())

14 |

15 | log_prob_normal = FixedNormal.log_prob

16 | FixedNormal.log_probs = lambda self, actions: log_prob_normal(self, actions.double()).sum(-1, keepdim=True).float()

17 |

18 | normal_entropy = FixedNormal.entropy

19 | FixedNormal.entropy = lambda self: normal_entropy(self).sum(-1).float()

20 |

21 | FixedNormal.mode = lambda self: self.mean.float()

22 |

23 | normal_sample = FixedNormal.sample

24 | FixedNormal.sample = lambda self: normal_sample(self).float()

25 |

26 | normal_rsample = FixedNormal.rsample

27 | FixedNormal.rsample = lambda self: normal_rsample(self).float()

28 |

29 | class MixedDistribution(nn.Module):

30 | def __init__(self, distributions):

31 | super().__init__()

32 | assert isinstance(distributions, OrderedDict)

33 | self.distributions = distributions

34 |

35 | def mode(self):

36 | return OrderedDict([(k, dist.mode()) for k, dist in self.distributions.items()])

37 |

38 | def sample(self):

39 | return OrderedDict([(k, dist.sample()) for k, dist in self.distributions.items()])

40 |

41 | def rsample(self):

42 | return OrderedDict([(k, dist.rsample()) for k, dist in self.distributions.items()])

43 |

44 | def log_probs(self, x):

45 | assert isinstance(x, dict)

46 | return OrderedDict([(k, dist.log_probs(x[k])) for k, dist in self.distributions.items()])

47 |

48 | def entropy(self):

49 | return sum([dist.entropy() for dist in self.distributions.values()])

50 |

51 |

--------------------------------------------------------------------------------

/panda/rl/base_agent.py:

--------------------------------------------------------------------------------

1 | from collections import OrderedDict

2 | from rl.normalizer import Normalizer

3 |

4 |

5 | class BaseAgent():

6 | def __init__(self, config, ob_space): # ob_space is an ordered dict where keys are ob names and values are dimensions

7 | self._config = config

8 | self._ob_norm = Normalizer(ob_space, default_clip_range=config.clip_range, clip_obs=config.clip_obs)

9 |

10 | def normalize(self, ob):

11 | if self._config.ob_norm:

12 | return self._ob_norm.normalize(ob)

13 | return ob

14 |

15 | def act(self, ob, is_train=True):

16 | ob = self.normalize(ob)

17 | ac, activation = self._actor.act(ob, is_train=is_train)

18 | return ac, activation

19 |

20 | def update_normalizer(self, obs):

21 | if self._config.ob_norm:

22 | self._ob_norm.update(obs)

23 | self._ob_norm.recompute_stats()

24 |

25 | def store_episode(self, rollouts):

26 | raise NotImplementedError()

27 |

28 | def replay_buffer(self):

29 | return self._buffer.state_dict() # gives episodes buffer; buffer is like a rollout but can store more values for multiple episodes

30 |

31 | def load_replay_buffer(self, state_dict):

32 | self._buffer.load_state_dict(state_dict)

33 |

34 | def sync_networks(self):

35 | raise NotImplementedError()

36 |

37 | def train(self):

38 | raise NotImplementedError()

39 |

40 | def _soft_update_target_network(self, target, source, tau):

41 | for target_param, source_param in zip(target.parameters(), source.parameters()):

42 | target_param.data.copy_((1 - tau) * source_param.data + tau * target_param.data)

43 |

44 | def _copy_target_network(self, target, source):

45 | for target_param, source_param in zip(target.parameters(), source.parameters()):

46 | target_param.data.copy_(source_param.data)

47 |

--------------------------------------------------------------------------------

/panda/models/task/grasping_task.py:

--------------------------------------------------------------------------------

1 | from utils.mjcf_utils import new_joint, array_to_string

2 | from models.task import Task

3 |

4 |

5 | class GraspingTask(Task):

6 | """

7 | Creates MJCF model of a grasping task.

8 |

9 | A gear assembly task consists of a robot picking up a cube from a table. This class combines

10 | the robot, the arena with table, and the objects into a single MJCF model.

11 | """

12 |

13 | def __init__(self, mujoco_arena, mujoco_robot, mujoco_objects):

14 | """

15 | Args:

16 | mujoco_arena: MJCF model of robot workspace

17 | mujoco_robot: MJCF model of robot model

18 | mujoco_objects: a list of MJCF models of physical objects

19 | """

20 | super().__init__()

21 |

22 | self.merge_arena(mujoco_arena)

23 | self.merge_robot(mujoco_robot)

24 | self.merge_objects(mujoco_objects)

25 |

26 |

27 | def merge_robot(self, mujoco_robot):

28 | """Adds robot model to the MJCF model."""

29 | self.robot = mujoco_robot

30 | self.merge(mujoco_robot)

31 |

32 | def merge_arena(self, mujoco_arena):

33 | """Adds arena model to the MJCF model."""

34 | self.arena = mujoco_arena

35 | self.table_offset = mujoco_arena.table_top_abs

36 | self.table_size = mujoco_arena.table_full_size

37 | self.table_body = mujoco_arena.table_body

38 | self.merge(mujoco_arena)

39 |

40 | def merge_objects(self, mujoco_objects):

41 | """Adds physical objects to the MJCF model."""

42 | self.mujoco_objects = mujoco_objects

43 | self.objects = {} # xml manifestation

44 | self.max_horizontal_radius = 0

45 | for obj_name, obj_mjcf in mujoco_objects.items():

46 | self.merge_asset(obj_mjcf)

47 | # Load object

48 | obj = obj_mjcf.get_collision(name=obj_name, site=True)

49 | obj.append(new_joint(name=obj_name, type="free", damping="0.0005"))

50 | self.objects[obj_name] = obj

51 | self.worldbody.append(obj)

52 |

--------------------------------------------------------------------------------

/panda/environments/action_space.py:

--------------------------------------------------------------------------------

1 | """ Define ActionSpace class to represent action space. """

2 |

3 | from collections import OrderedDict

4 | import numpy as np

5 | from utils.logger import logger

6 |

7 |

8 | class ActionSpace(object):

9 | """

10 | Base class for action space

11 | This action space is used in the provided RL training code.

12 | """

13 |

14 | def __init__(self, size, minimum=-1., maximum=1.):

15 | """

16 | Loads a mujoco xml from file.

17 |

18 | Args:

19 | size (int): action dimension.

20 | min: minimum values for action.

21 | max: maximum values for action.

22 | """

23 | self.size = size

24 | self.shape = OrderedDict([('default', size)])

25 |

26 | self._minimum = np.array(minimum)

27 | self._minimum.setflags(write=False)

28 |

29 | self._maximum = np.array(maximum)

30 | self._maximum.setflags(write=False)

31 |

32 | @property

33 | def minimum(self):

34 | """

35 | Returns the minimum values of the action.

36 | """

37 | return self._minimum

38 |

39 | @property

40 | def maximum(self):

41 | """

42 | Returns the maximum values of the action.

43 | """

44 | return self._maximum

45 |

46 | def keys(self):

47 | """

48 | Returns the keys of the action space.

49 | """

50 | return self.shape.keys()

51 |

52 | def __repr__(self):

53 | template = ('ActionSpace(shape={},''minimum={}, maximum={})')

54 | return template.format(self.shape, self._minimum, self._maximum)

55 |

56 | def __eq__(self, other):

57 | """

58 | Returns whether other action space is the same or not.

59 | """

60 | if not isinstance(other, ActionSpace):

61 | return False

62 | return (self.minimum == other.minimum).all() and (self.maximum == other.maximum).all()

63 |

64 | def sample(self):

65 | """

66 | Returns a sample from the action space.

67 | """

68 | return np.random.uniform(low=self.minimum, high=self.maximum, size=self.size)

69 |

--------------------------------------------------------------------------------

/panda/config/grasping.py:

--------------------------------------------------------------------------------

1 | from utils import str2bool, str2intlist

2 |

3 |

4 |

5 | def add_argument(parser):

6 | """

7 | Adds a list of arguments to argparser for the lift environment.

8 | """

9 |

10 | # training scene

11 |

12 | parser.add_argument('--mode', type=int, default=1,

13 | help='1: nominal cube scene, 2: collision avoidance scene')

14 |

15 |

16 | # mujoco simulation

17 |

18 | parser.add_argument('--table_full_size', type=float, default=(0.35, 0.46, 0.02),

19 | help='x, y, and z dimensions of the table')

20 | parser.add_argument('--gripper_type', type=str, default='PandaGripper',

21 | help='Gripper type of robot')

22 | parser.add_argument('--gripper_visualization', type=str2bool, default=True,

23 | help='using gripper visualization')

24 |

25 | # rendering

26 |

27 | parser.add_argument('--render_collision_mesh', type=str2bool, default=False,

28 | help='if rendering collision meshes in camera')

29 | parser.add_argument('--render_visual_mesh', type=str2bool, default=True,

30 | help='if rendering visual meshes in camera')

31 |

32 | # episode settings

33 |

34 | parser.add_argument('--horizon', type=int, default=600,

35 | help='Every episode lasts for exactly @horizon timesteps')

36 | parser.add_argument('--ignore_done', type=str2bool, default=True,

37 | help='if never terminating the environment (ignore @horizon)')

38 |

39 | # controller

40 |

41 | parser.add_argument('--control_freq', type=int, default= 250,

42 | help='control signals to receive in every simulated second, sets the amount of simulation time that passes between every action input')

43 |

44 |

45 | def get_default_config():

46 | """

47 | Gets default configurations for the lift environment.

48 | """

49 | import argparse

50 | parser = argparse.ArgumentParser("Default Configuration for lift Environment")

51 | add_argument(parser)

52 |

53 | config = parser.parse_args([])

54 | return config

55 |

--------------------------------------------------------------------------------

/panda/models/gripper/gripper.py:

--------------------------------------------------------------------------------

1 | """

2 | Defines the base class of gripper

3 | """

4 | from models.base import MujocoXML

5 |

6 |

7 | class Gripper(MujocoXML):

8 | """Base class for gripper"""

9 |

10 | def __init__(self, fname):

11 | super().__init__(fname)

12 |

13 | def format_action(self, action):

14 | """

15 | Given (-1,1) abstract control as np-array

16 | returns the (-1,1) control signals

17 | for underlying actuators as 1-d np array

18 | """

19 | raise NotImplementedError

20 |

21 | @property

22 | def init_qpos(self):

23 | """

24 | Returns rest(open) qpos of the gripper

25 | """

26 | raise NotImplementedError

27 |

28 | @property

29 | def dof(self):

30 | """

31 | Returns the number of DOF of the gripper

32 | """

33 | raise NotImplementedError

34 |

35 | @property

36 | def joints(self):

37 | """

38 | Returns a list of joint names of the gripper

39 | """

40 | raise NotImplementedError

41 |

42 | def contact_geoms(self):

43 | """

44 | Returns a list of names corresponding to the geoms

45 | used to determine contact with the gripper.

46 | """

47 | return []

48 |

49 | @property

50 | def visualization_sites(self):

51 | """

52 | Returns a list of sites corresponding to the geoms

53 | used to aid visualization by human.

54 | (and should be hidden from robots)

55 | """

56 | return []

57 |

58 | @property

59 | def left_finger_geoms(self):

60 | """

61 | Geoms corresponding to left finger of a gripper

62 | """

63 | raise NotImplementedError

64 |

65 | @property

66 | def right_finger_geoms(self):

67 | """

68 | Geoms corresponding to raise finger of a gripper

69 | """

70 | raise NotImplementedError

71 |

72 | def hide_visualization(self):

73 | """

74 | Hides all visualization geoms and sites.

75 | This should be called before rendering to agents

76 | """

77 | for site_name in self.visualization_sites:

78 | site = self.worldbody.find(".//site[@name='{}']".format(site_name))

79 | site.set("rgba", "0 0 0 0")

80 |

--------------------------------------------------------------------------------

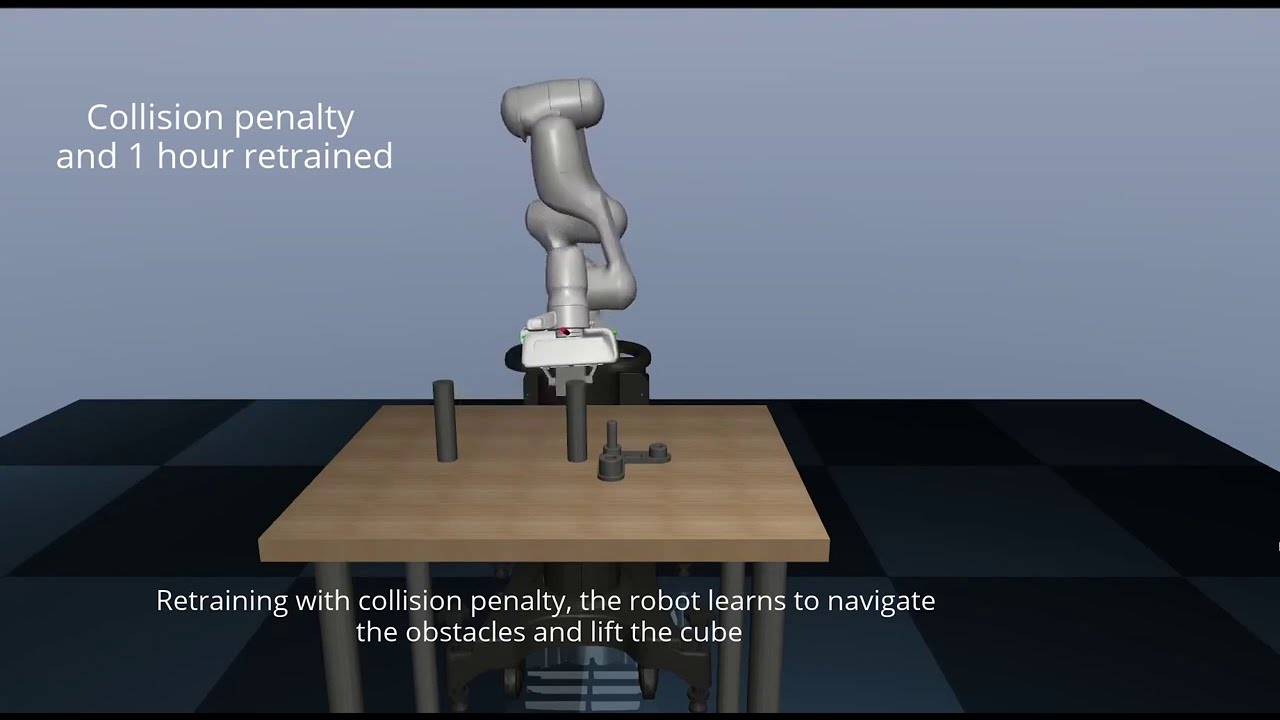

/README.md:

--------------------------------------------------------------------------------

1 | # Intelligent-Task-Learning

2 | The Repository for the _Autonomous Robots 2022 Journal article:_

3 |

4 | "[Continuous control actions learning and adaptation for robotic manipulation through reinforcement learning](https://link.springer.com/article/10.1007/s10514-022-10034-z)"

5 |

6 | This contains a python toolkit for learning a grasping task with Franka Emika Panda Robot. The robot can be trained to grasp the cube, avoid obstacles and learn to manage redundancy using modern Reinforcement Learning algorithms of [Proximal Policy Optimization (PPO)](https://arxiv.org/abs/1707.06347) and [Soft Actor-Critic (SAC)](https://arxiv.org/abs/1812.05905). It is powered by [MuJoCo physics engine](http://www.mujoco.org/)

7 |

8 |

9 | # How to cite

10 | ```

11 | @article{shahid2022continuous,

12 | title={Continuous control actions learning and adaptation for robotic manipulation through reinforcement learning},

13 | author={Shahid, Asad Ali and Piga, Dario and Braghin, Francesco and Roveda, Loris},

14 | journal={Autonomous Robots},

15 | pages={1--16},

16 | year={2022},

17 | publisher={Springer}

18 | }

19 | ```

20 |

21 | # New Experiment (Dynamic Enviornment)

22 | The adapted policy can grasp the moving cube in 30 mints of retraining.

23 |

24 |

25 | Before, the base grasping policy trained on a static cube is not able to grasp the moving cube.

26 |

27 |

28 | # Simulation Video

29 | [](https://www.youtube.com/watch?v=aX55Zc2XMTE)

30 |

31 | # Experimental validation

32 | [](https://drive.google.com/file/d/1zlS-_HIWMlIAvrxqGNGRyMbuDfQrws8z/view)

33 |

34 |

35 | ## Installation

36 |

37 | To use this toolkit, it is required to first install [MuJoCo 200](https://www.roboti.us/index.html) and then [mujoco-py](https://github.com/openai/mujoco-py) from Open AI. mujoco-py allows using MuJoCo from python interface.

38 | The installation requires python 3.6 or higher. It is recommended to install all the required packages under a conda virtual environment

39 |

40 |

41 | ## References

42 | This toolit is mainly developed based on [Surreal Robotics Suite](https://github.com/StanfordVL/robosuite) and the Reinforcement learning part is referenced from

43 | [this repo](https://github.com/clvrai/furniture)

44 |

--------------------------------------------------------------------------------

/panda/models/robot/panda_robot.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from models.robot.robot import Robot

3 | from utils.mjcf_utils import xml_path_completion, array_to_string

4 |

5 |

6 | class Panda(Robot):

7 | """Panda is a sensitive single-arm robot designed by Franka."""

8 |

9 | def __init__(self, xml_path="robot/panda/robot_torque.xml"):

10 |

11 | super().__init__(xml_path_completion(xml_path))

12 |

13 | self.bottom_offset = np.array([0, 0, 0]) # ignore the bottom offset; directly define base_xpos in world frame

14 | self.set_joint_damping()

15 | self._init_qpos = np.array([0, np.pi / 16.0, 0.00, -np.pi / 2.0 - np.pi / 3.0, 0.00, np.pi - 0.2, -np.pi/4])

16 |

17 | def set_base_xpos(self, pos):

18 | """Places the robot on position @pos."""

19 | node = self.worldbody.find("./body[@name='link0']")

20 | node.set("pos", array_to_string(pos - self.bottom_offset))

21 |

22 | def set_joint_damping(self, damping=np.array((0.1, 0.1, 0.1, 0.1, 0.1, 0.01, 0.01))):

23 | """Set joint damping """

24 | body = self._base_body

25 | for i in range(len(self._link_body)):

26 | body = body.find("./body[@name='{}']".format(self._link_body[i]))

27 | joint = body.find("./joint[@name='{}']".format(self._joints[i]))

28 | joint.set("damping", array_to_string(np.array([damping[i]])))

29 |

30 | def set_joint_frictionloss(self, friction=np.array((0.1, 0.1, 0.1, 0.1, 0.1, 0.01, 0.01))):

31 | """Set joint friction loss (static friction)"""

32 | body = self._base_body

33 | for i in range(len(self._link_body)):

34 | body = body.find("./body[@name='{}']".format(self._link_body[i]))

35 | joint = body.find("./joint[@name='{}']".format(self._joints[i]))

36 | joint.set("frictionloss", array_to_string(np.array([friction[i]])))

37 |

38 | @property

39 | def dof(self):

40 | return 7

41 |

42 | @property

43 | def joints(self):

44 | return ["joint{}".format(x) for x in range(1, 8)]

45 |

46 | @property

47 | def init_qpos(self):

48 | return self._init_qpos

49 |

50 | @property

51 | def contact_geoms(self):

52 | return ["link{}_collision".format(x) for x in range(1, 8)]

53 |

54 | @property

55 | def _base_body(self):

56 | node = self.worldbody.find("./body[@name='link0']")

57 | return node

58 |

59 | @property

60 | def _link_body(self):

61 | return ["link1", "link2", "link3", "link4", "link5", "link6", "link7"]

62 |

63 | @property

64 | def _joints(self):

65 | return ["joint1", "joint2", "joint3", "joint4", "joint5", "joint6", "joint7"]

66 |

--------------------------------------------------------------------------------

/panda/environment.yml:

--------------------------------------------------------------------------------

1 | name: robo

2 | channels:

3 | - defaults

4 | dependencies:

5 | - _libgcc_mutex=0.1=main

6 | - _openmp_mutex=5.1=1_gnu

7 | - ca-certificates=2023.08.22=h06a4308_0

8 | - ld_impl_linux-64=2.38=h1181459_1

9 | - libffi=3.4.4=h6a678d5_0

10 | - libgcc-ng=11.2.0=h1234567_1

11 | - libgfortran-ng=7.5.0=ha8ba4b0_17

12 | - libgfortran4=7.5.0=ha8ba4b0_17

13 | - libgomp=11.2.0=h1234567_1

14 | - libstdcxx-ng=11.2.0=h1234567_1

15 | - mpi=1.0=mpich

16 | - mpi4py=3.1.4=py39hfc96bbd_0

17 | - mpich=3.3.2=hc856adb_0

18 | - ncurses=6.4=h6a678d5_0

19 | - openssl=3.0.10=h7f8727e_2

20 | - pip=23.2.1=py39h06a4308_0

21 | - python=3.9.18=h955ad1f_0

22 | - readline=8.2=h5eee18b_0

23 | - setuptools=68.0.0=py39h06a4308_0

24 | - sqlite=3.41.2=h5eee18b_0

25 | - tk=8.6.12=h1ccaba5_0

26 | - tzdata=2023c=h04d1e81_0

27 | - wheel=0.38.4=py39h06a4308_0

28 | - xz=5.4.2=h5eee18b_0

29 | - zlib=1.2.13=h5eee18b_0

30 | - pip:

31 | - appdirs==1.4.4

32 | - certifi==2023.7.22

33 | - cffi==1.15.1

34 | - charset-normalizer==3.2.0

35 | - click==8.1.7

36 | - cmake==3.27.4.1

37 | - colorlog==6.7.0

38 | - cython==0.29.36

39 | - docker-pycreds==0.4.0

40 | - fasteners==0.18

41 | - filelock==3.12.4

42 | - gitdb==4.0.10

43 | - gitpython==3.1.36

44 | - glfw==2.6.2

45 | - h5py==3.9.0

46 | - hjson==3.1.0

47 | - idna==3.4

48 | - imageio==2.31.3

49 | - jinja2==3.1.2

50 | - lit==16.0.6

51 | - markupsafe==2.1.3

52 | - mpmath==1.3.0

53 | - mujoco-py==2.1.2.14

54 | - networkx==3.1

55 | - numpy==1.25.2

56 | - nvidia-cublas-cu11==11.10.3.66

57 | - nvidia-cuda-cupti-cu11==11.7.101

58 | - nvidia-cuda-nvrtc-cu11==11.7.99

59 | - nvidia-cuda-runtime-cu11==11.7.99

60 | - nvidia-cudnn-cu11==8.5.0.96

61 | - nvidia-cufft-cu11==10.9.0.58

62 | - nvidia-curand-cu11==10.2.10.91

63 | - nvidia-cusolver-cu11==11.4.0.1

64 | - nvidia-cusparse-cu11==11.7.4.91

65 | - nvidia-nccl-cu11==2.14.3

66 | - nvidia-nvtx-cu11==11.7.91

67 | - pathtools==0.1.2

68 | - pillow==10.0.0

69 | - protobuf==4.24.3

70 | - psutil==5.9.5

71 | - pycparser==2.21

72 | - pyyaml==6.0.1

73 | - requests==2.31.0

74 | - scipy==1.11.2

75 | - sentry-sdk==1.31.0

76 | - setproctitle==1.3.2

77 | - six==1.16.0

78 | - smmap==5.0.0

79 | - sympy==1.12

80 | - torch==2.0.1

81 | - torchvision==0.15.2

82 | - tqdm==4.66.1

83 | - triton==2.0.0

84 | - typing-extensions==4.7.1

85 | - urllib3==2.0.4

86 | - wandb==0.15.10

87 | prefix: /home/asad/miniconda3/envs/robo

88 |

--------------------------------------------------------------------------------

/panda/models/assets/arena/table_arena.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

32 |

33 |

34 |

35 |

36 |

37 |

--------------------------------------------------------------------------------

/panda/rl/main.py:

--------------------------------------------------------------------------------

1 | """ Launch RL training and evaluation. """

2 |

3 | import sys

4 | import signal

5 | import os

6 | import json

7 | import numpy as np

8 | import torch

9 | from mpi4py import MPI

10 | from config import argparser

11 | from rl.trainer import Trainer

12 | from utils.logger import logger

13 |

14 |

15 | np.set_printoptions(precision=3)

16 | np.set_printoptions(suppress=True)

17 |

18 |

19 | def run(config):

20 | """

21 | Runs Trainer.

22 | """

23 | # for parallel workers training (distributed training)

24 | rank = MPI.COMM_WORLD.Get_rank() # Each process is assigned a rank that is unique within Communicator(group of processes)

25 | config.rank = rank

26 | config.is_chef = rank == 0 # Binary

27 | config.seed = config.seed + rank

28 | config.num_workers = MPI.COMM_WORLD.Get_size() # total no. of processes is a size of communicator

29 |

30 | if config.is_chef:

31 | logger.warn('Run a base worker.')

32 | make_log_files(config)

33 | else:

34 | logger.warn('Run worker %d and disable logger.', config.rank)

35 | import logging

36 | logger.setLevel(logging.CRITICAL)

37 |

38 | def shutdown(signal, frame):

39 | logger.warn('Received signal %s: exiting', signal)

40 | sys.exit(128+signal)

41 |

42 | signal.signal(signal.SIGHUP, shutdown)

43 | signal.signal(signal.SIGINT, shutdown)

44 | signal.signal(signal.SIGTERM, shutdown)

45 |

46 | # set global seed

47 | np.random.seed(config.seed)

48 | torch.manual_seed(config.seed)

49 | torch.cuda.manual_seed_all(config.seed)

50 |

51 | # set the display no. configured with gpu

52 | os.environ["DISPLAY"] = ":0"

53 | # use gpu or cpu

54 | if config.gpu is not None:

55 | os.environ["CUDA_VISIBLE_DEVICES"] = "{}".format(config.gpu)

56 | assert torch.cuda.is_available()

57 | config.device = torch.device("cuda")

58 | else:

59 | config.device = torch.device("cpu")

60 |

61 | # build a trainer

62 | trainer = Trainer(config)

63 | if config.is_train:

64 | trainer.train()

65 | logger.info("Finish training")

66 | else:

67 | trainer.evaluate()

68 | logger.info("Finish evaluating")

69 |

70 |

71 | def make_log_files(config):

72 | """

73 | Sets up log directories and saves git diff and command line.

74 | """

75 | config.run_name = '{}.{}.{}'.format(config.prefix, config.seed, config.suffix)

76 |

77 | config.log_dir = os.path.join(config.log_root_dir, config.run_name)

78 | logger.info('Create log directory: %s', config.log_dir)

79 | os.makedirs(config.log_dir, exist_ok=True)

80 |

81 | if config.is_train:

82 | # log config

83 | param_path = os.path.join(config.log_dir, 'params.json')

84 | logger.info('Store parameters in %s', param_path)

85 | with open(param_path, 'w') as fp:

86 | json.dump(config.__dict__, fp, indent=4, sort_keys=True)

87 |

88 |

89 | if __name__ == '__main__':

90 | args, unparsed = argparser()

91 | if len(unparsed):

92 | logger.error('Unparsed argument is detected:\n%s', unparsed)

93 | else:

94 | run(args)

95 |

--------------------------------------------------------------------------------

/panda/rl/policies/mlp_actor_critic.py:

--------------------------------------------------------------------------------

1 | from collections import OrderedDict

2 | import torch

3 | import torch.nn as nn

4 | import numpy as np

5 | from rl.policies.utils import MLP

6 | from rl.policies.actor_critic import Actor, Critic

7 |

8 |

9 | class MlpActor(Actor):

10 | def __init__(self, config, ob_space, ac_space, tanh_policy):

11 | super().__init__(config, ob_space, ac_space, tanh_policy)

12 |

13 | self._ac_space = ac_space # e.g. ActionSpace(shape=OrderedDict([('default', 8)]),minimum=-1.0, maximum=1.0)

14 |

15 | # observation # e.g. OrderedDict([('object-state', [10]), ('robot-state', [36])])

16 | input_dim = sum([np.prod(x) for x in ob_space.values()]) # [[226,226,3],[36],[10]] (226*226*3)+36+10

17 |

18 | # build sequential layers of neural network

19 | self.fc = MLP(config, input_dim, config.rl_hid_size, [config.rl_hid_size]) # inp= 46, output= 64

20 | self.fc_means = nn.ModuleDict()

21 | self.fc_log_stds = nn.ModuleDict()

22 |

23 | for k, size in ac_space.shape.items(): # shape=OrderedDict([('default', 8)])

24 | self.fc_means.update({k: MLP(config, config.rl_hid_size, size)}) # MLP here defines the out_layer of nn

25 | self.fc_log_stds.update({k: MLP(config, config.rl_hid_size, size)})

26 |

27 | def forward(self, ob): # extracts input values from ob odict and passes it to nn

28 | inp = list(ob.values()) # [tensor([-0.007,.....,-0.51]), tensor([-0.08,.....,0.08])]

29 | if len(inp[0].shape) == 1: #[36]

30 | inp = [x.unsqueeze(0) for x in inp] # change the tensor shape from e.g. [36] to [1, 36]

31 |

32 | # concatenates the tensor to [1, 46], passes to model and activation_fn; out is a tensor of shape (1, hid_size)

33 | out = self._activation_fn(self.fc(torch.cat(inp, dim=-1)))

34 | out = torch.reshape(out, (out.shape[0], -1)) # [1, 64]

35 |

36 | means, stds = OrderedDict(), OrderedDict()

37 | for k in self._ac_space.keys():

38 | mean = self.fc_means[k](out) # passes previous layers output to last layer to produce action tensor [1,8]

39 | log_std = self.fc_log_stds[k](out) # passes previous layers output to last layer for s.d. tensor

40 | log_std = torch.clamp(log_std, -10, 2) # clips between min and max

41 | std = torch.exp(log_std.double()) # exponential on s.d.

42 | means[k] = mean

43 | stds[k] = std

44 |

45 | return means, stds

46 |

47 |

48 | class MlpCritic(Critic):

49 | def __init__(self, config, ob_space, ac_space=None):

50 | super().__init__(config)

51 |

52 | input_dim = sum([np.prod(x) for x in ob_space.values()])

53 | if ac_space is not None:

54 | input_dim += ac_space.size

55 |

56 | self.fc = MLP(config, input_dim, 1, [config.rl_hid_size] * 2)

57 |

58 | def forward(self, ob, ac=None):

59 | inp = list(ob.values())

60 | if len(inp[0].shape) == 1:

61 | inp = [x.unsqueeze(0) for x in inp]

62 |

63 | if ac is not None:

64 | ac = list(ac.values())

65 | if len(ac[0].shape) == 1:

66 | ac = [x.unsqueeze(0) for x in ac]

67 | inp.extend(ac)

68 |

69 | out = self.fc(torch.cat(inp, dim=-1))

70 | out = torch.reshape(out, (out.shape[0], 1))

71 |

72 | return out

73 |

--------------------------------------------------------------------------------

/panda/rl/dataset.py:

--------------------------------------------------------------------------------

1 | from collections import defaultdict

2 | from time import time

3 | import numpy as np

4 |

5 | class ReplayBuffer:

6 | def __init__(self, keys, buffer_size, sample_func):

7 | self._size = buffer_size # buffer size in config

8 |

9 | # memory management

10 | self._idx = 0

11 | self._current_size = 0

12 | self._sample_func = sample_func

13 |

14 | # create the buffer to store info

15 | self._keys = keys

16 | self._buffers = defaultdict(list)

17 |

18 | def clear(self):

19 | self._idx = 0

20 | self._current_size = 0

21 | self._buffers = defaultdict(list)

22 |

23 | # store the episode

24 | def store_episode(self, rollout): # stores an episode given a rollout; calling each time adds a new episode

25 | idx = self._idx = (self._idx + 1) % self._size

26 | self._current_size += 1

27 |

28 | if self._current_size > self._size:

29 | for k in self._keys:

30 | self._buffers[k][idx] = rollout[k]

31 | else:

32 | for k in self._keys:

33 | self._buffers[k].append(rollout[k])

34 |

35 | # sample the data from the replay buffer

36 | def sample(self, batch_size):

37 | # sample transitions

38 | transitions = self._sample_func(self._buffers, batch_size) # buffers contains rollout(s), batch_size is in config

39 | return transitions

40 |

41 | def state_dict(self):

42 | return self._buffers

43 |

44 | def load_state_dict(self, state_dict):

45 | self._buffers = state_dict

46 | self._current_size = len(self._buffers['ac']) # no. of episodes in buffer

47 |

48 |

49 | class RandomSampler:

50 | def sample_func(self, episode_batch, batch_size_in_transitions): # episode_batch is buffers

51 | rollout_batch_size = len(episode_batch['ac']) # no. of episodes in buffer

52 | batch_size = batch_size_in_transitions

53 |

54 | episode_idxs = np.random.randint(0, rollout_batch_size, batch_size) # selects a list of episode idxs i.e. [2,0,4,0...], length equal to batch_size

55 | t_samples = [np.random.randint(len(episode_batch['ac'][episode_idx])) for episode_idx in episode_idxs] # [135,78,54,180,...] list

56 |

57 | transitions = {}

58 | for key in episode_batch.keys(): # selects transitions experiences corresponding to sampled timesteps from episode(s)

59 | # values are lists {'ob': [], 'ac': [], 'done': []}; len of each list is equal to batch_size

60 | transitions[key] = [episode_batch[key][episode_idx][t] for episode_idx, t in zip(episode_idxs, t_samples)]

61 |

62 | # selects next observations corresponding to sampled transitions from an episode(s)

63 | transitions['ob_next'] = [episode_batch['ob'][episode_idx][t + 1] for episode_idx, t in zip(episode_idxs, t_samples)]

64 |

65 | # join sequence of array values in transitions; ob values is a dict {'robot-state': array(), 'object-state': array()}, others are array

66 | new_transitions = {}

67 | for k, v in transitions.items():

68 | if isinstance(v[0], dict):

69 | sub_keys = v[0].keys()

70 | new_transitions[k] = {sub_key: np.stack([v_[sub_key] for v_ in v]) for sub_key in sub_keys}

71 | else:

72 | new_transitions[k] = np.stack(v)

73 | return new_transitions

74 |

--------------------------------------------------------------------------------

/panda/utils/mujoco_py_renderer.py:

--------------------------------------------------------------------------------

1 | from mujoco_py import MjViewer

2 | from mujoco_py.generated import const

3 | import glfw

4 | from collections import defaultdict

5 |

6 |

7 | class CustomMjViewer(MjViewer):

8 |

9 | keypress = defaultdict(list)

10 | keyup = defaultdict(list)

11 | keyrepeat = defaultdict(list)

12 |

13 | def key_callback(self, window, key, scancode, action, mods):

14 | if action == glfw.PRESS:

15 | tgt = self.keypress

16 | elif action == glfw.RELEASE:

17 | tgt = self.keyup

18 | elif action == glfw.REPEAT:

19 | tgt = self.keyrepeat

20 | else:

21 | return

22 | if tgt.get(key):

23 | for fn in tgt[key]:

24 | fn(window, key, scancode, action, mods)

25 | if tgt.get("any"):

26 | for fn in tgt["any"]:

27 | fn(window, key, scancode, action, mods)

28 | # retain functionality for closing the viewer

29 | if key == glfw.KEY_ESCAPE:

30 | super().key_callback(window, key, scancode, action, mods)

31 | else:

32 | # only use default mujoco callbacks if "any" callbacks are unset

33 | super().key_callback(window, key, scancode, action, mods)

34 |

35 |

36 | class MujocoPyRenderer:

37 | def __init__(self, sim):

38 | """

39 | Args:

40 | sim: MjSim object

41 | """

42 |

43 | self.viewer = CustomMjViewer(sim)

44 | self.viewer.cam.fixedcamid = 6

45 | self.callbacks = {}

46 |

47 |

48 | def set_camera(self, camera_id):

49 | """

50 | Set the camera view to the specified camera ID.

51 | """

52 | self.viewer.cam.fixedcamid = camera_id

53 | self.viewer.cam.type = const.CAMERA_FIXED

54 |

55 | def render(self):

56 | # safe for multiple calls

57 | self.viewer.render()

58 |

59 | def close(self):

60 | """

61 | Destroys the open window and renders (pun intended) the viewer useless.

62 | """

63 | glfw.destroy_window(self.viewer.window)

64 | self.viewer = None

65 |

66 | def add_keypress_callback(self, key, fn):

67 | """

68 | Allows for custom callback functions for the viewer. Called on key down.

69 | Parameter 'any' will ensure that the callback is called on any key down,

70 | and block default mujoco viewer callbacks from executing, except for

71 | the ESC callback to close the viewer.

72 | """

73 | self.viewer.keypress[key].append(fn)

74 |

75 | def add_keyup_callback(self, key, fn):

76 | """

77 | Allows for custom callback functions for the viewer. Called on key up.

78 | Parameter 'any' will ensure that the callback is called on any key up,

79 | and block default mujoco viewer callbacks from executing, except for

80 | the ESC callback to close the viewer.

81 | """

82 | self.viewer.keyup[key].append(fn)

83 |

84 | def add_keyrepeat_callback(self, key, fn):

85 | """

86 | Allows for custom callback functions for the viewer. Called on key repeat.

87 | Parameter 'any' will ensure that the callback is called on any key repeat,

88 | and block default mujoco viewer callbacks from executing, except for