├── .gitattributes

├── .github

├── README.md

└── workflows

│ └── sync-to-hf.yaml

├── .gitignore

├── LICENSE

├── README-main.md

├── README.md

├── autoagents

├── __init__.py

├── agents

│ ├── __init__.py

│ ├── agents

│ │ ├── __init__.py

│ │ ├── search.py

│ │ ├── search_v3.py

│ │ └── wiki_agent.py

│ ├── models

│ │ └── custom.py

│ ├── spaces

│ │ └── app.py

│ ├── tools

│ │ ├── __init__.py

│ │ └── tools.py

│ └── utils

│ │ ├── __init__.py

│ │ ├── constants.py

│ │ └── logger.py

├── data

│ ├── action_name_transformation.py

│ ├── create_sft_dataset.py

│ ├── dataset.py

│ ├── generate_action_data.py

│ └── generate_action_tasks

│ │ ├── REACT.ipynb

│ │ ├── README.md

│ │ ├── generate_data.py

│ │ ├── generate_data_chat_api.py

│ │ ├── goals_test.json

│ │ ├── goals_train.json

│ │ ├── goals_valid.json

│ │ ├── previous_goals.json

│ │ ├── prompt.txt

│ │ ├── requirements.txt

│ │ ├── run_genenerate_data.sh

│ │ ├── seed_tasks_test.jsonl

│ │ ├── seed_tasks_train.jsonl

│ │ ├── seed_tasks_valid.jsonl

│ │ └── utils.py

├── eval

│ ├── README.md

│ ├── __init__.py

│ ├── bamboogle.py

│ ├── hotpotqa

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── constants.py

│ │ ├── eval_async.py

│ │ ├── hotpotqa_eval.py

│ │ └── run_eval.py

│ ├── metrics.py

│ ├── reward

│ │ ├── eval.py

│ │ └── get_scores.py

│ └── test.py

├── serve

│ ├── README.md

│ ├── action_api_server.py

│ ├── action_model_worker.py

│ ├── controller.sh

│ ├── model_worker.sh

│ ├── openai_api.sh

│ └── serve_rescale.py

└── train

│ ├── README.md

│ ├── scripts

│ ├── action_finetuning.sh

│ ├── action_finetuning_v3.sh

│ ├── conv_finetuning.sh

│ ├── longchat_action_finetuning.sh

│ └── longchat_conv_finetuning.sh

│ ├── test_v3_preprocess.py

│ ├── train.py

│ └── train_v3.py

├── requirements.txt

└── setup.py

/.gitattributes:

--------------------------------------------------------------------------------

1 | *.7z filter=lfs diff=lfs merge=lfs -text

2 | *.arrow filter=lfs diff=lfs merge=lfs -text

3 | *.bin filter=lfs diff=lfs merge=lfs -text

4 | *.bz2 filter=lfs diff=lfs merge=lfs -text

5 | *.ckpt filter=lfs diff=lfs merge=lfs -text

6 | *.ftz filter=lfs diff=lfs merge=lfs -text

7 | *.gz filter=lfs diff=lfs merge=lfs -text

8 | *.h5 filter=lfs diff=lfs merge=lfs -text

9 | *.joblib filter=lfs diff=lfs merge=lfs -text

10 | *.lfs.* filter=lfs diff=lfs merge=lfs -text

11 | *.mlmodel filter=lfs diff=lfs merge=lfs -text

12 | *.model filter=lfs diff=lfs merge=lfs -text

13 | *.msgpack filter=lfs diff=lfs merge=lfs -text

14 | *.npy filter=lfs diff=lfs merge=lfs -text

15 | *.npz filter=lfs diff=lfs merge=lfs -text

16 | *.onnx filter=lfs diff=lfs merge=lfs -text

17 | *.ot filter=lfs diff=lfs merge=lfs -text

18 | *.parquet filter=lfs diff=lfs merge=lfs -text

19 | *.pb filter=lfs diff=lfs merge=lfs -text

20 | *.pickle filter=lfs diff=lfs merge=lfs -text

21 | *.pkl filter=lfs diff=lfs merge=lfs -text

22 | *.pt filter=lfs diff=lfs merge=lfs -text

23 | *.pth filter=lfs diff=lfs merge=lfs -text

24 | *.rar filter=lfs diff=lfs merge=lfs -text

25 | *.safetensors filter=lfs diff=lfs merge=lfs -text

26 | saved_model/**/* filter=lfs diff=lfs merge=lfs -text

27 | *.tar.* filter=lfs diff=lfs merge=lfs -text

28 | *.tflite filter=lfs diff=lfs merge=lfs -text

29 | *.tgz filter=lfs diff=lfs merge=lfs -text

30 | *.wasm filter=lfs diff=lfs merge=lfs -text

31 | *.xz filter=lfs diff=lfs merge=lfs -text

32 | *.zip filter=lfs diff=lfs merge=lfs -text

33 | *.zst filter=lfs diff=lfs merge=lfs -text

34 | *tfevents* filter=lfs diff=lfs merge=lfs -text

35 |

--------------------------------------------------------------------------------

/.github/README.md:

--------------------------------------------------------------------------------

1 | ../README-main.md

--------------------------------------------------------------------------------

/.github/workflows/sync-to-hf.yaml:

--------------------------------------------------------------------------------

1 | name: Sync to Hugging Face hub

2 | on:

3 | push:

4 | branches: [main]

5 |

6 | # to run this workflow manually from the Actions tab

7 | workflow_dispatch:

8 |

9 | jobs:

10 | sync-to-hub:

11 | runs-on: ubuntu-latest

12 | steps:

13 | - uses: actions/checkout@v3

14 | with:

15 | fetch-depth: 0

16 | lfs: true

17 | ref: hf-active

18 | - name: Push to hub

19 | env:

20 | HF_TOKEN: ${{ secrets.HF_TOKEN }}

21 | run: git push https://omkarenator:$HF_TOKEN@huggingface.co/spaces/AutoLLM/AutoAgents hf-active:main

22 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | share/python-wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 | MANIFEST

28 | .DS_Store

29 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 AutoLLM

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README-main.md:

--------------------------------------------------------------------------------

1 | # AutoAgents

2 |

3 |

4 |

5 | Unlock complex question answering in LLMs with enhanced chain-of-thought reasoning and information-seeking capabilities.

6 |

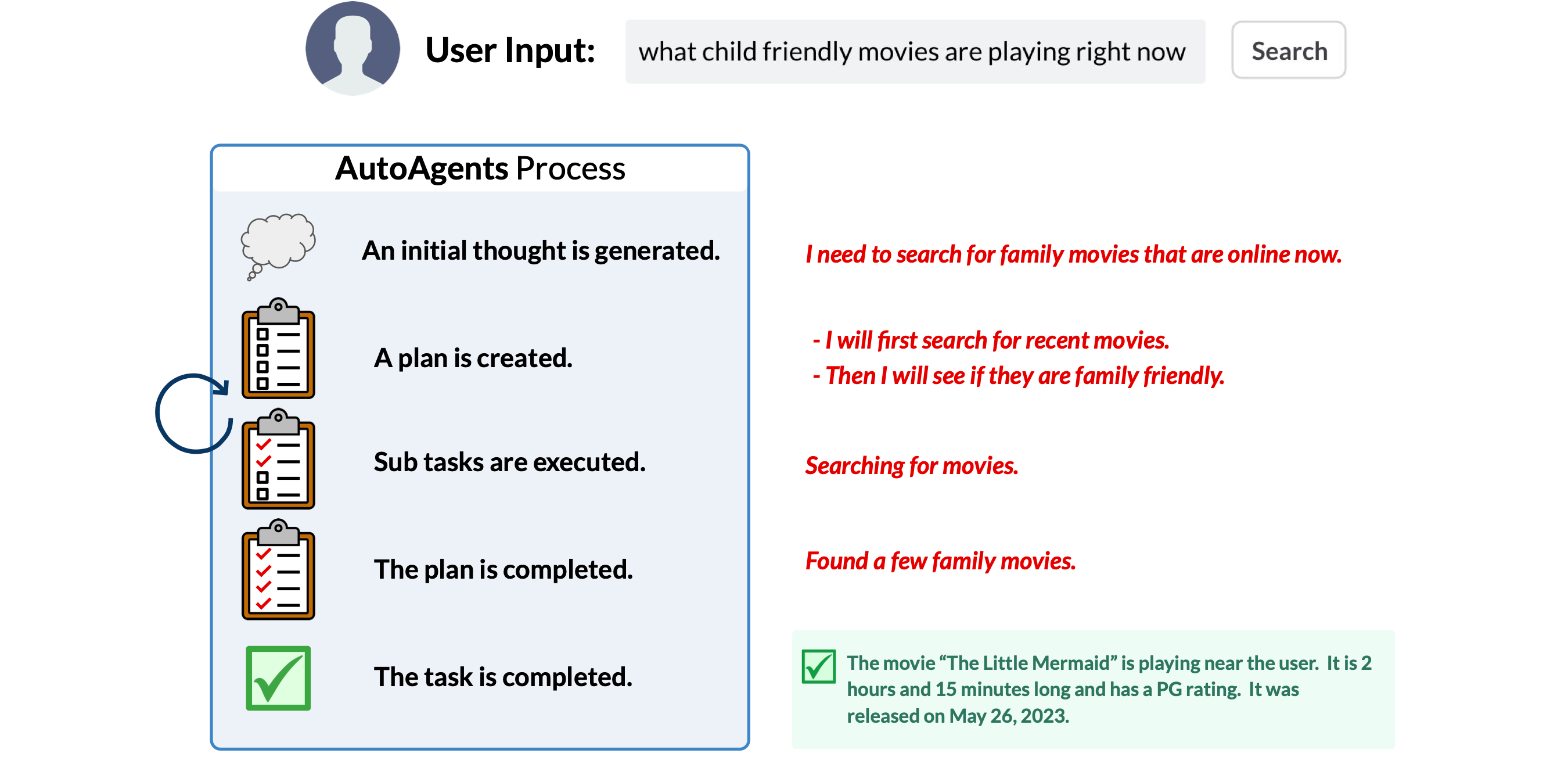

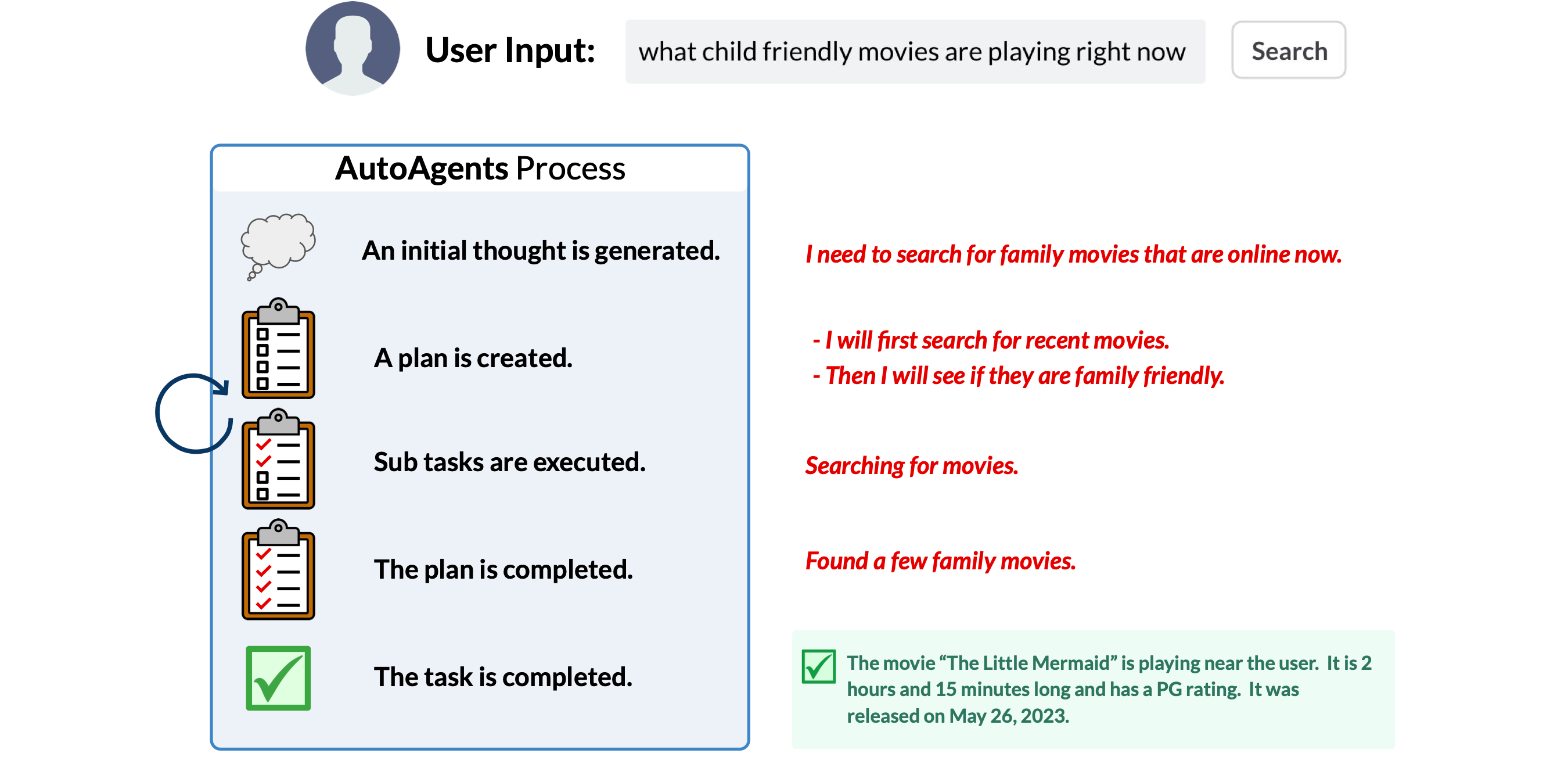

7 | ## 👉 Overview

8 |

9 | The purpose of this project is to extend LLMs ability to answer more complex questions through chain-of-thought reasoning and information-seeking actions.

10 |

11 | We are excited to release the initial version of AutoAgents, a proof-of-concept on what can be achieved with only well-written prompts. This is the initial step towards our first big milestone, releasing and open-sourcing the AutoAgents 7B model!

12 |

13 | Come try out our [Huggingface Space](https://huggingface.co/spaces/AutoLLM/AutoAgents)!

14 |

15 |

16 |

17 | ## 🤖 The AutoAgents Project

18 |

19 | This project demonstrates LLMs capability to execute a complex user goal: understand a user's goal, generate a plan, use proper tools, and deliver a final result.

20 |

21 | For simplicity, our first attempt starts with a Web Search Agent.

22 |

23 |

24 |

25 | ## 💫 How it works:

26 |

27 |

28 |

29 |

30 |

31 | ## 📔 Examples

32 |

33 | Ask your AutoAgent to do what a real person would do using the internet:

34 |

35 | For example:

36 |

37 | *1. Recommend a kid friendly movie that is playing at a theater near Sunnyvale. Give me the showtimes and a link to purchase the tickets*

38 |

39 | *2. What is the average age of the past three president when they took office*

40 |

41 | *3. What is the mortgage rate right now and how does that compare to the past two years*

42 |

43 |

44 |

45 | ## 💁 Roadmap

46 |

47 | * ~~HuggingFace Space demo using OpenAI models~~ [LINK](https://huggingface.co/spaces/AutoLLM/AutoAgents)

48 | * AutoAgents [7B] Model

49 | * Initial Release:

50 | * Finetune and release a 7B parameter fine-tuned search model

51 | * AutoAgents Dataset

52 | * A high-quality dataset for a diverse set of search scenarios (why quality and diversity?[1](https://arxiv.org/abs/2305.11206))

53 | * Reduce Model Inference Overhead

54 | * Affordance Modeling [2](https://en.wikipedia.org/wiki/Affordance)

55 | * Extend Support to Additional Tools

56 | * Customizable Document Search set (e.g. personal documents)

57 | * Support Multi-turn Dialogue

58 | * Advanced Flow Control in Plan Execution

59 |

60 | We are actively developing a few interesting things, check back here or follow us on [Twitter](https://twitter.com/AutoLLM) for any new development.

61 |

62 | If you are interested in any other problems, feel free to shoot us an issue.

63 |

64 |

65 |

66 | ## 🧭 How to use this repo?

67 |

68 | This repo contains the entire code to run the search agent from your local browser. All you need is an OpenAI API key to begin.

69 |

70 | To run the search agent locally:

71 |

72 | 1. Clone the repo and change the directory

73 |

74 | ```bash

75 | git clone https://github.com/AutoLLM/AutoAgents.git

76 | cd AutoAgents

77 | ```

78 |

79 | 2. Install the dependencies

80 |

81 | ```bash

82 | pip install -r requirements.txt

83 | ```

84 |

85 | 3. Install the `autoagents` package

86 |

87 | ```bash

88 | pip install -e .

89 | ```

90 |

91 | 4. Make sure you have your OpenAI API key set as an environment variable. Alternatively, you can also feed it through the input text-box on the sidebar.

92 |

93 | ```bash

94 | export OPENAI_API_KEY=sk-xxxxxx

95 | ```

96 |

97 | 5. Run the Streamlit app

98 |

99 | ```bash

100 | streamlit run autoagents/agents/spaces/app.py

101 | ```

102 |

103 | This should open a browser window where you can type your search query.

104 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: AutoAgents

3 | emoji: 🐢

4 | colorFrom: green

5 | colorTo: purple

6 | sdk: streamlit

7 | sdk_version: 1.21.0

8 | python_version: 3.10.11

9 | app_file: autoagents/spaces/app.py

10 | pinned: true

11 | ---

12 |

13 | Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

14 |

--------------------------------------------------------------------------------

/autoagents/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutoLLM/AutoAgents/e1210c7884f951f1b90254e40c162e81fc1442f3/autoagents/__init__.py

--------------------------------------------------------------------------------

/autoagents/agents/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutoLLM/AutoAgents/e1210c7884f951f1b90254e40c162e81fc1442f3/autoagents/agents/__init__.py

--------------------------------------------------------------------------------

/autoagents/agents/agents/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutoLLM/AutoAgents/e1210c7884f951f1b90254e40c162e81fc1442f3/autoagents/agents/agents/__init__.py

--------------------------------------------------------------------------------

/autoagents/agents/agents/search.py:

--------------------------------------------------------------------------------

1 | import os

2 | import json

3 | import uuid

4 | import re

5 | from datetime import date

6 | import asyncio

7 | from collections import defaultdict

8 | from pprint import pprint

9 | from typing import List, Union, Any, Optional, Dict

10 |

11 | from langchain.agents import Tool, AgentExecutor, LLMSingleActionAgent, AgentOutputParser

12 | from langchain.prompts import StringPromptTemplate

13 | from langchain import LLMChain

14 | from langchain.chat_models import ChatOpenAI

15 | from langchain.schema import AgentAction, AgentFinish

16 | from langchain.callbacks import get_openai_callback

17 | from langchain.callbacks.base import AsyncCallbackHandler

18 | from langchain.callbacks.manager import AsyncCallbackManager

19 | from langchain.base_language import BaseLanguageModel

20 |

21 | from autoagents.agents.tools.tools import search_tool, note_tool, rewrite_search_query, finish_tool

22 | from autoagents.agents.utils.logger import InteractionsLogger

23 | from autoagents.agents.utils.constants import LOG_SAVE_DIR

24 |

25 | from pydantic import BaseModel, ValidationError, Extra # pydantic==1.10.11

26 |

27 |

28 | class InterOutputSchema(BaseModel):

29 | thought: str

30 | reasoning: str

31 | plan: List[str]

32 | action: str

33 | action_input: str

34 | class Config:

35 | extra = Extra.forbid

36 |

37 |

38 | class FinalOutputSchema(BaseModel):

39 | thought: str

40 | reasoning: str

41 | plan: List[str]

42 | action: str

43 | action_input: str

44 | citations: List[str]

45 | class Config:

46 | extra = Extra.forbid

47 |

48 |

49 | def check_valid(o):

50 | try:

51 | if o.get("action") == "Tool_Finish":

52 | FinalOutputSchema(**o)

53 | else:

54 | InterOutputSchema(**o)

55 | except ValidationError:

56 | return False

57 | return True

58 |

59 |

60 | # Set up the base template

61 | template = """We are working together to satisfy the user's original goal

62 | step-by-step. Play to your strengths as an LLM. Make sure the plan is

63 | achievable using the available tools. The final answer should be descriptive,

64 | and should include all relevant details.

65 |

66 | Today is {today}.

67 |

68 | ## Goal:

69 | {input}

70 |

71 | If you require assistance or additional information, you should use *only* one

72 | of the following tools: {tools}.

73 |

74 | ## History

75 | {agent_scratchpad}

76 |

77 | Do not repeat any past actions in History, because you will not get additional

78 | information. If the last action is Tool_Search, then you should use Tool_Notepad to keep

79 | critical information. If you have gathered all information in your plannings

80 | to satisfy the user's original goal, then respond immediately with the Finish

81 | Action.

82 |

83 | ## Output format

84 | You MUST produce JSON output with below keys:

85 | "thought": "current train of thought",

86 | "reasoning": "reasoning",

87 | "plan": [

88 | "short bulleted",

89 | "list that conveys",

90 | "next-step plan",

91 | ],

92 | "action": "the action to take",

93 | "action_input": "the input to the Action",

94 | """

95 |

96 |

97 | # Set up a prompt template

98 | class CustomPromptTemplate(StringPromptTemplate):

99 | # The template to use

100 | template: str

101 | # The list of tools available

102 | tools: List[Tool]

103 | ialogger: InteractionsLogger

104 |

105 | def format(self, **kwargs) -> str:

106 | # Get the intermediate steps [(AgentAction, Observation)]

107 | # Format them in a particular way

108 | intermediate_steps = kwargs.pop("intermediate_steps")

109 | history = []

110 | # Set the agent_scratchpad variable to that value

111 | for i, (action, observation) in enumerate(intermediate_steps):

112 | if action.tool not in [tool.name for tool in self.tools]:

113 | raise Exception("Invalid tool requested by the model.")

114 | parsed = json.loads(action.log)

115 | if i == len(intermediate_steps) - 1:

116 | # Add observation only for the last action

117 | parsed["observation"] = observation

118 | history.append(parsed)

119 | self.ialogger.add_history(history)

120 | kwargs["agent_scratchpad"] = json.dumps(history)

121 | # Create a tools variable from the list of tools provided

122 | kwargs["tools"] = "\n".join([f"{tool.name}: {tool.description}" for tool in self.tools])

123 | # Create a list of tool names for the tools provided

124 | kwargs["tool_names"] = ", ".join([tool.name for tool in self.tools])

125 | kwargs["today"] = date.today()

126 | final_prompt = self.template.format(**kwargs)

127 | self.ialogger.add_system(final_prompt)

128 | return final_prompt

129 |

130 |

131 | class CustomOutputParser(AgentOutputParser):

132 | class Config:

133 | arbitrary_types_allowed = True

134 | ialogger: InteractionsLogger

135 | llm: BaseLanguageModel

136 | new_action_input: Optional[str]

137 | action_history = defaultdict(set)

138 |

139 | def parse(self, llm_output: str) -> Union[AgentAction, AgentFinish]:

140 | self.ialogger.add_ai(llm_output)

141 | parsed = json.loads(llm_output)

142 | if not check_valid(parsed):

143 | raise ValueError(f"Could not parse LLM output: `{llm_output}`")

144 |

145 | # Parse out the action and action input

146 | action = parsed["action"]

147 | action_input = parsed["action_input"]

148 |

149 | if action == "Tool_Finish":

150 | return AgentFinish(return_values={"output": action_input}, log=llm_output)

151 |

152 | if action_input in self.action_history[action]:

153 | new_action_input = rewrite_search_query(action_input,

154 | self.action_history[action],

155 | self.llm)

156 | self.ialogger.add_message({"query_rewrite": True})

157 | self.new_action_input = new_action_input

158 | self.action_history[action].add(new_action_input)

159 | return AgentAction(tool=action, tool_input=new_action_input, log=llm_output)

160 | else:

161 | # Return the action and action input

162 | self.action_history[action].add(action_input)

163 | return AgentAction(tool=action, tool_input=action_input, log=llm_output)

164 |

165 |

166 | class ActionRunner:

167 | def __init__(self,

168 | outputq,

169 | llm: BaseLanguageModel,

170 | persist_logs: bool = False,

171 | prompt_template: str = template,

172 | tools: List[Tool] = [search_tool, note_tool, finish_tool]):

173 | self.ialogger = InteractionsLogger(name=f"{uuid.uuid4().hex[:6]}", persist=persist_logs)

174 | prompt = CustomPromptTemplate(template=prompt_template,

175 | tools=tools,

176 | input_variables=["input", "intermediate_steps"],

177 | ialogger=self.ialogger)

178 |

179 | output_parser = CustomOutputParser(ialogger=self.ialogger, llm=llm)

180 | self.model_name = llm.model_name

181 |

182 | class MyCustomHandler(AsyncCallbackHandler):

183 | def __init__(self):

184 | pass

185 |

186 | async def on_chain_end(self, outputs, **kwargs) -> None:

187 | if "text" in outputs:

188 | await outputq.put(outputs["text"])

189 |

190 | async def on_agent_action(

191 | self,

192 | action: AgentAction,

193 | *,

194 | run_id: uuid.UUID,

195 | parent_run_id: Optional[uuid.UUID] = None,

196 | **kwargs: Any,

197 | ) -> None:

198 | if (new_action_input := output_parser.new_action_input):

199 | await outputq.put(RuntimeWarning(f"Action Input Rewritten: {new_action_input}"))

200 | # Notify users

201 | output_parser.new_action_input = None

202 |

203 | async def on_tool_start(

204 | self,

205 | serialized: Dict[str, Any],

206 | input_str: str,

207 | *,

208 | run_id: uuid.UUID,

209 | parent_run_id: Optional[uuid.UUID] = None,

210 | **kwargs: Any,

211 | ) -> None:

212 | pass

213 |

214 | async def on_tool_end(

215 | self,

216 | output: str,

217 | *,

218 | run_id: uuid.UUID,

219 | parent_run_id: Optional[uuid.UUID] = None,

220 | **kwargs: Any,

221 | ) -> None:

222 | await outputq.put(output)

223 |

224 | handler = MyCustomHandler()

225 |

226 | llm_chain = LLMChain(llm=llm, prompt=prompt, callbacks=[handler])

227 | tool_names = [tool.name for tool in tools]

228 | for tool in tools:

229 | tool.callbacks = [handler]

230 |

231 | agent = LLMSingleActionAgent(

232 | llm_chain=llm_chain,

233 | output_parser=output_parser,

234 | stop=["0xdeadbeef"], # required

235 | allowed_tools=tool_names

236 | )

237 | callback_manager = AsyncCallbackManager([handler])

238 |

239 | # Finally create the Executor

240 | self.agent_executor = AgentExecutor.from_agent_and_tools(agent=agent,

241 | tools=tools,

242 | verbose=False,

243 | callback_manager=callback_manager)

244 |

245 | async def run(self, goal: str, outputq, save_dir=LOG_SAVE_DIR):

246 | self.ialogger.set_goal(goal)

247 | try:

248 | with get_openai_callback() as cb:

249 | output = await self.agent_executor.arun(goal)

250 | self.ialogger.add_cost({"total_tokens": cb.total_tokens,

251 | "prompt_tokens": cb.prompt_tokens,

252 | "completion_tokens": cb.completion_tokens,

253 | "total_cost": cb.total_cost,

254 | "successful_requests": cb.successful_requests})

255 | self.ialogger.save(save_dir)

256 | except Exception as e:

257 | self.ialogger.add_message({"error": str(e)})

258 | self.ialogger.save(save_dir)

259 | await outputq.put(e)

260 | return

261 | return output

262 |

--------------------------------------------------------------------------------

/autoagents/agents/agents/search_v3.py:

--------------------------------------------------------------------------------

1 | from datetime import date

2 | import json

3 | import uuid

4 | from collections import defaultdict

5 | from typing import List, Union, Any, Optional, Dict

6 |

7 | from langchain.agents import Tool, AgentExecutor, LLMSingleActionAgent, AgentOutputParser

8 | from langchain.prompts import StringPromptTemplate

9 | from langchain import LLMChain

10 | from langchain.schema import AgentAction, AgentFinish

11 | from langchain.callbacks import get_openai_callback

12 | from langchain.callbacks.base import AsyncCallbackHandler

13 | from langchain.callbacks.manager import AsyncCallbackManager

14 | from langchain.base_language import BaseLanguageModel

15 |

16 | from autoagents.agents.tools.tools import search_tool_v3, note_tool_v3, finish_tool_v3

17 | from autoagents.agents.utils.logger import InteractionsLogger

18 | from autoagents.agents.utils.constants import LOG_SAVE_DIR

19 | from autoagents.agents.agents.search import check_valid

20 |

21 |

22 | # Set up a prompt template

23 | class CustomPromptTemplate(StringPromptTemplate):

24 | # The list of tools available

25 | tools: List[Tool]

26 | ialogger: InteractionsLogger

27 |

28 | def format(self, **kwargs) -> str:

29 | # Get the intermediate steps [(AgentAction, Observation)]

30 | # Format them in a particular way

31 | intermediate_steps = kwargs.pop("intermediate_steps")

32 | history = []

33 | # Set the agent_scratchpad variable to that value

34 | for i, (action, observation) in enumerate(intermediate_steps):

35 | if action.tool not in [tool.name for tool in self.tools]:

36 | raise Exception("Invalid tool requested by the model.")

37 | parsed = json.loads(action.log)

38 | if i == len(intermediate_steps) - 1:

39 | # Add observation only for the last action

40 | parsed["observation"] = observation

41 | history.append(parsed)

42 | self.ialogger.add_history(history)

43 | goal = kwargs["input"]

44 | goal = f"Today is {date.today()}. {goal}"

45 | list_prompt =[]

46 | list_prompt.append({"role": "goal", "content": goal})

47 | list_prompt.append({"role": "tools", "content": [{tool.name: tool.description} for tool in self.tools]})

48 | list_prompt.append({"role": "history", "content": history})

49 | return json.dumps(list_prompt)

50 |

51 |

52 | class CustomOutputParser(AgentOutputParser):

53 | class Config:

54 | arbitrary_types_allowed = True

55 | ialogger: InteractionsLogger

56 | llm: BaseLanguageModel

57 | new_action_input: Optional[str]

58 | action_history = defaultdict(set)

59 |

60 | def parse(self, llm_output: str) -> Union[AgentAction, AgentFinish]:

61 | try:

62 | parsed = json.loads(llm_output)

63 | except json.decoder.JSONDecodeError:

64 | raise ValueError(f"Could not parse LLM output: `{llm_output}`")

65 | if not check_valid(parsed):

66 | raise ValueError(f"Could not parse LLM output: `{llm_output}`")

67 | self.ialogger.add_ai(llm_output)

68 | # Parse out the action and action input

69 | action = parsed["action"]

70 | action_input = parsed["action_input"]

71 |

72 | if action == "Tool_Finish":

73 | return AgentFinish(return_values={"output": action_input}, log=llm_output)

74 | return AgentAction(tool=action, tool_input=action_input, log=llm_output)

75 |

76 |

77 | class ActionRunnerV3:

78 | def __init__(self,

79 | outputq,

80 | llm: BaseLanguageModel,

81 | persist_logs: bool = False,

82 | tools = [search_tool_v3, note_tool_v3, finish_tool_v3]):

83 | self.ialogger = InteractionsLogger(name=f"{uuid.uuid4().hex[:6]}", persist=persist_logs)

84 | self.ialogger.set_tools([{tool.name: tool.description} for tool in tools])

85 | prompt = CustomPromptTemplate(tools=tools,

86 | input_variables=["input", "intermediate_steps"],

87 | ialogger=self.ialogger)

88 | output_parser = CustomOutputParser(ialogger=self.ialogger, llm=llm)

89 |

90 | class MyCustomHandler(AsyncCallbackHandler):

91 | def __init__(self):

92 | pass

93 |

94 | async def on_chain_end(self, outputs, **kwargs) -> None:

95 | if "text" in outputs:

96 | await outputq.put(outputs["text"])

97 |

98 | async def on_agent_action(

99 | self,

100 | action: AgentAction,

101 | *,

102 | run_id: uuid.UUID,

103 | parent_run_id: Optional[uuid.UUID] = None,

104 | **kwargs: Any,

105 | ) -> None:

106 | pass

107 |

108 | async def on_tool_start(

109 | self,

110 | serialized: Dict[str, Any],

111 | input_str: str,

112 | *,

113 | run_id: uuid.UUID,

114 | parent_run_id: Optional[uuid.UUID] = None,

115 | **kwargs: Any,

116 | ) -> None:

117 | pass

118 |

119 | async def on_tool_end(

120 | self,

121 | output: str,

122 | *,

123 | run_id: uuid.UUID,

124 | parent_run_id: Optional[uuid.UUID] = None,

125 | **kwargs: Any,

126 | ) -> None:

127 | await outputq.put(output)

128 |

129 | handler = MyCustomHandler()

130 | llm_chain = LLMChain(llm=llm, prompt=prompt, callbacks=[handler])

131 | tool_names = [tool.name for tool in tools]

132 | for tool in tools:

133 | tool.callbacks = [handler]

134 |

135 | agent = LLMSingleActionAgent(

136 | llm_chain=llm_chain,

137 | output_parser=output_parser,

138 | stop=["0xdeadbeef"], # required

139 | allowed_tools=tool_names

140 | )

141 | callback_manager = AsyncCallbackManager([handler])

142 | # Finally create the Executor

143 | self.agent_executor = AgentExecutor.from_agent_and_tools(agent=agent,

144 | tools=tools,

145 | verbose=False,

146 | callback_manager=callback_manager)

147 |

148 | async def run(self, goal: str, outputq, save_dir=LOG_SAVE_DIR):

149 | goal = f"Today is {date.today()}. {goal}"

150 | self.ialogger.set_goal(goal)

151 | try:

152 | with get_openai_callback() as cb:

153 | output = await self.agent_executor.arun(goal)

154 | self.ialogger.add_cost({"total_tokens": cb.total_tokens,

155 | "prompt_tokens": cb.prompt_tokens,

156 | "completion_tokens": cb.completion_tokens,

157 | "total_cost": cb.total_cost,

158 | "successful_requests": cb.successful_requests})

159 | self.ialogger.save(save_dir)

160 | except Exception as e:

161 | self.ialogger.add_message({"error": str(e)})

162 | self.ialogger.save(save_dir)

163 | await outputq.put(e)

164 | return

165 | return output

166 |

--------------------------------------------------------------------------------

/autoagents/agents/agents/wiki_agent.py:

--------------------------------------------------------------------------------

1 | from langchain.base_language import BaseLanguageModel

2 | from autoagents.agents.agents.search import ActionRunner

3 | from autoagents.agents.agents.search_v3 import ActionRunnerV3

4 | from autoagents.agents.tools.tools import wiki_dump_search_tool, wiki_note_tool, finish_tool

5 |

6 |

7 | # Set up the base template

8 | template = """We are working together to satisfy the user's original goal

9 | step-by-step. Play to your strengths as an LLM. Make sure the plan is

10 | achievable using the available tools. The final answer should be descriptive,

11 | and should include all relevant details.

12 |

13 | Today is {today}.

14 |

15 | ## Goal:

16 | {input}

17 |

18 | If you require assistance or additional information, you should use *only* one

19 | of the following tools: {tools}.

20 |

21 | ## History

22 | {agent_scratchpad}

23 |

24 | Do not repeat any past actions in History, because you will not get additional

25 | information. If the last action is Tool_Wikipedia, then you should use Tool_Notepad to keep

26 | critical information. If you have gathered all information in your plannings

27 | to satisfy the user's original goal, then respond immediately with the Finish

28 | Action.

29 |

30 | ## Output format

31 | You MUST produce JSON output with below keys:

32 | "thought": "current train of thought",

33 | "reasoning": "reasoning",

34 | "plan": [

35 | "short bulleted",

36 | "list that conveys",

37 | "next-step plan",

38 | ],

39 | "action": "the action to take",

40 | "action_input": "the input to the Action",

41 | """

42 |

43 |

44 | class WikiActionRunner(ActionRunner):

45 |

46 | def __init__(self, outputq, llm: BaseLanguageModel, persist_logs: bool = False):

47 |

48 | super().__init__(

49 | outputq, llm, persist_logs,

50 | prompt_template=template,

51 | tools=[wiki_dump_search_tool, wiki_note_tool, finish_tool]

52 | )

53 |

54 | class WikiActionRunnerV3(ActionRunnerV3):

55 | def __init__(self, outputq, llm: BaseLanguageModel, persist_logs: bool = False):

56 |

57 | super().__init__(

58 | outputq, llm, persist_logs,

59 | tools=[wiki_dump_search_tool, wiki_note_tool, finish_tool]

60 | )

61 |

--------------------------------------------------------------------------------

/autoagents/agents/models/custom.py:

--------------------------------------------------------------------------------

1 | import requests

2 | import json

3 |

4 | from langchain.llms.base import LLM

5 |

6 |

7 | class CustomLLM(LLM):

8 | model_name: str

9 | completions_url: str = "http://localhost:8000/v1/chat/completions"

10 | temperature: float = 0.

11 | max_tokens: int = 1024

12 |

13 | @property

14 | def _llm_type(self) -> str:

15 | return "custom"

16 |

17 | def _call(self, prompt: str, stop=None) -> str:

18 | r = requests.post(

19 | self.completions_url,

20 | json={

21 | "model": self.model_name,

22 | "messages": [{"role": "user", "content": prompt}],

23 | "stop": stop,

24 | "temperature": self.temperature,

25 | "max_tokens": self.max_tokens

26 | },

27 | )

28 | result = r.json()

29 | try:

30 | return result["choices"][0]["message"]["content"]

31 | except:

32 | raise RuntimeError(result)

33 |

34 | async def _acall(self, prompt: str, stop=None) -> str:

35 | return self._call(prompt, stop)

36 |

37 |

38 | class CustomLLMV3(LLM):

39 | model_name: str

40 | completions_url: str = "http://localhost:8004/v1/completions"

41 | temperature: float = 0.

42 | max_tokens: int = 1024

43 |

44 | @property

45 | def _llm_type(self) -> str:

46 | return "custom"

47 |

48 | def _call(self, prompt: str, stop=None) -> str:

49 | r = requests.post(

50 | self.completions_url,

51 | json={

52 | "model": self.model_name,

53 | "prompt": json.loads(prompt),

54 | "stop": "\n\n",

55 | "temperature": self.temperature,

56 | "max_tokens": self.max_tokens

57 | },

58 | )

59 | result = r.json()

60 | if result.get("object") == "error":

61 | raise RuntimeError(result.get("message"))

62 | else:

63 | return result["choices"][0]["text"]

64 |

65 | async def _acall(self, prompt: str, stop=None) -> str:

66 | return self._call(prompt, stop)

67 |

--------------------------------------------------------------------------------

/autoagents/agents/spaces/app.py:

--------------------------------------------------------------------------------

1 | import os

2 | import asyncio

3 | import json

4 | import random

5 | from datetime import date, datetime, timezone, timedelta

6 | from ast import literal_eval

7 |

8 | import streamlit as st

9 | import openai

10 |

11 | from autoagents.agents.utils.constants import MAIN_HEADER, MAIN_CAPTION, SAMPLE_QUESTIONS

12 | from autoagents.agents.agents.search import ActionRunner

13 |

14 | from langchain.chat_models import ChatOpenAI

15 |

16 |

17 | async def run():

18 | output_acc = ""

19 | st.session_state["random"] = random.randint(0, 99)

20 | if "task" not in st.session_state:

21 | st.session_state.task = None

22 | if "model_name" not in st.session_state:

23 | st.session_state.model_name = "gpt-3.5-turbo"

24 |

25 | st.set_page_config(

26 | page_title="Search Agent",

27 | page_icon="🤖",

28 | layout="wide",

29 | initial_sidebar_state="expanded",

30 | )

31 |

32 | st.title(MAIN_HEADER)

33 | st.caption(MAIN_CAPTION)

34 |

35 | with st.form("my_form", clear_on_submit=False):

36 | st.markdown("", unsafe_allow_html=True)

37 | user_input = st.text_input(

38 | "You: ",

39 | key="input",

40 | placeholder="Ask me anything ...",

41 | label_visibility="hidden",

42 | )

43 |

44 | submitted = st.form_submit_button(

45 | "Search", help="Hit to submit the search query."

46 | )

47 |

48 | # Ask the user to enter their OpenAI API key

49 | if (api_key := st.sidebar.text_input("OpenAI api-key", type="password")):

50 | api_org = None

51 | else:

52 | api_key, api_org = os.getenv("OPENAI_API_KEY"), os.getenv("OPENAI_API_ORG")

53 | with st.sidebar:

54 | model_dict = {

55 | "gpt-3.5-turbo": "GPT-3.5-turbo",

56 | "gpt-4": "GPT-4 (Better but slower)",

57 | }

58 | st.radio(

59 | "OpenAI model",

60 | model_dict.keys(),

61 | key="model_name",

62 | format_func=lambda x: model_dict[x],

63 | )

64 |

65 | time_zone = str(datetime.now(timezone(timedelta(0))).astimezone().tzinfo)

66 | st.markdown(f"**The system time zone is {time_zone} and the date is {date.today()}**")

67 |

68 | st.markdown("**Example Queries:**")

69 | for q in SAMPLE_QUESTIONS:

70 | st.markdown(f"*{q}*")

71 |

72 | if not api_key:

73 | st.warning(

74 | "API key required to try this app. The API key is not stored in any form. [This](https://help.openai.com/en/articles/4936850-where-do-i-find-my-secret-api-key) might help."

75 | )

76 | elif api_org and st.session_state.model_name == "gpt-4":

77 | st.warning(

78 | "The free API key does not support GPT-4. Please switch to GPT-3.5-turbo or input your own API key."

79 | )

80 | else:

81 | outputq = asyncio.Queue()

82 | runner = ActionRunner(outputq,

83 | ChatOpenAI(openai_api_key=api_key,

84 | openai_organization=api_org,

85 | temperature=0,

86 | model_name=st.session_state.model_name),

87 | persist_logs=True) # log to HF-dataset

88 |

89 | async def cleanup(e):

90 | st.error(e)

91 | await st.session_state.task

92 | st.session_state.task = None

93 | st.stop()

94 |

95 | placeholder = st.empty()

96 |

97 | if user_input and submitted:

98 | if st.session_state.task is not None:

99 | with placeholder.container():

100 | st.session_state.task.cancel()

101 | st.warning("Previous search aborted", icon="⚠️")

102 |

103 | st.session_state.task = asyncio.create_task(

104 | runner.run(user_input, outputq)

105 | )

106 | iterations = 0

107 | with st.expander("Search Results", expanded=True):

108 | while True:

109 | with st.spinner("Wait for it..."):

110 | output = await outputq.get()

111 | placeholder.empty()

112 | if isinstance(output, Exception):

113 | if isinstance(output, openai.error.AuthenticationError):

114 | await cleanup(f"AuthenticationError: Invalid OpenAI API key.")

115 | elif isinstance(output, openai.error.InvalidRequestError) \

116 | and output._message == "The model: `gpt-4` does not exist":

117 | await cleanup(f"The free API key does not support GPT-4. Please switch to GPT-3.5-turbo or input your own API key.")

118 | elif isinstance(output, openai.error.OpenAIError):

119 | await cleanup(output)

120 | elif isinstance(output, RuntimeWarning):

121 | st.warning(output)

122 | continue

123 | else:

124 | await cleanup("Something went wrong. Please try searching again.")

125 | return

126 | try:

127 | parsed = json.loads(output)

128 | st.json(output, expanded=True)

129 | st.write("---")

130 | iterations += 1

131 | if parsed.get("action") == "Finish":

132 | break

133 | except:

134 | output_fmt = literal_eval(output)

135 | st.json(output_fmt, expanded=False)

136 | if iterations >= runner.agent_executor.max_iterations:

137 | await cleanup(

138 | f"Maximum iterations ({iterations}) exceeded. You can try running the search again or try a variation of the query."

139 | )

140 | return

141 | # Found the answer

142 | final_answer = await st.session_state.task

143 | final_answer = final_answer.replace("$", "\$")

144 | # st.success accepts md

145 | st.success(final_answer, icon="✅")

146 | st.balloons()

147 | st.session_state.task = None

148 | st.stop()

149 |

150 | if __name__ == "__main__":

151 | loop = asyncio.new_event_loop()

152 | loop.set_debug(enabled=False)

153 | loop.run_until_complete(run())

154 |

--------------------------------------------------------------------------------

/autoagents/agents/tools/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutoLLM/AutoAgents/e1210c7884f951f1b90254e40c162e81fc1442f3/autoagents/agents/tools/__init__.py

--------------------------------------------------------------------------------

/autoagents/agents/tools/tools.py:

--------------------------------------------------------------------------------

1 | import wikipedia

2 | import requests

3 | from elasticsearch import Elasticsearch

4 |

5 | from duckpy import Client

6 | from langchain import PromptTemplate, LLMChain, Wikipedia

7 | from langchain.agents import Tool

8 | from langchain.agents.react.base import DocstoreExplorer

9 |

10 | from langchain.base_language import BaseLanguageModel

11 |

12 |

13 | MAX_SEARCH_RESULTS = 5 # Number of search results to observe at a time

14 |

15 | INDEX_NAME = "wiki-dump-2017"

16 |

17 | # Create the client instance

18 | es_client = None

19 |

20 | search_description = """ Useful for when you need to ask with search. Use direct language and be

21 | EXPLICIT in what you want to search. Do NOT use filler words.

22 |

23 | ## Examples of incorrect use

24 | {

25 | "action": "Tool_Search",

26 | "action_input": "[name of bagel shop] menu"

27 | }

28 |

29 | The action_input cannot be None or empty.

30 | """

31 |

32 | notepad_description = """ Useful for when you need to note-down specific

33 | information for later reference. Please provide the website and full

34 | information you want to note-down in the action_input and all future prompts

35 | will remember it. This is the mandatory tool after using the Tool_Search.

36 | Using Tool_Notepad does not always lead to a final answer.

37 |

38 | ## Examples of using Notepad tool

39 | {

40 | "action": "Tool_Notepad",

41 | "action_input": "(www.website.com) the information you want to note-down"

42 | }

43 | """

44 |

45 | wiki_notepad_description = """ Useful for when you need to note-down specific

46 | information for later reference. Please provide the website and full

47 | information you want to note-down in the action_input and all future prompts

48 | will remember it. This is the mandatory tool after using the Tool_Wikipedia.

49 | Using Tool_Notepad does not always lead to a final answer.

50 |

51 | ## Examples of using Notepad tool

52 | {

53 | "action": "Tool_Notepad",

54 | "action_input": "(www.website.com) the information you want to note-down"

55 | }

56 | """

57 |

58 | wiki_search_description = """ Useful for when you need to get some information about a certain entity. Use direct language and be

59 | concise about what you want to retrieve. Note: the action input MUST be a wikipedia entity instead of a long sentence.

60 |

61 | ## Examples of correct use

62 | 1. Action: Tool_Wikipedia

63 | Action Input: Colorado orogeny

64 |

65 | The Action Input cannot be None or empty.

66 | """

67 |

68 | wiki_lookup_description = """ This tool is helpful when you want to retrieve sentences containing a specific text snippet after checking a Wikipedia entity.

69 | It should be utilized when a successful Wikipedia search does not provide sufficient information.

70 | Keep your lookup concise, using no more than three words.

71 |

72 | ## Examples of correct use

73 | 1. Action: Tool_Lookup

74 | Action Input: eastern sector

75 |

76 | The Action Input cannot be None or empty.

77 | """

78 |

79 |

80 | async def ddg(query: str):

81 | if query is None or query.lower().strip().strip('"') == "none" or query.lower().strip().strip('"') == "null":

82 | x = "The action_input field is empty. Please provide a search query."

83 | return [x]

84 | else:

85 | client = Client()

86 | return client.search(query)[:MAX_SEARCH_RESULTS]

87 |

88 | docstore=DocstoreExplorer(Wikipedia())

89 |

90 | async def notepad(x: str) -> str:

91 | return f"{[x]}"

92 |

93 | async def wikisearch(x: str) -> str:

94 | title_list = wikipedia.search(x)

95 | if not title_list:

96 | return docstore.search(x)

97 | title = title_list[0]

98 | return f"Wikipedia Page Title: {title}\nWikipedia Page Content: {docstore.search(title)}"

99 |

100 | async def wikilookup(x: str) -> str:

101 | return docstore.lookup(x)

102 |

103 | async def wikidumpsearch_es(x: str) -> str:

104 | global es_client

105 | if es_client is None:

106 | es_client = Elasticsearch("http://localhost:9200")

107 | resp = es_client.search(

108 | index=INDEX_NAME, query={"match": {"text": x}}, size=MAX_SEARCH_RESULTS

109 | )

110 | res = []

111 | for hit in resp['hits']['hits']:

112 | doc = hit["_source"]

113 | res.append({

114 | "title": doc["title"],

115 | "text": ''.join(sent for sent in doc["text"][1]),

116 | "url": doc["url"]

117 | })

118 | if doc["title"] == x:

119 | return [{

120 | "title": doc["title"],

121 | "text": '\n'.join(''.join(paras) for paras in doc["text"][1:3])

122 | if len(doc["text"]) > 2

123 | else '\n'.join(''.join(paras) for paras in doc["text"]),

124 | "url": doc["url"]

125 | }]

126 | return res

127 |

128 | async def wikidumpsearch_embed(x: str) -> str:

129 | res = []

130 | for obj in vector_search(x):

131 | paras = obj["text"].split('\n')

132 | cur = {

133 | "title": obj["sources"][0]["title"],

134 | "text": paras[min(1, len(paras) - 1)],

135 | "url": obj["sources"][0]["url"]

136 | }

137 | res.append(cur)

138 | if cur["title"] == x:

139 | return [{

140 | "title": obj["sources"][0]["title"],

141 | "text": '\n'.join(paras[1:3] if len(paras) > 2 else paras),

142 | "url": obj["sources"][0]["url"]

143 | }]

144 | return res

145 |

146 | def vector_search(

147 | query: str,

148 | url: str = "http://0.0.0.0:8080/query",

149 | max_candidates: int = MAX_SEARCH_RESULTS

150 | ):

151 | response = requests.post(

152 | url=url, json={"query_list": [query]}

153 | ).json()["result"][0]["top_answers"]

154 | return response[:min(max_candidates, len(response))]

155 |

156 |

157 | search_tool = Tool(name="Tool_Search",

158 | func=lambda x: x,

159 | coroutine=ddg,

160 | description=search_description)

161 |

162 | note_tool = Tool(name="Tool_Notepad",

163 | func=lambda x: x,

164 | coroutine=notepad,

165 | description=notepad_description)

166 |

167 | wiki_note_tool = Tool(name="Tool_Notepad",

168 | func=lambda x: x,

169 | coroutine=notepad,

170 | description=wiki_notepad_description)

171 |

172 | wiki_search_tool = Tool(

173 | name="Tool_Wikipedia",

174 | func=lambda x: x,

175 | coroutine=wikisearch,

176 | description=wiki_search_description

177 | )

178 |

179 | wiki_lookup_tool = Tool(

180 | name="Tool_Lookup",

181 | func=lambda x: x,

182 | coroutine=wikilookup,

183 | description=wiki_lookup_description

184 | )

185 |

186 | wiki_dump_search_tool = Tool(

187 | name="Tool_Wikipedia",

188 | func=lambda x: x,

189 | coroutine=wikidumpsearch_embed,

190 | description=wiki_search_description

191 | )

192 |

193 | async def final(x: str):

194 | pass

195 |

196 | finish_description = """ Useful when you have enough information to produce a

197 | final answer that achieves the original Goal.

198 |

199 | You must also include this key in the output for the Tool_Finish action

200 | "citations": ["www.example.com/a/list/of/websites: what facts you got from the website",

201 | "www.example.com/used/to/produce/the/action/and/action/input: "what facts you got from the website",

202 | "www.webiste.com/include/the/citations/from/the/previous/steps/as/well: "what facts you got from the website",

203 | "www.website.com": "this section is only needed for the final answer"]

204 |

205 | ## Examples of using Finish tool

206 | {

207 | "action": "Tool_Finish",

208 | "action_input": "final answer",

209 | "citations": ["www.example.com: what facts you got from the website"]

210 | }

211 | """

212 |

213 | finish_tool = Tool(name="Tool_Finish",

214 | func=lambda x: x,

215 | coroutine=final,

216 | description=finish_description)

217 |

218 | def rewrite_search_query(q: str, search_history, llm: BaseLanguageModel) -> str:

219 | history_string = '\n'.join(search_history)

220 | template ="""We are using the Search tool.

221 | # Previous queries:

222 | {history_string}. \n\n Rewrite query {action_input} to be

223 | different from the previous queries."""

224 | prompt = PromptTemplate(template=template,

225 | input_variables=["action_input", "history_string"])

226 | llm_chain = LLMChain(prompt=prompt, llm=llm)

227 | result = llm_chain.predict(action_input=q, history_string=history_string)

228 | return result

229 |

230 |

231 | ### Prompt V3 tools

232 |

233 | search_description_v3 = """Useful for when you need to ask with search."""

234 | notepad_description_v3 = """ Useful for when you need to note-down specific information for later reference."""

235 | finish_description_v3 = """Useful when you have enough information to produce a final answer that achieves the original Goal."""

236 |

237 | search_tool_v3 = Tool(name="Tool_Search",

238 | func=lambda x: x,

239 | coroutine=ddg,

240 | description=search_description_v3)

241 |

242 | note_tool_v3 = Tool(name="Tool_Notepad",

243 | func=lambda x: x,

244 | coroutine=notepad,

245 | description=notepad_description_v3)

246 |

247 | finish_tool_v3 = Tool(name="Tool_Finish",

248 | func=lambda x: x,

249 | coroutine=final,

250 | description=finish_description_v3)

251 |

--------------------------------------------------------------------------------

/autoagents/agents/utils/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutoLLM/AutoAgents/e1210c7884f951f1b90254e40c162e81fc1442f3/autoagents/agents/utils/__init__.py

--------------------------------------------------------------------------------

/autoagents/agents/utils/constants.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 |

4 | MAIN_HEADER = "Web Search Agent"

5 |

6 | MAIN_CAPTION = """This is a proof-of-concept search agent that reasons, plans,

7 | and executes web searches to collect information on your behalf. It aims to

8 | resolve your question by breaking it down into step-by-step subtasks. All the

9 | intermediate results will be presented.

10 |

11 | *DISCLAIMER*: We are collecting search queries, so please refrain from

12 | providing any personal information. If you wish to avoid this, you can run the

13 | app locally by following the instructions on our

14 | [Github](https://github.com/AutoLLM/AutoAgents)."""

15 |

16 | SAMPLE_QUESTIONS = [

17 | "Recommend me a movie in theater now to watch with kids.",

18 | "Who is the most recent NBA MVP? Which team does he play for? What are his career stats?",

19 | "Who is the head coach of AC Milan now? How long has he been coaching the team?",

20 | "What is the mortgage rate right now and how does that compare to the past two years?",

21 | "What is the weather like in San Francisco today? What about tomorrow?",

22 | "When and where is the upcoming concert for Taylor Swift? Share a link to purchase tickets.",

23 | "Find me recent studies focusing on hallucination in large language models. Provide the link to each study found.",

24 | ]

25 |

26 | LOG_SAVE_DIR: str = os.path.join(os.getcwd(), "data")

27 |

--------------------------------------------------------------------------------

/autoagents/agents/utils/logger.py:

--------------------------------------------------------------------------------

1 | import os

2 | import json

3 | from typing import Dict, Any, List

4 | import uuid

5 | from datetime import datetime

6 | import pytz

7 |

8 | import huggingface_hub

9 | from huggingface_hub import Repository

10 |

11 | from autoagents.agents.utils.constants import LOG_SAVE_DIR

12 |

13 |

14 | class InteractionsLogger:

15 | def __init__(self, name: str, persist=False):

16 | self.persist = persist

17 | self.messages = []

18 | self.counter = 0

19 | self.name = name # unique id

20 | HF_TOKEN = os.environ.get("HF_TOKEN")

21 | HF_DATASET_REPO_URL = os.environ.get("HF_DATASET_REPO_URL")

22 | if (HF_TOKEN is not None) and (HF_DATASET_REPO_URL is not None):

23 | self.repo = Repository(

24 | local_dir="data", clone_from=HF_DATASET_REPO_URL, use_auth_token=HF_TOKEN

25 | )

26 | else:

27 | self.repo = None

28 |

29 | def set_goal(self, goal: str):

30 | self.messages.append({"goal": goal})

31 |

32 | def set_tools(self, tools: List):

33 | self.messages.append({"tools": tools})

34 |

35 | def add_history(self, hist: Dict):

36 | self.convos = [{"from": "history", "value": hist}]

37 |

38 | def add_ai(self, msg: Dict):

39 | self.convos.append({"from": "ai", "value": msg})

40 | self.messages.append({"id": f"{self.name}_{self.counter}", "conversations": self.convos})

41 | self.counter += 1

42 |

43 | def add_system(self, more: Dict):

44 | self.convos.append({"from": "system", "value": more})

45 |

46 | def add_message(self, data: Dict[str, Any]):

47 | self.messages.append(data)

48 |

49 | def save(self, save_dir=LOG_SAVE_DIR):

50 | self.add_message({"datetime": datetime.now(pytz.utc).strftime("%m/%d/%Y %H:%M:%S %Z%z")})

51 | if self.persist:

52 | if not os.path.isdir(save_dir):

53 | os.mkdir(save_dir)

54 | # TODO: want to add retry in a loop?

55 | if self.repo is not None:

56 | self.repo.git_pull()

57 | fname = uuid.uuid4().hex[:16]

58 | with open(os.path.join(save_dir, f"{fname}.json"), "w") as f:

59 | json.dump(self.messages, f, indent=2)

60 | if self.repo is not None:

61 | commit_url = self.repo.push_to_hub()

62 |

63 | def add_cost(self, cost):

64 | self.messages.append({"metrics": cost})

65 |

--------------------------------------------------------------------------------

/autoagents/data/action_name_transformation.py:

--------------------------------------------------------------------------------

1 | import json

2 | import uuid

3 | import argparse

4 |

5 |

6 | parser = argparse.ArgumentParser()

7 | parser.add_argument('input')

8 | parser.add_argument('output')

9 |

10 | args = parser.parse_args()

11 |

12 |

13 | def transform(conversation, search_word, notepad_word):

14 | for i, message in enumerate(conversation):

15 | conversation[i]["value"] = \

16 | message["value"].replace(

17 | "Tool_Search", search_word).replace(

18 | "Tool_Notepad", notepad_word)

19 | return conversation

20 |

21 |

22 | input_file = args.input

23 | output_file = args.output

24 |

25 | with open(input_file, "r") as f:

26 | body = json.load(f)

27 |

28 | result = []

29 | for elem in body:

30 | search_word = str(uuid.uuid4())[:6]

31 | notepad_word = str(uuid.uuid4())[:6]

32 | elem = {

33 | "id": elem["id"],

34 | "conversations": transform(elem["conversations"], search_word, notepad_word)}

35 | result.append(elem)

36 | with open(output_file, "w") as f:

37 | json.dump(result, f, indent=2)

38 |

--------------------------------------------------------------------------------

/autoagents/data/create_sft_dataset.py:

--------------------------------------------------------------------------------

1 | import json

2 | import glob

3 | from collections import Counter

4 | import argparse

5 |

6 | counts = Counter()

7 |

8 | Goals = set()

9 |

10 | def process_file(data, name):

11 | if name.startswith("train_data") or name.startswith("final") or name.startswith("ab_"):

12 | return

13 | for d in data:

14 | if "error" in d:

15 | counts["error"] += 1

16 | return

17 | elif "query_rewrite" in d:

18 | counts["query_rewrite"] += 1

19 | return

20 | output = json.loads(data[-3]["conversations"][2]["value"])

21 | if output["action"] != "Tool_Finish":

22 | counts["no_final_answer"] += 1

23 | return

24 | # remove dups in case

25 | goal = data[0]["goal"]

26 | if goal not in Goals:

27 | Goals.add(goal)

28 | else:

29 | return

30 | costs = data[-2]

31 | data = data[1:-2]

32 |

33 | counts["conv_len"] += len(data)

34 | counts["total"] += 1

35 |

36 | counts["totals_cost"] += costs["metrics"]["total_cost"]

37 | data_new = []

38 | for d in data:

39 | convs = []

40 | for conv in d["conversations"]:

41 | k, v = conv.values()

42 | if k == "system":

43 | convs.append({"from": "human", "value": v})

44 | elif k == "ai":

45 | convs.append({"from": "gpt", "value": v})

46 | assert len(convs) == 2

47 | data_new.append({"id": d["id"], "conversations": convs})

48 | return data_new

49 |

50 | def main(dir_path, save=False):

51 | assert dir_path is not None

52 | train_data = []

53 | for name in glob.glob(f"{dir_path}/*.json"):

54 | dname = name.split("/")[-1].split(".")[0]

55 | with open(f"{dir_path}/{dname}.json", "r") as file:

56 | data = json.load(file)

57 | if (filtered_data := process_file(data, dname)):

58 | train_data += filtered_data

59 | if save:

60 | with open(f"{dir_path}/sft_data.json", "w") as f:

61 | json.dump(train_data, f, indent=2)

62 | print(counts)

63 |

64 | if __name__ == "__main__":

65 | parser = argparse.ArgumentParser()

66 | parser.add_argument('data_dir_path', type=str)

67 | parser.add_argument("--save", action="store_true")

68 | args = parser.parse_args()

69 | main(args.data_dir_path, args.save)

70 |

71 |

--------------------------------------------------------------------------------

/autoagents/data/dataset.py:

--------------------------------------------------------------------------------

1 | import datetime

2 |

3 | BAMBOOGLE = {

4 | "questions": ['Who was president of the United States in the year that Citibank was founded?', 'What rocket was the first spacecraft that ever approached Uranus launched on?', 'In what year was the company that was founded as Sound of Music added to the S&P 500?', 'Who was the first African American mayor of the most populous city in the United States?', "When did the last king from Britain's House of Hanover die?", 'When did the president who set the precedent of a two term limit enter office?', 'When did the president who set the precedent of a two term limit leave office?', 'How many people died in the second most powerful earthquake ever recorded?', 'Can people who have celiac eat camel meat?', 'What was the final book written by the author of On the Origin of Species?', 'When was the company that built the first steam locomotive to carry passengers on a public rail line founded?', 'Which Theranos whistleblower is not related to a senior American government official?', 'What is the fastest air-breathing manned aircraft mostly made out of?', 'Who built the fastest air-breathing manned aircraft?', 'When was the author of The Population Bomb born?', 'When did the author of Annabel Lee enlist in the army?', 'What was the religion of the inventor of the Polio vaccine?', 'Who was the second wife of the founder of CNN?', 'When did the first prime minister of the Russian Empire come into office?', 'What is the primary male hormone derived from?', 'The Filipino statesman who established the government-in-exile during the outbreak of World War II was also the mayor of what city?', 'Where was the person who shared the Nobel Prize in Physics in 1954 with Max Born born?', 'When was the person who shared the Nobel Prize in Physics in 1954 with Max Born born?', 'What was the founding date of the university in which Plotonium was discovered?', 'The material out of which the Great Sphinx of Giza is made of is mainly composed of what mineral?', 'The husband of Lady Godiva was Earl of which Anglic kingdom?', 'The machine used to extract honey from honeycombs uses which physical force?', 'What is the third letter of the top level domain of the military?', 'In what year was the government department where the internet originated at founded?', 'The main actor of Indiana Jones is a licensed what?', 'When was the person after which the Hubble Space Telescope is named after born?', 'When did the person who gave the Checkers speech die?', 'When was the philosopher that formulated the hard problem of consciousness born?', 'What is the capital of the second largest state in the US by area?', 'What is the maximum airspeed (in km/h) of the third fastest bird?', 'Who founded the city where the founder of geometry lived?', 'What is the capital of the country where yoga originated?', 'The fourth largest city in Germany was originally called what?', "When did Nirvana's second most selling studio album come out?", 'What was the job of the father of the founder of psychoanalysis?', 'How much protein in four boiled egg yolks?', 'What is the political party of the American president who entered into the Paris agreement?', 'The most populous city in Punjab is how large (area wise)?', 'What was the death toll of the second largest volcanic eruption in the 20th century?', 'What was the death toll of the most intense Atlantic hurricane?', 'Who was the head of NASA during Apollo 11?', 'Who is the father of the father of George Washington?', 'Who is the mother of the father of George Washington?', 'Who is the father of the father of Barack Obama?', 'Who is the mother of the father of Barack Obama?', 'Who was mayor of New York City when Fiorello H. La Guardia was born?', 'Who was president of the U.S. when superconductivity was discovered?', 'When was the person Russ Hanneman is based on born?', "When was the first location of the world's largest coffeehouse chain opened?", 'Who directed the highest grossing film?', 'When was the longest bridge in the world opened?', 'Which company was responsible for the largest pharmaceutical settlement?', 'In what year was the tallest self-supporting tower completed?', 'In what year was the tallest fixed steel structure completed?', 'In what year was the tallest lattice tower completed?', 'In what year was the current tallest wooden lattice tower completed?', 'In what country is the second tallest statue in the world?', 'When was the tallest ferris wheel in the world completed?', 'In what year was the tallest lighthouse completed?', 'In what country is the world largest desalination plant?', 'The most populous national capital city was established in what year?', 'The third largest river (by discharge) in the world is in what countries?', 'What is the highest elevation (in meters) of the second largest island in the world?', 'What is the length of the second deepest river in the world?', 'In what country is the third largest stadium in the world?', 'Who is the largest aircraft carrier in the world is named after?', 'In what year did the oldest cat ever recorded with the Cat of the Year award?', 'In what year was the country that is the third largest exporter of coffee founded?', 'Who was the commander for the space mission that had the first spacewalk?', 'Who is the predecessor of the longest-reigning British monarch?', 'In 2016, who was the host of the longest running talk show?', 'In 2016, who was the host of the longest running American game show?', 'Who wrote the novel on which the longest running show in Broadway history is based on?', 'In what country was the only cruise line that flies the American flag incorporated in?', 'In what year did work begin on the second longest road tunnel in the world?', 'What is the official color of the third oldest surviving university?', 'Who succeeded the longest reigning Roman emperor?', 'Who preceded the Roman emperor that declared war on the sea?', 'Who produced the longest running video game franchise?', 'Who was the father of the father of psychoanalysis?', 'Who was the father of the father of empiricism?', 'Who is the father of the father of observational astronomy?', 'Who is the father of the father of modern Hebrew?', 'Who is the father of the father of modern experimental psychology?', 'Who is the father of the originator of cybernetics?', 'Who is the father of the father of the hydrogen bomb?', 'Who was the father of the father of computer science?', 'Who was the father of the father of behaviorism?', 'Who was the father of the founder of modern human anatomy?', 'What was the father of the last surviving Canadian father of Confederation?', 'When was the person who said “Now, I am become Death, the destroyer of worlds.” born?', 'Who was the father of the father of information theory?', 'When was the person who delivered the "Quit India" speech born?', 'When did the president who warned about the military industrial complex die?', 'When did the president who said Tear Down This Wall die?', 'What is the lowest elevation of the longest railway tunnel?', 'When did the person who said "Cogito, ergo sum." die?', 'When did the person who delivered the Gettysburg Address die?', 'Who was governor of Florida during Hurricane Irma?', "For which club did the winner of the 2007 Ballon d'Or play for in 2012?", "What's the capital city of the country that was the champion of the 2010 World Cup?", 'When was the anime studio that made Sword Art Online founded?', 'Who was the first king of the longest Chinese dynasty?', 'Who was the last emperor of the dynasty that succeeded the Song dynasty?', "What's the motto of the oldest California State university?", "What's the capital of the state that the College of William & Mary is in?", "What's the capital of the state that Washington University in St. Louis is in?", "What's the capital of the state that Harvard University is in?", "What's the capital of the state that the Space Needle is at?", "Which team won in women's volleyball in the most recent Summer Olympics that was held in London?", 'What is the nickname of the easternmost U.S. state?', 'What is the nickname for the state that is the home to the “Avocado Capital of the World"?', 'What rocket was used for the mission that landed the first humans on the moon?', 'When did the war that Neil Armstrong served in end?', 'What is the nickname for the state that Mount Rainier is located in?', 'When was the composer of Carol of the Bells born?', 'Who is the father of the scientist at MIT that won the Queen Elizabeth Prize for Engineering in 2013?', 'Who was the mother of the emperor of Japan during World War I?', 'Which element has an atomic number that is double that of hydrogen?', 'What was the motto of the Olympics that had Fuwa as the mascots?'],

5 | "answers": ['james madison', 'Titan IIIE', 1999, 'David Dinkins', '20 June 1837', 'April 30, 1789', 'March 4, 1797', 131, 'Yes', 'The Formation of Vegetable Mould Through the Action of Worms', 1823, 'Erika Cheung', 'Titanium', 'Lockheed Corporation', datetime.datetime(1932, 5, 29, 0, 0), 1827, 'Jewish', 'Jane Shirley Smith', datetime.datetime(1905, 11, 6, 0, 0), 'cholesterol', 'Quezon City', 'Oranienburg, Germany', 'January 8, 1891', 'March 23, 1868', 'calcite', 'Mercia', 'Centrifugal Force', 'l', 1947, 'pilot', 'November 20, 1889', datetime.datetime(1994, 4, 22, 0, 0), datetime.datetime(1966, 4, 20, 0, 0), 'Austin', '320 km/h', 'Alexander the Great', 'New Delhi', 'Colonia Claudia Ara Agrippinensium', datetime.datetime(1993, 9, 13, 0, 0), 'wool merchant', 10.8, 'Democratic Party', '310 square kilometers', 847, 52, 'Thomas O. Paine', 'Lawrence Washington', 'Mildred Warner', 'Hussein Onyango Obama', 'Habiba Akumu Nyanjango', 'William Russell Grace', 'William Howard Taft', datetime.datetime(1958, 7, 31, 0, 0), datetime.datetime(1971, 3, 30, 0, 0), 'James Cameroon', datetime.datetime(2011, 6, 30, 0, 0), 'GlaxoSmithKline', 2012, 1988, 2012, 1935, 'China', 2021, 1902, 'Saudi Arabia', '1045 BC', 'India and Bangladesh', '4,884 m', '6,300 km', 'United States', 'Gerald R. Ford', 1999, 1810, 'Pavel Belyayev', 'George VI\n', 'Jimmy Fallon', 'Drew Carey', 'Gaston Leroux', 'Bermuda', 1992, 'Cambridge Blue', 'Tiberius', 'Tiberius', 'MECC', 'Jacob Freud', 'Sir Nicholas Bacon', 'Vincenzo Galilei', 'Yehuda Leib', 'Maximilian Wundt', 'Leo Wiener', 'Max Teller', 'Julius Mathison Turing', 'Pickens Butler Watson', 'Anders van Wesel', 'Charles Tupper Sr.', datetime.datetime(1904, 4, 22, 0, 0), 'Claude Sr.', 'October 2, 1869', datetime.datetime(1969, 3, 28, 0, 0), datetime.datetime(2004, 6, 5, 0, 0), '312 m', 'February 11, 1650', 'April 15, 1865', 'Rick Scott', 'Real Madrid', 'Madrid', datetime.datetime(2005, 5, 9, 0, 0), 'King Wu of Zhou', 'Toghon Temür', 'Powering Silicon Valley', 'Richmond', 'Jefferson City', 'Boston', 'Olympia', 'Brazil', 'Pine Tree State', 'Golden State', 'Saturn V', datetime.datetime(1953, 7, 27, 0, 0), 'Evergreen State', 'December 13, 1877', 'Conway Berners-Lee', 'Yanagiwara Naruko', 'Helium', 'One World, One Dream']

6 | }

7 |

8 | DEFAULT_Q = [

9 | (0, "list 3 cities and their current populations where Paramore is playing this year."),

10 | (1, "Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?"),

11 | (2, "How many watermelons can fit in a Tesla Model S?"),

12 | (3, "Recommend me some laptops suitable for UI designers under $2000. Please include brand and price."),

13 | (4, "Build me a vacation plan for Rome and Milan this summer for seven days. Include place to visit and hotels to stay. "),

14 | (5, "What is the sum of ages of the wives of Barack Obama and Donald Trump?"),

15 | (6, "Who is the most recent NBA MVP? Which team does he play for? What is his season stats?"),

16 | (7, "What were the scores for the last three games for the Los Angeles Lakers? Provide the dates and opposing teams."),

17 | (8, "Which team won in women's volleyball in the Summer Olympics that was held in London?"),

18 | (9, "Provide a summary of the latest COVID-19 research paper published. Include the title, authors and abstract."),

19 | (10, "What is the top grossing movie in theatres this week? Provide the movie title, director, and a brief synopsis of the movie's plot. Attach a review for this movie."),

20 | (11, "Recommend a bagel shop near the Strip district in Pittsburgh that offer vegan food"),

21 | (12, "Who are some top researchers in the field of machine learning systems nowadays?"),

22 | ]

23 |

24 | FT = [

25 | (0, "Briefly explain the current global climate change adaptation strategy and its effectiveness."),

26 | (1, "What steps should be taken to prepare a backyard garden for spring planting?"),

27 | (2, "Report the critical reception of the latest superhero movie."),

28 | (3, "When is the next NBA or NFL finals game scheduled?"),

29 | (4, "Which national parks or nature reserves are currently open for visitors near Denver, Colorado?"),

30 | (5, "Who are the most recent Nobel Prize winners in physics, chemistry, and medicine, and what are their respective contributions?"),

31 | ]

32 |

33 | HF = [

34 | (0, "Recommend me a movie in theater now to watch with kids."),

35 | (1, "Who is the most recent NBA MVP? Which team does he play for? What are his career stats?"),

36 | (2, "Who is the head coach of AC Milan now? How long has he been coaching the team?"),

37 | (3, "What is the mortgage rate right now and how does that compare to the past two years?"),

38 | (4, "What is the weather like in San Francisco today? What about tomorrow?"),

39 | (5, "When and where is the upcoming concert for Taylor Swift? Share a link to purchase tickets."),

40 | (6, "Find me recent studies focusing on hallucination in large language models. Provide the link to each study found."),

41 | ]

42 |

--------------------------------------------------------------------------------

/autoagents/data/generate_action_data.py:

--------------------------------------------------------------------------------

1 | # Script generates action data from goals calling GPT-4

2 | import os

3 | import asyncio

4 | import argparse

5 | from tqdm import tqdm

6 |

7 | from multiprocessing import Pool

8 |

9 | from autoagents.agents.agents.search import ActionRunner

10 | from autoagents.eval.test import AWAIT_TIMEOUT

11 | from langchain.chat_models import ChatOpenAI

12 | import json

13 |

14 |

15 | async def work(user_input):

16 | outputq = asyncio.Queue()

17 | llm = ChatOpenAI(openai_api_key=os.getenv("OPENAI_API_KEY"),

18 | openai_organization=os.getenv("OPENAI_API_ORG"),

19 | temperature=0.,

20 | model_name="gpt-4")

21 | runner = ActionRunner(outputq, llm=llm, persist_logs=True)

22 | task = asyncio.create_task(runner.run(user_input, outputq))

23 |

24 | while True:

25 | try:

26 | output = await asyncio.wait_for(outputq.get(), AWAIT_TIMEOUT)

27 | except asyncio.TimeoutError:

28 | return

29 | if isinstance(output, RuntimeWarning):

30 | print(output)

31 | continue

32 | elif isinstance(output, Exception):

33 | print(output)

34 | return

35 | try:

36 | parsed = json.loads(output)

37 | if parsed["action"] in ("Tool_Finish", "Tool_Abort"):

38 | break

39 | except:

40 | pass

41 | await task

42 |

43 | def main(q):

44 | asyncio.run(work(q))

45 |

46 | if __name__ == "__main__":

47 | parser = argparse.ArgumentParser()

48 | parser.add_argument('--goals', type=str, help="file containing JSON array of goals", required=True)

49 | parser.add_argument("--num_data", type=int, default=-1, help="number of goals for generation")

50 | args = parser.parse_args()

51 | with open(args.goals, "r") as file:

52 | data = json.load(file)

53 | if args.num_data > -1 and len(data) > args.num_data:

54 | data = data[:args.num_data]

55 | with Pool(processes=4) as pool:

56 | for _ in tqdm(pool.imap_unordered(main, data), total=len(data)):

57 | pass

58 |

--------------------------------------------------------------------------------

/autoagents/data/generate_action_tasks/README.md:

--------------------------------------------------------------------------------

1 | # Generate_action_tasks

2 | Generate action tasks for AutoGPT following self-instruct.

3 |

4 |

5 | ## What does this repo do

6 |