├── .DS_Store

├── .github

└── workflows

│ └── python-package-conda.yml

├── .gitignore

├── .vscode

└── settings.json

├── CONTRIBUTING.md

├── Examples

├── AutoViz_Bokeh_Interactive_Demo.ipynb

├── AutoViz_Demo.ipynb

├── Boston.csv

└── LCA Bokeh.ipynb

├── LICENSE

├── README.md

├── autoviz

├── AutoViz_Class.py

├── AutoViz_Holo.py

├── AutoViz_NLP.py

├── AutoViz_Utils.py

├── __init__.py

├── __version__.py

├── classify_method.py

├── test.png

└── tests

│ ├── __init__.py

│ ├── test_autoviz_class.py

│ └── test_deps.py

├── images

├── bokeh_charts.JPG

├── data_clean.png

├── logo.JPG

├── logo.png

├── server_charts.JPG

└── var_charts.JPG

├── old_setup.py

├── requirements-py310.txt

├── requirements-py311.txt

├── requirements.txt

├── setup.py

└── updates.md

/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutoViML/AutoViz/63f4b3c67ca80d0148b10b4079e3fd5609e32657/.DS_Store

--------------------------------------------------------------------------------

/.github/workflows/python-package-conda.yml:

--------------------------------------------------------------------------------

1 | name: Python Package using Conda

2 |

3 | on: [push]

4 |

5 | jobs:

6 | build-linux:

7 | runs-on: ubuntu-latest

8 | strategy:

9 | matrix:

10 | os: [ubuntu-latest, macos-latest, windows-latest]:

11 | max-parallel: 5

12 |

13 | steps:

14 | - uses: actions/checkout@v3

15 | - name: Set up Python 3.10

16 | uses: actions/setup-python@v3

17 | with:

18 | python-version: '3.10'

19 | - name: Add conda to system path

20 | run: |

21 | # $CONDA is an environment variable pointing to the root of the miniconda directory

22 | echo $CONDA/bin >> $GITHUB_PATH

23 | - name: Install dependencies

24 | run: |

25 | conda env update --file environment.yml --name base

26 | - name: Lint with flake8

27 | run: |

28 | conda install flake8

29 | # stop the build if there are Python syntax errors or undefined names

30 | flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

31 | # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

32 | flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

33 | - name: Test with pytest

34 | run: |

35 | conda install pytest

36 | pytest

37 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .ipynb_checkpoints/

2 | __pycache__/

3 | .idea/

4 | dist/

5 | autoviz.egg-info/

6 | build/

7 |

--------------------------------------------------------------------------------

/.vscode/settings.json:

--------------------------------------------------------------------------------

1 | {

2 | "python.pythonPath": "/opt/conda/bin/python"

3 | }

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing

2 |

3 | We welcome contributions from anyone beginner or advanced. Please before working on some feature

4 |

5 | - search through the past issues, your concern may have been raised by others in the past. Check through

6 | closed issues as well.

7 | - if there is no open issue for your feature request please open one up to coordinate all collaborators

8 | - write your feature

9 | - submit a pull request on this repo with:

10 | - a brief description

11 | - **detail of the expected change(s) in behaviour**

12 | - how to test it (if it's not obvious)

13 |

14 | Ask someone to test it.

15 |

--------------------------------------------------------------------------------

/Examples/Boston.csv:

--------------------------------------------------------------------------------

1 | "","crim","zn","indus","chas","nox","rm","age","dis","rad","tax","ptratio","black","lstat","medv"

2 | "1",0.00632,18,2.31,0,0.538,6.575,65.2,4.09,1,296,15.3,396.9,4.98,24

3 | "2",0.02731,0,7.07,0,0.469,6.421,78.9,4.9671,2,242,17.8,396.9,9.14,21.6

4 | "3",0.02729,0,7.07,0,0.469,7.185,61.1,4.9671,2,242,17.8,392.83,4.03,34.7

5 | "4",0.03237,0,2.18,0,0.458,6.998,45.8,6.0622,3,222,18.7,394.63,2.94,33.4

6 | "5",0.06905,0,2.18,0,0.458,7.147,54.2,6.0622,3,222,18.7,396.9,5.33,36.2

7 | "6",0.02985,0,2.18,0,0.458,6.43,58.7,6.0622,3,222,18.7,394.12,5.21,28.7

8 | "7",0.08829,12.5,7.87,0,0.524,6.012,66.6,5.5605,5,311,15.2,395.6,12.43,22.9

9 | "8",0.14455,12.5,7.87,0,0.524,6.172,96.1,5.9505,5,311,15.2,396.9,19.15,27.1

10 | "9",0.21124,12.5,7.87,0,0.524,5.631,100,6.0821,5,311,15.2,386.63,29.93,16.5

11 | "10",0.17004,12.5,7.87,0,0.524,6.004,85.9,6.5921,5,311,15.2,386.71,17.1,18.9

12 | "11",0.22489,12.5,7.87,0,0.524,6.377,94.3,6.3467,5,311,15.2,392.52,20.45,15

13 | "12",0.11747,12.5,7.87,0,0.524,6.009,82.9,6.2267,5,311,15.2,396.9,13.27,18.9

14 | "13",0.09378,12.5,7.87,0,0.524,5.889,39,5.4509,5,311,15.2,390.5,15.71,21.7

15 | "14",0.62976,0,8.14,0,0.538,5.949,61.8,4.7075,4,307,21,396.9,8.26,20.4

16 | "15",0.63796,0,8.14,0,0.538,6.096,84.5,4.4619,4,307,21,380.02,10.26,18.2

17 | "16",0.62739,0,8.14,0,0.538,5.834,56.5,4.4986,4,307,21,395.62,8.47,19.9

18 | "17",1.05393,0,8.14,0,0.538,5.935,29.3,4.4986,4,307,21,386.85,6.58,23.1

19 | "18",0.7842,0,8.14,0,0.538,5.99,81.7,4.2579,4,307,21,386.75,14.67,17.5

20 | "19",0.80271,0,8.14,0,0.538,5.456,36.6,3.7965,4,307,21,288.99,11.69,20.2

21 | "20",0.7258,0,8.14,0,0.538,5.727,69.5,3.7965,4,307,21,390.95,11.28,18.2

22 | "21",1.25179,0,8.14,0,0.538,5.57,98.1,3.7979,4,307,21,376.57,21.02,13.6

23 | "22",0.85204,0,8.14,0,0.538,5.965,89.2,4.0123,4,307,21,392.53,13.83,19.6

24 | "23",1.23247,0,8.14,0,0.538,6.142,91.7,3.9769,4,307,21,396.9,18.72,15.2

25 | "24",0.98843,0,8.14,0,0.538,5.813,100,4.0952,4,307,21,394.54,19.88,14.5

26 | "25",0.75026,0,8.14,0,0.538,5.924,94.1,4.3996,4,307,21,394.33,16.3,15.6

27 | "26",0.84054,0,8.14,0,0.538,5.599,85.7,4.4546,4,307,21,303.42,16.51,13.9

28 | "27",0.67191,0,8.14,0,0.538,5.813,90.3,4.682,4,307,21,376.88,14.81,16.6

29 | "28",0.95577,0,8.14,0,0.538,6.047,88.8,4.4534,4,307,21,306.38,17.28,14.8

30 | "29",0.77299,0,8.14,0,0.538,6.495,94.4,4.4547,4,307,21,387.94,12.8,18.4

31 | "30",1.00245,0,8.14,0,0.538,6.674,87.3,4.239,4,307,21,380.23,11.98,21

32 | "31",1.13081,0,8.14,0,0.538,5.713,94.1,4.233,4,307,21,360.17,22.6,12.7

33 | "32",1.35472,0,8.14,0,0.538,6.072,100,4.175,4,307,21,376.73,13.04,14.5

34 | "33",1.38799,0,8.14,0,0.538,5.95,82,3.99,4,307,21,232.6,27.71,13.2

35 | "34",1.15172,0,8.14,0,0.538,5.701,95,3.7872,4,307,21,358.77,18.35,13.1

36 | "35",1.61282,0,8.14,0,0.538,6.096,96.9,3.7598,4,307,21,248.31,20.34,13.5

37 | "36",0.06417,0,5.96,0,0.499,5.933,68.2,3.3603,5,279,19.2,396.9,9.68,18.9

38 | "37",0.09744,0,5.96,0,0.499,5.841,61.4,3.3779,5,279,19.2,377.56,11.41,20

39 | "38",0.08014,0,5.96,0,0.499,5.85,41.5,3.9342,5,279,19.2,396.9,8.77,21

40 | "39",0.17505,0,5.96,0,0.499,5.966,30.2,3.8473,5,279,19.2,393.43,10.13,24.7

41 | "40",0.02763,75,2.95,0,0.428,6.595,21.8,5.4011,3,252,18.3,395.63,4.32,30.8

42 | "41",0.03359,75,2.95,0,0.428,7.024,15.8,5.4011,3,252,18.3,395.62,1.98,34.9

43 | "42",0.12744,0,6.91,0,0.448,6.77,2.9,5.7209,3,233,17.9,385.41,4.84,26.6

44 | "43",0.1415,0,6.91,0,0.448,6.169,6.6,5.7209,3,233,17.9,383.37,5.81,25.3

45 | "44",0.15936,0,6.91,0,0.448,6.211,6.5,5.7209,3,233,17.9,394.46,7.44,24.7

46 | "45",0.12269,0,6.91,0,0.448,6.069,40,5.7209,3,233,17.9,389.39,9.55,21.2

47 | "46",0.17142,0,6.91,0,0.448,5.682,33.8,5.1004,3,233,17.9,396.9,10.21,19.3

48 | "47",0.18836,0,6.91,0,0.448,5.786,33.3,5.1004,3,233,17.9,396.9,14.15,20

49 | "48",0.22927,0,6.91,0,0.448,6.03,85.5,5.6894,3,233,17.9,392.74,18.8,16.6

50 | "49",0.25387,0,6.91,0,0.448,5.399,95.3,5.87,3,233,17.9,396.9,30.81,14.4

51 | "50",0.21977,0,6.91,0,0.448,5.602,62,6.0877,3,233,17.9,396.9,16.2,19.4

52 | "51",0.08873,21,5.64,0,0.439,5.963,45.7,6.8147,4,243,16.8,395.56,13.45,19.7

53 | "52",0.04337,21,5.64,0,0.439,6.115,63,6.8147,4,243,16.8,393.97,9.43,20.5

54 | "53",0.0536,21,5.64,0,0.439,6.511,21.1,6.8147,4,243,16.8,396.9,5.28,25

55 | "54",0.04981,21,5.64,0,0.439,5.998,21.4,6.8147,4,243,16.8,396.9,8.43,23.4

56 | "55",0.0136,75,4,0,0.41,5.888,47.6,7.3197,3,469,21.1,396.9,14.8,18.9

57 | "56",0.01311,90,1.22,0,0.403,7.249,21.9,8.6966,5,226,17.9,395.93,4.81,35.4

58 | "57",0.02055,85,0.74,0,0.41,6.383,35.7,9.1876,2,313,17.3,396.9,5.77,24.7

59 | "58",0.01432,100,1.32,0,0.411,6.816,40.5,8.3248,5,256,15.1,392.9,3.95,31.6

60 | "59",0.15445,25,5.13,0,0.453,6.145,29.2,7.8148,8,284,19.7,390.68,6.86,23.3

61 | "60",0.10328,25,5.13,0,0.453,5.927,47.2,6.932,8,284,19.7,396.9,9.22,19.6

62 | "61",0.14932,25,5.13,0,0.453,5.741,66.2,7.2254,8,284,19.7,395.11,13.15,18.7

63 | "62",0.17171,25,5.13,0,0.453,5.966,93.4,6.8185,8,284,19.7,378.08,14.44,16

64 | "63",0.11027,25,5.13,0,0.453,6.456,67.8,7.2255,8,284,19.7,396.9,6.73,22.2

65 | "64",0.1265,25,5.13,0,0.453,6.762,43.4,7.9809,8,284,19.7,395.58,9.5,25

66 | "65",0.01951,17.5,1.38,0,0.4161,7.104,59.5,9.2229,3,216,18.6,393.24,8.05,33

67 | "66",0.03584,80,3.37,0,0.398,6.29,17.8,6.6115,4,337,16.1,396.9,4.67,23.5

68 | "67",0.04379,80,3.37,0,0.398,5.787,31.1,6.6115,4,337,16.1,396.9,10.24,19.4

69 | "68",0.05789,12.5,6.07,0,0.409,5.878,21.4,6.498,4,345,18.9,396.21,8.1,22

70 | "69",0.13554,12.5,6.07,0,0.409,5.594,36.8,6.498,4,345,18.9,396.9,13.09,17.4

71 | "70",0.12816,12.5,6.07,0,0.409,5.885,33,6.498,4,345,18.9,396.9,8.79,20.9

72 | "71",0.08826,0,10.81,0,0.413,6.417,6.6,5.2873,4,305,19.2,383.73,6.72,24.2

73 | "72",0.15876,0,10.81,0,0.413,5.961,17.5,5.2873,4,305,19.2,376.94,9.88,21.7

74 | "73",0.09164,0,10.81,0,0.413,6.065,7.8,5.2873,4,305,19.2,390.91,5.52,22.8

75 | "74",0.19539,0,10.81,0,0.413,6.245,6.2,5.2873,4,305,19.2,377.17,7.54,23.4

76 | "75",0.07896,0,12.83,0,0.437,6.273,6,4.2515,5,398,18.7,394.92,6.78,24.1

77 | "76",0.09512,0,12.83,0,0.437,6.286,45,4.5026,5,398,18.7,383.23,8.94,21.4

78 | "77",0.10153,0,12.83,0,0.437,6.279,74.5,4.0522,5,398,18.7,373.66,11.97,20

79 | "78",0.08707,0,12.83,0,0.437,6.14,45.8,4.0905,5,398,18.7,386.96,10.27,20.8

80 | "79",0.05646,0,12.83,0,0.437,6.232,53.7,5.0141,5,398,18.7,386.4,12.34,21.2

81 | "80",0.08387,0,12.83,0,0.437,5.874,36.6,4.5026,5,398,18.7,396.06,9.1,20.3

82 | "81",0.04113,25,4.86,0,0.426,6.727,33.5,5.4007,4,281,19,396.9,5.29,28

83 | "82",0.04462,25,4.86,0,0.426,6.619,70.4,5.4007,4,281,19,395.63,7.22,23.9

84 | "83",0.03659,25,4.86,0,0.426,6.302,32.2,5.4007,4,281,19,396.9,6.72,24.8

85 | "84",0.03551,25,4.86,0,0.426,6.167,46.7,5.4007,4,281,19,390.64,7.51,22.9

86 | "85",0.05059,0,4.49,0,0.449,6.389,48,4.7794,3,247,18.5,396.9,9.62,23.9

87 | "86",0.05735,0,4.49,0,0.449,6.63,56.1,4.4377,3,247,18.5,392.3,6.53,26.6

88 | "87",0.05188,0,4.49,0,0.449,6.015,45.1,4.4272,3,247,18.5,395.99,12.86,22.5

89 | "88",0.07151,0,4.49,0,0.449,6.121,56.8,3.7476,3,247,18.5,395.15,8.44,22.2

90 | "89",0.0566,0,3.41,0,0.489,7.007,86.3,3.4217,2,270,17.8,396.9,5.5,23.6

91 | "90",0.05302,0,3.41,0,0.489,7.079,63.1,3.4145,2,270,17.8,396.06,5.7,28.7

92 | "91",0.04684,0,3.41,0,0.489,6.417,66.1,3.0923,2,270,17.8,392.18,8.81,22.6

93 | "92",0.03932,0,3.41,0,0.489,6.405,73.9,3.0921,2,270,17.8,393.55,8.2,22

94 | "93",0.04203,28,15.04,0,0.464,6.442,53.6,3.6659,4,270,18.2,395.01,8.16,22.9

95 | "94",0.02875,28,15.04,0,0.464,6.211,28.9,3.6659,4,270,18.2,396.33,6.21,25

96 | "95",0.04294,28,15.04,0,0.464,6.249,77.3,3.615,4,270,18.2,396.9,10.59,20.6

97 | "96",0.12204,0,2.89,0,0.445,6.625,57.8,3.4952,2,276,18,357.98,6.65,28.4

98 | "97",0.11504,0,2.89,0,0.445,6.163,69.6,3.4952,2,276,18,391.83,11.34,21.4

99 | "98",0.12083,0,2.89,0,0.445,8.069,76,3.4952,2,276,18,396.9,4.21,38.7

100 | "99",0.08187,0,2.89,0,0.445,7.82,36.9,3.4952,2,276,18,393.53,3.57,43.8

101 | "100",0.0686,0,2.89,0,0.445,7.416,62.5,3.4952,2,276,18,396.9,6.19,33.2

102 | "101",0.14866,0,8.56,0,0.52,6.727,79.9,2.7778,5,384,20.9,394.76,9.42,27.5

103 | "102",0.11432,0,8.56,0,0.52,6.781,71.3,2.8561,5,384,20.9,395.58,7.67,26.5

104 | "103",0.22876,0,8.56,0,0.52,6.405,85.4,2.7147,5,384,20.9,70.8,10.63,18.6

105 | "104",0.21161,0,8.56,0,0.52,6.137,87.4,2.7147,5,384,20.9,394.47,13.44,19.3

106 | "105",0.1396,0,8.56,0,0.52,6.167,90,2.421,5,384,20.9,392.69,12.33,20.1

107 | "106",0.13262,0,8.56,0,0.52,5.851,96.7,2.1069,5,384,20.9,394.05,16.47,19.5

108 | "107",0.1712,0,8.56,0,0.52,5.836,91.9,2.211,5,384,20.9,395.67,18.66,19.5

109 | "108",0.13117,0,8.56,0,0.52,6.127,85.2,2.1224,5,384,20.9,387.69,14.09,20.4

110 | "109",0.12802,0,8.56,0,0.52,6.474,97.1,2.4329,5,384,20.9,395.24,12.27,19.8

111 | "110",0.26363,0,8.56,0,0.52,6.229,91.2,2.5451,5,384,20.9,391.23,15.55,19.4

112 | "111",0.10793,0,8.56,0,0.52,6.195,54.4,2.7778,5,384,20.9,393.49,13,21.7

113 | "112",0.10084,0,10.01,0,0.547,6.715,81.6,2.6775,6,432,17.8,395.59,10.16,22.8

114 | "113",0.12329,0,10.01,0,0.547,5.913,92.9,2.3534,6,432,17.8,394.95,16.21,18.8

115 | "114",0.22212,0,10.01,0,0.547,6.092,95.4,2.548,6,432,17.8,396.9,17.09,18.7

116 | "115",0.14231,0,10.01,0,0.547,6.254,84.2,2.2565,6,432,17.8,388.74,10.45,18.5

117 | "116",0.17134,0,10.01,0,0.547,5.928,88.2,2.4631,6,432,17.8,344.91,15.76,18.3

118 | "117",0.13158,0,10.01,0,0.547,6.176,72.5,2.7301,6,432,17.8,393.3,12.04,21.2

119 | "118",0.15098,0,10.01,0,0.547,6.021,82.6,2.7474,6,432,17.8,394.51,10.3,19.2

120 | "119",0.13058,0,10.01,0,0.547,5.872,73.1,2.4775,6,432,17.8,338.63,15.37,20.4

121 | "120",0.14476,0,10.01,0,0.547,5.731,65.2,2.7592,6,432,17.8,391.5,13.61,19.3

122 | "121",0.06899,0,25.65,0,0.581,5.87,69.7,2.2577,2,188,19.1,389.15,14.37,22

123 | "122",0.07165,0,25.65,0,0.581,6.004,84.1,2.1974,2,188,19.1,377.67,14.27,20.3

124 | "123",0.09299,0,25.65,0,0.581,5.961,92.9,2.0869,2,188,19.1,378.09,17.93,20.5

125 | "124",0.15038,0,25.65,0,0.581,5.856,97,1.9444,2,188,19.1,370.31,25.41,17.3

126 | "125",0.09849,0,25.65,0,0.581,5.879,95.8,2.0063,2,188,19.1,379.38,17.58,18.8

127 | "126",0.16902,0,25.65,0,0.581,5.986,88.4,1.9929,2,188,19.1,385.02,14.81,21.4

128 | "127",0.38735,0,25.65,0,0.581,5.613,95.6,1.7572,2,188,19.1,359.29,27.26,15.7

129 | "128",0.25915,0,21.89,0,0.624,5.693,96,1.7883,4,437,21.2,392.11,17.19,16.2

130 | "129",0.32543,0,21.89,0,0.624,6.431,98.8,1.8125,4,437,21.2,396.9,15.39,18

131 | "130",0.88125,0,21.89,0,0.624,5.637,94.7,1.9799,4,437,21.2,396.9,18.34,14.3

132 | "131",0.34006,0,21.89,0,0.624,6.458,98.9,2.1185,4,437,21.2,395.04,12.6,19.2

133 | "132",1.19294,0,21.89,0,0.624,6.326,97.7,2.271,4,437,21.2,396.9,12.26,19.6

134 | "133",0.59005,0,21.89,0,0.624,6.372,97.9,2.3274,4,437,21.2,385.76,11.12,23

135 | "134",0.32982,0,21.89,0,0.624,5.822,95.4,2.4699,4,437,21.2,388.69,15.03,18.4

136 | "135",0.97617,0,21.89,0,0.624,5.757,98.4,2.346,4,437,21.2,262.76,17.31,15.6

137 | "136",0.55778,0,21.89,0,0.624,6.335,98.2,2.1107,4,437,21.2,394.67,16.96,18.1

138 | "137",0.32264,0,21.89,0,0.624,5.942,93.5,1.9669,4,437,21.2,378.25,16.9,17.4

139 | "138",0.35233,0,21.89,0,0.624,6.454,98.4,1.8498,4,437,21.2,394.08,14.59,17.1

140 | "139",0.2498,0,21.89,0,0.624,5.857,98.2,1.6686,4,437,21.2,392.04,21.32,13.3

141 | "140",0.54452,0,21.89,0,0.624,6.151,97.9,1.6687,4,437,21.2,396.9,18.46,17.8

142 | "141",0.2909,0,21.89,0,0.624,6.174,93.6,1.6119,4,437,21.2,388.08,24.16,14

143 | "142",1.62864,0,21.89,0,0.624,5.019,100,1.4394,4,437,21.2,396.9,34.41,14.4

144 | "143",3.32105,0,19.58,1,0.871,5.403,100,1.3216,5,403,14.7,396.9,26.82,13.4

145 | "144",4.0974,0,19.58,0,0.871,5.468,100,1.4118,5,403,14.7,396.9,26.42,15.6

146 | "145",2.77974,0,19.58,0,0.871,4.903,97.8,1.3459,5,403,14.7,396.9,29.29,11.8

147 | "146",2.37934,0,19.58,0,0.871,6.13,100,1.4191,5,403,14.7,172.91,27.8,13.8

148 | "147",2.15505,0,19.58,0,0.871,5.628,100,1.5166,5,403,14.7,169.27,16.65,15.6

149 | "148",2.36862,0,19.58,0,0.871,4.926,95.7,1.4608,5,403,14.7,391.71,29.53,14.6

150 | "149",2.33099,0,19.58,0,0.871,5.186,93.8,1.5296,5,403,14.7,356.99,28.32,17.8

151 | "150",2.73397,0,19.58,0,0.871,5.597,94.9,1.5257,5,403,14.7,351.85,21.45,15.4

152 | "151",1.6566,0,19.58,0,0.871,6.122,97.3,1.618,5,403,14.7,372.8,14.1,21.5

153 | "152",1.49632,0,19.58,0,0.871,5.404,100,1.5916,5,403,14.7,341.6,13.28,19.6

154 | "153",1.12658,0,19.58,1,0.871,5.012,88,1.6102,5,403,14.7,343.28,12.12,15.3

155 | "154",2.14918,0,19.58,0,0.871,5.709,98.5,1.6232,5,403,14.7,261.95,15.79,19.4

156 | "155",1.41385,0,19.58,1,0.871,6.129,96,1.7494,5,403,14.7,321.02,15.12,17

157 | "156",3.53501,0,19.58,1,0.871,6.152,82.6,1.7455,5,403,14.7,88.01,15.02,15.6

158 | "157",2.44668,0,19.58,0,0.871,5.272,94,1.7364,5,403,14.7,88.63,16.14,13.1

159 | "158",1.22358,0,19.58,0,0.605,6.943,97.4,1.8773,5,403,14.7,363.43,4.59,41.3

160 | "159",1.34284,0,19.58,0,0.605,6.066,100,1.7573,5,403,14.7,353.89,6.43,24.3

161 | "160",1.42502,0,19.58,0,0.871,6.51,100,1.7659,5,403,14.7,364.31,7.39,23.3

162 | "161",1.27346,0,19.58,1,0.605,6.25,92.6,1.7984,5,403,14.7,338.92,5.5,27

163 | "162",1.46336,0,19.58,0,0.605,7.489,90.8,1.9709,5,403,14.7,374.43,1.73,50

164 | "163",1.83377,0,19.58,1,0.605,7.802,98.2,2.0407,5,403,14.7,389.61,1.92,50

165 | "164",1.51902,0,19.58,1,0.605,8.375,93.9,2.162,5,403,14.7,388.45,3.32,50

166 | "165",2.24236,0,19.58,0,0.605,5.854,91.8,2.422,5,403,14.7,395.11,11.64,22.7

167 | "166",2.924,0,19.58,0,0.605,6.101,93,2.2834,5,403,14.7,240.16,9.81,25

168 | "167",2.01019,0,19.58,0,0.605,7.929,96.2,2.0459,5,403,14.7,369.3,3.7,50

169 | "168",1.80028,0,19.58,0,0.605,5.877,79.2,2.4259,5,403,14.7,227.61,12.14,23.8

170 | "169",2.3004,0,19.58,0,0.605,6.319,96.1,2.1,5,403,14.7,297.09,11.1,23.8

171 | "170",2.44953,0,19.58,0,0.605,6.402,95.2,2.2625,5,403,14.7,330.04,11.32,22.3

172 | "171",1.20742,0,19.58,0,0.605,5.875,94.6,2.4259,5,403,14.7,292.29,14.43,17.4

173 | "172",2.3139,0,19.58,0,0.605,5.88,97.3,2.3887,5,403,14.7,348.13,12.03,19.1

174 | "173",0.13914,0,4.05,0,0.51,5.572,88.5,2.5961,5,296,16.6,396.9,14.69,23.1

175 | "174",0.09178,0,4.05,0,0.51,6.416,84.1,2.6463,5,296,16.6,395.5,9.04,23.6

176 | "175",0.08447,0,4.05,0,0.51,5.859,68.7,2.7019,5,296,16.6,393.23,9.64,22.6

177 | "176",0.06664,0,4.05,0,0.51,6.546,33.1,3.1323,5,296,16.6,390.96,5.33,29.4

178 | "177",0.07022,0,4.05,0,0.51,6.02,47.2,3.5549,5,296,16.6,393.23,10.11,23.2

179 | "178",0.05425,0,4.05,0,0.51,6.315,73.4,3.3175,5,296,16.6,395.6,6.29,24.6

180 | "179",0.06642,0,4.05,0,0.51,6.86,74.4,2.9153,5,296,16.6,391.27,6.92,29.9

181 | "180",0.0578,0,2.46,0,0.488,6.98,58.4,2.829,3,193,17.8,396.9,5.04,37.2

182 | "181",0.06588,0,2.46,0,0.488,7.765,83.3,2.741,3,193,17.8,395.56,7.56,39.8

183 | "182",0.06888,0,2.46,0,0.488,6.144,62.2,2.5979,3,193,17.8,396.9,9.45,36.2

184 | "183",0.09103,0,2.46,0,0.488,7.155,92.2,2.7006,3,193,17.8,394.12,4.82,37.9

185 | "184",0.10008,0,2.46,0,0.488,6.563,95.6,2.847,3,193,17.8,396.9,5.68,32.5

186 | "185",0.08308,0,2.46,0,0.488,5.604,89.8,2.9879,3,193,17.8,391,13.98,26.4

187 | "186",0.06047,0,2.46,0,0.488,6.153,68.8,3.2797,3,193,17.8,387.11,13.15,29.6

188 | "187",0.05602,0,2.46,0,0.488,7.831,53.6,3.1992,3,193,17.8,392.63,4.45,50

189 | "188",0.07875,45,3.44,0,0.437,6.782,41.1,3.7886,5,398,15.2,393.87,6.68,32

190 | "189",0.12579,45,3.44,0,0.437,6.556,29.1,4.5667,5,398,15.2,382.84,4.56,29.8

191 | "190",0.0837,45,3.44,0,0.437,7.185,38.9,4.5667,5,398,15.2,396.9,5.39,34.9

192 | "191",0.09068,45,3.44,0,0.437,6.951,21.5,6.4798,5,398,15.2,377.68,5.1,37

193 | "192",0.06911,45,3.44,0,0.437,6.739,30.8,6.4798,5,398,15.2,389.71,4.69,30.5

194 | "193",0.08664,45,3.44,0,0.437,7.178,26.3,6.4798,5,398,15.2,390.49,2.87,36.4

195 | "194",0.02187,60,2.93,0,0.401,6.8,9.9,6.2196,1,265,15.6,393.37,5.03,31.1

196 | "195",0.01439,60,2.93,0,0.401,6.604,18.8,6.2196,1,265,15.6,376.7,4.38,29.1

197 | "196",0.01381,80,0.46,0,0.422,7.875,32,5.6484,4,255,14.4,394.23,2.97,50

198 | "197",0.04011,80,1.52,0,0.404,7.287,34.1,7.309,2,329,12.6,396.9,4.08,33.3

199 | "198",0.04666,80,1.52,0,0.404,7.107,36.6,7.309,2,329,12.6,354.31,8.61,30.3

200 | "199",0.03768,80,1.52,0,0.404,7.274,38.3,7.309,2,329,12.6,392.2,6.62,34.6

201 | "200",0.0315,95,1.47,0,0.403,6.975,15.3,7.6534,3,402,17,396.9,4.56,34.9

202 | "201",0.01778,95,1.47,0,0.403,7.135,13.9,7.6534,3,402,17,384.3,4.45,32.9

203 | "202",0.03445,82.5,2.03,0,0.415,6.162,38.4,6.27,2,348,14.7,393.77,7.43,24.1

204 | "203",0.02177,82.5,2.03,0,0.415,7.61,15.7,6.27,2,348,14.7,395.38,3.11,42.3

205 | "204",0.0351,95,2.68,0,0.4161,7.853,33.2,5.118,4,224,14.7,392.78,3.81,48.5

206 | "205",0.02009,95,2.68,0,0.4161,8.034,31.9,5.118,4,224,14.7,390.55,2.88,50

207 | "206",0.13642,0,10.59,0,0.489,5.891,22.3,3.9454,4,277,18.6,396.9,10.87,22.6

208 | "207",0.22969,0,10.59,0,0.489,6.326,52.5,4.3549,4,277,18.6,394.87,10.97,24.4

209 | "208",0.25199,0,10.59,0,0.489,5.783,72.7,4.3549,4,277,18.6,389.43,18.06,22.5

210 | "209",0.13587,0,10.59,1,0.489,6.064,59.1,4.2392,4,277,18.6,381.32,14.66,24.4

211 | "210",0.43571,0,10.59,1,0.489,5.344,100,3.875,4,277,18.6,396.9,23.09,20

212 | "211",0.17446,0,10.59,1,0.489,5.96,92.1,3.8771,4,277,18.6,393.25,17.27,21.7

213 | "212",0.37578,0,10.59,1,0.489,5.404,88.6,3.665,4,277,18.6,395.24,23.98,19.3

214 | "213",0.21719,0,10.59,1,0.489,5.807,53.8,3.6526,4,277,18.6,390.94,16.03,22.4

215 | "214",0.14052,0,10.59,0,0.489,6.375,32.3,3.9454,4,277,18.6,385.81,9.38,28.1

216 | "215",0.28955,0,10.59,0,0.489,5.412,9.8,3.5875,4,277,18.6,348.93,29.55,23.7

217 | "216",0.19802,0,10.59,0,0.489,6.182,42.4,3.9454,4,277,18.6,393.63,9.47,25

218 | "217",0.0456,0,13.89,1,0.55,5.888,56,3.1121,5,276,16.4,392.8,13.51,23.3

219 | "218",0.07013,0,13.89,0,0.55,6.642,85.1,3.4211,5,276,16.4,392.78,9.69,28.7

220 | "219",0.11069,0,13.89,1,0.55,5.951,93.8,2.8893,5,276,16.4,396.9,17.92,21.5

221 | "220",0.11425,0,13.89,1,0.55,6.373,92.4,3.3633,5,276,16.4,393.74,10.5,23

222 | "221",0.35809,0,6.2,1,0.507,6.951,88.5,2.8617,8,307,17.4,391.7,9.71,26.7

223 | "222",0.40771,0,6.2,1,0.507,6.164,91.3,3.048,8,307,17.4,395.24,21.46,21.7

224 | "223",0.62356,0,6.2,1,0.507,6.879,77.7,3.2721,8,307,17.4,390.39,9.93,27.5

225 | "224",0.6147,0,6.2,0,0.507,6.618,80.8,3.2721,8,307,17.4,396.9,7.6,30.1

226 | "225",0.31533,0,6.2,0,0.504,8.266,78.3,2.8944,8,307,17.4,385.05,4.14,44.8

227 | "226",0.52693,0,6.2,0,0.504,8.725,83,2.8944,8,307,17.4,382,4.63,50

228 | "227",0.38214,0,6.2,0,0.504,8.04,86.5,3.2157,8,307,17.4,387.38,3.13,37.6

229 | "228",0.41238,0,6.2,0,0.504,7.163,79.9,3.2157,8,307,17.4,372.08,6.36,31.6

230 | "229",0.29819,0,6.2,0,0.504,7.686,17,3.3751,8,307,17.4,377.51,3.92,46.7

231 | "230",0.44178,0,6.2,0,0.504,6.552,21.4,3.3751,8,307,17.4,380.34,3.76,31.5

232 | "231",0.537,0,6.2,0,0.504,5.981,68.1,3.6715,8,307,17.4,378.35,11.65,24.3

233 | "232",0.46296,0,6.2,0,0.504,7.412,76.9,3.6715,8,307,17.4,376.14,5.25,31.7

234 | "233",0.57529,0,6.2,0,0.507,8.337,73.3,3.8384,8,307,17.4,385.91,2.47,41.7

235 | "234",0.33147,0,6.2,0,0.507,8.247,70.4,3.6519,8,307,17.4,378.95,3.95,48.3

236 | "235",0.44791,0,6.2,1,0.507,6.726,66.5,3.6519,8,307,17.4,360.2,8.05,29

237 | "236",0.33045,0,6.2,0,0.507,6.086,61.5,3.6519,8,307,17.4,376.75,10.88,24

238 | "237",0.52058,0,6.2,1,0.507,6.631,76.5,4.148,8,307,17.4,388.45,9.54,25.1

239 | "238",0.51183,0,6.2,0,0.507,7.358,71.6,4.148,8,307,17.4,390.07,4.73,31.5

240 | "239",0.08244,30,4.93,0,0.428,6.481,18.5,6.1899,6,300,16.6,379.41,6.36,23.7

241 | "240",0.09252,30,4.93,0,0.428,6.606,42.2,6.1899,6,300,16.6,383.78,7.37,23.3

242 | "241",0.11329,30,4.93,0,0.428,6.897,54.3,6.3361,6,300,16.6,391.25,11.38,22

243 | "242",0.10612,30,4.93,0,0.428,6.095,65.1,6.3361,6,300,16.6,394.62,12.4,20.1

244 | "243",0.1029,30,4.93,0,0.428,6.358,52.9,7.0355,6,300,16.6,372.75,11.22,22.2

245 | "244",0.12757,30,4.93,0,0.428,6.393,7.8,7.0355,6,300,16.6,374.71,5.19,23.7

246 | "245",0.20608,22,5.86,0,0.431,5.593,76.5,7.9549,7,330,19.1,372.49,12.5,17.6

247 | "246",0.19133,22,5.86,0,0.431,5.605,70.2,7.9549,7,330,19.1,389.13,18.46,18.5

248 | "247",0.33983,22,5.86,0,0.431,6.108,34.9,8.0555,7,330,19.1,390.18,9.16,24.3

249 | "248",0.19657,22,5.86,0,0.431,6.226,79.2,8.0555,7,330,19.1,376.14,10.15,20.5

250 | "249",0.16439,22,5.86,0,0.431,6.433,49.1,7.8265,7,330,19.1,374.71,9.52,24.5

251 | "250",0.19073,22,5.86,0,0.431,6.718,17.5,7.8265,7,330,19.1,393.74,6.56,26.2

252 | "251",0.1403,22,5.86,0,0.431,6.487,13,7.3967,7,330,19.1,396.28,5.9,24.4

253 | "252",0.21409,22,5.86,0,0.431,6.438,8.9,7.3967,7,330,19.1,377.07,3.59,24.8

254 | "253",0.08221,22,5.86,0,0.431,6.957,6.8,8.9067,7,330,19.1,386.09,3.53,29.6

255 | "254",0.36894,22,5.86,0,0.431,8.259,8.4,8.9067,7,330,19.1,396.9,3.54,42.8

256 | "255",0.04819,80,3.64,0,0.392,6.108,32,9.2203,1,315,16.4,392.89,6.57,21.9

257 | "256",0.03548,80,3.64,0,0.392,5.876,19.1,9.2203,1,315,16.4,395.18,9.25,20.9

258 | "257",0.01538,90,3.75,0,0.394,7.454,34.2,6.3361,3,244,15.9,386.34,3.11,44

259 | "258",0.61154,20,3.97,0,0.647,8.704,86.9,1.801,5,264,13,389.7,5.12,50

260 | "259",0.66351,20,3.97,0,0.647,7.333,100,1.8946,5,264,13,383.29,7.79,36

261 | "260",0.65665,20,3.97,0,0.647,6.842,100,2.0107,5,264,13,391.93,6.9,30.1

262 | "261",0.54011,20,3.97,0,0.647,7.203,81.8,2.1121,5,264,13,392.8,9.59,33.8

263 | "262",0.53412,20,3.97,0,0.647,7.52,89.4,2.1398,5,264,13,388.37,7.26,43.1

264 | "263",0.52014,20,3.97,0,0.647,8.398,91.5,2.2885,5,264,13,386.86,5.91,48.8

265 | "264",0.82526,20,3.97,0,0.647,7.327,94.5,2.0788,5,264,13,393.42,11.25,31

266 | "265",0.55007,20,3.97,0,0.647,7.206,91.6,1.9301,5,264,13,387.89,8.1,36.5

267 | "266",0.76162,20,3.97,0,0.647,5.56,62.8,1.9865,5,264,13,392.4,10.45,22.8

268 | "267",0.7857,20,3.97,0,0.647,7.014,84.6,2.1329,5,264,13,384.07,14.79,30.7

269 | "268",0.57834,20,3.97,0,0.575,8.297,67,2.4216,5,264,13,384.54,7.44,50

270 | "269",0.5405,20,3.97,0,0.575,7.47,52.6,2.872,5,264,13,390.3,3.16,43.5

271 | "270",0.09065,20,6.96,1,0.464,5.92,61.5,3.9175,3,223,18.6,391.34,13.65,20.7

272 | "271",0.29916,20,6.96,0,0.464,5.856,42.1,4.429,3,223,18.6,388.65,13,21.1

273 | "272",0.16211,20,6.96,0,0.464,6.24,16.3,4.429,3,223,18.6,396.9,6.59,25.2

274 | "273",0.1146,20,6.96,0,0.464,6.538,58.7,3.9175,3,223,18.6,394.96,7.73,24.4

275 | "274",0.22188,20,6.96,1,0.464,7.691,51.8,4.3665,3,223,18.6,390.77,6.58,35.2

276 | "275",0.05644,40,6.41,1,0.447,6.758,32.9,4.0776,4,254,17.6,396.9,3.53,32.4

277 | "276",0.09604,40,6.41,0,0.447,6.854,42.8,4.2673,4,254,17.6,396.9,2.98,32

278 | "277",0.10469,40,6.41,1,0.447,7.267,49,4.7872,4,254,17.6,389.25,6.05,33.2

279 | "278",0.06127,40,6.41,1,0.447,6.826,27.6,4.8628,4,254,17.6,393.45,4.16,33.1

280 | "279",0.07978,40,6.41,0,0.447,6.482,32.1,4.1403,4,254,17.6,396.9,7.19,29.1

281 | "280",0.21038,20,3.33,0,0.4429,6.812,32.2,4.1007,5,216,14.9,396.9,4.85,35.1

282 | "281",0.03578,20,3.33,0,0.4429,7.82,64.5,4.6947,5,216,14.9,387.31,3.76,45.4

283 | "282",0.03705,20,3.33,0,0.4429,6.968,37.2,5.2447,5,216,14.9,392.23,4.59,35.4

284 | "283",0.06129,20,3.33,1,0.4429,7.645,49.7,5.2119,5,216,14.9,377.07,3.01,46

285 | "284",0.01501,90,1.21,1,0.401,7.923,24.8,5.885,1,198,13.6,395.52,3.16,50

286 | "285",0.00906,90,2.97,0,0.4,7.088,20.8,7.3073,1,285,15.3,394.72,7.85,32.2

287 | "286",0.01096,55,2.25,0,0.389,6.453,31.9,7.3073,1,300,15.3,394.72,8.23,22

288 | "287",0.01965,80,1.76,0,0.385,6.23,31.5,9.0892,1,241,18.2,341.6,12.93,20.1

289 | "288",0.03871,52.5,5.32,0,0.405,6.209,31.3,7.3172,6,293,16.6,396.9,7.14,23.2

290 | "289",0.0459,52.5,5.32,0,0.405,6.315,45.6,7.3172,6,293,16.6,396.9,7.6,22.3

291 | "290",0.04297,52.5,5.32,0,0.405,6.565,22.9,7.3172,6,293,16.6,371.72,9.51,24.8

292 | "291",0.03502,80,4.95,0,0.411,6.861,27.9,5.1167,4,245,19.2,396.9,3.33,28.5

293 | "292",0.07886,80,4.95,0,0.411,7.148,27.7,5.1167,4,245,19.2,396.9,3.56,37.3

294 | "293",0.03615,80,4.95,0,0.411,6.63,23.4,5.1167,4,245,19.2,396.9,4.7,27.9

295 | "294",0.08265,0,13.92,0,0.437,6.127,18.4,5.5027,4,289,16,396.9,8.58,23.9

296 | "295",0.08199,0,13.92,0,0.437,6.009,42.3,5.5027,4,289,16,396.9,10.4,21.7

297 | "296",0.12932,0,13.92,0,0.437,6.678,31.1,5.9604,4,289,16,396.9,6.27,28.6

298 | "297",0.05372,0,13.92,0,0.437,6.549,51,5.9604,4,289,16,392.85,7.39,27.1

299 | "298",0.14103,0,13.92,0,0.437,5.79,58,6.32,4,289,16,396.9,15.84,20.3

300 | "299",0.06466,70,2.24,0,0.4,6.345,20.1,7.8278,5,358,14.8,368.24,4.97,22.5

301 | "300",0.05561,70,2.24,0,0.4,7.041,10,7.8278,5,358,14.8,371.58,4.74,29

302 | "301",0.04417,70,2.24,0,0.4,6.871,47.4,7.8278,5,358,14.8,390.86,6.07,24.8

303 | "302",0.03537,34,6.09,0,0.433,6.59,40.4,5.4917,7,329,16.1,395.75,9.5,22

304 | "303",0.09266,34,6.09,0,0.433,6.495,18.4,5.4917,7,329,16.1,383.61,8.67,26.4

305 | "304",0.1,34,6.09,0,0.433,6.982,17.7,5.4917,7,329,16.1,390.43,4.86,33.1

306 | "305",0.05515,33,2.18,0,0.472,7.236,41.1,4.022,7,222,18.4,393.68,6.93,36.1

307 | "306",0.05479,33,2.18,0,0.472,6.616,58.1,3.37,7,222,18.4,393.36,8.93,28.4

308 | "307",0.07503,33,2.18,0,0.472,7.42,71.9,3.0992,7,222,18.4,396.9,6.47,33.4

309 | "308",0.04932,33,2.18,0,0.472,6.849,70.3,3.1827,7,222,18.4,396.9,7.53,28.2

310 | "309",0.49298,0,9.9,0,0.544,6.635,82.5,3.3175,4,304,18.4,396.9,4.54,22.8

311 | "310",0.3494,0,9.9,0,0.544,5.972,76.7,3.1025,4,304,18.4,396.24,9.97,20.3

312 | "311",2.63548,0,9.9,0,0.544,4.973,37.8,2.5194,4,304,18.4,350.45,12.64,16.1

313 | "312",0.79041,0,9.9,0,0.544,6.122,52.8,2.6403,4,304,18.4,396.9,5.98,22.1

314 | "313",0.26169,0,9.9,0,0.544,6.023,90.4,2.834,4,304,18.4,396.3,11.72,19.4

315 | "314",0.26938,0,9.9,0,0.544,6.266,82.8,3.2628,4,304,18.4,393.39,7.9,21.6

316 | "315",0.3692,0,9.9,0,0.544,6.567,87.3,3.6023,4,304,18.4,395.69,9.28,23.8

317 | "316",0.25356,0,9.9,0,0.544,5.705,77.7,3.945,4,304,18.4,396.42,11.5,16.2

318 | "317",0.31827,0,9.9,0,0.544,5.914,83.2,3.9986,4,304,18.4,390.7,18.33,17.8

319 | "318",0.24522,0,9.9,0,0.544,5.782,71.7,4.0317,4,304,18.4,396.9,15.94,19.8

320 | "319",0.40202,0,9.9,0,0.544,6.382,67.2,3.5325,4,304,18.4,395.21,10.36,23.1

321 | "320",0.47547,0,9.9,0,0.544,6.113,58.8,4.0019,4,304,18.4,396.23,12.73,21

322 | "321",0.1676,0,7.38,0,0.493,6.426,52.3,4.5404,5,287,19.6,396.9,7.2,23.8

323 | "322",0.18159,0,7.38,0,0.493,6.376,54.3,4.5404,5,287,19.6,396.9,6.87,23.1

324 | "323",0.35114,0,7.38,0,0.493,6.041,49.9,4.7211,5,287,19.6,396.9,7.7,20.4

325 | "324",0.28392,0,7.38,0,0.493,5.708,74.3,4.7211,5,287,19.6,391.13,11.74,18.5

326 | "325",0.34109,0,7.38,0,0.493,6.415,40.1,4.7211,5,287,19.6,396.9,6.12,25

327 | "326",0.19186,0,7.38,0,0.493,6.431,14.7,5.4159,5,287,19.6,393.68,5.08,24.6

328 | "327",0.30347,0,7.38,0,0.493,6.312,28.9,5.4159,5,287,19.6,396.9,6.15,23

329 | "328",0.24103,0,7.38,0,0.493,6.083,43.7,5.4159,5,287,19.6,396.9,12.79,22.2

330 | "329",0.06617,0,3.24,0,0.46,5.868,25.8,5.2146,4,430,16.9,382.44,9.97,19.3

331 | "330",0.06724,0,3.24,0,0.46,6.333,17.2,5.2146,4,430,16.9,375.21,7.34,22.6

332 | "331",0.04544,0,3.24,0,0.46,6.144,32.2,5.8736,4,430,16.9,368.57,9.09,19.8

333 | "332",0.05023,35,6.06,0,0.4379,5.706,28.4,6.6407,1,304,16.9,394.02,12.43,17.1

334 | "333",0.03466,35,6.06,0,0.4379,6.031,23.3,6.6407,1,304,16.9,362.25,7.83,19.4

335 | "334",0.05083,0,5.19,0,0.515,6.316,38.1,6.4584,5,224,20.2,389.71,5.68,22.2

336 | "335",0.03738,0,5.19,0,0.515,6.31,38.5,6.4584,5,224,20.2,389.4,6.75,20.7

337 | "336",0.03961,0,5.19,0,0.515,6.037,34.5,5.9853,5,224,20.2,396.9,8.01,21.1

338 | "337",0.03427,0,5.19,0,0.515,5.869,46.3,5.2311,5,224,20.2,396.9,9.8,19.5

339 | "338",0.03041,0,5.19,0,0.515,5.895,59.6,5.615,5,224,20.2,394.81,10.56,18.5

340 | "339",0.03306,0,5.19,0,0.515,6.059,37.3,4.8122,5,224,20.2,396.14,8.51,20.6

341 | "340",0.05497,0,5.19,0,0.515,5.985,45.4,4.8122,5,224,20.2,396.9,9.74,19

342 | "341",0.06151,0,5.19,0,0.515,5.968,58.5,4.8122,5,224,20.2,396.9,9.29,18.7

343 | "342",0.01301,35,1.52,0,0.442,7.241,49.3,7.0379,1,284,15.5,394.74,5.49,32.7

344 | "343",0.02498,0,1.89,0,0.518,6.54,59.7,6.2669,1,422,15.9,389.96,8.65,16.5

345 | "344",0.02543,55,3.78,0,0.484,6.696,56.4,5.7321,5,370,17.6,396.9,7.18,23.9

346 | "345",0.03049,55,3.78,0,0.484,6.874,28.1,6.4654,5,370,17.6,387.97,4.61,31.2

347 | "346",0.03113,0,4.39,0,0.442,6.014,48.5,8.0136,3,352,18.8,385.64,10.53,17.5

348 | "347",0.06162,0,4.39,0,0.442,5.898,52.3,8.0136,3,352,18.8,364.61,12.67,17.2

349 | "348",0.0187,85,4.15,0,0.429,6.516,27.7,8.5353,4,351,17.9,392.43,6.36,23.1

350 | "349",0.01501,80,2.01,0,0.435,6.635,29.7,8.344,4,280,17,390.94,5.99,24.5

351 | "350",0.02899,40,1.25,0,0.429,6.939,34.5,8.7921,1,335,19.7,389.85,5.89,26.6

352 | "351",0.06211,40,1.25,0,0.429,6.49,44.4,8.7921,1,335,19.7,396.9,5.98,22.9

353 | "352",0.0795,60,1.69,0,0.411,6.579,35.9,10.7103,4,411,18.3,370.78,5.49,24.1

354 | "353",0.07244,60,1.69,0,0.411,5.884,18.5,10.7103,4,411,18.3,392.33,7.79,18.6

355 | "354",0.01709,90,2.02,0,0.41,6.728,36.1,12.1265,5,187,17,384.46,4.5,30.1

356 | "355",0.04301,80,1.91,0,0.413,5.663,21.9,10.5857,4,334,22,382.8,8.05,18.2

357 | "356",0.10659,80,1.91,0,0.413,5.936,19.5,10.5857,4,334,22,376.04,5.57,20.6

358 | "357",8.98296,0,18.1,1,0.77,6.212,97.4,2.1222,24,666,20.2,377.73,17.6,17.8

359 | "358",3.8497,0,18.1,1,0.77,6.395,91,2.5052,24,666,20.2,391.34,13.27,21.7

360 | "359",5.20177,0,18.1,1,0.77,6.127,83.4,2.7227,24,666,20.2,395.43,11.48,22.7

361 | "360",4.26131,0,18.1,0,0.77,6.112,81.3,2.5091,24,666,20.2,390.74,12.67,22.6

362 | "361",4.54192,0,18.1,0,0.77,6.398,88,2.5182,24,666,20.2,374.56,7.79,25

363 | "362",3.83684,0,18.1,0,0.77,6.251,91.1,2.2955,24,666,20.2,350.65,14.19,19.9

364 | "363",3.67822,0,18.1,0,0.77,5.362,96.2,2.1036,24,666,20.2,380.79,10.19,20.8

365 | "364",4.22239,0,18.1,1,0.77,5.803,89,1.9047,24,666,20.2,353.04,14.64,16.8

366 | "365",3.47428,0,18.1,1,0.718,8.78,82.9,1.9047,24,666,20.2,354.55,5.29,21.9

367 | "366",4.55587,0,18.1,0,0.718,3.561,87.9,1.6132,24,666,20.2,354.7,7.12,27.5

368 | "367",3.69695,0,18.1,0,0.718,4.963,91.4,1.7523,24,666,20.2,316.03,14,21.9

369 | "368",13.5222,0,18.1,0,0.631,3.863,100,1.5106,24,666,20.2,131.42,13.33,23.1

370 | "369",4.89822,0,18.1,0,0.631,4.97,100,1.3325,24,666,20.2,375.52,3.26,50

371 | "370",5.66998,0,18.1,1,0.631,6.683,96.8,1.3567,24,666,20.2,375.33,3.73,50

372 | "371",6.53876,0,18.1,1,0.631,7.016,97.5,1.2024,24,666,20.2,392.05,2.96,50

373 | "372",9.2323,0,18.1,0,0.631,6.216,100,1.1691,24,666,20.2,366.15,9.53,50

374 | "373",8.26725,0,18.1,1,0.668,5.875,89.6,1.1296,24,666,20.2,347.88,8.88,50

375 | "374",11.1081,0,18.1,0,0.668,4.906,100,1.1742,24,666,20.2,396.9,34.77,13.8

376 | "375",18.4982,0,18.1,0,0.668,4.138,100,1.137,24,666,20.2,396.9,37.97,13.8

377 | "376",19.6091,0,18.1,0,0.671,7.313,97.9,1.3163,24,666,20.2,396.9,13.44,15

378 | "377",15.288,0,18.1,0,0.671,6.649,93.3,1.3449,24,666,20.2,363.02,23.24,13.9

379 | "378",9.82349,0,18.1,0,0.671,6.794,98.8,1.358,24,666,20.2,396.9,21.24,13.3

380 | "379",23.6482,0,18.1,0,0.671,6.38,96.2,1.3861,24,666,20.2,396.9,23.69,13.1

381 | "380",17.8667,0,18.1,0,0.671,6.223,100,1.3861,24,666,20.2,393.74,21.78,10.2

382 | "381",88.9762,0,18.1,0,0.671,6.968,91.9,1.4165,24,666,20.2,396.9,17.21,10.4

383 | "382",15.8744,0,18.1,0,0.671,6.545,99.1,1.5192,24,666,20.2,396.9,21.08,10.9

384 | "383",9.18702,0,18.1,0,0.7,5.536,100,1.5804,24,666,20.2,396.9,23.6,11.3

385 | "384",7.99248,0,18.1,0,0.7,5.52,100,1.5331,24,666,20.2,396.9,24.56,12.3

386 | "385",20.0849,0,18.1,0,0.7,4.368,91.2,1.4395,24,666,20.2,285.83,30.63,8.8

387 | "386",16.8118,0,18.1,0,0.7,5.277,98.1,1.4261,24,666,20.2,396.9,30.81,7.2

388 | "387",24.3938,0,18.1,0,0.7,4.652,100,1.4672,24,666,20.2,396.9,28.28,10.5

389 | "388",22.5971,0,18.1,0,0.7,5,89.5,1.5184,24,666,20.2,396.9,31.99,7.4

390 | "389",14.3337,0,18.1,0,0.7,4.88,100,1.5895,24,666,20.2,372.92,30.62,10.2

391 | "390",8.15174,0,18.1,0,0.7,5.39,98.9,1.7281,24,666,20.2,396.9,20.85,11.5

392 | "391",6.96215,0,18.1,0,0.7,5.713,97,1.9265,24,666,20.2,394.43,17.11,15.1

393 | "392",5.29305,0,18.1,0,0.7,6.051,82.5,2.1678,24,666,20.2,378.38,18.76,23.2

394 | "393",11.5779,0,18.1,0,0.7,5.036,97,1.77,24,666,20.2,396.9,25.68,9.7

395 | "394",8.64476,0,18.1,0,0.693,6.193,92.6,1.7912,24,666,20.2,396.9,15.17,13.8

396 | "395",13.3598,0,18.1,0,0.693,5.887,94.7,1.7821,24,666,20.2,396.9,16.35,12.7

397 | "396",8.71675,0,18.1,0,0.693,6.471,98.8,1.7257,24,666,20.2,391.98,17.12,13.1

398 | "397",5.87205,0,18.1,0,0.693,6.405,96,1.6768,24,666,20.2,396.9,19.37,12.5

399 | "398",7.67202,0,18.1,0,0.693,5.747,98.9,1.6334,24,666,20.2,393.1,19.92,8.5

400 | "399",38.3518,0,18.1,0,0.693,5.453,100,1.4896,24,666,20.2,396.9,30.59,5

401 | "400",9.91655,0,18.1,0,0.693,5.852,77.8,1.5004,24,666,20.2,338.16,29.97,6.3

402 | "401",25.0461,0,18.1,0,0.693,5.987,100,1.5888,24,666,20.2,396.9,26.77,5.6

403 | "402",14.2362,0,18.1,0,0.693,6.343,100,1.5741,24,666,20.2,396.9,20.32,7.2

404 | "403",9.59571,0,18.1,0,0.693,6.404,100,1.639,24,666,20.2,376.11,20.31,12.1

405 | "404",24.8017,0,18.1,0,0.693,5.349,96,1.7028,24,666,20.2,396.9,19.77,8.3

406 | "405",41.5292,0,18.1,0,0.693,5.531,85.4,1.6074,24,666,20.2,329.46,27.38,8.5

407 | "406",67.9208,0,18.1,0,0.693,5.683,100,1.4254,24,666,20.2,384.97,22.98,5

408 | "407",20.7162,0,18.1,0,0.659,4.138,100,1.1781,24,666,20.2,370.22,23.34,11.9

409 | "408",11.9511,0,18.1,0,0.659,5.608,100,1.2852,24,666,20.2,332.09,12.13,27.9

410 | "409",7.40389,0,18.1,0,0.597,5.617,97.9,1.4547,24,666,20.2,314.64,26.4,17.2

411 | "410",14.4383,0,18.1,0,0.597,6.852,100,1.4655,24,666,20.2,179.36,19.78,27.5

412 | "411",51.1358,0,18.1,0,0.597,5.757,100,1.413,24,666,20.2,2.6,10.11,15

413 | "412",14.0507,0,18.1,0,0.597,6.657,100,1.5275,24,666,20.2,35.05,21.22,17.2

414 | "413",18.811,0,18.1,0,0.597,4.628,100,1.5539,24,666,20.2,28.79,34.37,17.9

415 | "414",28.6558,0,18.1,0,0.597,5.155,100,1.5894,24,666,20.2,210.97,20.08,16.3

416 | "415",45.7461,0,18.1,0,0.693,4.519,100,1.6582,24,666,20.2,88.27,36.98,7

417 | "416",18.0846,0,18.1,0,0.679,6.434,100,1.8347,24,666,20.2,27.25,29.05,7.2

418 | "417",10.8342,0,18.1,0,0.679,6.782,90.8,1.8195,24,666,20.2,21.57,25.79,7.5

419 | "418",25.9406,0,18.1,0,0.679,5.304,89.1,1.6475,24,666,20.2,127.36,26.64,10.4

420 | "419",73.5341,0,18.1,0,0.679,5.957,100,1.8026,24,666,20.2,16.45,20.62,8.8

421 | "420",11.8123,0,18.1,0,0.718,6.824,76.5,1.794,24,666,20.2,48.45,22.74,8.4

422 | "421",11.0874,0,18.1,0,0.718,6.411,100,1.8589,24,666,20.2,318.75,15.02,16.7

423 | "422",7.02259,0,18.1,0,0.718,6.006,95.3,1.8746,24,666,20.2,319.98,15.7,14.2

424 | "423",12.0482,0,18.1,0,0.614,5.648,87.6,1.9512,24,666,20.2,291.55,14.1,20.8

425 | "424",7.05042,0,18.1,0,0.614,6.103,85.1,2.0218,24,666,20.2,2.52,23.29,13.4

426 | "425",8.79212,0,18.1,0,0.584,5.565,70.6,2.0635,24,666,20.2,3.65,17.16,11.7

427 | "426",15.8603,0,18.1,0,0.679,5.896,95.4,1.9096,24,666,20.2,7.68,24.39,8.3

428 | "427",12.2472,0,18.1,0,0.584,5.837,59.7,1.9976,24,666,20.2,24.65,15.69,10.2

429 | "428",37.6619,0,18.1,0,0.679,6.202,78.7,1.8629,24,666,20.2,18.82,14.52,10.9

430 | "429",7.36711,0,18.1,0,0.679,6.193,78.1,1.9356,24,666,20.2,96.73,21.52,11

431 | "430",9.33889,0,18.1,0,0.679,6.38,95.6,1.9682,24,666,20.2,60.72,24.08,9.5

432 | "431",8.49213,0,18.1,0,0.584,6.348,86.1,2.0527,24,666,20.2,83.45,17.64,14.5

433 | "432",10.0623,0,18.1,0,0.584,6.833,94.3,2.0882,24,666,20.2,81.33,19.69,14.1

434 | "433",6.44405,0,18.1,0,0.584,6.425,74.8,2.2004,24,666,20.2,97.95,12.03,16.1

435 | "434",5.58107,0,18.1,0,0.713,6.436,87.9,2.3158,24,666,20.2,100.19,16.22,14.3

436 | "435",13.9134,0,18.1,0,0.713,6.208,95,2.2222,24,666,20.2,100.63,15.17,11.7

437 | "436",11.1604,0,18.1,0,0.74,6.629,94.6,2.1247,24,666,20.2,109.85,23.27,13.4

438 | "437",14.4208,0,18.1,0,0.74,6.461,93.3,2.0026,24,666,20.2,27.49,18.05,9.6

439 | "438",15.1772,0,18.1,0,0.74,6.152,100,1.9142,24,666,20.2,9.32,26.45,8.7

440 | "439",13.6781,0,18.1,0,0.74,5.935,87.9,1.8206,24,666,20.2,68.95,34.02,8.4

441 | "440",9.39063,0,18.1,0,0.74,5.627,93.9,1.8172,24,666,20.2,396.9,22.88,12.8

442 | "441",22.0511,0,18.1,0,0.74,5.818,92.4,1.8662,24,666,20.2,391.45,22.11,10.5

443 | "442",9.72418,0,18.1,0,0.74,6.406,97.2,2.0651,24,666,20.2,385.96,19.52,17.1

444 | "443",5.66637,0,18.1,0,0.74,6.219,100,2.0048,24,666,20.2,395.69,16.59,18.4

445 | "444",9.96654,0,18.1,0,0.74,6.485,100,1.9784,24,666,20.2,386.73,18.85,15.4

446 | "445",12.8023,0,18.1,0,0.74,5.854,96.6,1.8956,24,666,20.2,240.52,23.79,10.8

447 | "446",10.6718,0,18.1,0,0.74,6.459,94.8,1.9879,24,666,20.2,43.06,23.98,11.8

448 | "447",6.28807,0,18.1,0,0.74,6.341,96.4,2.072,24,666,20.2,318.01,17.79,14.9

449 | "448",9.92485,0,18.1,0,0.74,6.251,96.6,2.198,24,666,20.2,388.52,16.44,12.6

450 | "449",9.32909,0,18.1,0,0.713,6.185,98.7,2.2616,24,666,20.2,396.9,18.13,14.1

451 | "450",7.52601,0,18.1,0,0.713,6.417,98.3,2.185,24,666,20.2,304.21,19.31,13

452 | "451",6.71772,0,18.1,0,0.713,6.749,92.6,2.3236,24,666,20.2,0.32,17.44,13.4

453 | "452",5.44114,0,18.1,0,0.713,6.655,98.2,2.3552,24,666,20.2,355.29,17.73,15.2

454 | "453",5.09017,0,18.1,0,0.713,6.297,91.8,2.3682,24,666,20.2,385.09,17.27,16.1

455 | "454",8.24809,0,18.1,0,0.713,7.393,99.3,2.4527,24,666,20.2,375.87,16.74,17.8

456 | "455",9.51363,0,18.1,0,0.713,6.728,94.1,2.4961,24,666,20.2,6.68,18.71,14.9

457 | "456",4.75237,0,18.1,0,0.713,6.525,86.5,2.4358,24,666,20.2,50.92,18.13,14.1

458 | "457",4.66883,0,18.1,0,0.713,5.976,87.9,2.5806,24,666,20.2,10.48,19.01,12.7

459 | "458",8.20058,0,18.1,0,0.713,5.936,80.3,2.7792,24,666,20.2,3.5,16.94,13.5

460 | "459",7.75223,0,18.1,0,0.713,6.301,83.7,2.7831,24,666,20.2,272.21,16.23,14.9

461 | "460",6.80117,0,18.1,0,0.713,6.081,84.4,2.7175,24,666,20.2,396.9,14.7,20

462 | "461",4.81213,0,18.1,0,0.713,6.701,90,2.5975,24,666,20.2,255.23,16.42,16.4

463 | "462",3.69311,0,18.1,0,0.713,6.376,88.4,2.5671,24,666,20.2,391.43,14.65,17.7

464 | "463",6.65492,0,18.1,0,0.713,6.317,83,2.7344,24,666,20.2,396.9,13.99,19.5

465 | "464",5.82115,0,18.1,0,0.713,6.513,89.9,2.8016,24,666,20.2,393.82,10.29,20.2

466 | "465",7.83932,0,18.1,0,0.655,6.209,65.4,2.9634,24,666,20.2,396.9,13.22,21.4

467 | "466",3.1636,0,18.1,0,0.655,5.759,48.2,3.0665,24,666,20.2,334.4,14.13,19.9

468 | "467",3.77498,0,18.1,0,0.655,5.952,84.7,2.8715,24,666,20.2,22.01,17.15,19

469 | "468",4.42228,0,18.1,0,0.584,6.003,94.5,2.5403,24,666,20.2,331.29,21.32,19.1

470 | "469",15.5757,0,18.1,0,0.58,5.926,71,2.9084,24,666,20.2,368.74,18.13,19.1

471 | "470",13.0751,0,18.1,0,0.58,5.713,56.7,2.8237,24,666,20.2,396.9,14.76,20.1

472 | "471",4.34879,0,18.1,0,0.58,6.167,84,3.0334,24,666,20.2,396.9,16.29,19.9

473 | "472",4.03841,0,18.1,0,0.532,6.229,90.7,3.0993,24,666,20.2,395.33,12.87,19.6

474 | "473",3.56868,0,18.1,0,0.58,6.437,75,2.8965,24,666,20.2,393.37,14.36,23.2

475 | "474",4.64689,0,18.1,0,0.614,6.98,67.6,2.5329,24,666,20.2,374.68,11.66,29.8

476 | "475",8.05579,0,18.1,0,0.584,5.427,95.4,2.4298,24,666,20.2,352.58,18.14,13.8

477 | "476",6.39312,0,18.1,0,0.584,6.162,97.4,2.206,24,666,20.2,302.76,24.1,13.3

478 | "477",4.87141,0,18.1,0,0.614,6.484,93.6,2.3053,24,666,20.2,396.21,18.68,16.7

479 | "478",15.0234,0,18.1,0,0.614,5.304,97.3,2.1007,24,666,20.2,349.48,24.91,12

480 | "479",10.233,0,18.1,0,0.614,6.185,96.7,2.1705,24,666,20.2,379.7,18.03,14.6

481 | "480",14.3337,0,18.1,0,0.614,6.229,88,1.9512,24,666,20.2,383.32,13.11,21.4

482 | "481",5.82401,0,18.1,0,0.532,6.242,64.7,3.4242,24,666,20.2,396.9,10.74,23

483 | "482",5.70818,0,18.1,0,0.532,6.75,74.9,3.3317,24,666,20.2,393.07,7.74,23.7

484 | "483",5.73116,0,18.1,0,0.532,7.061,77,3.4106,24,666,20.2,395.28,7.01,25

485 | "484",2.81838,0,18.1,0,0.532,5.762,40.3,4.0983,24,666,20.2,392.92,10.42,21.8

486 | "485",2.37857,0,18.1,0,0.583,5.871,41.9,3.724,24,666,20.2,370.73,13.34,20.6

487 | "486",3.67367,0,18.1,0,0.583,6.312,51.9,3.9917,24,666,20.2,388.62,10.58,21.2

488 | "487",5.69175,0,18.1,0,0.583,6.114,79.8,3.5459,24,666,20.2,392.68,14.98,19.1

489 | "488",4.83567,0,18.1,0,0.583,5.905,53.2,3.1523,24,666,20.2,388.22,11.45,20.6

490 | "489",0.15086,0,27.74,0,0.609,5.454,92.7,1.8209,4,711,20.1,395.09,18.06,15.2

491 | "490",0.18337,0,27.74,0,0.609,5.414,98.3,1.7554,4,711,20.1,344.05,23.97,7

492 | "491",0.20746,0,27.74,0,0.609,5.093,98,1.8226,4,711,20.1,318.43,29.68,8.1

493 | "492",0.10574,0,27.74,0,0.609,5.983,98.8,1.8681,4,711,20.1,390.11,18.07,13.6

494 | "493",0.11132,0,27.74,0,0.609,5.983,83.5,2.1099,4,711,20.1,396.9,13.35,20.1

495 | "494",0.17331,0,9.69,0,0.585,5.707,54,2.3817,6,391,19.2,396.9,12.01,21.8

496 | "495",0.27957,0,9.69,0,0.585,5.926,42.6,2.3817,6,391,19.2,396.9,13.59,24.5

497 | "496",0.17899,0,9.69,0,0.585,5.67,28.8,2.7986,6,391,19.2,393.29,17.6,23.1

498 | "497",0.2896,0,9.69,0,0.585,5.39,72.9,2.7986,6,391,19.2,396.9,21.14,19.7

499 | "498",0.26838,0,9.69,0,0.585,5.794,70.6,2.8927,6,391,19.2,396.9,14.1,18.3

500 | "499",0.23912,0,9.69,0,0.585,6.019,65.3,2.4091,6,391,19.2,396.9,12.92,21.2

501 | "500",0.17783,0,9.69,0,0.585,5.569,73.5,2.3999,6,391,19.2,395.77,15.1,17.5

502 | "501",0.22438,0,9.69,0,0.585,6.027,79.7,2.4982,6,391,19.2,396.9,14.33,16.8

503 | "502",0.06263,0,11.93,0,0.573,6.593,69.1,2.4786,1,273,21,391.99,9.67,22.4

504 | "503",0.04527,0,11.93,0,0.573,6.12,76.7,2.2875,1,273,21,396.9,9.08,20.6

505 | "504",0.06076,0,11.93,0,0.573,6.976,91,2.1675,1,273,21,396.9,5.64,23.9

506 | "505",0.10959,0,11.93,0,0.573,6.794,89.3,2.3889,1,273,21,393.45,6.48,22

507 | "506",0.04741,0,11.93,0,0.573,6.03,80.8,2.505,1,273,21,396.9,7.88,11.9

508 |

--------------------------------------------------------------------------------

/Examples/LCA Bokeh.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {},

7 | "outputs": [],

8 | "source": [

9 | "import pandas as pd\n",

10 | "import numpy as np"

11 | ]

12 | },

13 | {

14 | "cell_type": "code",

15 | "execution_count": null,

16 | "metadata": {},

17 | "outputs": [],

18 | "source": [

19 | "#datapath = 'C:/Users/Ram/Documents/Ram/Data_Sets/'\n",

20 | "datapath = ''\n",

21 | "filename = ''\n",

22 | "sep = ','\n",

23 | "target = 'chas'"

24 | ]

25 | },

26 | {

27 | "cell_type": "code",

28 | "execution_count": null,

29 | "metadata": {},

30 | "outputs": [],

31 | "source": [

32 | "df = pd.read_csv(datapath+filename,sep=sep)\n",

33 | "dft = df[:]\n",

34 | "print(df.shape)\n",

35 | "df.head(1)"

36 | ]

37 | },

38 | {

39 | "cell_type": "code",

40 | "execution_count": null,

41 | "metadata": {

42 | "scrolled": false

43 | },

44 | "outputs": [],

45 | "source": [

46 | "dft = AV.AutoViz(datapath+filename, sep, target, \"\",\n",

47 | " header=0, verbose=1,\n",

48 | " lowess=False,chart_format='bokeh',max_rows_analyzed=150000,max_cols_analyzed=30)\n"

49 | ]

50 | },

51 | {

52 | "cell_type": "code",

53 | "execution_count": null,

54 | "metadata": {},

55 | "outputs": [],

56 | "source": []

57 | },

58 | {

59 | "cell_type": "code",

60 | "execution_count": null,

61 | "metadata": {},

62 | "outputs": [],

63 | "source": []

64 | },

65 | {

66 | "cell_type": "code",

67 | "execution_count": null,

68 | "metadata": {},

69 | "outputs": [],

70 | "source": []

71 | }

72 | ],

73 | "metadata": {

74 | "kernelspec": {

75 | "display_name": "Python 3",

76 | "language": "python",

77 | "name": "python3"

78 | },

79 | "language_info": {

80 | "codemirror_mode": {

81 | "name": "ipython",

82 | "version": 3

83 | },

84 | "file_extension": ".py",

85 | "mimetype": "text/x-python",

86 | "name": "python",

87 | "nbconvert_exporter": "python",

88 | "pygments_lexer": "ipython3",

89 | "version": "3.10.13"

90 | }

91 | },

92 | "nbformat": 4,

93 | "nbformat_minor": 2

94 | }

95 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # AutoViz: The One-Line Automatic Data Visualization Library

2 |

3 |

4 |

5 | Unlock the power of **AutoViz** to visualize any dataset, any size, with just a single line of code! Plus, now you can get a quick assessment of your dataset's quality and fix DQ issues through the FixDQ() function.

6 |

7 | [](https://pepy.tech/project/autoviz)

8 | [](https://pepy.tech/project/autoviz)

9 | [](https://pepy.tech/project/autoviz)

10 | [](https://github.com/RichardLitt/standard-readme)

11 | [](https://pypi.org/project/autoviz)

12 | [](https://pypi.org/project/autoviz)

13 | [](https://github.com/AutoViML/AutoViz/blob/master/LICENSE)

14 |

15 | With AutoViz, you can easily and quickly generate insightful visualizations for your data. Whether you're a beginner or an expert in data analysis, AutoViz can help you explore your data and uncover valuable insights. Try it out and see the power of automated visualization for yourself!

16 |

17 | ## Table of Contents

18 |

32 |

33 | ## Latest

34 | The latest updates about `autoviz` library can be found in Updates page.

35 |

36 | ## ImportantAnnouncement

37 | ### Starting with version 0.1.901, an important update

38 | We're excited to announce we've made significant updates to our `setup.py` script to leverage the latest versions in our dependencies while maintaining support for older Python versions (you may want to check older versions). The installation process is seamless—simply run pip install . in the AutoViz directory, and the script takes care of the rest, tailoring the installation to your environment.

39 |

40 | ### Feedback

41 | Your feedback is crucial! If you encounter any issues or have suggestions, please let us know through [GitHub Issues](https://github.com/AutoViML/AutoViz/issues)

42 |

43 | Thank you for your continued support and happy visualizing!

44 |

45 | ## Citation

46 | If you use AutoViz in your research project or paper, please use the following format for citations:

47 | "Seshadri, Ram (2020). GitHub - AutoViML/AutoViz: Automatically Visualize any dataset, any size with a single line of code. source code: https://github.com/AutoViML/AutoViz"

48 | Current citations for AutoViz

49 |

50 | [Google Scholar](https://scholar.google.com/scholar?hl=en&as_sdt=0%2C31&q=autoviz&oq=autoviz)

51 |

52 | ## Motivation

53 | The motivation behind the creation of AutoViz is to provide a more efficient, user-friendly, and automated approach to exploratory data analysis (EDA) through quick and easy data visualization plus data quality. The library is designed to help users understand patterns, trends, and relationships in the data by creating insightful visualizations with minimal effort. AutoViz is particularly useful for beginners in data analysis as it abstracts away the complexities of various plotting libraries and techniques. For experts, it provides another expert tool that they can use to provide inights into data that they may have missed.

54 |

55 | AutoViz is a powerful tool for generating insightful visualizations with minimal effort. Here are some of its key selling points compared to other automated EDA tools:

56 |

57 | - Ease of use: AutoViz is designed to be user-friendly and accessible to beginners in data analysis, abstracting away the complexities of various plotting libraries

58 | - Speed: AutoViz is optimized for speed and can generate multiple insightful plots with just a single line of code

59 | - Scalability: AutoViz is designed to work with datasets of any size and can handle large datasets efficiently

60 | - Automation: AutoViz automates the visualization process, requiring just a single line of code to generate multiple insightful plots

61 | - Customization: AutoViz provides several options for customizing the visualizations, such as changing the chart type, color palette, etc.

62 | - Data Quality: AutoViz now provides data quality assessment by default and helps you fix DQ issues with a single line of code using the FixDQ() function

63 |

64 | ## Installation

65 |

66 | **Prerequisites**

67 | - [Anaconda](https://docs.anaconda.com/anaconda/install/)

68 |

69 | Create a new environment and install the required dependencies to clone AutoViz:

70 |

71 | **From PyPi:**

72 | ```sh

73 | cd

74 | git clone git@github.com:AutoViML/AutoViz.git

75 | # or download and unzip https://github.com/AutoViML/AutoViz/archive/master.zip

76 | conda create -n python=3.7 anaconda

77 | conda activate # ON WINDOWS: `source activate `

78 | cd AutoViz

79 | ```

80 | For Python versions below 3.10, install dependencies as follows:

81 |

82 | ```

83 | pip install -r requirements.txt

84 | ```

85 |

86 | For Python 3.10, please use:

87 |

88 | ```

89 | pip install -r requirements-py310.txt

90 | ```

91 |

92 | For Python 3.11 and above, it's recommended to use:

93 |

94 | ```

95 | pip install -r requirements-py311.txt

96 | ```

97 |

98 | These requirement files ensure that AutoViz works seamlessly with your Python environment by installing compatible versions of libraries like HoloViews, Bokeh, and hvPlot. Please select the requirement file that corresponds to your Python version to enjoy a smooth experience with AutoViz.

99 |

100 | ## Usage

101 | Discover how to use AutoViz in this Medium article.

102 |

103 | In the AutoViz directory, open a Jupyter Notebook or open a command palette (terminal) and use the following code to instantiate the AutoViz_Class. You can simply run this code step by step:

104 |

105 | ```python

106 | from autoviz import AutoViz_Class

107 | AV = AutoViz_Class()

108 | dft = AV.AutoViz(filename)

109 | ```

110 |

111 | AutoViz can use any input either filename (in CSV, txt, or JSON format) or a pandas dataframe. If you have a large dataset, you can set the `max_rows_analyzed` and `max_cols_analyzed` arguments to speed up the visualization by asking autoviz to sample your dataset.

112 |

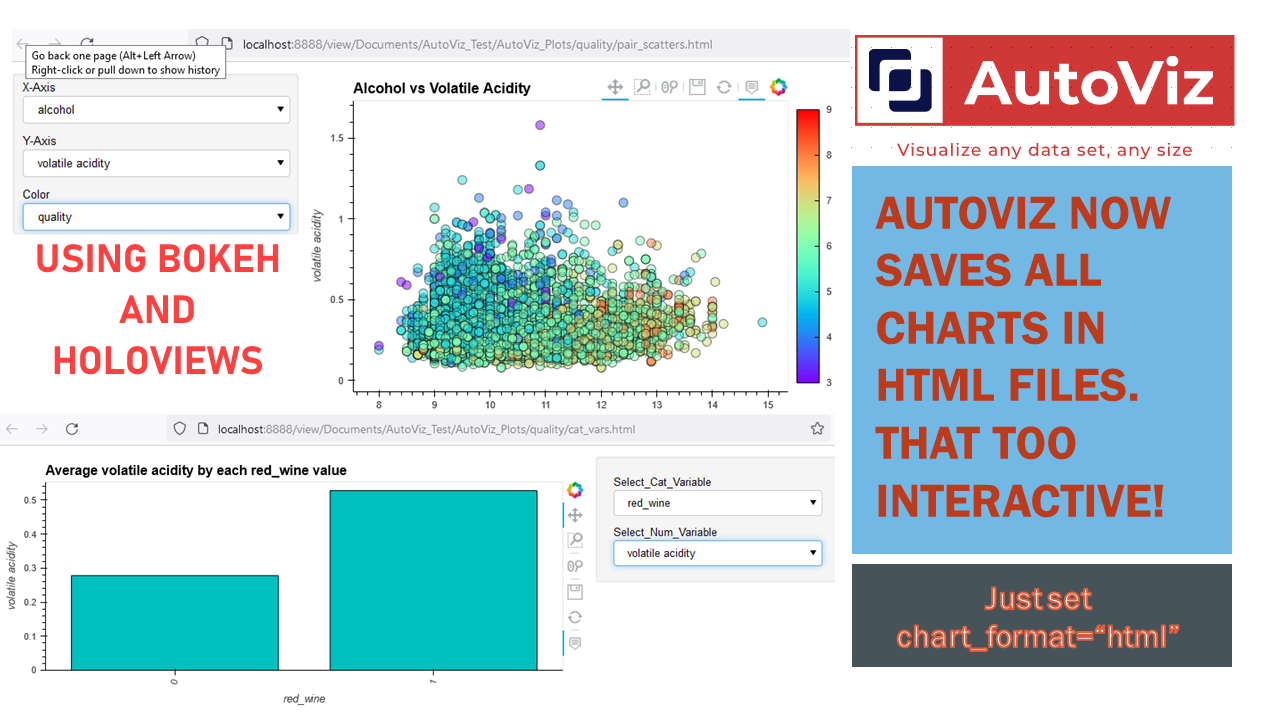

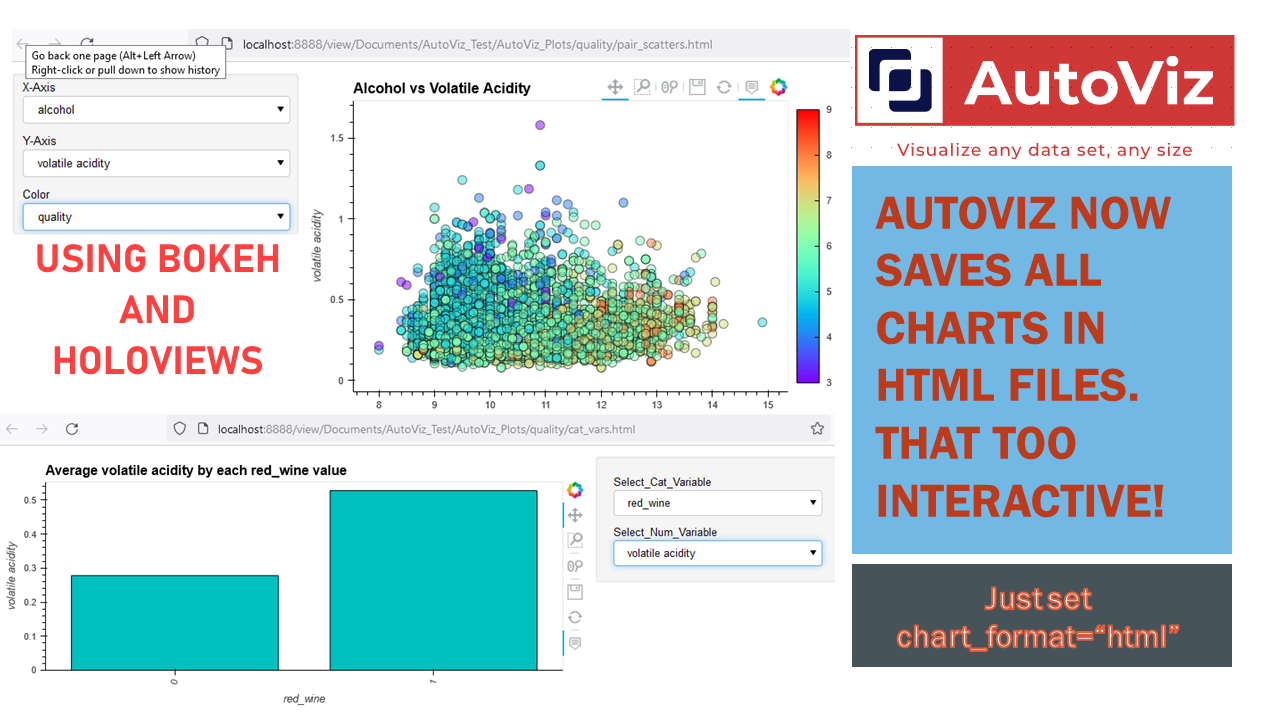

113 | AutoViz can also create charts in multiple formats using the `chart_format` setting:

114 | - If `chart_format ='png'` or `'svg'` or `'jpg'`: Matplotlib charts are plotted inline.

115 | * Can be saved locally (using `verbose=2` setting) or displayed (`verbose=1`) in Jupyter Notebooks.

116 | * This is the default behavior for AutoViz.

117 | - If `chart_format='bokeh'`: Interactive Bokeh charts are plotted in Jupyter Notebooks.

118 | - If `chart_format='server'`, dashboards will pop up for each kind of chart on your browser.

119 | - If `chart_format='html'`, interactive Bokeh charts will be created and silently saved as HTML files under the `AutoViz_Plots` directory (under working folder) or any other directory that you specify using the `save_plot_dir` setting (during input).

120 |

121 |

122 | ## API

123 | Arguments for `AV.AutoViz()` method:

124 |

125 | - `filename`: Use an empty string ("") if there's no associated filename and you want to use a dataframe. In that case, using the `dfte` argument for the dataframe. Otherwise provide a filename and leave `dfte` argument with an empty string. Only one of them can be used.

126 | - `sep`: File separator (comma, semi-colon, tab, or any column-separating value) if you use a filename above.

127 | - `depVar`: Target variable in your dataset; set it as an empty string if not applicable.

128 | - `dfte`: name of the pandas dataframe for plotting charts; leave it as empty string if using a filename.

129 | - `header`: set the row number of the header row in your file (0 for the first row). Otherwise leave it as 0.

130 | - `verbose`: 0 for minimal info and charts, 1 for more info and charts, or 2 for saving charts locally without display.

131 | - `lowess`: Use regression lines for each pair of continuous variables against the target variable in small datasets; avoid using for large datasets (>100,000 rows).

132 | - `chart_format`: 'svg', 'png', 'jpg', 'bokeh', 'server', or 'html' for displaying or saving charts in various formats, depending on the verbose option.

133 | - `max_rows_analyzed`: Limit the max number of rows to use for visualization when dealing with very large datasets (millions of rows). A statistically valid sample will be used by autoviz. Default is 150000 rows.

134 | - `max_cols_analyzed`: Limit the number of continuous variables to be analyzed. Defaul is 30 columns.

135 | - `save_plot_dir`: Directory for saving plots. Default is None, which saves plots under the current directory in a subfolder named AutoViz_Plots. If the save_plot_dir doesn't exist, it will be created.

136 |

137 | ## Examples

138 | Here are some examples to help you get started with AutoViz. If you need full jupyter notebooks with code samples they can be found in [examples](https://github.com/AutoViML/AutoViz/tree/master/Examples) folder.

139 |

140 | ### Example 1: Visualize a CSV file with a target variable

141 |

142 | ```python

143 | from autoviz import AutoViz_Class

144 | AV = AutoViz_Class()

145 |

146 | filename = "your_file.csv"

147 | target_variable = "your_target_variable"

148 |

149 | dft = AV.AutoViz(

150 | filename,

151 | sep=",",

152 | depVar=target_variable,

153 | dfte=None,

154 | header=0,

155 | verbose=1,

156 | lowess=False,

157 | chart_format="svg",

158 | max_rows_analyzed=150000,

159 | max_cols_analyzed=30,

160 | save_plot_dir=None

161 | )

162 | ```

163 |

164 |

165 |

166 | ### Example 2: Visualize a Pandas DataFrame without a target variable:

167 |

168 | ```python

169 | import pandas as pd

170 | from autoviz import AutoViz_Class

171 |

172 | AV = AutoViz_Class()

173 |

174 | data = {'col1': [1, 2, 3, 4, 5], 'col2': [5, 4, 3, 2, 1]}

175 | df = pd.DataFrame(data)

176 |

177 | dft = AV.AutoViz(

178 | "",

179 | sep=",",

180 | depVar="",

181 | dfte=df,

182 | header=0,

183 | verbose=1,

184 | lowess=False,

185 | chart_format="server",

186 | max_rows_analyzed=150000,

187 | max_cols_analyzed=30,

188 | save_plot_dir=None

189 | )

190 |

191 | ```

192 |

193 |

194 |

195 | ### Example 3: Generate interactive Bokeh charts and save them as HTML files in a custom directory

196 |

197 | ```python

198 | from autoviz import AutoViz_Class

199 | AV = AutoViz_Class()

200 |

201 | filename = "your_file.csv"

202 | target_variable = "your_target_variable"

203 | custom_plot_dir = "your_custom_plot_directory"

204 |

205 | dft = AV.AutoViz(

206 | filename,

207 | sep=",",

208 | depVar=target_variable,

209 | dfte=None,

210 | header=0,

211 | verbose=2,

212 | lowess=False,

213 | chart_format="bokeh",

214 | max_rows_analyzed=150000,

215 | max_cols_analyzed=30,

216 | save_plot_dir=custom_plot_dir

217 | )

218 | ```

219 |

220 |

221 |

222 | These examples should give you an idea of how to use AutoViz with different scenarios and settings. By tailoring the options and settings, you can generate visualizations that best suit your needs, whether you're working with large datasets, interactive charts, or simply exploring the relationships between variables.

223 |

224 | ## Maintainers

225 | AutoViz is actively maintained and improved by a team of dedicated developers. If you have any questions, suggestions, or issues, feel free to reach out to the maintainers:

226 |

227 | - [@AutoViML](https://github.com/AutoViML)

228 | - [@morenoh149](https://github.com/morenoh149)

229 | - [@hironroy](https://github.com/hironroy)

230 |

231 | ## Contributing

232 | We welcome contributions from the community! If you're interested in contributing to AutoViz, please follow these steps:

233 |

234 | - Fork the repository on GitHub.

235 | - Clone your fork and create a new branch for your feature or bugfix.

236 | - Commit your changes to the new branch, ensuring that you follow coding standards and write appropriate tests.

237 | - Push your changes to your fork on GitHub.

238 | - Submit a pull request to the main repository, detailing your changes and referencing any related issues.

239 |

240 | See [the contributing file](contributing.md)!

241 |

242 | ## License

243 | AutoViz is released under the Apache License, Version 2.0. By using AutoViz, you agree to the terms and conditions specified in the license.

244 |

245 | ## Tips

246 | Here are some additional tips and reminders to help you make the most of the library:

247 |

248 | - **Make sure to regularly upgrade AutoViz** to benefit from the latest features, bug fixes, and improvements. You can update it using pip install --upgrade autoviz.

249 | - **AutoViz is highly customizable, so don't hesitate to explore and experiment with various settings**, such as chart_format, verbose, and max_rows_analyzed. This will allow you to create visualizations that better suit your specific needs and preferences.

250 | - **Remember to delete the AutoViz_Plots directory (or any custom directory you specified) periodically** if you used the verbose=2 option, as it can accumulate a large number of saved charts over time.

251 | - **For further guidance or inspiration, check out the Medium article on AutoViz**, as well as other online resources and tutorials.

252 |

253 | - AutoViz will visualize any sized file using a statistically valid sample.

254 | - COMMA is the default separator in the file, but you can change it.

255 | - Assumes the first row as the header in the file, but this can be changed.

256 |

257 |

258 | - **By leveraging AutoViz's powerful and flexible features**, you can streamline your data visualization process and gain valuable insights more efficiently. Happy visualizing!

259 |

260 | ## DISCLAIMER

261 | This project is not an official Google project. It is not supported by Google, and Google specifically disclaims all warranties as to its quality, merchantability, or fitness for a particular purpose.

--------------------------------------------------------------------------------

/autoviz/AutoViz_Class.py:

--------------------------------------------------------------------------------

1 | ############################################################################

2 | # Copyright 2019 Google LLC

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # https://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.