├── .gitignore

├── LICENSE.md

├── README.md

├── docs

├── README.md

├── assembly.md

├── assembly_joint.md

├── images

│ ├── assembly_contacts.jpg

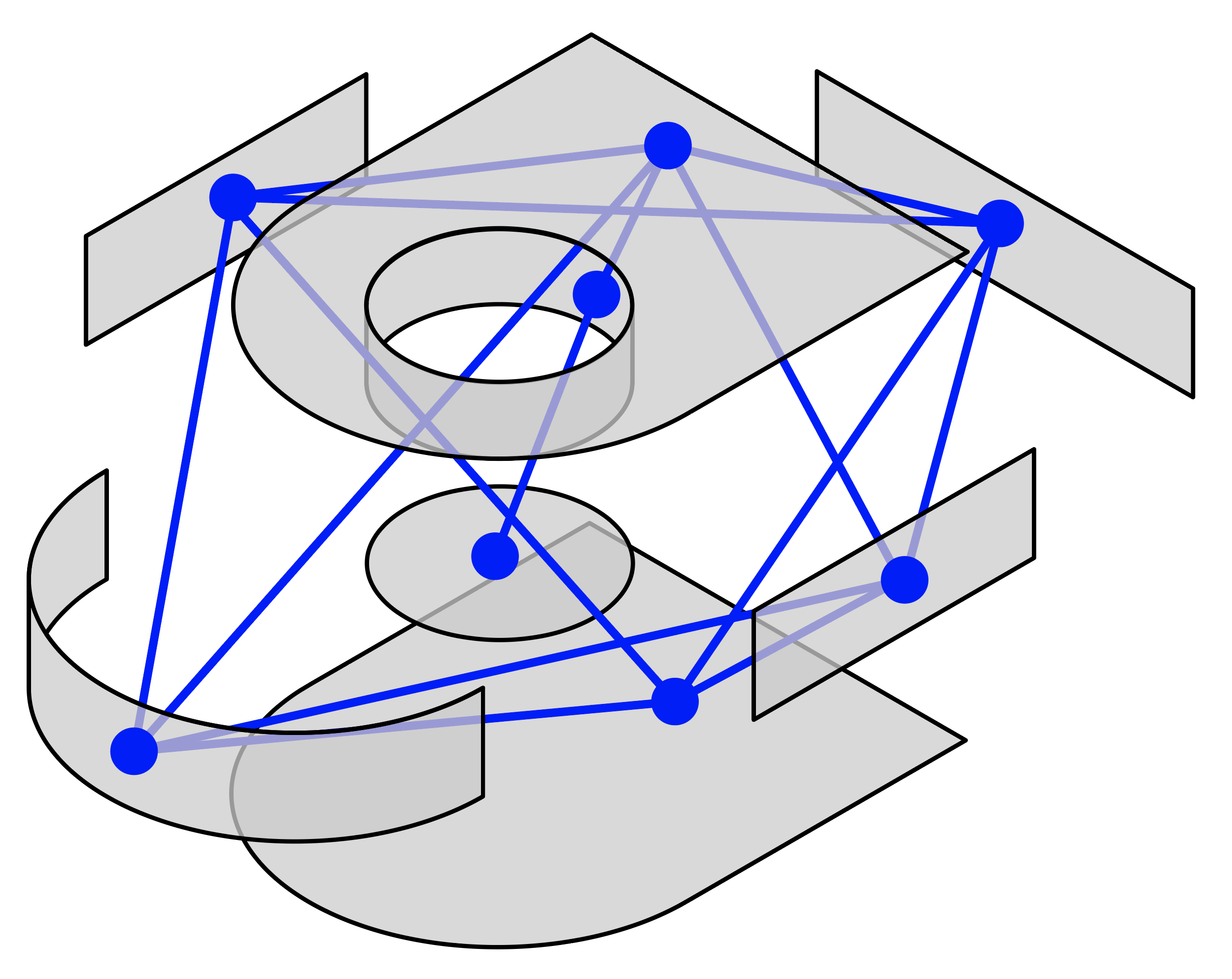

│ ├── assembly_graph.jpg

│ ├── assembly_joint_graph.png

│ ├── assembly_joint_labels.jpg

│ ├── assembly_joint_mosaic.jpg

│ ├── assembly_joint_motion_types.jpg

│ ├── assembly_joint_set.jpg

│ ├── assembly_mosaic.jpg

│ ├── fusion_gallery_mosaic.jpg

│ ├── reconstruction_extrude_operations.png

│ ├── reconstruction_extrude_types.png

│ ├── reconstruction_mosaic.jpg

│ ├── reconstruction_overview_extrude.png

│ ├── reconstruction_overview_sequence.png

│ ├── reconstruction_overview_sketch.png

│ ├── reconstruction_sketch_entities.png

│ ├── reconstruction_teaser.jpg

│ ├── reconstruction_timeline_icons.png

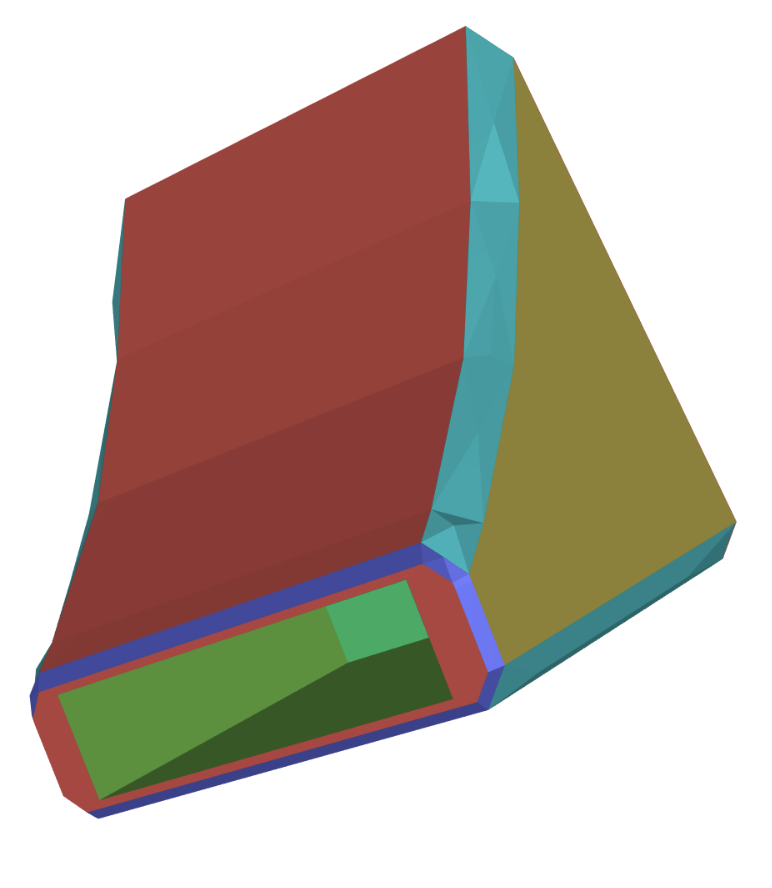

│ ├── segmentation_example.jpg

│ ├── segmentation_extended_dataset_stats.jpg

│ ├── segmentation_features_removed.jpg

│ ├── segmentation_mosaic.jpg

│ ├── segmentation_start_end_side_faces.jpg

│ └── segmentation_timeline.png

├── reconstruction.md

├── reconstruction_stats.md

├── segmentation.md

└── tags.json

└── tools

├── README.md

├── assembly2cad

├── README.md

├── assembly2cad.manifest

└── assembly2cad.py

├── assembly_download

├── README.md

└── assembly_download.py

├── assembly_graph

├── README.md

├── assembly2graph.py

├── assembly_graph.py

└── assembly_viewer.ipynb

├── common

├── __init__.py

├── assembly_importer.py

├── deserialize.py

├── exceptions.py

├── exporter.py

├── face_reconstructor.py

├── fusion_360_server.manifest

├── geometry.py

├── joint_importer.py

├── launcher.py

├── logger.py

├── match.py

├── name.py

├── regraph.py

├── serialize.py

├── sketch_extrude_importer.py

├── test

│ ├── common_test_base.py

│ ├── common_test_harness.manifest

│ ├── common_test_harness.py

│ └── test_geometry.py

└── view_control.py

├── fusion360gym

├── README.md

├── __init__.py

├── client

│ ├── __init__.py

│ ├── fusion360gym_client.py

│ └── gym_env.py

├── examples

│ ├── README.md

│ ├── client_example.py

│ ├── face_extrusion_example.py

│ ├── sketch_extrusion_example.py

│ └── sketch_extrusion_point_example.py

├── server

│ ├── .gitignore

│ ├── __init__.py

│ ├── command_base.py

│ ├── command_export.py

│ ├── command_face_extrusion.py

│ ├── command_reconstruct.py

│ ├── command_runner.py

│ ├── command_sketch_extrusion.py

│ ├── design_state.py

│ ├── fusion360gym_server.manifest

│ ├── fusion360gym_server.py

│ └── launch.py

└── test

│ ├── .gitignore

│ ├── README.md

│ ├── common_test.py

│ ├── test_detach_util.py

│ ├── test_fusion360gym_export.py

│ ├── test_fusion360gym_face_extrusion.py

│ ├── test_fusion360gym_randomized_reconstruction.py

│ ├── test_fusion360gym_reconstruct.py

│ ├── test_fusion360gym_server.py

│ └── test_fusion360gym_sketch_extrusion.py

├── joint2cad

├── README.md

├── joint2cad.manifest

└── joint2cad.py

├── reconverter

├── .gitignore

├── README.md

├── reconverter.manifest

└── reconverter.py

├── regraph

├── README.md

├── launch.py

├── regraph_exporter.manifest

├── regraph_exporter.py

└── regraph_viewer.ipynb

├── regraphnet

├── README.md

├── ckpt

│ ├── model_gat.ckpt

│ ├── model_gcn.ckpt

│ ├── model_gcn_aug.ckpt

│ ├── model_gcn_semisyn.ckpt

│ ├── model_gcn_syn.ckpt

│ ├── model_gin.ckpt

│ ├── model_mlp.ckpt

│ └── model_mlp_aug.ckpt

├── data

│ ├── 31962_e5291336_0054_0000.json

│ ├── 31962_e5291336_0054_0001.json

│ ├── 31962_e5291336_0054_0002.json

│ └── 31962_e5291336_0054_sequence.json

└── src

│ ├── inference.py

│ ├── inference_torch_geometric.py

│ ├── inference_vanilla.py

│ ├── train.py

│ ├── train_torch_geometric.py

│ └── train_vanilla.py

├── search

├── .gitignore

├── README.md

├── agent.py

├── agent_random.py

├── agent_supervised.py

├── evaluation

│ └── evaluation.ipynb

├── log.py

├── main.py

├── repl_env.py

├── search.py

├── search_beam.py

├── search_best.py

└── search_random.py

├── segmentation_viewer

├── README.md

├── segmentation_viewer.py

└── segmentation_viewer_demo.ipynb

├── sketch2image

├── README.md

├── sketch2image.py

└── sketch_plotter.py

└── testdata

├── .gitignore

├── Box.smt

├── Boxes.smt

├── Couch.json

├── Couch.smt

├── Couch.step

├── Hexagon.json

├── SingleSketchExtrude.json

├── SingleSketchExtrude_Invalid.json

├── assembly_examples

└── belt_clamp

│ ├── 8b4e1828-b296-11eb-9d3c-f21898acd3b7.smt

│ ├── 8b4e5752-b296-11eb-9d3c-f21898acd3b7.smt

│ └── assembly.json

├── common

├── BooleanAdjacent.f3d

├── BooleanExactOverlap.f3d

├── BooleanIntersectContained.f3d

├── BooleanIntersectDouble.f3d

├── BooleanIntersectMultiOverlap.f3d

├── BooleanIntersectMultiTool.f3d

├── BooleanIntersectOverlap.f3d

├── BooleanIntersectOverlapSelfIntersect.f3d

├── BooleanIntersectSeparate.f3d

├── BooleanOverlap.f3d

├── BooleanOverlap3Way.f3d

├── BooleanOverlapDouble.f3d

└── BooleanSeparate.f3d

├── joint_examples

├── 145132_7aea3b66_0024_1.smt

├── 145132_7aea3b66_0024_2.smt

└── joint_set_00119.json

└── segmentation_examples

├── 102673_56775b8e_5.obj

└── 102673_56775b8e_5.seg

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | .vscode/

3 | __pycache__/

4 | .ipynb_checkpoints/

5 | .env

--------------------------------------------------------------------------------

/LICENSE.md:

--------------------------------------------------------------------------------

1 |

2 | # Fusion 360 Gallery Dataset License

3 |

4 | The dataset to which this license is attached is data from the Fusion 360 Gallery Dataset (the "Dataset") provided by Autodesk, Inc. (“Autodesk”). You may only access and/or use the Dataset subject to the following terms and conditions (this “License”):

5 |

6 | 1. You may access, use, reproduce and modify the Dataset, in each case, only for non-commercial research purposes.

7 |

8 | 2. You may not redistribute or make available to others the Dataset in its entirety; however you may direct others to https://github.com/AutodeskAILab/Fusion360GalleryDataset to obtain the Dataset.

9 |

10 | 3. You may redistribute or make available to others portions of the Dataset or modifications of the Dataset (your “Modified Set”) only if you adhere to the following requirements:

11 |

12 | 3.1. You do not explicitly or implicitly represent that that your Modified Set is the Dataset itself. One way to satisfy this requirement is, in connection with such redistribution, to prominently indicate that your Modified Set is a portion or modification of the Dataset and to provide the attribution in Item 5 below.

13 |

14 | 3.2. You may not allow others to access, use, reproduce or modify the Modified Set except for non-commercial research purposes.

15 |

16 | 3.3. If you allow others to redistribute or make available the Modified Set (or their modifications to the Modified Set), you must require them to:

17 | (i) do so only for non-commercial research purposes;

18 | (ii) restrict their recipients to non-commercial research purposes through terms at least as restrictive as provided in these Items 3.2 and 3.3;

19 | (iii) include terms at least as protective of Autodesk as provided in Items 7 and 8; and

20 | (iv) retain any notices or attributions associated with the Dataset that were provided by you (and to impose this requirement on any downstream recipients, if any).

21 |

22 | 4. You will comply with applicable data security and privacy laws. You shall not attempt to re-associate any model in the Dataset with the creator of the model or any identifiable individual.

23 |

24 | 5. At your discretion as subject to general principles of academic attribution, if your use of the Dataset was a substantial contributor to your research, provide attribution to the “Fusion 360 Gallery Dataset” and the relevant citations provided on the Dataset website.

25 |

26 | 6. This License does not grant you permission to use any Autodesk trade names, trademarks, service marks, or product names, except as required for reasonable and customary use in describing the origin of the Dataset and satisfying the request for attribution in Item 5 above.

27 |

28 | 7. Autodesk makes no representations or warranties regarding the Dataset, including but not limited to warranties of non-infringement, merchantability or fitness for a particular purpose.

29 |

30 | 8. You accept full responsibility for your use of the Dataset and shall defend and indemnify Autodesk, Inc. including its employees, officers and agents, against any and all claims arising from your use of the Dataset, including but not limited to your use of any copies of copyrighted images that you may create from the Dataset.

31 |

32 | 9. Autodesk reserves the right to terminate this license at any time and may cease access to the Dataset at any time in its sole discretion.

33 |

34 | 10. All rights and licenses to the Dataset not explicitly provided hereunder are reserved for Autodesk.

35 |

36 | 11. If you are employed by a for-profit, commercial entity, your employer shall also be bound by this License, and you hereby represent that you are fully authorized to enter into this License on behalf of such employer.

37 |

38 | 12. The laws of the State of California shall apply to all disputes under this License.

39 |

40 | _Updated 11/2021_

41 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Fusion 360 Gallery Dataset

2 |

3 |

4 | The *Fusion 360 Gallery Dataset* contains rich 2D and 3D geometry data derived from parametric CAD models. The dataset is produced from designs submitted by users of the CAD package [Autodesk Fusion 360](https://www.autodesk.com/products/fusion-360/overview) to the [Autodesk Online Gallery](https://gallery.autodesk.com/fusion360). The dataset provides valuable data for learning how people design, including sequential CAD design data, designs segmented by modeling operation, and assemblies containing hierarchy and joint connectivity information.

5 |

6 | ## Datasets

7 | From the approximately 20,000 designs available we derive several datasets focused on specific areas of research. Currently the following data subsets are available, with more to be released on an ongoing basis.

8 |

9 | ### [Assembly Dataset](docs/assembly.md) - NEW!

10 | Multi-part CAD assemblies containing rich information on joints, contact surfaces, holes, and the underlying assembly graph structure.

11 |

12 |

13 |

14 |

15 | ### [Reconstruction Dataset](docs/reconstruction.md)

16 | Sequential construction sequence information from a subset of simple 'sketch and extrude' designs.

17 |

18 |

19 |

20 | ### [Segmentation Dataset](docs/segmentation.md)

21 |

22 | A segmentation of 3D models based on the modeling operation used to create each face, e.g. Extrude, Fillet, Chamfer etc...

23 |

24 |

25 |

26 |

27 | ## Publications

28 | Please cite the relevant paper below if you use the Fusion 360 Gallery dataset in your research.

29 |

30 | ### Assembly Dataset

31 | [JoinABLe: Learning Bottom-up Assembly of Parametric CAD Joints](https://arxiv.org/abs/2111.12772)

32 |

33 | ```

34 | @article{willis2021joinable,

35 | title={JoinABLe: Learning Bottom-up Assembly of Parametric CAD Joints},

36 | author={Willis, Karl DD and Jayaraman, Pradeep Kumar and Chu, Hang and Tian, Yunsheng and Li, Yifei and Grandi, Daniele and Sanghi, Aditya and Tran, Linh and Lambourne, Joseph G and Solar-Lezama, Armando and Matusik, Wojciech},

37 | journal={arXiv preprint arXiv:2111.12772},

38 | year={2021}

39 | }

40 | ```

41 |

42 | ### Reconstruction Dataset

43 | [Fusion 360 Gallery: A Dataset and Environment for Programmatic CAD Construction from Human Design Sequences](https://arxiv.org/abs/2010.02392)

44 | ```

45 | @article{willis2020fusion,

46 | title={Fusion 360 Gallery: A Dataset and Environment for Programmatic CAD Construction from Human Design Sequences},

47 | author={Karl D. D. Willis and Yewen Pu and Jieliang Luo and Hang Chu and Tao Du and Joseph G. Lambourne and Armando Solar-Lezama and Wojciech Matusik},

48 | journal={ACM Transactions on Graphics (TOG)},

49 | volume={40},

50 | number={4},

51 | year={2021},

52 | publisher={ACM New York, NY, USA}

53 | }

54 | ```

55 |

56 | ### Segmentation Dataset

57 | [BRepNet: A Topological Message Passing System for Solid Models](https://arxiv.org/abs/2104.00706)

58 | ```

59 | @inproceedings{lambourne2021brepnet,

60 | author = {Lambourne, Joseph G. and Willis, Karl D.D. and Jayaraman, Pradeep Kumar and Sanghi, Aditya and Meltzer, Peter and Shayani, Hooman},

61 | title = {BRepNet: A Topological Message Passing System for Solid Models},

62 | booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

63 | month = {June},

64 | year = {2021},

65 | pages = {12773-12782}

66 | }

67 | ```

68 |

69 | ## Download

70 |

71 | | Dataset | Designs | Documentation | Download | Paper | Code |

72 | | - | - | - | - | - | - |

73 | | Assembly | 8,251 assemblies / 154,468 parts | [Documentation](docs/assembly.md) | [Instructions](tools/assembly_download) | [Paper](https://arxiv.org/abs/2111.12772) | [Code](tools) |

74 | | Assembly - Joint | 32,148 joints / 23,029 parts | [Documentation](docs/assembly_joint.md) | [j1.0.0 - 2.8 GB](https://fusion-360-gallery-dataset.s3.us-west-2.amazonaws.com/assembly/j1.0.0/j1.0.0.7z) | [Paper](https://arxiv.org/abs/2111.12772) | [Code](https://github.com/AutodeskAILab/JoinABLe) |

75 | | Reconstruction | 8,625 sequences | [Documentation](docs/reconstruction.md) | [r1.0.1 - 2.0 GB](https://fusion-360-gallery-dataset.s3.us-west-2.amazonaws.com/reconstruction/r1.0.1/r1.0.1.zip) | [Paper](https://arxiv.org/abs/2010.02392) | [Code](tools) |

76 | | Segmentation | 35,680 parts | [Documentation](docs/segmentation.md) | [s2.0.1 - 3.1 GB](https://fusion-360-gallery-dataset.s3.us-west-2.amazonaws.com/segmentation/s2.0.1/s2.0.1.zip) | [Paper](https://arxiv.org/abs/2104.00706) | [Code](https://github.com/AutodeskAILab/BRepNet)

77 |

78 | ### Additional Downloads

79 | - **Reconstruction Dataset Extrude Volumes** [(r1.0.1 - 152 MB)](https://fusion-360-gallery-dataset.s3.us-west-2.amazonaws.com/reconstruction/r1.0.1/r1.0.1_extrude_tools.zip): The extrude volumes for each extrude operation in the design timeline.

80 | - **Reconstruction Dataset Face Extrusion Sequences** [(r1.0.1 - 41MB)](https://fusion-360-gallery-dataset.s3.us-west-2.amazonaws.com/reconstruction/r1.0.1/r1.0.1_regraph_05.zip): The pre-processed face extrusion sequences used to train our [reconstruction network](tools/regraphnet).

81 | - **Segmentation Extended STEP Dataset** [(s2.0.1 - 483 MB)](https://fusion-360-gallery-dataset.s3.us-west-2.amazonaws.com/segmentation/s2.0.1/s2.0.1_extended_step.zip): An extended collection of 42,912 STEP files with associated segmentation information. This include all STEP data from s2.0.0 along with additional files for which triangle meshes with close to 2500 edges could not be created.

82 |

83 | ## Tools

84 | As part of the dataset we provide various tools for working with the data. These tools leverage the [Fusion 360 API](http://help.autodesk.com/view/fusion360/ENU/?guid=GUID-7B5A90C8-E94C-48DA-B16B-430729B734DC) to perform operations such as geometry reconstruction, traversing B-Rep data structures, and conversion to other formats. More information can be found in the [tools directory](tools).

85 |

86 |

87 | ## License

88 | Please refer to the [dataset license](LICENSE.md).

89 |

--------------------------------------------------------------------------------

/docs/README.md:

--------------------------------------------------------------------------------

1 | # Fusion 360 Gallery Dataset Documentation

2 | Here you will find documentation of the data released as part of the Fusion 360 Gallery Dataset. Each dataset is extracted from designs created in [Fusion 360](https://www.autodesk.com/products/fusion-360/overview) and then posted to the [Autodesk Online Gallery](https://gallery.autodesk.com/) by users.

3 |

4 |

5 | ## Datasets

6 | We derive several datasets focused on specific areas of research. The 3D model content between data subsets will overlap as they are drawn from the same source, but will likely be formatted differently. We provide the following datasets.

7 |

8 | ### [Assembly Dataset](assembly.md)

9 | The Assembly Dataset contains multi-part CAD assemblies with rich information on joints, contact surfaces, holes, and the underlying assembly graph structure.

10 |

11 |

12 | ### [Assembly Dataset - Joint Data](assembly_joint.md)

13 | The Assembly Dataset joint data contains pairs of parts extracted from CAD assemblies, with one or more joints defined between them.

14 |

15 |

16 | ### [Reconstruction Dataset](reconstruction.md)

17 | The Reconstruction Dataset contains construction sequence information from a subset of simple 'sketch and extrude' designs.

18 |

19 |

20 | ### [Segmentation Dataset](segmentation.md)

21 | The Segmentation Dataset contains segmented 3D models based on the modeling operation used to create each face, e.g. Extrude, Fillet, Chamfer etc...

22 |

23 |

24 |

25 | ## Tools

26 | We provide [tools](../tools) to work with the data using Fusion 360. Full documentation of how to use these tools and write your own is provided in the [tools](../tools) directory.

27 |

--------------------------------------------------------------------------------

/docs/images/assembly_contacts.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_contacts.jpg

--------------------------------------------------------------------------------

/docs/images/assembly_graph.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_graph.jpg

--------------------------------------------------------------------------------

/docs/images/assembly_joint_graph.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_joint_graph.png

--------------------------------------------------------------------------------

/docs/images/assembly_joint_labels.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_joint_labels.jpg

--------------------------------------------------------------------------------

/docs/images/assembly_joint_mosaic.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_joint_mosaic.jpg

--------------------------------------------------------------------------------

/docs/images/assembly_joint_motion_types.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_joint_motion_types.jpg

--------------------------------------------------------------------------------

/docs/images/assembly_joint_set.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_joint_set.jpg

--------------------------------------------------------------------------------

/docs/images/assembly_mosaic.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/assembly_mosaic.jpg

--------------------------------------------------------------------------------

/docs/images/fusion_gallery_mosaic.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/fusion_gallery_mosaic.jpg

--------------------------------------------------------------------------------

/docs/images/reconstruction_extrude_operations.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_extrude_operations.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_extrude_types.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_extrude_types.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_mosaic.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_mosaic.jpg

--------------------------------------------------------------------------------

/docs/images/reconstruction_overview_extrude.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_overview_extrude.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_overview_sequence.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_overview_sequence.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_overview_sketch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_overview_sketch.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_sketch_entities.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_sketch_entities.png

--------------------------------------------------------------------------------

/docs/images/reconstruction_teaser.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_teaser.jpg

--------------------------------------------------------------------------------

/docs/images/reconstruction_timeline_icons.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/reconstruction_timeline_icons.png

--------------------------------------------------------------------------------

/docs/images/segmentation_example.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/segmentation_example.jpg

--------------------------------------------------------------------------------

/docs/images/segmentation_extended_dataset_stats.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/segmentation_extended_dataset_stats.jpg

--------------------------------------------------------------------------------

/docs/images/segmentation_features_removed.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/segmentation_features_removed.jpg

--------------------------------------------------------------------------------

/docs/images/segmentation_mosaic.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/segmentation_mosaic.jpg

--------------------------------------------------------------------------------

/docs/images/segmentation_start_end_side_faces.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/segmentation_start_end_side_faces.jpg

--------------------------------------------------------------------------------

/docs/images/segmentation_timeline.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/docs/images/segmentation_timeline.png

--------------------------------------------------------------------------------

/docs/reconstruction_stats.md:

--------------------------------------------------------------------------------

1 | # Reconstruction Dataset Statistics

2 |

3 | ## Design Complexity

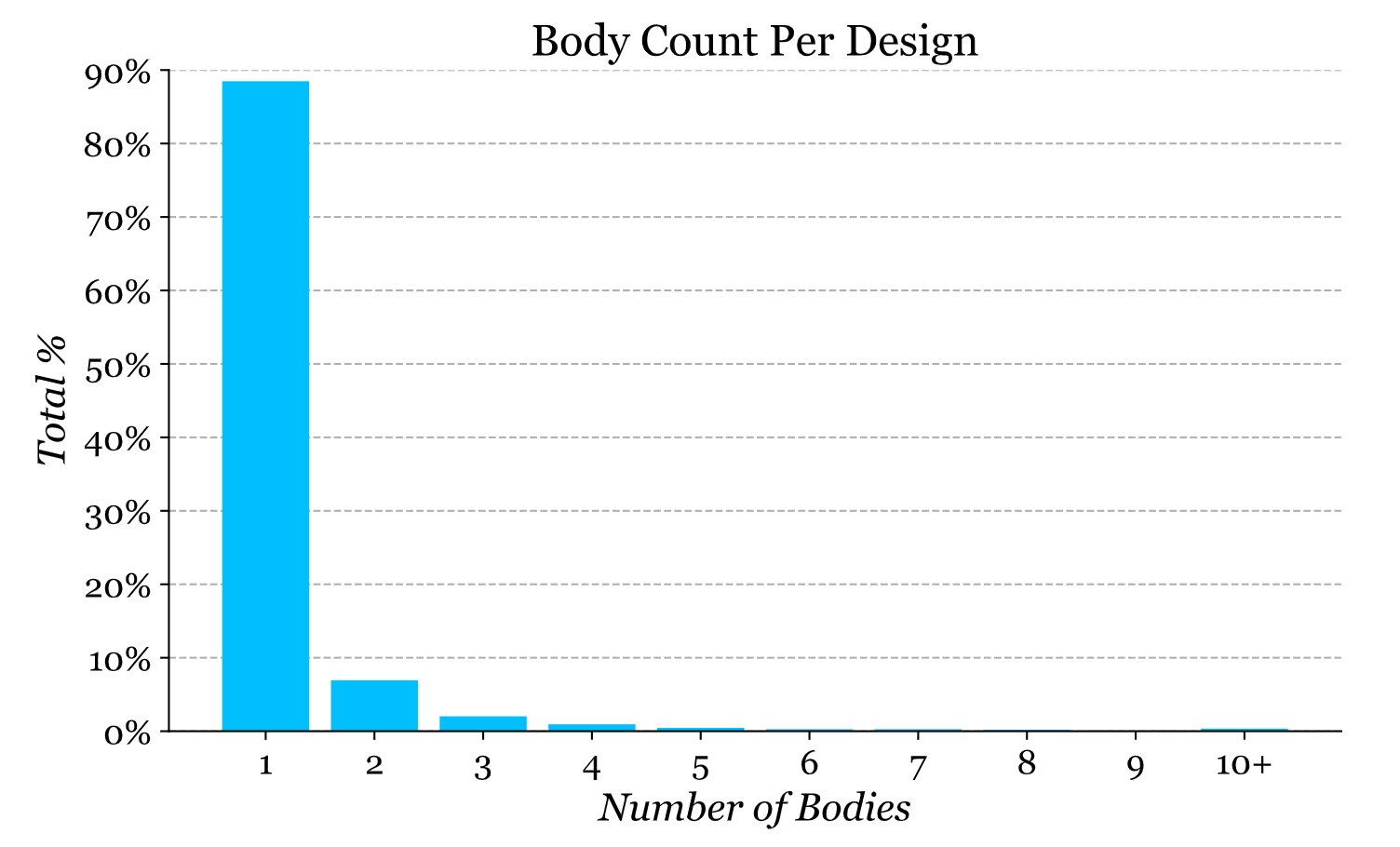

4 | A key goal of the reconstruction dataset is to provide a suitably scoped baseline for learning-based approaches to CAD reconstruction. Restricting the modeling operations to _sketch_ and _extrude_ vastly narrows the design space and enables simpler approaches for reconstruction. Each design represents a component in Fusion 360 that can have multiple geometric bodies. The vast majority of designs have a single body.

5 |

6 |

7 |

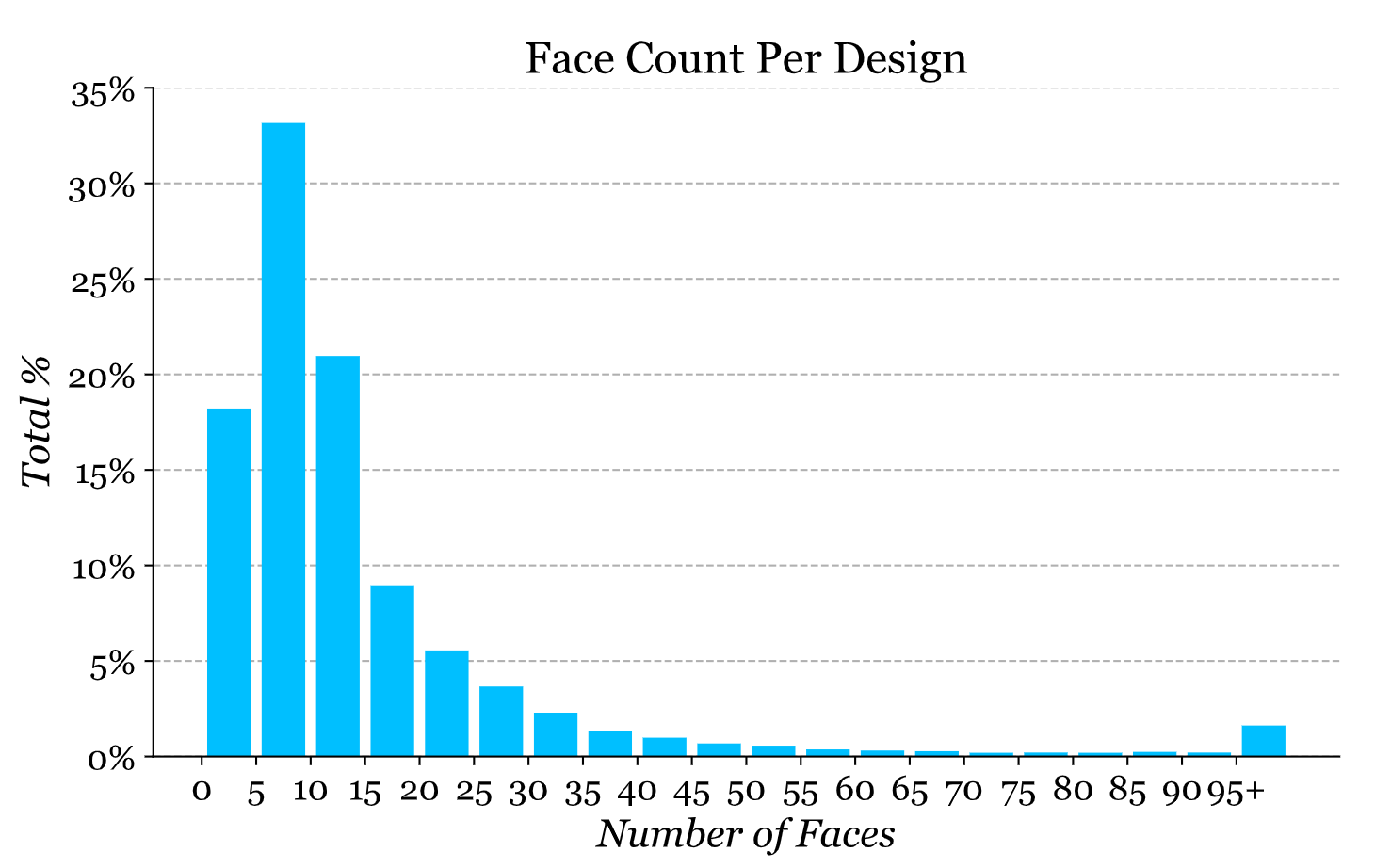

8 | The number of B-Rep faces in each design gives a good indication of the complexity of the dataset. Below we show the number of faces per design as a distribution, with the peak being between 5-10 faces per design. As we do not filter any of the designs based on complexity, this distribution reflects real designs where simple washers and flat plates are common components in mechanical assemblies.

9 |

10 |

11 |

12 | ## Construction Sequence

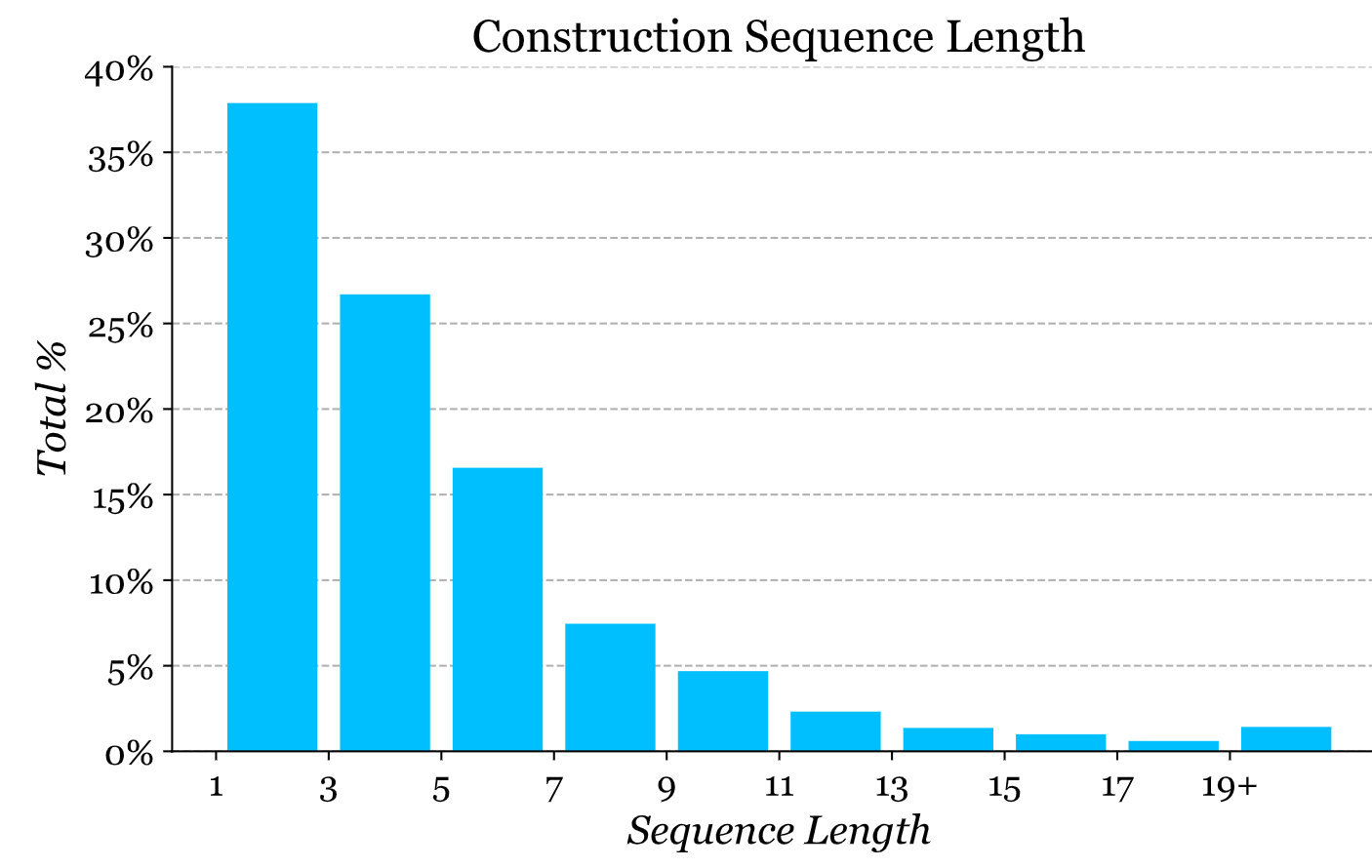

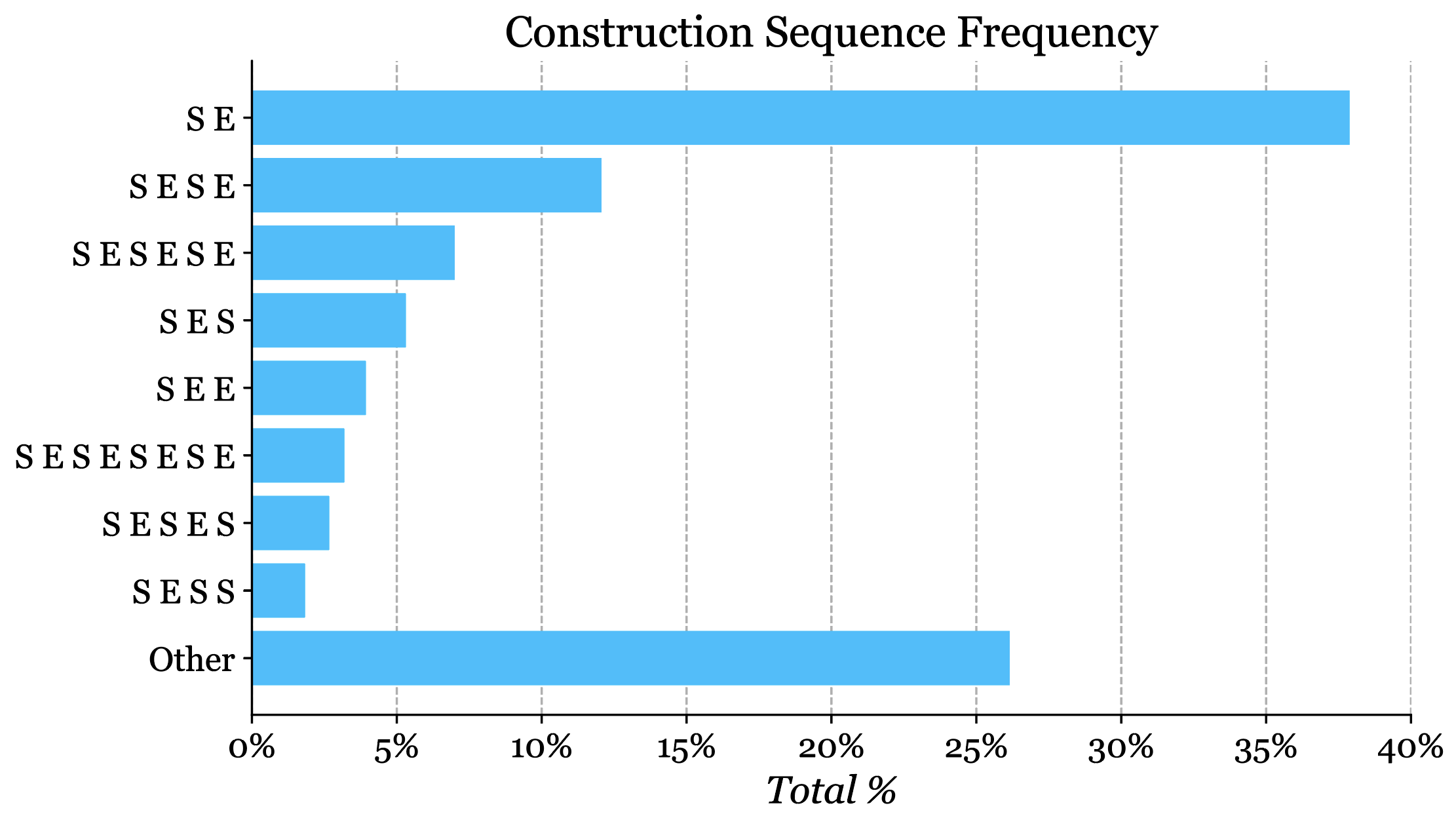

13 | The construction sequence is the series of _sketch_ and _extrude_ operations that are executed to produce the final geometry. Each construction sequence must have at least one _sketch_ and one _extrude_ step, for a minimum of two steps. The average number of steps is 4.74, the median 4, the mode 2, and the maximum 61. Below we illustrate the distribution of construction sequence length.

14 |

15 |

16 |

17 |

18 | The most frequent construction sequence combinations are shown below. S indicates a _sketch_ and E indicates an _extrude_ operation.

19 |

20 |

21 |

22 | ## Sketch

23 | ### Curves

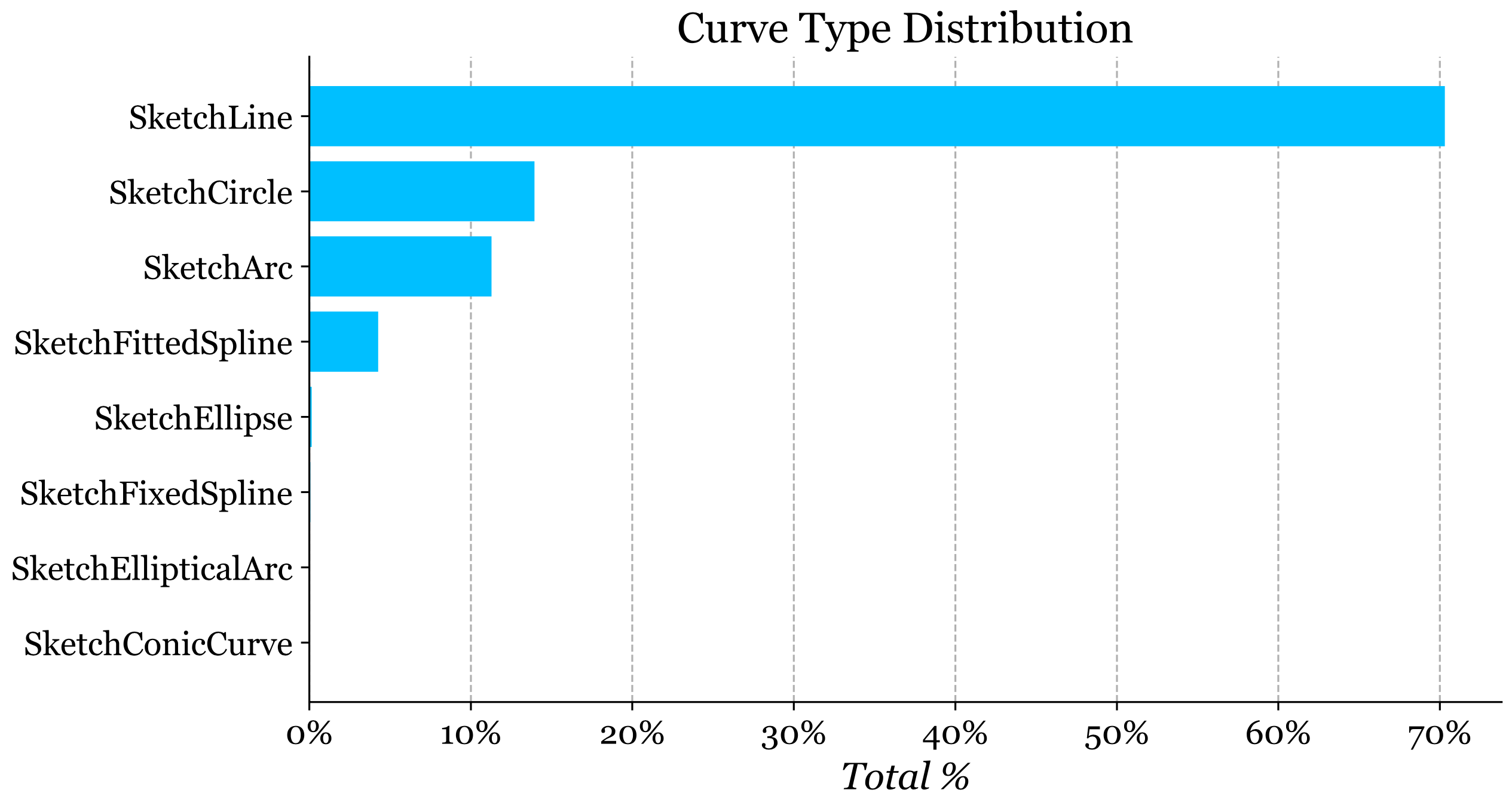

24 | Each sketch is made up on different types of curves, such as lines, arcs, and circles. It is notable that mechanical CAD sketches rely heavily on lines, circles, and arcs rather than spline curves.

25 | Below we show the overall distribution of different curve types in the reconstruction dataset.

26 |

27 |

28 |

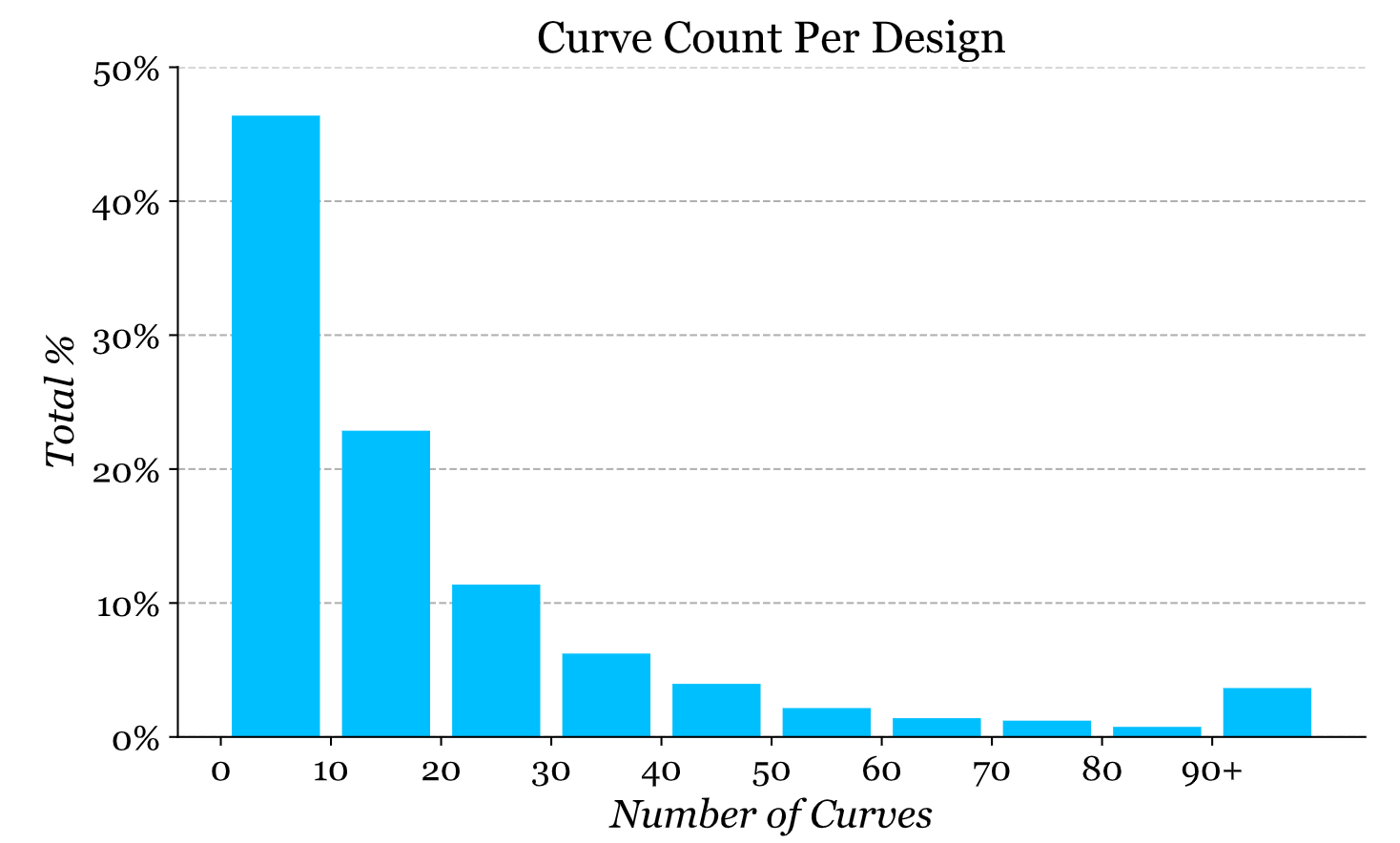

29 | The graph below illustrates the distribution of curve count per design, as another measure of design complexity.

30 |

31 |

32 |

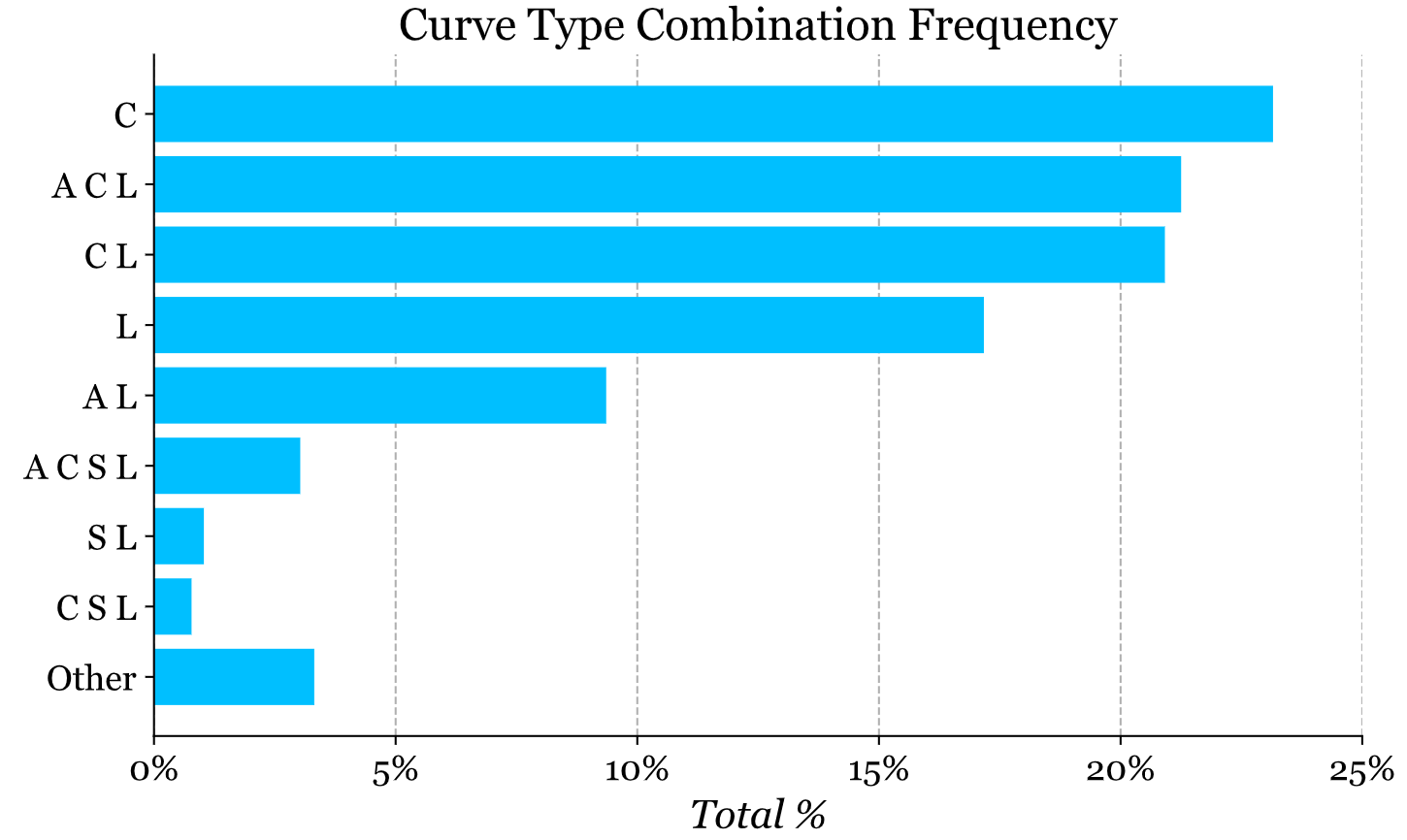

33 | Below we show the frequency that different curve combinations are used together in a design.

34 | Each curve type is abbreviated as follows:

35 | - C: `SketchCircle`

36 | - A: `SketchArc`

37 | - L: `SketchLine`

38 | - S: `SketchFittedSpline`

39 |

40 |

41 |

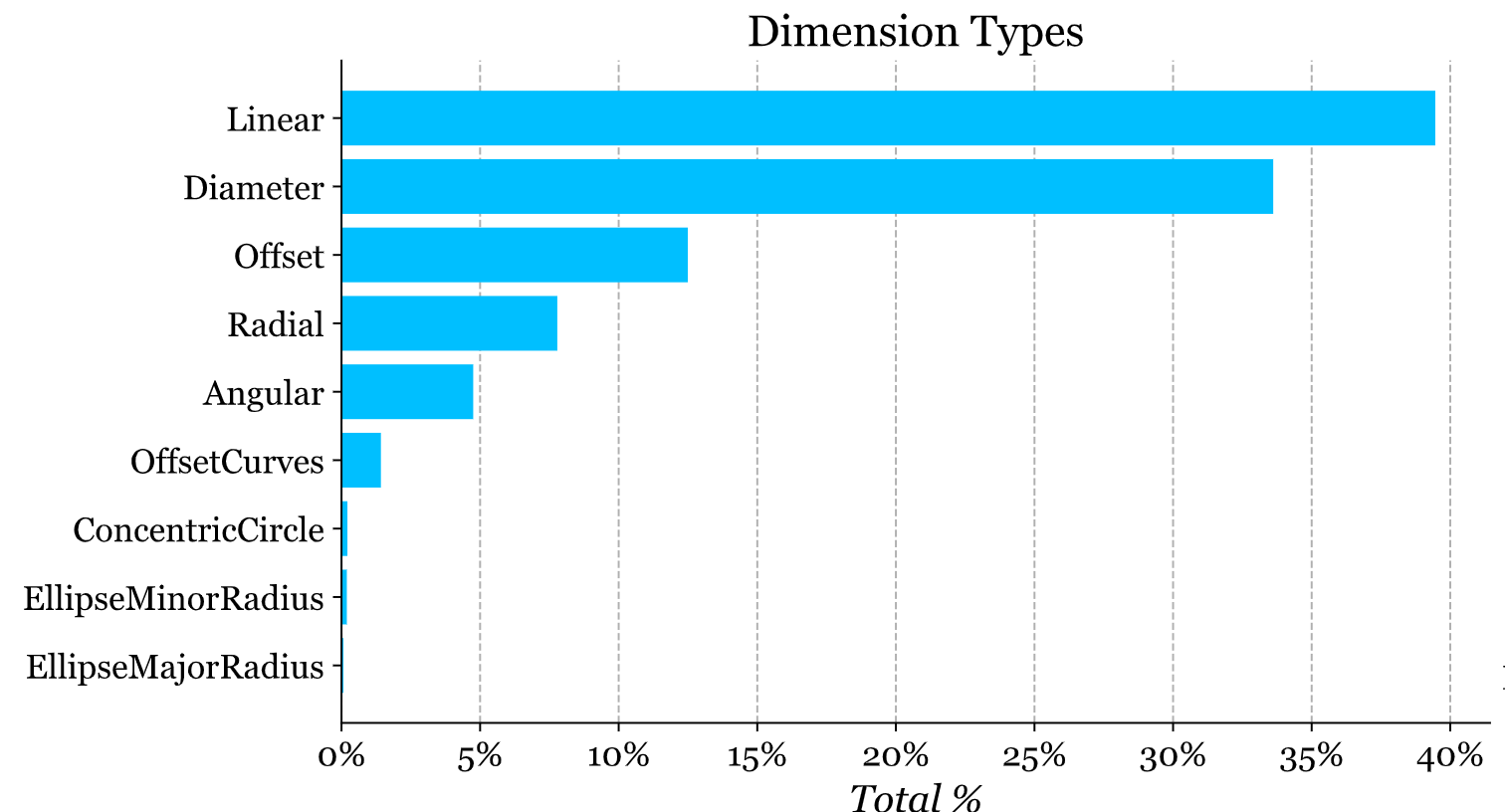

42 | ### Dimensions & Constraints

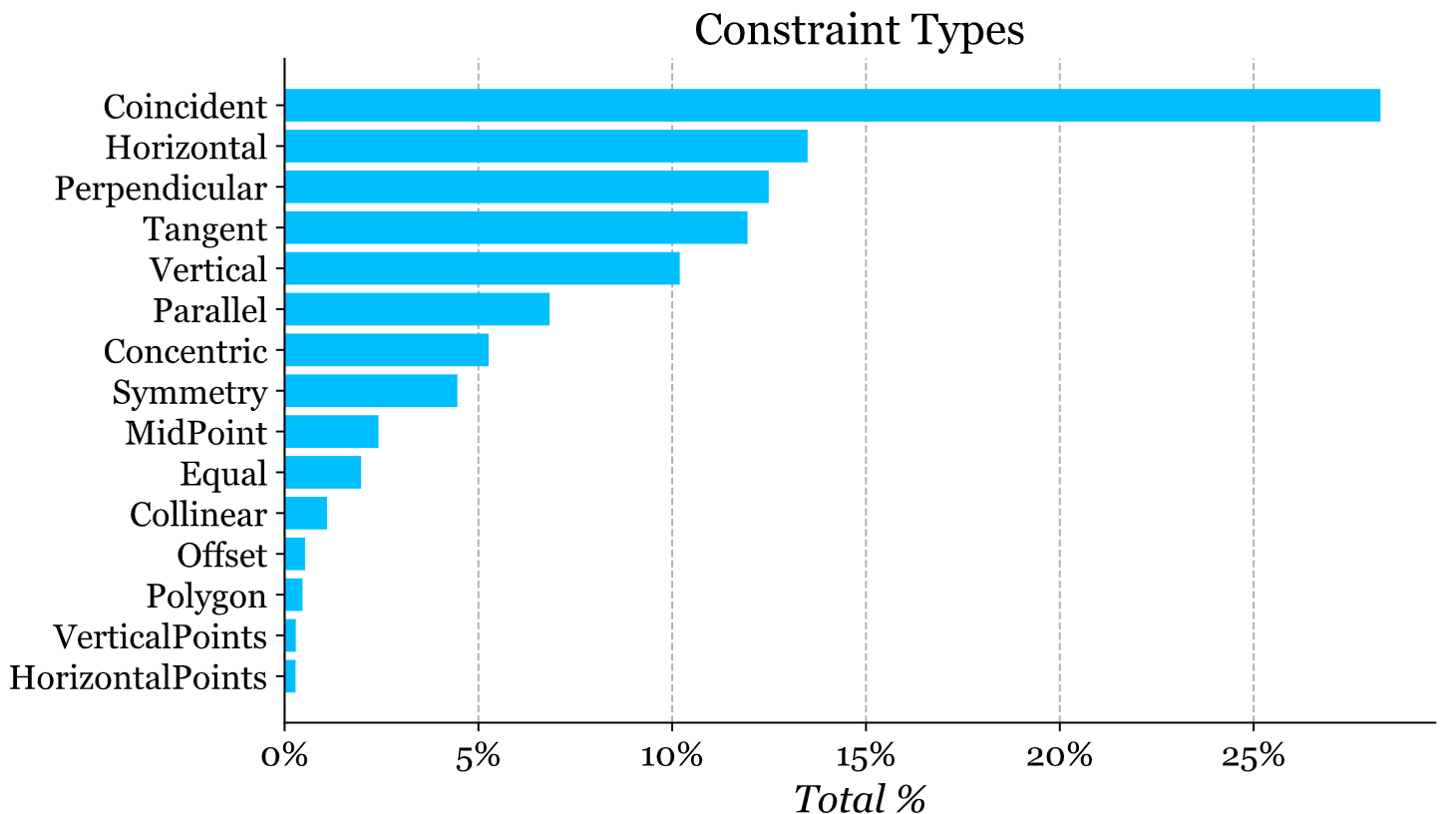

43 | Shown below are the distribution of dimension and constraint types in the dataset.

44 |

45 |

46 |

47 |

48 |

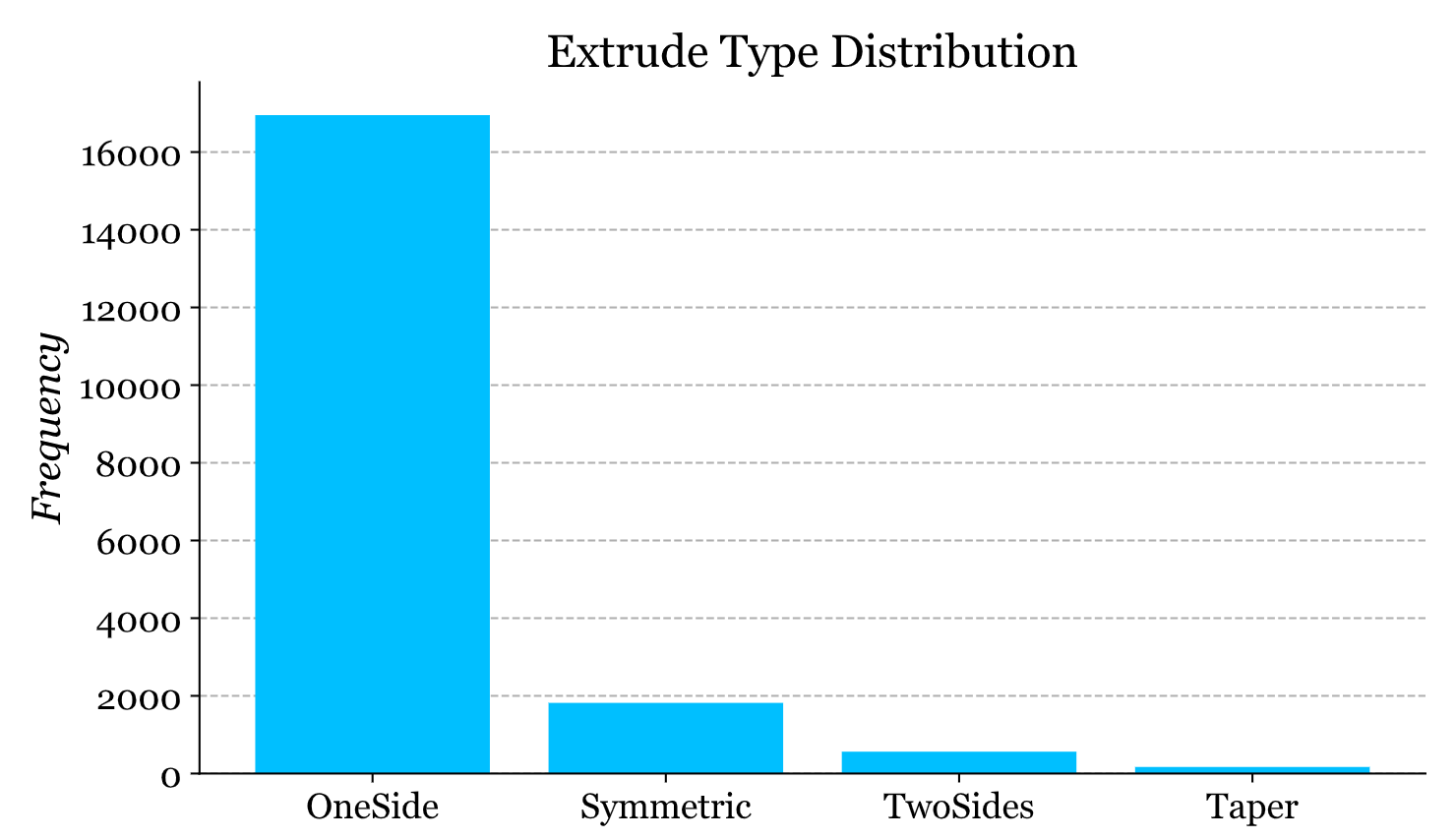

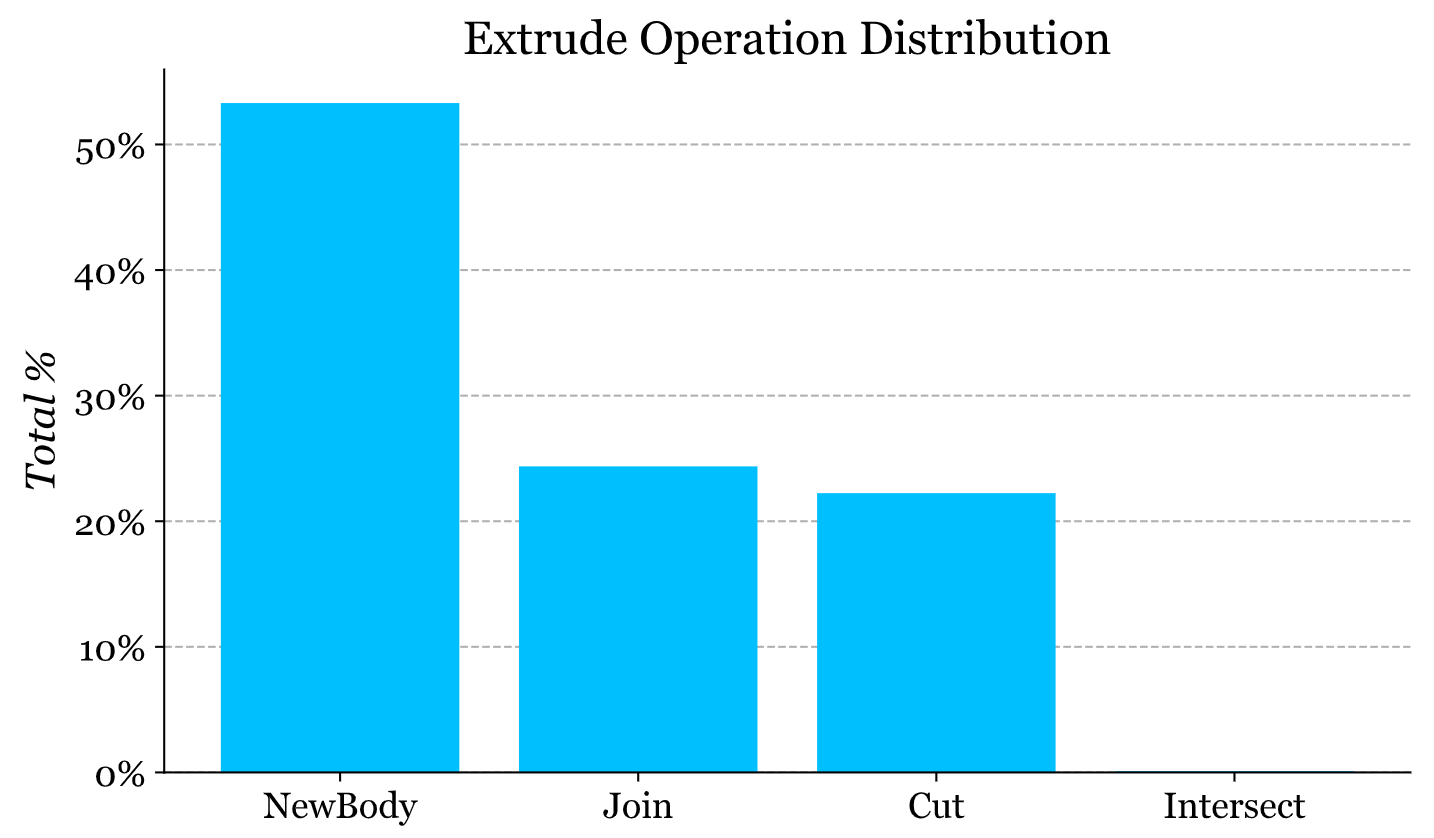

49 | ## Extrude

50 |

51 | Illustrated below is the distribution of different extrude types and operations. Note that tapers can be applied in addition to any extrude type, so the overall frequency of each is shown rather than a relative percentage.

52 |

53 |

54 |

55 |

56 |

--------------------------------------------------------------------------------

/docs/tags.json:

--------------------------------------------------------------------------------

1 | {

2 | "industry": [

3 | "Media & Entertainment",

4 | "Civil Infrastructure",

5 | "Architecture, Engineering & Construction",

6 | "Other Industries",

7 | "Product Design & Manufacturing"

8 | ],

9 | "category": [

10 | "3D Art + Illustration",

11 | "Game Asset Creation",

12 | "Utilities & Telecom",

13 | "Construction Verification",

14 | "Science",

15 | "Story Development",

16 | "Design",

17 | "Tools",

18 | "General",

19 | "Nature",

20 | "Sport",

21 | "Fantasy",

22 | "Land Development",

23 | "HVAC",

24 | "Automotive",

25 | "Virtual Reality",

26 | "Bridges",

27 | "Factory Layout",

28 | "Games/Film",

29 | "Product Design",

30 | "Digital Visualization",

31 | "Jewelry",

32 | "Concept Art",

33 | "Art",

34 | "Robotics",

35 | "Cartoon",

36 | "Advertising",

37 | "Furniture + Household",

38 | "Rapid Energy Modeling",

39 | "Infrastructure Visualization",

40 | "Game",

41 | "Conceptual Modeling",

42 | "Architecture",

43 | "Mechanical Engineering",

44 | "Aerospace",

45 | "City Model",

46 | "Energy + Power",

47 | "Toys",

48 | "Wood Working",

49 | "Film/TV/Post",

50 | "Character",

51 | "Fashion",

52 | "Archeology",

53 | "Engineering",

54 | "Museum",

55 | "Electronics",

56 | "Interior Design",

57 | "Heritage",

58 | "Infrastructure Design",

59 | "Props/Items",

60 | "Roads & Highways",

61 | "Building Renovation",

62 | "Oil & Gas",

63 | "Machine design",

64 | "Medical",

65 | "SCI-FI",

66 | "Industrial Asset Creation",

67 | "Marine",

68 | "Rail",

69 | "Miscellaneous",

70 | "Environments",

71 | "Gameplay",

72 | "Packaging",

73 | "Drainage",

74 | "Tourism",

75 | "Inspection",

76 | "Water & Wastewater",

77 | "Abstract",

78 | "Level Design",

79 | "Reality"

80 | ],

81 | "products": [

82 | "3ds Max",

83 | "3ds Max Design",

84 | "Alias",

85 | "Alias AutoStudio",

86 | "Alias Design",

87 | "Alias Surface",

88 | "AutoCAD",

89 | "AutoCAD Architecture",

90 | "AutoCAD LT",

91 | "AutoCAD Mechanical",

92 | "AutoCAD Plant 3D",

93 | "Autodesk® Rendering",

94 | "Fusion 360",

95 | "InfraWorks 360",

96 | "Inventor",

97 | "Inventor LT",

98 | "Inventor Professional",

99 | "Inventor Publisher",

100 | "Maya",

101 | "Not specified",

102 | "ReCap 360",

103 | "ReCap 360 Ultimate",

104 | "ReMake",

105 | "Revit",

106 | "Revit Architecture",

107 | "Revit LT"

108 | ]

109 | }

--------------------------------------------------------------------------------

/tools/README.md:

--------------------------------------------------------------------------------

1 | # Fusion 360 Gallery Dataset Tools

2 | Here we provide various tools for working with the Fusion 360 Gallery Dataset, including the CAD reconstruction code used in [our paper](https://arxiv.org/abs/2010.02392). Several tools leverage the [Fusion 360 API](http://help.autodesk.com/view/fusion360/ENU/?guid=GUID-7B5A90C8-E94C-48DA-B16B-430729B734DC) to perform geometry operations and require Fusion 360 to be installed.

3 |

4 | ## Getting Started

5 | Below are some general instructions for getting started setup with Fusion 360. Please refer to the readme provided along with each tool for specific instructions.

6 |

7 | ### Install Fusion 360

8 | The first step is to install Fusion 360 and setup up an account. As Fusion 360 stores data in the cloud, an account is required to login and use the application. Fusion 360 is available on Windows and Mac and is free for students and educators. [Follow these instructions](https://www.autodesk.com/products/fusion-360/students-teachers-educators) to create a free educational license.

9 |

10 | ### Running

11 | To run a script/add-in in Fusion 360:

12 |

13 | 1. Open Fusion 360

14 | 2. Go to Tools tab > Add-ins > Scripts and Add-ins

15 | 3. In the popup, select the Add-in panel, click the green '+' icon and select the appropriate directory in this repo

16 | 4. Click 'Run'

17 |

18 |

19 |

20 |

21 | ### Debugging

22 | To debug any of tools that use Fusion 360 you need to install [Visual Studio Code](https://code.visualstudio.com/), a free open source editor. For a general overview of how to debug scripts in Fusion 360 from Visual Studio Code, check out [this post](https://modthemachine.typepad.com/my_weblog/2019/09/debug-fusion-360-add-ins.html) and refer to the readme provided along with each tool.

23 |

24 |

25 | ## Tools

26 | - [`Fusion 360 Gym`](fusion360gym): A 'gym' environment for training ML models to design using Fusion 360.

27 | - [`Reconverter`](reconverter): Demonstrates how to batch convert the raw data structure provided with the reconstruction dataset into other representations using Fusion 360.

28 | - [`Regraph`](regraph): Demonstrates how to create a B-Rep graph data structure from data provided with the reconstruction dataset using Fusion 360.

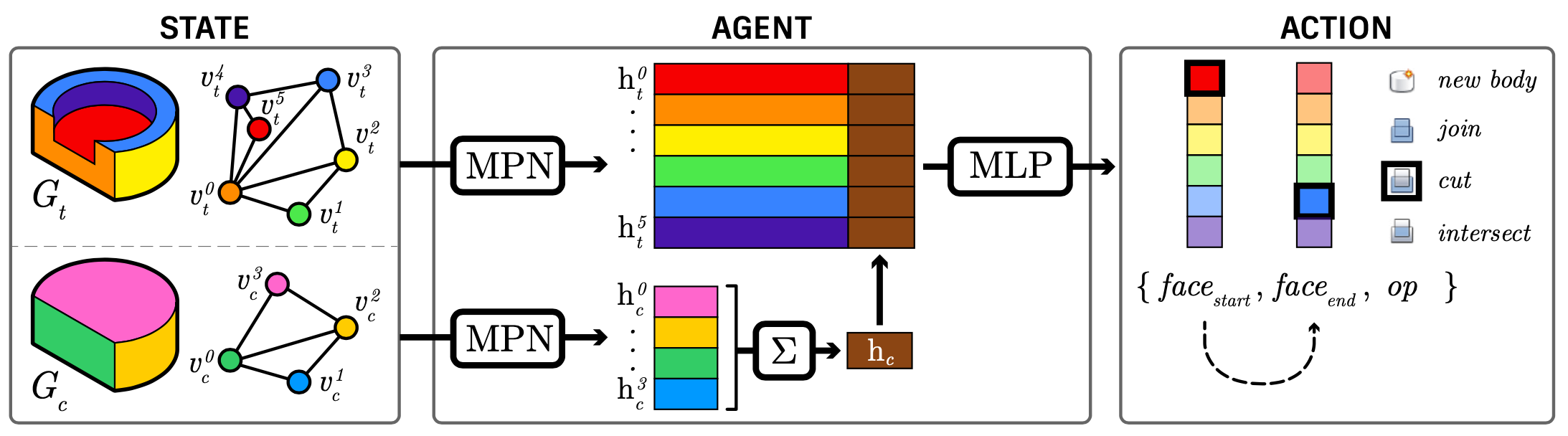

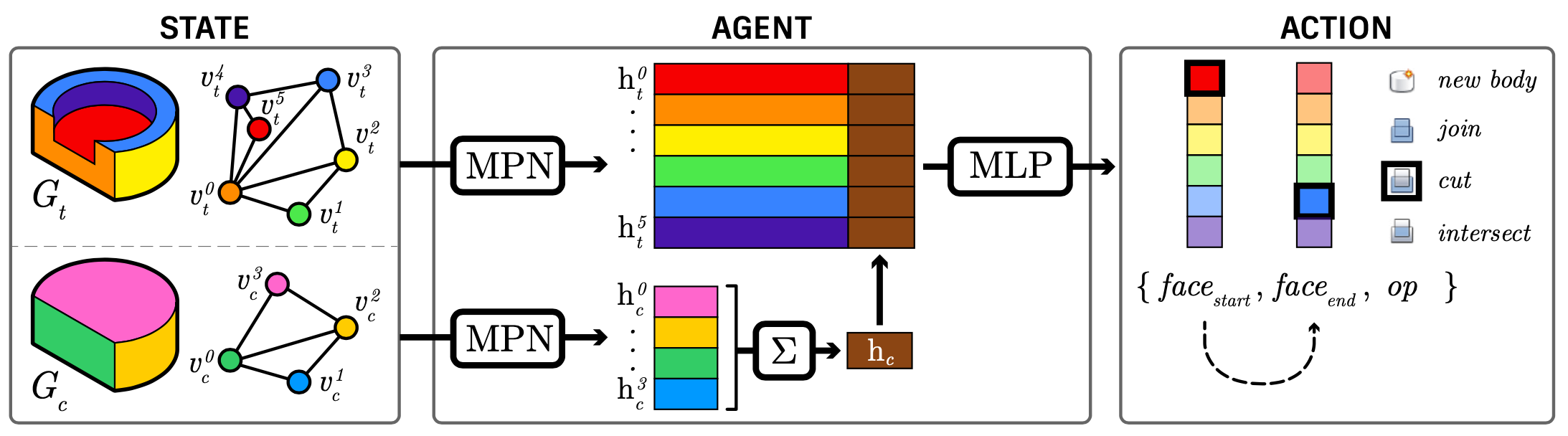

29 | - [`RegraphNet`](regraphnet): A neural network for predicting CAD reconstruction actions. This network takes the output from [`Regraph`](regraph) and is the underlying network used with neurally guided search in [our paper](https://arxiv.org/abs/2010.02392).

30 | - [`Segmentation Viewer`](segmentation_viewer): Viewer for the segmentation dataset to visualize the 3D models with different colors based on the modeling operation.

31 | - [`Search`](search): A framework for running neurally guided search to recover a construction sequence from B-Rep input. We use this code in [our paper](https://arxiv.org/abs/2010.02392).

32 | - [`sketch2image`](sketch2image): Convert sketches provided in json format to images using matplotlib.

33 | - [`Assembly Download`](assembly_download): Download and uncompress the Assembly Dataset.

34 | - [`Assembly Graph`](assembly_graph): Generate a graph representation from an assembly.

35 | - [`Assembly2CAD`](assembly2cad): Build a Fusion 360 CAD model from an assembly data sample.

36 | - [`Joint2CAD`](joint2cad): Build a Fusion 360 CAD model from a joint data sample.

37 |

--------------------------------------------------------------------------------

/tools/assembly2cad/README.md:

--------------------------------------------------------------------------------

1 | # Assembly2CAD

2 |

3 |

4 |

5 | [Assembly2CAD](assembly2cad.py) demonstrates how to build a Fusion 360 CAD model from the assembly data provided with the [Assembly Dataset](../../docs/assembly.md). The resulting CAD model has a complete assembly tree and fully specified parametric joints.

6 |

7 |

8 | ## Running

9 | [Assembly2CAD](assembly2cad.py) runs in Fusion 360 as a script with the following steps.

10 | 1. Follow the [general instructions here](../) to get setup with Fusion 360.

11 | 2. Optionally change the `assembly_file` in [`assembly2cad.py`](assembly2cad.py) to point towards an `assembly.json` provided with the [Assembly Dataset](../../docs/assembly.md).

12 | 3. Optionally change the `png_file` and `f3d_file` in [`assembly2cad.py`](assembly2cad.py) to your preferred name for each file that is exported.

13 | 4. Run the [`assembly2cad.py`](assembly2cad.py) script from within Fusion 360. When the script has finished running the design will be open in Fusion 360.

14 | 5. Check the contents of `assembly2cad/` directory to find the .f3d that was exported.

15 |

16 | ## How it Works

17 | If you look into the code you will notice that the hard work is performed by [`assembly_importer.py`](../common/assembly_importer.py) and does the following:

18 | 1. Opens and reads `assembly.json`.

19 | 2. Gets a list of all .smt files are in the directory where `assembly.json` is located.

20 | 3. Looks into the `root` data of `assembly.json` and creates brep bodies from the smt files at the root level.

21 | 4. Looks into `tree` and `occurrences` data of the `assembly.json` and creates components/occurrences by importing the appropriate .smt files.

22 | 5. After the assembly tree is built it creates joints if specified in `assembly.json`.

23 |

24 |

--------------------------------------------------------------------------------

/tools/assembly2cad/assembly2cad.manifest:

--------------------------------------------------------------------------------

1 | {

2 | "autodeskProduct": "Fusion360",

3 | "type": "script",

4 | "author": "",

5 | "description": {

6 | "": ""

7 | },

8 | "supportedOS": "windows|mac",

9 | "editEnabled": true

10 | }

--------------------------------------------------------------------------------

/tools/assembly2cad/assembly2cad.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Construct a Fusion 360 CAD model from an assembly

4 | provided with the Fusion 360 Gallery Assembly Dataset

5 |

6 | """

7 |

8 |

9 | import os

10 | import sys

11 | from pathlib import Path

12 | import adsk.core

13 | import traceback

14 |

15 | # Add the common folder to sys.path

16 | COMMON_DIR = os.path.join(os.path.dirname(__file__), "..", "common")

17 | if COMMON_DIR not in sys.path:

18 | sys.path.append(COMMON_DIR)

19 |

20 | from assembly_importer import AssemblyImporter

21 | import exporter

22 |

23 |

24 | def run(context):

25 | ui = None

26 | try:

27 | app = adsk.core.Application.get()

28 | ui = app.userInterface

29 |

30 | current_dir = Path(__file__).resolve().parent

31 | data_dir = current_dir.parent / "testdata/assembly_examples/belt_clamp"

32 | assembly_file = data_dir / "assembly.json"

33 |

34 | assembly_importer = AssemblyImporter(assembly_file)

35 | assembly_importer.reconstruct()

36 |

37 | png_file = current_dir / f"{assembly_file.stem}.png"

38 | exporter.export_png_from_component(png_file, app.activeProduct.rootComponent)

39 |

40 | f3d_file = current_dir / f"{assembly_file.stem}.f3d"

41 | exporter.export_f3d(f3d_file)

42 |

43 | if ui:

44 | if f3d_file.exists():

45 | ui.messageBox(f"Exported to: {f3d_file}")

46 | else:

47 | ui.messageBox(f"Failed to export: {f3d_file}")

48 | except:

49 | if ui:

50 | ui.messageBox(f"Failed to export: {traceback.format_exc()}")

51 |

--------------------------------------------------------------------------------

/tools/assembly_download/README.md:

--------------------------------------------------------------------------------

1 | # Assembly Dataset Download

2 | The Assembly Dataset is provided as a series of 7z archives.

3 | Each archive contains approximately 750 assemblies as well as the training split and license information.

4 | The size of the entire dataset is 146.53 GB and 18.8 GB when compressed.

5 | We provide a script to download and extract the files below.

6 |

7 | ## Download

8 | Below are the links to directly download each of the archive files. Each archive can be extracted independently if only a portion of the full dataset is required.

9 |

10 | - [a1.0.0_00.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_00.7z) (2.3 GB)

11 | - [a1.0.0_01.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_01.7z) (2.1 GB)

12 | - [a1.0.0_02.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_02.7z) (2.0 GB)

13 | - [a1.0.0_03.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_03.7z) (1.8 GB)

14 | - [a1.0.0_04.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_04.7z) (1.1 GB)

15 | - [a1.0.0_05.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_05.7z) (1.9 GB)

16 | - [a1.0.0_06.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_06.7z) (1.5 GB)

17 | - [a1.0.0_07.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_07.7z) (1.9 GB)

18 | - [a1.0.0_08.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_08.7z) (1.4 GB)

19 | - [a1.0.0_09.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_09.7z) (1.2 GB)

20 | - [a1.0.0_10.7z](https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_10.7z) (1.4 GB)

21 |

22 |

23 | ## Extraction

24 | To extract each archive requires a tool that supports the 7z compression format.

25 |

26 | ### Linux

27 | Distributions of Ubuntu come with `p7zip` installed. However, with older versions (e.g. 16.02) extraction times can be excessively slow. If you experience extraction times of longer than 10 mins per archive, we suggest using the latest version provided on the [7-Zip](https://www.7-zip.org) website. The following commands can be used to download and install the latest version if not already available in your package manager:

28 |

29 | ```

30 | curl https://www.7-zip.org/a/7z2106-linux-x64.tar.xz -o 7z2106-linux-x64.tar.xz

31 | sudo apt install xz-utils

32 | tar -xf 7z2106-linux-x64.tar.xz

33 | ```

34 | and then extract the archives:

35 | ```

36 | 7zz x a1.0.0_00.7z

37 | ```

38 |

39 | ### Mac OS

40 | On recent versions of Mac OS, 7z is supported natively.

41 |

42 | ### Windows

43 | Windows users can download and install [7-Zip](https://www.7-zip.org), which offers both a GUI and command line interface.

44 |

45 | ## Download and Extraction Script

46 | We provide the python script [assembly_download.py](assembly_download.py) to download and extract all archive files.

47 |

48 | ### Installation

49 | The script calls [7-Zip](https://www.7-zip.org) directly so if you encounter problems, ensure the path is set correctly in the `get_7z_path()` function or `7z` is present in your linux path. To run the script you will need the following python libraries that can be installed using `pip`:

50 |

51 | - `requests`

52 | - `tqdm`

53 |

54 |

55 | ### Running

56 | The script can be run by passing in the output directory where the files will be extracted to.

57 |

58 | ```

59 | python assembly_download.py --output path/to/files

60 | ```

61 | Additionally the following optional arguments can be passed:

62 | - `--limit`: Limit the number of archive files to download.

63 | - `--threads`: Number of threads to use for downloading in parallel [default: 4].

64 | - `--download_only`: Download without extracting files.

65 |

66 | The script will not re-download and overwrite archive files that have already been downloaded.

--------------------------------------------------------------------------------

/tools/assembly_download/assembly_download.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Download and extract the Fusion 360 Gallery Assembly Dataset

4 |

5 | """

6 |

7 | import argparse

8 | import sys

9 | import itertools

10 | import requests

11 | from requests.packages.urllib3.exceptions import InsecureRequestWarning

12 | import subprocess

13 | from pathlib import Path

14 | from multiprocessing.pool import ThreadPool

15 | from multiprocessing import Pool

16 | from tqdm import tqdm

17 | requests.packages.urllib3.disable_warnings(InsecureRequestWarning)

18 |

19 |

20 | def download_file(input):

21 | """Download a file and save it locally"""

22 | url, output_dir, position = input

23 | url_file = Path(url).name

24 | local_file = output_dir / url_file

25 | if not local_file.exists():

26 | tqdm.write(f"Downloading {url_file} to {local_file}")

27 | r = requests.get(url, stream=True, verify=False)

28 | if r.status_code == 200:

29 | total_length = int(r.headers.get("content-length"))

30 | pbar = tqdm(total=total_length, position=position)

31 | with open(local_file, "wb") as f:

32 | for chunk in r:

33 | pbar.update(len(chunk))

34 | f.write(chunk)

35 | pbar.close()

36 | tqdm.write(f"Finished downloading {local_file.name}")

37 | else:

38 | tqdm.write(f"Skipping download of {local_file.name} as local file already exists")

39 | return local_file

40 |

41 |

42 | def download_files(output_dir, limit, threads):

43 | """Download the assembly archive files"""

44 | assembly_urls = [

45 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_00.7z",

46 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_01.7z",

47 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_02.7z",

48 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_03.7z",

49 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_04.7z",

50 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_05.7z",

51 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_06.7z",

52 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_07.7z",

53 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_08.7z",

54 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_09.7z",

55 | "https://fusion-360-gallery-dataset.s3-us-west-2.amazonaws.com/assembly/a1.0.0/a1.0.0_10.7z",

56 | ]

57 | if limit is not None:

58 | assembly_urls = assembly_urls[:limit]

59 |

60 | local_files = []

61 | iter_data = zip(

62 | assembly_urls,

63 | itertools.repeat(output_dir),

64 | itertools.count(),

65 | )

66 |

67 | results = ThreadPool(threads).imap(download_file, iter_data)

68 | local_files = []

69 | for local_file in tqdm(results, total=len(assembly_urls)):

70 | local_files.append(local_file)

71 |

72 | # Serial Implementation

73 | # for index, url in enumerate(assembly_urls):

74 | # local_file = download_file((url, output_dir, index))

75 | # local_files.append(local_file)

76 | return local_files

77 |

78 |

79 | def get_7z_path():

80 | """Get the path to the 7-zip application"""

81 | # Edit the below paths to point to your install of 7-Zip

82 | if sys.platform == "darwin":

83 | zip_path = Path("/Applications/7z/7zz")

84 | assert zip_path.exists(), f"Could not find 7-Zip executable: {zip_path}"

85 | zip_path = str(zip_path.resolve())

86 | elif sys.platform == "win32":

87 | zip_path = Path("C:/Program Files/7-Zip/7z.exe")

88 | assert zip_path.exists(), f"Could not find 7-Zip executable: {zip_path}"

89 | zip_path = str(zip_path.resolve())

90 | elif sys.platform.startswith("linux"):

91 | # In linux the 7z executable is in the path

92 | zip_path = "7z"

93 | return zip_path

94 |

95 |

96 | def extract_file(zip_path, local_file, assembly_dir):

97 | """Extract a single archive"""

98 | tqdm.write(f"Extracting {local_file.name}...")

99 | args = [

100 | zip_path,

101 | "x",

102 | str(local_file.resolve()),

103 | "-aos" # Skip extracting of existing files

104 | ]

105 | p = subprocess.run(args, cwd=str(assembly_dir))

106 | return p.returncode == 0

107 |

108 |

109 | def extract_files(zip_path, local_files, output_dir):

110 | """Extract all files"""

111 | # Make a sub directory for the assembly files

112 | assembly_dir = output_dir / "assembly"

113 | if not assembly_dir.exists():

114 | assembly_dir.mkdir(parents=True)

115 | results = []

116 | for local_file in tqdm(local_files):

117 | result = extract_file(zip_path, local_file, assembly_dir)

118 | results.append(result)

119 | return results

120 |

121 |

122 | def main(output_dir, limit, threads, download_only):

123 | if not download_only:

124 | # Check we have a good path first

125 | zip_path = get_7z_path()

126 |

127 | # Download all the files, skipping those that have already been downloaded

128 | local_files = download_files(output_dir, limit, threads)

129 |

130 | if not download_only:

131 | # Extract all the files

132 | results = extract_files(zip_path, local_files, output_dir)

133 | tqdm.write(f"Extracted {sum(results)}/{len(results)} archives")

134 |

135 |

136 | if __name__ == "__main__":

137 | parser = argparse.ArgumentParser()

138 | parser.add_argument(

139 | "--output", type=str, help="Output folder to save compressed files."

140 | )

141 | parser.add_argument(

142 | "--limit", type=int, help="Limit the number of archive files to download."

143 | )

144 | parser.add_argument(

145 | "--threads", type=int, default=4, help="Number of threads to use for downloading in parallel [default: 4]."

146 | )

147 | parser.add_argument("--download_only", action="store_true", help="Download without extracting files.")

148 | args = parser.parse_args()

149 |

150 | output_dir = None

151 | if args.output is not None:

152 | # Prep the output directory

153 | output_dir = Path(args.output)

154 | if not output_dir.exists():

155 | output_dir.mkdir(parents=True)

156 |

157 | main(output_dir, args.limit, args.threads, args.download_only)

158 |

--------------------------------------------------------------------------------

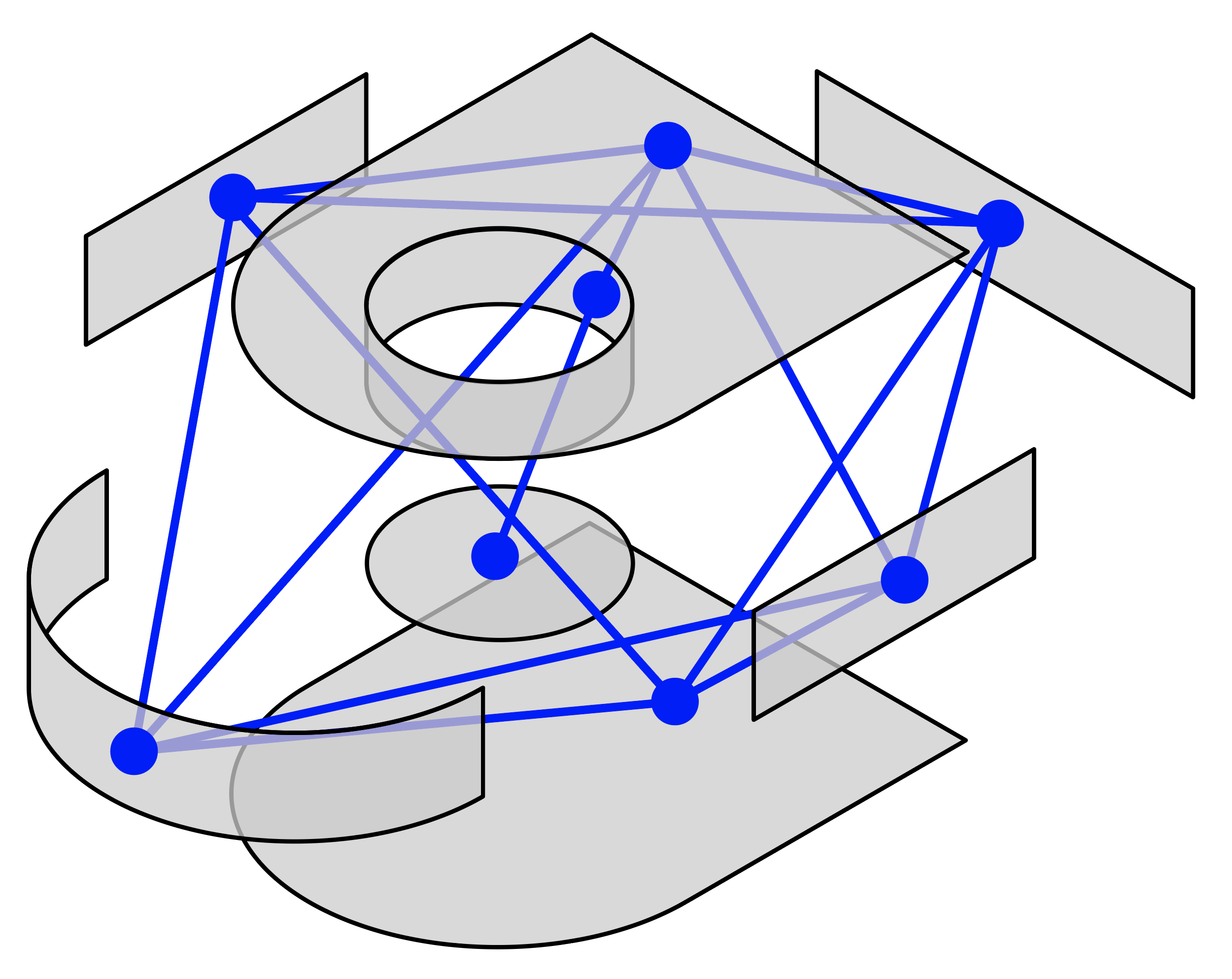

/tools/assembly_graph/README.md:

--------------------------------------------------------------------------------

1 | # AssemblyGraph

2 | Generate a graph representation from an assembly.

3 |

4 | ## Setup

5 | Install requirements:

6 | - `numpy`

7 | - `networkx`

8 | - `meshplot`

9 | - `trimesh`

10 | - `tqdm`

11 |

12 | ## [Assembly Viewer](assembly_viewer.ipynb)

13 | Example notebook showing how to use the [`AssemblyGraph`](assembly_graph.py) class to generate a NetworkX graph and visualize it as both a graph and a 3D model.

14 |

15 |

16 |

17 | ## [Assembly2Graph](assembly2graph.py)

18 | Utility script to convert a folder of assemblies into a NetworkX node-link graph JSON file format.

19 |

20 | ```

21 | python assembly2graph.py --input path/to/a1.0.0/assembly --output path/to/graphs

22 | ```

23 |

24 |

--------------------------------------------------------------------------------

/tools/assembly_graph/assembly2graph.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Convert assemblies into a graph representation

4 |

5 | """

6 |

7 | import sys

8 | import time

9 | import argparse

10 | from pathlib import Path

11 | from tqdm import tqdm

12 |

13 | from assembly_graph import AssemblyGraph

14 |

15 |

16 | def get_input_files(input):

17 | """Get the input files to process"""

18 | input_path = Path(input)

19 | if not input_path.exists():

20 | sys.exit("Input folder/file does not exist")

21 | if input_path.is_dir():

22 | assembly_files = [f for f in input_path.glob("**/assembly.json")]

23 | if len(assembly_files) == 0:

24 | sys.exit("Input folder/file does not contain assembly.json files")

25 | return assembly_files

26 | elif input_path.name == "assembly.json":

27 | return [input_path]

28 | else:

29 | sys.exit("Input folder/file invalid")

30 |

31 |

32 | def assembly2graph(args):

33 | """Convert assemblies to graph format"""

34 | input_files = get_input_files(args.input)

35 | if args.limit is not None:

36 | input_files = input_files[:args.limit]

37 | output_dir = Path(args.output)

38 | if not output_dir.exists():

39 | output_dir.mkdir(parents=True)

40 | tqdm.write(f"Converting {len(input_files)} assemblies...")

41 | start_time = time.time()

42 | for input_file in tqdm(input_files):

43 | ag = AssemblyGraph(input_file)

44 | json_file = output_dir / f"{input_file.parent.stem}_graph.json"

45 | ag.export_graph_json(json_file)

46 | print(f"Time taken: {time.time() - start_time}")

47 |

48 |

49 | if __name__ == "__main__":

50 | parser = argparse.ArgumentParser()

51 | parser.add_argument(

52 | "--input", type=str, required=True, help="Input folder/file with assembly data."

53 | )

54 | parser.add_argument(

55 | "--output", type=str, default="data", help="Output folder to save graphs."

56 | )

57 | parser.add_argument(

58 | "--limit", type=int, help="Limit the number assembly files to convert."

59 | )

60 | args = parser.parse_args()

61 | assembly2graph(args)

62 |

--------------------------------------------------------------------------------

/tools/common/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/AutodeskAILab/Fusion360GalleryDataset/1084b881f3bb710267801d812d6e9286b8667059/tools/common/__init__.py

--------------------------------------------------------------------------------

/tools/common/deserialize.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Deserialize dictionary data from json to Fusion 360 entities

4 |

5 | """

6 |

7 | import adsk.core

8 | import adsk.fusion

9 |

10 |

11 | def point2d(point_data):

12 | return adsk.core.Point2D.create(

13 | point_data["x"],

14 | point_data["y"]

15 | )

16 |

17 |

18 | def point3d(point_data):

19 | return adsk.core.Point3D.create(

20 | point_data["x"],

21 | point_data["y"],

22 | point_data["z"]

23 | )

24 |

25 |

26 | def point3d_list(point_list, xform=None):

27 | points = []

28 | for point_data in point_list:

29 | point = point3d(point_data)

30 | if xform is not None:

31 | point.transformBy(xform)

32 | points.append(point)

33 | return points

34 |

35 |

36 | def vector3d(vector_data):

37 | return adsk.core.Vector3D.create(

38 | vector_data["x"],

39 | vector_data["y"],

40 | vector_data["z"]

41 | )

42 |

43 |

44 | def line2d(start_point_data, end_point_data):

45 | start_point = point2d(start_point_data)

46 | end_point = point2d(end_point_data)

47 | return adsk.core.Line2D.create(start_point, end_point)

48 |

49 |

50 | def plane(plane_data):

51 | origin = point3d(plane_data["origin"])

52 | normal = vector3d(plane_data["normal"])

53 | u_direction = vector3d(plane_data["u_direction"])

54 | v_direction = vector3d(plane_data["v_direction"])

55 | plane = adsk.core.Plane.create(origin, normal)

56 | plane.setUVDirections(u_direction, v_direction)

57 | return plane

58 |

59 |

60 | def matrix3d(matrix_data):

61 | matrix = adsk.core.Matrix3D.create()

62 | origin = point3d(matrix_data["origin"])

63 | x_axis = vector3d(matrix_data["x_axis"])

64 | y_axis = vector3d(matrix_data["y_axis"])

65 | z_axis = vector3d(matrix_data["z_axis"])

66 | matrix.setWithCoordinateSystem(origin, x_axis, y_axis, z_axis)

67 | return matrix

68 |

69 |

70 | def feature_operations(operation_data):

71 | if operation_data == "JoinFeatureOperation":

72 | return adsk.fusion.FeatureOperations.JoinFeatureOperation

73 | if operation_data == "CutFeatureOperation":

74 | return adsk.fusion.FeatureOperations.CutFeatureOperation

75 | if operation_data == "IntersectFeatureOperation":

76 | return adsk.fusion.FeatureOperations.IntersectFeatureOperation

77 | if operation_data == "NewBodyFeatureOperation":

78 | return adsk.fusion.FeatureOperations.NewBodyFeatureOperation

79 | if operation_data == "NewComponentFeatureOperation":

80 | return adsk.fusion.FeatureOperations.NewComponentFeatureOperation

81 | return None

82 |

83 |

84 | def construction_plane(name):

85 | """Return a construction plane given a name"""

86 | app = adsk.core.Application.get()

87 | design = adsk.fusion.Design.cast(app.activeProduct)

88 | construction_planes = {

89 | "xy": design.rootComponent.xYConstructionPlane,

90 | "xz": design.rootComponent.xZConstructionPlane,

91 | "yz": design.rootComponent.yZConstructionPlane

92 | }

93 | name_lower = name.lower()

94 | if name_lower in construction_planes:

95 | return construction_planes[name_lower]

96 | return None

97 |

98 |

99 | def face_by_point3d(point3d_data):

100 | """Find a face with given serialized point3d that sits on that face"""

101 | point_on_face = point3d(point3d_data)

102 | app = adsk.core.Application.get()

103 | design = adsk.fusion.Design.cast(app.activeProduct)

104 | for component in design.allComponents:

105 | try:

106 | entities = component.findBRepUsingPoint(

107 | point_on_face,

108 | adsk.fusion.BRepEntityTypes.BRepFaceEntityType,

109 | 0.01, # -1.0 is the default tolerance

110 | False

111 | )

112 | if entities is None or len(entities) == 0:

113 | continue

114 | else:

115 | # Return the first face

116 | # although there could be multiple matches

117 | return entities[0]

118 | except Exception as ex:

119 | print("Exception finding BRepFace", ex)

120 | # Ignore and keep looking

121 | pass

122 | return None

123 |

124 |

125 | def view_orientation(name):

126 | """Return a camera view orientation given a name"""

127 | view_orientations = {

128 | "ArbitraryViewOrientation": adsk.core.ViewOrientations.ArbitraryViewOrientation,

129 | "BackViewOrientation": adsk.core.ViewOrientations.BackViewOrientation,

130 | "BottomViewOrientation": adsk.core.ViewOrientations.BottomViewOrientation,

131 | "FrontViewOrientation": adsk.core.ViewOrientations.FrontViewOrientation,

132 | "IsoBottomLeftViewOrientation": adsk.core.ViewOrientations.IsoBottomLeftViewOrientation,

133 | "IsoBottomRightViewOrientation": adsk.core.ViewOrientations.IsoBottomRightViewOrientation,

134 | "IsoTopLeftViewOrientation": adsk.core.ViewOrientations.IsoTopLeftViewOrientation,

135 | "IsoTopRightViewOrientation": adsk.core.ViewOrientations.IsoTopRightViewOrientation,

136 | "LeftViewOrientation": adsk.core.ViewOrientations.LeftViewOrientation,

137 | "RightViewOrientation": adsk.core.ViewOrientations.RightViewOrientation,

138 | "TopViewOrientation": adsk.core.ViewOrientations.TopViewOrientation,

139 | }

140 | name_lower = name.lower()

141 | if name_lower in view_orientations:

142 | return view_orientations[name_lower]

143 | return None

144 |

145 |

146 | def get_key_point_type(key_point_str):

147 | """Return Key Point Type used in a Joint"""

148 | if key_point_str == "CenterKeyPoint":

149 | return adsk.fusion.JointKeyPointTypes.CenterKeyPoint

150 | elif key_point_str == "EndKeyPoint":

151 | return adsk.fusion.JointKeyPointTypes.EndKeyPoint

152 | elif key_point_str == "MiddleKeyPoint":

153 | return adsk.fusion.JointKeyPointTypes.MiddleKeyPoint

154 | elif key_point_str == "StartKeyPoint":

155 | return adsk.fusion.JointKeyPointTypes.StartKeyPoint

156 | else:

157 | raise Exception(f"Unknown keyPointType type: {key_point_str}")

158 |

159 |

160 | def get_rotation_axis(rotation_axis):

161 | """Receives a string and Return Joint direction type"""

162 | if rotation_axis == "XAxisJointDirection":

163 | return adsk.fusion.JointDirections.XAxisJointDirection

164 | elif rotation_axis == "YAxisJointDirection":

165 | return adsk.fusion.JointDirections.YAxisJointDirection

166 | elif rotation_axis == "ZAxisJointDirection":

167 | return adsk.fusion.JointDirections.ZAxisJointDirection

168 | elif rotation_axis == "CustomJointDirection":

169 | return adsk.fusion.JointDirections.CustomJointDirection

170 | else:

171 | raise Exception(f"Unknown JointDirections type: {rotation_axis}")

--------------------------------------------------------------------------------

/tools/common/exceptions.py:

--------------------------------------------------------------------------------

1 | class UnsupportedException(Exception):

2 | """Raised when the an unsupported feature is used"""

3 | pass

4 |

--------------------------------------------------------------------------------

/tools/common/face_reconstructor.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Face Reconstructor

4 | Reconstruct via face extrusion to match a target design

5 |

6 | """

7 |

8 | import adsk.core

9 | import adsk.fusion

10 |

11 | import name

12 | import deserialize

13 |

14 |

15 | class FaceReconstructor():

16 |

17 | def __init__(self, target, reconstruction, use_temp_id=True):

18 | self.target = target

19 | self.reconstruction = reconstruction

20 | self.use_temp_id = use_temp_id

21 | self.app = adsk.core.Application.get()

22 | self.design = adsk.fusion.Design.cast(self.app.activeProduct)

23 | # Populate the cache with a map from uuids to face indices

24 | self.target_uuid_to_face_map = self.get_target_uuid_to_face_map()

25 |

26 | def set_reconstruction_component(self, reconstruction):

27 | """Set the reconstruction component"""

28 | self.reconstruction = reconstruction

29 |

30 | def reconstruct(self, graph_data):

31 | """Reconstruct from the sequence of faces"""

32 | self.sequence = graph_data["sequences"][0]

33 | for seq in self.sequence["sequence"]:

34 | self.add_extrude_from_uuid(

35 | seq["start_face"],

36 | seq["end_face"],

37 | seq["operation"]

38 | )

39 |

40 | def get_face_from_uuid(self, face_uuid):

41 | """Get a face from an index in the sequence"""

42 | if face_uuid not in self.target_uuid_to_face_map:

43 | return None

44 | uuid_data = self.target_uuid_to_face_map[face_uuid]

45 | # We get the face by following the entity token

46 | face_token = uuid_data["face_token"]

47 | entities = self.design.findEntityByToken(face_token)

48 | if entities is None:

49 | return None

50 | return entities[0]

51 | # body_index = uuid_data["body_index"]

52 | # face_index = uuid_data["face_index"]

53 | # body = self.target.bRepBodies[body_index]

54 | # face = body.faces[face_index]

55 | # return face

56 |

57 | def get_target_uuid_to_face_map(self):

58 | """As we have to find faces multiple times we first

59 | make a map between uuids and face indices"""

60 | target_uuid_to_face_map = {}

61 | for body_index, body in enumerate(self.target.bRepBodies):

62 | for face_index, face in enumerate(body.faces):

63 | face_uuid = self.get_regraph_uuid(face)

64 | assert face_uuid is not None

65 | target_uuid_to_face_map[face_uuid] = {

66 | "body_index": body_index,

67 | "face_index": face_index,

68 | "face_token": face.entityToken

69 | }

70 | return target_uuid_to_face_map

71 |

72 | def add_extrude_from_uuid(self, start_face_uuid, end_face_uuid, operation):

73 | """Create an extrude from a start face uuid to an end face uuid"""

74 | # Start and end face have to reference the occurrence

75 | # in order to perform extrude operations between components

76 | start_face = self.get_face_from_uuid(start_face_uuid)

77 | end_face = self.get_face_from_uuid(end_face_uuid)

78 | operation = deserialize.feature_operations(operation)

79 | return self.add_extrude(start_face, end_face, operation)

80 |

81 | def add_extrude(self, start_face, end_face, operation):

82 | """Create an extrude from a start face to an end face"""

83 | # If there are no bodies to cut or intersect, do nothing

84 | if ((operation == adsk.fusion.FeatureOperations.CutFeatureOperation or

85 | operation == adsk.fusion.FeatureOperations.IntersectFeatureOperation) and

86 | self.reconstruction.bRepBodies.count == 0):

87 | return None

88 | # We generate the extrude bodies in the reconstruction component

89 | extrudes = self.reconstruction.component.features.extrudeFeatures

90 | extrude_input = extrudes.createInput(start_face, operation)

91 | extent = adsk.fusion.ToEntityExtentDefinition.create(end_face, False)

92 | extrude_input.setOneSideExtent(extent, adsk.fusion.ExtentDirections.PositiveExtentDirection)

93 | extrude_input.creationOccurrence = self.reconstruction

94 | tools = []

95 | for body in self.reconstruction.bRepBodies:

96 | tools.append(body)

97 | extrude_input.participantBodies = tools

98 | extrude = extrudes.add(extrude_input)

99 | return extrude

100 |

101 | def get_regraph_uuid(self, entity):

102 | """Get a uuid or a tempid depending on a flag"""

103 | is_face = isinstance(entity, adsk.fusion.BRepFace)

104 | is_edge = isinstance(entity, adsk.fusion.BRepEdge)

105 | if self.use_temp_id and (is_face or is_edge):

106 | return str(entity.tempId)

107 | else:

108 | return name.get_uuid(entity)

109 |

--------------------------------------------------------------------------------

/tools/common/fusion_360_server.manifest:

--------------------------------------------------------------------------------

1 |

2 | {

3 | "autodeskProduct": "Fusion360",

4 | "type": "addin",

5 | "id": "",

6 | "author": "",

7 | "description": {

8 | "": ""

9 | },

10 | "version": "",

11 | "runOnStartup": true,

12 | "supportedOS": "windows|mac",

13 | "editEnabled": true

14 | }

--------------------------------------------------------------------------------

/tools/common/launcher.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Find and launch Fusion 360

4 |

5 | """

6 |

7 | import os

8 | import sys

9 | from pathlib import Path

10 | import subprocess

11 | import importlib

12 |

13 |

14 | class Launcher():

15 |

16 | def __init__(self):

17 | self.fusion_app = self.find_fusion()

18 | if self.fusion_app is None:

19 | print("Error: Fusion 360 could not be found")

20 | elif not self.fusion_app.exists():

21 | print(f"Error: Fusion 360 does not exist at {self.fusion_app}")

22 | else:

23 | print(f"Fusion 360 found at {self.fusion_app}")

24 |

25 | def launch(self):

26 | """Opens a new instance of Fusion 360"""

27 | if self.fusion_app is None:

28 | print("Error: Fusion 360 could not be found")

29 | return None

30 | elif not self.fusion_app.exists():

31 | print(f"Error: Fusion 360 does not exist at {self.fusion_app}")

32 | return None

33 | else:

34 | fusion_path = str(self.fusion_app.resolve())

35 | args = []

36 | if sys.platform == "darwin":

37 | # -W is to wait for the app to finish

38 | # -n is to open a new app

39 | args = ["open", "-W", "-n", fusion_path]

40 | elif sys.platform == "win32":

41 | args = [fusion_path]

42 |

43 | print(f"Fusion launching from {fusion_path}")

44 | # Turn off output from Fusion

45 | return subprocess.Popen(

46 | args, stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL)

47 |

48 | def find_fusion(self):

49 | """Find the Fusion app"""

50 | if sys.platform == "darwin":

51 | return self.find_fusion_mac()

52 | elif sys.platform == "win32":

53 | return self.find_fusion_windows()

54 |

55 | def find_fusion_mac(self):

56 | """Find the Fusion app on mac"""

57 | # Shortcut location that links to the latest version

58 | user_path = Path(os.path.expanduser("~"))

59 | fusion_app = user_path / "Library/Application Support/Autodesk/webdeploy/production/Autodesk Fusion 360.app"

60 | return fusion_app

61 |

62 | def find_fusion_windows(self):

63 | """Find the Fusion app

64 | by looking in a windows FusionLauncher.exe.ini file"""

65 | fusion_launcher = self.find_fusion_launcher()

66 | if fusion_launcher is None:

67 | return None

68 | # FusionLauncher.exe.ini looks like this (encoding is UTF-16):

69 | # [Launcher]

70 | # stream = production

71 | # auid = AutodeskInc.Fusion360

72 | # cmd = ""C:\path\to\Fusion360.exe""

73 | # global = False

74 | with open(fusion_launcher, "r", encoding="utf16") as f:

75 | lines = f.readlines()

76 | lines = [x.strip() for x in lines]

77 |

78 | for line in lines:

79 | if line.startswith("cmd") and "Fusion360.exe" in line:

80 | pieces = line.split("\"")

81 | for piece in pieces:

82 | if "Fusion360.exe" in piece:

83 | return Path(piece)

84 | return None

85 |

86 | def find_fusion_launcher(self):

87 | """Find the FusionLauncher.exe.ini file on windows"""

88 | user_dir = Path(os.environ["LOCALAPPDATA"])

89 | production_dir = user_dir / "Autodesk/webdeploy/production/"

90 | production_contents = Path(production_dir).iterdir()

91 | for item in production_contents:

92 | if item.is_dir():

93 | fusion_launcher = item / "FusionLauncher.exe.ini"

94 | if fusion_launcher.exists():

95 | return fusion_launcher

96 | return None

97 |

--------------------------------------------------------------------------------

/tools/common/logger.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Logger utility class to output log info to the Fusion TextCommands window

4 |

5 | """

6 |

7 | import adsk.core

8 | import adsk.fusion

9 | import time

10 |

11 |

12 | class Logger:

13 |

14 | def __init__(self):

15 | app = adsk.core.Application.get()

16 | ui = app.userInterface

17 | self.text_palette = ui.palettes.itemById('TextCommands')

18 |

19 | # Make sure the palette is visible.

20 | if not self.text_palette.isVisible:

21 | self.text_palette.isVisible = True

22 |

23 | def log(self, txt_str=""):

24 | print(txt_str)

25 | self.text_palette.writeText(txt_str)

26 | adsk.doEvents()

27 |

28 | def log_time(self, txt_str=""):

29 | time_stamp = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

30 | time_txt_str = f"{time_stamp} {txt_str}"

31 | print(time_txt_str)

32 | self.text_palette.writeText(time_txt_str)

33 | adsk.doEvents()

34 |

--------------------------------------------------------------------------------

/tools/common/match.py:

--------------------------------------------------------------------------------

1 | """

2 |

3 | Match Fusion 360 entities to ids

4 |

5 | """

6 |

7 |

8 | import adsk.core

9 | import adsk.fusion

10 |

11 | import deserialize

12 | import name

13 |

14 |

15 | def sketch_by_name(sketch_name, sketches=None):

16 | """Return a sketch with a given name"""

17 | app = adsk.core.Application.get()

18 | design = adsk.fusion.Design.cast(app.activeProduct)

19 | if sketches is None:

20 | sketches = design.rootComponent.sketches

21 | return sketches.itemByName(sketch_name)

22 |

23 |

24 | def sketch_by_id(sketch_id, sketches=None):

25 | """Return a sketch with a given sketch id"""

26 | app = adsk.core.Application.get()

27 | design = adsk.fusion.Design.cast(app.activeProduct)

28 | if sketches is None:

29 | sketches = design.rootComponent.sketches

30 | for sketch in sketches:

31 | uuid = name.get_uuid(sketch)

32 | if uuid is not None and uuid == sketch_id:

33 | return sketch

34 | return None

35 |

36 |

37 | def sketch_profile_by_id(sketch_profile_id, sketches=None):

38 | """Return a sketch profile with a given id"""

39 | app = adsk.core.Application.get()

40 | design = adsk.fusion.Design.cast(app.activeProduct)

41 | if sketches is None:

42 | sketches = design.rootComponent.sketches

43 | for sketch in sketches:

44 | for profile in sketch.profiles:

45 | uuid = name.get_profile_uuid(profile)