├── .gitmodules

├── blocky

├── .gitignore

├── .prettierrc

├── README.md

├── package.json

├── src

│ ├── block.ts

│ ├── block_grid.test.ts

│ ├── block_grid.ts

│ ├── index.html

│ └── index.tsx

├── tsconfig.json

└── yarn.lock

├── cryptography-engineer

├── .clang-format

├── .gitignore

├── CMakeLists.txt

├── README.md

├── bootstrap.sh

├── cmake

│ ├── arch.cmake

│ ├── barretenberg.cmake

│ ├── benchmark.cmake

│ ├── build.cmake

│ ├── gtest.cmake

│ ├── module.cmake

│ ├── threading.cmake

│ ├── toolchain.cmake

│ └── toolchains

│ │ ├── arm-apple-clang.cmake

│ │ ├── arm64-linux-gcc.cmake

│ │ ├── i386-linux-clang.cmake

│ │ ├── wasm-linux-clang.cmake

│ │ ├── x86_64-apple-clang.cmake

│ │ ├── x86_64-linux-clang.cmake

│ │ ├── x86_64-linux-gcc.cmake

│ │ └── x86_64-linux-gcc10.cmake

├── format.sh

└── src

│ ├── CMakeLists.txt

│ ├── ec_fft

│ ├── CMakeLists.txt

│ ├── README.md

│ ├── ec_fft.cpp

│ ├── ec_fft.hpp

│ └── ec_fft.test.cpp

│ └── indexed_merkle_tree

│ ├── CMakeLists.txt

│ ├── README.md

│ ├── indexed_merkle_tree.cpp

│ ├── indexed_merkle_tree.hpp

│ ├── indexed_merkle_tree.test.cpp

│ └── leaf.hpp

├── eng-sessions

├── merkle-tree-cpp

│ ├── .clang-format

│ ├── .clangd

│ ├── .gitignore

│ ├── README.md

│ ├── run.sh

│ └── src

│ │ ├── hash_path.hpp

│ │ ├── main.cpp

│ │ ├── merkle_tree.hpp

│ │ ├── mock_db.hpp

│ │ ├── sha256.cpp

│ │ ├── sha256.hpp

│ │ └── sha256_hasher.hpp

└── merkle-tree

│ ├── .gitignore

│ ├── .prettierrc

│ ├── README.md

│ ├── package.json

│ ├── src

│ ├── hash_path.ts

│ ├── index.ts

│ ├── merkle_tree.test.ts

│ ├── merkle_tree.ts

│ └── sha256_hasher.ts

│ ├── tsconfig.json

│ └── yarn.lock

├── senior-applied-cryptography-engineer

└── README.md

├── senior-software-engineer

└── solidity

├── .gitattributes

├── .gitignore

├── .solhint.json

├── README.md

├── contracts

├── DefiBridgeProxy.sol

├── ERC20Mintable.sol

├── Types.sol

├── UniswapBridge.sol

└── interfaces

│ └── IDefiBridge.sol

├── ensure_versions.js

├── hardhat.config.ts

├── package.json

├── src

├── contracts

│ ├── defi_bridge_proxy.ts

│ └── uniswap_bridge.test.ts

└── deploy

│ ├── deploy_erc20.ts

│ └── deploy_uniswap.ts

├── tsconfig.json

└── yarn.lock

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "cryptography-engineer/bb"]

2 | path = cryptography-engineer/barretenberg

3 | url = https://github.com/AztecProtocol/barretenberg

4 | branch = sb/defi-bridge-project

5 |

--------------------------------------------------------------------------------

/blocky/.gitignore:

--------------------------------------------------------------------------------

1 | node_modules

2 | dist

3 | .parcel-cache

--------------------------------------------------------------------------------

/blocky/.prettierrc:

--------------------------------------------------------------------------------

1 | {

2 | "singleQuote": true,

3 | "trailingComma": "all",

4 | "printWidth": 120,

5 | "arrowParens": "avoid"

6 | }

7 |

--------------------------------------------------------------------------------

/blocky/README.md:

--------------------------------------------------------------------------------

1 | # Aztec Blocky Test

2 |

3 | **WARNING: Do not fork this repository or make a public repository containing your solution. Either copy it to a private repository or submit your solution via other means.**

4 |

5 | Links to solutions may be sent to charlie@aztecprotocol.com.

6 |

7 | ## To get started

8 |

9 | ```sh

10 | yarn install

11 | yarn start

12 | ```

13 |

14 | Navigate to `http://localhost:8080`.

15 |

16 | `yarn test` or `yarn test --watch` to run the unit tests on the terminal.

17 |

18 | ## Time

19 |

20 | It's expected you take no more than half a day. If you complete the algorithm sooner, feel free to be creative to improve the game further.

21 |

22 | ## Task

23 |

24 | Implement `clicked` to remove all blocks of the same colour that are connected to the target element, then allow the blocks above the removed to "fall down".

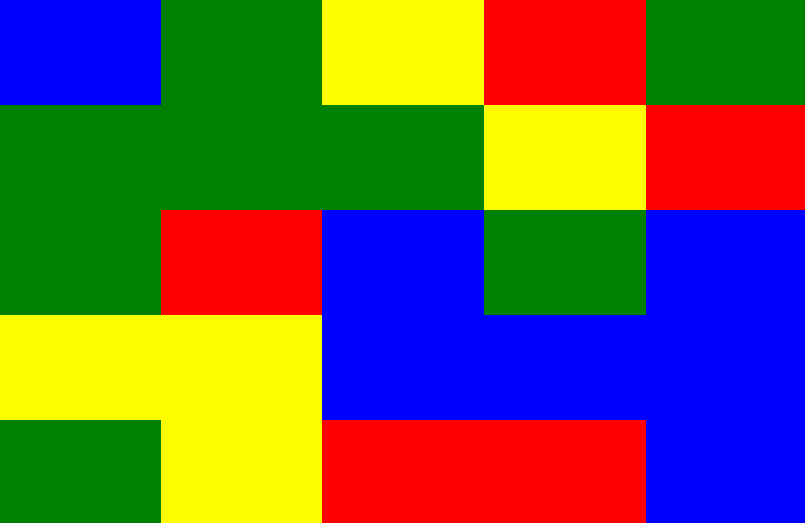

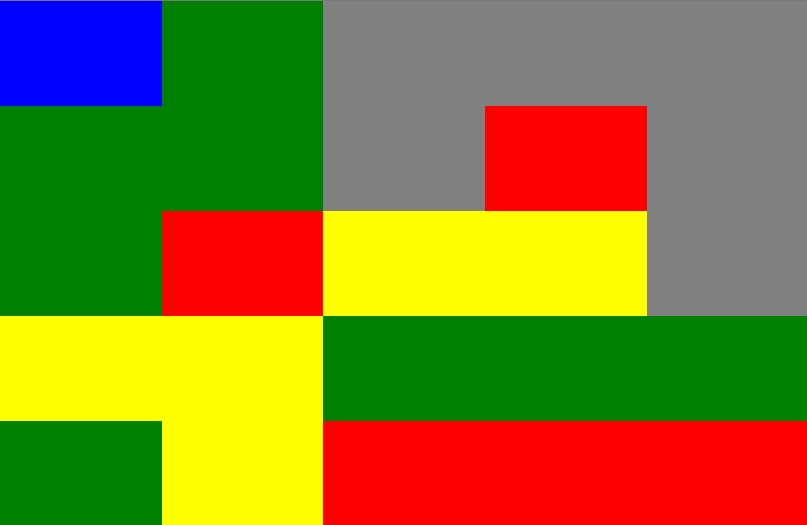

25 |

26 | E.g. given:

27 |

28 |

29 |

30 | After clicking one of the bottom right blue boxes it should then look like this:

31 |

32 |

33 |

--------------------------------------------------------------------------------

/blocky/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "front-end-dev-test",

3 | "version": "1.0.0",

4 | "license": "MIT",

5 | "description": "Aztec blocky test.",

6 | "main": "index.js",

7 | "scripts": {

8 | "build": "parcel build ./src/index.html",

9 | "start": "parcel --port 8080 ./src/index.html",

10 | "test": "jest"

11 | },

12 | "jest": {

13 | "transform": {

14 | "^.+\\.ts$": "ts-jest"

15 | },

16 | "testRegex": ".*\\.test\\.ts$",

17 | "rootDir": "./src"

18 | },

19 | "devDependencies": {

20 | "@types/jest": "^26.0.23",

21 | "@types/react-dom": "^17.0.8",

22 | "@types/styled-components": "^5.1.10",

23 | "jest": "^27.0.6",

24 | "parcel": "^2.0.0-beta.2",

25 | "prettier": "^2.3.2",

26 | "react": "^17.0.2",

27 | "react-dom": "^17.0.2",

28 | "styled-components": "^5.3.0",

29 | "ts-jest": "^27.0.3",

30 | "typescript": "^4.3.4"

31 | }

32 | }

33 |

--------------------------------------------------------------------------------

/blocky/src/block.ts:

--------------------------------------------------------------------------------

1 | export enum Colour {

2 | RED,

3 | GREEN,

4 | BLUE,

5 | ORANGE,

6 | }

7 |

8 | export class Block {

9 | public colour: Colour;

10 |

11 | constructor() {

12 | this.colour = Colour[Colour[Math.floor(Math.random() * (Colour.ORANGE + 1))] as keyof typeof Colour];

13 | }

14 | }

15 |

--------------------------------------------------------------------------------

/blocky/src/block_grid.test.ts:

--------------------------------------------------------------------------------

1 | import { Colour } from './block';

2 | import { BlockGrid } from './block_grid';

3 |

4 | describe('BlockGrid', () => {

5 | it('should create blocks with one of the valid colours', () => {

6 | const blockGrid = new BlockGrid(10, 10);

7 |

8 | blockGrid.grid.forEach(col => {

9 | col.forEach(block => {

10 | expect(block).not.toBeNull();

11 | expect(block!.colour).toBeLessThanOrEqual(Colour.ORANGE);

12 | });

13 | });

14 | });

15 |

16 | it('should perform correct algorithm when clicked', () => {

17 | // Implement me.

18 | });

19 | });

20 |

--------------------------------------------------------------------------------

/blocky/src/block_grid.ts:

--------------------------------------------------------------------------------

1 | import { Block } from './block';

2 |

3 | export class BlockGrid {

4 | public grid: Block[][] = [];

5 |

6 | constructor(public numCols: number, public numRows: number) {

7 | for (let x = 0; x < numCols; x++) {

8 | const col = [];

9 | for (let y = 0; y < numRows; y++) {

10 | col.push(new Block());

11 | }

12 | this.grid.push(col);

13 | }

14 | }

15 |

16 | clicked(x: number, y: number) {

17 | console.log(`(${x}, ${y}): Implement me...`);

18 | }

19 | }

20 |

--------------------------------------------------------------------------------

/blocky/src/index.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | Aztec Blocky Test

5 |

6 |

7 |

8 |

9 |

10 |

11 |

--------------------------------------------------------------------------------

/blocky/src/index.tsx:

--------------------------------------------------------------------------------

1 | import React from 'react';

2 | import ReactDOM from 'react-dom';

3 | import { Colour } from './block';

4 | import { BlockGrid } from './block_grid';

5 | import styled, { createGlobalStyle } from 'styled-components';

6 |

7 | const GlobalStyle = createGlobalStyle`

8 | html, body, #root {

9 | background: grey;

10 | margin: 0;

11 | padding: 0;

12 | width: 100%;

13 | height: 100%;

14 | }

15 | `;

16 |

17 | const StyledGrid = styled.div`

18 | width: 100%;

19 | height: 100%;

20 | background: grey;

21 | `;

22 |

23 | const StyledColumn = styled.div`

24 | float: left;

25 | background: grey;

26 | width: 10%;

27 | height: 100%;

28 | `;

29 |

30 | const StyledBlock = styled.div`

31 | width: 100%;

32 | height: 10%;

33 | margin: 0;

34 | padding: 0;

35 | `;

36 |

37 | function Blocky({ grid }: { grid: BlockGrid }) {

38 | return (

39 |

40 | {grid.grid.map((col, i) => (

41 |

42 | {col.map((block, j) => (

43 | grid.clicked(i, j)}

47 | >

48 | ))}

49 |

50 | ))}

51 |

52 | );

53 | }

54 |

55 | async function main() {

56 | const blockGrid = new BlockGrid(10, 10);

57 | console.log(blockGrid);

58 | ReactDOM.render(

59 | <>

60 |

61 |

62 | ,

63 | document.getElementById('root'),

64 | );

65 | }

66 |

67 | main().catch(console.error);

68 |

--------------------------------------------------------------------------------

/blocky/tsconfig.json:

--------------------------------------------------------------------------------

1 | {

2 | "compilerOptions": {

3 | "target": "es2020",

4 | "module": "es6",

5 | "lib": ["es2020", "dom"],

6 | "moduleResolution": "node",

7 | "jsx": "react",

8 | "noEmit": true,

9 | "strict": true,

10 | "esModuleInterop": true

11 | }

12 | }

13 |

--------------------------------------------------------------------------------

/cryptography-engineer/.clang-format:

--------------------------------------------------------------------------------

1 | PointerAlignment: Left

2 | ColumnLimit: 120

3 | BreakBeforeBraces: Allman

4 | IndentWidth: 4

5 | BinPackArguments: false

6 | BinPackParameters: false

7 | AllowShortFunctionsOnASingleLine: None

8 | Cpp11BracedListStyle: false

9 | AlwaysBreakAfterReturnType: None

10 | AlwaysBreakAfterDefinitionReturnType: None

11 | PenaltyReturnTypeOnItsOwnLine: 1000000

12 | BreakConstructorInitializers: BeforeComma

13 | BreakBeforeBraces: Custom

14 | BraceWrapping:

15 | AfterClass: false

16 | AfterEnum: false

17 | AfterFunction: true

18 | AfterNamespace: false

19 | AfterStruct: false

20 | AfterUnion: false

21 | AfterExternBlock: false

22 | BeforeCatch: false

23 | BeforeElse: false

24 | SplitEmptyFunction: false

25 | SplitEmptyRecord: false

26 | SplitEmptyNamespace: false

27 | AllowShortFunctionsOnASingleLine : Inline

28 | SortIncludes: false

--------------------------------------------------------------------------------

/cryptography-engineer/.gitignore:

--------------------------------------------------------------------------------

1 | .cache/

2 | build*/

3 | src/wasi-sdk-*

4 | src/aztec/proof_system/proving_key/fixtures

5 | src/aztec/rollup/proofs/*/fixtures

6 | srs_db/ignition/transcript*

7 | srs_db/lagrange

8 | srs_db/coset_lagrange

9 | srs_db/modified_lagrange

10 | # to be unignored when we agree on clang-tidy rules

11 | .clangd

--------------------------------------------------------------------------------

/cryptography-engineer/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | # aztec-connect-cpp

2 | # copyright 2019 Spilsbury Holdings Ltd

3 |

4 | cmake_minimum_required(VERSION 3.16)

5 |

6 | # Get the full path to barretenberg. This is helpful because the required

7 | # relative path changes based on where in cmake the path is used.

8 | # `BBERG_DIR` must be set before toolchain.cmake is imported because

9 | # `BBERG_DIR` is used in toolchain.cmake to determine `WASI_SDK_PREFIX`

10 | get_filename_component(BBERG_DIR ../barretenberg/cpp

11 | REALPATH BASE_DIR "${CMAKE_BINARY_DIR}")

12 |

13 | include(cmake/toolchain.cmake)

14 |

15 | set(PROJECT_VERSION 0.1.0)

16 | project(AztecInterviewTests

17 | DESCRIPTION "Project containing C++ technical tests for the position of Cryptography Engineer"

18 | LANGUAGES CXX C)

19 |

20 | # include barretenberg as ExternalProject

21 | include(cmake/barretenberg.cmake)

22 |

23 | option(DISABLE_ASM "Disable custom assembly" OFF)

24 | option(DISABLE_ADX "Disable ADX assembly variant" OFF)

25 | option(MULTITHREADING "Enable multi-threading" ON)

26 | option(TESTING "Build tests" ON)

27 |

28 | if(ARM)

29 | message(STATUS "Compiling for ARM.")

30 | set(DISABLE_ASM ON)

31 | set(DISABLE_ADX ON)

32 | set(RUN_HAVE_STD_REGEX 0)

33 | set(RUN_HAVE_POSIX_REGEX 0)

34 | endif()

35 |

36 | if(WASM)

37 | message(STATUS "Compiling for WebAssembly.")

38 | set(DISABLE_ASM ON)

39 | set(MULTITHREADING OFF)

40 | endif()

41 |

42 | set(CMAKE_C_STANDARD 11)

43 | set(CMAKE_C_EXTENSIONS ON)

44 | set(CMAKE_CXX_STANDARD 20)

45 | set(CMAKE_CXX_STANDARD_REQUIRED TRUE)

46 | set(CMAKE_CXX_EXTENSIONS ON)

47 |

48 | include(cmake/build.cmake)

49 | include(cmake/arch.cmake)

50 | include(cmake/threading.cmake)

51 | include(cmake/gtest.cmake)

52 | include(cmake/module.cmake)

53 |

54 | add_subdirectory(src)

--------------------------------------------------------------------------------

/cryptography-engineer/README.md:

--------------------------------------------------------------------------------

1 | ## Cryptography Take-Home Tests

2 |

3 | Welcome to Aztec's cryptography tests as a part of your interview process. This module contains some coding tests designed to be attempted by candidates for either of the roles listed below.

4 |

5 | 1. Cryptography Engineer

6 | 2. Applied Cryptography Engineer

7 | 3. Applied Cryptographer

8 |

9 | This module contains the following exercises:

10 |

11 | 1. [Indexed Merkle Tree](./src/indexed_merkle_tree/README.md)

12 | 2. [EC-FFT](./src/ec_fft/README.md)

13 |

14 | Since these exercises use our in-house cryptography library barretenberg in the backend, you need to run the following commands to get started after you have cloned this repository. Please _do not_ fork the original repository, instead clone it and push it to your private repository.

15 |

16 | ```console

17 | $ cd cryptography-engineer

18 | $ ./bootstrap.sh # this clones the barretenberg submodule and builds it

19 | $ cd build

20 | $ make _tests # this compiles the given test module

21 | $ ./bin/_tests # this runs the tests in that module

22 | ```

23 |

24 | Here, `module_name` must be replaced with `indexed_merkle_tree` for the first exercise. In case you face any issues with setting up this framework, feel free to reach out to [suyash@aztecprotocol.com](mailto:suyash@aztecprotocol.com) or [cody@aztecprotocol.com](mailto:cody@aztecprotocol.com).

25 |

--------------------------------------------------------------------------------

/cryptography-engineer/bootstrap.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | set -e

3 |

4 | # Update the submodule

5 | git submodule init

6 | git submodule update --init --recursive

7 |

8 | # Clean.

9 | rm -rf ./build

10 | rm -rf ./build-wasm

11 | rm -rf ./src/wasi-sdk-*

12 |

13 | # Clean barretenberg.

14 | rm -rf ../barretenberg/cpp/build

15 | rm -rf ../barretenberg/cpp/build-wasm

16 | rm -rf ../barretenberg/cpp/src/wasi-sdk-*

17 |

18 | # Install formatting git hook.

19 | HOOKS_DIR=$(git rev-parse --git-path hooks)

20 | echo "cd \$(git rev-parse --show-toplevel)/cryptography-engineer && ./format.sh staged" > $HOOKS_DIR/pre-commit

21 | chmod +x $HOOKS_DIR/pre-commit

22 |

23 | # Determine system.

24 | if [[ "$OSTYPE" == "darwin"* ]]; then

25 | OS=macos

26 | elif [[ "$OSTYPE" == "linux-gnu" ]]; then

27 | OS=linux

28 | else

29 | echo "Unknown OS: $OSTYPE"

30 | exit 1

31 | fi

32 |

33 | # Download ignition transcripts.

34 | (cd barretenberg/cpp/srs_db && ./download_ignition.sh 3)

35 |

36 | # Pick native toolchain file.

37 | if [ "$OS" == "macos" ]; then

38 | export BREW_PREFIX=$(brew --prefix)

39 | # Ensure we have toolchain.

40 | if [ ! "$?" -eq 0 ] || [ ! -f "$BREW_PREFIX/opt/llvm/bin/clang++" ]; then

41 | echo "Default clang not sufficient. Install homebrew, and then: brew install llvm libomp clang-format"

42 | exit 1

43 | fi

44 | ARCH=$(uname -m)

45 | if [ "$ARCH" = "arm64" ]; then

46 | TOOLCHAIN=arm-apple-clang

47 | else

48 | TOOLCHAIN=x86_64-apple-clang

49 | fi

50 | else

51 | TOOLCHAIN=x86_64-linux-clang

52 | fi

53 |

54 | # Build native.

55 | mkdir -p build && cd build

56 | cmake -DCMAKE_BUILD_TYPE=RelWithAssert -DTOOLCHAIN=$TOOLCHAIN ..

57 | cmake --build . --parallel ${@/#/--target }

58 | cd ..

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/arch.cmake:

--------------------------------------------------------------------------------

1 | if(WASM)

2 | # Disable SLP vectorization on WASM as it's brokenly slow. To give an idea, with this off it still takes

3 | # 2m:18s to compile scalar_multiplication.cpp, and with it on I estimate it's 50-100 times longer. I never

4 | # had the patience to wait it out...

5 | add_compile_options(-fno-exceptions -fno-slp-vectorize)

6 | endif()

7 |

8 | if(NOT WASM AND NOT APPLE)

9 | add_compile_options(-march=skylake-avx512)

10 | endif()

11 |

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/barretenberg.cmake:

--------------------------------------------------------------------------------

1 | # Here we Set up barretenberg as an ExternalProject

2 | # - Point to its source and build directories

3 | # - Construct its `configure` and `build` command lines

4 | # - include its `src/` in `search path for includes

5 | # - Depend on specific libraries from barretenberg

6 | #

7 | # If barretenberg's cmake files change, its configure and build are triggered

8 | # If barretenberg's source files change, build is triggered

9 |

10 | include(ExternalProject)

11 |

12 | if (WASM)

13 | set(BBERG_BUILD_DIR ${BBERG_DIR}/build-wasm)

14 | else()

15 | set(BBERG_BUILD_DIR ${BBERG_DIR}/build)

16 | endif()

17 |

18 | # If the OpenMP library is included via this option, propogate to ExternalProject configure

19 | if (OpenMP_omp_LIBRARY)

20 | set(LIB_OMP_OPTION -DOpenMP_omp_LIBRARY=${OpenMP_omp_LIBRARY})

21 | endif()

22 |

23 | # Make sure barretenberg doesn't set its own WASI_SDK_PREFIX

24 | if (WASI_SDK_PREFIX)

25 | set(WASI_SDK_OPTION -DWASI_SDK_PREFIX=${WASI_SDK_PREFIX})

26 | endif()

27 |

28 | # cmake configure cli args for ExternalProject

29 | set(BBERG_CONFIGURE_ARGS -DTOOLCHAIN=${TOOLCHAIN} ${WASI_SDK_OPTION} ${LIB_OMP_OPTION} -DCI=${CI})

30 |

31 | # Naming: Project: Barretenberg, Libraries: barretenberg, env

32 | # Need BUILD_ALWAYS to ensure that barretenberg is automatically reconfigured when its CMake files change

33 | # "Enabling this option forces the build step to always be run. This can be the easiest way to robustly

34 | # ensure that the external project's own build dependencies are evaluated rather than relying on the

35 | # default success timestamp-based method." - https://cmake.org/cmake/help/latest/module/ExternalProject.html

36 | ExternalProject_Add(Barretenberg

37 | SOURCE_DIR ${BBERG_DIR}

38 | BINARY_DIR ${BBERG_BUILD_DIR} # build directory

39 | BUILD_ALWAYS TRUE

40 | UPDATE_COMMAND ""

41 | INSTALL_COMMAND ""

42 | CONFIGURE_COMMAND ${CMAKE_COMMAND} ${BBERG_CONFIGURE_ARGS} ..

43 | BUILD_COMMAND ${CMAKE_COMMAND} --build . --parallel --target barretenberg --target env)

44 |

45 | include_directories(${BBERG_DIR}/src/aztec)

46 |

47 | # Add the imported barretenberg and env libraries, point to their library archives,

48 | # and add a dependency of these libraries on the imported project

49 | add_library(barretenberg STATIC IMPORTED)

50 | set_target_properties(barretenberg PROPERTIES IMPORTED_LOCATION ${BBERG_BUILD_DIR}/lib/libbarretenberg.a)

51 | add_dependencies(barretenberg Barretenberg)

52 |

53 | # env is needed for logstr in native executables and wasm tests

54 | # It is otherwise omitted from wasm to prevent use of C++ logstr instead of imported/Typescript

55 | add_library(env STATIC IMPORTED)

56 | set_target_properties(env PROPERTIES IMPORTED_LOCATION ${BBERG_BUILD_DIR}/lib/libenv.a)

57 | add_dependencies(env Barretenberg)

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/benchmark.cmake:

--------------------------------------------------------------------------------

1 | if(NOT TESTING)

2 | set(BENCHMARKS OFF)

3 | endif()

4 |

5 | if(BENCHMARKS)

6 | include(FetchContent)

7 |

8 | FetchContent_Declare(

9 | benchmark

10 | GIT_REPOSITORY https://github.com/google/benchmark

11 | GIT_TAG v1.6.1

12 | )

13 |

14 | FetchContent_GetProperties(benchmark)

15 | if(NOT benchmark_POPULATED)

16 | fetchcontent_populate(benchmark)

17 | set(BENCHMARK_ENABLE_TESTING OFF CACHE BOOL "Benchmark tests off")

18 | add_subdirectory(${benchmark_SOURCE_DIR} ${benchmark_BINARY_DIR} EXCLUDE_FROM_ALL)

19 | endif()

20 | endif()

21 |

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/build.cmake:

--------------------------------------------------------------------------------

1 | if(NOT CMAKE_BUILD_TYPE AND NOT CMAKE_CONFIGURATION_TYPES)

2 | set(CMAKE_BUILD_TYPE "Release" CACHE STRING "Choose the type of build." FORCE)

3 | endif()

4 | message(STATUS "Build type: ${CMAKE_BUILD_TYPE}")

5 |

6 | if(CMAKE_BUILD_TYPE STREQUAL "RelWithAssert")

7 | add_compile_options(-O3)

8 | remove_definitions(-DNDEBUG)

9 | endif()

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/gtest.cmake:

--------------------------------------------------------------------------------

1 | if(TESTING)

2 | include(GoogleTest)

3 | include(FetchContent)

4 |

5 | FetchContent_Declare(

6 | googletest

7 | GIT_REPOSITORY https://github.com/google/googletest.git

8 | GIT_TAG release-1.10.0

9 | )

10 |

11 | FetchContent_GetProperties(googletest)

12 | if(NOT googletest_POPULATED)

13 | FetchContent_Populate(googletest)

14 | add_subdirectory(${googletest_SOURCE_DIR} ${googletest_BINARY_DIR} EXCLUDE_FROM_ALL)

15 | endif()

16 |

17 | if(WASM)

18 | target_compile_definitions(

19 | gtest

20 | PRIVATE

21 | -DGTEST_HAS_EXCEPTIONS=0

22 | -DGTEST_HAS_STREAM_REDIRECTION=0)

23 | endif()

24 |

25 | mark_as_advanced(

26 | BUILD_GMOCK BUILD_GTEST BUILD_SHARED_LIBS

27 | gmock_build_tests gtest_build_samples gtest_build_tests

28 | gtest_disable_pthreads gtest_force_shared_crt gtest_hide_internal_symbols

29 | )

30 |

31 | enable_testing()

32 | endif()

33 |

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/module.cmake:

--------------------------------------------------------------------------------

1 | # copyright 2019 Spilsbury Holdings

2 | #

3 | # usage: barretenberg_module(module_name [dependencies ...])

4 | #

5 | # Scans for all .cpp files in a subdirectory, and creates a library named .

6 | # Scans for all .test.cpp files in a subdirectory, and creates a gtest binary named _tests.

7 | # Scans for all .bench.cpp files in a subdirectory, and creates a benchmark binary named _bench.

8 | #

9 | # We have to get a bit complicated here, due to the fact CMake will not parallelise the building of object files

10 | # between dependent targets, due to the potential of post-build code generation steps etc.

11 | # To work around this, we create "object libraries" containing the object files.

12 | # Then we declare executables/libraries that are to be built from these object files.

13 | # These assets will only be linked as their dependencies complete, but we can parallelise the compilation at least.

14 |

15 | function(barretenberg_module MODULE_NAME)

16 | file(GLOB_RECURSE SOURCE_FILES *.cpp)

17 | file(GLOB_RECURSE HEADER_FILES *.hpp)

18 | list(FILTER SOURCE_FILES EXCLUDE REGEX ".*\.(fuzzer|test|bench).cpp$")

19 |

20 | if(SOURCE_FILES)

21 | add_library(

22 | ${MODULE_NAME}_objects

23 | OBJECT

24 | ${SOURCE_FILES}

25 | )

26 |

27 | add_library(

28 | ${MODULE_NAME}

29 | STATIC

30 | $

31 | )

32 |

33 | target_link_libraries(

34 | ${MODULE_NAME}

35 | PUBLIC

36 | ${ARGN}

37 | barretenberg

38 | ${TBB_IMPORTED_TARGETS}

39 | )

40 |

41 | set(MODULE_LINK_NAME ${MODULE_NAME})

42 | endif()

43 |

44 | file(GLOB_RECURSE TEST_SOURCE_FILES *.test.cpp)

45 | if(TESTING AND TEST_SOURCE_FILES)

46 | add_library(

47 | ${MODULE_NAME}_test_objects

48 | OBJECT

49 | ${TEST_SOURCE_FILES}

50 | )

51 |

52 | target_link_libraries(

53 | ${MODULE_NAME}_test_objects

54 | PRIVATE

55 | gtest

56 | barretenberg

57 | env

58 | ${TBB_IMPORTED_TARGETS}

59 | )

60 |

61 | add_executable(

62 | ${MODULE_NAME}_tests

63 | $

64 | )

65 |

66 | if(WASM)

67 | target_link_options(

68 | ${MODULE_NAME}_tests

69 | PRIVATE

70 | -Wl,-z,stack-size=8388608

71 | )

72 | endif()

73 |

74 | if(CI)

75 | target_compile_definitions(

76 | ${MODULE_NAME}_test_objects

77 | PRIVATE

78 | -DCI=1

79 | )

80 | endif()

81 |

82 | if(DISABLE_HEAVY_TESTS)

83 | target_compile_definitions(

84 | ${MODULE_NAME}_test_objects

85 | PRIVATE

86 | -DDISABLE_HEAVY_TESTS=1

87 | )

88 | endif()

89 |

90 | target_link_libraries(

91 | ${MODULE_NAME}_tests

92 | PRIVATE

93 | ${MODULE_LINK_NAME}

94 | ${ARGN}

95 | gtest

96 | gtest_main

97 | barretenberg

98 | env

99 | ${TBB_IMPORTED_TARGETS}

100 | )

101 |

102 | if(NOT WASM AND NOT CI)

103 | # Currently haven't found a way to easily wrap the calls in wasmtime when run from ctest.

104 | gtest_discover_tests(${MODULE_NAME}_tests WORKING_DIRECTORY ${CMAKE_BINARY_DIR})

105 | endif()

106 |

107 | add_custom_target(

108 | run_${MODULE_NAME}_tests

109 | COMMAND ${MODULE_NAME}_tests

110 | WORKING_DIRECTORY ${CMAKE_BINARY_DIR}

111 | )

112 | endif()

113 |

114 | file(GLOB_RECURSE FUZZERS_SOURCE_FILES *.fuzzer.cpp)

115 | if(FUZZING AND FUZZERS_SOURCE_FILES)

116 | foreach(FUZZER_SOURCE_FILE ${FUZZERS_SOURCE_FILES})

117 | get_filename_component(FUZZER_NAME_STEM ${FUZZER_SOURCE_FILE} NAME_WE)

118 | add_executable(

119 | ${MODULE_NAME}_${FUZZER_NAME_STEM}_fuzzer

120 | ${FUZZER_SOURCE_FILE}

121 | )

122 |

123 | target_link_options(

124 | ${MODULE_NAME}_${FUZZER_NAME_STEM}_fuzzer

125 | PRIVATE

126 | "-fsanitize=fuzzer"

127 | ${SANITIZER_OPTIONS}

128 | )

129 |

130 | target_link_libraries(

131 | ${MODULE_NAME}_${FUZZER_NAME_STEM}_fuzzer

132 | PRIVATE

133 | ${MODULE_LINK_NAME}

134 | barretenberg

135 | env

136 | )

137 | endforeach()

138 | endif()

139 |

140 | file(GLOB_RECURSE BENCH_SOURCE_FILES *.bench.cpp)

141 | if(BENCHMARKS AND BENCH_SOURCE_FILES)

142 | add_library(

143 | ${MODULE_NAME}_bench_objects

144 | OBJECT

145 | ${BENCH_SOURCE_FILES}

146 | )

147 |

148 | target_link_libraries(

149 | ${MODULE_NAME}_bench_objects

150 | PRIVATE

151 | benchmark

152 | barretenberg

153 | env

154 | ${TBB_IMPORTED_TARGETS}

155 | )

156 |

157 | add_executable(

158 | ${MODULE_NAME}_bench

159 | $

160 | )

161 |

162 | target_link_libraries(

163 | ${MODULE_NAME}_bench

164 | PRIVATE

165 | ${MODULE_LINK_NAME}

166 | ${ARGN}

167 | benchmark

168 | barretenberg

169 | env

170 | ${TBB_IMPORTED_TARGETS}

171 | )

172 |

173 | add_custom_target(

174 | run_${MODULE_NAME}_bench

175 | COMMAND ${MODULE_NAME}_bench

176 | WORKING_DIRECTORY ${CMAKE_BINARY_DIR}

177 | )

178 | endif()

179 | endfunction()

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/threading.cmake:

--------------------------------------------------------------------------------

1 | if(APPLE)

2 | if(CMAKE_C_COMPILER_ID MATCHES "Clang")

3 | set(OpenMP_C_FLAGS "-fopenmp")

4 | set(OpenMP_C_FLAGS_WORK "-fopenmp")

5 | set(OpenMP_C_LIB_NAMES "libomp")

6 | set(OpenMP_C_LIB_NAMES_WORK "libomp")

7 | set(OpenMP_libomp_LIBRARY "$ENV{BREW_PREFIX}/opt/libomp/lib/libomp.dylib")

8 | endif()

9 | if(CMAKE_CXX_COMPILER_ID MATCHES "Clang")

10 | set(OpenMP_CXX_FLAGS "-fopenmp")

11 | set(OpenMP_CXX_FLAGS_WORK "-fopenmp")

12 | set(OpenMP_CXX_LIB_NAMES "libomp")

13 | set(OpenMP_CXX_LIB_NAMES_WORK "libomp")

14 | set(OpenMP_libomp_LIBRARY "$ENV{BREW_PREFIX}/opt/libomp/lib/libomp.dylib")

15 | endif()

16 | endif()

17 |

18 | if(MULTITHREADING)

19 | find_package(OpenMP REQUIRED)

20 | message(STATUS "Multithreading is enabled.")

21 | link_libraries(OpenMP::OpenMP_CXX)

22 | else()

23 | message(STATUS "Multithreading is disabled.")

24 | add_definitions(-DNO_MULTITHREADING -DBOOST_SP_NO_ATOMIC_ACCESS)

25 | endif()

26 |

27 | if(DISABLE_TBB)

28 | message(STATUS "Intel Thread Building Blocks is disabled.")

29 | add_definitions(-DNO_TBB)

30 | else()

31 | find_package(TBB REQUIRED tbb)

32 | if(${TBB_FOUND})

33 | message(STATUS "Intel Thread Building Blocks is enabled.")

34 | else()

35 | message(STATUS "Could not locate TBB.")

36 | add_definitions(-DNO_TBB)

37 | endif()

38 | endif()

39 |

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchain.cmake:

--------------------------------------------------------------------------------

1 | if (CMAKE_C_COMPILER AND CMAKE_CXX_COMPILER)

2 | message(STATUS "Toolchain: manually chosen ${CMAKE_C_COMPILER} and ${CMAKE_CXX_COMPILER}")

3 | else()

4 | if(NOT TOOLCHAIN)

5 | set(TOOLCHAIN "x86_64-linux-clang" CACHE STRING "Build toolchain." FORCE)

6 | endif()

7 | message(STATUS "Toolchain: ${TOOLCHAIN}")

8 |

9 | include("./cmake/toolchains/${TOOLCHAIN}.cmake")

10 | endif()

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/arm-apple-clang.cmake:

--------------------------------------------------------------------------------

1 | set(APPLE ON)

2 | set(ARM ON)

3 | set(CMAKE_CXX_COMPILER "$ENV{BREW_PREFIX}/opt/llvm/bin/clang++")

4 | set(CMAKE_C_COMPILER "$ENV{BREW_PREFIX}/opt/llvm/bin/clang")

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/arm64-linux-gcc.cmake:

--------------------------------------------------------------------------------

1 | set(ARM ON)

2 | set(CMAKE_SYSTEM_NAME Linux)

3 | set(CMAKE_SYSTEM_VERSION 1)

4 | set(CMAKE_SYSTEM_PROCESSOR aarch64)

5 |

6 | set(cross_triple "aarch64-unknown-linux-gnu")

7 | set(cross_root /usr/xcc/${cross_triple})

8 |

9 | set(CMAKE_C_COMPILER $ENV{CC})

10 | set(CMAKE_CXX_COMPILER $ENV{CXX})

11 | set(CMAKE_Fortran_COMPILER $ENV{FC})

12 |

13 | set(CMAKE_CXX_FLAGS "-I ${cross_root}/include/")

14 |

15 | set(CMAKE_FIND_ROOT_PATH ${cross_root} ${cross_root}/${cross_triple})

16 | set(CMAKE_FIND_ROOT_PATH_MODE_PROGRAM NEVER)

17 | set(CMAKE_FIND_ROOT_PATH_MODE_LIBRARY BOTH)

18 | set(CMAKE_FIND_ROOT_PATH_MODE_INCLUDE BOTH)

19 | set(CMAKE_SYSROOT ${cross_root}/${cross_triple}/sysroot)

20 |

21 | set(CMAKE_CROSSCOMPILING_EMULATOR /usr/bin/qemu-aarch64)

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/i386-linux-clang.cmake:

--------------------------------------------------------------------------------

1 | # Sometimes we need to set compilers manually, for example for fuzzing

2 | if(NOT CMAKE_C_COMPILER)

3 | set(CMAKE_C_COMPILER "clang")

4 | endif()

5 |

6 | if(NOT CMAKE_CXX_COMPILER)

7 | set(CMAKE_CXX_COMPILER "clang++")

8 | endif()

9 |

10 | add_compile_options("-m32")

11 | add_link_options("-m32")

12 | set(MULTITHREADING OFF)

13 | add_definitions(-DDISABLE_SHENANIGANS=1)

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/wasm-linux-clang.cmake:

--------------------------------------------------------------------------------

1 | # Cmake toolchain description file for the Makefile

2 |

3 | # This is arbitrary, AFAIK, for now.

4 | cmake_minimum_required(VERSION 3.4.0)

5 |

6 | set(WASM ON)

7 | set(CMAKE_SYSTEM_NAME Generic)

8 | set(CMAKE_SYSTEM_VERSION 1)

9 | set(CMAKE_SYSTEM_PROCESSOR wasm32)

10 | set(triple wasm32-wasi)

11 |

12 | set(WASI_SDK_PREFIX "${CMAKE_CURRENT_SOURCE_DIR}/src/wasi-sdk-12.0")

13 | set(CMAKE_C_COMPILER ${WASI_SDK_PREFIX}/bin/clang)

14 | set(CMAKE_CXX_COMPILER ${WASI_SDK_PREFIX}/bin/clang++)

15 | set(CMAKE_AR ${WASI_SDK_PREFIX}/bin/llvm-ar CACHE STRING "wasi-sdk build")

16 | set(CMAKE_RANLIB ${WASI_SDK_PREFIX}/bin/llvm-ranlib CACHE STRING "wasi-sdk build")

17 | set(CMAKE_C_COMPILER_TARGET ${triple} CACHE STRING "wasi-sdk build")

18 | set(CMAKE_CXX_COMPILER_TARGET ${triple} CACHE STRING "wasi-sdk build")

19 | #set(CMAKE_EXE_LINKER_FLAGS "-Wl,--no-threads" CACHE STRING "wasi-sdk build")

20 |

21 | set(CMAKE_SYSROOT ${WASI_SDK_PREFIX}/share/wasi-sysroot CACHE STRING "wasi-sdk build")

22 | set(CMAKE_STAGING_PREFIX ${WASI_SDK_PREFIX}/share/wasi-sysroot CACHE STRING "wasi-sdk build")

23 |

24 | # Don't look in the sysroot for executables to run during the build

25 | set(CMAKE_FIND_ROOT_PATH_MODE_PROGRAM NEVER)

26 | # Only look in the sysroot (not in the host paths) for the rest

27 | set(CMAKE_FIND_ROOT_PATH_MODE_LIBRARY ONLY)

28 | set(CMAKE_FIND_ROOT_PATH_MODE_INCLUDE ONLY)

29 | set(CMAKE_FIND_ROOT_PATH_MODE_PACKAGE ONLY)

30 |

31 | # Some other hacks

32 | set(CMAKE_C_COMPILER_WORKS ON)

33 | set(CMAKE_CXX_COMPILER_WORKS ON)

34 |

35 | add_definitions(-D_WASI_EMULATED_PROCESS_CLOCKS=1)

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/x86_64-apple-clang.cmake:

--------------------------------------------------------------------------------

1 | set(APPLE ON)

2 | set(CMAKE_CXX_COMPILER "$ENV{BREW_PREFIX}/opt/llvm/bin/clang++")

3 | set(CMAKE_C_COMPILER "$ENV{BREW_PREFIX}/opt/llvm/bin/clang")

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/x86_64-linux-clang.cmake:

--------------------------------------------------------------------------------

1 | set(CMAKE_C_COMPILER "clang")

2 | set(CMAKE_CXX_COMPILER "clang++")

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/x86_64-linux-gcc.cmake:

--------------------------------------------------------------------------------

1 | set(CMAKE_C_COMPILER "gcc")

2 | set(CMAKE_CXX_COMPILER "g++")

3 | # TODO(Cody): git rid of this when Adrian's work goes in

4 | add_compile_options(-Wno-uninitialized)

5 | add_compile_options(-Wno-maybe-uninitialized)

--------------------------------------------------------------------------------

/cryptography-engineer/cmake/toolchains/x86_64-linux-gcc10.cmake:

--------------------------------------------------------------------------------

1 | set(CMAKE_C_COMPILER "gcc-10")

2 | set(CMAKE_CXX_COMPILER "g++-10")

3 | # TODO(Cody): git rid of this when Adrian's work goes in

4 | add_compile_options(-Wno-uninitialized)

5 | add_compile_options(-Wno-maybe-uninitialized)

--------------------------------------------------------------------------------

/cryptography-engineer/format.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | set -e

3 |

4 | if [ "$1" == "staged" ]; then

5 | echo Formatting staged files...

6 | for FILE in $(git diff-index --diff-filter=d --relative --cached --name-only HEAD | grep -e '\.\(cpp\|hpp\|tcc\)$'); do

7 | clang-format -i $FILE

8 | sed -i.bak 's/\r$//' $FILE && rm ${FILE}.bak

9 | git add $FILE

10 | done

11 | elif [ -n "$1" ]; then

12 | for FILE in $(git diff-index --relative --name-only $1 | grep -e '\.\(cpp\|hpp\|tcc\)$'); do

13 | clang-format -i $FILE

14 | sed -i.bak 's/\r$//' $FILE && rm ${FILE}.bak

15 | done

16 | else

17 | for FILE in $(find ./src -iname *.hpp -o -iname *.cpp -o -iname *.tcc | grep -v src/boost); do

18 | clang-format -i $FILE

19 | sed -i.bak 's/\r$//' $FILE && rm ${FILE}.bak

20 | done

21 | fi

--------------------------------------------------------------------------------

/cryptography-engineer/src/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR}/bin)

2 |

3 | add_compile_options(-Werror -Wall -Wextra -Wconversion -Wsign-conversion -Wno-deprecated -Wno-tautological-compare -Wfatal-errors)

4 |

5 | if(CMAKE_CXX_COMPILER_ID MATCHES "Clang")

6 | add_compile_options(-Wno-unguarded-availability-new -Wno-c99-extensions -fconstexpr-steps=100000000)

7 | if(MEMORY_CHECKS)

8 | message(STATUS "Compiling with memory checks.")

9 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fsanitize=address")

10 | endif()

11 | endif()

12 |

13 | if(CMAKE_CXX_COMPILER_ID MATCHES "GNU")

14 | add_compile_options(-Wno-deprecated-copy -fconstexpr-ops-limit=100000000)

15 | endif()

16 |

17 | include_directories(${CMAKE_CURRENT_SOURCE_DIR})

18 |

19 | # I feel this should be limited to ecc, however it's currently used in headers that go across libraries,

20 | # and there currently isn't an easy way to inherit the DDISABLE_SHENANIGANS parameter.

21 | if(DISABLE_ASM)

22 | message(STATUS "Using fallback non-assembly methods for field multiplications.")

23 | add_definitions(-DDISABLE_SHENANIGANS=1)

24 | else()

25 | message(STATUS "Using optimized assembly for field arithmetic.")

26 | endif()

27 |

28 | add_subdirectory(indexed_merkle_tree)

29 | add_subdirectory(ec_fft)

30 |

31 | if(BENCHMARKS)

32 | add_subdirectory(benchmark)

33 | endif()

34 |

--------------------------------------------------------------------------------

/cryptography-engineer/src/ec_fft/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | # create a barretenberg_module for ec_fft

2 | barretenberg_module(ec_fft)

--------------------------------------------------------------------------------

/cryptography-engineer/src/ec_fft/README.md:

--------------------------------------------------------------------------------

1 | ## EC-FFT Test

2 |

3 | Hi there! Welcome to the `ec_fft` test that you are about to take. We will guide you through the concept of EC-FFT before you begin crushing this exercise. This exercise consists of two parts:

4 |

5 | 1. Implement the FFT algorithm for curve points:

6 | ```cpp

7 | void ec_fft_inner(g1::element* g1_elements, const size_t n, const std::vector& root_table);

8 | ```

9 | 2. Using the `ec_fft` function, convert a monomial reference string to a Lagrange reference string without knowing the secret $x$.

10 | ```cpp

11 | void convert_srs(g1::affine_element* monomial_srs, g1::affine_element* lagrange_srs, const evaluation_domain& domain);

12 | ```

13 | Objective: Implement the above functions to get the four pre-written tests in `ec_fft.test.cpp` passing.

14 |

15 | Pro-tip: You can take a look at the pre-written tests if you need help with the syntax.

16 |

17 | #### Monomial Reference String

18 |

19 | Universal zk-SNARKs like PlonK need to run a one-time trusted setup ceremony to generate a Structured Reference String (SRS). Any number of participants can participate in this ceremony but only _one_ of all the participants needs to be _honest_. As a part of this ceremony, each participant contributes to the setup with their own _secret_ which they are free to choose. If even one of the participants generates this secret randomly and destroys it successfully, the setup ceremony is considered to be successful. Fur our purposes, the output of the setup ceremony is a structure reference string of the form:

20 |

21 | $$

22 | \begin{aligned}

23 | \mathbb{G}_1 \text{ points: }

24 | \big([1]_1,[x]_1, [x^2]_1, [x^3]_1, \dots, [x^{N-1}]_1\big), \\

25 | \end{aligned}

26 | $$

27 |

28 | Here, $\mathbb{G}_1$ is a cyclic group, $\mathbb{F}$ is a finite field, and the order of $\mathbb{G}_1$ is the same as the order of $\mathbb{F}$. The element $x \in \mathbb{F}$ is the combined secret of all the participants and is assumed to be unknown to anyone in the world. Further, we define $[a]_1 := aG_1$, where $G_1\in \mathbb{G}_1$ is a fixed generator $\mathbb{G}_1$.

29 |

30 | The monomial reference string is used to commit to polynomials in their coefficient/monomial form. For example, given a polynomial $f(X) = f_0 + f_1X + f_2X^2 + \dots + f_{n-1}X^{n-1}$ for $n < N$, we can compute its commitment as:

31 |

32 | $$

33 | \textsf{commit}(f) := [f(x)]_1 = \sum_{i=0}^{n-1} f_i \cdot [x^i]_1.

34 | $$

35 |

36 | #### Lagrange Representation

37 |

38 | An alternative way to represent a polynomial is in its Lagrange form. Let $\{\omega^0, \omega^1, \omega^2, \dots, \omega^{n-1}\}$ be the $n$-th roots of unity and assume $n$ is a power of two. Then we can write the same polynomial $f(X)$ as:

39 |

40 | $$

41 | f(X) = \sum_{i=0}^{n-1} f(\omega^i) \cdot L_{n,i}(X)

42 | $$

43 |

44 | where $L_i(X)$ is the $n$-th Lagrange basis polynomial defined as:

45 |

46 | $$

47 | L_{n,i}(\omega^j) =

48 | \begin{cases}

49 | 1 & \text{if }j = i \\

50 | 0 & \text{otherwise}

51 | \end{cases}.

52 | $$

53 |

54 | In other words, the Lagrange basis polynomial $L_{n,i}(X)$ is $1$ on $\omega^i$ and $0$ on the other roots $\{\omega^j\}_{j \neq i}$. The Lagrange form of a polynomial is sometimes more useful than the monomial form.

55 |

56 | #### Fast Fourier Transform

57 |

58 | Given the coefficent form of a polynomial, it is possible to convert it to the Lagrange form using the Fast Fourier Transform (FFT) operation. Similarly, we can take an inverse FFT to convert the Lagrange form to its coefficient form.

59 |

60 | $$

61 | \begin{aligned}

62 | \{f_i\}_{i=0}^{n} \xrightarrow{\textsf{FFT}} \{f(\omega^i)\}_{i=0}^{n}, \\

63 | \{f(\omega^i)\}_{i=0}^{n} \xrightarrow{\textsf{iFFT}} \{f_i\}_{i=0}^{n}.

64 | \end{aligned}

65 | $$

66 |

67 | Note that this $\textsf{FFT}$ operation is defined on scalars in the field $\mathbb{F}$.

68 |

69 | #### EC-FFT

70 |

71 | Suppose we are given a bunch of elliptic curve points:

72 |

73 | $$

74 | \{a_0G_1, a_1G_1, \dots, a_{n-1}G_1\} \in \mathbb{G}_1^{n}

75 | $$

76 |

77 | for some scalars $\{a_i\}_{i=0}^{n-1}\in \mathbb{F}^n$. You can think of these scalars $\{a_i\}_{i=0}^{n-1}$ as coefficients of some polynomial $A(X)$. Note that you only have access to the given curve points and not the actual coefficients $\{a_i\}_{i=0}^{n-1}$. The question is: can you convert this set of curve points to another set of curve points defined as:

78 |

79 | $$

80 | \{A(\omega^0)\cdot G_1, \ A(\omega^1)\cdot G_1, \ \dots, \ A(\omega^{n-1})\cdot G_1\} \in \mathbb{G}_1^{n}.

81 | $$

82 |

83 | Since you do not have access to the coefficient $\{a_i\}_{i=0}^{n-1}$ you cannot just compute its FFT. But instead, if we can do an FFT operation on the curve points, that will give us the desried result. EC-FFT is exactly that: FFT on Elliptic Curve points!

84 |

85 | In this exercise, you have to implement the function `ec_fft` that takes in a set of points `g1_elements` and modifies the same points to get the FFT form.

86 |

87 | #### Application of EC-FFT

88 |

89 | Recall that our monomial SRS (of size $n$) was of the form:

90 |

91 | $$

92 | \mathbb{G}_1 \text{ monomial points: }

93 | \big([1]_1,[x]_1, [x^2]_1, [x^3]_1, \dots, [x^{n-1}]_1\big).

94 | $$

95 |

96 | Let's say we want to convert this monomial SRS to the Lagrange SRS:

97 |

98 | $$

99 | \mathbb{G}_1 \text{ lagrange points: }

100 | \big([L_0(x)]_1,[L_1(x)]_1, [L_2(x)]_1, \dots, [L_{n-1}(x)]_1\big).

101 | $$

102 |

103 | without knowing the scalar $x\in \mathbb{F}$. We can do this by using EC-FFT functionality. For that, lets Define a polynomial with coefficients $\{1, x, x^2, \dots, x^{n-1}\}$:

104 |

105 | $$

106 | P(Y) := 1 + xY + x^2Y^2 + \dots + x^{n-1}Y^{n-1}.

107 | $$

108 |

109 | Now, we can write the Lagrange polynomial $L_{n,i}(X)$ as:

110 |

111 | $$

112 | L_{n,i}(X) := \frac{1}{n}\left( \left(\frac{X}{\omega^i}\right)^0 + \left(\frac{X}{\omega^i}\right)^1 + \dots + \left(\frac{X}{\omega^i}\right)^{n-1} \right)

113 | $$

114 |

115 | This is because: when $X=\omega^i$ all of the terms would be 1 and so $L_{n,i}(\omega^i)=\frac{1 + 1 + \dots + 1}{n} = 1$. On the other hand, if $X = \omega^j$ s.t. $j\neq i$, the term in the numerator would just be the sum of all $n$-th roots of unity, i.e. $L_{n,i}(\omega^j)=\frac{\sum_k\omega^k}{n} = 0$. Using this definition of the Lagrange polynomial, we have:

116 |

117 | $$

118 | L_{n,i}(X) := \frac{1}{n}\sum_{j=0}^{n-1}\left(\frac{X}{\omega^i}\right)^j = \frac{1}{n}\sum_{j=0}^{n-1}\left(\omega^{-i}X\right)^j.

119 | $$

120 |

121 | By the definition of $P(Y)$, we can write:

122 |

123 | $$

124 | \begin{aligned}

125 | P(Y) &= \sum_{j=0}^{n-1} (x \cdot Y)^j \\

126 | \implies P(\omega^{-i}) &= \sum_{j=0}^{n-1} (\omega^{-i} \cdot x)^j =: n \cdot L_{n,i}(x) \\

127 | \therefore \quad L_{n,i}(x) &:= \frac{1}{n} \cdot P(\omega^{-i}) \qquad \text{(1)}

128 | \end{aligned}

129 | $$

130 |

131 | Therefore, we can compute the Lagrange SRS from the monomial SRS by first taking the EC-FFT on the monomial SRS and applying the transform shown in equation $(1)$.

132 |

133 | 1. Given the monomial SRS, take its EC-FFT;

134 |

135 | $$

136 | \Big([1]_1, [x]_1, [x^2]_1, \dots, [x^{n-1}]_1\Big)

137 | \xrightarrow{\textsf{ec-FFT}}

138 | \Big([P(\omega^0)]_1, [P(\omega^1)]_1, [P(\omega^2)]_1, \dots, [P(\omega^{n-1})]_1\Big)

139 | $$

140 |

141 | 2. Apply the transformation shown in equation $(1)$:

142 |

143 | $$

144 | [L_{n,i}(x)]_1 := \frac{1}{n} \cdot [P(\omega^{-i})]_1 \quad \forall i \in [0, n)

145 | $$

146 |

147 | As a part of this exercise, you also have to implement the function `convert_srs` that takes in a `monomial_srs` and converts it to a `lagrange_srs` for a given size as explained above.

148 |

--------------------------------------------------------------------------------

/cryptography-engineer/src/ec_fft/ec_fft.cpp:

--------------------------------------------------------------------------------

1 | #include "ec_fft.hpp"

2 |

3 | #pragma GCC diagnostic ignored "-Wunused-variable"

4 | #pragma GCC diagnostic ignored "-Wunused-parameter"

5 |

6 | namespace waffle {

7 | namespace g1_fft {

8 |

9 | using namespace barretenberg;

10 |

11 | inline bool is_power_of_two(uint64_t x)

12 | {

13 | return x && !(x & (x - 1));

14 | }

15 |

16 | inline uint32_t reverse_bits(uint32_t x, uint32_t bit_length)

17 | {

18 | x = (((x & 0xaaaaaaaa) >> 1) | ((x & 0x55555555) << 1));

19 | x = (((x & 0xcccccccc) >> 2) | ((x & 0x33333333) << 2));

20 | x = (((x & 0xf0f0f0f0) >> 4) | ((x & 0x0f0f0f0f) << 4));

21 | x = (((x & 0xff00ff00) >> 8) | ((x & 0x00ff00ff) << 8));

22 | return (((x >> 16) | (x << 16))) >> (32 - bit_length);

23 | }

24 |

25 | inline void ec_fft_inner(g1::element* g1_elements, const size_t n, const std::vector& root_table)

26 | {

27 | is_power_of_two(n);

28 | ASSERT(!root_table.empty());

29 |

30 | // Exercise: implement the butterfly structure to perform ec-fft

31 | }

32 |

33 | void ec_fft(g1::element* g1_elements, const evaluation_domain& domain)

34 | {

35 | ec_fft_inner(g1_elements, domain.size, domain.get_round_roots());

36 | }

37 |

38 | void ec_ifft(g1::element* g1_elements, const evaluation_domain& domain)

39 | {

40 | ec_fft_inner(g1_elements, domain.size, domain.get_inverse_round_roots());

41 | for (size_t i = 0; i < domain.size; i++) {

42 | g1_elements[i] *= domain.domain_inverse;

43 | }

44 | }

45 |

46 | void convert_srs(g1::affine_element* monomial_srs, g1::affine_element* lagrange_srs, const evaluation_domain& domain)

47 | {

48 | const size_t n = domain.size;

49 | is_power_of_two(n);

50 |

51 | // Exercise: implement the conversion of monomial to Lagrange SRS

52 | // Note that you can convert from g1::affine_element form to g1::element by just doing:

53 | // g1::affine_element x_affine = g1::affine_one;

54 | // auto x_elem = g1::element(x_affine);

55 | // Conversion from g1::element to g1::affine_element can be done similarly.

56 | }

57 |

58 | } // namespace g1_fft

59 | } // namespace waffle

--------------------------------------------------------------------------------

/cryptography-engineer/src/ec_fft/ec_fft.hpp:

--------------------------------------------------------------------------------

1 | #pragma once

2 | #include

3 | #include

4 | #include

5 |

6 | namespace waffle {

7 | namespace g1_fft {

8 |

9 | using namespace barretenberg;

10 |

11 | /**

12 | * Computes FFT (butterfly-structure) on EC (elliptic curve) points `g1_elements`.

13 | *

14 | * @param g1_elements: Given set of curve points on the BN254 curve

15 | * @param n: Number of curve points (assumed to be a power of two)

16 | * @param root_table: Contains roots of unity required in each round of the butterfly structure

17 | * @details:

18 | * root_table[0]: [1 ω₁]

19 | * root_table[1]: [1 ω₂ (ω₂)² (ω₂)³]

20 | * .

21 | * .

22 | * .

23 | * root_table[m]: [1 ωᵣ (ωᵣ)² (ωᵣ)³ ... (ωᵣ)ᴿ⁻² (ωᵣ)ᴿ⁻¹]

24 | *

25 | * where r = log2(n) - 1, R = 2^r and ωᵢ = i-th root of unity.

26 | */

27 | void ec_fft_inner(g1::element* g1_elements, const size_t n, const std::vector& root_table);

28 |

29 | /**

30 | * Computes EC-FFT of `g1_elements` given the evaluation domain `domain`.

31 | *

32 | * @details:

33 | * The domain contains the following:

34 | * n = domain.size (number of `g1_elements`, assumed to be a power of two)

35 | * ω = domain.root (n-th root of unity)

36 | * 1/ω = domain.root_inverse (multiplicative inverse of ω)

37 | */

38 | void ec_fft(g1::element* g1_elements, const evaluation_domain& domain);

39 |

40 | /**

41 | * Computes inverse EC-FFT of `g1_elements` given the evaluation domain `domain`.

42 | */

43 | void ec_ifft(g1::element* g1_elements, const evaluation_domain& domain);

44 |

45 | /**

46 | * Using `ec_fft`, computes the Lagrange form of the SRS given the monomial form SRS `monomial_srs`.

47 | *

48 | * @param monomial_srs: Monomial SRS of the form: ([1]₁, [x]₁, [x²]₁, [x³]₁, ..., [xⁿ⁻¹]₁)

49 | * @param lagrange_srs: Result must be stored in this, it should be of the form: ([L₀(x)]₁, [L₁(x)]₁, ..., [Lⁿ⁻¹(x)]₁)

50 | * @param domain: contains the information about n-th roots of unity

51 | */

52 | void convert_srs(g1::affine_element* monomial_srs, g1::affine_element* lagrange_srs, const evaluation_domain& domain);

53 |

54 | } // namespace g1_fft

55 | } // namespace waffle

--------------------------------------------------------------------------------

/cryptography-engineer/src/ec_fft/ec_fft.test.cpp:

--------------------------------------------------------------------------------

1 | #include "ec_fft.hpp"

2 | #include

3 |

4 | #include

5 | #include

6 | #include

7 | #include

8 | #include

9 | #include

10 | #include

11 |

12 | using namespace barretenberg;

13 |

14 | TEST(ec_fft, test_fft_ifft)

15 | {

16 | constexpr size_t n = 256;

17 | std::vector monomial_points;

18 | std::vector lagrange_points;

19 |

20 | for (size_t i = 0; i < n; i++) {

21 | fr multiplicand = fr::random_element();

22 | g1::element monomial_term = g1::one * multiplicand;

23 | monomial_points.push_back(monomial_term);

24 | lagrange_points.push_back(monomial_term);

25 | }

26 |

27 | auto domain = evaluation_domain(n);

28 | domain.compute_lookup_table();

29 |

30 | // Do EC-FFT and then EC-iFFT

31 | waffle::g1_fft::ec_fft(&lagrange_points[0], domain);

32 | waffle::g1_fft::ec_ifft(&lagrange_points[0], domain);

33 |

34 | // Compare the results

35 | for (size_t i = 0; i < n; i++) {

36 | EXPECT_EQ(monomial_points[i].normalize(), lagrange_points[i].normalize());

37 | }

38 | }

39 |

40 | TEST(ec_fft, test_compare_ffts)

41 | {

42 | constexpr size_t n = 256;

43 | std::vector monomial_points;

44 | std::vector lagrange_points;

45 | std::vector poly_monomial;

46 | std::vector poly_lagrange;

47 |

48 | for (size_t i = 0; i < n; i++) {

49 | fr multiplicand = fr::random_element();

50 | poly_monomial.push_back(multiplicand);

51 | poly_lagrange.push_back(multiplicand);

52 |

53 | g1::element monomial_term = g1::one * multiplicand;

54 | monomial_points.push_back(monomial_term);

55 | lagrange_points.push_back(monomial_term);

56 | }

57 |

58 | auto domain = evaluation_domain(n);

59 | domain.compute_lookup_table();

60 |

61 | // Do EC FFT

62 | waffle::g1_fft::ec_fft(&lagrange_points[0], domain);

63 |

64 | // Do fr FFT

65 | polynomial_arithmetic::fft(&poly_lagrange[0], domain);

66 |

67 | // Compare the results

68 | for (size_t i = 0; i < n; i++) {

69 | fr scalar = poly_lagrange[i];

70 | g1::element expected = g1::one * scalar;

71 | EXPECT_EQ(expected.normalize(), lagrange_points[i].normalize());

72 | }

73 | }

74 |

75 | TEST(ec_fft, test_compare_iffts)

76 | {

77 | constexpr size_t n = 256;

78 | std::vector monomial_points;

79 | std::vector lagrange_points;

80 | std::vector poly_monomial;

81 | std::vector poly_lagrange;

82 |

83 | for (size_t i = 0; i < n; i++) {

84 | fr multiplicand = fr::random_element();

85 | poly_monomial.push_back(multiplicand);

86 | poly_lagrange.push_back(multiplicand);

87 |

88 | g1::element monomial_term = g1::one * multiplicand;

89 | monomial_points.push_back(monomial_term);

90 | lagrange_points.push_back(monomial_term);

91 | }

92 |

93 | auto domain = evaluation_domain(n);

94 | domain.compute_lookup_table();

95 |

96 | // Do EC iFFT

97 | waffle::g1_fft::ec_ifft(&monomial_points[0], domain);

98 |

99 | // Do fr iFFT

100 | polynomial_arithmetic::ifft(&poly_monomial[0], domain);

101 |

102 | // Compare the results

103 | for (size_t i = 0; i < n; i++) {

104 | fr scalar = poly_monomial[i];

105 | g1::element expected = g1::one * scalar;

106 | EXPECT_EQ(expected.normalize(), monomial_points[i].normalize());

107 | }

108 | }

109 |

110 | TEST(ec_fft, test_convert_srs)

111 | {

112 | constexpr size_t n = 512;

113 | std::vector monomial_points;

114 | std::vector lagrange_points;

115 | std::vector poly_monomial;

116 | std::vector poly_lagrange;

117 |

118 | const fr x = fr::random_element();

119 | fr multiplicand = 1;

120 | for (size_t i = 0; i < n; i++) {

121 | // Fill the polynomials with random coefficients

122 | fr coefficient = fr::random_element();

123 | poly_monomial.push_back(coefficient);

124 | poly_lagrange.push_back(coefficient);

125 |

126 | // Create a fake srs with secret x

127 | g1::element monomial_term = g1::one * multiplicand;

128 | monomial_points.push_back(monomial_term);

129 | lagrange_points.push_back(monomial_term);

130 | multiplicand *= x;

131 | }

132 |

133 | auto domain = evaluation_domain(n);

134 | domain.compute_lookup_table();

135 |

136 | std::vector lagrange_srs;

137 | std::vector monomial_srs;

138 | lagrange_srs.resize(2 * n);

139 | monomial_srs.resize(2 * n);

140 |

141 | // Copy over the monomial points in monomial_srs

142 | for (size_t i = 0; i < n; i++) {

143 | monomial_srs[i] = g1::affine_element(monomial_points[i]);

144 | }

145 |

146 | // Convert from monomial to lagrange srs

147 | waffle::g1_fft::convert_srs(&monomial_srs[0], &lagrange_srs[0], domain);

148 | polynomial_arithmetic::fft(&poly_lagrange[0], domain);

149 |

150 | scalar_multiplication::pippenger_runtime_state state(n);

151 | scalar_multiplication::generate_pippenger_point_table(&monomial_srs[0], &monomial_srs[0], n);

152 | scalar_multiplication::generate_pippenger_point_table(&lagrange_srs[0], &lagrange_srs[0], n);

153 |

154 | // Check ==

155 | g1::element expected = scalar_multiplication::pippenger(&poly_monomial[0], &monomial_srs[0], n, state);

156 | g1::element result = scalar_multiplication::pippenger(&poly_lagrange[0], &lagrange_srs[0], n, state);

157 | expected = expected.normalize();

158 | result = result.normalize();

159 |

160 | EXPECT_EQ(result, expected);

161 | }

--------------------------------------------------------------------------------

/cryptography-engineer/src/indexed_merkle_tree/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | # create a barretenberg_module for merkle tree

2 | barretenberg_module(indexed_merkle_tree)

--------------------------------------------------------------------------------

/cryptography-engineer/src/indexed_merkle_tree/README.md:

--------------------------------------------------------------------------------

1 | ## Indexed Merkle Tree Test

2 |

3 | Hi there! Welcome to this Indexed Merkle tree test that you are about to take. We will guide you through the concept of Indexed Merkle trees before you begin crushing this exercise.

4 |

5 | #### Pre-requisites

6 |

7 | We assume that you are familier with the concept of Merkle trees. If not, please read [this](https://decentralizedthoughts.github.io/2020-12-22-what-is-a-merkle-tree/) excellent blog.

8 |

9 | #### Indexed Merkle Tree

10 |

11 | An indexed Merkle tree is a variant of the basic Merkle tree where the leaf structure changes slightly. Each leaf in the indexed Merkle tree not only stores some value $v \in \mathbb{F}$ but also points to the leaf with the next higher value:

12 |

13 | $$

14 | \textsf{leaf} = \{v, i_{\textsf{next}}, v_{\textsf{next}}\}.

15 | $$

16 |

17 | where $i_{\textsf{next}}$ is the index of the leaf with the next higher value $v_{\textsf{next}} > v$. By design, we assume that there are no leaves in the tree with a value between the range $(v, v_{\textsf{next}})$. Let us look at a toy example of the state transitions in an indexed Merkle tree of depth 3.

18 |

19 | [Note: Please check out [this](https://hackmd.io/@suyash67/ByXqvJI12) hackmd for the images, they're missing from the dependencies.]

20 |

21 | 1. Initial state

22 |

23 | 2. Add a new value $v=30$

24 |

25 | 3. Add a new value $v=10$

26 |

27 | 4. Add a new value $v=20$

28 |

29 | 5. Add a new value $v=50$

30 |

31 |

32 | #### Exercise

33 |

34 | In this exercise, you will implement the indexed Merkle tree as a class called `IndexedMerkleTree`. The class definition and the boilter plate code is given in the files `indexed_merkle_tree.*pp`. The aim of this exercise is to fill in the pre-defined functions and get the two tests passing in `indexed_merkle_tree.test.cpp`. Note that the structure of the `leaf` is already implemented in `leaf.hpp` for you.

35 |

36 | To compile this module and run the tests, do:

37 |

38 | ```bash

39 | $ cd interview-tests/cryptography-engineer

40 | $ ./bootstrap.sh

41 | $ cd build

42 | $ make indexed_merkle_tree_tests # This compiles the module

43 | $ ./bin/indexed_merkle_tree_tests # This runs the tests

44 | ```

45 |

46 | In case of any questions or suggestions related to this exercise, feel free to reach out to [suyash@aztecprotocol.com](mailto:suyash@aztecprotocol.com) or [cody@aztecprotocol.com](mailto:cody@aztecprotocol.com).

47 |

--------------------------------------------------------------------------------

/cryptography-engineer/src/indexed_merkle_tree/indexed_merkle_tree.cpp:

--------------------------------------------------------------------------------

1 | #include "indexed_merkle_tree.hpp"

2 | #include

3 |

4 | namespace plonk {

5 | namespace stdlib {

6 | namespace indexed_merkle_tree {

7 |

8 | /**

9 | * Initialise an indexed merkle tree state with all the leaf values: H({0, 0, 0}).

10 | * Note that the leaf pre-image vector `leaves_` must be filled with {0, 0, 0} only at index 0.

11 | */

12 | IndexedMerkleTree::IndexedMerkleTree(size_t depth)

13 | : depth_(depth)

14 | {

15 | ASSERT(depth_ >= 1 && depth <= 32);

16 | total_size_ = 1UL << depth_;

17 | hashes_.resize(total_size_ * 2 - 2);

18 |

19 | // Exercise: Build the initial state of the entire tree.

20 | }

21 |

22 | /**

23 | * Fetches a hash-path from a given index in the tree.

24 | * Note that the size of the fr_hash_path vector should be equal to the depth of the tree.

25 | */

26 | fr_hash_path IndexedMerkleTree::get_hash_path(size_t)

27 | {

28 | // Exercise: fill the hash path for a given index.

29 | fr_hash_path path(depth_);

30 | return path;

31 | }

32 |

33 | /**

34 | * Update the node values (i.e. `hashes_`) given the leaf hash `value` and its index `index`.

35 | * Note that indexing in the tree starts from 0.

36 | * This function should return the updated root of the tree.

37 | */

38 | fr IndexedMerkleTree::update_element_internal(size_t, fr const&)

39 | {

40 | // Exercise: insert the leaf hash `value` at `index`.

41 | return 0;

42 | }

43 |

44 | /**

45 | * Insert a new `value` in a new leaf in the `leaves_` vector in the form: {value, nextIdx, nextVal}

46 | * You will need to compute `nextIdx, nextVal` according to the way indexed merkle trees work.

47 | * Further, you will need to update one old leaf pre-image on inserting a new leaf.

48 | * Lastly, insert the new leaf hash in the tree as well as update the existing leaf hash of the old leaf.

49 | */

50 | fr IndexedMerkleTree::update_element(fr const&)

51 | {

52 | // Exercise: add a new leaf with value `value` to the tree.

53 | return 0;

54 | }

55 |

56 | } // namespace indexed_merkle_tree

57 | } // namespace stdlib

58 | } // namespace plonk

--------------------------------------------------------------------------------

/cryptography-engineer/src/indexed_merkle_tree/indexed_merkle_tree.hpp:

--------------------------------------------------------------------------------

1 | #pragma once

2 | #include

3 | #include "leaf.hpp"

4 |

5 | namespace plonk {

6 | namespace stdlib {

7 | namespace indexed_merkle_tree {

8 |

9 | using namespace barretenberg;

10 | using namespace plonk::stdlib::merkle_tree;

11 |

12 | /**

13 | * An IndexedMerkleTree is structured just like a usual merkle tree:

14 | *

15 | * hashes_

16 | * +------------------------------------------------------------------------------+

17 | * | 0 -> h_{0,0} h_{0,1} h_{0,2} h_{0,3} h_{0,4} h_{0,5} h_{0,6} h_{0,7} |

18 | * i | |

19 | * n | 8 -> h_{1,0} h_{1,1} h_{1,2} h_{1,3} |

20 | * d | |

21 | * e | 12 -> h_{2,0} h_{2,1} |

22 | * x | |

23 | * | 14 -> h_{3,0} |

24 | * +------------------------------------------------------------------------------+

25 | *

26 | * Here, depth_ = 3 and {h_{0,j}}_{i=0..7} are leaf values.

27 | * Also, root_ = h_{3,0} and total_size_ = (2 * 8 - 2) = 14.

28 | * Lastly, h_{i,j} = hash( h_{i-1,2j}, h_{i-1,2j+1} ) where i > 1.

29 | *

30 | * 1. Initial state:

31 | *

32 | * #

33 | *

34 | * # #

35 | *

36 | * # # # #

37 | *

38 | * # # # # # # # #

39 | *

40 | * index 0 1 2 3 4 5 6 7

41 | *

42 | * val 0 0 0 0 0 0 0 0

43 | * nextIdx 0 0 0 0 0 0 0 0

44 | * nextVal 0 0 0 0 0 0 0 0

45 | *

46 | * 2. Add new leaf with value 30

47 | *

48 | * val 0 30 0 0 0 0 0 0

49 | * nextIdx 1 0 0 0 0 0 0 0

50 | * nextVal 30 0 0 0 0 0 0 0

51 | *

52 | * 3. Add new leaf with value 10

53 | *

54 | * val 0 30 10 0 0 0 0 0

55 | * nextIdx 2 0 1 0 0 0 0 0

56 | * nextVal 10 0 30 0 0 0 0 0

57 | *

58 | * 4. Add new leaf with value 20

59 | *

60 | * val 0 30 10 20 0 0 0 0

61 | * nextIdx 2 0 3 1 0 0 0 0

62 | * nextVal 10 0 20 30 0 0 0 0

63 | *

64 | * 5. Add new leaf with value 50

65 | *

66 | * val 0 30 10 20 50 0 0 0

67 | * nextIdx 2 4 3 1 0 0 0 0

68 | * nextVal 10 50 20 30 0 0 0 0

69 | */

70 | class IndexedMerkleTree {

71 | public:

72 | IndexedMerkleTree(size_t depth);

73 |

74 | fr_hash_path get_hash_path(size_t index);

75 |

76 | fr update_element_internal(size_t index, fr const& value);

77 |

78 | fr update_element(fr const& value);

79 |

80 | fr root() const { return root_; }

81 |

82 | const std::vector& get_hashes() { return hashes_; }

83 | const std::vector& get_leaves() { return leaves_; }

84 |

85 | private:

86 | // The depth or height of the tree

87 | size_t depth_;

88 |

89 | // The total number of leaves in the tree

90 | size_t total_size_;

91 |

92 | // The root of the merkle tree

93 | barretenberg::fr root_;

94 |

95 | // Vector of pre-images of leaf values of the form {val, nextIdx, nextIdx}

96 | // Size = total_size_

97 | std::vector leaves_;

98 |

99 | // Vector that stores all the leaf hashes as well as intermediate node values

100 | // Size: total_size_ + (total_size_ / 2) + (total_size_ / 4) + ... + 2 = 2 * total_size_ - 2

101 | std::vector hashes_;

102 | };

103 |

104 | } // namespace indexed_merkle_tree

105 | } // namespace stdlib

106 | } // namespace plonk

--------------------------------------------------------------------------------

/cryptography-engineer/src/indexed_merkle_tree/indexed_merkle_tree.test.cpp:

--------------------------------------------------------------------------------

1 | #include "indexed_merkle_tree.hpp"

2 | #include

3 | #include

4 |

5 | using namespace barretenberg;

6 | using namespace plonk::stdlib::indexed_merkle_tree;

7 |

8 | void print_tree(const size_t depth, std::vector hashes, std::string const& msg)

9 | {

10 | info("\n", msg);

11 | size_t offset = 0;

12 | for (size_t i = 0; i < depth; i++) {

13 | info("i = ", i);

14 | size_t layer_size = (1UL << (depth - i));

15 | for (size_t j = 0; j < layer_size; j++) {

16 | info("j = ", j, ": ", hashes[offset + j]);

17 | }

18 | offset += layer_size;

19 | }

20 | }

21 |

22 | bool check_hash_path(const fr& root, const fr_hash_path& path, const leaf& leaf_value, const size_t idx)

23 | {

24 | auto current = leaf_value.hash();

25 | size_t depth_ = path.size();

26 | size_t index = idx;

27 | for (size_t i = 0; i < depth_; ++i) {

28 | fr left = (index & 1) ? path[i].first : current;

29 | fr right = (index & 1) ? current : path[i].second;

30 | current = compress_pair(left, right);

31 | index >>= 1;

32 | }

33 | return current == root;

34 | }

35 |

36 | TEST(stdlib_indexed_merkle_tree, test_toy_example)

37 | {

38 | // Create a depth-3 indexed merkle tree

39 | constexpr size_t depth = 3;

40 | IndexedMerkleTree tree(depth);

41 |

42 | /**

43 | * Intial state:

44 | *

45 | * index 0 1 2 3 4 5 6 7

46 | * ---------------------------------------------------------------------

47 | * val 0 0 0 0 0 0 0 0

48 | * nextIdx 0 0 0 0 0 0 0 0

49 | * nextVal 0 0 0 0 0 0 0 0

50 | */

51 | leaf zero_leaf = { 0, 0, 0 };

52 | EXPECT_EQ(tree.get_leaves().size(), 1);

53 | EXPECT_EQ(tree.get_leaves()[0], zero_leaf);

54 |

55 | /**

56 | * Add new value 30:

57 | *

58 | * index 0 1 2 3 4 5 6 7

59 | * ---------------------------------------------------------------------

60 | * val 0 30 0 0 0 0 0 0

61 | * nextIdx 1 0 0 0 0 0 0 0

62 | * nextVal 30 0 0 0 0 0 0 0

63 | */

64 | tree.update_element(30);

65 | EXPECT_EQ(tree.get_leaves().size(), 2);

66 | EXPECT_EQ(tree.get_leaves()[0].hash(), leaf({ 0, 1, 30 }).hash());

67 | EXPECT_EQ(tree.get_leaves()[1].hash(), leaf({ 30, 0, 0 }).hash());

68 |

69 | /**

70 | * Add new value 10:

71 | *

72 | * index 0 1 2 3 4 5 6 7

73 | * ---------------------------------------------------------------------

74 | * val 0 30 10 0 0 0 0 0

75 | * nextIdx 2 0 1 0 0 0 0 0

76 | * nextVal 10 0 30 0 0 0 0 0

77 | */

78 | tree.update_element(10);

79 | EXPECT_EQ(tree.get_leaves().size(), 3);

80 | EXPECT_EQ(tree.get_leaves()[0].hash(), leaf({ 0, 2, 10 }).hash());

81 | EXPECT_EQ(tree.get_leaves()[1].hash(), leaf({ 30, 0, 0 }).hash());

82 | EXPECT_EQ(tree.get_leaves()[2].hash(), leaf({ 10, 1, 30 }).hash());

83 |

84 | /**

85 | * Add new value 20:

86 | *

87 | * index 0 1 2 3 4 5 6 7

88 | * ---------------------------------------------------------------------

89 | * val 0 30 10 20 0 0 0 0

90 | * nextIdx 2 0 3 1 0 0 0 0

91 | * nextVal 10 0 20 30 0 0 0 0

92 | */

93 | tree.update_element(20);

94 | EXPECT_EQ(tree.get_leaves().size(), 4);

95 | EXPECT_EQ(tree.get_leaves()[0].hash(), leaf({ 0, 2, 10 }).hash());

96 | EXPECT_EQ(tree.get_leaves()[1].hash(), leaf({ 30, 0, 0 }).hash());

97 | EXPECT_EQ(tree.get_leaves()[2].hash(), leaf({ 10, 3, 20 }).hash());

98 | EXPECT_EQ(tree.get_leaves()[3].hash(), leaf({ 20, 1, 30 }).hash());

99 |

100 | // Adding the same value must not affect anything

101 | tree.update_element(20);

102 | EXPECT_EQ(tree.get_leaves().size(), 4);

103 | EXPECT_EQ(tree.get_leaves()[0].hash(), leaf({ 0, 2, 10 }).hash());

104 | EXPECT_EQ(tree.get_leaves()[1].hash(), leaf({ 30, 0, 0 }).hash());

105 | EXPECT_EQ(tree.get_leaves()[2].hash(), leaf({ 10, 3, 20 }).hash());

106 | EXPECT_EQ(tree.get_leaves()[3].hash(), leaf({ 20, 1, 30 }).hash());

107 |

108 | /**

109 | * Add new value 50:

110 | *

111 | * index 0 1 2 3 4 5 6 7

112 | * ---------------------------------------------------------------------

113 | * val 0 30 10 20 50 0 0 0

114 | * nextIdx 2 4 3 1 0 0 0 0

115 | * nextVal 10 50 20 30 0 0 0 0

116 | */

117 | tree.update_element(50);

118 | EXPECT_EQ(tree.get_leaves().size(), 5);

119 | EXPECT_EQ(tree.get_leaves()[0].hash(), leaf({ 0, 2, 10 }).hash());

120 | EXPECT_EQ(tree.get_leaves()[1].hash(), leaf({ 30, 4, 50 }).hash());

121 | EXPECT_EQ(tree.get_leaves()[2].hash(), leaf({ 10, 3, 20 }).hash());

122 | EXPECT_EQ(tree.get_leaves()[3].hash(), leaf({ 20, 1, 30 }).hash());

123 | EXPECT_EQ(tree.get_leaves()[4].hash(), leaf({ 50, 0, 0 }).hash());

124 |

125 | // Manually compute the node values

126 | auto e000 = tree.get_leaves()[0].hash();

127 | auto e001 = tree.get_leaves()[1].hash();

128 | auto e010 = tree.get_leaves()[2].hash();

129 | auto e011 = tree.get_leaves()[3].hash();