├── .DS_Store

├── .gitignore

├── LICENSE

├── README-CN.md

├── README.md

├── audio_denoising

├── .DS_Store

└── dtln

│ ├── .DS_Store

│ ├── README.md

│ ├── dtln.cpp

│ └── models

│ ├── .DS_Store

│ ├── convert1.py

│ ├── convert2.py

│ ├── dtln_1.param

│ └── dtln_2.param

├── contribute.md

├── diffusion

└── stablediffuson

│ └── README.md

├── docs

├── .DS_Store

├── Cmakelists.txt

└── images

│ └── logo.png

├── face_dection

├── .DS_Store

├── Anime_Face

│ ├── .DS_Store

│ ├── README.md

│ ├── anime_face.cpp

│ └── models

│ │ ├── anime-face_hrnetv2.param

│ │ └── convert.py

├── pfld

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── pfld-sim.param

│ └── pfld.cpp

└── ultraface

│ ├── .DS_Store

│ ├── README.md

│ ├── UltraFace.cpp

│ ├── UltraFace.h

│ ├── main.cpp

│ └── models

│ ├── .DS_Store

│ ├── convert.py

│ ├── version-RFB-320.param

│ └── version-RFB-640.param

├── face_restoration

├── .DS_Store

├── codeformer

│ └── README.md

└── gfpgan

│ ├── .DS_Store

│ ├── README.md

│ ├── gfpgan.cpp

│ ├── gfpgan.h

│ ├── main.cpp

│ └── models

│ └── downlaod.txt

├── face_swap

└── roop

│ ├── README.md

│ └── inswapper_128.param

├── image_classification

├── .DS_Store

├── cait

│ ├── .DS_Store

│ ├── Cait.cpp

│ ├── README.md

│ └── models

│ │ ├── cait_xxs36_384.param

│ │ ├── convert.py

│ │ └── download.txt

├── denseNet

│ ├── .DS_Store

│ ├── README.md

│ ├── densenet.cpp

│ └── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── densenet121.param

├── efficientnet

│ ├── .DS_Store

│ ├── README.md

│ ├── efficientnet.cpp

│ └── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── efficientnet_b0.param

├── mobilenet_v2

│ ├── .DS_Store

│ ├── README.md

│ ├── mobilenet_v2.cpp

│ └── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── mobilenet_v2.param

├── mobilenet_v3

│ ├── .DS_Store

│ ├── README.md

│ ├── mobilenet_v3.cpp

│ └── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── mobilenet_v3.param

├── res2net

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── convert.py

│ │ └── res2net101_26w_4s.param

│ └── res2net.cpp

├── res2next50

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── convert.py

│ │ └── res2next50.param

│ └── res2next50.cpp

├── resnet18

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ ├── download.txt

│ │ └── resnet18.param

│ └── resnet18.cpp

├── shufflenetv2

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── shufflenet_v2.param

│ └── shufflenetv2.cpp

└── vgg19

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ ├── convert.py

│ ├── download.txt

│ └── vgg16.param

│ └── vgg16.cpp

├── image_inpainting

├── .DS_Store

├── deoldify

│ ├── .DS_Store

│ ├── README.md

│ ├── deoldify.cpp

│ ├── input.png

│ ├── models

│ │ ├── convert.py

│ │ ├── deoldify.256.param

│ │ └── download.txt

│ └── output.jpg

└── mat

│ ├── README.md

│ └── mat.cpp

├── image_matting

├── .DS_Store

├── RVM

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── convert.py

│ │ └── download.txt

│ └── rvm.cpp

├── deeplabv3

│ ├── .DS_Store

│ ├── README.md

│ ├── deeplabv3.cpp

│ └── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ ├── deeplabv3_mobilenet_v3_large.param

│ │ ├── deeplabv3_resnet101.param

│ │ ├── deeplabv3_resnet50.param

│ │ └── download.txt

├── vitae

│ ├── .DS_Store

│ ├── README.md

│ └── models

│ │ ├── P3M-Net_ViTAE-S_trained_on_P3M-10k.param

│ │ └── convert.py

└── yolov7_mask

│ ├── README.md

│ └── yolov7_mask.cpp

├── nlp

├── .DS_Store

└── gpt2-chinese

│ ├── README.md

│ └── gpt2.cpp

├── object_dection

├── .DS_Store

├── fastestdet

│ ├── README.md

│ ├── fastestdet.cpp

│ ├── fastestdet.png

│ └── pt2ncnn.sh

├── hybridnets

│ ├── .DS_Store

│ ├── README.md

│ ├── hybridnets.cpp

│ └── models

│ │ └── convert.py

├── nanodet

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── nanodet416.param

│ └── nanodet.cpp

├── yolo-fastestv2

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ └── yolo-fastestv2.param

│ └── yolo-fastestv2.cpp

├── yolop

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ └── convert.py

│ └── yolop.cpp

├── yolov5

│ ├── .DS_Store

│ ├── README.md

│ ├── color.cpp

│ ├── models

│ │ ├── .DS_Store

│ │ ├── convert.sh

│ │ ├── yolov5n-7.ncnn.param

│ │ └── yolov5s.ncnn.param

│ └── yolov5.cpp

├── yolov6

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ │ └── convert.py

│ └── yolov6.cpp

├── yolov7

│ ├── README.md

│ ├── pt2ncnn.sh

│ └── yolov7.cpp

└── yolox

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ ├── .DS_Store

│ ├── convert.py

│ └── yolox_nano.param

│ └── yolox.cpp

├── style_transfer

├── .DS_Store

├── anime2real

│ ├── .DS_Store

│ ├── README.md

│ ├── anime2real.cpp

│ └── models

│ │ ├── convert.py

│ │ ├── netG_A2B.param

│ │ ├── netG_B2A.param

│ │ └── out.jpg

├── animeganv2

│ ├── .DS_Store

│ ├── README.md

│ ├── animeganv2.cpp

│ └── models

│ │ ├── .DS_Store

│ │ ├── convert.py

│ │ ├── face_paint_512_v2.param

│ │ └── paprika.param

├── animeganv3

│ ├── .DS_Store

│ ├── README.md

│ ├── animeganv3.cpp

│ ├── input.jpg

│ └── models

│ │ └── .DS_Store

└── styletransfer

│ ├── .DS_Store

│ ├── README.md

│ ├── models

│ ├── .DS_Store

│ ├── candy9.param

│ ├── convert.py

│ ├── mosaic-9.param

│ ├── pointilism-9.param

│ ├── rain-princess-9.param

│ └── udnie-9.param

│ └── styletransfer.cpp

├── tts

├── sherpa

│ └── README.md

└── vits

│ └── README.md

└── video

├── .DS_Store

├── ifrnet

├── 0.png

├── 1.png

├── README.md

└── main.cpp

├── nerf

└── README.md

└── rife

├── .DS_Store

├── README.md

├── models

├── .DS_Store

└── flownet.param

├── rife.cpp

├── rife_ops.h

├── warp.comp.hex.h

├── warp.cpp

├── warp_pack4.comp.hex.h

└── warp_pack8.comp.hex.h

/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/.DS_Store

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # ignore vscode

2 | .vscode

3 | # ignore large file

4 | deoldify.256.bin

5 | deeplabv3_resnet50.bin

6 | res2net101_26w_4s.bin

7 | res2next50.bin

8 | vgg16.bin

9 | cait_xxs36_384.bin

10 | anime-face_hrnetv2.bin

11 | netG_A2B.bin

12 | netG_B2A.bin

13 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2022 佰阅

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README-CN.md:

--------------------------------------------------------------------------------

1 | ## ncnn models

2 |

3 | The collection of pre-trained AI models, and how they were converted, deployed.

4 |

5 |

6 |

7 | ### 关于

8 |

9 | ncnn框架借助vulkan实现了全平台部署,我们通过pytorch、tensorflow、飞桨等预训练模型,然后转换成ncnn通用模型,实现Windows、mac、linux、安卓、ios、WebAssembly 以及uni-app的最终部署。然而模型转换并不是一键的,需要手动处理。为了拓展ncnn的边界应用,我们建立此仓库,欢迎提交接收任何转换成功或失败的案例。

10 |

11 | ### 如何参与贡献?

12 |

13 | fork代码,然后按照如下格式提交,最好是c++20 的最小demo,不要内嵌并发。

14 |

15 | yolov5 # 项目名称

16 | - models/xx.bin or xx.param # model

17 | - input.png # 输入

18 | - out.png # 输出

19 | - README.md # 模型推理介绍、转换步骤、相关案例

20 | - convert.py # 从pytorch等模型转换具体复现代码

21 |

22 | 灵感来自[ailia](https://github.com/axinc-ai/ailia-models),鉴于接收失败案例为主,因此失败案例优先排序,成功案例分类排序。

23 |

24 | ### 一些代表

25 |

26 | - [nihui](https://github.com/nihui) ncnn作者

27 | - [飞哥](https://github.com/feigechuanshu) ncnn安卓系列

28 | - [EdVince](https://github.com/EdVince) ncnn自然语言系列

29 | - [baiyue](https://github.com/Baiyuetribe/paper2gui) ncnn在PC桌面GUI系列

30 | - [670***@qq.com](https://ext.dcloud.net.cn/plugin?id=5243) ncnn在uni-app中的应用

31 | - [nihui](https://github.com/nihui/ncnn_on_esp32) ncnn在嵌入式设备上的应用

32 | - [nihui](https://github.com/nihui/ncnn-webassembly-yolov5) ncnn在wasm实现案例

33 |

34 | ### 跨设备的意义

35 |

36 | > 维护一次代码,多设备可用。

37 |

38 | - 借助vulkan,无需繁重的cuda驱动

39 | - 借助wasm实现任意设备的模型部署

40 | - 借助uni-app可绕过java实现多端部署

--------------------------------------------------------------------------------

/audio_denoising/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/audio_denoising/.DS_Store

--------------------------------------------------------------------------------

/audio_denoising/dtln/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/audio_denoising/dtln/.DS_Store

--------------------------------------------------------------------------------

/audio_denoising/dtln/README.md:

--------------------------------------------------------------------------------

1 | # DTLN

2 |

3 | ## Compare input audio with output audio

4 |

5 |

6 |

7 |

8 | ## Convert DTLN from ONNX to NCNN

9 |

10 | - Part 1

11 |

12 | ```python

13 | import os

14 | import torch

15 | from DTLN_model_ncnn_compat import Pytorch_DTLN_P1_stateful, Pytorch_DTLN_P2_stateful

16 |

17 | if __name__ == '__main__':

18 | import argparse

19 |

20 | parser = argparse.ArgumentParser()

21 | parser.add_argument("--model_path",

22 | type=str,

23 | help="model dir",

24 | default=os.path.dirname(__file__) + "/pretrained/model.pth")

25 | parser.add_argument("--model_1",

26 | type=str,

27 | help="model part 1 save path",

28 | default=os.path.dirname(__file__) + "/pretrained/model_p1.pt")

29 |

30 | parser.add_argument("--model_2",

31 | type=str,

32 | help="model part 2 save path",

33 | default=os.path.dirname(__file__) + "/pretrained/model_p2.pt")

34 |

35 | args = parser.parse_args()

36 |

37 | model1 = Pytorch_DTLN_P1_stateful()

38 | print('==> load model from: ', args.model_path)

39 | model1.load_state_dict(torch.load(args.model_path), strict=False)

40 | model1.eval()

41 | model2 = Pytorch_DTLN_P2_stateful()

42 | model2.load_state_dict(torch.load(args.model_path), strict=False)

43 | model2.eval()

44 |

45 | block_len = 512

46 | hidden_size = 128

47 | # in_state1 = torch.zeros(2, 1, hidden_size, 2)

48 | # in_state2 = torch.zeros(2, 1, hidden_size, 2)

49 | in_state1 = torch.zeros(4, 1, 1, 128)

50 | in_state2 = torch.zeros(4, 1, 1, 128)

51 |

52 | mag = torch.zeros(1, 1, (block_len // 2 + 1))

53 | phase = torch.zeros(1, 1, (block_len // 2 + 1))

54 | y1 = torch.zeros(1, block_len, 1)

55 |

56 | # NCNN not support Gather

57 | input_names = ["mag", "h1_in", "c1_in", "h2_in", "c2_in"]

58 | output_names = ["y1", "out_state1"]

59 |

60 | print("==> export to: ", args.model_1)

61 | # torch.onnx.export(model1,

62 | # (mag, in_state1[0], in_state1[1], in_state1[2], in_state1[3]),

63 | # args.model_1,

64 | # input_names=input_names, output_names=output_names)

65 | # torch.save(model1.state_dict(), args.model_1)

66 | # 1. pt --> torchscript

67 | traced_script_module = torch.jit.trace(model1, (mag, in_state1[0], in_state1[1], in_state1[2], in_state1[3]))

68 | traced_script_module.save("ts1.pt")

69 | # 2. ts --> pnnx --> ncnn

70 | os.system("pnnx ts1.pt inputshape=[1,1,257],[1,1,128],[1,1,128],[1,1,128][1,1,128]")

71 | ```

72 |

73 | - Part 2

74 |

75 | ```python

76 | import os

77 |

78 | # 2. onnx --> onnxsim

79 | os.system("python -m onnxsim model_p2.onnx sim.onnx")

80 |

81 | # 3. onnx --> ncnn

82 | os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

83 |

84 | # 4. ncnn --> optmize ---> ncnn

85 | os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

86 |

87 | # 从原始项目中的onnx模型进行转换,原始项目pnnx模式报错,意思pnnx算子不支持aten:square

88 |

89 | ```

90 |

91 | ## Example project

92 |

93 | ## Reference

94 |

95 | - [lhwcv/DTLN_pytorch](https://github.com/lhwcv/DTLN_pytorch)

96 | - [breizhn/DTLN](https://github.com/breizhn/DTLN)

97 |

--------------------------------------------------------------------------------

/audio_denoising/dtln/dtln.cpp:

--------------------------------------------------------------------------------

1 | // 本项目难点在音频前处理上,暂无最小demo,请参考原作者的deploment方法

2 | // 欢迎pr

3 |

4 | // https://github.com/lhwcv/DTLN_pytorch/tree/main/deploy

5 |

--------------------------------------------------------------------------------

/audio_denoising/dtln/models/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/audio_denoising/dtln/models/.DS_Store

--------------------------------------------------------------------------------

/audio_denoising/dtln/models/convert1.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | from DTLN_model_ncnn_compat import Pytorch_DTLN_P1_stateful, Pytorch_DTLN_P2_stateful

4 |

5 | if __name__ == '__main__':

6 | import argparse

7 |

8 | parser = argparse.ArgumentParser()

9 | parser.add_argument("--model_path",

10 | type=str,

11 | help="model dir",

12 | default=os.path.dirname(__file__) + "/pretrained/model.pth")

13 | parser.add_argument("--model_1",

14 | type=str,

15 | help="model part 1 save path",

16 | default=os.path.dirname(__file__) + "/pretrained/model_p1.pt")

17 |

18 | parser.add_argument("--model_2",

19 | type=str,

20 | help="model part 2 save path",

21 | default=os.path.dirname(__file__) + "/pretrained/model_p2.pt")

22 |

23 | args = parser.parse_args()

24 |

25 | model1 = Pytorch_DTLN_P1_stateful()

26 | print('==> load model from: ', args.model_path)

27 | model1.load_state_dict(torch.load(args.model_path), strict=False)

28 | model1.eval()

29 | model2 = Pytorch_DTLN_P2_stateful()

30 | model2.load_state_dict(torch.load(args.model_path), strict=False)

31 | model2.eval()

32 |

33 | block_len = 512

34 | hidden_size = 128

35 | # in_state1 = torch.zeros(2, 1, hidden_size, 2)

36 | # in_state2 = torch.zeros(2, 1, hidden_size, 2)

37 | in_state1 = torch.zeros(4, 1, 1, 128)

38 | in_state2 = torch.zeros(4, 1, 1, 128)

39 |

40 | mag = torch.zeros(1, 1, (block_len // 2 + 1))

41 | phase = torch.zeros(1, 1, (block_len // 2 + 1))

42 | y1 = torch.zeros(1, block_len, 1)

43 |

44 | # NCNN not support Gather

45 | input_names = ["mag", "h1_in", "c1_in", "h2_in", "c2_in"]

46 | output_names = ["y1", "out_state1"]

47 |

48 | print("==> export to: ", args.model_1)

49 | # torch.onnx.export(model1,

50 | # (mag, in_state1[0], in_state1[1], in_state1[2], in_state1[3]),

51 | # args.model_1,

52 | # input_names=input_names, output_names=output_names)

53 | # torch.save(model1.state_dict(), args.model_1)

54 | # 1. pt --> torchscript

55 | traced_script_module = torch.jit.trace(model1, (mag, in_state1[0], in_state1[1], in_state1[2], in_state1[3]))

56 | traced_script_module.save("ts1.pt")

57 | # 2. ts --> pnnx --> ncnn

58 | os.system("pnnx ts1.pt inputshape=[1,1,257],[1,1,128],[1,1,128],[1,1,128][1,1,128]")

59 |

60 | # input_names = ["y1", "h1_in", "c1_in", "h2_in", "c2_in"]

61 | # output_names = ["y", "out_state2"]

62 |

63 | # print("==> export to: ", args.model_2)

64 | # torch.onnx.export(model2,

65 | # (y1, in_state2[0], in_state2[1], in_state2[2], in_state2[3]),

66 | # args.model_2,

67 | # input_names=input_names, output_names=output_names)

68 |

69 | # traced_script_module = torch.jit.trace(model2, (y1, in_state2[0], in_state2[1], in_state2[2], in_state2[3]))

70 | # traced_script_module.save("ts2.pt")

71 | # # 2. ts --> pnnx --> ncnn

72 | # os.system("pnnx ts2.pt inputshape=[1,512,1],[1,1,128],[1,1,128],[1,1,128][1,1,128]")

73 |

74 | # 把文件丢到原始项目目录下,进行转换

75 |

--------------------------------------------------------------------------------

/audio_denoising/dtln/models/convert2.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | # 2. onnx --> onnxsim

4 | os.system("python -m onnxsim model_p2.onnx sim.onnx")

5 |

6 | # 3. onnx --> ncnn

7 | os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

8 |

9 | # 4. ncnn --> optmize ---> ncnn

10 | os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

11 |

12 | # 从原始项目中的onnx模型进行转换,原始项目pnnx模式报错,意思pnnx算子不支持aten:square

13 |

--------------------------------------------------------------------------------

/audio_denoising/dtln/models/dtln_1.param:

--------------------------------------------------------------------------------

1 | 7767517

2 | 13 18

3 | Input in0 0 1 in0

4 | Split splitncnn_0 1 2 in0 1 2

5 | Input in1 0 1 in1

6 | Input in2 0 1 in2

7 | Input in3 0 1 in3

8 | Input in4 0 1 in4

9 | LSTM lstm_1 3 3 1 in1 in2 7 8 9 0=128 1=131584 2=0

10 | LSTM lstm_2 3 3 7 in3 in4 10 11 12 0=128 1=65536 2=0

11 | Concat cat_0 4 1 8 9 11 12 out1 0=0

12 | InnerProduct fcsigmoid_0 1 1 10 14 0=257 1=1 2=32896 9=4

13 | Reshape reshape_5 1 1 14 15 0=-1

14 | Reshape reshape_4 1 1 2 16 0=-1

15 | BinaryOp mul_0 2 1 15 16 out0 0=2

16 |

--------------------------------------------------------------------------------

/audio_denoising/dtln/models/dtln_2.param:

--------------------------------------------------------------------------------

1 | 7767517

2 | 33 41

3 | Input y1 0 1 y1

4 | Input h1_in 0 1 h1_in

5 | Input c1_in 0 1 c1_in

6 | Input h2_in 0 1 h2_in

7 | Input c2_in 0 1 c2_in

8 | MemoryData encoder_norm1.beta 0 1 encoder_norm1.beta 0=256 1=1

9 | MemoryData encoder_norm1.gamma 0 1 encoder_norm1.gamma 0=256 1=1

10 | MemoryData sep2.dense.bias 0 1 sep2.dense.bias 0=256

11 | Convolution1D Conv_0 1 1 y1 19 0=256 1=1 6=131072

12 | Permute Transpose_1 1 1 19 20 0=1

13 | Split splitncnn_0 1 3 20 20_splitncnn_0 20_splitncnn_1 20_splitncnn_2

14 | Reduction ReduceMean_2 1 1 20_splitncnn_2 21 0=3

15 | BinaryOp Sub_3 2 1 20_splitncnn_1 21 22 0=1

16 | Split splitncnn_1 1 3 22 22_splitncnn_0 22_splitncnn_1 22_splitncnn_2

17 | BinaryOp Mul_4 2 1 22_splitncnn_2 22_splitncnn_1 23 0=2

18 | Reduction ReduceMean_5 1 1 23 24 0=3

19 | BinaryOp Add_7 1 1 24 26 1=1 2=1.000000e-07

20 | UnaryOp Sqrt_8 1 1 26 27 0=5

21 | BinaryOp Div_9 2 1 22_splitncnn_0 27 28 0=3

22 | BinaryOp Mul_10 2 1 28 encoder_norm1.gamma 29 0=2

23 | BinaryOp Add_11 2 1 29 encoder_norm1.beta 30

24 | LSTM LSTM_13 3 3 30 h1_in c1_in 56 54 55 0=128 1=131072

25 | LSTM LSTM_15 3 3 56 h2_in c2_in 82 79 80 0=128 1=65536

26 | InnerProduct MatMul_18 1 1 82 84 0=256 2=32768

27 | BinaryOp Add_19 2 1 sep2.dense.bias 84 85

28 | Sigmoid Sigmoid_20 1 1 85 86

29 | Concat Concat_21 4 1 54 55 79 80 out_state2

30 | Reshape Reshape_23 1 1 86 89 0=-1

31 | Reshape Reshape_25 1 1 20_splitncnn_0 91 0=-1

32 | BinaryOp Mul_26 2 1 89 91 92 0=2

33 | Reshape Reshape_28 1 1 92 94 0=256 1=1

34 | Permute Transpose_29 1 1 94 95 0=1

35 | Convolution1D Conv_30 1 1 95 y 0=512 1=1 6=131072

36 |

--------------------------------------------------------------------------------

/contribute.md:

--------------------------------------------------------------------------------

1 | ### How can I get involved in contributing?

2 |

3 | fork the code, then submit it in the following format, preferably a minimal demo of c++20, without inline concurrency.

4 |

5 | ```

6 | git clone https://github.com/Baiyuetribe/ncnn-models.git --depth=1

7 | cd ncnn-models

8 | code .

9 | ```

10 |

11 | ### Examples

12 |

13 | yolov5 # model name

14 | - models/xx.bin or xx.param # model

15 | - input.png

16 | - out.png

17 | - README.md

18 | - convert.py

19 |

--------------------------------------------------------------------------------

/diffusion/stablediffuson/README.md:

--------------------------------------------------------------------------------

1 | # StableDiffuson

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## 出入信息

8 |

9 | ### 输入尺寸

10 |

11 | [1,4,-1,-1],[1],[1,77,768]

12 |

13 | ## 模型转换

14 |

15 | ```python

16 | import os

17 | import torch

18 | from diffusers import StableDiffusionPipeline

19 |

20 | # config

21 | device = "cuda"

22 | from_model = "../diffusers-model"

23 | to_model = "model"

24 | height, width = 512, 512

25 |

26 | # check

27 | assert height % 8 == 0 and width % 8 == 0

28 | height, width = height // 8, width // 8

29 | os.makedirs(to_model, exist_ok=True)

30 |

31 | # load model

32 | pipe = StableDiffusionPipeline.from_pretrained(from_model, torch_dtype=torch.float32)

33 | pipe = pipe.to(device)

34 |

35 | # jit unet

36 | unet = torch.jit.trace(pipe.unet, (torch.rand(1,4,height,width).to(device),torch.rand(1).to(device),torch.rand(1,77,768).to(device)))

37 | unet.save(os.path.join(to_model,"unet.pt"))

38 |

39 | ## 4. fp16量化

40 | pnnx unet.pt inputshape=[1,4,64,64],[1],[1,77,768]

41 |

42 | ```

43 |

44 | ## c++实现

45 |

46 | 参见

47 |

48 | ## Example project

49 |

50 | ## Reference

51 |

52 | - [EdVince/Stable-Diffusion-NCNN](https://github.com/EdVince/Stable-Diffusion-NCNN)

53 | - [Tencent/ncnn](https://github.com/Tencent/ncnn)

54 |

--------------------------------------------------------------------------------

/docs/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/docs/.DS_Store

--------------------------------------------------------------------------------

/docs/Cmakelists.txt:

--------------------------------------------------------------------------------

1 | cmake_minimum_required(VERSION "3.5.1" ) # 定义cmake最小版本号

2 | add_compile_options("$<$:/source-charset:utf-8>") # 解决源码含有中文时报错

3 |

4 | set(CMAKE_BUILD_TYPE Release)

5 |

6 | # Create project

7 | set(ProjectName "demo") # 定义变量

8 | project(${ProjectName}) # 定义项目名称

9 | message("ProjectName: ${ProjectName}") # 打印项目名称

10 | add_executable(${ProjectName} yolov5.cpp) # 需要编译的如何文件

11 |

12 |

13 | # Opencv库引入

14 | set(OpenCV_DIR "C:\\Temp\\cinclude\\opencv\\build\\x64\\vc15\\lib") # 来源 https://github.com/opencv/opencv/releases/tag/4.5.5

15 | find_package(OpenCV REQUIRED)

16 | target_link_libraries(${ProjectName} ${OpenCV_LIBS})

17 |

18 | # ncnn库引入

19 | set(ncnn_DIR "C:\\Temp\\cinclude\\ncnn\\x64\\lib\\cmake\\ncnn") # 来源 https://github.com/Tencent/ncnn/releases/tag/20220420

20 | find_package(ncnn REQUIRED)

21 | target_link_libraries(${ProjectName} ncnn)

22 |

--------------------------------------------------------------------------------

/docs/images/logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/docs/images/logo.png

--------------------------------------------------------------------------------

/face_dection/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_dection/.DS_Store

--------------------------------------------------------------------------------

/face_dection/Anime_Face/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_dection/Anime_Face/.DS_Store

--------------------------------------------------------------------------------

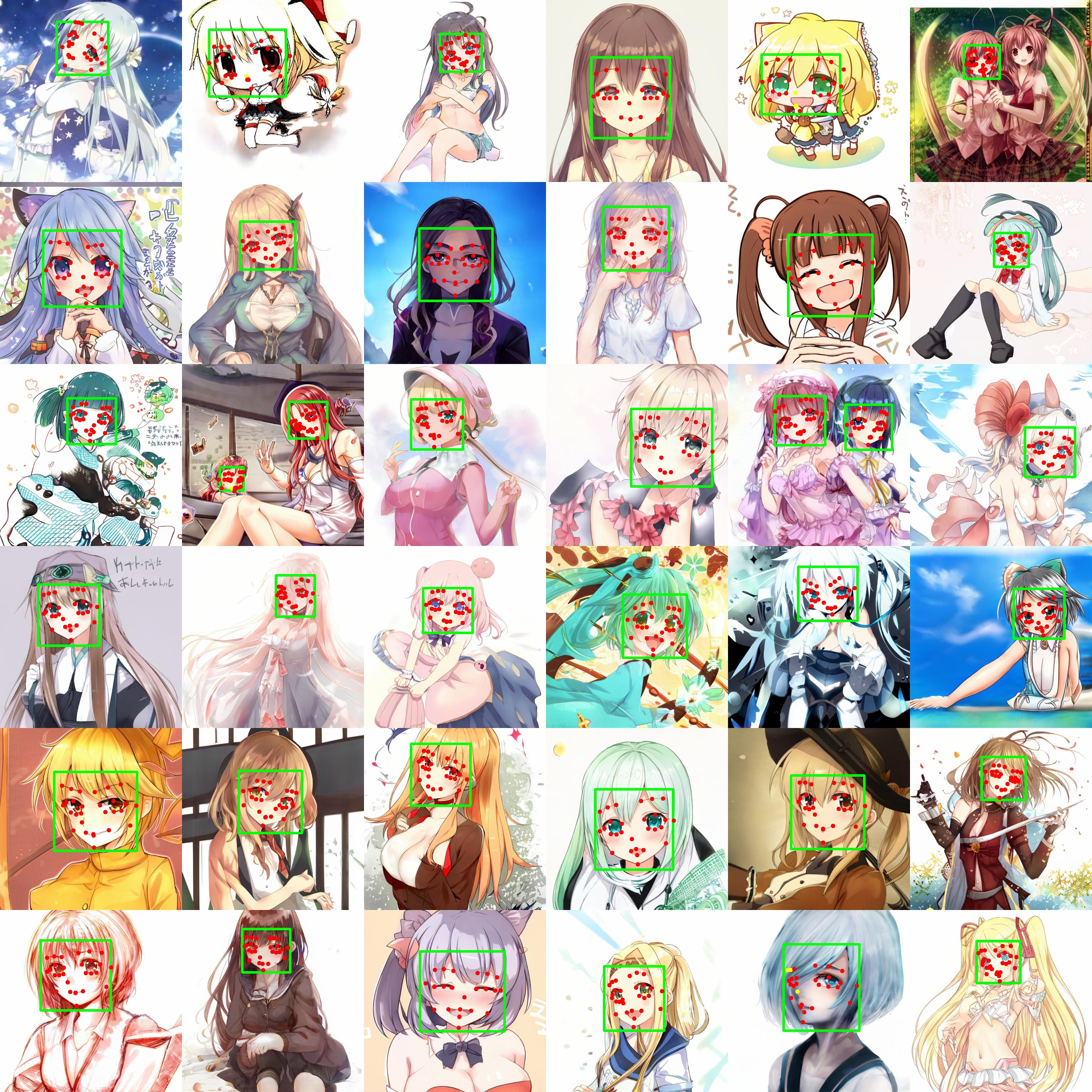

/face_dection/Anime_Face/README.md:

--------------------------------------------------------------------------------

1 | # Anime Face Detector

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## Convert

8 |

9 | pt --> onnx --> onnx-sim --> ncnnOptimize --> ncnn

10 |

11 | ```python

12 | import os

13 |

14 | # 1. download -- anime-face_hrnetv2.onnx \ anime-face_yolov3.onnx \anime-face_faster-rcnn.onnx

15 | # download_url = https://storage.googleapis.com/ailia-models/anime-face-detector/anime-face_hrnetv2.onnx

16 | os.system("")

17 |

18 | # 2. onnx --> onnxsim

19 | os.system("python -m onnxsim anime-face_hrnetv2.onnx sim.onnx --input-shape 1,3,256,256")

20 |

21 | # 3. onnx --> ncnn

22 | os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

23 |

24 | # 4. ncnn --> optmize ---> ncnn

25 | os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

26 | ```

27 |

28 | ## Example project

29 |

30 |

31 |

32 | ## Reference

33 |

34 | - [face_detection/anime-face-detector](https://github.com/axinc-ai/ailia-models/blob/master/face_detection/anime-face-detector/README.md)

35 | - [hysts/anime-face-detector](https://github.com/hysts/anime-face-detector)

36 |

37 |

38 |

--------------------------------------------------------------------------------

/face_dection/Anime_Face/anime_face.cpp:

--------------------------------------------------------------------------------

1 | #include

2 | // opencv2

3 | #include

4 | #include

5 | #include

6 | // ncnn

7 | #include "net.h"

8 |

9 | int main(int argc, char **argv)

10 | {

11 | cv::Mat image = cv::imread("demo.jpg"); // 读取图片

12 | if (image.empty())

13 | {

14 | std::cout << "read image failed" << std::endl;

15 | return -1;

16 | }

17 | // 推理模型定义

18 | ncnn::Net net;

19 | net.load_param("models/anime-face_hrnetv2.param");

20 | net.load_model("models/anime-face_hrnetv2.bin");

21 | // 前处理

22 | ncnn::Mat in = ncnn::Mat::from_pixels_resize(image.data, ncnn::Mat::PIXEL_BGR2RGB, image.cols, image.rows, 256, 256);

23 | const float mean_vals[3] = {127.5f, 127.5f, 127.5f};

24 | const float norm_vals[3] = {1 / 127.5f, 1 / 127.5f, 1 / 127.5f};

25 | // const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};

26 | in.substract_mean_normalize(mean_vals, norm_vals); // 可能有误

27 |

28 | ncnn::Extractor ex = net.create_extractor(); // 创建提取器

29 | ex.input("img", in); // 输入

30 | ncnn::Mat out;

31 | ex.extract("heatmap", out); // 输出

32 | std::cout << out.w << " " << out.h << " " << out.dims << std::endl; // 28 * 64 * 64

33 | // 后处理 -- 欢迎修正

34 |

35 | // std::vector keypoints;

36 | // keypoints.resize(out.w);

37 | // for (int j = 0; j < out.w; j++)

38 | // {

39 | // // std::cout << out[j] << std::endl;

40 | // keypoints[j] = out[j] * 256; // 一次存储两个节点位置

41 | // }

42 | // size_t max_len = keypoints.size(); // 画点

43 | // cv::Mat res;

44 | // cv::resize(image, res, cv::Size(256, 256));

45 | // // for (size_t i = 0; i < max_len; i += 2)

46 | // for (size_t i = 0; i < max_len; i += 2)

47 | // {

48 | // cv::circle(res, cv::Point(keypoints[i] * image.cols / 256, keypoints[i + 1] * image.rows / 256), 3, cv::Scalar(255, 255, 255), -1);

49 | // }

50 | // cv::imshow("demo", res);

51 | // cv::waitKey();

52 | return 0;

53 | }

--------------------------------------------------------------------------------

/face_dection/Anime_Face/models/convert.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | # 1. download -- anime-face_hrnetv2.onnx \ anime-face_yolov3.onnx \anime-face_faster-rcnn.onnx

4 | # download_url = https://storage.googleapis.com/ailia-models/anime-face-detector/anime-face_hrnetv2.onnx

5 | os.system("")

6 |

7 | # 2. onnx --> onnxsim

8 | os.system("python -m onnxsim anime-face_hrnetv2.onnx sim.onnx --input-shape 1,3,256,256")

9 |

10 | # 3. onnx --> ncnn

11 | os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

12 |

13 | # 4. ncnn --> optmize ---> ncnn

14 | os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

15 |

--------------------------------------------------------------------------------

/face_dection/pfld/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_dection/pfld/.DS_Store

--------------------------------------------------------------------------------

/face_dection/pfld/README.md:

--------------------------------------------------------------------------------

1 | # pfld

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## Convert

8 |

9 | pt --> onnx --> onnx-sim --> ncnnOptimize --> ncnn

10 |

11 | ```python

12 | # import os

13 | # import torch

14 | # # 0. pt模型下载及初始化

15 | # # 1. download onnx model # Windows下手动执行

16 | # os.system("wget xxxx.onnx -O xxx.onnx")

17 |

18 | # # 2. onnx --> onnxsim

19 | # os.system("python3 -m onnxsim xxx.onnx sim.onnx")

20 |

21 | # # 3. onnx --> ncnn

22 | # os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

23 |

24 | # # 4. ncnn --> optmize ---> ncnn

25 | # os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

26 | ```

27 |

28 | ## Example project

29 |

30 | - [abyssss52/PFLD_ncnn_test](https://github.com/abyssss52/PFLD_ncnn_test)

31 |

32 |

33 | ## Reference

34 |

35 | - [polarisZhao/PFLD-pytorch](https://github.com/polarisZhao/PFLD-pytorch)

36 |

37 |

38 |

--------------------------------------------------------------------------------

/face_dection/pfld/models/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_dection/pfld/models/.DS_Store

--------------------------------------------------------------------------------

/face_dection/pfld/models/convert.py:

--------------------------------------------------------------------------------

1 | # 欢迎pr

2 |

--------------------------------------------------------------------------------

/face_dection/pfld/pfld.cpp:

--------------------------------------------------------------------------------

1 | #include

2 | // opencv2

3 | #include

4 | #include

5 | #include

6 | // ncnn

7 | #include "net.h"

8 |

9 | int main(int argc, char **argv)

10 | {

11 | cv::Mat image = cv::imread("demo.jpg"); // 读取图片

12 | if (image.empty())

13 | {

14 | std::cout << "read image failed" << std::endl;

15 | return -1;

16 | }

17 | // 推理模型定义

18 | ncnn::Net net;

19 | net.load_param("models/pfld-sim.param");

20 | net.load_model("models/pfld-sim.bin");

21 | // 前处理

22 | ncnn::Mat in = ncnn::Mat::from_pixels_resize(image.data, ncnn::Mat::PIXEL_BGR, image.cols, image.rows, 112, 112);

23 | const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};

24 | in.substract_mean_normalize(0, norm_vals);

25 |

26 | ncnn::Extractor ex = net.create_extractor(); // 创建提取器

27 | ex.input("input_1", in); // 输入

28 | ncnn::Mat out;

29 | ex.extract("415", out); // 输出

30 | // 后处理

31 | std::vector keypoints;

32 | keypoints.resize(out.w);

33 | for (int j = 0; j < out.w; j++)

34 | {

35 | keypoints[j] = out[j] * 112;

36 | }

37 | size_t max_len = keypoints.size(); // 画点

38 | for (size_t i = 0; i < max_len; i += 2)

39 | {

40 | cv::circle(image, cv::Point(keypoints[i] * image.cols / 112, keypoints[i + 1] * image.rows / 112), 3, cv::Scalar(255, 255, 255), -1);

41 | }

42 | cv::imshow("demo", image);

43 | cv::waitKey();

44 | return 0;

45 | }

--------------------------------------------------------------------------------

/face_dection/ultraface/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_dection/ultraface/.DS_Store

--------------------------------------------------------------------------------

/face_dection/ultraface/README.md:

--------------------------------------------------------------------------------

1 | # UltraFace

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## Convert

8 |

9 | pt --> onnx --> onnx-sim --> ncnnOptimize --> ncnn

10 |

11 | ```python

12 | import os

13 | import torch

14 | # 0. pt模型下载及初始化

15 | # 1. download onnx model # Windows下手动执行

16 | os.system("wget https://raw.githubusercontent.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB/master/models/onnx/version-RFB-320.onnx -O version-RFB-320.onnx")

17 |

18 | # 2. onnx --> onnxsim

19 | os.system("python3 -m onnxsim version-RFB-320.onnx sim.onnx")

20 |

21 | # 3. onnx --> ncnn

22 | os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

23 |

24 | # 4. ncnn --> optmize ---> ncnn

25 | os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

26 | ```

27 |

28 | ## Example project

29 |

30 | - [Android: oaup/ncnn-android-ultraface](https://github.com/oaup/ncnn-android-ultraface)

31 |

32 |

33 | ## Reference

34 |

35 | - [Tencent/ncnn](https://github.com/Tencent/ncnn/blob/master/examples/rvm.cpp)

36 | - [Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB](https://github.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB)

37 |

38 |

39 |

--------------------------------------------------------------------------------

/face_dection/ultraface/UltraFace.h:

--------------------------------------------------------------------------------

1 | //

2 | // UltraFace.hpp

3 | // UltraFaceTest

4 | //

5 | // Created by vealocia on 2019/10/17.

6 | // Copyright © 2019 vealocia. All rights reserved.

7 | //

8 |

9 | #ifndef UltraFace_hpp

10 | #define UltraFace_hpp

11 |

12 | #pragma once

13 |

14 | #include "gpu.h"

15 | #include "net.h"

16 | #include

17 | #include

18 | #include

19 | #include

20 |

21 | #define num_featuremap 4

22 | #define hard_nms 1

23 | #define blending_nms 2 /* mix nms was been proposaled in paper blaze face, aims to minimize the temporal jitter*/

24 |

25 | typedef struct FaceInfo

26 | {

27 | float x1;

28 | float y1;

29 | float x2;

30 | float y2;

31 | float score;

32 |

33 | float *landmarks;

34 | } FaceInfo;

35 |

36 | class UltraFace

37 | {

38 | public:

39 | UltraFace(const std::string &bin_path, const std::string ¶m_path,

40 | int input_width, int input_length, int num_thread_ = 4, float score_threshold_ = 0.7, float iou_threshold_ = 0.3, int topk_ = -1);

41 |

42 | ~UltraFace();

43 |

44 | int detect(ncnn::Mat &img, std::vector &face_list);

45 |

46 | private:

47 | void generateBBox(std::vector &bbox_collection, ncnn::Mat scores, ncnn::Mat boxes, float score_threshold, int num_anchors);

48 |

49 | void nms(std::vector &input, std::vector &output, int type = blending_nms);

50 |

51 | private:

52 | ncnn::Net ultraface;

53 |

54 | int num_thread;

55 | int image_w;

56 | int image_h;

57 |

58 | int in_w;

59 | int in_h;

60 | int num_anchors;

61 |

62 | int topk;

63 | float score_threshold;

64 | float iou_threshold;

65 |

66 | const float mean_vals[3] = {127, 127, 127};

67 | const float norm_vals[3] = {1.0 / 128, 1.0 / 128, 1.0 / 128};

68 |

69 | const float center_variance = 0.1;

70 | const float size_variance = 0.2;

71 | const std::vector> min_boxes = {

72 | {10.0f, 16.0f, 24.0f},

73 | {32.0f, 48.0f},

74 | {64.0f, 96.0f},

75 | {128.0f, 192.0f, 256.0f}};

76 | const std::vector strides = {8.0, 16.0, 32.0, 64.0};

77 | std::vector> featuremap_size;

78 | std::vector> shrinkage_size;

79 | std::vector w_h_list;

80 |

81 | std::vector> priors = {};

82 | };

83 |

84 | #endif /* UltraFace_hpp */

85 |

--------------------------------------------------------------------------------

/face_dection/ultraface/main.cpp:

--------------------------------------------------------------------------------

1 | //

2 | // main.cpp

3 | // UltraFaceTest

4 | //

5 | // Created by vealocia on 2019/10/17.

6 | // Copyright © 2019 vealocia. All rights reserved.

7 | //

8 |

9 | #include "UltraFace.hpp"

10 | #include

11 | #include

12 |

13 | int main(int argc, char **argv)

14 | {

15 | UltraFace ultraface("models/version-RFB-320.bin", "models/version-RFB-320.param", 320, 240, 1, 0.7); // config model input

16 | std::string image_file = "intput.jpg"; // input image

17 | cv::Mat frame = cv::imread(image_file);

18 | if (frame.empty())

19 | {

20 | std::cout << "read image failed" << std::endl;

21 | return -1;

22 | }

23 | ncnn::Mat inmat = ncnn::Mat::from_pixels(frame.data, ncnn::Mat::PIXEL_BGR2RGB, frame.cols, frame.rows);

24 |

25 | std::vector face_info;

26 | ultraface.detect(inmat, face_info);

27 | for (int i = 0; i < face_info.size(); i++)

28 | {

29 | auto face = face_info[i];

30 | cv::Point pt1(face.x1, face.y1);

31 | cv::Point pt2(face.x2, face.y2);

32 | cv::rectangle(frame, pt1, pt2, cv::Scalar(0, 255, 0), 2);

33 | }

34 |

35 | cv::imshow("UltraFace", frame);

36 | cv::waitKey();

37 | cv::imwrite("result.jpg", frame);

38 | return 0;

39 | }

40 |

--------------------------------------------------------------------------------

/face_dection/ultraface/models/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_dection/ultraface/models/.DS_Store

--------------------------------------------------------------------------------

/face_dection/ultraface/models/convert.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | # 0. pt模型下载及初始化

4 | # 1. download onnx model # Windows下手动执行

5 | os.system("wget https://raw.githubusercontent.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB/master/models/onnx/version-RFB-320.onnx -O version-RFB-320.onnx")

6 |

7 | # 2. onnx --> onnxsim

8 | os.system("python3 -m onnxsim version-RFB-320.onnx sim.onnx")

9 |

10 | # 3. onnx --> ncnn

11 | os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

12 |

13 | # 4. ncnn --> optmize ---> ncnn

14 | os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

15 |

--------------------------------------------------------------------------------

/face_restoration/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_restoration/.DS_Store

--------------------------------------------------------------------------------

/face_restoration/codeformer/README.md:

--------------------------------------------------------------------------------

1 | # Codeformer

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 |

8 | ## 出入信息

9 |

10 | ## 模型转换

11 |

12 | ```python

13 | 欢迎pr

14 | ```

15 |

16 | ## c++实现

17 |

18 | 参见 https://github.com/FeiGeChuanShu/CodeFormer-ncnn

19 |

20 | ## Example project

21 |

22 | ## Reference

23 |

24 | - [FeiGeChuanShu/CodeFormer-ncnn](https://github.com/FeiGeChuanShu/CodeFormer-ncnn)

25 | - [Tencent/ncnn](https://github.com/Tencent/ncnn)

26 |

--------------------------------------------------------------------------------

/face_restoration/gfpgan/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/face_restoration/gfpgan/.DS_Store

--------------------------------------------------------------------------------

/face_restoration/gfpgan/README.md:

--------------------------------------------------------------------------------

1 | # GFPGAN

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 |

8 | ## Convert

9 |

10 | pt --> onnx --> onnx-sim --> ncnnOptimize --> ncnn

11 |

12 | ```python

13 | # need pr

14 | ```

15 | 受限于模型转换,目前仅能固定输出512*512图片

16 |

17 | ## Example project

18 |

19 | - [GFPGAN-GUI](https://github.com/Baiyuetribe/paper2gui/blob/main/FaceRestoration/readme.md)

20 |

21 | ## Reference

22 |

23 | - [TencentARC/GFPGAN](https://github.com/TencentARC/GFPGAN)

24 | - [FeiGeChuanShu/GFPGAN-ncnn](https://github.com/FeiGeChuanShu/GFPGAN-ncnn)

25 |

26 |

27 |

--------------------------------------------------------------------------------

/face_restoration/gfpgan/gfpgan.h:

--------------------------------------------------------------------------------

1 | // gfpgan implemented with ncnn library

2 |

3 | #ifndef GFPGAN_H

4 | #define GFPGAN_H

5 |

6 | #include

7 | #include

8 | #include

9 | #include

10 | #include

11 | #include

12 | #include

13 |

14 | // ncnn

15 | #include "net.h"

16 | #include "cpu.h"

17 | #include "layer.h"

18 |

19 | typedef struct StyleConvWeights

20 | {

21 | int data_size;

22 | int inc;

23 | int hid_dim;

24 | int num_output;

25 | std::vector style_convs_modulated_conv_weight;

26 | std::vector style_convs_modulated_conv_modulation_weight;

27 | std::vector style_convs_modulated_conv_modulation_bias;

28 | std::vector style_convs_weight;

29 | std::vector style_convs_bias;

30 | } StyleConvWeights;

31 | typedef struct ToRgbConvWeights

32 | {

33 | int data_size;

34 | int inc;

35 | int hid_dim;

36 | int num_output;

37 | std::vector to_rgbs_modulated_conv_weight;

38 | std::vector to_rgbs_modulated_conv_modulation_weight;

39 | std::vector to_rgbs_modulated_conv_modulation_bias;

40 | std::vector to_rgbs_bias;

41 | } ToRgbConvWeights;

42 | const int style_conv_sizes[][5] = {{512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 0

43 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 1

44 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 2

45 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 3

46 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 4

47 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 5

48 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 6

49 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}, // 7

50 | {256 * 512 * 3 * 3, 512 * 512, 512, 1, 256}, // 8

51 | {256 * 256 * 3 * 3, 256 * 512, 256, 1, 256}, // 9

52 | {128 * 256 * 3 * 3, 256 * 512, 256, 1, 128}, // 10

53 | {128 * 128 * 3 * 3, 128 * 512, 128, 1, 128}, // 11

54 | {64 * 128 * 3 * 3, 128 * 512, 128, 1, 64}, // 12

55 | {64 * 64 * 3 * 3, 64 * 512, 64, 1, 64}, // 13

56 | {512 * 512 * 3 * 3, 512 * 512, 512, 1, 512}}; // 14

57 | const int to_rgb_sizes[][4] = {{3 * 512 * 1 * 1, 512 * 512, 512, 3}, // 0

58 | {3 * 512 * 1 * 1, 512 * 512, 512, 3}, // 1

59 | {3 * 512 * 1 * 1, 512 * 512, 512, 3}, // 2

60 | {3 * 512 * 1 * 1, 512 * 512, 512, 3}, // 3

61 | {3 * 256 * 1 * 1, 256 * 512, 256, 3}, // 4

62 | {3 * 128 * 1 * 1, 128 * 512, 128, 3}, // 5

63 | {3 * 64 * 1 * 1, 64 * 512, 64, 3}, // 6

64 | {3 * 512 * 1 * 1, 512 * 512, 512, 3}}; // 7

65 | const int style_conv_channels[][3] = {{512, 512, 512}, // 0

66 | {512, 512, 512}, // 1

67 | {512, 512, 512}, // 2

68 | {512, 512, 512}, // 3

69 | {512, 512, 512}, // 4

70 | {512, 512, 512}, // 5

71 | {512, 512, 512}, // 6

72 | {512, 512, 512}, // 7

73 | {512, 512, 256}, // 8

74 | {512, 256, 256}, // 9

75 | {512, 256, 128}, // 10

76 | {512, 128, 128}, // 11

77 | {512, 128, 64}, // 12

78 | {512, 64, 64}, // 13

79 | {512, 512, 512}}; // 14

80 |

81 | const int to_rgb_channels[][3] = {{512, 512, 3}, // 0

82 | {512, 512, 3}, // 1

83 | {512, 512, 3}, // 2

84 | {512, 512, 3}, // 3

85 | {512, 256, 3}, // 4

86 | {512, 128, 3}, // 5

87 | {512, 64, 3}, // 6

88 | {512, 512, 3}}; // 7

89 |

90 | class GFPGAN

91 | {

92 | public:

93 | GFPGAN();

94 | ~GFPGAN();

95 |

96 | int load(const std::string ¶m_path, const std::string &model_path, const std::string &style_path);

97 |

98 | int process(const cv::Mat &img, ncnn::Mat &outimage);

99 |

100 | private:

101 | int modulated_conv(ncnn::Mat &x, ncnn::Mat &style,

102 | const float *self_weight, const float *weights,

103 | const float *bias, int sample_mode, int demodulate,

104 | int inc, int num_output, int kernel_size, int hid_dim, ncnn::Mat &out);

105 | int to_rgbs(ncnn::Mat &out, ncnn::Mat &latent, ncnn::Mat &skip,

106 | int inc, int hid_dim, int num_output,

107 | const float *to_rgbs_modulated_conv_weight,

108 | const float *to_rgbs_modulated_conv_modulation_weight,

109 | const float *to_rgbs_modulated_conv_modulation_bias,

110 | const float *to_rgbs_bias);

111 | int style_convs_modulated_conv(ncnn::Mat &x, ncnn::Mat style, int sample_mode,

112 | int demodulate, ncnn::Mat &out, int inc, int hid_dim, int num_output,

113 | const float *style_convs_modulated_conv_weight,

114 | const float *style_convs_modulated_conv_modulation_weight,

115 | const float *style_convs_modulated_conv_modulation_bias,

116 | const float *style_convs_weight,

117 | const float *style_convs_bias);

118 | int load_weights(const char *model_path, std::vector &style_conv_weights,

119 | std::vector &to_rgbs_conv_weights, ncnn::Mat &const_input);

120 |

121 | private:

122 | const float mean_vals[3] = {127.5f, 127.5f, 127.5f};

123 | const float norm_vals[3] = {1 / 127.5f, 1 / 127.5f, 1 / 127.5f};

124 | std::vector style_conv_weights;

125 | std::vector to_rgbs_conv_weights;

126 | ncnn::Mat const_input;

127 | ncnn::Net net;

128 | };

129 |

130 | #endif // GFPGAN_H

131 |

--------------------------------------------------------------------------------

/face_restoration/gfpgan/main.cpp:

--------------------------------------------------------------------------------

1 | #include

2 |

3 | #include

4 | #include "gfpgan.h" // 自定义

5 |

6 | static void to_ocv(const ncnn::Mat &result, cv::Mat &out)

7 | {

8 | cv::Mat cv_result_32F = cv::Mat::zeros(cv::Size(512, 512), CV_32FC3);

9 | for (int i = 0; i < result.h; i++)

10 | {

11 | for (int j = 0; j < result.w; j++)

12 | {

13 | cv_result_32F.at(i, j)[2] = (result.channel(0)[i * result.w + j] + 1) / 2;

14 | cv_result_32F.at(i, j)[1] = (result.channel(1)[i * result.w + j] + 1) / 2;

15 | cv_result_32F.at(i, j)[0] = (result.channel(2)[i * result.w + j] + 1) / 2;

16 | }

17 | }

18 |

19 | cv::Mat cv_result_8U;

20 | cv_result_32F.convertTo(cv_result_8U, CV_8UC3, 255.0, 0);

21 | cv_result_8U.copyTo(out);

22 | }

23 |

24 | // 先测试gfpan

25 | int main(int argc, char **argv)

26 | {

27 | std::string in_path = "in.png";

28 | std::string out_path = "out.png";

29 | cv::Mat img = cv::imread(in_path);

30 | if (img.empty())

31 | {

32 | std::cout << "image not found" << std::endl;

33 | return -1;

34 | }

35 | // std::cout << "入口0 size: " << img.size() << std::endl;

36 | // 测试gfpgan

37 | GFPGAN gfpgan;

38 | // gfpgan.load("models/encoder.param", "models/encoder.bin", "models/style.bin"); // 单张处理4s,但是GPU消耗很小

39 | static std::string exe_path = getExeDir();

40 | gfpgan.load("models/encoder.param", "models/encoder.bin", "models/style.bin"); // 单张处理4s,但是GPU消耗很小

41 | ncnn::Mat res3;

42 | auto start = std::chrono::system_clock::now();

43 | gfpgan.process(img, res3); // 入口任意,出口都是512*512

44 | auto end = std::chrono::system_clock::now();

45 | // 汇总时间

46 | std::chrono::duration elapsed_seconds = (end - start);

47 | std::cout << "Total elapsed time: " << elapsed_seconds.count() << "s" << std::endl;

48 | // std::cout << "res3 size" << res3.w << "h:" << res3.h << std::endl;

49 | // 转换为opencv格式

50 | cv::Mat res3_cv;

51 | to_ocv(res3, res3_cv);

52 | // cv::imshow("res3", res3_cv);

53 | // cv::waitKey(0);

54 | cv::imwrite(out_path, res3_cv); // 保存结果

55 | return 0;

56 | }

--------------------------------------------------------------------------------

/face_restoration/gfpgan/models/downlaod.txt:

--------------------------------------------------------------------------------

1 | see releases

--------------------------------------------------------------------------------

/face_swap/roop/README.md:

--------------------------------------------------------------------------------

1 | # ROOP 换脸

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## 出入信息

8 | ### 输入尺寸

9 | target [1,3,128,128]

10 | source [1,512]

11 | ### 输出尺寸

12 | output [1,3,128,128]

13 |

14 | ## 模型转换

15 |

16 |

17 | ```python

18 |

19 | ## 1. 下载onnx模型

20 | wget https://huggingface.co/optobsafetens/inswapper_128/resolve/main/roopVideoFace_v10.onnx

21 |

22 | ## 2. onnxsim简化

23 | onnxsim in.onnx sim.onnx

24 |

25 | ## 3. onnx2ncnn

26 | onnx2ncnn sim.onnx sim.param sim.bin

27 |

28 | ## 4. fp16量化

29 | ncnnoptimize sim.param sim.bin opt.param opt.bin 1

30 |

31 | ## 5. 模型信息

32 | Op. Total arm loongarch mips riscv vulkan x86

33 | BinaryOp 92 Y Y Y Y Y Y

34 | Split 43

35 | Reduction 24

36 | Crop 24 Y Y Y Y Y Y

37 | Convolution 20 Y Y Y Y Y Y

38 | Padding 14 Y Y Y Y Y Y

39 | UnaryOp 13 Y Y Y Y Y Y

40 | InnerProduct 12 Y Y Y Y Y Y

41 | ExpandDims 12

42 | ReLU 6 Y Y Y Y Y Y

43 | Interp 2 Y Y Y Y Y Y

44 |

45 | ```

46 |

47 | ## python实现

48 | https://github.com/s0md3v/sd-webui-roop/blob/main/scripts/faceswap.py

49 |

50 | ## c++实现

51 |

52 | 欢迎pr

53 |

54 | ## Example project

55 |

56 |

57 |

58 | ## Reference

59 |

60 | - [s0md3v/roop](https://github.com/s0md3v/roop)

61 | - [Tencent/ncnn](https://github.com/Tencent/ncnn)

62 |

63 |

64 |

--------------------------------------------------------------------------------

/image_classification/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/.DS_Store

--------------------------------------------------------------------------------

/image_classification/cait/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/cait/.DS_Store

--------------------------------------------------------------------------------

/image_classification/cait/Cait.cpp:

--------------------------------------------------------------------------------

1 | #include "net.h" // ncnn

2 | #include

3 | #include

4 | #include

5 | #include "iostream"

6 |

7 | static int print_topk(const std::vector &cls_scores, int topk)

8 | {

9 | // partial sort topk with index

10 | int size = cls_scores.size();

11 | std::vector> vec;

12 | vec.resize(size);

13 | for (int i = 0; i < size; i++)

14 | {

15 | vec[i] = std::make_pair(cls_scores[i], i);

16 | }

17 |

18 | std::partial_sort(vec.begin(), vec.begin() + topk, vec.end(),

19 | std::greater>());

20 |

21 | // print topk and score

22 | for (int i = 0; i < topk; i++)

23 | {

24 | float score = vec[i].first;

25 | int index = vec[i].second;

26 | fprintf(stderr, "%d = %f\n", index, score);

27 | }

28 |

29 | return 0;

30 | }

31 |

32 | int main(int argc, char **argv)

33 | {

34 | cv::Mat m = cv::imread("input.png"); // 输入一张图片,BGR格式

35 | if (m.empty())

36 | {

37 | std::cout << "read image failed" << std::endl;

38 | return -1;

39 | }

40 |

41 | ncnn::Net net;

42 | net.opt.use_vulkan_compute = true; // GPU环境

43 | net.load_param("models/efficientnet_b0.param"); // 模型加载

44 | net.load_model("models/efficientnet_b0.bin");

45 |

46 | ncnn::Mat in = ncnn::Mat::from_pixels_resize(m.data, ncnn::Mat::PIXEL_BGR2RGB, m.cols, m.rows, 224, 224); // 图片缩放

47 | const float mean_vals[3] = {0.485f / 255.f, 0.456f / 255.f, 0.406f / 255.f}; // Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

48 | const float norm_vals[3] = {1.0 / 0.229f / 255.f, 1.0 / 0.224f / 255.f, 1.0 / 0.225f / 255.f};

49 | in.substract_mean_normalize(mean_vals, norm_vals); // 像素范围0~255

50 |

51 | ncnn::Mat out;

52 | ncnn::Extractor ex = net.create_extractor();

53 | ex.input("in0", in); // 输入

54 | ex.extract("out0", out); // 输出

55 | std::vector cls_scores; // 所有分类的得分

56 | cls_scores.resize(out.w); // 分类个数

57 | for (int j = 0; j < out.w; j++)

58 | {

59 | cls_scores[j] = out[j];

60 | }

61 | print_topk(cls_scores, 5);

62 | return 0;

63 | }

--------------------------------------------------------------------------------

/image_classification/cait/README.md:

--------------------------------------------------------------------------------

1 | # efficientnet

2 |

3 | ## Input --> Output

4 |

5 | ## Convert

6 |

7 | pt --> torchscript--> pnnx --> ncnn

8 |

9 | ```python

10 | import torch

11 | import timm

12 | import os

13 |

14 | model = timm.create_model('cait_xxs36_384', pretrained=True)

15 | model.eval()

16 |

17 | x = torch.rand(1, 3, 384, 384)

18 | traced_script_module = torch.jit.trace(model, x, strict=False)

19 | traced_script_module.save("ts.pt")

20 |

21 | # 2. ts --> pnnx --> ncnn

22 | os.system("pnnx ts.pt inputshape=[1,3,384,384]")

23 | ```

24 |

25 | ## Example project

26 |

27 |

28 | ## Reference

29 |

30 | - [lucidrains/vit-pytorch](https://github.com/lucidrains/vit-pytorch)

31 | - [rwightman/pytorch-image-models](https://github.com/rwightman/pytorch-image-models)

32 |

33 |

34 |

--------------------------------------------------------------------------------

/image_classification/cait/models/convert.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import timm

3 | import os

4 |

5 | model = timm.create_model('cait_xxs36_384', pretrained=True)

6 | model.eval()

7 |

8 | x = torch.rand(1, 3, 384, 384)

9 | traced_script_module = torch.jit.trace(model, x, strict=False)

10 | traced_script_module.save("ts.pt")

11 |

12 | # 2. ts --> pnnx --> ncnn

13 | os.system("pnnx ts.pt inputshape=[1,3,384,384]")

14 |

15 | # 模型文件已支持如下:

16 | # "cait_m36_384",

17 | # "cait_m48_448",

18 | # "cait_s24_224",

19 | # "cait_s24_384",

20 | # "cait_s36_384",

21 | # "cait_xs24_384",

22 | # "cait_xxs24_224",

23 | # "cait_xxs24_384",

24 | # "cait_xxs36_224",

25 | # "cait_xxs36_384",

26 |

--------------------------------------------------------------------------------

/image_classification/cait/models/download.txt:

--------------------------------------------------------------------------------

1 | 模型转换成功,但是该系列体积巨大,60Mb~1GB,精度位列当前分类第一名

--------------------------------------------------------------------------------

/image_classification/denseNet/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/denseNet/.DS_Store

--------------------------------------------------------------------------------

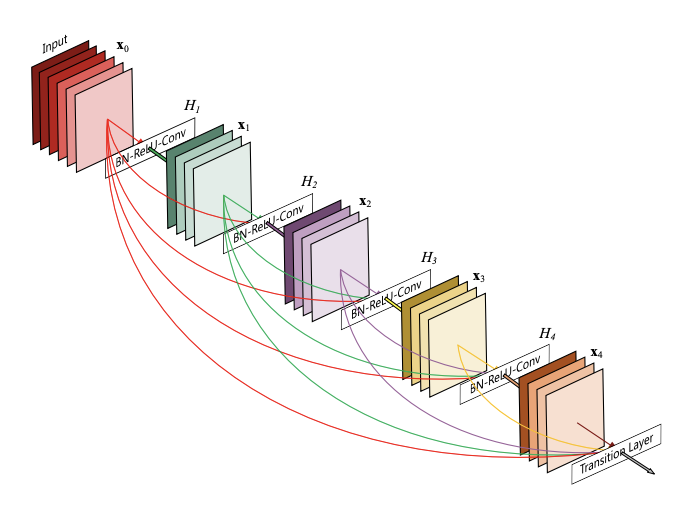

/image_classification/denseNet/README.md:

--------------------------------------------------------------------------------

1 | # densenet121

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## Convert

8 |

9 | pt --> torchscript --> pnnx --> ncnn

10 |

11 | ```python

12 | import os

13 | import torch

14 | # 0. pt模型下载及初始化

15 | model = torch.hub.load('pytorch/vision:v0.10.0', 'densenet121', pretrained=True)

16 | model.eval()

17 | x = torch.rand(1, 3, 224, 224)

18 | # 方法1: pnnx

19 | # 1. pt --> torchscript

20 | traced_script_module = torch.jit.trace(model, x, strict=False)

21 | traced_script_module.save("ts.pt")

22 |

23 | # 2. ts --> pnnx --> ncnn

24 | os.system("pnnx ts.pt inputshape=[1,3,224,224]") # 2022年5月25日起,pnnx默认自动量化,不需要再次optmize

25 |

26 | # # 方法2: onnx

27 | # # 1. pt ---> onnx

28 | # torch_out = torch.onnx._export(model, x, "densenet121.onnx", export_params=True)

29 |

30 | # # 2. onnx --> onnxsim

31 | # os.system("python3 -m onnxsim densenet121.onnx sim.onnx")

32 |

33 | # # 3. onnx --> ncnn

34 | # os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

35 |

36 | # # 4. ncnn --> optmize ---> ncnn

37 | # os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

38 |

39 | # 两种转换都成功

40 | ```

41 |

42 | ## Example project

43 |

44 |

45 | ## Reference

46 |

47 | - [pytorch_vision_densenet](https://pytorch.org/hub/pytorch_vision_densenet/)

48 |

49 |

50 |

--------------------------------------------------------------------------------

/image_classification/denseNet/densenet.cpp:

--------------------------------------------------------------------------------

1 | #include "net.h" // ncnn

2 | #include

3 | #include

4 | #include

5 | #include "iostream"

6 |

7 | static int print_topk(const std::vector &cls_scores, int topk)

8 | {

9 | // partial sort topk with index

10 | int size = cls_scores.size();

11 | std::vector> vec;

12 | vec.resize(size);

13 | for (int i = 0; i < size; i++)

14 | {

15 | vec[i] = std::make_pair(cls_scores[i], i);

16 | }

17 |

18 | std::partial_sort(vec.begin(), vec.begin() + topk, vec.end(),

19 | std::greater>());

20 |

21 | // print topk and score

22 | for (int i = 0; i < topk; i++)

23 | {

24 | float score = vec[i].first;

25 | int index = vec[i].second;

26 | fprintf(stderr, "%d = %f\n", index, score);

27 | }

28 |

29 | return 0;

30 | }

31 |

32 | int main(int argc, char **argv)

33 | {

34 | cv::Mat m = cv::imread("input.png"); // 输入一张图片,BGR格式

35 | if (m.empty())

36 | {

37 | std::cout << "read image failed" << std::endl;

38 | return -1;

39 | }

40 |

41 | ncnn::Net net;

42 | net.opt.use_vulkan_compute = true; // GPU环境

43 | net.load_param("models/densenet121.param"); // 模型加载

44 | net.load_model("models/densenet121.bin");

45 |

46 | ncnn::Mat in = ncnn::Mat::from_pixels_resize(m.data, ncnn::Mat::PIXEL_BGR2RGB, m.cols, m.rows, 224, 224); // 图片缩放

47 | const float mean_vals[3] = {0.485f / 255.f, 0.456f / 255.f, 0.406f / 255.f}; // Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

48 | const float norm_vals[3] = {1.0 / 0.229f / 255.f, 1.0 / 0.224f / 255.f, 1.0 / 0.225f / 255.f};

49 | in.substract_mean_normalize(mean_vals, norm_vals); // 像素范围0~255

50 |

51 | ncnn::Mat out;

52 | ncnn::Extractor ex = net.create_extractor();

53 | ex.input("input.1", in); // 输入

54 | ex.extract("626", out); // 输出

55 | std::vector cls_scores; // 所有分类的得分

56 | cls_scores.resize(out.w); // 分类个数

57 | for (int j = 0; j < out.w; j++)

58 | {

59 | cls_scores[j] = out[j];

60 | }

61 | print_topk(cls_scores, 5);

62 | return 0;

63 | }

--------------------------------------------------------------------------------

/image_classification/denseNet/models/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/denseNet/models/.DS_Store

--------------------------------------------------------------------------------

/image_classification/denseNet/models/convert.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | # 0. pt模型下载及初始化

4 | model = torch.hub.load('pytorch/vision:v0.10.0', 'densenet121', pretrained=True)

5 | model.eval()

6 | x = torch.rand(1, 3, 224, 224)

7 | # 方法1: pnnx

8 | # 1. pt --> torchscript

9 | traced_script_module = torch.jit.trace(model, x, strict=False)

10 | traced_script_module.save("ts.pt")

11 |

12 | # 2. ts --> pnnx --> ncnn

13 | os.system("pnnx ts.pt inputshape=[1,3,224,224]") # 2022年5月25日起,pnnx默认自动量化,不需要再次optmize

14 |

15 | # # 方法2: onnx

16 | # # 1. pt ---> onnx

17 | # torch_out = torch.onnx._export(model, x, "densenet121.onnx", export_params=True)

18 |

19 | # # 2. onnx --> onnxsim

20 | # os.system("python3 -m onnxsim densenet121.onnx sim.onnx")

21 |

22 | # # 3. onnx --> ncnn

23 | # os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

24 |

25 | # # 4. ncnn --> optmize ---> ncnn

26 | # os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

27 |

28 | # 两种转换都成功

29 |

--------------------------------------------------------------------------------

/image_classification/efficientnet/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/efficientnet/.DS_Store

--------------------------------------------------------------------------------

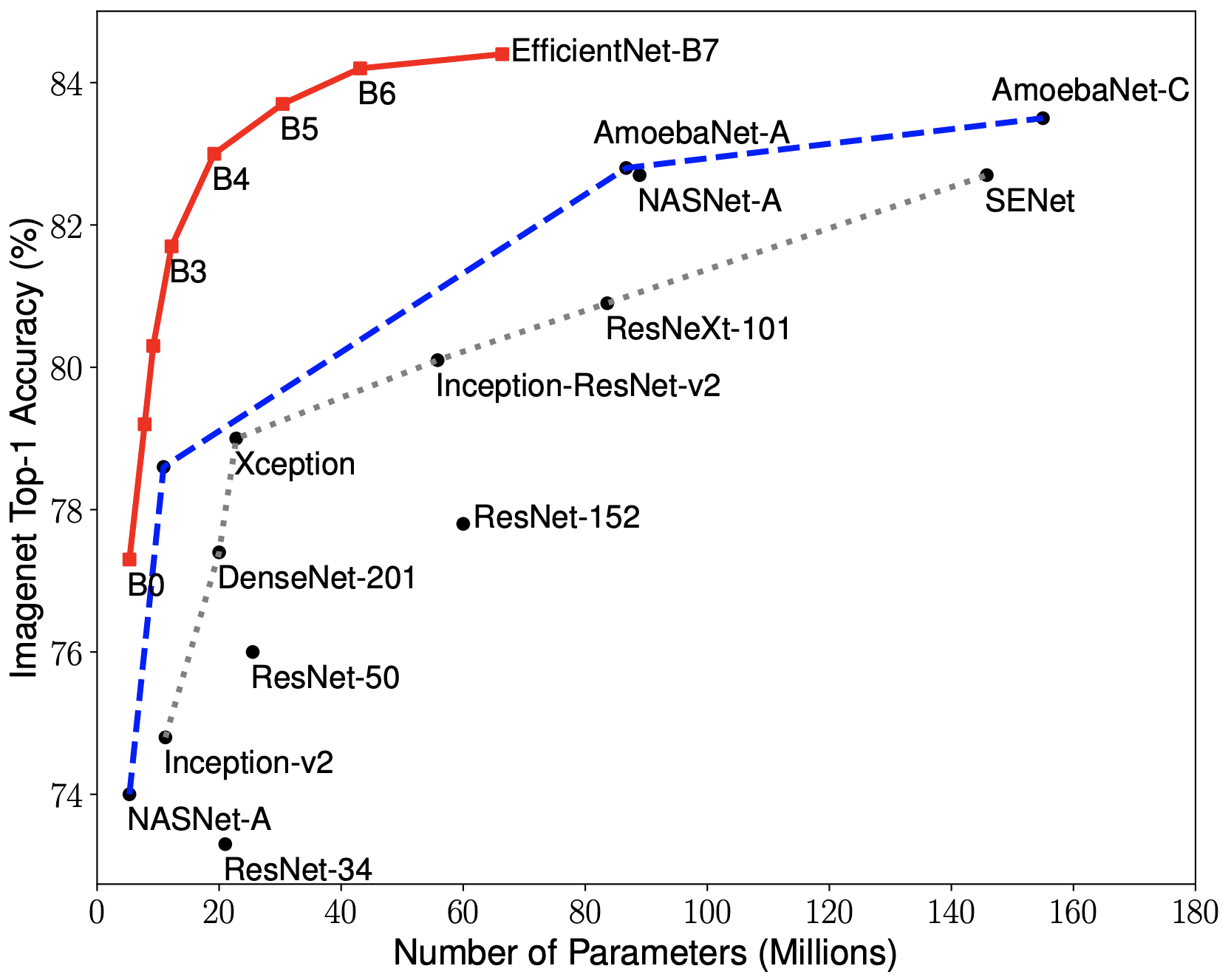

/image_classification/efficientnet/README.md:

--------------------------------------------------------------------------------

1 | # efficientnet

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## Convert

8 |

9 | pt --> torchscript--> pnnx --> ncnn

10 |

11 | ```python

12 | import torch

13 | import timm # 优秀的预训练模型库

14 | import os

15 |

16 | model = timm.create_model('efficientnet_b0', pretrained=True)

17 | model.eval()

18 |

19 | x = torch.rand(1, 3, 224, 224)

20 | traced_script_module = torch.jit.trace(model, x, strict=False)

21 | traced_script_module.save("ts.pt")

22 |

23 | # 2. ts --> pnnx --> ncnn

24 | os.system("pnnx ts.pt inputshape=[1,3,224,224]")

25 | ```

26 |

27 | ## Example project

28 |

29 |

30 | ## Reference

31 |

32 | - [qubvel/efficientnet](https://github.com/qubvel/efficientnet)

33 | - [rwightman/pytorch-image-models](https://github.com/rwightman/pytorch-image-models)

34 |

35 |

36 |

--------------------------------------------------------------------------------

/image_classification/efficientnet/efficientnet.cpp:

--------------------------------------------------------------------------------

1 | #include "net.h" // ncnn

2 | #include

3 | #include

4 | #include

5 | #include "iostream"

6 |

7 | static int print_topk(const std::vector &cls_scores, int topk)

8 | {

9 | // partial sort topk with index

10 | int size = cls_scores.size();

11 | std::vector> vec;

12 | vec.resize(size);

13 | for (int i = 0; i < size; i++)

14 | {

15 | vec[i] = std::make_pair(cls_scores[i], i);

16 | }

17 |

18 | std::partial_sort(vec.begin(), vec.begin() + topk, vec.end(),

19 | std::greater>());

20 |

21 | // print topk and score

22 | for (int i = 0; i < topk; i++)

23 | {

24 | float score = vec[i].first;

25 | int index = vec[i].second;

26 | fprintf(stderr, "%d = %f\n", index, score);

27 | }

28 |

29 | return 0;

30 | }

31 |

32 | int main(int argc, char **argv)

33 | {

34 | cv::Mat m = cv::imread("input.png"); // 输入一张图片,BGR格式

35 | if (m.empty())

36 | {

37 | std::cout << "read image failed" << std::endl;

38 | return -1;

39 | }

40 |

41 | ncnn::Net net;

42 | net.opt.use_vulkan_compute = true; // GPU环境

43 | net.load_param("models/efficientnet_b0.param"); // 模型加载

44 | net.load_model("models/efficientnet_b0.bin");

45 |

46 | ncnn::Mat in = ncnn::Mat::from_pixels_resize(m.data, ncnn::Mat::PIXEL_BGR2RGB, m.cols, m.rows, 224, 224); // 图片缩放

47 | const float mean_vals[3] = {0.485f / 255.f, 0.456f / 255.f, 0.406f / 255.f}; // Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

48 | const float norm_vals[3] = {1.0 / 0.229f / 255.f, 1.0 / 0.224f / 255.f, 1.0 / 0.225f / 255.f};

49 | in.substract_mean_normalize(mean_vals, norm_vals); // 像素范围0~255

50 |

51 | ncnn::Mat out;

52 | ncnn::Extractor ex = net.create_extractor();

53 | ex.input("in0", in); // 输入

54 | ex.extract("out0", out); // 输出

55 | std::vector cls_scores; // 所有分类的得分

56 | cls_scores.resize(out.w); // 分类个数

57 | for (int j = 0; j < out.w; j++)

58 | {

59 | cls_scores[j] = out[j];

60 | }

61 | print_topk(cls_scores, 5);

62 | return 0;

63 | }

--------------------------------------------------------------------------------

/image_classification/efficientnet/models/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/efficientnet/models/.DS_Store

--------------------------------------------------------------------------------

/image_classification/efficientnet/models/convert.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import timm # 优秀的预训练模型库

3 | import os

4 |

5 | model = timm.create_model('efficientnet_b0', pretrained=True) # efficientnet_b0~...

6 | model.eval()

7 |

8 | x = torch.rand(1, 3, 224, 224)

9 | traced_script_module = torch.jit.trace(model, x, strict=False)

10 | traced_script_module.save("ts.pt")

11 |

12 | # 2. ts --> pnnx --> ncnn

13 | os.system("pnnx ts.pt inputshape=[1,3,224,224]")

14 |

15 | # 支持下列所有模型

16 | # "efficientnet_b0",

17 | # "efficientnet_b1",

18 | # "efficientnet_b1_pruned",

19 | # "efficientnet_b2",

20 | # "efficientnet_b2_pruned",

21 | # "efficientnet_b3",

22 | # "efficientnet_b3_pruned",

23 | # "efficientnet_b4",

24 | # "efficientnet_el",

25 | # "efficientnet_el_pruned",

26 | # "efficientnet_em",

27 | # "efficientnet_es",

28 | # "efficientnet_es_pruned",

29 | # "efficientnet_lite0",

30 |

--------------------------------------------------------------------------------

/image_classification/mobilenet_v2/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Baiyuetribe/ncnn-models/7fff7655adef31b39f93653d4e73251c2dc9ba00/image_classification/mobilenet_v2/.DS_Store

--------------------------------------------------------------------------------

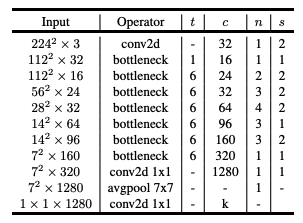

/image_classification/mobilenet_v2/README.md:

--------------------------------------------------------------------------------

1 | # mobilenet_v2

2 |

3 | ## Input --> Output

4 |

5 |

6 |

7 | ## Convert

8 |

9 | pt --> torchscript--> pnnx --> ncnn

10 |

11 | ```python

12 | import os

13 | import torch

14 | # 0. pt模型下载及初始化

15 | model = torch.hub.load('pytorch/vision:v0.10.0', 'mobilenet_v2', pretrained=True)

16 | model.eval() # 下面两种方式都可以成功,推理结果也一样

17 | # # inference

18 | x = torch.randn(1, 3, 224, 224)

19 | # 方法1: pnnx

20 | # 1. pt --> torchscript

21 | traced_script_module = torch.jit.trace(model, x, strict=False)

22 | traced_script_module.save("ts.pt")

23 |

24 | # 2. ts --> pnnx --> ncnn

25 | os.system("pnnx ts.pt inputshape=[1,3,224,224]") # 2022年5月25日起,pnnx默认自动量化,不需要再次optmize

26 |

27 | # 方法2: onnx

28 | # # 1. pt ---> onnx

29 | # torch_out = torch.onnx._export(model, x, "mobilenet_v2.onnx", export_params=True)

30 |

31 | # # 2. onnx --> onnxsim

32 | # os.system("python3 -m onnxsim mobilenet_v2.onnx sim.onnx")

33 |

34 | # # 3. onnx --> ncnn

35 | # os.system("onnx2ncnn sim.onnx ncnn.param ncnn.bin")

36 |

37 | # # 4. ncnn --> optmize ---> ncnn

38 | # os.system("ncnnoptimize ncnn.param ncnn.bin opt.param opt.bin 1") # 数字0 代表fp32 ;1代表fp16

39 |

40 | # 两种转换都成功

41 | ```

42 | 两种转换都可以成功,且推理结果一样

43 |

44 | ## Example project

45 |

46 |

47 | ## Reference

48 |

49 | - [pytorch_vision_mobilenet_v2](https://pytorch.org/hub/pytorch_vision_mobilenet_v2/)

50 |

51 |

52 |

--------------------------------------------------------------------------------

/image_classification/mobilenet_v2/mobilenet_v2.cpp:

--------------------------------------------------------------------------------

1 | #include "net.h" // ncnn

2 | #include

3 | #include

4 | #include

5 | #include "iostream"

6 |

7 | static int print_topk(const std::vector &cls_scores, int topk)

8 | {

9 | // partial sort topk with index

10 | int size = cls_scores.size();

11 | std::vector> vec;

12 | vec.resize(size);

13 | for (int i = 0; i < size; i++)

14 | {

15 | vec[i] = std::make_pair(cls_scores[i], i);

16 | }

17 |

18 | std::partial_sort(vec.begin(), vec.begin() + topk, vec.end(),

19 | std::greater>());

20 |

21 | // print topk and score

22 | for (int i = 0; i < topk; i++)

23 | {

24 | float score = vec[i].first;

25 | int index = vec[i].second;

26 | fprintf(stderr, "%d = %f\n", index, score);

27 | }

28 |