├── .github

└── ISSUE_TEMPLATE

│ ├── bug_report.md

│ ├── custom.md

│ └── feature_request.md

├── EVMOverview.md

├── Internal-ethapi

├── README.md

├── accounts源码分析.md

├── a黄皮书里面出现的所有的符号索引.md

├── blockvalidator&blockprocessor.md

├── cmd-geth.md

├── cmd.md

├── consensus.md

├── core-blockchain源码分析.md

├── core-bloombits源码分析.md

├── core-chain_indexer源码解析.md

├── core-genesis创世区块源码分析.md

├── core-state-process源码分析.md

├── core-state源码分析.md

├── core-txlist交易池的一些数据结构源码分析.md

├── core-txpool交易池源码分析.md

├── core-vm-jumptable-instruction.md

├── core-vm-stack-memory源码分析.md

├── core-vm源码分析.md

├── eth-bloombits和filter源码分析.md

├── eth-downloader-peer源码分析.md

├── eth-downloader-queue.go源码分析.md

├── eth-downloader-statesync.md

├── eth-downloader源码分析.md

├── eth-fetcher源码分析.md

├── ethdb源码分析.md

├── eth以太坊协议分析.md

├── eth源码分析.md

├── event源码分析.md

├── geth启动流程分析.md

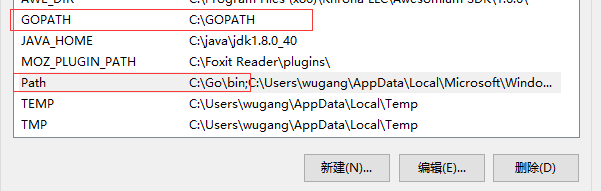

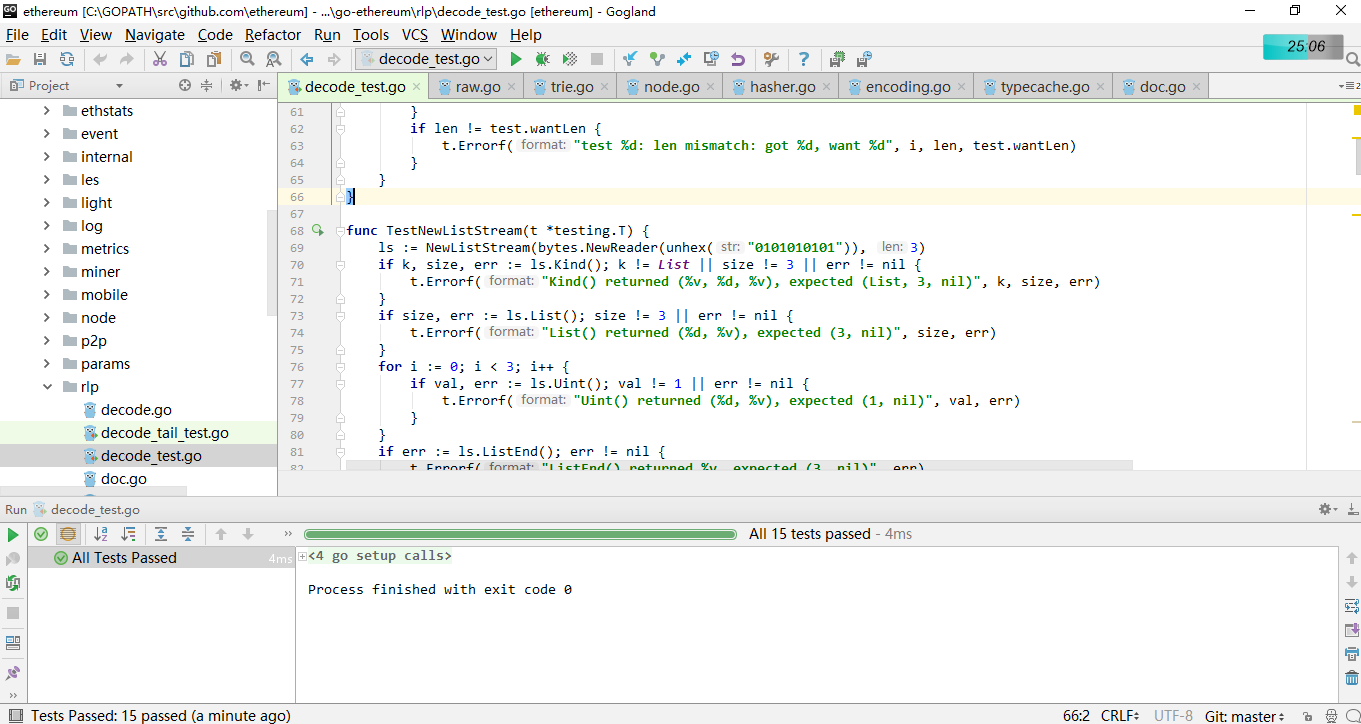

├── go-ethereum源码阅读环境搭建.md

├── hashimoto.md

├── miner-module.md

├── node源码分析.md

├── p2p-database.go源码分析.md

├── p2p-dial.go源码分析.md

├── p2p-nat源码分析.md

├── p2p-peer.go源码分析.md

├── p2p-rlpx节点之间的加密链路.md

├── p2p-server.go源码分析.md

├── p2p-table.go源码分析.md

├── p2p-udp.go源码分析.md

├── p2p源码分析.md

├── picture

├── Consensus-architecture.png

├── EVM-1.jpg

├── EVM-2.png

├── README.md

├── accounts.png

├── arch.jpg

├── block-seal-process.png

├── block-verification-process.png

├── bloom_1.png

├── bloom_2.png

├── bloom_3.png

├── bloom_4.png

├── bloom_5.png

├── bloom_6.png

├── chainindexer_1.png

├── chainindexer_2.png

├── geth_1.png

├── go_env_1.png

├── go_env_2.png

├── hashimoto-flow.png

├── hp_1.png

├── matcher_1.png

├── nat_1.png

├── nat_2.png

├── nat_3.png

├── patricia_tire.png

├── pow_hashimoto.png

├── rlp_1.png

├── rlp_2.png

├── rlp_3.png

├── rlp_4.png

├── rlp_5.png

├── rlp_6.png

├── rlpx_1.png

├── rlpx_2.png

├── rlpx_3.png

├── rpc_1.png

├── rpc_2.png

├── sign_ether.png

├── sign_ether_value.png

├── sign_exec_func.png

├── sign_exec_model.png

├── sign_func_1.png

├── sign_func_2.png

├── sign_gas_log.png

├── sign_gas_total.png

├── sign_h_b.png

├── sign_h_c.png

├── sign_h_d.png

├── sign_h_e.png

├── sign_h_g.png

├── sign_h_i.png

├── sign_h_l.png

├── sign_h_m.png

├── sign_h_n.png

├── sign_h_o.png

├── sign_h_p.png

├── sign_h_r.png

├── sign_h_s.png

├── sign_h_t.png

├── sign_h_x.png

├── sign_homestead.png

├── sign_i_a.png

├── sign_i_b.png

├── sign_i_d.png

├── sign_i_e.png

├── sign_i_h.png

├── sign_i_o.png

├── sign_i_p.png

├── sign_i_s.png

├── sign_i_v.png

├── sign_l1.png

├── sign_last_item.png

├── sign_last_item_1.png

├── sign_ls.png

├── sign_m_g.png

├── sign_m_w.png

├── sign_machine_state.png

├── sign_math_and.png

├── sign_math_any.png

├── sign_math_or.png

├── sign_memory.png

├── sign_pa.png

├── sign_placeholder_1.png

├── sign_placeholder_2.png

├── sign_placeholder_3.png

├── sign_placeholder_4.png

├── sign_r_bloom.png

├── sign_r_gasused.png

├── sign_r_i.png

├── sign_r_log.png

├── sign_r_logentry.png

├── sign_r_state.png

├── sign_receipt.png

├── sign_seq_item.png

├── sign_set_b.png

├── sign_set_b32.png

├── sign_set_p.png

├── sign_set_p256.png

├── sign_stack.png

├── sign_stack_added.png

├── sign_stack_removed.png

├── sign_state_1.png

├── sign_state_10.png

├── sign_state_2.png

├── sign_state_3.png

├── sign_state_4.png

├── sign_state_5.png

├── sign_state_6.png

├── sign_state_7.png

├── sign_state_8.png

├── sign_state_9.png

├── sign_state_balance.png

├── sign_state_code.png

├── sign_state_nonce.png

├── sign_state_root.png

├── sign_substate_a.png

├── sign_substate_al.png

├── sign_substate_ar.png

├── sign_substate_as.png

├── sign_t_data.png

├── sign_t_gaslimit.png

├── sign_t_gasprice.png

├── sign_t_lt.png

├── sign_t_nonce.png

├── sign_t_ti.png

├── sign_t_to.png

├── sign_t_tr.png

├── sign_t_ts.png

├── sign_t_value.png

├── sign_t_w.png

├── sign_u_i.png

├── sign_u_m.png

├── sign_u_pc.png

├── sign_u_s.png

├── state_1.png

├── trie_1.jpg

├── trie_1.png

├── trie_10.png

├── trie_2.png

├── trie_3.png

├── trie_4.png

├── trie_5.png

├── trie_6.png

├── trie_7.png

├── trie_8.png

├── trie_9.png

└── worldstatetrie.png

├── pos介绍proofofstake.md

├── pow一致性算法.md

├── readinguide4rlp.md

├── references

├── Kademlia协议原理简介.pdf

└── readme.md

├── rlp文件解析.md

├── rpc源码分析.md

├── todo-p2p加密算法.md

├── todo-用户账户-密钥-签名的关系.md

├── trie源码分析.md

├── types.md

├── 以太坊fast sync算法.md

├── 以太坊测试网络Clique_PoA介绍.md

├── 以太坊随机数生成方式.md

└── 封装的一些基础工具.md

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior:

15 | 1. Go to '...'

16 | 2. Click on '....'

17 | 3. Scroll down to '....'

18 | 4. See error

19 |

20 | **Expected behavior**

21 | A clear and concise description of what you expected to happen.

22 |

23 | **Screenshots**

24 | If applicable, add screenshots to help explain your problem.

25 |

26 | **Desktop (please complete the following information):**

27 | - OS: [e.g. iOS]

28 | - Browser [e.g. chrome, safari]

29 | - Version [e.g. 22]

30 |

31 | **Smartphone (please complete the following information):**

32 | - Device: [e.g. iPhone6]

33 | - OS: [e.g. iOS8.1]

34 | - Browser [e.g. stock browser, safari]

35 | - Version [e.g. 22]

36 |

37 | **Additional context**

38 | Add any other context about the problem here.

39 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/custom.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Custom issue template

3 | about: Describe this issue template's purpose here.

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 |

11 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/EVMOverview.md:

--------------------------------------------------------------------------------

1 | # EVM Overview

2 | ## 1. 设计目标

3 |

4 | 在以太坊的设计原理中描述了 EVM 的设计目标:

5 |

6 | - 简单:操作码尽可能的简单,低级,数据类型尽可能少,虚拟机结构尽可能少。

7 | - 结果明确:在 VM 规范里,没有任何可能产生歧义的空间,结果应该是完全确定的,此外,计算步骤应该是精确的,以便可以计量 gas 消耗量。

8 | - 节约空间:EVM 汇编码应该尽可能紧凑。

9 | - 预期应用应具备专业化能力:在 VM 上构建的应用能够处理20字节的地址,以及32位的自定义加密值,拥有用于自定义加密的模数运算、读取区块和交易数据和状态交互等能力。

10 | - 简单安全:能够容易地建立一套操作的 gas 消耗成本模型,让 VM 不被利用。

11 | - 优化友好:应该易于优化,以便即时编译(JIT)和 VM 的加速版本能够构建出来。

12 |

13 | ## 2. 特点:

14 |

15 | - 区分临时存储(Memory,存在于每个 VM 实例中,并在 VM 执行结束后消失)和永久存储(Storage,存在于区块链的状态层)。

16 | - 采用基于栈(stack)的架构。

17 | - 机器码长度为32字节。

18 | - 没有重用 Java,或其他一些 Lisp 方言,Lua 的虚拟机,自定义虚拟机。

19 | - 使用可变的可扩展内存大小。

20 | - 限制调用深度为 1024。

21 | - 没有类型。

22 |

23 | ## 3. 原理

24 | 通常智能合约的开发流程是使用 solidity 编写逻辑代码,通过编译器编译成 bytecode,然后发布到以太坊上,以太坊底层通过 EVM 模块支持合约的执行和调用,调用时根据合约地址获取到代码,即合约的字节码,生成环境后载入到 EVM 执行。

25 |

26 | 大致流程如下图1,指令的执行过程如下图2,从 EVM code 中不断取出指令执行,利用 Gas 来实现限制循环,利用栈来进行操作,内存存储临时变量,账户状态中的 storage 用来存储数据。

27 |

28 |

29 |

30 | ## 4. 代码结构

31 | EVM 模块的文件比较多,这里先给出每个文件的简述,先对每个文件提供的功能有个简单的了解。

32 |

33 | ├── analysis.go // 跳转目标判定

34 | ├── common.go

35 | ├── contract.go // 合约的数据结构

36 | ├── contracts.go // 预编译好的合约

37 | ├── errors.go

38 | ├── evm.go // 对外提供的接口

39 | ├── gas.go // 用来计算指令耗费的 gas

40 | ├── gas_table.go // 指令耗费计算函数表

41 | ├── gen_structlog.go

42 | ├── instructions.go // 指令操作

43 | ├── interface.go // 定义 StateDB 的接口

44 | ├── interpreter.go // 解释器

45 | ├── intpool.go // 存放大整数

46 | ├── int_pool_verifier_empty.go

47 | ├── int_pool_verifier.go

48 | ├── jump_table.go // 指令和指令操作(操作,花费,验证)对应表

49 | ├── logger.go // 状态日志

50 | ├── memory.go // EVM 内存

51 | ├── memory_table.go // EVM 内存操作表,用来衡量操作所需内存大小

52 | ├── noop.go

53 | ├── opcodes.go // 指令以及一些对应关系

54 | ├── runtime

55 | │ ├── env.go // 执行环境

56 | │ ├── fuzz.go

57 | │ └── runtime.go // 运行接口,测试使用

58 | ├── stack.go // 栈

59 | └── stack_table.go // 栈验证

60 |

61 |

--------------------------------------------------------------------------------

/Internal-ethapi:

--------------------------------------------------------------------------------

1 | 在 internal/ethapi/api.go 中,可以通过 NewAccount 获取新账户,这个 api 可以通过交互式命令行或 rpc 接口调用。

2 |

3 | func (s *PrivateAccountAPI) NewAccount(password string) (common.Address, error) {

4 | acc, err := fetchKeystore(s.am).NewAccount(password)

5 | if err == nil {

6 | return acc.Address, nil

7 | }

8 | return common.Address{}, err

9 | }

10 |

11 | 首先调用 fetchKeystore,通过 backends 获得 KeyStore 对象,最后通过调用 keystore.go 中的 NewAccount 获得新账户。

12 | func (ks *KeyStore) NewAccount(passphrase string) (accounts.Account, error) {

13 | _, account, err := storeNewKey(ks.storage, crand.Reader, passphrase)

14 | if err != nil {

15 | return accounts.Account{}, err

16 | }

17 | ks.cache.add(account)

18 | ks.refreshWallets()

19 | return account, nil

20 | }

21 | NewAccount 会调用 storeNewKey。

22 |

23 | func storeNewKey(ks keyStore, rand io.Reader, auth string) (*Key, accounts.Account, error) {

24 | key, err := newKey(rand)

25 | if err != nil {

26 | return nil, accounts.Account{}, err

27 | }

28 | a := accounts.Account{Address: key.Address, URL: accounts.URL{Scheme: KeyStoreScheme, Path: ks.JoinPath(keyFileName(key.Address))}}

29 | if err := ks.StoreKey(a.URL.Path, key, auth); err != nil {

30 | zeroKey(key.PrivateKey)

31 | return nil, a, err

32 | }

33 | return key, a, err

34 | }

35 | 注意第一个参数是 keyStore,这是一个接口类型。

36 |

37 | type keyStore interface {

38 | GetKey(addr common.Address, filename string, auth string) (*Key, error)

39 | StoreKey(filename string, k *Key, auth string) error

40 | JoinPath(filename string) string

41 | }

42 | storeNewKey 首先调用 newKey,通过椭圆曲线加密算法获取公私钥对。

43 |

44 | func newKey(rand io.Reader) (*Key, error) {

45 | privateKeyECDSA, err := ecdsa.GenerateKey(crypto.S256(), rand)

46 | if err != nil {

47 | return nil, err

48 | }

49 | return newKeyFromECDSA(privateKeyECDSA), nil

50 | }

51 | 然后会根据参数 ks 的类型调用对应的实现,通过 geth account new 命令创建新账户,调用的就是 accounts/keystore/keystore_passphrase.go 中的实现。即

52 |

53 | func (ks keyStorePassphrase) StoreKey(filename string, key *Key, auth string) error {

54 | keyjson, err := EncryptKey(key, auth, ks.scryptN, ks.scryptP)

55 | if err != nil {

56 | return err

57 | }

58 | return writeKeyFile(filename, keyjson)

59 | }

60 |

61 | 我们可以深入到 EncryptKey 中

62 | func EncryptKey(key *Key, auth string, scryptN, scryptP int) ([]byte, error) {

63 | authArray := []byte(auth)

64 | salt := randentropy.GetEntropyCSPRNG(32)

65 | derivedKey, err := scrypt.Key(authArray, salt, scryptN, scryptR, scryptP, scryptDKLen)

66 | if err != nil {

67 | return nil, err

68 | }

69 | encryptKey := derivedKey[:16]

70 | keyBytes := math.PaddedBigBytes(key.PrivateKey.D, 32)

71 | iv := randentropy.GetEntropyCSPRNG(aes.BlockSize) // 16

72 | cipherText, err := aesCTRXOR(encryptKey, keyBytes, iv)

73 | if err != nil {

74 | return nil, err

75 | }

76 | mac := crypto.Keccak256(derivedKey[16:32], cipherText)

77 | scryptParamsJSON := make(map[string]interface{}, 5)

78 | scryptParamsJSON["n"] = scryptN

79 | scryptParamsJSON["r"] = scryptR

80 | scryptParamsJSON["p"] = scryptP

81 | scryptParamsJSON["dklen"] = scryptDKLen

82 | scryptParamsJSON["salt"] = hex.EncodeToString(salt)

83 | cipherParamsJSON := cipherparamsJSON{

84 | IV: hex.EncodeToString(iv),

85 | }

86 | cryptoStruct := cryptoJSON{

87 | Cipher: "aes-128-ctr",

88 | CipherText: hex.EncodeToString(cipherText),

89 | CipherParams: cipherParamsJSON,

90 | KDF: keyHeaderKDF,

91 | KDFParams: scryptParamsJSON,

92 | MAC: hex.EncodeToString(mac),

93 | }

94 | encryptedKeyJSONV3 := encryptedKeyJSONV3{

95 | hex.EncodeToString(key.Address[:]),

96 | cryptoStruct,

97 | key.Id.String(),

98 | version,

99 | }

100 | return json.Marshal(encryptedKeyJSONV3)

101 | }

102 | EncryptKey 的 key 参数是加密的账户,包括 ID,公私钥,地址,auth 参数是用户输入的密码,scryptN 参数是 scrypt 算法中的 N,scryptP 参数是 scrypt 算法中的 P。整个过程,首先对密码使用 scrypt 算法加密,得到加密后的密码 derivedKey,然后用 derivedKey 对私钥使用 AES-CTR 算法加密,得到密文 cipherText,再对 derivedKey 和 cipherText 进行哈希运算得到 mac,mac 起到签名的作用,在解密的时候可以验证合法性,防止别人篡改。EncryptKey 最终返回 json 字符串,Storekey 方法接下来会将其保存在文件中。

103 |

104 | 列出所有账户

105 | 列出所有账户的入口也在 internal/ethapi/api.go 里。

106 | func (s *PrivateAccountAPI) ListAccounts() []common.Address {

107 | addresses := make([]common.Address, 0) // return [] instead of nil if empty

108 | for _, wallet := range s.am.Wallets() {

109 | for _, account := range wallet.Accounts() {

110 | addresses = append(addresses, account.Address)

111 | }

112 | }

113 | return addresses

114 | }

115 | 该方法会从 Account Manager 中读取所有钱包信息,获取其对应的所有地址信息。

116 |

117 | 如果读者对 geth account 命令还有印象的话,geth account 命令还有 update,import 等方法,这里就不再讨论了。

118 |

119 | 发起转账

120 | 发起一笔转账的函数入口在 internal/ethapi/api.go 中。

121 | func (s *PublicTransactionPoolAPI) SendTransaction(ctx context.Context, args SendTxArgs) (common.Hash, error) {

122 | account := accounts.Account{Address: args.From}

123 | wallet, err := s.b.AccountManager().Find(account)

124 | if err != nil {

125 | return common.Hash{}, err

126 | }

127 | if args.Nonce == nil {

128 | s.nonceLock.LockAddr(args.From)

129 | defer s.nonceLock.UnlockAddr(args.From)

130 | }

131 | if err := args.setDefaults(ctx, s.b); err != nil {

132 | return common.Hash{}, err

133 | }

134 | tx := args.toTransaction()

135 | var chainID *big.Int

136 | if config := s.b.ChainConfig(); config.IsEIP155(s.b.CurrentBlock().Number()) {

137 | chainID = config.ChainId

138 | }

139 | signed, err := wallet.SignTx(account, tx, chainID)

140 | if err != nil {

141 | return common.Hash{}, err

142 | }

143 | return submitTransaction(ctx, s.b, signed)

144 | }

145 | 转账时,首先利用传入的参数 from 构造一个 account,表示转出方。然后通过 accountMananger 的 Find 方法获得这个账户的钱包(Find 方法在上面有介绍),接下来有一个稍特别的地方。我们知道以太坊采用的是账户余额的体系,对于 UTXO 的方式来说,防止双花的方式很直观,一个输出不能同时被两个输入而引用,这种方式自然而然地就防止了发起转账时可能出现的双花,采用账户系统的以太坊没有这种便利,以太坊的做法是,每个账户有一个 nonce 值,它等于账户累计发起的交易数量,账户发起交易时,交易数据里必须包含 nonce,而且该值必须大于账户的 nonce 值,否则为非法,如果交易的 nonce 值减去账户的 nonce 值大于1,这个交易也不能打包到区块中,这确保了交易是按照一定的顺序执行的。如果有两笔交易有相同 nonce,那么其中只有一笔交易能够成功,通过给 nonce 加锁就是用来防止双花的问题。接着调用 args.setDefaults(ctx, s.b) 方法设置一些交易默认值。最后调用 toTransaction 方法创建交易:

146 |

147 | func (args *SendTxArgs) toTransaction() *types.Transaction {

148 | var input []byte

149 | if args.Data != nil {

150 | input = *args.Data

151 | } else if args.Input != nil {

152 | input = *args.Input

153 | }

154 | if args.To == nil {

155 | return types.NewContractCreation(uint64(*args.Nonce), (*big.Int)(args.Value), uint64(*args.Gas), (*big.Int)(args.GasPrice), input)

156 | }

157 | return types.NewTransaction(uint64(*args.Nonce), *args.To, (*big.Int)(args.Value), uint64(*args.Gas), (*big.Int)(args.GasPrice), input)

158 | }

159 | 这里有两个分支,如果传入的交易的 to 参数不存在,那就表明这是一笔合约转账;如果有 to 参数,就是一笔普通的转账,深入后你会发现这两种转账最终调用的都是 newTransaction

160 |

161 | func NewTransaction(nonce uint64, to common.Address, amount *big.Int, gasLimit uint64, gasPrice *big.Int, data []byte) *Transaction {

162 | return newTransaction(nonce, &to, amount, gasLimit, gasPrice, data)

163 | }

164 | func NewContractCreation(nonce uint64, amount *big.Int, gasLimit uint64, gasPrice *big.Int, data []byte) *Transaction {

165 | return newTransaction(nonce, nil, amount, gasLimit, gasPrice, data)

166 | }

167 | newTransaction 的功能很简单,实际上就是返回一个 Transaction 实例。我们接着看 SendTransaction 方法接下来的部分。创建好一笔交易,接着我们通过 ChainConfig 方法获得区块链的配置信息,如果是 EIP155 里描述的配置,需要做特殊处理(待深入),然后调用 SignTx 对交易签名来确保这笔交易是真实有效的。SignTx 的接口定义在 accounts/accounts.go 中,这里我们看 keystore 的实现。

168 |

169 | func (ks *KeyStore) SignTx(a accounts.Account, tx *types.Transaction, chainID *big.Int) (*types.Transaction, error) {

170 | ks.mu.RLock()

171 | defer ks.mu.RUnlock()

172 | unlockedKey, found := ks.unlocked[a.Address]

173 | if !found {

174 | return nil, ErrLocked

175 | }

176 | if chainID != nil {

177 | return types.SignTx(tx, types.NewEIP155Signer(chainID), unlockedKey.PrivateKey)

178 | }

179 | return types.SignTx(tx, types.HomesteadSigner{}, unlockedKey.PrivateKey)

180 | }

181 | 首先验证账户是否已解锁,若没有解锁,直接报异常退出。接着根据 chainID 判断使用哪一种签名方式,调用相应 SignTx 方法进行签名。

182 |

183 | func SignTx(tx *Transaction, s Signer, prv *ecdsa.PrivateKey) (*Transaction, error) {

184 | h := s.Hash(tx)

185 | sig, err := crypto.Sign(h[:], prv)

186 | if err != nil {

187 | return nil, err

188 | }

189 | return tx.WithSignature(s, sig)

190 | }

191 | SignTx 的功能是调用椭圆加密函数获得签名,得到带签名的交易后,通过 SubmitTrasaction 提交交易。

192 |

193 |

194 | func submitTransaction(ctx context.Context, b Backend, tx *types.Transaction) (common.Hash, error) {

195 | if err := b.SendTx(ctx, tx); err != nil {

196 | return common.Hash{}, err

197 | }

198 | if tx.To() == nil {

199 | signer := types.MakeSigner(b.ChainConfig(), b.CurrentBlock().Number())

200 | from, err := types.Sender(signer, tx)

201 | if err != nil {

202 | return common.Hash{}, err

203 | }

204 | addr := crypto.CreateAddress(from, tx.Nonce())

205 | log.Info("Submitted contract creation", "fullhash", tx.Hash().Hex(), "contract", addr.Hex())

206 | } else {

207 | log.Info("Submitted transaction", "fullhash", tx.Hash().Hex(), "recipient", tx.To())

208 | }

209 | return tx.Hash(), nil

210 | }

211 | submitTransaction 首先调用 SendTx,这个接口在 internal/ethapi/backend.go 中定义,而实现在 eth/api_backend.go 中,这部分代码涉及到交易池,我们在单独的交易池章节进行探讨,这里就此打住。

212 |

213 | 将交易写入交易池后,如果没有因错误退出,submitTransaction 会完成提交交易,返回交易哈希值。发起交易的这个过程就结束了,剩下的就交给矿工将交易上链。

214 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Ethereum Tutorial

2 | 本文结合了一些网上资料,加上个人的原创结合而成。若有疑问,还请及时批评指出。

3 |

4 | **For the one who is not familliar with Chinese, the English version is published. [link](https://github.com/Billy1900/Ethereum-tutorial-EN)**

5 |

6 | ## 目录

7 |

8 | - [go-ethereum代码阅读环境搭建](/go-ethereum源码阅读环境搭建.md)

9 | - [以太坊黄皮书 符号索引](a黄皮书里面出现的所有的符号索引.md)

10 | - [account文件解析](/accounts源码分析.md)

11 | - build文件解析: 此文件主要用于编译安装使用

12 | - [cmd文件解析](/cmd.md)

13 | - [geth](/cmd-geth.md)

14 | - common文件: 此文件是提供系统的一些通用的工具集 (utils)

15 | - [consensus文件解析](/consensus.md)

16 | - console文件解析: Console is a JavaScript interpreted runtime environment.

17 | - contract文件: Package checkpointoracle is a an on-chain light client checkpoint oracle about contract.

18 | - core文件源码分析

19 | - [types文件解析](/types.md)

20 | - [state文件分析](/core-state源码分析.md)

21 | - [core/genesis.go](/core-genesis创世区块源码分析.md)

22 | - [core/blockchain.go](/core-blockchain源码分析.md)

23 | - [core/tx_list.go & tx_journal.go](/core-txlist交易池的一些数据结构源码分析.md)

24 | - [core/tx_pool.go](/core-txpool交易池源码分析.md)

25 | - [core/block_processor.go & block_validator.go](/blockvalidator&blockprocessor.md)

26 | - [chain_indexer.go](/core-chain_indexer源码解析.md)

27 | - [bloombits源码分析](/core-bloombits源码分析.md)

28 | - [statetransition.go & stateprocess.go](/core-state-process源码分析.md)

29 | - vm 虚拟机源码分析

30 | - [EVM Overview](/EVMOverview.md)

31 | - [虚拟机堆栈和内存数据结构分析](/core-vm-stack-memory源码分析.md)

32 | - [虚拟机指令,跳转表,解释器源码分析](/core-vm-jumptable-instruction.md)

33 | - [虚拟机源码分析](/core-vm源码分析.md)

34 | - crypto文件: 整个system涉及的有关密码学的configuration

35 | - Dashboard: The dashboard is a data visualizer integrated into geth, intended to collect and visualize useful information of an Ethereum node. It consists of two parts: 1) The client visualizes the collected data. 2) The server collects the data, and updates the clients.

36 | - [eth源码分析](/eth源码分析.md)

37 | - [ethdb源码分析](/ethdb源码分析.md)

38 | - [miner文件解析](/miner-module.md)

39 | - [p2p源码分析](/p2p源码分析.md)

40 | - [rlp源码解析](/rlp文件解析.md)

41 | - [rpc源码分析](/rpc源码分析.md)

42 | - [trie源码分析](/trie源码分析.md)

43 | - [pow一致性算法](/pow一致性算法.md)

44 | - [以太坊测试网络Clique_PoA介绍](/以太坊测试网络Clique_PoA介绍.md)

45 |

46 |

47 |

--------------------------------------------------------------------------------

/a黄皮书里面出现的所有的符号索引.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | 是t+1时刻的状态(account trie)。

6 |

7 | 是状态转换函数,也可以理解为执行引擎。

8 |

9 |  是transaction,一次交易。

10 |

11 |

12 |

13 |  是区块级别的状态转换函数。

14 |

15 |  是区块,由很多交易组成。

16 |

17 |  0号位置的交易。

18 |

19 |  是块终结状态转换函数(一个奖励挖矿者的函数)。

20 |

21 |  Ether的标识。

22 |

23 |  Ethereum中所用到的各种单位与Wei的换算关系(例如:一个Finney对应10^15个Wei)。

24 |

25 |  machine-state

26 |

27 | ## 一些基本的规则

28 |

29 | - 对于大多数的函数来说,都用大写字母来标识。

30 | - 元组一般用大写字母来标识

31 | - 标量或者固定大小的字节数组都用小写字母标识。 比如 n 代表交易的nonce, 有一些可能有例外,比如δ代表 一个给定指令需要的堆栈数据的多少。

32 | - 变长的字节数组一般用加粗的小写字母。 比如 **o** 代表一个message call的输出数据。对于某些重要的也可能使用加粗的大写字母

33 |

34 |

35 |  字节序列

36 |  正整数

37 |  32字节长度的字节序列

38 |  小于 2^256 的正整数

39 | **[ ]** 用于索引数组里面的对应元素

40 |  代表机器堆栈(machine's stack)的第一个对象

41 |  代表了机器内存(machine's memory)里面的前32个元素

42 |  一个占位符号,可以是任意字符代表任意对象

43 |

44 |  代表这个对象被修改后的值

45 |  中间状态

46 |  中间状态2

47 |   如果前面的f代表了一个函数, 那么后面的f*代表了一个相似的函数,不过是对内部的元素依次执行f的一个函数。

48 |

49 |  代表了列表里面的最后一个元素

50 |  代表了列表里面的最后一个元素

51 |  求x的长度

52 |

53 |

54 |  a代表某个地址,代表某个账号的nonce

55 |  banlance 余额

56 |  storage trie 的 root hash

57 |  Code的hash。 如果code是b 那么KEC(b)===这个hash

58 |

59 |

60 |

61 |

62 |  world state collapse function

63 |

64 |

65 |

66 |  任意的 any

67 |  并集 or

68 |  交集 and

69 |

70 |  Homestead

71 | ## 交易

72 |

73 |  交易的nonce

74 |  gasPrice

75 |  gasLimit

76 |  to

77 |  value

78 |

79 | 通过者三个值可以得到sender的地址

80 |

81 |  合约的初始化代码

82 |  方法调用的入参

83 |

84 |

85 | ## 区块头

86 |

87 | ParentHash

88 | OmmersHash

89 | beneficiary矿工地址

90 | stateRoot

91 | transactionRoot

92 | receiptRoot

93 | logsBloom

94 | 难度

95 | number高度

96 | gasLimit

97 | gasUsed

98 | timestamp

99 | extraData

100 | mixHash

101 | nonce

102 | ## 回执

103 |

104 |  第i个交易的receipt

105 |

106 |

107 |  交易执行后的world-state

108 | 交易执行后区块总的gas使用量

109 | 本交易执行产生的所有log的布隆过滤数据

110 | 交易产生的日志集合

111 |

112 |  Log entry Oa日志产生的地址, Ot topic Od 时间

113 |

114 | ## 交易执行

115 |  substate

116 |  suicide set

117 |  log series

118 |  refund balance

119 |

120 |  交易过程中使用的总gas数量。

121 |  交易产生的日志。

122 |

123 |  执行代码的拥有者

124 |  交易的发起者

125 |  gasPrice

126 |  inputdata

127 |  引起代码执行的地址,如果是交易那么是交易的发起人

128 |  value

129 |  需要执行的代码

130 |  当前的区块头

131 |  当前的调用深度

132 |

133 |

134 |  执行模型 s suicide set; l 日志集合 **o** 输出 ; r refund

135 |

136 |  执行函数

137 |

138 |  当前可用的gas

139 |  程序计数器

140 |  内存内容

141 |  内存中有效的word数量

142 |  堆栈内容

143 |

144 |  w代表当前需要执行的指令

145 |

146 |  指令需要移除的堆栈对象个数

147 |  指令需要增加的堆栈对象个数

148 |

--------------------------------------------------------------------------------

/blockvalidator&blockprocessor.md:

--------------------------------------------------------------------------------

1 | # block_validatore.go

2 |

3 | ## core/ Block_Validator.go/ValidateBody()

4 | func (v *BlockValidator) ValidateBody(block *types.Block) error {

5 | // Check whether the block's known, and if not, that it's linkable

6 | if v.bc.HasBlockAndState(block.Hash(), block.NumberU64()) {

7 | return ErrKnownBlock

8 | }

9 | if !v.bc.HasBlockAndState(block.ParentHash(), block.NumberU64()-1) {

10 | if !v.bc.HasBlock(block.ParentHash(), block.NumberU64()-1) {

11 | return consensus.ErrUnknownAncestor

12 | }

13 | return consensus.ErrPrunedAncestor

14 | }

15 | // Header validity is known at this point, check the uncles and transactions

16 | header := block.Header()

17 | if err := v.engine.VerifyUncles(v.bc, block); err != nil {

18 | return err

19 | }

20 | if hash := types.CalcUncleHash(block.Uncles()); hash != header.UncleHash {

21 | return fmt.Errorf("uncle root hash mismatch: have %x, want %x", hash, header.UncleHash)

22 | }

23 | if hash := types.DeriveSha(block.Transactions()); hash != header.TxHash {

24 | return fmt.Errorf("transaction root hash mismatch: have %x, want %x", hash, header.TxHash)

25 | }

26 | return nil

27 | }

28 | 这段代码主要是用来验证区块内容的。

29 | - 首先判断当前数据库中是否已经包含了该区块,如果已经有了的话返回错误。

30 | - 接着判断当前数据库中是否包含该区块的父块,如果没有的话返回错误。

31 | - 然后验证叔块的有效性及其hash值,最后计算块中交易的hash值并验证是否和区块头中的hash值一致。

32 |

33 | ## core/BlockValidator.ValidateState()

34 | func (v *BlockValidator) ValidateState(block, parent *types.Block, statedb *state.StateDB, receipts types.Receipts, usedGas uint64) error {

35 | header := block.Header()

36 | if block.GasUsed() != usedGas {

37 | return fmt.Errorf("invalid gas used (remote: %d local: %d)", block.GasUsed(), usedGas)

38 | }

39 | // Validate the received block's bloom with the one derived from the generated receipts.

40 | // For valid blocks this should always validate to true.

41 | rbloom := types.CreateBloom(receipts)

42 | if rbloom != header.Bloom {

43 | return fmt.Errorf("invalid bloom (remote: %x local: %x)", header.Bloom, rbloom)

44 | }

45 | // Tre receipt Trie's root (R = (Tr [[H1, R1], ... [Hn, R1]]))

46 | receiptSha := types.DeriveSha(receipts)

47 | if receiptSha != header.ReceiptHash {

48 | return fmt.Errorf("invalid receipt root hash (remote: %x local: %x)", header.ReceiptHash, receiptSha)

49 | }

50 | // Validate the state root against the received state root and throw

51 | // an error if they don't match.

52 | if root := statedb.IntermediateRoot(v.config.IsEIP158(header.Number)); header.Root != root {

53 | return fmt.Errorf("invalid merkle root (remote: %x local: %x)", header.Root, root)

54 | }

55 | return nil

56 | }

57 |

58 | 这部分代码主要是用来验证区块中和状态转换相关的字段是否正确,包含以下几个部分:

59 |

60 | - 判断刚刚执行交易消耗的gas值是否和区块头中的值相同

61 | - 根据刚刚执行交易获得的交易回执创建Bloom过滤器,判断是否和区块头中的Bloom过滤器相同(Bloom过滤器是一个2048位的字节数组)

62 | - 判断交易回执的hash值是否和区块头中的值相同

63 | - 计算StateDB中的MPT的Merkle Root,判断是否和区块头中的值相同

64 |

65 | 至此,区块验证流程就走完了,新区块将被写入数据库,同时更新世界状态。

66 |

67 |

68 | # block_processor.go

69 |

70 | ## core/BlockProcessor.Process()

71 | func (p *StateProcessor) Process(block *types.Block, statedb *state.StateDB, cfg vm.Config) (types.Receipts, []*types.Log, uint64, error) {

72 | var (

73 | receipts types.Receipts

74 | usedGas = new(uint64)

75 | header = block.Header()

76 | allLogs []*types.Log

77 | gp = new(GasPool).AddGas(block.GasLimit())

78 | )

79 | // Mutate the the block and state according to any hard-fork specs

80 | if p.config.DAOForkSupport && p.config.DAOForkBlock != nil && p.config.DAOForkBlock.Cmp(block.Number()) == 0 {

81 | misc.ApplyDAOHardFork(statedb)

82 | }

83 | // Iterate over and process the individual transactions

84 | for i, tx := range block.Transactions() {

85 | statedb.Prepare(tx.Hash(), block.Hash(), i)

86 | receipt, _, err := ApplyTransaction(p.config, p.bc, nil, gp, statedb, header, tx, usedGas, cfg)

87 | if err != nil {

88 | return nil, nil, 0, err

89 | }

90 | receipts = append(receipts, receipt)

91 | allLogs = append(allLogs, receipt.Logs...)

92 | }

93 | // Finalize the block, applying any consensus engine specific extras (e.g. block rewards)

94 | p.engine.Finalize(p.bc, header, statedb, block.Transactions(), block.Uncles(), receipts)

95 |

96 | return receipts, allLogs, *usedGas, nil

97 | }

98 | 这段代码其实跟挖矿代码中执行交易是一模一样的,首先调用Prepare()计算难度值,然后调用ApplyTransaction()执行交易并获取交易回执和消耗的gas值,最后通过Finalize()生成区块。

99 |

100 | 值得注意的是,传进来的StateDB是父块的世界状态,执行交易会改变这些状态,为下一步验证状态转移相关的字段做准备。

101 |

--------------------------------------------------------------------------------

/cmd.md:

--------------------------------------------------------------------------------

1 | # cmd

2 |

3 | |文件|package|说明|

4 | |-----|----------|-----------------------------------------------------------------------------------|

5 | |cmd | |命令行工具,下面又分了很多的命令行工具|

6 | |cmd |abigen |将智能合约源代码转换成容易使用的,编译时类型安全的Go语言包|

7 | |cmd |bootnode |启动一个仅仅实现网络发现的节点|

8 | |cmd | checkpoint-admin| checkpoint-admin is a utility that can be used to query checkpoint information and register stable checkpoints into an oracle contract.|

9 | |cmd | clef | Clef is an account management tool|

10 | |cmd | devp2p | ethereum p2p tool|

11 | |cmd | ethkey | an Ethereum key manager|

12 | |cmd | evm |以太坊虚拟机的开发工具, 用来提供一个可配置的,受隔离的代码调试环境|

13 | |cmd | faucet |faucet is a Ether faucet backend by a light client.|

14 | |cmd |geth |以太坊命令行客户端,最重要的一个工具|

15 | |cmd |p2psim |提供了一个工具来模拟http的API|

16 | |cmd |puppeth |创建一个新的以太坊网络的向导,一个命令组装和维护私人网路|

17 | |cmd |rlpdump |提供了一个RLP数据的格式化输出|

18 | |cmd |swarm |swarm网络的接入点|

19 | |cmd |util |提供了一些公共的工具,为Go-Ethereum命令提供说明|

20 | |cmd |wnode |这是一个简单的Whisper节点。 它可以用作独立的引导节点。此外,可以用于不同的测试和诊断目的。|

21 |

--------------------------------------------------------------------------------

/core-genesis创世区块源码分析.md:

--------------------------------------------------------------------------------

1 | # core/genesis.go

2 |

3 | genesis 是创世区块的意思. 一个区块链就是从同一个创世区块开始,通过规则形成的.不同的网络有不同的创世区块, 主网络和测试网路的创世区块是不同的.

4 |

5 | 这个模块根据传入的genesis的初始值和database,来设置genesis的状态,如果不存在创世区块,那么在database里面创建它。

6 |

7 | 数据结构

8 |

9 | // Genesis specifies the header fields, state of a genesis block. It also defines hard

10 | // fork switch-over blocks through the chain configuration.

11 | // Genesis指定header的字段,起始块的状态。 它还通过配置来定义硬叉切换块。

12 | type Genesis struct {

13 | Config *params.ChainConfig `json:"config"`

14 | Nonce uint64 `json:"nonce"`

15 | Timestamp uint64 `json:"timestamp"`

16 | ExtraData []byte `json:"extraData"`

17 | GasLimit uint64 `json:"gasLimit" gencodec:"required"`

18 | Difficulty *big.Int `json:"difficulty" gencodec:"required"`

19 | Mixhash common.Hash `json:"mixHash"`

20 | Coinbase common.Address `json:"coinbase"`

21 | Alloc GenesisAlloc `json:"alloc" gencodec:"required"`

22 |

23 | // These fields are used for consensus tests. Please don't use them

24 | // in actual genesis blocks.

25 | Number uint64 `json:"number"`

26 | GasUsed uint64 `json:"gasUsed"`

27 | ParentHash common.Hash `json:"parentHash"`

28 | }

29 |

30 | // GenesisAlloc specifies the initial state that is part of the genesis block.

31 | // GenesisAlloc 指定了最开始的区块的初始状态.

32 | type GenesisAlloc map[common.Address]GenesisAccount

33 |

34 | genesisaccount,

35 | type GenesisAlloc map[common.Address]GenesisAccount

36 | type GenesisAccount struct {

37 | Code []byte `json:"code,omitempty"`

38 | Storage map[common.Hash]common.Hash `json:"storage,omitempty"`

39 | Balance *big.Int `json:"balance" gencodec:"required"`

40 | Nonce uint64 `json:"nonce,omitempty"`

41 | PrivateKey []byte `json:"secretKey,omitempty"`

42 | }

43 |

44 |

45 | SetupGenesisBlock,

46 |

47 | // SetupGenesisBlock writes or updates the genesis block in db.

48 | //

49 | // The block that will be used is:

50 | //

51 | // genesis == nil genesis != nil

52 | // +------------------------------------------

53 | // db has no genesis | main-net default | genesis

54 | // db has genesis | from DB | genesis (if compatible)

55 | //

56 | // The stored chain configuration will be updated if it is compatible (i.e. does not

57 | // specify a fork block below the local head block). In case of a conflict, the

58 | // error is a *params.ConfigCompatError and the new, unwritten config is returned.

59 | // 如果存储的区块链配置不兼容那么会被更新(). 为了避免发生冲突,会返回一个错误,并且新的配置和原来的配置会返回.

60 | // The returned chain configuration is never nil.

61 |

62 | // genesis 如果是 testnet dev 或者是 rinkeby 模式, 那么不为nil。如果是mainnet或者是私有链接。那么为空

63 | func SetupGenesisBlock(db ethdb.Database, genesis *Genesis) (*params.ChainConfig, common.Hash, error) {

64 | if genesis != nil && genesis.Config == nil {

65 | return params.AllProtocolChanges, common.Hash{}, errGenesisNoConfig

66 | }

67 |

68 | // Just commit the new block if there is no stored genesis block.

69 | stored := GetCanonicalHash(db, 0) //获取genesis对应的区块

70 | if (stored == common.Hash{}) { //如果没有区块 最开始启动geth会进入这里。

71 | if genesis == nil {

72 | //如果genesis是nil 而且stored也是nil 那么使用主网络

73 | // 如果是test dev rinkeby 那么genesis不为空 会设置为各自的genesis

74 | log.Info("Writing default main-net genesis block")

75 | genesis = DefaultGenesisBlock()

76 | } else { // 否则使用配置的区块

77 | log.Info("Writing custom genesis block")

78 | }

79 | // 写入数据库

80 | block, err := genesis.Commit(db)

81 | return genesis.Config, block.Hash(), err

82 | }

83 |

84 | // Check whether the genesis block is already written.

85 | if genesis != nil { //如果genesis存在而且区块也存在 那么对比这两个区块是否相同

86 | block, _ := genesis.ToBlock()

87 | hash := block.Hash()

88 | if hash != stored {

89 | return genesis.Config, block.Hash(), &GenesisMismatchError{stored, hash}

90 | }

91 | }

92 |

93 | // Get the existing chain configuration.

94 | // 获取当前存在的区块链的genesis配置

95 | newcfg := genesis.configOrDefault(stored)

96 | // 获取当前的区块链的配置

97 | storedcfg, err := GetChainConfig(db, stored)

98 | if err != nil {

99 | if err == ErrChainConfigNotFound {

100 | // This case happens if a genesis write was interrupted.

101 | log.Warn("Found genesis block without chain config")

102 | err = WriteChainConfig(db, stored, newcfg)

103 | }

104 | return newcfg, stored, err

105 | }

106 | // Special case: don't change the existing config of a non-mainnet chain if no new

107 | // config is supplied. These chains would get AllProtocolChanges (and a compat error)

108 | // if we just continued here.

109 | // 特殊情况:如果没有提供新的配置,请不要更改非主网链的现有配置。

110 | // 如果我们继续这里,这些链会得到AllProtocolChanges(和compat错误)。

111 | if genesis == nil && stored != params.MainnetGenesisHash {

112 | return storedcfg, stored, nil // 如果是私有链接会从这里退出。

113 | }

114 |

115 | // Check config compatibility and write the config. Compatibility errors

116 | // are returned to the caller unless we're already at block zero.

117 | // 检查配置的兼容性,除非我们在区块0,否则返回兼容性错误.

118 | height := GetBlockNumber(db, GetHeadHeaderHash(db))

119 | if height == missingNumber {

120 | return newcfg, stored, fmt.Errorf("missing block number for head header hash")

121 | }

122 | compatErr := storedcfg.CheckCompatible(newcfg, height)

123 | // 如果区块已经写入数据了,那么就不能更改genesis配置了

124 | if compatErr != nil && height != 0 && compatErr.RewindTo != 0 {

125 | return newcfg, stored, compatErr

126 | }

127 | // 如果是主网络会从这里退出。

128 | return newcfg, stored, WriteChainConfig(db, stored, newcfg)

129 | }

130 | SetupGenesisBlock 会根据创世区块返回一个区块链的配置。从 db 参数中拿到的区块里如果没有创世区块的话,首先提交一个新区块。接着通过调用 genesis.configOrDefault(stored) 拿到当前链的配置,测试兼容性后将配置写回 DB 中。最后返回区块链的配置信息。

131 |

132 | ToBlock, 这个方法使用genesis的数据,使用基于内存的数据库,然后创建了一个block并返回(通过 types.NewBlock)

133 |

134 |

135 | // ToBlock creates the block and state of a genesis specification.

136 | func (g *Genesis) ToBlock() (*types.Block, *state.StateDB) {

137 | db, _ := ethdb.NewMemDatabase()

138 | statedb, _ := state.New(common.Hash{}, state.NewDatabase(db))

139 | for addr, account := range g.Alloc {

140 | statedb.AddBalance(addr, account.Balance)

141 | statedb.SetCode(addr, account.Code)

142 | statedb.SetNonce(addr, account.Nonce)

143 | for key, value := range account.Storage {

144 | statedb.SetState(addr, key, value)

145 | }

146 | }

147 | root := statedb.IntermediateRoot(false)

148 | head := &types.Header{

149 | Number: new(big.Int).SetUint64(g.Number),

150 | Nonce: types.EncodeNonce(g.Nonce),

151 | Time: new(big.Int).SetUint64(g.Timestamp),

152 | ParentHash: g.ParentHash,

153 | Extra: g.ExtraData,

154 | GasLimit: new(big.Int).SetUint64(g.GasLimit),

155 | GasUsed: new(big.Int).SetUint64(g.GasUsed),

156 | Difficulty: g.Difficulty,

157 | MixDigest: g.Mixhash,

158 | Coinbase: g.Coinbase,

159 | Root: root,

160 | }

161 | if g.GasLimit == 0 {

162 | head.GasLimit = params.GenesisGasLimit

163 | }

164 | if g.Difficulty == nil {

165 | head.Difficulty = params.GenesisDifficulty

166 | }

167 | return types.NewBlock(head, nil, nil, nil), statedb

168 | }

169 |

170 | Commit方法和MustCommit方法, Commit方法把给定的genesis的block和state写入数据库, 这个block被认为是规范的区块链头。

171 |

172 | // Commit writes the block and state of a genesis specification to the database.

173 | // The block is committed as the canonical head block.

174 | func (g *Genesis) Commit(db ethdb.Database) (*types.Block, error) {

175 | block, statedb := g.ToBlock()

176 | if block.Number().Sign() != 0 {

177 | return nil, fmt.Errorf("can't commit genesis block with number > 0")

178 | }

179 | if _, err := statedb.CommitTo(db, false); err != nil {

180 | return nil, fmt.Errorf("cannot write state: %v", err)

181 | }

182 | // 写入总难度

183 | if err := WriteTd(db, block.Hash(), block.NumberU64(), g.Difficulty); err != nil {

184 | return nil, err

185 | }

186 | // 写入区块

187 | if err := WriteBlock(db, block); err != nil {

188 | return nil, err

189 | }

190 | // 写入区块收据

191 | if err := WriteBlockReceipts(db, block.Hash(), block.NumberU64(), nil); err != nil {

192 | return nil, err

193 | }

194 | // 写入 headerPrefix + num (uint64 big endian) + numSuffix -> hash

195 | if err := WriteCanonicalHash(db, block.Hash(), block.NumberU64()); err != nil {

196 | return nil, err

197 | }

198 | // 写入 "LastBlock" -> hash

199 | if err := WriteHeadBlockHash(db, block.Hash()); err != nil {

200 | return nil, err

201 | }

202 | // 写入 "LastHeader" -> hash

203 | if err := WriteHeadHeaderHash(db, block.Hash()); err != nil {

204 | return nil, err

205 | }

206 | config := g.Config

207 | if config == nil {

208 | config = params.AllProtocolChanges

209 | }

210 | // 写入 ethereum-config-hash -> config

211 | return block, WriteChainConfig(db, block.Hash(), config)

212 | }

213 |

214 | // MustCommit writes the genesis block and state to db, panicking on error.

215 | // The block is committed as the canonical head block.

216 | func (g *Genesis) MustCommit(db ethdb.Database) *types.Block {

217 | block, err := g.Commit(db)

218 | if err != nil {

219 | panic(err)

220 | }

221 | return block

222 | }

223 |

224 | 返回各种模式的默认Genesis

225 |

226 | // DefaultGenesisBlock returns the Ethereum main net genesis block.

227 | func DefaultGenesisBlock() *Genesis {

228 | return &Genesis{

229 | Config: params.MainnetChainConfig,

230 | Nonce: 66,

231 | ExtraData: hexutil.MustDecode("0x11bbe8db4e347b4e8c937c1c8370e4b5ed33adb3db69cbdb7a38e1e50b1b82fa"),

232 | GasLimit: 5000,

233 | Difficulty: big.NewInt(17179869184),

234 | Alloc: decodePrealloc(mainnetAllocData),

235 | }

236 | }

237 |

238 | // DefaultTestnetGenesisBlock returns the Ropsten network genesis block.

239 | func DefaultTestnetGenesisBlock() *Genesis {

240 | return &Genesis{

241 | Config: params.TestnetChainConfig,

242 | Nonce: 66,

243 | ExtraData: hexutil.MustDecode("0x3535353535353535353535353535353535353535353535353535353535353535"),

244 | GasLimit: 16777216,

245 | Difficulty: big.NewInt(1048576),

246 | Alloc: decodePrealloc(testnetAllocData),

247 | }

248 | }

249 |

250 | // DefaultRinkebyGenesisBlock returns the Rinkeby network genesis block.

251 | func DefaultRinkebyGenesisBlock() *Genesis {

252 | return &Genesis{

253 | Config: params.RinkebyChainConfig,

254 | Timestamp: 1492009146,

255 | ExtraData: hexutil.MustDecode("0x52657370656374206d7920617574686f7269746168207e452e436172746d616e42eb768f2244c8811c63729a21a3569731535f067ffc57839b00206d1ad20c69a1981b489f772031b279182d99e65703f0076e4812653aab85fca0f00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000"),

256 | GasLimit: 4700000,

257 | Difficulty: big.NewInt(1),

258 | Alloc: decodePrealloc(rinkebyAllocData),

259 | }

260 | }

261 |

262 | // DevGenesisBlock returns the 'geth --dev' genesis block.

263 | func DevGenesisBlock() *Genesis {

264 | return &Genesis{

265 | Config: params.AllProtocolChanges,

266 | Nonce: 42,

267 | GasLimit: 4712388,

268 | Difficulty: big.NewInt(131072),

269 | Alloc: decodePrealloc(devAllocData),

270 | }

271 | }

272 |

--------------------------------------------------------------------------------

/core-vm-stack-memory源码分析.md:

--------------------------------------------------------------------------------

1 | vm使用了stack.go里面的对象Stack来作为虚拟机的堆栈。memory代表了虚拟机里面使用的内存对象。

2 |

3 | ## stack.go

4 | 比较简单,就是用1024个big.Int的定长数组来作为堆栈的存储。

5 |

6 | 构造

7 |

8 | // stack is an object for basic stack operations. Items popped to the stack are

9 | // expected to be changed and modified. stack does not take care of adding newly

10 | // initialised objects.

11 | type Stack struct {

12 | data []*big.Int

13 | }

14 |

15 | func newstack() *Stack {

16 | return &Stack{data: make([]*big.Int, 0, 1024)}

17 | }

18 |

19 | push操作

20 |

21 | func (st *Stack) push(d *big.Int) { //追加到最末尾

22 | // NOTE push limit (1024) is checked in baseCheck

23 | //stackItem := new(big.Int).Set(d)

24 | //st.data = append(st.data, stackItem)

25 | st.data = append(st.data, d)

26 | }

27 | func (st *Stack) pushN(ds ...*big.Int) {

28 | st.data = append(st.data, ds...)

29 | }

30 |

31 | pop操作

32 |

33 |

34 | func (st *Stack) pop() (ret *big.Int) { //从最末尾取出。

35 | ret = st.data[len(st.data)-1]

36 | st.data = st.data[:len(st.data)-1]

37 | return

38 | }

39 | 交换元素的值操作,还有这种操作?

40 |

41 | func (st *Stack) swap(n int) { 交换堆栈顶的元素和离栈顶n距离的元素的值。

42 | st.data[st.len()-n], st.data[st.len()-1] = st.data[st.len()-1], st.data[st.len()-n]

43 | }

44 |

45 | dup操作 像复制指定位置的值到堆顶

46 |

47 | func (st *Stack) dup(pool *intPool, n int) {

48 | st.push(pool.get().Set(st.data[st.len()-n]))

49 | }

50 |

51 | peek 操作. 偷看栈顶元素

52 |

53 | func (st *Stack) peek() *big.Int {

54 | return st.data[st.len()-1]

55 | }

56 | Back 偷看指定位置的元素

57 |

58 | // Back returns the n'th item in stack

59 | func (st *Stack) Back(n int) *big.Int {

60 | return st.data[st.len()-n-1]

61 | }

62 |

63 | require 保证堆栈元素的数量要大于等于n.

64 |

65 | func (st *Stack) require(n int) error {

66 | if st.len() < n {

67 | return fmt.Errorf("stack underflow (%d <=> %d)", len(st.data), n)

68 | }

69 | return nil

70 | }

71 |

72 | ## intpool.go

73 | 非常简单. 就是256大小的 big.int的池,用来加速bit.Int的分配

74 |

75 | var checkVal = big.NewInt(-42)

76 |

77 | const poolLimit = 256

78 |

79 | // intPool is a pool of big integers that

80 | // can be reused for all big.Int operations.

81 | type intPool struct {

82 | pool *Stack

83 | }

84 |

85 | func newIntPool() *intPool {

86 | return &intPool{pool: newstack()}

87 | }

88 |

89 | func (p *intPool) get() *big.Int {

90 | if p.pool.len() > 0 {

91 | return p.pool.pop()

92 | }

93 | return new(big.Int)

94 | }

95 | func (p *intPool) put(is ...*big.Int) {

96 | if len(p.pool.data) > poolLimit {

97 | return

98 | }

99 |

100 | for _, i := range is {

101 | // verifyPool is a build flag. Pool verification makes sure the integrity

102 | // of the integer pool by comparing values to a default value.

103 | if verifyPool {

104 | i.Set(checkVal)

105 | }

106 |

107 | p.pool.push(i)

108 | }

109 | }

110 |

111 | ## memory.go

112 |

113 | 构造, memory的存储就是byte[]. 还有一个lastGasCost的记录.

114 |

115 | type Memory struct {

116 | store []byte

117 | lastGasCost uint64

118 | }

119 |

120 | func NewMemory() *Memory {

121 | return &Memory{}

122 | }

123 |

124 | 使用首先需要使用Resize分配空间

125 |

126 | // Resize resizes the memory to size

127 | func (m *Memory) Resize(size uint64) {

128 | if uint64(m.Len()) < size {

129 | m.store = append(m.store, make([]byte, size-uint64(m.Len()))...)

130 | }

131 | }

132 |

133 | 然后使用Set来设置值

134 |

135 | // Set sets offset + size to value

136 | func (m *Memory) Set(offset, size uint64, value []byte) {

137 | // length of store may never be less than offset + size.

138 | // The store should be resized PRIOR to setting the memory

139 | if size > uint64(len(m.store)) {

140 | panic("INVALID memory: store empty")

141 | }

142 |

143 | // It's possible the offset is greater than 0 and size equals 0. This is because

144 | // the calcMemSize (common.go) could potentially return 0 when size is zero (NO-OP)

145 | if size > 0 {

146 | copy(m.store[offset:offset+size], value)

147 | }

148 | }

149 | Get来取值, 一个是获取拷贝, 一个是获取指针.

150 |

151 | // Get returns offset + size as a new slice

152 | func (self *Memory) Get(offset, size int64) (cpy []byte) {

153 | if size == 0 {

154 | return nil

155 | }

156 |

157 | if len(self.store) > int(offset) {

158 | cpy = make([]byte, size)

159 | copy(cpy, self.store[offset:offset+size])

160 |

161 | return

162 | }

163 |

164 | return

165 | }

166 |

167 | // GetPtr returns the offset + size

168 | func (self *Memory) GetPtr(offset, size int64) []byte {

169 | if size == 0 {

170 | return nil

171 | }

172 |

173 | if len(self.store) > int(offset) {

174 | return self.store[offset : offset+size]

175 | }

176 |

177 | return nil

178 | }

179 |

180 |

181 | ## 一些额外的帮助函数--stack_table.go

182 |

183 |

184 | func makeStackFunc(pop, push int) stackValidationFunc {

185 | return func(stack *Stack) error {

186 | if err := stack.require(pop); err != nil {

187 | return err

188 | }

189 |

190 | if stack.len()+push-pop > int(params.StackLimit) {

191 | return fmt.Errorf("stack limit reached %d (%d)", stack.len(), params.StackLimit)

192 | }

193 | return nil

194 | }

195 | }

196 |

197 | func makeDupStackFunc(n int) stackValidationFunc {

198 | return makeStackFunc(n, n+1)

199 | }

200 |

201 | func makeSwapStackFunc(n int) stackValidationFunc {

202 | return makeStackFunc(n, n)

203 | }

204 |

205 |

206 |

--------------------------------------------------------------------------------

/eth-downloader-peer源码分析.md:

--------------------------------------------------------------------------------

1 | peer模块包含了downloader使用的peer节点,封装了吞吐量,是否空闲,并记录了之前失败的信息。

2 |

3 |

4 | ## peer

5 |

6 | // peerConnection represents an active peer from which hashes and blocks are retrieved.

7 | type peerConnection struct {

8 | id string // Unique identifier of the peer

9 |

10 | headerIdle int32 // Current header activity state of the peer (idle = 0, active = 1) 当前的header获取的工作状态。

11 | blockIdle int32 // Current block activity state of the peer (idle = 0, active = 1) 当前的区块获取的工作状态

12 | receiptIdle int32 // Current receipt activity state of the peer (idle = 0, active = 1) 当前的收据获取的工作状态

13 | stateIdle int32 // Current node data activity state of the peer (idle = 0, active = 1) 当前节点状态的工作状态

14 |

15 | headerThroughput float64 // Number of headers measured to be retrievable per second //记录每秒能够接收多少个区块头的度量值

16 | blockThroughput float64 // Number of blocks (bodies) measured to be retrievable per second //记录每秒能够接收多少个区块的度量值

17 | receiptThroughput float64 // Number of receipts measured to be retrievable per second 记录每秒能够接收多少个收据的度量值

18 | stateThroughput float64 // Number of node data pieces measured to be retrievable per second 记录每秒能够接收多少个账户状态的度量值

19 |

20 | rtt time.Duration // Request round trip time to track responsiveness (QoS) 请求回应时间

21 |

22 | headerStarted time.Time // Time instance when the last header fetch was started 记录最后一个header fetch的请求时间

23 | blockStarted time.Time // Time instance when the last block (body) fetch was started

24 | receiptStarted time.Time // Time instance when the last receipt fetch was started

25 | stateStarted time.Time // Time instance when the last node data fetch was started

26 |

27 | lacking map[common.Hash]struct{} // Set of hashes not to request (didn't have previously) 记录的Hash值不会去请求,一般是因为之前的请求失败

28 |

29 | peer Peer // eth的peer

30 |

31 | version int // Eth protocol version number to switch strategies

32 | log log.Logger // Contextual logger to add extra infos to peer logs

33 | lock sync.RWMutex

34 | }

35 |

36 |

37 |

38 | FetchXXX

39 | FetchHeaders FetchBodies等函数 主要调用了eth.peer的功能来进行发送数据请求。

40 |

41 | // FetchHeaders sends a header retrieval request to the remote peer.

42 | func (p *peerConnection) FetchHeaders(from uint64, count int) error {

43 | // Sanity check the protocol version

44 | if p.version < 62 {

45 | panic(fmt.Sprintf("header fetch [eth/62+] requested on eth/%d", p.version))

46 | }

47 | // Short circuit if the peer is already fetching

48 | if !atomic.CompareAndSwapInt32(&p.headerIdle, 0, 1) {

49 | return errAlreadyFetching

50 | }

51 | p.headerStarted = time.Now()

52 |

53 | // Issue the header retrieval request (absolut upwards without gaps)

54 | go p.peer.RequestHeadersByNumber(from, count, 0, false)

55 |

56 | return nil

57 | }

58 |

59 | SetXXXIdle函数

60 | SetHeadersIdle, SetBlocksIdle 等函数 设置peer的状态为空闲状态,允许它执行新的请求。 同时还会通过本次传输的数据的多少来重新评估链路的吞吐量。

61 |

62 | // SetHeadersIdle sets the peer to idle, allowing it to execute new header retrieval

63 | // requests. Its estimated header retrieval throughput is updated with that measured

64 | // just now.

65 | func (p *peerConnection) SetHeadersIdle(delivered int) {

66 | p.setIdle(p.headerStarted, delivered, &p.headerThroughput, &p.headerIdle)

67 | }

68 |

69 | setIdle

70 |

71 | // setIdle sets the peer to idle, allowing it to execute new retrieval requests.

72 | // Its estimated retrieval throughput is updated with that measured just now.

73 | func (p *peerConnection) setIdle(started time.Time, delivered int, throughput *float64, idle *int32) {

74 | // Irrelevant of the scaling, make sure the peer ends up idle

75 | defer atomic.StoreInt32(idle, 0)

76 |

77 | p.lock.Lock()

78 | defer p.lock.Unlock()

79 |

80 | // If nothing was delivered (hard timeout / unavailable data), reduce throughput to minimum

81 | if delivered == 0 {

82 | *throughput = 0

83 | return

84 | }

85 | // Otherwise update the throughput with a new measurement

86 | elapsed := time.Since(started) + 1 // +1 (ns) to ensure non-zero divisor

87 | measured := float64(delivered) / (float64(elapsed) / float64(time.Second))

88 |

89 | // measurementImpact = 0.1 , 新的吞吐量=老的吞吐量*0.9 + 这次的吞吐量*0.1

90 | *throughput = (1-measurementImpact)*(*throughput) + measurementImpact*measured

91 | // 更新RTT

92 | p.rtt = time.Duration((1-measurementImpact)*float64(p.rtt) + measurementImpact*float64(elapsed))

93 |

94 | p.log.Trace("Peer throughput measurements updated",

95 | "hps", p.headerThroughput, "bps", p.blockThroughput,

96 | "rps", p.receiptThroughput, "sps", p.stateThroughput,

97 | "miss", len(p.lacking), "rtt", p.rtt)

98 | }

99 |

100 |

101 | XXXCapacity函数,用来返回当前的链接允许的吞吐量。

102 |

103 | // HeaderCapacity retrieves the peers header download allowance based on its

104 | // previously discovered throughput.

105 | func (p *peerConnection) HeaderCapacity(targetRTT time.Duration) int {

106 | p.lock.RLock()

107 | defer p.lock.RUnlock()

108 | // 这里有点奇怪,targetRTT越大,请求的数量就越多。

109 | return int(math.Min(1+math.Max(1, p.headerThroughput*float64(targetRTT)/float64(time.Second)), float64(MaxHeaderFetch)))

110 | }

111 |

112 |

113 | Lacks 用来标记上次是否失败,以便下次同样的请求不通过这个peer

114 |

115 | // MarkLacking appends a new entity to the set of items (blocks, receipts, states)

116 | // that a peer is known not to have (i.e. have been requested before). If the

117 | // set reaches its maximum allowed capacity, items are randomly dropped off.

118 | func (p *peerConnection) MarkLacking(hash common.Hash) {

119 | p.lock.Lock()

120 | defer p.lock.Unlock()

121 |

122 | for len(p.lacking) >= maxLackingHashes {

123 | for drop := range p.lacking {

124 | delete(p.lacking, drop)

125 | break

126 | }

127 | }

128 | p.lacking[hash] = struct{}{}

129 | }

130 |

131 | // Lacks retrieves whether the hash of a blockchain item is on the peers lacking

132 | // list (i.e. whether we know that the peer does not have it).

133 | func (p *peerConnection) Lacks(hash common.Hash) bool {

134 | p.lock.RLock()

135 | defer p.lock.RUnlock()

136 |

137 | _, ok := p.lacking[hash]

138 | return ok

139 | }

140 |

141 |

142 | ## peerSet

143 |

144 | // peerSet represents the collection of active peer participating in the chain

145 | // download procedure.

146 | type peerSet struct {

147 | peers map[string]*peerConnection

148 | newPeerFeed event.Feed

149 | peerDropFeed event.Feed

150 | lock sync.RWMutex

151 | }

152 |

153 |

154 | Register 和 UnRegister

155 |

156 | // Register injects a new peer into the working set, or returns an error if the

157 | // peer is already known.

158 | //

159 | // The method also sets the starting throughput values of the new peer to the

160 | // average of all existing peers, to give it a realistic chance of being used

161 | // for data retrievals.

162 | func (ps *peerSet) Register(p *peerConnection) error {

163 | // Retrieve the current median RTT as a sane default

164 | p.rtt = ps.medianRTT()

165 |

166 | // Register the new peer with some meaningful defaults

167 | ps.lock.Lock()

168 | if _, ok := ps.peers[p.id]; ok {

169 | ps.lock.Unlock()

170 | return errAlreadyRegistered

171 | }

172 | if len(ps.peers) > 0 {

173 | p.headerThroughput, p.blockThroughput, p.receiptThroughput, p.stateThroughput = 0, 0, 0, 0

174 |

175 | for _, peer := range ps.peers {

176 | peer.lock.RLock()

177 | p.headerThroughput += peer.headerThroughput

178 | p.blockThroughput += peer.blockThroughput

179 | p.receiptThroughput += peer.receiptThroughput

180 | p.stateThroughput += peer.stateThroughput

181 | peer.lock.RUnlock()

182 | }

183 | p.headerThroughput /= float64(len(ps.peers))

184 | p.blockThroughput /= float64(len(ps.peers))

185 | p.receiptThroughput /= float64(len(ps.peers))

186 | p.stateThroughput /= float64(len(ps.peers))

187 | }

188 | ps.peers[p.id] = p

189 | ps.lock.Unlock()

190 |

191 | ps.newPeerFeed.Send(p)

192 | return nil

193 | }

194 |

195 | // Unregister removes a remote peer from the active set, disabling any further

196 | // actions to/from that particular entity.

197 | func (ps *peerSet) Unregister(id string) error {

198 | ps.lock.Lock()

199 | p, ok := ps.peers[id]

200 | if !ok {

201 | defer ps.lock.Unlock()

202 | return errNotRegistered

203 | }

204 | delete(ps.peers, id)

205 | ps.lock.Unlock()

206 |

207 | ps.peerDropFeed.Send(p)

208 | return nil

209 | }

210 |

211 | XXXIdlePeers

212 |

213 | // HeaderIdlePeers retrieves a flat list of all the currently header-idle peers

214 | // within the active peer set, ordered by their reputation.

215 | func (ps *peerSet) HeaderIdlePeers() ([]*peerConnection, int) {

216 | idle := func(p *peerConnection) bool {

217 | return atomic.LoadInt32(&p.headerIdle) == 0

218 | }

219 | throughput := func(p *peerConnection) float64 {

220 | p.lock.RLock()

221 | defer p.lock.RUnlock()

222 | return p.headerThroughput

223 | }

224 | return ps.idlePeers(62, 64, idle, throughput)

225 | }

226 |

227 | // idlePeers retrieves a flat list of all currently idle peers satisfying the

228 | // protocol version constraints, using the provided function to check idleness.

229 | // The resulting set of peers are sorted by their measure throughput.

230 | func (ps *peerSet) idlePeers(minProtocol, maxProtocol int, idleCheck func(*peerConnection) bool, throughput func(*peerConnection) float64) ([]*peerConnection, int) {

231 | ps.lock.RLock()

232 | defer ps.lock.RUnlock()

233 |

234 | idle, total := make([]*peerConnection, 0, len(ps.peers)), 0

235 | for _, p := range ps.peers { //首先抽取idle的peer

236 | if p.version >= minProtocol && p.version <= maxProtocol {

237 | if idleCheck(p) {

238 | idle = append(idle, p)

239 | }

240 | total++

241 | }

242 | }

243 | for i := 0; i < len(idle); i++ { // 冒泡排序, 从吞吐量大到吞吐量小。

244 | for j := i + 1; j < len(idle); j++ {

245 | if throughput(idle[i]) < throughput(idle[j]) {

246 | idle[i], idle[j] = idle[j], idle[i]

247 | }

248 | }

249 | }

250 | return idle, total

251 | }

252 |

253 | medianRTT,求得peerset的RTT的中位数,

254 |

255 | // medianRTT returns the median RTT of te peerset, considering only the tuning

256 | // peers if there are more peers available.

257 | func (ps *peerSet) medianRTT() time.Duration {

258 | // Gather all the currnetly measured round trip times

259 | ps.lock.RLock()

260 | defer ps.lock.RUnlock()

261 |

262 | rtts := make([]float64, 0, len(ps.peers))

263 | for _, p := range ps.peers {

264 | p.lock.RLock()

265 | rtts = append(rtts, float64(p.rtt))

266 | p.lock.RUnlock()

267 | }

268 | sort.Float64s(rtts)

269 |

270 | median := rttMaxEstimate

271 | if qosTuningPeers <= len(rtts) {

272 | median = time.Duration(rtts[qosTuningPeers/2]) // Median of our tuning peers

273 | } else if len(rtts) > 0 {

274 | median = time.Duration(rtts[len(rtts)/2]) // Median of our connected peers (maintain even like this some baseline qos)

275 | }

276 | // Restrict the RTT into some QoS defaults, irrelevant of true RTT

277 | if median < rttMinEstimate {

278 | median = rttMinEstimate

279 | }

280 | if median > rttMaxEstimate {

281 | median = rttMaxEstimate

282 | }

283 | return median

284 | }

285 |

--------------------------------------------------------------------------------

/eth-downloader-statesync.md:

--------------------------------------------------------------------------------

1 | statesync 用来获取pivot point所指定的区块的所有的state 的trie树,也就是所有的账号的信息,包括普通账号和合约账户。

2 |

3 | ## 数据结构

4 | stateSync调度下载由给定state root所定义的特定state trie的请求。

5 |

6 | // stateSync schedules requests for downloading a particular state trie defined

7 | // by a given state root.

8 | type stateSync struct {

9 | d *Downloader // Downloader instance to access and manage current peerset

10 |

11 | sched *trie.TrieSync // State trie sync scheduler defining the tasks

12 | keccak hash.Hash // Keccak256 hasher to verify deliveries with

13 | tasks map[common.Hash]*stateTask // Set of tasks currently queued for retrieval

14 |

15 | numUncommitted int

16 | bytesUncommitted int

17 |

18 | deliver chan *stateReq // Delivery channel multiplexing peer responses

19 | cancel chan struct{} // Channel to signal a termination request

20 | cancelOnce sync.Once // Ensures cancel only ever gets called once

21 | done chan struct{} // Channel to signal termination completion

22 | err error // Any error hit during sync (set before completion)

23 | }

24 |

25 | 构造函数

26 |

27 | func newStateSync(d *Downloader, root common.Hash) *stateSync {

28 | return &stateSync{

29 | d: d,

30 | sched: state.NewStateSync(root, d.stateDB),

31 | keccak: sha3.NewKeccak256(),

32 | tasks: make(map[common.Hash]*stateTask),

33 | deliver: make(chan *stateReq),

34 | cancel: make(chan struct{}),

35 | done: make(chan struct{}),

36 | }

37 | }

38 |

39 | NewStateSync

40 |

41 | // NewStateSync create a new state trie download scheduler.

42 | func NewStateSync(root common.Hash, database trie.DatabaseReader) *trie.TrieSync {

43 | var syncer *trie.TrieSync

44 | callback := func(leaf []byte, parent common.Hash) error {

45 | var obj Account

46 | if err := rlp.Decode(bytes.NewReader(leaf), &obj); err != nil {

47 | return err

48 | }

49 | syncer.AddSubTrie(obj.Root, 64, parent, nil)

50 | syncer.AddRawEntry(common.BytesToHash(obj.CodeHash), 64, parent)

51 | return nil

52 | }

53 | syncer = trie.NewTrieSync(root, database, callback)

54 | return syncer

55 | }

56 |

57 | syncState, 这个函数是downloader调用的。

58 |

59 | // syncState starts downloading state with the given root hash.

60 | func (d *Downloader) syncState(root common.Hash) *stateSync {

61 | s := newStateSync(d, root)

62 | select {

63 | case d.stateSyncStart <- s:

64 | case <-d.quitCh:

65 | s.err = errCancelStateFetch

66 | close(s.done)

67 | }

68 | return s

69 | }

70 |

71 | ## 启动

72 | 在downloader中启动了一个新的goroutine 来运行stateFetcher函数。 这个函数首先试图往stateSyncStart通道来以获取信息。 而syncState这个函数会给stateSyncStart通道发送数据。

73 |

74 | // stateFetcher manages the active state sync and accepts requests

75 | // on its behalf.

76 | func (d *Downloader) stateFetcher() {

77 | for {

78 | select {

79 | case s := <-d.stateSyncStart:

80 | for next := s; next != nil; { // 这个for循环代表了downloader可以通过发送信号来随时改变需要同步的对象。

81 | next = d.runStateSync(next)

82 | }

83 | case <-d.stateCh:

84 | // Ignore state responses while no sync is running.

85 | case <-d.quitCh:

86 | return

87 | }

88 | }

89 | }

90 |

91 | 我们下面看看哪里会调用syncState()函数。processFastSyncContent这个函数会在最开始发现peer的时候启动。

92 |

93 | // processFastSyncContent takes fetch results from the queue and writes them to the

94 | // database. It also controls the synchronisation of state nodes of the pivot block.

95 | func (d *Downloader) processFastSyncContent(latest *types.Header) error {

96 | // Start syncing state of the reported head block.

97 | // This should get us most of the state of the pivot block.

98 | stateSync := d.syncState(latest.Root)

99 |

100 |

101 |

102 | runStateSync,这个方法从stateCh获取已经下载好的状态,然后把他投递到deliver通道上等待别人处理。

103 |

104 | // runStateSync runs a state synchronisation until it completes or another root

105 | // hash is requested to be switched over to.

106 | func (d *Downloader) runStateSync(s *stateSync) *stateSync {

107 | var (

108 | active = make(map[string]*stateReq) // Currently in-flight requests

109 | finished []*stateReq // Completed or failed requests

110 | timeout = make(chan *stateReq) // Timed out active requests

111 | )

112 | defer func() {

113 | // Cancel active request timers on exit. Also set peers to idle so they're

114 | // available for the next sync.

115 | for _, req := range active {

116 | req.timer.Stop()

117 | req.peer.SetNodeDataIdle(len(req.items))

118 | }

119 | }()

120 | // Run the state sync.

121 | // 运行状态同步

122 | go s.run()

123 | defer s.Cancel()

124 |

125 | // Listen for peer departure events to cancel assigned tasks

126 | peerDrop := make(chan *peerConnection, 1024)

127 | peerSub := s.d.peers.SubscribePeerDrops(peerDrop)

128 | defer peerSub.Unsubscribe()

129 |

130 | for {

131 | // Enable sending of the first buffered element if there is one.

132 | var (

133 | deliverReq *stateReq

134 | deliverReqCh chan *stateReq

135 | )

136 | if len(finished) > 0 {

137 | deliverReq = finished[0]

138 | deliverReqCh = s.deliver

139 | }

140 |

141 | select {

142 | // The stateSync lifecycle:

143 | // 另外一个stateSync申请运行。 我们退出。

144 | case next := <-d.stateSyncStart:

145 | return next

146 |

147 | case <-s.done:

148 | return nil

149 |

150 | // Send the next finished request to the current sync:

151 | // 发送已经下载好的数据给sync

152 | case deliverReqCh <- deliverReq:

153 | finished = append(finished[:0], finished[1:]...)

154 |

155 | // Handle incoming state packs:

156 | // 处理进入的数据包。 downloader接收到state的数据会发送到这个通道上面。

157 | case pack := <-d.stateCh:

158 | // Discard any data not requested (or previsouly timed out)

159 | req := active[pack.PeerId()]

160 | if req == nil {

161 | log.Debug("Unrequested node data", "peer", pack.PeerId(), "len", pack.Items())

162 | continue

163 | }

164 | // Finalize the request and queue up for processing

165 | req.timer.Stop()

166 | req.response = pack.(*statePack).states

167 |

168 | finished = append(finished, req)

169 | delete(active, pack.PeerId())

170 |

171 | // Handle dropped peer connections:

172 | case p := <-peerDrop:

173 | // Skip if no request is currently pending

174 | req := active[p.id]

175 | if req == nil {

176 | continue

177 | }

178 | // Finalize the request and queue up for processing

179 | req.timer.Stop()

180 | req.dropped = true

181 |

182 | finished = append(finished, req)

183 | delete(active, p.id)

184 |

185 | // Handle timed-out requests:

186 | case req := <-timeout:

187 | // If the peer is already requesting something else, ignore the stale timeout.

188 | // This can happen when the timeout and the delivery happens simultaneously,

189 | // causing both pathways to trigger.

190 | if active[req.peer.id] != req {

191 | continue

192 | }

193 | // Move the timed out data back into the download queue

194 | finished = append(finished, req)

195 | delete(active, req.peer.id)

196 |

197 | // Track outgoing state requests:

198 | case req := <-d.trackStateReq:

199 | // If an active request already exists for this peer, we have a problem. In

200 | // theory the trie node schedule must never assign two requests to the same

201 | // peer. In practive however, a peer might receive a request, disconnect and

202 | // immediately reconnect before the previous times out. In this case the first

203 | // request is never honored, alas we must not silently overwrite it, as that

204 | // causes valid requests to go missing and sync to get stuck.

205 | if old := active[req.peer.id]; old != nil {

206 | log.Warn("Busy peer assigned new state fetch", "peer", old.peer.id)

207 |

208 | // Make sure the previous one doesn't get siletly lost

209 | old.timer.Stop()

210 | old.dropped = true

211 |

212 | finished = append(finished, old)

213 | }

214 | // Start a timer to notify the sync loop if the peer stalled.

215 | req.timer = time.AfterFunc(req.timeout, func() {

216 | select {

217 | case timeout <- req:

218 | case <-s.done:

219 | // Prevent leaking of timer goroutines in the unlikely case where a

220 | // timer is fired just before exiting runStateSync.

221 | }

222 | })

223 | active[req.peer.id] = req

224 | }

225 | }

226 | }

227 |

228 |

229 | run和loop方法,获取任务,分配任务,获取结果。

230 |

231 | func (s *stateSync) run() {

232 | s.err = s.loop()

233 | close(s.done)

234 | }

235 |

236 | // loop is the main event loop of a state trie sync. It it responsible for the

237 | // assignment of new tasks to peers (including sending it to them) as well as

238 | // for the processing of inbound data. Note, that the loop does not directly

239 | // receive data from peers, rather those are buffered up in the downloader and

240 | // pushed here async. The reason is to decouple processing from data receipt

241 | // and timeouts.

242 | func (s *stateSync) loop() error {

243 | // Listen for new peer events to assign tasks to them

244 | newPeer := make(chan *peerConnection, 1024)

245 | peerSub := s.d.peers.SubscribeNewPeers(newPeer)

246 | defer peerSub.Unsubscribe()

247 |

248 | // Keep assigning new tasks until the sync completes or aborts

249 | // 一直等到 sync完成或者被被终止

250 | for s.sched.Pending() > 0 {

251 | // 把数据从缓存里面刷新到持久化存储里面。 这也就是命令行 --cache指定的大小。

252 | if err := s.commit(false); err != nil {

253 | return err

254 | }

255 | // 指派任务,

256 | s.assignTasks()

257 | // Tasks assigned, wait for something to happen

258 | select {

259 | case <-newPeer:

260 | // New peer arrived, try to assign it download tasks

261 |

262 | case <-s.cancel:

263 | return errCancelStateFetch

264 |

265 | case req := <-s.deliver:

266 | // 接收到runStateSync方法投递过来的返回信息,注意 返回信息里面包含了成功请求的也包含了未成功请求的。

267 | // Response, disconnect or timeout triggered, drop the peer if stalling

268 | log.Trace("Received node data response", "peer", req.peer.id, "count", len(req.response), "dropped", req.dropped, "timeout", !req.dropped && req.timedOut())

269 | if len(req.items) <= 2 && !req.dropped && req.timedOut() {

270 | // 2 items are the minimum requested, if even that times out, we've no use of

271 | // this peer at the moment.

272 | log.Warn("Stalling state sync, dropping peer", "peer", req.peer.id)

273 | s.d.dropPeer(req.peer.id)

274 | }

275 | // Process all the received blobs and check for stale delivery

276 | stale, err := s.process(req)

277 | if err != nil {

278 | log.Warn("Node data write error", "err", err)

279 | return err

280 | }

281 | // The the delivery contains requested data, mark the node idle (otherwise it's a timed out delivery)

282 | if !stale {

283 | req.peer.SetNodeDataIdle(len(req.response))

284 | }

285 | }

286 | }

287 | return s.commit(true)

288 | }

--------------------------------------------------------------------------------

/ethdb源码分析.md:

--------------------------------------------------------------------------------

1 | go-ethereum所有的数据存储在levelDB这个Google开源的KeyValue文件数据库中,整个区块链的所有数据都存储在一个levelDB的数据库中,levelDB支持按照文件大小切分文件的功能,所以我们看到的区块链的数据都是一个一个小文件,其实这些小文件都是同一个levelDB实例。这里简单的看下levelDB的go封装代码。

2 |

3 | levelDB官方网站介绍的特点

4 |

5 | **特点**:

6 |

7 | - key和value都是任意长度的字节数组;

8 | - entry(即一条K-V记录)默认是按照key的字典顺序存储的,当然开发者也可以重载这个排序函数;

9 | - 提供的基本操作接口:Put()、Delete()、Get()、Batch();

10 | - 支持批量操作以原子操作进行;

11 | - 可以创建数据全景的snapshot(快照),并允许在快照中查找数据;

12 | - 可以通过前向(或后向)迭代器遍历数据(迭代器会隐含的创建一个snapshot);

13 | - 自动使用Snappy压缩数据;

14 | - 可移植性;

15 |

16 | **限制**:

17 |

18 | - 非关系型数据模型(NoSQL),不支持sql语句,也不支持索引;

19 | - 一次只允许一个进程访问一个特定的数据库;

20 | - 没有内置的C/S架构,但开发者可以使用LevelDB库自己封装一个server;

21 |

22 |

23 | 源码所在的目录在ethereum/ethdb目录。代码比较简单, 分为下面三个文件

24 |

25 | - database.go levelDB的封装代码

26 | - memory_database.go 供测试用的基于内存的数据库,不会持久化为文件,仅供测试

27 | - interface.go 定义了数据库的接口

28 | - database_test.go 测试案例