├── .github

└── workflows

│ ├── black.yml

│ ├── python_publish.yml

│ └── tests.yml

├── .gitignore

├── .readthedocs.yaml

├── LICENSE

├── README.md

├── demos

├── density_estimation_demo.ipynb

├── gaussian_fitting_demo.ipynb

├── gibbs_sampling_demo.ipynb

├── gp_linear_inversion_demo.ipynb

├── gp_optimisation_demo.ipynb

├── gp_regression_demo.ipynb

├── hamiltonian_mcmc_demo.ipynb

├── heteroscedastic_noise.ipynb

├── parallel_tempering_demo.ipynb

└── scripts

│ ├── ChainPool_demo.py

│ ├── GaussianKDE_demo.py

│ ├── GibbsChain_demo.py

│ ├── GpOptimiser_demo.py

│ ├── HamiltonianChain_demo.py

│ ├── ParallelTempering_demo.py

│ └── gaussian_fitting_demo.py

├── docs

├── Makefile

├── docs_requirements.txt

├── make.bat

└── source

│ ├── EnsembleSampler.rst

│ ├── GibbsChain.rst

│ ├── GpLinearInverter.rst

│ ├── GpOptimiser.rst

│ ├── GpRegressor.rst

│ ├── HamiltonianChain.rst

│ ├── ParallelTempering.rst

│ ├── PcaChain.rst

│ ├── acquisition_functions.rst

│ ├── approx.rst

│ ├── conf.py

│ ├── covariance_functions.rst

│ ├── distributions.rst

│ ├── getting_started.rst

│ ├── gp.rst

│ ├── images

│ ├── GibbsChain_images

│ │ ├── GibbsChain_image_production.py

│ │ ├── burned_scatter.png

│ │ ├── gibbs_diagnostics.png

│ │ ├── gibbs_marginals.png

│ │ └── initial_scatter.png

│ ├── GpOptimiser_images

│ │ ├── GpOptimiser_image_production.py

│ │ └── GpOptimiser_iteration.gif

│ ├── GpRegressor_images

│ │ ├── GpRegressor_image_production.py

│ │ ├── gradient_prediction.png

│ │ ├── posterior_samples.png

│ │ ├── regression_estimate.png

│ │ └── sampled_data.png

│ ├── HamiltonianChain_images

│ │ ├── HamiltonianChain_image_production.py

│ │ ├── hmc_matrix_plot.png

│ │ └── hmc_scatterplot.html

│ ├── ParallelTempering_images

│ │ ├── ParallelTempering_image_production.py

│ │ ├── parallel_tempering_matrix.png

│ │ └── parallel_tempering_trace.png

│ ├── gallery_images

│ │ ├── gallery_density_estimation.png

│ │ ├── gallery_density_estimation.py

│ │ ├── gallery_gibbs_sampling.png

│ │ ├── gallery_gibbs_sampling.py

│ │ ├── gallery_gpr.png

│ │ ├── gallery_gpr.py

│ │ ├── gallery_hdi.png

│ │ ├── gallery_hdi.py

│ │ ├── gallery_hmc.png

│ │ ├── gallery_hmc.py

│ │ └── gallery_matrix.py

│ ├── getting_started_images

│ │ ├── gaussian_data.png

│ │ ├── getting_started_image_production.py

│ │ ├── matrix_plot_example.png

│ │ ├── pdf_summary_example.png

│ │ ├── plot_diagnostics_example.png

│ │ └── prediction_uncertainty_example.png

│ └── matrix_plot_images

│ │ ├── matrix_plot_example.png

│ │ └── matrix_plot_image_production.py

│ ├── index.rst

│ ├── likelihoods.rst

│ ├── mcmc.rst

│ ├── pdf.rst

│ ├── plotting.rst

│ ├── posterior.rst

│ └── priors.rst

├── inference

├── __init__.py

├── approx

│ ├── __init__.py

│ └── conditional.py

├── gp

│ ├── __init__.py

│ ├── acquisition.py

│ ├── covariance.py

│ ├── inversion.py

│ ├── mean.py

│ ├── optimisation.py

│ └── regression.py

├── likelihoods.py

├── mcmc

│ ├── __init__.py

│ ├── base.py

│ ├── ensemble.py

│ ├── gibbs.py

│ ├── hmc

│ │ ├── __init__.py

│ │ ├── epsilon.py

│ │ └── mass.py

│ ├── parallel.py

│ ├── pca.py

│ └── utilities.py

├── pdf

│ ├── __init__.py

│ ├── base.py

│ ├── hdi.py

│ ├── kde.py

│ └── unimodal.py

├── plotting.py

├── posterior.py

└── priors.py

├── pyproject.toml

├── setup.py

└── tests

├── approx

└── test_conditional.py

├── gp

├── test_GpLinearInverter.py

├── test_GpOptimiser.py

└── test_GpRegressor.py

├── mcmc

├── mcmc_utils.py

├── test_bounds.py

├── test_ensemble.py

├── test_gibbs.py

├── test_hamiltonian.py

├── test_mass.py

└── test_pca.py

├── test_covariance.py

├── test_likelihoods.py

├── test_pdf.py

├── test_plotting.py

├── test_posterior.py

└── test_priors.py

/.github/workflows/black.yml:

--------------------------------------------------------------------------------

1 | name: black

2 |

3 | on:

4 | push:

5 | paths:

6 | - '**.py'

7 |

8 | defaults:

9 | run:

10 | shell: bash

11 |

12 | jobs:

13 | black:

14 | runs-on: ubuntu-latest

15 | steps:

16 | - uses: actions/checkout@v4

17 | with:

18 | ref: ${{ github.head_ref }}

19 | - name: Setup Python

20 | uses: actions/setup-python@v4

21 | with:

22 | python-version: 3.x

23 | - name: Install black

24 | run: |

25 | python -m pip install --upgrade pip

26 | pip install black

27 | - name: Version

28 | run: |

29 | python --version

30 | black --version

31 | - name: Run black

32 | run: |

33 | black inference setup.py tests

34 | - uses: stefanzweifel/git-auto-commit-action@v4

35 | with:

36 | commit_message: "[skip ci] Apply black changes"

37 |

--------------------------------------------------------------------------------

/.github/workflows/python_publish.yml:

--------------------------------------------------------------------------------

1 | name: Upload Python Package

2 |

3 | on:

4 | release:

5 | types: [published]

6 |

7 | jobs:

8 | deploy:

9 | runs-on: ubuntu-latest

10 | steps:

11 | - uses: actions/checkout@v4

12 | - name: Set up Python

13 | uses: actions/setup-python@v4

14 | with:

15 | python-version: '3.x'

16 | - name: Install dependencies

17 | run: |

18 | python -m pip install --upgrade pip

19 | pip install build twine

20 | - name: Build package

21 | run: python -m build --sdist --wheel

22 | - name: Check build

23 | run: twine check dist/*

24 | - name: Publish package

25 | uses: pypa/gh-action-pypi-publish@release/v1

26 | with:

27 | user: __token__

28 | password: ${{ secrets.PYPI_DEPLOYMENT_TOKEN }}

29 |

--------------------------------------------------------------------------------

/.github/workflows/tests.yml:

--------------------------------------------------------------------------------

1 | name: tests

2 |

3 | on:

4 | push:

5 | paths:

6 | - '**.py'

7 | pull_request:

8 | paths:

9 | - '**.py'

10 |

11 | jobs:

12 | pytest:

13 | runs-on: ubuntu-latest

14 | strategy:

15 | fail-fast: false

16 | matrix:

17 | python-version: ['3.9', '3.10', '3.11', '3.12']

18 |

19 | steps:

20 | - uses: actions/checkout@v4

21 | - name: Set up Python ${{ matrix.python-version }}

22 | uses: actions/setup-python@v4

23 | with:

24 | python-version: ${{ matrix.python-version }}

25 | - name: Install dependencies

26 | run: |

27 | python -m pip install --upgrade pip

28 | pip install .[tests]

29 | - name: Test with pytest

30 | run: |

31 | pytest -v --cov=inference

32 |

33 | build-test:

34 | runs-on: ubuntu-latest

35 | steps:

36 | - uses: actions/checkout@v4

37 | - name: Set up Python

38 | uses: actions/setup-python@v4

39 | with:

40 | python-version: '3.x'

41 | - name: Install dependencies

42 | run: |

43 | python -m pip install --upgrade pip

44 | pip install build twine

45 | - name: Build package

46 | run: python -m build --sdist --wheel

47 | - name: Check build

48 | run: twine check dist/*

49 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # -*- mode: gitignore; -*-

2 |

3 | # Auto-generated by setuptools_scm

4 | inference/_version.py

5 |

6 | *~

7 | \#*\#

8 | /.emacs.desktop

9 | /.emacs.desktop.lock

10 | *.elc

11 | auto-save-list

12 | tramp

13 | .\#*

14 |

15 | # Org-mode

16 | .org-id-locations

17 | *_archive

18 |

19 | # flymake-mode

20 | *_flymake.*

21 |

22 | # eshell files

23 | /eshell/history

24 | /eshell/lastdir

25 |

26 | # elpa packages

27 | /elpa/

28 |

29 | # reftex files

30 | *.rel

31 |

32 | # AUCTeX auto folder

33 | /auto/

34 |

35 | # cask packages

36 | .cask/

37 | dist/

38 |

39 | # Flycheck

40 | flycheck_*.el

41 |

42 | # server auth directory

43 | /server/

44 |

45 | # projectiles files

46 | .projectile

47 |

48 | # directory configuration

49 | .dir-locals.el

50 |

51 | # network security

52 | /network-security.data

53 |

54 | # Byte-compiled / optimized / DLL files

55 | __pycache__/

56 | *.py[cod]

57 | *$py.class

58 |

59 | # C extensions

60 | *.so

61 |

62 | # Distribution / packaging

63 | .Python

64 | build/

65 | develop-eggs/

66 | dist/

67 | downloads/

68 | eggs/

69 | .eggs/

70 | lib/

71 | lib64/

72 | parts/

73 | sdist/

74 | var/

75 | wheels/

76 | share/python-wheels/

77 | *.egg-info/

78 | .installed.cfg

79 | *.egg

80 | MANIFEST

81 |

82 | # PyInstaller

83 | # Usually these files are written by a python script from a template

84 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

85 | *.manifest

86 | *.spec

87 |

88 | # Installer logs

89 | pip-log.txt

90 | pip-delete-this-directory.txt

91 |

92 | # Unit test / coverage reports

93 | htmlcov/

94 | .tox/

95 | .nox/

96 | .coverage

97 | .coverage.*

98 | .cache

99 | nosetests.xml

100 | coverage.xml

101 | *.cover

102 | *.py,cover

103 | .hypothesis/

104 | .pytest_cache/

105 | cover/

106 |

107 | # Translations

108 | *.mo

109 | *.pot

110 |

111 | # Django stuff:

112 | *.log

113 | local_settings.py

114 | db.sqlite3

115 | db.sqlite3-journal

116 |

117 | # Flask stuff:

118 | instance/

119 | .webassets-cache

120 |

121 | # Scrapy stuff:

122 | .scrapy

123 |

124 | # Sphinx documentation

125 | docs/_build/

126 |

127 | # PyBuilder

128 | .pybuilder/

129 | target/

130 |

131 | # Jupyter Notebook

132 | .ipynb_checkpoints

133 |

134 | # IPython

135 | profile_default/

136 | ipython_config.py

137 |

138 | # pyenv

139 | # For a library or package, you might want to ignore these files since the code is

140 | # intended to run in multiple environments; otherwise, check them in:

141 | # .python-version

142 |

143 | # pipenv

144 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

145 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

146 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

147 | # install all needed dependencies.

148 | #Pipfile.lock

149 |

150 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

151 | __pypackages__/

152 |

153 | # Celery stuff

154 | celerybeat-schedule

155 | celerybeat.pid

156 |

157 | # SageMath parsed files

158 | *.sage.py

159 |

160 | # Environments

161 | .env

162 | .venv

163 | env/

164 | venv/

165 | ENV/

166 | env.bak/

167 | venv.bak/

168 |

169 | # Spyder project settings

170 | .spyderproject

171 | .spyproject

172 |

173 | # Rope project settings

174 | .ropeproject

175 |

176 | # mkdocs documentation

177 | /site

178 |

179 | # mypy

180 | .mypy_cache/

181 | .dmypy.json

182 | dmypy.json

183 |

184 | # Pyre type checker

185 | .pyre/

186 |

187 | # pytype static type analyzer

188 | .pytype/

189 |

190 | # Cython debug symbols

191 | cython_debug/

192 |

193 | *~

194 |

195 | # temporary files which can be created if a process still has a handle open of a deleted file

196 | .fuse_hidden*

197 |

198 | # KDE directory preferences

199 | .directory

200 |

201 | # Linux trash folder which might appear on any partition or disk

202 | .Trash-*

203 |

204 | # .nfs files are created when an open file is removed but is still being accessed

205 | .nfs*

206 |

--------------------------------------------------------------------------------

/.readthedocs.yaml:

--------------------------------------------------------------------------------

1 | # .readthedocs.yaml

2 | # Read the Docs configuration file

3 | # See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

4 |

5 | # Required

6 | version: 2

7 |

8 | # Set the version of Python and other tools you might need

9 | build:

10 | os: ubuntu-22.04

11 | tools:

12 | python: "3.11"

13 |

14 | # Build documentation in the docs/ directory with Sphinx

15 | sphinx:

16 | configuration: docs/source/conf.py

17 |

18 | # Optionally declare the Python requirements required to build your docs

19 | python:

20 | install:

21 | - method: pip

22 | path: .

23 | - requirements: docs/docs_requirements.txt

24 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Chris Bowman

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # inference-tools

2 |

3 | [](https://inference-tools.readthedocs.io/en/stable/?badge=stable)

4 | [](https://github.com/C-bowman/inference-tools/blob/master/LICENSE)

5 | [](https://pypi.org/project/inference-tools/)

6 |

7 | [](https://zenodo.org/badge/latestdoi/149741362)

8 |

9 | This package provides a set of Python-based tools for Bayesian data analysis

10 | which are simple to use, allowing them to applied quickly and easily.

11 |

12 | Inference-tools is not a framework for Bayesian modelling (e.g. like [PyMC](https://docs.pymc.io/)),

13 | but instead provides tools to sample from user-defined models using MCMC, and to analyse and visualise

14 | the sampling results.

15 |

16 | ## Features

17 |

18 | - Implementations of MCMC algorithms like Gibbs sampling and Hamiltonian Monte-Carlo for

19 | sampling from user-defined posterior distributions.

20 |

21 | - Density estimation and plotting tools for analysing and visualising inference results.

22 |

23 | - Gaussian-process regression and optimisation.

24 |

25 |

26 | | | | |

27 | |:-------------------------:|:-------------------------:|:-------------------------:|

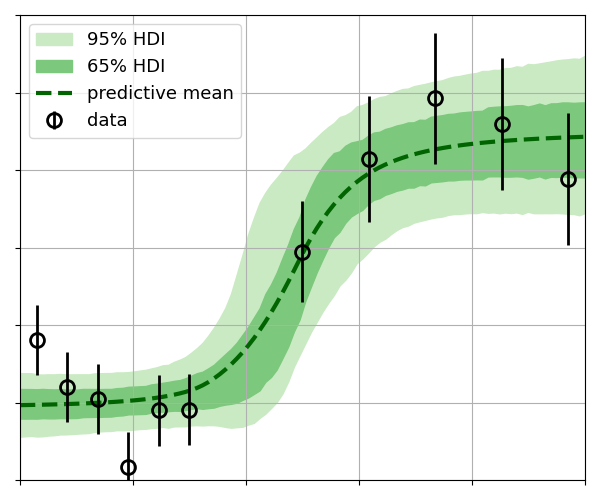

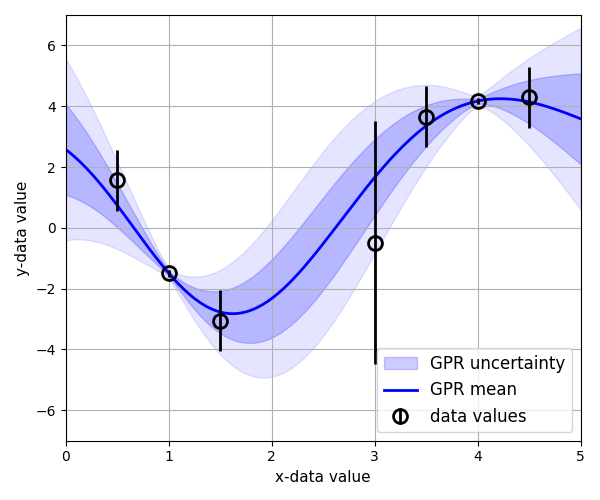

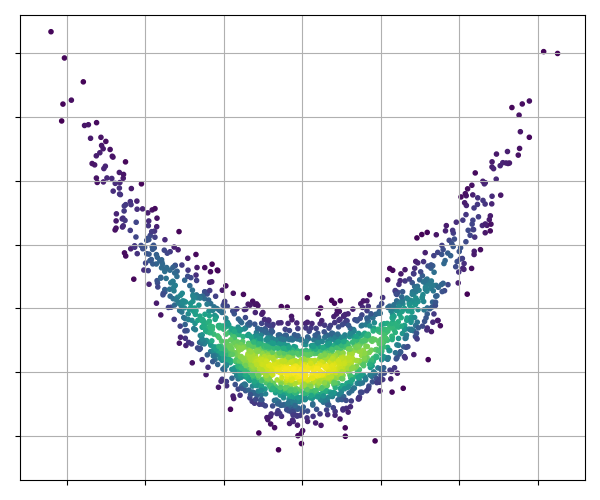

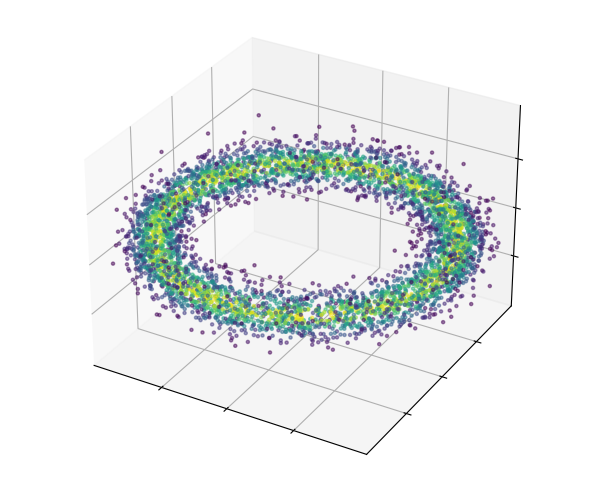

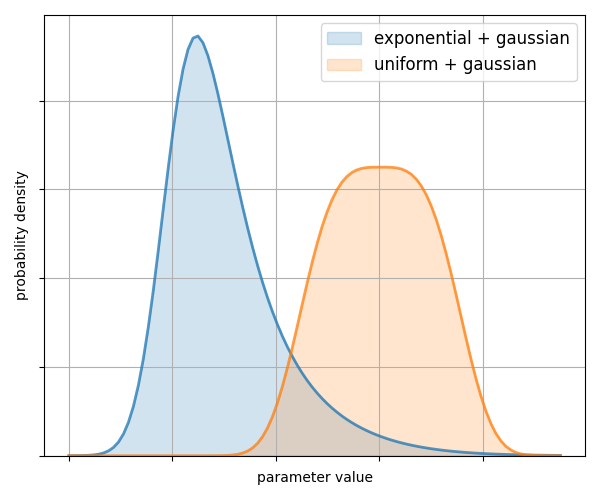

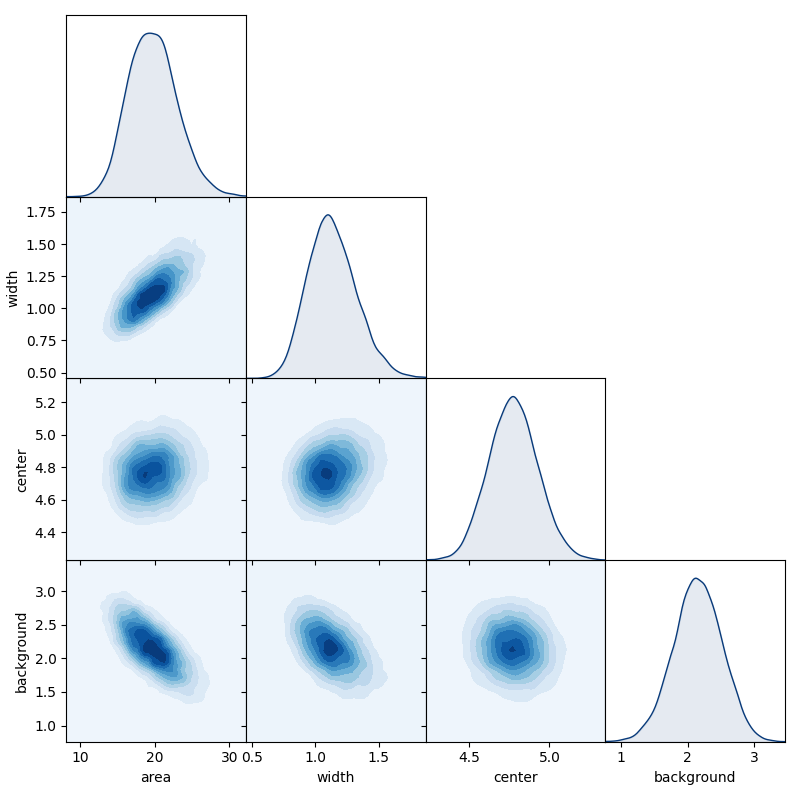

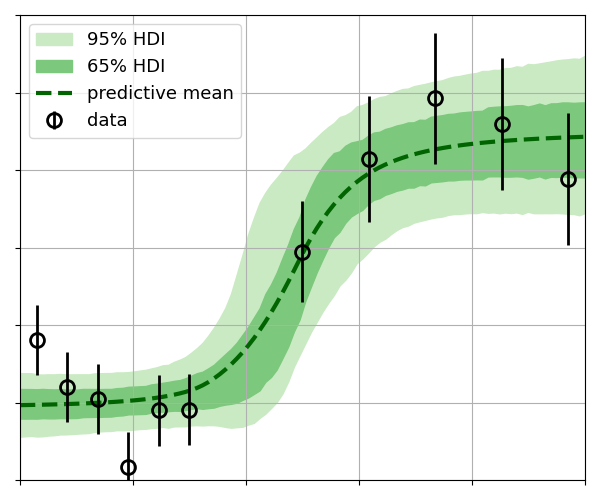

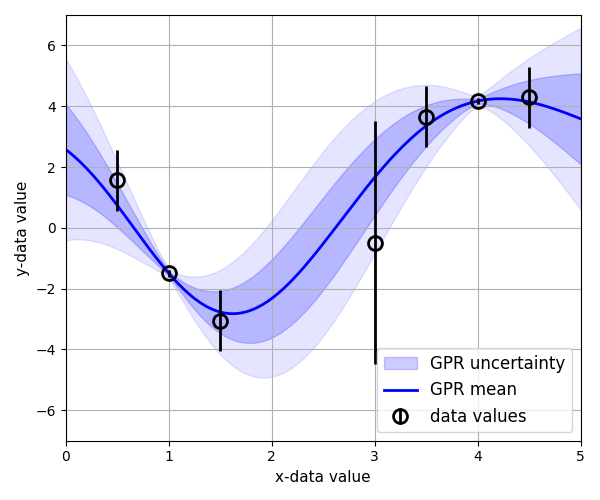

28 | | [Gibbs Sampling](https://github.com/C-bowman/inference-tools/blob/master/demos/gibbs_sampling_demo.ipynb)  | [Hamiltonian Monte-Carlo](https://github.com/C-bowman/inference-tools/blob/master/demos/hamiltonian_mcmc_demo.ipynb)

| [Hamiltonian Monte-Carlo](https://github.com/C-bowman/inference-tools/blob/master/demos/hamiltonian_mcmc_demo.ipynb)  | [Density estimation](https://github.com/C-bowman/inference-tools/blob/master/demos/density_estimation_demo.ipynb)

| [Density estimation](https://github.com/C-bowman/inference-tools/blob/master/demos/density_estimation_demo.ipynb)  |

29 | | Matrix plotting

|

29 | | Matrix plotting  | Highest-density intervals

| Highest-density intervals  | [GP regression](https://github.com/C-bowman/inference-tools/blob/master/demos/gp_regression_demo.ipynb)

| [GP regression](https://github.com/C-bowman/inference-tools/blob/master/demos/gp_regression_demo.ipynb)  |

30 |

31 | ## Installation

32 |

33 | inference-tools is available from [PyPI](https://pypi.org/project/inference-tools/),

34 | so can be easily installed using [pip](https://pip.pypa.io/en/stable/) as follows:

35 | ```bash

36 | pip install inference-tools

37 | ```

38 |

39 | ## Documentation

40 |

41 | Full documentation is available at [inference-tools.readthedocs.io](https://inference-tools.readthedocs.io/en/stable/).

--------------------------------------------------------------------------------

/demos/scripts/ChainPool_demo.py:

--------------------------------------------------------------------------------

1 | from inference.mcmc import GibbsChain, ChainPool

2 | from time import time

3 |

4 |

5 | def rosenbrock(t):

6 | # This is a modified form of the rosenbrock function, which

7 | # is commonly used to test optimisation algorithms

8 | X, Y = t

9 | X2 = X**2

10 | b = 15 # correlation strength parameter

11 | v = 3 # variance of the gaussian term

12 | return -X2 - b * (Y - X2) ** 2 - 0.5 * (X2 + Y**2) / v

13 |

14 |

15 | # required for multi-process code when running on windows

16 | if __name__ == "__main__":

17 | """

18 | The ChainPool class provides a convenient means to store multiple

19 | chain objects, and simultaneously advance those chains using multiple

20 | python processes.

21 | """

22 |

23 | # for example, here we create a singular chain object

24 | chain = GibbsChain(posterior=rosenbrock, start=[0.0, 0.0])

25 | # then advance it for some number of samples, and note the run-time

26 | t1 = time()

27 | chain.advance(150000)

28 | t2 = time()

29 | print("time elapsed, single chain:", t2 - t1)

30 |

31 | # We may want to run a number of chains in parallel - for example multiple chains

32 | # over different posteriors, or on a single posterior with different starting locations.

33 |

34 | # Here we create two chains with different starting points:

35 | chain_1 = GibbsChain(posterior=rosenbrock, start=[0.5, 0.0])

36 | chain_2 = GibbsChain(posterior=rosenbrock, start=[0.0, 0.5])

37 |

38 | # now we pass those chains to ChainPool in a list

39 | cpool = ChainPool([chain_1, chain_2])

40 |

41 | # if we now wish to advance both of these chains some number of steps, and do so in

42 | # parallel, we can use the advance() method of the ChainPool instance:

43 | t1 = time()

44 | cpool.advance(150000)

45 | t2 = time()

46 | print("time elapsed, two chains:", t2 - t1)

47 |

48 | # assuming you are running this example on a machine with two free cores, advancing

49 | # both chains in this way should have taken a comparable time to advancing just one.

50 |

--------------------------------------------------------------------------------

/demos/scripts/GaussianKDE_demo.py:

--------------------------------------------------------------------------------

1 | from numpy import linspace, zeros, exp, sqrt, pi

2 | from numpy.random import normal

3 | import matplotlib.pyplot as plt

4 | from inference.pdf import GaussianKDE

5 |

6 | """

7 | Code to demonstrate the use of the GaussianKDE class.

8 | """

9 |

10 | # first generate a test sample

11 | N = 150000

12 | sample = zeros(N)

13 | sample[: N // 3] = normal(size=N // 3) * 0.5 + 1.8

14 | sample[N // 3 :] = normal(size=2 * (N // 3)) * 0.5 + 3.5

15 |

16 | # GaussianKDE takes an array of sample values as its only argument

17 | pdf = GaussianKDE(sample)

18 |

19 | # much like the UnimodalPdf class, GaussianKDE returns a density estimator object

20 | # which can be called as a function to return an estimate of the PDF at a set of

21 | # points:

22 | x = linspace(0, 6, 1000)

23 | p = pdf(x)

24 |

25 | # GaussianKDE is fast even for large samples, as it uses a binary tree search to

26 | # match any given spatial location with a slice of the sample array which contains

27 | # all samples that have a non-negligible contribution to the density estimate.

28 |

29 | # We could plot (x, P) manually, but for convenience the plot_summary

30 | # method will generate a plot automatically as well as summary statistics:

31 | pdf.plot_summary()

32 |

33 | # The summary statistics can be accessed via properties or methods:

34 | # the location of the mode is a property

35 | mode = pdf.mode

36 |

37 | # The highest-density interval for any fraction of total probability

38 | # can is returned by the interval() method

39 | hdi_95 = pdf.interval(frac=0.95)

40 |

41 | # the mean, variance, skewness and excess kurtosis are returned

42 | # by the moments() method:

43 | mu, var, skew, kurt = pdf.moments()

44 |

45 | # By default, GaussianKDE uses a simple but easy to compute estimate of the

46 | # bandwidth (the standard deviation of each Gaussian kernel). However, when

47 | # estimating strongly non-normal distributions, this simple approach will

48 | # over-estimate required bandwidth.

49 |

50 | # In these cases, the cross-validation bandwidth selector can be used to

51 | # obtain better results, but with higher computational cost.

52 |

53 | # to demonstrate, lets create a new sample:

54 | N = 30000

55 | sample = zeros(N)

56 | sample[: N // 3] = normal(size=N // 3)

57 | sample[N // 3 :] = normal(size=2 * (N // 3)) + 10

58 |

59 | # now construct estimators using the simple and cross-validation estimators

60 | pdf_simple = GaussianKDE(sample)

61 | pdf_crossval = GaussianKDE(sample, cross_validation=True)

62 |

63 | # now build an axis on which to evaluate the estimates

64 | x = linspace(-4, 14, 500)

65 |

66 | # for comparison also compute the real distribution

67 | exact = (exp(-0.5 * x**2) / 3 + 2 * exp(-0.5 * (x - 10) ** 2) / 3) / sqrt(2 * pi)

68 |

69 | # plot everything together

70 | plt.plot(x, pdf_simple(x), label="simple")

71 | plt.plot(x, pdf_crossval(x), label="cross-validation")

72 | plt.plot(x, exact, label="exact")

73 |

74 | plt.ylabel("probability density")

75 | plt.xlabel("x")

76 |

77 | plt.grid()

78 | plt.legend()

79 | plt.show()

80 |

--------------------------------------------------------------------------------

/demos/scripts/GibbsChain_demo.py:

--------------------------------------------------------------------------------

1 | import matplotlib.pyplot as plt

2 | from numpy import array, exp, linspace

3 |

4 | from inference.mcmc import GibbsChain

5 |

6 |

7 | def rosenbrock(t):

8 | # This is a modified form of the rosenbrock function, which

9 | # is commonly used to test optimisation algorithms

10 | X, Y = t

11 | X2 = X**2

12 | b = 15 # correlation strength parameter

13 | v = 3 # variance of the gaussian term

14 | return -X2 - b * (Y - X2) ** 2 - 0.5 * (X2 + Y**2) / v

15 |

16 |

17 | """

18 | # Gibbs sampling example

19 |

20 | In order to use the GibbsChain sampler from the mcmc module, we must

21 | provide a log-posterior function to sample from, a point in the parameter

22 | space to start the chain, and an initial guess for the proposal width

23 | for each parameter.

24 |

25 | In this example a modified version of the Rosenbrock function (shown

26 | above) is used as the log-posterior.

27 | """

28 |

29 | # The maximum of the rosenbrock function is [0, 0] - here we intentionally

30 | # start the chain far from the mode.

31 | start_location = array([2.0, -4.0])

32 |

33 | # Here we make our initial guess for the proposal widths intentionally

34 | # poor, to demonstrate that gibbs sampling allows each proposal width

35 | # to be adjusted individually toward an optimal value.

36 | width_guesses = array([5.0, 0.05])

37 |

38 | # create the chain object

39 | chain = GibbsChain(posterior=rosenbrock, start=start_location, widths=width_guesses)

40 |

41 | # advance the chain 150k steps

42 | chain.advance(150000)

43 |

44 | # the samples for the n'th parameter can be accessed through the

45 | # get_parameter(n) method. We could use this to plot the path of

46 | # the chain through the 2D parameter space:

47 |

48 | p = chain.get_probabilities() # color the points by their probability value

49 | point_colors = exp(p - p.max())

50 | plt.scatter(

51 | chain.get_parameter(0), chain.get_parameter(1), c=point_colors, marker="."

52 | )

53 | plt.xlabel("parameter 1")

54 | plt.ylabel("parameter 2")

55 | plt.grid()

56 | plt.tight_layout()

57 | plt.show()

58 |

59 |

60 | # We can see from this plot that in order to take a representative sample,

61 | # some early portion of the chain must be removed. This is referred to as

62 | # the 'burn-in' period. This period allows the chain to both find the high

63 | # density areas, and adjust the proposal widths to their optimal values.

64 |

65 | # The plot_diagnostics() method can help us decide what size of burn-in to use:

66 | chain.plot_diagnostics()

67 |

68 | # Occasionally samples are also 'thinned' by a factor of n (where only every

69 | # n'th sample is used) in order to reduce the size of the data set for

70 | # storage, or to produce uncorrelated samples.

71 |

72 | # based on the diagnostics we can choose burn and thin values,

73 | # which can be passed as arguments to methods which act on the samples

74 | burn = 2000

75 | thin = 5

76 |

77 | # After discarding burn-in, what we have left should be a representative

78 | # sample drawn from the posterior. Repeating the previous plot as a

79 | # scatter-plot shows the sample:

80 | p = chain.get_probabilities(burn=burn, thin=thin) # color the points by their probability value

81 | plt.scatter(

82 | chain.get_parameter(index=0, burn=burn, thin=thin),

83 | chain.get_parameter(index=1, burn=burn, thin=thin),

84 | c=exp(p - p.max()),

85 | marker="."

86 | )

87 | plt.xlabel("parameter 1")

88 | plt.ylabel("parameter 2")

89 | plt.grid()

90 | plt.tight_layout()

91 | plt.show()

92 |

93 |

94 | # We can easily estimate 1D marginal distributions for any parameter

95 | # using the 'get_marginal' method:

96 | pdf_1 = chain.get_marginal(0, burn=burn, thin=thin, unimodal=True)

97 | pdf_2 = chain.get_marginal(1, burn=burn, thin=thin, unimodal=True)

98 |

99 | # get_marginal returns a density estimator object, which can be called

100 | # as a function to return the value of the pdf at any point.

101 | # Make an axis on which to evaluate the PDFs:

102 | ax = linspace(-3, 4, 500)

103 |

104 | # plot the results

105 | plt.plot(ax, pdf_1(ax), label="param #1 marginal", lw=2)

106 | plt.plot(ax, pdf_2(ax), label="param #2 marginal", lw=2)

107 |

108 | plt.xlabel("parameter value")

109 | plt.ylabel("probability density")

110 | plt.legend()

111 | plt.grid()

112 | plt.tight_layout()

113 | plt.show()

114 |

115 | # chain objects can be saved in their entirety as a single .npz file using

116 | # the save() method, and then re-built using the load() class method, so

117 | # that to save the chain you may write:

118 |

119 | # chain.save('chain_data.npz')

120 |

121 | # and to re-build a chain object at it was before you would write

122 |

123 | # chain = GibbsChain.load('chain_data.npz')

124 |

125 | # This allows you to advance a chain, store it, then re-load it at a later

126 | # time to analyse the chain data, or re-start the chain should you decide

127 | # more samples are required.

128 |

--------------------------------------------------------------------------------

/demos/scripts/GpOptimiser_demo.py:

--------------------------------------------------------------------------------

1 | from inference.gp import GpOptimiser

2 |

3 | import matplotlib.pyplot as plt

4 | import matplotlib as mpl

5 | from numpy import sin, cos, linspace, array, meshgrid

6 |

7 | mpl.rcParams["axes.autolimit_mode"] = "round_numbers"

8 | mpl.rcParams["axes.xmargin"] = 0

9 | mpl.rcParams["axes.ymargin"] = 0

10 |

11 |

12 | def example_plot_1d():

13 | mu, sig = GP(x_gp)

14 | fig, (ax1, ax2, ax3) = plt.subplots(

15 | 3, 1, gridspec_kw={"height_ratios": [1, 3, 1]}, figsize=(10, 8)

16 | )

17 |

18 | ax1.plot(

19 | evaluations,

20 | max_values,

21 | marker="o",

22 | ls="solid",

23 | c="orange",

24 | label="highest observed value",

25 | zorder=5,

26 | )

27 | ax1.plot(

28 | [2, 12], [max(y_func), max(y_func)], ls="dashed", label="actual max", c="black"

29 | )

30 | ax1.set_xlabel("function evaluations")

31 | ax1.set_xlim([2, 12])

32 | ax1.set_ylim([max(y) - 0.3, max(y_func) + 0.3])

33 | ax1.xaxis.set_label_position("top")

34 | ax1.yaxis.set_label_position("right")

35 | ax1.xaxis.tick_top()

36 | ax1.set_yticks([])

37 | ax1.legend(loc=4)

38 |

39 | ax2.plot(GP.x, GP.y, "o", c="red", label="observations", zorder=5)

40 | ax2.plot(x_gp, y_func, lw=1.5, c="red", ls="dashed", label="actual function")

41 | ax2.plot(x_gp, mu, lw=2, c="blue", label="GP prediction")

42 | ax2.fill_between(

43 | x_gp,

44 | (mu - 2 * sig),

45 | y2=(mu + 2 * sig),

46 | color="blue",

47 | alpha=0.15,

48 | label="95% confidence interval",

49 | )

50 | ax2.set_ylim([-1.5, 4])

51 | ax2.set_ylabel("y")

52 | ax2.set_xticks([])

53 |

54 | aq = array([abs(GP.acquisition(array([k]))) for k in x_gp]).squeeze()

55 | proposal = x_gp[aq.argmax()]

56 | ax3.fill_between(x_gp, 0.9 * aq / aq.max(), color="green", alpha=0.15)

57 | ax3.plot(x_gp, 0.9 * aq / aq.max(), color="green", label="acquisition function")

58 | ax3.plot(

59 | [proposal] * 2, [0.0, 1.0], c="green", ls="dashed", label="acquisition maximum"

60 | )

61 | ax2.plot([proposal] * 2, [-1.5, search_function(proposal)], c="green", ls="dashed")

62 | ax2.plot(

63 | proposal,

64 | search_function(proposal),

65 | "o",

66 | c="green",

67 | label="proposed observation",

68 | )

69 | ax3.set_ylim([0, 1])

70 | ax3.set_yticks([])

71 | ax3.set_xlabel("x")

72 | ax3.legend(loc=1)

73 | ax2.legend(loc=2)

74 |

75 | plt.tight_layout()

76 | plt.subplots_adjust(hspace=0)

77 | plt.show()

78 |

79 |

80 | def example_plot_2d():

81 | fig, (ax1, ax2) = plt.subplots(

82 | 2, 1, gridspec_kw={"height_ratios": [1, 3]}, figsize=(10, 8)

83 | )

84 | plt.subplots_adjust(hspace=0)

85 |

86 | ax1.plot(

87 | evaluations,

88 | max_values,

89 | marker="o",

90 | ls="solid",

91 | c="orange",

92 | label="optimum value",

93 | zorder=5,

94 | )

95 | ax1.plot(

96 | [5, 30],

97 | [z_func.max(), z_func.max()],

98 | ls="dashed",

99 | label="actual max",

100 | c="black",

101 | )

102 | ax1.set_xlabel("function evaluations")

103 | ax1.set_xlim([5, 30])

104 | ax1.set_ylim([max(y) - 0.3, z_func.max() + 0.3])

105 | ax1.xaxis.set_label_position("top")

106 | ax1.yaxis.set_label_position("right")

107 | ax1.xaxis.tick_top()

108 | ax1.set_yticks([])

109 | ax1.legend(loc=4)

110 |

111 | ax2.contour(*mesh, z_func, 40)

112 | ax2.plot(

113 | [i[0] for i in GP.x],

114 | [i[1] for i in GP.x],

115 | "D",

116 | c="red",

117 | markeredgecolor="black",

118 | )

119 | plt.show()

120 |

121 |

122 | """

123 | GpOptimiser extends the functionality of GpRegressor to perform 'Bayesian optimisation'.

124 |

125 | Bayesian optimisation is suited to problems for which a single evaluation of the function

126 | being explored is expensive, such that the total number of function evaluations must be

127 | made as small as possible.

128 | """

129 |

130 |

131 | # define the function whose maximum we will search for

132 | def search_function(x):

133 | return sin(0.5 * x) + 3 / (1 + (x - 1) ** 2)

134 |

135 |

136 | # define bounds for the optimisation

137 | bounds = [(-8.0, 8.0)]

138 |

139 | # create some initialisation data

140 | x = array([-8, 8])

141 | y = search_function(x)

142 |

143 | # create an instance of GpOptimiser

144 | GP = GpOptimiser(x, y, bounds=bounds)

145 |

146 |

147 | # here we evaluate the search function for plotting purposes

148 | M = 500

149 | x_gp = linspace(*bounds[0], M)

150 | y_func = search_function(x_gp)

151 | max_values = [max(GP.y)]

152 | evaluations = [len(GP.y)]

153 |

154 |

155 | for i in range(11):

156 | # plot the current state of the optimisation

157 | example_plot_1d()

158 |

159 | # request the proposed evaluation

160 | new_x = GP.propose_evaluation()

161 |

162 | # evaluate the new point

163 | new_y = search_function(new_x)

164 |

165 | # update the gaussian process with the new information

166 | GP.add_evaluation(new_x, new_y)

167 |

168 | # track the optimum value for plotting

169 | max_values.append(max(GP.y))

170 | evaluations.append(len(GP.y))

171 |

172 |

173 | """

174 | 2D example

175 | """

176 | from mpl_toolkits.mplot3d import Axes3D

177 |

178 |

179 | # define a new 2D search function

180 | def search_function(v):

181 | x, y = v

182 | z = ((x - 1) / 2) ** 2 + ((y + 3) / 1.5) ** 2

183 | return sin(0.5 * x) + cos(0.4 * y) + 5 / (1 + z)

184 |

185 |

186 | # set bounds

187 | bounds = [(-8, 8), (-8, 8)]

188 |

189 | # evaluate function for plotting

190 | N = 80

191 | x = linspace(*bounds[0], N)

192 | y = linspace(*bounds[1], N)

193 | mesh = meshgrid(x, y)

194 | z_func = search_function(mesh)

195 |

196 |

197 | # create some initialisation data

198 | # we've picked a point at each corner and one in the middle

199 | x = [(-8, -8), (8, -8), (-8, 8), (8, 8), (0, 0)]

200 | y = [search_function(k) for k in x]

201 |

202 | # initiate the optimiser

203 | GP = GpOptimiser(x, y, bounds=bounds)

204 |

205 |

206 | max_values = [max(GP.y)]

207 | evaluations = [len(GP.y)]

208 |

209 | for i in range(25):

210 | new_x = GP.propose_evaluation()

211 | new_y = search_function(new_x)

212 | GP.add_evaluation(new_x, new_y)

213 |

214 | # track the optimum value for plotting

215 | max_values.append(max(GP.y))

216 | evaluations.append(len(GP.y))

217 |

218 | # plot the results

219 | example_plot_2d()

220 |

--------------------------------------------------------------------------------

/demos/scripts/HamiltonianChain_demo.py:

--------------------------------------------------------------------------------

1 | from mpl_toolkits.mplot3d import Axes3D

2 | import matplotlib.pyplot as plt

3 | from numpy import sqrt, exp, array

4 | from inference.mcmc import HamiltonianChain

5 |

6 | """

7 | # Hamiltonian sampling example

8 |

9 | Hamiltonian Monte-Carlo (HMC) is a MCMC algorithm which is able to

10 | efficiently sample from complex PDFs which present difficulty for

11 | other algorithms, such as those which strong non-linear correlations.

12 |

13 | However, this requires not only the log-posterior probability but also

14 | its gradient in order to function. In cases where this gradient can be

15 | calculated analytically HMC can be very effective.

16 |

17 | The implementation of HMC shown here as HamiltonianChain is somewhat

18 | naive, and should at some point be replaced with a more advanced

19 | self-tuning version, such as the NUTS algorithm.

20 | """

21 |

22 |

23 | # define a non-linearly correlated posterior distribution

24 | class ToroidalGaussian:

25 | def __init__(self):

26 | self.R0 = 1.0 # torus major radius

27 | self.ar = 10.0 # torus aspect ratio

28 | self.inv_w2 = (self.ar / self.R0) ** 2

29 |

30 | def __call__(self, theta):

31 | x, y, z = theta

32 | r_sqr = z**2 + (sqrt(x**2 + y**2) - self.R0) ** 2

33 | return -0.5 * r_sqr * self.inv_w2

34 |

35 | def gradient(self, theta):

36 | x, y, z = theta

37 | R = sqrt(x**2 + y**2)

38 | K = 1 - self.R0 / R

39 | g = array([K * x, K * y, z])

40 | return -g * self.inv_w2

41 |

42 |

43 | # create an instance of our posterior class

44 | posterior = ToroidalGaussian()

45 |

46 | # create the chain object

47 | chain = HamiltonianChain(

48 | posterior=posterior, grad=posterior.gradient, start=array([1, 0.1, 0.1])

49 | )

50 |

51 | # advance the chain to generate the sample

52 | chain.advance(6000)

53 |

54 | # choose how many samples will be thrown away from the start

55 | # of the chain as 'burn-in'

56 | burn = 2000

57 |

58 | # extract sample and probability data from the chain

59 | probs = chain.get_probabilities(burn=burn)

60 | colors = exp(probs - probs.max())

61 | xs, ys, zs = [chain.get_parameter(i, burn=burn) for i in [0, 1, 2]]

62 |

63 | # Plot the sample we've generated as a 3D scatterplot

64 | fig = plt.figure(figsize=(10, 10))

65 | ax = fig.add_subplot(111, projection="3d")

66 | L = 1.2

67 | ax.set_xlim([-L, L])

68 | ax.set_ylim([-L, L])

69 | ax.set_zlim([-L, L])

70 | ax.set_xlabel("x")

71 | ax.set_ylabel("y")

72 | ax.set_zlabel("z")

73 | ax.scatter(xs, ys, zs, c=colors)

74 | plt.tight_layout()

75 | plt.show()

76 |

77 | # The plot_diagnostics() and matrix_plot() methods described in the GibbsChain demo

78 | # also work for HamiltonianChain:

79 | chain.plot_diagnostics()

80 |

81 | chain.matrix_plot()

82 |

--------------------------------------------------------------------------------

/demos/scripts/ParallelTempering_demo.py:

--------------------------------------------------------------------------------

1 | from numpy import log, sqrt, sin, arctan2, pi

2 |

3 | # define a posterior with multiple separate peaks

4 | def multimodal_posterior(theta):

5 | x, y = theta

6 | r = sqrt(x**2 + y**2)

7 | phi = arctan2(y, x)

8 | z = (r - (0.5 + pi - phi*0.5)) / 0.1

9 | return -0.5*z**2 + 4*log(sin(phi*2.)**2)

10 |

11 |

12 | # required for multi-process code when running on windows

13 | if __name__ == "__main__":

14 |

15 | from inference.mcmc import GibbsChain, ParallelTempering

16 |

17 | # define a set of temperature levels

18 | N_levels = 6

19 | temps = [10**(2.5*k/(N_levels-1.)) for k in range(N_levels)]

20 |

21 | # create a set of chains - one with each temperature

22 | chains = [GibbsChain(posterior=multimodal_posterior, start=[0.5, 0.5], temperature=T) for T in temps]

23 |

24 | # When an instance of ParallelTempering is created, a dedicated process for each chain is spawned.

25 | # These separate processes will automatically make use of the available cpu cores, such that the

26 | # computations to advance the separate chains are performed in parallel.

27 | PT = ParallelTempering(chains=chains)

28 |

29 | # These processes wait for instructions which can be sent using the methods of the

30 | # ParallelTempering object:

31 | PT.run_for(minutes=0.5)

32 |

33 | # To recover a copy of the chains held by the processes

34 | # we can use the return_chains method:

35 | chains = PT.return_chains()

36 |

37 | # by looking at the trace plot for the T = 1 chain, we see that it makes

38 | # large jumps across the parameter space due to the swaps.

39 | chains[0].trace_plot()

40 |

41 | # Even though the posterior has strongly separated peaks, the T = 1 chain

42 | # was able to explore all of them due to the swaps.

43 | chains[0].matrix_plot()

44 |

45 | # We can also visualise the acceptance rates of proposed position swaps between

46 | # each chain using the swap_diagnostics method:

47 | PT.swap_diagnostics()

48 |

49 | # Because each process waits for instructions from the ParallelTempering object,

50 | # they will not self-terminate. To terminate all the processes we have to trigger

51 | # a shutdown even using the shutdown method:

52 | PT.shutdown()

--------------------------------------------------------------------------------

/demos/scripts/gaussian_fitting_demo.py:

--------------------------------------------------------------------------------

1 | from numpy import array, exp, linspace, sqrt, pi

2 | import matplotlib.pyplot as plt

3 |

4 | # Suppose we have the following dataset, which we believe is described by a

5 | # Gaussian peak plus a constant background. Our goal in this example is to

6 | # infer the area of the Gaussian.

7 |

8 | x_data = array([

9 | 0.00, 0.80, 1.60, 2.40, 3.20, 4.00, 4.80, 5.60,

10 | 6.40, 7.20, 8.00, 8.80, 9.60, 10.4, 11.2, 12.0

11 | ])

12 |

13 | y_data = array([

14 | 2.473, 1.329, 2.370, 1.135, 5.861, 7.045, 9.942, 7.335,

15 | 3.329, 5.348, 1.462, 2.476, 3.096, 0.784, 3.342, 1.877

16 | ])

17 |

18 | y_error = array([

19 | 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0,

20 | 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0

21 | ])

22 |

23 | plt.errorbar(

24 | x_data,

25 | y_data,

26 | yerr=y_error,

27 | ls="dashed",

28 | marker="D",

29 | c="red",

30 | markerfacecolor="none",

31 | )

32 | plt.ylabel("y")

33 | plt.xlabel("x")

34 | plt.grid()

35 | plt.show()

36 |

37 | # The first step is to implement our model. For simple models like this one

38 | # this can be done using just a function, but as models become more complex

39 | # it becomes useful to build them as classes.

40 |

41 |

42 | class PeakModel:

43 | def __init__(self, x_data):

44 | """

45 | The __init__ should be used to pass in any data which is required

46 | by the model to produce predictions of the y-data values.

47 | """

48 | self.x = x_data

49 |

50 | def __call__(self, theta):

51 | return self.forward_model(self.x, theta)

52 |

53 | @staticmethod

54 | def forward_model(x, theta):

55 | """

56 | The forward model must make a prediction of the experimental data we would expect to measure

57 | given a specific set model parameters 'theta'.

58 | """

59 | # unpack the model parameters

60 | area, width, center, background = theta

61 | # return the prediction of the data

62 | z = (x - center) / width

63 | gaussian = exp(-0.5 * z**2) / (sqrt(2 * pi) * width)

64 | return area * gaussian + background

65 |

66 |

67 | # inference-tools has a variety of Likelihood classes which allow you to easily construct a

68 | # likelihood function given the measured data and your forward-model.

69 | from inference.likelihoods import GaussianLikelihood

70 |

71 | likelihood = GaussianLikelihood(

72 | y_data=y_data, sigma=y_error, forward_model=PeakModel(x_data)

73 | )

74 |

75 | # Instances of the likelihood classes can be called as functions, and return the log-likelihood

76 | # when passed a vector of model parameters:

77 | initial_guess = array([10.0, 2.0, 5.0, 2.0])

78 | guess_log_likelihood = likelihood(initial_guess)

79 | print(guess_log_likelihood)

80 |

81 | # We could at this stage pair the likelihood object with an optimiser in order to obtain

82 | # the maximum-likelihood estimate of the parameters. In this example however, we want to

83 | # construct the posterior distribution for the model parameters, and that means we need

84 | # a prior.

85 |

86 | # The inference.priors module contains classes which allow for easy construction of

87 | # prior distributions across all model parameters.

88 | from inference.priors import ExponentialPrior, UniformPrior, JointPrior

89 |

90 | # If we want different model parameters to have different prior distributions, as in this

91 | # case where we give three variables an exponential prior and one a uniform prior, we first

92 | # construct each type of prior separately:

93 | prior_components = [

94 | ExponentialPrior(beta=[50.0, 20.0, 20.0], variable_indices=[0, 1, 3]),

95 | UniformPrior(lower=0.0, upper=12.0, variable_indices=[2]),

96 | ]

97 | # Now we use the JointPrior class to combine the various components into a single prior

98 | # distribution which covers all the model parameters.

99 | prior = JointPrior(components=prior_components, n_variables=4)

100 |

101 | # As with the likelihood, prior objects can also be called as function to return a

102 | # log-probability value when passed a vector of model parameters. We can also draw

103 | # samples from the prior directly using the sample() method:

104 | prior_sample = prior.sample()

105 | print(prior_sample)

106 |

107 | # The likelihood and prior can be easily combined into a posterior distribution

108 | # using the Posterior class:

109 | from inference.posterior import Posterior

110 | posterior = Posterior(likelihood=likelihood, prior=prior)

111 |

112 | # Now we have constructed a posterior distribution, we can sample from it

113 | # using Markov-chain Monte-Carlo (MCMC).

114 |

115 | # The inference.mcmc module contains implementations of various MCMC sampling algorithms.

116 | # Here we import the PcaChain class and use it to create a Markov-chain object:

117 | from inference.mcmc import PcaChain

118 | chain = PcaChain(posterior=posterior, start=initial_guess)

119 |

120 | # We generate samples by advancing the chain by a chosen number of steps using the advance method:

121 | chain.advance(25000)

122 |

123 | # we can check the status of the chain using the plot_diagnostics method:

124 | chain.plot_diagnostics()

125 |

126 | # The burn-in (how many samples from the start of the chain are discarded)

127 | # can be specified as an argument to methods which act on the samples:

128 | burn = 5000

129 |

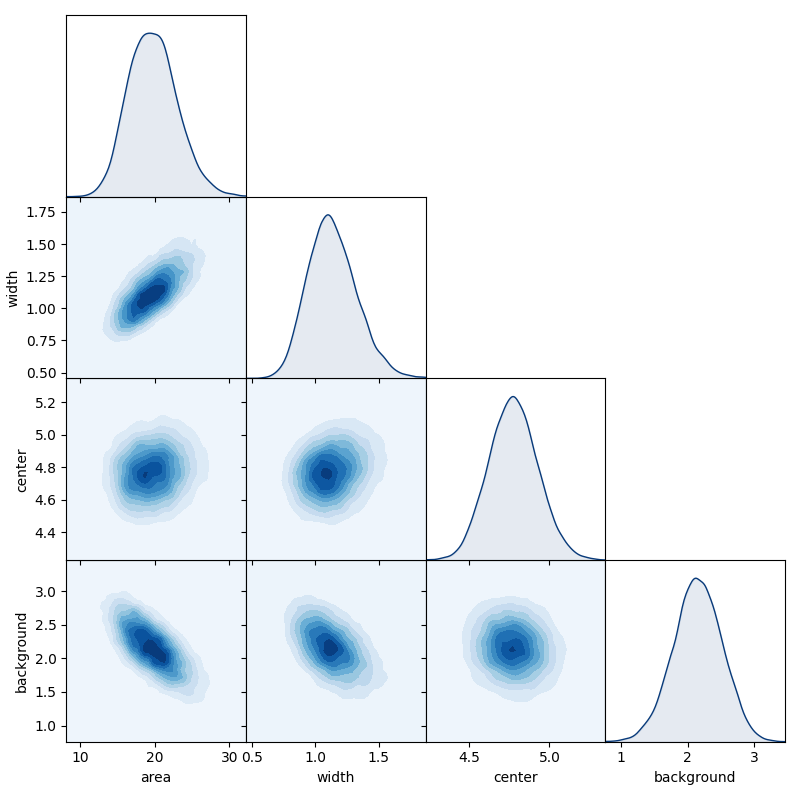

130 | # we can get a quick overview of the posterior using the matrix_plot method

131 | # of chain objects, which plots all possible 1D & 2D marginal distributions

132 | # of the full parameter set (or a chosen sub-set).

133 | chain.matrix_plot(labels=["area", "width", "center", "background"], burn=burn)

134 |

135 | # We can easily estimate 1D marginal distributions for any parameter

136 | # using the get_marginal method:

137 | area_pdf = chain.get_marginal(0, burn=burn)

138 | area_pdf.plot_summary(label="Gaussian area")

139 |

140 |

141 | # We can assess the level of uncertainty in the model predictions by passing each sample

142 | # through the forward-model and observing the distribution of model expressions that result:

143 |

144 | # generate an axis on which to evaluate the model

145 | x_fits = linspace(-2, 14, 500)

146 | # get the sample

147 | sample = chain.get_sample(burn=burn)

148 | # pass each through the forward model

149 | curves = array([PeakModel.forward_model(x_fits, theta) for theta in sample])

150 |

151 | # We could plot the predictions for each sample all on a single graph, but this is

152 | # often cluttered and difficult to interpret.

153 |

154 | # A better option is to use the hdi_plot function from the plotting module to plot

155 | # highest-density intervals for each point where the model is evaluated:

156 | from inference.plotting import hdi_plot

157 |

158 | fig = plt.figure(figsize=(8, 6))

159 | ax = fig.add_subplot(111)

160 | hdi_plot(x_fits, curves, intervals=[0.68, 0.95], axis=ax)

161 |

162 | # plot the MAP estimate (the sample with the single highest posterior probability)

163 | MAP_prediction = PeakModel.forward_model(x_fits, chain.mode())

164 | ax.plot(x_fits, MAP_prediction, ls="dashed", lw=3, c="C0", label="MAP estimate")

165 | # build the rest of the plot

166 | ax.errorbar(

167 | x_data,

168 | y_data,

169 | yerr=y_error,

170 | linestyle="none",

171 | c="red",

172 | label="data",

173 | marker="o",

174 | markerfacecolor="none",

175 | markeredgewidth=1.5,

176 | markersize=8,

177 | )

178 | ax.set_xlabel("x")

179 | ax.set_ylabel("y")

180 | ax.set_xlim([-0.5, 12.5])

181 | ax.legend()

182 | ax.grid()

183 | plt.tight_layout()

184 | plt.show()

185 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line.

5 | SPHINXOPTS =

6 | SPHINXBUILD = sphinx-build

7 | SOURCEDIR = source

8 | BUILDDIR = build

9 |

10 | # Put it first so that "make" without argument is like "make help".

11 | help:

12 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

13 |

14 | .PHONY: help Makefile

15 |

16 | # Catch-all target: route all unknown targets to Sphinx using the new

17 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

18 | %: Makefile

19 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

--------------------------------------------------------------------------------

/docs/docs_requirements.txt:

--------------------------------------------------------------------------------

1 | sphinx==5.3.0

2 | sphinx_rtd_theme==1.1.1

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=source

11 | set BUILDDIR=build

12 |

13 | if "%1" == "" goto help

14 |

15 | %SPHINXBUILD% >NUL 2>NUL

16 | if errorlevel 9009 (

17 | echo.

18 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

19 | echo.installed, then set the SPHINXBUILD environment variable to point

20 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

21 | echo.may add the Sphinx directory to PATH.

22 | echo.

23 | echo.If you don't have Sphinx installed, grab it from

24 | echo.http://sphinx-doc.org/

25 | exit /b 1

26 | )

27 |

28 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

29 | goto end

30 |

31 | :help

32 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

33 |

34 | :end

35 | popd

36 |

--------------------------------------------------------------------------------

/docs/source/EnsembleSampler.rst:

--------------------------------------------------------------------------------

1 |

2 | EnsembleSampler

3 | ~~~~~~~~~~~~~~~

4 |

5 | .. autoclass:: inference.mcmc.EnsembleSampler

6 | :members: advance, get_sample, get_parameter, get_probabilities, mode, plot_diagnostics, matrix_plot, trace_plot

7 |

--------------------------------------------------------------------------------

/docs/source/GibbsChain.rst:

--------------------------------------------------------------------------------

1 |

2 | GibbsChain

3 | ~~~~~~~~~~

4 |

5 | .. autoclass:: inference.mcmc.GibbsChain

6 | :members: advance, run_for, mode, get_marginal, get_sample, get_parameter, get_interval, plot_diagnostics, matrix_plot, trace_plot, set_non_negative, set_boundaries

7 |

8 |

9 | GibbsChain example code

10 | ^^^^^^^^^^^^^^^^^^^^^^^

11 |

12 | Define the Rosenbrock density to use as a test case:

13 |

14 | .. code-block:: python

15 |

16 | from numpy import linspace, exp

17 | import matplotlib.pyplot as plt

18 |

19 | def rosenbrock(t):

20 | X, Y = t

21 | X2 = X**2

22 | return -X2 - 15.*(Y - X2)**2 - 0.5*(X2 + Y**2) / 3.

23 |

24 | Create the chain object:

25 |

26 | .. code-block:: python

27 |

28 | from inference.mcmc import GibbsChain

29 | chain = GibbsChain(posterior = rosenbrock, start = [2., -4.])

30 |

31 | Advance the chain 150k steps to generate a sample from the posterior:

32 |

33 | .. code-block:: python

34 |

35 | chain.advance(150000)

36 |

37 | The samples for any parameter can be accessed through the

38 | ``get_parameter`` method. We could use this to plot the path of

39 | the chain through the 2D parameter space:

40 |

41 | .. code-block:: python

42 |

43 | p = chain.get_probabilities() # color the points by their probability value

44 | pnt_colors = exp(p - p.max())

45 | plt.scatter(chain.get_parameter(0), chain.get_parameter(1), c=pnt_colors, marker='.')

46 | plt.xlabel('parameter 1')

47 | plt.ylabel('parameter 2')

48 | plt.grid()

49 | plt.show()

50 |

51 | .. image:: ./images/GibbsChain_images/initial_scatter.png

52 |

53 | We can see from this plot that in order to take a representative sample,

54 | some early portion of the chain must be removed. This is referred to as

55 | the 'burn-in' period. This period allows the chain to both find the high

56 | density areas, and adjust the proposal widths to their optimal values.

57 |

58 | The ``plot_diagnostics`` method can help us decide what size of burn-in to use:

59 |

60 | .. code-block:: python

61 |

62 | chain.plot_diagnostics()

63 |

64 | .. image:: ./images/GibbsChain_images/gibbs_diagnostics.png

65 |

66 | Occasionally samples are also 'thinned' by a factor of n (where only every

67 | n'th sample is used) in order to reduce the size of the data set for

68 | storage, or to produce uncorrelated samples.

69 |

70 | Based on the diagnostics we can choose burn and thin values, which can be passed

71 | to methods of the chain that access or operate on the sample data.

72 |

73 | .. code-block:: python

74 |

75 | burn = 2000

76 | thin = 10

77 |

78 |

79 | By specifying the ``burn`` and ``thin`` values, we can generate a new version of

80 | the earlier plot with the burn-in and thinned samples discarded:

81 |

82 | .. code-block:: python

83 |

84 | p = chain.get_probabilities(burn=burn, thin=thin)

85 | pnt_colors = exp(p - p.max())

86 | plt.scatter(

87 | chain.get_parameter(0, burn=burn, thin=thin),

88 | chain.get_parameter(1, burn=burn, thin=thin),

89 | c=pnt_colors,

90 | marker = '.'

91 | )

92 | plt.xlabel('parameter 1')

93 | plt.ylabel('parameter 2')

94 | plt.grid()

95 | plt.show()

96 |

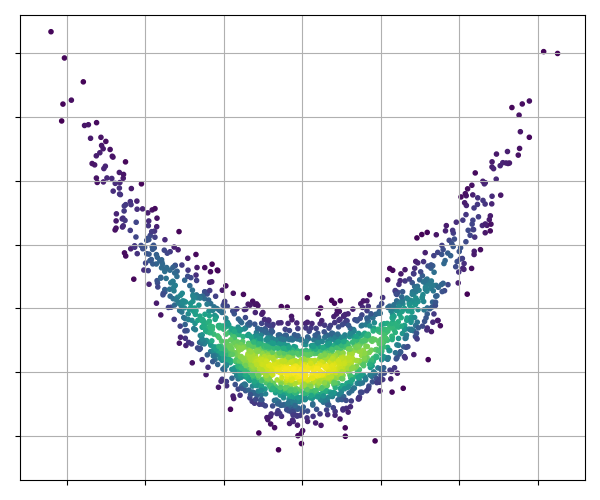

97 | .. image:: ./images/GibbsChain_images/burned_scatter.png

98 |

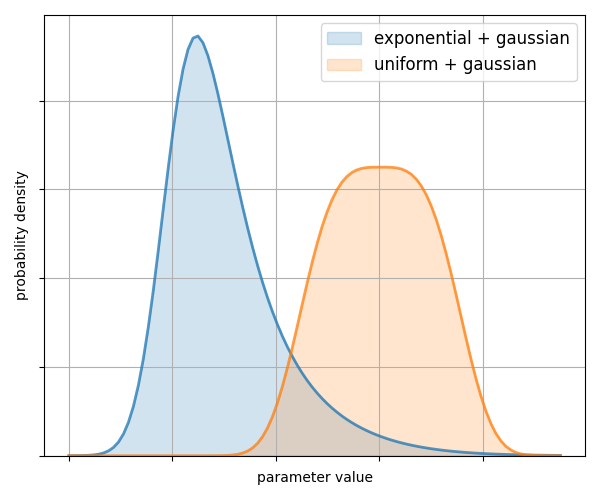

99 | We can easily estimate 1D marginal distributions for any parameter

100 | using the ``get_marginal`` method:

101 |

102 | .. code-block:: python

103 |

104 | pdf_1 = chain.get_marginal(0, burn=burn, thin=thin, unimodal=True)

105 | pdf_2 = chain.get_marginal(1, burn=burn, thin=thin, unimodal=True)

106 |

107 | ``get_marginal`` returns a density estimator object, which can be called

108 | as a function to return the value of the pdf at any point:

109 |

110 | .. code-block:: python

111 |

112 | axis = linspace(-3, 4, 500) # axis on which to evaluate the marginal PDFs

113 | # plot the marginal distributions

114 | plt.plot(axis, pdf_1(axis), label='param #1 marginal', lw=2)

115 | plt.plot(axis, pdf_2(axis), label='param #2 marginal', lw=2)

116 | plt.xlabel('parameter value')

117 | plt.ylabel('probability density')

118 | plt.legend()

119 | plt.grid()

120 | plt.show()

121 |

122 | .. image:: ./images/GibbsChain_images/gibbs_marginals.png

--------------------------------------------------------------------------------

/docs/source/GpLinearInverter.rst:

--------------------------------------------------------------------------------

1 |

2 | GpLinearInverter

3 | ~~~~~~~~~~~~~~~~

4 |

5 | .. autoclass:: inference.gp.GpLinearInverter

6 | :members: calculate_posterior, calculate_posterior_mean, optimize_hyperparameters, marginal_likelihood

7 |

8 |

9 | Example code

10 | ^^^^^^^^^^^^

11 |

12 | Example code can be found in the `Gaussian-process linear inversion jupyter notebook demo `_.

--------------------------------------------------------------------------------

/docs/source/GpOptimiser.rst:

--------------------------------------------------------------------------------

1 |

2 | GpOptimiser

3 | ~~~~~~~~~~~

4 |

5 | .. autoclass:: inference.gp.GpOptimiser

6 | :members: propose_evaluation, add_evaluation

7 |

8 | Example code

9 | ^^^^^^^^^^^^

10 |

11 | Gaussian-process optimisation efficiently searches for the global maximum of a function

12 | by iteratively 'learning' the structure of that function as new evaluations are made.

13 |

14 | As an example, define a simple 1D function:

15 |

16 | .. code-block:: python

17 |

18 | from numpy import sin

19 |

20 | def search_function(x): # Lorentzian plus a sine wave

21 | return sin(0.5 * x) + 3 / (1 + (x - 1)**2)

22 |

23 |

24 | Define some bounds for the optimisation, and make some evaluations of the function

25 | that will be used to build the initial gaussian-process estimate:

26 |

27 | .. code-block:: python

28 |

29 | # define bounds for the optimisation

30 | bounds = [(-8.0, 8.0)]

31 |

32 | # create some initialisation data

33 | x = array([-8.0, 8.0])

34 | y = search_function(x)

35 |

36 | Create an instance of GpOptimiser:

37 |

38 | .. code-block:: python

39 |

40 | from inference.gp import GpOptimiser

41 | GP = GpOptimiser(x, y, bounds=bounds)

42 |

43 | By using the ``propose_evaluation`` method, GpOptimiser will propose a new evaluation of

44 | the function. This proposed evaluation is generated by maximising an `acquisition function`,

45 | in this case the 'expected improvement' function. The new evaluation can be used to update

46 | the estimate by using the ``add_evaluation`` method, which leads to the following loop:

47 |

48 | .. code-block:: python

49 |

50 | for i in range(11):

51 | # request the proposed evaluation

52 | new_x = GP.propose_evaluation()

53 |

54 | # evaluate the new point

55 | new_y = search_function(new_x)

56 |

57 | # update the gaussian process with the new information

58 | GP.add_evaluation(new_x, new_y)

59 |

60 |

61 | Here we plot the state of the estimate at each iteration:

62 |

63 | .. image:: ./images/GpOptimiser_images/GpOptimiser_iteration.gif

--------------------------------------------------------------------------------

/docs/source/GpRegressor.rst:

--------------------------------------------------------------------------------

1 |

2 |

3 | GpRegressor

4 | ~~~~~~~~~~~

5 |

6 | .. autoclass:: inference.gp.GpRegressor

7 | :members: __call__, gradient, build_posterior

8 |

9 |

10 | Example code

11 | ^^^^^^^^^^^^

12 |

13 | Example code can be found in the `Gaussian-process regression jupyter notebook demo `_.

--------------------------------------------------------------------------------

/docs/source/HamiltonianChain.rst:

--------------------------------------------------------------------------------

1 |

2 | HamiltonianChain

3 | ~~~~~~~~~~~~~~~~

4 |

5 | .. autoclass:: inference.mcmc.HamiltonianChain

6 | :members: advance, run_for, mode, get_marginal, get_parameter, plot_diagnostics, matrix_plot, trace_plot

7 |

8 |

9 | HamiltonianChain example code

10 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

11 |

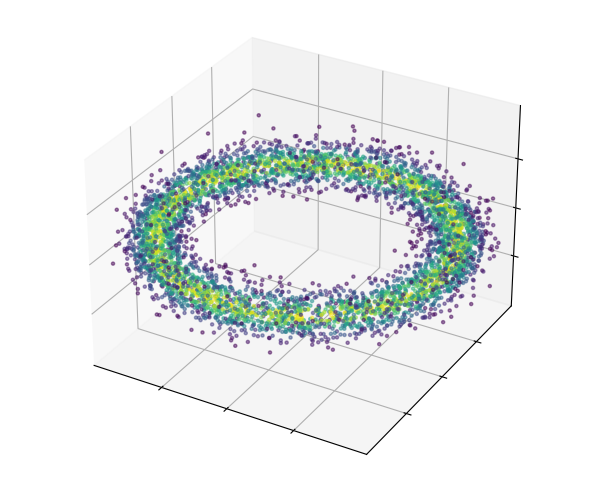

12 | Here we define a toroidal (donut-shaped!) posterior distribution which has strong non-linear correlation:

13 |

14 | .. code-block:: python

15 |

16 | from numpy import array, sqrt

17 |

18 | class ToroidalGaussian:

19 | def __init__(self):

20 | self.R0 = 1. # torus major radius

21 | self.ar = 10. # torus aspect ratio

22 | self.inv_w2 = (self.ar / self.R0)**2

23 |

24 | def __call__(self, theta):

25 | x, y, z = theta

26 | r_sqr = z**2 + (sqrt(x**2 + y**2) - self.R0)**2

27 | return -0.5 * self.inv_w2 * r_sqr

28 |

29 | def gradient(self, theta):

30 | x, y, z = theta

31 | R = sqrt(x**2 + y**2)

32 | K = 1 - self.R0 / R

33 | g = array([K*x, K*y, z])

34 | return -g * self.inv_w2

35 |

36 |

37 | Build the posterior and chain objects then generate the sample:

38 |

39 | .. code-block:: python

40 |

41 | # create an instance of our posterior class

42 | posterior = ToroidalGaussian()

43 |

44 | # create the chain object

45 | chain = HamiltonianChain(

46 | posterior = posterior,

47 | grad=posterior.gradient,

48 | start = [1, 0.1, 0.1]

49 | )

50 |

51 | # advance the chain to generate the sample

52 | chain.advance(6000)

53 |

54 | # choose how many samples will be thrown away from the start of the chain as 'burn-in'

55 | burn = 2000

56 |

57 | We can use the `Plotly `_ library to generate an interactive 3D scatterplot of our sample:

58 |

59 | .. code-block:: python

60 |

61 | # extract sample and probability data from the chain

62 | probs = chain.get_probabilities(burn=burn)

63 | point_colors = exp(probs - probs.max())

64 | x, y, z = [chain.get_parameter(i) for i in [0, 1, 2]]

65 |

66 | # build the scatterplot using plotly

67 | import plotly.graph_objects as go

68 |

69 | fig = go.Figure(data=1[go.Scatter3d(

70 | x=x, y=y, z=z, mode='markers',

71 | marker=dict(size=5, color=point_colors, colorscale='Viridis', opacity=0.6)

72 | )])

73 |

74 | fig.update_layout(margin=dict(l=0, r=0, b=0, t=0)) # set a tight layout

75 | fig.show()

76 |

77 | .. raw:: html

78 | :file: ./images/HamiltonianChain_images/hmc_scatterplot.html

79 |

80 | We can view all the corresponding 1D & 2D marginal distributions using the ``matrix_plot`` method of the chain:

81 |

82 | .. code-block:: python

83 |

84 | chain.matrix_plot(burn=burn)

85 |

86 |

87 | .. image:: ./images/HamiltonianChain_images/hmc_matrix_plot.png

--------------------------------------------------------------------------------

/docs/source/ParallelTempering.rst:

--------------------------------------------------------------------------------

1 | ParallelTempering

2 | ~~~~~~~~~~~~~~~~~

3 |

4 | .. autoclass:: inference.mcmc.ParallelTempering

5 | :members: advance, run_for, shutdown, return_chains

6 |

7 |

8 | ParallelTempering example code

9 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

10 |

11 | Define a posterior with separated maxima, which is difficult

12 | for a single chain to explore:

13 |

14 | .. code-block:: python

15 |

16 | from numpy import log, sqrt, sin, arctan2, pi

17 |

18 | # define a posterior with multiple separate peaks

19 | def multimodal_posterior(theta):

20 | x, y = theta

21 | r = sqrt(x**2 + y**2)

22 | phi = arctan2(y, x)

23 | z = (r - (0.5 + pi - phi*0.5)) / 0.1

24 | return -0.5*z**2 + 4*log(sin(phi*2.)**2)

25 |

26 | Define a set of temperature levels:

27 |

28 | .. code-block:: python

29 |

30 | N_levels = 6

31 | temperatures = [10**(2.5*k/(N_levels-1.)) for k in range(N_levels)]

32 |

33 | Create a set of chains - one with each temperature:

34 |

35 | .. code-block:: python

36 |

37 | from inference.mcmc import GibbsChain, ParallelTempering

38 | chains = [

39 | GibbsChain(posterior=multimodal_posterior, start=[0.5, 0.5], temperature=T)

40 | for T in temperatures

41 | ]

42 |

43 | When an instance of ``ParallelTempering`` is created, a dedicated process for each

44 | chain is spawned. These separate processes will automatically make use of the available

45 | cpu cores, such that the computations to advance the separate chains are performed in parallel.

46 |

47 | .. code-block:: python

48 |

49 | PT = ParallelTempering(chains=chains)

50 |

51 | These processes wait for instructions which can be sent using the methods of the

52 | ``ParallelTempering`` object:

53 |

54 | .. code-block:: python

55 |

56 | PT.run_for(minutes=0.5)

57 |

58 | To recover a copy of the chains held by the processes we can use the

59 | ``return_chains`` method:

60 |

61 | .. code-block:: python

62 |

63 | chains = PT.return_chains()

64 |

65 | By looking at the trace plot for the T = 1 chain, we see that it makes

66 | large jumps across the parameter space due to the swaps:

67 |

68 | .. code-block:: python

69 |

70 | chains[0].trace_plot()

71 |

72 | .. image:: ./images/ParallelTempering_images/parallel_tempering_trace.png

73 |

74 | Even though the posterior has strongly separated peaks, the T = 1 chain

75 | was able to explore all of them due to the swaps.

76 |

77 | .. code-block:: python

78 |

79 | chains[0].matrix_plot()

80 |

81 | .. image:: ./images/ParallelTempering_images/parallel_tempering_matrix.png

82 |

83 | Because each process waits for instructions from the ``ParallelTempering`` object,

84 | they will not self-terminate. To terminate all the processes we have to trigger

85 | a shutdown even using the ``shutdown`` method:

86 |

87 | .. code-block:: python

88 |

89 | PT.shutdown()

90 |

--------------------------------------------------------------------------------

/docs/source/PcaChain.rst:

--------------------------------------------------------------------------------

1 |

2 | PcaChain

3 | ~~~~~~~~

4 |

5 | .. autoclass:: inference.mcmc.PcaChain

6 | :members: advance, run_for, mode, get_marginal, get_sample, get_parameter, get_interval, plot_diagnostics, matrix_plot, trace_plot

7 |

--------------------------------------------------------------------------------

/docs/source/acquisition_functions.rst:

--------------------------------------------------------------------------------

1 |

2 | Acquisition functions

3 | ~~~~~~~~~~~~~~~~~~~~~

4 | Acquisition functions are used to select new points in the search-space to evaluate in

5 | Gaussian-process optimisation.

6 |

7 | The available acquisition functions are implemented as classes within ``inference.gp``,

8 | and can be passed to ``GpOptimiser`` via the ``acquisition`` keyword argument as follows:

9 |

10 | .. code-block:: python

11 |

12 | from inference.gp import GpOptimiser, ExpectedImprovement

13 | GP = GpOptimiser(x, y, bounds=bounds, acquisition=ExpectedImprovement)

14 |

15 | The acquisition function classes can also be passed as instances, allowing settings of the

16 | acquisition function to be altered:

17 |

18 | .. code-block:: python

19 |

20 | from inference.gp import GpOptimiser, UpperConfidenceBound

21 | UCB = UpperConfidenceBound(kappa = 2.)

22 | GP = GpOptimiser(x, y, bounds=bounds, acquisition=UCB)

23 |

24 | ExpectedImprovement

25 | ^^^^^^^^^^^^^^^^^^^

26 |

27 | .. autoclass:: inference.gp.ExpectedImprovement

28 |

29 |

30 | UpperConfidenceBound

31 | ^^^^^^^^^^^^^^^^^^^^

32 |

33 | .. autoclass:: inference.gp.UpperConfidenceBound

--------------------------------------------------------------------------------

/docs/source/approx.rst:

--------------------------------------------------------------------------------

1 | Approximate inference

2 | =====================

3 |

4 | This module provides tools for approximate inference.

5 |

6 |

7 | conditional_sample

8 | ------------------

9 |

10 | .. autofunction:: inference.approx.conditional_sample

11 |

12 | get_conditionals

13 | ----------------

14 |

15 | .. autofunction:: inference.approx.get_conditionals

16 |

17 |

18 | conditional_moments

19 | -------------------

20 |

21 | .. autofunction:: inference.approx.conditional_moments

--------------------------------------------------------------------------------

/docs/source/conf.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | #

3 | # Configuration file for the Sphinx documentation builder.

4 | #

5 | # This file does only contain a selection of the most common options. For a

6 | # full list see the documentation:

7 | # http://www.sphinx-doc.org/en/master/config

8 |

9 | # -- Path setup --------------------------------------------------------------

10 |

11 | # If extensions (or modules to document with autodoc) are in another directory,

12 | # add these directories to sys.path here. If the directory is relative to the

13 | # documentation root, use os.path.abspath to make it absolute, like shown here.

14 | #

15 | import os

16 | import sys

17 |

18 | from importlib.metadata import version as get_version

19 |

20 | sys.path.insert(0, os.path.abspath('../../'))

21 | sys.path.insert(0, os.path.abspath('./'))

22 |

23 | # -- Project information -----------------------------------------------------

24 |

25 | project = 'inference-tools'

26 | copyright = '2019, Chris Bowman'

27 | author = 'Chris Bowman'

28 |

29 | # The full version, including alpha/beta/rc tags

30 | release = get_version(project)

31 | # Major.minor version

32 | version = ".".join(release.split(".")[:2])

33 |

34 | # -- General configuration ---------------------------------------------------

35 |

36 | # If your documentation needs a minimal Sphinx version, state it here.

37 | #

38 | # needs_sphinx = '1.0'

39 |

40 | # Add any Sphinx extension module names here, as strings. They can be

41 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

42 | # ones.

43 | extensions = [

44 | 'sphinx.ext.autodoc',

45 | 'sphinx.ext.githubpages',

46 | ]

47 |

48 | # Add any paths that contain templates here, relative to this directory.

49 | templates_path = ['_templates']

50 |

51 | # The suffix(es) of source filenames.

52 | # You can specify multiple suffix as a list of string:

53 | #

54 | # source_suffix = ['.rst', '.md']

55 | source_suffix = '.rst'

56 |

57 | # The master toctree document.

58 | master_doc = 'index'

59 |

60 | # The language for content autogenerated by Sphinx. Refer to documentation

61 | # for a list of supported languages.

62 | #

63 | # This is also used if you do content translation via gettext catalogs.

64 | # Usually you set "language" from the command line for these cases.

65 | language = None

66 |

67 | # List of patterns, relative to source directory, that match files and

68 | # directories to ignore when looking for source files.

69 | # This pattern also affects html_static_path and html_extra_path.

70 | exclude_patterns = []

71 |

72 | # The name of the Pygments (syntax highlighting) style to use.

73 | pygments_style = None

74 |

75 |

76 | # -- Options for HTML output -------------------------------------------------

77 |

78 | # The theme to use for HTML and HTML Help pages. See the documentation for

79 | # a list of builtin themes.

80 | #

81 | html_theme = 'sphinx_rtd_theme'

82 | # html_theme = 'default'

83 | # Theme options are theme-specific and customize the look and feel of a theme

84 | # further. For a list of options available for each theme, see the

85 | # documentation.

86 | #

87 | # html_theme_options = {}

88 |