├── rabies

├── __init__.py

├── analysis_pkg

│ ├── __init__.py

│ ├── diagnosis_pkg

│ │ ├── __init__.py

│ │ └── diagnosis_wf.py

│ ├── utils.py

│ └── analysis_math.py

├── preprocess_pkg

│ ├── __init__.py

│ ├── stc.py

│ ├── resampling.py

│ ├── bold_ref.py

│ └── registration.py

├── confound_correction_pkg

│ ├── __init__.py

│ └── mod_ICA_AROMA

│ │ ├── __init__.py

│ │ ├── Manual.pdf

│ │ ├── requirements.txt

│ │ ├── Dockerfile

│ │ ├── ica-aroma-via-docker.py

│ │ ├── README.md

│ │ └── classification_plots.py

├── __version__.py

└── visualization.py

├── .gitignore

├── MANIFEST.in

├── dependencies.txt

├── docs

├── bibliography.md

├── pics

│ ├── QC_framework.png

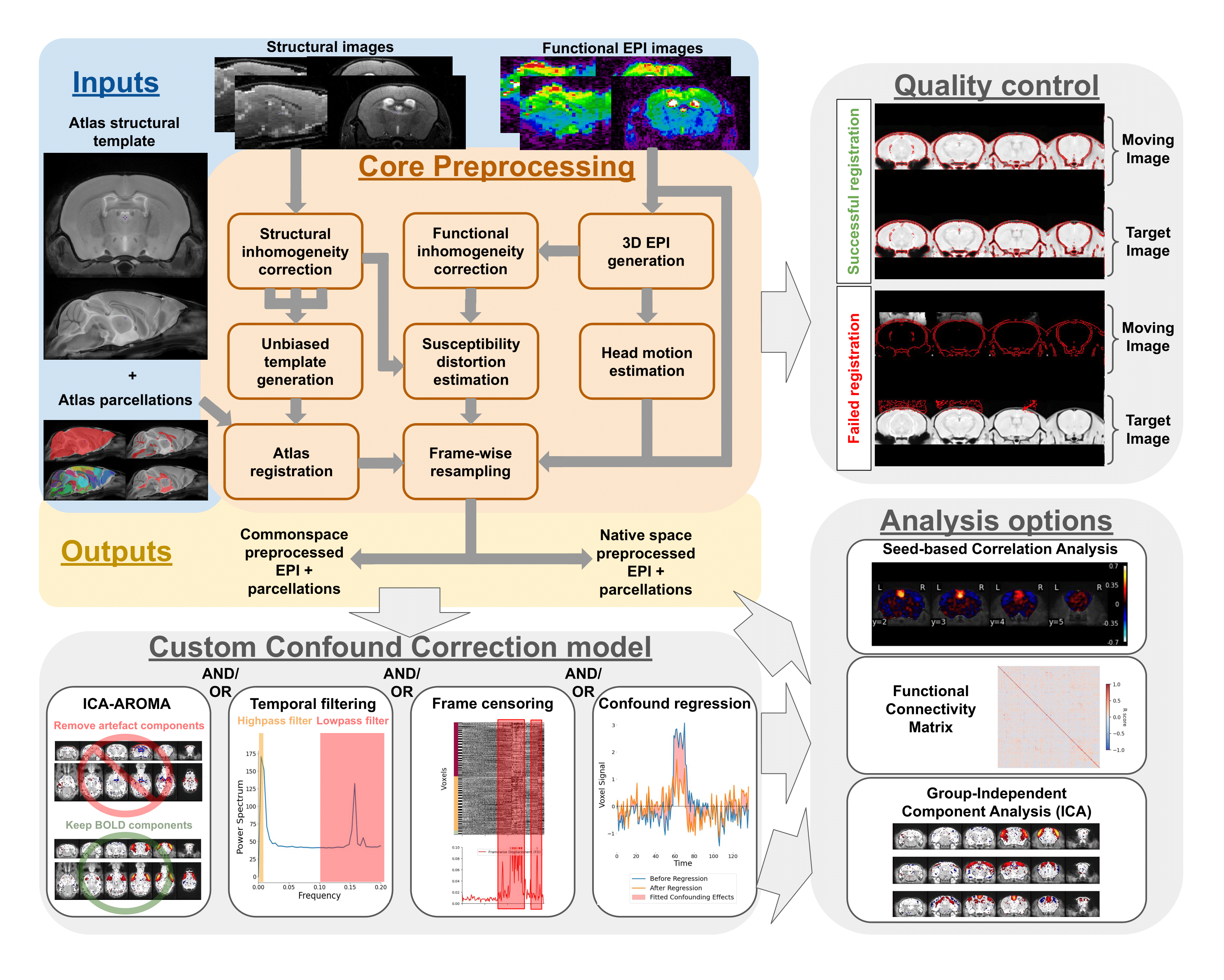

│ ├── RABIES_schema.png

│ ├── preprocessing.png

│ ├── template_files.png

│ ├── distribution_plot.png

│ ├── atlas_registration.png

│ ├── confound_correction.png

│ ├── scan_QC_thresholds.png

│ ├── diagnosis_key_markers.png

│ ├── spatiotemporal_diagnosis.png

│ ├── example_motion_parameters.png

│ ├── example_temporal_features.png

│ ├── sub-MFC067_ses-1_acq-FLASH_T1w_inho_cor.png

│ ├── sub-MFC067_ses-1_acq-FLASH_T1w_inho_cor_registration.png

│ ├── sub-MFC068_ses-1_task-rest_acq-EPI_run-1_bold_inho_cor.png

│ └── sub-MFC068_ses-1_task-rest_acq-EPI_run-1_bold_registration.png

├── requirements.txt

├── index.md

├── Makefile

├── make.bat

├── nested_docs

│ ├── optim_CR.md

│ ├── distribution_plot.md

│ ├── group_stats.md

│ ├── registration_troubleshoot.md

│ └── scan_diagnosis.md

├── installation.md

├── troubleshooting.md

├── preproc_QC.md

├── conf.py

├── analysis.md

├── confound_correction.md

├── preprocessing.md

├── analysis_QC.md

├── metrics.md

├── contributing.md

└── outputs.md

├── scripts

├── rabies

├── preprocess_scripts

│ ├── plot_overlap.sh

│ ├── null_nonlin.sh

│ ├── multistage_otsu_cor.py

│ └── EPI-preprocessing.sh

├── debug_workflow.py

├── install_DSURQE.sh

├── zeropad

└── gen_DSURQE_masks.py

├── .dockerignore

├── .gitmodules

├── rabies_environment.dev.yml

├── rabies_environment.yml

├── .github

├── workflows

│ ├── docker-build-PR.yml

│ ├── apptainer-attach-on-release.yml

│ └── docker-publish.yml

└── ISSUE_TEMPLATE

│ └── standard-bug-report.md

├── .readthedocs.yml

├── CITATION.cff

├── Dockerfile

├── README.md

└── setup.py

/rabies/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *zip

2 | *sif

3 |

--------------------------------------------------------------------------------

/rabies/analysis_pkg/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/rabies/preprocess_pkg/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include README.md LICENSE

2 |

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/rabies/analysis_pkg/diagnosis_pkg/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/mod_ICA_AROMA/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/dependencies.txt:

--------------------------------------------------------------------------------

1 | minc-toolkit-v2>=1.9.18

2 | ANTs>=v2.4.3

3 | FSL

4 | AFNI

5 |

--------------------------------------------------------------------------------

/docs/bibliography.md:

--------------------------------------------------------------------------------

1 | # Bibliography

2 |

3 | ```{bibliography} _static/refs.bib

4 |

5 | ```

6 |

--------------------------------------------------------------------------------

/docs/pics/QC_framework.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/QC_framework.png

--------------------------------------------------------------------------------

/docs/pics/RABIES_schema.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/RABIES_schema.png

--------------------------------------------------------------------------------

/docs/pics/preprocessing.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/preprocessing.png

--------------------------------------------------------------------------------

/docs/pics/template_files.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/template_files.png

--------------------------------------------------------------------------------

/docs/pics/distribution_plot.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/distribution_plot.png

--------------------------------------------------------------------------------

/docs/pics/atlas_registration.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/atlas_registration.png

--------------------------------------------------------------------------------

/docs/pics/confound_correction.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/confound_correction.png

--------------------------------------------------------------------------------

/docs/pics/scan_QC_thresholds.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/scan_QC_thresholds.png

--------------------------------------------------------------------------------

/scripts/rabies:

--------------------------------------------------------------------------------

1 | #! /usr/bin/env python

2 | from rabies.run_main import execute_workflow

3 | execute_workflow()

4 |

--------------------------------------------------------------------------------

/docs/pics/diagnosis_key_markers.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/diagnosis_key_markers.png

--------------------------------------------------------------------------------

/docs/pics/spatiotemporal_diagnosis.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/spatiotemporal_diagnosis.png

--------------------------------------------------------------------------------

/docs/pics/example_motion_parameters.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/example_motion_parameters.png

--------------------------------------------------------------------------------

/docs/pics/example_temporal_features.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/example_temporal_features.png

--------------------------------------------------------------------------------

/docs/pics/sub-MFC067_ses-1_acq-FLASH_T1w_inho_cor.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/sub-MFC067_ses-1_acq-FLASH_T1w_inho_cor.png

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/mod_ICA_AROMA/Manual.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/rabies/confound_correction_pkg/mod_ICA_AROMA/Manual.pdf

--------------------------------------------------------------------------------

/docs/pics/sub-MFC067_ses-1_acq-FLASH_T1w_inho_cor_registration.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/sub-MFC067_ses-1_acq-FLASH_T1w_inho_cor_registration.png

--------------------------------------------------------------------------------

/docs/pics/sub-MFC068_ses-1_task-rest_acq-EPI_run-1_bold_inho_cor.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/sub-MFC068_ses-1_task-rest_acq-EPI_run-1_bold_inho_cor.png

--------------------------------------------------------------------------------

/docs/pics/sub-MFC068_ses-1_task-rest_acq-EPI_run-1_bold_registration.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/CoBrALab/RABIES/HEAD/docs/pics/sub-MFC068_ses-1_task-rest_acq-EPI_run-1_bold_registration.png

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/mod_ICA_AROMA/requirements.txt:

--------------------------------------------------------------------------------

1 | future

2 | matplotlib==2.2

3 | numpy==1.14

4 | pandas==0.23

5 | seaborn==0.9.0

6 |

7 | ###added dependencies for rodent adaptation

8 | nibabel

9 |

--------------------------------------------------------------------------------

/docs/requirements.txt:

--------------------------------------------------------------------------------

1 | sphinx==5.0

2 | myst-parser

3 | sphinx-rtd-dark-mode

4 | sphinx-rtd-theme

5 | sphinxcontrib-bibtex

6 | sphinxcontrib-programoutput

7 | jinja2==3.1.1

8 | pillow==10.1.0

9 | rabies==0.5.4

10 | traits<7.0

11 |

--------------------------------------------------------------------------------

/.dockerignore:

--------------------------------------------------------------------------------

1 | *zip

2 | *sif

3 | work_dir/

4 |

5 | __pycache__

6 | *.pyc

7 | *.pyo

8 | *.pyd

9 | .Python

10 | env

11 | pip-log.txt

12 | pip-delete-this-directory.txt

13 | .tox

14 | .coverage

15 | .coverage.*

16 | .cache

17 | nosetests.xml

18 | coverage.xml

19 | *.cover

20 | *.log

21 | .git

22 | .mypy_cache

23 | .pytest_cache

24 | .hypothesis

25 |

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "minc-toolkit-extras"]

2 | path = minc-toolkit-extras

3 | url = https://github.com/CoBrALab/minc-toolkit-extras.git

4 | [submodule "optimized_antsMultivariateTemplateConstruction"]

5 | path = optimized_antsMultivariateTemplateConstruction

6 | url = https://github.com/Gab-D-G/optimized_antsMultivariateTemplateConstruction.git

7 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | ```{include} ../README.md

2 | ```

3 |

4 | ```{toctree}

5 | ---

6 | maxdepth: 3

7 | caption: Content

8 | ---

9 | installation.md

10 | running_the_software.md

11 | preprocessing.md

12 | preproc_QC.md

13 | confound_correction.md

14 | analysis.md

15 | analysis_QC.md

16 | outputs.md

17 | metrics.md

18 | troubleshooting.md

19 | contributing.md

20 | bibliography.md

21 |

--------------------------------------------------------------------------------

/rabies/__version__.py:

--------------------------------------------------------------------------------

1 | # 8b d8 Yb dP 88""Yb db dP""b8 88 dP db dP""b8 888888

2 | # 88b d88 YbdP 88__dP dPYb dP `" 88odP dPYb dP `" 88__

3 | # 88YbdP88 8P 88""" dP__Yb Yb 88"Yb dP__Yb Yb "88 88""

4 | # 88 YY 88 dP 88 dP""""Yb YboodP 88 Yb dP""""Yb YboodP 888888

5 |

6 | VERSION = (0, 5, 4)

7 |

8 | __version__ = '.'.join(map(str, VERSION))

9 |

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/mod_ICA_AROMA/Dockerfile:

--------------------------------------------------------------------------------

1 | # Installs ICA-AROMA to a centos image with FSL pre-installed

2 |

3 | # function provided by Tristan A.A., ttaa9 on github

4 |

5 | FROM mcin/docker-fsl:latest

6 |

7 | # Install necessary python packages

8 | RUN yum update -y; yum clean all

9 | RUN yum install -y numpy scipy

10 |

11 | # Add everything to the container

12 | ADD . /ICA-AROMA

13 |

14 |

--------------------------------------------------------------------------------

/rabies_environment.dev.yml:

--------------------------------------------------------------------------------

1 | name: rabies

2 | channels:

3 | - conda-forge

4 | - simpleitk

5 | - https://fsl.fmrib.ox.ac.uk/fsldownloads/fslconda/public/

6 | dependencies:

7 | - python=3.9

8 | - pip

9 | - future

10 | - matplotlib

11 | - nibabel

12 | - nilearn

13 | - nipype

14 | - numpy

15 | - pandas

16 | - pathos

17 | - pybids

18 | - scikit-learn

19 | - scikit-image

20 | - scipy

21 | - seaborn

22 | - simpleitk

23 | - fsl-base

24 | - fsl-melodic

25 | - traits<7.0

26 | - pip:

27 | - qbatch

28 |

--------------------------------------------------------------------------------

/rabies_environment.yml:

--------------------------------------------------------------------------------

1 | name: rabies

2 | channels:

3 | - conda-forge

4 | - simpleitk

5 | - https://fsl.fmrib.ox.ac.uk/fsldownloads/fslconda/public/

6 | dependencies:

7 | - python=3.9

8 | - pip

9 | - future

10 | - matplotlib=3.3.4

11 | - nibabel=3.2.1

12 | - nilearn=0.7.1

13 | - nipype=1.10.0

14 | - numpy=1.26.4

15 | - pandas=1.2.4

16 | - pathos=0.2.7

17 | - pybids=0.16.3

18 | - scikit-learn=0.24.1

19 | - scikit-image=0.19.3

20 | - scipy=1.13.1

21 | - seaborn=0.11.1

22 | - simpleitk=2.0.2

23 | - networkx<3

24 | - fsl-base=2309.1

25 | - fsl-melodic=2111.3

26 | - traits<7.0

27 | - pip:

28 | - qbatch==2.3

29 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line, and also

5 | # from the environment for the first two.

6 | SPHINXOPTS ?= -n -v -j auto

7 | SPHINXBUILD ?= sphinx-build

8 | SOURCEDIR = .

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | .PHONY: help Makefile

16 |

17 | # Catch-all target: route all unknown targets to Sphinx using the new

18 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

19 | %: Makefile

20 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

21 |

--------------------------------------------------------------------------------

/.github/workflows/docker-build-PR.yml:

--------------------------------------------------------------------------------

1 | name: PR Workflow - test that dockerfile will build on a PR to master

2 |

3 | on:

4 | workflow_dispatch:

5 | pull_request:

6 | branches:

7 | - master

8 | jobs:

9 | build:

10 | runs-on: ubuntu-latest

11 | steps:

12 | - name: Checkout repository

13 | uses: actions/checkout@v3

14 | with:

15 | submodules: recursive

16 |

17 | - name: Set up Docker Buildx

18 | uses: docker/setup-buildx-action@v3

19 |

20 | - name: Build and tag image

21 | uses: docker/build-push-action@v4

22 | with:

23 | context: .

24 | push: false

25 | tags: rabies:pr-${{ github.event.pull_request.number || 'manual' }}

26 | cache-from: type=gha

27 | cache-to: type=gha,mode=max

28 |

--------------------------------------------------------------------------------

/.readthedocs.yml:

--------------------------------------------------------------------------------

1 | # .readthedocs.yaml

2 | # Read the Docs configuration file

3 | # See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

4 |

5 | # Required

6 | version: 2

7 |

8 | # Set the version of Python and other tools you might need

9 | build:

10 | os: ubuntu-20.04

11 | tools:

12 | python: "3.9"

13 | # You can also specify other tool versions:

14 | # nodejs: "16"

15 | # rust: "1.55"

16 | # golang: "1.17"

17 |

18 | # Build documentation in the docs/ directory with Sphinx

19 | sphinx:

20 | configuration: docs/conf.py

21 |

22 | # If using Sphinx, optionally build your docs in additional formats such as PDF

23 | formats:

24 | - pdf

25 |

26 | # Optionally declare the Python requirements required to build your docs

27 | python:

28 | install:

29 | - requirements: docs/requirements.txt

--------------------------------------------------------------------------------

/rabies/analysis_pkg/utils.py:

--------------------------------------------------------------------------------

1 | def compute_edge_mask(mask_array, num_edge_voxels=1):

2 | import numpy as np

3 | #custom function for computing edge mask from an input brain mask

4 | shape = mask_array.shape

5 |

6 | #iterate through all voxels from the three dimensions and look if it contains surrounding voxels

7 | edge_mask = np.zeros(shape, dtype=bool)

8 | num_voxel = 0

9 | while num_voxel < num_edge_voxels:

10 | for x in range(shape[0]):

11 | for y in range(shape[1]):

12 | for z in range(shape[2]):

13 | #only look if the voxel is part of the mask

14 | if mask_array[x, y, z]:

15 | if (mask_array[x-1:x+2, y-1:y+2, z-1:z+2] == 0).sum() > 0:

16 | edge_mask[x, y, z] = 1

17 | mask_array = mask_array-edge_mask

18 | num_voxel += 1

19 |

20 | return edge_mask

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=.

11 | set BUILDDIR=_build

12 |

13 | if "%1" == "" goto help

14 |

15 | %SPHINXBUILD% >NUL 2>NUL

16 | if errorlevel 9009 (

17 | echo.

18 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

19 | echo.installed, then set the SPHINXBUILD environment variable to point

20 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

21 | echo.may add the Sphinx directory to PATH.

22 | echo.

23 | echo.If you don't have Sphinx installed, grab it from

24 | echo.http://sphinx-doc.org/

25 | exit /b 1

26 | )

27 |

28 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

29 | goto end

30 |

31 | :help

32 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

33 |

34 | :end

35 | popd

36 |

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/mod_ICA_AROMA/ica-aroma-via-docker.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | # Simple wrapper for the ICA-AROMA python scripts, to hand them absolute paths.

3 | # Required to make the tool work in cbrain via the docker container

4 |

5 | # function provided by Tristan A.A., ttaa9 on github

6 |

7 | import os, sys

8 | from subprocess import Popen

9 |

10 | # Input arguments

11 | args = sys.argv[1:]

12 |

13 | # Modify the arguments for existent files and the output dir to be absolute paths

14 | mod_args = [(os.path.abspath(f) if os.path.exists(f) else f) for f in args]

15 | targ_ind = mod_args.index("-out") + 1

16 | mod_args[targ_ind] = os.path.abspath(mod_args[targ_ind])

17 |

18 | # Call the ICA-AROMA process

19 | cmd = "python /ICA-AROMA/ICA_AROMA.py " + " ".join(mod_args)

20 | print("Running: " + cmd + "\n")

21 | process = Popen( cmd.split() )

22 | sys.exit( process.wait() )

23 |

24 |

25 |

--------------------------------------------------------------------------------

/scripts/preprocess_scripts/plot_overlap.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | #credit to Joanes Grandjean https://github.com/grandjeanlab/MouseMRIPrep

3 | anat=$1

4 | standard=$2

5 | out=$3

6 |

7 | slicer $anat ${standard} -s 2 -x 0.35 sla.png -x 0.45 slb.png -x 0.55 slc.png -x 0.65 sld.png -y 0.35 sle.png -y 0.45 slf.png -y 0.55 slg.png -y 0.65 slh.png -z 0.35 sli.png -z 0.45 slj.png -z 0.55 slk.png -z 0.65 sll.png

8 | pngappend sla.png + slb.png + slc.png + sld.png + sle.png + slf.png + slg.png + slh.png + sli.png + slj.png + slk.png + sll.png highres2standard1.png

9 | slicer ${standard} $anat -s 2 -x 0.35 sla.png -x 0.45 slb.png -x 0.55 slc.png -x 0.65 sld.png -y 0.35 sle.png -y 0.45 slf.png -y 0.55 slg.png -y 0.65 slh.png -z 0.35 sli.png -z 0.45 slj.png -z 0.55 slk.png -z 0.65 sll.png

10 | pngappend sla.png + slb.png + slc.png + sld.png + sle.png + slf.png + slg.png + slh.png + sli.png + slj.png + slk.png + sll.png highres2standard2.png

11 | pngappend highres2standard1.png - highres2standard2.png $out

12 | rm -f sl?.png highres2standard2.png

13 | rm highres2standard1.png

14 |

--------------------------------------------------------------------------------

/scripts/preprocess_scripts/null_nonlin.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Script that doesn't conduct any registration and only provide null transform files

4 | # Allows to keep consistent input/output nipype workflow, even if a step doesn't requiring registration in a particular case

5 |

6 | if [[ -n ${__mb_debug:-} ]]; then

7 |

8 | set -x

9 |

10 | fi

11 |

12 | set -euo pipefail

13 |

14 |

15 | moving=$1

16 | movingmask=$2

17 | fixed=$3

18 | fixedmask=$4

19 | filename_template=$5

20 |

21 | antsRegistration --dimensionality 3 \

22 | --output [${filename_template}_output_,${filename_template}_output_warped_image.nii.gz] \

23 | --transform Rigid[0.1] --metric Mattes[$fixed,$moving,1,128,None] --convergence [0,1e-6,10] --shrink-factors 1 --smoothing-sigmas 1vox \

24 | --transform Affine[0.1] --metric Mattes[$fixed,$moving,1,128,None] --convergence [0,1e-6,10] --shrink-factors 1 --smoothing-sigmas 0vox --masks [$fixedmask,$movingmask] \

25 | --transform SyN[0.2,2,0] --metric CC[$fixed,$moving,1,4] --convergence [0,1e-6,10] \

26 | --shrink-factors 1 \

27 | --smoothing-sigmas 1 \

28 | -z 1

29 |

--------------------------------------------------------------------------------

/CITATION.cff:

--------------------------------------------------------------------------------

1 | cff-version: 1.2.0

2 | message: "If you use this software, please cite it as below."

3 | authors:

4 | - family-names: "Desrosiers-Gregoire"

5 | given-names: "Gabriel"

6 | - family-names: "Devenyi"

7 | given-names: "Gabriel A."

8 | - family-names: "Grandjean"

9 | given-names: "Joanes"

10 | - family-names: "Chakravarty"

11 | given-names: "M. Mallar"

12 | title: "RABIES: Rodent Automated Bold Improvement of EPI Sequences."

13 | version: 0.5.4

14 | date-released: 2025-10-22

15 | url: "https://github.com/CoBrALab/RABIES"

16 |

17 |

18 | preferred-citation:

19 | type: article

20 | authors:

21 | - family-names: "Desrosiers-Gregoire"

22 | given-names: "Gabriel"

23 | - family-names: "Devenyi"

24 | given-names: "Gabriel A."

25 | - family-names: "Grandjean"

26 | given-names: "Joanes"

27 | - family-names: "Chakravarty"

28 | given-names: "M. Mallar"

29 | doi: "10.1038/s41467-024-50826-8"

30 | journal: "Nat. Commun."

31 | month: 8

32 | start: 1 # First page number

33 | end: 15 # Last page number

34 | title: "A standardized image processing and data quality platform for rodent fMRI"

35 | volume: 15

36 | year: 2024

37 |

--------------------------------------------------------------------------------

/.github/workflows/apptainer-attach-on-release.yml:

--------------------------------------------------------------------------------

1 | name: Build and Attach Apptainer Image from Docker URL to Release

2 | on:

3 | release:

4 | types:

5 | - published

6 | workflow_dispatch:

7 | inputs:

8 | tag_name:

9 | description: 'Tag name (e.g., 0.5.0)'

10 | required: true

11 | type: string

12 |

13 | jobs:

14 | build-and-attach:

15 | runs-on: ubuntu-latest

16 | steps:

17 | - name: Checkout code

18 | uses: actions/checkout@v4

19 |

20 | - name: Install Apptainer

21 | run: |

22 | sudo apt-get update

23 | sudo apt-get install -y software-properties-common

24 | sudo add-apt-repository -y ppa:apptainer/ppa

25 | sudo apt-get install -y apptainer

26 |

27 | - name: Get release version

28 | id: get_version

29 | run: |

30 | if [ "${{ github.event_name }}" = "workflow_dispatch" ]; then

31 | echo "VERSION=${{ inputs.tag_name }}" >> "$GITHUB_OUTPUT"

32 | else

33 | echo "VERSION=${GITHUB_REF#refs/tags/}" >> "$GITHUB_OUTPUT"

34 | fi

35 |

36 | - name: Build Apptainer image from Docker URL

37 | run: |

38 | REPO_LOWERCASE=$(echo "${{ github.repository }}" | tr '[:upper:]' '[:lower:]')

39 | VERSION=${{ steps.get_version.outputs.VERSION }}

40 | sudo apptainer build "app-${VERSION}.sif" "docker://ghcr.io/${REPO_LOWERCASE}:${VERSION}"

41 |

42 | - name: Upload image to release

43 | uses: softprops/action-gh-release@v2

44 | with:

45 | tag_name: ${{ steps.get_version.outputs.VERSION }}

46 | files: app-${{ steps.get_version.outputs.VERSION }}.sif

47 | env:

48 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

49 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/standard-bug-report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Standard bug report

3 | about: Standard template to report bugs that are encountered

4 | title: ""

5 | labels: ""

6 | assignees: ""

7 | ---

8 |

9 | **Before submitting, please check our [Troubleshooting Guide](https://github.com/CoBrALab/RABIES/blob/master/docs/troubleshooting.md) for common issues and solutions.**

10 |

11 | **Have you checked original image orientation?**

12 | RABIES assumes properly oriented data.

13 | Please ensure image orientation is correct by visualizing images with [ITK-snap](https://www.itksnap.org/pmwiki/pmwiki.php).

14 | See the [troubleshooting guide](https://github.com/CoBrALab/RABIES/blob/master/docs/troubleshooting.md#checking-image-orientation-with-itk-snap) for detailed instructions on verifying image orientation.

15 |

16 | **Describe the bug**

17 | A clear and concise description of what the bug is.

18 |

19 | **Describe your system**

20 |

21 | - RABIES version (e.g., release number or container tag)

22 | - Execution method (e.g., Docker, Singularity/Apptainer, or local install)

23 | - Operating system (e.g., Ubuntu 22.04, macOS 13.5, Windows WSL2)

24 | - (Optional) CPU threads and RAM available; container/runtime version

25 |

26 | **Describe RABIES call**

27 | Include a copy of the command call you executed from the terminal, and any additional information that could be relevant to your execution.

28 |

29 | **Attach log file**

30 | Attach to your issue the .log files present in the output folder. (e.g. rabies_out/rabies_preprocess.log)

31 |

32 | **Attach QC_report**

33 | Attach to your issue the QC_report folder present in the output folder. If QC_report is too large, mention that it couldn't be shared, and if possible, provide an alternative access to the files.

34 |

35 | **Is the bug reproducible?**

36 |

37 | **Additional context**

38 | Add any other context about the problem here.

39 |

--------------------------------------------------------------------------------

/docs/nested_docs/optim_CR.md:

--------------------------------------------------------------------------------

1 | # Optimization of confound correction strategy

2 |

3 | (optim_CR)=

4 |

5 | On this page is a procedure for improving confound correction design based on observations from the data quality assessment reports. These recommendations were originally developed in {cite}`Desrosiers-Gregoire2024-ou`, and consist of a stepwise protocol where confound correction is improved incrementally while referring to data quality reports and the table found on this page, relating data quality features to corresponding corrections. The protocol is as follows:

6 |

7 | 1. Initiate a **minimal** confound correction, and generate data quality reports at the analysis stage. Correction should be minimal at first to mitigate potential issues of over-correction, where network activity itself can be removed by excessive correction. A minimal correction can consist of applying frame censoring using framewise displacement and the regression of 6 motion parameters together with spatial smoothing.

8 | 2. Evaluation of the data quality reports (as described in the [guidelines on the main page](analysis_QC_target))

9 | 3. The most sensible additional correction is selected based on the observations and using the table below.

10 | 4. The confound correction pipeline stage is re-run with **one** additional correction at a time, and the data quality reports are re-evaluated. Only a single correction is tested at a time so its impact can be evaluated, and the correction is only kept if there were beneficial impacts.

11 | 5. Repeat 3. and 4. until desirable quality outcomes are met, or no further options are left for confound correction.

12 |

13 | The table below offers guidance for prioritizing additional corrections based on observations from the data quality reports. The confound correction workflow and the various strategies available are described elsewhere in the [confound correction pipeline](confound_pipeline_target).

14 |

15 |

--------------------------------------------------------------------------------

/rabies/confound_correction_pkg/mod_ICA_AROMA/README.md:

--------------------------------------------------------------------------------

1 | # ICA-AROMA

2 | ICA-AROMA (i.e. ‘ICA-based Automatic Removal Of Motion Artifacts’) concerns a data-driven method to identify and remove motion-related independent components from fMRI data. To that end it exploits a small, but robust set of theoretically motivated features, preventing the need for classifier re-training and therefore providing direct and easy applicability. This beta-version package requires installation of Python2.7 and FSL. Read the provided 'Manual.pdf' for a description on how to run ICA-AROMA. Make sure to first install all required python packages: `python2.7 -m pip install -r requirements.txt`.

3 |

4 | **! NOTE**: Previous versions of the ICA-AROMA scripts (v0.1-beta & v0.2-beta) contained a crucial mistake at the denoising stage of the method. Unfortunately this means that the output of these scripts is incorrect! The issue is solved in version v0.3-beta onwards. It concerns the Python scripts uploaded before the 27th of April 2015.

5 |

6 | **Log report (applied changes from v0.2-beta to v0.3-beta):**

7 |

8 | 1) Correct for incorrect definition of the string of indices of the components to be removed by *fsl_regfilt*:

9 |

10 | changed denIdxStr = np.char.mod('%i',denIdx)

11 | to denIdxStr = np.char.mod('%i',(denIdx+1))

12 | 2) Now take the maximum of the 'absolute' value of the correlation between the component time-course and set of realignment parameters:

13 |

14 | changed maxTC[i,:] = corMatrix.max(axis=1)

15 | to corMatrixAbs = np.abs(corMatrix)

16 | maxTC[i,:] = corMatrixAbs.max(axis=1)

17 | 3) Correct for the fact that the defined frequency-range, used for the high-frequency content feature, in few cases did not include the final Nyquist frequency due to limited numerical precision:

18 |

19 | changed step = Ny / FT.shape[0]

20 | f = np.arange(step,Ny,step)

21 | to f = Ny*(np.array(range(1,FT.shape[0]+1)))/(FT.shape[0])

22 |

--------------------------------------------------------------------------------

/scripts/debug_workflow.py:

--------------------------------------------------------------------------------

1 | #! /usr/bin/env python

2 |

3 | from rabies.utils import generate_token_data

4 | from rabies.run_main import execute_workflow

5 |

6 | import tempfile

7 | tmppath = tempfile.mkdtemp()

8 |

9 | #### increase the number of scans generated to 3 if running group analysis

10 | generate_token_data(tmppath, number_scans=3)

11 |

12 | output_folder = f'{tmppath}/outputs'

13 |

14 | #### HERE ARE SET THE DESIRED PARAMETERS FOR PREPROCESSING

15 | args = [

16 | f'--exclusion_ids',f'{tmppath}/inputs/sub-token1_bold.nii.gz',

17 | '-f',

18 | #'--debug',

19 | 'preprocess', f'{tmppath}/inputs', output_folder,

20 | '--anat_inho_cor', 'method=disable,otsu_thresh=2,multiotsu=false',

21 | '--bold_inho_cor', 'method=disable,otsu_thresh=2,multiotsu=false',

22 | '--bold2anat_coreg', 'registration=no_reg,masking=false,brain_extraction=false,winsorize_lower_bound=0.005,winsorize_upper_bound=0.995',

23 | '--commonspace_reg', 'masking=false,brain_extraction=false,fast_commonspace=true,template_registration=no_reg,winsorize_lower_bound=0.005,winsorize_upper_bound=0.995',

24 | '--data_type', 'int16',

25 | '--anat_template', f'{tmppath}/inputs/sub-token1_T1w.nii.gz',

26 | '--brain_mask', f'{tmppath}/inputs/token_mask.nii.gz',

27 | '--WM_mask', f'{tmppath}/inputs/token_mask.nii.gz',

28 | '--CSF_mask', f'{tmppath}/inputs/token_mask.nii.gz',

29 | '--vascular_mask', f'{tmppath}/inputs/token_mask.nii.gz',

30 | '--labels', f'{tmppath}/inputs/token_mask.nii.gz',

31 | ]

32 |

33 | execute_workflow(args=args)

34 |

35 |

36 |

37 | '''

38 |

39 | args = [

40 | f'--exclusion_ids',f'{tmppath}/inputs/sub-token1_bold.nii.gz',f'{tmppath}/inputs/sub-token2_bold.nii.gz',

41 | '-f',

42 | 'confound_correction', output_folder, output_folder,

43 | '--nativespace_analysis',

44 | ]

45 | execute_workflow(args=args)

46 |

47 |

48 | args = [

49 | f'--exclusion_ids',f'{tmppath}/inputs/sub-token3_bold.nii.gz',

50 | '-f',

51 | 'analysis', output_folder, output_folder,

52 | '--data_diagnosis'

53 | ]

54 | execute_workflow(args=args)

55 |

56 | '''

57 |

--------------------------------------------------------------------------------

/docs/installation.md:

--------------------------------------------------------------------------------

1 | # Installation

2 |

3 | ## Container (Apptainer/Docker) \*\*RECOMMENDED\*\*

4 | For most uses, we recommend instead using a containerized installation with [Apptainer](https://apptainer.org/) when possible on a Linux system (here's their [quick start guidelines](https://apptainer.org/docs/user/main/quick_start.html)), or [Docker](https://www.docker.com) on other platforms. Containers allow to build entire computing environments, grouping all dependencies required to run the software. This in turn reduces the burden of installing dependencies manually and ensures reproducible behavior of the software. Apptainer is generally preferred over Docker since root permissions are not required, and is thus generally compatible across computing platforms (e.g. high performance computing clusters).

5 |

6 | A [containerized version](https://github.com/CoBrALab/RABIES/pkgs/container/rabies) of RABIES is available from Github. After installing Apptainer or Docker, the following command will pull and build the container:

7 | * Install Apptainer .sif file:

8 | ```

9 | apptainer build rabies-latest.sif docker://ghcr.io/cobralab/rabies:latest

10 | ```

11 | * Install Docker image:

12 | ```

13 | docker pull ghcr.io/cobralab/rabies:latest

14 | ```

15 | A specific tag version can be selected (instead of `latest`) from the [list online](https://github.com/CoBrALab/RABIES/pkgs/container/rabies). Versions prior to 0.5.0 are found on [Docker Hub](https://hub.docker.com/r/gabdesgreg/rabies).

16 |

17 | ## PyPi

18 | The software is available on [PyPi](https://pypi.org/project/rabies/), which makes the rabies python package widely accessible with

19 | ```

20 | pip install rabies

21 | ```

22 | However, this does not account for non-python dependencies found in `dependencies.txt`.

23 |

24 | ## Neurodesk

25 | RABIES is also made available on the [Neurodesk platform](https://neurodesk.github.io/), as part of the [built-in tools](https://neurodesk.github.io/applications/) for neuroimaging. The Neurodesk platform allows for an entirely browser-based neuroimaging computing environment, with pre-built neuroimaging tools from the community, and aims at reducing needs for manual development of computing environments and at improving reproducible neuroimaging. More details on Neurodesk here .

--------------------------------------------------------------------------------

/docs/troubleshooting.md:

--------------------------------------------------------------------------------

1 | # Troubleshooting

2 |

3 | This page provides guidance on common issues encountered when using RABIES.

4 |

5 | ## Checking Image Orientation with ITK-SNAP

6 |

7 | RABIES assumes that input data are properly oriented according to the NIfTI standard (RAS+ orientation).

8 | Incorrectly oriented images are a common source of processing failures and unexpected results.

9 | Before reporting bugs or troubleshooting other issues, it is critical to verify that your images have the correct orientation.

10 |

11 | ### Installing ITK-SNAP

12 |

13 | [ITK-SNAP](https://www.itksnap.org/pmwiki/pmwiki.php) is a free, open-source medical image viewer that properly displays NIfTI orientation information.

14 | RABIES uses ANTs/ITK tools under the hood.

15 | Incorrect image orientation is one of the most common causes of registration failures in RABIES.

16 | Since RABIES expects RAS orientation (Right–Anterior–Superior), you should always verify your anatomical scans in ITK-SNAP before running the pipeline.

17 |

18 | ### How to Check Orientation in ITK-SNAP

19 |

20 | 1. **Open your image in ITK-SNAP**

21 | - Go to **File → Open Main Image…**

22 | - Load your NIfTI anatomical scan.

23 |

24 | 2. **Verify anatomical orientation**

25 | - ITK-SNAP shows three orthogonal views (Axial, Coronal, Sagittal).

26 | - Ensure anatomical structures appear where you expect them (e.g., nose = anterior, top of head = superior).

27 |

28 | 3. **Compare your scan to a correctly oriented atlas/template**

29 | - Open your reference atlas (e.g., SIGMA, Fischer rat, etc.) in another ITK-SNAP window.

30 | - Verify that structures appear in similar positions and that the orientation labels match.

31 |

32 | 4. **Check the orientation labels**

33 | - Inspect axes labels around each view (**R/L**, **A/P**, **S/I**).

34 | - Confirm they correspond to the real anatomical directions in your scan.

35 | - Move the cursor: the crosshair should move consistently across all views (e.g., dragging right corresponds to anatomical right).

36 |

37 | ## See Also

38 |

39 | - For registration-specific troubleshooting, see [Registration Troubleshooting](nested_docs/registration_troubleshoot.md)

40 | - For QC-related guidance, see [Preprocessing QC](preproc_QC.md)

41 | - When reporting bugs, refer to the [issue template](https://github.com/CoBrALab/RABIES/blob/master/.github/ISSUE_TEMPLATE/standard-bug-report.md)

42 |

--------------------------------------------------------------------------------

/docs/nested_docs/distribution_plot.md:

--------------------------------------------------------------------------------

1 | # Distribution plot

2 |

3 | (dist_plot_target)=

4 |

5 |

6 |

7 | The distribution plot allows visualizing the distribution of data quality measures across the dataset, where measures of network connectivity (specificity and amplitude) are contrasted with measures of confounds across samples (each point in the plot is a scan). Data points labelled in gray were removed using `--scan_QC_thresholds`, where the gray dotted lines correspond to the QC thresholds selected for network specificity (Dice overlap) and DR confound correlation. Among the remaining samples and for each metric separately, scan presenting outlier values were detected based on a modified Z-score threshold (set with `--outlier_threshold`, 3.5 by default) and labelled in orange. The derivation of the quality metrics is described in details on the [metrics documentation](dist_plot_metrics).

8 |

9 |

10 | The report was designed to subserve two main functions: 1. Inspect that network specificity is sufficient and the temporal correlation with confounds (i.e. DR confound corr.) minimal, and set thresholds for scan inclusion using `--scan_QC_thresholds` (top right subplot, more details on this below), and 2. complement the group statistical report to visualize the association between connectivity and the three confound measures included in the report ($CR_{SD}$, mean FD and tDOF). In the later case, it can be possible for instance to determine whether a group-wise correlation in statistical report is driven by outliers.

11 |

12 | ## Scan-level thresholds based on network specificity and confound temporal correlation

13 |

14 |

15 |

16 | The measures of network specificity (using Dice overlap) and temporal correlation with confounds (where confound timecourses are extracted using confound components specified with `--conf_prior_idx` and measured through dual regression) were defined in {cite}`Desrosiers-Gregoire2024-ou` for conducting scan-level QC (the figure above is reproduced from the study). They were selected as ideal measures for quantifying issues of network detectability and spurious connectivity (the figure above demonstrate how [categories of scan quality outcomes](quality_marker_target) can be distinguished with these metrics), and applying inclusion thresholds to select scans which respect assumptions for network detectability and minimal effects from confounds.

17 |

--------------------------------------------------------------------------------

/.github/workflows/docker-publish.yml:

--------------------------------------------------------------------------------

1 | name: Docker

2 |

3 | # This workflow uses actions that are not certified by GitHub.

4 | # They are provided by a third-party and are governed by

5 | # separate terms of service, privacy policy, and support

6 | # documentation.

7 |

8 | on:

9 | workflow_dispatch:

10 | schedule:

11 | - cron: '31 1 * * *'

12 | push:

13 | branches: [ "master" ]

14 | # Publish semver tags as releases.

15 | tags: [ '*.*.*' ]

16 | pull_request:

17 | branches: [ "master" ]

18 |

19 | env:

20 | # Use docker.io for Docker Hub if empty

21 | REGISTRY: ghcr.io

22 | # github.repository as /

23 | IMAGE_NAME: ${{ github.repository }}

24 |

25 |

26 | jobs:

27 | build:

28 |

29 | runs-on: ubuntu-latest

30 | permissions:

31 | contents: read

32 | packages: write

33 | # This is used to complete the identity challenge

34 | # with sigstore/fulcio when running outside of PRs.

35 | id-token: write

36 |

37 | steps:

38 | - name: Checkout repository

39 | uses: actions/checkout@v3

40 | with:

41 | submodules: recursive

42 |

43 | # Set up BuildKit Docker container builder to be able to build

44 | # multi-platform images and export cache

45 | # https://github.com/docker/setup-buildx-action

46 | - name: Set up Docker Buildx

47 | uses: docker/setup-buildx-action@f95db51fddba0c2d1ec667646a06c2ce06100226 # v3.0.0

48 |

49 | # Login against a Docker registry except on PR

50 | # https://github.com/docker/login-action

51 | - name: Log into registry ${{ env.REGISTRY }}

52 | if: github.event_name != 'pull_request'

53 | uses: docker/login-action@343f7c4344506bcbf9b4de18042ae17996df046d # v3.0.0

54 | with:

55 | registry: ${{ env.REGISTRY }}

56 | username: ${{ github.actor }}

57 | password: ${{ secrets.GITHUB_TOKEN }}

58 |

59 | # Extract metadata (tags, labels) for Docker

60 | # https://github.com/docker/metadata-action

61 | - name: Extract Docker metadata

62 | id: meta

63 | uses: docker/metadata-action@96383f45573cb7f253c731d3b3ab81c87ef81934 # v5.0.0

64 | with:

65 | images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

66 |

67 | # Build and push Docker image with Buildx (don't push on PR)

68 | # https://github.com/docker/build-push-action

69 | - name: Build and push Docker image

70 | id: build-and-push

71 | uses: docker/build-push-action@0565240e2d4ab88bba5387d719585280857ece09 # v5.0.0

72 | with:

73 | context: .

74 | push: ${{ github.event_name != 'pull_request' }}

75 | tags: ${{ steps.meta.outputs.tags }}

76 | labels: ${{ steps.meta.outputs.labels }}

77 | cache-from: type=gha

78 | cache-to: type=gha,mode=max

79 |

--------------------------------------------------------------------------------

/docs/nested_docs/group_stats.md:

--------------------------------------------------------------------------------

1 | # Group stats

2 |

3 | (group_stats_target)=

4 |

5 |

6 |

7 | Inspecting scan-level features is insufficient to conclude that inter-scan variability in connectivity isn't itself impacted (which is of primary interest for group analysis). This final report is aimed at inspecting features of connectivity variability at the group level, and focuses on two aspects:

8 |

9 | 1. **Specificity of network variability:** the standard deviation in connectivity across scan is computed voxelwise. This allows to visualize the spatial contrast of network variability. If primarily driven by network connectivity, the contrast should reflect the anatomical extent of the network of interest (as in the example above for the mouse somatomotor network), or otherwise may display spurious or absent features. For more details on the development of this metric, consult {cite}`Desrosiers-Gregoire2024-ou`.

10 | - **Relationship to sample size**: {cite}`Desrosiers-Gregoire2024-ou` demonstrate that the contrast of the network variability map depends on sample size. If network connectivity is observed in individual scans, but not in this statistical report, increasing sample size may improve this contrast.

11 | 2. **Correlation with confounds:** Connectivity is correlated across subject, for each voxel, with either of the three measures of confound included: variance explained from confound correction at a given voxel ($CR_{SD}$, see [predicted confound timeseries $Y_{CR}$](CR_target)), mean framewise displacement (FD), or temporal degrees of freedom. This allows establishing the importance of the association with potential confounds. What constitute a 'concerning' correlation may depend on the study, and the effect size of interest (i.e. is the effect size of interest much higher or similar to the effect size of confounds?).

12 |

13 | **Quantitative CSV report**: A CSV file is also automatically generated along the figure, which records a quantitative assessment of these two aspects. More specifically, the overlap between the network variability map and the reference network map is measuring using Dice overlap, and for confound measures, the mean correlation is measured within the area of the network (consult the [metric details elsewhere](group_QC_metrics)). These measures can be referred to for a quantitative summary instead (although visualization is preferred, as the Dice overlap for network variability may not perfectly distinguish network and spurious features).

14 |

15 | **IMPORTANT**: the validity of this report is dependent on whether [scan-level assumptions](dist_plot_target) of network detectability and minimal confound effects are met. This is because either the lack of network activity or spurious effects in a subset of scan can drive 'apparent' network variability, since there will be differences in the presence VS absence of the network across scans, but these differences may be actually driven by data quality divergences.

--------------------------------------------------------------------------------

/docs/preproc_QC.md:

--------------------------------------------------------------------------------

1 | # Preprocessing quality control (QC)

2 |

3 | Several registration operations during preprocessing are prone to fail in accurately aligning images, and it is thus necessary to visually inspect the quality of registration to prevent errors arising from failed alignment, or biased analyses downstream. For this purpose, RABIES generates automatically a set PNG images allowing for efficient visual assessment of key registration steps. These are found in the `{output_folder}/preprocess_QC_report/` folder, which contains several subfolders belonging to different registration step in the pipeline or providing supportive information about the files:

4 |

5 | - `anat_inho_cor/`: intensity inhomogeneities are corrected for prior to important registration operations. This folder allows to assess the quality of the inhomogeneity correction, which is crucial for the performance of downstream registration. The figure is divided in 4 columns, showing 1-the raw image, 2-an initial correction of the image, 3-an overlay of the anatomical mask used to conduct a final correction (by default obtained through a preliminary registration to the commonspace template), and 4-final corrected output.

6 |

7 | - `Native2Unbiased/`: alignment between each anatomical image and the generated unbiased template. This registration step controls for the overlap between different scanning sessions.

8 |

9 | - `Unbiased2Atlas/`: alignment of the generated unbiased template to the external anatomical template in commonspace. This step ensures proper alignment with the commonspace and the associated brain parcellation.

10 |

11 | - `bold_inho_cor/`: same as `anat_inho_cor/`, but conducted on the 3D reference EPI image which is used for estimating the alignment of the EPI.

12 |

13 | - `EPI2Anat/`: shows the alignment of the EPI image to its associated anatomical image of the same scanning session. This step resamples the EPI into native space, and corrects for susceptibility distortions through non-linear registration. An example is shown below:

14 |

15 | - `template_files/`: displays the overlap of the provided external anatomical template with it's associated masks and labels. Allows to validate that proper template files were provided and share those along the RABIES report.

16 |

17 | - `temporal_features/`: includes the timecourse of the head motion realignment parameters together with framewise displacement, to observe subject motion. Also includes a spatial map of the signal variability at each voxel and then the temporal signal-to-noise ratio (tSNR).

18 |

19 |

20 |

21 |

22 | ```{toctree}

23 | ---

24 | maxdepth: 3

25 | ---

26 | nested_docs/registration_troubleshoot.md

27 |

--------------------------------------------------------------------------------

/docs/nested_docs/registration_troubleshoot.md:

--------------------------------------------------------------------------------

1 | # Recommendations for registration troubleshooting

2 | When first attemting preprocessing with RABIES, we recommend following the default parameters as they involve less stringent modifications of the images and mostly rely on the original quality of the MR images at acquisition. However, the default parameters do not offer a generalizable robust workflow for every datasets, and to reach ideal outcomes, the workflow parameters may require tuning. We provide below recommendations for common types of registration failures that may be found from the QC report.

3 |

4 |

5 | ## Inhomogeneity correction (anat or BOLD) `--anat_inho_cor`, `--bold_inho_cor`, `--anat_robust_inho_cor`, `--bold_robust_inho_cor`

6 |

7 | * **Only a subset of the scans have failed masking, or the mask is partially misregistered:** Consider using the `--anat_robust_inho_cor/--bold_robust_inho_cor` option, which will register all corrected images to generate a temporary template representing the average of all scans, and this template is then itself masked, and becomes the new target for masking during a second iteration of inhomogeneity correction. This should provide a more robust registration target for masking. The parameters for handling this setp are the same as `--commonspace_reg` below.

8 | * **The inhomogeneity biases are not completely corrected:** if you observe that drops in signal are still present after the connection, you should consider applying `multiotsu=true`. This option will better correct low intensities in an image with important signal drops.

9 | * **Tissue outside the brain is provoking registration failures:** if the intensity of tissue outside the brain was enhanced during the initial inhomogeneity correction and leads to masking failures, you can consider using `--anat_autobox/--bold_autobox` which can automatically crop out extra tissue. You can also modify the `otsu_thresh` to set the threshold for the automatic masking during the initial correction, and attempt to select a threshold that is more specific to the brain tissue.

10 | * **There are still a large proportion of masking failures (mismatched brain sizes or non-linear wraps, or mask outside of the brain):** Consider applying a less stringent registration `method`, going down from `SyN` -> `Affine` -> `Rigid` -> `no_reg` . If `no_reg` is selected, you may have to also adjust the `otsu_thresh` to obtain an automatically-generated brain mask covering only the brain tissues.

11 |

12 |

13 | ## Commonspace registration `--commonspace_reg` or susceptibility distortion correction `--bold2anat_coreg`

14 |

15 | * **Many scans are misregistered, or brain edges are not well-matched:** First, inspect the quality of inhomogeneity correction for those scans, and refer to instructions above if the correction or brain masking was poor. If good quality masks were obtained during inhomogeneity correction, they can be used to improve registration quality by using `masking=true`. If registration errors persist, in particular if brain edges are not well-matched, `brain_extraction=true` can be used to further constrain the matching of brain edges after removing tissue outside the brain. However, the quality of brain edge delineation depends on masks derived during inhomogeneity correction, so this option depends on high quality masking during this previous step.

16 | * **Scans have incomplete brain coverage (e.g. cerebellum/olfactory bulbs), and surrounding brain tissue is streched to fill in missing regions:** The non-linear registration assumes corresponding brain anatomy between the moving image and the target. If brain regions are missing, the surrounding tissue may be improperly stretched to fill missing areas. Using the `brain_extraction=true` can largely mitigate this issue.

17 |

18 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM mambaorg/micromamba:jammy

2 |

3 | USER root

4 |

5 | # System-level dependencies

6 | RUN apt-get update && apt-get install -y --no-install-recommends \

7 | perl \

8 | imagemagick \

9 | parallel \

10 | gdebi-core \

11 | curl \

12 | ca-certificates \

13 | patch \

14 | rsync \

15 | unzip \

16 | && rm -rf /var/lib/apt/lists/*

17 |

18 | # Install a few minimal AFNI components

19 | RUN curl -L -O https://afni.nimh.nih.gov/pub/dist/tgz/linux_ubuntu_16_64.tgz && \

20 | mkdir -p /opt/afni && \

21 | tar xzvf linux_ubuntu_16_64.tgz -C /opt/afni linux_ubuntu_16_64/{libmri.so,libf2c.so,3dDespike,3dTshift,3dWarp,3dAutobox} --strip-components=1 && \

22 | rm -f linux_ubuntu_16_64.tgz

23 | ENV PATH=/opt/afni${PATH:+:$PATH}

24 |

25 | # Install minc-toolkit

26 | RUN curl -L --output /tmp/minc-toolkit-1.9.18.deb \

27 | https://packages.bic.mni.mcgill.ca/minc-toolkit/min/minc-toolkit-1.9.18-20200813-Ubuntu_18.04-x86_64.deb && \

28 | gdebi -n /tmp/minc-toolkit-1.9.18.deb && \

29 | rm -f /tmp/minc-toolkit-1.9.18.deb && \

30 | rm -f /opt/minc/1.9.18/bin/{ants*,ANTS*,ImageMath,AverageImages,ThresholdImage,ExtractRegionFromImageByMask,ConvertImage,AverageAffine*,ResampleImage}

31 |

32 | # minc-toolkit configuration parameters for 1.9.18-20200813

33 | ENV MINC_TOOLKIT=/opt/minc/1.9.18 \

34 | MINC_TOOLKIT_VERSION="1.9.18-20200813"

35 | ENV PATH=${MINC_TOOLKIT}/bin:${MINC_TOOLKIT}/pipeline:${PATH} \

36 | PERL5LIB=${MINC_TOOLKIT}/perl:${MINC_TOOLKIT}/pipeline${PERL5LIB:+:$PERL5LIB} \

37 | LD_LIBRARY_PATH=${MINC_TOOLKIT}/lib:${MINC_TOOLKIT}/lib/InsightToolkit${LD_LIBRARY_PATH:+:$LD_LIBRARY_PATH} \

38 | MNI_DATAPATH=${MINC_TOOLKIT}/../share:${MINC_TOOLKIT}/share \

39 | MINC_FORCE_V2=1 \

40 | MINC_COMPRESS=4 \

41 | VOLUME_CACHE_THRESHOLD=-1 \

42 | MANPATH=${MINC_TOOLKIT}/man${MANPATH:+:$MANPATH}

43 |

44 | # add a patch to nu_estimate_np_and_em

45 | COPY patch/nu_estimate_np_and_em.diff nu_estimate_np_and_em.diff

46 | RUN (cd / && patch -p0) < nu_estimate_np_and_em.diff && rm nu_estimate_np_and_em.diff

47 |

48 | # ANTs install

49 | RUN curl -L --output /tmp/ants.zip https://github.com/ANTsX/ANTs/releases/download/v2.5.0/ants-2.5.0-ubuntu-22.04-X64-gcc.zip && \

50 | unzip -d /opt /tmp/ants.zip && \

51 | rm -rf /opt/ants-2.5.0/lib && \

52 | rm -f /tmp/ants.zip

53 |

54 | ENV PATH=/opt/ants-2.5.0/bin:${PATH}

55 |

56 | USER $MAMBA_USER

57 | ENV HOME=/home/$MAMBA_USER

58 |

59 | # install RABIES

60 | ENV RABIES=${HOME}/RABIES

61 | RUN mkdir $RABIES

62 |

63 | COPY rabies_environment.yml setup.py MANIFEST.in README.md LICENSE dependencies.txt $RABIES/

64 |

65 | COPY rabies $RABIES/rabies

66 | COPY minc-toolkit-extras $RABIES/minc-toolkit-extras

67 | COPY optimized_antsMultivariateTemplateConstruction $RABIES/optimized_antsMultivariateTemplateConstruction

68 | COPY scripts $RABIES/scripts

69 |

70 | RUN micromamba install -y -n base -f $RABIES/rabies_environment.yml && \

71 | micromamba run -n base pip install -e $RABIES && \

72 | micromamba clean --all --yes

73 |

74 | # FSL conda packages don't properly setup FSL, do it manually

75 | ENV FSLDIR=/opt/conda

76 | ENV FSLWISH=/opt/conda/bin/fslwish

77 | ENV FSLTCLSH=/opt/conda/bin/fsltclsh

78 | ENV FSLMULTIFILEQUIT=TRUE

79 | ENV FSL_LOAD_NIFTI_EXTENSIONS=0

80 | ENV FSLGECUDAQ=

81 | ENV FSL_SKIP_GLOBAL=0

82 | ENV FSLOUTPUTTYPE=NIFTI_GZ

83 |

84 | # adding 'agg' as default backend to avoid matplotlib errors

85 | ENV MPLBACKEND agg

86 |

87 | # pre-install the template defaults

88 | ENV XDG_DATA_HOME=${HOME}/.local/share

89 |

90 | RUN micromamba run -n base install_DSURQE.sh $XDG_DATA_HOME/rabies

91 |

92 | # Run a basic test

93 | RUN micromamba run -n base error_check_rabies.py --complete

94 |

95 | ENTRYPOINT ["/usr/local/bin/_entrypoint.sh", "rabies"]

96 |

--------------------------------------------------------------------------------

/docs/conf.py:

--------------------------------------------------------------------------------

1 | # Configuration file for the Sphinx documentation builder.

2 | #

3 | # This file only contains a selection of the most common options. For a full

4 | # list see the documentation:

5 | # https://www.sphinx-doc.org/en/master/usage/configuration.html

6 |

7 | # -- Path setup --------------------------------------------------------------

8 |

9 | # If extensions (or modules to document with autodoc) are in another directory,

10 | # add these directories to sys.path here. If the directory is relative to the

11 | # documentation root, use os.path.abspath to make it absolute, like shown here.

12 | #

13 | # import os

14 | # import sys

15 | # sys.path.insert(0, os.path.abspath('.'))

16 |

17 |

18 | # -- Project information -----------------------------------------------------

19 |

20 | project = 'RABIES Documentation'

21 | copyright = '2019, CoBrALab and Gabriel Desrosiers-Gregoire and Gabriel A. Devenyi and Mallar Chakravarty'

22 | author = 'CoBrALab'

23 |

24 | # The full version, including alpha/beta/rc tags

25 | release = '0.5.4'

26 |

27 |

28 | # -- General configuration ---------------------------------------------------

29 |

30 | # Add any Sphinx extension module names here, as strings. They can be

31 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

32 | # ones.

33 | extensions = [

34 | "myst_parser",

35 | "sphinx.ext.githubpages",

36 | "sphinx_rtd_dark_mode",

37 | "sphinx.ext.autosectionlabel",

38 | "sphinx.ext.todo",

39 | 'sphinxcontrib.bibtex',

40 | 'sphinxcontrib.programoutput',

41 | ]

42 |

43 | # to get bibliography

44 | bibtex_bibfiles = ['_static/refs.bib']

45 |

46 | # Choose to generate TOOD notices or not. Defaults to False

47 | todo_include_todos = False

48 |

49 | # Set MyST specific extensions

50 | myst_enable_extensions = [

51 | "tasklist",

52 | "amsmath",

53 | "dollarmath",

54 | ]

55 |

56 | # enable equation rendering inline

57 | myst_dmath_double_inline = True

58 |

59 | # Make sure the target is unique

60 | autosectionlabel_prefix_document = True

61 |

62 | # Add any paths that contain templates here, relative to this directory.

63 | templates_path = ['_templates']

64 |

65 | # List of patterns, relative to source directory, that match files and

66 | # directories to ignore when looking for source files.

67 | # This pattern also affects html_static_path and html_extra_path.

68 | exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

69 |

70 |

71 | # -- Options for HTML output -------------------------------------------------

72 |

73 | # The theme to use for HTML and HTML Help pages. See the documentation for

74 | # a list of builtin themes.

75 | #

76 | html_theme = 'groundwork'

77 |

78 | # Add any paths that contain custom static files (such as style sheets) here,

79 | # relative to this directory. They are copied after the builtin static files,

80 | # so a file named "default.css" will overwrite the builtin "default.css".

81 | html_static_path = ['_static']

82 |

83 |

84 | # -- Options for sphinx_rtd_dark_mode -------

85 | default_dark_mode = False

86 |

87 | # Set some RTD theme config. This includes the entire navigation structure

88 | # into the sidebar of all pages. However, expanding the sections isn't

89 | # provided yet on the RTD theme (see

90 | # https://github.com/readthedocs/sphinx_rtd_theme/issues/455).

91 | html_theme_options = {

92 | 'collapse_navigation': False,

93 | 'navigation_depth': 3,

94 | }

95 |

96 | # Set some RTD theme config. This includes the entire navigation structure

97 | # into the sidebar of all pages. However, expanding the sections isn't

98 | # provided yet on the RTD theme (see

99 | # https://github.com/readthedocs/sphinx_rtd_theme/issues/455).

100 | html_theme_options = {

101 | 'collapse_navigation': False,

102 | 'navigation_depth': 2,

103 | }

104 |

--------------------------------------------------------------------------------

/scripts/install_DSURQE.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | set -euo pipefail

3 |

4 | out_dir=$1

5 | mkdir -p "$out_dir"

6 |

7 |

8 | files=(

9 | "DSURQE_40micron_average.nii"

10 | "DSURQE_40micron_labels.nii"

11 | "DSURQE_40micron_mask.nii"

12 | "DSURQE_40micron_R_mapping.csv"

13 | )

14 | fallback_files=(

15 | "DSURQE_40micron_average.nii.gz"

16 | "DSURQE_40micron_labels.nii.gz"

17 | "DSURQE_40micron_mask.nii.gz"

18 | "DSURQE_40micron_R_mapping.csv"

19 | )

20 |

21 | # Primary URLs (mouseimaging.ca)

22 | primary_urls=(

23 | "https://www.mouseimaging.ca/repo/DSURQE_40micron/Dorr_2008_Steadman_2013_Ullmann_2013_Richards_2011_Qiu_2016_Egan_2015_40micron/nifti/DSURQE_40micron_average.nii"

24 | "https://www.mouseimaging.ca/repo/DSURQE_40micron/Dorr_2008_Steadman_2013_Ullmann_2013_Richards_2011_Qiu_2016_Egan_2015_40micron/nifti/DSURQE_40micron_labels.nii"

25 | "https://www.mouseimaging.ca/repo/DSURQE_40micron/Dorr_2008_Steadman_2013_Ullmann_2013_Richards_2011_Qiu_2016_Egan_2015_40micron/nifti/DSURQE_40micron_mask.nii"

26 | "https://www.mouseimaging.ca/repo/DSURQE_40micron/Dorr_2008_Steadman_2013_Ullmann_2013_Richards_2011_Qiu_2016_Egan_2015_40micron/mappings/DSURQE_40micron_R_mapping.csv"

27 | )

28 |

29 | # Fallback URLs (github)

30 | fallback_urls=(

31 | "https://github.com/CoBrALab/RABIES/releases/download/0.5.1/DSURQE_40micron.nii.gz"

32 | "https://github.com/CoBrALab/RABIES/releases/download/0.5.1/DSURQE_40micron_labels.nii.gz"

33 | "https://github.com/CoBrALab/RABIES/releases/download/0.5.1/DSURQE_40micron_mask.nii.gz"

34 | "https://github.com/CoBrALab/RABIES/releases/download/0.5.1/DSURQE_40micron_R_mapping.csv"

35 | )

36 |

37 | # # Try to download from mouseimaging first, if that doesn't work download from github

38 | # primary_success=true

39 | # for i in {0..3}; do

40 | # if ! curl -L --retry 5 --fail --silent --show-error -o "${out_dir}/${files[$i]}" "${primary_urls[$i]}"; then

41 | # primary_success=false

42 | # break

43 | # fi

44 | # done

45 | #

46 | # if [ "$primary_success" = true ]; then

47 | # for f in "${out_dir}"/DSURQE_40micron_*.nii; do

48 | # gzip -f "$f"

49 | # done

50 | # fi

51 |

52 |

53 | # if these fail too exit 1

54 | #

55 | # temporary hotfix to force download from fallback_urls

56 | # remove line 57 when primary_urls can be used again and uncomment lines 38-50

57 | primary_success=false

58 | if [ "$primary_success" = false ]; then

59 | for i in {0..3}; do

60 | if ! curl -L --retry 5 --fail --silent --show-error -o "${out_dir}/${fallback_files[$i]}" "${fallback_urls[$i]}"; then

61 | exit 1

62 | fi

63 | done

64 | fi

65 |

66 | # create regional masks

67 | gen_DSURQE_masks.py ${out_dir}/DSURQE_40micron_labels.nii.gz ${out_dir}/DSURQE_40micron_R_mapping.csv ${out_dir}/DSURQE_40micron

68 |

69 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/melodic_IC.nii.gz" -o "${out_dir}/melodic_IC.nii.gz"

70 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/vascular_mask.nii.gz" -o "${out_dir}/vascular_mask.nii.gz"

71 |

72 | # download atlas file versions for the EPI template

73 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/EPI_template.nii.gz" -o "${out_dir}/EPI_template.nii.gz"

74 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/EPI_brain_mask.nii.gz" -o "${out_dir}/EPI_brain_mask.nii.gz"

75 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/EPI_WM_mask.nii.gz" -o "${out_dir}/EPI_WM_mask.nii.gz"

76 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/EPI_CSF_mask.nii.gz" -o "${out_dir}/EPI_CSF_mask.nii.gz"

77 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/EPI_vascular_mask.nii.gz" -o "${out_dir}/EPI_vascular_mask.nii.gz"

78 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/EPI_labels.nii.gz" -o "${out_dir}/EPI_labels.nii.gz"

79 | curl -L --retry 5 "https://zenodo.org/record/5118030/files/melodic_IC_resampled.nii.gz" -o "${out_dir}/melodic_IC_resampled.nii.gz"

80 |

--------------------------------------------------------------------------------

/rabies/visualization.py:

--------------------------------------------------------------------------------

1 | import os

2 | import numpy as np

3 | import SimpleITK as sitk

4 | import matplotlib.pyplot as plt

5 | # set a dark background

6 | plt.rcParams.update({

7 | "lines.color": "white",

8 | "patch.edgecolor": "white",

9 | "text.color": "black",

10 | "axes.facecolor": "white",

11 | "axes.edgecolor": "lightgray",

12 | "axes.labelcolor": "white",

13 | "xtick.color": "white",

14 | "ytick.color": "white",

15 | "grid.color": "lightgray",

16 | "figure.facecolor": "black",

17 | "figure.edgecolor": "black",

18 | "savefig.facecolor": "black",

19 | "savefig.edgecolor": "black"})

20 |

21 |

22 | def otsu_scaling(image_file):

23 | from skimage.filters import threshold_multiotsu

24 | img = sitk.ReadImage(image_file)

25 | array = sitk.GetArrayFromImage(img)

26 |

27 | thresholds = threshold_multiotsu(array.astype(float).flatten(), classes=5, nbins=100)

28 | voxel_subset=array[array>thresholds[1]] # clip off the background using otsu thresholds

29 | # select a maximal value which encompasses 90% of the voxels

30 | voxel_subset.sort()

31 | vmax=voxel_subset[int(len(voxel_subset)*0.9)]

32 |

33 | scaled = array/vmax

34 | scaled_img=sitk.GetImageFromArray(scaled, isVector=False)

35 | scaled_img.CopyInformation(img)

36 | return scaled_img

37 |

38 |

39 | def plot_3d(axes,sitk_img,fig,vmin=0,vmax=1,cmap='gray', alpha=1, cbar=False, threshold=None, planes=('sagittal', 'coronal', 'horizontal'), num_slices=4, slice_spacing=0.1):

40 | physical_dimensions = (np.array(sitk_img.GetSpacing())*np.array(sitk_img.GetSize()))[::-1] # invert because the array is inverted indices

41 | array=sitk.GetArrayFromImage(sitk_img)

42 |

43 | array[array==0]=None # set 0 values to be empty

44 |

45 | if not threshold is None:

46 | array[np.abs(array) "

75 | echo "e.g. zeropad 1 4 gives 0001"

76 | echo ""

77 | exit 1

78 | }

79 |

80 | [ "$1" = "" ] && Usage

81 | [ "$2" = "" ] && Usage

82 |

83 |

84 | i=`echo $1 | wc -c`;

85 | j=0;

86 | k=` expr $2 - $i`;

87 | k=` expr $k + 1`;

88 | num=$1;

89 | while [ "$j" -lt "$k" ];do

90 | num=0$num;

91 | j=` expr $j + 1`

92 | done

93 | echo $num

94 |

--------------------------------------------------------------------------------

/scripts/preprocess_scripts/multistage_otsu_cor.py:

--------------------------------------------------------------------------------

1 | #! /usr/bin/env python

2 | import sys

3 | import SimpleITK as sitk

4 | from rabies.utils import run_command

5 |

6 | def otsu_bias_cor(target, otsu_ref, out_dir, b_value, mask=None, n_iter=200):

7 | command = f'ImageMath 3 {out_dir}/null_mask.mnc ThresholdAtMean {otsu_ref} 0'

8 | rc = run_command(command)

9 | command = f'ThresholdImage 3 {otsu_ref} {out_dir}/otsu_weight.mnc Otsu 4'

10 | rc = run_command(command)

11 |

12 | otsu_img = sitk.ReadImage(

13 | f'{out_dir}/otsu_weight.mnc', sitk.sitkUInt8)

14 | otsu_array = sitk.GetArrayFromImage(otsu_img)

15 |

16 | if mask is not None:

17 | resampled_mask_img = sitk.ReadImage(

18 | mask, sitk.sitkUInt8)

19 | resampled_mask_array = sitk.GetArrayFromImage(resampled_mask_img)

20 |

21 | otsu_array = otsu_array*resampled_mask_array

22 |

23 | combined_mask=(otsu_array==1.0)+(otsu_array==2.0)

24 | mask_img=sitk.GetImageFromArray(combined_mask.astype('uint8'), isVector=False)

25 | mask_img.CopyInformation(otsu_img)

26 | sitk.WriteImage(mask_img, f'{out_dir}/mask12.mnc')

27 |

28 | combined_mask=(otsu_array==1.0)+(otsu_array==2.0)+(otsu_array==3.0)

29 | mask_img=sitk.GetImageFromArray(combined_mask.astype('uint8'), isVector=False)

30 | mask_img.CopyInformation(otsu_img)

31 | sitk.WriteImage(mask_img, f'{out_dir}/mask123.mnc')

32 |