├── .github

└── workflows

│ └── ci_pipeline.yml

├── .gitignore

├── .pre-commit-config.yaml

├── LICENSE.txt

├── Makefile

├── README.md

├── README_ru.md

├── examples

├── calculate_adjacency_matrix.ipynb

├── calculate_provision.ipynb

├── coverage_zones.ipynb

├── examples_data

│ ├── buildings.parquet

│ ├── matrix_time.parquet

│ ├── services.parquet

│ └── trees.parquet

├── isochrone_generator.ipynb

├── noise_simulation.ipynb

├── point_clusterization.ipynb

└── visibility_analysis.ipynb

├── pyproject.toml

└── src

├── objectnat

├── __init__.py

├── _api.py

├── _config.py

├── _version.py

└── methods

│ ├── __init__.py

│ ├── coverage_zones

│ ├── __init__.py

│ ├── graph_coverage.py

│ ├── radius_voronoi_coverage.py

│ └── stepped_coverage.py

│ ├── isochrones

│ ├── __init__.py

│ ├── isochrone_utils.py

│ └── isochrones.py

│ ├── noise

│ ├── __init__.py

│ ├── noise_exceptions.py

│ ├── noise_init_data.py

│ ├── noise_reduce.py

│ └── noise_sim.py

│ ├── point_clustering

│ ├── __init__.py

│ └── cluster_points_in_polygons.py

│ ├── provision

│ ├── __init__.py

│ ├── provision.py

│ ├── provision_exceptions.py

│ └── provision_model.py

│ ├── utils

│ ├── __init__.py

│ ├── geom_utils.py

│ ├── graph_utils.py

│ └── math_utils.py

│ └── visibility

│ ├── __init__.py

│ └── visibility_analysis.py

└── tests

├── __init__.py

├── conftest.py

├── test_clusterization.py

├── test_coverage_zones.py

├── test_isochrones.py

├── test_noise_simulation.py

├── test_provision.py

└── test_visibility.py

/.github/workflows/ci_pipeline.yml:

--------------------------------------------------------------------------------

1 | name: CI Pipeline

2 |

3 | on:

4 | push:

5 | branches:

6 | - master

7 | - dev

8 | pull_request:

9 | branches:

10 | - master

11 | - dev

12 |

13 | permissions:

14 | contents: write

15 |

16 | jobs:

17 | check-version:

18 | runs-on: ubuntu-latest

19 |

20 | steps:

21 | - name: Checkout repository

22 | uses: actions/checkout@v3

23 |

24 | - name: Set up Python

25 | uses: actions/setup-python@v4

26 | with:

27 | python-version: "3.10"

28 |

29 | - name: Install dependencies

30 | run: |

31 | pip install toml

32 |

33 | - name: Extract version from pyproject.toml

34 | id: pyproject-version

35 | run: |

36 | PYPROJECT_VERSION=$(python -c "import toml; print(toml.load('pyproject.toml')['tool']['poetry']['version'])")

37 | echo "Version in pyproject.toml: $PYPROJECT_VERSION"

38 | echo "pyproject_version=$PYPROJECT_VERSION" >> $GITHUB_OUTPUT

39 |

40 | - name: Extract version from _version.py

41 | id: version-py

42 | run: |

43 | VERSION_PY=$(grep -oP 'VERSION = "\K[^"]+' src/objectnat/_version.py)

44 | echo "Version in _version.py: $VERSION_PY"

45 | echo "version_py=$VERSION_PY" >> $GITHUB_OUTPUT

46 |

47 | - name: Compare versions

48 | run: |

49 | if [ "${{ steps.pyproject-version.outputs.pyproject_version }}" != "${{ steps.version-py.outputs.version_py }}" ]; then

50 | echo "Versions do not match!"

51 | echo "pyproject.toml: ${{ steps.pyproject-version.outputs.pyproject_version }}"

52 | echo "_version.py: ${{ steps.version-py.outputs.version_py }}"

53 | exit 1

54 | else

55 | echo "Versions match!"

56 | fi

57 |

58 | check-formatting:

59 | runs-on: ubuntu-latest

60 |

61 | steps:

62 | - name: Checkout repository

63 | uses: actions/checkout@v3

64 |

65 | - name: Set up Python

66 | uses: actions/setup-python@v4

67 | with:

68 | python-version: "3.10"

69 |

70 | - name: Install Poetry

71 | run: pip install poetry

72 |

73 | - name: Install dependencies

74 | run: poetry install

75 |

76 | - name: Run pylint

77 | run: |

78 | poetry run pylint src --exit-zero > pylint-report.txt

79 |

80 | - name: Upload pylint report

81 | uses: actions/upload-artifact@v4

82 | with:

83 | name: pylint-report

84 | path: pylint-report.txt

85 |

86 | - name: Run isort

87 | run: |

88 | poetry run isort --check-only src

89 |

90 | - name: Run black

91 | run: |

92 | poetry run black --check src

93 |

94 | test:

95 | runs-on: ubuntu-latest

96 | needs: [check-version, check-formatting]

97 |

98 | steps:

99 | - name: Checkout repository

100 | uses: actions/checkout@v3

101 |

102 | - name: Set up Python

103 | uses: actions/setup-python@v4

104 | with:

105 | python-version: "3.10"

106 |

107 | - name: Install Poetry

108 | run: pip install poetry

109 |

110 | - name: Install dependencies

111 | run: poetry install

112 |

113 | - name: Run tests with coverage

114 | run: |

115 | poetry run pytest src/tests --cov=objectnat --cov-report=xml

116 |

117 | - name: Upload coverage report

118 | uses: actions/upload-artifact@v4.6.1

119 | with:

120 | name: coverage-report

121 | path: coverage.xml

122 |

123 | - name: Upload coverage to Codecov

124 | uses: codecov/codecov-action@v3

125 | with:

126 | token: ${{ secrets.CODECOV_TOKEN }}

127 | file: coverage.xml

128 | slug: DDonnyy/ObjectNat

129 |

130 | - name: Commit and push test images to assets branch

131 | run: |

132 | git config --global user.name "github-actions"

133 | git config --global user.email "github-actions@github.com"

134 |

135 | if git ls-remote --exit-code --heads origin assets; then

136 | git fetch origin assets

137 | git checkout assets

138 | else

139 | git checkout --orphan assets

140 | git reset --hard

141 | fi

142 | rm -f ./*.png || echo "No old PNGs to remove"

143 | git rm -f ./*.png || echo "No old PNGs to remove from Git"

144 | cp -r src/tests/test_output/*.png . || echo "No images to copy"

145 | git add ./*.png

146 | git commit -m "Update stepped coverage zone test images" || echo "No changes to commit"

147 | git push --force origin assets

148 | env:

149 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

150 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | cache

2 | /dist

3 | /dev

4 | /.idea

5 | /.venv

6 | /.vscode

7 | /poetry.lock

8 | __pycache__

9 | /.ipynb_checkpoints

10 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | repos:

2 | - repo: https://github.com/psf/black

3 | rev: 25.1.0

4 | hooks:

5 | - id: black

6 | language_version: python3.10

7 |

8 | - repo: https://github.com/pycqa/isort

9 | rev: 6.0.1

10 | hooks:

11 | - id: isort

--------------------------------------------------------------------------------

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | BSD 3-Clause License

2 |

3 | Copyright (c) 2023, iduprojects

4 |

5 | Redistribution and use in source and binary forms, with or without

6 | modification, are permitted provided that the following conditions are met:

7 |

8 | 1. Redistributions of source code must retain the above copyright notice, this

9 | list of conditions and the following disclaimer.

10 |

11 | 2. Redistributions in binary form must reproduce the above copyright notice,

12 | this list of conditions and the following disclaimer in the documentation

13 | and/or other materials provided with the distribution.

14 |

15 | 3. Neither the name of the copyright holder nor the names of its

16 | contributors may be used to endorse or promote products derived from

17 | this software without specific prior written permission.

18 |

19 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

20 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

21 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

22 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

23 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

24 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

25 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

26 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

27 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

28 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

29 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | CODE := src

2 |

3 | build-and-publish: clean build publish

4 |

5 | lint:

6 | poetry run pylint $(CODE)

7 |

8 | format:

9 | poetry run isort $(CODE)

10 | poetry run black $(CODE)

11 |

12 | install:

13 | pip install .

14 |

15 | install-dev:

16 | poetry install --with dev

17 |

18 | install-dev-pip:

19 | pip install -e . --config-settings editable_mode=strict

20 |

21 | clean:

22 | rm -rf ./dist

23 |

24 | build:

25 | poetry build

26 |

27 | publish:

28 | poetry publish

29 |

30 | update:

31 | poetry update

32 |

33 | install-from-build:

34 | python -m wheel install dist/graph_lib-*.whl

35 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # ObjectNat

2 |

3 | [](https://github.com/psf/black)

4 | [](https://pypi.org/project/objectnat/)

5 | [](https://github.com/DDonnyy/ObjecNat/actions/workflows/ci_pipeline.yml)

6 | [](https://codecov.io/gh/DDonnyy/ObjectNat)

7 | [](https://opensource.org/licenses/MIT)

8 |

9 | - [РИДМИ (Russian)](README_ru.md)

10 |

11 |  12 |

12 |

13 |

14 | #### **ObjectNat** is an open-source library created for geospatial analysis created by **IDU team**

15 |

16 | ## Features and how to use

17 |

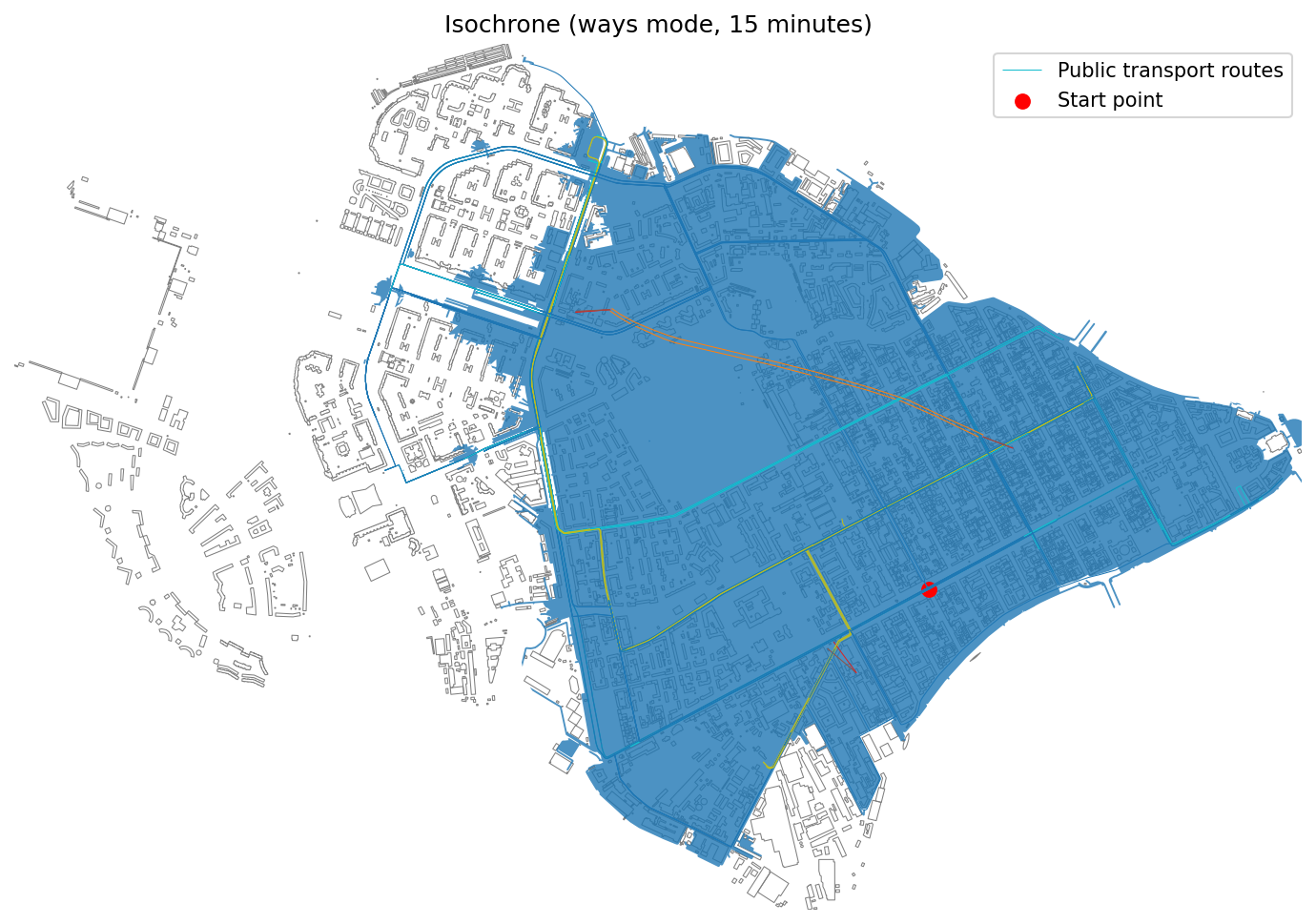

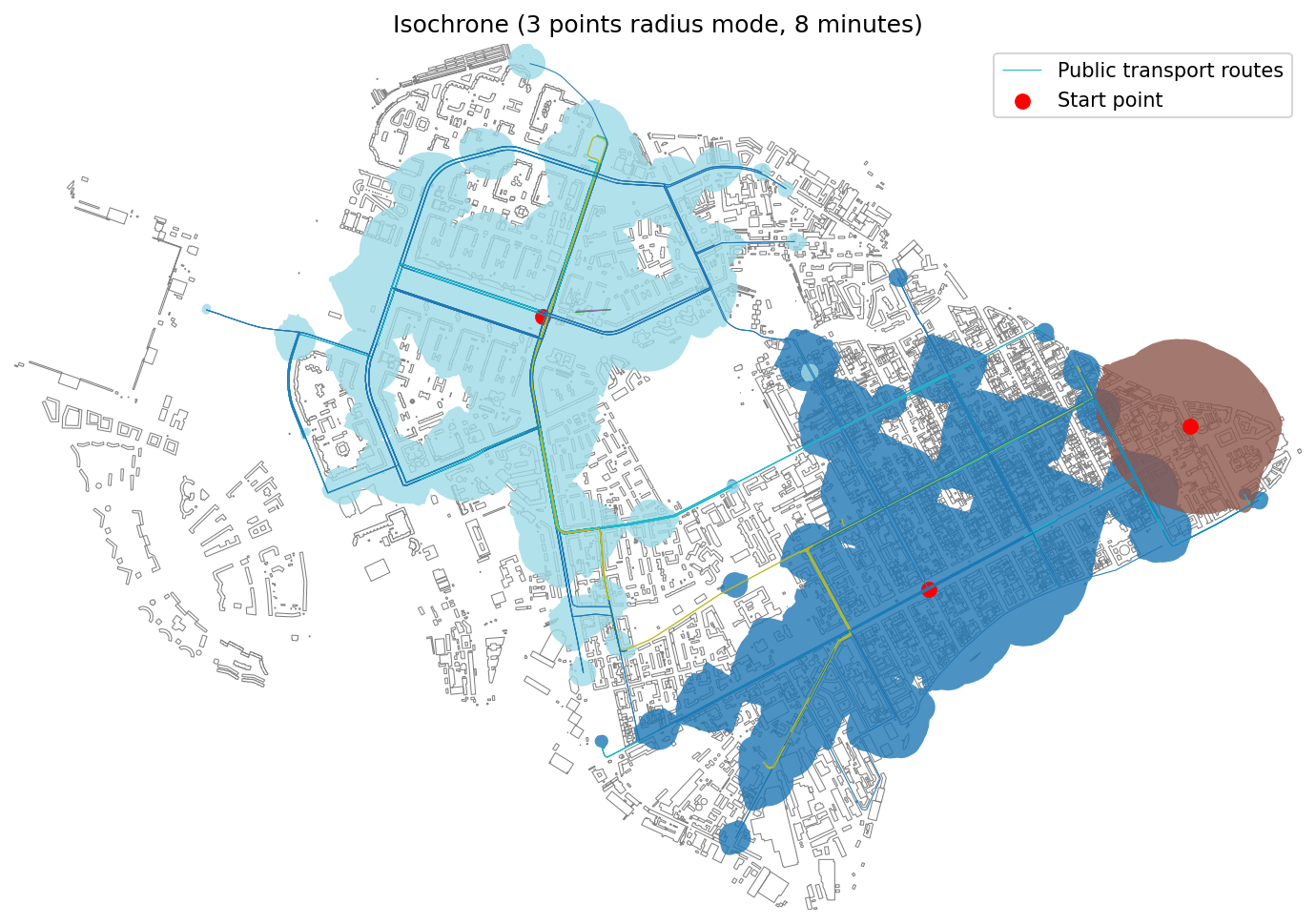

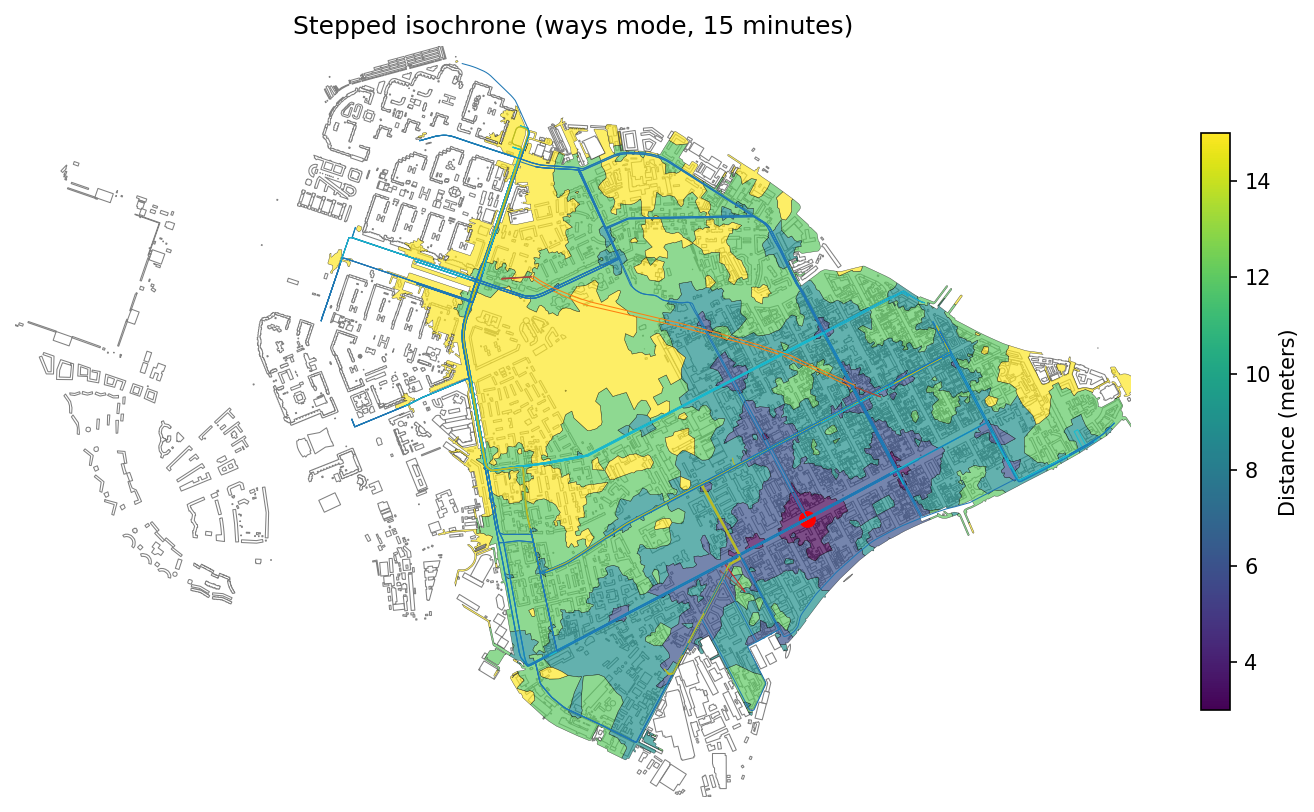

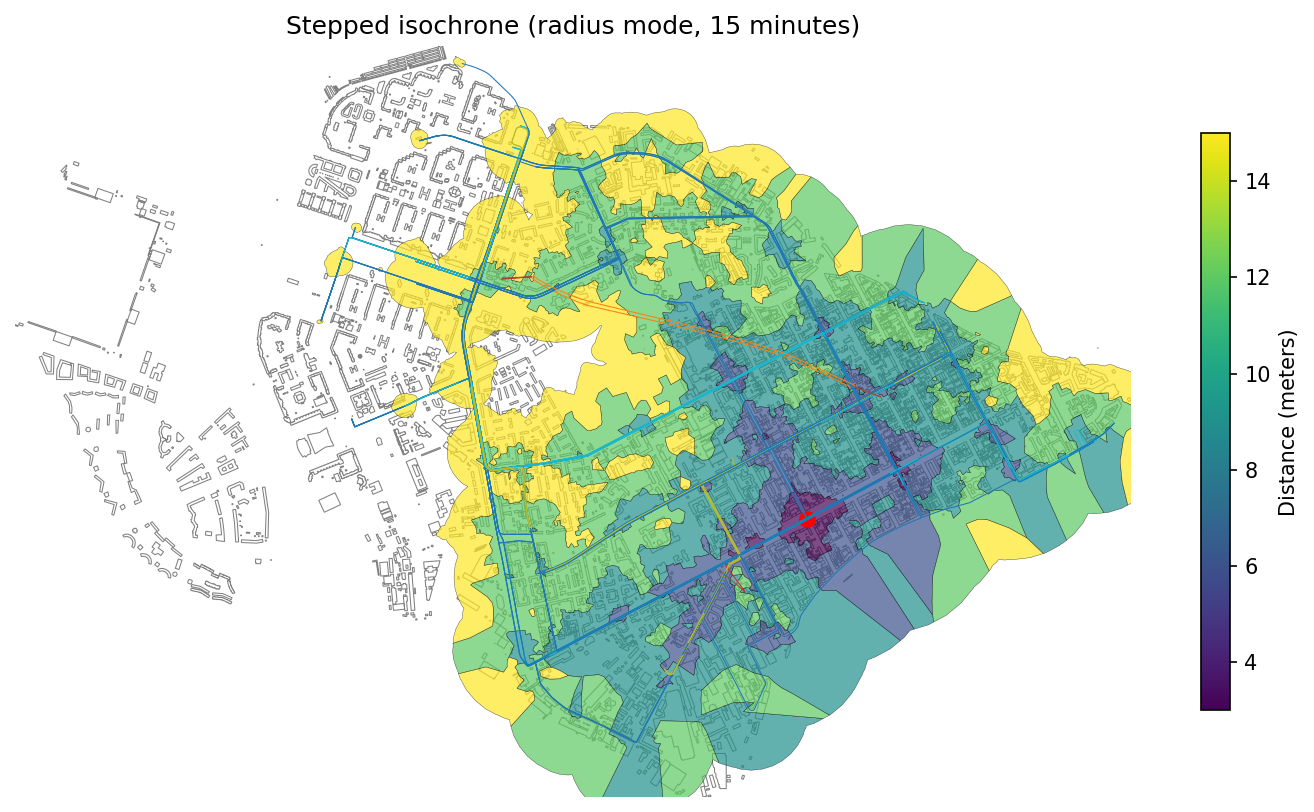

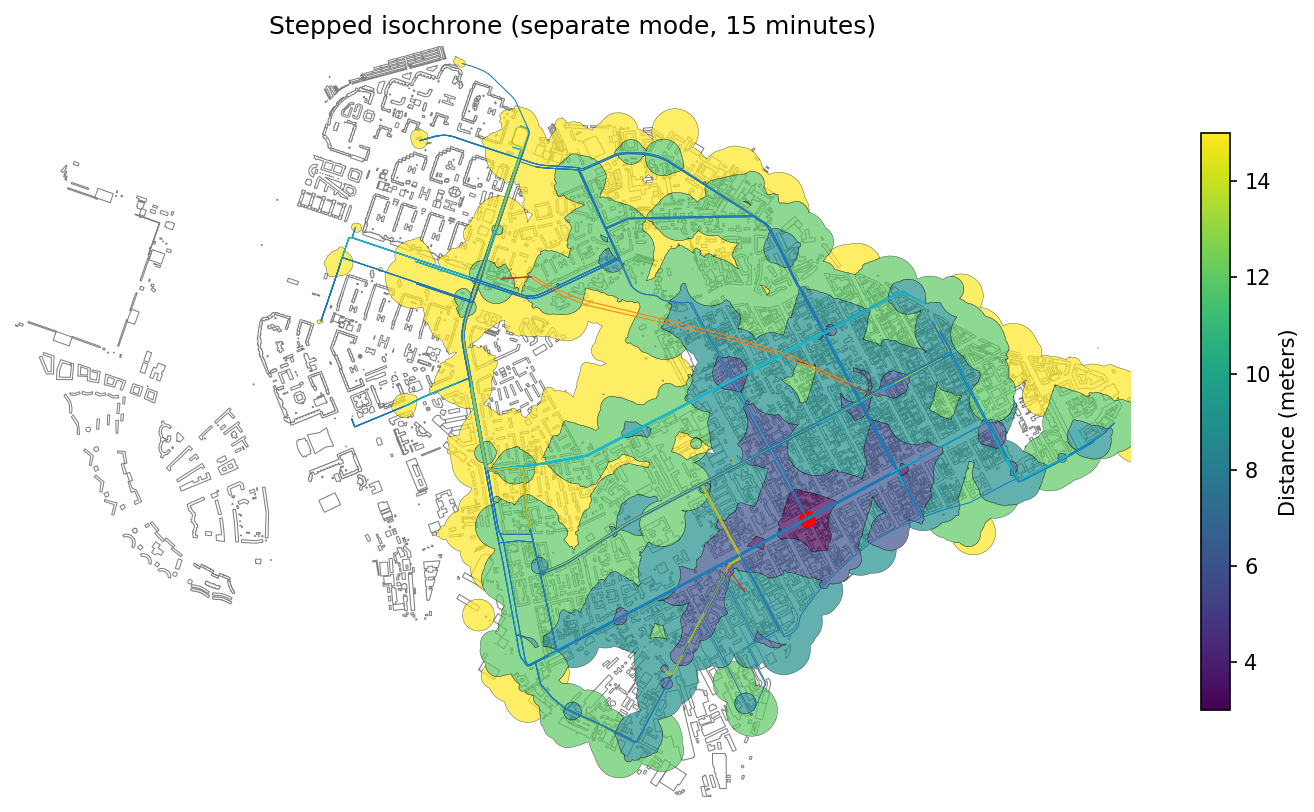

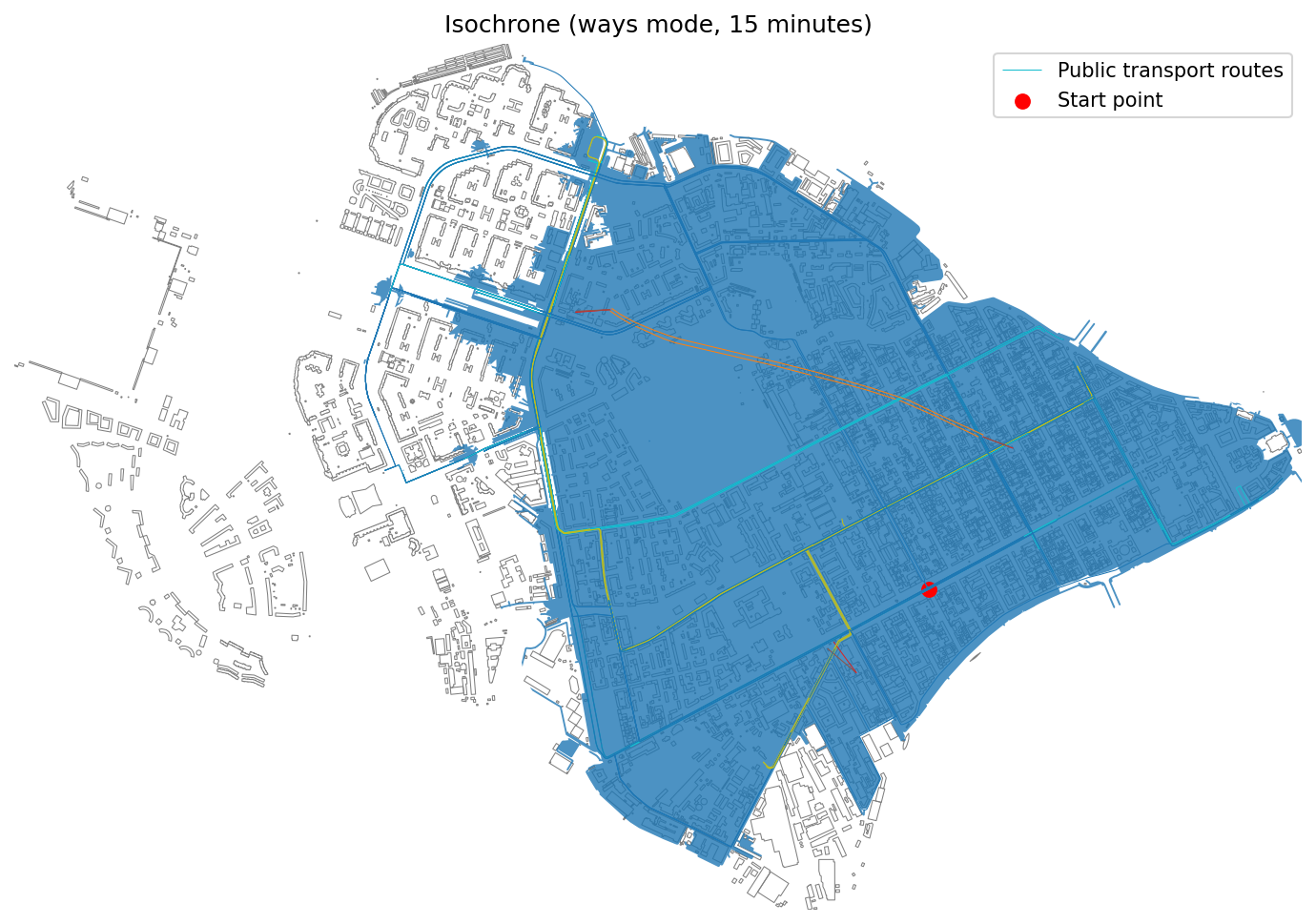

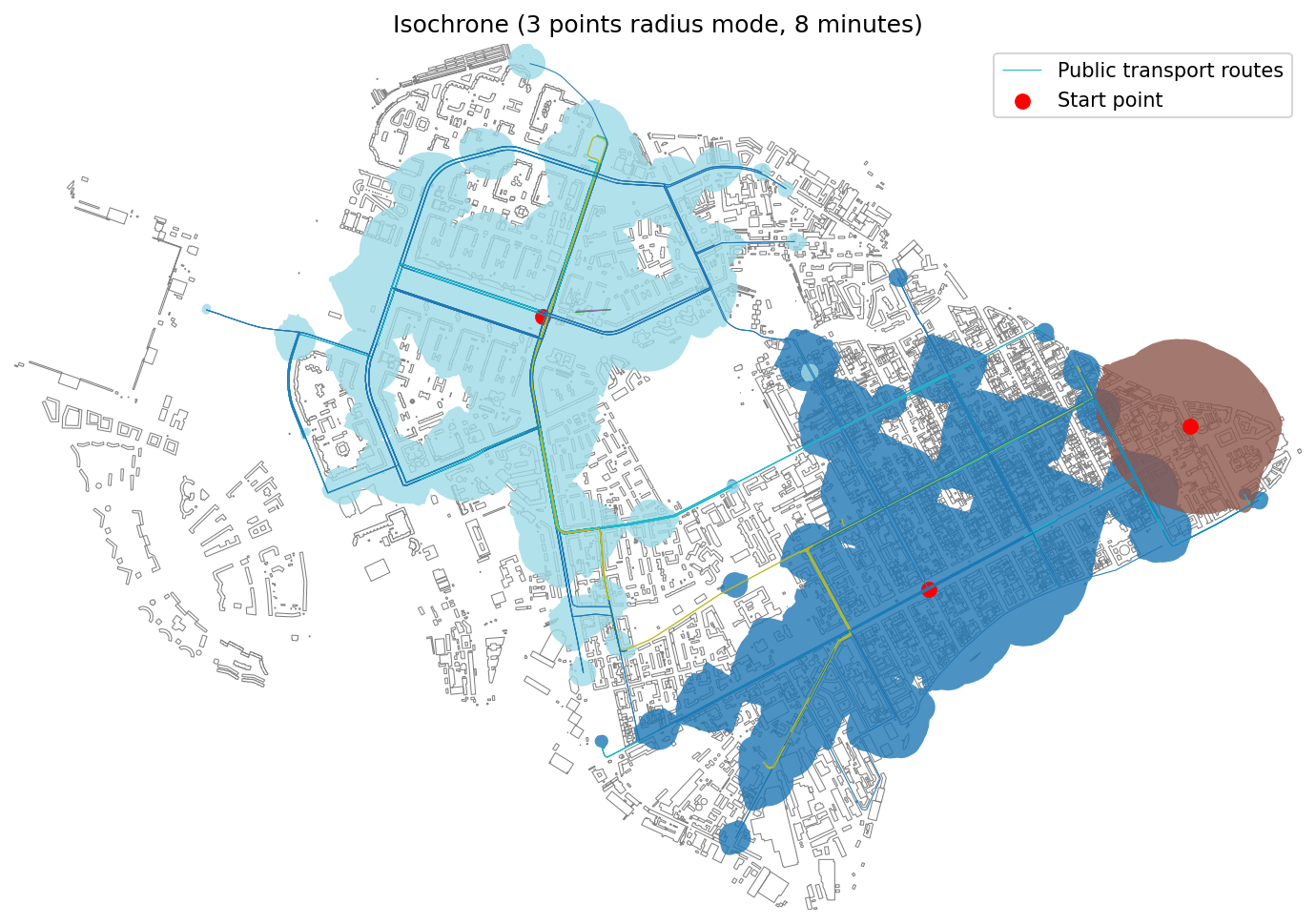

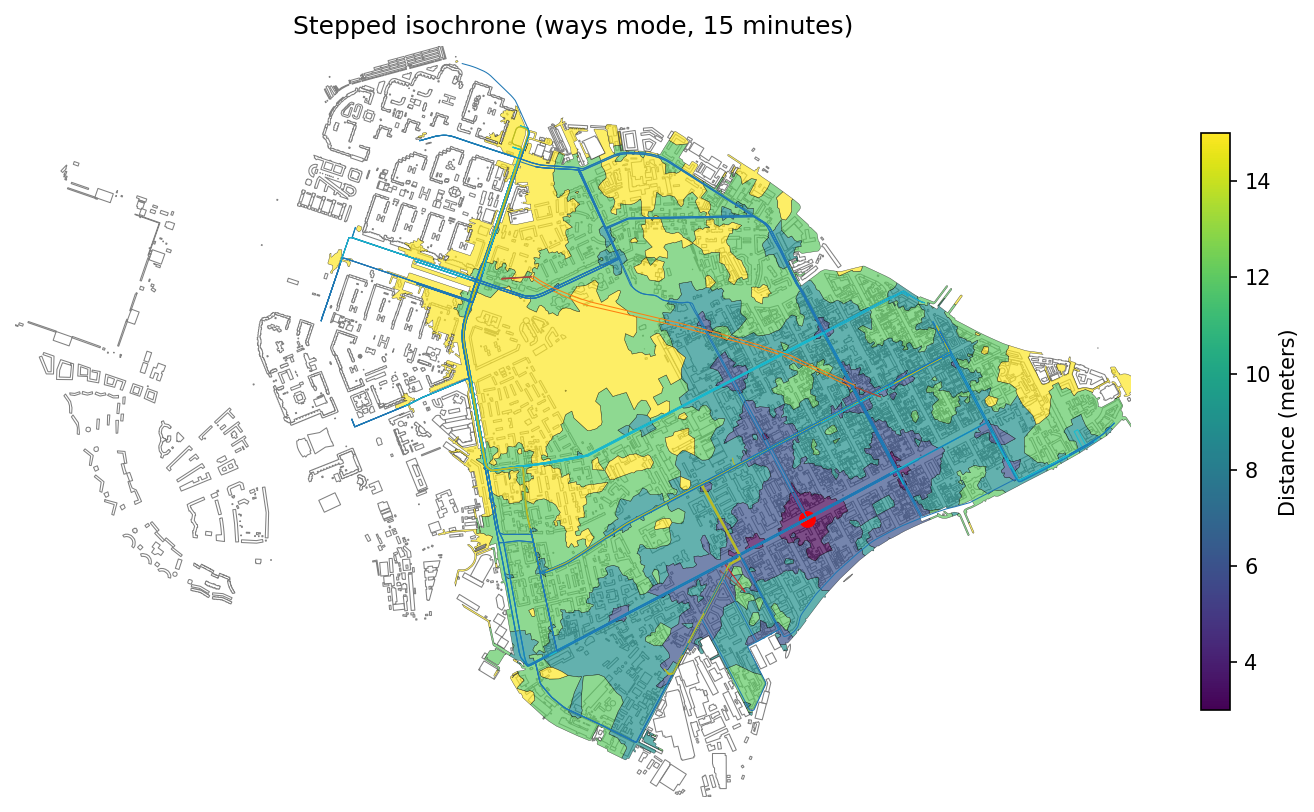

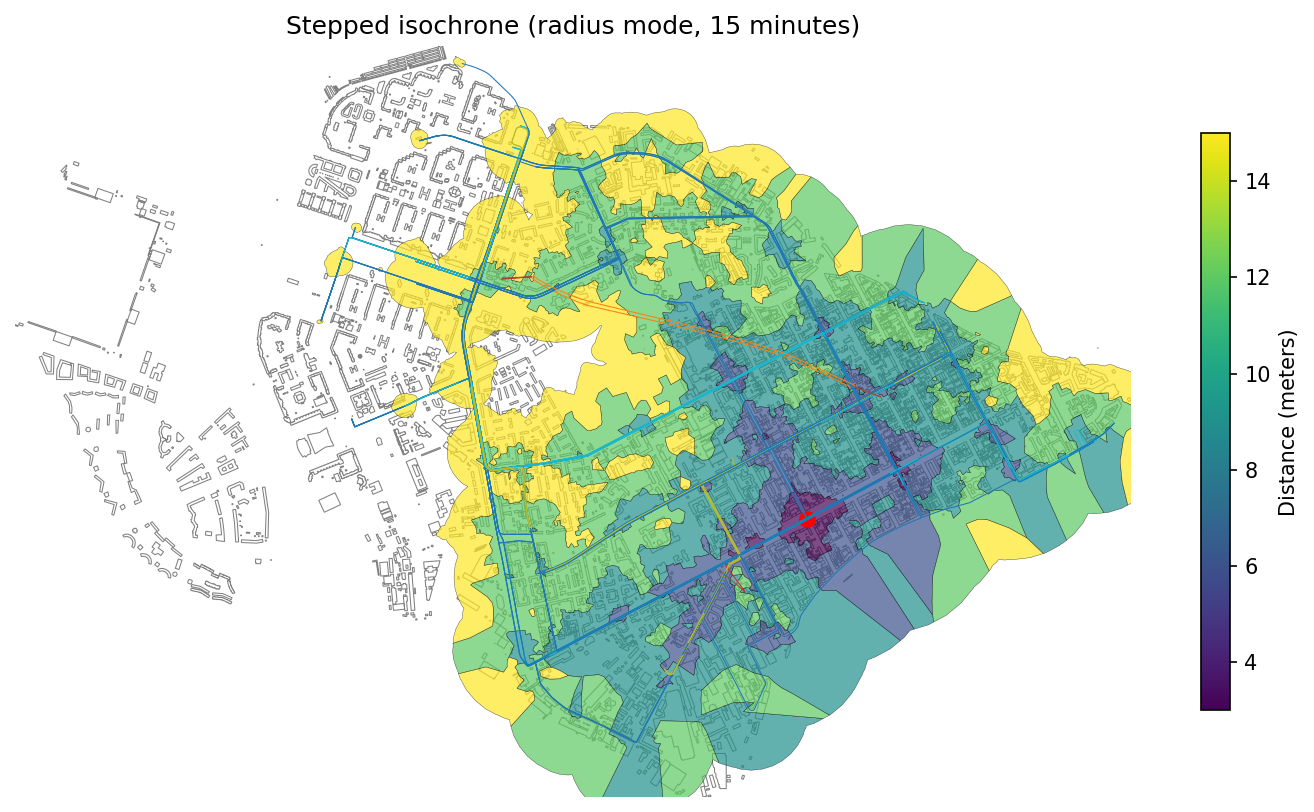

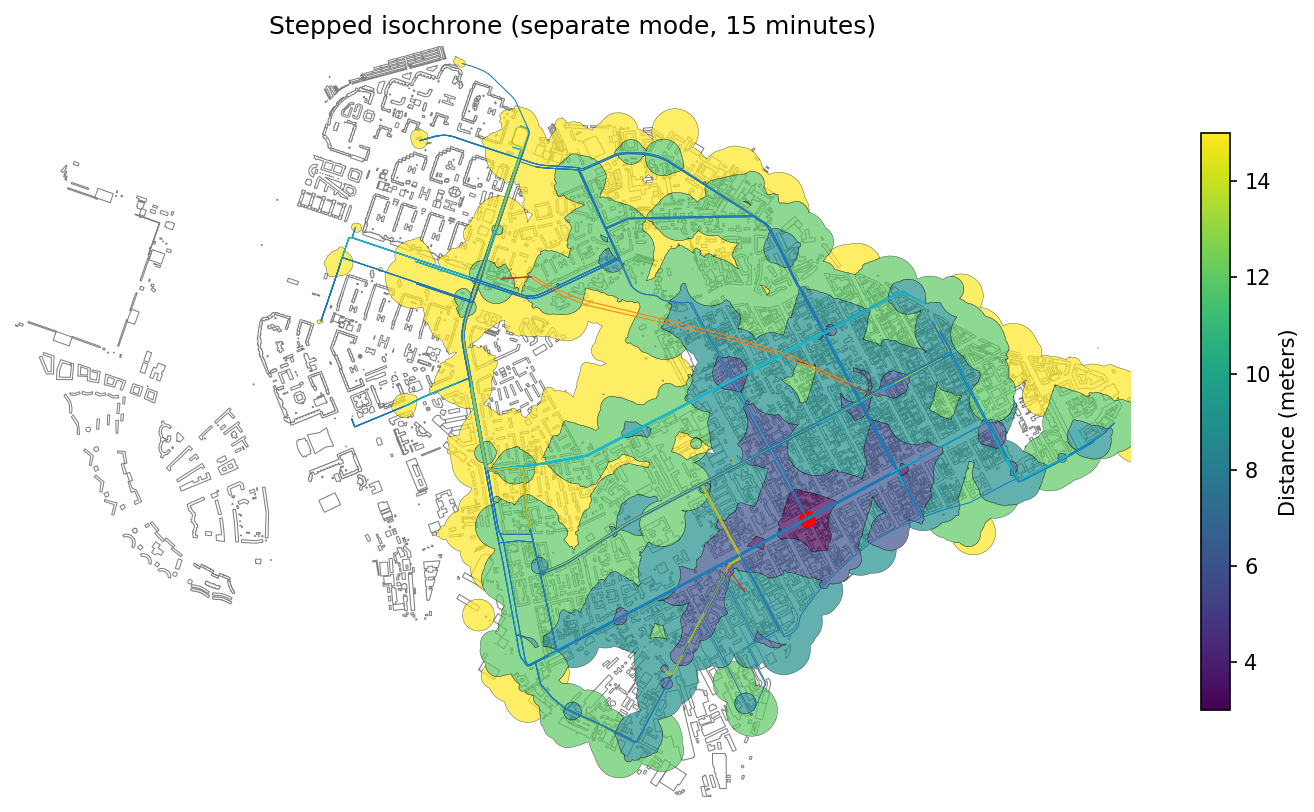

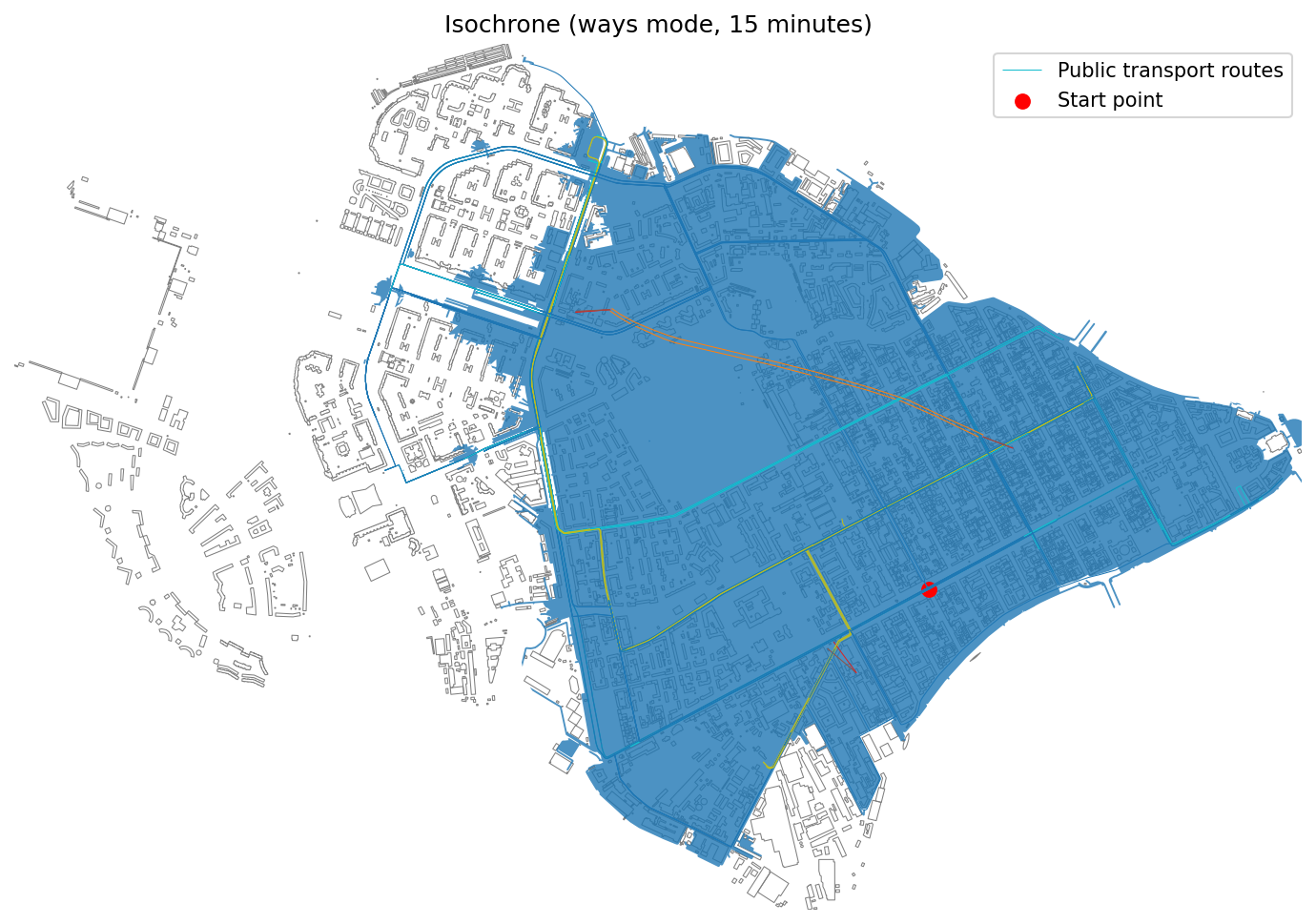

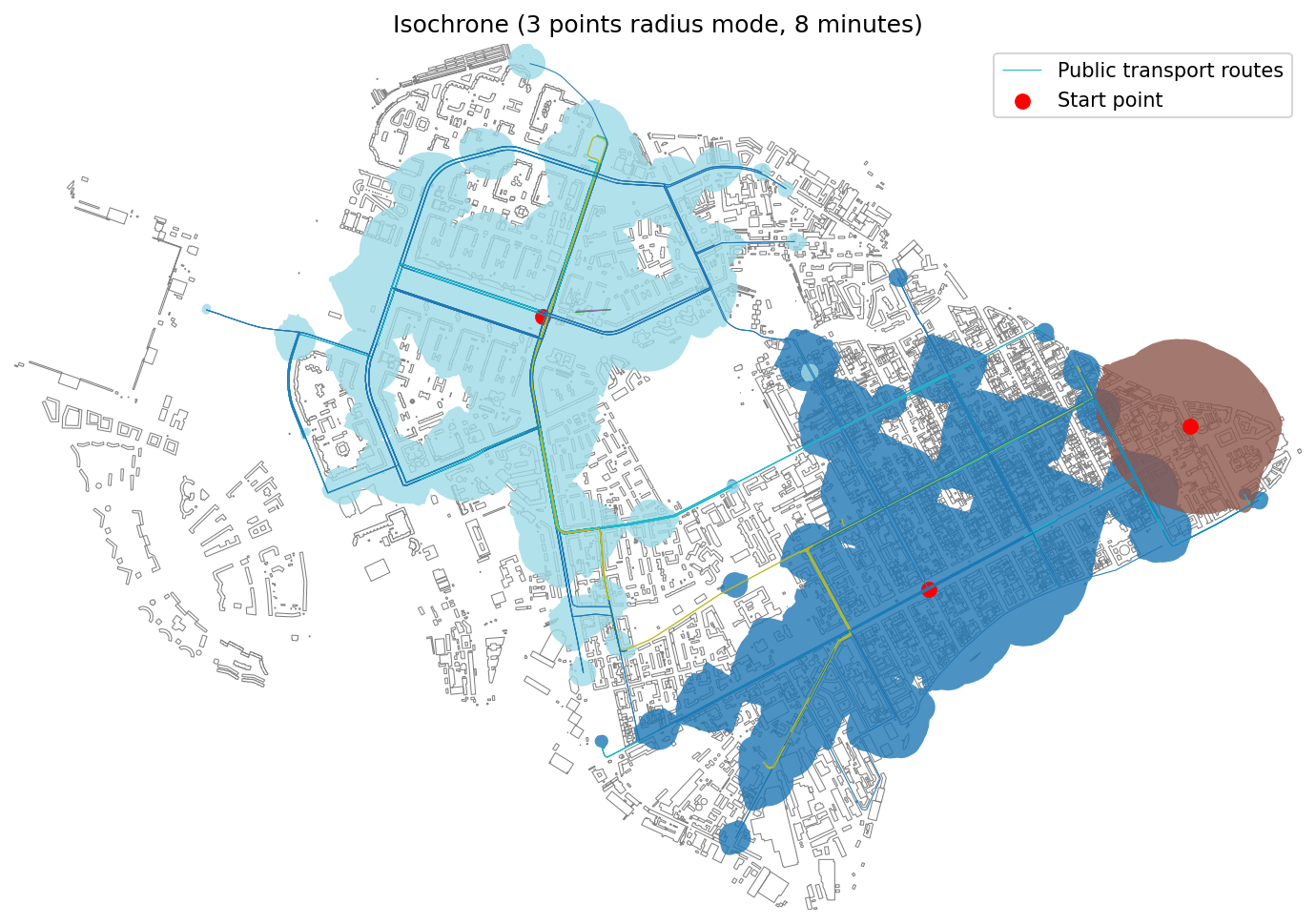

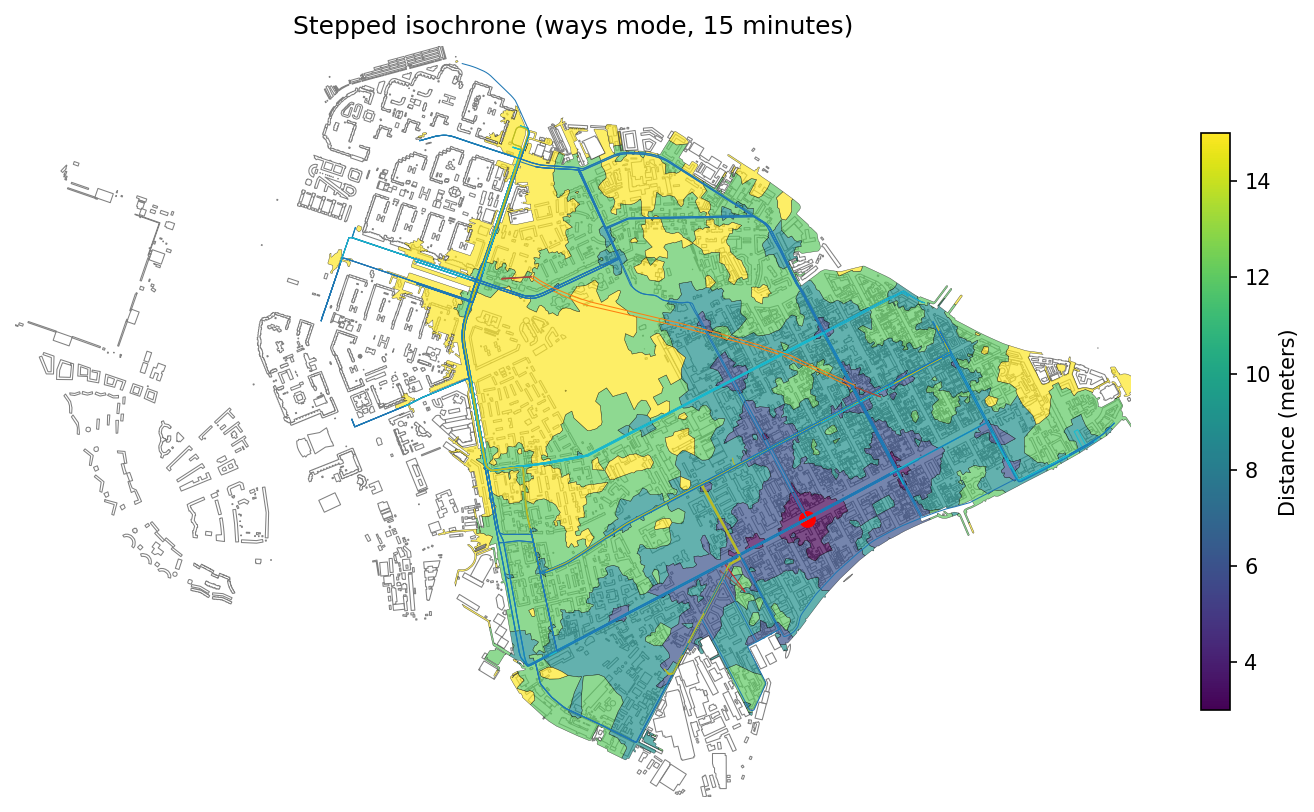

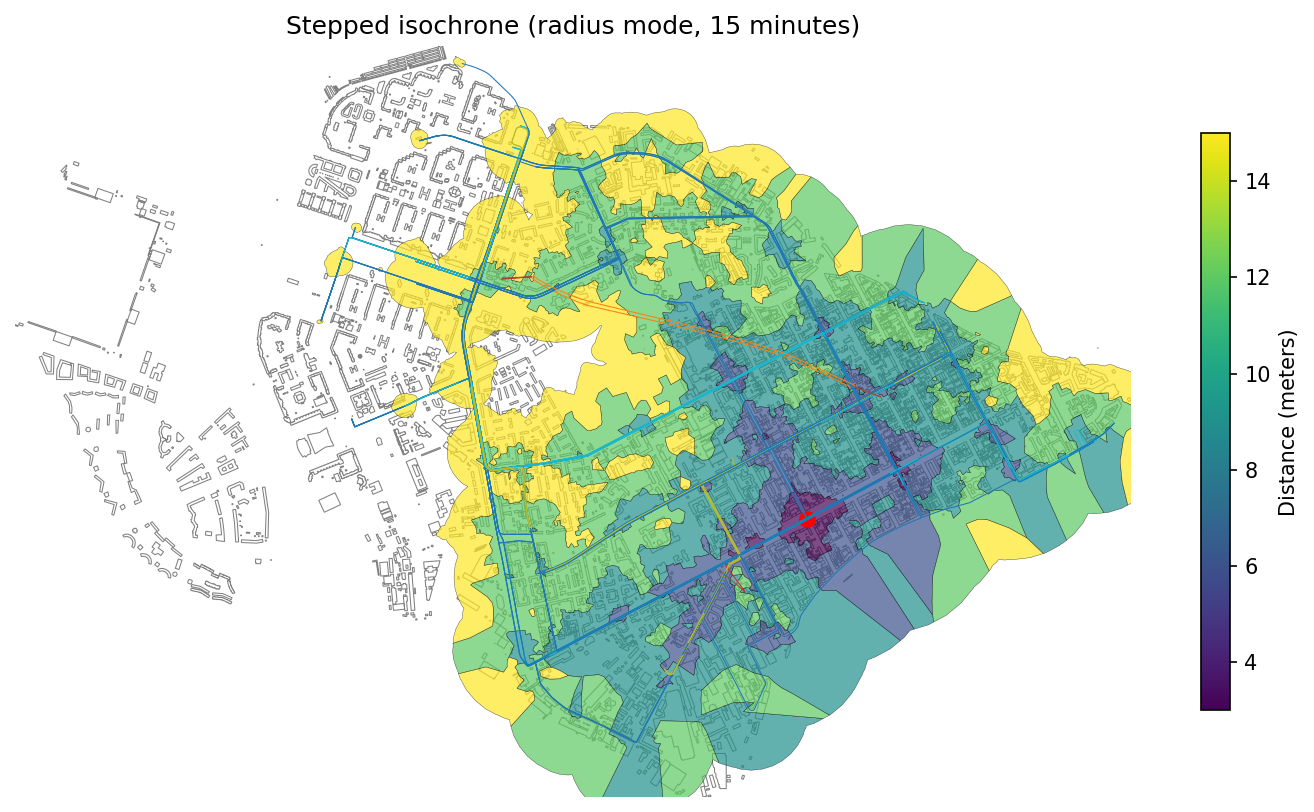

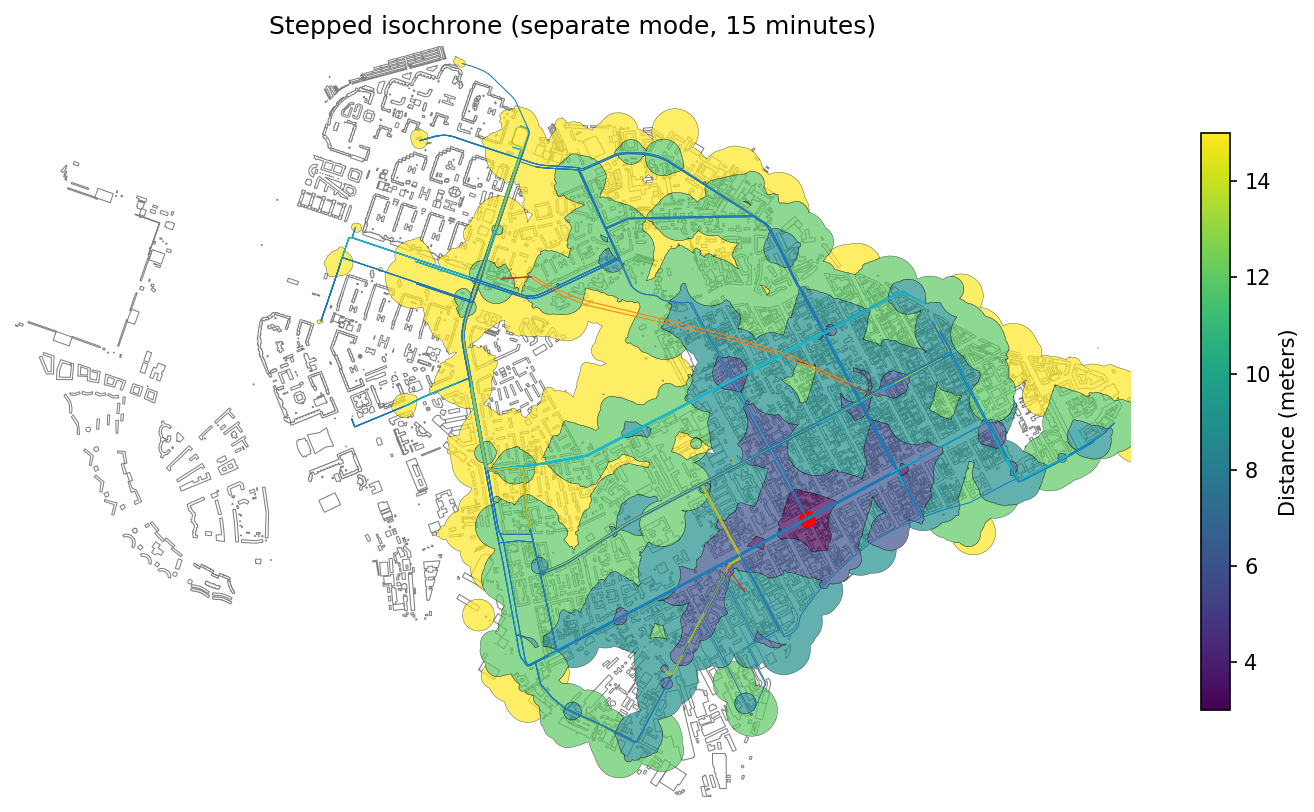

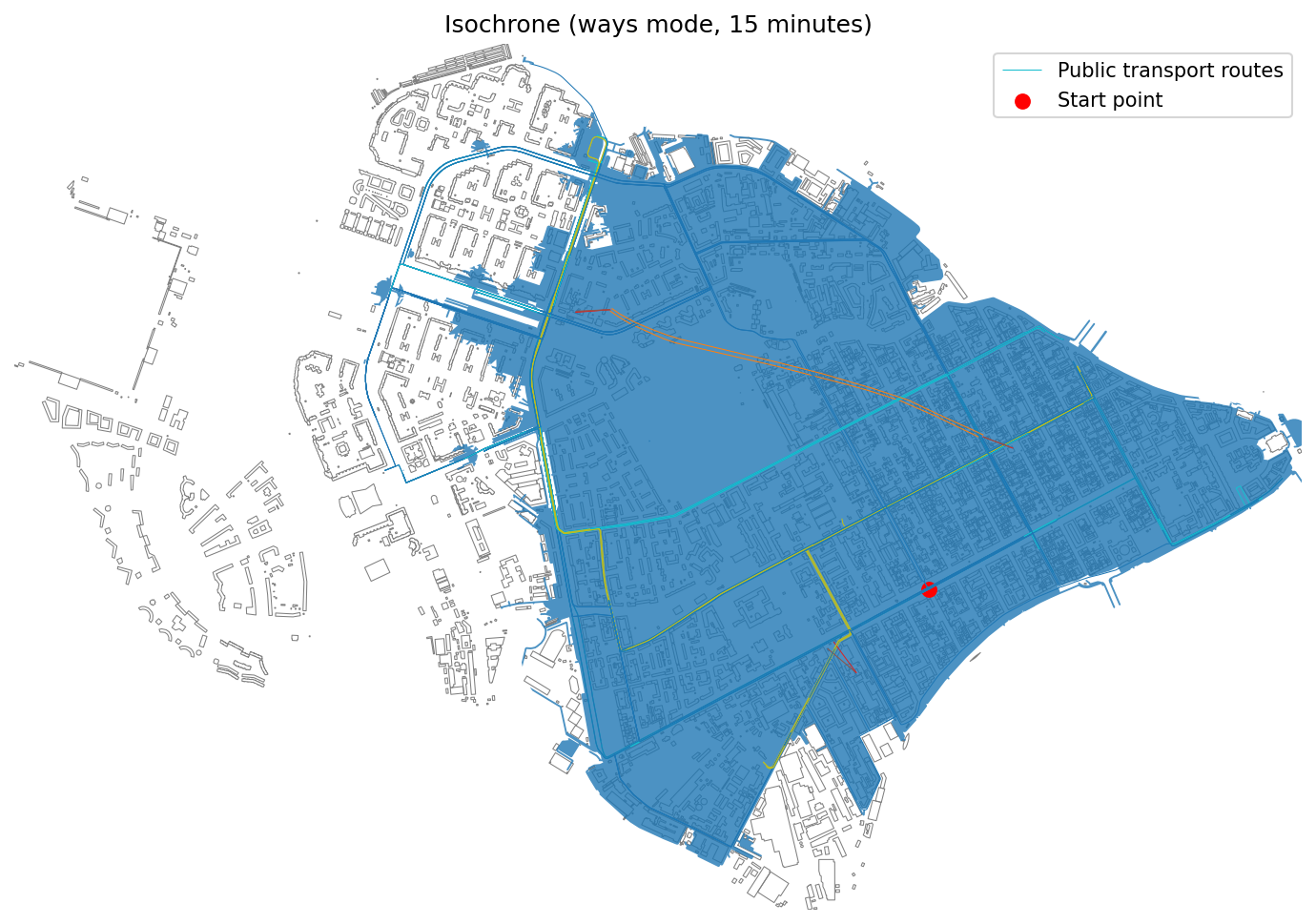

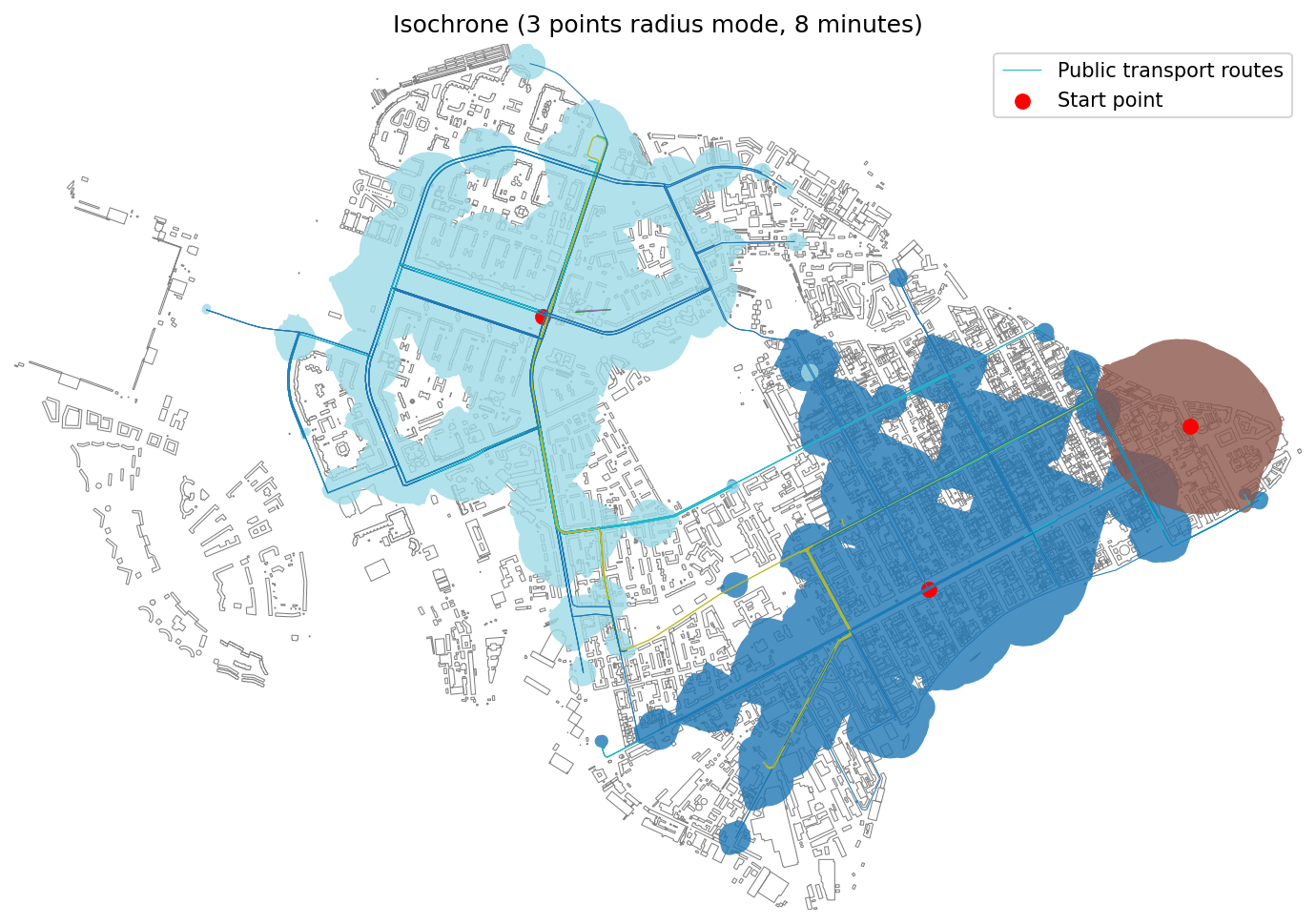

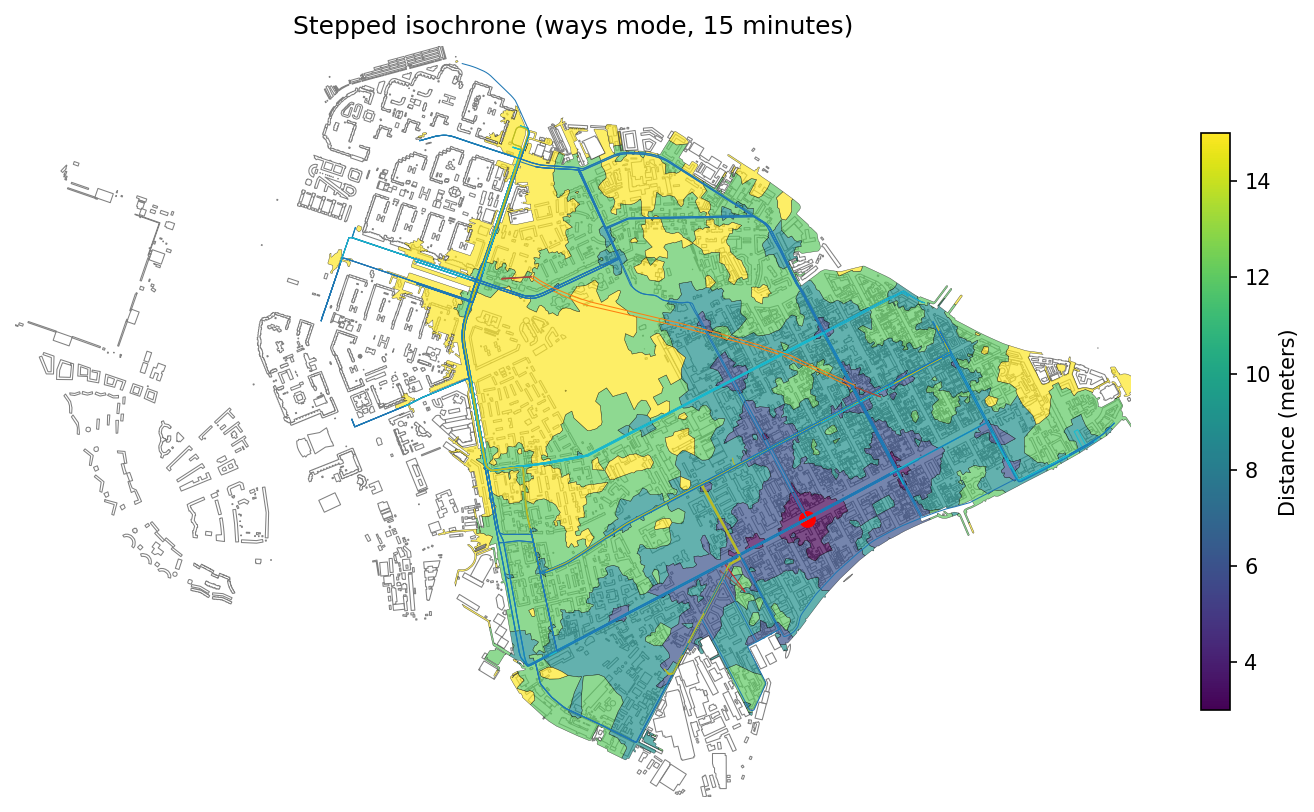

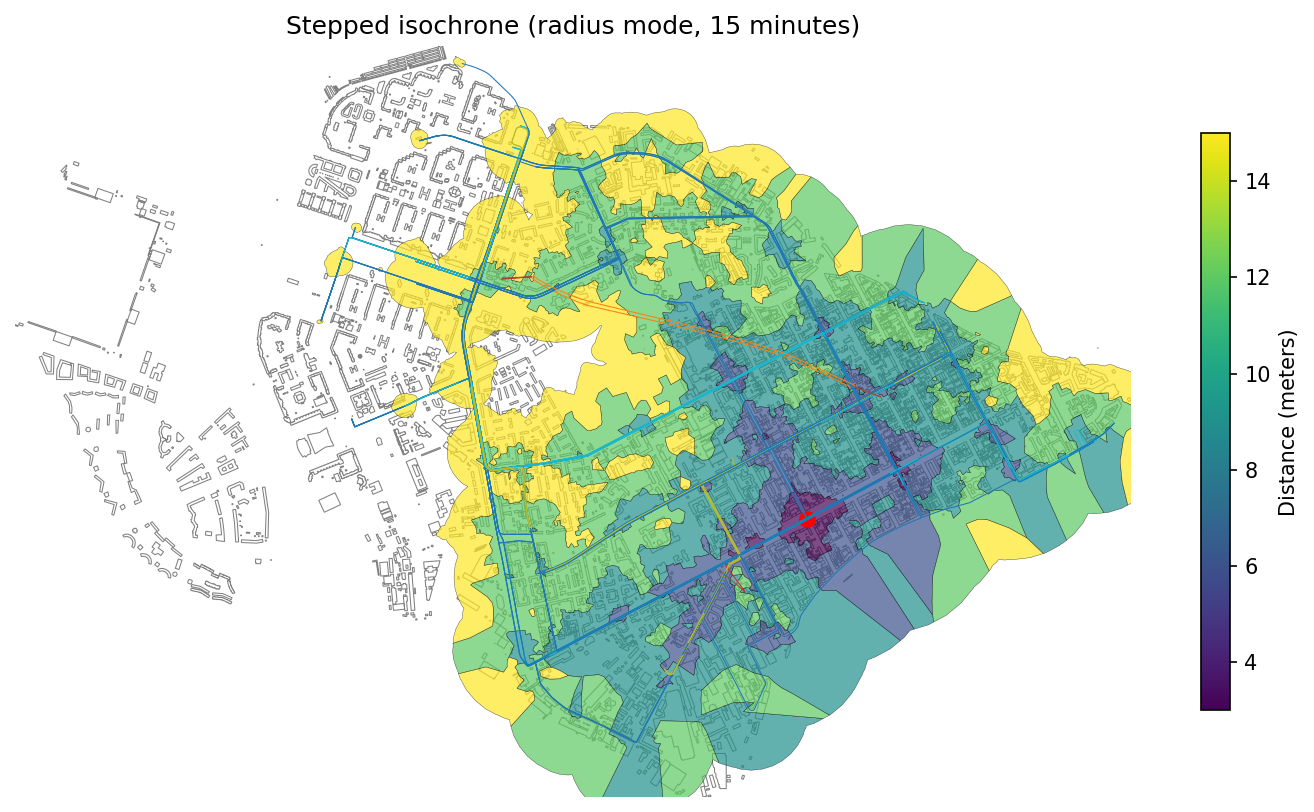

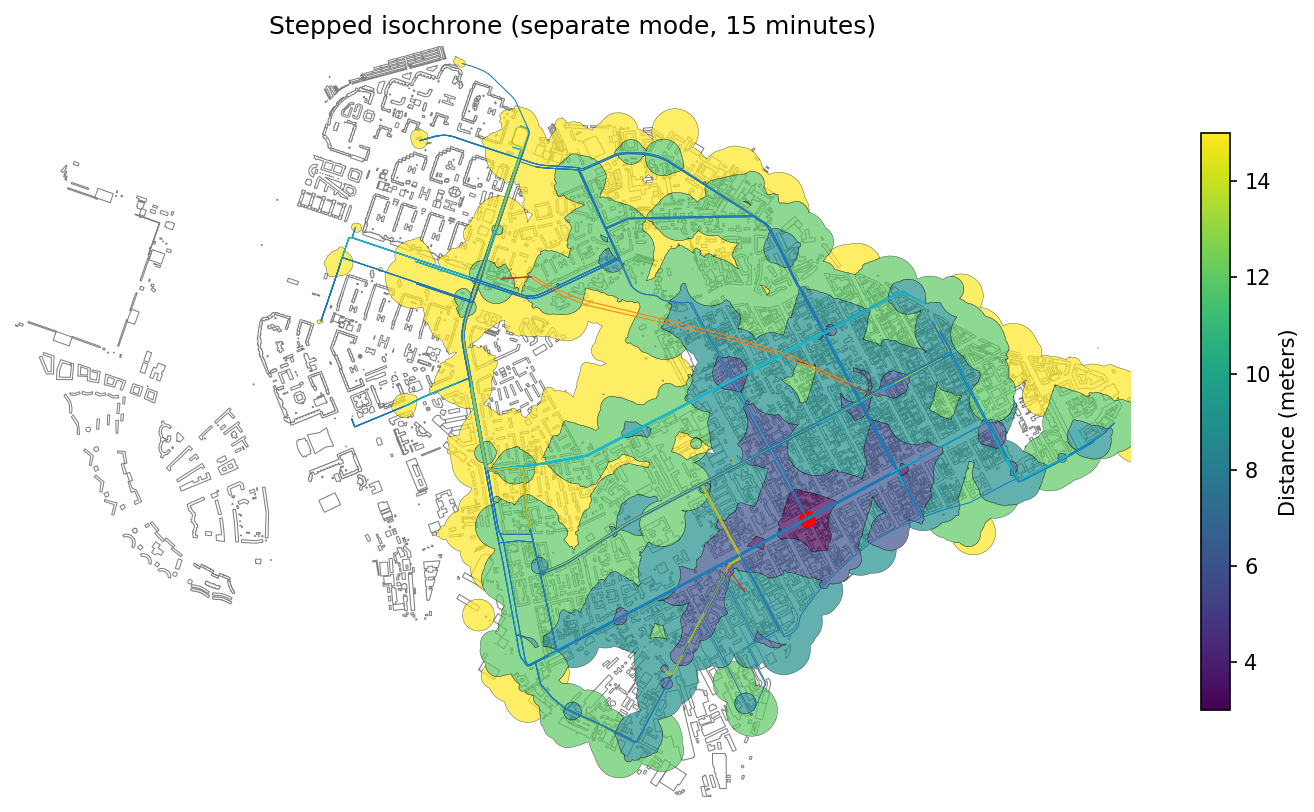

18 | 1. **[Isochrones and Transport Accessibility](./examples/isochrone_generator.ipynb)** — Isochrones represent areas reachable from a starting point within a given time limit along a transport network. This function enables analysis of transport accessibility using pedestrian, automobile, public transport graphs, or their combination.

19 |

20 | The library offers multiple isochrone generation methods:

21 | - **Baseline isochrones**: show a single area reachable within a specified time.

22 | - **Stepped isochrones**: show accessibility ranges divided into time intervals (e.g., 5, 10, 15 minutes).

23 |

24 |

25 |  26 |

26 |  27 |

27 |  28 |

28 |

29 |

30 |  31 |

31 |  32 |

32 |  33 |

33 |

34 |

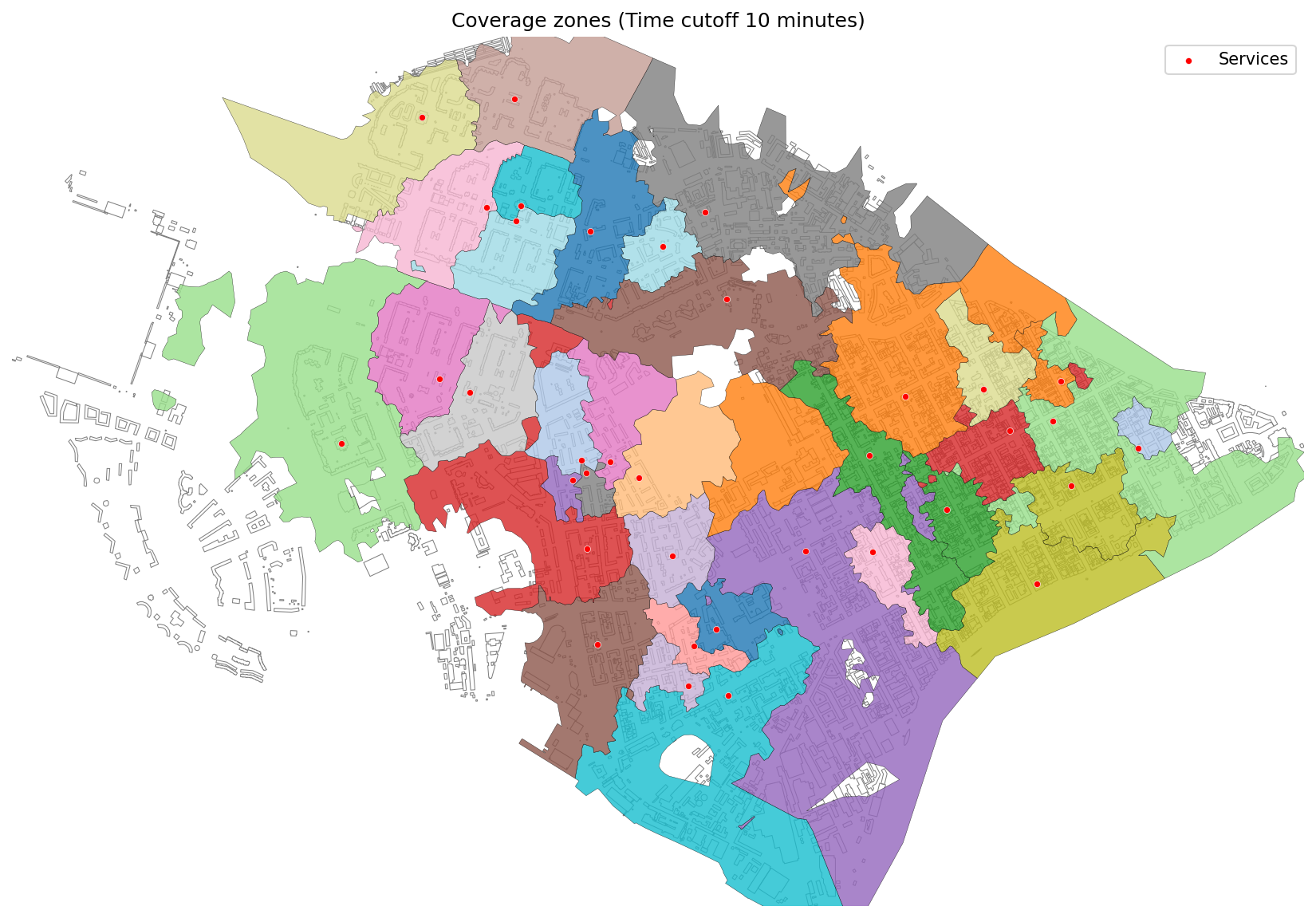

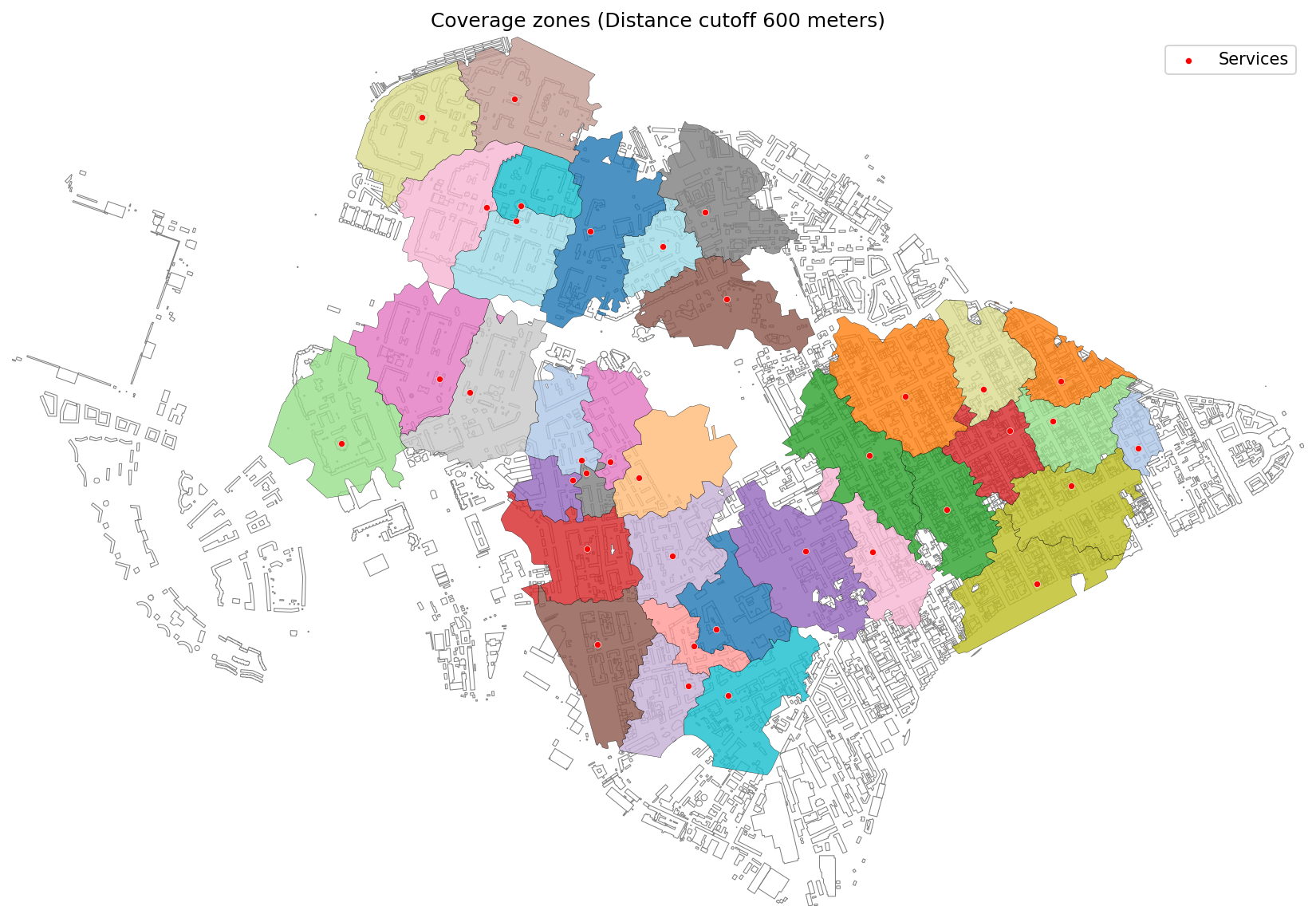

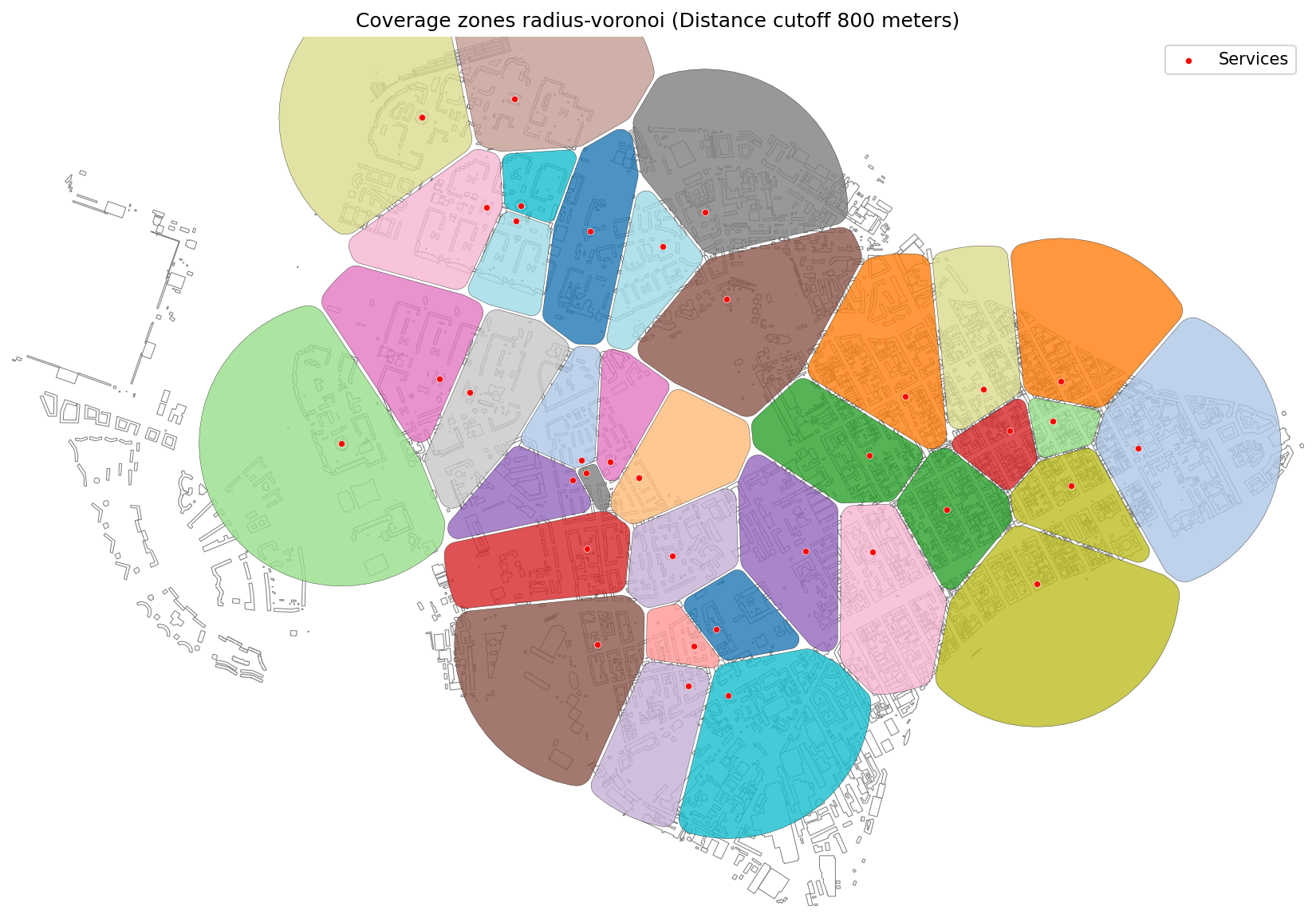

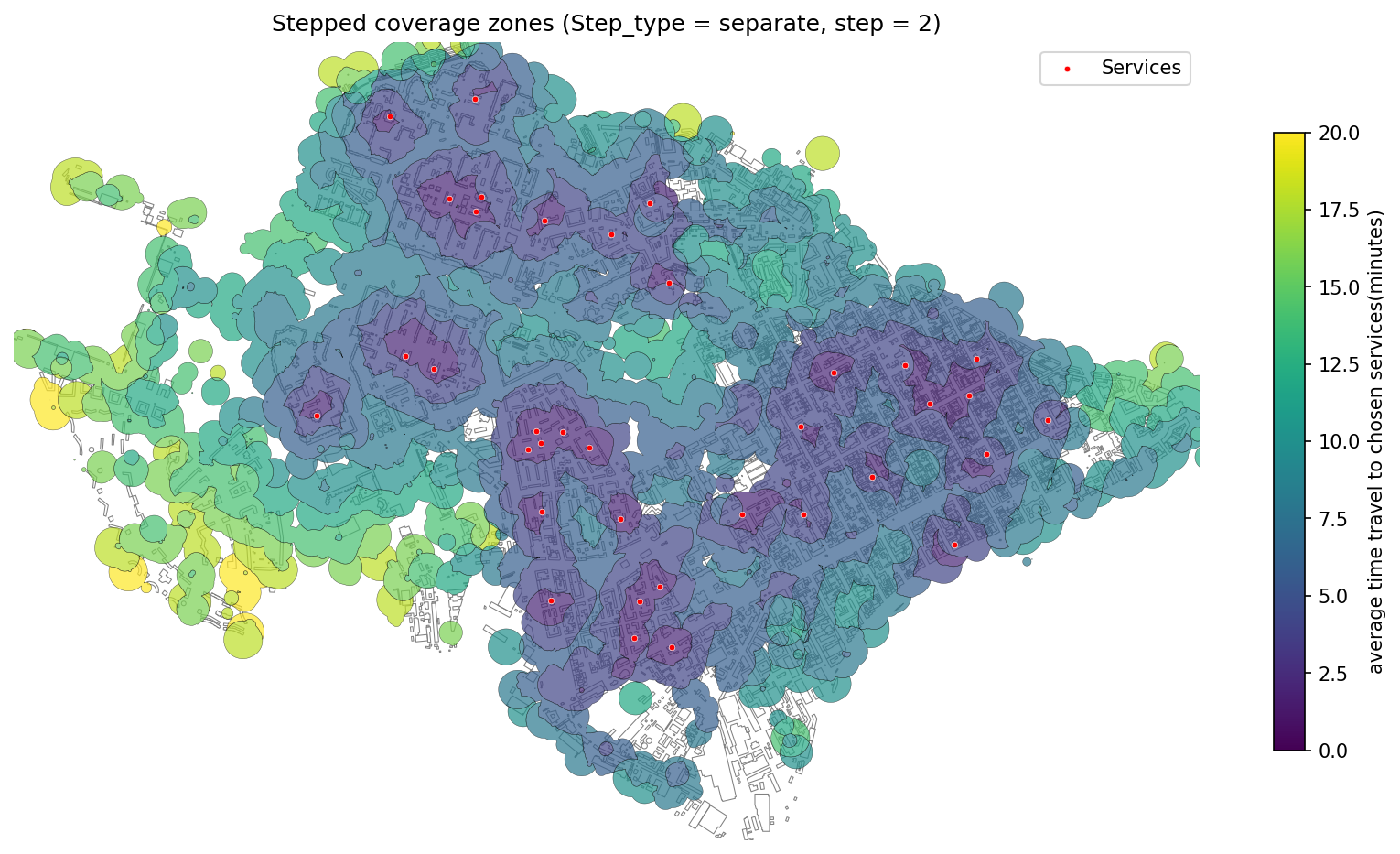

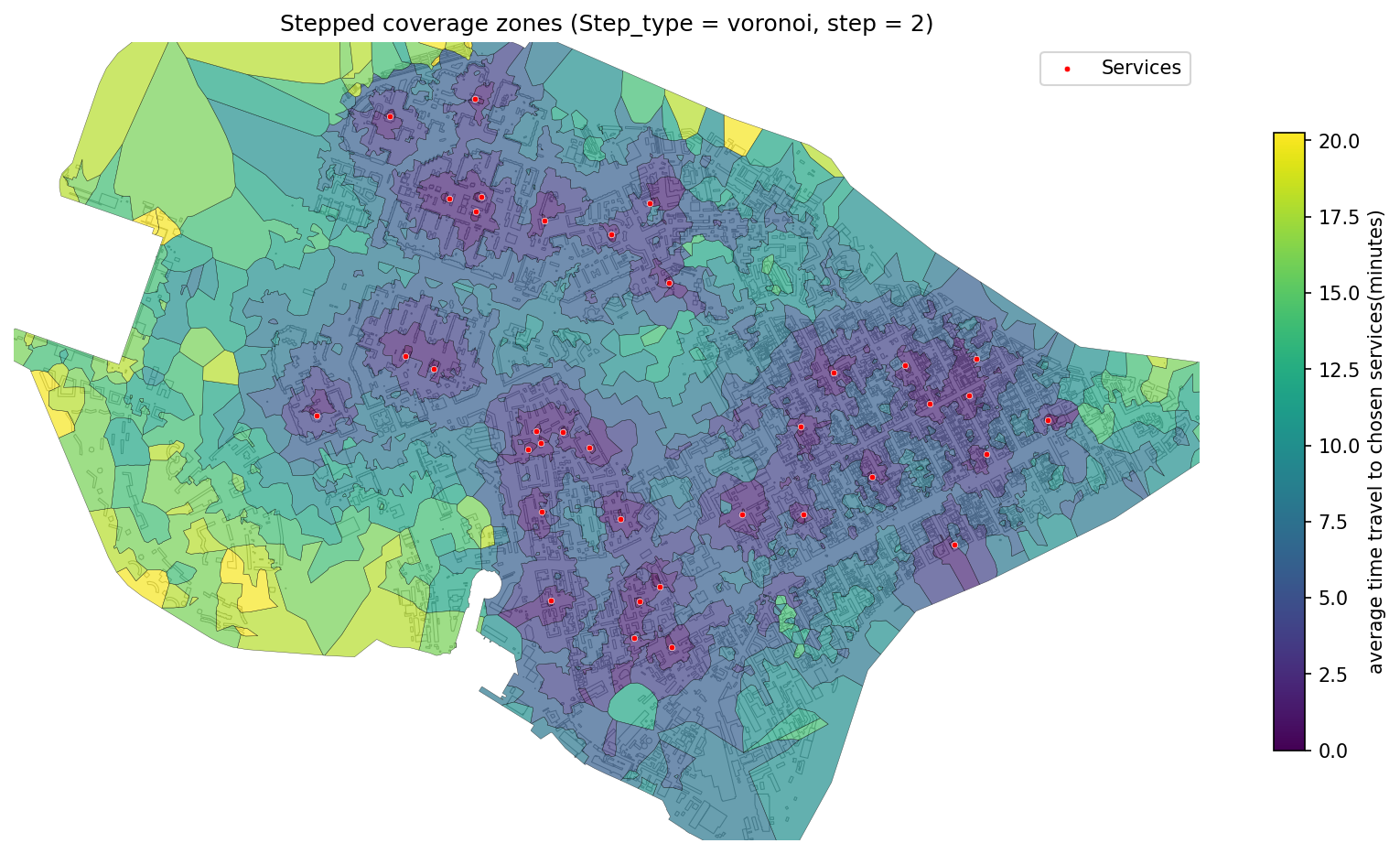

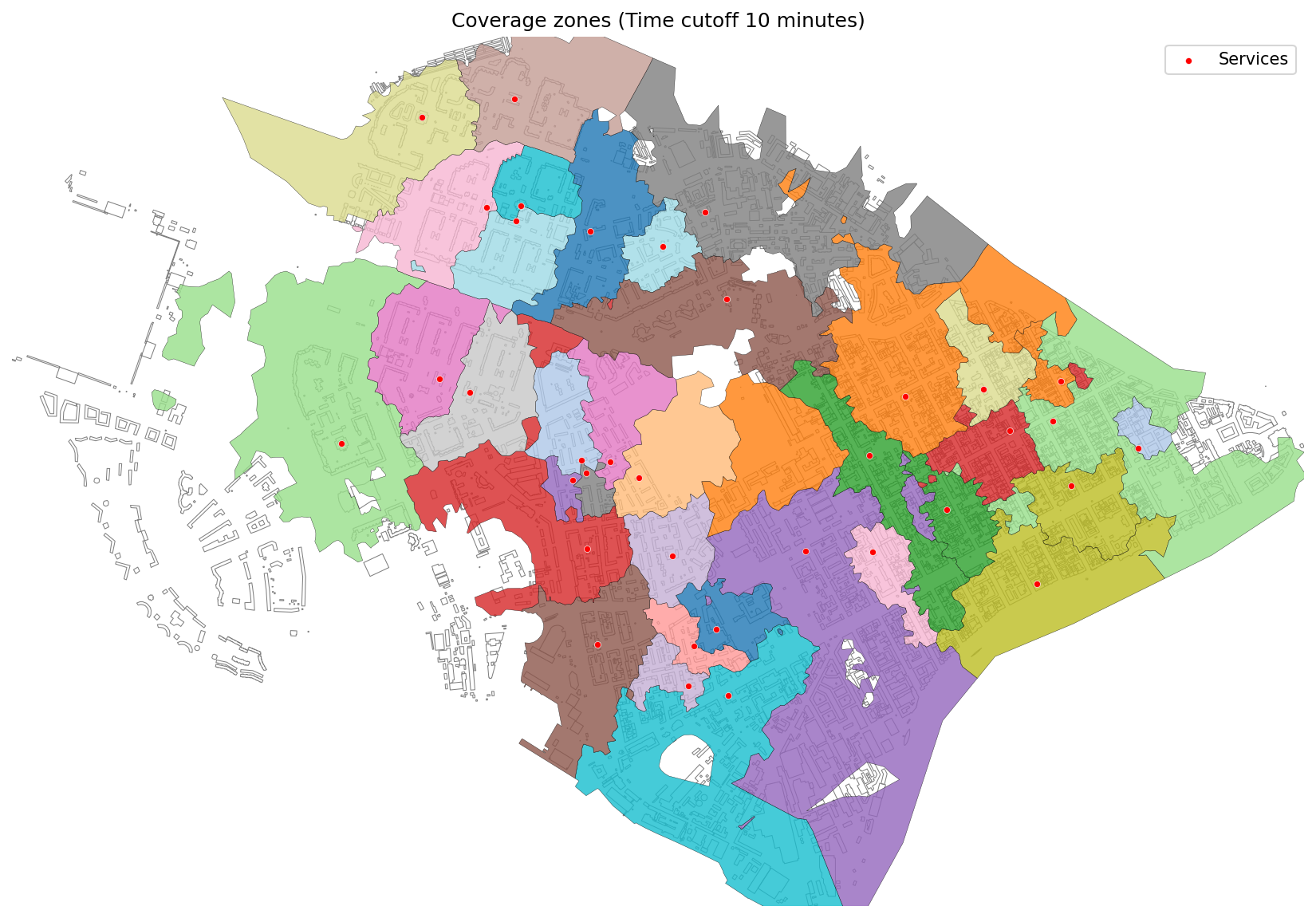

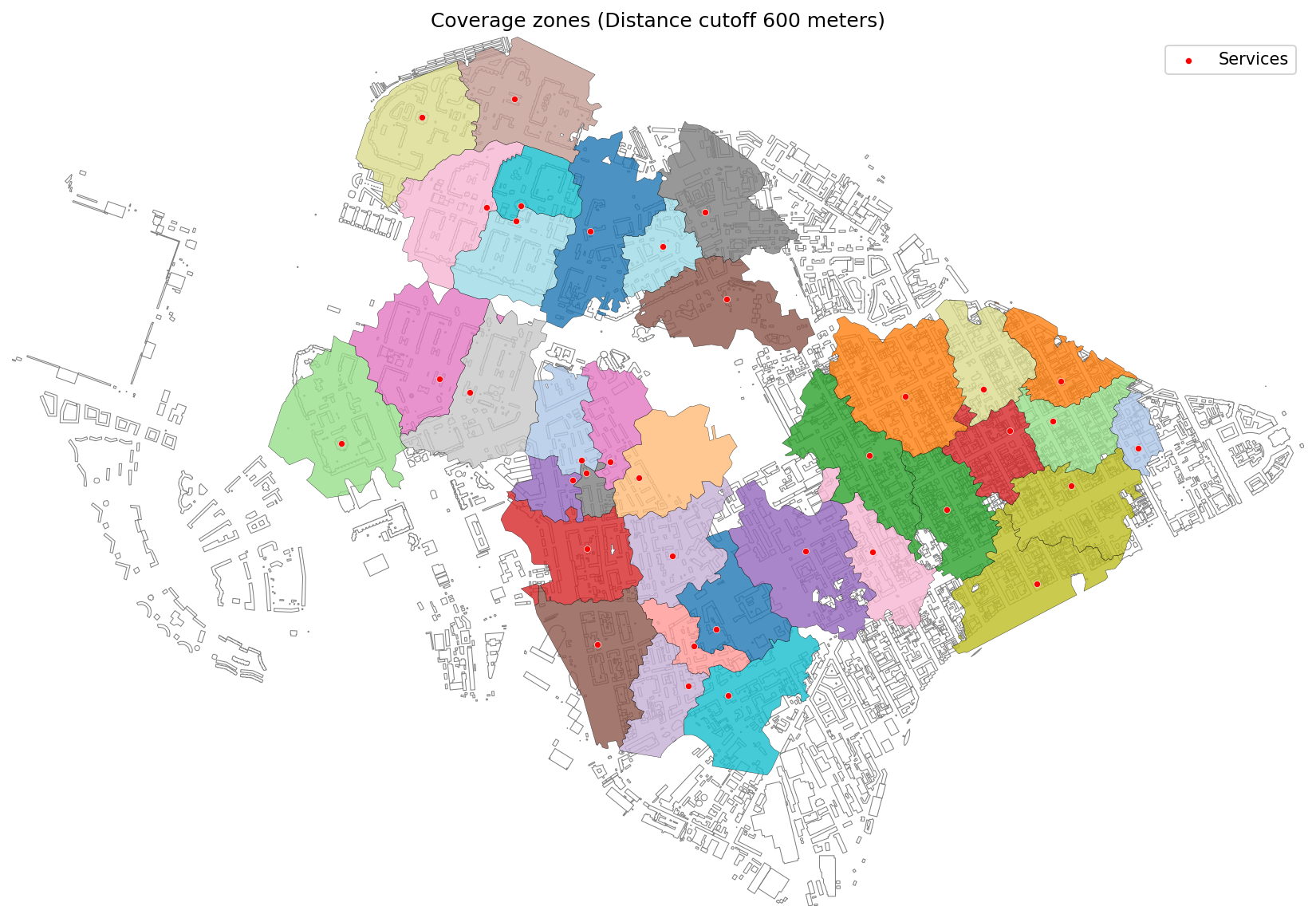

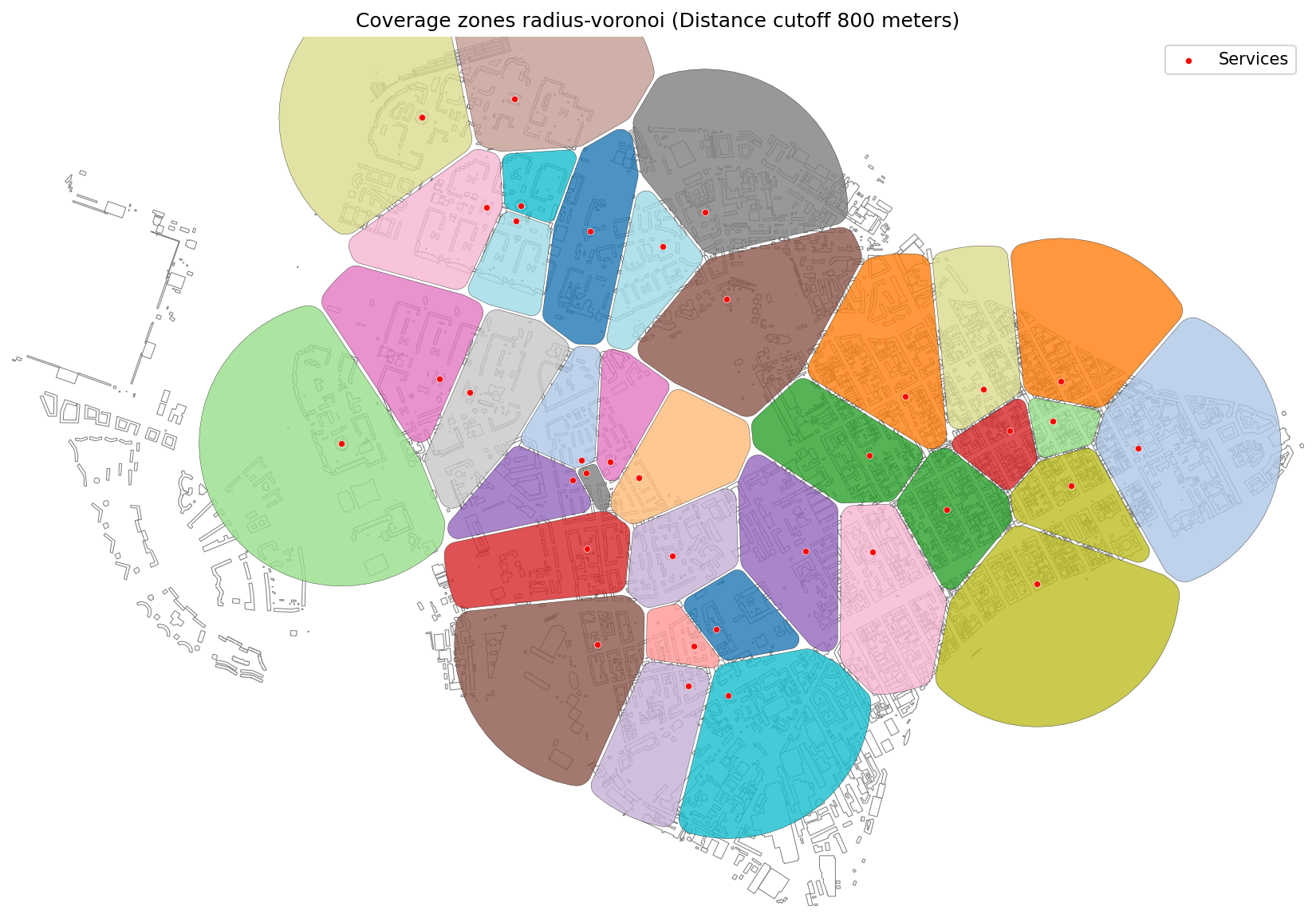

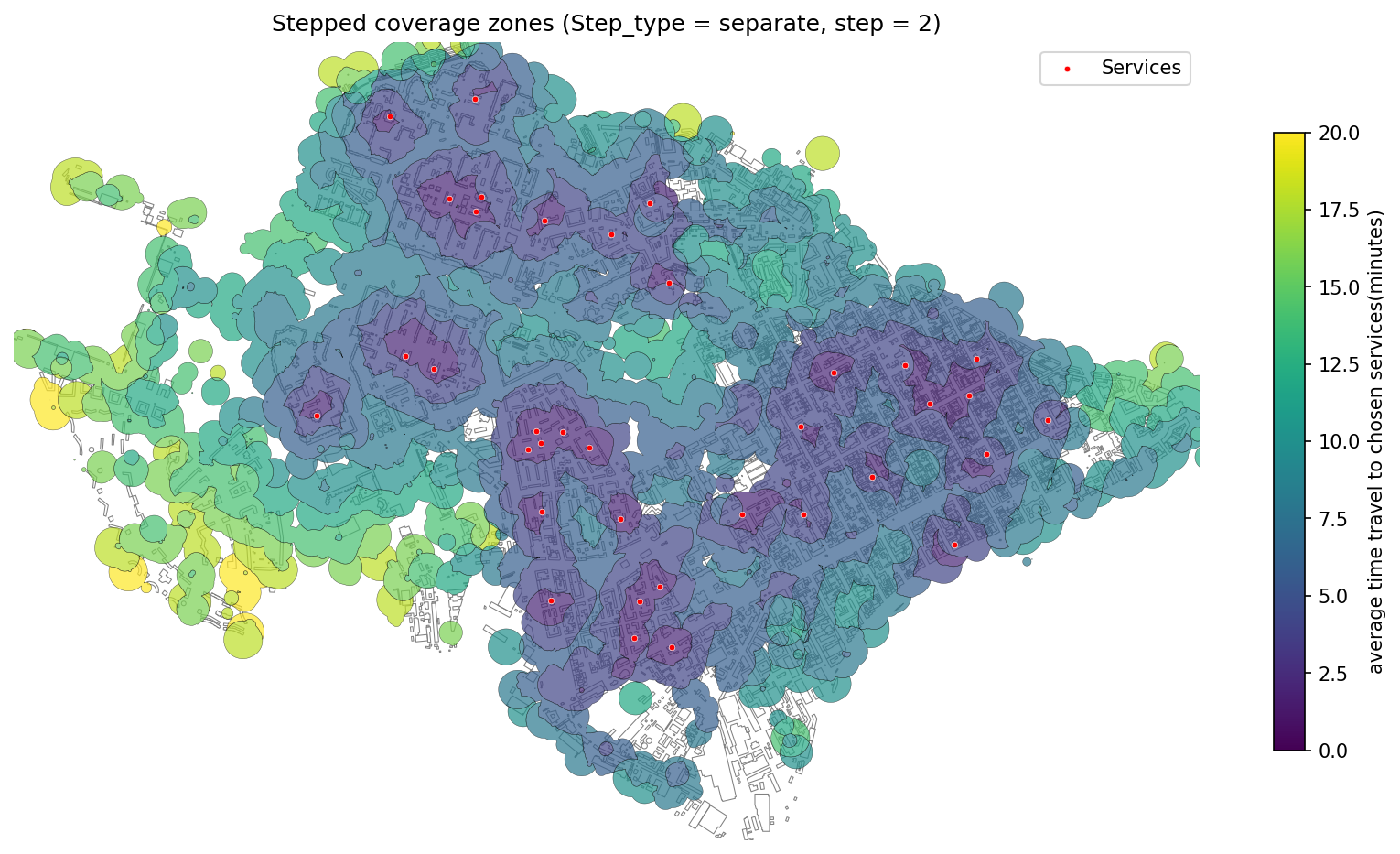

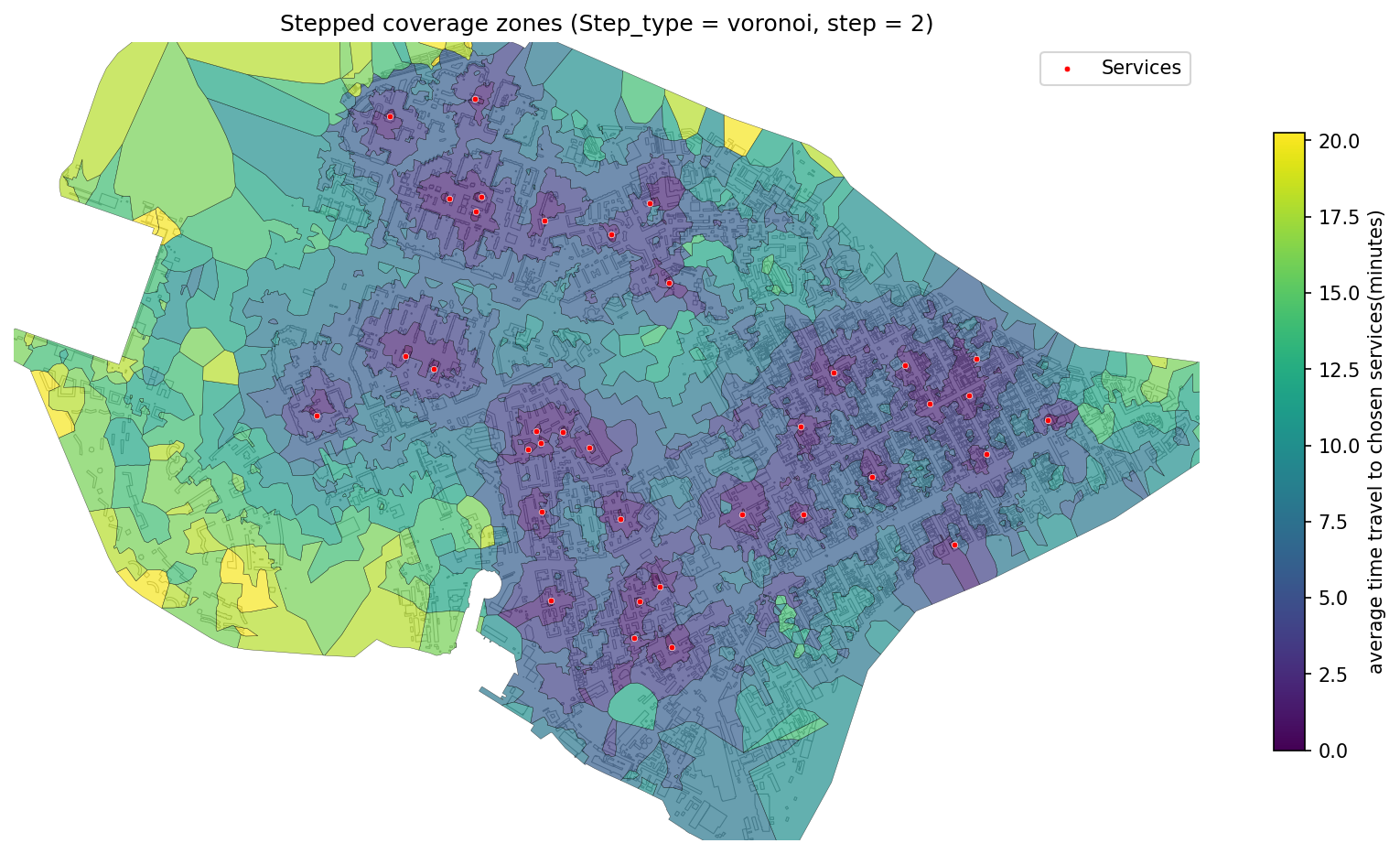

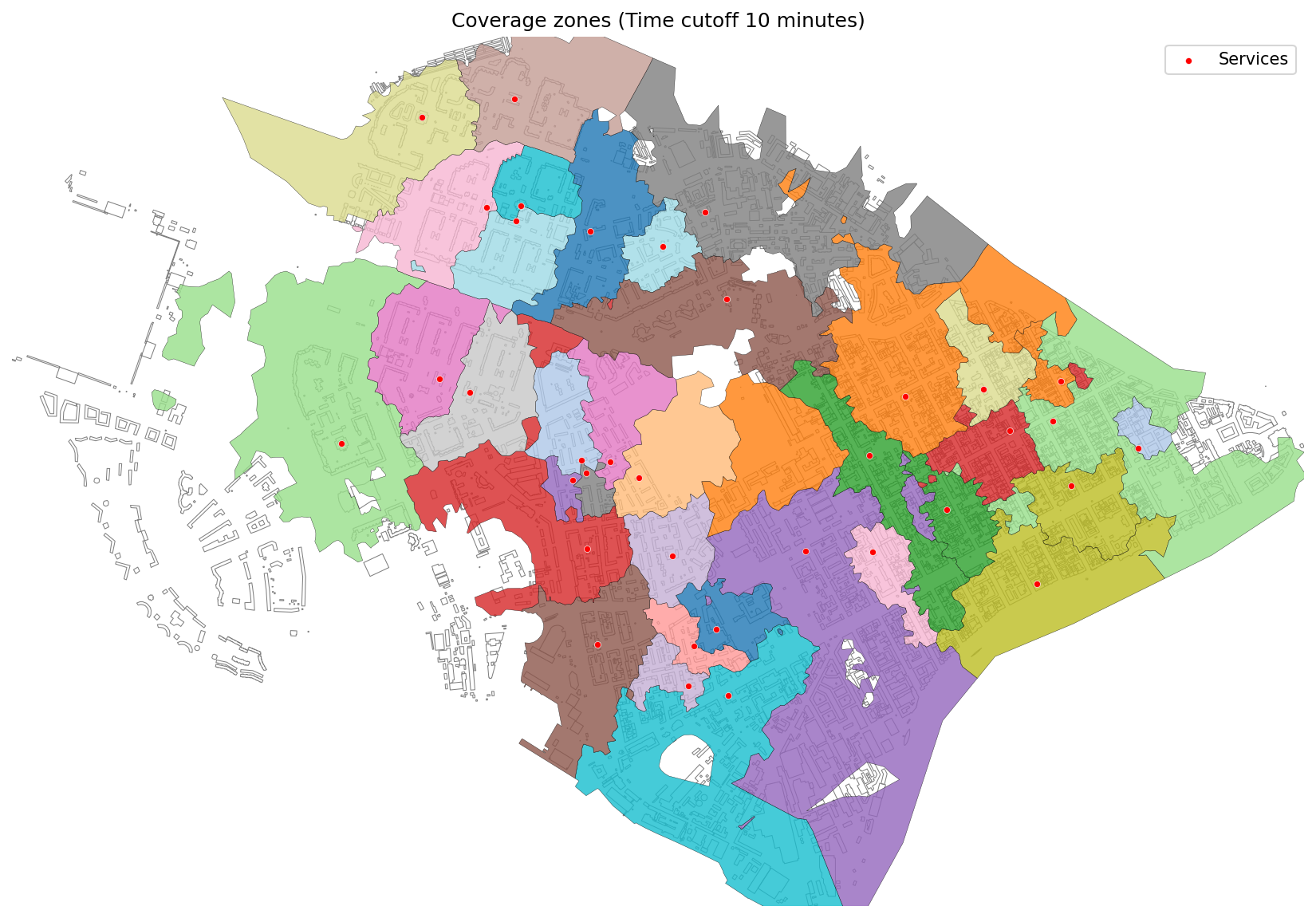

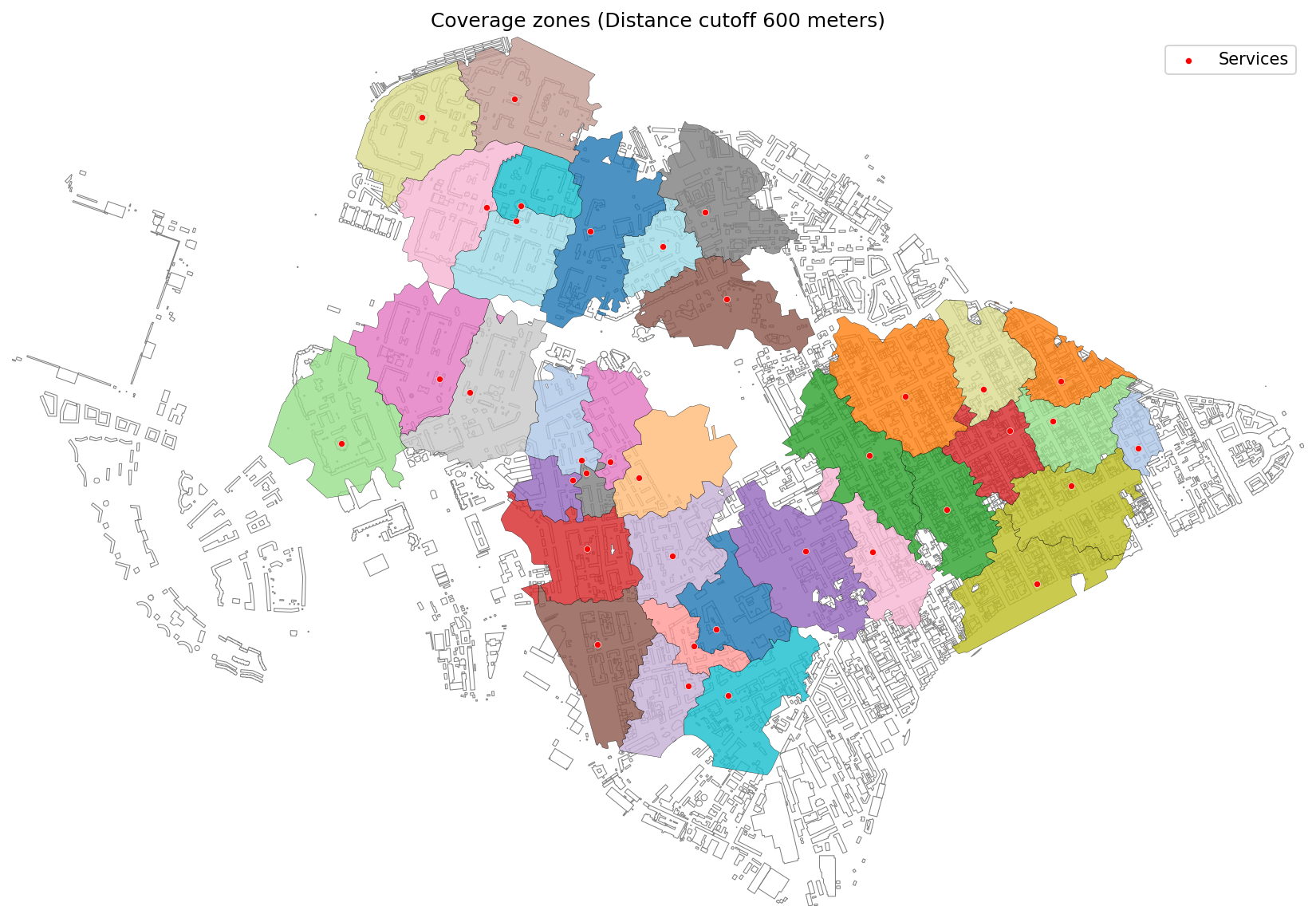

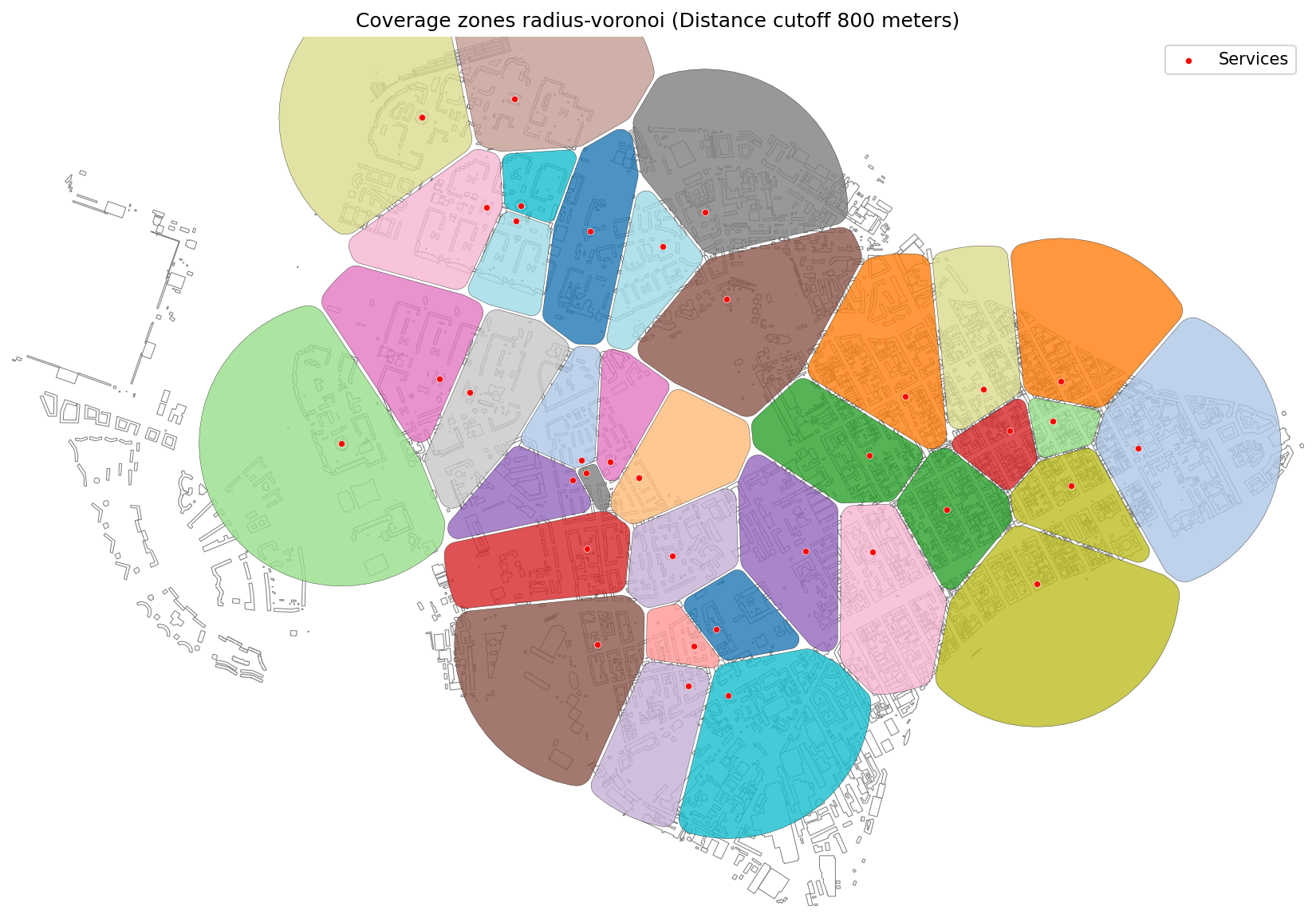

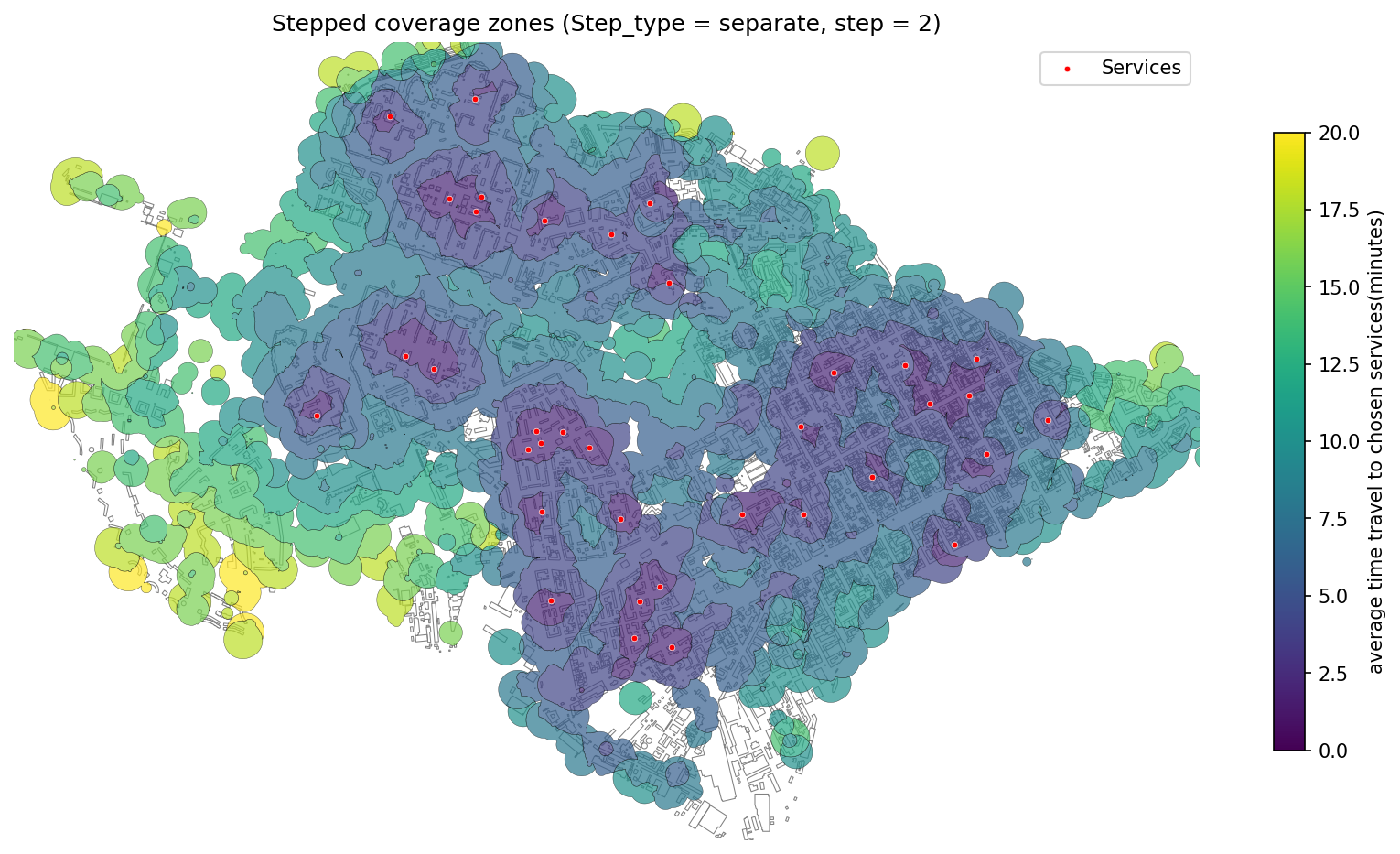

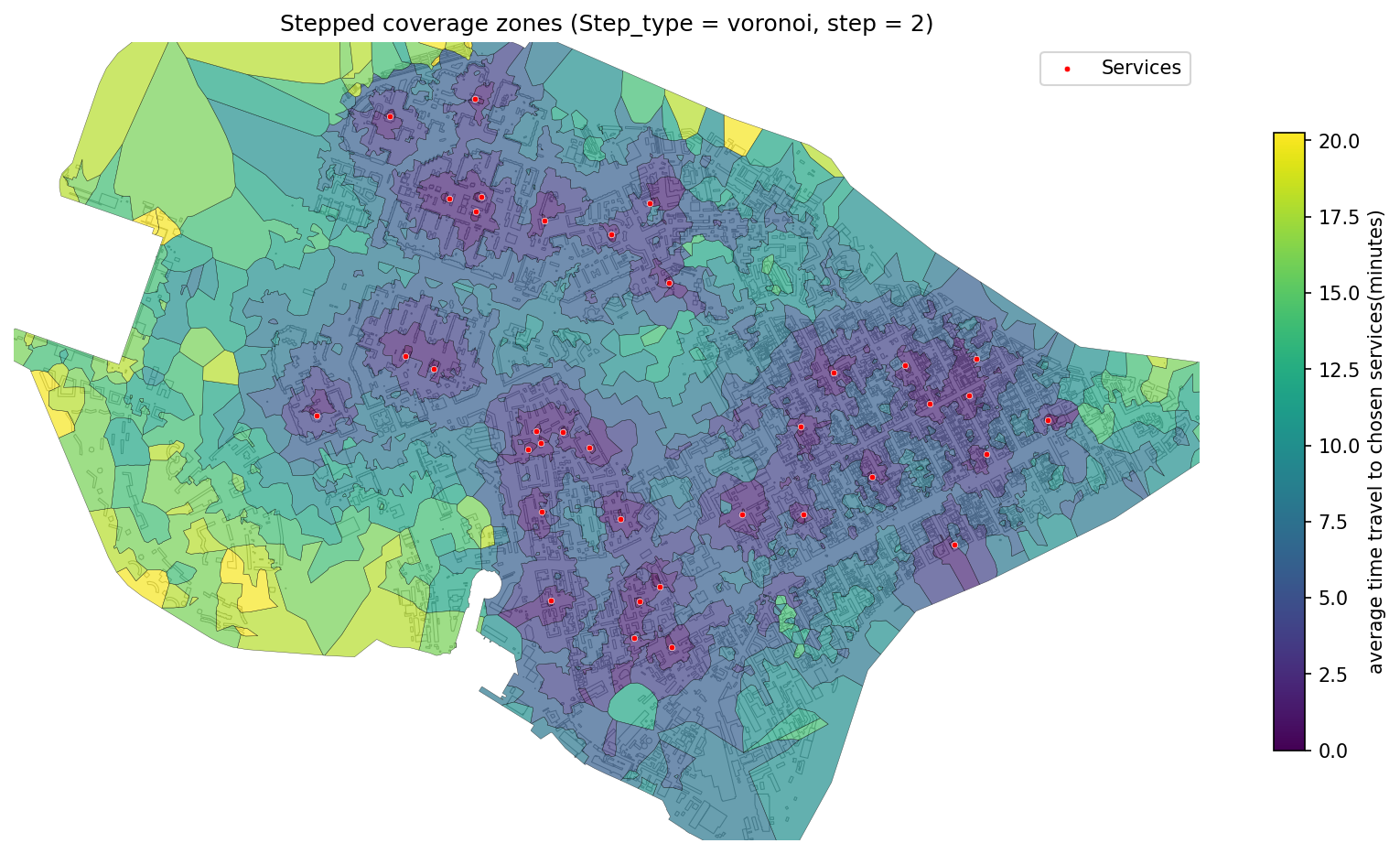

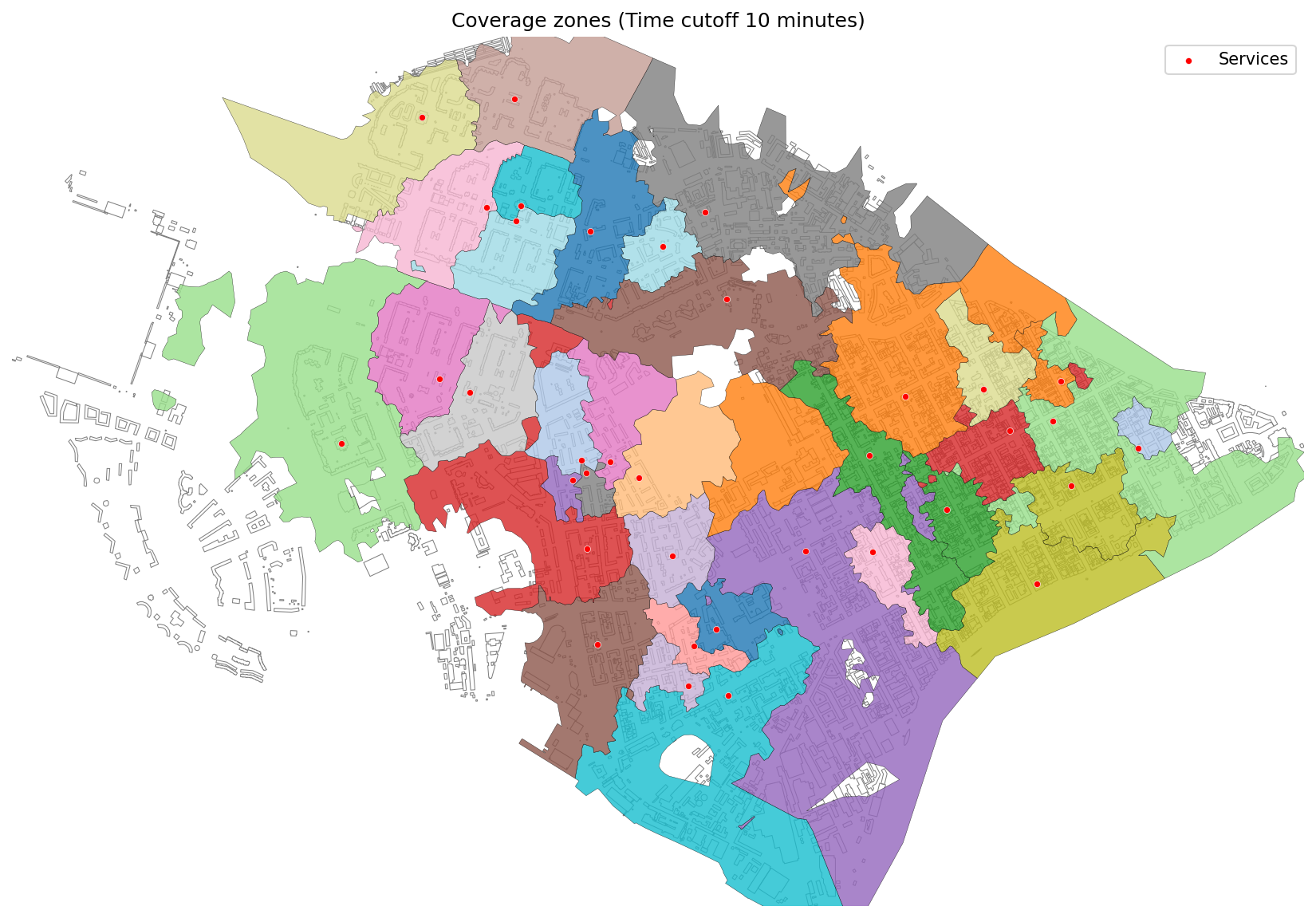

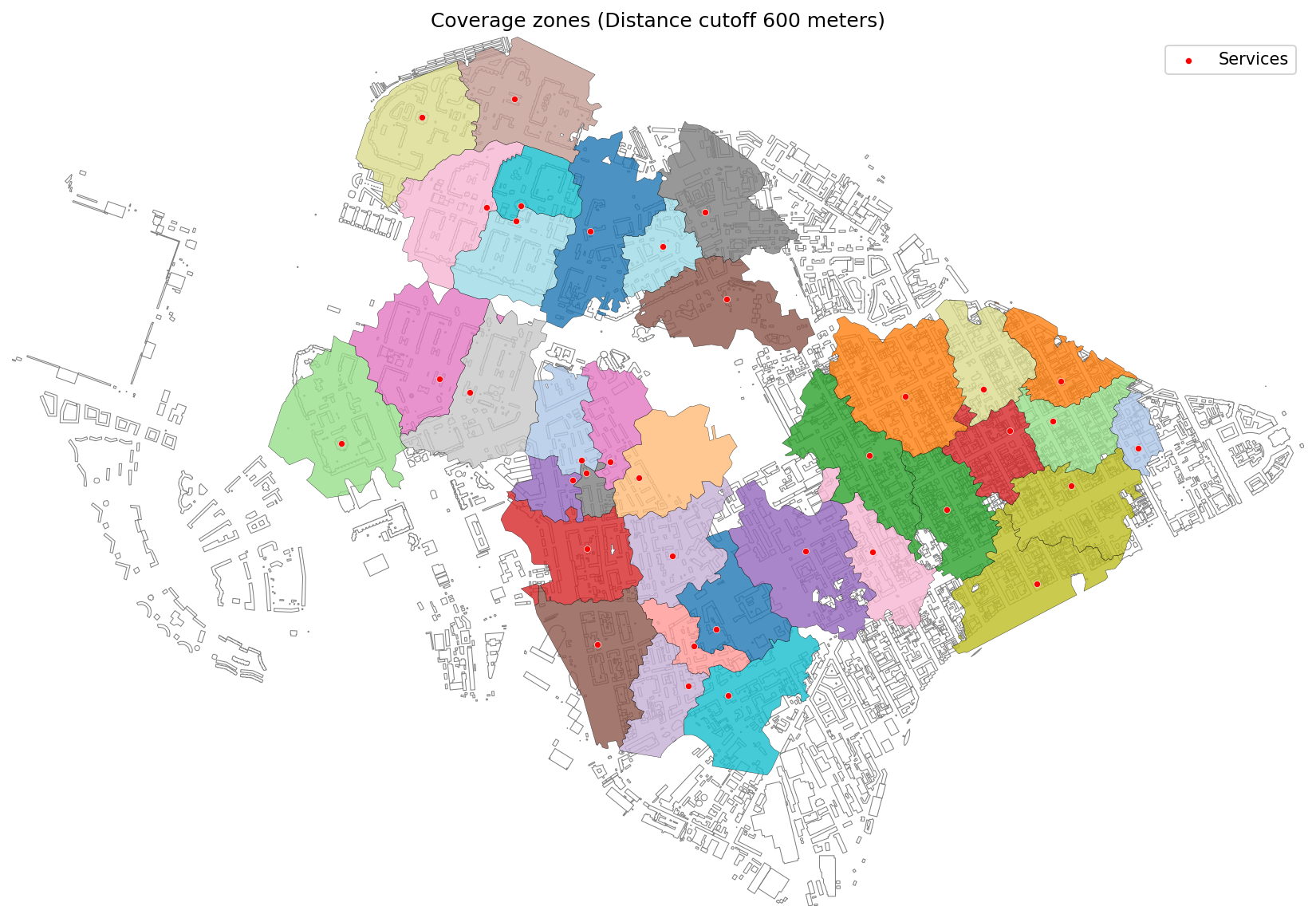

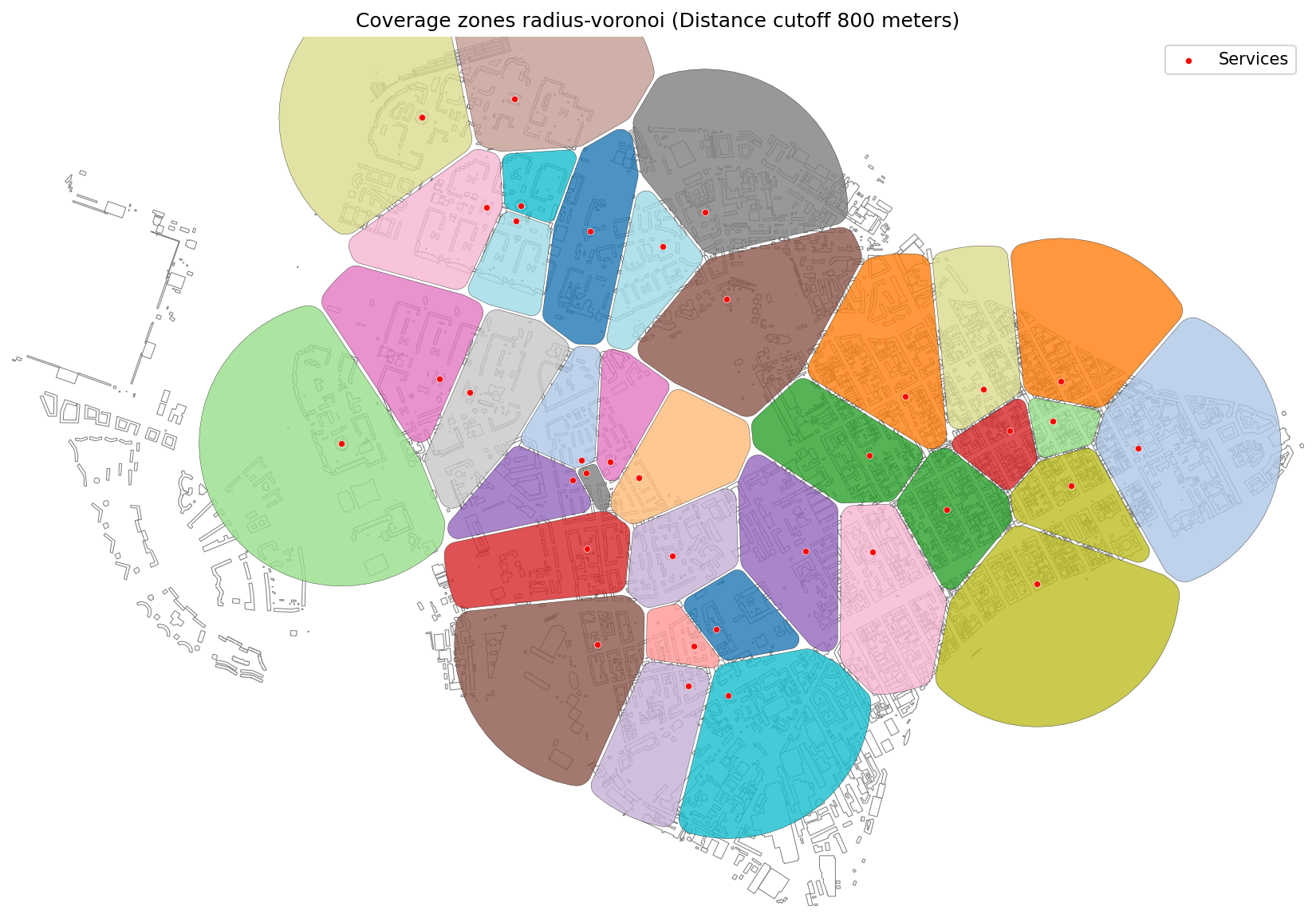

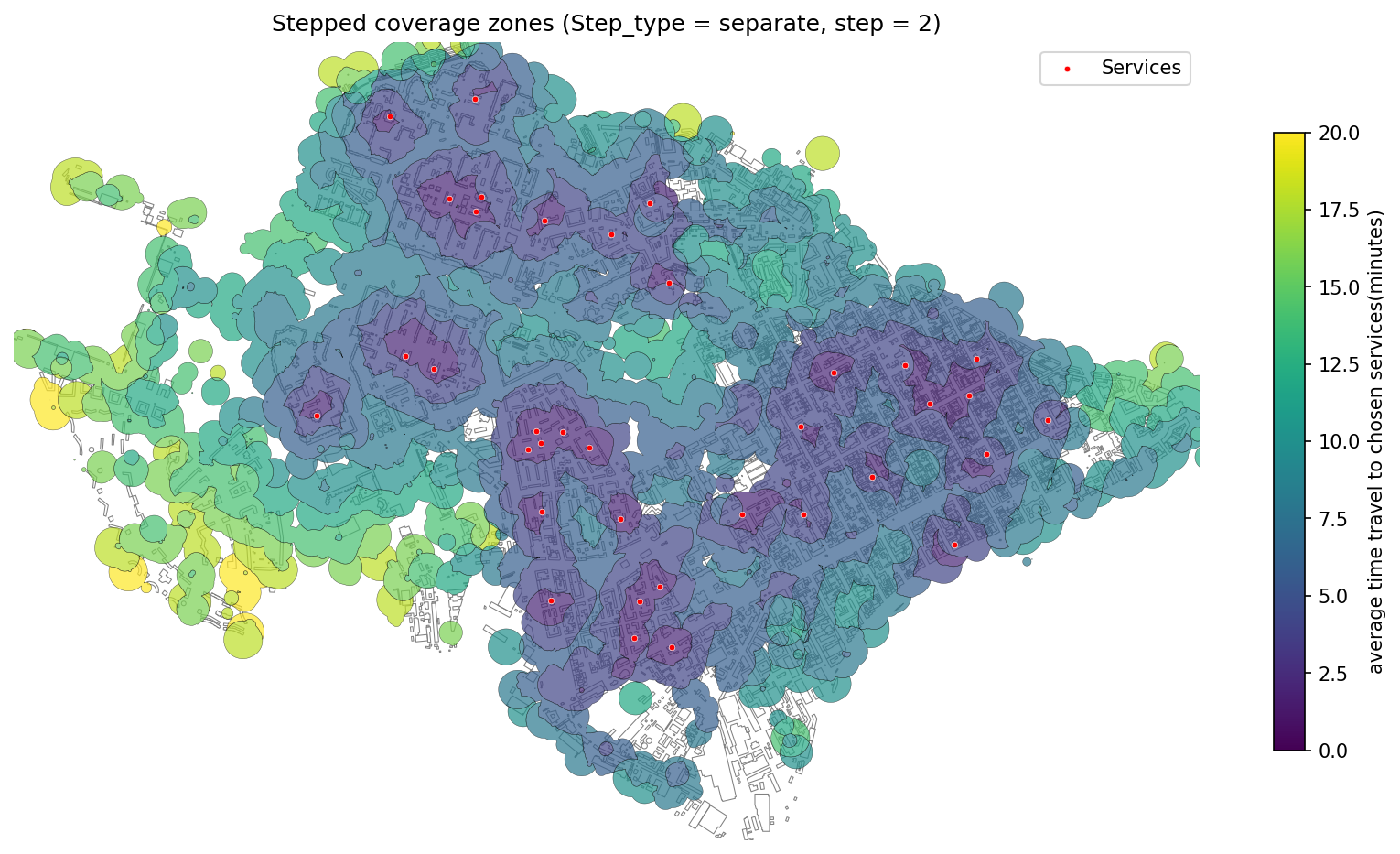

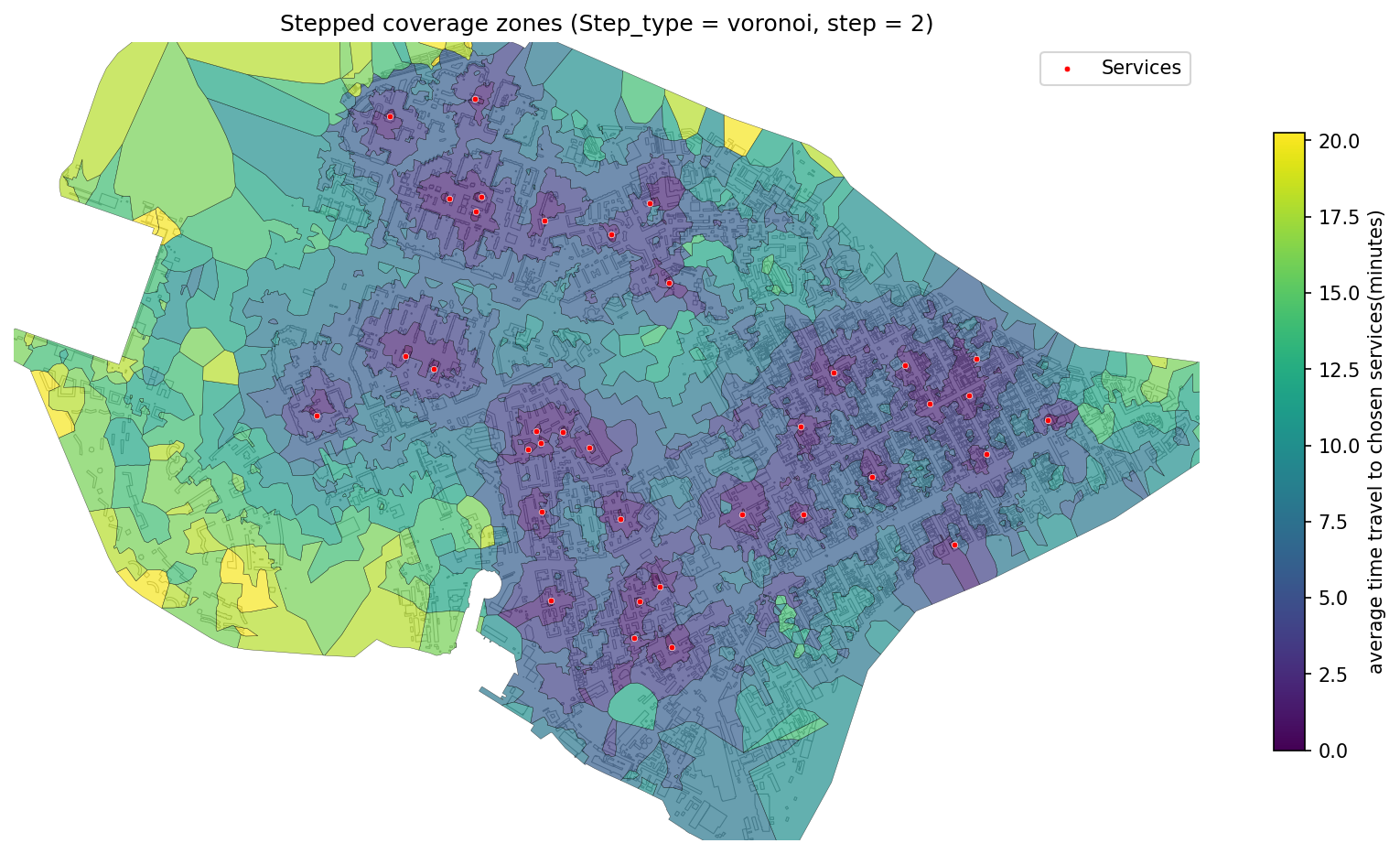

35 | 2. **[Coverage Zones](./examples/coverage_zones.ipynb)** — Function for generating **coverage zones** from a set of source points using a transport network. It calculates the area each point can reach based on **travel time** or **distance**, then builds polygons via **Voronoi diagrams** and clips them to a custom boundary if provided.

36 |

37 |

38 |  39 |

39 |  40 |

40 |  41 |

41 |

42 |

43 |  44 |

44 |  45 |

45 |

46 |

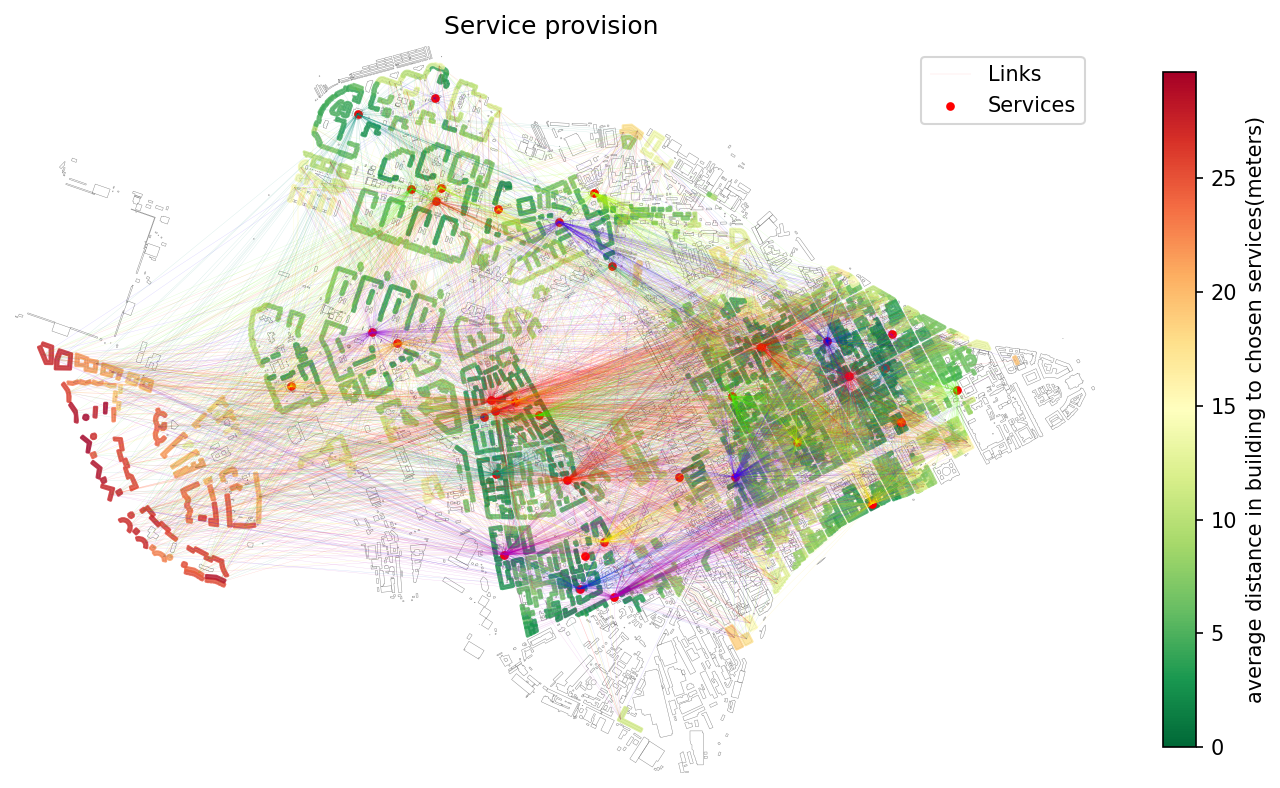

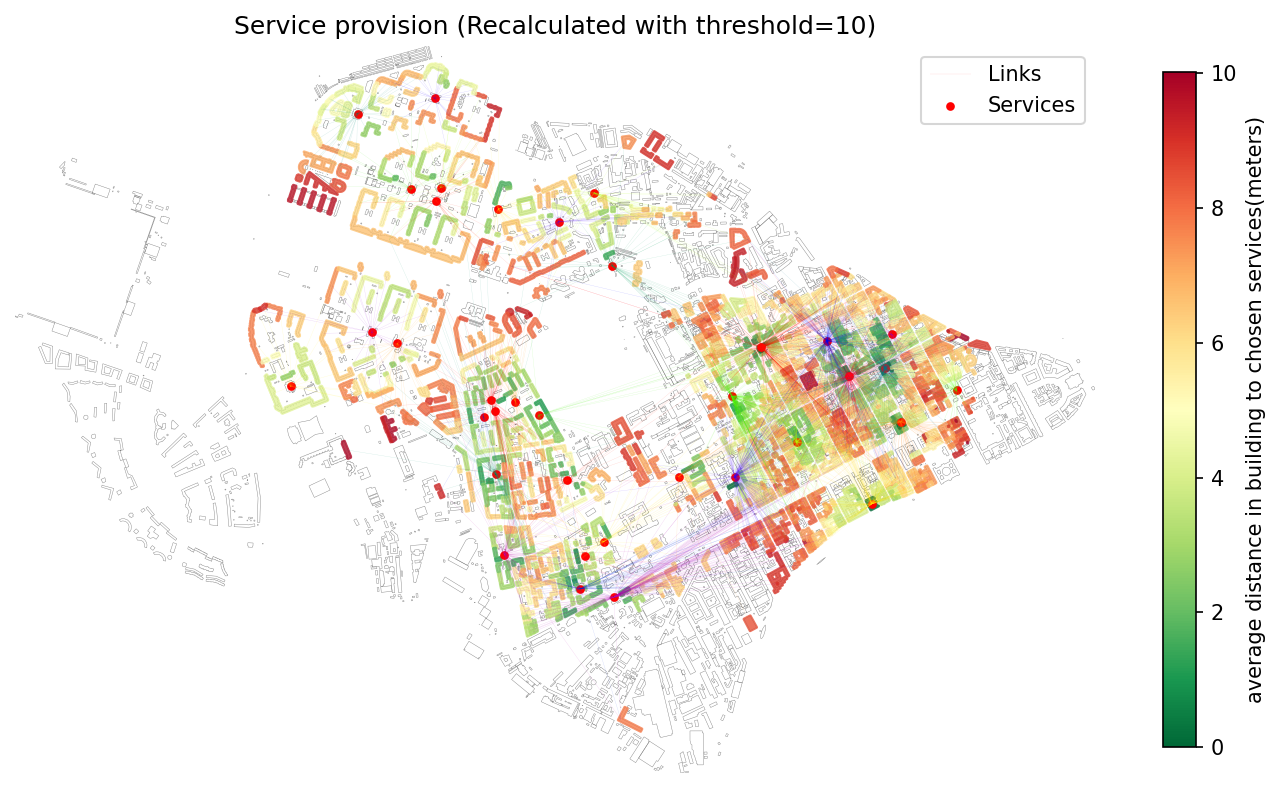

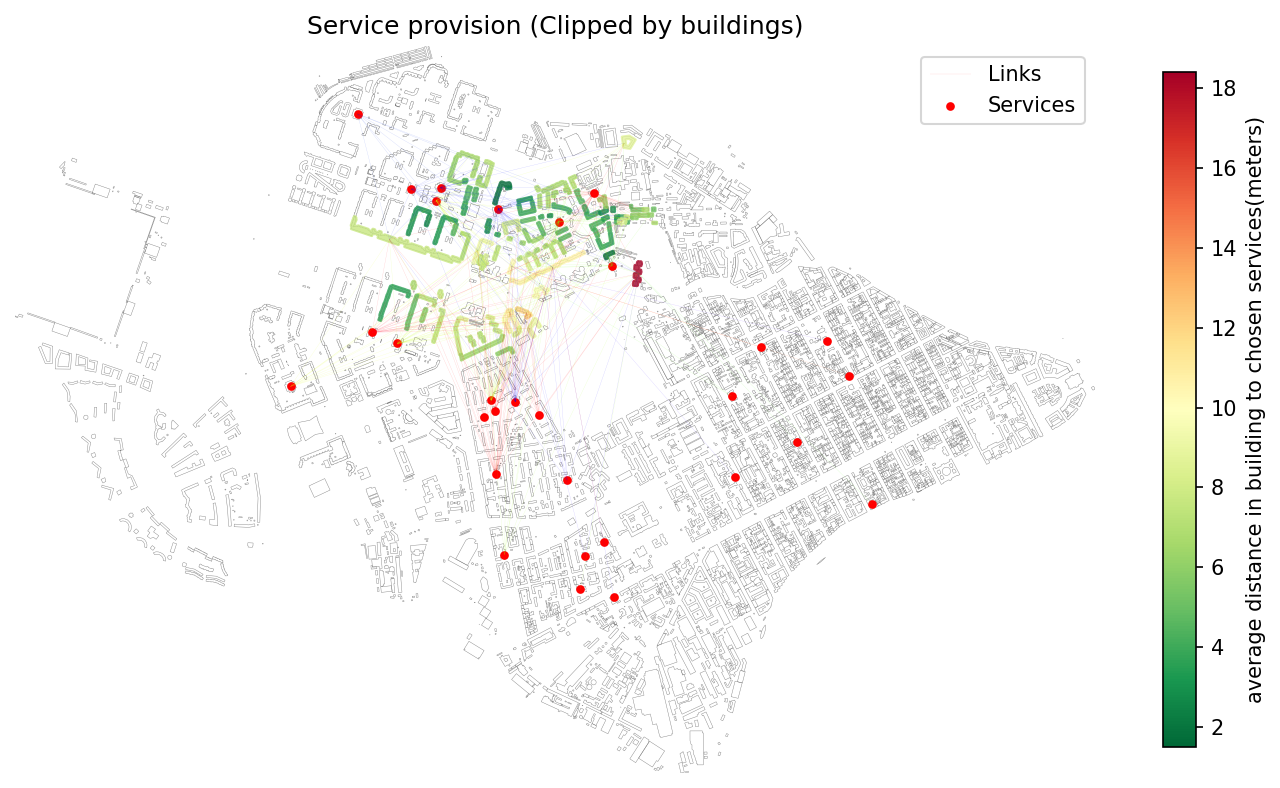

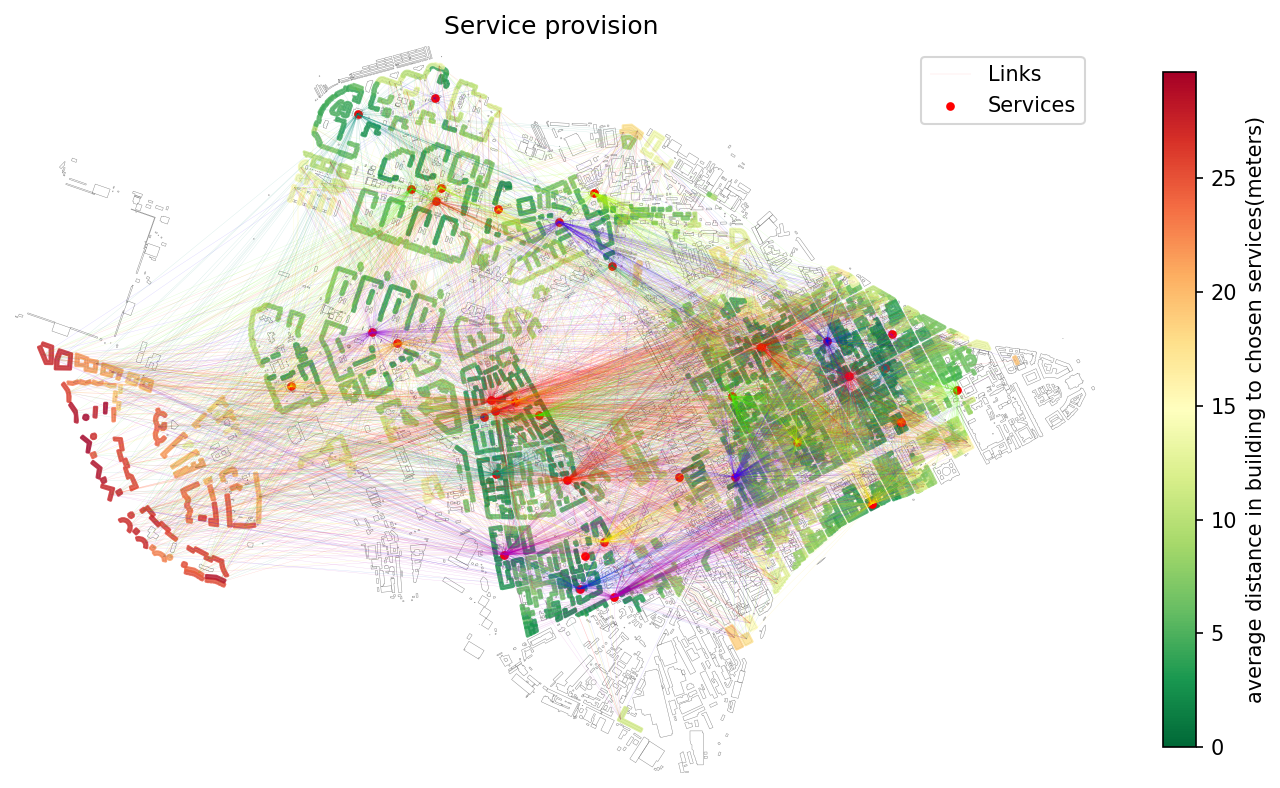

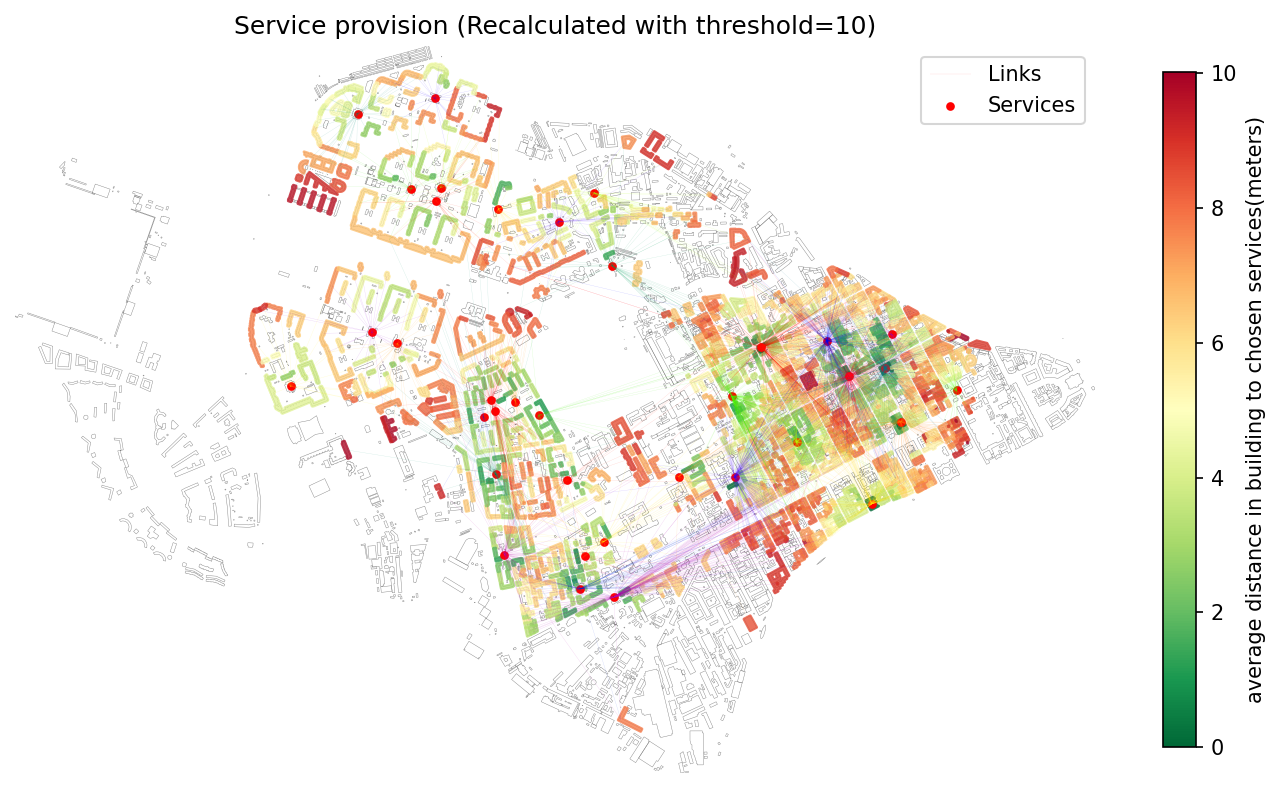

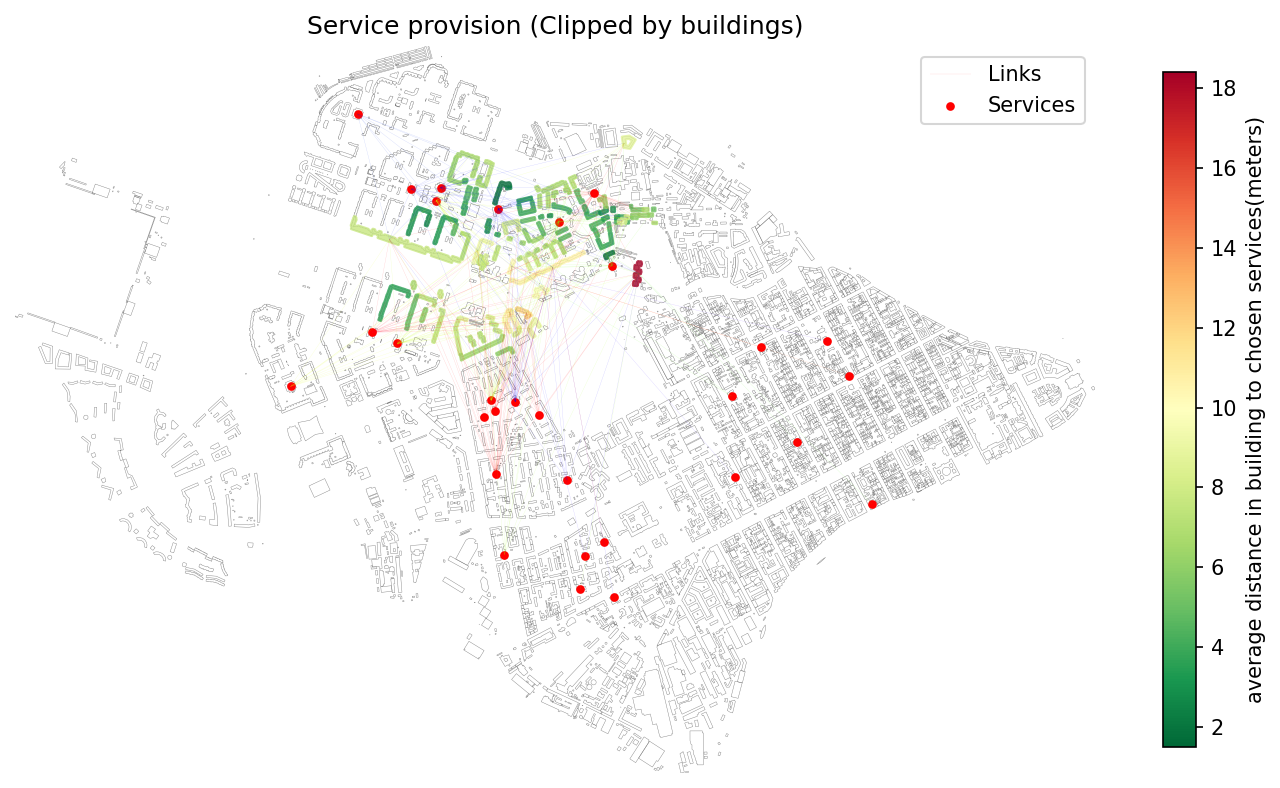

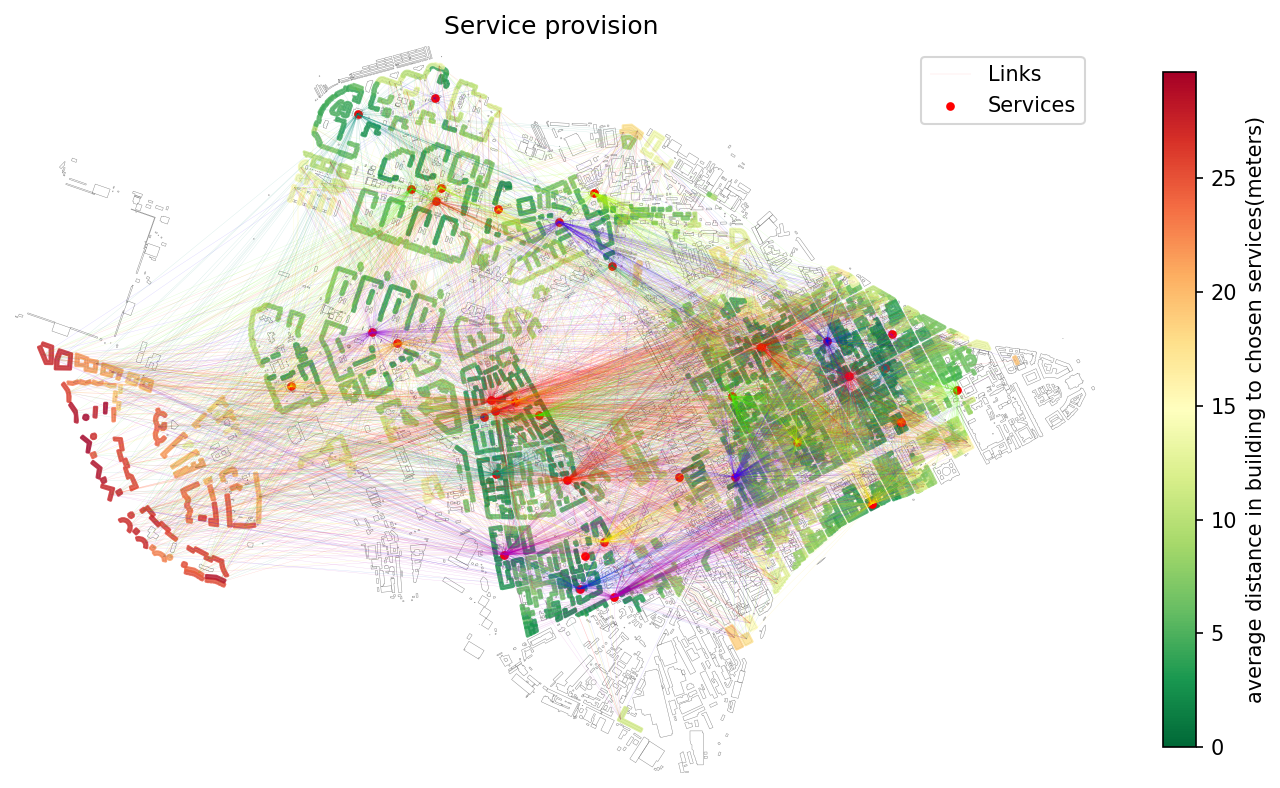

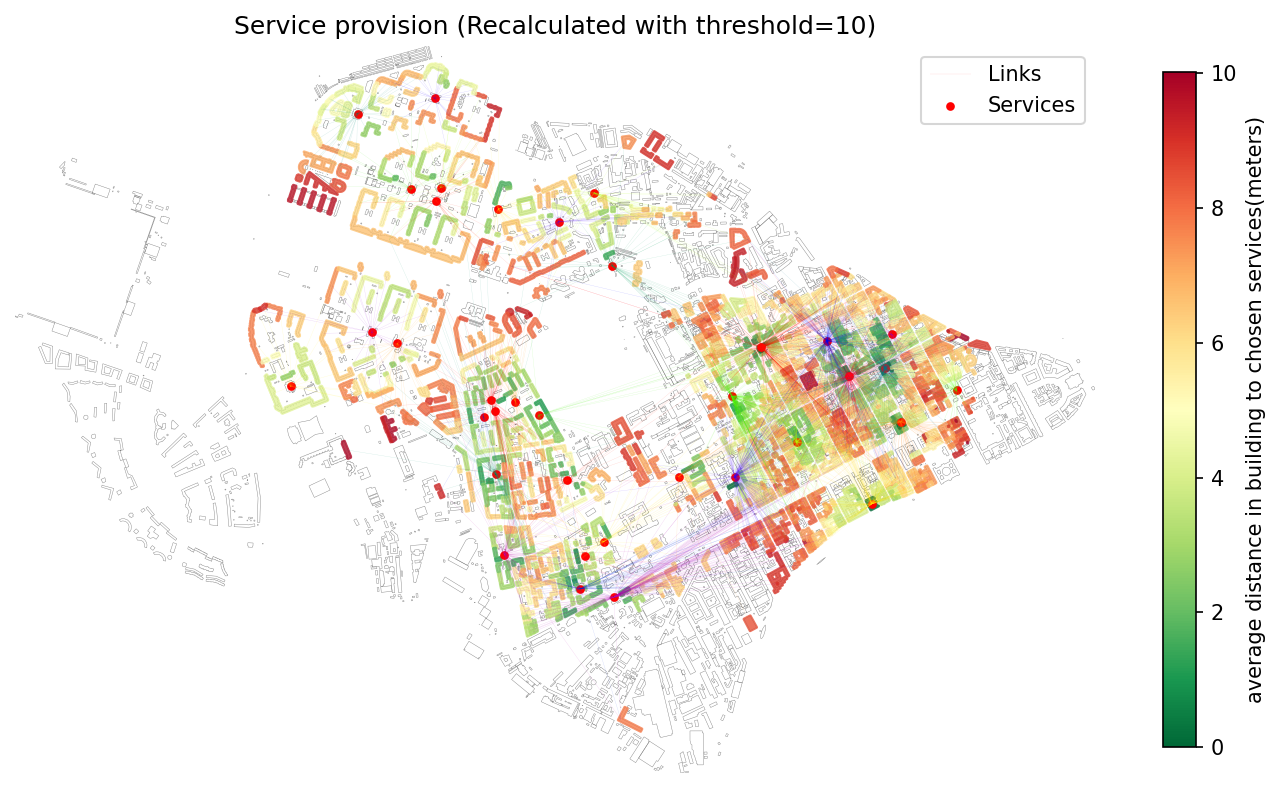

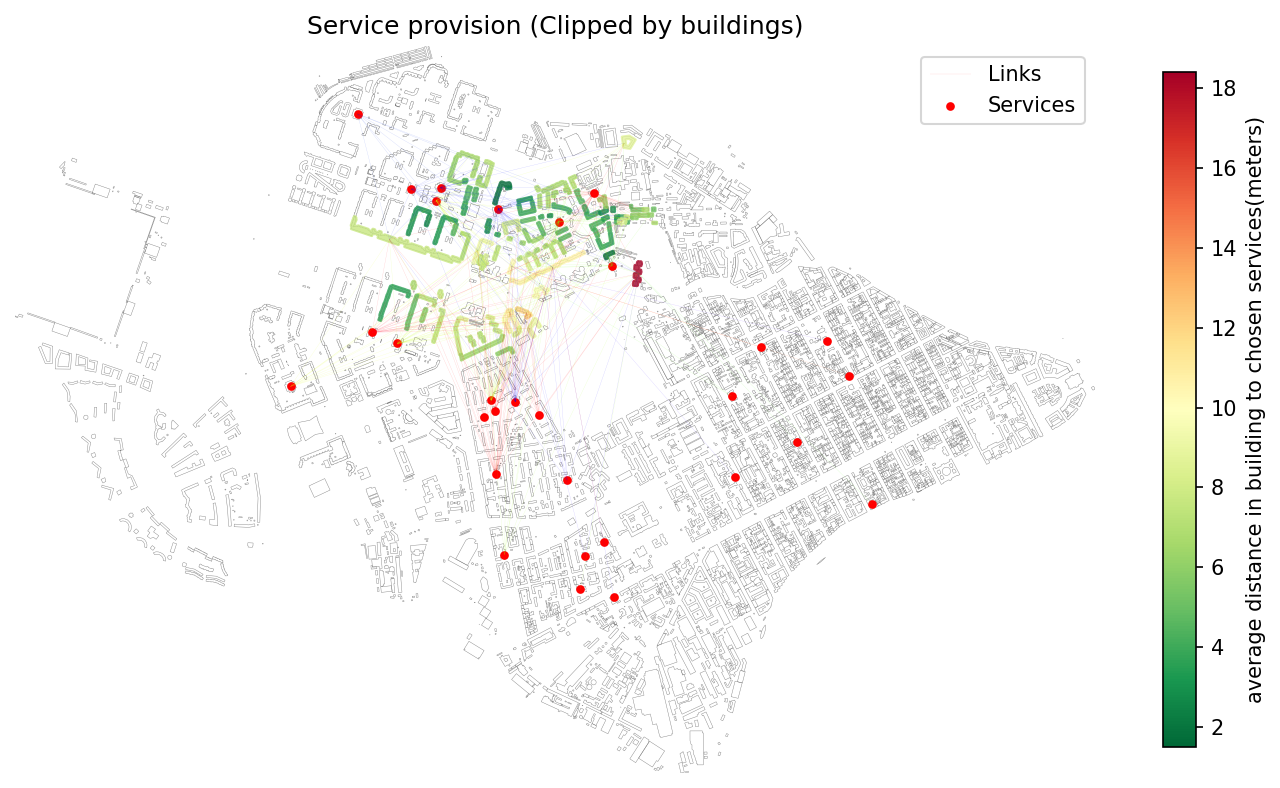

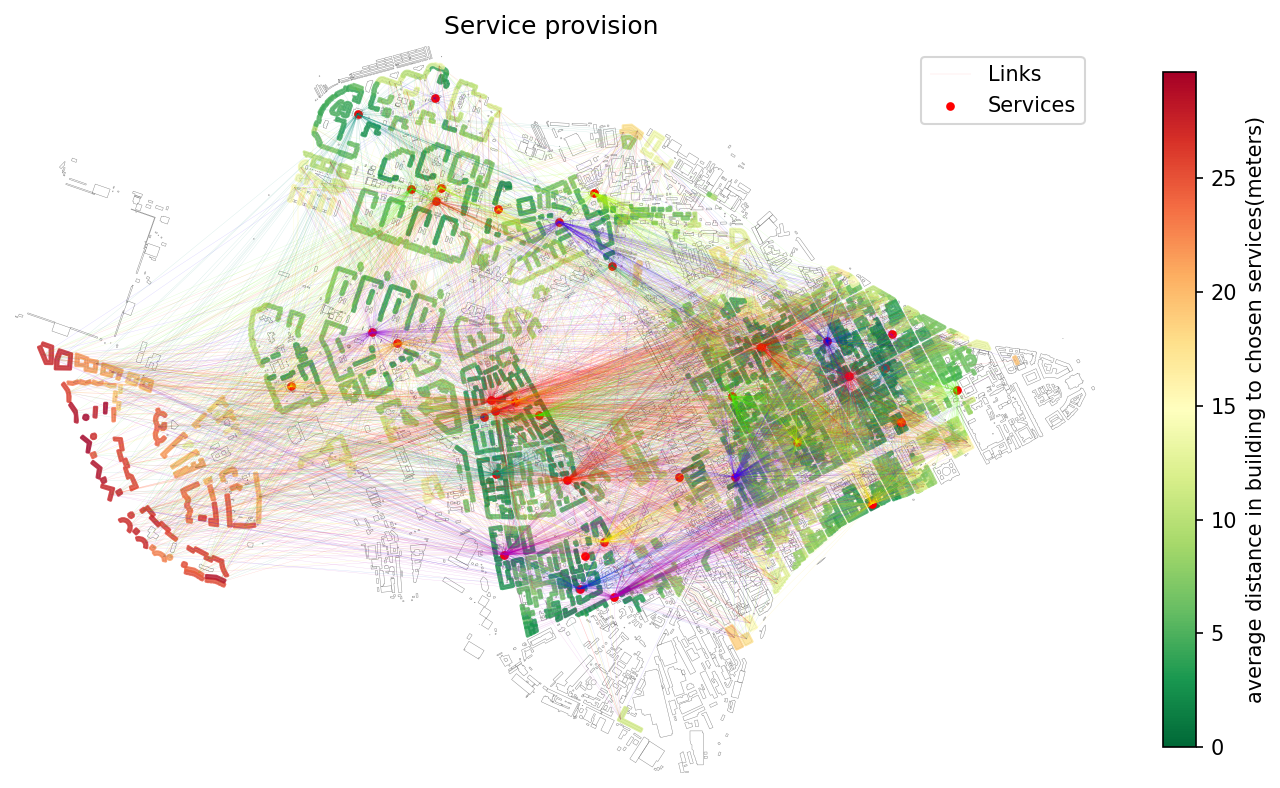

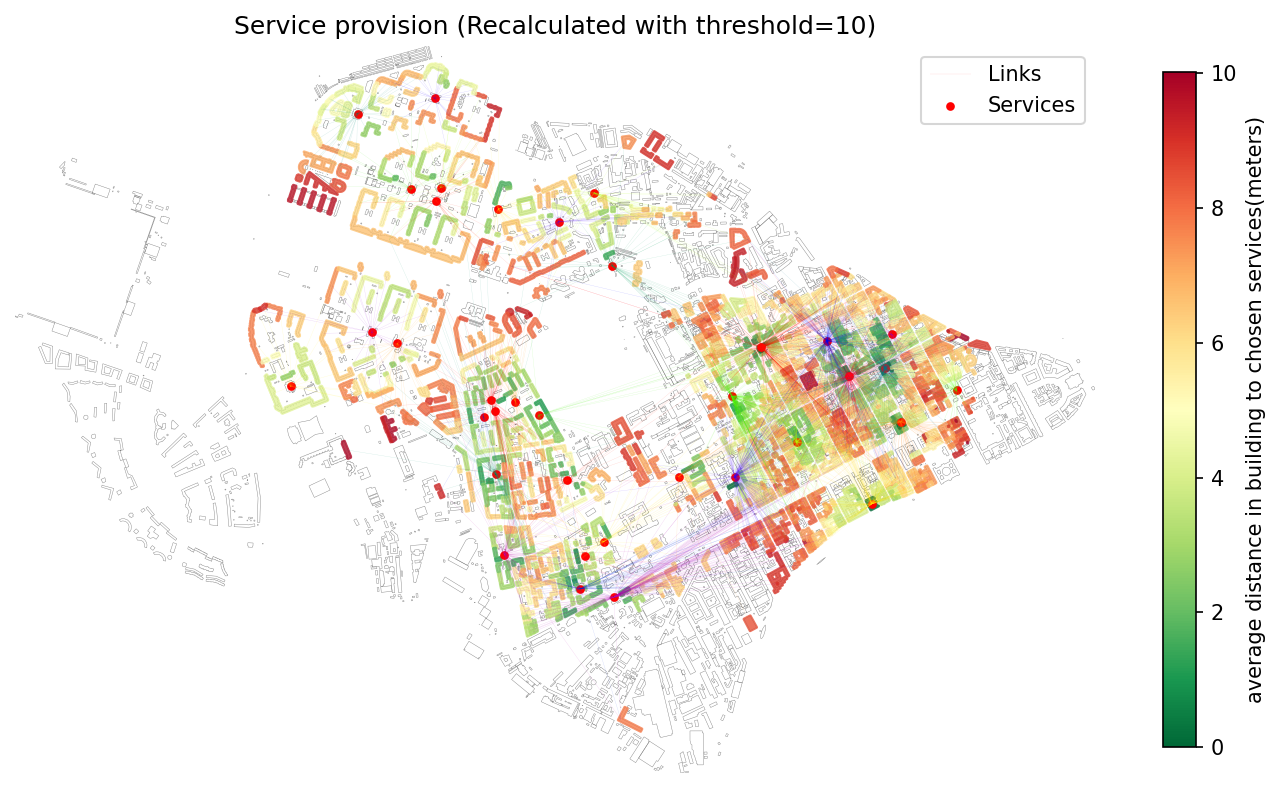

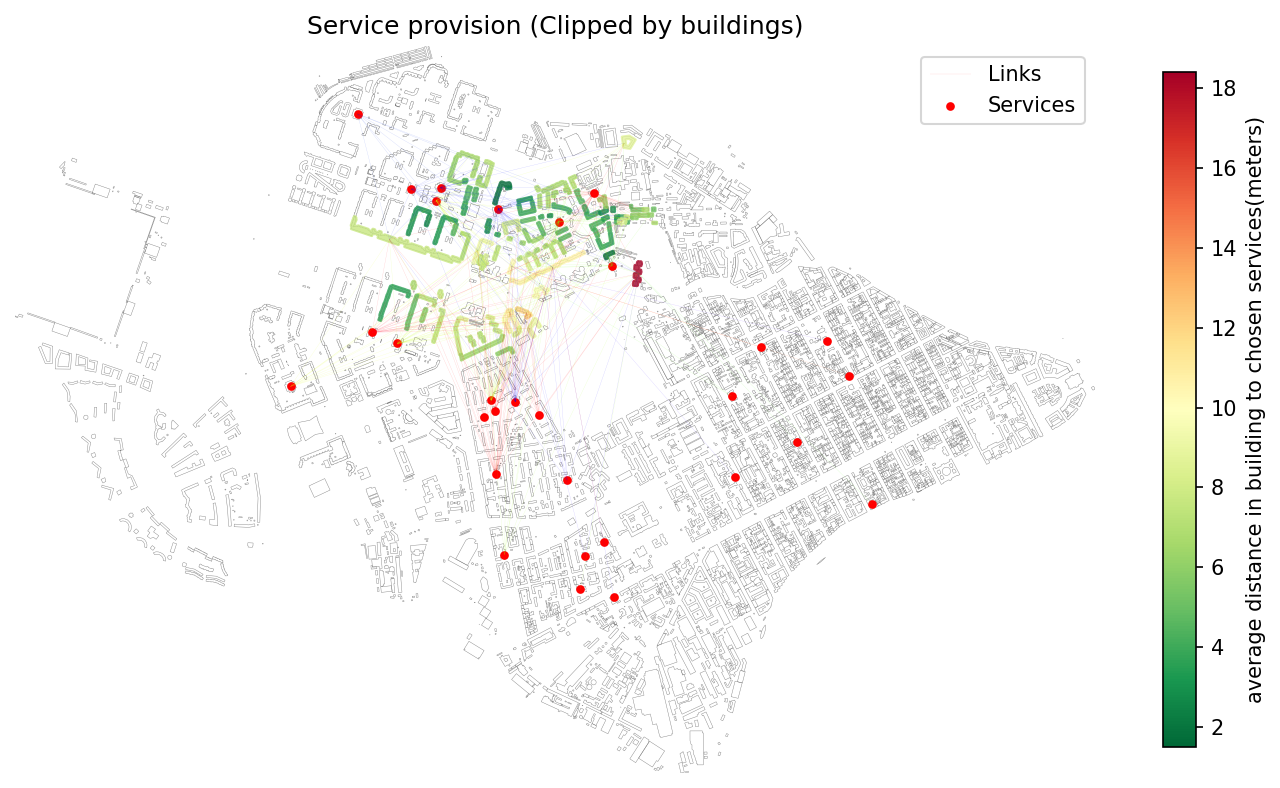

47 | 3. **[Service Provision Analysis](./examples/calculate_provision.ipynb)** — Function for evaluating the provision of residential buildings and their population with services (e.g., schools, clinics)

48 | that have limited **capacity** and a defined **accessibility threshold** (in minutes or distance). The function models **demand-supply balance**, estimating how well services meet the needs of nearby buildings within the allowed time.

49 |

50 | The library also supports:

51 | - **Recalculation** of existing provision results using a new time threshold.

52 | - **Clipping** of provision results to a custom analysis area (e.g., administrative boundaries).

53 |

54 |

55 |  56 |

56 |  57 |

57 |  58 |

58 |

59 |

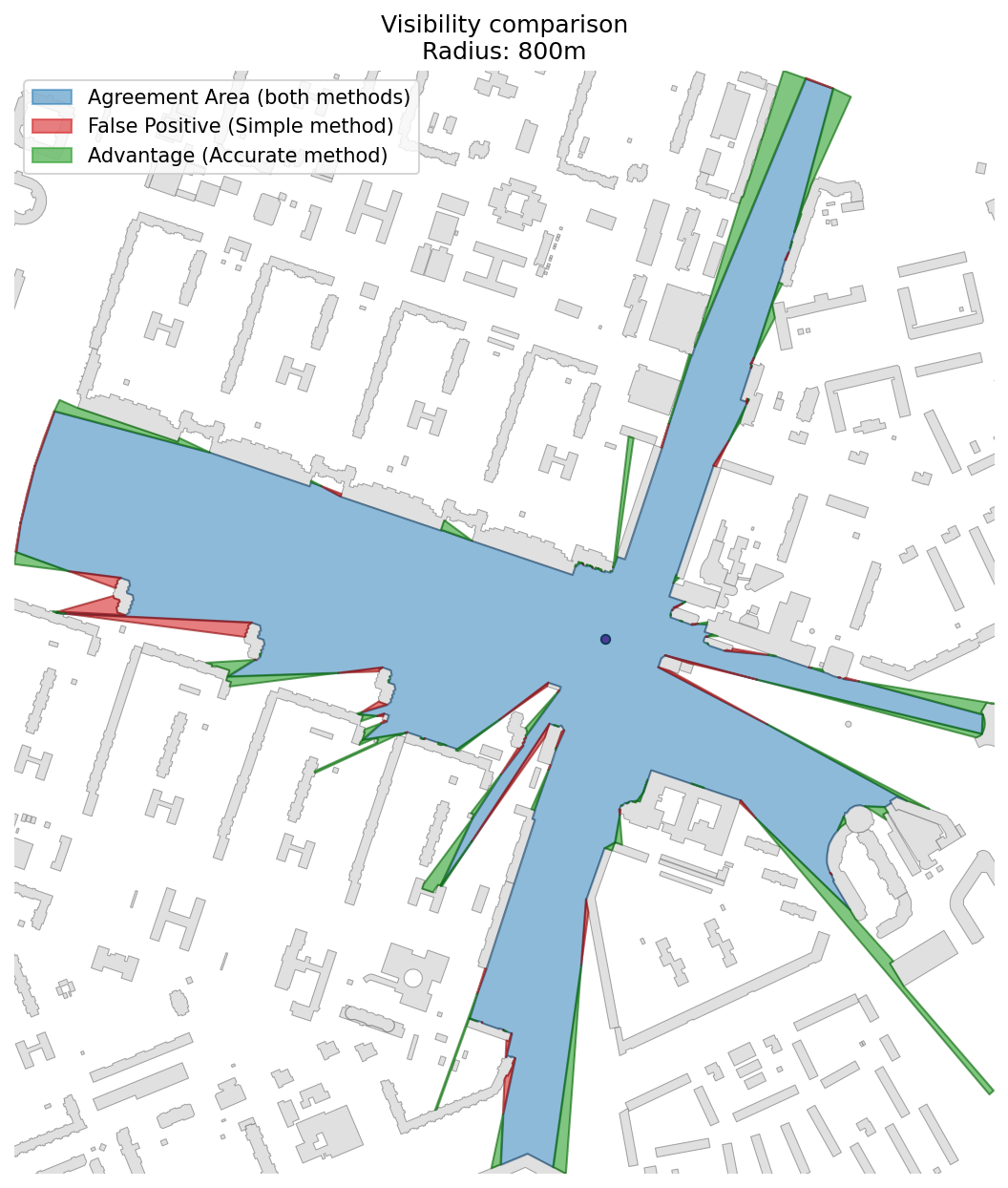

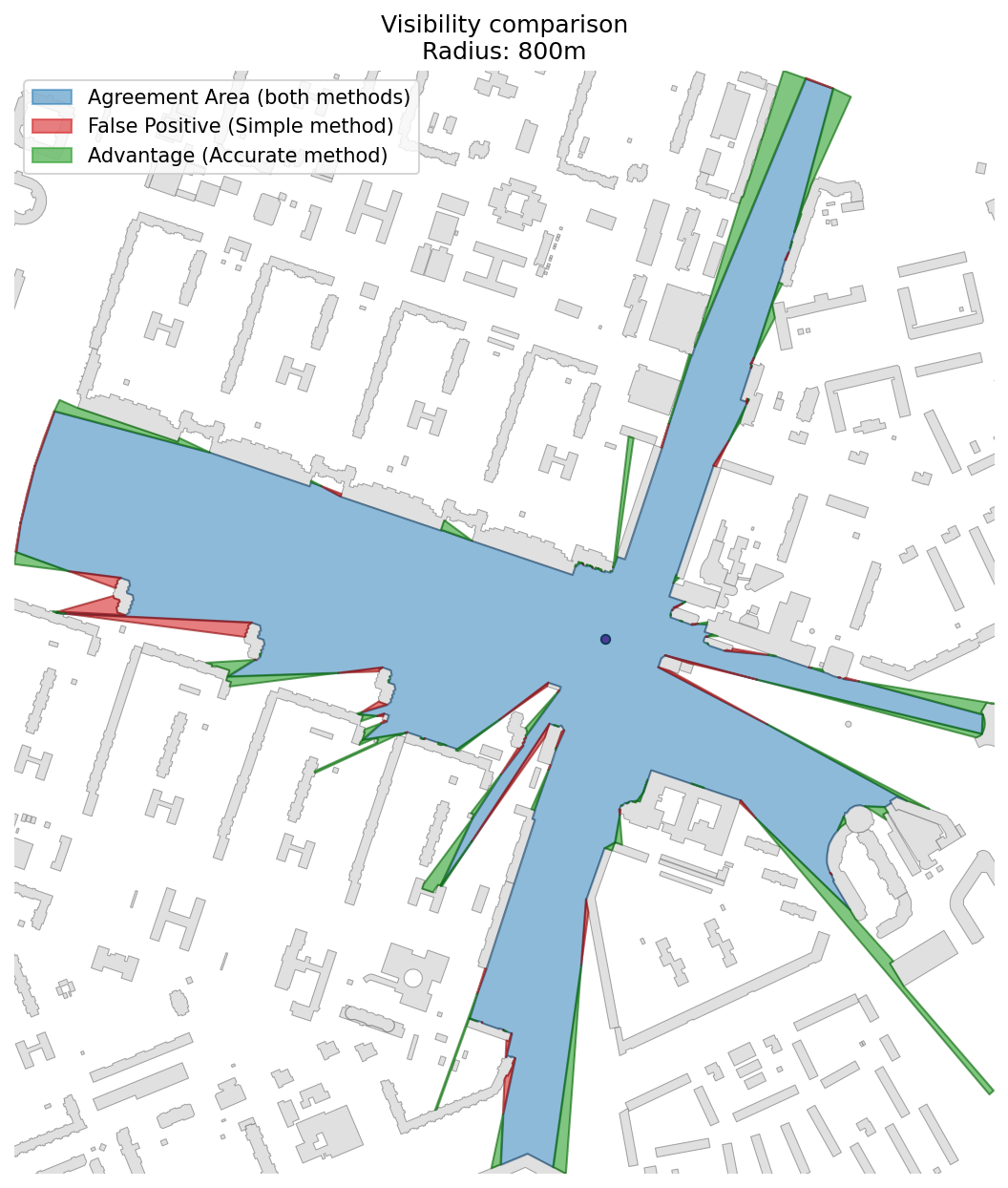

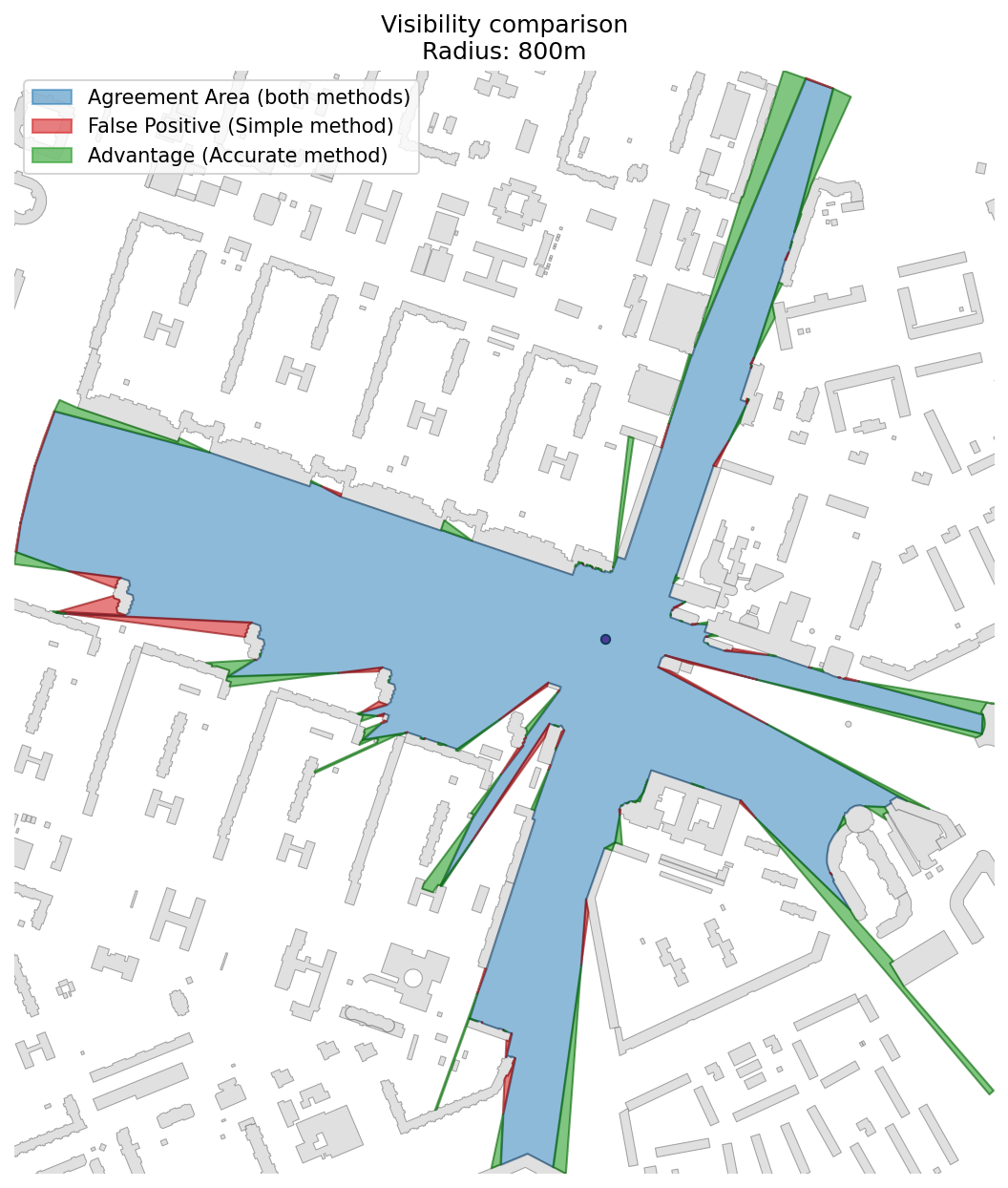

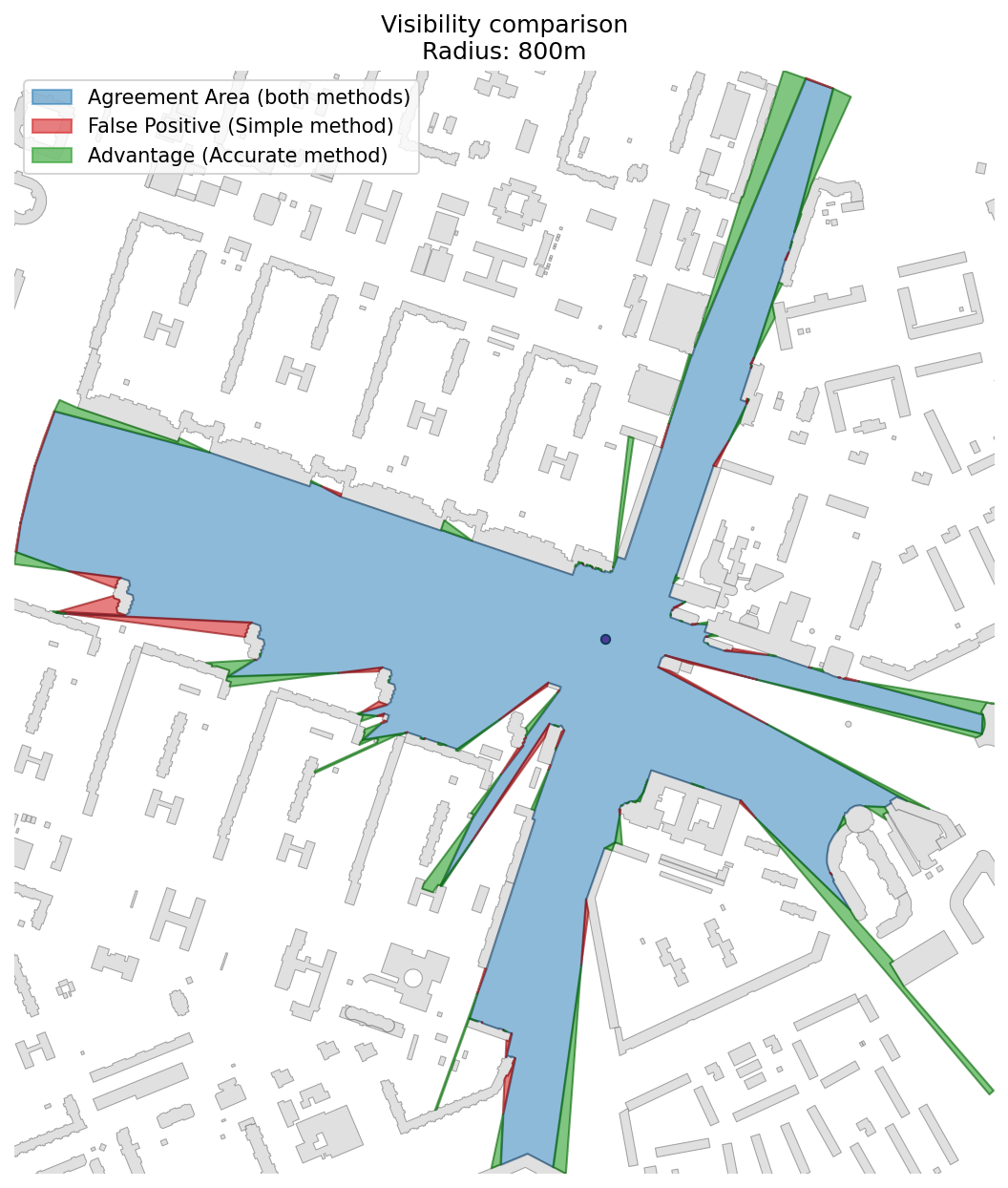

60 | 4. **[Visibility Analysis](./examples/visibility_analysis.ipynb)** — Function for estimating visibility from a given point or multiple points to nearby buildings within a certain distance.

61 | This can be used to assess visual accessibility in urban environments.

62 | The library also includes a **catchment area calculator** for large-scale visibility analysis based on a dense grid of observer points (recommended: ~1000 points spaced 10–20 meters apart).

63 | Points can be generated using a road network and distributed along edges.

64 |

65 | The module includes:

66 | - A **fast approximate method** for large datasets.

67 | - A **accurate method** for detailed local analysis.

68 |

69 |

70 |  71 |

71 |  72 |

72 |

73 |

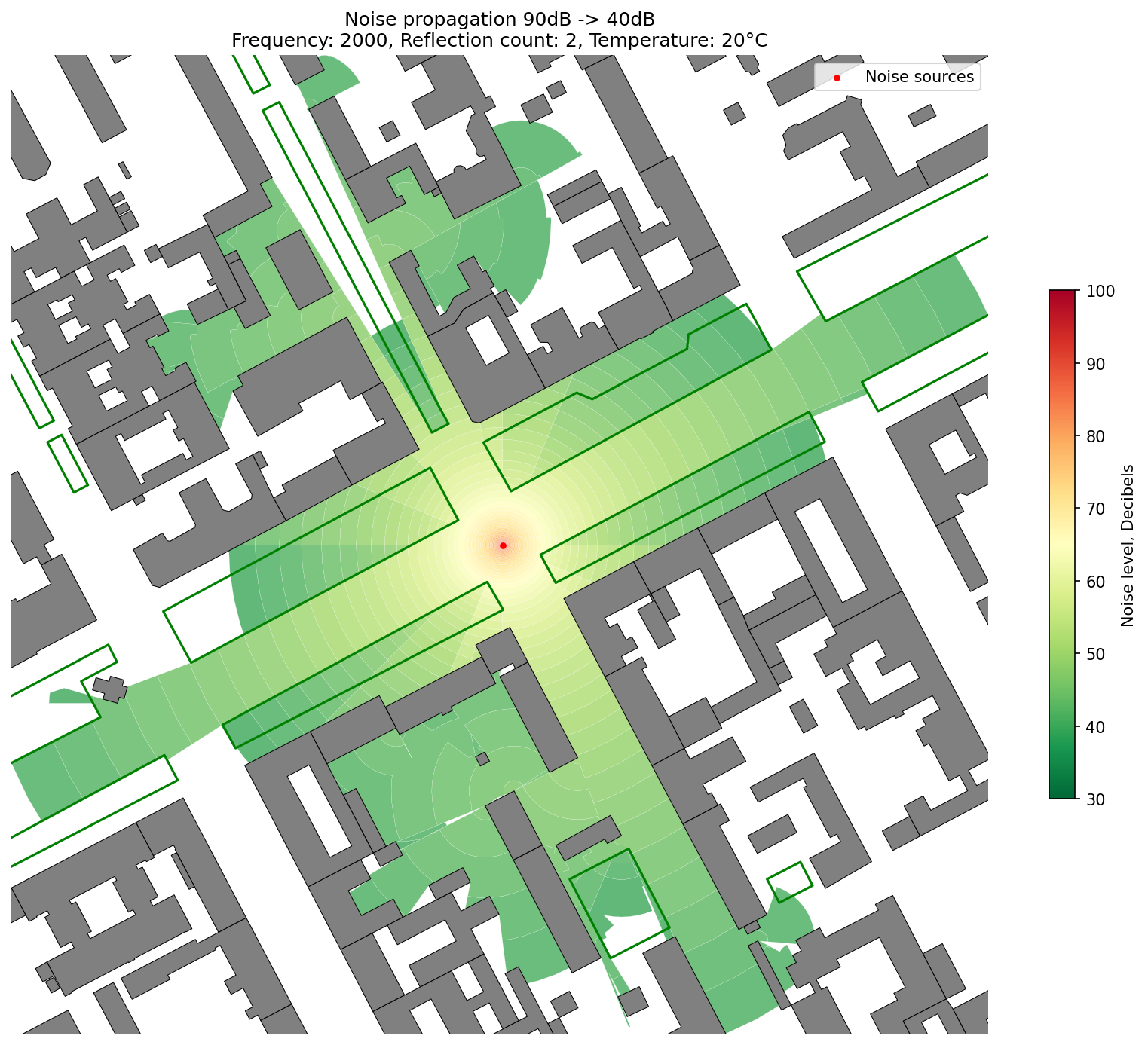

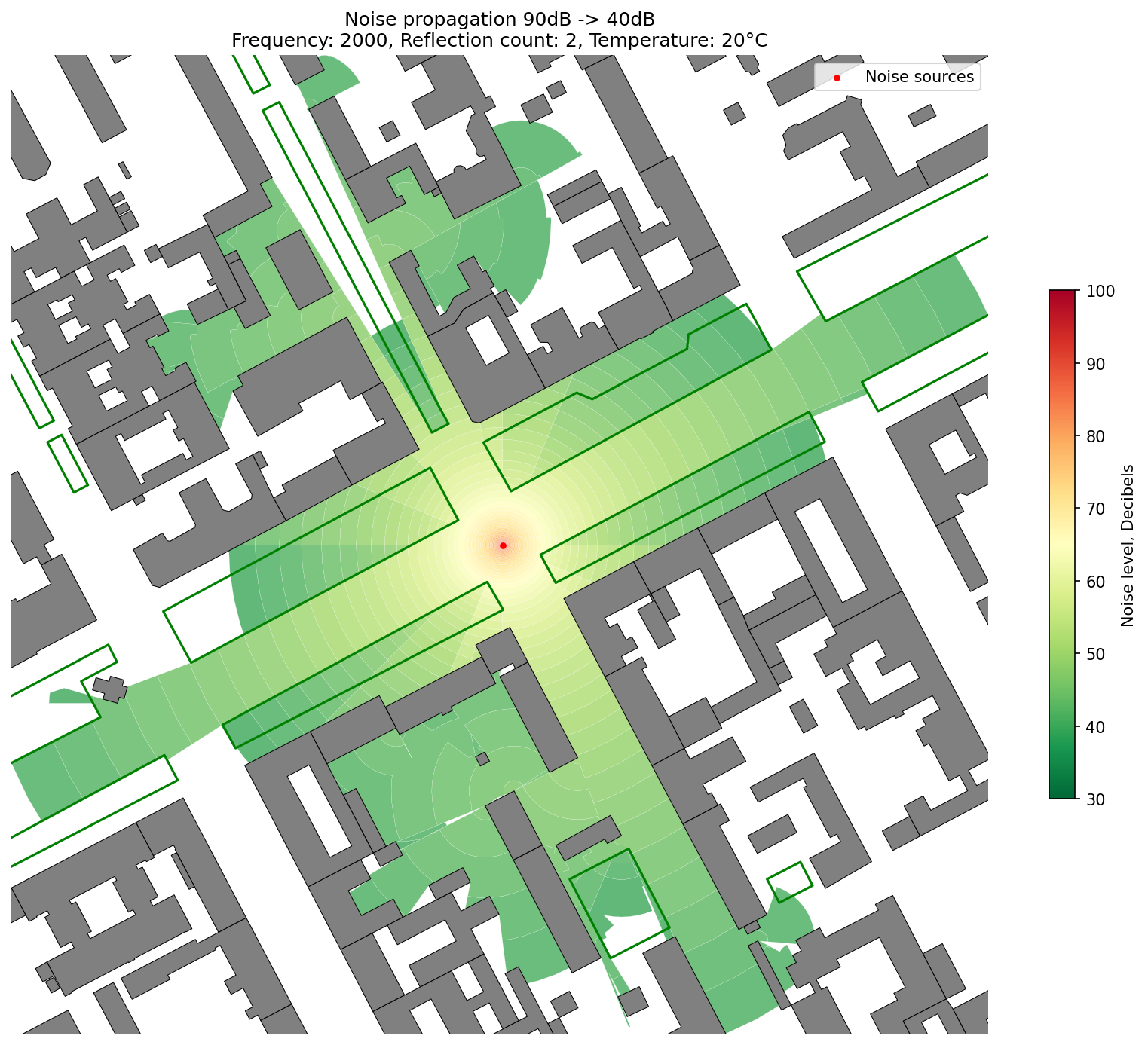

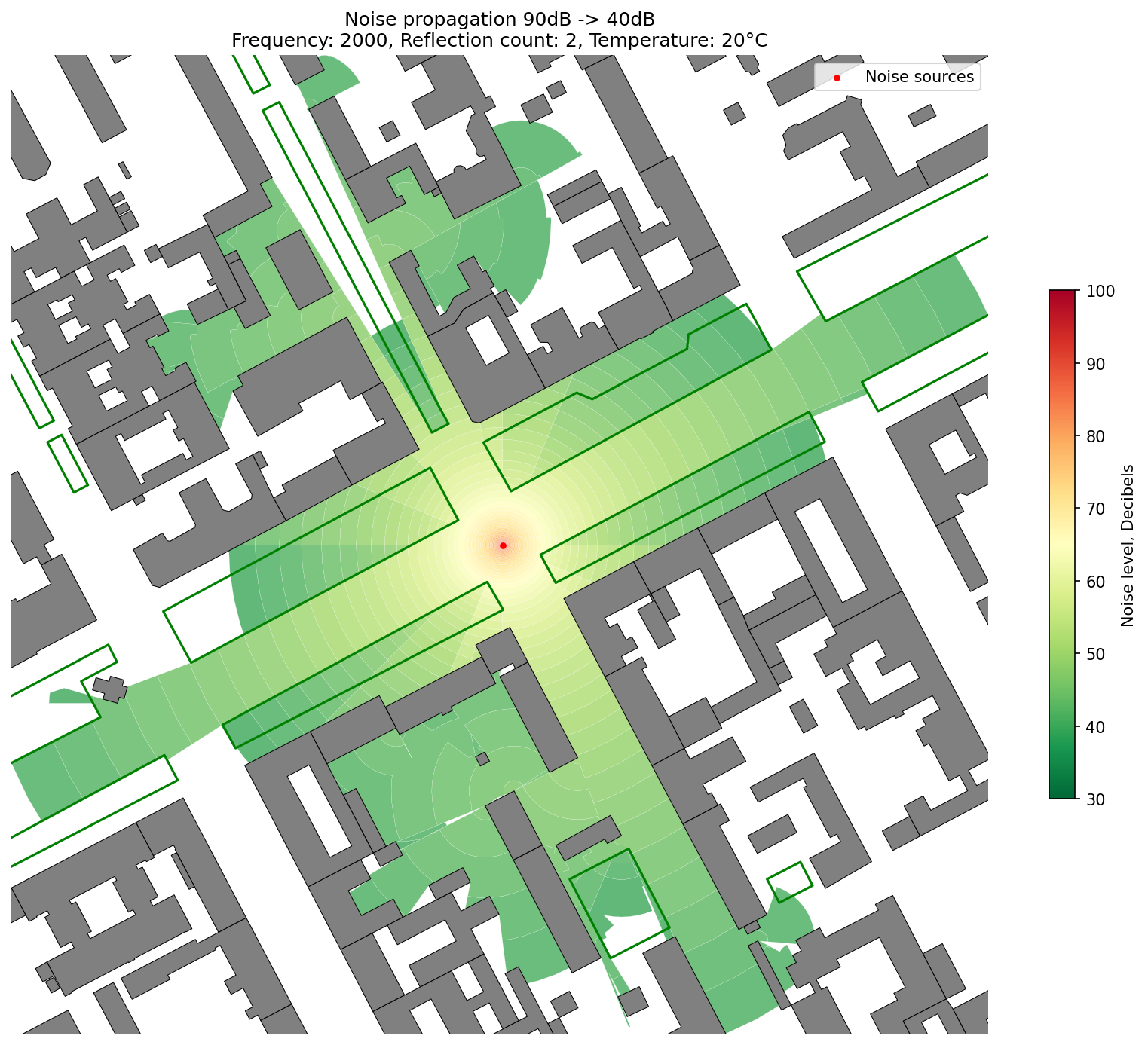

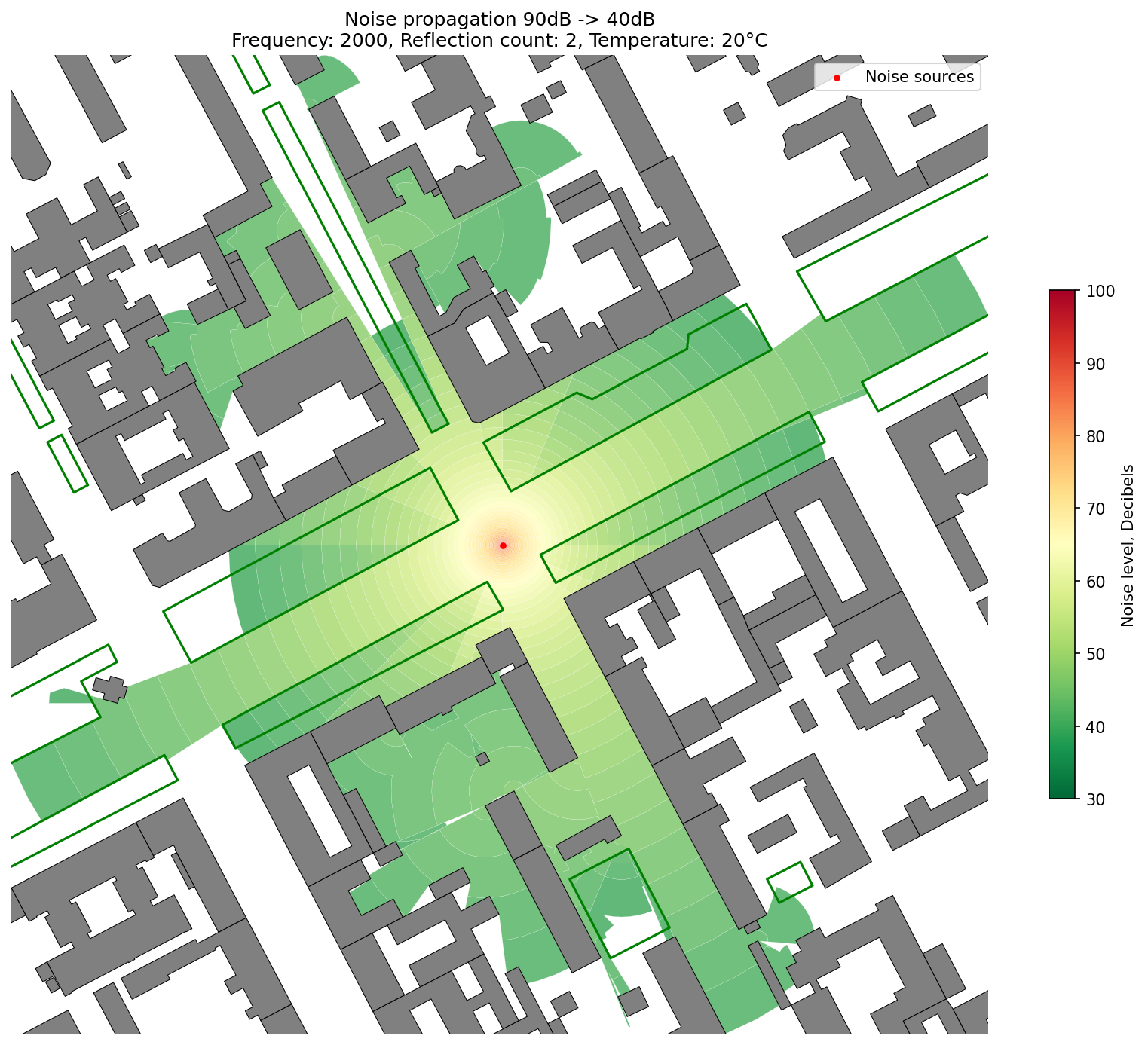

74 | 5. **[Noise Simulation](./examples/noise_simulation.ipynb)** — Simulates noise propagation from a set of source points, taking into account **obstacles**, **vegetation**, and **environmental factors**.

75 |

76 | 🔗 **[See detailed explanation in the Wiki](https://github.com/DDonnyy/ObjectNat/wiki/Noise-simulation)**

77 |

78 |

79 |  80 |

80 |

81 |

82 |

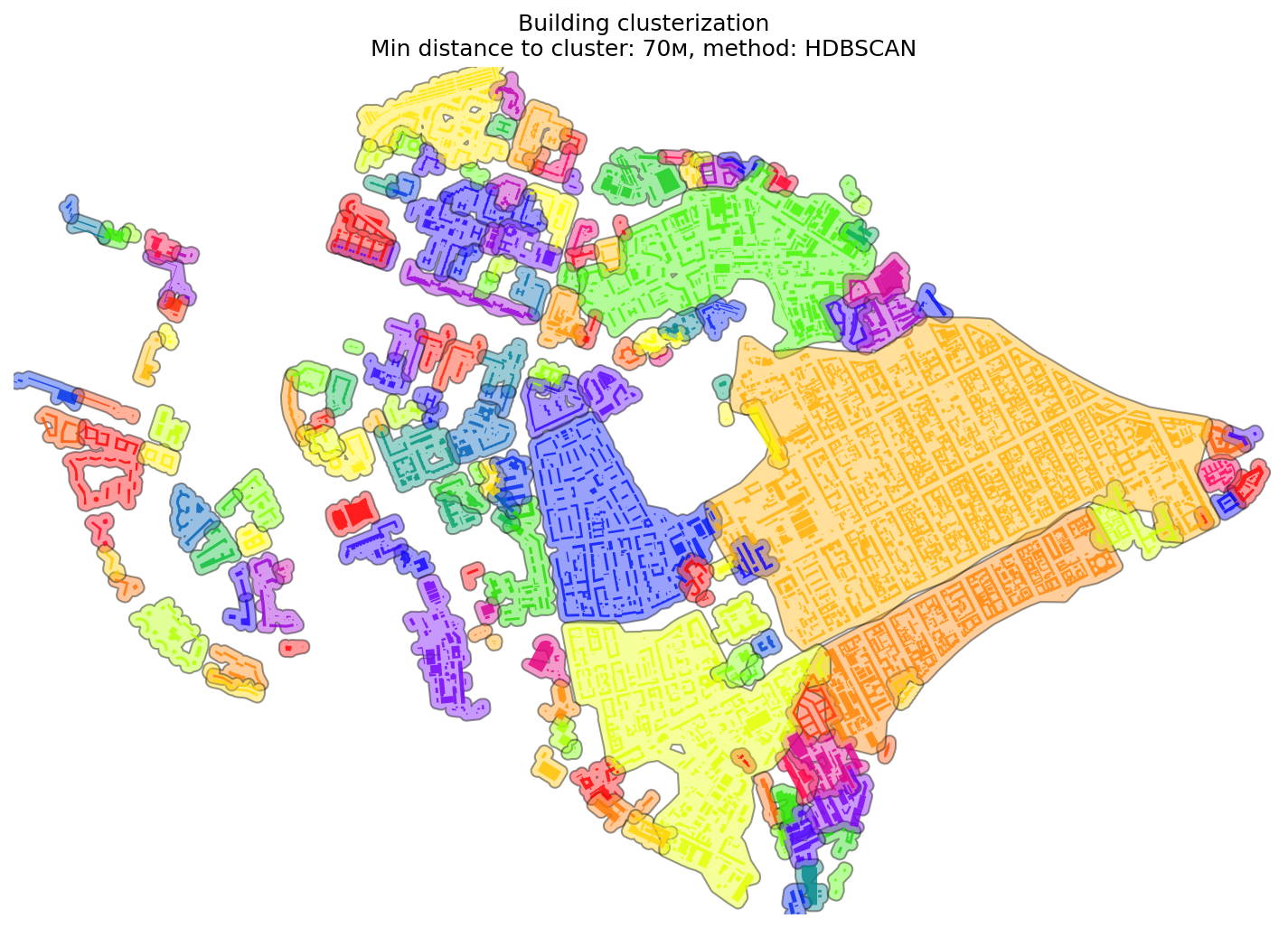

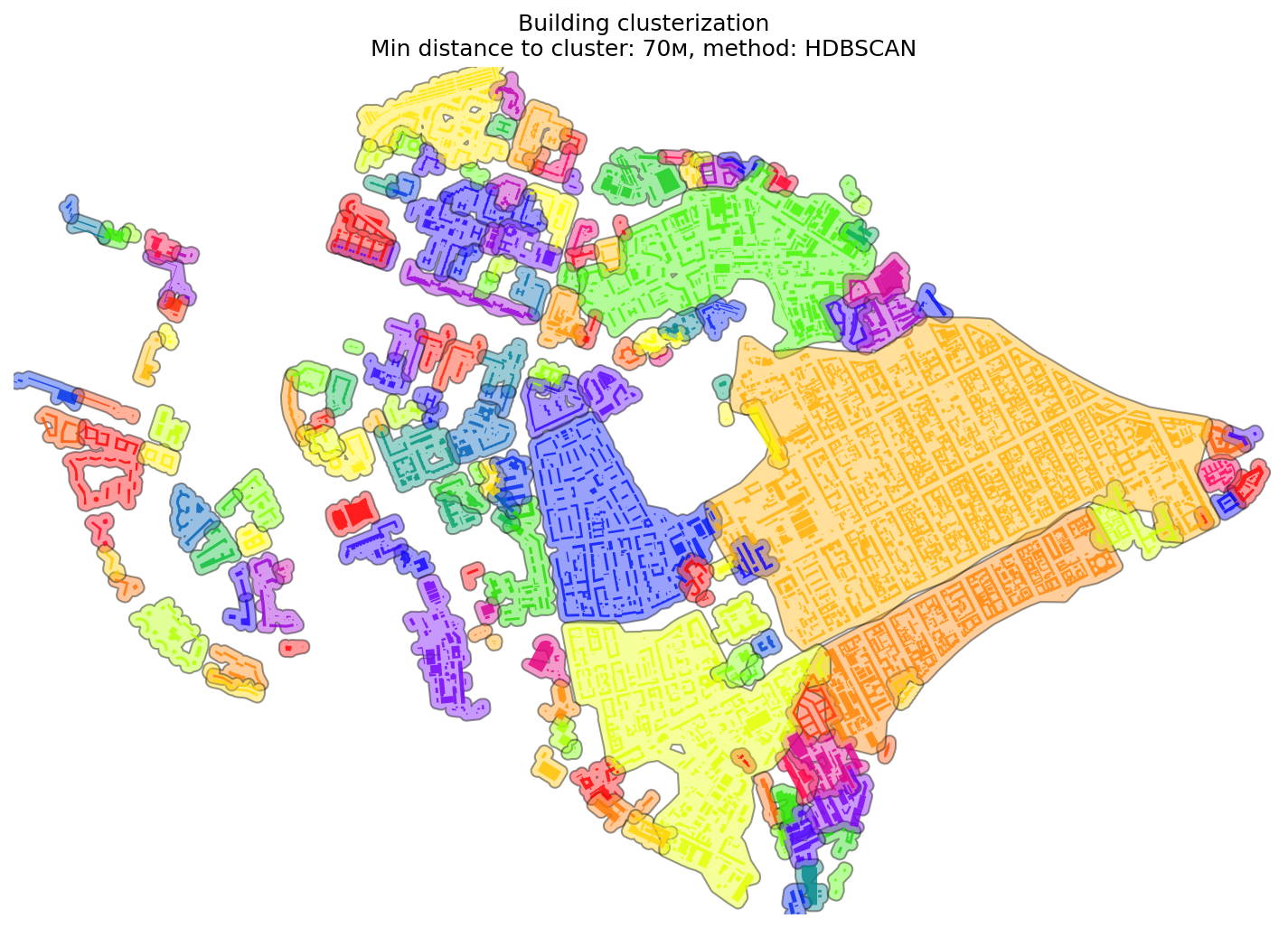

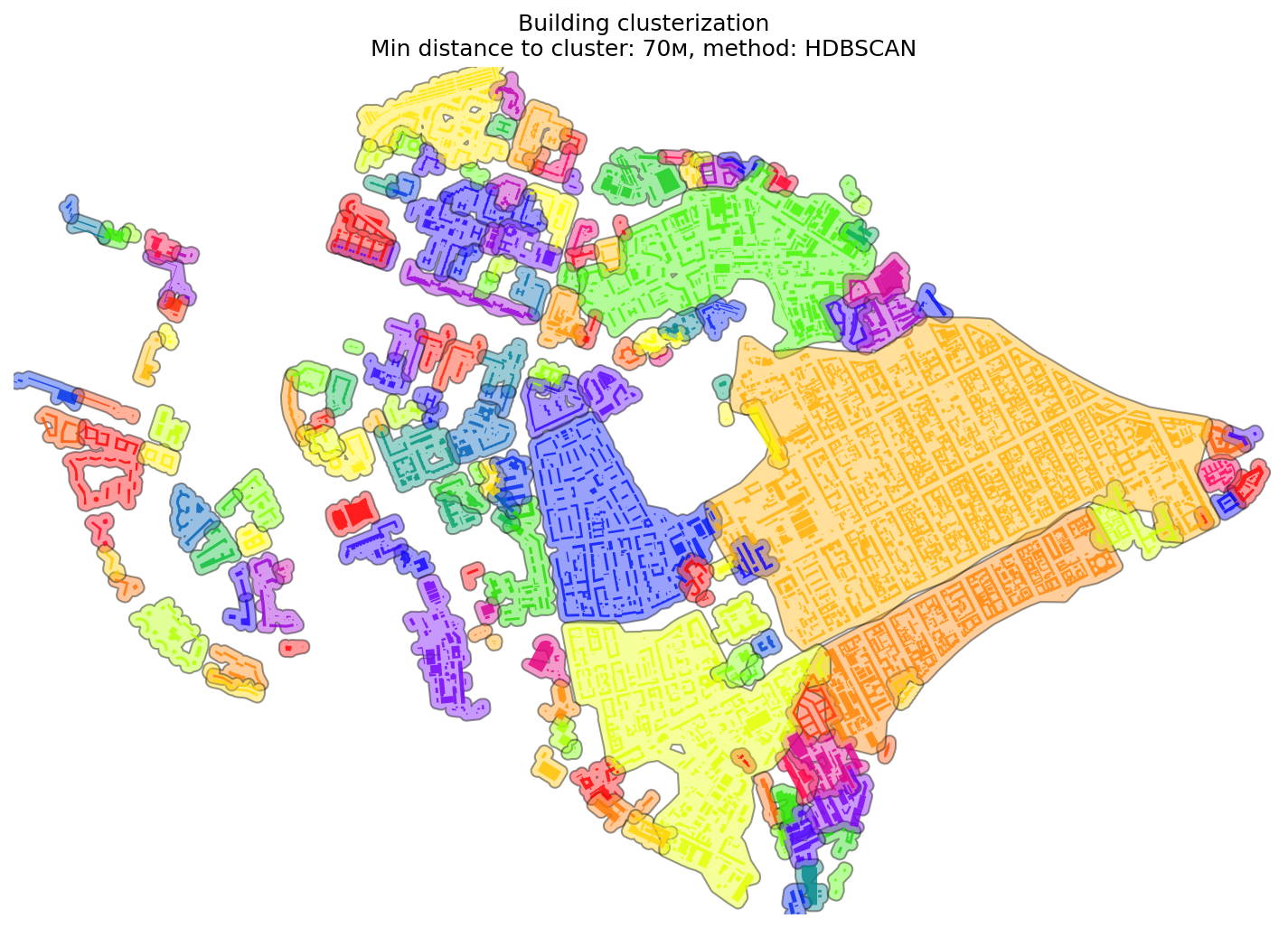

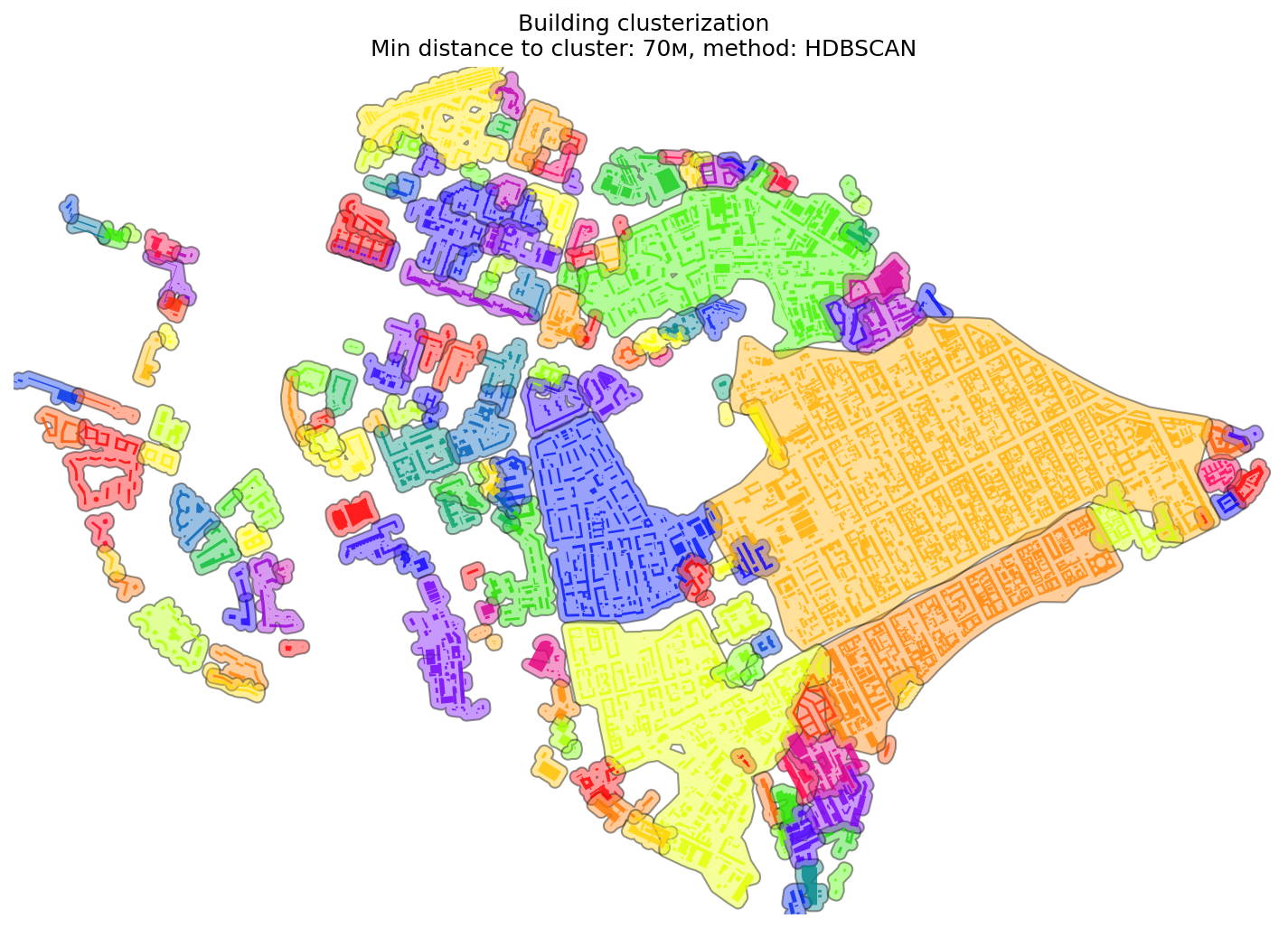

83 | 6. **[Point Clusterization](./examples/point_clusterization.ipynb)** — Function to generate **cluster polygons** from a set of input points based on:

84 | - Minimum **distance** between points.

85 | - Minimum **number of points** per cluster.

86 |

87 | Additionally, the function can calculate the **relative ratio** of different service types within each cluster, enabling spatial analysis of service composition.

88 |

89 |

90 |  91 |

91 |

92 |

93 | ## City graphs

94 |

95 | To ensure optimal performance of ObjectNat's geospatial analysis functions, it's recommended to utilize urban graphs sourced from the [IduEdu](https://github.com/DDonnyy/IduEdu) library.

96 | **IduEdu** is an open-source Python library designed for the creation and manipulation of complex city networks derived from OpenStreetMap data.

97 |

98 | **IduEdu** can be installed with ``pip``:

99 | ```

100 | pip install IduEdu

101 | ```

102 | ## Installation

103 |

104 | **ObjectNat** can be installed with ``pip``:

105 |

106 | ```

107 | pip install ObjectNat

108 | ```

109 | ### Configuration changes

110 |

111 | ```python

112 | from objectnat import config

113 |

114 | config.change_logger_lvl('INFO') # To mute all debug msgs

115 | config.set_enable_tqdm(False) # To mute all tqdm's progress bars

116 | ```

117 | ## Contacts

118 |

119 | - [NCCR](https://actcognitive.org/) - National Center for Cognitive Research

120 | - [IDU](https://idu.itmo.ru/) - Institute of Design and Urban Studies

121 | - [Natalya Chichkova](https://t.me/nancy_nat) - project manager

122 | - [Danila Oleynikov (Donny)](https://t.me/ddonny_dd) - lead software engineer

123 |

124 | ## Publications

125 |

--------------------------------------------------------------------------------

/README_ru.md:

--------------------------------------------------------------------------------

1 | # ObjectNat

2 |

3 | [](https://github.com/psf/black)

4 | [](https://pypi.org/project/objectnat/)

5 | [](https://github.com/DDonnyy/ObjecNat/actions/workflows/ci_pipeline.yml)

6 | [](https://codecov.io/gh/DDonnyy/ObjectNat)

7 | [](https://opensource.org/licenses/MIT)

8 |

9 |

10 |  11 |

11 |

12 |

13 | #### **ObjectNat** — это библиотека с открытым исходным кодом, разработанная командой **IDU** для пространственного анализа.

14 |

15 | ## Функции и как использовать

16 |

17 | 1. **[Изохроны и транспортная доступность](./examples/isochrone_generator.ipynb)** — Изохроны представляют собой области,

18 | достижимые из исходной точки за заданное время по транспортной сети.

19 | Эта функция позволяет анализировать транспортную доступность с использованием графов пешеходного, автомобильного,

20 | общественного транспорта или их комбинации.

21 |

22 | Библиотека поддерживает несколько методов построения изохрон:

23 | - **Базовые изохроны**: отображают одну зону, достижимую за заданное время.

24 | - **Шаговые изохроны**: делят зону доступности на интервалы времени (например, 3, 5, 10 минут).

25 |

26 |

27 |  28 |

28 |  29 |

29 |  30 |

30 |

31 |

32 |  33 |

33 |  34 |

34 |  35 |

35 |

36 |

37 | 2. **[Зоны покрытия](./examples/graph_coverage.ipynb)** — Функция генерации **зон покрытия** от набора исходных точек

38 | с использованием транспортной сети. Вычисляет область, достижимую из каждой точки по **времени в пути** или **дистанции**,

39 | затем строит полигоны с помощью **диаграмм Вороного** и обрезает их по заданной границе, если она указана.

40 |

41 |

42 |  43 |

43 |  44 |

44 |  45 |

45 |

46 |

47 |  48 |

48 |  49 |

49 |

50 |

51 | 3. **[Анализ обеспеченности сервисами](./examples/calculate_provision.ipynb)** — Функция оценки обеспеченности жилых зданий

52 | и их населения услугами (например, школы, поликлиники), которые имеют ограниченную **вместимость**

53 | и заданный **порог доступности** (в минутах или метрах). Функция моделирует **баланс спроса и предложения**,

54 | оценивая, насколько хорошо услуги удовлетворяют потребности близлежащих зданий в пределах допустимого времени.

55 |

56 | Библиотека также поддерживает:

57 | - **Перерасчёт** текущих результатов при изменении порога времени.

58 | - **Обрезку** результатов анализа по заданной зоне (например, границе района).

59 |

60 |

61 |  62 |

62 |  63 |

63 |  64 |

64 |

65 |

66 | 4. **[Анализ видимости](./examples/visibility_analysis.ipynb)** — Функция оценки видимости от заданной точки или множества

67 | точек до близлежащих зданий в пределах заданного радиуса. Применяется для оценки визуальной доступности в городской среде.

68 | Также реализован модуль для расчёта **зоны охвата** по видимости с использованием плотной сетки наблюдателей (рекомендуется ~1000 точек с шагом 10–20 метров).

69 | Точки можно сгенерировать по транспортной сети и распределить по её рёбрам.

70 |

71 | Модуль включает:

72 | - **Быстрый приближённый метод** для больших объёмов данных.

73 | - **Точный метод** для локального детального анализа.

74 |

75 |

76 |  77 |

77 |  78 |

78 |

79 |

80 | 5. **[Моделирование шума](./examples/noise_simulation.ipynb)** — Симуляция распространения шума от источников с учётом **препятствий**,

81 | **растительности** и **факторов окружающей среды**.

82 |

83 | 🔗 **[Подробное описание в Wiki](https://github.com/DDonnyy/ObjectNat/wiki/Симуляция-шумового-распространения)**

84 |

85 |

86 |  87 |

87 |

88 |

89 | 6. **[Кластеризация точек](./examples/point_clusterization.ipynb)** — Функция построения **кластерных полигонов** по множеству точек на основе:

90 | - Минимального **расстояния** между точками.

91 | - Минимального **числа точек** в кластере.

92 |

93 | Также функция может рассчитывать **соотношение типов услуг** в каждом кластере для пространственного анализа состава услуг.

94 |

95 |

96 |  97 |

97 |

98 |

99 | ## Городские графы

100 |

101 | Для достижения оптимальной производительности функций пространственного анализа ObjectNat рекомендуется использовать городские графы,

102 | полученные с помощью библиотеки [IduEdu](https://github.com/DDonnyy/IduEdu).

103 | **IduEdu** — это библиотека на Python с открытым исходным кодом, предназначенная для построения и обработки

104 | сложных городских сетей на основе данных OpenStreetMap.

105 |

106 | **IduEdu** можно установить с помощью ``pip``:

107 | ```

108 | pip install IduEdu

109 | ```

110 |

111 | ## Установка

112 |

113 | **ObjectNat** можно установить с помощью ``pip``:

114 |

115 | ```

116 | pip install ObjectNat

117 | ```

118 |

119 | ### Изменения конфигурации

120 |

121 | ```python

122 | from objectnat import config

123 |

124 | config.change_logger_lvl('INFO') # Чтобы отключить отладочные сообщения

125 | config.set_enable_tqdm(False) # Чтобы отключить прогресс-бары tqdm

126 | ```

127 |

128 | ## Контакты

129 |

130 | - [НЦКР](https://actcognitive.org/) — Национальный центр когнитивных разработок

131 | - [ИДУ](https://idu.itmo.ru/) — Институт дизайна и урбанистики

132 | - [Наталья Чичкова](https://t.me/nancy_nat) — менеджер проекта

133 | - [Данила Олейников (Donny)](https://t.me/ddonny_dd) — ведущий инженер-разработчик

134 |

135 | ## Публикации

136 |

--------------------------------------------------------------------------------

/examples/calculate_adjacency_matrix.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Building-to-Service Travel Time Matrix with Intermodal Graph\n",

8 | "This notebook demonstrates how to compute a time-based adjacency matrix between two GeoDataFrames\n",

9 | "(e.g., buildings and services) using a multimodal transport graph.\n",

10 | "\n",

11 | "The method utilizes the `IduEdu` library to:\n",

12 | "- Construct a multimodal graph (e.g., walk + public transport)\n",

13 | "- Calculate travel time-based adjacency matrix from one GeoDataFrame to another\n",

14 | "\n",

15 | "This matrix can be used in `ObjectNat` for further service provision analysis."

16 | ],

17 | "id": "9ca78071b77f245e"

18 | },

19 | {

20 | "metadata": {},

21 | "cell_type": "code",

22 | "source": [

23 | "# %%\n",

24 | "# Install required packages (uncomment if needed)\n",

25 | "# !pip install iduedu pyarrow"

26 | ],

27 | "id": "a3fd404cc3b83edd",

28 | "outputs": [],

29 | "execution_count": null

30 | },

31 | {

32 | "metadata": {},

33 | "cell_type": "code",

34 | "source": [

35 | "# Import necessary libraries\n",

36 | "from iduedu import get_intermodal_graph, get_adj_matrix_gdf_to_gdf\n",

37 | "import geopandas as gpd\n",

38 | "import pandas as pd\n",

39 | "from shapely.ops import unary_union"

40 | ],

41 | "id": "9a3e15f423bedc31",

42 | "outputs": [],

43 | "execution_count": null

44 | },

45 | {

46 | "metadata": {},

47 | "cell_type": "markdown",

48 | "source": [

49 | "### 1. Load Input Geospatial Data\n",

50 | "Load the GeoDataFrames of buildings (origins) and services (destinations).\n"

51 | ],

52 | "id": "68af599b21a7895d"

53 | },

54 | {

55 | "metadata": {},

56 | "cell_type": "code",

57 | "source": [

58 | "# Read building and service datasets\n",

59 | "buildings = gpd.read_parquet('examples_data/buildings.parquet')\n",

60 | "services = gpd.read_parquet('examples_data/services.parquet')"

61 | ],

62 | "id": "ecaca9093632eb44",

63 | "outputs": [],

64 | "execution_count": null

65 | },

66 | {

67 | "metadata": {},

68 | "cell_type": "markdown",

69 | "source": [

70 | "### 2. Create Coverage Polygon for Graph Download\n",

71 | "Compute a polygon that encompasses both datasets to define the spatial extent for graph download.\n",

72 | "This is done by computing a convex hull over all geometries and buffering it slightly.\n"

73 | ],

74 | "id": "5146507282cd8082"

75 | },

76 | {

77 | "metadata": {},

78 | "cell_type": "code",

79 | "source": [

80 | "polygon = unary_union(\n",

81 | " buildings.to_crs(4326).geometry.to_list() + services.to_crs(4326).geometry.to_list()\n",

82 | ").convex_hull.buffer(0.001)"

83 | ],

84 | "id": "74e684470ea483a1",

85 | "outputs": [],

86 | "execution_count": null

87 | },

88 | {

89 | "metadata": {},

90 | "cell_type": "markdown",

91 | "source": [

92 | "### 3. Download and Clip Intermodal Graph\n",

93 | "Download the intermodal (multi-modal) network graph using the defined polygon.\n",

94 | "This includes walking paths and public transport networks."

95 | ],

96 | "id": "b6b58fffdd714d38"

97 | },

98 | {

99 | "metadata": {},

100 | "cell_type": "code",

101 | "source": [

102 | "# Load multimodal graph clipped to polygon\n",

103 | "G_intermodal = get_intermodal_graph(polygon=polygon, clip_by_bounds=True)"

104 | ],

105 | "id": "1e643d3fdc052876",

106 | "outputs": [],

107 | "execution_count": null

108 | },

109 | {

110 | "metadata": {},

111 | "cell_type": "markdown",

112 | "source": [

113 | "### 4. Compute Adjacency Matrix (Travel Time)\n",

114 | "Calculate a travel-time-based adjacency matrix from buildings to services.\n",

115 | "\n",

116 | "Parameters:\n",

117 | "- `weight`: edge attribute used for cost (e.g., \"time_min\")\n",

118 | "- `threshold`: maximum allowed travel time (in minutes)"

119 | ],

120 | "id": "812757b2e10fe745"

121 | },

122 | {

123 | "metadata": {},

124 | "cell_type": "code",

125 | "source": [

126 | "# Compute travel time matrix (in minutes)\n",

127 | "matrix: pd.DataFrame = get_adj_matrix_gdf_to_gdf(\n",

128 | " gdf_from=buildings,\n",

129 | " gdf_to=services,\n",

130 | " nx_graph=G_intermodal,\n",

131 | " weight=\"time_min\",\n",

132 | " threshold=45\n",

133 | ")"

134 | ],

135 | "id": "f763ed1656707714",

136 | "outputs": [],

137 | "execution_count": null

138 | },

139 | {

140 | "metadata": {},

141 | "cell_type": "markdown",

142 | "source": [

143 | "### 5. Save Adjacency Matrix\n",

144 | "Export the result for further processing, e.g., with `ObjectNat`'s service provision tools."

145 | ],

146 | "id": "8c56b37f6c2f508f"

147 | },

148 | {

149 | "cell_type": "code",

150 | "source": [

151 | "# Save matrix to Parquet format\n",

152 | "matrix.to_parquet('examples_data/matrix_time.parquet')"

153 | ],

154 | "metadata": {},

155 | "id": "371f4607ed8ec9c9",

156 | "outputs": [],

157 | "execution_count": null

158 | }

159 | ],

160 | "metadata": {

161 | "kernelspec": {

162 | "display_name": "Python 3",

163 | "language": "python",

164 | "name": "python3"

165 | },

166 | "language_info": {

167 | "codemirror_mode": {

168 | "name": "ipython",

169 | "version": 2

170 | },

171 | "file_extension": ".py",

172 | "mimetype": "text/x-python",

173 | "name": "python",

174 | "nbconvert_exporter": "python",

175 | "pygments_lexer": "ipython2",

176 | "version": "2.7.6"

177 | }

178 | },

179 | "nbformat": 4,

180 | "nbformat_minor": 5

181 | }

182 |

--------------------------------------------------------------------------------

/examples/calculate_provision.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Service Provision Analysis\n",

8 | "This notebook demonstrates how to analyze service accessibility from buildings using precomputed distances:\n",

9 | "- Compute basic service provision\n",

10 | "- Adjust provision thresholds\n",

11 | "- Clip provision to specific subareas"

12 | ],

13 | "id": "8412dc445f8b77de"

14 | },

15 | {

16 | "metadata": {},

17 | "cell_type": "code",

18 | "source": [

19 | "# Import necessary libraries\n",

20 | "from objectnat import get_service_provision, recalculate_links, clip_provision\n",

21 | "import geopandas as gpd\n",

22 | "import pandas as pd"

23 | ],

24 | "id": "8bd677b0f6ad1c1",

25 | "outputs": [],

26 | "execution_count": null

27 | },

28 | {

29 | "metadata": {},

30 | "cell_type": "markdown",

31 | "source": [

32 | "### 1. Load Input Data\n",

33 | "Load buildings, services, and an adjacency matrix of distances or travel times between them. All layers are reprojected to UTM (EPSG:32636) for consistency.\n"

34 | ],

35 | "id": "133bb319e2fd5ec3"

36 | },

37 | {

38 | "metadata": {},

39 | "cell_type": "code",

40 | "source": [

41 | "# Load datasets\n",

42 | "buildings = gpd.read_parquet(\"examples_data/buildings.parquet\")\n",

43 | "services = gpd.read_parquet(\"examples_data/services.parquet\")\n",

44 | "adjacency_matrix = pd.read_parquet(\"examples_data/matrix_time.parquet\")"

45 | ],

46 | "id": "4f2059500cec3f8b",

47 | "outputs": [],

48 | "execution_count": null

49 | },

50 | {

51 | "metadata": {},

52 | "cell_type": "markdown",

53 | "source": [

54 | "### 2. Compute Initial Service Provision\n",

55 | "Compute how well buildings are served by nearby services using the `get_service_provision()` function.\n",

56 | "The `threshold` parameter defines the maximum distance or time for service availability."

57 | ],

58 | "id": "3072fc796157aab5"

59 | },

60 | {

61 | "metadata": {},

62 | "cell_type": "code",

63 | "source": [

64 | "# Compute service provision using a threshold of 10 (e.g., minutes)\n",

65 | "buildings_prov, services_prov, links_prov = get_service_provision(\n",

66 | " buildings=buildings,\n",

67 | " services=services,\n",

68 | " adjacency_matrix=adjacency_matrix,\n",

69 | " threshold=10\n",

70 | ")\n",

71 | "# This returns updated buildings, services, and links GeoDataFrames with provision status and metrics."

72 | ],

73 | "id": "5495c4c389c1d17",

74 | "outputs": [],

75 | "execution_count": null

76 | },

77 | {

78 | "metadata": {},

79 | "cell_type": "markdown",

80 | "source": [

81 | "### 3. Visualize Service Provision\n",

82 | "Use an interactive map to inspect which buildings are well-served and which are underserved.\n"

83 | ],

84 | "id": "f267ccc667dea286"

85 | },

86 | {

87 | "metadata": {},

88 | "cell_type": "code",

89 | "source": [

90 | "# Visualize provision by average distance to services\n",

91 | "m = buildings_prov.reset_index().explore(column=\"avg_dist\", cmap=\"RdYlGn_r\", tiles=\"CartoDB positron\")\n",

92 | "\n",

93 | "# Overlay service locations (in red)\n",

94 | "services_prov.explore(m=m, color=\"red\")\n",

95 | "\n",

96 | "# Uncomment to show service links (color-coded by service index)\n",

97 | "# links_prov.explore(m=m, column='service_index', cmap='prism', style_kwds={'opacity': 0.5})"

98 | ],

99 | "id": "dbdacc6bef92bc83",

100 | "outputs": [],

101 | "execution_count": null

102 | },

103 | {

104 | "metadata": {},

105 | "cell_type": "markdown",

106 | "source": [

107 | "### 4. Recalculate Provision with New Threshold\n",

108 | "Update the service provision based on a new threshold (e.g., longer acceptable walking or travel time).\n"

109 | ],

110 | "id": "9f941a72e57af722"

111 | },

112 | {

113 | "metadata": {},

114 | "cell_type": "code",

115 | "source": [

116 | "# Determine color scaling from original results\n",

117 | "vmax = buildings_prov['avg_dist'].max()\n",

118 | "\n",

119 | "# Recompute provision using a threshold of 15\n",

120 | "buildings_prov2, services_prov2, links_prov2 = recalculate_links(\n",

121 | " buildings_prov,\n",

122 | " services_prov,\n",

123 | " links_prov,\n",

124 | " new_max_dist=15\n",

125 | ")\n",

126 | "\n",

127 | "# Visualize updated provision with consistent color scale\n",

128 | "m2 = buildings_prov2.reset_index().explore(column=\"avg_dist\", cmap=\"RdYlGn_r\", tiles=\"CartoDB positron\", vmax=vmax)\n",

129 | "\n",

130 | "services_prov2.explore(m=m2, color=\"red\")\n",

131 | "# Uncomment to show service links (color-coded by service index)\n",

132 | "# links_prov2.explore(m=m2, column='service_index', cmap='prism', style_kwds={'opacity': 0.5})"

133 | ],

134 | "id": "ddeb3b14e59993",

135 | "outputs": [],

136 | "execution_count": null

137 | },

138 | {

139 | "metadata": {},

140 | "cell_type": "markdown",

141 | "source": [

142 | "### 5. Clip Provision to a Subarea\n",

143 | "Limit the analysis to a specific geographic region using any interested area.\n"

144 | ],

145 | "id": "4d8eaec54833393"

146 | },

147 | {

148 | "metadata": {},

149 | "cell_type": "code",

150 | "source": [

151 | "# Select a few buildings and buffer them to define a clipping area\n",

152 | "clip_area = buildings.iloc[500:503].copy()\n",

153 | "clip_area[\"geometry\"] = clip_area.geometry.buffer(500)\n",

154 | "\n",

155 | "# Clip provision to selected subarea\n",

156 | "buildings_prov_clipped, services_prov_clipped, links_prov_clipped = clip_provision(\n",

157 | " buildings_prov2,\n",

158 | " services_prov2,\n",

159 | " links_prov2,\n",

160 | " selection_zone=clip_area\n",

161 | ")\n",

162 | "\n",

163 | "# Visualize the clipped results\n",

164 | "m3 = buildings_prov_clipped.reset_index().explore(column=\"avg_dist\", cmap=\"RdYlGn_r\", tiles=\"CartoDB positron\",\n",

165 | " vmax=vmax)\n",

166 | "\n",

167 | "\n",

168 | "services_prov_clipped.explore(m=m3, color=\"red\")\n",

169 | "# Uncomment to show service links (color-coded by service index)\n",

170 | "# links_prov_clipped.explore(m=m3, column='service_index', cmap='prism', style_kwds={'opacity': 0.5})"

171 | ],

172 | "id": "950866ceb91eb982",

173 | "outputs": [],

174 | "execution_count": null

175 | }

176 | ],

177 | "metadata": {

178 | "kernelspec": {

179 | "display_name": "Python 3",

180 | "language": "python",

181 | "name": "python3"

182 | },

183 | "language_info": {

184 | "codemirror_mode": {

185 | "name": "ipython",

186 | "version": 2

187 | },

188 | "file_extension": ".py",

189 | "mimetype": "text/x-python",

190 | "name": "python",

191 | "nbconvert_exporter": "python",

192 | "pygments_lexer": "ipython2",

193 | "version": "2.7.6"

194 | }

195 | },

196 | "nbformat": 4,

197 | "nbformat_minor": 5

198 | }

199 |

--------------------------------------------------------------------------------

/examples/coverage_zones.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Graph Coverage Analysis for Service Points\n",

8 | "\n",

9 | "This notebook demonstrates how to calculate coverage zones from service points through a multimodal transportation network using Dijkstra's algorithm and Voronoi diagrams."

10 | ],

11 | "id": "4dc8b5b9755684aa"

12 | },

13 | {

14 | "metadata": {},

15 | "cell_type": "code",

16 | "source": [

17 | "# Install required packages (uncomment if needed)\n",

18 | "# !pip install iduedu pyarrow objectnat"

19 | ],

20 | "id": "db56d2861ab407be",

21 | "outputs": [],

22 | "execution_count": null

23 | },

24 | {

25 | "metadata": {},

26 | "cell_type": "code",

27 | "source": [

28 | "# Import dependencies\n",

29 | "from iduedu import get_intermodal_graph, get_boundary\n",

30 | "import geopandas as gpd\n",

31 | "from objectnat import get_graph_coverage,get_stepped_graph_coverage,get_radius_coverage"

32 | ],

33 | "id": "d568e9b461c6086a",

34 | "outputs": [],

35 | "execution_count": null

36 | },

37 | {

38 | "metadata": {},

39 | "cell_type": "markdown",

40 | "source": [

41 | "### 1. Load Transportation Network\n",

42 | "First, we retrieve the multimodal graph (roads, public transport, etc.) for a specified region using its OSM ID."

43 | ],

44 | "id": "5084078ebb6beef2"

45 | },

46 | {

47 | "cell_type": "code",

48 | "id": "initial_id",

49 | "metadata": {

50 | "collapsed": true

51 | },

52 | "source": [

53 | "# Get city boundary and transportation network\n",

54 | "poly = get_boundary(osm_id=1114252) # Example OSM ID for a city\n",

55 | "G_intermodal = get_intermodal_graph(polygon=poly, clip_by_bounds=True)"

56 | ],

57 | "outputs": [],

58 | "execution_count": null

59 | },

60 | {

61 | "metadata": {},

62 | "cell_type": "markdown",

63 | "source": [

64 | "### 2. Load Service Points\n",

65 | "These represent locations (e.g., healthcare facilities, schools) for which we want to calculate coverage zones."

66 | ],

67 | "id": "18c5a199616284b0"

68 | },

69 | {

70 | "metadata": {},

71 | "cell_type": "code",

72 | "source": [

73 | "# Load service points (replace with your actual data path)\n",

74 | "services = gpd.read_parquet('examples_data/services.parquet')"

75 | ],

76 | "id": "dc4ae5fbd2374af4",

77 | "outputs": [],

78 | "execution_count": null

79 | },

80 | {

81 | "metadata": {},

82 | "cell_type": "markdown",

83 | "source": [

84 | "### 3. Calculate Coverage by Distance\n",

85 | "Creates service areas based on maximum travel distance (800 meters in this example)."

86 | ],

87 | "id": "9e3ebbd07107a149"

88 | },

89 | {

90 | "metadata": {},

91 | "cell_type": "code",

92 | "source": [

93 | "# Calculate coverage zones by distance (800m cutoff)\n",

94 | "result_length = get_graph_coverage(\n",

95 | " gdf_to=services,\n",

96 | " nx_graph=G_intermodal,\n",

97 | " weight_type=\"length_meter\",\n",

98 | " weight_value_cutoff=800\n",

99 | ")\n",

100 | "\n",

101 | "# Visualize results\n",

102 | "result_length.explore(column='name', tiles='CartoDB Positron')"

103 | ],

104 | "id": "239ae7c0ed8604a1",

105 | "outputs": [],

106 | "execution_count": null

107 | },

108 | {

109 | "metadata": {},

110 | "cell_type": "markdown",

111 | "source": [

112 | "### 4. Calculate Coverage by Travel Time\n",

113 | "Creates service areas based on maximum travel time (10 minutes in this example), clipped to the city boundary."

114 | ],

115 | "id": "5f8d6888bdbf4716"

116 | },

117 | {

118 | "metadata": {},

119 | "cell_type": "code",

120 | "source": [

121 | "# Prepare zone boundary\n",

122 | "zone = gpd.GeoDataFrame(geometry=[poly], crs=4326)\n",

123 | "\n",

124 | "# Calculate coverage zones by time (10min cutoff)\n",

125 | "result_time = get_graph_coverage(\n",

126 | " gdf_to=services,\n",

127 | " nx_graph=G_intermodal,\n",

128 | " weight_type=\"time_min\",\n",

129 | " weight_value_cutoff=10,\n",

130 | " zone=zone\n",

131 | ")\n",

132 | "# Visualize results\n",

133 | "result_time.explore(column='name', tiles='CartoDB Positron')"

134 | ],

135 | "id": "3fe99dad99d0c066",

136 | "outputs": [],

137 | "execution_count": null

138 | },

139 | {

140 | "metadata": {},

141 | "cell_type": "markdown",

142 | "source": [

143 | "### Key Parameters Explained:\n",

144 | "- `weight_type`: \n",

145 | " - `\"length_meter\"` for distance-based coverage\n",

146 | " - `\"time_min\"` for time-based coverage\n",

147 | "- `weight_value_cutoff`: Maximum travel distance/time threshold\n",

148 | "- `zone` (optional): Boundary polygon to clip results"

149 | ],

150 | "id": "ac980132a47eb200"

151 | },

152 | {

153 | "metadata": {},

154 | "cell_type": "markdown",

155 | "source": [

156 | "### 5. Calculate stepped Coverage by Travel Time\n",

157 | "This method divides the total travel time threshold into steps (e.g. every 2 minutes), creating incremental zones.\n",

158 | "Useful for visualizing service accessibility gradients.\n",

159 | "\n",

160 | "You can choose the visualization method:\n",

161 | "- `\"voronoi\"`: polygons based on proximity to reachable network nodes\n",

162 | "- `\"separate\"`: independent buffer zones for each interval\n"

163 | ],

164 | "id": "f6965db2be4d9e73"

165 | },

166 | {

167 | "metadata": {},

168 | "cell_type": "code",

169 | "source": [

170 | "stepped_cov_voronoi = get_stepped_graph_coverage(\n",

171 | " gdf_to=services,\n",

172 | " nx_graph=G_intermodal,\n",

173 | " weight_type=\"time_min\",\n",

174 | " step_type='voronoi',\n",

175 | " weight_value_cutoff=15,\n",

176 | " step=2,\n",

177 | " zone=zone\n",

178 | ")\n",

179 | "# Visualize stepped coverage\n",

180 | "stepped_cov_voronoi.explore(column='dist', tiles='CartoDB Positron')"

181 | ],

182 | "id": "4f8d216ed2c9b472",

183 | "outputs": [],

184 | "execution_count": null

185 | },

186 | {

187 | "metadata": {},

188 | "cell_type": "code",

189 | "source": [

190 | "stepped_cov_voronoi = get_stepped_graph_coverage(\n",

191 | " gdf_to=services,\n",

192 | " nx_graph=G_intermodal,\n",

193 | " weight_type=\"time_min\",\n",

194 | " step_type='separate',\n",

195 | " weight_value_cutoff=15,\n",

196 | " step=2,\n",

197 | ")\n",

198 | "# Visualize stepped coverage\n",

199 | "stepped_cov_voronoi.explore(column='dist', tiles='CartoDB Positron',vmin=0)"

200 | ],

201 | "id": "208ee93a136558ac",

202 | "outputs": [],

203 | "execution_count": null

204 | },

205 | {

206 | "metadata": {},

207 | "cell_type": "markdown",

208 | "source": [

209 | "### 6. Calculate Radius-Based Coverage\n",

210 | "If a transport network is unavailable or unnecessary, use simple circular buffers around service points.\n",

211 | "\n",

212 | "This method creates geometric buffers with specified radius (e.g., 500 meters)."

213 | ],

214 | "id": "53ecdf19492c5b74"

215 | },

216 | {

217 | "metadata": {},

218 | "cell_type": "code",

219 | "source": [

220 | "radius_cov = get_radius_coverage(gdf_from=services, radius=500)\n",

221 | "# Visualize radius coverage\n",

222 | "radius_cov.explore(column='name', tiles='CartoDB Positron')"

223 | ],

224 | "id": "2908f41d0603713f",

225 | "outputs": [],

226 | "execution_count": null

227 | }

228 | ],

229 | "metadata": {

230 | "kernelspec": {

231 | "display_name": "Python 3",

232 | "language": "python",

233 | "name": "python3"

234 | },

235 | "language_info": {

236 | "codemirror_mode": {

237 | "name": "ipython",

238 | "version": 2

239 | },

240 | "file_extension": ".py",

241 | "mimetype": "text/x-python",

242 | "name": "python",

243 | "nbconvert_exporter": "python",

244 | "pygments_lexer": "ipython2",

245 | "version": "2.7.6"

246 | }

247 | },

248 | "nbformat": 4,

249 | "nbformat_minor": 5

250 | }

251 |

--------------------------------------------------------------------------------

/examples/examples_data/buildings.parquet:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/DDonnyy/ObjectNat/3c29a7b7c0ea5c944fe2f98c368fcfc99b51bf96/examples/examples_data/buildings.parquet

--------------------------------------------------------------------------------

/examples/examples_data/matrix_time.parquet:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/DDonnyy/ObjectNat/3c29a7b7c0ea5c944fe2f98c368fcfc99b51bf96/examples/examples_data/matrix_time.parquet

--------------------------------------------------------------------------------

/examples/examples_data/services.parquet:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/DDonnyy/ObjectNat/3c29a7b7c0ea5c944fe2f98c368fcfc99b51bf96/examples/examples_data/services.parquet

--------------------------------------------------------------------------------

/examples/examples_data/trees.parquet:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/DDonnyy/ObjectNat/3c29a7b7c0ea5c944fe2f98c368fcfc99b51bf96/examples/examples_data/trees.parquet

--------------------------------------------------------------------------------

/examples/isochrone_generator.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Isochrone Analysis from Points of Interest\n",

8 | "This notebook demonstrates how to generate accessibility isochrones from single or multiple points using different methods:\n",

9 | "- Simple `radius` and `ways` isochrones\n",

10 | "- Stepped isochrones with customizable intervals"

11 | ],

12 | "id": "fa4563c984470740"

13 | },

14 | {

15 | "metadata": {},

16 | "cell_type": "code",

17 | "source": [

18 | "# Install required packages (uncomment if needed)\n",

19 | "# !pip install objectnat iduedu"

20 | ],

21 | "id": "9e7d002c63864de6",

22 | "outputs": [],

23 | "execution_count": null

24 | },

25 | {

26 | "metadata": {},

27 | "cell_type": "code",

28 | "source": [

29 | "# Import necessary libraries\n",

30 | "from iduedu import get_intermodal_graph, get_boundary\n",

31 | "import geopandas as gpd\n",

32 | "from shapely import Point\n",

33 | "from objectnat import get_accessibility_isochrones, get_accessibility_isochrone_stepped"

34 | ],

35 | "id": "ebe2025fab286b4e",

36 | "outputs": [],

37 | "execution_count": null

38 | },

39 | {

40 | "metadata": {},

41 | "cell_type": "markdown",

42 | "source": [

43 | "### 1. Load Intermodal Graph\n",

44 | "Load a multimodal transportation graph (roads, public transport, etc.) for a specific region using its OSM ID."

45 | ],

46 | "id": "494425a561aa50ec"

47 | },

48 | {

49 | "cell_type": "code",

50 | "source": [

51 | "# Load boundary and graph for a specific region using OSM ID 1114252.\n",

52 | "poly = get_boundary(osm_id=1114252)\n",

53 | "G_intermodal = get_intermodal_graph(polygon=poly, clip_by_bounds=True)"

54 | ],

55 | "metadata": {

56 | "collapsed": false

57 | },

58 | "id": "df20dec8ac67c8da",

59 | "outputs": [],

60 | "execution_count": null

61 | },

62 | {

63 | "metadata": {},

64 | "cell_type": "markdown",

65 | "source": [

66 | "### 2. Create Points of Interest\n",

67 | "Define one or more source points from which isochrones will be generated."

68 | ],

69 | "id": "917bf19ff192faac"

70 | },

71 | {

72 | "metadata": {},

73 | "cell_type": "code",

74 | "source": [

75 | "# Define a single point of interest\n",

76 | "point = gpd.GeoDataFrame(geometry=[Point(30.27060176, 59.93546846)], crs=4326)"

77 | ],

78 | "id": "f6045b6fa1bc7c37",

79 | "outputs": [],

80 | "execution_count": null

81 | },

82 | {

83 | "metadata": {},

84 | "cell_type": "markdown",

85 | "source": [

86 | "### 3. Generate Radius Isochrones\n",

87 | "Create circular isochrones using a travel time threshold (e.g. 10 minutes)."

88 | ],

89 | "id": "7127732b199acab6"

90 | },

91 | {

92 | "metadata": {},

93 | "cell_type": "code",

94 | "source": [

95 | "isochrones_radius, stops_r, routes_r = get_accessibility_isochrones(\n",

96 | " isochrone_type='radius',\n",

97 | " points=point,\n",

98 | " weight_type=\"time_min\",\n",

99 | " weight_value=10,\n",

100 | " nx_graph=G_intermodal\n",

101 | ")\n",

102 | "\n",

103 | "# Visualize\n",

104 | "m = isochrones_radius.explore(tiles='CartoDB Positron')\n",

105 | "stops_r.explore(m=m)\n",

106 | "routes_r.explore(m=m, column='type')"

107 | ],

108 | "id": "f71a6d15e3dc7eed",

109 | "outputs": [],

110 | "execution_count": null

111 | },

112 | {

113 | "metadata": {},

114 | "cell_type": "markdown",

115 | "source": [

116 | "### 4. Generate Ways Isochrones\n",

117 | "Create road network-based polygons representing reachable areas within a time or distance threshold."

118 | ],

119 | "id": "1e9a3026b7537291"

120 | },

121 | {

122 | "metadata": {},

123 | "cell_type": "code",

124 | "source": [

125 | "isochrones_ways, stops_w, routes_w = get_accessibility_isochrones(\n",

126 | " isochrone_type='ways',\n",

127 | " points=point,\n",

128 | " weight_type=\"time_min\",\n",

129 | " weight_value=10,\n",

130 | " nx_graph=G_intermodal\n",

131 | ")\n",

132 | "\n",

133 | "# Visualize\n",

134 | "m = isochrones_ways.explore(tiles='CartoDB Positron')\n",

135 | "stops_w.explore(m=m)\n",

136 | "routes_w.explore(m=m, column='type')"

137 | ],

138 | "id": "b4bd257c0bafea8a",

139 | "outputs": [],

140 | "execution_count": null

141 | },

142 | {

143 | "metadata": {},

144 | "cell_type": "markdown",

145 | "source": [

146 | "### 5. Compare Isochrone Types\n",

147 | "Overlay both types of isochrones to compare coverage."

148 | ],

149 | "id": "38499d16fdc1991e"

150 | },

151 | {

152 | "metadata": {},

153 | "cell_type": "code",

154 | "source": [

155 | "m = isochrones_radius.explore(tiles='CartoDB Positron', color='blue', name='Radius')\n",

156 | "isochrones_ways.explore(m=m, color='red', name='Ways')"

157 | ],

158 | "id": "3565f5290601d78b",

159 | "outputs": [],

160 | "execution_count": null

161 | },

162 | {

163 | "metadata": {},

164 | "cell_type": "markdown",

165 | "source": [

166 | "### 6. Generate Stepped Isochrones (Radius)\n",

167 | "Create concentric buffer zones with stepped intervals (e.g. every 3 minutes).\n"

168 | ],

169 | "id": "739b0d17e9be6e02"

170 | },

171 | {

172 | "metadata": {},

173 | "cell_type": "code",

174 | "source": [

175 | "stepped_radius, stops_s1, routes_s1 = get_accessibility_isochrone_stepped(\n",

176 | " isochrone_type='radius',\n",

177 | " point=point,\n",

178 | " weight_type=\"time_min\",\n",

179 | " weight_value=15,\n",

180 | " nx_graph=G_intermodal,\n",

181 | " step=3\n",

182 | ")\n",

183 | "\n",

184 | "stepped_radius.explore(tiles='CartoDB Positron', column='dist')\n"

185 | ],

186 | "id": "8e0792ac42ed50d4",

187 | "outputs": [],

188 | "execution_count": null

189 | },

190 | {

191 | "metadata": {},

192 | "cell_type": "markdown",

193 | "source": [

194 | "### 7. Generate Stepped Isochrones (Ways)\n",

195 | "Create layered polygons in the road network with custom intervals (e.g. every 3 minutes).\n"

196 | ],

197 | "id": "5320bc04bdc43135"

198 | },

199 | {

200 | "metadata": {},

201 | "cell_type": "code",

202 | "source": [

203 | "stepped_ways, stops_s2, routes_s2 = get_accessibility_isochrone_stepped(\n",

204 | " isochrone_type='ways',\n",

205 | " point=point,\n",

206 | " weight_type=\"time_min\",\n",

207 | " weight_value=15,\n",

208 | " nx_graph=G_intermodal,\n",

209 | " step=3\n",

210 | ")\n",

211 | "stepped_ways.explore(tiles='CartoDB Positron', column='dist')"

212 | ],

213 | "id": "adb0b77046b2301e",

214 | "outputs": [],

215 | "execution_count": null

216 | },

217 | {

218 | "metadata": {},

219 | "cell_type": "markdown",

220 | "source": [

221 | "### 8. Generate Stepped Isochrones (Separate)\n",

222 | "Create distinct buffer rings for each interval."

223 | ],

224 | "id": "350e096ecc82ec2f"

225 | },

226 | {

227 | "metadata": {},

228 | "cell_type": "code",

229 | "source": [

230 | "stepped_separate, stops_s3, routes_s3 = get_accessibility_isochrone_stepped(\n",

231 | " isochrone_type='separate',\n",

232 | " point=point,\n",

233 | " weight_type=\"time_min\",\n",

234 | " weight_value=10,\n",

235 | " nx_graph=G_intermodal,\n",

236 | " step=2\n",

237 | ")\n",

238 | "\n",

239 | "stepped_separate.explore(tiles='CartoDB Positron', column='dist')"

240 | ],

241 | "id": "ae3cd6bd7384375e",

242 | "outputs": [],

243 | "execution_count": null

244 | },

245 | {

246 | "metadata": {},

247 | "cell_type": "markdown",

248 | "source": [

249 | "### Key Parameter Summary:\n",

250 | "- `isochrone_type`: `'radius'`, `'ways'`, or `'separate'`\n",

251 | "- `weight_type`: `'time_min'` (minutes) or `'length_meter'` (meters)\n",

252 | "- `weight_value`: total cutoff (e.g. 10 minutes)\n",

253 | "- `step`: interval size for stepped isochrones (optional)\n",

254 | "- Additional: `buffer_factor`, `road_buffer_size`"

255 | ],

256 | "id": "5881fcd1fe589d48"

257 | },

258 | {

259 | "metadata": {},

260 | "cell_type": "markdown",

261 | "source": "## Animation for stepped isochrones:",

262 | "id": "dbdc88b0d14ec294"

263 | },

264 | {

265 | "metadata": {},

266 | "cell_type": "code",

267 | "source": [

268 | "from objectnat.methods.utils.graph_utils import graph_to_gdf\n",

269 | "import matplotlib.pyplot as plt\n",

270 | "from matplotlib.animation import FuncAnimation\n",

271 | "from shapely import Point\n",

272 | "import geopandas as gpd\n",

273 | "from objectnat import get_accessibility_isochrone_stepped\n",

274 | "\n",

275 | "edges = graph_to_gdf(G_intermodal, nodes=False)\n",

276 | "point = gpd.GeoDataFrame(geometry=[Point(30.27060176, 59.93546846)], crs=4326).to_crs(edges.crs)\n",

277 | "bbox = gpd.GeoDataFrame(geometry=[poly], crs=4326).to_crs(edges.crs)\n",

278 | "\n",

279 | "type_colors = {\n",

280 | " 'walk': '#a3a3a3',\n",

281 | " 'bus': '#1f77b4',\n",

282 | " 'trolleybus': '#2ca02c',\n",

283 | " 'tram': '#ff7f0e',\n",

284 | " 'subway': '#9467bd',\n",

285 | " 'boarding': '#8c564b'\n",

286 | "}\n",

287 | "\n",

288 | "edges['color'] = edges['type'].map(type_colors)\n",

289 | "\n",

290 | "steps = [0.1, 0.5, 1, 2, 3, 4, 5]\n",

291 | "\n",

292 | "fig, ax = plt.subplots(figsize=(10, 8), dpi=150)\n",

293 | "plt.subplots_adjust(left=0.05, right=0.95, top=0.95, bottom=0.05)\n",

294 | "\n",

295 | "edges_plot = edges.plot(ax=ax, color=edges['color'], alpha=0.5, linewidth=0.1, legend=True)\n",

296 | "bbox.boundary.plot(ax=ax, color='black', linestyle='--', linewidth=1)\n",

297 | "point.plot(ax=ax, color='red', markersize=50)\n",

298 | "ax.set_axis_off()\n",

299 | "\n",

300 | "\n",

301 | "def update(step):\n",

302 | " for coll in ax.collections:\n",

303 | " if coll.get_label() == 'isochrone':\n",

304 | " coll.remove()\n",

305 | "\n",

306 | " result = get_accessibility_isochrone_stepped(\n",

307 | " isochrone_type='separate',\n",

308 | " point=point,\n",

309 | " weight_type=\"time_min\",\n",

310 | " weight_value=15,\n",

311 | " nx_graph=G_intermodal,\n",

312 | " step=step\n",

313 | " )\n",

314 | " result.plot(ax=ax, alpha=1, label='isochrone', column='dist', legend=False)\n",

315 | " ax.set_title(f'Isochrone with step = {step} minutes')\n",

316 | "\n",

317 | "\n",

318 | "ani = FuncAnimation(\n",

319 | " fig,\n",

320 | " update,\n",

321 | " frames=steps,\n",

322 | " repeat=True,\n",

323 | " interval=2000\n",

324 | ")\n",

325 | "\n",

326 | "ani.save('isochrone_animation.gif', writer='pillow', fps=1)"

327 | ],

328 | "id": "ac24793342e314e1",

329 | "outputs": [],

330 | "execution_count": null

331 | }

332 | ],

333 | "metadata": {

334 | "kernelspec": {

335 | "display_name": "Python 3",

336 | "language": "python",

337 | "name": "python3"

338 | },

339 | "language_info": {

340 | "codemirror_mode": {

341 | "name": "ipython",

342 | "version": 2

343 | },

344 | "file_extension": ".py",

345 | "mimetype": "text/x-python",

346 | "name": "python",

347 | "nbconvert_exporter": "python",

348 | "pygments_lexer": "ipython2",

349 | "version": "2.7.6"

350 | }

351 | },

352 | "nbformat": 4,

353 | "nbformat_minor": 5

354 | }

355 |

--------------------------------------------------------------------------------

/examples/noise_simulation.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Noise Propagation Simulation\n",

8 | "This section demonstrates how to simulate the propagation of noise in an urban environment using a point source,\n",

9 | "obstacles (e.g., buildings), and optional vegetation (e.g., trees). The `simulate_noise` function models the \n",

10 | "attenuation of noise based on geometry, absorption, reflections, and environmental parameters.\n"

11 | ],

12 | "id": "dcd797a362c7bfcc"

13 | },

14 | {

15 | "metadata": {},

16 | "cell_type": "code",

17 | "source": [

18 | "# Import necessary libraries\n",

19 | "import geopandas as gpd\n",

20 | "from shapely.geometry import Point\n",

21 | "from objectnat import simulate_noise"

22 | ],

23 | "id": "c9d41c96e9e29d25",

24 | "outputs": [],

25 | "execution_count": null

26 | },

27 | {

28 | "metadata": {},

29 | "cell_type": "markdown",

30 | "source": "### 1. Define Noise Source\n",

31 | "id": "d17c9f5c9d0a1e32"

32 | },

33 | {

34 | "metadata": {},

35 | "cell_type": "code",

36 | "source": [

37 | "# Define the starting point(s) of the noise source, and their parameters \n",

38 | "\n",

39 | "start_p = gpd.GeoDataFrame(data=[[90, 2000], [80, 200]],\n",

40 | " geometry=[Point(30.27060176, 59.93546846), Point(30.27213917, 59.93575345)],\n",

41 | " columns=['source_noise_db', 'geometric_mean_freq_hz'], crs=4326)"

42 | ],

43 | "id": "26de7874d08a9edc",

44 | "outputs": [],

45 | "execution_count": null

46 | },

47 | {

48 | "metadata": {},

49 | "cell_type": "markdown",

50 | "source": [

51 | "### 2. Load Obstacle and Tree Data\n",

52 | "Load obstacle (building) and tree layers from local files, and project them to the same CRS used for simulation.\n"

53 | ],

54 | "id": "2b92b561b2fc0613"

55 | },

56 | {

57 | "metadata": {},

58 | "cell_type": "code",

59 | "source": [

60 | "# Load obstacle geometries (e.g., buildings)\n",

61 | "obstacles = gpd.read_parquet('examples_data/buildings.parquet')\n",

62 | "\n",

63 | "# Load vegetation geometries (e.g., trees)\n",

64 | "trees = gpd.read_parquet('examples_data/trees.parquet')"

65 | ],

66 | "id": "2d28aed860ee113f",

67 | "outputs": [],

68 | "execution_count": null

69 | },

70 | {

71 | "metadata": {},

72 | "cell_type": "markdown",

73 | "source": [

74 | "### 3. Run Noise Simulation\n",

75 | "Simulate the propagation of noise using a point source with specified parameters. The simulation accounts for:\n",

76 | "- Obstacles and their absorption\n",

77 | "- Tree-based scattering\n",

78 | "- Environmental factors like air temperature\n",

79 | "- Sound reflection and attenuation with distance\n"

80 | ],

81 | "id": "cee8754172564e3d"

82 | },

83 | {

84 | "metadata": {},

85 | "cell_type": "code",

86 | "source": [

87 | "# Run the simulation\n",

88 | "noise = simulate_noise(\n",

89 | " source_points=start_p,\n",

90 | " obstacles=obstacles,\n",

91 | " # Alternatively use these args if not specified per-point\n",

92 | " # source_noise_db=90, # Initial noise level in decibels\n",

93 | " # geometric_mean_freq_hz=2000, # Frequency of the noise signal\n",

94 | " standart_absorb_ratio=0.05, # Default absorption coefficient for obstacles\n",

95 | " trees=trees, # Vegetation data\n",

96 | " tree_resolution=4, # Resolution of vegetation scattering\n",

97 | " air_temperature=20, # Air temperature in °C\n",

98 | " target_noise_db=40, # Simulation stops at this minimum noise level\n",

99 | " db_sim_step=1, # Step size in decibel for simulation granularity\n",

100 | " reflection_n=2, # Number of allowed reflections\n",

101 | " dead_area_r=5 # Radius of reflection-free dead zones (in meters)\n",

102 | ")\n"

103 | ],

104 | "id": "39e652ae619945c0",

105 | "outputs": [],

106 | "execution_count": null

107 | },

108 | {

109 | "metadata": {},

110 | "cell_type": "markdown",

111 | "source": [

112 | "### 4. Visualize the Result\n",

113 | "Visualize the noise propagation result on a map using a color scale that reflects noise levels (in dB).\n"

114 | ],

115 | "id": "7b5484096b6d206d"

116 | },

117 | {

118 | "metadata": {},

119 | "cell_type": "code",

120 | "source": [

121 | "# Visualize the result using the 'plasma' colormap and a fixed lower bound\n",

122 | "noise.explore(column='noise_level', cmap='plasma', vmin=40)"

123 | ],

124 | "id": "b747a45d091122cd",

125 | "outputs": [],

126 | "execution_count": null

127 | },

128 | {

129 | "metadata": {},

130 | "cell_type": "markdown",

131 | "source": "### Section for GIF creation",

132 | "id": "31cb77ec9dc93fc3"

133 | },

134 | {

135 | "metadata": {},

136 | "cell_type": "code",

137 | "outputs": [],

138 | "execution_count": null,

139 | "source": [

140 | "import matplotlib.pyplot as plt\n",

141 | "import matplotlib.animation as animation\n",

142 | "\n",

143 | "import numpy as np\n",

144 | "from matplotlib import cm\n",

145 | "\n",

146 | "\n",

147 | "def create_noise_animation(gdf_noise, gdf_obstacles, start_p, buffer_p, gdf_trees=None,\n",

148 | " output_file=\"noise_animation.gif\"):\n",

149 | " if gdf_trees is None:\n",

150 | " gdf_trees = gpd.GeoDataFrame()\n",

151 | "\n",

152 | " bounds = start_p.unary_union.buffer(buffer_p).bounds\n",

153 | " minx, miny, maxx, maxy = bounds\n",

154 | " vmin = gdf_noise['noise_level'].min()\n",

155 | " vmax = gdf_noise['noise_level'].max()\n",

156 | " cmap = cm.plasma\n",

157 | "\n",

158 | " fig, ax = plt.subplots(figsize=(10, 10))\n",

159 | " plt.subplots_adjust(left=0.05, right=0.95, top=0.95, bottom=0.05)\n",

160 | "\n",

161 | " def update_frame(frame):\n",

162 | " ax.clear()\n",

163 | " ax.set_xlim(minx, maxx)\n",

164 | " ax.set_ylim(miny, maxy)\n",

165 | "\n",

166 | " gdf_trees.plot(ax=ax, edgecolor='green', facecolor='none', linewidth=3)\n",

167 | " gdf_obstacles.plot(ax=ax, facecolor='gray')\n",

168 | "\n",

169 | " gdf_noise[gdf_noise['noise_level'] > frame].plot(ax=ax, column='noise_level', cmap=cmap, alpha=0.8, vmin=vmin,\n",

170 | " vmax=vmax)\n",

171 | " gdf_noise[gdf_noise['noise_level'] == frame].plot(ax=ax, column='noise_level', cmap=cmap, alpha=1, vmin=vmin,\n",

172 | " vmax=vmax)\n",

173 | " gdf_noise[gdf_noise['noise_level'] == frame - 1].plot(ax=ax, column='noise_level', cmap=cmap, alpha=0.5,\n",

174 | " vmin=vmin, vmax=vmax)\n",

175 | " gdf_noise[gdf_noise['noise_level'] < frame - 1].plot(ax=ax, column='noise_level', cmap=cmap, alpha=0.3,\n",

176 | " vmin=vmin, vmax=vmax)\n",

177 | "\n",

178 | " ax.set_title(f\"Noise Level: {frame} dB\", fontsize=20)\n",

179 | " ax.set_axis_off()\n",

180 | "\n",

181 | " frames = np.arange(gdf_noise['noise_level'].max(), gdf_noise['noise_level'].min() - 1, -1)\n",

182 | " ani = animation.FuncAnimation(fig, update_frame, frames=frames, repeat=False)\n",

183 | " ani.save(output_file, writer='imagemagick', fps=15)\n",

184 | "\n",

185 | " plt.close()\n",

186 | "\n",

187 | "\n",

188 | "# Call the function to create the noise animation, using the noise, obstacles, and trees data\n",

189 | "# Fill in the buffer_p parameter close to the value specified in the logs when running the simulation.\n",

190 | "create_noise_animation(noise, obstacles, start_p, 350, trees)\n"

191 | ],

192 | "id": "8266509e1bd32c7"

193 | }

194 | ],

195 | "metadata": {

196 | "kernelspec": {

197 | "display_name": "Python 3",

198 | "language": "python",

199 | "name": "python3"

200 | },

201 | "language_info": {

202 | "codemirror_mode": {

203 | "name": "ipython",

204 | "version": 2

205 | },

206 | "file_extension": ".py",

207 | "mimetype": "text/x-python",

208 | "name": "python",

209 | "nbconvert_exporter": "python",

210 | "pygments_lexer": "ipython2",

211 | "version": "2.7.6"

212 | }

213 | },

214 | "nbformat": 4,

215 | "nbformat_minor": 5

216 | }

217 |

--------------------------------------------------------------------------------

/examples/point_clusterization.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Spatial Clustering of GeoDataFrame into Polygons\n",

8 | "This example demonstrates how to cluster spatial point data (e.g., buildings or services) into polygons using density-based algorithms:\n",

9 | "- DBSCAN or HDBSCAN methods\n",

10 | "- Parameters for minimum distance and minimum points per cluster"

11 | ],

12 | "id": "cf34b03e60843e6a"

13 | },

14 | {

15 | "metadata": {},

16 | "cell_type": "code",

17 | "source": [

18 | "# Import necessary libraries\n",

19 | "from objectnat import get_clusters_polygon\n",

20 | "import geopandas as gpd"

21 | ],

22 | "id": "1a1dbf25992ecf52",

23 | "outputs": [],

24 | "execution_count": null

25 | },

26 | {

27 | "metadata": {},

28 | "cell_type": "markdown",

29 | "source": [

30 | "### 1. Load Point Dataset\n",

31 | "Load a set of points (e.g., buildings) for spatial clustering.\n"

32 | ],

33 | "id": "fb6c09592d642382"

34 | },

35 | {

36 | "metadata": {},

37 | "cell_type": "code",

38 | "source": [

39 | "# Load building data\n",

40 | "buildings = gpd.read_parquet('examples_data/buildings.parquet')"

41 | ],

42 | "id": "a4e1407403d83325",

43 | "outputs": [],

44 | "execution_count": null

45 | },

46 | {

47 | "metadata": {},

48 | "cell_type": "markdown",

49 | "source": [

50 | " ### 2. Perform Clustering and Create Cluster Polygons\n",

51 | " Use the `get_clusters_polygon()` function to cluster points into groups based on spatial proximity.\n",

52 | " \n",

53 | " Parameters:\n",

54 | " - `min_dist`: maximum distance between neighboring points (e.g., 20 meters)\n",

55 | " - `min_point`: minimum number of points to form a cluster (e.g., 10)\n",

56 | " - `method`: clustering algorithm ('DBSCAN' or 'HDBSCAN')"

57 | ],

58 | "id": "9149bdc20488bbe5"

59 | },

60 | {

61 | "metadata": {},

62 | "cell_type": "code",

63 | "source": [

64 | "# Apply clustering with DBSCAN\n",

65 | "clusters, buildings_clustered = get_clusters_polygon(\n",

66 | " points=buildings,\n",

67 | " min_dist=70,\n",

68 | " min_point=2,\n",

69 | " method='DBSCAN'\n",

70 | ")\n",

71 | "# Show cluster polygons\n",

72 | "m = clusters.explore()\n",

73 | "\n",

74 | "# Optional: show clustered buildings colored by cluster ID\n",

75 | "# buildings_clustered.explore(m=m, column='cluster', categorical=True)\n",

76 | "m"

77 | ],

78 | "id": "f990273f2f2e26c",

79 | "outputs": [],

80 | "execution_count": null

81 | }

82 | ],

83 | "metadata": {

84 | "kernelspec": {

85 | "display_name": "Python 3",

86 | "language": "python",

87 | "name": "python3"

88 | },

89 | "language_info": {

90 | "codemirror_mode": {

91 | "name": "ipython",

92 | "version": 2

93 | },

94 | "file_extension": ".py",

95 | "mimetype": "text/x-python",

96 | "name": "python",

97 | "nbconvert_exporter": "python",

98 | "pygments_lexer": "ipython2",

99 | "version": "2.7.6"

100 | }

101 | },

102 | "nbformat": 4,

103 | "nbformat_minor": 5

104 | }

105 |

--------------------------------------------------------------------------------

/examples/visibility_analysis.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "metadata": {},

5 | "cell_type": "markdown",

6 | "source": [

7 | "## Line-of-Sight Visibility Analysis\n",

8 | "This notebook demonstrates how to compute visible areas from a viewpoint or multiple points using:\n",

9 | "- Fast approximate visibility (suitable for quick overviews)\n",

10 | "- Accurate visibility analysis (respecting occlusions)\n",

11 | "- Parallelized visibility from multiple locations"

12 | ],

13 | "id": "f4c531a4c9e094ec"

14 | },

15 | {

16 | "metadata": {},

17 | "cell_type": "code",

18 | "source": [

19 | "# Import necessary libraries\n",

20 | "from objectnat import get_visibility, get_visibility_accurate, get_visibilities_from_points\n",

21 | "import geopandas as gpd\n",

22 | "from shapely.geometry import Point"

23 | ],

24 | "id": "7b840bc7edf14b2c",

25 | "outputs": [],

26 | "execution_count": null

27 | },

28 | {

29 | "metadata": {},

30 | "cell_type": "markdown",

31 | "source": [

32 | "### 1. Load Obstacle Data\n",

33 | "Load a building layer representing line-of-sight obstacles. This dataset is used to compute occlusions in the urban environment.\n"

34 | ],

35 | "id": "1afa4218134b2c84"

36 | },

37 | {

38 | "metadata": {},

39 | "cell_type": "code",

40 | "source": [

41 | "# Load buildings as obstacles\n",

42 | "obstacles = gpd.read_parquet('examples_data/buildings.parquet')"

43 | ],

44 | "id": "588dc80a1b941474",

45 | "outputs": [],

46 | "execution_count": null

47 | },

48 | {

49 | "metadata": {},

50 | "cell_type": "markdown",

51 | "source": [

52 | "### 2. Define Viewpoint\n",

53 | "Specify the observation point from which visibility will be computed. Coordinates must match the CRS of the obstacles dataset.\n"

54 | ],

55 | "id": "c7d345277339355a"

56 | },

57 | {

58 | "metadata": {},

59 | "cell_type": "code",

60 | "source": [

61 | "# Define a single viewpoint in WGS 84\n",

62 | "point_from = gpd.GeoDataFrame(geometry=[Point(30.2312112, 59.9482336)], crs=4326)"

63 | ],

64 | "id": "784128c4f7c5fe89",

65 | "outputs": [],

66 | "execution_count": null

67 | },

68 | {

69 | "metadata": {},

70 | "cell_type": "markdown",

71 | "source": [

72 | "### 3. Fast Visibility Calculation\n",

73 | "Compute visibility using a fast, approximate method. This is suitable for real-time feedback or exploratory analysis.\n",

74 | "**Note:** May produce artifacts (e.g., visibility behind walls).\n"

75 | ],

76 | "id": "a8026dc4e3dfba19"

77 | },

78 | {

79 | "metadata": {},

80 | "cell_type": "code",

81 | "source": [

82 | "# Fast visibility (less accurate)\n",

83 | "result_fast = get_visibility(point_from, obstacles, view_distance=500)\n",

84 | "# Computes visibility polygon from the viewpoint with a 500-meter radius using low-resolution simulation."

85 | ],

86 | "id": "8797859dfe469ace",

87 | "outputs": [],

88 | "execution_count": null

89 | },

90 | {

91 | "metadata": {},

92 | "cell_type": "markdown",

93 | "source": [

94 | "### 4. Accurate Visibility Calculation\n",

95 | "Use the more precise `get_visibility_accurate()` function, which simulates occlusion and limited sightlines.\n",

96 | "This method is slower but produces more reliable results.\n"

97 | ],

98 | "id": "ebc68021e8caed4f"

99 | },

100 | {

101 | "metadata": {},

102 | "cell_type": "code",

103 | "source": [

104 | "# Accurate visibility (includes occlusion and bottleneck modeling)\n",

105 | "result_accurate = get_visibility_accurate(point_from, obstacles, view_distance=500)\n",

106 | "# Simulates realistic visibility by tracing around buildings and respecting occlusions."

107 | ],

108 | "id": "4c08935e3e1bf3ca",

109 | "outputs": [],

110 | "execution_count": null

111 | },

112 | {

113 | "metadata": {},

114 | "cell_type": "markdown",

115 | "source": [

116 | "### 5. Visualization\n",

117 | "Visualize obstacles and both visibility methods on an interactive map using GeoPandas.\n"

118 | ],

119 | "id": "e1fdf25b75fbe716"

120 | },

121 | {

122 | "metadata": {},