├── .gitignore

├── 01-intro

├── README.md

├── documents-llm.json

├── documents.json

├── elastic-search.md

├── open-ai-alternatives.md

├── parse-faq.ipynb

└── rag-intro.ipynb

├── 02-vector-search

└── README.md

├── 03-evaluation

└── README.md

├── 04-monitoring

└── README.md

├── 05-best-practices

├── README.md

├── documents-with-ids.json

├── ground-truth-data.csv

├── hybrid-search-and-reranking-es.ipynb

├── hybrid-search-langchain.ipynb

└── llm-zoomcamp-best-practicies.pdf

├── 06-project-example

├── README.md

└── content-processing-summary.md

├── README.md

├── after-sign-up.md

├── asking-questions.md

├── awesome-llms.md

├── cohorts

├── 2024

│ ├── 01-intro

│ │ └── homework.md

│ ├── 02-open-source

│ │ ├── README.md

│ │ ├── docker-compose.yaml

│ │ ├── homework.md

│ │ ├── huggingface-flan-t5.ipynb

│ │ ├── huggingface-mistral-7b.ipynb

│ │ ├── huggingface-phi3.ipynb

│ │ ├── ollama.ipynb

│ │ ├── prompt.md

│ │ ├── qa_faq.py

│ │ ├── rag-intro.ipynb

│ │ ├── serving-hugging-face-models.md

│ │ └── starter.ipynb

│ ├── 03-vector-search

│ │ ├── README.md

│ │ ├── demo_es.ipynb

│ │ ├── homework.md

│ │ └── homework_solution.ipynb

│ ├── 04-monitoring

│ │ ├── README.md

│ │ ├── app

│ │ │ ├── .env

│ │ │ ├── Dockerfile

│ │ │ ├── README.MD

│ │ │ ├── app.py

│ │ │ ├── assistant.py

│ │ │ ├── db.py

│ │ │ ├── docker-compose.yaml

│ │ │ ├── generate_data.py

│ │ │ ├── prep.py

│ │ │ └── requirements.txt

│ │ ├── code.md

│ │ ├── dashboard.json

│ │ ├── data

│ │ │ ├── evaluations-aqa.csv

│ │ │ ├── evaluations-qa.csv

│ │ │ ├── results-gpt35-cosine.csv

│ │ │ ├── results-gpt35.csv

│ │ │ ├── results-gpt4o-cosine.csv

│ │ │ ├── results-gpt4o-mini-cosine.csv

│ │ │ ├── results-gpt4o-mini.csv

│ │ │ └── results-gpt4o.csv

│ │ ├── grafana.md

│ │ ├── homework.md

│ │ ├── offline-rag-evaluation.ipynb

│ │ └── solution.ipynb

│ ├── 05-orchestration

│ │ ├── README.md

│ │ ├── code

│ │ │ └── 06_retrieval.py

│ │ ├── homework.md

│ │ └── parse-faq-llm.ipynb

│ ├── README.md

│ ├── competition

│ │ ├── README.md

│ │ ├── data

│ │ │ ├── test.csv

│ │ │ └── train.csv

│ │ ├── scorer.py

│ │ ├── starter_notebook.ipynb

│ │ └── starter_notebook_submission.csv

│ ├── project.md

│ └── workshops

│ │ └── dlt.md

└── 2025

│ ├── 01-intro

│ └── homework.md

│ ├── README.md

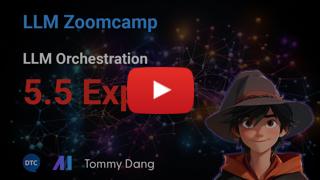

│ ├── course-launch-stream-summary.md

│ ├── pre-course-q-a-stream-summary.md

│ └── project.md

├── etc

└── chunking.md

├── images

├── llm-zoomcamp-2025.jpg

├── llm-zoomcamp.jpg

├── qdrant.png

└── saturn-cloud.png

├── learning-in-public.md

└── project.md

/.gitignore:

--------------------------------------------------------------------------------

1 | .ipynb_checkpoints/

2 | __pycache__/

3 | .venv

4 | .envrc

5 |

--------------------------------------------------------------------------------

/01-intro/README.md:

--------------------------------------------------------------------------------

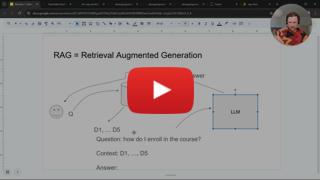

1 | # Module 1: Introduction

2 |

3 | In this module, we will learn what LLM and RAG are and

4 | implement a simple RAG pipeline to answer questions about

5 | the FAQ Documents from our Zoomcamp courses

6 |

7 | What we will do:

8 |

9 | * Index Zoomcamp FAQ documents

10 | * DE Zoomcamp: https://docs.google.com/document/d/19bnYs80DwuUimHM65UV3sylsCn2j1vziPOwzBwQrebw/edit

11 | * ML Zoomcamp: https://docs.google.com/document/d/1LpPanc33QJJ6BSsyxVg-pWNMplal84TdZtq10naIhD8/edit

12 | * MLOps Zoomcamp: https://docs.google.com/document/d/12TlBfhIiKtyBv8RnsoJR6F72bkPDGEvPOItJIxaEzE0/edit

13 | * Create a Q&A system for answering questions about these documents

14 |

15 | ## 1.1 Introduction to LLM and RAG

16 |

17 |

18 |  19 |

20 |

21 | * LLM

22 | * RAG

23 | * RAG architecture

24 | * Course outcome

25 |

26 |

27 | ## 1.2 Preparing the Environment

28 |

29 |

30 |

19 |

20 |

21 | * LLM

22 | * RAG

23 | * RAG architecture

24 | * Course outcome

25 |

26 |

27 | ## 1.2 Preparing the Environment

28 |

29 |

30 |  31 |

32 |

33 | * Installing libraries

34 | * Alternative: installing anaconda or miniconda

35 |

36 | ```bash

37 | pip install tqdm notebook==7.1.2 openai elasticsearch==8.13.0 pandas scikit-learn ipywidgets

38 | ```

39 |

40 | ## 1.3 Retrieval

41 |

42 |

43 |

31 |

32 |

33 | * Installing libraries

34 | * Alternative: installing anaconda or miniconda

35 |

36 | ```bash

37 | pip install tqdm notebook==7.1.2 openai elasticsearch==8.13.0 pandas scikit-learn ipywidgets

38 | ```

39 |

40 | ## 1.3 Retrieval

41 |

42 |

43 |  44 |

45 |

46 | Note: as of now, you can install minsearch with pip:

47 |

48 | ```bash

49 | pip install minsearch

50 | ```

51 |

52 | * We will use the search engine we build in the [build-your-own-search-engine workshop](https://github.com/alexeygrigorev/build-your-own-search-engine): [minsearch](https://github.com/alexeygrigorev/minsearch)

53 | * Indexing the documents

54 | * Peforming the search

55 |

56 |

57 | ## 1.4 Generation with OpenAI

58 |

59 |

60 |

44 |

45 |

46 | Note: as of now, you can install minsearch with pip:

47 |

48 | ```bash

49 | pip install minsearch

50 | ```

51 |

52 | * We will use the search engine we build in the [build-your-own-search-engine workshop](https://github.com/alexeygrigorev/build-your-own-search-engine): [minsearch](https://github.com/alexeygrigorev/minsearch)

53 | * Indexing the documents

54 | * Peforming the search

55 |

56 |

57 | ## 1.4 Generation with OpenAI

58 |

59 |

60 |  61 |

62 |

63 | * Invoking OpenAI API

64 | * Building the prompt

65 | * Getting the answer

66 |

67 |

68 | If you don't want to use a service, you can run an LLM locally

69 | refer to [module 2](../02-open-source/) for more details.

70 |

71 | In particular, check "2.7 Ollama - Running LLMs on a CPU" -

72 | it can work with OpenAI API, so to make the example from 1.4

73 | work locally, you only need to change a few lines of code.

74 |

75 |

76 | ## 1.4.2 OpenAI API Alternatives

77 |

78 |

79 |

61 |

62 |

63 | * Invoking OpenAI API

64 | * Building the prompt

65 | * Getting the answer

66 |

67 |

68 | If you don't want to use a service, you can run an LLM locally

69 | refer to [module 2](../02-open-source/) for more details.

70 |

71 | In particular, check "2.7 Ollama - Running LLMs on a CPU" -

72 | it can work with OpenAI API, so to make the example from 1.4

73 | work locally, you only need to change a few lines of code.

74 |

75 |

76 | ## 1.4.2 OpenAI API Alternatives

77 |

78 |

79 |  80 |

81 |

82 | [Open AI Alternatives](../awesome-llms.md#openai-api-alternatives)

83 |

84 |

85 | ## 1.5 Cleaned RAG flow

86 |

87 |

88 |

80 |

81 |

82 | [Open AI Alternatives](../awesome-llms.md#openai-api-alternatives)

83 |

84 |

85 | ## 1.5 Cleaned RAG flow

86 |

87 |

88 |  89 |

90 |

91 | * Cleaning the code we wrote so far

92 | * Making it modular

93 |

94 | ## 1.6 Searching with ElasticSearch

95 |

96 |

97 |

89 |

90 |

91 | * Cleaning the code we wrote so far

92 | * Making it modular

93 |

94 | ## 1.6 Searching with ElasticSearch

95 |

96 |

97 |  98 |

99 |

100 | * Run ElasticSearch with Docker

101 | * Index the documents

102 | * Replace MinSearch with ElasticSearch

103 |

104 | Running ElasticSearch:

105 |

106 | ```bash

107 | docker run -it \

108 | --rm \

109 | --name elasticsearch \

110 | -m 4GB \

111 | -p 9200:9200 \

112 | -p 9300:9300 \

113 | -e "discovery.type=single-node" \

114 | -e "xpack.security.enabled=false" \

115 | docker.elastic.co/elasticsearch/elasticsearch:8.4.3

116 | ```

117 |

118 | If the previous command doesn't work (i.e. you see "error pulling image configuration"), try to run ElasticSearch directly from Docker Hub:

119 |

120 | ```bash

121 | docker run -it \

122 | --rm \

123 | --name elasticsearch \

124 | -p 9200:9200 \

125 | -p 9300:9300 \

126 | -e "discovery.type=single-node" \

127 | -e "xpack.security.enabled=false" \

128 | elasticsearch:8.4.3

129 | ```

130 |

131 | Index settings:

132 |

133 | ```python

134 | {

135 | "settings": {

136 | "number_of_shards": 1,

137 | "number_of_replicas": 0

138 | },

139 | "mappings": {

140 | "properties": {

141 | "text": {"type": "text"},

142 | "section": {"type": "text"},

143 | "question": {"type": "text"},

144 | "course": {"type": "keyword"}

145 | }

146 | }

147 | }

148 | ```

149 |

150 | Query:

151 |

152 | ```python

153 | {

154 | "size": 5,

155 | "query": {

156 | "bool": {

157 | "must": {

158 | "multi_match": {

159 | "query": query,

160 | "fields": ["question^3", "text", "section"],

161 | "type": "best_fields"

162 | }

163 | },

164 | "filter": {

165 | "term": {

166 | "course": "data-engineering-zoomcamp"

167 | }

168 | }

169 | }

170 | }

171 | }

172 | ```

173 |

174 | We use `"type": "best_fields"`. You can read more about

175 | different types of `multi_match` search in [elastic-search.md](elastic-search.md).

176 |

177 | # 1.7 Homework

178 | More information [here](../cohorts/2025/01-intro/homework.md).

179 |

180 |

181 | # Extra materials

182 |

183 | * If you're curious to know how the code for parsing the FAQ works, check [this video](https://www.loom.com/share/ff54d898188b402d880dbea2a7cb8064)

184 |

185 | # Open-Source LLMs (optional)

186 |

187 | It's also possible to run LLMs locally. For that, we

188 | can use Ollama. Check these videos from LLM Zoomcamp 2024

189 | if you're interested in learning more about it:

190 |

191 | * [Ollama - Running LLMs on a CPU](https://www.youtube.com/watch?v=PVpBGs_iSjY&list=PL3MmuxUbc_hIB4fSqLy_0AfTjVLpgjV3R)

192 | * [Ollama & Phi3 + Elastic in Docker-Compose](https://www.youtube.com/watch?v=4juoo_jk96U&list=PL3MmuxUbc_hIB4fSqLy_0AfTjVLpgjV3R)

193 | * [UI for RAG](https://www.youtube.com/watch?v=R6L8PZ-7bGo&list=PL3MmuxUbc_hIB4fSqLy_0AfTjVLpgjV3R)

194 |

195 | To see the command lines used in the videos,

196 | see [2024 cohort folder](../cohorts/2024/02-open-source#27-ollama---running-llms-on-a-cpu)

197 |

198 | # Notes

199 |

200 | * [Notes by slavaheroes](https://github.com/slavaheroes/llm-zoomcamp/blob/homeworks/01-intro/notes.md)

201 | * [Notes by Pham Nguyen Hung](https://hung.bearblog.dev/llm-zoomcamp-1-rag/)

202 | * [Notes by dimzachar](https://github.com/dimzachar/llm_zoomcamp/blob/master/notes/01-intro/README.md)

203 | * [Notes by Olawale Ogundeji](https://github.com/presiZHai/LLM-Zoomcamp/blob/main/01-intro/notes.md)

204 | * [Notes by Uchechukwu](https://medium.com/@njokuuchechi/an-intro-to-large-language-models-llms-0c51c09abe10)

205 | * [Notes by Kamal](https://github.com/mk-hassan/llm-zoomcamp/blob/main/Module-1%3A%20Introduction%20to%20LLMs%20and%20RAG/README.md)

206 | * [Notes by Marat](https://machine-mind-ml.medium.com/discovering-semantic-search-and-rag-with-large-language-models-be7d9ba5bef4)

207 | * [Notes by Waleed](https://waleedayoub.com/post/llmzoomcamp_week1-intro_notes/)

208 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

209 |

--------------------------------------------------------------------------------

/01-intro/elastic-search.md:

--------------------------------------------------------------------------------

1 | # Elastic Search

2 |

3 | This document contains useful things about Elasticsearch

4 |

5 | # `multi_match` Query in Elasticsearch

6 |

7 | The `multi_match` query is used to search for a given text across multiple fields in an Elasticsearch index.

8 |

9 | It provides various types to control how the matching is executed and scored.

10 |

11 | There are multiple types of `multi_match` queries:

12 |

13 | - `best_fields`: Returns the highest score from any one field.

14 | - `most_fields`: Combines the scores from all fields.

15 | - `cross_fields`: Treats fields as one big field for scoring.

16 | - `phrase`: Searches for the query as an exact phrase.

17 | - `phrase_prefix`: Searches for the query as a prefix of a phrase.

18 |

19 |

20 | ## `best_fields`

21 |

22 | The `best_fields` type searches each field separately and returns the highest score from any one of the fields.

23 |

24 | This type is useful when you want to find documents where at least one field matches the query well.

25 |

26 |

27 | ```json

28 | {

29 | "size": 5,

30 | "query": {

31 | "bool": {

32 | "must": {

33 | "multi_match": {

34 | "query": "How do I run docker on Windows?",

35 | "fields": ["question", "text"],

36 | "type": "best_fields"

37 | }

38 | }

39 | }

40 | }

41 | }

42 | ```

43 |

44 | ## `most_fields`

45 |

46 | The `most_fields` type searches each field and combines the scores from all fields.

47 |

48 | This is useful when the relevance of a document increases with more matching fields.

49 |

50 | ```json

51 | {

52 | "multi_match": {

53 | "query": "How do I run docker on Windows?",

54 | "fields": ["question^4", "text"],

55 | "type": "most_fields"

56 | }

57 | }

58 | ```

59 |

60 | ## `cross_fields`

61 |

62 | The `cross_fields` type treats fields as though they were one big field.

63 |

64 | It is suitable for cases where you have fields representing the same text in different ways, such as synonyms.

65 |

66 | ```json

67 | {

68 | "multi_match": {

69 | "query": "How do I run docker on Windows?",

70 | "fields": ["question", "text"],

71 | "type": "cross_fields"

72 | }

73 | }

74 | ```

75 |

76 | ## `phrase`

77 |

78 | The `phrase` type looks for the query as an exact phrase within the fields.

79 |

80 | It is useful for exact match searches.

81 |

82 | ```json

83 | {

84 | "multi_match": {

85 | "query": "How do I run docker on Windows?",

86 | "fields": ["question", "text"],

87 | "type": "phrase"

88 | }

89 | }

90 | ```

91 |

92 | ## `phrase_prefix`

93 |

94 | The `phrase_prefix` type searches for documents that contain the query as a prefix of a phrase.

95 |

96 | This is useful for autocomplete or typeahead functionality.

97 |

98 |

99 | ```json

100 | {

101 | "multi_match": {

102 | "query": "How do I run docker on Windows?",

103 | "fields": ["question", "text"],

104 | "type": "phrase_prefix"

105 | }

106 | }

107 | ```

--------------------------------------------------------------------------------

/01-intro/open-ai-alternatives.md:

--------------------------------------------------------------------------------

1 | moved [here](https://github.com/DataTalksClub/llm-zoomcamp/blob/main/awesome-llms.md#openai-api-alternatives)

2 |

--------------------------------------------------------------------------------

/01-intro/parse-faq.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 8,

6 | "id": "4cd1eaa8-3424-41ad-9cf2-3e8548712865",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "import io\n",

11 | "\n",

12 | "import requests\n",

13 | "import docx"

14 | ]

15 | },

16 | {

17 | "cell_type": "code",

18 | "execution_count": 24,

19 | "id": "8180e7e4-b90d-4900-a59b-d22e5d6537c4",

20 | "metadata": {},

21 | "outputs": [],

22 | "source": [

23 | "def clean_line(line):\n",

24 | " line = line.strip()\n",

25 | " line = line.strip('\\uFEFF')\n",

26 | " return line\n",

27 | "\n",

28 | "def read_faq(file_id):\n",

29 | " url = f'https://docs.google.com/document/d/{file_id}/export?format=docx'\n",

30 | " \n",

31 | " response = requests.get(url)\n",

32 | " response.raise_for_status()\n",

33 | " \n",

34 | " with io.BytesIO(response.content) as f_in:\n",

35 | " doc = docx.Document(f_in)\n",

36 | "\n",

37 | " questions = []\n",

38 | "\n",

39 | " question_heading_style = 'heading 2'\n",

40 | " section_heading_style = 'heading 1'\n",

41 | " \n",

42 | " heading_id = ''\n",

43 | " section_title = ''\n",

44 | " question_title = ''\n",

45 | " answer_text_so_far = ''\n",

46 | " \n",

47 | " for p in doc.paragraphs:\n",

48 | " style = p.style.name.lower()\n",

49 | " p_text = clean_line(p.text)\n",

50 | " \n",

51 | " if len(p_text) == 0:\n",

52 | " continue\n",

53 | " \n",

54 | " if style == section_heading_style:\n",

55 | " section_title = p_text\n",

56 | " continue\n",

57 | " \n",

58 | " if style == question_heading_style:\n",

59 | " answer_text_so_far = answer_text_so_far.strip()\n",

60 | " if answer_text_so_far != '' and section_title != '' and question_title != '':\n",

61 | " questions.append({\n",

62 | " 'text': answer_text_so_far,\n",

63 | " 'section': section_title,\n",

64 | " 'question': question_title,\n",

65 | " })\n",

66 | " answer_text_so_far = ''\n",

67 | " \n",

68 | " question_title = p_text\n",

69 | " continue\n",

70 | " \n",

71 | " answer_text_so_far += '\\n' + p_text\n",

72 | " \n",

73 | " answer_text_so_far = answer_text_so_far.strip()\n",

74 | " if answer_text_so_far != '' and section_title != '' and question_title != '':\n",

75 | " questions.append({\n",

76 | " 'text': answer_text_so_far,\n",

77 | " 'section': section_title,\n",

78 | " 'question': question_title,\n",

79 | " })\n",

80 | "\n",

81 | " return questions"

82 | ]

83 | },

84 | {

85 | "cell_type": "code",

86 | "execution_count": 25,

87 | "id": "7d3c2dd7-f64a-4dc7-a4e3-3e8aadfa720f",

88 | "metadata": {},

89 | "outputs": [],

90 | "source": [

91 | "faq_documents = {\n",

92 | " 'data-engineering-zoomcamp': '19bnYs80DwuUimHM65UV3sylsCn2j1vziPOwzBwQrebw',\n",

93 | " 'machine-learning-zoomcamp': '1LpPanc33QJJ6BSsyxVg-pWNMplal84TdZtq10naIhD8',\n",

94 | " 'mlops-zoomcamp': '12TlBfhIiKtyBv8RnsoJR6F72bkPDGEvPOItJIxaEzE0',\n",

95 | "}"

96 | ]

97 | },

98 | {

99 | "cell_type": "code",

100 | "execution_count": 27,

101 | "id": "f94efe26-05e8-4ae5-a0fa-0a8e16852816",

102 | "metadata": {},

103 | "outputs": [

104 | {

105 | "name": "stdout",

106 | "output_type": "stream",

107 | "text": [

108 | "data-engineering-zoomcamp\n",

109 | "machine-learning-zoomcamp\n",

110 | "mlops-zoomcamp\n"

111 | ]

112 | }

113 | ],

114 | "source": [

115 | "documents = []\n",

116 | "\n",

117 | "for course, file_id in faq_documents.items():\n",

118 | " print(course)\n",

119 | " course_documents = read_faq(file_id)\n",

120 | " documents.append({'course': course, 'documents': course_documents})"

121 | ]

122 | },

123 | {

124 | "cell_type": "code",

125 | "execution_count": 29,

126 | "id": "06b8d8be-f656-4cc3-893f-b159be8fda21",

127 | "metadata": {},

128 | "outputs": [],

129 | "source": [

130 | "import json"

131 | ]

132 | },

133 | {

134 | "cell_type": "code",

135 | "execution_count": 32,

136 | "id": "30d50bc1-8d26-44ee-8734-cafce05e0523",

137 | "metadata": {},

138 | "outputs": [],

139 | "source": [

140 | "with open('documents.json', 'wt') as f_out:\n",

141 | " json.dump(documents, f_out, indent=2)"

142 | ]

143 | },

144 | {

145 | "cell_type": "code",

146 | "execution_count": 33,

147 | "id": "0eabb1c6-5cc6-4d4d-a6da-e27d41cea546",

148 | "metadata": {},

149 | "outputs": [

150 | {

151 | "name": "stdout",

152 | "output_type": "stream",

153 | "text": [

154 | "[\n",

155 | " {\n",

156 | " \"course\": \"data-engineering-zoomcamp\",\n",

157 | " \"documents\": [\n",

158 | " {\n",

159 | " \"text\": \"The purpose of this document is to capture frequently asked technical questions\\nThe exact day and hour of the course will be 15th Jan 2024 at 17h00. The course will start with the first \\u201cOffice Hours'' live.1\\nSubscribe to course public Google Calendar (it works from Desktop only).\\nRegister before the course starts using this link.\\nJoin the course Telegram channel with announcements.\\nDon\\u2019t forget to register in DataTalks.Club's Slack and join the channel.\",\n",

160 | " \"section\": \"General course-related questions\",\n",

161 | " \"question\": \"Course - When will the course start?\"\n",

162 | " },\n",

163 | " {\n"

164 | ]

165 | }

166 | ],

167 | "source": [

168 | "!head documents.json"

169 | ]

170 | },

171 | {

172 | "cell_type": "code",

173 | "execution_count": null,

174 | "id": "1b21af5c-2f6d-49e7-92e9-ca229e2473b9",

175 | "metadata": {},

176 | "outputs": [],

177 | "source": []

178 | }

179 | ],

180 | "metadata": {

181 | "kernelspec": {

182 | "display_name": "Python 3 (ipykernel)",

183 | "language": "python",

184 | "name": "python3"

185 | },

186 | "language_info": {

187 | "codemirror_mode": {

188 | "name": "ipython",

189 | "version": 3

190 | },

191 | "file_extension": ".py",

192 | "mimetype": "text/x-python",

193 | "name": "python",

194 | "nbconvert_exporter": "python",

195 | "pygments_lexer": "ipython3",

196 | "version": "3.9.13"

197 | }

198 | },

199 | "nbformat": 4,

200 | "nbformat_minor": 5

201 | }

202 |

--------------------------------------------------------------------------------

/02-vector-search/README.md:

--------------------------------------------------------------------------------

1 | # Vector Search

2 |

3 | TBA

4 |

5 | ## Homework

6 |

7 | See [here](../cohorts/2025/02-vector-search/homework.md)

8 |

9 |

10 | # Notes

11 |

12 | * Notes from [2024 edition](../cohorts/2024/03-vector-search/)

13 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

14 |

--------------------------------------------------------------------------------

/03-evaluation/README.md:

--------------------------------------------------------------------------------

1 | # RAG and LLM Evaluation

2 |

3 | TBA

4 |

5 | ## Homework

6 |

7 | TBA

8 |

9 | # Notes

10 |

11 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

12 |

--------------------------------------------------------------------------------

/04-monitoring/README.md:

--------------------------------------------------------------------------------

1 | # Module 4: Evaluation and Monitoring

2 |

3 | In this module, we will learn how to evaluate and monitor our LLM and RAG system.

4 |

5 | In the evaluation part, we assess the quality of our entire RAG

6 | system before it goes live.

7 |

8 | In the monitoring part, we collect, store and visualize

9 | metrics to assess the answer quality of a deployed LLM. We also

10 | collect chat history and user feedback.

11 |

12 |

13 | TBA

14 |

15 | # Notes

16 |

17 | * Notes from [2024 edition](../cohorts/2024/04-monitoring/)

18 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

19 |

--------------------------------------------------------------------------------

/05-best-practices/README.md:

--------------------------------------------------------------------------------

1 | # Module 6: Best practices

2 |

3 | In this module, we'll cover the techniques that could improve your RAG pipeline.

4 |

5 | ## 6.1 Techniques to Improve RAG Pipeline

6 |

7 |

8 |

98 |

99 |

100 | * Run ElasticSearch with Docker

101 | * Index the documents

102 | * Replace MinSearch with ElasticSearch

103 |

104 | Running ElasticSearch:

105 |

106 | ```bash

107 | docker run -it \

108 | --rm \

109 | --name elasticsearch \

110 | -m 4GB \

111 | -p 9200:9200 \

112 | -p 9300:9300 \

113 | -e "discovery.type=single-node" \

114 | -e "xpack.security.enabled=false" \

115 | docker.elastic.co/elasticsearch/elasticsearch:8.4.3

116 | ```

117 |

118 | If the previous command doesn't work (i.e. you see "error pulling image configuration"), try to run ElasticSearch directly from Docker Hub:

119 |

120 | ```bash

121 | docker run -it \

122 | --rm \

123 | --name elasticsearch \

124 | -p 9200:9200 \

125 | -p 9300:9300 \

126 | -e "discovery.type=single-node" \

127 | -e "xpack.security.enabled=false" \

128 | elasticsearch:8.4.3

129 | ```

130 |

131 | Index settings:

132 |

133 | ```python

134 | {

135 | "settings": {

136 | "number_of_shards": 1,

137 | "number_of_replicas": 0

138 | },

139 | "mappings": {

140 | "properties": {

141 | "text": {"type": "text"},

142 | "section": {"type": "text"},

143 | "question": {"type": "text"},

144 | "course": {"type": "keyword"}

145 | }

146 | }

147 | }

148 | ```

149 |

150 | Query:

151 |

152 | ```python

153 | {

154 | "size": 5,

155 | "query": {

156 | "bool": {

157 | "must": {

158 | "multi_match": {

159 | "query": query,

160 | "fields": ["question^3", "text", "section"],

161 | "type": "best_fields"

162 | }

163 | },

164 | "filter": {

165 | "term": {

166 | "course": "data-engineering-zoomcamp"

167 | }

168 | }

169 | }

170 | }

171 | }

172 | ```

173 |

174 | We use `"type": "best_fields"`. You can read more about

175 | different types of `multi_match` search in [elastic-search.md](elastic-search.md).

176 |

177 | # 1.7 Homework

178 | More information [here](../cohorts/2025/01-intro/homework.md).

179 |

180 |

181 | # Extra materials

182 |

183 | * If you're curious to know how the code for parsing the FAQ works, check [this video](https://www.loom.com/share/ff54d898188b402d880dbea2a7cb8064)

184 |

185 | # Open-Source LLMs (optional)

186 |

187 | It's also possible to run LLMs locally. For that, we

188 | can use Ollama. Check these videos from LLM Zoomcamp 2024

189 | if you're interested in learning more about it:

190 |

191 | * [Ollama - Running LLMs on a CPU](https://www.youtube.com/watch?v=PVpBGs_iSjY&list=PL3MmuxUbc_hIB4fSqLy_0AfTjVLpgjV3R)

192 | * [Ollama & Phi3 + Elastic in Docker-Compose](https://www.youtube.com/watch?v=4juoo_jk96U&list=PL3MmuxUbc_hIB4fSqLy_0AfTjVLpgjV3R)

193 | * [UI for RAG](https://www.youtube.com/watch?v=R6L8PZ-7bGo&list=PL3MmuxUbc_hIB4fSqLy_0AfTjVLpgjV3R)

194 |

195 | To see the command lines used in the videos,

196 | see [2024 cohort folder](../cohorts/2024/02-open-source#27-ollama---running-llms-on-a-cpu)

197 |

198 | # Notes

199 |

200 | * [Notes by slavaheroes](https://github.com/slavaheroes/llm-zoomcamp/blob/homeworks/01-intro/notes.md)

201 | * [Notes by Pham Nguyen Hung](https://hung.bearblog.dev/llm-zoomcamp-1-rag/)

202 | * [Notes by dimzachar](https://github.com/dimzachar/llm_zoomcamp/blob/master/notes/01-intro/README.md)

203 | * [Notes by Olawale Ogundeji](https://github.com/presiZHai/LLM-Zoomcamp/blob/main/01-intro/notes.md)

204 | * [Notes by Uchechukwu](https://medium.com/@njokuuchechi/an-intro-to-large-language-models-llms-0c51c09abe10)

205 | * [Notes by Kamal](https://github.com/mk-hassan/llm-zoomcamp/blob/main/Module-1%3A%20Introduction%20to%20LLMs%20and%20RAG/README.md)

206 | * [Notes by Marat](https://machine-mind-ml.medium.com/discovering-semantic-search-and-rag-with-large-language-models-be7d9ba5bef4)

207 | * [Notes by Waleed](https://waleedayoub.com/post/llmzoomcamp_week1-intro_notes/)

208 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

209 |

--------------------------------------------------------------------------------

/01-intro/elastic-search.md:

--------------------------------------------------------------------------------

1 | # Elastic Search

2 |

3 | This document contains useful things about Elasticsearch

4 |

5 | # `multi_match` Query in Elasticsearch

6 |

7 | The `multi_match` query is used to search for a given text across multiple fields in an Elasticsearch index.

8 |

9 | It provides various types to control how the matching is executed and scored.

10 |

11 | There are multiple types of `multi_match` queries:

12 |

13 | - `best_fields`: Returns the highest score from any one field.

14 | - `most_fields`: Combines the scores from all fields.

15 | - `cross_fields`: Treats fields as one big field for scoring.

16 | - `phrase`: Searches for the query as an exact phrase.

17 | - `phrase_prefix`: Searches for the query as a prefix of a phrase.

18 |

19 |

20 | ## `best_fields`

21 |

22 | The `best_fields` type searches each field separately and returns the highest score from any one of the fields.

23 |

24 | This type is useful when you want to find documents where at least one field matches the query well.

25 |

26 |

27 | ```json

28 | {

29 | "size": 5,

30 | "query": {

31 | "bool": {

32 | "must": {

33 | "multi_match": {

34 | "query": "How do I run docker on Windows?",

35 | "fields": ["question", "text"],

36 | "type": "best_fields"

37 | }

38 | }

39 | }

40 | }

41 | }

42 | ```

43 |

44 | ## `most_fields`

45 |

46 | The `most_fields` type searches each field and combines the scores from all fields.

47 |

48 | This is useful when the relevance of a document increases with more matching fields.

49 |

50 | ```json

51 | {

52 | "multi_match": {

53 | "query": "How do I run docker on Windows?",

54 | "fields": ["question^4", "text"],

55 | "type": "most_fields"

56 | }

57 | }

58 | ```

59 |

60 | ## `cross_fields`

61 |

62 | The `cross_fields` type treats fields as though they were one big field.

63 |

64 | It is suitable for cases where you have fields representing the same text in different ways, such as synonyms.

65 |

66 | ```json

67 | {

68 | "multi_match": {

69 | "query": "How do I run docker on Windows?",

70 | "fields": ["question", "text"],

71 | "type": "cross_fields"

72 | }

73 | }

74 | ```

75 |

76 | ## `phrase`

77 |

78 | The `phrase` type looks for the query as an exact phrase within the fields.

79 |

80 | It is useful for exact match searches.

81 |

82 | ```json

83 | {

84 | "multi_match": {

85 | "query": "How do I run docker on Windows?",

86 | "fields": ["question", "text"],

87 | "type": "phrase"

88 | }

89 | }

90 | ```

91 |

92 | ## `phrase_prefix`

93 |

94 | The `phrase_prefix` type searches for documents that contain the query as a prefix of a phrase.

95 |

96 | This is useful for autocomplete or typeahead functionality.

97 |

98 |

99 | ```json

100 | {

101 | "multi_match": {

102 | "query": "How do I run docker on Windows?",

103 | "fields": ["question", "text"],

104 | "type": "phrase_prefix"

105 | }

106 | }

107 | ```

--------------------------------------------------------------------------------

/01-intro/open-ai-alternatives.md:

--------------------------------------------------------------------------------

1 | moved [here](https://github.com/DataTalksClub/llm-zoomcamp/blob/main/awesome-llms.md#openai-api-alternatives)

2 |

--------------------------------------------------------------------------------

/01-intro/parse-faq.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 8,

6 | "id": "4cd1eaa8-3424-41ad-9cf2-3e8548712865",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "import io\n",

11 | "\n",

12 | "import requests\n",

13 | "import docx"

14 | ]

15 | },

16 | {

17 | "cell_type": "code",

18 | "execution_count": 24,

19 | "id": "8180e7e4-b90d-4900-a59b-d22e5d6537c4",

20 | "metadata": {},

21 | "outputs": [],

22 | "source": [

23 | "def clean_line(line):\n",

24 | " line = line.strip()\n",

25 | " line = line.strip('\\uFEFF')\n",

26 | " return line\n",

27 | "\n",

28 | "def read_faq(file_id):\n",

29 | " url = f'https://docs.google.com/document/d/{file_id}/export?format=docx'\n",

30 | " \n",

31 | " response = requests.get(url)\n",

32 | " response.raise_for_status()\n",

33 | " \n",

34 | " with io.BytesIO(response.content) as f_in:\n",

35 | " doc = docx.Document(f_in)\n",

36 | "\n",

37 | " questions = []\n",

38 | "\n",

39 | " question_heading_style = 'heading 2'\n",

40 | " section_heading_style = 'heading 1'\n",

41 | " \n",

42 | " heading_id = ''\n",

43 | " section_title = ''\n",

44 | " question_title = ''\n",

45 | " answer_text_so_far = ''\n",

46 | " \n",

47 | " for p in doc.paragraphs:\n",

48 | " style = p.style.name.lower()\n",

49 | " p_text = clean_line(p.text)\n",

50 | " \n",

51 | " if len(p_text) == 0:\n",

52 | " continue\n",

53 | " \n",

54 | " if style == section_heading_style:\n",

55 | " section_title = p_text\n",

56 | " continue\n",

57 | " \n",

58 | " if style == question_heading_style:\n",

59 | " answer_text_so_far = answer_text_so_far.strip()\n",

60 | " if answer_text_so_far != '' and section_title != '' and question_title != '':\n",

61 | " questions.append({\n",

62 | " 'text': answer_text_so_far,\n",

63 | " 'section': section_title,\n",

64 | " 'question': question_title,\n",

65 | " })\n",

66 | " answer_text_so_far = ''\n",

67 | " \n",

68 | " question_title = p_text\n",

69 | " continue\n",

70 | " \n",

71 | " answer_text_so_far += '\\n' + p_text\n",

72 | " \n",

73 | " answer_text_so_far = answer_text_so_far.strip()\n",

74 | " if answer_text_so_far != '' and section_title != '' and question_title != '':\n",

75 | " questions.append({\n",

76 | " 'text': answer_text_so_far,\n",

77 | " 'section': section_title,\n",

78 | " 'question': question_title,\n",

79 | " })\n",

80 | "\n",

81 | " return questions"

82 | ]

83 | },

84 | {

85 | "cell_type": "code",

86 | "execution_count": 25,

87 | "id": "7d3c2dd7-f64a-4dc7-a4e3-3e8aadfa720f",

88 | "metadata": {},

89 | "outputs": [],

90 | "source": [

91 | "faq_documents = {\n",

92 | " 'data-engineering-zoomcamp': '19bnYs80DwuUimHM65UV3sylsCn2j1vziPOwzBwQrebw',\n",

93 | " 'machine-learning-zoomcamp': '1LpPanc33QJJ6BSsyxVg-pWNMplal84TdZtq10naIhD8',\n",

94 | " 'mlops-zoomcamp': '12TlBfhIiKtyBv8RnsoJR6F72bkPDGEvPOItJIxaEzE0',\n",

95 | "}"

96 | ]

97 | },

98 | {

99 | "cell_type": "code",

100 | "execution_count": 27,

101 | "id": "f94efe26-05e8-4ae5-a0fa-0a8e16852816",

102 | "metadata": {},

103 | "outputs": [

104 | {

105 | "name": "stdout",

106 | "output_type": "stream",

107 | "text": [

108 | "data-engineering-zoomcamp\n",

109 | "machine-learning-zoomcamp\n",

110 | "mlops-zoomcamp\n"

111 | ]

112 | }

113 | ],

114 | "source": [

115 | "documents = []\n",

116 | "\n",

117 | "for course, file_id in faq_documents.items():\n",

118 | " print(course)\n",

119 | " course_documents = read_faq(file_id)\n",

120 | " documents.append({'course': course, 'documents': course_documents})"

121 | ]

122 | },

123 | {

124 | "cell_type": "code",

125 | "execution_count": 29,

126 | "id": "06b8d8be-f656-4cc3-893f-b159be8fda21",

127 | "metadata": {},

128 | "outputs": [],

129 | "source": [

130 | "import json"

131 | ]

132 | },

133 | {

134 | "cell_type": "code",

135 | "execution_count": 32,

136 | "id": "30d50bc1-8d26-44ee-8734-cafce05e0523",

137 | "metadata": {},

138 | "outputs": [],

139 | "source": [

140 | "with open('documents.json', 'wt') as f_out:\n",

141 | " json.dump(documents, f_out, indent=2)"

142 | ]

143 | },

144 | {

145 | "cell_type": "code",

146 | "execution_count": 33,

147 | "id": "0eabb1c6-5cc6-4d4d-a6da-e27d41cea546",

148 | "metadata": {},

149 | "outputs": [

150 | {

151 | "name": "stdout",

152 | "output_type": "stream",

153 | "text": [

154 | "[\n",

155 | " {\n",

156 | " \"course\": \"data-engineering-zoomcamp\",\n",

157 | " \"documents\": [\n",

158 | " {\n",

159 | " \"text\": \"The purpose of this document is to capture frequently asked technical questions\\nThe exact day and hour of the course will be 15th Jan 2024 at 17h00. The course will start with the first \\u201cOffice Hours'' live.1\\nSubscribe to course public Google Calendar (it works from Desktop only).\\nRegister before the course starts using this link.\\nJoin the course Telegram channel with announcements.\\nDon\\u2019t forget to register in DataTalks.Club's Slack and join the channel.\",\n",

160 | " \"section\": \"General course-related questions\",\n",

161 | " \"question\": \"Course - When will the course start?\"\n",

162 | " },\n",

163 | " {\n"

164 | ]

165 | }

166 | ],

167 | "source": [

168 | "!head documents.json"

169 | ]

170 | },

171 | {

172 | "cell_type": "code",

173 | "execution_count": null,

174 | "id": "1b21af5c-2f6d-49e7-92e9-ca229e2473b9",

175 | "metadata": {},

176 | "outputs": [],

177 | "source": []

178 | }

179 | ],

180 | "metadata": {

181 | "kernelspec": {

182 | "display_name": "Python 3 (ipykernel)",

183 | "language": "python",

184 | "name": "python3"

185 | },

186 | "language_info": {

187 | "codemirror_mode": {

188 | "name": "ipython",

189 | "version": 3

190 | },

191 | "file_extension": ".py",

192 | "mimetype": "text/x-python",

193 | "name": "python",

194 | "nbconvert_exporter": "python",

195 | "pygments_lexer": "ipython3",

196 | "version": "3.9.13"

197 | }

198 | },

199 | "nbformat": 4,

200 | "nbformat_minor": 5

201 | }

202 |

--------------------------------------------------------------------------------

/02-vector-search/README.md:

--------------------------------------------------------------------------------

1 | # Vector Search

2 |

3 | TBA

4 |

5 | ## Homework

6 |

7 | See [here](../cohorts/2025/02-vector-search/homework.md)

8 |

9 |

10 | # Notes

11 |

12 | * Notes from [2024 edition](../cohorts/2024/03-vector-search/)

13 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

14 |

--------------------------------------------------------------------------------

/03-evaluation/README.md:

--------------------------------------------------------------------------------

1 | # RAG and LLM Evaluation

2 |

3 | TBA

4 |

5 | ## Homework

6 |

7 | TBA

8 |

9 | # Notes

10 |

11 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

12 |

--------------------------------------------------------------------------------

/04-monitoring/README.md:

--------------------------------------------------------------------------------

1 | # Module 4: Evaluation and Monitoring

2 |

3 | In this module, we will learn how to evaluate and monitor our LLM and RAG system.

4 |

5 | In the evaluation part, we assess the quality of our entire RAG

6 | system before it goes live.

7 |

8 | In the monitoring part, we collect, store and visualize

9 | metrics to assess the answer quality of a deployed LLM. We also

10 | collect chat history and user feedback.

11 |

12 |

13 | TBA

14 |

15 | # Notes

16 |

17 | * Notes from [2024 edition](../cohorts/2024/04-monitoring/)

18 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

19 |

--------------------------------------------------------------------------------

/05-best-practices/README.md:

--------------------------------------------------------------------------------

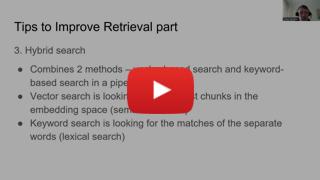

1 | # Module 6: Best practices

2 |

3 | In this module, we'll cover the techniques that could improve your RAG pipeline.

4 |

5 | ## 6.1 Techniques to Improve RAG Pipeline

6 |

7 |

8 |  9 |

10 |

11 | * Small-to-Big chunk retrieval

12 | * Leveraging document metadata

13 | * Hybrid search

14 | * User query rewriting

15 | * Document reranking

16 |

17 | Links:

18 | * [Slides](llm-zoomcamp-best-practicies.pdf)

19 | * [Five Techniques for Improving RAG Chatbots - Nikita Kozodoi [Video]](https://www.youtube.com/watch?v=xPYmClWk5O8)

20 | * [Survey on RAG techniques [Article]](https://arxiv.org/abs/2312.10997)

21 |

22 |

23 | ## 6.2 Hybrid search

24 |

25 |

26 |

9 |

10 |

11 | * Small-to-Big chunk retrieval

12 | * Leveraging document metadata

13 | * Hybrid search

14 | * User query rewriting

15 | * Document reranking

16 |

17 | Links:

18 | * [Slides](llm-zoomcamp-best-practicies.pdf)

19 | * [Five Techniques for Improving RAG Chatbots - Nikita Kozodoi [Video]](https://www.youtube.com/watch?v=xPYmClWk5O8)

20 | * [Survey on RAG techniques [Article]](https://arxiv.org/abs/2312.10997)

21 |

22 |

23 | ## 6.2 Hybrid search

24 |

25 |

26 |  27 |

28 |

29 | * Hybrid search strategy

30 | * Hybrid search in Elasticsearch

31 |

32 | Links:

33 | * [Notebook](hybrid-search-and-reranking-es.ipynb)

34 | * [Hybrid search [Elasticsearch Guide]](https://www.elastic.co/guide/en/elasticsearch/reference/current/knn-search.html#_combine_approximate_knn_with_other_features)

35 | * [Hybrid search [Tutorial]](https://www.elastic.co/search-labs/tutorials/search-tutorial/vector-search/hybrid-search)

36 |

37 |

38 | ## 6.3 Document Reranking

39 |

40 |

41 |

27 |

28 |

29 | * Hybrid search strategy

30 | * Hybrid search in Elasticsearch

31 |

32 | Links:

33 | * [Notebook](hybrid-search-and-reranking-es.ipynb)

34 | * [Hybrid search [Elasticsearch Guide]](https://www.elastic.co/guide/en/elasticsearch/reference/current/knn-search.html#_combine_approximate_knn_with_other_features)

35 | * [Hybrid search [Tutorial]](https://www.elastic.co/search-labs/tutorials/search-tutorial/vector-search/hybrid-search)

36 |

37 |

38 | ## 6.3 Document Reranking

39 |

40 |

41 |  42 |

43 |

44 | * Reranking concept and metrics

45 | * Reciprocal Rank Fusion (RRF)

46 | * Handmade raranking implementation

47 |

48 | Links:

49 | * [Reciprocal Rank Fusion (RRF) method [Elasticsearch Guide]](https://www.elastic.co/guide/en/elasticsearch/reference/current/rrf.html)

50 | * [RRF method [Article]](https://plg.uwaterloo.ca/~gvcormac/cormacksigir09-rrf.pdf)

51 | * [Elasticsearch subscription plans](https://www.elastic.co/subscriptions)

52 |

53 | We should pull and run a docker container with Elasticsearch 8.9.0 or higher in order to use reranking based on RRF algorithm:

54 |

55 | ```bash

56 | docker run -it \

57 | --rm \

58 | --name elasticsearch \

59 | -m 4GB \

60 | -p 9200:9200 \

61 | -p 9300:9300 \

62 | -e "discovery.type=single-node" \

63 | -e "xpack.security.enabled=false" \

64 | docker.elastic.co/elasticsearch/elasticsearch:8.9.0

65 | ```

66 |

67 |

68 | ## 6.4 Hybrid search with LangChain

69 |

70 |

71 |

42 |

43 |

44 | * Reranking concept and metrics

45 | * Reciprocal Rank Fusion (RRF)

46 | * Handmade raranking implementation

47 |

48 | Links:

49 | * [Reciprocal Rank Fusion (RRF) method [Elasticsearch Guide]](https://www.elastic.co/guide/en/elasticsearch/reference/current/rrf.html)

50 | * [RRF method [Article]](https://plg.uwaterloo.ca/~gvcormac/cormacksigir09-rrf.pdf)

51 | * [Elasticsearch subscription plans](https://www.elastic.co/subscriptions)

52 |

53 | We should pull and run a docker container with Elasticsearch 8.9.0 or higher in order to use reranking based on RRF algorithm:

54 |

55 | ```bash

56 | docker run -it \

57 | --rm \

58 | --name elasticsearch \

59 | -m 4GB \

60 | -p 9200:9200 \

61 | -p 9300:9300 \

62 | -e "discovery.type=single-node" \

63 | -e "xpack.security.enabled=false" \

64 | docker.elastic.co/elasticsearch/elasticsearch:8.9.0

65 | ```

66 |

67 |

68 | ## 6.4 Hybrid search with LangChain

69 |

70 |

71 |  72 |

73 |

74 | * LangChain: Introduction

75 | * ElasticsearchRetriever

76 | * Hybrid search implementation

77 |

78 | ```bash

79 | pip install -qU langchain langchain-elasticsearch langchain-huggingface

80 | ```

81 |

82 | Links:

83 | * [Notebook](hybrid-search-langchain.ipynb)

84 | * [Chatbot Implementation [Tutorial]](https://www.elastic.co/search-labs/tutorials/chatbot-tutorial/implementation)

85 | * [ElasticsearchRetriever](https://python.langchain.com/v0.2/docs/integrations/retrievers/elasticsearch_retriever/)

86 |

87 |

88 | ## Homework

89 |

90 | TBD

91 |

92 | # Notes

93 |

94 | * First link goes here

95 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

96 |

--------------------------------------------------------------------------------

/05-best-practices/llm-zoomcamp-best-practicies.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/DataTalksClub/llm-zoomcamp/b9d8bd63621736c75fa8d6d0def5ad6656c0b981/05-best-practices/llm-zoomcamp-best-practicies.pdf

--------------------------------------------------------------------------------

/06-project-example/README.md:

--------------------------------------------------------------------------------

1 | # 7. End-to-End Project Example

2 |

3 | Links:

4 |

5 | * [Project alexeygrigorev/fitness-assistant](https://github.com/alexeygrigorev/fitness-assistant)

6 | * [Project criteria](../project.md#evaluation-criteria)

7 |

8 |

9 | Note: check the final result, it's a bit different

10 | from what we showed in the videos: we further improved it

11 | by doing some small things here and there, like improved

12 | README, code readability, etc.

13 |

14 |

15 | ## 7.1. Fitness assistant project

16 |

17 |

18 |

72 |

73 |

74 | * LangChain: Introduction

75 | * ElasticsearchRetriever

76 | * Hybrid search implementation

77 |

78 | ```bash

79 | pip install -qU langchain langchain-elasticsearch langchain-huggingface

80 | ```

81 |

82 | Links:

83 | * [Notebook](hybrid-search-langchain.ipynb)

84 | * [Chatbot Implementation [Tutorial]](https://www.elastic.co/search-labs/tutorials/chatbot-tutorial/implementation)

85 | * [ElasticsearchRetriever](https://python.langchain.com/v0.2/docs/integrations/retrievers/elasticsearch_retriever/)

86 |

87 |

88 | ## Homework

89 |

90 | TBD

91 |

92 | # Notes

93 |

94 | * First link goes here

95 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

96 |

--------------------------------------------------------------------------------

/05-best-practices/llm-zoomcamp-best-practicies.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/DataTalksClub/llm-zoomcamp/b9d8bd63621736c75fa8d6d0def5ad6656c0b981/05-best-practices/llm-zoomcamp-best-practicies.pdf

--------------------------------------------------------------------------------

/06-project-example/README.md:

--------------------------------------------------------------------------------

1 | # 7. End-to-End Project Example

2 |

3 | Links:

4 |

5 | * [Project alexeygrigorev/fitness-assistant](https://github.com/alexeygrigorev/fitness-assistant)

6 | * [Project criteria](../project.md#evaluation-criteria)

7 |

8 |

9 | Note: check the final result, it's a bit different

10 | from what we showed in the videos: we further improved it

11 | by doing some small things here and there, like improved

12 | README, code readability, etc.

13 |

14 |

15 | ## 7.1. Fitness assistant project

16 |

17 |

18 |  19 |

20 |

21 | * Generating data for the project

22 | * Setting up the project

23 | * Implementing the initial version of the RAG flow

24 |

25 | ## 7.2. Evaluating retrieval

26 |

27 |

28 |

19 |

20 |

21 | * Generating data for the project

22 | * Setting up the project

23 | * Implementing the initial version of the RAG flow

24 |

25 | ## 7.2. Evaluating retrieval

26 |

27 |

28 |  29 |

30 |

31 | * Preparing the README file

32 | * Generating gold standard evaluation data

33 | * Evaluting retrieval

34 | * Findning the best boosting coefficients

35 |

36 |

37 | ## 7.3 Evaluating RAG

38 |

39 |

40 |

29 |

30 |

31 | * Preparing the README file

32 | * Generating gold standard evaluation data

33 | * Evaluting retrieval

34 | * Findning the best boosting coefficients

35 |

36 |

37 | ## 7.3 Evaluating RAG

38 |

39 |

40 |  41 |

42 |

43 | * Using LLM-as-a-Judge (type 2)

44 | * Comparing gpt-4o-mini with gpt-4o

45 |

46 | ## 7.4 Interface and ingestion pipeline

47 |

48 |

49 |

41 |

42 |

43 | * Using LLM-as-a-Judge (type 2)

44 | * Comparing gpt-4o-mini with gpt-4o

45 |

46 | ## 7.4 Interface and ingestion pipeline

47 |

48 |

49 |  50 |

51 |

52 | * Turnining the jupyter notebook into a script

53 | * Creating the ingestion pipeline

54 | * Creating the API interface with Flask

55 | * Improving README

56 |

57 |

58 | ## 7.5 Monitoring and containerization

59 |

60 |

61 |

50 |

51 |

52 | * Turnining the jupyter notebook into a script

53 | * Creating the ingestion pipeline

54 | * Creating the API interface with Flask

55 | * Improving README

56 |

57 |

58 | ## 7.5 Monitoring and containerization

59 |

60 |

61 |  62 |

63 |

64 | * Creating a Docker image for our application

65 | * Putting everything in docker compose

66 | * Logging all the information for monitoring purposes

67 |

68 |

69 | ## 7.6 Summary and closing remarks

70 |

71 |

72 |

62 |

63 |

64 | * Creating a Docker image for our application

65 | * Putting everything in docker compose

66 | * Logging all the information for monitoring purposes

67 |

68 |

69 | ## 7.6 Summary and closing remarks

70 |

71 |

72 |  73 |

74 |

75 | * Changes between 7.5 and 7.6 (postres logging, grafara, cli.py, etc)

76 | * README file improvements

77 | * Total cost of the project (~$2) and how to lower it

78 | * Using generated data for real-life projects

79 |

80 |

81 | ## 7.7 Chunking for longer texts

82 |

83 |

84 |

73 |

74 |

75 | * Changes between 7.5 and 7.6 (postres logging, grafara, cli.py, etc)

76 | * README file improvements

77 | * Total cost of the project (~$2) and how to lower it

78 | * Using generated data for real-life projects

79 |

80 |

81 | ## 7.7 Chunking for longer texts

82 |

83 |

84 |  85 |

86 |

87 | * Different chunking strategies

88 | * [Use cases: multiple articles, one article, slide decks](content-processing-summary.md)

89 |

90 | Links:

91 |

92 | * https://chatgpt.com/share/a4616f6b-43f4-4225-9d03-bb69c723c210

93 | * https://chatgpt.com/share/74217c02-95e6-46ae-b5a5-ca79f9a07084

94 | * https://chatgpt.com/share/8cf0ebde-c53f-4c6f-82ae-c6cc52b2fd0b

95 |

96 | # Notes

97 |

98 | * First link goes here

99 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

100 |

--------------------------------------------------------------------------------

/06-project-example/content-processing-summary.md:

--------------------------------------------------------------------------------

1 | # Content Processing Cases and Steps

2 |

3 | ## Case: Multiple Articles

4 |

5 | - Assign each article a document id

6 | - Chunk the articles

7 | - Assign each chunk a unique chunk id (could be doc_id + chunk_number)

8 | - Evaluate retrieval: separate hitrate for both doc_id and chunk_id

9 | - Evaluate RAG: LLM as a Judge

10 | - Tuning chunk size: use metrics from Evaluate RAG

11 |

12 | Example JSON structure for a chunk:

13 | ```json

14 | {

15 | "doc_id": "ashdiasdh",

16 | "chunk_id": "ashdiasdh_1",

17 | "text": "actual text"

18 | }

19 | ```

20 |

21 | ## Case: Single Article / Transcript / Etc.

22 |

23 | Example: the user provides YouTubeID, you initialize the system and now you can talk to it

24 |

25 | - Chunk it

26 | - Evaluation as for multiple articles

27 |

28 |

29 | ## Case: Book or Very Long Form Content

30 |

31 | - Experiment with it

32 | - Each chapter / section can be a separate document

33 | - Use LLM as a Judge to see which approach works best

34 |

35 | ## Case: Images

36 |

37 | - Describe the images using gpt-4o-mini

38 | - [CLIP](https://openai.com/index/clip/)

39 | - Each image is a separate document

40 |

41 | ## Case: Slides

42 |

43 | - Same as with images + multiple articles

44 | - "Chunking": slide deck = document, slide = chunk

45 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

85 |

86 |

87 | * Different chunking strategies

88 | * [Use cases: multiple articles, one article, slide decks](content-processing-summary.md)

89 |

90 | Links:

91 |

92 | * https://chatgpt.com/share/a4616f6b-43f4-4225-9d03-bb69c723c210

93 | * https://chatgpt.com/share/74217c02-95e6-46ae-b5a5-ca79f9a07084

94 | * https://chatgpt.com/share/8cf0ebde-c53f-4c6f-82ae-c6cc52b2fd0b

95 |

96 | # Notes

97 |

98 | * First link goes here

99 | * Did you take notes? Add them above this line (Send a PR with *links* to your notes)

100 |

--------------------------------------------------------------------------------

/06-project-example/content-processing-summary.md:

--------------------------------------------------------------------------------

1 | # Content Processing Cases and Steps

2 |

3 | ## Case: Multiple Articles

4 |

5 | - Assign each article a document id

6 | - Chunk the articles

7 | - Assign each chunk a unique chunk id (could be doc_id + chunk_number)

8 | - Evaluate retrieval: separate hitrate for both doc_id and chunk_id

9 | - Evaluate RAG: LLM as a Judge

10 | - Tuning chunk size: use metrics from Evaluate RAG

11 |

12 | Example JSON structure for a chunk:

13 | ```json

14 | {

15 | "doc_id": "ashdiasdh",

16 | "chunk_id": "ashdiasdh_1",

17 | "text": "actual text"

18 | }

19 | ```

20 |

21 | ## Case: Single Article / Transcript / Etc.

22 |

23 | Example: the user provides YouTubeID, you initialize the system and now you can talk to it

24 |

25 | - Chunk it

26 | - Evaluation as for multiple articles

27 |

28 |

29 | ## Case: Book or Very Long Form Content

30 |

31 | - Experiment with it

32 | - Each chapter / section can be a separate document

33 | - Use LLM as a Judge to see which approach works best

34 |

35 | ## Case: Images

36 |

37 | - Describe the images using gpt-4o-mini

38 | - [CLIP](https://openai.com/index/clip/)

39 | - Each image is a separate document

40 |

41 | ## Case: Slides

42 |

43 | - Same as with images + multiple articles

44 | - "Chunking": slide deck = document, slide = chunk

45 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 |

6 | LLM Zoomcamp: A Free Course on Real-Life Applications of LLMs

7 |

8 |

9 |

10 | In 10 weeks, learn how to build AI systems that answer questions about your knowledge base. Gain hands-on experience with LLMs, RAG, vector search, evaluation, monitoring, and more.

11 |

12 |

13 |

14 |  15 |

15 |

16 |

17 |

18 | Join Slack •

19 | #course-llm-zoomcamp Channel •

20 | Telegram Announcements •

21 | Course Playlist •

22 | FAQ

23 |

24 |

25 | ## How to Take LLM Zoomcamp

26 |

27 | ### 2025 Cohort

28 | - **Start Date**: June 2, 2025, 17:00 CET

29 | - **Register Here**: [Sign up](https://airtable.com/appPPxkgYLH06Mvbw/shr7WtxHEPXxaui0Q)

30 |

31 | 2025 cohort checklist:

32 | - Subscribe to our [Google Calendar](https://calendar.google.com/calendar/?cid=NjkxOThkOGFhZmUyZmQwMzZjNDFkNmE2ZDIyNjE5YjdiMmQyZDVjZTYzOGMxMzQyZmNkYjE5Y2VkNDYxOTUxY0Bncm91cC5jYWxlbmRhci5nb29nbGUuY29t)

33 | - Check [2025 cohort folder](https://github.com/DataTalksClub/llm-zoomcamp/tree/main/cohorts/2025) to stay updated

34 | - Watch [live Q&A](https://youtube.com/live/8lgiOLMMKcY) about the course

35 | - Watch [live course launch](https://www.youtube.com/live/FgnelhEJFj0) stream

36 | - Save the [2025 course playlist](https://youtube.com/playlist?list=PL3MmuxUbc_hIoBpuc900htYF4uhEAbaT-&si=n7CuD0DEgPtnbtsI) on YouTube

37 | - Check course content by navigating to the right module on GitHub

38 | - Share this course with a friend!

39 |

40 | ### Self-Paced Learning

41 | You can follow the course at your own pace:

42 | 1. Watch the course videos.

43 | 2. Complete the homework assignments.

44 | 3. Work on a project and share it in Slack for feedback.

45 |

46 | ## Syllabus

47 |

48 | ### Pre-course Workshops

49 | - [Build a Search Engine](https://www.youtube.com/watch?v=nMrGK5QgPVE) ([Code](https://github.com/alexeygrigorev/build-your-own-search-engine))

50 |

51 | ### Modules

52 |

53 | #### [Module 1: Introduction to LLMs and RAG](01-intro/)

54 | - Basics of LLMs and Retrieval-Augmented Generation (RAG)

55 | - OpenAI API and text search with Elasticsearch

56 |

57 | #### [Module 2: Vector Search](02-vector-search/)

58 |

59 | - Vector search and embeddings

60 | - Indexing and retrieving data efficiently

61 | - Using Qdrant as the vestor database

62 |

63 | #### [Module 3: Evaluation](03-evaluation/)

64 |

65 | - Search evaluation

66 | - Online vs offline evaluation

67 | - LLM as a Judge

68 |

69 | #### [Module 4: Monitoring](04-monitoring/)

70 |

71 | - Online evaluation techniques

72 | - Monitoring user feedback with dashboards

73 |

74 |

75 | #### [Module 5: Best Practices](05-best-practices/)

76 | - Hybrid search

77 | - Document reranking

78 |

79 | #### [Module 6: Bonus - End-to-End Project](06-project-example/)

80 | - Build a fitness assistant using LLMs

81 |

82 | ### [Capstone Project](project.md)

83 |

84 | Put eveything you learned into practice

85 |

86 | ## Meet the Instructors

87 | - [Alexey Grigorev](https://linkedin.com/in/agrigorev/)

88 | - [Timur Kamaliev](https://www.linkedin.com/in/timurkamaliev/)

89 |

90 | ## Community & Support

91 |

92 | ### **Getting Help on Slack**

93 | Join the [`#course-llm-zoomcamp`](https://app.slack.com/client/T01ATQK62F8/C06TEGTGM3J) channel on [DataTalks.Club Slack](https://datatalks.club/slack.html) for discussions, troubleshooting, and networking.

94 |

95 | To keep discussions organized:

96 | - Follow [our guidelines](asking-questions.md) when posting questions.

97 | - Review the [community guidelines](https://datatalks.club/slack/guidelines.html).

98 |

99 | ## Sponsors & Supporters

100 | A special thanks to our course sponsors for making this initiative possible!

101 |

102 |

103 |

104 |  105 |

106 |

105 |

106 |

107 |

108 |

109 |

110 |  111 |

112 |

111 |

112 |

113 |

114 |

115 |

116 |  117 |

118 |

117 |

118 |

119 |

120 |

121 | Interested in supporting our community? Reach out to [alexey@datatalks.club](mailto:alexey@datatalks.club).

122 |

123 | ## About DataTalks.Club

124 |

125 |

126 |  127 |

127 |

128 |

129 |

130 | DataTalks.Club is a global online community of data enthusiasts. It's a place to discuss data, learn, share knowledge, ask and answer questions, and support each other.

131 |

132 |

133 |

134 | Website •

135 | Join Slack Community •

136 | Newsletter •

137 | Upcoming Events •

138 | Google Calendar •

139 | YouTube •

140 | GitHub •

141 | LinkedIn •

142 | Twitter

143 |

144 |

145 | All the activity at DataTalks.Club mainly happens on [Slack](https://datatalks.club/slack.html). We post updates there and discuss different aspects of data, career questions, and more.

146 |

147 | At DataTalksClub, we organize online events, community activities, and free courses. You can learn more about what we do at [DataTalksClub Community Navigation](https://www.notion.so/DataTalksClub-Community-Navigation-bf070ad27ba44bf6bbc9222082f0e5a8?pvs=21).

148 |

--------------------------------------------------------------------------------

/after-sign-up.md:

--------------------------------------------------------------------------------

1 | ## Thank you!

2 |

3 | Thanks for signining up for the course.

4 |

5 | Here are some things you should do before you start the course:

6 |

7 | - Register in [DataTalks.Club's Slack](https://datatalks.club/slack.html)

8 | - Join the [`#course-llm-zoomcamp`](https://app.slack.com/client/T01ATQK62F8/C06TEGTGM3J) channel

9 | - Join the [course Telegram channel with announcements](https://t.me/llm_zoomcamp)

10 | - Subscribe to [DataTalks.Club's YouTube channel](https://www.youtube.com/c/DataTalksClub) and check [the course playlist](https://www.youtube.com/playlist?list=PL3MmuxUbc_hKiIVNf7DeEt_tGjypOYtKV)

11 | - Subscribe to our [Course Calendar](https://calendar.google.com/calendar/?cid=NjkxOThkOGFhZmUyZmQwMzZjNDFkNmE2ZDIyNjE5YjdiMmQyZDVjZTYzOGMxMzQyZmNkYjE5Y2VkNDYxOTUxY0Bncm91cC5jYWxlbmRhci5nb29nbGUuY29t)

12 | - Check our [Technical FAQ](https://docs.google.com/document/d/1m2KexowAXTmexfC5rVTCSnaShvdUQ8Ag2IEiwBDHxN0/edit?usp=sharing) if you have questions

13 |

14 | See you in the course!

15 |

--------------------------------------------------------------------------------

/asking-questions.md:

--------------------------------------------------------------------------------

1 | ## Asking questions

2 |

3 | If you have any questions, ask them

4 | in the [`#course-llm-zoomcamp`](https://app.slack.com/client/T01ATQK62F8/C06TEGTGM3J) channel in [DataTalks.Club](https://datatalks.club) slack.

5 |

6 | To keep our discussion in Slack more organized, we ask you to follow these suggestions:

7 |

8 | * Before asking a question, check [FAQ](https://docs.google.com/document/d/1m2KexowAXTmexfC5rVTCSnaShvdUQ8Ag2IEiwBDHxN0/edit?usp=sharing).

9 | * Use threads. When you have a problem, first describe the problem shortly

10 | and then put the actual error in the thread - so it doesn't take the entire screen.

11 | * Instead of screenshots, it's better to copy-paste the error you're getting in text.

12 | Use ` ``` ` for formatting your code.

13 | It's very difficult to read text from screenshots.

14 | * Please don't take pictures of your code with a phone. It's even harder to read. Follow the previous suggestion,

15 | and in rare cases when you need to show what happens on your screen, take a screenshot.

16 | * You don't need to tag the instructors when you have a problem. We will see it eventually.

17 | * If somebody helped you with your problem and it's not in [FAQ](https://docs.google.com/document/d/1m2KexowAXTmexfC5rVTCSnaShvdUQ8Ag2IEiwBDHxN0/edit?usp=sharing), please add it there.

18 | It'll help other students.

19 |

--------------------------------------------------------------------------------

/awesome-llms.md:

--------------------------------------------------------------------------------

1 | # Awesome LLMs

2 |

3 | In this file, we will collect all interesting links

4 |

5 | ## OpenAI API Alternatives

6 |

7 | OpenAI and GPT are not the only hosted LLMs that we can use.

8 | There are other services that we can use

9 |

10 |

11 | * [mistral.ai](https://mistral.ai) (5€ free credit on sign up)

12 | * [Groq](https://console.groq.com) (can inference from open source LLMs with rate limits)

13 | * [TogetherAI](https://api.together.ai) (can inference from variety of open source LLMs, 25$ free credit on sign up)

14 | * [Google Gemini](https://ai.google.dev/gemini-api/docs/get-started/tutorial?lang=python) (2 months unlimited access)

15 | * [OpenRouterAI](https://openrouter.ai/) (some small open-source models, such as Gemma 7B, are free)

16 | * [HuggingFace API](https://huggingface.co/docs/api-inference/index) (over 150,000 open-source models, rate-limited and free)

17 | * [Cohere](https://cohere.com/) (provides a developer trail key which allows upto 100 reqs/min for generating, summarizing, and classifying text. Read more [here](https://cohere.com/blog/free-developer-tier-announcement))

18 | * [wit](https://wit.ai/) (Facebook AI Afiliate - free)

19 | * [Anthropic API](https://www.anthropic.com/pricing#anthropic-api) (starting from $0.25 / MTok for input and $1.25 / MTok for the output for the most affordable model)

20 | * [AI21Labs API](https://www.ai21.com/pricing#foundation-models) (Free trial including $10 credits for 3 months)

21 | * [Replicate](https://replicate.com/) (faster inference, can host any ML model. charges 0.10$ per 1M input tokens for llama/Mistral model)

22 |

23 |

24 | ## Local LLMs on CPUs

25 |

26 | These services help run LLMs locally, also without GPUs

27 |

28 | - [ollama](https://github.com/ollama/ollama)

29 | - [Jan.AI](https://jan.ai/)

30 | - [h2oGPT](https://github.com/h2oai/h2ogpt)

31 |

32 |

33 | ## Applications

34 | - **Text Generation**

35 | - [OpenAI GPT-3 Playground](https://platform.openai.com/playground)

36 | - [AI Dungeon](https://play.aidungeon.io/)

37 | - **Chatbots**

38 | - [Rasa](https://rasa.com/)

39 | - [Microsoft Bot Framework](https://dev.botframework.com/)

40 | - **Sentiment Analysis**

41 | - [VADER Sentiment Analysis](https://github.com/cjhutto/vaderSentiment)

42 | - [TextBlob](https://textblob.readthedocs.io/en/dev/)

43 | - **Summarization**

44 | - [Sumy](https://github.com/miso-belica/sumy)

45 | - [Hugging Face Transformers Summarization](https://huggingface.co/transformers/task_summary.html)

46 | - **Translation**

47 | - [MarianMT by Hugging Face](https://huggingface.co/transformers/model_doc/marian.html)

48 |

49 | ## Fine-Tuning

50 | - **Guides and Tutorials**

51 | - [Fine-Tuning GPT-3](https://platform.openai.com/docs/guides/fine-tuning)

52 | - [Hugging Face Fine-Tuning Tutorial](https://huggingface.co/transformers/training.html)

53 | - **Tools and Frameworks**

54 | - [Hugging Face Trainer](https://huggingface.co/transformers/main_classes/trainer.html)

55 | - [Fastai](https://docs.fast.ai/text.learner.html)

56 | - **Colab Notebooks**

57 | - [Fine-Tuning BERT on Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/text_classification.ipynb)

58 | - [Fine-Tuning GPT-2 on Colab](https://colab.research.google.com/github/fastai/course-v3/blob/master/nbs/dl2/12a_ulmfit.ipynb)

59 |

60 | ## Prompt Engineering

61 | - **Techniques and Best Practices**

62 | - [OpenAI Prompt Engineering Guide](https://platform.openai.com/docs/guides/completions/best-practices)

63 | - [Prompt Design for GPT-3](https://beta.openai.com/docs/guides/prompt-design)

64 | - **Tools**

65 | - [Prompt Designer](https://promptdesigner.com/)

66 | - [Prompt Engineering Toolkit](https://github.com/prompt-engineering/awesome-prompt-engineering)

67 | - **Examples and Case Studies**

68 | - [Awesome ChatGPT Prompts](https://github.com/f/awesome-chatgpt-prompts)

69 | - [GPT-3 Prompt Engineering Examples](https://github.com/shreyashankar/gpt-3-sandbox)

70 |

71 | ## Deployment

72 | - **Hosting Services**

73 | - [Hugging Face Inference API](https://huggingface.co/inference-api)

74 | - [AWS SageMaker](https://aws.amazon.com/sagemaker/)

75 | - **Serverless Deployments**

76 | - [Serverless GPT-3 with AWS Lambda](https://towardsdatascience.com/building-serverless-gpt-3-powered-apis-with-aws-lambda-f2d4b8a91058)

77 | - [Deploying on Vercel](https://vercel.com/guides/deploying-next-and-vercel-api-with-openai-gpt-3)

78 | - **Containerization**

79 | - [Dockerizing a GPT Model](https://medium.com/swlh/dockerize-your-gpt-3-chatbot-28dd48c19c91)

80 | - [Kubernetes for ML Deployments](https://towardsdatascience.com/kubernetes-for-machine-learning-6c7f5c5466a2)

81 |

82 | ## Monitoring and Logging

83 | - **Best Practices**

84 | - [Logging and Monitoring AI Models](https://www.dominodatalab.com/resources/whitepapers/logging-and-monitoring-for-machine-learning)

85 | - [Monitor Your NLP Models](https://towardsdatascience.com/monitor-your-nlp-models-40c2fb141a51)

86 |

87 | ## Ethics and Bias

88 | - **Frameworks and Guidelines**

89 | - [AI Ethics Guidelines Global Inventory](https://algorithmwatch.org/en/project/ai-ethics-guidelines-global-inventory/)

90 | - [Google AI Principles](https://ai.google/principles/)

91 | - **Tools**

92 | - [Fairness Indicators](https://www.tensorflow.org/tfx/guide/fairness_indicators)

93 | - [IBM AI Fairness 360](https://aif360.mybluemix.net/)

94 | - **Research Papers**

95 | - [Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification](http://gendershades.org/overview.html)

96 | - [AI Fairness and Bias](https://arxiv.org/abs/1908.09635)

97 |

98 |

99 |

100 |

--------------------------------------------------------------------------------

/cohorts/2024/01-intro/homework.md:

--------------------------------------------------------------------------------

1 | ## Homework: Introduction

2 |

3 | In this homework, we'll learn more about search and use Elastic Search for practice.

4 |

5 | > It's possible that your answers won't match exactly. If it's the case, select the closest one.

6 |

7 | ## Q1. Running Elastic

8 |

9 | Run Elastic Search 8.4.3, and get the cluster information. If you run it on localhost, this is how you do it:

10 |

11 | ```bash

12 | curl localhost:9200

13 | ```

14 |

15 | What's the `version.build_hash` value?

16 |

17 |

18 | ## Getting the data

19 |

20 | Now let's get the FAQ data. You can run this snippet:

21 |

22 | ```python

23 | import requests

24 |

25 | docs_url = 'https://github.com/DataTalksClub/llm-zoomcamp/blob/main/01-intro/documents.json?raw=1'

26 | docs_response = requests.get(docs_url)

27 | documents_raw = docs_response.json()

28 |

29 | documents = []

30 |

31 | for course in documents_raw:

32 | course_name = course['course']

33 |

34 | for doc in course['documents']:

35 | doc['course'] = course_name

36 | documents.append(doc)

37 | ```

38 |

39 | Note that you need to have the `requests` library:

40 |

41 | ```bash

42 | pip install requests

43 | ```

44 |