├── JarvisAI

├── __init__.py

├── JarvisAI

│ ├── brain

│ │ ├── __init__.py

│ │ ├── ner.py

│ │ ├── auth.py

│ │ ├── intent_classification.py

│ │ └── chatbot_premium.py

│ ├── features

│ │ ├── __init__.py

│ │ ├── iambored.py

│ │ ├── youtube_play.py

│ │ ├── greet.py

│ │ ├── date_time.py

│ │ ├── places_near_me.py

│ │ ├── games.py

│ │ ├── joke.py

│ │ ├── whatsapp_message.py

│ │ ├── youtube_video_downloader.py

│ │ ├── website_open.py

│ │ ├── screenshot.py

│ │ ├── send_email.py

│ │ ├── news.py

│ │ ├── weather.py

│ │ ├── internet_speed_test.py

│ │ ├── click_photo.py

│ │ ├── covid_cases.py

│ │ ├── tell_me_about.py

│ │ └── volume_controller.py

│ ├── utils

│ │ ├── __init__.py

│ │ ├── speech_to_text

│ │ │ ├── __init__.py

│ │ │ ├── speech_to_text_whisper.py

│ │ │ └── speech_to_text.py

│ │ ├── text_to_speech

│ │ │ ├── __init__.py

│ │ │ └── text_to_speech.py

│ │ └── input_output.py

│ ├── actions.json

│ ├── features_manager.py

│ └── __init__.py

├── TODO

├── MANIFEST.in

├── License.txt

├── setup.py

├── README for JarvisAI 4.3 and below.md

└── README.md

├── .idea

├── .gitignore

├── vcs.xml

├── inspectionProfiles

│ └── profiles_settings.xml

├── modules.xml

├── misc.xml

└── Jarvis_AI.iml

├── .gitattributes

├── cmd_twine.txt

├── License.txt

├── requirements.txt

├── .gitignore

└── README.md

/JarvisAI/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/brain/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/JarvisAI/TODO:

--------------------------------------------------------------------------------

1 | Setup Premium Features

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/speech_to_text/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/text_to_speech/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/JarvisAI/MANIFEST.in:

--------------------------------------------------------------------------------

1 | recursive-include JarvisAI *.dll *.so *.dylib

--------------------------------------------------------------------------------

/.idea/.gitignore:

--------------------------------------------------------------------------------

1 | # Default ignored files

2 | /shelf/

3 | /workspace.xml

4 |

--------------------------------------------------------------------------------

/.gitattributes:

--------------------------------------------------------------------------------

1 | # Auto detect text files and perform LF normalization

2 | * text=auto

3 |

--------------------------------------------------------------------------------

/.idea/vcs.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.idea/inspectionProfiles/profiles_settings.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/iambored.py:

--------------------------------------------------------------------------------

1 | import requests

2 |

3 |

4 | def get_me_suggestion(*args, **kwargs):

5 | url = 'http://www.boredapi.com/api/activity'

6 | response = requests.get(url)

7 | return response.json()['activity']

8 |

--------------------------------------------------------------------------------

/cmd_twine.txt:

--------------------------------------------------------------------------------

1 | python setup.py sdist bdist_wheel

2 | twine upload dist/* --skip-existing

3 | pyarmor obfuscate --bootstrap 3 --exact --platform windows.x86_64 --platform windows.x86 --platform linux.x86_64 --platform linux.x86 --platform darwin.x86_64 manager.py

4 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/youtube_play.py:

--------------------------------------------------------------------------------

1 | import pywhatkit as kit

2 |

3 |

4 | def yt_play(*arg, **kwargs):

5 | inp_command = kwargs.get("query")

6 | kit.playonyt(inp_command)

7 | return "Playing Video on Youtube"

8 |

9 |

10 | if __name__ == "__main__":

11 | yt_play('play on youtube shape of you')

12 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/greet.py:

--------------------------------------------------------------------------------

1 | import datetime

2 |

3 |

4 | def greet(*args, **kwargs):

5 | time = datetime.datetime.now().hour

6 | if time < 12:

7 | return "Hi, Good Morning"

8 | elif 12 <= time < 18:

9 | return "Hi, Good Afternoon"

10 | else:

11 | return "Hi, Good Evening"

12 |

13 |

14 | def goodbye(*args, **kwargs):

15 | return "Goodbye"

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/date_time.py:

--------------------------------------------------------------------------------

1 | import datetime

2 |

3 |

4 | def date_time(*args, **kwargs):

5 | query = kwargs['query']

6 | if 'time' in query:

7 | return datetime.datetime.now().strftime("%H:%M:%S")

8 | elif 'date' in query:

9 | return datetime.datetime.now().strftime("%d/%m/%Y")

10 | else:

11 | return "Sorry, I don't know how to handle this intent."

12 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/places_near_me.py:

--------------------------------------------------------------------------------

1 | import webbrowser

2 |

3 |

4 | def get_places_near_me(*args, **kwargs):

5 | inp_command = kwargs.get("query")

6 | map_base_url = f"https://www.google.com/maps/search/{inp_command}"

7 | webbrowser.open(map_base_url)

8 |

9 | return "Opening Google Maps"

10 |

11 |

12 | if __name__ == "__main__":

13 | print(get_places_near_me("nearest coffee shop"))

14 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/games.py:

--------------------------------------------------------------------------------

1 | import webbrowser

2 |

3 |

4 | def play_games(*args, **kwargs):

5 | url = 'https://poki.com/'

6 | try:

7 | webbrowser.open(url)

8 | return "Successfully opened Poki.com, Play your games!"

9 | except Exception as e:

10 | print(e)

11 | return "Failed to open Poki.com, please try again!"

12 |

13 |

14 | if __name__ == '__main__':

15 | play_games('inp_command')

16 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/joke.py:

--------------------------------------------------------------------------------

1 | import pyjokes

2 |

3 |

4 | def tell_me_joke(*args, **kwargs):

5 | """

6 | Function to tell a joke

7 | Read https://pyjok.es/api/ for more details

8 | :return: str

9 | """

10 | lang = kwargs.get("lang", "en")

11 | cat = kwargs.get("cat", "neutral")

12 | return pyjokes.get_joke(language=lang, category=cat)

13 |

14 |

15 | if __name__ == '__main__':

16 | print(tell_me_joke())

17 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/whatsapp_message.py:

--------------------------------------------------------------------------------

1 | import pywhatkit as kit

2 |

3 |

4 | def send_whatsapp_message(*arg, **kwargs):

5 | country_code = input("Enter country code (Default=+91): ") or "+91"

6 | number = input("Enter whatsapp number: ")

7 | message = input("Enter message: ")

8 | print("Sending message...")

9 | kit.sendwhatmsg_instantly(f"{country_code}{number}", message, wait_time=20)

10 | print("Message sent successfully!")

11 |

12 |

13 | if __name__ == "__main__":

14 | send_whatsapp_message()

15 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/youtube_video_downloader.py:

--------------------------------------------------------------------------------

1 | from pytube import YouTube

2 | import os

3 |

4 |

5 | def download_yt_video(*arg, **kwargs):

6 | ytURL = input("Enter the URL of the YouTube video: ")

7 | yt = YouTube(ytURL)

8 | try:

9 | print("Downloading...")

10 | yt.streams.filter(progressive=True, file_extension="mp4").order_by("resolution")[-1].download()

11 | except:

12 | return "ERROR | Please try again later"

13 | return f"Download Complete | Saved at {os.getcwd()}"

14 |

15 |

16 | if __name__ == "__main__":

17 | download_yt_video()

18 |

--------------------------------------------------------------------------------

/.idea/Jarvis_AI.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/website_open.py:

--------------------------------------------------------------------------------

1 | import webbrowser

2 | import re

3 |

4 |

5 | def website_opener(*args, **kwargs):

6 | input_text = kwargs.get("query")

7 | domain = input_text.lower().split(" ")[-1]

8 | extension = re.search(r"[.]", domain)

9 | if not extension:

10 | if not domain.endswith(".com"):

11 | domain = domain + ".com"

12 | try:

13 | url = 'https://www.' + domain

14 | webbrowser.open(url)

15 | return True

16 | except Exception as e:

17 | print(e)

18 | return False

19 |

20 |

21 | if __name__ == '__main__':

22 | website_opener("facebook")

23 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/screenshot.py:

--------------------------------------------------------------------------------

1 | import pyscreenshot

2 | import os

3 | import datetime

4 |

5 |

6 | def take_screenshot(*args, **kwargs):

7 | try:

8 | image = pyscreenshot.grab()

9 | image.show()

10 | a = datetime.datetime.now()

11 | if not os.path.exists("screenshot"):

12 | os.mkdir("screenshot")

13 | image.save(f"screenshot/{a.day, a.month, a.year}_screenshot.png")

14 | print(f"Screenshot taken: screenshot/{a.day, a.month, a.year}_screenshot.png ")

15 | return f"Screenshot taken"

16 | except Exception as e:

17 | print(e)

18 | return "Unable to take screenshot"

19 |

20 |

21 | if __name__ == "__main__":

22 | take_screenshot()

23 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/speech_to_text/speech_to_text_whisper.py:

--------------------------------------------------------------------------------

1 | import whisper

2 | import sounddevice

3 | from scipy.io.wavfile import write

4 | import time

5 |

6 | model = whisper.load_model("base")

7 |

8 |

9 | def recorder(second=5):

10 | fs = 16000

11 | print("Recording.....")

12 | record_voice = sounddevice.rec(int(second * fs), samplerate=fs, channels=2)

13 | sounddevice.wait()

14 | write("./recording.wav", fs, record_voice)

15 | print("Finished.....")

16 | time.sleep(1)

17 |

18 |

19 | def speech_to_text_whisper(duration=5):

20 | try:

21 | recorder(second=duration)

22 | result = model.transcribe("./recording.wav")

23 | return result.get("text"), True

24 | except Exception as e:

25 | print(e)

26 | return e, False

27 |

28 |

29 | if __name__ == "__main__":

30 | speech_to_text_whisper()

31 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/send_email.py:

--------------------------------------------------------------------------------

1 | import pywhatkit

2 |

3 |

4 | def send_email(*args, **kwargs):

5 | try:

6 | my_email = input("Enter your email address: ")

7 | my_password = input("Enter your password: ")

8 | mail_to = input("Enter the email address you want to send to: ")

9 | subject = input("Enter the subject of the email: ")

10 | content = input("Enter the content of the email: ")

11 | print("Sending email...")

12 | pywhatkit.send_mail(email_sender=my_email,

13 | password=my_password,

14 | subject=subject,

15 | message=content,

16 | email_receiver=mail_to)

17 | print("Email sent!")

18 | return 'Email sent!'

19 | except Exception as e:

20 | print(e)

21 | return 'Email not sent!, Check Error. or Check secure apps is enabled'

22 |

23 |

24 | if __name__ == "__main__":

25 | send_email(None)

26 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/news.py:

--------------------------------------------------------------------------------

1 | import webbrowser

2 |

3 |

4 | def news(*args, **kwargs):

5 | """

6 | This method will open the browser and show the news "https://thetechport.in/"

7 | :return: list / bool

8 | """

9 | try:

10 | url = "https://thetechport.in/"

11 | webbrowser.open(url)

12 | return True

13 | except Exception as e:

14 | print(e)

15 | return False

16 |

17 |

18 | def show_me_some_tech_news():

19 | try:

20 | url = "https://thetechport.in/"

21 | webbrowser.open(url)

22 | return True

23 | except Exception as e:

24 | print(e)

25 | return False

26 |

27 |

28 | def show_me_some_tech_videos():

29 | try:

30 | url = "https://www.youtube.com/c/TechPortOfficial"

31 | webbrowser.open(url)

32 | return True

33 | except Exception as e:

34 | print(e)

35 | return False

36 |

37 |

38 | if __name__ == "__main__":

39 | print(news())

40 | print(show_me_some_tech_news())

41 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/brain/ner.py:

--------------------------------------------------------------------------------

1 | import spacy

2 | import os

3 |

4 | try:

5 | nlp = spacy.load("en_core_web_trf")

6 | except:

7 | print("Downloading spaCy NLP model...")

8 | print("This may take a few minutes and it's one time process...")

9 | os.system("pip install https://huggingface.co/spacy/en_core_web_trf/resolve/main/en_core_web_trf-any-py3-none-any.whl")

10 | nlp = spacy.load("en_core_web_trf")

11 |

12 |

13 | def perform_ner(*args, **kwargs):

14 | query = kwargs['query']

15 | # Process the input text with spaCy NLP model

16 | doc = nlp(query)

17 |

18 | # Extract named entities and categorize them

19 | entities = [(entity.text, entity.label_) for entity in doc.ents]

20 |

21 | return entities

22 |

23 |

24 | if __name__ == "__main__":

25 | # Example input text

26 | input_text = "I want to buy a new iPhone 12 Pro Max from Apple."

27 |

28 | # Perform NER on input text

29 | entities = perform_ner(query=input_text)

30 |

31 | # Print the extracted entities

32 | print(entities)

33 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/brain/auth.py:

--------------------------------------------------------------------------------

1 | import shutup

2 | import requests

3 | import json

4 |

5 | shutup.please()

6 |

7 |

8 | def verify_user(func):

9 | def inner(self, *args, **kwargs):

10 | with open('api_key.txt', 'r') as f:

11 | api_key = f.read()

12 | url = f'https://jarvisai.in/check_secret_key?secret_key={api_key}'

13 | response = requests.get(url)

14 | if response.status_code == 200:

15 | status = json.loads(response.content)

16 | if status:

17 | print("Authentication Successful")

18 | return func(self, *args, **kwargs) # Return the result of calling func

19 | else:

20 | print("Authentication Failed. Please check your API key.")

21 | exit()

22 | else:

23 | print(f'Error: {response.status_code}')

24 | exit()

25 |

26 | return inner

27 |

28 |

29 | if __name__ == "__main__":

30 | data = verify_user("527557f2-0b67-4500-8ca0-03766ade589a")

31 | print(data, type(data))

32 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/weather.py:

--------------------------------------------------------------------------------

1 | import requests

2 |

3 |

4 | def get_weather(*args, **kwargs):

5 | print("Getting weather")

6 | query = kwargs.get("query")

7 | entities = kwargs.get("entities")

8 | city = "Indore"

9 | if len(entities) == 0:

10 | city = [entity[0] for entity in entities if entity[1] == "GPE"][0]

11 | if len(city) == 0:

12 | return "Unable to in which city you want to know weather"

13 | geo_url = f"https://geocoding-api.open-meteo.com/v1/search?name={city}&count=1"

14 | geo_data = requests.get(geo_url).json()

15 | lat = geo_data['results'][0]["latitude"]

16 | lon = geo_data['results'][0]["longitude"]

17 | weather_url = f"https://api.open-meteo.com/v1/forecast?latitude={lat}&longitude={lon}&hourly=temperature_2m"

18 | weather_data = requests.get(weather_url).json()

19 | temp = weather_data["hourly"]["temperature_2m"][-1]

20 | return f"The temperature in {city} is {temp} degrees Celsius."

21 |

22 |

23 | if __name__ == "__main__":

24 | print(get_weather(entities=[("London", "GPE")]))

25 |

--------------------------------------------------------------------------------

/License.txt:

--------------------------------------------------------------------------------

1 | Copyright (c) 2018 The Python Packaging Authority

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy

4 | of this software and associated documentation files (the "Software"), to deal

5 | in the Software without restriction, including without limitation the rights

6 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

7 | copies of the Software, and to permit persons to whom the Software is

8 | furnished to do so, subject to the following conditions:

9 |

10 | The above copyright notice and this permission notice shall be included in all

11 | copies or substantial portions of the Software.

12 |

13 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

18 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

19 | SOFTWARE.

--------------------------------------------------------------------------------

/JarvisAI/License.txt:

--------------------------------------------------------------------------------

1 | Copyright (c) 2018 The Python Packaging Authority

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy

4 | of this software and associated documentation files (the "Software"), to deal

5 | in the Software without restriction, including without limitation the rights

6 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

7 | copies of the Software, and to permit persons to whom the Software is

8 | furnished to do so, subject to the following conditions:

9 |

10 | The above copyright notice and this permission notice shall be included in all

11 | copies or substantial portions of the Software.

12 |

13 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

18 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

19 | SOFTWARE.

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/internet_speed_test.py:

--------------------------------------------------------------------------------

1 | import speedtest # pip install speedtest-cli

2 |

3 | try:

4 | st = speedtest.Speedtest()

5 | except:

6 | print("Please check your internet connection.")

7 | pass

8 |

9 |

10 | def download_speed():

11 | down = round(st.download() / 10 ** 6, 2)

12 | return down

13 |

14 |

15 | def upload_speed():

16 | up = round(st.upload() / 10 ** 6, 2)

17 | return up

18 |

19 |

20 | def ping():

21 | servernames = []

22 | st.get_servers(servernames)

23 | results = st.results.ping

24 | return results

25 |

26 |

27 | def speed_test(*args, **kwargs):

28 | try:

29 | print("Checking internet speed. Please wait...")

30 | # print('Download Speed: ' + str(download_speed()) + 'MB/s')

31 | # print('Upload Speed: ' + str(upload_speed()) + ' MB/s')

32 | # print('Ping: ' + str(ping()) + ' ms')

33 | return "Download Speed: " + str(download_speed()) + "MB/s" + "\n Upload Speed: " + str(

34 | upload_speed()) + " MB/s" + "\n Ping: " + str(ping()) + " ms"

35 | except Exception as e:

36 | print(e)

37 | return "Error in internet speed test"

38 |

39 |

40 | if __name__ == '__main__':

41 | print(speed_test())

42 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/click_photo.py:

--------------------------------------------------------------------------------

1 | import datetime

2 | import cv2

3 | import os

4 |

5 |

6 | def click_pic(*args, **kwargs):

7 | try:

8 | t = datetime.datetime.now()

9 | # Taking a video from the webcam

10 | camera = cv2.VideoCapture(0)

11 | # Taking first 20 frames of the video

12 | for i in range(20):

13 | return_value, image = camera.read()

14 | if not os.path.exists("photos"):

15 | os.mkdir("photos")

16 | # Using 20th frame as the picture and now saving the image as the time in seconds,minute,hour,day and month of the year

17 | # Giving the camera around 20 frames to adjust to the surroundings for better picture quality

18 | cv2.imwrite(f"photos/{t.second, t.minute, t.hour, t.day, t.month}_photo.png", image)

19 | # As soon as the image is saved we will stop recording

20 | del camera

21 | print(f"Photo taken: photos/{t.second, t.minute, t.hour, t.day, t.month}_photo.png")

22 | return "Photo taken"

23 | except Exception as e:

24 | return "Error: " + str(e) + "\n Unable to take photo"

25 |

26 |

27 | # Calling the photo_with_python function

28 | if __name__ == "__main__":

29 | click_pic()

30 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/covid_cases.py:

--------------------------------------------------------------------------------

1 | import requests

2 | import pycountry

3 |

4 |

5 | def check_command_is_for_covid_cases(*args, **kwargs):

6 | command = kwargs.get('query')

7 | try:

8 | command = command.title()

9 | entities = kwargs.get('entities')

10 | if len(entities) > 0:

11 | country = [entity[0] for entity in entities if entity[1] == 'GPE'][0]

12 | else:

13 | country = get_country(command)

14 | cases = get_covid_cases(country)

15 | return f"The current active cases in {country} are {cases}"

16 | except Exception as e:

17 | print("Error: ", e)

18 | return "Sorry, I couldn't find the country you are looking for. Or server is down."

19 |

20 |

21 | def get_country(command): # For getting only the country name for the whole query

22 | for country in pycountry.countries:

23 | if country.name in command:

24 | return country.name

25 |

26 |

27 | def get_covid_cases(country): # For getting current covid cases

28 | totalActiveCases = 0

29 | response = requests.get('https://api.covid19api.com/live/country/' + country + '/status/confirmed').json()

30 | for data in response:

31 | totalActiveCases += data.get('Active')

32 | return totalActiveCases

33 |

34 |

35 | if __name__ == '__main__':

36 | print(check_command_is_for_covid_cases('active Covid India cases?')) # Example

37 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/actions.json:

--------------------------------------------------------------------------------

1 | [

2 | {

3 | "intent": "greet and hello hi kind of things",

4 | "example": []

5 | },

6 | {

7 | "intent": "goodbye",

8 | "example": []

9 | },

10 | {

11 | "intent": "asking date",

12 | "example": []

13 | },

14 | {

15 | "intent": "tell me joke",

16 | "example": []

17 | },

18 | {

19 | "intent": "asking time",

20 | "example": []

21 | },

22 | {

23 | "intent": "tell me about",

24 | "example": []

25 | },

26 | {

27 | "intent": "i am bored",

28 | "example": []

29 | },

30 | {

31 | "intent": "volume control",

32 | "example": []

33 | },

34 | {

35 | "intent": "tell me news",

36 | "example": []

37 | },

38 | {

39 | "intent": "click photo",

40 | "example": []

41 | },

42 | {

43 | "intent": "places near me",

44 | "example": []

45 | },

46 | {

47 | "intent": "play on youtube",

48 | "example": []

49 | },

50 | {

51 | "intent": "play games",

52 | "example": []

53 | },

54 | {

55 | "intent": "what can you do",

56 | "example": []

57 | },

58 | {

59 | "intent": "send email",

60 | "example": []

61 | },

62 | {

63 | "intent": "download youtube video",

64 | "example": []

65 | },

66 | {

67 | "intent": "asking weather",

68 | "example": []

69 | },

70 | {

71 | "intent": "take screenshot",

72 | "example": []

73 | },

74 | {

75 | "intent": "open website",

76 | "example": []

77 | },

78 | {

79 | "intent": "send whatsapp message",

80 | "example": []

81 | },

82 | {

83 | "intent": "covid cases",

84 | "example": []

85 | },

86 | {

87 | "intent": "asking weather",

88 | "example": []

89 | },

90 | {

91 | "intent": "others",

92 | "example": []

93 | }

94 | ]

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/tell_me_about.py:

--------------------------------------------------------------------------------

1 | import wikipedia

2 | import re

3 |

4 |

5 | def tell_me_about(*args, **kwargs):

6 | # topic = kwargs.get("query")

7 | entities = kwargs.get("entities")

8 | if len(entities) == 0:

9 | return "Entity not found"

10 | li = ['EVENT', 'FAC', 'GPE', 'LANGUAGE', 'LAW', 'LOC', 'MONEY', 'NORP', 'ORDINAL', 'ORG',

11 | 'PERCENT', 'PERSON', 'PRODUCT', 'TIME', 'WORK_OF_ART']

12 | topic = [entity[0] for entity in entities if entity[1] in li][0]

13 | try:

14 | ny = wikipedia.page(topic)

15 | res = str(ny.content[:500].encode('utf-8'))

16 | res = re.sub('[^a-zA-Z.\d\s]', '', res)[1:]

17 | return res

18 | except Exception as e:

19 | print(e)

20 | return False

21 |

22 |

23 | if __name__ == '__main__':

24 | import spacy

25 | import os

26 |

27 | try:

28 | nlp = spacy.load("en_core_web_trf")

29 | except:

30 | print("Downloading spaCy NLP model...")

31 | print("This may take a few minutes and it's one time process...")

32 | os.system(

33 | "pip install https://huggingface.co/spacy/en_core_web_trf/resolve/main/en_core_web_trf-any-py3-none-any.whl")

34 | nlp = spacy.load("en_core_web_trf")

35 |

36 |

37 | def perform_ner(*args, **kwargs):

38 | query = kwargs['query']

39 | # Process the input text with spaCy NLP model

40 | doc = nlp(query)

41 |

42 | # Extract named entities and categorize them

43 | entities = [(entity.text, entity.label_) for entity in doc.ents]

44 |

45 | return entities

46 |

47 |

48 | query = "tell me about Narendra Modi"

49 | # Perform NER on input text

50 | entities = perform_ner(query=query)

51 |

52 | print(tell_me_about(query=query, entities=entities))

53 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | absl-py==1.4.0

2 | aiohttp==3.8.3

3 | aiosignal==1.3.1

4 | async-timeout==4.0.2

5 | attrs==22.2.0

6 | beautifulsoup4==4.11.2

7 | blis==0.7.9

8 | catalogue==2.0.8

9 | certifi==2022.12.7

10 | charset-normalizer==2.1.1

11 | click==8.1.3

12 | colorama==0.4.6

13 | comtypes==1.1.14

14 | confection==0.0.4

15 | contourpy==1.0.7

16 | cycler==0.11.0

17 | cymem==2.0.7

18 | EasyProcess==1.1

19 | entrypoint2==1.1

20 | Flask==2.2.2

21 | flatbuffers==23.1.21

22 | fonttools==4.38.0

23 | frozenlist==1.3.3

24 | gTTS==2.3.1

25 | idna==3.4

26 | importlib-metadata==6.0.0

27 | itsdangerous==2.1.2

28 | Jinja2==3.1.2

29 | kiwisolver==1.4.4

30 | langcodes==3.3.0

31 | lazyme==0.0.27

32 | MarkupSafe==2.1.2

33 | matplotlib==3.6.3

34 | mediapipe==0.9.1.0

35 | MouseInfo==0.1.3

36 | mss==7.0.1

37 | multidict==6.0.4

38 | murmurhash==1.0.9

39 | numpy==1.24.2

40 | openai==0.26.4

41 | opencv-contrib-python==4.7.0.68

42 | opencv-python==4.7.0.68

43 | packaging==23.0

44 | pathy==0.10.1

45 | Pillow==9.4.0

46 | playsound==1.3.0

47 | preshed==3.0.8

48 | protobuf==3.20.3

49 | psutil==5.9.4

50 | PyAudio==0.2.13

51 | PyAutoGUI==0.9.53

52 | pycaw==20220416

53 | pycountry==22.3.5

54 | pydantic==1.10.4

55 | PyGetWindow==0.0.9

56 | pyjokes==0.6.0

57 | PyMsgBox==1.0.9

58 | pyparsing==3.0.9

59 | pyperclip==1.8.2

60 | pypiwin32==223

61 | PyRect==0.2.0

62 | pyscreenshot==3.0

63 | PyScreeze==0.1.28

64 | python-dateutil==2.8.2

65 | pyttsx3==2.90

66 | pytube==12.1.2

67 | pytweening==1.0.4

68 | pywhatkit==5.4

69 | pywin32==305

70 | requests==2.28.2

71 | six==1.16.0

72 | smart-open==6.3.0

73 | soupsieve==2.3.2.post1

74 | spacy==3.5.0

75 | spacy-legacy==3.0.12

76 | spacy-loggers==1.0.4

77 | SpeechRecognition==3.9.0

78 | speedtest-cli==2.1.3

79 | srsly==2.4.5

80 | thinc==8.1.7

81 | tqdm==4.64.1

82 | typer==0.7.0

83 | typing_extensions==4.4.0

84 | urllib3==1.26.14

85 | wasabi==1.1.1

86 | Werkzeug==2.2.2

87 | wikipedia==1.4.0

88 | yarl==1.8.2

89 | zipp==3.12.1

90 | sourcedefender==10.0.13

--------------------------------------------------------------------------------

/JarvisAI/setup.py:

--------------------------------------------------------------------------------

1 | import setuptools

2 | from setuptools import find_namespace_packages

3 |

4 | with open("README.md", "r", encoding='utf-8') as fh:

5 | long_description = fh.read()

6 |

7 | setuptools.setup(

8 | name="JarvisAI",

9 | version="4.9",

10 | author="Dipesh",

11 | author_email="dipeshpal17@gmail.com",

12 | description="JarvisAI is python library to build your own AI virtual assistant with natural language processing.",

13 | long_description=long_description,

14 | long_description_content_type="text/markdown",

15 | url="https://github.com/Dipeshpal/Jarvis_AI",

16 | include_package_data=True,

17 | packages=find_namespace_packages(include=['JarvisAI.*', 'JarvisAI']),

18 | install_requires=['numpy', 'gtts', 'playsound', 'pyscreenshot', "opencv-python",

19 | 'SpeechRecognition', 'pyjokes', 'wikipedia', 'scipy', 'lazyme',

20 | "requests", "pyttsx3", "spacy==3.5.0", 'pywhatkit', 'speedtest-cli',

21 | 'pytube', 'pycountry', 'playsound', 'pyaudio', 'mediapipe==0.8.11',

22 | 'pycaw', 'openai-whisper', 'shutup', 'sounddevice', 'html2text==2020.1.16',

23 | 'wikipedia==1.4.0', 'Markdown==3.4.1', 'markdown2==2.4.8',

24 | 'lxml==4.9.2', 'googlesearch-python==1.2.3', 'selenium', 'selenium-pro',

25 | 'element-manager'],

26 | classifiers=[

27 | "Programming Language :: Python :: 3",

28 | "License :: OSI Approved :: MIT License",

29 | "Operating System :: OS Independent",

30 | ],

31 | python_requires='>=3.6',

32 | project_urls={

33 | 'Official Website': 'https://jarvisai.in',

34 | 'Documentation': 'https://github.com/Dipeshpal/Jarvis_AI',

35 | 'Donate': 'https://www.buymeacoffee.com/dipeshpal',

36 | 'Say Thanks!': 'https://youtube.com/techportofficial',

37 | 'Source': 'https://github.com/Dipeshpal/Jarvis_AI',

38 | 'Contact': 'https://www.dipeshpal.in/social',

39 | },

40 | )

41 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/speech_to_text/speech_to_text.py:

--------------------------------------------------------------------------------

1 | import speech_recognition as sr

2 | import pyaudio

3 | import wave

4 |

5 |

6 | def record_audio(duration=5):

7 | filename = "recording.wav"

8 | # set the chunk size of 1024 samples

9 | chunk = 1024

10 | # sample format

11 | FORMAT = pyaudio.paInt16

12 | # mono, change to 2 if you want stereo

13 | channels = 1

14 | # 44100 samples per second

15 | sample_rate = 44100

16 | record_seconds = duration

17 | # initialize PyAudio object

18 | p = pyaudio.PyAudio()

19 | # open stream object as input & output

20 | stream = p.open(format=FORMAT,

21 | channels=channels,

22 | rate=sample_rate,

23 | input=True,

24 | output=True,

25 | frames_per_buffer=chunk)

26 | frames = []

27 | for i in range(int(sample_rate / chunk * record_seconds)):

28 | data = stream.read(chunk)

29 | # if you want to hear your voice while recording

30 | # stream.write(data)

31 | frames.append(data)

32 | # print("Finished recording.")

33 | # stop and close stream

34 | stream.stop_stream()

35 | stream.close()

36 | # terminate pyaudio object

37 | p.terminate()

38 | # save audio file

39 | # open the file in 'write bytes' mode

40 | wf = wave.open(filename, "wb")

41 | # set the channels

42 | wf.setnchannels(channels)

43 | # set the sample format

44 | wf.setsampwidth(p.get_sample_size(FORMAT))

45 | # set the sample rate

46 | wf.setframerate(sample_rate)

47 | # write the frames as bytes

48 | wf.writeframes(b"".join(frames))

49 | # close the file

50 | wf.close()

51 |

52 |

53 | def speech_to_text_google(input_lang='en', key=None, duration=5):

54 | try:

55 | print("Listening for next 5 seconds...")

56 | record_audio(duration=duration)

57 | r = sr.Recognizer()

58 | with sr.AudioFile("recording.wav") as source:

59 | audio = r.record(source)

60 | command = r.recognize_google(audio, language=input_lang, key=key)

61 | # TODO: Translate command to target language

62 | # if input_lang != 'en':

63 | # translator = googletrans.Translator()

64 | # command = translator.translate("command", dest='hi').text

65 | return command, True

66 | except Exception as e:

67 | print(e)

68 | return e, False

69 |

70 |

71 | if __name__ == "__main__":

72 | print(speech_to_text_google())

73 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 | manager.py

30 | manager_code.py

31 |

32 | # PyInstaller

33 | # Usually these files are written by a python script from a template

34 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

35 | *.manifest

36 | *.spec

37 |

38 | # Installer logs

39 | pip-log.txt

40 | pip-delete-this-directory.txt

41 |

42 | # Unit test / coverage reports

43 | htmlcov/

44 | .tox/

45 | .nox/

46 | .coverage

47 | .coverage.*

48 | .cache

49 | nosetests.xml

50 | coverage.xml

51 | *.cover

52 | *.py,cover

53 | .hypothesis/

54 | .pytest_cache/

55 |

56 | # Translations

57 | *.mo

58 | *.pot

59 |

60 | # Django stuff:

61 | *.log

62 | local_settings.py

63 | db.sqlite3

64 | db.sqlite3-journal

65 |

66 | # Flask stuff:

67 | instance/

68 | .webassets-cache

69 |

70 | # Scrapy stuff:

71 | .scrapy

72 |

73 | # Sphinx documentation

74 | docs/_build/

75 |

76 | # PyBuilder

77 | target/

78 |

79 | # Jupyter Notebook

80 | .ipynb_checkpoints

81 |

82 | # IPython

83 | profile_default/

84 | ipython_config.py

85 |

86 | # pyenv

87 | .python-version

88 |

89 | # pipenv

90 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

91 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

92 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

93 | # install all needed dependencies.

94 | #Pipfile.lock

95 |

96 | # celery beat schedule file

97 | celerybeat-schedule

98 |

99 | # SageMath parsed files

100 | *.sage.py

101 |

102 | # Environments

103 | .env

104 | .venv

105 | env/

106 | venv/

107 | ENV/

108 | env.bak/

109 | venv.bak/

110 |

111 | # Spyder project settings

112 | .spyderproject

113 | .spyproject

114 |

115 | # Rope project settings

116 | .ropeproject

117 |

118 | # mkdocs documentation

119 | /site

120 |

121 | # mypy

122 | .mypy_cache/

123 | .dmypy.json

124 | dmypy.json

125 |

126 | # Pyre type checker

127 | .pyre/

128 | JarvisAI/JarvisAI/user_configs/speech_engine.txt

129 | JarvisAI/JarvisAI/user_configs/api_key.txt

130 | JarvisAI/.idea/misc.xml

131 | JarvisAI/.idea/JarvisAI.iml

132 | *.iml

133 | *.iml

134 | *.xml

135 | *.iml

136 | *.iml

137 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features_manager.py:

--------------------------------------------------------------------------------

1 | try:

2 | from features.date_time import date_time

3 | from features.greet import greet, goodbye

4 | from features.joke import tell_me_joke

5 | from features.click_photo import click_pic

6 | from features.covid_cases import check_command_is_for_covid_cases

7 | from features.games import play_games

8 | from features.iambored import get_me_suggestion

9 | from features.internet_speed_test import speed_test

10 | from features.news import news

11 | from features.places_near_me import get_places_near_me

12 | from features.screenshot import take_screenshot

13 | from features.send_email import send_email

14 | from features.tell_me_about import tell_me_about

15 | from features.volume_controller import start_volume_control

16 | from features.weather import get_weather

17 | from features.website_open import website_opener

18 | from features.whatsapp_message import send_whatsapp_message

19 | from features.youtube_play import yt_play

20 | from features.youtube_video_downloader import download_yt_video

21 | from brain import chatbot_premium

22 | except Exception as e:

23 | from .features.date_time import date_time

24 | from .features.greet import greet, goodbye

25 | from .features.joke import tell_me_joke

26 | from .features.click_photo import click_pic

27 | from .features.covid_cases import check_command_is_for_covid_cases

28 | from .features.games import play_games

29 | from .features.iambored import get_me_suggestion

30 | from .features.internet_speed_test import speed_test

31 | from .features.news import news

32 | from .features.places_near_me import get_places_near_me

33 | from .features.screenshot import take_screenshot

34 | from .features.send_email import send_email

35 | from .features.tell_me_about import tell_me_about

36 | from .features.volume_controller import start_volume_control

37 | from .features.weather import get_weather

38 | from .features.website_open import website_opener

39 | from .features.whatsapp_message import send_whatsapp_message

40 | from .features.youtube_play import yt_play

41 | from .features.youtube_video_downloader import download_yt_video

42 | from .brain import chatbot_premium

43 |

44 |

45 | def show_what_can_i_do(*args, **kwargs):

46 | print("I can do following things:")

47 | for key in action_map.keys():

48 | print(key)

49 |

50 |

51 | action_map = {

52 | "asking time": date_time,

53 | "asking date": date_time,

54 | "greet and hello hi kind of things": greet,

55 | "goodbye": goodbye,

56 | "tell me joke": tell_me_joke,

57 | "tell me about": tell_me_about, # TODO: improve this

58 | "i am bored": get_me_suggestion,

59 | "volume control": start_volume_control,

60 | "tell me news": news,

61 | "click photo": click_pic,

62 | "places near me": get_places_near_me,

63 | "play on youtube": yt_play,

64 | "play games": play_games,

65 | "what can you do": show_what_can_i_do,

66 | "send email": send_email,

67 | "download youtube video": download_yt_video,

68 | "asking weather": get_weather,

69 | "take screenshot": take_screenshot,

70 | "open website": website_opener,

71 | "send whatsapp message": send_whatsapp_message,

72 | "covid cases": check_command_is_for_covid_cases,

73 | "check internet speed": speed_test,

74 | "others": chatbot_premium.premium_chat,

75 | }

76 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/text_to_speech/text_to_speech.py:

--------------------------------------------------------------------------------

1 | import os

2 | from gtts import gTTS

3 | import pyttsx3

4 | from playsound import playsound

5 | from lazyme.string import color_print as cprint

6 |

7 | USER_CONFIG_FOLDER = './user_configs/'

8 | SPEECH_ENGINE_PATH = f'{USER_CONFIG_FOLDER}speech_engine.txt'

9 |

10 |

11 | def text_to_speech(text, lang='en', backend_tts_api='gtts'):

12 | """

13 | Convert any text to speech

14 | You can use GTTS or PYTTSX3 as backend for Text to Speech.

15 | PYTTSX3 may support different voices (male/female) depends upon your system.

16 | You can set backend of tts while creating object of JarvisAI class. Default is PYTTSX3.

17 | :param backend_tts_api:

18 | :param text: str

19 | text (String)

20 | :param lang: str

21 | default 'en'

22 | :return: Bool

23 | True / False (Play sound if True otherwise write exception to log and return False)

24 | """

25 | if backend_tts_api == 'gtts':

26 | # for gtts Backend

27 | try:

28 | myobj = gTTS(text=text, lang=lang, slow=False)

29 | myobj.save("tmp.mp3")

30 | playsound("tmp.mp3")

31 | os.remove("tmp.mp3")

32 | return True

33 | except Exception as e:

34 | print(e)

35 | print("or You may reached free limit of 'gtts' API. Use 'pyttsx3' as backend for unlimited use.")

36 | return False

37 | else:

38 | # for pyttsx3 Backend

39 | engine = pyttsx3.init()

40 | voices = engine.getProperty('voices')

41 |

42 | try:

43 | if not os.path.exists("configs"):

44 | os.mkdir("configs")

45 |

46 | voice_file_name = "configs/Edith-Voice.txt"

47 | if not os.path.exists(voice_file_name):

48 | cprint("You can try different voices. This is one time setup. You can reset your voice by deleting"

49 | "'configs/Edith-Voice.txt' file in your working directory.",

50 | color='blue')

51 | cprint("Your System Support Following Voices- ",

52 | color='blue')

53 | voices_dict = {}

54 | for index, voice in enumerate(voices):

55 | print(f"{index}: ", voice.id)

56 | voices_dict[str(index)] = voice.id

57 | option = input(f"Choose any- {list(voices_dict.keys())}: ")

58 | with open(voice_file_name, 'w') as f:

59 | f.write(voices_dict.get(option, voices[0].id))

60 | with open(voice_file_name, 'r') as f:

61 | voice_property = f.read()

62 | else:

63 | with open(voice_file_name, 'r') as f:

64 | voice_property = f.read()

65 | except Exception as e:

66 | print(e)

67 | print("Error occurred while creating config file for voices in pyttsx3 in 'text2speech'.",

68 | "Contact maintainer/developer of JarvisAI")

69 | try:

70 | engine.setProperty('voice', voice_property)

71 | engine.say(text)

72 | engine.runAndWait()

73 | return True

74 | except Exception as e:

75 | print(e)

76 | print("Error occurred while using pyttsx3 in 'text2speech'.",

77 | "or Your system may not support pyttsx3 backend. Use 'gtts' as backend.",

78 | "Contact maintainer/developer of JarvisAI.")

79 | return False

80 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/brain/intent_classification.py:

--------------------------------------------------------------------------------

1 | import os

2 | import requests

3 | import json

4 | from selenium import webdriver

5 | from selenium.webdriver.common.by import By

6 | from selenium.webdriver.chrome.options import Options

7 | from element_manager import *

8 | import time

9 | import requests

10 |

11 |

12 | def check_local_intent(text):

13 | """Check if the intent of a text string is available locally.

14 |

15 | Args:

16 | text (str): The text string to check.

17 |

18 | Returns:

19 | str: The intent of the text string.

20 | """

21 | try:

22 | if not os.path.exists('actions.json'):

23 | return None

24 | else:

25 | with open('actions.json', 'r') as f:

26 | actions = json.load(f)

27 | # Below is sample actions.json file

28 | # [

29 | # {

30 | # "intent": "greet and hello hi kind of things",

31 | # "example": []

32 | # },

33 | # {

34 | # "intent": "goodbye",

35 | # "example": ['bye', 'goodbye', 'see you later']

36 | # }

37 | # ]

38 | # if text in example then return intent

39 | for action in actions:

40 | if text in action['example']:

41 | return action['intent']

42 | if action['intent'] in text:

43 | return action['intent']

44 | if action['example'] == "":

45 | continue

46 | except Exception as e:

47 | return None

48 |

49 |

50 | def try_to_classify_intent(secret_key, text):

51 | """Classify the intent of a text string using the JarvisAI API.

52 |

53 | Args:

54 | text (str): The text string to classify.

55 |

56 | Returns:

57 | str: The intent of the text string.

58 | """

59 | try:

60 | intent = check_local_intent(text)

61 | if intent is not None:

62 | return intent, 1.0

63 | except Exception as e:

64 | pass

65 |

66 | try:

67 | url = f'https://jarvisai.in/intent_classifier?secret_key={secret_key}&text={text}'

68 | response = requests.get(url)

69 | data = response.json()

70 | if data['status'] == 'success':

71 | return data['data'][0], data['data'][1]

72 | except Exception as e:

73 | raise Exception('Something went wrong while classifying the intent.')

74 |

75 |

76 | def restart_server():

77 | try:

78 | chrome_options = Options()

79 | chrome_options.add_argument("--headless")

80 | driver = webdriver.Chrome(options=chrome_options)

81 |

82 | driver.get('https://huggingface.co/spaces/dipesh/dipesh-Intent-Classification-large')

83 |

84 | time.sleep(4)

85 | # to click on the element(Restart this Space) found

86 | driver.find_element(By.XPATH, get_xpath(driver, 'd7n5Js111lAwV_U')).click()

87 | return True, 'Server restarted successfully'

88 | except Exception as e:

89 | print(e)

90 | print("Make sure chromedriver.exe is in the same folder as your script")

91 | return False, 'Server restart failed'

92 |

93 |

94 | def classify_intent(secret_key, text):

95 | for i in range(3):

96 | try:

97 | return try_to_classify_intent(secret_key, text)

98 | except Exception as e:

99 | restart_server()

100 | return try_to_classify_intent(secret_key, text)

101 |

102 |

103 | if __name__ == "__main__":

104 | intent, _ = classify_intent('99f605ce-5bf9-4e80-93a3-f367df65aa27', "custom function")

105 | print(intent, _)

106 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/utils/input_output.py:

--------------------------------------------------------------------------------

1 | try:

2 | from utils.speech_to_text.speech_to_text import speech_to_text_google

3 | from utils.text_to_speech.text_to_speech import text_to_speech

4 | from utils.speech_to_text.speech_to_text_whisper import speech_to_text_whisper

5 | except:

6 | from JarvisAI.utils.speech_to_text.speech_to_text import speech_to_text_google

7 | from JarvisAI.utils.text_to_speech.text_to_speech import text_to_speech

8 | from JarvisAI.utils.speech_to_text.speech_to_text_whisper import speech_to_text_whisper

9 |

10 |

11 | class JarvisInputOutput:

12 | def __init__(self, input_mechanism='text', output_mechanism='text', logging=None,

13 | google_speech_api_key=None, google_speech_recognition_input_lang='en',

14 | duration_listening=5, backend_tts_api='pyttsx3',

15 | use_whisper_asr=False, display_logs=False,

16 | api_key=None):

17 | self.input_mechanism = input_mechanism

18 | self.output_mechanism = output_mechanism

19 | self.google_speech_api_key = google_speech_api_key

20 | self.google_speech_recognition_input_lang = google_speech_recognition_input_lang

21 | self.duration_listening = duration_listening

22 | self.backend_tts_api = backend_tts_api

23 | self.logging = logging

24 | self.use_whisper_asr = use_whisper_asr

25 | self.display_logs = display_logs

26 | self.api_key = api_key

27 | with open('api_key.txt', 'w') as f:

28 | f.write(api_key)

29 | # print("JarvisInputOutput initialized")

30 | # print(f"Input mechanism: {self.input_mechanism}")

31 | # print(f"Output mechanism: {self.output_mechanism}")

32 | # print(f"Google Speech API Key: {self.google_speech_api_key}")

33 | # print(f"Backend TTS API: {self.backend_tts_api}")

34 |

35 | def text_input(self):

36 | if self.input_mechanism == 'text':

37 | return input("Enter your query: ")

38 | else:

39 | self.logging.exception("Invalid input mechanism")

40 | raise ValueError("Invalid input mechanism")

41 |

42 | def text_output(self, text):

43 | if self.output_mechanism == 'text' or self.output_mechanism == 'both':

44 | print(text)

45 | else:

46 | self.logging.exception("Invalid output mechanism")

47 | raise ValueError("Invalid output mechanism")

48 |

49 | def voice_input(self, *args, **kwargs):

50 | if self.input_mechanism == 'voice':

51 | if self.use_whisper_asr:

52 | if self.display_logs:

53 | print("Using Whisper ASR")

54 | command, status = speech_to_text_whisper(duration=self.duration_listening)

55 | else:

56 | if self.display_logs:

57 | print("Using Google ASR")

58 | command, status = speech_to_text_google(input_lang=self.google_speech_recognition_input_lang,

59 | key=self.google_speech_api_key,

60 | duration=self.duration_listening)

61 | print(f"You Said: {command}")

62 | if status:

63 | return command

64 | else:

65 | return None

66 | else:

67 | self.logging.exception("Invalid input mechanism")

68 | raise ValueError("Invalid input mechanism")

69 |

70 | def voice_output(self, *args, **kwargs):

71 | if self.output_mechanism == 'voice' or self.output_mechanism == 'both':

72 | text = kwargs.get('text', None)

73 | text_to_speech(text=text, lang='en', backend_tts_api=self.backend_tts_api)

74 | else:

75 | self.logging.exception("Invalid output mechanism")

76 | raise ValueError("Invalid output mechanism")

77 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/features/volume_controller.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import mediapipe as mp

3 | from math import hypot

4 | from ctypes import cast, POINTER

5 | from comtypes import CLSCTX_ALL

6 | from pycaw.pycaw import AudioUtilities, IAudioEndpointVolume

7 | import numpy as np

8 |

9 |

10 | def start_volume_control(*args, **kwargs):

11 | cap = cv2.VideoCapture(0) # Checks for camera

12 |

13 | mpHands = mp.solutions.hands # detects hand/finger

14 | hands = mpHands.Hands() # complete the initialization configuration of hands

15 | mpDraw = mp.solutions.drawing_utils

16 |

17 | # To access speaker through the library pycaw

18 | devices = AudioUtilities.GetSpeakers()

19 | interface = devices.Activate(IAudioEndpointVolume._iid_, CLSCTX_ALL, None)

20 | volume = cast(interface, POINTER(IAudioEndpointVolume))

21 | volbar = 400

22 | volper = 0

23 |

24 | volMin, volMax = volume.GetVolumeRange()[:2]

25 |

26 | while True:

27 | success, img = cap.read() # If camera works capture an image

28 | img = cv2.flip(img, 1)

29 | imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # Convert to rgb

30 | cv2.putText(img, f"Press SPACE to stop", (20, 80), cv2.FONT_ITALIC, 1, (0, 255, 98), 3)

31 |

32 | # Collection of gesture information

33 | results = hands.process(imgRGB) # completes the image processing.

34 |

35 | lmList = [] # empty list

36 | if results.multi_hand_landmarks: # list of all hands detected.

37 | # By accessing the list, we can get the information of each hand's corresponding flag bit

38 | for handlandmark in results.multi_hand_landmarks:

39 | for id, lm in enumerate(handlandmark.landmark): # adding counter and returning it

40 | # Get finger joint points

41 | h, w, _ = img.shape

42 | cx, cy = int(lm.x * w), int(lm.y * h)

43 | lmList.append([id, cx, cy]) # adding to the empty list 'lmList'

44 | mpDraw.draw_landmarks(img, handlandmark, mpHands.HAND_CONNECTIONS)

45 |

46 | if lmList != []:

47 | # getting the value at a point

48 | # x #y

49 | x1, y1 = lmList[4][1], lmList[4][2] # thumb

50 | x2, y2 = lmList[8][1], lmList[8][2] # index finger

51 | # creating circle at the tips of thumb and index finger

52 | cv2.circle(img, (x1, y1), 13, (255, 0, 0), cv2.FILLED) # image #fingers #radius #rgb

53 | cv2.circle(img, (x2, y2), 13, (255, 0, 0), cv2.FILLED) # image #fingers #radius #rgb

54 | cv2.line(img, (x1, y1), (x2, y2), (255, 0, 0), 3) # create a line b/w tips of index finger and thumb

55 |

56 | length = hypot(x2 - x1, y2 - y1) # distance b/w tips using hypotenuse

57 | # from numpy we find our length,by converting hand range in terms of volume range ie b/w -63.5 to 0

58 | vol = np.interp(length, [30, 350], [volMin, volMax])

59 | volbar = np.interp(length, [30, 350], [400, 150])

60 | volper = np.interp(length, [30, 350], [0, 100])

61 |

62 | print(vol, int(length))

63 | volume.SetMasterVolumeLevel(vol, None)

64 |

65 | # Hand range 30 - 350

66 | # Volume range -63.5 - 0.0

67 | # creating volume bar for volume level

68 |

69 | cv2.rectangle(img, (50, 150), (85, 400), (0, 0, 255),

70 | 4) # vid ,initial position ,ending position ,rgb ,thickness

71 | cv2.rectangle(img, (50, int(volbar)), (85, 400), (0, 0, 255), cv2.FILLED)

72 | cv2.putText(img, f"{int(volper)}%", (10, 40), cv2.FONT_ITALIC, 1, (0, 255, 98), 3)

73 | # tell the volume percentage ,location,font of text,length,rgb color,thickness

74 | # flip the image

75 | cv2.imshow('Image', img) # Show the video

76 | if cv2.waitKey(1) & 0xff == ord(' '): # By using spacebar delay will stop

77 | break

78 |

79 | cap.release() # stop cam

80 | cv2.destroyAllWindows() # close window

81 | return "Volume control stopped"

82 |

83 |

84 | if __name__ == '__main__':

85 | start_volume_control()

86 |

--------------------------------------------------------------------------------

/JarvisAI/JarvisAI/brain/chatbot_premium.py:

--------------------------------------------------------------------------------

1 | import requests

2 | from googlesearch import search

3 | import wikipedia

4 | import html2text

5 | from bs4 import BeautifulSoup

6 | from markdown import markdown

7 | import re

8 |

9 |

10 | def search_google_description(query):

11 | try:

12 | ans = search(query, advanced=True, num_results=5)

13 |

14 | desc = ''

15 | for i in ans:

16 | desc += i.description

17 |

18 | return desc

19 | except:

20 | return ''

21 |

22 |

23 | def query_pages(query):

24 | return list(search(query))

25 |

26 |

27 | def markdown_to_text(markdown_string):

28 | """ Converts a markdown string to plaintext """

29 |

30 | # md -> html -> text since BeautifulSoup can extract text cleanly

31 | html = markdown(markdown_string)

32 |

33 | # remove code snippets

34 | html = re.sub(r'

(.*?)

', ' ', html)

35 | html = re.sub(r'(.*?)', ' ', html)

36 |

37 | # extract text

38 | soup = BeautifulSoup(html, "html.parser")

39 | for e in soup.find_all():

40 | if e.name not in ['p']:

41 | e.unwrap()

42 | text = ''.join([i.strip() for i in soup.findAll(text=True)])

43 | return text

44 |

45 |

46 | def format_text(text):

47 | text = markdown_to_text(text)

48 | text = text.replace('\n', ' ')

49 | return text

50 |

51 |

52 | def search_google(query):

53 | try:

54 | def query_to_text(query):

55 | html_conv = html2text.HTML2Text()

56 | html_conv.ignore_links = True

57 | html_conv.escape_all = True

58 | text = []

59 | for link in query_pages(query)[0:3]:

60 | try:

61 | headers = {

62 | 'User-Agent': 'Mozilla/5.0 (Windows NT 6.2; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'}

63 |

64 | req = requests.get(link, headers=headers)

65 | text.append(html_conv.handle(req.text))

66 | text[-1] = format_text(text[-1])

67 | except:

68 | pass

69 | return text

70 |

71 | return query_to_text(query)

72 | except:

73 | return ''

74 |

75 |

76 | def search_wiki(query):

77 | try:

78 | ans = wikipedia.summary(query, sentences=2)

79 | return ans

80 | except:

81 | return ''

82 |

83 |

84 | def search_all(query, advance_search=False):

85 | combined = search_google_description(query) + "\n" + search_wiki(query)

86 | if advance_search:

87 | combined += "\n" + ' '.join(search_google(query))

88 | return combined

89 |

90 |

91 | def try_to_get_response(*args, **kwargs):

92 | api_key = kwargs.get("api_key")

93 | query = kwargs.get('query')

94 | context = search_all(query, advance_search=False)

95 | headers = {

96 | 'accept': 'application/json',

97 | 'content-type': 'application/x-www-form-urlencoded',

98 | }

99 | params = {

100 | 'secret_key': api_key,

101 | 'text': query,

102 | 'context': context,

103 | }

104 | response = requests.post('https://www.jarvisai.in/chatbot_premium_api', params=params, headers=headers)

105 | if response.status_code == 200:

106 | return response.json()['data']

107 | else:

108 | return response.json().get("message", "Server is facing some issues. Please try again later.")

109 |

110 |

111 | def premium_chat(*args, **kwargs):

112 | # try 3 times to call try_to_get_response(*args, **kwargs) function and if it fails then return error message

113 | for i in range(3):

114 | try:

115 | return try_to_get_response(*args, **kwargs)

116 | except Exception as e:

117 | pass

118 | return "Server is facing some issues. Please try again later."

119 | # try:

120 | # try_to_get_response(*args, **kwargs)

121 | # except Exception as e:

122 | # return f"An error occurred while performing premium_chat, connect with developer. Error: {e}"

123 |

124 |

125 | if __name__ == "__main__":

126 | print(premium_chat(query="who is naredra modi", api_key='ae44cc6e-0d5c-45c1-b8a3-fe412469510f'))

127 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](http://jarvis-ai-api.herokuapp.com/)

2 |

3 |

4 |

5 |

6 | # Hello, folks!

7 | This project is created only for those who are interested in building a Virtual Assistant. Generally, it took lots of time to write code from scratch to build a Virtual Assistant. So, I have built a Library called "JarvisAI", which gives you easy functionality to build your own Virtual Assistant.

8 | # Content-

9 |

10 | 1. What is JarvisAI?

11 | 2. Prerequisite

12 | 3. Architecture

13 | 4. Getting Started- How to use it?

14 | 5. What it can do (Features it supports)

15 | 6. Future / Request Features

16 | 7. Contribute

17 | 8. Contact me

18 | 9. Donate

19 | 10. Thank me on-

20 |

21 | ## YouTube Tutorial-

22 |

23 | Click on the image below to watch the tutorial on YouTube-

24 |

25 | **Tutorial 1-**

26 |

27 | [](https://www.youtube.com/watch?v=p2hdqB11S-8)

28 |

29 | **Tutorial 2-**

30 |

31 | [](https://www.youtube.com/watch?v=6p8bhNGtVbA)

32 |

33 |

34 |

35 | ## **1. What is Jarvis AI?**

36 | Jarvis AI is a Python Module that is able to perform tasks like Chatbot, Assistant, etc. It provides base functionality for any assistant application. This JarvisAI is built using Tensorflow, Pytorch, Transformers, and other open-source libraries and frameworks. Well, you can contribute to this project to make it more powerful.

37 |

38 | * Official Website: [Click Here](https://jarvisai.in)

39 |

40 | * Official Instagram Page: [Click Here](https://www.instagram.com/_jarvisai_)

41 |

42 |

43 | ## 2. Prerequisite

44 | - Get your Free API key from [https://jarvisai.in](https://jarvisai.in)

45 |

46 | - To use it only Python (> 3.6) is required.

47 |

48 | - To contribute to the project: Python is the only prerequisite for basic scripting, Machine Learning, and Deep Learning knowledge will help this model to do tasks like AI-ML. Read the How to Contribute section of this page.

49 |

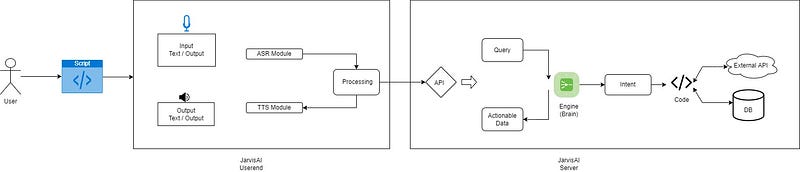

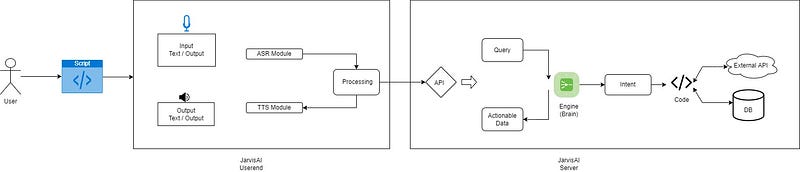

50 | ## 3. Architecture

51 |

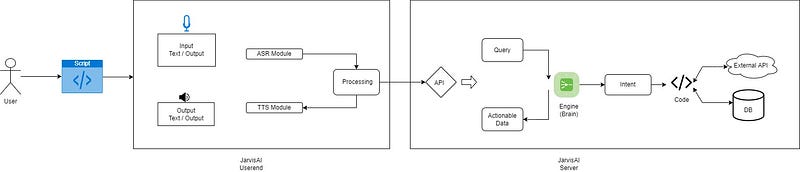

52 | The JarvisAI’s architecture is divided into two parts.

53 |

54 | 1. User End- It is basically responsible for getting input from the user and after preprocessing input it sends input to JarvisAI’s server. And once the server sends its response back, it produces output on the user screen/system.

55 | 2. Server Side- The server is responsible to handle various kinds of AI-ML, and NLP tasks. It mainly identifies user intent by analyzing user input and interacting with other external APIs and handling user input.

56 |

57 |

58 |

59 |

60 | ## 4. Getting Started- How to use it?

61 |

62 | #### NOTE: Old version is depreciated use latest version of JarvisAI

63 |

64 | ### 4.1. Installation-

65 |

66 | * Install the latest version-

67 |

68 | ```bash

69 | pip install JarvisAI

70 | ```

71 |

72 | #### Optional Steps (Common Installation Issues)-

73 |

74 | * [Optional Step] If Pyaudio is not working or not installed you might need to install it separately-

75 |

76 | In the case of Mac OSX do the following:

77 |

78 | ```

79 | brew install portaudio

80 | pip install pyaudio

81 | ```

82 |

83 | In the case of Windows or Linux do the following:

84 |

85 | - Download pyaudio from: lfd.uci.edu/~gohlke/pythonlibs/#pyaudio

86 |

87 | - ```pip install PyAudio-0.2.11-cp310-cp310-win_amd64.whl```

88 |

89 | * [Optional Step] If pycountry is not working or not installed then Install "python3-pycountry" Package on Ubuntu/Linux-

90 |

91 | ```

92 | sudo apt-get update -y

93 | sudo apt-get install -y python3-pycountry

94 | ```

95 |

96 |

97 | * [Optional Step] You might need to Install [Microsoft Visual C++ Redistributable for Visual Studio 2022](https://visualstudio.microsoft.com/downloads/#microsoft-visual-c-redistributable-for-visual-studio-2022)

98 |

99 | ### 4.2. Code You Need-

100 |

101 | You need only this piece of code-

102 |

103 |

104 | def custom_function(*args, **kwargs):

105 | command = kwargs.get('query')

106 | entities = kwargs.get('entities')

107 | print(entities)

108 | # write your code here to do something with the command

109 | # perform some tasks # return is optional

110 | return command + ' Executed'

111 |

112 |

113 | jarvis = JarvisAI.Jarvis(input_mechanism='voice', output_mechanism='both',

114 | google_speech_api_key=None, backend_tts_api='pyttsx3',

115 | use_whisper_asr=False, display_logs=False,

116 | api_key='527557f2-0b67-4500-8ca0-03766ade589a')

117 | # add_action("general", custom_function) # OPTIONAL

118 | jarvis.start()

119 |

120 |

121 |

122 | ### 4.3. **What's now?**

123 |

124 | It will start your AI, it will ask you to give input and accordingly it will produce output.

125 | You can configure `input_mechanism` and `output_mechanism` parameter for voice input/output or text input/output.

126 |

127 | ### 4.4. Let's understand the Parameters-

128 |

129 | ```bash :param input_method: (object) method to get input from user :param output_method: (object) method to give output to user :param api_key: (str) [Default ''] api key to use JarvisAI get it from http://jarvis-ai-api.herokuapp.com :param detect_wake_word: (bool) [Default True] detect wake word or not :param wake_word_detection_method: (object) [Default None] method to detect wake word :param google_speech_recognition_input_lang: (str) [Default 'en'] language of the input Check supported languages here: https://cloud.google.com/speech-to-text/docs/languages :param google_speech_recognition_key: (str) [Default None] api key to use Google Speech API :param google_speech_recognition_duration_listening: (int) [Default 5] duration of the listening

130 | READ MORE: Google Speech API (Pricing and Key) at: https://cloud.google.com/speech-to-text

131 | ```

132 | ## 5. What it can do (Features it supports)-

133 |

134 | 1. Currently, it supports only english language

135 | 2. Supports voice and text input/output.

136 | 3. Supports AI based voice input (using whisper asr) and by using google api voice input.

137 | 4. All intellectual task is process in JarvisAI server so there is no load on your system.

138 | 5. Lightweight and able to understand natural language (commands)

139 | 6. Ability to add your own custom functions.

140 |

141 | ### 5.1. Supported Commands-

142 |

143 | These are below supported intent that AI can handle, you can ask in natural language.

144 |

145 | **Example- "What is the time now", "make me laugh", "click a photo", etc.**

146 |

147 | **Note: Some features / command might not work. WIP. Tell me bugs.**

148 |

149 | 1. asking time

150 | 2. asking date

151 | 3. greet and hello hi kind of things goodbye

152 | 4. tell me joke

153 | 5. tell me about

154 | 6. i am bored

155 | 7. volume control

156 | 8. tell me news

157 | 9. click photo

158 | 10. places near me

159 | 11. play on youtube

160 | 12. play games

161 | 13. what can you do

162 | 14. send email

163 | 15. download youtube video

164 | 16. asking weather

165 | 17. take screenshot

166 | 18. open website

167 | 19. send whatsapp message

168 | 20. covid cases

169 | 21. check internet speed

170 | 22. others / Unknown Intent (IN PROGRESS)

171 |

172 |

173 | ### 5.2. Supported Input/Output Methods (Which option do I need to choose?)-

174 |

175 | You can set below parameter while creating object of JarvisAI-

176 |

177 | jarvis = JarvisAI.Jarvis(input_mechanism='voice', output_mechanism='both',

178 | google_speech_api_key=None, backend_tts_api='pyttsx3',

179 | use_whisper_asr=False, display_logs=False,

180 | api_key='527557f2-0b67-4500-8ca0-03766ade589a')

181 |

182 | 1. **For text input-**'

183 |

184 | input_mechanism='text'

185 |

186 | 2. **For voice input-**

187 |

188 | input_mechanism='voice'

189 |

190 | 3. **For text output-**

191 |

192 | output_mechanism='text'

193 |

194 | 4. **For voice output-**

195 |

196 | output_mechanism='text'

197 |

198 | 5. **For voice and text output-**

199 |

200 | output_mechanism='both'

201 |

202 | ## 6. Future/Request Features-

203 | **WIP**

204 | **You tell me**

205 |

206 | ## 7. Contribute-

207 | **Instructions Coming Soon**

208 | ## 8. Contact me-

209 | - [Instagram](https://www.instagram.com/dipesh_pal17)

210 |

211 | - [YouTube](https://www.youtube.com/dipeshpal17)

212 |

213 |

214 |

215 | ## 9. Donate-

216 | [Donate and Contribute to run me this project, and buy a domain](https://www.buymeacoffee.com/dipeshpal)

217 |

218 | **_Feel free to use my code, don't forget to mention credit. All the contributors will get credits in this repo._**

219 | **_Mention below line for credits-_**

220 | ***Credits-***