├── 0-install_guide

└── README.md

├── 0-torch_base

├── act_demo.py

├── grad_descend.py

├── loss_demo.py

├── op.md

├── op_demo.py

└── optimizer.md

├── 1-tensor_guide

├── README.md

├── pytorch_tutorial.py

├── tensor_demo.py

└── tensors_deeper_tutorial.py

├── 10-tensorboard_guide

├── README.md

├── minist_loss_tensorboard.py

├── tensorboard_demo.py

└── tensorboardyt_tutorial.py

├── 11-distribute_guide

├── README.md

├── ddp_demo

│ ├── README.md

│ ├── command_demo.py

│ ├── multi_machine_command_launch.py

│ ├── multi_machine_one_process_one_gpu.py

│ ├── multi_machine_one_process_with_mgpu.py

│ ├── process_demo.py

│ ├── spawn_demo.py

│ └── torchrun.md

├── deep_speed

│ └── README.md

├── dp_demo

│ ├── README.md

│ ├── dp_demo.py

│ ├── dp_hello.py

│ └── image.png

├── megatron

│ └── README.md

├── model_parallel

│ ├── README.md

│ ├── model_parallel_demo.py

│ └── model_parallel_demo2.py

├── tensor_parallelism

│ ├── README.md

│ ├── example.py

│ └── requirements.txt

└── zero

│ └── READEME.md

├── 12-pytorch2_dynomo

├── README.md

├── dynamo_hello.py

├── model_demo.py

└── torch2_demo.py

├── 13-model_deploy_guide

├── cloud_deploy

│ └── README.md

├── container_deploy

│ ├── Dockerfile

│ └── README.md

├── cpp_deploy

│ ├── README.md

│ └── mnist

│ │ ├── CMakeLists.txt

│ │ ├── README.md

│ │ ├── mnist-infer.cpp

│ │ └── mnist-train.cpp

├── flask_deploy

│ ├── README.md

│ └── flask_demo

│ │ ├── hello.py

│ │ ├── imagenet_lable.py

│ │ ├── resnet_infer.py

│ │ ├── templates

│ │ ├── upload.html

│ │ └── upload_ok.html

│ │ └── upload_image.py

└── java_deploy

│ └── README.md

├── 14-docker_image_container_guide

├── README.md

└── test_mtn.py

├── 15-model_learning

├── README.md

├── chatGLM

│ └── README.md

└── miniGPT

│ ├── README.md

│ └── chatgpt_demo.py

├── 2-autograd_guide

├── README.md

└── autograd_demo.py

├── 3-module_guide

├── README.md

├── minist_main.py

├── module_demo.py

└── op_demo.py

├── 4-data_guide

├── README.md

├── data

│ └── FashionMNIST

│ │ └── raw

│ │ └── train-images-idx3-ubyte.gz

├── data_loading_tutorial.py

└── data_prepare.py

├── 5-optim_guide

├── README.md

└── stepLR_demo.py

├── 6-save_load_guide

├── README.md

└── save_load_demo.py

├── 7-pytorch_modes

├── README.md

└── mode_demo.py

├── 8-model_train_guide

├── README.md

├── bert_train

│ ├── README.md

│ ├── requirements.txt

│ ├── run.sh

│ └── run_ner.py

├── imagenet-train

│ ├── README.md

│ ├── extract_ILSVRC.sh

│ ├── main.py

│ └── requirements.txt

├── introyt1_tutorial.py

├── mnist

│ ├── README.md

│ └── mnist-main.py

├── nlp

│ ├── README.md

│ ├── advanced_tutorial.py

│ ├── deep_learning_tutorial.py

│ ├── sequence_models_tutorial.py

│ └── word_embeddings_tutorial.py

├── regression

│ ├── README.md

│ └── main.py

├── transformer_encoder

│ └── encoder.py

├── vit-train

│ ├── README.md

│ ├── main.py

│ └── requirements.txt

├── word_language_model

│ ├── README.md

│ ├── data.py

│ ├── generate.py

│ ├── main.py

│ ├── model.py

│ └── requirements.txt

└── yolov8

│ └── inference_demo.py

├── 9-model_infer

├── README.md

└── onnx_utils.py

├── README.md

└── python_demo

├── class_demo

├── Property整体继承.py

├── descriptor_0.py

├── descriptor_demo.py

├── discriptor_1.py

├── item相关魔术方法.py

├── lazyproperty.py

├── nonlocal用法.py

├── property_1.py

├── property_2.py

├── property_3.py

├── property_4.py

├── super_1.py

├── super_2.py

├── super_demo.py

├── super_test.py

├── super指向兄弟类的方法.py

├── super菱形继承.py

├── test.py

├── yield_from_demo.py

├── 函数装饰器.py

├── 函数装饰器2.py

├── 函数装饰器应用.py

├── 可迭代对象和迭代器.py

├── 多继承兄弟类.py

├── 多继承解决方案.py

├── 多继承问题.py

├── 定义数据类.py

├── 属性的代理访问.py

├── 抽象基类.py

├── 生成器yield.py

├── 生成器函数.py

├── 简化数据初始化.py

├── 类方法构造实例.py

├── 类的一般操作.py

├── 闭包的应用.py

├── 闭包陷阱.py

└── 闭包陷阱解决方案.py

├── design_pattern

├── README.md

├── abs_factory.jpg

├── abs_factory_pattern.py

├── adapter_pattern.py

├── factory_pattern.py

├── iterator_pattern.py

├── observer_pattern.py

└── singleton_demo.py

├── magic_method

├── README.md

├── __attr__.py

├── __call__方法展示.py

├── __dict__and_dir().py

├── __enter__and__exit__.py

├── __getitem__迭代器.py

├── __item__.py

├── __iter__and__next__.py

├── __iter__and_generator.py

├── __new__魔术方法展示.py

├── __private的继承.py

├── __slots__.py

├── __str__and__repr__.py

├── attr_method.py

└── property_demo.py

├── meta_program

├── README.md

└── class_demo.py

└── thread_and_process

├── README.md

├── corutine.py

├── multi_process.py

├── multi_process_v2.py

└── multi_thread.py

/0-install_guide/README.md:

--------------------------------------------------------------------------------

1 | # pytorch 介绍

2 |

3 |

4 | #安装

5 | [安装链接](https://pytorch.org/)

6 |

7 | # 安装注意事项

8 | 1. 选Stable(选择稳定版本)

9 | 2. linux 和 windows;linux 工作时候;

10 | 3. pckage:pip install,

11 | 4. libtorch:它是pytorch的c++版本,c++ 部署的时候可能会用到;

12 | 5. language: Python

13 | 6. compute platform:

14 | cuda: 在Nvidia 的卡上跑模型要用到;

15 | cpu: 在cpu上跑模型;

16 | rocm: amd 显卡对应的计算平台;

17 | 7. cuda 和 driver 版本对应:

18 | [cuda driver 对照表](https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html)

19 |

20 | # conda 环境管理

21 | 1. (base) C:\Users\86183> : base : 我们现在在base 这个环境里;

22 | 2. conda env list : 查看conda 环境;

23 | 3. conda create -n python=3.9

24 | 4. conda activate base

25 |

26 | # pytorch 的软件栈

27 | 1. pytorch 软件本身;

28 | 2. cpu 版本 直接安装,依赖比较少:pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

29 | 3. cuda:nvidia 开发的一个在 GPU 上进行编程的一种语言, 和 C语言很类似;*.cu

30 | 4. cuda 最终要的作用就是加速,在GPU上进行加速;

31 | 5. GPU 非常善于处理结构化的数据,深度学习中 这些计算 : 矩阵运算 --> 特别适合我们GPU来处理;

32 | 6. cuda --> nvcc 编译器;装好cuda 后 nvcc 自动安装;

33 | 7. cuda 一定要有nvidia的卡支持,不一定所有的nvidia的卡都支持cuda;

34 | 8. cuda 要依赖于一个 driver();

35 |

36 | [驱动选择](https://www.nvidia.cn/Download/index.aspx?lang=cn)

37 |

38 | # pytorch version

39 | - 2.0

40 | - 1.9.0 1.10 1.11 1.12 1.13 --> 2.0

41 | - 2023年

42 | - 加速 pip install pytorch -i 镜像地址

43 |

44 | # 安装其他版本

45 | [安装链接1](https://pytorch.org/get-started/previous-versions/)

46 | [安装链接2](https://download.pytorch.org/whl/torch/)

47 |

48 | # 常用的pytorch 模块

49 | - nn

50 | - utils

51 | - optim

52 | - autograd : 对用户不感知

53 | - distributed

54 | - profiler : 算子性能追踪

55 | - quantization: 量化模块

56 | - jit、onnx:模型保存相关;

57 |

58 | # 学习pytorch 相关模块

59 | 1. [pytorch doc](https://pytorch.org/docs/stable/search.html?q=&check_keywords=yes&area=default#)

60 | 2. .conda/envs/mmcv/lib/python3.8/site-packages/torch/

61 | 3. torch/_C/_VariableFunctions.pyi : pyi c++ 程序的python 接口

62 | 4. 其它 。。。

63 |

64 | # torchvision

65 |

--------------------------------------------------------------------------------

/0-torch_base/act_demo.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 |

4 | def elu_demo():

5 | m = nn.ELU()

6 | input = torch.randn(2)

7 | output = m(input)

8 | print(output)

9 |

10 | if __name__ == "__main__":

11 | elu_demo()

12 | print("run successfully")

--------------------------------------------------------------------------------

/0-torch_base/grad_descend.py:

--------------------------------------------------------------------------------

1 | '''

2 | 如果 X W 两个矩阵:

3 | W[n,k] @ X[k,m] = Y[n,m] #

4 | 则: dW = dY @ XT; dX = WT @ dY # 矩阵反向传播公式

5 | output = a + b

6 | '''

7 | import numpy as np

8 |

9 |

10 | def bp_demo():

11 | def sigmoid(input):

12 | return 1 / (1 + np.e**(-input))

13 |

14 | def sigmoid_backward(out):

15 | return out*(1- out)

16 |

17 | w1 = np.array([[0.1, 0.15], [0.2, 0.25], [0.3, 0.35]])

18 | w2 = np.array([[0.4, 0.45, 0.5], [0.55, 0.6, 0.65]])

19 | b1 = 0.35

20 | b2 = 0.65

21 | input = np.array([5, 10]).reshape(2, 1)

22 | label = np.array([0.01, 0.99]).reshape(2, 1)

23 |

24 | for i in range(100):

25 | net_h = w1 @ input + b1

26 | out_h = sigmoid(net_h)

27 |

28 | net_o = w2 @ out_h + b2

29 | out_o = sigmoid(net_o)

30 |

31 | loss = np.sum((out_o - label)**2)

32 | print(loss)

33 |

34 | dw2 = (out_o - label) * sigmoid_backward(out_o) @ out_h.T

35 |

36 | # (out_o - label) * sigmoid_backward(out_o) --> dloss/net_o

37 | dout_h = w2.T @ ((out_o - label) * sigmoid_backward(out_o))

38 |

39 | dw1 = dout_h * sigmoid_backward(out_h) @ input.T

40 |

41 | w1 = w1 - 0.5 * dw1

42 | w2 = w2 - 0.5 * dw2

43 | print(f"loss[{i}]: {loss}")

44 |

45 | print(w1)

46 |

47 |

48 | def matmul_grad():

49 | W = np.array([[4, 5], [7, 2]])

50 | X = np.array([2, 6.0]).reshape(2, 1)

51 | label = np.array([14, 11]).reshape(2, 1)

52 |

53 | for i in range(100):

54 | Y = W @ X # 线性相乘

55 | Loss = 0.5 * np.sum((Y-label)**2) # 损失值 --> 标量

56 | # dY = Y - label # 向量

57 | dW = (Y-label) @ X.T # 矩阵求导公式

58 | W = W - 0.01*dW # 更新weight

59 | print(f"============= loss[{i}]: ", Loss)

60 |

61 | print(W)

62 |

63 | def loss_grad(o1, o2, label):

64 | # loss = (o1 - 14)**2 + (o2 -11)**2 # 距离越远,值越大

65 | # Loss = np.sum((O - Lable)**2)

66 | grad = [2 * (o1 - label[0]), 2 * (o2 - label[1])]

67 | return np.array(grad)

68 |

69 | def matrix_grad_demo():

70 | """

71 | 4x + 5y = O1

72 | 7x + 2y = O1

73 |

74 | [[4, 5], [7, 2]] * [x, y]T = [01, 02] --> A*x = O

75 | label = [14, 11] # 代表我们期望的那个值

76 | loss = (o1 - 14)**2 + (o2 -11)**2 # 距离越远,值越大

77 | """

78 | A = np.array([[4.0, 5], [7.0, 2]])

79 | X = np.array([2, 6.0]) # 随便给的初始值

80 |

81 | Lable = np.array([14, 11])

82 | lr = 0.001

83 |

84 | for i in range(1000):

85 | O = A @ X # 前向

86 | grad = A.T @ loss_grad(O[0], O[1], Lable)

87 | X[0] = X[0] - lr*grad[0]

88 | X[1] = X[1] - lr*grad[1]

89 | print("x[0]: {}, x[1]: {}".format(X[0], X[1]))

90 | Loss = np.sum((O - Lable)**2)

91 | print("Loss: ", Loss)

92 |

93 | if __name__ == "__main__":

94 | # grad_demo()

95 | # matrix_grad_demo()

96 | # matmul_grad()

97 | bp_demo()

98 | print("run grad_descend.py successfully !!!")

99 |

100 |

--------------------------------------------------------------------------------

/0-torch_base/op.md:

--------------------------------------------------------------------------------

1 | # conv2d

2 | ```python

3 | torch.nn.Conv2d(

4 | in_channels, # 输入通道

5 | out_channels, # 输出通道

6 | kernel_size, # kernel 大小

7 | stride=1, # 步长

8 | padding=0, # padding

9 | dilation=1, # 膨胀系数

10 | groups=1, # 分组

11 | bias=True, # 偏置

12 | padding_mode='zeros', # padding mode

13 | device=None, # 设备

14 | dtype=None # 数据类型

15 | )

16 | ```

--------------------------------------------------------------------------------

/0-torch_base/optimizer.md:

--------------------------------------------------------------------------------

1 | # 优化器展示

2 |

3 |

4 | ## 1 adam

5 | [参考链接](https://pytorch.org/docs/master/generated/torch.optim.Adam.html?highlight=adam#torch.optim.Adam)

6 |

7 | ```python

8 | CLASS torch.optim.Adam(

9 | params, # 可迭代的parameters, 或者是装有parameter组的字典

10 | lr=0.001, # 学习率

11 | betas=(0.9, 0.999), # 用于计算梯度及其平方的移动平均值系数

12 | eps=1e-08, # 防止分母为0

13 | weight_decay=0, # 权重衰减系数,L2 惩罚, 默认为0

14 | amsgrad=False, # 是否使用该算法的amsgrad 变体

15 | *,

16 | foreach=None, #

17 | maximize=False, # 最大化梯度--> 梯度提升

18 | capturable=False, # 在CUDA图中捕获此实例是否安全

19 | differentiable=False, # 是否可进行自动微分

20 | fused=None # 是否使用融合实现(仅CUDA)。

21 | )

22 |

23 | add_param_group(param_group)

24 | '''

25 | 用于向优化器中添加新的参数组;

26 | 参数组:一组共享相同超参数(学习率、权重衰减等)的模型参数;

27 | 通过定义不同的参数组,可以为模型的不同部分或不同层,设置不同的超参数;

28 | 这在微调预训练的网络时很有用.

29 | '''

30 |

31 | ```

32 |

33 | *添加参数组

34 | ```python

35 | import torch

36 | import torch.optim as optim

37 |

38 | # 创建模型和优化器

39 | model = torch.nn.Linear(10, 2)

40 | optimizer = optim.SGD(model.parameters(), lr=0.1)

41 |

42 | # 创建新的参数组

43 | new_params = [{'params': model.parameters(), 'lr': 0.01}]

44 |

45 | # 将新的参数组添加到优化器中

46 | optimizer.add_param_group(new_params)

47 | ```

48 |

49 | ## 2 sgd

50 | ```python

51 | # 定义两个模型和它们的参数

52 | model1 = ...

53 | model2 = ...

54 | model_params1 = model1.parameters()

55 | model_params2 = model2.parameters()

56 |

57 | # 创建优化器,并传递使用新的参数组名称

58 | optimizer = optim.SGD([{'params': model_params1}, {'params': model_params2}], lr=0.01)

59 | optimizer = optim.SGD([

60 | {'params': params1, 'lr': 0.01, 'weight_decay': 0.001},

61 | {'params': params2, 'lr': 0.1, 'weight_decay': 0.0001}

62 | ])

63 | ```

64 |

65 |

--------------------------------------------------------------------------------

/1-tensor_guide/README.md:

--------------------------------------------------------------------------------

1 | # pytorch Tensor guide

2 |

3 | ## tensor 是什么

4 | - 张量 (weight activationg)

5 | - 多维数据,numpy --> ndarray

6 | - pytorch 里最大的一个类(class)

7 | - 属性、和方法

8 |

9 | ## 初始化一个tensor

10 | - torch.Tensor(***) 调用 Tensor 这个类的init方法完成初始化

11 | - torch.ones() 调用torch本身自带的一些函数来生成特殊类型的tensor

12 | - torch.ones_like() # 也是调用torch 自带的函数来生成,shape等信息采用另外一种tensor的;

13 | - numpy 与 tensor之间的互相转换:优先选用 from_numpy: 数据共享

14 |

15 | # tensor 的结构

16 | 1. meta_data :dtype、shape、dims、device、stride()

17 | 2. raw_data : 内存中的数据

18 | 3. data_ptr 查询的是我们raw_data;

19 | 4. id : raw_data 或者 mete_data 有一个改变就会改变

20 |

21 | # tensor 的视图

22 | - transpose storage 之后没有发生任何的变化,raw_data 没有变;

23 | - stride: 正常情况下某一维度的stride的值,后几个维度相乘;

24 |

25 | # reshape/view/permute/transpose

26 | - 这四个算子(方法) raw_data 都没变;

27 | - 变化的都是 shape 和 stride;

28 | - reshape 和 view 可以改变维度的;

29 | - permute/transpose 换轴之后就不满足这种规律(stride)了 --> 数据不连续 uncontiguous;

30 | - reshape 和 view : 在大部分情况下都是一样的;数据不连续的情况下就有区别了。

31 | - view: 永远不会生成新的数据,永远是一个view,视图;

32 | - reshape:如何可能返回一个视图的话,它就返回一个视图,如果出现数据不连续的情况导致返回不了视图,

33 | - reshape就会返回一个新的tensor(新的一份raw_data)

34 | - uncontiguous: 对我们硬件不有好的,会导致我们的性能下降;

35 |

36 | ## pytorch 相关代码

37 | - /site-packages/torch/_C/_VariableFunctions.pyi --> tensor 的c++接口

38 | - /lib/python3.8/site-packages/torch/_tensor.py

39 |

40 | ## 如何学习API(tensor api 举例)

41 | - 软件工程中 api, file name 都不是随便取的;

42 | - tensor的接口在哪里 --> _tensor.py 中;

43 | - _tensor.py 中的 class Tensor 只是子类,

44 | - 父类: class _TensorBase 在__init__.pyi 中

45 | - 常用的属性:

46 | **requires_grad、shape、dytpe、layout、ndim、grad_fn**

47 | - 常用的方法

48 | **pointewise类型的方法:abs、acos、add、addcdiv**

49 | **投票函数:all any**

50 | **bit相关的操作**

51 | **clone**

52 | **统计学上的运算:mean var median min max**

53 | **backward:反向传播的时候使用**

54 | **register_hook: 钩子函数**

55 | ** retain_grad: 保留梯度**

56 | ** resize:通过插值的方式进行尺度缩放 **

57 | ** grad 查询梯度信息**

58 |

59 | ## tensor 的构成

60 | - meta_data : 描述一个tensor(shape/dtype/ndim/stride)

61 | - raw_data : 内存中的数据,以裸指针;

62 | *ndarray.ctypes.data numpy 获取方式

63 | *tensor.data_ptr() torch tensor的获取方式

64 |

65 | ## pytorch tensor 与 numpy的 相互转化 与 数据共享

66 | - from_numpy 形成的 torch.tensor 和numpy 公用一套数据

67 | - .numpy() 返回到 numpy 格式

68 |

69 | ## to 的理解

70 | - 数据类型的转化: int --> float

71 | **数据要从cpu copy 到 gpu上:h2d**

72 | **数据从 gpu copy 到 cpu:d2h**

73 | - 设备的转化 host(cpu) 和 device(GPU)的 概念

74 | **d2h**

75 | - tensor.cpu()

76 | - 不同设备上的tensor,不能进行运算;

77 |

78 | ##自动选择后端

79 | ```python

80 | if torch.cuda.is_available():

81 | device = torch.device("cuda:0")

82 | else:

83 | device = torch.device("cpu")

84 | ```

85 |

86 | ## Tensor 的 id 和 ptr

87 | - id: tensor 的地址 (meta data 和 raw data 一个变就会变)

88 | - data_ptr: 数据的地址(meta_data 变换不影响它)

89 | - 视图要拿最终的raw data来看, id 经常出现问题。

90 |

91 | ## tensor的计算方式:matmul 、 pointwise 、 elementwise

92 | - 矩阵形式的计算:matmul、rashpe/ permute/ transpose

93 | - pointewise / elementwise (元素之间的操作)

94 | - broadcast: 1. shape 右对齐;2. 对应dim 有一个是1 或者 相同;

95 |

96 | ## inplace 操作

97 | - 原地操作(raw data 不变),新计算出来的结果,替换掉原来结果;

98 | - 节约内存,提升性能

99 | - 不推荐使用,除非非常了解。

100 |

101 | ## 理解tensor的视图

102 | **reshape、permute、transpose、view**

103 | - reshape 和 view 是一组:;

104 | - permute 和 transpose 是一组:数据不连续的现象;

105 | **这四个算子raw data 都一样**

106 | **通常我们根据shape 就可以推断出 stride**

107 | **但是 transpose 和 permute 不能通过shape 来推断出来**

108 | **此时发生数据不连续**

109 | - 影响我们计算结果吗?no

110 | - 性能不好;

111 | - 我们应该怎么办?contiguous

112 |

113 | **contiguous发生了什么**

114 | *1. 重新申请了内存*

115 | *2.数据重排了*

116 |

117 | **reshape vs view**

118 | - reshape 不管数据是否连续,都可以用;

119 | - view 智能用于数据连续的情况下;

120 | - 如果必须用view:数据要先进行 contiguous

121 | - 数据不连续的情况下:reshape = contiguous + view

122 | - reshape :数据不连续去情况下 reshape 自动进行 数据copy 和 reorder

123 | - contiguous:本身也就是 数据copy 和重排

124 |

125 | ## 扩展

126 | *_tensor.py*

127 | *data到底存储在哪里呢?*

128 |

--------------------------------------------------------------------------------

/10-tensorboard_guide/README.md:

--------------------------------------------------------------------------------

1 | # tensorboard

2 | [torch 链接](https://pytorch.org/docs/stable/tensorboard.html?highlight=tensorboard)

3 | - board:展板

4 | - tensorflow 率先采用个

5 | - 效果很好,pytorch 也采用了这个 -->

6 | - 只要我们把我们需要保存的信息 dump 成tensorboard支持的格式就行;

7 | - pytorch 里面还有一个叫 tensorboardX 的东西,和 tensorboard 很类似,我们用tensorboard就行

8 |

9 | # 安装方式

10 | - 我们安装好了 tensorflow 的话,tensorboard会自动安装;

11 | - pip install tensorboard

12 |

13 | #执行方式:

14 | tensorboard --logdir=./logsm

15 | python -m tensorboard.main --logdir=./logs

16 |

17 | # torch 中的tensorboard

18 | - 作用: 用于 dump 文件

19 | - 代码位置:from torch.utils.tensorboard import SummaryWriter

20 |

21 | # tensorboard 单独的包

22 | - 用来展示数据的

23 | - site-packages/tensorboard/__init__.py

24 |

25 | # run 指令:

26 | tensorboard --logdir=runs

27 |

28 | # 注意事项

29 | - 名字:以名字来区分windows的,

30 | - 数据是可以按照window的名字来追加

31 | - loss 是以追加的形式来的,信息不回丢失

32 |

--------------------------------------------------------------------------------

/10-tensorboard_guide/minist_loss_tensorboard.py:

--------------------------------------------------------------------------------

1 | # PyTorch model and training necessities

2 | import torch

3 | import torch.nn as nn

4 | import torch.nn.functional as F

5 | import torch.optim as optim

6 |

7 | # Image datasets and image manipulation

8 | import torchvision

9 | import torchvision.transforms as transforms

10 |

11 | # Image display

12 | import matplotlib.pyplot as plt

13 | import numpy as np

14 |

15 | # PyTorch TensorBoard support

16 | from torch.utils.tensorboard import SummaryWriter

17 |

18 | # Gather datasets and prepare them for consumption

19 | transform = transforms.Compose(

20 | [transforms.ToTensor(),

21 | transforms.Normalize((0.5,), (0.5,))])

22 |

23 | # Store separate training and validations splits in ./data

24 | training_set = torchvision.datasets.FashionMNIST('./data',

25 | download=True,

26 | train=True,

27 | transform=transform)

28 | validation_set = torchvision.datasets.FashionMNIST('./data',

29 | download=True,

30 | train=False,

31 | transform=transform)

32 |

33 | training_loader = torch.utils.data.DataLoader(training_set,

34 | batch_size=4,

35 | shuffle=True,

36 | num_workers=2)

37 |

38 |

39 | validation_loader = torch.utils.data.DataLoader(validation_set,

40 | batch_size=4,

41 | shuffle=False,

42 | num_workers=2)

43 |

44 | # Class labels

45 | classes = ('T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

46 | 'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle Boot')

47 |

48 | class Net(nn.Module):

49 | def __init__(self):

50 | super(Net, self).__init__()

51 | self.conv1 = nn.Conv2d(1, 6, 5)

52 | self.pool = nn.MaxPool2d(2, 2)

53 | self.conv2 = nn.Conv2d(6, 16, 5)

54 | self.fc1 = nn.Linear(16 * 4 * 4, 120)

55 | self.fc2 = nn.Linear(120, 84)

56 | self.fc3 = nn.Linear(84, 10)

57 |

58 | def forward(self, x):

59 | x = self.pool(F.relu(self.conv1(x)))

60 | x = self.pool(F.relu(self.conv2(x)))

61 | x = x.view(-1, 16 * 4 * 4)

62 | x = F.relu(self.fc1(x))

63 | x = F.relu(self.fc2(x))

64 | x = self.fc3(x)

65 | return x

66 |

67 | writer = SummaryWriter('mnist_log')

68 |

69 | net = Net()

70 | criterion = nn.CrossEntropyLoss()

71 | optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

72 |

73 | print(len(validation_loader))

74 | for epoch in range(10): # loop over the dataset multiple times

75 | running_loss = 0.0

76 |

77 | for i, data in enumerate(training_loader, 0):

78 | # basic training loop

79 | inputs, labels = data

80 | optimizer.zero_grad()

81 | outputs = net(inputs)

82 | loss = criterion(outputs, labels)

83 | loss.backward()

84 | optimizer.step()

85 |

86 | running_loss = loss.item()

87 | if i % 1000 == 999: # Every 1000 mini-batches...

88 | print('Batch {}'.format(i + 1))

89 | # Check against the validation set

90 | running_vloss = 0.0

91 |

92 | net.train(False) # Don't need to track gradents for validation

93 | for j, vdata in enumerate(validation_loader, 0):

94 | vinputs, vlabels = vdata

95 | voutputs = net(vinputs)

96 | vloss = criterion(voutputs, vlabels)

97 | running_vloss = vloss.item()

98 |

99 | net.train(True) # Turn gradients back on for training

100 |

101 | # avg_train_loss = running_loss / 1000

102 | # avg_val_loss = running_vloss / len(validation_loader)

103 | avg_train_loss = running_loss

104 | avg_val_loss = running_vloss

105 |

106 | # Log the running loss averaged per batch

107 | writer.add_scalars('Training vs. Validation Loss',

108 | { 'Training' : avg_train_loss, 'Validation' : avg_val_loss },

109 | epoch * len(training_loader) + i)

110 |

111 | running_loss = 0.0

112 | print('Finished Training')

113 |

114 | writer.flush()

115 |

116 |

117 |

118 |

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/command_demo.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import sys

4 | import tempfile

5 | from urllib.parse import urlparse

6 |

7 | import torch

8 | import torch.distributed as dist

9 | import torch.nn as nn

10 | import torch.optim as optim

11 | import torch.distributed.launch as launch

12 |

13 | from torch.nn.parallel import DistributedDataParallel as DDP

14 |

15 | class ToyModel(nn.Module):

16 | def __init__(self):

17 | super(ToyModel, self).__init__()

18 | self.net1 = nn.Linear(10, 10)

19 | self.relu = nn.ReLU()

20 | self.net2 = nn.Linear(10, 5)

21 |

22 | def forward(self, x):

23 | return self.net2(self.relu(self.net1(x)))

24 |

25 |

26 | def demo_basic(local_world_size, local_rank):

27 |

28 | # setup devices for this process. For local_world_size = 2, num_gpus = 8,

29 | # rank 0 uses GPUs [0, 1, 2, 3] and

30 | # rank 1 uses GPUs [4, 5, 6, 7].

31 | n = torch.cuda.device_count() // local_world_size

32 | device_ids = list(range(local_rank * n, (local_rank + 1) * n))

33 |

34 | print(

35 | f"[{os.getpid()}] rank = {dist.get_rank()}, "

36 | + f"world_size = {dist.get_world_size()}, n = {n}, device_ids = {device_ids} \n", end=''

37 | )

38 |

39 | model = ToyModel().cuda(device_ids[0])

40 | ddp_model = DDP(model, device_ids)

41 |

42 | loss_fn = nn.MSELoss()

43 | optimizer = optim.SGD(ddp_model.parameters(), lr=0.001)

44 |

45 | optimizer.zero_grad()

46 | outputs = ddp_model(torch.randn(20, 10))

47 | labels = torch.randn(20, 5).to(device_ids[0])

48 | loss_fn(outputs, labels).backward()

49 | optimizer.step()

50 |

51 |

52 | def spmd_main(local_world_size, local_rank):

53 | # These are the parameters used to initialize the process group

54 | env_dict = {

55 | key: os.environ[key]

56 | for key in ("MASTER_ADDR", "MASTER_PORT", "RANK", "WORLD_SIZE")

57 | }

58 |

59 | if sys.platform == "win32":

60 | # Distributed package only covers collective communications with Gloo

61 | # backend and FileStore on Windows platform. Set init_method parameter

62 | # in init_process_group to a local file.

63 | if "INIT_METHOD" in os.environ.keys():

64 | print(f"init_method is {os.environ['INIT_METHOD']}")

65 | url_obj = urlparse(os.environ["INIT_METHOD"])

66 | if url_obj.scheme.lower() != "file":

67 | raise ValueError("Windows only supports FileStore")

68 | else:

69 | init_method = os.environ["INIT_METHOD"]

70 | else:

71 | # It is a example application, For convience, we create a file in temp dir.

72 | temp_dir = tempfile.gettempdir()

73 | init_method = f"file:///{os.path.join(temp_dir, 'ddp_example')}"

74 | dist.init_process_group(backend="gloo", init_method=init_method, rank=int(env_dict["RANK"]), world_size=int(env_dict["WORLD_SIZE"]))

75 | else:

76 | print(f"[{os.getpid()}] Initializing process group with: {env_dict}")

77 | dist.init_process_group(backend="nccl")

78 |

79 | print(

80 | f"[{os.getpid()}]: world_size = {dist.get_world_size()}, "

81 | + f"rank = {dist.get_rank()}, backend={dist.get_backend()} \n", end=''

82 | )

83 |

84 | demo_basic(local_world_size, local_rank)

85 |

86 | # Tear down the process group

87 | dist.destroy_process_group()

88 |

89 |

90 | if __name__ == "__main__":

91 | parser = argparse.ArgumentParser()

92 | # This is passed in via launch.py

93 | parser.add_argument("--local_rank", type=int, default=0)

94 | # This needs to be explicitly passed in

95 | parser.add_argument("--local_world_size", type=int, default=1)

96 | args = parser.parse_args()

97 | # The main entry point is called directly without using subprocess

98 | spmd_main(args.local_world_size, args.local_rank)

99 | print("run command_demo.py successfully !!!")

100 |

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/multi_machine_command_launch.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torchvision

3 | import torch.utils.data.distributed

4 | import argparse

5 | import torch.distributed as dist

6 | from torchvision import transforms

7 |

8 | parser = argparse.ArgumentParser()

9 | parser.add_argument("--local_rank", type=int) # 增加local_rank

10 | args = parser.parse_args()

11 | torch.cuda.set_device(args.local_rank)

12 |

13 | def main():

14 | dist.init_process_group("nccl", init_method='env://') # init_method方式修改

15 | trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))])

16 | data_set = torchvision.datasets.MNIST('~/DATA/', train=True,

17 | transform=trans, target_transform=None, download=True)

18 | train_sampler = torch.utils.data.distributed.DistributedSampler(data_set)

19 | data_loader_train = torch.utils.data.DataLoader(dataset=data_set,

20 | batch_size=256,

21 | sampler=train_sampler,

22 | num_workers=16,

23 | pin_memory=True)

24 | net = torchvision.models.resnet101(num_classes=10)

25 | net.conv1 = torch.nn.Conv1d(1, 64, (7, 7), (2, 2), (3, 3), bias=False)

26 | net = net.cuda()

27 | # DDP 输出方式修改:

28 | net = torch.nn.parallel.DistributedDataParallel(net, device_ids=[args.local_rank],

29 | output_device=args.local_rank)

30 | criterion = torch.nn.CrossEntropyLoss()

31 | opt = torch.optim.Adam(net.parameters(), lr=0.001)

32 | for epoch in range(1):

33 | for i, data in enumerate(data_loader_train):

34 | images, labels = data

35 | # 要将数据送入指定的对应的gpu中

36 | images.to(args.local_rank, non_blocking=True)

37 | labels.to(args.local_rank, non_blocking=True)

38 | opt.zero_grad()

39 | outputs = net(images)

40 | loss = criterion(outputs, labels)

41 | loss.backward()

42 | opt.step()

43 | if i % 10 == 0:

44 | print("loss: {}".format(loss.item()))

45 |

46 |

47 | if __name__ == "__main__":

48 | main()

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/multi_machine_one_process_one_gpu.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.distributed as dist

3 | import torch.multiprocessing as mp

4 | import torch.nn as nn

5 | import torch.optim as optim

6 | from torch.nn.parallel import DistributedDataParallel as DDP

7 | import argparse

8 |

9 | parser = argparse.ArgumentParser()

10 | parser.add_argument("--world_size", type=int)

11 | parser.add_argument("--node_rank", type=int)

12 | parser.add_argument("--master_addr", default="127.0.0.1", type=str)

13 | parser.add_argument("--master_port", default="12355", type=str)

14 | args = parser.parse_args()

15 |

16 |

17 | def example(local_rank, node_rank, local_size, world_size):

18 | # 初始化

19 | rank = local_rank + node_rank * local_size

20 | torch.cuda.set_device(local_rank)

21 | dist.init_process_group("nccl",

22 | init_method="tcp://{}:{}".format(args.master_addr, args.master_port),

23 | rank=rank,

24 | world_size=world_size)

25 | # 创建模型

26 | model = nn.Linear(10, 10).to(local_rank)

27 | # 放入DDP

28 | ddp_model = DDP(model, device_ids=[local_rank], output_device=local_rank)

29 | loss_fn = nn.MSELoss()

30 | optimizer = optim.SGD(ddp_model.parameters(), lr=0.001)

31 | # 进行前向后向计算

32 | for i in range(1000):

33 | outputs = ddp_model(torch.randn(20, 10).to(local_rank))

34 | labels = torch.randn(20, 10).to(local_rank)

35 | loss_fn(outputs, labels).backward()

36 | optimizer.step()

37 |

38 |

39 | def main():

40 | local_size = torch.cuda.device_count()

41 | print("local_size: %s" % local_size)

42 | mp.spawn(example,

43 | args=(args.node_rank, local_size, args.world_size,),

44 | nprocs=local_size,

45 | join=True)

46 |

47 |

48 | if __name__=="__main__":

49 | main()

50 |

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/multi_machine_one_process_with_mgpu.py:

--------------------------------------------------------------------------------

1 | # singe proc with multi gpu

2 | import torchvision

3 | from torchvision import transforms

4 | import torch.distributed as dist

5 | import torch.utils.data.distributed

6 | import argparse

7 |

8 | parser = argparse.ArgumentParser()

9 | parser.add_argument("--rank", default=0, type=int)

10 | parser.add_argument("--world_size", default=1, type=int)

11 | parser.add_argument("--master_addr", default="127.0.0.1", type=str)

12 | parser.add_argument("--master_port", default="12355", type=str)

13 | args = parser.parse_args()

14 |

15 | def main(rank, world_size):

16 | # 一个节点就一个rank,节点的数量等于world_size

17 | dist.init_process_group("gloo",

18 | init_method="tcp://{}:{}".format(args.master_addr, args.master_port),

19 | rank=rank,

20 | world_size=world_size)

21 | trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))])

22 | data_set = torchvision.datasets.MNIST('~/DATA/', train=True,

23 | transform=trans, target_transform=None, download=True)

24 | train_sampler = torch.utils.data.distributed.DistributedSampler(data_set)

25 | data_loader_train = torch.utils.data.DataLoader(dataset=data_set,

26 | batch_size=256,

27 | sampler=train_sampler,

28 | num_workers=16,

29 | pin_memory=True)

30 | net = torchvision.models.resnet101(num_classes=10)

31 | net.conv1 = torch.nn.Conv1d(1, 64, (7, 7), (2, 2), (3, 3), bias=False)

32 | net = net.cuda()

33 | # net中不需要指定设备!

34 | net = torch.nn.parallel.DistributedDataParallel(net)

35 | criterion = torch.nn.CrossEntropyLoss()

36 | opt = torch.optim.Adam(net.parameters(), lr=0.001)

37 | for epoch in range(1):

38 | for i, data in enumerate(data_loader_train):

39 | images, labels = data

40 | images, labels = images.cuda(), labels.cuda()

41 | opt.zero_grad()

42 | outputs = net(images)

43 | loss = criterion(outputs, labels)

44 | loss.backward()

45 | opt.step()

46 | if i % 10 == 0:

47 | print("loss: {}".format(loss.item()))

48 |

49 |

50 | if __name__ == '__main__':

51 | main(args.rank, args.world_size)

52 |

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/process_demo.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | import torchvision

4 | import torch.distributed as dist

5 | import torch.utils.data.distributed

6 | from torchvision import transforms

7 | from torch.multiprocessing import Process

8 |

9 | # 主机地址 和 端口(端口) 设置

10 | os.environ['MASTER_ADDR'] = 'localhost'

11 | os.environ['MASTER_PORT'] = '12355'

12 |

13 | WORLD_SIZE = 1 # 一共启动多少个进程

14 |

15 | def main(rank):

16 | dist.init_process_group("sccl", rank=rank, world_size= WORLD_SIZE) # 初始化进程组(后端选择,rank,world_size)

17 | torch.cuda.set_device(rank) #device 设置

18 | trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))])

19 | data_set = torchvision.datasets.MNIST("./data", train=True, transform=trans, target_transform=None, download=True)

20 | train_sampler = torch.utils.data.distributed.DistributedSampler(data_set) # 同 DP 相同的接口

21 | data_loader_train = torch.utils.data.DataLoader(dataset=data_set, batch_size=64, sampler=train_sampler)

22 |

23 | net = torchvision.models.resnet50(num_classes=10) # 适配mnist 数据集 --> class num: 10

24 |

25 | # monkey patch --> 猴子补丁

26 | net.conv1 = torch.nn.Conv2d(1, 64, (7, 7), (2, 2), (3, 3), bias=False) # 修改模型: --> minst 是一个灰度图(channel = 1)

27 | net = net.cuda()

28 | net = torch.nn.parallel.DistributedDataParallel(net, device_ids=[rank]) # 和 DP 类似了,将原来的model 转化为 distribute model

29 | criterion = torch.nn.CrossEntropyLoss()

30 | opt = torch.optim.Adam(net.parameters(), lr=0.001)

31 | for epoch in range(10):

32 | for i, data in enumerate(data_loader_train):

33 | images, labels = data

34 | images, labels = images.cuda(), labels.cuda()

35 | opt.zero_grad()

36 | outputs = net(images)

37 | loss = criterion(outputs, labels)

38 | loss.backward()

39 | opt.step()

40 | if i % 10 == 0:

41 | print("loss: {}".format(loss.item()))

42 |

43 | # 指定一个device 保存模型

44 | if rank == 0:

45 | torch.save(net, "my_net.pth")

46 |

47 | if __name__ == "__main__":

48 | size = 1

49 | processes = []

50 | # for 循环启动进程

51 | for rank in range(size):

52 | p = Process(target=main, args=(rank,))

53 | p.start()

54 | processes.append(p)

55 |

56 | # join 起来

57 | for p in processes:

58 | p.join()

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/spawn_demo.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.distributed as dist

3 | import torch.multiprocessing as mp

4 | import torch.nn as nn

5 | import torch.optim as optim

6 | from torch.nn.parallel import DistributedDataParallel as DDP

7 | import os

8 |

9 | # rank 表示当前节点的编号,从0开始,在分布式训练中,每个计算节点有一个唯一的rank编号;

10 | # rank 编号用于区分不同的节点, rank 可以通过 torch.distributed.get_rank()来获取

11 | # world_size 表示分布式训练中计算节点的总数,包括主节点和工作节点,可以通过torch.distributed.get_world_size()来获取;

12 | # 通过rank 和world_size 这两个参数,不同的计算节点可以进行通信和协调,共同完成模型训练的任务;

13 | def example(rank, world_size):

14 | # create default process group

15 | import pdb

16 | pdb.set_trace()

17 | print("=========rank: {}, world_size: {}".format(rank, world_size))

18 | dist.init_process_group("gloo", rank=rank, world_size=world_size)

19 | # create local model

20 | model = nn.Linear(10, 10).to(rank) #分发到不同的节点

21 | # construct DDP model

22 | ddp_model = DDP(model, device_ids=[rank]) #拿到不同节点的model

23 | # define loss function and optimizer

24 | loss_fn = nn.MSELoss()

25 | optimizer = optim.SGD(ddp_model.parameters(), lr=0.001)

26 |

27 | # forward pass

28 | outputs = ddp_model(torch.randn(20, 10).to(rank))

29 | labels = torch.randn(20, 10).to(rank)

30 | # backward pass

31 | loss_fn(outputs, labels).backward()

32 | # update parameters

33 | optimizer.step()

34 |

35 | def main():

36 | world_size = 1

37 | mp.spawn(example,

38 | args=(world_size,),

39 | nprocs=world_size,

40 | join=True)

41 |

42 | if __name__=="__main__":

43 | # Environment variables which need to be

44 | # set when using c10d's default "env"

45 | # initialization mode.

46 | '''

47 | 在进行分布式训练时,需要进行进程间通信来实现数据的交换和同步。为了使不同进程能够相互通信,需要指定一个进程作为主节点,其他进程则连接到主节点。

48 | os.environ["MASTER_ADDR"]和os.environ["MASTER_PORT"]就是用来指定主节点地址和端口的环境变量。

49 | '''

50 | os.environ["MASTER_ADDR"] = "localhost"

51 | os.environ["MASTER_PORT"] = "29500"

52 | main()

53 | print("run ddp_hello.py successfully !!!")

54 |

--------------------------------------------------------------------------------

/11-distribute_guide/ddp_demo/torchrun.md:

--------------------------------------------------------------------------------

1 | #

2 |

--------------------------------------------------------------------------------

/11-distribute_guide/deep_speed/README.md:

--------------------------------------------------------------------------------

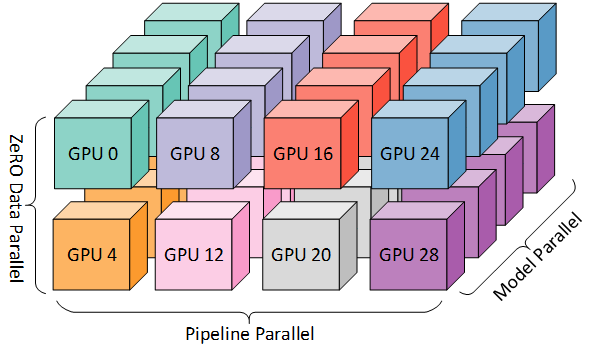

1 | # 概述

2 | DeepSpeed团队通过将

3 | - DeepSpeed库中的ZeRO分片(ZeRO sharding)、数据并行(Data Parallelism)、管道并行(也称流水线并行,Pipeline Parallelism)

4 | - 与Megatron-LM中的张量并行(Tensor Parallelism,可以理解为模型并行的一种)相结合;

5 | - 开发了一种基于3D并行的实现,这就是Megatron-Deepspeed,它使得千亿级参数量以上的大规模语言模型比如BLOOM的分布式训练变得更简单、高效和有效。

6 |

7 | # 3D并行

8 |

9 | - 数据并行 (Data Parallelism,DP) - 相同的设置和模型被复制多份,每份每次都被馈送不同的一份数据。处理是并行完成的,所有份在每个训练步结束时同步

10 | - 张量并行 (Tensor Parallelism,TP) - 每个张量都被分成多个块,因此张量的每个分片都位于其指定的 GPU 上,而不是让整个张量驻留在单个 GPU 上。在处理过程中,每个分片在不同的 GPU 上分别并行处理,结果在步骤结束时同步。这就是所谓的水平并行,因为是做的水平拆分

11 | - 流水线并行 (Pipeline Parallelism,PP) - 模型在多个 GPU 上垂直 (即按层) 拆分,因此只有一个或多个模型层放置在单个 GPU 上。每个 GPU 并行处理流水线的不同阶段,并处理 batch 的一部分数据

12 | - 零冗余优化器 (Zero Redundancy Optimizer,ZeRO) - 也执行与 TP 相类似的张量分片,但整个张量会及时重建以进行前向或反向计算,因此不需要修改模型。它还支持各种卸载技术以补偿有限的 GPU 内存

13 |

14 | # 参考链接:

15 | https://huggingface.co/blog/zh/bloom-megatron-deepspeed

16 |

--------------------------------------------------------------------------------

/11-distribute_guide/dp_demo/README.md:

--------------------------------------------------------------------------------

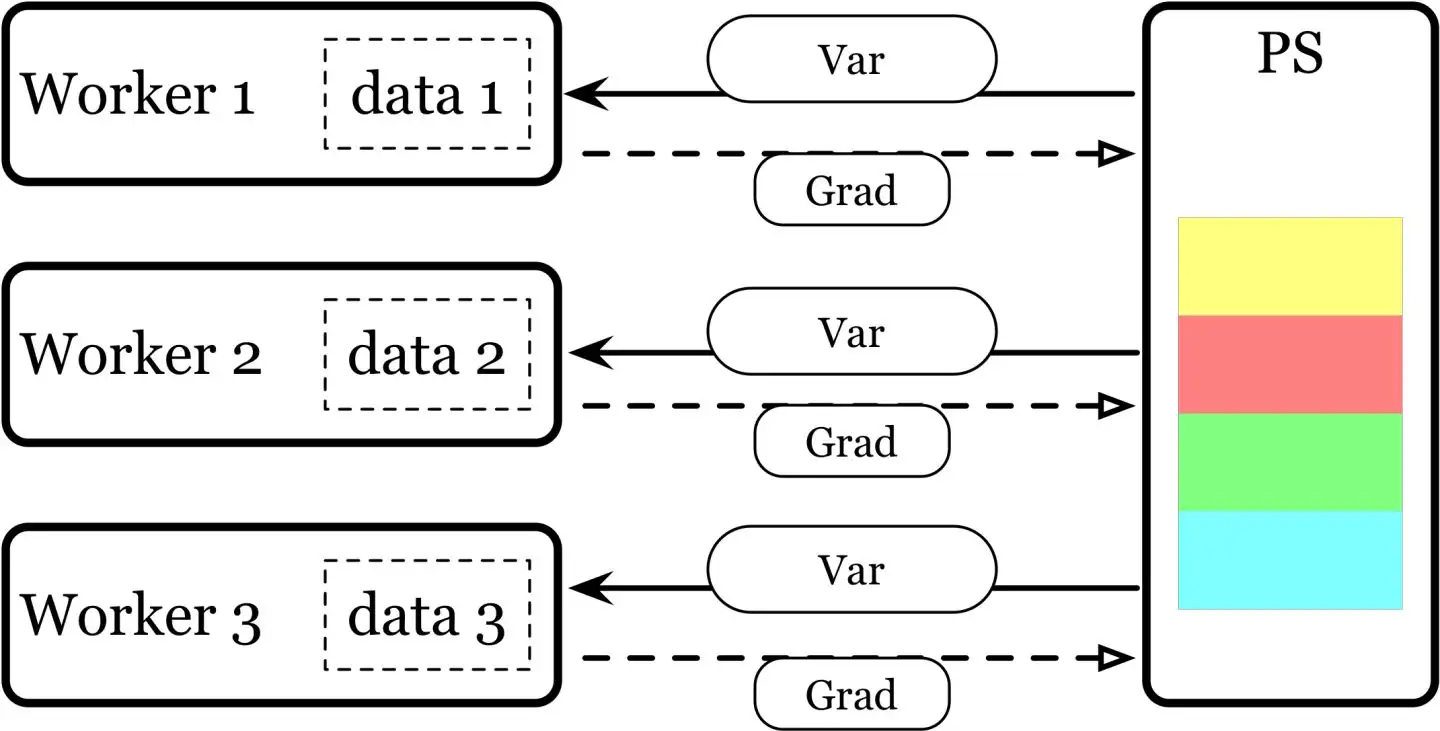

1 | # 1. DP(DataParalle)Summary

2 |

3 | ## 数据并行的概念

4 | 当一张 GPU 可以存储一个模型时,可以采用数据并行得到更准确的梯度或者加速训练:

5 | 即每个 GPU 复制一份模型,将一批样本分为多份输入各个模型并行计算。

6 | 因为求导以及加和都是线性的,数据并行在数学上也有效。

7 |

8 | ## DP原理及步骤

9 | - Parameter Server 架构 --> 单进程 多线程的方式 --> 只能在单机多卡上使用;

10 | - DP 基于单机多卡,所有设备都负责计算和训练网络;

11 | - 除此之外, device[0] (并非 GPU 真实标号而是输入参数 device_ids 首位) 还要负责整合梯度,更新参数。

12 | - 大体步骤:

13 | 1. 各卡分别计算损失和梯度;

14 | 2. 所有梯度整合到 device[0];

15 | 3. device[0] 进行参数更新,其他卡拉取 device[0] 的参数进行更新;

16 |

17 |

18 |

19 |

20 | # 2. code implement

21 | ## pytorch 相关源码

22 | ```python

23 | import torch.nn as nn

24 | model = nn.DataParallel(model) # 只需要将原来单卡的 module 用 DP 改成多卡

25 | class DataParallel(Module):

26 | ```

27 |

28 | ## train mode use pytorch DP

29 | **运行 dp_hello.py**

30 | ```shell

31 | python dp_hello.py

32 |

33 | output:

34 | >>> output: Let's use 2 GPUs!

35 | ```

36 |

37 | **运行 dp_demo.py**

38 | ```shell

39 | python dp_demo.py

40 |

41 | result:

42 | >>> data shape: torch.Size([64, 1, 28, 28])

43 | >>> =============x shape: torch.Size([32, 1, 28, 28])

44 | >>> =============x shape: torch.Size([32, 1, 28, 28])

45 | ```

46 |

47 | # 3. DP 的优缺点

48 | - 负载不均衡:device[0] 负载大一些;

49 | - 通信开销大;

50 | - 单进程;

51 | - Global Interpreter Lock (GIL)全局解释器锁,简单来说就是,一个 Python 进程只能利用一个 CPU kernel,

52 | 即单核多线程并发时,只能执行一个线程。考虑多核,多核多线程可能出现线程颠簸 (thrashing) 造成资源浪费,

53 | 所以 Python 想要利用多核最好是多进程。

54 |

55 | # 4. [references]

56 | 1. [pytorch 源码](https://github.com/pytorch/pytorch/blob/master/torch/nn/parallel/data_parallel.py)

57 | 2. [torch.nn.DataParallel](https://pytorch.org/docs/stable/generated/torch.nn.DataParallel.html?highlight=data+parallel#torch.nn.DataParallel)

58 | 3. [代码参考链接](https://pytorch.org/tutorials/beginner/blitz/data_parallel_tutorial.html#create-model-and-dataparallel)

59 | 4. [DP 和 DDP](https://link.zhihu.com/?target=https%3A//pytorch.org/docs/stable/notes/cuda.html%3Fhighlight%3Dbuffer)

60 |

--------------------------------------------------------------------------------

/11-distribute_guide/dp_demo/dp_demo.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.optim as optim

4 | # import torch.distributed as dist

5 | import torchvision.datasets as datasets

6 | import torchvision.transforms as transforms

7 |

8 | # 定义分布式环境

9 | # dist.init_process_group(backend="gloo", rank=0, world_size=2)

10 |

11 | USE_CUDA = torch.cuda.is_available()

12 | device = torch.device("cuda:0" if USE_CUDA else "cpu")

13 |

14 | # 加载数据集

15 | train_dataset = datasets.MNIST(root="./data", train=True, transform=transforms.ToTensor(), download=True)

16 | # train_sampler = torch.utils.data.distributed.DistributedSampler(train_dataset, num_replicas=2, rank=0) # update num_replicas to gpus num

17 | # train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=64, shuffle=False, sampler=train_sampler)

18 | train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=64, shuffle=False)

19 |

20 | # 定义模型

21 | class Net(nn.Module):

22 | def __init__(self):

23 | super(Net, self).__init__()

24 | self.fc1 = nn.Linear(784, 512)

25 | self.fc2 = nn.Linear(512, 10)

26 | self.relu = nn.ReLU()

27 |

28 | def forward(self, x):

29 | print("=============x shape: ", x.shape)

30 | x = x.view(-1, 784)

31 | x = self.relu(self.fc1(x))

32 | x = self.fc2(x)

33 | return x

34 |

35 | model = Net()

36 |

37 | # 将模型复制到多个GPU上

38 | model = nn.DataParallel(model).to(device)

39 |

40 | # 定义损失函数和优化器

41 | content = nn.CrossEntropyLoss()

42 | optimizer = optim.SGD(model.parameters(), lr=0.01)

43 |

44 | # 训练模型

45 | for epoch in range(10):

46 | # train_sampler.set_epoch(epoch)

47 | for data, target in train_loader:

48 | print("data shape: ", data.shape)

49 | data = data.to(device)

50 | target = target.to(device)

51 | optimizer.zero_grad()

52 | output = model(data)

53 | loss = content(output, target)

54 | loss.backward()

55 | optimizer.step()

56 |

57 | print("Epoch {} completed".format(epoch))

--------------------------------------------------------------------------------

/11-distribute_guide/dp_demo/dp_hello.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.optim as optim

4 | from torch.autograd import Variable

5 | from torch.utils.data import Dataset, DataLoader

6 |

7 |

8 | class RandomDataset(Dataset):

9 | def __init__(self, size, length):

10 | self.len = length

11 | self.data = torch.randn(length, size)

12 |

13 | def __getitem__(self, index):

14 | return self.data[index]

15 |

16 | def __len__(self):

17 | return self.len

18 |

19 |

20 | class Model(nn.Module):

21 | def __init__(self, input_size, output_size):

22 | super(Model, self).__init__()

23 | self.fc = nn.Linear(input_size, output_size)

24 | self.sigmoid = nn.Sigmoid()

25 | # self.modules = [self.fc, self.sigmoid]

26 |

27 | def forward(self, input):

28 | return self.sigmoid(self.fc(input))

29 |

30 |

31 | if __name__ == '__main__':

32 | # Parameters and DataLoaders

33 | input_size = 5

34 | output_size = 1

35 | batch_size = 30

36 | data_size = 100

37 |

38 | rand_loader = DataLoader(dataset=RandomDataset(input_size, data_size),

39 | batch_size=batch_size, shuffle=True)

40 |

41 | model = Model(input_size, output_size)

42 | if torch.cuda.device_count() > 1:

43 | print("Let's use", torch.cuda.device_count(), "GPUs!")

44 | model = nn.DataParallel(model).cuda()

45 |

46 | optimizer = optim.SGD(params=model.parameters(), lr=1e-3)

47 | cls_criterion = nn.BCELoss()

48 |

49 | for data in rand_loader:

50 | targets = torch.empty(data.size(0)).random_(2).view(-1, 1)

51 |

52 | if torch.cuda.is_available():

53 | input = Variable(data.cuda())

54 | with torch.no_grad():

55 | targets = Variable(targets.cuda())

56 | else:

57 | input = Variable(data)

58 | with torch.no_grad():

59 | targets = Variable(targets)

60 |

61 | output = model(input)

62 |

63 | optimizer.zero_grad()

64 | loss = cls_criterion(output, targets)

65 | loss.backward()

66 | optimizer.step()

67 |

--------------------------------------------------------------------------------

/11-distribute_guide/dp_demo/image.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Elvin-Ma/pytorch_guide/da12d191ab1dae88e67c2c30246be9f29492f4c5/11-distribute_guide/dp_demo/image.png

--------------------------------------------------------------------------------

/11-distribute_guide/megatron/README.md:

--------------------------------------------------------------------------------

1 |

2 | # megatron 论文下载

3 | https://arxiv.org/pdf/1909.08053.pdf

4 |

5 | # 所有并行优化手段

6 | https://blog.csdn.net/weixin_42764932/article/details/131007832

7 |

8 | # 参考文献

9 | [中文简介](https://zhuanlan.zhihu.com/p/366906920)

10 | [中文简介](https://zhuanlan.zhihu.com/p/622212228)

11 |

--------------------------------------------------------------------------------

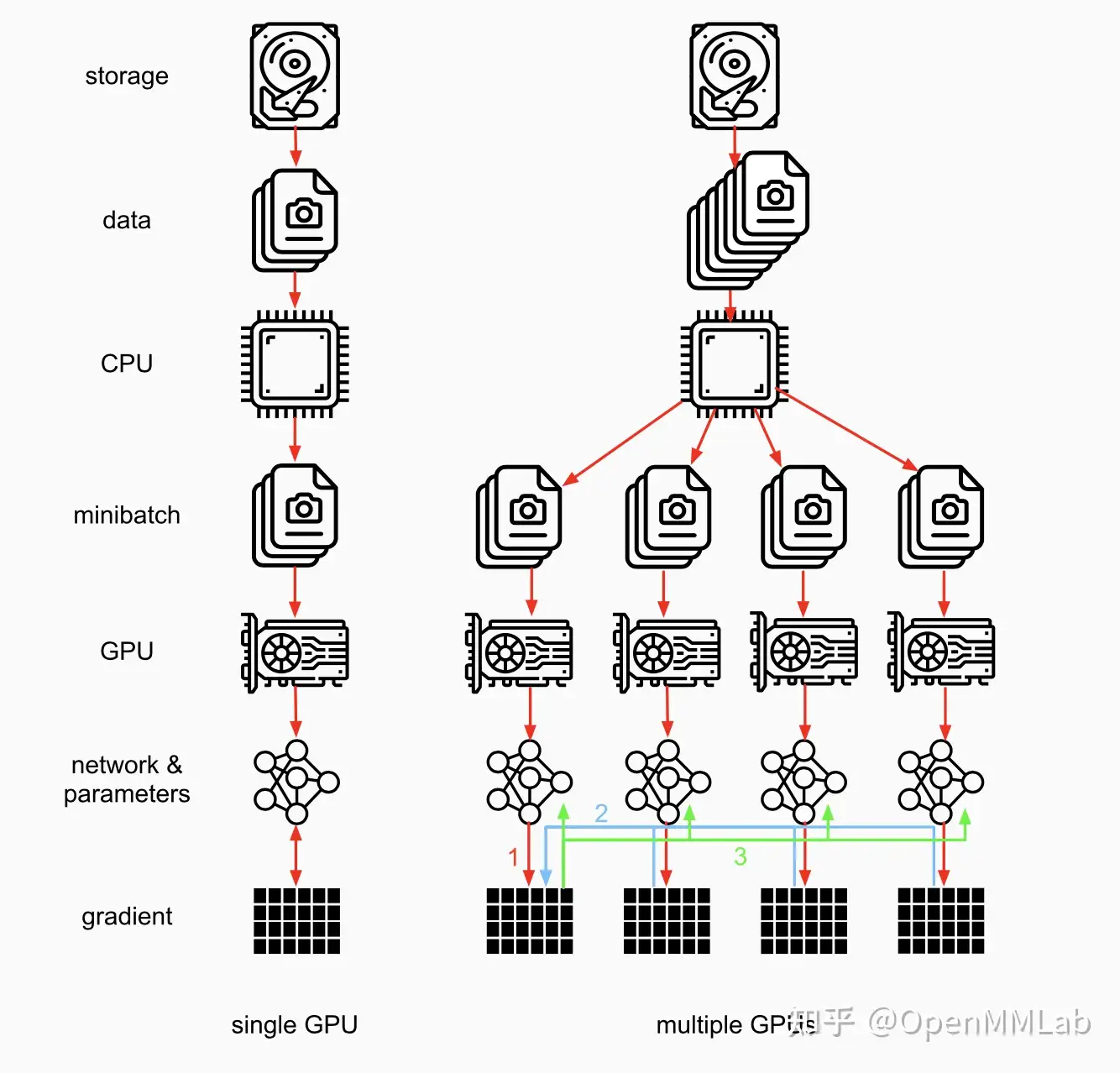

/11-distribute_guide/model_parallel/README.md:

--------------------------------------------------------------------------------

1 | # pytorch model parallel summary

2 |

3 |

4 |

5 | ## 数据并行(DataParallel)的优缺点

6 | - 优点:将相同的模型复制到所有GPU,其中每个GPU消耗输入数据的不同分区,可以极大地加快训练过程。

7 | - 缺点:不适用于某些模型太大而无法容纳单个GPU的用例。

8 |

9 | ## 模型并行(model parallel)介绍

10 | - *模型并行的高级思想是将模型的不同子网(sub net)放置到不同的设备上,并相应地实现该 forward方法以在设备之间移动中间输出。*

11 | - *由于模型的一部分只能在任何单个设备上运行,因此一组设备可以共同为更大的模型服务。*

12 | - *在本文中,我们不会尝试构建庞大的模型并将其压缩到有限数量的GPU中。*

13 | - *取而代之的是,本文着重展示模型并行的思想。读者可以将这些想法应用到实际应用中。*

14 |

15 | # 代码展示

16 | ```shell

17 | python model_parallel_demo.py

18 | ```

19 |

20 | ## 性能展示

21 | **没有pipline时**

22 |

23 |

24 | **有pipline时**

25 |

26 |

27 | **异步执行pytorch kernel**

28 | As PyTorch launches CUDA operations asynchronously,

29 | the implementation does not need to spawn multiple threads to achieve concurrency.

30 |

31 | ## rpc:

32 | pytorch官方model parallel 库,类似与DDP,帮助我们完成模型并行的训练。

33 | [参考链接](https://pytorch.org/tutorials/intermediate/rpc_tutorial.html)

34 |

35 | ## pytorch 提供的模型并行方案

36 | - 模型划分不容易;

37 | - 相互沟通成本高;

38 | - bubble

39 |

40 | # [references]

41 | [参考文献1-pytorch](https://pytorch.org/tutorials/intermediate/model_parallel_tutorial.html)

42 | [参考文献2-pytorchRPC](https://pytorch.org/tutorials/intermediate/rpc_tutorial.html)

43 | [参考文献3](https://pytorch.org/tutorials/intermediate/rpc_param_server_tutorial.html)

44 | [参考文献4](https://juejin.cn/post/7043601075307282462)

45 | [参考文献5](https://pytorch.org/tutorials/intermediate/dist_pipeline_parallel_tutorial.html)

46 |

--------------------------------------------------------------------------------

/11-distribute_guide/model_parallel/model_parallel_demo.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.optim as optim

4 |

5 | class ToyModel(nn.Module):

6 | def __init__(self):

7 | super(ToyModel, self).__init__()

8 | self.net1 = torch.nn.Linear(10, 10).to('cuda:0') # net1 --> gpu0 上

9 | self.relu = torch.nn.ReLU() # 没有任何可学习参数所以不需要to

10 | self.net2 = torch.nn.Linear(10, 5).to('cuda:0') # net2 --> gpu1 上

11 |

12 | def forward(self, x):

13 | x = x.to('cuda:0') # h2d : gpu0 上;

14 | x = self.net1(x) # 运算

15 | x = self.relu(x) # relu操作 x --> gpu0 上

16 | x = x.to('cuda:0') # 把 x --> gpu1 上

17 | x = self.net2(x) # 在 GPU1 上执行此操作

18 | return x # x 在gpu1 --> cuda:1

19 |

20 | if __name__ == "__main__":

21 | print("start to run model_parallel_demo.py !!!")

22 | model = ToyModel() # 实例化一个模型

23 | loss_fn = nn.MSELoss() # 损失函数定义

24 | optimizer = optim.SGD(model.parameters(), lr=0.001) # 定义优化器

25 |

26 | optimizer.zero_grad() # 梯度清0

27 | outputs = model(torch.randn(20, 10)) # forward 过程

28 | labels = torch.randn(20, 5).to('cuda:0') # label --> cuda:1 上

29 | loss = loss_fn(outputs, labels) # 计算损失

30 | loss.backward() # loss 的反向传播

31 | optimizer.step() # 更新权重

32 | print("run model_parallel_demo.py successfully !!!")

33 |

34 |

35 |

--------------------------------------------------------------------------------

/11-distribute_guide/model_parallel/model_parallel_demo2.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 | import torch.optim as optim

4 | from torchvision.models.resnet import ResNet, Bottleneck

5 |

6 | num_classes = 1000

7 |

8 | class ModelParallelResNet50(ResNet):

9 | def __init__(self, *args, **kwargs):

10 | super(ModelParallelResNet50, self).__init__(

11 | Bottleneck, [3, 4, 6, 3], num_classes=num_classes, *args, **kwargs)

12 |

13 | self.seq1 = nn.Sequential(

14 | self.conv1,

15 | self.bn1,

16 | self.relu,

17 | self.maxpool,

18 |

19 | self.layer1,

20 | self.layer2

21 | ).to('cuda:0')

22 |

23 | self.seq2 = nn.Sequential(

24 | self.layer3,

25 | self.layer4,

26 | self.avgpool,

27 | ).to('cuda:1')

28 |

29 | self.fc.to('cuda:1')

30 |

31 | def forward(self, x):

32 | x = self.seq2(self.seq1(x).to('cuda:1'))

33 | return self.fc(x.view(x.size(0), -1))

34 |

35 | class PipelineParallelResNet50(ModelParallelResNet50):

36 | def __init__(self, split_size=20, *args, **kwargs):

37 | super(PipelineParallelResNet50, self).__init__(*args, **kwargs)

38 | self.split_size = split_size

39 |

40 | def forward(self, x):

41 | splits = iter(x.split(self.split_size, dim=0)) # 对batch 维度进行split

42 | s_next = next(splits)

43 | s_prev = self.seq1(s_next) # s_prev --> cuda:0

44 | s_prev = s_prev.to('cuda:1') # s_prev --> cuda:0

45 | ret = []

46 |

47 | for s_next in splits:

48 | # A. ``s_prev`` runs on ``cuda:1``

49 | s_prev = self.seq2(s_prev) # s_prev: cuda:1

50 | ret.append(self.fc(s_prev.view(s_prev.size(0), -1)))

51 |

52 | # B. ``s_next`` runs on ``cuda:0``, which can run concurrently with A

53 | s_prev = self.seq1(s_next).to('cuda:1') # 执行seq1

54 |

55 | s_prev = self.seq2(s_prev)

56 | ret.append(self.fc(s_prev.view(s_prev.size(0), -1)))

57 |

58 | return torch.cat(ret)

59 |

60 |

61 | # setup = "model = PipelineParallelResNet50()"

62 | # pp_run_times = timeit.repeat(

63 | # stmt, setup, number=1, repeat=num_repeat, globals=globals())

64 | # pp_mean, pp_std = np.mean(pp_run_times), np.std(pp_run_times)

65 |

66 | # plot([mp_mean, rn_mean, pp_mean],

67 | # [mp_std, rn_std, pp_std],

68 | # ['Model Parallel', 'Single GPU', 'Pipelining Model Parallel'],

69 | # 'mp_vs_rn_vs_pp.png')

70 |

71 | # import torchvision.models as models

72 |

73 | # num_batches = 3

74 | # batch_size = 120

75 | # image_w = 128

76 | # image_h = 128

77 |

78 |

79 | # def train(model):

80 | # model.train(True)

81 | # loss_fn = nn.MSELoss()

82 | # optimizer = optim.SGD(model.parameters(), lr=0.001)

83 |

84 | # one_hot_indices = torch.LongTensor(batch_size) \

85 | # .random_(0, num_classes) \

86 | # .view(batch_size, 1)

87 |

88 | # for _ in range(num_batches):

89 | # # generate random inputs and labels

90 | # inputs = torch.randn(batch_size, 3, image_w, image_h)

91 | # labels = torch.zeros(batch_size, num_classes) \

92 | # .scatter_(1, one_hot_indices, 1)

93 |

94 | # # run forward pass

95 | # optimizer.zero_grad()

96 | # outputs = model(inputs.to('cuda:0'))

97 |

98 | # # run backward pass

99 | # labels = labels.to(outputs.device)

100 | # loss_fn(outputs, labels).backward()

101 | # optimizer.step()

--------------------------------------------------------------------------------

/11-distribute_guide/tensor_parallelism/README.md:

--------------------------------------------------------------------------------

1 | # PyTorch Tensor Parallelism for distributed training

2 |

3 | # Megatron-LM

4 | [Megatron-LM](https://github.com/NVIDIA/Megatron-LM#gpt-3-example)

5 |

6 | This example demonstrates SPMD Megatron-LM style tensor parallel by using

7 | PyTorch native Tensor Parallelism APIs, which include:

8 |

9 | 1. High-level APIs for module-level parallelism with a dummy MLP model.

10 | 2. Model agnostic ops for `DistributedTensor`, such as `Linear` and `RELU`.

11 | 3. A E2E demo of tensor parallel for a given toy model (Forward/backward + optimization).

12 |

13 | More details about the design can be found:

14 | https://github.com/pytorch/pytorch/issues/89884

15 |

16 | ```

17 | pip install -r requirements.txt

18 | python example.py

19 | ```

20 |

--------------------------------------------------------------------------------

/11-distribute_guide/tensor_parallelism/example.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import torch

4 | import torch.distributed as dist

5 | import torch.multiprocessing as mp

6 | import torch.nn as nn

7 |

8 | TP_AVAILABLE = False

9 | try:

10 | from torch.distributed._tensor import (

11 | DeviceMesh,

12 | )

13 | from torch.distributed.tensor.parallel import (

14 | PairwiseParallel,

15 | parallelize_module,

16 | )

17 | TP_AVAILABLE = True

18 | except BaseException as e:

19 | pass

20 |

21 |

22 | """

23 | This is the script to test Tensor Parallel(TP) on a toy model in a

24 | Megetron-LM SPMD style. We show an E2E working flow from forward,

25 | backward and optimization.

26 |

27 | More context about API designs can be found in the design:

28 |

29 | https://github.com/pytorch/pytorch/issues/89884.

30 |

31 | And it is built on top of Distributed Tensor which is proposed in:

32 |

33 | https://github.com/pytorch/pytorch/issues/88838.

34 |

35 | We use the example of two `nn.Linear` layers with an element-wise `nn.RELU`

36 | in between to show an example of Megatron-LM, which was proposed in paper:

37 |

38 | https://arxiv.org/abs/1909.08053.

39 |

40 | The basic idea is that we parallelize the first linear layer by column

41 | and also parallelize the second linear layer by row so that we only need

42 | one all reduce in the end of the second linear layer.

43 |

44 | We can speed up the model training by avoiding communications between

45 | two layers.

46 |

47 | To parallelize a nn module, we need to specify what parallel style we want

48 | to use and our `parallelize_module` API will parse and parallelize the modules

49 | based on the given `ParallelStyle`. We are using this PyTorch native Tensor

50 | Parallelism APIs in this example to show users how to use them.

51 | """

52 |

53 |

54 | def setup(rank, world_size):

55 | os.environ['MASTER_ADDR'] = 'localhost'

56 | os.environ['MASTER_PORT'] = '12355'

57 |

58 | # initialize the process group

59 | dist.init_process_group("nccl", rank=rank, world_size=world_size)

60 | torch.cuda.set_device(rank)

61 |

62 | def cleanup():

63 | dist.destroy_process_group()

64 |

65 |

66 | class ToyModel(nn.Module):

67 | def __init__(self):

68 | super(ToyModel, self).__init__()

69 | self.net1 = nn.Linear(10, 32)

70 | self.relu = nn.ReLU()

71 | self.net2 = nn.Linear(32, 5)

72 |

73 | def forward(self, x):

74 | return self.net2(self.relu(self.net1(x)))

75 |

76 |

77 | def demo_tp(rank, args):

78 | """

79 | Main body of the demo of a basic version of tensor parallel by using

80 | PyTorch native APIs.

81 | """

82 | print(f"Running basic Megatron style TP example on rank {rank}.")

83 | setup(rank, args.world_size)

84 | # create a sharding plan based on the given world_size.

85 | device_mesh = DeviceMesh(

86 | "cuda",

87 | torch.arange(args.world_size),

88 | )

89 |

90 | # create model and move it to GPU with id rank

91 | model = ToyModel().cuda(rank)

92 | # Create a optimizer for the parallelized module.

93 | LR = 0.25

94 | optimizer = torch.optim.SGD(model.parameters(), lr=LR)

95 | # Parallelize the module based on the given Parallel Style.

96 | model = parallelize_module(model, device_mesh, PairwiseParallel())

97 |

98 | # Perform a num of iterations of forward/backward

99 | # and optimizations for the sharded module.

100 | for _ in range(args.iter_nums):

101 | inp = torch.rand(20, 10).cuda(rank)

102 | output = model(inp)

103 | output.sum().backward()

104 | optimizer.step()

105 |

106 | cleanup()

107 |

108 |

109 | def run_demo(demo_fn, args):

110 | mp.spawn(demo_fn,

111 | args=(args,),

112 | nprocs=args.world_size,

113 | join=True)

114 |

115 |

116 | if __name__ == "__main__":

117 | n_gpus = torch.cuda.device_count()

118 | parser = argparse.ArgumentParser()

119 | # This is passed in via cmd

120 | parser.add_argument("--world_size", type=int, default=n_gpus)

121 | parser.add_argument("--iter_nums", type=int, default=10)

122 | args = parser.parse_args()

123 | # The main entry point is called directly without using subprocess

124 | if n_gpus < 2:

125 | print("Requires at least 2 GPUs to run.")

126 | elif not TP_AVAILABLE:

127 | print(

128 | "PyTorch doesn't have Tensor Parallelism available,"

129 | " need nightly build."

130 | )

131 | else:

132 | run_demo(demo_tp, args)

133 |

134 |

--------------------------------------------------------------------------------

/11-distribute_guide/tensor_parallelism/requirements.txt:

--------------------------------------------------------------------------------

1 | # Python dependencies required for running the example

2 |

3 | --pre

4 | --extra-index-url https://download.pytorch.org/whl/nightly/cu113

5 | --extra-index-url https://download.pytorch.org/whl/nightly/cu116

6 | torch >= 1.14.0.dev0; sys_platform == "linux"

--------------------------------------------------------------------------------

/11-distribute_guide/zero/READEME.md:

--------------------------------------------------------------------------------

1 | # 1. Zero(零冗余优化)

2 | - 它是DeepSpeed这一分布式训练框架的核心,被用来解决大模型训练中的显存开销问题。ZeRO的思想就是用通讯换显存。

3 | - ZeRO-1只对优化器状态进行分区。

4 | - ZeRO-2除了对优化器状态进行分区外,还对梯度进行分区,

5 | - ZeRO-3对所有模型状态进行分区。

6 |

7 | # 2. zero offload

8 |

9 |

10 | # 3 zero-infinity

11 | 1. ZeRO(ZeRO-3)的第3阶段允许通过跨数据并行过程划分模型状态来消除数据并行训练中的所有内存冗余。

12 | 2. Infinity Offload Engine是一个新颖的数据卸载库,通过将分区模型状态卸载到比GPU内存大得多的CPU或NVMe设备内存中,可以完全利用现代异构内存体系结构。

13 | 3.带有CPU卸载的激活检查点可以减少激活内存占用空间,在ZeRO-3和Infinity Offload Engine解决了模型状态所需的内存之后,这可能成为GPU上的内存瓶颈。

14 | 4.以内存为中心的运算符平铺,这是一种新颖的计算重新调度技术,可与ZeRO数据访问和通信调度配合使用,可减少难以置信的庞大单个层的内存占用,

15 | 这些单个层可能太大而无法容纳GPU内存,即使是一个时间。

16 |

17 | # 4. zero vs 模型并行

18 | - 知道模型并行的朋友,可能会想,既然ZeRO都把参数W给切了,那它应该是个模型并行呀?为什么要归到数据并行里呢?

19 | - 其实ZeRO是模型并行的形式,数据并行的实质。

20 | - 模型并行,是指在forward和backward的过程中,我只需要用自己维护的那块W来计算就行。即同样的输入X,每块GPU上各算模型的一部分,最后通过某些方式聚合结果。

21 | - 但对ZeRO来说,它做forward和backward的时候,是需要把各GPU上维护的W聚合起来的,即本质上还是用完整的W进行计算。它是不同的输入X,完整的参数W,最终再做聚合。

22 | - 因为下一篇要写模型并行Megatron-LM,因此现在这里罗列一下两者的对比。

23 |

24 | # 4. 参考文献:

25 | [zero 论文1](https://arxiv.org/pdf/1910.02054.pdf)

26 | [zero-offload](https://arxiv.org/pdf/2101.06840.pdf)

27 | [zero-infinity](https://arxiv.org/pdf/2104.07857.pdf)

28 | [zero-offload]https://www.usenix.org/conference/atc21/presentation/ren-jie

29 | [deepspeed1](https://www.microsoft.com/en-us/research/blog/deepspeed-extreme-scale-model-training-for-everyone/)

30 | [deepspeed2](https://www.microsoft.com/en-us/research/blog/ZeRO-deepspeed-new-system-optimizations-enable-training-models-with-over-100-billion-parameters/)

31 | [zero++](https://www.microsoft.com/en-us/research/publication/zero-extremely-efficient-collective-communication-for-giant-model-training/)

32 | [zero++ 中文](https://zhuanlan.zhihu.com/p/641297077)

33 | - DeepSpeed 在 https://github.com/microsoft/DeepSpeed/pull/3784 引入了 zero++的支持

34 | [中文简介](https://zhuanlan.zhihu.com/p/513571706)

35 |

--------------------------------------------------------------------------------

/12-pytorch2_dynomo/dynamo_hello.py:

--------------------------------------------------------------------------------

1 | import torch

2 | from torch._dynamo import optimize #

3 | import torch._inductor.config

4 |

5 | torch._inductor.config.debug = True

6 | torch._dynamo.config.suppress_errors = True

7 |

8 | # 对这个function 进行加速

9 | def fn(x):

10 | a = torch.sin(x).cuda()

11 | b = torch.sin(a).cuda()

12 | return b

13 |

14 | new_fn = optimize("inductor")(fn) # new_fn

15 | input_tensor = torch.randn(10000).to(device="cuda:0")

16 | a = new_fn(input_tensor)

17 | print("run dynamo_hell.py successfully !!!")

18 |

--------------------------------------------------------------------------------

/12-pytorch2_dynomo/model_demo.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import time

3 | from torchvision.models import resnet18

4 |

5 | # Returns the result of running `fn()` and the time it took for `fn()` to run,

6 | # in seconds. We use CUDA events and synchronization for the most accurate

7 | # measurements.

8 | def timed(fn):

9 | # start = torch.cuda.Event(enable_timing=True)

10 | # end = torch.cuda.Event(enable_timing=True)

11 | a = time.time()

12 | # start.record()

13 | result = fn()

14 | # end.record()

15 | b = time.time()

16 | # torch.cuda.synchronize()

17 | return result, (b-a)

18 |

19 | # Generates random input and targets data for the model, where `b` is

20 | # batch size.

21 | def generate_data(b):

22 | return (

23 | torch.randn(b, 3, 128, 128).to(torch.float32).cuda(),

24 | torch.randint(1000, (b,)).cuda(),

25 | )

26 |

27 | def init_model():

28 | return resnet18().to(torch.float32).cuda()

29 |

30 | def evaluate(mod, inp):

31 | return mod(inp)

32 |

33 | if __name__ == "__main__":

34 |

35 | model = init_model()

36 |

37 | # # Reset since we are using a different mode.

38 | import torch._dynamo

39 | torch._dynamo.reset()

40 |

41 | evaluate_opt = torch.compile(evaluate, mode="reduce-overhead")

42 |

43 | # 验证一次

44 | # inp = generate_data(16)[0]

45 | # print("eager:", timed(lambda: evaluate(model, inp))[1])

46 | # print("compile:", timed(lambda: evaluate_opt(model, inp))[1])

47 |

48 | N_ITERS = 10

49 |

50 | eager_times = []

51 | for i in range(N_ITERS):

52 | inp = generate_data(16)[0]

53 | _, eager_time = timed(lambda: evaluate(model, inp))

54 | eager_times.append(eager_time)

55 | print(f"eager eval time {i}: {eager_time}")

56 |

57 | print("~" * 10)

58 |

59 | compile_times = []

60 | for i in range(N_ITERS):

61 | inp = generate_data(16)[0]

62 | _, compile_time = timed(lambda: evaluate_opt(model, inp))

63 | compile_times.append(compile_time)

64 | print(f"compile eval time {i}: {compile_time}")

65 | print("~" * 10)

66 |

67 | import numpy as np

68 | eager_med = np.median(eager_times)

69 | compile_med = np.median(compile_times)

70 | speedup = eager_med / compile_med

71 | print(f"(eval) eager median: {eager_med}, compile median: {compile_med}, speedup: {speedup}x")

72 | print("~" * 10)

73 |

--------------------------------------------------------------------------------

/12-pytorch2_dynomo/torch2_demo.py:

--------------------------------------------------------------------------------

1 | import torch

2 |

3 | def demo_1():

4 | def foo(x, y):

5 | a = torch.sin(x)

6 | b = torch.cos(x)

7 | return a + b

8 |

9 | opt_foo1 = torch.compile(foo)

10 | print(opt_foo1(torch.randn(10, 10), torch.randn(10, 10)))

11 |

12 | def demo_2():

13 | @torch.compile

14 | def opt_foo2(x, y):

15 | a = torch.sin(x)

16 | b = torch.cos(x)

17 | return a + b

18 | print(opt_foo2(torch.randn(10, 10), torch.randn(10, 10)))

19 |

20 | def demo_3():

21 | class MyModule(torch.nn.Module):

22 | def __init__(self):

23 | super().__init__()

24 | self.lin = torch.nn.Linear(100, 10)

25 |

26 | def forward(self, x):

27 | return torch.nn.functional.relu(self.lin(x))

28 |

29 | mod = MyModule()

30 | opt_mod = torch.compile(mod)

31 | print(opt_mod(torch.randn(10, 100)))

32 |

33 | if __name__ == "__main__":

34 | # demo_1()

35 | # demo_2()

36 | demo_3()

37 | print("run torch2_demo.py successfully !!!")

--------------------------------------------------------------------------------

/13-model_deploy_guide/cloud_deploy/README.md:

--------------------------------------------------------------------------------

1 | # 相关网址

2 | - [阿里云首页](https://www.aliyun.com/?utm_content=se_1013083955)

3 | - [机器学习平台pai](https://www.aliyun.com/product/bigdata/learn?spm=5176.28055625.J_3207526240.255.6a27154aXUTslL&scm=20140722.M_9003148._.V_1)

4 | - [机器学习平台pai帮助文档](https://help.aliyun.com/document_detail/69223.html?spm=5176.14066474.J_5834642020.6.71d5426as0DTyC)

5 | - [控制台部署说明](https://help.aliyun.com/document_detail/110985.html?spm=a2c4g.433127.0.0.5f5d5dffNclYQe#section-66n-18i-lmm)

6 | - [用python 部署](https://help.aliyun.com/document_detail/2261532.html?spm=a2c4g.2261500.0.0.3d535dff7DWrbQ)

7 |

8 | # PAI的部署方式EAS

9 | step1. 登录 EAS;

10 | step2. [python processor](https://help.aliyun.com/document_detail/130248.html?spm=a2c4g.113696.0.0.37b255caYgyZaT)

11 | step3. [所有参数说明](https://help.aliyun.com/document_detail/450525.html?spm=a2c4g.130248.0.0.7bf97788Oixfjd)

12 | step4. [服务调用](https://help.aliyun.com/document_detail/250807.html?spm=a2c4g.110984.0.0.51657baehuab6g)

13 | step5. [状态码说明](https://help.aliyun.com/document_detail/449809.html?spm=a2c4g.250807.0.0.153783b8bF8slo)

14 |

15 |

16 | # aliyun 三种部署方式

17 | - 镜像部署服务: 其依赖项打包成一个镜像文件,然后在目标环境中运行

18 | - 镜像部署AI-Web应用:将 AI 模型部署为服务,通过 API 接口提供服务,并使用容器镜像来提供 Web 界面。\n

19 | 这种方式需要将 AI 模型封装为 API 接口,然后使用 Flask、Django 等 Web 框架将接口暴露出来。

20 | 可以使用 Dockerfile 构建一个镜像,其中包含了 Flask、Django 等 Web 框架和 AI 模型服务。

21 | 然后将镜像上传到阿里云容器镜像服务中,并创建容器实例来运行镜像。

22 | - 模型+processor部署服务:另一种方式是将 AI 模型和处理器部署在同一个容器中,这种方式可以更好地控制模型和处理器的交互和数据流,可以提高系统的性能和可靠性。

23 | 可以使用 Dockerfile 构建一个镜像,其中包含了 AI 模型和处理器服务。然后将镜像上传到阿里云容器镜像服务中,并创建容器实例来运行镜像。

24 |

25 |

26 | # 我们采用自定义processor的方式

27 | [自定义processor](https://help.aliyun.com/document_detail/130248.html?spm=a2c4g.2250005.0.0.25121af2JsiwXd)

28 |

29 |

30 | # 事先准备:

31 | - aliyun 账号;

32 | - aliyun unix 服务器

33 | 登录阿里云官网,进入控制台页面:https://www.aliyun.com/

34 | 在控制台页面中,找到“产品与服务”菜单,选择“云服务器 ECS”。

35 | 在 ECS 页面中,单击“创建实例”按钮,进入创建实例页面。

36 | 在创建实例页面中,您需要选择实例的配置信息,包括实例规格、操作系统、网络等。您可以根据自己的实际需求进行选择。

37 | 完成配置后,单击“立即购买”按钮,确认订单信息并完成支付。

38 | 在支付完成后,您可以在 ECS 页面中找到您创建的实例,获取实例的公网 IP 地址和登录密码。

39 | 使用 SSH 工具(如 PuTTY)连接到您的 ECS 服务器,输入公网 IP 地址和登录密码即可登录。

40 | - 在aliyun ACR中创建一个保存镜像的hub;

41 | [aliyun ACR](https://help.aliyun.com/document_detail/257112.htm?spm=a2c4g.258246.0.0.2aea607bOIuVPJ)

42 | [容器镜像服务台](https://cr.console.aliyun.com/cn-shanghai/instance/dashboard)

43 | - 准备一个aliyun OSS 账户;

44 |

45 | # docker 镜像准备(模型、代码、数据全部放入到镜像中)

46 | [参考文献1](https://help.aliyun.com/document_detail/130248.html?spm=a2c4g.258246.0.0.2b127c54StN1RA)

47 | [参考文献2](https://help.aliyun.com/document_detail/258246.html?spm=a2c4g.468735.0.0.4ea15f29hGMxua)

48 | **操作步骤**

49 | - service docker start

50 | - 注册号个人的镜像服务:(设定命名空间、访问凭证)

51 | - 得到并登录aliyun image hub:sudo docker login --username=天才孤星000 registry.cn-shanghai.aliyuncs.com

52 | - 操作步骤:

53 | [详细操作步骤](https://cr.console.aliyun.com/repository/cn-shanghai/mtn_ai/test/details) --> 推送自己的镜像

54 | - 端口映射:主机的端口和容器内部的端口;

55 |

56 | # pai-eas部署

57 | - 编写processor 对应的 py 文件;不能叫 app.py

58 | - 将代码、模型传入 oss 对应文件中;

59 | - 配置编辑里 写上我们的 "data_image": *.v0.1

60 | - 连接测试:eas http sdk 三种测试;

61 |

62 | # 挂载

63 | **挂载都是将云存储服务挂载到服务器上,以便在服务器上像使用本地磁盘一样访问云存储中的数据。**

64 | [oss 挂载](https://help.aliyun.com/document_detail/153892.html?spm=a2c4g.11186623.6.750.2b03142bM5YPG3)

65 | [网页版教程](https://www.qycn.com/about/hd/5658.html)

66 |

67 | ##OSS挂载

68 | - OSS(Object Storage Service)是阿里云提供的对象存储服务。

69 | - OSS 挂载是将 OSS 存储桶(bucket)挂载到服务器上,以便在服务器上像使用本地磁盘一样访问 OSS 中的数据。

70 | - OSS 挂载支持 NFS 和 SMB 两种协议,可以通过在服务器上安装相应的客户端软件来实现挂载。

71 |

72 | ##NAS挂载

73 | - NAS(Network Attached Storage)是一种网络附加存储设备,可以提供文件级别的数据访问。

74 | - NAS 挂载是将 NAS 存储设备挂载到服务器上,以便在服务器上像使用本地磁盘一样访问 NAS 中的数据。

75 | - NAS 挂载支持 NFS、SMB 和 FTP 等多种协议,可以通过在服务器上安装相应的客户端软件来实现挂载。

76 |

77 | **镜像管理:**

78 | [镜像管理教程](https://help.aliyun.com/document_detail/213570.htm?spm=a2c4g.110985.0.0.6ee7167fYIeRzs#task-2074428)

79 | **自定义镜像:**

80 | [自定义镜像教程](https://help.aliyun.com/document_detail/258246.html?spm=a2c4g.465149.0.0.223c167fb7fVD3)

81 | **镜像拉取凭证**

82 | [凭证官方获取步骤](https://help.aliyun.com/document_detail/142247.html)

83 | **使用Python开发自定义Processor**

84 | [python Processor doc](https://help.aliyun.com/document_detail/130248.html?spm=a2c4g.69223.0.i1)

85 | **管理自己的镜像**

86 | [阿里云自己的镜像](https://cr.console.aliyun.com/cn-shanghai/instance/repositories)

87 |

88 | #aliyun docker 管理

89 | 1. 登录:docker login --username=*** registry.cn-shanghai.aliyuncs.com*

90 | 2. 查看:docker search registry.cn-hangzhou.aliyuncs.com//*

91 | *docker search registry.cn-hangzhou.aliyuncs.com/tensorflow/tensorflow*

92 | 3. 拉取:docker pull registry.cn-hangzhou.aliyuncs.com//:

93 | 4. 推送:docker push

94 |

95 | # RAM 是什么???

96 | - RAM(Resource Access Management)是阿里云提供的一种访问控制服务;

97 | - 它允许用户创建和管理多个用户身份(即RAM用户),并对这些用户的访问权限进行细粒度的控制;

98 | - RAM用户可以被视为与主账号(即阿里云账号)相对独立的子账号;

99 | - 它们可以拥有自己的登录密码和访问密钥,并且可以被授予访问阿里云各种资源的权限;

100 | - RAM用户常用于企业内部的权限管理和资源隔离,以及多人协作场景下的访问控制。

101 |

102 | # 部署调试的三种方式测试:

103 |

104 | 1. python 调用调试: [python sdk](https://help.aliyun.com/document_detail/250807.html?spm=a2c4g.30347.0.0.51844a41SA02Jt)