├── .DS_Store

├── .gitignore

├── LICENSE

├── README.md

├── assets

├── ios.gif

└── live.png

├── iOS

├── .DS_Store

├── AppML.xcodeproj

│ ├── .xcodesamplecode.plist

│ ├── project.pbxproj

│ ├── project.xcworkspace

│ │ ├── contents.xcworkspacedata

│ │ ├── xcshareddata

│ │ │ ├── IDEWorkspaceChecks.plist

│ │ │ └── swiftpm

│ │ │ │ └── Package.resolved

│ │ └── xcuserdata

│ │ │ └── filippoaleotti.xcuserdatad

│ │ │ └── UserInterfaceState.xcuserstate

│ ├── xcshareddata

│ │ └── xcschemes

│ │ │ └── AppML.xcscheme

│ └── xcuserdata

│ │ └── filippoaleotti.xcuserdatad

│ │ └── xcdebugger

│ │ └── Breakpoints_v2.xcbkptlist

├── AppML.xcworkspace

│ ├── contents.xcworkspacedata

│ └── xcshareddata

│ │ ├── IDEWorkspaceChecks.plist

│ │ └── swiftpm

│ │ └── Package.resolved

└── AppML

│ ├── .DS_Store

│ ├── AppDelegate.swift

│ ├── Assets.xcassets

│ ├── .DS_Store

│ ├── AppIcon.appiconset

│ │ ├── Contents.json

│ │ ├── Icon-App-20x20@1x.png

│ │ ├── Icon-App-20x20@2x.png

│ │ ├── Icon-App-20x20@3x.png

│ │ ├── Icon-App-29x29@1x.png

│ │ ├── Icon-App-29x29@2x.png

│ │ ├── Icon-App-29x29@3x.png

│ │ ├── Icon-App-40x40@1x.png

│ │ ├── Icon-App-40x40@2x.png

│ │ ├── Icon-App-40x40@3x.png

│ │ ├── Icon-App-60x60@2x.png

│ │ ├── Icon-App-60x60@3x.png

│ │ ├── Icon-App-76x76@1x.png

│ │ ├── Icon-App-76x76@2x.png

│ │ ├── Icon-App-83.5x83.5@2x.png

│ │ └── ItunesArtwork@2x.png

│ ├── ColorFilterOff.imageset

│ │ ├── ColorFilterOff@2x.png

│ │ └── Contents.json

│ ├── ColorFilterOn.imageset

│ │ ├── ColorFilterOn@2x.png

│ │ └── Contents.json

│ ├── Contents.json

│ ├── SettingsIcon.imageset

│ │ ├── Contents.json

│ │ └── icons8-services-2.pdf

│ └── Trash.imageset

│ │ ├── Contents.json

│ │ └── Medium-S.pdf

│ ├── CameraLayer

│ ├── CameraStream.swift

│ └── Extensions

│ │ └── CGImage+CVPixelBuffer.swift

│ ├── Extensions

│ ├── CV

│ │ └── CVPixelBuffer+createCGImage.swift

│ ├── RxSwift

│ │ ├── RxSwiftBidirectionalBinding.swift

│ │ └── UITextView+textColor.swift

│ └── UI

│ │ └── UIAlertController+Ext.swift

│ ├── GraphicLayer

│ ├── ColorMapApplier.swift

│ ├── DepthToColorMap.metal

│ └── MetalColorMapApplier.swift

│ ├── Info.plist

│ ├── Models

│ ├── .DS_Store

│ └── Pydnet.mlmodel

│ ├── Mods

│ ├── CameraOutput.swift

│ ├── MonoCameraOutput.swift

│ ├── NeuralNetwork+Ext.swift

│ ├── NeuralNetwork.swift

│ └── StereoCameraOutput.swift

│ ├── NeuralNetworkRepository

│ ├── FileSystemNeuralNetworkRepository.swift

│ └── NeuralNetworkRepository.swift

│ ├── NeuralNetworks

│ ├── .DS_Store

│ ├── Helpers

│ │ ├── CVPixelBuffer+createCGImage.swift

│ │ ├── MonoInputFeatureProvider.swift

│ │ └── StereoInputFeatureProvider.swift

│ ├── Pydnet

│ │ └── OptimizedPydnet.mlmodel

│ └── QuantizedPydnet

│ │ └── PydnetQuantized.mlmodel

│ ├── Storyboards

│ ├── .DS_Store

│ └── Base.lproj

│ │ ├── .DS_Store

│ │ ├── LaunchScreen.storyboard

│ │ └── Main.storyboard

│ └── View

│ └── Main

│ ├── MainViewController.swift

│ ├── MainViewModel.swift

│ └── PreviewMode.swift

└── single_inference

├── .gitignore

├── LICENSE

├── README.md

├── eval_utils.py

├── export.py

├── inference.py

├── modules.py

├── network.py

├── requirements.txt

├── run.sh

├── test

├── 0.png

├── 3.png

├── 4.png

└── 6.png

├── test_kitti.py

├── test_kitti.txt

├── test_nyu.py

├── test_tum.py

├── test_tum.txt

└── train_files.txt

/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/.DS_Store

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | *.egg-info/

24 | .installed.cfg

25 | *.egg

26 | MANIFEST

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Unit test / coverage reports

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *.cover

47 | .hypothesis/

48 | .pytest_cache/

49 |

50 | # Translations

51 | *.mo

52 | *.pot

53 |

54 | # Django stuff:

55 | *.log

56 | local_settings.py

57 | db.sqlite3

58 |

59 | # Flask stuff:

60 | instance/

61 | .webassets-cache

62 |

63 | # Scrapy stuff:

64 | .scrapy

65 |

66 | # Sphinx documentation

67 | docs/_build/

68 |

69 | # PyBuilder

70 | target/

71 |

72 | # Jupyter Notebook

73 | .ipynb_checkpoints

74 |

75 | # pyenv

76 | .python-version

77 |

78 | # celery beat schedule file

79 | celerybeat-schedule

80 |

81 | # SageMath parsed files

82 | *.sage.py

83 |

84 | # Environments

85 | .env

86 | .venv

87 | env/

88 | venv/

89 | ENV/

90 | env.bak/

91 | venv.bak/

92 |

93 | # Spyder project settings

94 | .spyderproject

95 | .spyproject

96 |

97 | # Rope project settings

98 | .ropeproject

99 |

100 | # mkdocs documentation

101 | /site

102 |

103 | # mypy

104 | .mypy_cache/

105 | */Android/app/build/*

106 | ckpt/*

107 | frozen_models/*

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 |

2 | Apache License

3 | Version 2.0, January 2004

4 | http://www.apache.org/licenses/

5 |

6 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

7 |

8 | 1. Definitions.

9 |

10 | "License" shall mean the terms and conditions for use, reproduction,

11 | and distribution as defined by Sections 1 through 9 of this document.

12 |

13 | "Licensor" shall mean the copyright owner or entity authorized by

14 | the copyright owner that is granting the License.

15 |

16 | "Legal Entity" shall mean the union of the acting entity and all

17 | other entities that control, are controlled by, or are under common

18 | control with that entity. For the purposes of this definition,

19 | "control" means (i) the power, direct or indirect, to cause the

20 | direction or management of such entity, whether by contract or

21 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

22 | outstanding shares, or (iii) beneficial ownership of such entity.

23 |

24 | "You" (or "Your") shall mean an individual or Legal Entity

25 | exercising permissions granted by this License.

26 |

27 | "Source" form shall mean the preferred form for making modifications,

28 | including but not limited to software source code, documentation

29 | source, and configuration files.

30 |

31 | "Object" form shall mean any form resulting from mechanical

32 | transformation or translation of a Source form, including but

33 | not limited to compiled object code, generated documentation,

34 | and conversions to other media types.

35 |

36 | "Work" shall mean the work of authorship, whether in Source or

37 | Object form, made available under the License, as indicated by a

38 | copyright notice that is included in or attached to the work

39 | (an example is provided in the Appendix below).

40 |

41 | "Derivative Works" shall mean any work, whether in Source or Object

42 | form, that is based on (or derived from) the Work and for which the

43 | editorial revisions, annotations, elaborations, or other modifications

44 | represent, as a whole, an original work of authorship. For the purposes

45 | of this License, Derivative Works shall not include works that remain

46 | separable from, or merely link (or bind by name) to the interfaces of,

47 | the Work and Derivative Works thereof.

48 |

49 | "Contribution" shall mean any work of authorship, including

50 | the original version of the Work and any modifications or additions

51 | to that Work or Derivative Works thereof, that is intentionally

52 | submitted to Licensor for inclusion in the Work by the copyright owner

53 | or by an individual or Legal Entity authorized to submit on behalf of

54 | the copyright owner. For the purposes of this definition, "submitted"

55 | means any form of electronic, verbal, or written communication sent

56 | to the Licensor or its representatives, including but not limited to

57 | communication on electronic mailing lists, source code control systems,

58 | and issue tracking systems that are managed by, or on behalf of, the

59 | Licensor for the purpose of discussing and improving the Work, but

60 | excluding communication that is conspicuously marked or otherwise

61 | designated in writing by the copyright owner as "Not a Contribution."

62 |

63 | "Contributor" shall mean Licensor and any individual or Legal Entity

64 | on behalf of whom a Contribution has been received by Licensor and

65 | subsequently incorporated within the Work.

66 |

67 | 2. Grant of Copyright License. Subject to the terms and conditions of

68 | this License, each Contributor hereby grants to You a perpetual,

69 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

70 | copyright license to reproduce, prepare Derivative Works of,

71 | publicly display, publicly perform, sublicense, and distribute the

72 | Work and such Derivative Works in Source or Object form.

73 |

74 | 3. Grant of Patent License. Subject to the terms and conditions of

75 | this License, each Contributor hereby grants to You a perpetual,

76 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

77 | (except as stated in this section) patent license to make, have made,

78 | use, offer to sell, sell, import, and otherwise transfer the Work,

79 | where such license applies only to those patent claims licensable

80 | by such Contributor that are necessarily infringed by their

81 | Contribution(s) alone or by combination of their Contribution(s)

82 | with the Work to which such Contribution(s) was submitted. If You

83 | institute patent litigation against any entity (including a

84 | cross-claim or counterclaim in a lawsuit) alleging that the Work

85 | or a Contribution incorporated within the Work constitutes direct

86 | or contributory patent infringement, then any patent licenses

87 | granted to You under this License for that Work shall terminate

88 | as of the date such litigation is filed.

89 |

90 | 4. Redistribution. You may reproduce and distribute copies of the

91 | Work or Derivative Works thereof in any medium, with or without

92 | modifications, and in Source or Object form, provided that You

93 | meet the following conditions:

94 |

95 | (a) You must give any other recipients of the Work or

96 | Derivative Works a copy of this License; and

97 |

98 | (b) You must cause any modified files to carry prominent notices

99 | stating that You changed the files; and

100 |

101 | (c) You must retain, in the Source form of any Derivative Works

102 | that You distribute, all copyright, patent, trademark, and

103 | attribution notices from the Source form of the Work,

104 | excluding those notices that do not pertain to any part of

105 | the Derivative Works; and

106 |

107 | (d) If the Work includes a "NOTICE" text file as part of its

108 | distribution, then any Derivative Works that You distribute must

109 | include a readable copy of the attribution notices contained

110 | within such NOTICE file, excluding those notices that do not

111 | pertain to any part of the Derivative Works, in at least one

112 | of the following places: within a NOTICE text file distributed

113 | as part of the Derivative Works; within the Source form or

114 | documentation, if provided along with the Derivative Works; or,

115 | within a display generated by the Derivative Works, if and

116 | wherever such third-party notices normally appear. The contents

117 | of the NOTICE file are for informational purposes only and

118 | do not modify the License. You may add Your own attribution

119 | notices within Derivative Works that You distribute, alongside

120 | or as an addendum to the NOTICE text from the Work, provided

121 | that such additional attribution notices cannot be construed

122 | as modifying the License.

123 |

124 | You may add Your own copyright statement to Your modifications and

125 | may provide additional or different license terms and conditions

126 | for use, reproduction, or distribution of Your modifications, or

127 | for any such Derivative Works as a whole, provided Your use,

128 | reproduction, and distribution of the Work otherwise complies with

129 | the conditions stated in this License.

130 |

131 | 5. Submission of Contributions. Unless You explicitly state otherwise,

132 | any Contribution intentionally submitted for inclusion in the Work

133 | by You to the Licensor shall be under the terms and conditions of

134 | this License, without any additional terms or conditions.

135 | Notwithstanding the above, nothing herein shall supersede or modify

136 | the terms of any separate license agreement you may have executed

137 | with Licensor regarding such Contributions.

138 |

139 | 6. Trademarks. This License does not grant permission to use the trade

140 | names, trademarks, service marks, or product names of the Licensor,

141 | except as required for reasonable and customary use in describing the

142 | origin of the Work and reproducing the content of the NOTICE file.

143 |

144 | 7. Disclaimer of Warranty. Unless required by applicable law or

145 | agreed to in writing, Licensor provides the Work (and each

146 | Contributor provides its Contributions) on an "AS IS" BASIS,

147 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

148 | implied, including, without limitation, any warranties or conditions

149 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

150 | PARTICULAR PURPOSE. You are solely responsible for determining the

151 | appropriateness of using or redistributing the Work and assume any

152 | risks associated with Your exercise of permissions under this License.

153 |

154 | 8. Limitation of Liability. In no event and under no legal theory,

155 | whether in tort (including negligence), contract, or otherwise,

156 | unless required by applicable law (such as deliberate and grossly

157 | negligent acts) or agreed to in writing, shall any Contributor be

158 | liable to You for damages, including any direct, indirect, special,

159 | incidental, or consequential damages of any character arising as a

160 | result of this License or out of the use or inability to use the

161 | Work (including but not limited to damages for loss of goodwill,

162 | work stoppage, computer failure or malfunction, or any and all

163 | other commercial damages or losses), even if such Contributor

164 | has been advised of the possibility of such damages.

165 |

166 | 9. Accepting Warranty or Additional Liability. While redistributing

167 | the Work or Derivative Works thereof, You may choose to offer,

168 | and charge a fee for, acceptance of support, warranty, indemnity,

169 | or other liability obligations and/or rights consistent with this

170 | License. However, in accepting such obligations, You may act only

171 | on Your own behalf and on Your sole responsibility, not on behalf

172 | of any other Contributor, and only if You agree to indemnify,

173 | defend, and hold each Contributor harmless for any liability

174 | incurred by, or claims asserted against, such Contributor by reason

175 | of your accepting any such warranty or additional liability.

176 |

177 | END OF TERMS AND CONDITIONS

178 |

179 | APPENDIX: How to apply the Apache License to your work.

180 |

181 | To apply the Apache License to your work, attach the following

182 | boilerplate notice, with the fields enclosed by brackets "[]"

183 | replaced with your own identifying information. (Don't include

184 | the brackets!) The text should be enclosed in the appropriate

185 | comment syntax for the file format. We also recommend that a

186 | file or class name and description of purpose be included on the

187 | same "printed page" as the copyright notice for easier

188 | identification within third-party archives.

189 |

190 | Copyright [yyyy] [name of copyright owner]

191 |

192 | Licensed under the Apache License, Version 2.0 (the "License");

193 | you may not use this file except in compliance with the License.

194 | You may obtain a copy of the License at

195 |

196 | http://www.apache.org/licenses/LICENSE-2.0

197 |

198 | Unless required by applicable law or agreed to in writing, software

199 | distributed under the License is distributed on an "AS IS" BASIS,

200 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

201 | See the License for the specific language governing permissions and

202 | limitations under the License.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

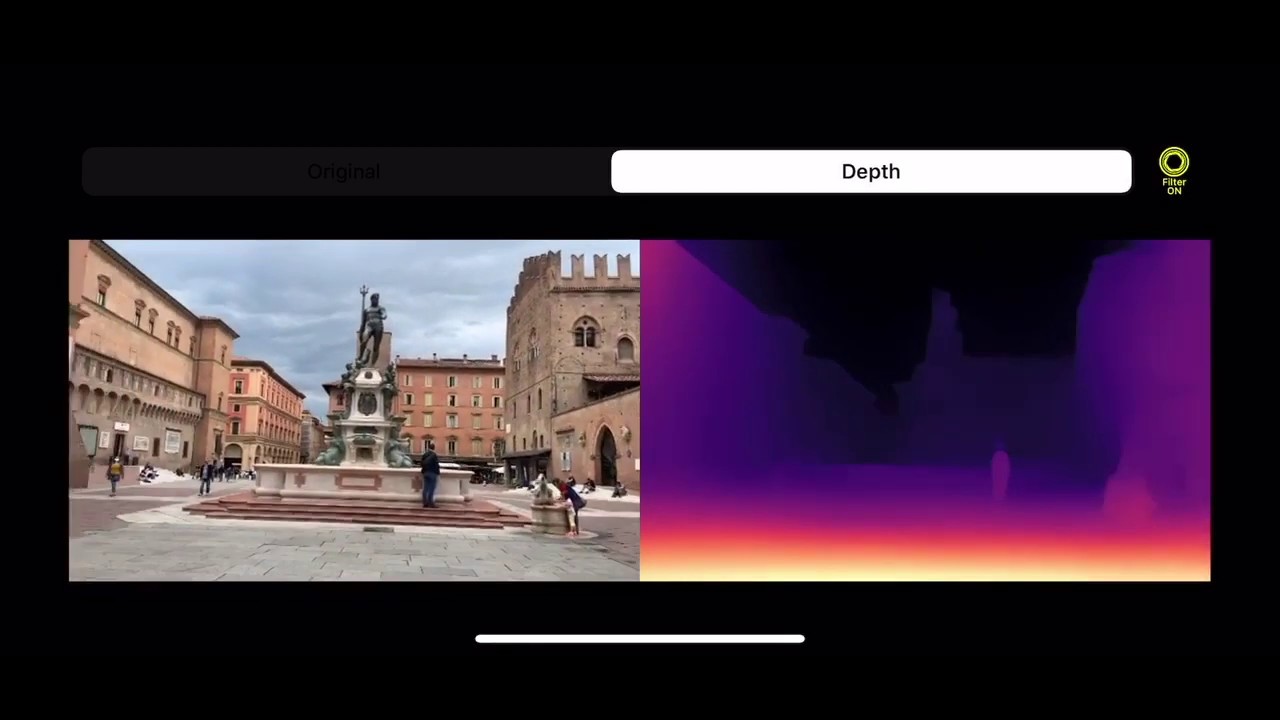

1 | # PyDNet on mobile devices v2.0

2 | This repository contains the source code to run PyDNet on mobile devices.

3 |

4 | # What's new?

5 | In v2.0, we changed the procedure and the data used for training. More information will be provided soon...

6 |

7 | Moreover, we build also a web-based demonstration of the same network! You can try it now [here](https://filippoaleotti.github.io/demo_live/).

8 | The model runs directly on your browser, so anything to install!

9 |

10 |

11 |  12 |

12 |

13 |

14 | ## iOS

15 | The iOS demo has been developed by [Giulio Zaccaroni](https://github.com/GZaccaroni).

16 |

17 | XCode is required to build the app, moreover you need to sign in with your AppleID and trust yourself as certified developer.

18 |

19 |

20 |  21 |

21 |

22 |

23 |  24 |

24 |

25 |

26 | ## Android

27 | The code will be released soon

28 |

29 | # License

30 | Code is licensed under APACHE version 2.0 license.

31 | Weights of the network can be used for research purposes only.

32 |

33 | # Contacts and links

34 | If you use this code in your projects, please cite our paper:

35 |

36 | ```

37 | @article{aleotti2020real,

38 | title={Real-time single image depth perception in the wild with handheld devices},

39 | author={Aleotti, Filippo and Zaccaroni, Giulio and Bartolomei, Luca and Poggi, Matteo and Tosi, Fabio and Mattoccia, Stefano},

40 | journal={Sensors},

41 | volume={21},

42 | year={2021}

43 | }

44 |

45 | @inproceedings{pydnet18,

46 | title = {Towards real-time unsupervised monocular depth estimation on CPU},

47 | author = {Poggi, Matteo and

48 | Aleotti, Filippo and

49 | Tosi, Fabio and

50 | Mattoccia, Stefano},

51 | booktitle = {IEEE/JRS Conference on Intelligent Robots and Systems (IROS)},

52 | year = {2018}

53 | }

54 | ```

55 |

56 | More info about the work can be found at these links:

57 | * [Real-time single image depth perception in the wild with handheld devices, Arxiv](https://arxiv.org/pdf/2006.05724.pdf)

58 | * [PyDNet paper](https://arxiv.org/pdf/1806.11430.pdf)

59 | * [PyDNet code](https://github.com/mattpoggi/pydnet)

60 |

61 | For questions, please send an email to filippo.aleotti2@unibo.it

62 |

--------------------------------------------------------------------------------

/assets/ios.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/assets/ios.gif

--------------------------------------------------------------------------------

/assets/live.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/assets/live.png

--------------------------------------------------------------------------------

/iOS/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/.DS_Store

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/.xcodesamplecode.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/project.pbxproj:

--------------------------------------------------------------------------------

1 | // !$*UTF8*$!

2 | {

3 | archiveVersion = 1;

4 | classes = {

5 | };

6 | objectVersion = 52;

7 | objects = {

8 |

9 | /* Begin PBXBuildFile section */

10 | 3C04E71122EC943000EA471B /* CoreImage.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 3C04E71022EC943000EA471B /* CoreImage.framework */; };

11 | 3C04E71322EC943800EA471B /* CoreVideo.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 3C04E71222EC943800EA471B /* CoreVideo.framework */; };

12 | 3C04E71522EC943C00EA471B /* Accelerate.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 3C04E71422EC943C00EA471B /* Accelerate.framework */; };

13 | 3C140CA32322CD8F00D27DFD /* RxRelay in Frameworks */ = {isa = PBXBuildFile; productRef = 3C140CA22322CD8F00D27DFD /* RxRelay */; };

14 | 3C140CA52322CD8F00D27DFD /* RxSwift in Frameworks */ = {isa = PBXBuildFile; productRef = 3C140CA42322CD8F00D27DFD /* RxSwift */; };

15 | 3C140CA72322CD8F00D27DFD /* RxCocoa in Frameworks */ = {isa = PBXBuildFile; productRef = 3C140CA62322CD8F00D27DFD /* RxCocoa */; };

16 | 3C31317F2321499A006F9963 /* PreviewMode.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3C31317E2321499A006F9963 /* PreviewMode.swift */; };

17 | 3C44D82B22FEBBE100F57013 /* UIAlertController+Ext.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3C44D82A22FEBBE100F57013 /* UIAlertController+Ext.swift */; };

18 | 3C5C90332299A75800C2E814 /* DepthToColorMap.metal in Sources */ = {isa = PBXBuildFile; fileRef = 3C5C90322299A75800C2E814 /* DepthToColorMap.metal */; };

19 | 3C5EFCCE2320680E004F6F7A /* RxSwiftBidirectionalBinding.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3C5EFCCD2320680E004F6F7A /* RxSwiftBidirectionalBinding.swift */; };

20 | 3C5EFCD02320F208004F6F7A /* UITextView+textColor.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3C5EFCCF2320F208004F6F7A /* UITextView+textColor.swift */; };

21 | 3C6BB34B2322E7E70041D581 /* CVPixelBuffer+createCGImage.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3C6BB34A2322E7E70041D581 /* CVPixelBuffer+createCGImage.swift */; };

22 | 3C6D91E6232132BF008D987C /* MainViewModel.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3C6D91E5232132BF008D987C /* MainViewModel.swift */; };

23 | 3CB56B5C2479566A00143CD8 /* Pydnet.mlmodel in Sources */ = {isa = PBXBuildFile; fileRef = 3CB56B5B2479566A00143CD8 /* Pydnet.mlmodel */; };

24 | 3CC9262322991C22001C75CE /* MetalColorMapApplier.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3CC9262222991C22001C75CE /* MetalColorMapApplier.swift */; };

25 | 3CE77C082325061A00DBAAD5 /* ColorMapApplier.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3CE77C072325061A00DBAAD5 /* ColorMapApplier.swift */; };

26 | 3CEECB0E2486A7FF00535292 /* CameraStream.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3CEECB0A2486A7FF00535292 /* CameraStream.swift */; };

27 | 3CEECB102486A7FF00535292 /* CGImage+CVPixelBuffer.swift in Sources */ = {isa = PBXBuildFile; fileRef = 3CEECB0D2486A7FF00535292 /* CGImage+CVPixelBuffer.swift */; };

28 | 7AA677151CFF765600B353FB /* AppDelegate.swift in Sources */ = {isa = PBXBuildFile; fileRef = 7AA677141CFF765600B353FB /* AppDelegate.swift */; };

29 | 7AA677171CFF765600B353FB /* MainViewController.swift in Sources */ = {isa = PBXBuildFile; fileRef = 7AA677161CFF765600B353FB /* MainViewController.swift */; };

30 | 7AA6771A1CFF765600B353FB /* Main.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = 7AA677181CFF765600B353FB /* Main.storyboard */; };

31 | 7AA6771C1CFF765600B353FB /* Assets.xcassets in Resources */ = {isa = PBXBuildFile; fileRef = 7AA6771B1CFF765600B353FB /* Assets.xcassets */; };

32 | 7AA6771F1CFF765600B353FB /* LaunchScreen.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = 7AA6771D1CFF765600B353FB /* LaunchScreen.storyboard */; };

33 | /* End PBXBuildFile section */

34 |

35 | /* Begin PBXFileReference section */

36 | 3C04E71022EC943000EA471B /* CoreImage.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = CoreImage.framework; path = System/Library/Frameworks/CoreImage.framework; sourceTree = SDKROOT; };

37 | 3C04E71222EC943800EA471B /* CoreVideo.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = CoreVideo.framework; path = System/Library/Frameworks/CoreVideo.framework; sourceTree = SDKROOT; };

38 | 3C04E71422EC943C00EA471B /* Accelerate.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = Accelerate.framework; path = System/Library/Frameworks/Accelerate.framework; sourceTree = SDKROOT; };

39 | 3C31317E2321499A006F9963 /* PreviewMode.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = PreviewMode.swift; sourceTree = ""; };

40 | 3C44D82A22FEBBE100F57013 /* UIAlertController+Ext.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = "UIAlertController+Ext.swift"; sourceTree = ""; };

41 | 3C5C90322299A75800C2E814 /* DepthToColorMap.metal */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.metal; path = DepthToColorMap.metal; sourceTree = ""; };

42 | 3C5EFCCD2320680E004F6F7A /* RxSwiftBidirectionalBinding.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = RxSwiftBidirectionalBinding.swift; sourceTree = ""; };

43 | 3C5EFCCF2320F208004F6F7A /* UITextView+textColor.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = "UITextView+textColor.swift"; sourceTree = ""; };

44 | 3C6BB34A2322E7E70041D581 /* CVPixelBuffer+createCGImage.swift */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.swift; path = "CVPixelBuffer+createCGImage.swift"; sourceTree = ""; };

45 | 3C6D91E5232132BF008D987C /* MainViewModel.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = MainViewModel.swift; sourceTree = ""; };

46 | 3CB56B5B2479566A00143CD8 /* Pydnet.mlmodel */ = {isa = PBXFileReference; lastKnownFileType = file.mlmodel; name = Pydnet.mlmodel; path = AppML/Models/Pydnet.mlmodel; sourceTree = SOURCE_ROOT; };

47 | 3CC9262222991C22001C75CE /* MetalColorMapApplier.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = MetalColorMapApplier.swift; sourceTree = ""; };

48 | 3CE77C072325061A00DBAAD5 /* ColorMapApplier.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = ColorMapApplier.swift; sourceTree = ""; };

49 | 3CEECB0A2486A7FF00535292 /* CameraStream.swift */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.swift; path = CameraStream.swift; sourceTree = ""; };

50 | 3CEECB0D2486A7FF00535292 /* CGImage+CVPixelBuffer.swift */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.swift; path = "CGImage+CVPixelBuffer.swift"; sourceTree = ""; };

51 | 7AA677111CFF765600B353FB /* MobilePydnet.app */ = {isa = PBXFileReference; explicitFileType = wrapper.application; includeInIndex = 0; path = MobilePydnet.app; sourceTree = BUILT_PRODUCTS_DIR; };

52 | 7AA677141CFF765600B353FB /* AppDelegate.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = AppDelegate.swift; sourceTree = ""; };

53 | 7AA677161CFF765600B353FB /* MainViewController.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = MainViewController.swift; sourceTree = ""; };

54 | 7AA677191CFF765600B353FB /* Base */ = {isa = PBXFileReference; lastKnownFileType = file.storyboard; name = Base; path = Base.lproj/Main.storyboard; sourceTree = ""; };

55 | 7AA6771B1CFF765600B353FB /* Assets.xcassets */ = {isa = PBXFileReference; lastKnownFileType = folder.assetcatalog; path = Assets.xcassets; sourceTree = ""; };

56 | 7AA6771E1CFF765600B353FB /* Base */ = {isa = PBXFileReference; lastKnownFileType = file.storyboard; name = Base; path = Base.lproj/LaunchScreen.storyboard; sourceTree = ""; };

57 | 7AA677201CFF765600B353FB /* Info.plist */ = {isa = PBXFileReference; lastKnownFileType = text.plist.xml; path = Info.plist; sourceTree = ""; };

58 | /* End PBXFileReference section */

59 |

60 | /* Begin PBXFrameworksBuildPhase section */

61 | 7AA6770E1CFF765500B353FB /* Frameworks */ = {

62 | isa = PBXFrameworksBuildPhase;

63 | buildActionMask = 2147483647;

64 | files = (

65 | 3C140CA52322CD8F00D27DFD /* RxSwift in Frameworks */,

66 | 3C140CA32322CD8F00D27DFD /* RxRelay in Frameworks */,

67 | 3C04E71522EC943C00EA471B /* Accelerate.framework in Frameworks */,

68 | 3C140CA72322CD8F00D27DFD /* RxCocoa in Frameworks */,

69 | 3C04E71122EC943000EA471B /* CoreImage.framework in Frameworks */,

70 | 3C04E71322EC943800EA471B /* CoreVideo.framework in Frameworks */,

71 | );

72 | runOnlyForDeploymentPostprocessing = 0;

73 | };

74 | /* End PBXFrameworksBuildPhase section */

75 |

76 | /* Begin PBXGroup section */

77 | 2563FCD02563FC9000000001 /* Configuration */ = {

78 | isa = PBXGroup;

79 | children = (

80 | );

81 | name = Configuration;

82 | sourceTree = "";

83 | };

84 | 3C3FBB7F23225E8800DA8B32 /* UI */ = {

85 | isa = PBXGroup;

86 | children = (

87 | 3C44D82A22FEBBE100F57013 /* UIAlertController+Ext.swift */,

88 | );

89 | path = UI;

90 | sourceTree = "";

91 | };

92 | 3C5EFCC823205F50004F6F7A /* GraphicLayer */ = {

93 | isa = PBXGroup;

94 | children = (

95 | 3CE77C072325061A00DBAAD5 /* ColorMapApplier.swift */,

96 | 3CC9262222991C22001C75CE /* MetalColorMapApplier.swift */,

97 | 3C5C90322299A75800C2E814 /* DepthToColorMap.metal */,

98 | );

99 | path = GraphicLayer;

100 | sourceTree = "";

101 | };

102 | 3C5EFCCB232067F7004F6F7A /* Extensions */ = {

103 | isa = PBXGroup;

104 | children = (

105 | 3C6BB3472322E7BD0041D581 /* CV */,

106 | 3C3FBB7F23225E8800DA8B32 /* UI */,

107 | 3C5EFCCC232067FF004F6F7A /* RxSwift */,

108 | );

109 | path = Extensions;

110 | sourceTree = "";

111 | };

112 | 3C5EFCCC232067FF004F6F7A /* RxSwift */ = {

113 | isa = PBXGroup;

114 | children = (

115 | 3C5EFCCD2320680E004F6F7A /* RxSwiftBidirectionalBinding.swift */,

116 | 3C5EFCCF2320F208004F6F7A /* UITextView+textColor.swift */,

117 | );

118 | path = RxSwift;

119 | sourceTree = "";

120 | };

121 | 3C6BB3442322E7680041D581 /* DefaultNeuralNetworks */ = {

122 | isa = PBXGroup;

123 | children = (

124 | 3CB56B5B2479566A00143CD8 /* Pydnet.mlmodel */,

125 | );

126 | path = DefaultNeuralNetworks;

127 | sourceTree = "";

128 | };

129 | 3C6BB3472322E7BD0041D581 /* CV */ = {

130 | isa = PBXGroup;

131 | children = (

132 | 3C6BB34A2322E7E70041D581 /* CVPixelBuffer+createCGImage.swift */,

133 | );

134 | path = CV;

135 | sourceTree = "";

136 | };

137 | 3CEECB092486A7F100535292 /* CameraLayer */ = {

138 | isa = PBXGroup;

139 | children = (

140 | 3CEECB0C2486A7FF00535292 /* Extensions */,

141 | 3CEECB0A2486A7FF00535292 /* CameraStream.swift */,

142 | );

143 | path = CameraLayer;

144 | sourceTree = "";

145 | };

146 | 3CEECB0C2486A7FF00535292 /* Extensions */ = {

147 | isa = PBXGroup;

148 | children = (

149 | 3CEECB0D2486A7FF00535292 /* CGImage+CVPixelBuffer.swift */,

150 | );

151 | path = Extensions;

152 | sourceTree = "";

153 | };

154 | 3CF695B8229FC4CE00E4115E /* View */ = {

155 | isa = PBXGroup;

156 | children = (

157 | 3CF695B9229FC4DE00E4115E /* Main */,

158 | );

159 | path = View;

160 | sourceTree = "";

161 | };

162 | 3CF695B9229FC4DE00E4115E /* Main */ = {

163 | isa = PBXGroup;

164 | children = (

165 | 7AA677161CFF765600B353FB /* MainViewController.swift */,

166 | 3C6D91E5232132BF008D987C /* MainViewModel.swift */,

167 | 3C31317E2321499A006F9963 /* PreviewMode.swift */,

168 | );

169 | path = Main;

170 | sourceTree = "";

171 | };

172 | 3CF695BA229FC4F700E4115E /* Storyboards */ = {

173 | isa = PBXGroup;

174 | children = (

175 | 7AA677181CFF765600B353FB /* Main.storyboard */,

176 | 7AA6771D1CFF765600B353FB /* LaunchScreen.storyboard */,

177 | );

178 | path = Storyboards;

179 | sourceTree = "";

180 | };

181 | 7AA677081CFF765500B353FB = {

182 | isa = PBXGroup;

183 | children = (

184 | 7AA677131CFF765600B353FB /* AppML */,

185 | 7AA677121CFF765600B353FB /* Products */,

186 | 2563FCD02563FC9000000001 /* Configuration */,

187 | EF473959B157DAEEB0DB064A /* Frameworks */,

188 | );

189 | sourceTree = "";

190 | };

191 | 7AA677121CFF765600B353FB /* Products */ = {

192 | isa = PBXGroup;

193 | children = (

194 | 7AA677111CFF765600B353FB /* MobilePydnet.app */,

195 | );

196 | name = Products;

197 | sourceTree = "";

198 | };

199 | 7AA677131CFF765600B353FB /* AppML */ = {

200 | isa = PBXGroup;

201 | children = (

202 | 7AA677141CFF765600B353FB /* AppDelegate.swift */,

203 | 3C6BB3442322E7680041D581 /* DefaultNeuralNetworks */,

204 | 3CEECB092486A7F100535292 /* CameraLayer */,

205 | 3C5EFCC823205F50004F6F7A /* GraphicLayer */,

206 | 3CF695B8229FC4CE00E4115E /* View */,

207 | 3CF695BA229FC4F700E4115E /* Storyboards */,

208 | 3C5EFCCB232067F7004F6F7A /* Extensions */,

209 | 7AA6771B1CFF765600B353FB /* Assets.xcassets */,

210 | 7AA677201CFF765600B353FB /* Info.plist */,

211 | );

212 | path = AppML;

213 | sourceTree = "";

214 | };

215 | EF473959B157DAEEB0DB064A /* Frameworks */ = {

216 | isa = PBXGroup;

217 | children = (

218 | 3C04E71422EC943C00EA471B /* Accelerate.framework */,

219 | 3C04E71222EC943800EA471B /* CoreVideo.framework */,

220 | 3C04E71022EC943000EA471B /* CoreImage.framework */,

221 | );

222 | name = Frameworks;

223 | sourceTree = "";

224 | };

225 | /* End PBXGroup section */

226 |

227 | /* Begin PBXNativeTarget section */

228 | 7AA677101CFF765500B353FB /* AppML */ = {

229 | isa = PBXNativeTarget;

230 | buildConfigurationList = 7AA677231CFF765600B353FB /* Build configuration list for PBXNativeTarget "AppML" */;

231 | buildPhases = (

232 | 7AA6770D1CFF765500B353FB /* Sources */,

233 | 7AA6770E1CFF765500B353FB /* Frameworks */,

234 | 7AA6770F1CFF765500B353FB /* Resources */,

235 | );

236 | buildRules = (

237 | );

238 | dependencies = (

239 | );

240 | name = AppML;

241 | packageProductDependencies = (

242 | 3C140CA22322CD8F00D27DFD /* RxRelay */,

243 | 3C140CA42322CD8F00D27DFD /* RxSwift */,

244 | 3C140CA62322CD8F00D27DFD /* RxCocoa */,

245 | );

246 | productName = AVCam;

247 | productReference = 7AA677111CFF765600B353FB /* MobilePydnet.app */;

248 | productType = "com.apple.product-type.application";

249 | };

250 | /* End PBXNativeTarget section */

251 |

252 | /* Begin PBXProject section */

253 | 7AA677091CFF765500B353FB /* Project object */ = {

254 | isa = PBXProject;

255 | attributes = {

256 | LastSwiftUpdateCheck = 0800;

257 | LastUpgradeCheck = 1020;

258 | ORGANIZATIONNAME = Apple;

259 | TargetAttributes = {

260 | 7AA677101CFF765500B353FB = {

261 | CreatedOnToolsVersion = 8.0;

262 | LastSwiftMigration = 1020;

263 | ProvisioningStyle = Automatic;

264 | };

265 | };

266 | };

267 | buildConfigurationList = 7AA6770C1CFF765500B353FB /* Build configuration list for PBXProject "AppML" */;

268 | compatibilityVersion = "Xcode 3.2";

269 | developmentRegion = en;

270 | hasScannedForEncodings = 0;

271 | knownRegions = (

272 | en,

273 | Base,

274 | );

275 | mainGroup = 7AA677081CFF765500B353FB;

276 | packageReferences = (

277 | 3C140CA12322CD8F00D27DFD /* XCRemoteSwiftPackageReference "RxSwift" */,

278 | );

279 | productRefGroup = 7AA677121CFF765600B353FB /* Products */;

280 | projectDirPath = "";

281 | projectRoot = "";

282 | targets = (

283 | 7AA677101CFF765500B353FB /* AppML */,

284 | );

285 | };

286 | /* End PBXProject section */

287 |

288 | /* Begin PBXResourcesBuildPhase section */

289 | 7AA6770F1CFF765500B353FB /* Resources */ = {

290 | isa = PBXResourcesBuildPhase;

291 | buildActionMask = 2147483647;

292 | files = (

293 | 7AA6771F1CFF765600B353FB /* LaunchScreen.storyboard in Resources */,

294 | 7AA6771C1CFF765600B353FB /* Assets.xcassets in Resources */,

295 | 7AA6771A1CFF765600B353FB /* Main.storyboard in Resources */,

296 | );

297 | runOnlyForDeploymentPostprocessing = 0;

298 | };

299 | /* End PBXResourcesBuildPhase section */

300 |

301 | /* Begin PBXSourcesBuildPhase section */

302 | 7AA6770D1CFF765500B353FB /* Sources */ = {

303 | isa = PBXSourcesBuildPhase;

304 | buildActionMask = 2147483647;

305 | files = (

306 | 3CEECB102486A7FF00535292 /* CGImage+CVPixelBuffer.swift in Sources */,

307 | 3CC9262322991C22001C75CE /* MetalColorMapApplier.swift in Sources */,

308 | 3C5EFCCE2320680E004F6F7A /* RxSwiftBidirectionalBinding.swift in Sources */,

309 | 3CB56B5C2479566A00143CD8 /* Pydnet.mlmodel in Sources */,

310 | 3CEECB0E2486A7FF00535292 /* CameraStream.swift in Sources */,

311 | 3C5EFCD02320F208004F6F7A /* UITextView+textColor.swift in Sources */,

312 | 3C6BB34B2322E7E70041D581 /* CVPixelBuffer+createCGImage.swift in Sources */,

313 | 3C5C90332299A75800C2E814 /* DepthToColorMap.metal in Sources */,

314 | 7AA677171CFF765600B353FB /* MainViewController.swift in Sources */,

315 | 3C31317F2321499A006F9963 /* PreviewMode.swift in Sources */,

316 | 3CE77C082325061A00DBAAD5 /* ColorMapApplier.swift in Sources */,

317 | 7AA677151CFF765600B353FB /* AppDelegate.swift in Sources */,

318 | 3C6D91E6232132BF008D987C /* MainViewModel.swift in Sources */,

319 | 3C44D82B22FEBBE100F57013 /* UIAlertController+Ext.swift in Sources */,

320 | );

321 | runOnlyForDeploymentPostprocessing = 0;

322 | };

323 | /* End PBXSourcesBuildPhase section */

324 |

325 | /* Begin PBXVariantGroup section */

326 | 7AA677181CFF765600B353FB /* Main.storyboard */ = {

327 | isa = PBXVariantGroup;

328 | children = (

329 | 7AA677191CFF765600B353FB /* Base */,

330 | );

331 | name = Main.storyboard;

332 | sourceTree = "";

333 | };

334 | 7AA6771D1CFF765600B353FB /* LaunchScreen.storyboard */ = {

335 | isa = PBXVariantGroup;

336 | children = (

337 | 7AA6771E1CFF765600B353FB /* Base */,

338 | );

339 | name = LaunchScreen.storyboard;

340 | sourceTree = "";

341 | };

342 | /* End PBXVariantGroup section */

343 |

344 | /* Begin XCBuildConfiguration section */

345 | 7AA677211CFF765600B353FB /* Debug */ = {

346 | isa = XCBuildConfiguration;

347 | buildSettings = {

348 | ALWAYS_SEARCH_USER_PATHS = NO;

349 | CLANG_ANALYZER_LOCALIZABILITY_NONLOCALIZED = YES;

350 | CLANG_ANALYZER_NONNULL = YES;

351 | CLANG_CXX_LANGUAGE_STANDARD = "gnu++0x";

352 | CLANG_CXX_LIBRARY = "libc++";

353 | CLANG_ENABLE_MODULES = YES;

354 | CLANG_ENABLE_OBJC_ARC = YES;

355 | CLANG_WARN_BLOCK_CAPTURE_AUTORELEASING = YES;

356 | CLANG_WARN_BOOL_CONVERSION = YES;

357 | CLANG_WARN_COMMA = YES;

358 | CLANG_WARN_CONSTANT_CONVERSION = YES;

359 | CLANG_WARN_DEPRECATED_OBJC_IMPLEMENTATIONS = YES;

360 | CLANG_WARN_DIRECT_OBJC_ISA_USAGE = YES_ERROR;

361 | CLANG_WARN_DOCUMENTATION_COMMENTS = YES;

362 | CLANG_WARN_EMPTY_BODY = YES;

363 | CLANG_WARN_ENUM_CONVERSION = YES;

364 | CLANG_WARN_INFINITE_RECURSION = YES;

365 | CLANG_WARN_INT_CONVERSION = YES;

366 | CLANG_WARN_NON_LITERAL_NULL_CONVERSION = YES;

367 | CLANG_WARN_OBJC_IMPLICIT_RETAIN_SELF = YES;

368 | CLANG_WARN_OBJC_LITERAL_CONVERSION = YES;

369 | CLANG_WARN_OBJC_ROOT_CLASS = YES_ERROR;

370 | CLANG_WARN_RANGE_LOOP_ANALYSIS = YES;

371 | CLANG_WARN_STRICT_PROTOTYPES = YES;

372 | CLANG_WARN_SUSPICIOUS_MOVE = YES;

373 | CLANG_WARN_UNREACHABLE_CODE = YES;

374 | CLANG_WARN__DUPLICATE_METHOD_MATCH = YES;

375 | "CODE_SIGN_IDENTITY[sdk=iphoneos*]" = "iPhone Developer";

376 | COPY_PHASE_STRIP = NO;

377 | DEBUG_INFORMATION_FORMAT = dwarf;

378 | ENABLE_STRICT_OBJC_MSGSEND = YES;

379 | ENABLE_TESTABILITY = YES;

380 | GCC_C_LANGUAGE_STANDARD = gnu99;

381 | GCC_DYNAMIC_NO_PIC = NO;

382 | GCC_NO_COMMON_BLOCKS = YES;

383 | GCC_OPTIMIZATION_LEVEL = 0;

384 | GCC_PREPROCESSOR_DEFINITIONS = (

385 | "DEBUG=1",

386 | "$(inherited)",

387 | );

388 | GCC_WARN_64_TO_32_BIT_CONVERSION = YES;

389 | GCC_WARN_ABOUT_RETURN_TYPE = YES_ERROR;

390 | GCC_WARN_UNDECLARED_SELECTOR = YES;

391 | GCC_WARN_UNINITIALIZED_AUTOS = YES_AGGRESSIVE;

392 | GCC_WARN_UNUSED_FUNCTION = YES;

393 | GCC_WARN_UNUSED_VARIABLE = YES;

394 | IPHONEOS_DEPLOYMENT_TARGET = 12.0;

395 | MTL_ENABLE_DEBUG_INFO = YES;

396 | ONLY_ACTIVE_ARCH = YES;

397 | SDKROOT = iphoneos;

398 | SWIFT_ACTIVE_COMPILATION_CONDITIONS = DEBUG;

399 | SWIFT_OPTIMIZATION_LEVEL = "-Onone";

400 | SWIFT_VERSION = 4.2;

401 | TARGETED_DEVICE_FAMILY = "1,2";

402 | };

403 | name = Debug;

404 | };

405 | 7AA677221CFF765600B353FB /* Release */ = {

406 | isa = XCBuildConfiguration;

407 | buildSettings = {

408 | ALWAYS_SEARCH_USER_PATHS = NO;

409 | CLANG_ANALYZER_LOCALIZABILITY_NONLOCALIZED = YES;

410 | CLANG_ANALYZER_NONNULL = YES;

411 | CLANG_CXX_LANGUAGE_STANDARD = "gnu++0x";

412 | CLANG_CXX_LIBRARY = "libc++";

413 | CLANG_ENABLE_MODULES = YES;

414 | CLANG_ENABLE_OBJC_ARC = YES;

415 | CLANG_WARN_BLOCK_CAPTURE_AUTORELEASING = YES;

416 | CLANG_WARN_BOOL_CONVERSION = YES;

417 | CLANG_WARN_COMMA = YES;

418 | CLANG_WARN_CONSTANT_CONVERSION = YES;

419 | CLANG_WARN_DEPRECATED_OBJC_IMPLEMENTATIONS = YES;

420 | CLANG_WARN_DIRECT_OBJC_ISA_USAGE = YES_ERROR;

421 | CLANG_WARN_DOCUMENTATION_COMMENTS = YES;

422 | CLANG_WARN_EMPTY_BODY = YES;

423 | CLANG_WARN_ENUM_CONVERSION = YES;

424 | CLANG_WARN_INFINITE_RECURSION = YES;

425 | CLANG_WARN_INT_CONVERSION = YES;

426 | CLANG_WARN_NON_LITERAL_NULL_CONVERSION = YES;

427 | CLANG_WARN_OBJC_IMPLICIT_RETAIN_SELF = YES;

428 | CLANG_WARN_OBJC_LITERAL_CONVERSION = YES;

429 | CLANG_WARN_OBJC_ROOT_CLASS = YES_ERROR;

430 | CLANG_WARN_RANGE_LOOP_ANALYSIS = YES;

431 | CLANG_WARN_STRICT_PROTOTYPES = YES;

432 | CLANG_WARN_SUSPICIOUS_MOVE = YES;

433 | CLANG_WARN_UNREACHABLE_CODE = YES;

434 | CLANG_WARN__DUPLICATE_METHOD_MATCH = YES;

435 | "CODE_SIGN_IDENTITY[sdk=iphoneos*]" = "iPhone Developer";

436 | COPY_PHASE_STRIP = NO;

437 | DEBUG_INFORMATION_FORMAT = "dwarf-with-dsym";

438 | ENABLE_NS_ASSERTIONS = NO;

439 | ENABLE_STRICT_OBJC_MSGSEND = YES;

440 | GCC_C_LANGUAGE_STANDARD = gnu99;

441 | GCC_NO_COMMON_BLOCKS = YES;

442 | GCC_WARN_64_TO_32_BIT_CONVERSION = YES;

443 | GCC_WARN_ABOUT_RETURN_TYPE = YES_ERROR;

444 | GCC_WARN_UNDECLARED_SELECTOR = YES;

445 | GCC_WARN_UNINITIALIZED_AUTOS = YES_AGGRESSIVE;

446 | GCC_WARN_UNUSED_FUNCTION = YES;

447 | GCC_WARN_UNUSED_VARIABLE = YES;

448 | IPHONEOS_DEPLOYMENT_TARGET = 12.0;

449 | MTL_ENABLE_DEBUG_INFO = NO;

450 | SDKROOT = iphoneos;

451 | SWIFT_COMPILATION_MODE = wholemodule;

452 | SWIFT_OPTIMIZATION_LEVEL = "-O";

453 | SWIFT_VERSION = 4.2;

454 | TARGETED_DEVICE_FAMILY = "1,2";

455 | VALIDATE_PRODUCT = YES;

456 | };

457 | name = Release;

458 | };

459 | 7AA677241CFF765600B353FB /* Debug */ = {

460 | isa = XCBuildConfiguration;

461 | buildSettings = {

462 | ASSETCATALOG_COMPILER_APPICON_NAME = AppIcon;

463 | CLANG_ENABLE_MODULES = YES;

464 | CODE_SIGN_IDENTITY = "iPhone Developer";

465 | CURRENT_PROJECT_VERSION = 1;

466 | DEAD_CODE_STRIPPING = NO;

467 | DEVELOPMENT_TEAM = TD7S8QYFSY;

468 | INFOPLIST_FILE = AppML/Info.plist;

469 | IPHONEOS_DEPLOYMENT_TARGET = 13.0;

470 | LD_RUNPATH_SEARCH_PATHS = (

471 | "$(inherited)",

472 | "@executable_path/Frameworks",

473 | );

474 | LD_VERIFY_BITCODE = NO;

475 | MARKETING_VERSION = 1.3;

476 | PRODUCT_BUNDLE_IDENTIFIER = it.filippoaleotti;

477 | PRODUCT_NAME = MobilePydnet;

478 | PROVISIONING_PROFILE_SPECIFIER = "";

479 | SDKROOT = iphoneos;

480 | SWIFT_OBJC_BRIDGING_HEADER = "";

481 | SWIFT_OPTIMIZATION_LEVEL = "-Onone";

482 | SWIFT_VERSION = 5.0;

483 | TARGETED_DEVICE_FAMILY = "1,2";

484 | };

485 | name = Debug;

486 | };

487 | 7AA677251CFF765600B353FB /* Release */ = {

488 | isa = XCBuildConfiguration;

489 | buildSettings = {

490 | ASSETCATALOG_COMPILER_APPICON_NAME = AppIcon;

491 | CLANG_ENABLE_MODULES = YES;

492 | CODE_SIGN_IDENTITY = "iPhone Developer";

493 | CURRENT_PROJECT_VERSION = 1;

494 | DEAD_CODE_STRIPPING = NO;

495 | DEVELOPMENT_TEAM = ZF4U32B6GT;

496 | INFOPLIST_FILE = AppML/Info.plist;

497 | IPHONEOS_DEPLOYMENT_TARGET = 13.0;

498 | LD_RUNPATH_SEARCH_PATHS = (

499 | "$(inherited)",

500 | "@executable_path/Frameworks",

501 | );

502 | LD_VERIFY_BITCODE = NO;

503 | MARKETING_VERSION = 1.3;

504 | PRODUCT_BUNDLE_IDENTIFIER = it.gzaccaroni.mlapp;

505 | PRODUCT_NAME = MobilePydnet;

506 | PROVISIONING_PROFILE_SPECIFIER = "";

507 | SDKROOT = iphoneos;

508 | SWIFT_OBJC_BRIDGING_HEADER = "";

509 | SWIFT_VERSION = 5.0;

510 | TARGETED_DEVICE_FAMILY = "1,2";

511 | };

512 | name = Release;

513 | };

514 | /* End XCBuildConfiguration section */

515 |

516 | /* Begin XCConfigurationList section */

517 | 7AA6770C1CFF765500B353FB /* Build configuration list for PBXProject "AppML" */ = {

518 | isa = XCConfigurationList;

519 | buildConfigurations = (

520 | 7AA677211CFF765600B353FB /* Debug */,

521 | 7AA677221CFF765600B353FB /* Release */,

522 | );

523 | defaultConfigurationIsVisible = 0;

524 | defaultConfigurationName = Release;

525 | };

526 | 7AA677231CFF765600B353FB /* Build configuration list for PBXNativeTarget "AppML" */ = {

527 | isa = XCConfigurationList;

528 | buildConfigurations = (

529 | 7AA677241CFF765600B353FB /* Debug */,

530 | 7AA677251CFF765600B353FB /* Release */,

531 | );

532 | defaultConfigurationIsVisible = 0;

533 | defaultConfigurationName = Release;

534 | };

535 | /* End XCConfigurationList section */

536 |

537 | /* Begin XCRemoteSwiftPackageReference section */

538 | 3C140CA12322CD8F00D27DFD /* XCRemoteSwiftPackageReference "RxSwift" */ = {

539 | isa = XCRemoteSwiftPackageReference;

540 | repositoryURL = "git@github.com:ReactiveX/RxSwift.git";

541 | requirement = {

542 | kind = upToNextMajorVersion;

543 | minimumVersion = 5.0.1;

544 | };

545 | };

546 | /* End XCRemoteSwiftPackageReference section */

547 |

548 | /* Begin XCSwiftPackageProductDependency section */

549 | 3C140CA22322CD8F00D27DFD /* RxRelay */ = {

550 | isa = XCSwiftPackageProductDependency;

551 | package = 3C140CA12322CD8F00D27DFD /* XCRemoteSwiftPackageReference "RxSwift" */;

552 | productName = RxRelay;

553 | };

554 | 3C140CA42322CD8F00D27DFD /* RxSwift */ = {

555 | isa = XCSwiftPackageProductDependency;

556 | package = 3C140CA12322CD8F00D27DFD /* XCRemoteSwiftPackageReference "RxSwift" */;

557 | productName = RxSwift;

558 | };

559 | 3C140CA62322CD8F00D27DFD /* RxCocoa */ = {

560 | isa = XCSwiftPackageProductDependency;

561 | package = 3C140CA12322CD8F00D27DFD /* XCRemoteSwiftPackageReference "RxSwift" */;

562 | productName = RxCocoa;

563 | };

564 | /* End XCSwiftPackageProductDependency section */

565 | };

566 | rootObject = 7AA677091CFF765500B353FB /* Project object */;

567 | }

568 |

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/project.xcworkspace/contents.xcworkspacedata:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/project.xcworkspace/xcshareddata/IDEWorkspaceChecks.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | IDEDidComputeMac32BitWarning

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/project.xcworkspace/xcshareddata/swiftpm/Package.resolved:

--------------------------------------------------------------------------------

1 | {

2 | "object": {

3 | "pins": [

4 | {

5 | "package": "RxSwift",

6 | "repositoryURL": "git@github.com:ReactiveX/RxSwift.git",

7 | "state": {

8 | "branch": null,

9 | "revision": "b3e888b4972d9bc76495dd74d30a8c7fad4b9395",

10 | "version": "5.0.1"

11 | }

12 | }

13 | ]

14 | },

15 | "version": 1

16 | }

17 |

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/project.xcworkspace/xcuserdata/filippoaleotti.xcuserdatad/UserInterfaceState.xcuserstate:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML.xcodeproj/project.xcworkspace/xcuserdata/filippoaleotti.xcuserdatad/UserInterfaceState.xcuserstate

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/xcshareddata/xcschemes/AppML.xcscheme:

--------------------------------------------------------------------------------

1 |

2 |

5 |

8 |

9 |

15 |

21 |

22 |

23 |

24 |

25 |

30 |

31 |

37 |

38 |

39 |

40 |

41 |

42 |

53 |

55 |

61 |

62 |

63 |

64 |

70 |

72 |

78 |

79 |

80 |

81 |

83 |

84 |

87 |

88 |

89 |

--------------------------------------------------------------------------------

/iOS/AppML.xcodeproj/xcuserdata/filippoaleotti.xcuserdatad/xcdebugger/Breakpoints_v2.xcbkptlist:

--------------------------------------------------------------------------------

1 |

2 |

6 |

7 |

--------------------------------------------------------------------------------

/iOS/AppML.xcworkspace/contents.xcworkspacedata:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/iOS/AppML.xcworkspace/xcshareddata/IDEWorkspaceChecks.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | IDEDidComputeMac32BitWarning

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/iOS/AppML.xcworkspace/xcshareddata/swiftpm/Package.resolved:

--------------------------------------------------------------------------------

1 | {

2 | "object": {

3 | "pins": [

4 | {

5 | "package": "RxSwift",

6 | "repositoryURL": "git@github.com:ReactiveX/RxSwift.git",

7 | "state": {

8 | "branch": null,

9 | "revision": "002d325b0bdee94e7882e1114af5ff4fe1e96afa",

10 | "version": "5.1.1"

11 | }

12 | }

13 | ]

14 | },

15 | "version": 1

16 | }

17 |

--------------------------------------------------------------------------------

/iOS/AppML/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/.DS_Store

--------------------------------------------------------------------------------

/iOS/AppML/AppDelegate.swift:

--------------------------------------------------------------------------------

1 | //

2 | // AppDelegate.swift

3 | // AppML

4 | //

5 | // Created by Giulio Zaccaroni on 21/04/2019.

6 | // Copyright © 2019 Apple. All rights reserved.

7 | //

8 | import UIKit

9 | @UIApplicationMain

10 | class AppDelegate: UIResponder, UIApplicationDelegate {

11 | var window: UIWindow?

12 | func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey : Any]? = nil) -> Bool {

13 | UIApplication.shared.isIdleTimerDisabled = true

14 |

15 | self.window = UIWindow(frame: UIScreen.main.bounds)

16 |

17 | let storyboard = UIStoryboard(name: "Main", bundle: nil)

18 |

19 | let initialViewController = storyboard.instantiateInitialViewController()!

20 |

21 | self.window?.rootViewController = initialViewController

22 | self.window?.makeKeyAndVisible()

23 |

24 | return true

25 | }

26 | }

27 |

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/.DS_Store

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "images" : [

3 | {

4 | "size" : "20x20",

5 | "idiom" : "iphone",

6 | "filename" : "Icon-App-20x20@2x.png",

7 | "scale" : "2x"

8 | },

9 | {

10 | "size" : "20x20",

11 | "idiom" : "iphone",

12 | "filename" : "Icon-App-20x20@3x.png",

13 | "scale" : "3x"

14 | },

15 | {

16 | "size" : "29x29",

17 | "idiom" : "iphone",

18 | "filename" : "Icon-App-29x29@1x.png",

19 | "scale" : "1x"

20 | },

21 | {

22 | "size" : "29x29",

23 | "idiom" : "iphone",

24 | "filename" : "Icon-App-29x29@2x.png",

25 | "scale" : "2x"

26 | },

27 | {

28 | "size" : "29x29",

29 | "idiom" : "iphone",

30 | "filename" : "Icon-App-29x29@3x.png",

31 | "scale" : "3x"

32 | },

33 | {

34 | "size" : "40x40",

35 | "idiom" : "iphone",

36 | "filename" : "Icon-App-40x40@2x.png",

37 | "scale" : "2x"

38 | },

39 | {

40 | "size" : "40x40",

41 | "idiom" : "iphone",

42 | "filename" : "Icon-App-40x40@3x.png",

43 | "scale" : "3x"

44 | },

45 | {

46 | "size" : "60x60",

47 | "idiom" : "iphone",

48 | "filename" : "Icon-App-60x60@2x.png",

49 | "scale" : "2x"

50 | },

51 | {

52 | "size" : "60x60",

53 | "idiom" : "iphone",

54 | "filename" : "Icon-App-60x60@3x.png",

55 | "scale" : "3x"

56 | },

57 | {

58 | "size" : "20x20",

59 | "idiom" : "ipad",

60 | "filename" : "Icon-App-20x20@1x.png",

61 | "scale" : "1x"

62 | },

63 | {

64 | "size" : "20x20",

65 | "idiom" : "ipad",

66 | "filename" : "Icon-App-20x20@2x.png",

67 | "scale" : "2x"

68 | },

69 | {

70 | "size" : "29x29",

71 | "idiom" : "ipad",

72 | "filename" : "Icon-App-29x29@1x.png",

73 | "scale" : "1x"

74 | },

75 | {

76 | "size" : "29x29",

77 | "idiom" : "ipad",

78 | "filename" : "Icon-App-29x29@2x.png",

79 | "scale" : "2x"

80 | },

81 | {

82 | "size" : "40x40",

83 | "idiom" : "ipad",

84 | "filename" : "Icon-App-40x40@1x.png",

85 | "scale" : "1x"

86 | },

87 | {

88 | "size" : "40x40",

89 | "idiom" : "ipad",

90 | "filename" : "Icon-App-40x40@2x.png",

91 | "scale" : "2x"

92 | },

93 | {

94 | "size" : "76x76",

95 | "idiom" : "ipad",

96 | "filename" : "Icon-App-76x76@1x.png",

97 | "scale" : "1x"

98 | },

99 | {

100 | "size" : "76x76",

101 | "idiom" : "ipad",

102 | "filename" : "Icon-App-76x76@2x.png",

103 | "scale" : "2x"

104 | },

105 | {

106 | "size" : "83.5x83.5",

107 | "idiom" : "ipad",

108 | "filename" : "Icon-App-83.5x83.5@2x.png",

109 | "scale" : "2x"

110 | },

111 | {

112 | "size" : "1024x1024",

113 | "idiom" : "ios-marketing",

114 | "filename" : "ItunesArtwork@2x.png",

115 | "scale" : "1x"

116 | }

117 | ],

118 | "info" : {

119 | "version" : 1,

120 | "author" : "xcode"

121 | }

122 | }

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-20x20@1x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-20x20@1x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-20x20@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-20x20@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-20x20@3x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-20x20@3x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-29x29@1x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-29x29@1x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-29x29@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-29x29@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-29x29@3x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-29x29@3x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-40x40@1x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-40x40@1x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-40x40@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-40x40@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-40x40@3x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-40x40@3x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-60x60@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-60x60@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-60x60@3x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-60x60@3x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-76x76@1x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-76x76@1x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-76x76@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-76x76@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-83.5x83.5@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/Icon-App-83.5x83.5@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/AppIcon.appiconset/ItunesArtwork@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/AppIcon.appiconset/ItunesArtwork@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/ColorFilterOff.imageset/ColorFilterOff@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/ColorFilterOff.imageset/ColorFilterOff@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/ColorFilterOff.imageset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "images" : [

3 | {

4 | "idiom" : "universal",

5 | "scale" : "1x"

6 | },

7 | {

8 | "idiom" : "universal",

9 | "filename" : "ColorFilterOff@2x.png",

10 | "scale" : "2x"

11 | },

12 | {

13 | "idiom" : "universal",

14 | "scale" : "3x"

15 | }

16 | ],

17 | "info" : {

18 | "version" : 1,

19 | "author" : "xcode"

20 | },

21 | "properties" : {

22 | "template-rendering-intent" : "template"

23 | }

24 | }

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/ColorFilterOn.imageset/ColorFilterOn@2x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/ColorFilterOn.imageset/ColorFilterOn@2x.png

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/ColorFilterOn.imageset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "images" : [

3 | {

4 | "idiom" : "universal",

5 | "scale" : "1x"

6 | },

7 | {

8 | "idiom" : "universal",

9 | "filename" : "ColorFilterOn@2x.png",

10 | "scale" : "2x"

11 | },

12 | {

13 | "idiom" : "universal",

14 | "scale" : "3x"

15 | }

16 | ],

17 | "info" : {

18 | "version" : 1,

19 | "author" : "xcode"

20 | },

21 | "properties" : {

22 | "template-rendering-intent" : "template"

23 | }

24 | }

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "info" : {

3 | "version" : 1,

4 | "author" : "xcode"

5 | }

6 | }

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/SettingsIcon.imageset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "images" : [

3 | {

4 | "idiom" : "universal",

5 | "filename" : "icons8-services-2.pdf"

6 | }

7 | ],

8 | "info" : {

9 | "version" : 1,

10 | "author" : "xcode"

11 | }

12 | }

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/SettingsIcon.imageset/icons8-services-2.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/SettingsIcon.imageset/icons8-services-2.pdf

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/Trash.imageset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "images" : [

3 | {

4 | "idiom" : "universal",

5 | "filename" : "Medium-S.pdf"

6 | }

7 | ],

8 | "info" : {

9 | "version" : 1,

10 | "author" : "xcode"

11 | },

12 | "properties" : {

13 | "template-rendering-intent" : "template"

14 | }

15 | }

--------------------------------------------------------------------------------

/iOS/AppML/Assets.xcassets/Trash.imageset/Medium-S.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/FilippoAleotti/mobilePydnet/9ab405806630341106514277f4d61156dd697c59/iOS/AppML/Assets.xcassets/Trash.imageset/Medium-S.pdf

--------------------------------------------------------------------------------

/iOS/AppML/CameraLayer/CameraStream.swift:

--------------------------------------------------------------------------------

1 | //

2 | // CameraStream.swift

3 | // AppML

4 | //

5 | // Created by Giulio Zaccaroni on 27/07/2019.

6 | // Copyright © 2019 Apple. All rights reserved.

7 | //

8 |

9 | import AVFoundation

10 | import Photos

11 | import Accelerate

12 | import CoreML

13 | import RxSwift

14 | public class CameraStream: NSObject {

15 | private let session = AVCaptureSession()

16 | private var isSessionRunning = false

17 | private let dataOutputQueue = DispatchQueue(label: "data output queue")

18 | private let sessionQueue = DispatchQueue(label: "session queue") // Communicate with the session and other session objects on this queue.

19 | private var subject: PublishSubject?

20 |

21 | public func configure() -> Completable{

22 | return Completable.create { completable in

23 | return self.sessionQueue.sync {

24 | return self.configureSession(completable: completable)

25 | }

26 | }

27 | }

28 | public func start() -> Observable{

29 | let subject = PublishSubject()

30 | sessionQueue.sync {

31 | self.subject = subject

32 | session.startRunning()

33 | self.isSessionRunning = self.session.isRunning

34 | }

35 | return subject

36 | }

37 | public func stop(){

38 | sessionQueue.sync {

39 | self.session.stopRunning()

40 | self.isSessionRunning = self.session.isRunning

41 | subject?.dispose()

42 | self.subject = nil

43 | }

44 | }

45 |

46 | private let videoOutput = AVCaptureVideoDataOutput()

47 | @objc private dynamic var videoDeviceInput: AVCaptureDeviceInput!

48 | private func configureSession(completable: ((CompletableEvent) -> ())) -> Cancelable{

49 |

50 | session.beginConfiguration()

51 |

52 | session.sessionPreset = .hd1920x1080

53 |

54 | do {

55 |

56 | // default to a wide angle camera.

57 |

58 | guard let videoDevice = AVCaptureDevice.default(.builtInWideAngleCamera, for: .video, position: .back) else{

59 | print("Camera video device is unavailable.")

60 | session.commitConfiguration()

61 | completable(.error(SessionSetupError.configurationFailed))

62 | return Disposables.create {}

63 | }

64 | let videoDeviceInput = try AVCaptureDeviceInput(device: videoDevice)

65 |

66 | if session.canAddInput(videoDeviceInput) {

67 | session.addInput(videoDeviceInput)

68 | self.videoDeviceInput = videoDeviceInput

69 |

70 | try videoDeviceInput.device.lockForConfiguration()

71 | videoDeviceInput.device.focusMode = .continuousAutoFocus

72 | videoDeviceInput.device.unlockForConfiguration()

73 | } else {

74 | print("Couldn't add video device input to the session.")

75 | session.commitConfiguration()

76 | completable(.error(SessionSetupError.configurationFailed))

77 | return Disposables.create {} }

78 |

79 | } catch {

80 | print("Couldn't create video device input: \(error)")

81 | session.commitConfiguration()

82 | completable(.error(SessionSetupError.configurationFailed))

83 | return Disposables.create {}

84 | }

85 | videoOutput.setSampleBufferDelegate(self, queue: dataOutputQueue)

86 | videoOutput.videoSettings = [kCVPixelBufferPixelFormatTypeKey as String: kCVPixelFormatType_32BGRA]

87 |

88 | session.addOutput(videoOutput)

89 |

90 | let videoConnection = videoOutput.connection(with: .video)

91 | videoConnection?.videoOrientation = .landscapeLeft

92 |

93 | session.commitConfiguration()

94 |

95 | completable(.completed)

96 | return Disposables.create {}

97 | }

98 |

99 | }

100 | extension CameraStream: AVCaptureVideoDataOutputSampleBufferDelegate {

101 | public func captureOutput(_ output: AVCaptureOutput,

102 | didOutput sampleBuffer: CMSampleBuffer,

103 | from connection: AVCaptureConnection) {

104 | guard let imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else { return }

105 | subject?.onNext(imageBuffer)

106 | }

107 | }

108 |

109 | public enum SessionSetupError: Error {

110 | case needAuthorization

111 | case authorizationDenied

112 | case configurationFailed

113 | case multiCamNotSupported

114 | }

115 |

--------------------------------------------------------------------------------

/iOS/AppML/CameraLayer/Extensions/CGImage+CVPixelBuffer.swift:

--------------------------------------------------------------------------------

1 | //

2 | // CGImage+CVPixelBuffer.swift

3 | // AppML

4 | //

5 | // Created by Giulio Zaccaroni on 07/08/2019.

6 | // Copyright © 2019 Apple. All rights reserved.

7 | //

8 |

9 |

10 | import CoreGraphics

11 | import CoreImage

12 | import VideoToolbox

13 | extension CGImage {

14 | /**

15 | Resizes the image to width x height and converts it to an RGB CVPixelBuffer.

16 | */