├── .github

├── ISSUE_TEMPLATE

│ ├── bug_report.md

│ └── feature_request.md

└── workflows

│ └── ubuntu.yml

├── .gitignore

├── .gitmodules

├── CODE_OF_CONDUCT.md

├── LICENSE

├── README.md

├── build.py

├── dingo

├── MetabolicNetwork.py

├── PolytopeSampler.py

├── __init__.py

├── __main__.py

├── bindings

│ ├── bindings.cpp

│ ├── bindings.h

│ └── hmc_sampling.h

├── illustrations.py

├── loading_models.py

├── nullspace.py

├── parser.py

├── preprocess.py

├── pyoptinterface_based_impl.py

├── scaling.py

├── utils.py

└── volestipy.pyx

├── doc

├── aconta_ppc_copula.png

├── e_coli_aconta.png

└── logo

│ └── dingo.jpg

├── ext_data

├── e_coli_core.json

├── e_coli_core.mat

├── e_coli_core.xml

├── e_coli_core_dingo.mat

└── matlab_model_wrapper.m

├── poetry.lock

├── pyproject.toml

├── setup.py

├── tests

├── correlation.py

├── fba.py

├── full_dimensional.py

├── max_ball.py

├── preprocess.py

├── rounding.py

├── sampling.py

├── sampling_no_multiphase.py

└── scaling.py

└── tutorials

├── CONTRIBUTING.md

├── README.md

├── dingo_tutorial.ipynb

└── figs

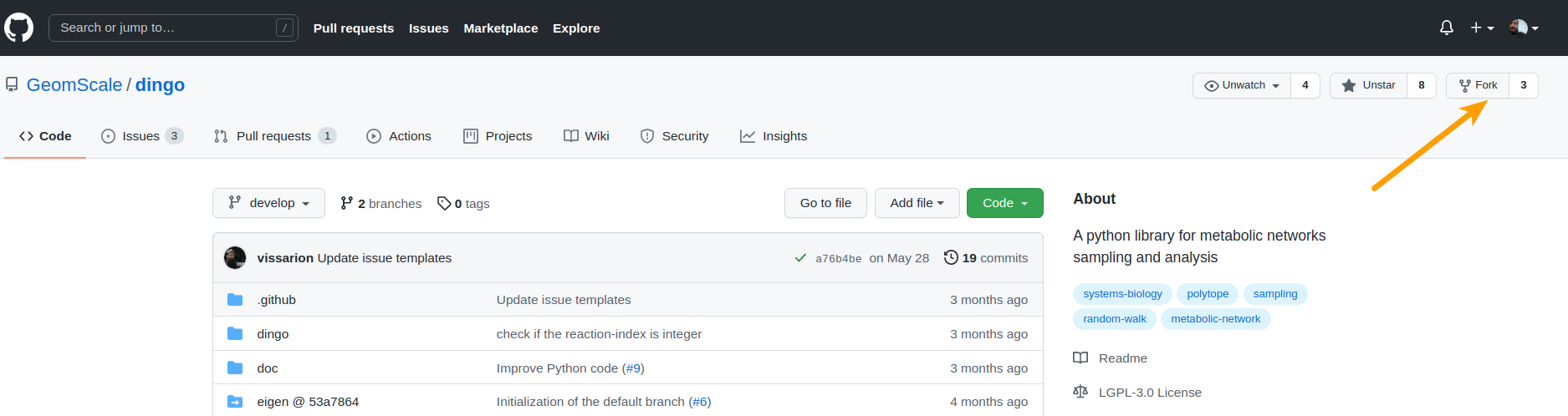

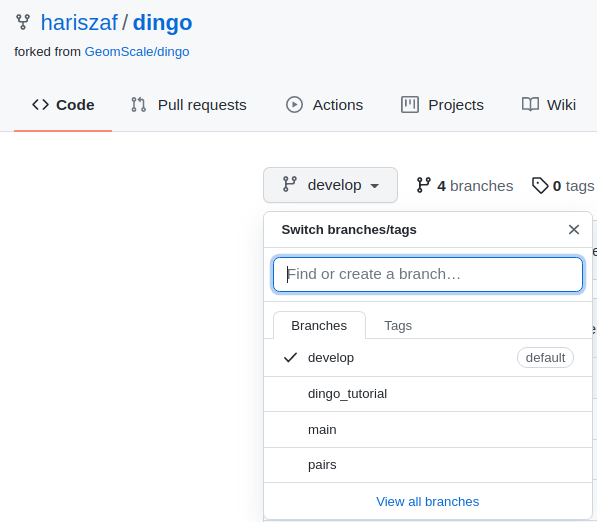

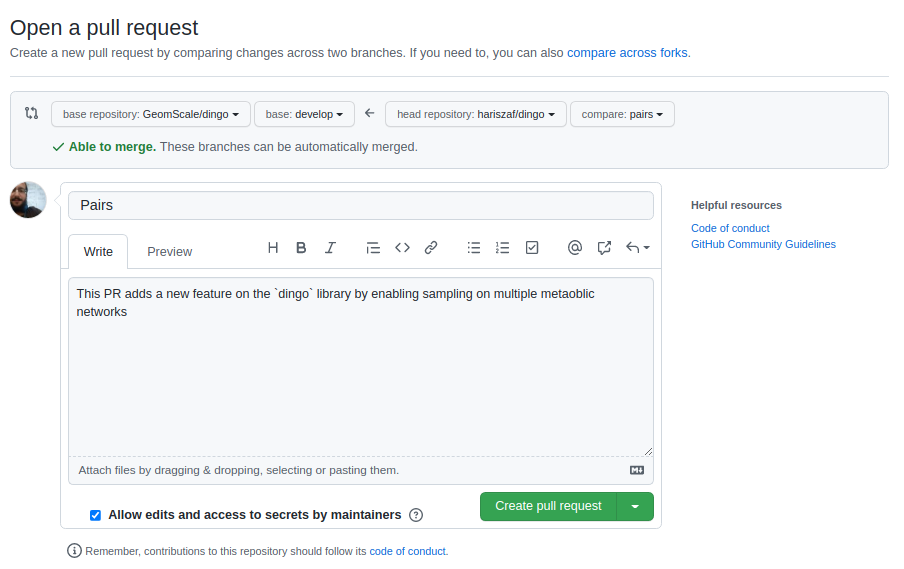

├── branches_github.png

├── fork.png

└── pr.png

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior:

15 | 1. Go to '...'

16 | 2. Click on '....'

17 | 3. Scroll down to '....'

18 | 4. See error

19 |

20 | **Expected behavior**

21 | A clear and concise description of what you expected to happen.

22 |

23 | **Screenshots**

24 | If applicable, add screenshots to help explain your problem.

25 |

26 | **Desktop (please complete the following information):**

27 | - OS: [e.g. iOS]

28 | - Browser [e.g. chrome, safari]

29 | - Version [e.g. 22]

30 |

31 | **Smartphone (please complete the following information):**

32 | - Device: [e.g. iPhone6]

33 | - OS: [e.g. iOS8.1]

34 | - Browser [e.g. stock browser, safari]

35 | - Version [e.g. 22]

36 |

37 | **Additional context**

38 | Add any other context about the problem here.

39 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/.github/workflows/ubuntu.yml:

--------------------------------------------------------------------------------

1 | # dingo : a python library for metabolic networks sampling and analysis

2 | # dingo is part of GeomScale project

3 |

4 | # Copyright (c) 2021-2022 Vissarion Fisikopoulos

5 |

6 | # Licensed under GNU LGPL.3, see LICENCE file

7 |

8 | name: dingo-ubuntu

9 |

10 | on: [push, pull_request]

11 |

12 | jobs:

13 | build:

14 |

15 | runs-on: ubuntu-latest

16 | strategy:

17 | matrix:

18 | #python-version: [2.7, 3.5, 3.6, 3.7, 3.8]

19 | python-version: [3.8]

20 |

21 | steps:

22 | - uses: actions/checkout@v2

23 | - name: Set up Python ${{ matrix.python-version }}

24 | uses: actions/setup-python@v2

25 | with:

26 | python-version: ${{ matrix.python-version }}

27 | - name: Load submodules

28 | run: |

29 | git submodule update --init;

30 | - name: Download and unzip the boost library

31 | run: |

32 | wget -O boost_1_76_0.tar.bz2 https://archives.boost.io/release/1.76.0/source/boost_1_76_0.tar.bz2;

33 | tar xjf boost_1_76_0.tar.bz2;

34 | rm boost_1_76_0.tar.bz2;

35 | - name: Download and unzip the lp-solve library

36 | run: |

37 | wget https://sourceforge.net/projects/lpsolve/files/lpsolve/5.5.2.11/lp_solve_5.5.2.11_source.tar.gz

38 | tar xzvf lp_solve_5.5.2.11_source.tar.gz

39 | rm lp_solve_5.5.2.11_source.tar.gz

40 | - name: Install dependencies

41 | run: |

42 | sudo apt-get install libsuitesparse-dev;

43 | curl -sSL https://install.python-poetry.org | python3 - --version 1.3.2;

44 | poetry --version

45 | poetry show -v

46 | source $(poetry env info --path)/bin/activate

47 | poetry install;

48 | pip3 install numpy scipy;

49 | - name: Run tests

50 | run: |

51 | poetry run python3 tests/fba.py;

52 | poetry run python3 tests/full_dimensional.py;

53 | poetry run python3 tests/max_ball.py;

54 | poetry run python3 tests/scaling.py;

55 | poetry run python3 tests/sampling.py;

56 | poetry run python3 tests/sampling_no_multiphase.py;

57 | # currently we do not test with gurobi

58 | # python3 tests/fast_implementation_test.py;

59 |

60 | #run all tests

61 | #python -m unittest discover test

62 | #TODO: use pytest

63 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | build

2 | dist

3 | boost_1_76_0

4 | dingo.egg-info

5 | *.pyc

6 | *.so

7 | volestipy.cpp

8 | volestipy.egg-info

9 | *.npy

10 | .ipynb_checkpoints/

11 | .vscode

12 | venv

13 | lp_solve_5.5/

14 | .devcontainer/

15 | .github/dependabot.yml

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "eigen"]

2 | path = eigen

3 | url = https://gitlab.com/libeigen/eigen.git

4 | branch = 3.3

5 | [submodule "volesti"]

6 | path = volesti

7 | url = https://github.com/GeomScale/volesti.git

8 | branch = develop

9 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | # Contributor Covenant Code of Conduct

2 |

3 | ## Our Pledge

4 |

5 | We as members, contributors, and leaders pledge to make participation in our

6 | community a harassment-free experience for everyone, regardless of age, body

7 | size, visible or invisible disability, ethnicity, sex characteristics, gender

8 | identity and expression, level of experience, education, socio-economic status,

9 | nationality, personal appearance, race, religion, or sexual identity

10 | and orientation.

11 |

12 | We pledge to act and interact in ways that contribute to an open, welcoming,

13 | diverse, inclusive, and healthy community.

14 |

15 | ## Our Standards

16 |

17 | Examples of behavior that contributes to a positive environment for our

18 | community include:

19 |

20 | * Demonstrating empathy and kindness toward other people

21 | * Being respectful of differing opinions, viewpoints, and experiences

22 | * Giving and gracefully accepting constructive feedback

23 | * Accepting responsibility and apologizing to those affected by our mistakes,

24 | and learning from the experience

25 | * Focusing on what is best not just for us as individuals, but for the

26 | overall community

27 |

28 | Examples of unacceptable behavior include:

29 |

30 | * The use of sexualized language or imagery, and sexual attention or

31 | advances of any kind

32 | * Trolling, insulting or derogatory comments, and personal or political attacks

33 | * Public or private harassment

34 | * Publishing others' private information, such as a physical or email

35 | address, without their explicit permission

36 | * Other conduct which could reasonably be considered inappropriate in a

37 | professional setting

38 |

39 | ## Enforcement Responsibilities

40 |

41 | Community leaders are responsible for clarifying and enforcing our standards of

42 | acceptable behavior and will take appropriate and fair corrective action in

43 | response to any behavior that they deem inappropriate, threatening, offensive,

44 | or harmful.

45 |

46 | Community leaders have the right and responsibility to remove, edit, or reject

47 | comments, commits, code, wiki edits, issues, and other contributions that are

48 | not aligned to this Code of Conduct, and will communicate reasons for moderation

49 | decisions when appropriate.

50 |

51 | ## Scope

52 |

53 | This Code of Conduct applies within all community spaces, and also applies when

54 | an individual is officially representing the community in public spaces.

55 | Examples of representing our community include using an official e-mail address,

56 | posting via an official social media account, or acting as an appointed

57 | representative at an online or offline event.

58 |

59 | ## Enforcement

60 |

61 | Instances of abusive, harassing, or otherwise unacceptable behavior may be

62 | reported to the community leaders responsible for enforcement at

63 | geomscale@gmail.com.

64 | All complaints will be reviewed and investigated promptly and fairly.

65 |

66 | All community leaders are obligated to respect the privacy and security of the

67 | reporter of any incident.

68 |

69 | ## Enforcement Guidelines

70 |

71 | Community leaders will follow these Community Impact Guidelines in determining

72 | the consequences for any action they deem in violation of this Code of Conduct:

73 |

74 | ### 1. Correction

75 |

76 | **Community Impact**: Use of inappropriate language or other behavior deemed

77 | unprofessional or unwelcome in the community.

78 |

79 | **Consequence**: A private, written warning from community leaders, providing

80 | clarity around the nature of the violation and an explanation of why the

81 | behavior was inappropriate. A public apology may be requested.

82 |

83 | ### 2. Warning

84 |

85 | **Community Impact**: A violation through a single incident or series

86 | of actions.

87 |

88 | **Consequence**: A warning with consequences for continued behavior. No

89 | interaction with the people involved, including unsolicited interaction with

90 | those enforcing the Code of Conduct, for a specified period of time. This

91 | includes avoiding interactions in community spaces as well as external channels

92 | like social media. Violating these terms may lead to a temporary or

93 | permanent ban.

94 |

95 | ### 3. Temporary Ban

96 |

97 | **Community Impact**: A serious violation of community standards, including

98 | sustained inappropriate behavior.

99 |

100 | **Consequence**: A temporary ban from any sort of interaction or public

101 | communication with the community for a specified period of time. No public or

102 | private interaction with the people involved, including unsolicited interaction

103 | with those enforcing the Code of Conduct, is allowed during this period.

104 | Violating these terms may lead to a permanent ban.

105 |

106 | ### 4. Permanent Ban

107 |

108 | **Community Impact**: Demonstrating a pattern of violation of community

109 | standards, including sustained inappropriate behavior, harassment of an

110 | individual, or aggression toward or disparagement of classes of individuals.

111 |

112 | **Consequence**: A permanent ban from any sort of public interaction within

113 | the community.

114 |

115 | ## Attribution

116 |

117 | This Code of Conduct is adapted from the [Contributor Covenant][homepage],

118 | version 2.0, available at

119 | https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

120 |

121 | Community Impact Guidelines were inspired by [Mozilla's code of conduct

122 | enforcement ladder](https://github.com/mozilla/diversity).

123 |

124 | [homepage]: https://www.contributor-covenant.org

125 |

126 | For answers to common questions about this code of conduct, see the FAQ at

127 | https://www.contributor-covenant.org/faq. Translations are available at

128 | https://www.contributor-covenant.org/translations.

129 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | GNU LESSER GENERAL PUBLIC LICENSE

2 | Version 3, 29 June 2007

3 |

4 | Copyright (C) 2007 Free Software Foundation, Inc.

5 | Everyone is permitted to copy and distribute verbatim copies

6 | of this license document, but changing it is not allowed.

7 |

8 |

9 | This version of the GNU Lesser General Public License incorporates

10 | the terms and conditions of version 3 of the GNU General Public

11 | License, supplemented by the additional permissions listed below.

12 |

13 | 0. Additional Definitions.

14 |

15 | As used herein, "this License" refers to version 3 of the GNU Lesser

16 | General Public License, and the "GNU GPL" refers to version 3 of the GNU

17 | General Public License.

18 |

19 | "The Library" refers to a covered work governed by this License,

20 | other than an Application or a Combined Work as defined below.

21 |

22 | An "Application" is any work that makes use of an interface provided

23 | by the Library, but which is not otherwise based on the Library.

24 | Defining a subclass of a class defined by the Library is deemed a mode

25 | of using an interface provided by the Library.

26 |

27 | A "Combined Work" is a work produced by combining or linking an

28 | Application with the Library. The particular version of the Library

29 | with which the Combined Work was made is also called the "Linked

30 | Version".

31 |

32 | The "Minimal Corresponding Source" for a Combined Work means the

33 | Corresponding Source for the Combined Work, excluding any source code

34 | for portions of the Combined Work that, considered in isolation, are

35 | based on the Application, and not on the Linked Version.

36 |

37 | The "Corresponding Application Code" for a Combined Work means the

38 | object code and/or source code for the Application, including any data

39 | and utility programs needed for reproducing the Combined Work from the

40 | Application, but excluding the System Libraries of the Combined Work.

41 |

42 | 1. Exception to Section 3 of the GNU GPL.

43 |

44 | You may convey a covered work under sections 3 and 4 of this License

45 | without being bound by section 3 of the GNU GPL.

46 |

47 | 2. Conveying Modified Versions.

48 |

49 | If you modify a copy of the Library, and, in your modifications, a

50 | facility refers to a function or data to be supplied by an Application

51 | that uses the facility (other than as an argument passed when the

52 | facility is invoked), then you may convey a copy of the modified

53 | version:

54 |

55 | a) under this License, provided that you make a good faith effort to

56 | ensure that, in the event an Application does not supply the

57 | function or data, the facility still operates, and performs

58 | whatever part of its purpose remains meaningful, or

59 |

60 | b) under the GNU GPL, with none of the additional permissions of

61 | this License applicable to that copy.

62 |

63 | 3. Object Code Incorporating Material from Library Header Files.

64 |

65 | The object code form of an Application may incorporate material from

66 | a header file that is part of the Library. You may convey such object

67 | code under terms of your choice, provided that, if the incorporated

68 | material is not limited to numerical parameters, data structure

69 | layouts and accessors, or small macros, inline functions and templates

70 | (ten or fewer lines in length), you do both of the following:

71 |

72 | a) Give prominent notice with each copy of the object code that the

73 | Library is used in it and that the Library and its use are

74 | covered by this License.

75 |

76 | b) Accompany the object code with a copy of the GNU GPL and this license

77 | document.

78 |

79 | 4. Combined Works.

80 |

81 | You may convey a Combined Work under terms of your choice that,

82 | taken together, effectively do not restrict modification of the

83 | portions of the Library contained in the Combined Work and reverse

84 | engineering for debugging such modifications, if you also do each of

85 | the following:

86 |

87 | a) Give prominent notice with each copy of the Combined Work that

88 | the Library is used in it and that the Library and its use are

89 | covered by this License.

90 |

91 | b) Accompany the Combined Work with a copy of the GNU GPL and this license

92 | document.

93 |

94 | c) For a Combined Work that displays copyright notices during

95 | execution, include the copyright notice for the Library among

96 | these notices, as well as a reference directing the user to the

97 | copies of the GNU GPL and this license document.

98 |

99 | d) Do one of the following:

100 |

101 | 0) Convey the Minimal Corresponding Source under the terms of this

102 | License, and the Corresponding Application Code in a form

103 | suitable for, and under terms that permit, the user to

104 | recombine or relink the Application with a modified version of

105 | the Linked Version to produce a modified Combined Work, in the

106 | manner specified by section 6 of the GNU GPL for conveying

107 | Corresponding Source.

108 |

109 | 1) Use a suitable shared library mechanism for linking with the

110 | Library. A suitable mechanism is one that (a) uses at run time

111 | a copy of the Library already present on the user's computer

112 | system, and (b) will operate properly with a modified version

113 | of the Library that is interface-compatible with the Linked

114 | Version.

115 |

116 | e) Provide Installation Information, but only if you would otherwise

117 | be required to provide such information under section 6 of the

118 | GNU GPL, and only to the extent that such information is

119 | necessary to install and execute a modified version of the

120 | Combined Work produced by recombining or relinking the

121 | Application with a modified version of the Linked Version. (If

122 | you use option 4d0, the Installation Information must accompany

123 | the Minimal Corresponding Source and Corresponding Application

124 | Code. If you use option 4d1, you must provide the Installation

125 | Information in the manner specified by section 6 of the GNU GPL

126 | for conveying Corresponding Source.)

127 |

128 | 5. Combined Libraries.

129 |

130 | You may place library facilities that are a work based on the

131 | Library side by side in a single library together with other library

132 | facilities that are not Applications and are not covered by this

133 | License, and convey such a combined library under terms of your

134 | choice, if you do both of the following:

135 |

136 | a) Accompany the combined library with a copy of the same work based

137 | on the Library, uncombined with any other library facilities,

138 | conveyed under the terms of this License.

139 |

140 | b) Give prominent notice with the combined library that part of it

141 | is a work based on the Library, and explaining where to find the

142 | accompanying uncombined form of the same work.

143 |

144 | 6. Revised Versions of the GNU Lesser General Public License.

145 |

146 | The Free Software Foundation may publish revised and/or new versions

147 | of the GNU Lesser General Public License from time to time. Such new

148 | versions will be similar in spirit to the present version, but may

149 | differ in detail to address new problems or concerns.

150 |

151 | Each version is given a distinguishing version number. If the

152 | Library as you received it specifies that a certain numbered version

153 | of the GNU Lesser General Public License "or any later version"

154 | applies to it, you have the option of following the terms and

155 | conditions either of that published version or of any later version

156 | published by the Free Software Foundation. If the Library as you

157 | received it does not specify a version number of the GNU Lesser

158 | General Public License, you may choose any version of the GNU Lesser

159 | General Public License ever published by the Free Software Foundation.

160 |

161 | If the Library as you received it specifies that a proxy can decide

162 | whether future versions of the GNU Lesser General Public License shall

163 | apply, that proxy's public statement of acceptance of any version is

164 | permanent authorization for you to choose that version for the

165 | Library.

166 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | **dingo** is a Python package that analyzes metabolic networks.

4 | It relies on high dimensional sampling with Markov Chain Monte Carlo (MCMC)

5 | methods and fast optimization methods to analyze the possible states of a

6 | metabolic network. To perform MCMC sampling, `dingo` relies on the `C++` library

7 | [volesti](https://github.com/GeomScale/volume_approximation), which provides

8 | several algorithms for sampling convex polytopes.

9 | `dingo` also performs two standard methods to analyze the flux space of a

10 | metabolic network, namely Flux Balance Analysis and Flux Variability Analysis.

11 |

12 | `dingo` is part of [GeomScale](https://geomscale.github.io/) project.

13 |

14 | [](https://github.com/GeomScale/dingo/actions?query=workflow%3Adingo-ubuntu)

15 | [](https://colab.research.google.com/github/GeomScale/dingo/blob/develop/tutorials/dingo_tutorial.ipynb)

16 | [](https://gitter.im/GeomScale/community?utm_source=share-link&utm_medium=link&utm_campaign=share-link)

17 |

18 |

19 | ## Installation

20 |

21 | **Note:** Python version should be 3.8.x. You can check this by running the following command in your terminal:

22 | ```bash

23 | python --version

24 | ```

25 | If you have a different version of Python installed, you'll need to install it ([start here](https://linuxize.com/post/how-to-install-python-3-8-on-ubuntu-18-04/)) and update-alternatives ([start here](https://linuxhint.com/update_alternatives_ubuntu/))

26 |

27 | **Note:** If you are using `GitHub Codespaces`. Start [here](https://docs.github.com/en/codespaces/setting-up-your-project-for-codespaces/adding-a-dev-container-configuration/setting-up-your-python-project-for-codespaces) to set the python version. Once your Python version is `3.8.x` you can start following the below instructions.

28 |

29 |

30 |

31 | To load the submodules that dingo uses, run

32 |

33 | ````bash

34 | git submodule update --init

35 | ````

36 |

37 | You will need to download and unzip the Boost library:

38 | ```

39 | wget -O boost_1_76_0.tar.bz2 https://archives.boost.io/release/1.76.0/source/boost_1_76_0.tar.bz2

40 | tar xjf boost_1_76_0.tar.bz2

41 | rm boost_1_76_0.tar.bz2

42 | ```

43 |

44 | You will also need to download and unzip the lpsolve library:

45 | ```

46 | wget https://sourceforge.net/projects/lpsolve/files/lpsolve/5.5.2.11/lp_solve_5.5.2.11_source.tar.gz

47 | tar xzvf lp_solve_5.5.2.11_source.tar.gz

48 | rm lp_solve_5.5.2.11_source.tar.gz

49 | ```

50 |

51 | Then, you need to install the dependencies for the PySPQR library; for Debian/Ubuntu Linux, run

52 |

53 | ```bash

54 | sudo apt-get update -y

55 | sudo apt-get install -y libsuitesparse-dev

56 | ```

57 |

58 | To install the Python dependencies, `dingo` is using [Poetry](https://python-poetry.org/),

59 | ```

60 | curl -sSL https://install.python-poetry.org | python3 - --version 1.3.2

61 | poetry shell

62 | poetry install

63 | ```

64 |

65 | You can install the [Gurobi solver](https://www.gurobi.com/) for faster linear programming optimization. Run

66 |

67 | ```

68 | pip3 install -i https://pypi.gurobi.com gurobipy

69 | ```

70 |

71 | Then, you will need a [license](https://www.gurobi.com/downloads/end-user-license-agreement-academic/). For more information, we refer to the Gurobi [download center](https://www.gurobi.com/downloads/).

72 |

73 |

74 |

75 |

76 | ## Unit tests

77 |

78 | Now, you can run the unit tests by the following commands (with the default solver `highs`):

79 | ```

80 | python3 tests/fba.py

81 | python3 tests/full_dimensional.py

82 | python3 tests/max_ball.py

83 | python3 tests/scaling.py

84 | python3 tests/rounding.py

85 | python3 tests/sampling.py

86 | ```

87 |

88 | If you have installed Gurobi successfully, then run

89 | ```

90 | python3 tests/fba.py gurobi

91 | python3 tests/full_dimensional.py gurobi

92 | python3 tests/max_ball.py gurobi

93 | python3 tests/scaling.py gurobi

94 | python3 tests/rounding.py gurobi

95 | python3 tests/sampling.py gurobi

96 | ```

97 |

98 | ## Tutorial

99 |

100 | You can have a look at our [Google Colab notebook](https://colab.research.google.com/github/GeomScale/dingo/blob/develop/tutorials/dingo_tutorial.ipynb)

101 | on how to use `dingo`.

102 |

103 |

104 | ## Documentation

105 |

106 |

107 | It quite simple to use dingo in your code. In general, dingo provides two classes:

108 |

109 | - `metabolic_network` represents a metabolic network

110 | - `polytope_sampler` can be used to sample from the flux space of a metabolic network or from a general convex polytope.

111 |

112 | The following script shows how you could sample steady states of a metabolic network with dingo. To initialize a metabolic network object you have to provide the path to the `json` file as those in [BiGG](http://bigg.ucsd.edu/models) dataset or the `mat` file (using the `matlab` wrapper in folder `/ext_data` to modify a standard `mat` file of a model as those in BiGG dataset):

113 |

114 | ```python

115 | from dingo import MetabolicNetwork, PolytopeSampler

116 |

117 | model = MetabolicNetwork.from_json('path/to/model_file.json')

118 | sampler = PolytopeSampler(model)

119 | steady_states = sampler.generate_steady_states()

120 | ```

121 |

122 | `dingo` can also load a model given in `.sbml` format using the following command,

123 |

124 | ```python

125 | model = MetabolicNetwork.from_sbml('path/to/model_file.sbml')

126 | ```

127 |

128 | The output variable `steady_states` is a `numpy` array that contains the steady states of the model column-wise. You could ask from the `sampler` for more statistical guarantees on sampling,

129 |

130 | ```python

131 | steady_states = sampler.generate_steady_states(ess=2000, psrf = True)

132 | ```

133 |

134 | The `ess` stands for the effective sample size (ESS) (default value is `1000`) and the `psrf` is a flag to request an upper bound equal to 1.1 for the value of the *potential scale reduction factor* of each marginal flux (default option is `False`).

135 |

136 | You could also ask for parallel MMCS algorithm,

137 |

138 | ```python

139 | steady_states = sampler.generate_steady_states(ess=2000, psrf = True,

140 | parallel_mmcs = True, num_threads = 2)

141 | ```

142 |

143 | The default option is to run the sequential [Multiphase Monte Carlo Sampling algorithm](https://arxiv.org/abs/2012.05503) (MMCS) algorithm.

144 |

145 | **Tip**: After the first run of MMCS algorithm the polytope stored in object `sampler` is usually more rounded than the initial one. Thus, the function `generate_steady_states()` becomes more efficient from run to run.

146 |

147 |

148 | #### Rounding the polytope

149 |

150 | `dingo` provides three methods to round a polytope: (i) Bring the polytope to John position by apllying to it the transformation that maps the largest inscribed ellipsoid of the polytope to the unit ball, (ii) Bring the polytope to near-isotropic position by using uniform sampling with Billiard Walk, (iii) Apply to the polytope the transformation that maps the smallest enclosing ellipsoid of a uniform sample from the interior of the polytope to the unit ball.

151 |

152 | ```python

153 | from dingo import MetabolicNetwork, PolytopeSampler

154 |

155 | model = MetabolicNetwork.from_json('path/to/model_file.json')

156 | sampler = PolytopeSampler(model)

157 | A, b, N, N_shift = sampler.get_polytope()

158 |

159 | A_rounded, b_rounded, Tr, Tr_shift = sampler.round_polytope(A, b, method="john_position")

160 | A_rounded, b_rounded, Tr, Tr_shift = sampler.round_polytope(A, b, method="isotropic_position")

161 | A_rounded, b_rounded, Tr, Tr_shift = sampler.round_polytope(A, b, method="min_ellipsoid")

162 | ```

163 |

164 | Then, to sample from the rounded polytope, the user has to call the following static method of PolytopeSampler class,

165 |

166 | ```python

167 | samples = sample_from_polytope(A_rounded, b_rounded)

168 | ```

169 |

170 | Last you can map the samples back to steady states,

171 |

172 | ```python

173 | from dingo import map_samples_to_steady_states

174 |

175 | steady_states = map_samples_to_steady_states(samples, N, N_shift, Tr, Tr_shift)

176 | ```

177 |

178 | #### Other MCMC sampling methods

179 |

180 | To use any other MCMC sampling method that `dingo` provides you can use the following piece of code:

181 |

182 | ```python

183 | sampler = polytope_sampler(model)

184 | steady_states = sampler.generate_steady_states_no_multiphase() #default parameters (method = 'billiard_walk', n=1000, burn_in=0, thinning=1)

185 | ```

186 |

187 | The MCMC methods that dingo (through `volesti` library) provides are the following: (i) 'cdhr': Coordinate Directions Hit-and-Run, (ii) 'rdhr': Random Directions Hit-and-Run,

188 | (iii) 'billiard_walk', (iv) 'ball_walk', (v) 'dikin_walk', (vi) 'john_walk', (vii) 'vaidya_walk'.

189 |

190 |

191 |

192 | #### Switch the linear programming solver

193 |

194 | We use `pyoptinterface` to interface with the linear programming solvers. To switch the solver that `dingo` uses, you can use the `set_default_solver` function. The default solver is `highs` and you can switch to `gurobi` by running,

195 |

196 | ```python

197 | from dingo import set_default_solver

198 | set_default_solver("gurobi")

199 | ```

200 |

201 | You can also switch to other solvers that `pyoptinterface` supports, but we recommend using `highs` or `gurobi`. If you have issues with the solver, you can check the `pyoptinterface` [documentation](https://metab0t.github.io/PyOptInterface/getting_started.html).

202 |

203 | ### Apply FBA and FVA methods

204 |

205 | To apply FVA and FBA methods you have to use the class `metabolic_network`,

206 |

207 | ```python

208 | from dingo import MetabolicNetwork

209 |

210 | model = MetabolicNetwork.from_json('path/to/model_file.json')

211 | fva_output = model.fva()

212 |

213 | min_fluxes = fva_output[0]

214 | max_fluxes = fva_output[1]

215 | max_biomass_flux_vector = fva_output[2]

216 | max_biomass_objective = fva_output[3]

217 | ```

218 |

219 | The output of FVA method is tuple that contains `numpy` arrays. The vectors `min_fluxes` and `max_fluxes` contains the minimum and the maximum values of each flux. The vector `max_biomass_flux_vector` is the optimal flux vector according to the biomass objective function and `max_biomass_objective` is the value of that optimal solution.

220 |

221 | To apply FBA method,

222 |

223 | ```python

224 | fba_output = model.fba()

225 |

226 | max_biomass_flux_vector = fba_output[0]

227 | max_biomass_objective = fba_output[1]

228 | ```

229 |

230 | while the output vectors are the same with the previous example.

231 |

232 |

233 |

234 | ### Set the restriction in the flux space

235 |

236 | FVA and FBA, restrict the flux space to the set of flux vectors that have an objective value equal to the optimal value of the function. dingo allows for a more relaxed option where you could ask for flux vectors that have an objective value equal to at least a percentage of the optimal value,

237 |

238 | ```python

239 | model.set_opt_percentage(90)

240 | fva_output = model.fva()

241 |

242 | # the same restriction in the flux space holds for the sampler

243 | sampler = polytope_sampler(model)

244 | steady_states = sampler.generate_steady_states()

245 | ```

246 |

247 | The default percentage is `100%`.

248 |

249 |

250 |

251 | ### Change the objective function

252 |

253 | You could also set an alternative objective function. For example, to maximize the 1st reaction of the model,

254 |

255 | ```python

256 | n = model.num_of_reactions()

257 | obj_fun = np.zeros(n)

258 | obj_fun[0] = 1

259 | model.objective_function(obj_fun)

260 |

261 | # apply FVA using the new objective function

262 | fva_output = model.fva()

263 | # sample from the flux space by restricting

264 | # the fluxes according to the new objective function

265 | sampler = polytope_sampler(model)

266 | steady_states = sampler.generate_steady_states()

267 | ```

268 |

269 |

270 |

271 | ### Plot flux marginals

272 |

273 | The generated steady states can be used to estimate the marginal density function of each flux. You can plot the histogram using the samples,

274 |

275 | ```python

276 | from dingo import plot_histogram

277 |

278 | model = MetabolicNetwork.from_json('path/to/e_coli_core.json')

279 | sampler = PolytopeSampler(model)

280 | steady_states = sampler.generate_steady_states(ess = 3000)

281 |

282 | # plot the histogram for the 14th reaction in e-coli (ACONTa)

283 | reactions = model.reactions

284 | plot_histogram(

285 | steady_states[13],

286 | reactions[13],

287 | n_bins = 60,

288 | )

289 | ```

290 |

291 | The default number of bins is 60. dingo uses the package `matplotlib` for plotting.

292 |

293 |

294 |

295 | ### Plot a copula between two fluxes

296 |

297 | The generated steady states can be used to estimate and plot the copula between two fluxes. You can plot the copula using the samples,

298 |

299 | ```python

300 | from dingo import plot_copula

301 |

302 | model = MetabolicNetwork.from_json('path/to/e_coli_core.json')

303 | sampler = PolytopeSampler(model)

304 | steady_states = sampler.generate_steady_states(ess = 3000)

305 |

306 | # plot the copula between the 13th (PPC) and the 14th (ACONTa) reaction in e-coli

307 | reactions = model.reactions

308 |

309 | data_flux2=[steady_states[12],reactions[12]]

310 | data_flux1=[steady_states[13],reactions[13]]

311 |

312 | plot_copula(data_flux1, data_flux2, n=10)

313 | ```

314 |

315 | The default number of cells is 5x5=25. dingo uses the package `plotly` for plotting.

316 |

317 |

318 |

319 |

320 |

--------------------------------------------------------------------------------

/build.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | # See if Cython is installed

4 | try:

5 | from Cython.Build import cythonize

6 | # Do nothing if Cython is not available

7 | except ImportError:

8 | # Got to provide this function. Otherwise, poetry will fail

9 | def build(setup_kwargs):

10 | pass

11 |

12 |

13 | # Cython is installed. Compile

14 | else:

15 | from setuptools import Extension

16 | from setuptools.dist import Distribution

17 | from distutils.command.build_ext import build_ext

18 |

19 | # This function will be executed in setup.py:

20 | def build(setup_kwargs):

21 | # The file you want to compile

22 | extensions = ["dingo/volestipy.pyx"]

23 |

24 | # gcc arguments hack: enable optimizations

25 | os.environ["CFLAGS"] = [

26 | "-std=c++17",

27 | "-O3",

28 | "-DBOOST_NO_AUTO_PTR",

29 | "-ldl",

30 | "-lm",

31 | ]

32 |

33 | # Build

34 | setup_kwargs.update(

35 | {

36 | "ext_modules": cythonize(

37 | extensions,

38 | language_level=3,

39 | compiler_directives={"linetrace": True},

40 | ),

41 | "cmdclass": {"build_ext": build_ext},

42 | }

43 | )

44 |

--------------------------------------------------------------------------------

/dingo/MetabolicNetwork.py:

--------------------------------------------------------------------------------

1 | # dingo : a python library for metabolic networks sampling and analysis

2 | # dingo is part of GeomScale project

3 |

4 | # Copyright (c) 2021 Apostolos Chalkis

5 | # Copyright (c) 2021 Vissarion Fisikopoulos

6 | # Copyright (c) 2024 Ke Shi

7 |

8 | # Licensed under GNU LGPL.3, see LICENCE file

9 |

10 | import numpy as np

11 | import sys

12 | from typing import Dict

13 | import cobra

14 | from dingo.loading_models import read_json_file, read_mat_file, read_sbml_file, parse_cobra_model

15 | from dingo.pyoptinterface_based_impl import fba,fva,inner_ball,remove_redundant_facets

16 |

17 | class MetabolicNetwork:

18 | def __init__(self, tuple_args):

19 |

20 | self._parameters = {}

21 | self._parameters["opt_percentage"] = 100

22 | self._parameters["distribution"] = "uniform"

23 | self._parameters["nullspace_method"] = "sparseQR"

24 | self._parameters["solver"] = None

25 |

26 | if len(tuple_args) != 10:

27 | raise Exception(

28 | "An unknown input format given to initialize a metabolic network object."

29 | )

30 |

31 | self._lb = tuple_args[0]

32 | self._ub = tuple_args[1]

33 | self._S = tuple_args[2]

34 | self._metabolites = tuple_args[3]

35 | self._reactions = tuple_args[4]

36 | self._biomass_index = tuple_args[5]

37 | self._objective_function = tuple_args[6]

38 | self._medium = tuple_args[7]

39 | self._medium_indices = tuple_args[8]

40 | self._exchanges = tuple_args[9]

41 |

42 | try:

43 | if self._biomass_index is not None and (

44 | self._lb.size != self._ub.size

45 | or self._lb.size != self._S.shape[1]

46 | or len(self._metabolites) != self._S.shape[0]

47 | or len(self._reactions) != self._S.shape[1]

48 | or self._objective_function.size != self._S.shape[1]

49 | or (self._biomass_index < 0)

50 | or (self._biomass_index > self._objective_function.size)

51 | ):

52 | raise Exception(

53 | "Wrong tuple format given to initialize a metabolic network object."

54 | )

55 | except LookupError as error:

56 | raise error.with_traceback(sys.exc_info()[2])

57 |

58 | @classmethod

59 | def from_json(cls, arg):

60 | if (not isinstance(arg, str)) or (arg[-4:] != "json"):

61 | raise Exception(

62 | "An unknown input format given to initialize a metabolic network object."

63 | )

64 |

65 | return cls(read_json_file(arg))

66 |

67 | @classmethod

68 | def from_mat(cls, arg):

69 | if (not isinstance(arg, str)) or (arg[-3:] != "mat"):

70 | raise Exception(

71 | "An unknown input format given to initialize a metabolic network object."

72 | )

73 |

74 | return cls(read_mat_file(arg))

75 |

76 | @classmethod

77 | def from_sbml(cls, arg):

78 | if (not isinstance(arg, str)) and ((arg[-3:] == "xml") or (arg[-4] == "sbml")):

79 | raise Exception(

80 | "An unknown input format given to initialize a metabolic network object."

81 | )

82 |

83 | return cls(read_sbml_file(arg))

84 |

85 | @classmethod

86 | def from_cobra_model(cls, arg):

87 | if (not isinstance(arg, cobra.core.model.Model)):

88 | raise Exception(

89 | "An unknown input format given to initialize a metabolic network object."

90 | )

91 |

92 | return cls(parse_cobra_model(arg))

93 |

94 | def fva(self):

95 | """A member function to apply the FVA method on the metabolic network."""

96 |

97 | return fva(

98 | self._lb,

99 | self._ub,

100 | self._S,

101 | self._objective_function,

102 | self._parameters["opt_percentage"],

103 | self._parameters["solver"]

104 | )

105 |

106 | def fba(self):

107 | """A member function to apply the FBA method on the metabolic network."""

108 | return fba(self._lb, self._ub, self._S, self._objective_function, self._parameters["solver"])

109 |

110 | @property

111 | def lb(self):

112 | return self._lb

113 |

114 | @property

115 | def ub(self):

116 | return self._ub

117 |

118 | @property

119 | def S(self):

120 | return self._S

121 |

122 | @property

123 | def metabolites(self):

124 | return self._metabolites

125 |

126 | @property

127 | def reactions(self):

128 | return self._reactions

129 |

130 | @property

131 | def biomass_index(self):

132 | return self._biomass_index

133 |

134 | @property

135 | def objective_function(self):

136 | return self._objective_function

137 |

138 | @property

139 | def medium(self):

140 | return self._medium

141 |

142 | @property

143 | def exchanges(self):

144 | return self._exchanges

145 |

146 | @property

147 | def parameters(self):

148 | return self._parameters

149 |

150 | @property

151 | def get_as_tuple(self):

152 | return (

153 | self._lb,

154 | self._ub,

155 | self._S,

156 | self._metabolites,

157 | self._reactions,

158 | self._biomass_index,

159 | self._objective_function,

160 | self._medium,

161 | self._inter_medium,

162 | self._exchanges

163 | )

164 |

165 | def num_of_reactions(self):

166 | return len(self._reactions)

167 |

168 | def num_of_metabolites(self):

169 | return len(self._metabolites)

170 |

171 | @lb.setter

172 | def lb(self, value):

173 | self._lb = value

174 |

175 | @ub.setter

176 | def ub(self, value):

177 | self._ub = value

178 |

179 | @S.setter

180 | def S(self, value):

181 | self._S = value

182 |

183 | @metabolites.setter

184 | def metabolites(self, value):

185 | self._metabolites = value

186 |

187 | @reactions.setter

188 | def reactions(self, value):

189 | self._reactions = value

190 |

191 | @biomass_index.setter

192 | def biomass_index(self, value):

193 | self._biomass_index = value

194 |

195 | @objective_function.setter

196 | def objective_function(self, value):

197 | self._objective_function = value

198 |

199 |

200 | @medium.setter

201 | def medium(self, medium: Dict[str, float]) -> None:

202 | """Set the constraints on the model exchanges.

203 |

204 | `model.medium` returns a dictionary of the bounds for each of the

205 | boundary reactions, in the form of `{rxn_id: rxn_bound}`, where `rxn_bound`

206 | specifies the absolute value of the bound in direction of metabolite

207 | creation (i.e., lower_bound for `met <--`, upper_bound for `met -->`)

208 |

209 | Parameters

210 | ----------

211 | medium: dict

212 | The medium to initialize. medium should be a dictionary defining

213 | `{rxn_id: bound}` pairs.

214 | """

215 |

216 | def set_active_bound(reaction: str, reac_index: int, bound: float) -> None:

217 | """Set active bound.

218 |

219 | Parameters

220 | ----------

221 | reaction: cobra.Reaction

222 | Reaction to set

223 | bound: float

224 | Value to set bound to. The bound is reversed and set as lower bound

225 | if reaction has reactants (metabolites that are consumed). If reaction

226 | has reactants, it seems the upper bound won't be set.

227 | """

228 | if any(x < 0 for x in list(self._S[:, reac_index])):

229 | self._lb[reac_index] = -bound

230 | elif any(x > 0 for x in list(self._S[:, reac_index])):

231 | self._ub[reac_index] = bound

232 |

233 | # Set the given media bounds

234 | media_rxns = []

235 | exchange_rxns = frozenset(self.exchanges)

236 | for rxn_id, rxn_bound in medium.items():

237 | if rxn_id not in exchange_rxns:

238 | logger.warning(

239 | f"{rxn_id} does not seem to be an an exchange reaction. "

240 | f"Applying bounds anyway."

241 | )

242 | media_rxns.append(rxn_id)

243 |

244 | reac_index = self._reactions.index(rxn_id)

245 |

246 | set_active_bound(rxn_id, reac_index, rxn_bound)

247 |

248 | frozen_media_rxns = frozenset(media_rxns)

249 |

250 | # Turn off reactions not present in media

251 | for rxn_id in exchange_rxns - frozen_media_rxns:

252 | """

253 | is_export for us, needs to check on the S

254 | order reactions to their lb and ub

255 | """

256 | # is_export = rxn.reactants and not rxn.products

257 | reac_index = self._reactions.index(rxn_id)

258 | products = np.any(self._S[:,reac_index] > 0)

259 | reactants_exist = np.any(self._S[:,reac_index] < 0)

260 | is_export = True if not products and reactants_exist else False

261 | set_active_bound(

262 | rxn_id, reac_index, min(0.0, -self._lb[reac_index] if is_export else self._ub[reac_index])

263 | )

264 |

265 | def set_solver(self, solver: str):

266 | self._parameters["solver"] = solver

267 |

268 | def set_nullspace_method(self, value):

269 |

270 | self._parameters["nullspace_method"] = value

271 |

272 | def set_opt_percentage(self, value):

273 |

274 | self._parameters["opt_percentage"] = value

275 |

276 | def shut_down_reaction(self, index_val):

277 |

278 | if (

279 | (not isinstance(index_val, int))

280 | or index_val < 0

281 | or index_val >= self._S.shape[1]

282 | ):

283 | raise Exception("The input does not correspond to a proper reaction index.")

284 |

285 | self._lb[index_val] = 0

286 | self._ub[index_val] = 0

287 |

--------------------------------------------------------------------------------

/dingo/PolytopeSampler.py:

--------------------------------------------------------------------------------

1 | # dingo : a python library for metabolic networks sampling and analysis

2 | # dingo is part of GeomScale project

3 |

4 | # Copyright (c) 2021 Apostolos Chalkis

5 | # Copyright (c) 2024 Ke Shi

6 |

7 | # Licensed under GNU LGPL.3, see LICENCE file

8 |

9 |

10 | import numpy as np

11 | import warnings

12 | import math

13 | from dingo.MetabolicNetwork import MetabolicNetwork

14 | from dingo.utils import (

15 | map_samples_to_steady_states,

16 | get_matrices_of_low_dim_polytope,

17 | get_matrices_of_full_dim_polytope,

18 | )

19 |

20 | from dingo.pyoptinterface_based_impl import fba,fva,inner_ball,remove_redundant_facets

21 |

22 | from volestipy import HPolytope

23 |

24 |

25 | class PolytopeSampler:

26 | def __init__(self, metabol_net):

27 |

28 | if not isinstance(metabol_net, MetabolicNetwork):

29 | raise Exception("An unknown input object given for initialization.")

30 |

31 | self._metabolic_network = metabol_net

32 | self._A = []

33 | self._b = []

34 | self._N = []

35 | self._N_shift = []

36 | self._T = []

37 | self._T_shift = []

38 | self._parameters = {}

39 | self._parameters["nullspace_method"] = "sparseQR"

40 | self._parameters["opt_percentage"] = self.metabolic_network.parameters[

41 | "opt_percentage"

42 | ]

43 | self._parameters["distribution"] = "uniform"

44 | self._parameters["first_run_of_mmcs"] = True

45 | self._parameters["remove_redundant_facets"] = True

46 |

47 | self._parameters["tol"] = 1e-06

48 | self._parameters["solver"] = None

49 |

50 | def get_polytope(self):

51 | """A member function to derive the corresponding full dimensional polytope

52 | and a isometric linear transformation that maps the latter to the initial space.

53 | """

54 |

55 | if (

56 | self._A == []

57 | or self._b == []

58 | or self._N == []

59 | or self._N_shift == []

60 | or self._T == []

61 | or self._T_shift == []

62 | ):

63 |

64 | (

65 | max_flux_vector,

66 | max_objective,

67 | ) = self._metabolic_network.fba()

68 |

69 | if (

70 | self._parameters["remove_redundant_facets"]

71 | ):

72 |

73 | A, b, Aeq, beq = remove_redundant_facets(

74 | self._metabolic_network.lb,

75 | self._metabolic_network.ub,

76 | self._metabolic_network.S,

77 | self._metabolic_network.objective_function,

78 | self._parameters["opt_percentage"],

79 | self._parameters["solver"],

80 | )

81 | else:

82 |

83 | (

84 | min_fluxes,

85 | max_fluxes,

86 | max_flux_vector,

87 | max_objective,

88 | ) = self._metabolic_network.fva()

89 |

90 | A, b, Aeq, beq = get_matrices_of_low_dim_polytope(

91 | self._metabolic_network.S,

92 | self._metabolic_network.lb,

93 | self._metabolic_network.ub,

94 | min_fluxes,

95 | max_fluxes,

96 | )

97 |

98 | if (

99 | A.shape[0] != b.size

100 | or A.shape[1] != Aeq.shape[1]

101 | or Aeq.shape[0] != beq.size

102 | ):

103 | raise Exception("Preprocess for full dimensional polytope failed.")

104 |

105 | A = np.vstack((A, -self._metabolic_network.objective_function))

106 |

107 | b = np.append(

108 | b,

109 | -np.floor(max_objective / self._parameters["tol"])

110 | * self._parameters["tol"]

111 | * self._parameters["opt_percentage"]

112 | / 100,

113 | )

114 |

115 | (

116 | self._A,

117 | self._b,

118 | self._N,

119 | self._N_shift,

120 | ) = get_matrices_of_full_dim_polytope(A, b, Aeq, beq)

121 |

122 | n = self._A.shape[1]

123 | self._T = np.eye(n)

124 | self._T_shift = np.zeros(n)

125 |

126 | return self._A, self._b, self._N, self._N_shift

127 |

128 | def generate_steady_states(

129 | self, ess=1000, psrf=False, parallel_mmcs=False, num_threads=1

130 | ):

131 | """A member function to sample steady states.

132 |

133 | Keyword arguments:

134 | ess -- the target effective sample size

135 | psrf -- a boolean flag to request PSRF smaller than 1.1 for all marginal fluxes

136 | parallel_mmcs -- a boolean flag to request the parallel mmcs

137 | num_threads -- the number of threads to use for parallel mmcs

138 | """

139 |

140 | self.get_polytope()

141 |

142 | P = HPolytope(self._A, self._b)

143 |

144 | self._A, self._b, Tr, Tr_shift, samples = P.mmcs(

145 | ess, psrf, parallel_mmcs, num_threads, self._parameters["solver"]

146 | )

147 |

148 | if self._parameters["first_run_of_mmcs"]:

149 | steady_states = map_samples_to_steady_states(

150 | samples, self._N, self._N_shift

151 | )

152 | self._parameters["first_run_of_mmcs"] = False

153 | else:

154 | steady_states = map_samples_to_steady_states(

155 | samples, self._N, self._N_shift, self._T, self._T_shift

156 | )

157 |

158 | self._T = np.dot(self._T, Tr)

159 | self._T_shift = np.add(self._T_shift, Tr_shift)

160 |

161 | return steady_states

162 |

163 | def generate_steady_states_no_multiphase(

164 | self, method = 'billiard_walk', n=1000, burn_in=0, thinning=1, variance=1.0, bias_vector=None, ess=1000

165 | ):

166 | """A member function to sample steady states.

167 |

168 | Keyword arguments:

169 | method -- An MCMC method to sample, i.e. {'billiard_walk', 'cdhr', 'rdhr', 'ball_walk', 'dikin_walk', 'john_walk', 'vaidya_walk', 'gaussian_hmc_walk', 'exponential_hmc_walk', 'hmc_leapfrog_gaussian', 'hmc_leapfrog_exponential'}

170 | n -- the number of steady states to sample

171 | burn_in -- the number of points to burn before sampling

172 | thinning -- the walk length of the chain

173 | """

174 |

175 | self.get_polytope()

176 |

177 | P = HPolytope(self._A, self._b)

178 |

179 | if bias_vector is None:

180 | bias_vector = np.ones(self._A.shape[1], dtype=np.float64)

181 | else:

182 | bias_vector = bias_vector.astype('float64')

183 |

184 | samples = P.generate_samples(method.encode('utf-8'), n, burn_in, thinning, variance, bias_vector, self._parameters["solver"], ess)

185 | samples_T = samples.T

186 |

187 | steady_states = map_samples_to_steady_states(

188 | samples_T, self._N, self._N_shift

189 | )

190 |

191 | return steady_states

192 |

193 | @staticmethod

194 | def sample_from_polytope(

195 | A, b, ess=1000, psrf=False, parallel_mmcs=False, num_threads=1, solver=None

196 | ):

197 | """A static function to sample from a full dimensional polytope.

198 |

199 | Keyword arguments:

200 | A -- an mxn matrix that contains the normal vectors of the facets of the polytope row-wise

201 | b -- a m-dimensional vector, s.t. A*x <= b

202 | ess -- the target effective sample size

203 | psrf -- a boolean flag to request PSRF smaller than 1.1 for all marginal fluxes

204 | parallel_mmcs -- a boolean flag to request the parallel mmcs

205 | num_threads -- the number of threads to use for parallel mmcs

206 | """

207 |

208 | P = HPolytope(A, b)

209 |

210 | A, b, Tr, Tr_shift, samples = P.mmcs(

211 | ess, psrf, parallel_mmcs, num_threads, solver

212 | )

213 |

214 |

215 | return samples

216 |

217 | @staticmethod

218 | def sample_from_polytope_no_multiphase(

219 | A, b, method = 'billiard_walk', n=1000, burn_in=0, thinning=1, variance=1.0, bias_vector=None, solver=None, ess=1000

220 | ):

221 | """A static function to sample from a full dimensional polytope with an MCMC method.

222 |

223 | Keyword arguments:

224 | A -- an mxn matrix that contains the normal vectors of the facets of the polytope row-wise

225 | b -- a m-dimensional vector, s.t. A*x <= b

226 | method -- An MCMC method to sample, i.e. {'billiard_walk', 'cdhr', 'rdhr', 'ball_walk', 'dikin_walk', 'john_walk', 'vaidya_walk', 'gaussian_hmc_walk', 'exponential_hmc_walk', 'hmc_leapfrog_gaussian', 'hmc_leapfrog_exponential'}

227 | n -- the number of steady states to sample

228 | burn_in -- the number of points to burn before sampling

229 | thinning -- the walk length of the chain

230 | """

231 | if bias_vector is None:

232 | bias_vector = np.ones(A.shape[1], dtype=np.float64)

233 | else:

234 | bias_vector = bias_vector.astype('float64')

235 |

236 | P = HPolytope(A, b)

237 |

238 | samples = P.generate_samples(method.encode('utf-8'), n, burn_in, thinning, variance, bias_vector, solver, ess)

239 |

240 | samples_T = samples.T

241 | return samples_T

242 |

243 | @staticmethod

244 | def round_polytope(

245 | A, b, method = "john_position", solver = None

246 | ):

247 | P = HPolytope(A, b)

248 | A, b, Tr, Tr_shift, round_value = P.rounding(method, solver)

249 |

250 | return A, b, Tr, Tr_shift

251 |

252 | @staticmethod

253 | def sample_from_fva_output(

254 | min_fluxes,

255 | max_fluxes,

256 | objective_function,

257 | max_objective,

258 | S,

259 | opt_percentage=100,

260 | ess=1000,

261 | psrf=False,

262 | parallel_mmcs=False,

263 | num_threads=1,

264 | solver = None

265 | ):

266 | """A static function to sample steady states when the output of FVA is given.

267 |

268 | Keyword arguments:

269 | min_fluxes -- minimum values of the fluxes, i.e., a n-dimensional vector

270 | max_fluxes -- maximum values for the fluxes, i.e., a n-dimensional vector

271 | objective_function -- the objective function

272 | max_objective -- the maximum value of the objective function

273 | S -- stoichiometric matrix

274 | opt_percentage -- consider solutions that give you at least a certain

275 | percentage of the optimal solution (default is to consider

276 | optimal solutions only)

277 | ess -- the target effective sample size

278 | psrf -- a boolean flag to request PSRF smaller than 1.1 for all marginal fluxes

279 | parallel_mmcs -- a boolean flag to request the parallel mmcs

280 | num_threads -- the number of threads to use for parallel mmcs

281 | """

282 |

283 | A, b, Aeq, beq = get_matrices_of_low_dim_polytope(

284 | S, min_fluxes, max_fluxes, opt_percentage, tol

285 | )

286 |

287 | A = np.vstack((A, -objective_function))

288 | b = np.append(

289 | b,

290 | -(opt_percentage / 100)

291 | * self._parameters["tol"]

292 | * math.floor(max_objective / self._parameters["tol"]),

293 | )

294 |

295 | A, b, N, N_shift = get_matrices_of_full_dim_polytope(A, b, Aeq, beq)

296 |

297 | P = HPolytope(A, b)

298 |

299 | A, b, Tr, Tr_shift, samples = P.mmcs(

300 | ess, psrf, parallel_mmcs, num_threads, solver

301 | )

302 |

303 | steady_states = map_samples_to_steady_states(samples, N, N_shift)

304 |

305 | return steady_states

306 |

307 | @property

308 | def A(self):

309 | return self._A

310 |

311 | @property

312 | def b(self):

313 | return self._b

314 |

315 | @property

316 | def T(self):

317 | return self._T

318 |

319 | @property

320 | def T_shift(self):

321 | return self._T_shift

322 |

323 | @property

324 | def N(self):

325 | return self._N

326 |

327 | @property

328 | def N_shift(self):

329 | return self._N_shift

330 |

331 | @property

332 | def metabolic_network(self):

333 | return self._metabolic_network

334 |

335 | def facet_redundancy_removal(self, value):

336 | self._parameters["remove_redundant_facets"] = value

337 |

338 | def set_solver(self, solver):

339 | self._parameters["solver"] = solver

340 |

341 | def set_distribution(self, value):

342 |

343 | self._parameters["distribution"] = value

344 |

345 | def set_nullspace_method(self, value):

346 |

347 | self._parameters["nullspace_method"] = value

348 |

349 | def set_tol(self, value):

350 |

351 | self._parameters["tol"] = value

352 |

353 | def set_opt_percentage(self, value):

354 |

355 | self._parameters["opt_percentage"] = value

356 |

--------------------------------------------------------------------------------

/dingo/__init__.py:

--------------------------------------------------------------------------------

1 | # dingo : a python library for metabolic networks sampling and analysis

2 | # dingo is part of GeomScale project

3 |

4 | # Copyright (c) 2021 Apostolos Chalkis

5 |

6 | # Licensed under GNU LGPL.3, see LICENCE file

7 |

8 | import numpy as np

9 | import sys

10 | import os

11 | import pickle

12 | from dingo.loading_models import read_json_file

13 | from dingo.nullspace import nullspace_dense, nullspace_sparse

14 | from dingo.scaling import gmscale

15 | from dingo.utils import (

16 | apply_scaling,

17 | remove_almost_redundant_facets,

18 | map_samples_to_steady_states,

19 | get_matrices_of_low_dim_polytope,

20 | get_matrices_of_full_dim_polytope,

21 | )

22 | from dingo.illustrations import (

23 | plot_copula,

24 | plot_histogram,

25 | )

26 | from dingo.parser import dingo_args

27 | from dingo.MetabolicNetwork import MetabolicNetwork

28 | from dingo.PolytopeSampler import PolytopeSampler

29 |

30 | from dingo.pyoptinterface_based_impl import fba, fva, inner_ball, remove_redundant_facets, set_default_solver

31 |

32 | from volestipy import HPolytope

33 |

34 |

35 | def get_name(args_network):

36 |

37 | position = [pos for pos, char in enumerate(args_network) if char == "/"]

38 |

39 | if args_network[-4:] == "json":

40 | if position == []:

41 | name = args_network[0:-5]

42 | else:

43 | name = args_network[(position[-1] + 1) : -5]

44 | elif args_network[-3:] == "mat":

45 | if position == []:

46 | name = args_network[0:-4]

47 | else:

48 | name = args_network[(position[-1] + 1) : -4]

49 |

50 | return name

51 |

52 |

53 | def dingo_main():

54 | """A function that (a) reads the inputs using argparse package, (b) calls the proper dingo pipeline

55 | and (c) saves the outputs using pickle package

56 | """

57 |

58 | args = dingo_args()

59 |

60 | if args.metabolic_network is None and args.polytope is None and not args.histogram:

61 | raise Exception(

62 | "You have to give as input either a model or a polytope derived from a model."

63 | )

64 |

65 | if args.metabolic_network is None and ((args.fva) or (args.fba)):

66 | raise Exception("You have to give as input a model to apply FVA or FBA method.")

67 |

68 | if args.output_directory == None:

69 | output_path_dir = os.getcwd()

70 | else:

71 | output_path_dir = args.output_directory

72 |

73 | if os.path.isdir(output_path_dir) == False:

74 | os.mkdir(output_path_dir)

75 |

76 | # Move to the output directory

77 | os.chdir(output_path_dir)

78 |

79 | set_default_solver(args.solver)

80 |

81 | if args.model_name is None:

82 | if args.metabolic_network is not None:

83 | name = get_name(args.metabolic_network)

84 | else:

85 | name = args.model_name

86 |

87 | if args.histogram:

88 |

89 | if args.steady_states is None:

90 | raise Exception(

91 | "A path to a pickle file that contains steady states of the model has to be given."

92 | )

93 |

94 | if args.metabolites_reactions is None:

95 | raise Exception(

96 | "A path to a pickle file that contains the names of the metabolites and the reactions of the model has to be given."

97 | )

98 |

99 | if int(args.reaction_index) <= 0:

100 | raise Exception("The index of the reaction has to be a positive integer.")

101 |

102 | file = open(args.steady_states, "rb")

103 | steady_states = pickle.load(file)

104 | file.close()

105 |

106 | file = open(args.metabolites_reactions, "rb")

107 | model = pickle.load(file)

108 | file.close()

109 |

110 | reactions = model.reactions

111 |

112 | if int(args.reaction_index) > len(reactions):

113 | raise Exception(

114 | "The index of the reaction has not to be exceed the number of reactions."

115 | )

116 |

117 | if int(args.n_bins) <= 0:

118 | raise Exception("The number of bins has to be a positive integer.")

119 |

120 | plot_histogram(

121 | steady_states[int(args.reaction_index) - 1],

122 | reactions[int(args.reaction_index) - 1],

123 | int(args.n_bins),

124 | )

125 |

126 | elif args.fva:

127 |

128 | if args.metabolic_network[-4:] == "json":

129 | model = MetabolicNetwork.fom_json(args.metabolic_network)

130 | elif args.metabolic_network[-3:] == "mat":

131 | model = MetabolicNetwork.fom_mat(args.metabolic_network)

132 | else:

133 | raise Exception("An unknown format file given.")

134 |

135 | model.set_solver(args.solver)

136 |

137 | result_obj = model.fva()

138 |

139 | with open("dingo_fva_" + name + ".pckl", "wb") as dingo_fva_file:

140 | pickle.dump(result_obj, dingo_fva_file)

141 |

142 | elif args.fba:

143 |

144 | if args.metabolic_network[-4:] == "json":

145 | model = MetabolicNetwork.fom_json(args.metabolic_network)

146 | elif args.metabolic_network[-3:] == "mat":

147 | model = MetabolicNetwork.fom_mat(args.metabolic_network)

148 | else:

149 | raise Exception("An unknown format file given.")

150 |

151 | model.set_solver(args.solver)

152 |

153 | result_obj = model.fba()

154 |

155 | with open("dingo_fba_" + name + ".pckl", "wb") as dingo_fba_file:

156 | pickle.dump(result_obj, dingo_fba_file)

157 |

158 | elif args.metabolic_network is not None:

159 |

160 | if args.metabolic_network[-4:] == "json":

161 | model = MetabolicNetwork.fom_json(args.metabolic_network)

162 | elif args.metabolic_network[-3:] == "mat":

163 | model = MetabolicNetwork.fom_mat(args.metabolic_network)

164 | else:

165 | raise Exception("An unknown format file given.")

166 |

167 | sampler = PolytopeSampler(model)

168 |

169 | if args.preprocess_only:

170 |

171 | sampler.get_polytope()

172 |

173 | polytope_info = (

174 | sampler,

175 | name,

176 | )

177 |

178 | with open("dingo_model_" + name + ".pckl", "wb") as dingo_model_file:

179 | pickle.dump(model, dingo_model_file)

180 |

181 | with open(

182 | "dingo_polytope_sampler_" + name + ".pckl", "wb"

183 | ) as dingo_polytope_file:

184 | pickle.dump(polytope_info, dingo_polytope_file)

185 |

186 | else:

187 |

188 | steady_states = sampler.generate_steady_states(

189 | int(args.effective_sample_size),

190 | args.psrf_check,

191 | args.parallel_mmcs,

192 | int(args.num_threads),

193 | )

194 |

195 | polytope_info = (

196 | sampler,

197 | name,

198 | )

199 |

200 | with open("dingo_model_" + name + ".pckl", "wb") as dingo_model_file:

201 | pickle.dump(model, dingo_model_file)

202 |

203 | with open(

204 | "dingo_polytope_sampler_" + name + ".pckl", "wb"

205 | ) as dingo_polytope_file:

206 | pickle.dump(polytope_info, dingo_polytope_file)

207 |

208 | with open(

209 | "dingo_steady_states_" + name + ".pckl", "wb"

210 | ) as dingo_steadystates_file:

211 | pickle.dump(steady_states, dingo_steadystates_file)

212 |

213 | else:

214 |

215 | file = open(args.polytope, "rb")

216 | input_obj = pickle.load(file)

217 | file.close()

218 | sampler = input_obj[0]

219 |

220 | if isinstance(sampler, PolytopeSampler):

221 |

222 | steady_states = sampler.generate_steady_states(

223 | int(args.effective_sample_size),

224 | args.psrf_check,

225 | args.parallel_mmcs,

226 | int(args.num_threads),

227 | )

228 |

229 | else:

230 | raise Exception("The input file has to be generated by dingo package.")

231 |

232 | if args.model_name is None:

233 | name = input_obj[-1]

234 |

235 | polytope_info = (

236 | sampler,

237 | name,

238 | )

239 |

240 | with open(

241 | "dingo_polytope_sampler" + name + "_improved.pckl", "wb"

242 | ) as dingo_polytope_file:

243 | pickle.dump(polytope_info, dingo_polytope_file)

244 |

245 | with open("dingo_steady_states_" + name + ".pckl", "wb") as dingo_network_file:

246 | pickle.dump(steady_states, dingo_network_file)

247 |

248 |

249 | if __name__ == "__main__":

250 |

251 | dingo_main()

252 |

--------------------------------------------------------------------------------

/dingo/__main__.py:

--------------------------------------------------------------------------------

1 | # dingo : a python library for metabolic networks sampling and analysis

2 | # dingo is part of GeomScale project

3 |

4 | # Copyright (c) 2021 Apostolos Chalkis

5 |

6 | # Licensed under GNU LGPL.3, see LICENCE file

7 |

8 | from dingo import dingo_main

9 |

10 | dingo_main()

11 |

--------------------------------------------------------------------------------

/dingo/bindings/bindings.cpp:

--------------------------------------------------------------------------------

1 | // This is binding file for the C++ library volesti

2 | // volesti (volume computation and sampling library)

3 |

4 | // Copyright (c) 2012-2021 Vissarion Fisikopoulos

5 | // Copyright (c) 2018-2021 Apostolos Chalkis

6 |

7 | // Contributed and/or modified by Haris Zafeiropoulos

8 | // Contributed and/or modified by Pedro Zuidberg Dos Martires

9 |

10 | // Licensed under GNU LGPL.3, see LICENCE file

11 |

12 | #include

13 | #include

14 | #include

15 | #include "bindings.h"

16 | #include "hmc_sampling.h"

17 |

18 |

19 | using namespace std;

20 |

21 | // >>> Main HPolytopeCPP class; compute_volume(), rounding() and generate_samples() volesti methods are included <<<

22 |

23 | // Here is the initialization of the HPolytopeCPP class

24 | HPolytopeCPP::HPolytopeCPP() {}

25 | HPolytopeCPP::HPolytopeCPP(double *A_np, double *b_np, int n_hyperplanes, int n_variables){

26 |

27 | MT A;

28 | VT b;

29 | A.resize(n_hyperplanes,n_variables);

30 | b.resize(n_hyperplanes);

31 |

32 | int index = 0;

33 | for (int i = 0; i < n_hyperplanes; i++){

34 | b(i) = b_np[i];

35 | for (int j=0; j < n_variables; j++){

36 | A(i,j) = A_np[index];

37 | index++;

38 | }

39 | }

40 |

41 | HP = Hpolytope(n_variables, A, b);

42 | }

43 | // Use a destructor for the HPolytopeCPP object

44 | HPolytopeCPP::~HPolytopeCPP(){}

45 |

46 | ////////// Start of "compute_volume" //////////

47 | double HPolytopeCPP::compute_volume(char* vol_method, char* walk_method,

48 | int walk_len, double epsilon, int seed) const {

49 |

50 | double volume;

51 |

52 | if (strcmp(vol_method,"sequence_of_balls") == 0){

53 | if (strcmp(walk_method,"uniform_ball") == 0){

54 | volume = volume_sequence_of_balls(HP, epsilon, walk_len);

55 | } else if (strcmp(walk_method,"CDHR") == 0){

56 | volume = volume_sequence_of_balls(HP, epsilon, walk_len);

57 | } else if (strcmp(walk_method,"RDHR") == 0){

58 | volume = volume_sequence_of_balls(HP, epsilon, walk_len);

59 | }

60 | }

61 | else if (strcmp(vol_method,"cooling_gaussian") == 0){

62 | if (strcmp(walk_method,"gaussian_ball") == 0){

63 | volume = volume_cooling_gaussians(HP, epsilon, walk_len);

64 | } else if (strcmp(walk_method,"gaussian_CDHR") == 0){

65 | volume = volume_cooling_gaussians(HP, epsilon, walk_len);

66 | } else if (strcmp(walk_method,"gaussian_RDHR") == 0){

67 | volume = volume_cooling_gaussians(HP, epsilon, walk_len);

68 | }

69 | } else if (strcmp(vol_method,"cooling_balls") == 0){

70 | if (strcmp(walk_method,"uniform_ball") == 0){

71 | volume = volume_cooling_balls(HP, epsilon, walk_len).second;

72 | } else if (strcmp(walk_method,"CDHR") == 0){

73 | volume = volume_cooling_balls(HP, epsilon, walk_len).second;

74 | } else if (strcmp(walk_method,"RDHR") == 0){

75 | volume = volume_cooling_balls(HP, epsilon, walk_len).second;

76 | } else if (strcmp(walk_method,"billiard") == 0){

77 | volume = volume_cooling_balls(HP, epsilon, walk_len).second;

78 | }

79 | }

80 | return volume;

81 | }

82 | ////////// End of "compute_volume()" //////////

83 |

84 |

85 | ////////// Start of "generate_samples()" //////////

86 | double HPolytopeCPP::apply_sampling(int walk_len,

87 | int number_of_points,

88 | int number_of_points_to_burn,

89 | char* method,

90 | double* inner_point,

91 | double radius,

92 | double* samples,

93 | double variance_value,

94 | double* bias_vector_,

95 | int ess){

96 |

97 | RNGType rng(HP.dimension());

98 | HP.normalize();

99 | int d = HP.dimension();

100 | Point starting_point;

101 | VT inner_vec(d);

102 |

103 | for (int i = 0; i < d; i++){

104 | inner_vec(i) = inner_point[i];

105 | }

106 |

107 | Point inner_point2(inner_vec);

108 | CheBall = std::pair(inner_point2, radius);

109 | HP.set_InnerBall(CheBall);

110 | starting_point = inner_point2;

111 | std::list rand_points;

112 |

113 | NT variance = variance_value;

114 |

115 | if (strcmp(method, "cdhr") == 0) { // cdhr

116 | uniform_sampling(rand_points, HP, rng, walk_len, number_of_points,

117 | starting_point, number_of_points_to_burn);

118 | } else if (strcmp(method, "rdhr") == 0) { // rdhr

119 | uniform_sampling(rand_points, HP, rng, walk_len, number_of_points,