├── .gitattributes

├── ComoSVC.py

├── Content

└── put_the_checkpoint_here

├── Features.py

├── LICENSE

├── README.md

├── Readme_CN.md

├── Vocoder.py

├── como.py

├── configs

└── the_config_files

├── configs_template

└── diffusion_template.yaml

├── data_loaders.py

├── dataset

└── the_prprocessed_data

├── dataset_slice

└── if_you_need_to_slice

├── filelists

└── put_the_txt_here

├── infer_tool.py

├── inference_main.py

├── logs

└── the_log_files

├── mel_processing.py

├── meldataset.py

├── pitch_extractor.py

├── preparation_slice.py

├── preprocessing1_resample.py

├── preprocessing2_flist.py

├── preprocessing3_feature.py

├── requirements.txt

├── saver.py

├── slicer.py

├── solver.py

├── train.py

├── utils.py

├── vocoder

├── __init__.py

└── m4gan

│ ├── __init__.py

│ ├── hifigan.py

│ └── parallel_wavegan.py

└── wavenet.py

/.gitattributes:

--------------------------------------------------------------------------------

1 | # Auto detect text files and perform LF normalization

2 | * text=auto

3 |

--------------------------------------------------------------------------------

/ComoSVC.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | import torch.nn as nn

4 | import yaml

5 | from Vocoder import Vocoder

6 | from como import Como

7 |

8 |

9 | class DotDict(dict):

10 | def __getattr__(*args):

11 | val = dict.get(*args)

12 | return DotDict(val) if type(val) is dict else val

13 |

14 | __setattr__ = dict.__setitem__

15 | __delattr__ = dict.__delitem__

16 |

17 |

18 | def load_model_vocoder(

19 | model_path,

20 | device='cpu',

21 | config_path = None,

22 | total_steps=1,

23 | teacher=False

24 | ):

25 | if config_path is None:

26 | config_file = os.path.join(os.path.split(model_path)[0], 'config.yaml')

27 | else:

28 | config_file = config_path

29 |

30 | with open(config_file, "r") as config:

31 | args = yaml.safe_load(config)

32 | args = DotDict(args)

33 |

34 | # load vocoder

35 | vocoder = Vocoder(args.vocoder.type, args.vocoder.ckpt, device=device)

36 |

37 | # load model

38 | model = ComoSVC(

39 | args.data.encoder_out_channels,

40 | args.model.n_spk,

41 | args.model.use_pitch_aug,

42 | vocoder.dimension,

43 | args.model.n_layers,

44 | args.model.n_chans,

45 | args.model.n_hidden,

46 | total_steps,

47 | teacher

48 | )

49 |

50 | print(' [Loading] ' + model_path)

51 | ckpt = torch.load(model_path, map_location=torch.device(device))

52 | model.to(device)

53 | model.load_state_dict(ckpt['model'],strict=False)

54 | model.eval()

55 | return model, vocoder, args

56 |

57 |

58 | class ComoSVC(nn.Module):

59 | def __init__(

60 | self,

61 | input_channel,

62 | n_spk,

63 | use_pitch_aug=True,

64 | out_dims=128, # define in como

65 | n_layers=20,

66 | n_chans=384,

67 | n_hidden=100,

68 | total_steps=1,

69 | teacher=True

70 | ):

71 | super().__init__()

72 |

73 | self.unit_embed = nn.Linear(input_channel, n_hidden)

74 | self.f0_embed = nn.Linear(1, n_hidden)

75 | self.volume_embed = nn.Linear(1, n_hidden)

76 | self.teacher=teacher

77 |

78 | if use_pitch_aug:

79 | self.aug_shift_embed = nn.Linear(1, n_hidden, bias=False)

80 | else:

81 | self.aug_shift_embed = None

82 | self.n_spk = n_spk

83 | if n_spk is not None and n_spk > 1:

84 | self.spk_embed = nn.Embedding(n_spk, n_hidden)

85 | self.n_hidden = n_hidden

86 | self.decoder = Como(out_dims, n_layers, n_chans, n_hidden, total_steps, teacher)

87 | self.input_channel = input_channel

88 |

89 | def forward(self, units, f0, volume, spk_id = None, aug_shift = None,

90 | gt_spec=None, infer=True):

91 |

92 | '''

93 | input:

94 | B x n_frames x n_unit

95 | return:

96 | dict of B x n_frames x feat

97 | '''

98 |

99 | x = self.unit_embed(units) + self.f0_embed((1+ f0 / 700).log()) + self.volume_embed(volume)

100 |

101 | if self.n_spk is not None and self.n_spk > 1:

102 | if spk_id.shape[1] > 1:

103 | g = spk_id.reshape((spk_id.shape[0], spk_id.shape[1], 1, 1, 1)) # [N, S, B, 1, 1]

104 | g = g * self.speaker_map # [N, S, B, 1, H]

105 | g = torch.sum(g, dim=1) # [N, 1, B, 1, H]

106 | g = g.transpose(0, -1).transpose(0, -2).squeeze(0) # [B, H, N]

107 | x = x + g

108 | else:

109 | x = x + self.spk_embed(spk_id)

110 |

111 | if self.aug_shift_embed is not None and aug_shift is not None:

112 | x = x + self.aug_shift_embed(aug_shift / 5)

113 |

114 | if not infer:

115 | output = self.decoder(gt_spec,x,infer=False)

116 | else:

117 | output = self.decoder(gt_spec,x,infer=True)

118 |

119 | return output

120 |

121 |

--------------------------------------------------------------------------------

/Content/put_the_checkpoint_here:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Grace9994/CoMoSVC/2ea8e644e2c5b3a8afc0762e870b9daacf3b5be5/Content/put_the_checkpoint_here

--------------------------------------------------------------------------------

/Features.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import pyworld

4 | from fairseq import checkpoint_utils

5 |

6 |

7 | class SpeechEncoder(object):

8 | def __init__(self, vec_path="Content/checkpoint_best_legacy_500.pt", device=None):

9 | self.model = None # This is Model

10 | self.hidden_dim = 768

11 | pass

12 |

13 |

14 | def encoder(self, wav):

15 | """

16 | input: wav:[signal_length]

17 | output: embedding:[batchsize,hidden_dim,wav_frame]

18 | """

19 | pass

20 |

21 |

22 |

23 | class ContentVec768L12(SpeechEncoder):

24 | def __init__(self, vec_path="Content/checkpoint_best_legacy_500.pt", device=None):

25 | super().__init__()

26 | print("load model(s) from {}".format(vec_path))

27 | self.hidden_dim = 768

28 | models, saved_cfg, task = checkpoint_utils.load_model_ensemble_and_task(

29 | [vec_path],

30 | suffix="",

31 | )

32 | if device is None:

33 | self.dev = torch.device("cuda" if torch.cuda.is_available() else "cpu")

34 | else:

35 | self.dev = torch.device(device)

36 | self.model = models[0].to(self.dev)

37 | self.model.eval()

38 |

39 | def encoder(self, wav):

40 | feats = wav

41 | if feats.dim() == 2: # double channels

42 | feats = feats.mean(-1)

43 | assert feats.dim() == 1, feats.dim()

44 | feats = feats.view(1, -1)

45 | padding_mask = torch.BoolTensor(feats.shape).fill_(False)

46 | inputs = {

47 | "source": feats.to(wav.device),

48 | "padding_mask": padding_mask.to(wav.device),

49 | "output_layer": 12, # layer 12

50 | }

51 | with torch.no_grad():

52 | logits = self.model.extract_features(**inputs)

53 | return logits[0].transpose(1, 2)

54 |

55 |

56 |

57 | class F0Predictor(object):

58 | def compute_f0(self,wav,p_len):

59 | '''

60 | input: wav:[signal_length]

61 | p_len:int

62 | output: f0:[signal_length//hop_length]

63 | '''

64 | pass

65 |

66 | def compute_f0_uv(self,wav,p_len):

67 | '''

68 | input: wav:[signal_length]

69 | p_len:int

70 | output: f0:[signal_length//hop_length],uv:[signal_length//hop_length]

71 | '''

72 | pass

73 |

74 |

75 | class DioF0Predictor(F0Predictor):

76 | def __init__(self,hop_length=512,f0_min=50,f0_max=1100,sampling_rate=44100):

77 | self.hop_length = hop_length

78 | self.f0_min = f0_min

79 | self.f0_max = f0_max

80 | self.sampling_rate = sampling_rate

81 | self.name = "dio"

82 |

83 | def interpolate_f0(self,f0):

84 | '''

85 | 对F0进行插值处理

86 | '''

87 | vuv_vector = np.zeros_like(f0, dtype=np.float32)

88 | vuv_vector[f0 > 0.0] = 1.0

89 | vuv_vector[f0 <= 0.0] = 0.0

90 |

91 | nzindex = np.nonzero(f0)[0]

92 | data = f0[nzindex]

93 | nzindex = nzindex.astype(np.float32)

94 | time_org = self.hop_length / self.sampling_rate * nzindex

95 | time_frame = np.arange(f0.shape[0]) * self.hop_length / self.sampling_rate

96 |

97 | if data.shape[0] <= 0:

98 | return np.zeros(f0.shape[0], dtype=np.float32),vuv_vector

99 |

100 | if data.shape[0] == 1:

101 | return np.ones(f0.shape[0], dtype=np.float32) * f0[0],vuv_vector

102 |

103 | f0 = np.interp(time_frame, time_org, data, left=data[0], right=data[-1])

104 |

105 | return f0,vuv_vector

106 |

107 | def resize_f0(self,x, target_len):

108 | source = np.array(x)

109 | source[source<0.001] = np.nan

110 | target = np.interp(np.arange(0, len(source)*target_len, len(source))/ target_len, np.arange(0, len(source)), source)

111 | res = np.nan_to_num(target)

112 | return res

113 |

114 | def compute_f0(self,wav,p_len=None):

115 | if p_len is None:

116 | p_len = wav.shape[0]//self.hop_length

117 | f0, t = pyworld.dio(

118 | wav.astype(np.double),

119 | fs=self.sampling_rate,

120 | f0_floor=self.f0_min,

121 | f0_ceil=self.f0_max,

122 | frame_period=1000 * self.hop_length / self.sampling_rate,

123 | )

124 | f0 = pyworld.stonemask(wav.astype(np.double), f0, t, self.sampling_rate)

125 | for index, pitch in enumerate(f0):

126 | f0[index] = round(pitch, 1)

127 | return self.interpolate_f0(self.resize_f0(f0, p_len))[0]

128 |

129 | def compute_f0_uv(self,wav,p_len=None):

130 | if p_len is None:

131 | p_len = wav.shape[0]//self.hop_length

132 | f0, t = pyworld.dio(

133 | wav.astype(np.double),

134 | fs=self.sampling_rate,

135 | f0_floor=self.f0_min,

136 | f0_ceil=self.f0_max,

137 | frame_period=1000 * self.hop_length / self.sampling_rate,

138 | )

139 | f0 = pyworld.stonemask(wav.astype(np.double), f0, t, self.sampling_rate)

140 | for index, pitch in enumerate(f0):

141 | f0[index] = round(pitch, 1)

142 | return self.interpolate_f0(self.resize_f0(f0, p_len))

143 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 Yiwen LU

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

CoMoSVC: Consistency Model Based Singing Voice Conversion

3 |

4 | [中文文档](./Readme_CN.md)

5 |

6 |

7 | A consistency model based Singing Voice Conversion system is composed, which is inspired by [CoMoSpeech](https://github.com/zhenye234/CoMoSpeech): One-Step Speech and Singing Voice Synthesis via Consistency Model.

8 |

9 | This is an implemention of the paper [CoMoSVC](https://arxiv.org/pdf/2401.01792.pdf).

10 | ## Improvements

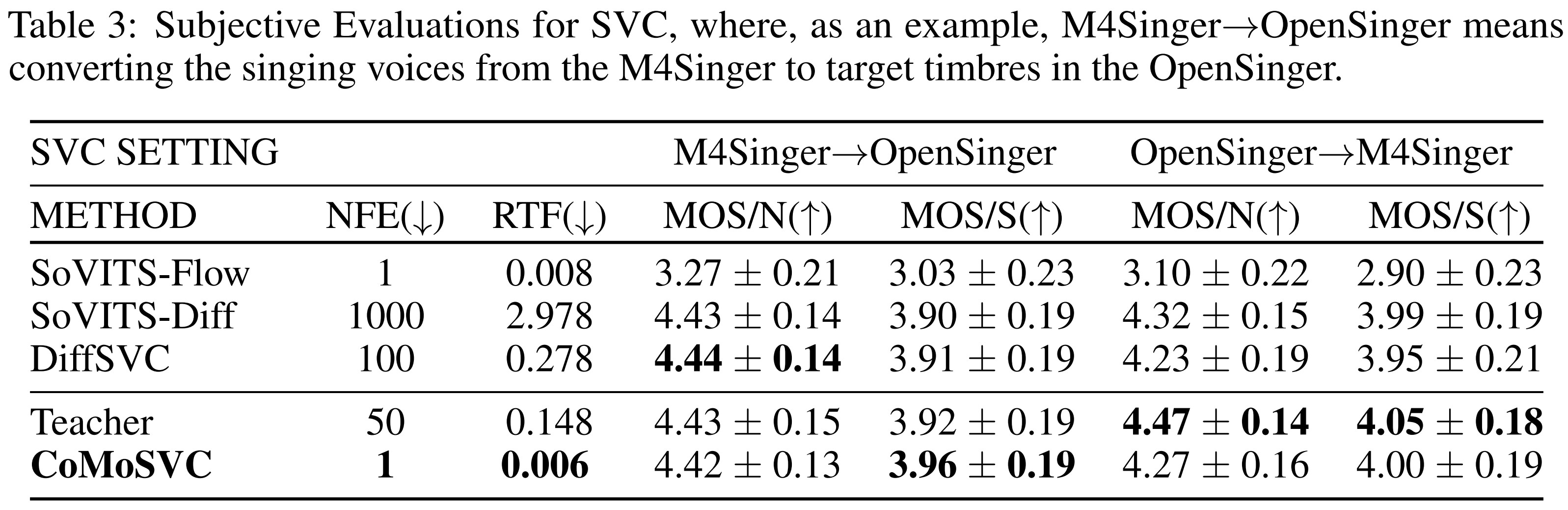

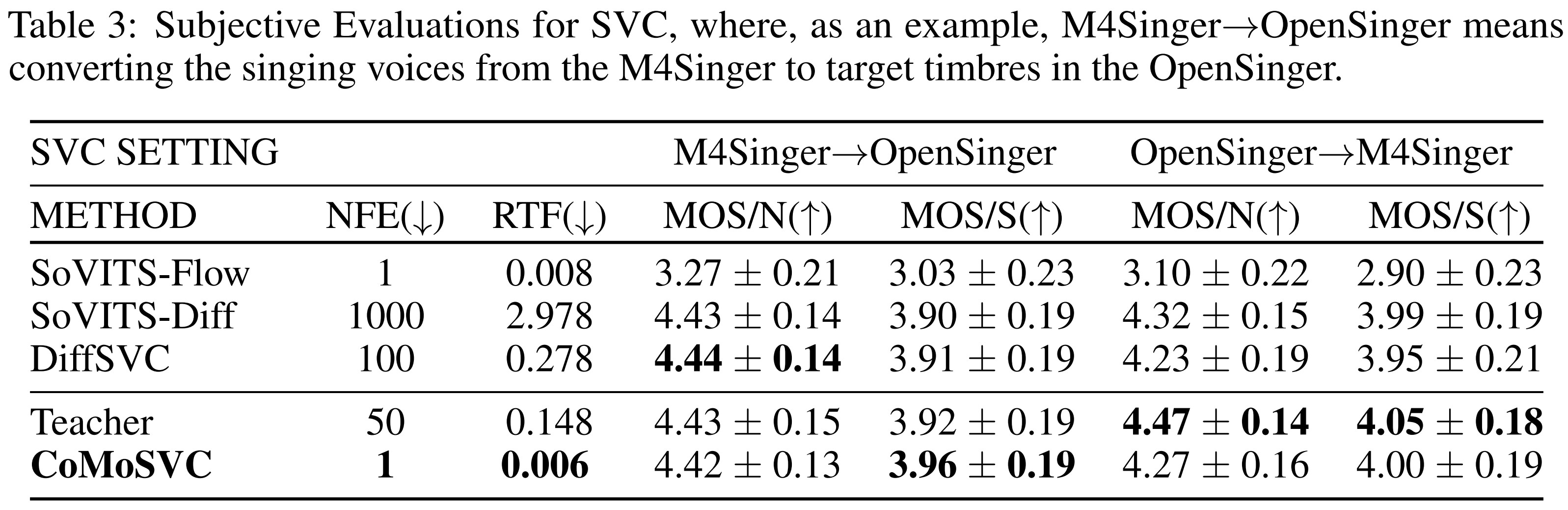

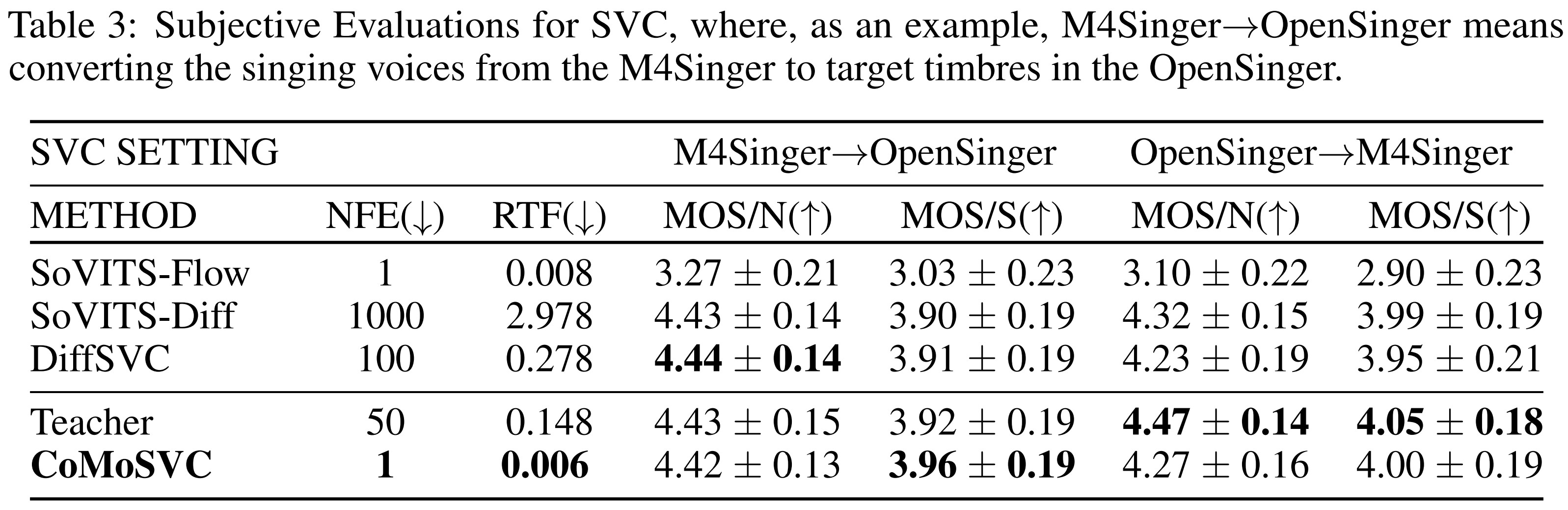

11 | The subjective evaluations are illustrated through the table below.

12 |  13 |

14 | ## Environment

15 | We have tested the code and it runs successfully on Python 3.8, so you can set up your Conda environment using the following command:

16 |

17 | ```shell

18 | conda create -n Your_Conda_Environment_Name python=3.8

19 | ```

20 | Then after activating your conda environment, you can install the required packages under it by:

21 |

22 | ```shell

23 | pip install -r requirements.txt

24 | ```

25 |

26 | ## Download the Checkpoints

27 | ### 1. m4singer_hifigan

28 |

29 | You should first download [m4singer_hifigan](https://drive.google.com/file/d/10LD3sq_zmAibl379yTW5M-LXy2l_xk6h/view) and then unzip the zip file by

30 | ```shell

31 | unzip m4singer_hifigan.zip

32 | ```

33 | The checkpoints of the vocoder will be in the `m4singer_hifigan` directory

34 |

35 | ### 2. ContentVec

36 | You should download the checkpoint [ContentVec](https://ibm.box.com/s/z1wgl1stco8ffooyatzdwsqn2psd9lrr) and the put it in the `Content` directory to extract the content feature.

37 |

38 | ### 3. m4singer_pe

39 | You should download the pitch_extractor checkpoint of the [m4singer_pe](https://drive.google.com/file/d/19QtXNeqUjY3AjvVycEt3G83lXn2HwbaJ/view) and then unzip the zip file by

40 |

41 | ```shell

42 | unzip m4singer_pe.zip

43 | ```

44 |

45 | ## Dataset Preparation

46 |

47 | You should first create the folders by

48 |

49 | ```shell

50 | mkdir dataset_raw

51 | mkdir dataset

52 | ```

53 | You can refer to different preparation methods based on your needs.

54 |

55 | Preparation With Slicing can help you remove the silent parts and slice the audio for stable training.

56 |

57 |

58 | ### 0. Preparation With Slicing

59 |

60 | Please place your original dataset in the `dataset_slice` directory.

61 |

62 | The original audios can be in any waveformat which should be specified in the command line. You can designate the length of slices you want, the unit of slice_size is milliseconds. The default wavformat and slice_size is mp3 and 10000 respectively.

63 |

64 | ```shell

65 | python preparation_slice.py -w your_wavformat -s slice_size

66 | ```

67 |

68 | ### 1. Preparation Without Slicing

69 |

70 | You can just place the dataset in the `dataset_raw` directory with the following file structure:

71 |

72 | ```

73 | dataset_raw

74 | ├───speaker0

75 | │ ├───xxx1-xxx1.wav

76 | │ ├───...

77 | │ └───Lxx-0xx8.wav

78 | └───speaker1

79 | ├───xx2-0xxx2.wav

80 | ├───...

81 | └───xxx7-xxx007.wav

82 | ```

83 |

84 |

85 | ## Preprocessing

86 |

87 | ### 1. Resample to 24000Hz and mono

88 |

89 | ```shell

90 | python preprocessing1_resample.py -n num_process

91 | ```

92 | num_process is the number of processes, the default num_process is 5.

93 |

94 | ### 2. Split the Training and Validation Datasets, and Generate Configuration Files.

95 |

96 | ```shell

97 | python preprocessing2_flist.py

98 | ```

99 |

100 |

101 | ### 3. Generate Features

102 |

103 | ```shell

104 | python preprocessing3_feature.py -c your_config_file -n num_processes

105 | ```

106 |

107 |

108 | ## Training

109 |

110 | ### 1. Train the Teacher Model

111 |

112 | ```shell

113 | python train.py

114 | ```

115 | The checkpoints will be saved in the `logs/teacher` directory

116 |

117 | ### 2. Train the Consistency Model

118 |

119 | If you want to adjust the config file, you can duplicate a new config file and modify some parameters.

120 |

121 |

122 | ```shell

123 | python train.py -t -c Your_new_configfile_path -p The_teacher_model_checkpoint_path

124 | ```

125 |

126 | ## Inference

127 | You should put the audios you want to convert under the `raw` directory firstly.

128 |

129 | ### Inference by the Teacher Model

130 |

131 | ```shell

132 | python inference_main.py -ts 50 -tm "logs/teacher/model_800000.pt" -tc "logs/teacher/config.yaml" -n "src.wav" -k 0 -s "target_singer"

133 | ```

134 | -ts refers to the total number of iterative steps during inference for the teacher model

135 |

136 | -tm refers to the teacher_model_path

137 |

138 | -tc refers to the teacher_config_path

139 |

140 | -n refers to the source audio

141 |

142 | -k refers to the pitch shift, it can be positive and negative (semitone) values

143 |

144 | -s refers to the target singer

145 |

146 | ### Inference by the Consistency Model

147 |

148 | ```shell

149 | python inference_main.py -ts 1 -cm "logs/como/model_800000.pt" -cc "logs/como/config.yaml" -n "src.wav" -k 0 -s "target_singer" -t

150 | ```

151 | -ts refers to the total number of iterative steps during inference for the student model

152 |

153 | -cm refers to the como_model_path

154 |

155 | -cc refers to the como_config_path

156 |

157 | -t means it is not the teacher model and you don't need to specify anything after it

158 |

--------------------------------------------------------------------------------

/Readme_CN.md:

--------------------------------------------------------------------------------

1 |

13 |

14 | ## Environment

15 | We have tested the code and it runs successfully on Python 3.8, so you can set up your Conda environment using the following command:

16 |

17 | ```shell

18 | conda create -n Your_Conda_Environment_Name python=3.8

19 | ```

20 | Then after activating your conda environment, you can install the required packages under it by:

21 |

22 | ```shell

23 | pip install -r requirements.txt

24 | ```

25 |

26 | ## Download the Checkpoints

27 | ### 1. m4singer_hifigan

28 |

29 | You should first download [m4singer_hifigan](https://drive.google.com/file/d/10LD3sq_zmAibl379yTW5M-LXy2l_xk6h/view) and then unzip the zip file by

30 | ```shell

31 | unzip m4singer_hifigan.zip

32 | ```

33 | The checkpoints of the vocoder will be in the `m4singer_hifigan` directory

34 |

35 | ### 2. ContentVec

36 | You should download the checkpoint [ContentVec](https://ibm.box.com/s/z1wgl1stco8ffooyatzdwsqn2psd9lrr) and the put it in the `Content` directory to extract the content feature.

37 |

38 | ### 3. m4singer_pe

39 | You should download the pitch_extractor checkpoint of the [m4singer_pe](https://drive.google.com/file/d/19QtXNeqUjY3AjvVycEt3G83lXn2HwbaJ/view) and then unzip the zip file by

40 |

41 | ```shell

42 | unzip m4singer_pe.zip

43 | ```

44 |

45 | ## Dataset Preparation

46 |

47 | You should first create the folders by

48 |

49 | ```shell

50 | mkdir dataset_raw

51 | mkdir dataset

52 | ```

53 | You can refer to different preparation methods based on your needs.

54 |

55 | Preparation With Slicing can help you remove the silent parts and slice the audio for stable training.

56 |

57 |

58 | ### 0. Preparation With Slicing

59 |

60 | Please place your original dataset in the `dataset_slice` directory.

61 |

62 | The original audios can be in any waveformat which should be specified in the command line. You can designate the length of slices you want, the unit of slice_size is milliseconds. The default wavformat and slice_size is mp3 and 10000 respectively.

63 |

64 | ```shell

65 | python preparation_slice.py -w your_wavformat -s slice_size

66 | ```

67 |

68 | ### 1. Preparation Without Slicing

69 |

70 | You can just place the dataset in the `dataset_raw` directory with the following file structure:

71 |

72 | ```

73 | dataset_raw

74 | ├───speaker0

75 | │ ├───xxx1-xxx1.wav

76 | │ ├───...

77 | │ └───Lxx-0xx8.wav

78 | └───speaker1

79 | ├───xx2-0xxx2.wav

80 | ├───...

81 | └───xxx7-xxx007.wav

82 | ```

83 |

84 |

85 | ## Preprocessing

86 |

87 | ### 1. Resample to 24000Hz and mono

88 |

89 | ```shell

90 | python preprocessing1_resample.py -n num_process

91 | ```

92 | num_process is the number of processes, the default num_process is 5.

93 |

94 | ### 2. Split the Training and Validation Datasets, and Generate Configuration Files.

95 |

96 | ```shell

97 | python preprocessing2_flist.py

98 | ```

99 |

100 |

101 | ### 3. Generate Features

102 |

103 | ```shell

104 | python preprocessing3_feature.py -c your_config_file -n num_processes

105 | ```

106 |

107 |

108 | ## Training

109 |

110 | ### 1. Train the Teacher Model

111 |

112 | ```shell

113 | python train.py

114 | ```

115 | The checkpoints will be saved in the `logs/teacher` directory

116 |

117 | ### 2. Train the Consistency Model

118 |

119 | If you want to adjust the config file, you can duplicate a new config file and modify some parameters.

120 |

121 |

122 | ```shell

123 | python train.py -t -c Your_new_configfile_path -p The_teacher_model_checkpoint_path

124 | ```

125 |

126 | ## Inference

127 | You should put the audios you want to convert under the `raw` directory firstly.

128 |

129 | ### Inference by the Teacher Model

130 |

131 | ```shell

132 | python inference_main.py -ts 50 -tm "logs/teacher/model_800000.pt" -tc "logs/teacher/config.yaml" -n "src.wav" -k 0 -s "target_singer"

133 | ```

134 | -ts refers to the total number of iterative steps during inference for the teacher model

135 |

136 | -tm refers to the teacher_model_path

137 |

138 | -tc refers to the teacher_config_path

139 |

140 | -n refers to the source audio

141 |

142 | -k refers to the pitch shift, it can be positive and negative (semitone) values

143 |

144 | -s refers to the target singer

145 |

146 | ### Inference by the Consistency Model

147 |

148 | ```shell

149 | python inference_main.py -ts 1 -cm "logs/como/model_800000.pt" -cc "logs/como/config.yaml" -n "src.wav" -k 0 -s "target_singer" -t

150 | ```

151 | -ts refers to the total number of iterative steps during inference for the student model

152 |

153 | -cm refers to the como_model_path

154 |

155 | -cc refers to the como_config_path

156 |

157 | -t means it is not the teacher model and you don't need to specify anything after it

158 |

--------------------------------------------------------------------------------

/Readme_CN.md:

--------------------------------------------------------------------------------

1 |

2 |

CoMoSVC: One-Step Consistency Model Based Singing Voice Conversion

3 |

4 |

5 | 基于一致性模型的歌声转换及克隆系统,可以一步diffusion采样进行歌声转换,是对论文[CoMoSVC](https://arxiv.org/pdf/2401.01792.pdf)的实现。工作基于[CoMoSpeech](https://github.com/zhenye234/CoMoSpeech): One-Step Speech and Singing Voice Synthesis via Consistency Model.

6 |

7 |

8 |

9 | # 环境配置

10 | Python 3.8环境下创建Conda虚拟环境:

11 |

12 | ```shell

13 | conda create -n Your_Conda_Environment_Name python=3.8

14 | ```

15 | 安装相关依赖库:

16 |

17 | ```shell

18 | pip install -r requirements.txt

19 | ```

20 | ## 下载checkpoints

21 | ### 1. m4singer_hifigan

22 | 下载vocoder [m4singer_hifigan](https://drive.google.com/file/d/10LD3sq_zmAibl379yTW5M-LXy2l_xk6h/view) 并解压

23 |

24 | ```shell

25 | unzip m4singer_hifigan.zip

26 | ```

27 |

28 | vocoder的checkoint将在`m4singer_hifigan`目录中

29 |

30 | ### 2. ContentVec

31 |

32 | 下载 [ContentVec](https://ibm.box.com/s/z1wgl1stco8ffooyatzdwsqn2psd9lrr) 放置在`Content`路径,以提取歌词内容特征。

33 |

34 | ### 3. m4singer_pe

35 |

36 | 下载pitch_extractor [m4singer_pe](https://drive.google.com/file/d/19QtXNeqUjY3AjvVycEt3G83lXn2HwbaJ/view) ,并解压到根目录

37 |

38 | ```shell

39 | unzip m4singer_pe.zip

40 | ```

41 |

42 |

43 | ## 数据准备

44 |

45 |

46 | 构造两个空文件夹

47 |

48 | ```shell

49 | mkdir dataset_raw

50 | mkdir dataset

51 | ```

52 |

53 | 请自行准备歌手的清唱录音数据,随后按照如下操作。

54 |

55 | ### 0. 带切片的数据准备流程

56 |

57 | 请将你的原始数据集放在 dataset_slice 目录下。

58 |

59 | 原始音频可以是任何波形格式,应在命令行中指定。你可以指定你想要的切片长度,切片大小的单位是毫秒。默认的文件格式和切片大小分别是mp3和10000。

60 |

61 | ```shell

62 | python preparation_slice.py -w 你的文件格式 -s 切片大小

63 | ```

64 |

65 | ### 1. 不带切片的数据准备流程

66 |

67 | 你可以只将数据集放在 `dataset_raw` 目录下,按照以下文件结构:

68 |

69 |

70 | ```

71 | dataset_raw

72 | ├───speaker0

73 | │ ├───xxx1-xxx1.wav

74 | │ ├───...

75 | │ └───Lxx-0xx8.wav

76 | └───speaker1

77 | ├───xx2-0xxx2.wav

78 | ├───...

79 | └───xxx7-xxx007.wav

80 | ```

81 |

82 |

83 | ## 预处理

84 |

85 | ### 1. 重采样为24000Hz和单声道

86 |

87 | ```shell

88 | python preprocessing1_resample.py -n num_process

89 | ```

90 | num_process 是进程数,默认5。

91 |

92 |

93 | ### 2. 分割训练和验证数据集,并生成配置文件

94 |

95 | ```shell

96 | python preprocessing2_flist.py

97 | ```

98 |

99 |

100 | ### 3. 生成特征

101 |

102 |

103 |

104 |

105 | 执行下面代码以提取所有特征

106 |

107 | ```shell

108 | python preprocessing3_feature.py -c your_config_file -n num_processes

109 | ```

110 |

111 |

112 |

113 |

114 | ## 训练

115 |

116 | ### 1. 训练 teacher model

117 |

118 | ```shell

119 | python train.py

120 | ```

121 | Checkpoint将存于 `logs/teacher` 目录中

122 |

123 | ### 2. 训练 consistency model

124 |

125 | #### 如果你想调整配置文件,你可以复制一个新的配置文件并修改一些参数。

126 |

127 |

128 |

129 | ```shell

130 | python train.py -t -c Your_new_configfile_path -p The_teacher_model_checkpoint_path

131 | ```

132 |

133 | ## 推理

134 |

135 | 你应该首先将你想要转换的音频放在`raw`目录下。

136 |

137 | ### 采用教师模型的推理

138 |

139 | ```shell

140 | python inference_main.py -ts 50 -tm "logs/teacher/model_800000.pt" -tc "logs/teacher/config.yaml" -n "src.wav" -k 0 -s "target_singer"

141 | ```

142 | -ts 教师模型推理时的迭代步数

143 |

144 | -tm 教师模型路径

145 |

146 | -tc 教师模型配置文件

147 |

148 | -n source音频路径

149 |

150 | -k pitch shift,可以是正负semitone值

151 |

152 | -s 目标歌手

153 |

154 |

155 |

156 | ### 采用CoMoSVC进行推理

157 |

158 | ```shell

159 | python inference_main.py -ts 1 -cm "logs/como/model_800000.pt" -cc "logs/como/config.yaml" -n "src.wav" -k 0 -s "target_singer" -t

160 | ```

161 | -ts 学生模型推理时的迭代步数

162 |

163 | -cm CoMoSVC模型路径

164 |

165 | -cc CoMoSVC模型配置文件

166 |

167 | -t 加上该参数并保留后续为空代表不是教师模型

168 |

--------------------------------------------------------------------------------

/Vocoder.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | from torchaudio.transforms import Resample

4 | from vocoder.m4gan.hifigan import HifiGanGenerator

5 |

6 | from mel_processing import mel_spectrogram, MAX_WAV_VALUE

7 | import utils

8 |

9 | class Vocoder:

10 | def __init__(self, vocoder_type, vocoder_ckpt, device = None):

11 | if device is None:

12 | device = 'cuda' if torch.cuda.is_available() else 'cpu'

13 | self.device = device # device

14 | self.vocodertype = vocoder_type

15 | if vocoder_type == 'm4-gan':

16 | self.vocoder = M4GAN(vocoder_ckpt, device = device)

17 | else:

18 | raise ValueError(f" [x] Unknown vocoder: {vocoder_type}")

19 |

20 | self.resample_kernel = {}

21 | self.vocoder_sample_rate = self.vocoder.sample_rate()

22 | self.vocoder_hop_size = self.vocoder.hop_size()

23 | self.dimension = self.vocoder.dimension()

24 |

25 | def extract(self, audio, sample_rate, keyshift=0):

26 | # resample

27 | if sample_rate == self.vocoder_sample_rate:

28 | audio_res = audio

29 | else:

30 | key_str = str(sample_rate)# 这里是24k

31 | if key_str not in self.resample_kernel:

32 | self.resample_kernel[key_str] = Resample(sample_rate, self.vocoder_sample_rate, lowpass_filter_width = 128).to(self.device)

33 |

34 | audio_res = self.resample_kernel[key_str](audio) # 对原始audio进行resample

35 |

36 | # extract

37 | mel = self.vocoder.extract(audio_res, keyshift=keyshift) # B, n_frames, bins

38 | return mel

39 |

40 | def infer(self, mel, f0):

41 | f0 = f0[:,:mel.size(1),0]

42 | audio = self.vocoder(mel,f0)

43 | return audio

44 |

45 |

46 | class M4GAN(torch.nn.Module):

47 | def __init__(self, model_path, device=None):

48 | super().__init__()

49 | if device is None:

50 | device = 'cuda' if torch.cuda.is_available() else 'cpu'

51 | self.device = device

52 | self.model_path = model_path

53 | self.model = None

54 | self.h = utils.load_config(os.path.join(os.path.split(model_path)[0], 'config.yaml'))

55 |

56 | def sample_rate(self):

57 | return self.h.audio_sample_rate

58 |

59 | def hop_size(self):

60 | return self.h.hop_size

61 |

62 | def dimension(self):

63 | return self.h.audio_num_mel_bins

64 |

65 | def extract(self, audio, keyshift=0):

66 |

67 | mel= mel_spectrogram(audio, self.h.fft_size, self.h.audio_num_mel_bins, self.h.audio_sample_rate, self.h.hop_size, self.h.win_size, self.h.fmin, self.h.fmax, keyshift=keyshift).transpose(1,2)

68 | # mel= mel_spectrogram(audio, 512, 80, 24000, 128, 512, 30, 12000, keyshift=keyshift).transpose(1,2)

69 | return mel

70 |

71 | def load_checkpoint(self, filepath, device):

72 | assert os.path.isfile(filepath)

73 | print("Loading '{}'".format(filepath))

74 | checkpoint_dict = torch.load(filepath, map_location=device)

75 | print("Complete.")

76 | return checkpoint_dict

77 |

78 |

79 | def forward(self, mel, f0):

80 | ckpt_dict = torch.load(self.model_path, map_location=self.device)

81 | state = ckpt_dict["state_dict"]["model_gen"]

82 | self.model = HifiGanGenerator(self.h).to(self.device)

83 | self.model.load_state_dict(state, strict=True)

84 | self.model.remove_weight_norm()

85 | self.model = self.model.eval()

86 | c = mel.transpose(2, 1)

87 | y = self.model(c,f0).view(-1)

88 |

89 | return y[None]

90 |

--------------------------------------------------------------------------------

/como.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | import copy

4 | from pitch_extractor import PitchExtractor

5 | from wavenet import WaveNet

6 |

7 | import numpy as np

8 | import torch

9 |

10 |

11 | class BaseModule(torch.nn.Module):

12 | def __init__(self):

13 | super(BaseModule, self).__init__()

14 |

15 | @property

16 | def nparams(self):

17 | """

18 | Returns number of trainable parameters of the module.

19 | """

20 | num_params = 0

21 | for name, param in self.named_parameters():

22 | if param.requires_grad:

23 | num_params += np.prod(param.detach().cpu().numpy().shape)

24 | return num_params

25 |

26 |

27 | def relocate_input(self, x: list):

28 | """

29 | Relocates provided tensors to the same device set for the module.

30 | """

31 | device = next(self.parameters()).device

32 | for i in range(len(x)):

33 | if isinstance(x[i], torch.Tensor) and x[i].device != device:

34 | x[i] = x[i].to(device)

35 | return x

36 |

37 |

38 | class Como(BaseModule):

39 | def __init__(self, out_dims, n_layers, n_chans, n_hidden,total_steps,teacher = True ):

40 | super().__init__()

41 | self.denoise_fn = WaveNet(out_dims, n_layers, n_chans, n_hidden)

42 | self.pe= PitchExtractor()

43 | self.teacher = teacher

44 | if not teacher:

45 | self.denoise_fn_ema = copy.deepcopy(self.denoise_fn)

46 | self.denoise_fn_pretrained = copy.deepcopy(self.denoise_fn)

47 |

48 | self.P_mean =-1.2

49 | self.P_std =1.2

50 | self.sigma_data =0.5

51 |

52 | self.sigma_min= 0.002

53 | self.sigma_max= 80

54 | self.rho=7

55 | self.N = 25

56 | self.total_steps=total_steps

57 | self.spec_min=-6

58 | self.spec_max=1.5

59 | step_indices = torch.arange(self.N)

60 | t_steps = (self.sigma_min ** (1 / self.rho) + step_indices / (self.N - 1) * (self.sigma_max ** (1 / self.rho) - self.sigma_min ** (1 / self.rho))) ** self.rho

61 | self.t_steps = torch.cat([torch.zeros_like(t_steps[:1]), self.round_sigma(t_steps)]) # round_tensorj将数据转为tensor

62 |

63 | def norm_spec(self, x):

64 | return (x - self.spec_min) / (self.spec_max - self.spec_min) * 2 - 1

65 |

66 | def denorm_spec(self, x):

67 | return (x + 1) / 2 * (self.spec_max - self.spec_min) + self.spec_min

68 |

69 | def EDMPrecond(self, x, sigma ,cond,denoise_fn):

70 | sigma = sigma.reshape(-1, 1, 1 )

71 | c_skip = self.sigma_data ** 2 / ((sigma-self.sigma_min) ** 2 + self.sigma_data ** 2)

72 | c_out = (sigma-self.sigma_min) * self.sigma_data / (sigma ** 2 + self.sigma_data ** 2).sqrt()

73 | c_in = 1 / (self.sigma_data ** 2 + sigma ** 2).sqrt()

74 | c_noise = sigma.log() / 4

75 | F_x = denoise_fn((c_in * x), c_noise.flatten(),cond)

76 | D_x = c_skip * x + c_out * (F_x .squeeze(1) )

77 | return D_x

78 |

79 | def EDMLoss(self, x_start, cond):

80 | rnd_normal = torch.randn([x_start.shape[0], 1, 1], device=x_start.device)

81 | sigma = (rnd_normal * self.P_std + self.P_mean).exp()

82 | weight = (sigma ** 2 + self.sigma_data ** 2) / (sigma * self.sigma_data) ** 2

83 | n = (torch.randn_like(x_start) ) * sigma # Generate Gaussian Noise

84 | D_yn = self.EDMPrecond(x_start + n, sigma ,cond,self.denoise_fn) # After Denoising

85 | loss = (weight * ((D_yn - x_start) ** 2))

86 | loss=loss.unsqueeze(1).unsqueeze(1)

87 | loss=loss.mean()

88 | return loss

89 |

90 | def round_sigma(self, sigma):

91 | return torch.as_tensor(sigma)

92 |

93 | def edm_sampler(self,latents, cond,num_steps=50, sigma_min=0.002, sigma_max=80, rho=7, S_churn=0, S_min=0, S_max=float('inf'), S_noise=1):

94 | # Time step discretization.

95 | step_indices = torch.arange(num_steps, device=latents.device)

96 |

97 | num_steps=num_steps + 1

98 | t_steps = (sigma_max ** (1 / rho) + step_indices / (num_steps - 1) * (sigma_min ** (1 / rho) - sigma_max ** (1 / rho))) ** rho

99 | t_steps = torch.cat([self.round_sigma(t_steps), torch.zeros_like(t_steps[:1])])

100 | # Main sampling loop.

101 | x_next = latents * t_steps[0]

102 | x_next = x_next.transpose(1,2)

103 | for i, (t_cur, t_next) in enumerate(zip(t_steps[:-1], t_steps[1:])):

104 | x_cur = x_next

105 | gamma = min(S_churn / num_steps, np.sqrt(2) - 1) if S_min <= t_cur <= S_max else 0

106 | t_hat = self.round_sigma(t_cur + gamma * t_cur)

107 | x_hat = x_cur + (t_hat ** 2 - t_cur ** 2).sqrt() * S_noise * torch.randn_like(x_cur)

108 | denoised = self.EDMPrecond(x_hat, t_hat, cond, self.denoise_fn) # mel,sigma,cond

109 | d_cur = (x_hat - denoised) / t_hat # 7th step

110 | x_next = x_hat + (t_next - t_hat) * d_cur

111 |

112 | return x_next

113 |

114 | def CTLoss_D(self,y, cond): # y is the gt_spec

115 | with torch.no_grad():

116 | mu = 0.95

117 | for p, ema_p in zip(self.denoise_fn.parameters(), self.denoise_fn_ema.parameters()):

118 | ema_p.mul_(mu).add_(p, alpha=1 - mu)

119 | n = torch.randint(1, self.N, (y.shape[0],))

120 |

121 | z = torch.randn_like(y) # Gaussian Noise

122 | tn_1 = self.c_t_d(n + 1).reshape(-1, 1, 1).to(y.device)

123 | f_theta = self.EDMPrecond(y + tn_1 * z, tn_1, cond, self.denoise_fn)

124 |

125 | with torch.no_grad():

126 | tn = self.c_t_d(n ).reshape(-1, 1, 1).to(y.device)

127 | #euler step

128 | x_hat = y + tn_1 * z

129 | denoised = self.EDMPrecond(x_hat, tn_1 , cond,self.denoise_fn_pretrained)

130 | d_cur = (x_hat - denoised) / tn_1

131 | y_tn = x_hat + (tn - tn_1) * d_cur

132 | f_theta_ema = self.EDMPrecond( y_tn, tn,cond, self.denoise_fn_ema)

133 |

134 | loss = (f_theta - f_theta_ema.detach()) ** 2 # For consistency model, lembda=1

135 | loss=loss.unsqueeze(1).unsqueeze(1)

136 | loss=loss.mean()

137 |

138 | return loss

139 |

140 | def c_t_d(self, i ):

141 | return self.t_steps[i]

142 |

143 | def get_t_steps(self,N):

144 | N=N+1

145 | step_indices = torch.arange( N ) #, device=latents.device)

146 | t_steps = (self.sigma_min ** (1 / self.rho) + step_indices / (N- 1) * (self.sigma_max ** (1 / self.rho) - self.sigma_min ** (1 / self.rho))) ** self.rho

147 |

148 | return t_steps.flip(0)# FLIP t_step

149 |

150 | def CT_sampler(self, latents, cond, t_steps=1):

151 | if t_steps ==1:

152 | t_steps=[80]

153 | else:

154 | t_steps=self.get_t_steps(t_steps)

155 | t_steps = torch.as_tensor(t_steps).to(latents.device)

156 | latents = latents * t_steps[0]

157 | latents = latents.transpose(1,2)

158 | x = self.EDMPrecond(latents, t_steps[0],cond,self.denoise_fn)

159 | for t in t_steps[1:-1]: # N-1 to 1

160 | z = torch.randn_like(x)

161 | x_tn = x + (t ** 2 - self.sigma_min ** 2).sqrt()*z

162 | x = self.EDMPrecond(x_tn, t,cond,self.denoise_fn)

163 | return x

164 |

165 | def forward(self, x, cond, infer=False):

166 |

167 | if self.teacher: # teacher model

168 | if not infer: # training

169 | x=self.norm_spec(x)

170 | loss = self.EDMLoss(x, cond)

171 | return loss

172 | else: # infer

173 | shape = (cond.shape[0], 80, cond.shape[1])

174 | x = torch.randn(shape, device=cond.device)

175 | x=self.edm_sampler(x, cond, self.total_steps)

176 | return self.denorm_spec(x)

177 | else: #Consistency distillation

178 | if not infer: # training

179 | x=self.norm_spec(x)

180 | loss = self.CTLoss_D(x, cond)

181 | return loss

182 | else: # infer

183 | shape = (cond.shape[0], 80, cond.shape[1])

184 | x = torch.randn(shape, device=cond.device) # The Input is the Random Noise

185 | x=self.CT_sampler(x,cond,self.total_steps)

186 | return self.denorm_spec(x)

187 |

188 |

189 |

--------------------------------------------------------------------------------

/configs/the_config_files:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Grace9994/CoMoSVC/2ea8e644e2c5b3a8afc0762e870b9daacf3b5be5/configs/the_config_files

--------------------------------------------------------------------------------

/configs_template/diffusion_template.yaml:

--------------------------------------------------------------------------------

1 | data:

2 | sampling_rate: 24000

3 | hop_length: 128

4 | duration: 2 # Audio duration during training, must be less than the duration of the shortest audio clip

5 | filter_length: 512

6 | win_length: 512

7 | encoder: 'vec768l12' #

8 | cnhubertsoft_gate: 10

9 | encoder_sample_rate: 16000

10 | encoder_hop_size: 320

11 | encoder_out_channels: 768 #

12 | training_files: "filelists/train.txt"

13 | validation_files: "filelists/val.txt"

14 | extensions: # List of extension included in the data collection

15 | - wav

16 | unit_interpolate_mode: "nearest"

17 | model:

18 | type: 'Diffusion'

19 | n_layers: 20

20 | n_chans: 512

21 | n_hidden: 256

22 | use_pitch_aug: true

23 | n_spk: 1 # max number of different speakers

24 | device: cuda

25 | vocoder:

26 | type: 'm4-gan'

27 | ckpt: 'm4singer_hifigan/model_ckpt_steps_1970000.ckpt'

28 | infer:

29 | method: 'dpm-solver++' #

30 | env:

31 | comodir: logs/como

32 | expdir: logs/teacher

33 | gpu_id: 0

34 | train:

35 | num_workers: 4 # If your cpu and gpu are both very strong, set to 0 may be faster!

36 | amp_dtype: fp32 # fp32, fp16 or bf16 (fp16 or bf16 may be faster if it is supported by your gpu)

37 | batch_size: 48

38 | cache_all_data: true # Save Internal-Memory or Graphics-Memory if it is false, but may be slow

39 | cache_device: 'cpu' # Set to 'cuda' to cache the data into the Graphics-Memory, fastest speed for strong gpu

40 | cache_fp16: true

41 | epochs: 100000

42 | interval_log: 10

43 | interval_val: 2000

44 | interval_force_save: 2000

45 | lr: 0.0001

46 | comolr: 0.00005

47 | decay_step: 100000

48 | gamma: 0.5

49 | weight_decay: 0

50 | save_opt: false

51 | spk:

52 | 'SPEAKER1': 0

--------------------------------------------------------------------------------

/data_loaders.py:

--------------------------------------------------------------------------------

1 | import os

2 | import random

3 |

4 | import librosa

5 | import numpy as np

6 | import torch

7 | from torch.utils.data import Dataset

8 | from tqdm import tqdm

9 |

10 | from utils import repeat_expand_2d

11 |

12 |

13 | def traverse_dir(

14 | root_dir,

15 | extensions,

16 | amount=None,

17 | str_include=None,

18 | str_exclude=None,

19 | is_pure=False,

20 | is_sort=False,

21 | is_ext=True):

22 |

23 | file_list = []

24 | cnt = 0

25 | for root, _, files in os.walk(root_dir):

26 | for file in files:

27 | if any([file.endswith(f".{ext}") for ext in extensions]):

28 | # path

29 | mix_path = os.path.join(root, file)

30 | pure_path = mix_path[len(root_dir)+1:] if is_pure else mix_path

31 |

32 | # amount

33 | if (amount is not None) and (cnt == amount):

34 | if is_sort:

35 | file_list.sort()

36 | return file_list

37 |

38 | # check string

39 | if (str_include is not None) and (str_include not in pure_path):

40 | continue

41 | if (str_exclude is not None) and (str_exclude in pure_path):

42 | continue

43 |

44 | if not is_ext:

45 | ext = pure_path.split('.')[-1]

46 | pure_path = pure_path[:-(len(ext)+1)]

47 | file_list.append(pure_path)

48 | cnt += 1

49 | if is_sort:

50 | file_list.sort()

51 | return file_list

52 |

53 |

54 | def get_data_loaders(args, whole_audio=False):

55 | data_train = AudioDataset(

56 | filelists = args.data.training_files,

57 | waveform_sec=args.data.duration,

58 | hop_size=args.data.hop_length,

59 | sample_rate=args.data.sampling_rate,

60 | load_all_data=args.train.cache_all_data,

61 | whole_audio=whole_audio,

62 | extensions=args.data.extensions,

63 | n_spk=args.model.n_spk,

64 | spk=args.spk,

65 | device=args.train.cache_device,

66 | fp16=args.train.cache_fp16,

67 | unit_interpolate_mode = args.data.unit_interpolate_mode,

68 | use_aug=True)

69 | loader_train = torch.utils.data.DataLoader(

70 | data_train ,

71 | batch_size=args.train.batch_size if not whole_audio else 1,

72 | shuffle=True,

73 | num_workers=args.train.num_workers if args.train.cache_device=='cpu' else 0,

74 | persistent_workers=(args.train.num_workers > 0) if args.train.cache_device=='cpu' else False,

75 | pin_memory=True if args.train.cache_device=='cpu' else False

76 | )

77 | data_valid = AudioDataset(

78 | filelists = args.data.validation_files,

79 | waveform_sec=args.data.duration,

80 | hop_size=args.data.hop_length,

81 | sample_rate=args.data.sampling_rate,

82 | load_all_data=args.train.cache_all_data,

83 | whole_audio=True,

84 | spk=args.spk,

85 | extensions=args.data.extensions,

86 | unit_interpolate_mode = args.data.unit_interpolate_mode,

87 | n_spk=args.model.n_spk)

88 | loader_valid = torch.utils.data.DataLoader(

89 | data_valid,

90 | batch_size=1,

91 | shuffle=False,

92 | num_workers=0,

93 | pin_memory=True

94 | )

95 | return loader_train, loader_valid

96 |

97 |

98 | class AudioDataset(Dataset):

99 | def __init__(

100 | self,

101 | filelists,

102 | waveform_sec,

103 | hop_size,

104 | sample_rate,

105 | spk,

106 | load_all_data=True,

107 | whole_audio=False,

108 | extensions=['wav'],

109 | n_spk=1,

110 | device='cpu',

111 | fp16=False,

112 | use_aug=False,

113 | unit_interpolate_mode = 'left'

114 | ):

115 | super().__init__()

116 |

117 | self.waveform_sec = waveform_sec

118 | self.sample_rate = sample_rate

119 | self.hop_size = hop_size

120 | self.filelists = filelists

121 | self.whole_audio = whole_audio

122 | self.use_aug = use_aug

123 | self.data_buffer={}

124 | self.pitch_aug_dict = {}

125 | self.unit_interpolate_mode = unit_interpolate_mode

126 | # np.load(os.path.join(self.path_root, 'pitch_aug_dict.npy'), allow_pickle=True).item()

127 | if load_all_data:

128 | print('Load all the data filelists:', filelists)

129 | else:

130 | print('Load the f0, volume data filelists:', filelists)

131 | with open(filelists,"r") as f:

132 | self.paths = f.read().splitlines()

133 | for name_ext in tqdm(self.paths, total=len(self.paths)):

134 | path_audio = name_ext

135 | duration = librosa.get_duration(filename = path_audio, sr = self.sample_rate)

136 |

137 | path_f0 = name_ext + ".f0.npy"

138 | f0,_ = np.load(path_f0,allow_pickle=True)

139 | f0 = torch.from_numpy(np.array(f0,dtype=float)).float().unsqueeze(-1).to(device)

140 |

141 | path_volume = name_ext + ".vol.npy"

142 | volume = np.load(path_volume)

143 | volume = torch.from_numpy(volume).float().unsqueeze(-1).to(device)

144 |

145 | path_augvol = name_ext + ".aug_vol.npy"

146 | aug_vol = np.load(path_augvol)

147 | aug_vol = torch.from_numpy(aug_vol).float().unsqueeze(-1).to(device)

148 |

149 | if n_spk is not None and n_spk > 1:

150 | spk_name = name_ext.split("/")[-2]

151 | spk_id = spk[spk_name] if spk_name in spk else 0

152 | if spk_id < 0 or spk_id >= n_spk:

153 | raise ValueError(' [x] Muiti-speaker traing error : spk_id must be a positive integer from 0 to n_spk-1 ')

154 | else:

155 | spk_id = 0

156 | spk_id = torch.LongTensor(np.array([spk_id])).to(device)

157 |

158 | if load_all_data:

159 | '''

160 | audio, sr = librosa.load(path_audio, sr=self.sample_rate)

161 | if len(audio.shape) > 1:

162 | audio = librosa.to_mono(audio)

163 | audio = torch.from_numpy(audio).to(device)

164 | '''

165 | path_mel = name_ext + ".mel.npy"

166 | mel = np.load(path_mel)

167 | mel = torch.from_numpy(mel).to(device)

168 |

169 | path_augmel = name_ext + ".aug_mel.npy"

170 | aug_mel,keyshift = np.load(path_augmel, allow_pickle=True)

171 | aug_mel = np.array(aug_mel,dtype=float)

172 | aug_mel = torch.from_numpy(aug_mel).to(device)

173 | self.pitch_aug_dict[name_ext] = keyshift

174 |

175 | path_units = name_ext + ".soft.pt"

176 | units = torch.load(path_units).to(device)

177 | units = units[0]

178 | units = repeat_expand_2d(units,f0.size(0),unit_interpolate_mode).transpose(0,1)

179 |

180 | if fp16:

181 | mel = mel.half()

182 | aug_mel = aug_mel.half()

183 | units = units.half()

184 |

185 | self.data_buffer[name_ext] = {

186 | 'duration': duration,

187 | 'mel': mel,

188 | 'aug_mel': aug_mel,

189 | 'units': units,

190 | 'f0': f0,

191 | 'volume': volume,

192 | 'aug_vol': aug_vol,

193 | 'spk_id': spk_id

194 | }

195 | else:

196 | path_augmel = name_ext + ".aug_mel.npy"

197 | aug_mel,keyshift = np.load(path_augmel, allow_pickle=True)

198 | self.pitch_aug_dict[name_ext] = keyshift

199 | self.data_buffer[name_ext] = {

200 | 'duration': duration,

201 | 'f0': f0,

202 | 'volume': volume,

203 | 'aug_vol': aug_vol,

204 | 'spk_id': spk_id

205 | }

206 |

207 |

208 | def __getitem__(self, file_idx):

209 | name_ext = self.paths[file_idx]

210 | data_buffer = self.data_buffer[name_ext]

211 | # check duration. if too short, then skip

212 | if data_buffer['duration'] < (self.waveform_sec + 0.1):

213 | return self.__getitem__( (file_idx + 1) % len(self.paths))

214 |

215 | # get item

216 | return self.get_data(name_ext, data_buffer)

217 |

218 | def get_data(self, name_ext, data_buffer):

219 | name = os.path.splitext(name_ext)[0]

220 | frame_resolution = self.hop_size / self.sample_rate

221 | duration = data_buffer['duration']

222 | waveform_sec = duration if self.whole_audio else self.waveform_sec

223 |

224 | # load audio

225 | idx_from = 0 if self.whole_audio else random.uniform(0, duration - waveform_sec - 0.1)

226 | start_frame = int(idx_from / frame_resolution)

227 | units_frame_len = int(waveform_sec / frame_resolution)

228 | aug_flag = random.choice([True, False]) and self.use_aug

229 | '''

230 | audio = data_buffer.get('audio')

231 | if audio is None:

232 | path_audio = os.path.join(self.path_root, 'audio', name) + '.wav'

233 | audio, sr = librosa.load(

234 | path_audio,

235 | sr = self.sample_rate,

236 | offset = start_frame * frame_resolution,

237 | duration = waveform_sec)

238 | if len(audio.shape) > 1:

239 | audio = librosa.to_mono(audio)

240 | # clip audio into N seconds

241 | audio = audio[ : audio.shape[-1] // self.hop_size * self.hop_size]

242 | audio = torch.from_numpy(audio).float()

243 | else:

244 | audio = audio[start_frame * self.hop_size : (start_frame + units_frame_len) * self.hop_size]

245 | '''

246 | # load mel

247 | mel_key = 'aug_mel' if aug_flag else 'mel'

248 | mel = data_buffer.get(mel_key)

249 | if mel is None:

250 | mel = name_ext + ".mel.npy"

251 | mel = np.load(mel)

252 | mel = mel[start_frame : start_frame + units_frame_len]

253 | mel = torch.from_numpy(mel).float()

254 | else:

255 | mel = mel[start_frame : start_frame + units_frame_len]

256 |

257 | # load f0

258 | f0 = data_buffer.get('f0')

259 | aug_shift = 0

260 | if aug_flag:

261 | aug_shift = self.pitch_aug_dict[name_ext]

262 | f0_frames = 2 ** (aug_shift / 12) * f0[start_frame : start_frame + units_frame_len]

263 |

264 | # load units

265 | units = data_buffer.get('units')

266 | if units is None:

267 | path_units = name_ext + ".soft.pt"

268 | units = torch.load(path_units)

269 | units = units[0]

270 | units = repeat_expand_2d(units,f0.size(0),self.unit_interpolate_mode).transpose(0,1)

271 |

272 | units = units[start_frame : start_frame + units_frame_len]

273 |

274 | # load volume

275 | vol_key = 'aug_vol' if aug_flag else 'volume'

276 | volume = data_buffer.get(vol_key)

277 | volume_frames = volume[start_frame : start_frame + units_frame_len]

278 |

279 | # load spk_id

280 | spk_id = data_buffer.get('spk_id')

281 |

282 | # load shift

283 | aug_shift = torch.from_numpy(np.array([[aug_shift]])).float()

284 |

285 | return dict(mel=mel, f0=f0_frames, volume=volume_frames, units=units, spk_id=spk_id, aug_shift=aug_shift, name=name, name_ext=name_ext)

286 |

287 | def __len__(self):

288 | return len(self.paths)

--------------------------------------------------------------------------------

/dataset/the_prprocessed_data:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Grace9994/CoMoSVC/2ea8e644e2c5b3a8afc0762e870b9daacf3b5be5/dataset/the_prprocessed_data

--------------------------------------------------------------------------------

/dataset_slice/if_you_need_to_slice:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Grace9994/CoMoSVC/2ea8e644e2c5b3a8afc0762e870b9daacf3b5be5/dataset_slice/if_you_need_to_slice

--------------------------------------------------------------------------------

/filelists/put_the_txt_here:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Grace9994/CoMoSVC/2ea8e644e2c5b3a8afc0762e870b9daacf3b5be5/filelists/put_the_txt_here

--------------------------------------------------------------------------------

/infer_tool.py:

--------------------------------------------------------------------------------

1 | import io

2 | import logging

3 | import os

4 | import time

5 | from pathlib import Path

6 |

7 | import librosa

8 | import numpy as np

9 |

10 | import soundfile

11 | import torch

12 | import torchaudio

13 |

14 | import utils

15 | from ComoSVC import load_model_vocoder

16 | import slicer

17 |

18 | logging.getLogger('matplotlib').setLevel(logging.WARNING)

19 |

20 |

21 | def format_wav(audio_path):

22 | if Path(audio_path).suffix == '.wav':

23 | return

24 | raw_audio, raw_sample_rate = librosa.load(audio_path, mono=True, sr=None)

25 | soundfile.write(Path(audio_path).with_suffix(".wav"), raw_audio, raw_sample_rate)

26 |

27 |

28 | def get_end_file(dir_path, end):

29 | file_lists = []

30 | for root, dirs, files in os.walk(dir_path):

31 | files = [f for f in files if f[0] != '.']

32 | dirs[:] = [d for d in dirs if d[0] != '.']

33 | for f_file in files:

34 | if f_file.endswith(end):

35 | file_lists.append(os.path.join(root, f_file).replace("\\", "/"))

36 | return file_lists

37 |

38 |

39 | def fill_a_to_b(a, b):

40 | if len(a) < len(b):

41 | for _ in range(0, len(b) - len(a)):

42 | a.append(a[0])

43 |

44 | def mkdir(paths: list):

45 | for path in paths:

46 | if not os.path.exists(path):

47 | os.mkdir(path)

48 |

49 | def pad_array(arr, target_length):

50 | current_length = arr.shape[0]

51 | if current_length >= target_length:

52 | return arr

53 | else:

54 | pad_width = target_length - current_length

55 | pad_left = pad_width // 2

56 | pad_right = pad_width - pad_left

57 | padded_arr = np.pad(arr, (pad_left, pad_right), 'constant', constant_values=(0, 0))

58 | return padded_arr

59 |

60 | def split_list_by_n(list_collection, n, pre=0):

61 | for i in range(0, len(list_collection), n):

62 | yield list_collection[i-pre if i-pre>=0 else i: i + n]

63 |

64 |

65 | class F0FilterException(Exception):

66 | pass

67 |

68 | class Svc(object):

69 | def __init__(self,

70 | diffusion_model_path="logs/como/model_8000.pt",

71 | diffusion_config_path="configs/diffusion.yaml",

72 | total_steps=1,

73 | teacher = False

74 | ):

75 |

76 | self.teacher = teacher

77 | self.total_steps=total_steps

78 | self.dev = torch.device("cuda" if torch.cuda.is_available() else "cpu")

79 | self.diffusion_model,self.vocoder,self.diffusion_args = load_model_vocoder(diffusion_model_path,self.dev,config_path=diffusion_config_path,total_steps=self.total_steps,teacher=self.teacher)

80 | self.target_sample = self.diffusion_args.data.sampling_rate

81 | self.hop_size = self.diffusion_args.data.hop_length

82 | self.spk2id = self.diffusion_args.spk

83 | self.dtype = torch.float32

84 | self.speech_encoder = self.diffusion_args.data.encoder

85 | self.unit_interpolate_mode = self.diffusion_args.data.unit_interpolate_mode if self.diffusion_args.data.unit_interpolate_mode is not None else 'left'

86 |

87 | # load hubert and model

88 |

89 | from Features import ContentVec768L12

90 | self.hubert_model = ContentVec768L12(device = self.dev)

91 | self.volume_extractor= utils.Volume_Extractor(self.hop_size)

92 |

93 |

94 |

95 | def get_unit_f0(self, wav, tran):

96 |

97 | if not hasattr(self,"f0_predictor_object") or self.f0_predictor_object is None:

98 | from Features import DioF0Predictor

99 | self.f0_predictor_object = DioF0Predictor(hop_length=self.hop_size,sampling_rate=self.target_sample)

100 | f0, uv = self.f0_predictor_object.compute_f0_uv(wav)

101 | f0 = torch.FloatTensor(f0).to(self.dev)

102 | uv = torch.FloatTensor(uv).to(self.dev)

103 |

104 | f0 = f0 * 2 ** (tran / 12)

105 | f0 = f0.unsqueeze(0)

106 | uv = uv.unsqueeze(0)

107 |

108 | wav = torch.from_numpy(wav).to(self.dev)

109 | if not hasattr(self,"audio16k_resample_transform"):

110 | self.audio16k_resample_transform = torchaudio.transforms.Resample(self.target_sample, 16000).to(self.dev)

111 | wav16k = self.audio16k_resample_transform(wav[None,:])[0]

112 |

113 | c = self.hubert_model.encoder(wav16k)

114 | c = utils.repeat_expand_2d(c.squeeze(0), f0.shape[1],self.unit_interpolate_mode)

115 | c = c.unsqueeze(0)

116 | return c, f0, uv

117 |

118 | def infer(self, speaker, tran, raw_path):

119 | torchaudio.set_audio_backend("soundfile")

120 | wav, sr = torchaudio.load(raw_path)

121 | if not hasattr(self,"audio_resample_transform") or self.audio16k_resample_transform.orig_freq != sr:

122 | self.audio_resample_transform = torchaudio.transforms.Resample(sr,self.target_sample)

123 | wav = self.audio_resample_transform(wav).numpy()[0]# (100080,)

124 | speaker_id = self.spk2id.get(speaker)

125 |

126 | if not speaker_id and type(speaker) is int:

127 | if len(self.spk2id.__dict__) >= speaker:

128 | speaker_id = speaker

129 | if speaker_id is None:

130 | raise RuntimeError("The name you entered is not in the speaker list!")

131 | sid = torch.LongTensor([int(speaker_id)]).to(self.dev).unsqueeze(0)

132 |

133 | c, f0, uv = self.get_unit_f0(wav, tran)

134 | n_frames = f0.size(1)

135 | c = c.to(self.dtype)

136 | f0 = f0.to(self.dtype)

137 | uv = uv.to(self.dtype)

138 |

139 | with torch.no_grad():

140 | start = time.time()

141 | vol = None

142 | audio = torch.FloatTensor(wav).to(self.dev)

143 | audio_mel = None

144 | vol = self.volume_extractor.extract(audio[None,:])[None,:,None].to(self.dev) if vol is None else vol[:,:,None]

145 | f0 = f0[:,:,None] # torch.Size([1, 390]) to torch.Size([1, 390, 1])

146 | c = c.transpose(-1,-2)

147 | audio_mel = self.diffusion_model(c, f0, vol,spk_id = sid,gt_spec=audio_mel,infer=True)

148 | # print("inferencetool_audiomel",audio_mel.shape)

149 | audio = self.vocoder.infer(audio_mel, f0).squeeze()

150 | use_time = time.time() - start

151 | print("inference_time is:{}".format(use_time))

152 | return audio, audio.shape[-1], n_frames

153 |

154 | def clear_empty(self):

155 | # clean up vram

156 | torch.cuda.empty_cache()

157 |

158 |

159 | def slice_inference(self,

160 | raw_audio_path,

161 | spk,

162 | tran,

163 | slice_db=-40, # -40

164 | pad_seconds=0.5,

165 | clip_seconds=0,

166 | ):

167 |

168 | wav_path = Path(raw_audio_path).with_suffix('.wav')

169 | chunks = slicer.cut(wav_path, db_thresh=slice_db)

170 | audio_data, audio_sr = slicer.chunks2audio(wav_path, chunks)

171 | per_size = int(clip_seconds*audio_sr)

172 | lg_size = 0

173 | global_frame = 0

174 | audio = []

175 | for (slice_tag, data) in audio_data:

176 | print(f'#=====segment start, {round(len(data) / audio_sr, 3)}s======')

177 | # padd

178 | length = int(np.ceil(len(data) / audio_sr * self.target_sample))

179 | if slice_tag:

180 | print('jump empty segment')

181 | _audio = np.zeros(length)

182 | audio.extend(list(pad_array(_audio, length)))

183 | global_frame += length // self.hop_size

184 | continue

185 | if per_size != 0:

186 | datas = split_list_by_n(data, per_size,lg_size)

187 | else:

188 | datas = [data]

189 | for k,dat in enumerate(datas):

190 | per_length = int(np.ceil(len(dat) / audio_sr * self.target_sample)) if clip_seconds!=0 else length

191 | if clip_seconds!=0:

192 | print(f'###=====segment clip start, {round(len(dat) / audio_sr, 3)}s======')

193 | # padd

194 | pad_len = int(audio_sr * pad_seconds)

195 | dat = np.concatenate([np.zeros([pad_len]), dat, np.zeros([pad_len])])

196 | raw_path = io.BytesIO()

197 | soundfile.write(raw_path, dat, audio_sr, format="wav")

198 | raw_path.seek(0)

199 | out_audio, out_sr, out_frame = self.infer(spk, tran, raw_path)

200 | global_frame += out_frame

201 | _audio = out_audio.cpu().numpy()

202 | pad_len = int(self.target_sample * pad_seconds)

203 | _audio = _audio[pad_len:-pad_len]

204 | _audio = pad_array(_audio, per_length)

205 | audio.extend(list(_audio))

206 | return np.array(audio)

207 |

--------------------------------------------------------------------------------

/inference_main.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import soundfile

3 | import os

4 | os.environ["CUDA_VISIBLE_DEVICES"]='1'

5 |

6 | import infer_tool

7 | from infer_tool import Svc

8 |

9 | logging.getLogger('numba').setLevel(logging.WARNING)

10 |

11 |

12 | def main():

13 | import argparse

14 |

15 | parser = argparse.ArgumentParser(description='comosvc inference')

16 | parser.add_argument('-t', '--teacher', action="store_false",help='if it is teacher model')

17 | parser.add_argument('-ts', '--total_steps', type=int,default=1,help='the total number of iterative steps during inference')

18 |

19 |

20 | parser.add_argument('--clip', type=float, default=0, help='Slicing the audios which are to be converted')

21 | parser.add_argument('-n','--clean_names', type=str, nargs='+', default=['1.wav'], help='The audios to be converted,should be put in "raw" directory')

22 | parser.add_argument('-k','--keys', type=int, nargs='+', default=[0], help='To Adjust the Key')

23 | parser.add_argument('-s','--spk_list', type=str, nargs='+', default=['singer1'], help='The target singer')

24 | parser.add_argument('-cm','--como_model_path', type=str, default="./logs/como/model_800000.pt", help='the path to checkpoint of CoMoSVC')

25 | parser.add_argument('-cc','--como_config_path', type=str, default="./logs/como/config.yaml", help='the path to config file of CoMoSVC')

26 | parser.add_argument('-tm','--teacher_model_path', type=str, default="./logs/teacher/model_800000.pt", help='the path to checkpoint of Teacher Model')

27 | parser.add_argument('-tc','--teacher_config_path', type=str, default="./logs/teacher/config.yaml", help='the path to config file of Teacher Model')

28 |

29 | args = parser.parse_args()

30 |

31 | clean_names = args.clean_names

32 | keys = args.keys

33 | spk_list = args.spk_list

34 | slice_db =-40

35 | wav_format = 'wav' # the format of the output audio

36 | pad_seconds = 0.5

37 | clip = args.clip

38 |

39 | if args.teacher:

40 | diffusion_model_path = args.teacher_model_path

41 | diffusion_config_path = args.teacher_config_path

42 | resultfolder='result_teacher'

43 | else:

44 | diffusion_model_path = args.como_model_path

45 | diffusion_config_path = args.como_config_path

46 | resultfolder='result_teacher'

47 |

48 | svc_model = Svc(diffusion_model_path,

49 | diffusion_config_path,

50 | args.total_steps,

51 | args.teacher)

52 |

53 | infer_tool.mkdir(["raw", resultfolder])

54 |

55 | infer_tool.fill_a_to_b(keys, clean_names)

56 | for clean_name, tran in zip(clean_names, keys):

57 | raw_audio_path = f"raw/{clean_name}"

58 | if "." not in raw_audio_path:

59 | raw_audio_path += ".wav"

60 | infer_tool.format_wav(raw_audio_path)

61 | for spk in spk_list:

62 | kwarg = {

63 | "raw_audio_path" : raw_audio_path,

64 | "spk" : spk,

65 | "tran" : tran,

66 | "slice_db" : slice_db,# -40

67 | "pad_seconds" : pad_seconds, # 0.5

68 | "clip_seconds" : clip, #0

69 |

70 | }

71 | audio = svc_model.slice_inference(**kwarg)

72 | step_num=diffusion_model_path.split('/')[-1].split('.')[0]

73 | if args.teacher:

74 | isdiffusion = "teacher"

75 | else:

76 | isdiffusion= "como"

77 | res_path = f'{resultfolder}/{clean_name}_{spk}_{isdiffusion}_{step_num}.{wav_format}'

78 | soundfile.write(res_path, audio, svc_model.target_sample, format=wav_format)

79 | svc_model.clear_empty()

80 |

81 | if __name__ == '__main__':

82 | main()

83 |

--------------------------------------------------------------------------------

/logs/the_log_files:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Grace9994/CoMoSVC/2ea8e644e2c5b3a8afc0762e870b9daacf3b5be5/logs/the_log_files

--------------------------------------------------------------------------------

/mel_processing.py:

--------------------------------------------------------------------------------

1 | import math

2 | import os

3 | import random

4 | import torch

5 | import torch.utils.data

6 | import torch.nn.functional as F

7 | import numpy as np

8 | from librosa.util import normalize

9 | from scipy.io.wavfile import read

10 | from librosa.filters import mel as librosa_mel_fn

11 | import pathlib

12 | from tqdm import tqdm

13 |

14 | MAX_WAV_VALUE = 32768.0

15 |

16 |

17 | def dynamic_range_compression_torch(x, C=1, clip_val=1e-5):

18 | return torch.log10(torch.clamp(x, min=clip_val) * C)

19 |

20 |

21 | def dynamic_range_decompression_torch(x, C=1):

22 | return torch.exp(x) / C

23 |

24 |

25 | def spectral_normalize_torch(magnitudes):

26 | output = dynamic_range_compression_torch(magnitudes)

27 | return output

28 |

29 |

30 | def spectral_de_normalize_torch(magnitudes):

31 | output = dynamic_range_decompression_torch(magnitudes)

32 | return output

33 |

34 |

35 | mel_basis = {}

36 | hann_window = {}

37 |

38 | def mel_spectrogram(y, n_fft, num_mels, sampling_rate, hop_size, win_size, fmin, fmax, keyshift=0, speed=1,center=False):

39 |

40 | factor = 2 ** (keyshift / 12)

41 | n_fft_new = int(np.round(n_fft * factor))

42 | win_size_new = int(np.round(win_size * factor))

43 | hop_length_new = int(np.round(hop_size * speed))

44 |

45 |

46 | if torch.min(y) < -1.:

47 | print('min value is ', torch.min(y))

48 | if torch.max(y) > 1.:

49 | print('max value is ', torch.max(y))

50 |

51 | global mel_basis, hann_window

52 | mel_basis_key = str(fmax)+'_'+str(y.device)

53 | if mel_basis_key not in mel_basis:

54 | mel = librosa_mel_fn(sr=sampling_rate, n_fft=n_fft, n_mels=num_mels, fmin=fmin, fmax=fmax) # 一个mel转换器,即这是一个函数,可以用来提取mel谱

55 | mel_basis[mel_basis_key] = torch.from_numpy(mel).float().to(y.device) # 建 Mel 转换器,并将其转换为 PyTorch 的张量,并根据 y.device 将其放置在相应的设备上。

56 |

57 | keyshift_key = str(keyshift)+'_'+str(y.device)

58 | if keyshift_key not in hann_window:

59 | hann_window[keyshift_key] = torch.hann_window(win_size_new).to(y.device)

60 |

61 | pad_left = (win_size_new - hop_length_new) //2

62 | pad_right = max((win_size_new- hop_length_new + 1) //2, win_size_new - y.size(-1) - pad_left)

63 | if pad_right < y.size(-1):

64 | mode = 'reflect'

65 | else:

66 | mode = 'constant'

67 | y = torch.nn.functional.pad(y.unsqueeze(1), (pad_left, pad_right), mode = mode)

68 | y = y.squeeze(1)

69 |

70 |

71 | spec = torch.stft(y, n_fft_new, hop_length=hop_length_new, win_length=win_size_new, window=hann_window[keyshift_key],

72 | center=center, pad_mode='reflect', normalized=False, onesided=True, return_complex=False)

73 |

74 | spec = torch.sqrt(spec.pow(2).sum(-1)+(1e-9))

75 | if keyshift != 0:

76 | size = n_fft // 2 + 1

77 | resize = spec.size(1)

78 | if resize < size:

79 | spec = F.pad(spec, (0, 0, 0, size-resize))

80 | spec = spec[:, :size, :] * win_size / win_size_new

81 | spec = torch.matmul(mel_basis[mel_basis_key], spec)

82 |

83 | spec = spectral_normalize_torch(spec)

84 |

85 | return spec

86 |

87 |

88 | def spectrogram_torch(y, n_fft, sampling_rate, hop_size, win_size, center=False):

89 | if torch.min(y) < -1.:

90 | print('min value is ', torch.min(y))

91 | if torch.max(y) > 1.:

92 | print('max value is ', torch.max(y))

93 |

94 | global hann_window

95 | dtype_device = str(y.dtype) + '_' + str(y.device)

96 | wnsize_dtype_device = str(win_size) + '_' + dtype_device

97 | if wnsize_dtype_device not in hann_window:

98 | hann_window[wnsize_dtype_device] = torch.hann_window(win_size).to(dtype=y.dtype, device=y.device)

99 |

100 | y = torch.nn.functional.pad(y.unsqueeze(1), (int((n_fft-hop_size)/2), int((n_fft-hop_size)/2)), mode='reflect')

101 | y = y.squeeze(1)

102 |

103 | y_dtype = y.dtype

104 | if y.dtype == torch.bfloat16:

105 | y = y.to(torch.float32)

106 |

107 | spec = torch.stft(y, n_fft, hop_length=hop_size, win_length=win_size, window=hann_window[wnsize_dtype_device],

108 | center=center, pad_mode='reflect', normalized=False, onesided=True, return_complex=True)

109 | spec = torch.view_as_real(spec).to(y_dtype)

110 |

111 | spec = torch.sqrt(spec.pow(2).sum(-1) + 1e-6)

112 | return spec

113 |

114 |

115 |

--------------------------------------------------------------------------------

/meldataset.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.utils.data

3 | import torch.nn.functional as F

4 | import numpy as np

5 | from librosa.filters import mel as librosa_mel_fn

6 |

7 |

8 | def spectral_normalize_torch(magnitudes):

9 | output = dynamic_range_compression_torch(magnitudes)

10 | return output

11 |

12 | def dynamic_range_compression_torch(x, C=1, clip_val=1e-5):

13 | return torch.log10(torch.clamp(x, min=clip_val) * C)

14 |

15 |

16 | def mel_spectrogram(y, n_fft, num_mels, sampling_rate, hop_size, win_size, fmin, fmax, keyshift=0, speed=1,center=False):

17 |

18 | factor = 2 ** (keyshift / 12)

19 | n_fft_new = int(np.round(n_fft * factor))

20 | win_size_new = int(np.round(win_size * factor))

21 | hop_length_new = int(np.round(hop_size * speed))

22 |

23 |

24 | if torch.min(y) < -1.:

25 | print('min value is ', torch.min(y))

26 | if torch.max(y) > 1.:

27 | print('max value is ', torch.max(y))

28 |

29 | global mel_basis, hann_window

30 | mel_basis_key = str(fmax)+'_'+str(y.device)

31 | if mel_basis_key not in mel_basis:

32 | mel = librosa_mel_fn(sr=sampling_rate, n_fft=n_fft, n_mels=num_mels, fmin=fmin, fmax=fmax) # 一个mel转换器,即这是一个函数,可以用来提取mel谱

33 | mel_basis[mel_basis_key] = torch.from_numpy(mel).float().to(y.device) # 建 Mel 转换器,并将其转换为 PyTorch 的张量,并根据 y.device 将其放置在相应的设备上。

34 |

35 | keyshift_key = str(keyshift)+'_'+str(y.device)

36 | if keyshift_key not in hann_window:

37 | hann_window[keyshift_key] = torch.hann_window(win_size_new).to(y.device)

38 |

39 | pad_left = (win_size_new - hop_length_new) //2

40 | pad_right = max((win_size_new- hop_length_new + 1) //2, win_size_new - y.size(-1) - pad_left)

41 | if pad_right < y.size(-1):

42 | mode = 'reflect'

43 | else:

44 | mode = 'constant'

45 | y = torch.nn.functional.pad(y.unsqueeze(1), (pad_left, pad_right), mode = mode)

46 | y = y.squeeze(1)

47 | spec = torch.stft(y, n_fft_new, hop_length=hop_length_new, win_length=win_size_new, window=hann_window[keyshift_key],

48 | center=center, pad_mode='reflect', normalized=False, onesided=True, return_complex=False)

49 |

50 | spec = torch.sqrt(spec.pow(2).sum(-1)+(1e-9))

51 | if keyshift != 0:

52 | size = n_fft // 2 + 1

53 | resize = spec.size(1)

54 | if resize < size:

55 | spec = F.pad(spec, (0, 0, 0, size-resize))

56 | spec = spec[:, :size, :] * win_size / win_size_new

57 | spec = torch.matmul(mel_basis[mel_basis_key], spec)

58 |

59 | spec = spectral_normalize_torch(spec)

60 |

61 | return spec

62 |

--------------------------------------------------------------------------------

/pitch_extractor.py:

--------------------------------------------------------------------------------

1 | import math

2 | import torch

3 | from torch import nn

4 | from torch.nn import Parameter

5 | import torch.onnx.operators

6 | import torch.nn.functional as F

7 | import utils

8 |

9 |

10 | class Reshape(nn.Module):

11 | def __init__(self, *args):

12 | super(Reshape, self).__init__()

13 | self.shape = args

14 |

15 | def forward(self, x):

16 | return x.view(self.shape)

17 |

18 |

19 | class Permute(nn.Module):

20 | def __init__(self, *args):

21 | super(Permute, self).__init__()

22 | self.args = args

23 |

24 | def forward(self, x):

25 | return x.permute(self.args)

26 |

27 |

28 | class LinearNorm(torch.nn.Module):

29 | def __init__(self, in_dim, out_dim, bias=True, w_init_gain='linear'):