├── GenerateApp

├── GuernikaModelConverter.version

├── GuernikaModelConverter_AppIcon.png

├── exportOptions.plist

└── GuernikaTools.entitlements

├── GuernikaTools

├── guernikatools

│ ├── _version.py

│ ├── __init__.py

│ ├── models

│ │ ├── layer_norm.py

│ │ ├── attention.py

│ │ └── controlnet.py

│ ├── convert

│ │ ├── convert_text_encoder.py

│ │ ├── convert_t2i_adapter.py

│ │ ├── convert_safety_checker.py

│ │ ├── convert_controlnet.py

│ │ ├── convert_vae.py

│ │ └── convert_unet.py

│ └── utils

│ │ ├── merge_lora.py

│ │ ├── utils.py

│ │ └── chunk_mlprogram.py

├── requirements.txt

├── build.sh

├── GuernikaTools.entitlements

├── setup.py

└── GuernikaTools.spec

├── GuernikaModelConverter

├── Assets.xcassets

│ ├── Contents.json

│ ├── AppIcon.appiconset

│ │ ├── AppIcon-128.png

│ │ ├── AppIcon-16.png

│ │ ├── AppIcon-256.png

│ │ ├── AppIcon-32.png

│ │ ├── AppIcon-512.png

│ │ ├── AppIcon-64.png

│ │ ├── AppIcon-1024.png

│ │ └── Contents.json

│ └── AccentColor.colorset

│ │ └── Contents.json

├── Preview Content

│ └── Preview Assets.xcassets

│ │ └── Contents.json

├── Model

│ ├── LoRAInfo.swift

│ ├── Compression.swift

│ ├── ModelOrigin.swift

│ ├── ComputeUnits.swift

│ ├── Version.swift

│ ├── Logger.swift

│ └── ConverterProcess.swift

├── GuernikaModelConverter.entitlements

├── GuernikaModelConverterApp.swift

├── Navigation

│ ├── ContentView.swift

│ ├── DetailColumn.swift

│ └── Sidebar.swift

├── Views

│ ├── CircularProgress.swift

│ ├── DestinationToolbarButton.swift

│ ├── IntegerField.swift

│ └── DecimalField.swift

├── Log

│ └── LogView.swift

├── ConvertControlNet

│ └── ConvertControlNetViewModel.swift

└── ConvertModel

│ └── ConvertModelViewModel.swift

├── GuernikaModelConverter.xcodeproj

├── project.xcworkspace

│ ├── contents.xcworkspacedata

│ └── xcshareddata

│ │ └── IDEWorkspaceChecks.plist

└── xcuserdata

│ └── guiye.xcuserdatad

│ └── xcschemes

│ └── xcschememanagement.plist

├── .github

└── FUNDING.yml

├── GuernikaModelConverterUITests

├── GuernikaModelConverterUITestsLaunchTests.swift

└── GuernikaModelConverterUITests.swift

├── GuernikaModelConverterTests

└── GuernikaModelConverterTests.swift

├── .gitignore

└── README.md

/GenerateApp/GuernikaModelConverter.version:

--------------------------------------------------------------------------------

1 | 6.5.0

2 |

--------------------------------------------------------------------------------

/GuernikaTools/guernikatools/_version.py:

--------------------------------------------------------------------------------

1 | __version__ = "7.0.0"

2 |

--------------------------------------------------------------------------------

/GuernikaTools/guernikatools/__init__.py:

--------------------------------------------------------------------------------

1 | from ._version import __version__

2 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "info" : {

3 | "author" : "xcode",

4 | "version" : 1

5 | }

6 | }

7 |

--------------------------------------------------------------------------------

/GenerateApp/GuernikaModelConverter_AppIcon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GenerateApp/GuernikaModelConverter_AppIcon.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Preview Content/Preview Assets.xcassets/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "info" : {

3 | "author" : "xcode",

4 | "version" : 1

5 | }

6 | }

7 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-128.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-128.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-16.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-16.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-256.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-256.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-32.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-32.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-512.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-512.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-64.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-64.png

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-1024.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/GuernikaCore/GuernikaModelConverter/HEAD/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/AppIcon-1024.png

--------------------------------------------------------------------------------

/GuernikaModelConverter.xcodeproj/project.xcworkspace/contents.xcworkspacedata:

--------------------------------------------------------------------------------

1 |

2 |

4 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/GuernikaTools/requirements.txt:

--------------------------------------------------------------------------------

1 | coremltools>=7.0b2

2 | diffusers[torch]

3 | torch

4 | transformers>=4.30.0

5 | scipy

6 | numpy<1.24

7 | pytest

8 | scikit-learn==1.1.2

9 | pytorch_lightning

10 | omegaconf

11 | six

12 | safetensors

13 | pyinstaller

14 |

--------------------------------------------------------------------------------

/GuernikaTools/build.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | pyinstaller GuernikaTools.spec --clean -y --distpath "../GuernikaModelConverter"

4 | codesign -s - -i com.guiyec.GuernikaModelConverter.GuernikaTools -o runtime --entitlements GuernikaTools.entitlements -f "../GuernikaModelConverter/GuernikaTools"

5 |

--------------------------------------------------------------------------------

/GuernikaModelConverter.xcodeproj/project.xcworkspace/xcshareddata/IDEWorkspaceChecks.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | IDEDidComputeMac32BitWarning

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/GenerateApp/exportOptions.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | method

6 | developer-id

7 | signingStyle

8 | automatic

9 | teamID

10 | A5ZC2LG374

11 |

12 |

13 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Model/LoRAInfo.swift:

--------------------------------------------------------------------------------

1 | //

2 | // LoRAInfo.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 11/8/23.

6 | //

7 |

8 | import Foundation

9 |

10 | struct LoRAInfo: Hashable, Identifiable {

11 | var id: URL { url }

12 | var url: URL

13 | var ratio: Double

14 |

15 | var argument: String {

16 | String(format: "%@:%0.2f", url.path(percentEncoded: false), ratio)

17 | }

18 | }

19 |

--------------------------------------------------------------------------------

/GuernikaModelConverter.xcodeproj/xcuserdata/guiye.xcuserdatad/xcschemes/xcschememanagement.plist:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | SchemeUserState

6 |

7 | GuernikaModelConverter.xcscheme_^#shared#^_

8 |

9 | orderHint

10 | 0

11 |

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/GuernikaModelConverter.entitlements:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | com.apple.security.cs.allow-dyld-environment-variables

6 |

7 | com.apple.security.cs.allow-jit

8 |

9 | com.apple.security.cs.disable-library-validation

10 |

11 | com.apple.security.network.client

12 |

13 |

14 |

15 |

--------------------------------------------------------------------------------

/GenerateApp/GuernikaTools.entitlements:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | com.apple.security.cs.allow-dyld-environment-variables

6 |

7 | com.apple.security.cs.allow-jit

8 |

9 | com.apple.security.cs.disable-library-validation

10 |

11 | com.apple.security.network.client

12 |

13 | com.apple.security.inherit

14 |

15 |

16 |

17 |

--------------------------------------------------------------------------------

/GuernikaTools/GuernikaTools.entitlements:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | com.apple.security.cs.allow-dyld-environment-variables

6 |

7 | com.apple.security.cs.allow-jit

8 |

9 | com.apple.security.cs.disable-library-validation

10 |

11 | com.apple.security.network.client

12 |

13 | com.apple.security.inherit

14 |

15 |

16 |

17 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Model/Compression.swift:

--------------------------------------------------------------------------------

1 | //

2 | // Compression.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 19/6/23.

6 | //

7 |

8 | import Foundation

9 |

10 | enum Compression: String, CaseIterable, Identifiable, CustomStringConvertible {

11 | case quantizied6bit

12 | case quantizied8bit

13 | case fullSize

14 |

15 | var id: String { rawValue }

16 |

17 | var description: String {

18 | switch self {

19 | case .quantizied6bit: return "6 bit"

20 | case .quantizied8bit: return "8 bit"

21 | case .fullSize: return "Full size"

22 | }

23 | }

24 | }

25 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Model/ModelOrigin.swift:

--------------------------------------------------------------------------------

1 | //

2 | // ModelOrigin.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 30/3/23.

6 | //

7 |

8 | import Foundation

9 |

10 | enum ModelOrigin: String, CaseIterable, Identifiable, CustomStringConvertible {

11 | case huggingface

12 | case diffusers

13 | case checkpoint

14 |

15 | var id: String { rawValue }

16 |

17 | var description: String {

18 | switch self {

19 | case .huggingface: return "🤗 Hugging Face"

20 | case .diffusers: return "📂 Diffusers"

21 | case .checkpoint: return "💽 Checkpoint"

22 | }

23 | }

24 | }

25 |

--------------------------------------------------------------------------------

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | # These are supported funding model platforms

2 |

3 | github: GuiyeC

4 | patreon: # Replace with a single Patreon username

5 | open_collective: # Replace with a single Open Collective username

6 | ko_fi: # Replace with a single Ko-fi username

7 | tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

8 | community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

9 | liberapay: # Replace with a single Liberapay username

10 | issuehunt: # Replace with a single IssueHunt username

11 | otechie: # Replace with a single Otechie username

12 | lfx_crowdfunding: # Replace with a single LFX Crowdfunding project-name e.g., cloud-foundry

13 | custom: # Replace with up to 4 custom sponsorship URLs e.g., ['link1', 'link2']

14 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/GuernikaModelConverterApp.swift:

--------------------------------------------------------------------------------

1 | //

2 | // GuernikaModelConverterApp.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | final class AppDelegate: NSObject, NSApplicationDelegate {

11 | func applicationShouldTerminateAfterLastWindowClosed(_ sender: NSApplication) -> Bool {

12 | true

13 | }

14 | }

15 |

16 | @main

17 | struct GuernikaModelConverterApp: App {

18 | @NSApplicationDelegateAdaptor(AppDelegate.self) var delegate

19 |

20 | var body: some Scene {

21 | WindowGroup {

22 | ContentView()

23 | }.commands {

24 | SidebarCommands()

25 |

26 | CommandGroup(replacing: CommandGroupPlacement.newItem) {}

27 | }

28 | }

29 | }

30 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AccentColor.colorset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "colors" : [

3 | {

4 | "color" : {

5 | "color-space" : "srgb",

6 | "components" : {

7 | "alpha" : "1.000",

8 | "blue" : "0.271",

9 | "green" : "0.067",

10 | "red" : "0.522"

11 | }

12 | },

13 | "idiom" : "mac"

14 | },

15 | {

16 | "appearances" : [

17 | {

18 | "appearance" : "luminosity",

19 | "value" : "dark"

20 | }

21 | ],

22 | "color" : {

23 | "color-space" : "srgb",

24 | "components" : {

25 | "alpha" : "1.000",

26 | "blue" : "0.271",

27 | "green" : "0.067",

28 | "red" : "0.522"

29 | }

30 | },

31 | "idiom" : "mac"

32 | }

33 | ],

34 | "info" : {

35 | "author" : "xcode",

36 | "version" : 1

37 | }

38 | }

39 |

--------------------------------------------------------------------------------

/GuernikaModelConverterUITests/GuernikaModelConverterUITestsLaunchTests.swift:

--------------------------------------------------------------------------------

1 | //

2 | // GuernikaModelConverterUITestsLaunchTests.swift

3 | // GuernikaModelConverterUITests

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import XCTest

9 |

10 | final class GuernikaModelConverterUITestsLaunchTests: XCTestCase {

11 |

12 | override class var runsForEachTargetApplicationUIConfiguration: Bool {

13 | true

14 | }

15 |

16 | override func setUpWithError() throws {

17 | continueAfterFailure = false

18 | }

19 |

20 | func testLaunch() throws {

21 | let app = XCUIApplication()

22 | app.launch()

23 |

24 | // Insert steps here to perform after app launch but before taking a screenshot,

25 | // such as logging into a test account or navigating somewhere in the app

26 |

27 | let attachment = XCTAttachment(screenshot: app.screenshot())

28 | attachment.name = "Launch Screen"

29 | attachment.lifetime = .keepAlways

30 | add(attachment)

31 | }

32 | }

33 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Navigation/ContentView.swift:

--------------------------------------------------------------------------------

1 | //

2 | // ContentView.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | struct ContentView: View {

11 | @State private var selection: Panel = Panel.model

12 | @State private var path = NavigationPath()

13 |

14 | var body: some View {

15 | NavigationSplitView {

16 | Sidebar(path: $path, selection: $selection)

17 | } detail: {

18 | NavigationStack(path: $path) {

19 | DetailColumn(path: $path, selection: $selection)

20 | }

21 | }

22 | .onChange(of: selection) { _ in

23 | path.removeLast(path.count)

24 | }

25 | .frame(minWidth: 800, minHeight: 500)

26 | }

27 | }

28 |

29 | struct ContentView_Previews: PreviewProvider {

30 | struct Preview: View {

31 | var body: some View {

32 | ContentView()

33 | }

34 | }

35 | static var previews: some View {

36 | Preview()

37 | }

38 | }

39 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Navigation/DetailColumn.swift:

--------------------------------------------------------------------------------

1 | //

2 | // DetailColumn.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | struct DetailColumn: View {

11 | @Binding var path: NavigationPath

12 | @Binding var selection: Panel

13 | @StateObject var modelConverter = ConvertModelViewModel()

14 | @StateObject var controlNetConverter = ConvertControlNetViewModel()

15 |

16 | var body: some View {

17 | switch selection {

18 | case .model:

19 | ConvertModelView(model: modelConverter)

20 | case .controlNet:

21 | ConvertControlNetView(model: controlNetConverter)

22 | case .log:

23 | LogView()

24 | }

25 | }

26 | }

27 |

28 | struct DetailColumn_Previews: PreviewProvider {

29 | struct Preview: View {

30 | @State private var selection: Panel = .model

31 |

32 | var body: some View {

33 | DetailColumn(path: .constant(NavigationPath()), selection: $selection)

34 | }

35 | }

36 | static var previews: some View {

37 | Preview()

38 | }

39 | }

40 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Model/ComputeUnits.swift:

--------------------------------------------------------------------------------

1 | //

2 | // ComputeUnits.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 30/3/23.

6 | //

7 |

8 | import Foundation

9 |

10 | enum ComputeUnits: String, CaseIterable, Identifiable, CustomStringConvertible {

11 | case cpuAndNeuralEngine, cpuAndGPU, cpuOnly, all

12 |

13 | var id: String { rawValue }

14 |

15 | var description: String {

16 | switch self {

17 | case .cpuAndNeuralEngine: return "CPU and Neural Engine"

18 | case .cpuAndGPU: return "CPU and GPU"

19 | case .cpuOnly: return "CPU only"

20 | case .all: return "All"

21 | }

22 | }

23 |

24 | var shortDescription: String {

25 | switch self {

26 | case .cpuAndNeuralEngine: return "CPU & NE"

27 | case .cpuAndGPU: return "CPU & GPU"

28 | case .cpuOnly: return "CPU"

29 | case .all: return "All"

30 | }

31 | }

32 |

33 | var asCTComputeUnits: String {

34 | switch self {

35 | case .cpuAndNeuralEngine: return "CPU_AND_NE"

36 | case .cpuAndGPU: return "CPU_AND_GPU"

37 | case .cpuOnly: return "CPU_ONLY"

38 | case .all: return "ALL"

39 | }

40 | }

41 | }

42 |

--------------------------------------------------------------------------------

/GuernikaTools/setup.py:

--------------------------------------------------------------------------------

1 | from setuptools import setup, find_packages

2 |

3 | from guernikatools._version import __version__

4 |

5 | setup(

6 | name='guernikatools',

7 | version=__version__,

8 | url='https://github.com/GuernikaCore/GuernikaModelConverter',

9 | description="Run Stable Diffusion on Apple Silicon with Guernika",

10 | author='Guernika',

11 | install_requires=[

12 | "coremltools>=7.0",

13 | "diffusers[torch]",

14 | "torch",

15 | "transformers>=4.30.0",

16 | "scipy",

17 | "numpy",

18 | "pytest",

19 | "scikit-learn==1.1.2",

20 | "pytorch_lightning",

21 | "OmegaConf",

22 | "six",

23 | "safetensors",

24 | "pyinstaller",

25 | ],

26 | packages=find_packages(),

27 | classifiers=[

28 | "Development Status :: 4 - Beta",

29 | "Intended Audience :: Developers",

30 | "Operating System :: MacOS :: MacOS X",

31 | "Programming Language :: Python :: 3",

32 | "Programming Language :: Python :: 3.7",

33 | "Programming Language :: Python :: 3.8",

34 | "Programming Language :: Python :: 3.9",

35 | "Topic :: Artificial Intelligence",

36 | "Topic :: Scientific/Engineering",

37 | "Topic :: Software Development",

38 | ],

39 | )

40 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Views/CircularProgress.swift:

--------------------------------------------------------------------------------

1 | //

2 | // CircularProgress.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 15/12/22.

6 | //

7 |

8 | import SwiftUI

9 |

10 | struct CircularProgress: View {

11 | var progress: Float?

12 |

13 | var body: some View {

14 | ZStack {

15 | if let progress, progress != 0 && progress != 1 {

16 | Circle()

17 | .stroke(lineWidth: 4)

18 | .opacity(0.2)

19 | .foregroundColor(Color.primary)

20 | .frame(width: 20, height: 20)

21 | Circle()

22 | .trim(from: 0, to: CGFloat(min(progress, 1)))

23 | .stroke(style: StrokeStyle(lineWidth: 4, lineCap: .round, lineJoin: .round))

24 | .foregroundColor(Color.accentColor)

25 | .rotationEffect(Angle(degrees: 270))

26 | .animation(.linear, value: progress)

27 | .frame(width: 20, height: 20)

28 | } else {

29 | ProgressView()

30 | .progressViewStyle(.circular)

31 | .scaleEffect(0.7)

32 | }

33 | }.frame(width: 24, height: 24)

34 | }

35 | }

36 |

37 | struct CircularProgress_Previews: PreviewProvider {

38 | static var previews: some View {

39 | CircularProgress(progress: 0.5)

40 | }

41 | }

42 |

--------------------------------------------------------------------------------

/GuernikaModelConverterTests/GuernikaModelConverterTests.swift:

--------------------------------------------------------------------------------

1 | //

2 | // GuernikaModelConverterTests.swift

3 | // GuernikaModelConverterTests

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import XCTest

9 | @testable import GuernikaModelConverter

10 |

11 | final class GuernikaModelConverterTests: XCTestCase {

12 |

13 | override func setUpWithError() throws {

14 | // Put setup code here. This method is called before the invocation of each test method in the class.

15 | }

16 |

17 | override func tearDownWithError() throws {

18 | // Put teardown code here. This method is called after the invocation of each test method in the class.

19 | }

20 |

21 | func testExample() throws {

22 | // This is an example of a functional test case.

23 | // Use XCTAssert and related functions to verify your tests produce the correct results.

24 | // Any test you write for XCTest can be annotated as throws and async.

25 | // Mark your test throws to produce an unexpected failure when your test encounters an uncaught error.

26 | // Mark your test async to allow awaiting for asynchronous code to complete. Check the results with assertions afterwards.

27 | }

28 |

29 | func testPerformanceExample() throws {

30 | // This is an example of a performance test case.

31 | self.measure {

32 | // Put the code you want to measure the time of here.

33 | }

34 | }

35 |

36 | }

37 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Xcode

2 | #

3 | # gitignore contributors: remember to update Global/Xcode.gitignore, Objective-C.gitignore & Swift.gitignore

4 |

5 | ## User settings

6 | xcuserdata/

7 |

8 | ## Obj-C/Swift specific

9 | *.hmap

10 |

11 | ## App packaging

12 | *.ipa

13 | *.dSYM.zip

14 | *.dSYM

15 |

16 | ## Playgrounds

17 | timeline.xctimeline

18 | playground.xcworkspace

19 |

20 | # Swift Package Manager

21 | #

22 | # Add this line if you want to avoid checking in source code from Swift Package Manager dependencies.

23 | # Packages/

24 | # Package.pins

25 | # Package.resolved

26 | # *.xcodeproj

27 | #

28 | # Xcode automatically generates this directory with a .xcworkspacedata file and xcuserdata

29 | # hence it is not needed unless you have added a package configuration file to your project

30 | .swiftpm

31 |

32 | .build/

33 |

34 | # fastlane

35 | #

36 | # It is recommended to not store the screenshots in the git repo.

37 | # Instead, use fastlane to re-generate the screenshots whenever they are needed.

38 | # For more information about the recommended setup visit:

39 | # https://docs.fastlane.tools/best-practices/source-control/#source-control

40 |

41 | fastlane/report.xml

42 | fastlane/Preview.html

43 | fastlane/screenshots/**/*.png

44 | fastlane/test_output

45 |

46 | # Code Injection

47 | #

48 | # After new code Injection tools there's a generated folder /iOSInjectionProject

49 | # https://github.com/johnno1962/injectionforxcode

50 |

51 | iOSInjectionProject/

52 | .DS_Store

53 |

54 | __pycache__

55 | GenerateApp/build

56 | GuernikaTools/build

57 | GuernikaTools/guernikatools.egg-info

58 | GuernikaModelConverter/GuernikaTools

59 | *.dmg

60 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Assets.xcassets/AppIcon.appiconset/Contents.json:

--------------------------------------------------------------------------------

1 | {

2 | "images" : [

3 | {

4 | "size" : "16x16",

5 | "idiom" : "mac",

6 | "filename" : "AppIcon-16.png",

7 | "scale" : "1x"

8 | },

9 | {

10 | "size" : "16x16",

11 | "idiom" : "mac",

12 | "filename" : "AppIcon-32.png",

13 | "scale" : "2x"

14 | },

15 | {

16 | "size" : "32x32",

17 | "idiom" : "mac",

18 | "filename" : "AppIcon-32.png",

19 | "scale" : "1x"

20 | },

21 | {

22 | "size" : "32x32",

23 | "idiom" : "mac",

24 | "filename" : "AppIcon-64.png",

25 | "scale" : "2x"

26 | },

27 | {

28 | "size" : "128x128",

29 | "idiom" : "mac",

30 | "filename" : "AppIcon-128.png",

31 | "scale" : "1x"

32 | },

33 | {

34 | "size" : "128x128",

35 | "idiom" : "mac",

36 | "filename" : "AppIcon-256.png",

37 | "scale" : "2x"

38 | },

39 | {

40 | "size" : "256x256",

41 | "idiom" : "mac",

42 | "filename" : "AppIcon-256.png",

43 | "scale" : "1x"

44 | },

45 | {

46 | "size" : "256x256",

47 | "idiom" : "mac",

48 | "filename" : "AppIcon-512.png",

49 | "scale" : "2x"

50 | },

51 | {

52 | "size" : "512x512",

53 | "idiom" : "mac",

54 | "filename" : "AppIcon-512.png",

55 | "scale" : "1x"

56 | },

57 | {

58 | "size" : "512x512",

59 | "idiom" : "mac",

60 | "filename" : "AppIcon-1024.png",

61 | "scale" : "2x"

62 | }

63 | ],

64 | "info" : {

65 | "version" : 1,

66 | "author" : "xcode"

67 | }

68 | }

--------------------------------------------------------------------------------

/GuernikaModelConverterUITests/GuernikaModelConverterUITests.swift:

--------------------------------------------------------------------------------

1 | //

2 | // GuernikaModelConverterUITests.swift

3 | // GuernikaModelConverterUITests

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import XCTest

9 |

10 | final class GuernikaModelConverterUITests: XCTestCase {

11 |

12 | override func setUpWithError() throws {

13 | // Put setup code here. This method is called before the invocation of each test method in the class.

14 |

15 | // In UI tests it is usually best to stop immediately when a failure occurs.

16 | continueAfterFailure = false

17 |

18 | // In UI tests it’s important to set the initial state - such as interface orientation - required for your tests before they run. The setUp method is a good place to do this.

19 | }

20 |

21 | override func tearDownWithError() throws {

22 | // Put teardown code here. This method is called after the invocation of each test method in the class.

23 | }

24 |

25 | func testExample() throws {

26 | // UI tests must launch the application that they test.

27 | let app = XCUIApplication()

28 | app.launch()

29 |

30 | // Use XCTAssert and related functions to verify your tests produce the correct results.

31 | }

32 |

33 | func testLaunchPerformance() throws {

34 | if #available(macOS 10.15, iOS 13.0, tvOS 13.0, watchOS 7.0, *) {

35 | // This measures how long it takes to launch your application.

36 | measure(metrics: [XCTApplicationLaunchMetric()]) {

37 | XCUIApplication().launch()

38 | }

39 | }

40 | }

41 | }

42 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Views/DestinationToolbarButton.swift:

--------------------------------------------------------------------------------

1 | //

2 | // DestinationToolbarButton.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 31/3/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | struct DestinationToolbarButton: View {

11 | @Binding var showOutputPicker: Bool

12 | var outputLocation: URL?

13 |

14 | var body: some View {

15 | ZStack(alignment: .leading) {

16 | Image(systemName: "folder")

17 | .padding(.leading, 18)

18 | .frame(width: 16)

19 | .foregroundColor(.secondary)

20 | .onTapGesture {

21 | guard let outputLocation else { return }

22 | NSWorkspace.shared.open(outputLocation)

23 | }

24 | Button {

25 | showOutputPicker = true

26 | } label: {

27 | Text(outputLocation?.lastPathComponent ?? "Select destination")

28 | .frame(minWidth: 200)

29 | }

30 | .foregroundColor(.primary)

31 | .background(Color.primary.opacity(0.1))

32 | .clipShape(RoundedRectangle(cornerRadius: 8, style: .continuous))

33 | .padding(.leading, 34)

34 | }.background {

35 | RoundedRectangle(cornerRadius: 8, style: .continuous)

36 | .stroke(.secondary, lineWidth: 1)

37 | .opacity(0.4)

38 | }

39 | .help("Destination")

40 | .padding(.trailing, 8)

41 | }

42 | }

43 |

44 | struct DestinationToolbarButton_Previews: PreviewProvider {

45 | static var previews: some View {

46 | DestinationToolbarButton(showOutputPicker: .constant(false), outputLocation: nil)

47 | }

48 | }

49 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Model/Version.swift:

--------------------------------------------------------------------------------

1 | //

2 | // Version.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 14/3/23.

6 | //

7 |

8 | import Foundation

9 |

10 | public struct Version: Comparable, Hashable, CustomStringConvertible {

11 | let components: [Int]

12 | public var major: Int {

13 | guard components.count > 0 else { return 0 }

14 | return components[0]

15 | }

16 | public var minor: Int {

17 | guard components.count > 1 else { return 0 }

18 | return components[1]

19 | }

20 | public var patch: Int {

21 | guard components.count > 2 else { return 0 }

22 | return components[2]

23 | }

24 |

25 | public var description: String {

26 | return components.map { $0.description }.joined(separator: ".")

27 | }

28 |

29 | public init(major: Int, minor: Int, patch: Int) {

30 | self.components = [major, minor, patch]

31 | }

32 |

33 | public static func == (lhs: Version, rhs: Version) -> Bool {

34 | return lhs.major == rhs.major &&

35 | lhs.minor == rhs.minor &&

36 | lhs.patch == rhs.patch

37 | }

38 |

39 | public static func < (lhs: Version, rhs: Version) -> Bool {

40 | return lhs.major < rhs.major ||

41 | (lhs.major == rhs.major && lhs.minor < rhs.minor) ||

42 | (lhs.major == rhs.major && lhs.minor == rhs.minor && lhs.patch < rhs.patch)

43 | }

44 | }

45 |

46 | extension Version: Codable {

47 | public init(from decoder: Decoder) throws {

48 | let container = try decoder.singleValueContainer()

49 | let stringValue = try container.decode(String.self)

50 | self.init(stringLiteral: stringValue)

51 | }

52 |

53 | public func encode(to encoder: Encoder) throws {

54 | var container = encoder.singleValueContainer()

55 | try container.encode(description)

56 | }

57 | }

58 |

59 | extension Version: ExpressibleByStringLiteral {

60 | public typealias StringLiteralType = String

61 |

62 | public init(stringLiteral value: StringLiteralType) {

63 | components = value.split(separator: ".").compactMap { Int($0) }

64 | }

65 | }

66 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Model/Logger.swift:

--------------------------------------------------------------------------------

1 | //

2 | // Logger.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 30/3/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | class Logger: ObservableObject {

11 | static var shared: Logger = Logger()

12 |

13 | enum LogLevel {

14 | case debug

15 | case info

16 | case warning

17 | case error

18 | case success

19 |

20 | var backgroundColor: Color {

21 | switch self {

22 | case .debug:

23 | return .secondary

24 | case .info:

25 | return .blue

26 | case .warning:

27 | return .orange

28 | case .error:

29 | return .red

30 | case .success:

31 | return .green

32 | }

33 | }

34 | }

35 |

36 | var isEmpty: Bool = true

37 | var previousContent: Text?

38 | @Published var content: Text = Text("")

39 |

40 | func append(_ line: String) {

41 | if line.starts(with: "INFO:") {

42 | append(String(line.replacing(try! Regex(#"INFO:.*:"#), with: "")), level: .info)

43 | } else if line.starts(with: "WARNING:") {

44 | append(String(line.replacing(try! Regex(#"WARNING:.*:"#), with: "")), level: .warning)

45 | } else if line.starts(with: "ERROR:") {

46 | append(String(line.replacing(try! Regex(#"ERROR:.*:"#), with: "")), level: .error)

47 | } else {

48 | append(line, level: .debug)

49 | }

50 | }

51 |

52 | func append(_ line: String, level: LogLevel) {

53 | if level == .success {

54 | if previousContent == nil {

55 | previousContent = content

56 | }

57 | content = previousContent! + Text(line + "\n").foregroundColor(level.backgroundColor)

58 | isEmpty = false

59 | print(line)

60 | } else {

61 | previousContent = nil

62 | if !line.isEmpty {

63 | content = content + Text(line + "\n").foregroundColor(level.backgroundColor)

64 | isEmpty = false

65 | print(line)

66 | }

67 | }

68 | }

69 |

70 | func clear() {

71 | content = Text("")

72 | previousContent = nil

73 | isEmpty = true

74 | }

75 | }

76 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Guernika Model Converter

2 |

3 | This repository contains a model converter compatible with [Guernika](https://apps.apple.com/app/id1660407508).

4 |

5 | ## Converting Models to Guernika

6 |

7 | **WARNING:** Xcode is required to convert models:

8 |

9 | - Make sure you have [Xcode](https://apps.apple.com/app/id497799835) installed.

10 |

11 | - Once installed run the following commands:

12 |

13 | ```shell

14 | sudo xcode-select --switch /Applications/Xcode.app/Contents/Developer/

15 | sudo xcodebuild -license accept

16 | ```

17 |

18 | - You should now be ready to start converting models!

19 |

20 | **Step 1:** Download and install [`Guernika Model Converter`](https://huggingface.co/Guernika/CoreMLStableDiffusion/resolve/main/GuernikaModelConverter.dmg).

21 |

22 | [ ](https://huggingface.co/Guernika/CoreMLStableDiffusion/resolve/main/GuernikaModelConverter.dmg)

23 |

24 | **Step 2:** Launch `Guernika Model Converter` from your `Applications` folder, this app may take a few seconds to load.

25 |

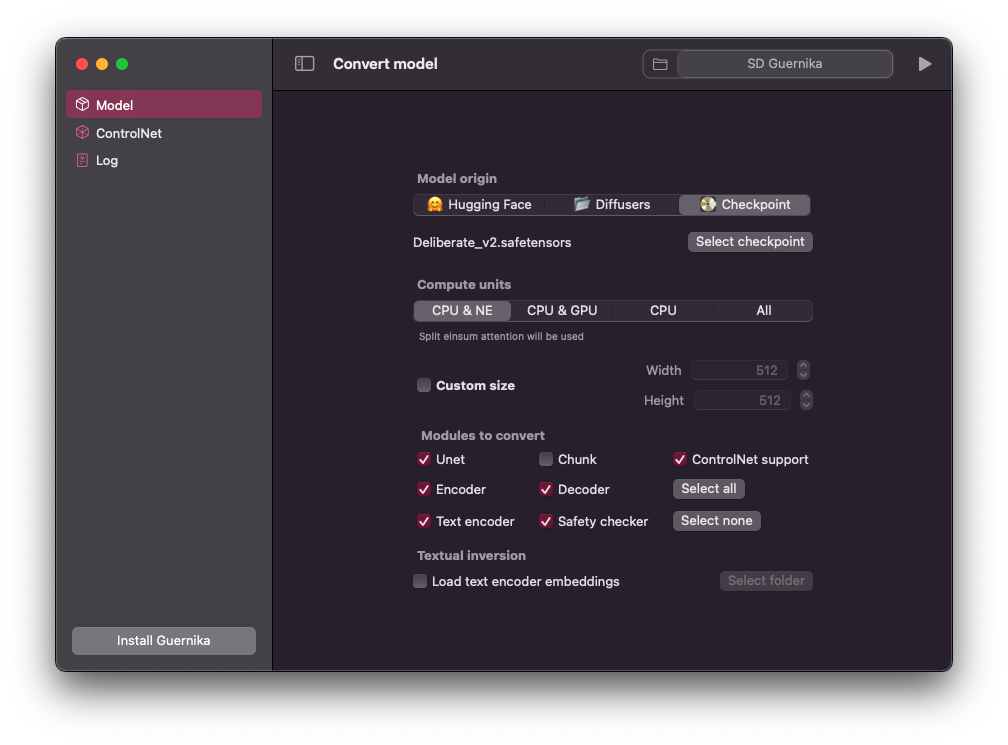

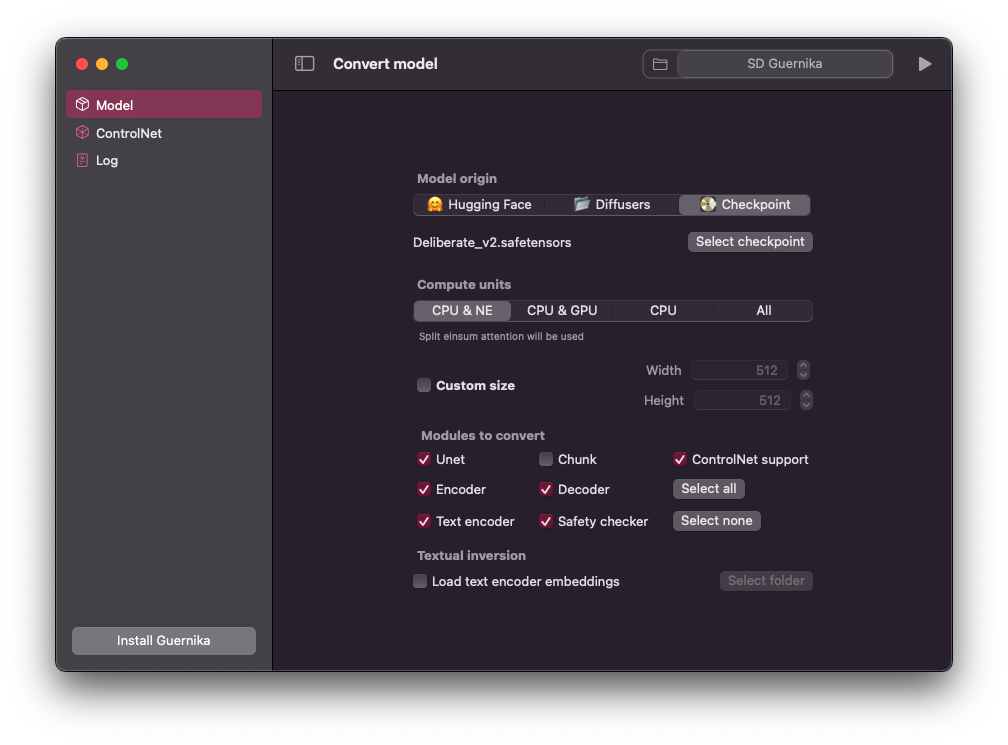

26 | **Step 3:** Once the app has loaded you will be able to select what model you want to convert:

27 |

28 | - You can input the model identifier (e.g. CompVis/stable-diffusion-v1-4) to download from Hugging Face. You may have to log in to or register for your [Hugging Face account](https://huggingface.co), generate a [User Access Token](https://huggingface.co/settings/tokens) and use this token to set up Hugging Face API access by running `huggingface-cli login` in a Terminal window.

29 |

30 | - You can select a local model from your machine: `Select local model`

31 |

32 | - You can select a local .CKPT model from your machine: `Select CKPT`

33 |

34 |

](https://huggingface.co/Guernika/CoreMLStableDiffusion/resolve/main/GuernikaModelConverter.dmg)

23 |

24 | **Step 2:** Launch `Guernika Model Converter` from your `Applications` folder, this app may take a few seconds to load.

25 |

26 | **Step 3:** Once the app has loaded you will be able to select what model you want to convert:

27 |

28 | - You can input the model identifier (e.g. CompVis/stable-diffusion-v1-4) to download from Hugging Face. You may have to log in to or register for your [Hugging Face account](https://huggingface.co), generate a [User Access Token](https://huggingface.co/settings/tokens) and use this token to set up Hugging Face API access by running `huggingface-cli login` in a Terminal window.

29 |

30 | - You can select a local model from your machine: `Select local model`

31 |

32 | - You can select a local .CKPT model from your machine: `Select CKPT`

33 |

34 |  35 |

36 | **Step 4:** Once you've chosen the model you want to convert you can choose what modules to convert and/or if you want to chunk the UNet module (recommended for iOS/iPadOS devices).

37 |

38 | **Step 5:** Once you're happy with your selection click `Convert to Guernika` and wait for the app to complete conversion.

39 | **WARNING:** This command may download several GB worth of PyTorch checkpoints from Hugging Face and may take a long time to complete (15-20 minutes on an M1 machine).

40 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Log/LogView.swift:

--------------------------------------------------------------------------------

1 | //

2 | // LogView.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 30/3/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | struct LogView: View {

11 | @ObservedObject var logger = Logger.shared

12 | @State var stickToBottom: Bool = true

13 |

14 | var body: some View {

15 | VStack {

16 | if logger.isEmpty {

17 | emptyView

18 | } else {

19 | contentView

20 | }

21 | }.navigationTitle("Log")

22 | .toolbar {

23 | ToolbarItemGroup {

24 | Button {

25 | logger.clear()

26 | } label: {

27 | Image(systemName: "trash")

28 | }.help("Clear log")

29 | .disabled(logger.isEmpty)

30 | Toggle(isOn: $stickToBottom) {

31 | Image(systemName: "dock.arrow.down.rectangle")

32 | }.help("Stick to bottom")

33 | }

34 | }

35 | }

36 |

37 | @ViewBuilder

38 | var emptyView: some View {

39 | Image(systemName: "moon.zzz.fill")

40 | .resizable()

41 | .aspectRatio(contentMode: .fit)

42 | .frame(width: 72)

43 | .opacity(0.3)

44 | .padding(8)

45 | Text("Log is empty")

46 | .font(.largeTitle)

47 | .opacity(0.3)

48 | }

49 |

50 | @ViewBuilder

51 | var contentView: some View {

52 | ScrollViewReader { proxy in

53 | ScrollView {

54 | logger.content

55 | .multilineTextAlignment(.leading)

56 | .font(.body.monospaced())

57 | .textSelection(.enabled)

58 | .frame(maxWidth: .infinity, alignment: .leading)

59 | .padding()

60 |

61 | Divider().opacity(0)

62 | .id("bottom")

63 | }.onChange(of: logger.content) { _ in

64 | if stickToBottom {

65 | proxy.scrollTo("bottom", anchor: .bottom)

66 | }

67 | }.onChange(of: stickToBottom) { newValue in

68 | if newValue {

69 | proxy.scrollTo("bottom", anchor: .bottom)

70 | }

71 | }.onAppear {

72 | if stickToBottom {

73 | proxy.scrollTo("bottom", anchor: .bottom)

74 | }

75 | }

76 | }

77 | }

78 | }

79 |

80 | struct LogView_Previews: PreviewProvider {

81 | static var previews: some View {

82 | LogView()

83 | }

84 | }

85 |

--------------------------------------------------------------------------------

/GuernikaTools/GuernikaTools.spec:

--------------------------------------------------------------------------------

1 | # -*- mode: python ; coding: utf-8 -*-

2 | from PyInstaller.utils.hooks import collect_all

3 |

4 | import sys ; sys.setrecursionlimit(sys.getrecursionlimit() * 5)

5 |

6 | datas = []

7 | binaries = []

8 | hiddenimports = []

9 | tmp_ret = collect_all('regex')

10 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

11 | tmp_ret = collect_all('tqdm')

12 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

13 | tmp_ret = collect_all('requests')

14 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

15 | tmp_ret = collect_all('packaging')

16 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

17 | tmp_ret = collect_all('filelock')

18 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

19 | tmp_ret = collect_all('numpy')

20 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

21 | tmp_ret = collect_all('tokenizers')

22 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

23 | tmp_ret = collect_all('transformers')

24 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

25 | tmp_ret = collect_all('huggingface-hub')

26 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

27 | tmp_ret = collect_all('pyyaml')

28 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

29 | tmp_ret = collect_all('omegaconf')

30 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

31 | tmp_ret = collect_all('pytorch_lightning')

32 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

33 | tmp_ret = collect_all('pytorch-lightning')

34 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

35 | tmp_ret = collect_all('torch')

36 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

37 | tmp_ret = collect_all('safetensors')

38 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

39 | tmp_ret = collect_all('pillow')

40 | datas += tmp_ret[0]; binaries += tmp_ret[1]; hiddenimports += tmp_ret[2]

41 |

42 | block_cipher = None

43 |

44 |

45 | a = Analysis(

46 | ['./guernikatools/torch2coreml.py'],

47 | pathex=[],

48 | binaries=binaries,

49 | datas=datas,

50 | hiddenimports=hiddenimports,

51 | hookspath=[],

52 | hooksconfig={},

53 | runtime_hooks=[],

54 | excludes=[],

55 | win_no_prefer_redirects=False,

56 | win_private_assemblies=False,

57 | cipher=block_cipher,

58 | noarchive=False,

59 | )

60 | pyz = PYZ(a.pure, a.zipped_data, cipher=block_cipher)

61 |

62 | exe = EXE(

63 | pyz,

64 | a.scripts,

65 | a.binaries,

66 | a.zipfiles,

67 | a.datas,

68 | [],

69 | name='GuernikaTools',

70 | debug=False,

71 | bootloader_ignore_signals=False,

72 | strip=False,

73 | upx=True,

74 | upx_exclude=[],

75 | runtime_tmpdir=None,

76 | console=False,

77 | disable_windowed_traceback=False,

78 | argv_emulation=False,

79 | target_arch=None,

80 | codesign_identity=None,

81 | entitlements_file=None,

82 | )

83 |

--------------------------------------------------------------------------------

/GuernikaTools/guernikatools/models/layer_norm.py:

--------------------------------------------------------------------------------

1 | #

2 | # For licensing see accompanying LICENSE.md file.

3 | # Copyright (C) 2022 Apple Inc. All Rights Reserved.

4 | #

5 |

6 | import torch

7 | import torch.nn as nn

8 |

9 |

10 | # Reference: https://github.com/apple/ml-ane-transformers/blob/main/ane_transformers/reference/layer_norm.py

11 | class LayerNormANE(nn.Module):

12 | """ LayerNorm optimized for Apple Neural Engine (ANE) execution

13 |

14 | Note: This layer only supports normalization over the final dim. It expects `num_channels`

15 | as an argument and not `normalized_shape` which is used by `torch.nn.LayerNorm`.

16 | """

17 |

18 | def __init__(self,

19 | num_channels,

20 | clip_mag=None,

21 | eps=1e-5,

22 | elementwise_affine=True):

23 | """

24 | Args:

25 | num_channels: Number of channels (C) where the expected input data format is BC1S. S stands for sequence length.

26 | clip_mag: Optional float value to use for clamping the input range before layer norm is applied.

27 | If specified, helps reduce risk of overflow.

28 | eps: Small value to avoid dividing by zero

29 | elementwise_affine: If true, adds learnable channel-wise shift (bias) and scale (weight) parameters

30 | """

31 | super().__init__()

32 | # Principle 1: Picking the Right Data Format (machinelearning.apple.com/research/apple-neural-engine)

33 | self.expected_rank = len("BC1S")

34 |

35 | self.num_channels = num_channels

36 | self.eps = eps

37 | self.clip_mag = clip_mag

38 | self.elementwise_affine = elementwise_affine

39 |

40 | if self.elementwise_affine:

41 | self.weight = nn.Parameter(torch.Tensor(num_channels))

42 | self.bias = nn.Parameter(torch.Tensor(num_channels))

43 |

44 | self._reset_parameters()

45 |

46 | def _reset_parameters(self):

47 | if self.elementwise_affine:

48 | nn.init.ones_(self.weight)

49 | nn.init.zeros_(self.bias)

50 |

51 | def forward(self, inputs):

52 | input_rank = len(inputs.size())

53 |

54 | # Principle 1: Picking the Right Data Format (machinelearning.apple.com/research/apple-neural-engine)

55 | # Migrate the data format from BSC to BC1S (most conducive to ANE)

56 | if input_rank == 3 and inputs.size(2) == self.num_channels:

57 | inputs = inputs.transpose(1, 2).unsqueeze(2)

58 | input_rank = len(inputs.size())

59 |

60 | assert input_rank == self.expected_rank

61 | assert inputs.size(1) == self.num_channels

62 |

63 | if self.clip_mag is not None:

64 | inputs.clamp_(-self.clip_mag, self.clip_mag)

65 |

66 | channels_mean = inputs.mean(dim=1, keepdim=True)

67 |

68 | zero_mean = inputs - channels_mean

69 |

70 | zero_mean_sq = zero_mean * zero_mean

71 |

72 | denom = (zero_mean_sq.mean(dim=1, keepdim=True) + self.eps).rsqrt()

73 |

74 | out = zero_mean * denom

75 |

76 | if self.elementwise_affine:

77 | out = (out + self.bias.view(1, self.num_channels, 1, 1)) * self.weight.view(1, self.num_channels, 1, 1)

78 |

79 | return out

80 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Navigation/Sidebar.swift:

--------------------------------------------------------------------------------

1 | //

2 | // Sidebar.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 19/3/23.

6 | //

7 |

8 | import Cocoa

9 | import SwiftUI

10 |

11 | enum Panel: Hashable {

12 | case model

13 | case controlNet

14 | case log

15 | }

16 |

17 | struct Sidebar: View {

18 | @Binding var path: NavigationPath

19 | @Binding var selection: Panel

20 | @State var showUpdateButton: Bool = false

21 |

22 | var body: some View {

23 | List(selection: $selection) {

24 | NavigationLink(value: Panel.model) {

25 | Label("Model", systemImage: "shippingbox")

26 | }

27 | NavigationLink(value: Panel.controlNet) {

28 | Label("ControlNet", systemImage: "cube.transparent")

29 | }

30 | NavigationLink(value: Panel.log) {

31 | Label("Log", systemImage: "doc.text.below.ecg")

32 | }

33 | }

34 | .safeAreaInset(edge: .bottom, content: {

35 | VStack(spacing: 12) {

36 | if showUpdateButton {

37 | Button {

38 | NSWorkspace.shared.open(URL(string: "https://huggingface.co/Guernika/CoreMLStableDiffusion/blob/main/GuernikaModelConverter.dmg")!)

39 | } label: {

40 | Text("Update available")

41 | .frame(minWidth: 168)

42 | }.controlSize(.large)

43 | .buttonStyle(.borderedProminent)

44 | }

45 | if !FileManager.default.fileExists(atPath: "/Applications/Guernika.app") {

46 | Button {

47 | NSWorkspace.shared.open(URL(string: "macappstore://apps.apple.com/app/id1660407508")!)

48 | } label: {

49 | Text("Install Guernika")

50 | .frame(minWidth: 168)

51 | }.controlSize(.large)

52 | }

53 | }

54 | .padding(16)

55 | })

56 | .navigationTitle("Guernika Model Converter")

57 | .navigationSplitViewColumnWidth(min: 200, ideal: 200)

58 | .onAppear { checkForUpdate() }

59 | }

60 |

61 | func checkForUpdate() {

62 | Task.detached {

63 | let versionUrl = URL(string: "https://huggingface.co/Guernika/CoreMLStableDiffusion/raw/main/GuernikaModelConverter.version")!

64 | guard let lastVersionString = try? String(contentsOf: versionUrl) else { return }

65 | let lastVersion = Version(stringLiteral: lastVersionString)

66 | let currentVersionString = Bundle.main.object(forInfoDictionaryKey: "CFBundleShortVersionString") as? String ?? ""

67 | let currentVersion = Version(stringLiteral: currentVersionString)

68 | await MainActor.run {

69 | withAnimation {

70 | showUpdateButton = currentVersion < lastVersion

71 | }

72 | }

73 | }

74 | }

75 | }

76 |

77 | struct Sidebar_Previews: PreviewProvider {

78 | struct Preview: View {

79 | @State private var selection: Panel = Panel.model

80 | var body: some View {

81 | Sidebar(path: .constant(NavigationPath()), selection: $selection)

82 | }

83 | }

84 |

85 | static var previews: some View {

86 | NavigationSplitView {

87 | Preview()

88 | } detail: {

89 | Text("Detail!")

90 | }

91 | }

92 | }

93 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Views/IntegerField.swift:

--------------------------------------------------------------------------------

1 | //

2 | // IntegerField.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 23/1/22.

6 | //

7 |

8 | import SwiftUI

9 |

10 | struct LabeledIntegerField: View {

11 | @Binding var value: Int

12 | var step: Int = 1

13 | var minValue: Int?

14 | var maxValue: Int?

15 | @ViewBuilder var label: () -> Content

16 |

17 | init(

18 | _ titleKey: LocalizedStringKey,

19 | value: Binding,

20 | step: Int = 1,

21 | minValue: Int? = nil,

22 | maxValue: Int? = nil

23 | ) where Content == Text {

24 | self.init(value: value, step: step, minValue: minValue, maxValue: maxValue, label: {

25 | Text(titleKey)

26 | })

27 | }

28 |

29 | init(

30 | value: Binding,

31 | step: Int = 1,

32 | minValue: Int? = nil,

33 | maxValue: Int? = nil,

34 | @ViewBuilder label: @escaping () -> Content

35 | ) {

36 | self.label = label

37 | self.step = step

38 | self.minValue = minValue

39 | self.maxValue = maxValue

40 | self._value = value

41 | }

42 |

43 | var body: some View {

44 | LabeledContent(content: {

45 | IntegerField(

46 | value: $value,

47 | step: step,

48 | minValue: minValue,

49 | maxValue: maxValue

50 | )

51 | .frame(maxWidth: 120)

52 | }, label: label)

53 | }

54 | }

55 |

56 | struct IntegerField: View {

57 | @Binding var value: Int

58 | var step: Int = 1

59 | var minValue: Int?

60 | var maxValue: Int?

61 | @State private var text: String

62 | @FocusState private var isFocused: Bool

63 |

64 | init(

65 | value: Binding,

66 | step: Int = 1,

67 | minValue: Int? = nil,

68 | maxValue: Int? = nil

69 | ) {

70 | self.step = step

71 | self.minValue = minValue

72 | self.maxValue = maxValue

73 | self._value = value

74 | let text = String(describing: value.wrappedValue)

75 | self._text = State(wrappedValue: text)

76 | }

77 |

78 | var body: some View {

79 | HStack(spacing: 0) {

80 | TextField("", text: $text, prompt: Text("Value"))

81 | .multilineTextAlignment(.trailing)

82 | .textFieldStyle(.plain)

83 | .padding(.horizontal, 10)

84 | .submitLabel(.done)

85 | .focused($isFocused)

86 | .frame(minWidth: 70)

87 | .labelsHidden()

88 | #if !os(macOS)

89 | .keyboardType(.numberPad)

90 | #endif

91 | Stepper(label: {}, onIncrement: {

92 | if let maxValue {

93 | value = min(value + step, maxValue)

94 | } else {

95 | value += step

96 | }

97 | }, onDecrement: {

98 | if let minValue {

99 | value = max(value - step, minValue)

100 | } else {

101 | value -= step

102 | }

103 | }).labelsHidden()

104 | }

105 | #if os(macOS)

106 | .padding(3)

107 | .background(Color.primary.opacity(0.06))

108 | .clipShape(RoundedRectangle(cornerRadius: 8, style: .continuous))

109 | #else

110 | .padding(2)

111 | .background(Color.primary.opacity(0.05))

112 | .clipShape(RoundedRectangle(cornerRadius: 10, style: .continuous))

113 | #endif

114 | .onSubmit {

115 | updateValue()

116 | isFocused = false

117 | }

118 | .onChange(of: isFocused) { focused in

119 | if !focused {

120 | updateValue()

121 | }

122 | }

123 | .onChange(of: value) { _ in updateText() }

124 | .onChange(of: text) { _ in

125 | if let newValue = Int(text), value != newValue {

126 | value = newValue

127 | }

128 | }

129 | #if !os(macOS)

130 | .toolbar {

131 | if isFocused {

132 | ToolbarItem(placement: .keyboard) {

133 | HStack {

134 | Spacer()

135 | Button("Done") {

136 | isFocused = false

137 | }

138 | }

139 | }

140 | }

141 | }

142 | #endif

143 | }

144 |

145 | private func updateValue() {

146 | if let newValue = Int(text) {

147 | if let maxValue, newValue > maxValue {

148 | value = maxValue

149 | } else if let minValue, newValue < minValue {

150 | value = minValue

151 | } else {

152 | value = newValue

153 | }

154 | }

155 | }

156 |

157 | private func updateText() {

158 | text = String(describing: value)

159 | }

160 | }

161 |

162 | struct ValueField_Previews: PreviewProvider {

163 | static var previews: some View {

164 | LabeledIntegerField("Text", value: .constant(20))

165 | }

166 | }

167 |

168 |

--------------------------------------------------------------------------------

/GuernikaTools/guernikatools/convert/convert_text_encoder.py:

--------------------------------------------------------------------------------

1 | #

2 | # For licensing see accompanying LICENSE.md file.

3 | # Copyright (C) 2022 Apple Inc. All Rights Reserved.

4 | #

5 |

6 | from guernikatools._version import __version__

7 | from guernikatools.utils import utils

8 |

9 | from collections import OrderedDict, defaultdict

10 | from copy import deepcopy

11 | import gc

12 |

13 | import logging

14 |

15 | logging.basicConfig()

16 | logger = logging.getLogger(__name__)

17 | logger.setLevel(logging.INFO)

18 |

19 | import numpy as np

20 | import os

21 |

22 | import torch

23 | import torch.nn as nn

24 | import torch.nn.functional as F

25 |

26 | torch.set_grad_enabled(False)

27 |

28 |

29 | def main(tokenizer, text_encoder, args, model_name="text_encoder"):

30 | """ Converts the text encoder component of Stable Diffusion

31 | """

32 | out_path = utils.get_out_path(args, model_name)

33 | if os.path.exists(out_path):

34 | logger.info(

35 | f"`text_encoder` already exists at {out_path}, skipping conversion."

36 | )

37 | return

38 |

39 | # Create sample inputs for tracing, conversion and correctness verification

40 | text_encoder_sequence_length = tokenizer.model_max_length

41 | text_encoder_hidden_size = text_encoder.config.hidden_size

42 |

43 | sample_text_encoder_inputs = {

44 | "input_ids":

45 | torch.randint(

46 | text_encoder.config.vocab_size,

47 | (1, text_encoder_sequence_length),

48 | # https://github.com/apple/coremltools/issues/1423

49 | dtype=torch.float32,

50 | )

51 | }

52 | sample_text_encoder_inputs_spec = {

53 | k: (v.shape, v.dtype)

54 | for k, v in sample_text_encoder_inputs.items()

55 | }

56 | logger.info(f"Sample inputs spec: {sample_text_encoder_inputs_spec}")

57 |

58 | class TextEncoder(nn.Module):

59 |

60 | def __init__(self):

61 | super().__init__()

62 | self.text_encoder = text_encoder

63 |

64 | def forward(self, input_ids):

65 | return text_encoder(input_ids, return_dict=False)

66 |

67 | class TextEncoderXL(nn.Module):

68 |

69 | def __init__(self):

70 | super().__init__()

71 | self.text_encoder = text_encoder

72 |

73 | def forward(self, input_ids):

74 | output = text_encoder(input_ids, output_hidden_states=True)

75 | return (output.hidden_states[-2], output[0])

76 |

77 | reference_text_encoder = TextEncoderXL().eval() if args.model_is_sdxl else TextEncoder().eval()

78 |

79 | logger.info("JIT tracing {model_name}..")

80 | reference_text_encoder = torch.jit.trace(

81 | reference_text_encoder,

82 | (sample_text_encoder_inputs["input_ids"].to(torch.int32), ),

83 | )

84 | logger.info("Done.")

85 |

86 | sample_coreml_inputs = utils.get_coreml_inputs(sample_text_encoder_inputs)

87 | coreml_text_encoder, out_path = utils.convert_to_coreml(

88 | model_name, reference_text_encoder, sample_coreml_inputs,

89 | ["last_hidden_state", "pooled_outputs"], args.precision_full, args

90 | )

91 |

92 | # Set model metadata

93 | coreml_text_encoder.author = f"Please refer to the Model Card available at huggingface.co/{args.model_version}"

94 | coreml_text_encoder.license = "OpenRAIL (https://huggingface.co/spaces/CompVis/stable-diffusion-license)"

95 | coreml_text_encoder.version = args.model_version

96 | coreml_text_encoder.short_description = \

97 | "Stable Diffusion generates images conditioned on text and/or other images as input through the diffusion process. " \

98 | "Please refer to https://arxiv.org/abs/2112.10752 for details."

99 |

100 | # Set the input descriptions

101 | coreml_text_encoder.input_description["input_ids"] = "The token ids that represent the input text"

102 |

103 | # Set the output descriptions

104 | coreml_text_encoder.output_description["last_hidden_state"] = "The token embeddings as encoded by the Transformer model"

105 | coreml_text_encoder.output_description["pooled_outputs"] = "The version of the `last_hidden_state` output after pooling"

106 |

107 | # Set package version metadata

108 | coreml_text_encoder.user_defined_metadata["identifier"] = args.model_version

109 | coreml_text_encoder.user_defined_metadata["converter_version"] = __version__

110 | coreml_text_encoder.user_defined_metadata["attention_implementation"] = args.attention_implementation

111 | coreml_text_encoder.user_defined_metadata["compute_unit"] = args.compute_unit

112 | coreml_text_encoder.user_defined_metadata["hidden_size"] = str(text_encoder.config.hidden_size)

113 |

114 | coreml_text_encoder.save(out_path)

115 |

116 | logger.info(f"Saved {model_name} into {out_path}")

117 |

118 | # Parity check PyTorch vs CoreML

119 | if args.check_output_correctness:

120 | baseline_out = text_encoder(

121 | sample_text_encoder_inputs["input_ids"].to(torch.int32),

122 | return_dict=False,

123 | )[1].numpy()

124 |

125 | coreml_out = list(coreml_text_encoder.predict({

126 | k: v.numpy() for k, v in sample_text_encoder_inputs.items()

127 | }).values())[0]

128 | utils.report_correctness(baseline_out, coreml_out, "{model_name} baseline PyTorch to reference CoreML")

129 |

130 | del reference_text_encoder, coreml_text_encoder, text_encoder

131 | gc.collect()

132 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/Views/DecimalField.swift:

--------------------------------------------------------------------------------

1 | //

2 | // DecimalField.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 5/2/23.

6 | //

7 |

8 | import SwiftUI

9 |

10 | extension Formatter {

11 | static let decimal: NumberFormatter = {

12 | let formatter = NumberFormatter()

13 | formatter.numberStyle = .decimal

14 | formatter.usesGroupingSeparator = false

15 | formatter.maximumFractionDigits = 2

16 | return formatter

17 | }()

18 | }

19 |

20 | struct LabeledDecimalField: View {

21 | @Binding var value: Double

22 | var step: Double = 1

23 | var minValue: Double?

24 | var maxValue: Double?

25 | @ViewBuilder var label: () -> Content

26 |

27 | init(

28 | _ titleKey: LocalizedStringKey,

29 | value: Binding,

30 | step: Double = 1,

31 | minValue: Double? = nil,

32 | maxValue: Double? = nil

33 | ) where Content == Text {

34 | self.init(value: value, step: step, minValue: minValue, maxValue: maxValue, label: {

35 | Text(titleKey)

36 | })

37 | }

38 |

39 | init(

40 | value: Binding,

41 | step: Double = 1,

42 | minValue: Double? = nil,

43 | maxValue: Double? = nil,

44 | @ViewBuilder label: @escaping () -> Content

45 | ) {

46 | self.label = label

47 | self.step = step

48 | self.minValue = minValue

49 | self.maxValue = maxValue

50 | self._value = value

51 | }

52 |

53 | var body: some View {

54 | LabeledContent(content: {

55 | DecimalField(

56 | value: $value,

57 | step: step,

58 | minValue: minValue,

59 | maxValue: maxValue

60 | )

61 | }, label: label)

62 | }

63 | }

64 |

65 | struct DecimalField: View {

66 | @Binding var value: Double

67 | var step: Double = 1

68 | var minValue: Double?

69 | var maxValue: Double?

70 | @State private var text: String

71 | @FocusState private var isFocused: Bool

72 |

73 | init(

74 | value: Binding,

75 | step: Double = 1,

76 | minValue: Double? = nil,

77 | maxValue: Double? = nil

78 | ) {

79 | self.step = step

80 | self.minValue = minValue

81 | self.maxValue = maxValue

82 | self._value = value

83 | let text = Formatter.decimal.string(from: value.wrappedValue as NSNumber) ?? ""

84 | self._text = State(wrappedValue: text)

85 | }

86 |

87 | var body: some View {

88 | HStack(spacing: 0) {

89 | TextField("", text: $text, prompt: Text("Value"))

90 | .multilineTextAlignment(.trailing)

91 | .textFieldStyle(.plain)

92 | .padding(.horizontal, 10)

93 | .submitLabel(.done)

94 | .focused($isFocused)

95 | .frame(minWidth: 70)

96 | .labelsHidden()

97 | #if !os(macOS)

98 | .keyboardType(.decimalPad)

99 | #endif

100 | Stepper(label: {}, onIncrement: {

101 | if let maxValue {

102 | value = min(value + step, maxValue)

103 | } else {

104 | value += step

105 | }

106 | }, onDecrement: {

107 | if let minValue {

108 | value = max(value - step, minValue)

109 | } else {

110 | value -= step

111 | }

112 | }).labelsHidden()

113 | }

114 | #if os(macOS)

115 | .padding(3)

116 | .background(Color.primary.opacity(0.06))

117 | .clipShape(RoundedRectangle(cornerRadius: 8, style: .continuous))

118 | #else

119 | .padding(2)

120 | .background(Color.primary.opacity(0.05))

121 | .clipShape(RoundedRectangle(cornerRadius: 10, style: .continuous))

122 | #endif

123 | .onSubmit {

124 | updateValue()

125 | isFocused = false

126 | }

127 | .onChange(of: isFocused) { focused in

128 | if !focused {

129 | updateValue()

130 | }

131 | }

132 | .onChange(of: value) { _ in updateText() }

133 | .onChange(of: text) { _ in

134 | if let newValue = Formatter.decimal.number(from: text)?.doubleValue, value != newValue {

135 | value = newValue

136 | }

137 | }

138 | #if !os(macOS)

139 | .toolbar {

140 | if isFocused {

141 | ToolbarItem(placement: .keyboard) {

142 | HStack {

143 | Spacer()

144 | Button("Done") {

145 | isFocused = false

146 | }

147 | }

148 | }

149 | }

150 | }

151 | #endif

152 | }

153 |

154 | private func updateValue() {

155 | if let newValue = Formatter.decimal.number(from: text)?.doubleValue {

156 | if let maxValue, newValue > maxValue {

157 | value = maxValue

158 | } else if let minValue, newValue < minValue {

159 | value = minValue

160 | } else {

161 | value = newValue

162 | }

163 | }

164 | }

165 |

166 | private func updateText() {

167 | text = Formatter.decimal.string(from: value as NSNumber) ?? ""

168 | }

169 | }

170 |

171 |

172 | struct DecimalField_Previews: PreviewProvider {

173 | static var previews: some View {

174 | DecimalField(value: .constant(20))

175 | .padding()

176 | }

177 | }

178 |

--------------------------------------------------------------------------------

/GuernikaModelConverter/ConvertControlNet/ConvertControlNetViewModel.swift:

--------------------------------------------------------------------------------

1 | //

2 | // ConvertControlNetViewModel.swift

3 | // GuernikaModelConverter

4 | //

5 | // Created by Guillermo Cique Fernández on 30/3/23.

6 | //

7 |

8 | import SwiftUI

9 | import Combine

10 |

11 | class ConvertControlNetViewModel: ObservableObject {

12 | @Published var showSuccess: Bool = false

13 | var showError: Bool {

14 | get { error != nil }

15 | set {

16 | if !newValue {

17 | error = nil

18 | isCoreMLError = false

19 | }

20 | }

21 | }

22 | var isCoreMLError: Bool = false

23 | @Published var error: String?

24 |

25 | var isReady: Bool {

26 | switch controlNetOrigin {

27 | case .huggingface: return !huggingfaceIdentifier.isEmpty

28 | case .diffusers: return diffusersLocation != nil

29 | case .checkpoint: return checkpointLocation != nil

30 | }

31 | }

32 | @Published var process: ConverterProcess?

33 | var isRunning: Bool { process != nil }

34 |

35 | @Published var showOutputPicker: Bool = false

36 | @AppStorage("output_location") var outputLocation: URL?

37 |

38 | var controlNetOrigin: ModelOrigin {

39 | get { ModelOrigin(rawValue: controlNetOriginString) ?? .huggingface }

40 | set { controlNetOriginString = newValue.rawValue }

41 | }

42 | @AppStorage("controlnet_origin") var controlNetOriginString: String = ModelOrigin.huggingface.rawValue

43 | @AppStorage("controlnet_huggingface_identifier") var huggingfaceIdentifier: String = ""

44 | @AppStorage("controlnet_diffusers_location") var diffusersLocation: URL?

45 | @AppStorage("controlnet_checkpoint_location") var checkpointLocation: URL?

46 | var selectedControlNet: String? {

47 | switch controlNetOrigin {

48 | case .huggingface: return nil

49 | case .diffusers: return diffusersLocation?.lastPathComponent

50 | case .checkpoint: return checkpointLocation?.lastPathComponent

51 | }

52 | }

53 |

54 | var computeUnits: ComputeUnits {

55 | get { ComputeUnits(rawValue: computeUnitsString) ?? .cpuAndNeuralEngine }

56 | set { computeUnitsString = newValue.rawValue }

57 | }

58 | @AppStorage("compute_units") var computeUnitsString: String = ComputeUnits.cpuAndNeuralEngine.rawValue

59 |

60 | var compression: Compression {

61 | get { Compression(rawValue: compressionString) ?? .fullSize }

62 | set { compressionString = newValue.rawValue }

63 | }

64 | @AppStorage("controlnet_compression") var compressionString: String = Compression.fullSize.rawValue

65 |

66 | @AppStorage("custom_size") var customSize: Bool = false

67 | @AppStorage("custom_size_width") var customWidth: Int = 512

68 | @AppStorage("custom_size_height") var customHeight: Int = 512

69 | @AppStorage("controlnet_multisize") var multisize: Bool = false

70 |

71 | private var cancellables: Set = []

72 |

73 | func start() {

74 | guard checkCoreMLCompiler() else {

75 | isCoreMLError = true

76 | error = "CoreMLCompiler not available.\nMake sure you have Xcode installed and you run \"sudo xcode-select --switch /Applications/Xcode.app/Contents/Developer/\" on a Terminal."

77 | return

78 | }

79 | do {

80 | let process = try ConverterProcess(

81 | outputLocation: outputLocation,

82 | controlNetOrigin: controlNetOrigin,

83 | huggingfaceIdentifier: huggingfaceIdentifier,