├── README.md

├── ctr_data.txt

├── deep nn ctr prediction

├── CTR_prediction_LR_FM_CCPM_PNN.ipynb

├── DeepFM.py

├── DeepFM_NFM_DeepCTR.ipynb

├── run_dfm.ipynb

├── wide_and_deep_model_criteo.ipynb

└── wide_and_deep_model_official.ipynb

└── 从FM推演各深度学习CTR预估模型(附代码).pdf

/README.md:

--------------------------------------------------------------------------------

1 | # CTR_NN

2 | CTR prediction using Neural Network methods

3 | 基于深度学习的CTR预估,从FM推演各深度学习CTR预估模型(附代码)

4 |

5 | 详情请参见博文[《从FM推演各深度学习CTR预估模型(附代码)》](https://blog.csdn.net/han_xiaoyang/article/details/81031961)

6 | 部分代码参考[lambdaji](https://github.com/lambdaji)同学,对此表示感谢

7 |

8 | 代码所需数据可在ctr_data.txt中的网盘地址中下载到。

9 |

--------------------------------------------------------------------------------

/ctr_data.txt:

--------------------------------------------------------------------------------

1 | 链接: https://pan.baidu.com/s/1tIhlbWaVHbKoLd4XAwrUDw 密码: veww

2 |

--------------------------------------------------------------------------------

/deep nn ctr prediction/DeepFM.py:

--------------------------------------------------------------------------------

1 | # -*- coding:utf-8 -*-

2 | #!/usr/bin/env python

3 | """

4 | #1 Input pipline using Dataset high level API, Support parallel and prefetch reading

5 | #2 Train pipline using Coustom Estimator by rewriting model_fn

6 | #3 Support distincted training using TF_CONFIG

7 | #4 Support export_model for TensorFlow Serving

8 |

9 | 方便迁移到其他算法上,只要修改input_fn and model_fn

10 |

11 | """

12 | #from __future__ import absolute_import

13 | #from __future__ import division

14 | #from __future__ import print_function

15 |

16 | #import argparse

17 | import shutil

18 | #import sys

19 | import os

20 | import json

21 | import glob

22 | from datetime import date, timedelta

23 | from time import time

24 | #import gc

25 | #from multiprocessing import Process

26 |

27 | #import math

28 | import random

29 | import pandas as pd

30 | import numpy as np

31 | import tensorflow as tf

32 |

33 | #################### CMD Arguments ####################

34 | FLAGS = tf.app.flags.FLAGS

35 | tf.app.flags.DEFINE_integer("dist_mode", 0, "distribuion mode {0-loacal, 1-single_dist, 2-multi_dist}")

36 | tf.app.flags.DEFINE_string("ps_hosts", '', "Comma-separated list of hostname:port pairs")

37 | tf.app.flags.DEFINE_string("worker_hosts", '', "Comma-separated list of hostname:port pairs")

38 | tf.app.flags.DEFINE_string("job_name", '', "One of 'ps', 'worker'")

39 | tf.app.flags.DEFINE_integer("task_index", 0, "Index of task within the job")

40 | tf.app.flags.DEFINE_integer("num_threads", 16, "Number of threads")

41 | tf.app.flags.DEFINE_integer("feature_size", 0, "Number of features")

42 | tf.app.flags.DEFINE_integer("field_size", 0, "Number of fields")

43 | tf.app.flags.DEFINE_integer("embedding_size", 32, "Embedding size")

44 | tf.app.flags.DEFINE_integer("num_epochs", 10, "Number of epochs")

45 | tf.app.flags.DEFINE_integer("batch_size", 64, "Number of batch size")

46 | tf.app.flags.DEFINE_integer("log_steps", 1000, "save summary every steps")

47 | tf.app.flags.DEFINE_float("learning_rate", 0.0005, "learning rate")

48 | tf.app.flags.DEFINE_float("l2_reg", 0.0001, "L2 regularization")

49 | tf.app.flags.DEFINE_string("loss_type", 'log_loss', "loss type {square_loss, log_loss}")

50 | tf.app.flags.DEFINE_string("optimizer", 'Adam', "optimizer type {Adam, Adagrad, GD, Momentum}")

51 | tf.app.flags.DEFINE_string("deep_layers", '256,128,64', "deep layers")

52 | tf.app.flags.DEFINE_string("dropout", '0.5,0.5,0.5', "dropout rate")

53 | tf.app.flags.DEFINE_boolean("batch_norm", False, "perform batch normaization (True or False)")

54 | tf.app.flags.DEFINE_float("batch_norm_decay", 0.9, "decay for the moving average(recommend trying decay=0.9)")

55 | tf.app.flags.DEFINE_string("data_dir", '', "data dir")

56 | tf.app.flags.DEFINE_string("dt_dir", '', "data dt partition")

57 | tf.app.flags.DEFINE_string("model_dir", '', "model check point dir")

58 | tf.app.flags.DEFINE_string("servable_model_dir", '', "export servable model for TensorFlow Serving")

59 | tf.app.flags.DEFINE_string("task_type", 'train', "task type {train, infer, eval, export}")

60 | tf.app.flags.DEFINE_boolean("clear_existing_model", False, "clear existing model or not")

61 |

62 | #1 1:0.5 2:0.03519 3:1 4:0.02567 7:0.03708 8:0.01705 9:0.06296 10:0.18185 11:0.02497 12:1 14:0.02565 15:0.03267 17:0.0247 18:0.03158 20:1 22:1 23:0.13169 24:0.02933 27:0.18159 31:0.0177 34:0.02888 38:1 51:1 63:1 132:1 164:1 236:1

63 | def input_fn(filenames, batch_size=32, num_epochs=1, perform_shuffle=False):

64 | print('Parsing', filenames)

65 | def decode_libsvm(line):

66 | #columns = tf.decode_csv(value, record_defaults=CSV_COLUMN_DEFAULTS)

67 | #features = dict(zip(CSV_COLUMNS, columns))

68 | #labels = features.pop(LABEL_COLUMN)

69 | columns = tf.string_split([line], ' ')

70 | labels = tf.string_to_number(columns.values[0], out_type=tf.float32)

71 | splits = tf.string_split(columns.values[1:], ':')

72 | id_vals = tf.reshape(splits.values,splits.dense_shape)

73 | feat_ids, feat_vals = tf.split(id_vals,num_or_size_splits=2,axis=1)

74 | feat_ids = tf.string_to_number(feat_ids, out_type=tf.int32)

75 | feat_vals = tf.string_to_number(feat_vals, out_type=tf.float32)

76 | #feat_ids = tf.reshape(feat_ids,shape=[-1,FLAGS.field_size])

77 | #for i in range(splits.dense_shape.eval()[0]):

78 | # feat_ids.append(tf.string_to_number(splits.values[2*i], out_type=tf.int32))

79 | # feat_vals.append(tf.string_to_number(splits.values[2*i+1]))

80 | #return tf.reshape(feat_ids,shape=[-1,field_size]), tf.reshape(feat_vals,shape=[-1,field_size]), labels

81 | return {"feat_ids": feat_ids, "feat_vals": feat_vals}, labels

82 |

83 | # Extract lines from input files using the Dataset API, can pass one filename or filename list

84 | dataset = tf.data.TextLineDataset(filenames).map(decode_libsvm, num_parallel_calls=10).prefetch(500000) # multi-thread pre-process then prefetch

85 |

86 | # Randomizes input using a window of 256 elements (read into memory)

87 | if perform_shuffle:

88 | dataset = dataset.shuffle(buffer_size=256)

89 |

90 | # epochs from blending together.

91 | dataset = dataset.repeat(num_epochs)

92 | dataset = dataset.batch(batch_size) # Batch size to use

93 |

94 | #return dataset.make_one_shot_iterator()

95 | iterator = dataset.make_one_shot_iterator()

96 | batch_features, batch_labels = iterator.get_next()

97 | #return tf.reshape(batch_ids,shape=[-1,field_size]), tf.reshape(batch_vals,shape=[-1,field_size]), batch_labels

98 | return batch_features, batch_labels

99 |

100 | def model_fn(features, labels, mode, params):

101 | """Bulid Model function f(x) for Estimator."""

102 | #------hyperparameters----

103 | field_size = params["field_size"]

104 | feature_size = params["feature_size"]

105 | embedding_size = params["embedding_size"]

106 | l2_reg = params["l2_reg"]

107 | learning_rate = params["learning_rate"]

108 | #batch_norm_decay = params["batch_norm_decay"]

109 | #optimizer = params["optimizer"]

110 | layers = map(int, params["deep_layers"].split(','))

111 | dropout = map(float, params["dropout"].split(','))

112 |

113 | #------bulid weights------

114 | FM_B = tf.get_variable(name='fm_bias', shape=[1], initializer=tf.constant_initializer(0.0))

115 | print "FM_B", FM_B.get_shape()

116 | FM_W = tf.get_variable(name='fm_w', shape=[feature_size], initializer=tf.glorot_normal_initializer())

117 | print "FM_W", FM_W.get_shape()

118 | # F

119 | FM_V = tf.get_variable(name='fm_v', shape=[feature_size, embedding_size], initializer=tf.glorot_normal_initializer())

120 | # F * E

121 | print "FM_V", FM_V.get_shape()

122 | #------build feaure-------

123 | feat_ids = features['feat_ids']

124 | print "feat_ids", feat_ids.get_shape()

125 | feat_ids = tf.reshape(feat_ids,shape=[-1,field_size]) # None * f/K * K

126 | print "feat_ids", feat_ids.get_shape()

127 | feat_vals = features['feat_vals']

128 | print "feat_vals", feat_vals.get_shape()

129 | feat_vals = tf.reshape(feat_vals,shape=[-1,field_size]) # None * f/K * K

130 | print "feat_vals", feat_vals.get_shape()

131 |

132 | #------build f(x)------

133 | with tf.variable_scope("First-order"):

134 | feat_wgts = tf.nn.embedding_lookup(FM_W, feat_ids) # None * f/K * K

135 | print "feat_wgts", feat_wgts.get_shape()

136 | y_w = tf.reduce_sum(tf.multiply(feat_wgts, feat_vals),1)

137 |

138 | with tf.variable_scope("Second-order"):

139 | embeddings = tf.nn.embedding_lookup(FM_V, feat_ids) # None * f/K * K * E

140 | print "embeddings", embeddings.get_shape()

141 | feat_vals = tf.reshape(feat_vals, shape=[-1, field_size, 1]) # None * f/K * K * 1 ?

142 | print "feat_vals", feat_vals.get_shape()

143 | embeddings = tf.multiply(embeddings, feat_vals) #vij*xi

144 | print "embeddings", embeddings.get_shape()

145 | sum_square = tf.square(tf.reduce_sum(embeddings,1)) # None * K * E

146 | print "sum_square", sum_square.get_shape()

147 | square_sum = tf.reduce_sum(tf.square(embeddings),1)

148 | print "square_sum", square_sum.get_shape()

149 | y_v = 0.5*tf.reduce_sum(tf.subtract(sum_square, square_sum),1) # None * 1

150 |

151 | with tf.variable_scope("Deep-part"):

152 | if FLAGS.batch_norm:

153 | #normalizer_fn = tf.contrib.layers.batch_norm

154 | #normalizer_fn = tf.layers.batch_normalization

155 | if mode == tf.estimator.ModeKeys.TRAIN:

156 | train_phase = True

157 | #normalizer_params = {'decay': batch_norm_decay, 'center': True, 'scale': True, 'updates_collections': None, 'is_training': True, 'reuse': None}

158 | else:

159 | train_phase = False

160 | #normalizer_params = {'decay': batch_norm_decay, 'center': True, 'scale': True, 'updates_collections': None, 'is_training': False, 'reuse': True}

161 | else:

162 | normalizer_fn = None

163 | normalizer_params = None

164 |

165 | deep_inputs = tf.reshape(embeddings,shape=[-1,field_size*embedding_size]) # None * (F*K)

166 | for i in range(len(layers)):

167 | #if FLAGS.batch_norm:

168 | # deep_inputs = batch_norm_layer(deep_inputs, train_phase=train_phase, scope_bn='bn_%d' %i)

169 | #normalizer_params.update({'scope': 'bn_%d' %i})

170 | deep_inputs = tf.contrib.layers.fully_connected(inputs=deep_inputs, num_outputs=layers[i], \

171 | #normalizer_fn=normalizer_fn, normalizer_params=normalizer_params, \

172 | weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg), scope='mlp%d' % i)

173 | if FLAGS.batch_norm:

174 | deep_inputs = batch_norm_layer(deep_inputs, train_phase=train_phase, scope_bn='bn_%d' %i) #放在RELU之后 https://github.com/ducha-aiki/caffenet-benchmark/blob/master/batchnorm.md#bn----before-or-after-relu

175 | if mode == tf.estimator.ModeKeys.TRAIN:

176 | deep_inputs = tf.nn.dropout(deep_inputs, keep_prob=dropout[i]) #Apply Dropout after all BN layers and set dropout=0.8(drop_ratio=0.2)

177 | #deep_inputs = tf.layers.dropout(inputs=deep_inputs, rate=dropout[i], training=mode == tf.estimator.ModeKeys.TRAIN)

178 |

179 | y_deep = tf.contrib.layers.fully_connected(inputs=deep_inputs, num_outputs=1, activation_fn=tf.identity, \

180 | weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg), scope='deep_out')

181 | y_d = tf.reshape(y_deep,shape=[-1])

182 | #sig_wgts = tf.get_variable(name='sigmoid_weights', shape=[layers[-1]], initializer=tf.glorot_normal_initializer())

183 | #sig_bias = tf.get_variable(name='sigmoid_bias', shape=[1], initializer=tf.constant_initializer(0.0))

184 | #deep_out = tf.nn.xw_plus_b(deep_inputs,sig_wgts,sig_bias,name='deep_out')

185 |

186 | with tf.variable_scope("DeepFM-out"):

187 | #y_bias = FM_B * tf.ones_like(labels, dtype=tf.float32) # None * 1 warning;这里不能用label,否则调用predict/export函数会出错,train/evaluate正常;初步判断estimator做了优化,用不到label时不传

188 | y_bias = FM_B * tf.ones_like(y_d, dtype=tf.float32) # None * 1

189 | y = y_bias + y_w + y_v + y_d

190 | pred = tf.sigmoid(y)

191 |

192 | predictions={"prob": pred}

193 | export_outputs = {tf.saved_model.signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY: tf.estimator.export.PredictOutput(predictions)}

194 | # Provide an estimator spec for `ModeKeys.PREDICT`

195 | if mode == tf.estimator.ModeKeys.PREDICT:

196 | return tf.estimator.EstimatorSpec(

197 | mode=mode,

198 | predictions=predictions,

199 | export_outputs=export_outputs)

200 |

201 | #------bulid loss------

202 | loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=y, labels=labels)) + \

203 | l2_reg * tf.nn.l2_loss(FM_W) + \

204 | l2_reg * tf.nn.l2_loss(FM_V) #+ \ l2_reg * tf.nn.l2_loss(sig_wgts)

205 |

206 | # Provide an estimator spec for `ModeKeys.EVAL`

207 | eval_metric_ops = {

208 | "auc": tf.metrics.auc(labels, pred)

209 | }

210 | if mode == tf.estimator.ModeKeys.EVAL:

211 | return tf.estimator.EstimatorSpec(

212 | mode=mode,

213 | predictions=predictions,

214 | loss=loss,

215 | eval_metric_ops=eval_metric_ops)

216 |

217 | #------bulid optimizer------

218 | if FLAGS.optimizer == 'Adam':

219 | optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate, beta1=0.9, beta2=0.999, epsilon=1e-8)

220 | elif FLAGS.optimizer == 'Adagrad':

221 | optimizer = tf.train.AdagradOptimizer(learning_rate=learning_rate, initial_accumulator_value=1e-8)

222 | elif FLAGS.optimizer == 'Momentum':

223 | optimizer = tf.train.MomentumOptimizer(learning_rate=learning_rate, momentum=0.95)

224 | elif FLAGS.optimizer == 'ftrl':

225 | optimizer = tf.train.FtrlOptimizer(learning_rate)

226 |

227 | train_op = optimizer.minimize(loss, global_step=tf.train.get_global_step())

228 |

229 | # Provide an estimator spec for `ModeKeys.TRAIN` modes

230 | if mode == tf.estimator.ModeKeys.TRAIN:

231 | return tf.estimator.EstimatorSpec(

232 | mode=mode,

233 | predictions=predictions,

234 | loss=loss,

235 | train_op=train_op)

236 |

237 | # Provide an estimator spec for `ModeKeys.EVAL` and `ModeKeys.TRAIN` modes.

238 | #return tf.estimator.EstimatorSpec(

239 | # mode=mode,

240 | # loss=loss,

241 | # train_op=train_op,

242 | # predictions={"prob": pred},

243 | # eval_metric_ops=eval_metric_ops)

244 |

245 | def batch_norm_layer(x, train_phase, scope_bn):

246 | bn_train = tf.contrib.layers.batch_norm(x, decay=FLAGS.batch_norm_decay, center=True, scale=True, updates_collections=None, is_training=True, reuse=None, scope=scope_bn)

247 | bn_infer = tf.contrib.layers.batch_norm(x, decay=FLAGS.batch_norm_decay, center=True, scale=True, updates_collections=None, is_training=False, reuse=True, scope=scope_bn)

248 | z = tf.cond(tf.cast(train_phase, tf.bool), lambda: bn_train, lambda: bn_infer)

249 | return z

250 |

251 | def set_dist_env():

252 | if FLAGS.dist_mode == 1: # 本地分布式测试模式1 chief, 1 ps, 1 evaluator

253 | ps_hosts = FLAGS.ps_hosts.split(',')

254 | chief_hosts = FLAGS.chief_hosts.split(',')

255 | task_index = FLAGS.task_index

256 | job_name = FLAGS.job_name

257 | print('ps_host', ps_hosts)

258 | print('chief_hosts', chief_hosts)

259 | print('job_name', job_name)

260 | print('task_index', str(task_index))

261 | # 无worker参数

262 | tf_config = {

263 | 'cluster': {'chief': chief_hosts, 'ps': ps_hosts},

264 | 'task': {'type': job_name, 'index': task_index }

265 | }

266 | print(json.dumps(tf_config))

267 | os.environ['TF_CONFIG'] = json.dumps(tf_config)

268 | elif FLAGS.dist_mode == 2: # 集群分布式模式

269 | ps_hosts = FLAGS.ps_hosts.split(',')

270 | worker_hosts = FLAGS.worker_hosts.split(',')

271 | chief_hosts = worker_hosts[0:1] # get first worker as chief

272 | worker_hosts = worker_hosts[2:] # the rest as worker

273 | task_index = FLAGS.task_index

274 | job_name = FLAGS.job_name

275 | print('ps_host', ps_hosts)

276 | print('worker_host', worker_hosts)

277 | print('chief_hosts', chief_hosts)

278 | print('job_name', job_name)

279 | print('task_index', str(task_index))

280 | # use #worker=0 as chief

281 | if job_name == "worker" and task_index == 0:

282 | job_name = "chief"

283 | # use #worker=1 as evaluator

284 | if job_name == "worker" and task_index == 1:

285 | job_name = 'evaluator'

286 | task_index = 0

287 | # the others as worker

288 | if job_name == "worker" and task_index > 1:

289 | task_index -= 2

290 |

291 | tf_config = {

292 | 'cluster': {'chief': chief_hosts, 'worker': worker_hosts, 'ps': ps_hosts},

293 | 'task': {'type': job_name, 'index': task_index }

294 | }

295 | print(json.dumps(tf_config))

296 | os.environ['TF_CONFIG'] = json.dumps(tf_config)

297 |

298 | def main(_):

299 | tr_files = glob.glob("%s/tr*libsvm" % FLAGS.data_dir)

300 | random.shuffle(tr_files)

301 | print("tr_files:", tr_files)

302 | va_files = glob.glob("%s/va*libsvm" % FLAGS.data_dir)

303 | print("va_files:", va_files)

304 | te_files = glob.glob("%s/te*libsvm" % FLAGS.data_dir)

305 | print("te_files:", te_files)

306 |

307 | if FLAGS.clear_existing_model:

308 | try:

309 | shutil.rmtree(FLAGS.model_dir)

310 | except Exception as e:

311 | print(e, "at clear_existing_model")

312 | else:

313 | print("existing model cleaned at %s" % FLAGS.model_dir)

314 |

315 | set_dist_env()

316 |

317 | model_params = {

318 | "field_size": FLAGS.field_size,

319 | "feature_size": FLAGS.feature_size,

320 | "embedding_size": FLAGS.embedding_size,

321 | "learning_rate": FLAGS.learning_rate,

322 | "batch_norm_decay": FLAGS.batch_norm_decay,

323 | "l2_reg": FLAGS.l2_reg,

324 | "deep_layers": FLAGS.deep_layers,

325 | "dropout": FLAGS.dropout

326 | }

327 | config = tf.estimator.RunConfig().replace(session_config = tf.ConfigProto(device_count={'GPU':0, 'CPU':FLAGS.num_threads}),

328 | log_step_count_steps=FLAGS.log_steps, save_summary_steps=FLAGS.log_steps)

329 | DeepFM = tf.estimator.Estimator(model_fn=model_fn, model_dir=FLAGS.model_dir, params=model_params, config=config)

330 |

331 | if FLAGS.task_type == 'train':

332 | train_spec = tf.estimator.TrainSpec(input_fn=lambda: input_fn(tr_files, num_epochs=FLAGS.num_epochs, batch_size=FLAGS.batch_size))

333 | eval_spec = tf.estimator.EvalSpec(input_fn=lambda: input_fn(va_files, num_epochs=1, batch_size=FLAGS.batch_size), steps=None, start_delay_secs=1000, throttle_secs=1200)

334 | tf.estimator.train_and_evaluate(DeepFM, train_spec, eval_spec)

335 | elif FLAGS.task_type == 'eval':

336 | DeepFM.evaluate(input_fn=lambda: input_fn(va_files, num_epochs=1, batch_size=FLAGS.batch_size))

337 | elif FLAGS.task_type == 'infer':

338 | preds = DeepFM.predict(input_fn=lambda: input_fn(te_files, num_epochs=1, batch_size=FLAGS.batch_size), predict_keys="prob")

339 | with open(FLAGS.data_dir+"/pred.txt", "w") as fo:

340 | for prob in preds:

341 | fo.write("%f\n" % (prob['prob']))

342 | elif FLAGS.task_type == 'export':

343 | #feature_spec = tf.feature_column.make_parse_example_spec(feature_columns)

344 | #feature_spec = {

345 | # 'feat_ids': tf.FixedLenFeature(dtype=tf.int64, shape=[None, FLAGS.field_size]),

346 | # 'feat_vals': tf.FixedLenFeature(dtype=tf.float32, shape=[None, FLAGS.field_size])

347 | #}

348 | #serving_input_receiver_fn = tf.estimator.export.build_parsing_serving_input_receiver_fn(feature_spec)

349 | feature_spec = {

350 | 'feat_ids': tf.placeholder(dtype=tf.int64, shape=[None, FLAGS.field_size], name='feat_ids'),

351 | 'feat_vals': tf.placeholder(dtype=tf.float32, shape=[None, FLAGS.field_size], name='feat_vals')

352 | }

353 | serving_input_receiver_fn = tf.estimator.export.build_raw_serving_input_receiver_fn(feature_spec)

354 | DeepFM.export_savedmodel(FLAGS.servable_model_dir, serving_input_receiver_fn)

355 |

356 | if __name__ == "__main__":

357 | #------check Arguments------

358 | if FLAGS.dt_dir == "":

359 | FLAGS.dt_dir = (date.today() + timedelta(-1)).strftime('%Y%m%d')

360 | FLAGS.model_dir = FLAGS.model_dir + FLAGS.dt_dir

361 | #FLAGS.data_dir = FLAGS.data_dir + FLAGS.dt_dir

362 |

363 | print('task_type ', FLAGS.task_type)

364 | print('model_dir ', FLAGS.model_dir)

365 | print('data_dir ', FLAGS.data_dir)

366 | print('dt_dir ', FLAGS.dt_dir)

367 | print('num_epochs ', FLAGS.num_epochs)

368 | print('feature_size ', FLAGS.feature_size)

369 | print('field_size ', FLAGS.field_size)

370 | print('embedding_size ', FLAGS.embedding_size)

371 | print('batch_size ', FLAGS.batch_size)

372 | print('deep_layers ', FLAGS.deep_layers)

373 | print('dropout ', FLAGS.dropout)

374 | print('loss_type ', FLAGS.loss_type)

375 | print('optimizer ', FLAGS.optimizer)

376 | print('learning_rate ', FLAGS.learning_rate)

377 | print('batch_norm_decay ', FLAGS.batch_norm_decay)

378 | print('batch_norm ', FLAGS.batch_norm)

379 | print('l2_reg ', FLAGS.l2_reg)

380 |

381 | tf.logging.set_verbosity(tf.logging.INFO)

382 | tf.app.run()

383 |

--------------------------------------------------------------------------------

/deep nn ctr prediction/DeepFM_NFM_DeepCTR.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## CTR预估(2)\n",

8 | "\n",

9 | "资料&&代码整理by[@寒小阳](https://blog.csdn.net/han_xiaoyang)(hanxiaoyang.ml@gmail.com)\n",

10 | "\n",

11 | "reference:\n",

12 | "* [《广告点击率预估是怎么回事?》](https://zhuanlan.zhihu.com/p/23499698)\n",

13 | "* [从ctr预估问题看看f(x)设计—DNN篇](https://zhuanlan.zhihu.com/p/28202287)\n",

14 | "* [Atomu2014 product_nets](https://github.com/Atomu2014/product-nets)"

15 | ]

16 | },

17 | {

18 | "cell_type": "markdown",

19 | "metadata": {},

20 | "source": [

21 | "同样以criteo数据为例,我们来看看深度学习的应用。"

22 | ]

23 | },

24 | {

25 | "cell_type": "markdown",

26 | "metadata": {},

27 | "source": [

28 | "### 特征工程\n",

29 | "特征工程是比较重要的数据处理过程,这里对criteo数据依照[paddlepaddle做ctr预估特征工程](https://github.com/PaddlePaddle/models/blob/develop/deep_fm/preprocess.py)完成特征工程"

30 | ]

31 | },

32 | {

33 | "cell_type": "code",

34 | "execution_count": 1,

35 | "metadata": {

36 | "collapsed": true

37 | },

38 | "outputs": [],

39 | "source": [

40 | "#coding=utf8\n",

41 | "\"\"\"\n",

42 | "特征工程参考(https://github.com/PaddlePaddle/models/blob/develop/deep_fm/preprocess.py)完成\n",

43 | "-对数值型特征,normalize处理\n",

44 | "-对类别型特征,对长尾(出现频次低于200)的进行过滤\n",

45 | "\"\"\"\n",

46 | "import os\n",

47 | "import sys\n",

48 | "import random\n",

49 | "import collections\n",

50 | "import argparse\n",

51 | "from multiprocessing import Pool as ThreadPool\n",

52 | "\n",

53 | "# 13个连续型列,26个类别型列\n",

54 | "continous_features = range(1, 14)\n",

55 | "categorial_features = range(14, 40)\n",

56 | "\n",

57 | "# 对连续值进行截断处理(取每个连续值列的95%分位数)\n",

58 | "continous_clip = [20, 600, 100, 50, 64000, 500, 100, 50, 500, 10, 10, 10, 50]\n",

59 | "\n",

60 | "\n",

61 | "class CategoryDictGenerator:\n",

62 | " \"\"\"\n",

63 | " 类别型特征编码字典\n",

64 | " \"\"\"\n",

65 | "\n",

66 | " def __init__(self, num_feature):\n",

67 | " self.dicts = []\n",

68 | " self.num_feature = num_feature\n",

69 | " for i in range(0, num_feature):\n",

70 | " self.dicts.append(collections.defaultdict(int))\n",

71 | "\n",

72 | " def build(self, datafile, categorial_features, cutoff=0):\n",

73 | " with open(datafile, 'r') as f:\n",

74 | " for line in f:\n",

75 | " features = line.rstrip('\\n').split('\\t')\n",

76 | " for i in range(0, self.num_feature):\n",

77 | " if features[categorial_features[i]] != '':\n",

78 | " self.dicts[i][features[categorial_features[i]]] += 1\n",

79 | " for i in range(0, self.num_feature):\n",

80 | " self.dicts[i] = filter(lambda x: x[1] >= cutoff, self.dicts[i].items())\n",

81 | " self.dicts[i] = sorted(self.dicts[i], key=lambda x: (-x[1], x[0]))\n",

82 | " vocabs, _ = list(zip(*self.dicts[i]))\n",

83 | " self.dicts[i] = dict(zip(vocabs, range(1, len(vocabs) + 1)))\n",

84 | " self.dicts[i][''] = 0\n",

85 | "\n",

86 | " def gen(self, idx, key):\n",

87 | " if key not in self.dicts[idx]:\n",

88 | " res = self.dicts[idx]['']\n",

89 | " else:\n",

90 | " res = self.dicts[idx][key]\n",

91 | " return res\n",

92 | "\n",

93 | " def dicts_sizes(self):\n",

94 | " return map(len, self.dicts)\n",

95 | "\n",

96 | "\n",

97 | "class ContinuousFeatureGenerator:\n",

98 | " \"\"\"\n",

99 | " 对连续值特征做最大最小值normalization\n",

100 | " \"\"\"\n",

101 | "\n",

102 | " def __init__(self, num_feature):\n",

103 | " self.num_feature = num_feature\n",

104 | " self.min = [sys.maxint] * num_feature\n",

105 | " self.max = [-sys.maxint] * num_feature\n",

106 | "\n",

107 | " def build(self, datafile, continous_features):\n",

108 | " with open(datafile, 'r') as f:\n",

109 | " for line in f:\n",

110 | " features = line.rstrip('\\n').split('\\t')\n",

111 | " for i in range(0, self.num_feature):\n",

112 | " val = features[continous_features[i]]\n",

113 | " if val != '':\n",

114 | " val = int(val)\n",

115 | " if val > continous_clip[i]:\n",

116 | " val = continous_clip[i]\n",

117 | " self.min[i] = min(self.min[i], val)\n",

118 | " self.max[i] = max(self.max[i], val)\n",

119 | "\n",

120 | " def gen(self, idx, val):\n",

121 | " if val == '':\n",

122 | " return 0.0\n",

123 | " val = float(val)\n",

124 | " return (val - self.min[idx]) / (self.max[idx] - self.min[idx])\n",

125 | "\n",

126 | "\n",

127 | "def preprocess(input_dir, output_dir):\n",

128 | " \"\"\"\n",

129 | " 对连续型和类别型特征进行处理\n",

130 | " \"\"\"\n",

131 | " \n",

132 | " dists = ContinuousFeatureGenerator(len(continous_features))\n",

133 | " dists.build(input_dir + 'train.txt', continous_features)\n",

134 | "\n",

135 | " dicts = CategoryDictGenerator(len(categorial_features))\n",

136 | " dicts.build(input_dir + 'train.txt', categorial_features, cutoff=150)\n",

137 | "\n",

138 | " output = open(output_dir + 'feature_map','w')\n",

139 | " for i in continous_features:\n",

140 | " output.write(\"{0} {1}\\n\".format('I'+str(i), i))\n",

141 | " dict_sizes = dicts.dicts_sizes()\n",

142 | " categorial_feature_offset = [dists.num_feature]\n",

143 | " for i in range(1, len(categorial_features)+1):\n",

144 | " offset = categorial_feature_offset[i - 1] + dict_sizes[i - 1]\n",

145 | " categorial_feature_offset.append(offset)\n",

146 | " for key, val in dicts.dicts[i-1].iteritems():\n",

147 | " output.write(\"{0} {1}\\n\".format('C'+str(i)+'|'+key, categorial_feature_offset[i - 1]+val+1))\n",

148 | "\n",

149 | " random.seed(0)\n",

150 | "\n",

151 | " # 90%的数据用于训练,10%的数据用于验证\n",

152 | " with open(output_dir + 'tr.libsvm', 'w') as out_train:\n",

153 | " with open(output_dir + 'va.libsvm', 'w') as out_valid:\n",

154 | " with open(input_dir + 'train.txt', 'r') as f:\n",

155 | " for line in f:\n",

156 | " features = line.rstrip('\\n').split('\\t')\n",

157 | "\n",

158 | " feat_vals = []\n",

159 | " for i in range(0, len(continous_features)):\n",

160 | " val = dists.gen(i, features[continous_features[i]])\n",

161 | " feat_vals.append(str(continous_features[i]) + ':' + \"{0:.6f}\".format(val).rstrip('0').rstrip('.'))\n",

162 | "\n",

163 | " for i in range(0, len(categorial_features)):\n",

164 | " val = dicts.gen(i, features[categorial_features[i]]) + categorial_feature_offset[i]\n",

165 | " feat_vals.append(str(val) + ':1')\n",

166 | "\n",

167 | " label = features[0]\n",

168 | " if random.randint(0, 9999) % 10 != 0:\n",

169 | " out_train.write(\"{0} {1}\\n\".format(label, ' '.join(feat_vals)))\n",

170 | " else:\n",

171 | " out_valid.write(\"{0} {1}\\n\".format(label, ' '.join(feat_vals)))\n",

172 | "\n",

173 | " with open(output_dir + 'te.libsvm', 'w') as out:\n",

174 | " with open(input_dir + 'test.txt', 'r') as f:\n",

175 | " for line in f:\n",

176 | " features = line.rstrip('\\n').split('\\t')\n",

177 | "\n",

178 | " feat_vals = []\n",

179 | " for i in range(0, len(continous_features)):\n",

180 | " val = dists.gen(i, features[continous_features[i] - 1])\n",

181 | " feat_vals.append(str(continous_features[i]) + ':' + \"{0:.6f}\".format(val).rstrip('0').rstrip('.'))\n",

182 | "\n",

183 | " for i in range(0, len(categorial_features)):\n",

184 | " val = dicts.gen(i, features[categorial_features[i] - 1]) + categorial_feature_offset[i]\n",

185 | " feat_vals.append(str(val) + ':1')\n",

186 | "\n",

187 | " out.write(\"{0} {1}\\n\".format(label, ' '.join(feat_vals)))"

188 | ]

189 | },

190 | {

191 | "cell_type": "code",

192 | "execution_count": null,

193 | "metadata": {},

194 | "outputs": [

195 | {

196 | "name": "stdout",

197 | "output_type": "stream",

198 | "text": [

199 | "开始数据处理与特征工程...\n"

200 | ]

201 | }

202 | ],

203 | "source": [

204 | "input_dir = './criteo_data/'\n",

205 | "output_dir = './criteo_data/'\n",

206 | "print(\"开始数据处理与特征工程...\")\n",

207 | "preprocess(input_dir, output_dir)"

208 | ]

209 | },

210 | {

211 | "cell_type": "code",

212 | "execution_count": 8,

213 | "metadata": {},

214 | "outputs": [

215 | {

216 | "name": "stdout",

217 | "output_type": "stream",

218 | "text": [

219 | "0 1:0.2 2:0.028192 3:0.07 4:0.56 5:0.000562 6:0.056 7:0.04 8:0.86 9:0.094 10:0.25 11:0.2 12:0 13:0.56 14:1 169:1 631:1 1414:1 2534:1 2584:1 4239:1 4991:1 5060:1 5064:1 7141:1 8818:1 9906:1 11250:1 11377:1 12951:1 13833:1 13883:1 14817:1 15204:1 15327:1 16118:1 16128:1 16183:1 17289:1 17361:1\r\n",

220 | "1 1:0 2:0.004975 3:0.11 4:0 5:1.373375 6:0 7:0 8:0.14 9:0 10:0 11:0 12:0 13:0 14:1 181:1 543:1 1379:1 2534:1 2582:1 3632:1 4990:1 5061:1 5217:1 6925:1 8726:1 9705:1 11250:1 11605:1 12849:1 13835:1 13971:1 14816:1 15202:1 15224:1 16118:1 16129:1 16148:1 17280:1 17320:1\r\n",

221 | "0 1:0.1 2:0.008292 3:0.28 4:0.14 5:0.000016 6:0.002 7:0.21 8:0.14 9:0.786 10:0.125 11:0.4 12:0 13:0.02 14:1 209:1 632:1 1491:1 2534:1 2582:1 2719:1 4995:1 5060:1 5069:1 6960:1 8820:1 9727:1 11249:1 11471:1 12933:1 13834:1 13927:1 14817:1 15204:1 15328:1 16118:1 16129:1 16185:1 17282:1 17364:1\r\n",

222 | "1 1:0 2:0.003317 3:0.04 4:0.5 5:0.504031 6:0.222 7:0.02 8:0.72 9:0.16 10:0 11:0.1 12:0 13:0.5 14:1 156:1 529:1 1377:1 2534:1 2583:1 3768:1 4990:1 5060:1 5247:1 7131:1 8711:1 9893:1 11250:1 11361:1 12827:1 13835:1 13888:1 14816:1 15202:1 15207:1 16118:1 16129:1 16145:1 17280:1 17320:1\r\n",

223 | "0 1:0 2:0.004975 3:0.28 4:0.14 5:0.022766 6:0.058 7:0.05 8:0.28 9:0.464 10:0 11:0.3 12:0 13:0.14 15:1 142:1 528:1 1376:1 2535:1 2582:1 2659:1 4997:1 5060:1 5064:1 7780:1 8710:1 9703:1 11250:1 11324:1 12826:1 13834:1 13861:1 14817:1 15203:1 15206:1 16118:1 16128:1 16746:1 17282:1 17320:1\r\n"

224 | ]

225 | }

226 | ],

227 | "source": [

228 | "!head -5 ./criteo_data/tr.libsvm"

229 | ]

230 | },

231 | {

232 | "cell_type": "markdown",

233 | "metadata": {},

234 | "source": [

235 | "### DeepFM\n",

236 | "reference:[常见的计算广告点击率预估算法总结](https://zhuanlan.zhihu.com/p/29053940)\n",

237 | "\n",

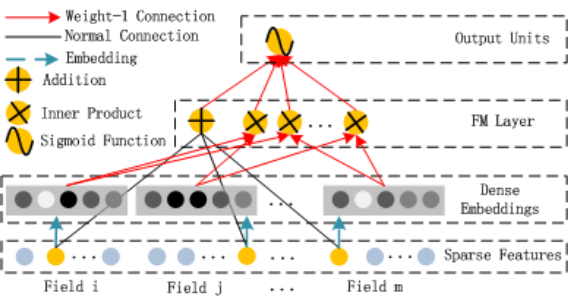

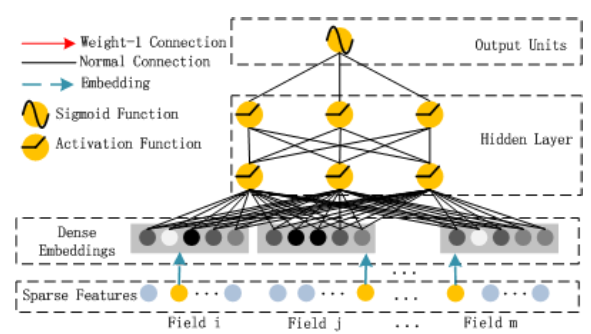

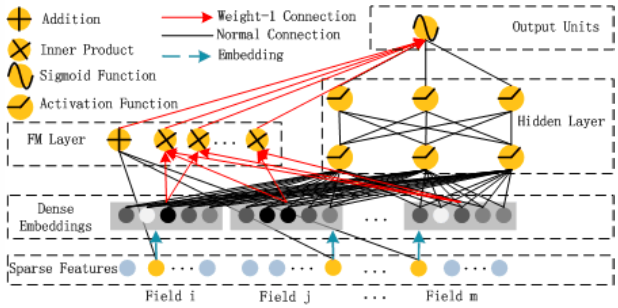

238 | "DeepFM结合了wide and deep和FM,其实就是把PNN和WDL结合了。原始的Wide and Deep,Wide的部分只是LR,构造线性关系,Deep部分建模更高阶的关系,所以在Wide and Deep中还需要做一些特征的东西,如Cross Column的工作,FM是可以建模二阶关系达到Cross column的效果,DeepFM就是把FM和NN结合,无需再对特征做诸如Cross Column的工作了。\n",

239 | "\n",

240 | "FM部分如下:\n",

241 | "\n",

242 | "\n",

243 | "Deep部分如下:\n",

244 | "\n",

245 | "\n",

246 | "总体结构图:\n",

247 | "\n",

248 | "\n",

249 | "DeepFM相对于FNN、PNN,能够利用其Deep部分建模更高阶信息(二阶以上),而相对于Wide and Deep能够减少特征工程的部分工作,wide部分类似FM建模一、二阶特征间关系, 算是NN和FM的一个很好的结合,另外不同的是如下图,DeepFM的wide和deep部分共享embedding向量空间,wide和deep均可以更新embedding部分"

250 | ]

251 | },

252 | {

253 | "cell_type": "code",

254 | "execution_count": null,

255 | "metadata": {

256 | "collapsed": true

257 | },

258 | "outputs": [],

259 | "source": [

260 | "# %load DeepFM.py\n",

261 | "#!/usr/bin/env python\n",

262 | "\"\"\"\n",

263 | "#1 Input pipline using Dataset high level API, Support parallel and prefetch reading\n",

264 | "#2 Train pipline using Coustom Estimator by rewriting model_fn\n",

265 | "#3 Support distincted training using TF_CONFIG\n",

266 | "#4 Support export_model for TensorFlow Serving\n",

267 | "\n",

268 | "方便迁移到其他算法上,只要修改input_fn and model_fn\n",

269 | "by lambdaji\n",

270 | "\"\"\"\n",

271 | "#from __future__ import absolute_import\n",

272 | "#from __future__ import division\n",

273 | "#from __future__ import print_function\n",

274 | "\n",

275 | "#import argparse\n",

276 | "import shutil\n",

277 | "#import sys\n",

278 | "import os\n",

279 | "import json\n",

280 | "import glob\n",

281 | "from datetime import date, timedelta\n",

282 | "from time import time\n",

283 | "#import gc\n",

284 | "#from multiprocessing import Process\n",

285 | "\n",

286 | "#import math\n",

287 | "import random\n",

288 | "import pandas as pd\n",

289 | "import numpy as np\n",

290 | "import tensorflow as tf\n",

291 | "\n",

292 | "#################### CMD Arguments ####################\n",

293 | "FLAGS = tf.app.flags.FLAGS\n",

294 | "tf.app.flags.DEFINE_integer(\"dist_mode\", 0, \"distribuion mode {0-loacal, 1-single_dist, 2-multi_dist}\")\n",

295 | "tf.app.flags.DEFINE_string(\"ps_hosts\", '', \"Comma-separated list of hostname:port pairs\")\n",

296 | "tf.app.flags.DEFINE_string(\"worker_hosts\", '', \"Comma-separated list of hostname:port pairs\")\n",

297 | "tf.app.flags.DEFINE_string(\"job_name\", '', \"One of 'ps', 'worker'\")\n",

298 | "tf.app.flags.DEFINE_integer(\"task_index\", 0, \"Index of task within the job\")\n",

299 | "tf.app.flags.DEFINE_integer(\"num_threads\", 16, \"Number of threads\")\n",

300 | "tf.app.flags.DEFINE_integer(\"feature_size\", 0, \"Number of features\")\n",

301 | "tf.app.flags.DEFINE_integer(\"field_size\", 0, \"Number of fields\")\n",

302 | "tf.app.flags.DEFINE_integer(\"embedding_size\", 32, \"Embedding size\")\n",

303 | "tf.app.flags.DEFINE_integer(\"num_epochs\", 10, \"Number of epochs\")\n",

304 | "tf.app.flags.DEFINE_integer(\"batch_size\", 64, \"Number of batch size\")\n",

305 | "tf.app.flags.DEFINE_integer(\"log_steps\", 1000, \"save summary every steps\")\n",

306 | "tf.app.flags.DEFINE_float(\"learning_rate\", 0.0005, \"learning rate\")\n",

307 | "tf.app.flags.DEFINE_float(\"l2_reg\", 0.0001, \"L2 regularization\")\n",

308 | "tf.app.flags.DEFINE_string(\"loss_type\", 'log_loss', \"loss type {square_loss, log_loss}\")\n",

309 | "tf.app.flags.DEFINE_string(\"optimizer\", 'Adam', \"optimizer type {Adam, Adagrad, GD, Momentum}\")\n",

310 | "tf.app.flags.DEFINE_string(\"deep_layers\", '256,128,64', \"deep layers\")\n",

311 | "tf.app.flags.DEFINE_string(\"dropout\", '0.5,0.5,0.5', \"dropout rate\")\n",

312 | "tf.app.flags.DEFINE_boolean(\"batch_norm\", False, \"perform batch normaization (True or False)\")\n",

313 | "tf.app.flags.DEFINE_float(\"batch_norm_decay\", 0.9, \"decay for the moving average(recommend trying decay=0.9)\")\n",

314 | "tf.app.flags.DEFINE_string(\"data_dir\", '', \"data dir\")\n",

315 | "tf.app.flags.DEFINE_string(\"dt_dir\", '', \"data dt partition\")\n",

316 | "tf.app.flags.DEFINE_string(\"model_dir\", '', \"model check point dir\")\n",

317 | "tf.app.flags.DEFINE_string(\"servable_model_dir\", '', \"export servable model for TensorFlow Serving\")\n",

318 | "tf.app.flags.DEFINE_string(\"task_type\", 'train', \"task type {train, infer, eval, export}\")\n",

319 | "tf.app.flags.DEFINE_boolean(\"clear_existing_model\", False, \"clear existing model or not\")\n",

320 | "\n",

321 | "#1 1:0.5 2:0.03519 3:1 4:0.02567 7:0.03708 8:0.01705 9:0.06296 10:0.18185 11:0.02497 12:1 14:0.02565 15:0.03267 17:0.0247 18:0.03158 20:1 22:1 23:0.13169 24:0.02933 27:0.18159 31:0.0177 34:0.02888 38:1 51:1 63:1 132:1 164:1 236:1\n",

322 | "def input_fn(filenames, batch_size=32, num_epochs=1, perform_shuffle=False):\n",

323 | " print('Parsing', filenames)\n",

324 | " def decode_libsvm(line):\n",

325 | " #columns = tf.decode_csv(value, record_defaults=CSV_COLUMN_DEFAULTS)\n",

326 | " #features = dict(zip(CSV_COLUMNS, columns))\n",

327 | " #labels = features.pop(LABEL_COLUMN)\n",

328 | " columns = tf.string_split([line], ' ')\n",

329 | " labels = tf.string_to_number(columns.values[0], out_type=tf.float32)\n",

330 | " splits = tf.string_split(columns.values[1:], ':')\n",

331 | " id_vals = tf.reshape(splits.values,splits.dense_shape)\n",

332 | " feat_ids, feat_vals = tf.split(id_vals,num_or_size_splits=2,axis=1)\n",

333 | " feat_ids = tf.string_to_number(feat_ids, out_type=tf.int32)\n",

334 | " feat_vals = tf.string_to_number(feat_vals, out_type=tf.float32)\n",

335 | " #feat_ids = tf.reshape(feat_ids,shape=[-1,FLAGS.field_size])\n",

336 | " #for i in range(splits.dense_shape.eval()[0]):\n",

337 | " # feat_ids.append(tf.string_to_number(splits.values[2*i], out_type=tf.int32))\n",

338 | " # feat_vals.append(tf.string_to_number(splits.values[2*i+1]))\n",

339 | " #return tf.reshape(feat_ids,shape=[-1,field_size]), tf.reshape(feat_vals,shape=[-1,field_size]), labels\n",

340 | " return {\"feat_ids\": feat_ids, \"feat_vals\": feat_vals}, labels\n",

341 | "\n",

342 | " # Extract lines from input files using the Dataset API, can pass one filename or filename list\n",

343 | " dataset = tf.data.TextLineDataset(filenames).map(decode_libsvm, num_parallel_calls=10).prefetch(500000) # multi-thread pre-process then prefetch\n",

344 | "\n",

345 | " # Randomizes input using a window of 256 elements (read into memory)\n",

346 | " if perform_shuffle:\n",

347 | " dataset = dataset.shuffle(buffer_size=256)\n",

348 | "\n",

349 | " # epochs from blending together.\n",

350 | " dataset = dataset.repeat(num_epochs)\n",

351 | " dataset = dataset.batch(batch_size) # Batch size to use\n",

352 | "\n",

353 | " #return dataset.make_one_shot_iterator()\n",

354 | " iterator = dataset.make_one_shot_iterator()\n",

355 | " batch_features, batch_labels = iterator.get_next()\n",

356 | " #return tf.reshape(batch_ids,shape=[-1,field_size]), tf.reshape(batch_vals,shape=[-1,field_size]), batch_labels\n",

357 | " return batch_features, batch_labels\n",

358 | "\n",

359 | "def model_fn(features, labels, mode, params):\n",

360 | " \"\"\"Bulid Model function f(x) for Estimator.\"\"\"\n",

361 | " #------hyperparameters----\n",

362 | " field_size = params[\"field_size\"]\n",

363 | " feature_size = params[\"feature_size\"]\n",

364 | " embedding_size = params[\"embedding_size\"]\n",

365 | " l2_reg = params[\"l2_reg\"]\n",

366 | " learning_rate = params[\"learning_rate\"]\n",

367 | " #batch_norm_decay = params[\"batch_norm_decay\"]\n",

368 | " #optimizer = params[\"optimizer\"]\n",

369 | " layers = map(int, params[\"deep_layers\"].split(','))\n",

370 | " dropout = map(float, params[\"dropout\"].split(','))\n",

371 | "\n",

372 | " #------bulid weights------\n",

373 | " FM_B = tf.get_variable(name='fm_bias', shape=[1], initializer=tf.constant_initializer(0.0))\n",

374 | " FM_W = tf.get_variable(name='fm_w', shape=[feature_size], initializer=tf.glorot_normal_initializer())\n",

375 | " # F\n",

376 | " FM_V = tf.get_variable(name='fm_v', shape=[feature_size, embedding_size], initializer=tf.glorot_normal_initializer())\n",

377 | " # F * E \n",

378 | " #------build feaure-------\n",

379 | " feat_ids = features['feat_ids']\n",

380 | " feat_ids = tf.reshape(feat_ids,shape=[-1,field_size]) # None * f/K * K\n",

381 | " feat_vals = features['feat_vals']\n",

382 | " feat_vals = tf.reshape(feat_vals,shape=[-1,field_size]) # None * f/K * K\n",

383 | "\n",

384 | " #------build f(x)------\n",

385 | " with tf.variable_scope(\"First-order\"):\n",

386 | " feat_wgts = tf.nn.embedding_lookup(FM_W, feat_ids) # None * f/K * K\n",

387 | " y_w = tf.reduce_sum(tf.multiply(feat_wgts, feat_vals),1) \n",

388 | "\n",

389 | " with tf.variable_scope(\"Second-order\"):\n",

390 | " embeddings = tf.nn.embedding_lookup(FM_V, feat_ids) # None * f/K * K * E\n",

391 | " feat_vals = tf.reshape(feat_vals, shape=[-1, field_size, 1]) # None * f/K * K * 1 ?\n",

392 | " embeddings = tf.multiply(embeddings, feat_vals) #vij*xi \n",

393 | " sum_square = tf.square(tf.reduce_sum(embeddings,1)) # None * K * E\n",

394 | " square_sum = tf.reduce_sum(tf.square(embeddings),1)\n",

395 | " y_v = 0.5*tf.reduce_sum(tf.subtract(sum_square, square_sum),1)\t# None * 1\n",

396 | "\n",

397 | " with tf.variable_scope(\"Deep-part\"):\n",

398 | " if FLAGS.batch_norm:\n",

399 | " #normalizer_fn = tf.contrib.layers.batch_norm\n",

400 | " #normalizer_fn = tf.layers.batch_normalization\n",

401 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

402 | " train_phase = True\n",

403 | " #normalizer_params = {'decay': batch_norm_decay, 'center': True, 'scale': True, 'updates_collections': None, 'is_training': True, 'reuse': None}\n",

404 | " else:\n",

405 | " train_phase = False\n",

406 | " #normalizer_params = {'decay': batch_norm_decay, 'center': True, 'scale': True, 'updates_collections': None, 'is_training': False, 'reuse': True}\n",

407 | " else:\n",

408 | " normalizer_fn = None\n",

409 | " normalizer_params = None\n",

410 | "\n",

411 | " deep_inputs = tf.reshape(embeddings,shape=[-1,field_size*embedding_size]) # None * (F*K)\n",

412 | " for i in range(len(layers)):\n",

413 | " #if FLAGS.batch_norm:\n",

414 | " # deep_inputs = batch_norm_layer(deep_inputs, train_phase=train_phase, scope_bn='bn_%d' %i)\n",

415 | " #normalizer_params.update({'scope': 'bn_%d' %i})\n",

416 | " deep_inputs = tf.contrib.layers.fully_connected(inputs=deep_inputs, num_outputs=layers[i], \\\n",

417 | " #normalizer_fn=normalizer_fn, normalizer_params=normalizer_params, \\\n",

418 | " weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg), scope='mlp%d' % i)\n",

419 | " if FLAGS.batch_norm:\n",

420 | " deep_inputs = batch_norm_layer(deep_inputs, train_phase=train_phase, scope_bn='bn_%d' %i) #放在RELU之后 https://github.com/ducha-aiki/caffenet-benchmark/blob/master/batchnorm.md#bn----before-or-after-relu\n",

421 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

422 | " deep_inputs = tf.nn.dropout(deep_inputs, keep_prob=dropout[i]) #Apply Dropout after all BN layers and set dropout=0.8(drop_ratio=0.2)\n",

423 | " #deep_inputs = tf.layers.dropout(inputs=deep_inputs, rate=dropout[i], training=mode == tf.estimator.ModeKeys.TRAIN)\n",

424 | "\n",

425 | " y_deep = tf.contrib.layers.fully_connected(inputs=deep_inputs, num_outputs=1, activation_fn=tf.identity, \\\n",

426 | " weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg), scope='deep_out')\n",

427 | " y_d = tf.reshape(y_deep,shape=[-1])\n",

428 | " #sig_wgts = tf.get_variable(name='sigmoid_weights', shape=[layers[-1]], initializer=tf.glorot_normal_initializer())\n",

429 | " #sig_bias = tf.get_variable(name='sigmoid_bias', shape=[1], initializer=tf.constant_initializer(0.0))\n",

430 | " #deep_out = tf.nn.xw_plus_b(deep_inputs,sig_wgts,sig_bias,name='deep_out')\n",

431 | "\n",

432 | " with tf.variable_scope(\"DeepFM-out\"):\n",

433 | " #y_bias = FM_B * tf.ones_like(labels, dtype=tf.float32) # None * 1 warning;这里不能用label,否则调用predict/export函数会出错,train/evaluate正常;初步判断estimator做了优化,用不到label时不传\n",

434 | " y_bias = FM_B * tf.ones_like(y_d, dtype=tf.float32) # None * 1\n",

435 | " y = y_bias + y_w + y_v + y_d\n",

436 | " pred = tf.sigmoid(y)\n",

437 | "\n",

438 | " predictions={\"prob\": pred}\n",

439 | " export_outputs = {tf.saved_model.signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY: tf.estimator.export.PredictOutput(predictions)}\n",

440 | " # Provide an estimator spec for `ModeKeys.PREDICT`\n",

441 | " if mode == tf.estimator.ModeKeys.PREDICT:\n",

442 | " return tf.estimator.EstimatorSpec(\n",

443 | " mode=mode,\n",

444 | " predictions=predictions,\n",

445 | " export_outputs=export_outputs)\n",

446 | "\n",

447 | " #------bulid loss------\n",

448 | " loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=y, labels=labels)) + \\\n",

449 | " l2_reg * tf.nn.l2_loss(FM_W) + \\\n",

450 | " l2_reg * tf.nn.l2_loss(FM_V) #+ \\ l2_reg * tf.nn.l2_loss(sig_wgts)\n",

451 | "\n",

452 | " # Provide an estimator spec for `ModeKeys.EVAL`\n",

453 | " eval_metric_ops = {\n",

454 | " \"auc\": tf.metrics.auc(labels, pred)\n",

455 | " }\n",

456 | " if mode == tf.estimator.ModeKeys.EVAL:\n",

457 | " return tf.estimator.EstimatorSpec(\n",

458 | " mode=mode,\n",

459 | " predictions=predictions,\n",

460 | " loss=loss,\n",

461 | " eval_metric_ops=eval_metric_ops)\n",

462 | "\n",

463 | " #------bulid optimizer------\n",

464 | " if FLAGS.optimizer == 'Adam':\n",

465 | " optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate, beta1=0.9, beta2=0.999, epsilon=1e-8)\n",

466 | " elif FLAGS.optimizer == 'Adagrad':\n",

467 | " optimizer = tf.train.AdagradOptimizer(learning_rate=learning_rate, initial_accumulator_value=1e-8)\n",

468 | " elif FLAGS.optimizer == 'Momentum':\n",

469 | " optimizer = tf.train.MomentumOptimizer(learning_rate=learning_rate, momentum=0.95)\n",

470 | " elif FLAGS.optimizer == 'ftrl':\n",

471 | " optimizer = tf.train.FtrlOptimizer(learning_rate)\n",

472 | "\n",

473 | " train_op = optimizer.minimize(loss, global_step=tf.train.get_global_step())\n",

474 | "\n",

475 | " # Provide an estimator spec for `ModeKeys.TRAIN` modes\n",

476 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

477 | " return tf.estimator.EstimatorSpec(\n",

478 | " mode=mode,\n",

479 | " predictions=predictions,\n",

480 | " loss=loss,\n",

481 | " train_op=train_op)\n",

482 | "\n",

483 | " # Provide an estimator spec for `ModeKeys.EVAL` and `ModeKeys.TRAIN` modes.\n",

484 | " #return tf.estimator.EstimatorSpec(\n",

485 | " # mode=mode,\n",

486 | " # loss=loss,\n",

487 | " # train_op=train_op,\n",

488 | " # predictions={\"prob\": pred},\n",

489 | " # eval_metric_ops=eval_metric_ops)\n",

490 | "\n",

491 | "def batch_norm_layer(x, train_phase, scope_bn):\n",

492 | " bn_train = tf.contrib.layers.batch_norm(x, decay=FLAGS.batch_norm_decay, center=True, scale=True, updates_collections=None, is_training=True, reuse=None, scope=scope_bn)\n",

493 | " bn_infer = tf.contrib.layers.batch_norm(x, decay=FLAGS.batch_norm_decay, center=True, scale=True, updates_collections=None, is_training=False, reuse=True, scope=scope_bn)\n",

494 | " z = tf.cond(tf.cast(train_phase, tf.bool), lambda: bn_train, lambda: bn_infer)\n",

495 | " return z\n",

496 | "\n",

497 | "def set_dist_env():\n",

498 | " if FLAGS.dist_mode == 1: # 本地分布式测试模式1 chief, 1 ps, 1 evaluator\n",

499 | " ps_hosts = FLAGS.ps_hosts.split(',')\n",

500 | " chief_hosts = FLAGS.chief_hosts.split(',')\n",

501 | " task_index = FLAGS.task_index\n",

502 | " job_name = FLAGS.job_name\n",

503 | " print('ps_host', ps_hosts)\n",

504 | " print('chief_hosts', chief_hosts)\n",

505 | " print('job_name', job_name)\n",

506 | " print('task_index', str(task_index))\n",

507 | " # 无worker参数\n",

508 | " tf_config = {\n",

509 | " 'cluster': {'chief': chief_hosts, 'ps': ps_hosts},\n",

510 | " 'task': {'type': job_name, 'index': task_index }\n",

511 | " }\n",

512 | " print(json.dumps(tf_config))\n",

513 | " os.environ['TF_CONFIG'] = json.dumps(tf_config)\n",

514 | " elif FLAGS.dist_mode == 2: # 集群分布式模式\n",

515 | " ps_hosts = FLAGS.ps_hosts.split(',')\n",

516 | " worker_hosts = FLAGS.worker_hosts.split(',')\n",

517 | " chief_hosts = worker_hosts[0:1] # get first worker as chief\n",

518 | " worker_hosts = worker_hosts[2:] # the rest as worker\n",

519 | " task_index = FLAGS.task_index\n",

520 | " job_name = FLAGS.job_name\n",

521 | " print('ps_host', ps_hosts)\n",

522 | " print('worker_host', worker_hosts)\n",

523 | " print('chief_hosts', chief_hosts)\n",

524 | " print('job_name', job_name)\n",

525 | " print('task_index', str(task_index))\n",

526 | " # use #worker=0 as chief\n",

527 | " if job_name == \"worker\" and task_index == 0:\n",

528 | " job_name = \"chief\"\n",

529 | " # use #worker=1 as evaluator\n",

530 | " if job_name == \"worker\" and task_index == 1:\n",

531 | " job_name = 'evaluator'\n",

532 | " task_index = 0\n",

533 | " # the others as worker\n",

534 | " if job_name == \"worker\" and task_index > 1:\n",

535 | " task_index -= 2\n",

536 | "\n",

537 | " tf_config = {\n",

538 | " 'cluster': {'chief': chief_hosts, 'worker': worker_hosts, 'ps': ps_hosts},\n",

539 | " 'task': {'type': job_name, 'index': task_index }\n",

540 | " }\n",

541 | " print(json.dumps(tf_config))\n",

542 | " os.environ['TF_CONFIG'] = json.dumps(tf_config)\n",

543 | "\n",

544 | "def main(_):\n",

545 | " tr_files = glob.glob(\"%s/tr*libsvm\" % FLAGS.data_dir)\n",

546 | " random.shuffle(tr_files)\n",

547 | " print(\"tr_files:\", tr_files)\n",

548 | " va_files = glob.glob(\"%s/va*libsvm\" % FLAGS.data_dir)\n",

549 | " print(\"va_files:\", va_files)\n",

550 | " te_files = glob.glob(\"%s/te*libsvm\" % FLAGS.data_dir)\n",

551 | " print(\"te_files:\", te_files)\n",

552 | "\n",

553 | " if FLAGS.clear_existing_model:\n",

554 | " try:\n",

555 | " shutil.rmtree(FLAGS.model_dir)\n",

556 | " except Exception as e:\n",

557 | " print(e, \"at clear_existing_model\")\n",

558 | " else:\n",

559 | " print(\"existing model cleaned at %s\" % FLAGS.model_dir)\n",

560 | "\n",

561 | " set_dist_env()\n",

562 | "\n",

563 | " model_params = {\n",

564 | " \"field_size\": FLAGS.field_size,\n",

565 | " \"feature_size\": FLAGS.feature_size,\n",

566 | " \"embedding_size\": FLAGS.embedding_size,\n",

567 | " \"learning_rate\": FLAGS.learning_rate,\n",

568 | " \"batch_norm_decay\": FLAGS.batch_norm_decay,\n",

569 | " \"l2_reg\": FLAGS.l2_reg,\n",

570 | " \"deep_layers\": FLAGS.deep_layers,\n",

571 | " \"dropout\": FLAGS.dropout\n",

572 | " }\n",

573 | " config = tf.estimator.RunConfig().replace(session_config = tf.ConfigProto(device_count={'GPU':0, 'CPU':FLAGS.num_threads}),\n",

574 | " log_step_count_steps=FLAGS.log_steps, save_summary_steps=FLAGS.log_steps)\n",

575 | " DeepFM = tf.estimator.Estimator(model_fn=model_fn, model_dir=FLAGS.model_dir, params=model_params, config=config)\n",

576 | "\n",

577 | " if FLAGS.task_type == 'train':\n",

578 | " train_spec = tf.estimator.TrainSpec(input_fn=lambda: input_fn(tr_files, num_epochs=FLAGS.num_epochs, batch_size=FLAGS.batch_size))\n",

579 | " eval_spec = tf.estimator.EvalSpec(input_fn=lambda: input_fn(va_files, num_epochs=1, batch_size=FLAGS.batch_size), steps=None, start_delay_secs=1000, throttle_secs=1200)\n",

580 | " tf.estimator.train_and_evaluate(DeepFM, train_spec, eval_spec)\n",

581 | " elif FLAGS.task_type == 'eval':\n",

582 | " DeepFM.evaluate(input_fn=lambda: input_fn(va_files, num_epochs=1, batch_size=FLAGS.batch_size))\n",

583 | " elif FLAGS.task_type == 'infer':\n",

584 | " preds = DeepFM.predict(input_fn=lambda: input_fn(te_files, num_epochs=1, batch_size=FLAGS.batch_size), predict_keys=\"prob\")\n",

585 | " with open(FLAGS.data_dir+\"/pred.txt\", \"w\") as fo:\n",

586 | " for prob in preds:\n",

587 | " fo.write(\"%f\\n\" % (prob['prob']))\n",

588 | " elif FLAGS.task_type == 'export':\n",

589 | " #feature_spec = tf.feature_column.make_parse_example_spec(feature_columns)\n",

590 | " #feature_spec = {\n",

591 | " # 'feat_ids': tf.FixedLenFeature(dtype=tf.int64, shape=[None, FLAGS.field_size]),\n",

592 | " # 'feat_vals': tf.FixedLenFeature(dtype=tf.float32, shape=[None, FLAGS.field_size])\n",

593 | " #}\n",

594 | " #serving_input_receiver_fn = tf.estimator.export.build_parsing_serving_input_receiver_fn(feature_spec)\n",

595 | " feature_spec = {\n",

596 | " 'feat_ids': tf.placeholder(dtype=tf.int64, shape=[None, FLAGS.field_size], name='feat_ids'),\n",

597 | " 'feat_vals': tf.placeholder(dtype=tf.float32, shape=[None, FLAGS.field_size], name='feat_vals')\n",

598 | " }\n",

599 | " serving_input_receiver_fn = tf.estimator.export.build_raw_serving_input_receiver_fn(feature_spec)\n",

600 | " DeepFM.export_savedmodel(FLAGS.servable_model_dir, serving_input_receiver_fn)\n",

601 | "\n",

602 | "if __name__ == \"__main__\":\n",

603 | " #------check Arguments------\n",

604 | " if FLAGS.dt_dir == \"\":\n",

605 | " FLAGS.dt_dir = (date.today() + timedelta(-1)).strftime('%Y%m%d')\n",

606 | " FLAGS.model_dir = FLAGS.model_dir + FLAGS.dt_dir\n",

607 | " #FLAGS.data_dir = FLAGS.data_dir + FLAGS.dt_dir\n",

608 | "\n",

609 | " print('task_type ', FLAGS.task_type)\n",

610 | " print('model_dir ', FLAGS.model_dir)\n",

611 | " print('data_dir ', FLAGS.data_dir)\n",

612 | " print('dt_dir ', FLAGS.dt_dir)\n",

613 | " print('num_epochs ', FLAGS.num_epochs)\n",

614 | " print('feature_size ', FLAGS.feature_size)\n",

615 | " print('field_size ', FLAGS.field_size)\n",

616 | " print('embedding_size ', FLAGS.embedding_size)\n",

617 | " print('batch_size ', FLAGS.batch_size)\n",

618 | " print('deep_layers ', FLAGS.deep_layers)\n",

619 | " print('dropout ', FLAGS.dropout)\n",

620 | " print('loss_type ', FLAGS.loss_type)\n",

621 | " print('optimizer ', FLAGS.optimizer)\n",

622 | " print('learning_rate ', FLAGS.learning_rate)\n",

623 | " print('batch_norm_decay ', FLAGS.batch_norm_decay)\n",

624 | " print('batch_norm ', FLAGS.batch_norm)\n",

625 | " print('l2_reg ', FLAGS.l2_reg)\n",

626 | "\n",

627 | " tf.logging.set_verbosity(tf.logging.INFO)\n",

628 | " tf.app.run()\n"

629 | ]

630 | },

631 | {

632 | "cell_type": "code",

633 | "execution_count": null,

634 | "metadata": {},

635 | "outputs": [

636 | {

637 | "name": "stdout",

638 | "output_type": "stream",

639 | "text": [

640 | "('task_type ', 'train')\n",

641 | "('model_dir ', './criteo_model20180503')\n",

642 | "('data_dir ', './criteo_data')\n",

643 | "('dt_dir ', '20180503')\n",

644 | "('num_epochs ', 1)\n",

645 | "('feature_size ', 117581)\n",

646 | "('field_size ', 39)\n",

647 | "('embedding_size ', 32)\n",

648 | "('batch_size ', 256)\n",

649 | "('deep_layers ', '400,400,400')\n",

650 | "('dropout ', '0.5,0.5,0.5')\n",

651 | "('loss_type ', 'log_loss')\n",

652 | "('optimizer ', 'Adam')\n",

653 | "('learning_rate ', 0.0005)\n",

654 | "('batch_norm_decay ', 0.9)\n",

655 | "('batch_norm ', False)\n",

656 | "('l2_reg ', 0.0001)\n",

657 | "('tr_files:', ['./criteo_data/tr.libsvm'])\n",

658 | "('va_files:', ['./criteo_data/va.libsvm'])\n",

659 | "('te_files:', ['./criteo_data/te.libsvm'])\n",

660 | "INFO:tensorflow:Using config: {'_save_checkpoints_secs': 600, '_session_config': device_count {\n",

661 | " key: \"CPU\"\n",

662 | " value: 8\n",

663 | "}\n",

664 | "device_count {\n",

665 | " key: \"GPU\"\n",

666 | "}\n",

667 | ", '_keep_checkpoint_max': 5, '_task_type': 'worker', '_is_chief': True, '_cluster_spec': , '_save_checkpoints_steps': None, '_keep_checkpoint_every_n_hours': 10000, '_service': None, '_num_ps_replicas': 0, '_tf_random_seed': None, '_master': '', '_num_worker_replicas': 1, '_task_id': 0, '_log_step_count_steps': 1000, '_model_dir': './criteo_model', '_save_summary_steps': 1000}\n",

668 | "INFO:tensorflow:Running training and evaluation locally (non-distributed).\n",

669 | "INFO:tensorflow:Start train and evaluate loop. The evaluate will happen after 1200 secs (eval_spec.throttle_secs) or training is finished.\n",

670 | "('Parsing', ['./criteo_data/tr.libsvm'])\n",

671 | "INFO:tensorflow:Create CheckpointSaverHook.\n",

672 | "2018-05-04 23:34:53.147375: I tensorflow/core/platform/cpu_feature_guard.cc:137] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA\n",

673 | "INFO:tensorflow:Saving checkpoints for 1 into ./criteo_model/model.ckpt.\n",

674 | "INFO:tensorflow:loss = 0.6947804, step = 1\n",

675 | "INFO:tensorflow:loss = 0.51585126, step = 101 (4.948 sec)\n",

676 | "INFO:tensorflow:loss = 0.4950318, step = 201 (4.408 sec)\n",

677 | "INFO:tensorflow:loss = 0.5462832, step = 301 (4.357 sec)\n",

678 | "INFO:tensorflow:loss = 0.5671505, step = 401 (4.368 sec)\n",

679 | "INFO:tensorflow:loss = 0.45424744, step = 501 (4.300 sec)\n",

680 | "INFO:tensorflow:loss = 0.5399899, step = 601 (4.274 sec)\n",

681 | "INFO:tensorflow:loss = 0.49540266, step = 701 (4.234 sec)\n",

682 | "INFO:tensorflow:loss = 0.5175852, step = 801 (4.294 sec)\n",

683 | "INFO:tensorflow:loss = 0.4686305, step = 901 (4.314 sec)\n",

684 | "INFO:tensorflow:global_step/sec: 22.8576\n",

685 | "INFO:tensorflow:loss = 0.5371931, step = 1001 (4.254 sec)\n",

686 | "INFO:tensorflow:loss = 0.49340367, step = 1101 (4.243 sec)\n",

687 | "INFO:tensorflow:loss = 0.49719507, step = 1201 (4.346 sec)\n",

688 | "INFO:tensorflow:loss = 0.48593232, step = 1301 (4.225 sec)\n",

689 | "INFO:tensorflow:loss = 0.48725832, step = 1401 (4.238 sec)\n",

690 | "INFO:tensorflow:loss = 0.4386774, step = 1501 (4.361 sec)\n",

691 | "INFO:tensorflow:loss = 0.49065983, step = 1601 (4.312 sec)\n",

692 | "INFO:tensorflow:loss = 0.53164876, step = 1701 (4.272 sec)\n",

693 | "INFO:tensorflow:loss = 0.40944415, step = 1801 (4.286 sec)\n",

694 | "INFO:tensorflow:loss = 0.521611, step = 1901 (4.270 sec)\n",

695 | "INFO:tensorflow:global_step/sec: 23.327\n",

696 | "INFO:tensorflow:loss = 0.49082595, step = 2001 (4.317 sec)\n",

697 | "INFO:tensorflow:loss = 0.50453734, step = 2101 (4.302 sec)\n",

698 | "INFO:tensorflow:loss = 0.49503702, step = 2201 (4.369 sec)\n",

699 | "INFO:tensorflow:loss = 0.45685932, step = 2301 (4.326 sec)\n",

700 | "INFO:tensorflow:loss = 0.47562104, step = 2401 (4.326 sec)\n",

701 | "INFO:tensorflow:loss = 0.5106457, step = 2501 (4.366 sec)\n",

702 | "INFO:tensorflow:loss = 0.4949795, step = 2601 (4.408 sec)\n",

703 | "INFO:tensorflow:loss = 0.4684176, step = 2701 (4.442 sec)\n",

704 | "INFO:tensorflow:loss = 0.43745354, step = 2801 (4.457 sec)\n",

705 | "INFO:tensorflow:loss = 0.48600715, step = 2901 (4.490 sec)\n",

706 | "INFO:tensorflow:global_step/sec: 22.7801\n",

707 | "INFO:tensorflow:loss = 0.4853104, step = 3001 (4.412 sec)\n",

708 | "INFO:tensorflow:loss = 0.49764964, step = 3101 (4.420 sec)\n",

709 | "INFO:tensorflow:loss = 0.4432894, step = 3201 (4.496 sec)\n",

710 | "INFO:tensorflow:loss = 0.46213925, step = 3301 (4.479 sec)\n",

711 | "INFO:tensorflow:loss = 0.4637582, step = 3401 (4.582 sec)\n",

712 | "INFO:tensorflow:loss = 0.46756223, step = 3501 (4.504 sec)\n",

713 | "INFO:tensorflow:loss = 0.46732077, step = 3601 (4.464 sec)\n"

714 | ]

715 | }

716 | ],

717 | "source": [

718 | "!python DeepFM.py --task_type=train \\\n",

719 | " --learning_rate=0.0005 \\\n",

720 | " --optimizer=Adam \\\n",

721 | " --num_epochs=1 \\\n",

722 | " --batch_size=256 \\\n",

723 | " --field_size=39 \\\n",

724 | " --feature_size=117581 \\\n",

725 | " --deep_layers=400,400,400 \\\n",

726 | " --dropout=0.5,0.5,0.5 \\\n",

727 | " --log_steps=1000 \\\n",

728 | " --num_threads=8 \\\n",

729 | " --model_dir=./criteo_model/DeepFM \\\n",

730 | " --data_dir=./criteo_data"

731 | ]

732 | },

733 | {

734 | "cell_type": "markdown",

735 | "metadata": {},

736 | "source": [

737 | "### NFM\n",

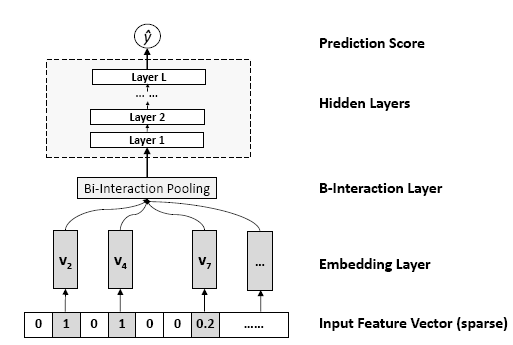

738 | "reference:

[从ctr预估问题看看f(x)设计—DNN篇](https://zhuanlan.zhihu.com/p/28202287)

[深度学习在CTR预估中的应用](https://zhuanlan.zhihu.com/p/35484389)\n",

739 | "\n",

740 | "NFM = LR + Embedding + Bi-Interaction Pooling + MLP\n",

741 | "\n",

742 | "\n",

743 | "\n",

744 | "对不同特征做相同维数的embedding向量。接下来,这些embedding向量两两做element-wise的相乘运算得到B-interaction layer。(element-wide运算举例: (1,2,3)element-wide相乘(4,5,6)结果是(4,10,18)。)\n",

745 | "\n",

746 | "B-interaction Layer 得到的是一个和embedding维数相同的向量。然后后面接几个隐藏层输出结果。\n",

747 | "\n",

748 | "大家思考一下,如果B-interaction layer后面不接隐藏层,直接把向量的元素相加输出结果, 就是一个FM,现在后面增加了隐藏层,相当于做了更高阶的FM,更加增强了非线性表达能力。\n",

749 | "\n",

750 | "NFM 在 embedding 做了 bi-interaction 操作来做特征的交叉处理,优点是网络参数从 n 直接压缩到 k(比 FNN 和 DeepFM 的 f\\*k 还少),降低了网络复杂度,能够加速网络的训练得到模型;但同时这种方法也可能带来较大的信息损失。"

751 | ]

752 | },

753 | {

754 | "cell_type": "code",

755 | "execution_count": null,

756 | "metadata": {

757 | "collapsed": true

758 | },

759 | "outputs": [],

760 | "source": [

761 | "# %load NFM.py\n",

762 | "#!/usr/bin/env python\n",

763 | "\"\"\"\n",

764 | "TensorFlow Implementation of <> with the fellowing features:\n",

765 | "#1 Input pipline using Dataset high level API, Support parallel and prefetch reading\n",

766 | "#2 Train pipline using Coustom Estimator by rewriting model_fn\n",

767 | "#3 Support distincted training by TF_CONFIG\n",

768 | "#4 Support export model for TensorFlow Serving\n",

769 | "\n",

770 | "by lambdaji\n",

771 | "\"\"\"\n",

772 | "#from __future__ import absolute_import\n",

773 | "#from __future__ import division\n",

774 | "#from __future__ import print_function\n",

775 | "\n",

776 | "#import argparse\n",

777 | "import shutil\n",

778 | "#import sys\n",

779 | "import os\n",

780 | "import json\n",

781 | "import glob\n",

782 | "from datetime import date, timedelta\n",

783 | "from time import time\n",

784 | "#import gc\n",

785 | "#from multiprocessing import Process\n",

786 | "\n",

787 | "#import math\n",

788 | "import random\n",

789 | "import pandas as pd\n",

790 | "import numpy as np\n",

791 | "import tensorflow as tf\n",

792 | "\n",

793 | "#################### CMD Arguments ####################\n",

794 | "FLAGS = tf.app.flags.FLAGS\n",

795 | "tf.app.flags.DEFINE_integer(\"dist_mode\", 0, \"distribuion mode {0-loacal, 1-single_dist, 2-multi_dist}\")\n",

796 | "tf.app.flags.DEFINE_string(\"ps_hosts\", '', \"Comma-separated list of hostname:port pairs\")\n",

797 | "tf.app.flags.DEFINE_string(\"worker_hosts\", '', \"Comma-separated list of hostname:port pairs\")\n",

798 | "tf.app.flags.DEFINE_string(\"job_name\", '', \"One of 'ps', 'worker'\")\n",

799 | "tf.app.flags.DEFINE_integer(\"task_index\", 0, \"Index of task within the job\")\n",

800 | "tf.app.flags.DEFINE_integer(\"num_threads\", 16, \"Number of threads\")\n",

801 | "tf.app.flags.DEFINE_integer(\"feature_size\", 0, \"Number of features\")\n",

802 | "tf.app.flags.DEFINE_integer(\"field_size\", 0, \"Number of fields\")\n",

803 | "tf.app.flags.DEFINE_integer(\"embedding_size\", 64, \"Embedding size\")\n",

804 | "tf.app.flags.DEFINE_integer(\"num_epochs\", 10, \"Number of epochs\")\n",

805 | "tf.app.flags.DEFINE_integer(\"batch_size\", 128, \"Number of batch size\")\n",

806 | "tf.app.flags.DEFINE_integer(\"log_steps\", 1000, \"save summary every steps\")\n",

807 | "tf.app.flags.DEFINE_float(\"learning_rate\", 0.05, \"learning rate\")\n",

808 | "tf.app.flags.DEFINE_float(\"l2_reg\", 0.001, \"L2 regularization\")\n",

809 | "tf.app.flags.DEFINE_string(\"loss_type\", 'log_loss', \"loss type {square_loss, log_loss}\")\n",

810 | "tf.app.flags.DEFINE_string(\"optimizer\", 'Adam', \"optimizer type {Adam, Adagrad, GD, Momentum}\")\n",

811 | "tf.app.flags.DEFINE_string(\"deep_layers\", '128,64', \"deep layers\")\n",

812 | "tf.app.flags.DEFINE_string(\"dropout\", '0.5,0.8,0.8', \"dropout rate\")\n",

813 | "tf.app.flags.DEFINE_boolean(\"batch_norm\", False, \"perform batch normaization (True or False)\")\n",

814 | "tf.app.flags.DEFINE_float(\"batch_norm_decay\", 0.9, \"decay for the moving average(recommend trying decay=0.9)\")\n",

815 | "tf.app.flags.DEFINE_string(\"data_dir\", '', \"data dir\")\n",

816 | "tf.app.flags.DEFINE_string(\"dt_dir\", '', \"data dt partition\")\n",

817 | "tf.app.flags.DEFINE_string(\"model_dir\", '', \"model check point dir\")\n",

818 | "tf.app.flags.DEFINE_string(\"servable_model_dir\", '', \"export servable model for TensorFlow Serving\")\n",

819 | "tf.app.flags.DEFINE_string(\"task_type\", 'train', \"task type {train, infer, eval, export}\")\n",

820 | "tf.app.flags.DEFINE_boolean(\"clear_existing_model\", False, \"clear existing model or not\")\n",

821 | "\n",

822 | "#1 1:0.5 2:0.03519 3:1 4:0.02567 7:0.03708 8:0.01705 9:0.06296 10:0.18185 11:0.02497 12:1 14:0.02565 15:0.03267 17:0.0247 18:0.03158 20:1 22:1 23:0.13169 24:0.02933 27:0.18159 31:0.0177 34:0.02888 38:1 51:1 63:1 132:1 164:1 236:1\n",

823 | "def input_fn(filenames, batch_size=32, num_epochs=1, perform_shuffle=False):\n",

824 | " print('Parsing', filenames)\n",

825 | " def decode_libsvm(line):\n",

826 | " #columns = tf.decode_csv(value, record_defaults=CSV_COLUMN_DEFAULTS)\n",

827 | " #features = dict(zip(CSV_COLUMNS, columns))\n",

828 | " #labels = features.pop(LABEL_COLUMN)\n",

829 | " columns = tf.string_split([line], ' ')\n",

830 | " labels = tf.string_to_number(columns.values[0], out_type=tf.float32)\n",

831 | " splits = tf.string_split(columns.values[1:], ':')\n",

832 | " id_vals = tf.reshape(splits.values,splits.dense_shape)\n",

833 | " feat_ids, feat_vals = tf.split(id_vals,num_or_size_splits=2,axis=1)\n",

834 | " feat_ids = tf.string_to_number(feat_ids, out_type=tf.int32)\n",

835 | " feat_vals = tf.string_to_number(feat_vals, out_type=tf.float32)\n",

836 | " return {\"feat_ids\": feat_ids, \"feat_vals\": feat_vals}, labels\n",

837 | "\n",

838 | " # Extract lines from input files using the Dataset API, can pass one filename or filename list\n",

839 | " dataset = tf.data.TextLineDataset(filenames).map(decode_libsvm, num_parallel_calls=10).prefetch(500000) # multi-thread pre-process then prefetch\n",

840 | "\n",

841 | " # Randomizes input using a window of 256 elements (read into memory)\n",

842 | " if perform_shuffle:\n",

843 | " dataset = dataset.shuffle(buffer_size=256)\n",

844 | "\n",

845 | " # epochs from blending together.\n",

846 | " dataset = dataset.repeat(num_epochs)\n",

847 | " dataset = dataset.batch(batch_size) # Batch size to use\n",

848 | "\n",

849 | " iterator = dataset.make_one_shot_iterator()\n",

850 | " batch_features, batch_labels = iterator.get_next()\n",

851 | " #return tf.reshape(batch_ids,shape=[-1,field_size]), tf.reshape(batch_vals,shape=[-1,field_size]), batch_labels\n",

852 | " return batch_features, batch_labels\n",

853 | "\n",

854 | "def model_fn(features, labels, mode, params):\n",

855 | " \"\"\"Bulid Model function f(x) for Estimator.\"\"\"\n",

856 | " #------hyperparameters----\n",

857 | " field_size = params[\"field_size\"]\n",

858 | " feature_size = params[\"feature_size\"]\n",

859 | " embedding_size = params[\"embedding_size\"]\n",

860 | " l2_reg = params[\"l2_reg\"]\n",

861 | " learning_rate = params[\"learning_rate\"]\n",

862 | " #optimizer = params[\"optimizer\"]\n",

863 | " layers = map(int, params[\"deep_layers\"].split(','))\n",

864 | " dropout = map(float, params[\"dropout\"].split(','))\n",

865 | "\n",

866 | " #------bulid weights------\n",

867 | " Global_Bias = tf.get_variable(name='bias', shape=[1], initializer=tf.constant_initializer(0.0))\n",

868 | " Feat_Bias = tf.get_variable(name='linear', shape=[feature_size], initializer=tf.glorot_normal_initializer())\n",

869 | " Feat_Emb = tf.get_variable(name='emb', shape=[feature_size,embedding_size], initializer=tf.glorot_normal_initializer())\n",

870 | "\n",

871 | " #------build feaure-------\n",

872 | " feat_ids = features['feat_ids']\n",

873 | " feat_ids = tf.reshape(feat_ids,shape=[-1,field_size])\n",

874 | " feat_vals = features['feat_vals']\n",

875 | " feat_vals = tf.reshape(feat_vals,shape=[-1,field_size])\n",

876 | "\n",

877 | " #------build f(x)------\n",

878 | " with tf.variable_scope(\"Linear-part\"):\n",

879 | " feat_wgts = tf.nn.embedding_lookup(Feat_Bias, feat_ids) \t\t# None * F * 1\n",

880 | " y_linear = tf.reduce_sum(tf.multiply(feat_wgts, feat_vals),1)\n",

881 | "\n",

882 | " with tf.variable_scope(\"BiInter-part\"):\n",

883 | " embeddings = tf.nn.embedding_lookup(Feat_Emb, feat_ids) \t\t# None * F * K\n",

884 | " feat_vals = tf.reshape(feat_vals, shape=[-1, field_size, 1])\n",

885 | " embeddings = tf.multiply(embeddings, feat_vals) \t\t\t\t# vij * xi\n",

886 | " sum_square_emb = tf.square(tf.reduce_sum(embeddings,1))\n",

887 | " square_sum_emb = tf.reduce_sum(tf.square(embeddings),1)\n",

888 | " deep_inputs = 0.5*tf.subtract(sum_square_emb, square_sum_emb)\t# None * K\n",

889 | "\n",

890 | " with tf.variable_scope(\"Deep-part\"):\n",

891 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

892 | " train_phase = True\n",

893 | " else:\n",

894 | " train_phase = False\n",

895 | "\n",

896 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

897 | " deep_inputs = tf.nn.dropout(deep_inputs, keep_prob=dropout[0]) \t\t\t\t\t\t# None * K\n",

898 | " for i in range(len(layers)):\n",

899 | " deep_inputs = tf.contrib.layers.fully_connected(inputs=deep_inputs, num_outputs=layers[i], \\\n",

900 | " weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg), scope='mlp%d' % i)\n",

901 | "\n",

902 | " if FLAGS.batch_norm:\n",

903 | " deep_inputs = batch_norm_layer(deep_inputs, train_phase=train_phase, scope_bn='bn_%d' %i) #放在RELU之后 https://github.com/ducha-aiki/caffenet-benchmark/blob/master/batchnorm.md#bn----before-or-after-relu\n",

904 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

905 | " deep_inputs = tf.nn.dropout(deep_inputs, keep_prob=dropout[i]) #Apply Dropout after all BN layers and set dropout=0.8(drop_ratio=0.2)\n",

906 | " #deep_inputs = tf.layers.dropout(inputs=deep_inputs, rate=dropout[i], training=mode == tf.estimator.ModeKeys.TRAIN)\n",

907 | "\n",

908 | " y_deep = tf.contrib.layers.fully_connected(inputs=deep_inputs, num_outputs=1, activation_fn=tf.identity, \\\n",

909 | " weights_regularizer=tf.contrib.layers.l2_regularizer(l2_reg), scope='deep_out')\n",

910 | " y_d = tf.reshape(y_deep,shape=[-1])\n",

911 | "\n",

912 | " with tf.variable_scope(\"NFM-out\"):\n",

913 | " #y_bias = Global_Bias * tf.ones_like(labels, dtype=tf.float32) # None * 1 warning;这里不能用label,否则调用predict/export函数会出错,train/evaluate正常;初步判断estimator做了优化,用不到label时不传\n",

914 | " y_bias = Global_Bias * tf.ones_like(y_d, dtype=tf.float32) \t# None * 1\n",

915 | " y = y_bias + y_linear + y_d\n",

916 | " pred = tf.sigmoid(y)\n",

917 | "\n",

918 | " predictions={\"prob\": pred}\n",

919 | " export_outputs = {tf.saved_model.signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY: tf.estimator.export.PredictOutput(predictions)}\n",

920 | " # Provide an estimator spec for `ModeKeys.PREDICT`\n",

921 | " if mode == tf.estimator.ModeKeys.PREDICT:\n",

922 | " return tf.estimator.EstimatorSpec(\n",

923 | " mode=mode,\n",

924 | " predictions=predictions,\n",

925 | " export_outputs=export_outputs)\n",

926 | "\n",

927 | " #------bulid loss------\n",

928 | " loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=y, labels=labels)) + \\\n",

929 | " l2_reg * tf.nn.l2_loss(Feat_Bias) + l2_reg * tf.nn.l2_loss(Feat_Emb)\n",

930 | "\n",

931 | " # Provide an estimator spec for `ModeKeys.EVAL`\n",

932 | " eval_metric_ops = {\n",

933 | " \"auc\": tf.metrics.auc(labels, pred)\n",

934 | " }\n",

935 | " if mode == tf.estimator.ModeKeys.EVAL:\n",

936 | " return tf.estimator.EstimatorSpec(\n",

937 | " mode=mode,\n",

938 | " predictions=predictions,\n",

939 | " loss=loss,\n",

940 | " eval_metric_ops=eval_metric_ops)\n",

941 | "\n",

942 | " #------bulid optimizer------\n",

943 | " if FLAGS.optimizer == 'Adam':\n",

944 | " optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate, beta1=0.9, beta2=0.999, epsilon=1e-8)\n",

945 | " elif FLAGS.optimizer == 'Adagrad':\n",

946 | " optimizer = tf.train.AdagradOptimizer(learning_rate=learning_rate, initial_accumulator_value=1e-8)\n",

947 | " elif FLAGS.optimizer == 'Momentum':\n",

948 | " optimizer = tf.train.MomentumOptimizer(learning_rate=learning_rate, momentum=0.95)\n",

949 | " elif FLAGS.optimizer == 'ftrl':\n",

950 | " optimizer = tf.train.FtrlOptimizer(learning_rate)\n",

951 | "\n",

952 | " train_op = optimizer.minimize(loss, global_step=tf.train.get_global_step())\n",

953 | "\n",

954 | " # Provide an estimator spec for `ModeKeys.TRAIN` modes\n",

955 | " if mode == tf.estimator.ModeKeys.TRAIN:\n",

956 | " return tf.estimator.EstimatorSpec(\n",

957 | " mode=mode,\n",

958 | " predictions=predictions,\n",

959 | " loss=loss,\n",

960 | " train_op=train_op)\n",

961 | "\n",

962 | " # Provide an estimator spec for `ModeKeys.EVAL` and `ModeKeys.TRAIN` modes.\n",

963 | " #return tf.estimator.EstimatorSpec(\n",

964 | " # mode=mode,\n",

965 | " # loss=loss,\n",

966 | " # train_op=train_op,\n",

967 | " # predictions={\"prob\": pred},\n",

968 | " # eval_metric_ops=eval_metric_ops)\n",

969 | "\n",

970 | "def batch_norm_layer(x, train_phase, scope_bn):\n",

971 | " bn_train = tf.contrib.layers.batch_norm(x, decay=FLAGS.batch_norm_decay, center=True, scale=True, updates_collections=None, is_training=True, reuse=None, scope=scope_bn)\n",

972 | " bn_infer = tf.contrib.layers.batch_norm(x, decay=FLAGS.batch_norm_decay, center=True, scale=True, updates_collections=None, is_training=False, reuse=True, scope=scope_bn)\n",

973 | " z = tf.cond(tf.cast(train_phase, tf.bool), lambda: bn_train, lambda: bn_infer)\n",

974 | " return z\n",

975 | "\n",

976 | "def set_dist_env():\n",

977 | " if FLAGS.dist_mode == 1: # 本地分布式测试模式1 chief, 1 ps, 1 evaluator\n",

978 | " ps_hosts = FLAGS.ps_hosts.split(',')\n",

979 | " chief_hosts = FLAGS.chief_hosts.split(',')\n",

980 | " task_index = FLAGS.task_index\n",