├── 00_atari_dqn.py

├── 01_dqn.py

├── 02_ddqn.py

├── 03_priority_replay.py

├── 04_dueling.py

├── 05_multistep_td.py

├── 06_distributional_rl.py

├── 07_noisynet.py

├── README.md

├── images

├── ddqn.png

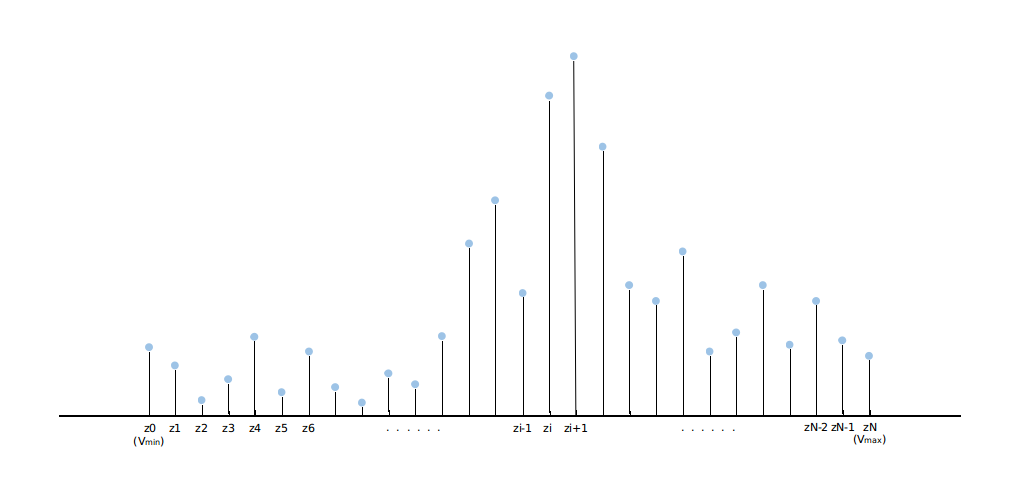

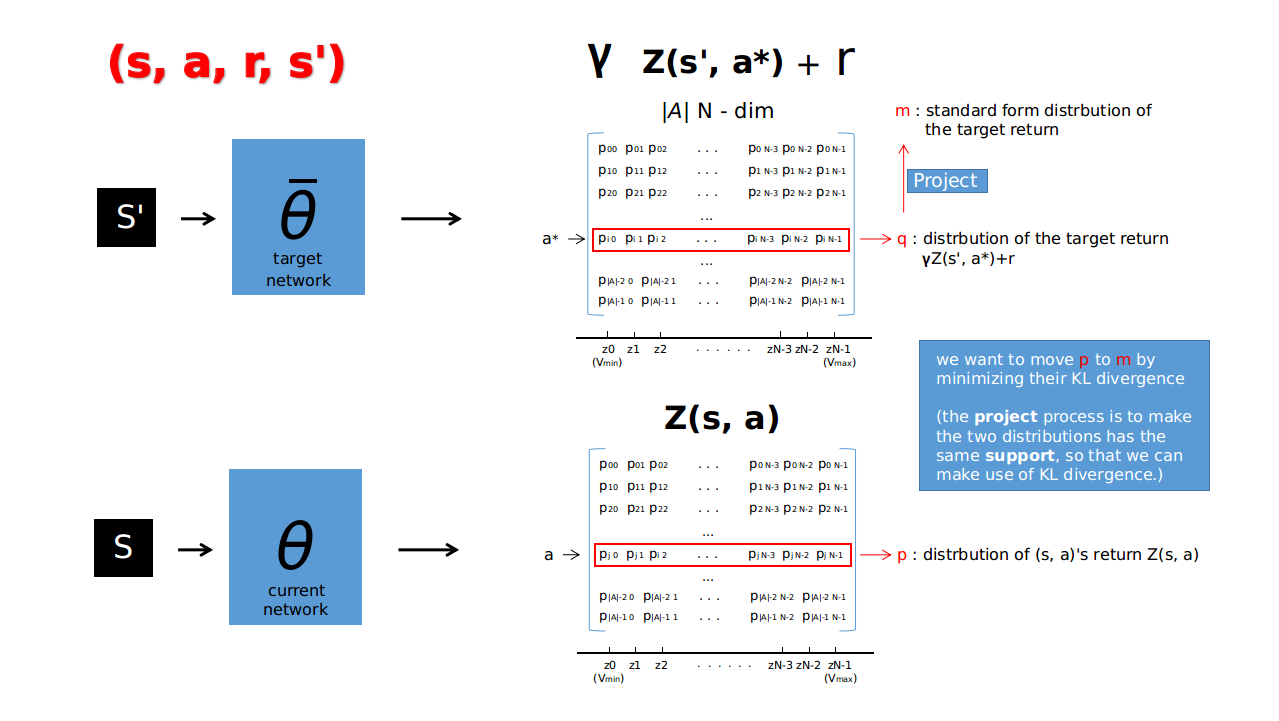

├── distributional_algorithm2.png

├── distributional_learn.png

├── distributional_project.png

├── distributional_projected.png

├── distributional_rl.png

├── dqn.png

├── dqn_algorithm.png

├── dqn_net.png

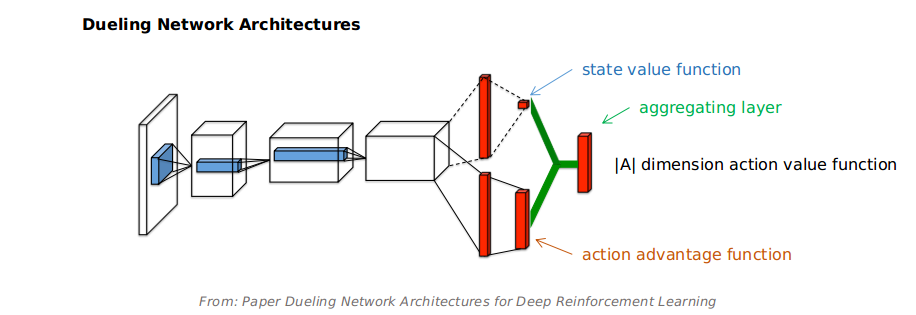

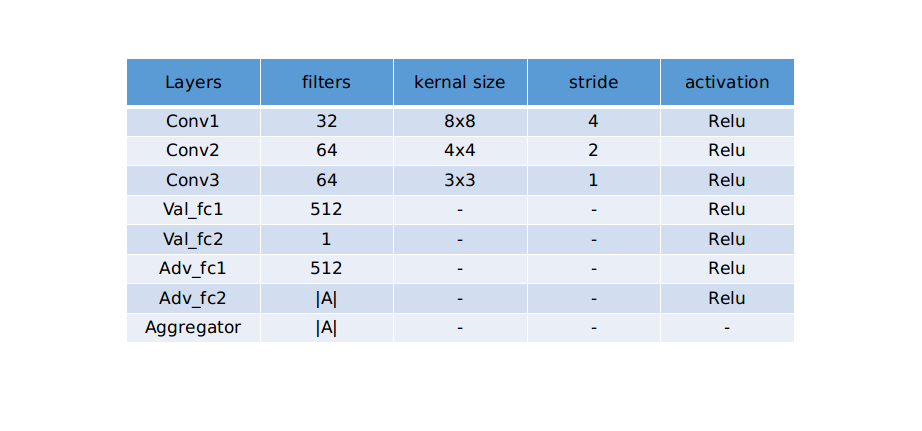

├── dueling_detail.png

├── dueling_details.png

├── dueling_netarch.png

├── gym_cartpole_v0.gif

├── noisy_net_algorithm.png

├── p2.png

├── rlblog_images

│ ├── IS.jpg

│ ├── LSTM.png

│ ├── PPO.png

│ ├── README.md

│ ├── RNN-unrolled.png

│ ├── ppo.png

│ ├── r1.png

│ └── r2.png

└── sards.png

└── tutorial_blogs

├── Building_Rainbow_Step_by_Step_with_TensorFlow2.0.md

└── gym_tutorial.md

/00_atari_dqn.py:

--------------------------------------------------------------------------------

1 | """

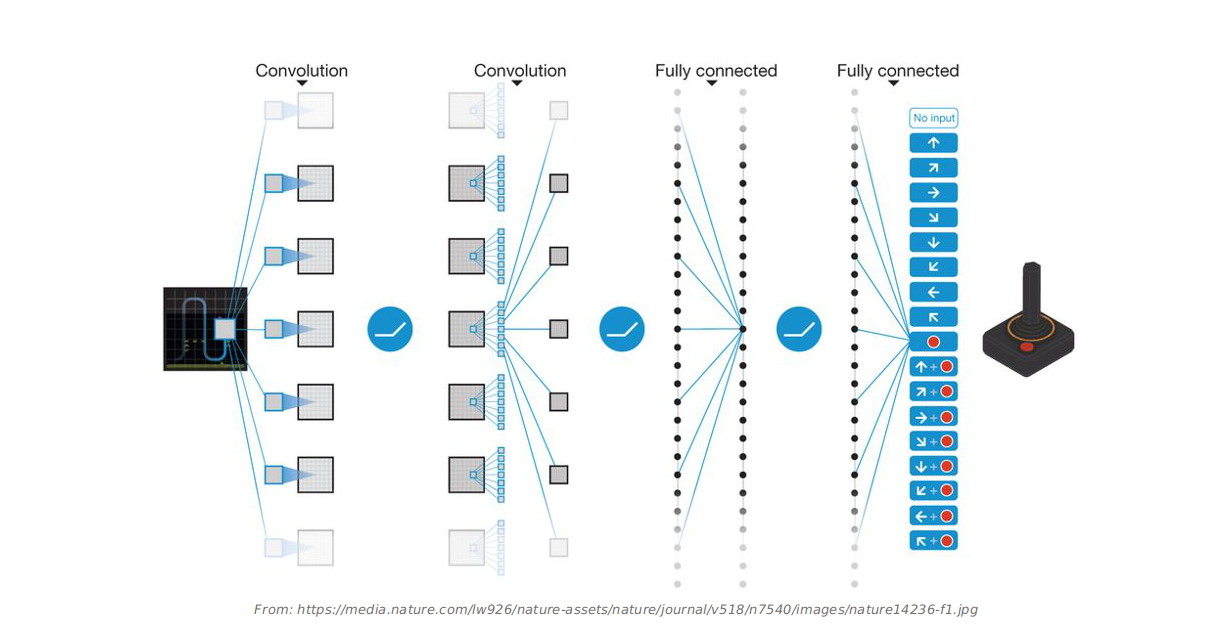

2 | Deep Q-Network(DQN) for Atari Game, which has convolutional layers to handle images input and other preprocessings.

3 |

4 | Using:

5 | TensorFlow 2.0

6 | Numpy 1.16.2

7 | Gym 0.12.1

8 | """

9 |

10 | import tensorflow as tf

11 | print(tf.__version__)

12 |

13 | import gym

14 | import time

15 | import numpy as np

16 | import tensorflow.keras.layers as kl

17 | import tensorflow.keras.optimizers as ko

18 |

19 | np.random.seed(1)

20 | tf.random.set_seed(1)

21 |

22 | # Minor change from cs234:reinforcement learning, assignment 2 -> utils/preprocess.py

23 | def greyscale(state):

24 | """

25 | Preprocess state (210, 160, 3) image into

26 | a (80, 80, 1) image in grey scale

27 | """

28 | state = np.reshape(state, [210, 160, 3]).astype(np.float32)

29 | # grey scale

30 | state = state[:, :, 0] * 0.299 + state[:, :, 1] * 0.587 + state[:, :, 2] * 0.114

31 | # karpathy

32 | state = state[35:195] # crop

33 | state = state[::2,::2] # downsample by factor of 2

34 | state = state[:, :, np.newaxis]

35 | return state.astype(np.float32)

36 |

37 |

38 | class Model(tf.keras.Model):

39 | def __init__(self, num_actions):

40 | super().__init__(name='dqn')

41 | self.conv1 = kl.Conv2D(32, kernel_size=(8, 8), strides=4, activation='relu')

42 | self.conv2 = kl.Conv2D(64, kernel_size=(4, 4), strides=2, activation='relu')

43 | self.conv3 = kl.Conv2D(64, kernel_size=(3, 3), strides=1, activation='relu')

44 | self.flat = kl.Flatten()

45 | self.fc1 = kl.Dense(512, activation='relu')

46 | self.fc2 = kl.Dense(num_actions)

47 |

48 | def call(self, inputs):

49 | # x = tf.convert_to_tensor(inputs, dtype=tf.float32)

50 | x = self.conv1(inputs)

51 | x = self.conv2(x)

52 | x = self.conv3(x)

53 | x = self.flat(x)

54 | x = self.fc1(x)

55 | x = self.fc2(x)

56 | return x

57 |

58 | def action_value(self, obs):

59 | q_values = self.predict(obs)

60 | best_action = np.argmax(q_values, axis=-1)

61 | return best_action[0], q_values[0]

62 |

63 |

64 | class DQNAgent:

65 | def __init__(self, model, target_model, env, buffer_size=1000, learning_rate=.001, epsilon=.1, gamma=.9,

66 | batch_size=4, target_update_iter=20, train_nums=100, start_learning=10):

67 | self.model = model

68 | self.target_model = target_model

69 | self.model.compile(optimizer=ko.Adam(), loss='mse')

70 |

71 | # parameters

72 | self.env = env # gym environment

73 | self.lr = learning_rate # learning step

74 | self.epsilon = epsilon # e-greedy when exploring

75 | self.gamma = gamma # discount rate

76 | self.batch_size = batch_size # batch_size

77 | self.target_update_iter = target_update_iter # target update period

78 | self.train_nums = train_nums # total training steps

79 | self.num_in_buffer = 0 # transitions num in buffer

80 | self.buffer_size = buffer_size # replay buffer size

81 | self.start_learning = start_learning # step to begin learning(save transitions before that step)

82 |

83 | # replay buffer

84 | self.obs = np.empty((self.buffer_size,) + greyscale(self.env.reset()).shape)

85 | self.actions = np.empty((self.buffer_size), dtype=np.int8)

86 | self.rewards = np.empty((self.buffer_size), dtype=np.float32)

87 | self.dones = np.empty((self.buffer_size), dtype=np.bool)

88 | self.next_states = np.empty((self.buffer_size,) + greyscale(self.env.reset()).shape)

89 | self.next_idx = 0

90 |

91 |

92 | # To test whether the model works

93 | def test(self, render=True):

94 | obs, done, ep_reward = self.env.reset(), False, 0

95 | while not done:

96 | obs = greyscale(obs)

97 | # Using [None] to extend its dimension [80, 80, 1] -> [1, 80, 80, 1]

98 | action, _ = self.model.action_value(obs[None])

99 | obs, reward, done, info = self.env.step(action)

100 | ep_reward += reward

101 | if render: # visually

102 | self.env.render()

103 | time.sleep(0.05)

104 | self.env.close()

105 | return ep_reward

106 |

107 | def train(self):

108 | obs = self.env.reset()

109 | obs = greyscale(obs)[None]

110 | for t in range(self.train_nums):

111 | best_action, q_values = self.model.action_value(obs)

112 | action = self.get_action(best_action)

113 | next_obs, reward, done, info = self.env.step(action)

114 | next_obs = greyscale(next_obs)[None]

115 | self.store_transition(obs, action, reward, next_obs, done)

116 | self.num_in_buffer += 1

117 |

118 | if t > self.start_learning: # start learning

119 | losses = self.train_step(t)

120 |

121 | if t % self.target_update_iter == 0:

122 | self.update_target_model()

123 |

124 | obs = next_obs

125 |

126 | def train_step(self, t):

127 | idxes = self.sample(self.batch_size)

128 | self.s_batch = self.obs[idxes]

129 | self.a_batch = self.actions[idxes]

130 | self.r_batch = self.rewards[idxes]

131 | self.ns_batch = self.next_states[idxes]

132 | self.done_batch = self.dones[idxes]

133 |

134 | target_q = self.r_batch + self.gamma * \

135 | np.amax(self.get_target_value(self.ns_batch), axis=1) * (1 - self.done_batch)

136 | target_f = self.model.predict(self.s_batch)

137 | for i, val in enumerate(self.a_batch):

138 | target_f[i][val] = target_q[i]

139 |

140 | losses = self.model.train_on_batch(self.s_batch, target_f)

141 |

142 | return losses

143 |

144 |

145 |

146 | # def loss_function(self, q, target_q):

147 | # n_actions = self.env.action_space.n

148 | # print('action in loss', self.a_batch)

149 | # actions = to_categorical(self.a_batch, n_actions)

150 | # q = np.sum(np.multiply(q, actions), axis=1)

151 | # self.loss = kls.mean_squared_error(q, target_q)

152 |

153 |

154 | def store_transition(self, obs, action, reward, next_state, done):

155 | n_idx = self.next_idx % self.buffer_size

156 | self.obs[n_idx] = obs

157 | self.actions[n_idx] = action

158 | self.rewards[n_idx] = reward

159 | self.next_states[n_idx] = next_state

160 | self.dones[n_idx] = done

161 | self.next_idx = (self.next_idx + 1) % self.buffer_size

162 |

163 | def sample(self, n):

164 | assert n < self.num_in_buffer

165 | res = []

166 | while True:

167 | num = np.random.randint(0, self.num_in_buffer)

168 | if num not in res:

169 | res.append(num)

170 | if len(res) == n:

171 | break

172 | return res

173 |

174 | def get_action(self, best_action):

175 | if np.random.rand() < self.epsilon:

176 | return self.env.action_space.sample()

177 | return best_action

178 |

179 | def update_target_model(self):

180 | print('update_target_mdoel')

181 | self.target_model.set_weights(self.model.get_weights())

182 |

183 | def get_target_value(self, obs):

184 | return self.target_model.predict(obs)

185 |

186 | if __name__ == '__main__':

187 | env = gym.make("Pong-v0")

188 | obs = env.reset()

189 | num_actions = env.action_space.n

190 | model = Model(num_actions)

191 | target_model = Model(num_actions)

192 | agent = DQNAgent(model, target_model, env)

193 | # reward = agent.test()

194 | agent.train()

195 |

--------------------------------------------------------------------------------

/01_dqn.py:

--------------------------------------------------------------------------------

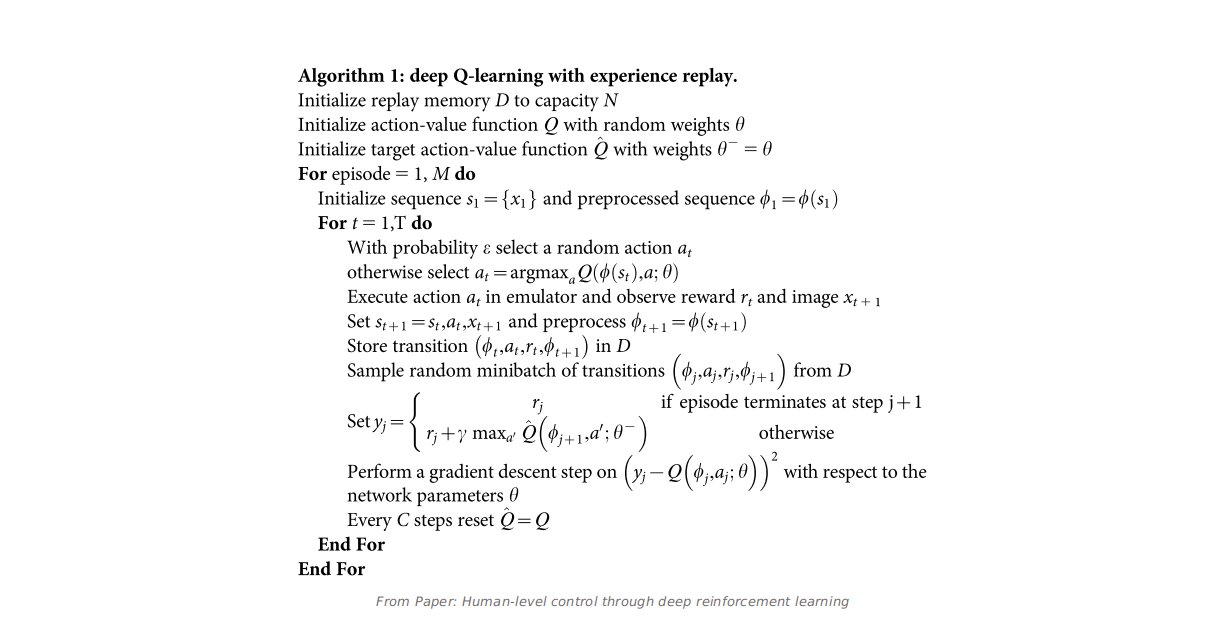

1 | """

2 | A simple version of Deep Q-Network(DQN) including the main tactics mentioned in DeepMind's original paper:

3 | - Experience Replay

4 | - Target Network

5 | To play CartPole-v0.

6 |

7 | > Note: DQN can only handle discrete-env which have a discrete action space, like up, down, left, right.

8 | As for the CartPole-v0 environment, its state(the agent's observation) is a 1-D vector not a 3-D image like

9 | Atari, so in that simple example, there is no need to use the convolutional layer, just fully-connected layer.

10 |

11 | Using:

12 | TensorFlow 2.0

13 | Numpy 1.16.2

14 | Gym 0.12.1

15 | """

16 |

17 | import tensorflow as tf

18 | print(tf.__version__)

19 |

20 | import gym

21 | import time

22 | import numpy as np

23 | import tensorflow.keras.layers as kl

24 | import tensorflow.keras.optimizers as ko

25 |

26 | np.random.seed(1)

27 | tf.random.set_seed(1)

28 |

29 | # Neural Network Model Defined at Here.

30 | class Model(tf.keras.Model):

31 | def __init__(self, num_actions):

32 | super().__init__(name='basic_dqn')

33 | # you can try different kernel initializer

34 | self.fc1 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

35 | self.fc2 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

36 | self.logits = kl.Dense(num_actions, name='q_values')

37 |

38 | # forward propagation

39 | def call(self, inputs):

40 | x = self.fc1(inputs)

41 | x = self.fc2(x)

42 | x = self.logits(x)

43 | return x

44 |

45 | # a* = argmax_a' Q(s, a')

46 | def action_value(self, obs):

47 | q_values = self.predict(obs)

48 | best_action = np.argmax(q_values, axis=-1)

49 | return best_action[0], q_values[0]

50 |

51 | # To test whether the model works

52 | def test_model():

53 | env = gym.make('CartPole-v0')

54 | print('num_actions: ', env.action_space.n)

55 | model = Model(env.action_space.n)

56 |

57 | obs = env.reset()

58 | print('obs_shape: ', obs.shape)

59 |

60 | # tensorflow 2.0: no feed_dict or tf.Session() needed at all

61 | best_action, q_values = model.action_value(obs[None])

62 | print('res of test model: ', best_action, q_values) # 0 [ 0.00896799 -0.02111824]

63 |

64 |

65 | class DQNAgent: # Deep Q-Network

66 | def __init__(self, model, target_model, env, buffer_size=100, learning_rate=.0015, epsilon=.1, epsilon_dacay=0.995,

67 | min_epsilon=.01, gamma=.95, batch_size=4, target_update_iter=400, train_nums=5000, start_learning=10):

68 | self.model = model

69 | self.target_model = target_model

70 | # print(id(self.model), id(self.target_model)) # to make sure the two models don't update simultaneously

71 | # gradient clip

72 | opt = ko.Adam(learning_rate=learning_rate, clipvalue=10.0) # do gradient clip

73 | self.model.compile(optimizer=opt, loss='mse')

74 |

75 | # parameters

76 | self.env = env # gym environment

77 | self.lr = learning_rate # learning step

78 | self.epsilon = epsilon # e-greedy when exploring

79 | self.epsilon_decay = epsilon_dacay # epsilon decay rate

80 | self.min_epsilon = min_epsilon # minimum epsilon

81 | self.gamma = gamma # discount rate

82 | self.batch_size = batch_size # batch_size

83 | self.target_update_iter = target_update_iter # target network update period

84 | self.train_nums = train_nums # total training steps

85 | self.num_in_buffer = 0 # transition's num in buffer

86 | self.buffer_size = buffer_size # replay buffer size

87 | self.start_learning = start_learning # step to begin learning(no update before that step)

88 |

89 | # replay buffer params [(s, a, r, ns, done), ...]

90 | self.obs = np.empty((self.buffer_size,) + self.env.reset().shape)

91 | self.actions = np.empty((self.buffer_size), dtype=np.int8)

92 | self.rewards = np.empty((self.buffer_size), dtype=np.float32)

93 | self.dones = np.empty((self.buffer_size), dtype=np.bool)

94 | self.next_states = np.empty((self.buffer_size,) + self.env.reset().shape)

95 | self.next_idx = 0

96 |

97 | def train(self):

98 | # initialize the initial observation of the agent

99 | obs = self.env.reset()

100 | for t in range(1, self.train_nums):

101 | best_action, q_values = self.model.action_value(obs[None]) # input the obs to the network model

102 | action = self.get_action(best_action) # get the real action

103 | next_obs, reward, done, info = self.env.step(action) # take the action in the env to return s', r, done

104 | self.store_transition(obs, action, reward, next_obs, done) # store that transition into replay butter

105 | self.num_in_buffer = min(self.num_in_buffer + 1, self.buffer_size)

106 |

107 | if t > self.start_learning: # start learning

108 | losses = self.train_step()

109 | if t % 1000 == 0:

110 | print('losses each 1000 steps: ', losses)

111 |

112 | if t % self.target_update_iter == 0:

113 | self.update_target_model()

114 | if done:

115 | obs = self.env.reset()

116 | else:

117 | obs = next_obs

118 |

119 | def train_step(self):

120 | idxes = self.sample(self.batch_size)

121 | s_batch = self.obs[idxes]

122 | a_batch = self.actions[idxes]

123 | r_batch = self.rewards[idxes]

124 | ns_batch = self.next_states[idxes]

125 | done_batch = self.dones[idxes]

126 |

127 | target_q = r_batch + self.gamma * np.amax(self.get_target_value(ns_batch), axis=1) * (1 - done_batch)

128 | target_f = self.model.predict(s_batch)

129 | for i, val in enumerate(a_batch):

130 | target_f[i][val] = target_q[i]

131 |

132 | losses = self.model.train_on_batch(s_batch, target_f)

133 |

134 | return losses

135 |

136 | def evalation(self, env, render=True):

137 | obs, done, ep_reward = env.reset(), False, 0

138 | # one episode until done

139 | while not done:

140 | action, q_values = self.model.action_value(obs[None]) # Using [None] to extend its dimension (4,) -> (1, 4)

141 | obs, reward, done, info = env.step(action)

142 | ep_reward += reward

143 | if render: # visually show

144 | env.render()

145 | time.sleep(0.05)

146 | env.close()

147 | return ep_reward

148 |

149 | # store transitions into replay butter

150 | def store_transition(self, obs, action, reward, next_state, done):

151 | n_idx = self.next_idx % self.buffer_size

152 | self.obs[n_idx] = obs

153 | self.actions[n_idx] = action

154 | self.rewards[n_idx] = reward

155 | self.next_states[n_idx] = next_state

156 | self.dones[n_idx] = done

157 | self.next_idx = (self.next_idx + 1) % self.buffer_size

158 |

159 | # sample n different indexes

160 | def sample(self, n):

161 | assert n < self.num_in_buffer

162 | res = []

163 | while True:

164 | num = np.random.randint(0, self.num_in_buffer)

165 | if num not in res:

166 | res.append(num)

167 | if len(res) == n:

168 | break

169 | return res

170 |

171 | # e-greedy

172 | def get_action(self, best_action):

173 | if np.random.rand() < self.epsilon:

174 | return self.env.action_space.sample()

175 | return best_action

176 |

177 | # assign the current network parameters to target network

178 | def update_target_model(self):

179 | self.target_model.set_weights(self.model.get_weights())

180 |

181 | def get_target_value(self, obs):

182 | return self.target_model.predict(obs)

183 |

184 | def e_decay(self):

185 | self.epsilon *= self.epsilon_decay

186 |

187 | if __name__ == '__main__':

188 | test_model()

189 |

190 | env = gym.make("CartPole-v0")

191 | num_actions = env.action_space.n

192 | model = Model(num_actions)

193 | target_model = Model(num_actions)

194 | agent = DQNAgent(model, target_model, env)

195 | # test before

196 | rewards_sum = agent.evalation(env)

197 | print("Before Training: %d out of 200" % rewards_sum) # 9 out of 200

198 |

199 | agent.train()

200 | # test after

201 | rewards_sum = agent.evalation(env)

202 | print("After Training: %d out of 200" % rewards_sum) # 200 out of 200

203 |

--------------------------------------------------------------------------------

/02_ddqn.py:

--------------------------------------------------------------------------------

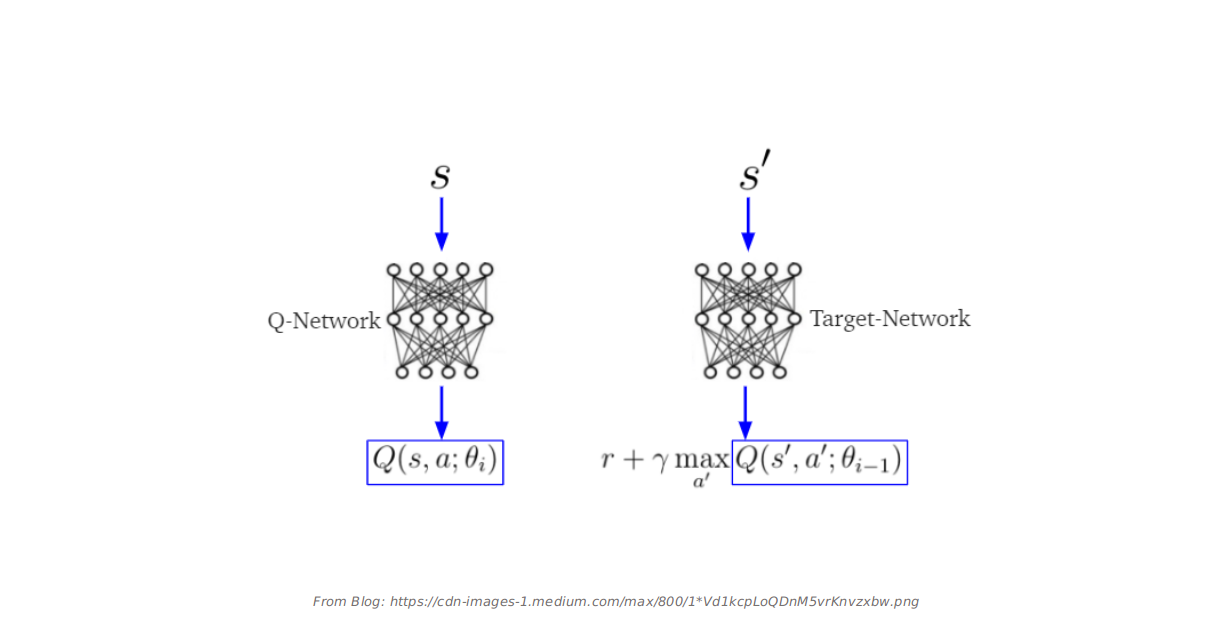

1 | """

2 | A simple version of Double Deep Q-Network(DDQN), minor change to DQN.

3 | To play CartPole-v0.

4 |

5 | Using:

6 | TensorFlow 2.0

7 | Numpy 1.16.2

8 | Gym 0.12.1

9 | """

10 |

11 | import tensorflow as tf

12 | print(tf.__version__)

13 |

14 | import gym

15 | import time

16 | import numpy as np

17 | import tensorflow.keras.layers as kl

18 | import tensorflow.keras.optimizers as ko

19 |

20 | np.random.seed(1)

21 | tf.random.set_seed(1)

22 |

23 | # Neural Network Model Defined at Here.

24 | class Model(tf.keras.Model):

25 | def __init__(self, num_actions):

26 | super().__init__(name='basic_ddqn')

27 | # you can try different kernel initializer

28 | self.fc1 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

29 | self.fc2 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

30 | self.logits = kl.Dense(num_actions, name='q_values')

31 |

32 | # forward propagation

33 | def call(self, inputs):

34 | x = self.fc1(inputs)

35 | x = self.fc2(x)

36 | x = self.logits(x)

37 | return x

38 |

39 | # a* = argmax_a' Q(s, a')

40 | def action_value(self, obs):

41 | q_values = self.predict(obs)

42 | best_action = np.argmax(q_values, axis=-1)

43 | return best_action if best_action.shape[0] > 1 else best_action[0], q_values[0]

44 |

45 | # To test whether the model works

46 | def test_model():

47 | env = gym.make('CartPole-v0')

48 | print('num_actions: ', env.action_space.n)

49 | model = Model(env.action_space.n)

50 |

51 | obs = env.reset()

52 | print('obs_shape: ', obs.shape)

53 |

54 | # tensorflow 2.0: no feed_dict or tf.Session() needed at all

55 | best_action, q_values = model.action_value(obs[None])

56 | print('res of test model: ', best_action, q_values) # 0 [ 0.00896799 -0.02111824]

57 |

58 |

59 | class DDQNAgent: # Double Deep Q-Network

60 | def __init__(self, model, target_model, env, buffer_size=200, learning_rate=.0015, epsilon=.1, epsilon_dacay=0.995,

61 | min_epsilon=.01, gamma=.9, batch_size=8, target_update_iter=200, train_nums=5000, start_learning=100):

62 | self.model = model

63 | self.target_model = target_model

64 | # gradient clip

65 | opt = ko.Adam(learning_rate=learning_rate, clipvalue=10.0)

66 | self.model.compile(optimizer=opt, loss='mse')

67 |

68 | # parameters

69 | self.env = env # gym environment

70 | self.lr = learning_rate # learning step

71 | self.epsilon = epsilon # e-greedy when exploring

72 | self.epsilon_decay = epsilon_dacay # epsilon decay rate

73 | self.min_epsilon = min_epsilon # minimum epsilon

74 | self.gamma = gamma # discount rate

75 | self.batch_size = batch_size # batch_size

76 | self.target_update_iter = target_update_iter # target network update period

77 | self.train_nums = train_nums # total training steps

78 | self.num_in_buffer = 0 # transition's num in buffer

79 | self.buffer_size = buffer_size # replay buffer size

80 | self.start_learning = start_learning # step to begin learning(no update before that step)

81 |

82 | # replay buffer params [(s, a, r, ns, done), ...]

83 | self.obs = np.empty((self.buffer_size,) + self.env.reset().shape)

84 | self.actions = np.empty((self.buffer_size), dtype=np.int8)

85 | self.rewards = np.empty((self.buffer_size), dtype=np.float32)

86 | self.dones = np.empty((self.buffer_size), dtype=np.bool)

87 | self.next_states = np.empty((self.buffer_size,) + self.env.reset().shape)

88 | self.next_idx = 0

89 |

90 | def train(self):

91 | # initialize the initial observation of the agent

92 | obs = self.env.reset()

93 | for t in range(1, self.train_nums):

94 | best_action, q_values = self.model.action_value(obs[None]) # input the obs to the network model

95 | action = self.get_action(best_action) # get the real action

96 | next_obs, reward, done, info = self.env.step(action) # take the action in the env to return s', r, done

97 | self.store_transition(obs, action, reward, next_obs, done) # store that transition into replay butter

98 | self.num_in_buffer = min(self.num_in_buffer + 1, self.buffer_size)

99 |

100 | if t > self.start_learning: # start learning

101 | losses = self.train_step()

102 | if t % 1000 == 0:

103 | print('losses each 1000 steps: ', losses)

104 |

105 | if t % self.target_update_iter == 0:

106 | self.update_target_model()

107 | if done:

108 | obs = self.env.reset()

109 | else:

110 | obs = next_obs

111 |

112 | def train_step(self):

113 | idxes = self.sample(self.batch_size)

114 | s_batch = self.obs[idxes]

115 | a_batch = self.actions[idxes]

116 | r_batch = self.rewards[idxes]

117 | ns_batch = self.next_states[idxes]

118 | done_batch = self.dones[idxes]

119 | # Double Q-Learning, decoupling selection and evaluation of the bootstrap action

120 | # selection with the current DQN model

121 | best_action_idxes, _ = self.model.action_value(ns_batch)

122 | target_q = self.get_target_value(ns_batch)

123 | # evaluation with the target DQN model

124 | target_q = r_batch + self.gamma * target_q[np.arange(target_q.shape[0]), best_action_idxes] * (1 - done_batch)

125 | target_f = self.model.predict(s_batch)

126 | for i, val in enumerate(a_batch):

127 | target_f[i][val] = target_q[i]

128 |

129 | losses = self.model.train_on_batch(s_batch, target_f)

130 |

131 | return losses

132 |

133 | def evalation(self, env, render=True):

134 | obs, done, ep_reward = env.reset(), False, 0

135 | # one episode until done

136 | while not done:

137 | action, q_values = self.model.action_value(obs[None]) # Using [None] to extend its dimension (4,) -> (1, 4)

138 | obs, reward, done, info = env.step(action)

139 | ep_reward += reward

140 | if render: # visually show

141 | env.render()

142 | time.sleep(0.05)

143 | env.close()

144 | return ep_reward

145 |

146 | # store transitions into replay butter

147 | def store_transition(self, obs, action, reward, next_state, done):

148 | n_idx = self.next_idx % self.buffer_size

149 | self.obs[n_idx] = obs

150 | self.actions[n_idx] = action

151 | self.rewards[n_idx] = reward

152 | self.next_states[n_idx] = next_state

153 | self.dones[n_idx] = done

154 | self.next_idx = (self.next_idx + 1) % self.buffer_size

155 |

156 | # sample n different indexes

157 | def sample(self, n):

158 | assert n < self.num_in_buffer

159 | res = []

160 | while True:

161 | num = np.random.randint(0, self.num_in_buffer)

162 | if num not in res:

163 | res.append(num)

164 | if len(res) == n:

165 | break

166 | return res

167 |

168 | # e-greedy

169 | def get_action(self, best_action):

170 | if np.random.rand() < self.epsilon:

171 | return self.env.action_space.sample()

172 | return best_action

173 |

174 | # assign the current network parameters to target network

175 | def update_target_model(self):

176 | self.target_model.set_weights(self.model.get_weights())

177 |

178 | def get_target_value(self, obs):

179 | return self.target_model.predict(obs)

180 |

181 | def e_decay(self):

182 | self.epsilon *= self.epsilon_decay

183 |

184 | if __name__ == '__main__':

185 | test_model()

186 |

187 | env = gym.make("CartPole-v0")

188 | num_actions = env.action_space.n

189 | model = Model(num_actions)

190 | target_model = Model(num_actions)

191 | agent = DDQNAgent(model, target_model, env)

192 | # test before

193 | rewards_sum = agent.evalation(env)

194 | print("Before Training: %d out of 200" % rewards_sum) # 9 out of 200

195 |

196 | agent.train()

197 | # test after

198 | # env = gym.wrappers.Monitor(env, './recording', force=True) # to record the process

199 | rewards_sum = agent.evalation(env)

200 | print("After Training: %d out of 200" % rewards_sum) # 200 out of 200

201 |

--------------------------------------------------------------------------------

/03_priority_replay.py:

--------------------------------------------------------------------------------

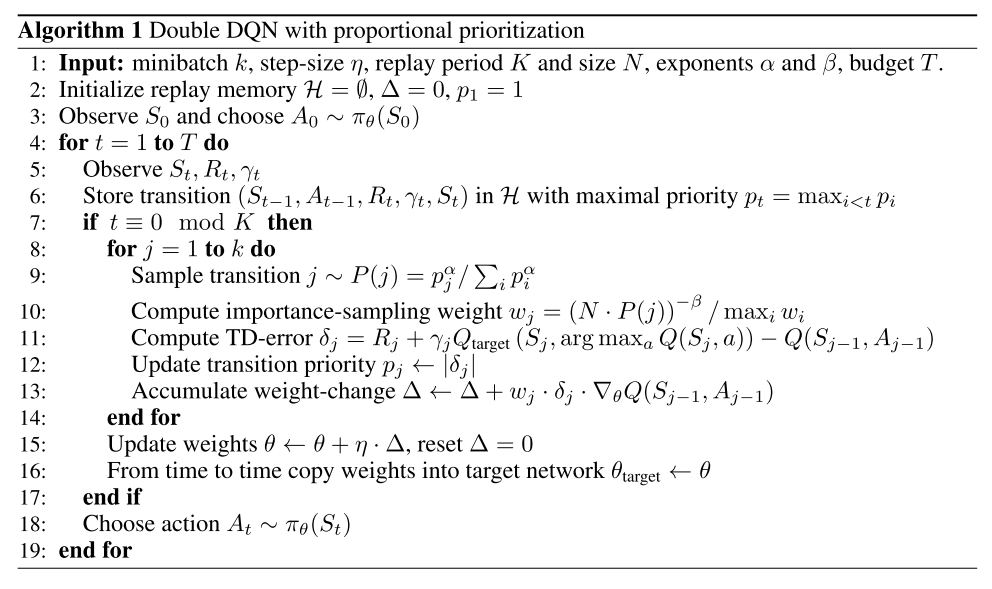

1 | """

2 | A simple version of Prioritized Experience Replay based on Double DQN.

3 | To play CartPole-v0.

4 |

5 | Using:

6 | TensorFlow 2.0

7 | Numpy 1.16.2

8 | Gym 0.12.1

9 | """

10 |

11 | import tensorflow as tf

12 | print(tf.__version__)

13 |

14 | import gym

15 | import time

16 | import numpy as np

17 | import tensorflow.keras.layers as kl

18 | import tensorflow.keras.optimizers as ko

19 |

20 | np.random.seed(1)

21 | tf.random.set_seed(1)

22 |

23 | # Neural Network Model Defined at Here.

24 | class Model(tf.keras.Model):

25 | def __init__(self, num_actions):

26 | super().__init__(name='basic_prddqn')

27 | # you can try different kernel initializer

28 | self.fc1 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

29 | self.fc2 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

30 | self.logits = kl.Dense(num_actions, name='q_values')

31 |

32 | # forward propagation

33 | def call(self, inputs):

34 | x = self.fc1(inputs)

35 | x = self.fc2(x)

36 | x = self.logits(x)

37 | return x

38 |

39 | # a* = argmax_a' Q(s, a')

40 | def action_value(self, obs):

41 | q_values = self.predict(obs)

42 | best_action = np.argmax(q_values, axis=-1)

43 | return best_action if best_action.shape[0] > 1 else best_action[0], q_values[0]

44 |

45 |

46 | # To test whether the model works

47 | def test_model():

48 | env = gym.make('CartPole-v0')

49 | print('num_actions: ', env.action_space.n)

50 | model = Model(env.action_space.n)

51 |

52 | obs = env.reset()

53 | print('obs_shape: ', obs.shape)

54 |

55 | # tensorflow 2.0 eager mode: no feed_dict or tf.Session() needed at all

56 | best_action, q_values = model.action_value(obs[None])

57 | print('res of test model: ', best_action, q_values) # 0 [ 0.00896799 -0.02111824]

58 |

59 |

60 | # replay buffer

61 | class SumTree:

62 | # little modified from https://github.com/jaromiru/AI-blog/blob/master/SumTree.py

63 | def __init__(self, capacity):

64 | self.capacity = capacity # N, the size of replay buffer, so as to the number of sum tree's leaves

65 | self.tree = np.zeros(2 * capacity - 1) # equation, to calculate the number of nodes in a sum tree

66 | self.transitions = np.empty(capacity, dtype=object)

67 | self.next_idx = 0

68 |

69 | @property

70 | def total_p(self):

71 | return self.tree[0]

72 |

73 | def add(self, priority, transition):

74 | idx = self.next_idx + self.capacity - 1

75 | self.transitions[self.next_idx] = transition

76 | self.update(idx, priority)

77 | self.next_idx = (self.next_idx + 1) % self.capacity

78 |

79 | def update(self, idx, priority):

80 | change = priority - self.tree[idx]

81 | self.tree[idx] = priority

82 | self._propagate(idx, change) # O(logn)

83 |

84 | def _propagate(self, idx, change):

85 | parent = (idx - 1) // 2

86 | self.tree[parent] += change

87 | if parent != 0:

88 | self._propagate(parent, change)

89 |

90 | def get_leaf(self, s):

91 | idx = self._retrieve(0, s) # from root

92 | trans_idx = idx - self.capacity + 1

93 | return idx, self.tree[idx], self.transitions[trans_idx]

94 |

95 | def _retrieve(self, idx, s):

96 | left = 2 * idx + 1

97 | right = left + 1

98 | if left >= len(self.tree):

99 | return idx

100 | if s <= self.tree[left]:

101 | return self._retrieve(left, s)

102 | else:

103 | return self._retrieve(right, s - self.tree[left])

104 |

105 |

106 | class PERAgent: # Double DQN with Proportional Prioritization

107 | def __init__(self, model, target_model, env, learning_rate=.0012, epsilon=.1, epsilon_dacay=0.995, min_epsilon=.01,

108 | gamma=.9, batch_size=8, target_update_iter=400, train_nums=5000, buffer_size=200, replay_period=20,

109 | alpha=0.4, beta=0.4, beta_increment_per_sample=0.001):

110 | self.model = model

111 | self.target_model = target_model

112 | # gradient clip

113 | opt = ko.Adam(learning_rate=learning_rate) # , clipvalue=10.0

114 | self.model.compile(optimizer=opt, loss=self._per_loss) # loss=self._per_loss

115 |

116 | # parameters

117 | self.env = env # gym environment

118 | self.lr = learning_rate # learning step

119 | self.epsilon = epsilon # e-greedy when exploring

120 | self.epsilon_decay = epsilon_dacay # epsilon decay rate

121 | self.min_epsilon = min_epsilon # minimum epsilon

122 | self.gamma = gamma # discount rate

123 | self.batch_size = batch_size # minibatch k

124 | self.target_update_iter = target_update_iter # target network update period

125 | self.train_nums = train_nums # total training steps

126 |

127 | # replay buffer params [(s, a, r, ns, done), ...]

128 | self.b_obs = np.empty((self.batch_size,) + self.env.reset().shape)

129 | self.b_actions = np.empty(self.batch_size, dtype=np.int8)

130 | self.b_rewards = np.empty(self.batch_size, dtype=np.float32)

131 | self.b_next_states = np.empty((self.batch_size,) + self.env.reset().shape)

132 | self.b_dones = np.empty(self.batch_size, dtype=np.bool)

133 |

134 | self.replay_buffer = SumTree(buffer_size) # sum-tree data structure

135 | self.buffer_size = buffer_size # replay buffer size N

136 | self.replay_period = replay_period # replay period K

137 | self.alpha = alpha # priority parameter, alpha=[0, 0.4, 0.5, 0.6, 0.7, 0.8]

138 | self.beta = beta # importance sampling parameter, beta=[0, 0.4, 0.5, 0.6, 1]

139 | self.beta_increment_per_sample = beta_increment_per_sample

140 | self.num_in_buffer = 0 # total number of transitions stored in buffer

141 | self.margin = 0.01 # pi = |td_error| + margin

142 | self.p1 = 1 # initialize priority for the first transition

143 | # self.is_weight = np.empty((None, 1))

144 | self.is_weight = np.power(self.buffer_size, -self.beta) # because p1 == 1

145 | self.abs_error_upper = 1

146 |

147 | def _per_loss(self, y_target, y_pred):

148 | return tf.reduce_mean(self.is_weight * tf.math.squared_difference(y_target, y_pred))

149 |

150 | def train(self):

151 | # initialize the initial observation of the agent

152 | obs = self.env.reset()

153 | for t in range(1, self.train_nums):

154 | best_action, q_values = self.model.action_value(obs[None]) # input the obs to the network model

155 | action = self.get_action(best_action) # get the real action

156 | next_obs, reward, done, info = self.env.step(action) # take the action in the env to return s', r, done

157 | if t == 1:

158 | p = self.p1

159 | else:

160 | p = np.max(self.replay_buffer.tree[-self.replay_buffer.capacity:])

161 | self.store_transition(p, obs, action, reward, next_obs, done) # store that transition into replay butter

162 | self.num_in_buffer = min(self.num_in_buffer + 1, self.buffer_size)

163 |

164 | if t > self.buffer_size:

165 | # if t % self.replay_period == 0: # transition sampling and update

166 | losses = self.train_step()

167 | if t % 1000 == 0:

168 | print('losses each 1000 steps: ', losses)

169 |

170 | if t % self.target_update_iter == 0:

171 | self.update_target_model()

172 | if done:

173 | obs = self.env.reset() # one episode end

174 | else:

175 | obs = next_obs

176 |

177 | def train_step(self):

178 | idxes, self.is_weight = self.sum_tree_sample(self.batch_size)

179 | # Double Q-Learning

180 | best_action_idxes, _ = self.model.action_value(self.b_next_states) # get actions through the current network

181 | target_q = self.get_target_value(self.b_next_states) # get target q-value through the target network

182 | # get td_targets of batch states

183 | td_target = self.b_rewards + \

184 | self.gamma * target_q[np.arange(target_q.shape[0]), best_action_idxes] * (1 - self.b_dones)

185 | predict_q = self.model.predict(self.b_obs)

186 | td_predict = predict_q[np.arange(predict_q.shape[0]), self.b_actions]

187 | abs_td_error = np.abs(td_target - td_predict) + self.margin

188 | clipped_error = np.where(abs_td_error < self.abs_error_upper, abs_td_error, self.abs_error_upper)

189 | ps = np.power(clipped_error, self.alpha)

190 | # priorities update

191 | for idx, p in zip(idxes, ps):

192 | self.replay_buffer.update(idx, p)

193 |

194 | for i, val in enumerate(self.b_actions):

195 | predict_q[i][val] = td_target[i]

196 |

197 | target_q = predict_q # just to change a more explicit name

198 | losses = self.model.train_on_batch(self.b_obs, target_q)

199 |

200 | return losses

201 |

202 | # proportional prioritization sampling

203 | def sum_tree_sample(self, k):

204 | idxes = []

205 | is_weights = np.empty((k, 1))

206 | self.beta = min(1., self.beta + self.beta_increment_per_sample)

207 | # calculate max_weight

208 | min_prob = np.min(self.replay_buffer.tree[-self.replay_buffer.capacity:]) / self.replay_buffer.total_p

209 | max_weight = np.power(self.buffer_size * min_prob, -self.beta)

210 | segment = self.replay_buffer.total_p / k

211 | for i in range(k):

212 | s = np.random.uniform(segment * i, segment * (i + 1))

213 | idx, p, t = self.replay_buffer.get_leaf(s)

214 | idxes.append(idx)

215 | self.b_obs[i], self.b_actions[i], self.b_rewards[i], self.b_next_states[i], self.b_dones[i] = t

216 | # P(j)

217 | sampling_probabilities = p / self.replay_buffer.total_p # where p = p ** self.alpha

218 | is_weights[i, 0] = np.power(self.buffer_size * sampling_probabilities, -self.beta) / max_weight

219 | return idxes, is_weights

220 |

221 | def evaluation(self, env, render=True):

222 | obs, done, ep_reward = env.reset(), False, 0

223 | # one episode until done

224 | while not done:

225 | action, q_values = self.model.action_value(obs[None]) # Using [None] to extend its dimension (4,) -> (1, 4)

226 | obs, reward, done, info = env.step(action)

227 | ep_reward += reward

228 | if render: # visually show

229 | env.render()

230 | time.sleep(0.05)

231 | env.close()

232 | return ep_reward

233 |

234 | # store transitions into replay butter, now sum tree.

235 | def store_transition(self, priority, obs, action, reward, next_state, done):

236 | transition = [obs, action, reward, next_state, done]

237 | self.replay_buffer.add(priority, transition)

238 |

239 | # rank-based prioritization sampling

240 | def rand_based_sample(self, k):

241 | pass

242 |

243 | # e-greedy

244 | def get_action(self, best_action):

245 | if np.random.rand() < self.epsilon:

246 | return self.env.action_space.sample()

247 | return best_action

248 |

249 | # assign the current network parameters to target network

250 | def update_target_model(self):

251 | self.target_model.set_weights(self.model.get_weights())

252 |

253 | def get_target_value(self, obs):

254 | return self.target_model.predict(obs)

255 |

256 | def e_decay(self):

257 | self.epsilon *= self.epsilon_decay

258 |

259 |

260 | if __name__ == '__main__':

261 | test_model()

262 |

263 | env = gym.make("CartPole-v0")

264 | num_actions = env.action_space.n

265 | model = Model(num_actions)

266 | target_model = Model(num_actions)

267 | agent = PERAgent(model, target_model, env)

268 | # test before

269 | rewards_sum = agent.evaluation(env)

270 | print("Before Training: %d out of 200" % rewards_sum) # 9 out of 200

271 |

272 | agent.train()

273 | # test after

274 | # env = gym.wrappers.Monitor(env, './recording', force=True)

275 | rewards_sum = agent.evaluation(env)

276 | print("After Training: %d out of 200" % rewards_sum) # 200 out of 200

277 |

--------------------------------------------------------------------------------

/04_dueling.py:

--------------------------------------------------------------------------------

1 | """

2 | A simple version of Dueling Double DQN with Prioritized Experience Replay. Just slightly modify the network architecture.

3 | To play CartPole-v0.

4 |

5 | Using:

6 | TensorFlow 2.0

7 | Numpy 1.16.2

8 | Gym 0.12.1

9 | """

10 |

11 | import tensorflow as tf

12 | print(tf.__version__)

13 |

14 | import gym

15 | import time

16 | import numpy as np

17 | import tensorflow.keras.layers as kl

18 | import tensorflow.keras.optimizers as ko

19 |

20 | np.random.seed(1)

21 | tf.random.set_seed(1)

22 |

23 | # Neural Network Model Defined at Here.

24 | class Model(tf.keras.Model):

25 | def __init__(self, num_actions):

26 | super().__init__(name='basic_prdddqn')

27 | # you can try different kernel initializer

28 | self.shared_fc1 = kl.Dense(16, activation='relu', kernel_initializer='he_uniform')

29 | self.shared_fc2 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

30 | # there is a trick that combining the two streams' fc layer, then

31 | # the output of that layer is a |A| + 1 dimension tensor: |V|A1|A2| ... |An|

32 | # output[:, 0] is state value, output[:, 1:] is action advantage

33 | self.val_adv_fc = kl.Dense(num_actions + 1, activation='relu', kernel_initializer='he_uniform')

34 |

35 | # forward propagation

36 | def call(self, inputs):

37 | x = self.shared_fc1(inputs)

38 | x = self.shared_fc2(x)

39 | val_adv = self.val_adv_fc(x)

40 | # average version, you can also try the max version.

41 | outputs = tf.expand_dims(val_adv[:, 0], -1) + (val_adv[:, 1:] - tf.reduce_mean(val_adv[:, 1:], -1, keepdims=True))

42 | return outputs

43 |

44 | # a* = argmax_a' Q(s, a')

45 | def action_value(self, obs):

46 | q_values = self.predict(obs)

47 | best_action = np.argmax(q_values, axis=-1)

48 | return best_action if best_action.shape[0] > 1 else best_action[0], q_values[0]

49 |

50 |

51 | # To test whether the model works

52 | def test_model():

53 | env = gym.make('CartPole-v0')

54 | print('num_actions: ', env.action_space.n)

55 | model = Model(env.action_space.n)

56 |

57 | obs = env.reset()

58 | print('obs_shape: ', obs.shape)

59 |

60 | # tensorflow 2.0 eager mode: no feed_dict or tf.Session() needed at all

61 | best_action, q_values = model.action_value(obs[None])

62 | print('res of test model: ', best_action, q_values) # 0 [ 0.00896799 -0.02111824]

63 |

64 |

65 | # replay buffer

66 | class SumTree:

67 | # little modified from https://github.com/jaromiru/AI-blog/blob/master/SumTree.py

68 | def __init__(self, capacity):

69 | self.capacity = capacity # N, the size of replay buffer, so as to the number of sum tree's leaves

70 | self.tree = np.zeros(2 * capacity - 1) # equation, to calculate the number of nodes in a sum tree

71 | self.transitions = np.empty(capacity, dtype=object)

72 | self.next_idx = 0

73 |

74 | @property

75 | def total_p(self):

76 | return self.tree[0]

77 |

78 | def add(self, priority, transition):

79 | idx = self.next_idx + self.capacity - 1

80 | self.transitions[self.next_idx] = transition

81 | self.update(idx, priority)

82 | self.next_idx = (self.next_idx + 1) % self.capacity

83 |

84 | def update(self, idx, priority):

85 | change = priority - self.tree[idx]

86 | self.tree[idx] = priority

87 | self._propagate(idx, change) # O(logn)

88 |

89 | def _propagate(self, idx, change):

90 | parent = (idx - 1) // 2

91 | self.tree[parent] += change

92 | if parent != 0:

93 | self._propagate(parent, change)

94 |

95 | def get_leaf(self, s):

96 | idx = self._retrieve(0, s) # from root

97 | trans_idx = idx - self.capacity + 1

98 | return idx, self.tree[idx], self.transitions[trans_idx]

99 |

100 | def _retrieve(self, idx, s):

101 | left = 2 * idx + 1

102 | right = left + 1

103 | if left >= len(self.tree):

104 | return idx

105 | if s <= self.tree[left]:

106 | return self._retrieve(left, s)

107 | else:

108 | return self._retrieve(right, s - self.tree[left])

109 |

110 |

111 | class DDDQNAgent: # Dueling Double DQN with Proportional Prioritization

112 | def __init__(self, model, target_model, env, learning_rate=.001, epsilon=.1, epsilon_dacay=0.995, min_epsilon=.01,

113 | gamma=.9, batch_size=8, target_update_iter=400, train_nums=5000, buffer_size=300, replay_period=20,

114 | alpha=0.4, beta=0.4, beta_increment_per_sample=0.001):

115 | self.model = model

116 | self.target_model = target_model

117 | # gradient clip

118 | opt = ko.Adam(learning_rate=learning_rate, clipvalue=10.0) #, clipvalue=10.0

119 | self.model.compile(optimizer=opt, loss=self._per_loss) #loss=self._per_loss

120 |

121 | # parameters

122 | self.env = env # gym environment

123 | self.lr = learning_rate # learning step

124 | self.epsilon = epsilon # e-greedy when exploring

125 | self.epsilon_decay = epsilon_dacay # epsilon decay rate

126 | self.min_epsilon = min_epsilon # minimum epsilon

127 | self.gamma = gamma # discount rate

128 | self.batch_size = batch_size # minibatch k

129 | self.target_update_iter = target_update_iter # target network update period

130 | self.train_nums = train_nums # total training steps

131 |

132 | # replay buffer params [(s, a, r, ns, done), ...]

133 | self.b_obs = np.empty((self.batch_size,) + self.env.reset().shape)

134 | self.b_actions = np.empty(self.batch_size, dtype=np.int8)

135 | self.b_rewards = np.empty(self.batch_size, dtype=np.float32)

136 | self.b_next_states = np.empty((self.batch_size,) + self.env.reset().shape)

137 | self.b_dones = np.empty(self.batch_size, dtype=np.bool)

138 |

139 | self.replay_buffer = SumTree(buffer_size) # sum-tree data structure

140 | self.buffer_size = buffer_size # replay buffer size N

141 | self.replay_period = replay_period # replay period K

142 | self.alpha = alpha # priority parameter, alpha=[0, 0.4, 0.5, 0.6, 0.7, 0.8]

143 | self.beta = beta # importance sampling parameter, beta=[0, 0.4, 0.5, 0.6, 1]

144 | self.beta_increment_per_sample = beta_increment_per_sample

145 | self.num_in_buffer = 0 # total number of transitions stored in buffer

146 | self.margin = 0.01 # pi = |td_error| + margin

147 | self.p1 = 1 # initialize priority for the first transition

148 | # self.is_weight = np.empty((None, 1))

149 | self.is_weight = np.power(self.buffer_size, -self.beta) # because p1 == 1

150 | self.abs_error_upper = 1

151 |

152 | def _per_loss(self, y_target, y_pred):

153 | return tf.reduce_mean(self.is_weight * tf.math.squared_difference(y_target, y_pred))

154 |

155 | def train(self):

156 | # initialize the initial observation of the agent

157 | obs = self.env.reset()

158 | for t in range(1, self.train_nums):

159 | best_action, q_values = self.model.action_value(obs[None]) # input the obs to the network model

160 | action = self.get_action(best_action) # get the real action

161 | next_obs, reward, done, info = self.env.step(action) # take the action in the env to return s', r, done

162 | if t == 1:

163 | p = self.p1

164 | else:

165 | p = np.max(self.replay_buffer.tree[-self.replay_buffer.capacity:])

166 | self.store_transition(p, obs, action, reward, next_obs, done) # store that transition into replay butter

167 | self.num_in_buffer = min(self.num_in_buffer + 1, self.buffer_size)

168 |

169 | if t > self.buffer_size:

170 | # if t % self.replay_period == 0: # transition sampling and update

171 | losses = self.train_step()

172 | if t % 1000 == 0:

173 | print('losses each 1000 steps: ', losses)

174 |

175 | if t % self.target_update_iter == 0:

176 | self.update_target_model()

177 | if done:

178 | obs = self.env.reset() # one episode end

179 | else:

180 | obs = next_obs

181 |

182 | def train_step(self):

183 | idxes, self.is_weight = self.sum_tree_sample(self.batch_size)

184 | # Double Q-Learning

185 | best_action_idxes, _ = self.model.action_value(self.b_next_states) # get actions through the current network

186 | target_q = self.get_target_value(self.b_next_states) # get target q-value through the target network

187 | # get td_targets of batch states

188 | td_target = self.b_rewards + \

189 | self.gamma * target_q[np.arange(target_q.shape[0]), best_action_idxes] * (1 - self.b_dones)

190 | predict_q = self.model.predict(self.b_obs)

191 | td_predict = predict_q[np.arange(predict_q.shape[0]), self.b_actions]

192 | abs_td_error = np.abs(td_target - td_predict) + self.margin

193 | clipped_error = np.where(abs_td_error < self.abs_error_upper, abs_td_error, self.abs_error_upper)

194 | ps = np.power(clipped_error, self.alpha)

195 | # priorities update

196 | for idx, p in zip(idxes, ps):

197 | self.replay_buffer.update(idx, p)

198 |

199 | for i, val in enumerate(self.b_actions):

200 | predict_q[i][val] = td_target[i]

201 |

202 | target_q = predict_q # just to change a more explicit name

203 | losses = self.model.train_on_batch(self.b_obs, target_q)

204 |

205 | return losses

206 |

207 | # proportional prioritization sampling

208 | def sum_tree_sample(self, k):

209 | idxes = []

210 | is_weights = np.empty((k, 1))

211 | self.beta = min(1., self.beta + self.beta_increment_per_sample)

212 | # calculate max_weight

213 | min_prob = np.min(self.replay_buffer.tree[-self.replay_buffer.capacity:]) / self.replay_buffer.total_p

214 | max_weight = np.power(self.buffer_size * min_prob, -self.beta)

215 | segment = self.replay_buffer.total_p / k

216 | for i in range(k):

217 | s = np.random.uniform(segment * i, segment * (i + 1))

218 | idx, p, t = self.replay_buffer.get_leaf(s)

219 | idxes.append(idx)

220 | self.b_obs[i], self.b_actions[i], self.b_rewards[i], self.b_next_states[i], self.b_dones[i] = t

221 | # P(j)

222 | sampling_probabilities = p / self.replay_buffer.total_p # where p = p ** self.alpha

223 | is_weights[i, 0] = np.power(self.buffer_size * sampling_probabilities, -self.beta) / max_weight

224 | return idxes, is_weights

225 |

226 | def evaluation(self, env, render=True):

227 | obs, done, ep_reward = env.reset(), False, 0

228 | # one episode until done

229 | while not done:

230 | action, q_values = self.model.action_value(obs[None]) # Using [None] to extend its dimension (4,) -> (1, 4)

231 | obs, reward, done, info = env.step(action)

232 | ep_reward += reward

233 | if render: # visually show

234 | env.render()

235 | time.sleep(0.05)

236 | env.close()

237 | return ep_reward

238 |

239 | # store transitions into replay butter, now sum tree.

240 | def store_transition(self, priority, obs, action, reward, next_state, done):

241 | transition = [obs, action, reward, next_state, done]

242 | self.replay_buffer.add(priority, transition)

243 |

244 | # rank-based prioritization sampling

245 | def rand_based_sample(self, k):

246 | pass

247 |

248 | # e-greedy

249 | def get_action(self, best_action):

250 | if np.random.rand() < self.epsilon:

251 | return self.env.action_space.sample()

252 | return best_action

253 |

254 | # assign the current network parameters to target network

255 | def update_target_model(self):

256 | self.target_model.set_weights(self.model.get_weights())

257 |

258 | def get_target_value(self, obs):

259 | return self.target_model.predict(obs)

260 |

261 | def e_decay(self):

262 | self.epsilon *= self.epsilon_decay

263 |

264 |

265 | if __name__ == '__main__':

266 | test_model()

267 |

268 | env = gym.make("CartPole-v0")

269 | num_actions = env.action_space.n

270 | model = Model(num_actions)

271 | target_model = Model(num_actions)

272 | agent = DDDQNAgent(model, target_model, env)

273 | # test before

274 | rewards_sum = agent.evaluation(env)

275 | print("Before Training: %d out of 200" % rewards_sum) # 9 out of 200

276 |

277 | agent.train()

278 | # test after

279 | # env = gym.wrappers.Monitor(env, './recording', force=True)

280 | rewards_sum = agent.evaluation(env)

281 | print("After Training: %d out of 200" % rewards_sum) # 200 out of 200

282 |

--------------------------------------------------------------------------------

/05_multistep_td.py:

--------------------------------------------------------------------------------

1 | """

2 | A simple version of Multi-Step TD Learning Based on Dueling Double DQN with Prioritized Experience Replay.

3 | To play CartPole-v0.

4 |

5 | Using:

6 | TensorFlow 2.0

7 | Numpy 1.16.2

8 | Gym 0.12.1

9 | """

10 |

11 | import tensorflow as tf

12 | print(tf.__version__)

13 |

14 | import gym

15 | import time

16 | import numpy as np

17 | import tensorflow.keras.layers as kl

18 | import tensorflow.keras.optimizers as ko

19 |

20 | from collections import deque

21 |

22 | np.random.seed(1)

23 | tf.random.set_seed(1)

24 |

25 | # Neural Network Model Defined at Here.

26 | class Model(tf.keras.Model):

27 | def __init__(self, num_actions):

28 | super().__init__(name='basic_nstepTD')

29 | # you can try different kernel initializer

30 | self.shared_fc1 = kl.Dense(16, activation='relu', kernel_initializer='he_uniform')

31 | self.shared_fc2 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

32 | # there is a trick that combining the two streams' fc layer, then

33 | # the output of that layer is a |A| + 1 dimension tensor: |V|A1|A2| ... |An|

34 | # output[:, 0] is state value, output[:, 1:] is action advantage

35 | self.val_adv_fc = kl.Dense(num_actions + 1, activation='relu', kernel_initializer='he_uniform')

36 |

37 | # forward propagation

38 | def call(self, inputs):

39 | x = self.shared_fc1(inputs)

40 | x = self.shared_fc2(x)

41 | val_adv = self.val_adv_fc(x)

42 | # average version, you can also try the max version.

43 | outputs = tf.expand_dims(val_adv[:, 0], -1) + (val_adv[:, 1:] - tf.reduce_mean(val_adv[:, 1:], -1, keepdims=True))

44 | return outputs

45 |

46 | # a* = argmax_a' Q(s, a')

47 | def action_value(self, obs):

48 | q_values = self.predict(obs)

49 | best_action = np.argmax(q_values, axis=-1)

50 | return best_action if best_action.shape[0] > 1 else best_action[0], q_values[0]

51 |

52 |

53 | # To test whether the model works

54 | def test_model():

55 | env = gym.make('CartPole-v0')

56 | print('num_actions: ', env.action_space.n)

57 | model = Model(env.action_space.n)

58 |

59 | obs = env.reset()

60 | print('obs_shape: ', obs.shape)

61 |

62 | # tensorflow 2.0 eager mode: no feed_dict or tf.Session() needed at all

63 | best_action, q_values = model.action_value(obs[None])

64 | print('res of test model: ', best_action, q_values) # 0 [ 0.00896799 -0.02111824]

65 |

66 |

67 | # replay buffer

68 | class SumTree:

69 | # little modified from https://github.com/jaromiru/AI-blog/blob/master/SumTree.py

70 | def __init__(self, capacity):

71 | self.capacity = capacity # N, the size of replay buffer, so as to the number of sum tree's leaves

72 | self.tree = np.zeros(2 * capacity - 1) # equation, to calculate the number of nodes in a sum tree

73 | self.transitions = np.empty(capacity, dtype=object)

74 | self.next_idx = 0

75 |

76 | @property

77 | def total_p(self):

78 | return self.tree[0]

79 |

80 | def add(self, priority, transition):

81 | idx = self.next_idx + self.capacity - 1

82 | self.transitions[self.next_idx] = transition

83 | self.update(idx, priority)

84 | self.next_idx = (self.next_idx + 1) % self.capacity

85 |

86 | def update(self, idx, priority):

87 | change = priority - self.tree[idx]

88 | self.tree[idx] = priority

89 | self._propagate(idx, change) # O(logn)

90 |

91 | def _propagate(self, idx, change):

92 | parent = (idx - 1) // 2

93 | self.tree[parent] += change

94 | if parent != 0:

95 | self._propagate(parent, change)

96 |

97 | def get_leaf(self, s):

98 | idx = self._retrieve(0, s) # from root

99 | trans_idx = idx - self.capacity + 1

100 | return idx, self.tree[idx], self.transitions[trans_idx]

101 |

102 | def _retrieve(self, idx, s):

103 | left = 2 * idx + 1

104 | right = left + 1

105 | if left >= len(self.tree):

106 | return idx

107 | if s <= self.tree[left]:

108 | return self._retrieve(left, s)

109 | else:

110 | return self._retrieve(right, s - self.tree[left])

111 |

112 |

113 | class MSTDAgent: # Multi-Step TD Learning Based on Dueling Double DQN with Proportional Prioritization

114 | def __init__(self, model, target_model, env, learning_rate=.0008, epsilon=.1, epsilon_dacay=0.995, min_epsilon=.01,

115 | gamma=.9, batch_size=8, target_update_iter=400, train_nums=5000, buffer_size=300, replay_period=20,

116 | alpha=0.4, beta=0.4, beta_increment_per_sample=0.001, n_step=3):

117 | self.model = model

118 | self.target_model = target_model

119 | # gradient clip

120 | opt = ko.Adam(learning_rate=learning_rate, clipvalue=10.0) # , clipvalue=10.0

121 | self.model.compile(optimizer=opt, loss=self._per_loss) # loss=self._per_loss

122 |

123 | # parameters

124 | self.env = env # gym environment

125 | self.lr = learning_rate # learning step

126 | self.epsilon = epsilon # e-greedy when exploring

127 | self.epsilon_decay = epsilon_dacay # epsilon decay rate

128 | self.min_epsilon = min_epsilon # minimum epsilon

129 | self.gamma = gamma # discount rate

130 | self.batch_size = batch_size # minibatch k

131 | self.target_update_iter = target_update_iter # target network update period

132 | self.train_nums = train_nums # total training steps

133 |

134 | # replay buffer params [(s, a, r, ns, done), ...]

135 | self.b_obs = np.empty((self.batch_size,) + self.env.reset().shape)

136 | self.b_actions = np.empty(self.batch_size, dtype=np.int8)

137 | self.b_rewards = np.empty(self.batch_size, dtype=np.float32)

138 | self.b_next_states = np.empty((self.batch_size,) + self.env.reset().shape)

139 | self.b_dones = np.empty(self.batch_size, dtype=np.bool)

140 |

141 | self.replay_buffer = SumTree(buffer_size) # sum-tree data structure

142 | self.buffer_size = buffer_size # replay buffer size N

143 | self.replay_period = replay_period # replay period K

144 | self.alpha = alpha # priority parameter, alpha=[0, 0.4, 0.5, 0.6, 0.7, 0.8]

145 | self.beta = beta # importance sampling parameter, beta=[0, 0.4, 0.5, 0.6, 1]

146 | self.beta_increment_per_sample = beta_increment_per_sample

147 | self.num_in_buffer = 0 # total number of transitions stored in buffer

148 | self.margin = 0.01 # pi = |td_error| + margin

149 | self.p1 = 1 # initialize priority for the first transition

150 | # self.is_weight = np.empty((None, 1))

151 | self.is_weight = np.power(self.buffer_size, -self.beta) # because p1 == 1

152 | self.abs_error_upper = 1

153 |

154 | # multi step TD learning

155 | self.n_step = n_step

156 | self.n_step_buffer = deque(maxlen=n_step)

157 |

158 | def _per_loss(self, y_target, y_pred):

159 | return tf.reduce_mean(self.is_weight * tf.math.squared_difference(y_target, y_pred))

160 |

161 | def train(self):

162 | # initialize the initial observation of the agent

163 | obs = self.env.reset()

164 | for t in range(1, self.train_nums):

165 | best_action, q_values = self.model.action_value(obs[None]) # input the obs to the network model

166 | action = self.get_action(best_action) # get the real action

167 | next_obs, reward, done, info = self.env.step(action) # take the action in the env to return s', r, done

168 |

169 | # n-step replay buffer

170 | # minor modified from github.com/medipixel/rl_algorithms/blob/master/algorithms/common/helper_functions.py

171 | temp_transition = [obs, action, reward, next_obs, done]

172 | self.n_step_buffer.append(temp_transition)

173 | if len(self.n_step_buffer) == self.n_step: # fill the n-step buffer for the first translation

174 | # add a multi step transition

175 | reward, next_obs, done = self.get_n_step_info(self.n_step_buffer, self.gamma)

176 | obs, action = self.n_step_buffer[0][:2]

177 |

178 | if t == 1:

179 | p = self.p1

180 | else:

181 | p = np.max(self.replay_buffer.tree[-self.replay_buffer.capacity:])

182 | self.store_transition(p, obs, action, reward, next_obs, done) # store that transition into replay butter

183 | self.num_in_buffer = min(self.num_in_buffer + 1, self.buffer_size)

184 |

185 | if t > self.buffer_size:

186 | # if t % self.replay_period == 0: # transition sampling and update

187 | losses = self.train_step()

188 | if t % 1000 == 0:

189 | print('losses each 1000 steps: ', losses)

190 |

191 | if t % self.target_update_iter == 0:

192 | self.update_target_model()

193 | if done:

194 | obs = self.env.reset() # one episode end

195 | else:

196 | obs = next_obs

197 |

198 | def train_step(self):

199 | idxes, self.is_weight = self.sum_tree_sample(self.batch_size)

200 | assert len(idxes) == self.b_next_states.shape[0]

201 |

202 | # Double Q-Learning

203 | best_action_idxes, _ = self.model.action_value(self.b_next_states) # get actions through the current network

204 | target_q = self.get_target_value(self.b_next_states) # get target q-value through the target network

205 | # get td_targets of batch states

206 | td_target = self.b_rewards + \

207 | self.gamma * target_q[np.arange(target_q.shape[0]), best_action_idxes] * (1 - self.b_dones)

208 | predict_q = self.model.predict(self.b_obs)

209 | td_predict = predict_q[np.arange(predict_q.shape[0]), self.b_actions]

210 | abs_td_error = np.abs(td_target - td_predict) + self.margin

211 | clipped_error = np.where(abs_td_error < self.abs_error_upper, abs_td_error, self.abs_error_upper)

212 | ps = np.power(clipped_error, self.alpha)

213 | # priorities update

214 | for idx, p in zip(idxes, ps):

215 | self.replay_buffer.update(idx, p)

216 |

217 | for i, val in enumerate(self.b_actions):

218 | predict_q[i][val] = td_target[i]

219 |

220 | target_q = predict_q # just to change a more explicit name

221 | losses = self.model.train_on_batch(self.b_obs, target_q)

222 |

223 | return losses

224 |

225 | # proportional prioritization sampling

226 | def sum_tree_sample(self, k):

227 | idxes = []

228 | is_weights = np.empty((k, 1))

229 | self.beta = min(1., self.beta + self.beta_increment_per_sample)

230 | # calculate max_weight

231 | min_prob = np.min(self.replay_buffer.tree[-self.replay_buffer.capacity:]) / self.replay_buffer.total_p

232 | max_weight = np.power(self.buffer_size * min_prob, -self.beta)

233 | segment = self.replay_buffer.total_p / k

234 | for i in range(k):

235 | s = np.random.uniform(segment * i, segment * (i + 1))

236 | idx, p, t = self.replay_buffer.get_leaf(s)

237 | idxes.append(idx)

238 | self.b_obs[i], self.b_actions[i], self.b_rewards[i], self.b_next_states[i], self.b_dones[i] = t

239 | # P(j)

240 | sampling_probabilities = p / self.replay_buffer.total_p # where p = p ** self.alpha

241 | is_weights[i, 0] = np.power(self.buffer_size * sampling_probabilities, -self.beta) / max_weight

242 | return idxes, is_weights

243 |

244 | def evaluation(self, env, render=True):

245 | obs, done, ep_reward = env.reset(), False, 0

246 | # one episode until done

247 | while not done:

248 | action, q_values = self.model.action_value(obs[None]) # Using [None] to extend its dimension (4,) -> (1, 4)

249 | obs, reward, done, info = env.step(action)

250 | ep_reward += reward

251 | if render: # visually show

252 | env.render()

253 | time.sleep(0.05)

254 | env.close()

255 | return ep_reward

256 |

257 | # store transitions into replay butter, now sum tree.

258 | def store_transition(self, priority, obs, action, reward, next_state, done):

259 | transition = [obs, action, reward, next_state, done]

260 | self.replay_buffer.add(priority, transition)

261 |

262 | # minor modified from https://github.com/medipixel/rl_algorithms/blob/master/algorithms/common/helper_functions.py

263 | def get_n_step_info(self, n_step_buffer, gamma):

264 | """Return n step reward, next state, and done."""

265 | # info of the last transition

266 | reward, next_state, done = n_step_buffer[-1][-3:]

267 |

268 | for transition in reversed(list(n_step_buffer)[:-1]):

269 | r, n_s, d = transition[-3:]

270 |

271 | reward = r + gamma * reward * (1 - d)

272 | next_state, done = (n_s, d) if d else (next_state, done)

273 |

274 | return reward, next_state, done

275 |

276 |

277 | # rank-based prioritization sampling

278 | def rand_based_sample(self, k):

279 | pass

280 |

281 | # e-greedy

282 | def get_action(self, best_action):

283 | if np.random.rand() < self.epsilon:

284 | return self.env.action_space.sample()

285 | return best_action

286 |

287 | # assign the current network parameters to target network

288 | def update_target_model(self):

289 | self.target_model.set_weights(self.model.get_weights())

290 |

291 | def get_target_value(self, obs):

292 | return self.target_model.predict(obs)

293 |

294 | def e_decay(self):

295 | self.epsilon *= self.epsilon_decay

296 |

297 |

298 | if __name__ == '__main__':

299 | test_model()

300 |

301 | env = gym.make("CartPole-v0")

302 | num_actions = env.action_space.n

303 | model = Model(num_actions)

304 | target_model = Model(num_actions)

305 | agent = MSTDAgent(model, target_model, env)

306 | # test before

307 | rewards_sum = agent.evaluation(env)

308 | print("Before Training: %d out of 200" % rewards_sum) # 9 out of 200

309 |

310 | agent.train()

311 | # test after

312 | # env = gym.wrappers.Monitor(env, './recording', force=True)

313 | rewards_sum = agent.evaluation(env)

314 | print("After Training: %d out of 200" % rewards_sum) # 200 out of 200

315 |

--------------------------------------------------------------------------------

/06_distributional_rl.py:

--------------------------------------------------------------------------------

1 | """

2 | A simple version of Distributional RL Based on Multi-Step Dueling Double DQN with Prioritized Experience Replay.

3 | To play CartPole-v0.

4 |

5 | Using:

6 | TensorFlow 2.0

7 | Numpy 1.16.2

8 | Gym 0.12.1

9 | """

10 |

11 | import tensorflow as tf

12 | print(tf.__version__)

13 |

14 | import gym

15 | import time

16 | import numpy as np

17 | import tensorflow.keras.layers as kl

18 | import tensorflow.keras.optimizers as ko

19 |

20 | from collections import deque

21 |

22 | np.random.seed(1)

23 | tf.random.set_seed(1)

24 |

25 | # Neural Network Model Defined at Here.

26 | class Model(tf.keras.Model):

27 | def __init__(self, num_actions, num_atoms):

28 | super().__init__(name='basic_distributional_rl')

29 | self.num_actions = num_actions

30 | self.num_atoms = num_atoms

31 | # you can try different kernel initializer

32 | self.shared_fc1 = kl.Dense(16, activation='relu', kernel_initializer='he_uniform')

33 | self.shared_fc2 = kl.Dense(32, activation='relu', kernel_initializer='he_uniform')

34 | # still use the dueling network architecture, but now:

35 | # V | v_0| v_1| ... | v_N-1|

36 | # A |a1_0|a1_1| ... |a1_N-1|

37 | # |a2_0|a2_1| ... |a2_N-1|

38 | # . . .

39 | # . . .

40 | # |an_0|an_1| ... |an_N-1|

41 | # the output of that layer is a (|A| + 1) * N dimension tensor

42 | # each column is a |A| + 1 dimension tensor for each atom.

43 | self.val_adv_fc = kl.Dense((num_actions + 1) * num_atoms, activation='relu', kernel_initializer='he_uniform')

44 |

45 | # forward propagation

46 | def call(self, inputs):

47 | x = self.shared_fc1(inputs)

48 | x = self.shared_fc2(x)

49 | val_adv = self.val_adv_fc(x)

50 | # average version, you can also try the max version.

51 | val_adv = tf.reshape(val_adv, [-1, self.num_actions + 1, self.num_atoms])

52 | outputs = tf.expand_dims(val_adv[:, 0], 1) + (val_adv[:, 1:] - tf.reduce_mean(val_adv[:, 1:], -1, keepdims=True))

53 | # you may need tf.nn.log_softmax()

54 | outputs = tf.nn.softmax(outputs, axis=-1)

55 |

56 | return outputs

57 |

58 | # a* = argmax_a' Q(s, a')

59 | def action_value(self, obs, support_z):

60 | r_distribute = self.predict(obs)

61 | q_values = np.sum(r_distribute * support_z, axis=-1)

62 | best_action = np.argmax(q_values, axis=-1)

63 | return best_action if best_action.shape[0] > 1 else best_action[0], q_values[0]

64 |

65 |

66 | # To test whether the model works

67 | def test_model():

68 | num_atoms = 11

69 | support_z = np.linspace(-5.0, 5.0, num_atoms)

70 | env = gym.make('CartPole-v0')

71 | print('num_actions: ', env.action_space.n)

72 | model = Model(env.action_space.n, num_atoms)

73 |

74 | obs = env.reset()

75 | print('obs_shape: ', obs.shape)

76 |

77 | # tensorflow 2.0 eager mode: no feed_dict or tf.Session() needed at all

78 | best_action, q_values = model.action_value(obs[None], support_z)

79 | print('res of test model: ', best_action, q_values) # 0 [ 0.00896799 -0.02111824]

80 |

81 |

82 | # replay buffer

83 | class SumTree:

84 | # little modified from https://github.com/jaromiru/AI-blog/blob/master/SumTree.py

85 | def __init__(self, capacity):

86 | self.capacity = capacity # N, the size of replay buffer, so as to the number of sum tree's leaves

87 | self.tree = np.zeros(2 * capacity - 1) # equation, to calculate the number of nodes in a sum tree

88 | self.transitions = np.empty(capacity, dtype=object)

89 | self.next_idx = 0

90 |

91 | @property

92 | def total_p(self):

93 | return self.tree[0]

94 |

95 | def add(self, priority, transition):

96 | idx = self.next_idx + self.capacity - 1

97 | self.transitions[self.next_idx] = transition

98 | self.update(idx, priority)

99 | self.next_idx = (self.next_idx + 1) % self.capacity

100 |

101 | def update(self, idx, priority):

102 | change = priority - self.tree[idx]

103 | self.tree[idx] = priority

104 | self._propagate(idx, change) # O(logn)

105 |

106 | def _propagate(self, idx, change):

107 | parent = (idx - 1) // 2

108 | self.tree[parent] += change

109 | if parent != 0:

110 | self._propagate(parent, change)

111 |

112 | def get_leaf(self, s):

113 | idx = self._retrieve(0, s) # from root

114 | trans_idx = idx - self.capacity + 1

115 | return idx, self.tree[idx], self.transitions[trans_idx]

116 |

117 | def _retrieve(self, idx, s):

118 | left = 2 * idx + 1

119 | right = left + 1

120 | if left >= len(self.tree):

121 | return idx

122 | if s <= self.tree[left]:

123 | return self._retrieve(left, s)

124 | else:

125 | return self._retrieve(right, s - self.tree[left])

126 |

127 |

128 | class DISTAgent: # Distributional RL Based on Multi-Step Dueling Double DQN with Proportional Prioritization

129 | def __init__(self, model, target_model, env, learning_rate=.001, epsilon=.1, epsilon_dacay=0.995, min_epsilon=.01,

130 | gamma=.9, batch_size=8, target_update_iter=400, train_nums=5000, buffer_size=300, replay_period=20,

131 | alpha=0.4, beta=0.4, beta_increment_per_sample=0.001, n_step=3, atom_num=11, vmin=-3.0, vmax=3.0):

132 | self.model = model

133 | self.target_model = target_model

134 | # gradient clip

135 | opt = ko.Adam(learning_rate=learning_rate, clipvalue=10.0) # , clipvalue=10.0

136 | self.model.compile(optimizer=opt, loss=self._per_loss) # loss=self._per_loss

137 |

138 | # parameters

139 | self.env = env # gym environment

140 | self.lr = learning_rate # learning step

141 | self.epsilon = epsilon # e-greedy when exploring

142 | self.epsilon_decay = epsilon_dacay # epsilon decay rate

143 | self.min_epsilon = min_epsilon # minimum epsilon

144 | self.gamma = gamma # discount rate

145 | self.batch_size = batch_size # minibatch k

146 | self.target_update_iter = target_update_iter # target network update period

147 | self.train_nums = train_nums # total training steps

148 |

149 | # replay buffer params [(s, a, r, ns, done), ...]

150 | self.b_obs = np.empty((self.batch_size,) + self.env.reset().shape)

151 | self.b_actions = np.empty(self.batch_size, dtype=np.int8)

152 | self.b_rewards = np.empty(self.batch_size, dtype=np.float32)

153 | self.b_next_states = np.empty((self.batch_size,) + self.env.reset().shape)

154 | self.b_dones = np.empty(self.batch_size, dtype=np.bool)

155 |

156 | self.replay_buffer = SumTree(buffer_size) # sum-tree data structure

157 | self.buffer_size = buffer_size # replay buffer size N

158 | self.replay_period = replay_period # replay period K

159 | self.alpha = alpha # priority parameter, alpha=[0, 0.4, 0.5, 0.6, 0.7, 0.8]

160 | self.beta = beta # importance sampling parameter, beta=[0, 0.4, 0.5, 0.6, 1]

161 | self.beta_increment_per_sample = beta_increment_per_sample

162 | self.num_in_buffer = 0 # total number of transitions stored in buffer

163 | self.margin = 0.01 # pi = |td_error| + margin

164 | self.p1 = 1 # initialize priority for the first transition

165 | # self.is_weight = np.empty((None, 1))

166 | self.is_weight = np.power(self.buffer_size, -self.beta) # because p1 == 1

167 | self.abs_error_upper = 1

168 |

169 | # multi step TD learning

170 | self.n_step = n_step

171 | self.n_step_buffer = deque(maxlen=n_step)

172 |

173 | # distributional rl

174 | self.atom_num = atom_num

175 | self.vmin = vmin

176 | self.vmax = vmax

177 | self.support_z = np.expand_dims(np.linspace(vmin, vmax, atom_num), 0)

178 | self.delta_z = (vmax - vmin) / (atom_num - 1)

179 |

180 | def _per_loss(self, y_target, y_pred):

181 | return tf.reduce_mean(self.is_weight * tf.math.squared_difference(y_target, y_pred))

182 |

183 | def _kl_loss(self, y_target, y_pred): # cross_entropy loss

184 | return tf.reduce_mean(self.is_weight * tf.nn.softmax_cross_entropy_with_logits(labels=y_pred, logits=y_target))

185 |

186 | def train(self):

187 | # initialize the initial observation of the agent

188 | obs = self.env.reset()

189 | for t in range(1, self.train_nums):

190 | best_action, _ = self.model.action_value(obs[None], self.support_z) # input the obs to the network model

191 | action = self.get_action(best_action) # get the real action

192 | next_obs, reward, done, info = self.env.step(action) # take the action in the env to return s', r, done

193 |

194 | # n-step replay buffer

195 | # minor modified from github.com/medipixel/rl_algorithms/blob/master/algorithms/common/helper_functions.py

196 | temp_transition = [obs, action, reward, next_obs, done]

197 | self.n_step_buffer.append(temp_transition)

198 | if len(self.n_step_buffer) == self.n_step: # fill the n-step buffer for the first translation

199 | # add a multi step transition

200 | reward, next_obs, done = self.get_n_step_info(self.n_step_buffer, self.gamma)

201 | obs, action = self.n_step_buffer[0][:2]

202 |

203 | if t == 1:

204 | p = self.p1

205 | else:

206 | p = np.max(self.replay_buffer.tree[-self.replay_buffer.capacity:])

207 | self.store_transition(p, obs, action, reward, next_obs, done) # store that transition into replay butter

208 | self.num_in_buffer = min(self.num_in_buffer + 1, self.buffer_size)

209 |

210 | if t > self.buffer_size:

211 | # if t % self.replay_period == 0: # transition sampling and update

212 | losses = self.train_step()

213 | if t % 1000 == 0:

214 | print('losses each 1000 steps: ', losses)

215 |

216 | if t % self.target_update_iter == 0:

217 | self.update_target_model()

218 | if done:

219 | obs = self.env.reset() # one episode end

220 | else:

221 | obs = next_obs

222 |

223 | def train_step(self):

224 | idxes, self.is_weight = self.sum_tree_sample(self.batch_size)

225 | assert len(idxes) == self.b_next_states.shape[0]

226 |

227 | # Double Q-Learning

228 | best_action_idxes, _ = self.model.action_value(self.b_next_states, self.support_z) # get actions through the current network