├── .gitignore

├── LICENSE

├── README.md

├── app.py

├── assets

├── 3d_generation_video.mp4

├── car.png

├── dragon.png

├── gradio.png

├── kunkun.png

└── rocket.png

├── cldm

├── ddim_hacked.py

├── hack.py

├── logger.py

├── model.py

└── toss.py

├── config.py

├── datasets

├── __init__.py

├── base.py

├── colmap.py

├── colmap_utils.py

├── depth_utils.py

├── nerfpp.py

├── nsvf.py

├── objaverse.py

├── objaverse800k.py

├── objaverse_car.py

├── ray_utils.py

└── rtmv.py

├── ldm

├── data

│ ├── __init__.py

│ ├── base.py

│ ├── personalized.py

│ └── util.py

├── lr_scheduler.py

├── models

│ ├── autoencoder.py

│ └── diffusion

│ │ ├── __init__.py

│ │ ├── ddim.py

│ │ ├── ddpm.py

│ │ ├── dpm_solver

│ │ ├── __init__.py

│ │ ├── dpm_solver.py

│ │ └── sampler.py

│ │ ├── plms.py

│ │ └── sampling_util.py

├── modules

│ ├── attention.py

│ ├── diffusionmodules

│ │ ├── __init__.py

│ │ ├── model.py

│ │ ├── openaimodel.py

│ │ ├── upscaling.py

│ │ └── util.py

│ ├── distributions

│ │ ├── __init__.py

│ │ └── distributions.py

│ ├── ema.py

│ ├── embedding_manager.py

│ ├── encoders

│ │ ├── __init__.py

│ │ └── modules.py

│ ├── image_degradation

│ │ ├── __init__.py

│ │ ├── bsrgan.py

│ │ ├── bsrgan_light.py

│ │ ├── utils

│ │ │ └── test.png

│ │ └── utils_image.py

│ └── midas

│ │ ├── __init__.py

│ │ ├── api.py

│ │ ├── midas

│ │ ├── __init__.py

│ │ ├── base_model.py

│ │ ├── blocks.py

│ │ ├── dpt_depth.py

│ │ ├── midas_net.py

│ │ ├── midas_net_custom.py

│ │ ├── transforms.py

│ │ └── vit.py

│ │ └── utils.py

└── util.py

├── models

└── toss_vae.yaml

├── opt.py

├── outputs

├── a dragon toy with fire on the back.png

├── a dragon toy with ice on the back.png

├── anya

│ └── 0_95.png

├── backview of a dragon toy with fire on the back.png

├── backview of a dragon toy with ice on the back.png

├── dragon

│ ├── a purple dragon with fire on the back.png

│ ├── a dragon toy with fire on the back.png

│ ├── a dragon with fire on its back.png

│ ├── a dragon with ice on its back.png

│ ├── a dragon with ice on the back.png

│ └── a purple dragon with fire on the back.png

└── minion

│ ├── a dragon toy with fire on its back.png

│ └── a minion with a rocket on the back.png

├── requirements.txt

├── share.py

├── streamlit_app.py

├── train.py

├── tutorial_dataset.py

└── viz.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | share/python-wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 | MANIFEST

28 |

29 | # PyInstaller

30 | # Usually these files are written by a python script from a template

31 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

32 | *.manifest

33 | *.spec

34 |

35 | # Installer logs

36 | pip-log.txt

37 | pip-delete-this-directory.txt

38 |

39 | # Unit test / coverage reports

40 | htmlcov/

41 | .tox/

42 | .nox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | *.py,cover

50 | .hypothesis/

51 | .pytest_cache/

52 | cover/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | .pybuilder/

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | # For a library or package, you might want to ignore these files since the code is

87 | # intended to run in multiple environments; otherwise, check them in:

88 | # .python-version

89 |

90 | # pipenv

91 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

92 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

93 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

94 | # install all needed dependencies.

95 | #Pipfile.lock

96 |

97 | # poetry

98 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

99 | # This is especially recommended for binary packages to ensure reproducibility, and is more

100 | # commonly ignored for libraries.

101 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

102 | #poetry.lock

103 |

104 | # pdm

105 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

106 | #pdm.lock

107 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

108 | # in version control.

109 | # https://pdm.fming.dev/#use-with-ide

110 | .pdm.toml

111 |

112 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

113 | __pypackages__/

114 |

115 | # Celery stuff

116 | celerybeat-schedule

117 | celerybeat.pid

118 |

119 | # SageMath parsed files

120 | *.sage.py

121 |

122 | # Environments

123 | .env

124 | .venv

125 | env/

126 | venv/

127 | ENV/

128 | env.bak/

129 | venv.bak/

130 |

131 | # Spyder project settings

132 | .spyderproject

133 | .spyproject

134 |

135 | # Rope project settings

136 | .ropeproject

137 |

138 | # mkdocs documentation

139 | /site

140 |

141 | # mypy

142 | .mypy_cache/

143 | .dmypy.json

144 | dmypy.json

145 |

146 | # Pyre type checker

147 | .pyre/

148 |

149 | # pytype static type analyzer

150 | .pytype/

151 |

152 | # Cython debug symbols

153 | cython_debug/

154 |

155 | # PyCharm

156 | # JetBrains specific template is maintained in a separate JetBrains.gitignore that can

157 | # be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

158 | # and can be added to the global gitignore or merged into this file. For a more nuclear

159 | # option (not recommended) you can uncomment the following to ignore the entire idea folder.

160 | #.idea/

161 |

162 | gradio_res/

163 | ckpt/toss.ckpt

164 | exp/

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

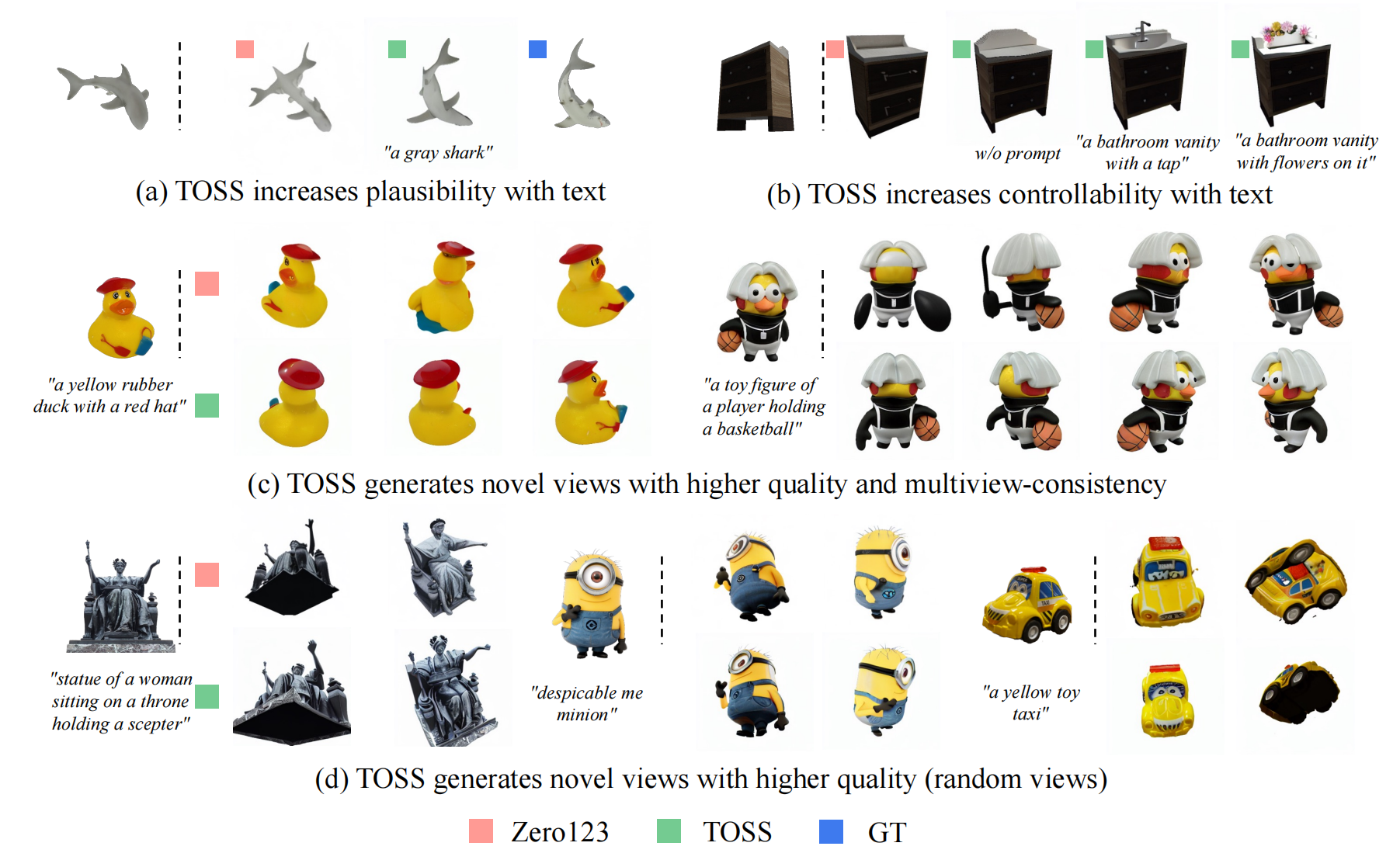

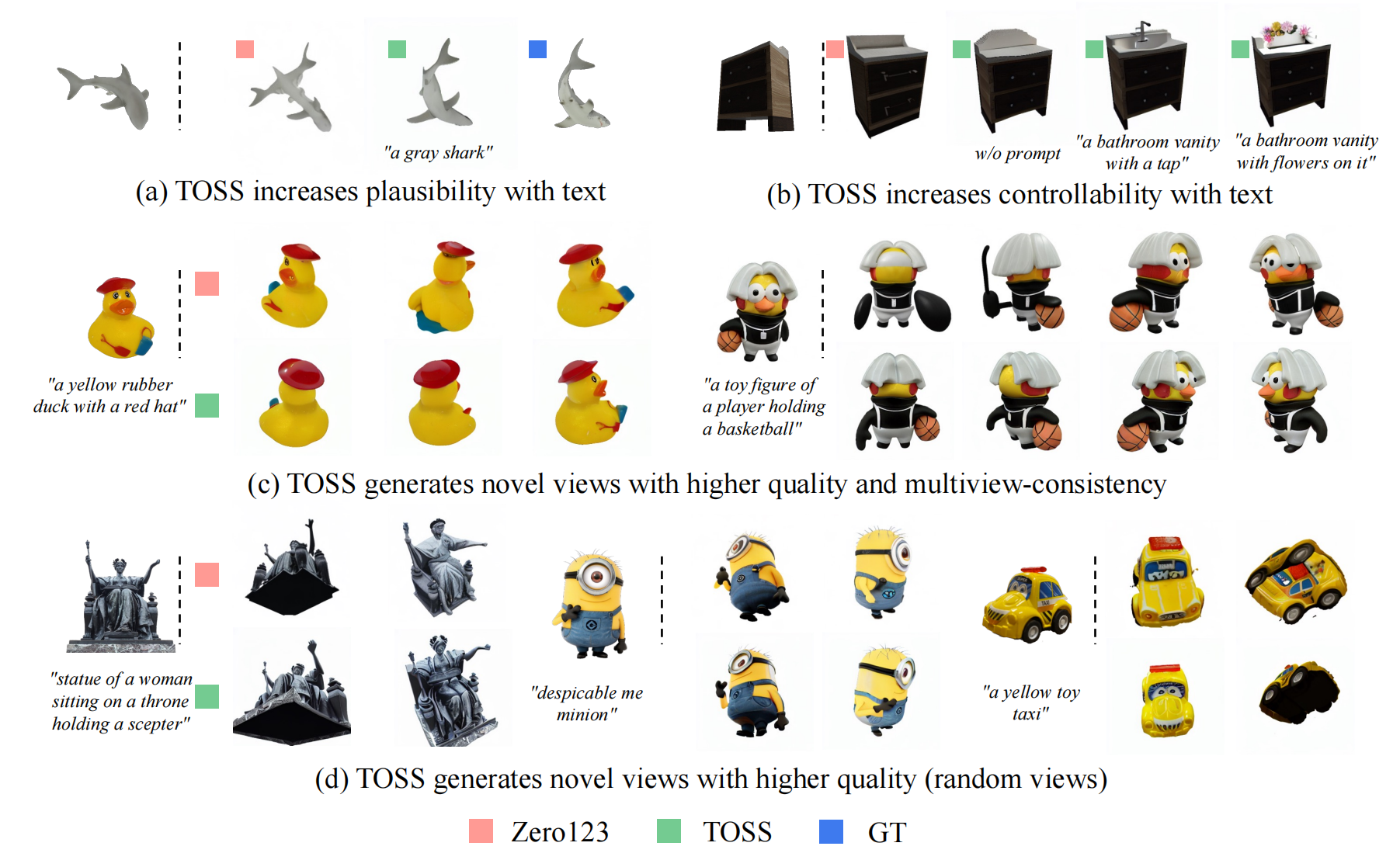

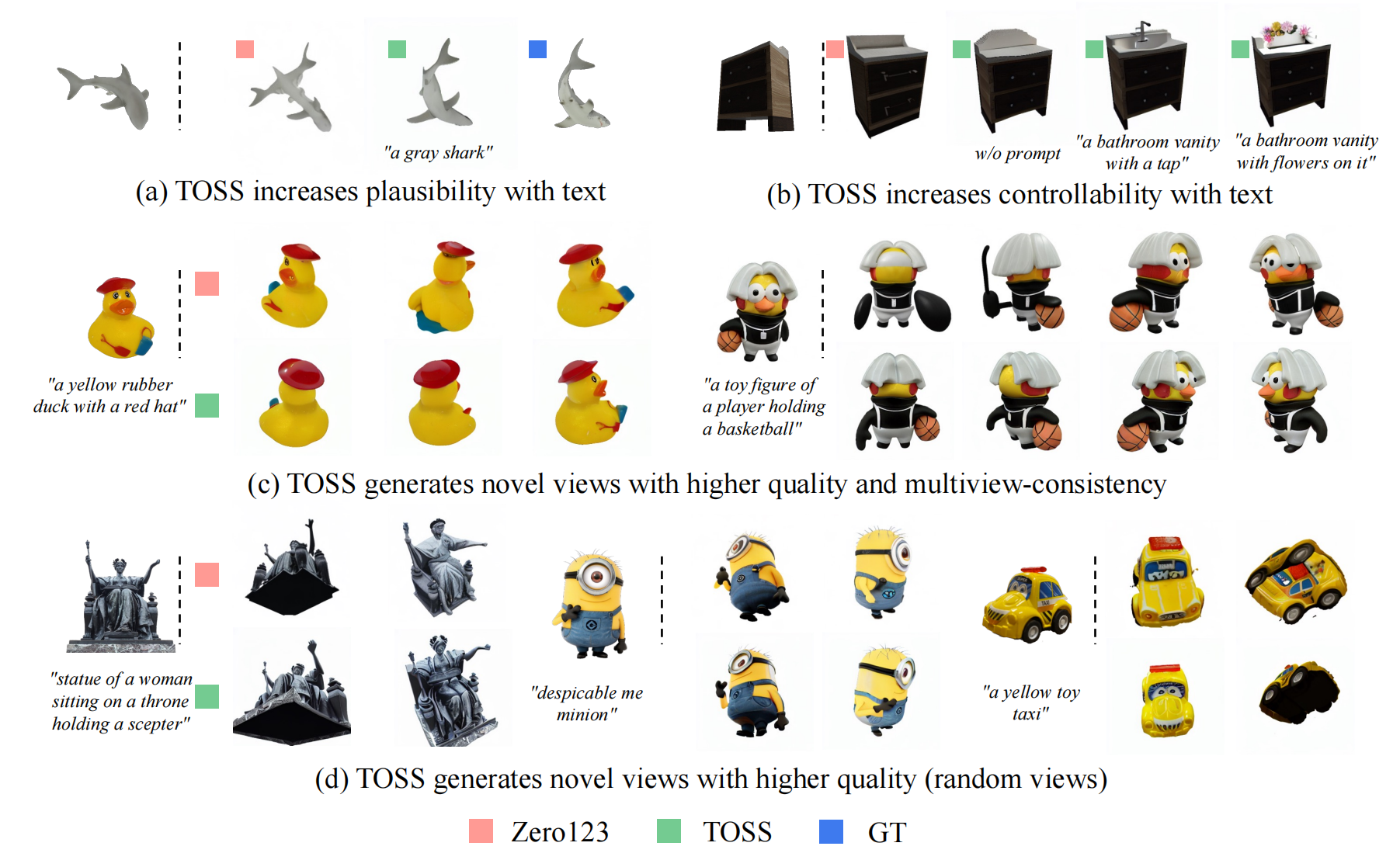

1 | # TOSS: High-quality Text-guided Novel View Synthesis from a Single Image (ICLR2024)

2 |

3 | ##### Yukai Shi, Jianan Wang, He Cao, Boshi Tang, Xianbiao Qi, Tianyu Yang, Yukun Huang, Shilong Liu, Lei Zhang, Heung-Yeung Shum

4 |

5 |  6 |

6 |

7 |

8 | Official implementation for *TOSS: High-quality Text-guided Novel View Synthesis from a Single Image*.

9 |

10 | **TOSS introduces text as high-level sementic information to constraint the NVS solution space for more controllable and more plausible results.**

11 |

12 | ## [Project Page](https://toss3d.github.io/) | [ArXiv](https://arxiv.org/abs/2310.10644) | [Weights](https://drive.google.com/drive/folders/15URQHblOVi_7YXZtgdFpjZAlKsoHylsq?usp=sharing)

13 |

14 |

15 | https://github.com/IDEA-Research/TOSS/assets/54578597/cd64c6c5-fef8-43c2-a223-7930ad6a71b7

16 |

17 |

18 | ## Install

19 |

20 | ### Create environment

21 | ```bash

22 | conda create -n toss python=3.9

23 | conda activate toss

24 | ```

25 |

26 | ### Install packages

27 | ```bash

28 | pip install torch==2.0.0 torchvision==0.15.1 torchaudio==2.0.1 --index-url https://download.pytorch.org/whl/cu118

29 | pip install -r requirements.txt

30 | git clone https://github.com/openai/CLIP.git

31 | pip install -e CLIP/

32 | ```

33 | ### Weights

34 | Download pretrain weights from [this link](https://drive.google.com/drive/folders/15URQHblOVi_7YXZtgdFpjZAlKsoHylsq?usp=sharing) to sub-directory ./ckpt

35 |

36 | ## Inference

37 |

38 | We suggest gradio for a visualized inference and test this demo on a single RTX3090.

39 |

40 | ```

41 | python app.py

42 | ```

43 |

44 |

45 |

46 |

47 | ## Todo List

48 | - [x] Release inference code.

49 | - [x] Release pretrained models.

50 | - [ ] Upload 3D generation code.

51 | - [ ] Upload training code.

52 |

53 | ## Acknowledgement

54 | - [ControlNet](https://github.com/lllyasviel/ControlNet/)

55 | - [Zero123](https://github.com/cvlab-columbia/zero123/)

56 | - [threestudio](https://github.com/threestudio-project/threestudio)

57 |

58 | ## Citation

59 |

60 | ```

61 | @article{shi2023toss,

62 | title={Toss: High-quality text-guided novel view synthesis from a single image},

63 | author={Shi, Yukai and Wang, Jianan and Cao, He and Tang, Boshi and Qi, Xianbiao and Yang, Tianyu and Huang, Yukun and Liu, Shilong and Zhang, Lei and Shum, Heung-Yeung},

64 | journal={arXiv preprint arXiv:2310.10644},

65 | year={2023}

66 | }

67 | ```

68 |

--------------------------------------------------------------------------------

/assets/3d_generation_video.mp4:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/IDEA-Research/TOSS/451a1d30f55d2f54f0421db3d0dfd6ed741b23f3/assets/3d_generation_video.mp4

--------------------------------------------------------------------------------

/assets/car.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/IDEA-Research/TOSS/451a1d30f55d2f54f0421db3d0dfd6ed741b23f3/assets/car.png

--------------------------------------------------------------------------------

/assets/dragon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/IDEA-Research/TOSS/451a1d30f55d2f54f0421db3d0dfd6ed741b23f3/assets/dragon.png

--------------------------------------------------------------------------------

/assets/gradio.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/IDEA-Research/TOSS/451a1d30f55d2f54f0421db3d0dfd6ed741b23f3/assets/gradio.png

--------------------------------------------------------------------------------

/assets/kunkun.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/IDEA-Research/TOSS/451a1d30f55d2f54f0421db3d0dfd6ed741b23f3/assets/kunkun.png

--------------------------------------------------------------------------------

/assets/rocket.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/IDEA-Research/TOSS/451a1d30f55d2f54f0421db3d0dfd6ed741b23f3/assets/rocket.png

--------------------------------------------------------------------------------

/cldm/hack.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import einops

3 |

4 | import ldm.modules.encoders.modules

5 | import ldm.modules.attention

6 |

7 | from transformers import logging

8 | from ldm.modules.attention import default

9 |

10 |

11 | def disable_verbosity():

12 | logging.set_verbosity_error()

13 | print('logging improved.')

14 | return

15 |

16 |

17 | def enable_sliced_attention():

18 | ldm.modules.attention.CrossAttention.forward = _hacked_sliced_attentin_forward

19 | print('Enabled sliced_attention.')

20 | return

21 |

22 |

23 | def hack_everything(clip_skip=0):

24 | disable_verbosity()

25 | ldm.modules.encoders.modules.FrozenCLIPEmbedder.forward = _hacked_clip_forward

26 | ldm.modules.encoders.modules.FrozenCLIPEmbedder.clip_skip = clip_skip

27 | print('Enabled clip hacks.')

28 | return

29 |

30 |

31 | # Written by Lvmin

32 | def _hacked_clip_forward(self, text):

33 | PAD = self.tokenizer.pad_token_id

34 | EOS = self.tokenizer.eos_token_id

35 | BOS = self.tokenizer.bos_token_id

36 |

37 | def tokenize(t):

38 | return self.tokenizer(t, truncation=False, add_special_tokens=False)["input_ids"]

39 |

40 | def transformer_encode(t):

41 | if self.clip_skip > 1:

42 | rt = self.transformer(input_ids=t, output_hidden_states=True)

43 | return self.transformer.text_model.final_layer_norm(rt.hidden_states[-self.clip_skip])

44 | else:

45 | return self.transformer(input_ids=t, output_hidden_states=False).last_hidden_state

46 |

47 | def split(x):

48 | return x[75 * 0: 75 * 1], x[75 * 1: 75 * 2], x[75 * 2: 75 * 3]

49 |

50 | def pad(x, p, i):

51 | return x[:i] if len(x) >= i else x + [p] * (i - len(x))

52 |

53 | raw_tokens_list = tokenize(text)

54 | tokens_list = []

55 |

56 | for raw_tokens in raw_tokens_list:

57 | raw_tokens_123 = split(raw_tokens)

58 | raw_tokens_123 = [[BOS] + raw_tokens_i + [EOS] for raw_tokens_i in raw_tokens_123]

59 | raw_tokens_123 = [pad(raw_tokens_i, PAD, 77) for raw_tokens_i in raw_tokens_123]

60 | tokens_list.append(raw_tokens_123)

61 |

62 | tokens_list = torch.IntTensor(tokens_list).to(self.device)

63 |

64 | feed = einops.rearrange(tokens_list, 'b f i -> (b f) i')

65 | y = transformer_encode(feed)

66 | z = einops.rearrange(y, '(b f) i c -> b (f i) c', f=3)

67 |

68 | return z

69 |

70 |

71 | # Stolen from https://github.com/basujindal/stable-diffusion/blob/main/optimizedSD/splitAttention.py

72 | def _hacked_sliced_attentin_forward(self, x, context=None, mask=None):

73 | h = self.heads

74 |

75 | q = self.to_q(x)

76 | context = default(context, x)

77 | k = self.to_k(context)

78 | v = self.to_v(context)

79 | del context, x

80 |

81 | q, k, v = map(lambda t: einops.rearrange(t, 'b n (h d) -> (b h) n d', h=h), (q, k, v))

82 |

83 | limit = k.shape[0]

84 | att_step = 1

85 | q_chunks = list(torch.tensor_split(q, limit // att_step, dim=0))

86 | k_chunks = list(torch.tensor_split(k, limit // att_step, dim=0))

87 | v_chunks = list(torch.tensor_split(v, limit // att_step, dim=0))

88 |

89 | q_chunks.reverse()

90 | k_chunks.reverse()

91 | v_chunks.reverse()

92 | sim = torch.zeros(q.shape[0], q.shape[1], v.shape[2], device=q.device)

93 | del k, q, v

94 | for i in range(0, limit, att_step):

95 | q_buffer = q_chunks.pop()

96 | k_buffer = k_chunks.pop()

97 | v_buffer = v_chunks.pop()

98 | sim_buffer = torch.einsum('b i d, b j d -> b i j', q_buffer, k_buffer) * self.scale

99 |

100 | del k_buffer, q_buffer

101 | # attention, what we cannot get enough of, by chunks

102 |

103 | sim_buffer = sim_buffer.softmax(dim=-1)

104 |

105 | sim_buffer = torch.einsum('b i j, b j d -> b i d', sim_buffer, v_buffer)

106 | del v_buffer

107 | sim[i:i + att_step, :, :] = sim_buffer

108 |

109 | del sim_buffer

110 | sim = einops.rearrange(sim, '(b h) n d -> b n (h d)', h=h)

111 | return self.to_out(sim)

112 |

--------------------------------------------------------------------------------

/cldm/logger.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | import numpy as np

4 | import torch

5 | import torchvision

6 | from PIL import Image

7 | from pytorch_lightning.callbacks import Callback

8 | from pytorch_lightning.utilities.distributed import rank_zero_only

9 | import time

10 |

11 |

12 | class ImageLogger(Callback):

13 | def __init__(self, batch_frequency=2000, max_images=4, clamp=True, increase_log_steps=True,

14 | rescale=True, disabled=False, log_on_batch_idx=False, log_first_step=False,

15 | log_images_kwargs=None, epoch_frequency=1):

16 | super().__init__()

17 | self.rescale = rescale

18 | self.batch_freq = batch_frequency

19 | self.max_images = max_images

20 | if not increase_log_steps:

21 | self.log_steps = [self.batch_freq]

22 | self.clamp = clamp

23 | self.disabled = disabled

24 | self.log_on_batch_idx = log_on_batch_idx

25 | self.log_images_kwargs = log_images_kwargs if log_images_kwargs else {}

26 | self.log_first_step = log_first_step

27 | self.epoch = 0

28 | self.epoch_frequency = epoch_frequency

29 | self.start_time = time.time()

30 |

31 | @rank_zero_only

32 | def log_local(self, save_dir, split, images, global_step, current_epoch, batch_idx):

33 | root = os.path.join(save_dir, "image_log", split)

34 | for k in images:

35 | grid = torchvision.utils.make_grid(images[k], nrow=4)

36 | if self.rescale:

37 | grid = (grid + 1.0) / 2.0 # -1,1 -> 0,1; c,h,w

38 | grid = grid.transpose(0, 1).transpose(1, 2).squeeze(-1)

39 | grid = grid.numpy()

40 | grid = (grid * 255).astype(np.uint8)

41 | filename = "epoch{:06}/{}_gs-{:06}_e-{:06}_b-{:06}.png".format(current_epoch,k, global_step, current_epoch, batch_idx)

42 | path = os.path.join(root, filename)

43 | os.makedirs(os.path.split(path)[0], exist_ok=True)

44 | Image.fromarray(grid).save(path)

45 | if current_epoch > self.epoch:

46 | self.epoch += 1

47 |

48 | def log_img(self, pl_module, batch, batch_idx, split="train"):

49 | check_idx = batch_idx if self.log_on_batch_idx else pl_module.global_step

50 | if (self.check_frequency(check_idx) and

51 | hasattr(pl_module, "log_images") and

52 | callable(pl_module.log_images) and

53 | self.max_images > 0):

54 | logger = type(pl_module.logger)

55 |

56 | is_train = pl_module.training

57 | if is_train:

58 | pl_module.eval()

59 |

60 | with torch.no_grad():

61 | images = pl_module.log_images(batch, split=split, **self.log_images_kwargs)

62 |

63 | for k in images:

64 | N = min(images[k].shape[0], self.max_images)

65 | images[k] = images[k][:N]

66 | if isinstance(images[k], torch.Tensor):

67 | images[k] = images[k].detach().cpu()

68 | if self.clamp:

69 | images[k] = torch.clamp(images[k], -1., 1.)

70 |

71 | self.log_local(pl_module.logger.save_dir, split, images,

72 | pl_module.global_step, pl_module.current_epoch, batch_idx)

73 |

74 | if is_train:

75 | pl_module.train()

76 |

77 | def check_frequency(self, check_idx):

78 | return check_idx % self.batch_freq == 0

79 |

80 | def on_train_batch_end(self, trainer, pl_module, outputs, batch, batch_idx, dataloader_idx):

81 | if not self.disabled:

82 | self.log_img(pl_module, batch, batch_idx, split="train")

83 |

84 | def on_validation_batch_end(self, trainer, pl_module, outputs, batch, batch_idx, dataloader_idx):

85 | if not self.disabled:

86 | self.log_img(pl_module, batch, batch_idx, split="val")

87 |

88 | def on_train_epoch_end(self, trainer, pl_module):

89 | end_time = time.time()

90 | self.time_counter(self.start_time, end_time, pl_module.global_step)

91 |

92 | def time_counter(self, begin_time, end_time, step):

93 | run_time = round(end_time - begin_time)

94 | # transfer time

95 | hour = run_time // 3600

96 | minute = (run_time - 3600 * hour) // 60

97 | second = run_time - 3600 * hour - 60 * minute

98 | print(f'Time cost until step {step}:{hour}h:{minute}miin:{second}s')

--------------------------------------------------------------------------------

/cldm/model.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 |

4 | from omegaconf import OmegaConf

5 | from ldm.util import instantiate_from_config

6 |

7 |

8 | def get_state_dict(d):

9 | return d.get('state_dict', d)

10 |

11 |

12 | def load_state_dict(ckpt_path, location='cpu'):

13 | _, extension = os.path.splitext(ckpt_path)

14 | if extension.lower() == ".safetensors":

15 | import safetensors.torch

16 | state_dict = safetensors.torch.load_file(ckpt_path, device=location)

17 | else:

18 | state_dict = get_state_dict(torch.load(ckpt_path, map_location=torch.device(location)))

19 | state_dict = get_state_dict(state_dict)

20 | print(f'Loaded state_dict from [{ckpt_path}]')

21 | return state_dict

22 |

23 |

24 | def create_model(config_path):

25 | config = OmegaConf.load(config_path)

26 | model = instantiate_from_config(config.model).cpu()

27 | print(f'Loaded model config from [{config_path}]')

28 | return model

29 |

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | save_memory = False

2 |

--------------------------------------------------------------------------------

/datasets/__init__.py:

--------------------------------------------------------------------------------

1 | from .nsvf import NSVFDataset, NSVFDataset_v2, NSVFDataset_all

2 | from .colmap import ColmapDataset

3 | from .nerfpp import NeRFPPDataset

4 | from .objaverse import ObjaverseData

5 |

6 |

7 | dataset_dict = {'nsvf': NSVFDataset,

8 | 'nsvf_v2': NSVFDataset_v2,

9 | "nsvf_all": NSVFDataset_all,

10 | 'colmap': ColmapDataset,

11 | 'nerfpp': NeRFPPDataset,

12 | 'objaverse': ObjaverseData,}

--------------------------------------------------------------------------------

/datasets/base.py:

--------------------------------------------------------------------------------

1 | from torchvision import transforms as T

2 |

3 | from torchvision import transforms

4 | from torch.utils.data import Dataset

5 | import numpy as np

6 | import torch

7 | import random

8 | import math

9 | import pdb

10 | from viz import save_image_tensor2cv2

11 |

12 |

13 | class BaseDataset(Dataset):

14 | """

15 | Define length and sampling method

16 | """

17 | def __init__(self, root_dir, split='train', text="a green chair", img_size=512, downsample=1.0):

18 | self.img_w, self.img_h = img_size, img_size

19 | self.root_dir = root_dir

20 | self.split = split

21 | self.downsample = downsample

22 | self.define_transforms()

23 | self.text = text

24 |

25 | def read_intrinsics(self):

26 | raise NotImplementedError

27 |

28 | def define_transforms(self):

29 | self.transform = transforms.Compose([T.ToTensor(), transforms.Resize(size=(self.img_w, self.img_h))])

30 | # self.transform = T.ToTensor()

31 |

32 | def __len__(self):

33 | # if self.split.startswith('train'):

34 | # return 1000

35 | return len(self.poses)

36 |

37 | def cartesian_to_spherical(self, xyz):

38 | ptsnew = np.hstack((xyz, np.zeros(xyz.shape)))

39 | xy = xyz[:,0]**2 + xyz[:,1]**2

40 | z = np.sqrt(xy + xyz[:,2]**2)

41 | theta = np.arctan2(np.sqrt(xy), xyz[:,2]) # for elevation angle defined from Z-axis down

42 | #ptsnew[:,4] = np.arctan2(xyz[:,2], np.sqrt(xy)) # for elevation angle defined from XY-plane up

43 | azimuth = np.arctan2(xyz[:,1], xyz[:,0])

44 | return np.array([theta, azimuth, z])

45 |

46 | def get_T(self, target_RT, cond_RT):

47 | R, T = target_RT[:3, :3], target_RT[:, -1]

48 | T_target = -R.T @ T

49 |

50 | R, T = cond_RT[:3, :3], cond_RT[:, -1]

51 | T_cond = -R.T @ T

52 |

53 | theta_cond, azimuth_cond, z_cond = self.cartesian_to_spherical(T_cond[None, :])

54 | theta_target, azimuth_target, z_target = self.cartesian_to_spherical(T_target[None, :])

55 |

56 | d_theta = theta_target - theta_cond

57 | d_azimuth = (azimuth_target - azimuth_cond) % (2 * math.pi)

58 | d_z = z_target - z_cond

59 |

60 | d_T = torch.tensor([d_theta.item(), d_azimuth.item(), d_z.item()])

61 | return d_T

62 |

63 | def get_T_w2c(self, target_RT, cond_RT):

64 | T_target = target_RT[:, -1]

65 | T_cond = cond_RT[:, -1]

66 |

67 | theta_cond, azimuth_cond, z_cond = self.cartesian_to_spherical(T_cond[None, :])

68 | theta_target, azimuth_target, z_target = self.cartesian_to_spherical(T_target[None, :])

69 |

70 | d_theta = theta_target - theta_cond

71 | d_azimuth = (azimuth_target - azimuth_cond) % (2 * math.pi)

72 | d_z = z_target - z_cond

73 | # d_z = (z_target - z_cond) / np.max(z_cond) * 2

74 | # pdb.set_trace()

75 |

76 | d_T = torch.tensor([d_theta.item(), d_azimuth.item(), d_z.item()])

77 | return d_T

78 |

79 | def __getitem__(self, idx):

80 | # camera pose and img

81 | poses = self.poses[idx]

82 | img = self.imgs[idx]

83 | prompt = self.text

84 |

85 | # condition

86 | # idx_cond = idx % 5

87 | # idx_cond = 1

88 | idx_cond = random.randint(0, 4)

89 | # idx_cond = random.randint(0, len(self.poses)-1)

90 | poses_cond = self.poses[idx_cond]

91 | img_cond = self.imgs[idx_cond]

92 |

93 | # if len(self.imgs)>0: # if ground truth available

94 | # img_rgb = imgs[:, :,:3]

95 | # img_rgb_cond = imgs_cond[:, :,:3]

96 | # if imgs.shape[-1] == 4: # HDR-NeRF data

97 | # sample['exposure'] = rays[0, 3] # same exposure for all rays

98 |

99 | # Normalize target images to [-1, 1].

100 | target = (img.float() / 127.5) - 1.0

101 | # Normalize source images to [0, 1].

102 | condition = img_cond.float() / 255.0

103 | # save_image_tensor2cv2(condition.permute(2,0,1), "./viz_fig/input_cond.png")

104 |

105 | # get delta pose

106 | # delta_pose = self.get_T(target_RT=poses, cond_RT=poses_cond)

107 | delta_pose = self.get_T_w2c(target_RT=poses, cond_RT=poses_cond)

108 |

109 | return dict(jpg=target, txt=prompt, hint=condition, delta_pose=delta_pose)

--------------------------------------------------------------------------------

/datasets/colmap.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | import os

4 | import glob

5 | from PIL import Image

6 | from einops import rearrange

7 | from tqdm import tqdm

8 |

9 | from .ray_utils import *

10 | from .colmap_utils import \

11 | read_cameras_binary, read_images_binary, read_points3d_binary

12 |

13 | from .base import BaseDataset

14 |

15 |

16 | class ColmapDataset(BaseDataset):

17 | def __init__(self, root_dir, split='train', downsample=1.0, **kwargs):

18 | super().__init__(root_dir, split, downsample)

19 |

20 | self.read_intrinsics()

21 |

22 | if kwargs.get('read_meta', True):

23 | self.read_meta(split, **kwargs)

24 |

25 | def read_intrinsics(self):

26 | # Step 1: read and scale intrinsics (same for all images)

27 | camdata = read_cameras_binary(os.path.join(self.root_dir, 'sparse/0/cameras.bin'))

28 | h = int(camdata[1].height*self.downsample)

29 | w = int(camdata[1].width*self.downsample)

30 | self.img_wh = (w, h)

31 |

32 | if camdata[1].model == 'SIMPLE_RADIAL':

33 | fx = fy = camdata[1].params[0]*self.downsample

34 | cx = camdata[1].params[1]*self.downsample

35 | cy = camdata[1].params[2]*self.downsample

36 | elif camdata[1].model in ['PINHOLE', 'OPENCV']:

37 | fx = camdata[1].params[0]*self.downsample

38 | fy = camdata[1].params[1]*self.downsample

39 | cx = camdata[1].params[2]*self.downsample

40 | cy = camdata[1].params[3]*self.downsample

41 | else:

42 | raise ValueError(f"Please parse the intrinsics for camera model {camdata[1].model}!")

43 | self.K = torch.FloatTensor([[fx, 0, cx],

44 | [0, fy, cy],

45 | [0, 0, 1]])

46 | self.directions = get_ray_directions(h, w, self.K)

47 |

48 | def read_meta(self, split, **kwargs):

49 | # Step 2: correct poses

50 | # read extrinsics (of successfully reconstructed images)

51 | imdata = read_images_binary(os.path.join(self.root_dir, 'sparse/0/images.bin'))

52 | img_names = [imdata[k].name for k in imdata]

53 | perm = np.argsort(img_names)

54 | if '360_v2' in self.root_dir and self.downsample<1: # mipnerf360 data

55 | folder = f'images_{int(1/self.downsample)}'

56 | else:

57 | folder = 'images'

58 | # read successfully reconstructed images and ignore others

59 | img_paths = [os.path.join(self.root_dir, folder, name)

60 | for name in sorted(img_names)]

61 | w2c_mats = []

62 | bottom = np.array([[0, 0, 0, 1.]])

63 | for k in imdata:

64 | im = imdata[k]

65 | R = im.qvec2rotmat(); t = im.tvec.reshape(3, 1)

66 | w2c_mats += [np.concatenate([np.concatenate([R, t], 1), bottom], 0)]

67 | w2c_mats = np.stack(w2c_mats, 0)

68 | poses = np.linalg.inv(w2c_mats)[perm, :3] # (N_images, 3, 4) cam2world matrices

69 |

70 | pts3d = read_points3d_binary(os.path.join(self.root_dir, 'sparse/0/points3D.bin'))

71 | pts3d = np.array([pts3d[k].xyz for k in pts3d]) # (N, 3)

72 |

73 | self.poses, self.pts3d = center_poses(poses, pts3d)

74 |

75 | scale = np.linalg.norm(self.poses[..., 3], axis=-1).min()

76 | self.poses[..., 3] /= scale

77 | self.pts3d /= scale

78 |

79 | self.rays = []

80 | if split == 'test_traj': # use precomputed test poses

81 | self.poses = create_spheric_poses(1.2, self.poses[:, 1, 3].mean())

82 | self.poses = torch.FloatTensor(self.poses)

83 | return

84 |

85 | if 'HDR-NeRF' in self.root_dir: # HDR-NeRF data

86 | if 'syndata' in self.root_dir: # synthetic

87 | # first 17 are test, last 18 are train

88 | self.unit_exposure_rgb = 0.73

89 | if split=='train':

90 | img_paths = sorted(glob.glob(os.path.join(self.root_dir,

91 | f'train/*[024].png')))

92 | self.poses = np.repeat(self.poses[-18:], 3, 0)

93 | elif split=='test':

94 | img_paths = sorted(glob.glob(os.path.join(self.root_dir,

95 | f'test/*[13].png')))

96 | self.poses = np.repeat(self.poses[:17], 2, 0)

97 | else:

98 | raise ValueError(f"split {split} is invalid for HDR-NeRF!")

99 | else: # real

100 | self.unit_exposure_rgb = 0.5

101 | # even numbers are train, odd numbers are test

102 | if split=='train':

103 | img_paths = sorted(glob.glob(os.path.join(self.root_dir,

104 | f'input_images/*0.jpg')))[::2]

105 | img_paths+= sorted(glob.glob(os.path.join(self.root_dir,

106 | f'input_images/*2.jpg')))[::2]

107 | img_paths+= sorted(glob.glob(os.path.join(self.root_dir,

108 | f'input_images/*4.jpg')))[::2]

109 | self.poses = np.tile(self.poses[::2], (3, 1, 1))

110 | elif split=='test':

111 | img_paths = sorted(glob.glob(os.path.join(self.root_dir,

112 | f'input_images/*1.jpg')))[1::2]

113 | img_paths+= sorted(glob.glob(os.path.join(self.root_dir,

114 | f'input_images/*3.jpg')))[1::2]

115 | self.poses = np.tile(self.poses[1::2], (2, 1, 1))

116 | else:

117 | raise ValueError(f"split {split} is invalid for HDR-NeRF!")

118 | else:

119 | # use every 8th image as test set

120 | if split=='train':

121 | img_paths = [x for i, x in enumerate(img_paths) if i%8!=0]

122 | self.poses = np.array([x for i, x in enumerate(self.poses) if i%8!=0])

123 | elif split=='test':

124 | img_paths = [x for i, x in enumerate(img_paths) if i%8==0]

125 | self.poses = np.array([x for i, x in enumerate(self.poses) if i%8==0])

126 |

127 | print(f'Loading {len(img_paths)} {split} images ...')

128 | for img_path in tqdm(img_paths):

129 | buf = [] # buffer for ray attributes: rgb, etc

130 |

131 | img = Image.open(img_path).convert('RGB').resize(self.img_wh, Image.LANCZOS)

132 | img = rearrange(self.transform(img), 'c h w -> (h w) c')

133 | buf += [img]

134 |

135 | if 'HDR-NeRF' in self.root_dir: # get exposure

136 | folder = self.root_dir.split('/')

137 | scene = folder[-1] if folder[-1] != '' else folder[-2]

138 | if scene in ['bathroom', 'bear', 'chair', 'desk']:

139 | e_dict = {e: 1/8*4**e for e in range(5)}

140 | elif scene in ['diningroom', 'dog']:

141 | e_dict = {e: 1/16*4**e for e in range(5)}

142 | elif scene in ['sofa']:

143 | e_dict = {0:0.25, 1:1, 2:2, 3:4, 4:16}

144 | elif scene in ['sponza']:

145 | e_dict = {0:0.5, 1:2, 2:4, 3:8, 4:32}

146 | elif scene in ['box']:

147 | e_dict = {0:2/3, 1:1/3, 2:1/6, 3:0.1, 4:0.05}

148 | elif scene in ['computer']:

149 | e_dict = {0:1/3, 1:1/8, 2:1/15, 3:1/30, 4:1/60}

150 | elif scene in ['flower']:

151 | e_dict = {0:1/3, 1:1/6, 2:0.1, 3:0.05, 4:1/45}

152 | elif scene in ['luckycat']:

153 | e_dict = {0:2, 1:1, 2:0.5, 3:0.25, 4:0.125}

154 | e = int(img_path.split('.')[0][-1])

155 | buf += [e_dict[e]*torch.ones_like(img[:, :1])]

156 |

157 | self.rays += [torch.cat(buf, 1)]

158 |

159 | self.rays = torch.stack(self.rays) # (N_images, hw, ?)

160 | self.poses = torch.FloatTensor(self.poses) # (N_images, 3, 4)

--------------------------------------------------------------------------------

/datasets/colmap_utils.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) 2018, ETH Zurich and UNC Chapel Hill.

2 | # All rights reserved.

3 | #

4 | # Redistribution and use in source and binary forms, with or without

5 | # modification, are permitted provided that the following conditions are met:

6 | #

7 | # * Redistributions of source code must retain the above copyright

8 | # notice, this list of conditions and the following disclaimer.

9 | #

10 | # * Redistributions in binary form must reproduce the above copyright

11 | # notice, this list of conditions and the following disclaimer in the

12 | # documentation and/or other materials provided with the distribution.

13 | #

14 | # * Neither the name of ETH Zurich and UNC Chapel Hill nor the names of

15 | # its contributors may be used to endorse or promote products derived

16 | # from this software without specific prior written permission.

17 | #

18 | # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

19 | # AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

20 | # IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

21 | # ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDERS OR CONTRIBUTORS BE

22 | # LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

23 | # CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

24 | # SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

25 | # INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

26 | # CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

27 | # ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

28 | # POSSIBILITY OF SUCH DAMAGE.

29 | #

30 | # Author: Johannes L. Schoenberger (jsch at inf.ethz.ch)

31 |

32 | import os

33 | import sys

34 | import collections

35 | import numpy as np

36 | import struct

37 |

38 |

39 | CameraModel = collections.namedtuple(

40 | "CameraModel", ["model_id", "model_name", "num_params"])

41 | Camera = collections.namedtuple(

42 | "Camera", ["id", "model", "width", "height", "params"])

43 | BaseImage = collections.namedtuple(

44 | "Image", ["id", "qvec", "tvec", "camera_id", "name", "xys", "point3D_ids"])

45 | Point3D = collections.namedtuple(

46 | "Point3D", ["id", "xyz", "rgb", "error", "image_ids", "point2D_idxs"])

47 |

48 | class Image(BaseImage):

49 | def qvec2rotmat(self):

50 | return qvec2rotmat(self.qvec)

51 |

52 |

53 | CAMERA_MODELS = {

54 | CameraModel(model_id=0, model_name="SIMPLE_PINHOLE", num_params=3),

55 | CameraModel(model_id=1, model_name="PINHOLE", num_params=4),

56 | CameraModel(model_id=2, model_name="SIMPLE_RADIAL", num_params=4),

57 | CameraModel(model_id=3, model_name="RADIAL", num_params=5),

58 | CameraModel(model_id=4, model_name="OPENCV", num_params=8),

59 | CameraModel(model_id=5, model_name="OPENCV_FISHEYE", num_params=8),

60 | CameraModel(model_id=6, model_name="FULL_OPENCV", num_params=12),

61 | CameraModel(model_id=7, model_name="FOV", num_params=5),

62 | CameraModel(model_id=8, model_name="SIMPLE_RADIAL_FISHEYE", num_params=4),

63 | CameraModel(model_id=9, model_name="RADIAL_FISHEYE", num_params=5),

64 | CameraModel(model_id=10, model_name="THIN_PRISM_FISHEYE", num_params=12)

65 | }

66 | CAMERA_MODEL_IDS = dict([(camera_model.model_id, camera_model) \

67 | for camera_model in CAMERA_MODELS])

68 |

69 |

70 | def read_next_bytes(fid, num_bytes, format_char_sequence, endian_character="<"):

71 | """Read and unpack the next bytes from a binary file.

72 | :param fid:

73 | :param num_bytes: Sum of combination of {2, 4, 8}, e.g. 2, 6, 16, 30, etc.

74 | :param format_char_sequence: List of {c, e, f, d, h, H, i, I, l, L, q, Q}.

75 | :param endian_character: Any of {@, =, <, >, !}

76 | :return: Tuple of read and unpacked values.

77 | """

78 | data = fid.read(num_bytes)

79 | return struct.unpack(endian_character + format_char_sequence, data)

80 |

81 |

82 | def read_cameras_text(path):

83 | """

84 | see: src/base/reconstruction.cc

85 | void Reconstruction::WriteCamerasText(const std::string& path)

86 | void Reconstruction::ReadCamerasText(const std::string& path)

87 | """

88 | cameras = {}

89 | with open(path, "r") as fid:

90 | while True:

91 | line = fid.readline()

92 | if not line:

93 | break

94 | line = line.strip()

95 | if len(line) > 0 and line[0] != "#":

96 | elems = line.split()

97 | camera_id = int(elems[0])

98 | model = elems[1]

99 | width = int(elems[2])

100 | height = int(elems[3])

101 | params = np.array(tuple(map(float, elems[4:])))

102 | cameras[camera_id] = Camera(id=camera_id, model=model,

103 | width=width, height=height,

104 | params=params)

105 | return cameras

106 |

107 |

108 | def read_cameras_binary(path_to_model_file):

109 | """

110 | see: src/base/reconstruction.cc

111 | void Reconstruction::WriteCamerasBinary(const std::string& path)

112 | void Reconstruction::ReadCamerasBinary(const std::string& path)

113 | """

114 | cameras = {}

115 | with open(path_to_model_file, "rb") as fid:

116 | num_cameras = read_next_bytes(fid, 8, "Q")[0]

117 | for camera_line_index in range(num_cameras):

118 | camera_properties = read_next_bytes(

119 | fid, num_bytes=24, format_char_sequence="iiQQ")

120 | camera_id = camera_properties[0]

121 | model_id = camera_properties[1]

122 | model_name = CAMERA_MODEL_IDS[camera_properties[1]].model_name

123 | width = camera_properties[2]

124 | height = camera_properties[3]

125 | num_params = CAMERA_MODEL_IDS[model_id].num_params

126 | params = read_next_bytes(fid, num_bytes=8*num_params,

127 | format_char_sequence="d"*num_params)

128 | cameras[camera_id] = Camera(id=camera_id,

129 | model=model_name,

130 | width=width,

131 | height=height,

132 | params=np.array(params))

133 | assert len(cameras) == num_cameras

134 | return cameras

135 |

136 |

137 | def read_images_text(path):

138 | """

139 | see: src/base/reconstruction.cc

140 | void Reconstruction::ReadImagesText(const std::string& path)

141 | void Reconstruction::WriteImagesText(const std::string& path)

142 | """

143 | images = {}

144 | with open(path, "r") as fid:

145 | while True:

146 | line = fid.readline()

147 | if not line:

148 | break

149 | line = line.strip()

150 | if len(line) > 0 and line[0] != "#":

151 | elems = line.split()

152 | image_id = int(elems[0])

153 | qvec = np.array(tuple(map(float, elems[1:5])))

154 | tvec = np.array(tuple(map(float, elems[5:8])))

155 | camera_id = int(elems[8])

156 | image_name = elems[9]

157 | elems = fid.readline().split()

158 | xys = np.column_stack([tuple(map(float, elems[0::3])),

159 | tuple(map(float, elems[1::3]))])

160 | point3D_ids = np.array(tuple(map(int, elems[2::3])))

161 | images[image_id] = Image(

162 | id=image_id, qvec=qvec, tvec=tvec,

163 | camera_id=camera_id, name=image_name,

164 | xys=xys, point3D_ids=point3D_ids)

165 | return images

166 |

167 |

168 | def read_images_binary(path_to_model_file):

169 | """

170 | see: src/base/reconstruction.cc

171 | void Reconstruction::ReadImagesBinary(const std::string& path)

172 | void Reconstruction::WriteImagesBinary(const std::string& path)

173 | """

174 | images = {}

175 | with open(path_to_model_file, "rb") as fid:

176 | num_reg_images = read_next_bytes(fid, 8, "Q")[0]

177 | for image_index in range(num_reg_images):

178 | binary_image_properties = read_next_bytes(

179 | fid, num_bytes=64, format_char_sequence="idddddddi")

180 | image_id = binary_image_properties[0]

181 | qvec = np.array(binary_image_properties[1:5])

182 | tvec = np.array(binary_image_properties[5:8])

183 | camera_id = binary_image_properties[8]

184 | image_name = ""

185 | current_char = read_next_bytes(fid, 1, "c")[0]

186 | while current_char != b"\x00": # look for the ASCII 0 entry

187 | image_name += current_char.decode("utf-8")

188 | current_char = read_next_bytes(fid, 1, "c")[0]

189 | num_points2D = read_next_bytes(fid, num_bytes=8,

190 | format_char_sequence="Q")[0]

191 | x_y_id_s = read_next_bytes(fid, num_bytes=24*num_points2D,

192 | format_char_sequence="ddq"*num_points2D)

193 | xys = np.column_stack([tuple(map(float, x_y_id_s[0::3])),

194 | tuple(map(float, x_y_id_s[1::3]))])

195 | point3D_ids = np.array(tuple(map(int, x_y_id_s[2::3])))

196 | images[image_id] = Image(

197 | id=image_id, qvec=qvec, tvec=tvec,

198 | camera_id=camera_id, name=image_name,

199 | xys=xys, point3D_ids=point3D_ids)

200 | return images

201 |

202 |

203 | def read_points3D_text(path):

204 | """

205 | see: src/base/reconstruction.cc

206 | void Reconstruction::ReadPoints3DText(const std::string& path)

207 | void Reconstruction::WritePoints3DText(const std::string& path)

208 | """

209 | points3D = {}

210 | with open(path, "r") as fid:

211 | while True:

212 | line = fid.readline()

213 | if not line:

214 | break

215 | line = line.strip()

216 | if len(line) > 0 and line[0] != "#":

217 | elems = line.split()

218 | point3D_id = int(elems[0])

219 | xyz = np.array(tuple(map(float, elems[1:4])))

220 | rgb = np.array(tuple(map(int, elems[4:7])))

221 | error = float(elems[7])

222 | image_ids = np.array(tuple(map(int, elems[8::2])))

223 | point2D_idxs = np.array(tuple(map(int, elems[9::2])))

224 | points3D[point3D_id] = Point3D(id=point3D_id, xyz=xyz, rgb=rgb,

225 | error=error, image_ids=image_ids,

226 | point2D_idxs=point2D_idxs)

227 | return points3D

228 |

229 |

230 | def read_points3d_binary(path_to_model_file):

231 | """

232 | see: src/base/reconstruction.cc

233 | void Reconstruction::ReadPoints3DBinary(const std::string& path)

234 | void Reconstruction::WritePoints3DBinary(const std::string& path)

235 | """

236 | points3D = {}

237 | with open(path_to_model_file, "rb") as fid:

238 | num_points = read_next_bytes(fid, 8, "Q")[0]

239 | for point_line_index in range(num_points):

240 | binary_point_line_properties = read_next_bytes(

241 | fid, num_bytes=43, format_char_sequence="QdddBBBd")

242 | point3D_id = binary_point_line_properties[0]

243 | xyz = np.array(binary_point_line_properties[1:4])

244 | rgb = np.array(binary_point_line_properties[4:7])

245 | error = np.array(binary_point_line_properties[7])

246 | track_length = read_next_bytes(

247 | fid, num_bytes=8, format_char_sequence="Q")[0]

248 | track_elems = read_next_bytes(

249 | fid, num_bytes=8*track_length,

250 | format_char_sequence="ii"*track_length)

251 | image_ids = np.array(tuple(map(int, track_elems[0::2])))

252 | point2D_idxs = np.array(tuple(map(int, track_elems[1::2])))

253 | points3D[point3D_id] = Point3D(

254 | id=point3D_id, xyz=xyz, rgb=rgb,

255 | error=error, image_ids=image_ids,

256 | point2D_idxs=point2D_idxs)

257 | return points3D

258 |

259 |

260 | def read_model(path, ext):

261 | if ext == ".txt":

262 | cameras = read_cameras_text(os.path.join(path, "cameras" + ext))

263 | images = read_images_text(os.path.join(path, "images" + ext))

264 | points3D = read_points3D_text(os.path.join(path, "points3D") + ext)

265 | else:

266 | cameras = read_cameras_binary(os.path.join(path, "cameras" + ext))

267 | images = read_images_binary(os.path.join(path, "images" + ext))

268 | points3D = read_points3d_binary(os.path.join(path, "points3D") + ext)

269 | return cameras, images, points3D

270 |

271 |

272 | def qvec2rotmat(qvec):

273 | return np.array([

274 | [1 - 2 * qvec[2]**2 - 2 * qvec[3]**2,

275 | 2 * qvec[1] * qvec[2] - 2 * qvec[0] * qvec[3],

276 | 2 * qvec[3] * qvec[1] + 2 * qvec[0] * qvec[2]],

277 | [2 * qvec[1] * qvec[2] + 2 * qvec[0] * qvec[3],

278 | 1 - 2 * qvec[1]**2 - 2 * qvec[3]**2,

279 | 2 * qvec[2] * qvec[3] - 2 * qvec[0] * qvec[1]],

280 | [2 * qvec[3] * qvec[1] - 2 * qvec[0] * qvec[2],

281 | 2 * qvec[2] * qvec[3] + 2 * qvec[0] * qvec[1],

282 | 1 - 2 * qvec[1]**2 - 2 * qvec[2]**2]])

283 |

284 |

285 | def rotmat2qvec(R):

286 | Rxx, Ryx, Rzx, Rxy, Ryy, Rzy, Rxz, Ryz, Rzz = R.flat

287 | K = np.array([

288 | [Rxx - Ryy - Rzz, 0, 0, 0],

289 | [Ryx + Rxy, Ryy - Rxx - Rzz, 0, 0],

290 | [Rzx + Rxz, Rzy + Ryz, Rzz - Rxx - Ryy, 0],

291 | [Ryz - Rzy, Rzx - Rxz, Rxy - Ryx, Rxx + Ryy + Rzz]]) / 3.0

292 | eigvals, eigvecs = np.linalg.eigh(K)

293 | qvec = eigvecs[[3, 0, 1, 2], np.argmax(eigvals)]

294 | if qvec[0] < 0:

295 | qvec *= -1

296 | return qvec

--------------------------------------------------------------------------------

/datasets/depth_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import re

3 |

4 |

5 | def read_pfm(path):

6 | """Read pfm file.

7 |

8 | Args:

9 | path (str): path to file

10 |

11 | Returns:

12 | tuple: (data, scale)

13 | """

14 | with open(path, "rb") as file:

15 |

16 | color = None

17 | width = None

18 | height = None

19 | scale = None

20 | endian = None

21 |

22 | header = file.readline().rstrip()

23 | if header.decode("ascii") == "PF":

24 | color = True

25 | elif header.decode("ascii") == "Pf":

26 | color = False

27 | else:

28 | raise Exception("Not a PFM file: " + path)

29 |

30 | dim_match = re.match(r"^(\d+)\s(\d+)\s$", file.readline().decode("ascii"))

31 | if dim_match:

32 | width, height = list(map(int, dim_match.groups()))

33 | else:

34 | raise Exception("Malformed PFM header.")

35 |

36 | scale = float(file.readline().decode("ascii").rstrip())

37 | if scale < 0:

38 | # little-endian

39 | endian = "<"

40 | scale = -scale

41 | else:

42 | # big-endian

43 | endian = ">"

44 |

45 | data = np.fromfile(file, endian + "f")

46 | shape = (height, width, 3) if color else (height, width)

47 |

48 | data = np.reshape(data, shape)

49 | data = np.flipud(data)

50 |

51 | return data, scale

--------------------------------------------------------------------------------

/datasets/nerfpp.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import glob

3 | import numpy as np

4 | import os

5 | from PIL import Image

6 | from einops import rearrange

7 | from tqdm import tqdm

8 |

9 | from .ray_utils import get_ray_directions

10 |

11 | from .base import BaseDataset

12 |

13 |

14 | class NeRFPPDataset(BaseDataset):

15 | def __init__(self, root_dir, split='train', downsample=1.0, **kwargs):

16 | super().__init__(root_dir, split, downsample)

17 |

18 | self.read_intrinsics()

19 |

20 | if kwargs.get('read_meta', True):

21 | self.read_meta(split)

22 |

23 | def read_intrinsics(self):

24 | K = np.loadtxt(glob.glob(os.path.join(self.root_dir, 'train/intrinsics/*.txt'))[0],

25 | dtype=np.float32).reshape(4, 4)[:3, :3]

26 | K[:2] *= self.downsample

27 | w, h = Image.open(glob.glob(os.path.join(self.root_dir, 'train/rgb/*'))[0]).size

28 | w, h = int(w*self.downsample), int(h*self.downsample)

29 | self.K = torch.FloatTensor(K)

30 | self.directions = get_ray_directions(h, w, self.K)

31 | self.img_wh = (w, h)

32 |

33 | def read_meta(self, split):

34 | self.rays = []

35 | self.poses = []

36 |

37 | if split == 'test_traj':

38 | poses_path = \

39 | sorted(glob.glob(os.path.join(self.root_dir, 'camera_path/pose/*.txt')))

40 | self.poses = [np.loadtxt(p).reshape(4, 4)[:3] for p in poses_path]

41 | else:

42 | if split=='trainval':

43 | imgs = sorted(glob.glob(os.path.join(self.root_dir, 'train/rgb/*')))+\

44 | sorted(glob.glob(os.path.join(self.root_dir, 'val/rgb/*')))

45 | poses = sorted(glob.glob(os.path.join(self.root_dir, 'train/pose/*.txt')))+\

46 | sorted(glob.glob(os.path.join(self.root_dir, 'val/pose/*.txt')))

47 | else:

48 | imgs = sorted(glob.glob(os.path.join(self.root_dir, split, 'rgb/*')))

49 | poses = sorted(glob.glob(os.path.join(self.root_dir, split, 'pose/*.txt')))

50 |

51 | print(f'Loading {len(imgs)} {split} images ...')

52 | for img, pose in tqdm(zip(imgs, poses)):

53 | self.poses += [np.loadtxt(pose).reshape(4, 4)[:3]]

54 |

55 | img = Image.open(img).convert('RGB').resize(self.img_wh, Image.LANCZOS)

56 | img = rearrange(self.transform(img), 'c h w -> (h w) c')

57 |

58 | self.rays += [img]

59 |

60 | self.rays = torch.stack(self.rays) # (N_images, hw, ?)

61 | self.poses = torch.FloatTensor(self.poses) # (N_images, 3, 4)

62 |

--------------------------------------------------------------------------------

/datasets/ray_utils.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import numpy as np

3 | from kornia import create_meshgrid

4 | from einops import rearrange

5 |

6 |

7 | @torch.cuda.amp.autocast(dtype=torch.float32)

8 | def get_ray_directions(H, W, K, device='cpu', random=False, return_uv=False, flatten=True):

9 | """

10 | Get ray directions for all pixels in camera coordinate [right down front].

11 | Reference: https://www.scratchapixel.com/lessons/3d-basic-rendering/

12 | ray-tracing-generating-camera-rays/standard-coordinate-systems

13 |

14 | Inputs:

15 | H, W: image height and width

16 | K: (3, 3) camera intrinsics

17 | random: whether the ray passes randomly inside the pixel

18 | return_uv: whether to return uv image coordinates

19 |

20 | Outputs: (shape depends on @flatten)

21 | directions: (H, W, 3) or (H*W, 3), the direction of the rays in camera coordinate

22 | uv: (H, W, 2) or (H*W, 2) image coordinates

23 | """

24 | grid = create_meshgrid(H, W, False, device=device)[0] # (H, W, 2)

25 | u, v = grid.unbind(-1)

26 |

27 | fx, fy, cx, cy = K[0, 0], K[1, 1], K[0, 2], K[1, 2]

28 | if random:

29 | directions = \

30 | torch.stack([(u-cx+torch.rand_like(u))/fx,

31 | (v-cy+torch.rand_like(v))/fy,

32 | torch.ones_like(u)], -1)

33 | else: # pass by the center

34 | directions = \

35 | torch.stack([(u-cx+0.5)/fx, (v-cy+0.5)/fy, torch.ones_like(u)], -1)

36 | if flatten:

37 | directions = directions.reshape(-1, 3)

38 | grid = grid.reshape(-1, 2)

39 |

40 | if return_uv:

41 | return directions, grid

42 | return directions

43 |

44 |

45 | @torch.cuda.amp.autocast(dtype=torch.float32)

46 | def get_rays(directions, c2w):

47 | """

48 | Get ray origin and directions in world coordinate for all pixels in one image.

49 | Reference: https://www.scratchapixel.com/lessons/3d-basic-rendering/

50 | ray-tracing-generating-camera-rays/standard-coordinate-systems

51 |

52 | Inputs:

53 | directions: (N, 3) ray directions in camera coordinate

54 | c2w: (3, 4) or (N, 3, 4) transformation matrix from camera coordinate to world coordinate

55 |

56 | Outputs:

57 | rays_o: (N, 3), the origin of the rays in world coordinate

58 | rays_d: (N, 3), the direction of the rays in world coordinate

59 | """

60 | if c2w.ndim==2:

61 | # Rotate ray directions from camera coordinate to the world coordinate

62 | rays_d = directions @ c2w[:, :3].T

63 | else:

64 | rays_d = rearrange(directions, 'n c -> n 1 c') @ c2w[..., :3].mT

65 | rays_d = rearrange(rays_d, 'n 1 c -> n c')

66 | # The origin of all rays is the camera origin in world coordinate

67 | rays_o = c2w[..., 3].expand_as(rays_d)

68 |

69 | return rays_o, rays_d

70 |

71 |

72 | @torch.cuda.amp.autocast(dtype=torch.float32)

73 | def axisangle_to_R(v):

74 | """

75 | Convert an axis-angle vector to rotation matrix

76 | from https://github.com/ActiveVisionLab/nerfmm/blob/main/utils/lie_group_helper.py#L47

77 |

78 | Inputs:

79 | v: (B, 3)

80 |

81 | Outputs:

82 | R: (B, 3, 3)

83 | """

84 | zero = torch.zeros_like(v[:, :1]) # (B, 1)

85 | skew_v0 = torch.cat([zero, -v[:, 2:3], v[:, 1:2]], 1) # (B, 3)

86 | skew_v1 = torch.cat([v[:, 2:3], zero, -v[:, 0:1]], 1)

87 | skew_v2 = torch.cat([-v[:, 1:2], v[:, 0:1], zero], 1)

88 | skew_v = torch.stack([skew_v0, skew_v1, skew_v2], dim=1) # (B, 3, 3)

89 |

90 | norm_v = rearrange(torch.norm(v, dim=1)+1e-7, 'b -> b 1 1')

91 | eye = torch.eye(3, device=v.device)

92 | R = eye + (torch.sin(norm_v)/norm_v)*skew_v + \

93 | ((1-torch.cos(norm_v))/norm_v**2)*(skew_v@skew_v)

94 | return R

95 |

96 |

97 | def normalize(v):

98 | """Normalize a vector."""

99 | return v/np.linalg.norm(v)

100 |

101 |

102 | def average_poses(poses, pts3d=None):

103 | """

104 | Calculate the average pose, which is then used to center all poses

105 | using @center_poses. Its computation is as follows:

106 | 1. Compute the center: the average of 3d point cloud (if None, center of cameras).

107 | 2. Compute the z axis: the normalized average z axis.

108 | 3. Compute axis y': the average y axis.

109 | 4. Compute x' = y' cross product z, then normalize it as the x axis.

110 | 5. Compute the y axis: z cross product x.

111 |

112 | Note that at step 3, we cannot directly use y' as y axis since it's

113 | not necessarily orthogonal to z axis. We need to pass from x to y.

114 | Inputs:

115 | poses: (N_images, 3, 4)

116 | pts3d: (N, 3)

117 |

118 | Outputs:

119 | pose_avg: (3, 4) the average pose

120 | """

121 | # 1. Compute the center

122 | if pts3d is not None:

123 | center = pts3d.mean(0)

124 | else:

125 | center = poses[..., 3].mean(0)

126 |

127 | # 2. Compute the z axis

128 | z = normalize(poses[..., 2].mean(0)) # (3)

129 |

130 | # 3. Compute axis y' (no need to normalize as it's not the final output)

131 | y_ = poses[..., 1].mean(0) # (3)

132 |

133 | # 4. Compute the x axis

134 | x = normalize(np.cross(y_, z)) # (3)

135 |

136 | # 5. Compute the y axis (as z and x are normalized, y is already of norm 1)

137 | y = np.cross(z, x) # (3)

138 |

139 | pose_avg = np.stack([x, y, z, center], 1) # (3, 4)

140 |

141 | return pose_avg

142 |

143 |

144 | def center_poses(poses, pts3d=None):

145 | """

146 | See https://github.com/bmild/nerf/issues/34

147 | Inputs:

148 | poses: (N_images, 3, 4)

149 | pts3d: (N, 3) reconstructed point cloud

150 |

151 | Outputs:

152 | poses_centered: (N_images, 3, 4) the centered poses

153 | pts3d_centered: (N, 3) centered point cloud

154 | """

155 |

156 | pose_avg = average_poses(poses, pts3d) # (3, 4)

157 | pose_avg_homo = np.eye(4)

158 | pose_avg_homo[:3] = pose_avg # convert to homogeneous coordinate for faster computation

159 | # by simply adding 0, 0, 0, 1 as the last row

160 | pose_avg_inv = np.linalg.inv(pose_avg_homo)

161 | last_row = np.tile(np.array([0, 0, 0, 1]), (len(poses), 1, 1)) # (N_images, 1, 4)

162 | poses_homo = \

163 | np.concatenate([poses, last_row], 1) # (N_images, 4, 4) homogeneous coordinate

164 |

165 | poses_centered = pose_avg_inv @ poses_homo # (N_images, 4, 4)

166 | poses_centered = poses_centered[:, :3] # (N_images, 3, 4)

167 |

168 | if pts3d is not None:

169 | pts3d_centered = pts3d @ pose_avg_inv[:, :3].T + pose_avg_inv[:, 3:].T

170 | return poses_centered, pts3d_centered

171 |

172 | return poses_centered

173 |

174 | def create_spheric_poses(radius, mean_h, n_poses=120):

175 | """

176 | Create circular poses around z axis.

177 | Inputs:

178 | radius: the (negative) height and the radius of the circle.

179 | mean_h: mean camera height

180 | Outputs:

181 | spheric_poses: (n_poses, 3, 4) the poses in the circular path

182 | """

183 | def spheric_pose(theta, phi, radius):

184 | trans_t = lambda t : np.array([

185 | [1,0,0,0],

186 | [0,1,0,2*mean_h],

187 | [0,0,1,-t]

188 | ])

189 |

190 | rot_phi = lambda phi : np.array([

191 | [1,0,0],

192 | [0,np.cos(phi),-np.sin(phi)],

193 | [0,np.sin(phi), np.cos(phi)]

194 | ])

195 |

196 | rot_theta = lambda th : np.array([

197 | [np.cos(th),0,-np.sin(th)],

198 | [0,1,0],

199 | [np.sin(th),0, np.cos(th)]

200 | ])

201 |

202 | c2w = rot_theta(theta) @ rot_phi(phi) @ trans_t(radius)

203 | c2w = np.array([[-1,0,0],[0,0,1],[0,1,0]]) @ c2w

204 | return c2w

205 |

206 | spheric_poses = []

207 | for th in np.linspace(0, 2*np.pi, n_poses+1)[:-1]:

208 | spheric_poses += [spheric_pose(th, -np.pi/12, radius)]

209 | return np.stack(spheric_poses, 0)

--------------------------------------------------------------------------------

/datasets/rtmv.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import glob

3 | import json

4 | #### Under construction. Don't use now

5 |

6 | import numpy as np

7 | import os

8 | import imageio

9 | import cv2

10 | from einops import rearrange

11 | from tqdm import tqdm

12 |

13 | from .ray_utils import get_ray_directions

14 |

15 | from .base import BaseDataset

16 |

17 |

18 | def srgb_to_linear(img):

19 | limit = 0.04045

20 | return np.where(img > limit, np.power((img + 0.055) / 1.055, 2.4), img / 12.92)

21 |

22 |

23 | def linear_to_srgb(img):

24 | limit = 0.0031308

25 | return np.where(img > limit, 1.055 * (img ** (1.0 / 2.4)) - 0.055, 12.92 * img)

26 |

27 |

28 | class RTMVDataset(BaseDataset):

29 | def __init__(self, root_dir, split='train', downsample=1.0, **kwargs):

30 | super().__init__(root_dir, split, downsample)

31 |

32 | with open(os.path.join(self.root_dir, '00000.json'), 'r') as f:

33 | meta = json.load(f)['camera_data']

34 | self.shift = np.array(meta['scene_center_3d_box'])

35 | self.scale = (np.array(meta['scene_max_3d_box'])-

36 | np.array(meta['scene_min_3d_box'])).max()/2 * 1.05 # enlarge a little

37 |

38 | fx = meta['intrinsics']['fx'] * downsample

39 | fy = meta['intrinsics']['fy'] * downsample

40 | cx = meta['intrinsics']['cx'] * downsample

41 | cy = meta['intrinsics']['cy'] * downsample

42 | w = int(meta['width']*downsample)

43 | h = int(meta['height']*downsample)

44 | K = np.float32([[fx, 0, cx],

45 | [0, fy, cy],

46 | [0, 0, 1]])

47 | self.K = torch.FloatTensor(K)

48 | self.directions = get_ray_directions(h, w, self.K)

49 | self.img_wh = (w, h)

50 |

51 | self.read_meta(split)

52 |

53 | def read_meta(self, split):

54 | self.rays = []

55 | self.poses = []

56 |

57 | if split == 'train': start_idx, end_idx = 0, 100

58 | elif split == 'trainval': start_idx, end_idx = 0, 105

59 | elif split == 'test': start_idx, end_idx = 105, 150

60 | else: raise ValueError(f'{split} split not recognized!')

61 | imgs = sorted(glob.glob(os.path.join(self.root_dir, '*[0-9].exr')))[start_idx:end_idx]

62 | poses = sorted(glob.glob(os.path.join(self.root_dir, '*.json')))[start_idx:end_idx]

63 |

64 | print(f'Loading {len(imgs)} {split} images ...')

65 | for img, pose in tqdm(zip(imgs, poses)):

66 | with open(pose, 'r') as f:

67 | m = json.load(f)['camera_data']

68 | c2w = np.zeros((3, 4), dtype=np.float32)

69 | c2w[:, :3] = -np.array(m['cam2world'])[:3, :3].T