41 |

42 |

41 |

42 |

39 |

40 |  41 |

42 |

41 |

42 |

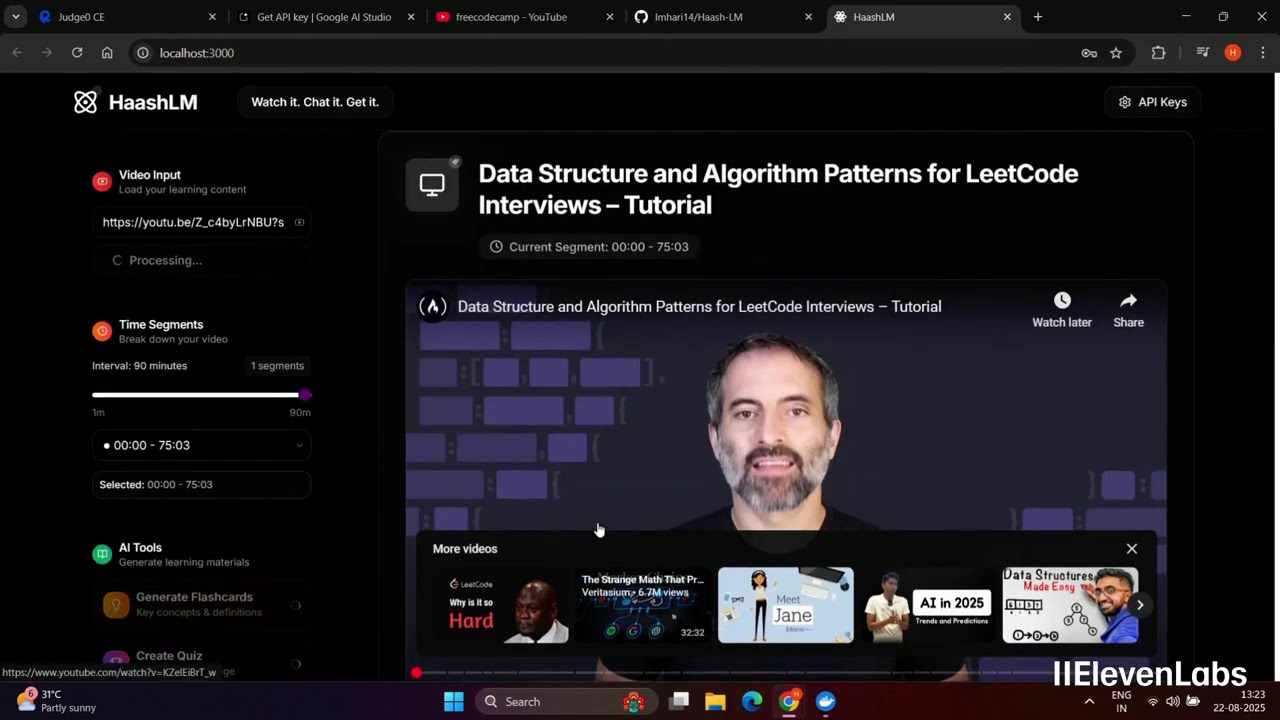

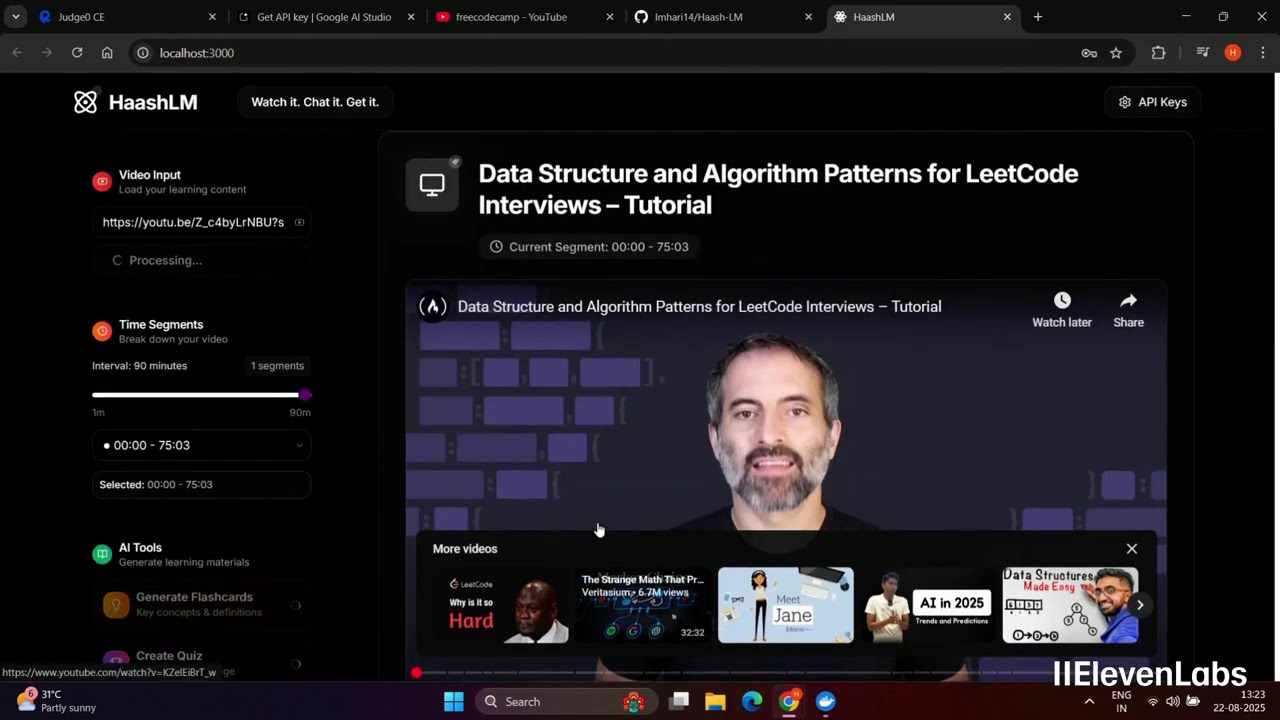

45 | ▶️ Watch the Full Demo on YouTube 46 |

47 | 48 | 49 | 50 | 51 | ## 🌐 Access 52 | 53 | - **Frontend**: [http://localhost:3000](http://localhost:3000) 54 | - **Health Check**: [http://localhost:8001/health](http://localhost:8001/health) 55 | 56 | ## 🔧 Configuration 57 | 58 | ### API Keys (Through Web UI) 59 | 60 | 1. Start container 61 | 2. Open [http://localhost:3000](http://localhost:3000) 62 | 3. Configure API keys in settings 63 | 64 | **Required Keys:** 65 | 66 | - Gemini API Key ([https://aistudio.google.com/app/apikey](https://aistudio.google.com/app/apikey)) 67 | - Judge0 API Key ([https://judge0.com/](https://judge0.com/) or [https://rapidapi.com/judge0-official/api/judge0-ce](https://rapidapi.com/judge0-official/api/judge0-ce)) 68 | 69 | ## 📈 Performance Comparison: HaashLM vs AI Studio 70 | 71 | | Feature | **HaashLM** | AI Studio | Improvement | 72 | |---------|-------------|-----------|-------------| 73 | | **Max Video Length** | 90 minutes | 40 minutes | **+125%** | 74 | | **Token Consumption** | ~150K tokens | 700K-800K tokens | **5x Less** | 75 | | **Video Processing Method** | Proprietary Smart Sampling | 1FPS extraction | **10x Efficient** | 76 | | **Initial Processing Time** | 3 minutes (90min video) | 3 minutes (40min video) | **3x Faster** | 77 | | **Consecutive Responses** | 30-40 seconds | 3+ minutes | **5x Faster** | 78 | | **Context Handling** | Maintains low hallucination (fewer tokens) | Hallucinates >500K | **Rock Solid** | 79 | 80 | **Why HaashLM handles longer videos:** While AI Studio can technically upload 1-hour videos, it fills up the context window 100%, leaving no space for chat replies - making 40 minutes the practical limit for interactive use. 81 | 82 | 🚀 **Here's the game-changer:** HaashLM processes a full 90-minute video using only 150k tokens - that's incredible efficiency! Our advanced video processing algorithms intelligently sample key frames and optimize content analysis, allowing you to learn from feature-length tutorials while maintaining plenty of context space for meaningful conversations. 83 | 84 | ## 📋 Features 85 | 86 | - **Video Processing**: Transcription, summarization, multi-language support 87 | - **AI-Powered Generation**: Quizzes, flashcards, code exercises, Colab notebooks 88 | - **Code Execution**: Multi-language support, real-time execution, Judge0 integration 89 | - **Modern Interface**: Responsive design, dark/light themes, intuitive UX 90 | 91 | ## 🏗️ Architecture 92 | 93 | - Frontend: Next.js 14 + TypeScript + Tailwind CSS 94 | - Backend: FastAPI + Python 3.12 95 | - AI Integration: Google Gemini 2.5 Flash API 96 | - Code Execution: Judge0 CE API 97 | - Video Processing: FFmpeg + yt-dlp 98 | - Security: Obfuscated bytecode, non-root user 99 | 100 | ## 🔍 Health & Logs 101 | 102 | ```bash 103 | # Health check 104 | curl http://localhost:8001/health 105 | 106 | # Logs 107 | docker logs -f haashlm 108 | ``` 109 | 110 | ## 🛠️ Troubleshooting 111 | 112 | - **Invalid API key**: Check format, permissions, and quota 113 | - **Port conflicts**: Ensure 3000 & 8001 are free 114 | - **System Config**: Minimum 8-12 core CPUs and 8GB RAM for smooth experience 115 | - **Network issues**: Check connectivity to APIs 116 | 117 | ## 📝 Feedback 118 | 119 | We’d love to hear your thoughts and suggestions to improve **HaashLM**! 120 | Please take a minute to fill out our short feedback form: 121 | 122 | 👉 [Give Feedback Here](https://docs.google.com/forms/d/e/1FAIpQLSek_2hkcVyvUhSlnFPQO1H3P-ZK5RviKDQyuHsRoTJObV8q7g/viewform?usp=dialog) 123 | 124 | 125 | **⭐ Star this repo if you find it useful!** 126 | --------------------------------------------------------------------------------