├── .DS_Store

├── .gitignore

├── NER

├── MSRA

│ ├── ReadMe.md

│ ├── link.txt

│ ├── test1.txt

│ ├── testright1.txt

│ ├── train1.txt

│ └── train2pkl.py

├── boson

│ ├── data_util.py

│ ├── license.txt

│ ├── origindata.txt

│ └── readme.md

├── readme.txt

├── renMinRiBao

│ ├── data_renmin_word.py

│ └── renmin.txt

└── weiboNER

│ └── readme.txt

├── THUCNews

└── readme.md

├── dialogue

└── SMP-2019-NLU

│ └── train.json

├── news_sohusite_xml

└── README.md

├── oppo_round1

└── README.md

├── pic

└── image-20200910233858454.png

├── readme.md

├── toutiao text classfication dataset

└── readme.md

└── word_vector

└── readme.md

/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/InsaneLife/ChineseNLPCorpus/65fc49b20af96c9f1ae159bef7f04b9e56157fda/.DS_Store

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | !/*/README.*

2 | !/*/readme.*

3 |

--------------------------------------------------------------------------------

/NER/MSRA/ReadMe.md:

--------------------------------------------------------------------------------

1 | # 数据量

2 | 训练集(46,364),测试集(4,365)

3 |

4 |

5 | # reference

6 | - https://faculty.washington.edu/levow/papers/sighan06.pdf

--------------------------------------------------------------------------------

/NER/MSRA/link.txt:

--------------------------------------------------------------------------------

1 | http://www.pudn.com/Download/item/id/2435241.html

--------------------------------------------------------------------------------

/NER/MSRA/train2pkl.py:

--------------------------------------------------------------------------------

1 | #coding:utf-8

2 | from __future__ import print_function

3 | import codecs

4 | import re

5 | import pandas as pd

6 | import numpy as np

7 |

8 | def wordtag():

9 | input_data = codecs.open('train1.txt','r','utf-8')

10 | output_data = codecs.open('wordtag.txt','w','utf-8')

11 | for line in input_data.readlines():

12 | #line=re.split('[,。;!:?、‘’“”]/[o]'.decode('utf-8'),line.strip())

13 | line = line.strip().split()

14 |

15 | if len(line)==0:

16 | continue

17 | for word in line:

18 | word = word.split('/')

19 | if word[1]!='o':

20 | if len(word[0])==1:

21 | output_data.write(word[0]+"/B_"+word[1]+" ")

22 | elif len(word[0])==2:

23 | output_data.write(word[0][0]+"/B_"+word[1]+" ")

24 | output_data.write(word[0][1]+"/E_"+word[1]+" ")

25 | else:

26 | output_data.write(word[0][0]+"/B_"+word[1]+" ")

27 | for j in word[0][1:len(word[0])-1]:

28 | output_data.write(j+"/M_"+word[1]+" ")

29 | output_data.write(word[0][-1]+"/E_"+word[1]+" ")

30 | else:

31 | for j in word[0]:

32 | output_data.write(j+"/o"+" ")

33 | output_data.write('\n')

34 |

35 |

36 | input_data.close()

37 | output_data.close()

38 |

39 | wordtag()

40 | datas = list()

41 | labels = list()

42 | linedata=list()

43 | linelabel=list()

44 |

45 | tag2id = {'' :0,

46 | 'B_ns' :1,

47 | 'B_nr' :2,

48 | 'B_nt' :3,

49 | 'M_nt' :4,

50 | 'M_nr' :5,

51 | 'M_ns' :6,

52 | 'E_nt' :7,

53 | 'E_nr' :8,

54 | 'E_ns' :9,

55 | 'o': 0}

56 |

57 | id2tag = {0:'' ,

58 | 1:'B_ns' ,

59 | 2:'B_nr' ,

60 | 3:'B_nt' ,

61 | 4:'M_nt' ,

62 | 5:'M_nr' ,

63 | 6:'M_ns' ,

64 | 7:'E_nt' ,

65 | 8:'E_nr' ,

66 | 9:'E_ns' ,

67 | 10: 'o'}

68 |

69 |

70 | input_data = codecs.open('wordtag.txt','r','utf-8')

71 | for line in input_data.readlines():

72 | line=re.split('[,。;!:?、‘’“”]/[o]'.decode('utf-8'),line.strip())

73 | for sen in line:

74 | sen = sen.strip().split()

75 | if len(sen)==0:

76 | continue

77 | linedata=[]

78 | linelabel=[]

79 | num_not_o=0

80 | for word in sen:

81 | word = word.split('/')

82 | linedata.append(word[0])

83 | linelabel.append(tag2id[word[1]])

84 |

85 | if word[1]!='o':

86 | num_not_o+=1

87 | if num_not_o!=0:

88 | datas.append(linedata)

89 | labels.append(linelabel)

90 |

91 | input_data.close()

92 | print(len(datas))

93 | print(len(labels))

94 |

95 | from compiler.ast import flatten

96 | all_words = flatten(datas)

97 | sr_allwords = pd.Series(all_words)

98 | sr_allwords = sr_allwords.value_counts()

99 | set_words = sr_allwords.index

100 | set_ids = range(1, len(set_words)+1)

101 | word2id = pd.Series(set_ids, index=set_words)

102 | id2word = pd.Series(set_words, index=set_ids)

103 |

104 | word2id["unknow"] = len(word2id)+1

105 |

106 |

107 | max_len = 50

108 | def X_padding(words):

109 | """把 words 转为 id 形式,并自动补全位 max_len 长度。"""

110 | ids = list(word2id[words])

111 | if len(ids) >= max_len: # 长则弃掉

112 | return ids[:max_len]

113 | ids.extend([0]*(max_len-len(ids))) # 短则补全

114 | return ids

115 |

116 | def y_padding(ids):

117 | """把 tags 转为 id 形式, 并自动补全位 max_len 长度。"""

118 | if len(ids) >= max_len: # 长则弃掉

119 | return ids[:max_len]

120 | ids.extend([0]*(max_len-len(ids))) # 短则补全

121 | return ids

122 |

123 | df_data = pd.DataFrame({'words': datas, 'tags': labels}, index=range(len(datas)))

124 | df_data['x'] = df_data['words'].apply(X_padding)

125 | df_data['y'] = df_data['tags'].apply(y_padding)

126 | x = np.asarray(list(df_data['x'].values))

127 | y = np.asarray(list(df_data['y'].values))

128 |

129 | from sklearn.model_selection import train_test_split

130 | x_train,x_test, y_train, y_test = train_test_split(x, y, test_size=0.1, random_state=43)

131 | x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size=0.2, random_state=43)

132 |

133 |

134 | print('Finished creating the data generator.')

135 | import pickle

136 | import os

137 | with open('../dataMSRA.pkl', 'wb') as outp:

138 | pickle.dump(word2id, outp)

139 | pickle.dump(id2word, outp)

140 | pickle.dump(tag2id, outp)

141 | pickle.dump(id2tag, outp)

142 | pickle.dump(x_train, outp)

143 | pickle.dump(y_train, outp)

144 | pickle.dump(x_test, outp)

145 | pickle.dump(y_test, outp)

146 | pickle.dump(x_valid, outp)

147 | pickle.dump(y_valid, outp)

148 | print('** Finished saving the data.')

149 |

150 |

151 |

--------------------------------------------------------------------------------

/NER/boson/data_util.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python

2 | # -*- coding: UTF-8 -*-

3 |

4 | from __future__ import print_function

5 | import codecs

6 | import pandas as pd

7 | import numpy as np

8 | import re

9 |

10 | def data2pkl():

11 | datas = list()

12 | labels = list()

13 | linedata=list()

14 | linelabel=list()

15 | tags = set()

16 |

17 | input_data = codecs.open('./wordtagsplit.txt','r','utf-8')

18 | for line in input_data.readlines():

19 | line = line.split()

20 | linedata=[]

21 | linelabel=[]

22 | numNotO=0

23 | for word in line:

24 | word = word.split('/')

25 | linedata.append(word[0])

26 | linelabel.append(word[1])

27 | tags.add(word[1])

28 | if word[1]!='O':

29 | numNotO+=1

30 | if numNotO!=0:

31 | datas.append(linedata)

32 | labels.append(linelabel)

33 |

34 | input_data.close()

35 | print(len(datas),tags)

36 | print(len(labels))

37 | from compiler.ast import flatten

38 | all_words = flatten(datas)

39 | sr_allwords = pd.Series(all_words)

40 | sr_allwords = sr_allwords.value_counts()

41 | set_words = sr_allwords.index

42 | set_ids = range(1, len(set_words)+1)

43 |

44 |

45 | tags = [i for i in tags]

46 | tag_ids = range(len(tags))

47 | word2id = pd.Series(set_ids, index=set_words)

48 | id2word = pd.Series(set_words, index=set_ids)

49 | tag2id = pd.Series(tag_ids, index=tags)

50 | id2tag = pd.Series(tags, index=tag_ids)

51 |

52 | word2id["unknow"] = len(word2id)+1

53 | print(word2id)

54 | max_len = 60

55 | def X_padding(words):

56 | ids = list(word2id[words])

57 | if len(ids) >= max_len:

58 | return ids[:max_len]

59 | ids.extend([0]*(max_len-len(ids)))

60 | return ids

61 |

62 | def y_padding(tags):

63 | ids = list(tag2id[tags])

64 | if len(ids) >= max_len:

65 | return ids[:max_len]

66 | ids.extend([0]*(max_len-len(ids)))

67 | return ids

68 | df_data = pd.DataFrame({'words': datas, 'tags': labels}, index=range(len(datas)))

69 | df_data['x'] = df_data['words'].apply(X_padding)

70 | df_data['y'] = df_data['tags'].apply(y_padding)

71 | x = np.asarray(list(df_data['x'].values))

72 | y = np.asarray(list(df_data['y'].values))

73 |

74 | from sklearn.model_selection import train_test_split

75 | x_train,x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=43)

76 | x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size=0.2, random_state=43)

77 |

78 |

79 | import pickle

80 | import os

81 | with open('../Bosondata.pkl', 'wb') as outp:

82 | pickle.dump(word2id, outp)

83 | pickle.dump(id2word, outp)

84 | pickle.dump(tag2id, outp)

85 | pickle.dump(id2tag, outp)

86 | pickle.dump(x_train, outp)

87 | pickle.dump(y_train, outp)

88 | pickle.dump(x_test, outp)

89 | pickle.dump(y_test, outp)

90 | pickle.dump(x_valid, outp)

91 | pickle.dump(y_valid, outp)

92 | print('** Finished saving the data.')

93 |

94 |

95 |

96 | def origin2tag():

97 | input_data = codecs.open('./origindata.txt','r','utf-8')

98 | output_data = codecs.open('./wordtag.txt','w','utf-8')

99 | for line in input_data.readlines():

100 | line=line.strip()

101 | i=0

102 | while i

25 | - PKU :

26 |

--------------------------------------------------------------------------------

/NER/renMinRiBao/data_renmin_word.py:

--------------------------------------------------------------------------------

1 | # -*- coding: UTF-8 -*-

2 |

3 | from __future__ import print_function

4 | import codecs

5 | import re

6 | import pdb

7 | import pandas as pd

8 | import numpy as np

9 | import collections

10 | def originHandle():

11 | with open('./renmin.txt','r') as inp,open('./renmin2.txt','w') as outp:

12 | for line in inp.readlines():

13 | line = line.split(' ')

14 | i = 1

15 | while i= max_len:

114 | return ids[:max_len]

115 | ids.extend([0]*(max_len-len(ids)))

116 | return ids

117 |

118 | def y_padding(tags):

119 | ids = list(tag2id[tags])

120 | if len(ids) >= max_len:

121 | return ids[:max_len]

122 | ids.extend([0]*(max_len-len(ids)))

123 | return ids

124 | df_data = pd.DataFrame({'words': datas, 'tags': labels}, index=range(len(datas)))

125 | df_data['x'] = df_data['words'].apply(X_padding)

126 | df_data['y'] = df_data['tags'].apply(y_padding)

127 | x = np.asarray(list(df_data['x'].values))

128 | y = np.asarray(list(df_data['y'].values))

129 |

130 | from sklearn.model_selection import train_test_split

131 | x_train,x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=43)

132 | x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size=0.2, random_state=43)

133 |

134 |

135 | import pickle

136 | import os

137 | with open('../renmindata.pkl', 'wb') as outp:

138 | pickle.dump(word2id, outp)

139 | pickle.dump(id2word, outp)

140 | pickle.dump(tag2id, outp)

141 | pickle.dump(id2tag, outp)

142 | pickle.dump(x_train, outp)

143 | pickle.dump(y_train, outp)

144 | pickle.dump(x_test, outp)

145 | pickle.dump(y_test, outp)

146 | pickle.dump(x_valid, outp)

147 | pickle.dump(y_valid, outp)

148 | print('** Finished saving the data.')

149 |

150 |

151 |

152 | originHandle()

153 | originHandle2()

154 | sentence2split()

155 | data2pkl()

156 |

--------------------------------------------------------------------------------

/NER/weiboNER/readme.txt:

--------------------------------------------------------------------------------

1 |

2 |

3 | # 微博实体识别.

4 | - https://github.com/hltcoe/golden-horse

5 | - https://github.com/hltcoe/golden-horse/tree/master/data

6 |

7 |

8 |

--------------------------------------------------------------------------------

/THUCNews/readme.md:

--------------------------------------------------------------------------------

1 | ## 开源协议

2 |

3 | 1. THUCTC面向国内外大学、研究所、企业以及个人研究者免费开放源。

4 | 2. 如有机构或个人拟将THUCTC用于商业目的,请发邮件至thunlp@gmail.com洽谈技术许可协议。

5 | 3. 欢迎对该工具包的任何宝贵意见和建议,请发邮件至thunlp@gmail.com。

6 | 4. 如果您在THUCTC基础上发表论文或取得科研成果,请您在发表论文和申报成果时声明“使用了清华大学THUCTC”,并按如下格式引用:

7 | - **中文:孙茂松,李景阳,郭志芃,赵宇,郑亚斌,司宪策,刘知远. THUCTC:一个高效的中文文本分类工具包. 2016.**

8 | - **英文: Maosong Sun, Jingyang Li, Zhipeng Guo, Yu Zhao, Yabin Zheng, Xiance Si, Zhiyuan Liu. THUCTC: An Efficient Chinese Text Classifier. 2016.**

9 |

10 |

11 |

12 | 下载链接:http://thuctc.thunlp.org/#%E8%8E%B7%E5%8F%96%E9%93%BE%E6%8E%A5

--------------------------------------------------------------------------------

/news_sohusite_xml/README.md:

--------------------------------------------------------------------------------

1 | ## 搜狐新闻数据(SogouCS)版本:2012

2 |

3 | ##### 介绍:

4 |

5 | 来自搜狐新闻2012年6月—7月期间国内,国际,体育,社会,娱乐等18个频道的新闻数据,提供URL和正文信息

6 |

7 | ##### 格式说明:

8 |

9 | 数据格式为

10 |

11 |

12 |

13 | 页面URL

14 |

15 | 页面ID

16 |

17 | 页面标题

18 |

19 | 页面内容

20 |

21 |

22 |

23 | 注意:content字段去除了HTML标签,保存的是新闻正文文本

24 |

25 | ##### 相关任务:

26 |

27 | 文本分类

28 |

29 | 事件检测跟踪

30 |

31 | 新词发现

32 |

33 | 命名实体识别

34 |

35 | 自动摘要

36 |

37 | ##### 相关资源:

38 |

39 | [全网新闻数据](https://www.sogou.com/labs/resource/ca.php) [互联网语料库](https://www.sogou.com/labs/resource/t.php) [Reuters-21578](http://kdd.ics.uci.edu/databases/reuters21578/reuters21578.html) [20 Newsgroups](http://kdd.ics.uci.edu/databases/20newsgroups/20newsgroups.html) [Web KB](http://www-2.cs.cmu.edu/afs/cs.cmu.edu/project/theo-11/www/wwkb/)

40 |

41 | ##### 成果列表:

42 |

43 | [Automatic Online News Issue Construction in Web Environment](https://www.sogou.com/labs/paper/Automatic_Online_News_Issue_Construction_in_Web_Environment.pdf)

44 |

45 | Canhui Wang, Min Zhang, Shaoping ma, Liyun Ru, the 17th International World Wide Web Conference (WWW08), Beijing, April, 2008.

46 |

47 | ##### 下载:

48 |

49 | 下载前请仔细阅读“[搜狗实验室数据使用许可协议](https://www.sogou.com/labs/resource/license.php)”

50 |

51 | Please read the "[License for Use of Sogou Lab Data](https://www.sogou.com/labs/resource/license_en.php)" carefully before downloading.

52 |

53 | 迷你版(样例数据, 110KB):[tar.gz格式](http://download.labs.sogou.com/dl/sogoulabdown/SogouCS/news_sohusite_xml.smarty.tar.gz),[zip格式](http://download.labs.sogou.com/dl/sogoulabdown/SogouCS/news_sohusite_xml.smarty.zip)

54 |

55 | 完整版(648MB):[tar.gz格式](https://www.sogou.com/labs/resource/ftp.php?dir=/Data/SogouCS/news_sohusite_xml.full.tar.gz),[zip格式](https://www.sogou.com/labs/resource/ftp.php?dir=/Data/SogouCS/news_sohusite_xml.full.zip)

56 |

57 | 历史版本:2008版(6KB):完整版(同时提供[硬盘拷贝](https://www.sogou.com/labs/resource/contact.php),65GB):[tar.gz格式](https://www.sogou.com/labs/resource/ftp.php?dir=/Data/SogouCS/SogouCS.tar.gz)

58 |

59 | 迷你版(样例数据, 1KB):[tar.gz格式](http://download.labs.sogou.com/dl/sogoulabdown/SogouCS/SogouCS.mini.tar.gz)

60 |

61 | 精简版(一个月数据, 347MB):[tar.gz格式](https://www.sogou.com/labs/resource/ftp.php?dir=/Data/SogouCS/SogouCS.reduced.tar.gz)

62 |

63 | 特别版([王灿辉WWW08论文](https://www.sogou.com/labs/paper/Automatic_Online_News_Issue_Construction_in_Web_Environment.pdf)数据, 647KB):[tar.gz格式](http://download.labs.sogou.com/dl/sogoulabdown/SogouCS/SogouCS.WWW08.tar.gz)

64 |

65 |

66 |

67 | 来源: https://www.sogou.com/labs/resource/cs.php

68 |

69 |

--------------------------------------------------------------------------------

/oppo_round1/README.md:

--------------------------------------------------------------------------------

1 | 数据来自天池大数据比赛,是OPPO手机搜索排序query-title语义匹配的问题。

2 |

3 | 数据格式: 数据分4列,\t分隔。

4 |

5 | | 字段 | 说明 | 数据示例 |

6 | | ---------------- | ------------------------------------------------------------ | ------------------------------------------ |

7 | | prefix | 用户输入(query前缀) | 刘德 |

8 | | query_prediction | 根据当前前缀,预测的用户完整需求查询词,最多10条;预测的查询词可能是前缀本身,数字为统计概率 | {“刘德华”: “0.5”, “刘德华的歌”: “0.3”, …} |

9 | | title | 文章标题 | 刘德华 |

10 | | tag | 文章内容标签 | 百科 |

11 | | label | 是否点击 | 0或1 |

12 |

13 | 为了应用来训练DSSM demo,将prefix和title作为正样,prefix和query_prediction(除title以外)作为负样本。

14 |

15 |

16 |

17 | 下载链接:

18 | 链接: https://pan.baidu.com/s/1KzLK_4Iv0CHOkkut7TJBkA?pwd=ju52 提取码: ju52 复制这段内容后打开百度网盘手机App,操作更方便哦

19 |

20 | 本数据仅限用于个人实验,如数据版权问题,请联系chou.young@qq.com 下架。

21 |

--------------------------------------------------------------------------------

/pic/image-20200910233858454.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/InsaneLife/ChineseNLPCorpus/65fc49b20af96c9f1ae159bef7f04b9e56157fda/pic/image-20200910233858454.png

--------------------------------------------------------------------------------

/readme.md:

--------------------------------------------------------------------------------

1 | [TOC]

2 |

3 |

4 | # ChineseNlpCorpus

5 |

6 | 中文自然语言处理数据集,平时做做实验的材料。欢迎补充提交合并。

7 |

8 | # 阅读理解

9 |

10 | 阅读理解数据集按照方法主要有:抽取式、分类(观点提取)。按照篇章又分为单篇章、多篇章,比如有的问题答案可能需要从多个文章中提取,每个文章可能都只是一部分,那么多篇章提取就会面临怎么合并,合并的时候怎么去掉重复的,保留补充的。

11 |

12 | | 名称 | 规模 | 说明 | 单位 | 论文 | 下载 | 评测 |

13 | | -------- | --------------------------- | ---------------------------- | ---- | ----------------------------------------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

14 | | DuReader | 30万问题 140万文档 66万答案 | 问答阅读理解数据集 | 百度 | [链接](https://www.aclweb.org/anthology/W18-2605.pdf) | [链接](https://ai.baidu.com/broad/introduction?dataset=dureader) | [2018 NLP Challenge on MRC](http://mrc2018.cipsc.org.cn/) [2019 Language and Intelligence Challenge on MRC](http://lic2019.ccf.org.cn/) |

15 | | $DuReader_{robust}$ | 2.2万问题 | 单篇章、抽取式阅读理解数据集 | 百度 | | [链接](https://github.com/PaddlePaddle/Research/tree/master/NLP/DuReader-Robust-BASELINE) | [评测](https://aistudio.baidu.com/aistudio/competition/detail/49/?isFromLUGE=TRUE) |

16 | | CMRC 2018 | 2万问题 | 篇章片段抽取型阅读理解 | 哈工大讯飞联合实验室 | [链接](https://www.aclweb.org/anthology/D19-1600.pdf) | [链接](https://github.com/ymcui/cmrc2018) | [第二届“讯飞杯”中文机器阅读理解评测](https://hfl-rc.github.io/cmrc2018/) |

17 | | $DuReader_{yesno}$ | 9万 | 观点型阅读理解数据集 | 百度 | | [链接](https://aistudio.baidu.com/aistudio/competition/detail/49/?isFromLUGE=TRUE) | [评测](https://aistudio.baidu.com/aistudio/competition/detail/49/?isFromLUGE=TRUE) |

18 | | $DuReader_{checklist}$ | 1万 | 抽取式数据集 | 百度 | | [链接](https://aistudio.baidu.com/aistudio/competition/detail/49/?isFromLUGE=TRUE) | |

19 |

20 | # 任务型对话数据

21 |

22 | ## Medical DS

23 |

24 | 复旦大学发布的基于百度拇指医生上真实对话数据的,面向任务型对话的中文医疗诊断数据集。

25 |

26 | | 名称 | 规模 | 创建日期 | 作者 | 单位 | 论文 | 下载 |

27 | | ---------- | -------------------------- | -------- | ---------- | -------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

28 | | Medical DS | 710个对话 67种症状 4种疾病 | 2018年 | Liu et al. | 复旦大学 | [链接](http://www.sdspeople.fudan.edu.cn/zywei/paper/liu-acl2018.pdf) | [链接](http://www.sdspeople.fudan.edu.cn/zywei/data/acl2018-mds.zip) |

29 |

30 | ## 千言数据集

31 |

32 | 包含知识对话、推荐对话、画像对话。详细见[官网](https://aistudio.baidu.com/aistudio/competition/detail/48/?isFromLUGE=TRUE)

33 | 千言里面还有很多数据集,见:[https://www.luge.ai/#/](https://www.luge.ai/#/)

34 | ## [CATSLU](https://dl.acm.org/doi/10.1145/3340555.3356098)

35 |

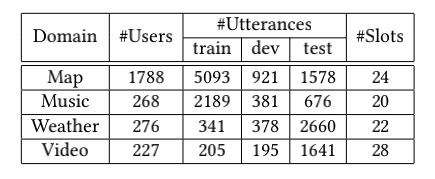

36 | 之前的一些对话数据集集中于语义理解,而工业界真实情况ASR也会有错误,往往被忽略。[CATSLU](https://dl.acm.org/doi/10.1145/3340555.3356098)而是一个中文语音+NLU文本理解的对话数据集,可以从语音信号到理解端到端进行实验,例如直接从音素建模语言理解(而非word or token)。

37 |

38 | 数据统计:

39 |

40 |

41 |

42 | 官方说明手册:[CATSLU](https://sites.google.com/view/catslu/handbook)

43 | 数据下载:[https://sites.google.com/view/CATSLU/home](https://sites.google.com/view/CATSLU/home)

44 |

45 | ## NLPCC2018 Shared Task 4

46 |

47 | 中文呢真实商用车载语音任务型对话系统的对话日志.

48 |

49 | | 名称 | 规模 | 创建日期 | 作者 | 单位 | 论文 | 下载 | 评测 |

50 | | ----------------------- | ------------------ | -------- | ----------- | ---- | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

51 | | NLPCC2018 Shared Task 4 | 5800对话 2.6万问题 | 2018年 | zhao et al. | 腾讯 | [链接](http://tcci.ccf.org.cn/conference/2018/papers/EV33.pdf) | [训练开发集](http://tcci.ccf.org.cn/conference/2018/dldoc/trainingdata04.zip) [测试集](http://tcci.ccf.org.cn/conference/2018/dldoc/tasktestdata04.zip) | [NLPCC 2018 Spoken Language Understanding in Task-oriented Dialog Systems](http://tcci.ccf.org.cn/conference/2018/taskdata.php) |

52 |

53 | NLPCC每年都会举办,包含大量中文数据集,如对话、qa、ner、情感检测、摘要等任务

54 |

55 | ## SMP

56 |

57 | 这是一系类数据集,每年都会有新的数据集放出。

58 | ### SMP-2020-ECDT小样本对话语言理解数据集

59 | > 论文中叫FewJoint 基准数据集,来自于讯飞AIUI开放平台上真实用户语料和专家构造的语料(比例大概为3:7),包含59个真实domain,目前domain最多的对话数据集之一,可以避免构造模拟domain,非常适合小样本和元学习方法评测。其中45个训练domain,5个开发domain,9个测试domain。

60 |

61 | 数据集介绍:[新闻链接](https://mp.weixin.qq.com/s?__biz=MzIxMjAzNDY5Mg==&mid=2650799572&idx=1&sn=509e256c62d80e2866f38e9d026d4af3&chksm=8f47683fb830e129f0ac7d2ff294ad1bd2cad5dc2050ae1ab81a7b108b79a6edcdba3d8030f9&mpshare=1&scene=1&srcid=1007YJCULNtwsRCUx7b35S0m&sharer_sharetime=1602603945222&sharer_shareid=904fa30621d7b898b031f4fdb5da41fc&key=9ae93b5dab71cae000c0dd901c537565d9fac572f40bafa92d79cee849b96fddbdece4d7151bec0f9a1c330dc3a9ddfe5ff4d742eef3165a71be493cd344e6ebc0a34dd5ebc61cb3c519f3a1d765f480cd5fd85d6b45655cc09b9816726ff06c2480b5287346c11ef1a18c0195b51259bd768110b49eb4b7583b40580369bcd2&ascene=1&uin=MTAxMzA5NjY2NQ%3D%3D&devicetype=Windows+10+x64&version=6300002f&lang=zh_CN&exportkey=ATbSQY9SBUjBETt7KZpV%2BIk%3D&pass_ticket=gGOfSeYJMhUPfn3Fbu8lBtWlGjw%2BANSIQ4rgajKq6vxzOW%2Fm%2Bwcw3YkXM0bkiM%2Bz&wx_header=0)

62 |

63 | 数据集论文:https://arxiv.org/abs/2009.08138

64 | 数据集下载地址:https://atmahou.github.io/attachments/FewJoint.zip

65 | 小样本工具平台主页地址:https://github.com/AtmaHou/MetaDialog

66 |

67 | ### SMP-2019-NLU

68 | 包含领域分类、意图识别和语义槽填充三项子任务的数据集。训练数据集下载:[trian.json](./dialogue/SMP-2019-NLU/train.json),目前只获取到训练集,如果有同学有测试集,欢迎提供。

69 |

70 | | | Train |

71 | | ------ | ----- |

72 | | Domain | 24 |

73 | | Intent | 29 |

74 | | Slot | 63 |

75 | | Samples | 2579 |

76 |

77 |

78 |

79 | ### SMP-2017

80 | 中文对话意图识别数据集,官方git和数据: [https://github.com/HITlilingzhi/SMP2017ECDT-DATA](https://github.com/HITlilingzhi/SMP2017ECDT-DATA)

81 |

82 | 数据集:

83 |

84 | | | Train |

85 | | ------------- | ----- |

86 | | Train samples | 2299 |

87 | | Dev samples | 770 |

88 | | Test samples | 666 |

89 | | Domain | 31 |

90 |

91 | 论文:[https://arxiv.org/abs/1709.10217 ](https://arxiv.org/abs/1709.10217)

92 |

93 | # 文本分类

94 |

95 | ## 新闻分类

96 |

97 | - 今日头条中文新闻(短文本)分类数据集 :https://github.com/fateleak/toutiao-text-classfication-dataset

98 | - 数据规模:共**38万条**,分布于15个分类中。

99 | - 采集时间:2018年05月。

100 | - 以0.7 0.15 0.15做分割 。

101 | - 清华新闻分类语料:

102 | - 根据新浪新闻RSS订阅频道2005~2011年间的历史数据筛选过滤生成。

103 | - 数据量:**74万篇新闻文档**(2.19 GB)

104 | - 小数据实验可以筛选类别:体育, 财经, 房产, 家居, 教育, 科技, 时尚, 时政, 游戏, 娱乐

105 | - http://thuctc.thunlp.org/#%E8%8E%B7%E5%8F%96%E9%93%BE%E6%8E%A5

106 | - rnn和cnn实验:https://github.com/gaussic/text-classification-cnn-rnn

107 | - 中科大新闻分类语料库:http://www.nlpir.org/?action-viewnews-itemid-145

108 |

109 |

110 |

111 | ## 情感/观点/评论 倾向性分析

112 |

113 | | 数据集 | 数据概览 | 下载 |

114 | | ----------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

115 | | ChnSentiCorp_htl_all | 7000 多条酒店评论数据,5000 多条正向评论,2000 多条负向评论 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/ChnSentiCorp_htl_all/intro.ipynb) |

116 | | waimai_10k | 某外卖平台收集的用户评价,正向 4000 条,负向 约 8000 条 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/waimai_10k/intro.ipynb) |

117 | | online_shopping_10_cats | 10 个类别,共 6 万多条评论数据,正、负向评论各约 3 万条, 包括书籍、平板、手机、水果、洗发水、热水器、蒙牛、衣服、计算机、酒店 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/online_shopping_10_cats/intro.ipynb) |

118 | | weibo_senti_100k | 10 万多条,带情感标注 新浪微博,正负向评论约各 5 万条 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/weibo_senti_100k/intro.ipynb) |

119 | | simplifyweibo_4_moods | 36 万多条,带情感标注 新浪微博,包含 4 种情感, 其中喜悦约 20 万条,愤怒、厌恶、低落各约 5 万条 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/simplifyweibo_4_moods/intro.ipynb) |

120 | | dmsc_v2 | 28 部电影,超 70 万 用户,超 200 万条 评分/评论 数据 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/dmsc_v2/intro.ipynb) |

121 | | yf_dianping | 24 万家餐馆,54 万用户,440 万条评论/评分数据 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/yf_dianping/intro.ipynb) |

122 | | yf_amazon | 52 万件商品,1100 多个类目,142 万用户,720 万条评论/评分数据 | [地址](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/yf_amazon/intro.ipynb) |

123 | | 百度千言情感分析数据集 | 包括句子级情感分类(Sentence-level Sentiment Classification)、评价对象级情感分类(Aspect-level Sentiment Classification)、观点抽取(Opinion Target Extraction) | [地址](https://aistudio.baidu.com/aistudio/competition/detail/50/?isFromLUGE=TRUE) |

124 |

125 |

126 |

127 |

128 |

129 | # 实体识别&词性标注&分词

130 |

131 | - ## 微博实体识别.

132 |

133 | - https://github.com/hltcoe/golden-horse

134 |

135 | - ## boson数据。

136 |

137 | - 包含6种实体类型。

138 | - https://github.com/InsaneLife/ChineseNLPCorpus/tree/master/NER/boson

139 |

140 | - ## 人民日报数据集。

141 |

142 | - 人名、地名、组织名三种实体类型

143 | - 1998:[https://github.com/InsaneLife/ChineseNLPCorpus/tree/master/NER/renMinRiBao](https://github.com/InsaneLife/ChineseNLPCorpus/tree/master/NER/renMinRiBao)

144 | - 2004:https://pan.baidu.com/s/1LDwQjoj7qc-HT9qwhJ3rcA password: 1fa3

145 | - ## MSRA微软亚洲研究院数据集。

146 |

147 | - 5 万多条中文命名实体识别标注数据(包括地点、机构、人物)

148 | - https://github.com/InsaneLife/ChineseNLPCorpus/tree/master/NER/MSRA

149 |

150 | - SIGHAN Bakeoff 2005:一共有四个数据集,包含繁体中文和简体中文,下面是简体中文分词数据。

151 |

152 | - MSR:

153 | - PKU :

154 |

155 | 另外这三个链接里面数据集也挺全的,链接:

156 |

157 | - [分词](https://github.com/luge-ai/luge-ai/blob/master/lexical-analysis/word-segment.md)

158 | - [词性标注](https://github.com/luge-ai/luge-ai/blob/master/lexical-analysis/part-of-speech-tagging.md)

159 | - [命名实体](https://github.com/luge-ai/luge-ai/blob/master/lexical-analysis/name-entity-recognition.md)

160 |

161 | # 句法&语义解析

162 |

163 | ## [依存句法](https://github.com/luge-ai/luge-ai/blob/master/dependency-parsing/dependency-parsing.md)

164 |

165 | ## 语义解析

166 |

167 | - 看方法主要还是转化为分类和ner任务。下载地址:[https://aistudio.baidu.com/aistudio/competition/detail/47/?isFromLUGE=TRUE](https://aistudio.baidu.com/aistudio/competition/detail/47/?isFromLUGE=TRUE)

168 |

169 | | 数据集 | 单/多表 | 语言 | 复杂度 | 数据库/表格 | 训练集 | 验证集 | 测试集 | 文档 |

170 | | :-----: | :-----: | :--: | :----: | :---------: | :----: | :----: | :----: | ------------------------------------------------------------ |

171 | | NL2SQL | 单 | 中文 | 简单 | 5,291/5,291 | 41,522 | 4,396 | 8,141 | [NL2SQL](https://arxiv.org/abs/2006.06434) |

172 | | CSpider | 多 | 中英 | 复杂 | 166/876 | 6,831 | 954 | 1,906 | [CSpider](https://arxiv.org/abs/1909.13293) |

173 | | DuSQL | 多 | 中文 | 复杂 | 200/813 | 22,521 | 2,482 | 3,759 | [DuSQL](https://www.aclweb.org/anthology/2020.emnlp-main.562.pdf) |

174 |

175 |

176 |

177 | # 信息抽取

178 |

179 | - [实体链指](https://github.com/luge-ai/luge-ai/blob/master/information-extraction/entity_linking.md)

180 | - [关系抽取](https://github.com/luge-ai/luge-ai/blob/master/information-extraction/relation-extraction.md)

181 | - [事件抽取](https://github.com/luge-ai/luge-ai/blob/master/information-extraction/event-extraction.md)

182 |

183 | # 搜索匹配

184 |

185 | ## 千言文本相似度

186 |

187 | 百度千言文本相似度,主要包含LCQMC/BQ Corpus/PAWS-X,见[官网](https://aistudio.baidu.com/aistudio/competition/detail/45/?isFromLUGE=TRUE),丰富文本匹配的数据,可以作为目标匹配数据集的源域数据,进行多任务学习/迁移学习。

188 |

189 | ## OPPO手机搜索排序

190 |

191 | OPPO手机搜索排序query-title语义匹配数据集。

192 |

193 | 链接: https://pan.baidu.com/s/1KzLK_4Iv0CHOkkut7TJBkA?pwd=ju52 提取码: ju52

194 |

195 | ## 网页搜索结果评价(SogouE)

196 |

197 | - 用户查询及相关URL列表

198 |

199 | - https://www.sogou.com/labs/resource/e.php

200 |

201 | # 推荐系统

202 |

203 | | 数据集 | 数据概览 | 下载地址 |

204 | | ----------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

205 | | ez_douban | 5 万多部电影(3 万多有电影名称,2 万多没有电影名称),2.8 万 用户,280 万条评分数据 | [点击查看](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/ez_douban/intro.ipynb) |

206 | | dmsc_v2 | 28 部电影,超 70 万 用户,超 200 万条 评分/评论 数据 | [点击查看](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/dmsc_v2/intro.ipynb) |

207 | | yf_dianping | 24 万家餐馆,54 万用户,440 万条评论/评分数据 | [点击查看](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/yf_dianping/intro.ipynb) |

208 | | yf_amazon | 52 万件商品,1100 多个类目,142 万用户,720 万条评论/评分数据 | [点击查看](https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/yf_amazon/intro.ipynb) |

209 |

210 | # 百科数据

211 |

212 | ## 维基百科

213 |

214 | 维基百科会定时将语料库打包发布:

215 |

216 | - [数据处理博客](https://blog.csdn.net/wangyangzhizhou/article/details/78348949)

217 | - https://dumps.wikimedia.org/zhwiki/

218 |

219 | ## 百度百科

220 |

221 | 只能自己爬,爬取得链接:`https://pan.baidu.com/share/init?surl=i3wvfil`提取码 neqs 。

222 |

223 |

224 |

225 | # 指代消歧

226 |

227 | CoNLL 2012 :

228 |

229 | # 预训练:(词向量or模型)

230 |

231 | ## BERT

232 |

233 | 1. 开源代码:https://github.com/google-research/bert

234 | 2. 模型下载:[**BERT-Base, Chinese**](https://storage.googleapis.com/bert_models/2018_11_03/chinese_L-12_H-768_A-12.zip): Chinese Simplified and Traditional, 12-layer, 768-hidden, 12-heads, 110M parameters

235 |

236 | BERT变种模型:

237 |

238 | | 模型 | 参数 | git |

239 | | ------------------------------------------------------------ | ---- | ------------------------------------------------------------ |

240 | | [Chinese-BERT-base](https://storage.googleapis.com/bert_models/2018_11_03/chinese_L-12_H-768_A-12.zip) | 108M | [BERT](https://github.com/google-research/bert) |

241 | | [Chinese-BERT-wwm-ext](https://drive.google.com/open?id=1Jzn1hYwmv0kXkfTeIvNT61Rn1IbRc-o8) | 108M | [Chinese-BERT-wwm](https://github.com/ymcui/Chinese-BERT-wwm) |

242 | | [RBT3](https://drive.google.com/open?id=1-rvV0nBDvRCASbRz8M9Decc3_8Aw-2yi) | 38M | [Chinese-BERT-wwm](https://github.com/ymcui/Chinese-BERT-wwm) |

243 | | [ERNIE 1.0 Base 中文](https://ernie-github.cdn.bcebos.com/model-ernie1.0.1.tar.gz) | 108M | [ERNIE](https://github.com/PaddlePaddle/ERNIE)、ernie模型转成tensorflow模型:[tensorflow_ernie](https://github.com/ArthurRizar/tensorflow_ernie) |

244 | | [RoBERTa-large](https://drive.google.com/open?id=1W3WgPJWGVKlU9wpUYsdZuurAIFKvrl_Y) | 334M | [RoBERT](https://github.com/brightmart/roberta_zh) |

245 | | [XLNet-mid](https://drive.google.com/open?id=1342uBc7ZmQwV6Hm6eUIN_OnBSz1LcvfA) | 209M | [XLNet-mid](https://github.com/ymcui/Chinese-PreTrained-XLNet) |

246 | | [ALBERT-large](https://storage.googleapis.com/albert_zh/albert_large_zh.zip) | 59M | [Chinese-ALBERT](https://github.com/brightmart/albert_zh) |

247 | | [ALBERT-xlarge](https://storage.googleapis.com/albert_zh/albert_xlarge_zh_183k.zip) | | [Chinese-ALBERT](https://github.com/brightmart/albert_zh) |

248 | | [ALBERT-tiny](https://storage.googleapis.com/albert_zh/albert_tiny_489k.zip) | 4M | [Chinese-ALBERT](https://github.com/brightmart/albert_zh) |

249 | | [chinese-roberta-wwm-ext](https://www.paddlepaddle.org.cn/hubdetail?name=chinese-roberta-wwm-ext&en_category=SemanticModel) | 108M | [Chinese-BERT-wwm](https://github.com/ymcui/Chinese-BERT-wwm) |

250 | | [chinese-roberta-wwm-ext-large](https://www.paddlepaddle.org.cn/hubdetail?name=chinese-roberta-wwm-ext-large&en_category=SemanticModel) | 330M | [Chinese-BERT-wwm](https://github.com/ymcui/Chinese-BERT-wwm) |

251 |

252 | ## ELMO

253 |

254 | 1. 开源代码:https://github.com/allenai/bilm-tf

255 | 2. 预训练的模型:https://allennlp.org/elmo

256 |

257 | ## 腾讯词向量

258 |

259 | 腾讯AI实验室公开的中文词向量数据集包含800多万中文词汇,其中每个词对应一个200维的向量。

260 |

261 | - 下载地址:~~https://ai.tencent.com/ailab/nlp/embedding.html~~,网页已经失效,有网盘链接同学希望分享下

262 |

263 | 下载地址:[https://ai.tencent.com/ailab/nlp/en/download.html](https://ai.tencent.com/ailab/nlp/en/download.html)

264 |

265 |

266 | ## **上百种预训练中文词向量**

267 |

268 | [https://github.com/Embedding/Chinese-Word-Vectors](https://link.zhihu.com/?target=https%3A//github.com/Embedding/Chinese-Word-Vectors)

269 |

270 | # **中文完形填空数据集**

271 |

272 | [https://github.com/ymcui/Chinese-RC-Dataset](https://link.zhihu.com/?target=https%3A//github.com/ymcui/Chinese-RC-Dataset)

273 |

274 |

275 |

276 | # **中华古诗词数据库**

277 |

278 | 最全中华古诗词数据集,唐宋两朝近一万四千古诗人, 接近5.5万首唐诗加26万宋诗. 两宋时期1564位词人,21050首词。

279 |

280 | [https://github.com/chinese-poetry/chinese-poetry](https://link.zhihu.com/?target=https%3A//github.com/chinese-poetry/chinese-poetry)

281 |

282 |

283 |

284 |

285 |

286 | # **保险行业语料库**

287 |

288 | [https://github.com/Samurais/insuranceqa-corpus-zh](https://link.zhihu.com/?target=https%3A//github.com/Samurais/insuranceqa-corpus-zh)

289 |

290 |

291 |

292 | # **汉语拆字字典**

293 |

294 | 英文可以做char embedding,中文不妨可以试试拆字

295 |

296 | [https://github.com/kfcd/chaizi](https://link.zhihu.com/?target=https%3A//github.com/kfcd/chaizi)

297 |

298 |

299 |

300 |

301 |

302 | # 中文数据集平台

303 |

304 | - ## **搜狗实验室**

305 |

306 | 搜狗实验室提供了一些高质量的中文文本数据集,时间比较早,多为2012年以前的数据。

307 |

308 | [https://www.sogou.com/labs/resource/list_pingce.php](https://link.zhihu.com/?target=https%3A//www.sogou.com/labs/resource/list_pingce.php)

309 |

310 | - ## **中科大自然语言处理与信息检索共享平台**

311 |

312 | [http://www.nlpir.org/?action-category-catid-28](https://link.zhihu.com/?target=http%3A//www.nlpir.org/%3Faction-category-catid-28)

313 |

314 | - ## 中文语料小数据

315 |

316 | - 包含了中文命名实体识别、中文关系识别、中文阅读理解等一些小量数据。

317 | - https://github.com/crownpku/Small-Chinese-Corpus

318 |

319 | - ## 维基百科数据集

320 |

321 | - https://dumps.wikimedia.org/

322 | - 中文维基百科23万条高质量词条数据集(更新至2307):https://huggingface.co/datasets/pleisto/wikipedia-cn-20230720-filtered

323 |

324 |

325 |

326 | # NLP工具

327 |

328 | THULAC: [https://github.com/thunlp/THULAC]( ) :包括中文分词、词性标注功能。

329 |

330 | HanLP:

331 |

332 | 哈工大LTP

333 |

334 | NLPIR

335 |

336 | jieba

337 |

338 | 百度千言数据集:[https://github.com/luge-ai/luge-ai](https://github.com/luge-ai/luge-ai)

339 |

--------------------------------------------------------------------------------

/toutiao text classfication dataset/readme.md:

--------------------------------------------------------------------------------

1 | # 中文文本分类数据集

2 |

3 | 数据来源:

4 |

5 | 今日头条客户端

6 |

7 |

8 |

9 | 数据格式:

10 |

11 | ```

12 | 6552431613437805063_!_102_!_news_entertainment_!_谢娜为李浩菲澄清网络谣言,之后她的两个行为给自己加分_!_佟丽娅,网络谣言,快乐大本营,李浩菲,谢娜,观众们

13 | ```

14 |

15 | 每行为一条数据,以`_!_`分割的个字段,从前往后分别是 新闻ID,分类code(见下文),分类名称(见下文),新闻字符串(仅含标题),新闻关键词

16 |

17 |

18 |

19 | 分类code与名称:

20 |

21 | ```

22 | 100 民生 故事 news_story

23 | 101 文化 文化 news_culture

24 | 102 娱乐 娱乐 news_entertainment

25 | 103 体育 体育 news_sports

26 | 104 财经 财经 news_finance

27 | 106 房产 房产 news_house

28 | 107 汽车 汽车 news_car

29 | 108 教育 教育 news_edu

30 | 109 科技 科技 news_tech

31 | 110 军事 军事 news_military

32 | 112 旅游 旅游 news_travel

33 | 113 国际 国际 news_world

34 | 114 证券 股票 stock

35 | 115 农业 三农 news_agriculture

36 | 116 电竞 游戏 news_game

37 | ```

38 |

39 |

40 |

41 | 数据规模:

42 |

43 | 共382688条,分布于15个分类中。

44 |

45 |

46 |

47 | 采集时间:

48 |

49 | 2018年05月

50 |

51 |

52 |

53 | 实验结果:

54 |

55 | 以0.7 0.15 0.15做分割。欢迎提交你使用本数据集的实验结果~

56 |

57 | ```

58 | Test Loss: 0.57, Test Acc: 83.81%

59 |

60 | precision recall f1-score support

61 |

62 | news_story 0.66 0.75 0.70 848

63 |

64 | news_culture 0.57 0.83 0.68 1531

65 |

66 | news_entertainment 0.86 0.86 0.86 8078

67 |

68 | news_sports 0.94 0.91 0.92 7338

69 |

70 | news_finance 0.59 0.67 0.63 1594

71 |

72 | news_house 0.84 0.89 0.87 1478

73 |

74 | news_car 0.92 0.90 0.91 6481

75 |

76 | news_edu 0.71 0.86 0.77 1425

77 |

78 | news_tech 0.85 0.84 0.85 6944

79 |

80 | news_military 0.90 0.78 0.84 6174

81 |

82 | news_travel 0.58 0.76 0.66 1287

83 |

84 | news_world 0.72 0.69 0.70 3823

85 |

86 | stock 0.00 0.00 0.00 53

87 |

88 | news_agriculture 0.80 0.88 0.84 1701

89 |

90 | news_game 0.92 0.87 0.89 6244

91 |

92 | avg / total 0.85 0.84 0.84 54999

93 |

94 |

95 |

96 | 以上Acc较低的原因:

97 |

98 | 1,数据不均衡,部分类目数据太少

99 |

100 | 2,部分分类之间本身模棱两可,例如故事、文化、旅行

101 |

102 | 详见text-class xxxx内代码

103 |

104 | 后续可以优化的地方:

105 |

106 | 1,更多的数据

107 |

108 | 2,更全的分类

109 |

110 | 因为分类不全,例如缺少美食等,导致实际使用时,分哪里都不对的情况出现。

111 |

112 | 3,更均衡的分类数据

113 |

114 | 4,引入正文

115 |

116 | ```

117 |

118 | 下载地址:

119 |

120 | https://github.com/fateleak/toutiao-text-classfication-dataset

--------------------------------------------------------------------------------

/word_vector/readme.md:

--------------------------------------------------------------------------------

1 | # 预训练:(词向量or模型)

2 |

3 | ## BERT

4 |

5 | 1. 开源代码:https://github.com/google-research/bert

6 | 2. 模型下载:[**BERT-Base, Chinese**](https://storage.googleapis.com/bert_models/2018_11_03/chinese_L-12_H-768_A-12.zip): Chinese Simplified and Traditional, 12-layer, 768-hidden, 12-heads, 110M parameters

7 |

8 |

9 |

10 | ## ELMO

11 |

12 | 1. 开源代码:https://github.com/allenai/bilm-tf

13 | 2. 预训练的模型:https://allennlp.org/elmo

14 |

15 | ## 腾讯词向量

16 |

17 | 腾讯AI实验室公开的中文词向量数据集包含800多万中文词汇,其中每个词对应一个200维的向量。

18 |

19 | - 下载地址:https://ai.tencent.com/ailab/nlp/embedding.html

20 |

21 |

--------------------------------------------------------------------------------