├── demo.png

├── pyproject.toml

├── .gitattributes

├── .github

└── workflows

│ ├── publication.yml

│ └── validation.yml

├── tests

├── test___init__.py

├── test_identities.py

├── test_directories.py

├── test_cli.py

├── test__scripts.py

├── test_onefs.py

└── conftest.py

├── src

└── isilon_hadoop_tools

│ ├── __init__.py

│ ├── _scripts.py

│ ├── cli.py

│ ├── directories.py

│ ├── identities.py

│ └── onefs.py

├── LICENSE

├── tox.ini

├── setup.py

├── .gitignore

├── CONTRIBUTING.md

└── README.md

/demo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Isilon/isilon_hadoop_tools/HEAD/demo.png

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [build-system]

2 | requires = ["setuptools ~= 67.5.0", "setuptools-scm[toml] ~= 7.1.0", "wheel ~= 0.38.4"]

3 | build-backend = "setuptools.build_meta"

4 |

5 | # https://black.readthedocs.io/en/stable/guides/using_black_with_other_tools.html#pylint

6 | [tool.pylint.design]

7 | max-args = "7"

8 | [tool.pylint.format]

9 | max-line-length = "100"

10 |

11 | [tool.setuptools_scm]

12 |

--------------------------------------------------------------------------------

/.gitattributes:

--------------------------------------------------------------------------------

1 | # Auto detect text files and perform LF normalization

2 | * text=auto

3 |

4 | # Custom for Visual Studio

5 | *.cs diff=csharp

6 |

7 | # Standard to msysgit

8 | *.doc diff=astextplain

9 | *.DOC diff=astextplain

10 | *.docx diff=astextplain

11 | *.DOCX diff=astextplain

12 | *.dot diff=astextplain

13 | *.DOT diff=astextplain

14 | *.pdf diff=astextplain

15 | *.PDF diff=astextplain

16 | *.rtf diff=astextplain

17 | *.RTF diff=astextplain

18 |

--------------------------------------------------------------------------------

/.github/workflows/publication.yml:

--------------------------------------------------------------------------------

1 | name: Publication

2 | on:

3 | release:

4 | types: [created]

5 | jobs:

6 | deploy:

7 | runs-on: ubuntu-latest

8 | steps:

9 | - uses: actions/checkout@v3

10 | - uses: actions/setup-python@v4

11 | with:

12 | python-version: '3.8'

13 | - run: python -m pip install --upgrade tox-gh-actions

14 | - env:

15 | TWINE_USERNAME: ${{ secrets.PYPI_USERNAME }}

16 | TWINE_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

17 | run: tox -e publish -- upload

18 |

--------------------------------------------------------------------------------

/.github/workflows/validation.yml:

--------------------------------------------------------------------------------

1 | name: Validation

2 | on: [push, pull_request]

3 | jobs:

4 | build:

5 | runs-on: ubuntu-latest

6 | strategy:

7 | matrix:

8 | python-version: ["3.7", "3.8", "3.9", "3.10", "3.11"]

9 | steps:

10 | - uses: actions/checkout@v3

11 | - uses: actions/setup-python@v4

12 | with:

13 | python-version: ${{ matrix.python-version }}

14 | - run: python -m pip install --upgrade tox-gh-actions

15 | - run: sudo apt install --assume-yes libkrb5-dev

16 | - run: tox

17 |

--------------------------------------------------------------------------------

/tests/test___init__.py:

--------------------------------------------------------------------------------

1 | """Verify the functionality of isilon_hadoop_tools.__init__."""

2 |

3 |

4 | import pytest

5 |

6 | import isilon_hadoop_tools

7 |

8 |

9 | @pytest.mark.parametrize(

10 | "error, classinfo",

11 | [

12 | (isilon_hadoop_tools.IsilonHadoopToolError, Exception),

13 | ],

14 | )

15 | def test_errors(error, classinfo):

16 | """Ensure that exception types remain consistent."""

17 | assert issubclass(error, isilon_hadoop_tools.IsilonHadoopToolError)

18 | assert issubclass(error, classinfo)

19 |

--------------------------------------------------------------------------------

/tests/test_identities.py:

--------------------------------------------------------------------------------

1 | import pytest

2 |

3 | import isilon_hadoop_tools.identities

4 |

5 |

6 | @pytest.mark.parametrize("zone", ["System", "notSystem"])

7 | @pytest.mark.parametrize(

8 | "identities",

9 | [

10 | isilon_hadoop_tools.identities.cdh_identities,

11 | isilon_hadoop_tools.identities.cdp_identities,

12 | isilon_hadoop_tools.identities.hdp_identities,

13 | ],

14 | )

15 | def test_log_identities(identities, zone):

16 | """Verify that log_identities returns None."""

17 | assert isilon_hadoop_tools.identities.log_identities(identities(zone)) is None

18 |

--------------------------------------------------------------------------------

/src/isilon_hadoop_tools/__init__.py:

--------------------------------------------------------------------------------

1 | """Isilon Hadoop Tools"""

2 |

3 |

4 | from pkg_resources import get_distribution

5 |

6 |

7 | __all__ = [

8 | # Constants

9 | "__version__",

10 | # Exceptions

11 | "IsilonHadoopToolError",

12 | ]

13 | __version__ = get_distribution(__name__).version

14 |

15 |

16 | class IsilonHadoopToolError(Exception):

17 | """All Exceptions emitted from this package inherit from this Exception."""

18 |

19 | def __str__(self):

20 | return super().__str__() or repr(self)

21 |

22 | def __repr__(self):

23 | cause = (

24 | f" caused by {self.__cause__!r}" # pylint: disable=no-member

25 | if getattr(self, "__cause__", None)

26 | else ""

27 | )

28 | return f"{super()!r}{cause}"

29 |

--------------------------------------------------------------------------------

/tests/test_directories.py:

--------------------------------------------------------------------------------

1 | import pytest

2 |

3 | from isilon_hadoop_tools import IsilonHadoopToolError, directories

4 |

5 |

6 | def test_directory_identities(users_groups_for_directories):

7 | """

8 | Verify that identities needed by the directories module

9 | are guaranteed to exist by the identities module.

10 | """

11 | (users, groups), dirs = users_groups_for_directories

12 | for hdfs_directory in dirs:

13 | assert hdfs_directory.owner in users

14 | assert hdfs_directory.group in groups

15 |

16 |

17 | @pytest.mark.parametrize(

18 | "error, classinfo",

19 | [

20 | (directories.DirectoriesError, IsilonHadoopToolError),

21 | (directories.HDFSRootDirectoryError, directories.DirectoriesError),

22 | ],

23 | )

24 | def test_errors_cli(error, classinfo):

25 | """Ensure that exception types remain consistent."""

26 | assert issubclass(error, IsilonHadoopToolError)

27 | assert issubclass(error, directories.DirectoriesError)

28 | assert issubclass(error, classinfo)

29 |

--------------------------------------------------------------------------------

/tests/test_cli.py:

--------------------------------------------------------------------------------

1 | """Verify the functionality of isilon_hadoop_tools.cli."""

2 |

3 |

4 | from unittest.mock import Mock

5 |

6 | import pytest

7 |

8 | from isilon_hadoop_tools import IsilonHadoopToolError, cli

9 |

10 |

11 | def test_catches(exception):

12 | """Ensure cli.catches detects the desired exception."""

13 | assert cli.catches(exception)(Mock(side_effect=exception))() == 1

14 |

15 |

16 | def test_not_catches(exception):

17 | """Ensure cli.catches does not catch undesirable exceptions."""

18 | with pytest.raises(exception):

19 | cli.catches(())(Mock(side_effect=exception))()

20 |

21 |

22 | @pytest.mark.parametrize(

23 | "error, classinfo",

24 | [

25 | (cli.CLIError, IsilonHadoopToolError),

26 | (cli.HintedError, cli.CLIError),

27 | ],

28 | )

29 | def test_errors_cli(error, classinfo):

30 | """Ensure that exception types remain consistent."""

31 | assert issubclass(error, IsilonHadoopToolError)

32 | assert issubclass(error, cli.CLIError)

33 | assert issubclass(error, classinfo)

34 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Dell EMC Isilon

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/tox.ini:

--------------------------------------------------------------------------------

1 | [tox]

2 | minversion = 4.0.0

3 | isolated_build = true

4 | envlist = py{37,38,39,310,311}

5 |

6 | [testenv]

7 | deps =

8 | contextlib2 ~= 21.6.0

9 | mock ~= 5.0.0

10 | pytest ~= 7.2.0

11 | pytest-cov ~= 4.0.0

12 | pytest-randomly ~= 3.12.0

13 | git+https://github.com/tucked/python-kadmin.git@8d1f6fe064310be98734e5b2082defac2531e6b6

14 | commands =

15 | pytest --cov isilon_hadoop_tools --cov-report term-missing {posargs:-r a}

16 |

17 | [gh-actions]

18 | python =

19 | 3.7: py37

20 | 3.8: py38, static, publish

21 | 3.9: py39

22 | 3.10: py310

23 | 3.11: py311

24 |

25 | [testenv:static]

26 | basepython = python3.8

27 | deps =

28 | black ~= 23.1.0

29 | flake8 ~= 6.0.0

30 | pylint ~= 2.16.0

31 | commands =

32 | black --check src setup.py tests

33 | flake8 src setup.py tests

34 | pylint src setup.py

35 |

36 | [flake8]

37 | # https://black.readthedocs.io/en/stable/guides/using_black_with_other_tools.html#flake8

38 | extend-ignore = E203

39 | max-line-length = 100

40 |

41 | [testenv:publish]

42 | basepython = python3.8

43 | passenv = TWINE_*

44 | deps =

45 | build[virtualenv] ~= 0.10.0

46 | twine ~= 4.0.0

47 | commands =

48 | {envpython} -m build --outdir {envtmpdir} .

49 | twine {posargs:check} {envtmpdir}/*

50 |

--------------------------------------------------------------------------------

/tests/test__scripts.py:

--------------------------------------------------------------------------------

1 | import posixpath

2 | import subprocess

3 | import uuid

4 |

5 | import pytest

6 |

7 |

8 | @pytest.fixture

9 | def empty_hdfs_root(onefs_client):

10 | """Create a temporary directory and make it the HDFS root."""

11 | old_hdfs_root = onefs_client.hdfs_settings()["root_directory"]

12 | new_root_name = str(uuid.uuid4())

13 | onefs_client.mkdir(new_root_name, 0o755)

14 | onefs_client.update_hdfs_settings(

15 | {

16 | "root_directory": posixpath.join(

17 | onefs_client.zone_settings()["path"], new_root_name

18 | ),

19 | }

20 | )

21 | yield

22 | onefs_client.update_hdfs_settings({"root_directory": old_hdfs_root})

23 | onefs_client.rmdir(new_root_name, recursive=True)

24 |

25 |

26 | @pytest.mark.usefixtures("empty_hdfs_root")

27 | @pytest.mark.parametrize("script", ["isilon_create_users", "isilon_create_directories"])

28 | @pytest.mark.parametrize("dist", ["cdh", "cdp", "hdp"])

29 | def test_dry_run(script, onefs_client, dist):

30 | subprocess.check_call(

31 | [

32 | script,

33 | "--append-cluster-name",

34 | str(uuid.uuid4()),

35 | "--dist",

36 | dist,

37 | "--dry",

38 | "--no-verify",

39 | "--onefs-password",

40 | onefs_client.password,

41 | "--onefs-user",

42 | onefs_client.username,

43 | "--zone",

44 | "System",

45 | onefs_client.address,

46 | ]

47 | )

48 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | """Packaging for Isilon Hadoop Tools"""

2 |

3 | import setuptools

4 |

5 | with open("README.md", encoding="utf-8") as readme_file:

6 | README = readme_file.read()

7 |

8 | setuptools.setup(

9 | name="isilon_hadoop_tools",

10 | description="Tools for Using Hadoop with OneFS",

11 | long_description=README,

12 | long_description_content_type="text/markdown",

13 | license="MIT",

14 | url="https://github.com/isilon/isilon_hadoop_tools",

15 | maintainer="Isilon",

16 | maintainer_email="support@isilon.com",

17 | package_dir={"": "src"},

18 | packages=setuptools.find_packages("src"),

19 | include_package_data=True,

20 | python_requires=">=3.7",

21 | install_requires=[

22 | "isi-sdk-7-2 ~= 0.2.11",

23 | "isi-sdk-8-0 ~= 0.2.11",

24 | "isi-sdk-8-0-1 ~= 0.2.11",

25 | "isi-sdk-8-1-0 ~= 0.2.11",

26 | "isi-sdk-8-1-1 ~= 0.2.11",

27 | "isi-sdk-8-2-0 ~= 0.2.11",

28 | "isi-sdk-8-2-1 ~= 0.2.11",

29 | "isi-sdk-8-2-2 ~= 0.2.11",

30 | "requests >= 2.20.0",

31 | "setuptools >= 41.0.0",

32 | "urllib3 >= 1.22.0",

33 | ],

34 | entry_points={

35 | "console_scripts": [

36 | "isilon_create_directories = isilon_hadoop_tools._scripts:isilon_create_directories",

37 | "isilon_create_users = isilon_hadoop_tools._scripts:isilon_create_users",

38 | ],

39 | },

40 | classifiers=[

41 | "Development Status :: 5 - Production/Stable",

42 | "License :: OSI Approved :: MIT License",

43 | "Programming Language :: Python :: 3",

44 | "Programming Language :: Python :: 3.7",

45 | "Programming Language :: Python :: 3.8",

46 | "Programming Language :: Python :: 3.9",

47 | "Programming Language :: Python :: 3.10",

48 | "Programming Language :: Python :: 3.11",

49 | ],

50 | )

51 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | .hypothesis/

51 | .pytest_cache/

52 |

53 | # Translations

54 | *.mo

55 | *.pot

56 |

57 | # Django stuff:

58 | *.log

59 | local_settings.py

60 | db.sqlite3

61 | db.sqlite3-journal

62 |

63 | # Flask stuff:

64 | instance/

65 | .webassets-cache

66 |

67 | # Scrapy stuff:

68 | .scrapy

69 |

70 | # Sphinx documentation

71 | docs/_build/

72 |

73 | # PyBuilder

74 | target/

75 |

76 | # Jupyter Notebook

77 | .ipynb_checkpoints

78 |

79 | # IPython

80 | profile_default/

81 | ipython_config.py

82 |

83 | # pyenv

84 | .python-version

85 |

86 | # pipenv

87 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

88 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

89 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

90 | # install all needed dependencies.

91 | #Pipfile.lock

92 |

93 | # celery beat schedule file

94 | celerybeat-schedule

95 |

96 | # SageMath parsed files

97 | *.sage.py

98 |

99 | # Environments

100 | .env

101 | .venv

102 | env/

103 | venv/

104 | ENV/

105 | env.bak/

106 | venv.bak/

107 |

108 | # Spyder project settings

109 | .spyderproject

110 | .spyproject

111 |

112 | # Rope project settings

113 | .ropeproject

114 |

115 | # mkdocs documentation

116 | /site

117 |

118 | # mypy

119 | .mypy_cache/

120 | .dmypy.json

121 | dmypy.json

122 |

123 | # Pyre type checker

124 | .pyre/

125 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing Guidelines

2 |

3 | [GitHub](https://github.com/) hosts the project.

4 |

5 | ## Development

6 |

7 | Please [open an issue](https://help.github.com/articles/creating-an-issue/) to discuss any concerns or ideas for the project.

8 |

9 | ### Version Control

10 |

11 | Use [`git`](https://git-scm.com/doc) to retrieve and manage the project source code:

12 | ``` sh

13 | git clone https://github.com/Isilon/isilon_hadoop_tools.git

14 | ```

15 |

16 | ### Test Environment

17 |

18 | Use [`tox`](https://tox.readthedocs.io/) to deploy and run the project source code:

19 | ``` sh

20 | # Build and test the entire project:

21 | tox

22 |

23 | # Run a specific test with Python 3.7, and drop into Pdb if it fails:

24 | tox -e py37 -- -k test_catches --pdb

25 |

26 | # Create a Python 3.7 development environment:

27 | tox -e py37 --devenv ./venv

28 |

29 | # Run an IHT console_script in that environment:

30 | venv/bin/isilon_create_users --help

31 | ```

32 |

33 | ## Merges

34 |

35 | 1. All change proposals are submitted by [creating a pull request](https://help.github.com/articles/creating-a-pull-request/) (PR).

36 | - [Branch protection](https://help.github.com/articles/about-protected-branches/) is used to enforce acceptance criteria.

37 |

38 | 2. All PRs are [associated with a milestone](https://help.github.com/articles/associating-milestones-with-issues-and-pull-requests/).

39 | - Milestones serve as a change log for the project.

40 |

41 | 3. Any PR that directly changes any release artifact gets 1 of 3 [labels](https://help.github.com/articles/applying-labels-to-issues-and-pull-requests/): `major`, `minor`, `patch`.

42 | - This helps with release versioning.

43 |

44 | ### Continuous Integration

45 |

46 | [Github Actions](https://github.com/features/actions) ensures the build never breaks and the tests always pass.

47 |

48 | [](https://github.com/Isilon/isilon_hadoop_tools/actions/workflows/validation.yml)

49 |

50 | It also deploys releases to the package repository automatically (see below).

51 |

52 | ## Releases

53 |

54 | 1. Releases are versioned according to [Semantic Versioning](http://semver.org/).

55 | - https://semver.org/#why-use-semantic-versioning

56 |

57 | 2. All releases are [tagged](https://git-scm.com/book/en/v2/Git-Basics-Tagging).

58 | - This permanently associates a version with a commit.

59 |

60 | 3. Every release closes a [milestone](https://help.github.com/articles/about-milestones/).

61 | - This permanently associates a version with a milestone.

62 |

63 | ### Package Repository

64 |

65 | [PyPI](http://pypi.org/) serves releases publically.

66 |

67 | [](https://pypi.org/project/isilon_hadoop_tools)

68 |

--------------------------------------------------------------------------------

/src/isilon_hadoop_tools/_scripts.py:

--------------------------------------------------------------------------------

1 | """Command-line interface for entry points"""

2 |

3 | import logging

4 | import os

5 | import sys

6 | import time

7 |

8 | import urllib3

9 |

10 | import isilon_hadoop_tools

11 | import isilon_hadoop_tools.cli

12 | import isilon_hadoop_tools.directories

13 | import isilon_hadoop_tools.identities

14 |

15 |

16 | DRY_RUN = "Had this been for real, this is what would have happened..."

17 | LOGGER = logging.getLogger(__name__)

18 |

19 |

20 | def base_cli(parser=None):

21 | """Define CLI arguments and options for all entry points."""

22 | if parser is None:

23 | parser = isilon_hadoop_tools.cli.base_cli()

24 | parser.add_argument(

25 | "--append-cluster-name",

26 | help="the cluster name to append on identities",

27 | type=str,

28 | )

29 | parser.add_argument(

30 | "--dist",

31 | help="the Hadoop distribution to be deployed",

32 | choices=("cdh", "cdp", "hdp"),

33 | required=True,

34 | )

35 | parser.add_argument(

36 | "--dry",

37 | help="do a dry run (only logs)",

38 | action="store_true",

39 | default=False,

40 | )

41 | parser.add_argument(

42 | "--version",

43 | action="version",

44 | version=f"%(prog)s v{isilon_hadoop_tools.__version__}",

45 | )

46 | return parser

47 |

48 |

49 | def configure_script(args):

50 | """Logic that applies to all scripts goes here."""

51 | if args.no_verify:

52 | urllib3.disable_warnings()

53 |

54 |

55 | def isilon_create_users_cli(parser=None):

56 | """Define CLI arguments and options for isilon_create_users."""

57 | if parser is None:

58 | parser = base_cli()

59 | parser.add_argument(

60 | "--start-gid",

61 | help="the lowest GID to create a group with",

62 | type=int,

63 | default=isilon_hadoop_tools.identities.Creator.default_start_gid,

64 | )

65 | parser.add_argument(

66 | "--start-uid",

67 | help="the lowest UID to create a user with",

68 | type=int,

69 | default=isilon_hadoop_tools.identities.Creator.default_start_uid,

70 | )

71 | parser.add_argument(

72 | "--user-password",

73 | help="the password for users created",

74 | type=str,

75 | default=None,

76 | )

77 | return parser

78 |

79 |

80 | @isilon_hadoop_tools.cli.catches(isilon_hadoop_tools.IsilonHadoopToolError)

81 | def isilon_create_users(argv=None):

82 | """Execute isilon_create_users commands."""

83 |

84 | if argv is None:

85 | argv = sys.argv[1:]

86 | args = isilon_create_users_cli().parse_args(argv)

87 |

88 | isilon_hadoop_tools.cli.configure_logging(args)

89 | configure_script(args)

90 | onefs = isilon_hadoop_tools.cli.hdfs_client(args)

91 |

92 | identities = {

93 | "cdh": isilon_hadoop_tools.identities.cdh_identities,

94 | "cdp": isilon_hadoop_tools.identities.cdp_identities,

95 | "hdp": isilon_hadoop_tools.identities.hdp_identities,

96 | }[args.dist](args.zone)

97 |

98 | name = "-".join(

99 | [

100 | str(int(time.time())),

101 | args.zone,

102 | args.dist,

103 | ]

104 | )

105 |

106 | if args.append_cluster_name is not None:

107 | suffix = args.append_cluster_name

108 | if not suffix.startswith("-"):

109 | suffix = "-" + suffix

110 | identities = isilon_hadoop_tools.identities.with_suffix_applied(

111 | identities, suffix

112 | )

113 | name += suffix

114 |

115 | onefs_and_files = isilon_hadoop_tools.identities.Creator(

116 | onefs=onefs,

117 | onefs_zone=args.zone,

118 | start_uid=args.start_uid,

119 | start_gid=args.start_gid,

120 | script_path=os.path.join(os.getcwd(), name + ".sh"),

121 | user_password=args.user_password,

122 | )

123 | if args.dry:

124 | LOGGER.info(DRY_RUN)

125 | LOGGER.info(

126 | "A script would have been created at %s.", onefs_and_files.script_path

127 | )

128 | LOGGER.info("The following actions would have populated it and OneFS:")

129 | onefs_and_files.log_identities(identities)

130 | else:

131 | onefs_and_files.create_identities(identities)

132 |

133 |

134 | def isilon_create_directories_cli(parser=None):

135 | """Define CLI arguments and options for isilon_create_directories."""

136 | if parser is None:

137 | parser = base_cli()

138 | return parser

139 |

140 |

141 | @isilon_hadoop_tools.cli.catches(isilon_hadoop_tools.IsilonHadoopToolError)

142 | def isilon_create_directories(argv=None):

143 | """Execute isilon_create_directories commands."""

144 |

145 | if argv is None:

146 | argv = sys.argv[1:]

147 | args = isilon_create_directories_cli().parse_args(argv)

148 |

149 | isilon_hadoop_tools.cli.configure_logging(args)

150 | configure_script(args)

151 | onefs = isilon_hadoop_tools.cli.hdfs_client(args)

152 |

153 | suffix = args.append_cluster_name

154 | if suffix is not None and not suffix.startswith("-"):

155 | suffix = "-" + suffix

156 |

157 | directories = {

158 | "cdh": isilon_hadoop_tools.directories.cdh_directories,

159 | "cdp": isilon_hadoop_tools.directories.cdp_directories,

160 | "hdp": isilon_hadoop_tools.directories.hdp_directories,

161 | }[args.dist](identity_suffix=suffix)

162 |

163 | creator = isilon_hadoop_tools.directories.Creator(

164 | onefs=onefs,

165 | onefs_zone=args.zone,

166 | )

167 | try:

168 | if args.dry:

169 | LOGGER.info(DRY_RUN)

170 | creator.log_directories(directories)

171 | else:

172 | creator.create_directories(directories)

173 | except isilon_hadoop_tools.directories.HDFSRootDirectoryError as exc:

174 | raise isilon_hadoop_tools.cli.CLIError(

175 | f"The HDFS root directory must not be {exc}."

176 | ) from exc

177 |

--------------------------------------------------------------------------------

/src/isilon_hadoop_tools/cli.py:

--------------------------------------------------------------------------------

1 | """This module defines a CLI common to all command-line tools."""

2 |

3 | import argparse

4 | import getpass

5 | import logging

6 |

7 | import isilon_hadoop_tools.onefs

8 |

9 |

10 | __all__ = [

11 | # Decorators

12 | "catches",

13 | # Exceptions

14 | "CLIError",

15 | "HintedError",

16 | # Functions

17 | "base_cli",

18 | "configure_logging",

19 | "hdfs_client",

20 | "logging_cli",

21 | "onefs_cli",

22 | "onefs_client",

23 | ]

24 |

25 | LOGGER = logging.getLogger(__name__)

26 |

27 |

28 | class CLIError(isilon_hadoop_tools.IsilonHadoopToolError):

29 | """All Exceptions emitted from this module inherit from this Exception."""

30 |

31 |

32 | def catches(exception):

33 | """Create a decorator for functions that emit the specified exception."""

34 |

35 | def decorator(func):

36 | """Decorate a function that should catch instances of the specified exception."""

37 |

38 | def decorated(*args, **kwargs):

39 | """Catch instances of a specified exception that are raised from the function."""

40 | try:

41 | return func(*args, **kwargs)

42 | except exception as ex:

43 | logging.error(ex)

44 | return 1

45 |

46 | return decorated

47 |

48 | return decorator

49 |

50 |

51 | def base_cli(parser=None):

52 | """Define common CLI arguments and options."""

53 | if parser is None:

54 | parser = argparse.ArgumentParser(

55 | formatter_class=argparse.ArgumentDefaultsHelpFormatter

56 | )

57 | onefs_cli(parser.add_argument_group("OneFS"))

58 | logging_cli(parser.add_argument_group("Logging"))

59 | return parser

60 |

61 |

62 | def onefs_cli(parser=None):

63 | """Define OneFS CLI arguments and options."""

64 | if parser is None:

65 | parser = argparse.ArgumentParser(

66 | formatter_class=argparse.ArgumentDefaultsHelpFormatter

67 | )

68 | parser.add_argument(

69 | "--zone",

70 | "-z",

71 | help="Specify a OneFS access zone.",

72 | type=str,

73 | required=True,

74 | )

75 | parser.add_argument(

76 | "--no-verify",

77 | help="Do not verify SSL/TLS certificates.",

78 | default=False,

79 | action="store_true",

80 | )

81 | parser.add_argument(

82 | "--onefs-password",

83 | help="Specify the password for --onefs-user.",

84 | type=str,

85 | )

86 | parser.add_argument(

87 | "--onefs-user",

88 | help="Specify the user to connect to OneFS as.",

89 | type=str,

90 | default="root",

91 | )

92 | parser.add_argument(

93 | "onefs_address",

94 | help="Specify an IP address or FQDN/SmartConnect that "

95 | "can be used to connect to and configure OneFS.",

96 | type=str,

97 | )

98 | return parser

99 |

100 |

101 | class HintedError(CLIError):

102 |

103 | """

104 | This exception is used to modify the error message passed to the user

105 | when a common error occurs that has a possible solution the user will likely want.

106 | """

107 |

108 | def __str__(self):

109 | base_str = super().__str__()

110 | return str(getattr(self, "__cause__", None)) + "\nHint: " + base_str

111 |

112 |

113 | def _client_from_onefs_cli(init, args):

114 | try:

115 | return init(

116 | address=args.onefs_address,

117 | username=args.onefs_user,

118 | password=getpass.getpass()

119 | if args.onefs_password is None

120 | else args.onefs_password,

121 | default_zone=args.zone,

122 | verify_ssl=not args.no_verify,

123 | )

124 | except isilon_hadoop_tools.onefs.OneFSCertificateError as exc:

125 | raise HintedError(

126 | "--no-verify can be used to skip certificate verification."

127 | ) from exc

128 | except isilon_hadoop_tools.onefs.MissingLicenseError as exc:

129 | raise CLIError(

130 | (

131 | isilon_hadoop_tools.onefs.APIError.license_expired_error_format

132 | if isinstance(exc, isilon_hadoop_tools.onefs.ExpiredLicenseError)

133 | else isilon_hadoop_tools.onefs.APIError.license_missing_error_format

134 | ).format(exc),

135 | ) from exc

136 | except isilon_hadoop_tools.onefs.MissingZoneError as exc:

137 | raise CLIError(

138 | isilon_hadoop_tools.onefs.APIError.zone_not_found_error_format.format(exc)

139 | ) from exc

140 |

141 |

142 | def hdfs_client(args):

143 | """Get a onefs.Client.for_hdfs from args parsed by onefs_cli."""

144 | return _client_from_onefs_cli(isilon_hadoop_tools.onefs.Client.for_hdfs, args)

145 |

146 |

147 | def onefs_client(args):

148 | """Get a onefs.Client from args parsed by onefs_cli."""

149 | return _client_from_onefs_cli(isilon_hadoop_tools.onefs.Client, args)

150 |

151 |

152 | def logging_cli(parser=None):

153 | """Define logging CLI arguments and options."""

154 | if parser is None:

155 | parser = argparse.ArgumentParser(

156 | formatter_class=argparse.ArgumentDefaultsHelpFormatter

157 | )

158 | parser.add_argument(

159 | "-q",

160 | "--quiet",

161 | default=False,

162 | action="store_true",

163 | help="Supress console output.",

164 | )

165 | parser.add_argument(

166 | "--log-file",

167 | type=str,

168 | help="Specify a path to log to.",

169 | )

170 | parser.add_argument(

171 | "--log-level",

172 | help="Specify how verbose logging should be.",

173 | default="info",

174 | choices=("debug", "info", "warning", "error", "critical"),

175 | )

176 | return parser

177 |

178 |

179 | def configure_logging(args):

180 | """Configure logging for command-line tools."""

181 | logging.getLogger().setLevel(logging.getLevelName(args.log_level.upper()))

182 | if not args.quiet:

183 | console_handler = logging.StreamHandler()

184 | console_handler.setFormatter(logging.Formatter("[%(levelname)s] %(message)s"))

185 | logging.getLogger().addHandler(console_handler)

186 | if args.log_file:

187 | logfile_handler = logging.FileHandler(args.log_file)

188 | logfile_handler.setFormatter(

189 | logging.Formatter("[%(asctime)s] %(name)s [%(levelname)s] %(message)s"),

190 | )

191 | logging.getLogger().addHandler(logfile_handler)

192 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

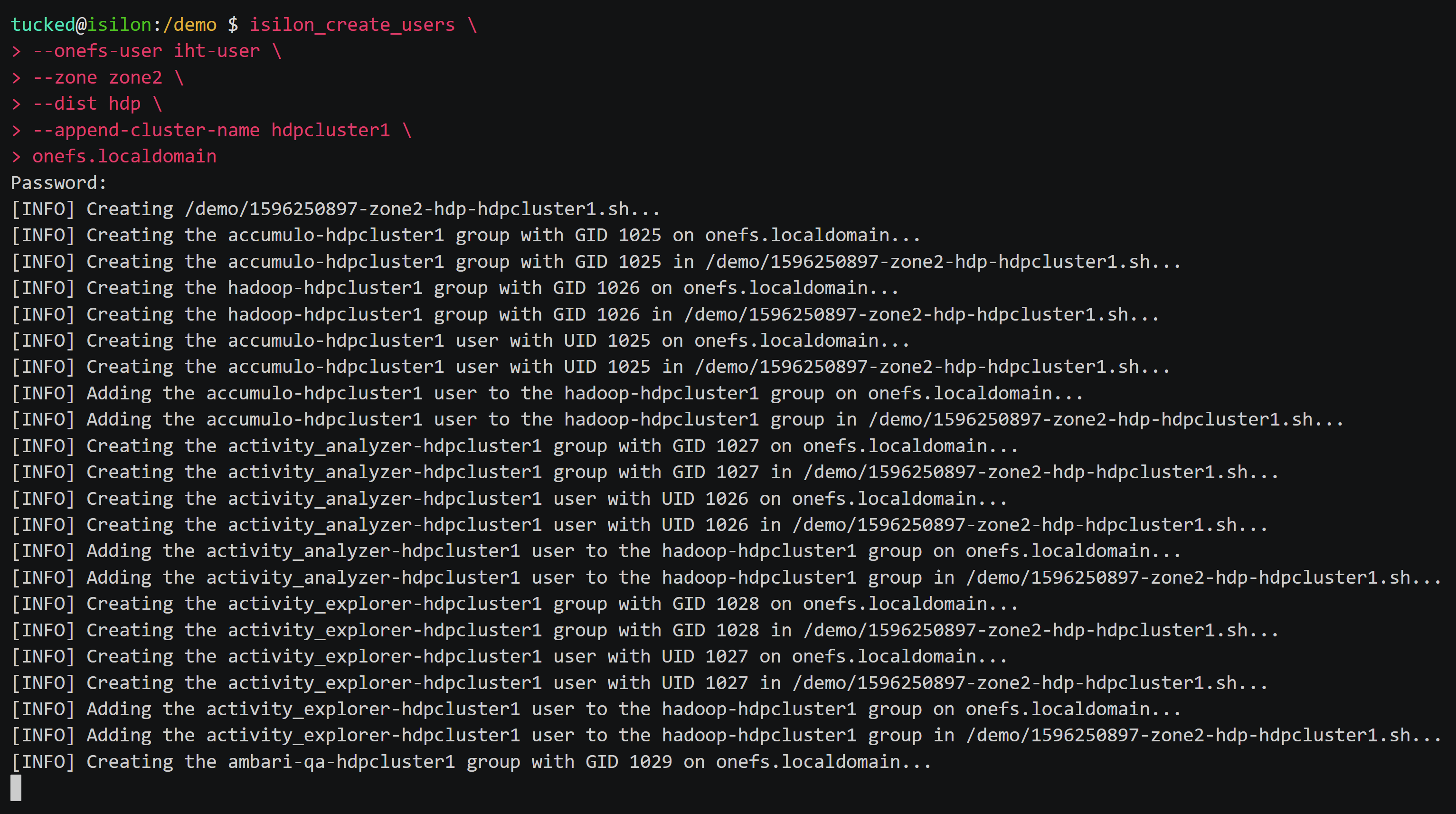

1 | # Isilon Hadoop Tools

2 |

3 | Tools for Using Hadoop with OneFS

4 |

5 | - `isilon_create_users` creates identities needed by Hadoop distributions compatible with OneFS.

6 | - `isilon_create_directories` creates a directory structure with appropriate ownership and permissions in HDFS on OneFS.

7 |

8 |

9 |

10 | ## Installation

11 |

12 | Isilon Hadoop Tools (IHT) currently requires Python 3.7+ and supports OneFS 8+.

13 |

14 | - Python support schedules can be found [in the Python Developer's Guide](https://devguide.python.org/versions/).

15 | - OneFS support schedules can be found in the [PowerScale OneFS Product Availability Guide](https://www.dell.com/support/manuals/en-us/isilon-onefs/ifs_pub_product_availability_9.4.0.0/software?guid=guid-925f6b6a-2882-42b1-8b64-2c5eb2190eb7).

16 |

17 | ### Option 1: Install as a stand-alone command line tool.

18 |

19 |

20 | Use pipx to install IHT.

21 |

22 |

23 | > _`pipx` requires Python 3.7 or later._

24 |

25 | 1. [Install `pipx`:](https://pipxproject.github.io/pipx/installation/)

26 |

27 | ``` sh

28 | python3 -m pip install --user pipx

29 | ```

30 |

31 | - Tip: Newer versions of some Linux distributions (e.g. [Debian 10](https://packages.debian.org/buster/pipx), [Ubuntu 19.04](https://packages.ubuntu.com/disco/pipx), etc.) offer native packages for `pipx`.

32 |

33 |

34 |

35 | ``` sh

36 | python3 -m pipx ensurepath

37 | ```

38 |

39 | - Note: You may need to restart your terminal for the `$PATH` updates to take effect.

40 |

41 | 2. Use `pipx` to install [`isilon_hadoop_tools`](https://pypi.org/project/isilon_hadoop_tools/):

42 |

43 | ``` sh

44 | pipx install isilon_hadoop_tools

45 | ```

46 |

47 | 3. Test the installation:

48 |

49 | ``` sh

50 | isilon_create_users --help

51 | isilon_create_directories --help

52 | ```

53 |

54 | - Use `pipx` to uninstall at any time:

55 |

56 | ``` sh

57 | pipx uninstall isilon_hadoop_tools

58 | ```

59 |

60 | See Python's [Installing stand alone command line tools](https://packaging.python.org/guides/installing-stand-alone-command-line-tools/) guide for more information.

61 |

62 |

63 | ### Option 2: Create an ephemeral installation.

64 |

65 |

66 | Use pip to install IHT in a virtual environment.

67 |

68 |

69 | > Python "Virtual Environments" allow Python packages to be installed in an isolated location for a particular application, rather than being installed globally.

70 |

71 | 1. Use the built-in [`venv`](https://docs.python.org/3/library/venv.html) module to create a virtual environment:

72 |

73 | ``` sh

74 | python3 -m venv ./iht

75 | ```

76 |

77 | 2. Install [`isilon_hadoop_tools`](https://pypi.org/project/isilon_hadoop_tools/) into the virtual environment:

78 |

79 | ``` sh

80 | iht/bin/pip install isilon_hadoop_tools

81 | ```

82 |

83 | - Note: This requires access to an up-to-date Python Package Index (PyPI, usually https://pypi.org/).

84 | For offline installations, necessary resources can be downloaded to a USB flash drive which can be used instead:

85 |

86 | ``` sh

87 | pip3 download --dest /media/usb/iht-dists isilon_hadoop_tools

88 | ```

89 | ``` sh

90 | iht/bin/pip install --no-index --find-links /media/usb/iht-dists isilon_hadoop_tools

91 | ```

92 |

93 | 3. Test the installation:

94 |

95 | ``` sh

96 | iht/bin/isilon_create_users --help

97 | ```

98 |

99 | - Tip: Some users find it more convenient to "activate" the virtual environment (which prepends the virtual environment's `bin/` to `$PATH`):

100 |

101 | ``` sh

102 | source iht/bin/activate

103 | isilon_create_users --help

104 | isilon_create_directories --help

105 | deactivate

106 | ```

107 |

108 | - Remove the virtual environment to uninstall at any time:

109 |

110 | ``` sh

111 | rm --recursive iht/

112 | ```

113 |

114 | See Python's [Installing Packages](https://packaging.python.org/tutorials/installing-packages/) tutorial for more information.

115 |

116 |

117 | ## Usage

118 |

119 | - Tip: `--help` can be used with any IHT script to see extended usage information.

120 |

121 | To use IHT, you will need the following:

122 |

123 | - `$onefs`, an IP address, hostname, or SmartConnect name associated with the OneFS System zone

124 | - Unfortunately, Zone-specific Role-Based Access Control (ZRBAC) is not fully supported by OneFS's RESTful Access to Namespace (RAN) service yet, which is required by `isilon_create_directories`.

125 | - `$iht_user`, a OneFS System zone user with the following privileges:

126 | - `ISI_PRIV_LOGIN_PAPI`

127 | - `ISI_PRIV_AUTH`

128 | - `ISI_PRIV_HDFS`

129 | - `ISI_PRIV_IFS_BACKUP` (only needed by `isilon_create_directories`)

130 | - `ISI_PRIV_IFS_RESTORE` (only needed by `isilon_create_directories`)

131 | - `$zone`, the name of the access zone on OneFS that will host HDFS

132 | - The System zone should **NOT** be used for HDFS.

133 | - `$dist`, the distribution of Hadoop that will be deployed with OneFS (e.g. CDH, HDP, etc.)

134 | - `$cluster_name`, the name of the Hadoop cluster

135 |

136 | ### Connecting to OneFS via HTTPS

137 |

138 | OneFS ships with a self-signed SSL/TLS certificate by default, and such a certificate will not be verifiable by any well-known certificate authority. If you encounter `CERTIFICATE_VERIFY_FAILED` errors while using IHT, it may be because OneFS is still using the default certificate. To remedy the issue, consider encouraging your OneFS administrator to install a verifiable certificate instead. Alternatively, you may choose to skip certificate verification by using the `--no-verify` option, but do so at your own risk!

139 |

140 | ### Preparing OneFS for Hadoop Deployment

141 |

142 | _Note: This is not meant to be a complete guide to setting up Hadoop with OneFS. If you stumbled upon this page or have not otherwise consulted the appropriate install guide for your distribution, please do so at https://community.emc.com/docs/DOC-61379._

143 |

144 | There are 2 tools in IHT that are meant to assist with the setup of OneFS as HDFS for a Hadoop cluster:

145 | 1. `isilon_create_users`, which creates users and groups that must exist on all hosts in the Hadoop cluster, including OneFS

146 | 2. `isilon_create_directories`, which sets the correct ownership and permissions on directories in HDFS on OneFS

147 |

148 | These tools must be used _in order_ since a user/group must exist before it can own a directory.

149 |

150 | #### `isilon_create_users`

151 |

152 | Using the information from above, an invocation of `isilon_create_users` could look like this:

153 | ``` sh

154 | isilon_create_users --dry \

155 | --onefs-user "$iht_user" \

156 | --zone "$zone" \

157 | --dist "$dist" \

158 | --append-cluster-name "$cluster_name" \

159 | "$onefs"

160 | ```

161 | - Note: `--dry` causes the script to log without executing. Use it to ensure the script will do what you intend before actually doing it.

162 |

163 | If anything goes wrong (e.g. the script stopped because you forgot to give `$iht_user` the `ISI_PRIV_HDFS` privilege), you can safely rerun with the same options. IHT should figure out that some of its job has been done already and work with what it finds.

164 | - If a particular user/group already exists with a particular UID/GID, the ID it already has will be used.

165 | - If a particular UID/GID is already in use by another user/group, IHT will try again with a different, higher ID.

166 | - IHT may **NOT** detect previous runs that used different options.

167 |

168 | ##### Generated Shell Script

169 |

170 | After running `isilon_create_users`, you will find a new file in `$PWD` named like so:

171 | ``` sh

172 | $unix_timestamp-$zone-$dist-$cluster_name.sh

173 | ```

174 |

175 | This script should be copied to and run on all the other hosts in the Hadoop cluster (excluding OneFS).

176 | It will create the same users/groups with the same UIDs/GIDs and memberships as on OneFS using LSB utilities such as `groupadd`, `useradd`, and `usermod`.

177 |

178 | #### `isilon_create_directories`

179 |

180 | Using the information from above, an invocation of `isilon_create_directories` could look like this:

181 | ``` sh

182 | isilon_create_directories --dry \

183 | --onefs-user "$iht_user" \

184 | --zone "$zone" \

185 | --dist "$dist" \

186 | --append-cluster-name "$cluster_name" \

187 | "$onefs"

188 | ```

189 | - Note: `--dry` causes the script to log without executing. Use it to ensure the script will do what you intend before actually doing it.

190 |

191 | If anything goes wrong (e.g. the script stopped because you forgot to run `isilon_create_users` first), you can safely rerun with the same options. IHT should figure out that some of its job has been done already and work with what it finds.

192 | - If a particular directory already exists but does not have the correct ownership or permissions, IHT will correct it.

193 | - If a user/group has been deleted and re-created with a new UID/GID, IHT will adjust ownership accordingly.

194 | - IHT may **NOT** detect previous runs that used different options.

195 |

196 | ## Development

197 |

198 | See the [Contributing Guidelines](https://github.com/Isilon/isilon_hadoop_tools/blob/master/CONTRIBUTING.md) for information on project development.

199 |

--------------------------------------------------------------------------------

/src/isilon_hadoop_tools/directories.py:

--------------------------------------------------------------------------------

1 | """Define and create directories with appropriate permissions on OneFS."""

2 |

3 | import logging

4 | import posixpath

5 |

6 | import isilon_hadoop_tools.onefs

7 | from isilon_hadoop_tools import IsilonHadoopToolError

8 |

9 | __all__ = [

10 | # Exceptions

11 | "DirectoriesError",

12 | "HDFSRootDirectoryError",

13 | # Functions

14 | "cdh_directories",

15 | "cdp_directories",

16 | "hdp_directories",

17 | # Objects

18 | "Creator",

19 | "HDFSDirectory",

20 | ]

21 |

22 | LOGGER = logging.getLogger(__name__)

23 |

24 |

25 | class DirectoriesError(IsilonHadoopToolError):

26 | """All exceptions emitted from this module inherit from this Exception."""

27 |

28 |

29 | class HDFSRootDirectoryError(DirectoriesError):

30 | """This exception occurs when the HDFS root directory is not set to a usable path."""

31 |

32 |

33 | class Creator:

34 |

35 | """Create directories with appropriate ownership and permissions on OneFS."""

36 |

37 | def __init__(self, onefs, onefs_zone=None):

38 | self.onefs = onefs

39 | self.onefs_zone = onefs_zone

40 |

41 | def create_directories(

42 | self, directories, setup=None, mkdir=None, chmod=None, chown=None

43 | ):

44 | """Create directories on HDFS on OneFS."""

45 | if self.onefs_zone.lower() == "system":

46 | LOGGER.warning("Deploying in the System zone is not recommended.")

47 | sep = posixpath.sep

48 | zone_root = self.onefs.zone_settings(zone=self.onefs_zone)["path"].rstrip(sep)

49 | hdfs_root = self.onefs.hdfs_settings(zone=self.onefs_zone)[

50 | "root_directory"

51 | ].rstrip(sep)

52 | if hdfs_root == zone_root:

53 | LOGGER.warning("The HDFS root is the same as the zone root.")

54 | if hdfs_root == "/ifs":

55 | # The HDFS root requires non-default ownership/permissions,

56 | # and modifying /ifs can break NFS/SMB.

57 | raise HDFSRootDirectoryError(hdfs_root)

58 | assert hdfs_root.startswith(zone_root)

59 | zone_hdfs = hdfs_root[len(zone_root) :]

60 | if setup:

61 | setup(zone_root, hdfs_root, zone_hdfs)

62 | for directory in directories:

63 | path = posixpath.join(zone_hdfs, directory.path.lstrip(posixpath.sep))

64 | LOGGER.info("mkdir '%s%s'", zone_root, path)

65 | try:

66 | (mkdir or self.onefs.mkdir)(path, directory.mode, zone=self.onefs_zone)

67 | except isilon_hadoop_tools.onefs.APIError as exc:

68 | if exc.dir_path_already_exists_error():

69 | LOGGER.warning("%s%s already exists. ", zone_root, path)

70 | else:

71 | raise

72 | LOGGER.info("chmod '%o' '%s%s'", directory.mode, zone_root, path)

73 | (chmod or self.onefs.chmod)(path, directory.mode, zone=self.onefs_zone)

74 | LOGGER.info(

75 | "chown '%s:%s' '%s%s'",

76 | directory.owner,

77 | directory.group,

78 | zone_root,

79 | path,

80 | )

81 | (chown or self.onefs.chown)(

82 | path,

83 | owner=directory.owner,

84 | group=directory.group,

85 | zone=self.onefs_zone,

86 | )

87 |

88 | def log_directories(self, directories):

89 | """Log the actions that would be taken by create_directories."""

90 |

91 | def _pass(*_, **__):

92 | pass

93 |

94 | self.create_directories(

95 | directories, setup=_pass, mkdir=_pass, chmod=_pass, chown=_pass

96 | )

97 |

98 |

99 | class HDFSDirectory: # pylint: disable=too-few-public-methods

100 |

101 | """A Directory on HDFS"""

102 |

103 | def __init__(self, path, owner, group, mode):

104 | self.path = path

105 | self.owner = owner

106 | self.group = group

107 | self.mode = mode

108 |

109 | def apply_identity_suffix(self, suffix):

110 | """Append a suffix to all identities associated with the directory."""

111 | self.owner += suffix

112 | self.group += suffix

113 |

114 |

115 | def cdh_directories(identity_suffix=None):

116 | """Directories needed for Cloudera Distribution including Hadoop"""

117 | directories = [

118 | HDFSDirectory("/", "hdfs", "hadoop", 0o755),

119 | HDFSDirectory("/hbase", "hbase", "hbase", 0o755),

120 | HDFSDirectory("/solr", "solr", "solr", 0o775),

121 | HDFSDirectory("/tmp", "hdfs", "supergroup", 0o1777),

122 | HDFSDirectory("/tmp/hive", "hive", "supergroup", 0o777),

123 | HDFSDirectory("/tmp/logs", "mapred", "hadoop", 0o1777),

124 | HDFSDirectory("/user", "hdfs", "supergroup", 0o755),

125 | HDFSDirectory("/user/flume", "flume", "flume", 0o775),

126 | HDFSDirectory("/user/hdfs", "hdfs", "hdfs", 0o755),

127 | HDFSDirectory("/user/history", "mapred", "hadoop", 0o777),

128 | HDFSDirectory("/user/hive", "hive", "hive", 0o775),

129 | HDFSDirectory("/user/hive/warehouse", "hive", "hive", 0o1777),

130 | HDFSDirectory("/user/hue", "hue", "hue", 0o755),

131 | HDFSDirectory(

132 | "/user/hue/.cloudera_manager_hive_metastore_canary", "hue", "hue", 0o777

133 | ),

134 | HDFSDirectory("/user/impala", "impala", "impala", 0o775),

135 | HDFSDirectory("/user/oozie", "oozie", "oozie", 0o775),

136 | HDFSDirectory("/user/spark", "spark", "spark", 0o751),

137 | HDFSDirectory("/user/spark/applicationHistory", "spark", "spark", 0o1777),

138 | HDFSDirectory("/user/sqoop2", "sqoop2", "sqoop", 0o775),

139 | HDFSDirectory("/user/yarn", "yarn", "yarn", 0o755),

140 | ]

141 | if identity_suffix:

142 | for directory in directories:

143 | directory.apply_identity_suffix(identity_suffix)

144 | return directories

145 |

146 |

147 | def cdp_directories(identity_suffix=None):

148 | """Directories needed for Cloudera Data Platform"""

149 | directories = [

150 | HDFSDirectory("/", "hdfs", "hadoop", 0o755),

151 | HDFSDirectory("/hbase", "hbase", "hbase", 0o755),

152 | HDFSDirectory("/ranger", "hdfs", "supergroup", 0o755),

153 | HDFSDirectory("/ranger/audit", "hdfs", "supergroup", 0o755),

154 | HDFSDirectory("/solr", "solr", "solr", 0o775),

155 | HDFSDirectory("/tmp", "hdfs", "supergroup", 0o1777),

156 | HDFSDirectory("/tmp/hive", "hive", "supergroup", 0o777),

157 | HDFSDirectory("/tmp/logs", "yarn", "hadoop", 0o1777),

158 | HDFSDirectory("/user", "hdfs", "supergroup", 0o755),

159 | HDFSDirectory("/user/flume", "flume", "flume", 0o775),

160 | HDFSDirectory("/user/hdfs", "hdfs", "hdfs", 0o755),

161 | HDFSDirectory("/user/history", "mapred", "hadoop", 0o777),

162 | HDFSDirectory("/user/history/done_intermediate", "mapred", "hadoop", 0o1777),

163 | HDFSDirectory("/user/hive", "hive", "hive", 0o775),

164 | HDFSDirectory("/user/hive/warehouse", "hive", "hive", 0o1777),

165 | HDFSDirectory("/user/hue", "hue", "hue", 0o755),

166 | HDFSDirectory(

167 | "/user/hue/.cloudera_manager_hive_metastore_canary", "hue", "hue", 0o777

168 | ),

169 | HDFSDirectory("/user/impala", "impala", "impala", 0o775),

170 | HDFSDirectory("/user/livy", "livy", "livy", 0o775),

171 | HDFSDirectory("/user/oozie", "oozie", "oozie", 0o775),

172 | HDFSDirectory("/user/spark", "spark", "spark", 0o751),

173 | HDFSDirectory("/user/spark/applicationHistory", "spark", "spark", 0o1777),

174 | HDFSDirectory("/user/spark/spark3ApplicationHistory", "spark", "spark", 0o1777),

175 | HDFSDirectory("/user/spark/driverLogs", "spark", "spark", 0o1777),

176 | HDFSDirectory("/user/spark/driver3Logs", "spark", "spark", 0o1777),

177 | HDFSDirectory("/user/sqoop", "sqoop", "sqoop", 0o775),

178 | HDFSDirectory("/user/sqoop2", "sqoop2", "sqoop", 0o775),

179 | HDFSDirectory("/user/tez", "hdfs", "supergroup", 0o775),

180 | HDFSDirectory("/user/yarn", "hdfs", "supergroup", 0o775),

181 | HDFSDirectory("/user/yarn/mapreduce", "hdfs", "supergroup", 0o775),

182 | HDFSDirectory("/user/yarn/mapreduce/mr-framework", "yarn", "hadoop", 0o775),

183 | HDFSDirectory("/user/yarn/services", "hdfs", "supergroup", 0o775),

184 | HDFSDirectory("/user/yarn/services/service-framework", "hdfs", "supergroup", 0o775),

185 | HDFSDirectory("/user/zeppelin", "zeppelin", "zeppelin", 0o775),

186 | HDFSDirectory("/warehouse", "hdfs", "supergroup", 0o775),

187 | HDFSDirectory("/warehouse/tablespace", "hdfs", "supergroup", 0o775),

188 | HDFSDirectory("/warehouse/tablespace/external", "hdfs", "supergroup", 0o775),

189 | HDFSDirectory("/warehouse/tablespace/managed", "hdfs", "supergroup", 0o775),

190 | HDFSDirectory("/warehouse/tablespace/external/hive", "hive", "hive", 0o1775),

191 | HDFSDirectory("/warehouse/tablespace/managed/hive", "hive", "hive", 0o1775),

192 | HDFSDirectory("/yarn", "yarn", "yarn", 0o700),

193 | HDFSDirectory("/yarn/node-labels", "yarn", "yarn", 0o700),

194 | ]

195 | if identity_suffix:

196 | for directory in directories:

197 | directory.apply_identity_suffix(identity_suffix)

198 | return directories

199 |

200 |

201 | def hdp_directories(identity_suffix=None):

202 | """Directories needed for Hortonworks Data Platform"""

203 | directories = [

204 | HDFSDirectory("/", "hdfs", "hadoop", 0o755),

205 | HDFSDirectory("/app-logs", "yarn", "hadoop", 0o1777),

206 | HDFSDirectory("/app-logs/ambari-qa", "ambari-qa", "hadoop", 0o770),

207 | HDFSDirectory("/app-logs/ambari-qa/logs", "ambari-qa", "hadoop", 0o770),

208 | HDFSDirectory("/apps", "hdfs", "hadoop", 0o755),

209 | HDFSDirectory("/apps/accumulo", "accumulo", "hadoop", 0o750),

210 | HDFSDirectory("/apps/falcon", "falcon", "hdfs", 0o777),

211 | HDFSDirectory("/apps/hbase", "hdfs", "hadoop", 0o755),

212 | HDFSDirectory("/apps/hbase/data", "hbase", "hadoop", 0o775),

213 | HDFSDirectory("/apps/hbase/staging", "hbase", "hadoop", 0o711),

214 | HDFSDirectory("/apps/hive", "hdfs", "hdfs", 0o755),

215 | HDFSDirectory("/apps/hive/warehouse", "hive", "hdfs", 0o777),

216 | HDFSDirectory("/apps/tez", "tez", "hdfs", 0o755),

217 | HDFSDirectory("/apps/webhcat", "hcat", "hdfs", 0o755),

218 | HDFSDirectory("/ats", "yarn", "hdfs", 0o755),

219 | HDFSDirectory("/ats/done", "yarn", "hdfs", 0o775),

220 | HDFSDirectory("/atsv2", "yarn-ats", "hadoop", 0o755),

221 | HDFSDirectory("/mapred", "mapred", "hadoop", 0o755),

222 | HDFSDirectory("/mapred/system", "mapred", "hadoop", 0o755),

223 | HDFSDirectory("/system", "yarn", "hadoop", 0o755),

224 | HDFSDirectory("/system/yarn", "yarn", "hadoop", 0o755),

225 | HDFSDirectory("/system/yarn/node-labels", "yarn", "hadoop", 0o700),

226 | HDFSDirectory("/tmp", "hdfs", "hdfs", 0o1777),

227 | HDFSDirectory("/tmp/hive", "ambari-qa", "hdfs", 0o777),

228 | HDFSDirectory("/user", "hdfs", "hdfs", 0o755),

229 | HDFSDirectory("/user/ambari-qa", "ambari-qa", "hdfs", 0o770),

230 | HDFSDirectory("/user/hcat", "hcat", "hdfs", 0o755),

231 | HDFSDirectory("/user/hdfs", "hdfs", "hdfs", 0o755),

232 | HDFSDirectory("/user/hive", "hive", "hdfs", 0o700),

233 | HDFSDirectory("/user/hue", "hue", "hue", 0o755),

234 | HDFSDirectory("/user/oozie", "oozie", "hdfs", 0o775),

235 | HDFSDirectory("/user/yarn", "yarn", "hdfs", 0o755),

236 | ]

237 | if identity_suffix:

238 | for directory in directories:

239 | directory.apply_identity_suffix(identity_suffix)

240 | return directories

241 |

--------------------------------------------------------------------------------

/tests/test_onefs.py:

--------------------------------------------------------------------------------

1 | """Verify the functionality of isilon_hadoop_tools.onefs."""

2 |

3 |

4 | import socket

5 |

6 | from unittest.mock import Mock

7 | from urllib.parse import urlparse

8 | import uuid

9 |

10 | import isi_sdk_7_2

11 | import isi_sdk_8_0

12 | import isi_sdk_8_0_1

13 | import isi_sdk_8_1_0

14 | import isi_sdk_8_1_1

15 | import isi_sdk_8_2_0

16 | import isi_sdk_8_2_1

17 | import isi_sdk_8_2_2

18 | import pytest

19 |

20 | from isilon_hadoop_tools import IsilonHadoopToolError, onefs

21 |

22 |

23 | def test_init_connection_error(invalid_address, pytestconfig):

24 | """Creating a Client for an unusable host should raise a OneFSConnectionError."""

25 | with pytest.raises(onefs.OneFSConnectionError):

26 | onefs.Client(address=invalid_address, username=None, password=None)

27 |

28 |

29 | @pytest.mark.xfail(

30 | raises=onefs.MalformedAPIError,

31 | reason="https://bugs.west.isilon.com/show_bug.cgi?id=248011",

32 | )

33 | def test_init_bad_creds(pytestconfig):

34 | """Creating a Client with invalid credentials should raise an appropriate exception."""

35 | with pytest.raises(onefs.APIError):

36 | onefs.Client(

37 | address=pytestconfig.getoption("--address", skip=True),

38 | username=str(uuid.uuid4()),

39 | password=str(uuid.uuid4()),

40 | verify_ssl=False, # OneFS uses a self-signed certificate by default.

41 | )

42 |

43 |

44 | def test_init(request):

45 | """Creating a Client should not raise an Exception."""

46 | assert isinstance(request.getfixturevalue("onefs_client"), onefs.Client)

47 |

48 |

49 | def test_api_error_errors(api_error_errors_expectation):

50 | """Verify that APIError.errors raises appropriate exceptions."""

51 | api_error, expectation = api_error_errors_expectation

52 | with expectation:

53 | api_error.errors()

54 |

55 |

56 | def test_api_error_str(api_error):

57 | """Verify that APIErrors can be stringified."""

58 | assert isinstance(str(api_error), str)

59 |

60 |

61 | @pytest.mark.parametrize(

62 | "revision, expected_sdk",

63 | [

64 | (0, isi_sdk_8_2_2),

65 | (onefs.ONEFS_RELEASES["7.2.0.0"], isi_sdk_7_2),

66 | (onefs.ONEFS_RELEASES["8.0.0.0"], isi_sdk_8_0),

67 | (onefs.ONEFS_RELEASES["8.0.0.4"], isi_sdk_8_0),

68 | (onefs.ONEFS_RELEASES["8.0.1.0"], isi_sdk_8_0_1),

69 | (onefs.ONEFS_RELEASES["8.0.1.1"], isi_sdk_8_0_1),

70 | (onefs.ONEFS_RELEASES["8.1.0.0"], isi_sdk_8_1_0),

71 | (onefs.ONEFS_RELEASES["8.1.1.0"], isi_sdk_8_1_1),

72 | (onefs.ONEFS_RELEASES["8.1.2.0"], isi_sdk_8_1_1),

73 | (onefs.ONEFS_RELEASES["8.2.0.0"], isi_sdk_8_2_0),

74 | (onefs.ONEFS_RELEASES["8.2.1.0"], isi_sdk_8_2_1),

75 | (onefs.ONEFS_RELEASES["8.2.2.0"], isi_sdk_8_2_2),

76 | (onefs.ONEFS_RELEASES["8.2.3.0"], isi_sdk_8_2_2),

77 | (float("inf"), isi_sdk_8_2_2),

78 | ],

79 | )

80 | def test_sdk_for_revision(revision, expected_sdk):

81 | """Verify that an appropriate SDK is selected for a given revision."""

82 | assert onefs.sdk_for_revision(revision) is expected_sdk

83 |

84 |

85 | def test_sdk_for_revision_unsupported():

86 | """Ensure that an UnsupportedVersion exception for unsupported revisions."""

87 | with pytest.raises(onefs.UnsupportedVersion):

88 | onefs.sdk_for_revision(revision=0, strict=True)

89 |

90 |

91 | def test_accesses_onefs_connection_error(max_retry_exception_mock, onefs_client):

92 | """Verify that MaxRetryErrors are converted to OneFSConnectionErrors."""

93 | with pytest.raises(onefs.OneFSConnectionError):

94 | onefs.accesses_onefs(max_retry_exception_mock)(onefs_client)

95 |

96 |

97 | def test_accesses_onefs_api_error(empty_api_exception_mock, onefs_client):

98 | """Verify that APIExceptions are converted to APIErrors."""

99 | with pytest.raises(onefs.APIError):

100 | onefs.accesses_onefs(empty_api_exception_mock)(onefs_client)

101 |

102 |

103 | def test_accesses_onefs_try_again(retriable_api_exception_mock, onefs_client):

104 | """Verify that APIExceptions are retried appropriately."""

105 | mock, return_value = retriable_api_exception_mock

106 | assert onefs.accesses_onefs(mock)(onefs_client) == return_value

107 |

108 |

109 | def test_accesses_onefs_other(exception, onefs_client):

110 | """Verify that arbitrary exceptions are not caught."""

111 | with pytest.raises(exception):

112 | onefs.accesses_onefs(Mock(side_effect=exception))(onefs_client)

113 |

114 |

115 | def test_address(onefs_client, pytestconfig):

116 | """Verify that onefs.Client.address is exactly what was passed in."""

117 | assert onefs_client.address == pytestconfig.getoption("--address")

118 |

119 |

120 | def test_username(onefs_client, pytestconfig):

121 | """Verify that onefs.Client.username is exactly what was passed in."""

122 | assert onefs_client.username == pytestconfig.getoption("--username")

123 |

124 |

125 | def test_password(onefs_client, pytestconfig):

126 | """Verify that onefs.Client.password is exactly what was passed in."""

127 | assert onefs_client.password == pytestconfig.getoption("--password")

128 |

129 |

130 | def test_host(onefs_client):

131 | """Verify that onefs.Client.host is a parsable url."""

132 | parsed = urlparse(onefs_client.host)

133 | assert parsed.scheme == "https"

134 | assert socket.gethostbyname(parsed.hostname)

135 | assert parsed.port == 8080

136 |

137 |

138 | def test_create_group(request):

139 | """Ensure that a group can be created successfully."""

140 | request.getfixturevalue("created_group")

141 |

142 |

143 | def test_delete_group(onefs_client, deletable_group):

144 | """Verify that a group can be deleted successfully."""

145 | group_name, _ = deletable_group

146 | assert onefs_client.delete_group(name=group_name) is None

147 |

148 |

149 | def test_gid_of_group(onefs_client, created_group):

150 | """Verify that the correct GID is fetched for an existing group."""

151 | group_name, gid = created_group

152 | assert onefs_client.gid_of_group(group_name=group_name) == gid

153 |

154 |

155 | def test_groups(onefs_client, created_group):

156 | """Verify that a group that is known to exist appears in the list of existing groups."""

157 | group_name, _ = created_group

158 | assert group_name in onefs_client.groups()

159 |

160 |

161 | def test_delete_user(onefs_client, deletable_user):

162 | """Verify that a user can be deleted successfully."""

163 | assert onefs_client.delete_user(name=deletable_user[0]) is None

164 |

165 |

166 | def test_create_user(request):

167 | """Ensure that a user can be created successfully."""

168 | request.getfixturevalue("created_user")

169 |

170 |

171 | def test_add_user_to_group(onefs_client, created_user, created_group):

172 | """Ensure that a user can be added to a group successfully."""

173 | assert (

174 | onefs_client.add_user_to_group(

175 | user_name=created_user[0],

176 | group_name=created_group[0],

177 | )

178 | is None

179 | )

180 |

181 |

182 | def test_create_hdfs_proxy_user(request):

183 | """Ensure that an HDFS proxy user can be created successfully."""

184 | request.getfixturevalue("created_proxy_user")

185 |

186 |

187 | def test_delete_proxy_user(onefs_client, deletable_proxy_user):

188 | """Verify that a proxy user can be deleted successfully."""

189 | assert onefs_client.delete_hdfs_proxy_user(name=deletable_proxy_user[0]) is None

190 |

191 |

192 | def test_uid_of_user(onefs_client, created_user):

193 | """Verify that the correct UID is fetched for an existing user."""

194 | user_name, _, uid = created_user

195 | assert onefs_client.uid_of_user(user_name=user_name) == uid

196 |

197 |

198 | def test_primary_group_of_user(onefs_client, created_user):

199 | """Verify that the correct primary group is fetched for an existing user."""

200 | user_name, primary_group, _ = created_user

201 | assert onefs_client.primary_group_of_user(user_name=user_name) == primary_group

202 |

203 |

204 | def test_create_realm(request):

205 | """Verify that a Kerberos realm can be created successfully."""

206 | request.getfixturevalue("created_realm")

207 |

208 |

209 | def test_delete_realm(onefs_client, deletable_realm):

210 | """Verify that a realm can be deleted successfully."""

211 | onefs_client.delete_realm(name=deletable_realm)

212 |

213 |

214 | def test_create_auth_provider(request):

215 | """Verify that a Kerberos auth provider can be created successfully."""

216 | request.getfixturevalue("created_auth_provider")

217 |

218 |

219 | def test_delete_auth_provider(onefs_client, deletable_auth_provider):

220 | """Verify that a Kerberos auth provider can be deleted successfully."""

221 | onefs_client.delete_auth_provider(name=deletable_auth_provider)

222 |

223 |

224 | def test_delete_spn(onefs_client, deletable_spn):

225 | """Verify that an SPN can be deleted successfully."""

226 | spn, provider = deletable_spn

227 | onefs_client.delete_spn(spn=spn, provider=provider)

228 |

229 |

230 | def test_create_spn(request):

231 | """Verify that a Kerberos SPN can be created successfully."""

232 | request.getfixturevalue("created_spn")

233 |

234 |

235 | def test_list_spns(onefs_client, created_spn):

236 | """Verify that a Kerberos SPN can be listed successfully."""

237 | spn, provider = created_spn

238 | assert (spn + "@" + provider) in onefs_client.list_spns(provider=provider)

239 |

240 |

241 | def test_flush_auth_cache(onefs_client):

242 | """Verify that flushing the auth cache does not raise an exception."""

243 | assert onefs_client.flush_auth_cache() is None

244 |

245 |

246 | def test_flush_auth_cache_unsupported(riptide_client):

247 | """

248 | Verify that trying flush the auth cache of a non-System zone

249 | before Halfpipe raises an UnsupportedOperation exception.

250 | """

251 | with pytest.raises(onefs.UnsupportedOperation):

252 | riptide_client.flush_auth_cache(zone="notSystem")

253 |

254 |

255 | @pytest.mark.usefixtures("requests_delete_raises")

256 | def test_flush_auth_cache_error(riptide_client):

257 | """

258 | Verify that flushing the auth cache raises an appropriate exception

259 | when things go wrong before Halfpipe.

260 | """

261 | with pytest.raises(onefs.NonSDKAPIError):

262 | riptide_client.flush_auth_cache()

263 |

264 |

265 | def test_hdfs_inotify_settings(onefs_client):

266 | """Ensure hdfs_inotify_settings returns all available settings appropriately."""

267 | try:

268 | hdfs_inotify_settings = onefs_client.hdfs_inotify_settings()

269 | except onefs.UnsupportedOperation:

270 | assert onefs_client.revision() < onefs.ONEFS_RELEASES["8.1.1.0"]

271 | else:

272 | assert isinstance(hdfs_inotify_settings, dict)

273 | assert all(

274 | setting in hdfs_inotify_settings

275 | for setting in ["enabled", "maximum_delay", "retention"]

276 | )

277 |

278 |

279 | @pytest.mark.parametrize(

280 | "setting_and_type",

281 | {

282 | "alternate_system_provider": str,

283 | "auth_providers": list,

284 | "cache_entry_expiry": int,

285 | "create_path": (bool, type(None)),

286 | "groupnet": str,

287 | "home_directory_umask": int,

288 | "id": str,

289 | "map_untrusted": str,

290 | "name": str,

291 | "netbios_name": str,

292 | "path": str,

293 | "skeleton_directory": str,

294 | "system": bool,

295 | "system_provider": str,

296 | "user_mapping_rules": list,

297 | "zone_id": int,

298 | }.items(),

299 | )

300 | def test_zone_settings(onefs_client, setting_and_type):

301 | """Ensure zone_settings returns all available settings appropriately."""

302 | setting, setting_type = setting_and_type

303 | assert isinstance(onefs_client.zone_settings()[setting], setting_type)

304 |

305 |

306 | def test_zone_settings_bad_zone(onefs_client):

307 | """Ensure zone_settings fails appropriately when given a nonexistent zone."""

308 | with pytest.raises(onefs.MissingZoneError):

309 | onefs_client.zone_settings(zone=str(uuid.uuid4()))

310 |

311 |

312 | def test_mkdir(request, onefs_client):

313 | """Ensure that a directory can be created successfully."""

314 | path, permissions = request.getfixturevalue("created_directory")

315 |

316 | def _check_postconditions():

317 | assert onefs_client.permissions(path) == permissions

318 |

319 | request.addfinalizer(_check_postconditions)

320 |

321 |

322 | @pytest.mark.parametrize("recursive", [False, True])

323 | def test_rmdir(onefs_client, deletable_directory, recursive, request):

324 | """Verify that a directory can be deleted successfully."""

325 | path, _ = deletable_directory

326 | assert onefs_client.rmdir(path=path, recursive=recursive) is None

327 |

328 | def _check_postconditions():

329 | with pytest.raises(onefs.APIError):

330 | onefs_client.permissions(path)

331 |

332 | request.addfinalizer(_check_postconditions)

333 |

334 |

335 | def test_permissions(onefs_client, created_directory):

336 | """Check that permissions returns correct information."""

337 | path, permissions = created_directory

338 | assert onefs_client.permissions(path) == permissions

339 |

340 |

341 | def test_chmod(onefs_client, created_directory, max_mode, request):

342 | """Check that chmod modifies the mode correctly."""

343 | path, permissions = created_directory

344 | new_mode = (permissions["mode"] + 1) % (max_mode + 1)

345 | assert onefs_client.chmod(path, new_mode) is None

346 |

347 | def _check_postconditions():

348 | assert onefs_client.permissions(path)["mode"] == new_mode

349 |

350 | request.addfinalizer(_check_postconditions)

351 |

352 |

353 | @pytest.mark.parametrize("new_owner", [True, False])

354 | @pytest.mark.parametrize("new_group", [True, False])

355 | def test_chown(

356 | onefs_client,

357 | created_directory,

358 | created_user,

359 | created_group,

360 | new_owner,

361 | new_group,

362 | request,

363 | ):

364 | """Check that chown modifies ownership correctly."""

365 | path, permissions = created_directory

366 | user_name = created_user[0]

367 | group_name = created_group[0]

368 | assert (

369 | onefs_client.chown(

370 | path,

371 | owner=user_name if new_owner else None,

372 | group=group_name if new_group else None,

373 | )

374 | is None

375 | )

376 |

377 | def _check_postconditions():

378 | owner = user_name if new_owner else permissions["owner"]

379 | assert onefs_client.permissions(path)["owner"] == owner

380 | group = group_name if new_group else permissions["group"]

381 | assert onefs_client.permissions(path)["group"] == group

382 |

383 | request.addfinalizer(_check_postconditions)

384 |

385 |

386 | def test_feature_supported(onefs_client, supported_feature):

387 | """Ensure that feature_is_supported correctly identifies a supported feature."""

388 | try:

389 | assert onefs_client.feature_is_supported(supported_feature)

390 | except onefs.UnsupportedOperation:

391 | assert onefs_client.revision() < onefs.ONEFS_RELEASES["8.2.0.0"]

392 |

393 |

394 | def test_feature_unsupported(onefs_client, unsupported_feature):

395 | """Ensure that feature_is_supported correctly identifies an unsupported feature."""

396 | try:

397 | assert not onefs_client.feature_is_supported(unsupported_feature)

398 | except onefs.UnsupportedOperation:

399 | assert onefs_client.revision() < onefs.ONEFS_RELEASES["8.2.0.0"]

400 |

401 |

402 | @pytest.mark.parametrize(

403 | "error, classinfo",

404 | [

405 | (onefs.APIError, onefs.OneFSError),

406 | (onefs.ExpiredLicenseError, onefs.MissingLicenseError),

407 | (onefs.MalformedAPIError, onefs.OneFSError),

408 | (onefs.MissingLicenseError, onefs.OneFSError),

409 | (onefs.MissingZoneError, onefs.OneFSError),

410 | (onefs.MixedModeError, onefs.OneFSError),

411 | (onefs.OneFSCertificateError, onefs.OneFSConnectionError),

412 | (onefs.OneFSConnectionError, onefs.OneFSError),

413 | (onefs.OneFSError, IsilonHadoopToolError),

414 | (onefs.OneFSValueError, ValueError),

415 | (onefs.NonSDKAPIError, onefs.OneFSError),

416 | (onefs.UndecodableAPIError, onefs.MalformedAPIError),

417 | (onefs.UndeterminableVersion, onefs.OneFSError),

418 | (onefs.UnsupportedOperation, onefs.OneFSError),

419 | (onefs.UnsupportedVersion, onefs.OneFSError),

420 | ],

421 | )

422 | def test_errors_onefs(error, classinfo):

423 | """Ensure that exception types remain consistent."""

424 | assert issubclass(error, IsilonHadoopToolError)

425 | assert issubclass(error, onefs.OneFSError)

426 | assert issubclass(error, classinfo)

427 |

--------------------------------------------------------------------------------

/src/isilon_hadoop_tools/identities.py:

--------------------------------------------------------------------------------

1 | """Define and create necessary Hadoop users and groups on OneFS."""

2 |

3 | import logging

4 | import os

5 |

6 | import isilon_hadoop_tools.onefs

7 |

8 |

9 | __all__ = [

10 | # Functions

11 | "cdh_identities",

12 | "cdp_identities",

13 | "hdp_identities",

14 | "iterate_identities",

15 | "log_identities",

16 | "with_suffix_applied",

17 | # Objects

18 | "Creator",

19 | ]

20 |

21 | ENCODING = "utf-8"

22 | LOGGER = logging.getLogger(__name__)

23 |

24 |

25 | def _log_create_group(group_name):

26 | LOGGER.info("Create %s group.", group_name)

27 |

28 |

29 | def _log_create_user(user_name, pgroup_name):

30 | LOGGER.info("Create %s:%s user.", user_name, pgroup_name)

31 |

32 |

33 | def _log_add_user_to_group(user_name, group_name):

34 | LOGGER.info("Add %s user to %s group.", user_name, group_name)