├── README.md

├── Readme_Img

├── C_Frame.png

├── Frameworks.png

├── Q_Frame.png

└── qflow.png

├── model.tar.gz

├── model

├── u2_p2

│ ├── config

│ └── model_best.tar

├── u2_p2_n2

│ ├── config

│ └── model_best.tar

├── u4_p2

│ ├── config

│ └── model_best.tar

├── u4_v2

│ ├── config

│ └── model_best.tar

├── v16_u2

│ ├── config

│ └── model_best.tar

└── v16_v2

│ ├── config

│ └── model_best.tar

├── requirements.txt

├── session_1

└── Tutorial_0_Basic_Quantum_Gate.ipynb

├── session_2

├── Tutorial_1_DataPreparation.ipynb

├── Tutorial_2_Hidden_NeuralComp.ipynb

├── Tutorial_3_Full_MNIST_Prediction.ipynb

├── Tutorial_4_QAccelerate.ipynb

└── Tutorial_5_QF-Map-Eval.ipynb

├── session_3

├── EX_1_QF_pNet.ipynb

├── EX_2_QF_hNet.ipynb

├── EX_3_QF_FB.py

├── EX_4_FFNN.ipynb

└── EX_5_VQC.ipynb

└── session_4

├── EX_6_QF_MixNN_V_U.ipynb

└── EX_7_QF_MixNN_U_V.ipynb

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | [](https://jqub.github.io/categories/QF/) [](https://www.nature.com/articles/s41467-020-20729-5) [](https://arxiv.org/pdf/2012.10360.pdf) [](#)

5 |

6 |

7 | # Tutorial of Implemeting Neural Network on Quantum Computer

8 |

9 | ## News

10 | [2021/06/25] A tutorial proposal on QuantumFlow has been accepted by [**IEEE Quantum Week**](https://qce.quantum.ieee.org/). See you then virtually on Oct. 18-22, 2021.

11 |

12 | [2021/06/17] A tutorial proposal on QuantumFlow has been accepted by [**ESWEEK**](https://esweek.org/). See you then virtually on Oct. 08, 2021.

13 |

14 | [2021/06/01] The tutorial on QuantumFlow optimization is released on 06/02/2021! See Tutorial 4.

15 |

16 | [2021/05/01] The tutorial can be executed on Google CoLab now!

17 |

18 | [2021/01/17] Invited to give a talk at **ASP-DAC 2021** for this tutorial work.

19 |

20 | [2021/01/01] [QuantumFlow](https://www.nature.com/articles/s41467-020-20729-5) has been accepted by **Nature Communications**.

21 |

22 | [2020/12/30] The tutorial is released on 12/31/2020! Happy New Year and enjoy the following Tutorial.

23 |

24 | [2020/09/17] Invited to give a talk at **IBM Quantum Summit** about [QuantumFlow](https://www.nature.com/articles/s41467-020-20729-5).

25 |

26 |

27 | ## Overview

28 | Recently, we proposed the first neural network and quantum circuit co-design framework, [QuantumFlow](https://www.nature.com/articles/s41467-020-20729-5), in which we have successfully demonstrated the quantum advatages in performing the basic neural operation from O(N) to O(logN). Based on the understandings from the co-design framework, in this repo, we provide a tutorial on an end-to-end implementation of neural networks onto quantum circuits, which is base of the invited paper at **ASP-DAC 2021**, titled [When Machine Learning Meets Quantum Computers: A Case Study](https://arxiv.org/pdf/2012.10360.pdf).

29 |

30 | This repo aims to demonstrate the workflow of implementing neural network onto quantum circuit and demonstrates the functional correctness. It will provide the basis to understand [QuantumFlow](https://www.nature.com/articles/s41467-020-20729-5). The demonstration of quantum advantage will be included in the repo for [QuantumFlow](https://www.nature.com/articles/s41467-020-20729-5), which will be completed soon at [here](https://github.com/weiwenjiang/QuantumFlow).

31 |

32 | Before introducing the QuantumFlow framework, we povide the implementation of basic quantum cirucit in the following Qikist codes, which can be executed in colab [](https://colab.research.google.com/github/JQub/QuantumFlow_Tutorial/blob/main/session_1/Tutorial_0_Basic_Quantum_Gate.ipynb)

33 |

34 |

35 | ## Framework: from classical to quantum

36 |

37 |

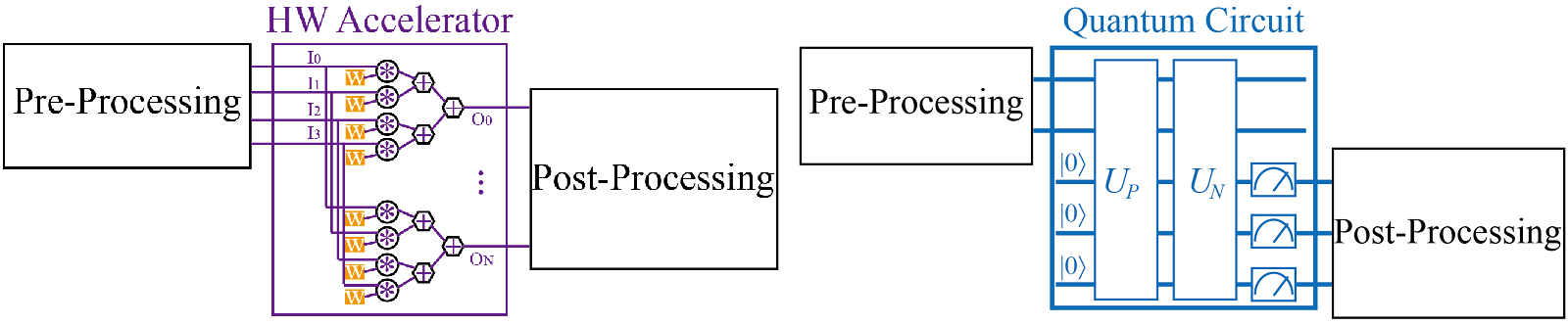

38 | In the above figure, on the left-hand, it is the design framework for classical hardware (HW) accelerators. The whole procedure will take three steps: (1) pre-processing data, (2) accelerating the neural computations, (3) post-processing data.

39 |

40 | Similarly, on the right-hand side, we can build up the workflow for quantum machine learning. It takes 5 steps to complete the whole computation: (1) **PreP** pre-processing data; (2) **UP** data encoding onto quantum, that is quantum-state preparation; (3) **UN** neural operation oracle on quantum computer; (4) **M** data readout, that is quantum measurement; (5) **PostP** post-processing data. Among these stpes, (1) and (5) are conducted on classical computer, and (2-4) are conducted on the quantum circuit.

41 |

42 | ## Tutorial 1: **PreP** + **UP**

43 |

44 | [](https://colab.research.google.com/github/JQub/QuantumFlow_Tutorial/blob/main/session_2/Tutorial_1_DataPreparation.ipynb)

45 |

46 | This tutorial demonstrates how to do data pre-preocessing and encoding it to quantum circuit using Qiskit.

47 |

48 | Let us formulate the problem as follow.

49 |

50 | **Given:** (1) One 28\*28 image from MNIST ; (2) The size to be downsampled, i.e., 4\*4

51 |

52 | **Do:** (1) Downsampling image; (2) Converting classical data to quantum data that can be encoded to quantum circuit; (3) Create quantum circuit and encode 16 pixel data to log16=4 qubits.

53 |

54 | **Check:** Whether the data is correctly encoded.

55 |

56 |

57 | ## Tutorial 2: **PreP** + **UP** + **UN**

58 |

59 | [](https://colab.research.google.com/github/JQub/QuantumFlow_Tutorial/blob/main/session_2/Tutorial_2_Hidden_NeuralComp.ipynb)

60 |

61 |

62 | This tutorial demonstrates how to use the encoded quantum circuit to perform **weighted sum** and **quadratic non-linear** operations, which are the basic operations in machine learning.

63 |

64 | Let us formulate the problem based on the output of Tutorial 1 as follow.

65 |

66 | **Given:** (1) A circuit with encoded input data **x**; (2) the trained binary weights **w** for one neural computation, which will be associated to each data.

67 |

68 | **Do:** (1) Place quantum gates on the qubits, such that it performs **(x\*w)^2/||x||**.

69 |

70 | **Check:** Whether the output data of quantum circuit and the output computed using torch on classical computer are the same.

71 |

72 |

73 |

74 | ## Tutorial 3: **PreP** + **UP** + **UN** + **M** + **PostP**

75 |

76 | [](https://colab.research.google.com/github/JQub/QuantumFlow_Tutorial/blob/main/session_2/Tutorial_3_Full_MNIST_Prediction.ipynb)

77 |

78 | This is a complete tutorial to demonstrates an end-to-end implementation of a two-layer neural network for MNIST sub-dataset of {3,6}. The first layer (hidden layer) is implemented using the one presented in Tutorial 2, and the second layer (output layer) is implemented using the P-LYR and N-LYR proposed in [QuantumFlow](https://arxiv.org/pdf/2006.14815.pdf). The model is pre-trained and the weights for hidden layer, output layer, and normalization are obtained (details will be provided in [QuantumFlow github repo](https://github.com/weiwenjiang/QuantumFlow)).

79 |

80 | Let us formulate the problem from scratch as follow.

81 |

82 | **Given:** (1) An image from MNIST; (2) The trained model.

83 |

84 | **Do:** (1) Construct the quantum circuit; (2) Perform the simulation on Qiskit or execute the circuit on IBM Quantum Processor.

85 |

86 | **Check:** Whether the prediction is correct.

87 |

88 |

89 |

90 | ## Tutorial 4: **PreP** + **UP** + **Optimized UN** + **M** + **PostP**

91 |

92 | [](https://colab.research.google.com/github/JQub/QuantumFlow_Tutorial/blob/main/session_2/Tutorial_4_QAccelerate.ipynb)

93 |

94 | This is a complete tutorial to demonstrates QuantumFlow can optimize the quantum circuit with the same function as the one created in Tutorial 3.

95 | We continously use the settings in Tutorial 3. We do the optimization on the U-Layer of two hidden neurons using the algorithm proposed in [QuantumFlow github repo](https://github.com/weiwenjiang/QuantumFlow).

96 |

97 | Let us formulate the problem from scratch as follow.

98 |

99 | **Given:** (1) An image from MNIST; (2) The trained model.

100 |

101 | **Do:** (1) Construct the quantum circuit with optimized U-Layer; (2) Perform the simulation on Qiskit or execute the circuit on IBM Quantum Processor.

102 |

103 | **Check:** Whether the prediction is correct; whether the results are almost the same with the circuit created in Tutorial 3; compare the reduction on circuit depth.

104 |

105 |

106 | ## Related work on This Tutorial

107 |

108 | The work published at Nature Communications.

109 |

110 | ```

111 | @article{jiang2021co,

112 | title={A co-design framework of neural networks and quantum circuits towards quantum advantage},

113 | author={Jiang, Weiwen and Xiong, Jinjun and Shi, Yiyu},

114 | journal={Nature communications},

115 | volume={12},

116 | number={1},

117 | pages={1--13},

118 | year={2021},

119 | publisher={Nature Publishing Group}

120 | }

121 | ```

122 |

123 | The work invited by ASP-DAC 2021.

124 |

125 | ```

126 | @article{jiang2020machine,

127 | title={When Machine Learning Meets Quantum Computers: A Case Study},

128 | author={Jiang, Weiwen and Xiong, Jinjun and Shi, Yiyu},

129 | journal={arXiv preprint arXiv:2012.10360},

130 | year={2020}

131 | }

132 | ```

133 |

134 | ## Contact

135 | **Weiwen Jiang**

136 |

137 | **Email: wjiang8@gmu.edu**

138 |

139 | **Web: https://jqub.ece.gmu.edu/**

140 |

141 | **Date: 06/17/2021**

142 |

--------------------------------------------------------------------------------

/Readme_Img/C_Frame.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/Readme_Img/C_Frame.png

--------------------------------------------------------------------------------

/Readme_Img/Frameworks.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/Readme_Img/Frameworks.png

--------------------------------------------------------------------------------

/Readme_Img/Q_Frame.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/Readme_Img/Q_Frame.png

--------------------------------------------------------------------------------

/Readme_Img/qflow.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/Readme_Img/qflow.png

--------------------------------------------------------------------------------

/model.tar.gz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model.tar.gz

--------------------------------------------------------------------------------

/model/u2_p2/config:

--------------------------------------------------------------------------------

1 | ===================== Your setting is listed as follows ======================

2 | Attribute Input

3 | device cpu

4 | interest_class 3,6

5 | img_size 4

6 | datapath /home/hzr/Software/quantum/qc_mnist/pytorch/data

7 | preprocessdata 0

8 | num_workers 0

9 | batch_size 32

10 | inference_batch_size 1

11 | neural_in_layers u:2,p:2

12 | init_lr 0.01

13 | milestones 5, 7, 9

14 | max_epoch 2

15 | resume_path

16 | test_only 0

17 | binary 0

18 | given_ang 1 -1 1 -1, -1 -1

19 | train_ang 0

20 | save_chkp 1

21 | chk_name u2_p2

22 | chk_path .

23 | ====================== Exploration will start, have fun ======================

24 | ==============================================================================

25 |

--------------------------------------------------------------------------------

/model/u2_p2/model_best.tar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model/u2_p2/model_best.tar

--------------------------------------------------------------------------------

/model/u2_p2_n2/config:

--------------------------------------------------------------------------------

1 | ===================== Your setting is listed as follows ======================

2 | Attribute Input

3 | device cpu

4 | interest_class 3,6

5 | img_size 4

6 | datapath /home/hzr/Software/quantum/qc_mnist/pytorch/data

7 | preprocessdata 0

8 | num_workers 0

9 | batch_size 32

10 | inference_batch_size 1

11 | neural_in_layers u:2,p:2,n:2

12 | init_lr 0.01

13 | milestones 5, 7, 9

14 | max_epoch 2

15 | resume_path

16 | test_only 0

17 | binary 0

18 | given_ang 1, 1, 1 1

19 | train_ang 1

20 | save_chkp 1

21 | chk_name u2_p2_n2

22 | chk_path .

23 | ====================== Exploration will start, have fun ======================

24 | ==============================================================================

25 |

--------------------------------------------------------------------------------

/model/u2_p2_n2/model_best.tar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model/u2_p2_n2/model_best.tar

--------------------------------------------------------------------------------

/model/u4_p2/config:

--------------------------------------------------------------------------------

1 | ===================== Your setting is listed as follows ======================

2 | Attribute Input

3 | device cpu

4 | interest_class 3,6

5 | img_size 4

6 | datapath /home/hzr/Software/quantum/qc_mnist/pytorch/data

7 | preprocessdata 0

8 | num_workers 0

9 | batch_size 32

10 | inference_batch_size 1

11 | neural_in_layers u:4,p:2

12 | init_lr 0.01

13 | milestones 5, 7, 9

14 | max_epoch 2

15 | resume_path

16 | test_only 0

17 | binary 0

18 | given_ang 1 -1 1 -1, -1 -1

19 | train_ang 0

20 | save_chkp 1

21 | chk_name u4_p2

22 | chk_path .

23 | ====================== Exploration will start, have fun ======================

24 | ==============================================================================

25 |

--------------------------------------------------------------------------------

/model/u4_p2/model_best.tar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model/u4_p2/model_best.tar

--------------------------------------------------------------------------------

/model/u4_v2/config:

--------------------------------------------------------------------------------

1 | ===================== Your setting is listed as follows ======================

2 | Attribute Input

3 | device cpu

4 | interest_class 3,6

5 | img_size 4

6 | datapath /home/hzr/Software/quantum/qc_mnist/pytorch/data

7 | preprocessdata 0

8 | num_workers 0

9 | batch_size 32

10 | inference_batch_size 1

11 | neural_in_layers u:4,p2a:16,v:2

12 | init_lr 0.01

13 | milestones 5, 7, 9

14 | max_epoch 2

15 | resume_path

16 | test_only 0

17 | binary 0

18 | given_ang 1, 1, 1 1

19 | train_ang 1

20 | save_chkp 1

21 | chk_name u4_v2

22 | chk_path .

23 | ====================== Exploration will start, have fun ======================

24 | ==============================================================================

25 |

--------------------------------------------------------------------------------

/model/u4_v2/model_best.tar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model/u4_v2/model_best.tar

--------------------------------------------------------------------------------

/model/v16_u2/config:

--------------------------------------------------------------------------------

1 | ===================== Your setting is listed as follows ======================

2 | Attribute Input

3 | device cpu

4 | interest_class 3,6

5 | img_size 4

6 | datapath /home/hzr/Software/quantum/qc_mnist/pytorch/data

7 | preprocessdata 0

8 | num_workers 0

9 | batch_size 32

10 | inference_batch_size 1

11 | neural_in_layers v:16,u:2

12 | init_lr 0.01

13 | milestones 5, 7, 9

14 | max_epoch 10

15 | resume_path

16 | test_only 0

17 | binary 0

18 | given_ang 1 -1 1 -1, -1 -1

19 | train_ang 0

20 | save_chkp 1

21 | chk_name v16_u2

22 | chk_path .

23 | ====================== Exploration will start, have fun ======================

24 | ==============================================================================

25 |

--------------------------------------------------------------------------------

/model/v16_u2/model_best.tar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model/v16_u2/model_best.tar

--------------------------------------------------------------------------------

/model/v16_v2/config:

--------------------------------------------------------------------------------

1 | ===================== Your setting is listed as follows ======================

2 | Attribute Input

3 | device cpu

4 | interest_class 3,6

5 | img_size 4

6 | datapath /home/hzr/Software/quantum/qc_mnist/pytorch/data

7 | preprocessdata 0

8 | num_workers 0

9 | batch_size 32

10 | inference_batch_size 1

11 | neural_in_layers v:16,v:2

12 | init_lr 0.01

13 | milestones 5, 7, 9

14 | max_epoch 2

15 | resume_path

16 | test_only 0

17 | binary 0

18 | given_ang 1 -1 1 -1, -1 -1

19 | train_ang 0

20 | save_chkp 1

21 | chk_name u4_v2

22 | chk_path .

23 | ====================== Exploration will start, have fun ======================

24 | ==============================================================================

25 |

--------------------------------------------------------------------------------

/model/v16_v2/model_best.tar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/JQub/QuantumFlow_Tutorial/488923974fbc783480964d857ad537b46190d277/model/v16_v2/model_best.tar

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | pylatexenc==2.10

2 | qfnn==0.1.10

3 | qiskit==0.20.0

4 | torch==1.9.0

5 | torchvision==0.10.0

6 |

--------------------------------------------------------------------------------

/session_1/Tutorial_0_Basic_Quantum_Gate.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 2,

4 | "metadata": {

5 | "colab": {

6 | "name": "Basic Quantum Gate",

7 | "provenance": [],

8 | "collapsed_sections": [],

9 | "toc_visible": true

10 | },

11 | "kernelspec": {

12 | "name": "python3",

13 | "display_name": "Python 3.8.10 64-bit ('qf': conda)"

14 | },

15 | "language_info": {

16 | "name": "python",

17 | "version": "3.8.10",

18 | "mimetype": "text/x-python",

19 | "codemirror_mode": {

20 | "name": "ipython",

21 | "version": 3

22 | },

23 | "pygments_lexer": "ipython3",

24 | "nbconvert_exporter": "python",

25 | "file_extension": ".py"

26 | },

27 | "interpreter": {

28 | "hash": "f24048f0d5bdb0ff49c5e7c8a9899a65bc3ab13b0f32660a2227453ca6b95fd8"

29 | }

30 | },

31 | "cells": [

32 | {

33 | "cell_type": "markdown",

34 | "source": [

35 | "# Single qubit gate"

36 | ],

37 | "metadata": {

38 | "id": "XhKK71TPv07l"

39 | }

40 | },

41 | {

42 | "cell_type": "code",

43 | "execution_count": 180,

44 | "source": [

45 | "is_colab = True\n",

46 | "\n",

47 | "if is_colab:\n",

48 | " !pip install -q torch==1.9.0\n",

49 | " !pip install -q torchvision==0.10.0\n",

50 | " !pip install -q qiskit==0.20.0\n",

51 | "\n",

52 | "import torch\n",

53 | "import torchvision\n",

54 | "import qiskit \n",

55 | "from qiskit import QuantumCircuit, assemble, Aer\n",

56 | "from qiskit import execute"

57 | ],

58 | "outputs": [],

59 | "metadata": {

60 | "colab": {

61 | "base_uri": "https://localhost:8080/"

62 | },

63 | "id": "jkCtB8IiGu49",

64 | "outputId": "14892b0b-77e8-4953-b7e1-409c7ec91c3b"

65 | }

66 | },

67 | {

68 | "cell_type": "code",

69 | "execution_count": 181,

70 | "source": [

71 | "sim = Aer.get_backend('statevector_simulator') # Tell Qiskit how to simulate our circuit"

72 | ],

73 | "outputs": [],

74 | "metadata": {

75 | "id": "vewjYJIIL4Lu"

76 | }

77 | },

78 | {

79 | "cell_type": "markdown",

80 | "source": [

81 | "### X Gate"

82 | ],

83 | "metadata": {

84 | "id": "rge0n87hJPnt"

85 | }

86 | },

87 | {

88 | "cell_type": "code",

89 | "execution_count": 182,

90 | "source": [

91 | "qc = QuantumCircuit(1)\n",

92 | "initial_state = [1,0] # Define initial_state as |0>\n",

93 | "# qc.initialize(initial_state, 0) # Apply initialisation operation to the 0th qubit\n",

94 | "qc.x(0)\n",

95 | "qc.draw()"

96 | ],

97 | "outputs": [

98 | {

99 | "data": {

100 | "text/plain": " ┌───┐\nq_0: ┤ X ├\n └───┘",

101 | "text/html": " ┌───┐\nq_0: ┤ X ├\n └───┘

"

102 | },

103 | "execution_count": 182,

104 | "metadata": {},

105 | "output_type": "execute_result"

106 | }

107 | ],

108 | "metadata": {

109 | "colab": {

110 | "base_uri": "https://localhost:8080/",

111 | "height": 63

112 | },

113 | "id": "5TlRdJx4ATH9",

114 | "outputId": "f19b14f8-6892-41b0-d8f1-3ca1fdb04914"

115 | }

116 | },

117 | {

118 | "cell_type": "code",

119 | "execution_count": 183,

120 | "source": [

121 | "result = execute(qc, sim).result()\n",

122 | "out_state = result.get_statevector(qc)\n",

123 | "print(\"after X gate, the state is \",out_state)\n",

124 | "\n",

125 | "initial_state_torch = torch.tensor([initial_state])\n",

126 | "x_gate_matrix = torch.tensor([[0,1],[1,0]])\n",

127 | "out_state_torch = torch.mm(x_gate_matrix,initial_state_torch.t()) \n",

128 | "print(\"after X matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

129 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))\n"

130 | ],

131 | "outputs": [

132 | {

133 | "name": "stdout",

134 | "output_type": "stream",

135 | "text": [

136 | "after X gate, the state is [0.+0.j 1.+0.j]\n",

137 | "after X matrix, the state is tensor([[0, 1]])\n",

138 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

139 | ]

140 | }

141 | ],

142 | "metadata": {

143 | "colab": {

144 | "base_uri": "https://localhost:8080/",

145 | "height": 312

146 | },

147 | "id": "EOYG4XTBIyFk",

148 | "outputId": "88d9ac16-0b36-42e0-8fa6-65e8e1b6db38"

149 | }

150 | },

151 | {

152 | "cell_type": "markdown",

153 | "source": [

154 | "### Y Gate"

155 | ],

156 | "metadata": {

157 | "id": "k8z_4_y2LYOB"

158 | }

159 | },

160 | {

161 | "cell_type": "code",

162 | "execution_count": 184,

163 | "source": [

164 | "qc = QuantumCircuit(1)\n",

165 | "initial_state = [1,0] # Define initial_state as |0>\n",

166 | "qc.y(0)\n",

167 | "qc.draw()"

168 | ],

169 | "outputs": [

170 | {

171 | "data": {

172 | "text/plain": " ┌───┐\nq_0: ┤ Y ├\n └───┘",

173 | "text/html": " ┌───┐\nq_0: ┤ Y ├\n └───┘

"

174 | },

175 | "execution_count": 184,

176 | "metadata": {},

177 | "output_type": "execute_result"

178 | }

179 | ],

180 | "metadata": {

181 | "id": "znSu1ETWLOpl"

182 | }

183 | },

184 | {

185 | "cell_type": "code",

186 | "execution_count": 185,

187 | "source": [

188 | "result = execute(qc, sim).result()\n",

189 | "out_state = result.get_statevector(qc)\n",

190 | "print(\"after Y gate, the state is \",out_state) # Display the output state vector\n",

191 | "\n",

192 | "initial_state_torch = torch.tensor([initial_state],dtype=torch.cfloat)\n",

193 | "y_gate_matrix = torch.tensor([[0,-1j],[1j,0]])\n",

194 | "out_state_torch = torch.mm(y_gate_matrix,initial_state_torch.t()) \n",

195 | "print(\"after Y matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

196 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

197 | ],

198 | "outputs": [

199 | {

200 | "name": "stdout",

201 | "output_type": "stream",

202 | "text": [

203 | "after Y gate, the state is [0.-0.j 0.+1.j]\n",

204 | "after Y matrix, the state is tensor([[0.+0.j, 0.+1.j]])\n",

205 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

206 | ]

207 | }

208 | ],

209 | "metadata": {

210 | "id": "7k8E7kGDLQzM"

211 | }

212 | },

213 | {

214 | "cell_type": "markdown",

215 | "source": [

216 | "### Z Gate"

217 | ],

218 | "metadata": {

219 | "id": "1h5MwXQTLa0V"

220 | }

221 | },

222 | {

223 | "cell_type": "code",

224 | "execution_count": 186,

225 | "source": [

226 | "qc = QuantumCircuit(1)\n",

227 | "initial_state = [1,0] # Define initial_state as |0>\n",

228 | "qc.z(0)\n",

229 | "qc.draw()"

230 | ],

231 | "outputs": [

232 | {

233 | "data": {

234 | "text/plain": " ┌───┐\nq_0: ┤ Z ├\n └───┘",

235 | "text/html": " ┌───┐\nq_0: ┤ Z ├\n └───┘

"

236 | },

237 | "execution_count": 186,

238 | "metadata": {},

239 | "output_type": "execute_result"

240 | }

241 | ],

242 | "metadata": {

243 | "id": "q3vUiWKcLfdQ"

244 | }

245 | },

246 | {

247 | "cell_type": "code",

248 | "execution_count": 187,

249 | "source": [

250 | "result = execute(qc, sim).result()\n",

251 | "out_state = result.get_statevector(qc)\n",

252 | "print(\"after Z gate, the state is \",out_state) # Display the output state vector\n",

253 | "\n",

254 | "initial_state_torch = torch.tensor([initial_state])\n",

255 | "z_gate_matrix = torch.tensor([[1,0],[0,-1]])\n",

256 | "out_state_torch = torch.mm(z_gate_matrix,initial_state_torch.t()) \n",

257 | "print(\"after Z matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

258 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

259 | ],

260 | "outputs": [

261 | {

262 | "name": "stdout",

263 | "output_type": "stream",

264 | "text": [

265 | "after Z gate, the state is [ 1.+0.j -0.+0.j]\n",

266 | "after Z matrix, the state is tensor([[1, 0]])\n",

267 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

268 | ]

269 | }

270 | ],

271 | "metadata": {

272 | "id": "BQ0O3msTLgng"

273 | }

274 | },

275 | {

276 | "cell_type": "markdown",

277 | "source": [

278 | "### H Gate"

279 | ],

280 | "metadata": {

281 | "id": "1h5MwXQTLa0V"

282 | }

283 | },

284 | {

285 | "cell_type": "code",

286 | "execution_count": 188,

287 | "source": [

288 | "qc = QuantumCircuit(1)\n",

289 | "initial_state = [1,0] # Define initial_state as |0>\n",

290 | "qc.h(0)\n",

291 | "qc.draw()"

292 | ],

293 | "outputs": [

294 | {

295 | "data": {

296 | "text/plain": " ┌───┐\nq_0: ┤ H ├\n └───┘",

297 | "text/html": " ┌───┐\nq_0: ┤ H ├\n └───┘

"

298 | },

299 | "execution_count": 188,

300 | "metadata": {},

301 | "output_type": "execute_result"

302 | }

303 | ],

304 | "metadata": {}

305 | },

306 | {

307 | "cell_type": "code",

308 | "execution_count": 189,

309 | "source": [

310 | "import math\n",

311 | "result = execute(qc, sim).result()\n",

312 | "out_state = result.get_statevector(qc)\n",

313 | "print(\"after H gate, the state is \",out_state) # Display the output state vector\n",

314 | "\n",

315 | "initial_state_torch = torch.tensor([initial_state],dtype=torch.double)\n",

316 | "h_gate_matrix = torch.tensor([[math.sqrt(0.5),math.sqrt(0.5)],[math.sqrt(0.5),-math.sqrt(0.5)]],dtype=torch.double)\n",

317 | "out_state_torch = torch.mm(h_gate_matrix,initial_state_torch.t()) \n",

318 | "print(\"after H matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

319 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

320 | ],

321 | "outputs": [

322 | {

323 | "name": "stdout",

324 | "output_type": "stream",

325 | "text": [

326 | "after H gate, the state is [0.70710678+0.j 0.70710678+0.j]\n",

327 | "after H matrix, the state is tensor([[0.7071, 0.7071]], dtype=torch.float64)\n",

328 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

329 | ]

330 | }

331 | ],

332 | "metadata": {}

333 | },

334 | {

335 | "cell_type": "markdown",

336 | "source": [

337 | "### U Gate"

338 | ],

339 | "metadata": {

340 | "id": "1h5MwXQTLa0V"

341 | }

342 | },

343 | {

344 | "cell_type": "code",

345 | "execution_count": 190,

346 | "source": [

347 | "qc = QuantumCircuit(1)\n",

348 | "initial_state = [1,0] # Define initial_state as |0>\n",

349 | "qc.u(math.pi/2, 0, math.pi, 0)\n",

350 | "qc.draw()"

351 | ],

352 | "outputs": [

353 | {

354 | "data": {

355 | "text/plain": " ┌──────────────┐\nq_0: ┤ U(pi/2,0,pi) ├\n └──────────────┘",

356 | "text/html": " ┌──────────────┐\nq_0: ┤ U(pi/2,0,pi) ├\n └──────────────┘

"

357 | },

358 | "execution_count": 190,

359 | "metadata": {},

360 | "output_type": "execute_result"

361 | }

362 | ],

363 | "metadata": {}

364 | },

365 | {

366 | "cell_type": "code",

367 | "execution_count": 191,

368 | "source": [

369 | "import cmath\n",

370 | "result = execute(qc, sim).result()\n",

371 | "out_state = result.get_statevector(qc)\n",

372 | "print(\"after U gate, the state is \",out_state) # Display the output state vector\n",

373 | "\n",

374 | "initial_state_torch = torch.tensor([initial_state],dtype=torch.cdouble)\n",

375 | "para = [cmath.pi/2, 0, cmath.pi, 0]\n",

376 | "u_gate_matrix = torch.tensor([[cmath.cos(para[0]/2),-cmath.exp(-1j*para[2])*cmath.sin(para[0]/2)],\n",

377 | " [cmath.exp(1j*para[1])*cmath.sin(para[0]/2),cmath.exp(1j*(para[1]+para[2]))*cmath.cos(para[0]/2)]],dtype=torch.cdouble)\n",

378 | "out_state_torch = torch.mm(u_gate_matrix,initial_state_torch.t()) \n",

379 | "print(\"after U matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

380 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

381 | ],

382 | "outputs": [

383 | {

384 | "name": "stdout",

385 | "output_type": "stream",

386 | "text": [

387 | "after U gate, the state is [0.70710678+0.j 0.70710678+0.j]\n",

388 | "after U matrix, the state is tensor([[0.7071+0.j, 0.7071+0.j]], dtype=torch.complex128)\n",

389 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

390 | ]

391 | }

392 | ],

393 | "metadata": {}

394 | },

395 | {

396 | "cell_type": "markdown",

397 | "source": [

398 | "# Single Qubit Gates in parallel"

399 | ],

400 | "metadata": {}

401 | },

402 | {

403 | "cell_type": "markdown",

404 | "source": [

405 | "### H Gate + H Gate"

406 | ],

407 | "metadata": {}

408 | },

409 | {

410 | "cell_type": "code",

411 | "execution_count": 192,

412 | "source": [

413 | "qc = QuantumCircuit(2)\n",

414 | "initial_state = [1,0] # Define initial_state as |0>\n",

415 | "qc.h(0)\n",

416 | "qc.h(1)\n",

417 | "qc.draw()"

418 | ],

419 | "outputs": [

420 | {

421 | "data": {

422 | "text/plain": " ┌───┐\nq_0: ┤ H ├\n ├───┤\nq_1: ┤ H ├\n └───┘",

423 | "text/html": " ┌───┐\nq_0: ┤ H ├\n ├───┤\nq_1: ┤ H ├\n └───┘

"

424 | },

425 | "execution_count": 192,

426 | "metadata": {},

427 | "output_type": "execute_result"

428 | }

429 | ],

430 | "metadata": {}

431 | },

432 | {

433 | "cell_type": "code",

434 | "execution_count": 193,

435 | "source": [

436 | "import math\n",

437 | "result = execute(qc, sim).result()\n",

438 | "out_state = result.get_statevector(qc)\n",

439 | "print(\"after gate, the state is \",out_state) # Display the output state vector\n",

440 | "\n",

441 | "initial_state_single_qubit = torch.tensor([initial_state],dtype=torch.double)\n",

442 | "initial_state_torch = torch.kron(initial_state_single_qubit,initial_state_single_qubit)\n",

443 | "\n",

444 | "h_gate_matrix = torch.tensor([[math.sqrt(0.5),math.sqrt(0.5)],[math.sqrt(0.5),-math.sqrt(0.5)]],dtype=torch.double)\n",

445 | "\n",

446 | "double_h_gate_matrix = torch.kron(h_gate_matrix,h_gate_matrix)\n",

447 | "out_state_torch = torch.mm(double_h_gate_matrix,initial_state_torch.t()) \n",

448 | "print(\"after matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

449 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

450 | ],

451 | "outputs": [

452 | {

453 | "name": "stdout",

454 | "output_type": "stream",

455 | "text": [

456 | "after gate, the state is [0.5+0.j 0.5+0.j 0.5+0.j 0.5+0.j]\n",

457 | "after matrix, the state is tensor([[0.5000, 0.5000, 0.5000, 0.5000]], dtype=torch.float64)\n",

458 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

459 | ]

460 | }

461 | ],

462 | "metadata": {}

463 | },

464 | {

465 | "cell_type": "markdown",

466 | "source": [

467 | "### I+X -> H+Z Gate"

468 | ],

469 | "metadata": {}

470 | },

471 | {

472 | "cell_type": "code",

473 | "execution_count": 194,

474 | "source": [

475 | "qc = QuantumCircuit(2)\n",

476 | "initial_state_1 = [1,0] # Define initial_state as |0>\n",

477 | "initial_state_2 = [1,0] # Define initial_state as |1>\n",

478 | "# qc.initialize(initial_state_1, 0)\n",

479 | "# qc.initialize(initial_state_2, 1)\n",

480 | "qc.x(1)\n",

481 | "qc.barrier()\n",

482 | "qc.h(0)\n",

483 | "qc.z(1)\n",

484 | "qc.draw()"

485 | ],

486 | "outputs": [

487 | {

488 | "data": {

489 | "text/plain": " ░ ┌───┐\nq_0: ──────░─┤ H ├\n ┌───┐ ░ ├───┤\nq_1: ┤ X ├─░─┤ Z ├\n └───┘ ░ └───┘",

490 | "text/html": " ░ ┌───┐\nq_0: ──────░─┤ H ├\n ┌───┐ ░ ├───┤\nq_1: ┤ X ├─░─┤ Z ├\n └───┘ ░ └───┘

"

491 | },

492 | "execution_count": 194,

493 | "metadata": {},

494 | "output_type": "execute_result"

495 | }

496 | ],

497 | "metadata": {}

498 | },

499 | {

500 | "cell_type": "markdown",

501 | "source": [

502 | "Qiskit encoding\n",

503 | "\n",

504 | "A -----\n",

505 | "\n",

506 | "B -----\n",

507 | "\n",

508 | "|BA>"

509 | ],

510 | "metadata": {

511 | "collapsed": false,

512 | "pycharm": {

513 | "name": "#%% md\n"

514 | }

515 | }

516 | },

517 | {

518 | "cell_type": "code",

519 | "execution_count": 195,

520 | "source": [

521 | "import math\n",

522 | "result = execute(qc, sim).result()\n",

523 | "out_state = result.get_statevector(qc)\n",

524 | "print(\"after gate, the state is \",out_state) # Display the output state vector\n",

525 | "\n",

526 | "initial_state_single_qubit_1 = torch.tensor([initial_state_1],dtype=torch.double)\n",

527 | "initial_state_single_qubit_2 = torch.tensor([initial_state_2],dtype=torch.double)\n",

528 | "initial_state_torch = torch.kron(initial_state_single_qubit_2,initial_state_single_qubit_1)\n",

529 | "\n",

530 | "h_gate_matrix = torch.tensor([[math.sqrt(0.5),math.sqrt(0.5)],[math.sqrt(0.5),-math.sqrt(0.5)]],dtype=torch.double)\n",

531 | "z_gate_matrix = torch.tensor([[1,0],[0,-1]],dtype=torch.double)\n",

532 | "x_gate_matrix = torch.tensor([[0,1],[1,0]],dtype=torch.double)\n",

533 | "i_gate_matrix = torch.tensor([[1,0],[0,1]],dtype=torch.double)\n",

534 | "\n",

535 | "x_i_gate_matrix = torch.kron(x_gate_matrix,i_gate_matrix)\n",

536 | "z_h_gate_matrix = torch.kron(z_gate_matrix,h_gate_matrix)\n",

537 | "\n",

538 | "\n",

539 | "composed_gate = torch.mm(z_h_gate_matrix,x_i_gate_matrix)\n",

540 | "out_state_torch = torch.mm(composed_gate,initial_state_torch.t())\n",

541 | "\n",

542 | "\n",

543 | "print(\"after matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

544 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))\n"

545 | ],

546 | "outputs": [

547 | {

548 | "name": "stdout",

549 | "output_type": "stream",

550 | "text": [

551 | "after gate, the state is [ 0. +0.j 0. +0.j -0.70710678+0.j -0.70710678+0.j]\n",

552 | "after matrix, the state is tensor([[ 0.0000, 0.0000, -0.7071, -0.7071]], dtype=torch.float64)\n",

553 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

554 | ]

555 | }

556 | ],

557 | "metadata": {}

558 | },

559 | {

560 | "cell_type": "markdown",

561 | "source": [

562 | "# Entanglement"

563 | ],

564 | "metadata": {}

565 | },

566 | {

567 | "cell_type": "markdown",

568 | "source": [

569 | "### CX Gate"

570 | ],

571 | "metadata": {}

572 | },

573 | {

574 | "cell_type": "code",

575 | "execution_count": 196,

576 | "source": [

577 | "qc = QuantumCircuit(2)\n",

578 | "initial_state_1 = [0,1] # Define initial_state as |1>\n",

579 | "# initial_state_2 = [1,0] # Define initial_state as |1>\n",

580 | "qc.initialize(initial_state_1, 0)\n",

581 | "# qc.initialize(initial_state_2, 1)\n",

582 | "qc.cx(0,1)\n",

583 | "qc.draw()"

584 | ],

585 | "outputs": [

586 | {

587 | "data": {

588 | "text/plain": " ┌─────────────────┐ \nq_0: ┤ initialize(0,1) ├──■──\n └─────────────────┘┌─┴─┐\nq_1: ───────────────────┤ X ├\n └───┘",

589 | "text/html": " ┌─────────────────┐ \nq_0: ┤ initialize(0,1) ├──■──\n └─────────────────┘┌─┴─┐\nq_1: ───────────────────┤ X ├\n └───┘

"

590 | },

591 | "execution_count": 196,

592 | "metadata": {},

593 | "output_type": "execute_result"

594 | }

595 | ],

596 | "metadata": {}

597 | },

598 | {

599 | "cell_type": "code",

600 | "execution_count": 197,

601 | "source": [

602 | "result = execute(qc, sim).result()\n",

603 | "out_state = result.get_statevector(qc)\n",

604 | "print(\"after gate, the state is \",out_state) # Display the output state vector\n",

605 | "\n",

606 | "initial_state_single_qubit_1 = torch.tensor([initial_state_1],dtype=torch.double)\n",

607 | "initial_state_single_qubit_2 = torch.tensor([initial_state_2],dtype=torch.double)\n",

608 | "initial_state_torch = torch.kron(initial_state_single_qubit_2,initial_state_single_qubit_1)\n",

609 | "\n",

610 | "cx_gate_matrix = torch.tensor([[1,0,0,0],\n",

611 | " [0,0,0,1],\n",

612 | " [0,0,1,0],\n",

613 | " [0,1,0,0]],dtype=torch.double)\n",

614 | "\n",

615 | "out_state_torch = torch.mm(cx_gate_matrix,initial_state_torch.t()) \n",

616 | "print(\"after matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

617 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))\n"

618 | ],

619 | "outputs": [

620 | {

621 | "name": "stdout",

622 | "output_type": "stream",

623 | "text": [

624 | "after gate, the state is [0.+0.j 0.+0.j 0.+0.j 1.+0.j]\n",

625 | "after matrix, the state is tensor([[0., 0., 0., 1.]], dtype=torch.float64)\n",

626 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

627 | ]

628 | }

629 | ],

630 | "metadata": {}

631 | },

632 | {

633 | "cell_type": "markdown",

634 | "source": [

635 | "### CZ Gate"

636 | ],

637 | "metadata": {}

638 | },

639 | {

640 | "cell_type": "code",

641 | "execution_count": 198,

642 | "source": [

643 | "qc = QuantumCircuit(2)\n",

644 | "initial_state = [1,0] # Define initial_state as |0>\n",

645 | "qc.cz(0,1)\n",

646 | "qc.draw()"

647 | ],

648 | "outputs": [

649 | {

650 | "data": {

651 | "text/plain": " \nq_0: ─■─\n │ \nq_1: ─■─\n ",

652 | "text/html": " \nq_0: ─■─\n │ \nq_1: ─■─\n

"

653 | },

654 | "execution_count": 198,

655 | "metadata": {},

656 | "output_type": "execute_result"

657 | }

658 | ],

659 | "metadata": {}

660 | },

661 | {

662 | "cell_type": "code",

663 | "execution_count": 199,

664 | "source": [

665 | "result = execute(qc, sim).result()\n",

666 | "out_state = result.get_statevector(qc)\n",

667 | "print(\"after gate, the state is \",out_state) # Display the output state vector\n",

668 | "\n",

669 | "initial_state_single_qubit = torch.tensor([initial_state],dtype=torch.double)\n",

670 | "initial_state_torch = torch.kron(initial_state_single_qubit,initial_state_single_qubit)\n",

671 | "\n",

672 | "cz_gate_matrix = torch.tensor([[1,0,0,0],[0,1,0,0],[0,0,1,0],[0,0,0,-1]],dtype=torch.double)\n",

673 | "\n",

674 | "out_state_torch = torch.mm(cz_gate_matrix,initial_state_torch.t()) \n",

675 | "print(\"after matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

676 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

677 | ],

678 | "outputs": [

679 | {

680 | "name": "stdout",

681 | "output_type": "stream",

682 | "text": [

683 | "after gate, the state is [ 1.+0.j 0.+0.j 0.+0.j -0.+0.j]\n",

684 | "after matrix, the state is tensor([[1., 0., 0., 0.]], dtype=torch.float64)\n",

685 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

686 | ]

687 | }

688 | ],

689 | "metadata": {}

690 | },

691 | {

692 | "cell_type": "markdown",

693 | "source": [

694 | "### X Gate + CX Gate"

695 | ],

696 | "metadata": {}

697 | },

698 | {

699 | "cell_type": "code",

700 | "execution_count": 200,

701 | "source": [

702 | "qc = QuantumCircuit(2)\n",

703 | "initial_state = [1,0] # Define initial_state as |0>\n",

704 | "qc.x(0)\n",

705 | "qc.cx(0,1)\n",

706 | "qc.draw()"

707 | ],

708 | "outputs": [

709 | {

710 | "data": {

711 | "text/plain": " ┌───┐ \nq_0: ┤ X ├──■──\n └───┘┌─┴─┐\nq_1: ─────┤ X ├\n └───┘",

712 | "text/html": " ┌───┐ \nq_0: ┤ X ├──■──\n └───┘┌─┴─┐\nq_1: ─────┤ X ├\n └───┘

"

713 | },

714 | "execution_count": 200,

715 | "metadata": {},

716 | "output_type": "execute_result"

717 | }

718 | ],

719 | "metadata": {}

720 | },

721 | {

722 | "cell_type": "code",

723 | "execution_count": 201,

724 | "source": [

725 | "result = execute(qc, sim).result()\n",

726 | "out_state = result.get_statevector(qc)\n",

727 | "print(\"after gate, the state is \",out_state) # Display the output state vector\n",

728 | "\n",

729 | "initial_state_single_qubit = torch.tensor([initial_state],dtype=torch.double)\n",

730 | "initial_state_torch = torch.kron(initial_state_single_qubit,initial_state_single_qubit)\n",

731 | "x_gate_matrix = torch.tensor([[0,1],[1,0]],dtype=torch.double)\n",

732 | "i_gate_matrix = torch.tensor([[1,0],[0,1]],dtype=torch.double)\n",

733 | "cx_gate_matrix = torch.tensor([[1,0,0,0],\n",

734 | " [0,0,0,1],\n",

735 | " [0,0,1,0],\n",

736 | " [0,1,0,0]],dtype=torch.double)\n",

737 | "\n",

738 | "\n",

739 | "i_x_gate_matrix = torch.kron(i_gate_matrix,x_gate_matrix)\n",

740 | "\n",

741 | "composed_gate = torch.mm( cx_gate_matrix, i_x_gate_matrix)\n",

742 | "out_state_torch = torch.mm(composed_gate ,initial_state_torch.t())\n",

743 | "\n",

744 | "print(\"after matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

745 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

746 | ],

747 | "outputs": [

748 | {

749 | "name": "stdout",

750 | "output_type": "stream",

751 | "text": [

752 | "after gate, the state is [0.+0.j 0.+0.j 0.+0.j 1.+0.j]\n",

753 | "after matrix, the state is tensor([[0., 0., 0., 1.]], dtype=torch.float64)\n",

754 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

755 | ]

756 | }

757 | ],

758 | "metadata": {}

759 | },

760 | {

761 | "cell_type": "markdown",

762 | "source": [

763 | "### H Gate + CX Gate"

764 | ],

765 | "metadata": {}

766 | },

767 | {

768 | "cell_type": "code",

769 | "execution_count": 202,

770 | "source": [

771 | "qc = QuantumCircuit(2)\n",

772 | "initial_state = [1,0] # Define initial_state as |0>\n",

773 | "qc.h(0)\n",

774 | "qc.cx(0,1)\n",

775 | "qc.draw()"

776 | ],

777 | "outputs": [

778 | {

779 | "data": {

780 | "text/plain": " ┌───┐ \nq_0: ┤ H ├──■──\n └───┘┌─┴─┐\nq_1: ─────┤ X ├\n └───┘",

781 | "text/html": " ┌───┐ \nq_0: ┤ H ├──■──\n └───┘┌─┴─┐\nq_1: ─────┤ X ├\n └───┘

"

782 | },

783 | "execution_count": 202,

784 | "metadata": {},

785 | "output_type": "execute_result"

786 | }

787 | ],

788 | "metadata": {}

789 | },

790 | {

791 | "cell_type": "markdown",

792 | "source": [

793 | "Qiskit encoding\n",

794 | "\n",

795 | "CX = [[1,0,0,0],[0,0,0,1],[0,0,1,0],[0,1,0,0]]"

796 | ],

797 | "metadata": {

798 | "collapsed": false,

799 | "pycharm": {

800 | "name": "#%% md\n"

801 | }

802 | }

803 | },

804 | {

805 | "cell_type": "code",

806 | "execution_count": 203,

807 | "source": [

808 | "import math\n",

809 | "result = execute(qc, sim).result()\n",

810 | "out_state = result.get_statevector(qc)\n",

811 | "print(\"after gate, the state is \",out_state) # Display the output state vector\n",

812 | "\n",

813 | "initial_state_single_qubit = torch.tensor([initial_state],dtype=torch.double)\n",

814 | "initial_state_torch = torch.kron(initial_state_single_qubit,initial_state_single_qubit)\n",

815 | "print(initial_state_torch)\n",

816 | "h_gate_matrix = torch.tensor([[math.sqrt(0.5),math.sqrt(0.5)],[math.sqrt(0.5),-math.sqrt(0.5)]],dtype=torch.double)\n",

817 | "i_gate_matrix = torch.tensor([[1,0],[0,1]],dtype=torch.double)\n",

818 | "cx_gate_matrix = torch.tensor([[1,0,0,0],\n",

819 | " [0,0,0,1],\n",

820 | " [0,0,1,0],\n",

821 | " [0,1,0,0]],dtype=torch.double)\n",

822 | "\n",

823 | "composed_gate = torch.kron(i_gate_matrix,h_gate_matrix)\n",

824 | "composed_gate = torch.mm(cx_gate_matrix,composed_gate)\n",

825 | "out_state_torch = torch.mm(composed_gate,initial_state_torch.t())\n",

826 | "\n",

827 | "print(\"after matrix, the state is \",out_state_torch.t()) # Display the output state vector\n",

828 | "print(\"Equal 0 if correct!\",out_state_torch.t()[0].sub(torch.tensor(out_state)))"

829 | ],

830 | "outputs": [

831 | {

832 | "name": "stdout",

833 | "output_type": "stream",

834 | "text": [

835 | "after gate, the state is [0.70710678+0.j 0. +0.j 0. +0.j 0.70710678+0.j]\n",

836 | "tensor([[1., 0., 0., 0.]], dtype=torch.float64)\n",

837 | "after matrix, the state is tensor([[0.7071, 0.0000, 0.0000, 0.7071]], dtype=torch.float64)\n",

838 | "Equal 0 if correct! tensor([0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j], dtype=torch.complex128)\n"

839 | ]

840 | }

841 | ],

842 | "metadata": {

843 | "pycharm": {

844 | "name": "#%% Qiskit Encoding\n"

845 | }

846 | }

847 | }

848 | ]

849 | }

850 |

--------------------------------------------------------------------------------

/session_2/Tutorial_1_DataPreparation.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {

7 | "pycharm": {

8 | "is_executing": false

9 | },

10 | "id": "BL4fMqPQrXH-"

11 | },

12 | "outputs": [],

13 | "source": [

14 | "# !pip install -q torch==1.9.0\n",

15 | "# !pip install -q torchvision==0.10.0\n",

16 | "!pip install -q qiskit==0.20.0\n",

17 | "\n",

18 | "import warnings\n",

19 | "warnings.filterwarnings(\"ignore\", category=DeprecationWarning) \n",

20 | "\n",

21 | "import torch\n",

22 | "import torchvision\n",

23 | "from torchvision import datasets\n",

24 | "import torchvision.transforms as transforms\n",

25 | "import qiskit \n",

26 | "import sys\n",

27 | "from pathlib import Path\n",

28 | "import numpy as np\n",

29 | "import matplotlib.pyplot as plt\n",

30 | "import numpy as np\n",

31 | "from PIL import Image\n",

32 | "from matplotlib import cm\n",

33 | "import functools\n",

34 | "\n",

35 | "import warnings\n",

36 | "warnings.filterwarnings(\"ignore\", category=DeprecationWarning)\n",

37 | "print = functools.partial(print, flush=True)\n",

38 | "\n",

39 | "interest_num = [3,6]\n",

40 | "ori_img_size = 28\n",

41 | "img_size = 4\n",

42 | "# number of subprocesses to use for data loading\n",

43 | "num_workers = 0\n",

44 | "# how many samples per batch to load\n",

45 | "batch_size = 1\n",

46 | "inference_batch_size = 1\n",

47 | "\n",

48 | "\n",

49 | "\n",

50 | "# Weiwen: modify the target classes starting from 0. Say, [3,6] -> [0,1]\n",

51 | "def modify_target(target):\n",

52 | " for j in range(len(target)):\n",

53 | " for idx in range(len(interest_num)):\n",

54 | " if target[j] == interest_num[idx]:\n",

55 | " target[j] = idx\n",

56 | " break\n",

57 | " new_target = torch.zeros(target.shape[0],2)\n",

58 | " for i in range(target.shape[0]): \n",

59 | " if target[i].item() == 0: \n",

60 | " new_target[i] = torch.tensor([1,0]).clone() \n",

61 | " else:\n",

62 | " new_target[i] = torch.tensor([0,1]).clone()\n",

63 | " \n",

64 | " return target,new_target\n",

65 | "\n",

66 | "# Weiwen: select sub-set from MNIST\n",

67 | "def select_num(dataset,interest_num):\n",

68 | " labels = dataset.targets #get labels\n",

69 | " labels = labels.numpy()\n",

70 | " idx = {}\n",

71 | " for num in interest_num:\n",

72 | " idx[num] = np.where(labels == num)\n",

73 | " fin_idx = idx[interest_num[0]]\n",

74 | " for i in range(1,len(interest_num)): \n",

75 | " fin_idx = (np.concatenate((fin_idx[0],idx[interest_num[i]][0])),)\n",

76 | " \n",

77 | " fin_idx = fin_idx[0] \n",

78 | " dataset.targets = labels[fin_idx]\n",

79 | " dataset.data = dataset.data[fin_idx]\n",

80 | " dataset.targets,_ = modify_target(dataset.targets)\n",

81 | " return dataset\n",

82 | "\n",

83 | "################ Weiwen on 12-30-2020 ################\n",

84 | "# Function: ToQuantumData from Listing 1\n",

85 | "# Note: Coverting classical data to quantum data\n",

86 | "######################################################\n",

87 | "class ToQuantumData(object):\n",

88 | " def __call__(self, tensor):\n",

89 | " device = torch.device(\"cuda\" if torch.cuda.is_available() else \"cpu\")\n",

90 | " data = tensor.to(device)\n",

91 | " input_vec = data.view(-1)\n",

92 | " vec_len = input_vec.size()[0]\n",

93 | " input_matrix = torch.zeros(vec_len, vec_len)\n",

94 | " input_matrix[0] = input_vec\n",

95 | " input_matrix = np.float64(input_matrix.transpose(0,1))\n",

96 | " u, s, v = np.linalg.svd(input_matrix)\n",

97 | " output_matrix = torch.tensor(np.dot(u, v))\n",

98 | " output_data = output_matrix[:, 0].view(1, img_size,img_size)\n",

99 | " return output_data\n",

100 | "\n",

101 | "################ Weiwen on 12-30-2020 ################\n",

102 | "# Function: ToQuantumData from Listing 1\n",

103 | "# Note: Coverting classical data to quantum matrix\n",

104 | "######################################################\n",

105 | "class ToQuantumMatrix(object):\n",

106 | " def __call__(self, tensor):\n",

107 | " device = torch.device(\"cuda\" if torch.cuda.is_available() else \"cpu\")\n",

108 | " data = tensor.to(device)\n",

109 | " input_vec = data.view(-1)\n",

110 | " vec_len = input_vec.size()[0]\n",

111 | " input_matrix = torch.zeros(vec_len, vec_len)\n",

112 | " input_matrix[0] = input_vec\n",

113 | " input_matrix = np.float64(input_matrix.transpose(0,1))\n",

114 | " u, s, v = np.linalg.svd(input_matrix)\n",

115 | " output_matrix = torch.tensor(np.dot(u, v))\n",

116 | " return output_matrix "

117 | ]

118 | },

119 | {

120 | "cell_type": "code",

121 | "execution_count": null,

122 | "metadata": {

123 | "pycharm": {

124 | "is_executing": false

125 | },

126 | "id": "oj5JfepLrXID"

127 | },

128 | "outputs": [],

129 | "source": [

130 | "################ Weiwen on 12-30-2020 ################\n",

131 | "# Using torch to load MNIST data\n",

132 | "######################################################\n",

133 | "\n",

134 | "# convert data to torch.FloatTensor\n",

135 | "transform = transforms.Compose([transforms.Resize((ori_img_size,ori_img_size)),\n",

136 | " transforms.ToTensor()])\n",

137 | "# Path to MNIST Dataset\n",

138 | "train_data = datasets.MNIST(root='./data', train=True,\n",

139 | " download=True, transform=transform)\n",

140 | "test_data = datasets.MNIST(root='./data', train=False,\n",

141 | " download=True, transform=transform)\n",

142 | "\n",

143 | "train_data = select_num(train_data,interest_num)\n",

144 | "test_data = select_num(test_data,interest_num)\n",

145 | "\n",

146 | "# prepare data loaders\n",

147 | "train_loader = torch.utils.data.DataLoader(train_data, batch_size=batch_size,\n",

148 | " num_workers=num_workers, shuffle=True, drop_last=True)\n",

149 | "test_loader = torch.utils.data.DataLoader(test_data, batch_size=inference_batch_size, \n",

150 | " num_workers=num_workers, shuffle=True, drop_last=True)\n"

151 | ]

152 | },

153 | {

154 | "cell_type": "code",

155 | "execution_count": null,

156 | "metadata": {

157 | "pycharm": {

158 | "is_executing": false

159 | },

160 | "id": "Eq9L6tffrXIE"

161 | },

162 | "outputs": [],

163 | "source": [

164 | "################ Weiwen on 12-30-2020 ################\n",

165 | "# T1: Downsample the image from 28*28 to 4*4\n",

166 | "# T2: Convert classical data to quantum data which \n",

167 | "# can be encoded to the quantum states (amplitude)\n",

168 | "######################################################\n",

169 | "\n",

170 | "# Process data by hand, we can also integrate ToQuantumData into transform\n",

171 | "def data_pre_pro(img):\n",

172 | " # Print original figure\n",

173 | " img = img\n",

174 | " npimg = img.numpy()\n",

175 | " plt.imshow(np.transpose(npimg, (1, 2, 0))) \n",

176 | " plt.show()\n",

177 | " # Print resized figure\n",

178 | " image = np.asarray(npimg[0] * 255, np.uint8) \n",

179 | " im = Image.fromarray(image,mode=\"L\")\n",

180 | " im = im.resize((4,4),Image.BILINEAR) \n",

181 | " plt.imshow(im,cmap='gray',)\n",

182 | " plt.show()\n",

183 | " # Converting classical data to quantum data\n",

184 | " trans_to_tensor = transforms.ToTensor()\n",

185 | " trans_to_vector = ToQuantumData()\n",

186 | " trans_to_matrix = ToQuantumMatrix() \n",

187 | " print(\"Classical Data: {}\".format(trans_to_tensor(im).flatten()))\n",

188 | " print(\"Quantum Data: {}\".format(trans_to_vector(trans_to_tensor(im)).flatten()))\n",

189 | " return trans_to_matrix(trans_to_tensor(im)),trans_to_vector(trans_to_tensor(im))\n",

190 | "\n",

191 | "# Use the first image from test loader as example\n",

192 | "for batch_idx, (data, target) in enumerate(test_loader):\n",

193 | " torch.set_printoptions(threshold=sys.maxsize)\n",

194 | " print(\"Batch Id: {}, Target: {}\".format(batch_idx,target))\n",

195 | " quantum_matrix,qantum_data = data_pre_pro(torchvision.utils.make_grid(data))\n",

196 | " break"

197 | ]

198 | },

199 | {

200 | "cell_type": "code",

201 | "execution_count": null,

202 | "metadata": {

203 | "pycharm": {

204 | "is_executing": false

205 | },

206 | "id": "ELav6khrrXII"

207 | },

208 | "outputs": [],

209 | "source": [

210 | "################ Weiwen on 12-30-2020 ################\n",

211 | "# Do quantum state preparation and compare it with\n",

212 | "# the original data\n",

213 | "######################################################\n",

214 | "\n",

215 | "# Quantum-State Preparation in IBM Qiskit\n",

216 | "from qiskit import QuantumRegister, QuantumCircuit, ClassicalRegister\n",

217 | "from qiskit.extensions import XGate, UnitaryGate\n",

218 | "from qiskit import Aer, execute\n",

219 | "import qiskit\n",

220 | "# Input: a 4*4 matrix (data) holding 16 input data\n",

221 | "inp = QuantumRegister(4,\"in_qbit\")\n",

222 | "circ = QuantumCircuit(inp)\n",

223 | "data_matrix = quantum_matrix\n",

224 | "circ.append(UnitaryGate(data_matrix, label=\"Input\"), inp[0:4])\n",

225 | "print(circ)\n",

226 | "# Using StatevectorSimulator from the Aer provider\n",

227 | "simulator = Aer.get_backend('statevector_simulator')\n",

228 | "result = execute(circ, simulator).result()\n",

229 | "statevector = result.get_statevector(circ)\n",

230 | "\n",

231 | "print(\"Data to be encoded: \\n {}\\n\".format(qantum_data))\n",

232 | "print(\"Data read from the circuit: \\n {}\".format(statevector))"

233 | ]

234 | }

235 | ],

236 | "metadata": {

237 | "kernelspec": {

238 | "display_name": "PyCharm (qiskit_practice)",

239 | "language": "python",

240 | "name": "pycharm-8213722"

241 | },

242 | "language_info": {

243 | "codemirror_mode": {

244 | "name": "ipython",

245 | "version": 3

246 | },

247 | "file_extension": ".py",

248 | "mimetype": "text/x-python",

249 | "name": "python",

250 | "nbconvert_exporter": "python",

251 | "pygments_lexer": "ipython3",

252 | "version": "3.8.5"

253 | },

254 | "pycharm": {

255 | "stem_cell": {

256 | "cell_type": "raw",

257 | "metadata": {

258 | "collapsed": false

259 | },

260 | "source": []

261 | }

262 | },

263 | "colab": {

264 | "provenance": []

265 | }

266 | },

267 | "nbformat": 4,

268 | "nbformat_minor": 0

269 | }

--------------------------------------------------------------------------------

/session_2/Tutorial_2_Hidden_NeuralComp.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {

7 | "id": "_DmG8bKXrkkU"

8 | },

9 | "outputs": [],

10 | "source": [

11 | "# !pip install -q torch==1.9.0\n",

12 | "# !pip install -q torchvision==0.10.0\n",

13 | "\n",

14 | "!pip install -q qiskit==0.20.0\n",

15 | "\n",

16 | "import warnings\n",

17 | "warnings.filterwarnings(\"ignore\", category=DeprecationWarning) \n",

18 | "\n",

19 | "import torch\n",

20 | "import torchvision\n",

21 | "from torchvision import datasets\n",

22 | "import torchvision.transforms as transforms\n",

23 | "import torch.nn as nn\n",

24 | "import shutil\n",

25 | "import os\n",

26 | "import time\n",

27 | "import sys\n",

28 | "from pathlib import Path\n",

29 | "import numpy as np\n",

30 | "import matplotlib.pyplot as plt\n",

31 | "import numpy as np\n",

32 | "from PIL import Image\n",

33 | "from matplotlib import cm\n",

34 | "import functools\n",

35 | "from qiskit.tools.monitor import job_monitor\n",

36 | "from qiskit import QuantumRegister, QuantumCircuit, ClassicalRegister\n",

37 | "from qiskit.extensions import XGate, UnitaryGate\n",

38 | "from qiskit import Aer, execute\n",

39 | "import qiskit\n",

40 | "\n",

41 | "import warnings\n",

42 | "warnings.filterwarnings(\"ignore\", category=DeprecationWarning)\n",

43 | "print = functools.partial(print, flush=True)\n",

44 | "\n",

45 | "interest_num = [3,6]\n",

46 | "ori_img_size = 28\n",

47 | "img_size = 4\n",

48 | "# number of subprocesses to use for data loading\n",

49 | "num_workers = 0\n",

50 | "# how many samples per batch to load\n",

51 | "batch_size = 1\n",

52 | "inference_batch_size = 1\n",

53 | "\n",

54 | "\n",

55 | "# Weiwen: modify the target classes starting from 0. Say, [3,6] -> [0,1]\n",

56 | "def modify_target(target):\n",

57 | " for j in range(len(target)):\n",

58 | " for idx in range(len(interest_num)):\n",

59 | " if target[j] == interest_num[idx]:\n",

60 | " target[j] = idx\n",

61 | " break\n",

62 | " new_target = torch.zeros(target.shape[0],2)\n",

63 | " for i in range(target.shape[0]): \n",

64 | " if target[i].item() == 0: \n",

65 | " new_target[i] = torch.tensor([1,0]).clone() \n",

66 | " else:\n",

67 | " new_target[i] = torch.tensor([0,1]).clone()\n",

68 | " \n",

69 | " return target,new_target\n",

70 | "\n",

71 | "# Weiwen: select sub-set from MNIST\n",

72 | "def select_num(dataset,interest_num):\n",

73 | " labels = dataset.targets #get labels\n",

74 | " labels = labels.numpy()\n",

75 | " idx = {}\n",

76 | " for num in interest_num:\n",

77 | " idx[num] = np.where(labels == num)\n",

78 | " fin_idx = idx[interest_num[0]]\n",

79 | " for i in range(1,len(interest_num)): \n",

80 | " fin_idx = (np.concatenate((fin_idx[0],idx[interest_num[i]][0])),)\n",

81 | " \n",

82 | " fin_idx = fin_idx[0] \n",

83 | " dataset.targets = labels[fin_idx]\n",

84 | " dataset.data = dataset.data[fin_idx]\n",

85 | " dataset.targets,_ = modify_target(dataset.targets)\n",

86 | " return dataset\n",

87 | "\n",

88 | "################ Weiwen on 12-30-2020 ################\n",

89 | "# Function: ToQuantumData from Listing 1\n",

90 | "# Note: Coverting classical data to quantum data\n",

91 | "######################################################\n",

92 | "class ToQuantumData(object):\n",

93 | " def __call__(self, tensor):\n",

94 | " device = torch.device(\"cuda\" if torch.cuda.is_available() else \"cpu\")\n",

95 | " data = tensor.to(device)\n",

96 | " input_vec = data.view(-1)\n",

97 | " vec_len = input_vec.size()[0]\n",

98 | " input_matrix = torch.zeros(vec_len, vec_len)\n",

99 | " input_matrix[0] = input_vec\n",

100 | " input_matrix = np.float64(input_matrix.transpose(0,1))\n",

101 | " u, s, v = np.linalg.svd(input_matrix)\n",

102 | " output_matrix = torch.tensor(np.dot(u, v))\n",

103 | " output_data = output_matrix[:, 0].view(1, img_size,img_size)\n",

104 | " return output_data\n",

105 | "\n",

106 | "################ Weiwen on 12-30-2020 ################\n",

107 | "# Function: ToQuantumData from Listing 1\n",

108 | "# Note: Coverting classical data to quantum matrix\n",

109 | "######################################################\n",

110 | "class ToQuantumMatrix(object):\n",

111 | " def __call__(self, tensor):\n",

112 | " device = torch.device(\"cuda\" if torch.cuda.is_available() else \"cpu\")\n",

113 | " data = tensor.to(device)\n",

114 | " input_vec = data.view(-1)\n",

115 | " vec_len = input_vec.size()[0]\n",

116 | " input_matrix = torch.zeros(vec_len, vec_len)\n",

117 | " input_matrix[0] = input_vec\n",

118 | " input_matrix = np.float64(input_matrix.transpose(0,1))\n",

119 | " u, s, v = np.linalg.svd(input_matrix)\n",

120 | " output_matrix = torch.tensor(np.dot(u, v))\n",

121 | " return output_matrix \n",

122 | " \n",

123 | "################ Weiwen on 12-30-2020 ################\n",

124 | "# Function: fire_ibmq from Listing 6\n",

125 | "# Note: used for execute quantum circuit using \n",

126 | "# simulation or ibm quantum processor\n",

127 | "# Parameters: (1) quantum circuit; \n",

128 | "# (2) number of shots;\n",

129 | "# (3) simulation or quantum processor;\n",

130 | "# (4) backend name if quantum processor.\n",

131 | "######################################################\n",

132 | "def fire_ibmq(circuit,shots,Simulation = False,backend_name='ibmq_essex'): \n",

133 | " count_set = []\n",

134 | " if not Simulation:\n",

135 | " provider = IBMQ.get_provider('ibm-q-academic')\n",

136 | " backend = provider.get_backend(backend_name)\n",

137 | " else:\n",

138 | " backend = Aer.get_backend('qasm_simulator')\n",

139 | " job_ibm_q = execute(circuit, backend, shots=shots)\n",

140 | " job_monitor(job_ibm_q)\n",

141 | " result_ibm_q = job_ibm_q.result()\n",

142 | " counts = result_ibm_q.get_counts()\n",

143 | " return counts\n",

144 | "\n",

145 | "################ Weiwen on 12-30-2020 ################\n",

146 | "# Function: analyze from Listing 6\n",

147 | "# Note: used for analyze the count on states to \n",

148 | "# formulate the probability for each qubit\n",

149 | "# Parameters: (1) counts returned by fire_ibmq; \n",

150 | "######################################################\n",

151 | "def analyze(counts):\n",

152 | " mycount = {}\n",

153 | " for i in range(2):\n",

154 | " mycount[i] = 0\n",

155 | " for k,v in counts.items():\n",

156 | " bits = len(k) \n",

157 | " for i in range(bits): \n",

158 | " if k[bits-1-i] == \"1\":\n",

159 | " if i in mycount.keys():\n",

160 | " mycount[i] += v\n",

161 | " else:\n",

162 | " mycount[i] = v\n",

163 | " return mycount,bits\n",

164 | "\n",

165 | "################ Weiwen on 12-30-2020 ################\n",

166 | "# Function: cccz from Listing 3\n",

167 | "# Note: using the basic Toffoli gates and CZ gate\n",

168 | "# to implement cccz gate, which will flip the\n",

169 | "# sign of state |1111>\n",

170 | "# Parameters: (1) quantum circuit; \n",

171 | "# (2-4) control qubits;\n",

172 | "# (5) target qubits;\n",

173 | "# (6-7) auxiliary qubits.\n",

174 | "######################################################\n",

175 | "def cccz(circ, q1, q2, q3, q4, aux1, aux2):\n",

176 | " # Apply Z-gate to a state controlled by 4 qubits\n",

177 | " circ.ccx(q1, q2, aux1)\n",

178 | " circ.ccx(q3, aux1, aux2)\n",

179 | " circ.cz(aux2, q4)\n",

180 | " # cleaning the aux bits\n",

181 | " circ.ccx(q3, aux1, aux2)\n",

182 | " circ.ccx(q1, q2, aux1)\n",

183 | " return circ\n",

184 | "\n",

185 | "################ Weiwen on 12-30-2020 ################\n",

186 | "# Function: cccz from Listing 4\n",

187 | "# Note: using the basic Toffoli gate to implement ccccx\n",

188 | "# gate. It is used to switch the quantum states\n",

189 | "# of |11110> and |11111>.\n",

190 | "# Parameters: (1) quantum circuit; \n",

191 | "# (2-5) control qubits;\n",

192 | "# (6) target qubits;\n",

193 | "# (7-8) auxiliary qubits.\n",

194 | "######################################################\n",

195 | "def ccccx(circ, q1, q2, q3, q4, q5, aux1, aux2):\n",

196 | " circ.ccx(q1, q2, aux1)\n",

197 | " circ.ccx(q3, q4, aux2)\n",

198 | " circ.ccx(aux2, aux1, q5)\n",

199 | " # cleaning the aux bits\n",

200 | " circ.ccx(q3, q4, aux2)\n",

201 | " circ.ccx(q1, q2, aux1)\n",

202 | " return circ\n",

203 | "\n",

204 | "################ Weiwen on 12-30-2020 ################\n",

205 | "# Function: neg_weight_gate from Listing 3\n",

206 | "# Note: adding NOT(X) gate before the qubits associated\n",

207 | "# with 0 state. For example, if we want to flip \n",

208 | "# the sign of |1101>, we add X gate for q2 before\n",

209 | "# the cccz gate, as follows.\n",

210 | "# --q3-----|---\n",

211 | "# --q2----X|X--\n",

212 | "# --q1-----|---\n",

213 | "# --q0-----z---\n",

214 | "# Parameters: (1) quantum circuit; \n",

215 | "# (2) all qubits, say q0-q3;\n",

216 | "# (3) the auxiliary qubits used for cccz\n",

217 | "# (4) states, say 1101\n",

218 | "######################################################\n",

219 | "def neg_weight_gate(circ,qubits,aux,state):\n",

220 | " idx = 0\n",

221 | " # The index of qubits are reversed in terms of states.\n",

222 | " # As shown in the above example: we put X at q2 not the third position.\n",

223 | " state = state[::-1]\n",

224 | " for idx in range(len(state)):\n",

225 | " if state[idx]=='0':\n",

226 | " circ.x(qubits[idx])\n",

227 | " cccz(circ,qubits[0],qubits[1],qubits[2],qubits[3],aux[0],aux[1])\n",

228 | " for idx in range(len(state)):\n",

229 | " if state[idx]=='0':\n",

230 | " circ.x(qubits[idx])\n"

231 | ]

232 | },

233 | {

234 | "cell_type": "code",

235 | "execution_count": null,

236 | "metadata": {

237 | "id": "qeLLhENSrkka"

238 | },

239 | "outputs": [],

240 | "source": [

241 | "################ Weiwen on 12-30-2020 ################\n",

242 | "# Using torch to load MNIST data\n",

243 | "######################################################\n",

244 | "\n",

245 | "# convert data to torch.FloatTensor\n",

246 | "transform = transforms.Compose([transforms.Resize((ori_img_size,ori_img_size)),\n",

247 | " transforms.ToTensor()])\n",

248 | "# Path to MNIST Dataset\n",

249 | "train_data = datasets.MNIST(root='./data', train=True,\n",

250 | " download=True, transform=transform)\n",

251 | "test_data = datasets.MNIST(root='./data', train=False,\n",

252 | " download=True, transform=transform)\n",

253 | "\n",

254 | "train_data = select_num(train_data,interest_num)\n",

255 | "test_data = select_num(test_data,interest_num)\n",

256 | "\n",

257 | "# prepare data loaders\n",

258 | "train_loader = torch.utils.data.DataLoader(train_data, batch_size=batch_size,\n",

259 | " num_workers=num_workers, shuffle=True, drop_last=True)\n",

260 | "test_loader = torch.utils.data.DataLoader(test_data, batch_size=inference_batch_size, \n",

261 | " num_workers=num_workers, shuffle=True, drop_last=True)\n"

262 | ]

263 | },

264 | {

265 | "cell_type": "code",

266 | "execution_count": null,

267 | "metadata": {

268 | "id": "5ooj924Lrkkb"

269 | },

270 | "outputs": [],

271 | "source": [

272 | "################ Weiwen on 12-30-2020 ################\n",

273 | "# T1: Downsample the image from 28*28 to 4*4\n",

274 | "# T2: Convert classical data to quantum data which \n",

275 | "# can be encoded to the quantum states (amplitude)\n",

276 | "######################################################\n",

277 | "\n",

278 | "# Process data by hand, we can also integrate ToQuantumData into transform\n",

279 | "def data_pre_pro(img):\n",

280 | " # Print original figure\n",

281 | " img = img\n",

282 | " npimg = img.numpy()\n",

283 | " plt.imshow(np.transpose(npimg, (1, 2, 0))) \n",

284 | " plt.show()\n",

285 | " # Print resized figure\n",

286 | " image = np.asarray(npimg[0] * 255, np.uint8) \n",

287 | " im = Image.fromarray(image,mode=\"L\")\n",

288 | " im = im.resize((4,4),Image.BILINEAR) \n",

289 | " plt.imshow(im,cmap='gray',)\n",

290 | " plt.show()\n",

291 | " # Converting classical data to quantum data\n",

292 | " trans_to_tensor = transforms.ToTensor()\n",

293 | " trans_to_vector = ToQuantumData()\n",

294 | " trans_to_matrix = ToQuantumMatrix() \n",

295 | " print(\"Classical Data: {}\".format(trans_to_tensor(im).flatten()))\n",

296 | " print(\"Quantum Data: {}\".format(trans_to_vector(trans_to_tensor(im)).flatten()))\n",

297 | " return trans_to_matrix(trans_to_tensor(im)),trans_to_vector(trans_to_tensor(im))\n",

298 | "\n",

299 | "# Use the first image from test loader as example\n",

300 | "for batch_idx, (data, target) in enumerate(test_loader):\n",

301 | " torch.set_printoptions(threshold=sys.maxsize)\n",

302 | " print(\"Batch Id: {}, Target: {}\".format(batch_idx,target))\n",

303 | " quantum_matrix,qantum_data = data_pre_pro(torchvision.utils.make_grid(data))\n",

304 | " break"

305 | ]

306 | },

307 | {