Prediction of Drug-likeness using Graph Convolutional Attention Network.

4 |

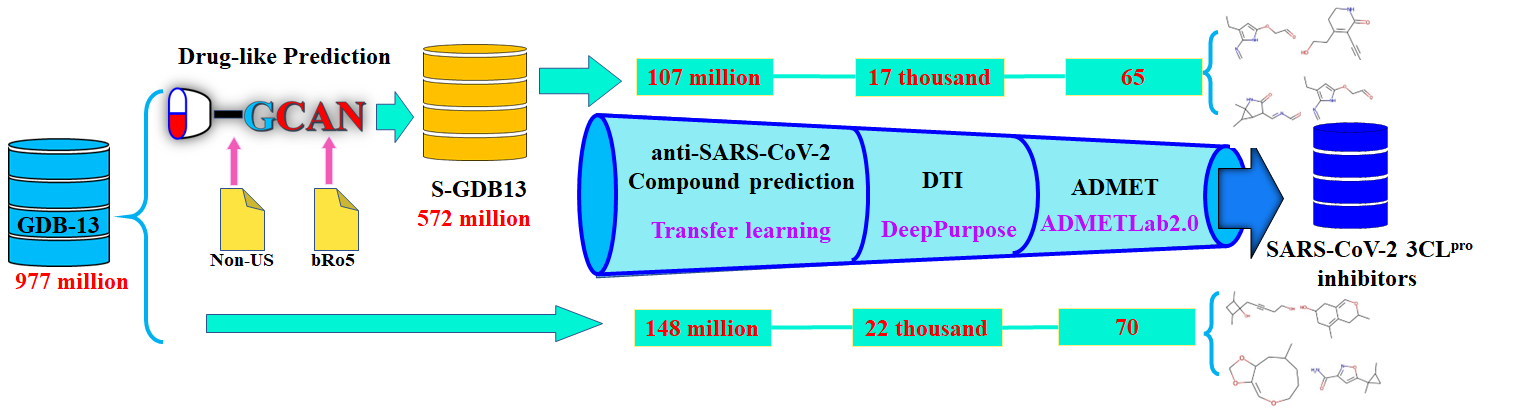

A deep learning-based process related to the screening of SARS-CoV2 3CL inhibitors.

4 |

5 |

6 | ## process

7 |

8 |

9 |

10 | Coronavirus disease 2019 (COVID-19) is a highly infectious disease caused by severe acute respiratory syndrome coronavirus-2 (SARS-CoV-2). It is urgent to find potential antiviral drugs against SARS-CoV-2 in a short time. Deep learning-based virtual screening is one of the approaches that can rapidly search against large molecular libraries. Here, SARS-CoV-2 3C-like protease (SARS-CoV-2 3CLpro) was chosen as the target. As shown in Figure bellow, the utility of D-GCAN is evaluated by comparing the screening results on the GDB-13 and S-GDB13 databases. The process was carried out with the help of the transfer learning method (Wang et al., 2021), DeepPurpose (Huang et al., 2020), and ADMETLab2.0 (Xiong et al., 2021).

11 |

12 | These databases were firstly screened by using a transfer learning method (COVIDVS) proposed by Wang et al (Wang et al., 2021), which was reported for screening inhibitors against SARS-CoV-2. The model was trained on the dataset containing inhibitors against HCoV-OC43, SARS-CoV and MERS-CoV. All of these viruses as well as SARS-CoV-2 belong to β-coronaviruses. They have high consistency in essential functional proteins (Wu et al., 2020; Shen et al.; Pillaiyar et al., 2020). Then, the trained model was fine-tuned by the transfer learning approach with the dataset containing drugs against SARS-CoV-2. In this way, 107 million drug-like molecules were screened out. Then, drug-target interaction prediction (DTI) was carried out based on DeepPurpose (Huang et al., 2020), which provided pretrained model for the interaction prediction between drugs and SARS-CoV-2 3CLpro target. The interaction binding score was evaluated by the dissociation equilibrium constant (Kd). After this step, 17 thousand molecules with high affinity were obtained. Finally, ADMET properties were widely chosen and used for screening SARS-CoV-2 inhibitors (Gajjar et al., 2021; Roy et al., 2021; Dhameliya et al., 2022). These properties were calculated by using ADMETLab2.0 (Xiong et al., 2021), and 65 candidates with good properties were selected.

13 |

14 | ## COVIDVS

15 |

16 | COVIDVS models are Chemprop models trained with anti-beta-coronavirus actives/inactives collected from published papers and fine-tuned with anti-SARS-CoV-2 actives/inactives.

17 |

18 |

19 |

20 | ## DeepPurpose

21 |

22 | DeepPurpose has provied the pretrained model by predicting the interaction between a target (SARS-CoV2 3CL Protease) and a list of repurposing drugs from a curated drug library of 81 antiviral drugs. The Binding Score is the Kd values. Results aggregated from five pretrained model on BindingDB dataset.

23 |

24 |

25 |

26 | ## AMETLab2.0

27 |

28 | Undesirable pharmacokinetics and toxicity of candidate compounds are the main reasons for the failure of drug development, and it has been widely recognized that absorption, distribution, metabolism, excretion and toxicity (ADMET) of chemicals should be evaluated as early as possible. ADMETlab 2.0 is an enhanced version of the widely used [ADMETlab](http://admet.scbdd.com/) for systematical evaluation of ADMET properties, as well as some physicochemical properties and medicinal chemistry friendliness. With significant updates to functional modules, predictive models, explanations, and the user interface, ADMETlab 2.0 has greater capacity to assist medicinal chemists in accelerating the drug research and development process.

29 |

30 |

31 |

32 | ## Acknowledgement

33 |

34 | Dhameliya,T.M. *et al.* (2022) Systematic virtual screening in search of SARS CoV-2 inhibitors against spike glycoprotein: pharmacophore screening, molecular docking, ADMET analysis and MD simulations. *Mol Divers*.

35 |

36 | Gajjar,N.D. *et al.* (2021) In search of RdRp and Mpro inhibitors against SARS CoV-2: Molecular docking, molecular dynamic simulations and ADMET analysis. *Journal of Molecular Structure*, **1239**, 130488.

37 |

38 | Huang,K. *et al.* (2020) DeepPurpose: a deep learning library for drug–target interaction prediction. *Bioinformatics*, **36**, 5545–5547.

39 |

40 | Pillaiyar,T. *et al.* (2020) Recent discovery and development of inhibitors targeting coronaviruses. *Drug Discovery Today*, **25**, 668–688.

41 |

42 | Roy,R. *et al.* (2021) Finding potent inhibitors against SARS-CoV-2 main protease through virtual screening, ADMET, and molecular dynamics simulation studies. *Journal of Biomolecular Structure and Dynamics*, **0**, 1–13.

43 |

44 | Shen,L. *et al.* High-Throughput Screening and Identification of Potent Broad-Spectrum Inhibitors of Coronaviruses. *Journal of Virology*, **93**, e00023-19.

45 |

46 | Wang,S. *et al.* (2021) A transferable deep learning approach to fast screen potential antiviral drugs against SARS-CoV-2. *Briefings in Bioinformatics*.

47 |

48 | Wu,F. *et al.* (2020) A new coronavirus associated with human respiratory disease in China. *Nature*, **579**, 265–269.

49 |

50 | Xiong,G. *et al.* (2021) ADMETlab 2.0: an integrated online platform for accurate and comprehensive predictions of ADMET properties. *Nucleic Acids Research*, **49**, W5–W14.

51 |

52 |

53 |

54 |

--------------------------------------------------------------------------------

/Discussion/preprocess.py:

--------------------------------------------------------------------------------

1 | from collections import defaultdict

2 |

3 | import numpy as np

4 |

5 | from rdkit import Chem

6 |

7 | import torch

8 | atom_dict = defaultdict(lambda: len(atom_dict))

9 | bond_dict = defaultdict(lambda: len(bond_dict))

10 | fingerprint_dict = defaultdict(lambda: len(fingerprint_dict))

11 | edge_dict = defaultdict(lambda: len(edge_dict))

12 | radius=1

13 | if torch.cuda.is_available():

14 | device = torch.device('cuda')

15 | print('The code uses a GPU!')

16 | else:

17 | device = torch.device('cpu')

18 | print('The code uses a CPU...')

19 | def create_atoms(mol, atom_dict):

20 | """Transform the atom types in a molecule (e.g., H, C, and O)

21 | into the indices (e.g., H=0, C=1, and O=2).

22 | Note that each atom index considers the aromaticity.

23 | """

24 | atoms = [a.GetSymbol() for a in mol.GetAtoms()]

25 | for a in mol.GetAromaticAtoms():

26 | i = a.GetIdx()

27 | atoms[i] = (atoms[i], 'aromatic')

28 | atoms = [atom_dict[a] for a in atoms]

29 | return np.array(atoms)

30 |

31 |

32 | def create_ijbonddict(mol, bond_dict):

33 | """Create a dictionary, in which each key is a node ID

34 | and each value is the tuples of its neighboring node

35 | and chemical bond (e.g., single and double) IDs.

36 |

37 | """

38 | i_jbond_dict = defaultdict(lambda: [])

39 | for b in mol.GetBonds():

40 | i, j = b.GetBeginAtomIdx(), b.GetEndAtomIdx()

41 | bond = bond_dict[str(b.GetBondType())]

42 | i_jbond_dict[i].append((j, bond))

43 | i_jbond_dict[j].append((i, bond))

44 | return i_jbond_dict

45 |

46 |

47 | def extract_fingerprints(radius, atoms, i_jbond_dict,

48 | fingerprint_dict, edge_dict):

49 | """Extract the fingerprints from a molecular graph

50 | based on Weisfeiler-Lehman algorithm.

51 |

52 | """

53 |

54 | if (len(atoms) == 1) or (radius == 0):

55 | nodes = [fingerprint_dict[a] for a in atoms]

56 |

57 | else:

58 | nodes = atoms

59 | i_jedge_dict = i_jbond_dict

60 |

61 | for _ in range(radius):

62 |

63 | """Update each node ID considering its neighboring nodes and edges.

64 | The updated node IDs are the fingerprint IDs.。

65 | """

66 | nodes_ = []

67 | for i, j_edge in i_jedge_dict.items():

68 | neighbors = [(nodes[j], edge) for j, edge in j_edge]

69 | fingerprint = (nodes[i], tuple(sorted(neighbors)))

70 | nodes_.append(fingerprint_dict[fingerprint])

71 |

72 | """Also update each edge ID considering

73 | its two nodes on both sides.

74 | """

75 | i_jedge_dict_ = defaultdict(lambda: [])

76 | for i, j_edge in i_jedge_dict.items():

77 | for j, edge in j_edge:

78 | both_side = tuple(sorted((nodes[i], nodes[j])))

79 | edge = edge_dict[(both_side, edge)]

80 | i_jedge_dict_[i].append((j, edge))

81 |

82 | nodes = nodes_

83 | i_jedge_dict = i_jedge_dict_

84 |

85 | return np.array(nodes)

86 |

87 |

88 | def split_dataset(dataset, ratio):

89 | """Shuffle and split a dataset.洗牌和拆分数据集"""

90 | np.random.seed(1234) # fix the seed for shuffle为洗牌修正种子.

91 | np.random.shuffle(dataset)

92 | n = int(ratio * len(dataset))

93 | return dataset[:n], dataset[n:]

94 |

95 |

96 | def create_dataset(filename,path,dataname):

97 | dir_dataset = path+dataname

98 | print(filename)

99 | """Load a dataset."""

100 | with open(dir_dataset + filename, 'r') as f:

101 | smiles_property = f.readline().strip().split()

102 | data_original = f.read().strip().split('\n')

103 |

104 | """Exclude the data contains '.' in its smiles.排除含.的数据"""

105 | data_original = [data for data in data_original

106 | if '.' not in data.split()[0]]

107 | dataset = []

108 | for data in data_original:

109 |

110 | smiles, property = data.strip().split()

111 |

112 | """Create each data with the above defined functions."""

113 | mol = Chem.AddHs(Chem.MolFromSmiles(smiles))

114 | atoms = create_atoms(mol, atom_dict)

115 | molecular_size = len(atoms)

116 | i_jbond_dict = create_ijbonddict(mol, bond_dict)

117 | fingerprints = extract_fingerprints(radius, atoms, i_jbond_dict,

118 | fingerprint_dict, edge_dict)

119 | adjacency = Chem.GetAdjacencyMatrix(mol)

120 |

121 | """Transform the above each data of numpy

122 | to pytorch tensor on a device (i.e., CPU or GPU).

123 | """

124 | fingerprints = torch.LongTensor(fingerprints).to(device)

125 | adjacency = torch.FloatTensor(adjacency).to(device)

126 | property = torch.FloatTensor([int(property)]).to(device)

127 |

128 | dataset.append((smiles,fingerprints, adjacency, molecular_size, property))

129 |

130 | return dataset

131 |

132 | dataset_train = create_dataset('data_train.txt')

133 | dataset_train, dataset_dev = split_dataset(dataset_train, 0.9)

134 | dataset_test = create_dataset('data_test.txt')

135 |

136 | N_fingerprints = len(fingerprint_dict)

137 |

138 | return dataset_train, dataset_dev, dataset_test, N_fingerprints

139 |

--------------------------------------------------------------------------------

/Test/test.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 1,

6 | "id": "65b363bc",

7 | "metadata": {},

8 | "outputs": [],

9 | "source": [

10 | "import predict"

11 | ]

12 | },

13 | {

14 | "cell_type": "code",

15 | "execution_count": 3,

16 | "id": "4cc4f418",

17 | "metadata": {},

18 | "outputs": [

19 | {

20 | "name": "stdout",

21 | "output_type": "stream",

22 | "text": [

23 | "The code uses a GPU!\n",

24 | "../dataset/data_test.txt\n",

25 | "bacc_dev: 0.5119539230602043\n",

26 | "pre_dev: 0.5080213903743316\n",

27 | "rec_dev: 0.8837209302325582\n",

28 | "f1_dev: 0.6451612903225807\n",

29 | "mcc_dev: 0.03575604067764825\n",

30 | "sp_dev: 0.14018691588785046\n",

31 | "q__dev: 0.5454545454545454\n",

32 | "acc_dev: 0.5128205128205128\n"

33 | ]

34 | }

35 | ],

36 | "source": [

37 | "test1 = predict.predict('../dataset/data_test.txt',property=True)#Drugs from FDA"

38 | ]

39 | },

40 | {

41 | "cell_type": "code",

42 | "execution_count": 2,

43 | "id": "8f0eba5e",

44 | "metadata": {},

45 | "outputs": [

46 | {

47 | "name": "stdout",

48 | "output_type": "stream",

49 | "text": [

50 | "The code uses a GPU!\n",

51 | "../dataset/world_wide.txt\n",

52 | "bacc_dev: 0.46604215456674475\n",

53 | "pre_dev: 0.47987043035631655\n",

54 | "rec_dev: 0.8095238095238095\n",

55 | "f1_dev: 0.6025566531086578\n",

56 | "mcc_dev: -0.09345868862125822\n",

57 | "sp_dev: 0.12256049960967993\n",

58 | "q__dev: 0.3915211970074813\n",

59 | "acc_dev: 0.46604215456674475\n"

60 | ]

61 | }

62 | ],

63 | "source": [

64 | "test2 = predict.predict('../dataset/world_wide.txt',property=True)#Drugs from non-US"

65 | ]

66 | },

67 | {

68 | "cell_type": "code",

69 | "execution_count": 4,

70 | "id": "75c3a192",

71 | "metadata": {},

72 | "outputs": [

73 | {

74 | "name": "stdout",

75 | "output_type": "stream",

76 | "text": [

77 | "The code uses a GPU!\n",

78 | "../dataset/beyondRo5.txt\n",

79 | "1\n",

80 | "1\n",

81 | "1\n",

82 | "1\n",

83 | "1\n",

84 | "1\n",

85 | "1\n",

86 | "1\n",

87 | "1\n",

88 | "1\n",

89 | "1\n",

90 | "1\n",

91 | "1\n",

92 | "1\n",

93 | "1\n",

94 | "1\n",

95 | "1\n",

96 | "1\n",

97 | "1\n",

98 | "1\n",

99 | "1\n",

100 | "1\n",

101 | "1\n",

102 | "1\n",

103 | "1\n",

104 | "1\n",

105 | "1\n",

106 | "1\n",

107 | "1\n",

108 | "1\n",

109 | "1\n",

110 | "1\n",

111 | "1\n",

112 | "1\n",

113 | "1\n",

114 | "1\n",

115 | "1\n",

116 | "1\n",

117 | "1\n",

118 | "1\n",

119 | "1\n",

120 | "1\n",

121 | "1\n",

122 | "1\n",

123 | "1\n",

124 | "1\n",

125 | "1\n",

126 | "1\n",

127 | "1\n",

128 | "1\n",

129 | "1\n",

130 | "1\n",

131 | "1\n",

132 | "1\n",

133 | "1\n",

134 | "1\n",

135 | "1\n",

136 | "1\n",

137 | "1\n",

138 | "1\n",

139 | "1\n",

140 | "1\n",

141 | "1\n",

142 | "1\n",

143 | "1\n",

144 | "1\n",

145 | "1\n",

146 | "1\n",

147 | "1\n",

148 | "1\n",

149 | "1\n",

150 | "1\n",

151 | "1\n",

152 | "1\n",

153 | "1\n",

154 | "1\n",

155 | "1\n",

156 | "1\n",

157 | "1\n",

158 | "1\n",

159 | "1\n",

160 | "1\n",

161 | "1\n",

162 | "1\n",

163 | "1\n",

164 | "1\n",

165 | "1\n",

166 | "1\n",

167 | "1\n",

168 | "1\n",

169 | "1\n",

170 | "1\n",

171 | "1\n",

172 | "1\n",

173 | "1\n",

174 | "1\n",

175 | "1\n",

176 | "1\n",

177 | "1\n",

178 | "1\n",

179 | "1\n",

180 | "1\n",

181 | "1\n",

182 | "1\n",

183 | "1\n",

184 | "1\n",

185 | "1\n",

186 | "1\n",

187 | "1\n",

188 | "1\n",

189 | "1\n",

190 | "1\n",

191 | "1\n",

192 | "1\n",

193 | "1\n",

194 | "1\n",

195 | "1\n",

196 | "1\n",

197 | "1\n",

198 | "1\n",

199 | "1\n",

200 | "1\n",

201 | "1\n",

202 | "1\n",

203 | "1\n",

204 | "1\n",

205 | "1\n",

206 | "1\n",

207 | "1\n",

208 | "1\n",

209 | "1\n",

210 | "1\n",

211 | "1\n",

212 | "1\n",

213 | "1\n",

214 | "1\n"

215 | ]

216 | }

217 | ],

218 | "source": [

219 | "test3 = predict.predict('../dataset/beyondRo5.txt',property=False)#Drugs beyond Ro5"

220 | ]

221 | },

222 | {

223 | "cell_type": "code",

224 | "execution_count": null,

225 | "id": "147a96e5",

226 | "metadata": {},

227 | "outputs": [],

228 | "source": []

229 | }

230 | ],

231 | "metadata": {

232 | "kernelspec": {

233 | "display_name": "Python 3",

234 | "language": "python",

235 | "name": "python3"

236 | },

237 | "language_info": {

238 | "codemirror_mode": {

239 | "name": "ipython",

240 | "version": 3

241 | },

242 | "file_extension": ".py",

243 | "mimetype": "text/x-python",

244 | "name": "python",

245 | "nbconvert_exporter": "python",

246 | "pygments_lexer": "ipython3",

247 | "version": "3.8.8"

248 | }

249 | },

250 | "nbformat": 4,

251 | "nbformat_minor": 5

252 | }

253 |

--------------------------------------------------------------------------------

/DGCAN/train.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | """

3 | Created on Wed Apr 27 20:09:31 2022

4 |

5 | @author:Jinyu-Sun

6 | """

7 |

8 | import timeit

9 | import sys

10 | import numpy as np

11 | import math

12 | import torch

13 | import torch.nn as nn

14 | import torch.nn.functional as F

15 | import torch.optim as optim

16 | import pickle

17 | from sklearn.metrics import roc_auc_score, roc_curve,auc

18 | from sklearn.metrics import confusion_matrix

19 | import preprocess as pp

20 | import pandas as pd

21 | import matplotlib.pyplot as plt

22 | from DGCAN import MolecularGraphNeuralNetwork,Trainer,Tester

23 |

24 | def metrics(cnf_matrix):

25 | '''Evaluation Metrics'''

26 | tn = cnf_matrix[0, 0]

27 | tp = cnf_matrix[1, 1]

28 | fn = cnf_matrix[1, 0]

29 | fp = cnf_matrix[0, 1]

30 |

31 | bacc = ((tp / (tp + fn)) + (tn / (tn + fp))) / 2 # balance accurance

32 | pre = tp / (tp + fp) # precision/q+

33 | rec = tp / (tp + fn) # recall/se

34 | sp = tn / (tn + fp)

35 | q_ = tn / (tn + fn)

36 | f1 = 2 * pre * rec / (pre + rec) # f1score

37 | mcc = ((tp * tn) - (fp * fn)) / math.sqrt(

38 | (tp + fp) * (tp + fn) * (tn + fp) * (tn + fn)) # Matthews correlation coefficient

39 | acc = (tp + tn) / (tp + fp + fn + tn) # accurancy

40 |

41 | print('bacc:', bacc)

42 | print('pre:', pre)

43 | print('rec:', rec)

44 | print('f1:', f1)

45 | print('mcc:', mcc)

46 | print('sp:', sp)

47 | print('q_:', q_)

48 | print('acc:', acc)

49 |

50 |

51 | def train (test_name, radius, dim, layer_hidden, layer_output, dropout, batch_train,

52 | batch_test, lr, lr_decay, decay_interval, iteration, N , dataset_train):

53 | '''

54 |

55 | Parameters

56 | ----------

57 | data_test='../dataset/data_test.txt', #test set

58 | radius = 1, #hops of radius subgraph: 1, 2

59 | dim = 64, #dimension of graph convolution layers

60 | layer_hidden = 4, #Number of graph convolution layers

61 | layer_output = 10, #Number of dense layers

62 | dropout = 0.45, #drop out rate :0-1

63 | batch_train = 8, # batch of training set

64 | batch_test = 8, #batch of test set

65 | lr =3e-4, #learning rate: 1e-5,1e-4,3e-4, 5e-4, 1e-3, 3e-3,5e-3

66 | lr_decay = 0.85, #Learning rate decay:0.5, 0.75, 0.85, 0.9

67 | decay_interval = 25,#Number of iterations for learning rate decay:10,25,30,50

68 | iteration = 140, #Number of iterations

69 | N = 5000, #length of embedding: 2000,3000,5000,7000

70 | dataset_train='../dataset/data_train.txt') #training set

71 |

72 | Returns

73 | -------

74 | res_test : results

75 | Predicting results.

76 |

77 | '''

78 | dataset_test = test_name

79 | (radius, dim, layer_hidden, layer_output,

80 | batch_train, batch_test, decay_interval,

81 | iteration, dropout) = map(int, [radius, dim, layer_hidden, layer_output,

82 | batch_train, batch_test,

83 | decay_interval, iteration, dropout])

84 | lr, lr_decay = map(float, [lr, lr_decay])

85 | if torch.cuda.is_available():

86 | device = torch.device('cuda')

87 | print('The code uses a GPU!')

88 | else:

89 | device = torch.device('cpu')

90 | print('The code uses a CPU...')

91 |

92 | lr, lr_decay = map(float, [lr, lr_decay])

93 |

94 | print('-' * 100)

95 | print('Just a moment......')

96 | print('-' * 100)

97 | path = ''

98 | dataname = ''

99 |

100 | dataset_train= pp.create_dataset(dataset_train,path,dataname)

101 | #dataset_train,dataset_test = pp.split_dataset(dataset_train,0.9)

102 | #dataset_test= pp.create_dataset(dataset_dev,path,dataname)

103 | dataset_test= pp.create_dataset(dataset_test,path,dataname)

104 | np.random.seed(0)

105 | np.random.shuffle(dataset_train)

106 | print('The preprocess has finished!')

107 | print('-' * 100)

108 |

109 | print('Creating a model.')

110 | torch.manual_seed(0)

111 | model = MolecularGraphNeuralNetwork(

112 | N, dim, layer_hidden, layer_output, dropout).to(device)

113 | trainer = Trainer(model,lr,batch_train)

114 | tester = Tester(model,batch_test)

115 | print('# of model parameters:',

116 | sum([np.prod(p.size()) for p in model.parameters()]))

117 | print('-' * 100)

118 | file_result = path + '../DGCAN/results/AUC' + '.txt'

119 | # file_result = '../output/result--' + setting + '.txt'

120 | result = 'Epoch\tTime(sec)\tLoss_train\tLoss_test\tAUC_train\tAUC_test'

121 | file_test_result = path + 'test_prediction' + '.txt'

122 | file_predictions = path + 'train_prediction' + '.txt'

123 | file_model = '../DGCAN/model/model' + '.pth'

124 | with open(file_result, 'w') as f:

125 | f.write(result + '\n')

126 |

127 | print('Start training.')

128 | print('The result is saved in the output directory every epoch!')

129 |

130 | np.random.seed(0)

131 |

132 | start = timeit.default_timer()

133 |

134 | for epoch in range(iteration):

135 | epoch += 1

136 | if epoch % decay_interval == 0:

137 | trainer.optimizer.param_groups[0]['lr'] *= lr_decay

138 | # [‘amsgrad’, ‘params’, ‘lr’, ‘betas’, ‘weight_decay’, ‘eps’]

139 | prediction_train, loss_train, train_res = trainer.train(dataset_train)

140 | prediction_test, loss_test, test_res = tester.test_classifier(dataset_test)

141 |

142 | time = timeit.default_timer() - start

143 |

144 | if epoch == 1:

145 | minutes = time * iteration / 60

146 | hours = int(minutes / 60)

147 | minutes = int(minutes - 60 * hours)

148 | print('The training will finish in about',

149 | hours, 'hours', minutes, 'minutes.')

150 | print('-' * 100)

151 | print(result)

152 |

153 | result = '\t'.join(map(str, [epoch, time, loss_train, loss_test, prediction_train, prediction_test]))

154 | tester.save_result(result, file_result)

155 | tester.save_model(model, file_model)

156 | print(result)

157 | model.eval()

158 | prediction_test, loss_test, test_res = tester.test_classifier(dataset_test)

159 | res_test = test_res.T

160 |

161 | cnf_matrix = confusion_matrix(res_test[:, 0], res_test[:, 1])

162 | fpr, tpr, thresholds = roc_curve(res_test[:, 0], res_test[:, 1])

163 | AUC = auc(fpr, tpr)

164 | print('auc:',AUC)

165 | metrics(cnf_matrix)

166 | return res_test

--------------------------------------------------------------------------------

/screening/DTI.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "id": "c40219cd",

6 | "metadata": {},

7 | "source": [

8 | "# Drug Target Interaction Prediction by using DeepPurpose"

9 | ]

10 | },

11 | {

12 | "cell_type": "markdown",

13 | "id": "23b43a6f",

14 | "metadata": {},

15 | "source": [

16 | "DeepPurpose has provied the convinient way for DTI prediction especially for SARS_CoV2_Protease. "

17 | ]

18 | },

19 | {

20 | "cell_type": "markdown",

21 | "id": "38ab44af",

22 | "metadata": {},

23 | "source": [

24 | "## Installation"

25 | ]

26 | },

27 | {

28 | "cell_type": "code",

29 | "execution_count": null,

30 | "id": "1ffbb4b3",

31 | "metadata": {},

32 | "outputs": [],

33 | "source": [

34 | "pip\n",

35 | "conda create -n DeepPurpose python=3.6\n",

36 | "conda activate DeepPurpose\n",

37 | "conda install -c conda-forge rdkit\n",

38 | "conda install -c conda-forge notebook\n",

39 | "pip install git+https://github.com/bp-kelley/descriptastorus \n",

40 | "pip install DeepPurpose\n",

41 | "\n",

42 | "or Build from Source\n",

43 | "\n",

44 | "git clone https://github.com/kexinhuang12345/DeepPurpose.git ## Download code repository\n",

45 | "cd DeepPurpose ## Change directory to DeepPurpose\n",

46 | "conda env create -f environment.yml ## Build virtual environment with all packages installed using conda\n",

47 | "conda activate DeepPurpose ## Activate conda environment (use \"source activate DeepPurpose\" for anaconda 4.4 or earlier) \n",

48 | "jupyter notebook ## open the jupyter notebook with the conda env\n",

49 | "\n",

50 | "## run our code, e.g. click a file in the DEMO folder\n",

51 | "... ...\n",

52 | "\n",

53 | "conda deactivate ## when done, exit conda environment "

54 | ]

55 | },

56 | {

57 | "cell_type": "markdown",

58 | "id": "26e590fe",

59 | "metadata": {},

60 | "source": [

61 | "## Run"

62 | ]

63 | },

64 | {

65 | "cell_type": "code",

66 | "execution_count": null,

67 | "id": "55207f2c",

68 | "metadata": {},

69 | "outputs": [],

70 | "source": [

71 | "import os\n",

72 | "os.chdir('../')\n",

73 | "from DeepPurpose import utils\n",

74 | "from DeepPurpose import DTI as models\n",

75 | "X_drug, X_target, y = process_BindingDB(download_BindingDB(SAVE_PATH),\n",

76 | " y = 'Kd', \n",

77 | " binary = False, \n",

78 | " convert_to_log = True)\n",

79 | "\n",

80 | "# Type in the encoding names for drug/protein.\n",

81 | "drug_encoding, target_encoding = 'MPNN', 'CNN'\n",

82 | "\n",

83 | "# Data processing, here we select cold protein split setup.\n",

84 | "train, val, test = data_process(X_drug, X_target, y, \n",

85 | " drug_encoding, target_encoding, \n",

86 | " split_method='cold_protein', \n",

87 | " frac=[0.7,0.1,0.2])\n",

88 | "\n",

89 | "# Generate new model using default parameters; also allow model tuning via input parameters.\n",

90 | "config = generate_config(drug_encoding, target_encoding, transformer_n_layer_target = 8)\n",

91 | "net = models.model_initialize(**config)\n",

92 | "\n",

93 | "# Train the new model.\n",

94 | "# Detailed output including a tidy table storing validation loss, metrics, AUC curves figures and etc. are stored in the ./result folder.\n",

95 | "net.train(train, val, test)\n",

96 | "\n",

97 | "# or simply load pretrained model from a model directory path or reproduced model name such as DeepDTA\n",

98 | "net = models.model_pretrained(MODEL_PATH_DIR or MODEL_NAME)\n",

99 | "\n",

100 | "X_repurpose, drug_name, drug_cid = load_broad_repurposing_hub(SAVE_PATH)\n",

101 | "target, target_name = load_SARS_CoV2_Protease_3CL()\n",

102 | "\n",

103 | "_ = models.virtual_screening(smiles, target, net, drug_name, target_name)"

104 | ]

105 | },

106 | {

107 | "cell_type": "markdown",

108 | "id": "bab0c86d",

109 | "metadata": {},

110 | "source": [

111 | "## Results"

112 | ]

113 | },

114 | {

115 | "cell_type": "code",

116 | "execution_count": null,

117 | "id": "1136255f",

118 | "metadata": {},

119 | "outputs": [],

120 | "source": [

121 | "+-------+-----------+------------------------+---------------+\n",

122 | "| Rank | Drug Name | Target Name | Binding Score |\n",

123 | "+-------+-----------+------------------------+---------------+\n",

124 | "| 1 | Drug 4565 | SARS-CoV2 3CL Protease | 8.96 |\n",

125 | "| 2 | Drug 4570 | SARS-CoV2 3CL Protease | 12.42 |\n",

126 | "| 3 | Drug 3690 | SARS-CoV2 3CL Protease | 12.86 |\n",

127 | "| 4 | Drug 3068 | SARS-CoV2 3CL Protease | 13.36 |\n",

128 | "| 5 | Drug 8387 | SARS-CoV2 3CL Protease | 13.47 |\n",

129 | "| 6 | Drug 5176 | SARS-CoV2 3CL Protease | 14.47 |\n",

130 | "| 7 | Drug 438 | SARS-CoV2 3CL Protease | 14.67 |\n",

131 | "| 8 | Drug 4507 | SARS-CoV2 3CL Protease | 16.11 |\n",

132 | "```\n",

133 | "```\n",

134 | "| 9978 | Drug 1377 | SARS-CoV2 3CL Protease | 460788.11 |\n",

135 | "| 9979 | Drug 3768 | SARS-CoV2 3CL Protease | 479737.13 |\n",

136 | "| 9980 | Drug 5106 | SARS-CoV2 3CL Protease | 485684.14 |\n",

137 | "| 9981 | Drug 3765 | SARS-CoV2 3CL Protease | 505994.35 |\n",

138 | "| 9982 | Drug 2207 | SARS-CoV2 3CL Protease | 510293.39 |\n",

139 | "| 9983 | Drug 1161 | SARS-CoV2 3CL Protease | 525921.93 |\n",

140 | "| 9984 | Drug 2477 | SARS-CoV2 3CL Protease | 533613.12 |\n",

141 | "| 9985 | Drug 3320 | SARS-CoV2 3CL Protease | 538902.46 |\n",

142 | "| 9986 | Drug 3783 | SARS-CoV2 3CL Protease | 542639.17 |\n",

143 | "| 9987 | Drug 4834 | SARS-CoV2 3CL Protease | 603510.00 |\n",

144 | "| 9988 | Drug 9653 | SARS-CoV2 3CL Protease | 611796.89 |\n",

145 | "| 9989 | Drug 6606 | SARS-CoV2 3CL Protease | 671138.31 |\n",

146 | "| 9990 | Drug 160 | SARS-CoV2 3CL Protease | 697775.04 |\n",

147 | "| 9991 | Drug 3851 | SARS-CoV2 3CL Protease | 792134.96 |\n",

148 | "| 9992 | Drug 5208 | SARS-CoV2 3CL Protease | 832708.75 |\n",

149 | "| 9993 | Drug 2786 | SARS-CoV2 3CL Protease | 905739.10 |\n",

150 | "| 9994 | Drug 6612 | SARS-CoV2 3CL Protease | 968825.66 |\n",

151 | "| 9995 | Drug 6609 | SARS-CoV2 3CL Protease | 1088788.87 |\n",

152 | "| 9996 | Drug 801 | SARS-CoV2 3CL Protease | 1186364.21 |\n",

153 | "| 9997 | Drug 3844 | SARS-CoV2 3CL Protease | 1199274.11 |\n",

154 | "| 9998 | Drug 3842 | SARS-CoV2 3CL Protease | 1559694.06 |\n",

155 | "| 9999 | Drug 4486 | SARS-CoV2 3CL Protease | 1619297.87 |\n",

156 | "| 10000 | Drug 800 | SARS-CoV2 3CL Protease | 1623061.65 |\n",

157 | "+-------+-----------+------------------------+---------------+"

158 | ]

159 | },

160 | {

161 | "cell_type": "markdown",

162 | "id": "83ff4364",

163 | "metadata": {},

164 | "source": [

165 | "## Acknowledgement"

166 | ]

167 | },

168 | {

169 | "cell_type": "code",

170 | "execution_count": null,

171 | "id": "ab0dd49f",

172 | "metadata": {},

173 | "outputs": [],

174 | "source": [

175 | "This project incorporates code from the following repo:\n",

176 | " \n",

177 | " https://github.com/kexinhuang12345/DeepPurpose\n",

178 | " "

179 | ]

180 | }

181 | ],

182 | "metadata": {

183 | "kernelspec": {

184 | "display_name": "Python 3 (ipykernel)",

185 | "language": "python",

186 | "name": "python3"

187 | },

188 | "language_info": {

189 | "codemirror_mode": {

190 | "name": "ipython",

191 | "version": 3

192 | },

193 | "file_extension": ".py",

194 | "mimetype": "text/x-python",

195 | "name": "python",

196 | "nbconvert_exporter": "python",

197 | "pygments_lexer": "ipython3",

198 | "version": "3.8.8"

199 | }

200 | },

201 | "nbformat": 4,

202 | "nbformat_minor": 5

203 | }

204 |

--------------------------------------------------------------------------------

/screening/Dataset/finetunev1.csv:

--------------------------------------------------------------------------------

1 | smiles,new_label

2 | CCN(CC)CCCC(C)Nc1ccnc2cc(Cl)ccc12,1

3 | Cc1cccc(C)c1OCC(=O)NC(Cc1ccccc1)C(O)CC(Cc1ccccc1)NC(=O)C(C(C)C)N1CCCNC1=O,1

4 | O=C(Nc1ccc([N+](=O)[O-])cc1Cl)c1cc(Cl)ccc1O,1

5 | CN(C)C(=O)C(CCN1CCC(O)(c2ccc(Cl)cc2)CC1)(c1ccccc1)c1ccccc1,1

6 | CC1OC(OC2CC(O)C3(CO)C4C(O)CC5(C)C(C6=CC(=O)OC6)CCC5(O)C4CCC3(O)C2)C(O)C(O)C1O,1

7 | CCN(CC)CCOc1ccc(C(O)(Cc2ccc(Cl)cc2)c2ccc(C)cc2)cc1,1

8 | COc1ccc2cc1Oc1ccc(cc1)CC1c3cc(c(OC)cc3CCN1C)Oc1c(OC)c(OC)cc3c1C(C2)N(C)CC3,1

9 | CCN(CC)Cc1cc(Nc2ccnc3cc(Cl)ccc23)ccc1O,1

10 | O=C1NCN(c2ccccc2)C12CCN(CCCC(c1ccc(F)cc1)c1ccc(F)cc1)CC2,1

11 | Cc1ccc(NC(=O)c2ccc(CN3CCN(C)CC3)cc2)cc1Nc1nccc(-c2cccnc2)n1,1

12 | CCN(CC)CCOc1ccc2c(c1)C(=O)c1cc(OCCN(CC)CC)ccc1-2,1

13 | COc1cc2c3cc1Oc1c(OC)c(OC)cc4c1C(Cc1ccc(O)c(c1)Oc1ccc(cc1)CC3N(C)CC2)N(C)CC4,1

14 | OC(c1cc(C(F)(F)F)nc2c(C(F)(F)F)cccc12)C1CCCCN1,1

15 | OCCN1CCN(CCCN2c3ccccc3Sc3ccc(C(F)(F)F)cc32)CC1,1

16 | O=C(Nc1cc(Cl)cc(Cl)c1O)c1c(O)c(Cl)cc(Cl)c1Cl,1

17 | CCN(CCO)CCCC(C)Nc1ccnc2cc(Cl)ccc12,1

18 | CC(CN1c2ccccc2Sc2ccccc21)N(C)C,1

19 | CCSc1ccc2c(c1)N(CCCN1CCN(C)CC1)c1ccccc1S2,1

20 | CC1OC(OC2C(O)CC(OC3C(O)CC(OC4CCC5(C)C(CCC6C5CC(O)C5(C)C(C7=CC(=O)OC7)CCC65O)C4)OC3C)OC2C)CC(O)C1O,1

21 | CN(C)CCCN1c2ccccc2CCc2ccc(Cl)cc21,1

22 | CN(C)CCCN1c2ccccc2Sc2ccc(Cl)cc21,1

23 | CCN(CC)CCOc1ccc(C(=C(Cl)c2ccccc2)c2ccccc2)cc1,1

24 | CCC1OC(=O)C(C)C(OC2CC(C)(OC)C(O)C(C)O2)C(C)C(OC2OC(C)CC(N(C)C)C2O)C(C)(O)CC(C)CN(C)C(C)C(O)C1(C)O,1

25 | COc1ncnc(NS(=O)(=O)c2ccc(N)cc2)c1OC,1

26 | COc1ccc2nc(S(=O)Cc3ncc(C)c(OC)c3C)[nH]c2c1,1

27 | CN(C)CCOc1ccc(C(=C(CCCl)c2ccccc2)c2ccccc2)cc1,1

28 | CSc1ccc2c(c1)N(CCC1CCCCN1C)c1ccccc1S2,1

29 | CC=CCC(C)C(O)C1C(=O)NC(CC)C(=O)N(C)CC(=O)N(C)C(CC(C)C)C(=O)NC(C(C)C)C(=O)N(C)C(CC(C)C)C(=O)NC(C)C(=O)NC(C)C(=O)N(C)C(CC(C)C)C(=O)N(C)C(CC(C)C)C(=O)N(C)C(C(C)C)C(=O)N1C,1

30 | CC(C)=CCCC1(C)C=Cc2c(O)c3c(c(CC=C(C)C)c2O1)OC12C(=CC4CC1C(C)(C)OC2(CC=C(C)C(=O)O)C4O)C3=O,1

31 | C=CC1CN2CCC1CC2C(O)c1ccnc2ccc(OC)cc12,1

32 | CCCCCC(=O)OC1(C(C)=O)CCC2C3CCC4=CC(=O)CCC4(C)C3CCC21C,1

33 | COc1ccc2cc1Oc1ccc(cc1)CC1c3c(cc4c(c3Oc3cc5c(cc3OC)CCN(C)C5C2)OCO4)CCN1C,1

34 | CCC(=C(c1ccccc1)c1ccc(OCCN(C)C)cc1)c1ccccc1,1

35 | OC1(c2ccc(Cl)c(C(F)(F)F)c2)CCN(CCCC(c2ccc(F)cc2)c2ccc(F)cc2)CC1,1

36 | Clc1ccc(Cn2c(CN3CCCC3)nc3ccccc32)cc1,1

37 | C1CCC(C(CC2CCCCN2)C2CCCCC2)CC1,1

38 | Oc1c(Cl)cc(Cl)c(Cl)c1Cc1c(O)c(Cl)cc(Cl)c1Cl,1

39 | CCC(C(=O)O)C1CCC(C)C(C(C)C(O)C(C)C(=O)C(CC)C2OC3(C=CC(O)C4(CCC(C)(C5CCC(O)(CC)C(C)O5)O4)O3)C(C)CC2C)O1,1

40 | Cc1cc(Nc2ncc(Cl)c(Nc3ccccc3S(=O)(=O)C(C)C)n2)c(OC(C)C)cc1C1CCNCC1,1

41 | CC1(C)C=Cc2c(c3c(c4c(=O)c(-c5ccc(O)cc5)coc24)OC(C)(C)CC3)O1,1

42 | C=CC(=O)Nc1cc(Nc2nccc(-c3cn(C)c4ccccc34)n2)c(OC)cc1N(C)CCN(C)C,1

43 | Cc1c(-c2ccc(O)cc2)n(Cc2ccc(OCCN3CCCCCC3)cc2)c2ccc(O)cc12,1

44 | CCCCCCOC(C)c1cccc(-c2csc(NC(=O)c3cc(Cl)c(C=C(C)C(=O)O)c(Cl)c3)n2)c1OC,1

45 | CC(C)=CCc1c2c(c3occ(-c4ccc(O)cc4)c(=O)c3c1O)C=CC(C)(C)O2,1

46 | CCCCc1oc2ccc(NS(C)(=O)=O)cc2c1C(=O)c1ccc(OCCCN(CCCC)CCCC)cc1,1

47 | CC(C)C(=O)OCC(=O)C12OC(C3CCCCC3)OC1CC1C3CCC4=CC(=O)C=CC4(C)C3C(O)CC12C,1

48 | CC1(C)C=Cc2c(c3c(c4c(=O)c(-c5ccc(O)c(O)c5)coc24)OC(C)(C)CC3)O1,1

49 | CCCCCOc1ccc(-c2ccc(-c3ccc(C(=O)NC4CC(O)C(O)NC(=O)C5C(O)C(C)CN5C(=O)C(C(C)O)NC(=O)C(C(O)C(O)c5ccc(O)cc5)NC(=O)C5CC(O)CN5C(=O)C(C(C)O)NC4=O)cc3)cc2)cc1,1

50 | CC(C)(C)c1cc(C(C)(C)C)c(NC(=O)c2c[nH]c3ccccc3c2=O)cc1O,1

51 | CCC(=C(c1ccc(OCCN(C)C)cc1)c1cccc(O)c1)c1ccccc1,1

52 | CCN1CCN(Cc2ccc(Nc3ncc(F)c(-c4cc(F)c5nc(C)n(C(C)C)c5c4)n3)nc2)CC1,1

53 | CCc1nc(C(N)=O)c(Nc2ccc(N3CCC(N4CCN(C)CC4)CC3)c(OC)c2)nc1NC1CCOCC1,1

54 | CC(C)(C)c1ccc(C(=O)CCCN2CCC(OC(c3ccccc3)c3ccccc3)CC2)cc1,1

55 | c1ccc2c(c1)Sc1ccccc1N2CC1CN2CCC1CC2,1

56 | CC1=NN(c2ccc(C)c(C)c2)C(=O)C1=NNc1cccc(-c2cccc(C(=O)O)c2)c1O,1

57 | COC(=O)NC(C(=O)NC(Cc1ccccc1)C(O)CN(Cc1ccc(-c2ccccn2)cc1)NC(=O)C(NC(=O)OC)C(C)(C)C)C(C)(C)C,1

58 | CN1C2CCC1CC(OC(c1ccccc1)c1ccccc1)C2,1

59 | CC(C)N1CCN(c2ccc(OCC3COC(Cn4cncn4)(c4ccc(Cl)cc4Cl)O3)cc2)CC1,1

60 | C=CCOc1ccccc1OCC(O)CNC(C)C,1

61 | CCCCCC(O)C=CC1C(O)CC(=O)C1CCCCCCC(=O)O,1

62 | CC1CCOC2Cn3cc(C(=O)NCc4ccc(F)cc4F)c(=O)c(O)c3C(=O)N12,1

63 | OCCN1CCN(CCCN2c3ccccc3C=Cc3ccccc32)CC1,1

64 | CCOC(=O)c1c(CSc2ccccc2)n(C)c2cc(Br)c(O)c(CN(C)C)c12,1

65 | CC(C)c1nc(CN(C)C(=O)NC(C(=O)NC(Cc2ccccc2)CC(O)C(Cc2ccccc2)NC(=O)OCc2cncs2)C(C)C)cs1,1

66 | Cc1c(O)cccc1C(=O)NC(CSc1ccccc1)C(O)CN1CC2CCCCC2CC1C(=O)NC(C)(C)C,1

67 | CC(C)(C)NC(=O)C1CC2CCCCC2CN1CC(O)C(Cc1ccccc1)NC(=O)C(CC(N)=O)NC(=O)c1ccc2ccccc2n1,1

68 | CCCC1(CCc2ccccc2)CC(O)=C(C(CC)c2cccc(NS(=O)(=O)c3ccc(C(F)(F)F)cn3)c2)C(=O)O1,1

69 | CC(C)CN(CC(O)C(Cc1ccccc1)NC(=O)OC1CCOC1)S(=O)(=O)c1ccc(N)cc1,1

70 | CC(C)CN(CC(O)C(Cc1ccccc1)NC(=O)OC1COC2OCCC12)S(=O)(=O)c1ccc(N)cc1,1

71 | CC(C)(C)NC(=O)C1CN(Cc2cccnc2)CCN1CC(O)CC(Cc1ccccc1)C(=O)NC1c2ccccc2CC1O,1

72 | Nc1ccn(C2OC(CO)C(O)C2(F)F)c(=O)n1,0

73 | CCC1CN2CCc3cc(OC)c(OC)cc3C2CC1CC1NCCc2cc(OC)c(OC)cc21,0

74 | CN(C)CCCN1c2ccccc2Sc2ccccc21,0

75 | CC(=O)OC1CC(OC2C(O)CC(OC3C(O)CC(OC4CCC5(C)C(CCC6C5CC(O)C5(C)C(C7=CC(=O)OC7)CCC65O)C4)OC3C)OC2C)OC(C)C1OC1OC(CO)C(O)C(O)C1O,0

76 | COc1ccc(CC2NCC(O)C2OC(C)=O)cc1,0

77 | Nc1ccc(N=Nc2ccccc2)c(N)n1,0

78 | CC(C)NCC(O)COc1cccc2ccccc12,0

79 | CN(C)CCCSC(=N)N,0

80 | CN1CCCC1Cc1c[nH]c2ccc(CCS(=O)(=O)c3ccccc3)cc12,0

81 | NC1CONC1=O,0

82 | Nc1c2c(nc3ccccc13)CCCC2,0

83 | CC(=O)C1CCC2C3CC=C4CC(O)CCC4(C)C3CCC12C,0

84 | O=C1CC2(CCCC2)CC(=O)N1CCCCN1CCN(c2ncccn2)CC1,0

85 | CCN(CC)CCCC(C)Nc1c2ccc(Cl)cc2nc2ccc(OC)cc12,0

86 | CCCCOc1ccc(C(=O)CCN2CCCCC2)cc1,0

87 | O=C(CCCN1CCC2(CC1)C(=O)NCN2c1ccccc1)c1ccc(F)cc1,0

88 | CC(C(O)c1ccc(O)cc1)N1CCC(Cc2ccccc2)CC1,0

89 | Cn1nnnc1SCC1=C(C(=O)[O-])N2C(=O)C(NC(=O)C(O)c3ccccc3)C2SC1,0

90 | CCOc1ccc2nc(S(N)(=O)=O)sc2c1,0

91 | CC=Cc1ccc(OC)cc1,0

92 | CC(CCc1ccccc1)NCC(O)c1ccc(O)c(C(N)=O)c1,0

93 | CC1COc2c(N3CCN(C)CC3)c(F)cc3c(=O)c(C(=O)O)cn1c23,0

94 | CC(C)(N)Cc1ccccc1,0

95 | CC12CCC3C(=CCc4cc(O)ccc43)C1CCC2=O,0

96 | COc1cc2c(cc1OC)C(=O)C(CC1CCN(Cc3ccccc3)CC1)C2,0

97 | CC(CCc1ccccc1)NC(C)C(O)c1ccc(O)cc1,0

98 | C=C1CC2C(CCC3(C)C(=O)CCC23)C2(C)C=CC(=O)C=C12,0

99 | NC(CCC(=O)NC(CSSCC(NC(=O)CCC(N)C(=O)O)C(=O)NCC(=O)O)C(=O)NCC(=O)O)C(=O)O,0

100 | CC(C=CC1=C(C)CCCC1(C)C)=CC=CC(C)=CC(=O)O,0

101 | CC12CCC3C(CCC4CC(O)CCC43C)C1CCC2=O,0

102 | Cc1ccc(C(=O)C(C)CN2CCCCC2)cc1,0

103 | CN(C)CCN(Cc1ccccc1)c1ccccn1,0

104 | COc1ccccc1OCC(O)CN1CCN(CC(=O)Nc2c(C)cccc2C)CC1,0

105 | COc1cc(N)c(Cl)cc1C(=O)NC1CCN(Cc2ccccc2)CC1,0

106 | CC1(C)OC2CC3C4CCC5=CC(=O)C=CC5(C)C4C(O)CC3(C)C2(C(=O)CO)O1,0

107 | CCn1cc(C(=O)O)c(=O)c2cc(F)c(N3CCNCC3)nc21,0

108 | Nc1c(Br)cc(Br)cc1CNC1CCC(O)CC1,0

109 | CC12CCC3C(CCC4=C(O)C(=O)CCC43C)C1CCC2=O,0

110 | NCCc1ccc(O)c(O)c1,0

111 | CC(C)(C)NCC(O)c1ccc(O)c(CO)c1,0

112 | CCCN1CC(CSC)CC2c3cccc4[nH]cc(c34)CC21,0

113 | CN(C)CCOC(=O)C(c1ccccc1)C1(O)CCCC1,0

114 | c1ccc2c(c1)OCC(C1=NCCN1)O2,0

115 | CCCc1cc(C(N)=S)ccn1,0

116 | CN(C)CCOC(c1ccccc1)c1ccccc1,0

117 | O=C1c2c(O)cccc2Cc2cccc(O)c21,0

118 | CN(C)C(=O)COC(=O)Cc1ccc(OC(=O)c2ccc(N=C(N)N)cc2)cc1,0

119 | NC(=O)c1nc(F)c[nH]c1=O,0

120 | COC1C(OC(C)=O)CC(=O)OC(C)CC=CC=CC(OC2CCC(N(C)C)C(C)O2)C(C)CC(CC=O)C1OC1OC(C)C(OC2CC(C)(O)C(O)C(C)O2)C(N(C)C)C1O,0

121 | CC12CC(=O)C3C(CCC4CC(O)CCC43C)C1CCC2C(=O)CO,0

122 | COC(c1ccccc1)(c1ccccc1)C(Oc1nc(C)cc(C)n1)C(=O)O,0

123 | CC1CCC2C(C)C(O)OC3OC4(C)CCC1C32OO4,0

124 | CCCCOc1cc(C(=O)OCCN(CC)CC)ccc1N,0

125 | c1ccc(CNCCNCc2ccccc2)cc1,0

126 | CC(=C(CCOC(=O)c1ccccc1)SSC(CCOC(=O)c1ccccc1)=C(C)N(C=O)Cc1cnc(C)nc1N)N(C=O)Cc1cnc(C)nc1N,0

127 | CC(C)=CC(C)=NNc1nncc2ccccc12,0

128 | CCOc1nc2cccc(C(=O)O)c2n1Cc1ccc(-c2ccccc2-c2nn[nH]n2)cc1,0

129 | COc1ccc(CC2c3cc(OC)c(OC)cc3CC[N+]2(C)CCC(=O)OCCCCCOC(=O)CC[N+]2(C)CCc3cc(OC)c(OC)cc3C2Cc2ccc(OC)c(OC)c2)cc1OC,0

130 | CC(=O)Oc1ccc(C(=C2CCCCC2)c2ccc(OC(C)=O)cc2)cc1,0

131 | CNCC(O)c1ccc(OC(=O)C(C)(C)C)c(OC(=O)C(C)(C)C)c1,0

132 | CCc1ccc(C(=O)C(C)CN2CCCCC2)cc1,0

133 | Oc1ccc(C2CNCCc3c2cc(O)c(O)c3Cl)cc1,0

134 | COc1ccc(CC(C)NCC(O)c2ccc(O)c(NC=O)c2)cc1,0

135 | CCC(=O)OC(OP(=O)(CCCCc1ccccc1)CC(=O)N1CC(C2CCCCC2)CC1C(=O)O)C(C)C,0

136 | CC(=C(CCO)SSCC1CCCO1)N(C=O)Cc1cnc(C)nc1N,0

137 | CC(C)C(=O)c1c(C(C)C)nn2ccccc12,0

138 | CCCNC(C)(C)COC(=O)c1ccccc1,0

139 | CCC1(c2cccc(O)c2)CCCCN(C)C1,0

140 | CN1CCN2c3ncccc3Cc3ccccc3C2C1,0

141 | CCCCC(C)(O)CC=CC1C(O)CC(=O)C1CCCCCCC(=O)OC,0

142 | COc1ccc2cc(CCC(C)=O)ccc2c1,0

143 | C=C1CCC2(O)C3Cc4ccc(O)c5c4C2(CCN3CC2CC2)C1O5,0

144 | Cc1cc2c(s1)Nc1ccccc1N=C2N1CCN(C)CC1,0

145 | CCCc1nc(C(C)(C)O)c(C(=O)O)n1Cc1ccc(-c2ccccc2-c2nn[nH]n2)cc1,0

146 | Cc1nccn1CC1CCc2c(c3ccccc3n2C)C1=O,0

147 | CC12CC(=CO)C(=O)CC1CCC1C2CCC2(C)C1CCC2(C)O,0

148 | O=C1Nc2ccccc2C1(c1ccc(O)cc1)c1ccc(O)cc1,0

149 | CCOC(=O)OC1(C(=O)COC(=O)CC)CCC2C3CCC4=CC(=O)C=CC4(C)C3C(O)CC21C,0

150 | CC1CN(c2c(F)c(N)c3c(=O)c(C(=O)O)cn(C4CC4)c3c2F)CC(C)N1,0

151 | Cc1ccc(O)c(C(CCN(C(C)C)C(C)C)c2ccccc2)c1,0

152 | CNCc1cc(-c2ccccc2F)n(S(=O)(=O)c2cccnc2)c1,0

153 | O=P(O)(O)C(O)(Cn1ccnc1)P(=O)(O)O,0

154 | O=C1OC2(c3cc(Br)c([O-])cc3Oc3c2cc(Br)c([O-])c3[Hg])c2ccccc21,0

155 | CC12C=CC(=O)C=C1CCC1C2CCC2(C)C1CCC2(C)O,0

156 |

--------------------------------------------------------------------------------

/Discussion/GPC.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | """

3 | Created on Tue Apr 27 22:04:01 2021

4 |

5 | @author: BM109X32G-10GPU-02

6 | """

7 |

8 | # -*- coding: utf-8 -*-

9 | """

10 | Created on Sun Nov 15 13:46:29 2020

11 |

12 | @author: de''

13 | """

14 |

15 | # -*- coding: utf-8 -*-

16 | """

17 | Created on Sun Nov 15 10:40:57 2020

18 |

19 | @author: de''

20 | """

21 |

22 | from sklearn.datasets import make_blobs

23 | import json

24 | import numpy as np

25 | import math

26 | from tqdm import tqdm

27 | from scipy import sparse

28 | from sklearn.metrics import roc_auc_score,roc_curve,auc

29 | from sklearn.metrics import confusion_matrix

30 | from sklearn.gaussian_process.kernels import RBF

31 | import pandas as pd

32 | import matplotlib.pyplot as plt

33 | from rdkit import Chem

34 | from sklearn.gaussian_process import GaussianProcessClassifier as GPC

35 | from sklearn.ensemble import RandomForestClassifier

36 | from sklearn.model_selection import train_test_split

37 | from sklearn.preprocessing import MinMaxScaler

38 | from sklearn.neural_network import MLPClassifier

39 | from sklearn.svm import SVC

40 | from tensorflow.keras.models import Model, load_model

41 | from tensorflow.keras.layers import Dense, Input, Flatten, Conv1D, MaxPooling1D, concatenate

42 | from tensorflow.keras import metrics, optimizers

43 | from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau

44 |

45 | def split_smiles(smiles, kekuleSmiles=True):

46 | try:

47 | mol = Chem.MolFromSmiles(smiles)

48 | smiles = Chem.MolToSmiles(mol, kekuleSmiles=kekuleSmiles)

49 | except:

50 | pass

51 | splitted_smiles = []

52 | for j, k in enumerate(smiles):

53 | if len(smiles) == 1:

54 | return [smiles]

55 | if j == 0:

56 | if k.isupper() and smiles[j + 1].islower() and smiles[j + 1] != "c":

57 | splitted_smiles.append(k + smiles[j + 1])

58 | else:

59 | splitted_smiles.append(k)

60 | elif j != 0 and j < len(smiles) - 1:

61 | if k.isupper() and smiles[j + 1].islower() and smiles[j + 1] != "c":

62 | splitted_smiles.append(k + smiles[j + 1])

63 | elif k.islower() and smiles[j - 1].isupper() and k != "c":

64 | pass

65 | else:

66 | splitted_smiles.append(k)

67 |

68 | elif j == len(smiles) - 1:

69 | if k.islower() and smiles[j - 1].isupper() and k != "c":

70 | pass

71 | else:

72 | splitted_smiles.append(k)

73 | return splitted_smiles

74 |

75 | def get_maxlen(all_smiles, kekuleSmiles=True):

76 | maxlen = 0

77 | for smi in tqdm(all_smiles):

78 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

79 | if spt is None:

80 | continue

81 | maxlen = max(maxlen, len(spt))

82 | return maxlen

83 | def get_dict(all_smiles, save_path, kekuleSmiles=True):

84 | words = [' ']

85 | for smi in tqdm(all_smiles):

86 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

87 | if spt is None:

88 | continue

89 | for w in spt:

90 | if w in words:

91 | continue

92 | else:

93 | words.append(w)

94 | with open(save_path, 'w') as js:

95 | json.dump(words, js)

96 | return words

97 |

98 | def one_hot_coding(smi, words, kekuleSmiles=True, max_len=1000):

99 | coord_j = []

100 | coord_k = []

101 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

102 | if spt is None:

103 | return None

104 | for j,w in enumerate(spt):

105 | if j >= max_len:

106 | break

107 | try:

108 | k = words.index(w)

109 | except:

110 | continue

111 | coord_j.append(j)

112 | coord_k.append(k)

113 | data = np.repeat(1, len(coord_j))

114 | output = sparse.csr_matrix((data, (coord_j, coord_k)), shape=(max_len, len(words)))

115 | return output

116 |

117 | if __name__ == "__main__":

118 | data_train= pd.read_csv('E:/code/drug/drugnn/data_train.csv')

119 | data_test=pd.read_csv('E:/code/drug/drugnn/worddrug.csv')

120 | inchis = list(data_train['SMILES'])

121 | rts = list(data_train['type'])

122 |

123 | smiles, targets = [], []

124 | for i, inc in enumerate(tqdm(inchis)):

125 | mol = Chem.MolFromSmiles(inc)

126 | if mol is None:

127 | continue

128 | else:

129 | smi = Chem.MolToSmiles(mol)

130 | smiles.append(smi)

131 | targets.append(rts[i])

132 |

133 | words = get_dict(smiles, save_path='E:\code\FingerID Reference\drug-likeness/dict.json')

134 |

135 | features = []

136 | for i, smi in enumerate(tqdm(smiles)):

137 | xi = one_hot_coding(smi, words, max_len=600)

138 | if xi is not None:

139 | features.append(xi.todense())

140 | features = np.asarray(features)

141 | targets = np.asarray(targets)

142 | X_train=features

143 | Y_train=targets

144 |

145 |

146 | # physical_devices = tf.config.experimental.list_physical_devices('CPU')

147 | # assert len(physical_devices) > 0, "Not enough GPU hardware devices available"

148 | # tf.config.experimental.set_memory_growth(physical_devices[0], True)

149 |

150 |

151 |

152 | inchis = list(data_test['SMILES'])

153 | rts = list(data_test['type'])

154 |

155 | smiles, targets = [], []

156 | for i, inc in enumerate(tqdm(inchis)):

157 | mol = Chem.MolFromSmiles(inc)

158 | if mol is None:

159 | continue

160 | else:

161 | smi = Chem.MolToSmiles(mol)

162 | smiles.append(smi)

163 | targets.append(rts[i])

164 |

165 | # words = get_dict(smiles, save_path='D:/工作文件/work.Data/CNN/dict.json')

166 |

167 | features = []

168 | for i, smi in enumerate(tqdm(smiles)):

169 | xi = one_hot_coding(smi, words, max_len=600)

170 | if xi is not None:

171 | features.append(xi.todense())

172 | features = np.asarray(features)

173 | targets = np.asarray(targets)

174 | X_test=features

175 | Y_test=targets

176 |

177 | # kernel = 1.0 * RBF(0.8)

178 | #model = RandomForestClassifier(n_estimators=10,max_features='auto', max_depth=None,min_samples_split=2, bootstrap=True)

179 | model = GPC( random_state=111)

180 |

181 | # earlyStopping = EarlyStopping(monitor='val_loss', patience=0.05, verbose=0, mode='min')

182 | #mcp_save = ModelCheckpoint('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/single_model.h5', save_best_only=True, monitor='accuracy', mode='auto')

183 | # reduce_lr_loss = ReduceLROnPlateau(monitor='val_loss', factor=0.1, patience=3, verbose=1, epsilon=1e-4, mode='min')

184 | from tensorflow.keras import backend as K

185 | X_train = K.cast_to_floatx(X_train).reshape((np.size(X_train,0),np.size(X_train,1)*np.size(X_train,2)))

186 |

187 | Y_train = K.cast_to_floatx(Y_train)

188 |

189 | # X_train,Y_train = make_blobs(n_samples=300, n_features=n_features, centers=6)

190 | model.fit(X_train, Y_train)

191 |

192 |

193 | # model = load_model('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/single_model.h5')

194 | Y_predict = model.predict(K.cast_to_floatx(X_test).reshape((np.size(X_test,0),np.size(X_test,1)*np.size(X_test,2))))

195 | #Y_predict = model.predict(X_test)#训练数据

196 | x = list(Y_test)

197 | y = list(Y_predict)

198 | from pandas.core.frame import DataFrame

199 | x=DataFrame(x)

200 | y=DataFrame(y)

201 | # X= pd.concat([x,y], axis=1)

202 | #X.to_csv('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/molecularGNN_smiles-master/0825/single-CNN-seed444.csv')

203 | Y_predict = [1 if i >0.4 else 0 for i in Y_predict]

204 |

205 | cnf_matrix=confusion_matrix(Y_test, Y_predict)

206 | cnf_matrix

207 |

208 | tn = cnf_matrix[0,0]

209 | tp = cnf_matrix[1,1]

210 | fn = cnf_matrix[1,0]

211 | fp = cnf_matrix[0,1]

212 |

213 | bacc = ((tp/(tp+fn))+(tn/(tn+fp)))/2#balance accurance

214 | pre = tp/(tp+fp)#precision/q+

215 | rec = tp/(tp+fn)#recall/se

216 | sp=tn/(tn+fp)

217 | q_=tn/(tn+fn)

218 | f1 = 2*pre*rec/(pre+rec)#f1score

219 | mcc = ((tp*tn) - (fp*fn))/math.sqrt((tp+fp)*(tp+fn)*(tn+fp)*(tn+fn))#Matthews correlation coefficient

220 | acc=(tp+tn)/(tp+fp+fn+tn)#accurancy

221 | fpr, tpr, thresholds =roc_curve(Y_test, Y_predict)

222 | AUC = auc(fpr, tpr)

223 | print('bacc:',bacc)

224 | print('pre:',pre)

225 | print('rec:',rec)

226 | print('f1:',f1)

227 | print('mcc:',mcc)

228 | print('sp:',sp)

229 | print('q_:',q_)

230 | print('acc:',acc)

231 | print('auc:',AUC)

232 |

--------------------------------------------------------------------------------

/Discussion/svc.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | """

3 | Created on Sun Nov 15 13:46:29 2020

4 |

5 | @author: de''

6 | """

7 |

8 | # -*- coding: utf-8 -*-

9 | """

10 | Created on Sun Nov 15 10:40:57 2020

11 |

12 | @author: de''

13 | """

14 |

15 | from sklearn.datasets import make_blobs

16 | import json

17 | import numpy as np

18 | import math

19 | from tqdm import tqdm

20 | from scipy import sparse

21 | from sklearn.metrics import roc_auc_score,roc_curve,auc

22 | from sklearn.metrics import confusion_matrix

23 |

24 | import pandas as pd

25 | import matplotlib.pyplot as plt

26 | from rdkit import Chem

27 |

28 | from sklearn.ensemble import RandomForestClassifier

29 | from sklearn.model_selection import train_test_split

30 | from sklearn.preprocessing import MinMaxScaler

31 | from sklearn.neural_network import MLPClassifier

32 | from sklearn.svm import SVC

33 | from tensorflow.keras.models import Model, load_model

34 | from tensorflow.keras.layers import Dense, Input, Flatten, Conv1D, MaxPooling1D, concatenate

35 | from tensorflow.keras import metrics, optimizers

36 | from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau

37 |

38 | def split_smiles(smiles, kekuleSmiles=True):

39 | try:

40 | mol = Chem.MolFromSmiles(smiles)

41 | smiles = Chem.MolToSmiles(mol, kekuleSmiles=kekuleSmiles)

42 | except:

43 | pass

44 | splitted_smiles = []

45 | for j, k in enumerate(smiles):

46 | if len(smiles) == 1:

47 | return [smiles]

48 | if j == 0:

49 | if k.isupper() and smiles[j + 1].islower() and smiles[j + 1] != "c":

50 | splitted_smiles.append(k + smiles[j + 1])

51 | else:

52 | splitted_smiles.append(k)

53 | elif j != 0 and j < len(smiles) - 1:

54 | if k.isupper() and smiles[j + 1].islower() and smiles[j + 1] != "c":

55 | splitted_smiles.append(k + smiles[j + 1])

56 | elif k.islower() and smiles[j - 1].isupper() and k != "c":

57 | pass

58 | else:

59 | splitted_smiles.append(k)

60 |

61 | elif j == len(smiles) - 1:

62 | if k.islower() and smiles[j - 1].isupper() and k != "c":

63 | pass

64 | else:

65 | splitted_smiles.append(k)

66 | return splitted_smiles

67 |

68 | def get_maxlen(all_smiles, kekuleSmiles=True):

69 | maxlen = 0

70 | for smi in tqdm(all_smiles):

71 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

72 | if spt is None:

73 | continue

74 | maxlen = max(maxlen, len(spt))

75 | return maxlen

76 | def get_dict(all_smiles, save_path, kekuleSmiles=True):

77 | words = [' ']

78 | for smi in tqdm(all_smiles):

79 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

80 | if spt is None:

81 | continue

82 | for w in spt:

83 | if w in words:

84 | continue

85 | else:

86 | words.append(w)

87 | with open(save_path, 'w') as js:

88 | json.dump(words, js)

89 | return words

90 |

91 | def one_hot_coding(smi, words, kekuleSmiles=True, max_len=1000):

92 | coord_j = []

93 | coord_k = []

94 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

95 | if spt is None:

96 | return None

97 | for j,w in enumerate(spt):

98 | if j >= max_len:

99 | break

100 | try:

101 | k = words.index(w)

102 | except:

103 | continue

104 | coord_j.append(j)

105 | coord_k.append(k)

106 | data = np.repeat(1, len(coord_j))

107 | output = sparse.csr_matrix((data, (coord_j, coord_k)), shape=(max_len, len(words)))

108 | return output

109 |

110 | if __name__ == "__main__":

111 |

112 |

113 | data_train= pd.read_csv('E:/code/drug/drugnn/data_train.csv')

114 | data_test=pd.read_csv('E:/code/drug/drugnn/bro5.csv')

115 | inchis = list(data_train['SMILES'])

116 | rts = list(data_train['type'])

117 |

118 | smiles, targets = [], []

119 | for i, inc in enumerate(tqdm(inchis)):

120 | mol = Chem.MolFromSmiles(inc)

121 | if mol is None:

122 | continue

123 | else:

124 | smi = Chem.MolToSmiles(mol)

125 | smiles.append(smi)

126 | targets.append(rts[i])

127 |

128 | words = get_dict(smiles, save_path='E:\code\FingerID Reference\drug-likeness/dict.json')

129 |

130 | features = []

131 | for i, smi in enumerate(tqdm(smiles)):

132 | xi = one_hot_coding(smi, words, max_len=2000)

133 | if xi is not None:

134 | features.append(xi.todense())

135 | features = np.asarray(features)

136 | targets = np.asarray(targets)

137 | X_train=features

138 | Y_train=targets

139 |

140 |

141 | # physical_devices = tf.config.experimental.list_physical_devices('CPU')

142 | # assert len(physical_devices) > 0, "Not enough GPU hardware devices available"

143 | # tf.config.experimental.set_memory_growth(physical_devices[0], True)

144 |

145 |

146 |

147 | inchis = list(data_test['SMILES'])

148 | rts = list(data_test['type'])

149 |

150 | smiles, targets = [], []

151 | for i, inc in enumerate(tqdm(inchis)):

152 | mol = Chem.MolFromSmiles(inc)

153 | if mol is None:

154 | continue

155 | else:

156 | smi = Chem.MolToSmiles(mol)

157 | smiles.append(smi)

158 | targets.append(rts[i])

159 |

160 | # words = get_dict(smiles, save_path='D:/工作文件/work.Data/CNN/dict.json')

161 |

162 | features = []

163 | for i, smi in enumerate(tqdm(smiles)):

164 | xi = one_hot_coding(smi, words, max_len=2000)

165 | if xi is not None:

166 | features.append(xi.todense())

167 | features = np.asarray(features)

168 | targets = np.asarray(targets)

169 | X_test=features

170 | Y_test=targets

171 |

172 |

173 | #model = RandomForestClassifier(n_estimators=10,max_features='auto', max_depth=None,min_samples_split=2, bootstrap=True)

174 | #model = MLPClassifier(rangdom_state=1,max_iter=300)

175 | model = SVC(C=500, kernel='rbf', gamma='auto',

176 | coef0=0.0, shrinking=True,probability=False, tol=0.0001, cache_size=200, class_weight=None, verbose=False, max_iter=- 1, decision_function_shape='ovr', break_ties=False, random_state=None)

177 |

178 | # earlyStopping = EarlyStopping(monitor='val_loss', patience=0.05, verbose=0, mode='min')

179 | #mcp_save = ModelCheckpoint('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/single_model.h5', save_best_only=True, monitor='accuracy', mode='auto')

180 | # reduce_lr_loss = ReduceLROnPlateau(monitor='val_loss', factor=0.1, patience=3, verbose=1, epsilon=1e-4, mode='min')

181 | from tensorflow.keras import backend as K

182 | X_train = K.cast_to_floatx(X_train).reshape((np.size(X_train,0),np.size(X_train,1)*np.size(X_train,2)))

183 |

184 | Y_train = K.cast_to_floatx(Y_train)

185 |

186 | # X_train,Y_train = make_blobs(n_samples=300, n_features=n_features, centers=6)

187 | model.fit(X_train, Y_train)

188 |

189 |

190 | # model = load_model('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/single_model.h5')

191 | Y_predict = model.predict(K.cast_to_floatx(X_test).reshape((np.size(X_test,0),np.size(X_test,1)*np.size(X_test,2))))

192 | #Y_predict = model.predict(X_test)#训练数据

193 | x = list(Y_test)

194 | y = list(Y_predict)

195 | from pandas.core.frame import DataFrame

196 | x=DataFrame(x)

197 | y=DataFrame(y)

198 | # X= pd.concat([x,y], axis=1)

199 | #X.to_csv('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/molecularGNN_smiles-master/0825/single-CNN-seed444.csv')

200 | Y_predict = [1 if i >0.5 else 0 for i in Y_predict]

201 |

202 | cnf_matrix=confusion_matrix(Y_test, Y_predict)

203 | cnf_matrix

204 |

205 | tn = cnf_matrix[0,0]

206 | tp = cnf_matrix[1,1]

207 | fn = cnf_matrix[1,0]

208 | fp = cnf_matrix[0,1]

209 |

210 | bacc = ((tp/(tp+fn))+(tn/(tn+fp)))/2#balance accurance

211 | pre = tp/(tp+fp)#precision/q+

212 | rec = tp/(tp+fn)#recall/se

213 | sp=tn/(tn+fp)

214 | q_=tn/(tn+fn)

215 | f1 = 2*pre*rec/(pre+rec)#f1score

216 | mcc = ((tp*tn) - (fp*fn))/math.sqrt((tp+fp)*(tp+fn)*(tn+fp)*(tn+fn))#Matthews correlation coefficient

217 | acc=(tp+tn)/(tp+fp+fn+tn)#accurancy

218 | fpr, tpr, thresholds =roc_curve(Y_test, Y_predict)

219 | AUC = auc(fpr, tpr)

220 | print('bacc:',bacc)

221 | print('pre:',pre)

222 | print('rec:',rec)

223 | print('f1:',f1)

224 | print('mcc:',mcc)

225 | print('sp:',sp)

226 | print('q_:',q_)

227 | print('acc:',acc)

228 | print('auc:',AUC)

--------------------------------------------------------------------------------

/DGCAN/preprocess.py:

--------------------------------------------------------------------------------

1 |

2 | # -*- coding: utf-8 -*-

3 | """

4 | Created on Wed Apr 27 20:09:31 2022

5 |

6 | @author:Jinyu-Sun

7 | """

8 |

9 | from collections import defaultdict

10 | import numpy as np

11 | from rdkit import Chem

12 | import torch

13 |

14 | device = torch.device('cuda')

15 |

16 | atom_dict = defaultdict(lambda: len(atom_dict))

17 | bond_dict = defaultdict(lambda: len(bond_dict))

18 | fingerprint_dict = defaultdict(lambda: len(fingerprint_dict))

19 | edge_dict = defaultdict(lambda: len(edge_dict))

20 | radius=1

21 | def create_atoms(mol, atom_dict):

22 | """Transform the atom types in a molecule (e.g., H, C, and O)

23 | into the indices (e.g., H=0, C=1, and O=2).

24 | Note that each atom index considers the aromaticity.

25 | """

26 | atoms = [a.GetSymbol() for a in mol.GetAtoms()]

27 | for a in mol.GetAromaticAtoms():

28 | i = a.GetIdx()

29 | atoms[i] = (atoms[i], 'aromatic')

30 | atoms = [atom_dict[a] for a in atoms]

31 | return np.array(atoms)

32 |

33 |

34 | def create_ijbonddict(mol, bond_dict):

35 | """Create a dictionary, in which each key is a node ID

36 | and each value is the tuples of its neighboring node

37 | and chemical bond (e.g., single and double) IDs.

38 |

39 | """

40 | i_jbond_dict = defaultdict(lambda: [])

41 | for b in mol.GetBonds():

42 | i, j = b.GetBeginAtomIdx(), b.GetEndAtomIdx()

43 | bond = bond_dict[str(b.GetBondType())]

44 | i_jbond_dict[i].append((j, bond))

45 | i_jbond_dict[j].append((i, bond))

46 | return i_jbond_dict

47 |

48 |

49 | def extract_fingerprints(radius, atoms, i_jbond_dict,

50 | fingerprint_dict, edge_dict):

51 | """Extract the fingerprints from a molecular graph

52 | based on Weisfeiler-Lehman algorithm.

53 | """

54 |

55 | if (len(atoms) == 1) or (radius == 0):

56 | nodes = [fingerprint_dict[a] for a in atoms]

57 |

58 | else:

59 | nodes = atoms

60 | i_jedge_dict = i_jbond_dict

61 |

62 | for _ in range(radius):

63 |

64 | """Update each node ID considering its neighboring nodes and edges.

65 | The updated node IDs are the fingerprint IDs.。

66 | """

67 | nodes_ = []

68 | for i, j_edge in i_jedge_dict.items():

69 | neighbors = [(nodes[j], edge) for j, edge in j_edge]

70 | fingerprint = (nodes[i], tuple(sorted(neighbors)))

71 | nodes_.append(fingerprint_dict[fingerprint])

72 |

73 | """Also update each edge ID considering

74 | its two nodes on both sides.

75 | """

76 | i_jedge_dict_ = defaultdict(lambda: [])

77 | for i, j_edge in i_jedge_dict.items():

78 | for j, edge in j_edge:

79 | both_side = tuple(sorted((nodes[i], nodes[j])))

80 | edge = edge_dict[(both_side, edge)]

81 | i_jedge_dict_[i].append((j, edge))

82 |

83 | nodes = nodes_

84 | i_jedge_dict = i_jedge_dict_

85 |

86 | return np.array(nodes)

87 |

88 |

89 | def split_dataset(dataset, ratio):

90 | """Shuffle and split a dataset."""

91 | np.random.seed(1234) # fix the seed for shuffle

92 | # np.random.shuffle(dataset)

93 | n = int(ratio * len(dataset))

94 | return dataset[:n], dataset[n:]

95 | def create_testdataset(filename,path,dataname,property):

96 | dir_dataset = path+dataname

97 | print(filename)

98 | """Load a dataset."""

99 | if property== False:

100 | with open(dir_dataset + filename, 'r') as f:

101 | #smiles_property = f.readline().strip().split()

102 | data_original = f.read().strip().split()

103 | data_original = [data for data in data_original

104 | if '.' not in data.split()[0]]

105 | dataset = []

106 | for data in data_original:

107 | smiles = data

108 | try:

109 | """Create each data with the above defined functions."""

110 | mol = Chem.AddHs(Chem.MolFromSmiles(smiles))

111 | atoms = create_atoms(mol, atom_dict)

112 | molecular_size = len(atoms)

113 | i_jbond_dict = create_ijbonddict(mol, bond_dict)

114 | fingerprints = extract_fingerprints(radius, atoms, i_jbond_dict,

115 | fingerprint_dict, edge_dict)

116 | adjacency = Chem.GetAdjacencyMatrix(mol)

117 | """Transform the above each data of numpy

118 | to pytorch tensor on a device (i.e., CPU or GPU).

119 | """

120 | fingerprints = torch.LongTensor(fingerprints).to(device)

121 | adjacency = torch.FloatTensor(adjacency).to(device)

122 | proper = torch.LongTensor([int(0)]).to(device)

123 | dataset.append((smiles,fingerprints, adjacency, molecular_size,proper ))

124 | except:

125 | print(smiles)

126 | elif property== True:

127 | with open(dir_dataset + filename, 'r') as f:

128 | # smiles_property = f.readline().strip().split()

129 | data_original = f.read().strip().split('\n')

130 |

131 | data_original = [data for data in data_original

132 | if '.' not in data.split()[0]]

133 | dataset = []

134 | for data in data_original:

135 | smiles, proper = data.strip().split()

136 | try:

137 | """Create each data with the above defined functions."""

138 | mol = Chem.AddHs(Chem.MolFromSmiles(smiles))

139 | atoms = create_atoms(mol, atom_dict)

140 | molecular_size = len(atoms)

141 | i_jbond_dict = create_ijbonddict(mol, bond_dict)

142 | fingerprints = extract_fingerprints(radius, atoms, i_jbond_dict,

143 | fingerprint_dict, edge_dict)

144 | adjacency = Chem.GetAdjacencyMatrix(mol)

145 |

146 | """Transform the above each data of numpy

147 | to pytorch tensor on a device (i.e., CPU or GPU).

148 | """

149 | fingerprints = torch.LongTensor(fingerprints).to(device)

150 | adjacency = torch.FloatTensor(adjacency).to(device)

151 | proper = torch.LongTensor([int(proper)]).to(device)

152 | dataset.append((smiles,fingerprints, adjacency, molecular_size, proper))

153 | except:

154 | print(smiles+'is error')

155 | return dataset

156 |

157 | def create_dataset(filename,path,dataname):

158 | dir_dataset = path+dataname

159 | print(filename)

160 | """Load a dataset."""

161 | try:

162 | with open(dir_dataset + filename, 'r') as f:

163 | smiles_property = f.readline().strip().split()

164 | data_original = f.read().strip().split('\n')

165 | except:

166 | with open(dir_dataset + filename, 'r') as f:

167 | smiles_property = f.readline().strip().split()

168 | data_original = f.read().strip().split('\n')

169 |

170 | """Exclude the data contains '.' in its smiles.排除含.的数据"""

171 | data_original = [data for data in data_original

172 | if '.' not in data.split()[0]]

173 | dataset = []

174 | for data in data_original:

175 | smiles, property = data.strip().split()

176 | try:

177 | """Create each data with the above defined functions."""

178 | mol = Chem.AddHs(Chem.MolFromSmiles(smiles))

179 | atoms = create_atoms(mol, atom_dict)

180 | molecular_size = len(atoms)

181 | i_jbond_dict = create_ijbonddict(mol, bond_dict)

182 | fingerprints = extract_fingerprints(radius, atoms, i_jbond_dict,

183 | fingerprint_dict, edge_dict)

184 | adjacency = Chem.GetAdjacencyMatrix(mol)

185 | """

186 | Transform the above each data of numpy

187 | to pytorch tensor on a device (i.e., CPU or GPU).

188 | """

189 | fingerprints = torch.LongTensor(fingerprints).to(device)

190 | adjacency = torch.FloatTensor(adjacency).to(device)

191 | property = torch.LongTensor([int(property)]).to(device)

192 | dataset.append((smiles,fingerprints, adjacency, molecular_size, property))

193 | except:

194 | print(smiles)

195 | return dataset

196 |

197 |

198 |

--------------------------------------------------------------------------------

/Discussion/RF.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | """

3 | Created on Tue Apr 27 22:08:23 2021

4 |

5 | @author:Jinyusun

6 | """

7 |

8 |

9 | from sklearn.datasets import make_blobs

10 | import json

11 | import numpy as np

12 | import math

13 | from tqdm import tqdm

14 | from scipy import sparse

15 | from sklearn.metrics import roc_auc_score,roc_curve,auc

16 | from sklearn.metrics import confusion_matrix

17 |

18 | import pandas as pd

19 | import matplotlib.pyplot as plt

20 | from rdkit import Chem

21 |

22 | from sklearn.ensemble import RandomForestClassifier

23 | from sklearn.model_selection import train_test_split

24 | from sklearn.preprocessing import MinMaxScaler

25 | from sklearn.neural_network import MLPClassifier

26 | from sklearn.svm import SVC

27 | from tensorflow.keras.models import Model, load_model

28 | from tensorflow.keras.layers import Dense, Input, Flatten, Conv1D, MaxPooling1D, concatenate

29 | from tensorflow.keras import metrics, optimizers

30 | from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau

31 |

32 | def split_smiles(smiles, kekuleSmiles=True):

33 | try:

34 | mol = Chem.MolFromSmiles(smiles)

35 | smiles = Chem.MolToSmiles(mol, kekuleSmiles=kekuleSmiles)

36 | except:

37 | pass

38 | splitted_smiles = []

39 | for j, k in enumerate(smiles):

40 | if len(smiles) == 1:

41 | return [smiles]

42 | if j == 0:

43 | if k.isupper() and smiles[j + 1].islower() and smiles[j + 1] != "c":

44 | splitted_smiles.append(k + smiles[j + 1])

45 | else:

46 | splitted_smiles.append(k)

47 | elif j != 0 and j < len(smiles) - 1:

48 | if k.isupper() and smiles[j + 1].islower() and smiles[j + 1] != "c":

49 | splitted_smiles.append(k + smiles[j + 1])

50 | elif k.islower() and smiles[j - 1].isupper() and k != "c":

51 | pass

52 | else:

53 | splitted_smiles.append(k)

54 |

55 | elif j == len(smiles) - 1:

56 | if k.islower() and smiles[j - 1].isupper() and k != "c":

57 | pass

58 | else:

59 | splitted_smiles.append(k)

60 | return splitted_smiles

61 |

62 | def get_maxlen(all_smiles, kekuleSmiles=True):

63 | maxlen = 0

64 | for smi in tqdm(all_smiles):

65 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

66 | if spt is None:

67 | continue

68 | maxlen = max(maxlen, len(spt))

69 | return maxlen

70 | def get_dict(all_smiles, save_path, kekuleSmiles=True):

71 | words = [' ']

72 | for smi in tqdm(all_smiles):

73 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

74 | if spt is None:

75 | continue

76 | for w in spt:

77 | if w in words:

78 | continue

79 | else:

80 | words.append(w)

81 | with open(save_path, 'w') as js:

82 | json.dump(words, js)

83 | return words

84 |

85 | def one_hot_coding(smi, words, kekuleSmiles=True, max_len=1000):

86 | coord_j = []

87 | coord_k = []

88 | spt = split_smiles(smi, kekuleSmiles=kekuleSmiles)

89 | if spt is None:

90 | return None

91 | for j,w in enumerate(spt):

92 | if j >= max_len:

93 | break

94 | try:

95 | k = words.index(w)

96 | except:

97 | continue

98 | coord_j.append(j)

99 | coord_k.append(k)

100 | data = np.repeat(1, len(coord_j))

101 | output = sparse.csr_matrix((data, (coord_j, coord_k)), shape=(max_len, len(words)))

102 | return output

103 | def split_dataset(dataset, ratio):

104 | """Shuffle and split a dataset."""

105 | # np.random.seed(111) # fix the seed for shuffle.

106 | #np.random.shuffle(dataset)

107 | n = int(ratio * len(dataset))

108 | return dataset[:n], dataset[n:]

109 | def edit_dataset(drug,non_drug,task):

110 | # np.random.seed(111) # fix the seed for shuffle.

111 |

112 | # np.random.shuffle(non_drug)

113 | non_drug=non_drug[0:len(drug)]

114 |

115 |

116 | # np.random.shuffle(non_drug)

117 | # np.random.shuffle(drug)

118 | dataset_train_drug, dataset_test_drug = split_dataset(drug, 0.9)

119 | # dataset_train_drug,dataset_dev_drug = split_dataset(dataset_train_drug, 0.9)

120 | dataset_train_no, dataset_test_no = split_dataset(non_drug, 0.9)

121 | # dataset_train_no,dataset_dev_no = split_dataset(dataset_train_no, 0.9)

122 | dataset_train = pd.concat([dataset_train_drug,dataset_train_no], axis=0)

123 | dataset_test=pd.concat([ dataset_test_drug,dataset_test_no], axis=0)

124 | # dataset_dev = dataset_dev_drug+dataset_dev_no

125 | return dataset_train, dataset_test

126 | if __name__ == "__main__":

127 | data_train= pd.read_csv('E:/code/drug/drugnn/data_train.csv')

128 | data_test=pd.read_csv('E:/code/drug/drugnn/bro5.csv')

129 | inchis = list(data_train['SMILES'])

130 | rts = list(data_train['type'])

131 |

132 | smiles, targets = [], []

133 | for i, inc in enumerate(tqdm(inchis)):

134 | mol = Chem.MolFromSmiles(inc)

135 | if mol is None:

136 | continue

137 | else:

138 | smi = Chem.MolToSmiles(mol)

139 | smiles.append(smi)

140 | targets.append(rts[i])

141 |

142 | words = get_dict(smiles, save_path='E:\code\FingerID Reference\drug-likeness/dict.json')

143 |

144 | features = []

145 | for i, smi in enumerate(tqdm(smiles)):

146 | xi = one_hot_coding(smi, words, max_len=600)

147 | if xi is not None:

148 | features.append(xi.todense())

149 | features = np.asarray(features)

150 | targets = np.asarray(targets)

151 | X_train=features

152 | Y_train=targets

153 |

154 |

155 | # physical_devices = tf.config.experimental.list_physical_devices('CPU')

156 | # assert len(physical_devices) > 0, "Not enough GPU hardware devices available"

157 | # tf.config.experimental.set_memory_growth(physical_devices[0], True)

158 |

159 |

160 |

161 | inchis = list(data_test['SMILES'])

162 | rts = list(data_test['type'])

163 |

164 | smiles, targets = [], []

165 | for i, inc in enumerate(tqdm(inchis)):

166 | mol = Chem.MolFromSmiles(inc)

167 | if mol is None:

168 | continue

169 | else:

170 | smi = Chem.MolToSmiles(mol)

171 | smiles.append(smi)

172 | targets.append(rts[i])

173 |

174 | # words = get_dict(smiles, save_path='D:/工作文件/work.Data/CNN/dict.json')

175 |

176 | features = []

177 | for i, smi in enumerate(tqdm(smiles)):

178 | xi = one_hot_coding(smi, words, max_len=600)

179 | if xi is not None:

180 | features.append(xi.todense())

181 | features = np.asarray(features)

182 | targets = np.asarray(targets)

183 | X_test=features

184 | Y_test=targets

185 | n_features=10

186 |

187 | model = RandomForestClassifier(n_estimators=5,max_features='auto', max_depth=None,min_samples_split=5, bootstrap=True)

188 | #model = MLPClassifier(rangdom_state=1,max_iter=300)

189 | #model = SVC()

190 |

191 | # earlyStopping = EarlyStopping(monitor='val_loss', patience=0.05, verbose=0, mode='min')

192 | #mcp_save = ModelCheckpoint('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/single_model.h5', save_best_only=True, monitor='accuracy', mode='auto')

193 | # reduce_lr_loss = ReduceLROnPlateau(monitor='val_loss', factor=0.1, patience=3, verbose=1, epsilon=1e-4, mode='min')

194 | from tensorflow.keras import backend as K

195 | X_train = K.cast_to_floatx(X_train).reshape((np.size(X_train,0),np.size(X_train,1)*np.size(X_train,2)))

196 |

197 | Y_train = K.cast_to_floatx(Y_train)

198 |

199 | # X_train,Y_train = make_blobs(n_samples=300, n_features=n_features, centers=6)

200 | model.fit(X_train, Y_train)

201 |

202 |

203 | # model = load_model('C:/Users/sunjinyu/Desktop/FingerID Reference/drug-likeness/CNN/single_model.h5')