├── LICENSE.txt

├── MANIFEST

├── README.md

├── images

├── test_compare.PNG

└── test_decorator.PNG

├── pythonbenchmark

├── __init__.py

└── pythonbenchmark.py

├── setup.cfg

├── setup.py

└── test_pythonbenchmark.py

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | The MIT License (MIT)

2 |

3 | Copyright (c) 2015 Karlheinz Niebuhr

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in

13 | all copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

21 | THE SOFTWARE.

22 |

--------------------------------------------------------------------------------

/MANIFEST:

--------------------------------------------------------------------------------

1 | # file GENERATED by distutils, do NOT edit

2 | setup.cfg

3 | setup.py

4 | pythonbenchmark/__init__.py

5 | pythonbenchmark/pythonbenchmark.py

6 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ### Learn to write faster code by experimenting with this library

2 |

3 | # pip install pythonbenchmark

4 | - The timeit module that comes with python is only useful for small bits of Python code not for functions.

5 |

6 | - This library solves that. It provides an intuitive way to measure the execution time of functions and compare the relative speed of two functions.

7 |

8 | - Optimizing your code? Curious how much speed you gained? No problem

9 |

10 | - Pythonbenchmark allows this by letting you compare two functions which take the same input and measure which one gets the job done faster.

11 | ```python

12 | compare(myFunction, myOptimizedFunction, 10)

13 | ```

14 | - Additionally you can just put a decorator on the functions, pythonbenchmark will measure them and print out the execution time in the console.

15 | ```python

16 | @measure

17 | def myFunction(something):

18 | [x*x for x in range(1000000)]

19 | ```

20 |

21 | ### How-To:

22 | A typical use case is: I have functionX, and optimized functionX. Now I want to know if my modified version is faster and how much.

23 |

24 | ```python

25 | from pythonbenchmark import compare, measure

26 | import time

27 |

28 | a,b,c,d,e = 10,10,10,10,10

29 | something = [a,b,c,d,e]

30 |

31 | def myFunction(something):

32 | time.sleep(0.4)

33 |

34 | def myOptimizedFunction(something):

35 | time.sleep(0.2)

36 |

37 | # comparing test

38 | compare(myFunction, myOptimizedFunction, 10, input)

39 | # without input

40 | compare(myFunction, myOptimizedFunction, 100)

41 | ```

42 |

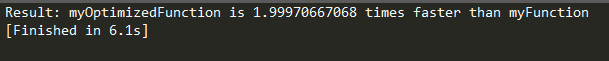

43 | Output

44 |

45 |

46 |

47 |

48 | Measuring execution time with the @measure decorator

49 | ```python

50 | from pythonbenchmark import compare, measure

51 | import time

52 |

53 | a,b,c,d,e = 10,10,10,10,10

54 | something = [a,b,c,d,e]

55 |

56 | @measure

57 | def myFunction(something):

58 | time.sleep(0.4)

59 |

60 | @measure

61 | def myOptimizedFunction(something):

62 | time.sleep(0.2)

63 |

64 | myFunction(input)

65 | myOptimizedFunction(input)

66 |

67 | ```

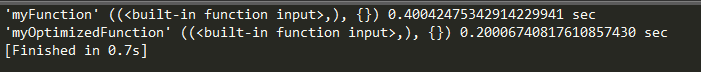

68 | Output

69 |

70 |

71 |

72 |

73 |

74 | Do experiments, have fun!

75 | Make incremental changes to your high performance code and see how much speed improvement you gain with each modification. This will give you an enormous intuition about how to write fast code :)

76 |

77 | A spanish article about how I came up with this library is on my [Blog.](http://karlheinzniebuhr.github.io/es/2015/05/10/de-CS101-a-una-libreria-python.html)

78 |

--------------------------------------------------------------------------------

/images/test_compare.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Karlheinzniebuhr/pythonbenchmark/670a89cbefa22820a50d89f210235481370ccc13/images/test_compare.PNG

--------------------------------------------------------------------------------

/images/test_decorator.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/Karlheinzniebuhr/pythonbenchmark/670a89cbefa22820a50d89f210235481370ccc13/images/test_decorator.PNG

--------------------------------------------------------------------------------

/pythonbenchmark/__init__.py:

--------------------------------------------------------------------------------

1 | from .pythonbenchmark import compare, measure

2 |

--------------------------------------------------------------------------------

/pythonbenchmark/pythonbenchmark.py:

--------------------------------------------------------------------------------

1 | # Author: Karlheinz Niebuhr

2 | # Copyright (c) 2015

3 | # License: see License.txt

4 |

5 | import time

6 | import sys

7 |

8 | # This part of code is to calculate how long a code will take to run

9 | def measure(method):

10 | def run(*args, **kwargs):

11 | if sys.platform == 'win32':

12 | # time.clock() resolution is very good on Windows, but very bad on Unix.

13 |

14 | '''

15 | ***** For now process_time() doesn't seems to work well so we'll just use time.clock() *****

16 |

17 | check if python is >= V 3.3

18 | if False: #(sys.version_info[0] == 3 and sys.version_info[1] == 3):

19 | run_time = time.process_time()

20 | function( arg )

21 | #run_time = time.process_time()

22 | '''

23 | start = time.clock()

24 | #run the function and save the result

25 | result = method(*args, **kwargs)

26 | run_time = time.clock() - start

27 | print('%r (%r, %r) %2.20f sec' % (method.__name__, args, kwargs, run_time))

28 |

29 | else:

30 | # On non Windows platforms the best timer is time.time

31 | start = time.time()

32 | #run the function and save the result

33 | result = method(*args, **kwargs)

34 | run_time = time.time() - start

35 | print('%r (%r, %r) %2.20f sec' % (method.__name__, args, kwargs, run_time))

36 |

37 | return result

38 | return run

39 |

40 |

41 | def run_time(method,*args, **kwargs):

42 | if sys.platform == 'win32':

43 | # time.clock() resolution is very good on Windows, but very bad on Unix.

44 |

45 | start = time.clock()

46 | #run the function and save the result

47 | result = method(*args, **kwargs)

48 | run_time = time.clock() - start

49 | #print('%r (%r, %r) %2.20f sec' % (method.__name__, args, kwargs, run_time))

50 |

51 | else:

52 | # On non Windows platforms the best timer is time.time

53 | start = time.time()

54 | #run the function and save the result

55 | result = method(*args, **kwargs)

56 | run_time = time.time() - start

57 | #print('%r (%r, %r) %2.20f sec' % (method.__name__, args, kwargs, run_time))

58 |

59 | return run_time

60 |

61 |

62 | def compare(functionA, functionB, times_average, *args, **kwargs):

63 | # loop n times

64 | i = 0

65 | totalA = 0.0

66 | totalB = 0.0

67 |

68 | '''

69 | Normalizer

70 | We intercalate the function calls because python tends to execute the second function faster if we first loop over functionA and then over functionB

71 |

72 | '''

73 | for i in range(times_average):

74 | totalA += run_time(functionA,*args, **kwargs)

75 | totalB += run_time(functionB,*args, **kwargs)

76 |

77 | totalA = totalA/times_average

78 | totalB = totalB/times_average

79 |

80 | '''

81 | 1.001 or 0.1% is the lowest granularity time.clock() can distinguish I've tested

82 | it calling compare and passing the same function twice. So we assume that if the difference

83 | of speed is less than 0.1% the functions take the same time to execute

84 | '''

85 |

86 | if totalA < totalB and (totalB/totalA > 1.001):

87 | print("Result: "+ functionA.__name__+ " is "+ str(totalB/totalA) +" times faster than "+functionB.__name__)

88 | elif totalB < totalA and (totalA/totalB > 1.001):

89 | print("Result: "+ functionB.__name__+ " is "+ str(totalA/totalB) +" times faster than "+functionA.__name__)

90 | else:

91 | print(functionA.__name__+" and "+functionB.__name__+" have the same speed!")

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | description-file = README.md

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | from distutils.core import setup

2 | setup(

3 | name = 'pythonbenchmark',

4 | packages = ['pythonbenchmark'], # this must be the same as the name above

5 | version = '1.3',

6 | description = 'A Python library that makes benchmarking easy and fun.',

7 | author = 'Karlheinz Niebuhr',

8 | author_email = 'karlheinzniebuhr89@gmail.com',

9 | url = 'https://github.com/Karlheinzniebuhr/pythonbenchmark', # use the URL to the github repo

10 | download_url = 'https://github.com/Karlheinzniebuhr/pythonbenchmark.git', # I'll explain this in a second

11 | keywords = ['benchmark', 'python', 'optimization'], # arbitrary keywords

12 | classifiers = [],

13 | )

--------------------------------------------------------------------------------

/test_pythonbenchmark.py:

--------------------------------------------------------------------------------

1 | from pythonbenchmark import compare, measure

2 | import time

3 |

4 | a,b,c,d,e = 10,10,10,10,10

5 | something = [a,b,c,d,e]

6 |

7 | #@measure

8 | def myFunction(something):

9 | time.sleep(0.4)

10 |

11 | #@measure

12 | def myOptimizedFunction(something):

13 | time.sleep(0.2)

14 |

15 | # decorator test

16 | #myFunction(input)

17 | #myOptimizedFunction(input)

18 |

19 | # comparing test

20 | compare(myFunction, myOptimizedFunction, 10, input)

21 |

--------------------------------------------------------------------------------