Custom Containerized Deep Learning Environment

2 | with Docker and Harbor

3 |

4 | - [For Beginners: build FROM a base image](#for-beginners-build-from-a-base-image)

5 | - [Set up the CA certificate to use our Harbor registry](#set-up-the-ca-certificate-to-use-our-harbor-registry)

6 | - [Example](#example)

7 | - [Upload the custom image](#upload-the-custom-image)

8 | - [Use the custom image](#use-the-custom-image)

9 | - [Advanced: build an image from scratch](#advanced-build-an-image-from-scratch)

10 | - [Proxy](#proxy)

11 | - [Set up proxy for the docker daemon](#set-up-proxy-for-the-docker-daemon)

12 | - [Set up proxy in the temporary building container](#set-up-proxy-in-the-temporary-building-container)

13 |

14 | # For Beginners: build FROM a base image

15 |

16 | *Determined AI* provides [*Docker* images](https://hub.docker.com/r/determinedai/environments/tags) that include common deep-learning libraries and frameworks. You can also [develop your custom image](https://gpu.lins.lab/docs/prepare-environment/custom-env.html) based on your project dependency.

17 |

18 | For beginners, it is recommended that custom images use one of the Determined AI's official images as a base image, using the `FROM` instruction.

19 |

20 | ## Set up the CA certificate to use our Harbor registry

21 |

22 | **You can skip this part if you only build your custom docker image on the log-in node.**

23 |

24 | Instead of pulling determinedai's images from Docker Hub (which requires setting up proxy now), you can pull them from our Harbor registry.

25 |

26 | Make sure you have configured your `hosts` file with the following settings:

27 |

28 | ```text

29 | 10.0.2.169 lins.lab

30 | 10.0.2.169 harbor.lins.lab

31 | ```

32 |

33 | Check out [here](https://harbor.lins.lab/harbor/projects) to see the available images.

34 |

35 | We have mirrored some of the determined ai's environments in `harbor`. [Here is the link](https://harbor.lins.lab/harbor/projects/3/repositories/environments).

36 |

37 | You can also ask the system admin to add or update the images.

38 |

39 | If you want to use the images from the docker hub, you will need to [use the proxy service](#proxy).

40 |

41 | To use our Harbor registry, you need to complete the following setup:

42 |

43 | ```bash

44 | sudo mkdir -p /etc/docker/certs.d/harbor.lins.lab

45 | cd /etc/docker/certs.d/harbor.lins.lab

46 | sudo wget https://lins.lab/lins-lab.crt --no-check-certificate

47 | sudo systemctl restart docker

48 | ```

49 |

50 | This configures the CA certificate for Docker.

51 |

52 | Then log in to our Harbor registry:

53 |

54 | ```bash

55 | docker login -u -p harbor.lins.lab # You only need to login once

56 | ```

57 |

58 | Now edit the first `FROM` line in the `Dockerfile`, and change the base image to some existing image in the Harbor registry, for example:

59 |

60 | ```dockerfile

61 | FROM harbor.lins.lab/determinedai/environments:cuda-11.8-pytorch-2.0-gpu-mpi-0.31.1

62 | ```

63 |

64 | ## Example

65 |

66 | Suppose you have `environment.yaml` for creating the `conda` environment, `pip_requirements.txt` for `pip` requirements and some `apt` packages that need to be installed.

67 |

68 | Put these files in a folder, and create a `Dockerfile` with the following contents:

69 |

70 | ```dockerfile

71 | # Determined AI's base image

72 | FROM harbor.lins.lab/determinedai/environments:cuda-11.8-pytorch-2.0-gpu-mpi-0.31.1

73 | # Another one of their base images, with newer CUDA and pytorch

74 | # FROM determinedai/environments:cuda-11.8-pytorch-2.0-gpu-mpi-0.27.1

75 | # You can check out their images here: https://hub.docker.com/r/determinedai/environments/

76 |

77 | # Some important environment variables in Dockerfile

78 | ARG DEBIAN_FRONTEND=noninteractive

79 | ENV TZ=Asia/Shanghai LANG=C.UTF-8 LC_ALL=C.UTF-8 PIP_NO_CACHE_DIR=1

80 | # Custom Configuration

81 | RUN sed -i "s/archive.ubuntu.com/mirrors.ustc.edu.cn/g" /etc/apt/sources.list && \

82 | sed -i "s/security.ubuntu.com/mirrors.ustc.edu.cn/g" /etc/apt/sources.list && \

83 | rm -f /etc/apt/sources.list.d/* && \

84 | apt-get update && \

85 | apt-get -y install tzdata && \

86 | apt-get install -y unzip python-opencv graphviz && \

87 | apt-get clean

88 | COPY environment.yml /tmp/environment.yml

89 | COPY pip_requirements.txt /tmp/pip_requirements.txt

90 | RUN conda env update --name base --file /tmp/environment.yml

91 | RUN conda clean --all --force-pkgs-dirs --yes

92 | RUN eval "$(conda shell.bash hook)" && \

93 | conda activate base && \

94 | pip config set global.index-url https://mirrors.bfsu.edu.cn/pypi/web/simple &&\

95 | pip install --requirement /tmp/pip_requirements.txt

96 | ```

97 |

98 | If you want to adapt your custom containerized environment for NVIDIA RTX 4090, `CUDA version >= 11.8` is required.

99 |

100 | Some other Dockerfile examples:

101 | * [svox2](../examples/svox2/)

102 | * [lietorch-opencv](../examples/lietorch-opencv/)

103 |

104 | Notice that we are using the `apt` mirror by `ustc.edu.cn` and the `pip` mirror by `bfsu.edu.cn`. They are currently fast and thus recommended by the system admin.

105 |

106 | To build the image, use the following command:

107 |

108 | ```bash

109 | DOCKER_BUILDKIT=0 docker build -t my_image:v1.0 .

110 | ```

111 |

112 | where `my_image` is your image name, and `v1.0` is the image tag that usually contains descriptions and version information. `DOCKER_BUILDKIT=0` is needed if you are using private Docker registry (i.e. our Harbor) [[Reference]](https://stackoverflow.com/questions/75766469/docker-build-cannot-pull-base-image-from-private-docker-registry-that-requires).

113 |

114 | Don't forget the dot "." at the end of the command!

115 |

116 | # Upload the custom image

117 |

118 | Instead of pushing the image to Docker Hub, it is recommended to use the private Harbor registry: `harbor.lins.lab`.

119 |

120 | You need to ask the system admin to create your Harbor user account. Once you have logged in, you can check out the [public library](https://harbor.lins.lab/harbor/projects/1/repositories):

121 |

122 |

123 |

124 | Note that instead of using the default `library`, you can also create your own *project* in Harbor.

125 |

126 | Also, you need to complete the CA certificate configuration in the [previous section](#accelerating-the-pulling-stage).

127 |

128 | Now you can create your custom docker image on the login node or your PC following the instructions above, and then push the image to the Harbor registry. For instance:

129 |

130 | ```bash

131 | docker login -u -p harbor.lins.lab # You only need to login once

132 | docker tag my_image:v1.0 harbor.lins.lab/library/my_image:v1.0

133 | docker push harbor.lins.lab/library/my_image:v1.0

134 | ```

135 |

136 | In the first line, replace `` with your username and `` with your password.

137 |

138 | In the second line, add the prefix `harbor.lins.lab/library/` to your image. Don't worry, this process does not occupy additional storage.

139 |

140 | In the third line, push your new tagged image.

141 |

142 | # Use the custom image

143 |

144 | In the Determined AI configuration `.yaml` file (as mentioned in [the previous tutorial](./Determined_AI_User_Guide.md#task-configuration-template)), use the newly tagged image (like `harbor.lins.lab/library/my_image:v1.0` above) to tell the system to use your new image as the task environment.

145 |

146 | Also note that every time you update an image, you need to change the image name, otherwise the system will not be able to detect the image update (probably because it only uses the image name as detection, not its checksum).

147 |

148 | # Advanced: build an image from scratch

149 |

150 | To make our life easier, we will build our custom image FROM NVIDIA's base image. You can use the minimum template we provide: [determined-minimum](../examples/determined-minimum/)

151 |

152 | Note that for RTX 4090, we need `CUDA` version >= `11.8`, thus you need to use the base image from [NGC/CUDA](https://catalog.ngc.nvidia.com/orgs/nvidia/containers/cuda) with tags >= 11.8, or [NGC/Pytorch](https://catalog.ngc.nvidia.com/orgs/nvidia/containers/pytorch) with tags >= 22.09.

153 |

154 | Here are some examples tested on RTX 4090:

155 |

156 | 1. [torch-ngp](../examples/torch-ngp/)

157 | 2. [[NEW] nerfstudio](../examples/nerfstudio/)

158 |

159 | # Proxy

160 |

161 | **You can skip this part if you only build your custom docker image on the log-in node.**

162 |

163 | ## Set up proxy for the docker daemon

164 |

165 | You need to set up proxy for the docker daemon in order to pull images from the docker hub (i.e. `docker pull ` command or `FROM ` in the first line of your `Dockerfile`) since it has been blocked.

166 |

167 | The status of our public proxies can be monitored here: [Grafana - v2ray-dashboard](https://grafana.lins.lab/d/CCSvIIEZz/v2ray-dashboard)

168 |

169 | 1) To proceed, recursively create the folder:

170 |

171 | ```sh

172 | sudo mkdir -p /etc/systemd/system/docker.service.d

173 | ```

174 |

175 | 2) Add environment variables to the configuration file `/etc/systemd/system/docker.service.d/proxy.conf`:

176 |

177 | ```conf

178 | [Service]

179 | Environment="HTTP_PROXY=http://10.0.2.169:18889"

180 | Environment="HTTPS_PROXY=http://10.0.2.169:18889"

181 | Environment="NO_PROXY=localhost,127.0.0.1,nvcr.io,aliyuncs.com,edu.cn,lins.lab"

182 | ```

183 |

184 | You can change `10.0.2.169` and `18889` to the other proxy address and port respectively.

185 |

186 | Note that the `http` is intentionally used in `HTTPS_PROXY` - this is how most HTTP proxies work.

187 |

188 | 3) Update configuration and restart `Docker`:

189 |

190 | ```sh

191 | systemctl daemon-reload

192 | systemctl restart docker

193 | ```

194 |

195 | 4) Check the proxy:

196 |

197 | ```sh

198 | docker info

199 | ```

200 |

201 | ## Set up proxy in the temporary building container

202 |

203 | If you also need international internet access during the Dockerfile building process, you can add build arguments to use the public proxy services:

204 |

205 | ```bash

206 | DOCKER_BUILDKIT=0 docker build -t my_image:v1.0 --build-arg http_proxy=http://10.0.2.169:18889 --build-arg https_proxy=http://10.0.2.169:18889 .

207 | ```

208 |

--------------------------------------------------------------------------------

/docs/Determined_AI_User_Guide.md:

--------------------------------------------------------------------------------

1 |

Getting started with the batch system:

2 | Determined-AI User Guide

3 |

4 | - [Introduction](#introduction)

5 | - [Monitoring](#monitoring)

6 | - [User Account](#user-account)

7 | - [Ask for your account](#ask-for-your-account)

8 | - [Authentication](#authentication)

9 | - [WebUI](#webui)

10 | - [CLI](#cli)

11 | - [Changing passwords](#changing-passwords)

12 | - [Submitting Tasks](#submitting-tasks)

13 | - [Task Configuration Template](#task-configuration-template)

14 | - [Submit](#submit)

15 | - [Managing Tasks](#managing-tasks)

16 | - [Connect to a shell task](#connect-to-a-shell-task)

17 | - [First-time setup of connecting VS Code to a shell task](#first-time-setup-of-connecting-vs-code-to-a-shell-task)

18 | - [Update the setup of connecting VS Code to a shell task](#update-the-setup-of-connecting-vs-code-to-a-shell-task)

19 | - [Connect PyCharm to a shell task](#connect-pycharm-to-a-shell-task)

20 | - [Port forwarding](#port-forwarding)

21 | - [Experiments](#experiments)

22 |

23 | # Introduction

24 |

25 |

26 |

27 | We are currently using [Determined AI](https://www.determined.ai/) to manage our GPU Cluster.

28 |

29 | You can open the dashboard (a.k.a WebUI) by the following URL and log in:

30 |

31 | [https://gpu.lins.lab/](https://gpu.lins.lab/)

32 |

33 | Determined is a successful (acquired by Hewlett Packard Enterprise in 2021) open-source deep learning training platform that helps researchers train models more quickly, easily share GPU resources, and collaborate more effectively.

34 |

35 | # Monitoring

36 |

37 | You can check the realtime utilization of the cluster in the [grafana dashboard](https://grafana.lins.lab/d/glTohhh7k/cluster-realtime-hardware-utilization-cadvisor-tba-and-dcgm-exporter).

38 |

39 | # User Account

40 |

41 | ## Ask for your account

42 |

43 | You need to ask the [system admin](https://lins-lab-workspace.slack.com/team/U054PUBM7FB) [(Yufan Wang)](tommark00022@gmail.com) for your user account.

44 |

45 | **Tips**

46 | * Once getting your cluster account, you can configure your own job environment. Some [guidelines](https://github.com/LINs-lab/cluster_tutorial/blob/main/docs/Custom_Containerized_Environment.md) can be found here.

47 | * We have a basic GPU job monitor, and the reserved container will be terminated if all GPUs are idle for _2 hours_.

48 | * We notify the container status through Slack. If you do not want the notification in the Slack channel disturbs you, please consider [this settings](Determined_AI_User_Guide/slack_notice_setting.png)

49 |

50 | ## Authentication

51 |

52 | ### WebUI

53 |

54 | The WebUI will automatically redirect users to a login page if there is no valid Determined session established on that browser. After logging in, the user will be redirected to the URL they initially attempted to access.

55 |

56 | ### CLI

57 |

58 | Users can also interact with Determined using a command-line interface (CLI). The CLI is distributed as a Python wheel package; once the wheel has been installed, the CLI can be used via the `det` command.

59 |

60 | You can use the CLI either on the login node or on your local development machine.

61 |

62 | 1) Installation

63 |

64 | The CLI can be installed via pip:

65 |

66 | ```bash

67 | pip install determined

68 | ```

69 |

70 | 2) (Optional) Configure environment variable

71 |

72 | If you are using your own PC, you need to add the environment variable `DET_MASTER=10.0.2.168`. If you are using the login node, no configuration is required, because the system administrator has already configured this globally on the login node.

73 |

74 | For Linux, *nix including macOS, if you are using `bash` append this line to the end of `~/.bashrc` (most systems) or `~/.bash_profile` (some macOS);

75 |

76 | If you are using `zsh`, append it to the end of `~/.zshrc`:

77 |

78 | ```bash

79 | export DET_MASTER=10.0.2.168

80 | ```

81 |

82 | For Windows, you can follow this tutorial: [tutorial](https://www.architectryan.com/2018/08/31/how-to-change-environment-variables-on-windows-10/)

83 |

84 | 3) Log in

85 |

86 | In the CLI, the user login subcommand can be used to authenticate a user:

87 |

88 | ```bash

89 | det user login

90 | ```

91 |

92 | Note: If you did not configure the environment variable, you need to specify the master's IP:

93 |

94 | ```bash

95 | det -m 10.0.2.168 user login

96 | ```

97 |

98 | ## Changing passwords

99 |

100 | Users have *blank* passwords by default. If desired, a user can change their own password using the user change-password subcommand:

101 |

102 | ```bash

103 | det user change-password

104 | ```

105 |

106 | # Submitting Tasks

107 |

108 |

109 |

110 | ## Task Configuration Template

111 |

112 | Here is a template of a task configuration file, in YAML format:

113 |

114 | ```yaml

115 | description:

116 | resources:

117 | slots: 1

118 | resource_pool: 64c128t_512_3090

119 | shm_size: 4G

120 | bind_mounts:

121 | - host_path: /home//

122 | container_path: /run/determined/workdir/home/

123 | - host_path: /labdata0//

124 | container_path: /run/determined/workdir/data/

125 | environment:

126 | image: determinedai/environments:cuda-11.3-pytorch-1.10-tf-2.8-gpu-0.19.4

127 | ```

128 |

129 | Notes:

130 |

131 | - You need to change the `task_name` and `user_name` to your own

132 | - Number of `resources.slots` is the number of GPUs you are requesting to use, which is set to `1` here

133 | - `resources.resource_pool` is the resource pool you are requesting to use. Currently, we have two resource pools: `64c128t_512_3090` and `64c128t_512_4090`.

134 | - `resources.shm_size` is set to `4G` by default. You may need a greater size if you use multiple dataloader workers in pytorch, etc.

135 | - In `bind_mounts`, it maps the dataset directory (`/labdata0`) into the container.

136 | - In `environment.image`, an official image by *Determined AI* is used. *Determined AI* provides [*Docker* images](https://hub.docker.com/r/determinedai/environments/tags) that include common deep-learning libraries and frameworks. You can also [develop your custom image](https://gpu.lins.lab/docs/prepare-environment/custom-env.html) based on your project dependency, which will be discussed in this tutorial: [Custom Containerized Environment](./Custom_Containerized_Environment.md)

137 | - How `bind_mounts` works:

138 |

139 |

140 |

141 | ## Submit

142 |

143 | Save the YAML configuration to, let's say, `test_task.yaml`. You can start a Jupyter Notebook (Lab) environment or a simple shell environment. A notebook is a web interface and thus more user-friendly. However, you can use **Visual Studio Code** or **PyCharm** to connect to a shell environment[[3]](https://gpu.lins.lab/docs/interfaces/ide-integration.html), which brings more flexibility and productivity if you are familiar with these editors.

144 |

145 | For notebook **(try to avoid using notebook through DeterminedAI, due to some privacy issues)**:

146 |

147 | ```bash

148 | det notebook start --config-file test_task.yaml

149 | ```

150 |

151 | For shell **(strongly recommended)**:

152 |

153 | ```bash

154 | det shell start --config-file test_task.yaml

155 | ```

156 |

157 | **In order to ensure a pleasant environment**, please

158 | * avoid being a root user in your tasks/pods/containers.

159 | * carefully check your code and avoid occupying too many CPU cores.

160 | * try to use `OMP_NUM_THREADS=2 MKL_NUM_THREADS=2 python `.

161 | * include your name in your .

162 | * ...

163 |

164 | ## Managing Tasks

165 |

166 | **You are encouraged to check out more operations of Determined.AI** in the [API docs](https://docs.determined.ai/latest/interfaces/commands-and-shells.html), e.g.,

167 | * `det task`

168 | * `det shell open [task id]`

169 | * `det shell kill [task id]`

170 |

171 | Now you can see your task pending/running on the WebUI dashboard. You can manage the tasks on the WebUI.

172 |

173 |

174 | ## Connect to a shell task

175 |

176 | You can use **Visual Studio Code** or **PyCharm** to connect to a shell task.

177 |

178 | You also need to [install](#cli) and use `determined` on your local computer, in order to get the SSH IdentityFile, which is necessary in the next section.

179 |

180 | ### First-time setup of connecting VS Code to a shell task

181 |

182 | 1. First, you need to install the [Remote-SSH](https://code.visualstudio.com/docs/remote/ssh) plugin.

183 |

184 | 2. Check the UUID of your tasks:

185 |

186 | ```bash

187 | det shell list

188 | ```

189 |

190 | 3. Get the ssh command for the task with the UUID above (it also generates an SSH IdentityFile on your PC):

191 |

192 | ```bash

193 | det shell show_ssh_command

194 | ```

195 |

196 | The results should follow this pattern:

197 |

198 | ```bash

199 | ssh -o "ProxyCommand=" \

200 | -o StrictHostKeyChecking=no \

201 | -tt \

202 | -o IdentitiesOnly=yes \

203 | -i \

204 | -p \

205 | @

206 | ```

207 |

208 | 4. Add the shell task as a new SSH task:

209 |

210 | Click the SSH button on the left-bottom corner:

211 |

212 |

213 |

214 | Select connect to host -> +Add New SSH Host:

215 |

216 |

217 |

218 |

219 |

220 | Paste the SSH command generated by `det shell show_ssh_command` above in to the dialog window:

221 |

222 |

223 |

224 | Then choose your ssh configuration file to update:

225 |

226 |

227 |

228 | You can continue to edit your ssh configuration file, e.g. add a custom name:

229 |

230 |

231 |

232 |

233 |

234 | ### Update the setup of connecting VS Code to a shell task

235 |

236 | 1. Check the UUID of your tasks:

237 |

238 | ```bash

239 | det shell list

240 | ```

241 |

242 | 2. Get the new ssh command:

243 |

244 | ```bash

245 | det shell show_ssh_command

246 | ```

247 |

248 | 3. Replace the old UUID with the new one (with `Ctrl + H`):

249 |

250 |

251 |

252 |

253 | ### Connect PyCharm to a shell task

254 |

255 | 1. As of the current version, PyCharm lacks support for custom options in SSH commands via the UI.

256 | Therefore, you must provide via an entry in your `ssh_config` file.

257 | You can generate this entry by following the steps in [First-time setup of connecting VS Code to a shell task](#first-time-setup-of-connecting-vs-code-to-a-shell-task).

258 |

259 | 2. In PyCharm, open Settings/Preferences > Tools > SSH Configurations.

260 |

261 | 3. Select the plus icon to add a new configuration.

262 |

263 | 4. Enter `YOUR SHELL HOST NAME (UUID)`, `YOUR PORT NUMBER` (fill in `22` here), and `YOUR USERNAME` in the corresponding fields. (P.S. you can chage `YOUR SHELL HOST NAME (UUID)` into your custom one configured in the SSH config identity, e.g. `TestEnv`, as shown above)

264 |

265 | 5. Switch the Authentication type dropdown to OpenSSH config and authentication agent.

266 |

267 | 6. You can hit `Test Connection` to test it.

268 |

269 | 7. Save the new configuration by clicking OK. Now you can continue to add Python Interpreters with this SSH configuration.

270 |

271 |

272 |

273 | ## Port forwarding

274 |

275 | You will need to do the *port forwarding* from the task container to your personal computer through the SSH tunnel (managed by `determined`) when you want to set up services like `tensorboard`, etc, in your task container.

276 |

277 | Here is an example. First launch a notebook or shell task with the `proxy_ports` configurations:

278 |

279 | ```yaml

280 | environment:

281 | proxy_ports:

282 | - proxy_port: 6006

283 | proxy_tcp: true

284 | ```

285 | where 6006 is the port used by tensorboard.

286 |

287 | Then launch port forwarding on you personal computer with this command:

288 |

289 | ```bash

290 | python -m determined.cli.tunnel --listener 6006 --auth 10.0.2.168 YOUR_TASK_UUID:6006

291 | ```

292 |

293 | Remember to change **YOUR_TASK_UUID** to your task's UUID.

294 |

295 | Now you can open the tensorboard (http://localhost:6006) with your web browser.

296 |

297 | Reference: [Expossing custom ports - Determined AI docs](https://docs.determined.ai/latest/interfaces/proxy-ports.html#exposing-custom-ports)

298 |

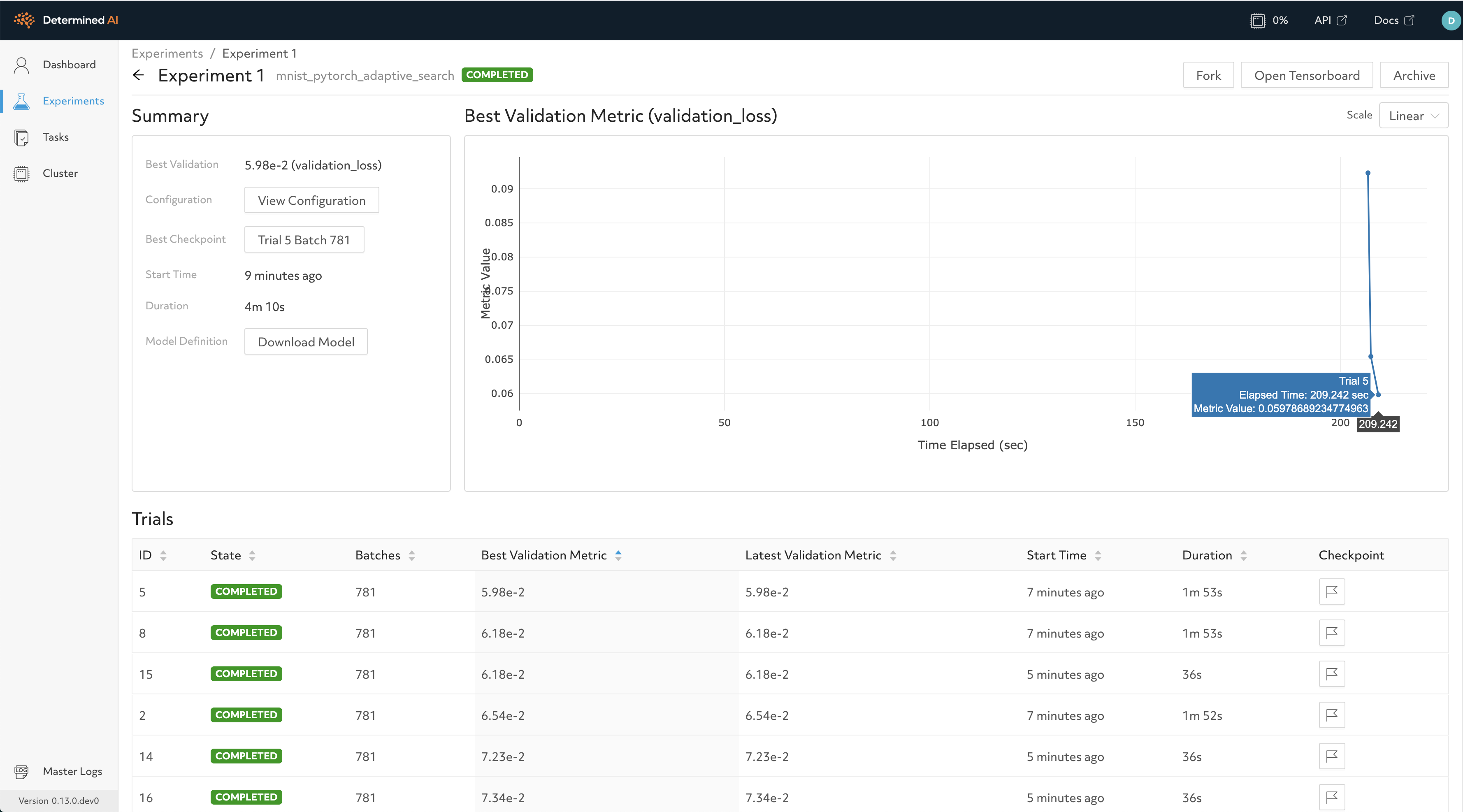

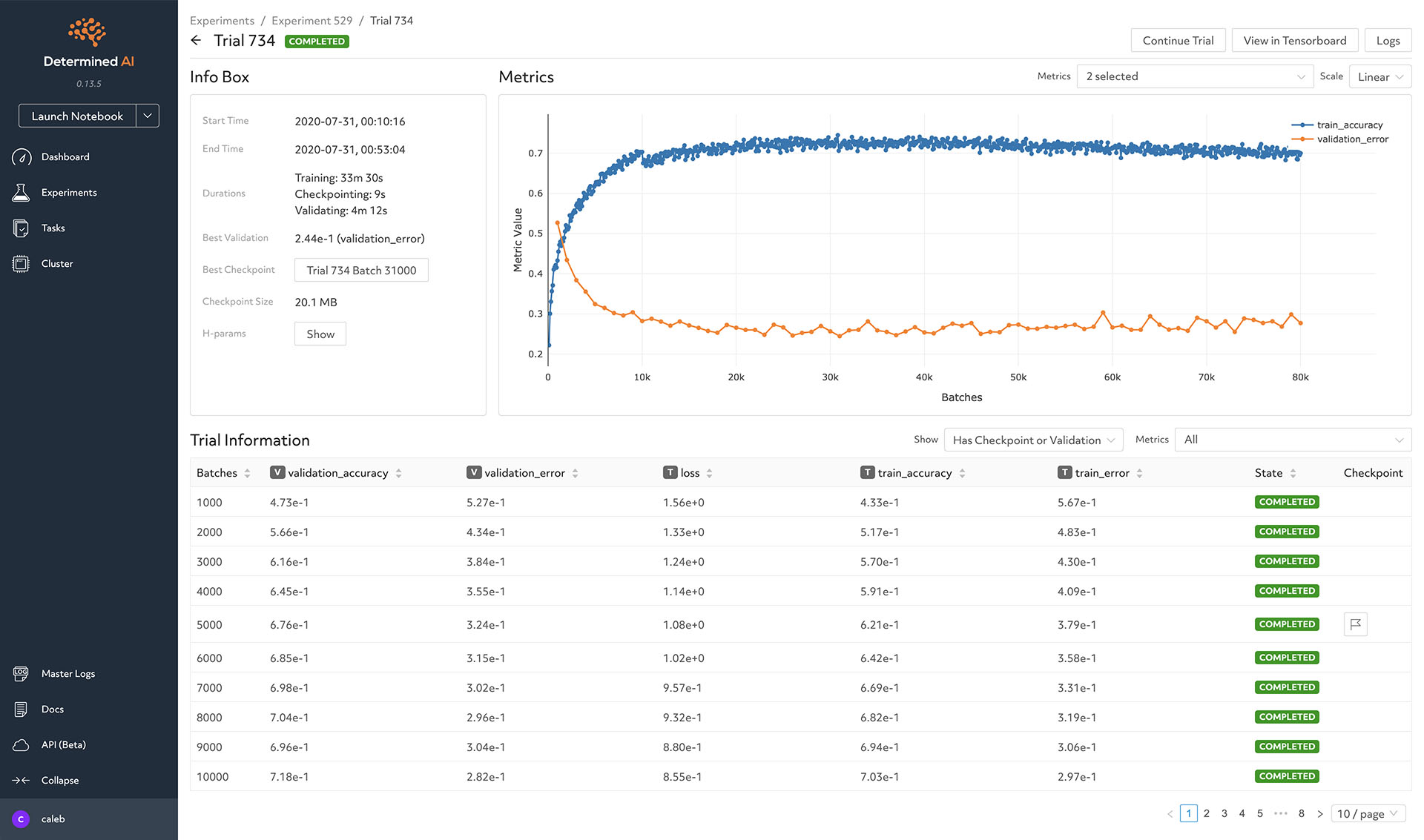

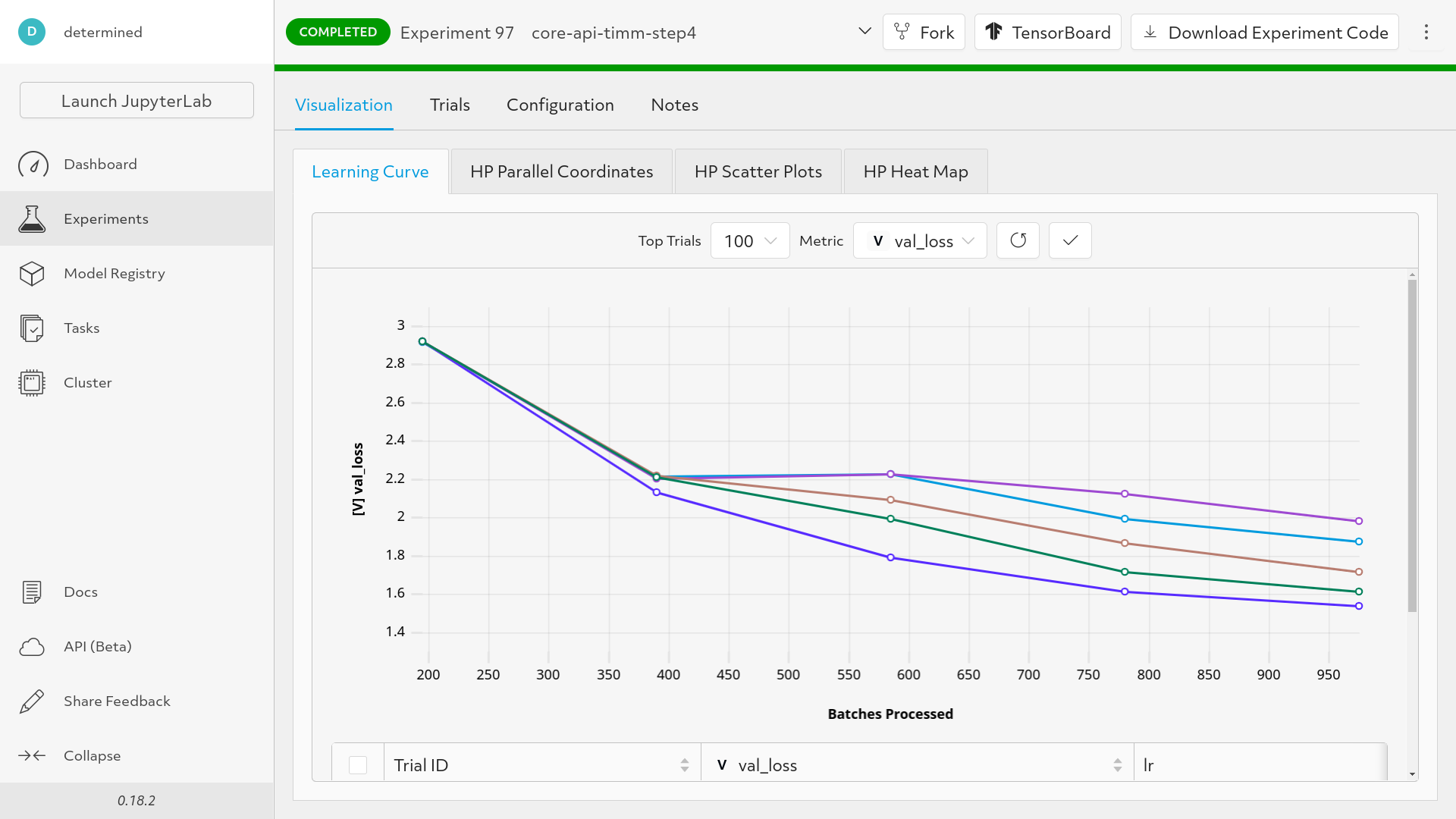

299 | ## Experiments

300 |

301 | (TBA)

302 |

303 |

304 |

305 |

306 |

--------------------------------------------------------------------------------

/docs/Getting_started.md:

--------------------------------------------------------------------------------

1 |

Getting started with the cluster

2 |

3 | - [Requesting accounts](#requesting-accounts)

4 | - [Accessing the cluster](#accessing-the-cluster)

5 | - [Security](#security)

6 | - [Setting up the hosts file](#setting-up-the-hosts-file)

7 | - [For Windows](#for-windows)

8 | - [For Linux, \*nix including macOS](#for-linux-nix-including-macos)

9 | - [Hosts Modification](#hosts-modification)

10 | - [Install the root CA certificate (Optional)](#install-the-root-ca-certificate-optional)

11 | - [SSH](#ssh)

12 | - [SSH in Linux, \*nix including macOS](#ssh-in-linux-nix-including-macos)

13 | - [SSH in Windows](#ssh-in-windows)

14 | - [SSH keys](#ssh-keys)

15 | - [SSH keys on Linux](#ssh-keys-on-linux)

16 | - [SSH keys on Windows](#ssh-keys-on-windows)

17 | - [Safety rules](#safety-rules)

18 | - [How to use keys with non-default names](#how-to-use-keys-with-non-default-names)

19 | - [X11 forwarding and remote desktop](#x11-forwarding-and-remote-desktop)

20 | - [X11 forwarding](#x11-forwarding)

21 | - [Remote desktop via RDP](#remote-desktop-via-rdp)

22 | - [Data management](#data-management)

23 | - [Introduction](#introduction)

24 | - [Uploading and downloading data](#uploading-and-downloading-data)

25 | - [Uploading](#uploading)

26 | - [Downloading](#downloading)

27 | - [Using proxy service](#using-proxy-service)

28 | - [Proxychains](#proxychains)

29 | - [Environment variable](#environment-variable)

30 |

31 | # Requesting accounts

32 |

33 | Accounts that need to be created by the administrator (Peng SUN, sp12138sp@gmail.com) include:

34 |

35 | - A Linux account on the login node (`login.lins.lab`)

36 | - An account for the batch system (Determined AI, [gpu.lins.lab](https://gpu.lins.lab)).

37 |

38 | Note:

39 |

40 | - You can only access the links above after setting up the `hosts` file.

41 |

42 | # Accessing the cluster

43 |

44 | A Web entry point can be found [here](https://lins.lab).

45 |

46 | ## Security

47 |

48 | Accessing the cluster is currently only possible via secure protocols (ssh, scp, rsync). The cluster is only accessible from inside the campus's local area network. If you would like to connect from a computer, which is not inside the campus network, then you would need to establish a [VPN](https://vpn.westlake.edu.cn/) connection first.

49 |

50 | ## Setting up the hosts file

51 |

52 | Since our cluster is only accessible inside the campus's LAN, and we do not have the administration of the DNS server, setting up the `hosts` file is the best way to translate human-friendly hostnames into IP addresses.

53 |

54 | The way to modify the `hosts` file is as follows:

55 |

56 | ### For Windows

57 |

58 | - Press `Win-Key + R`. A small window will pop up.

59 |

60 | - Type in the following command and press `Ctrl+Shift+Enter`, to make notepad run as administrator and edit the `hosts` file.

61 |

62 | ```bat

63 | notepad C:\Windows\System32\drivers\etc\hosts

64 | ```

65 |

66 | ### For Linux, *nix including macOS

67 |

68 | - Edit `/etc/hosts` with root privilege in your favorite way. For example:

69 |

70 | ```bash

71 | sudo vim /etc/hosts

72 | ```

73 |

74 | ### Hosts Modification

75 |

76 | Append these lines to the end of the `hosts` file:

77 |

78 | ```text

79 | 10.0.2.166 login.lins.lab

80 | 10.0.2.169 lins.lab

81 | 10.0.2.169 gpu.lins.lab

82 | 10.0.2.169 harbor.lins.lab

83 | 10.0.2.169 grafana.lins.lab

84 | ```

85 |

86 | ## Install the root CA certificate (Optional)

87 |

88 | Since we are using a self-signed certificate, after modifying the host, when we use a web browser to access the service, a security warning appears saying the certificate is not recognized. We can suppress this warning by making the system trust the certificate.

89 |

90 | The certificate can be downloaded at: [https://lins.lab/lins-lab.crt](https://lins.lab/lins-lab.crt)

91 |

92 | - For Windows, right-click the CA certificate file and select 'Install Certificate'. Follow the prompts to add the certificate to the **Trusted Root Certification Authorities**. If you are using Git for Windows, you will need to configure Git to use Windows native crypto backend: `git config --global http.sslbackend schannel`

93 |

94 | - For Linux (tested Ubuntu), first, you need the `ca-certificates` package installed, then copy the `.crt` file into the folder `/usr/local/share/ca-certificates`, and update certificates system-wide with the command `sudo update-ca-certificates`. This works for most applications, but browsers like google-chrome and chromium on Linux have their own certificate storage. You need to go to `chrome://settings/certificates`, select "Authorities", and import the `.crt` file. To use our Harbor registry `harbor.lins.lab`, you need to create the folder `/etc/docker/certs.d/harbor.lins.lab/` and copy the certificate into it.

95 |

96 | ## SSH

97 |

98 | You can connect to the cluster via the SSH protocol. For this purpose, it is required that you have an SSH client installed. The information required to connect to the cluster is the hostname (which resolves to an IP address) of the cluster and your account credentials (username, password).

99 |

100 | Since we have set up the `hosts` in the [previous section](#hosts-modification), we can use the human-readable hostname to make our connection.

101 |

102 | | Hostname | IP Address | Port |

103 | | :-- | :-- | :-- |

104 | |login.lins.lab|10.0.2.166|22332|

105 |

106 | ### SSH in Linux, *nix including macOS

107 |

108 | Open a terminal and use the standard ssh command

109 |

110 | ```bash

111 | ssh -p 22332 username@login.lins.lab

112 | ```

113 |

114 | where **username** is your username and the **hostname** can be found in the table shown above. The parameter `-p 22332` is used to declare the SSH port used on the server. For security, we modified the default port. If for instance, user **peter** would like to access the cluster, then the command would be

115 |

116 | ```text

117 | peter@laptop:~$ ssh -p 22332 peter@login.lins.lab

118 | peter@login.lins.lab's password:

119 | Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.4.0-104-generic x86_64)

120 |

121 | * Documentation: https://help.ubuntu.com

122 | * Management: https://landscape.canonical.com

123 | * Support: https://ubuntu.com/advantage

124 |

125 | System information as of Tue 15 Mar 2022 11:51:03 AM UTC

126 |

127 | System load: 0.0 Users logged in: 1

128 | Usage of /: 28.0% of 125.49GB IPv4 address for docker0: 172.17.0.1

129 | Memory usage: 6% IPv4 address for enp1s0: 192.168.122.2

130 | Swap usage: 0% IPv4 address for enp6s0: 10.0.2.166

131 | Processes: 278

132 |

133 | 0 updates can be applied immediately.

134 |

135 | Last login: Tue Mar 15 11:29:19 2022 from 172.16.29.72

136 | ```

137 |

138 | Note that when it prompts to enter the password:

139 |

140 | ```text

141 | peter@login.lins.lab's password:

142 | ```

143 |

144 | there will not be any visual feedback (i.e. asterisks) in order not to show the length of your password.

145 |

146 | ### SSH in Windows

147 |

148 | Since Windows 10, an ssh client is also provided in the operating system, but it is more common to use third-party software to establish ssh connections. Widely used ssh clients are for instance MobaXterm, XShell, FinalShell, Terminus, PuTTY and Cygwin.

149 |

150 | For using MobaXterm, you can either start a local terminal and use the same SSH command as for Linux and Mac OS X, or you can click on the session button, choose SSH and then enter the hostname and username. After clicking on OK, you will be asked to enter your password.

151 |

152 | How to use MobaXterm: [How to access the cluster with MobaXterm - ETHZ](https://scicomp.ethz.ch/wiki/How_to_access_the_cluster_with_MobaXterm) / [Download and setup MobaXterm - CECI](https://support.ceci-hpc.be/doc/_contents/QuickStart/ConnectingToTheClusters/MobaXTerm.html)

153 |

154 | How to use PuTTY: [How to access the cluster with PuTTY - ETHZ](https://scicomp.ethz.ch/wiki/How_to_access_the_cluster_with_PuTTY)

155 |

156 | > An alternative option: use WSL/WSL2 [[CECI Doc]](https://support.ceci-hpc.be/doc/_contents/QuickStart/ConnectingToTheClusters/WSL.html)

157 |

158 | ### SSH keys

159 |

160 | It is recommended to create SSH keys: Imagine when the network connection is unstable, typing the passwords, again and again, is frustrating. Using SSH Certificates, you will never need to type in passwords during logging in. Powered by cryptography, it prevents man-in-the-middle attacks, etc.

161 |

162 | The [links](#ssh-in-windows) above demonstrate methods using GUI. You can also create the keys with CLI:

163 |

164 | ### SSH keys on Linux

165 |

166 | For security reasons, we recommend that you use a different key pair for every computer you want to connect to:

167 |

168 | ```bash

169 | ssh-keygen -t ed25519 -f $HOME/.ssh/id_lins

170 | ```

171 |

172 | It is recommended to set a passphrase for the private key.

173 |

174 | Once this is done, copy the public key to the cluster:

175 |

176 | ```bash

177 | ssh-copy-id -i $HOME/.ssh/id_lins.pub -p 22332 username@login.lins.lab

178 | ```

179 |

180 | Finally, you can add the private key to the ssh-agent temporarily so that you don't need to enter the passphrase every time (You still need to do this every time after reboot).

181 |

182 | ```bash

183 | ssh-add ~/.ssh/id_lins

184 | ```

185 |

186 | ### SSH keys on Windows

187 |

188 | For Windows, third-party software ([PuTTYgen](https://www.puttygen.com/)](https://www.puttygen.com/), [MobaXterm](https://mobaxterm.mobatek.net/)) is commonly used to create SSH keys (demonstrated in the [links above](#ssh-in-windows)), however, since Windows 10, we can also follow similar steps in PowerShell:

189 |

190 | - Step 1. On your PC, go to the folder:

191 |

192 | ```bash

193 | mkdir ~/.ssh && cd ~/.ssh

194 | ```

195 |

196 | - Step 2. Create a public/private key pair:

197 |

198 | ```bash

199 | ssh-keygen -t ed25519 -f id_lins

200 | ```

201 |

202 | It's recommended to set a passphrase for the private key for advanced safety.

203 |

204 | - Step 3. The program `ssh-copy-id` is not available so we manually copy the public key:

205 |

206 | ```bash

207 | notepad ~/.ssh/id_lins.pub

208 | ```

209 |

210 | (Copy)

211 |

212 | - Step 4. On the remote Server, create and edit the file, and paste the public key into it:

213 |

214 | ```bash

215 | mkdir ~/.ssh && vim ~/.ssh/authorized_keys

216 | ```

217 |

218 | (Paste to above and Save)

219 |

220 | - Step 5. Start the ssh-agent; Apply the private key so that you don't need to enter the passphrase every time (You need to do this every time after the system starts up)

221 |

222 | ```bash

223 | ssh-agent

224 |

225 | ssh-add ~/.ssh/id_lins

226 | ```

227 |

228 | ### Safety rules

229 |

230 | - Always use a (strong) passphrase to protect your SSH key. Do not leave it empty!

231 |

232 | - Never share your private key with somebody else, or copy it to another computer. It must only be stored on your personal computer

233 |

234 | - Use a different key pair for each computer you want to connect to

235 |

236 | - Do not reuse the key pairs for other systems

237 |

238 | - Do not keep open SSH connections in detached `screen` sessions

239 |

240 | - Disable the ForwardAgent option in your SSH configuration and do not use ssh -A (or use ssh -a to disable agent forwarding)

241 |

242 | ### How to use keys with non-default names

243 |

244 | If you use different key pairs for different computers (as recommended above), you need to specify the right key when you connect, for instance:

245 |

246 | ```bash

247 | ssh -p 22332 -i $HOME/.ssh/id_lins username@login.lins.lab

248 | ```

249 |

250 | To make your life easier, you can configure your ssh client to use these options automatically by adding the following lines in your $HOME/.ssh/config file:

251 |

252 | ```text

253 | Host cluster

254 | HostName login.lins.lab

255 | Port 22332

256 | User username

257 | IdentityFile ~/.ssh/id_lins

258 | ```

259 |

260 | For Windows Users, you need to use the backslash in `IdentityFile`:

261 |

262 | IdentityFile ~\\.ssh\\id_lins

263 |

264 | Then your ssh command simplifies as follows:

265 |

266 | ```bash

267 | ssh cluster

268 | ```

269 |

270 | ## X11 forwarding and remote desktop

271 |

272 | ### X11 forwarding

273 |

274 | Sometimes we need to run GUI applications on the login node. To directly run GUI applications in ssh terminals, you must open an SSH tunnel and redirect all X11 communication through that tunnel.

275 |

276 | Xorg (X11) is normally installed by default as part of most Linux distributions. For Windows, tools such as [vcxsrv](https://sourceforge.net/projects/vcxsrv/) or [x410](https://x410.dev/) can be used. For macOS, since X11 is no longer included, you must install [XQuartz](https://www.xquartz.org/). You may want to check out the [Troubleshooting section](https://scicomp.ethz.ch/wiki/Accessing_the_clusters#Troubleshooting) by ETHZ IT-Services.

277 |

278 | ### Remote desktop via RDP

279 |

280 | RDP (Remote Desktop Protocol) provides a remote desktop interface that is more user-friendly. To connect using RDP, you need an RDP Client installed. On Windows, there is a built-in remote desktop software `mstsc.exe`, or you can download a newer `Microsoft Remote Desktop` from the Microsoft Store.

281 | On Linux, it's recommended to install `Remmina` and `remmina-plugin-rdp`.

282 |

283 | Using the RDP Clients is simple. Following the prompts, type in the server address, user name and password. Then, set the screen resolution and color depth you want.

284 |

285 | For security, RDP is only allowed from SSH tunnels, and the default RDP port is also changed from 3389 to 23389. One can create the SSH tunnel and forward RDP connections to localhost:23389 by:

286 |

287 | ```bash

288 | ssh -p 22332 -NL 23389:localhost:23389 username@login.lins.lab

289 | ```

290 |

291 | Note: If you have completed [this step](#how-to-use-keys-with-non-default-names), you can shorten the command:

292 |

293 | ```bash

294 | ssh -NL 23389:localhost:23389 cluster

295 | ```

296 |

297 | Then connect to `localhost:23389` using `mstsc.exe` or Remote Desktop App from [Microsoft Store](https://apps.microsoft.com/store/detail/microsoft-remote-desktop/9WZDNCRFJ3PS)

298 |

299 |

300 | Click to show image

301 |

302 |

303 |

304 |

305 |

306 |

307 | # Data management

308 |

309 | ## Introduction

310 |

311 |

312 |

313 | We are currently using NFS to share filesystems between cluster nodes. The storage space of the login node is small (about 100GB), so it is recommended to store code and data in NFS shared folders: `/dataset` for datasets and `/workspace` for workspaces. The two NFS folders are allocated on the storage server, which currently offers a capacity of 143TB, with data redundancy and snapshot capability powered by TrueNAS ZFS.

314 |

315 | We can check the file systems with the command `df -H`:

316 |

317 | ```text

318 | peter@login.lins.lab: ~ $ df -H

319 |

320 | Filesystem Size Used Avail Use% Mounted on

321 | /dev/nvme0n1p2 138G 25G 113G 19% /

322 | nas.lins.lab:/mnt/Peter/Datasets 143T 4.2T 139T 3% /datasets

323 | nas.lins.lab:/mnt/Peter/Workspace/peter 8.8T 136G 8.7T 2% /workspace/peter

324 | ```

325 |

326 | You need to ask the system admin to create your workspace folder `/workspace/`.

327 |

328 | By default, other users do not have either read or write [permissions](https://scicomp.ethz.ch/wiki/Linux_permissions) on your folder.

329 |

330 | ## Uploading and downloading data

331 |

332 | ### Uploading

333 |

334 | When you transfer data from your personal computer to a storage server, it's called an upload.

335 | We can use CLI tools like `scp`, `rsync`; or GUI tools like `mobaXterm`, `FinalShell`, `VSCode`, `xftp`, `SSHFS` for uploading files from a personal computer to the data storage.

336 |

337 | Here is an example of using FinalShell:

338 |

339 |

340 | Click to show image

341 |

342 |

343 |

344 |

345 | Here is an example of using SSHFS-win:

346 |

347 |

348 | Click to show image

349 |

350 |

351 |

352 |

353 | ### Downloading

354 |

355 | When you get data from a service provider such as Baidu Netdisk, Google Drive, Microsoft Onedrive, Amazon S3, etc., it's called a download. For example, you can use the Baidu Netdisk client (already installed).

356 | You can also download datasets directly from the source. It is recommended to use professional download software to download large datasets, such as aria2, motrix (aria2 with GUI), etc.

357 |

358 | Here is an example of using Baidu Netdisk:

359 |

360 |

361 | Click to show image

362 |

363 |

364 |

365 |

366 | ### Using proxy service

367 |

368 | We have configured both HTTP and SOCKS5 proxy services on the cluster:

369 |

370 | - Osaka, central Japan

371 | - http://192.168.123.169:18889

372 | - socks5://192.168.123.169:10089

373 |

374 | #### Proxychains

375 |

376 | Project homepage: [proxychains-ng](https://github.com/rofl0r/proxychains-ng)

377 |

378 | Example Usage:

379 |

380 | ```bash

381 | proxychains curl google.com

382 |

383 | proxychains -q curl google.com # Quite mode

384 |

385 | proxychains git clone https://github.com/LINs-lab/cluster_tutorial

386 | ```

387 |

388 | #### Environment variable

389 |

390 | Export these environment variables before program execution.

391 |

392 | This is useful when some programs that do not use `libc` cannot be hooked by `proxychains`,

393 | such as many programs written in `python` or `golang`.

394 |

395 | ```bash

396 | export HF_ENDPOINT=https://hf-mirror.com &&\

397 | export http_proxy=http://192.168.123.169:18889 &&\

398 | export https_proxy=http://192.168.123.169:18889 &&\

399 | export HTTP_PROXY=http://192.168.123.169:18889 &&\

400 | export HTTPS_PROXY=http://192.168.123.169:18889 &&\

401 | export NO_PROXY="localhost,127.0.0.0/8,::1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,lins.lab,*.lins.lab,westlake.edu.cn,*.westlake.edu.cn,*.edu.cn,hf-mirror.com" &&\

402 | export no_proxy="localhost,127.0.0.0/8,::1,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,lins.lab,*.lins.lab,westlake.edu.cn,*.westlake.edu.cn,*.edu.cn,hf-mirror.com"

403 | curl google.com

404 | ```

405 |

406 | Outputs:

407 |

408 | ```text

409 |

410 | 301 Moved

411 |

301 Moved

412 | The document has moved

413 | here.

414 |

415 | ```

416 |

--------------------------------------------------------------------------------

/docs/Getting_started/storage_model.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------