├── .github

├── ISSUE_TEMPLATE.md

└── PULL_REQUEST_TEMPLATE.md

├── .gitignore

├── AUTHORS

├── CITATION

├── CODE_OF_CONDUCT.md

├── CONTRIBUTING.md

├── Gemfile

├── LICENSE.md

├── Makefile

├── README.md

├── _config.yml

├── _episodes

├── .gitkeep

├── 01-introduction.md

├── 02-findable.md

├── 03-accessible.md

├── 04-interoperable.md

├── 05-reusable.md

├── 07-assessment.md

└── 08-software.md

├── _episodes_rmd

├── .gitkeep

└── data

│ └── .gitkeep

├── _extras

├── .gitkeep

├── about.md

├── design.md

├── discuss.md

├── figures.md

└── guide.md

├── aio.md

├── bin

├── boilerplate

│ ├── .travis.yml

│ ├── AUTHORS

│ ├── CITATION

│ ├── CODE_OF_CONDUCT.md

│ ├── CONTRIBUTING.md

│ ├── README.md

│ ├── _config.yml

│ ├── _episodes

│ │ └── 01-introduction.md

│ ├── _extras

│ │ ├── about.md

│ │ ├── discuss.md

│ │ ├── figures.md

│ │ └── guide.md

│ ├── aio.md

│ ├── index.md

│ ├── reference.md

│ └── setup.md

├── chunk-options.R

├── generate_md_episodes.R

├── knit_lessons.sh

├── lesson_check.py

├── lesson_initialize.py

├── markdown_ast.rb

├── repo_check.py

├── test_lesson_check.py

├── util.py

└── workshop_check.py

├── code

└── .gitkeep

├── data

└── .gitkeep

├── fig

├── .gitkeep

├── anatomy-of-a-doi.jpg

├── datacite-arxiv-crossref.png

├── datacite_statistics.png

├── el-gebali-research-lifecycle.png

├── file_structures.png

├── pepe_research_lifecycle.png

└── rest-api.png

├── files

└── .gitkeep

├── index.md

├── reference.md

└── setup.md

/.github/ISSUE_TEMPLATE.md:

--------------------------------------------------------------------------------

1 | Please delete the text below before submitting your contribution.

2 |

3 | ---

4 |

5 | Thanks for contributing! If this contribution is for instructor training, please send an email to checkout@carpentries.org with a link to this contribution so we can record your progress. You’ve completed your contribution step for instructor checkout just by submitting this contribution.

6 |

7 | Please keep in mind that lesson maintainers are volunteers and it may be some time before they can respond to your contribution. Although not all contributions can be incorporated into the lesson materials, we appreciate your time and effort to improve the curriculum. If you have any questions about the lesson maintenance process or would like to volunteer your time as a contribution reviewer, please contact Kate Hertweck (k8hertweck@gmail.com).

8 |

9 | ---

10 |

--------------------------------------------------------------------------------

/.github/PULL_REQUEST_TEMPLATE.md:

--------------------------------------------------------------------------------

1 | Please delete the text below before submitting your contribution.

2 |

3 | ---

4 |

5 | Thanks for contributing! If this contribution is for instructor training, please send an email to checkout@carpentries.org with a link to this contribution so we can record your progress. You’ve completed your contribution step for instructor checkout just by submitting this contribution.

6 |

7 | Please keep in mind that lesson maintainers are volunteers and it may be some time before they can respond to your contribution. Although not all contributions can be incorporated into the lesson materials, we appreciate your time and effort to improve the curriculum. If you have any questions about the lesson maintenance process or would like to volunteer your time as a contribution reviewer, please contact Kate Hertweck (k8hertweck@gmail.com).

8 |

9 | When submitting a pull request with links, we request that you use persistent identifiers (PIDs) to articles, datasets and other research objects, when available. For more information on PIDs, see Persistent identifier. (2020-08-28). In Wikipedia. Retrieved from [https://en.wikipedia.org/wiki/Persistent_identifier](https://en.wikipedia.org/wiki/Persistent_identifier).

10 |

11 | ---

12 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *~

3 | .DS_Store

4 | .ipynb_checkpoints

5 | .sass-cache

6 | .jekyll-cache/

7 | __pycache__

8 | _site

9 | .Rproj.user

10 | .Rhistory

11 | .RData

12 | .bundle/

13 | .vendor/

14 | .docker-vendor/

15 | Gemfile.lock

16 |

--------------------------------------------------------------------------------

/AUTHORS:

--------------------------------------------------------------------------------

1 | FIXME: list authors' names and email addresses.

2 |

--------------------------------------------------------------------------------

/CITATION:

--------------------------------------------------------------------------------

1 | FIXME: describe how to cite this lesson.

2 |

--------------------------------------------------------------------------------

/CODE_OF_CONDUCT.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: page

3 | title: "Contributor Code of Conduct"

4 | ---

5 | As contributors and maintainers of this project,

6 | we pledge to follow the [Carpentry Code of Conduct][coc].

7 |

8 | Instances of abusive, harassing, or otherwise unacceptable behavior

9 | may be reported by following our [reporting guidelines][coc-reporting].

10 |

11 | {% include links.md %}

12 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing

2 |

3 | [Software Carpentry][swc-site] and [Data Carpentry][dc-site] are open source projects,

4 | and we welcome contributions of all kinds:

5 | new lessons,

6 | fixes to existing material,

7 | bug reports,

8 | and reviews of proposed changes are all welcome.

9 |

10 | ## Contributor Agreement

11 |

12 | By contributing,

13 | you agree that we may redistribute your work under [our license](LICENSE.md).

14 | In exchange,

15 | we will address your issues and/or assess your change proposal as promptly as we can,

16 | and help you become a member of our community.

17 | Everyone involved in [Software Carpentry][swc-site] and [Data Carpentry][dc-site]

18 | agrees to abide by our [code of conduct](CONDUCT.md).

19 |

20 | ## How to Contribute

21 |

22 | The easiest way to get started is to file an issue

23 | to tell us about a spelling mistake,

24 | some awkward wording,

25 | or a factual error.

26 | This is a good way to introduce yourself

27 | and to meet some of our community members.

28 |

29 | 1. If you do not have a [GitHub][github] account,

30 | you can [send us comments by email][email].

31 | However,

32 | we will be able to respond more quickly if you use one of the other methods described below.

33 |

34 | 2. If you have a [GitHub][github] account,

35 | or are willing to [create one][github-join],

36 | but do not know how to use Git,

37 | you can report problems or suggest improvements by [creating an issue][issues].

38 | This allows us to assign the item to someone

39 | and to respond to it in a threaded discussion.

40 |

41 | 3. If you are comfortable with Git,

42 | and would like to add or change material,

43 | you can submit a pull request (PR).

44 | Instructions for doing this are [included below](#using-github).

45 |

46 | ## Where to Contribute

47 |

48 | 1. If you wish to change this lesson,

49 | please work in .

51 |

52 | 2. If you wish to change the example lesson,

53 | please work in ,

54 | which documents the format of our lessons

55 | and can be viewed at .

56 |

57 | 3. If you wish to change the template used for workshop websites,

58 | please work in .

59 | The home page of that repository explains how to set up workshop websites,

60 | while the extra pages in

61 | provide more background on our design choices.

62 |

63 | 4. If you wish to change CSS style files, tools,

64 | or HTML boilerplate for lessons or workshops stored in `_includes` or `_layouts`,

65 | please work in .

66 |

67 | ## What to Contribute

68 |

69 | There are many ways to contribute,

70 | from writing new exercises and improving existing ones

71 | to updating or filling in the documentation

72 | and submitting [bug reports][issues]

73 | about things that don't work, aren't clear, or are missing.

74 | If you are looking for ideas, please see the 'Issues' tab for

75 | a list of issues associated with this repository,

76 | or you may also look at the issues for [Data Carpentry][dc-issues]

77 | and [Software Carpentry][swc-issues] projects.

78 |

79 | Comments on issues and reviews of pull requests are just as welcome:

80 | we are smarter together than we are on our own.

81 | Reviews from novices and newcomers are particularly valuable:

82 | it's easy for people who have been using these lessons for a while

83 | to forget how impenetrable some of this material can be,

84 | so fresh eyes are always welcome.

85 |

86 | ## What *Not* to Contribute

87 |

88 | Our lessons already contain more material than we can cover in a typical workshop,

89 | so we are usually *not* looking for more concepts or tools to add to them.

90 | As a rule,

91 | if you want to introduce a new idea,

92 | you must (a) estimate how long it will take to teach

93 | and (b) explain what you would take out to make room for it.

94 | The first encourages contributors to be honest about requirements;

95 | the second, to think hard about priorities.

96 |

97 | We are also not looking for exercises or other material that only run on one platform.

98 | Our workshops typically contain a mixture of Windows, Mac OS X, and Linux users;

99 | in order to be usable,

100 | our lessons must run equally well on all three.

101 |

102 | ## Using GitHub

103 |

104 | If you choose to contribute via GitHub, you may want to look at

105 | [How to Contribute to an Open Source Project on GitHub][how-contribute].

106 | To manage changes, we follow [GitHub flow][github-flow].

107 | Each lesson has two maintainers who review issues and pull requests or encourage others to do so.

108 | The maintainers are community volunteers and have final say over what gets merged into the lesson.

109 | To use the web interface for contributing to a lesson:

110 |

111 | 1. Fork the originating repository to your GitHub profile.

112 | 2. Within your version of the forked repository, move to the `gh-pages` branch and

113 | create a new branch for each significant change being made.

114 | 3. Navigate to the file(s) you wish to change within the new branches and make revisions as required.

115 | 4. Commit all changed files within the appropriate branches.

116 | 5. Create individual pull requests from each of your changed branches

117 | to the `gh-pages` branch within the originating repository.

118 | 6. If you receive feedback, make changes using your issue-specific branches of the forked

119 | repository and the pull requests will update automatically.

120 | 7. Repeat as needed until all feedback has been addressed.

121 |

122 | When starting work, please make sure your clone of the originating `gh-pages` branch is up-to-date

123 | before creating your own revision-specific branch(es) from there.

124 | Additionally, please only work from your newly-created branch(es) and *not*

125 | your clone of the originating `gh-pages` branch.

126 | Lastly, published copies of all the lessons are available in the `gh-pages` branch of the originating

127 | repository for reference while revising.

128 |

129 | ## Other Resources

130 |

131 | General discussion of [Software Carpentry][swc-site] and [Data Carpentry][dc-site]

132 | happens on the [discussion mailing list][discuss-list],

133 | which everyone is welcome to join.

134 | You can also [reach us by email][email].

135 |

136 | [email]: mailto:admin@software-carpentry.org

137 | [dc-issues]: https://github.com/issues?q=user%3Adatacarpentry

138 | [dc-lessons]: http://datacarpentry.org/lessons/

139 | [dc-site]: http://datacarpentry.org/

140 | [discuss-list]: http://lists.software-carpentry.org/listinfo/discuss

141 | [github]: https://github.com

142 | [github-flow]: https://guides.github.com/introduction/flow/

143 | [github-join]: https://github.com/join

144 | [how-contribute]: https://egghead.io/series/how-to-contribute-to-an-open-source-project-on-github

145 | [issues]: https://guides.github.com/features/issues/

146 | [swc-issues]: https://github.com/issues?q=user%3Aswcarpentry

147 | [swc-lessons]: https://software-carpentry.org/lessons/

148 | [swc-site]: https://software-carpentry.org/

149 |

--------------------------------------------------------------------------------

/Gemfile:

--------------------------------------------------------------------------------

1 | source 'https://rubygems.org'

2 | gem 'github-pages', group: :jekyll_plugins

3 |

--------------------------------------------------------------------------------

/LICENSE.md:

--------------------------------------------------------------------------------

1 | ---

2 | layout: page

3 | title: "Licenses"

4 | root: .

5 | ---

6 | ## Instructional Material

7 |

8 | All Software Carpentry and Data Carpentry instructional material is

9 | made available under the [Creative Commons Attribution

10 | license][cc-by-human]. The following is a human-readable summary of

11 | (and not a substitute for) the [full legal text of the CC BY 4.0

12 | license][cc-by-legal].

13 |

14 | You are free:

15 |

16 | * to **Share**---copy and redistribute the material in any medium or format

17 | * to **Adapt**---remix, transform, and build upon the material

18 |

19 | for any purpose, even commercially.

20 |

21 | The licensor cannot revoke these freedoms as long as you follow the

22 | license terms.

23 |

24 | Under the following terms:

25 |

26 | * **Attribution**---You must give appropriate credit (mentioning that

27 | your work is derived from work that is Copyright © Software

28 | Carpentry and, where practical, linking to

29 | http://software-carpentry.org/), provide a [link to the

30 | license][cc-by-human], and indicate if changes were made. You may do

31 | so in any reasonable manner, but not in any way that suggests the

32 | licensor endorses you or your use.

33 |

34 | **No additional restrictions**---You may not apply legal terms or

35 | technological measures that legally restrict others from doing

36 | anything the license permits. With the understanding that:

37 |

38 | Notices:

39 |

40 | * You do not have to comply with the license for elements of the

41 | material in the public domain or where your use is permitted by an

42 | applicable exception or limitation.

43 | * No warranties are given. The license may not give you all of the

44 | permissions necessary for your intended use. For example, other

45 | rights such as publicity, privacy, or moral rights may limit how you

46 | use the material.

47 |

48 | ## Software

49 |

50 | Except where otherwise noted, the example programs and other software

51 | provided by Software Carpentry and Data Carpentry are made available under the

52 | [OSI][osi]-approved

53 | [MIT license][mit-license].

54 |

55 | Permission is hereby granted, free of charge, to any person obtaining

56 | a copy of this software and associated documentation files (the

57 | "Software"), to deal in the Software without restriction, including

58 | without limitation the rights to use, copy, modify, merge, publish,

59 | distribute, sublicense, and/or sell copies of the Software, and to

60 | permit persons to whom the Software is furnished to do so, subject to

61 | the following conditions:

62 |

63 | The above copyright notice and this permission notice shall be

64 | included in all copies or substantial portions of the Software.

65 |

66 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

67 | EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

68 | MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

69 | NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

70 | LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

71 | OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

72 | WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

73 |

74 | ## Trademark

75 |

76 | "Software Carpentry" and "Data Carpentry" and their respective logos

77 | are registered trademarks of [Community Initiatives][CI].

78 |

79 | [cc-by-human]: https://creativecommons.org/licenses/by/4.0/

80 | [cc-by-legal]: https://creativecommons.org/licenses/by/4.0/legalcode

81 | [mit-license]: https://opensource.org/licenses/mit-license.html

82 | [ci]: http://communityin.org/

83 | [osi]: https://opensource.org

84 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | ## ========================================

2 | ## Commands for both workshop and lesson websites.

3 |

4 | # Settings

5 | MAKEFILES=Makefile $(wildcard *.mk)

6 | JEKYLL=bundle config --local set path .vendor/bundle && bundle install && bundle update && bundle exec jekyll

7 | PARSER=bin/markdown_ast.rb

8 | DST=_site

9 |

10 | # Check Python 3 is installed and determine if it's called via python3 or python

11 | # (https://stackoverflow.com/a/4933395)

12 | PYTHON3_EXE := $(shell which python3 2>/dev/null)

13 | ifneq (, $(PYTHON3_EXE))

14 | ifeq (,$(findstring Microsoft/WindowsApps/python3,$(subst \,/,$(PYTHON3_EXE))))

15 | PYTHON := python3

16 | endif

17 | endif

18 |

19 | ifeq (,$(PYTHON))

20 | PYTHON_EXE := $(shell which python 2>/dev/null)

21 | ifneq (, $(PYTHON_EXE))

22 | PYTHON_VERSION_FULL := $(wordlist 2,4,$(subst ., ,$(shell python --version 2>&1)))

23 | PYTHON_VERSION_MAJOR := $(word 1,${PYTHON_VERSION_FULL})

24 | ifneq (3, ${PYTHON_VERSION_MAJOR})

25 | $(error "Your system does not appear to have Python 3 installed.")

26 | endif

27 | PYTHON := python

28 | else

29 | $(error "Your system does not appear to have any Python installed.")

30 | endif

31 | endif

32 |

33 |

34 | # Controls

35 | .PHONY : commands clean files

36 |

37 | # Default target

38 | .DEFAULT_GOAL := commands

39 |

40 | ## I. Commands for both workshop and lesson websites

41 | ## =================================================

42 |

43 | ## * serve : render website and run a local server

44 | serve : lesson-md

45 | ${JEKYLL} serve

46 |

47 | ## * site : build website but do not run a server

48 | site : lesson-md

49 | ${JEKYLL} build

50 |

51 | ## * docker-serve : use Docker to serve the site

52 | docker-serve :

53 | docker pull carpentries/lesson-docker:latest

54 | docker run --rm -it \

55 | -v $${PWD}:/home/rstudio \

56 | -p 4000:4000 \

57 | -p 8787:8787 \

58 | -e USERID=$$(id -u) \

59 | -e GROUPID=$$(id -g) \

60 | carpentries/lesson-docker:latest

61 |

62 | ## * repo-check : check repository settings

63 | repo-check :

64 | @${PYTHON} bin/repo_check.py -s .

65 |

66 | ## * clean : clean up junk files

67 | clean :

68 | @rm -rf ${DST}

69 | @rm -rf .sass-cache

70 | @rm -rf bin/__pycache__

71 | @find . -name .DS_Store -exec rm {} \;

72 | @find . -name '*~' -exec rm {} \;

73 | @find . -name '*.pyc' -exec rm {} \;

74 |

75 | ## * clean-rmd : clean intermediate R files (that need to be committed to the repo)

76 | clean-rmd :

77 | @rm -rf ${RMD_DST}

78 | @rm -rf fig/rmd-*

79 |

80 |

81 | ##

82 | ## II. Commands specific to workshop websites

83 | ## =================================================

84 |

85 | .PHONY : workshop-check

86 |

87 | ## * workshop-check : check workshop homepage

88 | workshop-check :

89 | @${PYTHON} bin/workshop_check.py .

90 |

91 |

92 | ##

93 | ## III. Commands specific to lesson websites

94 | ## =================================================

95 |

96 | .PHONY : lesson-check lesson-md lesson-files lesson-fixme install-rmd-deps

97 |

98 | # RMarkdown files

99 | RMD_SRC = $(wildcard _episodes_rmd/??-*.Rmd)

100 | RMD_DST = $(patsubst _episodes_rmd/%.Rmd,_episodes/%.md,$(RMD_SRC))

101 |

102 | # Lesson source files in the order they appear in the navigation menu.

103 | MARKDOWN_SRC = \

104 | index.md \

105 | CODE_OF_CONDUCT.md \

106 | setup.md \

107 | $(sort $(wildcard _episodes/*.md)) \

108 | reference.md \

109 | $(sort $(wildcard _extras/*.md)) \

110 | LICENSE.md

111 |

112 | # Generated lesson files in the order they appear in the navigation menu.

113 | HTML_DST = \

114 | ${DST}/index.html \

115 | ${DST}/conduct/index.html \

116 | ${DST}/setup/index.html \

117 | $(patsubst _episodes/%.md,${DST}/%/index.html,$(sort $(wildcard _episodes/*.md))) \

118 | ${DST}/reference/index.html \

119 | $(patsubst _extras/%.md,${DST}/%/index.html,$(sort $(wildcard _extras/*.md))) \

120 | ${DST}/license/index.html

121 |

122 | ## * install-rmd-deps : Install R packages dependencies to build the RMarkdown lesson

123 | install-rmd-deps:

124 | @${SHELL} bin/install_r_deps.sh

125 |

126 | ## * lesson-md : convert Rmarkdown files to markdown

127 | lesson-md : ${RMD_DST}

128 |

129 | _episodes/%.md: _episodes_rmd/%.Rmd install-rmd-deps

130 | @mkdir -p _episodes

131 | @bin/knit_lessons.sh $< $@

132 |

133 | ## * lesson-check : validate lesson Markdown

134 | lesson-check : lesson-fixme

135 | @${PYTHON} bin/lesson_check.py -s . -p ${PARSER} -r _includes/links.md

136 |

137 | ## * lesson-check-all : validate lesson Markdown, checking line lengths and trailing whitespace

138 | lesson-check-all :

139 | @${PYTHON} bin/lesson_check.py -s . -p ${PARSER} -r _includes/links.md -l -w --permissive

140 |

141 | ## * unittest : run unit tests on checking tools

142 | unittest :

143 | @${PYTHON} bin/test_lesson_check.py

144 |

145 | ## * lesson-files : show expected names of generated files for debugging

146 | lesson-files :

147 | @echo 'RMD_SRC:' ${RMD_SRC}

148 | @echo 'RMD_DST:' ${RMD_DST}

149 | @echo 'MARKDOWN_SRC:' ${MARKDOWN_SRC}

150 | @echo 'HTML_DST:' ${HTML_DST}

151 |

152 | ## * lesson-fixme : show FIXME markers embedded in source files

153 | lesson-fixme :

154 | @grep --fixed-strings --word-regexp --line-number --no-messages FIXME ${MARKDOWN_SRC} || true

155 |

156 | ##

157 | ## IV. Auxililary (plumbing) commands

158 | ## =================================================

159 |

160 | ## * commands : show all commands.

161 | commands :

162 | @sed -n -e '/^##/s|^##[[:space:]]*||p' $(MAKEFILE_LIST)

163 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Maintainers for Library Carpentry: FAIR Data & Software

2 |

3 | - [Chris Erdmann](https://github.com/libcce) (Lead)

4 | - [Liz Stokes](https://github.com/ragamouf)

5 | - [Kristina Hettne](https://github.com/kmhettne)

6 | - [Carmi Cronje](https://github.com/ccronje)

7 | - [Sara El-Gebali](https://github.com/selgebali)

8 |

9 | Lesson Maintainers communication is via the [team site](https://github.com/orgs/LibraryCarpentry/teams/lc-fair-maintainers).

10 |

11 | ## Library Carpentry

12 |

13 | [Library Carpentry](https://librarycarpentry.org) is a software and data skills training programme for people working in library- and information-related roles. It builds on the work of [Software Carpentry](http://software-carpentry.org/) and [Data Carpentry](http://www.datacarpentry.org/). Library Carpentry is an official Lesson Program of [The Carpentries](https://carpentries.org/).

14 |

15 | ## License

16 |

17 | All Software, Data, and Library Carpentry instructional material is made available under the [Creative Commons Attribution

18 | license](https://github.com/LibraryCarpentry/lc-fair-research/blob/gh-pages/LICENSE.md).

19 |

20 | ## Contributing

21 |

22 | There are many ways to discuss and contribute to Library Carpentry lessons. Visit the lesson [discussion page](https://librarycarpentry.org/lc-fair-research/discuss/index.html) to learn more. Also see [Contributing](https://github.com/LibraryCarpentry/lc-fair-research/blob/gh-pages/CONTRIBUTING.md).

23 |

24 | ## Code of Conduct

25 |

26 | All participants should agree to abide by The Carpentries [Code of Conduct](https://docs.carpentries.org/topic_folders/policies/code-of-conduct.html).

27 |

28 | ## Authors

29 |

30 | Library Carpentry is authored and maintained through issues, commits, and pull requests from the community.

31 |

32 | ## Citation

33 |

34 | Cite as:

35 |

36 | Library Carpentry. September 2019. https://librarycarpentry.org/lc-fair-research.

37 |

38 | ## Checking and Previewing the Lesson

39 |

40 | To check and preview a lesson locally, see [http://carpentries.github.io/lesson-example/07-checking/index.html](http://carpentries.github.io/lesson-example/07-checking/index.html).

41 |

42 |

--------------------------------------------------------------------------------

/_config.yml:

--------------------------------------------------------------------------------

1 | #------------------------------------------------------------

2 | # Values for this lesson.

3 | #------------------------------------------------------------

4 |

5 | # Which carpentry is this ("swc", "dc", "lc", or "cp")?

6 | # swc: Software Carpentry

7 | # dc: Data Carpentry

8 | # lc: Library Carpentry

9 | # cp: Carpentries (to use for instructor traning for instance)

10 | carpentry: "lc"

11 |

12 | # Overall title for pages.

13 | title: "Library Carpentry: FAIR Data and Software"

14 |

15 | # Life cycle stage of the lesson

16 | # possible values: "pre-alpha", "alpha", "beta", "stable"

17 | life_cycle: "pre-alpha"

18 |

19 | #------------------------------------------------------------

20 | # Generic settings (should not need to change).

21 | #------------------------------------------------------------

22 |

23 | # What kind of thing is this ("workshop" or "lesson")?

24 | kind: "lesson"

25 |

26 | # Magic to make URLs resolve both locally and on GitHub.

27 | # See https://help.github.com/articles/repository-metadata-on-github-pages/.

28 | # Please don't change it: / is correct.

29 | repository: /

30 |

31 | # Email address, no mailto:

32 | email: "team@carpentries.org"

33 |

34 | # Sites.

35 | amy_site: "https://amy.software-carpentry.org/workshops"

36 | carpentries_github: "https://github.com/carpentries"

37 | carpentries_pages: "https://carpentries.github.io"

38 | carpentries_site: "https://carpentries.org/"

39 | dc_site: "http://datacarpentry.org"

40 | example_repo: "https://github.com/carpentries/lesson-example"

41 | example_site: "https://carpentries.github.io/lesson-example"

42 | lc_site: "https://librarycarpentry.github.io/"

43 | swc_github: "https://github.com/swcarpentry"

44 | swc_pages: "https://swcarpentry.github.io"

45 | swc_site: "https://software-carpentry.org"

46 | template_repo: "https://github.com/carpentries/styles"

47 | training_site: "https://carpentries.github.io/instructor-training"

48 | workshop_repo: "https://github.com/carpentries/workshop-template"

49 | workshop_site: "https://carpentries.github.io/workshop-template"

50 |

51 | # Surveys.

52 | pre_survey: "https://www.surveymonkey.com/r/swc_pre_workshop_v1?workshop_id="

53 | post_survey: "https://www.surveymonkey.com/r/swc_post_workshop_v1?workshop_id="

54 | training_post_survey: "https://www.surveymonkey.com/r/post-instructor-training"

55 |

56 | # Start time in minutes (0 to be clock-independent, 540 to show a start at 09:00 am).

57 | start_time: 0

58 |

59 | # Specify that things in the episodes collection should be output.

60 | collections:

61 | episodes:

62 | output: true

63 | permalink: /:path/index.html

64 | extras:

65 | output: true

66 | permalink: /:path/index.html

67 |

68 | # Set the default layout for things in the episodes collection.

69 | defaults:

70 | - values:

71 | root: .

72 | layout: page

73 | - scope:

74 | path: ""

75 | type: episodes

76 | values:

77 | root: ..

78 | layout: episode

79 | - scope:

80 | path: ""

81 | type: extras

82 | values:

83 | root: ..

84 | layout: page

85 |

86 | # Files and directories that are not to be copied.

87 | exclude:

88 | - Makefile

89 | - bin/

90 | - .Rproj.user/

91 |

92 | # Turn on built-in syntax highlighting.

93 | highlighter: rouge

94 |

95 | remote_theme: carpentries/carpentries-theme

96 |

97 |

--------------------------------------------------------------------------------

/_episodes/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/LibraryCarpentry/lc-fair-research/6dc5a89613cfcd137d835372821f4e532c1ca9bc/_episodes/.gitkeep

--------------------------------------------------------------------------------

/_episodes/01-introduction.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Introduction"

3 | teaching: 0

4 | exercises: 0

5 | questions:

6 | - 'What does the acronym "FAIR" stand for, and what does it mean?'

7 | - "How can library services contribute to FAIR research?"

8 | objectives:

9 | - "Articulate the purpose and value of making research FAIR"

10 | - "Understand that library services impact various parts of the research lifecycle"

11 | keypoints:

12 | - The FAIR principles set out how to make data more usable, by humans and machines,

13 | by making it Findable, Accessible, Interoperable and Reusable.

14 | - Librarians have key expertise in information management that can help researchers

15 | navigate the process of making their research more FAIR

16 | ---

17 |

18 | ## Goals of this lesson:

19 |

20 | - To teach FAIRer research data and software management and development practices

21 | - Focus on practical approaches to being FAIRer admitting that there are no “silver bullets”

22 |

23 | ## Library services across the research lifecycle

24 |

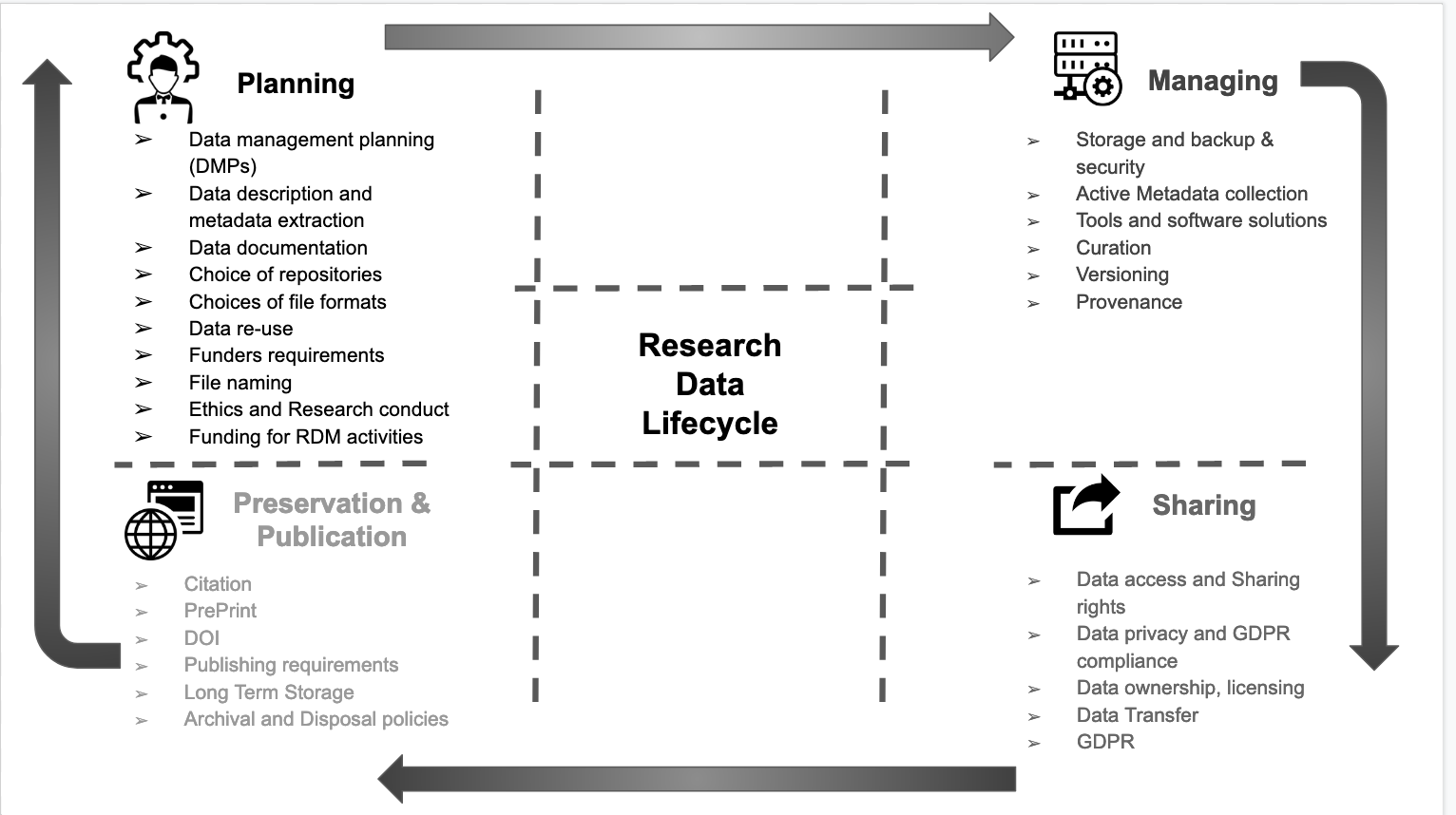

25 | Libraries actively help researchers navigate the requirements, demands, and tools that make up the research data management landscape, particularly when it comes to the organization, preservation, and sharing of research data and software.

26 |

27 | They play a vital role in directly supporting the academic enterprise by promoting data sharing and reproducibility. Through their research training and services, particularly for early career researchers and graduate students, libraries are driving a cultural shift towards more effective data and software stewardship.

28 |

29 | As a trusted partner, and with an embedded understanding of their communities, libraries foster collaboration and facilitate coordination between community stakeholders and are a critical part of the discussion.

30 |

31 |

32 |

33 | El-Gebali, Sara. (2020, September 29). Research Data Life Cycle. Zenodo. [http://doi.org/10.5281/zenodo.4057867](http://doi.org/10.5281/zenodo.4057867)

34 |

35 | ## FAIR in one sentence

36 |

37 | The FAIR data principles are all about how machines and humans communicate with each other. They are not a standard, but a set of principles for developing robust, extensible infrastructure which facilitates discovery, access and reuse of research data and software.

38 |

39 | ## Where did FAIR come from?

40 |

41 | The FAIR data principles emerged from a FORCE11 workshop in 2014. This was formalised in 2016 when these were published in Scientific Data: [FAIR Guiding Principles for scientific data management and stewardship](https://doi.org/10.1038/sdata.2016.18). In this article, the authors provide general guidance on machine-actionability and improvements that can be made to streamline the findability, accessibility, interoperatbility, and reuability (FAIR) of digital assets.

42 |

43 | "as open as possible, as closed as necessary"

44 |

45 | ## FAIR brings all the stakeholders together

46 |

47 | We all win when the outputs of research are properly managed, preserved and reusable. This is applicable from big data of genomic expression all the way through to the ‘small data’ of qualitative research.

48 |

49 | Research is increasingly dependent on computational support and yet there are still many bottlenecks in the process. The overall aim of FAIR is to cut down on the inefficient processes in research by taking advantage of linked resources and the exchange of data so that all stakeholders in the research ecosystem, can automate repetitive, boring, error-prone tasks.

50 |

51 | ## Examples of Library Services implementing the FAIR principles

52 |

53 | * If your local data repository shares metadata with other aggregators, it's F for Findable.

54 | * If you advocate for researchers to use ORCIDs and seek DOIs for research data outputs, it's F for Findable

55 | * If your institution mints DOIs for research datasets... that's A for Accessible.

56 | * If your institutional data repository enables metadata for harvest by an aggregator, that's I for Interoperable.

57 | * If you provide advice and consultation services for choosing licences for research data, that's R for Reusable

58 |

59 | ## Further reading following this lesson

60 |

61 | TIB Hannover has provided the following FAIR guide with examples:

62 | [TIB Hannover FAIR Principles Guide](https://blogs.tib.eu/wp/tib/2017/09/12/the-fair-data-principles-for-research-data)

63 |

64 | The European Commission gives tips on implementing FAIR: [Six Recommendations for Implementation of FAIR Practice](https://doi.org/10.2777/986252)

65 |

66 | ## How does “FAIR” translate to your institution or workplace?

67 |

68 | Group exercise

69 | Use an etherpad / whiteboard

70 |

71 | * Does your institutional data management policy refer to FAIR principles?

72 |

73 | * If you have a data management planning tool (eg DMP online) go through the mandatory fields and identify where there are FAIR teaching moments.

74 |

75 | * Compile a list of research management tools that your institution provides access to and brainstorm examples where these tools embody the FAIR data principles.

76 |

77 | * Use the [FAIR data self assessment tool](https://www.ands.org.au/working-with-data/fairdata/fair-data-self-assessment-tool) to help frame your answers.

78 |

--------------------------------------------------------------------------------

/_episodes/02-findable.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Findable"

3 | teaching: 0

4 | exercises: 0

5 | questions:

6 | - "What is a persistent identifier or PID?"

7 | - "What types of PIDs are there?"

8 | objectives:

9 | - "Explain what globally unique, persistent, resolvable identifiers are and how they make data and metadata findable"

10 | - "Articulate what metadata is and how metadata makes data findable"

11 | - "Articulate how metadata can be explicitly linked to data and vice versa"

12 | - "Understand how and where to find data discovery platforms"

13 | - "Articulate the role of data repositories in enabling findable data"

14 | keypoints:

15 | - "First key point."

16 | ---

17 |

18 | > ## For data & software to be findable:

19 | > F1. (meta)data are assigned a globally unique and eternally persistent identifier or PID

20 | > F2. data are described with rich metadata

21 | > F3. (meta)data are registered or indexed in a searchable resource

22 | > F4. metadata specify the data identifier

23 | {: .checklist}

24 |

25 | ## Persistent identifiers (PIDs) 101

26 |

27 | A persistent identifier (PID) is a long-lasting reference to a (digital or physical) resource:

28 |

29 | - Designed to provide access to information about a resource even if the resource it describes has moved location on the web

30 | - Requires technical, governance and community to provide the persistence

31 | - There are many different PIDs available for many different types of scholarly resources e.g. articles, data, samples, authors, grants, projects, conference papers and so much more

32 |

33 | ## Different types of PIDs

34 |

35 | PIDs have community support, organizational commitment and technical infrastructure to ensure persistence of identifiers. They often are created to respond to a community need. For instance, the International Standard Book Number or ISBN was created to assign unique numbers to books, is used by book publishers, and is managed by the International ISBN Agency. Another type of PID, the Open Researcher and Contributor ID or ORCID (iD) was created to help with author disambiguation by providing unique identifiers for authors. The [ODIN Project identifies additional PIDs](https://project-thor.readme.io/docs/project-glossary) along with [Wikipedia's page on PIDs](https://en.wikipedia.org/wiki/Persistent_identifier).

36 |

37 | ## Digital Object Identifiers (DOIs)

38 |

39 | The DOI is a common identifier used for academic, professional, and governmental information such as articles, datasets, reports, and other supplemental information. The [International DOI Foundation (IDF)](https://www.doi.org/) is the agency that oversees DOIs. [CrossRef](https://www.crossref.org/) and [Datacite](https://datacite.org/) are two prominent not-for-profit registries that provide services to create or mint DOIs. Both have membership models where their clients are able to mint DOIs distinguished by their prefix. For example, DataCite features a [statistics page](https://stats.datacite.org/) where you can see registrations by members.

40 |

41 | ## Anatomy of a DOI

42 |

43 | A DOI has three main parts:

44 |

45 | - Proxy or DOI resolver service

46 | - Prefix which is unique to the registrant or member

47 | - Suffix, a unique identifier assigned locally by the registrant to an object

48 |

49 |

50 |

51 | In the example above, the prefix is used by the Australian National Data Service (ANDS) now called the Australia Research Data Commons (ARDC) and the suffix is a unique identifier for an object at Griffith University. DataCite provides DOI [display guidance](https://support.datacite.org/docs/datacite-doi-display-guidelines

52 | ) so that they are easy to recognize and use, for both humans and machines.

53 |

54 | > ## Challenge

55 | > arXiv is a preprint repository for physics, math, computer science and related disciplines.

56 | > It allows researchers to share and access their work before it is formally published.

57 | > Visit the arXiv new papers page for [Machine Learning](https://arxiv.org/list/cs.LG/recent).

58 | > Choose any paper by clicking on the 'pdf' link next to it. Now use control + F or command + F and search for 'http'. Did the author use DOIs for their data and software?

59 | >

60 | > > ## Solution

61 | > > Authors will often link to platforms such as GitHub where they have shared their software and/or they will link to their website where they are hosting the data used in the paper. The danger here is that platforms like GitHub and personal websites are not permanent. Instead, authors can use repositories to deposit and preserve their data and software while minting a DOI. Links to software sharing platforms or personal websites might move but DOIs will always resolve to information about the software and/or data. See DataCite's [Best Practices for a Tombstone Page](https://support.datacite.org/docs/tombstone-pages).

62 | > {: .solution}

63 | {: .challenge}

64 |

65 | ## Rich Metadata

66 |

67 | More and more services are using common schemas such as [DataCite's Metadata Schema](https://schema.datacite.org) or [Dublin Core](https://www.dublincore.org) to foster greater use and discovery. A schema provides an overall structure for the metadata and describes core metadata properties. While DataCite's Metadata Schema is more general, there are discipline specific schemas such as [Data Documentation Initiative (DDI) and Darwin Core](https://en.wikipedia.org/wiki/Metadata_standard).

68 |

69 | Thanks to schemas, the process of adding metadata has been standardised to some extent but there is still room for error. For instance, DataCite [reports](https://blog.datacite.org/citation-analysis-scholix-rda/) that links between papers and data are still very low. Publishers and authors are missing this opportunity.

70 |

71 | Challenges:

72 | Automatic ORCID profile update when DOI is minted

73 | RelatedIdentifiers linking papers, data, software in Zenodo

74 |

75 | ## Connecting research outputs

76 | DOIs are everywhere. Examples.

77 |

78 | Resource IDs (articles, data, software, …)

79 | Researcher IDs

80 | Organisation IDs, Funder IDs

81 | Projects IDs

82 | Instrument IDs

83 | Ship cruises IDs

84 | Physical sample IDs,

85 | DMP IDs…

86 | videos

87 | images

88 | 3D models

89 | grey literature

90 |

91 |

92 |

93 | https://support.datacite.org/docs/connecting-research-outputs

94 |

95 | Bullet points about the current state of linking...

96 | https://blog.datacite.org/citation-analysis-scholix-rda/

97 |

98 |

99 | ## Provenance?

100 | Provenance means validation & credibility – a researcher should comply to good scientific practices and be sure about what should get a PID (and what not).

101 | Metadata is central to visibility and citability – metadata behind a PID should be provided with consideration.

102 | Policies behind a PID system ensure persistence in the WWW - point. At least metadata will be available for a long time.

103 | Machine readability will be an essential part of future discoverability – resources should be checked and formats should be adjusted (as far possible).

104 | Metrics (e.g. altmetrics) are supported by PID systems.

105 |

106 |

107 | ## Publishing behaviour of researchers

108 |

109 | According to:

110 |

111 | Technische Informationsbibliothek (TIB) (conducted by engage AG) (2017): Questionnaire and Dataset of the TIB Survey 2017 on information procurment and pubishing behaviour of researchers in the natural sciences and engineering. Technische Informationsbibliothek (TIB). DOI: [https://doi.org/10.22000/54](https://doi.org/10.22000/54)

112 |

113 | - responses from 1400 scientists in the natural sciences & engineering (across Germany)

114 | - 70% of the researchers are using DOIs for journal publications

115 | - less than 10% use DOIs for research data

116 | -- 56% answered that they don’t know about the option to use DOIs for other publications (datasets, conference papers etc.)

117 | -- 57% stated no need for DOI counselling services

118 | -- 40% of the questioned researchers need more information

119 | -- 30% cannot see a benefit from a DOI

120 |

121 | ## Choosing the right repository

122 |

123 | Ask your colleagues & collaborators

124 | Look for institutional repository at your own institution

125 |

126 | determining the right repo for your reseearch

127 | data are kept safe in a secure environment

128 | data are regularly backed up and preserved (long-term) for future use

129 | data can be easily discovered by search engines and included in online catalogues

130 | intellectual property rights and licencing of data are managed

131 | access to data can be administered and usage monitored

132 | the visibility of data can be enhanced

133 | enables more use and citation

134 | citation of data increases researchers scientific reputation

135 | Decision for or against a specific repository depends on various criteria, e.g.

136 | Data quality

137 | Discipline

138 | Institutional requirements

139 | Reputation (researcher and/or repository)

140 | Visibility of research

141 | Legal terms and conditions

142 | Data value (FAIR Principles)

143 | Exit strategy (tested?)

144 | Certificate (based only on documents?)

145 |

146 | Some recommendations:

147 | → look for the usage of PIDs

148 | → look for the usage of standards (DataCite, Dublin Core, discipline-specific metadata

149 | → look for licences offered

150 | → look for certifications (DSA / Core Trust Seal, DINI/nestor, WDS, …)

151 |

152 | Searching re3data w/ exercise

153 | https://www.re3data.org/

154 | Out of more than 2115 repository systems listed in re3data.org in July 2018, only 809 (less than 39 %!) state to provide a PID service, with 524 of them using the DOI system

155 |

156 | Search open access repos

157 | http://v2.sherpa.ac.uk/opendoar/

158 |

159 | FAIRSharing

160 | https://fairsharing.org/databases/

161 |

162 | ## Data Journals

163 |

164 | Another method available to researchers to cite and give credit to research data is to author works in data journals or supplemental approaches used by publishers, societies, disciplines, and/or journals.

165 |

166 | Articles in data journals allow authors to:

167 | - Describe their research data (including information about process, qualities, etc)

168 | - Explain how the data can be reused

169 | - Improve discoverability (through citation/linking mechanisms and indexing)

170 | - Provide information on data deposit

171 | - Allow for further (peer) review and quality assurance

172 | - Offer the opportunity for further recognition and awards

173 |

174 | Examples:

175 | - [Nature Scientific data](https://www.nature.com/sdata/) - published by Nature and established in 2013

176 | - [Geoscience Data Journal](https://rmets.onlinelibrary.wiley.com/journal/20496060) - published by Wiley and established in 2012

177 | - [Journal of Open Archaeology Data](https://openarchaeologydata.metajnl.com/) - published by Ubiquity and established in 2011

178 | - [Biodiversity Data Journal](https://bdj.pensoft.net/) - published by Pensoft and established in 2013.

179 | - [Earth System Science Data](https://www.earth-system-science-data.net/) - published by Copernicus Publications and established in 2009

180 |

181 | Also, the following study discusses data journals in depth and reviews over 100 data journals:

182 | Candela, L. , Castelli, D. , Manghi, P. and Tani, A. (2015), Data Journals: A Survey. J Assn Inf Sci Tec, 66: 1747-1762. doi:[10.1002/asi.23358](https://doi.org/10.1002/asi.23358)

183 |

184 | > ## How does your discipline share data

185 | >

186 | > Does your discipline have a data journal? Or some other mechanism to share data? For example, the American Astronomical Society (AAS) via the publisher IOP Physics offers a [supplment series](http://iopscience.iop.org/journal/0067-0049/page/article-data) as a way for astronomers to publish data.

187 | {: .discussion}

188 |

189 |

190 | List recent publications re: benefits of data sharing / software sharing

191 |

192 | Questions:

193 | Is FAIRSharing vs re3data comparison slide from TIB findability slides needed here?

194 | Should we include recent thread about handle system vs DOIs in IRs (costs)

195 | Zenodo-GitHub linking is listed in another episode, right?

196 | Include guidance for Google schema indexing...

197 |

198 | Notes:

199 | Note about authors being proactive and working with the journals/societies to improve papers referencing data, software...

200 |

201 | Tombstone

202 |

--------------------------------------------------------------------------------

/_episodes/03-accessible.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Accessible"

3 | teaching: 0

4 | exercises: 0

5 | questions:

6 | - "Key question"

7 | objectives:

8 | - "Understand what a protocol is"

9 | - "Understand authentication protocols and their role in FAIR"

10 | - "Articulate the value of landing pages"

11 | - "Explain closed, open and mediated access to data"

12 | keypoints:

13 | - "First key point."

14 | ---

15 |

16 |

17 | > ## For data & software to be accessible:

18 | > A1. (meta)data are retrievable by their identifier using a standardized communications protocol

19 | > A1.1 the protocol is open, free, and universally implementable

20 | > A1.2 the protocol allows for an authentication and authorization procedure, where necessary

21 | > A2. metadata remain accessible, even when the data are no longer available

22 | {: .checklist}

23 |

24 | ## What is a protocol?

25 | Simply put, it's an access method of exchanging data over a computer network. Each protocol has its rules for how data is formatted, compressed, checked for errors. Research repositories often use the OAI-PMH or REST API protocols to interface with data in the repository. The following image from [TutorialEdge.net: What is a RESTful API by Elliot Forbes](https://tutorialedge.net/general/what-is-a-rest-api/) provides a useful overview of how RESTful interfaces work:

26 |

27 |

28 |

29 | Zenodo offers a visual interface for seeing how formats such as DataCite XML will look like when requested for records such as the following record from the Biodiversity Literature Repository:

30 |

31 | [Formiche di Madagascar raccolte dal Sig. A. Mocquerys nei pressi della Baia di Antongil (1897-1898).](https://sandbox.zenodo.org/record/9785/export/dcite4#.W3eDVthKjGI)

32 |

33 | Wikipedia has a list of [commonly used network protocols](https://en.wikipedia.org/wiki/Lists_of_network_protocols) but check the service you are using for documentation on the protocols it uses and whether it corresponds with the FAIR Principles. For instance, see [Zenodo's Principles](http://about.zenodo.org/principles/) page.

34 |

35 | ## Contributor information

36 | Alternatively, for sensitive/protected data, if the protocol cannot guarantee secure access, an e-mail or other contact information of a person/data manager should be provided, via the metadata, with whom access to the data can be discussed. The [DataCite metadata schema](https://schema.datacite.org/) includes contributor type and name as fields where contact information is included. Collaborative projects such as [THOR](https://project-thor.readme.io/), [FREYA](https://www.project-freya.eu/en/resources), and [ODIN](https://odin-project.eu/project-outputs/deliverables/) are working towards improving the interoperability and exchange of metadata such as contributor information.

37 |

38 | ## Author disambiguation and authentication

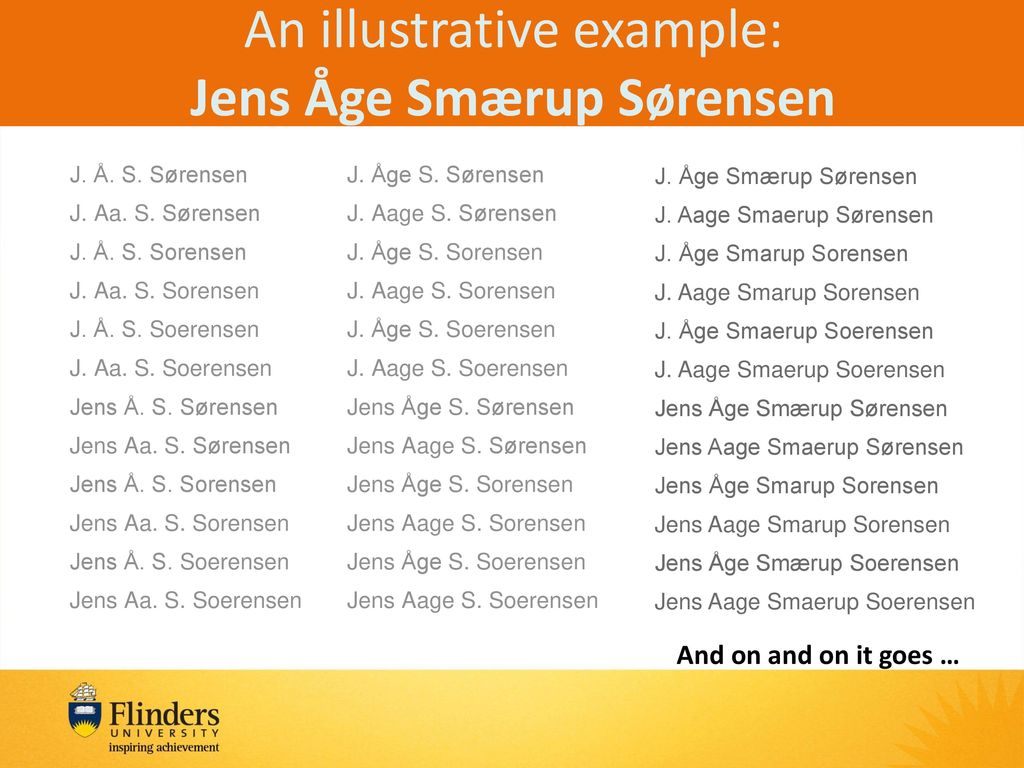

39 | Across the research ecosystem, publishers, repositories, funders, research information systems, have recognized the need to address the problem of author disambiguation. The illustrative example below of the many variations of the name _Jens Åge Smærup Sørensen demonstrations_ the challenge of wrangling the correct name for each individual author or contributor:

40 |

41 |

42 |

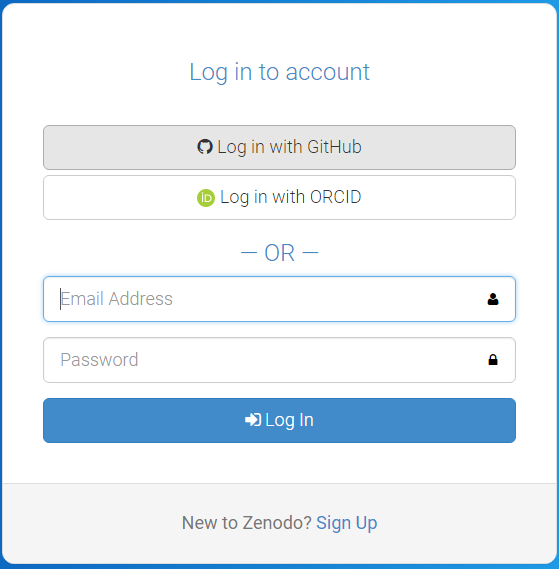

43 | Thankfully, a number of research systems are now integrating ORCID into their authentication systems. Zenodo provides the login ORCID authentication option. Once logged in, your ORCID will be assigned to your authored and deposited works.

44 |

45 | ## Exercise to create ORCID account and authenticate via Zenodo

46 | 1. [Register](https://orcid.org/register) for an ORCID.

47 | 2. You will receive a confirmation email. Click the link in the email to establish your unique 16-digit ORCID.

48 | 3. Go to [Zenodo](https://zenodo.org/) and select Log in (if you are new to Zenodo select Sign up).

49 | 4. Go to [linked accounts](https://zenodo.org/account/settings/linkedaccounts/) and click the Connect button next to ORCID.

50 |

51 | Next time you log into Zenodo you will be able to 'Log in with ORCID':

52 |

53 |

54 |

55 | ## Understanding whether something is open, free, and universally implementable

56 | ORCID features a [principles page](https://orcid.org/about/what-is-orcid/principles) where we can assess where it lies on the spectrum of these criteria. Can you identify statements that speak to these conditions: open, free, and universally implemetable?

57 |

58 | Answers:

59 | - ORCID is a non-profit that collects fees from its members to sustain its operations

60 | - [Creative Commons CC0 1.0 Universal (CC0)](https://tldrlegal.com/license/creative-commons-cc0-1.0-universal) license releases data into the public domain, or otherwise grants permission to use it for any purpose

61 | - It is open to any organization and transcends borders

62 |

63 | Challenge Questions:

64 | - Where can you download the freely available data?

65 | - How does ORCID solicit community input outside of its governance?

66 | - Are the tools used to create, read, update, delete ORCID data open?

67 |

68 |

69 | ## Tombstones, a very grave subject

70 |

71 | There are a variety of reasons why a placeholder with metadata or tombstone of the removed research object exists including but not limited to staff removal, spam, request from owner, data center does not exist is still, etc. A tombstone page is needed when data and software is no longer accessible. A tombstone page communicates that the record is gone, why it is gone, and in case you really must know, there is a copy of the metadata for the record. A tombstone page should include: DOI, date of deaccession, reason for deaccession, message explaining the data center's policies, and a message that a copy of the metadata is kept for record keeping purposes as well as checksums of the files. Zenodo offers us further [explanation of the reasoning behind tombstone pages](https://github.com/zenodo/zenodo/issues/160).

72 |

73 | DataCite offers [statistics](https://stats.datacite.org/) where the failure to resolve DOIs after a certain number of attempts is reported (see [DataCite statistics support page](https://support.datacite.org/docs/datacite-statistics)for more information). In the case of Zenodo and the GitHub issue above, the hidden field reveals thousands of records that are a result of spam.

74 |

75 |

76 |

77 | If a DOI is no longer available and the data center does not have the resources to create a tombstone page, DataCite provides a generic [tombstone page](https://support.datacite.org/docs/tombstone-pages).

78 |

79 | **See the following tombstone examples:**

80 | - Zenodo tombstone: [https://zenodo.org/record/1098445](https://zenodo.org/record/1098445)

81 | - Figshare tombstone: [https://figshare.com/articles/Climate_Change/1381402](https://figshare.com/articles/Climate_Change/1381402)

82 |

83 | ## Discussion of tombstones

84 |

85 |

--------------------------------------------------------------------------------

/_episodes/04-interoperable.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Interoperable"

3 | teaching: 0

4 | exercises: 0

5 | questions:

6 | - What does interoperability mean?

7 | - What is a controlled vocabulary, a metadata schema and linked data?

8 | - How do I describe data so that humans and computers can understand?

9 | objectives:

10 | - "Explain what makes data and software (more) interoperable for machines"

11 | - "Identify widely used metadata standards for research, including generic and discipline-focussed examples"

12 | - "Explain the role of controlled vocabularies for encoding data and for annotating metadata in enabling interoperability"

13 | - "Understand how linked data standards and conventions for metadata schema documentation relate to interoperability"

14 | keypoints:

15 | - "Understand that FAIR is about both humans and machines understanding data."

16 | - "Interoperability means choosing a data format or knowledge representation language that helps machines to understand the data."

17 | ---

18 |

19 | > ## For data & software to be interoperable:

20 | > I1. (meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation

21 | > I2. (meta)data use vocabularies that follow FAIR principles

22 | > I3. (meta)data include qualified references to other (meta)data

23 | {: .checklist}

24 |

25 | ## What is interoperability for data and software?

26 |

27 | Shared understanding of concepts, for humans as well as machines.

28 |

29 | ### What does it mean to be machine readable vs human readable?

30 |

31 | According to the [Open Data Handbook](http://opendatahandbook.org/glossary/en/):

32 |

33 | > *Human Readable*

34 | > "Data in a format that can be conveniently read by a human. Some human-readable formats, such as PDF, are not machine-readable as they are not structured data, i.e. the representation of the data on disk does not represent the actual relationships present in the data."

35 |

36 | > *Machine Readable*

37 | > "Data in a data format that can be automatically read and processed by a computer, such as CSV, JSON, XML, etc. Machine-readable data must be structured data. Compare human-readable.

38 | > Non-digital material (for example printed or hand-written documents) is by its non-digital nature not machine-readable. But even digital material need not be machine-readable. For example, consider a PDF document containing tables of data. These are definitely digital but are not machine-readable because a computer would struggle to access the tabular information - even though they are very human readable. The equivalent tables in a format such as a spreadsheet would be machine readable.

39 | > As another example scans (photographs) of text are not machine-readable (but are human readable!) but the equivalent text in a format such as a simple ASCII text file can machine readable and processable."

40 |

41 |

42 | > Software uses community accepted standards and platforms, making it possible for users to run the software.

43 | [Top 10 FAIR things for research software][10FTRS]

44 |

45 | [10FTRS]: https://librarycarpentry.org/Top-10-FAIR//2018/12/01/research-software/

46 |

47 | ## Describing data and software with shared, controlled vocabularies

48 |

49 | See

50 | -

51 | -

52 | -

53 |

54 | ## Representing knowledge in data and software

55 |

56 | See .

57 |

58 | ### Beyond the PDF

59 | Publishers, librarians, researchers, developers, funders, they have all been working towards a future where we can move beyond the PDF, from 'static and disparate data and knowledge representations to richly integrated content which grows and changes the more we learn." Research objects of the future will capture all aspects of scholarship: hypotheses, data, methods, results, presentations etc.) that are semantically enriched, interoperable and easily transmitted and comprehended.

60 | Attribution, Evaluation, Archiving, Impact

61 | https://sites.google.com/site/beyondthepdf/

62 |

63 | Beyond the PDF has now grown into FORCE...

64 | Towards a vision where research will move from document- to knowledge-based information flows

65 | semantic descriptions of research data & their structures

66 | aggregation, development & teaching of subject-specific vocabularies, ontologies & knowledge graphs

67 | Paper of the Future

68 | https://www.authorea.com/users/23/articles/8762-the-paper-of-the-future to Jupyter Notebooks/Stencilia

69 | https://stenci.la/

70 |

71 | ### Knowledge representation languages

72 | provide machine-readable (meta)data with a well-established formalism

73 | structured, using discipline-established vocabularies / ontologies / thesauri (RDF extensible knowledge representation model, OWL, JSON LD, schema.org)

74 | offer (meta)data ingest from relevant sources (Document Information Dictionary or Extensible Metadata Platform from PDF)

75 | provide as precise & complete metadata as possible

76 | look for metrics to evaluate the FAIRness of a controlled vocabulary / ontology / thesaurus

77 | often do not (yet) exist

78 | assist in their development

79 | clearly identify relationships between datasets in the metadata (e.g. “is new version of”, “is supplement to”, “relates to”, etc.)

80 | request support regarding these tasks from the repositories in your field of study

81 | for software: follow established code style guides (thanks to @npch!)

82 |

83 | ## Adding qualified references among data and software

84 |

85 | support referencing metadata fields between datasets via a schema (relatedIdentifer, relationType)

86 |

87 | Data Science and Digital Libraries => (research) knowledge graph(s)

88 | Scientific Data Management

89 | Visual Analytics to expose information within videos as keywords => av.tib.eu

90 | Scientific Knowledge Engineering => ontologies

91 |

92 | Example:

93 | → Automatic ORCID profile update when DOI is minted

94 | DataCite – CrossRef – ORCID

95 | collaboration

96 | → PID of choice for RDM: �Here: The Digital Object Identifier (DOI)

97 |

98 | Detour: Replication / Reproducibility Crisis

99 | doi.org/10.1073/pnas.1708272114

100 | doi.org/10.1371/journal.pbio.1002165

101 | doi.org/10.12688/f1000research.11334.1

102 | Examples of science failing due to software errors/bugs:

103 | figshare.com/authors/Neil_Chue_Hong/96503

104 |

105 |

106 | “[...] around 70% of research relies on software [...] if almost a half of that software is untested, this is a huge risk to the reliability of research results.”

107 | Results from a US survey about Research Software Engineers

108 | URSSI.us/blog/2018/06/21/results-from-a-us-survey-about-research-software-engineers (Daniel S. Katz, Sandra Gesing, Olivier Philippe, and Simon Hettrick)

109 | Olivier Philippe, Martin Hammitzsch, Stephan Janosch, Anelda van der Walt, Ben van Werkhoven, Simon Hettrick, Daniel S. Katz, Katrin Leinweber, Sandra Gesing, Stephan Druskat. 2018. doi.org/10.5281/zenodo.1194669

110 |

111 | Code style guides & formatters (thanks to Neil Chu Hong)

112 | faster than manual/menial formatting

113 | code looks the same, regardless of author

114 | can be automated enforced to keep diffs focussed

115 | PyPI.org/project/pycodestyle, /black, etc.

116 | ROpenSci packaging guide

117 | style.tidyverse.org

118 | Google.GitHub.io/styleguide

119 |

120 |

121 | If others can use your code, convey the meaning of updates with SemVer.org (CC BY 3.0)

122 | “version number[ changes] convey meaning about the underlying code” (Tom Preston-Werner)

123 |

124 |

125 | Exercise

126 | Python & R Carpentries lessons

127 |

128 | ## Linked Data

129 |

130 | [Top 10 FAIR things: Linked Open Data](https://librarycarpentry.org/Top-10-FAIR//2019/09/05/linked-open-data/)

131 |

132 | Linked data example

133 | Triples - RDF - SPARQL

134 | Wikidata exercise

135 |

136 | Standards: https://fairsharing.org/standards/

137 | schema.org: http://schema.org/

138 |

139 | ISA framework: 'Investigation' (the project context), 'Study' (a unit of research) and 'Assay' (analytical measurement) - https://isa-tools.github.io/

140 |

141 | Example of schema.org: rOpenSci/codemetar

142 |

143 | Modularity

144 | http://bioschemas.org

145 |

146 | codemeta croswalks to other standards

147 | https://codemeta.github.io/crosswalk/

148 |

149 | DCAT

150 | https://www.w3.org/TR/vocab-dcat/

151 |

152 | Using community accepted code style guidelines such as PEP 8 for Python (PEP 8 itself is FAIR)

153 |

154 | Scholix - related indentifiers - Zenodo example linking data/software to papers

155 | https://dliservice.research-infrastructures.eu/#/

156 | https://authorcarpentry.github.io/dois-citation-data/01-register-doi.html

157 |

158 | Should vocabularies from reusable episode be moved here?

159 |

--------------------------------------------------------------------------------

/_episodes/05-reusable.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Reusable"

3 | teaching: 0

4 | exercises: 0

5 | questions:

6 | - "Key question"

7 | objectives:

8 | - "Explain machine readability in terms of file naming conventions and providing provenance metadata"

9 | - "Explain how data citation works in practice"

10 | - "Understand key components of a data citation"

11 | - "Explore domain-relevant community standards including metadata standards"

12 | - "Understand how proper licensing is essential for reusability"

13 | - "Know about some of the licenses commonly used for data and software"

14 | keypoints:

15 | - "First key point."

16 | ---

17 |

18 | > ## For data & software to be reusable:

19 | > R1. (meta)data have a plurality of accurate and relevant attributes

20 | > R1.1 (meta)data are released with a clear and accessible data usage licence

21 | > R1.2 (meta)data are associated with their provenance

22 | > R1.3 (meta)data meet domain-relevant community standards

23 | {: .checklist}

24 |

25 |

26 | ## What does it mean to be machine readable vs human readable?

27 |

28 | According to the [Open Data Handbook](http://opendatahandbook.org/glossary/en/):

29 |

30 | *Human Readable*

31 | "Data in a format that can be conveniently read by a human. Some human-readable formats, such as PDF, are not machine-readable as they are not structured data, i.e. the representation of the data on disk does not represent the actual relationships present in the data."

32 |

33 | *Machine Readable*

34 | "Data in a data format that can be automatically read and processed by a computer, such as CSV, JSON, XML, etc. Machine-readable data must be structured data. Compare human-readable.

35 |

36 | Non-digital material (for example printed or hand-written documents) is by its non-digital nature not machine-readable. But even digital material need not be machine-readable. For example, consider a PDF document containing tables of data. These are definitely digital but are not machine-readable because a computer would struggle to access the tabular information - even though they are very human readable. The equivalent tables in a format such as a spreadsheet would be machine readable.

37 |

38 | As another example scans (photographs) of text are not machine-readable (but are human readable!) but the equivalent text in a format such as a simple ASCII text file can machine readable and processable."

39 |

40 |

41 | ## File naming best practices

42 | A file name should be unique, consistent and descriptive. This allows for increased visibility and discoverability and can be used to easily classify and sort files. Remember, a file name is the primary identifier to the file and its contents.

43 | ### Do’s and Don’ts of file naming:

44 | #### Do’s:

45 | - Make use of file naming tools for bulk naming such as Ant Renamer, RenameIT or Rename4Mac.

46 | - Create descriptive, meaningful, easily understood names no less than 12-14 characters.

47 | - Use identifiers to make it easier to classify types of files i.e. Int1 (interview 1)

48 | - Make sure the 3-letter file format extension is present at the end of the name (e.g. .doc, .xls, .mov, .tif)

49 | - If applicable, include versioning within file names

50 | - For dates use the ISO 8601 standard: YYYY-MM-DD and place at the end of the file number UNLESS you need to organise your files chronologically.

51 | - For experimental data files, consider using the project/experiment name and conditions in abbreviations

52 | - Add a README file in your top directory which details your naming convention, directory structure and abbreviations

53 | - - When combining elements in file name, use common [special letter case](https://en.wikipedia.org/wiki/Letter_case#Special_case_styles) patterns such as Kebab-case, CamelCase, or Snake_case, preferably use hyphens (-) or underscores (_)

54 | #### Don’ts:

55 | - Avoid naming files/folders with individual persons names as it impedes handover and data sharing.

56 | - Avoid long names

57 | - Avoid using spaces, dots, commas and special characters (e.g. ~ ! @ # $ % ^ & * ( ) ` ; < > ? , [ ] { } ‘ “)

58 | - Avoid repetition for ex. Directory name Electron_Microscopy_Images, then you don’t need to name the files ELN_MI_Img_20200101.img

59 |

60 | #### Examples:

61 | - Stanford Libraries [guidance on file naming](https://guides.library.stanford.edu/data-best-practices) is a great place to start.

62 | - [Dryad example](http://datadryad.com/pages/reusabilityBestPractices):

63 | - 1900-2000_sasquatch_migration_coordinates.csv

64 | - Smith-fMRI-neural-response-to-cupcakes-vs-vegetables.nii.gz

65 | - 2015-SimulationOfTropicalFrogEvolution.R

66 |

67 | ## Directory structures and README files

68 | A clear directory structure will make it easier to locate files and versions and this is particularly important when collaborating with others. Consider a hierarchical file structure starting from broad topics to more specific ones nested inside, restricting the level of folders to 3 or 4 with a limited number of items inside each of them.

69 |

70 | The UK data services offers an example of directory structure and naming: https://ukdataservice.ac.uk/manage-data/format/organising.aspx

71 |

72 | For others to reuse your research, it is important to include a README file and to organize your files in a logical way. Consider the following file structure examples from Dryad:

73 |

74 |

75 |

76 | It is also good practice to include README files to describe how the data was collected, processed, and analyzed. In other words, README files help others correctly interpret and reanalyze your data. A README file can include file names/directory structure, glossary/definitions of acronyms/terms, description of the parameters/variables and units of measurement, report precision/accuracy/uncertainty in measurements, standards/calibrations used, environment/experimental conditions, quality assurance/quality control applied, known problems, research date information, description of relationships/dependencies, additional resources/references, methods/software/data used, example records, and other supplemental information.

77 |

78 | - Dryad README file example:

79 | https://doi.org/10.5061/dryad.j512f21p

80 |

81 | - Awesome README list (for software):

82 | https://github.com/matiassingers/awesome-readme

83 |

84 | - Different Format Types

85 | https://data.library.virginia.edu/data-management/plan/format-types/

86 |

87 |

88 | ## Disciplinary Data Formats

89 |

90 | Many disciplines have developed formal metadata standards that enable re-use of data; however, these standards are not universal and often it requires background knowledge to indentify, contextualize, and interpret the underlying data. Interoperability between disciplines is still a challenge based on the continued use of custom metadata schmes, and the development of new, incompatiable standards. Thankfully, DataCite is providing a common, overarching metadata standard across disciplinary datasets, albeit at a generic vs granular level.

91 |

92 | In the meantime, the Research Data Alliance (RDA) Metadata Standards Directory - Working Group developed a collaborative, open directory of metadata standards, applicable to scientific data, to help the research community learn about metadata standards, controlled vocabularies, and the underlying elements across the different disciplines, to potentially help with mapping data elements from different sources.

93 |

94 | Exercise/Quiz?

95 |

96 | [Metadata Standards Directory](http://rd-alliance.github.io/metadata-directory/standards/)

97 | Features: Standards, Extensions, Tools, and Use Cases

98 |

99 | ## Quality Control

100 | Quality control is a fundamental step in research, which ensures the integrity of the data and could affect its use and reuse and is required in order to identify potential problems.

101 |

102 | It is therefore essential to outline how data collection will be controlled at various stages (data collection,digitisation or data entry, checking and analysis).

103 |

104 | ## Versioning

105 | In order to keep track of changes made to a file/dataset, versioning can be an efficient way to see who did what and when, in collaborative work this can be very useful.

106 |

107 | A version control strategy will allow you to easily detect the most current/final version, organize, manage and record any edits made while working on the document/data, drafting, editing and analysis.

108 |

109 | Consider the following practices:

110 | - Outline the master file and identify major files for instance; original, pre-review, 1st revision, 2nd revision, final revision, submitted.

111 | - Outline strategy for archiving and storing: Where to store the minor and major versions, how long will you retain them accordingly.

112 | - Maintain a record of file locations, a good place is in the README files

113 |

114 | Example:

115 | UK Data service version control guide:

116 | https://www.ukdataservice.ac.uk/manage-data/format/versioning.aspx

117 |

118 | ## Research vocabularies

119 | Research Vocabularies Australia https://vocabs.ands.org.au/

120 | AGROVOC & VocBench http://aims.fao.org/vest-registry/vocabularies/agrovoc