├── .gitignore

├── LICENSE

├── README.md

├── data

├── __init__.py

├── base_dataset.py

├── fashion_dataset.py

└── pose_utils.py

├── datasets

└── fashion

├── model

├── __init__.py

├── base_model.py

├── cocos_model.py

├── contextual_loss.py

├── correspondence_net.py

├── discriminator.py

├── loss.py

├── networks.py

└── translation_net.py

├── options.py

├── test.py

└── train.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 |

131 | # other stuff

132 | **/*.png

133 | **/*.pdf

134 | **/*.pth

135 | **/*.jpg

136 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | GNU AFFERO GENERAL PUBLIC LICENSE

2 | Version 3, 19 November 2007

3 |

4 | Copyright (C) 2007 Free Software Foundation, Inc.

5 | Everyone is permitted to copy and distribute verbatim copies

6 | of this license document, but changing it is not allowed.

7 |

8 | Preamble

9 |

10 | The GNU Affero General Public License is a free, copyleft license for

11 | software and other kinds of works, specifically designed to ensure

12 | cooperation with the community in the case of network server software.

13 |

14 | The licenses for most software and other practical works are designed

15 | to take away your freedom to share and change the works. By contrast,

16 | our General Public Licenses are intended to guarantee your freedom to

17 | share and change all versions of a program--to make sure it remains free

18 | software for all its users.

19 |

20 | When we speak of free software, we are referring to freedom, not

21 | price. Our General Public Licenses are designed to make sure that you

22 | have the freedom to distribute copies of free software (and charge for

23 | them if you wish), that you receive source code or can get it if you

24 | want it, that you can change the software or use pieces of it in new

25 | free programs, and that you know you can do these things.

26 |

27 | Developers that use our General Public Licenses protect your rights

28 | with two steps: (1) assert copyright on the software, and (2) offer

29 | you this License which gives you legal permission to copy, distribute

30 | and/or modify the software.

31 |

32 | A secondary benefit of defending all users' freedom is that

33 | improvements made in alternate versions of the program, if they

34 | receive widespread use, become available for other developers to

35 | incorporate. Many developers of free software are heartened and

36 | encouraged by the resulting cooperation. However, in the case of

37 | software used on network servers, this result may fail to come about.

38 | The GNU General Public License permits making a modified version and

39 | letting the public access it on a server without ever releasing its

40 | source code to the public.

41 |

42 | The GNU Affero General Public License is designed specifically to

43 | ensure that, in such cases, the modified source code becomes available

44 | to the community. It requires the operator of a network server to

45 | provide the source code of the modified version running there to the

46 | users of that server. Therefore, public use of a modified version, on

47 | a publicly accessible server, gives the public access to the source

48 | code of the modified version.

49 |

50 | An older license, called the Affero General Public License and

51 | published by Affero, was designed to accomplish similar goals. This is

52 | a different license, not a version of the Affero GPL, but Affero has

53 | released a new version of the Affero GPL which permits relicensing under

54 | this license.

55 |

56 | The precise terms and conditions for copying, distribution and

57 | modification follow.

58 |

59 | TERMS AND CONDITIONS

60 |

61 | 0. Definitions.

62 |

63 | "This License" refers to version 3 of the GNU Affero General Public License.

64 |

65 | "Copyright" also means copyright-like laws that apply to other kinds of

66 | works, such as semiconductor masks.

67 |

68 | "The Program" refers to any copyrightable work licensed under this

69 | License. Each licensee is addressed as "you". "Licensees" and

70 | "recipients" may be individuals or organizations.

71 |

72 | To "modify" a work means to copy from or adapt all or part of the work

73 | in a fashion requiring copyright permission, other than the making of an

74 | exact copy. The resulting work is called a "modified version" of the

75 | earlier work or a work "based on" the earlier work.

76 |

77 | A "covered work" means either the unmodified Program or a work based

78 | on the Program.

79 |

80 | To "propagate" a work means to do anything with it that, without

81 | permission, would make you directly or secondarily liable for

82 | infringement under applicable copyright law, except executing it on a

83 | computer or modifying a private copy. Propagation includes copying,

84 | distribution (with or without modification), making available to the

85 | public, and in some countries other activities as well.

86 |

87 | To "convey" a work means any kind of propagation that enables other

88 | parties to make or receive copies. Mere interaction with a user through

89 | a computer network, with no transfer of a copy, is not conveying.

90 |

91 | An interactive user interface displays "Appropriate Legal Notices"

92 | to the extent that it includes a convenient and prominently visible

93 | feature that (1) displays an appropriate copyright notice, and (2)

94 | tells the user that there is no warranty for the work (except to the

95 | extent that warranties are provided), that licensees may convey the

96 | work under this License, and how to view a copy of this License. If

97 | the interface presents a list of user commands or options, such as a

98 | menu, a prominent item in the list meets this criterion.

99 |

100 | 1. Source Code.

101 |

102 | The "source code" for a work means the preferred form of the work

103 | for making modifications to it. "Object code" means any non-source

104 | form of a work.

105 |

106 | A "Standard Interface" means an interface that either is an official

107 | standard defined by a recognized standards body, or, in the case of

108 | interfaces specified for a particular programming language, one that

109 | is widely used among developers working in that language.

110 |

111 | The "System Libraries" of an executable work include anything, other

112 | than the work as a whole, that (a) is included in the normal form of

113 | packaging a Major Component, but which is not part of that Major

114 | Component, and (b) serves only to enable use of the work with that

115 | Major Component, or to implement a Standard Interface for which an

116 | implementation is available to the public in source code form. A

117 | "Major Component", in this context, means a major essential component

118 | (kernel, window system, and so on) of the specific operating system

119 | (if any) on which the executable work runs, or a compiler used to

120 | produce the work, or an object code interpreter used to run it.

121 |

122 | The "Corresponding Source" for a work in object code form means all

123 | the source code needed to generate, install, and (for an executable

124 | work) run the object code and to modify the work, including scripts to

125 | control those activities. However, it does not include the work's

126 | System Libraries, or general-purpose tools or generally available free

127 | programs which are used unmodified in performing those activities but

128 | which are not part of the work. For example, Corresponding Source

129 | includes interface definition files associated with source files for

130 | the work, and the source code for shared libraries and dynamically

131 | linked subprograms that the work is specifically designed to require,

132 | such as by intimate data communication or control flow between those

133 | subprograms and other parts of the work.

134 |

135 | The Corresponding Source need not include anything that users

136 | can regenerate automatically from other parts of the Corresponding

137 | Source.

138 |

139 | The Corresponding Source for a work in source code form is that

140 | same work.

141 |

142 | 2. Basic Permissions.

143 |

144 | All rights granted under this License are granted for the term of

145 | copyright on the Program, and are irrevocable provided the stated

146 | conditions are met. This License explicitly affirms your unlimited

147 | permission to run the unmodified Program. The output from running a

148 | covered work is covered by this License only if the output, given its

149 | content, constitutes a covered work. This License acknowledges your

150 | rights of fair use or other equivalent, as provided by copyright law.

151 |

152 | You may make, run and propagate covered works that you do not

153 | convey, without conditions so long as your license otherwise remains

154 | in force. You may convey covered works to others for the sole purpose

155 | of having them make modifications exclusively for you, or provide you

156 | with facilities for running those works, provided that you comply with

157 | the terms of this License in conveying all material for which you do

158 | not control copyright. Those thus making or running the covered works

159 | for you must do so exclusively on your behalf, under your direction

160 | and control, on terms that prohibit them from making any copies of

161 | your copyrighted material outside their relationship with you.

162 |

163 | Conveying under any other circumstances is permitted solely under

164 | the conditions stated below. Sublicensing is not allowed; section 10

165 | makes it unnecessary.

166 |

167 | 3. Protecting Users' Legal Rights From Anti-Circumvention Law.

168 |

169 | No covered work shall be deemed part of an effective technological

170 | measure under any applicable law fulfilling obligations under article

171 | 11 of the WIPO copyright treaty adopted on 20 December 1996, or

172 | similar laws prohibiting or restricting circumvention of such

173 | measures.

174 |

175 | When you convey a covered work, you waive any legal power to forbid

176 | circumvention of technological measures to the extent such circumvention

177 | is effected by exercising rights under this License with respect to

178 | the covered work, and you disclaim any intention to limit operation or

179 | modification of the work as a means of enforcing, against the work's

180 | users, your or third parties' legal rights to forbid circumvention of

181 | technological measures.

182 |

183 | 4. Conveying Verbatim Copies.

184 |

185 | You may convey verbatim copies of the Program's source code as you

186 | receive it, in any medium, provided that you conspicuously and

187 | appropriately publish on each copy an appropriate copyright notice;

188 | keep intact all notices stating that this License and any

189 | non-permissive terms added in accord with section 7 apply to the code;

190 | keep intact all notices of the absence of any warranty; and give all

191 | recipients a copy of this License along with the Program.

192 |

193 | You may charge any price or no price for each copy that you convey,

194 | and you may offer support or warranty protection for a fee.

195 |

196 | 5. Conveying Modified Source Versions.

197 |

198 | You may convey a work based on the Program, or the modifications to

199 | produce it from the Program, in the form of source code under the

200 | terms of section 4, provided that you also meet all of these conditions:

201 |

202 | a) The work must carry prominent notices stating that you modified

203 | it, and giving a relevant date.

204 |

205 | b) The work must carry prominent notices stating that it is

206 | released under this License and any conditions added under section

207 | 7. This requirement modifies the requirement in section 4 to

208 | "keep intact all notices".

209 |

210 | c) You must license the entire work, as a whole, under this

211 | License to anyone who comes into possession of a copy. This

212 | License will therefore apply, along with any applicable section 7

213 | additional terms, to the whole of the work, and all its parts,

214 | regardless of how they are packaged. This License gives no

215 | permission to license the work in any other way, but it does not

216 | invalidate such permission if you have separately received it.

217 |

218 | d) If the work has interactive user interfaces, each must display

219 | Appropriate Legal Notices; however, if the Program has interactive

220 | interfaces that do not display Appropriate Legal Notices, your

221 | work need not make them do so.

222 |

223 | A compilation of a covered work with other separate and independent

224 | works, which are not by their nature extensions of the covered work,

225 | and which are not combined with it such as to form a larger program,

226 | in or on a volume of a storage or distribution medium, is called an

227 | "aggregate" if the compilation and its resulting copyright are not

228 | used to limit the access or legal rights of the compilation's users

229 | beyond what the individual works permit. Inclusion of a covered work

230 | in an aggregate does not cause this License to apply to the other

231 | parts of the aggregate.

232 |

233 | 6. Conveying Non-Source Forms.

234 |

235 | You may convey a covered work in object code form under the terms

236 | of sections 4 and 5, provided that you also convey the

237 | machine-readable Corresponding Source under the terms of this License,

238 | in one of these ways:

239 |

240 | a) Convey the object code in, or embodied in, a physical product

241 | (including a physical distribution medium), accompanied by the

242 | Corresponding Source fixed on a durable physical medium

243 | customarily used for software interchange.

244 |

245 | b) Convey the object code in, or embodied in, a physical product

246 | (including a physical distribution medium), accompanied by a

247 | written offer, valid for at least three years and valid for as

248 | long as you offer spare parts or customer support for that product

249 | model, to give anyone who possesses the object code either (1) a

250 | copy of the Corresponding Source for all the software in the

251 | product that is covered by this License, on a durable physical

252 | medium customarily used for software interchange, for a price no

253 | more than your reasonable cost of physically performing this

254 | conveying of source, or (2) access to copy the

255 | Corresponding Source from a network server at no charge.

256 |

257 | c) Convey individual copies of the object code with a copy of the

258 | written offer to provide the Corresponding Source. This

259 | alternative is allowed only occasionally and noncommercially, and

260 | only if you received the object code with such an offer, in accord

261 | with subsection 6b.

262 |

263 | d) Convey the object code by offering access from a designated

264 | place (gratis or for a charge), and offer equivalent access to the

265 | Corresponding Source in the same way through the same place at no

266 | further charge. You need not require recipients to copy the

267 | Corresponding Source along with the object code. If the place to

268 | copy the object code is a network server, the Corresponding Source

269 | may be on a different server (operated by you or a third party)

270 | that supports equivalent copying facilities, provided you maintain

271 | clear directions next to the object code saying where to find the

272 | Corresponding Source. Regardless of what server hosts the

273 | Corresponding Source, you remain obligated to ensure that it is

274 | available for as long as needed to satisfy these requirements.

275 |

276 | e) Convey the object code using peer-to-peer transmission, provided

277 | you inform other peers where the object code and Corresponding

278 | Source of the work are being offered to the general public at no

279 | charge under subsection 6d.

280 |

281 | A separable portion of the object code, whose source code is excluded

282 | from the Corresponding Source as a System Library, need not be

283 | included in conveying the object code work.

284 |

285 | A "User Product" is either (1) a "consumer product", which means any

286 | tangible personal property which is normally used for personal, family,

287 | or household purposes, or (2) anything designed or sold for incorporation

288 | into a dwelling. In determining whether a product is a consumer product,

289 | doubtful cases shall be resolved in favor of coverage. For a particular

290 | product received by a particular user, "normally used" refers to a

291 | typical or common use of that class of product, regardless of the status

292 | of the particular user or of the way in which the particular user

293 | actually uses, or expects or is expected to use, the product. A product

294 | is a consumer product regardless of whether the product has substantial

295 | commercial, industrial or non-consumer uses, unless such uses represent

296 | the only significant mode of use of the product.

297 |

298 | "Installation Information" for a User Product means any methods,

299 | procedures, authorization keys, or other information required to install

300 | and execute modified versions of a covered work in that User Product from

301 | a modified version of its Corresponding Source. The information must

302 | suffice to ensure that the continued functioning of the modified object

303 | code is in no case prevented or interfered with solely because

304 | modification has been made.

305 |

306 | If you convey an object code work under this section in, or with, or

307 | specifically for use in, a User Product, and the conveying occurs as

308 | part of a transaction in which the right of possession and use of the

309 | User Product is transferred to the recipient in perpetuity or for a

310 | fixed term (regardless of how the transaction is characterized), the

311 | Corresponding Source conveyed under this section must be accompanied

312 | by the Installation Information. But this requirement does not apply

313 | if neither you nor any third party retains the ability to install

314 | modified object code on the User Product (for example, the work has

315 | been installed in ROM).

316 |

317 | The requirement to provide Installation Information does not include a

318 | requirement to continue to provide support service, warranty, or updates

319 | for a work that has been modified or installed by the recipient, or for

320 | the User Product in which it has been modified or installed. Access to a

321 | network may be denied when the modification itself materially and

322 | adversely affects the operation of the network or violates the rules and

323 | protocols for communication across the network.

324 |

325 | Corresponding Source conveyed, and Installation Information provided,

326 | in accord with this section must be in a format that is publicly

327 | documented (and with an implementation available to the public in

328 | source code form), and must require no special password or key for

329 | unpacking, reading or copying.

330 |

331 | 7. Additional Terms.

332 |

333 | "Additional permissions" are terms that supplement the terms of this

334 | License by making exceptions from one or more of its conditions.

335 | Additional permissions that are applicable to the entire Program shall

336 | be treated as though they were included in this License, to the extent

337 | that they are valid under applicable law. If additional permissions

338 | apply only to part of the Program, that part may be used separately

339 | under those permissions, but the entire Program remains governed by

340 | this License without regard to the additional permissions.

341 |

342 | When you convey a copy of a covered work, you may at your option

343 | remove any additional permissions from that copy, or from any part of

344 | it. (Additional permissions may be written to require their own

345 | removal in certain cases when you modify the work.) You may place

346 | additional permissions on material, added by you to a covered work,

347 | for which you have or can give appropriate copyright permission.

348 |

349 | Notwithstanding any other provision of this License, for material you

350 | add to a covered work, you may (if authorized by the copyright holders of

351 | that material) supplement the terms of this License with terms:

352 |

353 | a) Disclaiming warranty or limiting liability differently from the

354 | terms of sections 15 and 16 of this License; or

355 |

356 | b) Requiring preservation of specified reasonable legal notices or

357 | author attributions in that material or in the Appropriate Legal

358 | Notices displayed by works containing it; or

359 |

360 | c) Prohibiting misrepresentation of the origin of that material, or

361 | requiring that modified versions of such material be marked in

362 | reasonable ways as different from the original version; or

363 |

364 | d) Limiting the use for publicity purposes of names of licensors or

365 | authors of the material; or

366 |

367 | e) Declining to grant rights under trademark law for use of some

368 | trade names, trademarks, or service marks; or

369 |

370 | f) Requiring indemnification of licensors and authors of that

371 | material by anyone who conveys the material (or modified versions of

372 | it) with contractual assumptions of liability to the recipient, for

373 | any liability that these contractual assumptions directly impose on

374 | those licensors and authors.

375 |

376 | All other non-permissive additional terms are considered "further

377 | restrictions" within the meaning of section 10. If the Program as you

378 | received it, or any part of it, contains a notice stating that it is

379 | governed by this License along with a term that is a further

380 | restriction, you may remove that term. If a license document contains

381 | a further restriction but permits relicensing or conveying under this

382 | License, you may add to a covered work material governed by the terms

383 | of that license document, provided that the further restriction does

384 | not survive such relicensing or conveying.

385 |

386 | If you add terms to a covered work in accord with this section, you

387 | must place, in the relevant source files, a statement of the

388 | additional terms that apply to those files, or a notice indicating

389 | where to find the applicable terms.

390 |

391 | Additional terms, permissive or non-permissive, may be stated in the

392 | form of a separately written license, or stated as exceptions;

393 | the above requirements apply either way.

394 |

395 | 8. Termination.

396 |

397 | You may not propagate or modify a covered work except as expressly

398 | provided under this License. Any attempt otherwise to propagate or

399 | modify it is void, and will automatically terminate your rights under

400 | this License (including any patent licenses granted under the third

401 | paragraph of section 11).

402 |

403 | However, if you cease all violation of this License, then your

404 | license from a particular copyright holder is reinstated (a)

405 | provisionally, unless and until the copyright holder explicitly and

406 | finally terminates your license, and (b) permanently, if the copyright

407 | holder fails to notify you of the violation by some reasonable means

408 | prior to 60 days after the cessation.

409 |

410 | Moreover, your license from a particular copyright holder is

411 | reinstated permanently if the copyright holder notifies you of the

412 | violation by some reasonable means, this is the first time you have

413 | received notice of violation of this License (for any work) from that

414 | copyright holder, and you cure the violation prior to 30 days after

415 | your receipt of the notice.

416 |

417 | Termination of your rights under this section does not terminate the

418 | licenses of parties who have received copies or rights from you under

419 | this License. If your rights have been terminated and not permanently

420 | reinstated, you do not qualify to receive new licenses for the same

421 | material under section 10.

422 |

423 | 9. Acceptance Not Required for Having Copies.

424 |

425 | You are not required to accept this License in order to receive or

426 | run a copy of the Program. Ancillary propagation of a covered work

427 | occurring solely as a consequence of using peer-to-peer transmission

428 | to receive a copy likewise does not require acceptance. However,

429 | nothing other than this License grants you permission to propagate or

430 | modify any covered work. These actions infringe copyright if you do

431 | not accept this License. Therefore, by modifying or propagating a

432 | covered work, you indicate your acceptance of this License to do so.

433 |

434 | 10. Automatic Licensing of Downstream Recipients.

435 |

436 | Each time you convey a covered work, the recipient automatically

437 | receives a license from the original licensors, to run, modify and

438 | propagate that work, subject to this License. You are not responsible

439 | for enforcing compliance by third parties with this License.

440 |

441 | An "entity transaction" is a transaction transferring control of an

442 | organization, or substantially all assets of one, or subdividing an

443 | organization, or merging organizations. If propagation of a covered

444 | work results from an entity transaction, each party to that

445 | transaction who receives a copy of the work also receives whatever

446 | licenses to the work the party's predecessor in interest had or could

447 | give under the previous paragraph, plus a right to possession of the

448 | Corresponding Source of the work from the predecessor in interest, if

449 | the predecessor has it or can get it with reasonable efforts.

450 |

451 | You may not impose any further restrictions on the exercise of the

452 | rights granted or affirmed under this License. For example, you may

453 | not impose a license fee, royalty, or other charge for exercise of

454 | rights granted under this License, and you may not initiate litigation

455 | (including a cross-claim or counterclaim in a lawsuit) alleging that

456 | any patent claim is infringed by making, using, selling, offering for

457 | sale, or importing the Program or any portion of it.

458 |

459 | 11. Patents.

460 |

461 | A "contributor" is a copyright holder who authorizes use under this

462 | License of the Program or a work on which the Program is based. The

463 | work thus licensed is called the contributor's "contributor version".

464 |

465 | A contributor's "essential patent claims" are all patent claims

466 | owned or controlled by the contributor, whether already acquired or

467 | hereafter acquired, that would be infringed by some manner, permitted

468 | by this License, of making, using, or selling its contributor version,

469 | but do not include claims that would be infringed only as a

470 | consequence of further modification of the contributor version. For

471 | purposes of this definition, "control" includes the right to grant

472 | patent sublicenses in a manner consistent with the requirements of

473 | this License.

474 |

475 | Each contributor grants you a non-exclusive, worldwide, royalty-free

476 | patent license under the contributor's essential patent claims, to

477 | make, use, sell, offer for sale, import and otherwise run, modify and

478 | propagate the contents of its contributor version.

479 |

480 | In the following three paragraphs, a "patent license" is any express

481 | agreement or commitment, however denominated, not to enforce a patent

482 | (such as an express permission to practice a patent or covenant not to

483 | sue for patent infringement). To "grant" such a patent license to a

484 | party means to make such an agreement or commitment not to enforce a

485 | patent against the party.

486 |

487 | If you convey a covered work, knowingly relying on a patent license,

488 | and the Corresponding Source of the work is not available for anyone

489 | to copy, free of charge and under the terms of this License, through a

490 | publicly available network server or other readily accessible means,

491 | then you must either (1) cause the Corresponding Source to be so

492 | available, or (2) arrange to deprive yourself of the benefit of the

493 | patent license for this particular work, or (3) arrange, in a manner

494 | consistent with the requirements of this License, to extend the patent

495 | license to downstream recipients. "Knowingly relying" means you have

496 | actual knowledge that, but for the patent license, your conveying the

497 | covered work in a country, or your recipient's use of the covered work

498 | in a country, would infringe one or more identifiable patents in that

499 | country that you have reason to believe are valid.

500 |

501 | If, pursuant to or in connection with a single transaction or

502 | arrangement, you convey, or propagate by procuring conveyance of, a

503 | covered work, and grant a patent license to some of the parties

504 | receiving the covered work authorizing them to use, propagate, modify

505 | or convey a specific copy of the covered work, then the patent license

506 | you grant is automatically extended to all recipients of the covered

507 | work and works based on it.

508 |

509 | A patent license is "discriminatory" if it does not include within

510 | the scope of its coverage, prohibits the exercise of, or is

511 | conditioned on the non-exercise of one or more of the rights that are

512 | specifically granted under this License. You may not convey a covered

513 | work if you are a party to an arrangement with a third party that is

514 | in the business of distributing software, under which you make payment

515 | to the third party based on the extent of your activity of conveying

516 | the work, and under which the third party grants, to any of the

517 | parties who would receive the covered work from you, a discriminatory

518 | patent license (a) in connection with copies of the covered work

519 | conveyed by you (or copies made from those copies), or (b) primarily

520 | for and in connection with specific products or compilations that

521 | contain the covered work, unless you entered into that arrangement,

522 | or that patent license was granted, prior to 28 March 2007.

523 |

524 | Nothing in this License shall be construed as excluding or limiting

525 | any implied license or other defenses to infringement that may

526 | otherwise be available to you under applicable patent law.

527 |

528 | 12. No Surrender of Others' Freedom.

529 |

530 | If conditions are imposed on you (whether by court order, agreement or

531 | otherwise) that contradict the conditions of this License, they do not

532 | excuse you from the conditions of this License. If you cannot convey a

533 | covered work so as to satisfy simultaneously your obligations under this

534 | License and any other pertinent obligations, then as a consequence you may

535 | not convey it at all. For example, if you agree to terms that obligate you

536 | to collect a royalty for further conveying from those to whom you convey

537 | the Program, the only way you could satisfy both those terms and this

538 | License would be to refrain entirely from conveying the Program.

539 |

540 | 13. Remote Network Interaction; Use with the GNU General Public License.

541 |

542 | Notwithstanding any other provision of this License, if you modify the

543 | Program, your modified version must prominently offer all users

544 | interacting with it remotely through a computer network (if your version

545 | supports such interaction) an opportunity to receive the Corresponding

546 | Source of your version by providing access to the Corresponding Source

547 | from a network server at no charge, through some standard or customary

548 | means of facilitating copying of software. This Corresponding Source

549 | shall include the Corresponding Source for any work covered by version 3

550 | of the GNU General Public License that is incorporated pursuant to the

551 | following paragraph.

552 |

553 | Notwithstanding any other provision of this License, you have

554 | permission to link or combine any covered work with a work licensed

555 | under version 3 of the GNU General Public License into a single

556 | combined work, and to convey the resulting work. The terms of this

557 | License will continue to apply to the part which is the covered work,

558 | but the work with which it is combined will remain governed by version

559 | 3 of the GNU General Public License.

560 |

561 | 14. Revised Versions of this License.

562 |

563 | The Free Software Foundation may publish revised and/or new versions of

564 | the GNU Affero General Public License from time to time. Such new versions

565 | will be similar in spirit to the present version, but may differ in detail to

566 | address new problems or concerns.

567 |

568 | Each version is given a distinguishing version number. If the

569 | Program specifies that a certain numbered version of the GNU Affero General

570 | Public License "or any later version" applies to it, you have the

571 | option of following the terms and conditions either of that numbered

572 | version or of any later version published by the Free Software

573 | Foundation. If the Program does not specify a version number of the

574 | GNU Affero General Public License, you may choose any version ever published

575 | by the Free Software Foundation.

576 |

577 | If the Program specifies that a proxy can decide which future

578 | versions of the GNU Affero General Public License can be used, that proxy's

579 | public statement of acceptance of a version permanently authorizes you

580 | to choose that version for the Program.

581 |

582 | Later license versions may give you additional or different

583 | permissions. However, no additional obligations are imposed on any

584 | author or copyright holder as a result of your choosing to follow a

585 | later version.

586 |

587 | 15. Disclaimer of Warranty.

588 |

589 | THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

590 | APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT

591 | HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM "AS IS" WITHOUT WARRANTY

592 | OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO,

593 | THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

594 | PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM

595 | IS WITH YOU. SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF

596 | ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

597 |

598 | 16. Limitation of Liability.

599 |

600 | IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN WRITING

601 | WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES AND/OR CONVEYS

602 | THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY

603 | GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE

604 | USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT NOT LIMITED TO LOSS OF

605 | DATA OR DATA BEING RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD

606 | PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS),

607 | EVEN IF SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF

608 | SUCH DAMAGES.

609 |

610 | 17. Interpretation of Sections 15 and 16.

611 |

612 | If the disclaimer of warranty and limitation of liability provided

613 | above cannot be given local legal effect according to their terms,

614 | reviewing courts shall apply local law that most closely approximates

615 | an absolute waiver of all civil liability in connection with the

616 | Program, unless a warranty or assumption of liability accompanies a

617 | copy of the Program in return for a fee.

618 |

619 | END OF TERMS AND CONDITIONS

620 |

621 | How to Apply These Terms to Your New Programs

622 |

623 | If you develop a new program, and you want it to be of the greatest

624 | possible use to the public, the best way to achieve this is to make it

625 | free software which everyone can redistribute and change under these terms.

626 |

627 | To do so, attach the following notices to the program. It is safest

628 | to attach them to the start of each source file to most effectively

629 | state the exclusion of warranty; and each file should have at least

630 | the "copyright" line and a pointer to where the full notice is found.

631 |

632 |

633 | Copyright (C)

634 |

635 | This program is free software: you can redistribute it and/or modify

636 | it under the terms of the GNU Affero General Public License as published

637 | by the Free Software Foundation, either version 3 of the License, or

638 | (at your option) any later version.

639 |

640 | This program is distributed in the hope that it will be useful,

641 | but WITHOUT ANY WARRANTY; without even the implied warranty of

642 | MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

643 | GNU Affero General Public License for more details.

644 |

645 | You should have received a copy of the GNU Affero General Public License

646 | along with this program. If not, see .

647 |

648 | Also add information on how to contact you by electronic and paper mail.

649 |

650 | If your software can interact with users remotely through a computer

651 | network, you should also make sure that it provides a way for users to

652 | get its source. For example, if your program is a web application, its

653 | interface could display a "Source" link that leads users to an archive

654 | of the code. There are many ways you could offer source, and different

655 | solutions will be better for different programs; see section 13 for the

656 | specific requirements.

657 |

658 | You should also get your employer (if you work as a programmer) or school,

659 | if any, to sign a "copyright disclaimer" for the program, if necessary.

660 | For more information on this, and how to apply and follow the GNU AGPL, see

661 | .

662 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://arxiv.org/abs/2004.05571)

4 |

5 | # CoCosNet

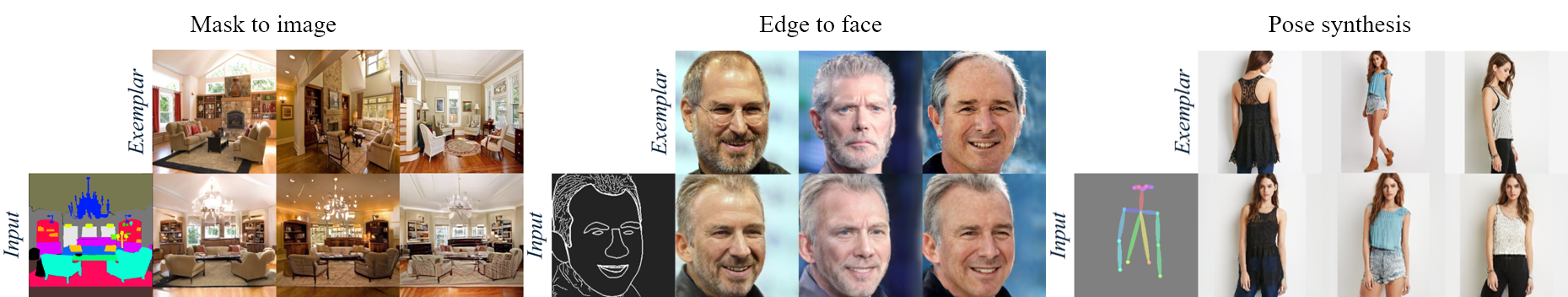

6 | Pytorch Implementation of the paper ["Cross-domain Correspondence Learning for Exemplar-based Image Translation"](https://panzhang0212.github.io/CoCosNet) (CVPR 2020 oral).

7 |

8 |

9 |

10 |

11 | ### Update:

12 | 20200525: Training code for deepfashion complete. Due to the memory limitations, I employed the following conversions:

13 | - Disable the non-local layer, as the memory cost is infeasible on common hardware. If the original paper is telling the truth that the non-lacal layer works on (128-128-256) tensors, then each attention matrix would contain 128^4 elements (which takes 1GB).

14 | - Shrink the correspondence map size from 64 to 32, leading to 4x memory save on dense correspondence matrices.

15 | - Shrink the base number of filters from 64 to 16.

16 |

17 | The truncated model barely fits in a 12GB GTX Titan X card, but the performance would not be the same.

18 |

19 | # Environment

20 | - Ubuntu/CentOS

21 | - Pytorch 1.0+

22 | - opencv-python

23 | - tqdm

24 |

25 | # TODO list

26 | - [x] Prepare dataset

27 | - [x] Implement the network

28 | - [x] Implement the loss functions

29 | - [x] Implement the trainer

30 | - [x] Training on DeepFashion

31 | - [ ] Adjust network architecture to satisfy a single 16 GB GPU.

32 | - [ ] Training for other tasks

33 |

34 | # Dataset Preparation

35 | ### DeepFashion

36 | Just follow the routine in [the PATN repo](https://github.com/Lotayou/Pose-Transfer)

37 |

38 | # Pretrained Model

39 | The pretrained model for human pose transfer task: [TO BE RELEASED](https://github.com/Lotayou)

40 |

41 | # Training

42 | run `python train.py`.

43 |

44 | # Citations

45 | If you find this repo useful for your research, don't forget to cite the original paper:

46 | ```

47 | @article{Zhang2020CrossdomainCL,

48 | title={Cross-domain Correspondence Learning for Exemplar-based Image Translation},

49 | author={Pan Zhang and Bo Zhang and Dong Chen and Lu Yuan and Fang Wen},

50 | journal={ArXiv},

51 | year={2020},

52 | volume={abs/2004.05571}

53 | }

54 | ```

55 |

56 | # Acknowledgement

57 | TODO.

58 |

--------------------------------------------------------------------------------

/data/__init__.py:

--------------------------------------------------------------------------------

1 | """This package includes all the modules related to data loading and preprocessing

2 |

3 | To add a custom dataset class called 'dummy', you need to add a file called 'dummy_dataset.py' and define a subclass 'DummyDataset' inherited from BaseDataset.

4 | You need to implement four functions:

5 | -- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

6 | -- <__len__>: return the size of dataset.

7 | -- <__getitem__>: get a data point from data loader.

8 | -- : (optionally) add dataset-specific options and set default options.

9 |

10 | Now you can use the dataset class by specifying flag '--dataset_mode dummy'.

11 | See our template dataset class 'template_dataset.py' for more details.

12 | """

13 | import importlib

14 | import torch.utils.data

15 | from data.base_dataset import BaseDataset

16 |

17 |

18 | def find_dataset_using_name(dataset_name):

19 | """Import the module "data/[dataset_name]_dataset.py".

20 |

21 | In the file, the class called DatasetNameDataset() will

22 | be instantiated. It has to be a subclass of BaseDataset,

23 | and it is case-insensitive.

24 | """

25 | dataset_filename = "data." + dataset_name + "_dataset"

26 | datasetlib = importlib.import_module(dataset_filename)

27 |

28 | dataset = None

29 | target_dataset_name = dataset_name.replace('_', '') + 'dataset'

30 | for name, cls in datasetlib.__dict__.items():

31 | if name.lower() == target_dataset_name.lower() \

32 | and issubclass(cls, BaseDataset):

33 | dataset = cls

34 |

35 | if dataset is None:

36 | raise NotImplementedError("In %s.py, there should be a subclass of BaseDataset with class name that matches %s in lowercase." % (dataset_filename, target_dataset_name))

37 |

38 | return dataset

39 |

40 |

41 | def get_option_setter(dataset_name):

42 | """Return the static method of the dataset class."""

43 | dataset_class = find_dataset_using_name(dataset_name)

44 | return dataset_class.modify_commandline_options

45 |

46 |

47 | def create_dataset(opt):

48 | """Create a dataset given the option.

49 |

50 | This function wraps the class CustomDatasetDataLoader.

51 | This is the main interface between this package and 'train.py'/'test.py'

52 |

53 | Example:

54 | >>> from data import create_dataset

55 | >>> dataset = create_dataset(opt)

56 | """

57 | data_loader = CustomDatasetDataLoader(opt)

58 | dataset = data_loader.load_data()

59 | return dataset

60 |

61 |

62 | class CustomDatasetDataLoader():

63 | """Wrapper class of Dataset class that performs multi-threaded data loading"""

64 |

65 | def __init__(self, opt):

66 | """Initialize this class

67 |

68 | Step 1: create a dataset instance given the name [dataset_mode]

69 | Step 2: create a multi-threaded data loader.

70 | """

71 | self.opt = opt

72 | dataset_class = find_dataset_using_name(opt.dataset_mode)

73 | self.dataset = dataset_class(opt)

74 | print("dataset [%s] was created" % type(self.dataset).__name__)

75 | self.dataloader = torch.utils.data.DataLoader(

76 | self.dataset,

77 | batch_size=opt.batch_size,

78 | shuffle=not opt.serial_batches,

79 | num_workers=int(opt.num_workers),

80 | drop_last=True,

81 | pin_memory=True)

82 |

83 | def load_data(self):

84 | return self

85 |

86 | def __len__(self):

87 | """Return the number of data in the dataset"""

88 | return min(len(self.dataset), self.opt.max_dataset_size)

89 |

90 | def __iter__(self):

91 | """Return a batch of data"""

92 | for i, data in enumerate(self.dataloader):

93 | if i * self.opt.batch_size >= self.opt.max_dataset_size:

94 | break

95 | yield data

96 |

--------------------------------------------------------------------------------

/data/base_dataset.py:

--------------------------------------------------------------------------------

1 | from torch.utils.data import Dataset

2 |

3 | class BaseDataset(Dataset):

4 | def __init__(self, opt):

5 | super().__init__()

6 |

7 | def __getitem__(self, index):

8 | pass

9 |

10 | def __len__(self): pass

11 |

--------------------------------------------------------------------------------

/data/fashion_dataset.py:

--------------------------------------------------------------------------------

1 | """

2 | fashion dataset: load deepfashion models

3 | Requires skeleton input as stick figures.

4 | """

5 |

6 | import random

7 | import numpy as np

8 | import torch

9 | import torch.utils.data as data

10 | import cv2

11 | from tqdm import tqdm

12 | import os

13 | from data.base_dataset import BaseDataset

14 | from data.pose_utils import draw_pose_from_cords, load_pose_cords_from_strings

15 |

16 | class FashionDataset(BaseDataset):

17 | # Beware, the pose annotation is fitted for 256*176 images, need additional resizing

18 | def __init__(self, opt):

19 | super().__init__(opt)

20 | self.opt = opt

21 | self.h = opt.image_size

22 | self.w = opt.image_size - 2 * opt.padding

23 | self.size = (self.h, self.w)

24 | self.pd = opt.padding

25 |

26 | self.white = torch.ones((3, self.h, self.h), dtype=torch.float32)

27 | self.black = -1 * self.white

28 |

29 | self.dir_Img = os.path.join(opt.dataroot, opt.phase) # person images (exemplar)

30 | self.dir_Anno = os.path.join(opt.dataroot, opt.phase + '_pose_rgb') # rgb pose images

31 |

32 | pairLst = os.path.join(opt.dataroot, 'fasion-resize-pairs-%s.csv' % opt.phase)

33 | self.init_categories(pairLst)

34 |

35 | if not os.path.isdir(self.dir_Anno):

36 | print('Folder %s not found or annotation incomplete...' % self.dir_Anno)

37 | annotation_csv = os.path.join(opt.dataroot, 'fasion-resize-annotation-%s.csv' % opt.phase)

38 | if os.path.isfile(annotation_csv):

39 | print('Found backup annotation file, start generating required pose images...')

40 | self.draw_stick_figures(annotation_csv, self.dir_Anno)

41 |

42 |

43 | def trans(self, x, bg='black'):

44 | x = torch.from_numpy(x / 127.5 - 1).permute(2, 0, 1).float()

45 | full = torch.ones((3, self.h, self.h), dtype=torch.float32)

46 | if bg == 'black':

47 | full = -1 * full

48 |

49 | full[:,:,self.pd:self.pd+self.w] = x

50 | return full

51 |

52 | def draw_stick_figures(self, annotation, target_dir):

53 | os.makedirs(target_dir, exist_ok=True)

54 | with open(annotation, 'r') as f:

55 | lines = [l.strip() for l in f][1:]

56 |

57 | for l in tqdm(lines):

58 | name, str_y, str_x = l.split(':')

59 | target_name = os.path.join(target_dir, name)

60 | cords = load_pose_cords_from_strings(str_y, str_x)

61 | target_im, _ = draw_pose_from_cords(cords, self.size)

62 | cv2.imwrite(target_name, target_im)

63 |

64 |

65 | def init_categories(self, pairLst):

66 | '''

67 | Using pandas is too f**king slow...

68 |

69 | pairs_file_train = pd.read_csv(pairLst)

70 | self.size = len(pairs_file_train)

71 | self.pairs = []

72 | print('Loading data pairs ...')

73 | for i in range(self.size):

74 | pair = [pairs_file_train.iloc[i]['from'], pairs_file_train.iloc[i]['to']]

75 | self.pairs.append(pair)

76 | '''

77 | with open(pairLst, 'r') as f:

78 | lines = [l for l in f][1:self.opt.max_dataset_size+1]

79 | self.pairs = [l.strip().split(',') for l in lines]

80 | print('Loading data pairs finished ...')

81 |

82 | def __getitem__(self, index):

83 | P1_name, P2_name = self.pairs[index]

84 |

85 | P1 = self.trans(cv2.imread(os.path.join(self.dir_Img, P1_name)), bg='white') # person 1

86 | BP1 = self.trans(cv2.imread(os.path.join(self.dir_Anno, P1_name)), bg='black') # bone of person 1

87 | P2 = self.trans(cv2.imread(os.path.join(self.dir_Img, P2_name)), bg='white') # person 2

88 | BP2 = self.trans(cv2.imread(os.path.join(self.dir_Anno, P2_name)), bg='black') # bone of person 2

89 | # domain x: posemap

90 | # domain y: exemplar

91 | return {'a': BP2, 'b_gt': P2, 'a_exemplar': BP1, 'b_exemplar': P1}

92 |

93 |

94 | def __len__(self):

95 | return len(self.pairs)

96 |

97 |

--------------------------------------------------------------------------------

/data/pose_utils.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from scipy.ndimage.filters import gaussian_filter

3 | from skimage.draw import circle, line_aa, polygon

4 | import json

5 | from pandas import Series

6 | import matplotlib

7 | matplotlib.use('Agg')

8 | import matplotlib.pyplot as plt

9 | import matplotlib.patches as mpatches

10 | from collections import defaultdict

11 | import skimage.measure, skimage.transform

12 | import sys

13 |

14 | LIMB_SEQ = [[1,2], [1,5], [2,3], [3,4], [5,6], [6,7], [1,8], [8,9],

15 | [9,10], [1,11], [11,12], [12,13], [1,0], [0,14], [14,16],

16 | [0,15], [15,17], [2,16], [5,17]]

17 |

18 | COLORS = [[255, 0, 0], [255, 85, 0], [255, 170, 0], [255, 255, 0], [170, 255, 0], [85, 255, 0], [0, 255, 0],

19 | [0, 255, 85], [0, 255, 170], [0, 255, 255], [0, 170, 255], [0, 85, 255], [0, 0, 255], [85, 0, 255],

20 | [170, 0, 255], [255, 0, 255], [255, 0, 170], [255, 0, 85]]

21 |

22 |

23 | LABELS = ['nose', 'neck', 'Rsho', 'Relb', 'Rwri', 'Lsho', 'Lelb', 'Lwri',

24 | 'Rhip', 'Rkne', 'Rank', 'Lhip', 'Lkne', 'Lank', 'Leye', 'Reye', 'Lear', 'Rear']

25 |

26 | MISSING_VALUE = -1

27 | def MISSING(x):

28 | return x == -1 or x == 0

29 |

30 |

31 | def map_to_cord(pose_map, threshold=0.1):

32 | all_peaks = [[] for i in range(18)]

33 | pose_map = pose_map[..., :18]

34 |

35 | y, x, z = np.where(np.logical_and(pose_map == pose_map.max(axis = (0, 1)),

36 | pose_map > threshold))

37 | for x_i, y_i, z_i in zip(x, y, z):

38 | all_peaks[z_i].append([x_i, y_i])

39 |

40 | x_values = []

41 | y_values = []

42 |

43 | for i in range(18):

44 | if len(all_peaks[i]) != 0:

45 | x_values.append(all_peaks[i][0][0])

46 | y_values.append(all_peaks[i][0][1])

47 | else:

48 | x_values.append(MISSING_VALUE)

49 | y_values.append(MISSING_VALUE)

50 |

51 | return np.concatenate([np.expand_dims(y_values, -1), np.expand_dims(x_values, -1)], axis=1)

52 |

53 |

54 | def cords_to_map(cords, img_size, sigma=6):

55 | result = np.zeros(img_size + cords.shape[0:1], dtype='float32')

56 | for i, point in enumerate(cords):

57 | if point[0] == MISSING_VALUE or point[1] == MISSING_VALUE:

58 | continue

59 | xx, yy = np.meshgrid(np.arange(img_size[1]), np.arange(img_size[0]))

60 | result[..., i] = np.exp(-((yy - point[0]) ** 2 + (xx - point[1]) ** 2) / (2 * sigma ** 2))

61 | return result

62 |

63 |

64 | def draw_pose_from_cords(pose_joints, img_size, radius=2, draw_joints=True):

65 | colors = np.zeros(shape=img_size + (3, ), dtype=np.uint8)

66 | mask = np.zeros(shape=img_size, dtype=bool)

67 |

68 | if draw_joints:

69 | for f, t in LIMB_SEQ:

70 | from_missing = MISSING(pose_joints[f][0]) or MISSING(pose_joints[f][1])

71 | to_missing = MISSING(pose_joints[t][0]) or MISSING(pose_joints[t][1])

72 | if from_missing or to_missing:

73 | continue

74 |

75 | '''

76 | Trick, use a 4-polygon with 1 pixel width to represent lines, involve shape control.

77 |

78 | yy, xx = polygon(

79 | [pose_joints[f][0], pose_joints[t][0], pose_joints[t][0]+1, pose_joints[f][0]+1],

80 | [pose_joints[f][1], pose_joints[t][1], pose_joints[t][1]+1, pose_joints[f][1]+1],

81 | shape=img_size

82 | )

83 | '''

84 | yy, xx, val = line_aa(pose_joints[f][0], pose_joints[f][1], pose_joints[t][0], pose_joints[t][1])

85 | valid_ids = [i for i in range(len(yy)) if 0 < yy[i] < img_size[0] and 0 < xx[i] < img_size[1]]

86 | yy, xx, val = yy[valid_ids], xx[valid_ids], val[valid_ids]

87 | colors[yy, xx] = np.expand_dims(val, 1) * 255

88 | mask[yy, xx] = True

89 |

90 | for i, joint in enumerate(pose_joints):

91 | if MISSING(pose_joints[i][0]) or MISSING(pose_joints[i][1]):

92 | continue

93 | yy, xx = circle(joint[0], joint[1], radius=radius, shape=img_size)

94 | colors[yy, xx] = COLORS[i]

95 | mask[yy, xx] = True

96 |

97 | return colors, mask

98 |

99 |

100 | def draw_pose_from_map(pose_map, threshold=0.1, **kwargs):

101 | cords = map_to_cord(pose_map, threshold=threshold)

102 | return draw_pose_from_cords(cords, pose_map.shape[:2], **kwargs)

103 |

104 |

105 | def load_pose_cords_from_strings(y_str, x_str):

106 | ## 20181114: FIX bug, convert pandas.Series object to a string-formatted int list

107 | if isinstance(y_str, Series):

108 | y_str = y_str.values[0]

109 | if isinstance(x_str, Series):

110 | x_str = x_str.values[0]

111 | y_cords = json.loads(y_str)

112 | x_cords = json.loads(x_str)

113 | # 20191117: modify PATN processed coords by adding 40 to non-negative indices

114 | # NOTE: For fasion dataset only.

115 | # print(x_cords)

116 | # 20191123: deprecate this.

117 | # x_cords = [item + 40 if item > 0 else item for item in x_cords]

118 | # print(x_cords)

119 | return np.concatenate([np.expand_dims(y_cords, -1), np.expand_dims(x_cords, -1)], axis=1)

120 |

121 | def mean_inputation(X):

122 | X = X.copy()

123 | for i in range(X.shape[1]):

124 | for j in range(X.shape[2]):

125 | val = np.mean(X[:, i, j][X[:, i, j] != -1])

126 | X[:, i, j][X[:, i, j] == -1] = val

127 | return X

128 |

129 | def draw_legend():

130 | handles = [mpatches.Patch(color=np.array(color) / 255.0, label=name) for color, name in zip(COLORS, LABELS)]

131 | plt.legend(handles=handles, bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.)

132 |

133 | def produce_ma_mask(kp_array, img_size, point_radius=4):

134 | from skimage.morphology import dilation, erosion, square

135 | mask = np.zeros(shape=img_size, dtype=bool)

136 | limbs = [[2,3], [2,6], [3,4], [4,5], [6,7], [7,8], [2,9], [9,10],

137 | [10,11], [2,12], [12,13], [13,14], [2,1], [1,15], [15,17],

138 | [1,16], [16,18], [2,17], [2,18], [9,12], [12,6], [9,3], [17,18]]

139 | limbs = np.array(limbs) - 1

140 | for f, t in limbs:

141 | from_missing = kp_array[f][0] == MISSING_VALUE or kp_array[f][1] == MISSING_VALUE

142 | to_missing = kp_array[t][0] == MISSING_VALUE or kp_array[t][1] == MISSING_VALUE

143 | if from_missing or to_missing:

144 | continue

145 |

146 | norm_vec = kp_array[f] - kp_array[t]

147 | norm_vec = np.array([-norm_vec[1], norm_vec[0]])

148 | norm_vec = point_radius * norm_vec / np.linalg.norm(norm_vec)

149 |

150 |

151 | vetexes = np.array([

152 | kp_array[f] + norm_vec,

153 | kp_array[f] - norm_vec,

154 | kp_array[t] - norm_vec,

155 | kp_array[t] + norm_vec

156 | ])

157 | yy, xx = polygon(vetexes[:, 0], vetexes[:, 1], shape=img_size)

158 | mask[yy, xx] = True

159 |

160 | for i, joint in enumerate(kp_array):

161 | if kp_array[i][0] == MISSING_VALUE or kp_array[i][1] == MISSING_VALUE:

162 | continue

163 | yy, xx = circle(joint[0], joint[1], radius=point_radius, shape=img_size)

164 | mask[yy, xx] = True

165 |

166 | mask = dilation(mask, square(5))

167 | mask = erosion(mask, square(5))

168 | return mask

169 |

170 | if __name__ == "__main__":

171 | import pandas as pd

172 | from skimage.io import imread

173 | import pylab as plt

174 | import os

175 | i = 5

176 | df = pd.read_csv('data/market-annotation-train.csv', sep=':')

177 |

178 | for index, row in df.iterrows():

179 | pose_cords = load_pose_cords_from_strings(row['keypoints_y'], row['keypoints_x'])

180 |

181 | colors, mask = draw_pose_from_cords(pose_cords, (128, 64))

182 |

183 | mmm = produce_ma_mask(pose_cords, (128, 64)).astype(float)[..., np.newaxis].repeat(3, axis=-1)

184 | print(mmm.shape)

185 | img = imread('data/market-dataset/train/' + row['name'])

186 |

187 | mmm[mask] = colors[mask]

188 |

189 | print (mmm)

190 | plt.subplot(1, 1, 1)

191 | plt.imshow(mmm)

192 | plt.show()

193 |

--------------------------------------------------------------------------------

/datasets/fashion:

--------------------------------------------------------------------------------

1 | /backup1/lingboyang/human_image_generation/CVPR2019_pose_transfer/fashion_data

--------------------------------------------------------------------------------

/model/__init__.py:

--------------------------------------------------------------------------------

1 | """This package contains modules related to objective functions, optimizations, and network architectures.

2 | To add a custom model class called 'dummy', you need to add a file called 'dummy_model.py' and define a subclass DummyModel inherited from BaseModel.

3 | You need to implement the following five functions:

4 | -- <__init__>: initialize the class; first call BaseModel.__init__(self, opt).

5 | -- : unpack data from dataset and apply preprocessing.

6 | -- : produce intermediate results.

7 | -- : calculate loss, gradients, and update network weights.

8 | -- : (optionally) add model-specific options and set default options.

9 | In the function <__init__>, you need to define four lists:

10 | -- self.loss_names (str list): specify the training losses that you want to plot and save.

11 | -- self.model_names (str list): specify the images that you want to display and save.

12 | -- self.visual_names (str list): define networks used in our training.

13 | -- self.optimizers (optimizer list): define and initialize optimizers. You can define one optimizer for each network. If two networks are updated at the same time, you can use itertools.chain to group them. See cycle_gan_model.py for an example.

14 | Now you can use the model class by specifying flag '--model dummy'.

15 | See our template model class 'template_model.py' for an example.

16 | """

17 |

18 | import importlib

19 | from model.base_model import BaseModel

20 |

21 |

22 | def find_model_using_name(model_name):

23 | """Import the module "models/[model_name]_model.py".

24 | In the file, the class called DatasetNameModel() will

25 | be instantiated. It has to be a subclass of BaseModel,

26 | and it is case-insensitive.

27 | """

28 | model_filename = "model." + model_name + "_model"

29 | modellib = importlib.import_module(model_filename)

30 | model = None

31 | target_model_name = model_name.replace('_', '') + 'model'

32 | for name, cls in modellib.__dict__.items():

33 | if name.lower() == target_model_name.lower() \

34 | and issubclass(cls, BaseModel):

35 | model = cls

36 |

37 | if model is None:

38 | print("In %s.py, there should be a subclass of BaseModel with class name that matches %s in lowercase." % (model_filename, target_model_name))

39 | exit(0)

40 |

41 | return model

42 |

43 |

44 | def get_option_setter(model_name):

45 | """Return the static method of the model class."""

46 | model_class = find_model_using_name(model_name)

47 | return model_class.modify_commandline_options

48 |

49 |

50 | def create_model(opt):

51 | """Create a model given the option.

52 | This function warps the class CustomDatasetDataLoader.

53 | This is the main interface between this package and 'train.py'/'test.py'

54 | Example:

55 | >>> from models import create_model

56 | >>> model = create_model(opt)

57 | """

58 | model = find_model_using_name(opt.model)

59 | instance = model(opt)

60 | print("model [%s] was created" % type(instance).__name__)

61 | return instance

62 |

--------------------------------------------------------------------------------

/model/base_model.py:

--------------------------------------------------------------------------------

1 | import os

2 | import torch

3 | from collections import OrderedDict

4 | from abc import ABC, abstractmethod

5 | from . import networks

6 |

7 |

8 | class BaseModel(ABC):

9 | """This class is an abstract base class (ABC) for models.

10 | To create a subclass, you need to implement the following five functions:

11 | -- <__init__>: initialize the class; first call BaseModel.__init__(self, opt).

12 | -- : unpack data from dataset and apply preprocessing.

13 | -- : produce intermediate results.

14 | -- : calculate losses, gradients, and update network weights.

15 | -- : (optionally) add model-specific options and set default options.

16 | """

17 |

18 | def __init__(self, opt):

19 | """Initialize the BaseModel class.

20 |

21 | Parameters:

22 | opt (Option class)-- stores all the experiment flags; needs to be a subclass of BaseOptions

23 |

24 | When creating your custom class, you need to implement your own initialization.

25 | In this fucntion, you should first call `BaseModel.__init__(self, opt)`

26 | Then, you need to define four lists:

27 | -- self.loss_names (str list): specify the training losses that you want to plot and save.

28 | -- self.model_names (str list): specify the images that you want to display and save.

29 | -- self.visual_names (str list): define networks used in our training.

30 | -- self.optimizers (optimizer list): define and initialize optimizers. You can define one optimizer for each network. If two networks are updated at the same time, you can use itertools.chain to group them. See cycle_gan_model.py for an example.

31 | """

32 | self.opt = opt

33 | self.gpu_ids = opt.gpu_ids

34 | self.isTrain = opt.isTrain

35 | self.device = torch.device('cuda:{}'.format(self.gpu_ids[0])) if self.gpu_ids else torch.device('cpu') # get device name: CPU or GPU

36 | # damn it, build all directories recursively

37 | self.mkdir_recursive(opt.checkpoints_dir, opt.name, 'images')

38 | self.save_dir = os.path.join(opt.checkpoints_dir, opt.name)

39 | self.save_image_dir = os.path.join(self.save_dir, 'images')

40 |

41 | self.loss_names = []

42 | self.model_names = []

43 | self.visual_names = []

44 | self.optimizers = []

45 | self.image_paths = []

46 |

47 | @staticmethod

48 | def mkdir_recursive(*folders):

49 | cur_folder = None

50 | for folder in folders:

51 | cur_folder = folder if cur_folder is None else os.path.join(cur_folder, folder)

52 | os.makedirs(cur_folder, exist_ok=True)

53 |

54 | @staticmethod

55 | def modify_commandline_options(parser, is_train):

56 | """Add new model-specific options, and rewrite default values for existing options.

57 |

58 | Parameters:

59 | parser -- original option parser

60 | is_train (bool) -- whether training phase or test phase. You can use this flag to add training-specific or test-specific options.

61 |

62 | Returns:

63 | the modified parser.

64 | """

65 | return parser

66 |

67 | @abstractmethod

68 | def set_input(self, input):

69 | """Unpack input data from the dataloader and perform necessary pre-processing steps.

70 |

71 | Parameters:

72 | input (dict): includes the data itself and its metadata information.

73 | """

74 | pass

75 |

76 | @abstractmethod

77 | def forward(self):

78 | """Run forward pass; called by both functions and ."""

79 | pass

80 |

81 | def is_train(self):

82 | """check if the current batch is good for training."""

83 | return True

84 |

85 | @abstractmethod

86 | def optimize_parameters(self):

87 | """Calculate losses, gradients, and update network weights; called in every training iteration"""

88 | pass

89 |

90 | def setup(self, opt):

91 | """Load and print networks; create schedulers

92 |

93 | Parameters:

94 | opt (Option class) -- stores all the experiment flags; needs to be a subclass of BaseOptions

95 | """

96 | if self.isTrain:

97 | self.schedulers = [networks.get_scheduler(optimizer, opt) for optimizer in self.optimizers]

98 | if not self.isTrain or opt.continue_train:

99 | self.load_networks(opt.which_epoch)

100 | else:

101 | self.init_networks(opt)

102 | self.print_networks(opt.verbose)

103 |

104 | def init_networks(self, opt):

105 | print('Initializing models in %s mode and start training from scratch' % opt.init_type)

106 | for name in self.model_names:

107 | net = getattr(self, 'net' + name)

108 | if isinstance(net, torch.nn.DataParallel):

109 | net = net.module

110 | net.to(self.device)

111 | networks.init_weights(net, opt.init_type, opt.init_gain)

112 |

113 | def eval(self):

114 | """Make models eval mode during test time"""

115 | for name in self.model_names:

116 | if isinstance(name, str):

117 | net = getattr(self, 'net' + name)

118 | net.eval()

119 |

120 | def test(self):

121 | """Forward function used in test time.

122 |

123 | This function wraps function in no_grad() so we don't save intermediate steps for backprop

124 | It also calls to produce additional visualization results

125 | """

126 | with torch.no_grad():

127 | self.forward()

128 | self.compute_visuals()

129 |

130 | def compute_visuals(self):

131 | """Calculate additional output images for visdom and HTML visualization"""

132 | pass

133 |

134 | def get_image_paths(self):

135 | """ Return image paths that are used to load current data"""

136 | return self.image_paths

137 |

138 | def update_learning_rate(self):

139 | """Update learning rates for all the networks; called at the end of every epoch"""

140 | for scheduler in self.schedulers:

141 | scheduler.step()

142 | lr = self.optimizers[0].param_groups[0]['lr']

143 | print('learning rate = %.7f' % lr)

144 |

145 | def get_current_visuals(self):

146 | """Return visualization images. train.py will display these images with visdom, and save the images to a HTML"""

147 | visual_ret = OrderedDict()

148 | for name in self.visual_names:

149 | if isinstance(name, str):

150 | visual_ret[name] = getattr(self, name)

151 | return visual_ret

152 |

153 | def get_current_losses(self):

154 | """Return traning losses / errors. train.py will print out these errors on console, and save them to a file"""

155 | errors_ret = OrderedDict()

156 | for name in self.loss_names:

157 | if isinstance(name, str):

158 | errors_ret[name] = float(getattr(self, 'loss_' + name)) # float(...) works for both scalar tensor and float number

159 | return errors_ret

160 |

161 | def save_networks(self, epoch):

162 | """Save all the networks to the disk.

163 |

164 | Parameters:

165 | epoch (int) -- current epoch; used in the file name '%s_net_%s.pth' % (epoch, name)

166 | """

167 | for name in self.model_names:

168 | if isinstance(name, str):

169 | save_filename = '%s_net_%s.pth' % (epoch, name)

170 | save_path = os.path.join(self.save_dir, save_filename)

171 | net = getattr(self, 'net' + name)

172 | torch.save(net.state_dict(), save_path)

173 |

174 | def __patch_instance_norm_state_dict(self, state_dict, module, keys, i=0):

175 | """Fix InstanceNorm checkpoints incompatibility (prior to 0.4)"""

176 | key = keys[i]

177 | if i + 1 == len(keys): # at the end, pointing to a parameter/buffer

178 | if module.__class__.__name__.startswith('InstanceNorm') and \

179 | (key == 'running_mean' or key == 'running_var'):

180 | if getattr(module, key) is None:

181 | state_dict.pop('.'.join(keys))

182 | if module.__class__.__name__.startswith('InstanceNorm') and \

183 | (key == 'num_batches_tracked'):

184 | state_dict.pop('.'.join(keys))

185 | else:

186 | self.__patch_instance_norm_state_dict(state_dict, getattr(module, key), keys, i + 1)

187 |

188 | def load_networks(self, epoch):

189 | """Load all the networks from the disk.

190 |

191 | Parameters:

192 | epoch (int) -- current epoch; used in the file name '%s_net_%s.pth' % (epoch, name)

193 | """

194 | for name in self.model_names:

195 | if isinstance(name, str):

196 | load_filename = '%s_net_%s.pth' % (epoch, name)

197 | load_path = os.path.join(self.save_dir, load_filename)

198 | net = getattr(self, 'net' + name)

199 | if isinstance(net, torch.nn.DataParallel):

200 | net = net.module

201 | print('loading the model from %s' % load_path)

202 | # if you are using PyTorch newer than 0.4 (e.g., built from

203 | # GitHub source), you can remove str() on self.device

204 | state_dict = torch.load(load_path, map_location=str(self.device))

205 | if hasattr(state_dict, '_metadata'):

206 | del state_dict._metadata

207 |

208 | # patch InstanceNorm checkpoints prior to 0.4