├── .editorconfig

├── .gitattributes

├── .github

├── ISSUE_TEMPLATE

│ ├── bug_report.md

│ ├── custom.md

│ └── feature_request.md

└── workflows

│ └── ci.yml

├── .gitignore

├── .pre-commit-config.yaml

├── CHANGELOG.md

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── data

├── README.md

└── _scripts

│ ├── 1_download_all.sh

│ ├── 2_prepare.py

│ ├── cosmx_convert.py

│ ├── cosmx_download.sh

│ ├── merscope_convert.py

│ ├── merscope_download.sh

│ ├── stereoseq_convert.py

│ ├── stereoseq_download.sh

│ ├── xenium_convert.py

│ └── xenium_download.sh

├── docs

├── api

│ ├── Novae.md

│ ├── dataloader.md

│ ├── metrics.md

│ ├── modules.md

│ ├── plot.md

│ └── utils.md

├── assets

│ ├── Figure1.png

│ ├── banner.png

│ ├── logo_favicon.png

│ ├── logo_small_black.png

│ └── logo_white.png

├── cite_us.md

├── faq.md

├── getting_started.md

├── index.md

├── javascripts

│ └── mathjax.js

└── tutorials

│ ├── LEE_AGING_CEREBELLUM_UP.json

│ ├── hallmarks_pathways.json

│ ├── input_modes.md

│ ├── main_usage.ipynb

│ ├── mouse_hallmarks.json

│ ├── proteins.ipynb

│ └── resolutions.ipynb

├── mkdocs.yml

├── novae

├── __init__.py

├── _constants.py

├── _logging.py

├── _settings.py

├── data

│ ├── __init__.py

│ ├── convert.py

│ ├── datamodule.py

│ └── dataset.py

├── model.py

├── module

│ ├── __init__.py

│ ├── aggregate.py

│ ├── augment.py

│ ├── embed.py

│ ├── encode.py

│ └── swav.py

├── monitor

│ ├── __init__.py

│ ├── callback.py

│ ├── eval.py

│ └── log.py

├── plot

│ ├── __init__.py

│ ├── _bar.py

│ ├── _graph.py

│ ├── _heatmap.py

│ ├── _spatial.py

│ └── _utils.py

└── utils

│ ├── __init__.py

│ ├── _build.py

│ ├── _correct.py

│ ├── _data.py

│ ├── _mode.py

│ ├── _preprocess.py

│ ├── _utils.py

│ └── _validate.py

├── poetry.lock

├── pyproject.toml

├── scripts

├── README.md

├── __init__.py

├── config.py

├── config

│ ├── README.md

│ ├── _example.yaml

│ ├── all_16.yaml

│ ├── all_17.yaml

│ ├── all_brain.yaml

│ ├── all_human.yaml

│ ├── all_human2.yaml

│ ├── all_mouse.yaml

│ ├── all_new.yaml

│ ├── all_ruche.yaml

│ ├── all_spot.yaml

│ ├── brain.yaml

│ ├── brain2.yaml

│ ├── breast.yaml

│ ├── breast_zs.yaml

│ ├── breast_zs2.yaml

│ ├── colon.yaml

│ ├── colon_retrain.yaml

│ ├── colon_zs.yaml

│ ├── colon_zs2.yaml

│ ├── local_tests.yaml

│ ├── lymph_node.yaml

│ ├── missing.yaml

│ ├── ovarian.yaml

│ ├── revision.yaml

│ ├── revision_tests.yaml

│ ├── toy_cpu_seed0.yaml

│ └── toy_missing.yaml

├── missing_domain.py

├── revision

│ ├── cpu.sh

│ ├── heterogeneous.py

│ ├── heterogeneous_start.py

│ ├── mgc.py

│ ├── missing_domains.py

│ ├── perturbations.py

│ └── seg_robustness.py

├── ruche

│ ├── README.md

│ ├── agent.sh

│ ├── convert.sh

│ ├── cpu.sh

│ ├── debug_cpu.sh

│ ├── debug_gpu.sh

│ ├── download.sh

│ ├── gpu.sh

│ ├── prepare.sh

│ └── test.sh

├── sweep

│ ├── README.md

│ ├── cpu.yaml

│ ├── debug.yaml

│ ├── gpu.yaml

│ ├── gpu_ruche.yaml

│ ├── lung.yaml

│ ├── revision.yaml

│ └── toy.yaml

├── toy_missing_domain.py

├── train.py

└── utils.py

├── setup.py

└── tests

├── __init__.py

├── _utils.py

├── test_correction.py

├── test_dataset.py

├── test_metrics.py

├── test_misc.py

├── test_model.py

├── test_plots.py

└── test_utils.py

/.editorconfig:

--------------------------------------------------------------------------------

1 | max_line_length = 120

2 |

3 | [*.json]

4 | indent_style = space

5 | indent_size = 4

6 |

7 | [*.yaml]

8 | indent_size = 2

9 |

--------------------------------------------------------------------------------

/.gitattributes:

--------------------------------------------------------------------------------

1 | # Ignoring notebooks for language statistics on Github

2 | .ipynb -linguist-detectable

3 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: "[Bug]"

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | ## Description

11 | A clear and concise description of what the bug is.

12 |

13 | ## Reproducing the issue

14 | Steps to reproduce the behavior.

15 |

16 | ## Expected behavior

17 | A clear and concise description of what you expected to happen.

18 |

19 | ## System

20 | - OS: [e.g. Linux]

21 | - Python version [e.g. 3.10.7]

22 |

23 | Dependencies versions

24 |

25 | Paste here what 'pip list' gives you.

26 |

27 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/custom.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Custom issue template

3 | about: Describe this issue template's purpose here.

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: "[Feature]"

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | name: test_deploy_publish

2 | on:

3 | push:

4 | tags:

5 | - v*

6 | pull_request:

7 | branches: [main]

8 |

9 | jobs:

10 | pre-commit:

11 | runs-on: ubuntu-latest

12 | steps:

13 | - uses: actions/checkout@v3

14 | - uses: actions/setup-python@v3

15 | - uses: pre-commit/action@v3.0.1

16 |

17 | build:

18 | needs: [pre-commit]

19 | runs-on: ubuntu-latest

20 | strategy:

21 | matrix:

22 | python-version: ["3.10", "3.11", "3.12"]

23 | steps:

24 | - uses: actions/checkout@v4

25 | - uses: actions/setup-python@v5

26 | with:

27 | python-version: ${{ matrix.python-version }}

28 | cache: "pip"

29 | - run: pip install '.[dev]'

30 |

31 | - name: Run tests

32 | run: pytest --cov

33 |

34 | - name: Deploy doc

35 | if: matrix.python-version == '3.10' && contains(github.ref, 'tags')

36 | run: mkdocs gh-deploy --force

37 |

38 | - name: Upload results to Codecov

39 | if: matrix.python-version == '3.10'

40 | uses: codecov/codecov-action@v4

41 | with:

42 | token: ${{ secrets.CODECOV_TOKEN }}

43 |

44 | publish:

45 | needs: [build]

46 | if: contains(github.ref, 'tags')

47 | runs-on: ubuntu-latest

48 | steps:

49 | - uses: actions/checkout@v3

50 | - name: Build and publish to pypi

51 | uses: JRubics/poetry-publish@v1.17

52 | with:

53 | python_version: "3.10"

54 | pypi_token: ${{ secrets.PYPI_TOKEN }}

55 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # OS related

2 | .DS_Store

3 |

4 | # Ruche related

5 | jobs/

6 |

7 | # Tests related

8 | tests/*

9 | !tests/*.py

10 |

11 | # IDE related

12 | .vscode/*

13 | !.vscode/settings.json

14 | !.vscode/tasks.json

15 | !.vscode/launch.json

16 | !.vscode/extensions.json

17 | *.code-workspace

18 | **/.vscode

19 |

20 | # Documentation

21 | site

22 |

23 | # Logs

24 | outputs

25 | multirun

26 | lightning_logs

27 | novae_*

28 | checkpoints

29 |

30 | # Data files

31 | data/*

32 | !data/_scripts

33 | !data/README.md

34 | !data/*.py

35 | !data/*.sh

36 |

37 | # Results files

38 | results/*

39 |

40 | # Jupyter Notebook

41 | .ipynb_checkpoints

42 | *.ipynb

43 | !docs/tutorials/*.ipynb

44 |

45 | # Misc

46 | logs/

47 | test.h5ad

48 | test.ckpt

49 | wandb/

50 | .env

51 | .autoenv

52 | !**/.gitkeep

53 | exploration

54 |

55 | # pyenv

56 | .python-version

57 |

58 | # Byte-compiled / optimized / DLL files

59 | __pycache__/

60 | *.py[cod]

61 | *$py.class

62 |

63 | # C extensions

64 | *.so

65 |

66 | # Distribution / packaging

67 | .Python

68 | build/

69 | develop-eggs/

70 | dist/

71 | downloads/

72 | eggs/

73 | .eggs/

74 | lib/

75 | lib64/

76 | parts/

77 | sdist/

78 | var/

79 | wheels/

80 | pip-wheel-metadata/

81 | share/python-wheels/

82 | *.egg-info/

83 | .installed.cfg

84 | *.egg

85 | MANIFEST

86 |

87 | # PyInstaller

88 | # Usually these files are written by a python script from a template

89 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

90 | *.manifest

91 | *.spec

92 |

93 | # Installer logs

94 | pip-log.txt

95 | pip-delete-this-directory.txt

96 |

97 | # Unit test / coverage reports

98 | htmlcov/

99 | .tox/

100 | .nox/

101 | .coverage

102 | .coverage.*

103 | .cache

104 | nosetests.xml

105 | coverage.xml

106 | *.cover

107 | *.py,cover

108 | .hypothesis/

109 | .pytest_cache/

110 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | fail_fast: false

2 | default_language_version:

3 | python: python3

4 | default_stages:

5 | - pre-commit

6 | - pre-push

7 | minimum_pre_commit_version: 2.16.0

8 | repos:

9 | - repo: https://github.com/astral-sh/ruff-pre-commit

10 | rev: v0.11.5

11 | hooks:

12 | - id: ruff

13 | - id: ruff-format

14 | - repo: https://github.com/pre-commit/pre-commit-hooks

15 | rev: v4.5.0

16 | hooks:

17 | - id: trailing-whitespace

18 | - id: end-of-file-fixer

19 | - id: check-yaml

20 | - id: debug-statements

21 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 | ## [0.2.4] - 2025-03-26

2 |

3 | Hotfix (#18)

4 |

5 | ## [0.2.3] - 2025-03-21

6 |

7 | ### Added

8 | - New Visium-HD and Visium tutorials

9 | - Infer default plot size for plots (median neighbors distrance)

10 | - Can filter by technology in `load_dataset`

11 |

12 | ### Fixed

13 | - Fix `model.plot_prototype_covariance` and `model.plot_prototype_weights`

14 | - Edge case: ensure that even negative max-weights are above the prototype threshold

15 |

16 | ### Changed

17 | - `novae.utils.spatial_neighbors` can be now called via `novae.spatial_neighbors`

18 | - Store distances after `spatial_neighbors` instead of `1` when `coord_type="grid"`

19 |

20 | ## [0.2.2] - 2024-12-17

21 |

22 | ### Added

23 | - `load_dataset`: add `custom_filter` and `dry_run` arguments

24 | - added `min_prototypes_ratio` argument in `fine_tune` to run `init_slide_queue`

25 | - Added tutorials for proteins data + minor docs improvements

26 |

27 | ### Fixed

28 | - Ensure reset clustering if multiple zero-shot (#9)

29 |

30 | ### Changed

31 | - Removed the docs formatting (better for autocompletion)

32 | - Reorder parameters in Novae `__init__` (sorted by importance)

33 |

34 | ## [0.2.1] - 2024-12-04

35 |

36 | ### Added

37 | - `novae.utils.quantile_scaling` for proteins expression

38 |

39 | ### Fixed

40 | - Fix autocompletion using `__new__` to trick hugging_face inheritance

41 |

42 |

43 | ## [0.2.0] - 2024-12-03

44 |

45 | ### Added

46 |

47 | - `novae.plot.connectivities(...)` to show the cells neighbors

48 | - `novae.settings.auto_processing = False` to enforce using your own preprocessing

49 | - Tutorials update (more plots and more details)

50 |

51 | ### Fixed

52 |

53 | - Issue with `library_id` in `novae.plot.domains` (#8)

54 | - Set `pandas>=2.0.0` in the dependencies (#5)

55 |

56 | ### Breaking changes

57 |

58 | - `novae.utils.spatial_neighbors` must always be run, to force the user having more control on it

59 | - For multi-slide mode, the `slide_key` argument should now be used in `novae.utils.spatial_neighbors` (and only there)

60 | - Drop python 3.9 support (because dropped in `anndata`)

61 |

62 | ## [0.1.0] - 2024-09-11

63 |

64 | First official `novae` release. Preprint coming soon.

65 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing to *novae*

2 |

3 | Contributions are welcome as we aim to continue improving `novae`. For instance, you can contribute by:

4 |

5 | - Opening an issue

6 | - Discussing the current state of the code

7 | - Making a Pull Request (PR)

8 |

9 | If you want to open a PR, follow the following instructions.

10 |

11 | ## Making a Pull Request (PR)

12 |

13 | To add some new code to **novae**, you should:

14 |

15 | 1. Fork the repository

16 | 2. Install `novae` in editable mode with the `dev` extra (see below)

17 | 3. Create your personal branch from `main`

18 | 4. Implement your changes according to the 'Coding guidelines' below

19 | 5. Create a pull request on the `main` branch of the original repository. Add explanations about your developed features, and wait for discussion and validation of your pull request

20 |

21 | ## Installing `novae` in editable mode

22 |

23 | When contributing, installing `novae` in editable mode is recommended. We also recommend installing the `dev` extra.

24 |

25 | For that, choose between `pip` and `poetry` as below:

26 |

27 | ```sh

28 | git clone https://github.com/MICS-Lab/novae.git

29 | cd novae

30 |

31 | pip install -e '.[dev]' # pip installation

32 | poetry install -E dev # poetry installation

33 | ```

34 |

35 | ## Coding guidelines

36 |

37 | ### Styling and formatting

38 |

39 | We use [`pre-commit`](https://pre-commit.com/) to run code quality controls before the commits. This will run `ruff` and others minor checks.

40 |

41 |

42 | You can set it up at the root of the repository like this:

43 | ```sh

44 | pre-commit install

45 | ```

46 |

47 | Then, it will run the pre-commit automatically before each commit.

48 |

49 | You can also run the pre-commit manually:

50 | ```sh

51 | pre-commit run --all-files

52 | ```

53 |

54 | Apart from this, we recommend to follow the standard styling conventions:

55 | - Follow the [PEP8](https://peps.python.org/pep-0008/) style guide.

56 | - Provide meaningful names to all your variables and functions.

57 | - Provide type hints to your function inputs/outputs.

58 | - Add docstrings in the Google style.

59 | - Try as much as possible to follow the same coding style as the rest of the repository.

60 |

61 | ### Testing

62 |

63 | When create a pull request, tests are run automatically. But you can also run the tests yourself before making the PR. For that, run `pytest` at the root of the repository. You can also add new tests in the `./tests` directory.

64 |

65 | To check the coverage of the tests:

66 |

67 | ```sh

68 | coverage run -m pytest

69 | coverage report # command line report

70 | coverage html # html report

71 | ```

72 |

73 | ### Documentation

74 |

75 | You can update the documentation in the `./docs` directory. Refer to the [mkdocs-material documentation](https://squidfunk.github.io/mkdocs-material/) for more help.

76 |

77 | To serve the documentation locally:

78 |

79 | ```sh

80 | mkdocs serve

81 | ```

82 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | BSD 3-Clause License

2 |

3 | Copyright (c) 2024, Quentin Blampey

4 | All rights reserved.

5 |

6 | Redistribution and use in source and binary forms, with or without

7 | modification, are permitted provided that the following conditions are met:

8 |

9 | 1. Redistributions of source code must retain the above copyright notice, this

10 | list of conditions and the following disclaimer.

11 |

12 | 2. Redistributions in binary form must reproduce the above copyright notice,

13 | this list of conditions and the following disclaimer in the documentation

14 | and/or other materials provided with the distribution.

15 |

16 | 3. Neither the name of the copyright holder nor the names of its

17 | contributors may be used to endorse or promote products derived from

18 | this software without specific prior written permission.

19 |

20 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

21 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

22 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

23 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

24 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

25 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

26 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

27 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

28 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

29 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

30 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 |

6 |

7 | [](https://pypi.org/project/novae)

8 | [](https://pepy.tech/project/novae)

9 | [](https://mics-lab.github.io/novae)

10 |

11 | [](https://github.com/MICS-Lab/novae/blob/main/LICENSE)

12 | [](https://codecov.io/gh/MICS-Lab/novae)

13 | [](https://github.com/astral-sh/ruff)

14 |

15 |

16 |

17 |

18 | 💫 Graph-based foundation model for spatial transcriptomics data

19 |

20 |

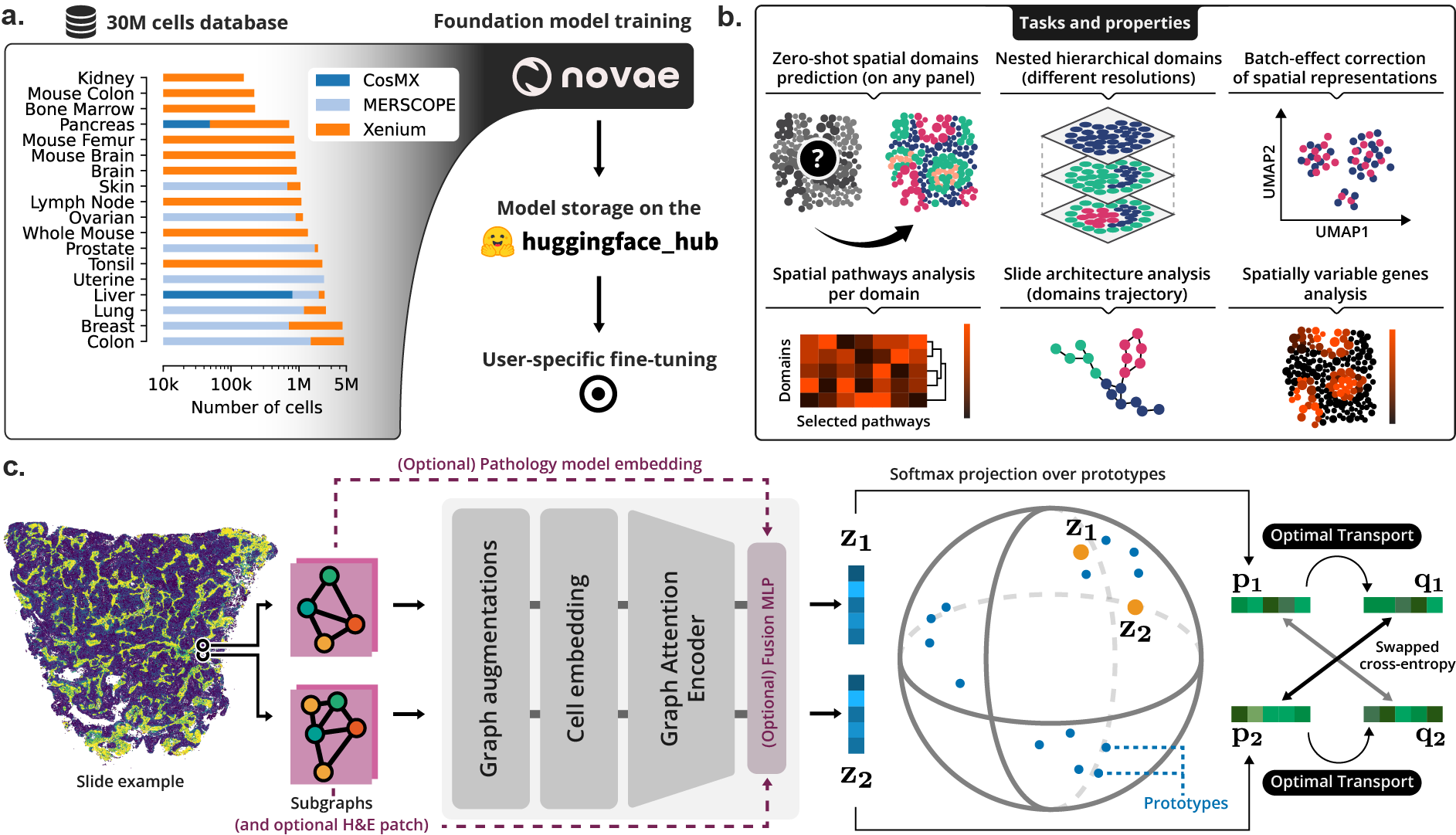

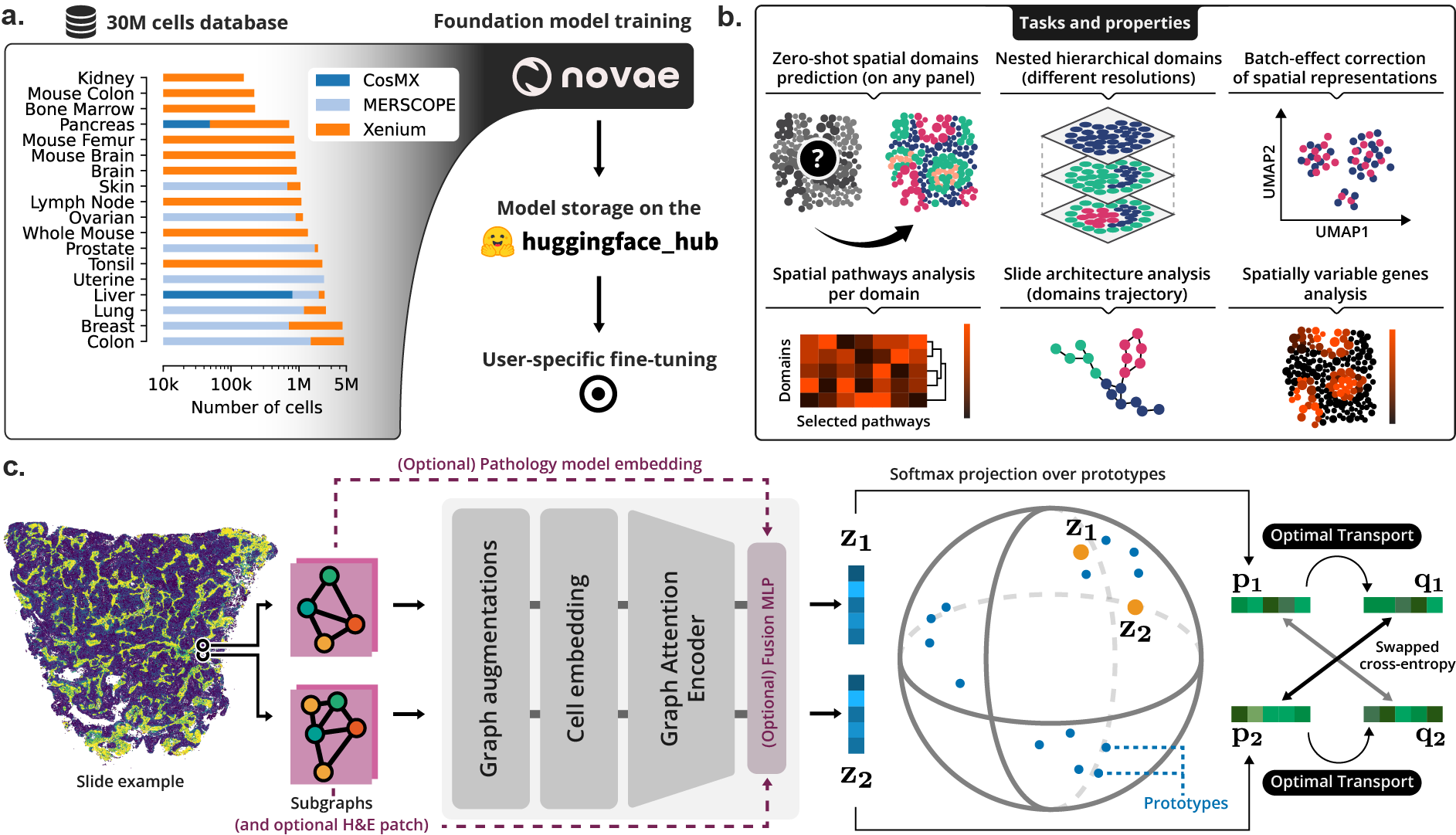

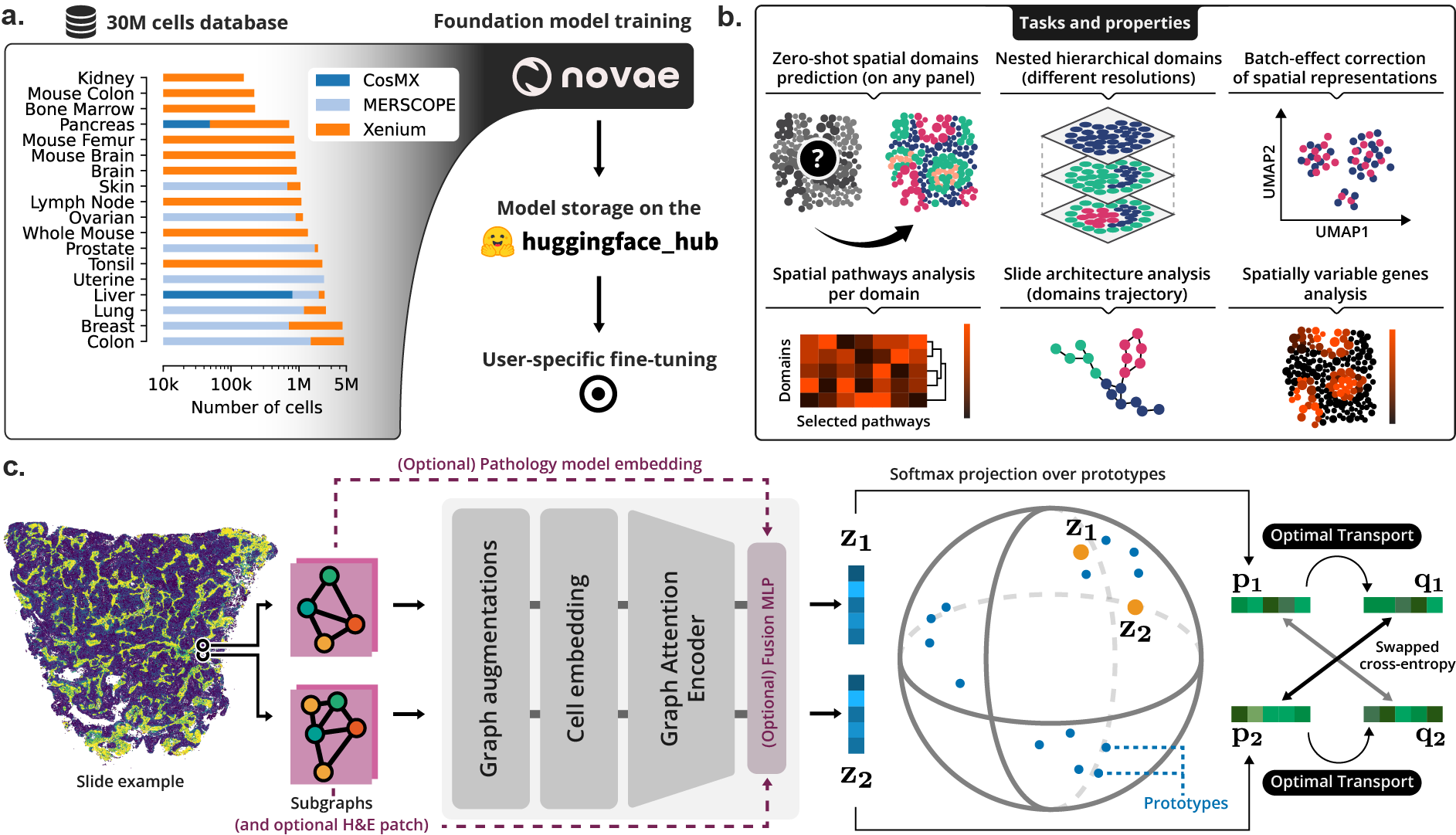

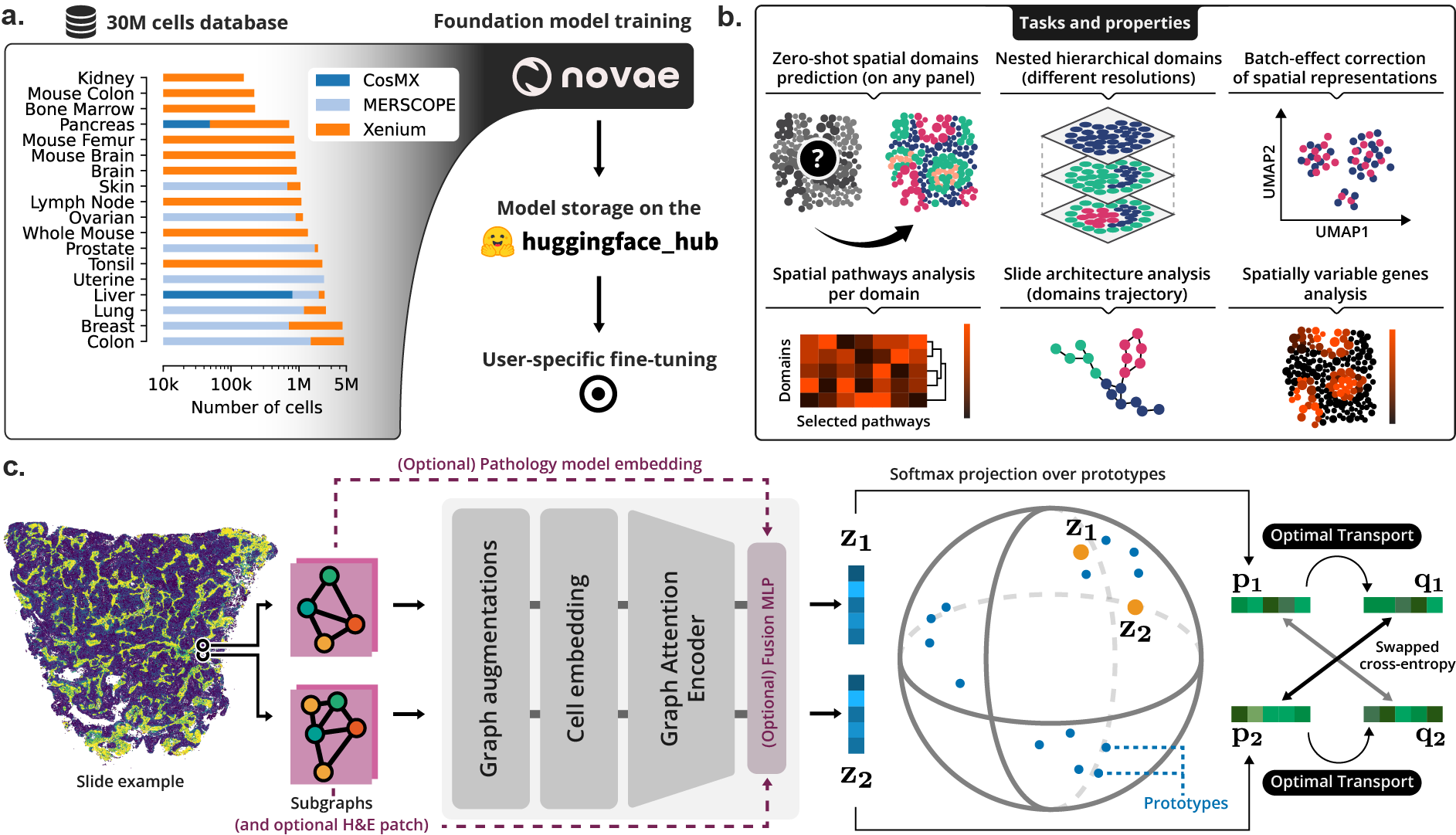

21 | Novae is a deep learning model for spatial domain assignments of spatial transcriptomics data (at both single-cell or spot resolution). It works across multiple gene panels, tissues, and technologies. Novae offers several additional features, including: (i) native batch-effect correction, (ii) analysis of spatially variable genes and pathways, and (iii) architecture analysis of tissue slides.

22 |

23 | ## Documentation

24 |

25 | Check [Novae's documentation](https://mics-lab.github.io/novae/) to get started. It contains installation explanations, API details, and tutorials.

26 |

27 | ## Overview

28 |

29 |

30 |  31 |

31 |

32 |

33 | > **(a)** Novae was trained on a large dataset, and is shared on [Hugging Face Hub](https://huggingface.co/collections/MICS-Lab/novae-669cdf1754729d168a69f6bd). **(b)** Illustration of the main tasks and properties of Novae. **(c)** Illustration of the method behind Novae (self-supervision on graphs, adapted from [SwAV](https://arxiv.org/abs/2006.09882)).

34 |

35 | ## Installation

36 |

37 | ### PyPI

38 |

39 | `novae` can be installed via `PyPI` on all OS, for `python>=3.10`.

40 |

41 | ```

42 | pip install novae

43 | ```

44 |

45 | ### Editable mode

46 |

47 | To install `novae` in editable mode (e.g., to contribute), clone the repository and choose among the options below.

48 |

49 | ```sh

50 | pip install -e . # pip, minimal dependencies

51 | pip install -e '.[dev]' # pip, all extras

52 | poetry install # poetry, minimal dependencies

53 | poetry install --all-extras # poetry, all extras

54 | ```

55 |

56 | ## Usage

57 |

58 | Here is a minimal usage example. For more details, refer to the [documentation](https://mics-lab.github.io/novae/).

59 |

60 | ```python

61 | import novae

62 |

63 | model = novae.Novae.from_pretrained("MICS-Lab/novae-human-0")

64 |

65 | model.compute_representations(adata, zero_shot=True)

66 | model.assign_domains(adata)

67 | ```

68 |

69 | ## Cite us

70 |

71 | You can cite our [preprint](https://www.biorxiv.org/content/10.1101/2024.09.09.612009v1) as below:

72 |

73 | ```txt

74 | @article{blampeyNovae2024,

75 | title = {Novae: A Graph-Based Foundation Model for Spatial Transcriptomics Data},

76 | author = {Blampey, Quentin and Benkirane, Hakim and Bercovici, Nadege and Andre, Fabrice and Cournede, Paul-Henry},

77 | year = {2024},

78 | pages = {2024.09.09.612009},

79 | publisher = {bioRxiv},

80 | doi = {10.1101/2024.09.09.612009},

81 | }

82 | ```

83 |

--------------------------------------------------------------------------------

/data/README.md:

--------------------------------------------------------------------------------

1 | # Public datasets

2 |

3 | We detail below how to download public spatial transcriptomics datasets.

4 |

5 | ## Option 1: Hugging Face Hub

6 |

7 | We store our dataset on [Hugging Face Hub](https://huggingface.co/datasets/MICS-Lab/novae).

8 | To automatically download these slides, you can use the [`novae.utils.load_dataset`](https://mics-lab.github.io/novae/api/utils/#novae.utils.load_dataset) function.

9 |

10 | NB: not all slides are uploaded on Hugging Face yet, but we are progressively adding new slides. To get the full dataset right now, use the "Option 2" below.

11 |

12 | ## Option 2: Download

13 |

14 | For consistency, all the scripts below need to be executed at the root of the `data` directory (i.e., `novae/data`).

15 |

16 | ### MERSCOPE (18 samples)

17 |

18 | Requirements: the `gsutil` command line should be installed (see [here](https://cloud.google.com/storage/docs/gsutil_install)) and a Python environment with `scanpy`.

19 |

20 | ```sh

21 | # download all MERSCOPE datasets

22 | sh _scripts/merscope_download.sh

23 |

24 | # convert all datasets to h5ad files

25 | python _scripts/merscope_convert.py

26 | ```

27 |

28 | ### Xenium (20+ samples)

29 |

30 | Requirements: a Python environment with `spatialdata-io` installed.

31 |

32 | ```sh

33 | # download all Xenium datasets

34 | sh _scripts/xenium_download.sh

35 |

36 | # convert all datasets to h5ad files

37 | python _scripts/xenium_convert.py

38 | ```

39 |

40 | ### CosMX (3 samples)

41 |

42 | Requirements: a Python environment with `scanpy` installed.

43 |

44 | ```sh

45 | # download all CosMX datasets

46 | sh _scripts/cosmx_download.sh

47 |

48 | # convert all datasets to h5ad files

49 | python _scripts/cosmx_convert.py

50 | ```

51 |

52 | ### All datasets

53 |

54 | All above datasets can be downloaded using a single command line. Make sure you have all the requirements listed above.

55 |

56 | ```sh

57 | sh _scripts/1_download_all.sh

58 | ```

59 |

60 | ### Preprocess and prepare for training

61 |

62 | The script bellow will copy all `adata.h5ad` files into a single directory, compute UMAPs, and minor preprocessing. See the `argparse` helper of this script for more details.

63 |

64 | ```sh

65 | python _scripts/2_prepare.py

66 | ```

67 |

68 | ### Usage

69 |

70 | These datasets can be used during training (see the `scripts` directory at the root of the `novae` repository).

71 |

72 | ## Notes

73 | - Missing technologies: CosMX, Curio Seeker, Resolve

74 | - Public institute datasets with [STOmics DB](https://db.cngb.org/stomics/)

75 | - Some Xenium datasets are available outside of the main "10X Datasets" page:

76 | - https://www.10xgenomics.com/products/visium-hd-spatial-gene-expression/dataset-human-crc

77 |

--------------------------------------------------------------------------------

/data/_scripts/1_download_all.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # download all MERSCOPE datasets

4 | sh merscope_download.sh

5 |

6 | # convert all datasets to h5ad files

7 | python merscope_convert.py

8 |

9 | # download all Xenium datasets

10 | sh xenium_download.sh

11 |

12 | # convert all datasets to h5ad files

13 | python xenium_convert.py

14 |

15 | # download all CosMX datasets

16 | sh cosmx_download.sh

17 |

18 | # convert all datasets to h5ad files

19 | python cosmx_convert.py

20 |

--------------------------------------------------------------------------------

/data/_scripts/2_prepare.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from pathlib import Path

3 |

4 | import anndata

5 | import scanpy as sc

6 | from anndata import AnnData

7 |

8 | import novae

9 |

10 | MIN_CELLS = 50

11 | DELAUNAY_RADIUS = 100

12 |

13 |

14 | def preprocess(adata: AnnData, compute_umap: bool = False):

15 | sc.pp.filter_genes(adata, min_cells=MIN_CELLS)

16 | adata.layers["counts"] = adata.X.copy()

17 | sc.pp.normalize_total(adata)

18 | sc.pp.log1p(adata)

19 |

20 | if compute_umap:

21 | sc.pp.neighbors(adata)

22 | sc.tl.umap(adata)

23 |

24 | novae.utils.spatial_neighbors(adata, radius=[0, DELAUNAY_RADIUS])

25 |

26 |

27 | def main(args):

28 | data_path: Path = Path(args.path).absolute()

29 | out_dir = data_path / args.name

30 |

31 | if not out_dir.exists():

32 | out_dir.mkdir()

33 |

34 | for dataset in args.datasets:

35 | dataset_dir: Path = data_path / dataset

36 | for file in dataset_dir.glob("**/adata.h5ad"):

37 | print("Reading file", file)

38 |

39 | adata = anndata.read_h5ad(file)

40 | adata.obs["technology"] = dataset

41 |

42 | if "slide_id" not in adata.obs:

43 | print(" (no slide_id in obs, skipping)")

44 | continue

45 |

46 | out_file = out_dir / f"{adata.obs['slide_id'].iloc[0]}.h5ad"

47 |

48 | if out_file.exists() and not args.overwrite:

49 | print(" (already exists)")

50 | continue

51 |

52 | preprocess(adata, compute_umap=args.umap)

53 | adata.write_h5ad(out_file)

54 |

55 |

56 | if __name__ == "__main__":

57 | parser = argparse.ArgumentParser()

58 | parser.add_argument(

59 | "-p",

60 | "--path",

61 | type=str,

62 | default=".",

63 | help="Path to spatial directory",

64 | )

65 | parser.add_argument(

66 | "-n",

67 | "--name",

68 | type=str,

69 | default="all",

70 | help="Name of the resulting data directory",

71 | )

72 | parser.add_argument(

73 | "-d",

74 | "--datasets",

75 | nargs="+",

76 | default=["xenium", "merscope", "cosmx"],

77 | help="List of dataset names to concatenate",

78 | )

79 | parser.add_argument(

80 | "-o",

81 | "--overwrite",

82 | action="store_true",

83 | help="Overwrite existing output files",

84 | )

85 | parser.add_argument(

86 | "-u",

87 | "--umap",

88 | action="store_true",

89 | help="Whether to compute the UMAP embedding",

90 | )

91 |

92 | args = parser.parse_args()

93 | main(args)

94 |

--------------------------------------------------------------------------------

/data/_scripts/cosmx_convert.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from pathlib import Path

3 |

4 | import anndata

5 | import pandas as pd

6 | from scipy.sparse import csr_matrix

7 |

8 |

9 | def convert_to_h5ad(dataset_dir: Path):

10 | print(f"Reading {dataset_dir}")

11 | res_path = dataset_dir / "adata.h5ad"

12 |

13 | if res_path.exists():

14 | print(f"File {res_path} already existing.")

15 | return

16 |

17 | slide_id = f"cosmx_{dataset_dir.name}"

18 |

19 | counts_files = list(dataset_dir.glob("*exprMat_file.csv"))

20 | metadata_files = list(dataset_dir.glob("*metadata_file.csv"))

21 |

22 | if len(counts_files) != 1 or len(metadata_files) != 1:

23 | print(f"Did not found both exprMat and metadata csv inside {dataset_dir}. Skipping this directory.")

24 | return

25 |

26 | data = pd.read_csv(counts_files[0], index_col=[0, 1])

27 | obs = pd.read_csv(metadata_files[0], index_col=[0, 1])

28 |

29 | data.index = data.index.map(lambda x: f"{x[0]}-{x[1]}")

30 | obs.index = obs.index.map(lambda x: f"{x[0]}-{x[1]}")

31 |

32 | if len(data) != len(obs):

33 | cell_ids = list(set(data.index) & set(obs.index))

34 | data = data.loc[cell_ids]

35 | obs = obs.loc[cell_ids]

36 |

37 | obs.index = obs.index.astype(str) + f"_{slide_id}"

38 | data.index = obs.index

39 |

40 | is_gene = ~data.columns.str.lower().str.contains("SystemControl")

41 |

42 | adata = anndata.AnnData(data.loc[:, is_gene], obs=obs)

43 |

44 | adata.obsm["spatial"] = adata.obs[["CenterX_global_px", "CenterY_global_px"]].values * 0.120280945

45 | adata.obs["slide_id"] = pd.Series(slide_id, index=adata.obs_names, dtype="category")

46 |

47 | adata.X = csr_matrix(adata.X)

48 | adata.write_h5ad(res_path)

49 |

50 | print(f"Created file at path {res_path}")

51 |

52 |

53 | def main(args):

54 | path = Path(args.path).absolute() / "cosmx"

55 |

56 | print(f"Reading all datasets inside {path}")

57 |

58 | for dataset_dir in path.iterdir():

59 | if dataset_dir.is_dir():

60 | convert_to_h5ad(dataset_dir)

61 |

62 |

63 | if __name__ == "__main__":

64 | parser = argparse.ArgumentParser()

65 | parser.add_argument(

66 | "-p",

67 | "--path",

68 | type=str,

69 | default=".",

70 | help="Path to spatial directory (containing the 'cosmx' directory)",

71 | )

72 |

73 | main(parser.parse_args())

74 |

--------------------------------------------------------------------------------

/data/_scripts/cosmx_download.sh:

--------------------------------------------------------------------------------

1 | # Pancreas

2 | PANCREAS_FLAT_FILES="https://smi-public.objects.liquidweb.services/cosmx-wtx/Pancreas-CosMx-WTx-FlatFiles.zip"

3 | PANCREAS_OUTPUT_ZIP="cosmx/pancreas/Pancreas-CosMx-WTx-FlatFiles.zip"

4 |

5 | mkdir -p cosmx/pancreas

6 |

7 | if [ -f $PANCREAS_OUTPUT_ZIP ]; then

8 | echo "File $PANCREAS_OUTPUT_ZIP already exists."

9 | else

10 | echo "Downloading $PANCREAS_FLAT_FILES to $PANCREAS_OUTPUT_ZIP"

11 | curl $PANCREAS_FLAT_FILES -o $PANCREAS_OUTPUT_ZIP

12 | unzip $PANCREAS_OUTPUT_ZIP -d cosmx/pancreas

13 | fi

14 |

15 | # Normal Liver

16 | mkdir -p cosmx/normal_liver

17 | METADATA_FILE="https://nanostring.app.box.com/index.php?rm=box_download_shared_file&shared_name=id16si2dckxqqpilexl2zg90leo57grn&file_id=f_1392279064291"

18 | METADATA_OUTPUT="cosmx/normal_liver/metadata_file.csv"

19 | if [ -f $METADATA_OUTPUT ]; then

20 | echo "File $METADATA_OUTPUT already exists."

21 | else

22 | echo "Downloading $METADATA_FILE to $METADATA_OUTPUT"

23 | curl -L $METADATA_FILE -o $METADATA_OUTPUT

24 | fi

25 | COUNT_FILE="https://nanostring.app.box.com/index.php?rm=box_download_shared_file&shared_name=id16si2dckxqqpilexl2zg90leo57grn&file_id=f_1392318918584"

26 | COUNT_OUTPUT="cosmx/normal_liver/exprMat_file.csv"

27 | if [ -f $COUNT_OUTPUT ]; then

28 | echo "File $COUNT_OUTPUT already exists."

29 | else

30 | echo "Downloading $COUNT_FILE to $COUNT_OUTPUT"

31 | curl -L $COUNT_FILE -o $COUNT_OUTPUT

32 | fi

33 |

34 | # Cancer Liver

35 | mkdir -p cosmx/cancer_liver

36 | METADATA_FILE="https://nanostring.app.box.com/index.php?rm=box_download_shared_file&shared_name=id16si2dckxqqpilexl2zg90leo57grn&file_id=f_1392293795557"

37 | METADATA_OUTPUT="cosmx/cancer_liver/metadata_file.csv"

38 | if [ -f $METADATA_OUTPUT ]; then

39 | echo "File $METADATA_OUTPUT already exists."

40 | else

41 | echo "Downloading $METADATA_FILE to $METADATA_OUTPUT"

42 | curl -L $METADATA_FILE -o $METADATA_OUTPUT

43 | fi

44 | COUNT_FILE="https://nanostring.app.box.com/index.php?rm=box_download_shared_file&shared_name=id16si2dckxqqpilexl2zg90leo57grn&file_id=f_1392441469377"

45 | COUNT_OUTPUT="cosmx/cancer_liver/exprMat_file.csv"

46 | if [ -f $COUNT_OUTPUT ]; then

47 | echo "File $COUNT_OUTPUT already exists."

48 | else

49 | echo "Downloading $COUNT_FILE to $COUNT_OUTPUT"

50 | curl -L $COUNT_FILE -o $COUNT_OUTPUT

51 | fi

52 |

--------------------------------------------------------------------------------

/data/_scripts/merscope_convert.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from pathlib import Path

3 |

4 | import anndata

5 | import numpy as np

6 | import pandas as pd

7 | from scipy.sparse import csr_matrix

8 |

9 |

10 | def convert_to_h5ad(dataset_dir: Path):

11 | res_path = dataset_dir / "adata.h5ad"

12 |

13 | if res_path.exists():

14 | print(f"File {res_path} already existing.")

15 | return

16 |

17 | region = "region_0"

18 | slide_id = f"{dataset_dir.name}_{region}"

19 |

20 | data_dir = dataset_dir / "cell_by_gene.csv"

21 | obs_dir = dataset_dir / "cell_metadata.csv"

22 |

23 | if not data_dir.exists() or not obs_dir.exists():

24 | print(f"Did not found both csv inside {dataset_dir}. Skipping this directory.")

25 | return

26 |

27 | data = pd.read_csv(data_dir, index_col=0, dtype={"cell": str})

28 | obs = pd.read_csv(obs_dir, index_col=0, dtype={"EntityID": str})

29 |

30 | obs.index = obs.index.astype(str) + f"_{slide_id}"

31 | data.index = data.index.astype(str) + f"_{slide_id}"

32 | obs = obs.loc[data.index]

33 |

34 | is_gene = ~data.columns.str.lower().str.contains("blank")

35 |

36 | adata = anndata.AnnData(data.loc[:, is_gene], dtype=np.uint16, obs=obs)

37 |

38 | adata.obsm["spatial"] = adata.obs[["center_x", "center_y"]].values

39 | adata.obs["region"] = pd.Series(region, index=adata.obs_names, dtype="category")

40 | adata.obs["slide_id"] = pd.Series(slide_id, index=adata.obs_names, dtype="category")

41 |

42 | adata.X = csr_matrix(adata.X)

43 | adata.write_h5ad(res_path)

44 |

45 | print(f"Created file at path {res_path}")

46 |

47 |

48 | def main(args):

49 | path = Path(args.path).absolute() / "merscope"

50 |

51 | print(f"Reading all datasets inside {path}")

52 |

53 | for dataset_dir in path.iterdir():

54 | if dataset_dir.is_dir():

55 | convert_to_h5ad(dataset_dir)

56 |

57 |

58 | if __name__ == "__main__":

59 | parser = argparse.ArgumentParser()

60 | parser.add_argument(

61 | "-p",

62 | "--path",

63 | type=str,

64 | default=".",

65 | help="Path to spatial directory (containing the 'merscope' directory)",

66 | )

67 |

68 | main(parser.parse_args())

69 |

--------------------------------------------------------------------------------

/data/_scripts/merscope_download.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | BUCKET_NAME="vz-ffpe-showcase"

4 | OUTPUT_DIR="./merscope"

5 |

6 | for BUCKET_DIR in $(gsutil ls -d gs://$BUCKET_NAME/*); do

7 | DATASET_NAME=$(basename $BUCKET_DIR)

8 | OUTPUT_DATASET_DIR=$OUTPUT_DIR/$DATASET_NAME

9 |

10 | mkdir -p $OUTPUT_DATASET_DIR

11 |

12 | for BUCKET_FILE in ${BUCKET_DIR}{cell_by_gene,cell_metadata}.csv; do

13 | FILE_NAME=$(basename $BUCKET_FILE)

14 |

15 | if [ -f $OUTPUT_DATASET_DIR/$FILE_NAME ]; then

16 | echo "File $FILE_NAME already exists in $OUTPUT_DATASET_DIR"

17 | else

18 | echo "Copying $BUCKET_FILE to $OUTPUT_DATASET_DIR"

19 | gsutil cp "$BUCKET_FILE" $OUTPUT_DATASET_DIR

20 | echo "Copied successfully"

21 | fi

22 | done

23 | done

24 |

--------------------------------------------------------------------------------

/data/_scripts/stereoseq_convert.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from pathlib import Path

3 |

4 | import anndata

5 | import pandas as pd

6 | from scipy.sparse import csr_matrix

7 |

8 |

9 | def convert_to_h5ad(dataset_dir: Path):

10 | res_path = dataset_dir / "adata.h5ad"

11 |

12 | if res_path.exists():

13 | print(f"File {res_path} already existing.")

14 | return

15 |

16 | slide_id = f"stereoseq_{dataset_dir.name}"

17 |

18 | h5ad_files = dataset_dir.glob(".h5ad")

19 |

20 | if len(h5ad_files) != 1:

21 | print(f"Found {len(h5ad_files)} h5ad file inside {dataset_dir}. Skipping this directory.")

22 | return

23 |

24 | adata = anndata.read_h5ad(h5ad_files[0])

25 | adata.X = adata.layers["raw_counts"]

26 | del adata.layers["raw_counts"]

27 |

28 | adata.obsm["spatial"] = adata.obsm["spatial"].astype(float).values

29 | adata.obs["slide_id"] = pd.Series(slide_id, index=adata.obs_names, dtype="category")

30 |

31 | adata.X = csr_matrix(adata.X)

32 | adata.write_h5ad(res_path)

33 |

34 | print(f"Created file at path {res_path}")

35 |

36 |

37 | def main(args):

38 | path = Path(args.path).absolute() / "stereoseq"

39 |

40 | print(f"Reading all datasets inside {path}")

41 |

42 | for dataset_dir in path.iterdir():

43 | if dataset_dir.is_dir():

44 | convert_to_h5ad(dataset_dir)

45 |

46 |

47 | if __name__ == "__main__":

48 | parser = argparse.ArgumentParser()

49 | parser.add_argument(

50 | "-p",

51 | "--path",

52 | type=str,

53 | default=".",

54 | help="Path to spatial directory (containing the 'stereoseq' directory)",

55 | )

56 |

57 | main(parser.parse_args())

58 |

--------------------------------------------------------------------------------

/data/_scripts/stereoseq_download.sh:

--------------------------------------------------------------------------------

1 | H5AD_REMOTE_PATHS=(\

2 | "https://ftp.cngb.org/pub/SciRAID/stomics/STDS0000062/stomics/FP200000498TL_D2_stereoseq.h5ad"\

3 | "https://ftp.cngb.org/pub/SciRAID/stomics/STDS0000062/stomics/FP200000498TL_E4_stereoseq.h5ad"\

4 | "https://ftp.cngb.org/pub/SciRAID/stomics/STDS0000062/stomics/FP200000498TL_E5_stereoseq.h5ad"\

5 | )

6 |

7 | OUTPUT_DIR="stereoseq"

8 | mkdir -p $OUTPUT_DIR

9 |

10 | for H5AD_REMOTE_PATH in "${H5AD_REMOTE_PATHS[@]}"

11 | do

12 | DATASET_NAME=$(basename $H5AD_REMOTE_PATH)

13 | OUTPUT_DATASET_DIR=${OUTPUT_DIR}/${DATASET_NAME%.h5ad}

14 | OUTPUT_DATASET=$OUTPUT_DATASET_DIR/${DATASET_NAME}

15 |

16 | mkdir -p $OUTPUT_DATASET_DIR

17 |

18 | if [ -f $OUTPUT_DATASET ]; then

19 | echo "File $OUTPUT_DATASET_DIR already exists"

20 | else

21 | echo "Downloading $H5AD_REMOTE_PATH to $OUTPUT_DATASET"

22 | # curl $H5AD_REMOTE_PATH -o $OUTPUT_DATASET

23 | echo "Successfully downloaded"

24 | fi

25 | done

26 |

--------------------------------------------------------------------------------

/data/_scripts/xenium_convert.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | from pathlib import Path

3 |

4 | import anndata

5 | import pandas as pd

6 | from spatialdata_io.readers.xenium import _get_tables_and_circles

7 |

8 |

9 | def convert_to_h5ad(dataset_dir: Path):

10 | res_path = dataset_dir / "adata.h5ad"

11 |

12 | if res_path.exists():

13 | print(f"File {res_path} already existing.")

14 | return

15 |

16 | adata: anndata.AnnData = _get_tables_and_circles(dataset_dir, False, {"region": "region_0"})

17 | adata.obs["cell_id"] = adata.obs["cell_id"].apply(lambda x: x if (isinstance(x, (str, int))) else x.decode("utf-8"))

18 |

19 | slide_id = dataset_dir.name

20 | adata.obs.index = adata.obs["cell_id"].astype(str).values + f"_{slide_id}"

21 |

22 | adata.obs["slide_id"] = pd.Series(slide_id, index=adata.obs_names, dtype="category")

23 |

24 | adata.write_h5ad(res_path)

25 |

26 | print(f"Created file at path {res_path}")

27 |

28 |

29 | def main(args):

30 | path = Path(args.path).absolute() / "xenium"

31 |

32 | print(f"Reading all datasets inside {path}")

33 |

34 | for dataset_dir in path.iterdir():

35 | if dataset_dir.is_dir():

36 | print(f"In {dataset_dir}")

37 | try:

38 | convert_to_h5ad(dataset_dir)

39 | except:

40 | print(f"Failed to convert {dataset_dir}")

41 |

42 |

43 | if __name__ == "__main__":

44 | parser = argparse.ArgumentParser()

45 | parser.add_argument(

46 | "-p",

47 | "--path",

48 | type=str,

49 | default=".",

50 | help="Path to spatial directory (containing the 'xenium' directory)",

51 | )

52 |

53 | main(parser.parse_args())

54 |

--------------------------------------------------------------------------------

/docs/api/Novae.md:

--------------------------------------------------------------------------------

1 | ::: novae.Novae

2 |

--------------------------------------------------------------------------------

/docs/api/dataloader.md:

--------------------------------------------------------------------------------

1 | ::: novae.data.AnnDataTorch

2 |

3 | ::: novae.data.NovaeDataset

4 |

5 | ::: novae.data.NovaeDatamodule

6 |

--------------------------------------------------------------------------------

/docs/api/metrics.md:

--------------------------------------------------------------------------------

1 | ::: novae.monitor.jensen_shannon_divergence

2 |

3 | ::: novae.monitor.fide_score

4 |

5 | ::: novae.monitor.mean_fide_score

6 |

--------------------------------------------------------------------------------

/docs/api/modules.md:

--------------------------------------------------------------------------------

1 | ::: novae.module.AttentionAggregation

2 |

3 | ::: novae.module.CellEmbedder

4 |

5 | ::: novae.module.GraphAugmentation

6 |

7 | ::: novae.module.GraphEncoder

8 |

9 | ::: novae.module.SwavHead

10 |

--------------------------------------------------------------------------------

/docs/api/plot.md:

--------------------------------------------------------------------------------

1 | ::: novae.plot.domains

2 |

3 | ::: novae.plot.domains_proportions

4 |

5 | ::: novae.plot.connectivities

6 |

7 | ::: novae.plot.pathway_scores

8 |

9 | ::: novae.plot.paga

10 |

11 | ::: novae.plot.spatially_variable_genes

12 |

--------------------------------------------------------------------------------

/docs/api/utils.md:

--------------------------------------------------------------------------------

1 | ::: novae.spatial_neighbors

2 |

3 | ::: novae.batch_effect_correction

4 |

5 | ::: novae.utils.quantile_scaling

6 |

7 | ::: novae.utils.prepare_adatas

8 |

9 | ::: novae.utils.load_dataset

10 |

11 | ::: novae.utils.toy_dataset

12 |

--------------------------------------------------------------------------------

/docs/assets/Figure1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MICS-Lab/novae/b641a0bd1947759317c9e7ab4d997bb7a4a00932/docs/assets/Figure1.png

--------------------------------------------------------------------------------

/docs/assets/banner.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MICS-Lab/novae/b641a0bd1947759317c9e7ab4d997bb7a4a00932/docs/assets/banner.png

--------------------------------------------------------------------------------

/docs/assets/logo_favicon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MICS-Lab/novae/b641a0bd1947759317c9e7ab4d997bb7a4a00932/docs/assets/logo_favicon.png

--------------------------------------------------------------------------------

/docs/assets/logo_small_black.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MICS-Lab/novae/b641a0bd1947759317c9e7ab4d997bb7a4a00932/docs/assets/logo_small_black.png

--------------------------------------------------------------------------------

/docs/assets/logo_white.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MICS-Lab/novae/b641a0bd1947759317c9e7ab4d997bb7a4a00932/docs/assets/logo_white.png

--------------------------------------------------------------------------------

/docs/cite_us.md:

--------------------------------------------------------------------------------

1 | You can cite our [preprint](https://www.biorxiv.org/content/10.1101/2024.09.09.612009v1) as below:

2 |

3 | ```txt

4 | @article{blampeyNovae2024,

5 | title = {Novae: A Graph-Based Foundation Model for Spatial Transcriptomics Data},

6 | author = {Blampey, Quentin and Benkirane, Hakim and Bercovici, Nadege and Andre, Fabrice and Cournede, Paul-Henry},

7 | year = {2024},

8 | pages = {2024.09.09.612009},

9 | publisher = {bioRxiv},

10 | doi = {10.1101/2024.09.09.612009},

11 | }

12 | ```

13 |

14 | This library has been developed by Quentin Blampey, PhD student in biomathematics and deep learning. The following institutions funded this work:

15 |

16 | - Lab of Mathematics and Computer Science (MICS), **CentraleSupélec** (Engineering School, Paris-Saclay University).

17 | - PRISM center, **Gustave Roussy Institute** (Cancer campus, Paris-Saclay University).

18 |

--------------------------------------------------------------------------------

/docs/faq.md:

--------------------------------------------------------------------------------

1 | # Frequently asked questions

2 |

3 | ### How to use the GPU?

4 |

5 | Using a GPU may significantly speed up Novae's training or inference.

6 |

7 | If you have a valid GPU for PyTorch, you can set the `accelerator` argument (e.g., one of `["cpu", "gpu", "tpu", "hpu", "mps", "auto"]`) in the following methods: [model.fit()](../api/Novae/#novae.Novae.fit), [model.fine_tune()](../api/Novae/#novae.Novae.fine_tune), [model.compute_representations()](../api/Novae/#novae.Novae.compute_representations).

8 |

9 | When using a GPU, we also highly recommend setting multiple workers to speed up the dataset `__getitem__`. For that, you'll need to set the `num_workers` argument in the previous methods, according to the number of CPUs available (`num_workers=8` is usually a good value).

10 |

11 | For more details, refer to the API of the [PyTorch Lightning Trainer](https://lightning.ai/docs/pytorch/stable/common/trainer.html#trainer-class-api) and to the API of the [PyTorch DataLoader](https://pytorch.org/docs/stable/data.html#torch.utils.data.DataLoader).

12 |

13 | ### How to load a pretrained model?

14 |

15 | We highly recommend loading a pre-trained Novae model instead of re-training from scratch. For that, choose an available Novae model name on [our HuggingFace collection](https://huggingface.co/collections/MICS-Lab/novae-669cdf1754729d168a69f6bd), and provide this name to the [model.save_pretrained()](../api/Novae/#novae.Novae.save_pretrained) method:

16 |

17 | ```python

18 | from novae import Novae

19 |

20 | model = Novae.from_pretrained("MICS-Lab/novae-human-0") # or any valid model name

21 | ```

22 |

23 | ### How to avoid overcorrecting?

24 |

25 | By default, Novae corrects the batch-effect to get shared spatial domains across slides.

26 | The batch information is used only during training (`fit` or `fine_tune`), which should prevent Novae from overcorrecting in `zero_shot` mode.

27 |

28 | If not using the `zero_shot` mode, you can provide the `min_prototypes_ratio` parameter to control batch effect correction: either (i) in the `fine_tune` method itself, or (ii) during the model initialization (if retraining a model from scratch).

29 |

30 | For instance, if `min_prototypes_ratio=0.5`, Novae expects each slide to contain at least 50% of the prototypes (each prototype can be interpreted as an "elementary spatial domain"). Therefore, the lower `min_prototypes_ratio`, the lower the batch-effect correction. Conversely, if `min_prototypes_ratio=1`, all prototypes are expected to be found in all slides (this doesn't mean the proportions will be the same overall slides, though).

31 |

32 | ### How do I save my own model?

33 |

34 | If you have trained or fine-tuned your own Novae model, you can save it for later use. For that, use the [model.save_pretrained()](../api/Novae/#novae.Novae.save_pretrained) method as below:

35 |

36 | ```python

37 | model.save_pretrained(save_directory="./my-model-directory")

38 | ```

39 |

40 | Then, you can load this model back via the [model.from_pretrained()](../api/Novae/#novae.Novae.from_pretrained) method:

41 |

42 | ```python

43 | from novae import Novae

44 |

45 | model = Novae.from_pretrained("./my-model-directory")

46 | ```

47 |

48 | ### How to turn lazy loading on or off?

49 |

50 | By default, lazy loading is used only on large datasets. To enforce a specific behavior, you can do the following:

51 |

52 | ```python

53 | # never use lazy loading

54 | novae.settings.disable_lazy_loading()

55 |

56 | # always use lazy loading

57 | novae.settings.enable_lazy_loading()

58 |

59 | # use lazy loading only for AnnData objects with 1M+ cells

60 | novae.settings.enable_lazy_loading(n_obs_threshold=1_000_000)

61 | ```

62 |

63 | ### How to update the logging level?

64 |

65 | The logging level can be updated as below:

66 |

67 | ```python

68 | import logging

69 | from novae import log

70 |

71 | log.setLevel(logging.ERROR) # or any other level, e.g. logging.DEBUG

72 | ```

73 |

74 | ### How to disable auto-preprocessing

75 |

76 | By default, Novae automatically run data preprocessing for you. If you don't want that, you can run the line below.

77 |

78 | ```python

79 | novae.settings.auto_preprocessing = False

80 | ```

81 |

82 | ### How long does it take to use Novae?

83 |

84 | The `pip` installation of Novae usually takes less than a minute on a standard laptop. The inference time depends on the number of cells, but typically takes 5-20 minutes on a CPUs, or 30sec to 2 minutes on a GPU (expect it to be roughly 10x times faster on a GPU).

85 |

86 | ### How to contribute?

87 |

88 | If you want to contribute, check our [contributing guide](https://github.com/MICS-Lab/novae/blob/main/CONTRIBUTING.md).

89 |

90 | ### How to resolve any other issue?

91 |

92 | If you have any bugs/questions/suggestions, don't hesitate to [open a new issue](https://github.com/MICS-Lab/novae/issues).

93 |

--------------------------------------------------------------------------------

/docs/getting_started.md:

--------------------------------------------------------------------------------

1 | ## Installation

2 |

3 | Novae can be installed on every OS via `pip` or [`poetry`](https://python-poetry.org/docs/), on any Python version from `3.10` to `3.12` (included). By default, we recommend using `python==3.10`.

4 |

5 | !!! note "Advice (optional)"

6 |

7 | We advise creating a new environment via a package manager, except if you use Poetry, which will automatically create the environment.

8 |

9 | For instance, you can create a new `conda` environment:

10 |

11 | ```bash

12 | conda create --name novae python=3.10

13 | conda activate novae

14 | ```

15 |

16 | Choose one of the following, depending on your needs. It should take at most a few minutes.

17 |

18 | === "From PyPI"

19 |

20 | ``` bash

21 | pip install novae

22 | ```

23 |

24 | === "pip (editable mode)"

25 |

26 | ``` bash

27 | git clone https://github.com/MICS-Lab/novae.git

28 | cd novae

29 |

30 | pip install -e . # no extra

31 | pip install -e '.[dev]' # all extras

32 | ```

33 |

34 | === "Poetry (editable mode)"

35 |

36 | ``` bash

37 | git clone https://github.com/MICS-Lab/novae.git

38 | cd novae

39 |

40 | poetry install --all-extras

41 | ```

42 |

43 | ## Next steps

44 |

45 | - We recommend to start with our [first tutorial](../tutorials/main_usage).

46 | - You can also read the [API](../api/Novae).

47 | - If you have questions, please check our [FAQ](../faq) or open an issue on the [GitHub repository](https://github.com/MICS-Lab/novae).

48 | - If you want to contribute, check our [contributing guide](https://github.com/MICS-Lab/novae/blob/main/CONTRIBUTING.md).

49 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 |

6 | 💫 Graph-based foundation model for spatial transcriptomics data

7 |

8 |

9 | Novae is a deep learning model for spatial domain assignments of spatial transcriptomics data (at both single-cell or spot resolution). It works across multiple gene panels, tissues, and technologies. Novae offers several additional features, including: (i) native batch-effect correction, (ii) analysis of spatially variable genes and pathways, and (iii) architecture analysis of tissue slides.

10 |

11 | ## Overview

12 |

13 |

14 |  15 |

15 |

16 |

17 | > **(a)** Novae was trained on a large dataset, and is shared on [Hugging Face Hub](https://huggingface.co/collections/MICS-Lab/novae-669cdf1754729d168a69f6bd). **(b)** Illustration of the main tasks and properties of Novae. **(c)** Illustration of the method behind Novae (self-supervision on graphs, adapted from [SwAV](https://arxiv.org/abs/2006.09882)).

18 |

19 |

20 | ## Why using Novae

21 |

22 | - It is already pretrained on a large dataset (pan human/mouse tissues, brain, ...). Therefore, you can compute spatial domains in a zero-shot manner (i.e., without fine-tuning).

23 | - It has been developed to find consistent domains across many slides. This also works if you have different technologies (e.g., MERSCOPE/Xenium) and multiple gene panels.

24 | - You can natively correct batch-effect, without using external tools.

25 | - After inference, the spatial domain assignment is super fast, allowing you to try multiple resolutions easily.

26 | - It supports many downstream tasks, all included inside one framework.

27 |

--------------------------------------------------------------------------------

/docs/javascripts/mathjax.js:

--------------------------------------------------------------------------------

1 | window.MathJax = {

2 | tex: {

3 | inlineMath: [["\\(", "\\)"]],

4 | displayMath: [["\\[", "\\]"]],

5 | processEscapes: true,

6 | processEnvironments: true

7 | },

8 | options: {

9 | ignoreHtmlClass: ".*|",

10 | processHtmlClass: "arithmatex"

11 | }

12 | };

13 |

14 | document$.subscribe(() => {

15 | MathJax.typesetPromise()

16 | })

17 |

--------------------------------------------------------------------------------

/docs/tutorials/LEE_AGING_CEREBELLUM_UP.json:

--------------------------------------------------------------------------------

1 | {

2 | "LEE_AGING_CEREBELLUM_UP" : {"systematicName":"MM1023","pmid":"10888876","exactSource":"Table 5S","geneSymbols":["Acadvl","Agt","Amh","Apc","Apoe","Axl","B2m","Bcl2a1a","Bdnf","C1qa","C1qb","C1qc","C4b","Capn2","Ccl21b","Cd24a","Cd68","Cdk4","Cst7","Ctsd","Ctsh","Ctss","Ctsz","Dnajb2","Efs","Eif2b5","Eprs1","Eps15","F2","Fcrl2","Fos","Gbp3","Gck","Gfap","Gnb2","Gng11","H2-Ab1","Hexb","Hmox1","Hnrnph3","Hoxa4","Hoxd12","Hspa8","Iars1","Ifi27","Ifit1","Ighm","Impa1","Irf7","Irgm1","Itgb5","Lgals3","Lgals3bp","Lmnb1","Mnat1","Mpeg1","Myh8","Nfya","Nos3","Notch1","Nr4a1","Or2c1","Pglyrp1","Pigf","Psg-ps1","Ptbp2","Ptpro","Rhog","Rpsa","Selplg","Sez6","Sipa1l2","Slc11a1","Slc7a3","Snta1","Spp1","Tbc1d1","Tbx6","Tgfbr3","Thbs2","Trappc5","Trim30a","Tyms","Ube2h","Wdfy3","Zfp40"],"msigdbURL":"https://www.gsea-msigdb.org/gsea/msigdb/mouse/geneset/LEE_AGING_CEREBELLUM_UP","externalDetailsURL":[],"filteredBySimilarity":[],"externalNamesForSimilarTerms":[],"collection":"M2:CGP"}

3 | }

4 |

--------------------------------------------------------------------------------

/docs/tutorials/input_modes.md:

--------------------------------------------------------------------------------

1 | Depending on your data and preferences, you can use 4 types of inputs.

2 | Specifically, it depends on whether (i) you have one or multiple slides and (ii) you prefer to concatenate your data.

3 |

4 | !!! info

5 | In all cases, the data structure is [AnnData](https://anndata.readthedocs.io/en/latest/). We may support MuData in the future.

6 |

7 | ## 1. One slide mode

8 |

9 | This case is the easiest one. You simply have one `AnnData` object corresponding to one slide.

10 |

11 | You can follow the first section of the [main usage tutorial](../main_usage).

12 |

13 | ## 2. Multiple slides, one AnnData object

14 |

15 | If you have multiple slides with the same gene panel, you can concatenate them into one `AnnData` object. In that case, make sure you keep a column in `adata.obs` that denotes which cell corresponds to which slide.

16 |

17 | Then, remind this column, and pass it to [`novae.spatial_neighbors`](../../api/utils/#novae.spatial_neighbors).

18 |

19 | !!! example

20 | For instance, you can do:

21 | ```python

22 | novae.spatial_neighbors(adata, slide_key="my-slide-id-column")

23 | ```

24 |

25 | ## 3. Multiple slides, one AnnData object per slide

26 |

27 | If you have multiple slides, you may prefer to keep one `AnnData` object for each slide. This is also convenient if you have different gene panels and can't concatenate your data.

28 |

29 | That case is pretty easy, since most functions and methods of Novae also support a **list of `AnnData` objects** as inputs. Therefore, simply pass a list of `AnnData` object, as below:

30 |

31 | !!! example

32 | ```python

33 | adatas = [adata_1, adata_2, ...]

34 |

35 | novae.spatial_neighbors(adatas)

36 |

37 | model.compute_representations(adatas, zero_shot=True)

38 | ```

39 |

40 | ## 4. Multiple slides, multiple slides per AnnData object

41 |

42 | If you have multiple slides and multiple panels, instead of the above option, you could have one `AnnData` object per panel, and multiple slides inside each `AnnData` object. In that case, make sure you keep a column in `adata.obs` that denotes which cell corresponds to which slide.

43 |

44 | Then, remind this column, and pass it to [`novae.spatial_neighbors`](../../api/utils/#novae.spatial_neighbors). The other functions don't need this argument.

45 |

46 | !!! example

47 | For instance, you can do:

48 | ```python

49 | adatas = [adata_1, adata_2, ...]

50 |

51 | novae.spatial_neighbors(adatas, slide_key="my-slide-id-column")

52 |

53 | model.compute_representations(adatas, zero_shot=True)

54 | ```

55 |

--------------------------------------------------------------------------------

/mkdocs.yml:

--------------------------------------------------------------------------------

1 | site_name: Novae

2 | repo_name: MICS-Lab/novae

3 | repo_url: https://github.com/MICS-Lab/novae

4 | copyright: Copyright © 2024 Quentin Blampey

5 | theme:

6 | name: material

7 | logo: assets/logo_small_black.png

8 | favicon: assets/logo_favicon.png

9 | palette:

10 | scheme: slate

11 | primary: white

12 | nav:

13 | - Home: index.md

14 | - Getting started: getting_started.md

15 | - Tutorials:

16 | - Main usage: tutorials/main_usage.ipynb

17 | - Different input modes: tutorials/input_modes.md

18 | - Usage on proteins: tutorials/proteins.ipynb

19 | - Spot/bin technologies: tutorials/resolutions.ipynb

20 | - API:

21 | - Novae model: api/Novae.md

22 | - Utils: api/utils.md

23 | - Plotting: api/plot.md

24 | - Advanced:

25 | - Metrics: api/metrics.md

26 | - Modules: api/modules.md

27 | - Dataloader: api/dataloader.md

28 | - FAQ: faq.md

29 | - Cite us: cite_us.md

30 |

31 | plugins:

32 | - search

33 | - mkdocstrings:

34 | handlers:

35 | python:

36 | options:

37 | show_root_heading: true

38 | heading_level: 3

39 | - mkdocs-jupyter:

40 | include_source: True

41 | markdown_extensions:

42 | - admonition

43 | - attr_list

44 | - md_in_html

45 | - pymdownx.details

46 | - pymdownx.highlight:

47 | anchor_linenums: true

48 | - pymdownx.inlinehilite

49 | - pymdownx.snippets

50 | - pymdownx.superfences

51 | - pymdownx.arithmatex:

52 | generic: true

53 | - pymdownx.tabbed:

54 | alternate_style: true

55 | extra_javascript:

56 | - javascripts/mathjax.js

57 | - https://polyfill.io/v3/polyfill.min.js?features=es6

58 | - https://cdn.jsdelivr.net/npm/mathjax@3/es5/tex-mml-chtml.js

59 |

--------------------------------------------------------------------------------

/novae/__init__.py:

--------------------------------------------------------------------------------

1 | import importlib.metadata

2 | import logging

3 |

4 | __version__ = importlib.metadata.version("novae")

5 |

6 | from ._logging import configure_logger

7 | from ._settings import settings

8 | from .model import Novae

9 | from . import utils

10 | from . import data

11 | from . import monitor

12 | from . import plot

13 | from .utils import spatial_neighbors, batch_effect_correction

14 |

15 | log = logging.getLogger("novae")

16 | configure_logger(log)

17 |

--------------------------------------------------------------------------------

/novae/_constants.py:

--------------------------------------------------------------------------------

1 | class Keys:

2 | # obs keys

3 | LEAVES: str = "novae_leaves"

4 | DOMAINS_PREFIX: str = "novae_domains_"

5 | IS_VALID_OBS: str = "neighborhood_valid"

6 | SLIDE_ID: str = "novae_sid"

7 |

8 | # obsm keys

9 | REPR: str = "novae_latent"

10 | REPR_CORRECTED: str = "novae_latent_corrected"

11 |

12 | # obsp keys

13 | ADJ: str = "spatial_distances"

14 | ADJ_LOCAL: str = "spatial_distances_local"

15 | ADJ_PAIR: str = "spatial_distances_pair"

16 |

17 | # var keys

18 | VAR_MEAN: str = "mean"

19 | VAR_STD: str = "std"

20 | IS_KNOWN_GENE: str = "in_vocabulary"

21 | HIGHLY_VARIABLE: str = "highly_variable"

22 | USE_GENE: str = "novae_use_gene"

23 |

24 | # layer keys

25 | COUNTS_LAYER: str = "counts"

26 |

27 | # misc keys

28 | UNS_TISSUE: str = "novae_tissue"

29 | ADATA_INDEX: str = "adata_index"

30 | N_BATCHES: str = "n_batches"

31 | NOVAE_VERSION: str = "novae_version"

32 |

33 |

34 | class Nums:

35 | # training constants

36 | EPS: float = 1e-8

37 | MIN_DATASET_LENGTH: int = 50_000

38 | MAX_DATASET_LENGTH_RATIO: float = 0.02

39 | DEFAULT_SAMPLE_CELLS: int = 100_000

40 | WARMUP_EPOCHS: int = 1

41 |

42 | # distances constants and thresholds (in microns)

43 | CELLS_CHARACTERISTIC_DISTANCE: int = 20 # characteristic distance between two cells, in microns

44 | MAX_MEAN_DISTANCE_RATIO: float = 8

45 |

46 | # genes constants

47 | N_HVG_THRESHOLD: int = 500

48 | MIN_GENES_FOR_HVG: int = 100

49 | MIN_GENES: int = 20

50 |

51 | # swav head constants

52 | SWAV_EPSILON: float = 0.05

53 | SINKHORN_ITERATIONS: int = 3

54 | QUEUE_SIZE: int = 2

55 | QUEUE_WEIGHT_THRESHOLD_RATIO: float = 0.99

56 |

57 | # misc nums

58 | MEAN_NGH_TH_WARNING: float = 3.5

59 | N_OBS_THRESHOLD: int = 2_000_000 # above this number, lazy loading is used

60 | RATIO_VALID_CELLS_TH: float = 0.7

61 |

--------------------------------------------------------------------------------

/novae/_logging.py:

--------------------------------------------------------------------------------

1 | import logging

2 |

3 | log = logging.getLogger(__name__)

4 |

5 |

6 | class ColorFormatter(logging.Formatter):

7 | grey = "\x1b[38;20m"

8 | blue = "\x1b[36;20m"

9 | yellow = "\x1b[33;20m"

10 | red = "\x1b[31;20m"

11 | bold_red = "\x1b[31;1m"

12 | reset = "\x1b[0m"

13 |

14 | prefix = "[%(levelname)s] (%(name)s)"

15 | suffix = "%(message)s"

16 |

17 | FORMATS = {

18 | logging.DEBUG: f"{grey}{prefix}{reset} {suffix}",

19 | logging.INFO: f"{blue}{prefix}{reset} {suffix}",

20 | logging.WARNING: f"{yellow}{prefix}{reset} {suffix}",

21 | logging.ERROR: f"{red}{prefix}{reset} {suffix}",

22 | logging.CRITICAL: f"{bold_red}{prefix}{reset} {suffix}",

23 | }

24 |

25 | def format(self, record):

26 | log_fmt = self.FORMATS.get(record.levelno)

27 | formatter = logging.Formatter(log_fmt)

28 | return formatter.format(record)

29 |

30 |

31 | def configure_logger(log: logging.Logger):

32 | log.setLevel(logging.INFO)

33 |

34 | consoleHandler = logging.StreamHandler()

35 | consoleHandler.setFormatter(ColorFormatter())

36 |

37 | log.addHandler(consoleHandler)

38 | log.propagate = False

39 |

--------------------------------------------------------------------------------

/novae/_settings.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 |

3 | from ._constants import Nums

4 |

5 |

6 | class Settings:

7 | # misc settings

8 | auto_preprocessing: bool = True

9 |

10 | def disable_lazy_loading(self):

11 | """Disable lazy loading of subgraphs in the NovaeDataset."""

12 | Nums.N_OBS_THRESHOLD = np.inf

13 |

14 | def enable_lazy_loading(self, n_obs_threshold: int = 0):

15 | """Enable lazy loading of subgraphs in the NovaeDataset.

16 |

17 | Args:

18 | n_obs_threshold: Lazy loading is used above this number of cells in an AnnData object.

19 | """

20 | Nums.N_OBS_THRESHOLD = n_obs_threshold

21 |

22 | @property

23 | def warmup_epochs(self):

24 | return Nums.WARMUP_EPOCHS

25 |

26 | @warmup_epochs.setter

27 | def warmup_epochs(self, value: int):

28 | Nums.WARMUP_EPOCHS = value

29 |

30 |

31 | settings = Settings()

32 |

--------------------------------------------------------------------------------

/novae/data/__init__.py:

--------------------------------------------------------------------------------

1 | from .convert import AnnDataTorch

2 | from .dataset import NovaeDataset

3 | from .datamodule import NovaeDatamodule

4 |

--------------------------------------------------------------------------------

/novae/data/convert.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import torch

3 | from anndata import AnnData

4 | from sklearn.preprocessing import LabelEncoder

5 | from torch import Tensor

6 |

7 | from .._constants import Keys, Nums

8 | from ..module import CellEmbedder

9 | from ..utils import sparse_std

10 |

11 |

12 | class AnnDataTorch:

13 | tensors: list[Tensor] | None

14 | genes_indices_list: list[Tensor]

15 |

16 | def __init__(self, adatas: list[AnnData], cell_embedder: CellEmbedder):

17 | """Converting AnnData objects to PyTorch tensors.

18 |

19 | Args:

20 | adatas: A list of `AnnData` objects.

21 | cell_embedder: A [novae.module.CellEmbedder][] object.

22 | """

23 | super().__init__()

24 | self.adatas = adatas

25 | self.cell_embedder = cell_embedder

26 |

27 | self.genes_indices_list = [self._adata_to_genes_indices(adata) for adata in self.adatas]

28 | self.tensors = None

29 |

30 | self.means, self.stds, self.label_encoder = self._compute_means_stds()

31 |

32 | # Tensors are loaded in memory for low numbers of cells

33 | if sum(adata.n_obs for adata in self.adatas) < Nums.N_OBS_THRESHOLD:

34 | self.tensors = [self.to_tensor(adata) for adata in self.adatas]

35 |

36 | def _adata_to_genes_indices(self, adata: AnnData) -> Tensor:

37 | return self.cell_embedder.genes_to_indices(adata.var_names[self._keep_var(adata)])[None, :]

38 |

39 | def _keep_var(self, adata: AnnData) -> AnnData:

40 | return adata.var[Keys.USE_GENE]

41 |

42 | def _compute_means_stds(self) -> tuple[Tensor, Tensor, LabelEncoder]:

43 | means, stds = {}, {}

44 |

45 | for adata in self.adatas:

46 | slide_ids = adata.obs[Keys.SLIDE_ID]

47 | for slide_id in slide_ids.cat.categories:

48 | adata_slide = adata[adata.obs[Keys.SLIDE_ID] == slide_id, self._keep_var(adata)]

49 |

50 | mean = adata_slide.X.mean(0)

51 | mean = mean.A1 if isinstance(mean, np.matrix) else mean

52 | means[slide_id] = mean.astype(np.float32)

53 |

54 | std = adata_slide.X.std(0) if isinstance(adata_slide.X, np.ndarray) else sparse_std(adata_slide.X, 0).A1

55 | stds[slide_id] = std.astype(np.float32)

56 |

57 | label_encoder = LabelEncoder()

58 | label_encoder.fit(list(means.keys()))

59 |

60 | means = [torch.tensor(means[slide_id]) for slide_id in label_encoder.classes_]

61 | stds = [torch.tensor(stds[slide_id]) for slide_id in label_encoder.classes_]

62 |

63 | return means, stds, label_encoder

64 |

65 | def to_tensor(self, adata: AnnData) -> Tensor:

66 | """Get the normalized gene expressions of the cells in the dataset.

67 | Only the genes of interest are kept (known genes and highly variable).

68 |

69 | Args:

70 | adata: An `AnnData` object.

71 |

72 | Returns:

73 | A `Tensor` containing the normalized gene expresions.

74 | """

75 | adata = adata[:, self._keep_var(adata)]

76 |

77 | if len(np.unique(adata.obs[Keys.SLIDE_ID])) == 1:

78 | slide_id_index = self.label_encoder.transform([adata.obs.iloc[0][Keys.SLIDE_ID]])[0]

79 | mean, std = self.means[slide_id_index], self.stds[slide_id_index]

80 | else:

81 | slide_id_indices = self.label_encoder.transform(adata.obs[Keys.SLIDE_ID])

82 | mean = torch.stack([self.means[i] for i in slide_id_indices]) # TODO: avoid stack (only if not fast enough)

83 | std = torch.stack([self.stds[i] for i in slide_id_indices])

84 |

85 | X = adata.X if isinstance(adata.X, np.ndarray) else adata.X.toarray()

86 | X = torch.tensor(X, dtype=torch.float32)

87 | X = (X - mean) / (std + Nums.EPS)

88 |

89 | return X

90 |

91 | def __getitem__(self, item: tuple[int, slice]) -> tuple[Tensor, Tensor]:

92 | """Get the expression values for a subset of cells (corresponding to a subgraph).

93 |

94 | Args: