├── nets

├── __init__.py

├── inception.py

├── inception_utils.py

├── nets_factory.py

├── resnet_utils.py

├── vgg.py

├── resnet_v1.py

├── resnet_v2.py

├── inception_v1.py

├── inception_resnet_v2.py

└── inception_v4.py

├── datasets

├── __init__.py

├── dataset_factory.py

├── custom.py

├── dataset_utils.py

└── convert_tfrecord.py

├── preprocessing

├── __init__.py

├── preprocessing_factory.py

├── inception_preprocessing.py

└── vgg_preprocessing.py

├── run_train.sh

├── run_test.sh

├── run_eval.sh

├── set_eval_env.sh

├── set_test_env.sh

├── set_train_env.sh

├── convert_data.py

├── eval.py

├── README.md

├── test.py

└── train.py

/nets/__init__.py:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/datasets/__init__.py:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/preprocessing/__init__.py:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/run_train.sh:

--------------------------------------------------------------------------------

1 | source set_train_env.sh

2 | python train.py --dataset_dir=$DATASET_DIR \

3 | --train_dir=$TRAIN_DIR \

4 | --checkpoint_path=$CHECKPOINT_PATH \

5 | --labels_file=$LABELS_FILE

6 |

--------------------------------------------------------------------------------

/run_test.sh:

--------------------------------------------------------------------------------

1 | source set_test_env.sh

2 | python train.py --dataset_dir=$DATASET_DIR \

3 | --train_dir=$TRAIN_DIR \

4 | --checkpoint_path=$CHECKPOINT_PATH \

5 | --labels_file=$LABELS_FILE \

6 | --log_test=$TRAIN_DIR/test

7 |

--------------------------------------------------------------------------------

/run_eval.sh:

--------------------------------------------------------------------------------

1 | source set_eval_env.sh

2 | python -u eval.py --dataset_name=$DATASET_NAME \

3 | --dataset_dir=$DATASET_DIR \

4 | --dataset_split_name=validation \

5 | --model_name=$MODLE_NAME \

6 | --checkpoint_path=$TRAIN_DIR \

7 | --eval_dir=$TRAIN_DIR/validation

8 |

--------------------------------------------------------------------------------

/set_eval_env.sh:

--------------------------------------------------------------------------------

1 | #Validation dataset

2 | export DATASET_NAME=origin

3 |

4 | export DATASET_DIR=/../pack

5 |

6 | export CHECKPOINT_PATH=/../inception_resnet_v2.ckpt

7 | #export CHECKPOINT_PATH=/../inception_v3.ckpt

8 | #export CHECKPOINT_PATH=/../vgg_16.ckpt

9 | #export CHECKPOINT_PATH=/../vgg_19.ckpt

10 | #export CHECKPOINT_PATH=/../resnet_v1_50.ckpt

11 |

12 | export TRAIN_DIR=/../100_inception_resnet_v2_150

13 |

14 | #export MODEL_NAME=inception_v3

15 | #export MODEL_NAME=inception_v4

16 | export MODEL_NAME=inception_resnet_v2

17 | #export MODEL_NAME=vgg_16

18 | #export MODEL_NAME=vgg_19

19 | #export MODEL_NAME=resnet_v1_50

20 |

--------------------------------------------------------------------------------

/set_test_env.sh:

--------------------------------------------------------------------------------

1 | #Test dataset

2 | export DATASET_NAME=origin

3 |

4 | export DATASET_DIR=/../pack

5 |

6 | export CHECKPOINT_PATH=/../inception_resnet_v2.ckpt

7 | #export CHECKPOINT_PATH=/../inception_v3.ckpt

8 | #export CHECKPOINT_PATH=/../vgg_16.ckpt

9 | #export CHECKPOINT_PATH=/../vgg_19.ckpt

10 | #export CHECKPOINT_PATH=/../resnet_v1_50.ckpt

11 |

12 | export TRAIN_DIR=/../100_inception_resnet_v2_150

13 |

14 | export LABELS_FILE=/../labels.txt

15 |

16 | #export MODEL_NAME=inception_v3

17 | #export MODEL_NAME=inception_v4

18 | export MODEL_NAME=inception_resnet_v2

19 | #export MODEL_NAME=vgg_16

20 | #export MODEL_NAME=vgg_19

21 | #export MODEL_NAME=resnet_v1_50

22 |

--------------------------------------------------------------------------------

/set_train_env.sh:

--------------------------------------------------------------------------------

1 | #Training dataset

2 | #If Training dataset and Validation dataset in the same folder, you don't have to set "set_eval_env.sh" file

3 | export DATASET_NAME=origin

4 |

5 | export DATASET_DIR=/../pack

6 |

7 | export CHECKPOINT_PATH=/../inception_resnet_v2.ckpt

8 | #export CHECKPOINT_PATH=/../inception_v3.ckpt

9 | #export CHECKPOINT_PATH=/../vgg_16.ckpt

10 | #export CHECKPOINT_PATH=/../vgg_19.ckpt

11 | #export CHECKPOINT_PATH=/../resnet_v1_50.ckpt

12 |

13 | export TRAIN_DIR=/../100_inception_resnet_v2_150

14 |

15 | export LABELS_FILE=/../labels.txt

16 |

17 | #export MODEL_NAME=inception_v3

18 | #export MODEL_NAME=inception_v4

19 | export MODEL_NAME=inception_resnet_v2

20 | #export MODEL_NAME=vgg_16

21 | #export MODEL_NAME=vgg_19

22 | #export MODEL_NAME=resnet_v1_50

23 |

--------------------------------------------------------------------------------

/nets/inception.py:

--------------------------------------------------------------------------------

1 | """Brings all inception models under one namespace."""

2 |

3 | from __future__ import absolute_import

4 | from __future__ import division

5 | from __future__ import print_function

6 |

7 | # pylint: disable=unused-import

8 | from nets.inception_resnet_v2 import inception_resnet_v2

9 | from nets.inception_resnet_v2 import inception_resnet_v2_arg_scope

10 | from nets.inception_resnet_v2 import inception_resnet_v2_base

11 | from nets.inception_v1 import inception_v1

12 | from nets.inception_v1 import inception_v1_arg_scope

13 | from nets.inception_v1 import inception_v1_base

14 | from nets.inception_v2 import inception_v2

15 | from nets.inception_v2 import inception_v2_arg_scope

16 | from nets.inception_v2 import inception_v2_base

17 | from nets.inception_v3 import inception_v3

18 | from nets.inception_v3 import inception_v3_arg_scope

19 | from nets.inception_v3 import inception_v3_base

20 | from nets.inception_v4 import inception_v4

21 | from nets.inception_v4 import inception_v4_arg_scope

22 | from nets.inception_v4 import inception_v4_base

23 | # pylint: enable=unused-import

24 |

--------------------------------------------------------------------------------

/datasets/dataset_factory.py:

--------------------------------------------------------------------------------

1 | """A factory-pattern class which returns classification image/label pairs."""

2 |

3 | from __future__ import absolute_import

4 | from __future__ import division

5 | from __future__ import print_function

6 |

7 | from datasets import custom

8 |

9 |

10 | def get_dataset(name, split_name, dataset_dir, file_pattern=None, reader=None):

11 | """Given a dataset name and a split_name returns a Dataset.

12 |

13 | Args:

14 | name: String, the name of the dataset.

15 | split_name: A train/test split name.

16 | dataset_dir: The directory where the dataset files are stored.

17 | file_pattern: The file pattern to use for matching the dataset source files.

18 | reader: The subclass of tf.ReaderBase. If left as `None`, then the default

19 | reader defined by each dataset is used.

20 |

21 | Returns:

22 | A `Dataset` class.

23 |

24 | Raises:

25 | ValueError: If the dataset `name` is unknown.

26 | """

27 | return custom.get_split(

28 | split_name,

29 | dataset_dir,

30 | name,

31 | file_pattern,

32 | reader)

33 |

34 |

--------------------------------------------------------------------------------

/convert_data.py:

--------------------------------------------------------------------------------

1 | r"""Converts your own dataset.

2 |

3 | Usage:

4 | ```shell

5 |

6 | $ python download_and_convert_data.py \

7 | --dataset_name=mnist \

8 | --dataset_dir=/tmp/mnist

9 |

10 | $ python download_and_convert_data.py \

11 | --dataset_name=cifar10 \

12 | --dataset_dir=/tmp/cifar10

13 |

14 | $ python download_and_convert_data.py \

15 | --dataset_name=flowers \

16 | --dataset_dir=/tmp/flowers

17 | ```

18 | """

19 | from __future__ import absolute_import

20 | from __future__ import division

21 | from __future__ import print_function

22 |

23 | import tensorflow as tf

24 |

25 | from datasets import convert_tfrecord

26 |

27 | FLAGS = tf.app.flags.FLAGS

28 |

29 | tf.app.flags.DEFINE_string(

30 | 'dataset_name',

31 | None,

32 | '')

33 |

34 | tf.app.flags.DEFINE_string(

35 | 'dataset_dir',

36 | None,

37 | 'The directory where the output TFRecords and temporary files are saved.')

38 |

39 |

40 | def main(_):

41 | if not FLAGS.dataset_name:

42 | raise ValueError('You must supply the dataset name with --dataset_name')

43 | if not FLAGS.dataset_dir:

44 | raise ValueError('You must supply the dataset directory with --dataset_dir')

45 |

46 | if FLAGS.dataset_name == 'custom':

47 | convert_tfrecord.run(FLAGS.dataset_dir, dataset_name=FLAGS.dataset_name)

48 | else:

49 | convert_tfrecord.run(FLAGS.dataset_dir, dataset_name=FLAGS.dataset_name)

50 |

51 | if __name__ == '__main__':

52 | tf.app.run()

53 |

54 |

--------------------------------------------------------------------------------

/preprocessing/preprocessing_factory.py:

--------------------------------------------------------------------------------

1 | """Contains a factory for building various models."""

2 |

3 | from __future__ import absolute_import

4 | from __future__ import division

5 | from __future__ import print_function

6 |

7 | import tensorflow as tf

8 |

9 | from preprocessing import inception_preprocessing

10 | from preprocessing import vgg_preprocessing

11 |

12 | slim = tf.contrib.slim

13 |

14 |

15 | def get_preprocessing(name, is_training=False):

16 | """Returns preprocessing_fn(image, height, width, **kwargs).

17 |

18 | Args:

19 | name: The name of the preprocessing function.

20 | is_training: `True` if the model is being used for training and `False`

21 | otherwise.

22 |

23 | Returns:

24 | preprocessing_fn: A function that preprocessing a single image (pre-batch).

25 | It has the following signature:

26 | image = preprocessing_fn(image, output_height, output_width, ...).

27 |

28 | Raises:

29 | ValueError: If Preprocessing `name` is not recognized.

30 | """

31 | preprocessing_fn_map = {

32 | 'inception': inception_preprocessing,

33 | 'inception_v1': inception_preprocessing,

34 | 'inception_v2': inception_preprocessing,

35 | 'inception_v3': inception_preprocessing,

36 | 'inception_v4': inception_preprocessing,

37 | 'inception_resnet_v2': inception_preprocessing,

38 | 'mobilenet_v1': inception_preprocessing,

39 | 'resnet_v1_50': vgg_preprocessing,

40 | 'resnet_v1_101': vgg_preprocessing,

41 | 'resnet_v1_152': vgg_preprocessing,

42 | 'vgg': vgg_preprocessing,

43 | 'vgg_a': vgg_preprocessing,

44 | 'vgg_16': vgg_preprocessing,

45 | 'vgg_19': vgg_preprocessing,

46 | }

47 |

48 | if name not in preprocessing_fn_map:

49 | raise ValueError('Preprocessing name [%s] was not recognized' % name)

50 |

51 | def preprocessing_fn(image, output_height, output_width, **kwargs):

52 | return preprocessing_fn_map[name].preprocess_image(

53 | image, output_height, output_width, is_training=is_training, **kwargs)

54 |

55 | return preprocessing_fn

56 |

--------------------------------------------------------------------------------

/nets/inception_utils.py:

--------------------------------------------------------------------------------

1 | """Contains common code shared by all inception models.

2 |

3 | Usage of arg scope:

4 | with slim.arg_scope(inception_arg_scope()):

5 | logits, end_points = inception.inception_v3(images, num_classes,

6 | is_training=is_training)

7 |

8 | """

9 | from __future__ import absolute_import

10 | from __future__ import division

11 | from __future__ import print_function

12 |

13 | import tensorflow as tf

14 |

15 | slim = tf.contrib.slim

16 |

17 |

18 | def inception_arg_scope(weight_decay=0.00004,

19 | use_batch_norm=True,

20 | batch_norm_decay=0.9997,

21 | batch_norm_epsilon=0.001,

22 | activation_fn=tf.nn.relu):

23 | """Defines the default arg scope for inception models.

24 |

25 | Args:

26 | weight_decay: The weight decay to use for regularizing the model.

27 | use_batch_norm: "If `True`, batch_norm is applied after each convolution.

28 | batch_norm_decay: Decay for batch norm moving average.

29 | batch_norm_epsilon: Small float added to variance to avoid dividing by zero

30 | in batch norm.

31 | activation_fn: Activation function for conv2d.

32 |

33 | Returns:

34 | An `arg_scope` to use for the inception models.

35 | """

36 | batch_norm_params = {

37 | # Decay for the moving averages.

38 | 'decay': batch_norm_decay,

39 | # epsilon to prevent 0s in variance.

40 | 'epsilon': batch_norm_epsilon,

41 | # collection containing update_ops.

42 | 'updates_collections': tf.GraphKeys.UPDATE_OPS,

43 | # use fused batch norm if possible.

44 | 'fused': None,

45 | }

46 | if use_batch_norm:

47 | normalizer_fn = slim.batch_norm

48 | normalizer_params = batch_norm_params

49 | else:

50 | normalizer_fn = None

51 | normalizer_params = {}

52 | # Set weight_decay for weights in Conv and FC layers.

53 | with slim.arg_scope([slim.conv2d, slim.fully_connected],

54 | weights_regularizer=slim.l2_regularizer(weight_decay)):

55 | with slim.arg_scope(

56 | [slim.conv2d],

57 | weights_initializer=slim.variance_scaling_initializer(),

58 | activation_fn=activation_fn,

59 | normalizer_fn=normalizer_fn,

60 | normalizer_params=normalizer_params) as sc:

61 | return sc

62 |

--------------------------------------------------------------------------------

/datasets/custom.py:

--------------------------------------------------------------------------------

1 | """Provides data for the flowers dataset.

2 |

3 | The dataset scripts used to create the dataset can be found at:

4 | tensorflow/models/slim/datasets/download_and_convert_flowers.py

5 | """

6 |

7 | from __future__ import absolute_import

8 | from __future__ import division

9 | from __future__ import print_function

10 |

11 | import os

12 | import tensorflow as tf

13 |

14 | from datasets import dataset_utils

15 |

16 | slim = tf.contrib.slim

17 |

18 | _FILE_PATTERN = '%s_%s_*.tfrecord'

19 |

20 | _ITEMS_TO_DESCRIPTIONS = {

21 | 'image': 'A color image of varying size.',

22 | 'label': 'A single integer between 0 and 4',

23 | }

24 |

25 |

26 | def get_split(split_name, dataset_dir, dataset_name,

27 | file_pattern=None, reader=None):

28 | """Gets a dataset tuple with instructions for reading flowers.

29 |

30 | Args:

31 | split_name: A train/validation split name.

32 | dataset_dir: The base directory of the dataset sources.

33 | file_pattern: The file pattern to use when matching the dataset sources.

34 | It is assumed that the pattern contains a '%s' string so that the split

35 | name can be inserted.

36 | reader: The TensorFlow reader type.

37 |

38 | Returns:

39 | A `Dataset` namedtuple.

40 |

41 | Raises:

42 | ValueError: if `split_name` is not a valid train/validation split.

43 | """

44 |

45 | # load dataset info from file

46 | dataset_info_file_path = os.path.join(dataset_dir, dataset_name + '.info')

47 | with open(dataset_info_file_path, 'r') as f:

48 | contents = f.read().split('\n')

49 | splits_to_sizes = {}

50 | for line in contents:

51 | info = line.split(':')

52 | splits_to_sizes[info[0]] = int(info[1])

53 |

54 | num_classes = splits_to_sizes['label']

55 |

56 | if split_name not in splits_to_sizes:

57 | raise ValueError('split name %s was not recognized.' % split_name)

58 |

59 | if not file_pattern:

60 | file_pattern = _FILE_PATTERN

61 | file_pattern = os.path.join(dataset_dir,

62 | file_pattern % (dataset_name, split_name))

63 |

64 | # Allowing None in the signature so that dataset_factory can use the default.

65 | if reader is None:

66 | reader = tf.TFRecordReader

67 |

68 | keys_to_features = {

69 | 'image/encoded': tf.FixedLenFeature((), tf.string, default_value=''),

70 | 'image/format': tf.FixedLenFeature((), tf.string, default_value='png'),

71 | 'image/class/label': tf.FixedLenFeature(

72 | [], tf.int64, default_value=tf.zeros([], dtype=tf.int64)),

73 | }

74 |

75 | items_to_handlers = {

76 | 'image': slim.tfexample_decoder.Image(),

77 | 'label': slim.tfexample_decoder.Tensor('image/class/label'),

78 | }

79 |

80 | decoder = slim.tfexample_decoder.TFExampleDecoder(

81 | keys_to_features, items_to_handlers)

82 |

83 | labels_to_names = None

84 | if dataset_utils.has_labels(dataset_dir):

85 | labels_to_names = dataset_utils.read_label_file(dataset_dir)

86 |

87 | return slim.dataset.Dataset(

88 | data_sources=file_pattern,

89 | reader=reader,

90 | decoder=decoder,

91 | num_samples=splits_to_sizes[split_name],

92 | items_to_descriptions=_ITEMS_TO_DESCRIPTIONS,

93 | num_classes=num_classes,

94 | labels_to_names=labels_to_names)

95 |

--------------------------------------------------------------------------------

/datasets/dataset_utils.py:

--------------------------------------------------------------------------------

1 | """Contains utilities for downloading and converting datasets."""

2 | from __future__ import absolute_import

3 | from __future__ import division

4 | from __future__ import print_function

5 |

6 | import os

7 | import sys

8 | import importlib

9 | importlib.reload(sys)

10 |

11 | import tarfile

12 |

13 | from six.moves import urllib

14 | import tensorflow as tf

15 |

16 | LABELS_FILENAME = 'labels.txt'

17 |

18 |

19 | def int64_feature(values):

20 | """Returns a TF-Feature of int64s.

21 |

22 | Args:

23 | values: A scalar or list of values.

24 |

25 | Returns:

26 | a TF-Feature.

27 | """

28 | if not isinstance(values, (tuple, list)):

29 | values = [values]

30 | return tf.train.Feature(int64_list=tf.train.Int64List(value=values))

31 |

32 |

33 | def bytes_feature(values):

34 | """Returns a TF-Feature of bytes.

35 |

36 | Args:

37 | values: A string.

38 |

39 | Returns:

40 | a TF-Feature.

41 | """

42 | return tf.train.Feature(bytes_list=tf.train.BytesList(value=[values]))

43 |

44 |

45 | def image_to_tfexample(image_data, image_format, height, width, class_id):

46 | return tf.train.Example(features=tf.train.Features(feature={

47 | 'image/encoded': bytes_feature(image_data),

48 | 'image/format': bytes_feature(image_format),

49 | 'image/class/label': int64_feature(class_id),

50 | 'image/height': int64_feature(height),

51 | 'image/width': int64_feature(width),

52 | }))

53 |

54 |

55 | def download_and_uncompress_tarball(tarball_url, dataset_dir):

56 | """Downloads the `tarball_url` and uncompresses it locally.

57 |

58 | Args:

59 | tarball_url: The URL of a tarball file.

60 | dataset_dir: The directory where the temporary files are stored.

61 | """

62 | filename = tarball_url.split('/')[-1]

63 | filepath = os.path.join(dataset_dir, filename)

64 |

65 | def _progress(count, block_size, total_size):

66 | sys.stdout.write('\r>> Downloading %s %.1f%%' % (

67 | filename, float(count * block_size) / float(total_size) * 100.0))

68 | sys.stdout.flush()

69 | if not os.path.exists(file_path):

70 | filepath, _ = urllib.request.urlretrieve(tarball_url, filepath, _progress)

71 | print()

72 | statinfo = os.stat(filepath)

73 | print('Successfully downloaded', filename, statinfo.st_size, 'bytes.')

74 | tarfile.open(filepath, 'r:gz').extractall(dataset_dir)

75 |

76 |

77 | def write_label_file(labels_to_class_names, dataset_dir,

78 | filename=LABELS_FILENAME):

79 | """Writes a file with the list of class names.

80 |

81 | Args:

82 | labels_to_class_names: A map of (integer) labels to class names.

83 | dataset_dir: The directory in which the labels file should be written.

84 | filename: The filename where the class names are written.

85 | """

86 | labels_filename = os.path.join(dataset_dir, filename)

87 | with tf.gfile.Open(labels_filename, 'w') as f:

88 | for label in labels_to_class_names:

89 | class_name = labels_to_class_names[label]

90 | f.write('%d:%s\n' % (label, class_name))

91 |

92 |

93 | def has_labels(dataset_dir, filename=LABELS_FILENAME):

94 | """Specifies whether or not the dataset directory contains a label map file.

95 |

96 | Args:

97 | dataset_dir: The directory in which the labels file is found.

98 | filename: The filename where the class names are written.

99 |

100 | Returns:

101 | `True` if the labels file exists and `False` otherwise.

102 | """

103 | return tf.gfile.Exists(os.path.join(dataset_dir, filename))

104 |

105 |

106 | def read_label_file(dataset_dir, filename=LABELS_FILENAME):

107 | """Reads the labels file and returns a mapping from ID to class name.

108 |

109 | Args:

110 | dataset_dir: The directory in which the labels file is found.

111 | filename: The filename where the class names are written.

112 |

113 | Returns:

114 | A map from a label (integer) to class name.

115 | """

116 | labels_filename = os.path.join(dataset_dir, filename)

117 | with tf.gfile.Open(labels_filename, 'rb') as f:

118 | lines = f.read().decode()

119 | lines = lines.split('\n')

120 | lines = filter(None, lines)

121 |

122 | labels_to_class_names = {}

123 | for line in lines:

124 | index = line.index(':')

125 | labels_to_class_names[int(line[:index])] = line[index+1:]

126 | return labels_to_class_names

127 |

--------------------------------------------------------------------------------

/nets/nets_factory.py:

--------------------------------------------------------------------------------

1 | """Contains a factory for building various models."""

2 |

3 | from __future__ import absolute_import

4 | from __future__ import division

5 | from __future__ import print_function

6 | import functools

7 |

8 | import tensorflow as tf

9 |

10 | from nets import alexnet

11 | from nets import cifarnet

12 | from nets import inception

13 | from nets import lenet

14 | from nets import mobilenet_v1

15 | from nets import overfeat

16 | from nets import resnet_v1

17 | from nets import resnet_v2

18 | from nets import vgg

19 | from nets.nasnet import nasnet

20 |

21 | slim = tf.contrib.slim

22 |

23 | networks_map = {'alexnet_v2': alexnet.alexnet_v2,

24 | 'cifarnet': cifarnet.cifarnet,

25 | 'overfeat': overfeat.overfeat,

26 | 'vgg_a': vgg.vgg_a,

27 | 'vgg_16': vgg.vgg_16,

28 | 'vgg_19': vgg.vgg_19,

29 | 'inception_v1': inception.inception_v1,

30 | 'inception_v2': inception.inception_v2,

31 | 'inception_v3': inception.inception_v3,

32 | 'inception_v4': inception.inception_v4,

33 | 'inception_resnet_v2': inception.inception_resnet_v2,

34 | 'lenet': lenet.lenet,

35 | 'resnet_v1_50': resnet_v1.resnet_v1_50,

36 | 'resnet_v1_101': resnet_v1.resnet_v1_101,

37 | 'resnet_v1_152': resnet_v1.resnet_v1_152,

38 | 'resnet_v1_200': resnet_v1.resnet_v1_200,

39 | 'resnet_v2_50': resnet_v2.resnet_v2_50,

40 | 'resnet_v2_101': resnet_v2.resnet_v2_101,

41 | 'resnet_v2_152': resnet_v2.resnet_v2_152,

42 | 'resnet_v2_200': resnet_v2.resnet_v2_200,

43 | 'mobilenet_v1': mobilenet_v1.mobilenet_v1,

44 | 'mobilenet_v1_075': mobilenet_v1.mobilenet_v1_075,

45 | 'mobilenet_v1_050': mobilenet_v1.mobilenet_v1_050,

46 | 'mobilenet_v1_025': mobilenet_v1.mobilenet_v1_025,

47 | 'nasnet_cifar': nasnet.build_nasnet_cifar,

48 | 'nasnet_mobile': nasnet.build_nasnet_mobile,

49 | 'nasnet_large': nasnet.build_nasnet_large,

50 | }

51 |

52 | arg_scopes_map = {'alexnet_v2': alexnet.alexnet_v2_arg_scope,

53 | 'cifarnet': cifarnet.cifarnet_arg_scope,

54 | 'overfeat': overfeat.overfeat_arg_scope,

55 | 'vgg_a': vgg.vgg_arg_scope,

56 | 'vgg_16': vgg.vgg_arg_scope,

57 | 'vgg_19': vgg.vgg_arg_scope,

58 | 'inception_v1': inception.inception_v3_arg_scope,

59 | 'inception_v2': inception.inception_v3_arg_scope,

60 | 'inception_v3': inception.inception_v3_arg_scope,

61 | 'inception_v4': inception.inception_v4_arg_scope,

62 | 'inception_resnet_v2':

63 | inception.inception_resnet_v2_arg_scope,

64 | 'lenet': lenet.lenet_arg_scope,

65 | 'resnet_v1_50': resnet_v1.resnet_arg_scope,

66 | 'resnet_v1_101': resnet_v1.resnet_arg_scope,

67 | 'resnet_v1_152': resnet_v1.resnet_arg_scope,

68 | 'resnet_v1_200': resnet_v1.resnet_arg_scope,

69 | 'resnet_v2_50': resnet_v2.resnet_arg_scope,

70 | 'resnet_v2_101': resnet_v2.resnet_arg_scope,

71 | 'resnet_v2_152': resnet_v2.resnet_arg_scope,

72 | 'resnet_v2_200': resnet_v2.resnet_arg_scope,

73 | 'mobilenet_v1': mobilenet_v1.mobilenet_v1_arg_scope,

74 | 'mobilenet_v1_075': mobilenet_v1.mobilenet_v1_arg_scope,

75 | 'mobilenet_v1_050': mobilenet_v1.mobilenet_v1_arg_scope,

76 | 'mobilenet_v1_025': mobilenet_v1.mobilenet_v1_arg_scope,

77 | 'nasnet_cifar': nasnet.nasnet_cifar_arg_scope,

78 | 'nasnet_mobile': nasnet.nasnet_mobile_arg_scope,

79 | 'nasnet_large': nasnet.nasnet_large_arg_scope,

80 | }

81 |

82 |

83 | def get_network_fn(name, num_classes, weight_decay=0.0, is_training=False):

84 | """Returns a network_fn such as `logits, end_points = network_fn(images)`.

85 |

86 | Args:

87 | name: The name of the network.

88 | num_classes: The number of classes to use for classification. If 0 or None,

89 | the logits layer is omitted and its input features are returned instead.

90 | weight_decay: The l2 coefficient for the model weights.

91 | is_training: `True` if the model is being used for training and `False`

92 | otherwise.

93 |

94 | Returns:

95 | network_fn: A function that applies the model to a batch of images. It has

96 | the following signature:

97 | net, end_points = network_fn(images)

98 | The `images` input is a tensor of shape [batch_size, height, width, 3]

99 | with height = width = network_fn.default_image_size. (The permissibility

100 | and treatment of other sizes depends on the network_fn.)

101 | The returned `end_points` are a dictionary of intermediate activations.

102 | The returned `net` is the topmost layer, depending on `num_classes`:

103 | If `num_classes` was a non-zero integer, `net` is a logits tensor

104 | of shape [batch_size, num_classes].

105 | If `num_classes` was 0 or `None`, `net` is a tensor with the input

106 | to the logits layer of shape [batch_size, 1, 1, num_features] or

107 | [batch_size, num_features]. Dropout has not been applied to this

108 | (even if the network's original classification does); it remains for

109 | the caller to do this or not.

110 |

111 | Raises:

112 | ValueError: If network `name` is not recognized.

113 | """

114 | if name not in networks_map:

115 | raise ValueError('Name of network unknown %s' % name)

116 | func = networks_map[name]

117 | @functools.wraps(func)

118 | def network_fn(images, **kwargs):

119 | arg_scope = arg_scopes_map[name](weight_decay=weight_decay)

120 | with slim.arg_scope(arg_scope):

121 | return func(images, num_classes, is_training=is_training, **kwargs)

122 | if hasattr(func, 'default_image_size'):

123 | network_fn.default_image_size = func.default_image_size

124 |

125 | return network_fn

126 |

--------------------------------------------------------------------------------

/eval.py:

--------------------------------------------------------------------------------

1 | import math

2 | import tensorflow as tf

3 | import os

4 |

5 | from datasets import dataset_factory

6 | from nets import nets_factory

7 | from preprocessing import preprocessing_factory

8 |

9 | slim = tf.contrib.slim

10 |

11 | tf.app.flags.DEFINE_integer(

12 | 'batch_size', 20, 'The number of samples in each batch.')

13 |

14 | tf.app.flags.DEFINE_integer(

15 | 'max_num_batches', None,

16 | 'Max number of batches to evaluate by default use all.')

17 |

18 | tf.app.flags.DEFINE_string(

19 | 'master', '', 'The address of the TensorFlow master to use.')

20 |

21 | tf.app.flags.DEFINE_string(

22 | 'checkpoint_path', '/tmp/tfmodel/',

23 | 'The directory where the model was written to or an absolute path to a '

24 | 'checkpoint file.')

25 |

26 | tf.app.flags.DEFINE_string(

27 | 'eval_dir', '/tmp/tfmodel/', 'Directory where the results are saved to.')

28 |

29 | tf.app.flags.DEFINE_integer(

30 | 'num_preprocessing_threads', 4,

31 | 'The number of threads used to create the batches.')

32 |

33 | tf.app.flags.DEFINE_string(

34 | 'dataset_name', 'imagenet', 'The name of the dataset to load.')

35 |

36 | tf.app.flags.DEFINE_string(

37 | 'dataset_split_name', 'test', 'The name of the train/test split.')

38 |

39 | tf.app.flags.DEFINE_string(

40 | 'dataset_dir', None, 'The directory where the dataset files are stored.')

41 |

42 | tf.app.flags.DEFINE_integer(

43 | 'labels_offset', 0,

44 | 'An offset for the labels in the dataset. This flag is primarily used to '

45 | 'evaluate the VGG and ResNet architectures which do not use a background '

46 | 'class for the ImageNet dataset.')

47 |

48 | tf.app.flags.DEFINE_string(

49 | 'model_name', 'inception_v3', 'The name of the architecture to evaluate.')

50 |

51 | tf.app.flags.DEFINE_string(

52 | 'preprocessing_name', None, 'The name of the preprocessing to use. If left '

53 | 'as `None`, then the model_name flag is used.')

54 |

55 | tf.app.flags.DEFINE_float(

56 | 'moving_average_decay', None,

57 | 'The decay to use for the moving average.'

58 | 'If left as None, then moving averages are not used.')

59 |

60 | tf.app.flags.DEFINE_integer(

61 | 'eval_image_size', None, 'Eval image size')

62 |

63 | tf.app.flags.DEFINE_integer(

64 | 'eval_interval_secs', 600,

65 | 'The minimum number of seconds between evaluations')

66 |

67 | FLAGS = tf.app.flags.FLAGS

68 |

69 |

70 | def main(_):

71 | if not FLAGS.dataset_dir:

72 | raise ValueError('You must supply the dataset directory with --dataset_dir')

73 |

74 | tf.logging.set_verbosity(tf.logging.INFO)

75 | with tf.Graph().as_default():

76 | tf_global_step = slim.get_or_create_global_step()

77 |

78 | ######################

79 | # Select the dataset #

80 | ######################

81 | dataset = dataset_factory.get_dataset(

82 | FLAGS.dataset_name, FLAGS.dataset_split_name, FLAGS.dataset_dir)

83 |

84 | ####################

85 | # Select the model #

86 | ####################

87 | network_fn = nets_factory.get_network_fn(

88 | FLAGS.model_name,

89 | num_classes=(dataset.num_classes - FLAGS.labels_offset),

90 | is_training=False)

91 |

92 | ##############################################################

93 | # Create a dataset provider that loads data from the dataset #

94 | ##############################################################

95 | provider = slim.dataset_data_provider.DatasetDataProvider(

96 | dataset,

97 | shuffle=False,

98 | common_queue_capacity=2 * FLAGS.batch_size,

99 | common_queue_min=FLAGS.batch_size)

100 | [image, label] = provider.get(['image', 'label'])

101 | label -= FLAGS.labels_offset

102 |

103 | #####################################

104 | # Select the preprocessing function #

105 | #####################################

106 | preprocessing_name = FLAGS.preprocessing_name or FLAGS.model_name

107 | image_preprocessing_fn = preprocessing_factory.get_preprocessing(

108 | preprocessing_name,

109 | is_training=False)

110 |

111 | eval_image_size = FLAGS.eval_image_size or network_fn.default_image_size

112 |

113 | image = image_preprocessing_fn(image, eval_image_size, eval_image_size)

114 |

115 | images, labels = tf.train.batch(

116 | [image, label],

117 | batch_size=FLAGS.batch_size,

118 | num_threads=FLAGS.num_preprocessing_threads,

119 | capacity=5 * FLAGS.batch_size)

120 |

121 | ####################

122 | # Define the model #

123 | ####################

124 | logits, _ = network_fn(images)

125 |

126 | if FLAGS.moving_average_decay:

127 | variable_averages = tf.train.ExponentialMovingAverage(

128 | FLAGS.moving_average_decay, tf_global_step)

129 | variables_to_restore = variable_averages.variables_to_restore(

130 | slim.get_model_variables())

131 | variables_to_restore[tf_global_step.op.name] = tf_global_step

132 | else:

133 | variables_to_restore = slim.get_variables_to_restore()

134 |

135 | predictions = tf.argmax(logits, 1)

136 | labels = tf.squeeze(labels)

137 |

138 | # Define the metrics:

139 | names_to_values, names_to_updates = slim.metrics.aggregate_metric_map({

140 | 'Accuracy': slim.metrics.streaming_accuracy(predictions, labels),

141 | 'Recall_5': slim.metrics.streaming_recall_at_k(

142 | logits, labels, 5),

143 | #'Precision': slim.metrics.streaming_precision(predictions, labels),

144 | #'confusion_matrix': slim.metrics.confusion_matrix(

145 | # labels, predictions, num_classes=102, dtype=tf.int32, name=None, weights=None)

146 | })

147 |

148 | # Print the summaries to screen.

149 | for name, value in names_to_values.items():

150 | summary_name = 'eval/%s' % name

151 | op = tf.summary.scalar(summary_name, value, collections=[])

152 | op = tf.Print(op, [value], summary_name)

153 | tf.add_to_collection(tf.GraphKeys.SUMMARIES, op)

154 |

155 | # TODO(sguada) use num_epochs=1

156 | if FLAGS.max_num_batches:

157 | num_batches = FLAGS.max_num_batches

158 | else:

159 | # This ensures that we make a single pass over all of the data.

160 | num_batches = math.ceil(dataset.num_samples / float(FLAGS.batch_size))

161 |

162 | if tf.gfile.IsDirectory(FLAGS.checkpoint_path):

163 | if FLAGS.eval_interval_secs:

164 | checkpoint_path = FLAGS.checkpoint_path

165 | else:

166 | checkpoint_path = tf.train.latest_checkpoint(FLAGS.checkpoint_path)

167 | else:

168 | if FLAGS.eval_interval_secs:

169 | checkpoint_path, _ = os.path.split(FLAGS.checkpoint_path)

170 | else:

171 | checkpoint_path = FLAGS.checkpoint_path

172 |

173 | tf.logging.info('Evaluating %s' % checkpoint_path)

174 | # mask GPUs visible to the session so it falls back on CPU

175 | config = tf.ConfigProto(device_count={'GPU':0})

176 | if not FLAGS.eval_interval_secs:

177 | slim.evaluation.evaluate_once(

178 | master=FLAGS.master,

179 | checkpoint_path=checkpoint_path,

180 | logdir=FLAGS.eval_dir,

181 | num_evals=num_batches,

182 | eval_op=list(names_to_updates.values()),

183 | variables_to_restore=variables_to_restore,

184 | session_config=config)

185 | #)

186 | else:

187 | slim.evaluation.evaluation_loop(

188 | master=FLAGS.master,

189 | checkpoint_dir=checkpoint_path,

190 | logdir=FLAGS.eval_dir,

191 | num_evals=num_batches,

192 | eval_op=list(names_to_updates.values()),

193 | eval_interval_secs=60,

194 | variables_to_restore=variables_to_restore,

195 | session_config=config)

196 | #)

197 |

198 | if __name__ == '__main__':

199 | tf.app.run()

200 |

--------------------------------------------------------------------------------

/datasets/convert_tfrecord.py:

--------------------------------------------------------------------------------

1 | r"""Converts your own dataset to TFRecords of TF-Example protos.

2 |

3 | This module reads the files

4 | that make up the data and creates two TFRecord datasets: one for train

5 | and one for test. Each TFRecord dataset is comprised of a set of TF-Example

6 | protocol buffers, each of which contain a single image and label.

7 |

8 | The script should take about a minute to run.

9 |

10 | """

11 |

12 | from __future__ import absolute_import

13 | from __future__ import division

14 | from __future__ import print_function

15 |

16 | import math

17 | import os

18 | import random

19 | import sys

20 |

21 | import tensorflow as tf

22 |

23 | from datasets import dataset_utils

24 |

25 |

26 | # The number of images in the validation set.

27 | #_NUM_VALIDATION = 180

28 | PERCENT_VALIDATION = 2.5

29 |

30 | # Seed for repeatability.

31 | _RANDOM_SEED = 0

32 |

33 | # The number of shards per dataset split.

34 | _NUM_SHARDS = None

35 |

36 |

37 | class ImageReader(object):

38 | """Helper class that provides TensorFlow image coding utilities."""

39 |

40 | def __init__(self):

41 | # Initializes function that decodes RGB JPEG data.

42 | self._decode_jpeg_data = tf.placeholder(dtype=tf.string)

43 | self._decode_jpeg = tf.image.decode_jpeg(self._decode_jpeg_data, channels=3)

44 |

45 | def read_image_dims(self, sess, image_data):

46 | image = self.decode_jpeg(sess, image_data)

47 | return image.shape[0], image.shape[1]

48 |

49 | def decode_jpeg(self, sess, image_data):

50 | image = sess.run(self._decode_jpeg,

51 | feed_dict={self._decode_jpeg_data: image_data})

52 | assert len(image.shape) == 3

53 | assert image.shape[2] == 3

54 | return image

55 |

56 |

57 | def _get_filenames_and_classes(dataset_dir, dataset_name):

58 | """Returns a list of filenames and inferred class names.

59 |

60 | Args:

61 | dataset_dir: A directory containing a set of subdirectories representing

62 | class names. Each subdirectory should contain PNG or JPG encoded images.

63 |

64 | Returns:

65 | A list of image file paths, relative to `dataset_dir` and the list of

66 | subdirectories, representing class names.

67 | """

68 | dataset_root = os.path.join(dataset_dir, dataset_name)

69 | print('processing data in [%s] :' % dataset_root)

70 | directories = []

71 | class_names = []

72 | for filename in os.listdir(dataset_root):

73 | path = os.path.join(dataset_root, filename)

74 | if os.path.isdir(path):

75 | directories.append(path)

76 | class_names.append(filename)

77 |

78 | photo_filenames = []

79 | for directory in directories:

80 | for filename in os.listdir(directory):

81 | path = os.path.join(directory, filename)

82 | photo_filenames.append(path)

83 |

84 | return photo_filenames, sorted(class_names)

85 |

86 |

87 | def _get_dataset_filename(dataset_dir, dataset_name, split_name, shard_id):

88 | output_filename = '%s_%s_%05d-of-%05d.tfrecord' % (

89 | dataset_name, split_name, shard_id, _NUM_SHARDS)

90 | return os.path.join(dataset_dir, output_filename)

91 |

92 |

93 | def _convert_dataset(split_name,

94 | filenames,

95 | class_names_to_ids,

96 | dataset_dir,

97 | dataset_name):

98 | """Converts the given filenames to a TFRecord dataset.

99 |

100 | Args:

101 | split_name: The name of the dataset, either 'train' or 'validation'.

102 | filenames: A list of absolute paths to png or jpg images.

103 | class_names_to_ids: A dictionary from class names (strings) to ids

104 | (integers).

105 | dataset_dir: The directory where the converted datasets are stored.

106 | """

107 | assert split_name in ['train', 'validation']

108 |

109 | num_per_shard = int(math.ceil(len(filenames) / float(_NUM_SHARDS)))

110 |

111 | with tf.Graph().as_default():

112 | image_reader = ImageReader()

113 |

114 | with tf.Session('') as sess:

115 |

116 | for shard_id in range(_NUM_SHARDS):

117 | output_filename = _get_dataset_filename(

118 | dataset_dir, dataset_name, split_name, shard_id)

119 |

120 | with tf.python_io.TFRecordWriter(output_filename) as tfrecord_writer:

121 | start_ndx = shard_id * num_per_shard

122 | end_ndx = min((shard_id+1) * num_per_shard, len(filenames))

123 | for i in range(start_ndx, end_ndx):

124 | sys.stdout.write('\r>> Converting image %d/%d shard %d' % (

125 | i+1, len(filenames), shard_id))

126 | sys.stdout.flush()

127 |

128 | # Read the filename:

129 | image_data = tf.gfile.FastGFile(filenames[i], 'rb').read()

130 | height, width = image_reader.read_image_dims(sess, image_data)

131 |

132 | class_name = os.path.basename(os.path.dirname(filenames[i]))

133 | class_id = class_names_to_ids[class_name]

134 |

135 | example = dataset_utils.image_to_tfexample(

136 | image_data, b'jpg', height, width, class_id)

137 | tfrecord_writer.write(example.SerializeToString())

138 |

139 | sys.stdout.write('\n')

140 | sys.stdout.flush()

141 |

142 |

143 |

144 | def _dataset_exists(dataset_dir, dataset_name, split_name):

145 | for shard_id in range(_NUM_SHARDS):

146 | output_filename = _get_dataset_filename(

147 | dataset_dir, dataset_name, split_name, shard_id)

148 | if not tf.gfile.Exists(output_filename):

149 | return False

150 | return True

151 |

152 |

153 | def run(dataset_dir, dataset_name='dataset'):

154 | """Runs the download and conversion operation.

155 |

156 | Args:

157 | dataset_dir: The dataset directory where the dataset is stored.

158 | """

159 | if not tf.gfile.Exists(dataset_dir):

160 | tf.gfile.MakeDirs(dataset_dir)

161 |

162 |

163 | photo_filenames, class_names = _get_filenames_and_classes(dataset_dir,

164 | dataset_name)

165 | class_names_to_ids = dict(zip(class_names, range(len(class_names))))

166 |

167 | # Divide into train and test:

168 | random.seed(_RANDOM_SEED)

169 | random.shuffle(photo_filenames)

170 | #number_validation = len(photo_filenames) * PERCENT_VALIDATION //100

171 | number_validation = 1000

172 | print(' total pics number %d' % len(photo_filenames))

173 | print(' valid number: %d' % number_validation)

174 | training_filenames = photo_filenames[number_validation:]

175 | validation_filenames = photo_filenames[:number_validation]

176 |

177 | # First, convert the training and validation sets.

178 | global _NUM_SHARDS

179 | _NUM_SHARDS = len(training_filenames) // 1024

180 | _NUM_SHARDS = _NUM_SHARDS if _NUM_SHARDS else 1

181 | if _dataset_exists(dataset_dir, dataset_name, 'train'):

182 | print('Dataset files already exist. Exiting without re-creating them.')

183 | return

184 | _convert_dataset('train', training_filenames, class_names_to_ids,

185 | dataset_dir, dataset_name=dataset_name)

186 | _NUM_SHARDS = len(validation_filenames) // 1024

187 | _NUM_SHARDS = _NUM_SHARDS if _NUM_SHARDS else 1

188 | if _dataset_exists(dataset_dir, dataset_name, 'validation'):

189 | print('Dataset files already exist. Exiting without re-creating them.')

190 | return

191 | _convert_dataset('validation', validation_filenames, class_names_to_ids,

192 | dataset_dir, dataset_name=dataset_name)

193 |

194 | # write dataset info

195 | dataset_info = "label:%d\ntrain:%d\nvalidation:%d" % (

196 | len(class_names),

197 | len(training_filenames),

198 | len(validation_filenames))

199 | dataset_info_file_path = os.path.join(dataset_dir, dataset_name + '.info')

200 | with open(dataset_info_file_path, 'w') as f:

201 | f.write(dataset_info)

202 | f.flush()

203 |

204 |

205 | # Finally, write the labels file:

206 | labels_to_class_names = dict(zip(range(len(class_names)), class_names))

207 | dataset_utils.write_label_file(labels_to_class_names, dataset_dir)

208 |

209 | # _clean_up_temporary_files(dataset_dir)

210 | print('\nFinished converting the dataset!')

211 |

212 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Deep-Model-Transfer

2 | []()

3 | []()

4 |

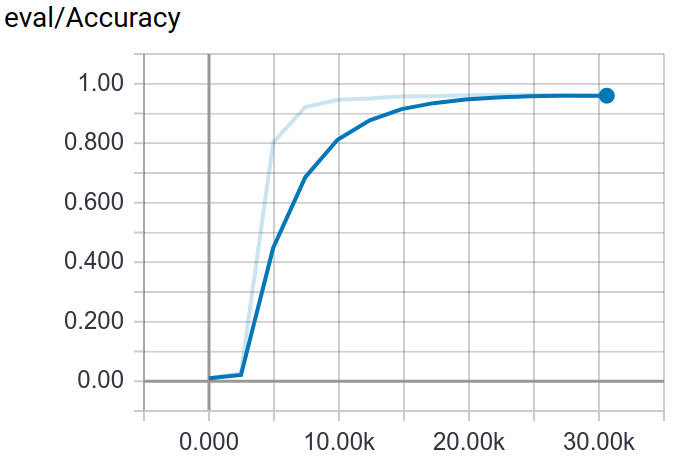

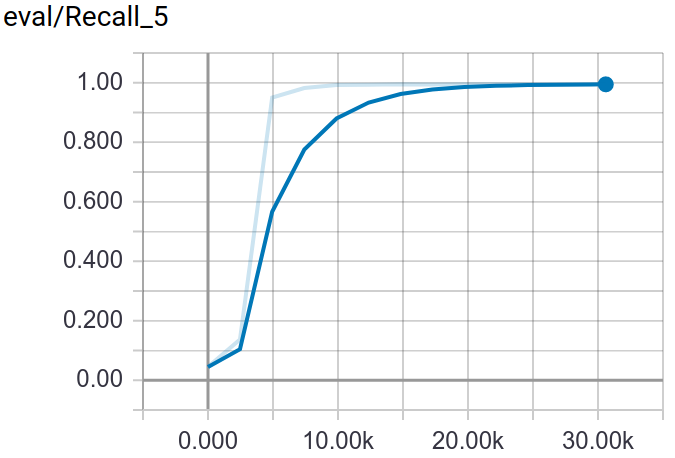

5 | > A method for Fine-Grained image classification implemented by TensorFlow. The best accuracy we have got are 73.33%(Bird-200), 91.03%(Car-196), 72.23%(Dog-120), 96.27%(Flower-102), 86.07%(Pet-37).

6 |

7 | ------------------

8 |

9 | **Note**: For Fine-Grained image classification problem, our solution is combining deep model and transfer learning. Firstly, the deep model, e.g., [vgg-16](https://arxiv.org/abs/1409.1556), [vgg-19](https://arxiv.org/abs/1409.1556), [inception-v1](https://arxiv.org/abs/1409.4842), [inception-v2](https://arxiv.org/abs/1502.03167), [inception-v3](https://arxiv.org/abs/1512.00567), [inception-v4](https://arxiv.org/abs/1602.07261), [inception-resnet-v2](https://arxiv.org/abs/1602.07261), [resnet50](https://arxiv.org/abs/1512.03385), is pretrained in [ImageNet](http://image-net.org/challenges/LSVRC/2014/browse-synsets) dataset to gain the feature extraction abbility. Secondly, transfer the pretrained model to Fine-Grained image dataset, e.g., 🕊️[Bird-200](http://www.vision.caltech.edu/visipedia/CUB-200.html), 🚗[Car-196](https://ai.stanford.edu/~jkrause/cars/car_dataset.html), 🐶[Dog-120](http://vision.stanford.edu/aditya86/ImageNetDogs/), 🌸[Flower-102](http://www.robots.ox.ac.uk/~vgg/data/flowers/102/), 🐶🐱[Pet-37](http://www.robots.ox.ac.uk/~vgg/data/pets/).

10 |

11 | ## Installation

12 | 1. Install the requirments:

13 | - Ubuntu 16.04+

14 | - TensorFlow 1.5.0+

15 | - Python 3.5+

16 | - NumPy

17 | - Nvidia GPU(optional)

18 |

19 | 2. Clone the repository

20 | ```Shell

21 | $ git clone https://github.com/MacwinWin/Deep-Model-Transfer.git

22 | ```

23 |

24 | ## Pretrain

25 | Slim provide a log of pretrained [models](https://github.com/tensorflow/models/tree/master/research/slim). What we need is just downloading the .ckpt files and then using them. Make a new folder, download and extract the .ckpt file to the folder.

26 | ```

27 | pretrained

28 | ├── inception_v1.ckpt

29 | ├── inception_v2.ckpt

30 | ├── inception_v3.ckpt

31 | ├── inception_v4.ckpt

32 | ├── inception_resnet_v2.ckpt

33 | | └── ...

34 | ```

35 |

36 | ## Transfer

37 | 1. set environment variables

38 | - Edit the [set_train_env.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/set_train_env.sh) and [set_eval_env.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/set_eval_env.sh) files to specify the "DATASET_NAME", "DATASET_DIR", "CHECKPOINT_PATH", "TRAIN_DIR", "MODEL_NAME".

39 |

40 | - "DATASET_NAME" and "DATASET_DIR" define the dataset name and location. For example, the dataset structure is shown below. "DATASET_NAME" is "origin", "DATASET_DIR" is "/../Flower_102"

41 | ```

42 | Flower_102

43 | ├── _origin

44 | | ├── _class1

45 | | ├── image1.jpg

46 | | ├── image2.jpg

47 | | └── ...

48 | | └── _class2

49 | | └── ...

50 | ```

51 | - "CHECKPOINT_PATH" is the path to pretrained model. For example, '/../pretrained/inception_v1.ckpt'.

52 | - "TRAIN_DIR" stores files generated during training.

53 | - "MODEL_NAME" is the name of pretrained model, such as resnet_v1_50, vgg_19, vgg_16, inception_resnet_v2, inception_v1, inception_v2, inception_v3, inception_v4.

54 | - Source the [set_train_env.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/set_train_env.sh) and [set_eval_env.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/set_eval_env.sh)

55 | ```Shell

56 | $ source set_train_env.sh

57 | ```

58 | 2. prepare data

59 |

60 | We use the tfrecord format to feed the model, so we should convert the .jpg file to tfrecord file.

61 | - After downloading the dataset, arrange the iamges like the structure below.

62 | ```

63 | Flower_102

64 | ├── _origin

65 | | ├── _class1

66 | | ├── image1.jpg

67 | | ├── image2.jpg

68 | | └── ...

69 | | └── _class2

70 | | └── ...

71 |

72 | Flower_102_eval

73 | ├── _origin

74 | | ├── _class1

75 | | ├── image1.jpg

76 | | ├── image2.jpg

77 | | └── ...

78 | | └── _class2

79 | | └── ...

80 |

81 | Flower_102_test

82 | ├── _origin

83 | | ├── _class1

84 | | ├── image1.jpg

85 | | ├── image2.jpg

86 | | └── ...

87 | | └── _class2

88 | | └── ...

89 | ```

90 | If the dataset doesn't have validation set, we can extract some images from test set. The percentage or quantity is defined at [./datasets/convert_tfrecord.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/datasets/convert_tfrecord.py) line [170](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/datasets/convert_tfrecord.py#L170) [171](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/datasets/convert_tfrecord.py#L171).

91 |

92 | - Run [./convert_data.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/convert_data.py)

93 | ```Shell

94 | $ python convert_data.py \

95 | --dataset_name=$DATASET_NAME \

96 | --dataset_dir=$DATASET_DIR

97 | ```

98 | 3. train and evaluate

99 |

100 | - Edit [./train.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/train.py) to specify "image_size", "num_classes".

101 | - Edit [./train.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/train.py) line [162](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/train.py#L162) to selecet image preprocessing method.

102 | - Edit [./train.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/train.py) line [203](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/train.py#L203) to create the model inference.

103 | - Edit [./train.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/train.py) line [219](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/train.py#L219) to define the scopes that you want to exclude for restoration

104 | - Edit [./set_train_env.sh]

105 | - Run script[./run_train.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/run_train.sh) to start training.

106 | - Create a new terminal window and set the [./set_eval_env.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/set_eval_env.sh) to satisfy validation set.

107 | - Create a new terminal, edit [./set_eval_env.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/set_eval_env.sh), and run script[./run_eval.sh](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/run_eval.sh) as the following command.

108 |

109 | **Note**: If you have 2 GPU, you can evaluate with GPU by changing [[./eval.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/eval.py)] line [175](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/eval.py#L175)-line[196](https://github.com/MacwinWin/Deep-Model-Transfer/blob/f399fd6011bc35e42e8b6559ea3846ed0d6a57c0/eval.py#L196) as shown below

110 | ```python

111 | #config = tf.ConfigProto(device_count={'GPU':0})

112 | if not FLAGS.eval_interval_secs:

113 | slim.evaluation.evaluate_once(

114 | master=FLAGS.master,

115 | checkpoint_path=checkpoint_path,

116 | logdir=FLAGS.eval_dir,

117 | num_evals=num_batches,

118 | eval_op=list(names_to_updates.values()),

119 | variables_to_restore=variables_to_restore

120 | #session_config=config)

121 | )

122 | else:

123 | slim.evaluation.evaluation_loop(

124 | master=FLAGS.master,

125 | checkpoint_dir=checkpoint_path,

126 | logdir=FLAGS.eval_dir,

127 | num_evals=num_batches,

128 | eval_op=list(names_to_updates.values()),

129 | eval_interval_secs=60,

130 | variables_to_restore=variables_to_restore

131 | #session_config=config)

132 | )

133 | ```

134 | 4. test

135 |

136 | The [./test.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/test.py) looks like [./train.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/train.py), so edit [./set_test_env.sh] as shown before to satisfy your environment. Then run [./run_test.py](https://github.com/MacwinWin/Deep-Model-Transfer/blob/master/run_test.sh).

137 | **Note**: After test, you can get 2 .txt file. One is ground truth lable, another is predicted lable. Edit line[303](https://github.com/MacwinWin/Deep-Model-Transfer/blob/1045afed03b0dbbe317b91b416fb9b937da40649/test.py#L303) and line[304](https://github.com/MacwinWin/Deep-Model-Transfer/blob/1045afed03b0dbbe317b91b416fb9b937da40649/test.py#L304) to change the store path.

138 |

139 | ## Visualization

140 |

141 | Through tensorboard, you can visualization the training and testing process.

142 | ```Shell

143 | $ tensorboard --logdir $TRAIN_DIR

144 | ```

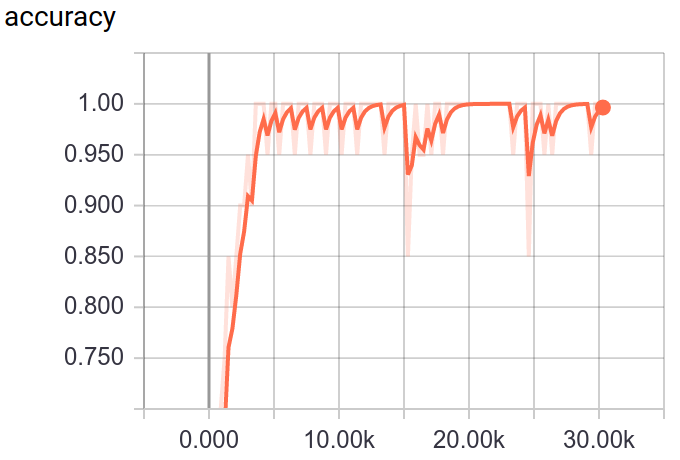

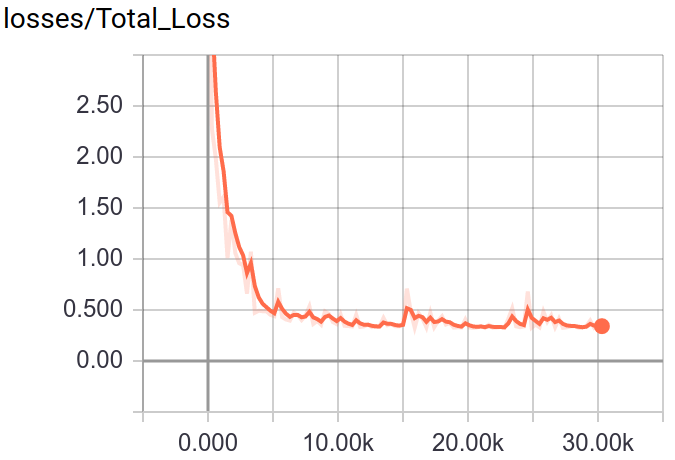

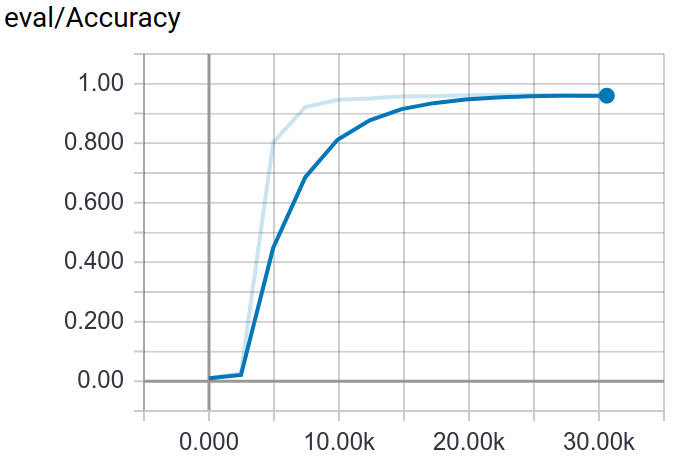

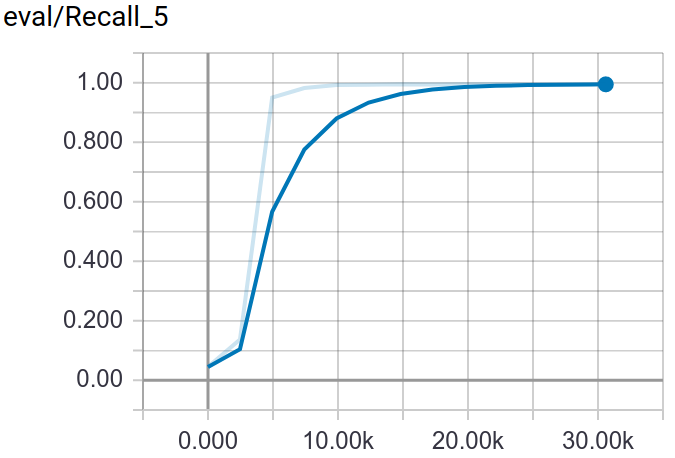

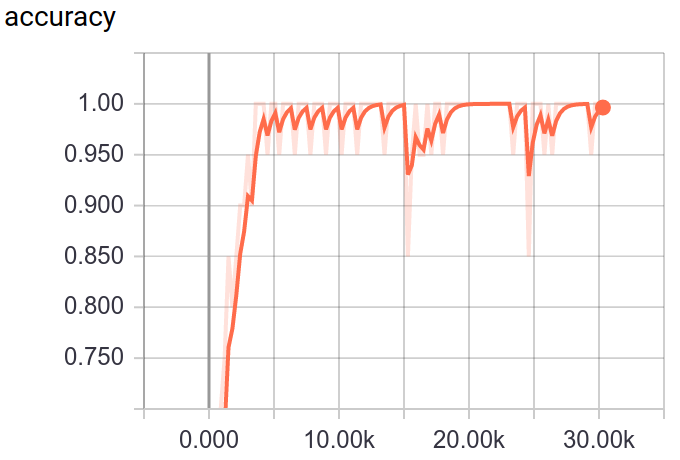

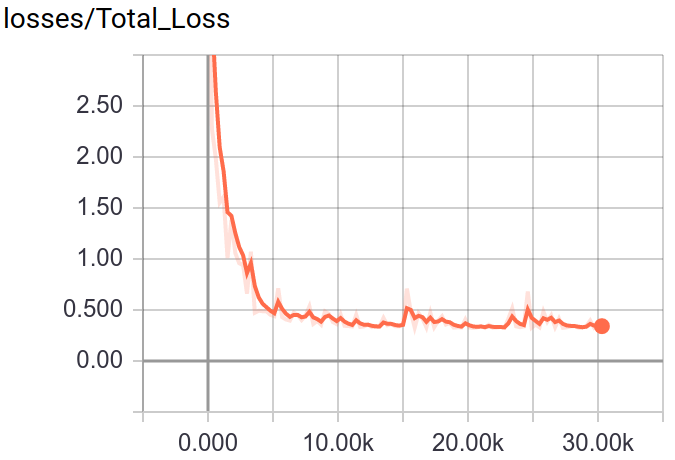

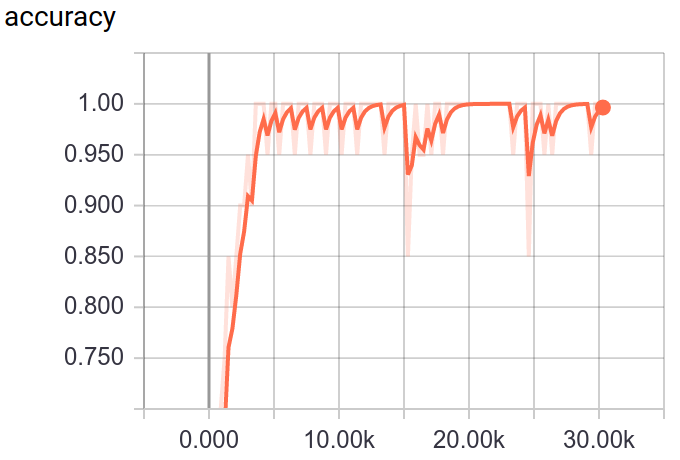

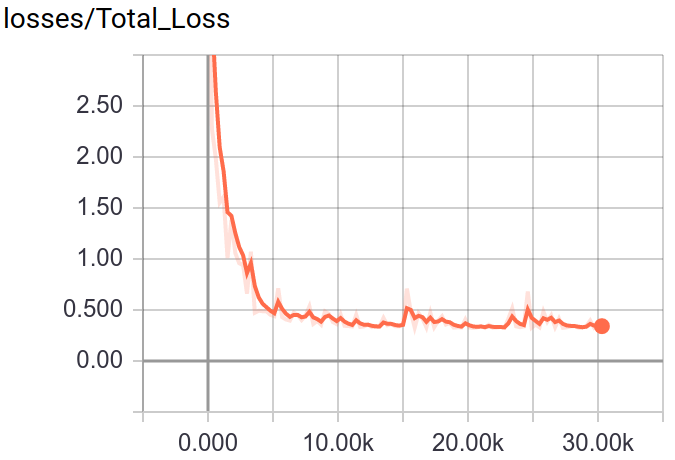

145 | :point_down:Screenshot:

146 |

147 |

148 |

148 |

149 |

150 |

151 |

151 |

152 |

153 | ## Deploy

154 |

155 | Deploy methods support html and api. Through html method, you can upload image file and get result in web browser. If you want to get result in json format, you can use api method.

156 | Because I'm not good at front-end and back-end skill, so the code not looks professional.

157 |

158 | The deployment repository: https://github.com/MacwinWin/Deep-Model-Transfer-Deployment

159 |

160 |

161 |  162 |

162 |

163 |

164 |  165 |

165 |

166 |

--------------------------------------------------------------------------------

/nets/resnet_utils.py:

--------------------------------------------------------------------------------

1 | """Contains building blocks for various versions of Residual Networks.

2 |

3 | Residual networks (ResNets) were proposed in:

4 | Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun

5 | Deep Residual Learning for Image Recognition. arXiv:1512.03385, 2015

6 |

7 | More variants were introduced in:

8 | Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun

9 | Identity Mappings in Deep Residual Networks. arXiv: 1603.05027, 2016

10 |

11 | We can obtain different ResNet variants by changing the network depth, width,

12 | and form of residual unit. This module implements the infrastructure for

13 | building them. Concrete ResNet units and full ResNet networks are implemented in

14 | the accompanying resnet_v1.py and resnet_v2.py modules.

15 |

16 | Compared to https://github.com/KaimingHe/deep-residual-networks, in the current

17 | implementation we subsample the output activations in the last residual unit of

18 | each block, instead of subsampling the input activations in the first residual

19 | unit of each block. The two implementations give identical results but our

20 | implementation is more memory efficient.

21 | """

22 | from __future__ import absolute_import

23 | from __future__ import division

24 | from __future__ import print_function

25 |

26 | import collections

27 | import tensorflow as tf

28 |

29 | slim = tf.contrib.slim

30 |

31 |

32 | class Block(collections.namedtuple('Block', ['scope', 'unit_fn', 'args'])):

33 | """A named tuple describing a ResNet block.

34 |

35 | Its parts are:

36 | scope: The scope of the `Block`.

37 | unit_fn: The ResNet unit function which takes as input a `Tensor` and

38 | returns another `Tensor` with the output of the ResNet unit.

39 | args: A list of length equal to the number of units in the `Block`. The list

40 | contains one (depth, depth_bottleneck, stride) tuple for each unit in the

41 | block to serve as argument to unit_fn.

42 | """

43 |

44 |

45 | def subsample(inputs, factor, scope=None):

46 | """Subsamples the input along the spatial dimensions.

47 |

48 | Args:

49 | inputs: A `Tensor` of size [batch, height_in, width_in, channels].

50 | factor: The subsampling factor.

51 | scope: Optional variable_scope.

52 |

53 | Returns:

54 | output: A `Tensor` of size [batch, height_out, width_out, channels] with the

55 | input, either intact (if factor == 1) or subsampled (if factor > 1).

56 | """

57 | if factor == 1:

58 | return inputs

59 | else:

60 | return slim.max_pool2d(inputs, [1, 1], stride=factor, scope=scope)

61 |

62 |

63 | def conv2d_same(inputs, num_outputs, kernel_size, stride, rate=1, scope=None):

64 | """Strided 2-D convolution with 'SAME' padding.

65 |

66 | When stride > 1, then we do explicit zero-padding, followed by conv2d with

67 | 'VALID' padding.

68 |

69 | Note that

70 |

71 | net = conv2d_same(inputs, num_outputs, 3, stride=stride)

72 |

73 | is equivalent to

74 |

75 | net = slim.conv2d(inputs, num_outputs, 3, stride=1, padding='SAME')

76 | net = subsample(net, factor=stride)

77 |

78 | whereas

79 |

80 | net = slim.conv2d(inputs, num_outputs, 3, stride=stride, padding='SAME')

81 |

82 | is different when the input's height or width is even, which is why we add the

83 | current function. For more details, see ResnetUtilsTest.testConv2DSameEven().

84 |

85 | Args:

86 | inputs: A 4-D tensor of size [batch, height_in, width_in, channels].

87 | num_outputs: An integer, the number of output filters.

88 | kernel_size: An int with the kernel_size of the filters.

89 | stride: An integer, the output stride.

90 | rate: An integer, rate for atrous convolution.

91 | scope: Scope.

92 |

93 | Returns:

94 | output: A 4-D tensor of size [batch, height_out, width_out, channels] with

95 | the convolution output.

96 | """

97 | if stride == 1:

98 | return slim.conv2d(inputs, num_outputs, kernel_size, stride=1, rate=rate,

99 | padding='SAME', scope=scope)

100 | else:

101 | kernel_size_effective = kernel_size + (kernel_size - 1) * (rate - 1)

102 | pad_total = kernel_size_effective - 1

103 | pad_beg = pad_total // 2

104 | pad_end = pad_total - pad_beg

105 | inputs = tf.pad(inputs,

106 | [[0, 0], [pad_beg, pad_end], [pad_beg, pad_end], [0, 0]])

107 | return slim.conv2d(inputs, num_outputs, kernel_size, stride=stride,

108 | rate=rate, padding='VALID', scope=scope)

109 |

110 |

111 | @slim.add_arg_scope

112 | def stack_blocks_dense(net, blocks, output_stride=None,

113 | outputs_collections=None):

114 | """Stacks ResNet `Blocks` and controls output feature density.

115 |

116 | First, this function creates scopes for the ResNet in the form of

117 | 'block_name/unit_1', 'block_name/unit_2', etc.

118 |

119 | Second, this function allows the user to explicitly control the ResNet

120 | output_stride, which is the ratio of the input to output spatial resolution.

121 | This is useful for dense prediction tasks such as semantic segmentation or

122 | object detection.

123 |

124 | Most ResNets consist of 4 ResNet blocks and subsample the activations by a

125 | factor of 2 when transitioning between consecutive ResNet blocks. This results

126 | to a nominal ResNet output_stride equal to 8. If we set the output_stride to

127 | half the nominal network stride (e.g., output_stride=4), then we compute

128 | responses twice.

129 |

130 | Control of the output feature density is implemented by atrous convolution.

131 |

132 | Args:

133 | net: A `Tensor` of size [batch, height, width, channels].

134 | blocks: A list of length equal to the number of ResNet `Blocks`. Each

135 | element is a ResNet `Block` object describing the units in the `Block`.

136 | output_stride: If `None`, then the output will be computed at the nominal

137 | network stride. If output_stride is not `None`, it specifies the requested

138 | ratio of input to output spatial resolution, which needs to be equal to

139 | the product of unit strides from the start up to some level of the ResNet.

140 | For example, if the ResNet employs units with strides 1, 2, 1, 3, 4, 1,

141 | then valid values for the output_stride are 1, 2, 6, 24 or None (which

142 | is equivalent to output_stride=24).

143 | outputs_collections: Collection to add the ResNet block outputs.

144 |

145 | Returns:

146 | net: Output tensor with stride equal to the specified output_stride.

147 |

148 | Raises:

149 | ValueError: If the target output_stride is not valid.

150 | """

151 | # The current_stride variable keeps track of the effective stride of the

152 | # activations. This allows us to invoke atrous convolution whenever applying

153 | # the next residual unit would result in the activations having stride larger

154 | # than the target output_stride.

155 | current_stride = 1

156 |

157 | # The atrous convolution rate parameter.

158 | rate = 1

159 |

160 | for block in blocks:

161 | with tf.variable_scope(block.scope, 'block', [net]) as sc:

162 | for i, unit in enumerate(block.args):

163 | if output_stride is not None and current_stride > output_stride:

164 | raise ValueError('The target output_stride cannot be reached.')

165 |

166 | with tf.variable_scope('unit_%d' % (i + 1), values=[net]):

167 | # If we have reached the target output_stride, then we need to employ

168 | # atrous convolution with stride=1 and multiply the atrous rate by the

169 | # current unit's stride for use in subsequent layers.

170 | if output_stride is not None and current_stride == output_stride:

171 | net = block.unit_fn(net, rate=rate, **dict(unit, stride=1))

172 | rate *= unit.get('stride', 1)

173 |

174 | else:

175 | net = block.unit_fn(net, rate=1, **unit)

176 | current_stride *= unit.get('stride', 1)

177 | net = slim.utils.collect_named_outputs(outputs_collections, sc.name, net)

178 |

179 | if output_stride is not None and current_stride != output_stride:

180 | raise ValueError('The target output_stride cannot be reached.')

181 |

182 | return net

183 |

184 |

185 | def resnet_arg_scope(weight_decay=0.0001,

186 | batch_norm_decay=0.997,

187 | batch_norm_epsilon=1e-5,

188 | batch_norm_scale=True,

189 | activation_fn=tf.nn.relu,

190 | use_batch_norm=True):

191 | """Defines the default ResNet arg scope.

192 |

193 | TODO(gpapan): The batch-normalization related default values above are

194 | appropriate for use in conjunction with the reference ResNet models

195 | released at https://github.com/KaimingHe/deep-residual-networks. When

196 | training ResNets from scratch, they might need to be tuned.

197 |

198 | Args:

199 | weight_decay: The weight decay to use for regularizing the model.

200 | batch_norm_decay: The moving average decay when estimating layer activation

201 | statistics in batch normalization.

202 | batch_norm_epsilon: Small constant to prevent division by zero when

203 | normalizing activations by their variance in batch normalization.

204 | batch_norm_scale: If True, uses an explicit `gamma` multiplier to scale the

205 | activations in the batch normalization layer.

206 | activation_fn: The activation function which is used in ResNet.

207 | use_batch_norm: Whether or not to use batch normalization.

208 |

209 | Returns:

210 | An `arg_scope` to use for the resnet models.

211 | """

212 | batch_norm_params = {

213 | 'decay': batch_norm_decay,

214 | 'epsilon': batch_norm_epsilon,

215 | 'scale': batch_norm_scale,

216 | 'updates_collections': tf.GraphKeys.UPDATE_OPS,

217 | 'fused': None, # Use fused batch norm if possible.

218 | }

219 |

220 | with slim.arg_scope(

221 | [slim.conv2d],

222 | weights_regularizer=slim.l2_regularizer(weight_decay),

223 | weights_initializer=slim.variance_scaling_initializer(),

224 | activation_fn=activation_fn,

225 | normalizer_fn=slim.batch_norm if use_batch_norm else None,

226 | normalizer_params=batch_norm_params):

227 | with slim.arg_scope([slim.batch_norm], **batch_norm_params):

228 | # The following implies padding='SAME' for pool1, which makes feature

229 | # alignment easier for dense prediction tasks. This is also used in

230 | # https://github.com/facebook/fb.resnet.torch. However the accompanying

231 | # code of 'Deep Residual Learning for Image Recognition' uses

232 | # padding='VALID' for pool1. You can switch to that choice by setting

233 | # slim.arg_scope([slim.max_pool2d], padding='VALID').

234 | with slim.arg_scope([slim.max_pool2d], padding='SAME') as arg_sc:

235 | return arg_sc

236 |

--------------------------------------------------------------------------------

/preprocessing/inception_preprocessing.py:

--------------------------------------------------------------------------------

1 | """Provides utilities to preprocess images for the Inception networks."""

2 |

3 | from __future__ import absolute_import

4 | from __future__ import division

5 | from __future__ import print_function

6 |

7 | import tensorflow as tf

8 |

9 | from tensorflow.python.ops import control_flow_ops

10 |

11 |

12 | def apply_with_random_selector(x, func, num_cases):

13 | """Computes func(x, sel), with sel sampled from [0...num_cases-1].

14 |

15 | Args:

16 | x: input Tensor.

17 | func: Python function to apply.

18 | num_cases: Python int32, number of cases to sample sel from.

19 |

20 | Returns:

21 | The result of func(x, sel), where func receives the value of the

22 | selector as a python integer, but sel is sampled dynamically.

23 | """

24 | sel = tf.random_uniform([], maxval=num_cases, dtype=tf.int32)

25 | # Pass the real x only to one of the func calls.

26 | return control_flow_ops.merge([

27 | func(control_flow_ops.switch(x, tf.equal(sel, case))[1], case)

28 | for case in range(num_cases)])[0]

29 |

30 |

31 | def distort_color(image, color_ordering=0, fast_mode=False, scope=None):

32 | """Distort the color of a Tensor image.

33 |

34 | Each color distortion is non-commutative and thus ordering of the color ops

35 | matters. Ideally we would randomly permute the ordering of the color ops.

36 | Rather then adding that level of complication, we select a distinct ordering

37 | of color ops for each preprocessing thread.

38 |

39 | Args:

40 | image: 3-D Tensor containing single image in [0, 1].

41 | color_ordering: Python int, a type of distortion (valid values: 0-3).

42 | fast_mode: Avoids slower ops (random_hue and random_contrast)

43 | scope: Optional scope for name_scope.

44 | Returns:

45 | 3-D Tensor color-distorted image on range [0, 1]

46 | Raises:

47 | ValueError: if color_ordering not in [0, 3]

48 | """

49 | with tf.name_scope(scope, 'distort_color', [image]):

50 | if fast_mode:

51 | if color_ordering == 0:

52 | image = tf.image.random_brightness(image, max_delta=32. / 255.)

53 | image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

54 | else:

55 | image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

56 | image = tf.image.random_brightness(image, max_delta=32. / 255.)

57 | else:

58 | if color_ordering == 0:

59 | image = tf.image.random_brightness(image, max_delta=32. / 255.)

60 | image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

61 | image = tf.image.random_hue(image, max_delta=0.2)

62 | image = tf.image.random_contrast(image, lower=0.5, upper=1.5)

63 | elif color_ordering == 1:

64 | image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

65 | image = tf.image.random_brightness(image, max_delta=32. / 255.)

66 | image = tf.image.random_contrast(image, lower=0.5, upper=1.5)

67 | image = tf.image.random_hue(image, max_delta=0.2)

68 | elif color_ordering == 2:

69 | image = tf.image.random_contrast(image, lower=0.5, upper=1.5)

70 | image = tf.image.random_hue(image, max_delta=0.2)

71 | image = tf.image.random_brightness(image, max_delta=32. / 255.)

72 | image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

73 | elif color_ordering == 3:

74 | image = tf.image.random_hue(image, max_delta=0.2)

75 | image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

76 | image = tf.image.random_contrast(image, lower=0.5, upper=1.5)

77 | image = tf.image.random_brightness(image, max_delta=32. / 255.)

78 | else:

79 | raise ValueError('color_ordering must be in [0, 3]')

80 |

81 | # The random_* ops do not necessarily clamp.

82 | return tf.clip_by_value(image, 0.0, 1.0)

83 |

84 |

85 | def distorted_bounding_box_crop(image,

86 | bbox,

87 | min_object_covered=0.1,

88 | aspect_ratio_range=(0.75, 1.33),

89 | area_range=(0.05, 1.0),

90 | max_attempts=100,

91 | scope=None):

92 | """Generates cropped_image using a one of the bboxes randomly distorted.

93 |

94 | See `tf.image.sample_distorted_bounding_box` for more documentation.

95 |

96 | Args:

97 | image: 3-D Tensor of image (it will be converted to floats in [0, 1]).

98 | bbox: 3-D float Tensor of bounding boxes arranged [1, num_boxes, coords]

99 | where each coordinate is [0, 1) and the coordinates are arranged

100 | as [ymin, xmin, ymax, xmax]. If num_boxes is 0 then it would use the whole

101 | image.

102 | min_object_covered: An optional `float`. Defaults to `0.1`. The cropped

103 | area of the image must contain at least this fraction of any bounding box

104 | supplied.

105 | aspect_ratio_range: An optional list of `floats`. The cropped area of the

106 | image must have an aspect ratio = width / height within this range.

107 | area_range: An optional list of `floats`. The cropped area of the image

108 | must contain a fraction of the supplied image within in this range.

109 | max_attempts: An optional `int`. Number of attempts at generating a cropped

110 | region of the image of the specified constraints. After `max_attempts`

111 | failures, return the entire image.

112 | scope: Optional scope for name_scope.

113 | Returns:

114 | A tuple, a 3-D Tensor cropped_image and the distorted bbox

115 | """

116 | with tf.name_scope(scope, 'distorted_bounding_box_crop', [image, bbox]):

117 | # Each bounding box has shape [1, num_boxes, box coords] and

118 | # the coordinates are ordered [ymin, xmin, ymax, xmax].

119 |

120 | # A large fraction of image datasets contain a human-annotated bounding

121 | # box delineating the region of the image containing the object of interest.

122 | # We choose to create a new bounding box for the object which is a randomly

123 | # distorted version of the human-annotated bounding box that obeys an

124 | # allowed range of aspect ratios, sizes and overlap with the human-annotated

125 | # bounding box. If no box is supplied, then we assume the bounding box is

126 | # the entire image.

127 | sample_distorted_bounding_box = tf.image.sample_distorted_bounding_box(

128 | tf.shape(image),

129 | bounding_boxes=bbox,

130 | min_object_covered=min_object_covered,

131 | aspect_ratio_range=aspect_ratio_range,

132 | area_range=area_range,

133 | max_attempts=max_attempts,

134 | use_image_if_no_bounding_boxes=True)

135 | bbox_begin, bbox_size, distort_bbox = sample_distorted_bounding_box

136 |

137 | # Crop the image to the specified bounding box.

138 | cropped_image = tf.slice(image, bbox_begin, bbox_size)

139 | return cropped_image, distort_bbox

140 |

141 |

142 | def preprocess_for_train(image, height, width, bbox,

143 | fast_mode=False,

144 | scope=None):

145 | """Distort one image for training a network.

146 |

147 | Distorting images provides a useful technique for augmenting the data

148 | set during training in order to make the network invariant to aspects

149 | of the image that do not effect the label.

150 |

151 | Additionally it would create image_summaries to display the different

152 | transformations applied to the image.

153 |

154 | Args:

155 | image: 3-D Tensor of image. If dtype is tf.float32 then the range should be

156 | [0, 1], otherwise it would converted to tf.float32 assuming that the range

157 | is [0, MAX], where MAX is largest positive representable number for

158 | int(8/16/32) data type (see `tf.image.convert_image_dtype` for details).

159 | height: integer

160 | width: integer

161 | bbox: 3-D float Tensor of bounding boxes arranged [1, num_boxes, coords]