├── requirements

├── prod.txt

├── dev.in

├── ci.in

├── dev.txt

└── ci.txt

├── tests

├── examples

│ ├── cm-2-classes.json

│ ├── perm.json

│ └── wili-labels.csv

├── test_utils.py

├── test_distribution.py

├── test_optimize.py

├── test_cli.py

├── test_clustering.py

├── test_get_cm.py

└── test_visualize.py

├── MANIFEST.in

├── docs

├── cm.png

├── cm-interface.png

├── cm-wili-2018.png

├── mnist_confusion_matrix.png

├── mnist_confusion_matrix_labels.png

├── requirements.txt

├── mnist

│ ├── labels.csv

│ └── cm.json

├── source

│ ├── io.rst

│ ├── get_cm.rst

│ ├── utils.rst

│ ├── distribution.rst

│ ├── visualize_cm.rst

│ ├── get_cm_simple.rst

│ ├── index.rst

│ ├── file_formats.rst

│ ├── mnist_example.rst

│ └── conf.py

├── Makefile

├── make.bat

├── visualizations.md

└── mnist_example.py

├── setup.py

├── clana

├── __main__.py

├── __init__.py

├── cm_metrics.py

├── config.yaml

├── distribution.py

├── get_cm.py

├── utils.py

├── templates

│ └── base.html

├── get_cm_simple.py

├── cli.py

├── io.py

├── clustering.py

├── optimize.py

└── visualize_cm.py

├── tox.ini

├── .travis.yml

├── conftest.py

├── .isort.cfg

├── .readthedocs.yml

├── Makefile

├── LICENSE

├── .github

└── workflows

│ └── python.yaml

├── requirements.txt

├── .pre-commit-config.yaml

├── .gitignore

├── setup.cfg

└── README.md

/requirements/prod.txt:

--------------------------------------------------------------------------------

1 | -r ../requirements.txt

2 |

--------------------------------------------------------------------------------

/tests/examples/cm-2-classes.json:

--------------------------------------------------------------------------------

1 | [[10, 6], [10, 42]]

2 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include README.md

2 | include clana/config.yaml

3 |

--------------------------------------------------------------------------------

/requirements/dev.in:

--------------------------------------------------------------------------------

1 | pip-tools

2 | pre-commit

3 | twine

4 | wheel

5 |

--------------------------------------------------------------------------------

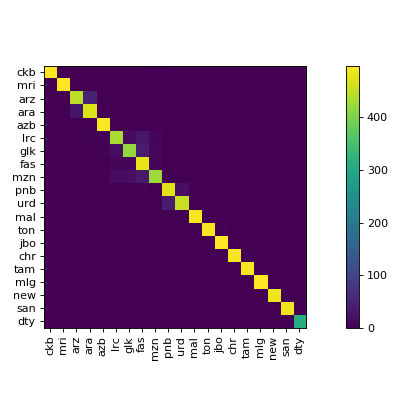

/docs/cm.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MartinThoma/clana/HEAD/docs/cm.png

--------------------------------------------------------------------------------

/docs/cm-interface.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MartinThoma/clana/HEAD/docs/cm-interface.png

--------------------------------------------------------------------------------

/docs/cm-wili-2018.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MartinThoma/clana/HEAD/docs/cm-wili-2018.png

--------------------------------------------------------------------------------

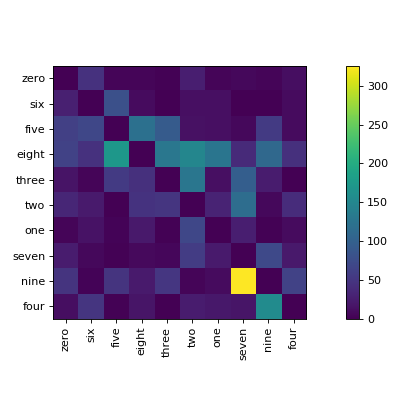

/docs/mnist_confusion_matrix.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MartinThoma/clana/HEAD/docs/mnist_confusion_matrix.png

--------------------------------------------------------------------------------

/docs/mnist_confusion_matrix_labels.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MartinThoma/clana/HEAD/docs/mnist_confusion_matrix_labels.png

--------------------------------------------------------------------------------

/docs/requirements.txt:

--------------------------------------------------------------------------------

1 | numpydoc==0.9.1

2 |

3 | sphinx>=3.0.4 # not directly required, pinned by Snyk to avoid a vulnerability

4 |

--------------------------------------------------------------------------------

/docs/mnist/labels.csv:

--------------------------------------------------------------------------------

1 | Labels

2 | zero

3 | one

4 | two

5 | three

6 | four

7 | five

8 | six

9 | seven

10 | eight

11 | nine

12 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | """CLANA is a toolkit for classifier analysis."""

2 |

3 | # Third party

4 | from setuptools import setup

5 |

6 | setup()

7 |

--------------------------------------------------------------------------------

/docs/source/io.rst:

--------------------------------------------------------------------------------

1 | clana.io

2 | --------

3 |

4 | .. automodule:: clana.io

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

--------------------------------------------------------------------------------

/docs/source/get_cm.rst:

--------------------------------------------------------------------------------

1 | clana.get_cm

2 | ------------

3 |

4 | .. automodule:: clana.get_cm

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

--------------------------------------------------------------------------------

/docs/source/utils.rst:

--------------------------------------------------------------------------------

1 | clana.utils

2 | -----------

3 |

4 | .. automodule:: clana.utils

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

--------------------------------------------------------------------------------

/clana/__main__.py:

--------------------------------------------------------------------------------

1 | """Execute clana as a module."""

2 |

3 | # First party

4 | from clana.cli import entry_point

5 |

6 | if __name__ == "__main__":

7 | entry_point()

8 |

--------------------------------------------------------------------------------

/tests/test_utils.py:

--------------------------------------------------------------------------------

1 | # First party

2 | import clana.utils

3 |

4 |

5 | def test_load_labels() -> None:

6 | clana.utils.load_labels("~/.clana/data/labels.csv", 10)

7 |

--------------------------------------------------------------------------------

/docs/source/distribution.rst:

--------------------------------------------------------------------------------

1 | clana.distribution

2 | ------------------

3 |

4 | .. automodule:: clana.distribution

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

--------------------------------------------------------------------------------

/docs/source/visualize_cm.rst:

--------------------------------------------------------------------------------

1 | clana.visualize_cm

2 | ------------------

3 |

4 | .. automodule:: clana.visualize_cm

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

--------------------------------------------------------------------------------

/docs/source/get_cm_simple.rst:

--------------------------------------------------------------------------------

1 | clana.get_cm_simple

2 | -------------------

3 |

4 | .. automodule:: clana.get_cm_simple

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

--------------------------------------------------------------------------------

/tox.ini:

--------------------------------------------------------------------------------

1 | [tox]

2 | envlist = linter,py37,py38,py39

3 |

4 | [testenv]

5 | deps =

6 | -r requirements/ci.txt

7 | commands =

8 | pip install -e .

9 | pytest .

10 | flake8

11 | black --check .

12 | pydocstyle

13 | mypy .

14 |

--------------------------------------------------------------------------------

/clana/__init__.py:

--------------------------------------------------------------------------------

1 | """Get the version."""

2 |

3 | # Third party

4 | import pkg_resources

5 |

6 | try:

7 | __version__ = pkg_resources.get_distribution("clana").version

8 | except pkg_resources.DistributionNotFound:

9 | __version__ = "not installed"

10 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: python

2 | before_install:

3 | - sudo apt-get install -y libblas3 liblapack3 liblapack-dev libblas-dev

4 | python:

5 | - 3.7

6 | - 3.8

7 | - 3.9

8 | install:

9 | - pip install coveralls tox-travis

10 | script:

11 | - tox

12 | after_success:

13 | - coveralls

14 |

--------------------------------------------------------------------------------

/docs/mnist/cm.json:

--------------------------------------------------------------------------------

1 | [[5808,5,28,2,12,5,47,7,5,4],[5,6586,70,2,9,3,16,27,22,2],[35,32,5602,50,42,1,24,117,48,7],[17,14,128,5742,1,56,5,98,45,25],[12,21,25,0,5537,2,50,19,19,157],[62,13,15,93,9,4977,70,7,120,55],[29,14,13,0,9,80,5763,0,10,0],[25,24,58,6,22,2,7,6041,8,72],[64,127,150,129,45,173,47,39,4967,110],[49,9,4,52,64,49,3,326,23,5370]]

2 |

--------------------------------------------------------------------------------

/conftest.py:

--------------------------------------------------------------------------------

1 | """Configure pytest."""

2 |

3 | # Core Library

4 | import logging

5 | from typing import Any, Dict

6 |

7 |

8 | def pytest_configure(config: Dict[str, Any]) -> None:

9 | """Flake8 is to verbose. Mute it."""

10 | logging.getLogger("flake8").setLevel(logging.WARN)

11 | logging.getLogger("pydocstyle").setLevel(logging.INFO)

12 |

--------------------------------------------------------------------------------

/tests/test_distribution.py:

--------------------------------------------------------------------------------

1 | # Third party

2 | import pkg_resources

3 | from click.testing import CliRunner

4 |

5 | # First party

6 | import clana.cli

7 |

8 |

9 | def test_cli() -> None:

10 | runner = CliRunner()

11 |

12 | path = "examples/wili-y_train.txt"

13 | y_train_path = pkg_resources.resource_filename(__name__, path)

14 | _ = runner.invoke(clana.cli.distribution, ["--gt", y_train_path])

15 |

--------------------------------------------------------------------------------

/.isort.cfg:

--------------------------------------------------------------------------------

1 | [settings]

2 | line_length=79

3 | indent=' '

4 | multi_line_output=3

5 | length_sort=0

6 | import_heading_stdlib=Core Library

7 | import_heading_firstparty=First party

8 | import_heading_thirdparty=Third party

9 | import_heading_localfolder=Local

10 | known_third_party = click,jinja2,keras,matplotlib,mpl_toolkits,numpy,pkg_resources,setuptools,sklearn,yaml

11 | include_trailing_comma=True

12 | skip=docs

13 |

--------------------------------------------------------------------------------

/tests/test_optimize.py:

--------------------------------------------------------------------------------

1 | # Third party

2 | import numpy as np

3 |

4 | # First party

5 | import clana.optimize

6 |

7 |

8 | def test_move_1d() -> None:

9 | perm = np.array([8, 7, 6, 1, 2])

10 | from_start = 1

11 | from_end = 2

12 | insert_pos = 0

13 | new_perm = clana.optimize.move_1d(perm, from_start, from_end, insert_pos)

14 | new_perm = new_perm.tolist()

15 | assert new_perm == [7, 6, 8, 1, 2]

16 |

--------------------------------------------------------------------------------

/requirements/ci.in:

--------------------------------------------------------------------------------

1 | -r prod.txt

2 | black

3 | coverage

4 | flake8<4.0.0

5 | flake8_implicit_str_concat

6 | flake8-bugbear

7 | flake8-builtins

8 | flake8-comprehensions

9 | flake8-eradicate

10 | flake8-executable

11 | flake8-isort

12 | flake8-pytest-style

13 | flake8-raise

14 | flake8-simplify

15 | flake8-string-format

16 | lxml

17 | mypy

18 | pydocstyle

19 | pytest

20 | pytest-cov

21 | pytest-mccabe

22 | pytest-timeout

23 | types-pkg-resources

24 | types-PyYAML

25 | types-setuptools

26 |

--------------------------------------------------------------------------------

/.readthedocs.yml:

--------------------------------------------------------------------------------

1 | # .readthedocs.yml

2 | # Read the Docs configuration file

3 | # See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

4 |

5 | # Required

6 | version: 2

7 |

8 | # Build documentation in the docs/ directory with Sphinx

9 | sphinx:

10 | configuration: docs/source/conf.py

11 |

12 | # Optionally build your docs in additional formats such as PDF and ePub

13 | formats: all

14 |

15 | # Optionally set the version of Python and requirements required to build your docs

16 | python:

17 | version: 3.6

18 | install:

19 | - requirements: docs/requirements.txt

20 |

--------------------------------------------------------------------------------

/tests/test_cli.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 |

3 | """Test the CLI functions."""

4 |

5 | # Third party

6 | from click.testing import CliRunner

7 | from pkg_resources import resource_filename

8 |

9 | # First party

10 | import clana.cli

11 |

12 |

13 | def test_visualize() -> None:

14 | runner = CliRunner()

15 | cm_path = resource_filename(__name__, "examples/cm-2-classes.json")

16 | commands = ["visualize", "--cm", cm_path]

17 | result = runner.invoke(clana.cli.entry_point, commands)

18 | assert result.exit_code == 0

19 | assert "Accuracy: 76.47%" in result.output, "clana" + " ".join(commands)

20 |

--------------------------------------------------------------------------------

/tests/test_clustering.py:

--------------------------------------------------------------------------------

1 | # Third party

2 | import numpy as np

3 |

4 | # First party

5 | import clana.clustering

6 |

7 |

8 | def test_extract_clusters_local() -> None:

9 | n = 10

10 | cm = np.random.randint(low=0, high=100, size=(n, n))

11 | clana.clustering.extract_clusters(

12 | cm, labels=list(range(n)), steps=10, method="local-connectivity" # type: ignore

13 | )

14 |

15 |

16 | def test_extract_clusters_energy() -> None:

17 | n = 10

18 | cm = np.random.randint(low=0, high=100, size=(n, n))

19 | clana.clustering.extract_clusters(

20 | cm, labels=list(range(n)), steps=10, method="energy" # type: ignore

21 | )

22 |

--------------------------------------------------------------------------------

/docs/source/index.rst:

--------------------------------------------------------------------------------

1 | .. clana documentation master file, created by

2 | sphinx-quickstart on Tue Jul 2 22:30:39 2019.

3 | You can adapt this file completely to your liking, but it should at least

4 | contain the root `toctree` directive.

5 |

6 | Welcome to clana's documentation!

7 | =================================

8 |

9 | .. toctree::

10 | :maxdepth: 2

11 | :caption: Contents:

12 |

13 | mnist_example

14 | file_formats

15 | distribution

16 | io

17 | get_cm

18 | get_cm_simple

19 | utils

20 | visualize_cm

21 |

22 |

23 | Indices and tables

24 | ==================

25 |

26 | * :ref:`genindex`

27 | * :ref:`modindex`

28 | * :ref:`search`

29 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line.

5 | SPHINXOPTS =

6 | SPHINXBUILD = sphinx-build

7 | SPHINXPROJ = clana

8 | SOURCEDIR = source

9 | BUILDDIR = build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | .PHONY: help Makefile

16 |

17 | # Catch-all target: route all unknown targets to Sphinx using the new

18 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

19 | %: Makefile

20 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

21 |

--------------------------------------------------------------------------------

/clana/cm_metrics.py:

--------------------------------------------------------------------------------

1 | """Metrics for confusion matrices."""

2 |

3 | # Third party

4 | import numpy.typing as npt

5 |

6 |

7 | def get_accuracy(cm: npt.NDArray) -> float:

8 | """

9 | Get the accuaracy by the confusion matrix cm.

10 |

11 | Parameters

12 | ----------

13 | cm : ndarray

14 |

15 | Returns

16 | -------

17 | accuracy : float

18 |

19 | Examples

20 | --------

21 | >>> import numpy as np

22 | >>> cm = np.array([[10, 20], [30, 40]])

23 | >>> get_accuracy(cm)

24 | 0.5

25 | >>> cm = np.array([[20, 10], [30, 40]])

26 | >>> get_accuracy(cm)

27 | 0.6

28 | """

29 | return float(sum(cm[i][i] for i in range(len(cm)))) / float(cm.sum())

30 |

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=source

11 | set BUILDDIR=build

12 | set SPHINXPROJ=clana

13 |

14 | if "%1" == "" goto help

15 |

16 | %SPHINXBUILD% >NUL 2>NUL

17 | if errorlevel 9009 (

18 | echo.

19 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

20 | echo.installed, then set the SPHINXBUILD environment variable to point

21 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

22 | echo.may add the Sphinx directory to PATH.

23 | echo.

24 | echo.If you don't have Sphinx installed, grab it from

25 | echo.http://sphinx-doc.org/

26 | exit /b 1

27 | )

28 |

29 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

30 | goto end

31 |

32 | :help

33 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

34 |

35 | :end

36 | popd

37 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | maint:

2 | pip install -r requirements/dev.txt

3 | pre-commit autoupdate && pre-commit run --all-files

4 | pip-compile -U setup.py

5 | pip-compile -U requirements/ci.in

6 | pip-compile -U requirements/dev.in

7 |

8 | upload:

9 | make clean

10 | python setup.py sdist bdist_wheel && twine upload -s dist/*

11 |

12 | test_upload:

13 | make clean

14 | python setup.py sdist bdist_wheel && twine upload --repository pypitest -s dist/*

15 |

16 | clean:

17 | python setup.py clean --all

18 | pyclean .

19 | rm -rf clana.egg-info dist tests/reports tests/__pycache__ clana.errors.log clana.info.log clana/cm_analysis.html dist __pycache__ clana/__pycache__ build docs/build

20 |

21 | muation-test:

22 | mutmut run

23 |

24 | mutmut-results:

25 | mutmut junitxml --suspicious-policy=ignore --untested-policy=ignore > mutmut-results.xml

26 | junit2html mutmut-results.xml mutmut-results.html

27 |

28 | bandit:

29 | # Not a security application: B311 and B303 should be save

30 | # Python3 only: B322 is save

31 | bandit -r clana -s B311,B303,B322

32 |

--------------------------------------------------------------------------------

/tests/examples/perm.json:

--------------------------------------------------------------------------------

1 | [0, 161, 39, 3, 171, 5, 130, 7, 8, 35, 17, 18, 40, 159, 196, 197, 16, 19, 146, 15, 132, 113, 80, 74, 22, 24, 98, 206, 169, 155, 114, 172, 49, 175, 174, 176, 177, 140, 27, 180, 78, 145, 183, 26, 137, 138, 139, 178, 28, 210, 211, 212, 213, 62, 215, 188, 217, 41, 143, 10, 25, 37, 86, 214, 38, 101, 199, 201, 51, 43, 128, 168, 46, 162, 88, 126, 21, 136, 96, 208, 173, 129, 224, 194, 195, 89, 164, 54, 106, 218, 32, 33, 220, 121, 70, 71, 142, 68, 190, 166, 216, 202, 103, 63, 48, 95, 90, 85, 104, 109, 153, 229, 102, 97, 167, 99, 58, 61, 47, 64, 118, 158, 50, 105, 165, 231, 2, 59, 72, 73, 148, 82, 185, 6, 31, 198, 179, 141, 29, 30, 60, 193, 69, 81, 163, 83, 170, 12, 13, 203, 135, 14, 181, 204, 205, 53, 56, 100, 36, 75, 9, 117, 67, 192, 65, 66, 87, 182, 127, 44, 108, 93, 157, 225, 23, 11, 111, 184, 1, 20, 107, 186, 55, 115, 77, 207, 52, 189, 94, 134, 84, 147, 200, 79, 144, 149, 4, 223, 152, 156, 120, 150, 160, 57, 92, 76, 232, 187, 112, 116, 119, 209, 235, 191, 222, 45, 227, 228, 151, 91, 154, 221, 124, 125, 110, 131, 219, 133, 226, 230, 34, 42, 233, 234, 122, 123]

2 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 Martin Thoma

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/.github/workflows/python.yaml:

--------------------------------------------------------------------------------

1 | # This workflow will install Python dependencies, run tests and lint with a variety of Python versions

2 | # For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

3 |

4 | name: Python package

5 |

6 | on:

7 | push:

8 | branches: [ master ]

9 | pull_request:

10 | branches: [ master ]

11 |

12 | jobs:

13 | build:

14 |

15 | runs-on: ubuntu-latest

16 | strategy:

17 | matrix:

18 | python-version: [3.7, 3.8, 3.9]

19 |

20 | steps:

21 | - uses: actions/checkout@v2

22 | - name: Set up Python ${{ matrix.python-version }}

23 | uses: actions/setup-python@v2

24 | with:

25 | python-version: ${{ matrix.python-version }}

26 | - name: Install dependencies

27 | run: |

28 | python -m pip install --upgrade pip

29 | pip install -r requirements/ci.txt

30 | pip install .

31 | - name: Test with pytest

32 | run: pytest

33 | - name: Test with mypy

34 | run: mypy . --exclude=build

35 | - name: Test with flake8

36 | run: flake8

37 | - name: Test with black

38 | run: black --check .

39 |

--------------------------------------------------------------------------------

/tests/test_get_cm.py:

--------------------------------------------------------------------------------

1 | # Third party

2 | import numpy as np

3 | import numpy.testing

4 | import pkg_resources

5 | from click.testing import CliRunner

6 |

7 | # First party

8 | import clana.cli

9 |

10 |

11 | def test_calculate_cm() -> None:

12 | labels = ["en", "de"]

13 | truths = ["de", "de", "en", "de", "en"]

14 | predictions = ["de", "en", "en", "de", "en"]

15 | res = clana.get_cm_simple.calculate_cm(labels, truths, predictions)

16 | numpy.testing.assert_array_equal(res, np.array([[2, 0], [1, 2]])) # type: ignore[no-untyped-call]

17 |

18 |

19 | def test_main() -> None:

20 | path = "examples/wili-labels.csv"

21 | labels_path = pkg_resources.resource_filename(__name__, path)

22 | path = "examples/wili-y_test.txt"

23 | gt_filepath = pkg_resources.resource_filename(__name__, path)

24 | path = "examples/cld2_results.txt"

25 | predictions_filepath = pkg_resources.resource_filename(__name__, path)

26 |

27 | runner = CliRunner()

28 | _ = runner.invoke(

29 | clana.cli.get_cm_simple,

30 | [

31 | "--labels",

32 | labels_path,

33 | "--gt",

34 | gt_filepath,

35 | "--predictions",

36 | predictions_filepath,

37 | ],

38 | )

39 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | #

2 | # This file is autogenerated by pip-compile with python 3.7

3 | # To update, run:

4 | #

5 | # pip-compile setup.py

6 | #

7 | click==8.0.3

8 | # via clana (setup.py)

9 | cycler==0.11.0

10 | # via matplotlib

11 | fonttools==4.29.0

12 | # via matplotlib

13 | importlib-metadata==4.10.1

14 | # via click

15 | jinja2==3.0.3

16 | # via clana (setup.py)

17 | joblib==1.1.0

18 | # via scikit-learn

19 | kiwisolver==1.3.2

20 | # via matplotlib

21 | markupsafe==2.0.1

22 | # via jinja2

23 | matplotlib==3.5.1

24 | # via clana (setup.py)

25 | numpy==1.22.0

26 | # via

27 | # clana (setup.py)

28 | # matplotlib

29 | # scikit-learn

30 | # scipy

31 | packaging==21.3

32 | # via matplotlib

33 | pillow==9.0.1

34 | # via matplotlib

35 | pyparsing==3.0.7

36 | # via

37 | # matplotlib

38 | # packaging

39 | python-dateutil==2.8.2

40 | # via matplotlib

41 | pyyaml==6.0

42 | # via clana (setup.py)

43 | scikit-learn==1.0.2

44 | # via clana (setup.py)

45 | scipy==1.7.3

46 | # via

47 | # clana (setup.py)

48 | # scikit-learn

49 | six==1.16.0

50 | # via python-dateutil

51 | threadpoolctl==3.0.0

52 | # via scikit-learn

53 | typing-extensions==4.0.1

54 | # via importlib-metadata

55 | zipp==3.7.0

56 | # via importlib-metadata

57 |

--------------------------------------------------------------------------------

/docs/visualizations.md:

--------------------------------------------------------------------------------

1 | # Confusion Matrices

2 |

3 | This module expects the Ground Truth labels to have exactly one value `1` and

4 | only zeros for each data element.

5 |

6 | See [File Formats](file-formats.md).

7 |

8 | ## Static Confusion Matrix

9 |

10 | ```

11 | $ clan --labels labels.csv --gt gt.csv --preds preds.csv

12 | ```

13 |

14 | Produces a static confusion matrix:

15 |

16 |

17 |

18 | The labels are by default the short version, but a switch `--long` changes them

19 | to the long version.

20 |

21 | Optionally, the command accepts a `permutations.json` which defines another

22 | order of the elements.

23 |

24 |

25 | ## Interactive Confusion Matrix

26 |

27 | ```

28 | $ clan cm --labels labels.csv --gt gt.csv --preds preds.csv --interactive --viz data_viz.py

29 | ```

30 |

31 | Starts a webserver with an interactive version of the confusion matrix. The

32 | user can click on each of the confusions and get a list of of the identifiers.

33 | Each identifier has a link on which the user can click again. This will call

34 | the `visualize(identifier)` function within `data_viz.py`.

35 | `visualize(identifier)` has to return an HTML page. That page could simply

36 | contain a string (e.g. for NLP), an image (e.g. Computer Vision) or audio files

37 | (e.g. ASR).

38 |

39 | The interface could look like this:

40 |

41 |

42 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # pre-commit run --all-files

2 | repos:

3 | - repo: https://github.com/pre-commit/pre-commit-hooks

4 | rev: v4.1.0

5 | hooks:

6 | - id: check-ast

7 | - id: check-byte-order-marker

8 | - id: check-case-conflict

9 | - id: check-docstring-first

10 | - id: check-executables-have-shebangs

11 | - id: check-json

12 | - id: check-yaml

13 | - id: debug-statements

14 | - id: detect-private-key

15 | - id: end-of-file-fixer

16 | - id: trailing-whitespace

17 | - id: mixed-line-ending

18 |

19 | - repo: https://github.com/pre-commit/mirrors-mypy

20 | rev: v0.931

21 | hooks:

22 | - id: mypy

23 | args: [--ignore-missing-imports, --no-warn-unused-ignores, --install-types]

24 | additional_dependencies: [types-PyYAML, types-setuptools]

25 | - repo: https://github.com/asottile/seed-isort-config

26 | rev: v2.2.0

27 | hooks:

28 | - id: seed-isort-config

29 | - repo: https://github.com/pre-commit/mirrors-isort

30 | rev: v5.10.1

31 | hooks:

32 | - id: isort

33 | - repo: https://github.com/psf/black

34 | rev: 22.1.0

35 | hooks:

36 | - id: black

37 | - repo: https://github.com/asottile/pyupgrade

38 | rev: v2.31.0

39 | hooks:

40 | - id: pyupgrade

41 | args: [--py36-plus]

42 | - repo: https://github.com/asottile/blacken-docs

43 | rev: v1.12.0

44 | hooks:

45 | - id: blacken-docs

46 | additional_dependencies: [black==19.10b0]

47 |

--------------------------------------------------------------------------------

/tests/test_visualize.py:

--------------------------------------------------------------------------------

1 | # Third party

2 | import numpy as np

3 | import pkg_resources

4 | from click.testing import CliRunner

5 |

6 | # First party

7 | import clana.cli

8 |

9 |

10 | def test_get_cm_problems1() -> None:

11 | cm = np.array([[0, 100], [0, 10]])

12 | labels = ["0", "1"]

13 | clana.visualize_cm.get_cm_problems(cm, labels)

14 |

15 |

16 | def test_get_cm_problems2() -> None:

17 | cm = np.array([[12, 100], [0, 0]])

18 | labels = ["0", "1"]

19 | clana.visualize_cm.get_cm_problems(cm, labels)

20 |

21 |

22 | def test_simulated_annealing() -> None:

23 | n = 10

24 | cm = np.random.randint(low=0, high=100, size=(n, n))

25 | clana.visualize_cm.simulated_annealing(cm, steps=10)

26 | clana.visualize_cm.simulated_annealing(cm, steps=10, deterministic=True)

27 |

28 |

29 | def test_create_html_cm() -> None:

30 | n = 10

31 | cm = np.random.randint(low=0, high=100, size=(n, n))

32 | clana.visualize_cm.create_html_cm(cm, zero_diagonal=True)

33 |

34 |

35 | def test_plot_cm() -> None:

36 | n = 25

37 | cm = np.random.randint(low=0, high=100, size=(n, n))

38 | clana.visualize_cm.plot_cm(cm, zero_diagonal=True, labels=None)

39 |

40 |

41 | def test_plot_cm_big() -> None:

42 | n = 5

43 | cm = np.random.randint(low=0, high=100, size=(n, n))

44 | clana.visualize_cm.plot_cm(cm, zero_diagonal=True, labels=None)

45 |

46 |

47 | def test_main() -> None:

48 | path = "examples/wili-cld2-cm.json"

49 | cm_path = pkg_resources.resource_filename(__name__, path)

50 |

51 | path = "examples/perm.json"

52 | perm_path = pkg_resources.resource_filename(__name__, path)

53 |

54 | runner = CliRunner()

55 | _ = runner.invoke(

56 | clana.cli.visualize, ["--cm", cm_path, "--steps", "100", "--perm", perm_path]

57 | )

58 |

--------------------------------------------------------------------------------

/clana/config.yaml:

--------------------------------------------------------------------------------

1 | visualize:

2 | threshold: 0.1

3 | save_path: 'clana_cm.pdf' # make sure this is consistent with the format

4 | html_save_path: 'cm_analysis.html'

5 | hierarchy_path: 'hierarchy.tmp.json'

6 | xlabels_rotation: -45

7 | ylabels_rotation: 0

8 | norm: LogNorm # null or LogNorm

9 | # See https://matplotlib.org/2.0.2/examples/color/colormaps_reference.html

10 | # a sequential colormap is highly recommended

11 | # best are: viridis, plasma, inferno, magma

12 | colormap: viridis

13 | # See https://matplotlib.org/3.1.1/api/_as_gen/matplotlib.pyplot.imshow.html

14 | interpolation: "nearest"

15 | LOGGING:

16 | version: 1

17 | disable_existing_loggers: False

18 | formatters:

19 | simple:

20 | format: "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

21 |

22 | handlers:

23 | console:

24 | class: logging.StreamHandler

25 | level: DEBUG

26 | formatter: simple

27 | stream: ext://sys.stdout

28 |

29 | info_file_handler:

30 | class: logging.handlers.RotatingFileHandler

31 | level: INFO

32 | formatter: simple

33 | filename: clana.info.log

34 | maxBytes: 10485760 # 10MB

35 | backupCount: 20

36 | encoding: utf8

37 |

38 | error_file_handler:

39 | class: logging.handlers.RotatingFileHandler

40 | level: ERROR

41 | formatter: simple

42 | filename: clana.errors.log

43 | maxBytes: 10485760 # 10MB

44 | backupCount: 20

45 | encoding: utf8

46 |

47 | loggers:

48 | my_module:

49 | level: ERROR

50 | handlers: [console]

51 | propagate: no

52 |

53 | root:

54 | level: DEBUG

55 | handlers: [console, info_file_handler, error_file_handler]

56 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Unit test / coverage reports

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *.cover

47 | .hypothesis/

48 |

49 | # Translations

50 | *.mo

51 | *.pot

52 |

53 | # Django stuff:

54 | *.log

55 | local_settings.py

56 |

57 | # Flask stuff:

58 | instance/

59 | .webassets-cache

60 |

61 | # Scrapy stuff:

62 | .scrapy

63 |

64 | # Sphinx documentation

65 | docs/_build/

66 |

67 | # PyBuilder

68 | target/

69 |

70 | # Jupyter Notebook

71 | .ipynb_checkpoints

72 |

73 | # pyenv

74 | .python-version

75 |

76 | # celery beat schedule file

77 | celerybeat-schedule

78 |

79 | # SageMath parsed files

80 | *.sage.py

81 |

82 | # dotenv

83 | .env

84 |

85 | # virtualenv

86 | .venv

87 | venv/

88 | ENV/

89 |

90 | # Spyder project settings

91 | .spyderproject

92 | .spyproject

93 |

94 | # Rope project settings

95 | .ropeproject

96 |

97 | # mkdocs documentation

98 | /site

99 |

100 | # mypy

101 | .mypy_cache/

102 | tests/reports/

103 | mypy-report/

104 | .clana

105 | .mutmut-cache

106 |

107 | mutmut-results.html

108 | mutmut-results.xml

109 | clana_cm.pdf

110 | cm_analysis.html

111 | hierarchy.tmp.json

112 |

--------------------------------------------------------------------------------

/clana/distribution.py:

--------------------------------------------------------------------------------

1 | """Get the distribution of classes in a dataset."""

2 |

3 | # Core Library

4 | from typing import Dict, List

5 |

6 |

7 | def main(gt_filepath: str) -> None:

8 | """

9 | Get the distribution of classes in a file.

10 |

11 | Parameters

12 | ----------

13 | gt_filepath : str

14 | List of ground truth; one label per line

15 | """

16 | # Read text file

17 | with open(gt_filepath) as fp:

18 | read_lines = fp.readlines()

19 | labels_str = [line.rstrip("\n") for line in read_lines]

20 |

21 | distribution = get_distribution(labels_str)

22 | labels = sorted(distribution.items(), key=lambda n: (-n[1], n[0]))

23 | label_len = max(len(label[0]) for label in labels)

24 | count_len = max(len(str(label[1])) for label in labels)

25 | total_count = sum(label[1] for label in labels)

26 | for label, count in labels:

27 | print(

28 | "{percentage:5.2f}% {label:<{label_len}} "

29 | "({count:>{count_len}} elements)".format(

30 | label=label,

31 | count=count,

32 | percentage=count / float(total_count) * 100.0,

33 | label_len=label_len,

34 | count_len=count_len,

35 | )

36 | )

37 |

38 |

39 | def get_distribution(labels: List[str]) -> Dict[str, int]:

40 | """

41 | Get the distribution of the labels.

42 |

43 | Prameters

44 | ---------

45 | labels : List[str]

46 | This list is non-unique.

47 |

48 | Returns

49 | -------

50 | distribution : Dict[str, int]

51 | Maps (label => count)

52 |

53 | Examples

54 | --------

55 | >>> dist = get_distribution(['de', 'de', 'en'])

56 | >>> sorted(dist.items())

57 | [('de', 2), ('en', 1)]

58 | """

59 | distribution: Dict[str, int] = {}

60 | for label in labels:

61 | if label not in distribution:

62 | distribution[label] = 1

63 | else:

64 | distribution[label] += 1

65 | return distribution

66 |

--------------------------------------------------------------------------------

/clana/get_cm.py:

--------------------------------------------------------------------------------

1 | """Calculate the confusion matrix (CSV inputs)."""

2 |

3 | # Core Library

4 | import csv

5 | import logging

6 | from typing import List, Tuple

7 |

8 | # Third party

9 | import numpy as np

10 | import numpy.typing as npt

11 |

12 | # First party

13 | import clana.io

14 |

15 | logger = logging.getLogger(__name__)

16 |

17 |

18 | def main(predictions_filepath: str, gt_filepath: str, n: int) -> None:

19 | """

20 | Calculate a confusion matrix.

21 |

22 | Parameters

23 | ----------

24 | predictions_filepath : str

25 | CSV file with delimter ; and quoting char "

26 | The first field is an identifier, the second one is the index of the

27 | predicted label

28 | gt_filepath : str

29 | CSV file with delimter ; and quoting char "

30 | The first field is an identifier, the second one is the index of the

31 | ground truth

32 | n : int

33 | Number of classes

34 | """

35 | # Read CSV files

36 | with open(predictions_filepath) as fp:

37 | reader = csv.reader(fp, delimiter=";", quotechar='"')

38 | predictions = [tuple(row) for row in reader]

39 |

40 | with open(gt_filepath) as fp:

41 | reader = csv.reader(fp, delimiter=";", quotechar='"')

42 | truths = [tuple(row) for row in reader]

43 |

44 | cm = calculate_cm(predictions, truths, n)

45 | path = "cm.json"

46 | clana.io.write_cm(path, cm)

47 | logger.info(f"cm was written to '{path}'")

48 |

49 |

50 | def calculate_cm(

51 | truths: List[Tuple[str, ...]], predictions: List[Tuple[str, ...]], n: int

52 | ) -> npt.NDArray:

53 | """

54 | Calculate a confusion matrix.

55 |

56 | Parameters

57 | ----------

58 | truths : List[Tuple[str, str]]

59 | predictions : List[Tuple[str, str]]

60 | n : int

61 | Number of classes

62 |

63 | Returns

64 | -------

65 | confusion_matrix : numpy array (n x n)

66 | """

67 | cm = np.zeros((n, n), dtype=int)

68 |

69 | ident2truth_index = {}

70 | for identifier, truth_index in truths:

71 | ident2truth_index[identifier] = int(truth_index)

72 |

73 | if len(predictions) != len(truths):

74 | msg = f'len(predictions) = {len(predictions)} != {len(truths)} = len(truths)"'

75 | raise ValueError(msg)

76 |

77 | for ident, pred_index in predictions:

78 | cm[ident2truth_index[ident]][int(pred_index)] += 1

79 |

80 | return cm

81 |

--------------------------------------------------------------------------------

/clana/utils.py:

--------------------------------------------------------------------------------

1 | """Utility functions for clana."""

2 |

3 | # Core Library

4 | import csv

5 | import os

6 | from typing import Any, Dict, List, Optional

7 |

8 | # Third party

9 | import yaml

10 | from pkg_resources import resource_filename

11 |

12 |

13 | def load_labels(labels_file: str, n: int) -> List[str]:

14 | """

15 | Load labels from a CSV file.

16 |

17 | Parameters

18 | ----------

19 | labels_file : str

20 | n : int

21 |

22 | Returns

23 | -------

24 | labels : List[str]

25 | """

26 | if n < 0:

27 | raise ValueError(f"n={n} needs to be non-negative")

28 | if os.path.isfile(labels_file):

29 | # Read CSV file

30 | with open(labels_file) as fp:

31 | reader = csv.reader(fp, delimiter=";", quotechar='"')

32 | next(reader, None) # skip the headers

33 | parsed_csv = list(reader)

34 | labels = [el[0] for el in parsed_csv] # short by default

35 | else:

36 | labels = [str(el) for el in range(n)]

37 | return labels

38 |

39 |

40 | def load_cfg(

41 | yaml_filepath: Optional[str] = None, verbose: bool = False

42 | ) -> Dict[str, Any]:

43 | """

44 | Load a YAML configuration file.

45 |

46 | Parameters

47 | ----------

48 | yaml_filepath : str, optional (default: package config file)

49 |

50 | Returns

51 | -------

52 | cfg : Dict[str, Any]

53 | """

54 | if yaml_filepath is None:

55 | yaml_filepath = resource_filename("clana", "config.yaml")

56 | # Read YAML experiment definition file

57 | if verbose:

58 | print(f"Load config from {yaml_filepath}...")

59 | with open(yaml_filepath) as stream:

60 | cfg = yaml.safe_load(stream)

61 | cfg = make_paths_absolute(os.path.dirname(yaml_filepath), cfg)

62 | return cfg

63 |

64 |

65 | def make_paths_absolute(dir_: str, cfg: Dict[str, Any]) -> Dict[str, Any]:

66 | """

67 | Make all values for keys ending with `_path` absolute to dir_.

68 |

69 | Parameters

70 | ----------

71 | dir_ : str

72 | cfg : Dict[str, Any]

73 |

74 | Returns

75 | -------

76 | cfg : Dict[str, Any]

77 | """

78 | for key in cfg.keys():

79 | if hasattr(key, "endswith") and key.endswith("_path"):

80 | if cfg[key].startswith("~"):

81 | cfg[key] = os.path.expanduser(cfg[key])

82 | else:

83 | cfg[key] = os.path.join(dir_, cfg[key])

84 | cfg[key] = os.path.abspath(cfg[key])

85 | if type(cfg[key]) is dict:

86 | cfg[key] = make_paths_absolute(dir_, cfg[key])

87 | return cfg

88 |

--------------------------------------------------------------------------------

/requirements/dev.txt:

--------------------------------------------------------------------------------

1 | #

2 | # This file is autogenerated by pip-compile with python 3.7

3 | # To update, run:

4 | #

5 | # pip-compile requirements/dev.in

6 | #

7 | bleach==4.1.0

8 | # via readme-renderer

9 | certifi==2021.10.8

10 | # via requests

11 | cffi==1.15.0

12 | # via cryptography

13 | cfgv==3.3.1

14 | # via pre-commit

15 | charset-normalizer==2.0.10

16 | # via requests

17 | click==8.0.3

18 | # via pip-tools

19 | colorama==0.4.4

20 | # via twine

21 | cryptography==36.0.1

22 | # via secretstorage

23 | distlib==0.3.4

24 | # via virtualenv

25 | docutils==0.18.1

26 | # via readme-renderer

27 | filelock==3.4.2

28 | # via virtualenv

29 | identify==2.4.6

30 | # via pre-commit

31 | idna==3.3

32 | # via requests

33 | importlib-metadata==4.10.1

34 | # via

35 | # click

36 | # keyring

37 | # pep517

38 | # pre-commit

39 | # twine

40 | # virtualenv

41 | jeepney==0.7.1

42 | # via

43 | # keyring

44 | # secretstorage

45 | keyring==23.5.0

46 | # via twine

47 | nodeenv==1.6.0

48 | # via pre-commit

49 | packaging==21.3

50 | # via bleach

51 | pep517==0.12.0

52 | # via pip-tools

53 | pip-tools==6.4.0

54 | # via -r requirements/dev.in

55 | pkginfo==1.8.2

56 | # via twine

57 | platformdirs==2.4.1

58 | # via virtualenv

59 | pre-commit==2.17.0

60 | # via -r requirements/dev.in

61 | pycparser==2.21

62 | # via cffi

63 | pygments==2.11.2

64 | # via readme-renderer

65 | pyparsing==3.0.7

66 | # via packaging

67 | pyyaml==6.0

68 | # via pre-commit

69 | readme-renderer==32.0

70 | # via twine

71 | requests==2.27.1

72 | # via

73 | # requests-toolbelt

74 | # twine

75 | requests-toolbelt==0.9.1

76 | # via twine

77 | rfc3986==2.0.0

78 | # via twine

79 | secretstorage==3.3.1

80 | # via keyring

81 | six==1.16.0

82 | # via

83 | # bleach

84 | # virtualenv

85 | toml==0.10.2

86 | # via pre-commit

87 | tomli==2.0.0

88 | # via pep517

89 | tqdm==4.62.3

90 | # via twine

91 | twine==3.7.1

92 | # via -r requirements/dev.in

93 | typing-extensions==4.0.1

94 | # via importlib-metadata

95 | urllib3==1.26.8

96 | # via requests

97 | virtualenv==20.13.0

98 | # via pre-commit

99 | webencodings==0.5.1

100 | # via bleach

101 | wheel==0.37.1

102 | # via

103 | # -r requirements/dev.in

104 | # pip-tools

105 | zipp==3.7.0

106 | # via

107 | # importlib-metadata

108 | # pep517

109 |

110 | # The following packages are considered to be unsafe in a requirements file:

111 | # pip

112 | # setuptools

113 |

--------------------------------------------------------------------------------

/docs/source/file_formats.rst:

--------------------------------------------------------------------------------

1 | The following file formats are used within ``clana``.

2 |

3 | Label Format

4 | ============

5 |

6 | The label file format is a text format. It is used to make sense of the

7 | prediction. The order matters.

8 |

9 | Specification

10 | -------------

11 |

12 | - One label per line

13 | - It is a CSV file with ``;`` as the delimiter and ``"`` as the quoting

14 | character.

15 | - The first value is a short version of the label. It has to be unique

16 | over all short versions.

17 | - The second value is a long version of the label. It has to be unique

18 | over all long versions.

19 |

20 | Example

21 | -------

22 |

23 | Computer Vision

24 | ~~~~~~~~~~~~~~~

25 |

26 | ::

27 |

28 | car;car

29 | cat;cat

30 | dog;dog

31 | mouse;mouse

32 |

33 | mnist.csv:

34 |

35 | ::

36 |

37 | 0;0

38 | 1;1

39 | 2;2

40 | 3;3

41 | 4;4

42 | 5;5

43 | 6;6

44 | 7;7

45 | 8;8

46 | 9;9

47 |

48 | Language Identification

49 | ~~~~~~~~~~~~~~~~~~~~~~~

50 |

51 | ::

52 |

53 | German;de

54 | English;en

55 | French;fr

56 |

57 | Classification Dump Format

58 | ==========================

59 |

60 | TODO: THIS IS WAY TOO BIG!

61 |

62 | The classification dump format is a text format. It describes what the

63 | output of a classifier for some inputs.

64 |

65 | .. _specification-1:

66 |

67 | Specification

68 | -------------

69 |

70 | The Classification Dump Format is a text format.

71 |

72 | - Each line contains exactly one output of the classifier for one

73 | input.

74 | - It is a CSV file with ``;`` as the delimiter and ``"`` as the quoting

75 | character.

76 | - The first value is an identifier for the input. It is no longer than

77 | 60 characters.

78 | - The second and following values are the outputs for each label. Each

79 | of those values is a number in ``[0, 1]``.

80 | - The outputs are in the same order as in the related ``label.csv``

81 | file.

82 |

83 | .. _example-1:

84 |

85 | Example

86 | -------

87 |

88 | ::

89 |

90 | identifier 1;0.1;0.3;0.6

91 | ident 2;0.8;0.1;0.1

92 |

93 | Ground Truth Format

94 | ===================

95 |

96 | The Ground Truth Format is a text file format. It is used to describe

97 | the ground truth of data.

98 |

99 | .. _specification-2:

100 |

101 | Specification

102 | -------------

103 |

104 | - Each line contains the ground truth of exactly one element.

105 | - It is a CSV file with ``;`` as the delimiter and ``"`` as the quoting

106 | character.

107 | - The first value is an identifier for the input. It is no longer than

108 | 60 characters.

109 | - The second and following values are the outputs for each label. Each

110 | of those values is a number in ``[0, 1]``.

111 | - The outputs are in the same order as in the related ``label.csv``

112 | file.

113 |

114 | .. _example-2:

115 |

116 | Example

117 | -------

118 |

119 | ::

120 |

121 | identifier 1;1;0;1

122 | identifier 1;0.5;0;0.5

123 |

--------------------------------------------------------------------------------

/clana/templates/base.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

30 |

31 |

32 |

33 |

34 |

35 |

36 | | |

37 | {% for cell in header_cells %}

38 | {{ cell['label'] }} |

39 | {% endfor %}

40 | support |

41 |

42 |

43 |

44 | {% for row in body_rows %}

45 | {% set outer_loop = loop %}

46 |

47 | {% for cell in row['row'] %}

48 | {% if loop.index == 1 %}

49 | | {{ cell['label'] }} |

50 | {% else %}

51 | {{ cell['label'] }} |

52 | {% endif %}

53 | {% endfor %}

54 | {{ row['support']}} |

55 |

56 | {% endfor %}

57 |

58 |

59 |

88 |

89 |

90 |

91 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | # https://setuptools.readthedocs.io/en/latest/setuptools.html#configuring-setup-using-setup-cfg-files

3 | name = clana

4 |

5 | author = Martin Thoma

6 | author_email = info@martin-thoma.de

7 | maintainer = Martin Thoma

8 | maintainer_email = info@martin-thoma.de

9 |

10 | version = 0.4.0

11 |

12 | description = CLANA is a toolkit for classifier analysis.

13 | long_description = file: README.md

14 | long_description_content_type = text/markdown

15 |

16 | platforms = Linux

17 |

18 | url = https://github.com/MartinThoma/clana

19 | download_url = https://github.com/MartinThoma/clana

20 |

21 | license = MIT

22 |

23 | keywords =

24 | Machine Learning

25 | Data Science

26 | classifiers

27 | Classification

28 | Classifier Analysis

29 |

30 | classifiers =

31 | Development Status :: 4 - Beta

32 | Environment :: Console

33 | Intended Audience :: Developers

34 | Intended Audience :: Information Technology

35 | Intended Audience :: Science/Research

36 | License :: OSI Approved :: MIT License

37 | Natural Language :: English

38 | Operating System :: OS Independent

39 | Programming Language :: Python :: 3

40 | Programming Language :: Python :: 3 :: Only

41 | Programming Language :: Python :: 3.7

42 | Programming Language :: Python :: 3.8

43 | Programming Language :: Python :: 3.9

44 | Topic :: Software Development :: Libraries :: Python Modules

45 | Topic :: Scientific/Engineering :: Information Analysis

46 | Topic :: Scientific/Engineering :: Visualization

47 | Topic :: Software Development

48 | Topic :: Utilities

49 |

50 | [options]

51 | zip_safe = false

52 | include_package_data = true

53 | packages = find:

54 | python_requires = >=3.6

55 | install_requires =

56 | click>=6.7

57 | jinja2

58 | matplotlib>=2.1.1

59 | numpy>=1.20.0

60 | PyYAML>=5.1.1

61 | scikit-learn>=0.19.1

62 | scipy>=1.0.0

63 |

64 | [options.entry_points]

65 | console_scripts =

66 | clana = clana.cli:entry_point

67 |

68 | [files]

69 | package-data = clana = clana/config.yaml

70 |

71 | [tool:pytest]

72 | addopts = --doctest-modules --mccabe --cov=./clana --cov-report html:tests/reports/coverage-html --cov-report term-missing --ignore=docs/ --ignore=clana/__main__.py --durations=3 --timeout=30

73 | doctest_encoding = utf-8

74 | mccabe-complexity=10

75 |

76 | [pydocstyle]

77 | match_dir = clana

78 | ignore = D105, D413, D107, D416, D212, D203, D417

79 |

80 | [flake8]

81 | match_dir = clana

82 | max-line-length = 80

83 | exclude = tests/*,.tox/*,.nox/*,docs/*,build/*

84 | ignore = E501,SIM106

85 |

86 | [mutmut]

87 | backup = False

88 | runner = python -m pytest

89 | tests_dir = tests/

90 |

91 | [bandit]

92 | ignore = B311 # See https://github.com/PyCQA/bandit/issues/212

93 |

94 | [mypy]

95 | ignore_missing_imports=true

96 | check_untyped_defs=true

97 | disallow_untyped_defs=true

98 | disallow_any_generics=true

99 | warn_unused_ignores=true

100 | strict_optional=true

101 | python_version=3.8

102 | warn_redundant_casts=true

103 | warn_unused_configs=true

104 | disallow_untyped_calls=true

105 | disallow_incomplete_defs=true

106 | follow_imports=skip

107 |

108 | [mypy-tests.*]

109 | ignore_errors = True

110 |

--------------------------------------------------------------------------------

/docs/source/mnist_example.rst:

--------------------------------------------------------------------------------

1 | How to use clana with MNIST

2 | ===========================

3 |

4 | Prerequesites

5 | -------------

6 |

7 | Install ``clana`` and execute the example:

8 |

9 | ::

10 |

11 | $ pip install clana

12 | $ python mnist_example.py

13 |

14 | This will generate the clana files.

15 |

16 | Usage

17 | -----

18 |

19 | distribution

20 | ~~~~~~~~~~~~

21 |

22 | ::

23 |

24 | $ clana distribution --gt gt-test.csv

25 | 11.35% 1 (1135 elements)

26 | 10.32% 2 (1032 elements)

27 | 10.28% 7 (1028 elements)

28 | 10.10% 3 (1010 elements)

29 | 10.09% 9 (1009 elements)

30 | 9.82% 4 ( 982 elements)

31 | 9.80% 0 ( 980 elements)

32 | 9.74% 8 ( 974 elements)

33 | 9.58% 6 ( 958 elements)

34 | 8.92% 5 ( 892 elements)

35 |

36 | get-cm

37 | ~~~~~~

38 |

39 | This is an intermediate step required for the visualization.

40 |

41 | ::

42 |

43 | $ clana get-cm --predictions train-pred.csv --gt gt-train.csv --n 10

44 | 2019-07-02 21:53:40,547 - root - INFO - cm was written to 'cm.json'

45 |

46 | visualize

47 | ~~~~~~~~~

48 |

49 | ::

50 |

51 | $ clana visualize --cm cm.json

52 | Score: 12634

53 | 2019-07-02 22:13:54,987 - root - INFO - n=10

54 | 2019-07-02 22:13:54,987 - root - INFO - ## Starting Score: 12634.00

55 | 2019-07-02 22:13:54,988 - root - INFO - Current: 12249.00 (best: 12249.00, hot_prob_thresh=100.0000%, step=0, swap=False)

56 | 2019-07-02 22:13:54,988 - root - INFO - Current: 10457.00 (best: 10457.00, hot_prob_thresh=100.0000%, step=1, swap=False)

57 | 2019-07-02 22:13:54,988 - root - INFO - Current: 10453.00 (best: 10453.00, hot_prob_thresh=100.0000%, step=3, swap=False)

58 | 2019-07-02 22:13:54,988 - root - INFO - Current: 10340.00 (best: 10340.00, hot_prob_thresh=100.0000%, step=6, swap=True)

59 | 2019-07-02 22:13:54,989 - root - INFO - Current: 10166.00 (best: 10166.00, hot_prob_thresh=100.0000%, step=14, swap=True)

60 | 2019-07-02 22:13:54,989 - root - INFO - Current: 9644.00 (best: 9644.00, hot_prob_thresh=100.0000%, step=17, swap=True)

61 | 2019-07-02 22:13:54,989 - root - INFO - Current: 9617.00 (best: 9617.00, hot_prob_thresh=100.0000%, step=19, swap=True)

62 | 2019-07-02 22:13:54,990 - root - INFO - Current: 9528.00 (best: 9528.00, hot_prob_thresh=100.0000%, step=38, swap=False)

63 | 2019-07-02 22:13:54,992 - root - INFO - Current: 9297.00 (best: 9297.00, hot_prob_thresh=100.0000%, step=86, swap=True)

64 | 2019-07-02 22:13:54,993 - root - INFO - Current: 9092.00 (best: 9092.00, hot_prob_thresh=100.0000%, step=109, swap=True)

65 | 2019-07-02 22:13:54,994 - root - INFO - Current: 9018.00 (best: 9018.00, hot_prob_thresh=100.0000%, step=123, swap=True)

66 | Score: 9018

67 | Perm: [0, 6, 5, 3, 8, 1, 2, 7, 9, 4]

68 | 2019-07-02 22:13:55,029 - root - INFO - Classes: [0, 6, 5, 3, 8, 1, 2, 7, 9, 4]

69 | Accuracy: 94.34%

70 | 2019-07-02 22:13:55,152 - root - INFO - Save figure at '/home/moose/confusion_matrix.tmp.pdf'

71 | 2019-07-02 22:13:55,269 - root - INFO - Found threshold for local connection: 258

72 | 2019-07-02 22:13:55,269 - root - INFO - Found 9 clusters

73 | 2019-07-02 22:13:55,270 - root - INFO - silhouette_score=-0.0067092812311967

74 | 1: [0]

75 | 1: [6]

76 | 1: [5]

77 | 1: [3]

78 | 1: [8]

79 | 1: [1]

80 | 1: [2]

81 | 2: [7, 9]

82 | 1: [4]

83 |

--------------------------------------------------------------------------------

/docs/mnist_example.py:

--------------------------------------------------------------------------------

1 | """

2 | Trains a simple neural network on the MNIST dataset.

3 |

4 | Gets to 97.54% test accuracy after 10 epochs.

5 | 22 seconds per epoch on a NVIDIA Geforce 940MX.

6 | """

7 |

8 | # Core Library

9 | from typing import Any, Tuple

10 |

11 | # Third party

12 | import keras

13 | import numpy as np

14 | import numpy.typing as npt

15 | from keras import backend as K

16 | from keras.datasets import mnist

17 | from keras.layers import Conv2D, Dense, Dropout, Flatten, MaxPooling2D

18 | from keras.models import Sequential

19 |

20 | # First party

21 | from clana.io import write_gt, write_predictions

22 |

23 |

24 | def main() -> None:

25 | batch_size = 128

26 | num_classes = 10

27 | epochs = 1

28 |

29 | # input image dimensions

30 | img_rows, img_cols = 28, 28

31 |

32 | # the data, split between train and test sets

33 | (x_train, y_train), (x_test, y_test) = mnist.load_data()

34 |

35 | # Write gt for CLANA

36 | write_gt(dict(enumerate(y_train)), "gt-train.csv") # type: ignore

37 | write_gt(dict(enumerate(y_test)), "gt-test.csv") # type: ignore

38 |

39 | x_train, y_train = preprocess(x_train, y_train, img_rows, img_cols, num_classes)

40 | x_test, y_test = preprocess(x_test, y_test, img_rows, img_cols, num_classes)

41 | input_shape = get_shape(img_rows, img_cols)

42 | model = create_model(input_shape, num_classes)

43 |

44 | model.fit(

45 | x_train,

46 | y_train,

47 | batch_size=batch_size,

48 | epochs=epochs,

49 | verbose=1,

50 | validation_data=(x_test, y_test),

51 | )

52 |

53 | y_train_pred = model.predict(x_train)

54 | y_test_pred = model.predict(x_train)

55 |

56 | # Write gt for CLANA

57 | y_train_pred_a = np.argmax(y_train_pred, axis=1)

58 | y_test_pred_a = np.argmax(y_test_pred, axis=1)

59 | write_predictions(dict(enumerate(y_train_pred_a)), "train-pred.csv") # type: ignore

60 | write_predictions(dict(enumerate(y_test_pred_a)), "test-pred.csv") # type: ignore

61 |

62 | score = model.evaluate(x_test, y_test, verbose=0)

63 | print("Test loss:", score[0])

64 | print("Test accuracy:", score[1])

65 |

66 |

67 | def get_shape(img_rows: int, img_cols: int) -> Tuple[int, int, int]:

68 | if K.image_data_format() == "channels_first":

69 | input_shape = (1, img_rows, img_cols)

70 | else:

71 | input_shape = (img_rows, img_cols, 1)

72 | return input_shape

73 |

74 |

75 | def preprocess(

76 | features: npt.NDArray,

77 | targets: npt.NDArray,

78 | img_rows: int,

79 | img_cols: int,

80 | num_classes: int,

81 | ) -> Tuple[Any, Any]:

82 | if K.image_data_format() == "channels_first":

83 | features = features.reshape(features.shape[0], 1, img_rows, img_cols)

84 | else:

85 | features = features.reshape(features.shape[0], img_rows, img_cols, 1)

86 | features = features.astype("float32")

87 | features /= 255

88 | print("x shape:", features.shape)

89 | print(f"{features.shape[0]} samples")

90 |

91 | # convert class vectors to binary class matrices

92 | targets = keras.utils.to_categorical(targets, num_classes)

93 | return features, targets

94 |

95 |

96 | def create_model(input_shape: Tuple[int, int, int], num_classes: int) -> Any:

97 | model = Sequential()

98 | model.add(

99 | Conv2D(32, kernel_size=(3, 3), activation="relu", input_shape=input_shape)

100 | )

101 | model.add(MaxPooling2D(pool_size=(2, 2)))

102 | model.add(Dropout(0.25))

103 | model.add(Flatten())

104 | model.add(Dense(16, activation="relu"))

105 | model.add(Dropout(0.5))

106 | model.add(Dense(num_classes, activation="softmax"))

107 |

108 | model.compile(

109 | loss=keras.losses.categorical_crossentropy,

110 | optimizer=keras.optimizers.Adadelta(),

111 | metrics=["accuracy"],

112 | )

113 | return model

114 |

115 |

116 | if __name__ == "__main__":

117 | main()

118 |

--------------------------------------------------------------------------------

/requirements/ci.txt:

--------------------------------------------------------------------------------

1 | #

2 | # This file is autogenerated by pip-compile with python 3.7

3 | # To update, run:

4 | #

5 | # pip-compile requirements/ci.in

6 | #

7 | astor==0.8.1

8 | # via flake8-simplify

9 | attrs==20.3.0

10 | # via

11 | # flake8-bugbear

12 | # flake8-eradicate

13 | # flake8-implicit-str-concat

14 | # pytest

15 | black==22.1.0

16 | # via -r ci.in

17 | click==8.0.3

18 | # via

19 | # -r ../requirements.txt

20 | # black

21 | coverage[toml]==6.3

22 | # via

23 | # -r ci.in

24 | # pytest-cov

25 | cycler==0.11.0

26 | # via

27 | # -r ../requirements.txt

28 | # matplotlib

29 | eradicate==2.0.0

30 | # via flake8-eradicate

31 | flake8==3.9.2

32 | # via

33 | # -r ci.in

34 | # flake8-bugbear

35 | # flake8-builtins

36 | # flake8-comprehensions

37 | # flake8-eradicate

38 | # flake8-executable

39 | # flake8-isort

40 | # flake8-raise

41 | # flake8-simplify

42 | # flake8-string-format

43 | flake8-bugbear==22.1.11

44 | # via -r ci.in

45 | flake8-builtins==1.5.3

46 | # via -r ci.in

47 | flake8-comprehensions==3.8.0

48 | # via -r ci.in

49 | flake8-eradicate==1.2.0

50 | # via -r ci.in

51 | flake8-executable==2.1.1

52 | # via -r ci.in

53 | flake8-implicit-str-concat==0.2.0

54 | # via -r ci.in

55 | flake8-isort==4.1.1

56 | # via -r ci.in

57 | flake8-plugin-utils==1.3.2

58 | # via flake8-pytest-style

59 | flake8-pytest-style==1.6.0

60 | # via -r ci.in

61 | flake8-raise==0.0.5

62 | # via -r ci.in

63 | flake8-simplify==0.15.1

64 | # via -r ci.in

65 | flake8-string-format==0.3.0

66 | # via -r ci.in

67 | fonttools==4.29.0

68 | # via

69 | # -r ../requirements.txt

70 | # matplotlib

71 | importlib-metadata==4.10.1

72 | # via -r ../requirements.txt

73 | iniconfig==1.1.1

74 | # via pytest

75 | isort==5.10.1

76 | # via flake8-isort

77 | jinja2==3.0.3

78 | # via -r ../requirements.txt

79 | joblib==1.1.0

80 | # via

81 | # -r ../requirements.txt

82 | # scikit-learn

83 | kiwisolver==1.3.2

84 | # via

85 | # -r ../requirements.txt

86 | # matplotlib

87 | lxml==4.7.1

88 | # via -r ci.in

89 | markupsafe==2.0.1

90 | # via

91 | # -r ../requirements.txt

92 | # jinja2

93 | matplotlib==3.5.1

94 | # via -r ../requirements.txt

95 | mccabe==0.6.1

96 | # via

97 | # flake8

98 | # pytest-mccabe

99 | more-itertools==8.12.0

100 | # via flake8-implicit-str-concat

101 | mypy==0.931

102 | # via -r ci.in

103 | mypy-extensions==0.4.3

104 | # via

105 | # black

106 | # mypy

107 | numpy==1.21.5

108 | # via

109 | # -r ../requirements.txt

110 | # matplotlib

111 | # scikit-learn

112 | # scipy

113 | packaging==21.3

114 | # via

115 | # -r ../requirements.txt

116 | # matplotlib

117 | # pytest

118 | pathspec==0.9.0

119 | # via black

120 | pillow==9.0.1

121 | # via

122 | # -r ../requirements.txt

123 | # matplotlib

124 | platformdirs==2.4.1

125 | # via black

126 | pluggy==1.0.0

127 | # via pytest

128 | py==1.11.0

129 | # via pytest

130 | pycodestyle==2.7.0

131 | # via flake8

132 | pydocstyle==6.1.1

133 | # via -r ci.in

134 | pyflakes==2.3.1

135 | # via flake8

136 | pyparsing==3.0.7

137 | # via

138 | # -r ../requirements.txt

139 | # matplotlib

140 | # packaging

141 | pytest==6.2.5

142 | # via

143 | # -r ci.in

144 | # pytest-cov

145 | # pytest-mccabe

146 | # pytest-timeout

147 | pytest-cov==3.0.0

148 | # via -r ci.in

149 | pytest-mccabe==2.0

150 | # via -r ci.in

151 | pytest-timeout==2.1.0

152 | # via -r ci.in

153 | python-dateutil==2.8.2

154 | # via

155 | # -r ../requirements.txt

156 | # matplotlib

157 | pyyaml==6.0

158 | # via -r ../requirements.txt

159 | scikit-learn==1.0.2

160 | # via -r ../requirements.txt

161 | scipy==1.7.3

162 | # via

163 | # -r ../requirements.txt

164 | # scikit-learn

165 | six==1.16.0

166 | # via

167 | # -r ../requirements.txt

168 | # python-dateutil

169 | snowballstemmer==2.2.0

170 | # via pydocstyle

171 | testfixtures==6.18.3

172 | # via flake8-isort

173 | threadpoolctl==3.0.0

174 | # via

175 | # -r ../requirements.txt

176 | # scikit-learn

177 | toml==0.10.2

178 | # via pytest

179 | tomli==2.0.0

180 | # via

181 | # black

182 | # mypy

183 | types-pkg-resources==0.1.3

184 | # via -r ci.in

185 | types-pyyaml==6.0.3

186 | # via -r ci.in

187 | types-setuptools==57.4.8

188 | # via -r ci.in

189 | typing-extensions==4.0.1

190 | # via

191 | # -r ../requirements.txt

192 | # mypy

193 | zipp==3.7.0

194 | # via

195 | # -r ../requirements.txt

196 | # importlib-metadata

197 |

--------------------------------------------------------------------------------

/clana/get_cm_simple.py:

--------------------------------------------------------------------------------

1 | """Calculate the confusion matrix (one label per line)."""

2 |

3 | # Core Library

4 | import csv

5 | import json

6 | import logging

7 | import os

8 | import sys

9 | from typing import Dict, List, Tuple

10 |

11 | # Third party

12 | import numpy as np

13 | import numpy.typing as npt

14 | import sklearn.metrics

15 |

16 | # First party

17 | import clana.utils

18 |

19 | logger = logging.getLogger(__name__)

20 |

21 |

22 | def main(

23 | label_filepath: str, gt_filepath: str, predictions_filepath: str, clean: bool

24 | ) -> None:

25 | """

26 | Get a simple confunsion matrix.

27 |

28 | Parameters

29 | ----------

30 | label_filepath : str

31 | Path to a CSV file with delimiter ;

32 | gt_filepath : str

33 | Path to a CSV file with delimiter ;

34 | predictions : str

35 | Path to a CSV file with delimiter ;

36 | clean : bool, optional (default: False)

37 | Remove classes that the classifier doesn't know

38 | """

39 | label_filepath = os.path.abspath(label_filepath)

40 | labels = clana.utils.load_labels(label_filepath, 0)

41 |

42 | # Read CSV files

43 | with open(gt_filepath) as fp:

44 | reader = csv.reader(fp, delimiter=";", quotechar='"')

45 | truths = [row[0] for row in reader]

46 |

47 | with open(predictions_filepath) as fp:

48 | reader = csv.reader(fp, delimiter=";", quotechar='"')

49 | predictions = [row[0] for row in reader]

50 |

51 | cm = calculate_cm(labels, truths, predictions, clean=False)

52 | # Write JSON file

53 | cm_filepath = os.path.abspath("cm.json")

54 | logger.info(f"Write results to '{cm_filepath}'.")

55 | with open(cm_filepath, "w") as outfile:

56 | str_ = json.dumps(

57 | cm.tolist(), indent=2, separators=(",", ": "), ensure_ascii=False

58 | )

59 | outfile.write(str_)

60 | print(cm)

61 |

62 |

63 | def calculate_cm(

64 | labels: List[str],

65 | truths: List[str],

66 | predictions: List[str],

67 | replace_unk_preds: bool = False,

68 | clean: bool = False,

69 | ) -> npt.NDArray:

70 | """

71 | Calculate a confusion matrix.

72 |

73 | Parameters

74 | ----------

75 | labels : List[int]

76 | truths : List[int]

77 | predictions : List[int]

78 | replace_unk_preds : bool, optional (default: True)

79 | If a prediction is not in the labels in label_filepath, replace it

80 | with UNK

81 | clean : bool, optional (default: False)

82 | Remove classes that the classifier doesn't know

83 |

84 | Returns

85 | -------

86 | confusion_matrix : numpy array (n x n)

87 | """

88 | # Check data

89 | if len(predictions) != len(truths):

90 | msg = f"len(predictions) = {len(predictions)} != {len(truths)} = len(truths)"

91 | raise ValueError(msg)

92 |

93 | label2i = {} # map a label to 0, ..., n

94 | for i, label in enumerate(labels):

95 | label2i[label] = i

96 |

97 | if clean:

98 | truths, predictions = clean_truths(truths, predictions)

99 |

100 | if replace_unk_preds:

101 | predictions = clean_preds(predictions, label2i)

102 |