├── SUq.gif

├── .gitignore

├── .npmignore

├── recipes

├── request-options.js

├── microformat-dump.js

├── youtube.js

├── generic.js

├── images.js

└── wordpress-microformat.js

├── bin

├── usage.txt

└── suq.js

├── lib

├── parseMeta.js

├── parseTwitterCard.js

├── parseOpenGraph.js

├── parseOembed.js

├── parseTags.js

├── cleanMicrodata.js

└── cleanMicroformats.js

├── tests

├── cleanMicrodata.js

├── parseTwitterCard.js

├── parseMeta.js

├── parseOembed.js

├── cleanMicroformats.js

├── parseTags.js

├── parseOpenGraph.js

└── fixtures

│ ├── sample.js

│ ├── cleanedMicrodata.json

│ └── rawMicrodata.json

├── LICENSE

├── package.json

├── index.js

└── README.md

/SUq.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MattMcFarland/SUq/HEAD/SUq.gif

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | node_modules

2 | .idea

3 | .c9

4 | npm-debug.log

5 | tests/sites/*.json

6 | .npmrc

7 |

--------------------------------------------------------------------------------

/.npmignore:

--------------------------------------------------------------------------------

1 | node_modules

2 | .idea

3 | .c9

4 | npm-debug.log

5 | task.txt

6 | tests/sites/*.json

--------------------------------------------------------------------------------

/recipes/request-options.js:

--------------------------------------------------------------------------------

1 | var suq = require('suq');

2 |

3 | suq('http://www.nytimes.com/2016/01/31/books/review/the-powers-that-were.html', function (err, data, body) {

4 |

5 | console.log(data);

6 |

7 | }, { jar: true });

--------------------------------------------------------------------------------

/recipes/microformat-dump.js:

--------------------------------------------------------------------------------

1 | var suq = require('suq');

2 | var cheerio = require('cheerio');

3 | var _ = require('lodash');

4 |

5 | suq('https://blog.agilebits.com/2015/06/17/1password-inter-process-communication-discussion/', function (err, data, body) {

6 |

7 | console.log(JSON.stringify(data));

8 |

9 | });

--------------------------------------------------------------------------------

/bin/usage.txt:

--------------------------------------------------------------------------------

1 | Usage: suq [url] {OPTIONS}

2 |

3 | Options:

4 |

5 | --url, -u Scrapes the url provided.

6 | Optionally url can be the first parameter.

7 |

8 | --output, -o Writes the scraped data to a file

9 | If unspecified, suq prints to stdout.

10 |

11 | --version, -v Displays version information.

12 |

13 | --help, -h Show this message

14 |

15 | Specify a parameter.

16 |

--------------------------------------------------------------------------------

/recipes/youtube.js:

--------------------------------------------------------------------------------

1 | var suq = require('suq');

2 |

3 | suq('https://www.youtube.com/watch?v=Xft3asYLKo0', function (err, data) {

4 |

5 | if (!err) {

6 | var props = data.microdata.items[0].properties;

7 | console.log('\n\ntitle:', props.name[0]);

8 | console.log('\nthumbnail:', props.thumbnailUrl[0]);

9 | console.log('embedURL:', props.embedURL[0]);

10 | console.log('\ndescription:', props.description[0]);

11 | console.log('\ndatePublished:', props.datePublished[0]);

12 | }

13 | });

--------------------------------------------------------------------------------

/lib/parseMeta.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 |

5 | try {

6 |

7 | var

8 | $head = $('head'),

9 | result = {};

10 |

11 |

12 | $head.find('meta').each(function(i, el) {

13 | var $el = $(el);

14 |

15 | if ($el.attr('name') && $el.attr('content')) {

16 | result[$el.attr('name')] = $el.attr('content');

17 | }

18 |

19 | });

20 |

21 | callback(null, result);

22 |

23 | } catch (e) {

24 | console.log(e);

25 | callback(e);

26 | }

27 |

28 | };

--------------------------------------------------------------------------------

/recipes/generic.js:

--------------------------------------------------------------------------------

1 | var suq = require('suq');

2 | var cheerio = require('cheerio');

3 | var _ = require('lodash');

4 |

5 | suq('http://odonatagame.blogspot.com/2015/07/oh-thats-right-were-not-dead.html', function (err, data, body) {

6 |

7 | var $ = cheerio.load(body);

8 |

9 | var scraped = {

10 | title: data.meta.title || data.headers.h1[0],

11 | description: data.meta.description || $('p').text().replace(/([\r\n\t])+/ig,'').substring(0,255) +'...',

12 | images: _.sample(data.images, 8)

13 | };

14 |

15 | console.log(scraped);

16 |

17 | });

--------------------------------------------------------------------------------

/tests/cleanMicrodata.js:

--------------------------------------------------------------------------------

1 | const test = require('tape');

2 | const cleanMicrodata = require('../lib/cleanMicrodata');

3 | const rawMicrodata = require('./fixtures/rawMicrodata.json');

4 | const cleanedMicrodata = require('./fixtures/cleanedMicrodata.json');

5 |

6 | test('cleanMicrodata.js', function (t) {

7 | t.plan(2);

8 |

9 | cleanMicrodata(rawMicrodata, (err, data) => {

10 | t.equal(err, null, 'should return callback without error');

11 | t.deepEqual(data, cleanedMicrodata, 'should return callback with cleaned microdata');

12 | });

13 | });

14 |

15 |

16 |

--------------------------------------------------------------------------------

/lib/parseTwitterCard.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 |

5 | try {

6 |

7 | var

8 | $head = $('head'),

9 | result = {};

10 |

11 |

12 | $head.find('meta').each(function(i, el) {

13 | var $el = $(el);

14 |

15 | if ($el.attr('name') && $el.attr('content') && $el.attr('name').indexOf('twitter:') > -1) {

16 | result[$el.attr('name')] = $el.attr('content');

17 | }

18 |

19 | });

20 |

21 | callback(null, result);

22 |

23 | } catch (e) {

24 | console.log(e);

25 | callback(e);

26 | }

27 |

28 | };

29 |

--------------------------------------------------------------------------------

/lib/parseOpenGraph.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 |

5 | try {

6 |

7 | var

8 | $head = $('head'),

9 | result = {};

10 |

11 |

12 | $head.find('meta').each(function(i, el) {

13 | var $el = $(el);

14 |

15 | if ($el.attr('property') && $el.attr('content') && $el.attr('property').indexOf('og:') > -1) {

16 | result[$el.attr('property')] = $el.attr('content');

17 | }

18 |

19 | });

20 |

21 | callback(null, result);

22 |

23 | } catch (e) {

24 | console.log(e);

25 | callback(e);

26 | }

27 |

28 | };

29 |

--------------------------------------------------------------------------------

/tests/parseTwitterCard.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseTwitterCard = require('../lib/parseTwitterCard');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 | const expected = {

6 | 'twitter:card': 'summary',

7 | 'twitter:site': '@nytimesbits',

8 | 'twitter:creator': '@nickbilton',

9 | }

10 |

11 | test('parseTwitterCard.js', function (t) {

12 | t.plan(2);

13 | parseTwitterCard(parsedHtml, (err, data) => {

14 | t.equal(err, null, 'should return callback without error');

15 | t.deepEqual(expected, data);

16 | })

17 | });

18 |

--------------------------------------------------------------------------------

/tests/parseMeta.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseMeta = require('../lib/parseMeta');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 | const expected = {

6 | description: 'Free Web tutorials',

7 | keywords: 'HTML,CSS,XML,JavaScript',

8 | author: 'Hege Refsnes',

9 | 'twitter:card': 'summary',

10 | 'twitter:site': '@nytimesbits',

11 | 'twitter:creator': '@nickbilton'

12 | }

13 |

14 | test('parseMeta.js', function (t) {

15 | t.plan(2);

16 | parseMeta(parsedHtml, (err, data) => {

17 | t.equal(err, null, 'should return callback without error');

18 | t.deepEqual(expected, data);

19 | })

20 | });

21 |

22 |

23 |

--------------------------------------------------------------------------------

/tests/parseOembed.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseOembed = require('../lib/parseOembed');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 |

6 | const expected = {

7 | 'text/xml+oembed': "https://namchey.com/api/oembed?url=https%3A%2F%2Fnamchey.com%2Fitineraries%2Ftilicho&format=xml",

8 | 'text/json+oembed': "https://namchey.com/api/oembed?url=https%3A%2F%2Fnamchey.com%2Fitineraries%2Ftilicho&format=json"

9 | };

10 |

11 | test('parseOembed.js', function (t) {

12 | t.plan(2);

13 | parseOembed(parsedHtml, (err, data) => {

14 | t.equal(err, null, 'should return callback without error');

15 | t.deepEqual(expected, data);

16 | });

17 | });

18 |

--------------------------------------------------------------------------------

/recipes/images.js:

--------------------------------------------------------------------------------

1 | // How to scrape image tag URLS from a website:

2 |

3 | var suq = require('suq');

4 | var _ = require('underscore');

5 |

6 | var url = "http://www.ufirstgroup.com";

7 |

8 | suq(url, function (err, json, body) {

9 |

10 | if (!err) {

11 | var images = json.images;

12 |

13 | console.log('\nThe Image tag URLs in the page, converted to json: \n\n', JSON.stringify(images, null, 2));

14 |

15 | console.log('\n\nList of individual Image tag URLs, pulled from the JSON using Underscore.js and converted into valid HTML: \n\n');

16 |

17 | _.each(images, function (src) {

18 | console.log(' ');

19 | });

20 |

21 | }

22 |

23 | });

--------------------------------------------------------------------------------

/tests/cleanMicroformats.js:

--------------------------------------------------------------------------------

1 | const fs = require("fs");

2 | const test = require("tape");

3 | const cleanMicroformats = require("../lib/cleanMicroformats");

4 |

5 | test("cleanMicroformats.js", function(t) {

6 | t.plan(2);

7 | const body = 'Glenn';

8 |

9 | cleanMicroformats(body, (err, data) => {

10 | t.equal(err, null, "should return callback without error");

11 |

12 | const expected = [

13 | {

14 | id: 1,

15 | type: "h-card",

16 | props: { name: "Glenn", url: "http://glennjones.net" },

17 | path: ["0", "type"],

18 | length: 2,

19 | level: 2

20 | }

21 | ];

22 | t.deepEqual(data, expected);

23 | });

24 | });

25 |

--------------------------------------------------------------------------------

/tests/parseTags.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseTags = require('../lib/parseTags');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 | const expected = {

6 | title: 'My cool website',

7 | headers: { h1: [ 'Lorem' ], h2: [ 'images' ], h3: [], h4: [], h5: [], h6: [] },

8 | images: [ '/cat1.jpg', '/cat2.jpg', '/cat3.jpg', '/cat4.jpg' ],

9 | links: [ { text: '', title: undefined, href: '#' },

10 | { text: '', title: undefined, href: '#' },

11 | { text: '', title: undefined, href: '#' },

12 | { text: '', title: undefined, href: '#' },

13 | { text: 'more stuff', title: undefined, href: '/more' } ]

14 | }

15 |

16 | test('parseTags.js', function (t) {

17 | t.plan(2);

18 | parseTags(parsedHtml, (err, data) => {

19 | t.equal(err, null, 'should return callback without error');

20 | t.deepEqual(expected, data);

21 | })

22 | });

23 |

--------------------------------------------------------------------------------

/tests/parseOpenGraph.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseOpenGraph = require('../lib/parseOpenGraph');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 | const expected = {

6 | 'og:url': 'http://bits.blogs.nytimes.com/2011/12/08/a-twitter-for-my-sister/',

7 | 'og:title': 'A Twitter for My Sister',

8 | 'og:description': 'In the early days, Twitter grew so quickly that it was almost impossible to add new features because engineers spent their time trying to keep the rocket ship from stalling.',

9 | 'og:image': 'http://graphics8.nytimes.com/images/2011/12/08/technology/bits-newtwitter/bits-newtwitter-tmagArticle.jpg'

10 | }

11 |

12 | test('parseTags.js', function (t) {

13 | t.plan(2);

14 | parseOpenGraph(parsedHtml, (err, data) => {

15 | t.equal(err, null, 'should return callback without error');

16 | t.deepEqual(expected, data);

17 | })

18 | });

19 |

--------------------------------------------------------------------------------

/lib/parseOembed.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 |

5 | try {

6 |

7 | var

8 | $head = $('head'),

9 | result = {};

10 |

11 |

12 | $head.find('link').each(function(i, el) {

13 | var $el = $(el);

14 |

15 | //xml type

16 | if ($el.attr('type') && $el.attr('href') && $el.attr('rel') === 'alternate' && $el.attr('type').indexOf('text/xml+oembed') > -1) {

17 | result[$el.attr('type')] = $el.attr('href');

18 | }

19 |

20 | //json type

21 | if ($el.attr('type') && $el.attr('href') && $el.attr('rel') === 'alternate' && ($el.attr('type').indexOf('text/json+oembed') > -1 || $el.attr('type').indexOf('application/json+oembed') > -1)) {

22 | result[$el.attr('type')] = $el.attr('href');

23 | }

24 |

25 | });

26 |

27 | callback(null, result);

28 |

29 | } catch (e) {

30 | console.log(e);

31 | callback(e);

32 | }

33 |

34 | };

35 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | This software is released under the MIT license:

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy of

4 | this software and associated documentation files (the "Software"), to deal in

5 | the Software without restriction, including without limitation the rights to

6 | use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of

7 | the Software, and to permit persons to whom the Software is furnished to do so,

8 | subject to the following conditions:

9 |

10 | The above copyright notice and this permission notice shall be included in all

11 | copies or substantial portions of the Software.

12 |

13 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS

15 | FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR

16 | COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER

17 | IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN

18 | CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

19 |

--------------------------------------------------------------------------------

/recipes/wordpress-microformat.js:

--------------------------------------------------------------------------------

1 | var suq = require('suq');

2 | var _ = require('lodash');

3 |

4 | function hget(model) {

5 | if (model[0]) {

6 | return (model[0])

7 | } else {

8 | return {}

9 | }

10 | }

11 |

12 |

13 | suq('/a-wordpress/blog-post', function (err, data) {

14 |

15 | var feed, posts, postData, postProps, author, post;

16 |

17 | feed = hget(_.filter(data.microformat.items, _.matches({type: ["h-feed"]})));

18 |

19 | if (feed) {

20 |

21 | posts = _.filter(feed.children, _.matches({type: ["h-entry"]}));

22 |

23 | if (posts) {

24 | postData = hget(posts);

25 | }

26 |

27 | if (postData) {

28 |

29 | postProps = postData.properties;

30 |

31 | if (postProps) {

32 |

33 | author = hget(_.filter(postProps.author, _.matches({type: ["h-card"]})));

34 |

35 | post = {

36 | title: hget(postProps.name),

37 | category: hget(postProps.category),

38 | excerpt: hget(postProps.content).value.substring(0, 150) + '...',

39 | author: hget(author.properties.name),

40 | url: hget(data.microformat.rels.canonical),

41 | images: _.sample(data.images, 4)

42 | };

43 |

44 | console.log(post);

45 |

46 | }

47 |

48 | }

49 | }

50 |

51 | });

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "suq",

3 | "main": "./index",

4 | "version": "1.4.1",

5 | "description": "A Scraping Utility for lazy people",

6 | "scripts": {

7 | "test": "tape tests/*.js | tap-diff"

8 | },

9 | "keywords": [

10 | "htmlparser",

11 | "jquery",

12 | "selector",

13 | "scraper",

14 | "parser",

15 | "html",

16 | "microdata",

17 | "microformats",

18 | "opengraph",

19 | "twittercard",

20 | "meta"

21 | ],

22 | "bin": {

23 | "suq": "./bin/suq.js"

24 | },

25 | "files": [

26 | "index.js",

27 | "lib",

28 | "bin",

29 | "recipes"

30 | ],

31 | "author": {

32 | "name": "Matt McFarland",

33 | "email": "contact@mattmcfarland.com"

34 | },

35 | "contributors": [

36 | {

37 | "name": "Matt McFarland",

38 | "email": "contact@mattmcfarland.com"

39 | },

40 | {

41 | "name": "Tom Sutton",

42 | "url": "https://github.com/tomsutton1984"

43 | },

44 | {

45 | "name": "Oscar Illescas",

46 | "url": "https://github.com/oillescas"

47 | },

48 | {

49 | "name": "Gary Moon",

50 | "url": "https://github.com/garymoon"

51 | }

52 | ],

53 | "license": "MIT",

54 | "dependencies": {

55 | "cheerio": "^0.22.0",

56 | "lodash": "^4.17.11",

57 | "microdata-node": "^1.0.0",

58 | "microformat-node": "^2.0.1",

59 | "minimist": "^1.2.0",

60 | "request": "^2.88.0",

61 | "traverse": "^0.6.6",

62 | "xss": "^1.0.6"

63 | },

64 | "repository": {

65 | "type": "git",

66 | "url": "git://github.com/MattMcFarland/SUq.git"

67 | },

68 | "devDependencies": {

69 | "chalk": "^2.4.2",

70 | "tap-diff": "^0.1.1",

71 | "tape": "^4.10.2"

72 | }

73 | }

74 |

--------------------------------------------------------------------------------

/lib/parseTags.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 | try {

5 |

6 | var

7 | $head = $('head'),

8 | $body = $('body'),

9 | result = {

10 | title: '',

11 | headers: { h1: [], h2: [], h3: [], h4: [], h5: [], h6: [] },

12 | images: [],

13 | links :[]

14 | };

15 |

16 | result.title = $head.find('title').text().replace(/\n|\t/g, "");

17 |

18 | $body.find('h1').each(function(i, el) {

19 |

20 | result.headers.h1.push($(el).text().replace(/\n|\t/g, ""));

21 |

22 | });

23 |

24 | $body.find('h2').each(function(i, el) {

25 |

26 | result.headers.h2.push($(el).text().replace(/\n|\t/g, ""));

27 |

28 | });

29 |

30 | $body.find('h3').each(function(i, el) {

31 |

32 | result.headers.h3.push($(el).text().replace(/\n|\t/g, ""));

33 |

34 | });

35 |

36 | $body.find('h4').each(function(i, el) {

37 |

38 | result.headers.h4.push($(el).text().replace(/\n|\t/g, ""));

39 |

40 | });

41 |

42 | $body.find('h5').each(function(i, el) {

43 |

44 | result.headers.h5.push($(el).text().replace(/\n|\t/g, ""));

45 |

46 | });

47 |

48 | $body.find('img').each(function(i, el) {

49 |

50 | result.images.push($(el).attr('src'));

51 |

52 | });

53 |

54 | $body.find('a[href!=""]').each(function(i, el) {

55 |

56 | result.links.push({

57 | text: $(el).text().trim(),

58 | title: $(el).attr('title'),

59 | href: $(el).attr('href')

60 | });

61 |

62 | });

63 |

64 | result.links = _.compact(result.links);

65 | result.images = _.compact(result.images);

66 |

67 | callback(null, result);

68 |

69 | } catch (e) {

70 | callback(e);

71 | }

72 |

73 | };

--------------------------------------------------------------------------------

/bin/suq.js:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env node

2 |

3 | var argv = require('minimist')(process.argv.slice(2)),

4 | url = argv.url || argv.u || process.argv.slice(2)[0],

5 | output = argv.output || argv.o || false,

6 | fs = require('fs'),

7 | path = require('path'),

8 | suq = require('../');

9 |

10 |

11 | if (argv._[0] === 'help' || argv.h || argv.help

12 | || (process.argv.length <= 1 && process.stdin.isTTY)) {

13 | return fs.createReadStream(__dirname + '/usage.txt')

14 | .pipe(process.stdout)

15 | .on('close', function () { process.exit(1) });

16 | }

17 |

18 | if (argv.version || argv.v) {

19 | return console.log(require('../package.json').version);

20 | }

21 |

22 |

23 | if (url) {

24 |

25 | if (url.indexOf('http') === -1) {

26 | console.error('SUq Error ['+ url + ']\n', 'Be sure to use http:// or https://');

27 | } else {

28 | suq(url, function (err, data) {

29 |

30 | if (!err) {

31 | if (!data) {

32 | console.error('SUq Error ['+ url + ']\n', 'response empty');

33 | } else {

34 | if (!output) {

35 | console.log(JSON.stringify(data, null, 2));

36 | } else {

37 | fs.writeFile(path.join(__dirname, output), JSON.stringify(data), function(err){

38 | console.log('File ' + output + ' successfully written!');

39 | })

40 | }

41 | }

42 | } else {

43 | console.log('SUq Error ['+ url + ']\n', err);

44 | }

45 | });

46 | }

47 |

48 |

49 | } else {

50 | return fs.createReadStream(__dirname + '/usage.txt')

51 | .pipe(process.stdout)

52 | .on('close', function () { process.exit(1) });

53 | }

54 |

55 |

--------------------------------------------------------------------------------

/tests/fixtures/sample.js:

--------------------------------------------------------------------------------

1 | const html = `

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 | My cool website

18 |

19 |

20 |

21 |

22 |

');

19 | });

20 |

21 | }

22 |

23 | });

--------------------------------------------------------------------------------

/tests/cleanMicroformats.js:

--------------------------------------------------------------------------------

1 | const fs = require("fs");

2 | const test = require("tape");

3 | const cleanMicroformats = require("../lib/cleanMicroformats");

4 |

5 | test("cleanMicroformats.js", function(t) {

6 | t.plan(2);

7 | const body = 'Glenn';

8 |

9 | cleanMicroformats(body, (err, data) => {

10 | t.equal(err, null, "should return callback without error");

11 |

12 | const expected = [

13 | {

14 | id: 1,

15 | type: "h-card",

16 | props: { name: "Glenn", url: "http://glennjones.net" },

17 | path: ["0", "type"],

18 | length: 2,

19 | level: 2

20 | }

21 | ];

22 | t.deepEqual(data, expected);

23 | });

24 | });

25 |

--------------------------------------------------------------------------------

/tests/parseTags.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseTags = require('../lib/parseTags');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 | const expected = {

6 | title: 'My cool website',

7 | headers: { h1: [ 'Lorem' ], h2: [ 'images' ], h3: [], h4: [], h5: [], h6: [] },

8 | images: [ '/cat1.jpg', '/cat2.jpg', '/cat3.jpg', '/cat4.jpg' ],

9 | links: [ { text: '', title: undefined, href: '#' },

10 | { text: '', title: undefined, href: '#' },

11 | { text: '', title: undefined, href: '#' },

12 | { text: '', title: undefined, href: '#' },

13 | { text: 'more stuff', title: undefined, href: '/more' } ]

14 | }

15 |

16 | test('parseTags.js', function (t) {

17 | t.plan(2);

18 | parseTags(parsedHtml, (err, data) => {

19 | t.equal(err, null, 'should return callback without error');

20 | t.deepEqual(expected, data);

21 | })

22 | });

23 |

--------------------------------------------------------------------------------

/tests/parseOpenGraph.js:

--------------------------------------------------------------------------------

1 | const fs = require('fs');

2 | const test = require('tape');

3 | const parseOpenGraph = require('../lib/parseOpenGraph');

4 | const { $: parsedHtml } = require('./fixtures/sample');

5 | const expected = {

6 | 'og:url': 'http://bits.blogs.nytimes.com/2011/12/08/a-twitter-for-my-sister/',

7 | 'og:title': 'A Twitter for My Sister',

8 | 'og:description': 'In the early days, Twitter grew so quickly that it was almost impossible to add new features because engineers spent their time trying to keep the rocket ship from stalling.',

9 | 'og:image': 'http://graphics8.nytimes.com/images/2011/12/08/technology/bits-newtwitter/bits-newtwitter-tmagArticle.jpg'

10 | }

11 |

12 | test('parseTags.js', function (t) {

13 | t.plan(2);

14 | parseOpenGraph(parsedHtml, (err, data) => {

15 | t.equal(err, null, 'should return callback without error');

16 | t.deepEqual(expected, data);

17 | })

18 | });

19 |

--------------------------------------------------------------------------------

/lib/parseOembed.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 |

5 | try {

6 |

7 | var

8 | $head = $('head'),

9 | result = {};

10 |

11 |

12 | $head.find('link').each(function(i, el) {

13 | var $el = $(el);

14 |

15 | //xml type

16 | if ($el.attr('type') && $el.attr('href') && $el.attr('rel') === 'alternate' && $el.attr('type').indexOf('text/xml+oembed') > -1) {

17 | result[$el.attr('type')] = $el.attr('href');

18 | }

19 |

20 | //json type

21 | if ($el.attr('type') && $el.attr('href') && $el.attr('rel') === 'alternate' && ($el.attr('type').indexOf('text/json+oembed') > -1 || $el.attr('type').indexOf('application/json+oembed') > -1)) {

22 | result[$el.attr('type')] = $el.attr('href');

23 | }

24 |

25 | });

26 |

27 | callback(null, result);

28 |

29 | } catch (e) {

30 | console.log(e);

31 | callback(e);

32 | }

33 |

34 | };

35 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | This software is released under the MIT license:

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy of

4 | this software and associated documentation files (the "Software"), to deal in

5 | the Software without restriction, including without limitation the rights to

6 | use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of

7 | the Software, and to permit persons to whom the Software is furnished to do so,

8 | subject to the following conditions:

9 |

10 | The above copyright notice and this permission notice shall be included in all

11 | copies or substantial portions of the Software.

12 |

13 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS

15 | FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR

16 | COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER

17 | IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN

18 | CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

19 |

--------------------------------------------------------------------------------

/recipes/wordpress-microformat.js:

--------------------------------------------------------------------------------

1 | var suq = require('suq');

2 | var _ = require('lodash');

3 |

4 | function hget(model) {

5 | if (model[0]) {

6 | return (model[0])

7 | } else {

8 | return {}

9 | }

10 | }

11 |

12 |

13 | suq('/a-wordpress/blog-post', function (err, data) {

14 |

15 | var feed, posts, postData, postProps, author, post;

16 |

17 | feed = hget(_.filter(data.microformat.items, _.matches({type: ["h-feed"]})));

18 |

19 | if (feed) {

20 |

21 | posts = _.filter(feed.children, _.matches({type: ["h-entry"]}));

22 |

23 | if (posts) {

24 | postData = hget(posts);

25 | }

26 |

27 | if (postData) {

28 |

29 | postProps = postData.properties;

30 |

31 | if (postProps) {

32 |

33 | author = hget(_.filter(postProps.author, _.matches({type: ["h-card"]})));

34 |

35 | post = {

36 | title: hget(postProps.name),

37 | category: hget(postProps.category),

38 | excerpt: hget(postProps.content).value.substring(0, 150) + '...',

39 | author: hget(author.properties.name),

40 | url: hget(data.microformat.rels.canonical),

41 | images: _.sample(data.images, 4)

42 | };

43 |

44 | console.log(post);

45 |

46 | }

47 |

48 | }

49 | }

50 |

51 | });

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "suq",

3 | "main": "./index",

4 | "version": "1.4.1",

5 | "description": "A Scraping Utility for lazy people",

6 | "scripts": {

7 | "test": "tape tests/*.js | tap-diff"

8 | },

9 | "keywords": [

10 | "htmlparser",

11 | "jquery",

12 | "selector",

13 | "scraper",

14 | "parser",

15 | "html",

16 | "microdata",

17 | "microformats",

18 | "opengraph",

19 | "twittercard",

20 | "meta"

21 | ],

22 | "bin": {

23 | "suq": "./bin/suq.js"

24 | },

25 | "files": [

26 | "index.js",

27 | "lib",

28 | "bin",

29 | "recipes"

30 | ],

31 | "author": {

32 | "name": "Matt McFarland",

33 | "email": "contact@mattmcfarland.com"

34 | },

35 | "contributors": [

36 | {

37 | "name": "Matt McFarland",

38 | "email": "contact@mattmcfarland.com"

39 | },

40 | {

41 | "name": "Tom Sutton",

42 | "url": "https://github.com/tomsutton1984"

43 | },

44 | {

45 | "name": "Oscar Illescas",

46 | "url": "https://github.com/oillescas"

47 | },

48 | {

49 | "name": "Gary Moon",

50 | "url": "https://github.com/garymoon"

51 | }

52 | ],

53 | "license": "MIT",

54 | "dependencies": {

55 | "cheerio": "^0.22.0",

56 | "lodash": "^4.17.11",

57 | "microdata-node": "^1.0.0",

58 | "microformat-node": "^2.0.1",

59 | "minimist": "^1.2.0",

60 | "request": "^2.88.0",

61 | "traverse": "^0.6.6",

62 | "xss": "^1.0.6"

63 | },

64 | "repository": {

65 | "type": "git",

66 | "url": "git://github.com/MattMcFarland/SUq.git"

67 | },

68 | "devDependencies": {

69 | "chalk": "^2.4.2",

70 | "tap-diff": "^0.1.1",

71 | "tape": "^4.10.2"

72 | }

73 | }

74 |

--------------------------------------------------------------------------------

/lib/parseTags.js:

--------------------------------------------------------------------------------

1 | var _ = require('lodash');

2 |

3 | module.exports = function ($, callback) {

4 | try {

5 |

6 | var

7 | $head = $('head'),

8 | $body = $('body'),

9 | result = {

10 | title: '',

11 | headers: { h1: [], h2: [], h3: [], h4: [], h5: [], h6: [] },

12 | images: [],

13 | links :[]

14 | };

15 |

16 | result.title = $head.find('title').text().replace(/\n|\t/g, "");

17 |

18 | $body.find('h1').each(function(i, el) {

19 |

20 | result.headers.h1.push($(el).text().replace(/\n|\t/g, ""));

21 |

22 | });

23 |

24 | $body.find('h2').each(function(i, el) {

25 |

26 | result.headers.h2.push($(el).text().replace(/\n|\t/g, ""));

27 |

28 | });

29 |

30 | $body.find('h3').each(function(i, el) {

31 |

32 | result.headers.h3.push($(el).text().replace(/\n|\t/g, ""));

33 |

34 | });

35 |

36 | $body.find('h4').each(function(i, el) {

37 |

38 | result.headers.h4.push($(el).text().replace(/\n|\t/g, ""));

39 |

40 | });

41 |

42 | $body.find('h5').each(function(i, el) {

43 |

44 | result.headers.h5.push($(el).text().replace(/\n|\t/g, ""));

45 |

46 | });

47 |

48 | $body.find('img').each(function(i, el) {

49 |

50 | result.images.push($(el).attr('src'));

51 |

52 | });

53 |

54 | $body.find('a[href!=""]').each(function(i, el) {

55 |

56 | result.links.push({

57 | text: $(el).text().trim(),

58 | title: $(el).attr('title'),

59 | href: $(el).attr('href')

60 | });

61 |

62 | });

63 |

64 | result.links = _.compact(result.links);

65 | result.images = _.compact(result.images);

66 |

67 | callback(null, result);

68 |

69 | } catch (e) {

70 | callback(e);

71 | }

72 |

73 | };

--------------------------------------------------------------------------------

/bin/suq.js:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env node

2 |

3 | var argv = require('minimist')(process.argv.slice(2)),

4 | url = argv.url || argv.u || process.argv.slice(2)[0],

5 | output = argv.output || argv.o || false,

6 | fs = require('fs'),

7 | path = require('path'),

8 | suq = require('../');

9 |

10 |

11 | if (argv._[0] === 'help' || argv.h || argv.help

12 | || (process.argv.length <= 1 && process.stdin.isTTY)) {

13 | return fs.createReadStream(__dirname + '/usage.txt')

14 | .pipe(process.stdout)

15 | .on('close', function () { process.exit(1) });

16 | }

17 |

18 | if (argv.version || argv.v) {

19 | return console.log(require('../package.json').version);

20 | }

21 |

22 |

23 | if (url) {

24 |

25 | if (url.indexOf('http') === -1) {

26 | console.error('SUq Error ['+ url + ']\n', 'Be sure to use http:// or https://');

27 | } else {

28 | suq(url, function (err, data) {

29 |

30 | if (!err) {

31 | if (!data) {

32 | console.error('SUq Error ['+ url + ']\n', 'response empty');

33 | } else {

34 | if (!output) {

35 | console.log(JSON.stringify(data, null, 2));

36 | } else {

37 | fs.writeFile(path.join(__dirname, output), JSON.stringify(data), function(err){

38 | console.log('File ' + output + ' successfully written!');

39 | })

40 | }

41 | }

42 | } else {

43 | console.log('SUq Error ['+ url + ']\n', err);

44 | }

45 | });

46 | }

47 |

48 |

49 | } else {

50 | return fs.createReadStream(__dirname + '/usage.txt')

51 | .pipe(process.stdout)

52 | .on('close', function () { process.exit(1) });

53 | }

54 |

55 |

--------------------------------------------------------------------------------

/tests/fixtures/sample.js:

--------------------------------------------------------------------------------

1 | const html = `

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 | My cool website

18 |

19 |

20 |

21 |

22 | Lorem

23 | Ipsum???

24 | images

25 |

31 |

34 |

35 |

36 |

37 | `

38 | module.exports = {

39 | html,

40 | $: require('cheerio').load(html)

41 | }

42 |

--------------------------------------------------------------------------------

/lib/cleanMicrodata.js:

--------------------------------------------------------------------------------

1 | var traverse = require('traverse');

2 | var xss = require('xss');

3 | var _ = require('lodash');

4 |

5 | module.exports = function (mdata, callback) {

6 |

7 | var index = 0;

8 | mdata = traverse(mdata).forEach(function(item) {

9 | if (this.key === 'type' && this.notLeaf) {

10 | index ++;

11 | this.node.push(index);

12 | //console.log(this.node);

13 | }

14 | });

15 |

16 | var flattenProps = function (props) {

17 | return traverse(props).reduce(function (acc) {

18 |

19 |

20 | // If its an array, it is not empty, and it has only one item.

21 | if (Array.isArray(this.node) &&

22 | this.node.length === 1 &&

23 | this.level < 3 &&

24 | !this.node[0].properties && this.parent && this.parent.node) {

25 | acc[this.key] = (

26 | (typeof this.node[0] === "string") ?

27 | xss(this.node[0].replace(/(<([^>]+)>)|\n|\t/ig,"")) :

28 | this.node[0]

29 | );

30 | }

31 | if (this.level === 3) {

32 | var node = _.get(this, 'parent.parent.node');

33 |

34 | if (node && node[0] && node[0].type && node[0].type[1])

35 | acc[node.key] = '__ref__' + node[0].type[1];

36 | }

37 | return acc;

38 |

39 | }, {});

40 |

41 | };

42 |

43 | var result = traverse(mdata).reduce(function (acc) {

44 |

45 | if (this.key === 'type' && this.notLeaf) {

46 |

47 | var props = this.parent.node['properties'],

48 | size = props.length ?

49 | props.length :

50 | Object.keys(props).length || 0;

51 |

52 |

53 | if (size) {

54 | acc.push({

55 | id: this.node[1],

56 | type: this.node[0],

57 | props: flattenProps(props),

58 | path: this.path,

59 | length: size,

60 | level: this.level

61 | });

62 | }

63 | }

64 | return acc;

65 | }, []);

66 |

67 | callback(null, result);

68 | };

--------------------------------------------------------------------------------

/index.js:

--------------------------------------------------------------------------------

1 | /*

2 | Required Modules

3 | */

4 |

5 | var

6 | cheerio = require('cheerio'),

7 | request = require('request'),

8 | microdata = require('microdata-node'),

9 | _ = require('lodash');

10 |

11 |

12 | var

13 | cleanMicrodata = require('./lib/cleanMicrodata'),

14 | cleanMicroformats = require('./lib/cleanMicroformats'),

15 | parseMeta = require('./lib/parseMeta'),

16 | parseTags = require('./lib/parseTags'),

17 | parseTwitterCard = require('./lib/parseTwitterCard'),

18 | parseOpenGraph = require('./lib/parseOpenGraph'),

19 | parseOembed = require('./lib/parseOembed');

20 |

21 | var populate = {

22 | meta: {},

23 | microdata: {},

24 | microformat: {},

25 | tags: {},

26 | opengraph: {},

27 | twittercard: {},

28 | oembed: {}

29 | };

30 |

31 |

32 | module.exports = function (url, callback, opts) {

33 | request(_.extend({"url": url}, opts || {}), function (err, res, body) {

34 | if (err) {

35 | callback(err, null);

36 | } else if (body && res) {

37 | module.exports.parse(body, callback);

38 | } else {

39 | callback('No Response');

40 | }

41 | });

42 | };

43 |

44 |

45 | module.exports.parse = function (body, callback) {

46 | cleanMicrodata(microdata.toJson(body), function (err, cleanData) {

47 | if (!err && cleanData) {

48 | populate.microdata = cleanData;

49 | var $ = cheerio.load(body);

50 | parseMeta($, function (err, meta) {

51 | populate.meta = meta;

52 | parseTags($, function(err, tags) {

53 | populate.tags = tags;

54 | cleanMicroformats(body, function(err, mfats) {

55 | populate.microformat = mfats;

56 | parseOpenGraph($, function(err, og) {

57 | populate.opengraph = og;

58 | parseTwitterCard($, function(err, twittercard) {

59 | populate.twittercard = twittercard;

60 | parseOembed($, function(err, oembed) {

61 | populate.oembed = oembed;

62 | callback(null, populate, body);

63 | });

64 | });

65 | });

66 | })

67 | })

68 | });

69 | } else {

70 | callback(err || 'CleanData fail');

71 | }

72 | });

73 | };

74 |

--------------------------------------------------------------------------------

/lib/cleanMicroformats.js:

--------------------------------------------------------------------------------

1 | var traverse = require("traverse");

2 | var _ = require("lodash");

3 | var microformat = require("microformat-node");

4 | var xss = require("xss");

5 |

6 | module.exports = function(body, callback) {

7 | microformat.get({ html: body, logger: false }, function(err, data) {

8 | if (err) {

9 | callback(err);

10 | return;

11 | }

12 | var index = 0;

13 |

14 | var items = traverse(data.items).forEach(function(item) {

15 | if (this.key === "type" && this.notLeaf) {

16 | index++;

17 | this.node.push(index);

18 | }

19 | });

20 |

21 | var flattenProps = function(props) {

22 | return traverse(props).reduce(function(acc) {

23 | if (this.key === "content" && this.notLeaf) {

24 | if (

25 | this.node[0] &&

26 | this.node[0].html &&

27 | typeof this.node[0].html === "string"

28 | ) {

29 | this.node[0] = xss(this.node[0].html).replace(/\n|\t/g, "");

30 | } else {

31 | if (

32 | this.node[0] &&

33 | this.node[0].value &&

34 | typeof this.node[0].value === "string"

35 | ) {

36 | this.node[0] = xss(this.node[0].value).replace(/\n|\t/g, "");

37 | }

38 | }

39 | }

40 | // If its an array, it is not empty, and it has only one item.

41 | if (

42 | Array.isArray(this.node) &&

43 | this.node.length === 1 &&

44 | this.level === 1 &&

45 | !this.node[0].properties &&

46 | this.parent &&

47 | this.parent.node

48 | ) {

49 | acc[this.key] =

50 | typeof this.node[0] === "string"

51 | ? xss(this.node[0].replace(/(<([^>]+)>)|\n|\t/gi, ""))

52 | : this.node[0];

53 | }

54 | return acc;

55 | }, {});

56 | };

57 |

58 | var result = traverse(items).reduce(function(acc) {

59 | if (this.key === "type" && this.notLeaf) {

60 | var props = this.parent.node["properties"],

61 | size = props.length ? props.length : Object.keys(props).length || 0;

62 |

63 | if (size) {

64 | acc.push({

65 | id: this.node[1],

66 | type: this.node[0],

67 | props: flattenProps(props),

68 | path: this.path,

69 | length: size,

70 | level: this.level

71 | });

72 | }

73 | }

74 | return acc;

75 | }, []);

76 |

77 | callback(null, result);

78 | });

79 | };

80 |

--------------------------------------------------------------------------------

/tests/fixtures/cleanedMicrodata.json:

--------------------------------------------------------------------------------

1 | [

2 | {

3 | "id": 1,

4 | "type": "http://schema.org/ImageObject",

5 | "props": {

6 | "height": "720",

7 | "width": "1280",

8 | "url": "https://i.ytimg.com/vi/Xft3asYLKo0/maxresdefault.jpg"

9 | },

10 | "path": [

11 | "items",

12 | "0",

13 | "properties",

14 | "thumbnail",

15 | "0",

16 | "type"

17 | ],

18 | "length": 3,

19 | "level": 6

20 | },

21 | {

22 | "id": 2,

23 | "type": "http://schema.org/Person",

24 | "props": {

25 | "url": "http://www.youtube.com/user/packt1000"

26 | },

27 | "path": [

28 | "items",

29 | "0",

30 | "properties",

31 | "author",

32 | "0",

33 | "type"

34 | ],

35 | "length": 1,

36 | "level": 6

37 | },

38 | {

39 | "id": 3,

40 | "type": "http://schema.org/Person",

41 | "props": {

42 | "url": "https://plus.google.com/102849454411878407512"

43 | },

44 | "path": [

45 | "items",

46 | "0",

47 | "properties",

48 | "author",

49 | "1",

50 | "type"

51 | ],

52 | "length": 1,

53 | "level": 6

54 | },

55 | {

56 | "id": 4,

57 | "type": "http://schema.org/VideoObject",

58 | "props": {

59 | "thumbnailUrl": "https://i.ytimg.com/vi/Xft3asYLKo0/maxresdefault.jpg",

60 | "genre": "Science & Technology",

61 | "undefined": "__ref__2",

62 | "url": "https://www.youtube.com/watch?v=Xft3asYLKo0",

63 | "embedURL": "https://www.youtube.com/embed/Xft3asYLKo0",

64 | "description": "Part of Rapid Lo-Dash video series. For the full Course visit: https://www.packtpub.com/web-development/rapid-lo-dash-video?utm_source=youtube&utm_medium=vid...",

65 | "playerType": "HTML5 Flash",

66 | "channelId": "UC3VydBGBl132baPCLeDspMQ",

67 | "width": "1280",

68 | "duration": "PT5M1S",

69 | "height": "720",

70 | "name": "Rapid Lo-Dash Tutorial: Creating and Using Objects | packtpub.com",

71 | "datePublished": "2014-11-26",

72 | "interactionCount": "3534",

73 | "regionsAllowed": "AD,AE,AF,AG,AI,AL,AM,AO,AQ,AR,AS,AT,AU,AW,AX,AZ,BA,BB,BD,BE,BF,BG,BH,BI,BJ,BL,BM,BN,BO,BQ,BR,BS,BT,BV,BW,BY,BZ,CA,CC,CD,CF,CG,CH,CI,CK,CL,CM,CN,CO,CR,CU,CV,CW,CX,CY,CZ,DE,DJ,DK,DM,DO,DZ,EC,EE,EG,EH,ER,ES,ET,FI,FJ,FK,FM,FO,FR,GA,GB,GD,GE,GF,GG,GH,GI,GL,GM,GN,GP,GQ,GR,GS,GT,GU,GW,GY,HK,HM,HN,HR,HT,HU,ID,IE,IL,IM,IN,IO,IQ,IR,IS,IT,JE,JM,JO,JP,KE,KG,KH,KI,KM,KN,KP,KR,KW,KY,KZ,LA,LB,LC,LI,LK,LR,LS,LT,LU,LV,LY,MA,MC,MD,ME,MF,MG,MH,MK,ML,MM,MN,MO,MP,MQ,MR,MS,MT,MU,MV,MW,MX,MY,MZ,NA,NC,NE,NF,NG,NI,NL,NO,NP,NR,NU,NZ,OM,PA,PE,PF,PG,PH,PK,PL,PM,PN,PR,PS,PT,PW,PY,QA,RE,RO,RS,RU,RW,SA,SB,SC,SD,SE,SG,SH,SI,SJ,SK,SL,SM,SN,SO,SR,SS,ST,SV,SX,SY,SZ,TC,TD,TF,TG,TH,TJ,TK,TL,TM,TN,TO,TR,TT,TV,TW,TZ,UA,UG,UM,US,UY,UZ,VA,VC,VE,VG,VI,VN,VU,WF,WS,YE,YT,ZA,ZM,ZW",

74 | "isFamilyFriendly": "True",

75 | "paid": "False",

76 | "unlisted": "False",

77 | "videoId": "Xft3asYLKo0"

78 | },

79 | "path": [

80 | "items",

81 | "0",

82 | "type"

83 | ],

84 | "length": 20,

85 | "level": 3

86 | }

87 | ]

88 |

--------------------------------------------------------------------------------

/tests/fixtures/rawMicrodata.json:

--------------------------------------------------------------------------------

1 | {

2 | "items": [

3 | {

4 | "properties": {

5 | "thumbnailUrl": [

6 | "https://i.ytimg.com/vi/Xft3asYLKo0/maxresdefault.jpg"

7 | ],

8 | "genre": [

9 | "Science & Technology"

10 | ],

11 | "thumbnail": [

12 | {

13 | "properties": {

14 | "height": [

15 | "720"

16 | ],

17 | "width": [

18 | "1280"

19 | ],

20 | "url": [

21 | "https://i.ytimg.com/vi/Xft3asYLKo0/maxresdefault.jpg"

22 | ]

23 | },

24 | "type": [

25 | "http://schema.org/ImageObject"

26 | ]

27 | }

28 | ],

29 | "url": [

30 | "https://www.youtube.com/watch?v=Xft3asYLKo0"

31 | ],

32 | "embedURL": [

33 | "https://www.youtube.com/embed/Xft3asYLKo0"

34 | ],

35 | "description": [

36 | "Part of Rapid Lo-Dash video series. For the full Course visit: https://www.packtpub.com/web-development/rapid-lo-dash-video?utm_source=youtube&utm_medium=vid..."

37 | ],

38 | "playerType": [

39 | "HTML5 Flash"

40 | ],

41 | "channelId": [

42 | "UC3VydBGBl132baPCLeDspMQ"

43 | ],

44 | "width": [

45 | "1280"

46 | ],

47 | "duration": [

48 | "PT5M1S"

49 | ],

50 | "height": [

51 | "720"

52 | ],

53 | "author": [

54 | {

55 | "properties": {

56 | "url": [

57 | "http://www.youtube.com/user/packt1000"

58 | ]

59 | },

60 | "type": [

61 | "http://schema.org/Person"

62 | ]

63 | },

64 | {

65 | "properties": {

66 | "url": [

67 | "https://plus.google.com/102849454411878407512"

68 | ]

69 | },

70 | "type": [

71 | "http://schema.org/Person"

72 | ]

73 | }

74 | ],

75 | "name": [

76 | "Rapid Lo-Dash Tutorial: Creating and Using Objects | packtpub.com"

77 | ],

78 | "datePublished": [

79 | "2014-11-26"

80 | ],

81 | "interactionCount": [

82 | "3534"

83 | ],

84 | "regionsAllowed": [

85 | "AD,AE,AF,AG,AI,AL,AM,AO,AQ,AR,AS,AT,AU,AW,AX,AZ,BA,BB,BD,BE,BF,BG,BH,BI,BJ,BL,BM,BN,BO,BQ,BR,BS,BT,BV,BW,BY,BZ,CA,CC,CD,CF,CG,CH,CI,CK,CL,CM,CN,CO,CR,CU,CV,CW,CX,CY,CZ,DE,DJ,DK,DM,DO,DZ,EC,EE,EG,EH,ER,ES,ET,FI,FJ,FK,FM,FO,FR,GA,GB,GD,GE,GF,GG,GH,GI,GL,GM,GN,GP,GQ,GR,GS,GT,GU,GW,GY,HK,HM,HN,HR,HT,HU,ID,IE,IL,IM,IN,IO,IQ,IR,IS,IT,JE,JM,JO,JP,KE,KG,KH,KI,KM,KN,KP,KR,KW,KY,KZ,LA,LB,LC,LI,LK,LR,LS,LT,LU,LV,LY,MA,MC,MD,ME,MF,MG,MH,MK,ML,MM,MN,MO,MP,MQ,MR,MS,MT,MU,MV,MW,MX,MY,MZ,NA,NC,NE,NF,NG,NI,NL,NO,NP,NR,NU,NZ,OM,PA,PE,PF,PG,PH,PK,PL,PM,PN,PR,PS,PT,PW,PY,QA,RE,RO,RS,RU,RW,SA,SB,SC,SD,SE,SG,SH,SI,SJ,SK,SL,SM,SN,SO,SR,SS,ST,SV,SX,SY,SZ,TC,TD,TF,TG,TH,TJ,TK,TL,TM,TN,TO,TR,TT,TV,TW,TZ,UA,UG,UM,US,UY,UZ,VA,VC,VE,VG,VI,VN,VU,WF,WS,YE,YT,ZA,ZM,ZW"

86 | ],

87 | "isFamilyFriendly": [

88 | "True"

89 | ],

90 | "paid": [

91 | "False"

92 | ],

93 | "unlisted": [

94 | "False"

95 | ],

96 | "videoId": [

97 | "Xft3asYLKo0"

98 | ]

99 | },

100 | "type": [

101 | "http://schema.org/VideoObject"

102 | ]

103 | }

104 | ]

105 | }

106 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

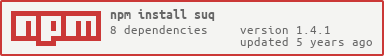

1 | [](https://npmjs.org/package/suq)

2 |

3 | ## SUq

4 |

5 | Scraping Utility for lazy people.

6 | MIT Licensed

7 |

8 | Here's a simple node module that will allow you to asynchronously scrape opengraph tags, microformats, microdata, header tags, images, classic meta, and whatever else you want with minimal effort.

9 | You can output the scraped data in the command line, or you can output scraped data as a JSON object.

10 | If you don't want the scraped data yet, and still want to fine tune and grab more data from the html, no problem. You can extend suq as much as you want, it doesn't care.

11 |

12 | * [Recipes](./recipes)

13 | * [Command line Usage](#command-line-usage)

14 | * [Basic Usage](#basic-usage)

15 | * [Opengraph](#opengraph)

16 | * [TwitterCard](#twittercard)

17 | * [Microformat](#microformat)

18 | * [Microdata](#microdata)

19 | * [Headers](#headers)

20 | * [Images](#images)

21 | * [Meta](#meta)

22 | * [Signature](#signature)

23 | * [Extending](#extending)

24 | * [Mentions](#mentions)

25 |

26 | ### Command line usage:

27 |

28 | Scrape a website and output the data to command line.

29 |

30 | suq can be used in the command line when installed globally, outputting scraped data to `stdout`

31 |

32 | ```

33 | npm install suq -g

34 |

35 | suq http://www.example.com > example.json

36 |

37 | suq -u http://www.example.com -o example.json

38 |

39 | suq --url http://www.example.com --output example.json

40 |

41 | ```

42 |

43 |

44 |

45 | ### Basic usage

46 |

47 | How to scrape a website and convert structured data to json, and keep the html data as well (in case you're not done with it yet)

48 |

49 |

50 | ```javascript

51 | var suq = require('suq');

52 |

53 | var url = "http://www.example.com";

54 |

55 | suq(url, function (err, json, body) {

56 |

57 | if (!err) {

58 | console.log('scraped json is:', JSON.stringify(json, null, 2));

59 | console.log('html body is', body);

60 | }

61 |

62 | });

63 |

64 | ```

65 |

66 |

67 | ### Opengraph

68 |

69 | How to scrape a website and store its opengraph tags.

70 |

71 |

72 | ```javascript

73 | var suq = require('suq');

74 | var url = "http://www.example.com";

75 |

76 | suq(url, function (err, json, body) {

77 |

78 | if (!err) {

79 | var openGraphTags = json.og;

80 | console.log(JSON.stringify(openGraphTags, null, 2));

81 | }

82 |

83 | });

84 |

85 | ```

86 |

87 | ### TwitterCard

88 |

89 | How to scrape a website and store its twitter card tags.

90 |

91 |

92 | ```javascript

93 | var suq = require('suq');

94 | var url = "http://www.example.com";

95 |

96 | suq(url, function (err, json, body) {

97 |

98 | if (!err) {

99 | var openTwitterCardTags = json.twittercard;

100 | console.log(JSON.stringify(openTwitterCardTags, null, 2));

101 | }

102 |

103 | });

104 |

105 | ```

106 |

107 |

108 | ### Oembed

109 |

110 | How to scrape a website and store its oembed links.

111 | https://oembed.com/

112 |

113 |

114 | ```javascript

115 | var suq = require('suq');

116 | var url = "http://www.example.com";

117 |

118 | suq(url, function (err, json, body) {

119 |

120 | if (!err) {

121 | var oembedLinks = json.oembed;

122 | console.log(JSON.stringify(oembedLinks, null, 2));

123 | }

124 |

125 | });

126 |

127 | ```

128 |

129 | ### Microformat

130 |

131 | How to scrape a website and store its microformats version 1 and 2 data.

132 |

133 |

134 | ```javascript

135 | var suq = require('suq');

136 | var url = "http://www.example.com";

137 |

138 | suq(url, function (err, json, body) {

139 |

140 | if (!err) {

141 | var microformat = json.microformat;

142 | console.log(JSON.stringify(microformat, null, 2));

143 | }

144 |

145 | });

146 |

147 | ```

148 |

149 | ### Microdata

150 |

151 | How to scrape a website and store its schema.org microdata.

152 |

153 |

154 | ```javascript

155 | var suq = require('suq');

156 | var url = "http://www.example.com";

157 |

158 | suq(url, function (err, json, body) {

159 |

160 | if (!err) {

161 | var microdata = json.microdata;

162 | DoSomethingCool(microdata);

163 | }

164 |

165 | });

166 |

167 | ```

168 |

169 | ### Headers

170 |

171 | How to scrape header tags from a URL:

172 |

173 |

174 | ```javascript

175 | var suq = require('suq');

176 | var url = "http://www.example.com";

177 |

178 | suq(url, function (err, json, body) {

179 |

180 | if (!err) {

181 | var headers = json.headers;

182 |

183 | var title = json.headers.h1[0];

184 | var subtitle = json.headers.h2[0];

185 |

186 | }

187 |

188 | });

189 |

190 | ```

191 |

192 | ### Images

193 |

194 | How to scrape image tag URLS from a website:

195 |

196 | ```javascript

197 | var suq = require('suq');

198 | var _ = require('lodash');

199 | var url = "http://www.example.com";

200 |

201 | suq(url, function (err, json, body) {

202 |

203 | if (!err) {

204 | var images = json.images;

205 |

206 | _.each(images, function (src) {

207 | makeSomeHTML(' ');

208 | });

209 |

210 | }

211 |

212 | });

213 |

214 | ```

215 |

216 | ### Meta

217 |

218 | How to scrape meta title and description from a URL:

219 |

220 |

221 | ```javascript

222 | var suq = require('suq');

223 | var url = "http://www.example.com";

224 |

225 | suq(url, function (err, json, body) {

226 |

227 | if (!err) {

228 | var title = json.meta.title;

229 | var description = json.meta.description;

230 | }

231 |

232 | });

233 | ```

234 |

235 |

236 | ### Signature

237 |

238 | If you are familiar with signature patterns, you may find this helpful. If not, you may ignore this :)

239 |

240 | ```javascript

241 | suq(String url, Callback( JSON err, JSON json, String body ) callback);

242 | ```

243 |

244 |

245 | ### Extending

246 |

247 | SUq is a node module that lets you scrape website data and customize what you want because it doesnt drop the html body from the request.

248 |

249 | In this example we scrape an unordered list with the class "grocerylist" and scrape all the p tags too for fun.

250 |

251 | ```javascript

252 | var suq = require('suq');

253 | var cheerio = require('cheerio');

254 | var url = "http://www.example.com";

255 |

256 | suq(url, function (err, json, body) {

257 |

258 | var $ = cheerio.load(body);

259 |

260 |

261 | $('body').find('p').each(function(i, el) {

262 |

263 | json.pTags.push($(el).text().trim());

264 |

265 | });

266 |

267 | $('body').find('ul.grocerylist').find('li').each(function(i, el) {

268 |

269 | json.groceryList.push($(el).text().trim());

270 |

271 | });

272 |

273 | NowDoSomethingCool(json);

274 | });

275 | ```

276 | ### Request options

277 |

278 | SUq uses the [request](https://github.com/request/request) library to retrieve the HTML of the given site. The default options may not always be ideal, so you can pass any [options](https://github.com/request/request#requestoptions-callback) to `request()` using an optional third argument to `suq()`. A prominent example is the NYTimes, where you must accept cookies to get to get past the paywall the content.

279 |

280 | ```javascript

281 | var suq = require('suq');

282 | var url = "http://www.example.com";

283 |

284 | suq(url, function (err, json, body) {

285 | NowDoSomethingCool(json);

286 | }, { jar: true });

287 | ```

288 |

289 | ### Handling requests yourself

290 |

291 | If you pass URLs that don't send HTML back, one of the dependencies for SUq will return an error. SUq therefore exposes

292 | it's `parse` function so you can handle these events yourself (in the cases when you don't want to validate the URL

293 | being passed to SUq) like so:

294 |

295 | ```javascript

296 | var request = require('request');

297 | var suq = require('suq');

298 |

299 | request("http://www.example.com/image.jpeg", function (err, res, body) {

300 | if (err) return callback(err);

301 | else if (!res || !res.statusCode) return callback(new Error('No response'));

302 | else if (res.headers['content-type'] !== 'text/html') return callback(null, {}, body);

303 | else suq.parse(body, callback);

304 | });

305 | ```

306 |

307 | ### Mentions

308 |

309 | SUq was made possible by:

310 |

311 | * [cheerio by Matt Mueller](https://github.com/cheeriojs/cheerio.git)

312 |

313 | * [lodash by John-David Dalton](https://lodash.com/)

314 |

315 | * [microdata-node by Jan Potoms](https://github.com/Janpot/microdata-node)

316 |

317 | * [microformat-node by Glenn Jones](https://github.com/glennjones/microformat-node#readme)

318 |

319 | * [minimist and traverse by James Halliday](https://github.com/substack/minimist)

320 |

321 | * [request by Mikeal Rogers](https://github.com/request/request#readme)

322 |

323 | * And of course the awesome folks over at nodeJS.org

324 |

325 |

326 | A huge THANK YOU goes out to all of you for making this easy for me.. :)

327 |

328 |

329 | ### Contributors

330 |

331 | - Matt McFarland

332 | - Tom Sutton

333 | - Oscar Illescas

334 | - Gary Moon

335 |

336 | ### TODOS:

337 |

338 | - Add more explanations regarding options

339 |

340 |

341 | ### Changelog

342 |

343 | #### v1.3.0

344 | - Backfill unit tests, remove microformat truncation.

345 |

346 | #### v1.2.0

347 | - Add new request and documentation for using it.

348 |

349 | #### v1.1.0

350 | - Add anchor tag links thanks to Oscar Illescas

351 |

352 |

353 | #### v1.0.1

354 |

355 | - Fixed issue with missing body (only populate data was coming in) thanks Tom Sutton

356 |

357 | #### v1.0.0

358 |

359 | - Cleaned up Microdata to much more managable state.

360 |

361 | - Cleaned up Microformats to much more managable state.

362 |

363 | - Cleaned up meta tag scraping

364 |

365 | - Reworked Opengraph tag scraping

366 |

367 | - Removed options support due to async bugs (may add back in later)

368 |

369 | - Added some (not all) XSS protection

370 |

371 | - Added trimming/whitespace removal

372 |

373 | - Remove options support.

374 |

375 | - Fails are graceful, resulting in at least some data returning if an error occurs

376 |

--------------------------------------------------------------------------------

');

208 | });

209 |

210 | }

211 |

212 | });

213 |

214 | ```

215 |

216 | ### Meta

217 |

218 | How to scrape meta title and description from a URL:

219 |

220 |

221 | ```javascript

222 | var suq = require('suq');

223 | var url = "http://www.example.com";

224 |

225 | suq(url, function (err, json, body) {

226 |

227 | if (!err) {

228 | var title = json.meta.title;

229 | var description = json.meta.description;

230 | }

231 |

232 | });

233 | ```

234 |

235 |

236 | ### Signature

237 |

238 | If you are familiar with signature patterns, you may find this helpful. If not, you may ignore this :)

239 |

240 | ```javascript

241 | suq(String url, Callback( JSON err, JSON json, String body ) callback);

242 | ```

243 |

244 |

245 | ### Extending

246 |

247 | SUq is a node module that lets you scrape website data and customize what you want because it doesnt drop the html body from the request.

248 |

249 | In this example we scrape an unordered list with the class "grocerylist" and scrape all the p tags too for fun.

250 |

251 | ```javascript

252 | var suq = require('suq');

253 | var cheerio = require('cheerio');

254 | var url = "http://www.example.com";

255 |

256 | suq(url, function (err, json, body) {

257 |

258 | var $ = cheerio.load(body);

259 |

260 |

261 | $('body').find('p').each(function(i, el) {

262 |

263 | json.pTags.push($(el).text().trim());

264 |

265 | });

266 |

267 | $('body').find('ul.grocerylist').find('li').each(function(i, el) {

268 |

269 | json.groceryList.push($(el).text().trim());

270 |

271 | });

272 |

273 | NowDoSomethingCool(json);

274 | });

275 | ```

276 | ### Request options

277 |

278 | SUq uses the [request](https://github.com/request/request) library to retrieve the HTML of the given site. The default options may not always be ideal, so you can pass any [options](https://github.com/request/request#requestoptions-callback) to `request()` using an optional third argument to `suq()`. A prominent example is the NYTimes, where you must accept cookies to get to get past the paywall the content.

279 |

280 | ```javascript

281 | var suq = require('suq');

282 | var url = "http://www.example.com";

283 |

284 | suq(url, function (err, json, body) {

285 | NowDoSomethingCool(json);

286 | }, { jar: true });

287 | ```

288 |

289 | ### Handling requests yourself

290 |

291 | If you pass URLs that don't send HTML back, one of the dependencies for SUq will return an error. SUq therefore exposes

292 | it's `parse` function so you can handle these events yourself (in the cases when you don't want to validate the URL

293 | being passed to SUq) like so:

294 |

295 | ```javascript

296 | var request = require('request');

297 | var suq = require('suq');

298 |

299 | request("http://www.example.com/image.jpeg", function (err, res, body) {

300 | if (err) return callback(err);

301 | else if (!res || !res.statusCode) return callback(new Error('No response'));

302 | else if (res.headers['content-type'] !== 'text/html') return callback(null, {}, body);

303 | else suq.parse(body, callback);

304 | });

305 | ```

306 |

307 | ### Mentions

308 |

309 | SUq was made possible by:

310 |

311 | * [cheerio by Matt Mueller](https://github.com/cheeriojs/cheerio.git)

312 |

313 | * [lodash by John-David Dalton](https://lodash.com/)

314 |

315 | * [microdata-node by Jan Potoms](https://github.com/Janpot/microdata-node)

316 |

317 | * [microformat-node by Glenn Jones](https://github.com/glennjones/microformat-node#readme)

318 |

319 | * [minimist and traverse by James Halliday](https://github.com/substack/minimist)

320 |

321 | * [request by Mikeal Rogers](https://github.com/request/request#readme)

322 |

323 | * And of course the awesome folks over at nodeJS.org

324 |

325 |

326 | A huge THANK YOU goes out to all of you for making this easy for me.. :)

327 |

328 |

329 | ### Contributors

330 |

331 | - Matt McFarland

332 | - Tom Sutton

333 | - Oscar Illescas

334 | - Gary Moon

335 |

336 | ### TODOS:

337 |

338 | - Add more explanations regarding options

339 |

340 |

341 | ### Changelog

342 |

343 | #### v1.3.0

344 | - Backfill unit tests, remove microformat truncation.

345 |

346 | #### v1.2.0

347 | - Add new request and documentation for using it.

348 |

349 | #### v1.1.0

350 | - Add anchor tag links thanks to Oscar Illescas

351 |

352 |

353 | #### v1.0.1

354 |

355 | - Fixed issue with missing body (only populate data was coming in) thanks Tom Sutton

356 |

357 | #### v1.0.0

358 |

359 | - Cleaned up Microdata to much more managable state.

360 |

361 | - Cleaned up Microformats to much more managable state.

362 |

363 | - Cleaned up meta tag scraping

364 |

365 | - Reworked Opengraph tag scraping

366 |

367 | - Removed options support due to async bugs (may add back in later)

368 |

369 | - Added some (not all) XSS protection

370 |

371 | - Added trimming/whitespace removal

372 |

373 | - Remove options support.

374 |

375 | - Fails are graceful, resulting in at least some data returning if an error occurs

376 |

--------------------------------------------------------------------------------