├── .gitignore

├── LICENSE

├── README.md

├── imgs

└── noe2e_learning_curve.png

└── src

├── deep_dialog

├── __init__.py

├── agents

│ ├── __init__.py

│ ├── agent.py

│ ├── agent_baselines.py

│ ├── agent_cmd.py

│ └── agent_dqn.py

├── checkpoints

│ └── rl_agent

│ │ ├── e2e

│ │ └── agt_9_performance_records.json

│ │ └── noe2e

│ │ ├── agt_9_478_500_0.98000.p

│ │ └── agt_9_performance_records.json

├── data

│ ├── dia_act_nl_pairs.v6.json

│ ├── dia_acts.txt

│ ├── dicts.v3.json

│ ├── dicts.v3.p

│ ├── movie_kb.1k.json

│ ├── movie_kb.1k.p

│ ├── movie_kb.v2.json

│ ├── movie_kb.v2.p

│ ├── slot_set.txt

│ ├── user_goals_all_turns_template.p

│ ├── user_goals_first_turn_template.part.movie.v1.p

│ └── user_goals_first_turn_template.v2.p

├── dialog_config.py

├── dialog_system

│ ├── __init__.py

│ ├── dialog_manager.py

│ ├── dict_reader.py

│ ├── kb_helper.py

│ ├── state_tracker.py

│ └── utils.py

├── models

│ ├── nlg

│ │ └── lstm_tanh_relu_[1468202263.38]_2_0.610.p

│ └── nlu

│ │ └── lstm_[1468447442.91]_39_80_0.921.p

├── nlg

│ ├── __init__.py

│ ├── decoder.py

│ ├── lstm_decoder_tanh.py

│ ├── nlg.py

│ └── utils.py

├── nlu

│ ├── __init__.py

│ ├── bi_lstm.py

│ ├── lstm.py

│ ├── nlu.py

│ ├── seq_seq.py

│ └── utils.py

├── qlearning

│ ├── __init__.py

│ ├── dqn.py

│ └── utils.py

└── usersims

│ ├── __init__.py

│ ├── usersim.py

│ └── usersim_rule.py

├── draw_learning_curve.py

└── run.py

/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2017 MiuLab and Microsoft Research

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

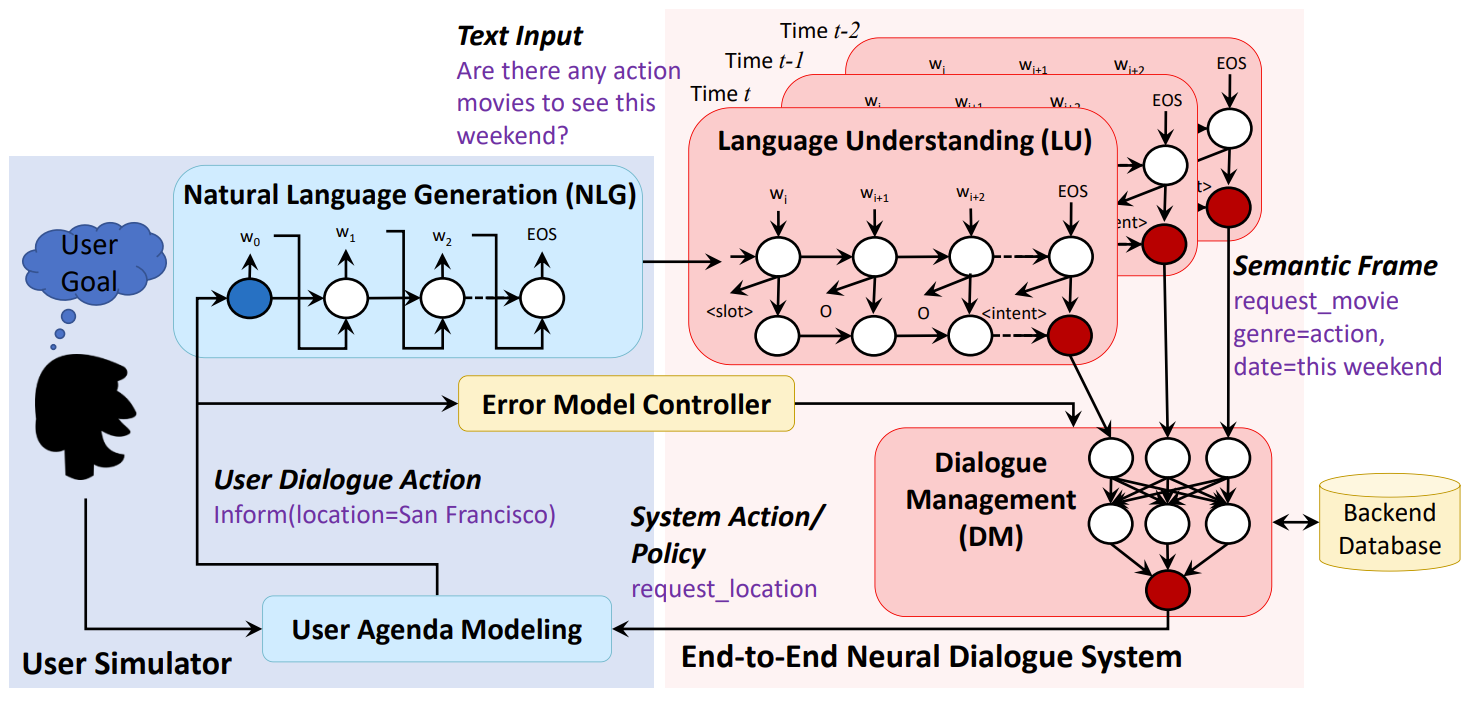

1 | # End-to-End Task-Completion Neural Dialogue Systems

2 | *An implementation of the

3 | [End-to-End Task-Completion Neural Dialogue Systems](http://arxiv.org/abs/1703.01008) and

4 | [A User Simulator for Task-Completion Dialogues](http://arxiv.org/abs/1612.05688).*

5 |

6 |

7 |

8 | This document describes how to run the simulation and different dialogue agents (rule-based, command line, reinforcement learning). More instructions to plug in your customized agents or user simulators are in the Recipe section of the paper.

9 |

10 | ## Content

11 | * [Data](#data)

12 | * [Parameter](#parameter)

13 | * [Running Dialogue Agents](#running-dialogue-agents)

14 | * [Evaluation](#evaluation)

15 | * [Reference](#reference)

16 |

17 | ## Data

18 | all the data is under this folder: ./src/deep_dialog/data

19 |

20 | * Movie Knowledge Bases

21 | `movie_kb.1k.p` --- 94% success rate (for `user_goals_first_turn_template_subsets.v1.p`)

22 | `movie_kb.v2.p` --- 36% success rate (for `user_goals_first_turn_template_subsets.v1.p`)

23 |

24 | * User Goals

25 | `user_goals_first_turn_template.v2.p` --- user goals extracted from the first user turn

26 | `user_goals_first_turn_template.part.movie.v1.p` --- a subset of user goals [Please use this one, the upper bound success rate on movie_kb.1k.json is 0.9765.]

27 |

28 | * NLG Rule Template

29 | `dia_act_nl_pairs.v6.json` --- some predefined NLG rule templates for both User simulator and Agent.

30 |

31 | * Dialog Act Intent

32 | `dia_acts.txt`

33 |

34 | * Dialog Act Slot

35 | `slot_set.txt`

36 |

37 | ## Parameter

38 |

39 | ### Basic setting

40 |

41 | `--agt`: the agent id

42 | `--usr`: the user (simulator) id

43 | `--max_turn`: maximum turns

44 | `--episodes`: how many dialogues to run

45 | `--slot_err_prob`: slot level err probability

46 | `--slot_err_mode`: which kind of slot err mode

47 | `--intent_err_prob`: intent level err probability

48 |

49 |

50 | ### Data setting

51 |

52 | `--movie_kb_path`: the movie kb path for agent side

53 | `--goal_file_path`: the user goal file path for user simulator side

54 |

55 | ### Model setting

56 |

57 | `--dqn_hidden_size`: hidden size for RL (DQN) agent

58 | `--batch_size`: batch size for DQN training

59 | `--simulation_epoch_size`: how many dialogue to be simulated in one epoch

60 | `--warm_start`: use rule policy to fill the experience replay buffer at the beginning

61 | `--warm_start_epochs`: how many dialogues to run in the warm start

62 |

63 | ### Display setting

64 |

65 | `--run_mode`: 0 for display mode (NL); 1 for debug mode (Dia_Act); 2 for debug mode (Dia_Act and NL); >3 for no display (i.e. training)

66 | `--act_level`: 0 for user simulator is Dia_Act level; 1 for user simulator is NL level

67 | `--auto_suggest`: 0 for no auto_suggest; 1 for auto_suggest

68 | `--cmd_input_mode`: 0 for NL input; 1 for Dia_Act input. (this parameter is for AgentCmd only)

69 |

70 | ### Others

71 |

72 | `--write_model_dir`: the directory to write the models

73 | `--trained_model_path`: the path of the trained RL agent model; load the trained model for prediction purpose.

74 |

75 | `--learning_phase`: train/test/all, default is all. You can split the user goal set into train and test set, or do not split (all); We introduce some randomness at the first sampled user action, even for the same user goal, the generated dialogue might be different.

76 |

77 | ## Running Dialogue Agents

78 |

79 | ### Rule Agent

80 | ```sh

81 | python run.py --agt 5 --usr 1 --max_turn 40

82 | --episodes 150

83 | --movie_kb_path ./deep_dialog/data/movie_kb.1k.p

84 | --goal_file_path ./deep_dialog/data/user_goals_first_turn_template.part.movie.v1.p

85 | --intent_err_prob 0.00

86 | --slot_err_prob 0.00

87 | --episodes 500

88 | --act_level 0

89 | ```

90 |

91 | ### Cmd Agent

92 | NL Input

93 | ```sh

94 | python run.py --agt 0 --usr 1 --max_turn 40

95 | --episodes 150

96 | --movie_kb_path ./deep_dialog/data/movie_kb.1k.p

97 | --goal_file_path ./deep_dialog/data/user_goals_first_turn_template.part.movie.v1.p

98 | --intent_err_prob 0.00

99 | --slot_err_prob 0.00

100 | --episodes 500

101 | --act_level 0

102 | --run_mode 0

103 | --cmd_input_mode 0

104 | ```

105 | Dia_Act Input

106 | ```sh

107 | python run.py --agt 0 --usr 1 --max_turn 40

108 | --episodes 150

109 | --movie_kb_path ./deep_dialog/data/movie_kb.1k.p

110 | --goal_file_path ./deep_dialog/data/user_goals_first_turn_template.part.movie.v1.p

111 | --intent_err_prob 0.00

112 | --slot_err_prob 0.00

113 | --episodes 500

114 | --act_level 0

115 | --run_mode 0

116 | --cmd_input_mode 1

117 | ```

118 |

119 | ### End2End RL Agent

120 | Train End2End RL Agent without NLU and NLG (with simulated noise in NLU)

121 | ```sh

122 | python run.py --agt 9 --usr 1 --max_turn 40

123 | --movie_kb_path ./deep_dialog/data/movie_kb.1k.p

124 | --dqn_hidden_size 80

125 | --experience_replay_pool_size 1000

126 | --episodes 500

127 | --simulation_epoch_size 100

128 | --write_model_dir ./deep_dialog/checkpoints/rl_agent/

129 | --run_mode 3

130 | --act_level 0

131 | --slot_err_prob 0.00

132 | --intent_err_prob 0.00

133 | --batch_size 16

134 | --goal_file_path ./deep_dialog/data/user_goals_first_turn_template.part.movie.v1.p

135 | --warm_start 1

136 | --warm_start_epochs 120

137 | ```

138 | Train End2End RL Agent with NLU and NLG

139 | ```sh

140 | python run.py --agt 9 --usr 1 --max_turn 40

141 | --movie_kb_path ./deep_dialog/data/movie_kb.1k.p

142 | --dqn_hidden_size 80

143 | --experience_replay_pool_size 1000

144 | --episodes 500

145 | --simulation_epoch_size 100

146 | --write_model_dir ./deep_dialog/checkpoints/rl_agent/

147 | --run_mode 3

148 | --act_level 1

149 | --slot_err_prob 0.00

150 | --intent_err_prob 0.00

151 | --batch_size 16

152 | --goal_file_path ./deep_dialog/data/user_goals_first_turn_template.part.movie.v1.p

153 | --warm_start 1

154 | --warm_start_epochs 120

155 | ```

156 | Test RL Agent with N dialogues:

157 | ```sh

158 | python run.py --agt 9 --usr 1 --max_turn 40

159 | --movie_kb_path ./deep_dialog/data/movie_kb.1k.p

160 | --dqn_hidden_size 80

161 | --experience_replay_pool_size 1000

162 | --episodes 300

163 | --simulation_epoch_size 100

164 | --write_model_dir ./deep_dialog/checkpoints/rl_agent/

165 | --slot_err_prob 0.00

166 | --intent_err_prob 0.00

167 | --batch_size 16

168 | --goal_file_path ./deep_dialog/data/user_goals_first_turn_template.part.movie.v1.p

169 | --trained_model_path ./deep_dialog/checkpoints/rl_agent/noe2e/agt_9_478_500_0.98000.p

170 | --run_mode 3

171 | ```

172 |

173 | ## Evaluation

174 | To evaluate the performance of agents, three metrics are available: success rate, average reward, average turns. Here we show the learning curve with success rate.

175 |

176 | 1. Plotting Learning Curve

177 | ``` python draw_learning_curve.py --result_file ./deep_dialog/checkpoints/rl_agent/noe2e/agt_9_performance_records.json```

178 | 2. Pull out the numbers and draw the curves in Excel

179 |

180 | ## Reference

181 |

182 | Main papers to be cited

183 | ```

184 | @inproceedings{li2017end,

185 | title={End-to-End Task-Completion Neural Dialogue Systems},

186 | author={Li, Xuijun and Chen, Yun-Nung and Li, Lihong and Gao, Jianfeng and Celikyilmaz, Asli},

187 | booktitle={Proceedings of The 8th International Joint Conference on Natural Language Processing},

188 | year={2017}

189 | }

190 |

191 | @article{li2016user,

192 | title={A User Simulator for Task-Completion Dialogues},

193 | author={Li, Xiujun and Lipton, Zachary C and Dhingra, Bhuwan and Li, Lihong and Gao, Jianfeng and Chen, Yun-Nung},

194 | journal={arXiv preprint arXiv:1612.05688},

195 | year={2016}

196 | }

197 |

--------------------------------------------------------------------------------

/imgs/noe2e_learning_curve.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MiuLab/TC-Bot/071a5a08dc85dc80adf51284d6f15f2b491098be/imgs/noe2e_learning_curve.png

--------------------------------------------------------------------------------

/src/deep_dialog/__init__.py:

--------------------------------------------------------------------------------

1 | #

--------------------------------------------------------------------------------

/src/deep_dialog/agents/__init__.py:

--------------------------------------------------------------------------------

1 | from .agent_cmd import *

2 | from .agent_baselines import *

3 | from .agent_dqn import *

--------------------------------------------------------------------------------

/src/deep_dialog/agents/agent.py:

--------------------------------------------------------------------------------

1 | """

2 | Created on May 17, 2016

3 |

4 | @author: xiul, t-zalipt

5 | """

6 |

7 | from deep_dialog import dialog_config

8 |

9 | class Agent:

10 | """ Prototype for all agent classes, defining the interface they must uphold """

11 |

12 | def __init__(self, movie_dict=None, act_set=None, slot_set=None, params=None):

13 | """ Constructor for the Agent class

14 |

15 | Arguments:

16 | movie_dict -- This is here now but doesn't belong - the agent doesn't know about movies

17 | act_set -- The set of acts. #### Shouldn't this be more abstract? Don't we want our agent to be more broadly usable?

18 | slot_set -- The set of available slots

19 | """

20 | self.movie_dict = movie_dict

21 | self.act_set = act_set

22 | self.slot_set = slot_set

23 | self.act_cardinality = len(act_set.keys())

24 | self.slot_cardinality = len(slot_set.keys())

25 |

26 | self.epsilon = params['epsilon']

27 | self.agent_run_mode = params['agent_run_mode']

28 | self.agent_act_level = params['agent_act_level']

29 |

30 |

31 | def initialize_episode(self):

32 | """ Initialize a new episode. This function is called every time a new episode is run. """

33 | self.current_action = {} # TODO Changed this variable's name to current_action

34 | self.current_action['diaact'] = None # TODO Does it make sense to call it a state if it has an act? Which act? The Most recent?

35 | self.current_action['inform_slots'] = {}

36 | self.current_action['request_slots'] = {}

37 | self.current_action['turn'] = 0

38 |

39 | def state_to_action(self, state, available_actions):

40 | """ Take the current state and return an action according to the current exploration/exploitation policy

41 |

42 | We define the agents flexibly so that they can either operate on act_slot representations or act_slot_value representations.

43 | We also define the responses flexibly, returning a dictionary with keys [act_slot_response, act_slot_value_response]. This way the command-line agent can continue to operate with values

44 |

45 | Arguments:

46 | state -- A tuple of (history, kb_results) where history is a sequence of previous actions and kb_results contains information on the number of results matching the current constraints.

47 | user_action -- A legacy representation used to run the command line agent. We should remove this ASAP but not just yet

48 | available_actions -- A list of the allowable actions in the current state

49 |

50 | Returns:

51 | act_slot_action -- An action consisting of one act and >= 0 slots as well as which slots are informed vs requested.

52 | act_slot_value_action -- An action consisting of acts slots and values in the legacy format. This can be used in the future for training agents that take value into account and interact directly with the database

53 | """

54 | act_slot_response = None

55 | act_slot_value_response = None

56 | return {"act_slot_response": act_slot_response, "act_slot_value_response": act_slot_value_response}

57 |

58 |

59 | def register_experience_replay_tuple(self, s_t, a_t, reward, s_tplus1, episode_over):

60 | """ Register feedback from the environment, to be stored as future training data

61 |

62 | Arguments:

63 | s_t -- The state in which the last action was taken

64 | a_t -- The previous agent action

65 | reward -- The reward received immediately following the action

66 | s_tplus1 -- The state transition following the latest action

67 | episode_over -- A boolean value representing whether the this is the final action.

68 |

69 | Returns:

70 | None

71 | """

72 | pass

73 |

74 |

75 | def set_nlg_model(self, nlg_model):

76 | self.nlg_model = nlg_model

77 |

78 | def set_nlu_model(self, nlu_model):

79 | self.nlu_model = nlu_model

80 |

81 |

82 | def add_nl_to_action(self, agent_action):

83 | """ Add NL to Agent Dia_Act """

84 |

85 | if agent_action['act_slot_response']:

86 | agent_action['act_slot_response']['nl'] = ""

87 | user_nlg_sentence = self.nlg_model.convert_diaact_to_nl(agent_action['act_slot_response'], 'agt') #self.nlg_model.translate_diaact(agent_action['act_slot_response']) # NLG

88 | agent_action['act_slot_response']['nl'] = user_nlg_sentence

89 | elif agent_action['act_slot_value_response']:

90 | agent_action['act_slot_value_response']['nl'] = ""

91 | user_nlg_sentence = self.nlg_model.convert_diaact_to_nl(agent_action['act_slot_value_response'], 'agt') #self.nlg_model.translate_diaact(agent_action['act_slot_value_response']) # NLG

92 | agent_action['act_slot_response']['nl'] = user_nlg_sentence

--------------------------------------------------------------------------------

/src/deep_dialog/agents/agent_baselines.py:

--------------------------------------------------------------------------------

1 | """

2 | Created on May 25, 2016

3 |

4 | @author: xiul, t-zalipt

5 | """

6 |

7 | import copy, random

8 | from deep_dialog import dialog_config

9 | from agent import Agent

10 |

11 |

12 | class InformAgent(Agent):

13 | """ A simple agent to test the system. This agent should simply inform all the slots and then issue: taskcomplete. """

14 |

15 | def initialize_episode(self):

16 | self.state = {}

17 | self.state['diaact'] = ''

18 | self.state['inform_slots'] = {}

19 | self.state['request_slots'] = {}

20 | self.state['turn'] = -1

21 | self.current_slot_id = 0

22 |

23 | def state_to_action(self, state):

24 | """ Run current policy on state and produce an action """

25 |

26 | self.state['turn'] += 2

27 | if self.current_slot_id < len(self.slot_set.keys()):

28 | slot = self.slot_set.keys()[self.current_slot_id]

29 | self.current_slot_id += 1

30 |

31 | act_slot_response = {}

32 | act_slot_response['diaact'] = "inform"

33 | act_slot_response['inform_slots'] = {slot: "PLACEHOLDER"}

34 | act_slot_response['request_slots'] = {}

35 | act_slot_response['turn'] = self.state['turn']

36 | else:

37 | act_slot_response = {'diaact': "thanks", 'inform_slots': {}, 'request_slots': {}, 'turn': self.state['turn']}

38 | return {'act_slot_response': act_slot_response, 'act_slot_value_response': None}

39 |

40 |

41 |

42 | class RequestAllAgent(Agent):

43 | """ A simple agent to test the system. This agent should simply request all the slots and then issue: thanks(). """

44 |

45 | def initialize_episode(self):

46 | self.state = {}

47 | self.state['diaact'] = ''

48 | self.state['inform_slots'] = {}

49 | self.state['request_slots'] = {}

50 | self.state['turn'] = -1

51 | self.current_slot_id = 0

52 |

53 | def state_to_action(self, state):

54 | """ Run current policy on state and produce an action """

55 |

56 | self.state['turn'] += 2

57 | if self.current_slot_id < len(dialog_config.sys_request_slots):

58 | slot = dialog_config.sys_request_slots[self.current_slot_id]

59 | self.current_slot_id += 1

60 |

61 | act_slot_response = {}

62 | act_slot_response['diaact'] = "request"

63 | act_slot_response['inform_slots'] = {}

64 | act_slot_response['request_slots'] = {slot: "PLACEHOLDER"}

65 | act_slot_response['turn'] = self.state['turn']

66 | else:

67 | act_slot_response = {'diaact': "thanks", 'inform_slots': {}, 'request_slots': {}, 'turn': self.state['turn']}

68 | return {'act_slot_response': act_slot_response, 'act_slot_value_response': None}

69 |

70 |

71 |

72 | class RandomAgent(Agent):

73 | """ A simple agent to test the interface. This agent should choose actions randomly. """

74 |

75 | def initialize_episode(self):

76 | self.state = {}

77 | self.state['diaact'] = ''

78 | self.state['inform_slots'] = {}

79 | self.state['request_slots'] = {}

80 | self.state['turn'] = -1

81 |

82 |

83 | def state_to_action(self, state):

84 | """ Run current policy on state and produce an action """

85 |

86 | self.state['turn'] += 2

87 | act_slot_response = copy.deepcopy(random.choice(dialog_config.feasible_actions))

88 | act_slot_response['turn'] = self.state['turn']

89 | return {'act_slot_response': act_slot_response, 'act_slot_value_response': None}

90 |

91 |

92 |

93 | class EchoAgent(Agent):

94 | """ A simple agent that informs all requested slots, then issues inform(taskcomplete) when the user stops making requests. """

95 |

96 | def initialize_episode(self):

97 | self.state = {}

98 | self.state['diaact'] = ''

99 | self.state['inform_slots'] = {}

100 | self.state['request_slots'] = {}

101 | self.state['turn'] = -1

102 |

103 |

104 | def state_to_action(self, state):

105 | """ Run current policy on state and produce an action """

106 | user_action = state['user_action']

107 |

108 | self.state['turn'] += 2

109 | act_slot_response = {}

110 | act_slot_response['inform_slots'] = {}

111 | act_slot_response['request_slots'] = {}

112 | ########################################################################

113 | # find out if the user is requesting anything

114 | # if so, inform it

115 | ########################################################################

116 | if user_action['diaact'] == 'request':

117 | requested_slot = user_action['request_slots'].keys()[0]

118 |

119 | act_slot_response['diaact'] = "inform"

120 | act_slot_response['inform_slots'][requested_slot] = "PLACEHOLDER"

121 | else:

122 | act_slot_response['diaact'] = "thanks"

123 |

124 | act_slot_response['turn'] = self.state['turn']

125 | return {'act_slot_response': act_slot_response, 'act_slot_value_response': None}

126 |

127 |

128 | class RequestBasicsAgent(Agent):

129 | """ A simple agent to test the system. This agent should simply request all the basic slots and then issue: thanks(). """

130 |

131 | def initialize_episode(self):

132 | self.state = {}

133 | self.state['diaact'] = 'UNK'

134 | self.state['inform_slots'] = {}

135 | self.state['request_slots'] = {}

136 | self.state['turn'] = -1

137 | self.current_slot_id = 0

138 | self.request_set = ['moviename', 'starttime', 'city', 'date', 'theater', 'numberofpeople']

139 | self.phase = 0

140 |

141 | def state_to_action(self, state):

142 | """ Run current policy on state and produce an action """

143 |

144 | self.state['turn'] += 2

145 | if self.current_slot_id < len(self.request_set):

146 | slot = self.request_set[self.current_slot_id]

147 | self.current_slot_id += 1

148 |

149 | act_slot_response = {}

150 | act_slot_response['diaact'] = "request"

151 | act_slot_response['inform_slots'] = {}

152 | act_slot_response['request_slots'] = {slot: "UNK"}

153 | act_slot_response['turn'] = self.state['turn']

154 | elif self.phase == 0:

155 | act_slot_response = {'diaact': "inform", 'inform_slots': {'taskcomplete': "PLACEHOLDER"}, 'request_slots': {}, 'turn':self.state['turn']}

156 | self.phase += 1

157 | elif self.phase == 1:

158 | act_slot_response = {'diaact': "thanks", 'inform_slots': {}, 'request_slots': {}, 'turn': self.state['turn']}

159 | else:

160 | raise Exception("THIS SHOULD NOT BE POSSIBLE (AGENT CALLED IN UNANTICIPATED WAY)")

161 | return {'act_slot_response': act_slot_response, 'act_slot_value_response': None}

162 |

163 |

--------------------------------------------------------------------------------

/src/deep_dialog/agents/agent_cmd.py:

--------------------------------------------------------------------------------

1 | """

2 | Created on May 17, 2016

3 |

4 | @author: xiul, t-zalipt

5 | """

6 |

7 |

8 | from agent import Agent

9 |

10 | class AgentCmd(Agent):

11 |

12 | def __init__(self, movie_dict=None, act_set=None, slot_set=None, params=None):

13 | """ Constructor for the Agent class """

14 |

15 | self.movie_dict = movie_dict

16 | self.act_set = act_set

17 | self.slot_set = slot_set

18 | self.act_cardinality = len(act_set.keys())

19 | self.slot_cardinality = len(slot_set.keys())

20 |

21 | self.agent_run_mode = params['agent_run_mode']

22 | self.agent_act_level = params['agent_act_level']

23 | self.agent_input_mode = params['cmd_input_mode']

24 |

25 |

26 | def state_to_action(self, state):

27 | """ Generate an action by getting input interactively from the command line """

28 |

29 | user_action = state['user_action']

30 | # get input from the command line

31 | print "Turn", user_action['turn'] + 1, "sys:",

32 | command = raw_input()

33 |

34 | if self.agent_input_mode == 0: # nl

35 | act_slot_value_response = self.generate_diaact_from_nl(command)

36 | elif self.agent_input_mode == 1: # dia_act

37 | act_slot_value_response = self.parse_str_to_diaact(command)

38 |

39 | return {"act_slot_response": act_slot_value_response, "act_slot_value_response": act_slot_value_response}

40 |

41 | def parse_str_to_diaact(self, string):

42 | """ Parse string into Dia_Act Form """

43 |

44 | annot = string.strip(' ').strip('\n').strip('\r')

45 | act = annot

46 |

47 | if annot.find('(') > 0 and annot.find(')') > 0:

48 | act = annot[0: annot.find('(')].strip(' ').lower() #Dia act

49 | annot = annot[annot.find('(')+1:-1].strip(' ') #slot-value pairs

50 | else: annot = ''

51 |

52 | act_slot_value_response = {}

53 | act_slot_value_response['diaact'] = 'UNK'

54 | act_slot_value_response['inform_slots'] = {}

55 | act_slot_value_response['request_slots'] = {}

56 |

57 | if act in self.act_set: # dialog_config.all_acts

58 | act_slot_value_response['diaact'] = act

59 | else:

60 | print ("Something wrong for your input dialog act! Please check your input ...")

61 |

62 | if len(annot) > 0: # slot-pair values: slot[val] = id

63 | annot_segs = annot.split(';') #slot-value pairs

64 | sent_slot_vals = {} # slot-pair real value

65 | sent_rep_vals = {} # slot-pair id value

66 |

67 | for annot_seg in annot_segs:

68 | annot_seg = annot_seg.strip(' ')

69 | annot_slot = annot_seg

70 | if annot_seg.find('=') > 0:

71 | annot_slot = annot_seg[:annot_seg.find('=')]

72 | annot_val = annot_seg[annot_seg.find('=')+1:]

73 | else: #requested

74 | annot_val = 'UNK' # for request

75 | if annot_slot == 'taskcomplete': annot_val = 'FINISH'

76 |

77 | if annot_slot == 'mc_list': continue

78 |

79 | # slot may have multiple values

80 | sent_slot_vals[annot_slot] = []

81 | sent_rep_vals[annot_slot] = []

82 |

83 | if annot_val.startswith('{') and annot_val.endswith('}'):

84 | annot_val = annot_val[1:-1]

85 |

86 | if annot_slot == 'result':

87 | result_annot_seg_arr = annot_val.strip(' ').split('&')

88 | if len(annot_val.strip(' '))> 0:

89 | for result_annot_seg_item in result_annot_seg_arr:

90 | result_annot_seg_arr = result_annot_seg_item.strip(' ').split('=')

91 | result_annot_seg_slot = result_annot_seg_arr[0]

92 | result_annot_seg_slot_val = result_annot_seg_arr[1]

93 |

94 | if result_annot_seg_slot_val == 'UNK': act_slot_value_response['request_slots'][result_annot_seg_slot] = 'UNK'

95 | else: act_slot_value_response['inform_slots'][result_annot_seg_slot] = result_annot_seg_slot_val

96 | else: # result={}

97 | pass

98 | else: # multi-choice or mc_list

99 | annot_val_arr = annot_val.split('#')

100 | act_slot_value_response['inform_slots'][annot_slot] = []

101 | for annot_val_ele in annot_val_arr:

102 | act_slot_value_response['inform_slots'][annot_slot].append(annot_val_ele)

103 | else: # single choice

104 | if annot_slot in self.slot_set.keys():

105 | if annot_val == 'UNK':

106 | act_slot_value_response['request_slots'][annot_slot] = 'UNK'

107 | else:

108 | act_slot_value_response['inform_slots'][annot_slot] = annot_val

109 |

110 | return act_slot_value_response

111 |

112 | def generate_diaact_from_nl(self, string):

113 | """ Generate Dia_Act Form with NLU """

114 |

115 | agent_action = {}

116 | agent_action['diaact'] = 'UNK'

117 | agent_action['inform_slots'] = {}

118 | agent_action['request_slots'] = {}

119 |

120 | if len(string) > 0:

121 | agent_action = self.nlu_model.generate_dia_act(string)

122 |

123 | agent_action['nl'] = string

124 | return agent_action

125 |

126 | def add_nl_to_action(self, agent_action):

127 | """ Add NL to Agent Dia_Act """

128 |

129 | if self.agent_input_mode == 1:

130 | if agent_action['act_slot_response']:

131 | agent_action['act_slot_response']['nl'] = ""

132 | user_nlg_sentence = self.nlg_model.convert_diaact_to_nl(agent_action['act_slot_response'], 'agt')

133 | agent_action['act_slot_response']['nl'] = user_nlg_sentence

134 | elif agent_action['act_slot_value_response']:

135 | agent_action['act_slot_value_response']['nl'] = ""

136 | user_nlg_sentence = self.nlg_model.convert_diaact_to_nl(agent_action['act_slot_value_response'], 'agt')

137 | agent_action['act_slot_response']['nl'] = user_nlg_sentence

138 |

--------------------------------------------------------------------------------

/src/deep_dialog/agents/agent_dqn.py:

--------------------------------------------------------------------------------

1 | '''

2 | Created on Jun 18, 2016

3 |

4 | An DQN Agent

5 |

6 | - An DQN

7 | - Keep an experience_replay pool: training_data

8 | - Keep a copy DQN

9 |

10 | Command: python .\run.py --agt 9 --usr 1 --max_turn 40 --movie_kb_path .\deep_dialog\data\movie_kb.1k.json --dqn_hidden_size 80 --experience_replay_pool_size 1000 --replacement_steps 50 --per_train_epochs 100 --episodes 200 --err_method 2

11 |

12 |

13 | @author: xiul

14 | '''

15 |

16 |

17 | import random, copy, json

18 | import cPickle as pickle

19 | import numpy as np

20 |

21 | from deep_dialog import dialog_config

22 |

23 | from agent import Agent

24 | from deep_dialog.qlearning import DQN

25 |

26 |

27 |

28 | class AgentDQN(Agent):

29 | def __init__(self, movie_dict=None, act_set=None, slot_set=None, params=None):

30 | self.movie_dict = movie_dict

31 | self.act_set = act_set

32 | self.slot_set = slot_set

33 | self.act_cardinality = len(act_set.keys())

34 | self.slot_cardinality = len(slot_set.keys())

35 |

36 | self.feasible_actions = dialog_config.feasible_actions

37 | self.num_actions = len(self.feasible_actions)

38 |

39 | self.epsilon = params['epsilon']

40 | self.agent_run_mode = params['agent_run_mode']

41 | self.agent_act_level = params['agent_act_level']

42 | self.experience_replay_pool = [] #experience replay pool

43 |

44 | self.experience_replay_pool_size = params.get('experience_replay_pool_size', 1000)

45 | self.hidden_size = params.get('dqn_hidden_size', 60)

46 | self.gamma = params.get('gamma', 0.9)

47 | self.predict_mode = params.get('predict_mode', False)

48 | self.warm_start = params.get('warm_start', 0)

49 |

50 | self.max_turn = params['max_turn'] + 4

51 | self.state_dimension = 2 * self.act_cardinality + 7 * self.slot_cardinality + 3 + self.max_turn

52 |

53 | self.dqn = DQN(self.state_dimension, self.hidden_size, self.num_actions)

54 | self.clone_dqn = copy.deepcopy(self.dqn)

55 |

56 | self.cur_bellman_err = 0

57 |

58 | # Prediction Mode: load trained DQN model

59 | if params['trained_model_path'] != None:

60 | self.dqn.model = copy.deepcopy(self.load_trained_DQN(params['trained_model_path']))

61 | self.clone_dqn = copy.deepcopy(self.dqn)

62 | self.predict_mode = True

63 | self.warm_start = 2

64 |

65 |

66 | def initialize_episode(self):

67 | """ Initialize a new episode. This function is called every time a new episode is run. """

68 |

69 | self.current_slot_id = 0

70 | self.phase = 0

71 | self.request_set = ['moviename', 'starttime', 'city', 'date', 'theater', 'numberofpeople']

72 |

73 |

74 | def state_to_action(self, state):

75 | """ DQN: Input state, output action """

76 |

77 | self.representation = self.prepare_state_representation(state)

78 | self.action = self.run_policy(self.representation)

79 | act_slot_response = copy.deepcopy(self.feasible_actions[self.action])

80 | return {'act_slot_response': act_slot_response, 'act_slot_value_response': None}

81 |

82 |

83 | def prepare_state_representation(self, state):

84 | """ Create the representation for each state """

85 |

86 | user_action = state['user_action']

87 | current_slots = state['current_slots']

88 | kb_results_dict = state['kb_results_dict']

89 | agent_last = state['agent_action']

90 |

91 | ########################################################################

92 | # Create one-hot of acts to represent the current user action

93 | ########################################################################

94 | user_act_rep = np.zeros((1, self.act_cardinality))

95 | user_act_rep[0,self.act_set[user_action['diaact']]] = 1.0

96 |

97 | ########################################################################

98 | # Create bag of inform slots representation to represent the current user action

99 | ########################################################################

100 | user_inform_slots_rep = np.zeros((1, self.slot_cardinality))

101 | for slot in user_action['inform_slots'].keys():

102 | user_inform_slots_rep[0,self.slot_set[slot]] = 1.0

103 |

104 | ########################################################################

105 | # Create bag of request slots representation to represent the current user action

106 | ########################################################################

107 | user_request_slots_rep = np.zeros((1, self.slot_cardinality))

108 | for slot in user_action['request_slots'].keys():

109 | user_request_slots_rep[0, self.slot_set[slot]] = 1.0

110 |

111 | ########################################################################

112 | # Creat bag of filled_in slots based on the current_slots

113 | ########################################################################

114 | current_slots_rep = np.zeros((1, self.slot_cardinality))

115 | for slot in current_slots['inform_slots']:

116 | current_slots_rep[0, self.slot_set[slot]] = 1.0

117 |

118 | ########################################################################

119 | # Encode last agent act

120 | ########################################################################

121 | agent_act_rep = np.zeros((1,self.act_cardinality))

122 | if agent_last:

123 | agent_act_rep[0, self.act_set[agent_last['diaact']]] = 1.0

124 |

125 | ########################################################################

126 | # Encode last agent inform slots

127 | ########################################################################

128 | agent_inform_slots_rep = np.zeros((1, self.slot_cardinality))

129 | if agent_last:

130 | for slot in agent_last['inform_slots'].keys():

131 | agent_inform_slots_rep[0,self.slot_set[slot]] = 1.0

132 |

133 | ########################################################################

134 | # Encode last agent request slots

135 | ########################################################################

136 | agent_request_slots_rep = np.zeros((1, self.slot_cardinality))

137 | if agent_last:

138 | for slot in agent_last['request_slots'].keys():

139 | agent_request_slots_rep[0,self.slot_set[slot]] = 1.0

140 |

141 | turn_rep = np.zeros((1,1)) + state['turn'] / 10.

142 |

143 | ########################################################################

144 | # One-hot representation of the turn count?

145 | ########################################################################

146 | turn_onehot_rep = np.zeros((1, self.max_turn))

147 | turn_onehot_rep[0, state['turn']] = 1.0

148 |

149 | ########################################################################

150 | # Representation of KB results (scaled counts)

151 | ########################################################################

152 | kb_count_rep = np.zeros((1, self.slot_cardinality + 1)) + kb_results_dict['matching_all_constraints'] / 100.

153 | for slot in kb_results_dict:

154 | if slot in self.slot_set:

155 | kb_count_rep[0, self.slot_set[slot]] = kb_results_dict[slot] / 100.

156 |

157 | ########################################################################

158 | # Representation of KB results (binary)

159 | ########################################################################

160 | kb_binary_rep = np.zeros((1, self.slot_cardinality + 1)) + np.sum( kb_results_dict['matching_all_constraints'] > 0.)

161 | for slot in kb_results_dict:

162 | if slot in self.slot_set:

163 | kb_binary_rep[0, self.slot_set[slot]] = np.sum( kb_results_dict[slot] > 0.)

164 |

165 | self.final_representation = np.hstack([user_act_rep, user_inform_slots_rep, user_request_slots_rep, agent_act_rep, agent_inform_slots_rep, agent_request_slots_rep, current_slots_rep, turn_rep, turn_onehot_rep, kb_binary_rep, kb_count_rep])

166 | return self.final_representation

167 |

168 | def run_policy(self, representation):

169 | """ epsilon-greedy policy """

170 |

171 | if random.random() < self.epsilon:

172 | return random.randint(0, self.num_actions - 1)

173 | else:

174 | if self.warm_start == 1:

175 | if len(self.experience_replay_pool) > self.experience_replay_pool_size:

176 | self.warm_start = 2

177 | return self.rule_policy()

178 | else:

179 | return self.dqn.predict(representation, {}, predict_model=True)

180 |

181 | def rule_policy(self):

182 | """ Rule Policy """

183 |

184 | if self.current_slot_id < len(self.request_set):

185 | slot = self.request_set[self.current_slot_id]

186 | self.current_slot_id += 1

187 |

188 | act_slot_response = {}

189 | act_slot_response['diaact'] = "request"

190 | act_slot_response['inform_slots'] = {}

191 | act_slot_response['request_slots'] = {slot: "UNK"}

192 | elif self.phase == 0:

193 | act_slot_response = {'diaact': "inform", 'inform_slots': {'taskcomplete': "PLACEHOLDER"}, 'request_slots': {} }

194 | self.phase += 1

195 | elif self.phase == 1:

196 | act_slot_response = {'diaact': "thanks", 'inform_slots': {}, 'request_slots': {} }

197 |

198 | return self.action_index(act_slot_response)

199 |

200 | def action_index(self, act_slot_response):

201 | """ Return the index of action """

202 |

203 | for (i, action) in enumerate(self.feasible_actions):

204 | if act_slot_response == action:

205 | return i

206 | print act_slot_response

207 | raise Exception("action index not found")

208 | return None

209 |

210 |

211 | def register_experience_replay_tuple(self, s_t, a_t, reward, s_tplus1, episode_over):

212 | """ Register feedback from the environment, to be stored as future training data """

213 |

214 | state_t_rep = self.prepare_state_representation(s_t)

215 | action_t = self.action

216 | reward_t = reward

217 | state_tplus1_rep = self.prepare_state_representation(s_tplus1)

218 | training_example = (state_t_rep, action_t, reward_t, state_tplus1_rep, episode_over)

219 |

220 | if self.predict_mode == False: # Training Mode

221 | if self.warm_start == 1:

222 | self.experience_replay_pool.append(training_example)

223 | else: # Prediction Mode

224 | self.experience_replay_pool.append(training_example)

225 |

226 | def train(self, batch_size=1, num_batches=100):

227 | """ Train DQN with experience replay """

228 |

229 | for iter_batch in range(num_batches):

230 | self.cur_bellman_err = 0

231 | for iter in range(len(self.experience_replay_pool)/(batch_size)):

232 | batch = [random.choice(self.experience_replay_pool) for i in xrange(batch_size)]

233 | batch_struct = self.dqn.singleBatch(batch, {'gamma': self.gamma}, self.clone_dqn)

234 | self.cur_bellman_err += batch_struct['cost']['total_cost']

235 |

236 | print ("cur bellman err %.4f, experience replay pool %s" % (float(self.cur_bellman_err)/len(self.experience_replay_pool), len(self.experience_replay_pool)))

237 |

238 |

239 | ################################################################################

240 | # Debug Functions

241 | ################################################################################

242 | def save_experience_replay_to_file(self, path):

243 | """ Save the experience replay pool to a file """

244 |

245 | try:

246 | pickle.dump(self.experience_replay_pool, open(path, "wb"))

247 | print 'saved model in %s' % (path, )

248 | except Exception, e:

249 | print 'Error: Writing model fails: %s' % (path, )

250 | print e

251 |

252 | def load_experience_replay_from_file(self, path):

253 | """ Load the experience replay pool from a file"""

254 |

255 | self.experience_replay_pool = pickle.load(open(path, 'rb'))

256 |

257 |

258 | def load_trained_DQN(self, path):

259 | """ Load the trained DQN from a file """

260 |

261 | trained_file = pickle.load(open(path, 'rb'))

262 | model = trained_file['model']

263 |

264 | print "trained DQN Parameters:", json.dumps(trained_file['params'], indent=2)

265 | return model

--------------------------------------------------------------------------------

/src/deep_dialog/checkpoints/rl_agent/noe2e/agt_9_performance_records.json:

--------------------------------------------------------------------------------

1 | {"ave_turns": {"0": 42.0, "1": 27.34, "2": 36.84, "3": 41.36, "4": 42.0, "5": 41.52, "6": 35.46, "7": 42.0, "8": 40.16, "9": 38.76, "10": 36.82, "11": 37.94, "12": 35.76, "13": 35.52, "14": 35.84, "15": 38.14, "16": 33.12, "17": 28.24, "18": 29.04, "19": 34.1, "20": 35.12, "21": 28.56, "22": 27.78, "23": 25.58, "24": 28.78, "25": 27.02, "26": 22.84, "27": 25.28, "28": 29.58, "29": 26.78, "30": 25.64, "31": 22.22, "32": 23.96, "33": 20.1, "34": 22.64, "35": 16.12, "36": 15.96, "37": 21.06, "38": 29.94, "39": 15.32, "40": 20.36, "41": 22.36, "42": 17.12, "43": 18.18, "44": 15.2, "45": 13.92, "46": 16.42, "47": 19.98, "48": 20.1, "49": 18.42, "50": 15.84, "51": 15.4, "52": 16.84, "53": 14.74, "54": 16.72, "55": 16.88, "56": 14.58, "57": 15.98, "58": 17.06, "59": 18.18, "60": 15.0, "61": 17.34, "62": 16.7, "63": 15.14, "64": 15.4, "65": 15.96, "66": 16.46, "67": 17.14, "68": 16.04, "69": 17.0, "70": 15.78, "71": 16.62, "72": 17.74, "73": 16.94, "74": 18.16, "75": 17.04, "76": 17.1, "77": 14.12, "78": 16.42, "79": 16.3, "80": 16.08, "81": 15.86, "82": 17.52, "83": 15.48, "84": 15.56, "85": 17.16, "86": 15.24, "87": 15.42, "88": 15.16, "89": 17.56, "90": 15.78, "91": 15.04, "92": 15.28, "93": 15.98, "94": 16.48, "95": 15.52, "96": 14.82, "97": 15.72, "98": 13.94, "99": 17.32, "100": 15.98, "101": 15.0, "102": 13.54, "103": 13.78, "104": 16.0, "105": 14.52, "106": 14.88, "107": 15.94, "108": 14.78, "109": 15.9, "110": 15.6, "111": 16.62, "112": 15.06, "113": 16.9, "114": 14.36, "115": 16.24, "116": 15.04, "117": 15.3, "118": 14.24, "119": 15.36, "120": 14.74, "121": 15.42, "122": 18.52, "123": 15.82, "124": 17.86, "125": 13.16, "126": 15.42, "127": 14.42, "128": 15.12, "129": 13.9, "130": 16.68, "131": 14.42, "132": 14.56, "133": 14.7, "134": 17.06, "135": 15.92, "136": 15.16, "137": 14.08, "138": 15.94, "139": 14.38, "140": 15.6, "141": 17.02, "142": 15.24, "143": 14.48, "144": 15.06, "145": 15.4, "146": 16.16, "147": 14.56, "148": 14.18, "149": 14.46, "150": 13.72, "151": 13.84, "152": 15.04, "153": 16.02, "154": 15.54, "155": 14.94, "156": 13.86, "157": 16.02, "158": 14.42, "159": 15.1, "160": 15.76, "161": 14.3, "162": 12.88, "163": 14.86, "164": 13.0, "165": 14.02, "166": 14.44, "167": 16.58, "168": 15.14, "169": 15.26, "170": 15.28, "171": 14.26, "172": 16.56, "173": 14.3, "174": 15.26, "175": 14.06, "176": 16.8, "177": 15.12, "178": 15.22, "179": 15.98, "180": 14.6, "181": 15.36, "182": 15.44, "183": 13.1, "184": 14.6, "185": 15.1, "186": 14.54, "187": 15.92, "188": 14.96, "189": 13.56, "190": 15.7, "191": 15.22, "192": 14.46, "193": 15.28, "194": 14.28, "195": 13.28, "196": 14.68, "197": 13.76, "198": 14.04, "199": 16.78, "200": 16.02, "201": 13.84, "202": 15.22, "203": 14.76, "204": 14.54, "205": 15.76, "206": 14.98, "207": 13.58, "208": 15.14, "209": 15.48, "210": 14.44, "211": 14.3, "212": 13.66, "213": 14.64, "214": 14.08, "215": 13.32, "216": 13.68, "217": 13.22, "218": 14.8, "219": 14.34, "220": 12.76, "221": 15.5, "222": 13.38, "223": 15.26, "224": 13.26, "225": 14.36, "226": 13.58, "227": 15.04, "228": 12.44, "229": 14.58, "230": 14.04, "231": 12.76, "232": 13.36, "233": 14.52, "234": 12.1, "235": 13.7, "236": 13.78, "237": 15.1, "238": 13.74, "239": 15.9, "240": 12.92, "241": 14.38, "242": 14.98, "243": 16.86, "244": 13.1, "245": 14.62, "246": 14.54, "247": 16.12, "248": 14.52, "249": 14.62, "250": 13.72, "251": 14.84, "252": 13.46, "253": 13.1, "254": 13.4, "255": 14.34, "256": 13.92, "257": 14.4, "258": 14.92, "259": 13.14, "260": 12.92, "261": 15.1, "262": 13.38, "263": 13.68, "264": 15.58, "265": 12.8, "266": 14.28, "267": 13.1, "268": 13.9, "269": 14.26, "270": 14.6, "271": 13.3, "272": 13.26, "273": 13.04, "274": 14.36, "275": 12.42, "276": 13.86, "277": 14.98, "278": 11.78, "279": 15.42, "280": 13.06, "281": 13.56, "282": 13.96, "283": 14.04, "284": 12.72, "285": 13.9, "286": 13.98, "287": 14.26, "288": 15.42, "289": 14.96, "290": 14.4, "291": 13.08, "292": 14.28, "293": 14.84, "294": 13.92, "295": 12.5, "296": 14.7, "297": 13.82, "298": 13.12, "299": 12.68, "300": 12.7, "301": 13.32, "302": 14.48, "303": 12.6, "304": 13.52, "305": 13.68, "306": 12.42, "307": 14.26, "308": 13.9, "309": 14.98, "310": 14.04, "311": 12.64, "312": 12.54, "313": 14.8, "314": 12.8, "315": 12.94, "316": 13.72, "317": 13.52, "318": 12.26, "319": 14.72, "320": 13.74, "321": 13.4, "322": 12.72, "323": 13.28, "324": 13.54, "325": 13.68, "326": 14.2, "327": 12.78, "328": 12.64, "329": 13.14, "330": 13.6, "331": 13.22, "332": 13.5, "333": 14.0, "334": 13.3, "335": 14.38, "336": 13.76, "337": 12.2, "338": 13.44, "339": 13.42, "340": 14.36, "341": 14.58, "342": 12.8, "343": 12.54, "344": 13.24, "345": 11.76, "346": 12.98, "347": 13.06, "348": 15.0, "349": 15.18, "350": 14.3, "351": 13.74, "352": 14.56, "353": 13.54, "354": 12.04, "355": 14.28, "356": 13.18, "357": 12.28, "358": 12.8, "359": 12.96, "360": 15.24, "361": 14.18, "362": 15.76, "363": 13.76, "364": 14.58, "365": 13.36, "366": 12.34, "367": 12.02, "368": 14.06, "369": 14.7, "370": 13.92, "371": 12.86, "372": 14.3, "373": 13.56, "374": 14.28, "375": 14.92, "376": 14.86, "377": 12.54, "378": 13.4, "379": 13.28, "380": 14.68, "381": 13.68, "382": 14.66, "383": 12.04, "384": 13.86, "385": 12.22, "386": 13.3, "387": 13.56, "388": 12.36, "389": 13.02, "390": 12.5, "391": 13.0, "392": 13.8, "393": 13.94, "394": 13.12, "395": 13.46, "396": 12.62, "397": 14.04, "398": 13.1, "399": 13.44, "400": 13.38, "401": 13.14, "402": 13.02, "403": 13.3, "404": 13.54, "405": 12.74, "406": 12.62, "407": 13.98, "408": 14.32, "409": 13.08, "410": 12.14, "411": 14.02, "412": 13.42, "413": 12.78, "414": 14.08, "415": 14.8, "416": 14.62, "417": 12.88, "418": 13.84, "419": 13.26, "420": 13.68, "421": 13.08, "422": 14.94, "423": 14.22, "424": 12.88, "425": 13.2, "426": 14.2, "427": 14.1, "428": 14.92, "429": 12.8, "430": 13.78, "431": 13.24, "432": 14.36, "433": 15.2, "434": 12.64, "435": 14.12, "436": 14.04, "437": 13.6, "438": 13.8, "439": 14.26, "440": 12.76, "441": 13.48, "442": 12.8, "443": 13.8, "444": 12.94, "445": 13.8, "446": 14.52, "447": 14.3, "448": 13.28, "449": 13.7, "450": 15.18, "451": 14.32, "452": 13.36, "453": 15.7, "454": 15.18, "455": 12.2, "456": 13.0, "457": 12.84, "458": 13.84, "459": 14.88, "460": 17.34, "461": 14.76, "462": 12.06, "463": 13.64, "464": 13.1, "465": 14.16, "466": 13.54, "467": 12.82, "468": 12.36, "469": 13.78, "470": 13.9, "471": 14.6, "472": 11.9, "473": 14.18, "474": 13.84, "475": 14.32, "476": 12.92, "477": 12.84, "478": 11.18, "479": 14.7, "480": 14.34, "481": 13.32, "482": 12.52, "483": 13.48, "484": 13.2, "485": 12.0, "486": 15.96, "487": 13.8, "488": 15.06, "489": 12.38, "490": 14.9, "491": 13.52, "492": 11.68, "493": 14.74, "494": 13.5, "495": 15.46, "496": 14.28, "497": 13.54, "498": 13.84, "499": 12.74, "500": 15.26, "501": 13.62, "502": 13.28, "503": 14.34, "504": 13.28, "505": 12.38, "506": 16.44, "507": 15.34, "508": 13.32, "509": 13.24, "510": 14.34, "511": 12.68, "512": 13.16, "513": 13.98, "514": 14.26, "515": 14.44, "516": 13.96, "517": 15.44, "518": 13.08, "519": 13.18, "520": 15.2, "521": 15.94, "522": 13.54, "523": 13.7, "524": 14.44, "525": 12.52, "526": 13.44, "527": 13.32, "528": 13.54, "529": 13.42, "530": 12.84, "531": 13.28, "532": 13.84, "533": 13.3, "534": 14.46, "535": 11.36, "536": 14.74, "537": 13.26, "538": 12.26, "539": 13.62, "540": 13.86, "541": 15.36, "542": 13.94, "543": 13.2, "544": 12.16, "545": 13.92, "546": 13.76, "547": 14.2, "548": 13.58, "549": 12.28, "550": 13.36, "551": 12.9, "552": 13.76, "553": 14.18, "554": 12.72, "555": 12.98, "556": 13.68, "557": 13.8, "558": 12.62, "559": 13.24, "560": 13.66, "561": 12.46, "562": 12.5, "563": 14.66, "564": 14.0, "565": 13.7, "566": 13.28, "567": 11.68, "568": 13.2, "569": 13.82, "570": 12.38, "571": 13.82, "572": 12.5, "573": 12.68, "574": 13.26, "575": 14.72, "576": 12.26, "577": 14.4, "578": 12.6, "579": 14.88, "580": 12.74, "581": 14.54, "582": 14.94, "583": 13.16, "584": 11.7, "585": 15.2, "586": 12.06, "587": 14.04, "588": 13.04, "589": 15.66, "590": 14.36, "591": 12.56, "592": 13.18, "593": 14.22, "594": 13.48, "595": 13.14, "596": 13.88, "597": 12.28, "598": 13.08, "599": 13.8}, "ave_reward": {"0": -60.0, "1": -52.67, "2": -57.42, "3": -59.68, "4": -60.0, "5": -59.76, "6": -53.13, "7": -60.0, "8": -54.28, "9": -43.98, "10": -39.41, "11": -41.17, "12": -31.68, "13": -32.76, "14": -43.72, "15": -37.67, "16": -18.36, "17": 5.68, "18": -0.72, "19": -23.65, "20": -19.36, "21": 1.92, "22": 2.31, "23": 7.01, "24": -0.59, "25": 3.89, "26": 16.78, "27": 9.56, "28": 1.41, "29": 6.41, "30": 17.78, "31": 14.69, "32": 23.42, "33": 28.95, "34": 14.48, "35": 29.74, "36": 40.62, "37": 32.07, "38": 0.03, "39": 37.34, "40": 36.02, "41": 25.42, "42": 44.84, "43": 41.91, "44": 49.4, "45": 53.64, "46": 39.19, "47": 26.61, "48": 42.15, "49": 39.39, "50": 49.08, "51": 49.3, "52": 52.18, "53": 50.83, "54": 47.44, "55": 44.96, "56": 58.11, "57": 53.81, "58": 50.87, "59": 43.11, "60": 59.1, "61": 47.13, "62": 51.05, "63": 59.03, "64": 56.5, "65": 47.82, "66": 53.57, "67": 48.43, "68": 53.78, "69": 49.7, "70": 53.91, "71": 51.09, "72": 48.13, "73": 50.93, "74": 46.72, "75": 52.08, "76": 49.65, "77": 63.14, "78": 51.19, "79": 53.65, "80": 54.96, "81": 56.27, "82": 47.04, "83": 57.66, "84": 56.42, "85": 50.82, "86": 56.58, "87": 56.49, "88": 57.82, "89": 48.22, "90": 55.11, "91": 60.28, "92": 58.96, "93": 56.21, "94": 53.56, "95": 55.24, "96": 60.39, "97": 56.34, "98": 63.23, "99": 47.14, "100": 55.01, "101": 59.1, "102": 64.63, "103": 64.51, "104": 53.8, "105": 59.34, "106": 59.16, "107": 53.83, "108": 60.41, "109": 52.65, "110": 52.8, "111": 51.09, "112": 59.07, "113": 47.35, "114": 60.62, "115": 51.28, "116": 56.68, "117": 56.55, "118": 61.88, "119": 56.52, "120": 59.23, "121": 55.29, "122": 44.14, "123": 53.89, "124": 45.67, "125": 66.02, "126": 56.49, "127": 59.39, "128": 57.84, "129": 62.05, "130": 49.86, "131": 60.59, "132": 59.32, "133": 59.25, "134": 48.47, "135": 55.04, "136": 55.42, "137": 61.96, "138": 52.63, "139": 59.41, "140": 55.2, "141": 49.69, "142": 56.58, "143": 60.56, "144": 56.67, "145": 55.3, "146": 53.72, "147": 59.32, "148": 61.91, "149": 60.57, "150": 63.34, "151": 62.08, "152": 57.88, "153": 51.39, "154": 55.23, "155": 57.93, "156": 62.07, "157": 52.59, "158": 59.39, "159": 57.85, "160": 53.92, "161": 59.45, "162": 67.36, "163": 57.97, "164": 66.1, "165": 60.79, "166": 60.58, "167": 51.11, "168": 56.63, "169": 56.57, "170": 56.56, "171": 60.67, "172": 51.12, "173": 59.45, "174": 56.57, "175": 63.17, "176": 48.6, "177": 57.84, "178": 56.59, "179": 52.61, "180": 60.5, "181": 56.52, "182": 56.48, "183": 66.05, "184": 60.5, "185": 56.65, "186": 60.53, "187": 53.84, "188": 57.92, "189": 64.62, "190": 53.95, "191": 57.79, "192": 59.37, "193": 56.56, "194": 60.66, "195": 65.96, "196": 59.26, "197": 64.52, "198": 61.98, "199": 48.61, "200": 53.79, "201": 62.08, "202": 57.79, "203": 59.22, "204": 60.53, "205": 55.12, "206": 56.71, "207": 62.21, "208": 56.63, "209": 56.46, "210": 59.38, "211": 60.65, "212": 64.57, "213": 59.28, "214": 61.96, "215": 64.74, "216": 64.56, "217": 64.79, "218": 58.0, "219": 59.43, "220": 67.42, "221": 55.25, "222": 64.71, "223": 57.77, "224": 65.97, "225": 60.62, "226": 64.61, "227": 56.68, "228": 68.78, "229": 58.11, "230": 61.98, "231": 68.62, "232": 65.92, "233": 59.34, "234": 70.15, "235": 63.35, "236": 63.31, "237": 59.05, "238": 63.33, "239": 52.65, "240": 67.34, "241": 61.81, "242": 57.91, "243": 49.77, "244": 67.25, "245": 59.29, "246": 60.53, "247": 52.54, "248": 59.34, "249": 60.49, "250": 63.34, "251": 59.18, "252": 63.47, "253": 66.05, "254": 64.7, "255": 60.63, "256": 63.24, "257": 60.6, "258": 59.14, "259": 67.23, "260": 67.34, "261": 57.85, "262": 64.71, "263": 64.56, "264": 54.01, "265": 67.4, "266": 61.86, "267": 66.05, "268": 62.05, "269": 61.87, "270": 59.3, "271": 64.75, "272": 65.97, "273": 66.08, "274": 60.62, "275": 68.79, "276": 63.27, "277": 57.91, "278": 71.51, "279": 56.49, "280": 66.07, "281": 62.22, "282": 62.02, "283": 61.98, "284": 67.44, "285": 62.05, "286": 62.01, "287": 60.67, "288": 56.49, "289": 57.92, "290": 60.6, "291": 66.06, "292": 60.66, "293": 59.18, "294": 63.24, "295": 68.75, "296": 59.25, "297": 63.29, "298": 66.04, "299": 67.46, "300": 66.25, "301": 64.74, "302": 60.56, "303": 67.5, "304": 63.44, "305": 63.36, "306": 68.79, "307": 58.27, "308": 60.85, "309": 56.71, "310": 60.78, "311": 67.48, "312": 67.53, "313": 58.0, "314": 67.4, "315": 66.13, "316": 63.34, "317": 64.64, "318": 70.07, "319": 59.24, "320": 63.33, "321": 64.7, "322": 67.44, "323": 65.96, "324": 62.23, "325": 63.36, "326": 60.7, "327": 68.61, "328": 67.48, "329": 66.03, "330": 63.4, "331": 65.99, "332": 64.65, "333": 62.0, "334": 64.75, "335": 60.61, "336": 63.32, "337": 70.1, "338": 65.88, "339": 64.69, "340": 60.62, "341": 59.31, "342": 67.4, "343": 68.73, "344": 64.78, "345": 71.52, "346": 66.11, "347": 66.07, "348": 57.9, "349": 57.81, "350": 61.85, "351": 63.33, "352": 59.32, "353": 63.43, "354": 68.98, "355": 60.66, "356": 66.01, "357": 68.86, "358": 67.4, "359": 66.12, "360": 56.58, "361": 60.71, "362": 53.92, "363": 63.32, "364": 58.11, "365": 64.72, "366": 67.63, "367": 70.19, "368": 63.17, "369": 59.25, "370": 62.04, "371": 67.37, "372": 60.65, "373": 63.42, "374": 59.46, "375": 57.94, "376": 57.97, "377": 66.33, "378": 63.5, "379": 64.76, "380": 58.06, "381": 62.16, "382": 58.07, "383": 70.18, "384": 63.27, "385": 70.09, "386": 64.75, "387": 63.42, "388": 68.82, "389": 66.09, "390": 67.55, "391": 66.1, "392": 63.3, "393": 62.03, "394": 66.04, "395": 63.47, "396": 67.49, "397": 61.98, "398": 64.85, "399": 64.68, "400": 65.91, "401": 64.83, "402": 66.09, "403": 64.75, "404": 62.23, "405": 66.23, "406": 67.49, "407": 62.01, "408": 60.64, "409": 67.26, "410": 70.13, "411": 60.79, "412": 64.69, "413": 66.21, "414": 60.76, "415": 58.0, "416": 59.29, "417": 66.16, "418": 62.08, "419": 65.97, "420": 63.36, "421": 66.06, "422": 57.93, "423": 60.69, "424": 68.56, "425": 64.8, "426": 60.7, "427": 61.95, "428": 57.94, "429": 67.4, "430": 62.11, "431": 63.58, "432": 59.42, "433": 56.6, "434": 67.48, "435": 60.74, "436": 61.98, "437": 63.4, "438": 63.3, "439": 59.47, "440": 67.42, "441": 64.66, "442": 66.2, "443": 62.1, "444": 66.13, "445": 62.1, "446": 59.34, "447": 59.45, "448": 63.56, "449": 63.35, "450": 56.61, "451": 59.44, "452": 63.52, "453": 53.95, "454": 56.61, "455": 68.9, "456": 66.1, "457": 67.38, "458": 62.08, "459": 59.16, "460": 48.33, "461": 58.02, "462": 68.97, "463": 63.38, "464": 64.85, "465": 60.72, "466": 62.23, "467": 66.19, "468": 67.62, "469": 62.11, "470": 62.05, "471": 56.9, "472": 70.25, "473": 60.71, "474": 64.48, "475": 60.64, "476": 66.14, "477": 66.18, "478": 73.01, "479": 56.85, "480": 60.63, "481": 63.54, "482": 67.54, "483": 64.66, "484": 66.0, "485": 69.0, "486": 53.82, "487": 62.1, "488": 57.87, "489": 68.81, "490": 56.75, "491": 63.44, "492": 71.56, "493": 58.03, "494": 63.45, "495": 55.27, "496": 60.66, "497": 63.43, "498": 62.08, "499": 67.43, "500": 56.57, "501": 63.39, "502": 63.56, "503": 59.43, "504": 63.56, "505": 67.61, "506": 51.18, "507": 56.53, "508": 63.54, "509": 64.78, "510": 60.63, "511": 67.46, "512": 64.82, "513": 62.01, "514": 60.67, "515": 59.38, "516": 62.02, "517": 55.28, "518": 64.86, "519": 64.81, "520": 56.6, "521": 52.63, "522": 64.63, "523": 63.35, "524": 59.38, "525": 66.34, "526": 64.68, "527": 63.54, "528": 63.43, "529": 65.89, "530": 66.18, "531": 64.76, "532": 60.88, "533": 64.75, "534": 59.37, "535": 72.92, "536": 59.23, "537": 64.77, "538": 68.87, "539": 62.19, "540": 62.07, "541": 56.52, "542": 62.03, "543": 64.8, "544": 68.92, "545": 62.04, "546": 63.32, "547": 60.7, "548": 59.81, "549": 68.86, "550": 64.72, "551": 67.35, "552": 63.32, "553": 60.71, "554": 67.44, "555": 66.11, "556": 62.16, "557": 62.1, "558": 66.29, "559": 64.78, "560": 63.37, "561": 68.77, "562": 68.75, "563": 59.27, "564": 62.0, "565": 64.55, "566": 64.76, "567": 71.56, "568": 66.0, "569": 63.29, "570": 68.81, "571": 62.09, "572": 67.55, "573": 65.06, "574": 63.57, "575": 58.04, "576": 68.87, "577": 59.4, "578": 68.7, "579": 55.56, "580": 67.43, "581": 59.33, "582": 56.73, "583": 64.82, "584": 71.55, "585": 56.6, "586": 68.97, "587": 60.78, "588": 63.68, "589": 53.97, "590": 60.62, "591": 67.52, "592": 64.81, "593": 60.69, "594": 64.66, "595": 64.83, "596": 62.06, "597": 68.86, "598": 64.86, "599": 62.1}, "success_rate": {"0": 0.0, "1": 0.0, "2": 0.0, "3": 0.0, "4": 0.0, "5": 0.0, "6": 0.03, "7": 0.0, "8": 0.04, "9": 0.12, "10": 0.15, "11": 0.14, "12": 0.21, "13": 0.2, "14": 0.11, "15": 0.17, "16": 0.31, "17": 0.49, "18": 0.44, "19": 0.27, "20": 0.31, "21": 0.46, "22": 0.46, "23": 0.49, "24": 0.44, "25": 0.47, "26": 0.56, "27": 0.51, "28": 0.46, "29": 0.49, "30": 0.58, "31": 0.54, "32": 0.62, "33": 0.65, "34": 0.54, "35": 0.64, "36": 0.73, "37": 0.68, "38": 0.45, "39": 0.7, "40": 0.71, "41": 0.63, "42": 0.77, "43": 0.75, "44": 0.8, "45": 0.83, "46": 0.72, "47": 0.63, "48": 0.76, "49": 0.73, "50": 0.8, "51": 0.8, "52": 0.83, "53": 0.81, "54": 0.79, "55": 0.77, "56": 0.87, "57": 0.84, "58": 0.82, "59": 0.76, "60": 0.88, "61": 0.79, "62": 0.82, "63": 0.88, "64": 0.86, "65": 0.79, "66": 0.84, "67": 0.8, "68": 0.84, "69": 0.81, "70": 0.84, "71": 0.82, "72": 0.8, "73": 0.82, "74": 0.79, "75": 0.83, "76": 0.81, "77": 0.91, "78": 0.82, "79": 0.84, "80": 0.85, "81": 0.86, "82": 0.79, "83": 0.87, "84": 0.86, "85": 0.82, "86": 0.86, "87": 0.86, "88": 0.87, "89": 0.8, "90": 0.85, "91": 0.89, "92": 0.88, "93": 0.86, "94": 0.84, "95": 0.85, "96": 0.89, "97": 0.86, "98": 0.91, "99": 0.79, "100": 0.85, "101": 0.88, "102": 0.92, "103": 0.92, "104": 0.84, "105": 0.88, "106": 0.88, "107": 0.84, "108": 0.89, "109": 0.83, "110": 0.83, "111": 0.82, "112": 0.88, "113": 0.79, "114": 0.89, "115": 0.82, "116": 0.86, "117": 0.86, "118": 0.9, "119": 0.86, "120": 0.88, "121": 0.85, "122": 0.77, "123": 0.84, "124": 0.78, "125": 0.93, "126": 0.86, "127": 0.88, "128": 0.87, "129": 0.9, "130": 0.81, "131": 0.89, "132": 0.88, "133": 0.88, "134": 0.8, "135": 0.85, "136": 0.85, "137": 0.9, "138": 0.83, "139": 0.88, "140": 0.85, "141": 0.81, "142": 0.86, "143": 0.89, "144": 0.86, "145": 0.85, "146": 0.84, "147": 0.88, "148": 0.9, "149": 0.89, "150": 0.91, "151": 0.9, "152": 0.87, "153": 0.82, "154": 0.85, "155": 0.87, "156": 0.9, "157": 0.83, "158": 0.88, "159": 0.87, "160": 0.84, "161": 0.88, "162": 0.94, "163": 0.87, "164": 0.93, "165": 0.89, "166": 0.89, "167": 0.82, "168": 0.86, "169": 0.86, "170": 0.86, "171": 0.89, "172": 0.82, "173": 0.88, "174": 0.86, "175": 0.91, "176": 0.8, "177": 0.87, "178": 0.86, "179": 0.83, "180": 0.89, "181": 0.86, "182": 0.86, "183": 0.93, "184": 0.89, "185": 0.86, "186": 0.89, "187": 0.84, "188": 0.87, "189": 0.92, "190": 0.84, "191": 0.87, "192": 0.88, "193": 0.86, "194": 0.89, "195": 0.93, "196": 0.88, "197": 0.92, "198": 0.9, "199": 0.8, "200": 0.84, "201": 0.9, "202": 0.87, "203": 0.88, "204": 0.89, "205": 0.85, "206": 0.86, "207": 0.9, "208": 0.86, "209": 0.86, "210": 0.88, "211": 0.89, "212": 0.92, "213": 0.88, "214": 0.9, "215": 0.92, "216": 0.92, "217": 0.92, "218": 0.87, "219": 0.88, "220": 0.94, "221": 0.85, "222": 0.92, "223": 0.87, "224": 0.93, "225": 0.89, "226": 0.92, "227": 0.86, "228": 0.95, "229": 0.87, "230": 0.9, "231": 0.95, "232": 0.93, "233": 0.88, "234": 0.96, "235": 0.91, "236": 0.91, "237": 0.88, "238": 0.91, "239": 0.83, "240": 0.94, "241": 0.9, "242": 0.87, "243": 0.81, "244": 0.94, "245": 0.88, "246": 0.89, "247": 0.83, "248": 0.88, "249": 0.89, "250": 0.91, "251": 0.88, "252": 0.91, "253": 0.93, "254": 0.92, "255": 0.89, "256": 0.91, "257": 0.89, "258": 0.88, "259": 0.94, "260": 0.94, "261": 0.87, "262": 0.92, "263": 0.92, "264": 0.84, "265": 0.94, "266": 0.9, "267": 0.93, "268": 0.9, "269": 0.9, "270": 0.88, "271": 0.92, "272": 0.93, "273": 0.93, "274": 0.89, "275": 0.95, "276": 0.91, "277": 0.87, "278": 0.97, "279": 0.86, "280": 0.93, "281": 0.9, "282": 0.9, "283": 0.9, "284": 0.94, "285": 0.9, "286": 0.9, "287": 0.89, "288": 0.86, "289": 0.87, "290": 0.89, "291": 0.93, "292": 0.89, "293": 0.88, "294": 0.91, "295": 0.95, "296": 0.88, "297": 0.91, "298": 0.93, "299": 0.94, "300": 0.93, "301": 0.92, "302": 0.89, "303": 0.94, "304": 0.91, "305": 0.91, "306": 0.95, "307": 0.87, "308": 0.89, "309": 0.86, "310": 0.89, "311": 0.94, "312": 0.94, "313": 0.87, "314": 0.94, "315": 0.93, "316": 0.91, "317": 0.92, "318": 0.96, "319": 0.88, "320": 0.91, "321": 0.92, "322": 0.94, "323": 0.93, "324": 0.9, "325": 0.91, "326": 0.89, "327": 0.95, "328": 0.94, "329": 0.93, "330": 0.91, "331": 0.93, "332": 0.92, "333": 0.9, "334": 0.92, "335": 0.89, "336": 0.91, "337": 0.96, "338": 0.93, "339": 0.92, "340": 0.89, "341": 0.88, "342": 0.94, "343": 0.95, "344": 0.92, "345": 0.97, "346": 0.93, "347": 0.93, "348": 0.87, "349": 0.87, "350": 0.9, "351": 0.91, "352": 0.88, "353": 0.91, "354": 0.95, "355": 0.89, "356": 0.93, "357": 0.95, "358": 0.94, "359": 0.93, "360": 0.86, "361": 0.89, "362": 0.84, "363": 0.91, "364": 0.87, "365": 0.92, "366": 0.94, "367": 0.96, "368": 0.91, "369": 0.88, "370": 0.9, "371": 0.94, "372": 0.89, "373": 0.91, "374": 0.88, "375": 0.87, "376": 0.87, "377": 0.93, "378": 0.91, "379": 0.92, "380": 0.87, "381": 0.9, "382": 0.87, "383": 0.96, "384": 0.91, "385": 0.96, "386": 0.92, "387": 0.91, "388": 0.95, "389": 0.93, "390": 0.94, "391": 0.93, "392": 0.91, "393": 0.9, "394": 0.93, "395": 0.91, "396": 0.94, "397": 0.9, "398": 0.92, "399": 0.92, "400": 0.93, "401": 0.92, "402": 0.93, "403": 0.92, "404": 0.9, "405": 0.93, "406": 0.94, "407": 0.9, "408": 0.89, "409": 0.94, "410": 0.96, "411": 0.89, "412": 0.92, "413": 0.93, "414": 0.89, "415": 0.87, "416": 0.88, "417": 0.93, "418": 0.9, "419": 0.93, "420": 0.91, "421": 0.93, "422": 0.87, "423": 0.89, "424": 0.95, "425": 0.92, "426": 0.89, "427": 0.9, "428": 0.87, "429": 0.94, "430": 0.9, "431": 0.91, "432": 0.88, "433": 0.86, "434": 0.94, "435": 0.89, "436": 0.9, "437": 0.91, "438": 0.91, "439": 0.88, "440": 0.94, "441": 0.92, "442": 0.93, "443": 0.9, "444": 0.93, "445": 0.9, "446": 0.88, "447": 0.88, "448": 0.91, "449": 0.91, "450": 0.86, "451": 0.88, "452": 0.91, "453": 0.84, "454": 0.86, "455": 0.95, "456": 0.93, "457": 0.94, "458": 0.9, "459": 0.88, "460": 0.8, "461": 0.87, "462": 0.95, "463": 0.91, "464": 0.92, "465": 0.89, "466": 0.9, "467": 0.93, "468": 0.94, "469": 0.9, "470": 0.9, "471": 0.86, "472": 0.96, "473": 0.89, "474": 0.92, "475": 0.89, "476": 0.93, "477": 0.93, "478": 0.98, "479": 0.86, "480": 0.89, "481": 0.91, "482": 0.94, "483": 0.92, "484": 0.93, "485": 0.95, "486": 0.84, "487": 0.9, "488": 0.87, "489": 0.95, "490": 0.86, "491": 0.91, "492": 0.97, "493": 0.87, "494": 0.91, "495": 0.85, "496": 0.89, "497": 0.91, "498": 0.9, "499": 0.94, "500": 0.86, "501": 0.91, "502": 0.91, "503": 0.88, "504": 0.91, "505": 0.94, "506": 0.82, "507": 0.86, "508": 0.91, "509": 0.92, "510": 0.89, "511": 0.94, "512": 0.92, "513": 0.9, "514": 0.89, "515": 0.88, "516": 0.9, "517": 0.85, "518": 0.92, "519": 0.92, "520": 0.86, "521": 0.83, "522": 0.92, "523": 0.91, "524": 0.88, "525": 0.93, "526": 0.92, "527": 0.91, "528": 0.91, "529": 0.93, "530": 0.93, "531": 0.92, "532": 0.89, "533": 0.92, "534": 0.88, "535": 0.98, "536": 0.88, "537": 0.92, "538": 0.95, "539": 0.9, "540": 0.9, "541": 0.86, "542": 0.9, "543": 0.92, "544": 0.95, "545": 0.9, "546": 0.91, "547": 0.89, "548": 0.88, "549": 0.95, "550": 0.92, "551": 0.94, "552": 0.91, "553": 0.89, "554": 0.94, "555": 0.93, "556": 0.9, "557": 0.9, "558": 0.93, "559": 0.92, "560": 0.91, "561": 0.95, "562": 0.95, "563": 0.88, "564": 0.9, "565": 0.92, "566": 0.92, "567": 0.97, "568": 0.93, "569": 0.91, "570": 0.95, "571": 0.9, "572": 0.94, "573": 0.92, "574": 0.91, "575": 0.87, "576": 0.95, "577": 0.88, "578": 0.95, "579": 0.85, "580": 0.94, "581": 0.88, "582": 0.86, "583": 0.92, "584": 0.97, "585": 0.86, "586": 0.95, "587": 0.89, "588": 0.91, "589": 0.84, "590": 0.89, "591": 0.94, "592": 0.92, "593": 0.89, "594": 0.92, "595": 0.92, "596": 0.9, "597": 0.95, "598": 0.92, "599": 0.9}}

--------------------------------------------------------------------------------

/src/deep_dialog/data/dia_acts.txt:

--------------------------------------------------------------------------------

1 | request

2 | inform

3 | confirm_question

4 | confirm_answer

5 | greeting

6 | closing

7 | multiple_choice

8 | thanks

9 | welcome

10 | deny

11 | not_sure

--------------------------------------------------------------------------------

/src/deep_dialog/data/slot_set.txt:

--------------------------------------------------------------------------------

1 | actor

2 | actress

3 | city

4 | closing

5 | critic_rating

6 | date

7 | description

8 | distanceconstraints

9 | genre

10 | greeting

11 | implicit_value

12 | movie_series

13 | moviename

14 | mpaa_rating

15 | numberofpeople

16 | numberofkids

17 | taskcomplete

18 | other

19 | price

20 | seating

21 | starttime

22 | state

23 | theater

24 | theater_chain

25 | video_format

26 | zip

27 | result

28 | ticket

29 | mc_list

--------------------------------------------------------------------------------

/src/deep_dialog/dialog_config.py:

--------------------------------------------------------------------------------

1 | '''

2 | Created on May 17, 2016

3 |

4 | @author: xiul, t-zalipt

5 | '''

6 |

7 | sys_request_slots = ['moviename', 'theater', 'starttime', 'date', 'numberofpeople', 'genre', 'state', 'city', 'zip', 'critic_rating', 'mpaa_rating', 'distanceconstraints', 'video_format', 'theater_chain', 'price', 'actor', 'description', 'other', 'numberofkids']

8 | sys_inform_slots = ['moviename', 'theater', 'starttime', 'date', 'genre', 'state', 'city', 'zip', 'critic_rating', 'mpaa_rating', 'distanceconstraints', 'video_format', 'theater_chain', 'price', 'actor', 'description', 'other', 'numberofkids', 'taskcomplete', 'ticket']

9 |

10 | start_dia_acts = {

11 | #'greeting':[],

12 | 'request':['moviename', 'starttime', 'theater', 'city', 'state', 'date', 'genre', 'ticket', 'numberofpeople']

13 | }

14 |

15 | ################################################################################

16 | # Dialog status

17 | ################################################################################

18 | FAILED_DIALOG = -1

19 | SUCCESS_DIALOG = 1

20 | NO_OUTCOME_YET = 0

21 |

22 | # Rewards

23 | SUCCESS_REWARD = 50

24 | FAILURE_REWARD = 0

25 | PER_TURN_REWARD = 0

26 |

27 | ################################################################################

28 | # Special Slot Values

29 | ################################################################################

30 | I_DO_NOT_CARE = "I do not care"

31 | NO_VALUE_MATCH = "NO VALUE MATCHES!!!"

32 | TICKET_AVAILABLE = 'Ticket Available'

33 |

34 | ################################################################################

35 | # Constraint Check

36 | ################################################################################

37 | CONSTRAINT_CHECK_FAILURE = 0

38 | CONSTRAINT_CHECK_SUCCESS = 1

39 |

40 | ################################################################################

41 | # NLG Beam Search

42 | ################################################################################

43 | nlg_beam_size = 10

44 |

45 | ################################################################################

46 | # run_mode: 0 for dia-act; 1 for NL; 2 for no output

47 | ################################################################################

48 | run_mode = 0

49 | auto_suggest = 0

50 |

51 | ################################################################################

52 | # A Basic Set of Feasible actions to be Consdered By an RL agent

53 | ################################################################################

54 | feasible_actions = [

55 | ############################################################################

56 | # greeting actions

57 | ############################################################################

58 | #{'diaact':"greeting", 'inform_slots':{}, 'request_slots':{}},

59 | ############################################################################

60 | # confirm_question actions

61 | ############################################################################

62 | {'diaact':"confirm_question", 'inform_slots':{}, 'request_slots':{}},

63 | ############################################################################

64 | # confirm_answer actions

65 | ############################################################################

66 | {'diaact':"confirm_answer", 'inform_slots':{}, 'request_slots':{}},

67 | ############################################################################

68 | # thanks actions

69 | ############################################################################

70 | {'diaact':"thanks", 'inform_slots':{}, 'request_slots':{}},

71 | ############################################################################

72 | # deny actions

73 | ############################################################################

74 | {'diaact':"deny", 'inform_slots':{}, 'request_slots':{}},

75 | ]

76 | ############################################################################

77 | # Adding the inform actions

78 | ############################################################################

79 | for slot in sys_inform_slots:

80 | feasible_actions.append({'diaact':'inform', 'inform_slots':{slot:"PLACEHOLDER"}, 'request_slots':{}})

81 |

82 | ############################################################################

83 | # Adding the request actions

84 | ############################################################################

85 | for slot in sys_request_slots:

86 | feasible_actions.append({'diaact':'request', 'inform_slots':{}, 'request_slots': {slot: "UNK"}})

87 |

--------------------------------------------------------------------------------

/src/deep_dialog/dialog_system/__init__.py:

--------------------------------------------------------------------------------

1 | from .kb_helper import *

2 | from .state_tracker import *

3 | from .dialog_manager import *

4 | from .dict_reader import *

5 | from .utils import *

--------------------------------------------------------------------------------

/src/deep_dialog/dialog_system/dialog_manager.py:

--------------------------------------------------------------------------------

1 | """

2 | Created on May 17, 2016

3 |

4 | @author: xiul, t-zalipt

5 | """

6 |

7 | import json

8 | from . import StateTracker

9 | from deep_dialog import dialog_config

10 |

11 |

12 | class DialogManager:

13 | """ A dialog manager to mediate the interaction between an agent and a customer """

14 |

15 | def __init__(self, agent, user, act_set, slot_set, movie_dictionary):

16 | self.agent = agent

17 | self.user = user

18 | self.act_set = act_set

19 | self.slot_set = slot_set

20 | self.state_tracker = StateTracker(act_set, slot_set, movie_dictionary)

21 | self.user_action = None

22 | self.reward = 0

23 | self.episode_over = False

24 |

25 | def initialize_episode(self):

26 | """ Refresh state for new dialog """

27 |

28 | self.reward = 0

29 | self.episode_over = False

30 | self.state_tracker.initialize_episode()

31 | self.user_action = self.user.initialize_episode()

32 | self.state_tracker.update(user_action = self.user_action)

33 |

34 | if dialog_config.run_mode < 3:

35 | print ("New episode, user goal:")

36 | print json.dumps(self.user.goal, indent=2)

37 | self.print_function(user_action = self.user_action)

38 |

39 | self.agent.initialize_episode()

40 |

41 | def next_turn(self, record_training_data=True):

42 | """ This function initiates each subsequent exchange between agent and user (agent first) """

43 |

44 | ########################################################################

45 | # CALL AGENT TO TAKE HER TURN

46 | ########################################################################

47 | self.state = self.state_tracker.get_state_for_agent()

48 | self.agent_action = self.agent.state_to_action(self.state)

49 |

50 | ########################################################################

51 | # Register AGENT action with the state_tracker

52 | ########################################################################

53 | self.state_tracker.update(agent_action=self.agent_action)

54 |

55 | self.agent.add_nl_to_action(self.agent_action) # add NL to Agent Dia_Act

56 | self.print_function(agent_action = self.agent_action['act_slot_response'])

57 |

58 | ########################################################################

59 | # CALL USER TO TAKE HER TURN

60 | ########################################################################

61 | self.sys_action = self.state_tracker.dialog_history_dictionaries()[-1]

62 | self.user_action, self.episode_over, dialog_status = self.user.next(self.sys_action)

63 | self.reward = self.reward_function(dialog_status)

64 |

65 | ########################################################################

66 | # Update state tracker with latest user action

67 | ########################################################################

68 | if self.episode_over != True: