├── LICENSE

├── README.md

├── README_CN.md

├── calflops

├── .DS_Store

├── __init__.py

├── __pycache__

│ ├── __init__.cpython-311.pyc

│ ├── __init__.cpython-39.pyc

│ ├── big_modeling.cpython-39.pyc

│ ├── calculate_pipline.cpython-311.pyc

│ ├── calculate_pipline.cpython-39.pyc

│ ├── estimate.cpython-311.pyc

│ ├── estimate.cpython-39.pyc

│ ├── estimate.py

│ ├── flops_counter.cpython-311.pyc

│ ├── flops_counter.cpython-39.pyc

│ ├── flops_counter_hf.cpython-311.pyc

│ ├── flops_counter_hf.cpython-39.pyc

│ ├── pytorch_ops.cpython-311.pyc

│ ├── pytorch_ops.cpython-39.pyc

│ ├── utils.cpython-311.pyc

│ └── utils.cpython-39.pyc

├── calculate_pipline.py

├── estimate.py

├── flops_counter.py

├── flops_counter_hf.py

├── pytorch_ops.py

└── utils.py

├── screenshot

├── .DS_Store

├── alxnet_print_detailed.png

├── alxnet_print_result.png

├── calflops_hf1.png

├── calflops_hf2.png

├── calflops_hf3.png

├── calflops_hf4.png

├── huggingface_model_name.png

├── huggingface_model_name2.png

├── huggingface_model_name3.png

└── huggingface_model_names.png

├── test_examples

├── test_bert.py

├── test_cnn.py

└── test_llm.py

└── test_llm_huggingface.py

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) [2023] [MrYXJ]

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | calflops: a FLOPs and Params calculate tool for neural networks

7 |

8 |

9 |

10 |

11 | [](https://pypi.org/project/calflops/)

12 | [](https://github.com/MrYxJ/calculate-flops.pytorch/blob/main/LICENSE)

13 |

14 |

15 |

16 | English |

17 | 中文

18 |

19 |

20 |

21 |

22 | # Introduction

23 | This tool(calflops) is designed to compute the theoretical amount of FLOPs(floating-point operations)、MACs(multiply-add operations) and Parameters in all various neural networks, such as Linear、 CNN、 RNN、 GCN、**Transformer(Bert、LlaMA etc Large Language Model)**,even including **any custom models** via ```torch.nn.function.*``` as long as based on the Pytorch implementation. Meanwhile this tool supports the printing of FLOPS, Parameter calculation value and proportion of each submodule of the model, it is convient for users to understand the performance consumption of each part of the model.

24 |

25 | Latest news, calflops has launched a tool on Huggingface Space, which is more convenient for computing FLOPS in the model of 🤗Huggingface Platform. Welcome to use it:https://huggingface.co/spaces/MrYXJ/calculate-model-flops

26 |

27 |  28 |

29 |

30 |

31 | For LLM, this is probably the easiest tool to calculate FLOPs and it is very convenient for **huggingface** platform models. You can use ```calflops.calculate_flops_hf(model_name)``` by `model_name` which in [huggingface models](https://huggingface.co/models) to calculate model FLOPs without downloading entire model weights locally.Notice this method requires the model to support the empty model being created for model inference in meta device.

32 |

33 |

34 |

35 | ``` python

36 | from calflops import calculate_flops_hf

37 |

38 | model_name = "meta-llama/Llama-2-7b"

39 | access_token = "..." # your application token for using llama2

40 | flops, macs, params = calculate_flops_hf(model_name=model_name, access_token=access_token) # default input shape: (1, 128)

41 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

42 | ```

43 |

44 |

45 | If model can't inference in meta device, you just need assign llm corresponding tokenizer to the parameter: ```transformers_tokenizer``` to pass in funcional of ```calflops.calculate_flops()```, and it will automatically help you build the model input data whose size is input_shape. Alternatively, you also can pass in the input data of models which need multi data as input that you have constructed.

46 |

47 |

48 | In addition, the implementation process of this package inspired by [ptflops](https://github.com/sovrasov/flops-counter.pytorch)、[deepspeed](https://github.com/microsoft/DeepSpeed/tree/master/deepspeed)、[hf accelerate](https://github.com/huggingface/accelerate) libraries, Thanks for their great efforts, they are both very good work. Meanwhile this package also improves some aspects to calculate FLOPs based on them.

49 |

50 | ## How to install

51 | ### Install the latest version

52 | #### From PyPI:

53 |

54 | ```python

55 | pip install --upgrade calflops

56 | ```

57 |

58 | And you also can download latest `calflops-*-py3-none-any.whl` files from https://pypi.org/project/calflops/

59 |

60 | ```python

61 | pip install calflops-*-py3-none-any.whl

62 | ```

63 |

64 | ## How to use calflops

65 |

66 | ### Example

67 | ### CNN Model

68 | If model has only one input, you just need set the model input size by parameter ```input_shape``` , it can automatically generate random model input to complete the calculation:

69 |

70 | ```python

71 | from calflops import calculate_flops

72 | from torchvision import models

73 |

74 | model = models.alexnet()

75 | batch_size = 1

76 | input_shape = (batch_size, 3, 224, 224)

77 | flops, macs, params = calculate_flops(model=model,

78 | input_shape=input_shape,

79 | output_as_string=True,

80 | output_precision=4)

81 | print("Alexnet FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

82 | #Alexnet FLOPs:4.2892 GFLOPS MACs:2.1426 GMACs Params:61.1008 M

83 | ```

84 |

85 | If the model has multiple inputs, use the parameters ```args``` or ```kargs```, as shown in the Transfomer Model below.

86 |

87 |

88 | ### Calculate Huggingface Model By Model Name(Online)

89 |

90 | No need to download the entire parameter weight of the model to the local, just by the model name can test any open source large model on the huggingface platform.

91 |

92 |

93 |

94 |

95 | ```python

96 | from calflops import calculate_flops_hf

97 |

98 | batch_size, max_seq_length = 1, 128

99 | model_name = "baichuan-inc/Baichuan-13B-Chat"

100 |

101 | flops, macs, params = calculate_flops_hf(model_name=model_name, input_shape=(batch_size, max_seq_length))

102 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

103 | ```

104 |

105 | You can also use this model urls of huggingface platform to calculate it FLOPs.

106 |

107 |

108 |

109 | ```python

110 | from calflops import calculate_flops_hf

111 |

112 | batch_size, max_seq_length = 1, 128

113 | model_name = "https://huggingface.co/THUDM/glm-4-9b-chat" # THUDM/glm-4-9b-chat

114 | flops, macs, params = calculate_flops_hf(model_name=model_name, input_shape=(batch_size, max_seq_length))

115 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

116 | ```

117 |

118 | ```

119 | ------------------------------------- Calculate Flops Results -------------------------------------

120 | Notations:

121 | number of parameters (Params), number of multiply-accumulate operations(MACs),

122 | number of floating-point operations (FLOPs), floating-point operations per second (FLOPS),

123 | fwd FLOPs (model forward propagation FLOPs), bwd FLOPs (model backward propagation FLOPs),

124 | default model backpropagation takes 2.00 times as much computation as forward propagation.

125 |

126 | Total Training Params: 9.4 B

127 | fwd MACs: 1.12 TMACs

128 | fwd FLOPs: 2.25 TFLOPS

129 | fwd+bwd MACs: 3.37 TMACs

130 | fwd+bwd FLOPs: 6.74 TFLOPS

131 |

132 | -------------------------------- Detailed Calculated FLOPs Results --------------------------------

133 | Each module caculated is listed after its name in the following order:

134 | params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

135 |

136 | Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

137 | They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

138 | 2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

139 |

140 | ChatGLMForConditionalGeneration(

141 | 9.4 B = 100% Params, 1.12 TMACs = 100% MACs, 2.25 TFLOPS = 50% FLOPs

142 | (transformer): ChatGLMModel(

143 | 9.4 B = 100% Params, 1.12 TMACs = 100% MACs, 2.25 TFLOPS = 50% FLOPs

144 | (embedding): Embedding(

145 | 620.76 M = 6.6% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs

146 | (word_embeddings): Embedding(620.76 M = 6.6% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 151552, 4096)

147 | )

148 | (rotary_pos_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

149 | (encoder): GLMTransformer(

150 | 8.16 B = 86.79% Params, 1.04 TMACs = 92.93% MACs, 2.09 TFLOPS = 46.46% FLOPs

151 | (layers): ModuleList(

152 | (0-39): 40 x GLMBlock(

153 | 203.96 M = 2.17% Params, 26.11 GMACs = 2.32% MACs, 52.21 GFLOPS = 1.16% FLOPs

154 | (input_layernorm): RMSNorm(4.1 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

155 | (self_attention): SelfAttention(

156 | 35.66 M = 0.38% Params, 4.56 GMACs = 0.41% MACs, 9.13 GFLOPS = 0.2% FLOPs

157 | (query_key_value): Linear(18.88 M = 0.2% Params, 2.42 GMACs = 0.22% MACs, 4.83 GFLOPS = 0.11% FLOPs, in_features=4096, out_features=4608, bias=True)

158 | (core_attention): CoreAttention(

159 | 0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs

160 | (attention_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

161 | )

162 | (dense): Linear(16.78 M = 0.18% Params, 2.15 GMACs = 0.19% MACs, 4.29 GFLOPS = 0.1% FLOPs, in_features=4096, out_features=4096, bias=False)

163 | )

164 | (post_attention_layernorm): RMSNorm(4.1 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

165 | (mlp): MLP(

166 | 168.3 M = 1.79% Params, 21.54 GMACs = 1.92% MACs, 43.09 GFLOPS = 0.96% FLOPs

167 | (dense_h_to_4h): Linear(112.2 M = 1.19% Params, 14.36 GMACs = 1.28% MACs, 28.72 GFLOPS = 0.64% FLOPs, in_features=4096, out_features=27392, bias=False)

168 | (dense_4h_to_h): Linear(56.1 M = 0.6% Params, 7.18 GMACs = 0.64% MACs, 14.36 GFLOPS = 0.32% FLOPs, in_features=13696, out_features=4096, bias=False)

169 | )

170 | )

171 | )

172 | (final_layernorm): RMSNorm(4.1 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

173 | )

174 | (output_layer): Linear(620.76 M = 6.6% Params, 79.46 GMACs = 7.07% MACs, 158.91 GFLOPS = 3.54% FLOPs, in_features=4096, out_features=151552, bias=False)

175 | )

176 | )

177 | ```

178 |

179 |

180 | There are some model uses that require an application first, and you only need to pass the application in through the ```access_token``` to calculate its FLOPs.

181 |

182 |

183 |

184 |

185 | ```python

186 | from calflops import calculate_flops_hf

187 |

188 | batch_size, max_seq_length = 1, 128

189 | model_name = "meta-llama/Llama-2-7b"

190 | access_token = "" # your application for using llama2

191 |

192 | flops, macs, params = calculate_flops_hf(model_name=model_name,

193 | access_token=access_token,

194 | input_shape=(batch_size, max_seq_length))

195 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

196 | ```

197 |

198 |

199 | ### Transformer Model (Local)

200 |

201 | Compared to the CNN Model, Transformer Model if you want to use the parameter ```input_shape``` to make calflops automatically generating the input data, you should pass its corresponding tokenizer through the parameter ```transformer_tokenizer```.

202 |

203 | ``` python

204 | # Transformers Model, such as bert.

205 | from calflops import calculate_flops

206 | from transformers import AutoModel

207 | from transformers import AutoTokenizer

208 |

209 | batch_size, max_seq_length = 1, 128

210 | model_name = "hfl/chinese-roberta-wwm-ext/"

211 | model_save = "../pretrain_models/" + model_name

212 | model = AutoModel.from_pretrained(model_save)

213 | tokenizer = AutoTokenizer.from_pretrained(model_save)

214 |

215 | flops, macs, params = calculate_flops(model=model,

216 | input_shape=(batch_size,max_seq_length),

217 | transformer_tokenizer=tokenizer)

218 | print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

219 | #Bert(hfl/chinese-roberta-wwm-ext) FLOPs:67.1 GFLOPS MACs:33.52 GMACs Params:102.27 M

220 | ```

221 |

222 | If you want to use your own generated specific data to calculate FLOPs, you can use

223 | parameter ```args``` or ```kwargs```,and parameter ```input_shape``` can no longer be assigned to pass in this case. Here is an example that can be seen is inconvenient comparedt to use parameter```transformer_tokenizer```.

224 |

225 |

226 | ``` python

227 | # Transformers Model, such as bert.

228 | from calflops import calculate_flops

229 | from transformers import AutoModel

230 | from transformers import AutoTokenizer

231 |

232 |

233 | batch_size, max_seq_length = 1, 128

234 | model_name = "hfl/chinese-roberta-wwm-ext/"

235 | model_save = "/code/yexiaoju/generate_tags/models/pretrain_models/" + model_name

236 | model = AutoModel.from_pretrained(model_save)

237 | tokenizer = AutoTokenizer.from_pretrained(model_save)

238 |

239 | text = ""

240 | inputs = tokenizer(text,

241 | add_special_tokens=True,

242 | return_attention_mask=True,

243 | padding=True,

244 | truncation="longest_first",

245 | max_length=max_seq_length)

246 |

247 | if len(inputs["input_ids"]) < max_seq_length:

248 | apply_num = max_seq_length-len(inputs["input_ids"])

249 | inputs["input_ids"].extend([0]*apply_num)

250 | inputs["token_type_ids"].extend([0]*apply_num)

251 | inputs["attention_mask"].extend([0]*apply_num)

252 |

253 | inputs["input_ids"] = torch.tensor([inputs["input_ids"]])

254 | inputs["token_type_ids"] = torch.tensor([inputs["token_type_ids"]])

255 | inputs["attention_mask"] = torch.tensor([inputs["attention_mask"]])

256 |

257 | flops, macs, params = calculate_flops(model=model,

258 | kwargs = inputs,

259 | print_results=False)

260 | print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

261 | #Bert(hfl/chinese-roberta-wwm-ext) FLOPs:22.36 GFLOPS MACs:11.17 GMACs Params:102.27 M

262 | ```

263 |

264 |

265 | ### Large Language Model

266 |

267 | #### Online

268 |

269 | ```python

270 | from calflops import calculate_flops_hf

271 |

272 | batch_size, max_seq_length = 1, 128

273 | model_name = "meta-llama/Llama-2-7b"

274 | access_token = "" # your application for using llama

275 |

276 | flops, macs, params = calculate_flops_hf(model_name=model_name,

277 | access_token=access_token,

278 | input_shape=(batch_size, max_seq_length))

279 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

280 | ```

281 |

282 | #### Local

283 | Note here that the tokenizer must correspond to the llm model because llm tokenizer processes maybe are different.

284 |

285 | ``` python

286 | #Large Languase Model, such as llama2-7b.

287 | from calflops import calculate_flops

288 | from transformers import LlamaTokenizer

289 | from transformers import LlamaForCausalLM

290 |

291 | batch_size, max_seq_length = 1, 128

292 | model_name = "llama2_hf_7B"

293 | model_save = "../model/" + model_name

294 | model = LlamaForCausalLM.from_pretrained(model_save)

295 | tokenizer = LlamaTokenizer.from_pretrained(model_save)

296 | flops, macs, params = calculate_flops(model=model,

297 | input_shape=(batch_size, max_seq_length),

298 | transformer_tokenizer=tokenizer)

299 | print("Llama2(7B) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

300 | #Llama2(7B) FLOPs:1.7 TFLOPS MACs:850.00 GMACs Params:6.74 B

301 | ```

302 |

303 | ### Show each submodule result of FLOPs、MACs、Params

304 |

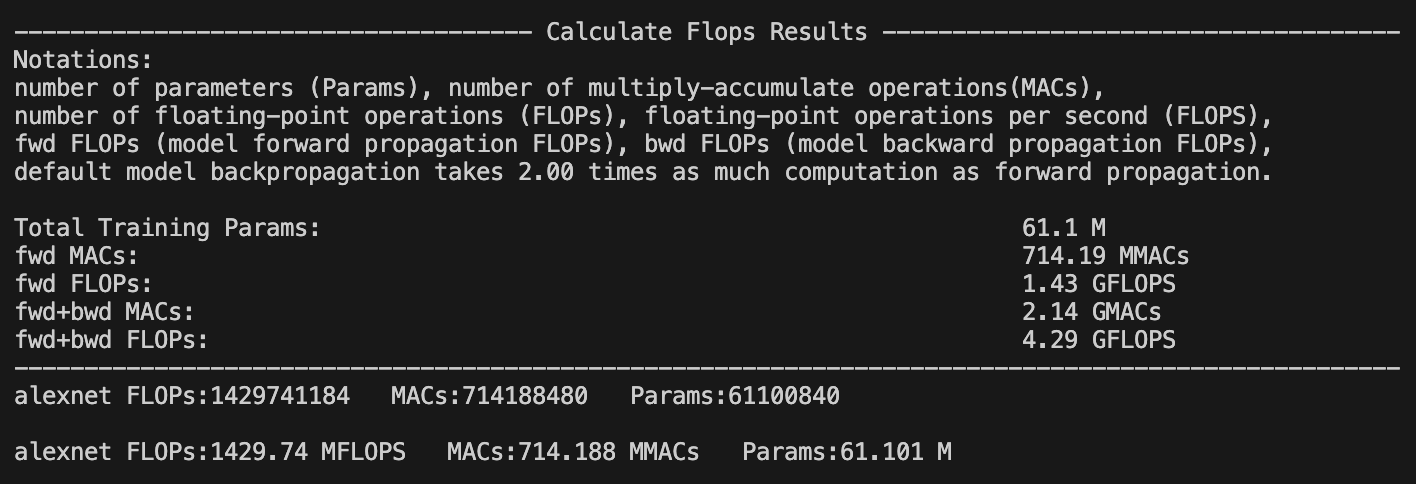

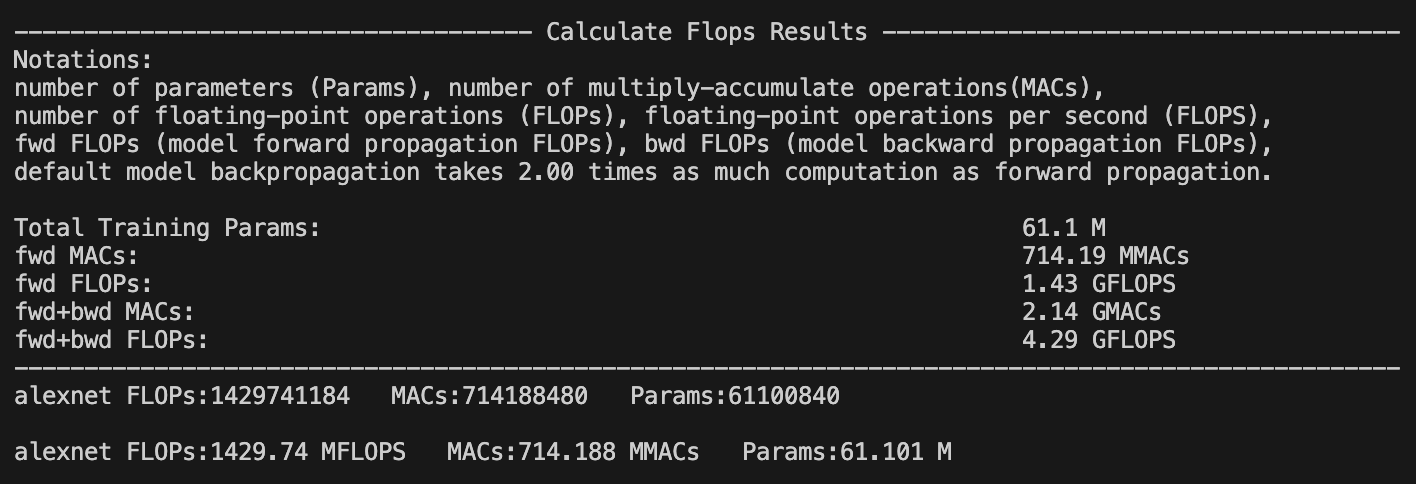

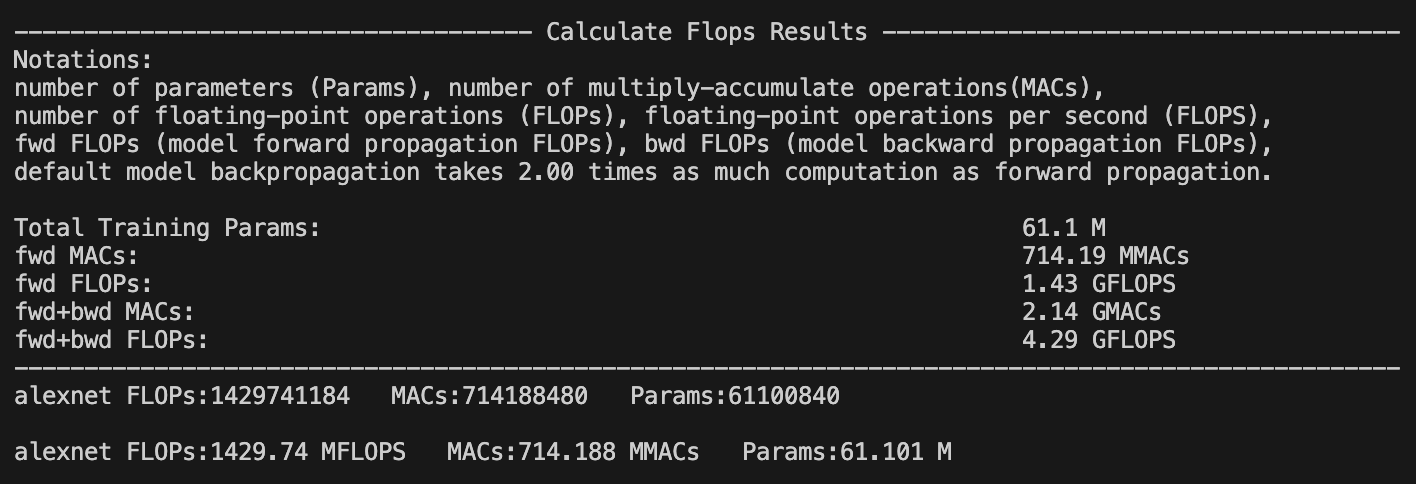

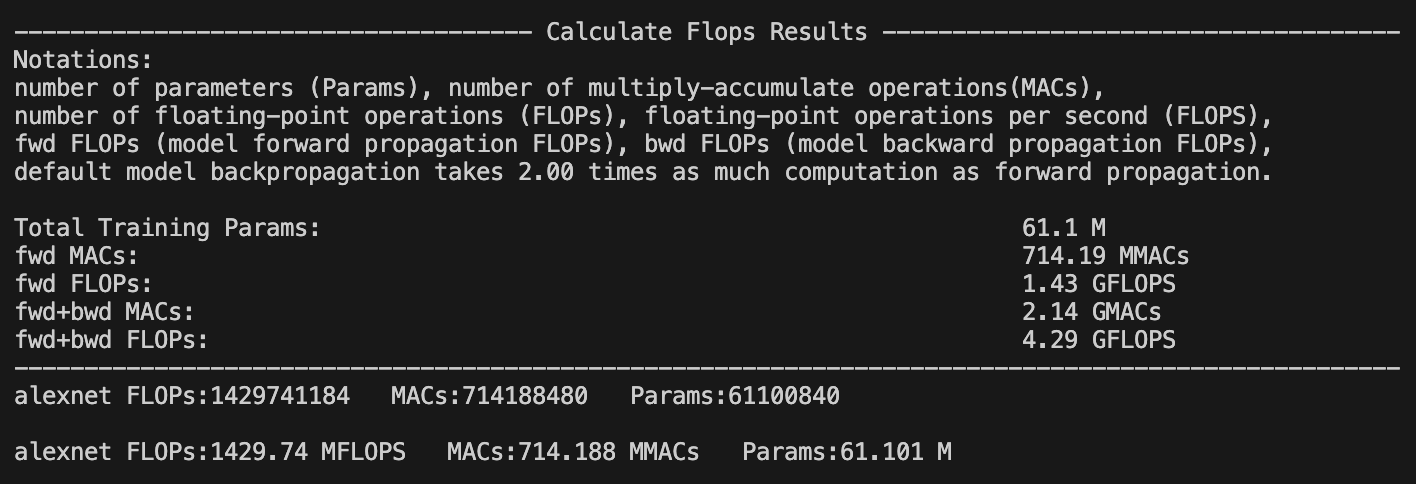

305 | The calflops provides a more detailed display of model FLOPs calculation information. By setting the parameter ```print_result=True```, which defaults to True, flops of the model will be printed in the terminal or jupyter interface.

306 |

307 |

308 |

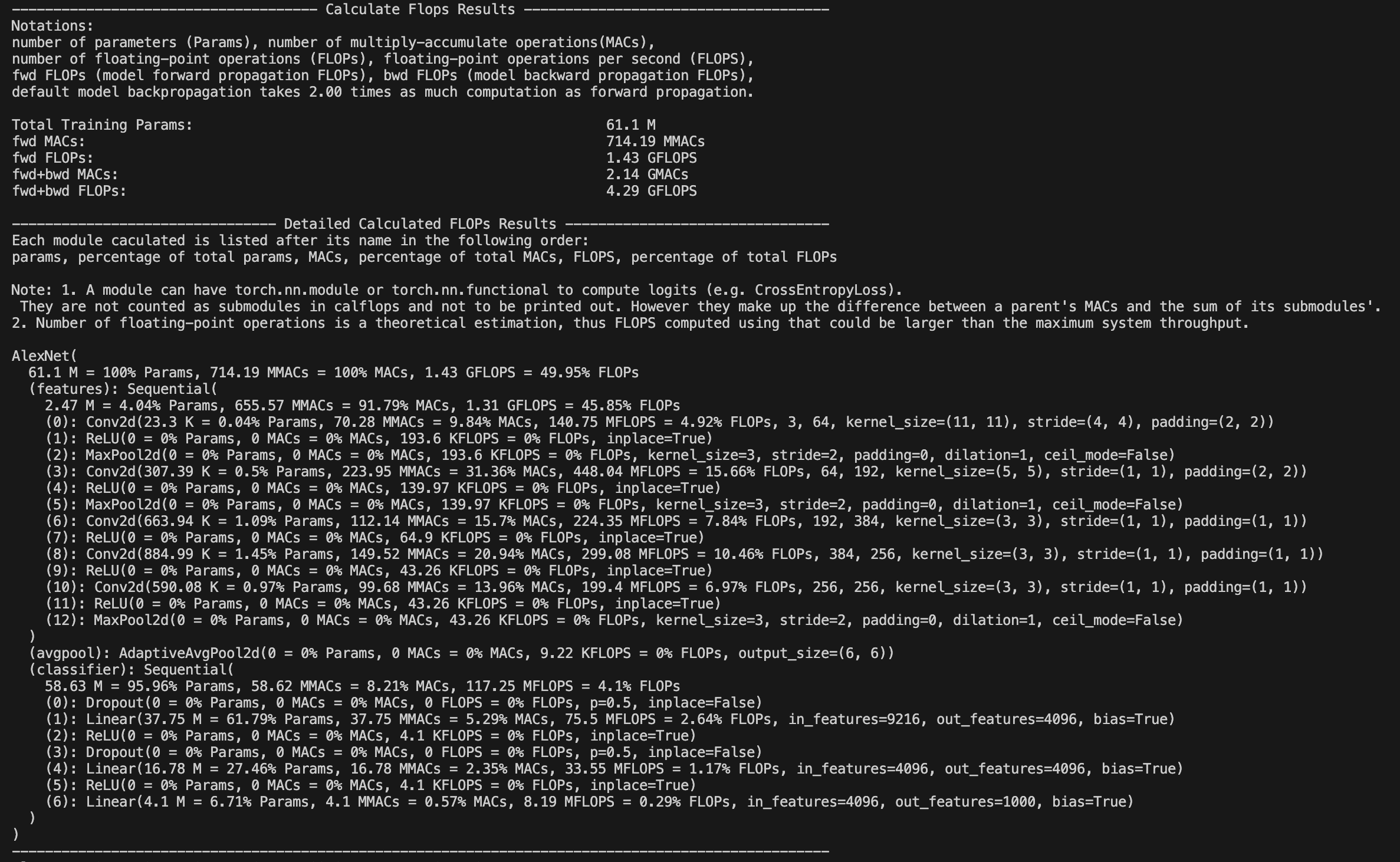

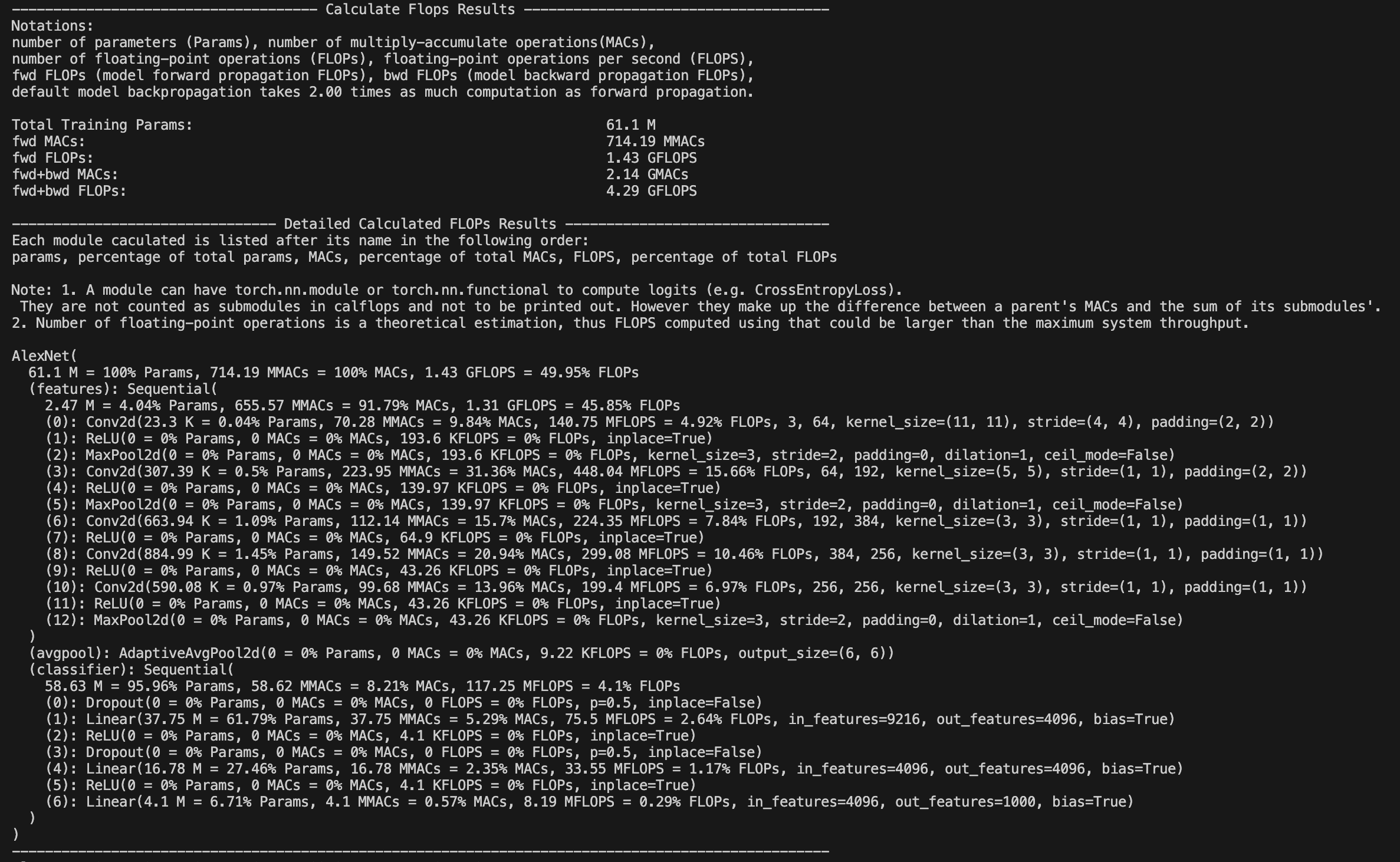

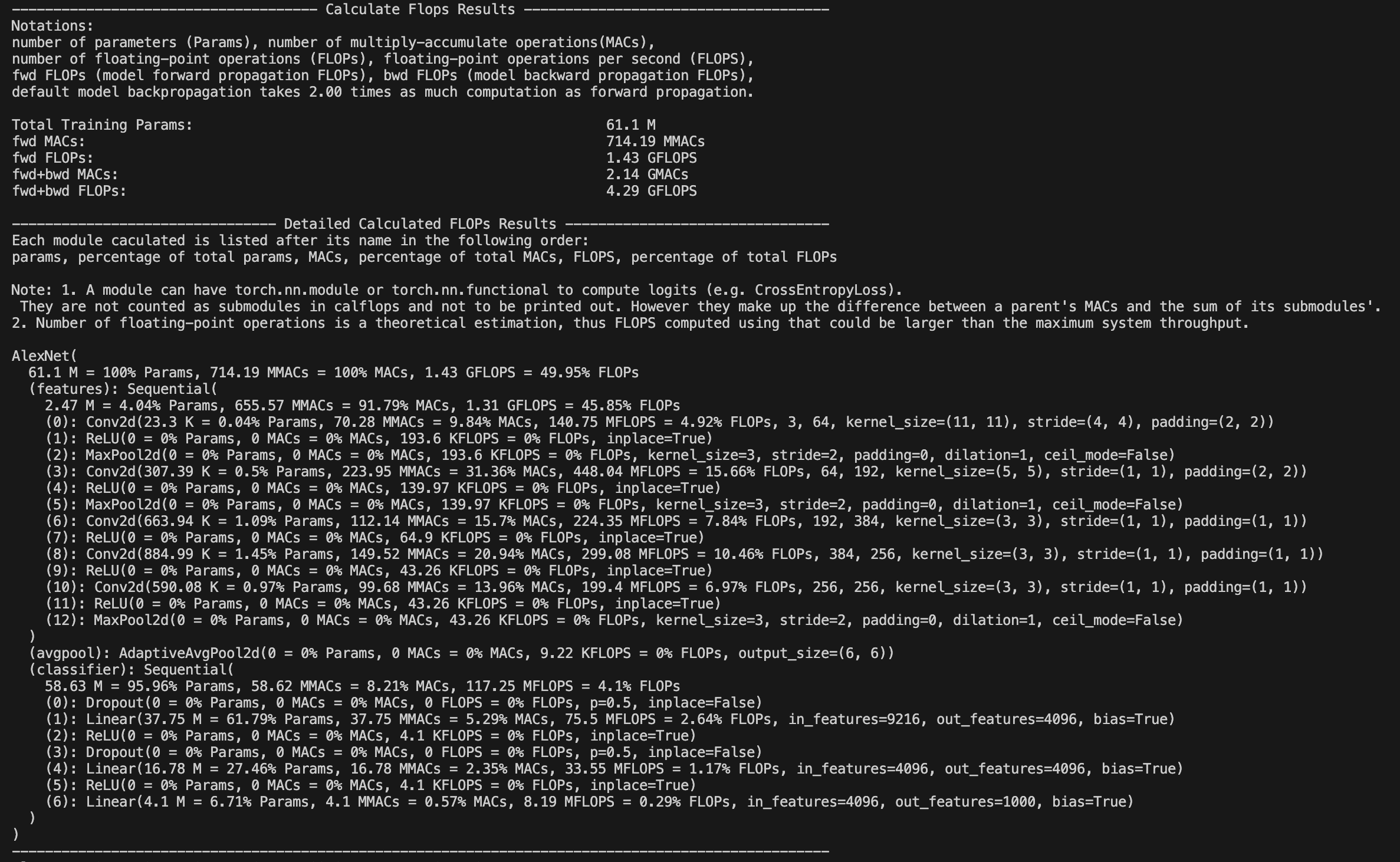

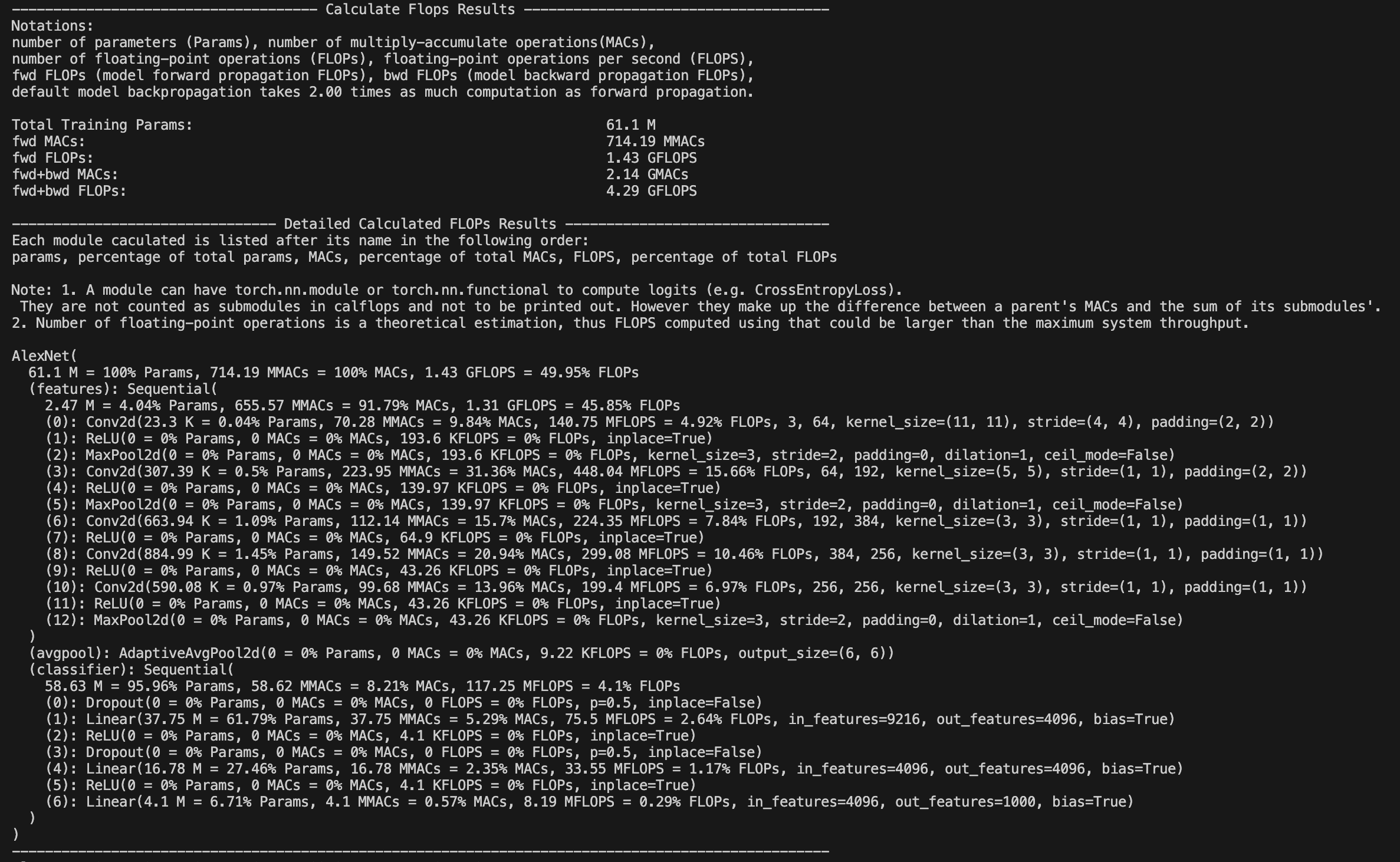

309 | Meanwhile, by setting the parameter ```print_detailed=True``` which default to True, the calflops supports the display of the calculation results and proportion of FLOPs、MACs and Parameter in each submodule of the entire model, so that it is convenient to see the largest part of the calculation consumption in the entire model.

310 |

311 |

312 |

313 | ### More use introduction

314 |

315 |

28 |

29 |

30 |

31 | For LLM, this is probably the easiest tool to calculate FLOPs and it is very convenient for **huggingface** platform models. You can use ```calflops.calculate_flops_hf(model_name)``` by `model_name` which in [huggingface models](https://huggingface.co/models) to calculate model FLOPs without downloading entire model weights locally.Notice this method requires the model to support the empty model being created for model inference in meta device.

32 |

33 |

34 |

35 | ``` python

36 | from calflops import calculate_flops_hf

37 |

38 | model_name = "meta-llama/Llama-2-7b"

39 | access_token = "..." # your application token for using llama2

40 | flops, macs, params = calculate_flops_hf(model_name=model_name, access_token=access_token) # default input shape: (1, 128)

41 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

42 | ```

43 |

44 |

45 | If model can't inference in meta device, you just need assign llm corresponding tokenizer to the parameter: ```transformers_tokenizer``` to pass in funcional of ```calflops.calculate_flops()```, and it will automatically help you build the model input data whose size is input_shape. Alternatively, you also can pass in the input data of models which need multi data as input that you have constructed.

46 |

47 |

48 | In addition, the implementation process of this package inspired by [ptflops](https://github.com/sovrasov/flops-counter.pytorch)、[deepspeed](https://github.com/microsoft/DeepSpeed/tree/master/deepspeed)、[hf accelerate](https://github.com/huggingface/accelerate) libraries, Thanks for their great efforts, they are both very good work. Meanwhile this package also improves some aspects to calculate FLOPs based on them.

49 |

50 | ## How to install

51 | ### Install the latest version

52 | #### From PyPI:

53 |

54 | ```python

55 | pip install --upgrade calflops

56 | ```

57 |

58 | And you also can download latest `calflops-*-py3-none-any.whl` files from https://pypi.org/project/calflops/

59 |

60 | ```python

61 | pip install calflops-*-py3-none-any.whl

62 | ```

63 |

64 | ## How to use calflops

65 |

66 | ### Example

67 | ### CNN Model

68 | If model has only one input, you just need set the model input size by parameter ```input_shape``` , it can automatically generate random model input to complete the calculation:

69 |

70 | ```python

71 | from calflops import calculate_flops

72 | from torchvision import models

73 |

74 | model = models.alexnet()

75 | batch_size = 1

76 | input_shape = (batch_size, 3, 224, 224)

77 | flops, macs, params = calculate_flops(model=model,

78 | input_shape=input_shape,

79 | output_as_string=True,

80 | output_precision=4)

81 | print("Alexnet FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

82 | #Alexnet FLOPs:4.2892 GFLOPS MACs:2.1426 GMACs Params:61.1008 M

83 | ```

84 |

85 | If the model has multiple inputs, use the parameters ```args``` or ```kargs```, as shown in the Transfomer Model below.

86 |

87 |

88 | ### Calculate Huggingface Model By Model Name(Online)

89 |

90 | No need to download the entire parameter weight of the model to the local, just by the model name can test any open source large model on the huggingface platform.

91 |

92 |

93 |

94 |

95 | ```python

96 | from calflops import calculate_flops_hf

97 |

98 | batch_size, max_seq_length = 1, 128

99 | model_name = "baichuan-inc/Baichuan-13B-Chat"

100 |

101 | flops, macs, params = calculate_flops_hf(model_name=model_name, input_shape=(batch_size, max_seq_length))

102 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

103 | ```

104 |

105 | You can also use this model urls of huggingface platform to calculate it FLOPs.

106 |

107 |

108 |

109 | ```python

110 | from calflops import calculate_flops_hf

111 |

112 | batch_size, max_seq_length = 1, 128

113 | model_name = "https://huggingface.co/THUDM/glm-4-9b-chat" # THUDM/glm-4-9b-chat

114 | flops, macs, params = calculate_flops_hf(model_name=model_name, input_shape=(batch_size, max_seq_length))

115 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

116 | ```

117 |

118 | ```

119 | ------------------------------------- Calculate Flops Results -------------------------------------

120 | Notations:

121 | number of parameters (Params), number of multiply-accumulate operations(MACs),

122 | number of floating-point operations (FLOPs), floating-point operations per second (FLOPS),

123 | fwd FLOPs (model forward propagation FLOPs), bwd FLOPs (model backward propagation FLOPs),

124 | default model backpropagation takes 2.00 times as much computation as forward propagation.

125 |

126 | Total Training Params: 9.4 B

127 | fwd MACs: 1.12 TMACs

128 | fwd FLOPs: 2.25 TFLOPS

129 | fwd+bwd MACs: 3.37 TMACs

130 | fwd+bwd FLOPs: 6.74 TFLOPS

131 |

132 | -------------------------------- Detailed Calculated FLOPs Results --------------------------------

133 | Each module caculated is listed after its name in the following order:

134 | params, percentage of total params, MACs, percentage of total MACs, FLOPS, percentage of total FLOPs

135 |

136 | Note: 1. A module can have torch.nn.module or torch.nn.functional to compute logits (e.g. CrossEntropyLoss).

137 | They are not counted as submodules in calflops and not to be printed out. However they make up the difference between a parent's MACs and the sum of its submodules'.

138 | 2. Number of floating-point operations is a theoretical estimation, thus FLOPS computed using that could be larger than the maximum system throughput.

139 |

140 | ChatGLMForConditionalGeneration(

141 | 9.4 B = 100% Params, 1.12 TMACs = 100% MACs, 2.25 TFLOPS = 50% FLOPs

142 | (transformer): ChatGLMModel(

143 | 9.4 B = 100% Params, 1.12 TMACs = 100% MACs, 2.25 TFLOPS = 50% FLOPs

144 | (embedding): Embedding(

145 | 620.76 M = 6.6% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs

146 | (word_embeddings): Embedding(620.76 M = 6.6% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, 151552, 4096)

147 | )

148 | (rotary_pos_emb): RotaryEmbedding(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

149 | (encoder): GLMTransformer(

150 | 8.16 B = 86.79% Params, 1.04 TMACs = 92.93% MACs, 2.09 TFLOPS = 46.46% FLOPs

151 | (layers): ModuleList(

152 | (0-39): 40 x GLMBlock(

153 | 203.96 M = 2.17% Params, 26.11 GMACs = 2.32% MACs, 52.21 GFLOPS = 1.16% FLOPs

154 | (input_layernorm): RMSNorm(4.1 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

155 | (self_attention): SelfAttention(

156 | 35.66 M = 0.38% Params, 4.56 GMACs = 0.41% MACs, 9.13 GFLOPS = 0.2% FLOPs

157 | (query_key_value): Linear(18.88 M = 0.2% Params, 2.42 GMACs = 0.22% MACs, 4.83 GFLOPS = 0.11% FLOPs, in_features=4096, out_features=4608, bias=True)

158 | (core_attention): CoreAttention(

159 | 0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs

160 | (attention_dropout): Dropout(0 = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs, p=0.0, inplace=False)

161 | )

162 | (dense): Linear(16.78 M = 0.18% Params, 2.15 GMACs = 0.19% MACs, 4.29 GFLOPS = 0.1% FLOPs, in_features=4096, out_features=4096, bias=False)

163 | )

164 | (post_attention_layernorm): RMSNorm(4.1 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

165 | (mlp): MLP(

166 | 168.3 M = 1.79% Params, 21.54 GMACs = 1.92% MACs, 43.09 GFLOPS = 0.96% FLOPs

167 | (dense_h_to_4h): Linear(112.2 M = 1.19% Params, 14.36 GMACs = 1.28% MACs, 28.72 GFLOPS = 0.64% FLOPs, in_features=4096, out_features=27392, bias=False)

168 | (dense_4h_to_h): Linear(56.1 M = 0.6% Params, 7.18 GMACs = 0.64% MACs, 14.36 GFLOPS = 0.32% FLOPs, in_features=13696, out_features=4096, bias=False)

169 | )

170 | )

171 | )

172 | (final_layernorm): RMSNorm(4.1 K = 0% Params, 0 MACs = 0% MACs, 0 FLOPS = 0% FLOPs)

173 | )

174 | (output_layer): Linear(620.76 M = 6.6% Params, 79.46 GMACs = 7.07% MACs, 158.91 GFLOPS = 3.54% FLOPs, in_features=4096, out_features=151552, bias=False)

175 | )

176 | )

177 | ```

178 |

179 |

180 | There are some model uses that require an application first, and you only need to pass the application in through the ```access_token``` to calculate its FLOPs.

181 |

182 |

183 |

184 |

185 | ```python

186 | from calflops import calculate_flops_hf

187 |

188 | batch_size, max_seq_length = 1, 128

189 | model_name = "meta-llama/Llama-2-7b"

190 | access_token = "" # your application for using llama2

191 |

192 | flops, macs, params = calculate_flops_hf(model_name=model_name,

193 | access_token=access_token,

194 | input_shape=(batch_size, max_seq_length))

195 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

196 | ```

197 |

198 |

199 | ### Transformer Model (Local)

200 |

201 | Compared to the CNN Model, Transformer Model if you want to use the parameter ```input_shape``` to make calflops automatically generating the input data, you should pass its corresponding tokenizer through the parameter ```transformer_tokenizer```.

202 |

203 | ``` python

204 | # Transformers Model, such as bert.

205 | from calflops import calculate_flops

206 | from transformers import AutoModel

207 | from transformers import AutoTokenizer

208 |

209 | batch_size, max_seq_length = 1, 128

210 | model_name = "hfl/chinese-roberta-wwm-ext/"

211 | model_save = "../pretrain_models/" + model_name

212 | model = AutoModel.from_pretrained(model_save)

213 | tokenizer = AutoTokenizer.from_pretrained(model_save)

214 |

215 | flops, macs, params = calculate_flops(model=model,

216 | input_shape=(batch_size,max_seq_length),

217 | transformer_tokenizer=tokenizer)

218 | print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

219 | #Bert(hfl/chinese-roberta-wwm-ext) FLOPs:67.1 GFLOPS MACs:33.52 GMACs Params:102.27 M

220 | ```

221 |

222 | If you want to use your own generated specific data to calculate FLOPs, you can use

223 | parameter ```args``` or ```kwargs```,and parameter ```input_shape``` can no longer be assigned to pass in this case. Here is an example that can be seen is inconvenient comparedt to use parameter```transformer_tokenizer```.

224 |

225 |

226 | ``` python

227 | # Transformers Model, such as bert.

228 | from calflops import calculate_flops

229 | from transformers import AutoModel

230 | from transformers import AutoTokenizer

231 |

232 |

233 | batch_size, max_seq_length = 1, 128

234 | model_name = "hfl/chinese-roberta-wwm-ext/"

235 | model_save = "/code/yexiaoju/generate_tags/models/pretrain_models/" + model_name

236 | model = AutoModel.from_pretrained(model_save)

237 | tokenizer = AutoTokenizer.from_pretrained(model_save)

238 |

239 | text = ""

240 | inputs = tokenizer(text,

241 | add_special_tokens=True,

242 | return_attention_mask=True,

243 | padding=True,

244 | truncation="longest_first",

245 | max_length=max_seq_length)

246 |

247 | if len(inputs["input_ids"]) < max_seq_length:

248 | apply_num = max_seq_length-len(inputs["input_ids"])

249 | inputs["input_ids"].extend([0]*apply_num)

250 | inputs["token_type_ids"].extend([0]*apply_num)

251 | inputs["attention_mask"].extend([0]*apply_num)

252 |

253 | inputs["input_ids"] = torch.tensor([inputs["input_ids"]])

254 | inputs["token_type_ids"] = torch.tensor([inputs["token_type_ids"]])

255 | inputs["attention_mask"] = torch.tensor([inputs["attention_mask"]])

256 |

257 | flops, macs, params = calculate_flops(model=model,

258 | kwargs = inputs,

259 | print_results=False)

260 | print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

261 | #Bert(hfl/chinese-roberta-wwm-ext) FLOPs:22.36 GFLOPS MACs:11.17 GMACs Params:102.27 M

262 | ```

263 |

264 |

265 | ### Large Language Model

266 |

267 | #### Online

268 |

269 | ```python

270 | from calflops import calculate_flops_hf

271 |

272 | batch_size, max_seq_length = 1, 128

273 | model_name = "meta-llama/Llama-2-7b"

274 | access_token = "" # your application for using llama

275 |

276 | flops, macs, params = calculate_flops_hf(model_name=model_name,

277 | access_token=access_token,

278 | input_shape=(batch_size, max_seq_length))

279 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

280 | ```

281 |

282 | #### Local

283 | Note here that the tokenizer must correspond to the llm model because llm tokenizer processes maybe are different.

284 |

285 | ``` python

286 | #Large Languase Model, such as llama2-7b.

287 | from calflops import calculate_flops

288 | from transformers import LlamaTokenizer

289 | from transformers import LlamaForCausalLM

290 |

291 | batch_size, max_seq_length = 1, 128

292 | model_name = "llama2_hf_7B"

293 | model_save = "../model/" + model_name

294 | model = LlamaForCausalLM.from_pretrained(model_save)

295 | tokenizer = LlamaTokenizer.from_pretrained(model_save)

296 | flops, macs, params = calculate_flops(model=model,

297 | input_shape=(batch_size, max_seq_length),

298 | transformer_tokenizer=tokenizer)

299 | print("Llama2(7B) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

300 | #Llama2(7B) FLOPs:1.7 TFLOPS MACs:850.00 GMACs Params:6.74 B

301 | ```

302 |

303 | ### Show each submodule result of FLOPs、MACs、Params

304 |

305 | The calflops provides a more detailed display of model FLOPs calculation information. By setting the parameter ```print_result=True```, which defaults to True, flops of the model will be printed in the terminal or jupyter interface.

306 |

307 |

308 |

309 | Meanwhile, by setting the parameter ```print_detailed=True``` which default to True, the calflops supports the display of the calculation results and proportion of FLOPs、MACs and Parameter in each submodule of the entire model, so that it is convenient to see the largest part of the calculation consumption in the entire model.

310 |

311 |

312 |

313 | ### More use introduction

314 |

315 |

316 | How to make output format more elegant

317 | You can use parameter output_as_string、output_precision、output_unit to determine the format of output data is value or string, if it is string, how many bits of precision to retain and the unit of value, such as FLOPs, the unit of result is "TFLOPs" or "GFLOPs", "MFLOPs".

318 |

319 |

320 |

321 |

322 | How do deal with model has multiple inputs

323 | The calflops support multiple inputs of model, just use parameter args or kwargs to construct multiple inputs can be passed in as model inference.

324 |

325 |

326 |

327 | How to calculate the results of FLOPS include forward and backward pass of the model

328 | You can use the parameter include_backPropagation to select whether the calculation of FLOPs results includes the process of model backpropagation. The default is False, that is result of FLOPs only include forward pass.

329 |

330 | In addition, the parameter compute_bp_factor to determine how many times backward as much computation as forward pass.The defaults that is 2.0, according to https://epochai.org/blog/backward-forward-FLOP-ratio

331 |

332 |

333 |

334 | How to calculate FLOPs for only part of the model module

335 | You can use the parameter ignore_modules to select which modules of model are ignored during FLOPs calculation. The default is [], that is all modules of model would be calculated in results.

336 |

337 |

338 |

339 | How to calculate FLOPs of the generate function in LLM

340 | You just need to assign "generate" to parameter forward_mode.

341 |

342 |

343 | ### **API** of the **calflops**

344 |

345 |

346 | calflops.calculate_flops()

347 |

348 | ``` python

349 | from calflops import calculate_flops

350 |

351 | def calculate_flops(model,

352 | input_shape=None,

353 | transformer_tokenizer=None,

354 | args=[],

355 | kwargs={},

356 | forward_mode="forward",

357 | include_backPropagation=False,

358 | compute_bp_factor=2.0,

359 | print_results=True,

360 | print_detailed=True,

361 | output_as_string=True,

362 | output_precision=2,

363 | output_unit=None,

364 | ignore_modules=None):

365 |

366 | """Returns the total floating-point operations, MACs, and parameters of a model.

367 |

368 | Args:

369 | model ([torch.nn.Module]): The model of input must be a PyTorch model.

370 | input_shape (tuple, optional): Input shape to the model. If args and kwargs is empty, the model takes a tensor with this shape as the only positional argument. Default to [].

371 | transformers_tokenizer (None, optional): Transforemrs Toekenizer must be special if model type is transformers and args、kwargs is empty. Default to None

372 | args (list, optinal): list of positional arguments to the model, such as bert input args is [input_ids, token_type_ids, attention_mask]. Default to []

373 | kwargs (dict, optional): dictionary of keyword arguments to the model, such as bert input kwargs is {'input_ids': ..., 'token_type_ids':..., 'attention_mask':...}. Default to {}

374 | forward_mode (str, optional): To determine the mode of model inference, Default to 'forward'. And use 'generate' if model inference uses model.generate().

375 | include_backPropagation (bool, optional): Decides whether the final return FLOPs computation includes the computation for backpropagation.

376 | compute_bp_factor (float, optional): The model backpropagation is a multiple of the forward propagation computation. Default to 2.

377 | print_results (bool, optional): Whether to print the model profile. Defaults to True.

378 | print_detailed (bool, optional): Whether to print the detailed model profile. Defaults to True.

379 | output_as_string (bool, optional): Whether to print the output as string. Defaults to True.

380 | output_precision (int, optional) : Output holds the number of decimal places if output_as_string is True. Default to 2.

381 | output_unit (str, optional): The unit used to output the result value, such as T, G, M, and K. Default is None, that is the unit of the output decide on value.

382 | ignore_modules ([type], optional): the list of modules to ignore during profiling. Defaults to None.

383 | ```

384 |

385 |

386 |

387 |

388 | calflops.calculate_flops_hf()

389 |

390 | ``` python

391 | def calculate_flops_hf(model_name,

392 | input_shape=None,

393 | library_name="transformers",

394 | trust_remote_code=True,

395 | access_token="",

396 | forward_mode="forward",

397 | include_backPropagation=False,

398 | compute_bp_factor=2.0,

399 | print_results=True,

400 | print_detailed=True,

401 | output_as_string=True,

402 | output_precision=2,

403 | output_unit=None,

404 | ignore_modules=None):

405 |

406 | """Returns the total floating-point operations, MACs, and parameters of a model.

407 |

408 | Args:

409 | model_name (str): The model name in huggingface platform https://huggingface.co/models, such as meta-llama/Llama-2-7b、baichuan-inc/Baichuan-13B-Chat etc.

410 | input_shape (tuple, optional): Input shape to the model. If args and kwargs is empty, the model takes a tensor with this shape as the only positional argument. Default to [].

411 | trust_remote_code (bool, optional): Trust the code in the remote library for the model structure.

412 | access_token (str, optional): Some models need to apply for a access token, such as meta llama2 etc.

413 | forward_mode (str, optional): To determine the mode of model inference, Default to 'forward'. And use 'generate' if model inference uses model.generate().

414 | include_backPropagation (bool, optional): Decides whether the final return FLOPs computation includes the computation for backpropagation.

415 | compute_bp_factor (float, optional): The model backpropagation is a multiple of the forward propagation computation. Default to 2.

416 | print_results (bool, optional): Whether to print the model profile. Defaults to True.

417 | print_detailed (bool, optional): Whether to print the detailed model profile. Defaults to True.

418 | output_as_string (bool, optional): Whether to print the output as string. Defaults to True.

419 | output_precision (int, optional) : Output holds the number of decimal places if output_as_string is True. Default to 2.

420 | output_unit (str, optional): The unit used to output the result value, such as T, G, M, and K. Default is None, that is the unit of the output decide on value.

421 | ignore_modules ([type], optional): the list of modules to ignore during profiling. Defaults to None.

422 |

423 | Example:

424 | .. code-block:: python

425 | from calflops import calculate_flops_hf

426 |

427 | batch_size = 1

428 | max_seq_length = 128

429 | model_name = "baichuan-inc/Baichuan-13B-Chat"

430 | flops, macs, params = calculate_flops_hf(model_name=model_name,

431 | input_shape=(batch_size, max_seq_length))

432 | print("%s FLOPs:%s MACs:%s Params:%s \n" %(model_name, flops, macs, params))

433 |

434 | Returns:

435 | The number of floating-point operations, multiply-accumulate operations (MACs), and parameters in the model.

436 | """

437 | ```

438 |

439 |

440 |

441 |

442 | calflops.generate_transformer_input()

443 |

444 | ``` python

445 | def generate_transformer_input(model_tokenizer, input_shape, device):

446 | """Automatically generates data in the form of transformes model input format.

447 |

448 | Args:

449 | input_shape (tuple):transformers model input shape: (batch_size, seq_len).

450 | tokenizer (transformer.model.tokenization): transformers model tokenization.tokenizer.

451 |

452 | Returns:

453 | dict: data format of transformers model input, it is a dict which contain 'input_ids', 'attention_mask', 'token_type_ids' etc.

454 | """

455 | ```

456 |

457 |

458 |

459 |

460 |

461 | ## Citation

462 | if calflops was useful for your paper or tech report, please cite me:

463 | ```

464 | @online{calflops,

465 | author = {xiaoju ye},

466 | title = {calflops: a FLOPs and Params calculate tool for neural networks in pytorch framework},

467 | year = 2023,

468 | url = {https://github.com/MrYxJ/calculate-flops.pytorch},

469 | }

470 | ```

471 |

472 | ## Common model calculate flops

473 |

474 | ### Large Language Model

475 | Input data format: batch_size=1, seq_len=128

476 |

477 | - fwd FLOPs: The FLOPs of the model forward propagation

478 |

479 | - bwd + fwd FLOPs: The FLOPs of model forward and backward propagation

480 |

481 | In addition, note that fwd + bwd does not include calculation losses for model parameter activation recomputation, if the results wants to include activation recomputation that is only necessary to multiply the result of fwd FLOPs by 4(According to the paper: https://arxiv.org/pdf/2205.05198.pdf), and in calflops you can easily set parameter ```computer_bp_factor=3 ```to make the result of including the activate the recalculation.

482 |

483 |

484 | Model | Input Shape | Params(B)|Params(Total)| fwd FLOPs(G) | fwd MACs(G) | fwd + bwd FLOPs(G) | fwd + bwd MACs(G) |

485 | --- |--- |--- |--- |--- |--- |--- |---

486 | bloom-1b7 |(1,128) | 1.72B | 1722408960 | 310.92 | 155.42 | 932.76 | 466.27

487 | bloom-7b1 |(1,128) | 7.07B | 7069016064 | 1550.39 | 775.11 | 4651.18 | 2325.32

488 | bloomz-1b7 |(1,128) | 1.72B | 1722408960 | 310.92 | 155.42 | 932.76 | 466.27

489 | baichuan-7B |(1,128) | 7B | 7000559616 | 1733.62 | 866.78 | 5200.85 | 2600.33

490 | chatglm-6b |(1,128) | 6.17B | 6173286400 | 1587.66 | 793.75 | 4762.97 | 2381.24

491 | chatglm2-6b |(1,128) | 6.24B | 6243584000 | 1537.68 | 768.8 | 4613.03 | 2306.4

492 | Qwen-7B |(1,128) | 7.72B | 7721324544 | 1825.83 | 912.88 | 5477.48 | 2738.65

493 | llama-7b |(1,128) | 6.74B | 6738415616 | 1700.06 | 850 | 5100.19 | 2550

494 | llama2-7b |(1,128) | 6.74B | 6738415616 | 1700.06 | 850 | 5100.19 | 2550

495 | llama2-7b-chat |(1,128) | 6.74B | 6738415616 | 1700.06 | 850 | 5100.19 | 2550

496 | chinese-llama-7b | (1,128) | 6.89B | 6885486592 | 1718.89 | 859.41 |5156.67 | 2578.24

497 | chinese-llama-plus-7b| (1,128) | 6.89B | 6885486592 | 1718.89 | 859.41 |5156.67 | 2578.24

498 | EleutherAI/pythia-1.4b | (1,128) | 1.31B | 1311625216 | 312.54 | 156.23 |937.61 | 468.69

499 | EleutherAI/pythia-12b | (1,128) | 11.59B | 11586549760 | 2911.47 | 1455.59 | 8734,41 | 4366.77

500 | moss-moon-003-sft |(1,128) | 16.72B | 16717980160 | 4124.93 | 2062.39 | 12374.8 | 6187.17

501 | moss-moon-003-sft-plugin |(1,128) | 16.06B | 16060416000 | 3956.62 | 1978.24 | 11869.9 | 5934.71

502 |

503 | We can draw some simple and interesting conclusions from the table above:

504 | - The chatglm2-6b in the model of the same scale, the model parameters are smaller, and FLOPs is also smaller, which has certain advantages in speed performance.

505 | - The parameters of the llama1-7b, llama2-7b, and llama2-7b-chat models did not change at all, and FLOPs remained consistent. The structure of the model that conforms to the 7b described by [meta in its llama2 report](https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/) has not changed, the main difference is the increase of training data tokens.

506 | - Similarly, it can be seen from the table that the chinese-llama-7b and chinese-llama-plus-7b data are also in line with [cui's report](https://arxiv.org/pdf/2304.08177v1.pdf), just more chinese data tokens are added for training, and the model structure and parameters do not change.

507 |

508 | - ......

509 |

510 | More model FLOPs would be updated successively, see github [calculate-flops.pytorch](https://github.com/MrYxJ/calculate-flops.pytorch)

511 |

512 | ### Bert

513 |

514 | Input data format: batch_size=1, seq_len=128

515 |

516 | Model | Input Shape | Params(M)|Params(Total)| fwd FLOPs(G) | fwd MACs(G) | fwd + bwd FLOPs(G) | fwd + bwd MACs(G) |

517 | --- |--- |--- |--- |--- |--- |--- |---

518 | hfl/chinese-roberta-wwm-ext | (1,128)| 102.27M | 102267648 | 22.363 | 11.174 | 67.089 | 33.523 |

519 | ......

520 |

521 | You can use calflops to calculate the more different transformer models based bert, look forward to updating in this form.

522 |

523 |

524 | ## Benchmark

525 | ### [torchvision](https://pytorch.org/docs/1.0.0/torchvision/models.html)

526 |

527 | Input data format: batch_size = 1, actually input_shape = (1, 3, 224, 224)

528 |

529 | Note: The FLOPs in the table only takes into account the computation of forward propagation of the model, **Total** refers to the total numerical representation without unit abbreviations.

530 |

531 | Model | Input Resolution | Params(M)|Params(Total) | FLOPs(G) | FLOPs(Total) | Macs(G) | Macs(Total)

532 | --- |--- |--- |--- |--- |--- |--- |---

533 | alexnet |224x224 | 61.10 | 61100840 | 1.43 | 1429740000 | 741.19 | 7418800000

534 | vgg11 |224x224 | 132.86 | 132863000 | 15.24 | 15239200000 | 7.61 | 7609090000

535 | vgg13 |224x224 | 133.05 | 133048000 | 22.65 | 22647600000 | 11.31 | 11308500000

536 | vgg16 |224x224 | 138.36 | 138358000 | 30.97 | 30973800000 | 15.47 | 15470300000

537 | vgg19 |224x224 | 143.67 | 143667000 | 39.30 | 39300000000 | 19.63 | 19632100000

538 | vgg11_bn |224x224 | 132.87 | 132869000 | 15.25 | 15254000000 | 7.61 | 7609090000

539 | vgg13_bn |224x224 | 133.05 | 133054000 | 22.67 | 22672100000 | 11.31 | 11308500000

540 | vgg16_bn |224x224 | 138.37 | 138366000 | 31.00 | 31000900000 | 15.47 | 15470300000

541 | vgg19_bn |224x224 | 143.68 | 143678000 | 39.33 | 39329700000 | 19.63 | 19632100000

542 | resnet18 |224x224 | 11.69 | 11689500 | 3.64 | 3636250000 | 1.81 | 1814070000

543 | resnet34 |224x224 | 21.80 | 21797700 | 7.34 | 7339390000 | 3.66 | 3663760000

544 | resnet50 |224x224 | 25.56 | 25557000 | 8.21 | 8211110000 | 4.09 | 4089180000

545 | resnet101 |224x224 | 44.55 | 44549200 | 15.65 | 15690900000 | 7.80 | 7801410000

546 | resnet152 |224x224 | 60.19 | 60192800 | 23.09 | 23094300000 | 11.51 | 11513600000

547 | squeezenet1_0 |224x224 | 1.25 | 1248420 | 1.65 | 1648970000 | 0.82 | 818925000

548 | squeezenet1_1 |224x224 | 1.24 | 1235500 | 0.71 | 705014000 | 0.35 | 349152000

549 | densenet121 |224x224 | 7.98 | 7978860 | 5.72 | 5716880000 | 2.83 | 2834160000

550 | densenet169 |224x224 | 14.15 | 14195000 | 6.78 | 6778370000 | 3.36 | 3359840000

551 | densenet201 |224x224 | 20.01 | 20013900 | 8.66 | 8658520000 | 4.29 | 4291370000

552 | densenet161 |224x224 | 28.68 | 28681000 | 15.55 | 1554650000 | 7.73 | 7727900000

553 | inception_v3 |224x224 | 27.16 | 27161300 | 5.29 | 5692390000 | 2.84 | 2837920000

554 |

555 | Thanks to @[zigangzhao-ai](https://github.com/zigangzhao-ai) use ```calflops``` to static torchvision form.

556 |

557 | You also can compare torchvision results of calculate FLOPs with anthoer good tool: [ptflops readme.md](https://github.com/sovrasov/flops-counter.pytorch/).

558 |

559 |

560 |

561 | ## Concact Author

562 |

563 | Author: [MrYXJ](https://github.com/MrYxJ/)

564 |

565 | Mail: yxj2017@gmail.com

566 |

--------------------------------------------------------------------------------

/README_CN.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | calflops: a FLOPs and Params calculate tool for neural networks

7 |

8 |

9 |

10 |

11 | [](https://pypi.org/project/calflops/)

12 | [](https://github.com/MrYxJ/calculate-flops.pytorch/blob/main/LICENSE)

13 |

14 |

15 |

16 | English|

17 | 中文

18 |

19 |

20 |

21 | # 介绍

22 | 这个工具(calflops)的作用是通过对模型结构与实现上统计计算各种神经网络中的FLOPs(浮点运算),mac(乘加运算)和模型参数的理论量,支持模型包括:Linear, CNN, RNN, GCN, **Transformer(Bert, LlaMA等大型语言模型)** 等等, 甚至**任何自定义模型**。这是因为caflops支持基于Pytorch的```torch.nn.function.*```实现的计算操作。同时该工具支持打印模型各子模块的FLOPS、参数计算值和比例,方便用户了解模型各部分的性能消耗情况。

23 |

24 | 对于大模型,```calflops```相比其他工具可以更方便计算FLOPs,通过```calflops.calculate_flops()```您只需要通过参数```transformers_tokenizer```传递需要计算的transformer模型相应的```tokenizer```,它将自动帮助您构建```input_shape```模型输入。或者,您还可以通过``` args```, ```kwargs ```处理需要具有多个输入的模型,例如bert模型的输入需要```input_ids```, ```attention_mask```等多个字段。详细信息请参见下面```calflops.calculate_flops()```的api。

25 |

26 | 另外,这个包的实现过程受到[ptflops](https://github.com/sovrasov/flops-counter.pytorch)和[deepspeed](https://github.com/microsoft/DeepSpeed/tree/master/deepspeed)库实现的启发,他们也都是非常好的工作。同时,calflops包也在他们基础上改进了一些方面(更简单的使用,更多的模型支持),详细可以使用```pip install calflops```体验一下。

27 |

28 |

29 | ## 安装最新的版本

30 | #### From PyPI:

31 |

32 | ```python

33 | pip install calflops

34 | ```

35 |

36 | 同时你也可以从pypi calflops官方网址: https://pypi.org/project/calflops/

37 | 上下载最新版本的whl文件 `calflops-*-py3-none-any.whl` 到本地进行离线安装:

38 |

39 | ```python

40 | pip install calflops-*-py3-none-any.whl

41 | ```

42 | ## 如何使用calflops

43 | ### 举个例子

44 | ### CNN Model

45 |

46 | 如果模型的输入只有一个参数,你只需要通过对传入参数```input_shape```设置参数的大小即可,calflops会根据设定维度自动生成一个随机值作为模型的输入进行计算FLOPs。

47 |

48 | ```python

49 | from calflops import calculate_flops

50 | from torchvision import models

51 |

52 | model = models.alexnet()

53 | batch_size = 1

54 | input_shape = (batch_size, 3, 224, 224)

55 | flops, macs, params = calculate_flops(model=model,

56 | input_shape=input_shape,

57 | output_as_string=True,

58 | output_precision=4)

59 | print("Alexnet FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

60 | #Alexnet FLOPs:4.2892 GFLOPS MACs:2.1426 GMACs Params:61.1008 M

61 | ```

62 |

63 | 如果需要计算FLOPs的模型有多个输入,你也只需要通过传入参数 ```args``` 或 ```kargs```进行构造, 具体可以见下面Tranformer Model给出的例子。

64 |

65 | ### Transformer Model

66 |

67 | 相比CNN Model,Transformer Model如果想使用参数 ```input_shape``` 指定输入数据的大小自动生成输入数据时额外还需要将其对应的```tokenizer```通过参数```transformer_tokenizer```进行传入,当然这种方式相比下面通过```kwargs```传入已构造输入数据方式更方便。

68 |

69 | ``` python

70 | # Transformers Model, such as bert.

71 | from calflops import calculate_flops

72 | from transformers import AutoModel

73 | from transformers import AutoTokenizer

74 |

75 | batch_size = 1

76 | max_seq_length = 128

77 | model_name = "hfl/chinese-roberta-wwm-ext/"

78 | model_save = "../pretrain_models/" + model_name

79 | model = AutoModel.from_pretrained(model_save)

80 | tokenizer = AutoTokenizer.from_pretrained(model_save)

81 |

82 | flops, macs, params = calculate_flops(model=model,

83 | input_shape=(batch_size,max_seq_length),

84 | transformer_tokenizer=tokenizer)

85 | print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

86 | #Bert(hfl/chinese-roberta-wwm-ext) FLOPs:67.1 GFLOPS MACs:33.52 GMACs Params:102.27 M

87 | ```

88 |

89 | 如果希望使用自己生成的特定数据来计算FLOPs,可以使用参数```args```或```kwargs```,这种情况参数```input_shape```不能再传入值。下面给出一个例子,可以看出没有通过```transformer_tokenizer```方便。

90 |

91 | ``` python

92 | # Transformers Model, such as bert.

93 | from calflops import calculate_flops

94 | from transformers import AutoModel

95 | from transformers import AutoTokenizer

96 |

97 | batch_size = 1

98 | max_seq_length = 128

99 | model_name = "hfl/chinese-roberta-wwm-ext/"

100 | model_save = "/code/yexiaoju/generate_tags/models/pretrain_models/" + model_name

101 | model = AutoModel.from_pretrained(model_save)

102 | tokenizer = AutoTokenizer.from_pretrained(model_save)

103 |

104 | text = ""

105 | inputs = tokenizer(text,

106 | add_special_tokens=True,

107 | return_attention_mask=True,

108 | padding=True,

109 | truncation="longest_first",

110 | max_length=max_seq_length)

111 |

112 | if len(inputs["input_ids"]) < max_seq_length:

113 | apply_num = max_seq_length-len(inputs["input_ids"])

114 | inputs["input_ids"].extend([0]*apply_num)

115 | inputs["token_type_ids"].extend([0]*apply_num)

116 | inputs["attention_mask"].extend([0]*apply_num)

117 |

118 | inputs["input_ids"] = torch.tensor([inputs["input_ids"]])

119 | inputs["token_type_ids"] = torch.tensor([inputs["token_type_ids"]])

120 | inputs["attention_mask"] = torch.tensor([inputs["attention_mask"]])

121 |

122 | flops, macs, params = calculate_flops(model=model,

123 | kwargs = inputs,

124 | print_results=False)

125 | print("Bert(hfl/chinese-roberta-wwm-ext) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

126 | #Bert(hfl/chinese-roberta-wwm-ext) FLOPs:22.36 GFLOPS MACs:11.17 GMACs Params:102.27 M

127 | ```

128 |

129 |

130 | ### Large Language Model

131 |

132 | 请注意,传入参数```transfromer_tokenizer```与大模型的tokenzier必须是一致匹配的。

133 |

134 |

135 | ``` python

136 | #Large Languase Model, such as llama2-7b.

137 | from calflops import calculate_flops

138 | from transformers import LlamaTokenizer

139 | from transformers import LlamaForCausalLM

140 |

141 | batch_size = 1

142 | max_seq_length = 128

143 | model_name = "llama2_hf_7B"

144 | model_save = "../model/" + model_name

145 | model = LlamaForCausalLM.from_pretrained(model_save)

146 | tokenizer = LlamaTokenizer.from_pretrained(model_save)

147 | flops, macs, params = calculate_flops(model=model,

148 | input_shape=(batch_size, max_seq_length),

149 | transformer_tokenizer=tokenizer)

150 | print("Llama2(7B) FLOPs:%s MACs:%s Params:%s \n" %(flops, macs, params))

151 | #Llama2(7B) FLOPs:1.7 TFLOPS MACs:850.00 GMACs Params:6.74 B

152 | ```

153 |

154 | ### 显示每个子模块的FLOPs, mac, Params

155 |

156 | calflops提供了更详细的显示模型FLOPs计算信息。通过设置参数```print_result=True```,默认为True。如下图所示,在终端或jupyter界面打印模型的FLOPs。

157 |

158 |

159 |

160 | 同时,通过设置参数```print_detailed =True```,默认为True。 calflops支持显示整个模型各子模块中FLOPs、NACs和Parameter的计算结果和占比的比例,这可以直接查看整个模型哪部分的消耗计算量最大,方便后续性能的优化。

161 |

162 |

163 |

164 | ### 更多使用介绍

165 |

166 |

167 | 如何使输出格式更优雅

168 | 您可以使用参数```output_as_string```, ```output_precision```, ```output_unit```来确定输出数据的格式是value还是string,如果是string,则保留多少位精度和值的单位,例如FLOPs的单位是“TFLOPs”或“GFLOPs”,“MFLOPs”。

169 |

170 |

171 |

172 |

173 | 如何处理有多个输入的模型

174 | calflops支持具有多个输入的模型,你只需使用参数args或kwargs进行构造,即可将多个输入作为模型推理的传入。

175 |

176 |

177 |

178 | 如何让计算FLOPS的结果包括模型的正向和反向传播

179 |

180 | 你可以使用参数include_backPropagation来选择FLOPs结果的计算是否包含模型反向传播的过程,默认缺省值为False,即FLOPs只包含模型前向传播的过程。

181 |

182 | 此外,参数compute_bp_factor用于确定向后传播的计算次数与向前传播的计算次数相同。默认值缺省值是2.0,根据技术报告:https://epochai.org/blog/backward-forward-FLOP-ratio

183 |

184 |

185 |

186 | 如何仅计算部分模型模块的FLOPs

187 | 你可以通过参数ignore_modules可以选择在计算FLOPs时忽略model中的哪些模块。默认为[],即在计算结果包括模型的所有模块。

188 |

189 |

190 |

191 | 如何计算LLM中生成函数(model.generate())的FLOPs

192 | 你只需要将“generate”赋值给参数forward_mode。

193 |

194 |

195 | ### **Api** of the **calflops**

196 |

197 |

198 | calflops.calculate_flops()

199 |

200 | ``` python

201 | from calflops import calculate_flops

202 |

203 | def calculate_flops(model,

204 | input_shape=None,

205 | transformer_tokenizer=None,

206 | args=[],

207 | kwargs={},

208 | forward_mode="forward",

209 | include_backPropagation=False,

210 | compute_bp_factor=2.0,

211 | print_results=True,

212 | print_detailed=True,

213 | output_as_string=True,

214 | output_precision=2,

215 | output_unit=None,

216 | ignore_modules=None):

217 |

218 | """Returns the total floating-point operations, MACs, and parameters of a model.

219 |

220 | Args:

221 | model ([torch.nn.Module]): The model of input must be a PyTorch model.

222 | input_shape (tuple, optional): Input shape to the model. If args and kwargs is empty, the model takes a tensor with this shape as the only positional argument. Default to [].

223 | transformers_tokenizer (None, optional): Transforemrs Toekenizer must be special if model type is transformers and args、kwargs is empty. Default to None

224 | args (list, optinal): list of positional arguments to the model, such as bert input args is [input_ids, token_type_ids, attention_mask]. Default to []

225 | kwargs (dict, optional): dictionary of keyword arguments to the model, such as bert input kwargs is {'input_ids': ..., 'token_type_ids':..., 'attention_mask':...}. Default to {}

226 | forward_mode (str, optional): To determine the mode of model inference, Default to 'forward'. And use 'generate' if model inference uses model.generate().

227 | include_backPropagation (bool, optional): Decides whether the final return FLOPs computation includes the computation for backpropagation.

228 | compute_bp_factor (float, optional): The model backpropagation is a multiple of the forward propagation computation. Default to 2.

229 | print_results (bool, optional): Whether to print the model profile. Defaults to True.

230 | print_detailed (bool, optional): Whether to print the detailed model profile. Defaults to True.

231 | output_as_string (bool, optional): Whether to print the output as string. Defaults to True.

232 | output_precision (int, optional) : Output holds the number of decimal places if output_as_string is True. Default to 2.

233 | output_unit (str, optional): The unit used to output the result value, such as T, G, M, and K. Default is None, that is the unit of the output decide on value.

234 | ignore_modules ([type], optional): the list of modules to ignore during profiling. Defaults to None.

235 | ```

236 |

237 |

238 |

239 | calflops.generate_transformer_input()

240 |

241 | ``` python

242 | def generate_transformer_input(model_tokenizer, input_shape, device):

243 | """Automatically generates data in the form of transformes model input format.

244 |

245 | Args:

246 | input_shape (tuple):transformers model input shape: (batch_size, seq_len).

247 | tokenizer (transformer.model.tokenization): transformers model tokenization.tokenizer.

248 |

249 | Returns:

250 | dict: data format of transformers model input, it is a dict which contain 'input_ids', 'attention_mask', 'token_type_ids' etc.

251 | """

252 | ```

253 |

254 |

255 |

256 |

257 |

258 | ## Citation

259 | if calflops was useful for your paper or tech report, please cite me:

260 | ```

261 | @online{calflops,

262 | author = {xiaoju ye},

263 | title = {calflops: a FLOPs and Params calculate tool for neural networks in pytorch framework},

264 | year = 2023,

265 | url = {https://github.com/MrYxJ/calculate-flops.pytorch},

266 | }

267 | ```

268 |

269 | ## 常见模型的FLOPs

270 |

271 | ### Large Language Model

272 | Input data format: batch_size=1, seq_len=128

273 |

274 | - fwd FLOPs: The FLOPs of the model forward propagation

275 |

276 | - bwd + fwd FLOPs: The FLOPs of model forward and backward propagation

277 |

278 | 另外注意这里fwd + bwd 没有包括模型参数激活的计算损耗,如果包括的对fwd的结果乘4即可。根据论文:https://arxiv.org/pdf/2205.05198.pdf

279 |

280 | Model | Input Shape | Params(B)|Params(Total)| fwd FLOPs(G) | fwd MACs(G) | fwd + bwd FLOPs(G) | fwd + bwd MACs(G) |

281 | --- |--- |--- |--- |--- |--- |--- |---

282 | bloom-1b7 |(1,128) | 1.72B | 1722408960 | 310.92 | 155.42 | 932.76 | 466.27

283 | bloom-7b1 |(1,128) | 7.07B | 7069016064 | 1550.39 | 775.11 | 4651.18 | 2325.32

284 | baichuan-7B |(1,128) | 7B | 7000559616 | 1733.62 | 866.78 | 5200.85 | 2600.33

285 | chatglm-6b |(1,128) | 6.17B | 6173286400 | 1587.66 | 793.75 | 4762.97 | 2381.24

286 | chatglm2-6b |(1,128) | 6.24B | 6243584000 | 1537.68 | 768.8 | 4613.03 | 2306.4

287 | Qwen-7B |(1,128) | 7.72B | 7721324544 | 1825.83 | 912.88 | 5477.48 | 2738.65

288 | llama-7b |(1,128) | 6.74B | 6738415616 | 1700.06 | 850 | 5100.19 | 2550

289 | llama2-7b |(1,128) | 6.74B | 6738415616 | 1700.06 | 850 | 5100.19 | 2550

290 | llama2-7b-chat |(1,128) | 6.74B | 6738415616 | 1700.06 | 850 | 5100.19 | 2550

291 | chinese-llama-7b | (1,128) | 6.89B | 6885486592 | 1718.89 | 859.41 |5156.67 | 2578.24

292 | chinese-llama-plus-7b| (1,128) | 6.89B | 6885486592 | 1718.89 | 859.41 |5156.67 | 2578.24

293 | moss-moon-003-sft |(1,128) | 16.72B | 16717980160 | 4124.93 | 2062.39 | 12374.8 | 6187.17

294 |

295 | 从上表中我们可以得出一些简单而有趣的发现:

296 | - chatglm2-6b在相同比例的模型中,模型参数更小,FLOPs也更小,在速度性能上具有一定的优势。

297 | - llama1-7b、llama2-7b和llama2-7b-chat模型参数一点没变、FLOPs也保持一致。符合[meta在其llama2报告](https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/)中描述的llama2-7b的模型结构没有改变,主要区别是训练数据token的增加。

298 | - 类似的从表中可以看出,chinese-llama-7b和chinese-llama-plus-7b数据也符合[cui的报告](https://arxiv.org/pdf/2304.08177v1.pdf),只是增加了更多的中文数据token进行训练,模型没有改变。

299 | - ......

300 |

301 | 更多的模型FLOPs将陆续更新,参见github

302 | [calculate-flops.pytorch](https://github.com/MrYxJ/calculate-flops.pytorch)

303 |

304 | ### Bert

305 |

306 | Input data format: batch_size=1, seq_len=128

307 |

308 | Model | Input Shape | Params(M)|Params(Total)| fwd FLOPs(G) | fwd MACs(G) | fwd + bwd FLOPs() | fwd + bwd MACs(G) |

309 | --- |--- |--- |--- |--- |--- |--- |---

310 | hfl/chinese-roberta-wwm-ext | (1,128)| 102.27M | 102267648 | 67.1 | 33.52 | 201.3 | 100.57

311 | ......

312 |

313 | 你可以使用calflops来计算基于bert的更多不同模型,期待你更新在此表中。

314 |

315 |

316 | ## Benchmark

317 | ### [torchvision](https://pytorch.org/docs/1.0.0/torchvision/models.html)

318 |

319 | Input data format: batch_size = 1, actually input_shape = (1, 3, 224, 224)

320 |

321 | 注:表中FLOPs仅考虑模型正向传播的计算,**Total**为不含单位缩写的总数值表示。

322 |

323 | Model | Input Resolution | Params(M)|Params(Total) | FLOPs(G) | FLOPs(Total) | Macs(G) | Macs(Total)

324 | --- |--- |--- |--- |--- |--- |--- |---

325 | alexnet |224x224 | 61.10 | 61100840 | 1.43 | 1429740000 | 741.19 | 7418800000

326 | vgg11 |224x224 | 132.86 | 132863000 | 15.24 | 15239200000 | 7.61 | 7609090000

327 | vgg13 |224x224 | 133.05 | 133048000 | 22.65 | 22647600000 | 11.31 | 11308500000

328 | vgg16 |224x224 | 138.36 | 138358000 | 30.97 | 30973800000 | 15.47 | 15470300000

329 | vgg19 |224x224 | 143.67 | 143667000 | 39.30 | 39300000000 | 19.63 | 19632100000

330 | vgg11_bn |224x224 | 132.87 | 132869000 | 15.25 | 15254000000 | 7.61 | 7609090000

331 | vgg13_bn |224x224 | 133.05 | 133054000 | 22.67 | 22672100000 | 11.31 | 11308500000

332 | vgg16_bn |224x224 | 138.37 | 138366000 | 31.00 | 31000900000 | 15.47 | 15470300000

333 | vgg19_bn |224x224 | 143.68 | 143678000 | 39.33 | 39329700000 | 19.63 | 19632100000

334 | resnet18 |224x224 | 11.69 | 11689500 | 3.64 | 3636250000 | 1.81 | 1814070000

335 | resnet34 |224x224 | 21.80 | 21797700 | 7.34 | 7339390000 | 3.66 | 3663760000

336 | resnet50 |224x224 | 25.56 | 25557000 | 8.21 | 8211110000 | 4.09 | 4089180000

337 | resnet101 |224x224 | 44.55 | 44549200 | 15.65 | 15690900000 | 7.80 | 7801410000

338 | resnet152 |224x224 | 60.19 | 60192800 | 23.09 | 23094300000 | 11.51 | 11513600000

339 | squeezenet1_0 |224x224 | 1.25 | 1248420 | 1.65 | 1648970000 | 0.82 | 818925000

340 | squeezenet1_1 |224x224 | 1.24 | 1235500 | 0.71 | 705014000 | 0.35 | 349152000

341 | densenet121 |224x224 | 7.98 | 7978860 | 5.72 | 5716880000 | 2.83 | 2834160000

342 | densenet169 |224x224 | 14.15 | 14195000 | 6.78 | 6778370000 | 3.36 | 3359840000

343 | densenet201 |224x224 | 20.01 | 20013900 | 8.66 | 8658520000 | 4.29 | 4291370000

344 | densenet161 |224x224 | 28.68 | 28681000 | 15.55 | 1554650000 | 7.73 | 7727900000

345 | inception_v3 |224x224 | 27.16 | 27161300 | 5.29 | 5692390000 | 2.84 | 2837920000

346 |

347 | 感谢 @[zigangzhao-ai](https://github.com/zigangzhao-ai) 帮忙使用 ```calflops``` 去统计表 torchvision的结果.

348 |

349 | 你也可以将calflops计算FLOPs的结果与其他优秀的工具计算结果进行比较

350 | : [ptflops readme.md](https://github.com/sovrasov/flops-counter.pytorch/).

351 |

352 |

353 | ## Concact Author

354 |

355 | Author: [MrYXJ](https://github.com/MrYxJ/)

356 |

357 | Mail: yxj2017@gmail.com

358 |

--------------------------------------------------------------------------------

/calflops/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/.DS_Store

--------------------------------------------------------------------------------

/calflops/__init__.py:

--------------------------------------------------------------------------------

1 | # !usr/bin/env python

2 | # -*- coding:utf-8 -*-

3 |

4 | '''

5 | Description :

6 | Version : 1.0

7 | Author : MrYXJ

8 | Mail : yxj2017@gmail.com

9 | Github : https://github.com/MrYxJ

10 | Date : 2023-08-19 10:27:55

11 | LastEditTime : 2023-09-05 15:31:43

12 | Copyright (C) 2023 mryxj. All rights reserved.

13 | '''

14 |

15 | from .flops_counter import calculate_flops

16 | from .flops_counter_hf import calculate_flops_hf

17 |

18 | from .utils import generate_transformer_input

19 | from .utils import number_to_string

20 | from .utils import flops_to_string

21 | from .utils import macs_to_string

22 | from .utils import params_to_string

23 | from .utils import bytes_to_string

24 |

25 | from .estimate import create_empty_model

--------------------------------------------------------------------------------

/calflops/__pycache__/__init__.cpython-311.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/__init__.cpython-311.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/__init__.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/__init__.cpython-39.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/big_modeling.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/big_modeling.cpython-39.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/calculate_pipline.cpython-311.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/calculate_pipline.cpython-311.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/calculate_pipline.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/calculate_pipline.cpython-39.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/estimate.cpython-311.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/estimate.cpython-311.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/estimate.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MrYxJ/calculate-flops.pytorch/027e89a24daf23ee7ed79ca4abee3fb59b5b23cd/calflops/__pycache__/estimate.cpython-39.pyc

--------------------------------------------------------------------------------

/calflops/__pycache__/estimate.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 |

3 | # Copyright 2023 The HuggingFace Team. All rights reserved.

4 | #

5 | # Licensed under the Apache License, Version 2.0 (the "License");

6 | # you may not use this file except in compliance with the License.

7 | # You may obtain a copy of the License at

8 | #

9 | # http://www.apache.org/licenses/LICENSE-2.0

10 | #

11 | # Unless required by applicable law or agreed to in writing, software

12 | # distributed under the License is distributed on an "AS IS" BASIS,

13 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

14 | # See the License for the specific language governing permissions and

15 | # limitations under the License.

16 | #import argparse

17 |

18 | from huggingface_hub import model_info

19 | from huggingface_hub.utils import GatedRepoError, RepositoryNotFoundError

20 |

21 | from accelerate import init_empty_weights

22 | from accelerate.utils import (

23 | # calculate_maximum_sizes,

24 | # convert_bytes,

25 | is_timm_available,

26 | is_transformers_available,

27 | )

28 |

29 |

30 | if is_transformers_available():

31 | import transformers

32 | from transformers import AutoConfig, AutoModel

33 |

34 | if is_timm_available():

35 | import timm

36 |

37 |

38 | def verify_on_hub(repo: str, token: str = None):

39 | "Verifies that the model is on the hub and returns the model info."

40 | try:

41 | return model_info(repo, token=token)

42 | except GatedRepoError:

43 | return "gated"

44 | except RepositoryNotFoundError:

45 | return "repo"

46 |

47 |

48 | def check_has_model(error):

49 | """

50 | Checks what library spawned `error` when a model is not found

51 | """

52 | if is_timm_available() and isinstance(error, RuntimeError) and "Unknown model" in error.args[0]:

53 | return "timm"

54 | elif (

55 | is_transformers_available()

56 | and isinstance(error, OSError)

57 | and "does not appear to have a file named" in error.args[0]

58 | ):

59 | return "transformers"

60 | else:

61 | return "unknown"

62 |

63 |

64 | def create_empty_model(model_name: str, library_name: str, trust_remote_code: bool = False, access_token: str = None):

65 | """

66 | Creates an empty model from its parent library on the `Hub` to calculate the overall memory consumption.

67 |

68 | Args:

69 | model_name (`str`):

70 | The model name on the Hub

71 | library_name (`str`):

72 | The library the model has an integration with, such as `transformers`. Will be used if `model_name` has no

73 | metadata on the Hub to determine the library.

74 | trust_remote_code (`bool`, `optional`, defaults to `False`):

75 | Whether or not to allow for custom models defined on the Hub in their own modeling files. This option

76 | should only be set to `True` for repositories you trust and in which you have read the code, as it will

77 | execute code present on the Hub on your local machine.

78 | access_token (`str`, `optional`, defaults to `None`):

79 | The access token to use to access private or gated models on the Hub. (for use on the Gradio app)

80 |

81 | Returns:

82 | `torch.nn.Module`: The torch model that has been initialized on the `meta` device.

83 |

84 | """

85 | model_info = verify_on_hub(model_name, access_token)

86 | # Simplified errors

87 | if model_info == "gated":

88 | raise GatedRepoError(

89 | f"Repo for model `{model_name}` is gated. You must be authenticated to access it. Please run `huggingface-cli login`."

90 | )

91 | elif model_info == "repo":

92 | raise RepositoryNotFoundError(

93 | f"Repo for model `{model_name}` does not exist on the Hub. If you are trying to access a private repo,"

94 | " make sure you are authenticated via `huggingface-cli login` and have access."

95 | )

96 | if library_name is None:

97 | library_name = getattr(model_info, "library_name", False)

98 | if not library_name:

99 | raise ValueError(

100 | f"Model `{model_name}` does not have any library metadata on the Hub, please manually pass in a `--library_name` to use (such as `transformers`)"

101 | )

102 | if library_name == "transformers":

103 | if not is_transformers_available():

104 | raise ImportError(

105 | f"To check `{model_name}`, `transformers` must be installed. Please install it via `pip install transformers`"

106 | )

107 | print(f"Loading pretrained config for `{model_name}` from `transformers`...")

108 |

109 | #auto_map = model_info.config.get("auto_map", False)

110 | auto_map = model_info.config.get("auto_map", True)

111 | config = AutoConfig.from_pretrained(model_name, trust_remote_code=trust_remote_code)

112 |

113 | with init_empty_weights():

114 | # remote code could specify a specific `AutoModel` class in the `auto_map`

115 | constructor = AutoModel

116 | if isinstance(auto_map, dict):

117 | value = None

118 | for key in auto_map.keys():

119 | if key.startswith("AutoModelFor"):

120 | value = key

121 | break

122 | if value is not None:

123 | constructor = getattr(transformers, value)

124 | model = constructor.from_config(config, trust_remote_code=trust_remote_code)

125 | elif library_name == "timm":

126 | if not is_timm_available():

127 | raise ImportError(

128 | f"To check `{model_name}`, `timm` must be installed. Please install it via `pip install timm`"

129 | )

130 | print(f"Loading pretrained config for `{model_name}` from `timm`...")

131 | with init_empty_weights():