├── CONTRIBUTING.md

├── MANIFEST.in

├── README.md

├── YOLOv8_Object_Tracking_Blurring_Counting.ipynb

├── figure

├── figure1.png

└── figure2.png

├── mkdocs.yml

├── requirements.txt

├── setup.cfg

├── setup.py

└── ultralytics

├── __init__.py

├── hub

├── __init__.py

├── auth.py

├── session.py

└── utils.py

├── models

├── README.md

├── v3

│ ├── yolov3-spp.yaml

│ ├── yolov3-tiny.yaml

│ └── yolov3.yaml

├── v5

│ ├── yolov5l.yaml

│ ├── yolov5m.yaml

│ ├── yolov5n.yaml

│ ├── yolov5s.yaml

│ └── yolov5x.yaml

└── v8

│ ├── cls

│ ├── yolov8l-cls.yaml

│ ├── yolov8m-cls.yaml

│ ├── yolov8n-cls.yaml

│ ├── yolov8s-cls.yaml

│ └── yolov8x-cls.yaml

│ ├── seg

│ ├── yolov8l-seg.yaml

│ ├── yolov8m-seg.yaml

│ ├── yolov8n-seg.yaml

│ ├── yolov8s-seg.yaml

│ └── yolov8x-seg.yaml

│ ├── yolov8l.yaml

│ ├── yolov8m.yaml

│ ├── yolov8n.yaml

│ ├── yolov8s.yaml

│ ├── yolov8x.yaml

│ └── yolov8x6.yaml

├── nn

├── __init__.py

├── autobackend.py

├── modules.py

└── tasks.py

└── yolo

├── cli.py

├── configs

├── __init__.py

├── default.yaml

└── hydra_patch.py

├── data

├── __init__.py

├── augment.py

├── base.py

├── build.py

├── dataloaders

│ ├── __init__.py

│ ├── stream_loaders.py

│ ├── v5augmentations.py

│ └── v5loader.py

├── dataset.py

├── dataset_wrappers.py

├── datasets

│ ├── Argoverse.yaml

│ ├── GlobalWheat2020.yaml

│ ├── ImageNet.yaml

│ ├── Objects365.yaml

│ ├── SKU-110K.yaml

│ ├── VOC.yaml

│ ├── VisDrone.yaml

│ ├── coco.yaml

│ ├── coco128-seg.yaml

│ ├── coco128.yaml

│ └── xView.yaml

├── scripts

│ ├── download_weights.sh

│ ├── get_coco.sh

│ ├── get_coco128.sh

│ └── get_imagenet.sh

└── utils.py

├── engine

├── __init__.py

├── exporter.py

├── model.py

├── predictor.py

├── trainer.py

└── validator.py

├── utils

├── __init__.py

├── autobatch.py

├── callbacks

│ ├── __init__.py

│ ├── base.py

│ ├── clearml.py

│ ├── comet.py

│ ├── hub.py

│ └── tensorboard.py

├── checks.py

├── dist.py

├── downloads.py

├── files.py

├── instance.py

├── loss.py

├── metrics.py

├── ops.py

├── plotting.py

├── tal.py

└── torch_utils.py

└── v8

└── detect

├── __init__.py

├── predict.py

├── train.py

└── val.py

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | ## Contributing to YOLOv8 🚀

2 |

3 | We love your input! We want to make contributing to YOLOv8 as easy and transparent as possible, whether it's:

4 |

5 | - Reporting a bug

6 | - Discussing the current state of the code

7 | - Submitting a fix

8 | - Proposing a new feature

9 | - Becoming a maintainer

10 |

11 | YOLOv8 works so well due to our combined community effort, and for every small improvement you contribute you will be

12 | helping push the frontiers of what's possible in AI 😃!

13 |

14 | ## Submitting a Pull Request (PR) 🛠️

15 |

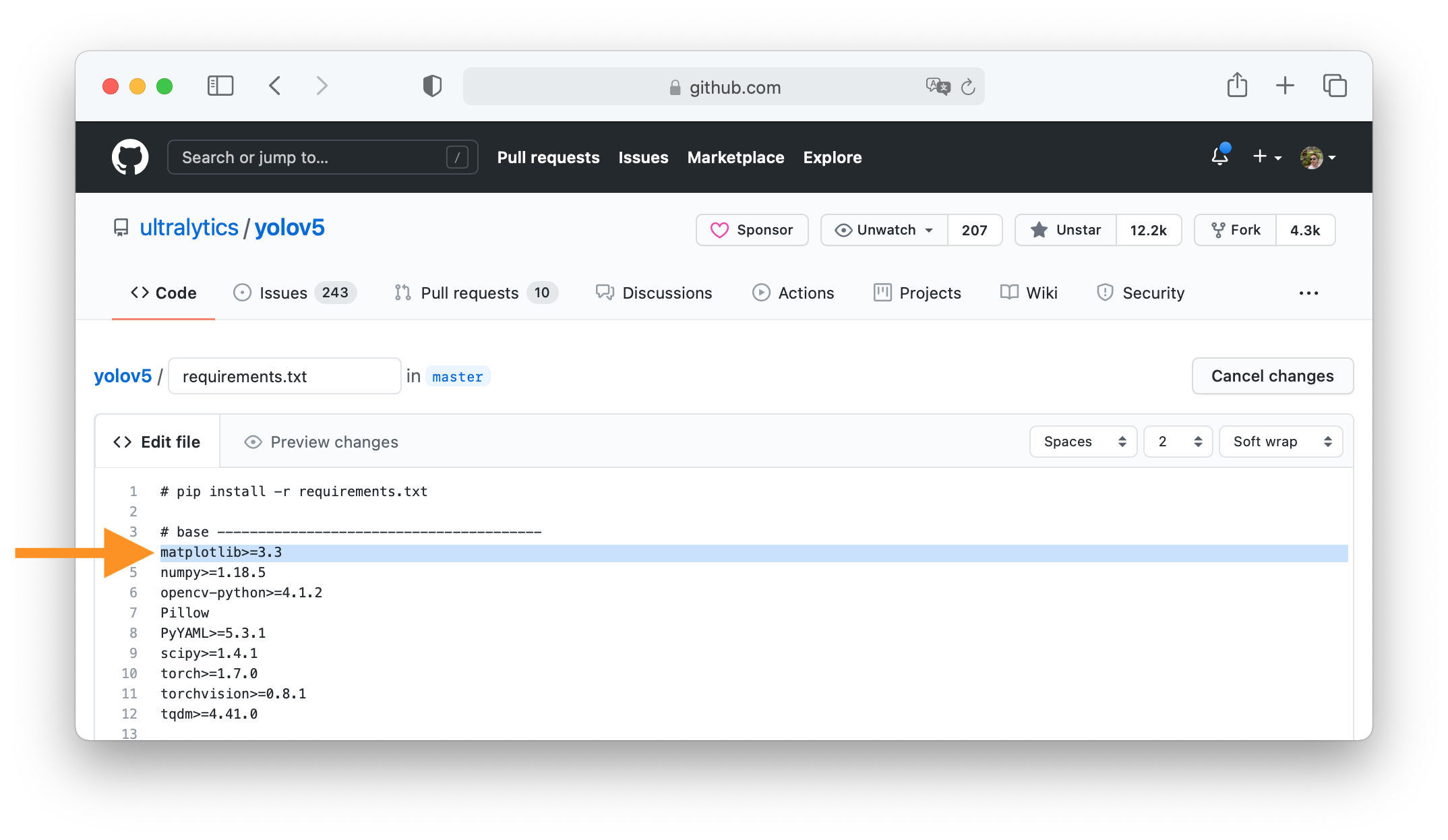

16 | Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

17 |

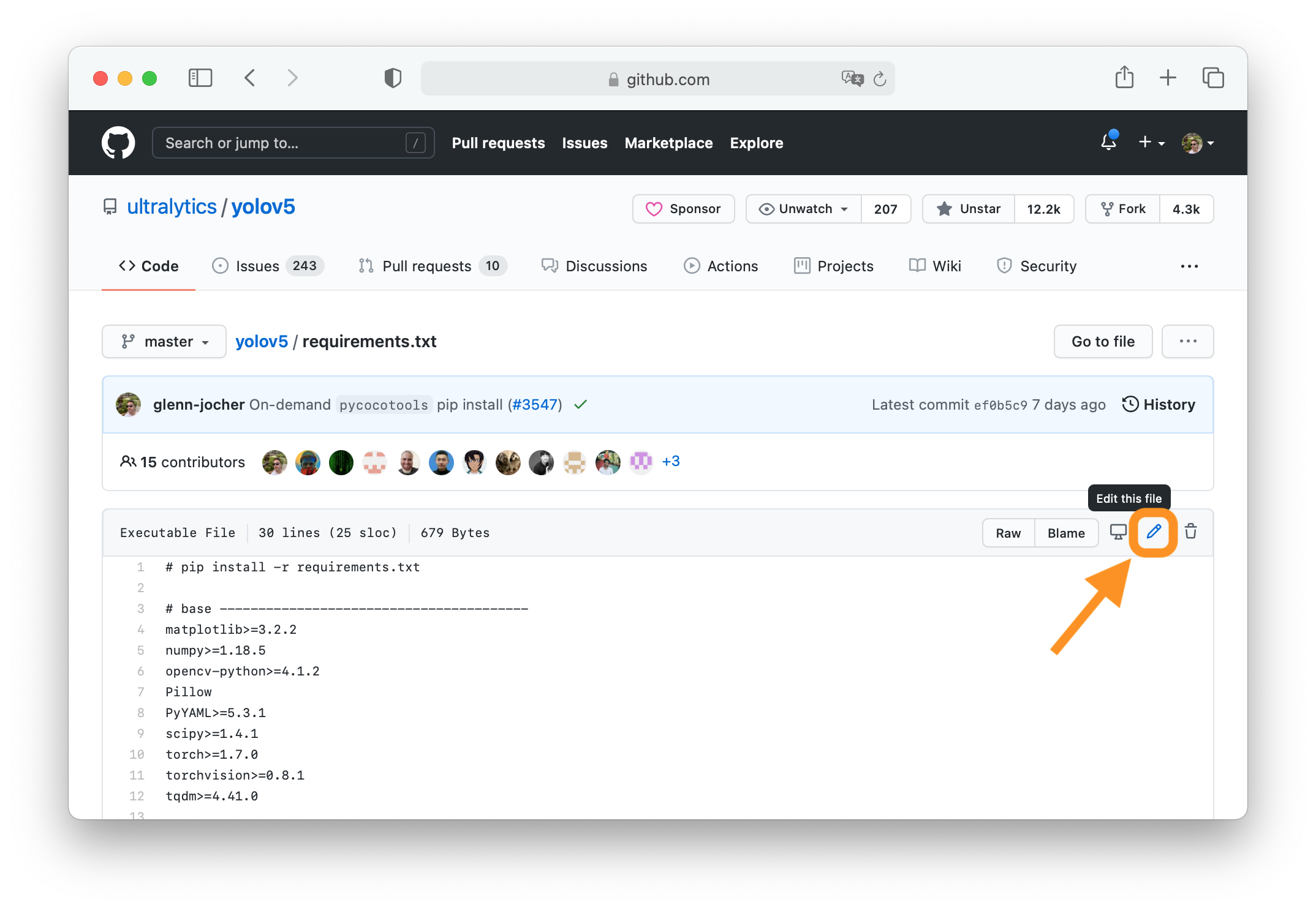

18 | ### 1. Select File to Update

19 |

20 | Select `requirements.txt` to update by clicking on it in GitHub.

21 |

22 |

23 |

24 | ### 2. Click 'Edit this file'

25 |

26 | Button is in top-right corner.

27 |

28 |

29 |

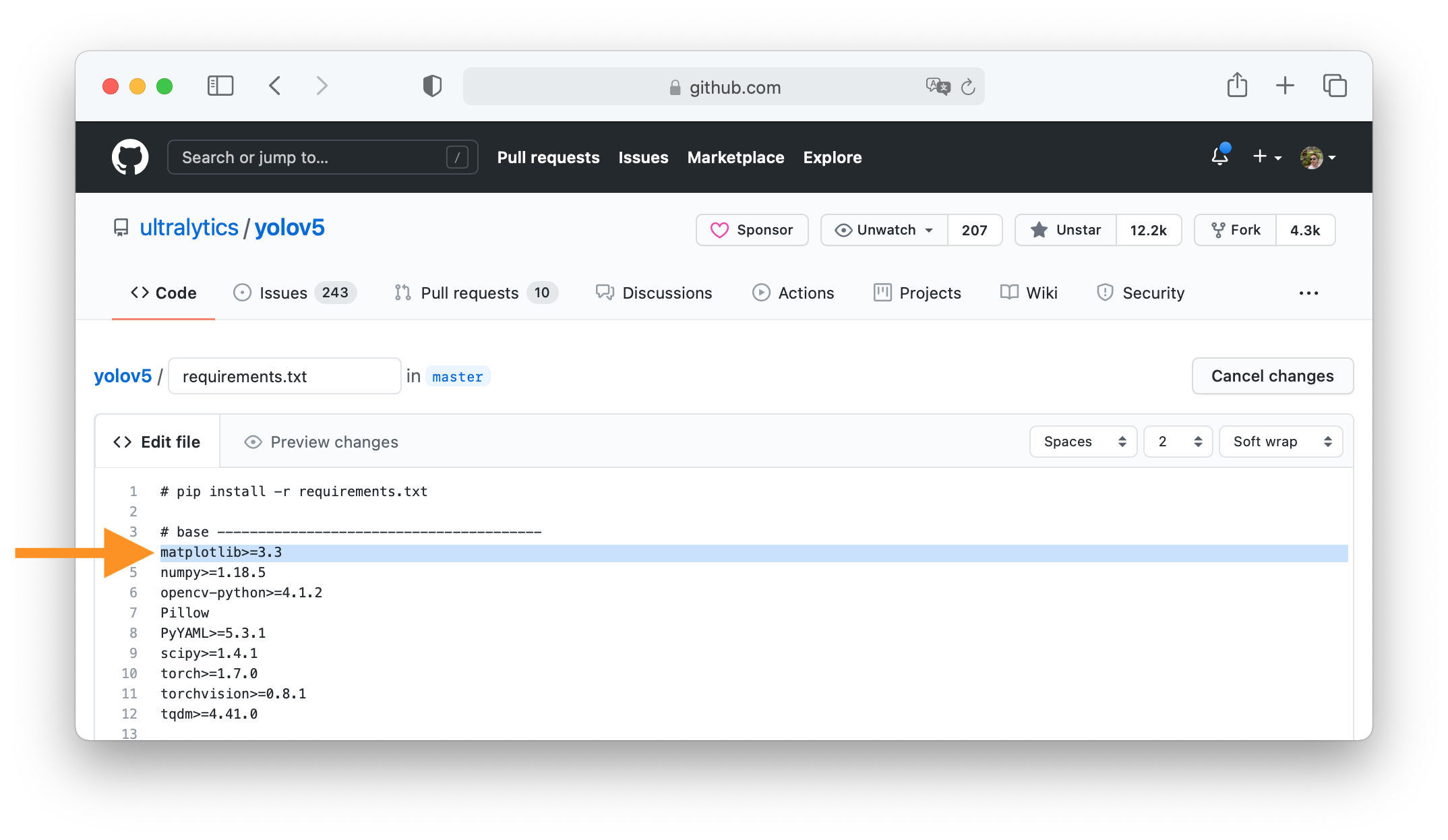

30 | ### 3. Make Changes

31 |

32 | Change `matplotlib` version from `3.2.2` to `3.3`.

33 |

34 |

35 |

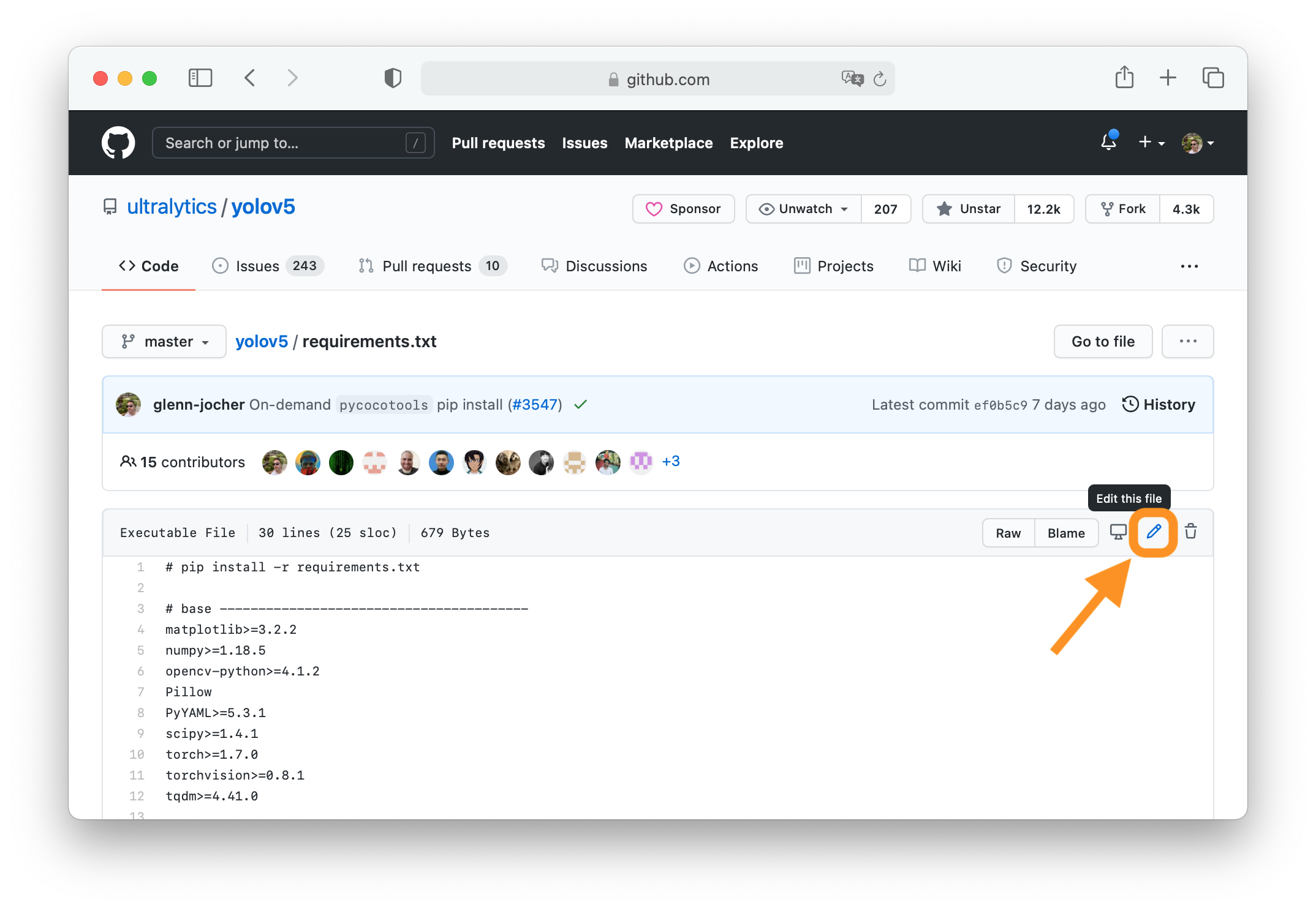

36 | ### 4. Preview Changes and Submit PR

37 |

38 | Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

39 | for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

40 | changes** button. All done, your PR is now submitted to YOLOv8 for review and approval 😃!

41 |

42 |

43 |

44 | ### PR recommendations

45 |

46 | To allow your work to be integrated as seamlessly as possible, we advise you to:

47 |

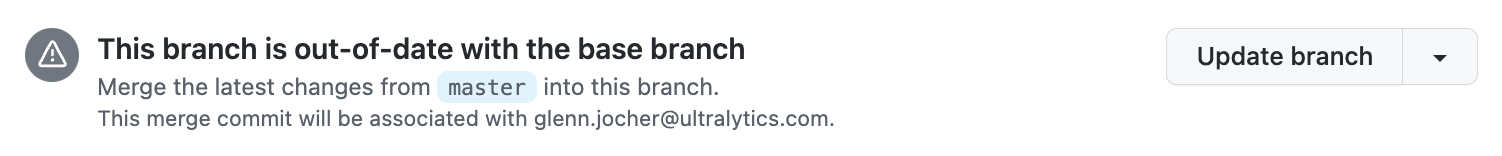

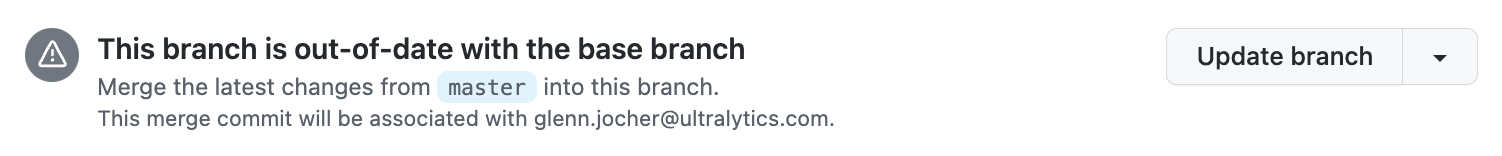

48 | - ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `master` branch. If your PR is behind you can update

49 | your code by clicking the 'Update branch' button or by running `git pull` and `git merge master` locally.

50 |

51 |

52 |

53 | - ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

54 |

55 |

56 |

57 | - ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase

58 | but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

59 |

60 | ### Docstrings

61 |

62 | Not all functions or classes require docstrings but when they do, we follow [google-stlye docstrings format](https://google.github.io/styleguide/pyguide.html#38-comments-and-docstrings). Here is an example:

63 |

64 | ```python

65 | """

66 | What the function does - performs nms on given detection predictions

67 |

68 | Args:

69 | arg1: The description of the 1st argument

70 | arg2: The description of the 2nd argument

71 |

72 | Returns:

73 | What the function returns. Empty if nothing is returned

74 |

75 | Raises:

76 | Exception Class: When and why this exception can be raised by the function.

77 | """

78 | ```

79 |

80 | ## Submitting a Bug Report 🐛

81 |

82 | If you spot a problem with YOLOv8 please submit a Bug Report!

83 |

84 | For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few

85 | short guidelines below to help users provide what we need in order to get started.

86 |

87 | When asking a question, people will be better able to provide help if you provide **code** that they can easily

88 | understand and use to **reproduce** the problem. This is referred to by community members as creating

89 | a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example). Your code that reproduces

90 | the problem should be:

91 |

92 | - ✅ **Minimal** – Use as little code as possible that still produces the same problem

93 | - ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

94 | - ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

95 |

96 | In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

97 | should be:

98 |

99 | - ✅ **Current** – Verify that your code is up-to-date with current

100 | GitHub [master](https://github.com/ultralytics/ultralytics/tree/main), and if necessary `git pull` or `git clone` a new

101 | copy to ensure your problem has not already been resolved by previous commits.

102 | - ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

103 | repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

104 |

105 | If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

106 | **Bug Report** [template](https://github.com/ultralytics/ultralytics/issues/new/choose) and providing

107 | a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us better

108 | understand and diagnose your problem.

109 |

110 | ## License

111 |

112 | By contributing, you agree that your contributions will be licensed under

113 | the [GPL-3.0 license](https://choosealicense.com/licenses/gpl-3.0/)

114 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include *.md

2 | include requirements.txt

3 | include LICENSE

4 | include setup.py

5 | recursive-include ultralytics *.yaml

6 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

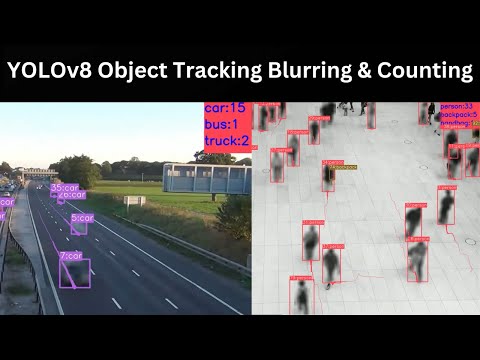

2 | YOLOv8 Object Tracking (ID + Trails) Blurring and Counting

3 |

4 | ## Google Colab File Link (A Single Click Solution)

5 | The google colab file link for yolov8 object tracking, blurring and counting is provided below, you can check the implementation in Google Colab, and its a single click implementation

6 | ,you just need to select the Run Time as GPU, and click on Run All.

7 |

8 | [`Google Colab File`](https://colab.research.google.com/drive/1haDui8z7OvITbOpGL1d0NFf6M4BxcI-y?usp=sharing)

9 |

10 | ## YOLOv8 Segmentation with DeepSORT Object Tracking

11 |

12 | [`Github Repo Link`](https://github.com/MuhammadMoinFaisal/YOLOv8_Segmentation_DeepSORT_Object_Tracking.git)

13 |

14 | ## Steps to run Code

15 |

16 | - Clone the repository

17 | ```

18 | git clone https://github.com/MuhammadMoinFaisal/YOLOv8-object-tracking-blurring-counting.git

19 | ```

20 | - Goto the cloned folder.

21 | ```

22 | cd YOLOv8-object-tracking-blurring-counting

23 | ```

24 | - Install the dependecies

25 | ```

26 | pip install -e '.[dev]'

27 |

28 | ```

29 |

30 | - Setting the Directory.

31 | ```

32 | cd ultralytics/yolo/v8/detect

33 |

34 | ```

35 | - Downloading the DeepSORT Files From The Google Drive

36 | ```

37 |

38 | https://drive.google.com/drive/folders/1kna8eWGrSfzaR6DtNJ8_GchGgPMv3VC8?usp=sharing

39 | ```

40 | - After downloading the DeepSORT Zip file from the drive, unzip it go into the subfolders and place the deep_sort_pytorch folder into the yolo/v8/detect folder

41 |

42 | - Downloading a Sample Video from the Google Drive

43 | ```

44 | gdown https://drive.google.com/uc?id=1_kt1alzcLRVxet-Drx0mt_KFSd3vrtHU

45 | ```

46 |

47 | - Run the code with mentioned command below.

48 |

49 | - For yolov8 object detection, Tracking, blurring and object counting

50 | ```

51 | python predict.py model=yolov8l.pt source="test1.mp4" show=True

52 | ```

53 |

54 | ### RESULTS

55 |

56 | #### YOLOv8 Object Detection, Tracking, Blurring and Counting

57 |

58 |

59 | #### YOLOv8 Object Detection, Tracking, Blurring and Counting

60 |

61 |

62 |

63 | ### Watch the Complete Step by Step Explanation

64 |

65 | - Video Tutorial Link [`YouTube Link`](https://www.youtube.com/watch?v=QWrP77qXEMA)

66 |

67 |

68 | []([https://www.youtube.com/watch?v=StTqXEQ2l-Y](https://www.youtube.com/watch?v=QWrP77qXEMA))

69 |

70 |

--------------------------------------------------------------------------------

/figure/figure1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MuhammadMoinFaisal/YOLOv8-object-tracking-blurring-counting/6ad9082f7d0022b22a2325e1078dc3534113f7df/figure/figure1.png

--------------------------------------------------------------------------------

/figure/figure2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/MuhammadMoinFaisal/YOLOv8-object-tracking-blurring-counting/6ad9082f7d0022b22a2325e1078dc3534113f7df/figure/figure2.png

--------------------------------------------------------------------------------

/mkdocs.yml:

--------------------------------------------------------------------------------

1 | site_name: Ultralytics Docs

2 | repo_url: https://github.com/ultralytics/ultralytics

3 | repo_name: Ultralytics

4 |

5 | theme:

6 | name: "material"

7 | logo: https://github.com/ultralytics/assets/raw/main/logo/Ultralytics-logomark-white.png

8 | icon:

9 | repo: fontawesome/brands/github

10 | admonition:

11 | note: octicons/tag-16

12 | abstract: octicons/checklist-16

13 | info: octicons/info-16

14 | tip: octicons/squirrel-16

15 | success: octicons/check-16

16 | question: octicons/question-16

17 | warning: octicons/alert-16

18 | failure: octicons/x-circle-16

19 | danger: octicons/zap-16

20 | bug: octicons/bug-16

21 | example: octicons/beaker-16

22 | quote: octicons/quote-16

23 |

24 | palette:

25 | # Palette toggle for light mode

26 | - scheme: default

27 | toggle:

28 | icon: material/brightness-7

29 | name: Switch to dark mode

30 |

31 | # Palette toggle for dark mode

32 | - scheme: slate

33 | toggle:

34 | icon: material/brightness-4

35 | name: Switch to light mode

36 | features:

37 | - content.code.annotate

38 | - content.tooltips

39 | - search.highlight

40 | - search.share

41 | - search.suggest

42 | - toc.follow

43 |

44 | extra_css:

45 | - stylesheets/style.css

46 |

47 | markdown_extensions:

48 | # Div text decorators

49 | - admonition

50 | - pymdownx.details

51 | - pymdownx.superfences

52 | - tables

53 | - attr_list

54 | - def_list

55 | # Syntax highlight

56 | - pymdownx.highlight:

57 | anchor_linenums: true

58 | - pymdownx.inlinehilite

59 | - pymdownx.snippets

60 |

61 | # Button

62 | - attr_list

63 |

64 | # Content tabs

65 | - pymdownx.superfences

66 | - pymdownx.tabbed:

67 | alternate_style: true

68 |

69 | # Highlight

70 | - pymdownx.critic

71 | - pymdownx.caret

72 | - pymdownx.keys

73 | - pymdownx.mark

74 | - pymdownx.tilde

75 | plugins:

76 | - mkdocstrings

77 |

78 | # Primary navigation

79 | nav:

80 | - Quickstart: quickstart.md

81 | - CLI: cli.md

82 | - Python Interface: sdk.md

83 | - Configuration: config.md

84 | - Customization Guide: engine.md

85 | - Ultralytics HUB: hub.md

86 | - iOS and Android App: app.md

87 | - Reference:

88 | - Python Model interface: reference/model.md

89 | - Engine:

90 | - Trainer: reference/base_trainer.md

91 | - Validator: reference/base_val.md

92 | - Predictor: reference/base_pred.md

93 | - Exporter: reference/exporter.md

94 | - nn Module: reference/nn.md

95 | - operations: reference/ops.md

96 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | # Ultralytics requirements

2 | # Usage: pip install -r requirements.txt

3 |

4 | # Base ----------------------------------------

5 | hydra-core>=1.2.0

6 | matplotlib>=3.2.2

7 | numpy>=1.18.5

8 | opencv-python>=4.1.1

9 | Pillow>=7.1.2

10 | PyYAML>=5.3.1

11 | requests>=2.23.0

12 | scipy>=1.4.1

13 | torch>=1.7.0

14 | torchvision>=0.8.1

15 | tqdm>=4.64.0

16 |

17 | # Logging -------------------------------------

18 | tensorboard>=2.4.1

19 | # clearml

20 | # comet

21 |

22 | # Plotting ------------------------------------

23 | pandas>=1.1.4

24 | seaborn>=0.11.0

25 |

26 | # Export --------------------------------------

27 | # coremltools>=6.0 # CoreML export

28 | # onnx>=1.12.0 # ONNX export

29 | # onnx-simplifier>=0.4.1 # ONNX simplifier

30 | # nvidia-pyindex # TensorRT export

31 | # nvidia-tensorrt # TensorRT export

32 | # scikit-learn==0.19.2 # CoreML quantization

33 | # tensorflow>=2.4.1 # TF exports (-cpu, -aarch64, -macos)

34 | # tensorflowjs>=3.9.0 # TF.js export

35 | # openvino-dev # OpenVINO export

36 |

37 | # Extras --------------------------------------

38 | ipython # interactive notebook

39 | psutil # system utilization

40 | thop>=0.1.1 # FLOPs computation

41 | # albumentations>=1.0.3

42 | # pycocotools>=2.0.6 # COCO mAP

43 | # roboflow

44 |

45 | # HUB -----------------------------------------

46 | GitPython>=3.1.24

47 |

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | # Project-wide configuration file, can be used for package metadata and other toll configurations

2 | # Example usage: global configuration for PEP8 (via flake8) setting or default pytest arguments

3 | # Local usage: pip install pre-commit, pre-commit run --all-files

4 |

5 | [metadata]

6 | license_file = LICENSE

7 | description_file = README.md

8 |

9 | [tool:pytest]

10 | norecursedirs =

11 | .git

12 | dist

13 | build

14 | addopts =

15 | --doctest-modules

16 | --durations=25

17 | --color=yes

18 |

19 | [flake8]

20 | max-line-length = 120

21 | exclude = .tox,*.egg,build,temp

22 | select = E,W,F

23 | doctests = True

24 | verbose = 2

25 | # https://pep8.readthedocs.io/en/latest/intro.html#error-codes

26 | format = pylint

27 | # see: https://www.flake8rules.com/

28 | ignore = E731,F405,E402,F401,W504,E127,E231,E501,F403

29 | # E731: Do not assign a lambda expression, use a def

30 | # F405: name may be undefined, or defined from star imports: module

31 | # E402: module level import not at top of file

32 | # F401: module imported but unused

33 | # W504: line break after binary operator

34 | # E127: continuation line over-indented for visual indent

35 | # E231: missing whitespace after ‘,’, ‘;’, or ‘:’

36 | # E501: line too long

37 | # F403: ‘from module import *’ used; unable to detect undefined names

38 |

39 | [isort]

40 | # https://pycqa.github.io/isort/docs/configuration/options.html

41 | line_length = 120

42 | # see: https://pycqa.github.io/isort/docs/configuration/multi_line_output_modes.html

43 | multi_line_output = 0

44 |

45 | [yapf]

46 | based_on_style = pep8

47 | spaces_before_comment = 2

48 | COLUMN_LIMIT = 120

49 | COALESCE_BRACKETS = True

50 | SPACES_AROUND_POWER_OPERATOR = True

51 | SPACE_BETWEEN_ENDING_COMMA_AND_CLOSING_BRACKET = False

52 | SPLIT_BEFORE_CLOSING_BRACKET = False

53 | SPLIT_BEFORE_FIRST_ARGUMENT = False

54 | # EACH_DICT_ENTRY_ON_SEPARATE_LINE = False

55 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | import re

4 | from pathlib import Path

5 |

6 | import pkg_resources as pkg

7 | from setuptools import find_packages, setup

8 |

9 | # Settings

10 | FILE = Path(__file__).resolve()

11 | ROOT = FILE.parent # root directory

12 | README = (ROOT / "README.md").read_text(encoding="utf-8")

13 | REQUIREMENTS = [f'{x.name}{x.specifier}' for x in pkg.parse_requirements((ROOT / 'requirements.txt').read_text())]

14 |

15 |

16 | def get_version():

17 | file = ROOT / 'ultralytics/__init__.py'

18 | return re.search(r'^__version__ = [\'"]([^\'"]*)[\'"]', file.read_text(), re.M)[1]

19 |

20 |

21 | setup(

22 | name="ultralytics", # name of pypi package

23 | version=get_version(), # version of pypi package

24 | python_requires=">=3.7.0",

25 | license='GPL-3.0',

26 | description='Ultralytics YOLOv8 and HUB',

27 | long_description=README,

28 | long_description_content_type="text/markdown",

29 | url="https://github.com/ultralytics/ultralytics",

30 | project_urls={

31 | 'Bug Reports': 'https://github.com/ultralytics/ultralytics/issues',

32 | 'Funding': 'https://ultralytics.com',

33 | 'Source': 'https://github.com/ultralytics/ultralytics',},

34 | author="Ultralytics",

35 | author_email='hello@ultralytics.com',

36 | packages=find_packages(), # required

37 | include_package_data=True,

38 | install_requires=REQUIREMENTS,

39 | extras_require={

40 | 'dev':

41 | ['check-manifest', 'pytest', 'pytest-cov', 'coverage', 'mkdocs', 'mkdocstrings[python]', 'mkdocs-material'],},

42 | classifiers=[

43 | "Intended Audience :: Developers", "Intended Audience :: Science/Research",

44 | "License :: OSI Approved :: GNU General Public License v3 (GPLv3)", "Programming Language :: Python :: 3",

45 | "Programming Language :: Python :: 3.7", "Programming Language :: Python :: 3.8",

46 | "Programming Language :: Python :: 3.9", "Programming Language :: Python :: 3.10",

47 | "Topic :: Software Development", "Topic :: Scientific/Engineering",

48 | "Topic :: Scientific/Engineering :: Artificial Intelligence",

49 | "Topic :: Scientific/Engineering :: Image Recognition", "Operating System :: POSIX :: Linux",

50 | "Operating System :: MacOS", "Operating System :: Microsoft :: Windows"],

51 | keywords="machine-learning, deep-learning, vision, ML, DL, AI, YOLO, YOLOv3, YOLOv5, YOLOv8, HUB, Ultralytics",

52 | entry_points={

53 | 'console_scripts': ['yolo = ultralytics.yolo.cli:cli', 'ultralytics = ultralytics.yolo.cli:cli'],})

54 |

--------------------------------------------------------------------------------

/ultralytics/__init__.py:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | __version__ = "8.0.3"

4 |

5 | from ultralytics.hub import checks

6 | from ultralytics.yolo.engine.model import YOLO

7 | from ultralytics.yolo.utils import ops

8 |

9 | __all__ = ["__version__", "YOLO", "hub", "checks"] # allow simpler import

10 |

--------------------------------------------------------------------------------

/ultralytics/hub/__init__.py:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | import os

4 | import shutil

5 |

6 | import psutil

7 | import requests

8 | from IPython import display # to display images and clear console output

9 |

10 | from ultralytics.hub.auth import Auth

11 | from ultralytics.hub.session import HubTrainingSession

12 | from ultralytics.hub.utils import PREFIX, split_key

13 | from ultralytics.yolo.utils import LOGGER, emojis, is_colab

14 | from ultralytics.yolo.utils.torch_utils import select_device

15 | from ultralytics.yolo.v8.detect import DetectionTrainer

16 |

17 |

18 | def checks(verbose=True):

19 | if is_colab():

20 | shutil.rmtree('sample_data', ignore_errors=True) # remove colab /sample_data directory

21 |

22 | if verbose:

23 | # System info

24 | gib = 1 << 30 # bytes per GiB

25 | ram = psutil.virtual_memory().total

26 | total, used, free = shutil.disk_usage("/")

27 | display.clear_output()

28 | s = f'({os.cpu_count()} CPUs, {ram / gib:.1f} GB RAM, {(total - free) / gib:.1f}/{total / gib:.1f} GB disk)'

29 | else:

30 | s = ''

31 |

32 | select_device(newline=False)

33 | LOGGER.info(f'Setup complete ✅ {s}')

34 |

35 |

36 | def start(key=''):

37 | # Start training models with Ultralytics HUB. Usage: from src.ultralytics import start; start('API_KEY')

38 | def request_api_key(attempts=0):

39 | """Prompt the user to input their API key"""

40 | import getpass

41 |

42 | max_attempts = 3

43 | tries = f"Attempt {str(attempts + 1)} of {max_attempts}" if attempts > 0 else ""

44 | LOGGER.info(f"{PREFIX}Login. {tries}")

45 | input_key = getpass.getpass("Enter your Ultralytics HUB API key:\n")

46 | auth.api_key, model_id = split_key(input_key)

47 | if not auth.authenticate():

48 | attempts += 1

49 | LOGGER.warning(f"{PREFIX}Invalid API key ⚠️\n")

50 | if attempts < max_attempts:

51 | return request_api_key(attempts)

52 | raise ConnectionError(emojis(f"{PREFIX}Failed to authenticate ❌"))

53 | else:

54 | return model_id

55 |

56 | try:

57 | api_key, model_id = split_key(key)

58 | auth = Auth(api_key) # attempts cookie login if no api key is present

59 | attempts = 1 if len(key) else 0

60 | if not auth.get_state():

61 | if len(key):

62 | LOGGER.warning(f"{PREFIX}Invalid API key ⚠️\n")

63 | model_id = request_api_key(attempts)

64 | LOGGER.info(f"{PREFIX}Authenticated ✅")

65 | if not model_id:

66 | raise ConnectionError(emojis('Connecting with global API key is not currently supported. ❌'))

67 | session = HubTrainingSession(model_id=model_id, auth=auth)

68 | session.check_disk_space()

69 |

70 | # TODO: refactor, hardcoded for v8

71 | args = session.model.copy()

72 | args.pop("id")

73 | args.pop("status")

74 | args.pop("weights")

75 | args["data"] = "coco128.yaml"

76 | args["model"] = "yolov8n.yaml"

77 | args["batch_size"] = 16

78 | args["imgsz"] = 64

79 |

80 | trainer = DetectionTrainer(overrides=args)

81 | session.register_callbacks(trainer)

82 | setattr(trainer, 'hub_session', session)

83 | trainer.train()

84 | except Exception as e:

85 | LOGGER.warning(f"{PREFIX}{e}")

86 |

87 |

88 | def reset_model(key=''):

89 | # Reset a trained model to an untrained state

90 | api_key, model_id = split_key(key)

91 | r = requests.post('https://api.ultralytics.com/model-reset', json={"apiKey": api_key, "modelId": model_id})

92 |

93 | if r.status_code == 200:

94 | LOGGER.info(f"{PREFIX}model reset successfully")

95 | return

96 | LOGGER.warning(f"{PREFIX}model reset failure {r.status_code} {r.reason}")

97 |

98 |

99 | def export_model(key='', format='torchscript'):

100 | # Export a model to all formats

101 | api_key, model_id = split_key(key)

102 | formats = ('torchscript', 'onnx', 'openvino', 'engine', 'coreml', 'saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs',

103 | 'ultralytics_tflite', 'ultralytics_coreml')

104 | assert format in formats, f"ERROR: Unsupported export format '{format}' passed, valid formats are {formats}"

105 |

106 | r = requests.post('https://api.ultralytics.com/export',

107 | json={

108 | "apiKey": api_key,

109 | "modelId": model_id,

110 | "format": format})

111 | assert r.status_code == 200, f"{PREFIX}{format} export failure {r.status_code} {r.reason}"

112 | LOGGER.info(f"{PREFIX}{format} export started ✅")

113 |

114 |

115 | def get_export(key='', format='torchscript'):

116 | # Get an exported model dictionary with download URL

117 | api_key, model_id = split_key(key)

118 | formats = ('torchscript', 'onnx', 'openvino', 'engine', 'coreml', 'saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs',

119 | 'ultralytics_tflite', 'ultralytics_coreml')

120 | assert format in formats, f"ERROR: Unsupported export format '{format}' passed, valid formats are {formats}"

121 |

122 | r = requests.post('https://api.ultralytics.com/get-export',

123 | json={

124 | "apiKey": api_key,

125 | "modelId": model_id,

126 | "format": format})

127 | assert r.status_code == 200, f"{PREFIX}{format} get_export failure {r.status_code} {r.reason}"

128 | return r.json()

129 |

130 |

131 | # temp. For checking

132 | if __name__ == "__main__":

133 | start(key="b3fba421be84a20dbe68644e14436d1cce1b0a0aaa_HeMfHgvHsseMPhdq7Ylz")

134 |

--------------------------------------------------------------------------------

/ultralytics/hub/auth.py:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | import requests

4 |

5 | from ultralytics.hub.utils import HUB_API_ROOT, request_with_credentials

6 | from ultralytics.yolo.utils import is_colab

7 |

8 | API_KEY_PATH = "https://hub.ultralytics.com/settings?tab=api+keys"

9 |

10 |

11 | class Auth:

12 | id_token = api_key = model_key = False

13 |

14 | def __init__(self, api_key=None):

15 | self.api_key = self._clean_api_key(api_key)

16 | self.authenticate() if self.api_key else self.auth_with_cookies()

17 |

18 | @staticmethod

19 | def _clean_api_key(key: str) -> str:

20 | """Strip model from key if present"""

21 | separator = "_"

22 | return key.split(separator)[0] if separator in key else key

23 |

24 | def authenticate(self) -> bool:

25 | """Attempt to authenticate with server"""

26 | try:

27 | header = self.get_auth_header()

28 | if header:

29 | r = requests.post(f"{HUB_API_ROOT}/v1/auth", headers=header)

30 | if not r.json().get('success', False):

31 | raise ConnectionError("Unable to authenticate.")

32 | return True

33 | raise ConnectionError("User has not authenticated locally.")

34 | except ConnectionError:

35 | self.id_token = self.api_key = False # reset invalid

36 | return False

37 |

38 | def auth_with_cookies(self) -> bool:

39 | """

40 | Attempt to fetch authentication via cookies and set id_token.

41 | User must be logged in to HUB and running in a supported browser.

42 | """

43 | if not is_colab():

44 | return False # Currently only works with Colab

45 | try:

46 | authn = request_with_credentials(f"{HUB_API_ROOT}/v1/auth/auto")

47 | if authn.get("success", False):

48 | self.id_token = authn.get("data", {}).get("idToken", None)

49 | self.authenticate()

50 | return True

51 | raise ConnectionError("Unable to fetch browser authentication details.")

52 | except ConnectionError:

53 | self.id_token = False # reset invalid

54 | return False

55 |

56 | def get_auth_header(self):

57 | if self.id_token:

58 | return {"authorization": f"Bearer {self.id_token}"}

59 | elif self.api_key:

60 | return {"x-api-key": self.api_key}

61 | else:

62 | return None

63 |

64 | def get_state(self) -> bool:

65 | """Get the authentication state"""

66 | return self.id_token or self.api_key

67 |

68 | def set_api_key(self, key: str):

69 | """Get the authentication state"""

70 | self.api_key = key

71 |

--------------------------------------------------------------------------------

/ultralytics/hub/session.py:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | import signal

4 | import sys

5 | from pathlib import Path

6 | from time import sleep

7 |

8 | import requests

9 |

10 | from ultralytics import __version__

11 | from ultralytics.hub.utils import HUB_API_ROOT, check_dataset_disk_space, smart_request

12 | from ultralytics.yolo.utils import LOGGER, is_colab, threaded

13 |

14 | AGENT_NAME = f'python-{__version__}-colab' if is_colab() else f'python-{__version__}-local'

15 |

16 | session = None

17 |

18 |

19 | def signal_handler(signum, frame):

20 | """ Confirm exit """

21 | global hub_logger

22 | LOGGER.info(f'Signal received. {signum} {frame}')

23 | if isinstance(session, HubTrainingSession):

24 | hub_logger.alive = False

25 | del hub_logger

26 | sys.exit(signum)

27 |

28 |

29 | signal.signal(signal.SIGTERM, signal_handler)

30 | signal.signal(signal.SIGINT, signal_handler)

31 |

32 |

33 | class HubTrainingSession:

34 |

35 | def __init__(self, model_id, auth):

36 | self.agent_id = None # identifies which instance is communicating with server

37 | self.model_id = model_id

38 | self.api_url = f'{HUB_API_ROOT}/v1/models/{model_id}'

39 | self.auth_header = auth.get_auth_header()

40 | self.rate_limits = {'metrics': 3.0, 'ckpt': 900.0, 'heartbeat': 300.0} # rate limits (seconds)

41 | self.t = {} # rate limit timers (seconds)

42 | self.metrics_queue = {} # metrics queue

43 | self.alive = True # for heartbeats

44 | self.model = self._get_model()

45 | self._heartbeats() # start heartbeats

46 |

47 | def __del__(self):

48 | # Class destructor

49 | self.alive = False

50 |

51 | def upload_metrics(self):

52 | payload = {"metrics": self.metrics_queue.copy(), "type": "metrics"}

53 | smart_request(f'{self.api_url}', json=payload, headers=self.auth_header, code=2)

54 |

55 | def upload_model(self, epoch, weights, is_best=False, map=0.0, final=False):

56 | # Upload a model to HUB

57 | file = None

58 | if Path(weights).is_file():

59 | with open(weights, "rb") as f:

60 | file = f.read()

61 | if final:

62 | smart_request(f'{self.api_url}/upload',

63 | data={

64 | "epoch": epoch,

65 | "type": "final",

66 | "map": map},

67 | files={"best.pt": file},

68 | headers=self.auth_header,

69 | retry=10,

70 | timeout=3600,

71 | code=4)

72 | else:

73 | smart_request(f'{self.api_url}/upload',

74 | data={

75 | "epoch": epoch,

76 | "type": "epoch",

77 | "isBest": bool(is_best)},

78 | headers=self.auth_header,

79 | files={"last.pt": file},

80 | code=3)

81 |

82 | def _get_model(self):

83 | # Returns model from database by id

84 | api_url = f"{HUB_API_ROOT}/v1/models/{self.model_id}"

85 | headers = self.auth_header

86 |

87 | try:

88 | r = smart_request(api_url, method="get", headers=headers, thread=False, code=0)

89 | data = r.json().get("data", None)

90 | if not data:

91 | return

92 | assert data['data'], 'ERROR: Dataset may still be processing. Please wait a minute and try again.' # RF fix

93 | self.model_id = data["id"]

94 |

95 | return data

96 | except requests.exceptions.ConnectionError as e:

97 | raise ConnectionRefusedError('ERROR: The HUB server is not online. Please try again later.') from e

98 |

99 | def check_disk_space(self):

100 | if not check_dataset_disk_space(self.model['data']):

101 | raise MemoryError("Not enough disk space")

102 |

103 | # COMMENT: Should not be needed as HUB is now considered an integration and is in integrations_callbacks

104 | # import ultralytics.yolo.utils.callbacks.hub as hub_callbacks

105 | # @staticmethod

106 | # def register_callbacks(trainer):

107 | # for k, v in hub_callbacks.callbacks.items():

108 | # trainer.add_callback(k, v)

109 |

110 | @threaded

111 | def _heartbeats(self):

112 | while self.alive:

113 | r = smart_request(f'{HUB_API_ROOT}/v1/agent/heartbeat/models/{self.model_id}',

114 | json={

115 | "agent": AGENT_NAME,

116 | "agentId": self.agent_id},

117 | headers=self.auth_header,

118 | retry=0,

119 | code=5,

120 | thread=False)

121 | self.agent_id = r.json().get('data', {}).get('agentId', None)

122 | sleep(self.rate_limits['heartbeat'])

123 |

--------------------------------------------------------------------------------

/ultralytics/hub/utils.py:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | import os

4 | import shutil

5 | import threading

6 | import time

7 |

8 | import requests

9 |

10 | from ultralytics.yolo.utils import DEFAULT_CONFIG_DICT, LOGGER, RANK, SETTINGS, TryExcept, colorstr, emojis

11 |

12 | PREFIX = colorstr('Ultralytics: ')

13 | HELP_MSG = 'If this issue persists please visit https://github.com/ultralytics/hub/issues for assistance.'

14 | HUB_API_ROOT = os.environ.get("ULTRALYTICS_HUB_API", "https://api.ultralytics.com")

15 |

16 |

17 | def check_dataset_disk_space(url='https://github.com/ultralytics/yolov5/releases/download/v1.0/coco128.zip', sf=2.0):

18 | # Check that url fits on disk with safety factor sf, i.e. require 2GB free if url size is 1GB with sf=2.0

19 | gib = 1 << 30 # bytes per GiB

20 | data = int(requests.head(url).headers['Content-Length']) / gib # dataset size (GB)

21 | total, used, free = (x / gib for x in shutil.disk_usage("/")) # bytes

22 | LOGGER.info(f'{PREFIX}{data:.3f} GB dataset, {free:.1f}/{total:.1f} GB free disk space')

23 | if data * sf < free:

24 | return True # sufficient space

25 | LOGGER.warning(f'{PREFIX}WARNING: Insufficient free disk space {free:.1f} GB < {data * sf:.3f} GB required, '

26 | f'training cancelled ❌. Please free {data * sf - free:.1f} GB additional disk space and try again.')

27 | return False # insufficient space

28 |

29 |

30 | def request_with_credentials(url: str) -> any:

31 | """ Make an ajax request with cookies attached """

32 | from google.colab import output # noqa

33 | from IPython import display # noqa

34 | display.display(

35 | display.Javascript("""

36 | window._hub_tmp = new Promise((resolve, reject) => {

37 | const timeout = setTimeout(() => reject("Failed authenticating existing browser session"), 5000)

38 | fetch("%s", {

39 | method: 'POST',

40 | credentials: 'include'

41 | })

42 | .then((response) => resolve(response.json()))

43 | .then((json) => {

44 | clearTimeout(timeout);

45 | }).catch((err) => {

46 | clearTimeout(timeout);

47 | reject(err);

48 | });

49 | });

50 | """ % url))

51 | return output.eval_js("_hub_tmp")

52 |

53 |

54 | # Deprecated TODO: eliminate this function?

55 | def split_key(key=''):

56 | """

57 | Verify and split a 'api_key[sep]model_id' string, sep is one of '.' or '_'

58 |

59 | Args:

60 | key (str): The model key to split. If not provided, the user will be prompted to enter it.

61 |

62 | Returns:

63 | Tuple[str, str]: A tuple containing the API key and model ID.

64 | """

65 |

66 | import getpass

67 |

68 | error_string = emojis(f'{PREFIX}Invalid API key ⚠️\n') # error string

69 | if not key:

70 | key = getpass.getpass('Enter model key: ')

71 | sep = '_' if '_' in key else '.' if '.' in key else None # separator

72 | assert sep, error_string

73 | api_key, model_id = key.split(sep)

74 | assert len(api_key) and len(model_id), error_string

75 | return api_key, model_id

76 |

77 |

78 | def smart_request(*args, retry=3, timeout=30, thread=True, code=-1, method="post", verbose=True, **kwargs):

79 | """

80 | Makes an HTTP request using the 'requests' library, with exponential backoff retries up to a specified timeout.

81 |

82 | Args:

83 | *args: Positional arguments to be passed to the requests function specified in method.

84 | retry (int, optional): Number of retries to attempt before giving up. Default is 3.

85 | timeout (int, optional): Timeout in seconds after which the function will give up retrying. Default is 30.

86 | thread (bool, optional): Whether to execute the request in a separate daemon thread. Default is True.

87 | code (int, optional): An identifier for the request, used for logging purposes. Default is -1.

88 | method (str, optional): The HTTP method to use for the request. Choices are 'post' and 'get'. Default is 'post'.

89 | verbose (bool, optional): A flag to determine whether to print out to console or not. Default is True.

90 | **kwargs: Keyword arguments to be passed to the requests function specified in method.

91 |

92 | Returns:

93 | requests.Response: The HTTP response object. If the request is executed in a separate thread, returns None.

94 | """

95 | retry_codes = (408, 500) # retry only these codes

96 |

97 | def func(*func_args, **func_kwargs):

98 | r = None # response

99 | t0 = time.time() # initial time for timer

100 | for i in range(retry + 1):

101 | if (time.time() - t0) > timeout:

102 | break

103 | if method == 'post':

104 | r = requests.post(*func_args, **func_kwargs) # i.e. post(url, data, json, files)

105 | elif method == 'get':

106 | r = requests.get(*func_args, **func_kwargs) # i.e. get(url, data, json, files)

107 | if r.status_code == 200:

108 | break

109 | try:

110 | m = r.json().get('message', 'No JSON message.')

111 | except AttributeError:

112 | m = 'Unable to read JSON.'

113 | if i == 0:

114 | if r.status_code in retry_codes:

115 | m += f' Retrying {retry}x for {timeout}s.' if retry else ''

116 | elif r.status_code == 429: # rate limit

117 | h = r.headers # response headers

118 | m = f"Rate limit reached ({h['X-RateLimit-Remaining']}/{h['X-RateLimit-Limit']}). " \

119 | f"Please retry after {h['Retry-After']}s."

120 | if verbose:

121 | LOGGER.warning(f"{PREFIX}{m} {HELP_MSG} ({r.status_code} #{code})")

122 | if r.status_code not in retry_codes:

123 | return r

124 | time.sleep(2 ** i) # exponential standoff

125 | return r

126 |

127 | if thread:

128 | threading.Thread(target=func, args=args, kwargs=kwargs, daemon=True).start()

129 | else:

130 | return func(*args, **kwargs)

131 |

132 |

133 | @TryExcept()

134 | def sync_analytics(cfg, all_keys=False, enabled=False):

135 | """

136 | Sync analytics data if enabled in the global settings

137 |

138 | Args:

139 | cfg (DictConfig): Configuration for the task and mode.

140 | all_keys (bool): Sync all items, not just non-default values.

141 | enabled (bool): For debugging.

142 | """

143 | if SETTINGS['sync'] and RANK in {-1, 0} and enabled:

144 | cfg = dict(cfg) # convert type from DictConfig to dict

145 | if not all_keys:

146 | cfg = {k: v for k, v in cfg.items() if v != DEFAULT_CONFIG_DICT.get(k, None)} # retain non-default values

147 | cfg['uuid'] = SETTINGS['uuid'] # add the device UUID to the configuration data

148 |

149 | # Send a request to the HUB API to sync analytics

150 | smart_request(f'{HUB_API_ROOT}/v1/usage/anonymous', json=cfg, headers=None, code=3, retry=0, verbose=False)

151 |

--------------------------------------------------------------------------------

/ultralytics/models/README.md:

--------------------------------------------------------------------------------

1 | ## Models

2 |

3 | Welcome to the Ultralytics Models directory! Here you will find a wide variety of pre-configured model configuration

4 | files (`*.yaml`s) that can be used to create custom YOLO models. The models in this directory have been expertly crafted

5 | and fine-tuned by the Ultralytics team to provide the best performance for a wide range of object detection and image

6 | segmentation tasks.

7 |

8 | These model configurations cover a wide range of scenarios, from simple object detection to more complex tasks like

9 | instance segmentation and object tracking. They are also designed to run efficiently on a variety of hardware platforms,

10 | from CPUs to GPUs. Whether you are a seasoned machine learning practitioner or just getting started with YOLO, this

11 | directory provides a great starting point for your custom model development needs.

12 |

13 | To get started, simply browse through the models in this directory and find one that best suits your needs. Once you've

14 | selected a model, you can use the provided `*.yaml` file to train and deploy your custom YOLO model with ease. See full

15 | details at the Ultralytics [Docs](https://docs.ultralytics.com), and if you need help or have any questions, feel free

16 | to reach out to the Ultralytics team for support. So, don't wait, start creating your custom YOLO model now!

17 |

18 | ### Usage

19 |

20 | Model `*.yaml` files may be used directly in the Command Line Interface (CLI) with a `yolo` command:

21 |

22 | ```bash

23 | yolo task=detect mode=train model=yolov8n.yaml data=coco128.yaml epochs=100

24 | ```

25 |

26 | They may also be used directly in a Python environment, and accepts the same

27 | [arguments](https://docs.ultralytics.com/config/) as in the CLI example above:

28 |

29 | ```python

30 | from ultralytics import YOLO

31 |

32 | model = YOLO("yolov8n.yaml") # build a YOLOv8n model from scratch

33 |

34 | model.info() # display model information

35 | model.train(data="coco128.yaml", epochs=100) # train the model

36 | ```

37 |

--------------------------------------------------------------------------------

/ultralytics/models/v3/yolov3-spp.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.0 # model depth multiple

6 | width_multiple: 1.0 # layer channel multiple

7 |

8 | # darknet53 backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [32, 3, 1]], # 0

12 | [-1, 1, Conv, [64, 3, 2]], # 1-P1/2

13 | [-1, 1, Bottleneck, [64]],

14 | [-1, 1, Conv, [128, 3, 2]], # 3-P2/4

15 | [-1, 2, Bottleneck, [128]],

16 | [-1, 1, Conv, [256, 3, 2]], # 5-P3/8

17 | [-1, 8, Bottleneck, [256]],

18 | [-1, 1, Conv, [512, 3, 2]], # 7-P4/16

19 | [-1, 8, Bottleneck, [512]],

20 | [-1, 1, Conv, [1024, 3, 2]], # 9-P5/32

21 | [-1, 4, Bottleneck, [1024]], # 10

22 | ]

23 |

24 | # YOLOv3-SPP head

25 | head:

26 | [[-1, 1, Bottleneck, [1024, False]],

27 | [-1, 1, SPP, [512, [5, 9, 13]]],

28 | [-1, 1, Conv, [1024, 3, 1]],

29 | [-1, 1, Conv, [512, 1, 1]],

30 | [-1, 1, Conv, [1024, 3, 1]], # 15 (P5/32-large)

31 |

32 | [-2, 1, Conv, [256, 1, 1]],

33 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

34 | [[-1, 8], 1, Concat, [1]], # cat backbone P4

35 | [-1, 1, Bottleneck, [512, False]],

36 | [-1, 1, Bottleneck, [512, False]],

37 | [-1, 1, Conv, [256, 1, 1]],

38 | [-1, 1, Conv, [512, 3, 1]], # 22 (P4/16-medium)

39 |

40 | [-2, 1, Conv, [128, 1, 1]],

41 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

42 | [[-1, 6], 1, Concat, [1]], # cat backbone P3

43 | [-1, 1, Bottleneck, [256, False]],

44 | [-1, 2, Bottleneck, [256, False]], # 27 (P3/8-small)

45 |

46 | [[27, 22, 15], 1, Detect, [nc]], # Detect(P3, P4, P5)

47 | ]

48 |

--------------------------------------------------------------------------------

/ultralytics/models/v3/yolov3-tiny.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.0 # model depth multiple

6 | width_multiple: 1.0 # layer channel multiple

7 |

8 | # YOLOv3-tiny backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [16, 3, 1]], # 0

12 | [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 1-P1/2

13 | [-1, 1, Conv, [32, 3, 1]],

14 | [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 3-P2/4

15 | [-1, 1, Conv, [64, 3, 1]],

16 | [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 5-P3/8

17 | [-1, 1, Conv, [128, 3, 1]],

18 | [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 7-P4/16

19 | [-1, 1, Conv, [256, 3, 1]],

20 | [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 9-P5/32

21 | [-1, 1, Conv, [512, 3, 1]],

22 | [-1, 1, nn.ZeroPad2d, [[0, 1, 0, 1]]], # 11

23 | [-1, 1, nn.MaxPool2d, [2, 1, 0]], # 12

24 | ]

25 |

26 | # YOLOv3-tiny head

27 | head:

28 | [[-1, 1, Conv, [1024, 3, 1]],

29 | [-1, 1, Conv, [256, 1, 1]],

30 | [-1, 1, Conv, [512, 3, 1]], # 15 (P5/32-large)

31 |

32 | [-2, 1, Conv, [128, 1, 1]],

33 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

34 | [[-1, 8], 1, Concat, [1]], # cat backbone P4

35 | [-1, 1, Conv, [256, 3, 1]], # 19 (P4/16-medium)

36 |

37 | [[19, 15], 1, Detect, [nc]], # Detect(P4, P5)

38 | ]

39 |

--------------------------------------------------------------------------------

/ultralytics/models/v3/yolov3.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.0 # model depth multiple

6 | width_multiple: 1.0 # layer channel multiple

7 |

8 | # darknet53 backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [32, 3, 1]], # 0

12 | [-1, 1, Conv, [64, 3, 2]], # 1-P1/2

13 | [-1, 1, Bottleneck, [64]],

14 | [-1, 1, Conv, [128, 3, 2]], # 3-P2/4

15 | [-1, 2, Bottleneck, [128]],

16 | [-1, 1, Conv, [256, 3, 2]], # 5-P3/8

17 | [-1, 8, Bottleneck, [256]],

18 | [-1, 1, Conv, [512, 3, 2]], # 7-P4/16

19 | [-1, 8, Bottleneck, [512]],

20 | [-1, 1, Conv, [1024, 3, 2]], # 9-P5/32

21 | [-1, 4, Bottleneck, [1024]], # 10

22 | ]

23 |

24 | # YOLOv3 head

25 | head:

26 | [[-1, 1, Bottleneck, [1024, False]],

27 | [-1, 1, Conv, [512, 1, 1]],

28 | [-1, 1, Conv, [1024, 3, 1]],

29 | [-1, 1, Conv, [512, 1, 1]],

30 | [-1, 1, Conv, [1024, 3, 1]], # 15 (P5/32-large)

31 |

32 | [-2, 1, Conv, [256, 1, 1]],

33 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

34 | [[-1, 8], 1, Concat, [1]], # cat backbone P4

35 | [-1, 1, Bottleneck, [512, False]],

36 | [-1, 1, Bottleneck, [512, False]],

37 | [-1, 1, Conv, [256, 1, 1]],

38 | [-1, 1, Conv, [512, 3, 1]], # 22 (P4/16-medium)

39 |

40 | [-2, 1, Conv, [128, 1, 1]],

41 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

42 | [[-1, 6], 1, Concat, [1]], # cat backbone P3

43 | [-1, 1, Bottleneck, [256, False]],

44 | [-1, 2, Bottleneck, [256, False]], # 27 (P3/8-small)

45 |

46 | [[27, 22, 15], 1, Detect, [nc]], # Detect(P3, P4, P5)

47 | ]

48 |

--------------------------------------------------------------------------------

/ultralytics/models/v5/yolov5l.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.0 # model depth multiple

6 | width_multiple: 1.0 # layer channel multiple

7 |

8 | # YOLOv5 v6.0 backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

12 | [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

13 | [-1, 3, C3, [128]],

14 | [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

15 | [-1, 6, C3, [256]],

16 | [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

17 | [-1, 9, C3, [512]],

18 | [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

19 | [-1, 3, C3, [1024]],

20 | [-1, 1, SPPF, [1024, 5]], # 9

21 | ]

22 |

23 | # YOLOv5 v6.0 head

24 | head:

25 | [[-1, 1, Conv, [512, 1, 1]],

26 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

27 | [[-1, 6], 1, Concat, [1]], # cat backbone P4

28 | [-1, 3, C3, [512, False]], # 13

29 |

30 | [-1, 1, Conv, [256, 1, 1]],

31 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

32 | [[-1, 4], 1, Concat, [1]], # cat backbone P3

33 | [-1, 3, C3, [256, False]], # 17 (P3/8-small)

34 |

35 | [-1, 1, Conv, [256, 3, 2]],

36 | [[-1, 14], 1, Concat, [1]], # cat head P4

37 | [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

38 |

39 | [-1, 1, Conv, [512, 3, 2]],

40 | [[-1, 10], 1, Concat, [1]], # cat head P5

41 | [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

42 |

43 | [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

44 | ]

--------------------------------------------------------------------------------

/ultralytics/models/v5/yolov5m.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.67 # model depth multiple

6 | width_multiple: 0.75 # layer channel multiple

7 |

8 | # YOLOv5 v6.0 backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

12 | [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

13 | [-1, 3, C3, [128]],

14 | [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

15 | [-1, 6, C3, [256]],

16 | [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

17 | [-1, 9, C3, [512]],

18 | [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

19 | [-1, 3, C3, [1024]],

20 | [-1, 1, SPPF, [1024, 5]], # 9

21 | ]

22 |

23 | # YOLOv5 v6.0 head

24 | head:

25 | [[-1, 1, Conv, [512, 1, 1]],

26 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

27 | [[-1, 6], 1, Concat, [1]], # cat backbone P4

28 | [-1, 3, C3, [512, False]], # 13

29 |

30 | [-1, 1, Conv, [256, 1, 1]],

31 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

32 | [[-1, 4], 1, Concat, [1]], # cat backbone P3

33 | [-1, 3, C3, [256, False]], # 17 (P3/8-small)

34 |

35 | [-1, 1, Conv, [256, 3, 2]],

36 | [[-1, 14], 1, Concat, [1]], # cat head P4

37 | [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

38 |

39 | [-1, 1, Conv, [512, 3, 2]],

40 | [[-1, 10], 1, Concat, [1]], # cat head P5

41 | [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

42 |

43 | [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

44 | ]

--------------------------------------------------------------------------------

/ultralytics/models/v5/yolov5n.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.33 # model depth multiple

6 | width_multiple: 0.25 # layer channel multiple

7 |

8 | # YOLOv5 v6.0 backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

12 | [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

13 | [-1, 3, C3, [128]],

14 | [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

15 | [-1, 6, C3, [256]],

16 | [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

17 | [-1, 9, C3, [512]],

18 | [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

19 | [-1, 3, C3, [1024]],

20 | [-1, 1, SPPF, [1024, 5]], # 9

21 | ]

22 |

23 | # YOLOv5 v6.0 head

24 | head:

25 | [[-1, 1, Conv, [512, 1, 1]],

26 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

27 | [[-1, 6], 1, Concat, [1]], # cat backbone P4

28 | [-1, 3, C3, [512, False]], # 13

29 |

30 | [-1, 1, Conv, [256, 1, 1]],

31 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

32 | [[-1, 4], 1, Concat, [1]], # cat backbone P3

33 | [-1, 3, C3, [256, False]], # 17 (P3/8-small)

34 |

35 | [-1, 1, Conv, [256, 3, 2]],

36 | [[-1, 14], 1, Concat, [1]], # cat head P4

37 | [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

38 |

39 | [-1, 1, Conv, [512, 3, 2]],

40 | [[-1, 10], 1, Concat, [1]], # cat head P5

41 | [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

42 |

43 | [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

44 | ]

--------------------------------------------------------------------------------

/ultralytics/models/v5/yolov5s.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.33 # model depth multiple

6 | width_multiple: 0.50 # layer channel multiple

7 |

8 |

9 | # YOLOv5 v6.0 backbone

10 | backbone:

11 | # [from, number, module, args]

12 | [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

13 | [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

14 | [-1, 3, C3, [128]],

15 | [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

16 | [-1, 6, C3, [256]],

17 | [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

18 | [-1, 9, C3, [512]],

19 | [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

20 | [-1, 3, C3, [1024]],

21 | [-1, 1, SPPF, [1024, 5]], # 9

22 | ]

23 |

24 | # YOLOv5 v6.0 head

25 | head:

26 | [[-1, 1, Conv, [512, 1, 1]],

27 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

28 | [[-1, 6], 1, Concat, [1]], # cat backbone P4

29 | [-1, 3, C3, [512, False]], # 13

30 |

31 | [-1, 1, Conv, [256, 1, 1]],

32 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

33 | [[-1, 4], 1, Concat, [1]], # cat backbone P3

34 | [-1, 3, C3, [256, False]], # 17 (P3/8-small)

35 |

36 | [-1, 1, Conv, [256, 3, 2]],

37 | [[-1, 14], 1, Concat, [1]], # cat head P4

38 | [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

39 |

40 | [-1, 1, Conv, [512, 3, 2]],

41 | [[-1, 10], 1, Concat, [1]], # cat head P5

42 | [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

43 |

44 | [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

45 | ]

--------------------------------------------------------------------------------

/ultralytics/models/v5/yolov5x.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.33 # model depth multiple

6 | width_multiple: 1.25 # layer channel multiple

7 |

8 | # YOLOv5 v6.0 backbone

9 | backbone:

10 | # [from, number, module, args]

11 | [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

12 | [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

13 | [-1, 3, C3, [128]],

14 | [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

15 | [-1, 6, C3, [256]],

16 | [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

17 | [-1, 9, C3, [512]],

18 | [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

19 | [-1, 3, C3, [1024]],

20 | [-1, 1, SPPF, [1024, 5]], # 9

21 | ]

22 |

23 | # YOLOv5 v6.0 head

24 | head:

25 | [[-1, 1, Conv, [512, 1, 1]],

26 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

27 | [[-1, 6], 1, Concat, [1]], # cat backbone P4

28 | [-1, 3, C3, [512, False]], # 13

29 |

30 | [-1, 1, Conv, [256, 1, 1]],

31 | [-1, 1, nn.Upsample, [None, 2, 'nearest']],

32 | [[-1, 4], 1, Concat, [1]], # cat backbone P3

33 | [-1, 3, C3, [256, False]], # 17 (P3/8-small)

34 |

35 | [-1, 1, Conv, [256, 3, 2]],

36 | [[-1, 14], 1, Concat, [1]], # cat head P4

37 | [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

38 |

39 | [-1, 1, Conv, [512, 3, 2]],

40 | [[-1, 10], 1, Concat, [1]], # cat head P5

41 | [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

42 |

43 | [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

44 | ]

--------------------------------------------------------------------------------

/ultralytics/models/v8/cls/yolov8l-cls.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 1000 # number of classes

5 | depth_multiple: 1.00 # scales module repeats

6 | width_multiple: 1.00 # scales convolution channels

7 |

8 | # YOLOv8.0n backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 |

21 | # YOLOv8.0n head

22 | head:

23 | - [-1, 1, Classify, [nc]]

24 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/cls/yolov8m-cls.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 1000 # number of classes

5 | depth_multiple: 0.67 # scales module repeats

6 | width_multiple: 0.75 # scales convolution channels

7 |

8 | # YOLOv8.0n backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 |

21 | # YOLOv8.0n head

22 | head:

23 | - [-1, 1, Classify, [nc]]

24 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/cls/yolov8n-cls.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 1000 # number of classes

5 | depth_multiple: 0.33 # scales module repeats

6 | width_multiple: 0.25 # scales convolution channels

7 |

8 | # YOLOv8.0n backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 |

21 | # YOLOv8.0n head

22 | head:

23 | - [-1, 1, Classify, [nc]]

24 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/cls/yolov8s-cls.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 1000 # number of classes

5 | depth_multiple: 0.33 # scales module repeats

6 | width_multiple: 0.50 # scales convolution channels

7 |

8 | # YOLOv8.0n backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 |

21 | # YOLOv8.0n head

22 | head:

23 | - [-1, 1, Classify, [nc]]

24 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/cls/yolov8x-cls.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 1000 # number of classes

5 | depth_multiple: 1.00 # scales module repeats

6 | width_multiple: 1.25 # scales convolution channels

7 |

8 | # YOLOv8.0n backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 |

21 | # YOLOv8.0n head

22 | head:

23 | - [-1, 1, Classify, [nc]]

24 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/seg/yolov8l-seg.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.00 # scales module repeats

6 | width_multiple: 1.00 # scales convolution channels

7 |

8 | # YOLOv8.0l backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [512, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [512, True]]

20 | - [-1, 1, SPPF, [512, 5]] # 9

21 |

22 | # YOLOv8.0l head

23 | head:

24 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

25 | - [[-1, 6], 1, Concat, [1]] # cat backbone P4

26 | - [-1, 3, C2f, [512]] # 13

27 |

28 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

29 | - [[-1, 4], 1, Concat, [1]] # cat backbone P3

30 | - [-1, 3, C2f, [256]] # 17 (P3/8-small)

31 |

32 | - [-1, 1, Conv, [256, 3, 2]]

33 | - [[-1, 12], 1, Concat, [1]] # cat head P4

34 | - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

35 |

36 | - [-1, 1, Conv, [512, 3, 2]]

37 | - [[-1, 9], 1, Concat, [1]] # cat head P5

38 | - [-1, 3, C2f, [512]] # 23 (P5/32-large)

39 |

40 | - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

41 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/seg/yolov8m-seg.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.67 # scales module repeats

6 | width_multiple: 0.75 # scales convolution channels

7 |

8 | # YOLOv8.0m backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [768, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [768, True]]

20 | - [-1, 1, SPPF, [768, 5]] # 9

21 |

22 | # YOLOv8.0m head

23 | head:

24 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

25 | - [[-1, 6], 1, Concat, [1]] # cat backbone P4

26 | - [-1, 3, C2f, [512]] # 13

27 |

28 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

29 | - [[-1, 4], 1, Concat, [1]] # cat backbone P3

30 | - [-1, 3, C2f, [256]] # 17 (P3/8-small)

31 |

32 | - [-1, 1, Conv, [256, 3, 2]]

33 | - [[-1, 12], 1, Concat, [1]] # cat head P4

34 | - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

35 |

36 | - [-1, 1, Conv, [512, 3, 2]]

37 | - [[-1, 9], 1, Concat, [1]] # cat head P5

38 | - [-1, 3, C2f, [768]] # 23 (P5/32-large)

39 |

40 | - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

41 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/seg/yolov8n-seg.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.33 # scales module repeats

6 | width_multiple: 0.25 # scales convolution channels

7 |

8 | # YOLOv8.0n backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 | - [-1, 1, SPPF, [1024, 5]] # 9

21 |

22 | # YOLOv8.0n head

23 | head:

24 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

25 | - [[-1, 6], 1, Concat, [1]] # cat backbone P4

26 | - [-1, 3, C2f, [512]] # 13

27 |

28 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

29 | - [[-1, 4], 1, Concat, [1]] # cat backbone P3

30 | - [-1, 3, C2f, [256]] # 17 (P3/8-small)

31 |

32 | - [-1, 1, Conv, [256, 3, 2]]

33 | - [[-1, 12], 1, Concat, [1]] # cat head P4

34 | - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

35 |

36 | - [-1, 1, Conv, [512, 3, 2]]

37 | - [[-1, 9], 1, Concat, [1]] # cat head P5

38 | - [-1, 3, C2f, [1024]] # 23 (P5/32-large)

39 |

40 | - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

41 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/seg/yolov8s-seg.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.33 # scales module repeats

6 | width_multiple: 0.50 # scales convolution channels

7 |

8 | # YOLOv8.0s backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [1024, True]]

20 | - [-1, 1, SPPF, [1024, 5]] # 9

21 |

22 | # YOLOv8.0s head

23 | head:

24 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

25 | - [[-1, 6], 1, Concat, [1]] # cat backbone P4

26 | - [-1, 3, C2f, [512]] # 13

27 |

28 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

29 | - [[-1, 4], 1, Concat, [1]] # cat backbone P3

30 | - [-1, 3, C2f, [256]] # 17 (P3/8-small)

31 |

32 | - [-1, 1, Conv, [256, 3, 2]]

33 | - [[-1, 12], 1, Concat, [1]] # cat head P4

34 | - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

35 |

36 | - [-1, 1, Conv, [512, 3, 2]]

37 | - [[-1, 9], 1, Concat, [1]] # cat head P5

38 | - [-1, 3, C2f, [1024]] # 23 (P5/32-large)

39 |

40 | - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

41 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/seg/yolov8x-seg.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.00 # scales module repeats

6 | width_multiple: 1.25 # scales convolution channels

7 |

8 | # YOLOv8.0x backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [512, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [512, True]]

20 | - [-1, 1, SPPF, [512, 5]] # 9

21 |

22 | # YOLOv8.0x head

23 | head:

24 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

25 | - [[-1, 6], 1, Concat, [1]] # cat backbone P4

26 | - [-1, 3, C2f, [512]] # 13

27 |

28 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

29 | - [[-1, 4], 1, Concat, [1]] # cat backbone P3

30 | - [-1, 3, C2f, [256]] # 17 (P3/8-small)

31 |

32 | - [-1, 1, Conv, [256, 3, 2]]

33 | - [[-1, 12], 1, Concat, [1]] # cat head P4

34 | - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

35 |

36 | - [-1, 1, Conv, [512, 3, 2]]

37 | - [[-1, 9], 1, Concat, [1]] # cat head P5

38 | - [-1, 3, C2f, [512]] # 23 (P5/32-large)

39 |

40 | - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

41 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/yolov8l.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 1.00 # scales module repeats

6 | width_multiple: 1.00 # scales convolution channels

7 |

8 | # YOLOv8.0l backbone

9 | backbone:

10 | # [from, repeats, module, args]

11 | - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

12 | - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

13 | - [-1, 3, C2f, [128, True]]

14 | - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

15 | - [-1, 6, C2f, [256, True]]

16 | - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

17 | - [-1, 6, C2f, [512, True]]

18 | - [-1, 1, Conv, [512, 3, 2]] # 7-P5/32

19 | - [-1, 3, C2f, [512, True]]

20 | - [-1, 1, SPPF, [512, 5]] # 9

21 |

22 | # YOLOv8.0l head

23 | head:

24 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

25 | - [[-1, 6], 1, Concat, [1]] # cat backbone P4

26 | - [-1, 3, C2f, [512]] # 13

27 |

28 | - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

29 | - [[-1, 4], 1, Concat, [1]] # cat backbone P3

30 | - [-1, 3, C2f, [256]] # 17 (P3/8-small)

31 |

32 | - [-1, 1, Conv, [256, 3, 2]]

33 | - [[-1, 12], 1, Concat, [1]] # cat head P4

34 | - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

35 |

36 | - [-1, 1, Conv, [512, 3, 2]]

37 | - [[-1, 9], 1, Concat, [1]] # cat head P5

38 | - [-1, 3, C2f, [512]] # 23 (P5/32-large)

39 |

40 | - [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)

41 |

--------------------------------------------------------------------------------

/ultralytics/models/v8/yolov8m.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | # Parameters

4 | nc: 80 # number of classes

5 | depth_multiple: 0.67 # scales module repeats

6 | width_multiple: 0.75 # scales convolution channels

7 |