143 |

15 |

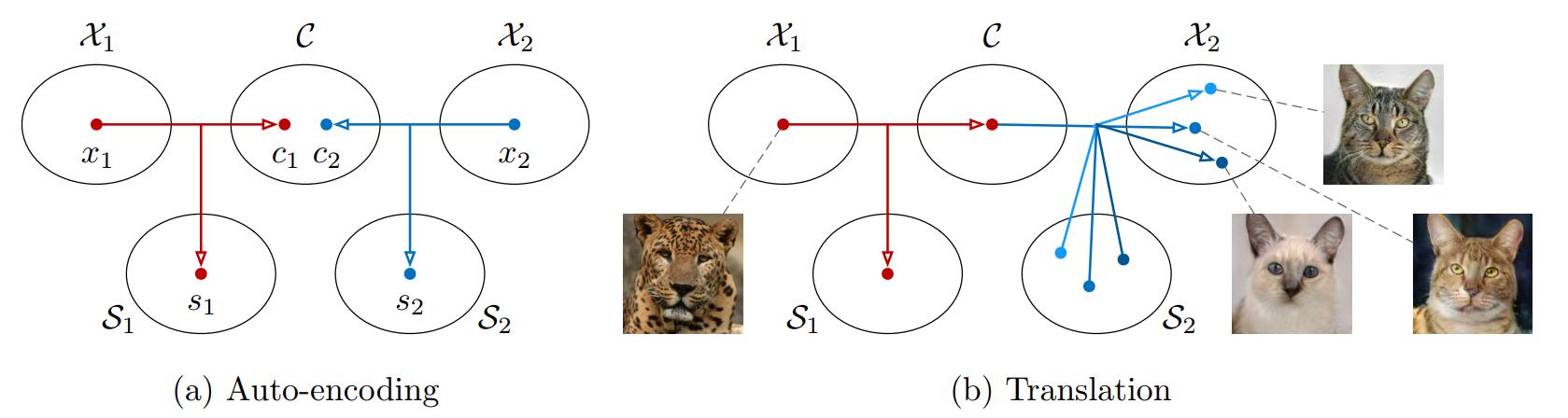

16 | MUNIT is based on the partially-shared latent space assumption as illustrated in (a) of the above image. Basically, it assumes that latent representation of an image can be decomposed into two parts where one represents content of the image that is shared across domains, while the other represents style of the image that is not-shared across domains. To realize this assumption, MUNIT uses 3 networks for each domain, which are

17 |

18 | 1. content encoder (for extracting a domain-shared latent code, content code)

19 | 2. style encoder (for extracting a domain-specific latent code, style code)

20 | 3. decoder (for generating an image using a content code and a style code)

21 |

22 | In the test time as illustrated in (b) of the above image, when we want to translate an input image in the 1st domain (source domain) to a corresponding image in the 2nd domain (target domain). MUNIT first uses the content encoder in the source domain to extract a content codes, combines it with a randomly sampled style code from the target domain, and feed them to the decoder in the target domain to generate the translation. By sampling different style codes, MUNIT generates different translations. Since the style space is a continuous space, MUNIT essentially maps an input image in the source domain to a distribution of images in the target domain.

23 |

24 | ### Requirments

25 |

26 |

27 | - Hardware: PC with NVIDIA Titan GPU. For large resolution images, you need NVIDIA Tesla P100 or V100 GPUs, which have 16GB+ GPU memory.

28 | - Software: *Ubuntu 16.04*, *CUDA 9.1*, *Anaconda3*, *pytorch 0.4.1*

29 | - System package

30 | - `sudo apt-get install -y axel imagemagick` (Only used for demo)

31 | - Python package

32 | - `conda install pytorch=0.4.1 torchvision cuda91 -y -c pytorch`

33 | - `conda install -y -c anaconda pip`

34 | - `conda install -y -c anaconda pyyaml`

35 | - `pip install tensorboard tensorboardX`

36 |

37 | ### Docker Image

38 |

39 | We also provide a [Dockerfile](Dockerfile) for building an environment for running the MUNIT code.

40 |

41 | 1. Install docker-ce. Follow the instruction in the [Docker page](https://docs.docker.com/install/linux/docker-ce/ubuntu/#install-docker-ce-1)

42 | 2. Install nvidia-docker. Follow the instruction in the [NVIDIA-DOCKER README page](https://github.com/NVIDIA/nvidia-docker).

43 | 3. Build the docker image `docker build -t your-docker-image:v1.0 .`

44 | 4. Run an interactive session `docker run -v YOUR_PATH:YOUR_PATH --runtime=nvidia -i -t your-docker-image:v1.0 /bin/bash`

45 | 5. `cd YOUR_PATH`

46 | 6. Follow the rest of the tutorial.

47 |

48 | ### Training

49 |

50 | We provide several training scripts as usage examples. They are located under `scripts` folder.

51 | - `bash scripts/demo_train_edges2handbags.sh` to train a model for multimodal sketches of handbags to images of handbags translation.

52 | - `bash scripts/demo_train_edges2shoes.sh` to train a model for multimodal sketches of shoes to images of shoes translation.

53 | - `bash scripts/demo_train_summer2winter_yosemite256.sh` to train a model for multimodal Yosemite summer 256x256 images to Yosemite winter 256x256 image translation.

54 |

55 | If you break down the command lines in the scripts, you will find that to train a multimodal unsupervised image-to-image translation model you have to do

56 |

57 | 1. Download the dataset you want to use.

58 |

59 | 3. Setup the yaml file. Check out `configs/demo_edges2handbags_folder.yaml` for folder-based dataset organization. Change the `data_root` field to the path of your downloaded dataset. For list-based dataset organization, check out `configs/demo_edges2handbags_list.yaml`

60 |

61 | 3. Start training

62 | ```

63 | python train.py --config configs/edges2handbags_folder.yaml

64 | ```

65 |

66 | 4. Intermediate image outputs and model binary files are stored in `outputs/edges2handbags_folder`

67 |

68 | ### Testing

69 |

70 | First, download our pretrained models for the edges2shoes task and put them in `models` folder.

71 |

72 | ### Pretrained models

73 |

74 | | Dataset | Model Link |

75 | |-------------|----------------|

76 | | edges2shoes | [model](https://drive.google.com/drive/folders/10IEa7gibOWmQQuJUIUOkh-CV4cm6k8__?usp=sharing) |

77 | | edges2handbags | coming soon |

78 | | summer2winter_yosemite256 | coming soon |

79 |

80 |

81 | #### Multimodal Translation

82 |

83 | Run the following command to translate edges to shoes

84 |

85 | python test.py --config configs/edges2shoes_folder.yaml --input inputs/edges2shoes_edge.jpg --output_folder results/edges2shoes --checkpoint models/edges2shoes.pt --a2b 1

86 |

87 | The results are stored in `results/edges2shoes` folder. By default, it produces 10 random translation outputs.

88 |

89 | | Input | Translation 1 | Translation 2 | Translation 3 | Translation 4 | Translation 5 |

90 | |-------|---------------|---------------|---------------|---------------|---------------|

91 | |

15 |

16 | MUNIT is based on the partially-shared latent space assumption as illustrated in (a) of the above image. Basically, it assumes that latent representation of an image can be decomposed into two parts where one represents content of the image that is shared across domains, while the other represents style of the image that is not-shared across domains. To realize this assumption, MUNIT uses 3 networks for each domain, which are

17 |

18 | 1. content encoder (for extracting a domain-shared latent code, content code)

19 | 2. style encoder (for extracting a domain-specific latent code, style code)

20 | 3. decoder (for generating an image using a content code and a style code)

21 |

22 | In the test time as illustrated in (b) of the above image, when we want to translate an input image in the 1st domain (source domain) to a corresponding image in the 2nd domain (target domain). MUNIT first uses the content encoder in the source domain to extract a content codes, combines it with a randomly sampled style code from the target domain, and feed them to the decoder in the target domain to generate the translation. By sampling different style codes, MUNIT generates different translations. Since the style space is a continuous space, MUNIT essentially maps an input image in the source domain to a distribution of images in the target domain.

23 |

24 | ### Requirments

25 |

26 |

27 | - Hardware: PC with NVIDIA Titan GPU. For large resolution images, you need NVIDIA Tesla P100 or V100 GPUs, which have 16GB+ GPU memory.

28 | - Software: *Ubuntu 16.04*, *CUDA 9.1*, *Anaconda3*, *pytorch 0.4.1*

29 | - System package

30 | - `sudo apt-get install -y axel imagemagick` (Only used for demo)

31 | - Python package

32 | - `conda install pytorch=0.4.1 torchvision cuda91 -y -c pytorch`

33 | - `conda install -y -c anaconda pip`

34 | - `conda install -y -c anaconda pyyaml`

35 | - `pip install tensorboard tensorboardX`

36 |

37 | ### Docker Image

38 |

39 | We also provide a [Dockerfile](Dockerfile) for building an environment for running the MUNIT code.

40 |

41 | 1. Install docker-ce. Follow the instruction in the [Docker page](https://docs.docker.com/install/linux/docker-ce/ubuntu/#install-docker-ce-1)

42 | 2. Install nvidia-docker. Follow the instruction in the [NVIDIA-DOCKER README page](https://github.com/NVIDIA/nvidia-docker).

43 | 3. Build the docker image `docker build -t your-docker-image:v1.0 .`

44 | 4. Run an interactive session `docker run -v YOUR_PATH:YOUR_PATH --runtime=nvidia -i -t your-docker-image:v1.0 /bin/bash`

45 | 5. `cd YOUR_PATH`

46 | 6. Follow the rest of the tutorial.

47 |

48 | ### Training

49 |

50 | We provide several training scripts as usage examples. They are located under `scripts` folder.

51 | - `bash scripts/demo_train_edges2handbags.sh` to train a model for multimodal sketches of handbags to images of handbags translation.

52 | - `bash scripts/demo_train_edges2shoes.sh` to train a model for multimodal sketches of shoes to images of shoes translation.

53 | - `bash scripts/demo_train_summer2winter_yosemite256.sh` to train a model for multimodal Yosemite summer 256x256 images to Yosemite winter 256x256 image translation.

54 |

55 | If you break down the command lines in the scripts, you will find that to train a multimodal unsupervised image-to-image translation model you have to do

56 |

57 | 1. Download the dataset you want to use.

58 |

59 | 3. Setup the yaml file. Check out `configs/demo_edges2handbags_folder.yaml` for folder-based dataset organization. Change the `data_root` field to the path of your downloaded dataset. For list-based dataset organization, check out `configs/demo_edges2handbags_list.yaml`

60 |

61 | 3. Start training

62 | ```

63 | python train.py --config configs/edges2handbags_folder.yaml

64 | ```

65 |

66 | 4. Intermediate image outputs and model binary files are stored in `outputs/edges2handbags_folder`

67 |

68 | ### Testing

69 |

70 | First, download our pretrained models for the edges2shoes task and put them in `models` folder.

71 |

72 | ### Pretrained models

73 |

74 | | Dataset | Model Link |

75 | |-------------|----------------|

76 | | edges2shoes | [model](https://drive.google.com/drive/folders/10IEa7gibOWmQQuJUIUOkh-CV4cm6k8__?usp=sharing) |

77 | | edges2handbags | coming soon |

78 | | summer2winter_yosemite256 | coming soon |

79 |

80 |

81 | #### Multimodal Translation

82 |

83 | Run the following command to translate edges to shoes

84 |

85 | python test.py --config configs/edges2shoes_folder.yaml --input inputs/edges2shoes_edge.jpg --output_folder results/edges2shoes --checkpoint models/edges2shoes.pt --a2b 1

86 |

87 | The results are stored in `results/edges2shoes` folder. By default, it produces 10 random translation outputs.

88 |

89 | | Input | Translation 1 | Translation 2 | Translation 3 | Translation 4 | Translation 5 |

90 | |-------|---------------|---------------|---------------|---------------|---------------|

91 | |  |

|  |

|  |

|  |

|  |

|  |

92 |

93 |

94 | #### Example-guided Translation

95 |

96 | The above command outputs diverse shoes from an edge input. In addition, it is possible to control the style of output using an example shoe image.

97 |

98 | python test.py --config configs/edges2shoes_folder.yaml --input inputs/edges2shoes_edge.jpg --output_folder results --checkpoint models/edges2shoes.pt --a2b 1 --style inputs/edges2shoes_shoe.jpg

99 |

100 | | Input Photo | Style Photo | Output Photo |

101 | |-------|---------------|---------------|

102 | |

|

92 |

93 |

94 | #### Example-guided Translation

95 |

96 | The above command outputs diverse shoes from an edge input. In addition, it is possible to control the style of output using an example shoe image.

97 |

98 | python test.py --config configs/edges2shoes_folder.yaml --input inputs/edges2shoes_edge.jpg --output_folder results --checkpoint models/edges2shoes.pt --a2b 1 --style inputs/edges2shoes_shoe.jpg

99 |

100 | | Input Photo | Style Photo | Output Photo |

101 | |-------|---------------|---------------|

102 | |  |

|  |

|  |

103 |

104 | ### Yosemite Summer2Winter HD dataset

105 |

106 | Coming soon.

107 |

108 |

109 |

--------------------------------------------------------------------------------

/USAGE.md:

--------------------------------------------------------------------------------

1 | [](https://raw.githubusercontent.com/NVIDIA/FastPhotoStyle/master/LICENSE.md)

2 |

3 |

4 | ## MUNIT: Multimodal UNsupervised Image-to-image Translation

5 |

6 | ### License

7 |

8 | Copyright (C) 2018 NVIDIA Corporation. All rights reserved.

9 | Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

10 |

11 | ### Dependency

12 |

13 |

14 | pytorch, yaml, tensorboard (from https://github.com/dmlc/tensorboard), and tensorboardX (from https://github.com/lanpa/tensorboard-pytorch).

15 |

16 |

17 | The code base was developed using Anaconda with the following packages.

18 | ```

19 | conda install pytorch=0.4.1 torchvision cuda91 -c pytorch;

20 | conda install -y -c anaconda pip;

21 | conda install -y -c anaconda pyyaml;

22 | pip install tensorboard tensorboardX;

23 | ```

24 |

25 | We also provide a [Dockerfile](Dockerfile) for building an environment for running the MUNIT code.

26 |

27 | ### Example Usage

28 |

29 | #### Testing

30 |

31 | First, download the [pretrained models](https://drive.google.com/drive/folders/10IEa7gibOWmQQuJUIUOkh-CV4cm6k8__?usp=sharing) and put them in `models` folder.

32 |

33 | ###### Multimodal Translation

34 |

35 | Run the following command to translate edges to shoes

36 |

37 | python test.py --config configs/edges2shoes_folder.yaml --input inputs/edge.jpg --output_folder outputs --checkpoint models/edges2shoes.pt --a2b 1

38 |

39 | The results are stored in `outputs` folder. By default, it produces 10 random translation outputs.

40 |

41 | ###### Example-guided Translation

42 |

43 | The above command outputs diverse shoes from an edge input. In addition, it is possible to control the style of output using an example shoe image.

44 |

45 | python test.py --config configs/edges2shoes_folder.yaml --input inputs/edge.jpg --output_folder outputs --checkpoint models/edges2shoes.pt --a2b 1 --style inputs/shoe.jpg

46 |

47 |

48 | #### Training

49 | 1. Download the dataset you want to use. For example, you can use the edges2shoes dataset provided by [Zhu et al.](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix)

50 |

51 | 3. Setup the yaml file. Check out `configs/edges2handbags_folder.yaml` for folder-based dataset organization. Change the `data_root` field to the path of your downloaded dataset. For list-based dataset organization, check out `configs/edges2handbags_list.yaml`

52 |

53 | 3. Start training

54 | ```

55 | python train.py --config configs/edges2handbags_folder.yaml

56 | ```

57 |

58 | 4. Intermediate image outputs and model binary files are stored in `outputs/edges2handbags_folder`

59 |

--------------------------------------------------------------------------------

/configs/demo_edges2handbags_folder.yaml:

--------------------------------------------------------------------------------

1 | # Copyright (C) 2017 NVIDIA Corporation. All rights reserved.

2 | # Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

3 |

4 | # logger options

5 | image_save_iter: 1000 # How often do you want to save output images during training

6 | image_display_iter: 100 # How often do you want to display output images during training

7 | display_size: 8 # How many images do you want to display each time

8 | snapshot_save_iter: 10000 # How often do you want to save trained models

9 | log_iter: 1 # How often do you want to log the training stats

10 |

11 | # optimization options

12 | max_iter: 1000000 # maximum number of training iterations

13 | batch_size: 1 # batch size

14 | weight_decay: 0.0001 # weight decay

15 | beta1: 0.5 # Adam parameter

16 | beta2: 0.999 # Adam parameter

17 | init: kaiming # initialization [gaussian/kaiming/xavier/orthogonal]

18 | lr: 0.0001 # initial learning rate

19 | lr_policy: step # learning rate scheduler

20 | step_size: 100000 # how often to decay learning rate

21 | gamma: 0.5 # how much to decay learning rate

22 | gan_w: 1 # weight of adversarial loss

23 | recon_x_w: 10 # weight of image reconstruction loss

24 | recon_s_w: 1 # weight of style reconstruction loss

25 | recon_c_w: 1 # weight of content reconstruction loss

26 | recon_x_cyc_w: 0 # weight of explicit style augmented cycle consistency loss

27 | vgg_w: 0 # weight of domain-invariant perceptual loss

28 |

29 | # model options

30 | gen:

31 | dim: 64 # number of filters in the bottommost layer

32 | mlp_dim: 256 # number of filters in MLP

33 | style_dim: 8 # length of style code

34 | activ: relu # activation function [relu/lrelu/prelu/selu/tanh]

35 | n_downsample: 2 # number of downsampling layers in content encoder

36 | n_res: 4 # number of residual blocks in content encoder/decoder

37 | pad_type: reflect # padding type [zero/reflect]

38 | dis:

39 | dim: 64 # number of filters in the bottommost layer

40 | norm: none # normalization layer [none/bn/in/ln]

41 | activ: lrelu # activation function [relu/lrelu/prelu/selu/tanh]

42 | n_layer: 4 # number of layers in D

43 | gan_type: lsgan # GAN loss [lsgan/nsgan]

44 | num_scales: 3 # number of scales

45 | pad_type: reflect # padding type [zero/reflect]

46 |

47 | # data options

48 | input_dim_a: 3 # number of image channels [1/3]

49 | input_dim_b: 3 # number of image channels [1/3]

50 | num_workers: 8 # number of data loading threads

51 | new_size: 256 # first resize the shortest image side to this size

52 | crop_image_height: 256 # random crop image of this height

53 | crop_image_width: 256 # random crop image of this width

54 | data_root: ./datasets/demo_edges2handbags/ # dataset folder location

--------------------------------------------------------------------------------

/configs/demo_edges2handbags_list.yaml:

--------------------------------------------------------------------------------

1 | # Copyright (C) 2017 NVIDIA Corporation. All rights reserved.

2 | # Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

3 |

4 | # logger options

5 | image_save_iter: 1000 # How often do you want to save output images during training

6 | image_display_iter: 100 # How often do you want to display output images during training

7 | display_size: 8 # How many images do you want to display each time

8 | snapshot_save_iter: 10000 # How often do you want to save trained models

9 | log_iter: 1 # How often do you want to log the training stats

10 |

11 | # optimization options

12 | max_iter: 1000000 # maximum number of training iterations

13 | batch_size: 1 # batch size

14 | weight_decay: 0.0001 # weight decay

15 | beta1: 0.5 # Adam parameter

16 | beta2: 0.999 # Adam parameter

17 | init: kaiming # initialization [gaussian/kaiming/xavier/orthogonal]

18 | lr: 0.0001 # initial learning rate

19 | lr_policy: step # learning rate scheduler

20 | step_size: 100000 # how often to decay learning rate

21 | gamma: 0.5 # how much to decay learning rate

22 | gan_w: 1 # weight of adversarial loss

23 | recon_x_w: 10 # weight of image reconstruction loss

24 | recon_s_w: 1 # weight of style reconstruction loss

25 | recon_c_w: 1 # weight of content reconstruction loss

26 | recon_x_cyc_w: 0 # weight of explicit style augmented cycle consistency loss

27 | vgg_w: 0 # weight of domain-invariant perceptual loss

28 |

29 | # model options

30 | gen:

31 | dim: 64 # number of filters in the bottommost layer

32 | mlp_dim: 256 # number of filters in MLP

33 | style_dim: 8 # length of style code

34 | activ: relu # activation function [relu/lrelu/prelu/selu/tanh]

35 | n_downsample: 2 # number of downsampling layers in content encoder

36 | n_res: 4 # number of residual blocks in content encoder/decoder

37 | pad_type: reflect # padding type [zero/reflect]

38 | dis:

39 | dim: 64 # number of filters in the bottommost layer

40 | norm: none # normalization layer [none/bn/in/ln]

41 | activ: lrelu # activation function [relu/lrelu/prelu/selu/tanh]

42 | n_layer: 4 # number of layers in D

43 | gan_type: lsgan # GAN loss [lsgan/nsgan]

44 | num_scales: 3 # number of scales

45 | pad_type: reflect # padding type [zero/reflect]

46 |

47 | # data options

48 | input_dim_a: 3 # number of image channels [1/3]

49 | input_dim_b: 3 # number of image channels [1/3]

50 | num_workers: 8 # number of data loading threads

51 | new_size: 256 # first resize the shortest image side to this size

52 | crop_image_height: 256 # random crop image of this height

53 | crop_image_width: 256 # random crop image of this width

54 |

55 | data_folder_train_a: ./datasets/demo_edges2handbags/trainA

56 | data_list_train_a: ./datasets/demo_edges2handbags/list_trainA.txt

57 | data_folder_test_a: ./datasets/demo_edges2handbags/testA

58 | data_list_test_a: ./datasets/demo_edges2handbags/list_testA.txt

59 | data_folder_train_b: ./datasets/demo_edges2handbags/trainB

60 | data_list_train_b: ./datasets/demo_edges2handbags/list_trainB.txt

61 | data_folder_test_b: ./datasets/demo_edges2handbags/testB

62 | data_list_test_b: ./datasets/demo_edges2handbags/list_testB.txt

63 |

--------------------------------------------------------------------------------

/configs/edges2handbags_folder.yaml:

--------------------------------------------------------------------------------

1 | # Copyright (C) 2017 NVIDIA Corporation. All rights reserved.

2 | # Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

3 |

4 | # logger options

5 | image_save_iter: 10000 # How often do you want to save output images during training

6 | image_display_iter: 500 # How often do you want to display output images during training

7 | display_size: 16 # How many images do you want to display each time

8 | snapshot_save_iter: 10000 # How often do you want to save trained models

9 | log_iter: 10 # How often do you want to log the training stats

10 |

11 | # optimization options

12 | max_iter: 1000000 # maximum number of training iterations

13 | batch_size: 1 # batch size

14 | weight_decay: 0.0001 # weight decay

15 | beta1: 0.5 # Adam parameter

16 | beta2: 0.999 # Adam parameter

17 | init: kaiming # initialization [gaussian/kaiming/xavier/orthogonal]

18 | lr: 0.0001 # initial learning rate

19 | lr_policy: step # learning rate scheduler

20 | step_size: 100000 # how often to decay learning rate

21 | gamma: 0.5 # how much to decay learning rate

22 | gan_w: 1 # weight of adversarial loss

23 | recon_x_w: 10 # weight of image reconstruction loss

24 | recon_s_w: 1 # weight of style reconstruction loss

25 | recon_c_w: 1 # weight of content reconstruction loss

26 | recon_x_cyc_w: 0 # weight of explicit style augmented cycle consistency loss

27 | vgg_w: 0 # weight of domain-invariant perceptual loss

28 |

29 | # model options

30 | gen:

31 | dim: 64 # number of filters in the bottommost layer

32 | mlp_dim: 256 # number of filters in MLP

33 | style_dim: 8 # length of style code

34 | activ: relu # activation function [relu/lrelu/prelu/selu/tanh]

35 | n_downsample: 2 # number of downsampling layers in content encoder

36 | n_res: 4 # number of residual blocks in content encoder/decoder

37 | pad_type: reflect # padding type [zero/reflect]

38 | dis:

39 | dim: 64 # number of filters in the bottommost layer

40 | norm: none # normalization layer [none/bn/in/ln]

41 | activ: lrelu # activation function [relu/lrelu/prelu/selu/tanh]

42 | n_layer: 4 # number of layers in D

43 | gan_type: lsgan # GAN loss [lsgan/nsgan]

44 | num_scales: 3 # number of scales

45 | pad_type: reflect # padding type [zero/reflect]

46 |

47 | # data options

48 | input_dim_a: 3 # number of image channels [1/3]

49 | input_dim_b: 3 # number of image channels [1/3]

50 | num_workers: 8 # number of data loading threads

51 | new_size: 256 # first resize the shortest image side to this size

52 | crop_image_height: 256 # random crop image of this height

53 | crop_image_width: 256 # random crop image of this width

54 | data_root: ./datasets/edges2handbags/ # dataset folder location

--------------------------------------------------------------------------------

/configs/edges2shoes_folder.yaml:

--------------------------------------------------------------------------------

1 | # Copyright (C) 2017 NVIDIA Corporation. All rights reserved.

2 | # Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

3 |

4 | # logger options

5 | image_save_iter: 10000 # How often do you want to save output images during training

6 | image_display_iter: 500 # How often do you want to display output images during training

7 | display_size: 16 # How many images do you want to display each time

8 | snapshot_save_iter: 10000 # How often do you want to save trained models

9 | log_iter: 10 # How often do you want to log the training stats

10 |

11 | # optimization options

12 | max_iter: 1000000 # maximum number of training iterations

13 | batch_size: 1 # batch size

14 | weight_decay: 0.0001 # weight decay

15 | beta1: 0.5 # Adam parameter

16 | beta2: 0.999 # Adam parameter

17 | init: kaiming # initialization [gaussian/kaiming/xavier/orthogonal]

18 | lr: 0.0001 # initial learning rate

19 | lr_policy: step # learning rate scheduler

20 | step_size: 100000 # how often to decay learning rate

21 | gamma: 0.5 # how much to decay learning rate

22 | gan_w: 1 # weight of adversarial loss

23 | recon_x_w: 10 # weight of image reconstruction loss

24 | recon_s_w: 1 # weight of style reconstruction loss

25 | recon_c_w: 1 # weight of content reconstruction loss

26 | recon_x_cyc_w: 0 # weight of explicit style augmented cycle consistency loss

27 | vgg_w: 0 # weight of domain-invariant perceptual loss

28 |

29 | # model options

30 | gen:

31 | dim: 64 # number of filters in the bottommost layer

32 | mlp_dim: 256 # number of filters in MLP

33 | style_dim: 8 # length of style code

34 | activ: relu # activation function [relu/lrelu/prelu/selu/tanh]

35 | n_downsample: 2 # number of downsampling layers in content encoder

36 | n_res: 4 # number of residual blocks in content encoder/decoder

37 | pad_type: reflect # padding type [zero/reflect]

38 | dis:

39 | dim: 64 # number of filters in the bottommost layer

40 | norm: none # normalization layer [none/bn/in/ln]

41 | activ: lrelu # activation function [relu/lrelu/prelu/selu/tanh]

42 | n_layer: 4 # number of layers in D

43 | gan_type: lsgan # GAN loss [lsgan/nsgan]

44 | num_scales: 3 # number of scales

45 | pad_type: reflect # padding type [zero/reflect]

46 |

47 | # data options

48 | input_dim_a: 3 # number of image channels [1/3]

49 | input_dim_b: 3 # number of image channels [1/3]

50 | num_workers: 8 # number of data loading threads

51 | new_size: 256 # first resize the shortest image side to this size

52 | crop_image_height: 256 # random crop image of this height

53 | crop_image_width: 256 # random crop image of this width

54 | data_root: ./datasets/edges2shoes/ # dataset folder location

--------------------------------------------------------------------------------

/configs/summer2winter_yosemite256_folder.yaml:

--------------------------------------------------------------------------------

1 | # Copyright (C) 2018 NVIDIA Corporation. All rights reserved.

2 | # Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

3 |

4 | # logger options

5 | image_save_iter: 10000 # How often do you want to save output images during training

6 | image_display_iter: 100 # How often do you want to display output images during training

7 | display_size: 16 # How many images do you want to display each time

8 | snapshot_save_iter: 10000 # How often do you want to save trained models

9 | log_iter: 1 # How often do you want to log the training stats

10 |

11 | # optimization options

12 | max_iter: 1000000 # maximum number of training iterations

13 | batch_size: 1 # batch size

14 | weight_decay: 0.0001 # weight decay

15 | beta1: 0.5 # Adam parameter

16 | beta2: 0.999 # Adam parameter

17 | init: kaiming # initialization [gaussian/kaiming/xavier/orthogonal]

18 | lr: 0.0001 # initial learning rate

19 | lr_policy: step # learning rate scheduler

20 | step_size: 100000 # how often to decay learning rate

21 | gamma: 0.5 # how much to decay learning rate

22 | gan_w: 1 # weight of adversarial loss

23 | recon_x_w: 10 # weight of image reconstruction loss

24 | recon_s_w: 1 # weight of style reconstruction loss

25 | recon_c_w: 1 # weight of content reconstruction loss

26 | recon_x_cyc_w: 10 # weight of explicit style augmented cycle consistency loss

27 | vgg_w: 0 # weight of domain-invariant perceptual loss

28 |

29 | # model options

30 | gen:

31 | dim: 64 # number of filters in the bottommost layer

32 | mlp_dim: 256 # number of filters in MLP

33 | style_dim: 8 # length of style code

34 | activ: relu # activation function [relu/lrelu/prelu/selu/tanh]

35 | n_downsample: 2 # number of downsampling layers in content encoder

36 | n_res: 4 # number of residual blocks in content encoder/decoder

37 | pad_type: reflect # padding type [zero/reflect]

38 | dis:

39 | dim: 64 # number of filters in the bottommost layer

40 | norm: none # normalization layer [none/bn/in/ln]

41 | activ: lrelu # activation function [relu/lrelu/prelu/selu/tanh]

42 | n_layer: 4 # number of layers in D

43 | gan_type: lsgan # GAN loss [lsgan/nsgan]

44 | num_scales: 3 # number of scales

45 | pad_type: reflect # padding type [zero/reflect]

46 |

47 | # data options

48 | input_dim_a: 3 # number of image channels [1/3]

49 | input_dim_b: 3 # number of image channels [1/3]

50 | num_workers: 8 # number of data loading threads

51 | new_size: 256 # first resize the shortest image side to this size

52 | crop_image_height: 256 # random crop image of this height

53 | crop_image_width: 256 # random crop image of this width

54 | data_root: ./datasets/summer2winter_yosemite256/summer2winter_yosemite # dataset folder location

--------------------------------------------------------------------------------

/configs/synthia2cityscape_folder.yaml:

--------------------------------------------------------------------------------

1 | # Copyright (C) 2017 NVIDIA Corporation. All rights reserved.

2 | # Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

3 |

4 | # logger options

5 | image_save_iter: 10000 # How often do you want to save output images during training

6 | image_display_iter: 500 # How often do you want to display output images during training

7 | display_size: 16 # How many images do you want to display each time

8 | snapshot_save_iter: 10000 # How often do you want to save trained models

9 | log_iter: 10 # How often do you want to log the training stats

10 |

11 | # optimization options

12 | max_iter: 1000000 # maximum number of training iterations

13 | batch_size: 1 # batch size

14 | weight_decay: 0.0001 # weight decay

15 | beta1: 0.5 # Adam parameter

16 | beta2: 0.999 # Adam parameter

17 | init: kaiming # initialization [gaussian/kaiming/xavier/orthogonal]

18 | lr: 0.0001 # initial learning rate

19 | lr_policy: step # learning rate scheduler

20 | step_size: 100000 # how often to decay learning rate

21 | gamma: 0.5 # how much to decay learning rate

22 | gan_w: 1 # weight of adversarial loss

23 | recon_x_w: 10 # weight of image reconstruction loss

24 | recon_s_w: 1 # weight of style reconstruction loss

25 | recon_c_w: 1 # weight of content reconstruction loss

26 | recon_x_cyc_w: 10 # weight of explicit style augmented cycle consistency loss

27 | vgg_w: 1 # weight of domain-invariant perceptual loss

28 |

29 | # model options

30 | gen:

31 | dim: 64 # number of filters in the bottommost layer

32 | mlp_dim: 256 # number of filters in MLP

33 | style_dim: 8 # length of style code

34 | activ: relu # activation function [relu/lrelu/prelu/selu/tanh]

35 | n_downsample: 2 # number of downsampling layers in content encoder

36 | n_res: 4 # number of residual blocks in content encoder/decoder

37 | pad_type: reflect # padding type [zero/reflect]

38 | dis:

39 | dim: 64 # number of filters in the bottommost layer

40 | norm: none # normalization layer [none/bn/in/ln]

41 | activ: lrelu # activation function [relu/lrelu/prelu/selu/tanh]

42 | n_layer: 4 # number of layers in D

43 | gan_type: lsgan # GAN loss [lsgan/nsgan]

44 | num_scales: 3 # number of scales

45 | pad_type: reflect # padding type [zero/reflect]

46 |

47 | # data options

48 | input_dim_a: 3 # number of image channels [1/3]

49 | input_dim_b: 3 # number of image channels [1/3]

50 | num_workers: 8 # number of data loading threads

51 | new_size: 512 # first resize the shortest image side to this size

52 | crop_image_height: 512 # random crop image of this height

53 | crop_image_width: 512 # random crop image of this width

54 | data_root: ./datasets/synthia2cityscape/ # dataset folder location

55 |

--------------------------------------------------------------------------------

/data.py:

--------------------------------------------------------------------------------

1 | """

2 | Copyright (C) 2018 NVIDIA Corporation. All rights reserved.

3 | Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

4 | """

5 | import torch.utils.data as data

6 | import os.path

7 |

8 | def default_loader(path):

9 | return Image.open(path).convert('RGB')

10 |

11 |

12 | def default_flist_reader(flist):

13 | """

14 | flist format: impath label\nimpath label\n ...(same to caffe's filelist)

15 | """

16 | imlist = []

17 | with open(flist, 'r') as rf:

18 | for line in rf.readlines():

19 | impath = line.strip()

20 | imlist.append(impath)

21 |

22 | return imlist

23 |

24 |

25 | class ImageFilelist(data.Dataset):

26 | def __init__(self, root, flist, transform=None,

27 | flist_reader=default_flist_reader, loader=default_loader):

28 | self.root = root

29 | self.imlist = flist_reader(flist)

30 | self.transform = transform

31 | self.loader = loader

32 |

33 | def __getitem__(self, index):

34 | impath = self.imlist[index]

35 | img = self.loader(os.path.join(self.root, impath))

36 | if self.transform is not None:

37 | img = self.transform(img)

38 |

39 | return img

40 |

41 | def __len__(self):

42 | return len(self.imlist)

43 |

44 |

45 | class ImageLabelFilelist(data.Dataset):

46 | def __init__(self, root, flist, transform=None,

47 | flist_reader=default_flist_reader, loader=default_loader):

48 | self.root = root

49 | self.imlist = flist_reader(os.path.join(self.root, flist))

50 | self.transform = transform

51 | self.loader = loader

52 | self.classes = sorted(list(set([path.split('/')[0] for path in self.imlist])))

53 | self.class_to_idx = {self.classes[i]: i for i in range(len(self.classes))}

54 | self.imgs = [(impath, self.class_to_idx[impath.split('/')[0]]) for impath in self.imlist]

55 |

56 | def __getitem__(self, index):

57 | impath, label = self.imgs[index]

58 | img = self.loader(os.path.join(self.root, impath))

59 | if self.transform is not None:

60 | img = self.transform(img)

61 | return img, label

62 |

63 | def __len__(self):

64 | return len(self.imgs)

65 |

66 | ###############################################################################

67 | # Code from

68 | # https://github.com/pytorch/vision/blob/master/torchvision/datasets/folder.py

69 | # Modified the original code so that it also loads images from the current

70 | # directory as well as the subdirectories

71 | ###############################################################################

72 |

73 | import torch.utils.data as data

74 |

75 | from PIL import Image

76 | import os

77 | import os.path

78 |

79 | IMG_EXTENSIONS = [

80 | '.jpg', '.JPG', '.jpeg', '.JPEG',

81 | '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',

82 | ]

83 |

84 |

85 | def is_image_file(filename):

86 | return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

87 |

88 |

89 | def make_dataset(dir):

90 | images = []

91 | assert os.path.isdir(dir), '%s is not a valid directory' % dir

92 |

93 | for root, _, fnames in sorted(os.walk(dir)):

94 | for fname in fnames:

95 | if is_image_file(fname):

96 | path = os.path.join(root, fname)

97 | images.append(path)

98 |

99 | return images

100 |

101 |

102 | class ImageFolder(data.Dataset):

103 |

104 | def __init__(self, root, transform=None, return_paths=False,

105 | loader=default_loader):

106 | imgs = sorted(make_dataset(root))

107 | if len(imgs) == 0:

108 | raise(RuntimeError("Found 0 images in: " + root + "\n"

109 | "Supported image extensions are: " +

110 | ",".join(IMG_EXTENSIONS)))

111 |

112 | self.root = root

113 | self.imgs = imgs

114 | self.transform = transform

115 | self.return_paths = return_paths

116 | self.loader = loader

117 |

118 | def __getitem__(self, index):

119 | path = self.imgs[index]

120 | img = self.loader(path)

121 | if self.transform is not None:

122 | img = self.transform(img)

123 | if self.return_paths:

124 | return img, path

125 | else:

126 | return img

127 |

128 | def __len__(self):

129 | return len(self.imgs)

130 |

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/list_testA.txt:

--------------------------------------------------------------------------------

1 | ./00002.jpg

2 | ./00006.jpg

3 | ./00004.jpg

4 | ./00000.jpg

5 | ./00007.jpg

6 | ./00001.jpg

7 | ./00003.jpg

8 | ./00005.jpg

9 | ./00008.jpg

10 |

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/list_testB.txt:

--------------------------------------------------------------------------------

1 | ./00002.jpg

2 | ./00006.jpg

3 | ./00004.jpg

4 | ./00000.jpg

5 | ./00007.jpg

6 | ./00001.jpg

7 | ./00003.jpg

8 | ./00005.jpg

9 | ./00008.jpg

10 |

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/list_trainA.txt:

--------------------------------------------------------------------------------

1 | ./00002.jpg

2 | ./00006.jpg

3 | ./00004.jpg

4 | ./00000.jpg

5 | ./00010.jpg

6 | ./00014.jpg

7 | ./00008.jpg

8 | ./00012.jpg

9 |

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/list_trainB.txt:

--------------------------------------------------------------------------------

1 | ./00009.jpg

2 | ./00007.jpg

3 | ./00001.jpg

4 | ./00003.jpg

5 | ./00013.jpg

6 | ./00005.jpg

7 | ./00011.jpg

8 | ./00015.jpg

9 |

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00000.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00000.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00001.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00001.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00002.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00002.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00003.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00003.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00004.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00004.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00005.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00005.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00006.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00006.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00007.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00007.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testA/00008.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testA/00008.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00000.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00000.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00001.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00001.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00002.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00002.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00003.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00003.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00004.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00004.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00005.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00005.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00006.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00006.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00007.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00007.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/testB/00008.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/testB/00008.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00000.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00000.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00002.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00002.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00004.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00004.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00006.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00006.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00008.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00008.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00010.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00010.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00012.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00012.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainA/00014.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainA/00014.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00001.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00001.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00003.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00003.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00005.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00005.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00007.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00007.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00009.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00009.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00011.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00011.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00013.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00013.jpg

--------------------------------------------------------------------------------

/datasets/demo_edges2handbags/trainB/00015.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/datasets/demo_edges2handbags/trainB/00015.jpg

--------------------------------------------------------------------------------

/docs/munit_assumption.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/docs/munit_assumption.jpg

--------------------------------------------------------------------------------

/inputs/edges2handbags_edge.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/inputs/edges2handbags_edge.jpg

--------------------------------------------------------------------------------

/inputs/edges2handbags_handbag.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/inputs/edges2handbags_handbag.jpg

--------------------------------------------------------------------------------

/inputs/edges2shoes_edge.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/inputs/edges2shoes_edge.jpg

--------------------------------------------------------------------------------

/inputs/edges2shoes_shoe.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NVlabs/MUNIT/a82e222bc359892bd0f522d7a0f1573f3ec4a485/inputs/edges2shoes_shoe.jpg

--------------------------------------------------------------------------------

/networks.py:

--------------------------------------------------------------------------------

1 | """

2 | Copyright (C) 2018 NVIDIA Corporation. All rights reserved.

3 | Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

4 | """

5 | from torch import nn

6 | from torch.autograd import Variable

7 | import torch

8 | import torch.nn.functional as F

9 | try:

10 | from itertools import izip as zip

11 | except ImportError: # will be 3.x series

12 | pass

13 |

14 | ##################################################################################

15 | # Discriminator

16 | ##################################################################################

17 |

18 | class MsImageDis(nn.Module):

19 | # Multi-scale discriminator architecture

20 | def __init__(self, input_dim, params):

21 | super(MsImageDis, self).__init__()

22 | self.n_layer = params['n_layer']

23 | self.gan_type = params['gan_type']

24 | self.dim = params['dim']

25 | self.norm = params['norm']

26 | self.activ = params['activ']

27 | self.num_scales = params['num_scales']

28 | self.pad_type = params['pad_type']

29 | self.input_dim = input_dim

30 | self.downsample = nn.AvgPool2d(3, stride=2, padding=[1, 1], count_include_pad=False)

31 | self.cnns = nn.ModuleList()

32 | for _ in range(self.num_scales):

33 | self.cnns.append(self._make_net())

34 |

35 | def _make_net(self):

36 | dim = self.dim

37 | cnn_x = []

38 | cnn_x += [Conv2dBlock(self.input_dim, dim, 4, 2, 1, norm='none', activation=self.activ, pad_type=self.pad_type)]

39 | for i in range(self.n_layer - 1):

40 | cnn_x += [Conv2dBlock(dim, dim * 2, 4, 2, 1, norm=self.norm, activation=self.activ, pad_type=self.pad_type)]

41 | dim *= 2

42 | cnn_x += [nn.Conv2d(dim, 1, 1, 1, 0)]

43 | cnn_x = nn.Sequential(*cnn_x)

44 | return cnn_x

45 |

46 | def forward(self, x):

47 | outputs = []

48 | for model in self.cnns:

49 | outputs.append(model(x))

50 | x = self.downsample(x)

51 | return outputs

52 |

53 | def calc_dis_loss(self, input_fake, input_real):

54 | # calculate the loss to train D

55 | outs0 = self.forward(input_fake)

56 | outs1 = self.forward(input_real)

57 | loss = 0

58 |

59 | for it, (out0, out1) in enumerate(zip(outs0, outs1)):

60 | if self.gan_type == 'lsgan':

61 | loss += torch.mean((out0 - 0)**2) + torch.mean((out1 - 1)**2)

62 | elif self.gan_type == 'nsgan':

63 | all0 = Variable(torch.zeros_like(out0.data).cuda(), requires_grad=False)

64 | all1 = Variable(torch.ones_like(out1.data).cuda(), requires_grad=False)

65 | loss += torch.mean(F.binary_cross_entropy(F.sigmoid(out0), all0) +

66 | F.binary_cross_entropy(F.sigmoid(out1), all1))

67 | else:

68 | assert 0, "Unsupported GAN type: {}".format(self.gan_type)

69 | return loss

70 |

71 | def calc_gen_loss(self, input_fake):

72 | # calculate the loss to train G

73 | outs0 = self.forward(input_fake)

74 | loss = 0

75 | for it, (out0) in enumerate(outs0):

76 | if self.gan_type == 'lsgan':

77 | loss += torch.mean((out0 - 1)**2) # LSGAN

78 | elif self.gan_type == 'nsgan':

79 | all1 = Variable(torch.ones_like(out0.data).cuda(), requires_grad=False)

80 | loss += torch.mean(F.binary_cross_entropy(F.sigmoid(out0), all1))

81 | else:

82 | assert 0, "Unsupported GAN type: {}".format(self.gan_type)

83 | return loss

84 |

85 | ##################################################################################

86 | # Generator

87 | ##################################################################################

88 |

89 | class AdaINGen(nn.Module):

90 | # AdaIN auto-encoder architecture

91 | def __init__(self, input_dim, params):

92 | super(AdaINGen, self).__init__()

93 | dim = params['dim']

94 | style_dim = params['style_dim']

95 | n_downsample = params['n_downsample']

96 | n_res = params['n_res']

97 | activ = params['activ']

98 | pad_type = params['pad_type']

99 | mlp_dim = params['mlp_dim']

100 |

101 | # style encoder

102 | self.enc_style = StyleEncoder(4, input_dim, dim, style_dim, norm='none', activ=activ, pad_type=pad_type)

103 |

104 | # content encoder

105 | self.enc_content = ContentEncoder(n_downsample, n_res, input_dim, dim, 'in', activ, pad_type=pad_type)

106 | self.dec = Decoder(n_downsample, n_res, self.enc_content.output_dim, input_dim, res_norm='adain', activ=activ, pad_type=pad_type)

107 |

108 | # MLP to generate AdaIN parameters

109 | self.mlp = MLP(style_dim, self.get_num_adain_params(self.dec), mlp_dim, 3, norm='none', activ=activ)

110 |

111 | def forward(self, images):

112 | # reconstruct an image

113 | content, style_fake = self.encode(images)

114 | images_recon = self.decode(content, style_fake)

115 | return images_recon

116 |

117 | def encode(self, images):

118 | # encode an image to its content and style codes

119 | style_fake = self.enc_style(images)

120 | content = self.enc_content(images)

121 | return content, style_fake

122 |

123 | def decode(self, content, style):

124 | # decode content and style codes to an image

125 | adain_params = self.mlp(style)

126 | self.assign_adain_params(adain_params, self.dec)

127 | images = self.dec(content)

128 | return images

129 |

130 | def assign_adain_params(self, adain_params, model):

131 | # assign the adain_params to the AdaIN layers in model

132 | for m in model.modules():

133 | if m.__class__.__name__ == "AdaptiveInstanceNorm2d":

134 | mean = adain_params[:, :m.num_features]

135 | std = adain_params[:, m.num_features:2*m.num_features]

136 | m.bias = mean.contiguous().view(-1)

137 | m.weight = std.contiguous().view(-1)

138 | if adain_params.size(1) > 2*m.num_features:

139 | adain_params = adain_params[:, 2*m.num_features:]

140 |

141 | def get_num_adain_params(self, model):

142 | # return the number of AdaIN parameters needed by the model

143 | num_adain_params = 0

144 | for m in model.modules():

145 | if m.__class__.__name__ == "AdaptiveInstanceNorm2d":

146 | num_adain_params += 2*m.num_features

147 | return num_adain_params

148 |

149 |

150 | class VAEGen(nn.Module):

151 | # VAE architecture

152 | def __init__(self, input_dim, params):

153 | super(VAEGen, self).__init__()

154 | dim = params['dim']

155 | n_downsample = params['n_downsample']

156 | n_res = params['n_res']

157 | activ = params['activ']

158 | pad_type = params['pad_type']

159 |

160 | # content encoder

161 | self.enc = ContentEncoder(n_downsample, n_res, input_dim, dim, 'in', activ, pad_type=pad_type)

162 | self.dec = Decoder(n_downsample, n_res, self.enc.output_dim, input_dim, res_norm='in', activ=activ, pad_type=pad_type)

163 |

164 | def forward(self, images):

165 | # This is a reduced VAE implementation where we assume the outputs are multivariate Gaussian distribution with mean = hiddens and std_dev = all ones.

166 | hiddens = self.encode(images)

167 | if self.training == True:

168 | noise = Variable(torch.randn(hiddens.size()).cuda(hiddens.data.get_device()))

169 | images_recon = self.decode(hiddens + noise)

170 | else:

171 | images_recon = self.decode(hiddens)

172 | return images_recon, hiddens

173 |

174 | def encode(self, images):

175 | hiddens = self.enc(images)

176 | noise = Variable(torch.randn(hiddens.size()).cuda(hiddens.data.get_device()))

177 | return hiddens, noise

178 |

179 | def decode(self, hiddens):

180 | images = self.dec(hiddens)

181 | return images

182 |

183 |

184 | ##################################################################################

185 | # Encoder and Decoders

186 | ##################################################################################

187 |

188 | class StyleEncoder(nn.Module):

189 | def __init__(self, n_downsample, input_dim, dim, style_dim, norm, activ, pad_type):

190 | super(StyleEncoder, self).__init__()

191 | self.model = []

192 | self.model += [Conv2dBlock(input_dim, dim, 7, 1, 3, norm=norm, activation=activ, pad_type=pad_type)]

193 | for i in range(2):

194 | self.model += [Conv2dBlock(dim, 2 * dim, 4, 2, 1, norm=norm, activation=activ, pad_type=pad_type)]

195 | dim *= 2

196 | for i in range(n_downsample - 2):

197 | self.model += [Conv2dBlock(dim, dim, 4, 2, 1, norm=norm, activation=activ, pad_type=pad_type)]

198 | self.model += [nn.AdaptiveAvgPool2d(1)] # global average pooling

199 | self.model += [nn.Conv2d(dim, style_dim, 1, 1, 0)]

200 | self.model = nn.Sequential(*self.model)

201 | self.output_dim = dim

202 |

203 | def forward(self, x):

204 | return self.model(x)

205 |

206 | class ContentEncoder(nn.Module):

207 | def __init__(self, n_downsample, n_res, input_dim, dim, norm, activ, pad_type):

208 | super(ContentEncoder, self).__init__()

209 | self.model = []

210 | self.model += [Conv2dBlock(input_dim, dim, 7, 1, 3, norm=norm, activation=activ, pad_type=pad_type)]

211 | # downsampling blocks

212 | for i in range(n_downsample):

213 | self.model += [Conv2dBlock(dim, 2 * dim, 4, 2, 1, norm=norm, activation=activ, pad_type=pad_type)]

214 | dim *= 2

215 | # residual blocks

216 | self.model += [ResBlocks(n_res, dim, norm=norm, activation=activ, pad_type=pad_type)]

217 | self.model = nn.Sequential(*self.model)

218 | self.output_dim = dim

219 |

220 | def forward(self, x):

221 | return self.model(x)

222 |

223 | class Decoder(nn.Module):

224 | def __init__(self, n_upsample, n_res, dim, output_dim, res_norm='adain', activ='relu', pad_type='zero'):

225 | super(Decoder, self).__init__()

226 |

227 | self.model = []

228 | # AdaIN residual blocks

229 | self.model += [ResBlocks(n_res, dim, res_norm, activ, pad_type=pad_type)]

230 | # upsampling blocks

231 | for i in range(n_upsample):

232 | self.model += [nn.Upsample(scale_factor=2),

233 | Conv2dBlock(dim, dim // 2, 5, 1, 2, norm='ln', activation=activ, pad_type=pad_type)]

234 | dim //= 2

235 | # use reflection padding in the last conv layer

236 | self.model += [Conv2dBlock(dim, output_dim, 7, 1, 3, norm='none', activation='tanh', pad_type=pad_type)]

237 | self.model = nn.Sequential(*self.model)

238 |

239 | def forward(self, x):

240 | return self.model(x)

241 |

242 | ##################################################################################

243 | # Sequential Models

244 | ##################################################################################

245 | class ResBlocks(nn.Module):

246 | def __init__(self, num_blocks, dim, norm='in', activation='relu', pad_type='zero'):

247 | super(ResBlocks, self).__init__()

248 | self.model = []

249 | for i in range(num_blocks):

250 | self.model += [ResBlock(dim, norm=norm, activation=activation, pad_type=pad_type)]

251 | self.model = nn.Sequential(*self.model)

252 |

253 | def forward(self, x):

254 | return self.model(x)

255 |

256 | class MLP(nn.Module):

257 | def __init__(self, input_dim, output_dim, dim, n_blk, norm='none', activ='relu'):

258 |

259 | super(MLP, self).__init__()

260 | self.model = []

261 | self.model += [LinearBlock(input_dim, dim, norm=norm, activation=activ)]

262 | for i in range(n_blk - 2):

263 | self.model += [LinearBlock(dim, dim, norm=norm, activation=activ)]

264 | self.model += [LinearBlock(dim, output_dim, norm='none', activation='none')] # no output activations

265 | self.model = nn.Sequential(*self.model)

266 |

267 | def forward(self, x):

268 | return self.model(x.view(x.size(0), -1))

269 |

270 | ##################################################################################

271 | # Basic Blocks

272 | ##################################################################################

273 | class ResBlock(nn.Module):

274 | def __init__(self, dim, norm='in', activation='relu', pad_type='zero'):

275 | super(ResBlock, self).__init__()

276 |

277 | model = []

278 | model += [Conv2dBlock(dim ,dim, 3, 1, 1, norm=norm, activation=activation, pad_type=pad_type)]

279 | model += [Conv2dBlock(dim ,dim, 3, 1, 1, norm=norm, activation='none', pad_type=pad_type)]

280 | self.model = nn.Sequential(*model)

281 |

282 | def forward(self, x):

283 | residual = x

284 | out = self.model(x)

285 | out += residual

286 | return out

287 |

288 | class Conv2dBlock(nn.Module):

289 | def __init__(self, input_dim ,output_dim, kernel_size, stride,

290 | padding=0, norm='none', activation='relu', pad_type='zero'):

291 | super(Conv2dBlock, self).__init__()

292 | self.use_bias = True

293 | # initialize padding

294 | if pad_type == 'reflect':

295 | self.pad = nn.ReflectionPad2d(padding)

296 | elif pad_type == 'replicate':

297 | self.pad = nn.ReplicationPad2d(padding)

298 | elif pad_type == 'zero':

299 | self.pad = nn.ZeroPad2d(padding)

300 | else:

301 | assert 0, "Unsupported padding type: {}".format(pad_type)

302 |

303 | # initialize normalization

304 | norm_dim = output_dim

305 | if norm == 'bn':

306 | self.norm = nn.BatchNorm2d(norm_dim)

307 | elif norm == 'in':

308 | #self.norm = nn.InstanceNorm2d(norm_dim, track_running_stats=True)

309 | self.norm = nn.InstanceNorm2d(norm_dim)

310 | elif norm == 'ln':

311 | self.norm = LayerNorm(norm_dim)

312 | elif norm == 'adain':

313 | self.norm = AdaptiveInstanceNorm2d(norm_dim)

314 | elif norm == 'none' or norm == 'sn':

315 | self.norm = None

316 | else:

317 | assert 0, "Unsupported normalization: {}".format(norm)

318 |

319 | # initialize activation

320 | if activation == 'relu':

321 | self.activation = nn.ReLU(inplace=True)

322 | elif activation == 'lrelu':

323 | self.activation = nn.LeakyReLU(0.2, inplace=True)

324 | elif activation == 'prelu':

325 | self.activation = nn.PReLU()

326 | elif activation == 'selu':

327 | self.activation = nn.SELU(inplace=True)

328 | elif activation == 'tanh':

329 | self.activation = nn.Tanh()

330 | elif activation == 'none':

331 | self.activation = None

332 | else:

333 | assert 0, "Unsupported activation: {}".format(activation)

334 |

335 | # initialize convolution

336 | if norm == 'sn':

337 | self.conv = SpectralNorm(nn.Conv2d(input_dim, output_dim, kernel_size, stride, bias=self.use_bias))

338 | else:

339 | self.conv = nn.Conv2d(input_dim, output_dim, kernel_size, stride, bias=self.use_bias)

340 |

341 | def forward(self, x):

342 | x = self.conv(self.pad(x))

343 | if self.norm:

344 | x = self.norm(x)

345 | if self.activation:

346 | x = self.activation(x)

347 | return x

348 |

349 | class LinearBlock(nn.Module):

350 | def __init__(self, input_dim, output_dim, norm='none', activation='relu'):

351 | super(LinearBlock, self).__init__()

352 | use_bias = True

353 | # initialize fully connected layer

354 | if norm == 'sn':

355 | self.fc = SpectralNorm(nn.Linear(input_dim, output_dim, bias=use_bias))

356 | else:

357 | self.fc = nn.Linear(input_dim, output_dim, bias=use_bias)

358 |

359 | # initialize normalization

360 | norm_dim = output_dim

361 | if norm == 'bn':

362 | self.norm = nn.BatchNorm1d(norm_dim)

363 | elif norm == 'in':

364 | self.norm = nn.InstanceNorm1d(norm_dim)

365 | elif norm == 'ln':

366 | self.norm = LayerNorm(norm_dim)

367 | elif norm == 'none' or norm == 'sn':

368 | self.norm = None

369 | else:

370 | assert 0, "Unsupported normalization: {}".format(norm)

371 |

372 | # initialize activation

373 | if activation == 'relu':

374 | self.activation = nn.ReLU(inplace=True)

375 | elif activation == 'lrelu':

376 | self.activation = nn.LeakyReLU(0.2, inplace=True)

377 | elif activation == 'prelu':

378 | self.activation = nn.PReLU()

379 | elif activation == 'selu':

380 | self.activation = nn.SELU(inplace=True)

381 | elif activation == 'tanh':

382 | self.activation = nn.Tanh()

383 | elif activation == 'none':

384 | self.activation = None

385 | else:

386 | assert 0, "Unsupported activation: {}".format(activation)

387 |

388 | def forward(self, x):

389 | out = self.fc(x)

390 | if self.norm:

391 | out = self.norm(out)

392 | if self.activation:

393 | out = self.activation(out)

394 | return out

395 |

396 | ##################################################################################

397 | # VGG network definition

398 | ##################################################################################

399 | class Vgg16(nn.Module):

400 | def __init__(self):

401 | super(Vgg16, self).__init__()

402 | self.conv1_1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1)

403 | self.conv1_2 = nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1)

404 |

405 | self.conv2_1 = nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1)

406 | self.conv2_2 = nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1)

407 |

408 | self.conv3_1 = nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1)

409 | self.conv3_2 = nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1)

410 | self.conv3_3 = nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1)

411 |

412 | self.conv4_1 = nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1)

413 | self.conv4_2 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

414 | self.conv4_3 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

415 |

416 | self.conv5_1 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

417 | self.conv5_2 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

418 | self.conv5_3 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

419 |

420 | def forward(self, X):

421 | h = F.relu(self.conv1_1(X), inplace=True)

422 | h = F.relu(self.conv1_2(h), inplace=True)

423 | # relu1_2 = h

424 | h = F.max_pool2d(h, kernel_size=2, stride=2)

425 |

426 | h = F.relu(self.conv2_1(h), inplace=True)

427 | h = F.relu(self.conv2_2(h), inplace=True)

428 | # relu2_2 = h

429 | h = F.max_pool2d(h, kernel_size=2, stride=2)

430 |

431 | h = F.relu(self.conv3_1(h), inplace=True)

432 | h = F.relu(self.conv3_2(h), inplace=True)

433 | h = F.relu(self.conv3_3(h), inplace=True)

434 | # relu3_3 = h

435 | h = F.max_pool2d(h, kernel_size=2, stride=2)

436 |

437 | h = F.relu(self.conv4_1(h), inplace=True)

438 | h = F.relu(self.conv4_2(h), inplace=True)

439 | h = F.relu(self.conv4_3(h), inplace=True)

440 | # relu4_3 = h

441 |

442 | h = F.relu(self.conv5_1(h), inplace=True)

443 | h = F.relu(self.conv5_2(h), inplace=True)

444 | h = F.relu(self.conv5_3(h), inplace=True)

445 | relu5_3 = h

446 |

447 | return relu5_3

448 | # return [relu1_2, relu2_2, relu3_3, relu4_3]

449 |

450 | ##################################################################################

451 | # Normalization layers

452 | ##################################################################################

453 | class AdaptiveInstanceNorm2d(nn.Module):

454 | def __init__(self, num_features, eps=1e-5, momentum=0.1):

455 | super(AdaptiveInstanceNorm2d, self).__init__()

456 | self.num_features = num_features

457 | self.eps = eps

458 | self.momentum = momentum

459 | # weight and bias are dynamically assigned

460 | self.weight = None

461 | self.bias = None

462 | # just dummy buffers, not used

463 | self.register_buffer('running_mean', torch.zeros(num_features))

464 | self.register_buffer('running_var', torch.ones(num_features))

465 |

466 | def forward(self, x):

467 | assert self.weight is not None and self.bias is not None, "Please assign weight and bias before calling AdaIN!"

468 | b, c = x.size(0), x.size(1)

469 | running_mean = self.running_mean.repeat(b)

470 | running_var = self.running_var.repeat(b)

471 |

472 | # Apply instance norm

473 | x_reshaped = x.contiguous().view(1, b * c, *x.size()[2:])

474 |

475 | out = F.batch_norm(

476 | x_reshaped, running_mean, running_var, self.weight, self.bias,

477 | True, self.momentum, self.eps)

478 |

479 | return out.view(b, c, *x.size()[2:])

480 |

481 | def __repr__(self):

482 | return self.__class__.__name__ + '(' + str(self.num_features) + ')'

483 |

484 |

485 | class LayerNorm(nn.Module):

486 | def __init__(self, num_features, eps=1e-5, affine=True):

487 | super(LayerNorm, self).__init__()

488 | self.num_features = num_features

489 | self.affine = affine

490 | self.eps = eps

491 |

492 | if self.affine:

493 | self.gamma = nn.Parameter(torch.Tensor(num_features).uniform_())

494 | self.beta = nn.Parameter(torch.zeros(num_features))

495 |

496 | def forward(self, x):

497 | shape = [-1] + [1] * (x.dim() - 1)

498 | # print(x.size())

499 | if x.size(0) == 1:

500 | # These two lines run much faster in pytorch 0.4 than the two lines listed below.

501 | mean = x.view(-1).mean().view(*shape)

502 | std = x.view(-1).std().view(*shape)

503 | else:

504 | mean = x.view(x.size(0), -1).mean(1).view(*shape)

505 | std = x.view(x.size(0), -1).std(1).view(*shape)

506 |

507 | x = (x - mean) / (std + self.eps)

508 |

509 | if self.affine:

510 | shape = [1, -1] + [1] * (x.dim() - 2)