El proyecto Netflora implica la aplicación de geotecnologías en la automatización forestal y el mapeo de reservas de carbono en áreas de bosque nativo en la Amazonía Occidental. Es una iniciativa desarrollada por Embrapa Acre con el apoyo del Fondo JBS por la Amazonía. 6 | 7 |

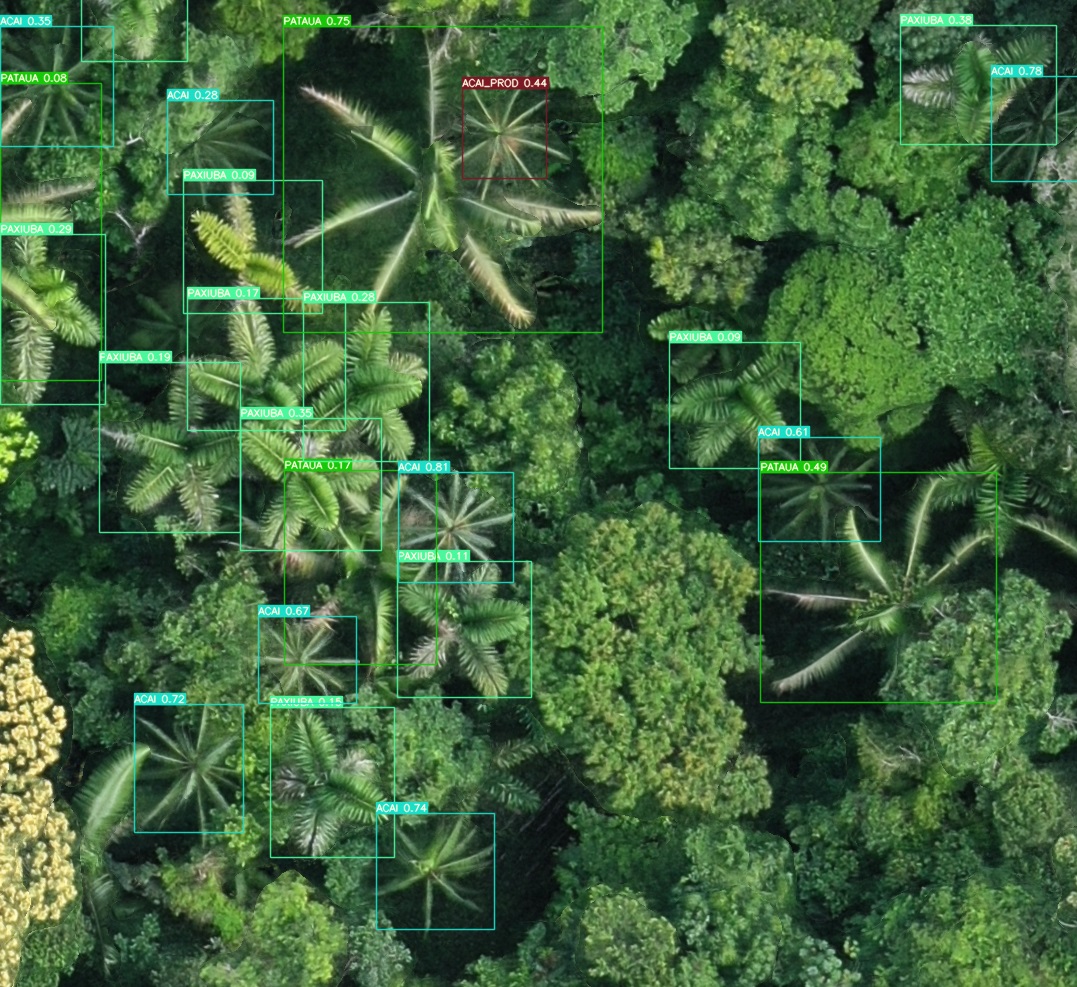

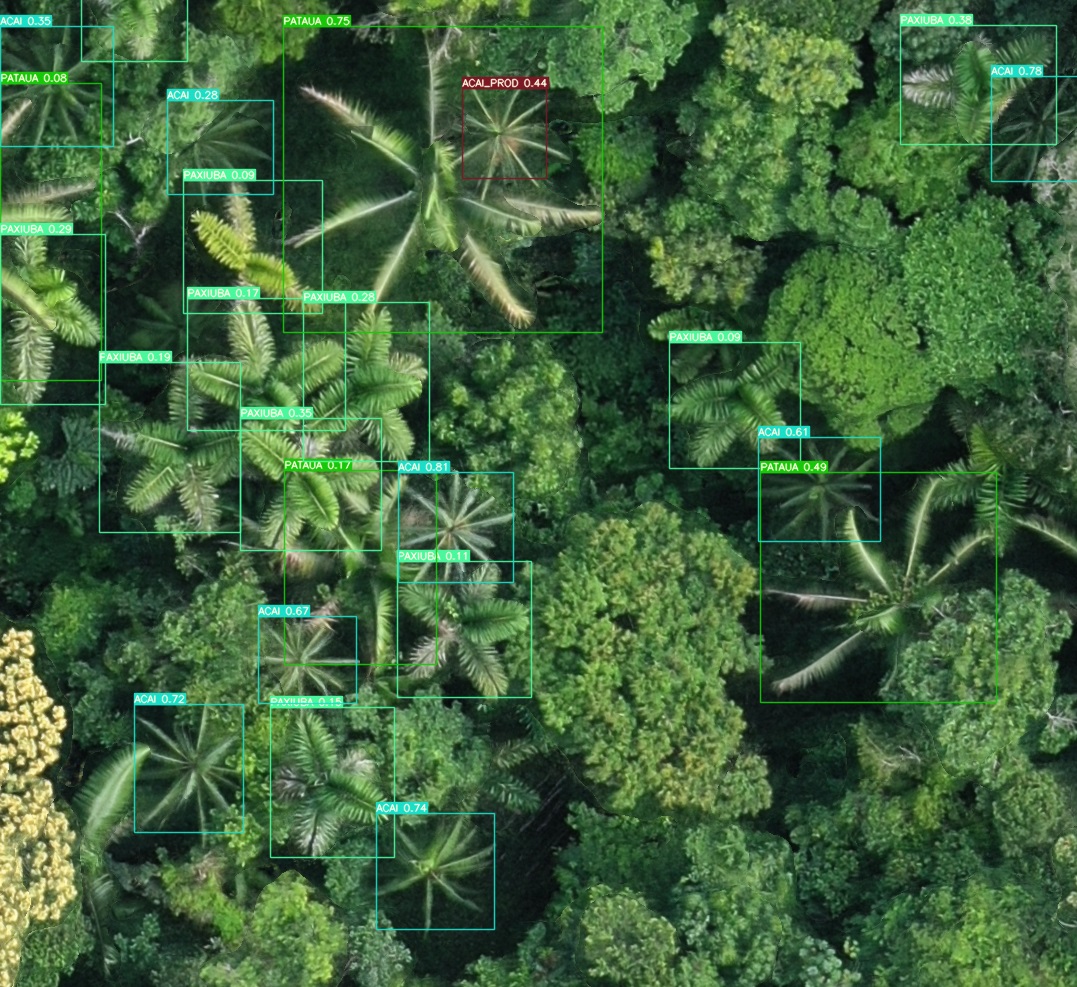

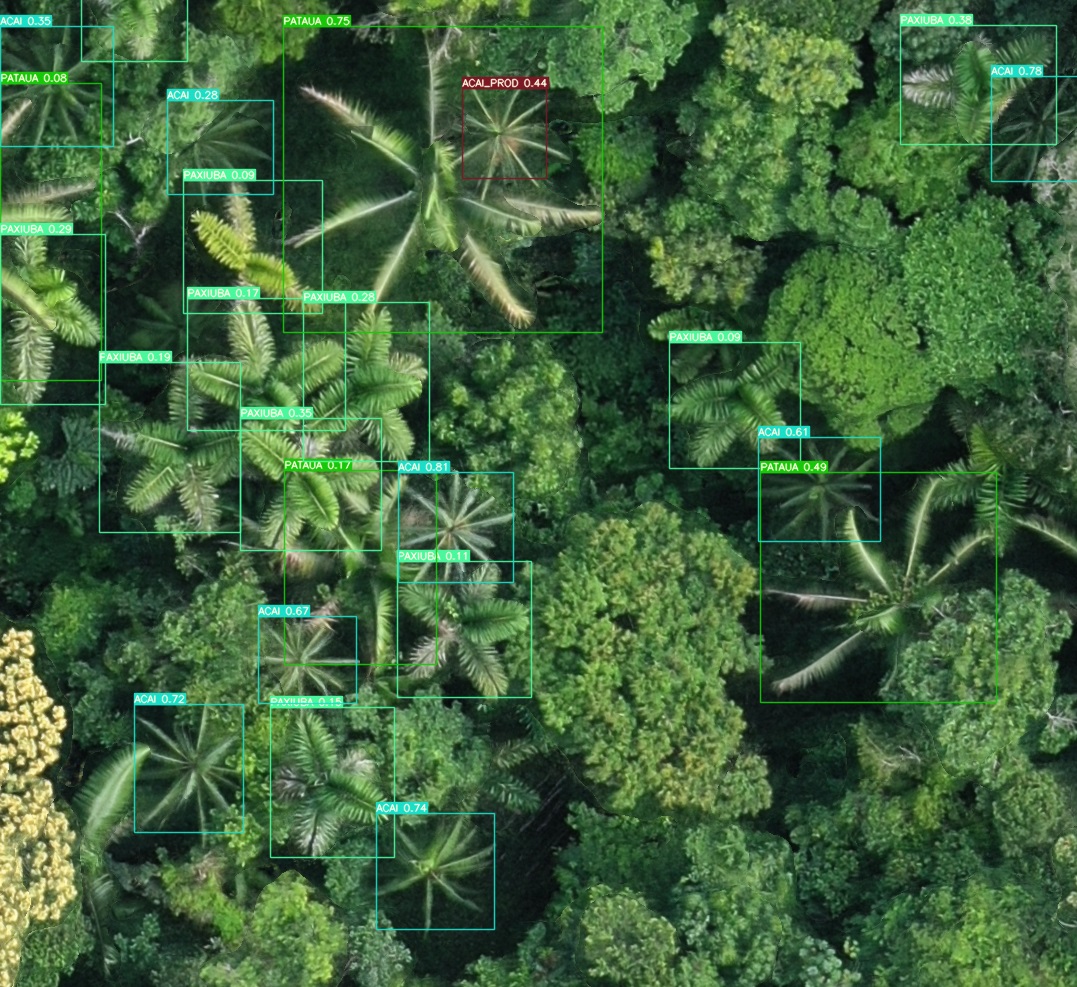

Aquí trataremos el componente "Inventario Forestal con uso de drones". Los drones y la inteligencia artificial se utilizan para automatizar etapas del inventario forestal en la identificación de especies estratégicas. Más de 40,000 hectáreas de áreas forestales ya han sido mapeadas con el objetivo de recopilar información para componer el dataset de Netflora. 8 | 9 |

12 |

13 |

12 |

13 |  14 |

15 |

14 |

15 |  16 |

17 |

16 |

17 |  28 |

29 |

28 |

29 |  30 |

31 |

30 |

31 |  32 |

33 |

32 |

33 | Expandir

56 | 57 | * [https://github.com/AlexeyAB/darknet](https://github.com/AlexeyAB/darknet) 58 | * [https://github.com/WongKinYiu/yolov7](https://github.com/WongKinYiu/yolov7) 59 | 60 | -------------------------------------------------------------------------------- /README.md: -------------------------------------------------------------------------------- 1 | # **Netflora** 2 | 3 | 4 |

5 |

6 |

7 | **Read this in other languages**: [Português](README.pt.md), [Español](README.es.md).

8 |

9 |

4 |

5 |

6 |

7 | **Read this in other languages**: [Português](README.pt.md), [Español](README.es.md).

8 |

9 | The Netflora Project involves the application of geotechnologies in forest automation and carbon stock mapping in native forest areas in Western Amazonia. It is an initiative developed by Embrapa Acre with sponsorship from the JBS Fund for the Amazon. 12 | 13 |

Here we will discuss the "Forest Inventory using drones" component. Drones and artificial intelligence are used to automate stages of the forest inventory in identifying strategic species. More than 50,000 hectares of forest areas have already been mapped with the goal of collecting information to compose the Netflora dataset. 14 | 15 |

18 |

19 |

18 |

19 |  20 |

21 |

20 |

21 |  22 |

23 |

22 |

23 |  38 |

39 |

38 |

39 |  40 |

41 |

40 |

41 |  42 |

43 |

42 |

43 | Expand

69 | 70 | * [https://github.com/AlexeyAB/darknet](https://github.com/AlexeyAB/darknet) 71 | * [https://github.com/WongKinYiu/yolov7](https://github.com/WongKinYiu/yolov7) 72 | -------------------------------------------------------------------------------- /README.pt.md: -------------------------------------------------------------------------------- 1 | # **Netflora** 2 | 3 |O Projeto Netflora envolve a aplicação de geotecnologias na automação florestal e no mapeamento dos estoques de carbono em áreas de floresta nativa na Amazônia Ocidental, é uma iniciativa desenvolvida pela Embrapa Acre com o apoio do Fundo JBS pela Amazônia. 6 | 7 |

Aqui vamos tratar do componente “Inventário Florestal com uso de drones”. Drones e inteligência artificial são utilizados para automatizar etapas do inventário florestal na identificação de espécies estratégicas. Mais de 50 mil hectares de áreas de floresta já foram mapeados com o objetivo de coletar informações para compor o dataset do Netflora. 8 | 9 | 10 |

13 |

14 |

13 |

14 |  15 |

16 |

15 |

16 |  17 |

18 |

17 |

18 |  37 |

38 |

37 |

38 |  39 |

40 |

39 |

40 |  41 |

42 |

41 |

42 | Expandir