├── .github

└── FUNDING.yml

├── .gitignore

├── LICENSE

├── MANIFEST.in

├── Makefile

├── OTHER_LICENSES

├── README.md

├── diffuzers.ipynb

├── docs

├── Makefile

├── conf.py

├── index.rst

└── make.bat

├── requirements.txt

├── setup.cfg

├── setup.py

├── stablefusion

├── Home.py

├── __init__.py

├── api

│ ├── __init__.py

│ ├── main.py

│ ├── schemas.py

│ └── utils.py

├── cli

│ ├── __init__.py

│ ├── main.py

│ ├── run_api.py

│ └── run_app.py

├── data

│ ├── artists.txt

│ ├── flavors.txt

│ ├── mediums.txt

│ └── movements.txt

├── model_list.txt

├── models

│ ├── ckpt_interference

│ │ └── t

│ ├── cpkt_models

│ │ └── t

│ ├── diffusion_models

│ │ └── t.txt

│ ├── realesrgan

│ │ ├── inference_realesrgan.py

│ │ ├── options

│ │ │ ├── finetune_realesrgan_x4plus.yml

│ │ │ ├── finetune_realesrgan_x4plus_pairdata.yml

│ │ │ ├── train_realesrgan_x2plus.yml

│ │ │ ├── train_realesrgan_x4plus.yml

│ │ │ ├── train_realesrnet_x2plus.yml

│ │ │ └── train_realesrnet_x4plus.yml

│ │ ├── realesrgan

│ │ │ ├── __init__.py

│ │ │ ├── archs

│ │ │ │ ├── __init__.py

│ │ │ │ ├── discriminator_arch.py

│ │ │ │ └── srvgg_arch.py

│ │ │ ├── data

│ │ │ │ ├── __init__.py

│ │ │ │ ├── realesrgan_dataset.py

│ │ │ │ └── realesrgan_paired_dataset.py

│ │ │ ├── models

│ │ │ │ ├── __init__.py

│ │ │ │ ├── realesrgan_model.py

│ │ │ │ └── realesrnet_model.py

│ │ │ ├── train.py

│ │ │ └── utils.py

│ │ └── weights

│ │ │ └── README.md

│ └── safetensors_models

│ │ └── t

├── pages

│ ├── 10_Utilities.py

│ ├── 1_Text2Image.py

│ ├── 2_Image2Image.py

│ ├── 3_Inpainting.py

│ ├── 4_ControlNet.py

│ ├── 5_OpenPose_Editor.py

│ ├── 6_Textual Inversion.py

│ ├── 7_Upscaler.py

│ ├── 8_Convertor.py

│ └── 9_Train.py

├── scripts

│ ├── blip.py

│ ├── ckpt_to_diffusion.py

│ ├── clip_interrogator.py

│ ├── controlnet.py

│ ├── dreambooth

│ │ ├── README.md

│ │ ├── convert_weights_to_ckpt.py

│ │ ├── requirements_flax.txt

│ │ ├── train_dreambooth.py

│ │ ├── train_dreambooth_flax.py

│ │ └── train_dreambooth_lora.py

│ ├── gfp_gan.py

│ ├── gradio_app.py

│ ├── image_info.py

│ ├── img2img.py

│ ├── inpainting.py

│ ├── interrogator.py

│ ├── model_adding.py

│ ├── model_removing.py

│ ├── pose_editor.py

│ ├── pose_html.py

│ ├── safetensors_to_diffusion.py

│ ├── text2img.py

│ ├── textual_inversion.py

│ ├── upscaler.py

│ └── x2image.py

└── utils.py

└── static

├── .keep

├── Screenshot1.png

├── Screenshot2.png

├── Screenshot3.png

└── screenshot4.png

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | github: everydaycodings

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # local stuff

2 | .vscode/

3 | examples/.logs/

4 | *.bin

5 | *.csv

6 | input/

7 | logs/

8 | gfpgan/

9 | *.pkl

10 | *.pt

11 | *.pth

12 | abhishek/

13 | diffout/

14 | datasets/*

15 | experiments/*

16 | results/*

17 | tb_logger/*

18 | wandb/*

19 | tmp/*

20 | weights/*

21 | # Byte-compiled / optimized / DLL files

22 | __pycache__/

23 | *.py[cod]

24 | *$py.class

25 | .pypirc

26 | # C extensions

27 | *.so

28 | 7_Train.py

29 |

30 | # Distribution / packaging

31 | .Python

32 | build/

33 | develop-eggs/

34 | dist/

35 | downloads/

36 | eggs/

37 | .eggs/

38 | lib/

39 | lib64/

40 | parts/

41 | sdist/

42 | var/

43 | pip-wheel-metadata/

44 | share/python-wheels/

45 | *.egg-info/

46 | .installed.cfg

47 | *.egg

48 | MANIFEST

49 | src/

50 |

51 | # PyInstaller

52 | # Usually these files are written by a python script from a template

53 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

54 | *.manifest

55 | *.spec

56 |

57 | # Installer logs

58 | pip-log.txt

59 | pip-delete-this-directory.txt

60 |

61 | # Unit test / coverage reports

62 | htmlcov/

63 | .tox/

64 | .nox/

65 | .coverage

66 | .coverage.*

67 | .cache

68 | nosetests.xml

69 | coverage.xml

70 | *.cover

71 | *.py,cover

72 | .hypothesis/

73 | .pytest_cache/

74 |

75 | # Translations

76 | *.mo

77 | *.pot

78 |

79 | # Django stuff:

80 | *.log

81 | local_settings.py

82 | db.sqlite3

83 | db.sqlite3-journal

84 |

85 | # Flask stuff:

86 | instance/

87 | .webassets-cache

88 |

89 | # Scrapy stuff:

90 | .scrapy

91 |

92 | # Sphinx documentation

93 | docs/_build/

94 |

95 | # PyBuilder

96 | target/

97 |

98 | # Jupyter Notebook

99 | .ipynb_checkpoints

100 |

101 | # IPython

102 | profile_default/

103 | ipython_config.py

104 |

105 | # pyenv

106 | .python-version

107 |

108 | # pipenv

109 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

110 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

111 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

112 | # install all needed dependencies.

113 | #Pipfile.lock

114 |

115 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

116 | __pypackages__/

117 |

118 | # Celery stuff

119 | celerybeat-schedule

120 | celerybeat.pid

121 |

122 | # SageMath parsed files

123 | *.sage.py

124 |

125 | # Environments

126 | .env

127 | .venv

128 | env/

129 | venv/

130 | ENV/

131 | env.bak/

132 | venv.bak/

133 |

134 | # Spyder project settings

135 | .spyderproject

136 | .spyproject

137 |

138 | # Rope project settings

139 | .ropeproject

140 |

141 | # mkdocs documentation

142 | /site

143 |

144 | # mypy

145 | .mypy_cache/

146 | .dmypy.json

147 | dmypy.json

148 |

149 | # Pyre type checker

150 | .pyre/

151 | test.ipynb

152 | # vscode

153 | .vscode/

154 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include stablefusion/pages/*.py

2 | include stablefusion/data/*.txt

3 | include stablefusion/*.txt

4 | recursive-include stablefusion/scripts *

5 | recursive-include stablefusion/models *

6 | include OTHER_LICENSES

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | .PHONY: quality style

2 |

3 | quality:

4 | python -m black --check --line-length 119 --target-version py38 .

5 | python -m isort --check-only .

6 | python -m flake8 --max-line-length 119 .

7 |

8 | style:

9 | python -m black --line-length 119 --target-version py38 .

10 | python -m isort .

--------------------------------------------------------------------------------

/OTHER_LICENSES:

--------------------------------------------------------------------------------

1 | clip_interrogator licence

2 | for diffuzes/clip_interrogator.py

3 | for diffuzes/data/*.txt

4 | -------------------------

5 | MIT License

6 |

7 | Copyright (c) 2022 pharmapsychotic

8 |

9 | Permission is hereby granted, free of charge, to any person obtaining a copy

10 | of this software and associated documentation files (the "Software"), to deal

11 | in the Software without restriction, including without limitation the rights

12 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

13 | copies of the Software, and to permit persons to whom the Software is

14 | furnished to do so, subject to the following conditions:

15 |

16 | The above copyright notice and this permission notice shall be included in all

17 | copies or substantial portions of the Software.

18 |

19 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

20 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

21 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

22 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

23 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

24 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

25 | SOFTWARE.

26 |

27 | blip licence

28 | for diffuzes/blip.py

29 | ------------

30 | Copyright (c) 2022, Salesforce.com, Inc.

31 | All rights reserved.

32 |

33 | Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

34 |

35 | * Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

36 |

37 | * Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

38 |

39 | * Neither the name of Salesforce.com nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

40 |

41 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

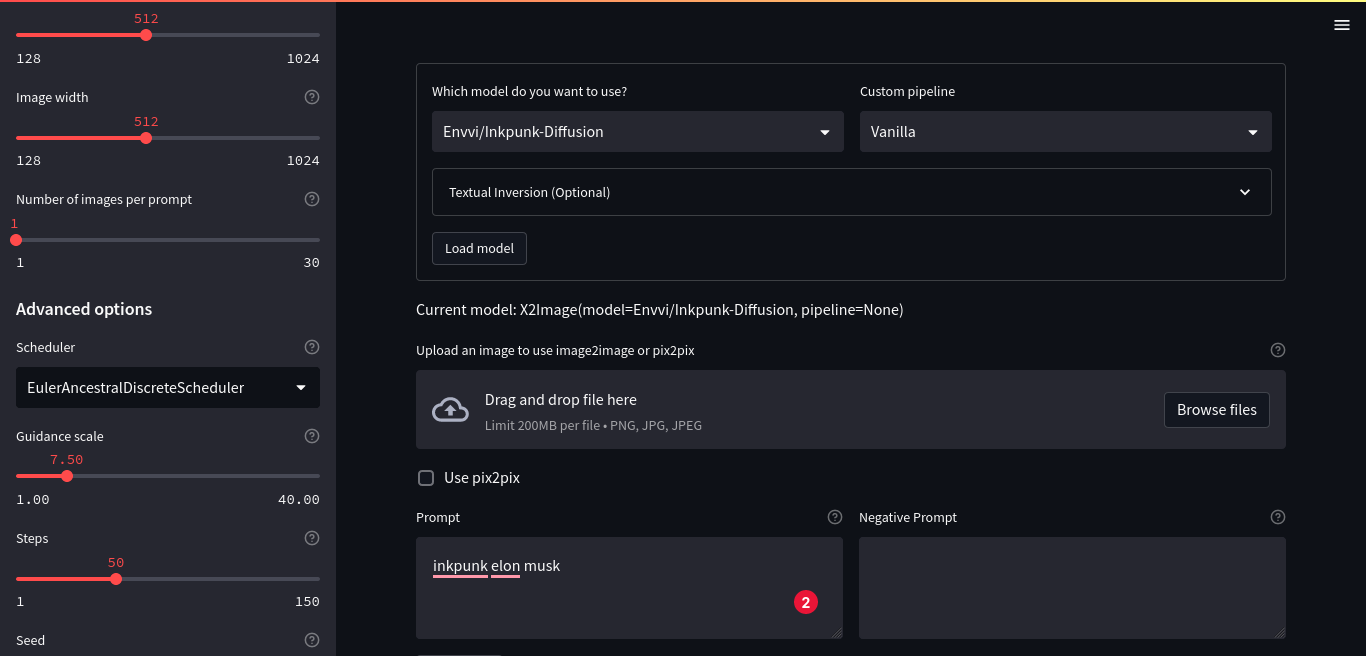

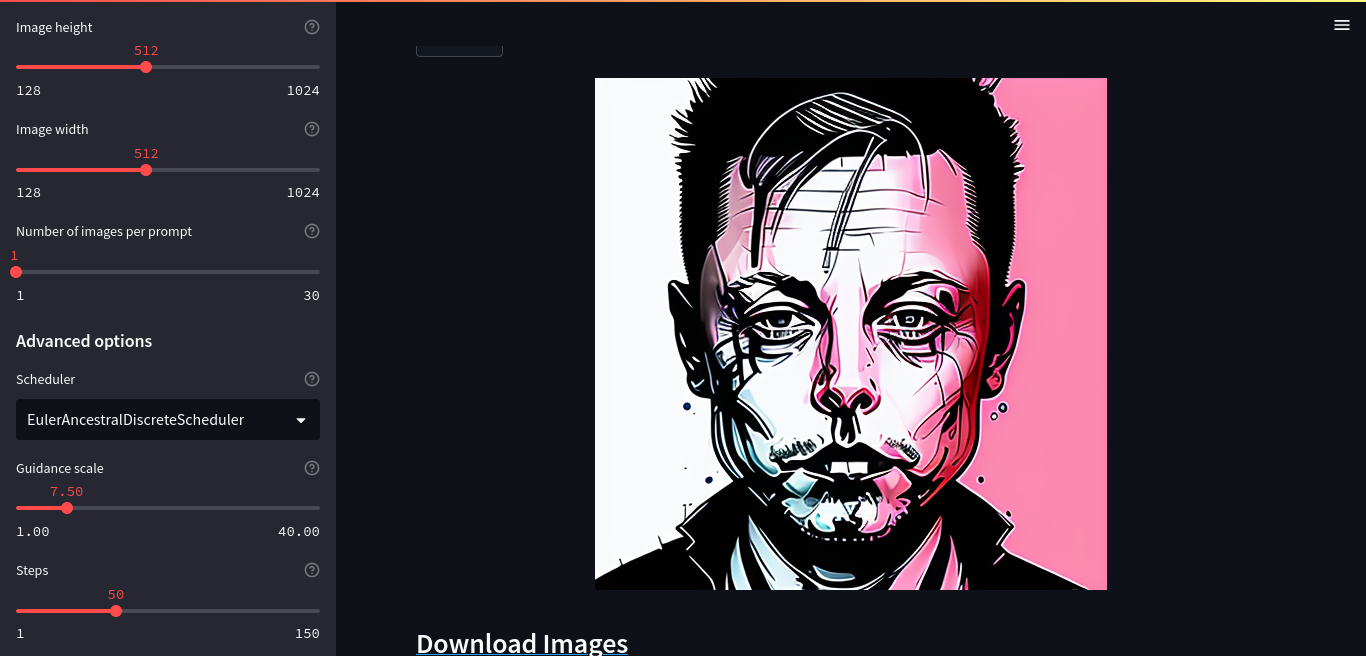

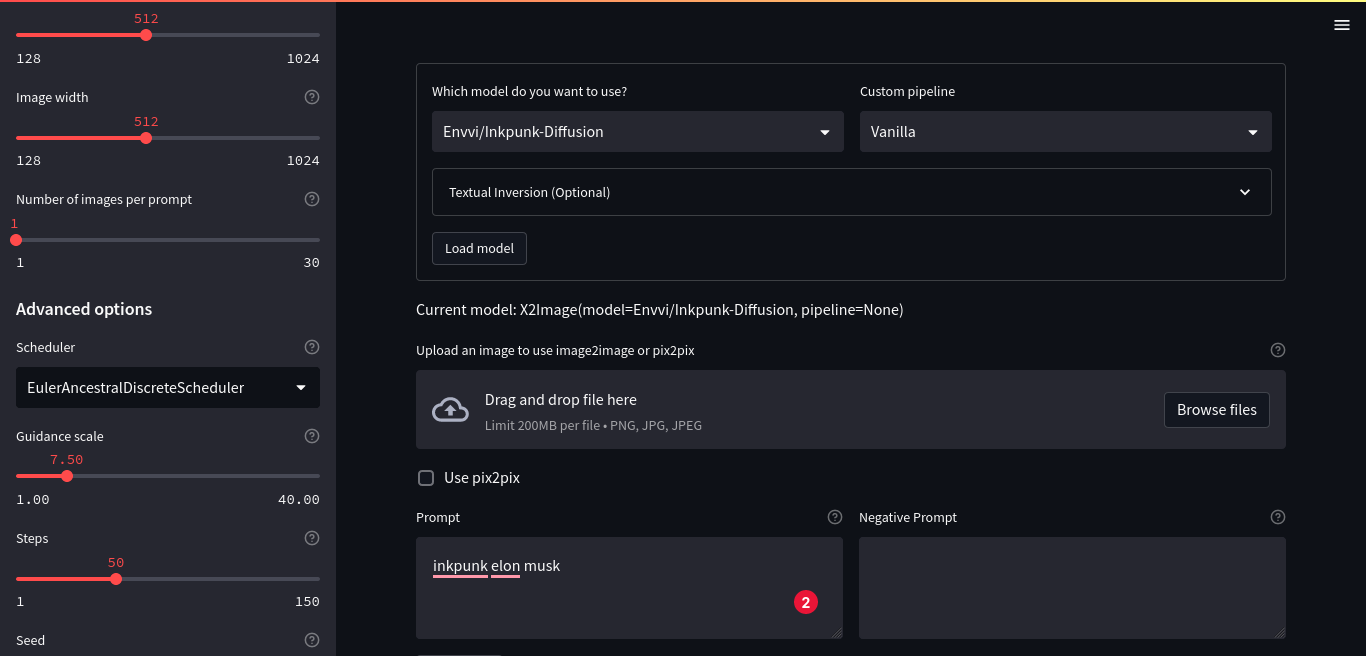

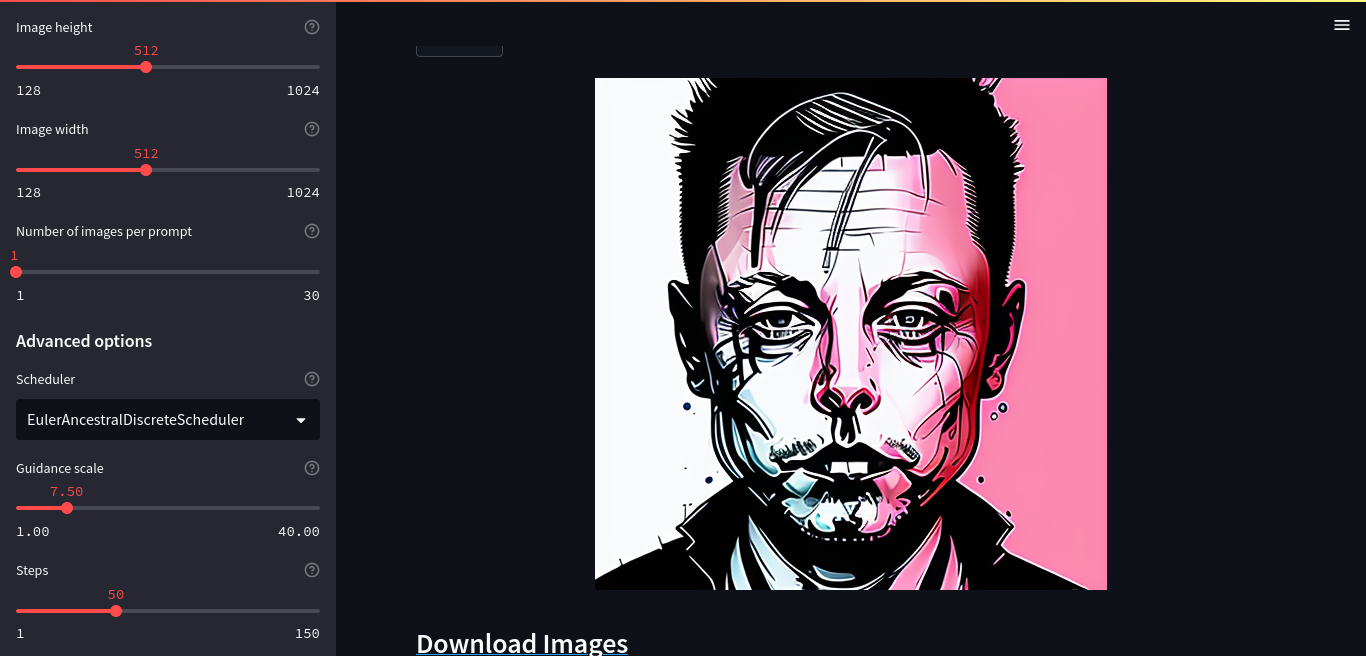

1 | # StableFusion

2 |

3 | A Web ui for **Stable Diffusion Models**.

4 |

5 | ## Update (Version 0.1.4)

6 |

7 | In this version, we've added two major features to the project:

8 |

9 | - **ControlNet Option**

10 | - **OpenPose Editor**

11 |

12 | We've also made the following improvements and bug fixes:

13 |

14 | - **Improved File Arrangement**: We've rearranged the files in the project to make it more organized and convenient for users to navigate.

15 | - **Fixed Random Seed Generator**: We've fixed a bug in the random seed generator that was causing incorrect results. This fix ensures that the project operates more accurately and reliably.

16 |

17 | We hope these updates will improve the overall functionality and user experience of the project. As always, please feel free to reach out to us if you have any questions or feedback.

18 |

19 |

20 |

21 |  22 |

23 |

24 |

25 |

26 |

27 |

28 | If something doesnt work as expected, or if you need some features which are not available, then create request using [github issues](https://github.com/NeuralRealm/StableFusion/issues)

29 |

30 |

31 | ## Features available in the app:

32 |

33 | - text to image

34 | - image to image

35 | - Inpainting

36 | - instruct pix2pix

37 | - textual inversion

38 | - ControlNet

39 | - OpenPose Editor

40 | - image info

41 | - Upscale Your Image

42 | - clip interrogator

43 | - Convert ckpt file to diffusers

44 | - Convert safetensors file to diffusers

45 | - Add your own diffusers model

46 | - more coming soon!

47 |

48 |

49 |

50 | ## Installation

51 |

52 | To install bleeding edge version of StableFusion, clone the repo and install it using pip.

53 |

54 | ```bash

55 | git clone https://github.com/NeuralRealm/StableFusion

56 | cd StableFusion

57 | pip install -e .

58 | ```

59 |

60 | Installation using pip:

61 |

62 | ```bash

63 | pip install stablefusion

64 | ```

65 |

66 | ## Usage

67 |

68 | ### Web App

69 | To run the web app, run the following command:

70 |

71 | For Local Host

72 | ```bash

73 | stablefusion app

74 | ```

75 | or

76 |

77 | For Public Shareable Link

78 | ```bash

79 | stablefusion app --port 10000 --ngrok_key YourNgrokAuthtoken --share

80 | ```

81 |

82 | ## All CLI Options for running the app:

83 |

84 | ```bash

85 | ❯ stablefusion app --help

86 | usage: stablefusion [] app [-h] [--output OUTPUT] [--share] [--port PORT] [--host HOST]

87 | [--device DEVICE] [--ngrok_key NGROK_KEY]

88 |

89 | ✨ Run stablefusion app

90 |

91 | optional arguments:

92 | -h, --help show this help message and exit

93 | --output OUTPUT Output path is optional, but if provided, all generations will automatically be saved to this

94 | path.

95 | --share Share the app

96 | --port PORT Port to run the app on

97 | --host HOST Host to run the app on

98 | --device DEVICE Device to use, e.g. cpu, cuda, cuda:0, mps (for m1 mac) etc.

99 | --ngrok_key NGROK_KEY

100 | Ngrok key to use for sharing the app. Only required if you want to share the app

101 | ```

102 |

103 |

104 | ## Using private models from huggingface hub

105 |

106 | If you want to use private models from huggingface hub, then you need to login using `huggingface-cli login` command.

107 |

108 | Note: You can also save your generations directly to huggingface hub if your output path points to a huggingface hub dataset repo and you have access to push to that repository. Thus, you will end up saving a lot of disk space.

109 |

110 | ## Acknowledgements

111 |

112 | I would like to express my gratitude to the following individuals and organizations for sharing their code, which formed the basis of the implementation used in this project:

113 |

114 | - [Tencent ARC](https://github.com/TencentARC) for their code for the [GFPGAN](https://github.com/TencentARC/GFPGAN) package, which was used for image super-resolution.

115 | - [LexKoin](https://github.com/LexKoin) for their code for the [Real-ESRGAN-UpScale](https://github.com/LexKoin/Real-ESRGAN-UpScale) package, which was used for image enhancement.

116 | - [Hugging Face](https://github.com/huggingface) for their code for the [diffusers](https://github.com/huggingface/diffusers) package, which was used for optimizing the model's parameters.

117 | - [Abhishek Thakur](https://github.com/abhishekkrthakur) for sharing his code for the [diffuzers](https://github.com/abhishekkrthakur/diffuzers) package, which was also used for optimizing the model's parameters.

118 |

119 | I am grateful for their contributions to the open source community, which made this project possible.

120 |

121 | ## Contributing

122 |

123 | StableFusion is an open-source project, and we welcome contributions from the community. Whether you're a developer, designer, or user, there are many ways you can help make this project better. Here are a few ways you can get involved:

124 |

125 | - **Report issues:** If you find a bug or have a feature request, please open an issue on our [GitHub repository](https://github.com/NeuralRealm/StableFusion/issues). We appreciate detailed bug reports and constructive feedback.

126 | - **Submit pull requests:** If you're interested in contributing code, we welcome pull requests for bug fixes, new features, and documentation improvements.

127 | - **Spread the word:** If you enjoy using StableFusion, please help us spread the word by sharing it with your friends, colleagues, and social media networks. We appreciate any support you can give us!

128 |

129 | ### We believe that open-source software is the future of technology, and we're excited to have you join us in making StableFusion a success. Thank you for your support!

130 |

--------------------------------------------------------------------------------

/diffuzers.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {},

7 | "outputs": [],

8 | "source": [

9 | "!pip3 install stablefusion -q"

10 | ]

11 | },

12 | {

13 | "cell_type": "code",

14 | "execution_count": 2,

15 | "metadata": {},

16 | "outputs": [

17 | {

18 | "name": "stdout",

19 | "output_type": "stream",

20 | "text": [

21 | "/bin/bash: line 1: diffuzers: command not found\n"

22 | ]

23 | }

24 | ],

25 | "source": [

26 | "!stablefusion app --port 10000 --ngrok_key YOUR_NGROK_AUTHTOKEN --share"

27 | ]

28 | }

29 | ],

30 | "metadata": {

31 | "kernelspec": {

32 | "display_name": "diffuzers",

33 | "language": "python",

34 | "name": "python3"

35 | },

36 | "language_info": {

37 | "codemirror_mode": {

38 | "name": "ipython",

39 | "version": 3

40 | },

41 | "file_extension": ".py",

42 | "mimetype": "text/x-python",

43 | "name": "python",

44 | "nbconvert_exporter": "python",

45 | "pygments_lexer": "ipython3",

46 | "version": "3.9.16"

47 | },

48 | "orig_nbformat": 4,

49 | "vscode": {

50 | "interpreter": {

51 | "hash": "e667c4130092153eeed1faae4ad5e1fb640895671448a3853c00b657af26211d"

52 | }

53 | }

54 | },

55 | "nbformat": 4,

56 | "nbformat_minor": 2

57 | }

58 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line, and also

5 | # from the environment for the first two.

6 | SPHINXOPTS ?=

7 | SPHINXBUILD ?= sphinx-build

8 | SOURCEDIR = .

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | .PHONY: help Makefile

16 |

17 | # Catch-all target: route all unknown targets to Sphinx using the new

18 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

19 | %: Makefile

20 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

21 |

--------------------------------------------------------------------------------

/docs/conf.py:

--------------------------------------------------------------------------------

1 | # Configuration file for the Sphinx documentation builder.

2 | #

3 | # This file only contains a selection of the most common options. For a full

4 | # list see the documentation:

5 | # https://www.sphinx-doc.org/en/master/usage/configuration.html

6 |

7 | # -- Path setup --------------------------------------------------------------

8 |

9 | # If extensions (or modules to document with autodoc) are in another directory,

10 | # add these directories to sys.path here. If the directory is relative to the

11 | # documentation root, use os.path.abspath to make it absolute, like shown here.

12 | #

13 | # import os

14 | # import sys

15 | # sys.path.insert(0, os.path.abspath('.'))

16 |

17 |

18 | # -- Project information -----------------------------------------------------

19 |

20 | project = "StableFusion: webapp and api for 🤗 diffusers"

21 | copyright = "2023, NeuralRealm"

22 | author = "NeuralRealm"

23 |

24 |

25 | # -- General configuration ---------------------------------------------------

26 |

27 | # Add any Sphinx extension module names here, as strings. They can be

28 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

29 | # ones.

30 | extensions = []

31 |

32 | # Add any paths that contain templates here, relative to this directory.

33 | templates_path = ["_templates"]

34 |

35 | # List of patterns, relative to source directory, that match files and

36 | # directories to ignore when looking for source files.

37 | # This pattern also affects html_static_path and html_extra_path.

38 | exclude_patterns = ["_build", "Thumbs.db", ".DS_Store"]

39 |

40 |

41 | # -- Options for HTML output -------------------------------------------------

42 |

43 | # The theme to use for HTML and HTML Help pages. See the documentation for

44 | # a list of builtin themes.

45 | #

46 | html_theme = "alabaster"

47 |

48 | # Add any paths that contain custom static files (such as style sheets) here,

49 | # relative to this directory. They are copied after the builtin static files,

50 | # so a file named "default.css" will overwrite the builtin "default.css".

51 | html_static_path = ["_static"]

52 |

--------------------------------------------------------------------------------

/docs/index.rst:

--------------------------------------------------------------------------------

1 | Welcome to StableFusion' documentation!

2 | ======================================================================

3 |

4 | Diffuzers offers web app and also api for 🤗 diffusers. Installation is very simple.

5 | You can install via pip:

6 |

7 | .. code-block:: bash

8 |

9 | pip install stablefusion

10 |

11 | .. toctree::

12 | :maxdepth: 2

13 | :caption: Contents:

14 |

15 |

16 |

17 | Indices and tables

18 | ==================

19 |

20 | * :ref:`genindex`

21 | * :ref:`modindex`

22 | * :ref:`search`

23 |

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=.

11 | set BUILDDIR=_build

12 |

13 | if "%1" == "" goto help

14 |

15 | %SPHINXBUILD% >NUL 2>NUL

16 | if errorlevel 9009 (

17 | echo.

18 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

19 | echo.installed, then set the SPHINXBUILD environment variable to point

20 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

21 | echo.may add the Sphinx directory to PATH.

22 | echo.

23 | echo.If you don't have Sphinx installed, grab it from

24 | echo.https://www.sphinx-doc.org/

25 | exit /b 1

26 | )

27 |

28 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

29 | goto end

30 |

31 | :help

32 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

33 |

34 | :end

35 | popd

36 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | accelerate==0.15.0

2 | basicsr>=1.4.2

3 | diffusers>=0.14.0

4 | facexlib>=0.2.5

5 | fairscale==0.4.4

6 | fastapi==0.88.0

7 | gfpgan>=1.3.7

8 | huggingface_hub>=0.11.1

9 | loguru==0.6.0

10 | open_clip_torch==2.9.1

11 | opencv-python

12 | protobuf==3.20

13 | pyngrok==5.2.1

14 | python-multipart==0.0.5

15 | realesrgan>=0.2.5

16 | streamlit==1.16.0

17 | streamlit-drawable-canvas==0.9.0

18 | st-clickable-images==0.0.3

19 | timm==0.4.12

20 | torch>=1.12.0

21 | torchvision>=0.13.0

22 | transformers==4.25.1

23 | uvicorn==0.15.0

24 | OmegaConf

25 | tqdl

26 | xformers

27 | bitsandbytes

28 | safetensors

29 | controlnet_aux

--------------------------------------------------------------------------------

/setup.cfg:

--------------------------------------------------------------------------------

1 | [metadata]

2 | version = attr: stablefusion.__version__

3 |

4 | [isort]

5 | ensure_newline_before_comments = True

6 | force_grid_wrap = 0

7 | include_trailing_comma = True

8 | line_length = 119

9 | lines_after_imports = 2

10 | multi_line_output = 3

11 | use_parentheses = True

12 |

13 | [flake8]

14 | ignore = E203, E501, W503

15 | max-line-length = 119

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | # Lint as: python3

2 | # pylint: enable=line-too-long

3 |

4 | from setuptools import find_packages, setup

5 |

6 |

7 | with open("README.md" , encoding='utf-8') as f:

8 | long_description = f.read()

9 |

10 | QUALITY_REQUIRE = [

11 | "black~=22.0",

12 | "isort==5.8.0",

13 | "flake8==3.9.2",

14 | "mypy==0.901",

15 | ]

16 |

17 | TEST_REQUIRE = ["pytest", "pytest-cov"]

18 |

19 | EXTRAS_REQUIRE = {

20 | "dev": QUALITY_REQUIRE,

21 | "quality": QUALITY_REQUIRE,

22 | "test": TEST_REQUIRE,

23 | "docs": [

24 | "recommonmark",

25 | "sphinx==3.1.2",

26 | "sphinx-markdown-tables",

27 | "sphinx-rtd-theme==0.4.3",

28 | "sphinx-copybutton",

29 | ],

30 | }

31 |

32 | with open("requirements.txt") as f:

33 | INSTALL_REQUIRES = f.read().splitlines()

34 |

35 | setup(

36 | name="stablefusion",

37 | description="StableFusion",

38 | long_description=long_description,

39 | long_description_content_type="text/markdown",

40 | author="NeuralRealm",

41 | url="https://github.com/NeuralRealm/StableFusion",

42 | packages=find_packages("."),

43 | entry_points={"console_scripts": ["stablefusion=stablefusion.cli.main:main"]},

44 | install_requires=INSTALL_REQUIRES,

45 | extras_require=EXTRAS_REQUIRE,

46 | python_requires=">=3.7",

47 | classifiers=[

48 | "Intended Audience :: Developers",

49 | "Intended Audience :: Education",

50 | "Intended Audience :: Science/Research",

51 | "License :: OSI Approved :: Apache Software License",

52 | "Operating System :: OS Independent",

53 | "Programming Language :: Python :: 3.8",

54 | "Programming Language :: Python :: 3.9",

55 | "Programming Language :: Python :: 3.10",

56 | "Topic :: Scientific/Engineering :: Artificial Intelligence",

57 | ],

58 | keywords="stablefusion",

59 | include_package_data=True,

60 | )

61 |

--------------------------------------------------------------------------------

/stablefusion/Home.py:

--------------------------------------------------------------------------------

1 | import argparse

2 |

3 | import streamlit as st

4 | from loguru import logger

5 |

6 | from stablefusion import utils

7 | from stablefusion.scripts.x2image import X2Image

8 | import ast

9 | import os

10 |

11 | def read_model_list():

12 |

13 | try:

14 | with open('{}/model_list.txt'.format(os.path.dirname(__file__)), 'r') as f:

15 | contents = f.read()

16 | except:

17 | with open('stablefusion/model_list.txt', 'r') as f:

18 | contents = f.read()

19 |

20 | model_list = ast.literal_eval(contents)

21 |

22 | return model_list

23 |

24 | def parse_args():

25 | parser = argparse.ArgumentParser()

26 | parser.add_argument(

27 | "--output",

28 | type=str,

29 | required=False,

30 | default=None,

31 | help="Output path",

32 | )

33 | parser.add_argument(

34 | "--device",

35 | type=str,

36 | required=True,

37 | help="Device to use, e.g. cpu, cuda, cuda:0, mps etc.",

38 | )

39 | return parser.parse_args()

40 |

41 |

42 | def x2img_app():

43 | with st.form("x2img_model_form"):

44 | col1, col2 = st.columns(2)

45 | with col1:

46 | model = st.selectbox(

47 | "Which model do you want to use?",

48 | options=read_model_list(),

49 | )

50 | with col2:

51 | custom_pipeline = st.selectbox(

52 | "Custom pipeline",

53 | options=[

54 | "Vanilla",

55 | "Long Prompt Weighting",

56 | ],

57 | index=0 if st.session_state.get("x2img_custom_pipeline") in (None, "Vanilla") else 1,

58 | )

59 |

60 | with st.expander("Textual Inversion (Optional)"):

61 | token_identifier = st.text_input(

62 | "Token identifier",

63 | placeholder=""

64 | if st.session_state.get("textual_inversion_token_identifier") is None

65 | else st.session_state.textual_inversion_token_identifier,

66 | )

67 | embeddings = st.text_input(

68 | "Embeddings",

69 | placeholder="https://huggingface.co/sd-concepts-library/axe-tattoo/resolve/main/learned_embeds.bin"

70 | if st.session_state.get("textual_inversion_embeddings") is None

71 | else st.session_state.textual_inversion_embeddings,

72 | )

73 | submit = st.form_submit_button("Load model")

74 |

75 | if submit:

76 | st.session_state.x2img_model = model

77 | st.session_state.x2img_custom_pipeline = custom_pipeline

78 | st.session_state.textual_inversion_token_identifier = token_identifier

79 | st.session_state.textual_inversion_embeddings = embeddings

80 | cpipe = "lpw_stable_diffusion" if custom_pipeline == "Long Prompt Weighting" else None

81 | with st.spinner("Loading model..."):

82 | x2img = X2Image(

83 | model=model,

84 | device=st.session_state.device,

85 | output_path=st.session_state.output_path,

86 | custom_pipeline=cpipe,

87 | token_identifier=token_identifier,

88 | embeddings_url=embeddings,

89 | )

90 | st.session_state.x2img = x2img

91 | if "x2img" in st.session_state:

92 | st.write(f"Current model: {st.session_state.x2img}")

93 | st.session_state.x2img.app()

94 |

95 |

96 | def run_app():

97 | utils.create_base_page()

98 | x2img_app()

99 |

100 |

101 | if __name__ == "__main__":

102 | args = parse_args()

103 | logger.info(f"Args: {args}")

104 | logger.info(st.session_state)

105 | st.session_state.device = args.device

106 | st.session_state.output_path = args.output

107 | run_app()

108 |

--------------------------------------------------------------------------------

/stablefusion/__init__.py:

--------------------------------------------------------------------------------

1 | import sys

2 |

3 | from loguru import logger

4 |

5 |

6 | logger.configure(handlers=[dict(sink=sys.stderr, format="> {level:<7} {message}")])

7 |

8 | __version__ = "0.1.4"

9 |

--------------------------------------------------------------------------------

/stablefusion/api/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NeuralRealm/StableFusion/9268d686f49b45194a2251c6728e74191f298654/stablefusion/api/__init__.py

--------------------------------------------------------------------------------

/stablefusion/api/main.py:

--------------------------------------------------------------------------------

1 | import io

2 | import os

3 |

4 | from fastapi import Depends, FastAPI, File, UploadFile

5 | from loguru import logger

6 | from PIL import Image

7 | from starlette.middleware.cors import CORSMiddleware

8 |

9 | from stablefusion.api.schemas import Img2ImgParams, ImgResponse, InpaintingParams, InstructPix2PixParams, Text2ImgParams

10 | from stablefusion.api.utils import convert_to_b64_list

11 | from stablefusion.scripts.inpainting import Inpainting

12 | from stablefusion.scripts.x2image import X2Image

13 |

14 |

15 | app = FastAPI(

16 | title="StableFusion api",

17 | license_info={

18 | "name": "Apache 2.0",

19 | "url": "https://www.apache.org/licenses/LICENSE-2.0.html",

20 | },

21 | )

22 | app.add_middleware(CORSMiddleware, allow_origins=["*"], allow_methods=["*"], allow_headers=["*"])

23 |

24 |

25 | @app.on_event("startup")

26 | async def startup_event():

27 |

28 | x2img_model = os.environ.get("X2IMG_MODEL")

29 | x2img_pipeline = os.environ.get("X2IMG_PIPELINE")

30 | inpainting_model = os.environ.get("INPAINTING_MODEL")

31 | device = os.environ.get("DEVICE")

32 | output_path = os.environ.get("OUTPUT_PATH")

33 | ti_identifier = os.environ.get("TOKEN_IDENTIFIER", "")

34 | ti_embeddings_url = os.environ.get("TOKEN_EMBEDDINGS_URL", "")

35 | logger.info("@@@@@ Starting Diffuzes API @@@@@ ")

36 | logger.info(f"Text2Image Model: {x2img_model}")

37 | logger.info(f"Text2Image Pipeline: {x2img_pipeline if x2img_pipeline is not None else 'Vanilla'}")

38 | logger.info(f"Inpainting Model: {inpainting_model}")

39 | logger.info(f"Device: {device}")

40 | logger.info(f"Output Path: {output_path}")

41 | logger.info(f"Token Identifier: {ti_identifier}")

42 | logger.info(f"Token Embeddings URL: {ti_embeddings_url}")

43 |

44 | logger.info("Loading x2img model...")

45 | if x2img_model is not None:

46 | app.state.x2img_model = X2Image(

47 | model=x2img_model,

48 | device=device,

49 | output_path=output_path,

50 | custom_pipeline=x2img_pipeline,

51 | token_identifier=ti_identifier,

52 | embeddings_url=ti_embeddings_url,

53 | )

54 | else:

55 | app.state.x2img_model = None

56 | logger.info("Loading inpainting model...")

57 | if inpainting_model is not None:

58 | app.state.inpainting_model = Inpainting(

59 | model=inpainting_model,

60 | device=device,

61 | output_path=output_path,

62 | )

63 | logger.info("API is ready to use!")

64 |

65 |

66 | @app.post("/text2img")

67 | async def text2img(params: Text2ImgParams) -> ImgResponse:

68 | logger.info(f"Params: {params}")

69 | if app.state.x2img_model is None:

70 | return {"error": "x2img model is not loaded"}

71 |

72 | images, _ = app.state.x2img_model.text2img_generate(

73 | params.prompt,

74 | num_images=params.num_images,

75 | steps=params.steps,

76 | seed=params.seed,

77 | negative_prompt=params.negative_prompt,

78 | scheduler=params.scheduler,

79 | image_size=(params.image_height, params.image_width),

80 | guidance_scale=params.guidance_scale,

81 | )

82 | base64images = convert_to_b64_list(images)

83 | return ImgResponse(images=base64images, metadata=params.dict())

84 |

85 |

86 | @app.post("/img2img")

87 | async def img2img(params: Img2ImgParams = Depends(), image: UploadFile = File(...)) -> ImgResponse:

88 | if app.state.x2img_model is None:

89 | return {"error": "x2img model is not loaded"}

90 | image = Image.open(io.BytesIO(image.file.read()))

91 | images, _ = app.state.x2img_model.img2img_generate(

92 | image=image,

93 | prompt=params.prompt,

94 | negative_prompt=params.negative_prompt,

95 | num_images=params.num_images,

96 | steps=params.steps,

97 | seed=params.seed,

98 | scheduler=params.scheduler,

99 | guidance_scale=params.guidance_scale,

100 | strength=params.strength,

101 | )

102 | base64images = convert_to_b64_list(images)

103 | return ImgResponse(images=base64images, metadata=params.dict())

104 |

105 |

106 | @app.post("/instruct-pix2pix")

107 | async def instruct_pix2pix(params: InstructPix2PixParams = Depends(), image: UploadFile = File(...)) -> ImgResponse:

108 | if app.state.x2img_model is None:

109 | return {"error": "x2img model is not loaded"}

110 | image = Image.open(io.BytesIO(image.file.read()))

111 | images, _ = app.state.x2img_model.pix2pix_generate(

112 | image=image,

113 | prompt=params.prompt,

114 | negative_prompt=params.negative_prompt,

115 | num_images=params.num_images,

116 | steps=params.steps,

117 | seed=params.seed,

118 | scheduler=params.scheduler,

119 | guidance_scale=params.guidance_scale,

120 | image_guidance_scale=params.image_guidance_scale,

121 | )

122 | base64images = convert_to_b64_list(images)

123 | return ImgResponse(images=base64images, metadata=params.dict())

124 |

125 |

126 | @app.post("/inpainting")

127 | async def inpainting(

128 | params: InpaintingParams = Depends(), image: UploadFile = File(...), mask: UploadFile = File(...)

129 | ) -> ImgResponse:

130 | if app.state.inpainting_model is None:

131 | return {"error": "inpainting model is not loaded"}

132 | image = Image.open(io.BytesIO(image.file.read()))

133 | mask = Image.open(io.BytesIO(mask.file.read()))

134 | images, _ = app.state.inpainting_model.generate_image(

135 | image=image,

136 | mask=mask,

137 | prompt=params.prompt,

138 | negative_prompt=params.negative_prompt,

139 | scheduler=params.scheduler,

140 | height=params.image_height,

141 | width=params.image_width,

142 | num_images=params.num_images,

143 | guidance_scale=params.guidance_scale,

144 | steps=params.steps,

145 | seed=params.seed,

146 | )

147 | base64images = convert_to_b64_list(images)

148 | return ImgResponse(images=base64images, metadata=params.dict())

149 |

150 |

151 | @app.get("/")

152 | def read_root():

153 | return {"Hello": "World"}

154 |

--------------------------------------------------------------------------------

/stablefusion/api/schemas.py:

--------------------------------------------------------------------------------

1 | from typing import Dict, List

2 |

3 | from pydantic import BaseModel, Field

4 |

5 |

6 | class Text2ImgParams(BaseModel):

7 | prompt: str = Field(..., description="Text prompt for the model")

8 | negative_prompt: str = Field(None, description="Negative text prompt for the model")

9 | scheduler: str = Field("EulerAncestralDiscreteScheduler", description="Scheduler to use for the model")

10 | image_height: int = Field(512, description="Image height")

11 | image_width: int = Field(512, description="Image width")

12 | num_images: int = Field(1, description="Number of images to generate")

13 | guidance_scale: float = Field(7, description="Guidance scale")

14 | steps: int = Field(50, description="Number of steps to run the model for")

15 | seed: int = Field(42, description="Seed for the model")

16 |

17 |

18 | class Img2ImgParams(BaseModel):

19 | prompt: str = Field(..., description="Text prompt for the model")

20 | negative_prompt: str = Field(None, description="Negative text prompt for the model")

21 | scheduler: str = Field("EulerAncestralDiscreteScheduler", description="Scheduler to use for the model")

22 | strength: float = Field(0.7, description="Strength")

23 | num_images: int = Field(1, description="Number of images to generate")

24 | guidance_scale: float = Field(7, description="Guidance scale")

25 | steps: int = Field(50, description="Number of steps to run the model for")

26 | seed: int = Field(42, description="Seed for the model")

27 |

28 |

29 | class InstructPix2PixParams(BaseModel):

30 | prompt: str = Field(..., description="Text prompt for the model")

31 | negative_prompt: str = Field(None, description="Negative text prompt for the model")

32 | scheduler: str = Field("EulerAncestralDiscreteScheduler", description="Scheduler to use for the model")

33 | num_images: int = Field(1, description="Number of images to generate")

34 | guidance_scale: float = Field(7, description="Guidance scale")

35 | image_guidance_scale: float = Field(1.5, description="Image guidance scale")

36 | steps: int = Field(50, description="Number of steps to run the model for")

37 | seed: int = Field(42, description="Seed for the model")

38 |

39 |

40 | class ImgResponse(BaseModel):

41 | images: List[str] = Field(..., description="List of images in base64 format")

42 | metadata: Dict = Field(..., description="Metadata")

43 |

44 |

45 | class InpaintingParams(BaseModel):

46 | prompt: str = Field(..., description="Text prompt for the model")

47 | negative_prompt: str = Field(None, description="Negative text prompt for the model")

48 | scheduler: str = Field("EulerAncestralDiscreteScheduler", description="Scheduler to use for the model")

49 | image_height: int = Field(512, description="Image height")

50 | image_width: int = Field(512, description="Image width")

51 | num_images: int = Field(1, description="Number of images to generate")

52 | guidance_scale: float = Field(7, description="Guidance scale")

53 | steps: int = Field(50, description="Number of steps to run the model for")

54 | seed: int = Field(42, description="Seed for the model")

55 |

--------------------------------------------------------------------------------

/stablefusion/api/utils.py:

--------------------------------------------------------------------------------

1 | import base64

2 | import io

3 |

4 |

5 | def convert_to_b64_list(images):

6 | base64images = []

7 | for image in images:

8 | buf = io.BytesIO()

9 | image.save(buf, format="PNG")

10 | byte_im = base64.b64encode(buf.getvalue())

11 | base64images.append(byte_im)

12 | return base64images

13 |

--------------------------------------------------------------------------------

/stablefusion/cli/__init__.py:

--------------------------------------------------------------------------------

1 | from abc import ABC, abstractmethod

2 | from argparse import ArgumentParser

3 |

4 |

5 | class BaseStableFusionCommand(ABC):

6 | @staticmethod

7 | @abstractmethod

8 | def register_subcommand(parser: ArgumentParser):

9 | raise NotImplementedError()

10 |

11 | @abstractmethod

12 | def run(self):

13 | raise NotImplementedError()

14 |

--------------------------------------------------------------------------------

/stablefusion/cli/main.py:

--------------------------------------------------------------------------------

1 | import argparse

2 |

3 | from .. import __version__

4 | from .run_api import RunStableFusionAPICommand

5 | from .run_app import RunStableFusionAppCommand

6 |

7 |

8 | def main():

9 | parser = argparse.ArgumentParser(

10 | "StableFusion CLI",

11 | usage="stablefusion []",

12 | epilog="For more information about a command, run: `stablefusion --help`",

13 | )

14 | parser.add_argument("--version", "-v", help="Display stablefusion version", action="store_true")

15 | commands_parser = parser.add_subparsers(help="commands")

16 |

17 | # Register commands

18 | RunStableFusionAppCommand.register_subcommand(commands_parser)

19 | #RunStableFusionAPICommand.register_subcommand(commands_parser)

20 |

21 | args = parser.parse_args()

22 |

23 | if args.version:

24 | print(__version__)

25 | exit(0)

26 |

27 | if not hasattr(args, "func"):

28 | parser.print_help()

29 | exit(1)

30 |

31 | command = args.func(args)

32 | command.run()

33 |

34 |

35 | if __name__ == "__main__":

36 | main()

37 |

--------------------------------------------------------------------------------

/stablefusion/cli/run_api.py:

--------------------------------------------------------------------------------

1 | import subprocess

2 | from argparse import ArgumentParser

3 |

4 | import torch

5 |

6 | from . import BaseStableFusionCommand

7 |

8 |

9 | def run_api_command_factory(args):

10 | return RunStableFusionAPICommand(

11 | args.output,

12 | args.port,

13 | args.host,

14 | args.device,

15 | args.workers,

16 | args.ssl_certfile,

17 | args.ssl_keyfile,

18 | )

19 |

20 |

21 | class RunStableFusionAPICommand(BaseStableFusionCommand):

22 | @staticmethod

23 | def register_subcommand(parser: ArgumentParser):

24 | run_api_parser = parser.add_parser(

25 | "api",

26 | description="✨ Run StableFusion api",

27 | )

28 | run_api_parser.add_argument(

29 | "--output",

30 | type=str,

31 | required=False,

32 | help="Output path is optional, but if provided, all generations will automatically be saved to this path.",

33 | )

34 | run_api_parser.add_argument(

35 | "--port",

36 | type=int,

37 | default=10000,

38 | help="Port to run the app on",

39 | required=False,

40 | )

41 | run_api_parser.add_argument(

42 | "--host",

43 | type=str,

44 | default="127.0.0.1",

45 | help="Host to run the app on",

46 | required=False,

47 | )

48 | run_api_parser.add_argument(

49 | "--device",

50 | type=str,

51 | required=False,

52 | help="Device to use, e.g. cpu, cuda, cuda:0, mps (for m1 mac) etc.",

53 | )

54 | run_api_parser.add_argument(

55 | "--workers",

56 | type=int,

57 | required=False,

58 | default=1,

59 | help="Number of workers to use",

60 | )

61 | run_api_parser.add_argument(

62 | "--ssl_certfile",

63 | type=str,

64 | required=False,

65 | help="the path to your ssl cert",

66 | )

67 | run_api_parser.add_argument(

68 | "--ssl_keyfile",

69 | type=str,

70 | required=False,

71 | help="the path to your ssl key",

72 | )

73 | run_api_parser.set_defaults(func=run_api_command_factory)

74 |

75 | def __init__(self, output, port, host, device, workers, ssl_certfile, ssl_keyfile):

76 | self.output = output

77 | self.port = port

78 | self.host = host

79 | self.device = device

80 | self.workers = workers

81 | self.ssl_certfile = ssl_certfile

82 | self.ssl_keyfile = ssl_keyfile

83 |

84 | if self.device is None:

85 | self.device = "cuda" if torch.cuda.is_available() else "cpu"

86 |

87 | self.port = str(self.port)

88 | self.workers = str(self.workers)

89 |

90 | def run(self):

91 | cmd = [

92 | "uvicorn",

93 | "stablefusion.api.main:app",

94 | "--host",

95 | self.host,

96 | "--port",

97 | self.port,

98 | "--workers",

99 | self.workers,

100 | ]

101 | if self.ssl_certfile is not None:

102 | cmd.extend(["--ssl-certfile", self.ssl_certfile])

103 | if self.ssl_keyfile is not None:

104 | cmd.extend(["--ssl-keyfile", self.ssl_keyfile])

105 |

106 | proc = subprocess.Popen(

107 | cmd,

108 | stdout=subprocess.PIPE,

109 | stderr=subprocess.STDOUT,

110 | shell=False,

111 | universal_newlines=True,

112 | bufsize=1,

113 | )

114 | with proc as p:

115 | try:

116 | for line in p.stdout:

117 | print(line, end="")

118 | except KeyboardInterrupt:

119 | print("Killing api")

120 | p.kill()

121 | p.wait()

122 | raise

123 |

--------------------------------------------------------------------------------

/stablefusion/cli/run_app.py:

--------------------------------------------------------------------------------

1 | import subprocess

2 | from argparse import ArgumentParser

3 |

4 | import torch

5 | from pyngrok import ngrok

6 |

7 | from . import BaseStableFusionCommand

8 |

9 |

10 | def run_app_command_factory(args):

11 | return RunStableFusionAppCommand(

12 | args.output,

13 | args.share,

14 | args.port,

15 | args.host,

16 | args.device,

17 | args.ngrok_key,

18 | )

19 |

20 |

21 | class RunStableFusionAppCommand(BaseStableFusionCommand):

22 | @staticmethod

23 | def register_subcommand(parser: ArgumentParser):

24 | run_app_parser = parser.add_parser(

25 | "app",

26 | description="✨ Run stablefusion app",

27 | )

28 | run_app_parser.add_argument(

29 | "--output",

30 | type=str,

31 | required=False,

32 | help="Output path is optional, but if provided, all generations will automatically be saved to this path.",

33 | )

34 | run_app_parser.add_argument(

35 | "--share",

36 | action="store_true",

37 | help="Share the app",

38 | )

39 | run_app_parser.add_argument(

40 | "--port",

41 | type=int,

42 | default=10000,

43 | help="Port to run the app on",

44 | required=False,

45 | )

46 | run_app_parser.add_argument(

47 | "--host",

48 | type=str,

49 | default="127.0.0.1",

50 | help="Host to run the app on",

51 | required=False,

52 | )

53 | run_app_parser.add_argument(

54 | "--device",

55 | type=str,

56 | required=False,

57 | help="Device to use, e.g. cpu, cuda, cuda:0, mps (for m1 mac) etc.",

58 | )

59 | run_app_parser.add_argument(

60 | "--ngrok_key",

61 | type=str,

62 | required=False,

63 | help="Ngrok key to use for sharing the app. Only required if you want to share the app",

64 | )

65 |

66 | run_app_parser.set_defaults(func=run_app_command_factory)

67 |

68 | def __init__(self, output, share, port, host, device, ngrok_key):

69 | self.output = output

70 | self.share = share

71 | self.port = port

72 | self.host = host

73 | self.device = device

74 | self.ngrok_key = ngrok_key

75 |

76 | if self.device is None:

77 | self.device = "cuda" if torch.cuda.is_available() else "cpu"

78 |

79 | if self.share:

80 | if self.ngrok_key is None:

81 | raise ValueError(

82 | "ngrok key is required if you want to share the app. Get it for free from https://dashboard.ngrok.com/get-started/your-authtoken"

83 | )

84 |

85 | def run(self):

86 | # from ..app import stablefusion

87 |

88 | # print(self.share)

89 | # app = stablefusion(self.model, self.output).app()

90 | # app.launch(show_api=False, share=self.share, server_port=self.port, server_name=self.host)

91 | import os

92 |

93 | dirname = os.path.dirname(__file__)

94 | filename = os.path.join(dirname, "..", "Home.py")

95 | cmd = [

96 | "streamlit",

97 | "run",

98 | filename,

99 | "--browser.gatherUsageStats",

100 | "false",

101 | "--browser.serverAddress",

102 | self.host,

103 | "--server.port",

104 | str(self.port),

105 | "--theme.base",

106 | "dark",

107 | "--",

108 | "--device",

109 | self.device,

110 | ]

111 | if self.output is not None:

112 | cmd.extend(["--output", self.output])

113 |

114 | if self.share:

115 | ngrok.set_auth_token(self.ngrok_key)

116 | public_url = ngrok.connect(self.port).public_url

117 | print(f"Sharing app at {public_url}")

118 |

119 | proc = subprocess.Popen(

120 | cmd,

121 | stdout=subprocess.PIPE,

122 | stderr=subprocess.STDOUT,

123 | shell=False,

124 | universal_newlines=True,

125 | bufsize=1,

126 | )

127 | with proc as p:

128 | try:

129 | for line in p.stdout:

130 | print(line, end="")

131 | except KeyboardInterrupt:

132 | print("Killing streamlit app")

133 | p.kill()

134 | if self.share:

135 | print("Killing ngrok tunnel")

136 | ngrok.kill()

137 | p.wait()

138 | raise

139 |

--------------------------------------------------------------------------------

/stablefusion/data/mediums.txt:

--------------------------------------------------------------------------------

1 | a 3D render

2 | a black and white photo

3 | a bronze sculpture

4 | a cartoon

5 | a cave painting

6 | a character portrait

7 | a charcoal drawing

8 | a child's drawing

9 | a color pencil sketch

10 | a colorized photo

11 | a comic book panel

12 | a computer rendering

13 | a cross stitch

14 | a cubist painting

15 | a detailed drawing

16 | a detailed matte painting

17 | a detailed painting

18 | a diagram

19 | a digital painting

20 | a digital rendering

21 | a drawing

22 | a fine art painting

23 | a flemish Baroque

24 | a gouache

25 | a hologram

26 | a hyperrealistic painting

27 | a jigsaw puzzle

28 | a low poly render

29 | a macro photograph

30 | a manga drawing

31 | a marble sculpture

32 | a matte painting

33 | a microscopic photo

34 | a mid-nineteenth century engraving

35 | a minimalist painting

36 | a mosaic

37 | a painting

38 | a pastel

39 | a pencil sketch

40 | a photo

41 | a photocopy

42 | a photorealistic painting

43 | a picture

44 | a pointillism painting

45 | a polaroid photo

46 | a pop art painting

47 | a portrait

48 | a poster

49 | a raytraced image

50 | a renaissance painting

51 | a screenprint

52 | a screenshot

53 | a silk screen

54 | a sketch

55 | a statue

56 | a still life

57 | a stipple

58 | a stock photo

59 | a storybook illustration

60 | a surrealist painting

61 | a surrealist sculpture

62 | a tattoo

63 | a tilt shift photo

64 | a watercolor painting

65 | a wireframe diagram

66 | a woodcut

67 | an abstract drawing

68 | an abstract painting

69 | an abstract sculpture

70 | an acrylic painting

71 | an airbrush painting

72 | an album cover

73 | an ambient occlusion render

74 | an anime drawing

75 | an art deco painting

76 | an art deco sculpture

77 | an engraving

78 | an etching

79 | an illustration of

80 | an impressionist painting

81 | an ink drawing

82 | an oil on canvas painting

83 | an oil painting

84 | an ultrafine detailed painting

85 | chalk art

86 | computer graphics

87 | concept art

88 | cyberpunk art

89 | digital art

90 | egyptian art

91 | graffiti art

92 | lineart

93 | pixel art

94 | poster art

95 | vector art

96 |

--------------------------------------------------------------------------------

/stablefusion/data/movements.txt:

--------------------------------------------------------------------------------

1 | abstract art

2 | abstract expressionism

3 | abstract illusionism

4 | academic art

5 | action painting

6 | aestheticism

7 | afrofuturism

8 | altermodern

9 | american barbizon school

10 | american impressionism

11 | american realism

12 | american romanticism

13 | american scene painting

14 | analytical art

15 | antipodeans

16 | arabesque

17 | arbeitsrat für kunst

18 | art & language

19 | art brut

20 | art deco

21 | art informel

22 | art nouveau

23 | art photography

24 | arte povera

25 | arts and crafts movement

26 | ascii art

27 | ashcan school

28 | assemblage

29 | australian tonalism

30 | auto-destructive art

31 | barbizon school

32 | baroque

33 | bauhaus

34 | bengal school of art

35 | berlin secession

36 | black arts movement

37 | brutalism

38 | classical realism

39 | cloisonnism

40 | cobra

41 | color field

42 | computer art

43 | conceptual art

44 | concrete art

45 | constructivism

46 | context art

47 | crayon art

48 | crystal cubism

49 | cubism

50 | cubo-futurism

51 | cynical realism

52 | dada

53 | danube school

54 | dau-al-set

55 | de stijl

56 | deconstructivism

57 | digital art

58 | ecological art

59 | environmental art

60 | excessivism

61 | expressionism

62 | fantastic realism

63 | fantasy art

64 | fauvism

65 | feminist art

66 | figuration libre

67 | figurative art

68 | figurativism

69 | fine art

70 | fluxus

71 | folk art

72 | funk art

73 | furry art

74 | futurism

75 | generative art

76 | geometric abstract art

77 | german romanticism

78 | gothic art

79 | graffiti

80 | gutai group

81 | happening

82 | harlem renaissance

83 | heidelberg school

84 | holography

85 | hudson river school

86 | hurufiyya

87 | hypermodernism

88 | hyperrealism

89 | impressionism

90 | incoherents

91 | institutional critique

92 | interactive art

93 | international gothic

94 | international typographic style

95 | kinetic art

96 | kinetic pointillism

97 | kitsch movement

98 | land art

99 | les automatistes

100 | les nabis

101 | letterism

102 | light and space

103 | lowbrow

104 | lyco art

105 | lyrical abstraction

106 | magic realism

107 | magical realism

108 | mail art

109 | mannerism

110 | massurrealism

111 | maximalism

112 | metaphysical painting

113 | mingei

114 | minimalism

115 | modern european ink painting

116 | modernism

117 | modular constructivism

118 | naive art

119 | naturalism

120 | neo-dada

121 | neo-expressionism

122 | neo-fauvism

123 | neo-figurative

124 | neo-primitivism

125 | neo-romanticism

126 | neoclassicism

127 | neogeo

128 | neoism

129 | neoplasticism

130 | net art

131 | new objectivity

132 | new sculpture

133 | northwest school

134 | nuclear art

135 | objective abstraction

136 | op art

137 | optical illusion

138 | orphism

139 | panfuturism

140 | paris school

141 | photorealism

142 | pixel art

143 | plasticien

144 | plein air

145 | pointillism

146 | pop art

147 | pop surrealism

148 | post-impressionism

149 | postminimalism

150 | pre-raphaelitism

151 | precisionism

152 | primitivism

153 | private press

154 | process art

155 | psychedelic art

156 | purism

157 | qajar art

158 | quito school

159 | rasquache

160 | rayonism

161 | realism

162 | regionalism

163 | remodernism

164 | renaissance

165 | retrofuturism

166 | rococo

167 | romanesque

168 | romanticism

169 | samikshavad

170 | serial art

171 | shin hanga

172 | shock art

173 | socialist realism

174 | sots art

175 | space art

176 | street art

177 | stuckism

178 | sumatraism

179 | superflat

180 | suprematism

181 | surrealism

182 | symbolism

183 | synchromism

184 | synthetism

185 | sōsaku hanga

186 | tachisme

187 | temporary art

188 | tonalism

189 | toyism

190 | transgressive art

191 | ukiyo-e

192 | underground comix

193 | unilalianism

194 | vancouver school

195 | vanitas

196 | verdadism

197 | video art

198 | viennese actionism

199 | visual art

200 | vorticism

201 |

--------------------------------------------------------------------------------

/stablefusion/model_list.txt:

--------------------------------------------------------------------------------

1 | ['runwayml/stable-diffusion-v1-5', 'stabilityai/stable-diffusion-2-1', 'Linaqruf/anything-v3.0', 'Envvi/Inkpunk-Diffusion', 'prompthero/openjourney', 'darkstorm2150/Protogen_v2.2_Official_Release', 'darkstorm2150/Protogen_x3.4_Official_Release']

--------------------------------------------------------------------------------

/stablefusion/models/ckpt_interference/t:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NeuralRealm/StableFusion/9268d686f49b45194a2251c6728e74191f298654/stablefusion/models/ckpt_interference/t

--------------------------------------------------------------------------------

/stablefusion/models/cpkt_models/t:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NeuralRealm/StableFusion/9268d686f49b45194a2251c6728e74191f298654/stablefusion/models/cpkt_models/t

--------------------------------------------------------------------------------

/stablefusion/models/diffusion_models/t.txt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NeuralRealm/StableFusion/9268d686f49b45194a2251c6728e74191f298654/stablefusion/models/diffusion_models/t.txt

--------------------------------------------------------------------------------

/stablefusion/models/realesrgan/inference_realesrgan.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import cv2

3 | import glob

4 | import os

5 | from basicsr.archs.rrdbnet_arch import RRDBNet

6 | from basicsr.utils.download_util import load_file_from_url

7 | import tempfile

8 | from realesrgan import RealESRGANer

9 | from realesrgan.archs.srvgg_arch import SRVGGNetCompact

10 | import numpy as np

11 | from stablefusion import utils

12 | import streamlit as st

13 | from PIL import Image

14 | from io import BytesIO

15 | import base64

16 | import datetime

17 |

18 | def main(model_name, denoise_strength, tile, tile_pad, pre_pad, fp32, gpu_id, face_enhance, outscale, input_image, model_path):

19 | # determine models according to model names

20 | model_name = model_name.split('.')[0]

21 | if model_name == 'RealESRGAN_x4plus': # x4 RRDBNet model

22 | model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32, scale=4)

23 | netscale = 4

24 | file_url = ['https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth']

25 | elif model_name == 'RealESRNet_x4plus': # x4 RRDBNet model

26 | model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32, scale=4)

27 | netscale = 4

28 | file_url = ['https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.1/RealESRNet_x4plus.pth']

29 | elif model_name == 'RealESRGAN_x4plus_anime_6B': # x4 RRDBNet model with 6 blocks

30 | model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=6, num_grow_ch=32, scale=4)

31 | netscale = 4

32 | file_url = ['https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth']

33 | elif model_name == 'RealESRGAN_x2plus': # x2 RRDBNet model

34 | model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32, scale=2)

35 | netscale = 2

36 | file_url = ['https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.1/RealESRGAN_x2plus.pth']

37 | elif model_name == 'realesr-animevideov3': # x4 VGG-style model (XS size)

38 | model = SRVGGNetCompact(num_in_ch=3, num_out_ch=3, num_feat=64, num_conv=16, upscale=4, act_type='prelu')

39 | netscale = 4

40 | file_url = ['https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-animevideov3.pth']

41 | elif model_name == 'realesr-general-x4v3': # x4 VGG-style model (S size)

42 | model = SRVGGNetCompact(num_in_ch=3, num_out_ch=3, num_feat=64, num_conv=32, upscale=4, act_type='prelu')

43 | netscale = 4

44 | file_url = [

45 | 'https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-general-wdn-x4v3.pth',

46 | 'https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-general-x4v3.pth'

47 | ]

48 |

49 | # determine model paths

50 | if model_path is not None:

51 | model_path = model_path

52 | else:

53 | model_path = os.path.join('weights', model_name + '.pth')

54 | if not os.path.isfile(model_path):

55 | ROOT_DIR = os.path.dirname(os.path.abspath(__file__))

56 | for url in file_url:

57 | # model_path will be updated

58 | model_path = load_file_from_url(

59 | url=url, model_dir=os.path.join(ROOT_DIR, 'weights'), progress=True, file_name=None)

60 |

61 | # use dni to control the denoise strength

62 | dni_weight = None

63 | if model_name == 'realesr-general-x4v3' and denoise_strength != 1:

64 | wdn_model_path = model_path.replace('realesr-general-x4v3', 'realesr-general-wdn-x4v3')

65 | model_path = [model_path, wdn_model_path]

66 | dni_weight = [denoise_strength, 1 - denoise_strength]

67 |

68 | # restorer

69 | upsampler = RealESRGANer(

70 | scale=netscale,

71 | model_path=model_path,

72 | dni_weight=dni_weight,

73 | model=model,

74 | tile=tile,

75 | tile_pad=tile_pad,

76 | pre_pad=pre_pad,

77 | half=not fp32,

78 | gpu_id=gpu_id)

79 |

80 | if face_enhance: # Use GFPGAN for face enhancement

81 | from gfpgan import GFPGANer

82 | face_enhancer = GFPGANer(

83 | model_path='https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth',

84 | upscale=outscale,

85 | arch='clean',

86 | channel_multiplier=2,

87 | bg_upsampler=upsampler)

88 |

89 |

90 |

91 | image_array = np.asarray(bytearray(input_image.read()), dtype=np.uint8)

92 |

93 | img = cv2.imdecode(image_array, cv2.IMREAD_UNCHANGED)

94 |

95 |

96 | try:

97 | if face_enhance:

98 | _, _, output = face_enhancer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

99 | else:

100 | output, _ = upsampler.enhance(img, outscale=outscale)

101 |

102 |

103 | img = cv2.cvtColor(output, cv2.COLOR_BGR2RGB)

104 | st.image(img)

105 |

106 | output_img = Image.fromarray(img)

107 | buffered = BytesIO()

108 | output_img.save(buffered, format="PNG")

109 | img_str = base64.b64encode(buffered.getvalue()).decode()

110 | now = datetime.datetime.now()

111 | formatted_date_time = now.strftime("%Y-%m-%d_%H_%M_%S")

112 | href = f'

22 |

23 |

24 |

25 |

26 |

27 |

28 | If something doesnt work as expected, or if you need some features which are not available, then create request using [github issues](https://github.com/NeuralRealm/StableFusion/issues)

29 |

30 |

31 | ## Features available in the app:

32 |

33 | - text to image

34 | - image to image

35 | - Inpainting

36 | - instruct pix2pix

37 | - textual inversion

38 | - ControlNet

39 | - OpenPose Editor

40 | - image info

41 | - Upscale Your Image

42 | - clip interrogator

43 | - Convert ckpt file to diffusers

44 | - Convert safetensors file to diffusers

45 | - Add your own diffusers model

46 | - more coming soon!

47 |

48 |

49 |

50 | ## Installation

51 |

52 | To install bleeding edge version of StableFusion, clone the repo and install it using pip.

53 |

54 | ```bash

55 | git clone https://github.com/NeuralRealm/StableFusion

56 | cd StableFusion

57 | pip install -e .

58 | ```

59 |

60 | Installation using pip:

61 |

62 | ```bash

63 | pip install stablefusion

64 | ```

65 |

66 | ## Usage

67 |

68 | ### Web App

69 | To run the web app, run the following command:

70 |

71 | For Local Host

72 | ```bash

73 | stablefusion app

74 | ```

75 | or

76 |

77 | For Public Shareable Link

78 | ```bash

79 | stablefusion app --port 10000 --ngrok_key YourNgrokAuthtoken --share

80 | ```

81 |

82 | ## All CLI Options for running the app:

83 |

84 | ```bash

85 | ❯ stablefusion app --help

86 | usage: stablefusion [] app [-h] [--output OUTPUT] [--share] [--port PORT] [--host HOST]

87 | [--device DEVICE] [--ngrok_key NGROK_KEY]

88 |

89 | ✨ Run stablefusion app

90 |

91 | optional arguments:

92 | -h, --help show this help message and exit

93 | --output OUTPUT Output path is optional, but if provided, all generations will automatically be saved to this

94 | path.

95 | --share Share the app

96 | --port PORT Port to run the app on

97 | --host HOST Host to run the app on

98 | --device DEVICE Device to use, e.g. cpu, cuda, cuda:0, mps (for m1 mac) etc.

99 | --ngrok_key NGROK_KEY

100 | Ngrok key to use for sharing the app. Only required if you want to share the app

101 | ```

102 |

103 |

104 | ## Using private models from huggingface hub

105 |

106 | If you want to use private models from huggingface hub, then you need to login using `huggingface-cli login` command.

107 |

108 | Note: You can also save your generations directly to huggingface hub if your output path points to a huggingface hub dataset repo and you have access to push to that repository. Thus, you will end up saving a lot of disk space.

109 |

110 | ## Acknowledgements

111 |

112 | I would like to express my gratitude to the following individuals and organizations for sharing their code, which formed the basis of the implementation used in this project:

113 |

114 | - [Tencent ARC](https://github.com/TencentARC) for their code for the [GFPGAN](https://github.com/TencentARC/GFPGAN) package, which was used for image super-resolution.

115 | - [LexKoin](https://github.com/LexKoin) for their code for the [Real-ESRGAN-UpScale](https://github.com/LexKoin/Real-ESRGAN-UpScale) package, which was used for image enhancement.

116 | - [Hugging Face](https://github.com/huggingface) for their code for the [diffusers](https://github.com/huggingface/diffusers) package, which was used for optimizing the model's parameters.

117 | - [Abhishek Thakur](https://github.com/abhishekkrthakur) for sharing his code for the [diffuzers](https://github.com/abhishekkrthakur/diffuzers) package, which was also used for optimizing the model's parameters.

118 |

119 | I am grateful for their contributions to the open source community, which made this project possible.

120 |

121 | ## Contributing

122 |

123 | StableFusion is an open-source project, and we welcome contributions from the community. Whether you're a developer, designer, or user, there are many ways you can help make this project better. Here are a few ways you can get involved:

124 |

125 | - **Report issues:** If you find a bug or have a feature request, please open an issue on our [GitHub repository](https://github.com/NeuralRealm/StableFusion/issues). We appreciate detailed bug reports and constructive feedback.

126 | - **Submit pull requests:** If you're interested in contributing code, we welcome pull requests for bug fixes, new features, and documentation improvements.

127 | - **Spread the word:** If you enjoy using StableFusion, please help us spread the word by sharing it with your friends, colleagues, and social media networks. We appreciate any support you can give us!

128 |

129 | ### We believe that open-source software is the future of technology, and we're excited to have you join us in making StableFusion a success. Thank you for your support!

130 |

--------------------------------------------------------------------------------

/diffuzers.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": null,

6 | "metadata": {},

7 | "outputs": [],

8 | "source": [

9 | "!pip3 install stablefusion -q"

10 | ]

11 | },

12 | {

13 | "cell_type": "code",

14 | "execution_count": 2,

15 | "metadata": {},

16 | "outputs": [

17 | {

18 | "name": "stdout",

19 | "output_type": "stream",

20 | "text": [

21 | "/bin/bash: line 1: diffuzers: command not found\n"

22 | ]

23 | }

24 | ],

25 | "source": [

26 | "!stablefusion app --port 10000 --ngrok_key YOUR_NGROK_AUTHTOKEN --share"

27 | ]

28 | }

29 | ],

30 | "metadata": {

31 | "kernelspec": {

32 | "display_name": "diffuzers",

33 | "language": "python",

34 | "name": "python3"

35 | },

36 | "language_info": {

37 | "codemirror_mode": {

38 | "name": "ipython",

39 | "version": 3

40 | },

41 | "file_extension": ".py",

42 | "mimetype": "text/x-python",

43 | "name": "python",

44 | "nbconvert_exporter": "python",

45 | "pygments_lexer": "ipython3",

46 | "version": "3.9.16"

47 | },

48 | "orig_nbformat": 4,

49 | "vscode": {

50 | "interpreter": {

51 | "hash": "e667c4130092153eeed1faae4ad5e1fb640895671448a3853c00b657af26211d"

52 | }

53 | }

54 | },

55 | "nbformat": 4,

56 | "nbformat_minor": 2

57 | }

58 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line, and also

5 | # from the environment for the first two.

6 | SPHINXOPTS ?=

7 | SPHINXBUILD ?= sphinx-build

8 | SOURCEDIR = .

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help: