├── images

├── ThematicPoem.png

└── ThematicAnalysis.png

├── web_app

├── requirements_app.txt

├── config.py

├── core

│ ├── __init__.py

│ └── predictor.py

├── pages

│ ├── Analyze_Poem.py

│ └── Explore_Corpus.py

└── app.py

├── LICENSE

├── .gitignore

├── .dvcignore

├── dvc.yaml

├── src

├── __init__.py

├── utils.py

├── dataset.py

├── data_processing.py

├── segmentation.py

├── hpo.py

├── embedding.py

├── trainer.py

└── model.py

├── test

├── test_data_processing.py

└── test_model.py

└── README.md

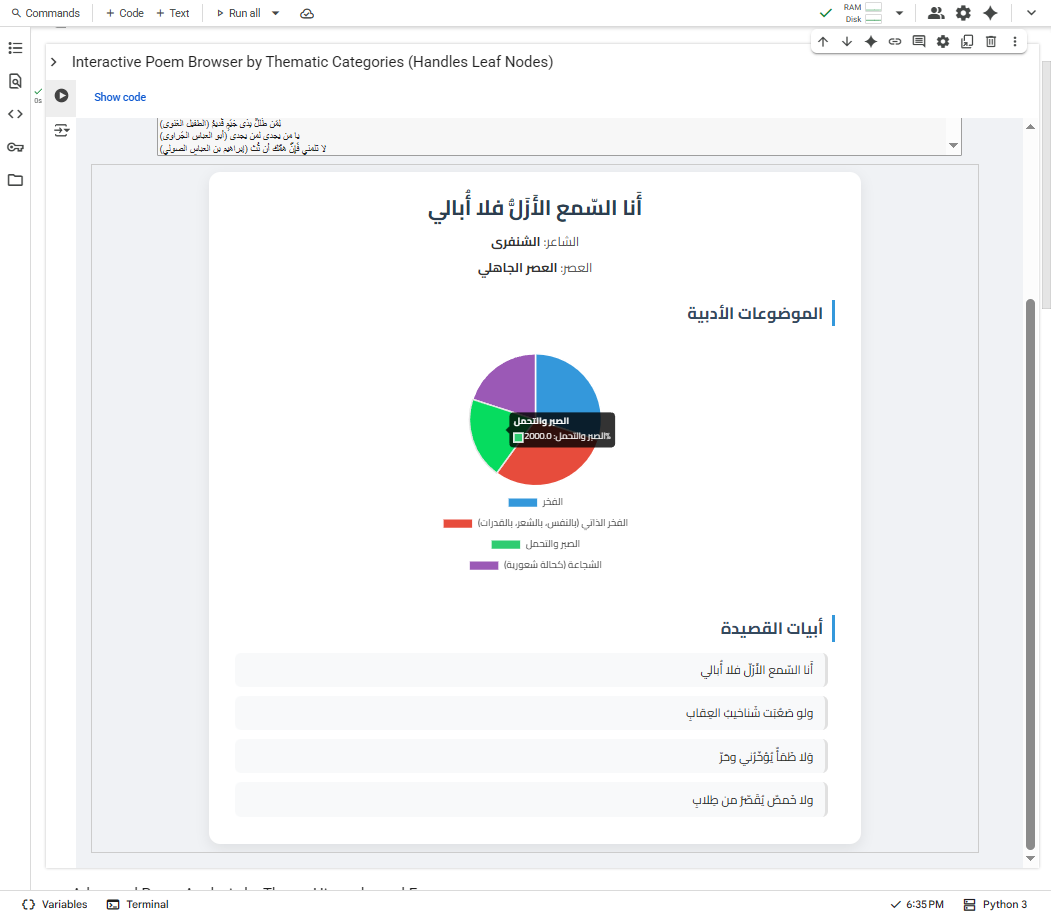

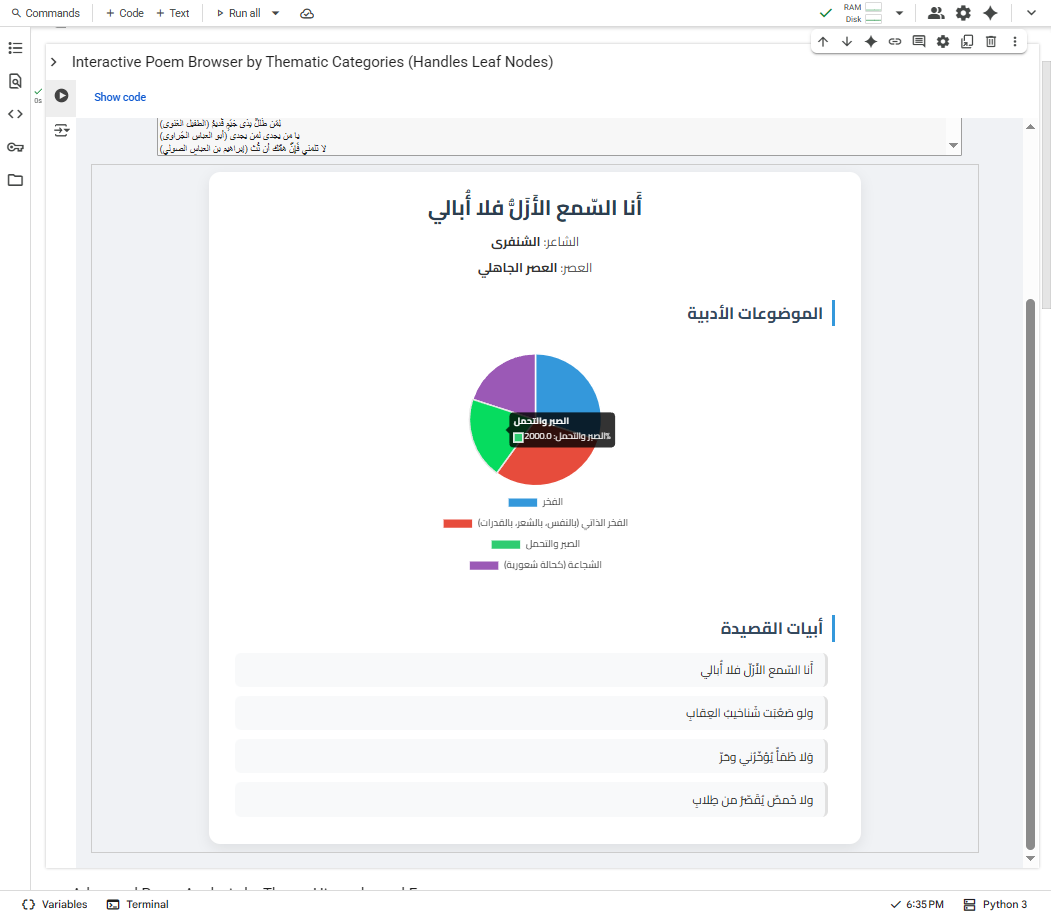

/images/ThematicPoem.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NoorBayan/Maqasid/HEAD/images/ThematicPoem.png

--------------------------------------------------------------------------------

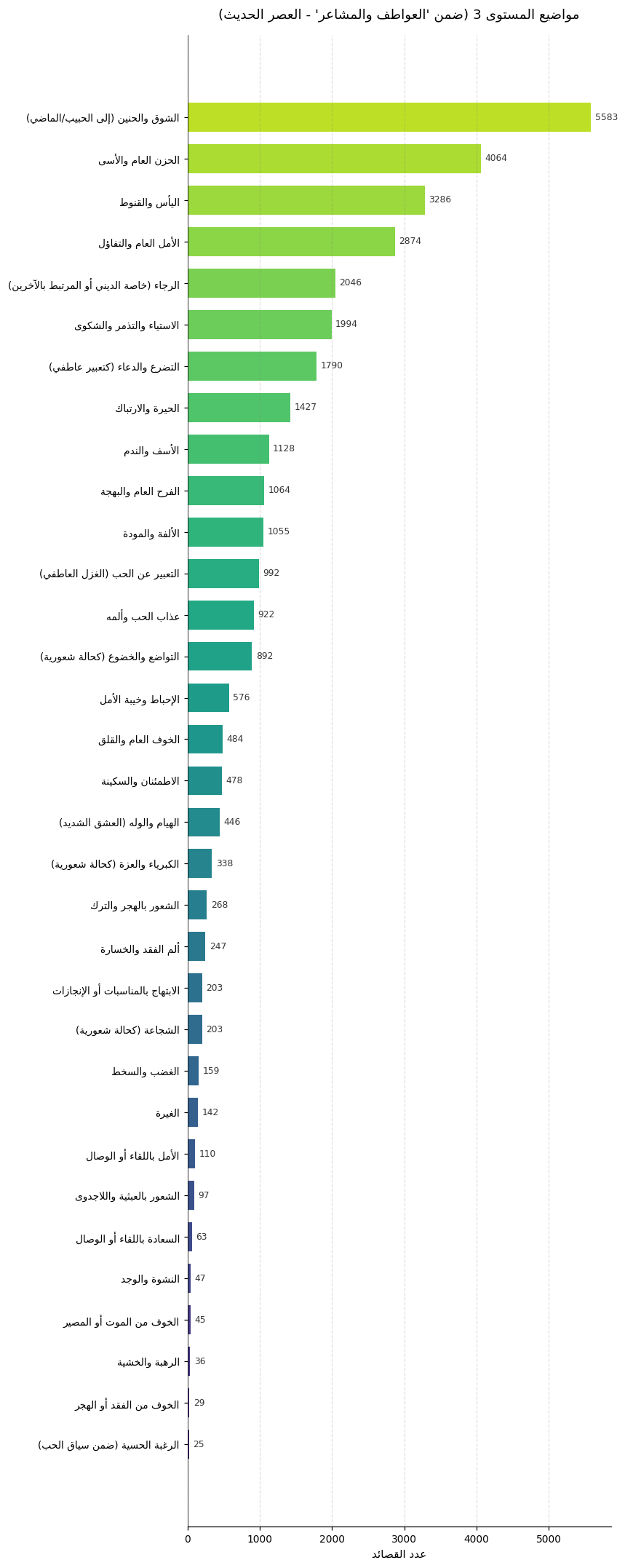

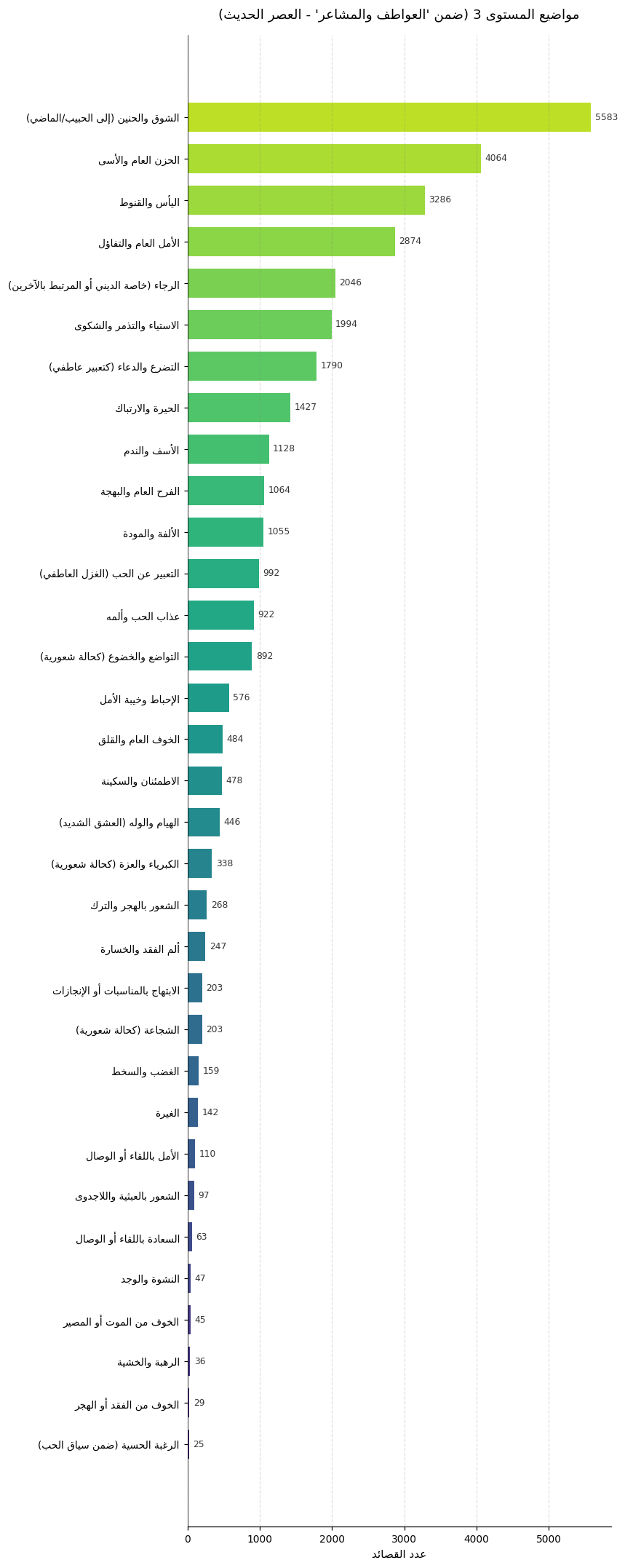

/images/ThematicAnalysis.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NoorBayan/Maqasid/HEAD/images/ThematicAnalysis.png

--------------------------------------------------------------------------------

/web_app/requirements_app.txt:

--------------------------------------------------------------------------------

1 | streamlit==1.33.0

2 | pandas==2.2.1

3 | plotly==5.20.0

4 | torch==2.2.1

5 | scikit-learn==1.4.1.post1

6 | gensim==4.3.2

7 | numpy==1.26.4

8 | pyfarasa==0.1.3

9 | PyYAML==6.0.1

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2025 NoorBayan

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Python Virtual Environments

2 | venv/

3 | /venv/

4 | .venv/

5 | /.venv/

6 | env/

7 | /env/

8 | .env/

9 | /.env/

10 | ENV/

11 | /ENV/

12 |

13 | # Python Bytecode and Cache

14 | __pycache__/

15 | *.pyc

16 | *.pyo

17 | *.pyd

18 | .Python

19 |

20 | # Environment Variables

21 | # The .env.example file SHOULD be committed.

22 | .env

23 | .env.*

24 | !.env.example

25 |

26 | # Large Data and Model Files (Handled by DVC)

27 | # DVC creates small .dvc pointer files which SHOULD be committed.

28 | /data/

29 | /saved_models/

30 |

31 | # Keep the directory structure but ignore the contents initially.

32 | !/data/.gitkeep

33 | !/saved_models/.gitkeep

34 |

35 | # DVC internal cache directory. This should NEVER be committed.

36 | .dvc/cache

37 |

38 | # IDE and Editor Configuration Files

39 | .idea/

40 | .vscode/

41 | *.swp

42 | *.swo

43 |

44 | # Operating System Generated Files

45 | .DS_Store

46 | Thumbs.db

47 | ._*

48 |

49 | # Build, Distribution, and Installation Artifacts

50 | build/

51 | dist/

52 | *.egg-info/

53 | *.egg

54 | *.whl

55 |

56 | # Test and Coverage Reports

57 | .pytest_cache/

58 | htmlcov/

59 | .coverage

60 | *.cover

61 |

62 | # Temporary Files and Logs

63 | *.log

64 | logs/

65 | *.tmp

66 | temp/

67 |

68 | # HPO and Notebook Checkpoints

69 | hpo_results/

70 | hpo_run_example/

71 | .ipynb_checkpoints/

72 |

--------------------------------------------------------------------------------

/.dvcignore:

--------------------------------------------------------------------------------

1 | # ==========================================================

2 | # DVC Ignore File

3 | # ==========================================================

4 | # This file specifies files and directories that DVC should

5 | # ignore and not attempt to track. The syntax is the same

6 | # as .gitignore.

7 |

8 | # --- Directories managed by Git ---

9 | # We explicitly tell DVC to ignore our source code, tests, and web app code,

10 | # as these are handled by Git.

11 | src/

12 | tests/

13 | web_app/

14 | pages/

15 | core/

16 | notebooks/

17 |

18 | # --- Project Configuration and Metadata Files ---

19 | # These are small text files tracked by Git.

20 | .gitignore

21 | .dvcignore

22 | dvc.yaml

23 | dvc.lock

24 | README.md

25 | LICENSE

26 | Makefile

27 | requirements.txt

28 | requirements_app.txt

29 | config.yaml

30 | params.yaml # Common file for DVC pipeline parameters

31 |

32 | # --- Python-related files and directories ---

33 | # These should be ignored by both Git and DVC.

34 | __pycache__/

35 | *.pyc

36 | venv/

37 | .env

38 | .env.example

39 |

40 | # --- Temporary Files and Logs ---

41 | # Ignore any log files generated during pipeline runs.

42 | *.log

43 | logs/

44 |

45 | # --- HPO and Experimentation Artifacts ---

46 | # Ignore temporary outputs from HPO runs that are not the final model.

47 | # The final selected model should be added to DVC manually or via the pipeline.

48 | hpo_results/

49 | hpo_run_example/

50 |

51 | # --- Locally Generated Plots and Reports ---

52 | # Any plots or reports generated for local analysis that are not part of the

53 | # official pipeline outputs.

54 | local_plots/

55 | *.png

56 | *.html

57 |

58 | # --- IDE and OS-specific files ---

59 | .idea/

60 | .vscode/

61 | *.DS_Store

--------------------------------------------------------------------------------

/dvc.yaml:

--------------------------------------------------------------------------------

1 | # ==========================================================

2 | # DVC Pipeline Definition

3 | # ==========================================================

4 | # This file defines the stages of the machine learning pipeline.

5 | # To run the entire pipeline, use the command `dvc repro`.

6 |

7 | stages:

8 | # --- Stage 1: Preprocess Raw Data ---

9 | preprocess:

10 | cmd: python scripts/01_preprocess_data.py --input data/raw/diwan_corpus.csv --output data/processed/preprocessed_poems.csv

11 | deps:

12 | - data/raw/diwan_corpus.csv

13 | - scripts/01_preprocess_data.py

14 | - src/poetry_classifier/data_processing.py

15 | outs:

16 | - data/processed/preprocessed_poems.csv

17 |

18 | # --- Stage 2: Train FastText Embeddings ---

19 | train_embeddings:

20 | cmd: python scripts/02_train_embeddings.py --corpus data/processed/preprocessed_poems.csv --output saved_models/embeddings/fasttext_poetry.bin --vector-size ${train_embeddings.vector_size} --epochs ${train_embeddings.epochs}

21 | deps:

22 | - data/processed/preprocessed_poems.csv

23 | - scripts/02_train_embeddings.py

24 | - src/poetry_classifier/embedding.py

25 | params:

26 | - train_embeddings.vector_size

27 | - train_embeddings.epochs

28 | outs:

29 | - saved_models/embeddings/fasttext_poetry.bin

30 |

31 | # --- Stage 3: Prepare Final Datasets (including segmentation and splitting) ---

32 | prepare_final_data:

33 | cmd: python scripts/03_prepare_final_data.py --input data/processed/preprocessed_poems.csv --embedder saved_models/embeddings/fasttext_poetry.bin --output-dir data/annotated --eps ${segment_and_embed.eps}

34 | deps:

35 | - data/processed/preprocessed_poems.csv

36 | - saved_models/embeddings/fasttext_poetry.bin

37 | - scripts/03_prepare_final_data.py

38 | - src/poetry_classifier/segmentation.py

39 | params:

40 | - segment_and_embed.eps

41 | - segment_and_embed.min_samples

42 | outs:

43 | - data/annotated/train.csv

44 | - data/annotated/validation.csv

45 | - data/annotated/test.csv

46 |

47 | # --- Stage 4: Train the Final Classifier Model ---

48 | train_model:

49 | cmd: python scripts/05_train_final_model.py --train-data data/annotated/train.csv --val-data data/annotated/validation.csv --output-dir saved_models/classifier --params-file params.yaml

50 | deps:

51 | - data/annotated/train.csv

52 | - data/annotated/validation.csv

53 | - scripts/05_train_final_model.py

54 | - src/poetry_classifier/model.py

55 | - src/poetry_classifier/trainer.py

56 | - src/poetry_classifier/dataset.py

57 | params:

58 | - train_model

59 | outs:

60 | - saved_models/classifier/best_poetry_classifier.pth

61 | - saved_models/classifier/model_config.json

62 | - reports/training_history.json

63 |

64 | # --- Stage 5: Evaluate the Final Model on the Test Set ---

65 | evaluate:

66 | cmd: python scripts/evaluate.py --model-dir saved_models/classifier --test-data data/annotated/test.csv --output-file reports/metrics.json

67 | deps:

68 | - saved_models/classifier/best_poetry_classifier.pth

69 | - data/annotated/test.csv

70 | - scripts/evaluate.py

71 | metrics:

72 | - reports/metrics.json:

73 | cache: false

--------------------------------------------------------------------------------

/src/__init__.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | """

4 | Poetry Classifier Package

5 | =========================

6 |

7 | This package provides a comprehensive toolkit for multi-label thematic classification

8 | of Arabic poetry. It includes modules for data processing, embedding, segmentation,

9 | model definition, and training.

10 |

11 | To get started, you can directly import the main classes from this top-level package.

12 |

13 | Example:

14 | from poetry_classifier import (

15 | PoetryPreprocessor,

16 | PoetryEmbedder,

17 | PoemSegmenter,

18 | HybridPoetryClassifier,

19 | ModelTrainer,

20 | PoetryThematicDataset

21 | )

22 |

23 | This __init__.py file handles:

24 | - Defining package-level metadata like version and author.

25 | - Setting up a default logger for the package.

26 | - Making key classes and functions available at the top level for a cleaner API.

27 | """

28 |

29 | import logging

30 | import os

31 |

32 | # --- Package Metadata ---

33 | # This is a good practice for package management and distribution.

34 | __version__ = "1.0.0"

35 | __author__ = "Your Name / Your Team"

36 | __email__ = "your.email@example.com"

37 |

38 |

39 | # --- Setup a Null Logger ---

40 | # This prevents log messages from being propagated to the root logger if the

41 | # library user has not configured logging. It's a standard practice for libraries.

42 | # The user of the library can then configure logging as they see fit.

43 | logging.getLogger(__name__).addHandler(logging.NullHandler())

44 |

45 |

46 | # --- Cleaner API: Import key classes to the top level ---

47 | # This allows users to import classes directly from the package,

48 | # e.g., `from poetry_classifier import PoetryPreprocessor`

49 | # instead of `from poetry_classifier.data_processing import PoetryPreprocessor`.

50 |

51 | # A try-except block is used here to handle potential circular imports or

52 | # missing dependencies gracefully, although it's less likely with a flat structure.

53 | try:

54 | from .data_processing import PoetryPreprocessor

55 | from .embedding import PoetryEmbedder

56 | from .segmentation import PoemSegmenter

57 | from .model import HybridPoetryClassifier

58 | from .dataset import PoetryThematicDataset

59 | from .trainer import ModelTrainer

60 | from .utils import setup_logging, set_seed, load_config

61 |

62 | except ImportError as e:

63 | # This error might occur if a dependency is not installed.

64 | # For example, if `pyfarasa` is missing, `data_processing` might fail to import.

65 | logging.getLogger(__name__).warning(

66 | f"Could not import all modules from the poetry_classifier package. "

67 | f"Please ensure all dependencies are installed. Original error: {e}"

68 | )

69 |

70 | # --- Define what is exposed with `from poetry_classifier import *` ---

71 | # It's a good practice to explicitly define `__all__` to control what gets imported.

72 | __all__ = [

73 | # Metadata

74 | "__version__",

75 | "__author__",

76 | "__email__",

77 |

78 | # Core Classes

79 | "PoetryPreprocessor",

80 | "PoetryEmbedder",

81 | "PoemSegmenter",

82 | "HybridPoetryClassifier",

83 | "PoetryThematicDataset",

84 | "ModelTrainer",

85 |

86 | # Utility Functions

87 | "setup_logging",

88 | "set_seed",

89 | "load_config"

90 | ]

91 |

92 | logger = logging.getLogger(__name__)

93 | logger.info(f"Poetry Classifier package version {__version__} loaded.")

--------------------------------------------------------------------------------

/web_app/config.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import os

4 | import logging

5 | from pathlib import Path

6 |

7 | # Configure a logger specific to the configuration module

8 | logger = logging.getLogger(__name__)

9 |

10 | # --- Dynamic Path Configuration ---

11 | # This is the most robust way to handle paths. It ensures that no matter where

12 | # you run the app from (e.g., from the root directory or from within web_app),

13 | # the paths to your data and models will always be correct.

14 |

15 | try:

16 | # The base directory of the entire project (the parent of 'src' and 'web_app')

17 | # It goes up two levels from this file's location (web_app/config.py -> web_app -> project_root)

18 | PROJECT_ROOT = Path(__file__).resolve().parent.parent.parent

19 | except NameError:

20 | # Fallback for interactive environments like Jupyter notebooks where __file__ is not defined

21 | PROJECT_ROOT = Path('.').resolve()

22 |

23 | logger.info(f"Project Root determined as: {PROJECT_ROOT}")

24 |

25 |

26 | # --- Helper function for path validation ---

27 | def validate_path(path: Path, description: str):

28 | """Checks if a given path exists and logs a warning if it doesn't."""

29 | if not path.exists():

30 | logger.warning(

31 | f"Configuration Warning: The path for '{description}' does not exist. "

32 | f"Path: '{path}'"

33 | )

34 | return path

35 |

36 |

37 | # ==============================================================================

38 | # 1. MODEL AND DATA PATHS CONFIGURATION

39 | # ==============================================================================

40 | # All paths are constructed relative to the PROJECT_ROOT.

41 |

42 | # --- Saved Models Paths ---

43 | SAVED_MODELS_DIR = PROJECT_ROOT / "saved_models"

44 |

45 | # Classifier model

46 | CLASSIFIER_MODEL_PATH = validate_path(

47 | SAVED_MODELS_DIR / "classifier" / "best_poetry_classifier.pth",

48 | "Classifier Model Weights"

49 | )

50 | CLASSIFIER_CONFIG_PATH = validate_path(

51 | SAVED_MODELS_DIR / "classifier" / "model_config.json",

52 | "Classifier Model Configuration"

53 | )

54 |

55 | # Embedding model

56 | EMBEDDING_MODEL_PATH = validate_path(

57 | SAVED_MODELS_DIR / "embeddings" / "fasttext_poetry.bin",

58 | "FastText Embedding Model"

59 | )

60 |

61 |

62 | # --- Data and Schema Paths ---

63 | DATA_DIR = PROJECT_ROOT / "data"

64 |

65 | # The main annotated corpus for the exploration dashboard

66 | ANNOTATED_CORPUS_PATH = validate_path(

67 | DATA_DIR / "annotated" / "diwan_corpus_annotated.csv",

68 | "Annotated Corpus CSV"

69 | )

70 |

71 | # The hierarchical schema file for mapping labels to names

72 | LABEL_SCHEMA_PATH = validate_path(

73 | DATA_DIR / "schema" / "thematic_schema.json",

74 | "Thematic Schema JSON"

75 | )

76 |

77 | # Example poems for the analysis page

78 | EXAMPLE_POEMS_PATH = validate_path(

79 | PROJECT_ROOT / "web_app" / "static" / "example_poems.json",

80 | "Example Poems JSON"

81 | )

82 |

83 |

84 | # ==============================================================================

85 | # 2. MODEL AND PREDICTION PARAMETERS

86 | # ==============================================================================

87 |

88 | # --- Segmentation Parameters ---

89 | # Hyperparameters for the DBSCAN segmenter used in the predictor.

90 | # These should ideally match the values that yielded the best results in your research.

91 | SEGMENTER_EPS = 0.4

92 | SEGMENTER_MIN_SAMPLES = 1

93 |

94 |

95 | # --- Classification Parameters ---

96 | # The confidence threshold for a theme to be considered "predicted".

97 | # A value between 0.0 and 1.0.

98 | CLASSIFICATION_THRESHOLD = 0.5

99 |

100 |

101 | # ==============================================================================

102 | # 3. WEB APPLICATION UI CONFIGURATION

103 | # ==============================================================================

104 |

105 | # --- Page Titles and Icons ---

106 | # Centralize UI elements for consistency across the app.

107 | APP_TITLE = "Arabic Poetry Thematic Classifier"

108 | APP_ICON = "📜"

109 |

110 | # Page-specific configurations

111 | PAGE_CONFIG = {

112 | "home": {

113 | "title": APP_TITLE,

114 | "icon": APP_ICON

115 | },

116 | "analyze_poem": {

117 | "title": "Analyze a Poem",

118 | "icon": "✍️"

119 | },

120 | "explore_corpus": {

121 | "title": "Explore The Corpus",

122 | "icon": "📚"

123 | }

124 | }

125 |

126 |

127 | # --- UI Defaults ---

128 | # Default values for interactive widgets.

129 | DEFAULT_TOP_K_THEMES = 7

130 | MAX_TOP_K_THEMES = 20

131 |

132 |

133 | # --- External Links ---

134 | # Centralize URLs for easy updates.

135 | RESEARCH_PAPER_URL = "https://your-paper-link.com"

136 | GITHUB_REPO_URL = "https://github.com/your-username/your-repo"

137 |

138 | # ==============================================================================

139 | # END OF CONFIGURATION

140 | # ==============================================================================

141 |

142 | # You could add a final check here if needed, for example:

143 | # if not all([CLASSIFIER_MODEL_PATH.exists(), CLASSIFIER_CONFIG_PATH.exists()]):

144 | # raise FileNotFoundError("Critical model files are missing. The application cannot start.")

--------------------------------------------------------------------------------

/test/test_data_processing.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import json

4 | import os

5 | import sys

6 | from unittest.mock import MagicMock, patch

7 |

8 | import numpy as np

9 | import pandas as pd

10 | import pytest

11 |

12 | # Add the source directory to the Python path

13 | sys.path.insert(0, os.path.abspath(os.path.join(os.path.dirname(__file__), '..', 'src')))

14 |

15 | from poetry_classifier.data_processing import PoetryPreprocessor

16 |

17 | # --- Helper Function to Load Test Data ---

18 |

19 | def load_test_data(file_name: str = "processing_examples.json"):

20 | """Loads test examples from a JSON file."""

21 | current_dir = os.path.dirname(os.path.abspath(__file__))

22 | file_path = os.path.join(current_dir, "test_data", file_name)

23 | try:

24 | with open(file_path, 'r', encoding='utf-8') as f:

25 | return json.load(f)

26 | except FileNotFoundError:

27 | pytest.fail(f"Test data file not found at {file_path}. Please create it.")

28 | except json.JSONDecodeError:

29 | pytest.fail(f"Could not decode JSON from {file_path}. Please check its syntax.")

30 |

31 | # Load all test data once, making it available to all tests

32 | test_data = load_test_data()

33 |

34 | # --- Fixtures for Pytest ---

35 |

36 | @pytest.fixture(scope="module")

37 | def segmentation_map_from_data():

38 | """

39 | Creates the segmentation map for the mock segmenter directly from the test data file.

40 | This fixture is now the single source of truth for the mock's behavior.

41 | """

42 | pipeline_cases = test_data.get("full_pipeline", [])

43 | if not pipeline_cases:

44 | pytest.fail("No 'full_pipeline' cases found in test data file.")

45 |

46 | return {

47 | case['expected_pre_segmentation']: case['expected_final']

48 | for case in pipeline_cases

49 | }

50 |

51 | @pytest.fixture(scope="module")

52 | def mock_farasa_segmenter(segmentation_map_from_data):

53 | """Mocks the FarasaSegmenter in a purely data-driven way using the segmentation map."""

54 | mock_segmenter = MagicMock()

55 |

56 | def mock_segment(text_to_segment):

57 | return segmentation_map_from_data.get(text_to_segment, text_to_segment)

58 |

59 | mock_segmenter.segment.side_effect = mock_segment

60 | return mock_segmenter

61 |

62 |

63 | @pytest.fixture

64 | def preprocessor(mock_farasa_segmenter):

65 | """Provides a PoetryPreprocessor instance with a mocked FarasaSegmenter."""

66 | with patch('poetry_classifier.data_processing.FarasaSegmenter', return_value=mock_farasa_segmenter):

67 | yield PoetryPreprocessor()

68 |

69 |

70 | # --- Test Cases ---

71 |

72 | class TestPoetryPreprocessor:

73 |

74 | @pytest.mark.parametrize("case", test_data.get("cleaning", []), ids=[c['name'] for c in test_data.get("cleaning", [])])

75 | def test_clean_text(self, preprocessor, case):

76 | """Test the _clean_text method using data-driven cases."""

77 | if 'input' in case and case['input'] is None:

78 | assert preprocessor._clean_text(None) == case['expected']

79 | else:

80 | assert preprocessor._clean_text(case['input']) == case['expected']

81 |

82 | @pytest.mark.parametrize("case", test_data.get("normalization", []), ids=[c['name'] for c in test_data.get("normalization", [])])

83 | def test_normalize_arabic(self, preprocessor, case):

84 | """Test the _normalize_arabic method using data-driven cases."""

85 | assert preprocessor._normalize_arabic(case['input']) == case['expected']

86 |

87 | @pytest.mark.parametrize("case", test_data.get("stopwords", []), ids=[c['name'] for c in test_data.get("stopwords", [])])

88 | def test_remove_stopwords(self, preprocessor, case):

89 | """Test the _remove_stopwords method using data-driven cases."""

90 | assert preprocessor._remove_stopwords(case['input']) == case['expected']

91 |

92 | @pytest.mark.parametrize("case", test_data.get("full_pipeline", []), ids=[c['name'] for c in test_data.get("full_pipeline", [])])

93 | def test_process_text_full_pipeline(self, preprocessor, case):

94 | """Test the end-to-end process_text method using purely data-driven cases."""

95 | processed_output = preprocessor.process_text(case['input'])

96 | assert processed_output == case['expected_final']

97 |

98 | def test_process_dataframe(self, preprocessor):

99 | """Test processing a full pandas DataFrame in a data-driven way."""

100 | pipeline_cases = test_data.get("full_pipeline", [])

101 |

102 | sample_data = {

103 | 'id': [i+1 for i in range(len(pipeline_cases))],

104 | 'raw_verse': [case['input'] for case in pipeline_cases]

105 | }

106 | df = pd.DataFrame(sample_data)

107 |

108 | processed_df = preprocessor.process_dataframe(df, text_column='raw_verse')

109 |

110 | assert 'processed_text' in processed_df.columns

111 | assert len(processed_df) == len(pipeline_cases)

112 | for i, case in enumerate(pipeline_cases):

113 | assert processed_df.loc[i, 'processed_text'] == case['expected_final']

114 |

115 | def test_process_dataframe_with_missing_column(self, preprocessor):

116 | """Test that processing a DataFrame with a missing column raises a ValueError."""

117 | df = pd.DataFrame({'id': [1], 'another_col': ['text']})

118 |

119 | with pytest.raises(ValueError, match="Column 'non_existent_column' not found"):

120 | preprocessor.process_dataframe(df, text_column='non_existent_column')

--------------------------------------------------------------------------------

/web_app/core/__init__.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import logging

4 | import time

5 |

6 | import pandas as pd

7 | import streamlit as st

8 |

9 | # Assuming your predictor is in the core directory relative to the web_app folder

10 | from core.predictor import PoetryPredictor

11 |

12 | # Configure logger for this page

13 | logger = logging.getLogger(__name__)

14 |

15 | # --- Page-Specific Configuration ---

16 | st.set_page_config(

17 | page_title="Analyze Poem - Arabic Poetry Classifier",

18 | page_icon="✍️",

19 | layout="wide",

20 | )

21 |

22 | # --- Helper Functions ---

23 |

24 | @st.cache_resource

25 | def get_predictor():

26 | """

27 | Factory function to load and cache the PoetryPredictor instance.

28 | This ensures the model is loaded only once across all pages of the app.

29 | """

30 | try:

31 | return PoetryPredictor()

32 | except Exception as e:

33 | st.error(f"Fatal Error: Could not initialize the model predictor. Please check logs. Error: {e}")

34 | logger.error(f"Failed to load predictor: {e}", exc_info=True)

35 | return None

36 |

37 | def display_results(results: list):

38 | """

39 | Renders the prediction results in a structured and user-friendly format.

40 | """

41 | if not results:

42 | st.warning("The model did not predict any themes above the confidence threshold for the given text.")

43 | return

44 |

45 | st.success("Analysis Complete!")

46 |

47 | # Create a DataFrame for better visualization

48 | # Convert probability to a more readable format

49 | df_data = [

50 | {"Theme": r["theme"], "Confidence": f"{r['probability']:.2%}"}

51 | for r in results

52 | ]

53 | df = pd.DataFrame(df_data)

54 |

55 | st.markdown("#### Predicted Themes:")

56 |

57 | # Use columns for a cleaner layout

58 | col1, col2 = st.columns([2, 1])

59 |

60 | with col1:

61 | st.dataframe(df, use_container_width=True, hide_index=True)

62 |

63 | with col2:

64 | top_theme = results[0]["theme"]

65 | top_prob = results[0]["probability"]

66 | st.metric(label="Top Predicted Theme", value=top_theme, delta=f"{top_prob:.2%}")

67 | st.info("Confidence indicates the model's certainty for each predicted theme.")

68 |

69 | # --- Main Page UI and Logic ---

70 |

71 | def main():

72 | """Main function to render the 'Analyze Poem' page."""

73 |

74 | st.title("✍️ Analyze a New Poem")

75 | st.markdown("Enter the verses of an Arabic poem below to classify its thematic content. "

76 | "For best results, please place each verse on a new line.")

77 |

78 | # --- Load Predictor ---

79 | predictor = get_predictor()

80 | if not predictor:

81 | st.stop() # Stop execution of the page if the predictor failed to load

82 |

83 | # --- UI Components for Analysis ---

84 | st.markdown("---")

85 |

86 | col1, col2 = st.columns(2)

87 |

88 | with col1:

89 | # --- Poem Input Area ---

90 | placeholder_poem = (

91 | "عَلَى قَدْرِ أَهْلِ العَزْمِ تَأْتِي العَزَائِمُ\n"

92 | "وَتَأْتِي عَلَى قَدْرِ الكِرَامِ المَكَارِمُ\n"

93 | "وَتَعْظُمُ فِي عَيْنِ الصَّغِيرِ صِغَارُهَا\n"

94 | "وَتَصْغُرُ فِي عَيْنِ العَظِيمِ العَظَائِمُ"

95 | )

96 | poem_input = st.text_area(

97 | label="**Enter Poem Text Here:**",

98 | value=placeholder_poem,

99 | height=250,

100 | placeholder="Paste or type the poem here..."

101 | )

102 |

103 | with col2:

104 | # --- Analysis Options ---

105 | st.markdown("**Analysis Options**")

106 | top_k = st.slider(

107 | label="Number of top themes to display:",

108 | min_value=1,

109 | max_value=10,

110 | value=5, # Default value

111 | help="Adjust this slider to control how many of the most confident themes are shown."

112 | )

113 |

114 | # The main action button

115 | analyze_button = st.button("Analyze Poem", type="primary", use_container_width=True)

116 |

117 | st.markdown("---")

118 |

119 | # --- Analysis Execution and Output ---

120 | if analyze_button:

121 | # Validate input

122 | if not poem_input or not poem_input.strip():

123 | st.warning("⚠️ Input is empty. Please enter a poem to analyze.")

124 | return # Stop execution for this run

125 |

126 | # Show a spinner while processing

127 | with st.spinner("🔍 Analyzing the poetic essence... Please wait."):

128 | try:

129 | start_time = time.time()

130 |

131 | # Call the predictor

132 | results = predictor.predict(poem_text=poem_input, top_k=top_k)

133 |

134 | end_time = time.time()

135 | logger.info(f"Prediction for input text completed in {end_time - start_time:.2f} seconds.")

136 |

137 | # Display the results

138 | display_results(results)

139 |

140 | except (ValueError, TypeError) as e:

141 | # Handle specific, expected errors from the predictor

142 | st.error(f"❌ Input Error: {e}")

143 | logger.warning(f"User input error: {e}")

144 | except Exception as e:

145 | # Handle unexpected errors

146 | st.error("An unexpected error occurred during analysis. Please try again or check the logs.")

147 | logger.error(f"Unexpected prediction error: {e}", exc_info=True)

148 |

149 |

150 | if __name__ == "__main__":

151 | main()

--------------------------------------------------------------------------------

/web_app/pages/Analyze_Poem.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import json

4 | import logging

5 | import os

6 | import time

7 |

8 | import pandas as pd

9 | import plotly.express as px

10 | import streamlit as st

11 |

12 | # Path adjustments for importing from the project's src directory

13 | import sys

14 | sys.path.insert(0, os.path.abspath(os.path.join(os.path.dirname(__file__), '..', '..')))

15 |

16 | try:

17 | from web_app.core.predictor import PoetryPredictor

18 | except ImportError:

19 | st.error("Could not import the PoetryPredictor. Ensure the project structure is correct.")

20 | st.stop()

21 |

22 |

23 | # --- Configure logger ---

24 | logger = logging.getLogger(__name__)

25 |

26 |

27 | # --- Page Configuration ---

28 | try:

29 | st.set_page_config(

30 | page_title="Analyze Poem",

31 | page_icon="✍️",

32 | layout="wide",

33 | )

34 | except st.errors.StreamlitAPIException:

35 | pass

36 |

37 |

38 | # --- Caching and Data Loading ---

39 |

40 | @st.cache_resource

41 | def get_predictor():

42 | """Factory function to load and cache the PoetryPredictor instance."""

43 | try:

44 | return PoetryPredictor()

45 | except Exception as e:

46 | logger.error(f"Failed to load predictor: {e}", exc_info=True)

47 | return None

48 |

49 | @st.cache_data

50 | def load_example_poems(file_path: str = "web_app/static/example_poems.json"):

51 | """

52 | Loads example poems from an external JSON file and caches the result.

53 | This makes the app data-driven and easy to update.

54 | """

55 | try:

56 | with open(file_path, 'r', encoding='utf-8') as f:

57 | examples_list = json.load(f)

58 | # Convert list of dicts to a single dict for easy lookup

59 | return {item['key']: item['value'] for item in examples_list}

60 | except (FileNotFoundError, json.JSONDecodeError, KeyError) as e:

61 | logger.error(f"Failed to load or parse example poems from {file_path}: {e}")

62 | st.error("Could not load example poems file.")

63 | return {"Default": "Please add examples to static/example_poems.json"}

64 |

65 |

66 | # --- UI Helper Functions ---

67 |

68 | def render_results(results):

69 | """Renders the analysis results in a visually appealing and informative way."""

70 | if not results:

71 | st.warning("The model did not predict any themes above the confidence threshold.")

72 | return

73 |

74 | st.success("Analysis Complete!")

75 |

76 | df = pd.DataFrame(results)

77 | df['probability_percent'] = df['probability'].apply(lambda p: f"{p:.2%}")

78 |

79 | col1, col2 = st.columns([1, 1])

80 |

81 | with col1:

82 | st.markdown("#### **Top Predicted Themes**")

83 | st.dataframe(

84 | df[['theme', 'probability_percent']],

85 | use_container_width=True,

86 | hide_index=True,

87 | column_config={

88 | "theme": st.column_config.TextColumn("Theme", width="large"),

89 | "probability_percent": st.column_config.TextColumn("Confidence"),

90 | }

91 | )

92 |

93 | with col2:

94 | st.markdown("#### **Confidence Distribution**")

95 | fig = px.bar(

96 | df, x='probability', y='theme', orientation='h', text='probability_percent',

97 | labels={'probability': 'Confidence Score', 'theme': 'Thematic Category'},

98 | )

99 | fig.update_layout(yaxis={'categoryorder':'total ascending'}, showlegend=False, margin=dict(l=0, r=0, t=30, b=0))

100 | fig.update_traces(marker_color='#0068c9', textposition='outside')

101 | st.plotly_chart(fig, use_container_width=True)

102 |

103 |

104 | # --- Main Page Application ---

105 |

106 | def main():

107 | """Main function to render the 'Analyze Poem' page."""

108 |

109 | st.title("✍️ Thematic Analysis of a Poem")

110 | st.markdown("Paste or select an example of Arabic poetry below to classify its thematic content.")

111 |

112 | if "analysis_results" not in st.session_state:

113 | st.session_state.analysis_results = None

114 |

115 | predictor = get_predictor()

116 | example_poems = load_example_poems()

117 |

118 | if predictor is None:

119 | st.error("The analysis model is not available. Application cannot proceed.")

120 | st.stop()

121 |

122 | # --- Input Section ---

123 | st.markdown("---")

124 |

125 | input_col, example_col = st.columns([2, 1])

126 |

127 | with example_col:

128 | st.markdown("##### **Try an Example**")

129 | if example_poems:

130 | selected_example_key = st.selectbox(

131 | "Choose a famous verse:",

132 | options=list(example_poems.keys()),

133 | index=0,

134 | label_visibility="collapsed"

135 | )

136 | # Use the selected key to get the poem text

137 | default_text = example_poems.get(selected_example_key, "")

138 | else:

139 | default_text = "Examples could not be loaded."

140 | st.warning("No examples available.")

141 |

142 | with input_col:

143 | st.markdown("##### **Enter Your Poem**")

144 | poem_input = st.text_area(

145 | "Paste the poem text here, with each verse on a new line.",

146 | value=default_text,

147 | height=200,

148 | key="poem_input_area"

149 | )

150 |

151 | # --- Options and Action Button ---

152 | st.markdown("##### **Analysis Configuration**")

153 | opts_col1, opts_col2 = st.columns([1, 3])

154 | with opts_col1:

155 | top_k = st.number_input(

156 | "Number of themes to show:", min_value=1, max_value=20, value=7,

157 | help="The maximum number of most confident themes to display."

158 | )

159 |

160 | with opts_col2:

161 | analyze_button = st.button("Analyze Poem", type="primary", use_container_width=True)

162 |

163 | # --- Analysis and Result Display Section ---

164 | st.markdown("---")

165 |

166 | if analyze_button:

167 | if not poem_input or not poem_input.strip():

168 | st.warning("⚠️ Please enter a poem to analyze.")

169 | else:

170 | with st.spinner("🔬 Performing deep semantic analysis..."):

171 | try:

172 | results = predictor.predict(poem_text=poem_input, top_k=top_k)

173 | st.session_state.analysis_results = results

174 | except Exception as e:

175 | st.error(f"An unexpected error occurred: {e}")

176 | st.session_state.analysis_results = None

177 |

178 | if st.session_state.analysis_results is not None:

179 | render_results(st.session_state.analysis_results)

180 |

181 | if __name__ == "__main__":

182 | main()

--------------------------------------------------------------------------------

/web_app/app.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import logging

4 | import os

5 | import sys

6 |

7 | import streamlit as st

8 |

9 | # --- Path Setup ---

10 | # Add the project root to the Python path. This allows importing from 'src' and 'web_app.core'.

11 | # This should be done once in the main entry point of the application.

12 | sys.path.insert(0, os.path.abspath(os.path.join(os.path.dirname(__file__), '..')))

13 |

14 | try:

15 | from web_app.core.predictor import PoetryPredictor

16 | except ImportError:

17 | # This is a critical failure, the app cannot run without the predictor.

18 | st.error(

19 | "**Fatal Error:** Could not import `PoetryPredictor` from `web_app.core`."

20 | "Please ensure the project structure is correct and all dependencies are installed."

21 | )

22 | st.stop()

23 |

24 |

25 | # --- Application-Wide Configuration ---

26 |

27 | # 1. Page Configuration (must be the first Streamlit command)

28 | st.set_page_config(

29 | page_title="Arabic Poetry Thematic Classifier",

30 | page_icon="📜",

31 | layout="wide",

32 | initial_sidebar_state="expanded",

33 | menu_items={

34 | 'Get Help': 'https://github.com/your-username/your-repo/issues',

35 | 'Report a bug': "https://github.com/your-username/your-repo/issues",

36 | 'About': """

37 | ## Nuanced Thematic Classification of Arabic Poetry

38 | This is an interactive platform demonstrating a deep learning model for classifying themes in Arabic poetry.

39 | Developed as part of our research paper.

40 | """

41 | }

42 | )

43 |

44 | # 2. Setup application-wide logging

45 | # You can use your utility function here if you have one

46 | logging.basicConfig(

47 | level=logging.INFO,

48 | format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

49 | )

50 | logger = logging.getLogger(__name__)

51 |

52 |

53 | # --- Resource Caching ---

54 |

55 | @st.cache_resource

56 | def load_resources():

57 | """

58 | Loads all heavy, application-wide resources once and caches them.

59 | This includes the main model predictor. This function will be called by

60 | all pages to ensure they share the same cached resources.

61 | """

62 | logger.info("Main app: Loading application-wide resources...")

63 | try:

64 | predictor = PoetryPredictor()

65 | logger.info("Main app: PoetryPredictor loaded and cached successfully.")

66 | return {"predictor": predictor}

67 | except Exception as e:

68 | logger.error(f"Main app: A fatal error occurred during resource loading: {e}", exc_info=True)

69 | # Display the error prominently on the main page

70 | st.error(

71 | "**Application Failed to Start**\n\n"

72 | f"A critical error occurred while loading the AI models: **{e}**\n\n"

73 | "Please check the application logs for more details. The app will not be functional."

74 | )

75 | return {"predictor": None}

76 |

77 |

78 | # --- Main Application UI ---

79 |

80 | def main():

81 | """

82 | Renders the main landing page of the application.

83 | This page introduces the project and guides the user to the sub-pages.

84 | """

85 |

86 | # --- Load shared resources ---

87 | # This call ensures that the models are loaded when the user first visits the app.

88 | # The result is cached, so subsequent calls on this or other pages are instantaneous.

89 | resources = load_resources()

90 | if resources["predictor"] is None:

91 | st.stop() # Halt execution if models failed to load.

92 |

93 | # --- Header and Introduction ---

94 | st.title("📜 Nuanced Thematic Classification of Arabic Poetry")

95 |

96 | st.markdown(

97 | """

98 | Welcome to the interactive platform for our research on the thematic analysis of Arabic poetry.

99 | This application serves as a practical demonstration of our hybrid deep learning model,

100 | designed to understand the rich and complex themes inherent in this literary tradition.

101 | """

102 | )

103 |

104 | st.info(

105 | "**👈 To get started, please select a tool from the sidebar on the left.**",

106 | icon="ℹ️"

107 | )

108 |

109 | # --- Page Navigation Guide ---

110 | st.header("Available Tools")

111 |

112 | col1, col2 = st.columns(2)

113 |

114 | with col1:

115 | with st.container(border=True):

116 | st.markdown("#### ✍️ Analyze a New Poem")

117 | st.markdown(

118 | "Input your own Arabic poetry verses or choose from our examples to get an "

119 | "instant thematic analysis, complete with confidence scores for each predicted theme."

120 | )

121 |

122 | with col2:

123 | with st.container(border=True):

124 | st.markdown("#### 📚 Explore the Corpus")

125 | st.markdown(

126 | "Dive into our richly annotated dataset. Use interactive filters to explore "

127 | "thematic trends across different poets, historical eras, and literary genres."

128 | )

129 |

130 | st.markdown("---")

131 |

132 | # --- Project Background and Resources ---

133 | st.header("About The Project")

134 |

135 | with st.expander("Click here to learn more about the project's methodology and contributions"):

136 | st.markdown(

137 | """

138 | This project was born out of the need to address the significant challenges in the computational

139 | analysis of Arabic poetry, namely its thematic complexity, frequent thematic overlap, and the

140 | scarcity of comprehensively annotated data.

141 |

142 | #### Key Contributions:

143 | 1. **A Novel Annotated Corpus:** We meticulously created a large-scale, multi-label Arabic poetry corpus,

144 | annotated according to a new, literature-grounded hierarchical thematic taxonomy and validated by domain experts.

145 |

146 | 2. **An Optimized Hybrid Model:** We developed a hybrid deep learning model that synergistically integrates

147 | Convolutional Neural Networks (CNNs) for local feature extraction with Bidirectional Long Short-Term

148 | Memory (Bi-LSTM) networks for sequential context modeling. This model leverages custom FastText embeddings

149 | trained from scratch on our poetic corpus.

150 |

151 | 3. **This Interactive Platform:** This web application makes our model and data accessible, fostering

152 | more sophisticated, data-driven research in Arabic digital humanities.

153 | """

154 | )

155 |

156 | st.markdown("#### Project Links")

157 | st.link_button("📄 View the Research Paper", "https://your-paper-link.com")

158 | st.link_button("💻 Browse the Source Code on GitHub", "https://github.com/your-username/your-repo")

159 |

160 | # --- Sidebar Content ---

161 | st.sidebar.success("Select a page above to begin.")

162 | st.sidebar.markdown("---")

163 | st.sidebar.info(

164 | "This application is a research prototype. "

165 | "For questions or feedback, please open an issue on our GitHub repository."

166 | )

167 |

168 |

169 | if __name__ == "__main__":

170 | main()

--------------------------------------------------------------------------------

/src/utils.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import json

4 | import logging

5 | import os

6 | import pickle

7 | import random

8 | from typing import Any, Dict, List, Optional

9 |

10 | import numpy as np

11 | import torch

12 | import yaml

13 | from torch.utils.data import DataLoader, Dataset

14 |

15 |

16 | # Configure a root logger for the utility functions

17 | logger = logging.getLogger(__name__)

18 |

19 |

20 | def setup_logging(level: int = logging.INFO, log_file: Optional[str] = None):

21 | """

22 | Sets up a standardized logging configuration for the entire project.

23 |

24 | Args:

25 | level (int): The logging level (e.g., logging.INFO, logging.DEBUG).

26 | log_file (Optional[str]): Path to a file to save logs. If None, logs to console only.

27 | """

28 | log_format = '%(asctime)s - %(name)s - %(levelname)s - %(message)s'

29 |

30 | # Get the root logger

31 | root_logger = logging.getLogger()

32 | root_logger.setLevel(level)

33 |

34 | # Clear existing handlers to avoid duplicate logs

35 | if root_logger.hasHandlers():

36 | root_logger.handlers.clear()

37 |

38 | # Console handler

39 | console_handler = logging.StreamHandler()

40 | console_handler.setFormatter(logging.Formatter(log_format))

41 | root_logger.addHandler(console_handler)

42 |

43 | # File handler

44 | if log_file:

45 | try:

46 | # Ensure directory exists

47 | os.makedirs(os.path.dirname(log_file), exist_ok=True)

48 | file_handler = logging.FileHandler(log_file, mode='a', encoding='utf-8')

49 | file_handler.setFormatter(logging.Formatter(log_format))

50 | root_logger.addHandler(file_handler)

51 | logger.info(f"Logging is set up. Logs will also be saved to {log_file}")

52 | except Exception as e:

53 | logger.error(f"Failed to set up file logger at {log_file}. Error: {e}")

54 |

55 | logger.info(f"Logging level set to {logging.getLevelName(level)}.")

56 |

57 |

58 | def set_seed(seed: int = 42):

59 | """

60 | Sets the random seed for reproducibility across all relevant libraries.

61 |

62 | Args:

63 | seed (int): The seed value.

64 | """

65 | random.seed(seed)

66 | np.random.seed(seed)

67 | torch.manual_seed(seed)

68 | if torch.cuda.is_available():

69 | torch.cuda.manual_seed(seed)

70 | torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

71 | # The following two lines are needed for full reproducibility with CUDA

72 | torch.backends.cudnn.deterministic = True

73 | torch.backends.cudnn.benchmark = False

74 | logger.info(f"Random seed set to {seed} for reproducibility.")

75 |

76 |

77 | def load_config(config_path: str) -> Dict[str, Any]:

78 | """

79 | Loads a configuration file in YAML format.

80 |

81 | Args:

82 | config_path (str): The path to the YAML configuration file.

83 |

84 | Returns:

85 | Dict[str, Any]: A dictionary containing the configuration.

86 |

87 | Raises:

88 | FileNotFoundError: If the config file does not exist.

89 | yaml.YAMLError: If the file is not a valid YAML.

90 | """

91 | logger.info(f"Loading configuration from {config_path}")

92 | try:

93 | with open(config_path, 'r', encoding='utf-8') as f:

94 | config = yaml.safe_load(f)

95 | if not isinstance(config, dict):

96 | raise TypeError("Configuration file content is not a dictionary.")

97 | return config

98 | except FileNotFoundError:

99 | logger.error(f"Configuration file not found at: {config_path}")

100 | raise

101 | except yaml.YAMLError as e:

102 | logger.error(f"Error parsing YAML file at {config_path}. Please check syntax. Error: {e}")

103 | raise

104 | except TypeError as e:

105 | logger.error(f"Error in config file structure: {e}")

106 | raise

107 |

108 |

109 | def save_object(obj: Any, filepath: str):

110 | """

111 | Saves a Python object to a file using pickle.

112 |

113 | Args:

114 | obj (Any): The Python object to save.

115 | filepath (str): The path where the object will be saved.

116 | """

117 | logger.info(f"Saving object to {filepath}")

118 | try:

119 | # Ensure the directory exists

120 | os.makedirs(os.path.dirname(filepath), exist_ok=True)

121 | with open(filepath, 'wb') as f:

122 | pickle.dump(obj, f)

123 | except (IOError, pickle.PicklingError) as e:

124 | logger.error(f"Failed to save object to {filepath}. Error: {e}")

125 | raise

126 |

127 |

128 | def load_object(filepath: str) -> Any:

129 | """

130 |

131 | Loads a Python object from a pickle file.

132 |

133 | Args:

134 | filepath (str): The path to the pickle file.

135 |

136 | Returns:

137 | Any: The loaded Python object.

138 | """

139 | logger.info(f"Loading object from {filepath}")

140 | try:

141 | with open(filepath, 'rb') as f:

142 | obj = pickle.load(f)

143 | return obj

144 | except FileNotFoundError:

145 | logger.error(f"Object file not found at: {filepath}")

146 | raise

147 | except (IOError, pickle.UnpicklingError) as e:

148 | logger.error(f"Failed to load object from {filepath}. Error: {e}")

149 | raise

150 |

151 |

152 | # --- Data Handling Utilities ---

153 |

154 | class PoetryDataset(Dataset):

155 | """

156 | A custom PyTorch Dataset for poetry classification.

157 | Assumes data is pre-processed and consists of feature tensors and label tensors.

158 | """

159 | def __init__(self, features: Union[np.ndarray, torch.Tensor], labels: Union[np.ndarray, torch.Tensor]):

160 | if len(features) != len(labels):

161 | raise ValueError("Features and labels must have the same length.")

162 |

163 | self.features = torch.tensor(features, dtype=torch.float32) if isinstance(features, np.ndarray) else features

164 | self.labels = torch.tensor(labels, dtype=torch.float32) if isinstance(labels, np.ndarray) else labels

165 | logger.info(f"Dataset created with {len(self.features)} samples.")

166 |

167 | def __len__(self) -> int:

168 | return len(self.features)

169 |

170 | def __getitem__(self, idx: int) -> Tuple[torch.Tensor, torch.Tensor]:

171 | return self.features[idx], self.labels[idx]

172 |

173 |

174 | def pad_collate_fn(batch: List[Tuple[torch.Tensor, torch.Tensor]], pad_value: float = 0.0) -> Tuple[torch.Tensor, torch.Tensor]:

175 | """

176 | A custom collate_fn for a DataLoader to handle padding of sequences with variable lengths.

177 | This is crucial if your poems (or segments) have different numbers of vectors.

178 |

179 | Args:

180 | batch (List[Tuple[torch.Tensor, torch.Tensor]]): A list of (features, label) tuples.

181 | pad_value (float): The value to use for padding.

182 |

183 | Returns:

184 | Tuple[torch.Tensor, torch.Tensor]: Padded features and their corresponding labels.

185 | """

186 | # Separate features and labels

187 | features, labels = zip(*batch)

188 |

189 | # Pad the features (sequences)

190 | # torch.nn.utils.rnn.pad_sequence handles padding efficiently

191 | features_padded = torch.nn.utils.rnn.pad_sequence(features, batch_first=True, padding_value=pad_value)

192 |

193 | # Stack the labels

194 | labels = torch.stack(labels, 0)

195 |

196 | return features_padded, labels

--------------------------------------------------------------------------------

/web_app/pages/Explore_Corpus.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import logging

4 | from typing import List

5 |

6 | import pandas as pd

7 | import plotly.express as px

8 | import streamlit as st

9 |

10 | # Configure logger for this page

11 | logger = logging.getLogger(__name__)

12 |

13 |

14 | # --- Page Configuration ---

15 | try:

16 | st.set_page_config(

17 | page_title="Explore The Corpus",

18 | page_icon="📚",

19 | layout="wide",

20 | )

21 | except st.errors.StreamlitAPIException:

22 | # This error is expected if set_page_config is already called in the main app.py

23 | pass

24 |

25 |

26 | # --- Caching and Data Loading ---

27 |

28 | @st.cache_data

29 | def load_annotated_corpus(file_path: str = "data/annotated/diwan_corpus_annotated.csv") -> pd.DataFrame:

30 | """

31 | Loads the main annotated corpus from a CSV file and caches it.

32 | This function is the single source of truth for the dashboard's data.

33 | """

34 | try:

35 | logger.info(f"Loading annotated corpus from {file_path}...")

36 | df = pd.read_csv(file_path)

37 | # Basic validation

38 | if 'poet_name' not in df.columns or 'era' not in df.columns:

39 | raise ValueError("Required columns 'poet_name' or 'era' not found.")

40 | logger.info(f"Corpus loaded successfully with {len(df)} poems.")

41 | return df

42 | except FileNotFoundError:

43 | st.error(f"Data file not found at '{file_path}'. Please ensure the annotated corpus is available.")

44 | logger.error(f"Data file not found: {file_path}")

45 | return pd.DataFrame() # Return empty dataframe to prevent crash

46 | except Exception as e:

47 | st.error(f"An error occurred while loading the data: {e}")

48 | logger.error(f"Error loading corpus: {e}", exc_info=True)

49 | return pd.DataFrame()

50 |

51 |

52 | @st.cache_data

53 | def get_theme_columns(df: pd.DataFrame) -> List[str]:

54 | """Extracts theme column names from the DataFrame."""

55 | return [col for col in df.columns if col.startswith('theme_')]

56 |

57 |

58 | # --- UI and Plotting Functions ---

59 |

60 | def display_main_metrics(df: pd.DataFrame, theme_cols: List[str]):

61 | """Displays key statistics about the corpus."""

62 | st.header("Corpus Overview")

63 |

64 | col1, col2, col3, col4 = st.columns(4)

65 | col1.metric("Total Poems", f"{len(df):,}")

66 | col2.metric("Unique Poets", f"{df['poet_name'].nunique():,}")

67 | col3.metric("Historical Eras", f"{df['era'].nunique():,}")

68 | col4.metric("Annotated Themes", f"{len(theme_cols):,}")

69 |

70 |

71 | def display_theme_distribution(df: pd.DataFrame, theme_cols: List[str]):

72 | """Calculates and displays the overall distribution of themes."""

73 | st.subheader("Overall Theme Distribution")

74 |

75 | if not theme_cols:

76 | st.warning("No theme columns found to analyze.")

77 | return

78 |

79 | # Calculate theme counts

80 | theme_counts = df[theme_cols].sum().sort_values(ascending=False)

81 | theme_counts.index = theme_counts.index.str.replace('theme_', '') # Clean up names for display

82 |

83 | # Create a bar chart

84 | fig = px.bar(

85 | theme_counts,

86 | x=theme_counts.values,

87 | y=theme_counts.index,

88 | orientation='h',

89 | labels={'x': 'Number of Poems', 'y': 'Theme'},

90 | title="Frequency of Each Theme in the Corpus"

91 | )

92 | fig.update_layout(yaxis={'categoryorder': 'total ascending'})

93 | st.plotly_chart(fig, use_container_width=True)

94 |

95 |

96 | def display_interactive_filters(df: pd.DataFrame, theme_cols: List[str]):

97 | """Creates and manages the interactive filters in the sidebar."""

98 | st.sidebar.header("🔍 Interactive Filters")

99 |

100 | # Theme filter

101 | # Clean up names for the multiselect widget

102 | cleaned_theme_names = [name.replace('theme_', '') for name in theme_cols]

103 | selected_themes_cleaned = st.sidebar.multiselect(

104 | "Filter by Theme(s):",

105 | options=cleaned_theme_names,

106 | help="Select one or more themes to view poems containing them."

107 | )

108 | # Map back to original column names

109 | selected_themes_original = ['theme_' + name for name in selected_themes_cleaned]

110 |

111 | # Poet filter

112 | all_poets = sorted(df['poet_name'].unique())

113 | selected_poets = st.sidebar.multiselect(

114 | "Filter by Poet(s):",

115 | options=all_poets

116 | )

117 |

118 | # Era filter

119 | all_eras = sorted(df['era'].unique())

120 | selected_eras = st.sidebar.multiselect(

121 | "Filter by Era(s):",

122 | options=all_eras

123 | )

124 |

125 | return selected_themes_original, selected_poets, selected_eras

126 |

127 |

128 | def apply_filters(df: pd.DataFrame, themes: List[str], poets: List[str], eras: List[str]) -> pd.DataFrame:

129 | """Applies the selected filters to the DataFrame."""

130 | filtered_df = df.copy()

131 |

132 | # Apply theme filter (poems must contain ALL selected themes)

133 | if themes:

134 | for theme in themes:

135 | filtered_df = filtered_df[filtered_df[theme] == 1]

136 |

137 | # Apply poet filter

138 | if poets:

139 | filtered_df = filtered_df[filtered_df['poet_name'].isin(poets)]

140 |

141 | # Apply era filter

142 | if eras:

143 | filtered_df = filtered_df[filtered_df['era'].isin(eras)]

144 |

145 | return filtered_df

146 |

147 |

148 | # --- Main Page Application ---

149 |

150 | def main():

151 | """Main function to render the 'Explore Corpus' page."""

152 |

153 | st.title("📚 Explore the Annotated Poetry Corpus")

154 | st.markdown(

155 | "This interactive dashboard allows you to explore the thematic landscape of our annotated "

156 | "Arabic poetry corpus. Use the filters in the sidebar to drill down into the data."

157 | )

158 | st.markdown("---")

159 |

160 | # Load data

161 | corpus_df = load_annotated_corpus()

162 |

163 | # Stop if data loading failed

164 | if corpus_df.empty:

165 | st.warning("Cannot display dashboard because the data could not be loaded.")

166 | st.stop()

167 |

168 | theme_columns = get_theme_columns(corpus_df)

169 |

170 | # Display widgets and get filter selections

171 | selected_themes, selected_poets, selected_eras = display_interactive_filters(corpus_df, theme_columns)

172 |

173 | # Apply filters

174 | filtered_data = apply_filters(corpus_df, selected_themes, selected_poets, selected_eras)

175 |

176 | # --- Main Content Area ---

177 |

178 | # Display high-level stats of the *original* corpus

179 | display_main_metrics(corpus_df, theme_columns)

180 | st.markdown("---")

181 |

182 | # Display analysis based on the *filtered* data

183 | st.header("Filtered Results")

184 | st.write(f"**Showing {len(filtered_data)} of {len(corpus_df)} poems based on your selections.**")

185 |

186 | if len(filtered_data) > 0:

187 | # Display theme distribution for the filtered subset

188 | display_theme_distribution(filtered_data, theme_columns)

189 |

190 | # Display a sample of the filtered data

191 | st.subheader("Filtered Data Sample")

192 | st.dataframe(

193 | filtered_data[['poet_name', 'era', 'poem_text']].head(20),

194 | use_container_width=True,

195 | hide_index=True

196 | )

197 | else:

198 | st.info("No poems match the selected filter criteria. Please broaden your search.")

199 |

200 |

201 | if __name__ == "__main__":

202 | main()

--------------------------------------------------------------------------------

/src/dataset.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import logging

4 | from typing import Tuple, List, Optional, Union

5 |

6 | import numpy as np

7 | import pandas as pd

8 | import torch

9 | from torch.utils.data import Dataset

10 |

11 | # Configure logging

12 | logger = logging.getLogger(__name__)

13 |

14 |

15 | class PoetryThematicDataset(Dataset):

16 | """

17 | A robust, custom PyTorch Dataset for the Arabic Poetry Thematic Classification task.

18 |

19 | This class is responsible for:

20 | 1. Loading data from a CSV file.

21 | 2. Validating the necessary columns.

22 | 3. Converting data into PyTorch Tensors.

23 | 4. Optionally caching the processed data in memory for faster access during training.

24 | """

25 |

26 | def __init__(

27 | self,

28 | csv_path: str,

29 | vector_column: str,

30 | label_columns: List[str],

31 | text_column: Optional[str] = None,

32 | use_caching: bool = True

33 | ):

34 | """

35 | Initializes the Dataset.

36 |

37 | Args:

38 | csv_path (str): Path to the CSV file containing the dataset (e.g., train.csv).

39 | vector_column (str): The name of the column containing the pre-computed segment embeddings.

40 | The data in this column should be list-like or array-like.

41 | label_columns (List[str]): A list of column names representing the multi-label targets.

42 | text_column (Optional[str]): The name of the column containing the original text.

43 | Useful for debugging and analysis, but not used by the model.

44 | use_caching (bool): If True, the entire dataset will be loaded and converted to tensors

45 | in memory upon initialization for faster access. Recommended for

46 | datasets that fit in RAM.

47 | """

48 | super().__init__()

49 |

50 | self.csv_path = csv_path

51 | self.vector_column = vector_column

52 | self.label_columns = label_columns

53 | self.text_column = text_column

54 | self.use_caching = use_caching

55 |

56 | self.data = self._load_and_validate_data()

57 | self.num_samples = len(self.data)

58 |

59 | self._cached_data: Optional[List[Tuple[torch.Tensor, torch.Tensor]]] = None

60 | if self.use_caching:

61 | logger.info("Caching enabled. Pre-loading and converting all data to tensors...")

62 | self._cache_data()

63 | logger.info(f"Successfully cached {self.num_samples} samples.")

64 |

65 | logger.info(f"Dataset initialized from {csv_path}. Found {self.num_samples} samples.")

66 |

67 | def _load_and_validate_data(self) -> pd.DataFrame:

68 | """Loads the CSV file and validates its structure."""

69 | try:

70 | df = pd.read_csv(self.csv_path)

71 | except FileNotFoundError:

72 | logger.error(f"Dataset file not found at: {self.csv_path}")

73 | raise

74 | except Exception as e:

75 | logger.error(f"Failed to read CSV file at {self.csv_path}. Error: {e}")

76 | raise

77 |

78 | # Validate required columns

79 | required_cols = [self.vector_column] + self.label_columns

80 | if self.text_column:

81 | required_cols.append(self.text_column)

82 |

83 | missing_cols = [col for col in required_cols if col not in df.columns]

84 | if missing_cols:

85 | raise ValueError(f"Missing required columns in {self.csv_path}: {missing_cols}")

86 |

87 | return df

88 |

89 | def _parse_row(self, index: int) -> Tuple[torch.Tensor, torch.Tensor, Optional[str]]:

90 | """

91 | Parses a single row from the DataFrame and converts it to tensors.

92 |

93 | Returns:

94 | A tuple of (feature_tensor, label_tensor, optional_text).

95 | """

96 | row = self.data.iloc[index]

97 |

98 | # --- Process Features (Vectors) ---

99 | try:

100 | # Vectors might be stored as string representations of lists, e.g., '[1, 2, 3]'

101 | # We need to handle this safely.

102 | vector_data = row[self.vector_column]

103 | if isinstance(vector_data, str):

104 | # A common case when reading from CSV

105 | import json

106 | feature_list = json.loads(vector_data)

107 | else:

108 | # Assumes it's already a list or numpy array

109 | feature_list = vector_data

110 |

111 | features = torch.tensor(feature_list, dtype=torch.float32)

112 |

113 | except Exception as e:

114 | logger.error(f"Failed to parse vector data at index {index} from column '{self.vector_column}'. Error: {e}")

115 | # Return a dummy tensor to avoid crashing the loader, or raise an error

116 | # For now, we'll raise to highlight the data quality issue.

117 | raise TypeError(f"Invalid data format in vector column at index {index}") from e

118 |

119 | # --- Process Labels ---

120 | try:

121 | labels = row[self.label_columns].values.astype(np.float32)

122 | labels = torch.from_numpy(labels)

123 | except Exception as e:

124 | logger.error(f"Failed to parse label data at index {index}. Error: {e}")

125 | raise TypeError(f"Invalid data format in label columns at index {index}") from e

126 |

127 | # --- Process Optional Text ---

128 | text = row[self.text_column] if self.text_column else None

129 |

130 | return features, labels, text

131 |

132 | def _cache_data(self):

133 | """Pre-processes and stores all samples in a list for fast retrieval."""

134 | self._cached_data = []

135 | for i in range(self.num_samples):

136 | try:

137 | # We only cache features and labels, not the text, to save memory

138 | features, labels, _ = self._parse_row(i)

139 | self._cached_data.append((features, labels))

140 | except TypeError as e:

141 | logger.error(f"Skipping sample at index {i} due to parsing error: {e}")

142 | # In a real scenario, you might want to handle this more gracefully,

143 | # e.g., by removing bad samples from self.data

144 | continue

145 |

146 | def __len__(self) -> int:

147 | """Returns the total number of samples in the dataset."""

148 | return self.num_samples

149 |

150 | def __getitem__(self, index: int) -> Union[Tuple[torch.Tensor, torch.Tensor], Dict]:

151 | """

152 | Retrieves a single sample from the dataset.

153 |

154 | If caching is enabled, it fetches pre-processed tensors from memory.

155 | Otherwise, it parses the row from the DataFrame on the fly.

156 |

157 | Returns:

158 | If text_column is provided, returns a dictionary.

159 | Otherwise, returns a tuple of (features, labels).

160 | This dictionary format is more flexible and explicit.

161 | """

162 | if self.use_caching and self._cached_data:

163 | if index >= len(self._cached_data):

164 | raise IndexError(f"Index {index} out of range for cached data of size {len(self._cached_data)}")

165 | features, labels = self._cached_data[index]

166 |

167 | # If text is needed, we still have to fetch it from the DataFrame

168 | if self.text_column:

169 | text = self.data.iloc[index][self.text_column]

170 | return {"features": features, "labels": labels, "text": text}

171 | else:

172 | return features, labels

173 | else:

174 | # Process on the fly

175 | features, labels, text = self._parse_row(index)

176 | if self.text_column:

177 | return {"features": features, "labels": labels, "text": text}

178 | else:

179 | return features, labels

--------------------------------------------------------------------------------

/src/data_processing.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | import pandas as pd

4 | import re

5 | import string

6 | import logging

7 | from typing import List, Optional

8 |

9 | # Set up logging

10 | logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

11 |

12 | try:

13 | from pyfarasa.segmenter import FarasaSegmenter

14 | except ImportError:

15 | logging.error("pyfarasa is not installed. Please install it using 'pip install pyfarasa'.")

16 | # You might want to raise the error to stop execution if Farasa is critical

17 | # raise ImportError("pyfarasa is not installed.")

18 | FarasaSegmenter = None # Set to None to handle cases where it's not present

19 |

20 |

21 | class PoetryPreprocessor:

22 | """

23 | A robust class to preprocess Arabic poetry text.

24 | Handles cleaning, normalization, stopword removal, and morphological segmentation.

25 | Includes error handling and logging.

26 | """

27 |

28 | def __init__(self, stopwords_path: Optional[str] = None):

29 | """

30 | Initializes the preprocessor, setting up the Farasa segmenter and loading stopwords.

31 |

32 | Args:

33 | stopwords_path (Optional[str]): Path to a file containing custom stopwords, one per line.

34 | If None, a default internal list is used.

35 | """

36 | self.segmenter = self._initialize_farasa()

37 | self.stopwords = self._load_stopwords(stopwords_path)

38 |

39 | def _initialize_farasa(self):

40 | """

41 | Initializes the FarasaSegmenter and handles potential errors if pyfarasa is not installed

42 | or if the JAR files are not found.

43 | """

44 | if FarasaSegmenter is None:

45 | logging.warning("FarasaSegmenter is not available. Segmentation step will be skipped.")

46 | return None

47 | try:

48 | # interactive=True avoids re-initializing the JVM for each call, making it much faster.

49 | return FarasaSegmenter(interactive=True)

50 | except Exception as e:

51 | logging.error(f"Failed to initialize FarasaSegmenter. Error: {e}")

52 | logging.warning("Segmentation step will be skipped. Ensure Java is installed and Farasa's dependencies are correct.")

53 | return None

54 |

55 | def _load_stopwords(self, path: Optional[str]) -> List[str]:

56 | """

57 | Loads stopwords from a specified file path or returns a default list.

58 | """

59 | if path:

60 | try:

61 | with open(path, 'r', encoding='utf-8') as f:

62 | stopwords = [line.strip() for line in f if line.strip()]

63 | logging.info(f"Successfully loaded {len(stopwords)} stopwords from {path}.")

64 | return stopwords

65 | except FileNotFoundError:

66 | logging.error(f"Stopwords file not found at {path}. Falling back to default list.")

67 |

68 | # Fallback to default list

69 | logging.info("Using default internal stopwords list.")

70 | return [

71 | "من", "في", "على", "إلى", "عن", "و", "ف", "ثم", "أو", "ب", "ك", "ل",

72 | "يا", "ما", "لا", "إن", "أن", "كان", "قد", "لقد", "لكن", "هذا", "هذه",

73 | "ذلك", "تلك", "هنا", "هناك", "هو", "هي", "هم", "هن", "أنا", "نحن",

74 | "أنت", "أنتي", "أنتما", "أنتن", "الذي", "التي", "الذين", "اللاتي",

75 | "كل", "بعض", "غير", "سوى", "مثل", "كيف", "متى", "أين", "كم", "أي",

76 | "حتى", "إذ", "إذا", "إذن", "بعد", "قبل", "حين", "بين", "مع", "عند"

77 | ]

78 |

79 | def _clean_text(self, text: str) -> str:

80 | """

81 | Applies basic cleaning to the text: removes diacritics, non-Arabic chars,

82 | tatweel, and extra whitespaces.

83 | Handles non-string inputs gracefully.

84 | """

85 | if not isinstance(text, str):

86 | logging.warning(f"Input is not a string (type: {type(text)}), returning empty string.")

87 | return ""

88 |

89 | # 1. Remove diacritics (tashkeel)

90 | text = re.sub(r'[\u064B-\u0652]', '', text)

91 |

92 | # 2. Remove tatweel (elongation)

93 | text = re.sub(r'\u0640', '', text)

94 |

95 | # 3. Remove punctuation and non-Arabic characters (keeps only Arabic letters and spaces)

96 | # This regex is more comprehensive and includes a wider range of punctuation.

97 | arabic_only_pattern = re.compile(r'[^\u0621-\u064A\s]')

98 | text = arabic_only_pattern.sub('', text)

99 |

100 | # 4. Remove extra whitespaces

101 | text = re.sub(r'\s+', ' ', text).strip()

102 |

103 | return text

104 |

105 | def _normalize_arabic(self, text: str) -> str:

106 | """

107 | Normalizes Arabic characters: unifies Alef forms, Yaa/Alef Maqsura, and Taa Marbuta.

108 | """

109 | text = re.sub(r'[إأآ]', 'ا', text)

110 | text = re.sub(r'ى', 'ي', text)

111 | text = re.sub(r'ة', 'ه', text)

112 | return text

113 |

114 | def _remove_stopwords(self, text: str) -> str:

115 | """Removes stopwords from a space-tokenized text."""

116 | words = text.split()

117 | filtered_words = [word for word in words if word not in self.stopwords]

118 | return " ".join(filtered_words)

119 |

120 | def _segment_text(self, text: str) -> str:

121 | """

122 |

123 | Segments the text using the initialized Farasa segmenter.

124 | Returns the original text if the segmenter is not available.