├── .github

└── workflows

│ └── testing.yml

├── .gitignore

├── LICENSE.txt

├── README.md

├── data

├── crunchbase_companies.json.gz

├── first_names.json

├── form_frequencies.json

├── geonames.json

├── model.PNG

├── products.json

├── reuters_small.tar.gz

├── sentiment

│ ├── lexicons

│ │ ├── IBM_Debater

│ │ │ └── no_unigram.txt

│ │ ├── NRC_Sentiment_Emotion

│ │ │ ├── NRC-Emotion-Lexicon-Wordlevel-v0.92.txt

│ │ │ └── no_sent.txt

│ │ ├── NRC_VAD_Lexicon

│ │ │ └── Norwegian-no-NRC-VAD-Lexicon.txt

│ │ └── socal

│ │ │ ├── no_adj.txt

│ │ │ ├── no_adv.txt

│ │ │ ├── no_google.txt

│ │ │ ├── no_int.txt

│ │ │ ├── no_noun.txt

│ │ │ └── no_verb.txt

│ └── norec_sentence

│ │ ├── dev.txt

│ │ ├── labels.json

│ │ ├── test.txt

│ │ └── train.txt

├── skweak_logo.jpg

├── skweak_logo_thumbnail.jpg

├── skweak_procedure.png

└── wikidata_small_tokenised.json.gz

├── examples

├── ner

│ ├── Step by step NER.ipynb

│ ├── __init__.py

│ ├── conll2003_ner.py

│ ├── conll2003_prep.py

│ ├── data_utils.py

│ ├── eval_utils.py

│ └── muc6_ner.py

├── quick_start.ipynb

└── sentiment

│ ├── Step_by_step.ipynb

│ ├── __init__.py

│ ├── norec_sentiment.py

│ ├── sentiment_lexicons.py

│ ├── sentiment_models.py

│ ├── transformer_model.py

│ └── weak_supervision_sentiment.py

├── poetry.lock

├── poetry.toml

├── pyproject.toml

├── skweak

├── __init__.py

├── aggregation.py

├── analysis.py

├── base.py

├── doclevel.py

├── gazetteers.py

├── generative.py

├── heuristics.py

├── spacy.py

├── utils.py

└── voting.py

└── tests

├── __init__.py

├── conftest.py

├── test_aggregation.py

├── test_analysis.py

├── test_doclevel.py

├── test_gazetteers.py

├── test_heuristics.py

└── test_utils.py

/.github/workflows/testing.yml:

--------------------------------------------------------------------------------

1 | name: testing

2 |

3 | on: [ push ]

4 |

5 | jobs:

6 | build:

7 |

8 | runs-on: ubuntu-latest

9 | strategy:

10 | matrix:

11 | python-version: [ "3.7", "3.8", "3.9", "3.10", "3.11" ]

12 | fail-fast: false

13 |

14 | steps:

15 | - uses: actions/checkout@v3

16 | name: Checkout

17 |

18 | - name: Set up Python ${{ matrix.python-version }}

19 | uses: actions/setup-python@v4

20 | with:

21 | python-version: ${{ matrix.python-version }}

22 | cache: 'pip'

23 |

24 | - uses: Gr1N/setup-poetry@v8

25 | with:

26 | poetry-version: 1.5.1

27 |

28 | - name: Install Python dependencies

29 | run: |

30 | poetry run pip install -U pip

31 | poetry install --with dev

32 |

33 | # TODO: add mkdocs documentation, make sure examples work

34 |

35 | # - name: Lint with flake8 #TODO: use ruff

36 | # run: |

37 | # # stop the build if there are Python syntax errors or undefined names

38 | # flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

39 | # # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

40 | # flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

41 |

42 | - name: Test with pytest

43 | run: poetry run pytest

44 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .idea/

2 | .venv/

3 | build/

4 | sdist/

5 | dist/

6 |

--------------------------------------------------------------------------------

/LICENSE.txt:

--------------------------------------------------------------------------------

1 | The MIT License (MIT)

2 |

3 | Copyright (C) 2021-2026 Norsk Regnesentral

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

6 |

7 | The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

8 |

9 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # skweak: Weak supervision for NLP

2 |

3 | [](https://github.com/NorskRegnesentral/skweak/blob/main/LICENSE.txt)

4 | [](https://github.com/NorskRegnesentral/skweak/stargazers)

5 |

6 |

7 |

8 |

9 |

10 |  11 |

11 |

12 |

13 | **Skweak is no longer actively maintained** (if you are interested to take over the project, give us a shout).

14 |

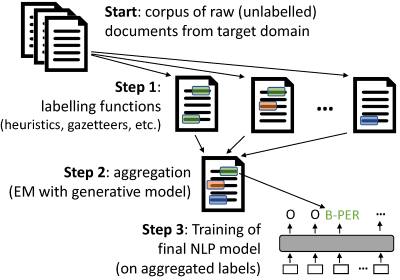

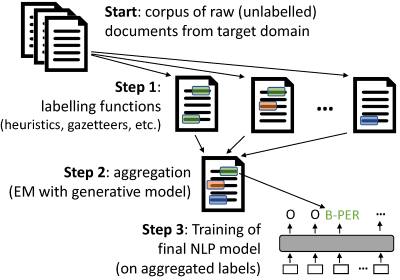

15 | Labelled data remains a scarce resource in many practical NLP scenarios. This is especially the case when working with resource-poor languages (or text domains), or when using task-specific labels without pre-existing datasets. The only available option is often to collect and annotate texts by hand, which is expensive and time-consuming.

16 |

17 | `skweak` (pronounced `/skwi:k/`) is a Python-based software toolkit that provides a concrete solution to this problem using weak supervision. `skweak` is built around a very simple idea: Instead of annotating texts by hand, we define a set of _labelling functions_ to automatically label our documents, and then _aggregate_ their results to obtain a labelled version of our corpus.

18 |

19 | The labelling functions may take various forms, such as domain-specific heuristics (like pattern-matching rules), gazetteers (based on large dictionaries), machine learning models, or even annotations from crowd-workers. The aggregation is done using a statistical model that automatically estimates the relative accuracy (and confusions) of each labelling function by comparing their predictions with one another.

20 |

21 | `skweak` can be applied to both sequence labelling and text classification, and comes with a complete API that makes it possible to create, apply and aggregate labelling functions with just a few lines of code. The toolkit is also tightly integrated with [SpaCy](http://www.spacy.io), which makes it easy to incorporate into existing NLP pipelines. Give it a try!

22 |

23 |

24 |

25 | **Full Paper**:

26 | Pierre Lison, Jeremy Barnes and Aliaksandr Hubin (2021), "[skweak: Weak Supervision Made Easy for NLP](https://aclanthology.org/2021.acl-demo.40/)", *ACL 2021 (System demonstrations)*.

27 |

28 | **Documentation & API**: See the [Wiki](https://github.com/NorskRegnesentral/skweak/wiki) for details on how to use `skweak`.

29 |

30 |

31 |

32 |

33 | https://user-images.githubusercontent.com/11574012/114999146-e0995300-9ea1-11eb-8288-2bb54dc043e7.mp4

34 |

35 |

36 |

37 |

38 |

39 | ## Dependencies

40 |

41 | - `spacy` >= 3.0.0

42 | - `hmmlearn` >= 0.3.0

43 | - `pandas` >= 0.23

44 | - `numpy` >= 1.18

45 |

46 | You also need Python >= 3.6.

47 |

48 |

49 | ## Install

50 |

51 | The easiest way to install `skweak` is through `pip`:

52 |

53 | ```shell

54 | pip install skweak

55 | ```

56 |

57 | or if you want to install from the repo:

58 |

59 | ```shell

60 | pip install --user git+https://github.com/NorskRegnesentral/skweak

61 | ```

62 |

63 | The above installation only includes the core library (not the additional examples in `examples`).

64 |

65 | Note: some examples and tests may require trained spaCy pipelines. These can be downloaded automatically using the syntax (for the pipeline `en_core_web_sm`)

66 | ```shell

67 | python -m spacy download en_core_web_sm

68 | ```

69 |

70 |

71 | ## Basic Overview

72 |

73 |

74 |

75 |  76 |

76 |

77 |

78 | Weak supervision with `skweak` goes through the following steps:

79 | - **Start**: First, you need raw (unlabelled) data from your text domain. `skweak` is build on top of [SpaCy](http://www.spacy.io), and operates with Spacy `Doc` objects, so you first need to convert your documents to `Doc` objects using SpaCy.

80 | - **Step 1**: Then, we need to define a range of labelling functions that will take those documents and annotate spans with labels. Those labelling functions can comes from heuristics, gazetteers, machine learning models, etc. See the  for more details.

81 | - **Step 2**: Once the labelling functions have been applied to your corpus, you need to _aggregate_ their results in order to obtain a single annotation layer (instead of the multiple, possibly conflicting annotations from the labelling functions). This is done in `skweak` using a generative model that automatically estimates the relative accuracy and possible confusions of each labelling function.

82 | - **Step 3**: Finally, based on those aggregated labels, we can train our final model. Step 2 gives us a labelled corpus that (probabilistically) aggregates the outputs of all labelling functions, and you can use this labelled data to estimate any kind of machine learning model. You are free to use whichever model/framework you prefer.

83 |

84 | ## Quickstart

85 |

86 | Here is a minimal example with three labelling functions (LFs) applied on a single document:

87 |

88 | ```python

89 | import spacy, re

90 | from skweak import heuristics, gazetteers, generative, utils

91 |

92 | # LF 1: heuristic to detect occurrences of MONEY entities

93 | def money_detector(doc):

94 | for tok in doc[1:]:

95 | if tok.text[0].isdigit() and tok.nbor(-1).is_currency:

96 | yield tok.i-1, tok.i+1, "MONEY"

97 | lf1 = heuristics.FunctionAnnotator("money", money_detector)

98 |

99 | # LF 2: detection of years with a regex

100 | lf2= heuristics.TokenConstraintAnnotator("years", lambda tok: re.match("(19|20)\d{2}$",

101 | tok.text), "DATE")

102 |

103 | # LF 3: a gazetteer with a few names

104 | NAMES = [("Barack", "Obama"), ("Donald", "Trump"), ("Joe", "Biden")]

105 | trie = gazetteers.Trie(NAMES)

106 | lf3 = gazetteers.GazetteerAnnotator("presidents", {"PERSON":trie})

107 |

108 | # We create a corpus (here with a single text)

109 | nlp = spacy.load("en_core_web_sm")

110 | doc = nlp("Donald Trump paid $750 in federal income taxes in 2016")

111 |

112 | # apply the labelling functions

113 | doc = lf3(lf2(lf1(doc)))

114 |

115 | # create and fit the HMM aggregation model

116 | hmm = generative.HMM("hmm", ["PERSON", "DATE", "MONEY"])

117 | hmm.fit([doc]*10)

118 |

119 | # once fitted, we simply apply the model to aggregate all functions

120 | doc = hmm(doc)

121 |

122 | # we can then visualise the final result (in Jupyter)

123 | utils.display_entities(doc, "hmm")

124 | ```

125 |

126 | Obviously, to get the most out of `skweak`, you will need more than three labelling functions. And, most importantly, you will need a larger corpus including as many documents as possible from your domain, so that the model can derive good estimates of the relative accuracy of each labelling function.

127 |

128 | ## Documentation

129 |

130 | See the [Wiki](https://github.com/NorskRegnesentral/skweak/wiki).

131 |

132 |

133 | ## License

134 |

135 | `skweak` is released under an MIT License.

136 |

137 | The MIT License is a short and simple permissive license allowing both commercial and non-commercial use of the software. The only requirement is to preserve

138 | the copyright and license notices (see file [License](https://github.com/NorskRegnesentral/skweak/blob/main/LICENSE.txt)). Licensed works, modifications, and larger works may be distributed under different terms and without source code.

139 |

140 | ## Citation

141 |

142 | See our paper describing the framework:

143 |

144 | Pierre Lison, Jeremy Barnes and Aliaksandr Hubin (2021), "[skweak: Weak Supervision Made Easy for NLP](https://aclanthology.org/2021.acl-demo.40/)", *ACL 2021 (System demonstrations)*.

145 |

146 | ```bibtex

147 | @inproceedings{lison-etal-2021-skweak,

148 | title = "skweak: Weak Supervision Made Easy for {NLP}",

149 | author = "Lison, Pierre and

150 | Barnes, Jeremy and

151 | Hubin, Aliaksandr",

152 | booktitle = "Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing: System Demonstrations",

153 | month = aug,

154 | year = "2021",

155 | address = "Online",

156 | publisher = "Association for Computational Linguistics",

157 | url = "https://aclanthology.org/2021.acl-demo.40",

158 | doi = "10.18653/v1/2021.acl-demo.40",

159 | pages = "337--346",

160 | }

161 | ```

162 |

--------------------------------------------------------------------------------

/data/crunchbase_companies.json.gz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/crunchbase_companies.json.gz

--------------------------------------------------------------------------------

/data/model.PNG:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/model.PNG

--------------------------------------------------------------------------------

/data/reuters_small.tar.gz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/reuters_small.tar.gz

--------------------------------------------------------------------------------

/data/sentiment/lexicons/socal/no_adv.txt:

--------------------------------------------------------------------------------

1 | vidunderlig 5

2 | herlig 5

3 | nydelig 5

4 | unntaksvis 5

5 | utmerket 5

6 | fantastisk 5

7 | fantastisk 5

8 | spektakulært 5

9 | feilfritt 5

10 | nydelig 5

11 | enestående 5

12 | perfekt 5

13 | fantastisk 5

14 | utrolig 5

15 | guddommelig 5

16 | fantastisk 5

17 | upåklagelig 5

18 | utelukkende 5

19 | fabelaktig 5

20 | bedårende 5

21 | strålende 5

22 | utrolig 5

23 | blendende 5

24 | utrolig 5

25 | strålende 5

26 | veldig bra 5

27 | orgasmisk 5

28 | hyggelig 4

29 | behagelig 4

30 | jublende 4

31 | flott 4

32 | magisk 4

33 | pent 4

34 | sprudlende 4

35 | engasjerende 4

36 | elegant 4

37 | beundringsverdig 4

38 | ypperlig 4

39 | elskelig 4

40 | mesterlig 4

41 | genialt 4

42 | fantastisk 4

43 | forfriskende 4

44 | lykkelig 4

45 | kjærlig 4

46 | høyest 4

47 | fenomenalt 4

48 | sjarmerende 4

49 | innovativt 4

50 | deilig 4

51 | mirakuløst 4

52 | fortryllende 4

53 | engrossingly 4

54 | morsomt 4

55 | vakkert 4

56 | intelligent 4

57 | gledelig 4

58 | attraktivt 4

59 | utsøkt 4

60 | kjevefall 4

61 | velvillig 4

62 | strålende 4

63 | entusiastisk 4

64 | oppladbart 4

65 | fredelig 3

66 | stilig 3

67 | eksotisk 3

68 | omfattende 3

69 | omhyggelig 3

70 | søtt 3

71 | fantasifullt 3

72 | prisverdig 3

73 | enormt 3

74 | høflig 3

75 | kjærlig 3

76 | populært 3

77 | søt 3

78 | lett 3

79 | bra 3

80 | rikt 3

81 | robust 3

82 | tilfredsstillende 3

83 | nylig 3

84 | gratis 3

85 | gripende 3

86 | muntert 3

87 | nøyaktig 3

88 | positivt 3

89 | spennende 3

90 | spennende 3

91 | gunstig 3

92 | kreativt 3

93 | festlig 3

94 | lidenskapelig 3

95 | fagmessig 3

96 | fengslende 3

97 | elegant 3

98 | kunstnerisk 3

99 | behendig 3

100 | imponerende 3

101 | intellektuelt 3

102 | levende 3

103 | ekstraordinært 3

104 | smart 3

105 | fantasifullt 3

106 | ergonomisk 3

107 | riktig 3

108 | sømløst 3

109 | fritt 3

110 | vittig 3

111 | uredd 3

112 | lyst 3

113 | fleksibelt 3

114 | adeptly 3

115 | ømt 3

116 | klokt 3

117 | triumferende 3

118 | uanstrengt 3

119 | hyggelig 3

120 | uproariously 3

121 | enormt 3

122 | morsomt 3

123 | hjertelig 3

124 | rikelig 3

125 | vellykket 3

126 | humoristisk 3

127 | tålmodig 3

128 | minneverdig 3

129 | uvurderlig 3

130 | underholdende 3

131 | ergonomisk 3

132 | dristig 3

133 | kraftig 3

134 | beleilig 3

135 | rungende 3

136 | adroitly 3

137 | romantisk 3

138 | forbløffende 3

139 | heroisk 3

140 | energisk 3

141 | sjelelig 3

142 | sjenerøst 3

143 | modig 3

144 | tappert 3

145 | rimelig 3

146 | pålitelig 3

147 | rimelig 3

148 | billig 3

149 | heldigvis 2

150 | profesjonelt 2

151 | bemerkelsesverdig 2

152 | elegant 2

153 | suspensivt 2

154 | intrikat 2

155 | treffende 2

156 | pent ferdig 2

157 | konsekvent 2

158 | pålitelig 2

159 | lyrisk 2

160 | passende 2

161 | virkelig 2

162 | riktig 2

163 | intensivt 2

164 | hjertelig 2

165 | evig 2

166 | gjerne 2

167 | modig 2

168 | forseggjort 2

169 | fersk 2

170 | godt 2

171 | overbærende 2

172 | overbevisende 2

173 | effektivt 2

174 | fargerikt 2

175 | gradvis 2

176 | rolig 2

177 | hederlig 2

178 | kjærlig 2

179 | dyktig 2

180 | liberalt 2

181 | lekent 2

182 | omtenksomt 2

183 | nøyaktig 2

184 | sannferdig 2

185 | målrettet 2

186 | heldigvis 2

187 | forsiktig 2

188 | komfortabelt 2

189 | grundig 2

190 | ivrig 2

191 | pent 2

192 | kompetent 2

193 | lovende 2

194 | pen 2

195 | nøye 2

196 | fantastisk 2

197 | delikat 2

198 | aktivt 2

199 | uskyldig 2

200 | kjærlig 2

201 | umåtelig 2

202 | trofast 2

203 | kapabel 2

204 | sammenhengende 2

205 | vanedannende 2

206 | oppfinnsomt 2

207 | reflekterende 2

208 | hjelpsomt 2

209 | nobelt 2

210 | ydmykt 2

211 | dyptgående 2

212 | ivrig 2

213 | oppriktig 2

214 | smart 2

215 | høflig 2

216 | interessant 2

217 | mystisk 2

218 | sentimentalt 2

219 | smart 2

220 | formidabelt 2

221 | fint 2

222 | lett 2

223 | eksepsjonell 2

224 | eterisk 2

225 | hovedsakelig 2

226 | ridderlig 2

227 | strategisk 2

228 | greit 2

229 | elektronisk 2

230 | kunstnerisk 2

231 | moralsk 2

232 | erotisk 2

233 | rørende 2

234 | kraftig 2

235 | optimistisk 2

236 | sterk 2

237 | spirituelt 2

238 | sympatisk 2

239 | nostalgisk 2

240 | smakfullt 2

241 | trygt 2

242 | monumentalt 2

243 | hjerteskjærende 2

244 | pent 2

245 | trygt 2

246 | avgjørende 2

247 | ansvarlig 2

248 | stolt 2

249 | forståelig nok 2

250 | mektig 2

251 | autentisk 2

252 | kompromissløst 2

253 | bedre 2

254 | digitali 2

255 | rask 2

256 | gratis 2

257 | klar 2

258 | rent 2

259 | universelt 1

260 | intuitivt 1

261 | forbausende 1

262 | ren 1

263 | stilistisk 1

264 | kjent 1

265 | rikelig 1

266 | digitalt 1

267 | lydløst 1

268 | andpusten 1

269 | naturlig 1

270 | komisk 1

271 | svimmel 1

272 | realistisk 1

273 | nøye 1

274 | skarpt 1

275 | uskyldig 1

276 | intimt 1

277 | helhet 1

278 | offisielt 1

279 | troverdig 1

280 | straks 1

281 | musikalsk 1

282 | merkbart 1

283 | unikt 1

284 | logisk 1

285 | lunefullt 1

286 | lett 1

287 | passende 1

288 | klassisk 1

289 | effektivt 1

290 | slående 1

291 | helst 1

292 | første 1

293 | hovedsakelig 1

294 | beskjedent 1

295 | rimelig 1

296 | tilstrekkelig 1

297 | elektrisk 1

298 | betydelig 1

299 | gjenkjennelig 1

300 | i det vesentlige 1

301 | tilstrekkelig 1

302 | mykt 1

303 | sikkert 1

304 | intenst 1

305 | solid 1

306 | umåtelig 1

307 | høytidelig 1

308 | varmt 1

309 | relevant 1

310 | rettferdig 1

311 | dyktig 1

312 | sikkert 1

313 | ordentlig 1

314 | normalt 1

315 | rent 1

316 | overbevisende 1

317 | billig 1

318 | sentralt 1

319 | tydelig 1

320 | bevisst 1

321 | sannsynlig 1

322 | forholdsvis 1

323 | nok 1

324 | rett frem 1

325 | sammenlignbart 1

326 | responsivt 1

327 | utpreget 1

328 | raskt 1

329 | følsomt 1

330 | spontant 1

331 | villig 1

332 | anstendig 1

333 | brønn 1

334 | dyrt 1

335 | smart 1

336 | virkelig 1

337 | legitimt 1

338 | uendelig 1

339 | raskt 1

340 | lett 1

341 | fremtredende 1

342 | verdifullt 1

343 | tilfeldig 1

344 | nyttig 1

345 | jevnt 1

346 | skyll 1

347 | gradvis 1

348 | spesielt 1

349 | sterkt 1

350 | jevnt og trutt 1

351 | automatisk 1

352 | stille 1

353 | troverdig 1

354 | tilfredsstillende 1

355 | flørtende 1

356 | uvanlig 1

357 | med rette 1

358 | globalt 1

359 | med respekt 1

360 | quirkily 1

361 | uavhengig 1

362 | enormt 1

363 | ekte 1

364 | realistisk 1

365 | myk 1

366 | stor 1

367 | rettferdig 1

368 | uheldig 1

369 | komisk 1

370 | ukonvensjonelt 1

371 | vitenskapelig 1

372 | uforutsigbart 1

373 | vanlig 1

374 | klar 1

375 | forførende 1

376 | muntert 1

377 | hypnotisk 1

378 | pålitelig 1

379 | ambisiøst 1

380 | nonchalant 1

381 | sikkert 1

382 | kompakt 1

383 | ekstra 1

384 | akseptabelt 1

385 | økonomisk 1

386 | funksjonelt 1

387 | leselig 1

388 | valgfritt 1

389 | konkurransedyktig 1

390 | merkbart 1

391 | glatt -1

392 | kjølig -1

393 | hysterisk -1

394 | stort -1

395 | blinkende -1

396 | overfladisk -1

397 | ially -1

398 | dramatisk -1

399 | rett ut -1

400 | underlig -1

401 | nedslående -1

402 | tvangsmessig -1

403 | sjelden -1

404 | tett -1

405 | kaldt -1

406 | marginalt -1

407 | skarpt -1

408 | ostelig -1

409 | snevert -1

410 | sjokkerende -1

411 | kort -1

412 | forbausende -1

413 | tydelig -1

414 | foruroligende -1

415 | svakt -1

416 | alvorlig -1

417 | løst -1

418 | opprørende -1

419 | ujevnt -1

420 | tung -1

421 | hard -1

422 | uunngåelig -1

423 | nervøst -1

424 | kommersielt -1

425 | nølende -1

426 | lite -1

427 | eksternt -1

428 | vilt -1

429 | trist -1

430 | fantastisk -1

431 | uendelig -1

432 | tomgang -1

433 | negativt -1

434 | minimalt -1

435 | ekstremt -1

436 | beskyttende -1

437 | rampete -1

438 | tett -1

439 | motvillig -1

440 | sakte -1

441 | unødvendig -1

442 | lurt -1

443 | gjennomsiktig -1

444 | gal -1

445 | følelsesmessig -1

446 | urolig -1

447 | knapt -1

448 | omtrent -1

449 | hakkete -1

450 | inkonsekvent -1

451 | tungt -1

452 | rastløs -1

453 | kompleks -1

454 | merkelig -1

455 | konvensjonelt -1

456 | spesielt -1

457 | stereotyp -1

458 | utenfor emnet -1

459 | trendig -1

460 | lang -1

461 | klinisk -1

462 | forsiktig -1

463 | politisk -1

464 | religiøst -1

465 | vanskelig -1

466 | radikalt -1

467 | feilaktig -1

468 | gjentatt -1

469 | uhyggelig -1

470 | uinteressant -1

471 | svakt -1

472 | overflødig -1

473 | mørkt -1

474 | kryptisk -1

475 | løs -1

476 | kunstig -1

477 | campily -1

478 | sporadisk -1

479 | forenklet -1

480 | sterkt -1

481 | unnskyldende -1

482 | uløselig -1

483 | flamboyant -1

484 | idealistisk -1

485 | vantro -1

486 | vanlig -1

487 | billig -1

488 | ulykkelig -1

489 | sakte -1

490 | sent -1

491 | vedvarende -1

492 | ufullstendig -1

493 | temperamentsfull -1

494 | ironisk nok -1

495 | merkelig -1

496 | blindende -1

497 | trassig -1

498 | uklart -1

499 | mørk -1

500 | innfødt -1

501 | uregelmessig -1

502 | urealistisk -1

503 | gratis -2

504 | kjølig -2

505 | heldigvis -2

506 | urimelig -2

507 | repeterende -2

508 | upassende -2

509 | uforklarlig -2

510 | unødvendig -2

511 | brashly -2

512 | dårlig -2

513 | ubarmhjertig -2

514 | ubehagelig -2

515 | lat -2

516 | støyende -2

517 | bare -2

518 | alvorlig -2

519 | voldsomt -2

520 | beryktet -2

521 | grovt -2

522 | likegyldig -2

523 | naken -2

524 | klønete -2

525 | lunken -2

526 | ulogisk -2

527 | mindnumbingly -2

528 | amatøraktig -2

529 | latterlig -2

530 | klumpete -2

531 | uberørt -2

532 | urettmessig -2

533 | umulig -2

534 | feil -2

535 | dessverre -2

536 | angivelig -2

537 | forutsigbart -2

538 | flatt -2

539 | skyldfølende -2

540 | vanvittig -2

541 | innblandet -2

542 | tregt -2

543 | uvillig -2

544 | uavbrutt -2

545 | urettferdig -2

546 | tåpelig -2

547 | dessverre -2

548 | engstelig -2

549 | sappily -2

550 | takknemlig -2

551 | urettferdig -2

552 | sårt -2

553 | icily -2

554 | hardt -2

555 | knapt -2

556 | upassende -2

557 | høyt -2

558 | lystig -2

559 | unnvikende -2

560 | kjedelig -2

561 | apprehensive -2

562 | neppe -2

563 | vagt -2

564 | overbevisende -2

565 | utålmodig -2

566 | unøyaktig -2

567 | dessverre -2

568 | voldsomt -2

569 | overdreven -2

570 | uintelligent -2

571 | feil -2

572 | skissert -2

573 | kjedelig -2

574 | sjalu -2

575 | svakt -2

576 | offensivt -2

577 | vilkårlig -2

578 | ubarmhjertig -2

579 | kjedelig -2

580 | desperat -2

581 | tankeløst -2

582 | beklager -2

583 | altfor -2

584 | mislykket -2

585 | skjelvende -2

586 | lam -2

587 | tre -2

588 | ukontrollerbart -2

589 | strengt -2

590 | desperat -2

591 | tøft -2

592 | forvirrende -2

593 | fantasiløst -2

594 | negativt -2

595 | rotete -2

596 | mistenkelig -2

597 | ulovlig -2

598 | feil -2

599 | overveldende -2

600 | sauete -2

601 | tankeløst -2

602 | ['d | ville] _rather -2

603 | generisk -2

604 | akutt -2

605 | nerdete -2

606 | urolig -2

607 | mutt -2

608 | høyt -2

609 | morsomt -2

610 | deprimerende -2

611 | uforståelig -2

612 | katatonisk -2

613 | endimensjonalt -2

614 | syk -2

615 | generelt -2

616 | ikke tiltalende -2

617 | sta -2

618 | møysommelig -2

619 | ugunstig -2

620 | tilfeldig -2

621 | frekt -2

622 | dystert -2

623 | slurvet -2

624 | sinnsykt -2

625 | latterlig -2

626 | beruset -2

627 | impulsivt -2

628 | vanskelig -2

629 | vakuum -2

630 | grådig -2

631 | naivt -2

632 | syndig -3

633 | uendelig -3

634 | uhyrlig -3

635 | lurt -3

636 | aggressivt -3

637 | kynisk -3

638 | sint -3

639 | ubehagelig -3

640 | terminalt -3

641 | dystert -3

642 | søppel -3

643 | motbydelig -3

644 | frekt -3

645 | skummelt -3

646 | frekt -3

647 | skremmende -3

648 | inhabil -3

649 | for -3

650 | blindt -3

651 | håpløst -3

652 | sinnsløs -3

653 | pretensiøst -3

654 | vilt -3

655 | skuffende -3

656 | absurd -3

657 | tunghendt -3

658 | kjedelig -3

659 | tett -3

660 | truende -3

661 | b-film -3

662 | farlig -3

663 | illevarslende -3

664 | utilgivende -3

665 | grovt -3

666 | rabiat -3

667 | hjemsøkende -3

668 | fryktelig -3

669 | uheldigvis -3

670 | urovekkende -3

671 | kjedelig -3

672 | skamløst -3

673 | krøllete -3

674 | dystert -3

675 | blatant -3

676 | egoistisk -3

677 | dårlig -3

678 | bisarrt -3

679 | grafisk -3

680 | tragisk -3

681 | problematisk -3

682 | kronisk -3

683 | død -3

684 | irriterende -3

685 | irriterende -3

686 | analt -3

687 | dødelig -3

688 | meningsløst -3

689 | arrogant -3

690 | skammelig -3

691 | dårlig -3

692 | latterlig -4

693 | uutholdelig -4

694 | unnskyldelig -4

695 | djevelsk -4

696 | ukikkelig -4

697 | avskrivning -4

698 | pervers -4

699 | dumt -4

700 | uakseptabelt -4

701 | kriminelt -4

702 | grusomt -4

703 | latterlig -4

704 | smertefullt -4

705 | notorisk -4

706 | inanely -4

707 | patetisk -4

708 | uanstendig -4

709 | meningsløst -4

710 | ynkelig -4

711 | livløst -4

712 | fornærmende -4

713 | ondsinnet -4

714 | psykotisk -4

715 | opprørende -4

716 | patologisk -4

717 | utnyttende -4

718 | idiotisk -4

719 | fryktelig -5

720 | kvalmende -5

721 | utilgivelig -5

722 | katastrofalt -5

723 | fryktelig -5

724 | sykt -5

725 | veldig -5

726 | brutalt -5

727 | forferdelig -5

728 | forferdelig -5

729 | elendig -5

730 | fryktelig -5

731 | forferdelig -5

732 | ondskapsfullt -5

733 | frastøtende -5

734 | trist -5

735 | fryktelig -5

736 | skjemmende -5

737 | ulykkelig -5

738 | grotesk -5

739 | alvorlig -5

740 | ondskapsfullt -5

741 | motbydelig -5

742 | ulidelig -5

743 | forferdelig -5

744 | fryktelig -5

745 | forferdelig -5

746 | styggt -5

747 | nøye 2

748 | kort -1

749 | ensom -1

750 | streetwise 1

751 | slu -1

752 | vital 1

753 | mind-blowingly 2

754 | melodramatisk -2

755 | ulastelig 5

756 | nasalt -1

757 | dyktig 2

758 | hjerteskjærende -2

759 | uredelig -3

760 | plettfritt 4

761 | tynt -1

762 | lystig 2

763 | vakkert 4

764 | utsmykket 2

765 | pent 2

766 | dynamisk 2

767 | kjedelig -3

768 | utilstrekkelig -1

769 | sportslig 2

770 | usammenhengende -2

771 | rettferdig -2

772 | veldedig 1

773 | flittig 2

774 | del tomt -2

775 | greit 1

776 | skjevt -1

777 | fristende 1

778 | klokt 2

779 | kostbar -1

780 | uoverkommelig -3

781 | tomt -1

782 | naturskjønt 4

783 | seremonielt 1

784 | surrealistisk -1

785 | prisbelønt 5

786 | fascinerende 5

787 | frustrerende -2

788 | moro 3

789 | periodevis -1

790 | vennlig 2

791 | kraftig 2

792 | veltalende 3

793 | freakishly -3

794 | skremmende -1

795 | bare 1

796 | omrørende 2

797 | etisk 1

798 | forsvarlig 1

799 | hensynsløs -2

800 | litt -1

801 | utrolig -2

802 | fiendishly -3

803 | skikkelig 1

804 | sørgelig -4

805 | kjapt 1

806 | rausende 3

807 | gledelig 4

808 | motbydelig -3

809 | nådeløst -3

810 | rettferdig 1

811 | nådig 2

812 | frodig 3

813 | lykksalig 4

814 | historisk 1

815 | kortfattet -1

816 | svakt -1

817 | halvhjertet -1

818 | raskt 1

819 | skremmende -2

820 | banebrytende 3

821 | på villspor -1

822 | bittert -2

823 | besatt -3

824 | hjelpeløst -3

825 | hilsen -2

826 | fortjent 1

827 | rasende -4

828 | ubønnhørlig -2

829 | uelegant -1

830 | rørende 3

831 | rolig 1

832 | spent 3

833 | godartet 1

834 | målløst -1

835 | forvirrende -2

836 | skjemmende -4

837 | raskt -2

838 | moderat 1

839 | grovt -2

840 | fantastisk 5

841 | stolt -1

842 | beroligende 1

843 | svakt -2

844 | majestetisk 4

845 | snikende -4

846 | distraherende -1

847 | skummelt -1

848 | skrytende -1

849 | utmerket 4

850 | uklokt -2

851 | iherdig 3

852 | rasende -2

853 | ufarlig 1

854 | forgjeves -2

855 | lakonisk -1

856 | oppgitt -2

857 | lønnsomt 1

858 | forvirrende -2

859 | bekymringsfullt -3

860 | kvalmende -3

861 | lunefull -2

862 | fanatisk -3

863 | uforsiktig -1

864 | abysmalt -4

865 | bærekraftig 2

866 | foraktelig -3

867 | glumly -2

868 | uberegnelig -1

869 | sparsommelig 1

870 | torturøst -4

871 | ublu -4

872 | selvtilfreds -2

873 | feil -1

874 | skadelig -2

875 | smertefritt 1

876 | feil -1

877 | luskent -1

878 | episk 4

879 |

--------------------------------------------------------------------------------

/data/sentiment/lexicons/socal/no_int.txt:

--------------------------------------------------------------------------------

1 | minst -3

2 | mindre -1.5

3 | knapt -1.5

4 | neppe -1.5

5 | nesten -1.5

6 | ikke for -1.5

7 | ikke bare 0.5

8 | ikke bare 0.5

9 | ikke bare 0.5

10 | bare -0.5

11 | litt -0.5

12 | litt -0.5

13 | litt -0.5

14 | marginalt -0.5

15 | relativt -0.3

16 | mildt -0.3

17 | moderat -0.3

18 | noe -0.3

19 | delvis -0.3

20 | litt -0.3

21 | uten tvil -0.2

22 | stort sett -0.2

23 | hovedsakelig -0.2

24 | minst -0.9

25 | til en viss grad -0.2

26 | til en viss grad -0.2

27 | slags -0.3

28 | sorta -0.3

29 | slags -0.3

30 | ganske -0.3

31 | ganske -0.2

32 | pen -0.1

33 | heller -0.1

34 | umiddelbart 0.1

35 | ganske 0.1

36 | perfekt 0.1

37 | konsekvent 0.1

38 | virkelig 0.2

39 | klart 0.2

40 | åpenbart 0.2

41 | absolutt 0.2

42 | helt 0.2

43 | definitivt 0.2

44 | absolutt 0.2

45 | konstant 0.2

46 | høyt 0.2

47 | veldig 0.2

48 | betydelig 0.2

49 | merkbart 0.2

50 | karakteristisk 0.2

51 | ofte 0.2

52 | forferdelig 0.2

53 | totalt 0.2

54 | stort sett 0.2

55 | fullt 0.2

56 | ekstra 0.3

57 | virkelig 0.3

58 | spesielt 0.3

59 | spesielt 0.3

60 | jævla 0.3

61 | intensivt 0.3

62 | rett og slett 0.3

63 | helt 0.3

64 | sterkt 0.3

65 | bemerkelsesverdig 0.3

66 | stort sett 0.3

67 | utrolig 0.3

68 | påfallende 0.3

69 | fantastisk 0.3

70 | i det vesentlige 0.3

71 | uvanlig 0.3

72 | dramatisk 0.3

73 | intenst 0.3

74 | ekstremt 0.4

75 | så 0.4

76 | utrolig 0.4

77 | fryktelig 0.4

78 | enormt 0.4

79 | umåtelig 0.4

80 | slik 0.4

81 | utrolig 0.4

82 | sinnsykt 0.4

83 | opprørende 0.4

84 | radikalt 0.4

85 | blærende 0.4

86 | unntaksvis 0.4

87 | overstigende 0.4

88 | uten tvil 0.4

89 | vei 0.4

90 | langt 0.4

91 | dypt 0.4

92 | super 0.4

93 | dypt 0.4

94 | universelt 0.4

95 | rikelig 0.4

96 | uendelig 0.4

97 | eksponentielt 0.4

98 | enormt 0.4

99 | grundig 0.4

100 | lidenskapelig 0.4

101 | voldsomt 0.4

102 | latterlig 0.4

103 | uanstendig 0.4

104 | vilt 0.4

105 | ekstraordinært 0.5

106 | spektakulært 0.5

107 | fenomenalt 0.5

108 | monumentalt 0.5

109 | utrolig 0.5

110 | helt 0.5

111 | mer -0.5

112 | enda mer 0.5

113 | mer enn 0.5

114 | mest 1

115 | ytterste 1

116 | totalt 0.5

117 | monumental 0.5

118 | flott 0.5

119 | enorm 0.5

120 | enorme 0.5

121 | massiv 0.5

122 | fullført 0.4

123 | uendelig 0.4

124 | uendelig 0.4

125 | absolutt 0.5

126 | rungende 0.4

127 | uskadd 0.4

128 | drop dead 0.4

129 | massiv 0.5

130 | kollossal 0.5

131 | utrolig 0.5

132 | ufattelig 0.5

133 | abject 0.5

134 | en slik 0.4

135 | en slik 0.4

136 | fullstendig 0.4

137 | dobbelt 0.3

138 | klar 0.3

139 | klarere 0.2

140 | klareste 0.5

141 | stor 0.3

142 | større 0.2

143 | største 0.5

144 | åpenbart 0.03

145 | alvorlig 0.3

146 | dyp 0.3

147 | dypere 0.2

148 | dypeste 0.5

149 | betydelig 0.2

150 | viktig 0.3

151 | større 0.2

152 | avgjørende 0.3

153 | umiddelbar 0.1

154 | synlig 0.1

155 | merkbar 0.1

156 | konsistent 0.1

157 | høy 0.2

158 | høyere 0.1

159 | høyeste 0.5

160 | ekte 0.2

161 | sant 0.2

162 | ren 0.2

163 | bestemt 0.2

164 | mye 0.2

165 | liten -0.3

166 | mindre -0.2

167 | minste -0.5

168 | moll -0.3

169 | moderat -0.3

170 | mild -0.3

171 | lett -0.5

172 | minste -0.9

173 | ubetydelig -0.5

174 | ubetydelig -0.5

175 | lav -2

176 | lavere -1.5

177 | laveste -3

178 | få -2

179 | færre -1.5

180 | færrest -3

181 | mye 0.3

182 | mange 0.3

183 | flere 0.2

184 | flere 0.2

185 | forskjellige 0.2

186 | noen få -0.3

187 | et par -0.3

188 | et par -0.3

189 | mye 0.3

190 | masse 0.3

191 | i det hele tatt -0.5

192 | mye 0.5

193 | en hel masse 0.5

194 | en enorm mengde på 0.5

195 | enorme antall på 0.5

196 | en pokker på 0.5

197 | en mengde på 0.5

198 | en mutltid på 0.5

199 | tonn 0.5

200 | tonn 0.5

201 | en haug med 0.3

202 | hauger på 0.3

203 | rikelig med 0.3

204 | en viss mengde -0.2

205 | noen -0.2

206 | litt av -0.5

207 | litt av -0.5

208 | litt av -0.5

209 | vanskelig å -1.5

210 | vanskelig til -1.5

211 | tøff til -1.5

212 | ikke i nærheten av -3

213 | ikke alt det -1.2

214 | ikke det -1.5

215 | ut av -2

216 |

--------------------------------------------------------------------------------

/data/sentiment/lexicons/socal/no_verb.txt:

--------------------------------------------------------------------------------

1 | kulminerer 4

2 | opphøyelse 4

3 | glede 4

4 | ære 4

5 | stein 4

6 | elsker 4

7 | enthrall 4

8 | ærefrykt 4

9 | fascinere 4

10 | enthrall 4

11 | enthrall 4

12 | elat 4

13 | extol 3

14 | helliggjøre 3

15 | transcend 3

16 | oppnå 3

17 | beundre 3

18 | forbløffe 3

19 | verne om 3

20 | ros 3

21 | glede 3

22 | vie 3

23 | fortrylle 3

24 | elske 3

25 | energiser 3

26 | nyt 3

27 | underholde 3

28 | utmerke seg 3

29 | imponere 3

30 | innovere 3

31 | ivrig 3

32 | kjærlighet 3

33 | tryllebinde 3

34 | ros 3

35 | premie 3

36 | rave 3

37 | glede 3

38 | klang 3

39 | respekt 3

40 | gjenopprette 3

41 | revitalisere 3

42 | smak 3

43 | lykkes 3

44 | overvinne 3

45 | overgå 3

46 | trives 3

47 | triumf 3

48 | vidunder 3

49 | løft 3

50 | capitivere 3

51 | wow 3

52 | spenning 3

53 | vant 3

54 | bekrefte 3

55 | glad 3

56 | forskjønne 3

57 | skatt 3

58 | stavebind 3

59 | trollbundet 3

60 | spennende 3

61 | blende 3

62 | gush 3

63 | hjelp 2

64 | more 2

65 | applaudere 2

66 | setter pris på 2

67 | tiltrekke 2

68 | gi 2

69 | skryte av 2

70 | boost 2

71 | stell 2

72 | kjærtegn 2

73 | feire 2

74 | sjarm 2

75 | koordinere 2

76 | samarbeide 2

77 | minnes 2

78 | kompliment 2

79 | gratulerer 2

80 | erobre 2

81 | bidra 2

82 | samarbeide 2

83 | opprett 2

84 | kreditt 2

85 | dyrke 2

86 | dedikere 2

87 | fortjener 2

88 | omfavne 2

89 | oppmuntre 2

90 | godkjenne 2

91 | engasjere 2

92 | forbedre 2

93 | berike 2

94 | fremkalle 2

95 | legge til rette for 2

96 | favorisere 2

97 | passform 2

98 | oppfylle 2

99 | få 2

100 | glad 2

101 | harmoniser 2

102 | helbrede 2

103 | høydepunkt 2

104 | ære 2

105 | lys 2

106 | senk 2

107 | inspirere 2

108 | interesse 2

109 | intriger 2

110 | le 2

111 | maske 2

112 | motivere 2

113 | pleie 2

114 | overvinne 2

115 | overvant 2

116 | vær så snill 2

117 | fremgang 2

118 | blomstre 2

119 | rens 2

120 | utstråle 2

121 | rally 2

122 | høste 2

123 | forene 2

124 | innløsning 2

125 | avgrense 2

126 | kongelig 2

127 | fornyelse 2

128 | reparasjon 2

129 | løse 2

130 | gjenforene 2

131 | svale 2

132 | belønning 2

133 | rival 2

134 | gnisten 2

135 | underbygge 2

136 | søte 2

137 | svimle 2

138 | sympatisere 2

139 | tillit 2

140 | løft 2

141 | ærverdig 2

142 | vinn 2

143 | verdt 2

144 | aktelse 2

145 | styrke 2

146 | frigjør 2

147 | anbefaler 2

148 | master 2

149 | forbedre 2

150 | overgå 2

151 | skinne 2

152 | pioner 2

153 | fortjeneste 2

154 | styrke 2

155 | extol 2

156 | extoll 2

157 | takk 2

158 | oppdater 2

159 | fortjeneste 2

160 | livne opp 2

161 | frigjør 2

162 | godkjenne 2

163 | forbedre 2

164 | frita 1

165 | godta 1

166 | bekrefte 1

167 | lindre 1

168 | forbedre 1

169 | forutse 1

170 | blidgjøre 1

171 | håpe 1

172 | assistere 1

173 | passer 1

174 | bli venn 1

175 | fange 1

176 | rens 1

177 | komfort 1

178 | kommune 1

179 | kommunisere 1

180 | kompensere 1

181 | kompromiss 1

182 | kondone 1

183 | overbevise 1

184 | råd 1

185 | korstog 1

186 | verdig 1

187 | doner 1

188 | spare 1

189 | forseggjort 1

190 | pynt ut 1

191 | styrke 1

192 | aktivere 1

193 | gi 1

194 | opplyse 1

195 | overlate 1

196 | tenke 1

197 | etablere 1

198 | utvikle seg 1

199 | opphisse 1

200 | opplevelse 1

201 | bli kjent 1

202 | flatere 1

203 | tilgi 1

204 | befeste 1

205 | foster 1

206 | boltre seg 1

207 | pynt 1

208 | generere 1

209 | glans 1

210 | glitter 1

211 | glød 1

212 | tilfredsstille 1

213 | guide 1

214 | sele 1

215 | informer 1

216 | arve 1

217 | spøk 1

218 | siste 1

219 | som 1

220 | formidle 1

221 | nominere 1

222 | gi næring 1

223 | adlyde 1

224 | tilbud 1

225 | overliste 1

226 | holde ut 1

227 | seire 1

228 | utsette 1

229 | beskytt 1

230 | purr 1

231 | reaktiver 1

232 | berolige 1

233 | gjenvinne 1

234 | tilbakelent 1

235 | gjenopprette 1

236 | slapp av 1

237 | avlaste 1

238 | oppussing 1

239 | renovere 1

240 | omvende deg 1

241 | hvile 1

242 | redning 1

243 | gjenopplive 1

244 | modnes 1

245 | hilsen 1

246 | tilfredsstille 1

247 | sikker 1

248 | del 1

249 | betyr 1

250 | forenkle 1

251 | smil 1

252 | krydder 1

253 | stabiliser 1

254 | standardisere 1

255 | stimulere 1

256 | stiver 1

257 | avta 1

258 | tilstrekkelig 1

259 | dress 1

260 | støtte 1

261 | tåle 1

262 | hyllest 1

263 | oppgradere 1

264 | overliste 1

265 | promotere 1

266 | empati 1

267 | rette 1

268 | overladning 1

269 | plass til 1

270 | multitask 1

271 | oppnå 1

272 | utdannet 1

273 | strømlinjeforme 1

274 | effektivitet 1

275 | blomstre 1

276 | tjen 1

277 | innkvartering 1

278 | berolige 1

279 | oppbygg 1

280 | bli venn 1

281 | mykgjøre 1

282 | felicitate 1

283 | frikoble 1

284 | overstige 1

285 | avmystifisere 1

286 | verdi 1

287 | titillate 1

288 | reienforce 1

289 | hjelp 1

290 | garanti 1

291 | komplement 1

292 | kapitaliser 1

293 | pris 1

294 | oppnå 1

295 | argumentere -1

296 | kamp -1

297 | uskarphet -1

298 | svak -1

299 | brudd -1

300 | blåmerke -1

301 | feil -1

302 | avbryt -1

303 | utfordring -1

304 | chide -1

305 | tette -1

306 | kollidere -1

307 | kamp -1

308 | tvinge -1

309 | komplisere -1

310 | concoct -1

311 | samsvar -1

312 | konfrontere -1

313 | krever -1

314 | kvake -1

315 | dawdle -1

316 | reduksjon -1

317 | forsinkelse -1

318 | død -1

319 | avskrive -1

320 | avvik -1

321 | diktere -1

322 | motet -1

323 | avskjed -1

324 | dispensere -1

325 | misfornøyde -1

326 | kast -1

327 | tvist -1

328 | distrahere -1

329 | grøft -1

330 | skilsmisse -1

331 | dominere -1

332 | nedskift -1

333 | svindle -1

334 | fare -1

335 | håndheve -1

336 | oppsluk -1

337 | vikle -1

338 | misunnelse -1

339 | slett -1

340 | feil -1

341 | unngå -1

342 | overdrive -1

343 | ekskluder -1

344 | utføre -1

345 | eksponere -1

346 | slukk -1

347 | feign -1

348 | fidget -1

349 | flykte -1

350 | forby -1

351 | bekymre -1

352 | skremme -1

353 | rynke pannen -1

354 | fumle -1

355 | gamble -1

356 | forherlige -1

357 | grip -1

358 | grip -1

359 | stønn -1

360 | knurring -1

361 | brummen -1

362 | hamstring -1

363 | vondt -1

364 | ignorere -1

365 | implikere -1

366 | bønnfall -1

367 | fengsel -1

368 | indusere -1

369 | betennelse -1

370 | forstyrre -1

371 | avbryt -1

372 | rus -1

373 | trenge inn -1

374 | oversvømmet -1

375 | klagesang -1

376 | lekkasje -1

377 | avvikle -1

378 | blander -1

379 | oppfører seg feil -1

380 | feilkast -1

381 | villede -1

382 | villedet -1

383 | feilinformasjon -1

384 | Mishandle -1

385 | feil -1

386 | mistrust -1

387 | misforstå -1

388 | misbruk -1

389 | stønn -1

390 | mønstre -1

391 | mutter -1

392 | nøytralisere -1

393 | oppheve -1

394 | utelat -1

395 | utgang -1

396 | overoppnå -1

397 | overløp -1

398 | overse -1

399 | overmakt -1

400 | overkjørt -1

401 | overreagerer -1

402 | overforenkle -1

403 | overvelde -1

404 | skjemme bort -1

405 | omkomme -1

406 | forfølge -1

407 | plod -1

408 | forby -1

409 | lirke -1

410 | avslutt -1

411 | rasjonalisere -1

412 | tilbakevise -1

413 | trekke seg tilbake -1

414 | avstå -1

415 | rehash -1

416 | gjengjelde -1

417 | retrett -1

418 | kvitt -1

419 | rip -1

420 | risiko -1

421 | romantiser -1

422 | sag -1

423 | skåld -1

424 | skremme -1

425 | svi -1

426 | scowl -1

427 | skrape -1

428 | granske -1

429 | sjokk -1

430 | skråstrek -1

431 | slug -1

432 | smugle -1

433 | snappe -1

434 | snike -1

435 | sob -1

436 | forstuing -1

437 | stammer -1

438 | stikk -1

439 | stjal -1

440 | bortkommen -1

441 | fast -1

442 | stunt -1

443 | undertrykke -1

444 | snuble -1

445 | sverget -1

446 | rive -1

447 | erte -1

448 | dekk -1

449 | revet -1

450 | overtredelse -1

451 | felle -1

452 | overtredelse -1

453 | triks -1

454 | trudge -1

455 | angre -1

456 | underbruk -1

457 | angre -1

458 | unravel -1

459 | røtter -1

460 | avta -1

461 | varp -1

462 | sutre -1

463 | pisk -1

464 | wince -1

465 | sår -1

466 | gjesp -1

467 | kjef -1

468 | lengter -1

469 | idolize -1

470 | hemme -1

471 | pålegge -1

472 | bekymring -1

473 | emne -1

474 | tåle -1

475 | fluster -1

476 | snivel -1

477 | insinuere -1

478 | coddle -1

479 | oppscenen -1

480 | underutnytte -1

481 | squirm -1

482 | mikromanage -1

483 | hund -1

484 | hollywoodise -1

485 | sidespor -1

486 | karikatur -1

487 | uenighet -1

488 | standard -1

489 | dø -1

490 | problemer -1

491 | mistillit -1

492 | skyld -1

493 | lekter -1

494 | overoppblås -1

495 | tømme -1

496 | vondt -1

497 | krampe -1

498 | jostle -1

499 | rasle -1

500 | uklar -1

501 | rust -1

502 | feil -1

503 | lur -1

504 | knuse -1

505 | placate -1

506 | overoppheting -1

507 | døve -1

508 | prute -1

509 | cuss -1

510 | uenighet -1

511 | uoverensstemmelse -1

512 | slapp -1

513 | misfarging -1

514 | avslutte -1

515 | tretthet -1

516 | motbevise -1

517 | syltetøy -1

518 | bolt -1

519 | offer -1

520 | sverte -1

521 | belch -1

522 | feiltolke -1

523 | forlenge -1

524 | typecast -1

525 | klynge -1

526 | gjennomsyre -1

527 | koble fra -1

528 | susing -1

529 | hobble -1

530 | drivhjul -1

531 | liten -1

532 | overreach -1

533 | deform -1

534 | rangel -1

535 | prevaricate -1

536 | forhåndsdømme -1

537 | raske -1

538 | peeve -1

539 | misforstå -1

540 | misforstått -1

541 | feil fremstilling -1

542 | jabber -1

543 | irk -1

544 | impinge -1

545 | hoodwink -1

546 | gawk -1

547 | frazzle -1

548 | dupe -1

549 | desorienterende -1

550 | lure -1

551 | skremmende -1

552 | karpe -1

553 | tukt -1

554 | blab -1

555 | blabber -1

556 | beleirer -1

557 | belabor -1

558 | bjørn -1

559 | avskaffe -2

560 | anklage -2

561 | agitere -2

562 | hevder -2

563 | bakhold -2

564 | amputere -2

565 | sinne -2

566 | irritere -2

567 | motvirke -2

568 | angrep -2

569 | avverge -2

570 | babble -2

571 | grevling -2

572 | balk -2

573 | forvis -2

574 | slo -2

575 | tro -2

576 | pass opp -2

577 | bite -2

578 | blære -2

579 | blokk -2

580 | tabbe -2

581 | bry -2

582 | skryte -2

583 | bestikkelse -2

584 | bust -2

585 | feil -2

586 | gnage -2

587 | billigere -2

588 | kvele -2

589 | sammenstøt -2

590 | tvinge -2

591 | kollaps -2

592 | commiserate -2

593 | skjul -2

594 | begrense -2

595 | konflikt -2

596 | forvirre -2

597 | konspirere -2

598 | begrense -2

599 | motsier -2

600 | contrive -2

601 | begjære -2

602 | krympe -2

603 | lamme -2

604 | kritisere -2

605 | knuse -2

606 | begrense -2

607 | skade -2

608 | forfall -2

609 | lure -2

610 | nederlag -2

611 | tømme -2

612 | trykk -2

613 | frata -2

614 | latterliggjøre -2

615 | forringe -2

616 | skuffe -2

617 | ikke godkjenner -2

618 | diskreditere -2

619 | diskriminere -2

620 | motløs -2

621 | misliker -2

622 | forstyrre -2

623 | misfornøyd -2

624 | forvreng -2

625 | nød -2

626 | forstyrr -2

627 | undergang -2

628 | avløp -2

629 | drukner -2

630 | dump -2

631 | eliminere -2

632 | flau -2

633 | emote -2

634 | inngrep -2

635 | erodere -2

636 | kaste ut -2

637 | eksos -2

638 | utvise -2

639 | fabrikere -2

640 | falsk -2

641 | vakle -2

642 | flaunt -2

643 | flyndre -2

644 | kraft -2

645 | taper -2

646 | forsak -2

647 | sørge -2

648 | hemme -2

649 | sikring -2

650 | hindre -2

651 | sult -2

652 | kjas -2

653 | svekke -2

654 | hindre -2

655 | pådra -2

656 | inept -2

657 | infisere -2

658 | angrep -2

659 | påføre -2

660 | skade -2

661 | invadere -2

662 | irritere -2

663 | fare -2

664 | mangel -2

665 | lyve -2

666 | taper -2

667 | tapte -2

668 | manipulere -2

669 | rot -2

670 | spotte -2

671 | drap -2

672 | nag -2

673 | negere -2

674 | forsømmelse -2

675 | besatt -2

676 | hindre -2

677 | fornærme -2

678 | motsette -2

679 | overaktiv -2

680 | overskygge -2

681 | lamme -2

682 | nedlatende -2

683 | perplex -2

684 | overhode -2

685 | forstyrrelse -2

686 | plyndre -2

687 | pontifikat -2

688 | pout -2

689 | preen -2

690 | late som -2

691 | tiltale -2

692 | provosere -2

693 | straffe -2

694 | avvis -2

695 | rekyl -2

696 | nekte -2

697 | regress -2

698 | tilbakefall -2

699 | si fra deg -2

700 | undertrykk -2

701 | bebreid -2

702 | mislik -2

703 | begrense -2

704 | forsinke -2

705 | hevn -2

706 | gå tilbake -2

707 | tilbakekalle -2

708 | opprør -2

709 | brudd -2

710 | sap -2

711 | skjelle -2

712 | skru -2

713 | gripe -2

714 | skill -2

715 | knuse -2

716 | skjul -2

717 | makulere -2

718 | unngå -2

719 | skulk -2

720 | baktalelse -2

721 | spor -2

722 | smøre -2

723 | hån -2

724 | snorke -2

725 | spank -2

726 | gyte -2

727 | sputter -2

728 | sløse -2

729 | stilk -2

730 | skremme -2

731 | stjele -2

732 | kveler -2

733 | stagnere -2

734 | kvele -2

735 | stamme -2

736 | strekke -2

737 | sliter -2

738 | bukke under -2

739 | lider -2

740 | kvele -2

741 | tukle -2

742 | hån -2

743 | true -2

744 | thrash -2

745 | slit -2

746 | tråkke -2

747 | bagatellisere -2

748 | undergrave -2

749 | underwhelm -2

750 | vex -2

751 | bryte -2

752 | skeptisk -2

753 | avfall -2

754 | svekk -2

755 | vilje -2

756 | vri deg -2

757 | myrde -2

758 | blind -2

759 | uenig -2

760 | utstøte -2

761 | vandre -2

762 | klage -2

763 | disenchant -2

764 | revulse -2

765 | duehull -2

766 | flabbergast -2

767 | harry -2

768 | piss -2

769 | feil -2

770 | ødelegge -2

771 | skadedyr -2

772 | skjevhet -2

773 | panorere -2

774 | dra -2

775 | _ned -2

776 | mar -2

777 | klage -2

778 | skade -2

779 | forverre -2

780 | vandalisere -2

781 | avslutt -2

782 | funksjonsfeil -2

783 | tosk -2

784 | slave -2

785 | taint -2

786 | ødelagt -2

787 | flekk -2

788 | rykket ned -2

789 | sprit -2

790 | utukt -2

791 | rane -2

792 | trist -2

793 | diss -2

794 | medskyldig -2

795 | ondskap -2

796 | manglende evne -2

797 | sverte -2

798 | forurense -2

799 | smerte -2

800 | feilberegne -2

801 | mope -2

802 | plage -2

803 | accost -2

804 | unnerve -2

805 | skam -2

806 | irettesett -2

807 | overdrive -2

808 | feilbehandling -2

809 | myr -2

810 | ondartet -2

811 | trussel -2

812 | jeer -2

813 | ugyldiggjøre -2

814 | innflytelse -2

815 | heckle -2

816 | hamstrung -2

817 | gripe -2

818 | ryper -2

819 | flout -2

820 | enervate -2

821 | emasculate -2

822 | manglende respekt -2

823 | vanære -2

824 | nedsett -2

825 | debase -2

826 | kolliderer -2

827 | bungle -2

828 | besmirch -2

829 | aunguish -2

830 | fornærme -2

831 | forverre -2

832 | forfalle -2

833 | bash -2

834 | bar -3

835 | misbruk -3

836 | forverre -3

837 | alarm -3

838 | fremmedgjøre -3

839 | atrofi -3

840 | baffel -3

841 | tro -3

842 | nedgjøre -3

843 | forvirret -3

844 | eksplosjon -3

845 | bombardere -3

846 | brutalisere -3

847 | kantrer -3

848 | careen -3

849 | jukse -3

850 | fordømme -3

851 | forvirre -3

852 | korroderer -3

853 | korrupt -3

854 | stapp -3

855 | forbannelse -3

856 | utartet -3

857 | fornedre -3

858 | fordømme -3

859 | beklager -3

860 | forverres -3

861 | fortvilelse -3

862 | forkaste -3

863 | avsky -3

864 | rasende -3

865 | slaveri -3

866 | utrydde -3

867 | irritere -3

868 | utnytte -3

869 | utrydde -3

870 | mislykkes -3

871 | frustrer -3

872 | gløtt -3

873 | vevstol -3

874 | mangel -3

875 | molest -3

876 | utslette -3

877 | undertrykke -3

878 | pervertere -3

879 | plagerize -3

880 | pest -3

881 | herjing -3

882 | irettesette -3

883 | angre -3

884 | vekke opp igjen -3

885 | avvis -3

886 | avvise -3

887 | latterliggjøring -3

888 | rue -3

889 | sabotasje -3

890 | spott -3

891 | skrik -3

892 | koke -3

893 | skrumpe -3

894 | smelle -3

895 | kvele -3

896 | spyd -3

897 | sulte -3

898 | stink -3

899 | underkaste -3

900 | undergrave -3

901 | hindre -3

902 | pine -3

903 | opprørt -3

904 | usurp -3

905 | jamre -3

906 | forverres -3

907 | overfall -3

908 | halshugge -3

909 | ærekrenke -3

910 | nedbryter -3

911 | rive -3

912 | demoralisere -3

913 | fornærmelse -3

914 | råte -3

915 | suger -3

916 | bastardize -3

917 | kvalme -3

918 | plyndring -3

919 | ydmyke -3

920 | hvem_ PRP _ reek -3

921 | not_ dritt -3

922 | desillusjon -3

923 | forårsake -3

924 | stank -3

925 | stinket -3

926 | voldtekt -3

927 | kvinnelig -3

928 | ødelegge -3

929 | håpløshet -3

930 | røkelse -3

931 | fattige -3

932 | trakassere -3

933 | forurense -3

934 | ødelegge -3

935 | traumatisere -3

936 | skandalisere -3

937 | repugn -3

938 | raseri -3

939 | plagiere -3

940 | lambaste -3

941 | imperil -3

942 | glødere -3

943 | excoriate -3

944 | rådgiver -3

945 | nedsettelse -3

946 | despoil -3

947 | vanhellige -3

948 | demonisere -3

949 | bespottelse -3

950 | hjernevask -3

951 | browbeat -3

952 | appal -3

953 | forferdelig -3

954 | befoul -3

955 | plage -4

956 | utslette -4

957 | forråde -4

958 | jævla -4

959 | avskyr -4

960 | gruer -4

961 | hater -4

962 | forferdelig -4

963 | rasende -4

964 | mortify -4

965 | frastøtte -4

966 | ruin -4

967 | slakter -4

968 | ødelegge -4

969 | slakt -4

970 | forferdelig -4

971 | terrorisere -4

972 | oppkast -4

973 | kunne_ panikk -4

974 | opprør -4

975 | tortur -4

976 | spott -4

977 | avsky -4

978 | utføre -4

979 | vanære -4

980 | avsky -5

981 | forferdelig -5

982 | avsky -5

983 | kannibalisere -5

984 | uren -5

985 | forakte -5

986 |

--------------------------------------------------------------------------------

/data/sentiment/norec_sentence/labels.json:

--------------------------------------------------------------------------------

1 | {"Negative": "0", "Neutral": "1", "Positive": "2"}

--------------------------------------------------------------------------------

/data/skweak_logo.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/skweak_logo.jpg

--------------------------------------------------------------------------------

/data/skweak_logo_thumbnail.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/skweak_logo_thumbnail.jpg

--------------------------------------------------------------------------------

/data/skweak_procedure.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/skweak_procedure.png

--------------------------------------------------------------------------------

/data/wikidata_small_tokenised.json.gz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/NorskRegnesentral/skweak/2b6db15e8429dbda062b2cc9cc74e69f51a0a8b6/data/wikidata_small_tokenised.json.gz

--------------------------------------------------------------------------------

/examples/ner/__init__.py:

--------------------------------------------------------------------------------

1 | from . import data_utils, conll2003_ner, eval_utils, muc6_ner, conll2003_prep

--------------------------------------------------------------------------------

/examples/ner/conll2003_ner.py:

--------------------------------------------------------------------------------

1 | from typing import Iterable, Tuple

2 | import re, json, os

3 | import snips_nlu_parsers

4 | from skweak.base import CombinedAnnotator, SpanAnnotator

5 | from skweak.spacy import ModelAnnotator, TruecaseAnnotator

6 | from skweak.heuristics import FunctionAnnotator, TokenConstraintAnnotator, SpanConstraintAnnotator, SpanEditorAnnotator

7 | from skweak.gazetteers import GazetteerAnnotator, extract_json_data

8 | from skweak.doclevel import DocumentHistoryAnnotator, DocumentMajorityAnnotator

9 | from skweak.aggregation import MajorityVoter

10 | from skweak import utils

11 | from spacy.tokens import Doc, Span # type: ignore

12 | from . import data_utils

13 |

14 | # Data files for gazetteers

15 | WIKIDATA = os.path.dirname(__file__) + "/../../data/wikidata_tokenised.json"

16 | WIKIDATA_SMALL = os.path.dirname(__file__) + "/../../data/wikidata_small_tokenised.json"

17 | COMPANY_NAMES = os.path.dirname(__file__) + "/../../data/company_names_tokenised.json"

18 | GEONAMES = os.path.dirname(__file__) + "/../../data/geonames.json"

19 | CRUNCHBASE = os.path.dirname(__file__) + "/../../data/crunchbase.json"

20 | PRODUCTS = os.path.dirname(__file__) + "/../../data/products.json"

21 | FIRST_NAMES = os.path.dirname(__file__) + "/../../data/first_names.json"

22 | FORM_FREQUENCIES = os.path.dirname(__file__) + "/../../data/form_frequencies.json"

23 |

24 |

25 | ############################################

26 | # Combination of all annotators

27 | ############################################

28 |

29 |

30 | class NERAnnotator(CombinedAnnotator):

31 | """Annotator of entities in documents, combining several sub-annotators (such as gazetteers,

32 | spacy models etc.). To add all annotators currently implemented, call add_all(). """

33 |

34 | def add_all(self):

35 | """Adds all implemented annotation functions, models and filters"""

36 |

37 | print("Loading shallow functions")

38 | self.add_shallow()

39 | print("Loading Spacy NER models")

40 | self.add_models()

41 | print("Loading gazetteer supervision modules")

42 | self.add_gazetteers()

43 | print("Loading document-level supervision sources")

44 | self.add_doc_level()

45 |

46 | return self

47 |

48 | def add_shallow(self):

49 | """Adds shallow annotation functions"""

50 |

51 | # Detection of dates, time, money, and numbers

52 | self.add_annotator(FunctionAnnotator("date_detector", date_generator))

53 | self.add_annotator(FunctionAnnotator("time_detector", time_generator))

54 | self.add_annotator(FunctionAnnotator("money_detector", money_generator))

55 |

56 | # Detection based on casing

57 | proper_detector = TokenConstraintAnnotator("proper_detector", utils.is_likely_proper, "ENT")

58 |

59 | # Detection based on casing, but allowing some lowercased tokens

60 | proper2_detector = TokenConstraintAnnotator("proper2_detector", utils.is_likely_proper, "ENT")

61 | proper2_detector.add_gap_tokens(data_utils.LOWERCASED_TOKENS | data_utils.NAME_PREFIXES)

62 |

63 | # Detection based on part-of-speech tags

64 | nnp_detector = TokenConstraintAnnotator("nnp_detector", lambda tok: tok.tag_ in {"NNP", "NNPS"}, "ENT")

65 |

66 | # Detection based on dependency relations (compound phrases)

67 | compound = lambda tok: utils.is_likely_proper(tok) and utils.in_compound(tok)

68 | compound_detector = TokenConstraintAnnotator("compound_detector", compound, "ENT")

69 |

70 | exclusives = ["date_detector", "time_detector", "money_detector"]

71 | for annotator in [proper_detector, proper2_detector, nnp_detector, compound_detector]:

72 | annotator.add_incompatible_sources(exclusives)

73 | annotator.add_gap_tokens(["'s", "-"])

74 | self.add_annotator(annotator)

75 |

76 | # We add one variants for each NE detector, looking at infrequent tokens

77 | infrequent_name = "infrequent_%s" % annotator.name

78 | self.add_annotator(SpanConstraintAnnotator(infrequent_name, annotator.name, utils.is_infrequent))

79 |

80 | # Other types (legal references etc.)

81 | misc_detector = FunctionAnnotator("misc_detector", misc_generator)

82 | legal_detector = FunctionAnnotator("legal_detector", legal_generator)

83 |

84 | # Detection of companies with a legal type

85 | ends_with_legal_suffix = lambda x: x[-1].lower_.rstrip(".") in data_utils.LEGAL_SUFFIXES

86 | company_type_detector = SpanConstraintAnnotator("company_type_detector", "proper2_detector",

87 | ends_with_legal_suffix, "COMPANY")

88 |

89 | # Detection of full person names

90 | full_name_detector = SpanConstraintAnnotator("full_name_detector", "proper2_detector",

91 | FullNameDetector(), "PERSON")

92 |

93 | for annotator in [misc_detector, legal_detector, company_type_detector, full_name_detector]:

94 | annotator.add_incompatible_sources(exclusives)

95 | self.add_annotator(annotator)

96 |

97 | # General number detector

98 | number_detector = FunctionAnnotator("number_detector", number_generator)

99 | number_detector.add_incompatible_sources(exclusives + ["legal_detector", "company_type_detector"])

100 | self.add_annotator(number_detector)

101 |

102 | self.add_annotator(SnipsAnnotator("snips"))

103 | return self

104 |

105 | def add_models(self):

106 | """Adds Spacy NER models to the annotator"""

107 |

108 | self.add_annotator(ModelAnnotator("core_web_md", "en_core_web_md"))

109 | self.add_annotator(TruecaseAnnotator("core_web_md_truecase", "en_core_web_md", FORM_FREQUENCIES))

110 | self.add_annotator(ModelAnnotator("BTC", os.path.dirname(__file__) + "/../../data/btc"))

111 | self.add_annotator( TruecaseAnnotator("BTC_truecase", os.path.dirname(__file__) + "/../../data/btc", FORM_FREQUENCIES))

112 |

113 | # Avoid spans that start with an article

114 | editor = lambda span: span[1:] if span[0].lemma_ in {"the", "a", "an"} else span

115 | self.add_annotator(SpanEditorAnnotator("edited_BTC", "BTC", editor))

116 | self.add_annotator(SpanEditorAnnotator("edited_BTC_truecase", "BTC_truecase", editor))

117 | self.add_annotator(SpanEditorAnnotator("edited_core_web_md", "core_web_md", editor))

118 | self.add_annotator(SpanEditorAnnotator("edited_core_web_md_truecase", "core_web_md_truecase", editor))

119 |

120 | return self

121 |

122 | def add_gazetteers(self, full_load=True):

123 | """Adds gazetteer supervision models (company names and wikidata)."""

124 |

125 | # Annotation of company names based on a large list of companies

126 | # company_tries = extract_json_data(COMPANY_NAMES) if full_load else {}

127 |

128 | # Annotation of company, person and location names based on wikidata

129 | wiki_tries = extract_json_data(WIKIDATA) if full_load else {}

130 |

131 | # Annotation of company, person and location names based on wikidata (only entries with descriptions)

132 | wiki_small_tries = extract_json_data(WIKIDATA_SMALL)

133 |

134 | # Annotation of location names based on geonames

135 | geo_tries = extract_json_data(GEONAMES)

136 |

137 | # Annotation of organisation and person names based on crunchbase open data

138 | crunchbase_tries = extract_json_data(CRUNCHBASE)

139 |

140 | # Annotation of product names

141 | products_tries = extract_json_data(PRODUCTS)

142 |

143 | exclusives = ["date_detector", "time_detector", "money_detector", "number_detector"]

144 | for name, tries in {"wiki":wiki_tries, "wiki_small":wiki_small_tries,

145 | "geo":geo_tries, "crunchbase":crunchbase_tries, "products":products_tries}.items():

146 |

147 | # For each KB, we create two gazetters (case-sensitive or not)

148 | cased_gazetteer = GazetteerAnnotator("%s_cased"%name, tries, case_sensitive=True)

149 | uncased_gazetteer = GazetteerAnnotator("%s_uncased"%name, tries, case_sensitive=False)

150 | cased_gazetteer.add_incompatible_sources(exclusives)

151 | uncased_gazetteer.add_incompatible_sources(exclusives)

152 | self.add_annotators(cased_gazetteer, uncased_gazetteer)

153 |

154 | # We also add new sources for multitoken entities (which have higher confidence)

155 | multitoken_cased = SpanConstraintAnnotator("multitoken_%s"%(cased_gazetteer.name),

156 | cased_gazetteer.name, lambda s: len(s) > 1)

157 | multitoken_uncased = SpanConstraintAnnotator("multitoken_%s"%(uncased_gazetteer.name),

158 | uncased_gazetteer.name, lambda s: len(s) > 1)

159 | self.add_annotators(multitoken_cased, multitoken_uncased)

160 |

161 | return self

162 |

163 | def add_doc_level(self):

164 | """Adds document-level supervision sources"""

165 |

166 | self.add_annotator(ConLL2003Standardiser())

167 |

168 | maj_voter = MajorityVoter("doclevel_voter", ["LOC", "MISC", "ORG", "PER"],

169 | initial_weights={"doc_history":0, "doc_majority":0})

170 | maj_voter.add_underspecified_label("ENT", {"LOC", "MISC", "ORG", "PER"})

171 | self.add_annotator(maj_voter)

172 |

173 | self.add_annotator(DocumentHistoryAnnotator("doc_history_cased", "doclevel_voter", ["PER", "ORG"]))

174 | self.add_annotator(DocumentHistoryAnnotator("doc_history_uncased", "doclevel_voter", ["PER", "ORG"],

175 | case_sentitive=False))

176 |

177 | maj_voter = MajorityVoter("doclevel_voter", ["LOC", "MISC", "ORG", "PER"],

178 | initial_weights={"doc_majority":0})

179 | maj_voter.add_underspecified_label("ENT", {"LOC", "MISC", "ORG", "PER"})

180 | self.add_annotator(maj_voter)

181 |

182 | self.add_annotator(DocumentMajorityAnnotator("doc_majority_cased", "doclevel_voter"))

183 | self.add_annotator(DocumentMajorityAnnotator("doc_majority_uncased", "doclevel_voter",

184 | case_sensitive=False))

185 | return self

186 |

187 |

188 | ############################################

189 | # Heuristics

190 | ############################################

191 |

192 |

193 | def date_generator(doc):

194 | """Searches for occurrences of date patterns in text"""

195 |

196 | spans = []

197 |

198 | i = 0

199 | while i < len(doc):

200 | tok = doc[i]

201 | if tok.lemma_ in data_utils.DAYS | data_utils.DAYS_ABBRV:

202 | spans.append((i, i + 1, "DATE"))

203 | elif tok.is_digit and re.match("\\d+$", tok.text) and int(tok.text) > 1920 and int(tok.text) < 2040:

204 | spans.append((i, i + 1, "DATE"))

205 | elif tok.lemma_ in data_utils.MONTHS | data_utils.MONTHS_ABBRV:

206 | if tok.tag_ == "MD": # Skipping "May" used as auxiliary

207 | pass

208 | elif i > 0 and re.match("\\d+$", doc[i - 1].text) and int(doc[i - 1].text) < 32:

209 | spans.append((i - 1, i + 1, "DATE"))

210 | elif i > 1 and re.match("\\d+(?:st|nd|rd|th)$", doc[i - 2].text) and doc[i - 1].lower_ == "of":

211 | spans.append((i - 2, i + 1, "DATE"))

212 | elif i < len(doc) - 1 and re.match("\\d+$", doc[i + 1].text) and int(doc[i + 1].text) < 32:

213 | spans.append((i, i + 2, "DATE"))

214 | i += 1

215 | else: