3 | {% if include.caption %}

4 |

3 | {% if include.caption %}

4 |  3 | {% if include.caption %}

4 |

3 | {% if include.caption %}

4 | " %}

2 | {% assign url = include.content | remove: "" | remove: "" %}

3 | {% else %}

4 | {% assign url = include.content | remove: "" | split: ">" | last %}

5 | {% endif %}

6 |

7 | {% twitter url %}

8 |

9 |

--------------------------------------------------------------------------------

/.github/workflows/check_cdns.yaml:

--------------------------------------------------------------------------------

1 | name: Check CDN

2 | on:

3 | # push:

4 | # schedule:

5 | # - cron: '1 7,14,21 * * *'

6 | workflow_dispatch:

7 |

8 | jobs:

9 | run:

10 | runs-on: ubuntu-latest

11 | steps:

12 | - uses: actions/checkout@main

13 | - run: ./_action_files/check_js.sh

14 |

--------------------------------------------------------------------------------

/_notebooks/.virtual_documents/2024-08-26-python-hunting.ipynb:

--------------------------------------------------------------------------------

1 |

2 | import base64

3 |

4 | ZWHmjDkfVvkVdclV = 'bWdzdHN0dWRpby5zaG9w'

5 | RYBTeAeZbiUOxeloi = base64.b64decode(ZWHmjDkfVvkVdclV).decode()

6 |

7 | print(f'https://{RYBTeAeZbiUOxeloi}/sunrise')

8 | print(f'https://{RYBTeAeZbiUOxeloi}/luminous')

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/_plugins/footnote-detail.rb:

--------------------------------------------------------------------------------

1 | module Jekyll

2 | module AssetFilter

3 | def fndetail(input, id)

4 | "#{id}. #{input}↩

"

5 | end

6 | end

7 | end

8 |

9 | Liquid::Template.register_filter(Jekyll::AssetFilter)

10 |

--------------------------------------------------------------------------------

/_includes/post_list.html:

--------------------------------------------------------------------------------

1 |

2 |

3 | {{ post.title | escape }}

4 |

5 |

6 | {%- if site.show_description and post.description -%}

7 |

8 | {%- endif -%}

9 |

10 |

--------------------------------------------------------------------------------

/_includes/image-r:

--------------------------------------------------------------------------------

1 |

2 |  7 | {% if include.caption %}

8 |

7 | {% if include.caption %}

8 | {{ include.caption }}

9 | {% endif %}

10 |

11 |

12 |

--------------------------------------------------------------------------------

/_includes/utterances.html:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/_includes/notebook_colab_link.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |  4 |

5 |

--------------------------------------------------------------------------------

/_includes/notebook_binder_link.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

--------------------------------------------------------------------------------

/_includes/notebook_binder_link.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |  4 |

5 |

6 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.swp

2 | ~*

3 | *~

4 | _site

5 | .sass-cache

6 | .jekyll-cache

7 | .jekyll-metadata

8 | *.xml

9 | vendor

10 | _notebooks/.ipynb_checkpoints

11 | # Local Netlify folder

12 | .netlify

13 | .tweet-cache

14 | __pycache__

15 |

16 | .ipynb_checkpoints

17 | */.ipynb_checkpoints/*

18 |

19 | # IPython

20 | profile_default/

21 | ipython_config.py

22 |

23 | # MacOS crap

24 | .DS_Store

25 |

26 | # Python trash

27 | *.py[co]

28 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/upgrade.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: "[fastpages] Automated Upgrade"

3 | about: "Trigger a PR for upgrading fastpages"

4 | title: "[fastpages] Automated Upgrade"

5 | labels: fastpages-automation

6 | assignees: ''

7 |

8 | ---

9 |

10 | Opening this issue will trigger GitHub Actions to fetch the latest version of [fastpages](https://github.com/fastai/fastpages). More information will be provided in forthcoming comments below.

11 |

--------------------------------------------------------------------------------

/_plugins/footnote.rb:

--------------------------------------------------------------------------------

1 | module Jekyll

2 | class FootNoteTag < Liquid::Tag

3 |

4 | def initialize(tag_name, text, tokens)

5 | super

6 | @text = text.strip

7 | end

8 |

9 | def render(context)

10 | "#{@text}"

11 | end

12 | end

13 | end

14 |

15 | Liquid::Template.register_tag('fn', Jekyll::FootNoteTag)

16 |

--------------------------------------------------------------------------------

/_includes/notebook_deepnote_link.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.swp

2 | ~*

3 | *~

4 | _site

5 | .sass-cache

6 | .jekyll-cache

7 | .jekyll-metadata

8 | *.xml

9 | vendor

10 | _notebooks/.ipynb_checkpoints

11 | # Local Netlify folder

12 | .netlify

13 | .tweet-cache

14 | __pycache__

15 |

16 | .ipynb_checkpoints

17 | */.ipynb_checkpoints/*

18 |

19 | # IPython

20 | profile_default/

21 | ipython_config.py

22 |

23 | # MacOS crap

24 | .DS_Store

25 |

26 | # Python trash

27 | *.py[co]

28 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/upgrade.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: "[fastpages] Automated Upgrade"

3 | about: "Trigger a PR for upgrading fastpages"

4 | title: "[fastpages] Automated Upgrade"

5 | labels: fastpages-automation

6 | assignees: ''

7 |

8 | ---

9 |

10 | Opening this issue will trigger GitHub Actions to fetch the latest version of [fastpages](https://github.com/fastai/fastpages). More information will be provided in forthcoming comments below.

11 |

--------------------------------------------------------------------------------

/_plugins/footnote.rb:

--------------------------------------------------------------------------------

1 | module Jekyll

2 | class FootNoteTag < Liquid::Tag

3 |

4 | def initialize(tag_name, text, tokens)

5 | super

6 | @text = text.strip

7 | end

8 |

9 | def render(context)

10 | "#{@text}"

11 | end

12 | end

13 | end

14 |

15 | Liquid::Template.register_tag('fn', Jekyll::FootNoteTag)

16 |

--------------------------------------------------------------------------------

/_includes/notebook_deepnote_link.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |  4 |

5 |

6 |

--------------------------------------------------------------------------------

/_includes/image.html:

--------------------------------------------------------------------------------

1 |

4 |

5 |

6 |

--------------------------------------------------------------------------------

/_includes/image.html:

--------------------------------------------------------------------------------

1 |

2 | {% if {{include.url}} %}{% endif %}

3 |  4 | {% if {{include.url}} %}{% endif %}

5 | {% if {{include.caption}} %}

6 |

4 | {% if {{include.url}} %}{% endif %}

5 | {% if {{include.caption}} %}

6 | {{include.caption}}

7 | {% endif %}

8 |

9 |

--------------------------------------------------------------------------------

/.devcontainer.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "fastpages-codespaces",

3 | "dockerComposeFile": "docker-compose.yml",

4 | "service": "watcher",

5 | "mounts": [ "source=/var/run/docker.sock,target=/var/run/docker.sock,type=bind" ],

6 | "forwardPorts": [4000, 8080],

7 | "appPort": [4000, 8080],

8 | "extensions": ["ms-python.python",

9 | "ms-azuretools.vscode-docker"],

10 | "runServices": ["converter", "notebook", "jekyll", "watcher"]

11 | }

12 |

13 |

--------------------------------------------------------------------------------

/_includes/youtube.html:

--------------------------------------------------------------------------------

1 | {% if include.content contains "" %}

2 | {% assign base_url = include.content | remove: "" | remove: "" | split: "/" | last %}

3 | {% else %}

4 | {% assign url = include.content | remove: "" | split: ">" | last %}

5 | {% assign base_url = url | split: "/" | last %}

6 | {% endif %}

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

/_includes/google-analytics.html:

--------------------------------------------------------------------------------

1 |

2 |

3 | {% if site.google_analytics %}

4 |

5 |

6 |

7 |

14 |

15 | {% endif %}

16 |

--------------------------------------------------------------------------------

/_pages/404.html:

--------------------------------------------------------------------------------

1 | ---

2 | permalink: /404.html

3 | layout: default

4 | search_exclude: true

5 | ---

6 |

7 |

20 |

21 |

22 | 404

23 | Page not found :(

24 | The requested page could not be found.

25 |

26 |

--------------------------------------------------------------------------------

/_includes/head.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | {%- seo -%}

7 |

8 | {%- feed_meta -%}

9 | {%- if jekyll.environment == 'production' and site.google_analytics -%}

10 | {%- include google-analytics.html -%}

11 | {%- endif -%}

12 |

13 | {%- include custom-head.html -%}

14 |

15 |

16 |

--------------------------------------------------------------------------------

/.github/workflows/gh-page.yaml:

--------------------------------------------------------------------------------

1 | name: GH-Pages Status

2 | on:

3 | page_build

4 |

5 | jobs:

6 | see-page-build-payload:

7 | runs-on: ubuntu-latest

8 | steps:

9 | - name: check status

10 | run: |

11 | import os

12 | status, errormsg = os.getenv('STATUS'), os.getenv('ERROR')

13 | assert status == 'built', 'There was an error building the page on GitHub pages.\n\nStatus: {}\n\nError messsage: {}'.format(status, errormsg)

14 | shell: python

15 | env:

16 | STATUS: ${{ github.event.build.status }}

17 | ERROR: ${{ github.event.build.error.message }}

18 |

--------------------------------------------------------------------------------

/_includes/post_list_image_card.html:

--------------------------------------------------------------------------------

1 |

2 | {%- if post.image -%}

3 |

4 |  5 |

6 | {%- endif -%}

7 |

8 |

5 |

6 | {%- endif -%}

7 |

8 |

9 |

10 | {{ post.title | escape }}

11 |

12 |

13 |

14 |

15 |

16 |

--------------------------------------------------------------------------------

/_includes/notebook_github_link.html:

--------------------------------------------------------------------------------

1 |

2 | {% if page.layout == 'notebook' %}

3 |

4 | {% elsif page.layout == 'post' %}

5 |

6 | {% endif -%}

7 |  8 |

9 |

10 |

--------------------------------------------------------------------------------

/_action_files/action.yml:

--------------------------------------------------------------------------------

1 | name: 'fastpages: An easy to use blogging platform with support for Jupyter Notebooks.'

2 | description: Converts Jupyter notebooks and Word docs into Jekyll blog posts.

3 | author: Hamel Husain

4 | inputs:

5 | BOOL_SAVE_MARKDOWN:

6 | description: Either 'true' or 'false'. Whether or not to commit converted markdown files from notebooks and word documents into the _posts directory in your repo. This is useful for debugging.

7 | required: false

8 | default: false

9 | SSH_DEPLOY_KEY:

10 | description: a ssh deploy key is required if BOOL_SAVE_MARKDOWN = 'true'

11 | required: false

12 | branding:

13 | color: 'blue'

14 | icon: 'book'

15 | runs:

16 | using: 'docker'

17 | image: 'Dockerfile'

18 |

--------------------------------------------------------------------------------

/_action_files/fastpages.tpl:

--------------------------------------------------------------------------------

1 | {%- extends 'hide.tpl' -%}

2 | {%- block body -%}

3 | {%- set internals = ["metadata", "output_extension", "inlining",

4 | "raw_mimetypes", "global_content_filter"] -%}

5 | ---

6 | {%- for k in resources |reject("in", internals) %}

7 | {% if k == "summary" and "description" not in resources %}description{% else %}{{ k }}{% endif %}: {{ resources[k] }}

8 | {%- endfor %}

9 | layout: notebook

10 | ---

11 |

12 |

18 |

19 |

20 | {{ super() }}

21 |

22 | {%- endblock body %}

--------------------------------------------------------------------------------

/_posts/README.md:

--------------------------------------------------------------------------------

1 | ⚠️ Do not delete this directory! You can delete the blog post files in this directory, but you should still keep this directory around as Jekyll expects this folder to exist.

2 |

3 | # Auto-convert markdown files To Posts

4 |

5 | [`fastpages`](https://github.com/fastai/fastpages) will automatically convert markdown files saved into this directory as blog posts!

6 |

7 | You must save your notebook with the naming convention `YYYY-MM-DD-*.md`. Examples of valid filenames are:

8 |

9 | ```shell

10 | 2020-01-28-My-First-Post.md

11 | 2012-09-12-how-to-write-a-blog.md

12 | ```

13 |

14 | # Resources

15 |

16 | - [Jekyll posts](https://jekyllrb.com/docs/posts/)

17 | - [Example markdown post](https://github.com/fastai/fastpages/blob/master/_posts/2020-01-14-test-markdown-post.md)

18 |

--------------------------------------------------------------------------------

/_includes/toc.html:

--------------------------------------------------------------------------------

1 |

2 |

19 |

20 |

21 |

--------------------------------------------------------------------------------

/index.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: home

3 | search_exclude: true

4 | image: images/logo.png

5 | ---

6 |

7 | [](https://www.youtube.com/c/OALabs)

8 | [](https://www.twitch.tv/oalabslive)

9 | [](https://discord.gg/cw4U3WHvpn)

10 | [](https://www.patreon.com/oalabs)

11 |

12 | This is a collection of our raw research notes. Each post is generated from a [Jupyter Notebook](https://jupyter.org/) that can be found in our GitHub [Research](https://github.com/OALabs/research/tree/master/_notebooks) repository. Notes may contain errors, spelling mistakes, grammar mistakes, and incorrect code. Please keep in mind these are all rough drafts. Pull requests are welcome!

13 |

14 | # Notes

15 |

--------------------------------------------------------------------------------

/_sass/minima/custom-styles.scss:

--------------------------------------------------------------------------------

1 | /*-----------------------------------*/

2 | /*--- IMPORT STYLES FOR FASTPAGES ---*/

3 | @import 'minima/fastpages-styles';

4 |

5 | /*-----------------------------------*/

6 | /*----- ADD YOUR STYLES BELOW -------*/

7 |

8 | @import 'minima/dark-mode';

9 |

10 | .site-header {

11 | font-family: 'IBM Plex Mono', monospace;

12 | border: none;

13 | }

14 |

15 | .site-header a:hover {

16 | color: $med-emph !important;

17 | text-decoration: none !important;

18 | }

19 |

20 | .home {

21 | font-family: 'Roboto Mono', monospace !important;

22 | }

23 |

24 | .page-content {

25 | font-family: 'Roboto', sans-serif;

26 | }

27 |

28 | .site-footer {

29 | font-family: 'IBM Plex Mono', monospace;

30 | border: none;

31 | }

32 |

33 | // If you want to turn off dark background for syntax highlighting, comment or delete the below line.

34 | @import 'minima/fastpages-dracula-highlight';

35 |

--------------------------------------------------------------------------------

/_action_files/nb2post.py:

--------------------------------------------------------------------------------

1 | """Converts Jupyter Notebooks to Jekyll compliant blog posts"""

2 | from datetime import datetime

3 | import re, os, logging

4 | from nbdev import export2html

5 | from nbdev.export2html import Config, Path, _to_html, _re_block_notes

6 | from fast_template import rename_for_jekyll

7 |

8 | warnings = set()

9 |

10 | # Modify the naming process such that destination files get named properly for Jekyll _posts

11 | def _nb2htmlfname(nb_path, dest=None):

12 | fname = rename_for_jekyll(nb_path, warnings=warnings)

13 | if dest is None: dest = Config().doc_path

14 | return Path(dest)/fname

15 |

16 | # TODO: Open a GitHub Issue in addition to printing warnings

17 | for original, new in warnings:

18 | print(f'{original} has been renamed to {new} to be complaint with Jekyll naming conventions.\n')

19 |

20 | ## apply monkey patches

21 | export2html._nb2htmlfname = _nb2htmlfname

22 | export2html.notebook2html(fname='_notebooks/*.ipynb', dest='_posts/', template_file='/fastpages/fastpages.tpl', execute=False)

23 |

--------------------------------------------------------------------------------

/_action_files/pr_comment.sh:

--------------------------------------------------------------------------------

1 | #!/bin/sh

2 |

3 | # Make a comment on a PR.

4 | # Usage:

5 | # > pr_comment.sh <

8 |

9 |

10 |

--------------------------------------------------------------------------------

/_action_files/action.yml:

--------------------------------------------------------------------------------

1 | name: 'fastpages: An easy to use blogging platform with support for Jupyter Notebooks.'

2 | description: Converts Jupyter notebooks and Word docs into Jekyll blog posts.

3 | author: Hamel Husain

4 | inputs:

5 | BOOL_SAVE_MARKDOWN:

6 | description: Either 'true' or 'false'. Whether or not to commit converted markdown files from notebooks and word documents into the _posts directory in your repo. This is useful for debugging.

7 | required: false

8 | default: false

9 | SSH_DEPLOY_KEY:

10 | description: a ssh deploy key is required if BOOL_SAVE_MARKDOWN = 'true'

11 | required: false

12 | branding:

13 | color: 'blue'

14 | icon: 'book'

15 | runs:

16 | using: 'docker'

17 | image: 'Dockerfile'

18 |

--------------------------------------------------------------------------------

/_action_files/fastpages.tpl:

--------------------------------------------------------------------------------

1 | {%- extends 'hide.tpl' -%}

2 | {%- block body -%}

3 | {%- set internals = ["metadata", "output_extension", "inlining",

4 | "raw_mimetypes", "global_content_filter"] -%}

5 | ---

6 | {%- for k in resources |reject("in", internals) %}

7 | {% if k == "summary" and "description" not in resources %}description{% else %}{{ k }}{% endif %}: {{ resources[k] }}

8 | {%- endfor %}

9 | layout: notebook

10 | ---

11 |

12 |

18 |

19 |

20 | {{ super() }}

21 |

22 | {%- endblock body %}

--------------------------------------------------------------------------------

/_posts/README.md:

--------------------------------------------------------------------------------

1 | ⚠️ Do not delete this directory! You can delete the blog post files in this directory, but you should still keep this directory around as Jekyll expects this folder to exist.

2 |

3 | # Auto-convert markdown files To Posts

4 |

5 | [`fastpages`](https://github.com/fastai/fastpages) will automatically convert markdown files saved into this directory as blog posts!

6 |

7 | You must save your notebook with the naming convention `YYYY-MM-DD-*.md`. Examples of valid filenames are:

8 |

9 | ```shell

10 | 2020-01-28-My-First-Post.md

11 | 2012-09-12-how-to-write-a-blog.md

12 | ```

13 |

14 | # Resources

15 |

16 | - [Jekyll posts](https://jekyllrb.com/docs/posts/)

17 | - [Example markdown post](https://github.com/fastai/fastpages/blob/master/_posts/2020-01-14-test-markdown-post.md)

18 |

--------------------------------------------------------------------------------

/_includes/toc.html:

--------------------------------------------------------------------------------

1 |

2 |

19 |

20 |

21 |

--------------------------------------------------------------------------------

/index.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: home

3 | search_exclude: true

4 | image: images/logo.png

5 | ---

6 |

7 | [](https://www.youtube.com/c/OALabs)

8 | [](https://www.twitch.tv/oalabslive)

9 | [](https://discord.gg/cw4U3WHvpn)

10 | [](https://www.patreon.com/oalabs)

11 |

12 | This is a collection of our raw research notes. Each post is generated from a [Jupyter Notebook](https://jupyter.org/) that can be found in our GitHub [Research](https://github.com/OALabs/research/tree/master/_notebooks) repository. Notes may contain errors, spelling mistakes, grammar mistakes, and incorrect code. Please keep in mind these are all rough drafts. Pull requests are welcome!

13 |

14 | # Notes

15 |

--------------------------------------------------------------------------------

/_sass/minima/custom-styles.scss:

--------------------------------------------------------------------------------

1 | /*-----------------------------------*/

2 | /*--- IMPORT STYLES FOR FASTPAGES ---*/

3 | @import 'minima/fastpages-styles';

4 |

5 | /*-----------------------------------*/

6 | /*----- ADD YOUR STYLES BELOW -------*/

7 |

8 | @import 'minima/dark-mode';

9 |

10 | .site-header {

11 | font-family: 'IBM Plex Mono', monospace;

12 | border: none;

13 | }

14 |

15 | .site-header a:hover {

16 | color: $med-emph !important;

17 | text-decoration: none !important;

18 | }

19 |

20 | .home {

21 | font-family: 'Roboto Mono', monospace !important;

22 | }

23 |

24 | .page-content {

25 | font-family: 'Roboto', sans-serif;

26 | }

27 |

28 | .site-footer {

29 | font-family: 'IBM Plex Mono', monospace;

30 | border: none;

31 | }

32 |

33 | // If you want to turn off dark background for syntax highlighting, comment or delete the below line.

34 | @import 'minima/fastpages-dracula-highlight';

35 |

--------------------------------------------------------------------------------

/_action_files/nb2post.py:

--------------------------------------------------------------------------------

1 | """Converts Jupyter Notebooks to Jekyll compliant blog posts"""

2 | from datetime import datetime

3 | import re, os, logging

4 | from nbdev import export2html

5 | from nbdev.export2html import Config, Path, _to_html, _re_block_notes

6 | from fast_template import rename_for_jekyll

7 |

8 | warnings = set()

9 |

10 | # Modify the naming process such that destination files get named properly for Jekyll _posts

11 | def _nb2htmlfname(nb_path, dest=None):

12 | fname = rename_for_jekyll(nb_path, warnings=warnings)

13 | if dest is None: dest = Config().doc_path

14 | return Path(dest)/fname

15 |

16 | # TODO: Open a GitHub Issue in addition to printing warnings

17 | for original, new in warnings:

18 | print(f'{original} has been renamed to {new} to be complaint with Jekyll naming conventions.\n')

19 |

20 | ## apply monkey patches

21 | export2html._nb2htmlfname = _nb2htmlfname

22 | export2html.notebook2html(fname='_notebooks/*.ipynb', dest='_posts/', template_file='/fastpages/fastpages.tpl', execute=False)

23 |

--------------------------------------------------------------------------------

/_action_files/pr_comment.sh:

--------------------------------------------------------------------------------

1 | #!/bin/sh

2 |

3 | # Make a comment on a PR.

4 | # Usage:

5 | # > pr_comment.sh <>

6 |

7 | set -e

8 |

9 | # This is populated by our secret from the Workflow file.

10 | if [[ -z "${GITHUB_TOKEN}" ]]; then

11 | echo "Set the GITHUB_TOKEN env variable."

12 | exit 1

13 | fi

14 |

15 | if [[ -z "${ISSUE_NUMBER}" ]]; then

16 | echo "Set the ISSUE_NUMBER env variable."

17 | exit 1

18 | fi

19 |

20 | if [ -z "$1" ]

21 | then

22 | echo "No MESSAGE argument supplied. Usage: issue_comment.sh "

23 | exit 1

24 | fi

25 |

26 | MESSAGE=$1

27 |

28 | ## Set Vars

29 | URI=https://api.github.com

30 | API_VERSION=v3

31 | API_HEADER="Accept: application/vnd.github.${API_VERSION}+json"

32 | AUTH_HEADER="Authorization: token ${GITHUB_TOKEN}"

33 |

34 | # Create a comment with APIv3 # POST /repos/:owner/:repo/issues/:issue_number/comments

35 | curl -XPOST -sSL \

36 | -d "{\"body\": \"$MESSAGE\"}" \

37 | -H "${AUTH_HEADER}" \

38 | -H "${API_HEADER}" \

39 | "${URI}/repos/${GITHUB_REPOSITORY}/issues/${ISSUE_NUMBER}/comments"

40 |

--------------------------------------------------------------------------------

/_pages/tags.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: categories

3 | permalink: /categories/

4 | title: Tags

5 | search_exclude: true

6 | ---

7 |

8 | {% if site.categories.size > 0 %}

9 | Contents

10 |

11 | {% assign categories = "" | split:"" %}

12 | {% for c in site.categories %}

13 | {% assign categories = categories | push: c[0] %}

14 | {% endfor %}

15 | {% assign categories = categories | sort_natural %}

16 |

17 |

18 | {% for category in categories %}

19 | - {{ category }}

20 | {% endfor %}

21 |

22 |

23 | {% for category in categories %}

24 | {{ category }}

25 |

26 | {% for post in site.categories[category] %}

27 | {% if post.hide != true %}

28 | {%- assign date_format = site.minima.date_format | default: "%b %-d, %Y" -%}

29 |

30 |

31 |

32 | {% endif %}

33 | {% endfor %}

34 | {% endfor %}

35 |

36 | {% endif %}

37 |

--------------------------------------------------------------------------------

/docker-compose.yml:

--------------------------------------------------------------------------------

1 | version: "3"

2 | services:

3 | fastpages: &fastpages

4 | working_dir: /data

5 | environment:

6 | - INPUT_BOOL_SAVE_MARKDOWN=false

7 | build:

8 | context: ./_action_files

9 | dockerfile: ./Dockerfile

10 | image: fastpages-dev

11 | logging:

12 | driver: json-file

13 | options:

14 | max-size: 50m

15 | stdin_open: true

16 | tty: true

17 | volumes:

18 | - .:/data/

19 |

20 | converter:

21 | <<: *fastpages

22 | command: /fastpages/action_entrypoint.sh

23 |

24 | notebook:

25 | <<: *fastpages

26 | command: jupyter notebook --allow-root --no-browser --ip=0.0.0.0 --port=8080 --NotebookApp.token='' --NotebookApp.password=''

27 | ports:

28 | - "8080:8080"

29 |

30 | watcher:

31 | <<: *fastpages

32 | command: watchmedo shell-command --command /fastpages/action_entrypoint.sh --pattern *.ipynb --recursive --drop

33 | network_mode: host # for GitHub Codespaces https://github.com/features/codespaces/

34 |

35 | jekyll:

36 | working_dir: /data

37 | image: fastai/fastpages-jekyll

38 | restart: unless-stopped

39 | ports:

40 | - "4000:4000"

41 | volumes:

42 | - .:/data/

43 | command: >

44 | bash -c "chmod -R u+rw . && jekyll serve --host 0.0.0.0 --trace --strict_front_matter"

45 |

--------------------------------------------------------------------------------

/_fastpages_docs/_upgrade_pr.md:

--------------------------------------------------------------------------------

1 | Hello :wave: @{_username_}!

2 |

3 | This PR pulls the most recent files from [fastpages](https://github.com/fastai/fastpages), and attempts to replace relevant files in your repository, without changing the content of your blog posts. This allows you to receive bug fixes and feature updates.

4 |

5 | ## Warning

6 |

7 | If you have applied **customizations to the HTML or styling of your site, they may be lost if you merge this PR. Please review the changes this PR makes carefully before merging!.** However, for people who only write content and don't change the styling of their site, this method is recommended.

8 |

9 | If you would like more fine-grained control over what changes to accept or decline, consider [following this approach](https://stackoverflow.com/questions/56577184/github-pull-changes-from-a-template-repository/56577320) instead.

10 |

11 | ### What to Expect After Merging This PR

12 |

13 | - GitHub Actions will build your site, which will take 3-4 minutes to complete. **This will happen anytime you push changes to the master branch of your repository.** You can monitor the logs of this if you like on the [Actions tab of your repo](https://github.com/{_username_}/{_repo_name_}/actions).

14 | - You can monitor the status of your site in the GitHub Pages section of your [repository settings](https://github.com/{_username_}/{_repo_name_}/settings).

15 |

--------------------------------------------------------------------------------

/_fastpages_docs/NOTEBOOK_FOOTNOTES.md:

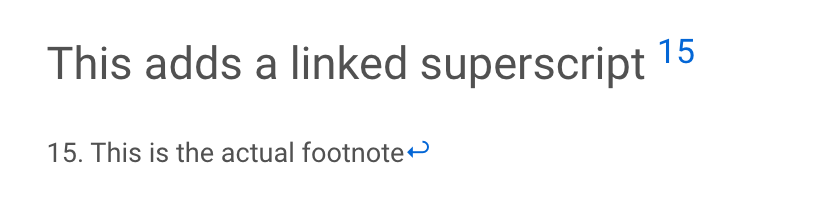

--------------------------------------------------------------------------------

1 | # Detailed Guide To Footnotes in Notebooks

2 |

3 | Notebook -> HTML Footnotes don't work the same as Markdown. There isn't a good solution, so made these Jekyll plugins as a workaround

4 |

5 | ```

6 | This adds a linked superscript {% fn 15 %}

7 |

8 | {{ "This is the actual footnote" | fndetail: 15 }}

9 | ```

10 |

11 |

12 |

13 | You can have links, but then you have to use **single quotes** to escape the link.

14 | ```

15 | This adds a linked superscript {% fn 20 %}

16 |

17 | {{ 'This is the actual footnote with a [link](www.github.com) as well!' | fndetail: 20 }}

18 | ```

19 |

20 |

21 | However, what if you want a single quote in your footnote? There is not an easy way to escape that. Fortunately, you can use the special HTML character `'` (you must keep the semicolon!). For example, you can include a single quote like this:

22 |

23 |

24 | ```

25 | This adds a linked superscript {% fn 20 %}

26 |

27 | {{ 'This is the actual footnote; with a [link](www.github.com) as well! and a single quote ' too!' | fndetail: 20 }}

28 | ```

29 |

30 |

31 |

--------------------------------------------------------------------------------

/_action_files/hide.tpl:

--------------------------------------------------------------------------------

1 | {%- extends 'basic.tpl' -%}

2 |

3 | {% block codecell %}

4 | {{ "{% raw %}" }}

5 | {{ super() }}

6 | {{ "{% endraw %}" }}

7 | {% endblock codecell %}

8 |

9 | {% block input_group -%}

10 | {%- if cell.metadata.collapse_show -%}

11 |

12 |

13 | {{ super() }}

14 |

15 | {%- elif cell.metadata.collapse_hide -%}

16 |

17 |

18 | {{ super() }}

19 |

20 | {%- elif cell.metadata.hide_input or nb.metadata.hide_input -%}

21 | {%- else -%}

22 | {{ super() }}

23 | {%- endif -%}

24 | {% endblock input_group %}

25 |

26 | {% block output_group -%}

27 | {%- if cell.metadata.collapse_output -%}

28 |

29 |

30 | {{ super() }}

31 |

32 | {%- elif cell.metadata.hide_output -%}

33 | {%- else -%}

34 | {{ super() }}

35 | {%- endif -%}

36 | {% endblock output_group %}

37 |

38 | {% block output_area_prompt %}

39 | {%- if cell.metadata.hide_input or nb.metadata.hide_input -%}

40 |

41 | {%- else -%}

42 | {{ super() }}

43 | {%- endif -%}

44 | {% endblock output_area_prompt %}

--------------------------------------------------------------------------------

/Gemfile:

--------------------------------------------------------------------------------

1 | source "https://rubygems.org"

2 | # Hello! This is where you manage which Jekyll version is used to run.

3 | # When you want to use a different version, change it below, save the

4 | # file and run `bundle install`. Run Jekyll with `bundle exec`, like so:

5 | #

6 | # bundle exec jekyll serve

7 | #

8 | # This will help ensure the proper Jekyll version is running.

9 | # Happy Jekylling!

10 | gem "jekyll", "~> 4.1.0"

11 | # This is the default theme for new Jekyll sites. You may change this to anything you like.

12 | gem "minima"

13 | # To upgrade, run `bundle update github-pages`.

14 | # gem "github-pages", group: :jekyll_plugins

15 | # If you have any plugins, put them here!

16 | group :jekyll_plugins do

17 | gem "jekyll-feed", "~> 0.12"

18 | gem 'jekyll-octicons'

19 | gem 'jekyll-remote-theme'

20 | gem "jekyll-twitter-plugin"

21 | gem 'jekyll-relative-links'

22 | gem 'jekyll-seo-tag'

23 | gem 'jekyll-toc'

24 | gem 'jekyll-gist'

25 | gem 'jekyll-paginate'

26 | gem 'jekyll-sitemap'

27 | end

28 |

29 | gem "kramdown-math-katex"

30 | gem "jemoji"

31 |

32 | # Windows and JRuby does not include zoneinfo files, so bundle the tzinfo-data gem

33 | # and associated library.

34 | install_if -> { RUBY_PLATFORM =~ %r!mingw|mswin|java! } do

35 | gem "tzinfo", "~> 1.2"

36 | gem "tzinfo-data"

37 | end

38 |

39 | # Performance-booster for watching directories on Windows

40 | gem "wdm", "~> 0.1.1", :install_if => Gem.win_platform?

41 |

42 | gem "faraday", "< 1.0"

43 |

44 |

--------------------------------------------------------------------------------

/_action_files/check_js.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | # The purpose of this script is to check parity between official hosted third party js libraries, and alternative CDNs used on this site.

3 |

4 | function compare {

5 | printf "=================\ncomparing:\n%s vs. %s\n" "$1" "$2"

6 | wget "$1" -O f1 &> /dev/null

7 | wget "$2" -O f2 &> /dev/null

8 | if ! cmp f1 f2;

9 | then

10 | printf "Files are NOT the same!\n"

11 | exit 1;

12 | else

13 | printf "Files are the same.\n"

14 | fi

15 | }

16 |

17 | compare "https://unpkg.com/@primer/css/dist/primer.css" "https://cdnjs.cloudflare.com/ajax/libs/Primer/15.2.0/primer.css"

18 | #compare "https://hypothes.is/embed.js" "https://cdn.jsdelivr.net/npm/hypothesis/build/boot.js"

19 | compare "https://cdn.jsdelivr.net/npm/katex@0.12.0/dist/contrib/auto-render.min.js" "https://cdnjs.cloudflare.com/ajax/libs/KaTeX/0.12.0/contrib/auto-render.min.js"

20 | compare "https://cdn.jsdelivr.net/npm/katex@0.12.0/dist/katex.min.css" "https://cdnjs.cloudflare.com/ajax/libs/KaTeX/0.12.0/katex.min.css"

21 | compare "https://cdn.jsdelivr.net/npm/katex@0.12.0/dist/katex.min.js" "https://cdnjs.cloudflare.com/ajax/libs/KaTeX/0.12.0/katex.min.js"

22 | compare "https://cdn.jsdelivr.net/npm/mathjax@2.7.5/MathJax.js" "https://cdnjs.cloudflare.com/ajax/libs/mathjax/2.7.5/MathJax.js"

23 |

24 | # Remove files created for comparison

25 | rm f1 f2

26 |

--------------------------------------------------------------------------------

/_action_files/fast_template.py:

--------------------------------------------------------------------------------

1 | from datetime import datetime

2 | import re, os

3 | from pathlib import Path

4 | from typing import Tuple, Set

5 |

6 | # Check for YYYY-MM-DD

7 | _re_blog_date = re.compile(r'([12]\d{3}-(0[1-9]|1[0-2])-(0[1-9]|[12]\d|3[01])-)')

8 | # Check for leading dashses or numbers

9 | _re_numdash = re.compile(r'(^[-\d]+)')

10 |

11 | def rename_for_jekyll(nb_path: Path, warnings: Set[Tuple[str, str]]=None) -> str:

12 | """

13 | Return a Path's filename string appended with its modified time in YYYY-MM-DD format.

14 | """

15 | assert nb_path.exists(), f'{nb_path} could not be found.'

16 |

17 | # Checks if filename is compliant with Jekyll blog posts

18 | if _re_blog_date.match(nb_path.name): return nb_path.with_suffix('.md').name.replace(' ', '-')

19 |

20 | else:

21 | clean_name = _re_numdash.sub('', nb_path.with_suffix('.md').name).replace(' ', '-')

22 |

23 | # Gets the file's last modified time and and append YYYY-MM-DD- to the beginning of the filename

24 | mdate = os.path.getmtime(nb_path) - 86400 # subtract one day b/c dates in the future break Jekyll

25 | dtnm = datetime.fromtimestamp(mdate).strftime("%Y-%m-%d-") + clean_name

26 | assert _re_blog_date.match(dtnm), f'{dtnm} is not a valid name, filename must be pre-pended with YYYY-MM-DD-'

27 | # push this into a set b/c _nb2htmlfname gets called multiple times per conversion

28 | if warnings: warnings.add((nb_path, dtnm))

29 | return dtnm

30 |

--------------------------------------------------------------------------------

/_action_files/settings.ini:

--------------------------------------------------------------------------------

1 | [DEFAULT]

2 | lib_name = nbdev

3 | user = fastai

4 | branch = master

5 | version = 0.2.10

6 | description = Writing a library entirely in notebooks

7 | keywords = jupyter notebook

8 | author = Sylvain Gugger and Jeremy Howard

9 | author_email = info@fast.ai

10 | baseurl =

11 | title = nbdev

12 | copyright = fast.ai

13 | license = apache2

14 | status = 2

15 | min_python = 3.6

16 | audience = Developers

17 | language = English

18 | requirements = nbformat>=4.4.0 nbconvert>=5.6.1 pyyaml fastscript packaging

19 | console_scripts = nbdev_build_lib=nbdev.cli:nbdev_build_lib

20 | nbdev_update_lib=nbdev.cli:nbdev_update_lib

21 | nbdev_diff_nbs=nbdev.cli:nbdev_diff_nbs

22 | nbdev_test_nbs=nbdev.cli:nbdev_test_nbs

23 | nbdev_build_docs=nbdev.cli:nbdev_build_docs

24 | nbdev_nb2md=nbdev.cli:nbdev_nb2md

25 | nbdev_trust_nbs=nbdev.cli:nbdev_trust_nbs

26 | nbdev_clean_nbs=nbdev.clean:nbdev_clean_nbs

27 | nbdev_read_nbs=nbdev.cli:nbdev_read_nbs

28 | nbdev_fix_merge=nbdev.cli:nbdev_fix_merge

29 | nbdev_install_git_hooks=nbdev.cli:nbdev_install_git_hooks

30 | nbdev_bump_version=nbdev.cli:nbdev_bump_version

31 | nbdev_new=nbdev.cli:nbdev_new

32 | nbdev_detach=nbdev.cli:nbdev_detach

33 | nbs_path = nbs

34 | doc_path = images/copied_from_nb

35 | doc_host = https://nbdev.fast.ai

36 | doc_baseurl = %(baseurl)s/images/copied_from_nb/

37 | git_url = https://github.com/fastai/nbdev/tree/master/

38 | lib_path = nbdev

39 | tst_flags = fastai2

40 | custom_sidebar = False

41 | cell_spacing = 1

42 | monospace_docstrings = False

43 | jekyll_styles = note,warning,tip,important,youtube,twitter

44 |

45 |

--------------------------------------------------------------------------------

/_word/README.md:

--------------------------------------------------------------------------------

1 | # Automatically Convert MS Word (*.docx) Documents To Blog Posts

2 |

3 | _Note: You can convert Google Docs to Word Docs by navigating to the File menu, and selecting Download > Microsoft Word (.docx)_

4 |

5 | [`fastpages`](https://github.com/fastai/fastpages) will automatically convert Word Documents (.docx) saved into this directory as blog posts!. Furthermore, images in your document are saved and displayed as you would expect on your blog post automatically.

6 |

7 | ## Usage

8 |

9 | 1. Create a Word Document (must be .docx) with the contents of your blog post.

10 |

11 | 2. Save your file with the naming convention `YYYY-MM-DD-*.docx` into the `/_word` folder of this repo. For example `2020-01-28-My-First-Post.docx`. This [naming convention is required by Jekyll](https://jekyllrb.com/docs/posts/) to render your blog post.

12 | - Be careful to name your file correctly! It is easy to forget the last dash in `YYYY-MM-DD-`. Furthermore, the character immediately following the dash should only be an alphabetical letter. Examples of valid filenames are:

13 |

14 | ```shell

15 | 2020-01-28-My-First-Post.docx

16 | 2012-09-12-how-to-write-a-blog.docx

17 | ```

18 |

19 | - If you fail to name your file correctly, `fastpages` will automatically attempt to fix the problem by prepending the last modified date of your notebook to your generated blog post. However, it is recommended that you name your files properly yourself for more transparency.

20 |

21 | 3. Synchronize your files with GitHub by [following the instructions in this blog post](https://www.fast.ai/2020/01/18/gitblog/).

22 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | help:

2 | cat Makefile

3 |

4 | # start (or restart) the services

5 | server: .FORCE

6 | docker-compose down --remove-orphans || true;

7 | docker-compose up

8 |

9 | # start (or restart) the services in detached mode

10 | server-detached: .FORCE

11 | docker-compose down || true;

12 | docker-compose up -d

13 |

14 | # build or rebuild the services WITHOUT cache

15 | build: .FORCE

16 | chmod 777 Gemfile.lock

17 | docker-compose stop || true; docker-compose rm || true;

18 | docker build --no-cache -t fastai/fastpages-jekyll -f _action_files/fastpages-jekyll.Dockerfile .

19 | docker-compose build --force-rm --no-cache

20 |

21 | # rebuild the services WITH cache

22 | quick-build: .FORCE

23 | docker-compose stop || true;

24 | docker build -t fastai/fastpages-jekyll -f _action_files/fastpages-jekyll.Dockerfile .

25 | docker-compose build

26 |

27 | # convert word & nb without Jekyll services

28 | convert: .FORCE

29 | docker-compose up converter

30 |

31 | # stop all containers

32 | stop: .FORCE

33 | docker-compose stop

34 | docker ps | grep fastpages | awk '{print $1}' | xargs docker stop

35 |

36 | # remove all containers

37 | remove: .FORCE

38 | docker-compose stop || true; docker-compose rm || true;

39 |

40 | # get shell inside the notebook converter service (Must already be running)

41 | bash-nb: .FORCE

42 | docker-compose exec watcher /bin/bash

43 |

44 | # get shell inside jekyll service (Must already be running)

45 | bash-jekyll: .FORCE

46 | docker-compose exec jekyll /bin/bash

47 |

48 | # restart just the Jekyll server

49 | restart-jekyll: .FORCE

50 | docker-compose restart jekyll

51 |

52 | .FORCE:

53 | chmod -R u+rw .

54 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://discord.gg/UWdMC3W2qn)

4 | [](https://www.patreon.com/oalabs)

5 |

6 |

7 | [**research.openanalysis.net**](https://research.openanalysis.net)

8 |

9 | # Research Notes

10 |

11 | This is our research notes repository, formerly known as "Lab-Notes". We use [Jupyter Notbooks](https://jupyter.org/) for all of our notes so we can directy include code. The raw notes can be found in this repository in the [_notebooks](https://github.com/OALabs/research/tree/master/_notebooks) directory and our full blog is available online at [research.openanalysis.net](https://research.openanalysis.net).

12 |

13 |

14 | ## How To Add Notes

15 |

16 | Adding a new note is as simple as cloning out repository and launching `juptyer-lab` from the [_notebooks](https://github.com/OALabs/research/tree/master/_notebooks) directory. You can then edit notes, or add new ones.

17 |

18 | The note filename must start with the date in the format `yyyy-mm-dd-` for example `2022-02-22-my_note.ipynb`.

19 |

20 | Each note must include a special markdown cell as the first cell in the notebook. The cell contains the markdown used to generate our blog posts.

21 | ```

22 | # Blog Title

23 | > Blog Subtitle

24 |

25 | - toc: true

26 | - badges: true

27 | - categories: [tagone,tag two]

28 | ```

29 | The blog is generated using fastpages. Full documentation can be found on the [fastpages](https://github.com/fastai/fastpages) GitHub.

30 |

31 |

32 |

--------------------------------------------------------------------------------

/_action_files/word2post.sh:

--------------------------------------------------------------------------------

1 | #!/bin/sh

2 |

3 | # This sets the environment variable when testing locally and not in a GitHub Action

4 | if [ -z "$GITHUB_ACTIONS" ]; then

5 | GITHUB_WORKSPACE='/data'

6 | echo "=== Running Locally: All assets expected to be in the directory /data ==="

7 | fi

8 |

9 | # Loops through directory of *.docx files and converts to markdown

10 | # markdown files are saved in _posts, media assets are saved in assets/img//media

11 | for FILENAME in "${GITHUB_WORKSPACE}"/_word/*.docx; do

12 | [ -e "$FILENAME" ] || continue # skip when glob doesn't match

13 | NAME=${FILENAME##*/} # Get filename without the directory

14 | NEW_NAME=$(python3 "/fastpages/word2post.py" "${FILENAME}") # clean filename to be Jekyll compliant for posts

15 | BASE_NEW_NAME=${NEW_NAME%.md} # Strip the file extension

16 |

17 | if [ -z "$NEW_NAME" ]; then

18 | echo "Unable To Rename: ${FILENAME} to a Jekyll complaint filename for blog posts"

19 | exit 1

20 | fi

21 |

22 | echo "Converting: ${NAME} ---to--- ${NEW_NAME}"

23 | cd ${GITHUB_WORKSPACE} || { echo "Failed to change to Github workspace directory"; exit 1; }

24 | pandoc --from docx --to gfm --output "${GITHUB_WORKSPACE}/_posts/${NEW_NAME}" --columns 9999 \

25 | --extract-media="assets/img/${BASE_NEW_NAME}" --standalone "${FILENAME}"

26 |

27 | # Inject correction to image links in markdown

28 | sed -i.bak 's/!\[\](assets/!\[\]({{ site.url }}{{ site.baseurl }}\/assets/g' "_posts/${NEW_NAME}"

29 | # Remove intermediate files

30 | rm _posts/*.bak 2> /dev/null || true

31 |

32 | cat "${GITHUB_WORKSPACE}/_action_files/word_front_matter.txt" "_posts/${NEW_NAME}" > temp && mv temp "_posts/${NEW_NAME}"

33 | done

34 |

--------------------------------------------------------------------------------

/_action_files/action_entrypoint.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | set -e

3 |

4 | # setup ssh: allow key to be used without a prompt and start ssh agent

5 | export GIT_SSH_COMMAND="ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no"

6 | eval "$(ssh-agent -s)"

7 |

8 | ######## Run notebook/word converter ########

9 | # word converter using pandoc

10 | /fastpages/word2post.sh

11 | # notebook converter using nbdev

12 | cp /fastpages/settings.ini .

13 | python /fastpages/nb2post.py

14 |

15 |

16 | ######## Optionally save files and build GitHub Pages ########

17 | if [[ "$INPUT_BOOL_SAVE_MARKDOWN" == "true" ]];then

18 |

19 | if [ -z "$INPUT_SSH_DEPLOY_KEY" ];then

20 | echo "You must set the SSH_DEPLOY_KEY input if BOOL_SAVE_MARKDOWN is set to true.";

21 | exit 1;

22 | fi

23 |

24 | # Get user's email from commit history

25 | if [[ "$GITHUB_EVENT_NAME" == "push" ]];then

26 | USER_EMAIL=$(jq '.commits | .[0] | .author.email' < "$GITHUB_EVENT_PATH")

27 | else

28 | USER_EMAIL="actions@github.com"

29 | fi

30 |

31 | # Setup Git credentials if we are planning to change the data in the repo

32 | git config --global user.name "$GITHUB_ACTOR"

33 | git config --global user.email "$USER_EMAIL"

34 | git remote add fastpages-origin "git@github.com:$GITHUB_REPOSITORY.git"

35 | echo "${INPUT_SSH_DEPLOY_KEY}" > _mykey

36 | chmod 400 _mykey

37 | ssh-add _mykey

38 |

39 | # Optionally save intermediate markdown

40 | if [[ "$INPUT_BOOL_SAVE_MARKDOWN" == "true" ]]; then

41 | git pull fastpages-origin "${GITHUB_REF}" --ff-only

42 | git add _posts

43 | git commit -m "[Bot] Update $INPUT_FORMAT blog posts" --allow-empty

44 | git push fastpages-origin HEAD:"$GITHUB_REF"

45 | fi

46 | fi

47 |

48 |

49 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yaml:

--------------------------------------------------------------------------------

1 | name: CI

2 | on:

3 | push:

4 | branches:

5 | - master # need to filter here so we only deploy when there is a push to master

6 | # no filters on pull requests, so intentionally left blank

7 | pull_request:

8 | workflow_dispatch:

9 |

10 | jobs:

11 | build-site:

12 | if: ( github.event.commits[0].message != 'Initial commit' ) || github.run_number > 1

13 | runs-on: ubuntu-latest

14 | steps:

15 |

16 | - name: Check if secret exists

17 | if: github.event_name == 'push'

18 | run: |

19 | if [ -z "$deploy_key" ]

20 | then

21 | echo "You do not have a secret named SSH_DEPLOY_KEY. This means you did not follow the setup instructions carefully. Please try setting up your repo again with the right secrets."

22 | exit 1;

23 | fi

24 | env:

25 | deploy_key: ${{ secrets.SSH_DEPLOY_KEY }}

26 |

27 |

28 | - name: Copy Repository Contents

29 | uses: actions/checkout@main

30 | with:

31 | persist-credentials: false

32 |

33 | - name: convert notebooks and word docs to posts

34 | uses: ./_action_files

35 |

36 | - name: setup directories for Jekyll build

37 | run: |

38 | rm -rf _site

39 | sudo chmod -R 777 .

40 |

41 | - name: Jekyll build

42 | uses: docker://herrcore/fastpages-jekyll-image

43 | with:

44 | args: bash -c "jekyll build -V --strict_front_matter --trace"

45 | env:

46 | JEKYLL_ENV: 'production'

47 |

48 | - name: copy CNAME file into _site if CNAME exists

49 | run: |

50 | sudo chmod -R 777 _site/

51 | cp CNAME _site/ 2>/dev/null || :

52 |

53 | - name: Deploy

54 | if: github.event_name == 'push'

55 | uses: peaceiris/actions-gh-pages@v3

56 | with:

57 | deploy_key: ${{ secrets.SSH_DEPLOY_KEY }}

58 | publish_dir: ./_site

59 |

--------------------------------------------------------------------------------

/assets/badges/github.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/assets/js/search-data.json:

--------------------------------------------------------------------------------

1 | ---

2 | ---

3 | {

4 | {% assign comma = false %}

5 | {%- assign date_format = site.minima.date_format | default: "%b %-d, %Y" -%}

6 | {% for post in site.posts %}

7 | {% if post.search_exclude != true %}

8 | {% if comma == true%},{% endif %}"post{{ forloop.index0 }}": {

9 | "title": "{{ post.title | replace: '&', '&' }}",

10 | "content": "{{ post.content | markdownify | replace: ' li > div {

87 | box-shadow: none !important;

88 | background-color: $overlay;

89 | border: none !important;

90 | }

91 |

92 | li .post-meta-description {

93 | color: $med-emph !important;

94 | }

95 |

--------------------------------------------------------------------------------

/assets/badges/colab.svg:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/_fastpages_docs/_manual_setup.md:

--------------------------------------------------------------------------------

1 | # Manual Setup Instructions

2 |

3 | These are the setup steps that are automated by [setup.yaml](.github/workflows/setup.yaml)

4 |

5 | 1. Click the [](https://github.com/fastai/fastpages/generate) button to create a copy of this repo in your account.

6 |

7 | 2. [Follow these instructions to create an ssh-deploy key](https://developer.github.com/v3/guides/managing-deploy-keys/#deploy-keys). Make sure you **select Allow write access** when adding this key to your GitHub account.

8 |

9 | 3. [Follow these instructions to upload your deploy key](https://help.github.com/en/actions/configuring-and-managing-workflows/creating-and-storing-encrypted-secrets#creating-encrypted-secrets) as an encrypted secret on GitHub. Make sure you name your key `SSH_DEPLOY_KEY`. Note: The deploy key secret is your **private key** (NOT the public key).

10 |

11 | 4. [Create a branch](https://help.github.com/en/github/collaborating-with-issues-and-pull-requests/creating-and-deleting-branches-within-your-repository#creating-a-branch) named `gh-pages`.

12 |

13 | 5. Change the badges on this README to point to **your** repository instead of `fastai/fastpages`. Badges are organized in a section at the beginning of this README. For example, you should replace `fastai` and `fastpages` in the below url:

14 |

15 | ``

16 |

17 | to

18 |

19 | ``

20 |

21 | 6. Change `baseurl:` in `_config.yaml` to the name of your repository. For example, instead of

22 |

23 | `baseurl: "/fastpages"`

24 |

25 | this should be

26 |

27 | `baseurl: "/your-repo-name"`

28 |

29 | 7. Similarly, change the `url:` parameter in `_config.yaml` to the url your blog will be served on. For example, instead of

30 |

31 | `url: "https://fastpages.fast.ai/"`

32 |

33 | this should be

34 |

35 | `url: "https://.github.io"`

36 |

37 | 8. Read through `_config.yaml` carefully as there may be other options that must be set. The comments in this file will provide instructions.

38 |

39 | 9. Delete the `CNAME` file from the root of your `master` branch (or change it if you are using a custom domain)

40 |

41 | 10. Go to your [repository settings and enable GitHub Pages](https://help.github.com/en/enterprise/2.13/user/articles/configuring-a-publishing-source-for-github-pages) with the `gh-pages` branch you created earlier.

--------------------------------------------------------------------------------

/_layouts/home.html:

--------------------------------------------------------------------------------

1 | ---

2 | layout: default

3 | ---

4 |

5 |

6 | {%- if page.title -%}

7 | {{ page.title }}

8 | {%- endif -%}

9 |

10 | {{ content | markdownify }}

11 |

12 |

13 | {% if site.paginate %}

14 | {% assign rawposts = paginator.posts %}

15 | {% else %}

16 | {% assign rawposts = site.posts %}

17 | {% endif %}

18 |

19 |

20 | {% assign posts = ''|split:'' %}

21 | {% for post in rawposts %}

22 | {% if post.hide != true %}

23 | {% assign posts = posts|push:post%}

24 | {% endif %}

25 | {% endfor %}

26 |

27 |

28 | {% assign grouped_posts = posts | group_by: "sticky_rank" | sort: "name", "last" %}

29 | {% assign sticky_posts = ''|split:'' %}

30 | {% assign non_sticky_posts = '' | split:'' %}

31 |

32 |

33 | {% for gp in grouped_posts %}

34 | {%- if gp.name == "" -%}

35 | {% assign non_sticky_posts = gp.items | sort: "date" | reverse %}

36 | {%- else %}

37 | {% assign sticky_posts = sticky_posts | concat: gp.items %}

38 | {%- endif -%}

39 | {% endfor %}

40 |

41 |

42 | {% assign sticky_posts = sticky_posts | sort: "sticky_rank", "last" %}

43 | {% assign posts = sticky_posts | concat: non_sticky_posts %}

44 |

45 | {%- if posts.size > 0 -%}

46 | {%- if page.list_title -%}

47 | {{ page.list_title }}

48 | {%- endif -%}

49 |

50 | {%- assign date_format = site.minima.date_format | default: "%b %-d, %Y" -%}

51 | {%- for post in posts -%}

52 | -

53 | {%- if site.show_image -%}

54 | {%- include post_list_image_card.html -%}

55 | {% else %}

56 | {%- include post_list.html -%}

57 | {%- endif -%}

58 |

59 | {%- endfor -%}

60 |

61 |

62 | {% if site.paginate and site.posts.size > site.paginate %}

63 |

64 |

65 | {%- if paginator.previous_page %}

66 | - {{ paginator.previous_page }}

67 | {%- else %}

68 | - •

69 | {%- endif %}

70 | - {{ paginator.page }}

71 | {%- if paginator.next_page %}

72 | - {{ paginator.next_page }}

73 | {%- else %}

74 | - •

75 | {%- endif %}

76 |

77 |

78 | {%- endif %}

79 |

80 | {%- endif -%}

81 |

82 |

--------------------------------------------------------------------------------

/_fastpages_docs/_setup_pr_template.md:

--------------------------------------------------------------------------------

1 | Hello :wave: @OALabs! Thank you for using fastpages!

2 |

3 | ## Before you merge this PR

4 |

5 | 1. Create an ssh key-pair. Open this utility. Select: `RSA` and `4096` and leave `Passphrase` blank. Click the blue button `Generate-SSH-Keys`.

6 |

7 | 2. Navigate to this link and click `New repository secret`. Copy and paste the **Private Key** into the `Value` field. This includes the "---BEGIN RSA PRIVATE KEY---" and "--END RSA PRIVATE KEY---" portions. **In the `Name` field, name the secret `SSH_DEPLOY_KEY`.**

8 |

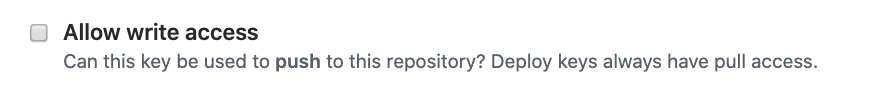

9 | 3. Navigate to this link and click the `Add deploy key` button. Paste your **Public Key** from step 1 into the `Key` box. In the `Title`, name the key anything you want, for example `fastpages-key`. Finally, **make sure you click the checkbox next to `Allow write access`** (pictured below), and click `Add key` to save the key.

10 |

11 |

12 |

13 |

14 | ### What to Expect After Merging This PR

15 |

16 | - GitHub Actions will build your site, which will take 2-3 minutes to complete. **This will happen anytime you push changes to the master branch of your repository.** You can monitor the logs of this if you like on the [Actions tab of your repo](https://github.com/OALabs/research/actions).

17 | - Your GH-Pages Status badge on your README will eventually appear and be green, indicating your first successful build.

18 | - You can monitor the status of your site in the GitHub Pages section of your [repository settings](https://github.com/OALabs/research/settings).

19 |

20 | If you are not using a custom domain, your website will appear at:

21 |

22 | #### https://OALabs.github.io/research

23 |

24 |

25 | ## Optional: Using a Custom Domain

26 |

27 | 1. After merging this PR, add a file named `CNAME` at the root of your repo. For example, the `fastpages` blog is hosted at `https://fastpages.fast.ai`, which means [our CNAME](https://github.com/fastai/fastpages/blob/master/CNAME) contains the following contents:

28 |

29 |

30 | >`fastpages.fast.ai`

31 |

32 |

33 | 2. Change the `url` and `baseurl` parameters in your `/_config.yml` file to reflect your custom domain.

34 |

35 |

36 | Wondering how to setup a custom domain? See [this article](https://dev.to/trentyang/how-to-setup-google-domain-for-github-pages-1p58). You must add a CNAME file to the root of your master branch for the intructions in the article to work correctly.

37 |

38 |

39 | ## Questions

40 |

41 | Please use the [nbdev & blogging channel](https://forums.fast.ai/c/fastai-users/nbdev/48) in the fastai forums for any questions or feature requests.

42 |

--------------------------------------------------------------------------------

/assets/badges/binder.svg:

--------------------------------------------------------------------------------

1 | launch launch binder binder

5 |

6 |

7 | {{ page.title | escape }}

8 | {%- if page.description -%}

9 | {%- if site.html_escape.description -%}

10 | {{ page.description | escape }}

11 | {%- else -%}

12 | {{ page.description }}

13 | {%- endif -%}

14 | {%- endif -%}

15 |

36 |

37 | {% if page.categories.size > 0 and site.show_tags %}

38 |

44 | {% endif %}

45 |

46 | {% if page.layout == 'notebook' %}

47 | {% if page.badges or page.badges == nil %}

48 |

49 |

50 |

51 |  52 |

53 |

54 |

55 |

56 |

52 |

53 |

54 |

55 |

56 |  57 |

58 |

59 |

60 |

61 |

57 |

58 |

59 |

60 |

61 |  62 |

63 |

64 |

65 |

66 |

62 |

63 |

64 |

65 |

66 |  67 |

68 |

69 | {% unless page.hide_github_badge or site.default_badges.github != true %}{% include notebook_github_link.html %}{% endunless %}

70 | {% unless page.hide_binder_badge or site.default_badges.binder != true %}{% include notebook_binder_link.html %}{% endunless %}

71 | {% unless page.hide_colab_badge or site.default_badges.colab != true %}{% include notebook_colab_link.html %}{% endunless %}

72 | {% unless page.hide_deepnote_badge or site.default_badges.deepnote != true %}{% include notebook_deepnote_link.html %}{% endunless %}

73 |

74 | {% endif -%}

75 | {% endif -%}

76 |

67 |

68 |

69 | {% unless page.hide_github_badge or site.default_badges.github != true %}{% include notebook_github_link.html %}{% endunless %}

70 | {% unless page.hide_binder_badge or site.default_badges.binder != true %}{% include notebook_binder_link.html %}{% endunless %}

71 | {% unless page.hide_colab_badge or site.default_badges.colab != true %}{% include notebook_colab_link.html %}{% endunless %}

72 | {% unless page.hide_deepnote_badge or site.default_badges.deepnote != true %}{% include notebook_deepnote_link.html %}{% endunless %}

73 |

74 | {% endif -%}

75 | {% endif -%}

76 |

77 |

78 |

79 | {{ content | toc }}

80 |

81 | {%- if page.comments -%}

82 | {%- include utterances.html -%}

83 | {%- endif -%}

84 | {%- if site.disqus.shortname -%}

85 | {%- include disqus_comments.html -%}

86 | {%- endif -%}

87 |

88 |

89 |

--------------------------------------------------------------------------------

/_fastpages_docs/DEVELOPMENT.md:

--------------------------------------------------------------------------------

1 | # Development Guide

2 | - [Seeing All Options From the Terminal](#seeing-all-commands-in-the-terminal)

3 | - [Basic usage: viewing your blog](#basic-usage-viewing-your-blog)

4 | - [Converting the pages locally](#converting-the-pages-locally)

5 | - [Visual Studio Code integration](#visual-studio-code-integration)

6 | - [Advanced usage](#advanced-usage)

7 | - [Rebuild all the containers](#rebuild-all-the-containers)

8 | - [Removing all the containers](#removing-all-the-containers)

9 | - [Attaching a shell to a container](#attaching-a-shell-to-a-container)

10 |

11 | You can run your fastpages blog on your local machine, and view any changes you make to your posts, including Jupyter Notebooks and Word documents, live.

12 | The live preview requires that you have Docker installed on your machine. [Follow the instructions on this page if you need to install Docker.](https://www.docker.com/products/docker-desktop)

13 |

14 | ## Seeing All Commands In The Terminal

15 |

16 | There are many different `docker-compose` commands that are necessary to manage the lifecycle of the fastpages Docker containers. To make this easier, we aliased common commands in a [Makefile](https://www.gnu.org/software/make/manual/html_node/Introduction.html).

17 |

18 | You can quickly see all available commands by running this command in the root of your repository:

19 |

20 | `make`

21 |

22 | ## Basic usage: viewing your blog

23 |

24 | All of the commands in this block assume that you're in your blog root directory.

25 | To run the blog with live preview:

26 |

27 | ```bash

28 | make server

29 | ```

30 |

31 | When you run this command for the first time, it'll build the required Docker images, and the process might take a couple minutes.

32 |

33 | This command will build all the necessary containers and run the following services:

34 | 1. A service that monitors any changes in `./_notebooks/*.ipynb/` and `./_word/*.docx;*.doc` and rebuild the blog on change.

35 | 2. A Jekyll server on https://127.0.0.1:4000 — use this to preview your blog.

36 |

37 | The services will output to your terminal. If you close the terminal or hit `Ctrl-C`, the services will stop.

38 | If you want to run the services in the background:

39 |

40 | ```bash

41 | # run all services in the background

42 | make server-detached

43 |

44 | # stop the services

45 | make stop

46 | ```

47 |

48 | If you need to restart just the Jekyll server, and it's running in the background — you can do `make restart-jekyll`.

49 |

50 | _Note that the blog won't autoreload on change, you'll have to refresh your browser manually._

51 |

52 | **If containers won't start**: try `make build` first, this would rebuild all the containers from scratch, This might fix the majority of update problems.

53 |

54 | ## Converting the pages locally

55 |

56 | If you just want to convert your notebooks and word documents to `.md` posts in `_posts`, this command will do it for you:

57 |

58 | ```bash

59 | make convert

60 | ```

61 |

62 | You can launch just the jekyll server with `make server`.

63 |

64 | ## Visual Studio Code integration

65 |

66 | If you're using VSCode with the Docker extension, you can run these containers from the sidebar: `fastpages_watcher_1` and `fastpages_jekyll_1`.

67 | The containers will only show up in the list after you run or build them for the first time. So if they're not in the list — try `make build` in the console.

68 |

69 | ## Advanced usage

70 |

71 | ### Rebuild all the containers

72 | If you changed files in `_action_files` directory, you might need to rebuild the containers manually, without cache.

73 |

74 | ```bash

75 | make build

76 | ```

77 |

78 | ### Removing all the containers

79 | Want to start from scratch and remove all the containers?

80 |

81 | ```

82 | make remove

83 | ```

84 |

85 | ### Attaching a shell to a container

86 | You can attach a terminal to a running service:

87 |

88 | ```bash

89 |

90 | # If the container is already running:

91 |

92 | # attach to a bash shell in the jekyll service

93 | make bash-jekyll

94 |

95 | # attach to a bash shell in the watcher service.

96 | make bash-nb

97 | ```

98 |

99 | _Note: you can use `docker-compose run` instead of `make bash-nb` or `make bash-jekyll` to start a service and then attach to it.

100 | Or you can run all your services in the background, `make server-detached`, and then use `make bash-nb` or `make bash-jekyll` as in the examples above._

101 |

102 |

--------------------------------------------------------------------------------

/_notebooks/2023-07-13-truebot.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "id": "342ed7fc-d919-47f4-be62-2d33e458f872",

6 | "metadata": {},

7 | "source": [

8 | "# Truebot\n",

9 | "> Truely a simple malware leading to ransomware\n",

10 | "\n",

11 | "- toc: true \n",

12 | "- badges: true\n",

13 | "- categories: [truebot,config,triage]"

14 | ]

15 | },

16 | {

17 | "cell_type": "markdown",

18 | "id": "45880ffe-5309-4602-bf7e-369a8ae3768f",

19 | "metadata": {},

20 | "source": [

21 | "## Overview\n",

22 | "\n",

23 | "Truebot (aka Silence) is primarily a downloader associated with the threat actor group [TA505](https://malpedia.caad.fkie.fraunhofer.de/actor/ta505). Recently there was a CISA alert [Increased Truebot Activity Infects U.S. and Canada Based Networks](https://www.cisa.gov/news-events/cybersecurity-advisories/aa23-187a) which described ransomware/extortion activity associated with the use of Truebot.\n",

24 | "\n",

25 | "### References\n",

26 | "- [Increased Truebot Activity Infects U.S. and Canada Based Networks](https://www.cisa.gov/news-events/cybersecurity-advisories/aa23-187a) \n",

27 | "- [TrueBot Analysis Part III - Capabilities](https://malware.love/malware_analysis/reverse_engineering/2023/03/31/analyzing-truebot-capabilities.html)\n",

28 | "- [10445155.r1.v1 (pdf)](https://www.cisa.gov/sites/default/files/2023-07/MAR-10445155.r1.v1.CLEAR_.pdf)\n",

29 | "- [A Truly Graceful Wipe Out](https://thedfirreport.com/2023/06/12/a-truly-graceful-wipe-out/)\n",

30 | "\n",

31 | "### Samples\n",

32 | "- [717beedcd2431785a0f59d194e47970e9544fbf398d462a305f6ad9a1b1100cb](https://www.unpac.me/results/8d1eb4c3-cbb0-4b32-8d52-6f142c836d0f?hash=717beedcd2431785a0f59d194e47970e9544fbf398d462a305f6ad9a1b1100cb#/)"

33 | ]

34 | },

35 | {

36 | "cell_type": "markdown",

37 | "id": "40019d1c-7c62-412b-bc5b-eff15ec576d2",

38 | "metadata": {},

39 | "source": [

40 | "## Analysis\n",

41 | "\n",

42 | "- The binary uses an Adobe PDF icon possibly to trick victims into clicking it. It also displays a fake error message. `\"There was an error opening this document. The file is damaged and could not be repaired. Adobe Acrobat\"`\n",

43 | "- The binary is padded with a significant amount of junk code that is not relevant to its operation. \n",

44 | "- The main code has some checks for debugging tools and AV which if detected cause the malware to execute a deception process (calc.exe)\n",

45 | "- There are multiple anti-emulation techniques used including ...\n",

46 | " - Reading from a fake named pipe\n",

47 | " - Calling EraseTape\n",

48 | " - Checking for a valid code page with GetACP\n",

49 | " - Loading `user32`\n",

50 | " - Trying to open a random invalid file\n",

51 | "- The C2 host and URL path are encrypted using base64, urldecode, and RC4 with a hard coded key\n",

52 | " - `essadonio.com`\n",

53 | " - `/538332[.]php`\n",

54 | "- A hard coded mutex `OrionStartWorld#666` is used to ensure only one copy of the malware is running \n",

55 | "- A GUID is generated for the victim and stored in a randomly named file with the extension `.JSONMSDN` in the `%APPDATA%` directory. This GUID is also used in the C2 communications.\n",

56 | "- A list of processes running on the host is collected and combined with the GUID. It is base64 encoded then sent to the C2 server in a POST request.\n",

57 | "- The C2 has the option of sending the following commands\n",

58 | " - **LSEL** - delete yourself and exit\n",

59 | " - **TFOUN** - array of commands\n",

60 | " - **EFE** - download payload, decrypt with RC4 (hard coded key), and execute PE\n",

61 | " - **S66** - download, decrypt with RC4 (hard coded key),and inject shellcode into `cmd.exe`\n",

62 | " - **Z66** - download, decrypt with RC4 (hard coded key),and run shellcode \n",

63 | "\n",

64 | "\n"

65 | ]

66 | },

67 | {

68 | "cell_type": "code",

69 | "execution_count": null,

70 | "id": "20e99156-df59-471a-8973-70e02fd7e3de",

71 | "metadata": {},

72 | "outputs": [],

73 | "source": []

74 | }

75 | ],

76 | "metadata": {

77 | "kernelspec": {

78 | "display_name": "Python 3",

79 | "language": "python",

80 | "name": "python3"

81 | },

82 | "language_info": {

83 | "codemirror_mode": {

84 | "name": "ipython",

85 | "version": 3

86 | },

87 | "file_extension": ".py",

88 | "mimetype": "text/x-python",

89 | "name": "python",