├── assets

├── intel.png

├── oatml.png

├── oxcs.png

└── turing.png

├── leaderboard

├── diabetic_retinopathy_diagnosis

│ ├── auc

│ │ ├── mfvi.csv

│ │ ├── random.csv

│ │ ├── deep_ensemble.csv

│ │ ├── deterministic.csv

│ │ ├── mc_dropout.csv

│ │ └── ensemble_mc_dropout.csv

│ ├── accuracy

│ │ ├── mfvi.csv

│ │ ├── random.csv

│ │ ├── deep_ensemble.csv

│ │ ├── deterministic.csv

│ │ ├── mc_dropout.csv

│ │ └── ensemble_mc_dropout.csv

│ └── README.md

├── aptos2019

│ ├── auc

│ │ ├── random.csv

│ │ ├── ensemble_mc_dropout.csv

│ │ ├── deterministic.csv

│ │ ├── mfvi.csv

│ │ ├── deep_ensemble.csv

│ │ └── mc_dropout.csv

│ └── accuracy

│ │ ├── deterministic.csv

│ │ ├── ensemble_mc_dropout.csv

│ │ ├── deep_ensemble.csv

│ │ ├── mc_dropout.csv

│ │ ├── random.csv

│ │ └── mfvi.csv

└── pretraining

│ ├── auc

│ ├── mc_dropout.csv

│ ├── random.csv

│ └── deterministic.csv

│ └── accuracy

│ ├── deterministic.csv

│ ├── mc_dropout.csv

│ └── random.csv

├── baselines

├── diabetic_retinopathy_diagnosis

│ ├── mfvi

│ │ ├── configs

│ │ │ ├── medium.cfg

│ │ │ └── realworld.cfg

│ │ ├── __init__.py

│ │ ├── main.py

│ │ └── model.py

│ ├── mc_dropout

│ │ ├── configs

│ │ │ ├── medium.cfg

│ │ │ └── realworld.cfg

│ │ ├── __init__.py

│ │ ├── main.py

│ │ └── model.py

│ ├── deep_ensembles

│ │ ├── __init__.py

│ │ ├── model.py

│ │ └── main.py

│ ├── deterministic

│ │ ├── __init__.py

│ │ ├── model.py

│ │ └── main.py

│ ├── ensemble_mc_dropout

│ │ ├── __init__.py

│ │ ├── model.py

│ │ └── main.py

│ └── README.md

└── __init__.py

├── bdlb

├── core

│ ├── __init__.py

│ ├── constants.py

│ ├── benchmark.py

│ ├── levels.py

│ ├── registered.py

│ ├── transforms.py

│ └── plotting.py

├── diabetic_retinopathy_diagnosis

│ ├── __init__.py

│ ├── tfds_adapter.py

│ └── benchmark.py

└── __init__.py

├── setup.py

├── .gitignore

├── README.md

└── LICENSE

/assets/intel.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OATML/bdl-benchmarks/HEAD/assets/intel.png

--------------------------------------------------------------------------------

/assets/oatml.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OATML/bdl-benchmarks/HEAD/assets/oatml.png

--------------------------------------------------------------------------------

/assets/oxcs.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OATML/bdl-benchmarks/HEAD/assets/oxcs.png

--------------------------------------------------------------------------------

/assets/turing.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/OATML/bdl-benchmarks/HEAD/assets/turing.png

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/auc/mfvi.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,86.6,0.9

3 | 0.6,85.4,1.2

4 | 0.7,84.0,1.0

5 | 0.8,83.0,0.9

6 | 0.9,81.8,0.8

7 | 1.0,82.1,1.3

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/auc/random.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,81.8,1.2

3 | 0.6,82.1,1.1

4 | 0.7,82.0,1.3

5 | 0.8,81.8,1.1

6 | 0.9,82.1,1.0

7 | 1.0,82.0,0.9

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/accuracy/mfvi.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,88.1,1.1

3 | 0.6,86.9,0.9

4 | 0.7,85.0,1.0

5 | 0.8,84.5,0.7

6 | 0.9,84.4,0.6

7 | 1.0,84.3,0.7

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/accuracy/random.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,84.8,0.9

3 | 0.6,84.6,0.8

4 | 0.7,84.3,0.7

5 | 0.8,84.6,0.7

6 | 0.9,84.3,0.6

7 | 1.0,84.2,0.5

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/auc/deep_ensemble.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,87.2,0.9

3 | 0.6,86.1,1.1

4 | 0.7,84.9,0.8

5 | 0.8,83.8,0.9

6 | 0.9,82.7,0.8

7 | 1.0,81.8,1.1

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/auc/deterministic.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,84.9,1.1

3 | 0.6,83.4,1.3

4 | 0.7,82.3,1.2

5 | 0.8,81.8,1.2

6 | 0.9,81.7,1.4

7 | 1.0,82.0,1.0

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/auc/mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,87.8,1.1

3 | 0.6,86.2,0.8

4 | 0.7,85.2,0.8

5 | 0.8,84.1,0.7

6 | 0.9,83.0,0.8

7 | 1.0,82.1,1.2

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/accuracy/deep_ensemble.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,89.9,0.9

3 | 0.6,88.3,1.1

4 | 0.7,86.1,1.0

5 | 0.8,85.1,0.9

6 | 0.9,84.7,0.8

7 | 1.0,84.6,0.7

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/accuracy/deterministic.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,86.1,0.6

3 | 0.6,85.2,0.5

4 | 0.7,84.9,0.5

5 | 0.8,84.4,0.5

6 | 0.9,84.3,0.5

7 | 1.0,84.2,0.6

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/accuracy/mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,91.3,0.7

3 | 0.6,88.9,0.9

4 | 0.7,87.1,0.9

5 | 0.8,86.4,0.7

6 | 0.9,85.2,0.8

7 | 1.0,84.5,0.9

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/auc/ensemble_mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,88.1,1.2

3 | 0.6,86.7,1.0

4 | 0.7,85.4,1.0

5 | 0.8,84.4,0.6

6 | 0.9,83.2,0.8

7 | 1.0,82.5,1.1

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/accuracy/ensemble_mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,92.4,0.9

3 | 0.6,90.2,1.0

4 | 0.7,88.1,1.0

5 | 0.8,87.1,0.9

6 | 0.9,86.7,0.8

7 | 1.0,85.3,1.0

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/mfvi/configs/medium.cfg:

--------------------------------------------------------------------------------

1 | --level=medium

2 | --output_dir=tmp/medium.mfvi

3 | --batch_size=64

4 | --num_epochs=50

5 | --num_base_filters=42

6 | --learning_rate=4e-4

7 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/mfvi/configs/realworld.cfg:

--------------------------------------------------------------------------------

1 | --level=realworld

2 | --output_dir=tmp/realworld.mfvi

3 | --batch_size=64

4 | --num_epochs=50

5 | --num_base_filters=42

6 | --learning_rate=4e-4

7 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/auc/random.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,74.1115282530884,1.2

3 | 0.6,73.98926352594685,1.1

4 | 0.7,74.31505347450982,1.3

5 | 0.8,73.63684036945445,1.1

6 | 0.9,73.70576881316478,1.0

7 | 1.0,74.44604600876292,0.9

8 |

--------------------------------------------------------------------------------

/leaderboard/pretraining/auc/mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,90.50087157323037,1.1

3 | 0.6,89.27465772369939,0.8

4 | 0.7,87.98128411710026,1.0

5 | 0.8,86.90757174461723,0.8

6 | 0.9,85.8656455334597,1.0

7 | 1.0,86.05092622000835,1.2

8 |

--------------------------------------------------------------------------------

/leaderboard/pretraining/auc/random.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,84.69111482898622,1.2

3 | 0.6,84.80000240671389,1.1

4 | 0.7,84.74939574019838,1.3

5 | 0.8,84.64244653558231,1.1

6 | 0.9,84.86491640995733,1.0

7 | 1.0,84.75539927127676,0.9

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/accuracy/deterministic.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,77.5813901979666,1.2

3 | 0.6,76.49447682269734,1.1

4 | 0.7,77.70305205105299,1.1

5 | 0.8,76.14151490098507,1.1

6 | 0.9,76.72487320864245,1.1

7 | 1.0,76.5230841411558,1.2

8 |

--------------------------------------------------------------------------------

/leaderboard/pretraining/auc/deterministic.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,87.7653697027395,1.1

3 | 0.6,86.29581740991412,1.3

4 | 0.7,85.37533171580017,1.2

5 | 0.8,84.60957109993197,1.2

6 | 0.9,84.51952536111276,1.4

7 | 1.0,84.98894869973235,1.0

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/auc/ensemble_mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,82.37751333962768,1.2

3 | 0.6,81.29736671796819,1.0

4 | 0.7,79.82695138171496,1.0

5 | 0.8,79.00445952639875,0.6

6 | 0.9,77.87282433308359,0.8

7 | 1.0,77.21169988706856,1.1

8 |

--------------------------------------------------------------------------------

/leaderboard/pretraining/accuracy/deterministic.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,89.81101313374596,0.6

3 | 0.6,88.20880358604539,0.5

4 | 0.7,88.67156209245978,0.5

5 | 0.8,88.3554739574559,0.5

6 | 0.9,88.26438144807636,0.5

7 | 1.0,87.48948402164251,0.6

8 |

--------------------------------------------------------------------------------

/leaderboard/pretraining/accuracy/mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,95.37552651460484,0.7

3 | 0.6,92.84161334475907,0.9

4 | 0.7,91.12201822951694,0.9

5 | 0.8,89.73503652410145,0.7

6 | 0.9,88.79844947088918,0.8

7 | 1.0,87.85851249188208,0.9

8 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/mc_dropout/configs/medium.cfg:

--------------------------------------------------------------------------------

1 | --level=medium

2 | --output_dir=tmp/medium.mc_dropout

3 | --batch_size=64

4 | --num_epochs=50

5 | --num_base_filters=64

6 | --learning_rate=4e-4

7 | --dropout_rate=0.1

8 | --l2_reg=5e-5

9 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/accuracy/ensemble_mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,87.1431711534417,1.3

3 | 0.6,84.93029656023879,1.4

4 | 0.7,82.88382528204772,1.4

5 | 0.8,81.23390412356164,1.3

6 | 0.9,80.87747459924013,1.2

7 | 1.0,79.40399028140253,1.4

8 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/mc_dropout/configs/realworld.cfg:

--------------------------------------------------------------------------------

1 | --level=realworld

2 | --output_dir=tmp/realworld.mc_dropout

3 | --batch_size=64

4 | --num_epochs=50

5 | --num_base_filters=64

6 | --learning_rate=4e-4

7 | --dropout_rate=0.1

8 | --l2_reg=5e-5

9 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/accuracy/deep_ensemble.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,84.33166109450175,0.7000000000000001

3 | 0.6,82.4987348870786,0.9

4 | 0.7,80.4561312734997,0.8

5 | 0.8,79.53716354380433,0.7000000000000001

6 | 0.9,79.30688328092553,0.6000000000000001

7 | 1.0,78.8957482417067,0.5

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/accuracy/mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,84.10168287156655,1.5

3 | 0.6,82.37704127549415,1.7000000000000002

4 | 0.7,79.93894164742707,1.7000000000000002

5 | 0.8,80.56345114826215,1.5

6 | 0.9,78.32015795734496,1.6

7 | 1.0,78.6132934251819,1.7000000000000002

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/auc/deterministic.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,76.35435199554918,0.5

3 | 0.6,74.31748839691352,0.7

4 | 0.7,73.55963606499797,0.5999999999999999

5 | 0.8,71.63022802104602,0.5999999999999999

6 | 0.9,73.83468349205513,0.8

7 | 1.0,73.85692043415344,0.39999999999999997

8 |

--------------------------------------------------------------------------------

/leaderboard/pretraining/accuracy/random.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,87.84742483344976,0.6000000000000001

3 | 0.6,88.01581754189371,0.5

4 | 0.7,87.20753593919196,0.39999999999999997

5 | 0.8,87.61709745818298,0.39999999999999997

6 | 0.9,86.91129532409715,0.3

7 | 1.0,87.23000053924596,0.2

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/auc/mfvi.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,77.85178917697054,0.09999999999999998

3 | 0.6,77.86896735637504,0.30000000000000004

4 | 0.7,75.55112560598127,0.0

5 | 0.8,75.2693398565609,0.09999999999999998

6 | 0.9,73.87362012532374,0.0

7 | 1.0,74.5137868570405,0.30000000000000004

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/accuracy/random.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,76.91767480701789,0.3000000000000001

3 | 0.6,76.73097026546617,0.2

4 | 0.7,76.41935840750058,0.10000000000000003

5 | 0.8,75.93500461156489,0.10000000000000003

6 | 0.9,76.26363128215164,0.0

7 | 1.0,76.93718103862291,-0.09999999999999998

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/auc/deep_ensemble.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,80.82331632570184,0.7000000000000001

3 | 0.6,80.61568433971902,0.9

4 | 0.7,78.56652659837609,0.6000000000000001

5 | 0.8,77.46110698253457,0.7000000000000001

6 | 0.9,77.00546441449661,0.6000000000000001

7 | 1.0,76.21950318328321,0.9

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/auc/mc_dropout.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,79.92363409730275,0.30000000000000004

3 | 0.6,78.50683527663989,0.0

4 | 0.7,76.86254965867806,0.19999999999999996

5 | 0.8,74.95801354030414,0.0

6 | 0.9,75.30579744841387,0.19999999999999996

7 | 1.0,74.0241101850024,0.3999999999999999

8 |

--------------------------------------------------------------------------------

/leaderboard/aptos2019/accuracy/mfvi.csv:

--------------------------------------------------------------------------------

1 | retained_data,mean,std

2 | 0.5,81.15193369214985,0.30000000000000004

3 | 0.6,80.18774373202086,0.09999999999999998

4 | 0.7,78.61797765336982,0.19999999999999996

5 | 0.8,76.99668588324471,-0.10000000000000009

6 | 0.9,77.59646926912359,-0.2

7 | 1.0,77.24944531248897,-0.10000000000000009

8 |

--------------------------------------------------------------------------------

/baselines/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/bdlb/core/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/bdlb/diabetic_retinopathy_diagnosis/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/mfvi/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/mc_dropout/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/deep_ensembles/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/deterministic/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/ensemble_mc_dropout/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

--------------------------------------------------------------------------------

/bdlb/__init__.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Global API."""

16 |

17 | from .core.registered import load

18 |

--------------------------------------------------------------------------------

/bdlb/core/constants.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Default values for some parameters of the API when no values are passed."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 | import os

22 |

23 | # Root directory of the BDL Benchmarks module.

24 | BDLB_ROOT_DIR: str = os.path.abspath(

25 | os.path.join(os.path.dirname(__file__), os.path.pardir, os.path.pardir))

26 |

27 | # Directory where to store processed datasets.

28 | DATA_DIR: str = os.path.join(BDLB_ROOT_DIR, "data")

29 |

30 | # URL to hosted datasets

31 | DIABETIC_RETINOPATHY_DIAGNOSIS_URL_MEDIUM = "https://drive.google.com/uc?id=1WAvS-pQsVLxUJiClmKLnVNQkoKmRt2I_"

32 |

33 | # URL to hosted assets

34 | LEADERBOARD_DIR_URL: str = "https://drive.google.com/uc?id=1LQeAfqMQa4lot09qAuzWa3t6attmCeG-"

35 |

--------------------------------------------------------------------------------

/bdlb/core/benchmark.py:

--------------------------------------------------------------------------------

1 | # Copyright 2018 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Data structures and API for general benchmarks."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 | import collections

22 |

23 | from .levels import Level

24 |

25 | # Abstract class for benchmark information.

26 | BenchmarkInfo = collections.namedtuple("BenchmarkInfo", [

27 | "description",

28 | "urls",

29 | "setup",

30 | "citation",

31 | ])

32 |

33 | # Container for train, validation and test sets.

34 | DataSplits = collections.namedtuple("DataSplits", [

35 | "train",

36 | "validation",

37 | "test",

38 | ])

39 |

40 |

41 | class Benchmark(object):

42 | """Abstract class for benchmark objects, specifying the core API."""

43 |

44 | def download_and_prepare(self):

45 | """Downloads and prepares necessary datasets for benchmark."""

46 | raise NotImplementedError()

47 |

48 | @property

49 | def info(self) -> BenchmarkInfo:

50 | """Text description of the benchmark."""

51 | raise NotImplementedError()

52 |

53 | @property

54 | def level(self) -> Level:

55 | """The downstream task level."""

56 | raise NotImplementedError()

57 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | import os

17 |

18 | from setuptools import find_packages

19 | from setuptools import setup

20 |

21 | here = os.path.abspath(os.path.dirname(__file__))

22 |

23 | # Get the long description from the README file

24 | with open(os.path.join(here, "README.md"), encoding="utf-8") as f:

25 | long_description = f.read()

26 |

27 | setup(

28 | name="bdlb",

29 | version="0.0.2",

30 | description="BDL Benchmarks",

31 | long_description=long_description,

32 | long_description_content_type="text/markdown",

33 | url="https://github.com/oatml/bdl-benchmarks",

34 | author="Oxford Applied and Theoretical Machine Learning Group",

35 | author_email="oatml@googlegroups.com",

36 | license="Apache-2.0",

37 | packages=find_packages(),

38 | install_requires=[

39 | "numpy==1.18.5",

40 | "scipy==1.4.1",

41 | "pandas==1.0.4",

42 | "matplotlib==3.2.1",

43 | "seaborn==0.10.1",

44 | "scikit-learn==0.21.3",

45 | "kaggle==1.5.6",

46 | "opencv-python==4.2.0.34",

47 | "tensorflow-gpu==2.0.0-beta0",

48 | "tensorflow-probability==0.7.0",

49 | "tensorflow-datasets==1.1.0",

50 | ],

51 | classifiers=[

52 | "Development Status :: 3 - Alpha",

53 | "Intended Audience :: Researchers",

54 | "Topic :: Software Development :: Build Tools",

55 | "License :: OSI Approved :: Apache 2.0 License",

56 | "Programming Language :: Python :: 3.6",

57 | "Programming Language :: Python :: 3.7",

58 | ],

59 | )

60 |

--------------------------------------------------------------------------------

/bdlb/core/levels.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Downstream tasks levels."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 | import enum

22 | from typing import Text

23 |

24 |

25 | class Level(enum.IntEnum):

26 | """Downstream task levels.

27 |

28 | TOY: Fewer examples and drastically reduced input dimensionality.

29 | This version is intended for sanity checks and debugging only.

30 | Training on a modern CPU should take five to ten minutes.

31 | MEDIUM: Fewer examples and reduced input dimensionality.

32 | This version is intended for prototyping before moving on to the

33 | real-world scale data. Training on a single modern GPU should take

34 | five or six hours.

35 | REALWORLD: The full dataset and input dimensionality. This version is

36 | intended for the evaluation of proposed techniques at a scale applicable

37 | to the real world. There are no guidelines for train time for the real-world

38 | version of the task, reflecting the fact that any improvement will translate to

39 | safer, more robust and reliable systems.

40 | """

41 |

42 | TOY = 0

43 | MEDIUM = 1

44 | REALWORLD = 2

45 |

46 | @classmethod

47 | def from_str(cls, strvalue: Text) -> "Level":

48 | """Parses a string value to ``Level``.

49 |

50 | Args:

51 | strvalue: `str`, the level in string format.

52 |

53 | Returns:

54 | The `IntEnum` ``Level`` object.

55 | """

56 | strvalue = strvalue.lower()

57 | if strvalue == "toy":

58 | return cls.TOY

59 | elif strvalue == "medium":

60 | return cls.MEDIUM

61 | elif strvalue == "realworld":

62 | return cls.REALWORLD

63 | else:

64 | raise ValueError(

65 | "Unrecognized level value '{}' provided.".format(strvalue))

66 |

--------------------------------------------------------------------------------

/leaderboard/diabetic_retinopathy_diagnosis/README.md:

--------------------------------------------------------------------------------

1 | # Diabetic Retinopathy Diagnosis

2 | The baseline results we evaluated on this benchmark are ranked below by AUC@50% data retained:

3 |

4 | | Method | AUC

(50% data retained) | Accuracy

(50% data retained) | AUC

(100% data retained) | Accuracy

(100% data retained) |

5 | | ------------------- | :-------------------------: | :-----------------------------: | :-------------------------: | :-------------------------------: |

6 | | Ensemble MC Dropout | 88.1 ± 1.2 | 92.4 ± 0.9 | 82.5 ± 1.1 | 85.3 ± 1.0 |

7 | | MC Dropout | 87.8 ± 1.1 | 91.3 ± 0.7 | 82.1 ± 0.9 | 84.5 ± 0.9 |

8 | | Deep Ensembles | 87.2 ± 0.9 | 89.9 ± 0.9 | 81.8 ± 1.1 | 84.6 ± 0.7 |

9 | | Mean-field VI | 86.6 ± 1.1 | 88.1 ± 1.1 | 82.1 ± 1.2 | 84.3 ± 0.7 |

10 | | Deterministic | 84.9 ± 1.1 | 86.1 ± 0.6 | 82.0 ± 1.0 | 84.2 ± 0.6 |

11 | | Random | 81.8 ± 1.2 | 84.8 ± 0.9 | 82.0 ± 0.9 | 84.2 ± 0.5 |

12 |

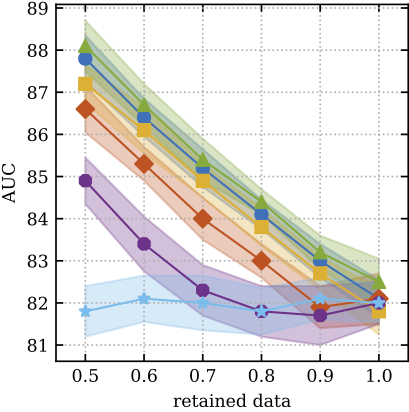

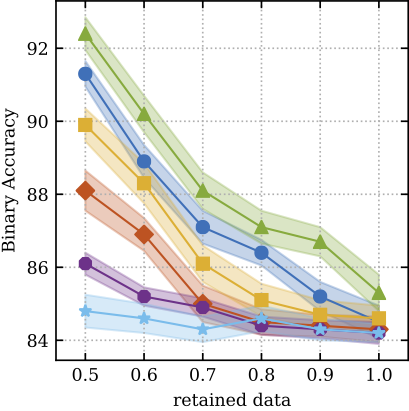

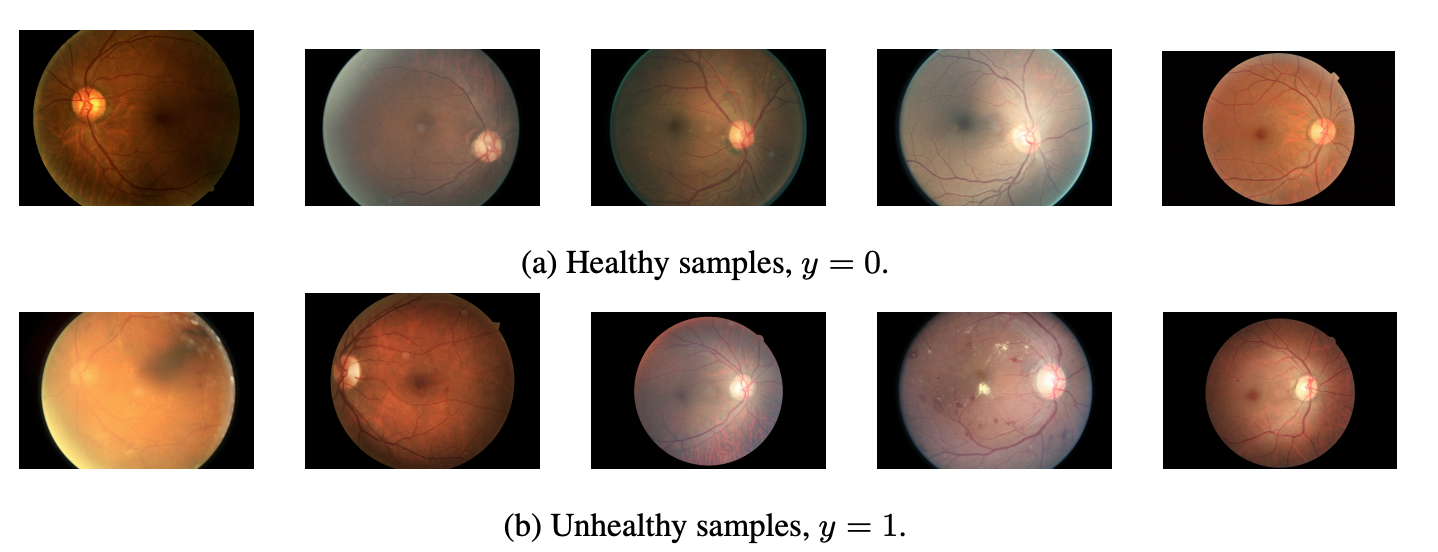

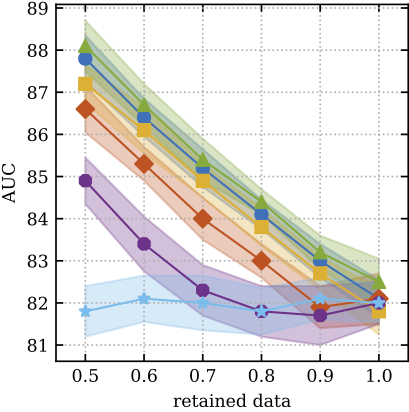

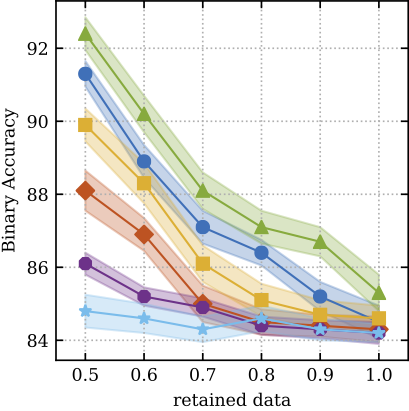

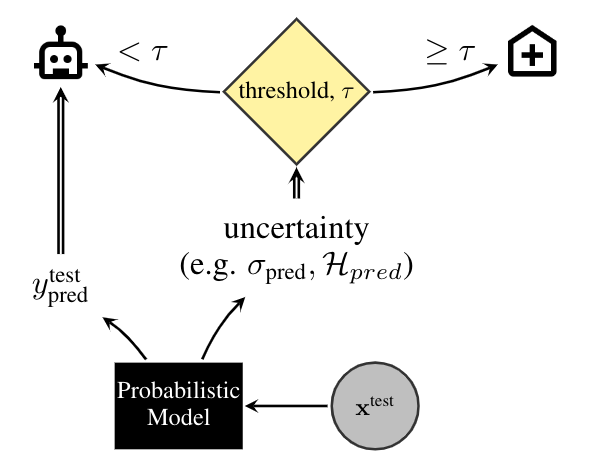

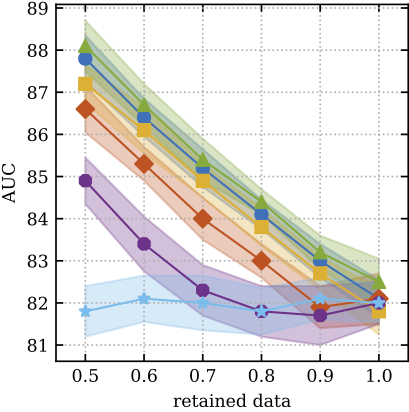

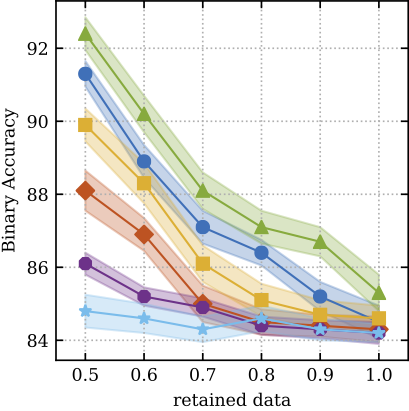

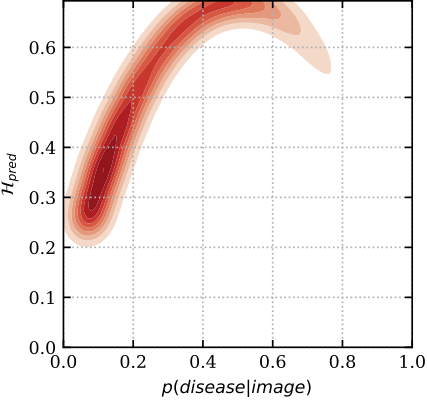

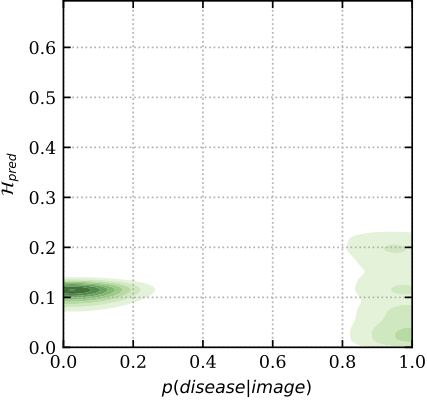

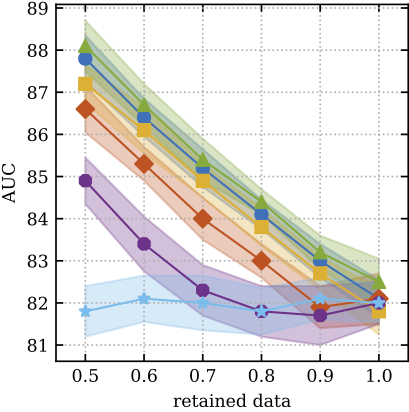

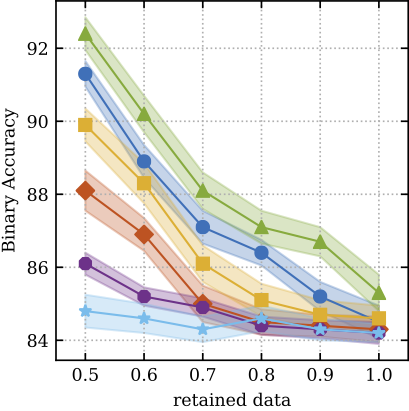

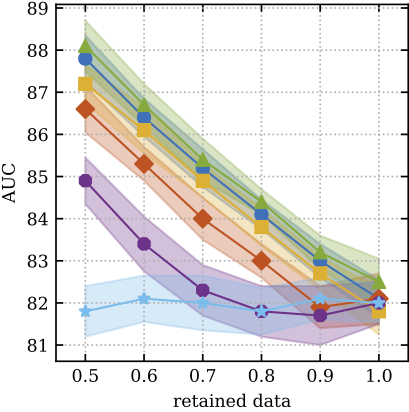

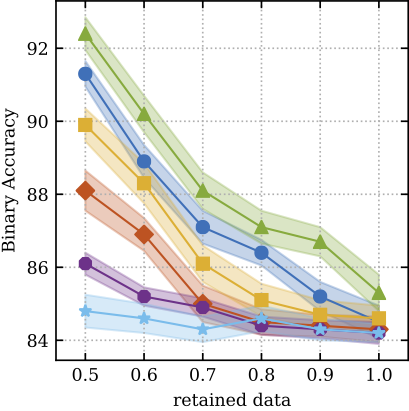

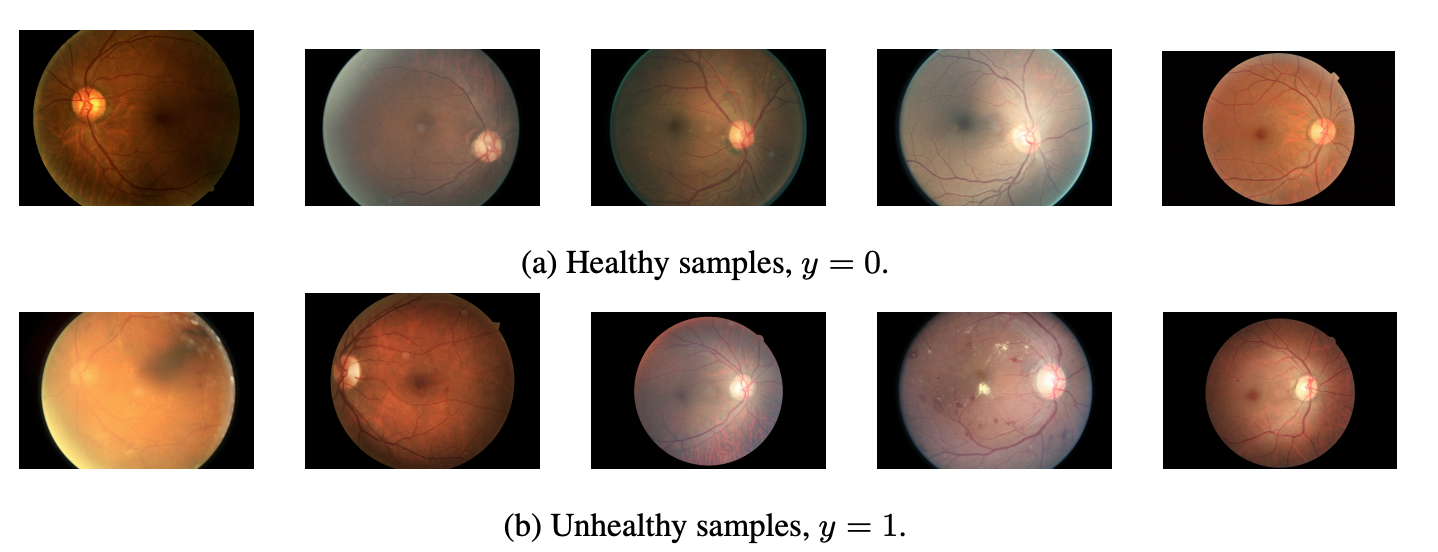

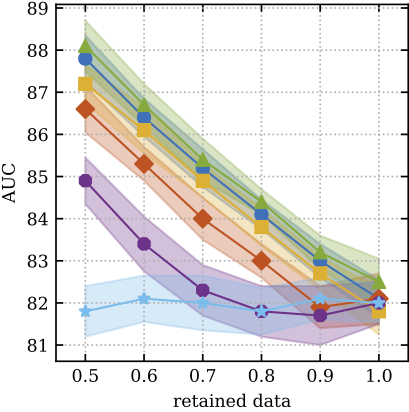

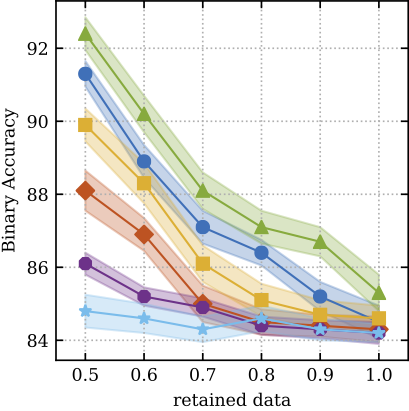

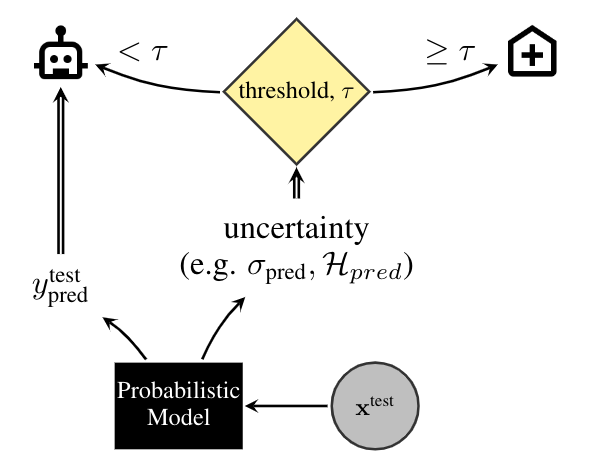

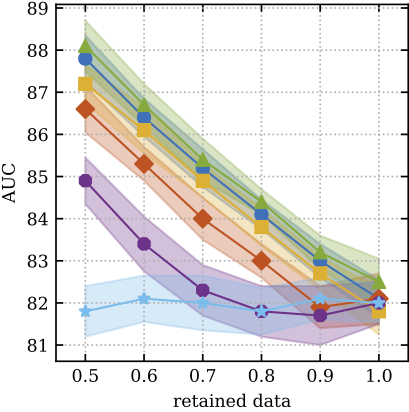

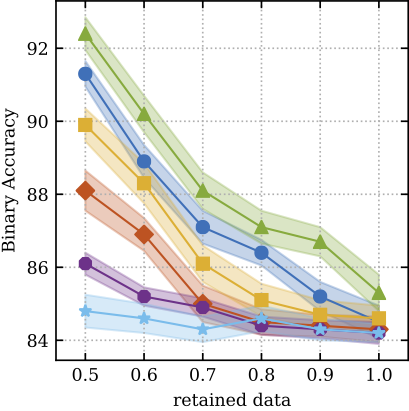

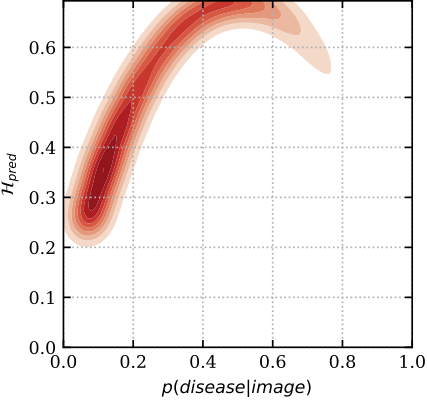

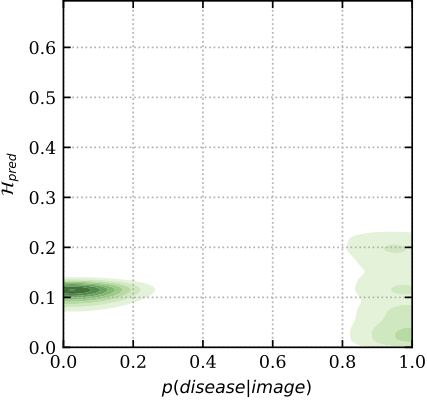

13 | These are also plotted below in an area under the receiver-operating characteristic curve (AUC) and binary accuracy for the different baselines. The methods that capture uncertainty score better when less data is retained, referring the least certain patients to expert doctors. The best scoring methods, _MC Dropout_, _mean-field variational inference_ and _Deep Ensembles_, estimate and use the predictive uncertainty. The deterministic deep model regularized by _standard dropout_ uses only aleatoric uncertainty and performs worse. Shading shows the standard error.

14 |

15 |

16 |  17 |

17 |  18 |

18 |  19 |

19 |

20 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # General

2 | .DS_Store

3 | .AppleDouble

4 | .LSOverride

5 | .vscode

6 |

7 | # Icon must end with two \r

8 | Icon

9 |

10 | # Thumbnails

11 | ._*

12 |

13 | # Files that might appear in the root of a volume

14 | .DocumentRevisions-V100

15 | .fseventsd

16 | .Spotlight-V100

17 | .TemporaryItems

18 | .Trashes

19 | .VolumeIcon.icns

20 | .com.apple.timemachine.donotpresent

21 |

22 | # Directories potentially created on remote AFP share

23 | .AppleDB

24 | .AppleDesktop

25 | Network Trash Folder

26 | Temporary Items

27 | .apdisk

28 |

29 | # Byte-compiled / optimized / DLL files

30 | __pycache__/

31 | *.py[cod]

32 | *$py.class

33 |

34 | # C extensions

35 | *.so

36 |

37 | # Distribution / packaging

38 | .Python

39 | build/

40 | develop-eggs/

41 | dist/

42 | downloads/

43 | eggs/

44 | .eggs/

45 | lib/

46 | lib64/

47 | parts/

48 | sdist/

49 | var/

50 | wheels/

51 | *.egg-info/

52 | .installed.cfg

53 | *.egg

54 | MANIFEST

55 |

56 | # PyInstaller

57 | # Usually these files are written by a python script from a template

58 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

59 | *.manifest

60 | *.spec

61 |

62 | # Installer logs

63 | pip-log.txt

64 | pip-delete-this-directory.txt

65 |

66 | # Unit test / coverage reports

67 | htmlcov/

68 | .tox/

69 | .coverage

70 | .coverage.*

71 | .cache

72 | nosetests.xml

73 | coverage.xml

74 | *.cover

75 | .hypothesis/

76 |

77 | # Translations

78 | *.mo

79 | *.pot

80 |

81 | # Django stuff:

82 | *.log

83 | .static_storage/

84 | .media/

85 | local_settings.py

86 |

87 | # Flask stuff:

88 | instance/

89 | .webassets-cache

90 |

91 | # Scrapy stuff:

92 | .scrapy

93 |

94 | # Sphinx documentation

95 | docs/_build/

96 |

97 | # PyBuilder

98 | target/

99 |

100 | # Jupyter Notebook

101 | .ipynb_checkpoints

102 |

103 | # pyenv

104 | .python-version

105 |

106 | # celery beat schedule file

107 | celerybeat-schedule

108 |

109 | # SageMath parsed files

110 | *.sage.py

111 |

112 | # Environments

113 | .env

114 | .venv

115 | env/

116 | venv/

117 | ENV/

118 | env.bak/

119 | venv.bak/

120 |

121 | # Spyder project settings

122 | .spyderproject

123 | .spyproject

124 |

125 | # Rope project settings

126 | .ropeproject

127 |

128 | # mkdocs documentation

129 | /site

130 |

131 | # mypy

132 | .mypy_cache/

133 |

134 | # temporary files

135 | tmp

136 |

137 | # direnv

138 | .envrc

139 |

140 | # Alpha release

141 | assets/*.graffle

142 | data

143 |

144 | # workstation files

145 | .style.yapf

146 | Makefile

147 | matplotlibrc

148 |

149 | # ckeckpoints

150 | baselines/*/*/checkpoints

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/deterministic/model.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Uncertainty estimator for the deterministic deep model baseline."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 |

22 | def predict(x, model, num_samples, type="entropy"):

23 | """Simple sigmoid uncertainty estimator.

24 |

25 | Args:

26 | x: `numpy.ndarray`, datapoints from input space,

27 | with shape [B, H, W, 3], where B the batch size and

28 | H, W the input images height and width accordingly.

29 | model: `tensorflow.keras.Model`, a probabilistic model,

30 | which accepts input with shape [B, H, W, 3] and

31 | outputs sigmoid probability [0.0, 1.0], and also

32 | accepts boolean arguments `training=False` for

33 | disabling dropout at test time.

34 | type: (optional) `str`, type of uncertainty returns,

35 | one of {"entropy", "stddev"}.

36 |

37 | Returns:

38 | mean: `numpy.ndarray`, predictive mean, with shape [B].

39 | uncertainty: `numpy.ndarray`, ncertainty in prediction,

40 | with shape [B].

41 | """

42 | import numpy as np

43 | import scipy.stats

44 |

45 | # Get shapes of data

46 | B, _, _, _ = x.shape

47 |

48 | # Single forward pass from the deterministic model

49 | p = model(x, training=False)

50 |

51 | # Bernoulli output distribution

52 | dist = scipy.stats.bernoulli(p)

53 |

54 | # Predictive mean calculation

55 | mean = dist.mean()

56 |

57 | # Use predictive entropy for uncertainty

58 | if type == "entropy":

59 | uncertainty = dist.entropy()

60 | # Use predictive standard deviation for uncertainty

61 | elif type == "stddev":

62 | uncertainty = dist.std()

63 | else:

64 | raise ValueError(

65 | "Unrecognized type={} provided, use one of {'entropy', 'stddev'}".

66 | format(type))

67 |

68 | return mean, uncertainty

69 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/deep_ensembles/model.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Uncertainty estimator for the Deep Ensemble baseline."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 |

22 | def predict(x, models, type="entropy"):

23 | """Deep Ensembles uncertainty estimator.

24 |

25 | Args:

26 | x: `numpy.ndarray`, datapoints from input space,

27 | with shape [B, H, W, 3], where B the batch size and

28 | H, W the input images height and width accordingly.

29 | models: `iterable` of `tensorflow.keras.Model`,

30 | a probabilistic model, which accepts input with

31 | shape [B, H, W, 3] and outputs sigmoid probability

32 | [0.0, 1.0], and also accepts boolean arguments

33 | `training=False` for disabling dropout at test time.

34 | type: (optional) `str`, type of uncertainty returns,

35 | one of {"entropy", "stddev"}.

36 |

37 | Returns:

38 | mean: `numpy.ndarray`, predictive mean, with shape [B].

39 | uncertainty: `numpy.ndarray`, ncertainty in prediction,

40 | with shape [B].

41 | """

42 | import numpy as np

43 | import scipy.stats

44 |

45 | # Get shapes of data

46 | B, _, _, _ = x.shape

47 |

48 | # Monte Carlo samples from different deterministic models

49 | mc_samples = np.asarray([model(x, training=False) for model in models

50 | ]).reshape(-1, B)

51 |

52 | # Bernoulli output distribution

53 | dist = scipy.stats.bernoulli(mc_samples.mean(axis=0))

54 |

55 | # Predictive mean calculation

56 | mean = dist.mean()

57 |

58 | # Use predictive entropy for uncertainty

59 | if type == "entropy":

60 | uncertainty = dist.entropy()

61 | # Use predictive standard deviation for uncertainty

62 | elif type == "stddev":

63 | uncertainty = dist.std()

64 | else:

65 | raise ValueError(

66 | "Unrecognized type={} provided, use one of {'entropy', 'stddev'}".

67 | format(type))

68 |

69 | return mean, uncertainty

70 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/ensemble_mc_dropout/model.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Uncertainty estimator for the Ensemble Monte Carlo Dropout baseline."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 |

22 | def predict(x, models, num_samples, type="entropy"):

23 | """Deep Ensembles uncertainty estimator.

24 |

25 | Args:

26 | x: `numpy.ndarray`, datapoints from input space,

27 | with shape [B, H, W, 3], where B the batch size and

28 | H, W the input images height and width accordingly.

29 | num_samples: `int`, number of Monte Carlo samples

30 | (i.e. forward passes from dropout) used for

31 | the calculation of predictive mean and uncertainty.

32 | type: (optional) `str`, type of uncertainty returns,

33 | one of {"entropy", "stddev"}.

34 | models: `iterable` of `tensorflow.keras.Model`,

35 | a probabilistic model, which accepts input with shape

36 | [B, H, W, 3] and outputs sigmoid probability [0.0, 1.0],

37 | and also accepts boolean arguments `training=True` for

38 | enabling dropout at test time.

39 |

40 | Returns:

41 | mean: `numpy.ndarray`, predictive mean, with shape [B].

42 | uncertainty: `numpy.ndarray`, ncertainty in prediction,

43 | with shape [B].

44 | """

45 | import numpy as np

46 | import scipy.stats

47 |

48 | # Get shapes of data

49 | B, _, _, _ = x.shape

50 |

51 | # Monte Carlo samples from different dropout mask at test time from different models

52 | mc_samples = np.asarray([

53 | model(x, training=True) for _ in range(num_samples) for model in models

54 | ]).reshape(-1, B)

55 |

56 | # Bernoulli output distribution

57 | dist = scipy.stats.bernoulli(mc_samples.mean(axis=0))

58 |

59 | # Predictive mean calculation

60 | mean = dist.mean()

61 |

62 | # Use predictive entropy for uncertainty

63 | if type == "entropy":

64 | uncertainty = dist.entropy()

65 | # Use predictive standard deviation for uncertainty

66 | elif type == "stddev":

67 | uncertainty = dist.std()

68 | else:

69 | raise ValueError(

70 | "Unrecognized type={} provided, use one of {'entropy', 'stddev'}".

71 | format(type))

72 |

73 | return mean, uncertainty

74 |

--------------------------------------------------------------------------------

/bdlb/core/registered.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Benchmarks registry handlers and definitions."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 | from typing import Dict

22 | from typing import Optional

23 | from typing import Text

24 | from typing import Union

25 |

26 | from ..core.benchmark import Benchmark

27 | from ..core.levels import Level

28 | from ..diabetic_retinopathy_diagnosis.benchmark import \

29 | DiabeticRetinopathyDiagnosisBecnhmark

30 |

31 | # Internal registry containing

32 | _BENCHMARK_REGISTRY: Dict[Text, Benchmark] = {

33 | "diabetic_retinopathy_diagnosis": DiabeticRetinopathyDiagnosisBecnhmark

34 | }

35 |

36 |

37 | def load(

38 | benchmark: Text,

39 | level: Union[Text, Level] = "realworld",

40 | data_dir: Optional[Text] = None,

41 | download_and_prepare: bool = True,

42 | **dtask_kwargs,

43 | ) -> Benchmark:

44 | """Loads the named benchmark into a `bdlb.Benchmark`.

45 |

46 | Args:

47 | benchmark: `str`, the registerd name of `bdlb.Benchmark`.

48 | level: `bdlb.Level` or `str`, which level of the benchmark to load.

49 | data_dir: `str` (optional), directory to read/write data.

50 | Defaults to "~/.bdlb/data".

51 | download_and_prepare: (optional) `bool`, if the data is not available

52 | it downloads and preprocesses it.

53 | dtask_kwargs: key arguments for the benchmark contructor.

54 |

55 | Returns:

56 | A registered `bdlb.Benchmark` with `level` at `data_dir`.

57 |

58 | Raises:

59 | BenchmarkNotFoundError: if `name` is unrecognised.

60 | """

61 | if not benchmark in _BENCHMARK_REGISTRY:

62 | raise BenchmarkNotFoundError(benchmark)

63 | # Fetch benchmark object

64 | return _BENCHMARK_REGISTRY.get(benchmark)(

65 | level=level,

66 | data_dir=data_dir,

67 | download_and_prepare=download_and_prepare,

68 | **dtask_kwargs,

69 | )

70 |

71 |

72 | class BenchmarkNotFoundError(ValueError):

73 | """The requested `bdlb.Benchmark` was not found."""

74 |

75 | def __init__(self, name: Text):

76 | all_denchmarks_str = "\n\t- ".join([""] + list(_BENCHMARK_REGISTRY.keys()))

77 | error_str = (

78 | "Benchmark {name} not found. Available denchmarks: {benchmarks}\n",

79 | format(name=name, benchmarks=all_denchmarks_str))

80 | super(BenchmarkNotFoundError, self).__init__(error_str)

81 |

--------------------------------------------------------------------------------

/bdlb/core/transforms.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Data augmentation and transformations."""

16 |

17 | from __future__ import absolute_import

18 | from __future__ import division

19 | from __future__ import print_function

20 |

21 | from typing import Optional

22 | from typing import Sequence

23 | from typing import Tuple

24 |

25 | import numpy as np

26 | import tensorflow as tf

27 |

28 |

29 | class Transform(object):

30 | """Abstract transformation class."""

31 |

32 | def __call__(

33 | self,

34 | x: tf.Tensor,

35 | y: Optional[tf.Tensor] = None,

36 | ) -> Tuple[tf.Tensor, tf.Tensor]:

37 | raise NotImplementedError()

38 |

39 |

40 | class Compose(Transform):

41 | """Uber transformation, composing a list of transformations."""

42 |

43 | def __init__(self, transforms: Sequence[Transform]):

44 | """Constructs a composition of transformations.

45 |

46 | Args:

47 | transforms: `iterable`, sequence of transformations to be composed.

48 | """

49 | self.transforms = transforms

50 |

51 | def __call__(

52 | self,

53 | x: tf.Tensor,

54 | y: Optional[tf.Tensor] = None,

55 | ) -> Tuple[tf.Tensor, tf.Tensor]:

56 | """Returns a composite function of transformations.

57 |

58 | Args:

59 | x: `any`, raw data format.

60 | y: `optional`, raw data format.

61 |

62 | Returns:

63 | A composite function to be used with `tf.data.Dataset.map()`.

64 | """

65 | for f in self.transforms:

66 | x, y = f(x, y)

67 | return x, y

68 |

69 |

70 | class RandomAugment(Transform):

71 |

72 | def __init__(self, **config):

73 | """Constructs a tranformer from `config`.

74 |

75 | Args:

76 | **config: keyword arguments for

77 | `tensorflow.keras.preprocessing.image.ImageDataGenerator`

78 | """

79 | self.idg = tf.keras.preprocessing.image.ImageDataGenerator(**config)

80 |

81 | def __call__(self, x: tf.Tensor, y: tf.Tensor) -> Tuple[tf.Tensor, tf.Tensor]:

82 | """Returns a randomly augmented image and its label.

83 |

84 | Args:

85 | x: `tensorflow.Tensor`, an image, with shape [height, width, channels].

86 | y: `tensorflow.Tensor`, a target, with shape [].

87 |

88 |

89 | Returns:

90 | The processed tuple:

91 | * `x`: `tensorflow.Tensor`, the randomly augmented image,

92 | with shape [height, width, channels].

93 | * `y`: `tensorflow.Tensor`, the unchanged target, with shape [].

94 | """

95 | return tf.py_function(self._transform, inp=[x], Tout=tf.float32), y

96 |

97 | def _transform(self, x: tf.Tensor) -> tf.Tensor:

98 | """Helper function for `tensorflow.py_function`, will be removed when

99 | TensorFlow 2.0 is released."""

100 | return tf.cast(self.idg.random_transform(x.numpy()), tf.float32)

101 |

102 |

103 | class Resize(Transform):

104 |

105 | def __init__(self, target_height: int, target_width: int):

106 | """Constructs an image resizer.

107 |

108 | Args:

109 | target_height: `int`, number of pixels in height.

110 | target_width: `int`, number of pixels in width.

111 | """

112 | self.target_height = target_height

113 | self.target_width = target_width

114 |

115 | def __call__(self, x: tf.Tensor, y: tf.Tensor) -> Tuple[tf.Tensor, tf.Tensor]:

116 | """Returns a resized image."""

117 | return tf.image.resize(x, size=[self.target_height, self.target_width]), y

118 |

119 |

120 | class Normalize(Transform):

121 |

122 | def __init__(self, loc: np.ndarray, scale: np.ndarray):

123 | self.loc = loc

124 | self.scale = scale

125 |

126 | def __call__(self, x: tf.Tensor, y: tf.Tensor) -> Tuple[tf.Tensor, tf.Tensor]:

127 | return (x - self.loc) / self.scale, y

128 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/deep_ensembles/main.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Script for training and evaluating Deep Ensemble baseline for Diabetic

16 | Retinopathy Diagnosis benchmark."""

17 |

18 | from __future__ import absolute_import

19 | from __future__ import division

20 | from __future__ import print_function

21 |

22 | import functools

23 | import os

24 |

25 | import tensorflow as tf

26 | from absl import app

27 | from absl import flags

28 | from absl import logging

29 |

30 | import bdlb

31 | from baselines.diabetic_retinopathy_diagnosis.deep_ensembles.model import \

32 | predict

33 | from baselines.diabetic_retinopathy_diagnosis.mc_dropout.model import VGGDrop

34 | from bdlb.core import plotting

35 |

36 | tfk = tf.keras

37 |

38 | ##########################

39 | # Command line arguments #

40 | ##########################

41 | FLAGS = flags.FLAGS

42 | flags.DEFINE_spaceseplist(

43 | name="model_checkpoints",

44 | default=None,

45 | help="Paths to checkpoints of the models.",

46 | )

47 | flags.DEFINE_string(

48 | name="output_dir",

49 | default="/tmp",

50 | help="Path to store model, tensorboard and report outputs.",

51 | )

52 | flags.DEFINE_enum(

53 | name="level",

54 | default="medium",

55 | enum_values=["realworld", "medium"],

56 | help="Downstream task level, one of {'medium', 'realworld'}.",

57 | )

58 | flags.DEFINE_integer(

59 | name="batch_size",

60 | default=128,

61 | help="Batch size used for training.",

62 | )

63 | flags.DEFINE_integer(

64 | name="num_epochs",

65 | default=50,

66 | help="Number of epochs of training over the whole training set.",

67 | )

68 | flags.DEFINE_enum(

69 | name="uncertainty",

70 | default="entropy",

71 | enum_values=["stddev", "entropy"],

72 | help="Uncertainty type, one of those defined "

73 | "with `estimator` function.",

74 | )

75 | flags.DEFINE_integer(

76 | name="num_base_filters",

77 | default=32,

78 | help="Number of base filters in convolutional layers.",

79 | )

80 | flags.DEFINE_float(

81 | name="learning_rate",

82 | default=4e-4,

83 | help="ADAM optimizer learning rate.",

84 | )

85 | flags.DEFINE_float(

86 | name="dropout_rate",

87 | default=0.1,

88 | help="The rate of dropout, between [0.0, 1.0).",

89 | )

90 | flags.DEFINE_float(

91 | name="l2_reg",

92 | default=5e-5,

93 | help="The L2-regularization coefficient.",

94 | )

95 |

96 |

97 | def main(argv):

98 |

99 | print(argv)

100 | print(FLAGS)

101 |

102 | ##########################

103 | # Hyperparmeters & Model #

104 | ##########################

105 | input_shape = dict(medium=(256, 256, 3), realworld=(512, 512, 3))[FLAGS.level]

106 |

107 | hparams = dict(dropout_rate=FLAGS.dropout_rate,

108 | num_base_filters=FLAGS.num_base_filters,

109 | learning_rate=FLAGS.learning_rate,

110 | l2_reg=FLAGS.l2_reg,

111 | input_shape=input_shape)

112 | classifiers = list()

113 | for checkpoint in FLAGS.model_checkpoints:

114 | classifier = VGGDrop(**hparams)

115 | classifier.load_weights(checkpoint)

116 | classifier.summary()

117 | classifiers.append(classifier)

118 |

119 | #############

120 | # Load Task #

121 | #############

122 | dtask = bdlb.load(

123 | benchmark="diabetic_retinopathy_diagnosis",

124 | level=FLAGS.level,

125 | batch_size=FLAGS.batch_size,

126 | download_and_prepare=False, # do not download data from this script

127 | )

128 | _, _, ds_test = dtask.datasets

129 |

130 | ##############

131 | # Evaluation #

132 | ##############

133 | dtask.evaluate(functools.partial(predict,

134 | models=classifiers,

135 | type=FLAGS.uncertainty),

136 | dataset=ds_test,

137 | output_dir=FLAGS.output_dir)

138 |

139 |

140 | if __name__ == "__main__":

141 | app.run(main)

142 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/ensemble_mc_dropout/main.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Script for training and evaluating Ensemble Monte Carlo Dropout baseline for

16 | Diabetic Retinopathy Diagnosis benchmark."""

17 |

18 | from __future__ import absolute_import

19 | from __future__ import division

20 | from __future__ import print_function

21 |

22 | import functools

23 | import os

24 |

25 | import tensorflow as tf

26 | from absl import app

27 | from absl import flags

28 | from absl import logging

29 |

30 | import bdlb

31 | from baselines.diabetic_retinopathy_diagnosis.ensemble_mc_dropout.model import \

32 | predict

33 | from baselines.diabetic_retinopathy_diagnosis.mc_dropout.model import VGGDrop

34 | from bdlb.core import plotting

35 |

36 | tfk = tf.keras

37 |

38 | ##########################

39 | # Command line arguments #

40 | ##########################

41 | FLAGS = flags.FLAGS

42 | flags.DEFINE_spaceseplist(

43 | name="model_checkpoints",

44 | default=None,

45 | help="Paths to checkpoints of the models.",

46 | )

47 | flags.DEFINE_string(

48 | name="output_dir",

49 | default="/tmp",

50 | help="Path to store model, tensorboard and report outputs.",

51 | )

52 | flags.DEFINE_enum(

53 | name="level",

54 | default="medium",

55 | enum_values=["realworld", "medium"],

56 | help="Downstream task level, one of {'medium', 'realworld'}.",

57 | )

58 | flags.DEFINE_integer(

59 | name="batch_size",

60 | default=128,

61 | help="Batch size used for training.",

62 | )

63 | flags.DEFINE_integer(

64 | name="num_epochs",

65 | default=50,

66 | help="Number of epochs of training over the whole training set.",

67 | )

68 | flags.DEFINE_integer(

69 | name="num_mc_samples",

70 | default=10,

71 | help="Number of Monte Carlo samples used for uncertainty estimation.",

72 | )

73 | flags.DEFINE_enum(

74 | name="uncertainty",

75 | default="entropy",

76 | enum_values=["stddev", "entropy"],

77 | help="Uncertainty type, one of those defined "

78 | "with `estimator` function.",

79 | )

80 | flags.DEFINE_integer(

81 | name="num_base_filters",

82 | default=32,

83 | help="Number of base filters in convolutional layers.",

84 | )

85 | flags.DEFINE_float(

86 | name="learning_rate",

87 | default=4e-4,

88 | help="ADAM optimizer learning rate.",

89 | )

90 | flags.DEFINE_float(

91 | name="dropout_rate",

92 | default=0.1,

93 | help="The rate of dropout, between [0.0, 1.0).",

94 | )

95 | flags.DEFINE_float(

96 | name="l2_reg",

97 | default=5e-5,

98 | help="The L2-regularization coefficient.",

99 | )

100 |

101 |

102 | def main(argv):

103 |

104 | print(argv)

105 | print(FLAGS)

106 |

107 | ##########################

108 | # Hyperparmeters & Model #

109 | ##########################

110 | input_shape = dict(medium=(256, 256, 3), realworld=(512, 512, 3))[FLAGS.level]

111 |

112 | hparams = dict(dropout_rate=FLAGS.dropout_rate,

113 | num_base_filters=FLAGS.num_base_filters,

114 | learning_rate=FLAGS.learning_rate,

115 | l2_reg=FLAGS.l2_reg,

116 | input_shape=input_shape)

117 | classifiers = list()

118 | for checkpoint in FLAGS.model_checkpoints:

119 | classifier = VGGDrop(**hparams)

120 | classifier.load_weights(checkpoint)

121 | classifier.summary()

122 | classifiers.append(classifier)

123 |

124 | #############

125 | # Load Task #

126 | #############

127 | dtask = bdlb.load(

128 | benchmark="diabetic_retinopathy_diagnosis",

129 | level=FLAGS.level,

130 | batch_size=FLAGS.batch_size,

131 | download_and_prepare=False, # do not download data from this script

132 | )

133 | _, _, ds_test = dtask.datasets

134 |

135 | ##############

136 | # Evaluation #

137 | ##############

138 | dtask.evaluate(functools.partial(predict,

139 | models=classifiers,

140 | num_samples=FLAGS.num_mc_samples,

141 | type=FLAGS.uncertainty),

142 | dataset=ds_test,

143 | output_dir=FLAGS.output_dir)

144 |

145 |

146 | if __name__ == "__main__":

147 | app.run(main)

148 |

--------------------------------------------------------------------------------

/baselines/diabetic_retinopathy_diagnosis/deterministic/main.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019 BDL Benchmarks Authors. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 | """Script for training and evaluating a deterministic baseline for Diabetic

16 | Retinopathy Diagnosis benchmark."""

17 |

18 | from __future__ import absolute_import

19 | from __future__ import division

20 | from __future__ import print_function

21 |

22 | import functools

23 | import os

24 |

25 | import tensorflow as tf

26 | from absl import app

27 | from absl import flags

28 | from absl import logging

29 |

30 | import bdlb

31 | from baselines.diabetic_retinopathy_diagnosis.deterministic.model import \

32 | predict

33 | from baselines.diabetic_retinopathy_diagnosis.mc_dropout.model import VGGDrop

34 | from bdlb.core import plotting

35 |

36 | tfk = tf.keras

37 |

38 | ##########################

39 | # Command line arguments #

40 | ##########################

41 | FLAGS = flags.FLAGS

42 | flags.DEFINE_string(

43 | name="output_dir",

44 | default="/tmp",

45 | help="Path to store model, tensorboard and report outputs.",

46 | )

47 | flags.DEFINE_enum(

48 | name="level",

49 | default="medium",

50 | enum_values=["realworld", "medium"],

51 | help="Downstream task level, one of {'medium', 'realworld'}.",

52 | )

53 | flags.DEFINE_integer(

54 | name="batch_size",

55 | default=128,

56 | help="Batch size used for training.",

57 | )

58 | flags.DEFINE_integer(

59 | name="num_epochs",

60 | default=50,

61 | help="Number of epochs of training over the whole training set.",

62 | )

63 | flags.DEFINE_enum(

64 | name="uncertainty",

65 | default="entropy",

66 | enum_values=["stddev", "entropy"],

67 | help="Uncertainty type, one of those defined "

68 | "with `estimator` function.",

69 | )

70 | flags.DEFINE_integer(

71 | name="num_base_filters",

72 | default=32,

73 | help="Number of base filters in convolutional layers.",

74 | )

75 | flags.DEFINE_float(

76 | name="learning_rate",

77 | default=4e-4,

78 | help="ADAM optimizer learning rate.",

79 | )

80 | flags.DEFINE_float(

81 | name="dropout_rate",

82 | default=0.1,

83 | help="The rate of dropout, between [0.0, 1.0).",

84 | )

85 | flags.DEFINE_float(

86 | name="l2_reg",

87 | default=5e-5,

88 | help="The L2-regularization coefficient.",

89 | )

90 |

91 |

92 | def main(argv):

93 |

94 | print(argv)

95 | print(FLAGS)

96 |

97 | ##########################

98 | # Hyperparmeters & Model #

99 | ##########################

100 | input_shape = dict(medium=(256, 256, 3), realworld=(512, 512, 3))[FLAGS.level]

101 |

102 | hparams = dict(dropout_rate=FLAGS.dropout_rate,

103 | num_base_filters=FLAGS.num_base_filters,

104 | learning_rate=FLAGS.learning_rate,

105 | l2_reg=FLAGS.l2_reg,

106 | input_shape=input_shape)

107 | classifier = VGGDrop(**hparams)

108 | classifier.summary()

109 |

110 | #############

111 | # Load Task #

112 | #############